CS 704 Advanced Computer Architecture Lecture 26 Memory

- Slides: 44

CS 704 Advanced Computer Architecture Lecture 26 Memory Hierarchy Design (Concept of Caching and Principle of Locality) Prof. Dr. M. Ashraf Chughtai

Today’s Topics Recap: Storage trends and memory hierarchy Concept of Cache Memory Principle of Locality Cache Addressing Techniques RAM vs. Cache Transaction Summary MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 2

Recap: Storage Devices Design features of semiconductor memories SRAM DRAM magnetic disk storage MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 3

Recap: Speed and Cost per byte – DRAM is slow but cheap relative to SRAM – Main memory of the processor to hold moderately large amount of data and instructions – Disk storage is slowest and cheapest – secondary storage to hold bulk of data and instructions MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 4

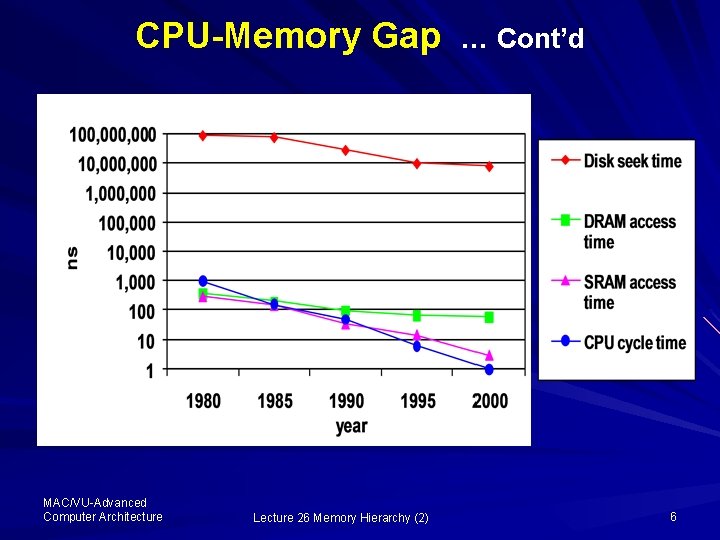

Recap: CPU-Memory Access-Time – The gap between the speed of DRAM and Disk with respect to the speed of processor, as compared to that of the SRAM, is increasing very fast with time MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 5

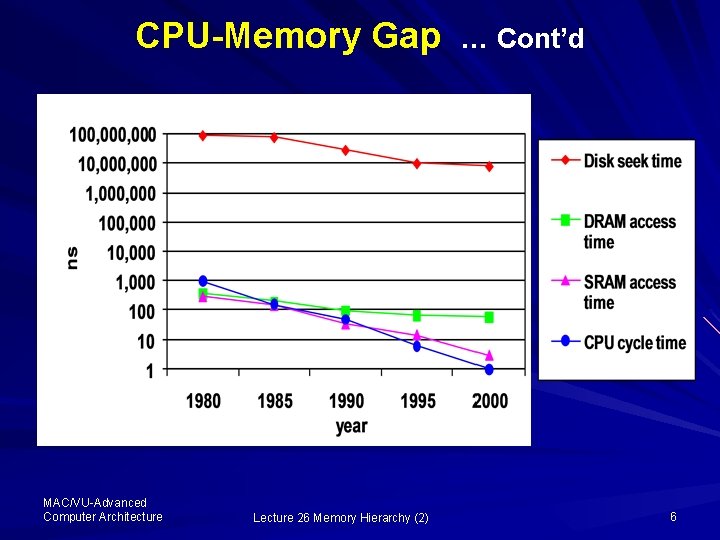

CPU-Memory Gap MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) … Cont’d 6

Memory Hierarchy Principles The speed of DRAM and CPU complement each other Organize memory in hierarchy, based on the Concept of Caching; and – Principle of Locality MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 7

1: Concept of Caching staging area or temporary-place to: – store frequently-used subset of the data or instructions from the relatively cheaper, larger and slower memory; and – To avoid having to go to the main memory every time this information is needed MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 8

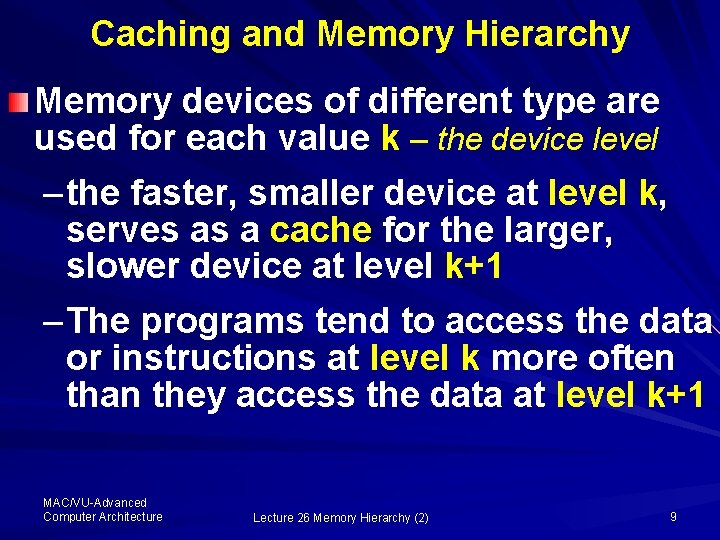

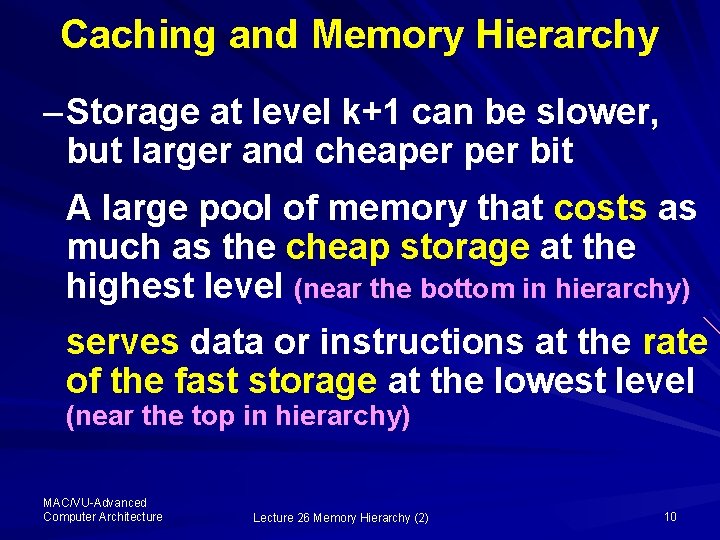

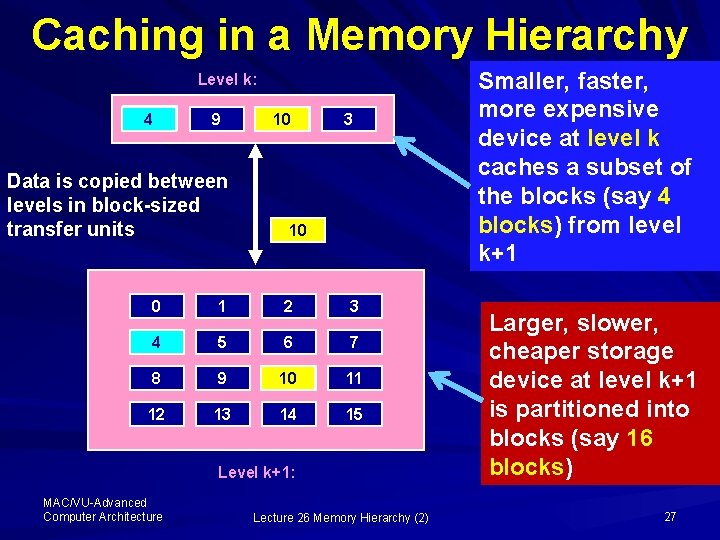

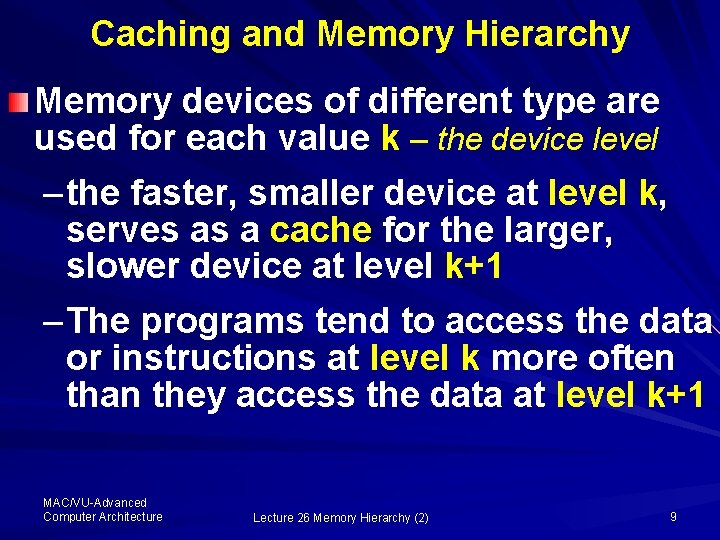

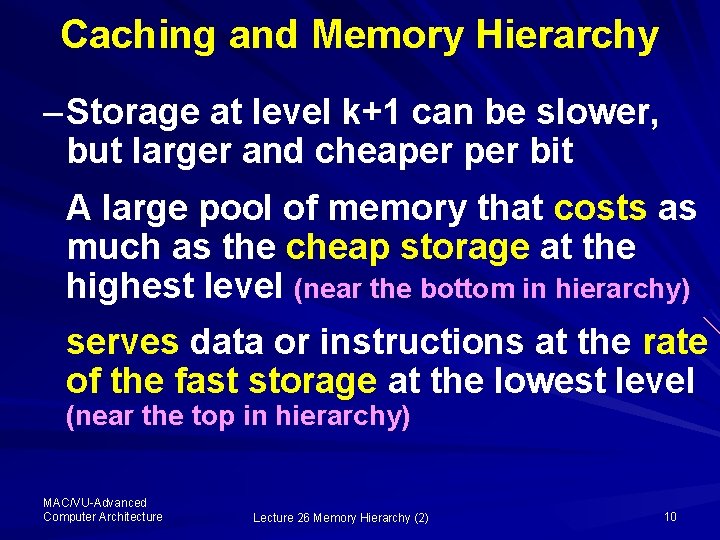

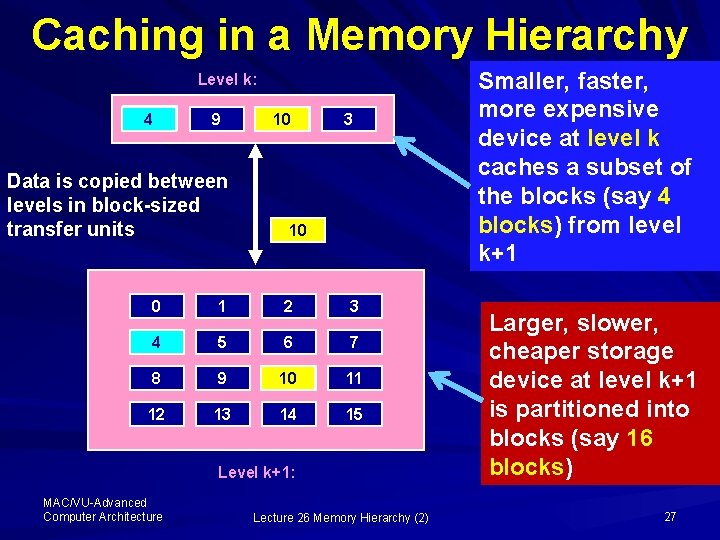

Caching and Memory Hierarchy Memory devices of different type are used for each value k – the device level – the faster, smaller device at level k, serves as a cache for the larger, slower device at level k+1 – The programs tend to access the data or instructions at level k more often than they access the data at level k+1 MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 9

Caching and Memory Hierarchy – Storage at level k+1 can be slower, but larger and cheaper bit A large pool of memory that costs as much as the cheap storage at the highest level (near the bottom in hierarchy) serves data or instructions at the rate of the fast storage at the lowest level (near the top in hierarchy) MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 10

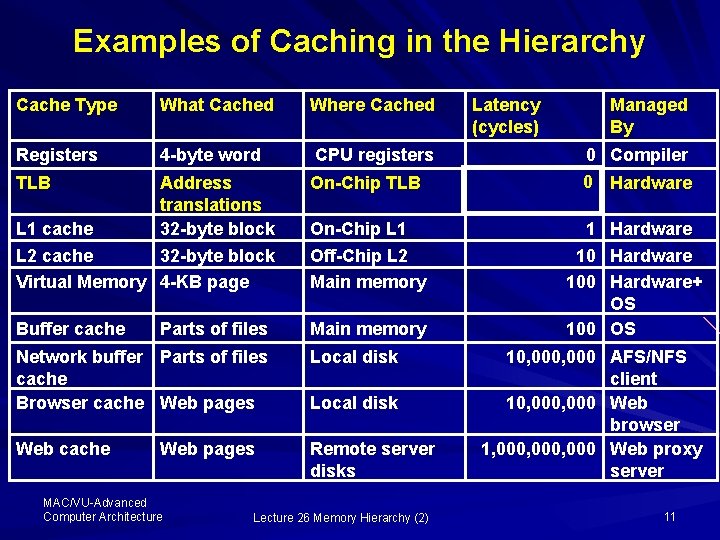

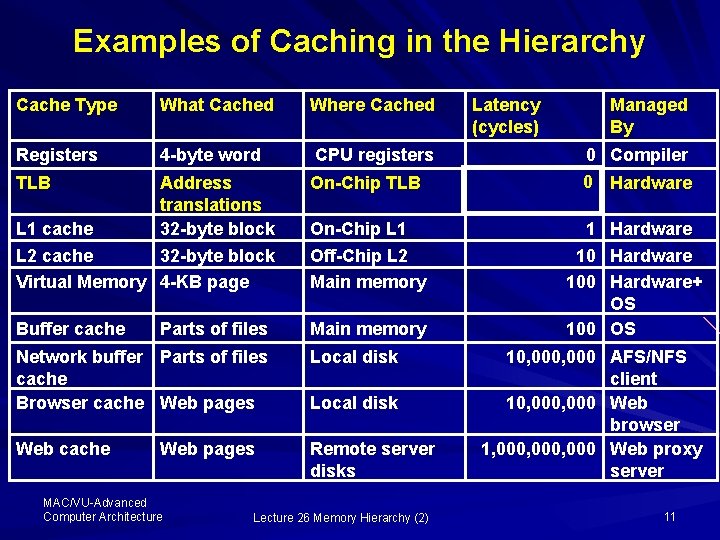

Examples of Caching in the Hierarchy Cache Type What Cached Where Cached Registers 4 -byte word CPU registers TLB Address translations L 1 cache 32 -byte block L 2 cache 32 -byte block Virtual Memory 4 -KB page On-Chip TLB Buffer cache Main memory Parts of files On-Chip L 1 Off-Chip L 2 Main memory Network buffer Parts of files cache Browser cache Web pages Local disk Web cache Remote server disks Web pages MAC/VU-Advanced Computer Architecture Local disk Lecture 26 Memory Hierarchy (2) Latency (cycles) Managed By 0 Compiler 0 Hardware 100 Hardware+ OS 100 OS 10, 000 AFS/NFS client 10, 000 Web browser 1, 000, 000 Web proxy server 11

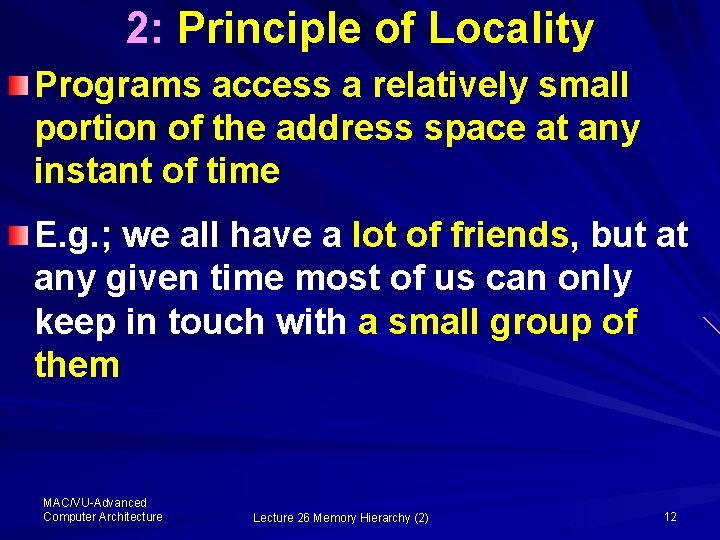

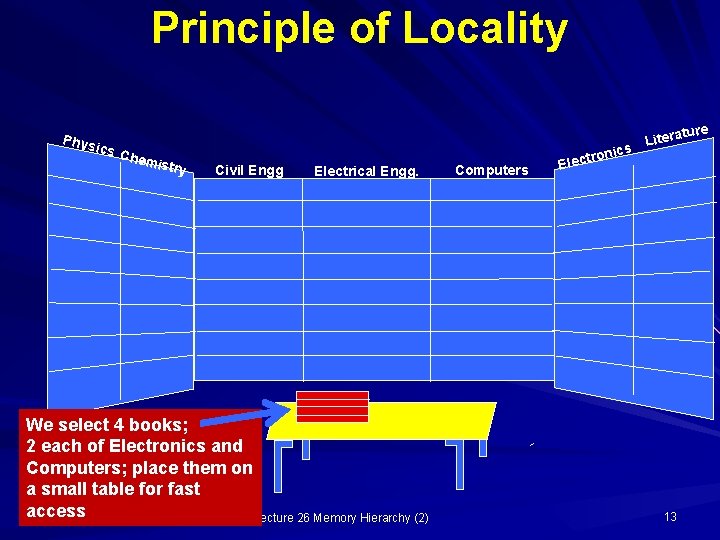

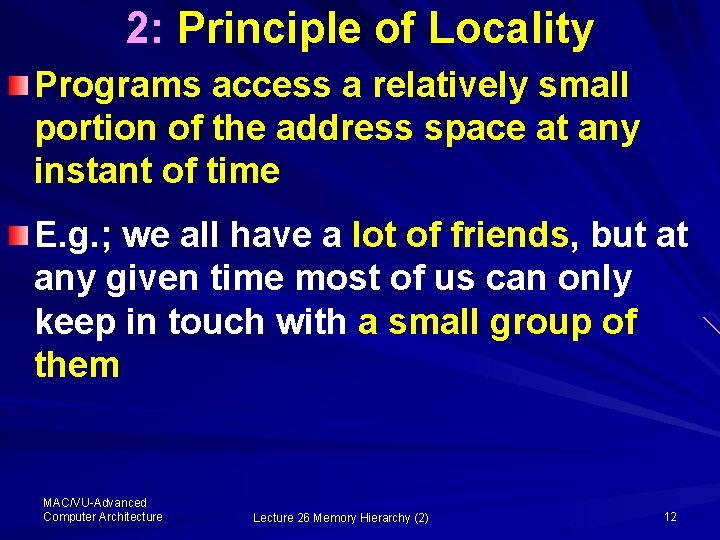

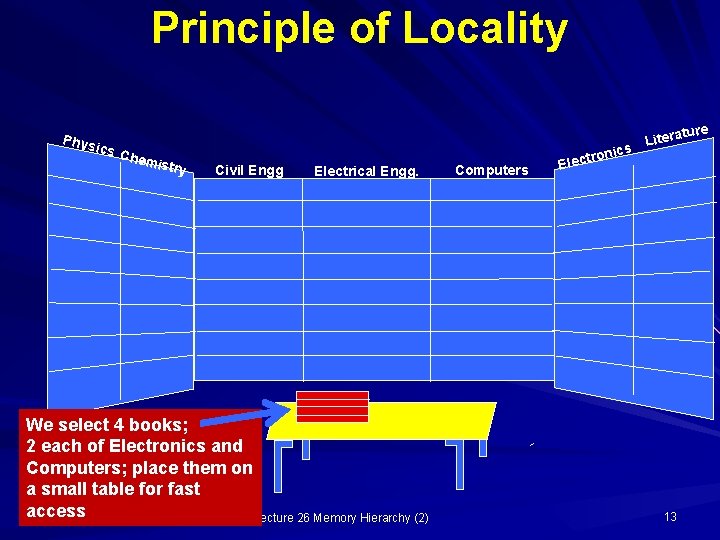

2: Principle of Locality Programs access a relatively small portion of the address space at any instant of time E. g. ; we all have a lot of friends, but at any given time most of us can only keep in touch with a small group of them MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 12

Principle of Locality Phys ics Chem istry Civil Engg Electrical Engg. We select 4 books; 2 each of Electronics and Computers; place them on a small table for fast MAC/VU-Advanced access Computer Architecture Lecture 26 Memory Hierarchy (2) Computers onics t Litera r Elect 13 ure

Types of Locality Temporal Spatial Temporal locality is the locality in time which says if an item is referenced, it will tend to be referenced again soon. MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 14

Types of Locality Spatial locality It is the locality in space. It says if an item is referenced, items whose addresses are close by tend to be referenced soon MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 15

A well-written program tends to reuse data and instructions which are: – either near those they have used recently – or that were recently referenced themselves MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 16

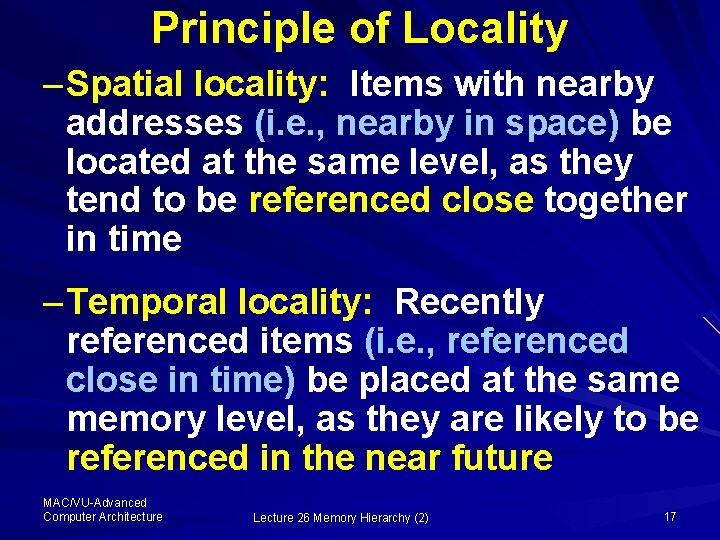

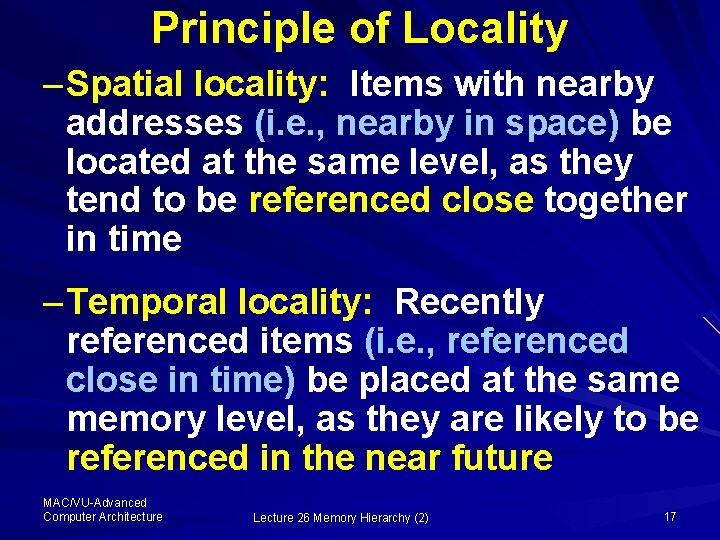

Principle of Locality – Spatial locality: Items with nearby addresses (i. e. , nearby in space) be located at the same level, as they tend to be referenced close together in time – Temporal locality: Recently referenced items (i. e. , referenced close in time) be placed at the same memory level, as they are likely to be referenced in the near future MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 17

Locality Example: Program sum = 0; for (i = 0; i < n; i++) sum + = a[i]; return sum; MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 18

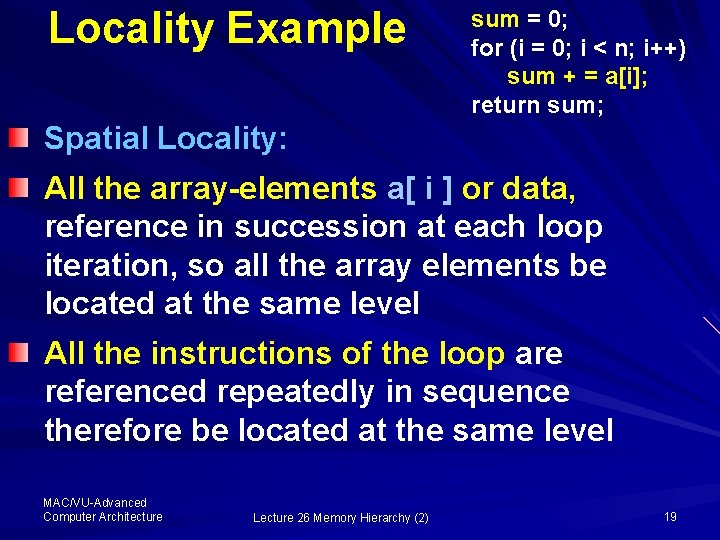

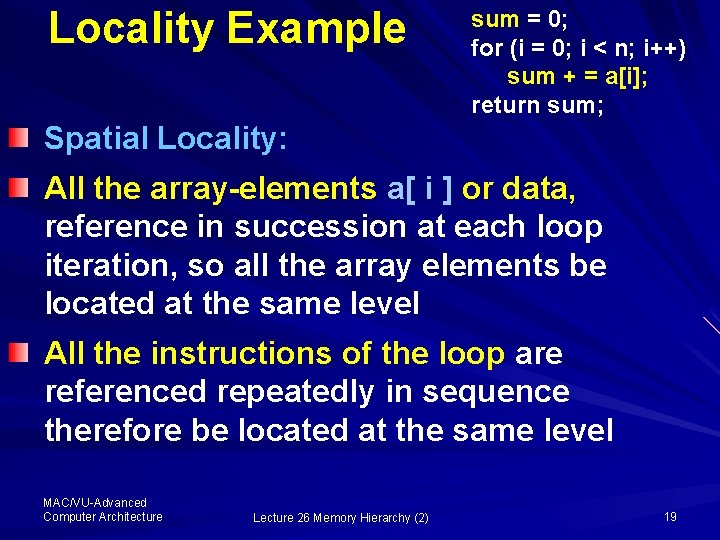

Locality Example sum = 0; for (i = 0; i < n; i++) sum + = a[i]; return sum; Spatial Locality: All the array-elements a[ i ] or data, reference in succession at each loop iteration, so all the array elements be located at the same level All the instructions of the loop are referenced repeatedly in sequence therefore be located at the same level MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 19

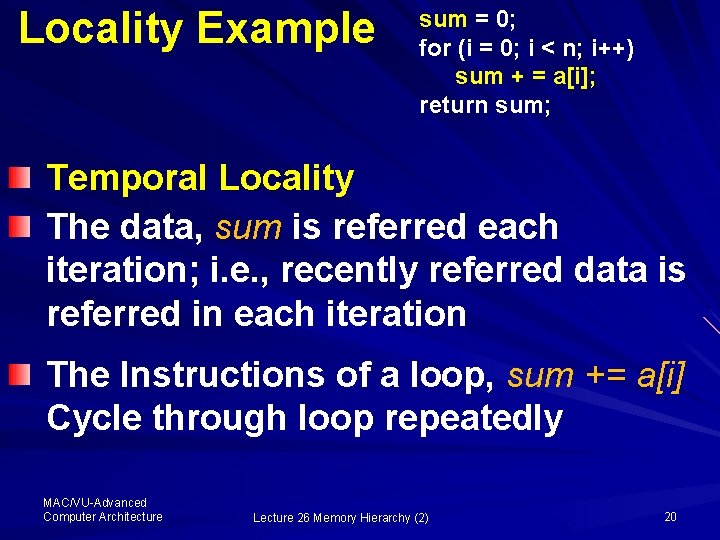

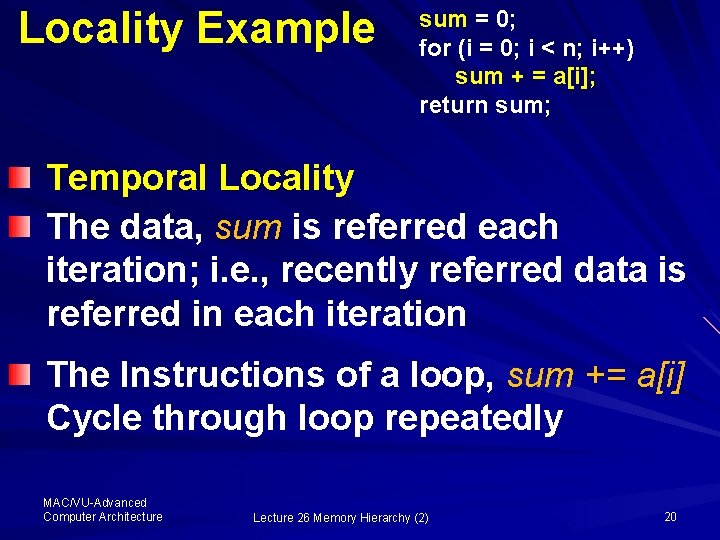

Locality Example sum = 0; for (i = 0; i < n; i++) sum + = a[i]; return sum; Temporal Locality The data, sum is referred each iteration; i. e. , recently referred data is referred in each iteration The Instructions of a loop, sum += a[i] Cycle through loop repeatedly MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 20

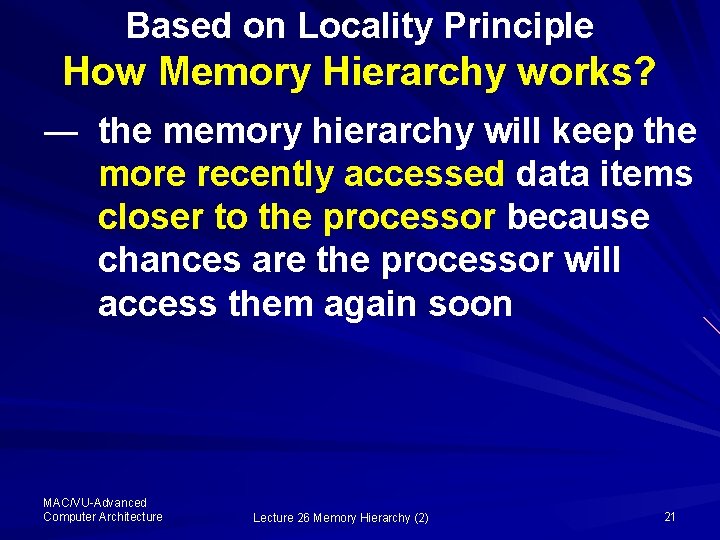

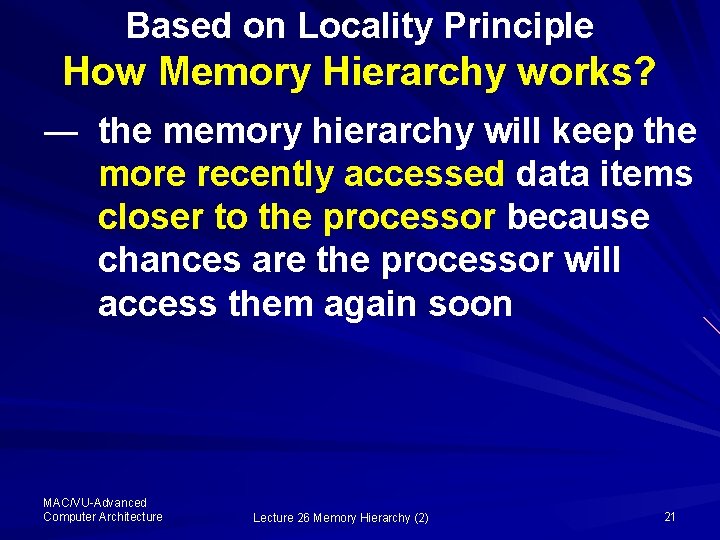

Based on Locality Principle How Memory Hierarchy works? ― the memory hierarchy will keep the more recently accessed data items closer to the processor because chances are the processor will access them again soon MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 21

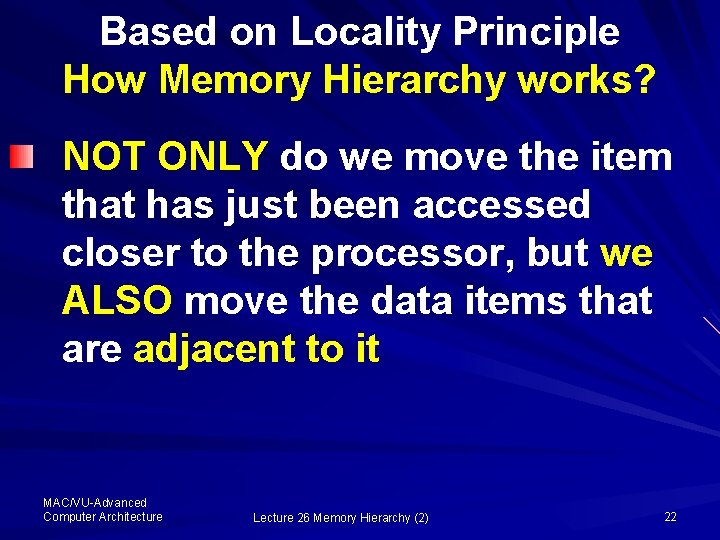

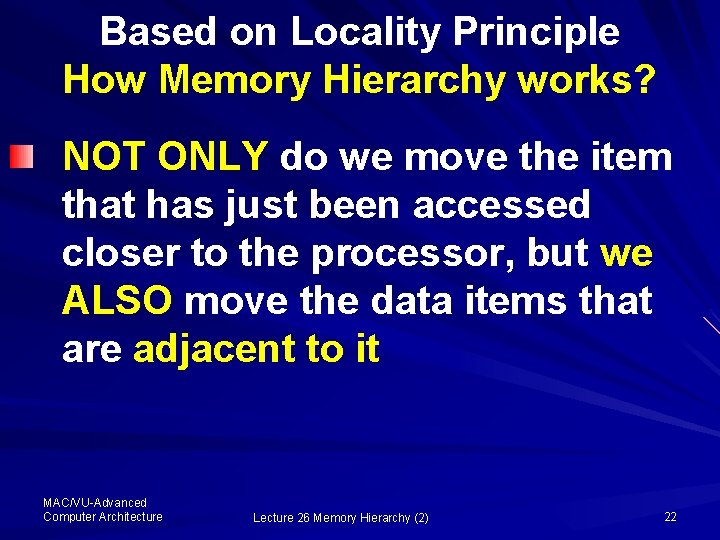

Based on Locality Principle How Memory Hierarchy works? NOT ONLY do we move the item that has just been accessed closer to the processor, but we ALSO move the data items that are adjacent to it MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 22

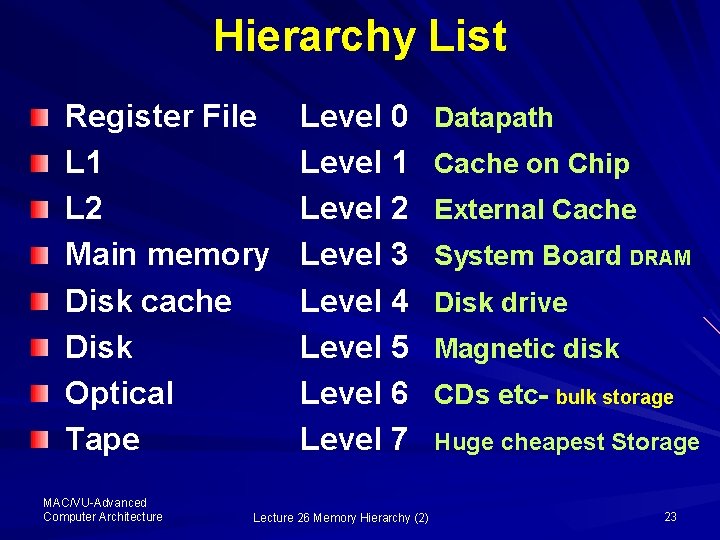

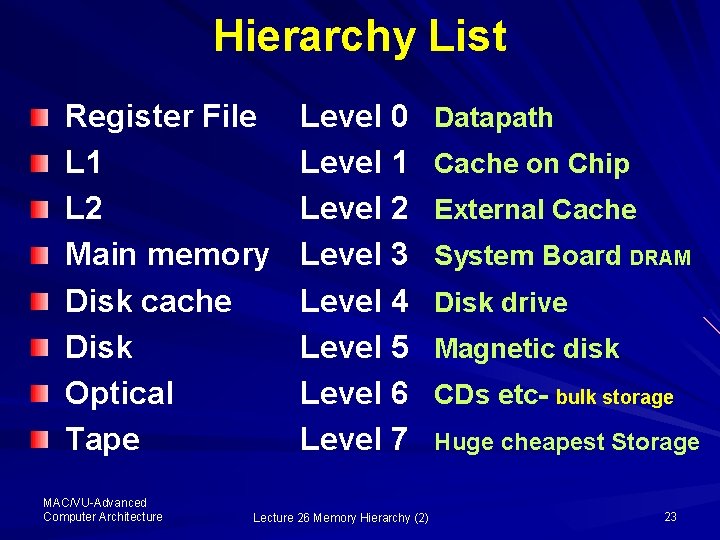

Hierarchy List Register File L 1 L 2 Main memory Disk cache Disk Optical Tape MAC/VU-Advanced Computer Architecture Level 0 Level 1 Level 2 Level 3 Level 4 Level 5 Level 6 Level 7 Lecture 26 Memory Hierarchy (2) Datapath Cache on Chip External Cache System Board DRAM Disk drive Magnetic disk CDs etc- bulk storage Huge cheapest Storage 23

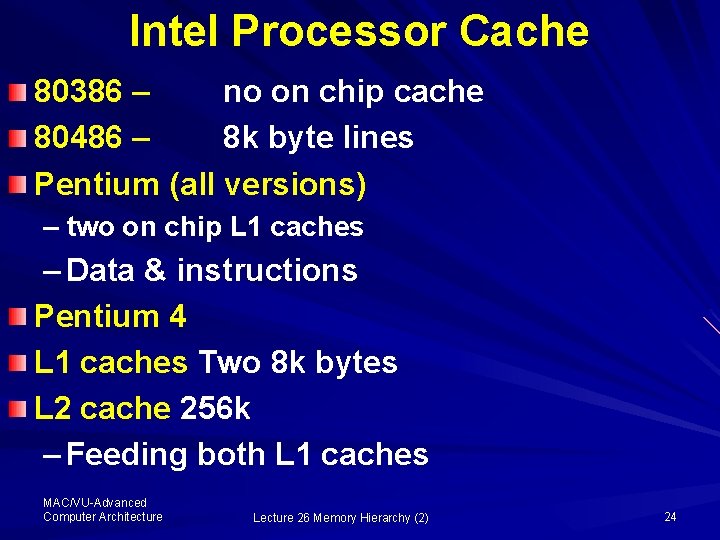

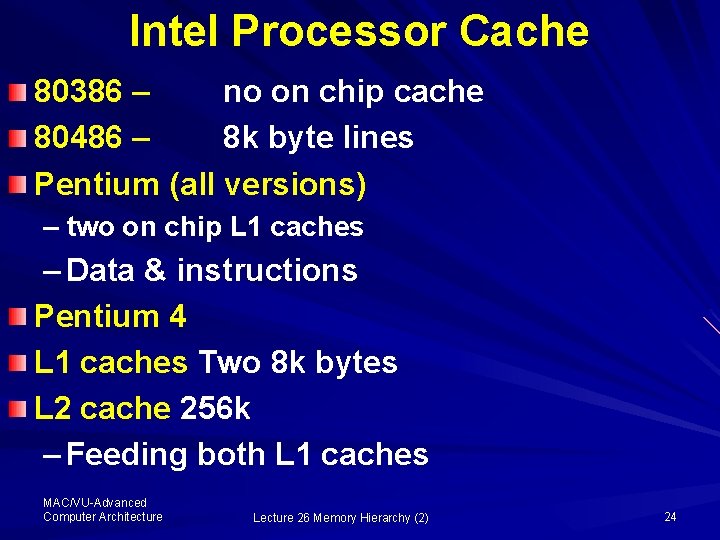

Intel Processor Cache 80386 – no on chip cache 80486 – 8 k byte lines Pentium (all versions) – two on chip L 1 caches – Data & instructions Pentium 4 L 1 caches Two 8 k bytes L 2 cache 256 k – Feeding both L 1 caches MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 24

Cache Devices Cache device is a small SRAM which is made directly accessible to the processor; and DRAM, which is accessible by the cache as well as by the user or programmer, is placed at the next higher level as the Main. Memory Larger storage such as disk, is placed away from the main memory MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 25

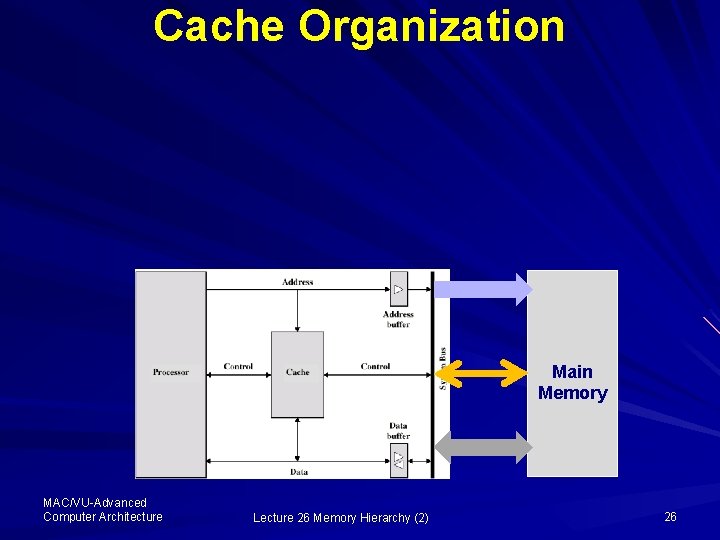

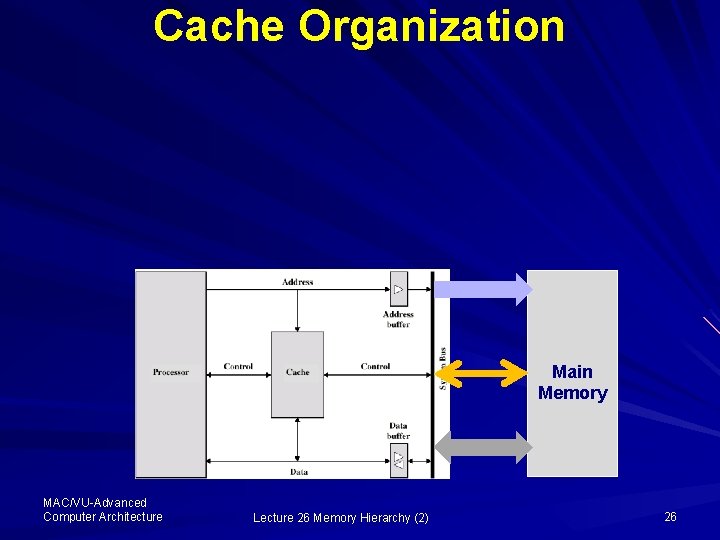

Cache Organization Main Memory MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 26

Caching in a Memory Hierarchy Level k: 8 4 9 Data is copied between levels in block-sized transfer units 14 10 3 10 4 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Level k+1: MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) Smaller, faster, more expensive device at level k caches a subset of the blocks (say 4 blocks) from level k+1 Larger, slower, cheaper storage device at level k+1 is partitioned into blocks (say 16 blocks) 27

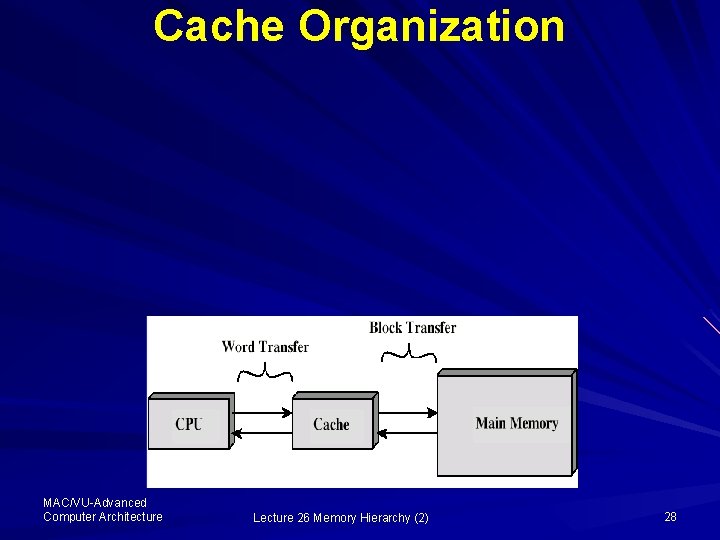

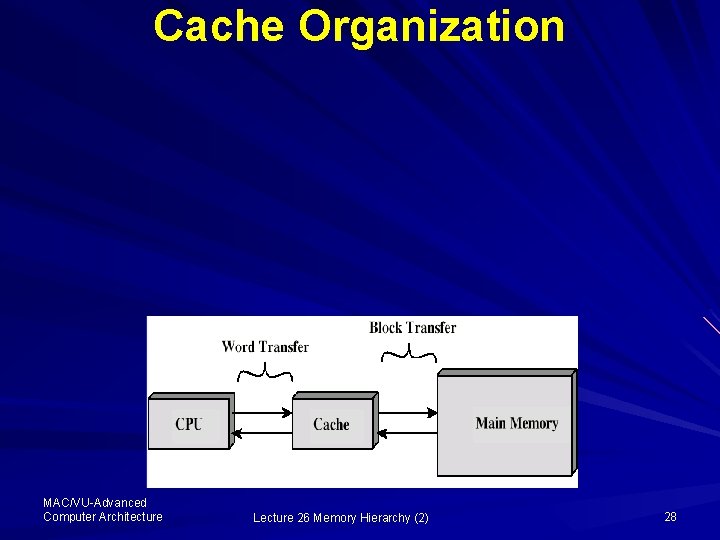

Cache Organization MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 28

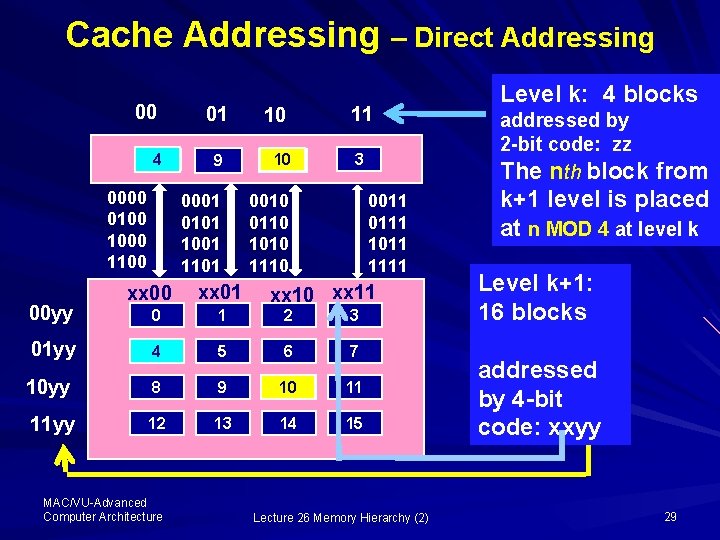

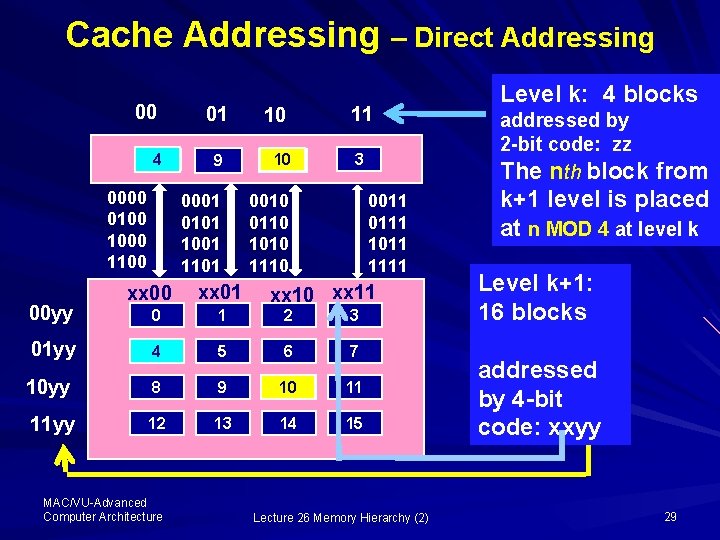

Cache Addressing – Direct Addressing 00 48 0000 0100 1000 1100 00 yy xx 00 01 10 9 10 14 0001 0101 1001 1101 0010 0110 1010 1110 xx 01 11 3 0011 0111 1011 1111 xx 10 xx 11 0 1 2 3 4 5 6 7 10 yy 8 9 10 11 11 yy 12 13 14 15 01 yy MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) Level k: 4 blocks addressed by 2 -bit code: zz The nth block from k+1 level is placed at n MOD 4 at level k Level k+1: 16 blocks addressed by 4 -bit code: xxyy 29

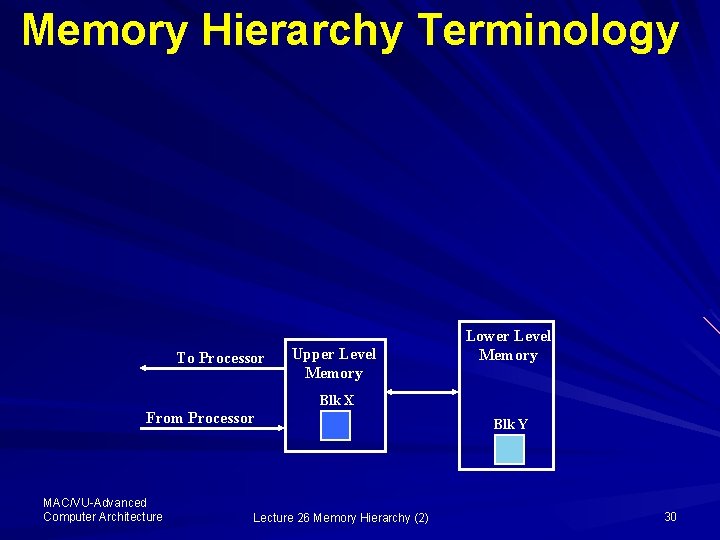

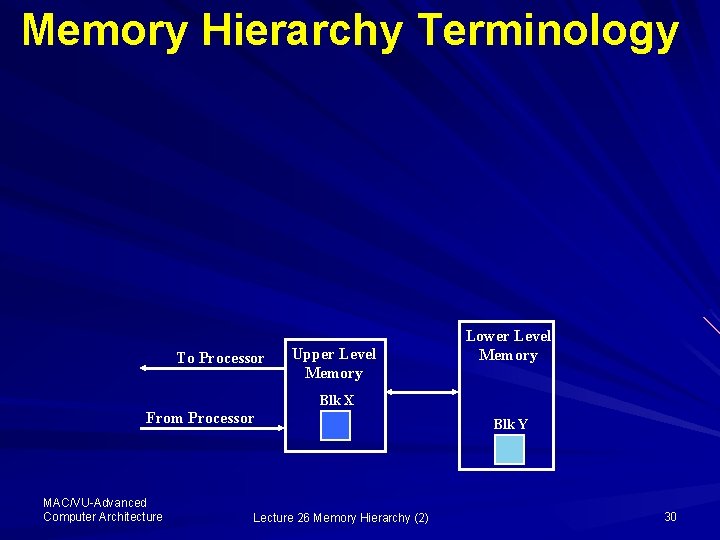

Memory Hierarchy Terminology To Processor Upper Level Memory Lower Level Memory Blk X From Processor MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) Blk Y 30

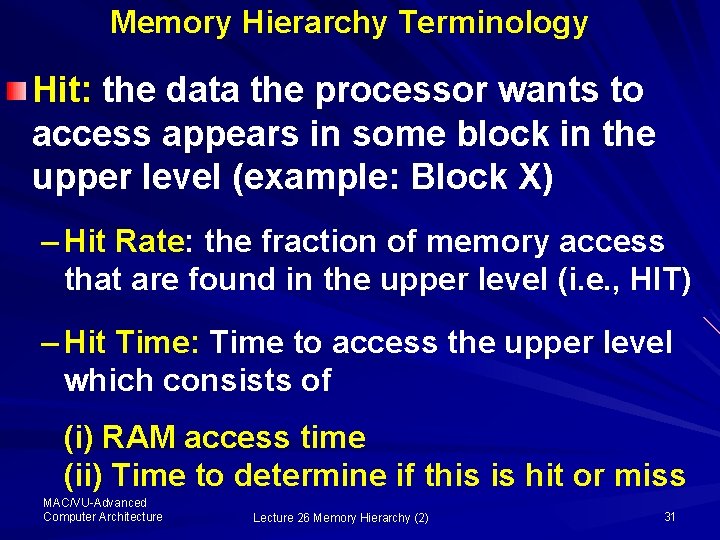

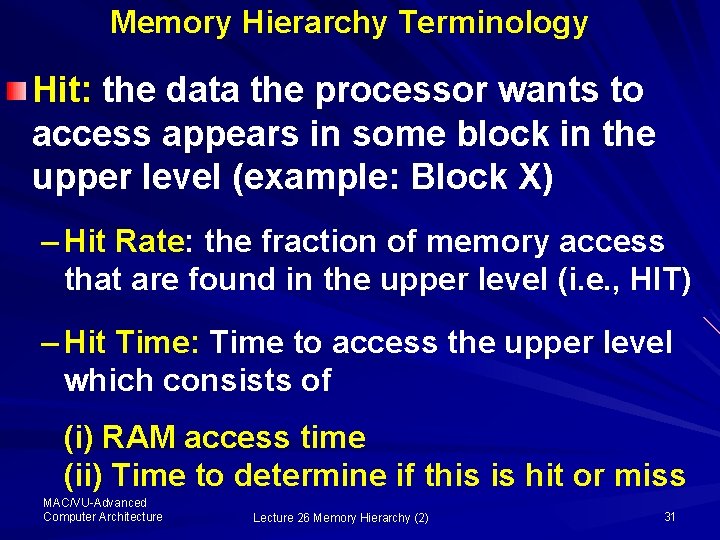

Memory Hierarchy Terminology Hit: the data the processor wants to access appears in some block in the upper level (example: Block X) – Hit Rate: the fraction of memory access that are found in the upper level (i. e. , HIT) – Hit Time: Time to access the upper level which consists of (i) RAM access time (ii) Time to determine if this is hit or miss MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 31

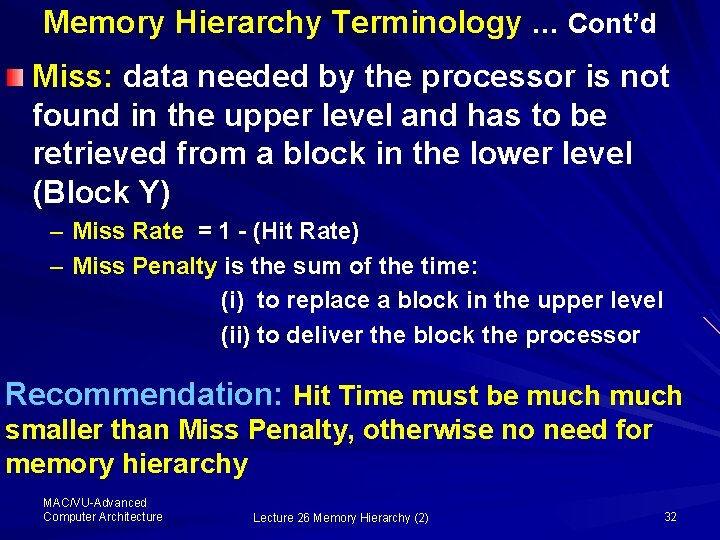

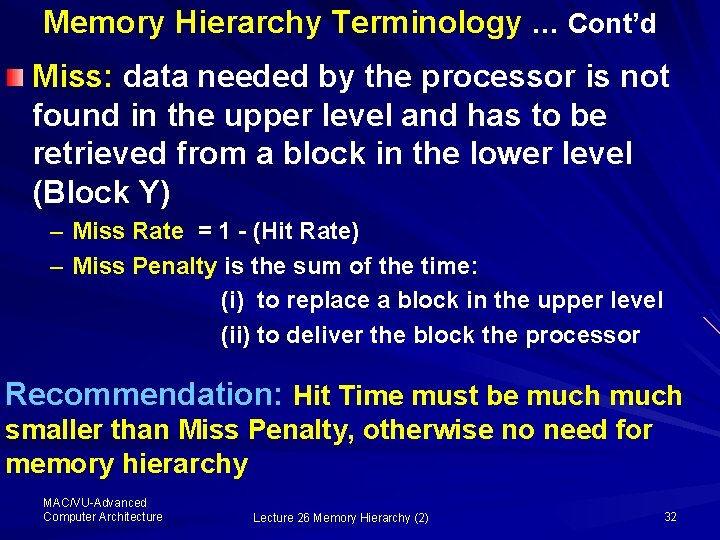

Memory Hierarchy Terminology … Cont’d Miss: data needed by the processor is not found in the upper level and has to be retrieved from a block in the lower level (Block Y) – Miss Rate = 1 - (Hit Rate) – Miss Penalty is the sum of the time: (i) to replace a block in the upper level (ii) to deliver the block the processor Recommendation: Hit Time must be much smaller than Miss Penalty, otherwise no need for memory hierarchy MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 32

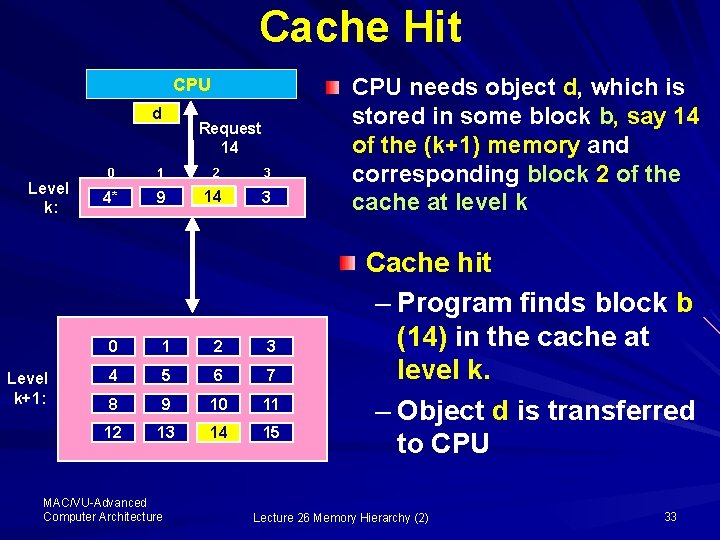

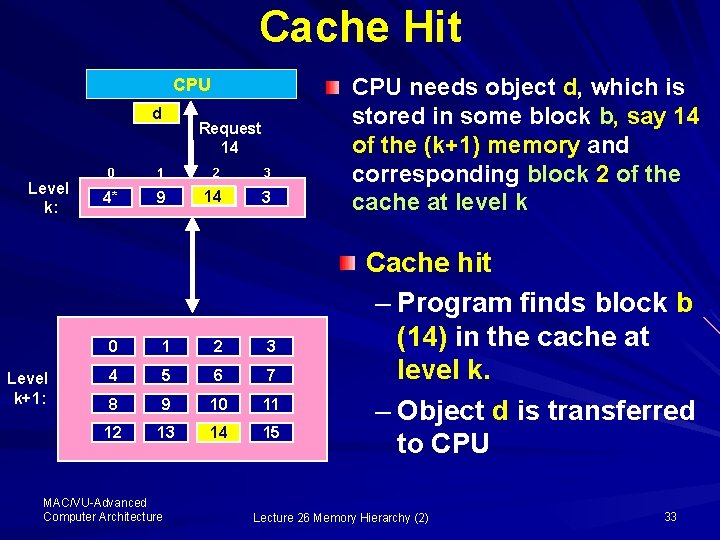

Cache Hit CPU d Level k: Level k+1: Request 14 0 1 2 3 4* 9 14 3 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 MAC/VU-Advanced Computer Architecture CPU needs object d, which is stored in some block b, say 14 of the (k+1) memory and corresponding block 2 of the cache at level k Cache hit – Program finds block b (14) in the cache at level k. – Object d is transferred to CPU Lecture 26 Memory Hierarchy (2) 33

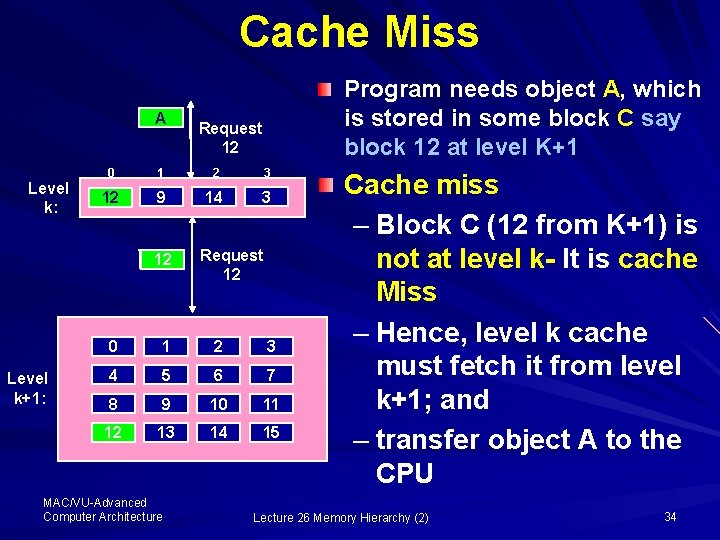

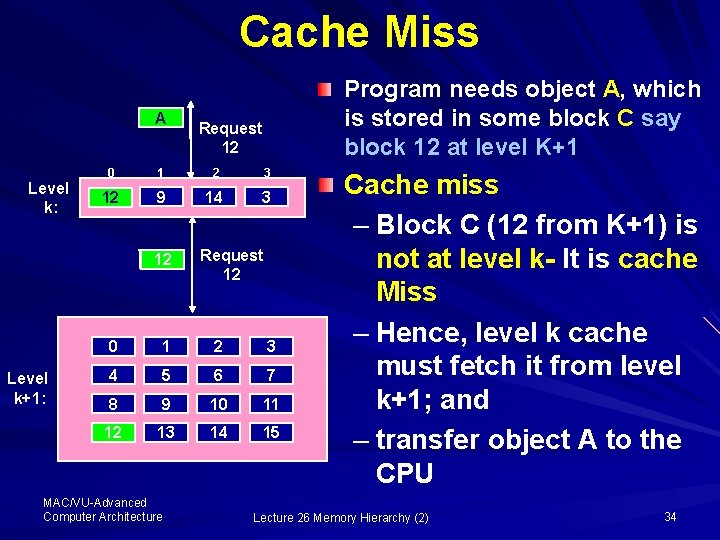

Cache Miss A Level k: Level k+1: Program needs object A, which is stored in some block C say block 12 at level K+1 Request 12 0 1 2 3 12 9 14 3 12 Request 12 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 MAC/VU-Advanced Computer Architecture Cache miss – Block C (12 from K+1) is not at level k- It is cache Miss – Hence, level k cache must fetch it from level k+1; and – transfer object A to the CPU Lecture 26 Memory Hierarchy (2) 34

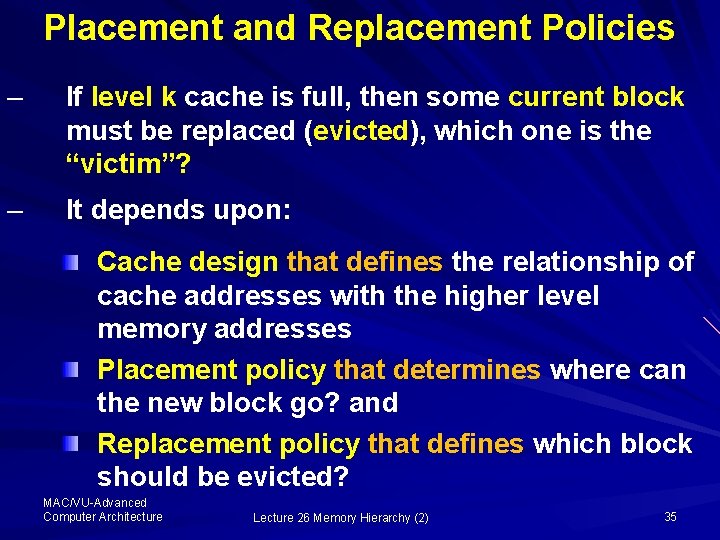

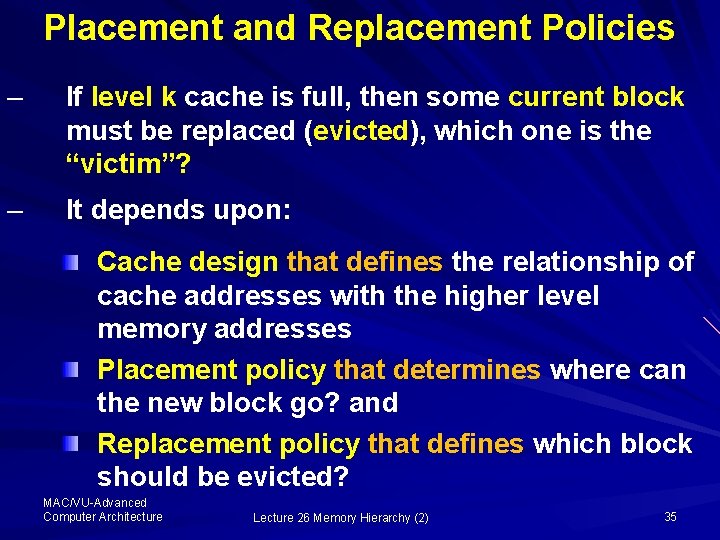

Placement and Replacement Policies – If level k cache is full, then some current block must be replaced (evicted), which one is the “victim”? – It depends upon: Cache design that defines the relationship of cache addresses with the higher level memory addresses Placement policy that determines where can the new block go? and Replacement policy that defines which block should be evicted? MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 35

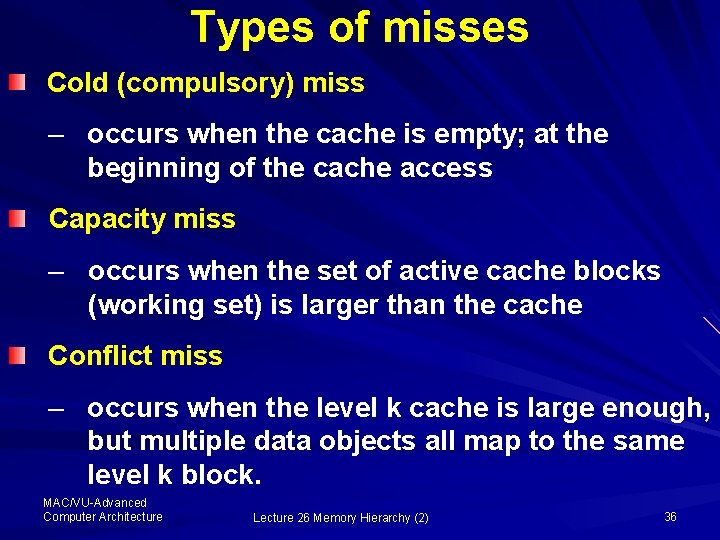

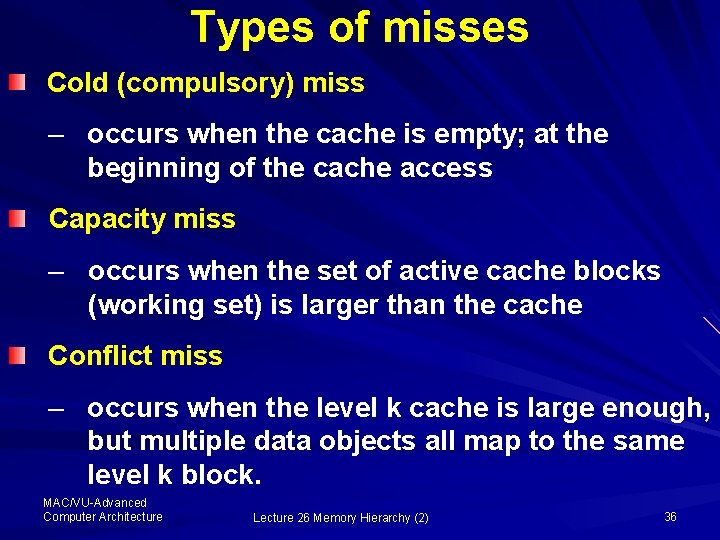

Types of misses Cold (compulsory) miss – occurs when the cache is empty; at the beginning of the cache access Capacity miss – occurs when the set of active cache blocks (working set) is larger than the cache Conflict miss – occurs when the level k cache is large enough, but multiple data objects all map to the same level k block. MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 36

Conflict Miss: Example … Cont’d If the placement policy is based on the direct addressing, then: – Block n at level k+1 must be placed in block (n mod 4) at level k In this case, referencing blocks 0, 8, . . . would miss every time as 8 mod 4 = 0, as both the blocks 0 and 8 of level k+1 are placed at the location 00 at level k MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 37

Cache Design We have observed that more than one blocks from the level k+1 memory (say of the main memory), having N blocks, may be placed at the same location (given by N MOD M) in the level-k memory (say cache) having M blocks Hence, a tag must be associated with each block in the level-k (cache) memory to identify its position in the level k+1 memory (Main memory) MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 38

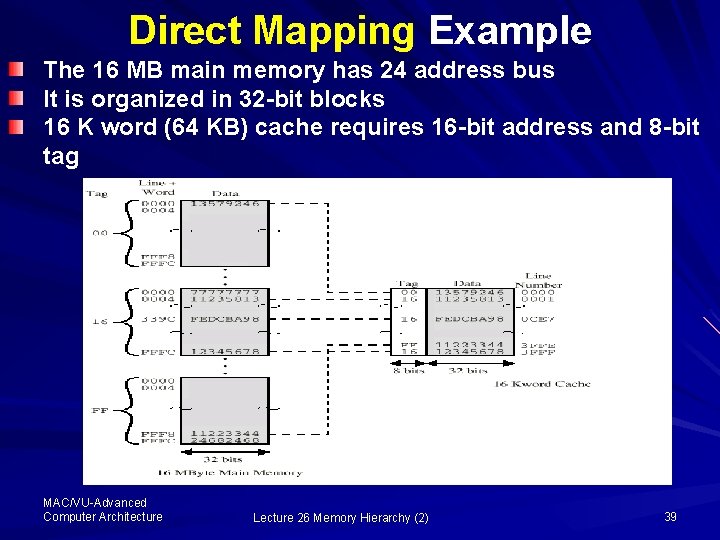

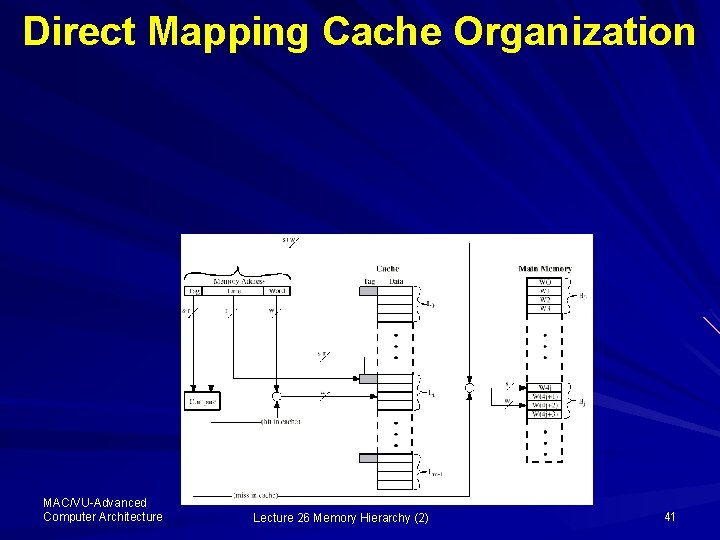

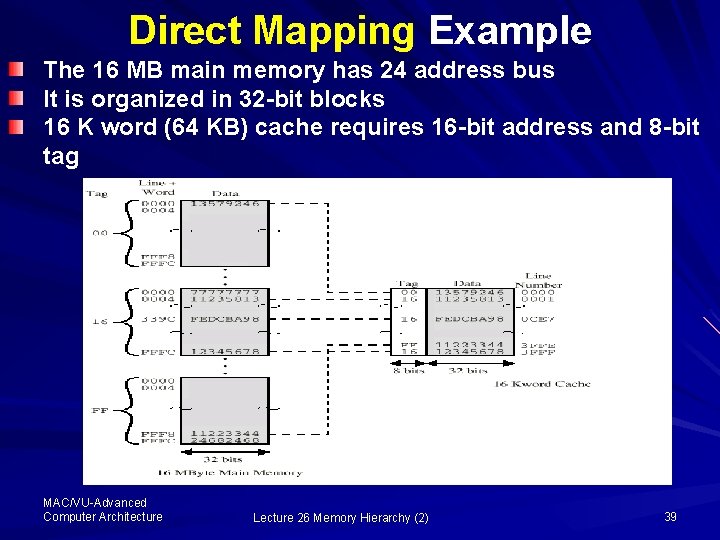

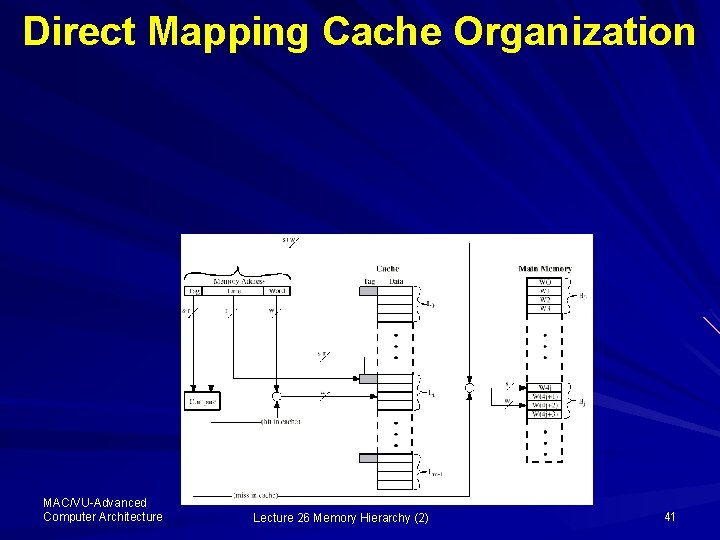

Direct Mapping Example The 16 MB main memory has 24 address bus It is organized in 32 -bit blocks 16 K word (64 KB) cache requires 16 -bit address and 8 -bit tag MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 39

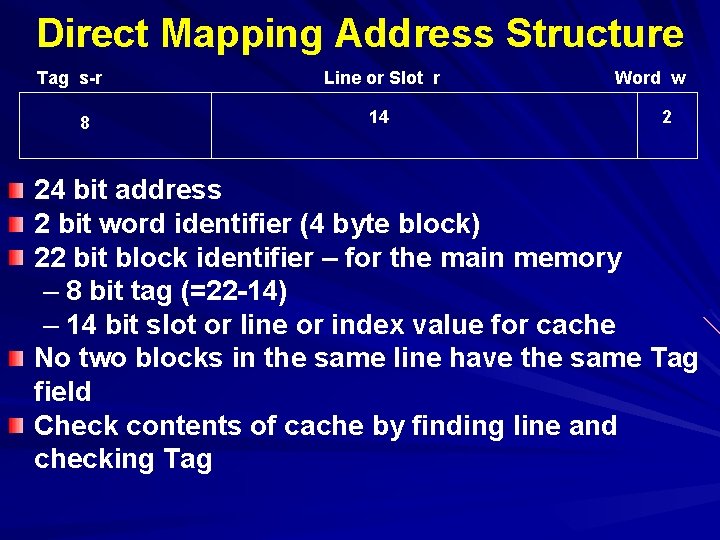

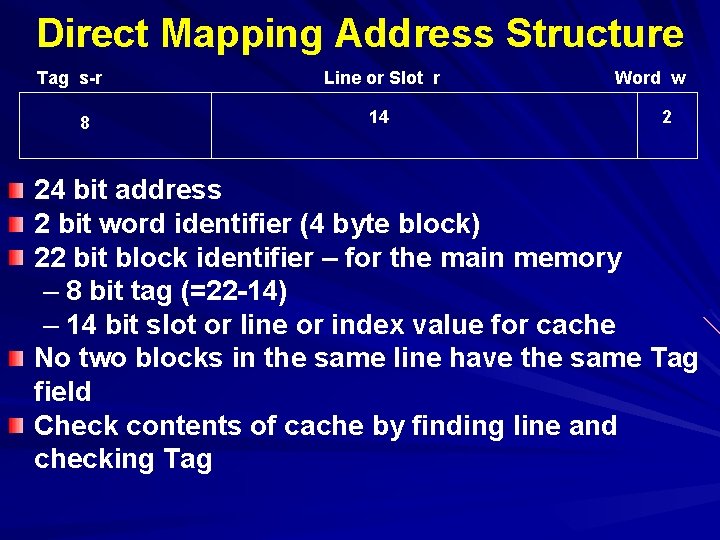

Direct Mapping Address Structure Tag s-r 8 Line or Slot r 14 Word w 2 24 bit address 2 bit word identifier (4 byte block) 22 bit block identifier – for the main memory – 8 bit tag (=22 -14) – 14 bit slot or line or index value for cache No two blocks in the same line have the same Tag field Check contents of cache by finding line and checking Tag

Direct Mapping Cache Organization MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 41

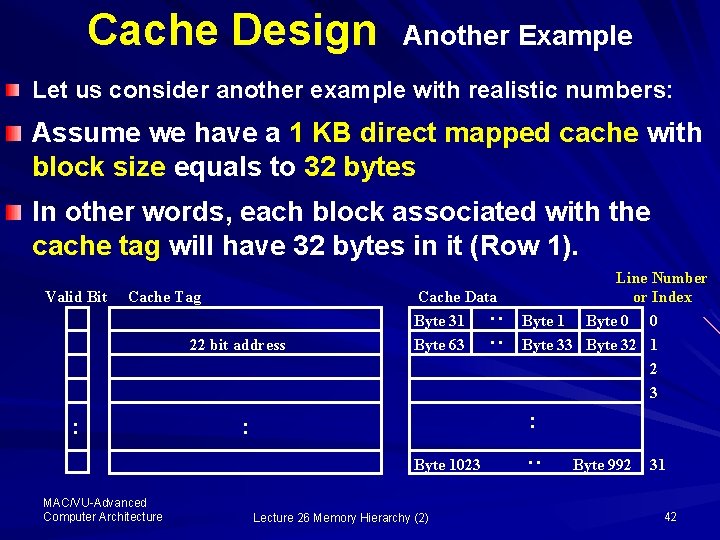

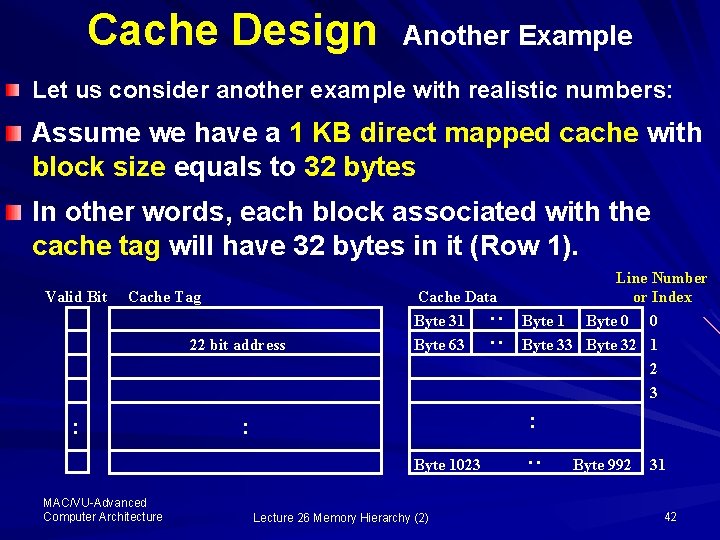

Cache Design Another Example Let us consider another example with realistic numbers: Assume we have a 1 KB direct mapped cache with block size equals to 32 bytes In other words, each block associated with the cache tag will have 32 bytes in it (Row 1). Cache Tag 22 bit address : : Byte 1023 MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) : : Cache Data Byte 31 Byte 63 : : Valid Bit Line Number or Index Byte 1 Byte 0 0 Byte 33 Byte 32 1 2 3 Byte 992 31 42

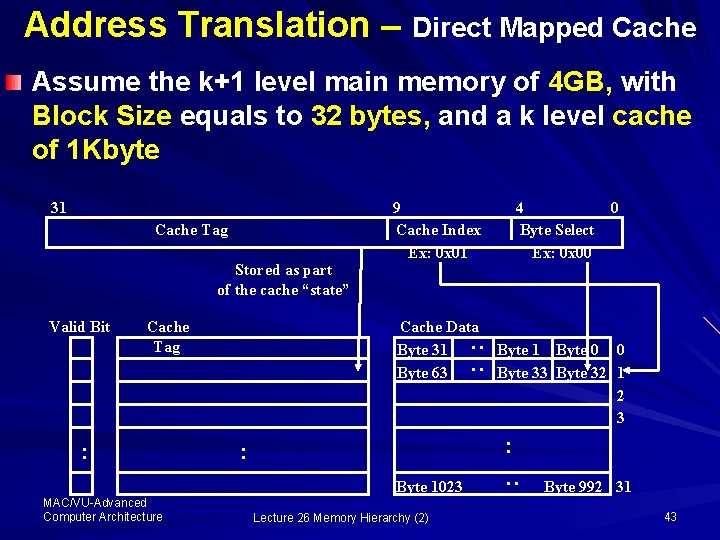

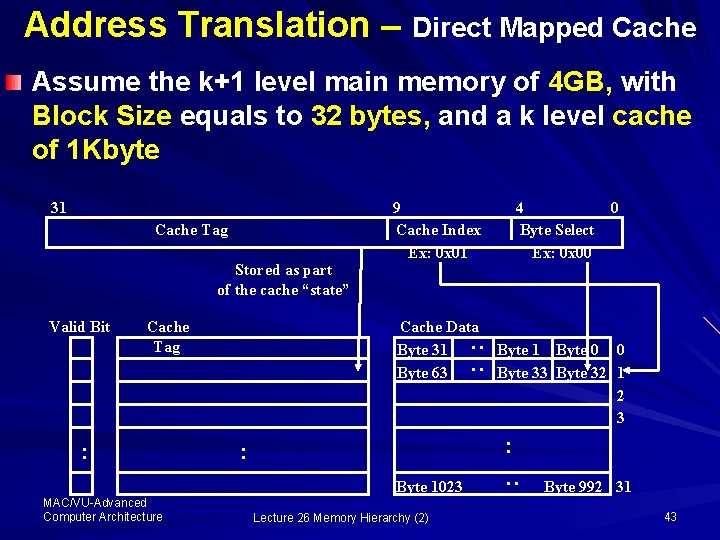

Address Translation – Direct Mapped Cache Assume the k+1 level main memory of 4 GB, with Block Size equals to 32 bytes, and a k level cache of 1 Kbyte 31 Cache Tag Stored as part of the cache “state” : Cache Data Byte 31 Byte 63 Byte 1 Byte 0 0 Byte 33 Byte 32 1 2 3 : : Byte 1023 MAC/VU-Advanced Computer Architecture 4 0 Byte Select Ex: 0 x 00 Lecture 26 Memory Hierarchy (2) : Cache Tag : : Valid Bit 9 Cache Index Ex: 0 x 01 Byte 992 31 43

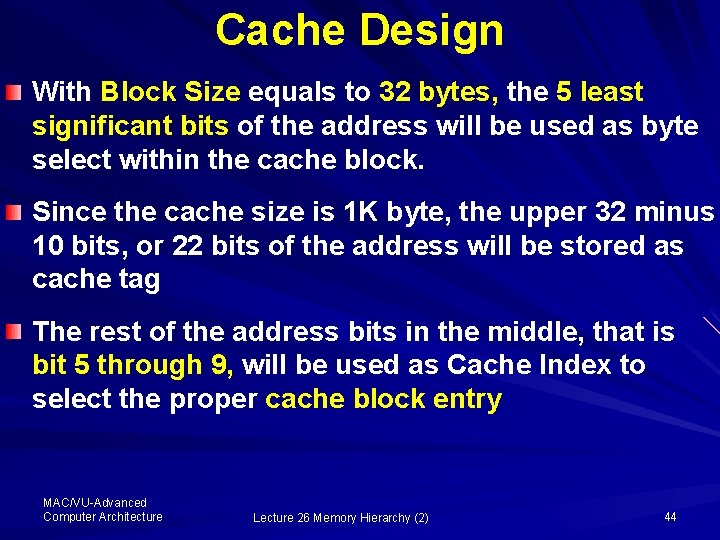

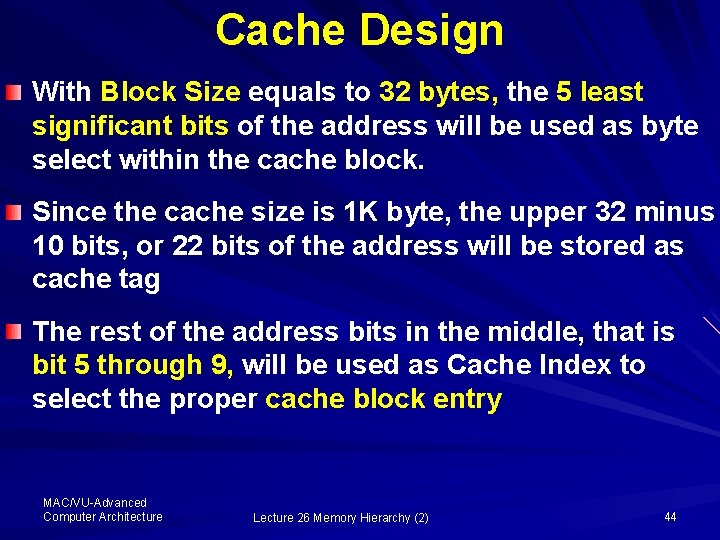

Cache Design With Block Size equals to 32 bytes, the 5 least significant bits of the address will be used as byte select within the cache block. Since the cache size is 1 K byte, the upper 32 minus 10 bits, or 22 bits of the address will be stored as cache tag The rest of the address bits in the middle, that is bit 5 through 9, will be used as Cache Index to select the proper cache block entry MAC/VU-Advanced Computer Architecture Lecture 26 Memory Hierarchy (2) 44