CS 704 Advanced Computer Architecture Lecture 30 Memory

- Slides: 51

CS 704 Advanced Computer Architecture Lecture 30 Memory Hierarchy Design Cache Performance Enhancement (Reducing Miss Rate) Prof. Dr. M. Ashraf Chughtai

Today’s Topics Recap: Reducing Miss Penalty Classification of Cache Misses Reducing Cache Miss Rate Summary MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 2

Recap: Improving Cache Performance ─ ─ ─ The miss penalty The miss rate The miss Penalty or miss rate via Parallelism ─ The time to hit in the cache MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 3

Recap: Reducing Miss Penalty – Multilevel Caches – Critical Word first and Early Restart – Priority to Read Misses Over writes – Merging Write Buffers – Victim Caches MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 4

Recap: Reducing Miss Penalty ‘Multi level caches’ ‘The more the merrier MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 5

Recap: Reducing Miss Penalty “ Critical Word First and Early Restart’ intolerance Reduces miss-penalty MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 6

Recap: Reducing Miss Penalty ‘Priority to read miss over the write miss’ Favoritism MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 7

Recap: Reducing Miss Penalty ‘Merging write-buffer, ’ Acquaintance Victim cache Salvage MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 8

Recap: Reducing Miss Penalty Reduces miss penalty Multi level caches Reduces miss rate Cache-misses Methods to reduce the miss rate MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 9

Cache Misses – Compulsory Misses (cold start or first reference misses) – Capacity Misses – Conflict Misses (collision or interference misses) MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 10

Cache Misses - Classification Compulsory Miss Block must be brought into the cache Capacity Miss Capacity misses occur in Fully Associative cache MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 11

Cache Misses - Classification Conflict Miss Many blocks map to the same address or set MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 12

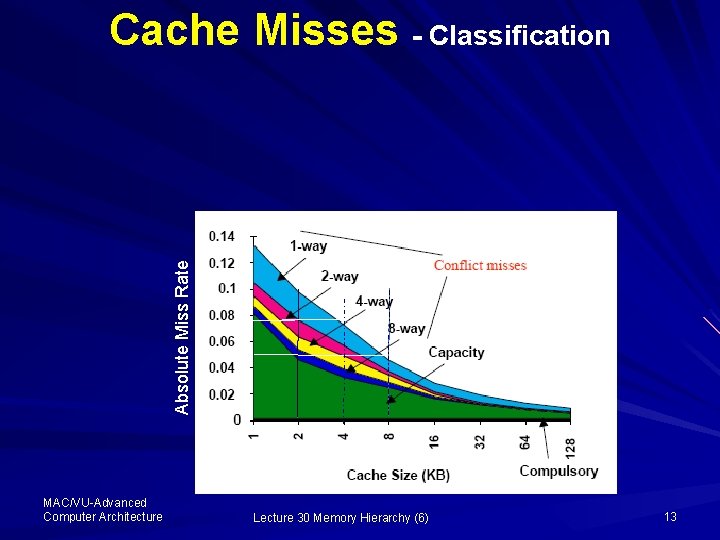

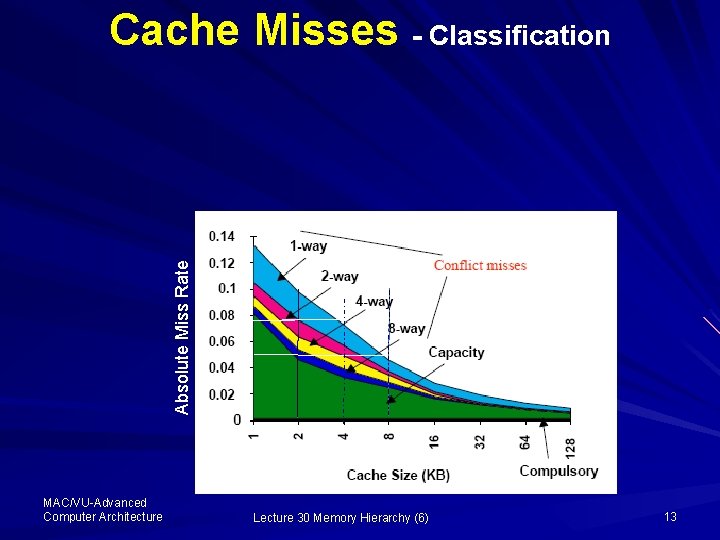

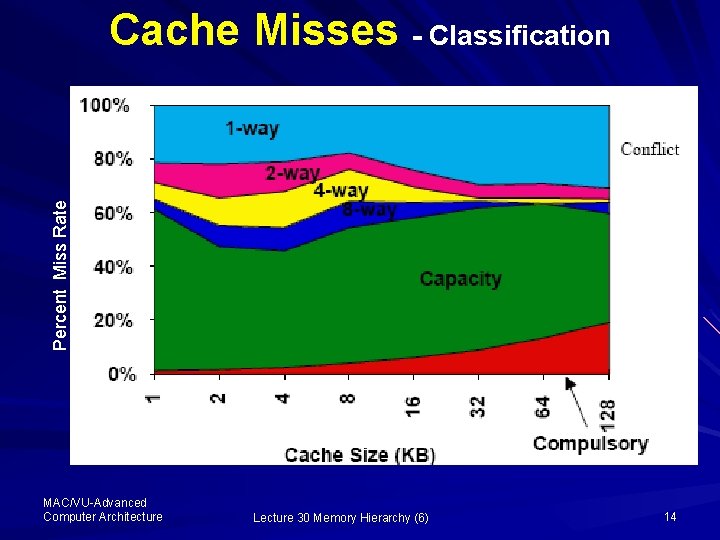

Absolute Miss Rate Cache Misses - Classification MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 13

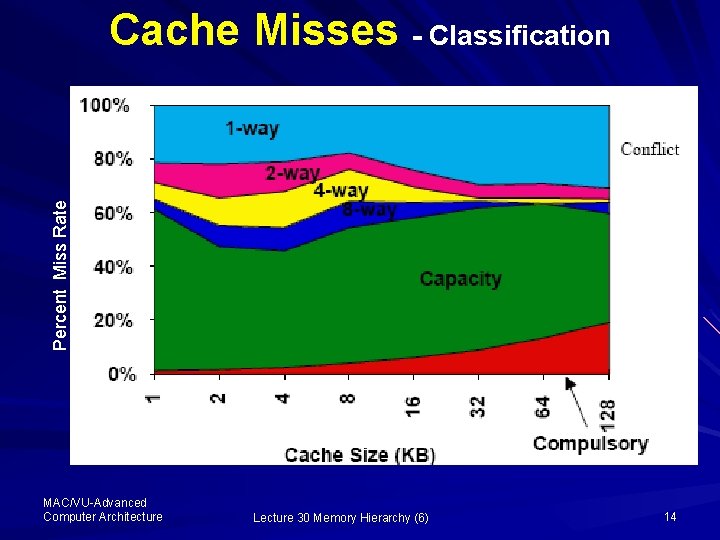

Percent Miss Rate Cache Misses - Classification MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 14

Reducing Miss Rate 1. 2. 3. 4. 5. Larger Block Size Larger Caches Higher Associativity Way Prediction and Pseudo-associativity Compiler Optimization MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 15

1: Larger Block Size Reduce the miss rate Spatial locality Larger block have maximum number of data or instructions MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 16

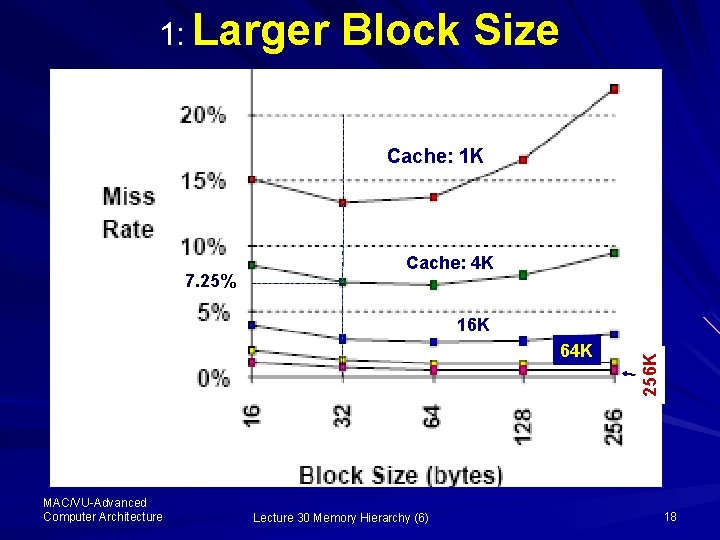

1: Larger Block Size In small cache , larger blocks may increase MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 17

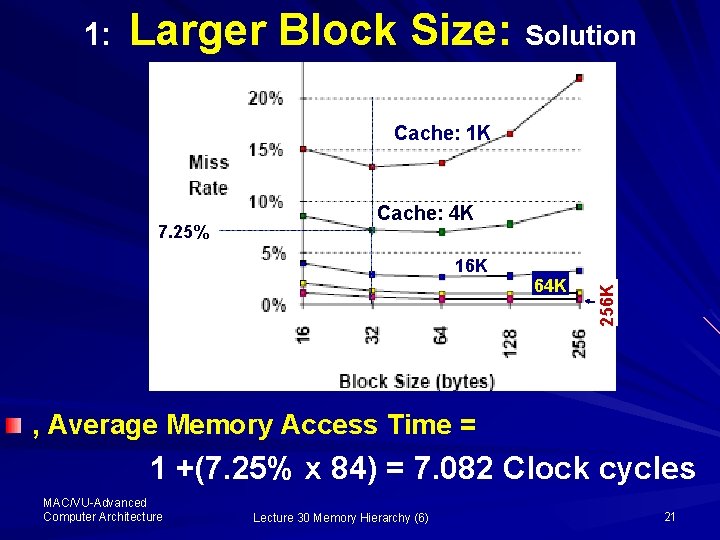

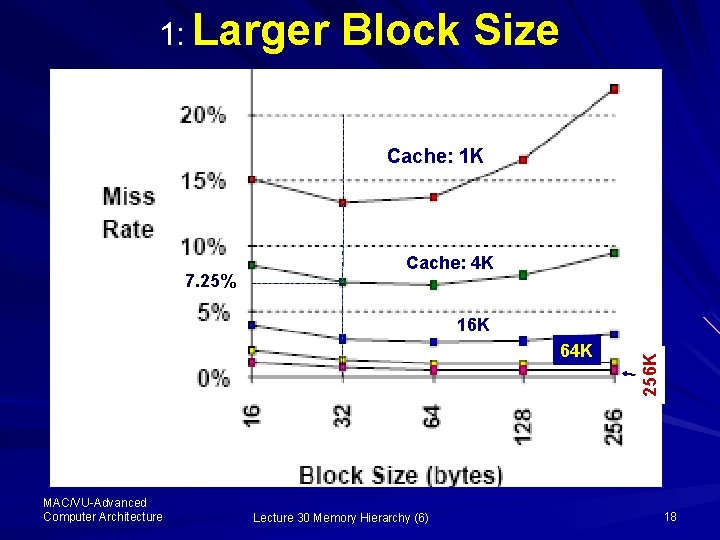

1: Larger Block Size Cache: 1 K 7. 25% Cache: 4 K 64 K MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 256 K 18

1: Larger Block Size Assumption 80 clock cycles of over head Delivers 16 bytes every 2 clock cycle Hit time of 1 clock cycle MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 19

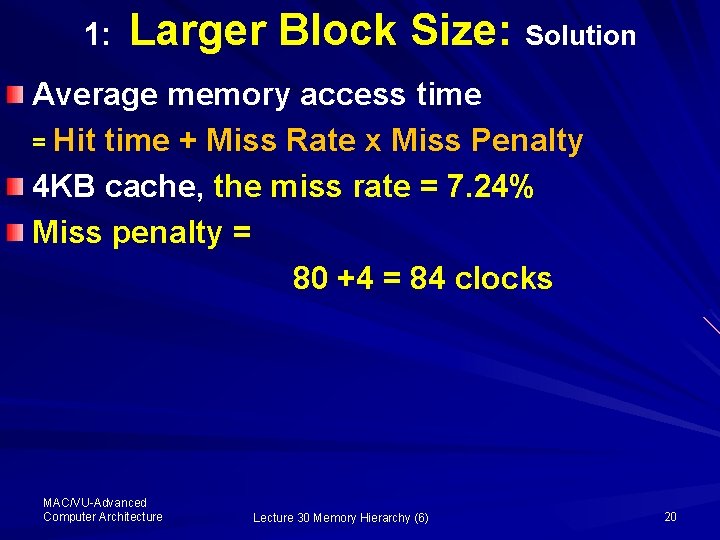

1: Larger Block Size: Solution Average memory access time = Hit time + Miss Rate x Miss Penalty 4 KB cache, the miss rate = 7. 24% Miss penalty = 80 +4 = 84 clocks MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 20

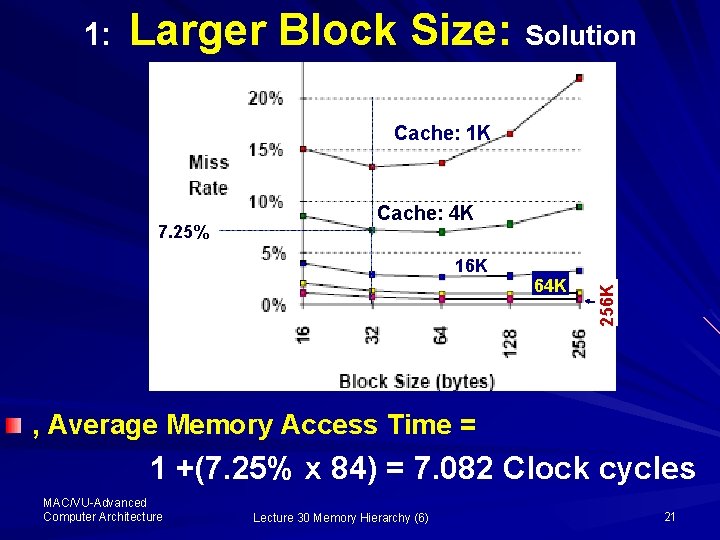

1: Larger Block Size: Solution Cache: 1 K 16 K 64 K 256 K 7. 25% Cache: 4 K , Average Memory Access Time = 1 +(7. 25% x 84) = 7. 082 Clock cycles MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 21

1: Larger Block Size: Solution Copy table 5. 18 pp 428 MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 22

1: Larger Block Size: Solution – Latency and bandwidth of the lower level memory – High latency and high bandwidth MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 23

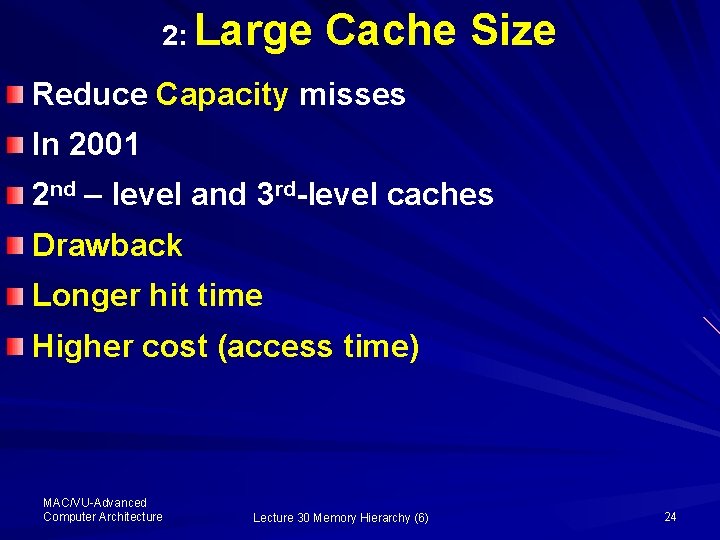

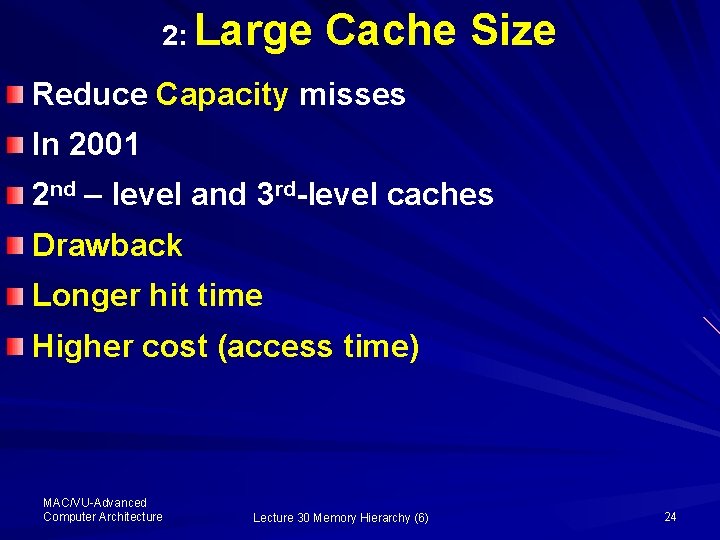

2: Large Cache Size Reduce Capacity misses In 2001 2 nd – level and 3 rd-level caches Drawback Longer hit time Higher cost (access time) MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 24

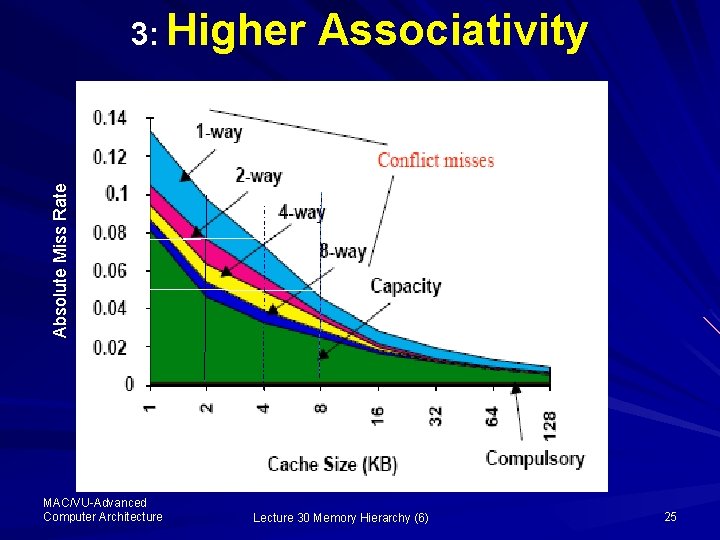

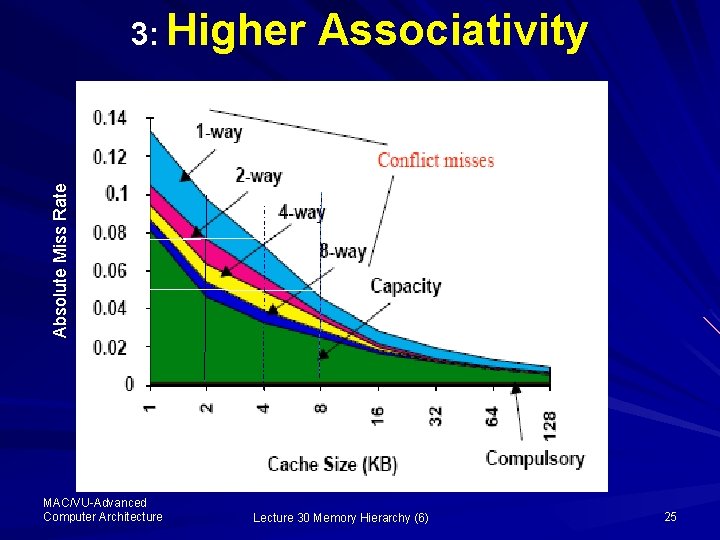

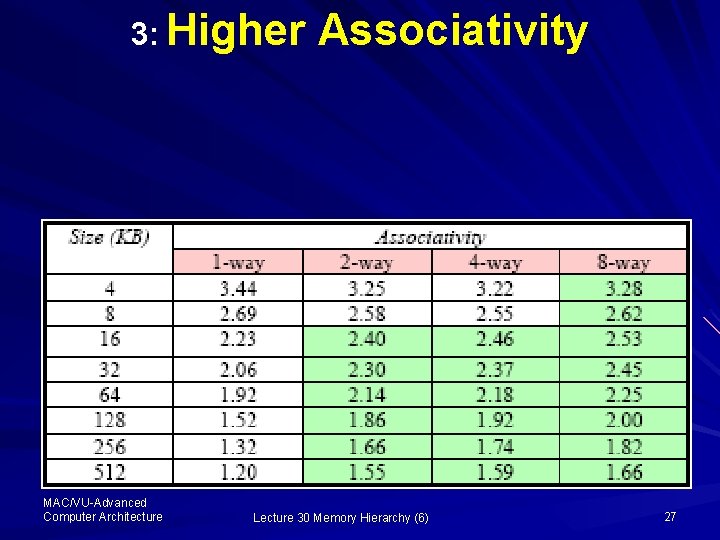

Associativity Absolute Miss Rate 3: Higher MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 25

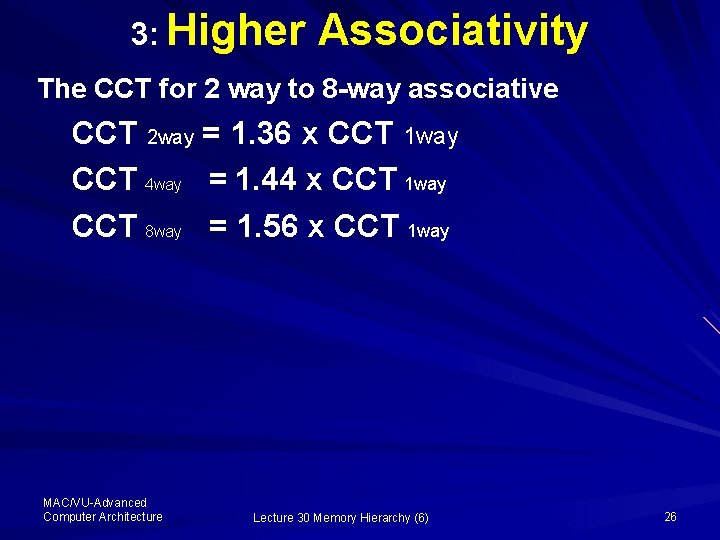

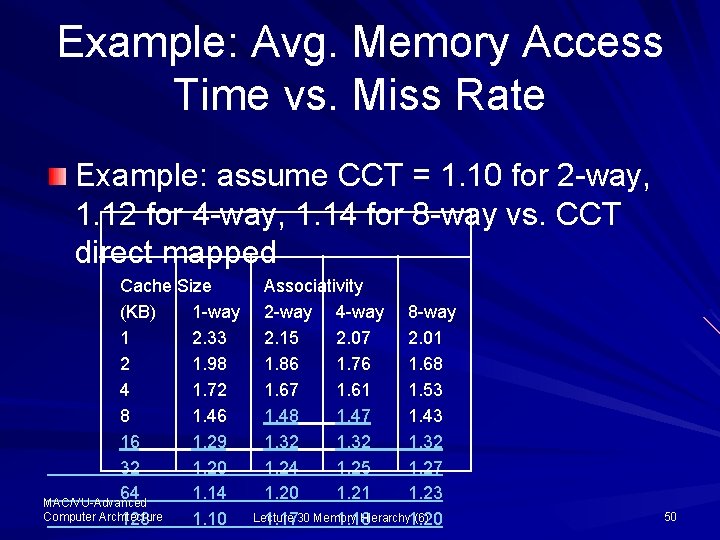

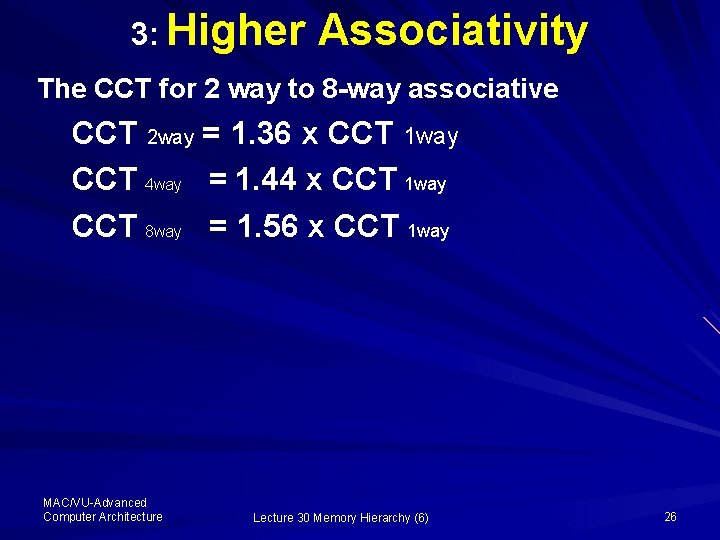

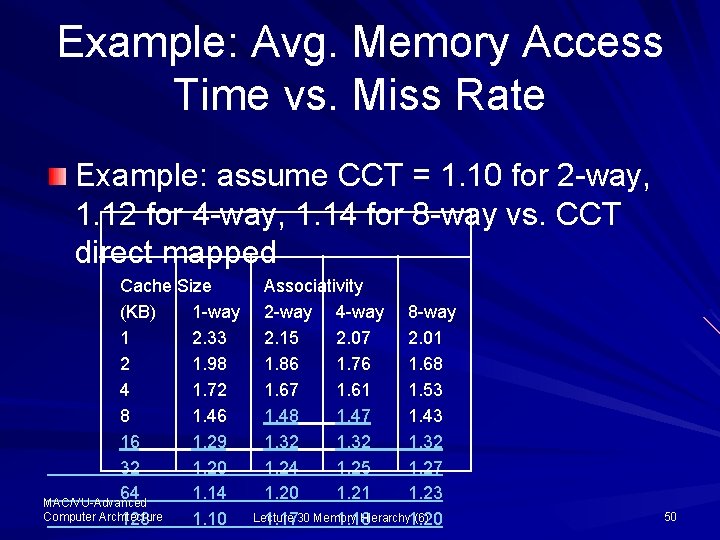

3: Higher Associativity The CCT for 2 way to 8 -way associative CCT 2 way = 1. 36 x CCT 1 way CCT 4 way = 1. 44 x CCT 1 way CCT 8 way = 1. 56 x CCT 1 way MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 26

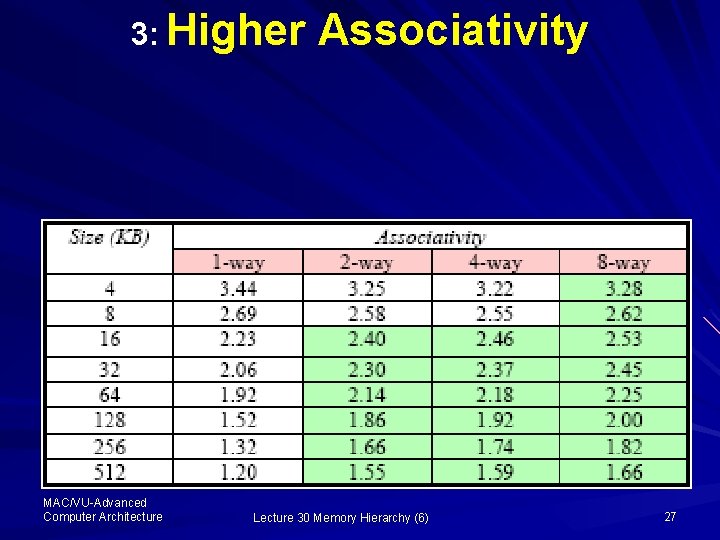

3: Higher MAC/VU-Advanced Computer Architecture Associativity Lecture 30 Memory Hierarchy (6) 27

4: Way Prediction and Pseudoassociativity Fast hit time of direct-mapped caches Lower conflict misses of set-associative caches Reduces the conflict misses MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 28

4: Way Prediction and Pseudo-associativity Way Prediction Block in a set Steps 1. Extra bits MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 29

4: Way Prediction and Pseudo-associativity 2 -way prediction 4 -way prediction 2. Multiplexer Single tag MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 30

4: Way Prediction and Pseudo-associativity Other blocks for matches in subsequent clock cycles Alpha 21264 Latency of 1 clock cycle 3 clock cycles MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 31

4: Way Prediction and Pseudo-associativity Pseudo-associative Column associative caches Miss “Pseudo-set” MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 32

4: Way Prediction and Pseudo-associativity Pseudo Associative caches Performance “Slower hit” MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 33

5: Compiler Optimization Reduce both the data caches misses and instruction cache misses MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 34

5: Compiler Optimization Instruction Code optimization – Reordering the Procedures – Using Cache-line Alignment MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 35

5: Compiler Optimization The code reordering – Determines conflicts The code-line alignment method: – Decreases cache miss – Entry point is at the beginning of a cache block MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 36

5: Compiler Optimization Data misses are reduced Spatial locality Temporal locality Array calculation MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 37

5: Compiler Optimization – loop interchange – blocking MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 38

5: Compiler Optimization: Loop Interchange • program having nested loops that access data in non-sequential order for j (0 100) and in sequential order for i (0 5000) MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 39

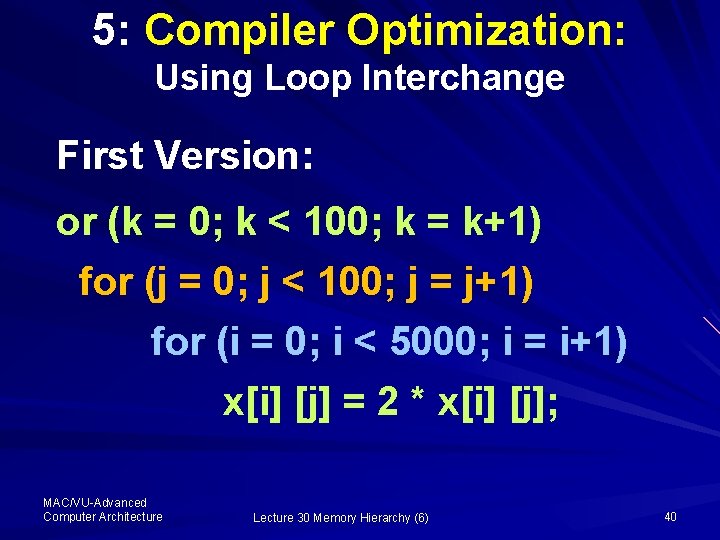

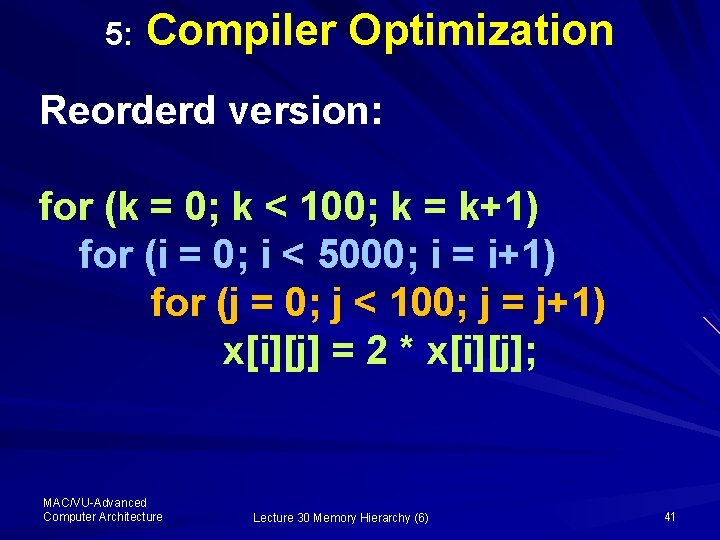

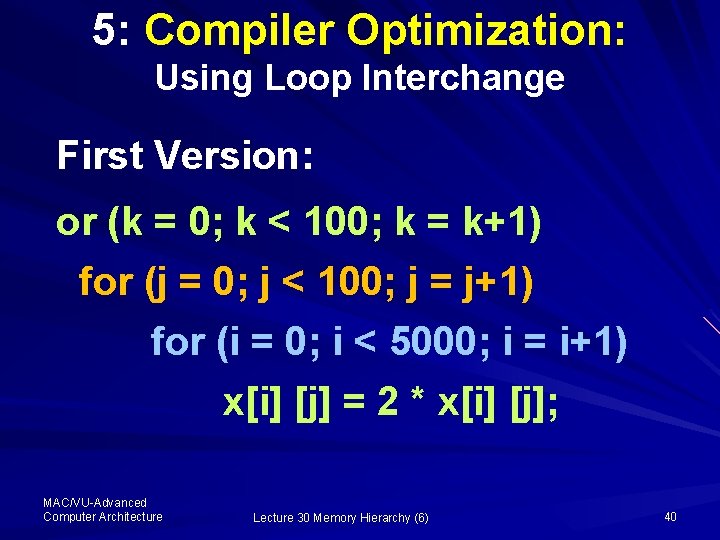

5: Compiler Optimization: Using Loop Interchange First Version: or (k = 0; k < 100; k = k+1) for (j = 0; j < 100; j = j+1) for (i = 0; i < 5000; i = i+1) x[i] [j] = 2 * x[i] [j]; MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 40

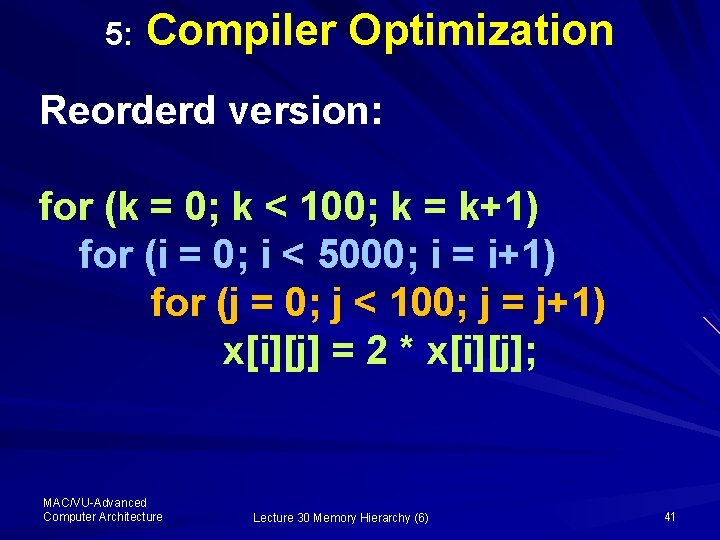

5: Compiler Optimization Reorderd version: for (k = 0; k < 100; k = k+1) for (i = 0; i < 5000; i = i+1) for (j = 0; j < 100; j = j+1) x[i][j] = 2 * x[i][j]; MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 41

5: Compiler Optimization: Using Blocking Example of improving Temporal Locality program to perform matrix multiplication MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 42

5: Compiler Optimization: Using Blocking ‘Row major order’ (row-by-row) ‘Column major order’ Iteration for matrix multiplication MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 43

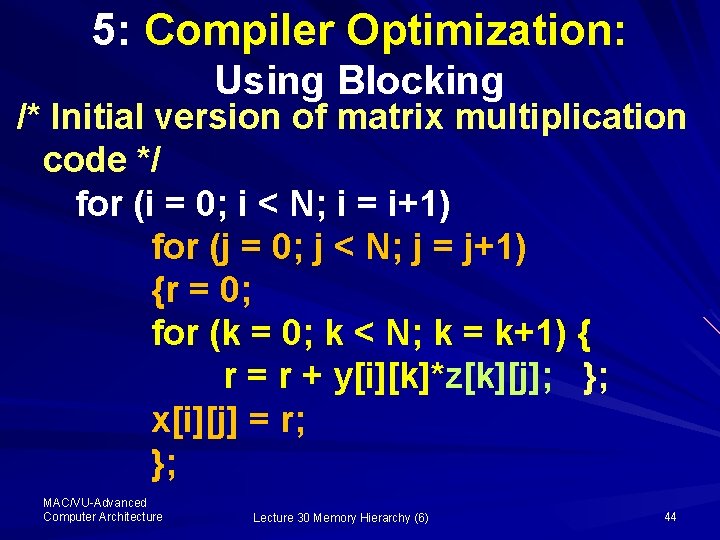

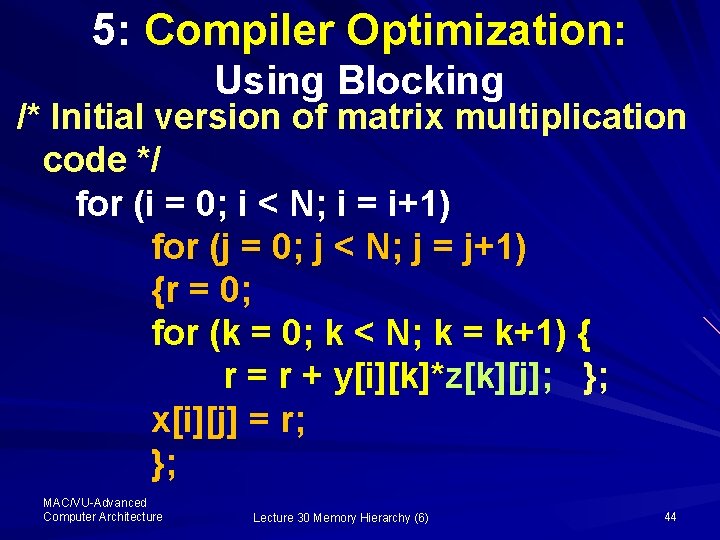

5: Compiler Optimization: Using Blocking /* Initial version of matrix multiplication code */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) {r = 0; for (k = 0; k < N; k = k+1) { r = r + y[i][k]*z[k][j]; }; x[i][j] = r; }; MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 44

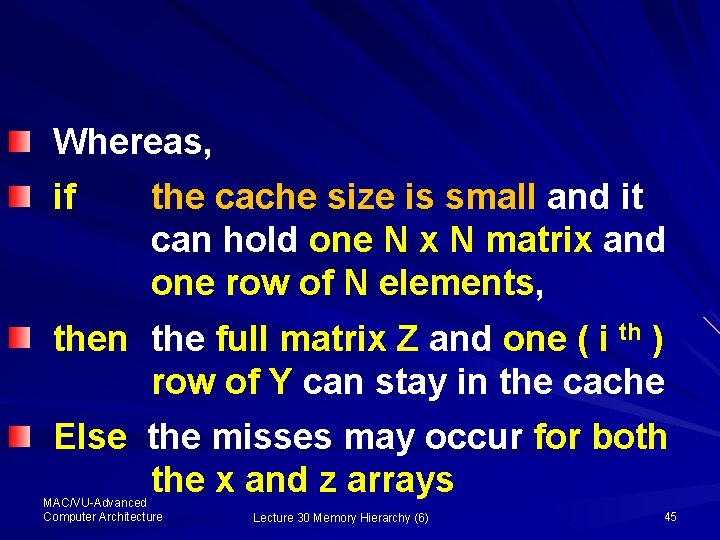

Whereas, if the cache size is small and it can hold one N x N matrix and one row of N elements, then the full matrix Z and one ( i th ) row of Y can stay in the cache Else the misses may occur for both the x and z arrays MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 45

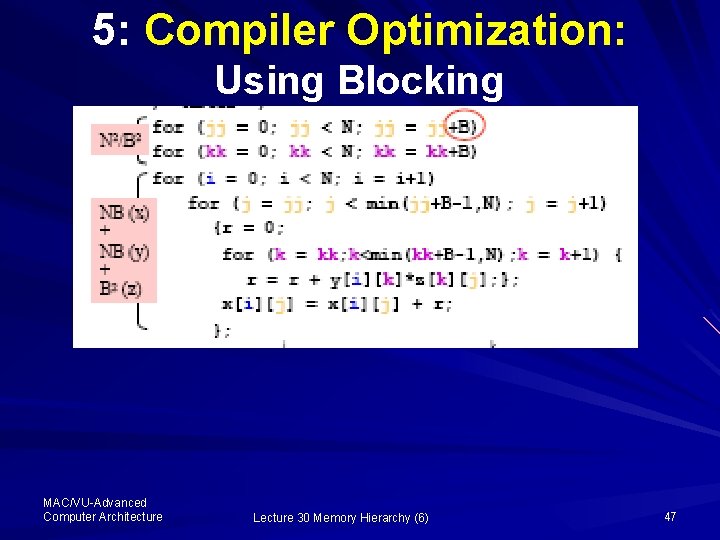

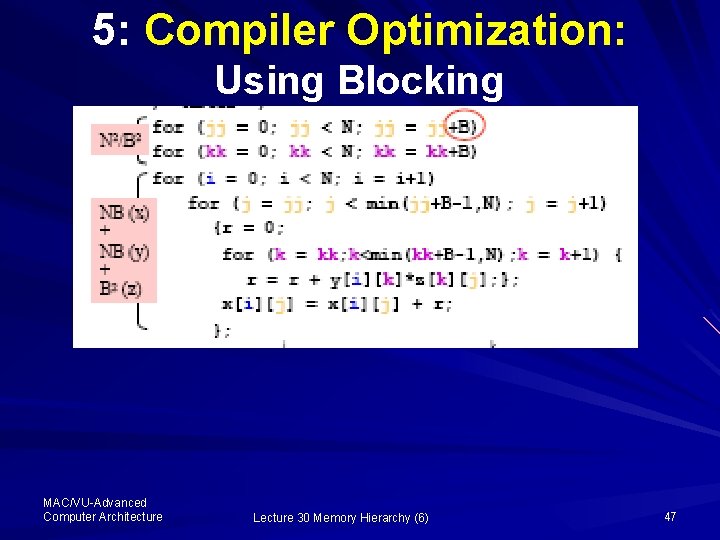

5: Compiler Optimization: Using Blocking B is chosen such that one row of B and one B x B matrix can fit in in cache. This ensures that the y and z blocks are resident on cache Let us have a look on to the modified code which shows that the two inner loops now compute in steps of size B (blocking factor) rather than the full N x N size of arrays X and Z MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 46

5: Compiler Optimization: Using Blocking MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 47

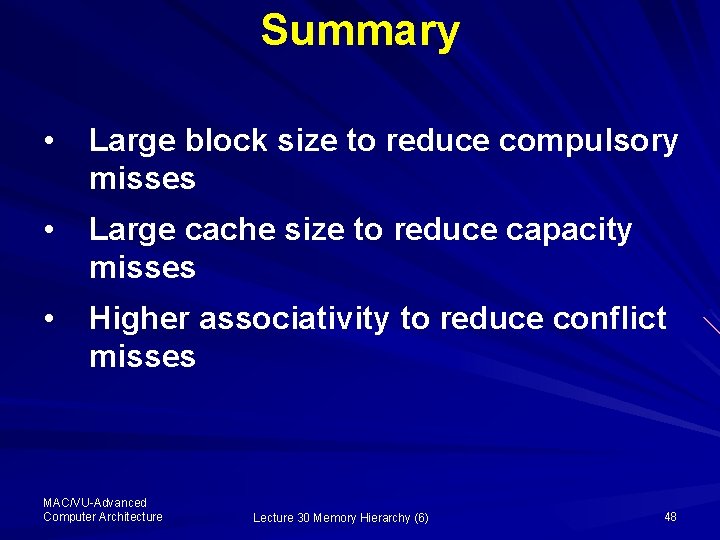

Summary • Large block size to reduce compulsory misses • Large cache size to reduce capacity misses • Higher associativity to reduce conflict misses MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 48

Summary The way-prediction techniques checks a section of cache for hit and then on miss it checks the rest of the cache The final technique – loop interchange and blocking, is a software approach to optimize the cache performance Next time we will talk about the way to enhance performance by having processor and memory operate in parallel – till then MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 49

Example: Avg. Memory Access Time vs. Miss Rate Example: assume CCT = 1. 10 for 2 -way, 1. 12 for 4 -way, 1. 14 for 8 -way vs. CCT direct mapped Cache Size (KB) 1 -way 1 2. 33 2 1. 98 4 1. 72 8 1. 46 16 1. 29 32 1. 20 64 1. 14 MAC/VU-Advanced Computer Architecture 128 1. 10 Associativity 2 -way 4 -way 8 -way 2. 15 2. 07 2. 01 1. 86 1. 76 1. 68 1. 67 1. 61 1. 53 1. 48 1. 47 1. 43 1. 32 1. 24 1. 25 1. 27 1. 20 1. 21 1. 23 Lecture Hierarchy 1. 20 (6) 1. 1730 Memory 1. 18 50

Allah Hafiz MAC/VU-Advanced Computer Architecture Lecture 30 Memory Hierarchy (6) 51