2160710 Distributed Operating System Unit6 Distributed Shared Memory

2160710 Distributed Operating System Unit-6 Distributed Shared Memory Prof. Rekha K. Karangiya 9727747317 Rekha. karangiya@darshan. ac. in

Topics to be covered § Introduction § General architecture of DSM systems § Design and implementation issues of DSM § Granularity § Structure of shared memory space § Consistency models § Replacement strategy § Thrashing Unit 6: Distributed Shared Memory 2 Darshan Institute of Engineering & Technology

Introduction to DSM § Distributed shared memory (DSM) is one of the basic paradigm for inter process communication. § The shared-memory paradigm provides shared address space to processes in a system. § Processes access data in the shared address space through the following two basic primitives. • Data = Read (address) • Write (address, data) § Read returns the data item referenced by address. § Write sets the contents referenced by address to the value of data. Unit 6: Distributed Shared Memory 3 Darshan Institute of Engineering & Technology

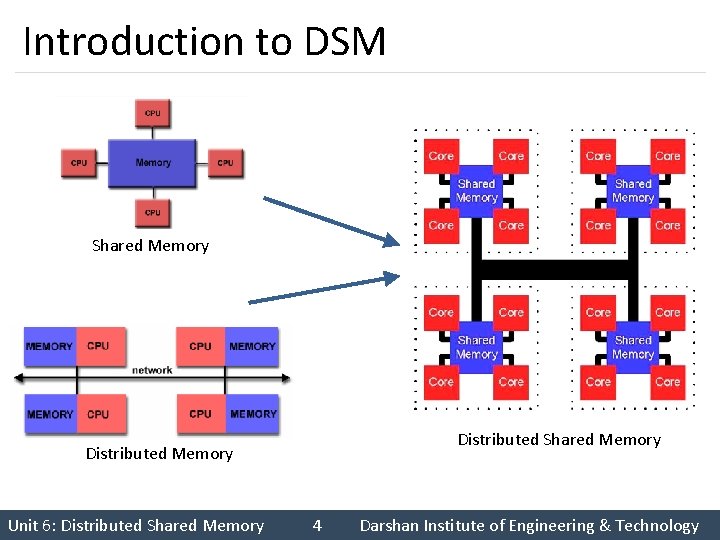

Introduction to DSM Shared Memory Distributed Memory Unit 6: Distributed Shared Memory 4 Darshan Institute of Engineering & Technology

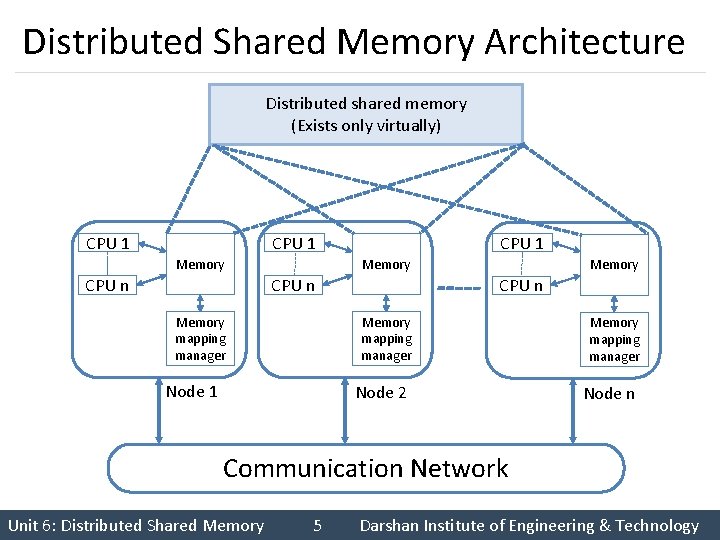

Distributed Shared Memory Architecture Distributed shared memory (Exists only virtually) CPU 1 Memory CPU n Memory mapping manager Node 1 Memory CPU n Memory mapping manager Node 2 Node n Communication Network Unit 6: Distributed Shared Memory 5 Darshan Institute of Engineering & Technology

Distributed Shared Memory Architecture § Each node of the system consist of one or more CPUs and memory unit. § Nodes are connected by high speed communication network. § Simple message passing system for nodes to exchange information. § Main memory of individual nodes is used to cache pieces of shared memory space. § Memory mapping manager routine maps local memory to shared virtual memory. Unit 6: Distributed Shared Memory 6 Darshan Institute of Engineering & Technology

Distributed Shared Memory Architecture § Shared memory of DSM exist only virtually. § Shared memory space is partitioned into blocks. § Data caching is used in DSM system to reduce network latency. § Data block keep migrating from one node to another on demand but no communication is visible to the user processes. § If data is not available in local memory network block fault is generated. Unit 6: Distributed Shared Memory 7 Darshan Institute of Engineering & Technology

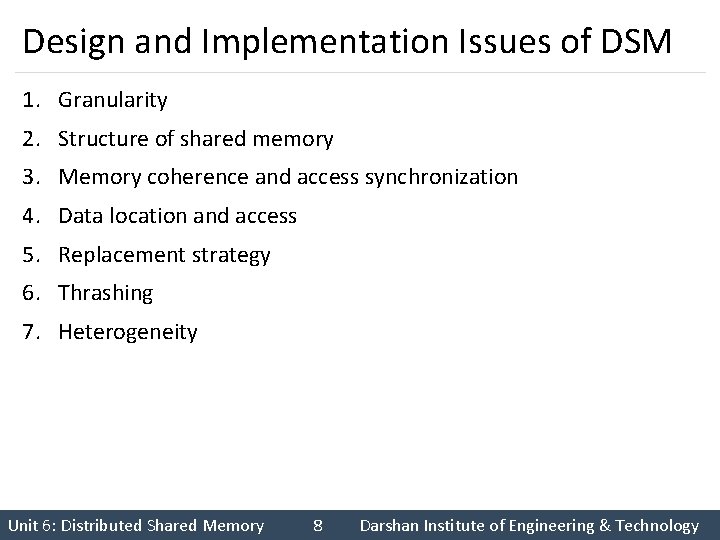

Design and Implementation Issues of DSM 1. Granularity 2. Structure of shared memory 3. Memory coherence and access synchronization 4. Data location and access 5. Replacement strategy 6. Thrashing 7. Heterogeneity Unit 6: Distributed Shared Memory 8 Darshan Institute of Engineering & Technology

Granularity § Granularity is a block or unit of data transfer across the network. § Selecting proper block size is an important part of the design of a DSM system. § Criteria for choosing granularity parameter are as follows. 1. Factors Influencing block Size Selection I. Paging overhead II. Directory size III. Thrashing IV. False sharing 2. Using page size as block size Less granular Unit 6: Distributed Shared Memory 9 More granular Darshan Institute of Engineering & Technology

Criteria for Choosing Granularity Parameter 1. Factors Influencing block size selection • Sending large packet of data is not much more expensive than sending small ones. I. Paging overhead • A process is likely to access a large region of its shared address space in a small amount of time. • Therefore the paging overhead is less for large block size as compared to the paging overhead for small block size. Unit 6: Distributed Shared Memory 10 Darshan Institute of Engineering & Technology

Criteria for Choosing Granularity Parameter II. Directory size • The larger the block size, the smaller the directory. • Ultimately result in reduced directory management overhead for larger block size. III. Thrashing • The problem of thrashing may occur when data item in the same data block are being updated by multiple node at the same time. • Problem may occur with any block size, it is more likely with larger block size. Unit 6: Distributed Shared Memory 11 Darshan Institute of Engineering & Technology

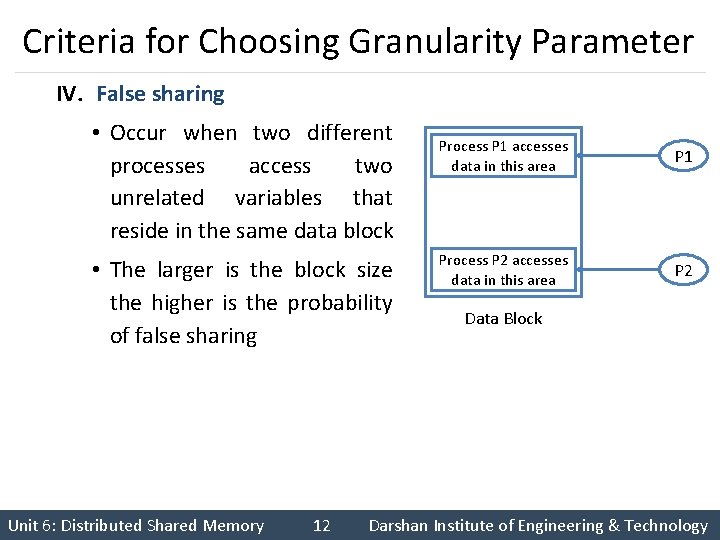

Criteria for Choosing Granularity Parameter IV. False sharing • Occur when two different processes access two unrelated variables that reside in the same data block • The larger is the block size the higher is the probability of false sharing Unit 6: Distributed Shared Memory 12 Process P 1 accesses data in this area P 1 Process P 2 accesses data in this area P 2 Data Block Darshan Institute of Engineering & Technology

Criteria for Choosing Granularity Parameter 2. Using page size as block size • Using page size as the block size of a DSM system has the following advantages: I. It allows the use of existing page fault schemes to trigger a DSM page fault. II. It allows the access right control. III. Page size do not impose undue communication overhead at the time of network page fault. IV. Page size is a suitable data entity unit with respect to memory contention. Unit 6: Distributed Shared Memory 13 Darshan Institute of Engineering & Technology

Structure of Shared Memory Space § Structure defines the abstract view of the shared memory space. § The structure and granularity of a DSM system are closely related. § Three approach for structuring shared memory space of DSM are: 1. No structuring 2. Structuring by data type 3. Structuring as a database Unit 6: Distributed Shared Memory 14 Darshan Institute of Engineering & Technology

Approach for Structuring Shared Memory Space 1. No structuring • The shared memory space is simply a linear array of words. • Advantage: I. Choose any suitable page size as the unit of sharing and a fixed grain (block) size may be used for all application. II. Simple and easy to design such a DSM system. 2. Structuring by data type • The shared memory space is structured either as a collection of variables in the source language. • The granularity in such DSM system is an object or a variable. Unit 6: Distributed Shared Memory 15 Darshan Institute of Engineering & Technology

Approach for Structuring Shared Memory Space • DSM system uses variable grain (block) size to match the size of the object/variable being accessed by the application. 3. Structuring as a database • Structure the shared memory like a database. • Shared memory space is ordered as an associative memory called tuple space. • Processes select tuples by specifying the number of their fields and their values or type. • DSM system uses variable grain (block) size to match with the size of tuples. Unit 6: Distributed Shared Memory 16 Darshan Institute of Engineering & Technology

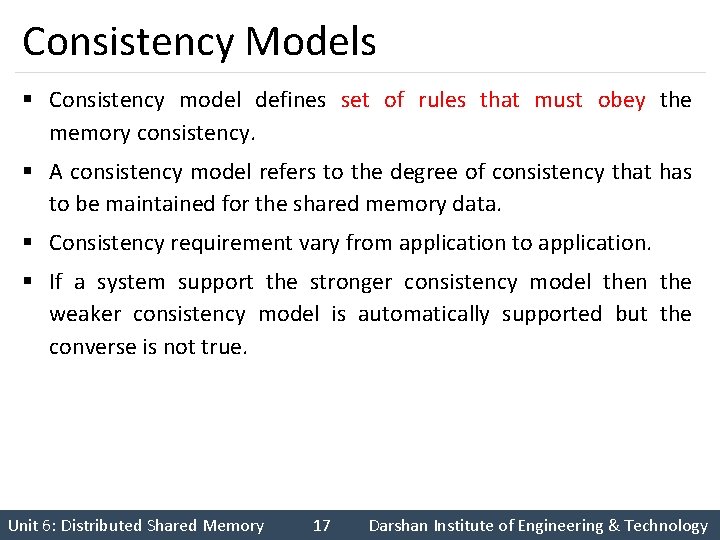

Consistency Models § Consistency model defines set of rules that must obey the memory consistency. § A consistency model refers to the degree of consistency that has to be maintained for the shared memory data. § Consistency requirement vary from application to application. § If a system support the stronger consistency model then the weaker consistency model is automatically supported but the converse is not true. Unit 6: Distributed Shared Memory 17 Darshan Institute of Engineering & Technology

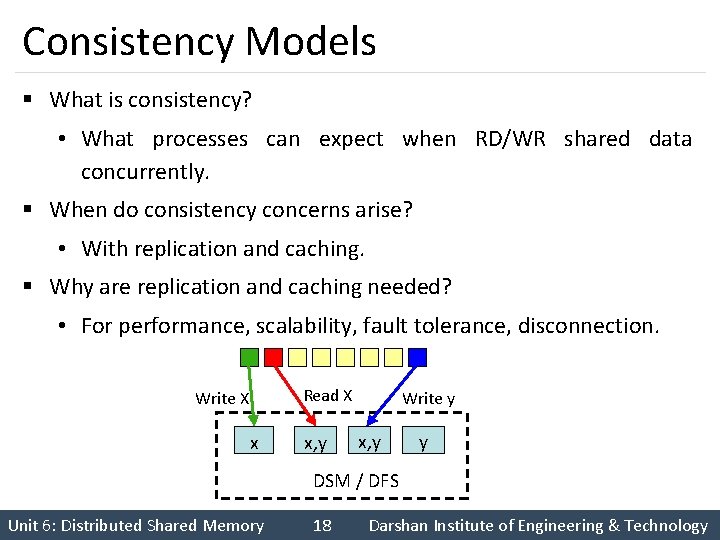

Consistency Models § What is consistency? • What processes can expect when RD/WR shared data concurrently. § When do consistency concerns arise? • With replication and caching. § Why are replication and caching needed? • For performance, scalability, fault tolerance, disconnection. Read X Write X x x, y Write y x, y y DSM / DFS Unit 6: Distributed Shared Memory 18 Darshan Institute of Engineering & Technology

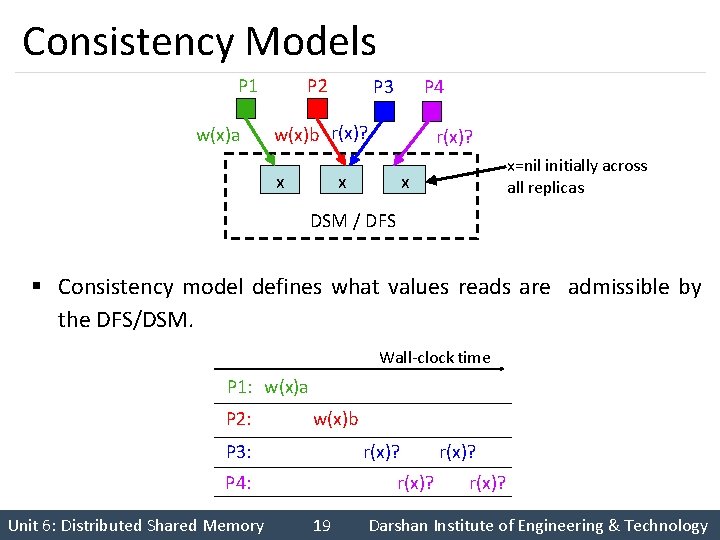

Consistency Models P 1 w(x)a P 2 P 4 P 3 w(x)b r(x)? x r(x)? x x=nil initially across all replicas x DSM / DFS § Consistency model defines what values reads are admissible by the DFS/DSM. Wall-clock time P 1: w(x)a P 2: w(x)b P 3: r(x)? P 4: Unit 6: Distributed Shared Memory r(x)? 19 r(x)? Darshan Institute of Engineering & Technology

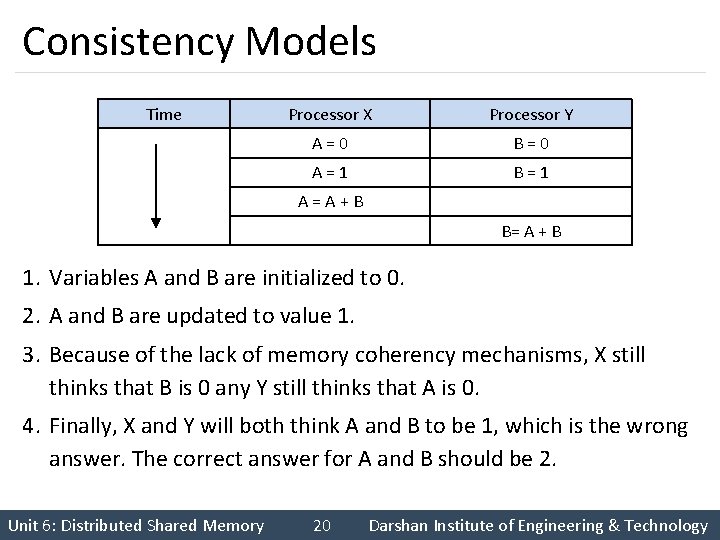

Consistency Models Time Processor X Processor Y A=0 B=0 A=1 B=1 A=A+B B= A + B 1. Variables A and B are initialized to 0. 2. A and B are updated to value 1. 3. Because of the lack of memory coherency mechanisms, X still thinks that B is 0 any Y still thinks that A is 0. 4. Finally, X and Y will both think A and B to be 1, which is the wrong answer. The correct answer for A and B should be 2. Unit 6: Distributed Shared Memory 20 Darshan Institute of Engineering & Technology

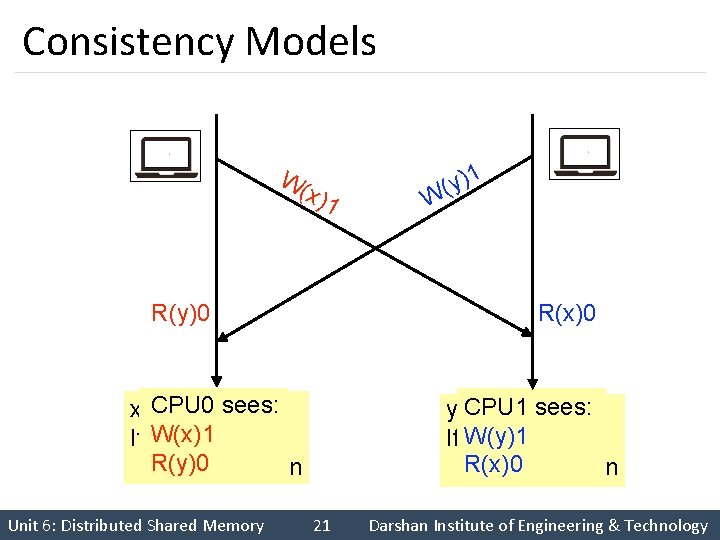

Consistency Models W( x)1 R(y)0 R(x)0 CPU 0 sees: x=1 W(x)1 If y==0 R(y)0 section critical Unit 6: Distributed Shared Memory W 1 ) y ( CPU 1 sees: y=1 If W(y)1 x==0 R(x)0 critical section 21 Darshan Institute of Engineering & Technology

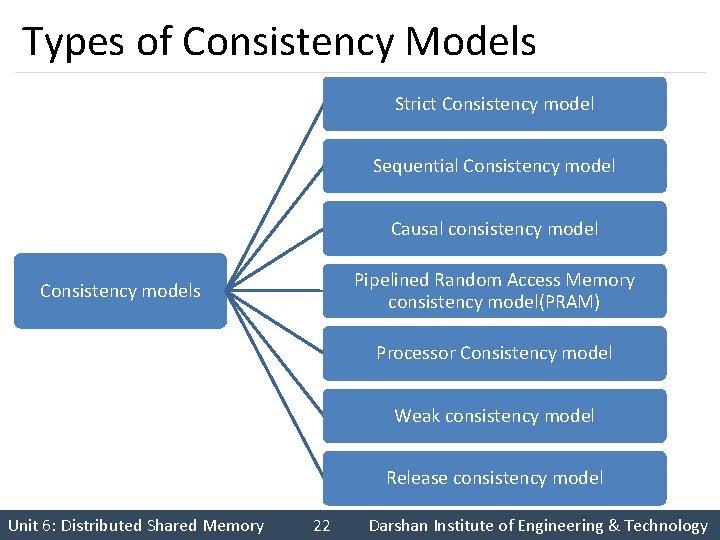

Types of Consistency Models Strict Consistency model Sequential Consistency model Causal consistency model Pipelined Random Access Memory consistency model(PRAM) Consistency models Processor Consistency model Weak consistency model Release consistency model Unit 6: Distributed Shared Memory 22 Darshan Institute of Engineering & Technology

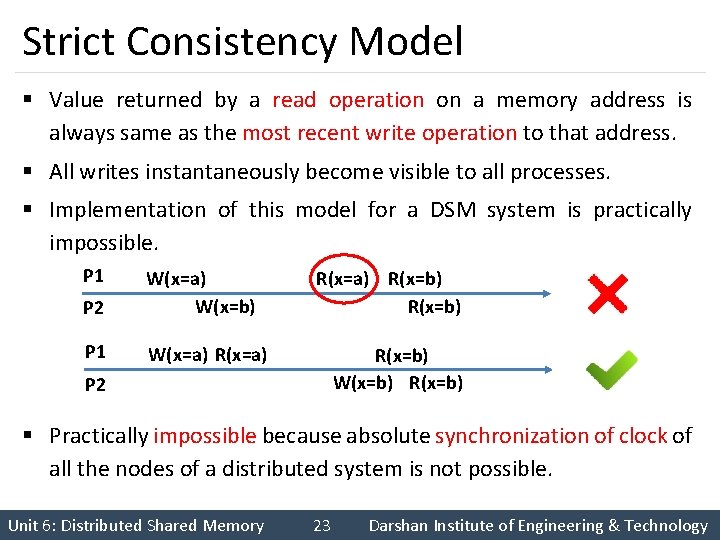

Strict Consistency Model § Value returned by a read operation on a memory address is always same as the most recent write operation to that address. § All writes instantaneously become visible to all processes. § Implementation of this model for a DSM system is practically impossible. P 1 P 2 W(x=a) W(x=b) P 1 W(x=a) R(x=a) R(x=b) W(x=b) R(x=b) P 2 § Practically impossible because absolute synchronization of clock of all the nodes of a distributed system is not possible. Unit 6: Distributed Shared Memory 23 Darshan Institute of Engineering & Technology

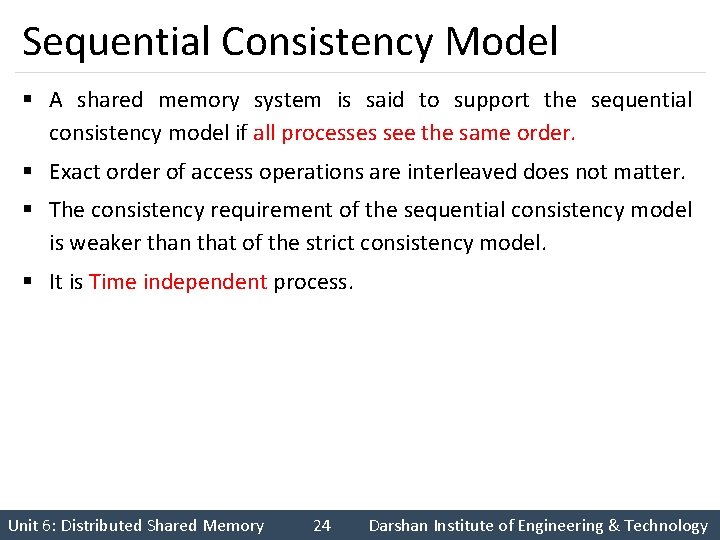

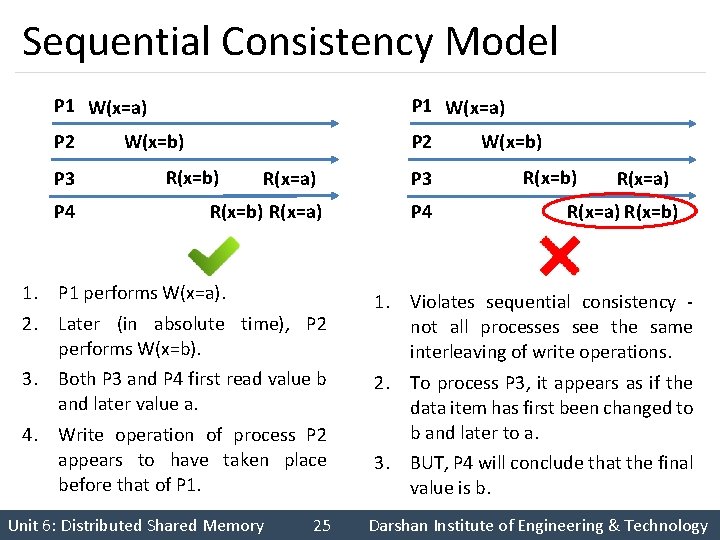

Sequential Consistency Model § A shared memory system is said to support the sequential consistency model if all processes see the same order. § Exact order of access operations are interleaved does not matter. § The consistency requirement of the sequential consistency model is weaker than that of the strict consistency model. § It is Time independent process. Unit 6: Distributed Shared Memory 24 Darshan Institute of Engineering & Technology

Sequential Consistency Model P 1 W(x=a) P 2 P 3 P 4 P 1 W(x=a) W(x=b) P 2 R(x=b) R(x=a) P 3 R(x=b) R(x=a) P 4 1. P 1 performs W(x=a). 2. Later (in absolute time), P 2 performs W(x=b). 3. Both P 3 and P 4 first read value b and later value a. 4. Write operation of process P 2 appears to have taken place before that of P 1. Unit 6: Distributed Shared Memory 25 W(x=b) R(x=a) R(x=b) 1. Violates sequential consistency not all processes see the same interleaving of write operations. 2. To process P 3, it appears as if the data item has first been changed to b and later to a. 3. BUT, P 4 will conclude that the final value is b. Darshan Institute of Engineering & Technology

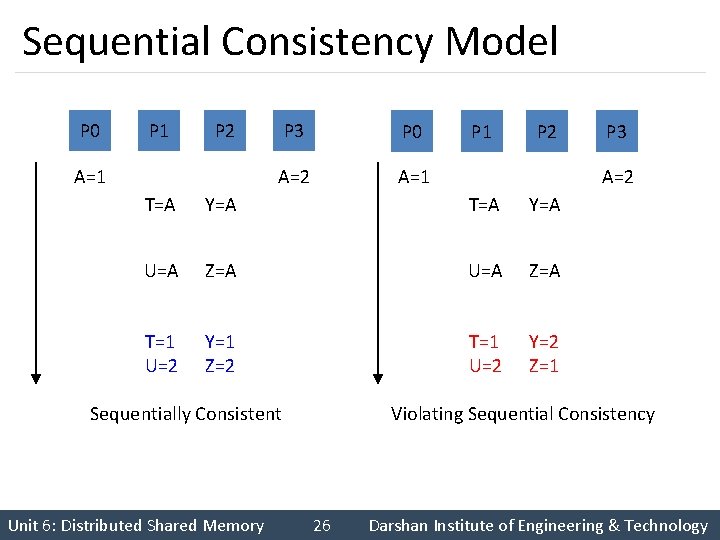

Sequential Consistency Model P 0 P 1 P 2 A=1 P 3 P 0 A=2 A=1 P 2 A=2 T=A Y=A U=A Z=A T=1 U=2 Y=1 Z=2 T=1 U=2 Y=2 Z=1 Violating Sequential Consistency Sequentially Consistent Unit 6: Distributed Shared Memory P 3 26 Darshan Institute of Engineering & Technology

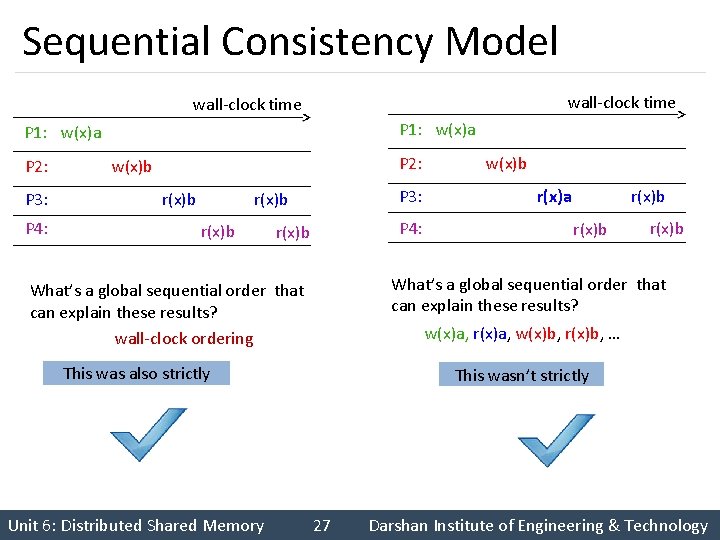

Sequential Consistency Model wall-clock time P 1: w(x)a P 2: P 3: P 4: P 2: w(x)b r(x)b P 3: r(x)b P 4: r(x)b r(x)a r(x)b What’s a global sequential order that can explain these results? wall-clock ordering w(x)a, r(x)a, w(x)b, r(x)b, … This was also strictly Unit 6: Distributed Shared Memory w(x)b This wasn’t strictly 27 Darshan Institute of Engineering & Technology

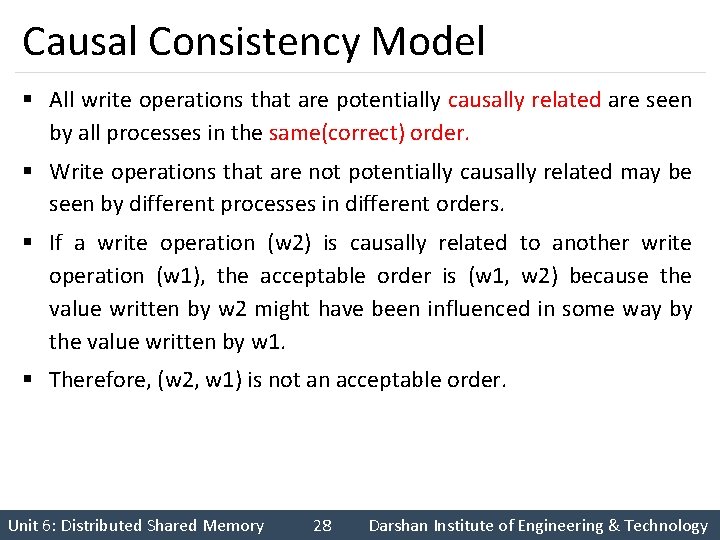

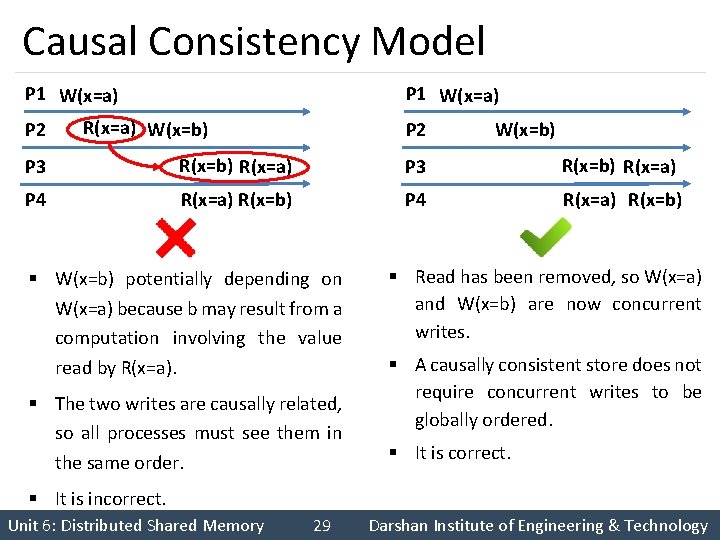

Causal Consistency Model § All write operations that are potentially causally related are seen by all processes in the same(correct) order. § Write operations that are not potentially causally related may be seen by different processes in different orders. § If a write operation (w 2) is causally related to another write operation (w 1), the acceptable order is (w 1, w 2) because the value written by w 2 might have been influenced in some way by the value written by w 1. § Therefore, (w 2, w 1) is not an acceptable order. Unit 6: Distributed Shared Memory 28 Darshan Institute of Engineering & Technology

Causal Consistency Model P 1 W(x=a) P 2 P 1 W(x=a) R(x=a) W(x=b) P 2 W(x=b) P 3 R(x=b) R(x=a) P 4 R(x=a) R(x=b) § W(x=b) potentially depending on W(x=a) because b may result from a computation involving the value read by R(x=a). § The two writes are causally related, so all processes must see them in the same order. § Read has been removed, so W(x=a) and W(x=b) are now concurrent writes. § A causally consistent store does not require concurrent writes to be globally ordered. § It is correct. § It is incorrect. Unit 6: Distributed Shared Memory 29 Darshan Institute of Engineering & Technology

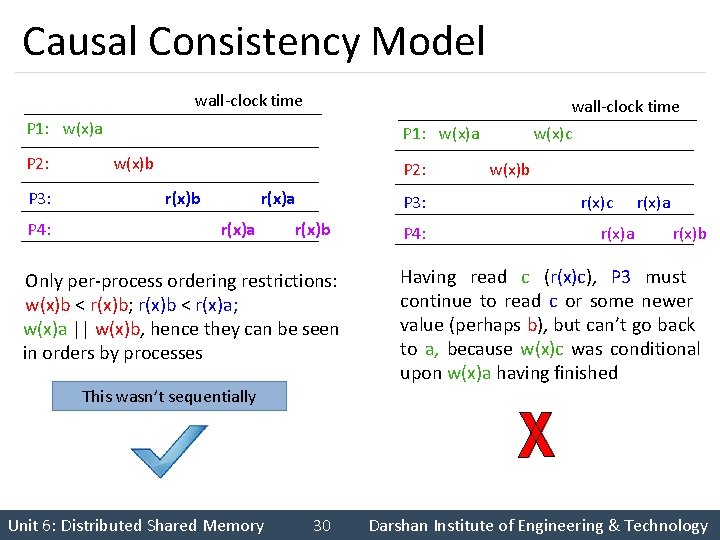

Causal Consistency Model wall-clock time P 1: w(x)a P 2: P 3: P 4: P 1: w(x)a w(x)b P 2: r(x)b r(x)a P 3: r(x)b Only per-process ordering restrictions: w(x)b < r(x)b; r(x)b < r(x)a; w(x)a || w(x)b, hence they can be seen in orders by processes P 4: w(x)c w(x)b r(x)c r(x)a r(x)b Having read c (r(x)c), P 3 must continue to read c or some newer value (perhaps b), but can’t go back to a, because w(x)c was conditional upon w(x)a having finished This wasn’t sequentially Unit 6: Distributed Shared Memory 30 Darshan Institute of Engineering & Technology

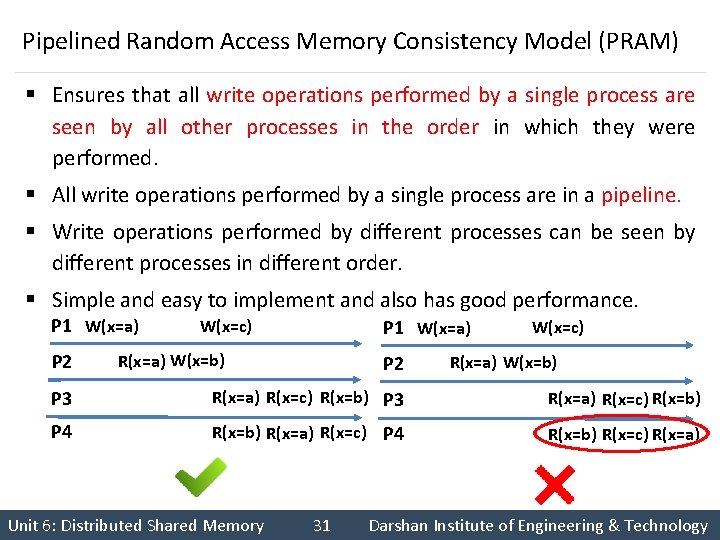

Pipelined Random Access Memory Consistency Model (PRAM) § Ensures that all write operations performed by a single process are seen by all other processes in the order in which they were performed. § All write operations performed by a single process are in a pipeline. § Write operations performed by different processes can be seen by different processes in different order. § Simple and easy to implement and also has good performance. P 1 W(x=a) P 2 W(x=c) P 1 W(x=a) R(x=a) W(x=b) P 2 W(x=c) R(x=a) W(x=b) P 3 R(x=a) R(x=c) R(x=b) P 4 R(x=b) R(x=a) R(x=c) P 4 R(x=b) R(x=c) R(x=a) Unit 6: Distributed Shared Memory 31 Darshan Institute of Engineering & Technology

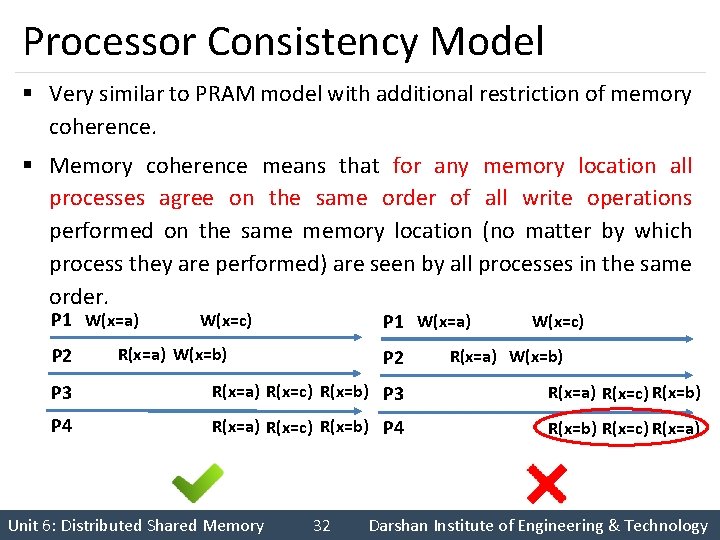

Processor Consistency Model § Very similar to PRAM model with additional restriction of memory coherence. § Memory coherence means that for any memory location all processes agree on the same order of all write operations performed on the same memory location (no matter by which process they are performed) are seen by all processes in the same order. P 1 W(x=a) P 2 W(x=c) P 1 W(x=a) R(x=a) W(x=b) P 2 W(x=c) R(x=a) W(x=b) P 3 R(x=a) R(x=c) R(x=b) P 4 R(x=b) R(x=c) R(x=a) Unit 6: Distributed Shared Memory 32 Darshan Institute of Engineering & Technology

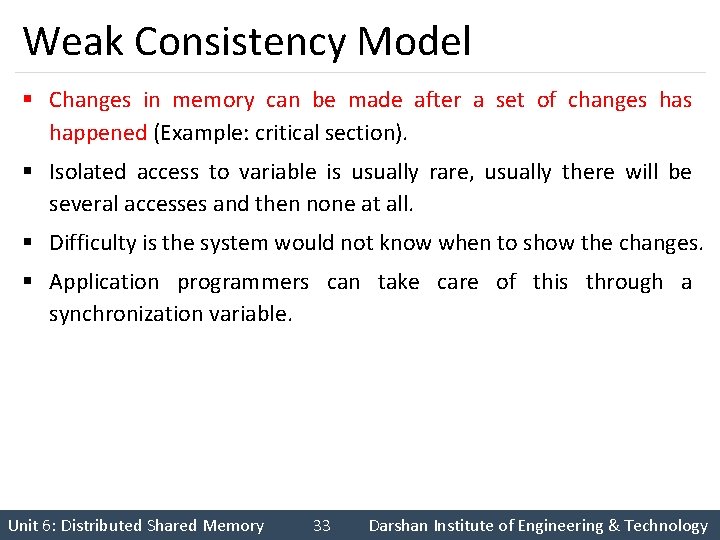

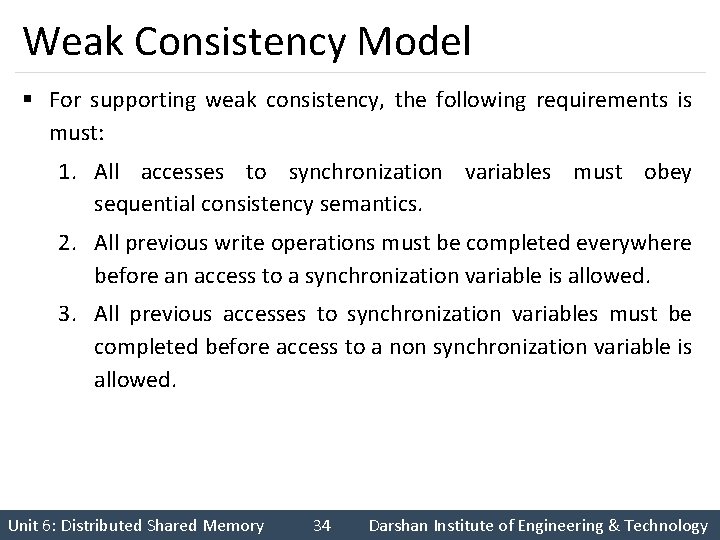

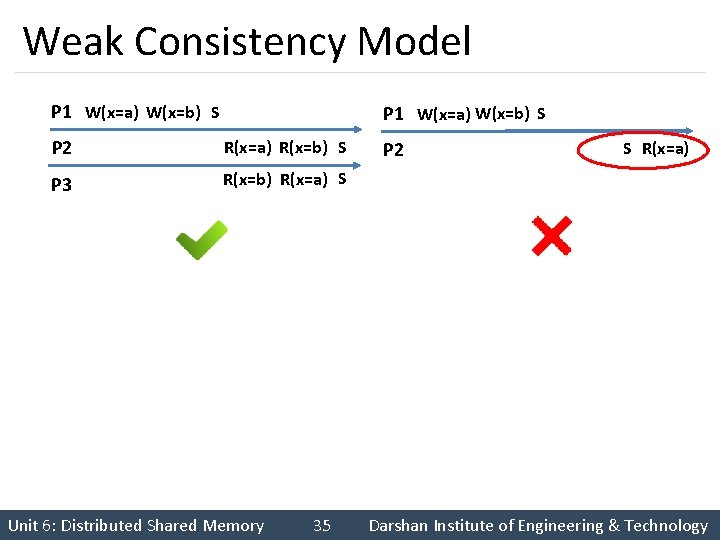

Weak Consistency Model § Changes in memory can be made after a set of changes happened (Example: critical section). § Isolated access to variable is usually rare, usually there will be several accesses and then none at all. § Difficulty is the system would not know when to show the changes. § Application programmers can take care of this through a synchronization variable. Unit 6: Distributed Shared Memory 33 Darshan Institute of Engineering & Technology

Weak Consistency Model § For supporting weak consistency, the following requirements is must: 1. All accesses to synchronization variables must obey sequential consistency semantics. 2. All previous write operations must be completed everywhere before an access to a synchronization variable is allowed. 3. All previous accesses to synchronization variables must be completed before access to a non synchronization variable is allowed. Unit 6: Distributed Shared Memory 34 Darshan Institute of Engineering & Technology

Weak Consistency Model P 1 W(x=a) W(x=b) S P 2 R(x=a) R(x=b) S P 3 R(x=b) R(x=a) S Unit 6: Distributed Shared Memory 35 P 2 S R(x=a) Darshan Institute of Engineering & Technology

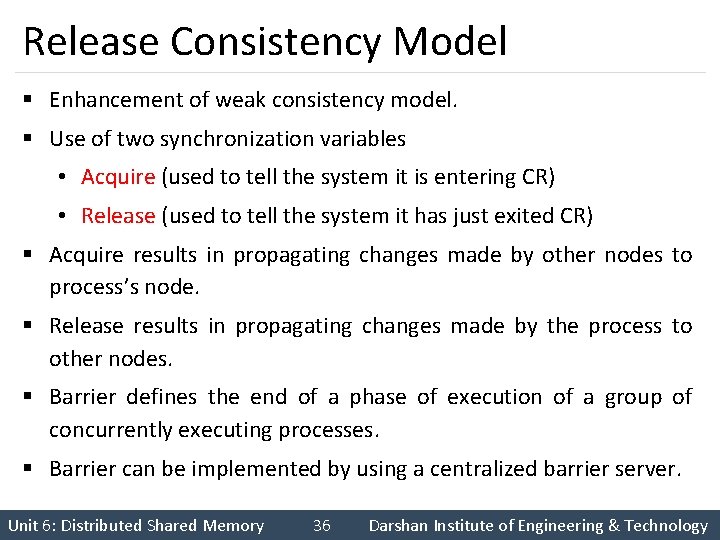

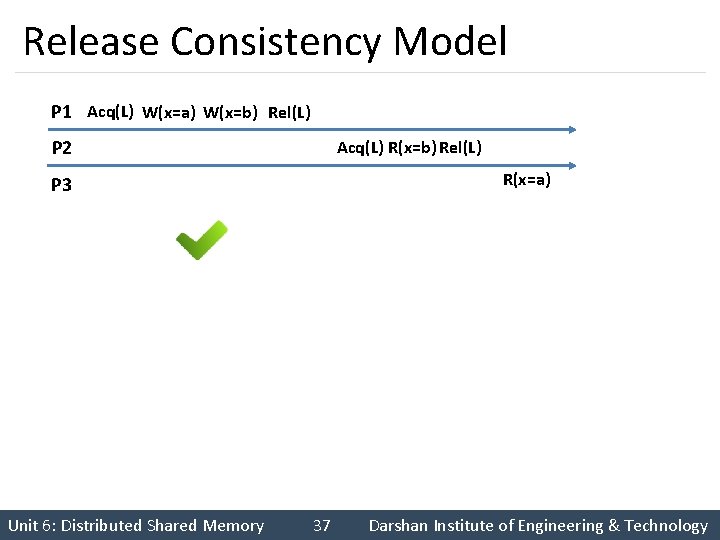

Release Consistency Model § Enhancement of weak consistency model. § Use of two synchronization variables • Acquire (used to tell the system it is entering CR) • Release (used to tell the system it has just exited CR) § Acquire results in propagating changes made by other nodes to process’s node. § Release results in propagating changes made by the process to other nodes. § Barrier defines the end of a phase of execution of a group of concurrently executing processes. § Barrier can be implemented by using a centralized barrier server. Unit 6: Distributed Shared Memory 36 Darshan Institute of Engineering & Technology

Release Consistency Model P 1 Acq(L) W(x=a) W(x=b) Rel(L) P 2 Acq(L) R(x=b) Rel(L) R(x=a) P 3 Unit 6: Distributed Shared Memory 37 Darshan Institute of Engineering & Technology

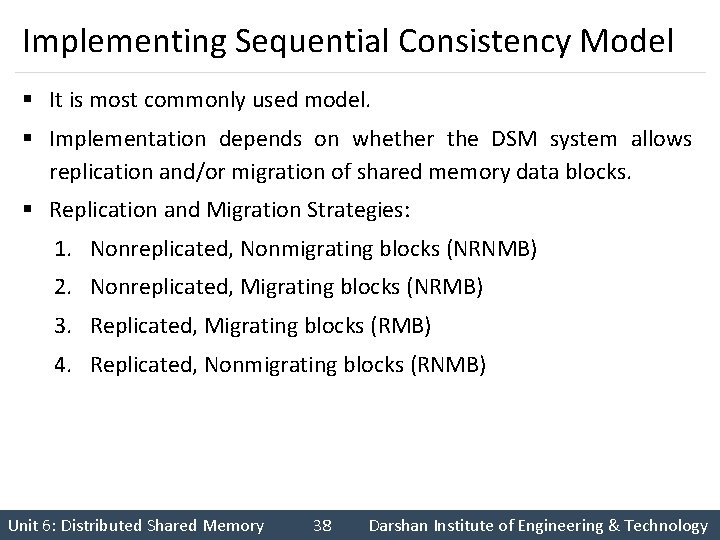

Implementing Sequential Consistency Model § It is most commonly used model. § Implementation depends on whether the DSM system allows replication and/or migration of shared memory data blocks. § Replication and Migration Strategies: 1. Nonreplicated, Nonmigrating blocks (NRNMB) 2. Nonreplicated, Migrating blocks (NRMB) 3. Replicated, Migrating blocks (RMB) 4. Replicated, Nonmigrating blocks (RNMB) Unit 6: Distributed Shared Memory 38 Darshan Institute of Engineering & Technology

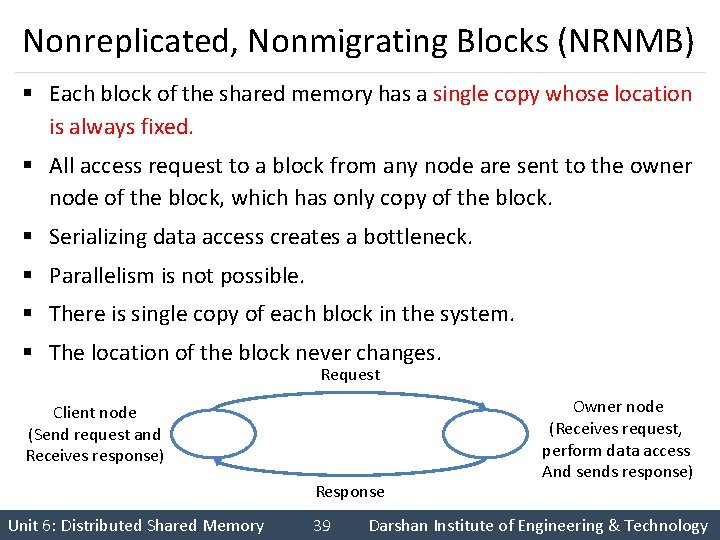

Nonreplicated, Nonmigrating Blocks (NRNMB) § Each block of the shared memory has a single copy whose location is always fixed. § All access request to a block from any node are sent to the owner node of the block, which has only copy of the block. § Serializing data access creates a bottleneck. § Parallelism is not possible. § There is single copy of each block in the system. § The location of the block never changes. Request Client node (Send request and Receives response) Response Unit 6: Distributed Shared Memory 39 Owner node (Receives request, perform data access And sends response) Darshan Institute of Engineering & Technology

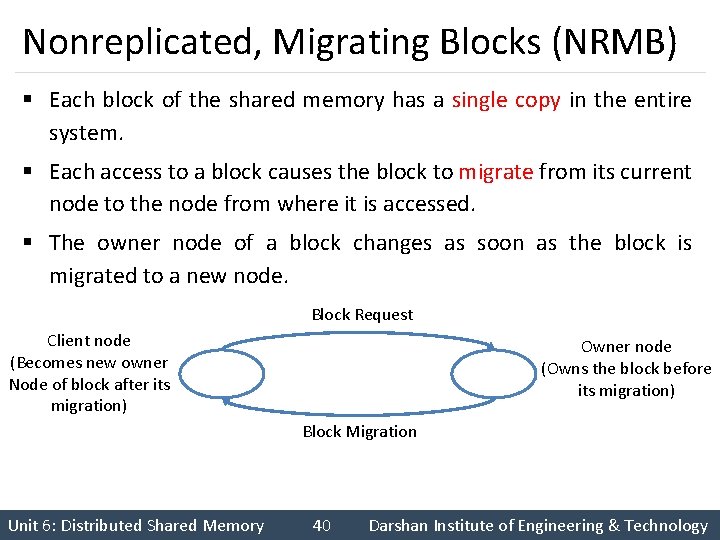

Nonreplicated, Migrating Blocks (NRMB) § Each block of the shared memory has a single copy in the entire system. § Each access to a block causes the block to migrate from its current node to the node from where it is accessed. § The owner node of a block changes as soon as the block is migrated to a new node. Block Request Client node (Becomes new owner Node of block after its migration) Owner node (Owns the block before its migration) Block Migration Unit 6: Distributed Shared Memory 40 Darshan Institute of Engineering & Technology

Data Locating in the NRMB Strategy § There is a single copy of each block, the location of a block keeps changing dynamically. § Following method used to locate a block: 1. Broadcasting 2. Centralized server algorithm 3. Fixed distributed server algorithm 4. Dynamic distributed server algorithm Unit 6: Distributed Shared Memory 41 Darshan Institute of Engineering & Technology

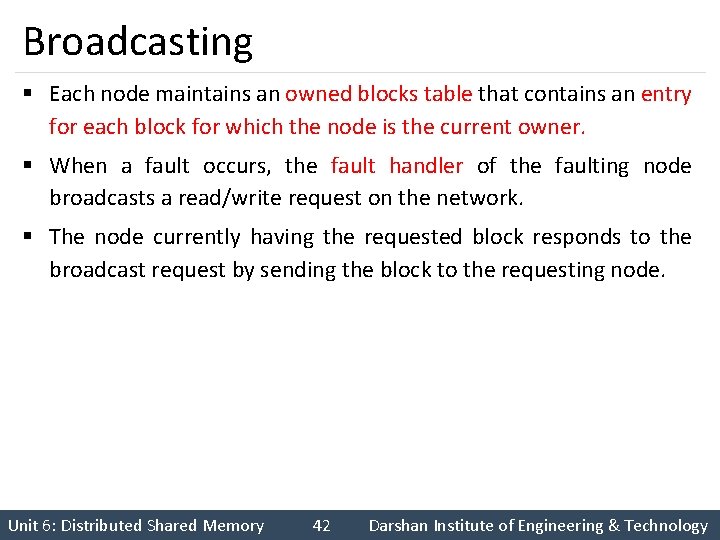

Broadcasting § Each node maintains an owned blocks table that contains an entry for each block for which the node is the current owner. § When a fault occurs, the fault handler of the faulting node broadcasts a read/write request on the network. § The node currently having the requested block responds to the broadcast request by sending the block to the requesting node. Unit 6: Distributed Shared Memory 42 Darshan Institute of Engineering & Technology

Broadcasting Node 1 Node i Node M Block address (changes dynamically) Contains an entry for each block for which this node is the current owner Owned blocks table Node boundary Unit 6: Distributed Shared Memory 43 Node boundary Darshan Institute of Engineering & Technology

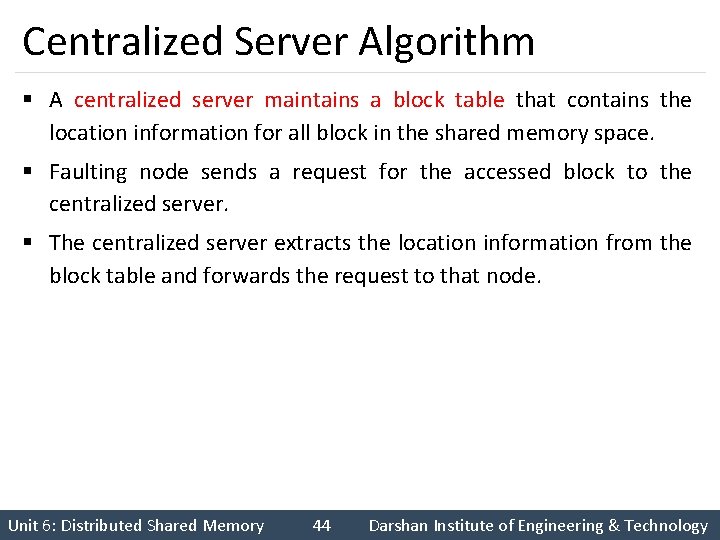

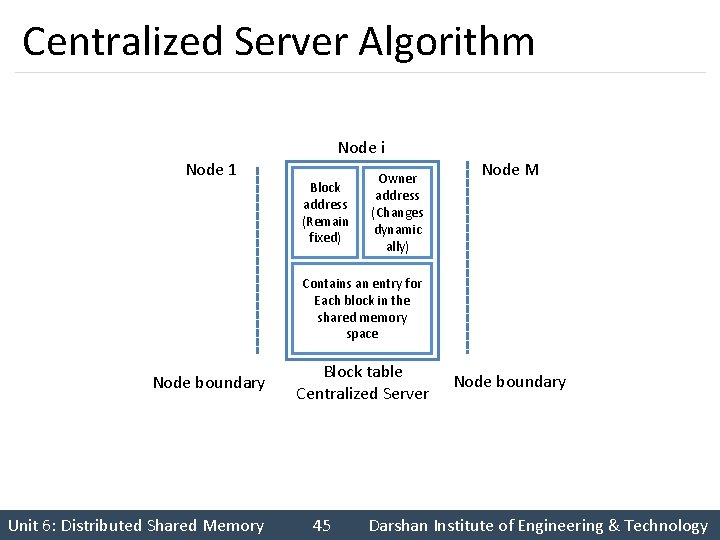

Centralized Server Algorithm § A centralized server maintains a block table that contains the location information for all block in the shared memory space. § Faulting node sends a request for the accessed block to the centralized server. § The centralized server extracts the location information from the block table and forwards the request to that node. Unit 6: Distributed Shared Memory 44 Darshan Institute of Engineering & Technology

Centralized Server Algorithm Node i Node 1 Block address (Remain fixed) Owner address (Changes dynamic ally) Node M Contains an entry for Each block in the shared memory space Node boundary Unit 6: Distributed Shared Memory Block table Centralized Server 45 Node boundary Darshan Institute of Engineering & Technology

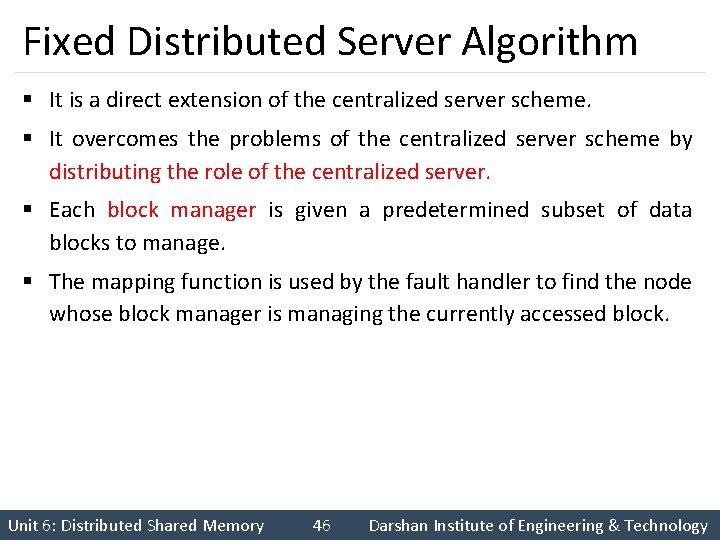

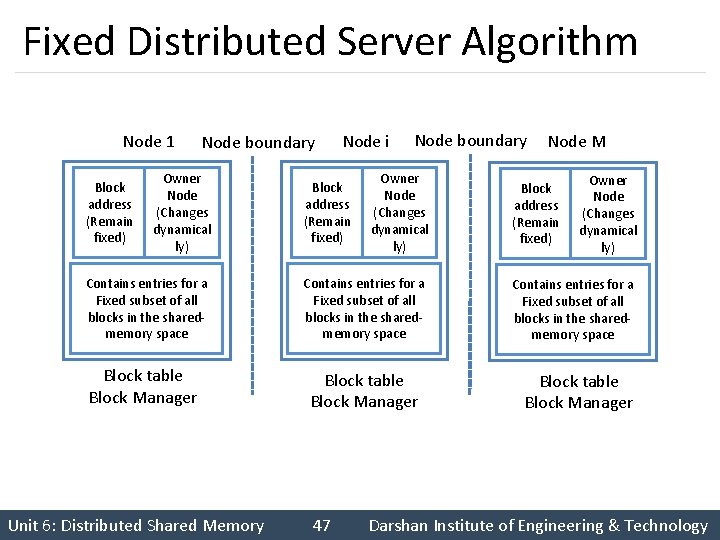

Fixed Distributed Server Algorithm § It is a direct extension of the centralized server scheme. § It overcomes the problems of the centralized server scheme by distributing the role of the centralized server. § Each block manager is given a predetermined subset of data blocks to manage. § The mapping function is used by the fault handler to find the node whose block manager is managing the currently accessed block. Unit 6: Distributed Shared Memory 46 Darshan Institute of Engineering & Technology

Fixed Distributed Server Algorithm Node 1 Block address (Remain fixed) Node boundary Owner Node (Changes dynamical ly) Node i Block address (Remain fixed) Node boundary Owner Node (Changes dynamical ly) Contains entries for a Fixed subset of all blocks in the sharedmemory space Block table Block Manager Unit 6: Distributed Shared Memory 47 Node M Block address (Remain fixed) Owner Node (Changes dynamical ly) Contains entries for a Fixed subset of all blocks in the sharedmemory space Block table Block Manager Darshan Institute of Engineering & Technology

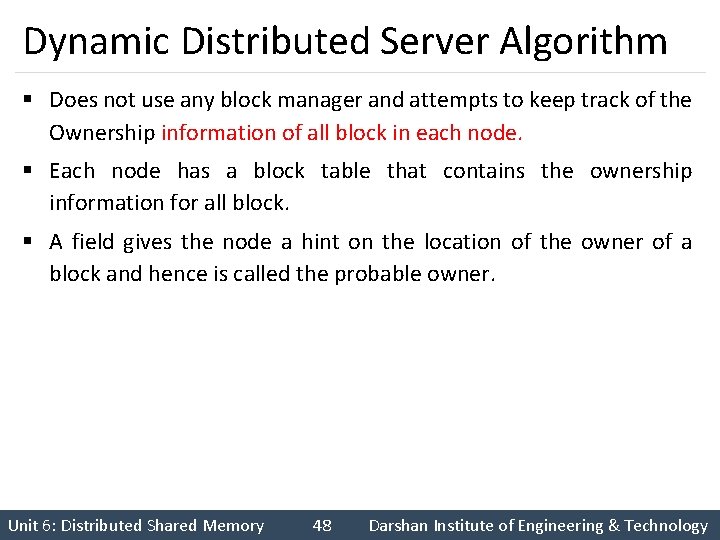

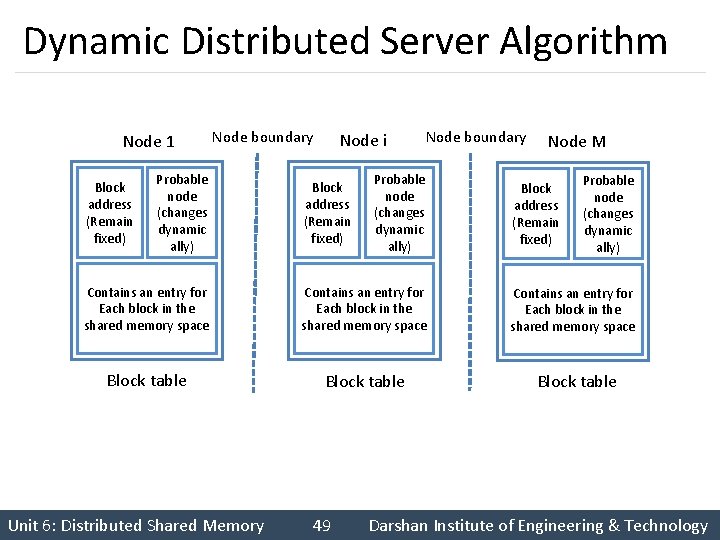

Dynamic Distributed Server Algorithm § Does not use any block manager and attempts to keep track of the Ownership information of all block in each node. § Each node has a block table that contains the ownership information for all block. § A field gives the node a hint on the location of the owner of a block and hence is called the probable owner. Unit 6: Distributed Shared Memory 48 Darshan Institute of Engineering & Technology

Dynamic Distributed Server Algorithm Node 1 Block address (Remain fixed) Node boundary Probable node (changes dynamic ally) Node i Block address (Remain fixed) Node boundary Probable node (changes dynamic ally) Node M Block address (Remain fixed) Probable node (changes dynamic ally) Contains an entry for Each block in the shared memory space Block table Unit 6: Distributed Shared Memory 49 Darshan Institute of Engineering & Technology

Replicated, Migrating Blocks (RMB) § To increase parallelism, all DSM systems replicate blocks. § With replicated blocks, Read operations can be carried out in parallel with multiples nodes. § Write operations increase the cost because its replicas must be invalidated or update to maintain consistency. § Two basic protocols that may be used for ensuring sequential consistency in this case are: 1. Write-invalidate 2. Write-update Unit 6: Distributed Shared Memory 50 Darshan Institute of Engineering & Technology

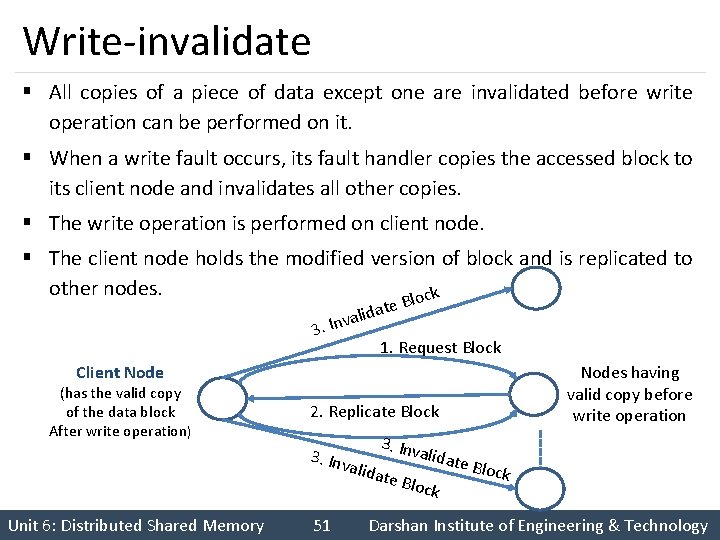

Write-invalidate § All copies of a piece of data except one are invalidated before write operation can be performed on it. § When a write fault occurs, its fault handler copies the accessed block to its client node and invalidates all other copies. § The write operation is performed on client node. § The client node holds the modified version of block and is replicated to other nodes. lock 3. B e t a d li Inva 1. Request Block Client Node (has the valid copy of the data block After write operation) 2. Replicate Block 3. Inv a 3. Inv lidate Unit 6: Distributed Shared Memory Nodes having valid copy before write operation 51 alidat Block e Bloc k Darshan Institute of Engineering & Technology

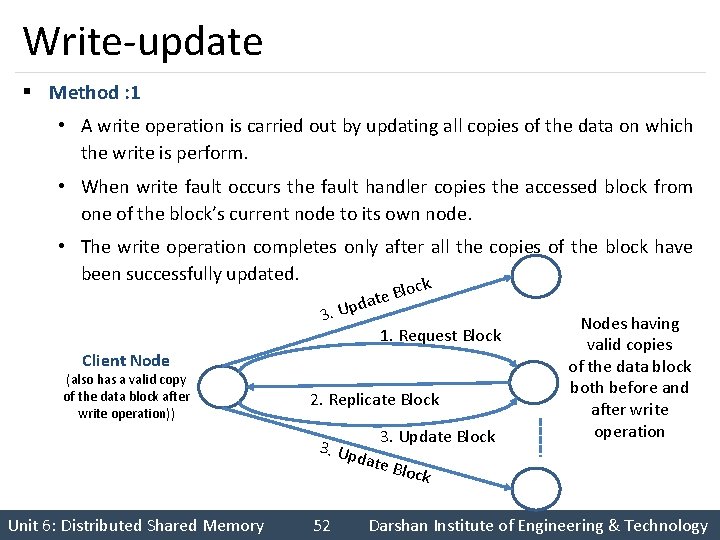

Write-update § Method : 1 • A write operation is carried out by updating all copies of the data on which the write is perform. • When write fault occurs the fault handler copies the accessed block from one of the block’s current node to its own node. • The write operation completes only after all the copies of the block have been successfully updated. k c Blo e t a d 3. Up 1. Request Block Client Node (also has a valid copy of the data block after write operation)) 2. Replicate Block 3. Update Block 3. Up d Nodes having valid copies of the data block both before and after write operation ate B Unit 6: Distributed Shared Memory 52 lock Darshan Institute of Engineering & Technology

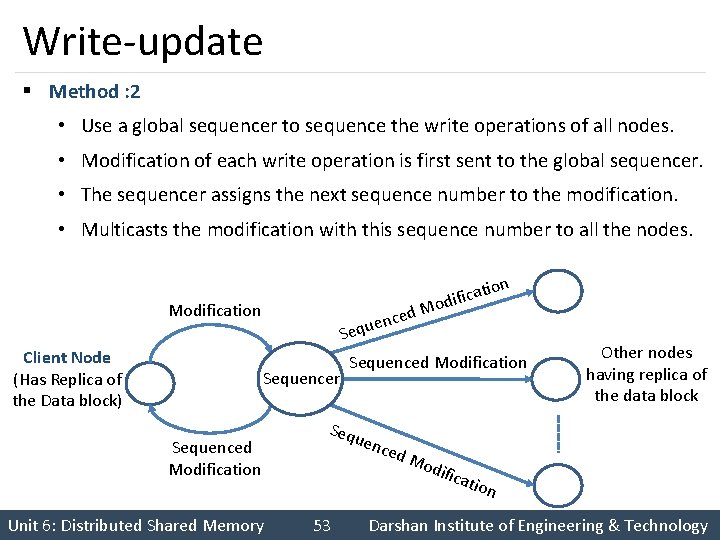

Write-update § Method : 2 • Use a global sequencer to sequence the write operations of all nodes. • Modification of each write operation is first sent to the global sequencer. • The sequencer assigns the next sequence number to the modification. • Multicasts the modification with this sequence number to all the nodes. Modification ed c n e equ ion cat i f i d o M S Client Node (Has Replica of the Data block) Sequencer Sequenced Modification Unit 6: Distributed Shared Memory Sequenced Modification Seq u enc ed M odif 53 icat Other nodes having replica of the data block ion Darshan Institute of Engineering & Technology

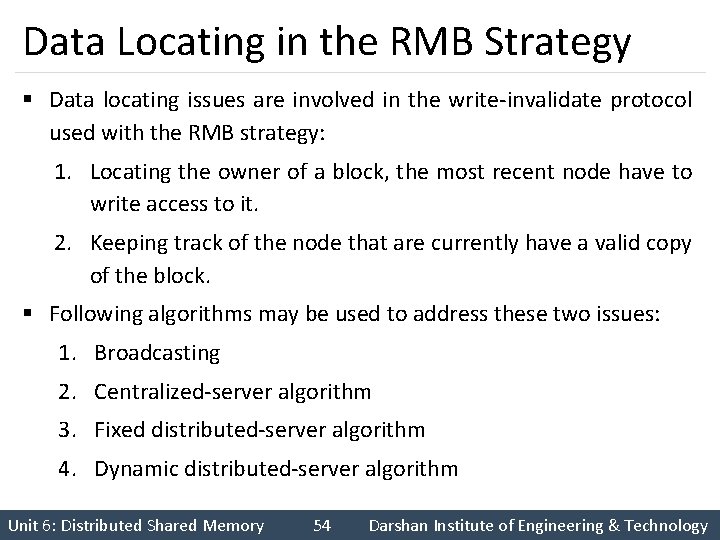

Data Locating in the RMB Strategy § Data locating issues are involved in the write-invalidate protocol used with the RMB strategy: 1. Locating the owner of a block, the most recent node have to write access to it. 2. Keeping track of the node that are currently have a valid copy of the block. § Following algorithms may be used to address these two issues: 1. Broadcasting 2. Centralized-server algorithm 3. Fixed distributed-server algorithm 4. Dynamic distributed-server algorithm Unit 6: Distributed Shared Memory 54 Darshan Institute of Engineering & Technology

Replicated, Nonmigrating Blocks (RNMB) § A shared memory block may be replicated at multiple node of the system but the location of each replica is fixed. § All replicas of a block are kept consistent by updating them all in case of a write access. § Sequential consistency is ensured by using a global sequencer to sequence the write operation of all nodes. Unit 6: Distributed Shared Memory 55 Darshan Institute of Engineering & Technology

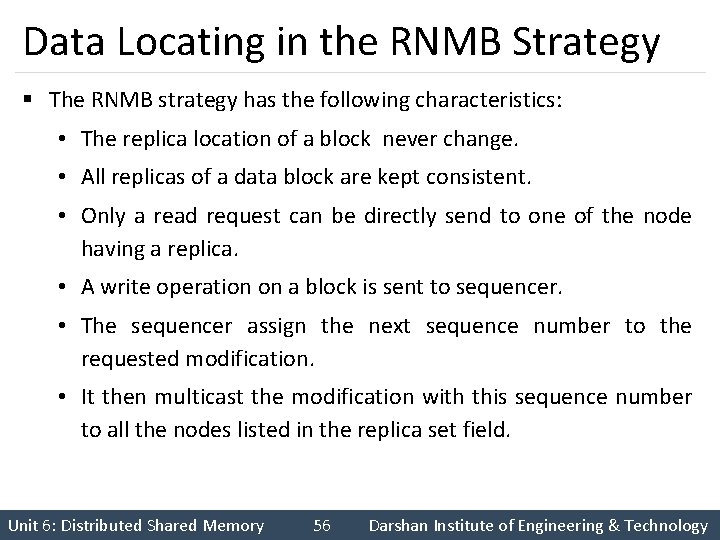

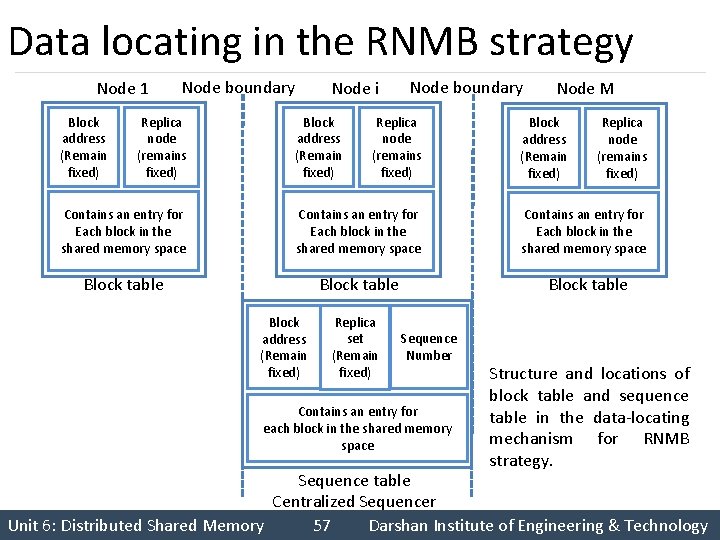

Data Locating in the RNMB Strategy § The RNMB strategy has the following characteristics: • The replica location of a block never change. • All replicas of a data block are kept consistent. • Only a read request can be directly send to one of the node having a replica. • A write operation on a block is sent to sequencer. • The sequencer assign the next sequence number to the requested modification. • It then multicast the modification with this sequence number to all the nodes listed in the replica set field. Unit 6: Distributed Shared Memory 56 Darshan Institute of Engineering & Technology

Data locating in the RNMB strategy Node 1 Block address (Remain fixed) Node boundary Replica node (remains fixed) Node i Block address (Remain fixed) Node boundary Replica node (remains fixed) Contains an entry for Each block in the shared memory space Block table Block address (Remain fixed) Replica set (Remain fixed) Node M Block address (Remain fixed) Replica node (remains fixed) Contains an entry for Each block in the shared memory space Block table Sequence Number Contains an entry for each block in the shared memory space Structure and locations of block table and sequence table in the data-locating mechanism for RNMB strategy. Sequence table Centralized Sequencer Unit 6: Distributed Shared Memory 57 Darshan Institute of Engineering & Technology

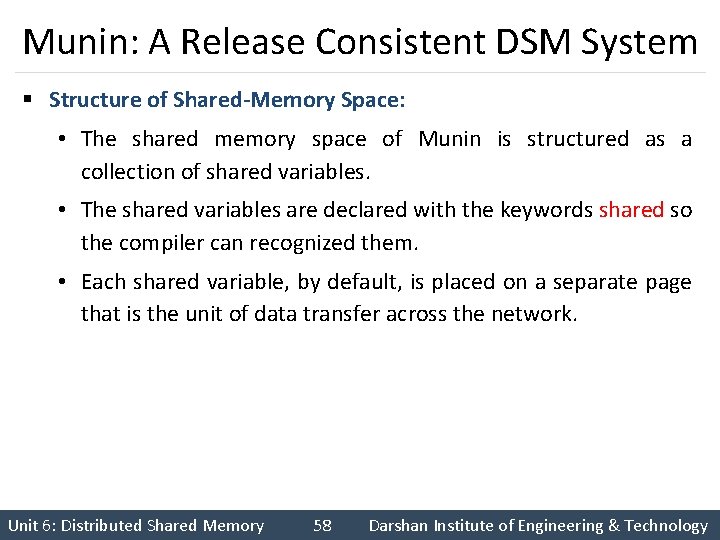

Munin: A Release Consistent DSM System § Structure of Shared-Memory Space: • The shared memory space of Munin is structured as a collection of shared variables. • The shared variables are declared with the keywords shared so the compiler can recognized them. • Each shared variable, by default, is placed on a separate page that is the unit of data transfer across the network. Unit 6: Distributed Shared Memory 58 Darshan Institute of Engineering & Technology

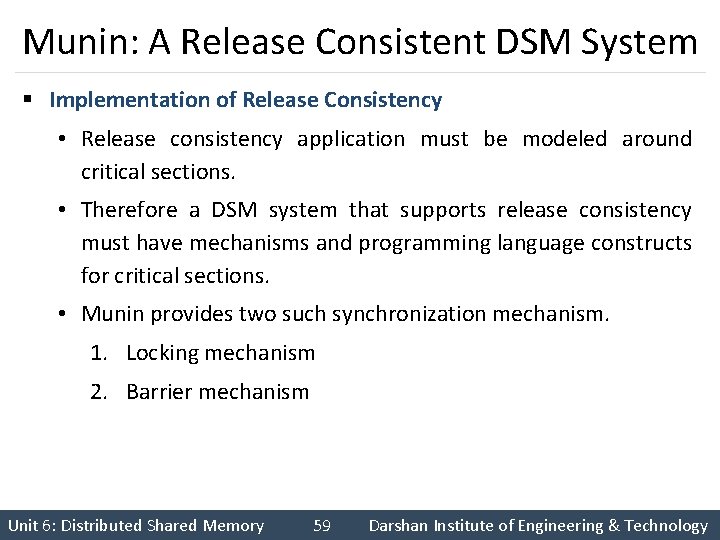

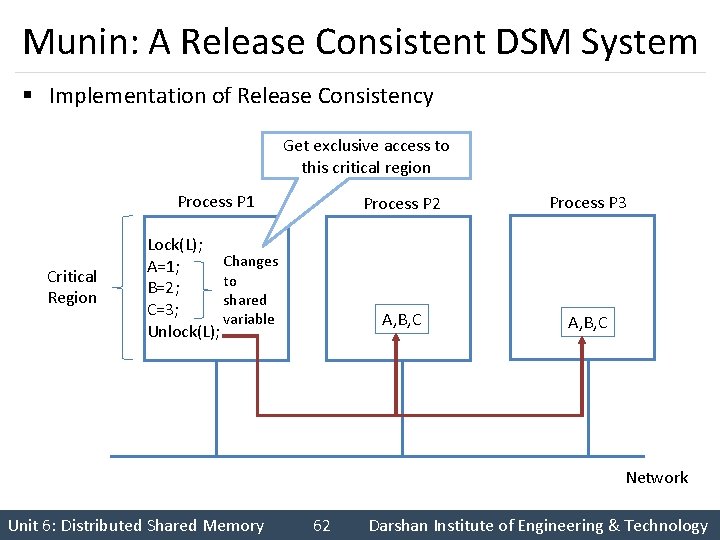

Munin: A Release Consistent DSM System § Implementation of Release Consistency • Release consistency application must be modeled around critical sections. • Therefore a DSM system that supports release consistency must have mechanisms and programming language constructs for critical sections. • Munin provides two such synchronization mechanism. 1. Locking mechanism 2. Barrier mechanism Unit 6: Distributed Shared Memory 59 Darshan Institute of Engineering & Technology

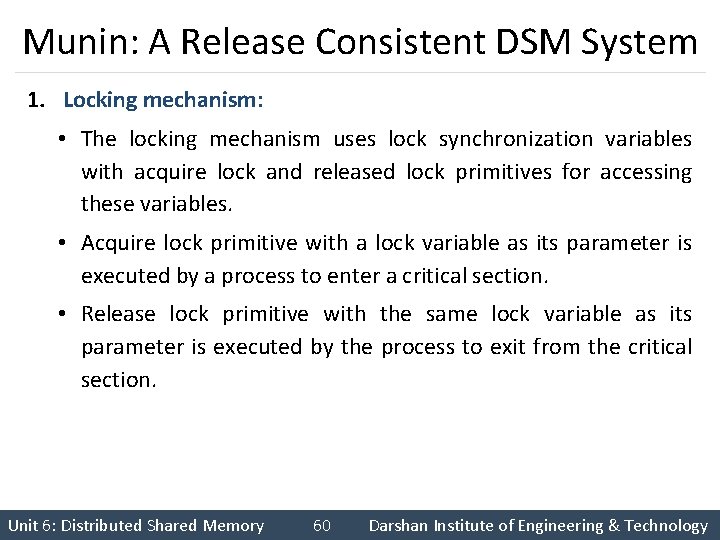

Munin: A Release Consistent DSM System 1. Locking mechanism: • The locking mechanism uses lock synchronization variables with acquire lock and released lock primitives for accessing these variables. • Acquire lock primitive with a lock variable as its parameter is executed by a process to enter a critical section. • Release lock primitive with the same lock variable as its parameter is executed by the process to exit from the critical section. Unit 6: Distributed Shared Memory 60 Darshan Institute of Engineering & Technology

Munin: A Release Consistent DSM System 2. Barrier mechanism: • The barrier mechanism uses barrier synchronization variables with a Wait at Barrier primitive for accessing these variables. • Barriers are implemented by using the centralized barrier server mechanism. Unit 6: Distributed Shared Memory 61 Darshan Institute of Engineering & Technology

Munin: A Release Consistent DSM System § Implementation of Release Consistency Get exclusive access to this critical region Process P 1 Critical Region Lock(L); Changes A=1; to B=2; shared C=3; variable Unlock(L); Process P 2 Process P 3 A, B, C Network Unit 6: Distributed Shared Memory 62 Darshan Institute of Engineering & Technology

Block Replacement Strategy § DSM system allows shared memory block to be dynamically migrated/replicated. § The following issues must be addressed when the available space for caching shared data fills up at a node: 1. Which block should be replaced to make space for a newly required block? 2. Where should the replaced block be placed? Unit 6: Distributed Shared Memory 63 Darshan Institute of Engineering & Technology

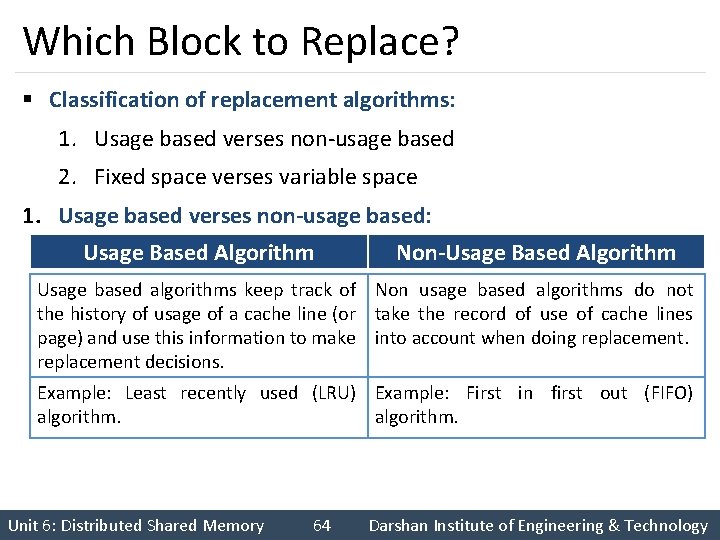

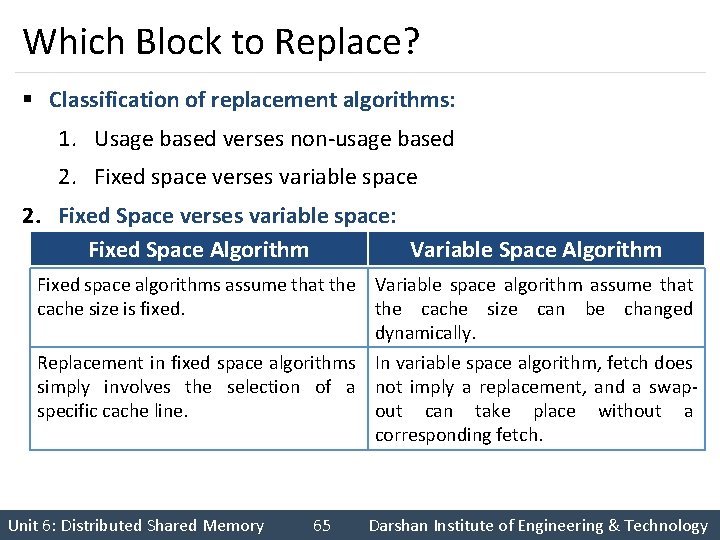

Which Block to Replace? § Classification of replacement algorithms: 1. Usage based verses non-usage based 2. Fixed space verses variable space 1. Usage based verses non-usage based: Usage Based Algorithm Non-Usage Based Algorithm Usage based algorithms keep track of Non usage based algorithms do not the history of usage of a cache line (or take the record of use of cache lines page) and use this information to make into account when doing replacement decisions. Example: Least recently used (LRU) Example: First in first out (FIFO) algorithm. Unit 6: Distributed Shared Memory 64 Darshan Institute of Engineering & Technology

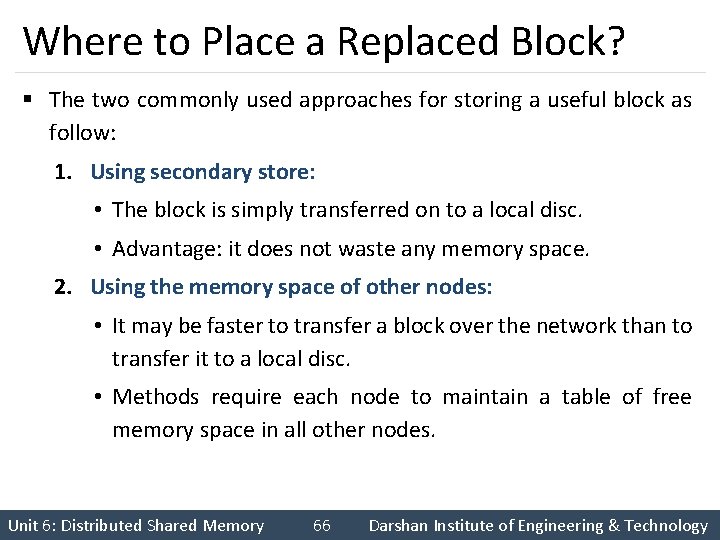

Which Block to Replace? § Classification of replacement algorithms: 1. Usage based verses non-usage based 2. Fixed space verses variable space 2. Fixed Space verses variable space: Fixed Space Algorithm Variable Space Algorithm Fixed space algorithms assume that the Variable space algorithm assume that cache size is fixed. the cache size can be changed dynamically. Replacement in fixed space algorithms In variable space algorithm, fetch does simply involves the selection of a not imply a replacement, and a swapspecific cache line. out can take place without a corresponding fetch. Unit 6: Distributed Shared Memory 65 Darshan Institute of Engineering & Technology

Where to Place a Replaced Block? § The two commonly used approaches for storing a useful block as follow: 1. Using secondary store: • The block is simply transferred on to a local disc. • Advantage: it does not waste any memory space. 2. Using the memory space of other nodes: • It may be faster to transfer a block over the network than to transfer it to a local disc. • Methods require each node to maintain a table of free memory space in all other nodes. Unit 6: Distributed Shared Memory 66 Darshan Institute of Engineering & Technology

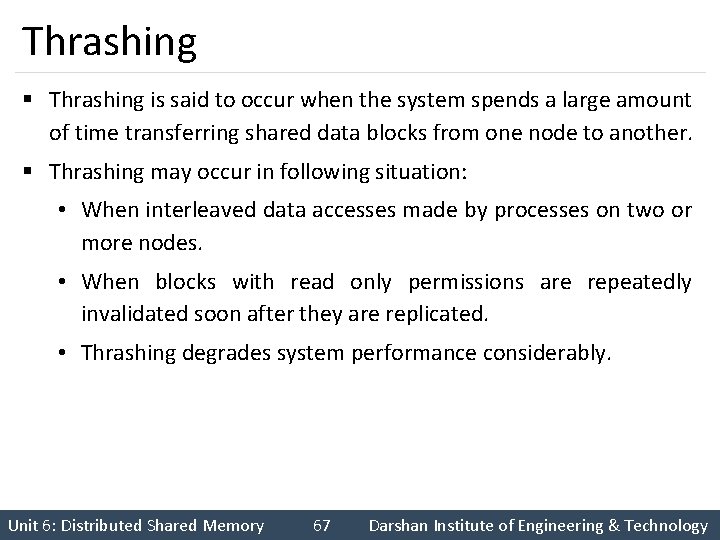

Thrashing § Thrashing is said to occur when the system spends a large amount of time transferring shared data blocks from one node to another. § Thrashing may occur in following situation: • When interleaved data accesses made by processes on two or more nodes. • When blocks with read only permissions are repeatedly invalidated soon after they are replicated. • Thrashing degrades system performance considerably. Unit 6: Distributed Shared Memory 67 Darshan Institute of Engineering & Technology

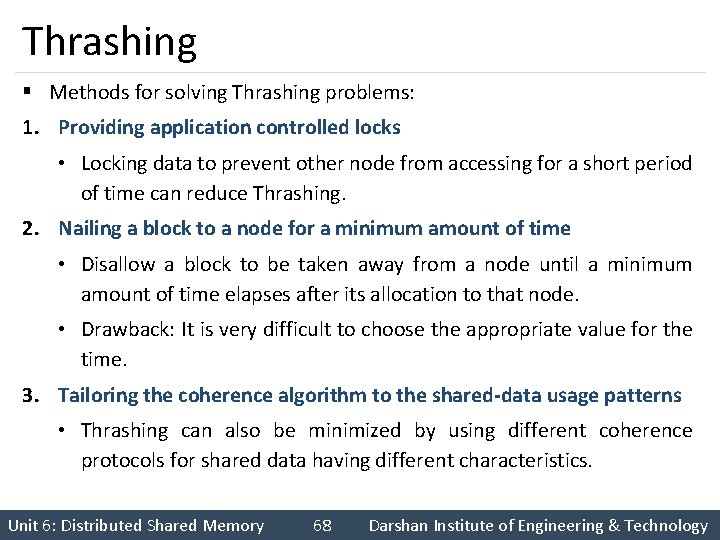

Thrashing § Methods for solving Thrashing problems: 1. Providing application controlled locks • Locking data to prevent other node from accessing for a short period of time can reduce Thrashing. 2. Nailing a block to a node for a minimum amount of time • Disallow a block to be taken away from a node until a minimum amount of time elapses after its allocation to that node. • Drawback: It is very difficult to choose the appropriate value for the time. 3. Tailoring the coherence algorithm to the shared-data usage patterns • Thrashing can also be minimized by using different coherence protocols for shared data having different characteristics. Unit 6: Distributed Shared Memory 68 Darshan Institute of Engineering & Technology

Advantages & Disadvantages of DSM § Advantages • Scales well with a large number of nodes. • Message passing is hidden. • Can handle complex and large databases without replication or sending the data to processes. • Generally cheaper than using a multiprocessor system. • Provides large virtual memory space. • Programs are more portable due to common programming interfaces. • Programs written for shared memory multiprocessors can be run on DSM systems with minimum changes. Unit 6: Distributed Shared Memory 69 Darshan Institute of Engineering & Technology

Advantages & Disadvantages of DSM § Disadvantages • Generally slower to access than non-distributed shared memory. • Must provide additional protection against simultaneous accesses to shared data. Unit 6: Distributed Shared Memory 70 Darshan Institute of Engineering & Technology

End of Unit-6 Unit 6: Distributed Shared Memory Darshan Institute of Engineering & Technology

- Slides: 71