Operating Systems Processes Threads and Scheduling Ref http

- Slides: 54

Operating Systems: Processes, Threads, and Scheduling Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Processes In embedded systems, process management, including enabling processes to meet hard or soft deadlines, is of paramount importance. So we need to understand the important concepts in this area. Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

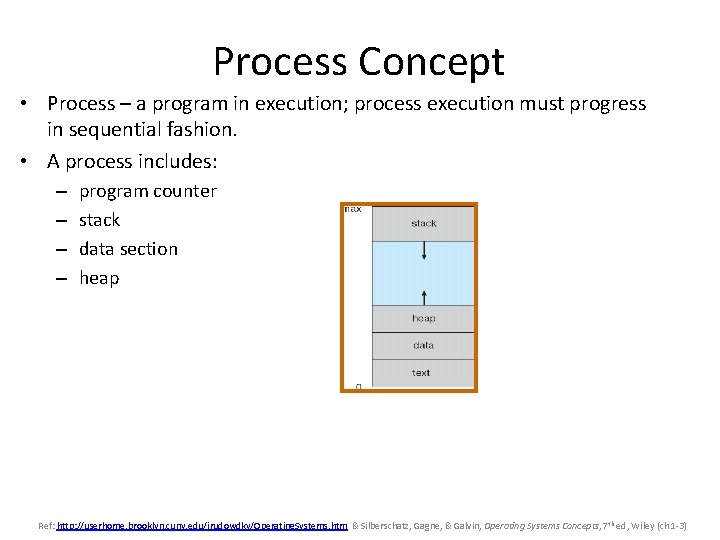

Process Concept • Process – a program in execution; process execution must progress in sequential fashion. • A process includes: – – program counter stack data section heap Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

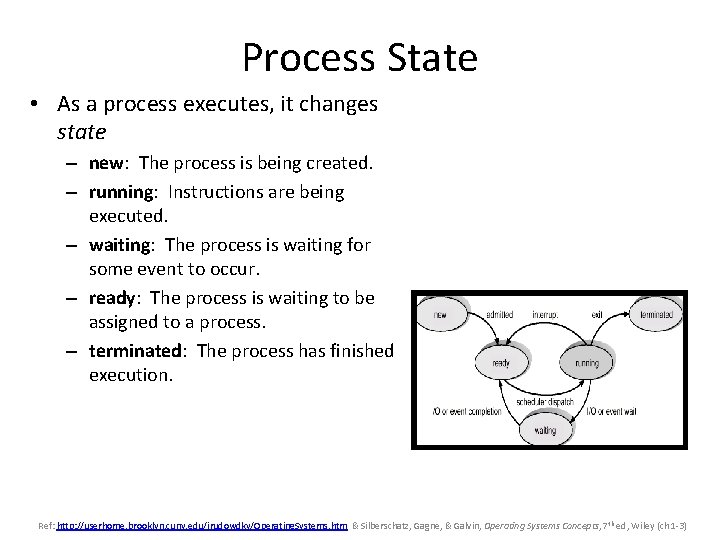

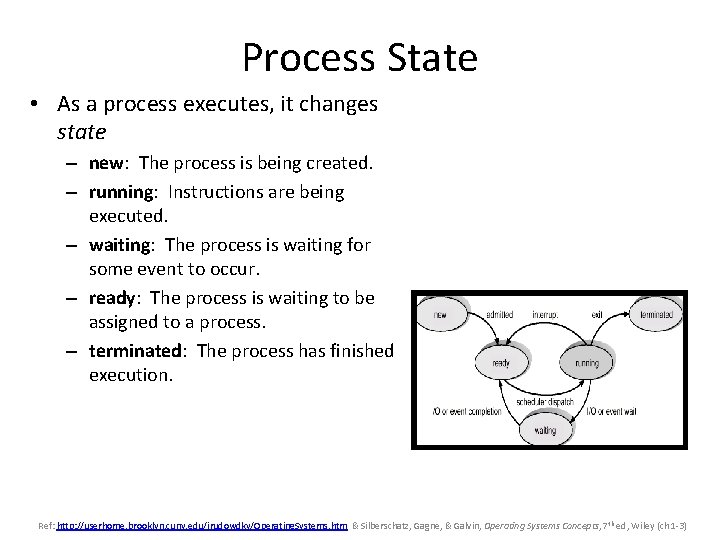

Process State • As a process executes, it changes state – new: The process is being created. – running: Instructions are being executed. – waiting: The process is waiting for some event to occur. – ready: The process is waiting to be assigned to a process. – terminated: The process has finished execution. Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

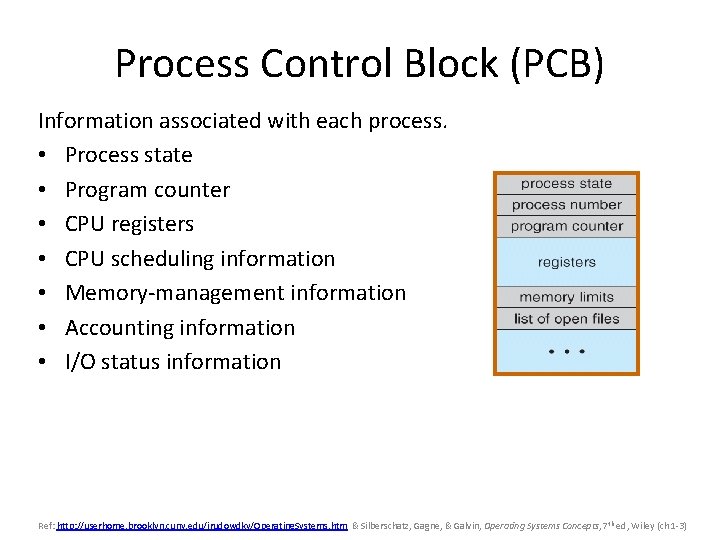

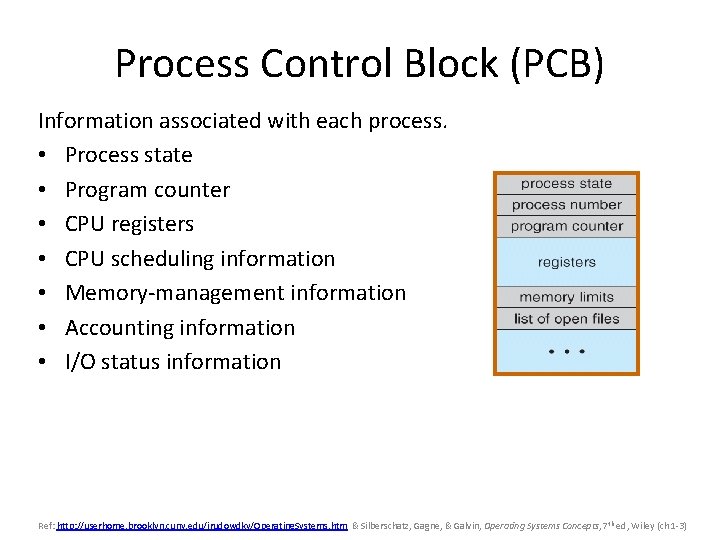

Process Control Block (PCB) Information associated with each process. • Process state • Program counter • CPU registers • CPU scheduling information • Memory-management information • Accounting information • I/O status information Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

CPU Switch From Process to Process This PCB is saved when a process is removed from the CPU and another process takes its place (context switch). Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Schedulers • Long-term scheduler (or job scheduler) – selects which processes should be brought into the ready queue. • Short-term scheduler (or CPU scheduler) – selects which process should be executed next and allocates CPU. Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Context Switch • When the CPU switches to another process, an interrupt occurs and a kernel routine runs to save the current context of the currently running process (PCB) • The system must save the state of the old process and load the saved state for the new process. • Context-switch time is overhead; the system does no useful work while switching. • Time dependent on hardware support. – Varies from 1 to 1000 microseconds Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Process Creation • Parent process create children processes which, in turn, create other processes forming a tree of processes. • Each process is assigned a unique process identifier (pid) • Resource sharing--possible approaches: – Parent and children share all resources. – Children share subset of parent’s resources. – Parent and child share no resources. • Execution—possible approaches: – Parent and children execute concurrently. – Parent waits until some or all of its children terminate. Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Process Creation (Cont. ) • Address space – Child duplicate of parent (UNIX) • Child has copy of parent’s address space – Enables easy communication between the two – The child process’ memory space is replaced with a new program which is then executed. Parent can wait for child to complete or create more processes – Child has a program loaded into it directly (DEC VMS) – Windows NT supports both models Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Process Creation (Cont. ) • UNIX example – fork() system call creates new process which has a copy of the address space of the original process • Simplifies parent-child communication • Both processes continue execution – execlp() system call used after a fork()to replace the process’ memory space with a new program. • Loads a binary file into memory and starts execution • Parent can then create more children processes or issue a wait() system call to move itself off the ready queue until the child completes Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Process Termination • Process executes last statement and asks the operating system to delete it by using the exit() system call – Process may return a status value to parent via wait() – Process’ resources are deallocated by operating system. • Parent may terminate execution of children processes abort() in UNIX or Terminate. Process() in Win 32 – Child has exceeded allocated resources. – Task assigned to child is no longer required. – Parent is exiting. • Some operating systems do not allow child to continue if its parent terminates - cascading termination. Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Process Termination • In UNIX, a process can be terminated via the exit system call. – Parent can wait for termination of child by the wait system call – wait returns the process identifier of a terminated child so that the parent can tell which child has terminated – If a parent terminates, all children are assigned the init process as their new parent Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Cooperating Processes • Independent process cannot affect or be affected by the execution of another process. • Cooperating process can affect or be affected by the execution of another process • Advantages of process cooperation – Information sharing – Computation speed-up via parallel sub-tasks – Modularity by dividing system functions into separate processes – Convenience - even an individual may want to edit, print and compile in parallel Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

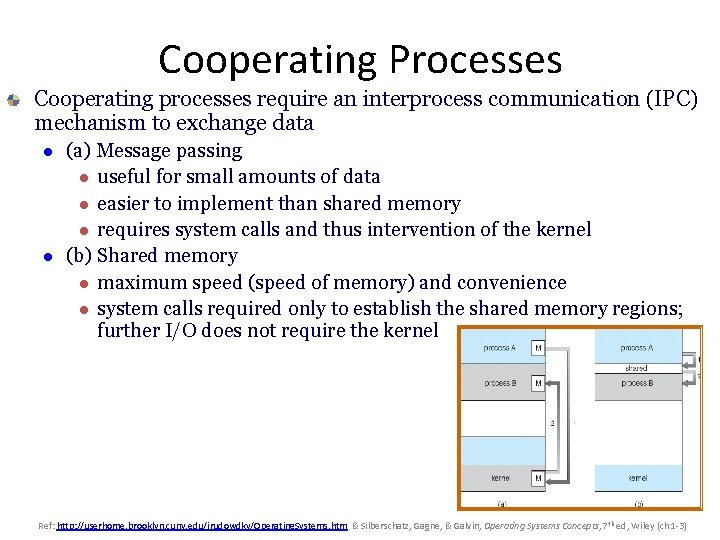

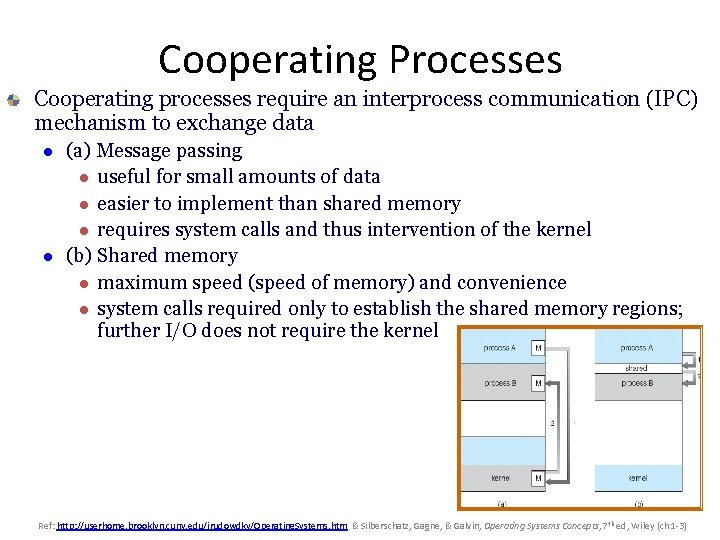

Cooperating Processes Cooperating processes require an interprocess communication (IPC) mechanism to exchange data l l (a) Message passing l useful for small amounts of data l easier to implement than shared memory l requires system calls and thus intervention of the kernel (b) Shared memory l maximum speed (speed of memory) and convenience l system calls required only to establish the shared memory regions; further I/O does not require the kernel Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Producer-Consumer Problem • Paradigm for cooperating processes, producer process produces information that is consumed by a consumer process. • A shared buffer enables the producer and consumer to run concurrently – e. g. , print program produces characters that are consumed by the print driver – unbounded-buffer places no practical limit on the size of the buffer. – bounded-buffer assumes that there is a fixed buffer size – producer must wait if the buffer is full Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

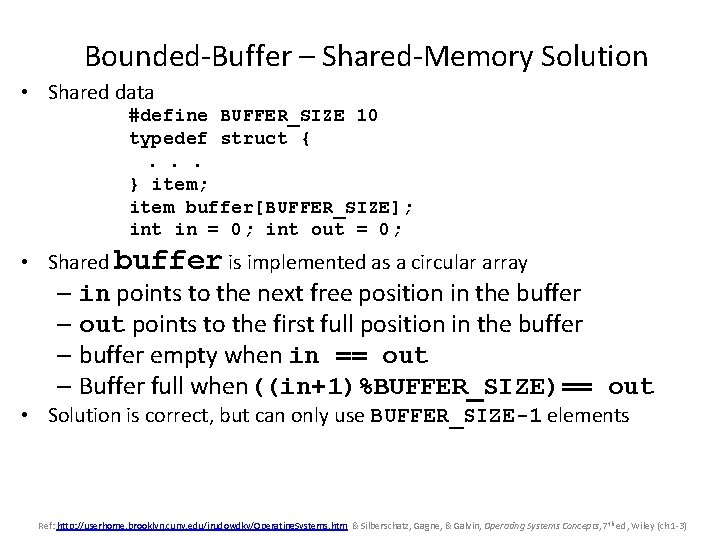

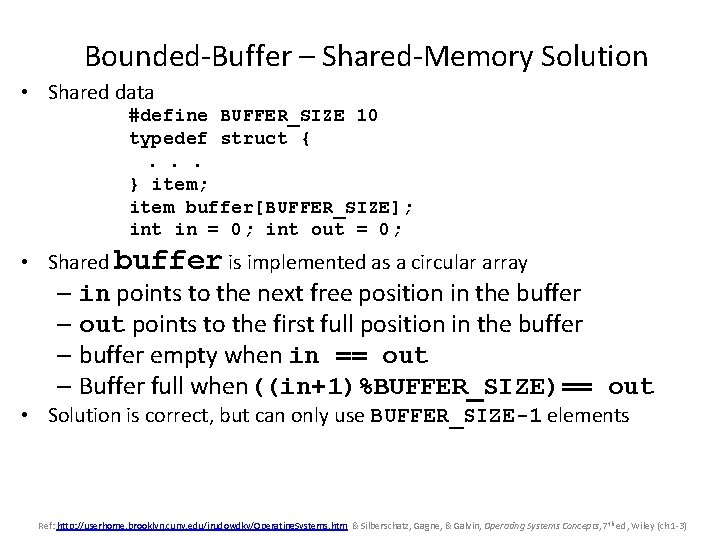

Bounded-Buffer – Shared-Memory Solution • Shared data #define BUFFER_SIZE 10 typedef struct {. . . } item; item buffer[BUFFER_SIZE]; int in = 0; int out = 0; • Shared buffer is implemented as a circular array – in points to the next free position in the buffer – out points to the first full position in the buffer – buffer empty when in == out – Buffer full when((in+1)%BUFFER_SIZE)== out • Solution is correct, but can only use BUFFER_SIZE-1 elements Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

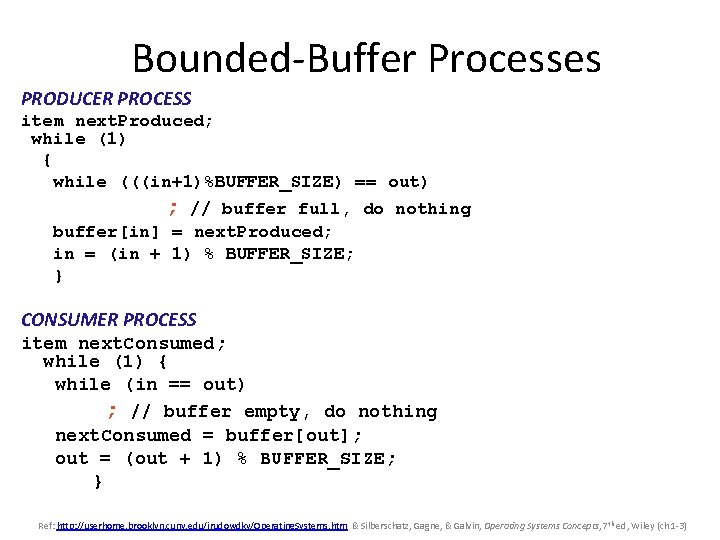

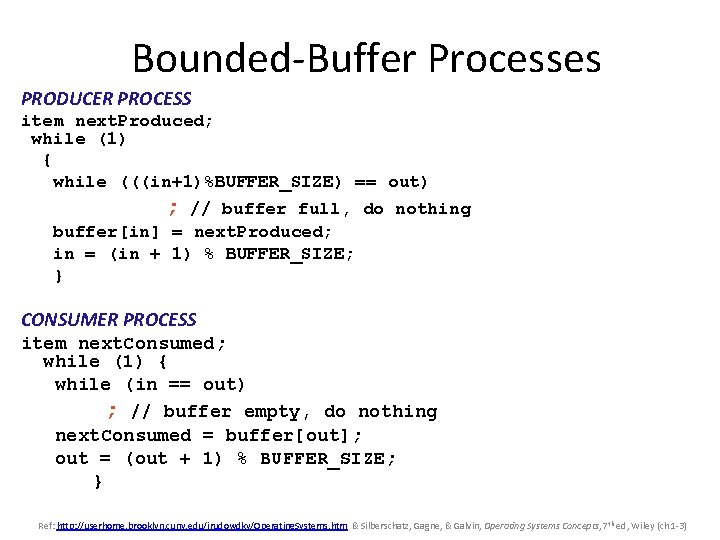

Bounded-Buffer Processes PRODUCER PROCESS item next. Produced; while (1) { while (((in+1)%BUFFER_SIZE) == out) ; // buffer full, do nothing buffer[in] = next. Produced; in = (in + 1) % BUFFER_SIZE; } CONSUMER PROCESS item next. Consumed; while (1) { while (in == out) ; // buffer empty, do nothing next. Consumed = buffer[out]; out = (out + 1) % BUFFER_SIZE; } Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

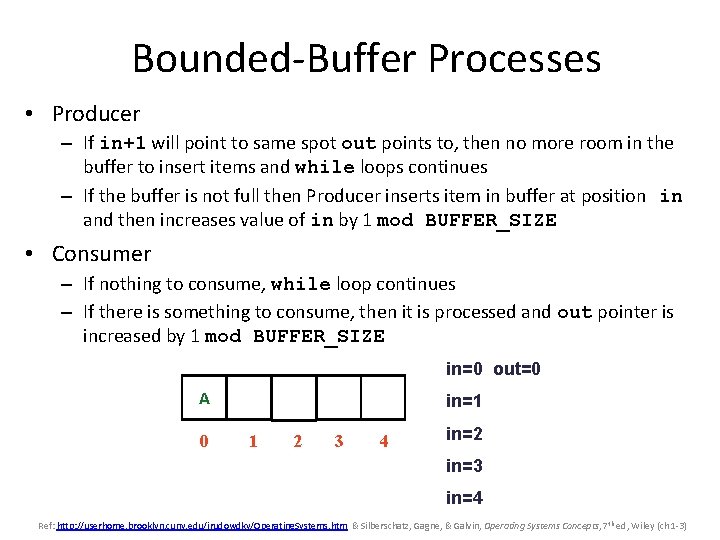

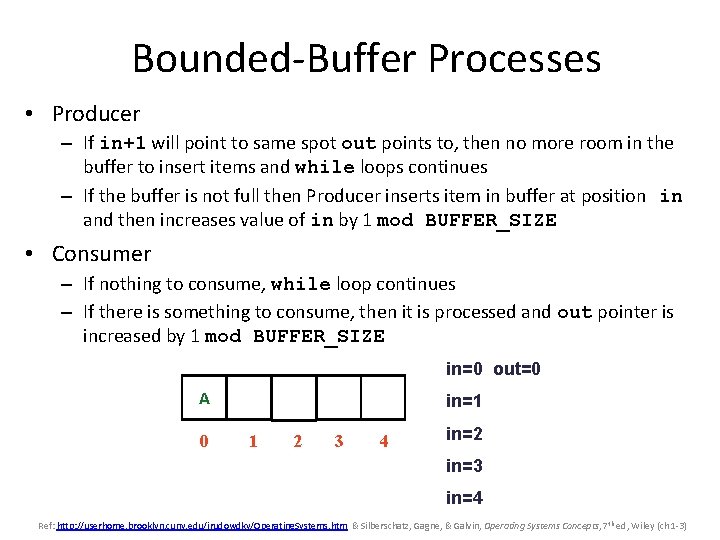

Bounded-Buffer Processes • Producer – If in+1 will point to same spot out points to, then no more room in the buffer to insert items and while loops continues – If the buffer is not full then Producer inserts item in buffer at position in and then increases value of in by 1 mod BUFFER_SIZE • Consumer – If nothing to consume, while loop continues – If there is something to consume, then it is processed and out pointer is increased by 1 mod BUFFER_SIZE in=0 out=0 A 0 in=1 1 2 3 4 in=2 in=3 in=4 Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

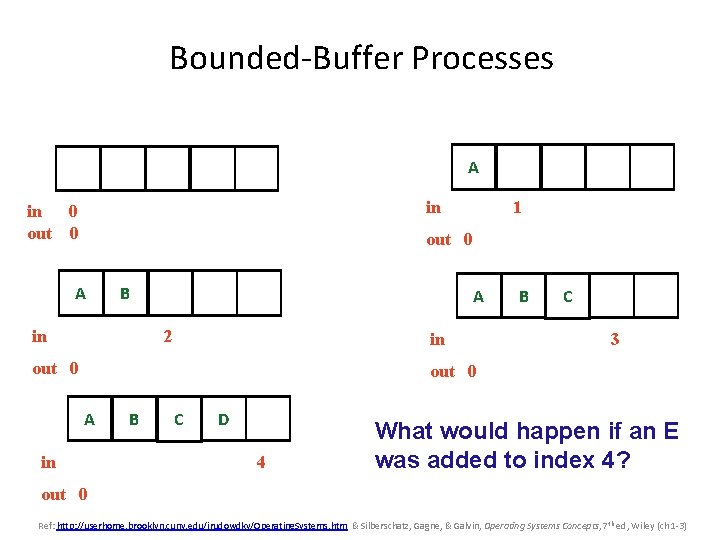

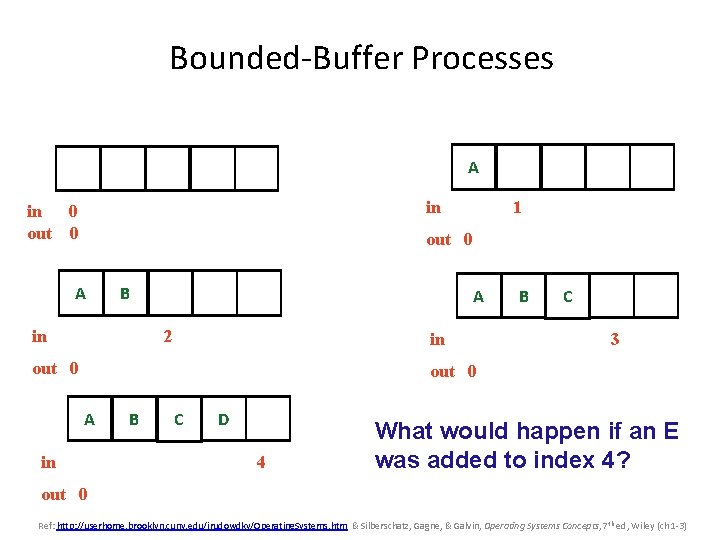

Bounded-Buffer Processes A in in 0 out 0 1 out 0 A B in A 2 in out 0 B C 3 out 0 A in B C D 4 What would happen if an E was added to index 4? out 0 Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

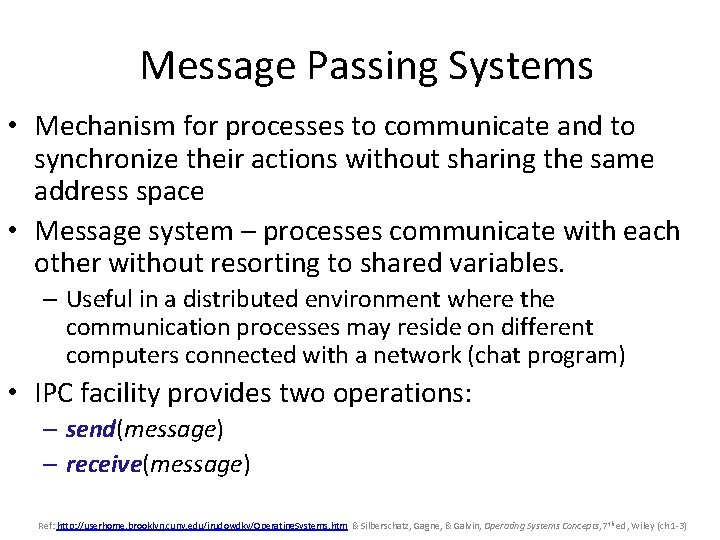

Message Passing Systems • Mechanism for processes to communicate and to synchronize their actions without sharing the same address space • Message system – processes communicate with each other without resorting to shared variables. – Useful in a distributed environment where the communication processes may reside on different computers connected with a network (chat program) • IPC facility provides two operations: – send(message) – receive(message) Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Message Passing Systems • If P and Q wish to communicate, they need to: – establish a communication link between them – exchange messages via send/receive • Implementation of communication link – physical properties • shared memory • hardware bus – logical properties • • • Direct or indirect communication Symmetric or asymmetric communication Automatic or explicit buffering Send by copy or send by reference Fixed-size or variable-size messages Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Synchronization • Message passing may be either blocking or nonblocking. • Blocking is considered synchronous – Send – sending process is blocked until the message is received by the receiving process or mailbox – Receive – receiver blocks until a message is available • Non-blocking is considered asynchronous – Send – sending process sends the message and resumes operation – Receive – receiver retrieves either a valid message or a null Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Buffering • Whether the communication link is direct or indirect, messages exchanged by communicating processes reside in a temporary queue • Queue of messages attached to the link; implemented in one of three ways. 1. Zero capacity – 0 messages Sender must wait for receiver (rendezvous). 2. Bounded capacity – finite length of n messages Sender must wait if link full. 3. Unbounded capacity – infinite length, sender never waits. Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Threads • • Overview Multithreading Models Thread Libraries Thread Pools Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Overview Many software packages are multi-threaded l l l Web browser: one thread display images, another thread retrieves data from the network Word processor: threads for displaying graphics, reading keystrokes from the user, performing spelling and grammar checking in the background Web server: instead of creating a process when a request is received, which is time consuming and resource intensive, server creates a thread to service the request A thread is sometimes called a lightweight process l l l It is comprised over a thread ID, program counter, a register set and a stack It shares with other threads belonging to the same process its code section, data section and other OS resources (e. g. , open files) A process that has multiples threads can do more than one task at a time Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

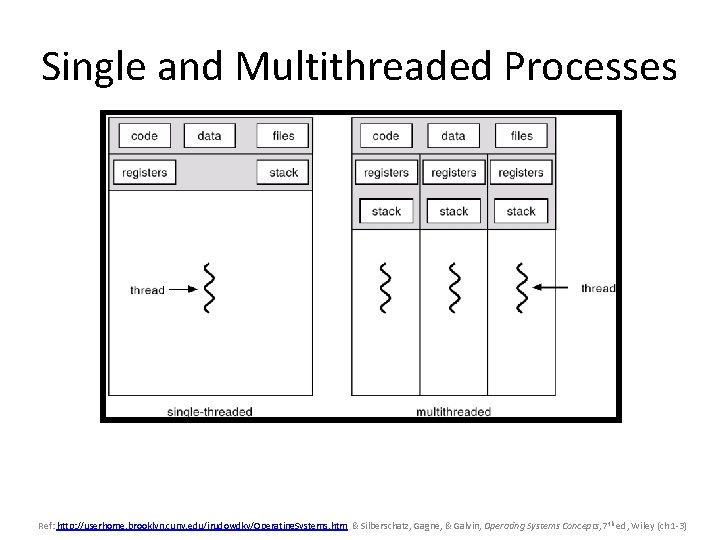

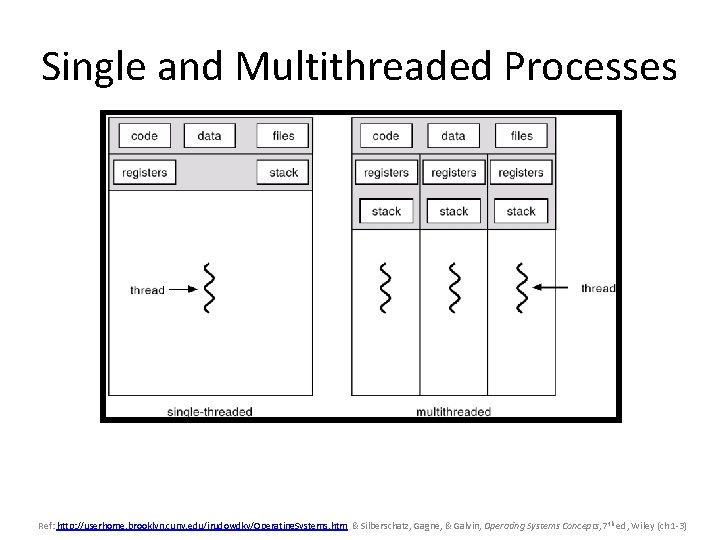

Single and Multithreaded Processes Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

User Threads • Thread management done by user-level threads library is without the intervention of the kernel – Fast to create and manage – If the kernel is single threaded, any user-level thread performing a blocking system call will cause the entire process to block Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Kernel Threads • Supported by the Kernel – Slower to create and manage than user threads – If thread performs a blocking system call, the kernel can schedule another thread in the application for execution – In multi-processor environments, the kernel can schedule threads on multiple processors • Examples - Windows 95/98/NT/2000 - Solaris - Tru 64 UNIX - Be. OS - Linux Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Multithreading Models • Many-to-One • One-to-One • Many-to-Many Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

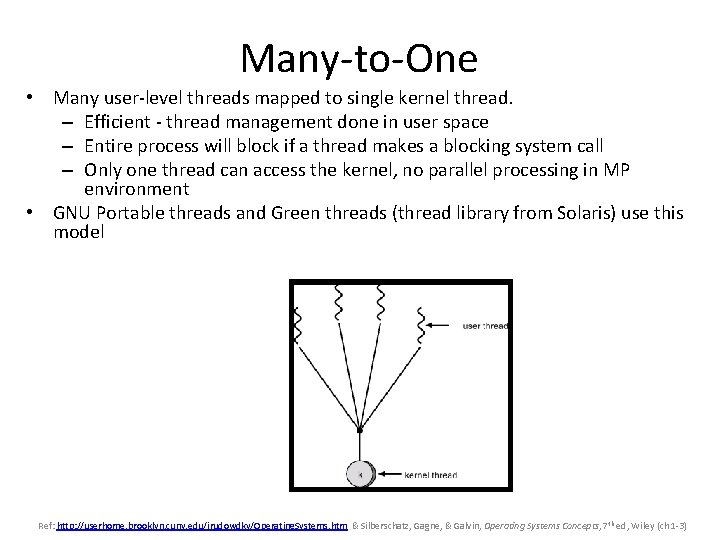

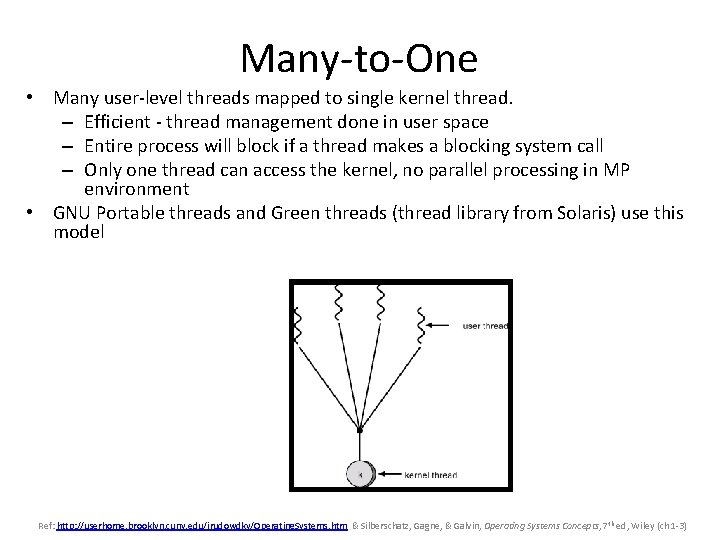

Many-to-One • Many user-level threads mapped to single kernel thread. – Efficient - thread management done in user space – Entire process will block if a thread makes a blocking system call – Only one thread can access the kernel, no parallel processing in MP environment • GNU Portable threads and Green threads (thread library from Solaris) use this model Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

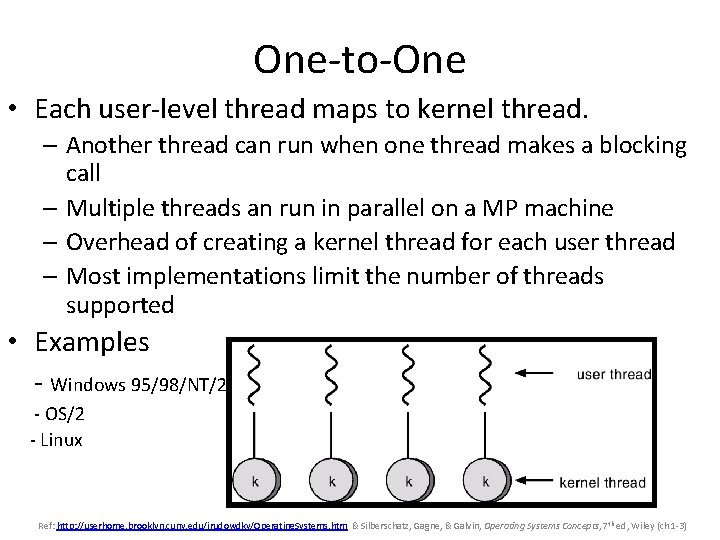

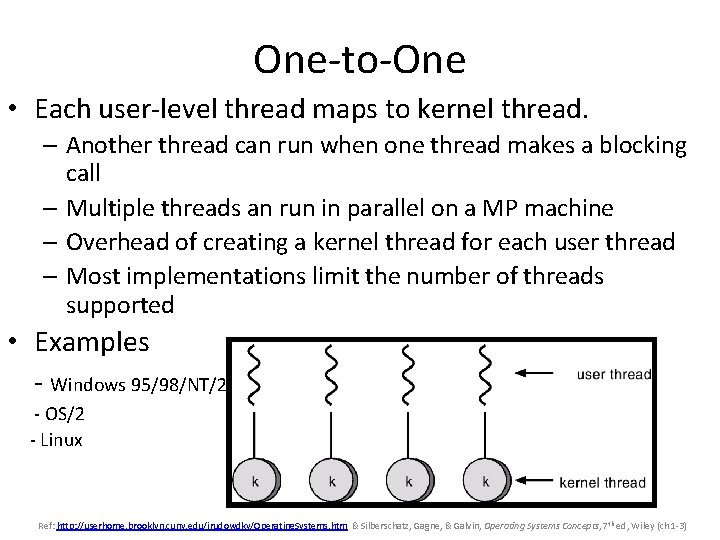

One-to-One • Each user-level thread maps to kernel thread. – Another thread can run when one thread makes a blocking call – Multiple threads an run in parallel on a MP machine – Overhead of creating a kernel thread for each user thread – Most implementations limit the number of threads supported • Examples - Windows 95/98/NT/2000 - OS/2 - Linux Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

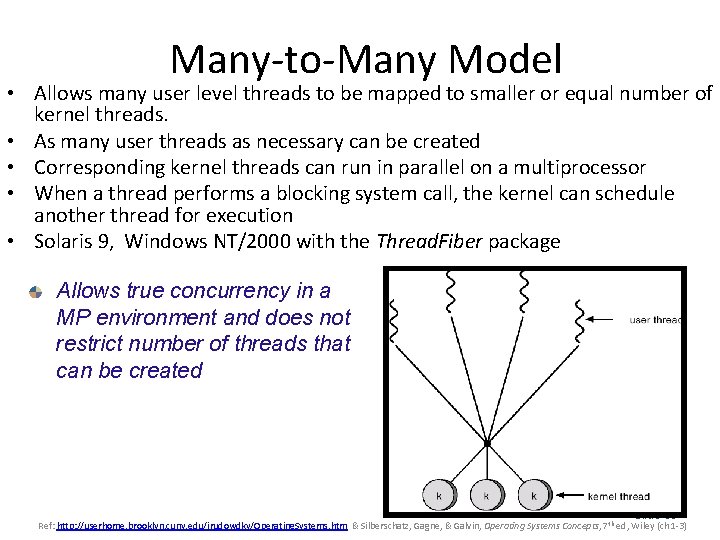

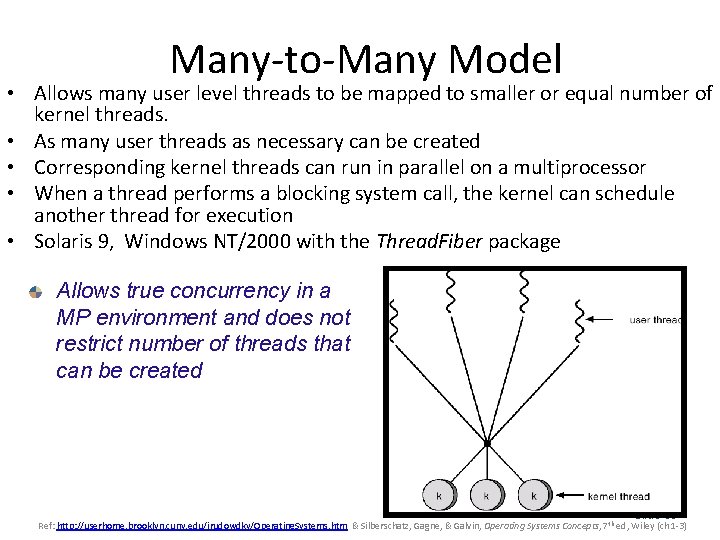

Many-to-Many Model • Allows many user level threads to be mapped to smaller or equal number of kernel threads. • As many user threads as necessary can be created • Corresponding kernel threads can run in parallel on a multiprocessor • When a thread performs a blocking system call, the kernel can schedule another thread for execution • Solaris 9, Windows NT/2000 with the Thread. Fiber package Allows true concurrency in a MP environment and does not restrict number of threads that can be created Slide 33 Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Thread Libraries • API for creating and managing threads – No kernel support, strictly in use space so no system calls involved – Kernel level directly supported by the OS. All code and data structures for the library exists in kernel spacer • An API call typically invokes a system call – Three main libraries in use • POSIX (Portable Operating System Interface) threads • Win 32 • Java Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Pthreads • Pthreads refers to the POSIX standard (IEEE 1003. 1 c) deifininf an API for thread creation and synchronization • Pthreads is an IEEE and Open Group certified product – The Open Group is a vendor-neutral and technologyneutral consortium, whose vision of Boundaryless Information Flow™ will enable access to integrated information, within and among enterprises, based on open standards and global interoperability. – This is a specification for thread behavior not an implementation – Implemented by Solaris, Linux, Mac. OS X and Tru 64 Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

CPU Scheduling • • • Basic Concepts Scheduling Criteria Scheduling Algorithms Real-Time Scheduling Algorithm Evaluation Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

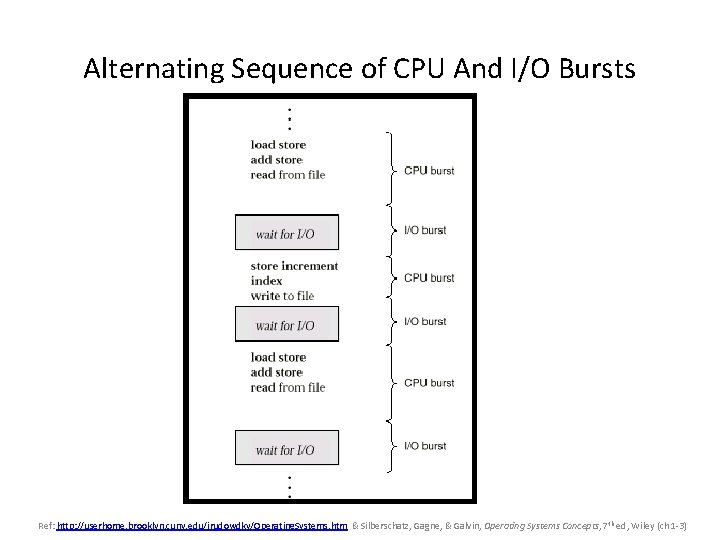

Basic Concepts • Maximum CPU utilization obtained with multiprogramming • CPU–I/O Burst Cycle – Process execution consists of a cycle of CPU execution and I/O wait. • Scheduling is central to OS design – CPU and almost all computer resources are scheduled before use Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

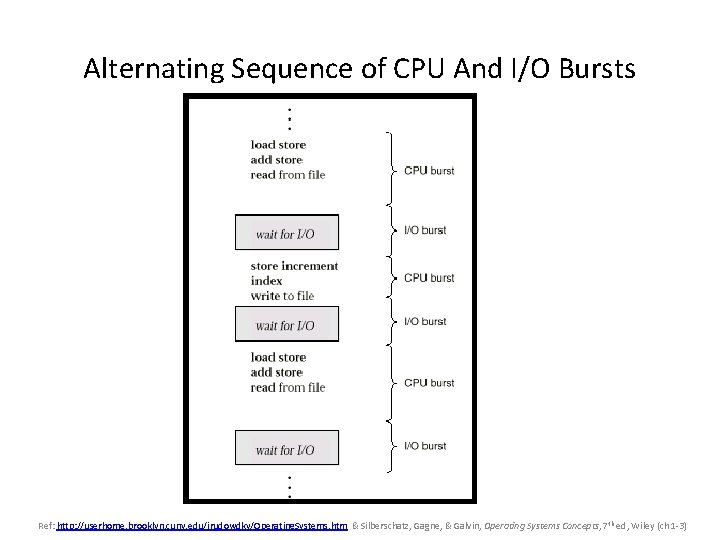

Alternating Sequence of CPU And I/O Bursts Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

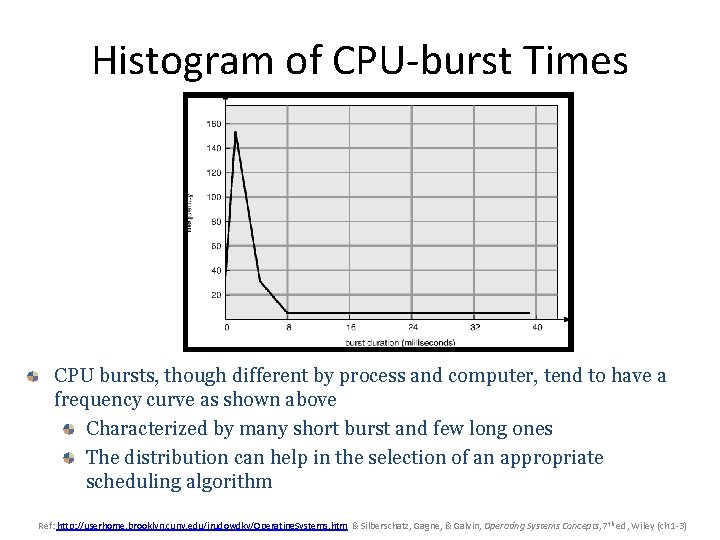

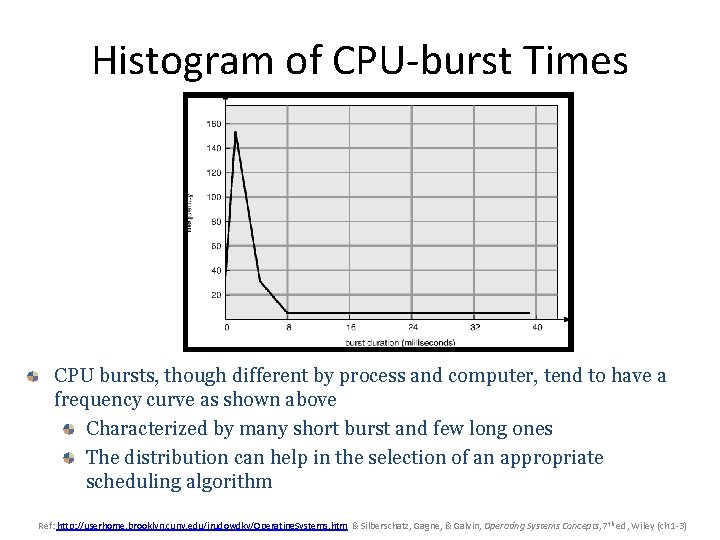

Histogram of CPU-burst Times CPU bursts, though different by process and computer, tend to have a frequency curve as shown above Characterized by many short burst and few long ones The distribution can help in the selection of an appropriate scheduling algorithm Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

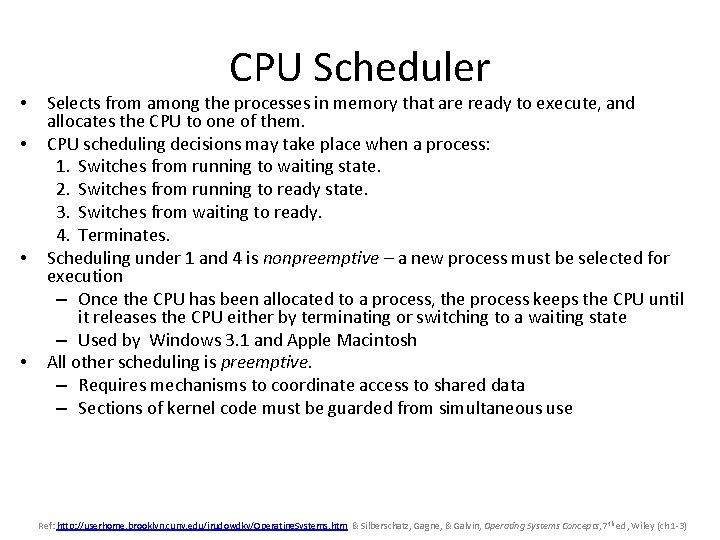

• • CPU Scheduler Selects from among the processes in memory that are ready to execute, and allocates the CPU to one of them. CPU scheduling decisions may take place when a process: 1. Switches from running to waiting state. 2. Switches from running to ready state. 3. Switches from waiting to ready. 4. Terminates. Scheduling under 1 and 4 is nonpreemptive – a new process must be selected for execution – Once the CPU has been allocated to a process, the process keeps the CPU until it releases the CPU either by terminating or switching to a waiting state – Used by Windows 3. 1 and Apple Macintosh All other scheduling is preemptive. – Requires mechanisms to coordinate access to shared data – Sections of kernel code must be guarded from simultaneous use Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

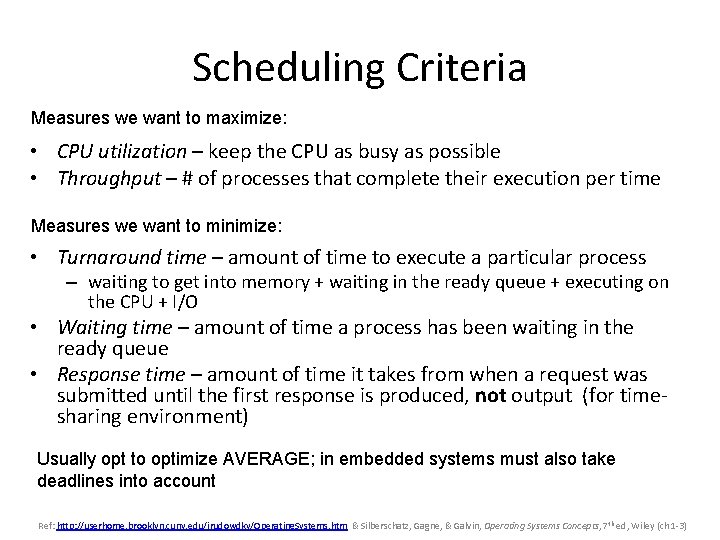

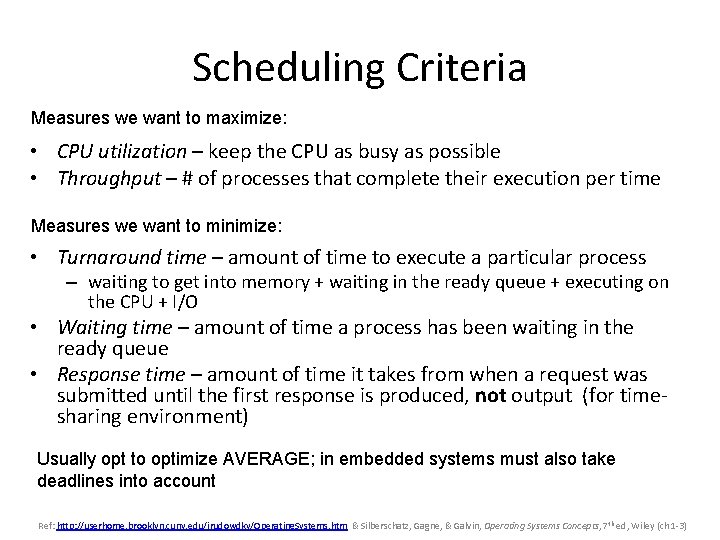

Scheduling Criteria Measures we want to maximize: • CPU utilization – keep the CPU as busy as possible • Throughput – # of processes that complete their execution per time Measures we want to minimize: • Turnaround time – amount of time to execute a particular process – waiting to get into memory + waiting in the ready queue + executing on the CPU + I/O • Waiting time – amount of time a process has been waiting in the ready queue • Response time – amount of time it takes from when a request was submitted until the first response is produced, not output (for timesharing environment) Usually opt to optimize AVERAGE; in embedded systems must also take deadlines into account Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

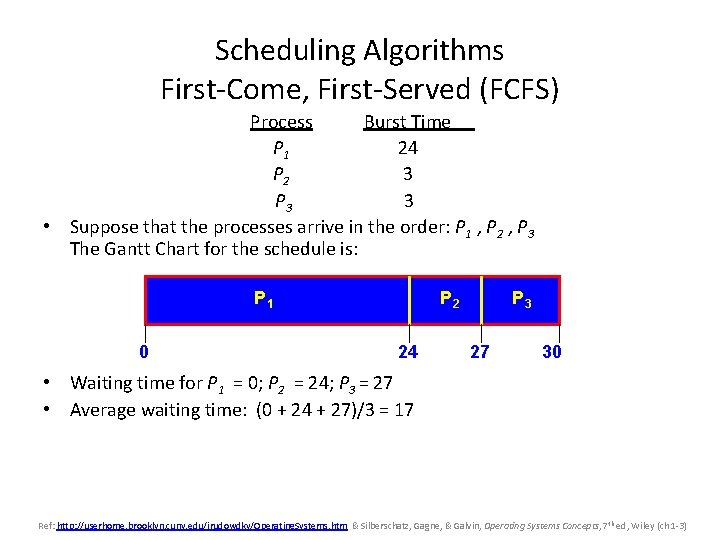

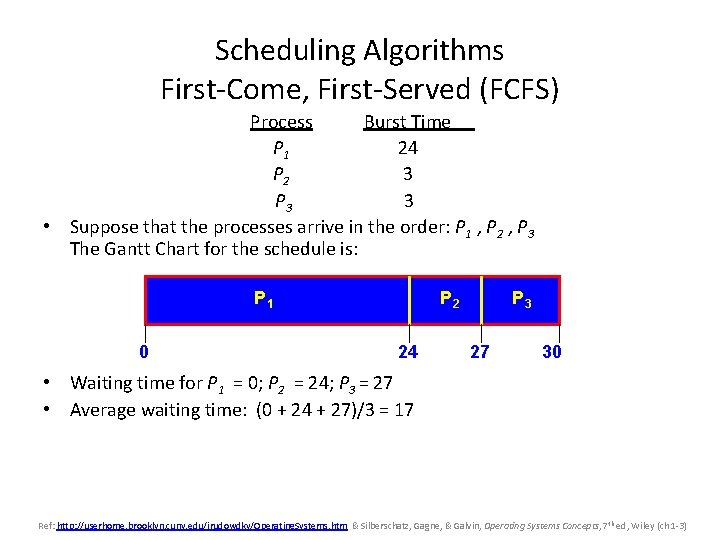

Scheduling Algorithms First-Come, First-Served (FCFS) Process Burst Time P 1 24 P 2 3 P 3 3 • Suppose that the processes arrive in the order: P 1 , P 2 , P 3 The Gantt Chart for the schedule is: P 1 0 P 2 24 P 3 27 30 • Waiting time for P 1 = 0; P 2 = 24; P 3 = 27 • Average waiting time: (0 + 24 + 27)/3 = 17 Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

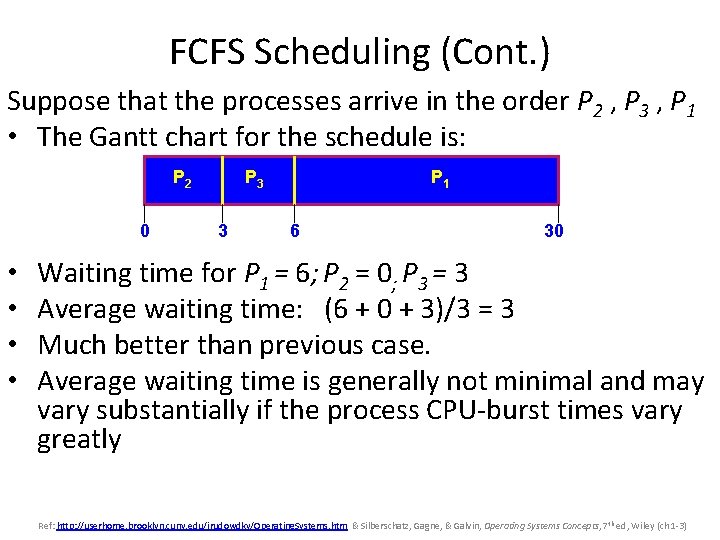

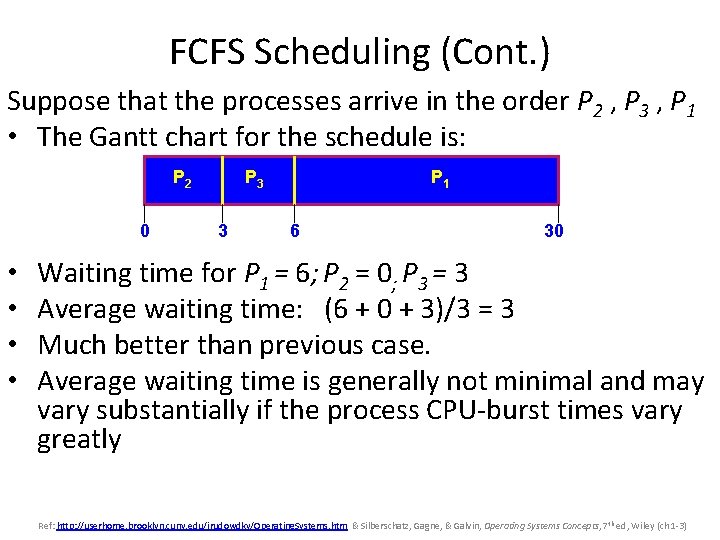

FCFS Scheduling (Cont. ) Suppose that the processes arrive in the order P 2 , P 3 , P 1 • The Gantt chart for the schedule is: P 2 0 • • P 3 3 P 1 6 30 Waiting time for P 1 = 6; P 2 = 0; P 3 = 3 Average waiting time: (6 + 0 + 3)/3 = 3 Much better than previous case. Average waiting time is generally not minimal and may vary substantially if the process CPU-burst times vary greatly Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

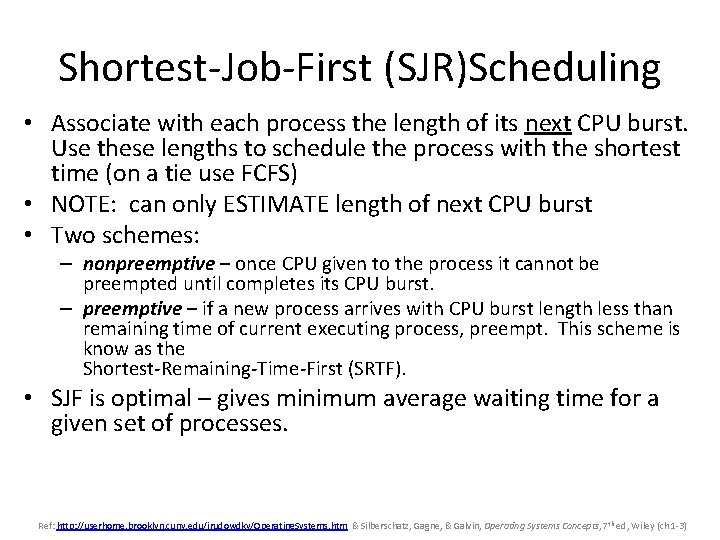

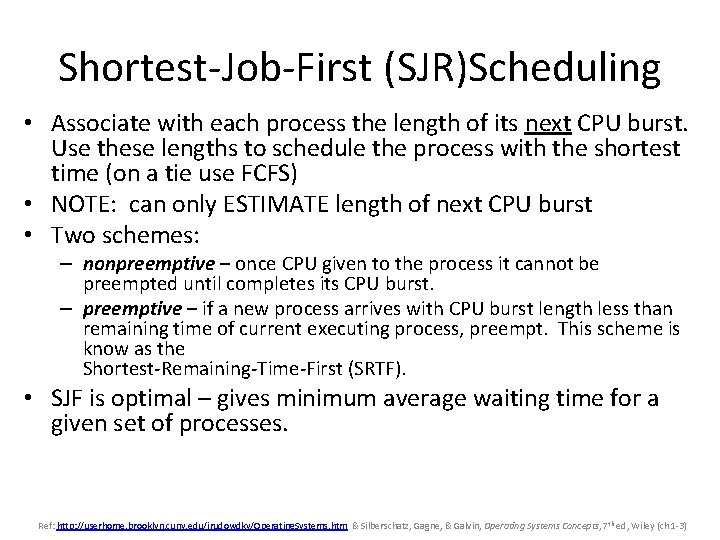

Shortest-Job-First (SJR)Scheduling • Associate with each process the length of its next CPU burst. Use these lengths to schedule the process with the shortest time (on a tie use FCFS) • NOTE: can only ESTIMATE length of next CPU burst • Two schemes: – nonpreemptive – once CPU given to the process it cannot be preempted until completes its CPU burst. – preemptive – if a new process arrives with CPU burst length less than remaining time of current executing process, preempt. This scheme is know as the Shortest-Remaining-Time-First (SRTF). • SJF is optimal – gives minimum average waiting time for a given set of processes. Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

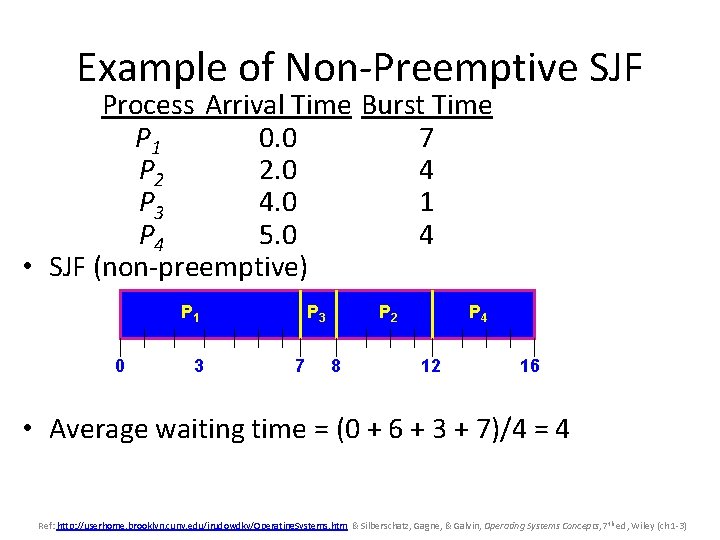

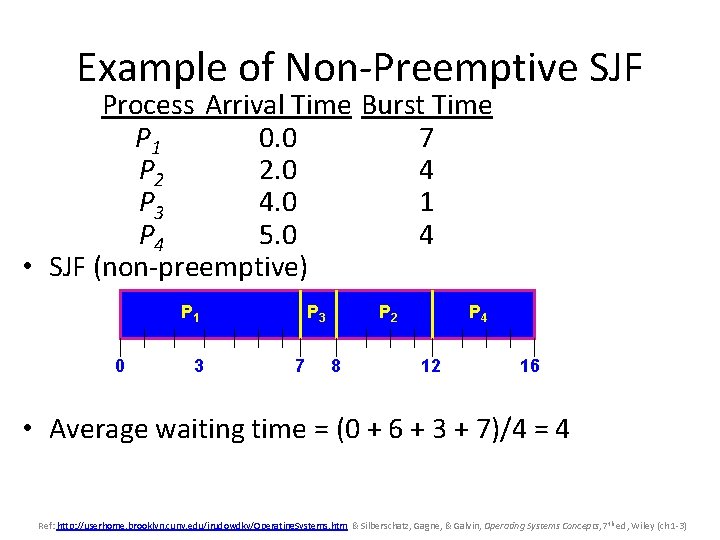

Example of Non-Preemptive SJF Process Arrival Time Burst Time P 1 0. 0 7 P 2 2. 0 4 P 3 4. 0 1 P 4 5. 0 4 • SJF (non-preemptive) P 1 0 3 P 3 7 P 2 8 P 4 12 16 • Average waiting time = (0 + 6 + 3 + 7)/4 = 4 Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

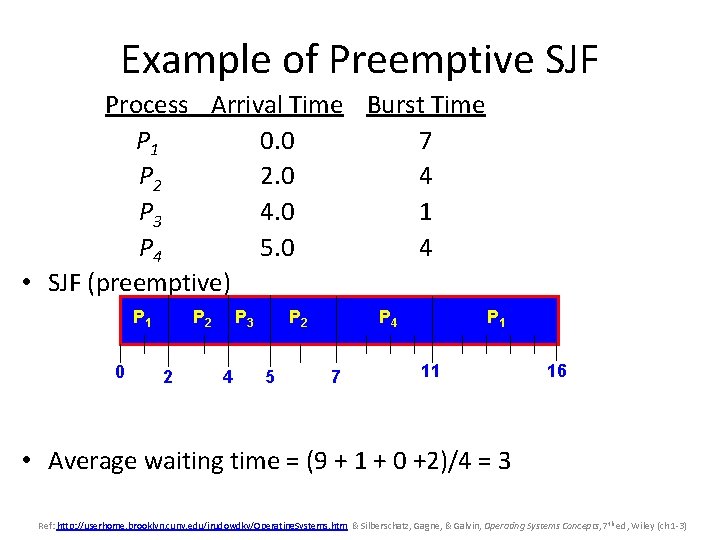

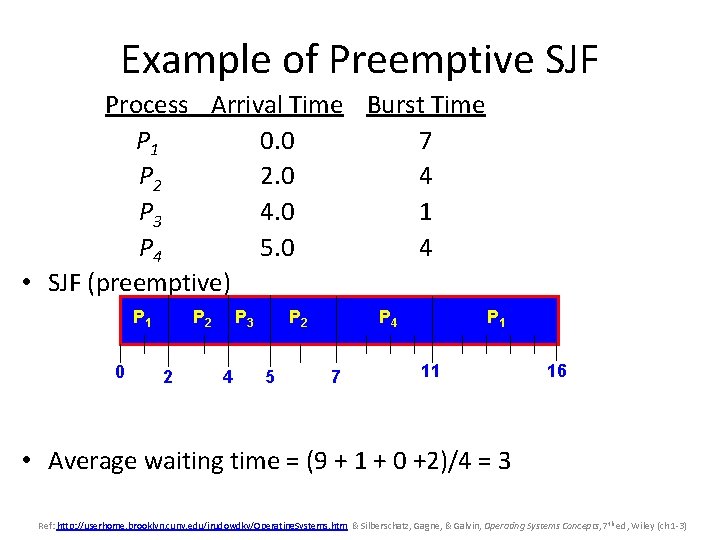

Example of Preemptive SJF Process Arrival Time Burst Time P 1 0. 0 7 P 2 2. 0 4 P 3 4. 0 1 P 4 5. 0 4 • SJF (preemptive) P 1 0 P 2 2 P 3 4 P 2 5 P 4 7 P 1 11 16 • Average waiting time = (9 + 1 + 0 +2)/4 = 3 Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

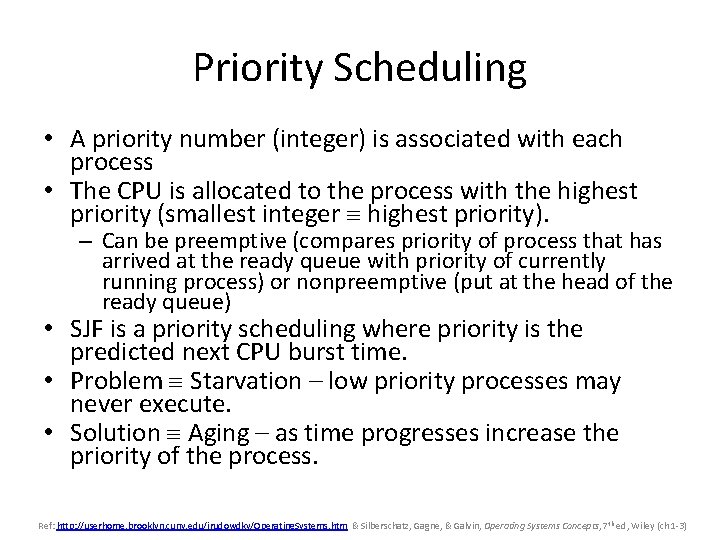

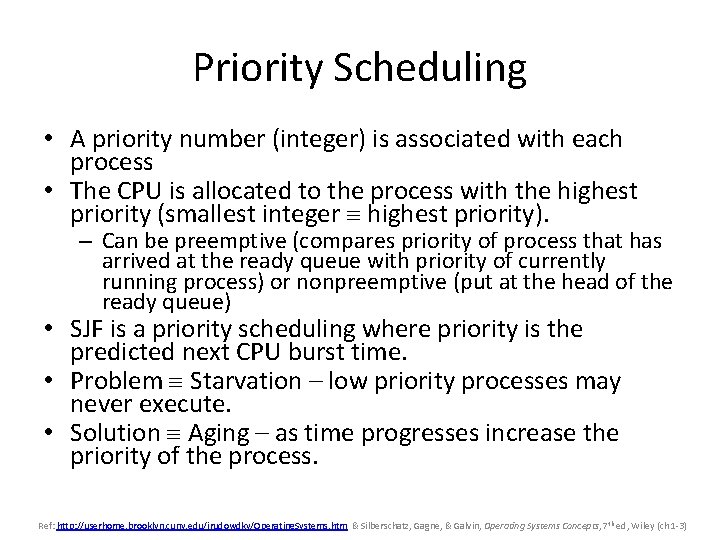

Priority Scheduling • A priority number (integer) is associated with each process • The CPU is allocated to the process with the highest priority (smallest integer highest priority). – Can be preemptive (compares priority of process that has arrived at the ready queue with priority of currently running process) or nonpreemptive (put at the head of the ready queue) • SJF is a priority scheduling where priority is the predicted next CPU burst time. • Problem Starvation – low priority processes may never execute. • Solution Aging – as time progresses increase the priority of the process. Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

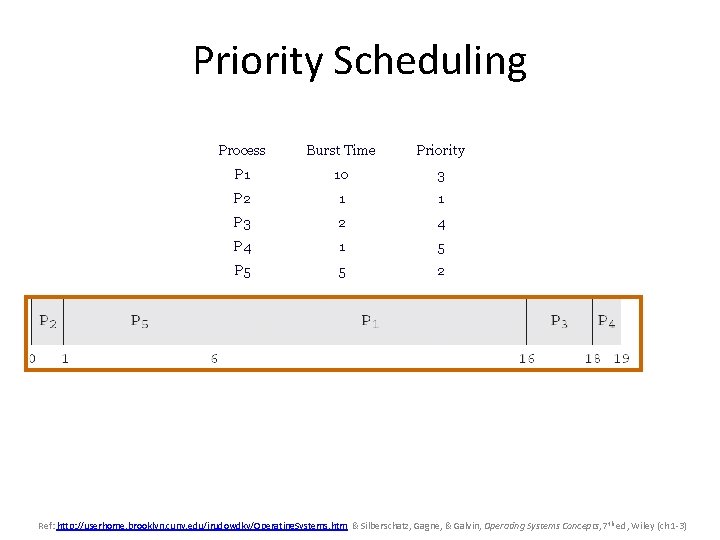

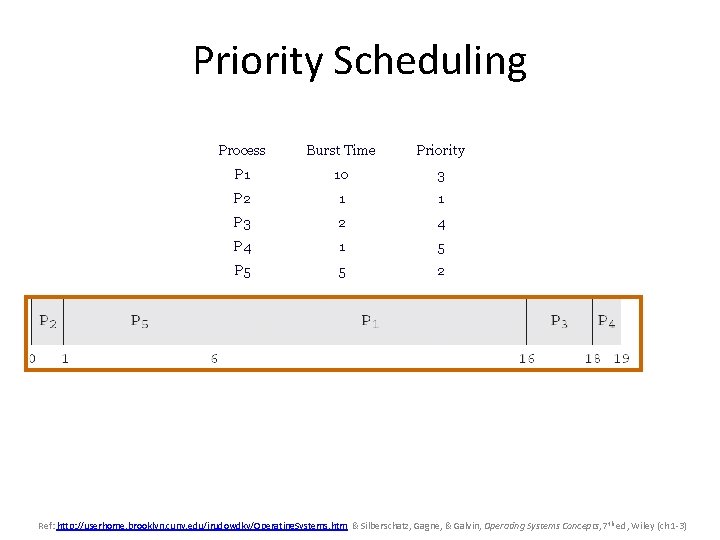

Priority Scheduling Process Burst Time Priority P 1 10 3 P 2 1 1 P 3 2 4 P 4 1 5 P 5 5 2 Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

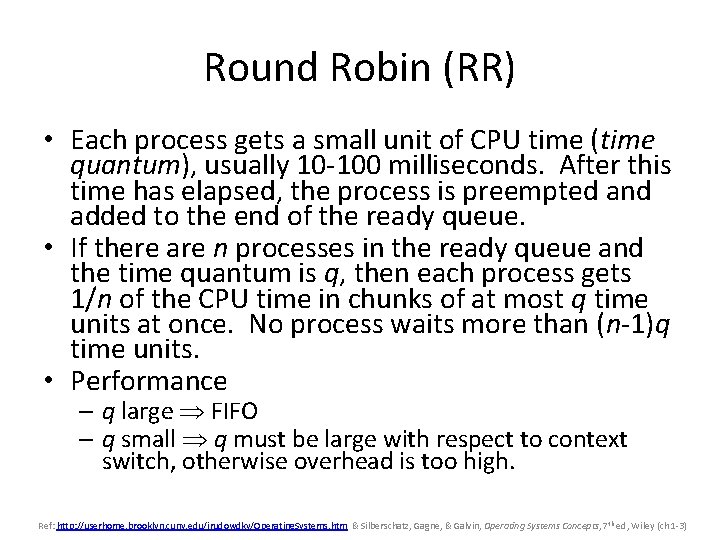

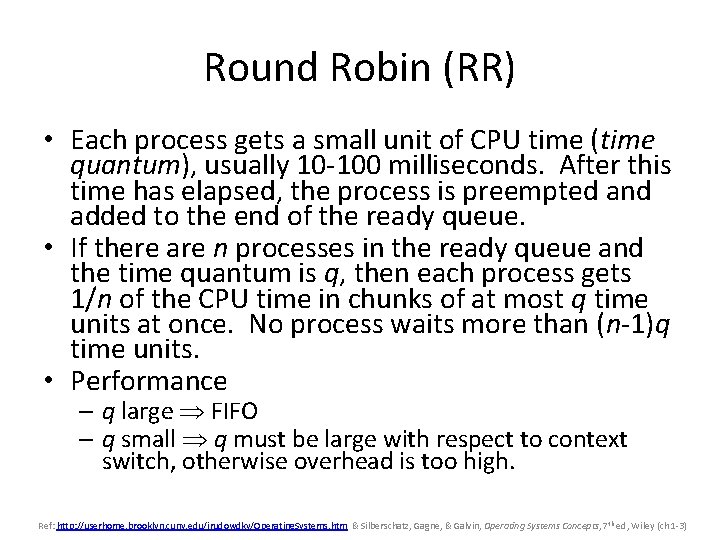

Round Robin (RR) • Each process gets a small unit of CPU time (time quantum), usually 10 -100 milliseconds. After this time has elapsed, the process is preempted and added to the end of the ready queue. • If there are n processes in the ready queue and the time quantum is q, then each process gets 1/n of the CPU time in chunks of at most q time units at once. No process waits more than (n-1)q time units. • Performance – q large FIFO – q small q must be large with respect to context switch, otherwise overhead is too high. Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

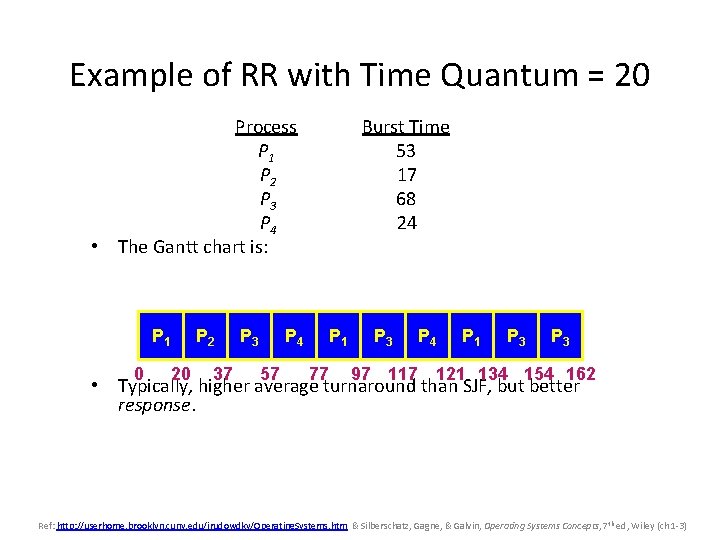

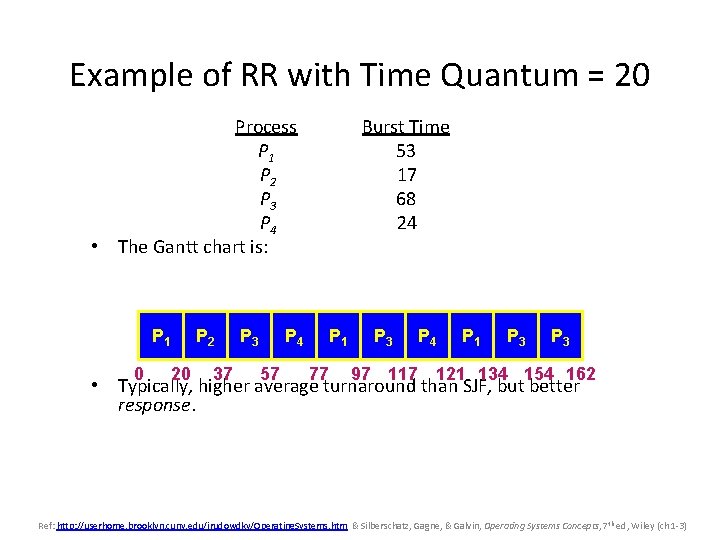

Example of RR with Time Quantum = 20 Process P 1 P 2 P 3 P 4 • The Gantt chart is: P 1 0 P 2 20 37 P 3 Burst Time 53 17 68 24 P 4 57 P 1 77 P 3 P 4 P 1 P 3 97 117 121 134 154 162 • Typically, higher average turnaround than SJF, but better response. Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

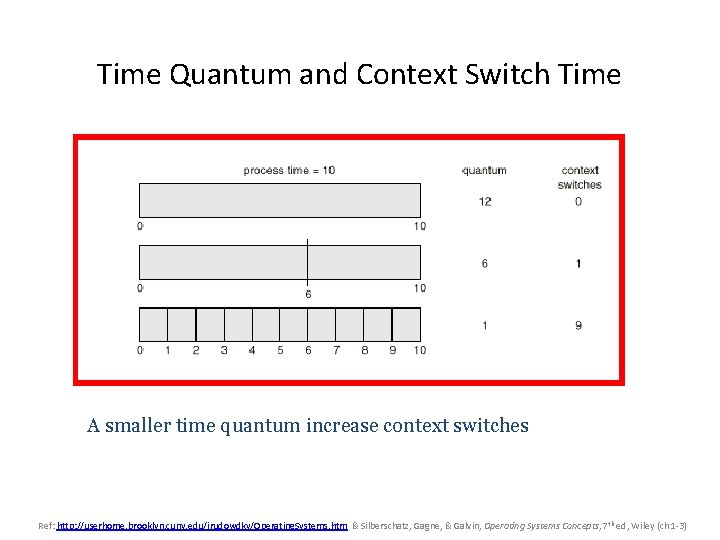

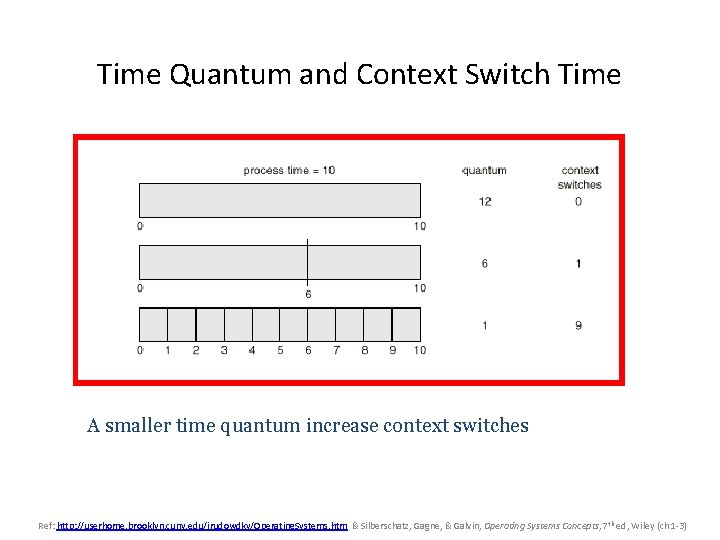

Time Quantum and Context Switch Time A smaller time quantum increase context switches Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

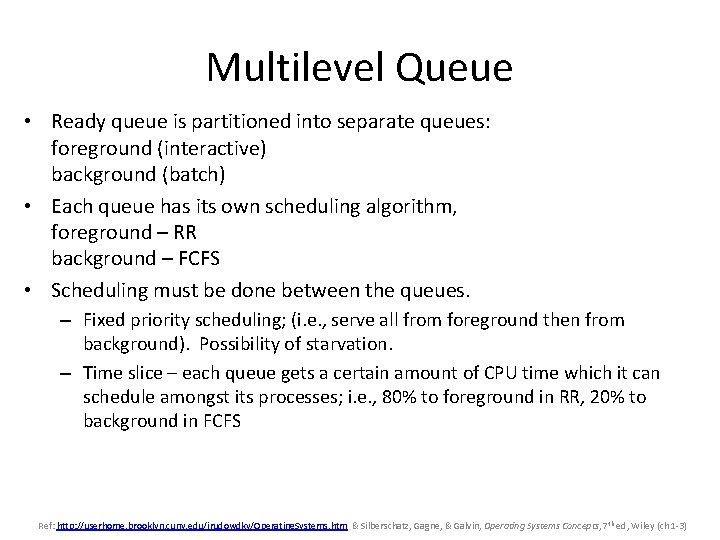

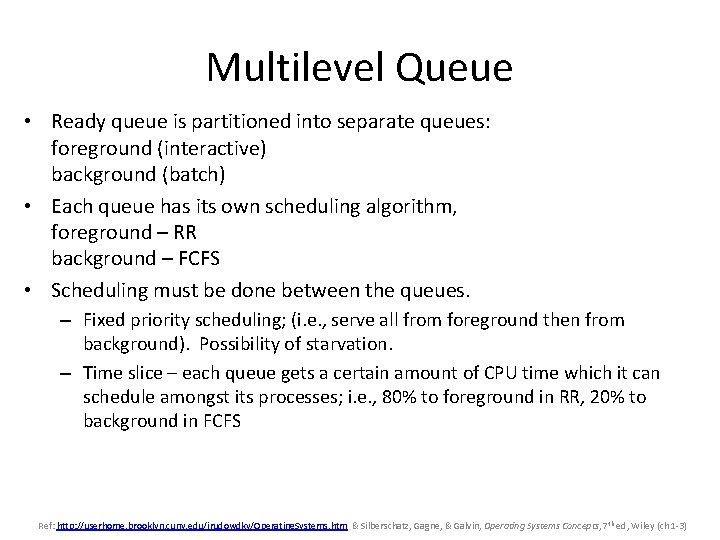

Multilevel Queue • Ready queue is partitioned into separate queues: foreground (interactive) background (batch) • Each queue has its own scheduling algorithm, foreground – RR background – FCFS • Scheduling must be done between the queues. – Fixed priority scheduling; (i. e. , serve all from foreground then from background). Possibility of starvation. – Time slice – each queue gets a certain amount of CPU time which it can schedule amongst its processes; i. e. , 80% to foreground in RR, 20% to background in FCFS Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

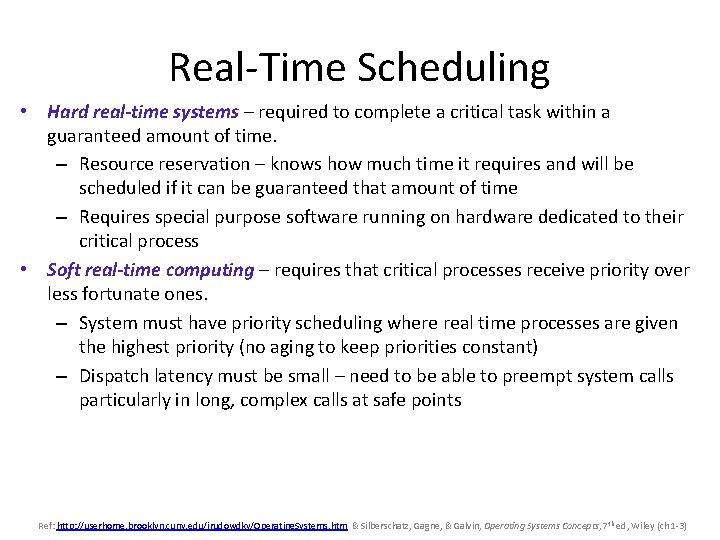

Real-Time Scheduling • Hard real-time systems – required to complete a critical task within a guaranteed amount of time. – Resource reservation – knows how much time it requires and will be scheduled if it can be guaranteed that amount of time – Requires special purpose software running on hardware dedicated to their critical process • Soft real-time computing – requires that critical processes receive priority over less fortunate ones. – System must have priority scheduling where real time processes are given the highest priority (no aging to keep priorities constant) – Dispatch latency must be small – need to be able to preempt system calls particularly in long, complex calls at safe points Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)

Algorithm Evaluation • Deterministic modeling – takes a particular predetermined workload and defines the performance of each algorithm for that workload. – Requires too much exact knowledge, can not generalize • Queueing models – Arrival and service distributions are often unrealistic – Classes of algorithms and distributions it can handle is limited • Simulation • Distribution of jobs may not accurate; use trace tapes of real system activity • Expensive to run, time consuming; writing and testing the simulator is a major task • Implementation – code the algorithm and actually evaluate it in the OS – Expensive to code and modify the OS; users unhappy with changing environment – Users take advantage of knowledge of the scheduling algorithm and run different types of programs (interactive or smaller programs given higher priority) • Best would be to be able to change the parameters used by the scheduler to reflect expected future use – separation of mechanism and policy – but not the common practice Ref: http: //userhome. brooklyn. cuny. edu/irudowdky/Operating. Systems. htm & Silberschatz, Gagne, & Galvin, Operating Systems Concepts, 7 th ed, Wiley (ch 1 -3)