Shared Memory and Shared Memory Consistency What is

- Slides: 34

Shared Memory and Shared Memory Consistency • What is the difference between eager and lazy implementations of relaxed consistency? • Which models for relaxed consistency are used in shared MPSo. C and multicore nowadays? • What is page-based shared virtual memory? • What are the implications of relaxed memory consistency models for parallel software? • How should software be written to preserve consistency? Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 1

Announcements Cadence Palladium: • Massively parallel Boolean computing engine and processor-based architecture • Two chips/modules in a MCM, each 700+ cores • 36 x 1400 cores • Parallel software: ASIC netlist Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 2

Introduction Scalable MP systems need to hide shared memory data latencies. A number of relevant techniques have been proposed and accepted in industry: • Coherent Caches (CC) – data latency hidden by caching data close to a processor • Relaxed Memory Consistency – allow processor/compiler optimizations (reordering of memory accesses and buffering/pipelining some of memory accesses) • Multithreading – program threads context switched if a long memory access takes place • Data Pre-fetching – long latency memory accesses issued long before needed Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 3

Shared Memory • Parallel processor architectures use shared memory as a way of exchanging data among individual processors to: – – avoid redundant copies or to communicate (for example synchronization events) - PS. shared memory versus messages • Most of contemporary multiprocessor systems provide complex cache coherence protocols to ensure all processors caches are up to date with the most recent data • Having a system cache coherent does not guarantee correct operation (due to memory access bypass etc). • Parallel programming requires memory consistency • While cache coherence is HW based protocol, memory consistency protocols are implemented in SW or combination of (mostly) SW and HW. • Cache coherence is less restrictive then memory consistency – if shared memory is consistent, its cache is coherent Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 4

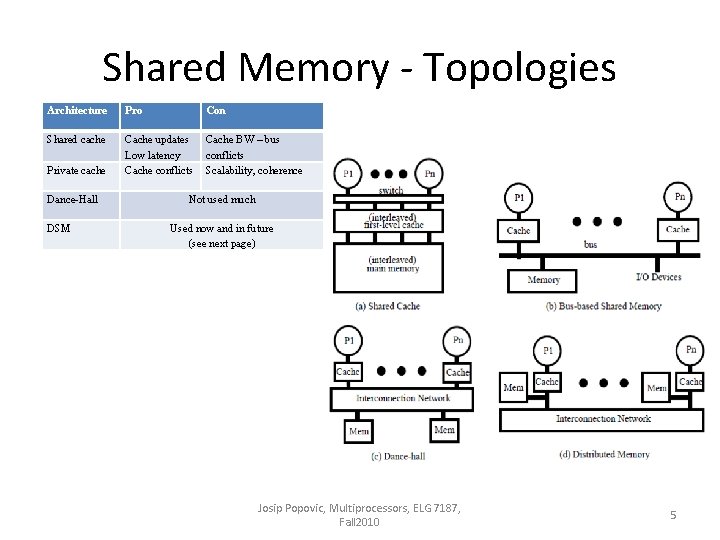

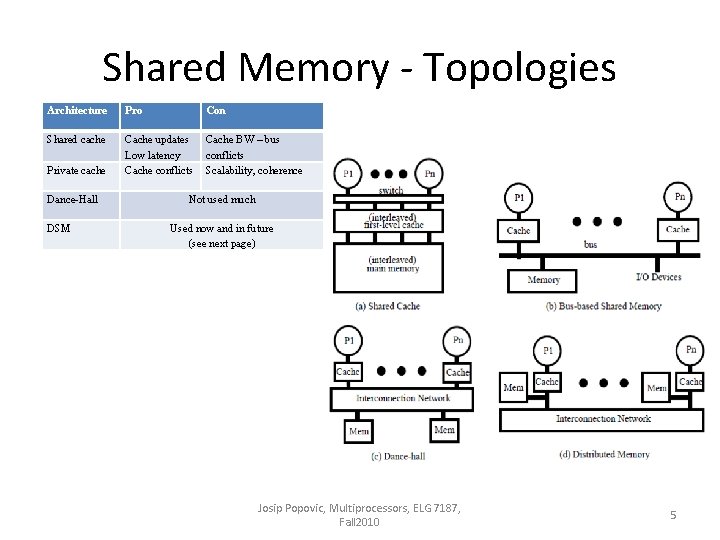

Shared Memory - Topologies Architecture Pro Con Shared cache Cache updates Low latency Cache conflicts Cache BW – bus conflicts Scalability, coherence Private cache Dance-Hall DSM Not used much Used now and in future (see next page) Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 5

Distributed Shared Memory Distributed Memory Pros • Better memory utilization if a processor(s) idle (load balancing) • Better memory bandwidth due to multiport memory accesses • Speed of access to local memory Distributed Memory Cons • Different memory latencies (local shared versus distant shared) • Memory consistency complicated • Cache coherence complicated (QPi example) Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 6

Memory Consistency Model • “A memory consistency model for a shared address space specifies constraints on the order in which memory operations must appear to be performed (i. e. to become visible to the processors) with respect to one another. This includes operations to the same locations or to different locations, and by the same process or different processes, so memory consistency subsumes coherence. ” David Culler, Jaswinder Pal Singh, Anoop Gupta , “Parallel Computer Architecture A Hardware / Software Approach” Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 7

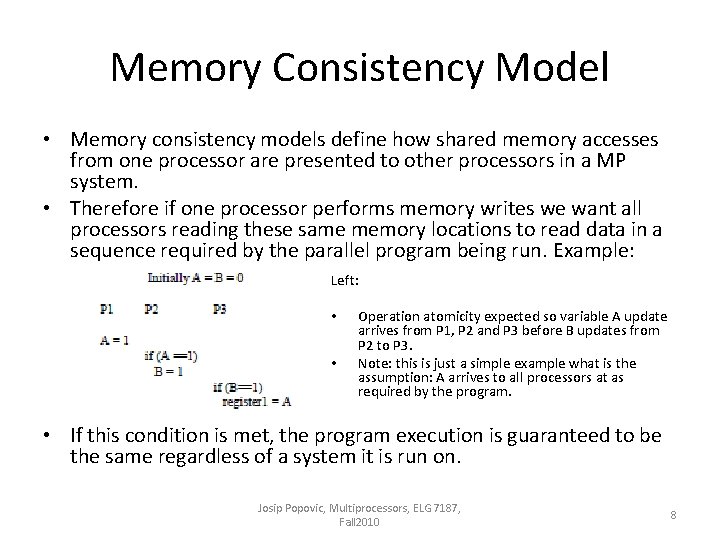

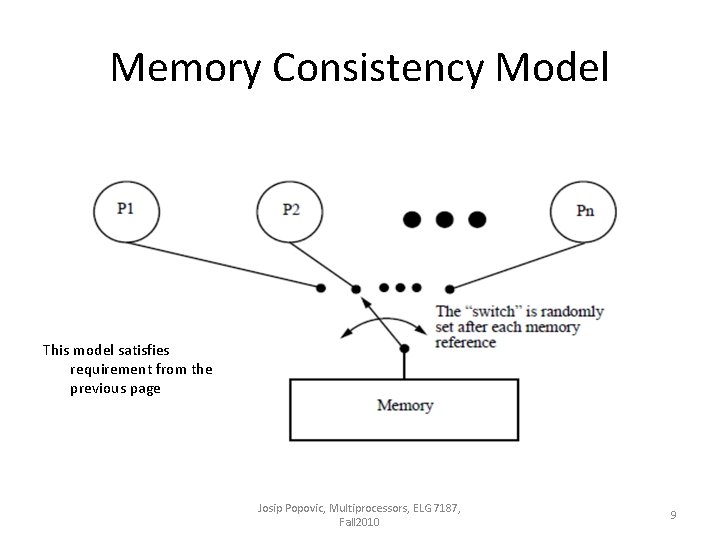

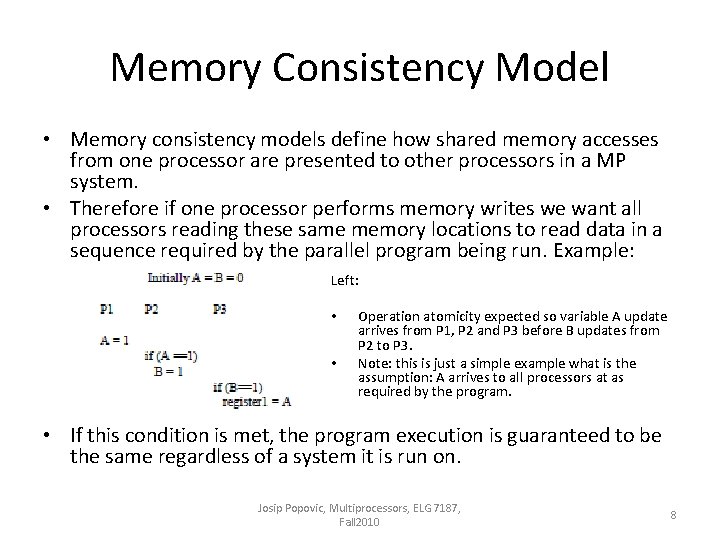

Memory Consistency Model • Memory consistency models define how shared memory accesses from one processor are presented to other processors in a MP system. • Therefore if one processor performs memory writes we want all processors reading these same memory locations to read data in a sequence required by the parallel program being run. Example: Left: • • Operation atomicity expected so variable A update arrives from P 1, P 2 and P 3 before B updates from P 2 to P 3. Note: this is just a simple example what is the assumption: A arrives to all processors at as required by the program. • If this condition is met, the program execution is guaranteed to be the same regardless of a system it is run on. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 8

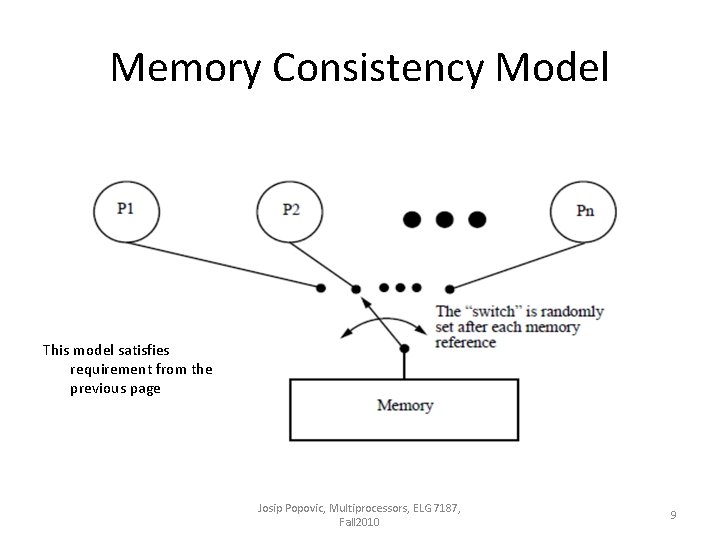

Memory Consistency Model This model satisfies requirement from the previous page Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 9

Sequential Consistency • no reordering of memory operations from the same processor is allowed • after a write operation is issued, the issuing processor waits for the write to complete before issuing its next operation (consider write cache miss) • no read operation can get a variable written by a write operation, if this write operation is still busy updating all the copies of the given variable (e. g. , in caches) Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 10

Sequential Consistency !!! • SC significantly reduces processor optimization space. • These optimizations are regularly used on uniprocessors and compilers Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 11

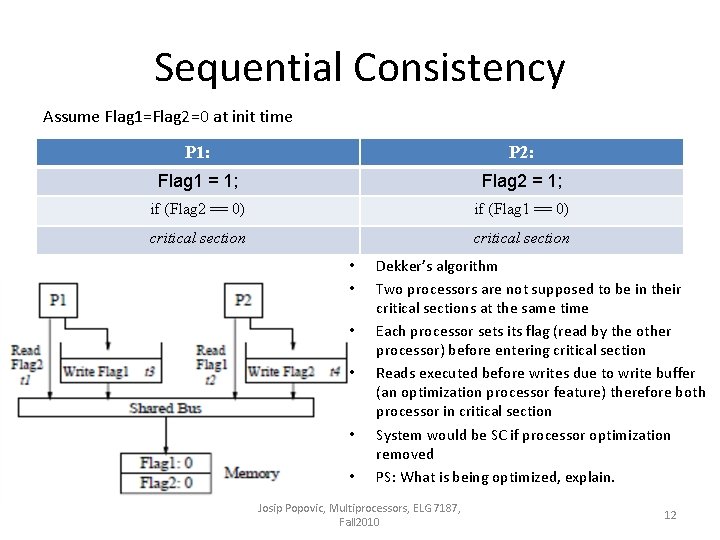

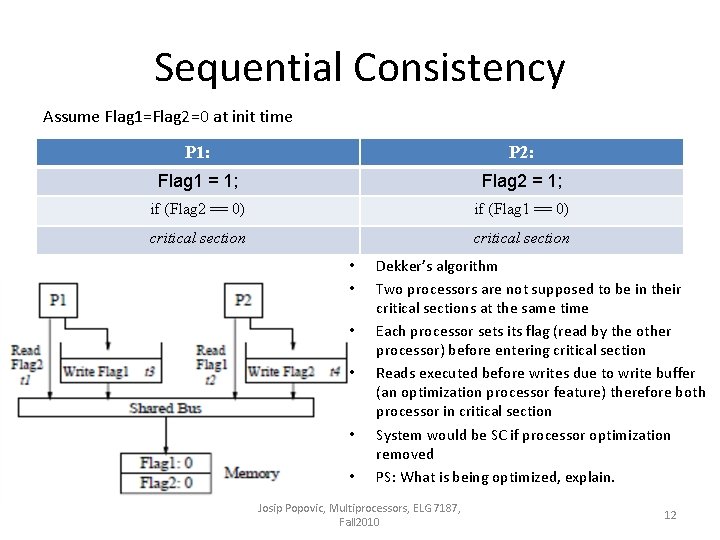

Sequential Consistency Assume Flag 1=Flag 2=0 at init time P 1: P 2: Flag 1 = 1; Flag 2 = 1; if (Flag 2 == 0) if (Flag 1 == 0) critical section • • • Dekker’s algorithm Two processors are not supposed to be in their critical sections at the same time Each processor sets its flag (read by the other processor) before entering critical section Reads executed before writes due to write buffer (an optimization processor feature) therefore both processor in critical section System would be SC if processor optimization removed PS: What is being optimized, explain. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 12

Sequential Consistency • Considering the above SC imposed hardware optimization limitations we can conclude that SC model is too restrictive and other memory consistency models need to be considered • A number of relaxed memory consistencies models have been documented in research communities and/or implemented in commercial products. • Relaxed memory consistency can be implemented in SW and HW or combination. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 13

Distributer Shared Memory (DSM) • Distributed Shared Memory (DSM) is a topology where a cluster of memory instances are presented to SW running on a multiprocessor system as a single shared memory. • There are two distinctive ways of achieving memory consistency, HW based or SW based. • Off-course it is possible often required to combine HW (complex) and SW (not always efficient) methods. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 14

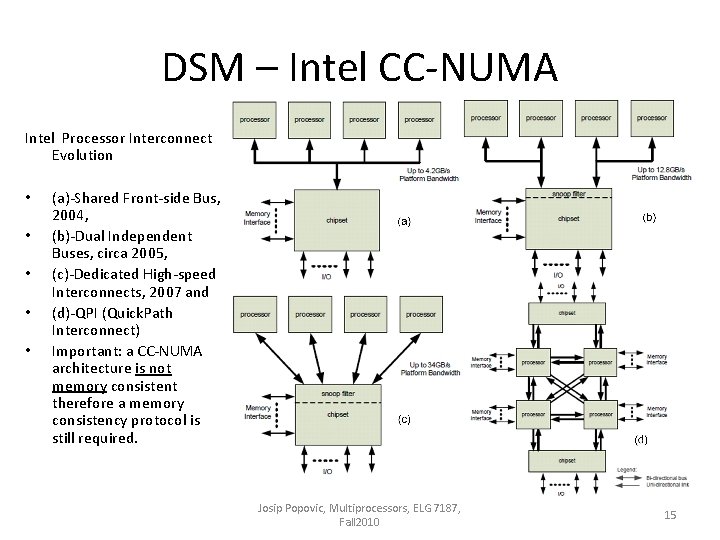

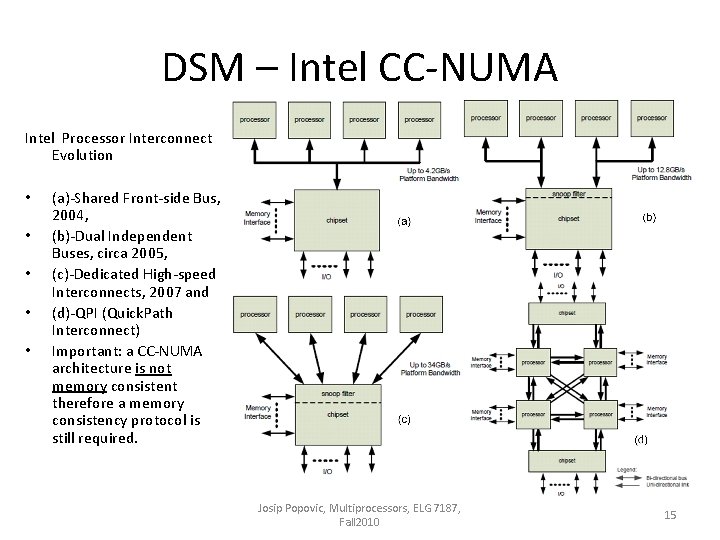

DSM – Intel CC-NUMA Intel Processor Interconnect Evolution • • • (a)-Shared Front-side Bus, 2004, (b)-Dual Independent Buses, circa 2005, (c)-Dedicated High-speed Interconnects, 2007 and (d)-QPI (Quick. Path Interconnect) Important: a CC-NUMA architecture is not memory consistent therefore a memory consistency protocol is still required. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 15

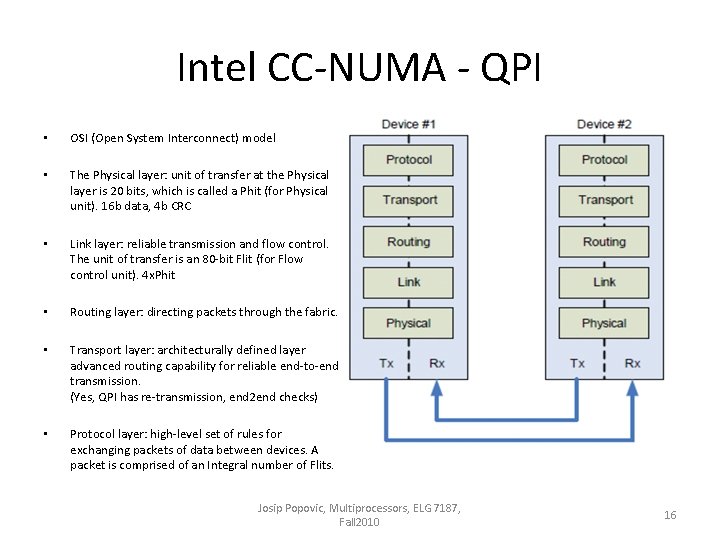

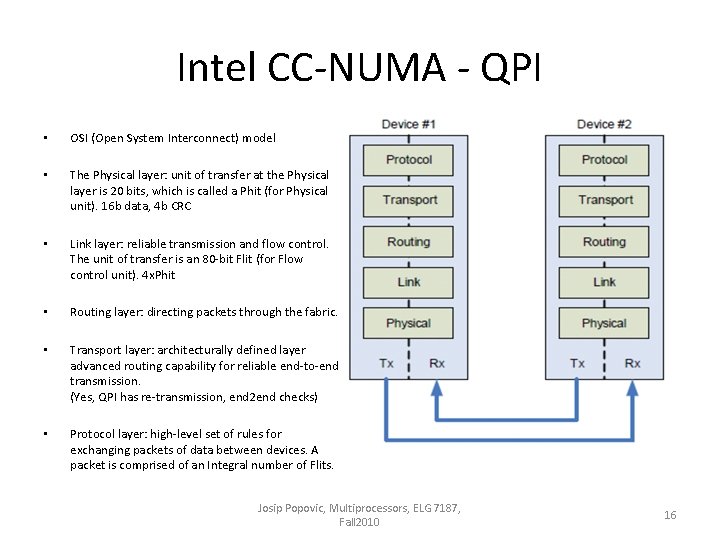

Intel CC-NUMA - QPI • OSI (Open System Interconnect) model • The Physical layer: unit of transfer at the Physical layer is 20 bits, which is called a Phit (for Physical unit). 16 b data, 4 b CRC • • Link layer: reliable transmission and flow control. The unit of transfer is an 80 -bit Flit (for Flow control unit). 4 x. Phit Routing layer: directing packets through the fabric. Transport layer: architecturally defined layer advanced routing capability for reliable end-to-end transmission. (Yes, QPI has re-transmission, end 2 end checks) Protocol layer: high-level set of rules for exchanging packets of data between devices. A packet is comprised of an Integral number of Flits. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 16

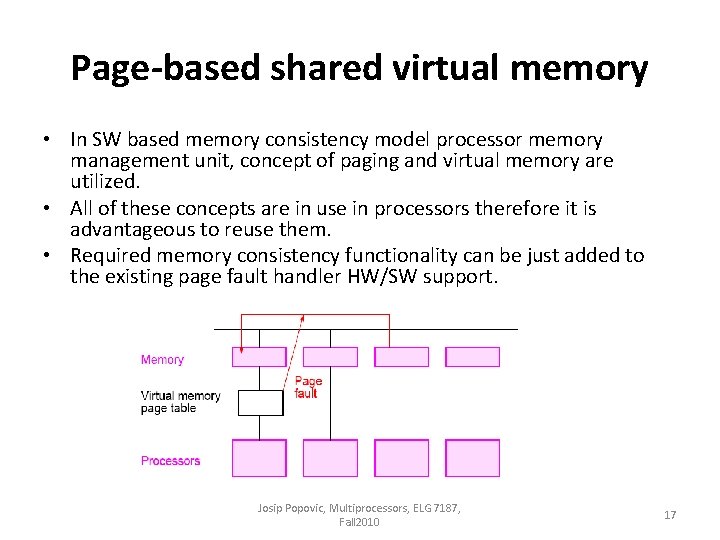

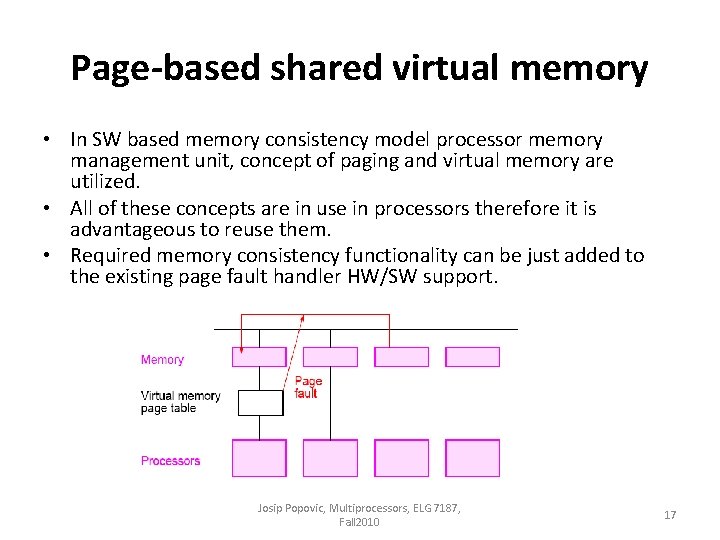

Page-based shared virtual memory • In SW based memory consistency model processor memory management unit, concept of paging and virtual memory are utilized. • All of these concepts are in use in processors therefore it is advantageous to reuse them. • Required memory consistency functionality can be just added to the existing page fault handler HW/SW support. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 17

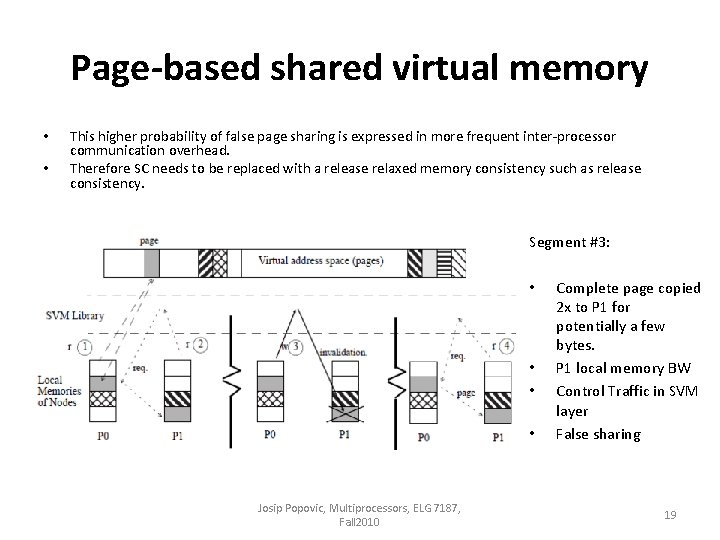

Page-based shared virtual memory • Advantage of this method is low HW support required since it is built on top of the existing infrastructure • Due to the page size, probability of false data sharing is higher • Different processors may have the same virtual address although their physical addresses are different Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 18

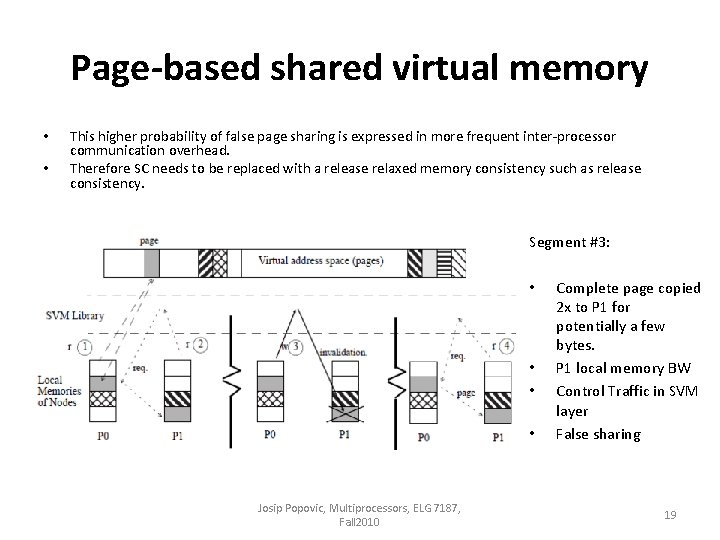

Page-based shared virtual memory • • This higher probability of false page sharing is expressed in more frequent inter-processor communication overhead. Therefore SC needs to be replaced with a release relaxed memory consistency such as release consistency. Segment #3: • • Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 Complete page copied 2 x to P 1 for potentially a few bytes. P 1 local memory BW Control Traffic in SVM layer False sharing 19

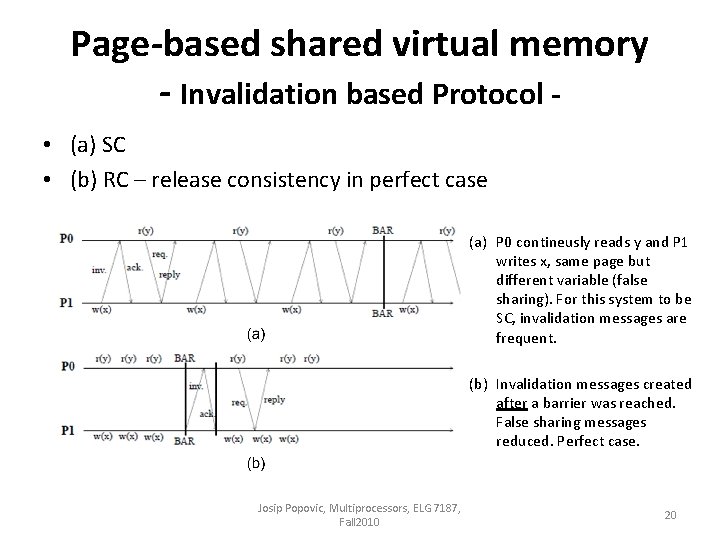

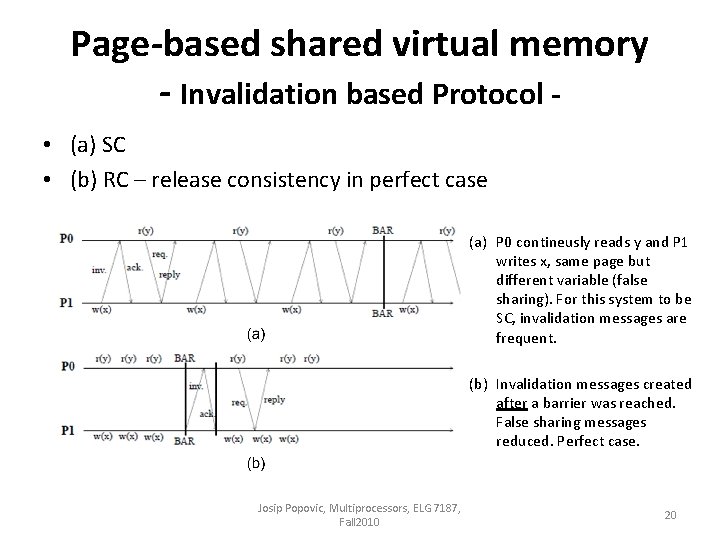

Page-based shared virtual memory - Invalidation based Protocol • (a) SC • (b) RC – release consistency in perfect case (a) P 0 contineusly reads y and P 1 writes x, same page but different variable (false sharing). For this system to be SC, invalidation messages are frequent. (b) Invalidation messages created after a barrier was reached. False sharing messages reduced. Perfect case. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 20

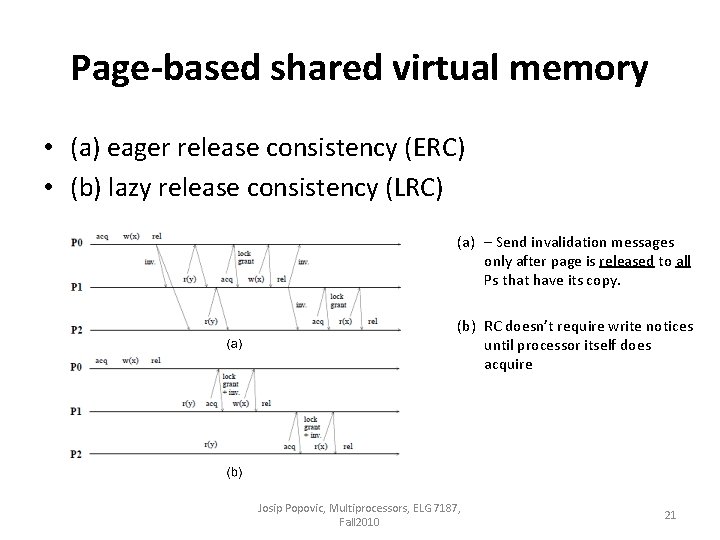

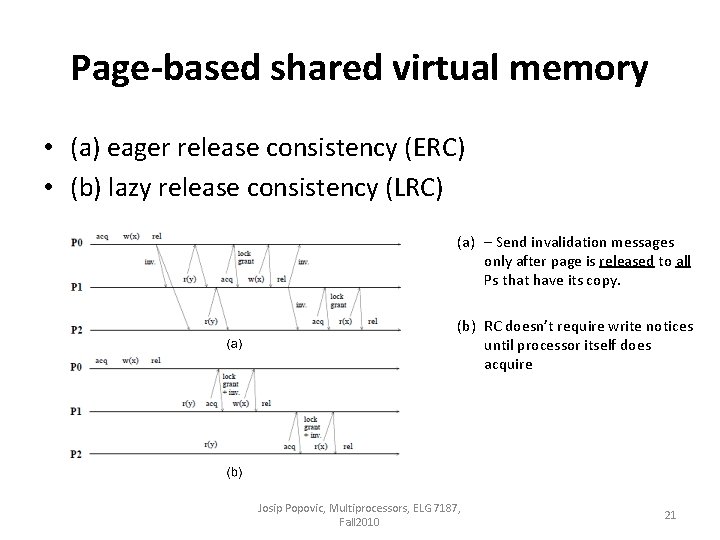

Page-based shared virtual memory • (a) eager release consistency (ERC) • (b) lazy release consistency (LRC) (a) – Send invalidation messages only after page is released to all Ps that have its copy. (b) RC doesn’t require write notices until processor itself does acquire Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 21

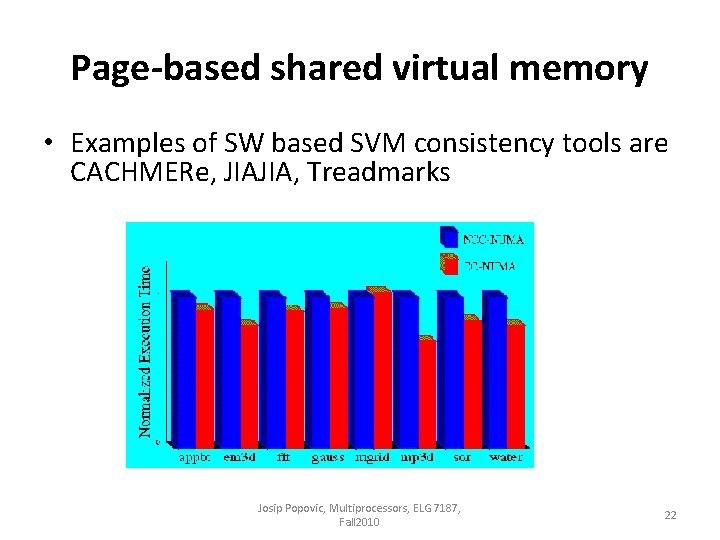

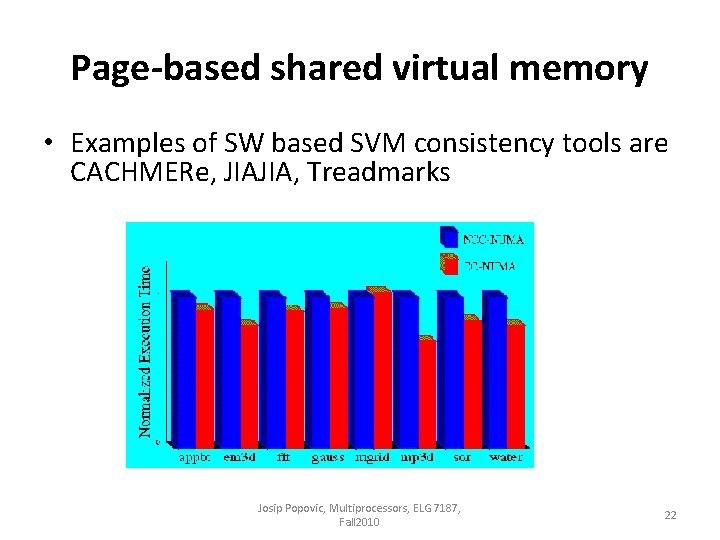

Page-based shared virtual memory • Examples of SW based SVM consistency tools are CACHMERe, JIAJIA, Treadmarks Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 22

Tread. Marks • Virtual DSM SW created at Rice University • Supports running parallel programs on a network of computers. • Provides global shared address space across different computers allowing programmers to focus on application rather than on message passing • Data exchange between computers is based on UDP/IP. • Uses lazy release memory consistency model as well use of barrier and lock synchronization, critical sections etc • Some of available functions: – – – void Tmk_barrier(unsignedid); void Tmk_lock_acquire(unsignedid); void Tmk_lock_release(unsignedid); char *Tmk_malloc(unsignedsize); void Tmk_free(char*ptr); Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 23

Lazy Release Consistency for HW Coherent Processors • FYI: cache coherence protocol based on the lazy release consistency model Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 24

Relaxed memory consistency models for parallel software • Relaxation models allow us to use processor/compiler optimizations • 3 sets of relaxation models: – in the first one only a read is allowed to bypass previous incomplete writes, – in the second we allow the above plus writes can pass previous writes, – in the third we allow all of the above plus reads or writes to bypass previous reads • When appropriate!!! Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 25

Relaxed memory consistency models for parallel software All Program order Relaxation: • Weak Ordering Consistency (WC) • Release Consistency (RC) • RC variants (eager, lazy) Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 26

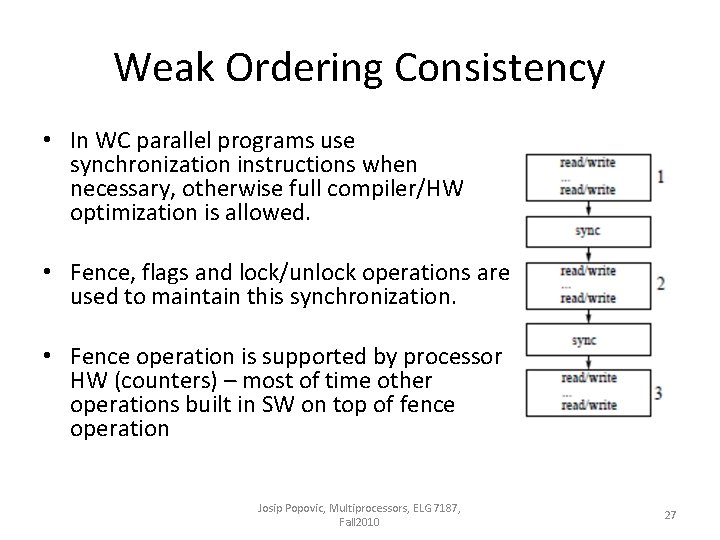

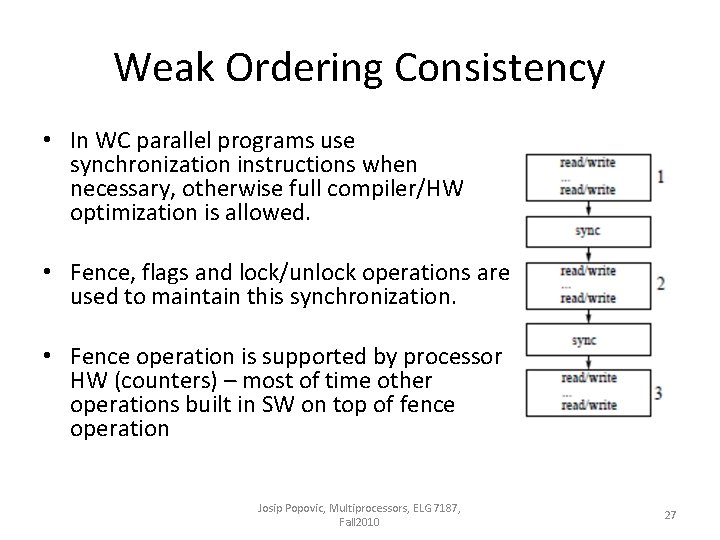

Weak Ordering Consistency • In WC parallel programs use synchronization instructions when necessary, otherwise full compiler/HW optimization is allowed. • Fence, flags and lock/unlock operations are used to maintain this synchronization. • Fence operation is supported by processor HW (counters) – most of time other operations built in SW on top of fence operation Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 27

Weak Ordering Consistency • There are 3 basic fence operations: lfence (load fence), sfence (store fence) and mfence (memory fence). In the case of Intel 86 architecture, mfence operation is defined as: • “Performs a serializing operation on all load-from-memory and store-tomemory instructions that were issued prior the MFENCE instruction. This serializing operation guarantees that every load and store instruction that precedes in program order the MFENCE instruction is globally visible before any load or store instruction that follows the MFENCE instruction is globally visible. The MFENCE instruction is ordered with respect to all load and store instructions, other MFENCE instructions, any SFENCE and LFENCE instructions, and any serializing instructions (such as the CPUID instruction). ” • In summary a system is WC memory consistent if: – A cache coherent system – Running programs with mfence (or similar operation that make sure all memory operations on processor are executed – when required) and – Using shared variable synchronization instructions. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 28

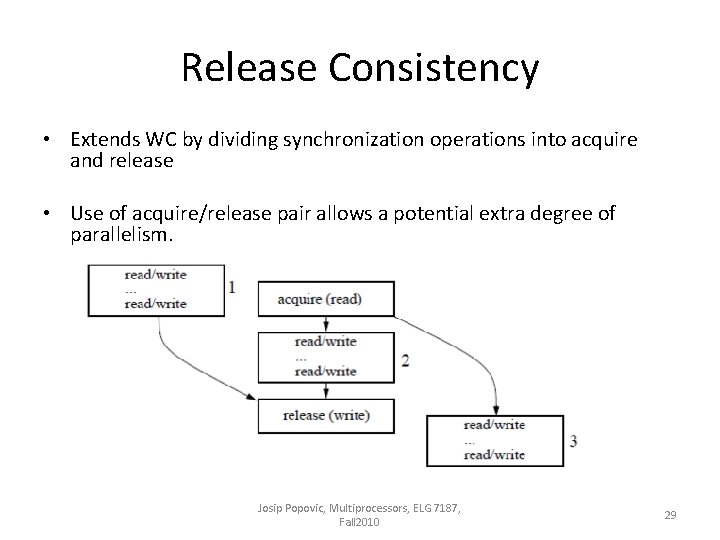

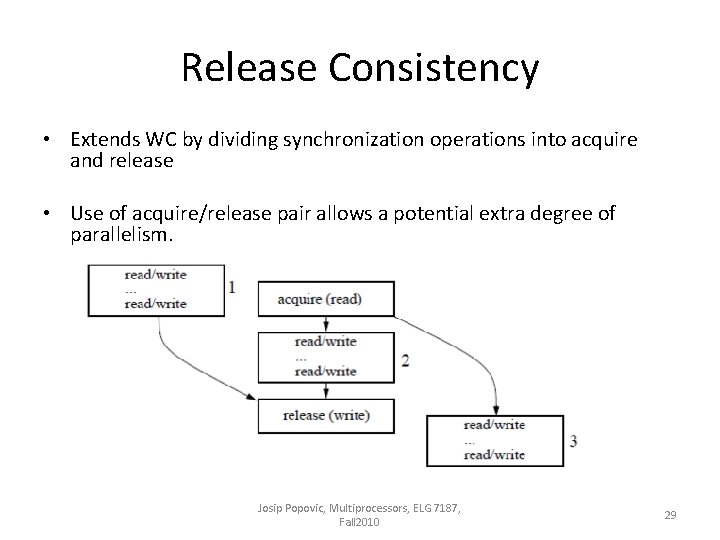

Release Consistency • Extends WC by dividing synchronization operations into acquire and release • Use of acquire/release pair allows a potential extra degree of parallelism. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 29

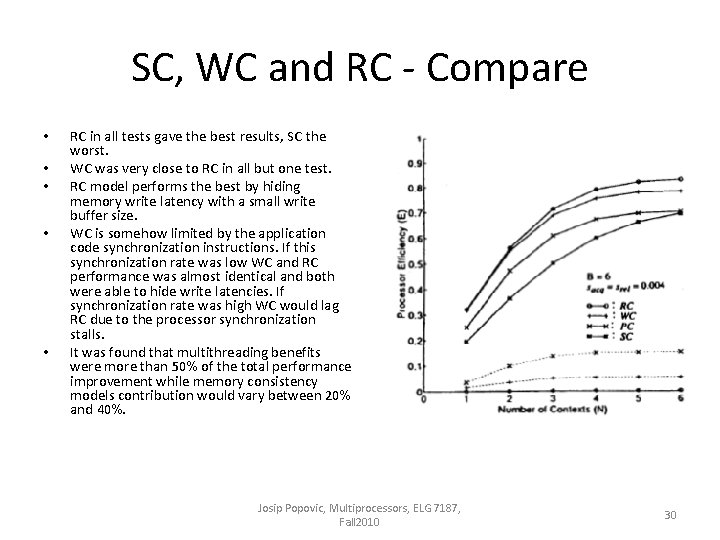

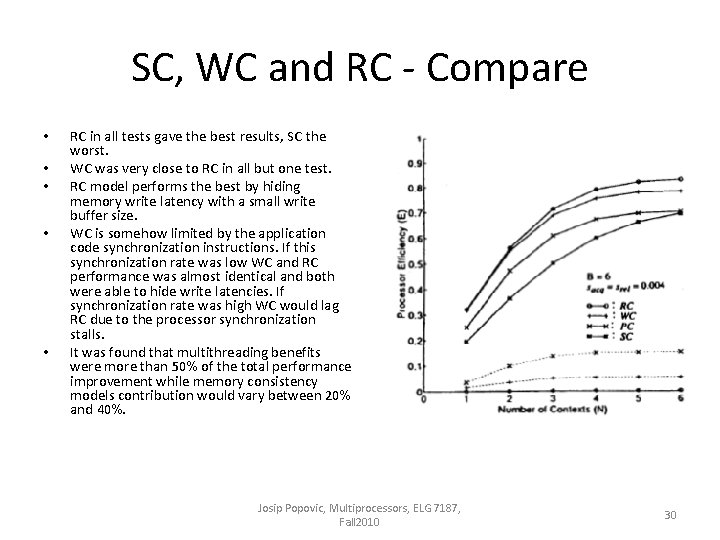

SC, WC and RC - Compare • • • RC in all tests gave the best results, SC the worst. WC was very close to RC in all but one test. RC model performs the best by hiding memory write latency with a small write buffer size. WC is somehow limited by the application code synchronization instructions. If this synchronization rate was low WC and RC performance was almost identical and both were able to hide write latencies. If synchronization rate was high WC would lag RC due to the processor synchronization stalls. It was found that multithreading benefits were more than 50% of the total performance improvement while memory consistency models contribution would vary between 20% and 40%. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 30

Contemporary relaxed consistency used in shared MPSo. C and multicore • ARM for their embedded MPSo. C provides a memory consistency model that is between TSO (Total Store Ordering) and PSO (Partial Store Ordering). • TSO allows a read to bypass an earlier write, while PSO in addition to TSO allows write to write reordering (not complete WC). • ARMs’ model is supported by SW synchronizations and barriers. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 31

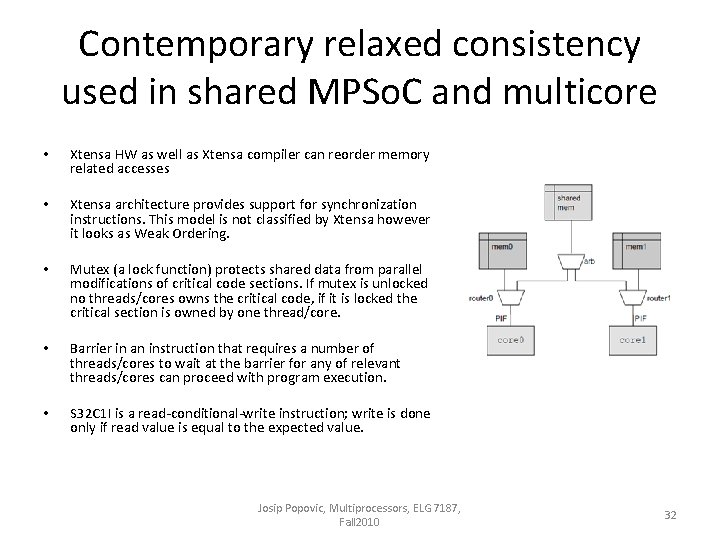

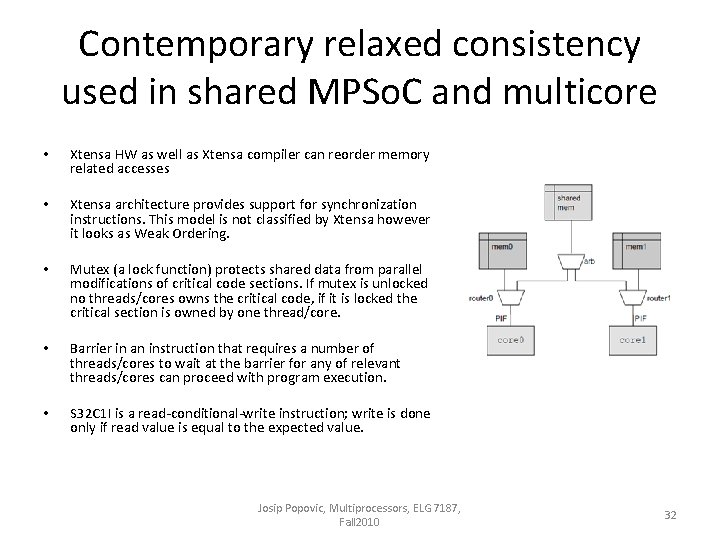

Contemporary relaxed consistency used in shared MPSo. C and multicore • Xtensa HW as well as Xtensa compiler can reorder memory related accesses • Xtensa architecture provides support for synchronization instructions. This model is not classified by Xtensa however it looks as Weak Ordering. • Mutex (a lock function) protects shared data from parallel modifications of critical code sections. If mutex is unlocked no threads/cores owns the critical code, if it is locked the critical section is owned by one thread/core. • Barrier in an instruction that requires a number of threads/cores to wait at the barrier for any of relevant threads/cores can proceed with program execution. • S 32 C 1 I is a read-conditional-write instruction; write is done only if read value is equal to the expected value. Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 32

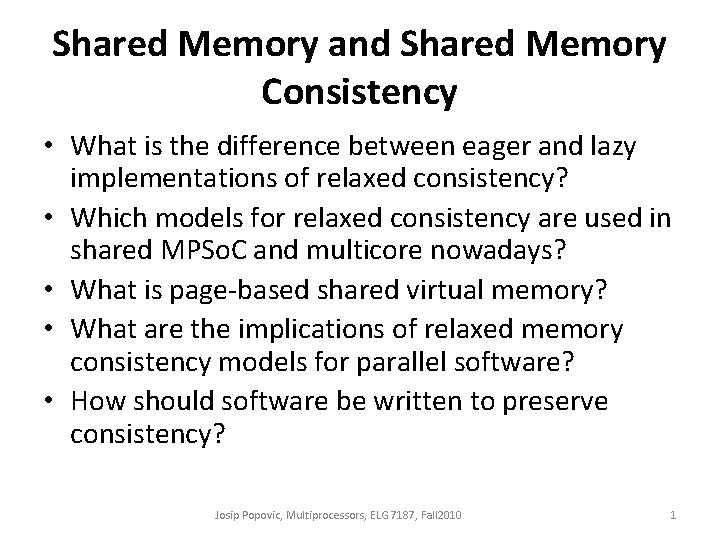

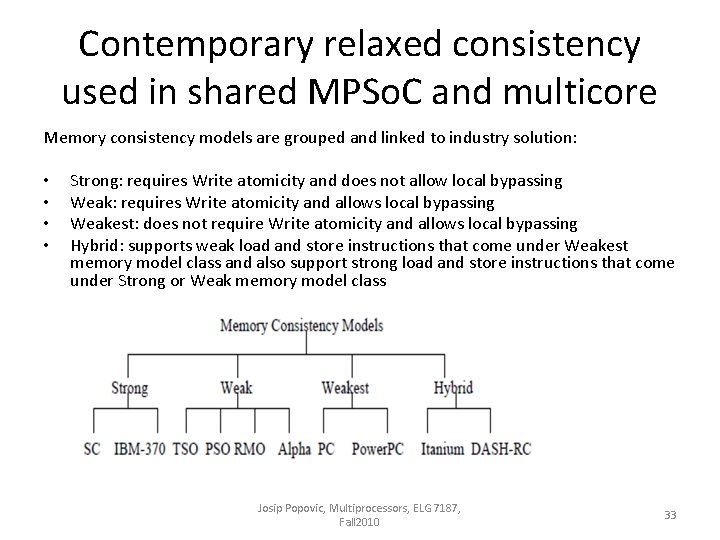

Contemporary relaxed consistency used in shared MPSo. C and multicore Memory consistency models are grouped and linked to industry solution: • • Strong: requires Write atomicity and does not allow local bypassing Weak: requires Write atomicity and allows local bypassing Weakest: does not require Write atomicity and allows local bypassing Hybrid: supports weak load and store instructions that come under Weakest memory model class and also support strong load and store instructions that come under Strong or Weak memory model class Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 33

References • • • • • David Culler, Jaswinder Pal Singh, Anoop Gupta , “Parallel Computer Architecture A Hardware / Software Approach”, Morgan Kaufmann Sarita V. Adve, Kourosh Gharachorloo , “Shared Memory Consistency Model, A Tutorial”, Digital Western Research Laboratory, Research Report 95/7 Sarita V. Adve, Mark D. Hill, "Weak Ordering - A New Definition", Computer Sciences Department University of Wisconsin Madison, Wisconsin 53706 M. Dubois, C. Scheurich and F. A. Briggs, "Memory Access Buffering in Multiprocessors", Proc. Thirteenth Annual International Symposium on Computer Architecture 14. 2 (June 1986), 434 -442. C. Amza, "Tread Marks: Shared memory computing on networks of workstations", IEEE Computer, vol. 29 (2), February 1996, pp. 18 -28. P. Keleher, "Lazy Release Consistency for Distributed Shared Memory", Ph. D. Thesis, Dept. of Computer Science, Rice University, 1995. Yong-Kim Chong and Kai Hwang, Fellow, IEEE, "Performance Analysis of Four Memory Consistency Models for Multithreaded Multiprocessors" Leslie Lamport “How to make a multiprocessor computer that correctly executes multiprocess programs” IEEE Transactions on Computers, C 28(9): 690– 691, September 1979 Leonidas I. Kontothanassis, Michael L. Scott, Ricardo Bianchini, "Lazy Release Consistency for Hardware-Coherent Multiprocessors", Department of Computer Science, University of Rochester, December 1994 Kunle Olukotun, Lance Hammond, and James Laudon , “Chip Multiprocessor Architecture: Techniques to Improve Throughput and Latency”, Morgan & Claypool, 2007 Xtensa Inc, Application Note, “Implementing a Memory-Based Mutex and Barrier Synchronization” Library, July, 2007 John Goodacre, http: //www. mpsoc-forum. org/2003/slides/MPSo. C_ARM_MP_Architecture. pdf, MPSo. C – System Architecture, ARM Multiprocessing, ARM Ltd. U. K. Page Based Distributed Shared Memory, http: //cs. gmu. edu/cne/modules/dsm/yellow/page_dsm. html Intel, http: //www. intel. com/technology/quickpath/ UNC Charlotte: http: //coitweb. uncc. edu/~abw/parallel/par_prog/resources. htm University of California Irvin, http: //www. ics. uci. edu/~javid/dsm. html Prosenjit Chatterjee, "Formal Specification and Verification of Memory Consistency Models of Shared Memory Multiprocessors" Master of Science Thesis, The University of Utah, 2009 Kourosh Gharachorloo, Daniel Lenoski, James Laudon, Phillip Gibbons, Ahoop Gupta, and John Hennessy, "Memory Consistency and Event Ordering in Scalable Shared-Memory Multiprocessors”, Computer Systems Laboratory, Stanford University, CA 94305 Josip Popovic, Multiprocessors, ELG 7187, Fall 2010 34