CS 704 Advanced Computer Architecture Lecture 31 Memory

![Hardware Prefetch: Example 1: Miss rate prefetch = [Misses/1000] / data references = [36. Hardware Prefetch: Example 1: Miss rate prefetch = [Misses/1000] / data references = [36.](https://slidetodoc.com/presentation_image/432929bb204bda5bd52ba02432ef765c/image-21.jpg)

- Slides: 50

CS 704 Advanced Computer Architecture Lecture 31 Memory Hierarchy Design Cache Performance Enhancement by (Miss Penalty/Rate Parallelism and Hit time) Prof. Dr. M. Ashraf Chughtai

Today’s Topics Recap: Reducing Miss Rate Reducing Miss Penalty or Miss Rate using Parallelism Reducing Hit Time Summary – Cache Optimization MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 2

Recap: 3 C Model and Reducing Miss Rate Large block size to reduce compulsory misses Large cache size to reduce capacity misses Higher associativity to reduce conflict misses MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 3

Recap: 3 C Model and Reducing Miss Rate In addition we discussed the wayprediction technique that checks a section of cache for hit first; and then on miss, it checks the rest of the cache At the end we discussed the compiler based techniques, namely the loop interchange and blocking, to optimize the cache performance MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 4

Recap: 3 C Model and Reducing Miss Rate Today, we will talk about the other ways to enhance cache performance These methods include: 1. Reducing Miss Penalty or Miss Rate via parallelism – Overlapping the execution of instructions with activities in the memory hierarchy 2. Reducing the hit time MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 5

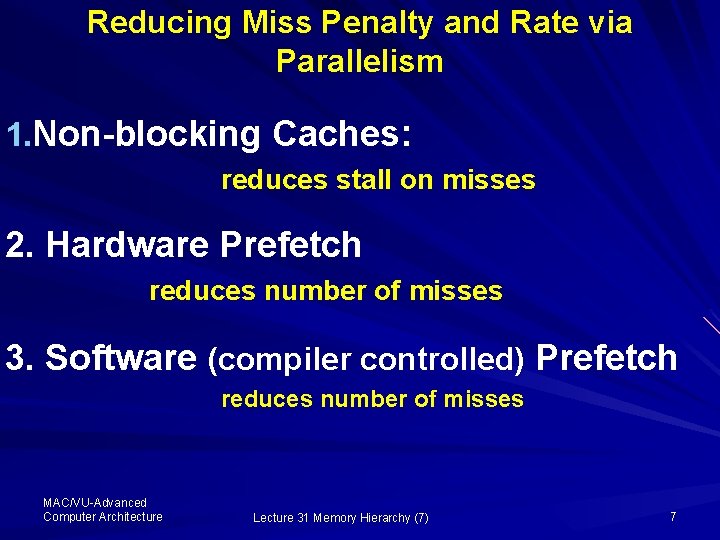

Reducing Miss Penalty or Rate via Parallelism The basic idea is to reduce miss penalty or miss rate by performing multiple outstanding memory operations through overlapping the memory activities and the instruction execution activities which take place in the processor This can be accomplished by using three different techniques MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 6

Reducing Miss Penalty and Rate via Parallelism 1. Non-blocking Caches: reduces stall on misses 2. Hardware Prefetch reduces number of misses 3. Software (compiler controlled) Prefetch reduces number of misses MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 7

Non-blocking Caches Memory hierarchy Instruction and data caches are decoupled Out-of-order execution CPU MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 8

Non-blocking Caches Non-blocking or lockup-free The “hit under miss” MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 9

Non-blocking Caches “Hit under multiple miss” “Miss under miss” MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 10

Non-blocking Caches Complexity 1000 and later a miss to address 1032 Complexity of the cache controller increases MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 11

Non-blocking Caches Memory system can service multiple misses Pentium Pro MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 12

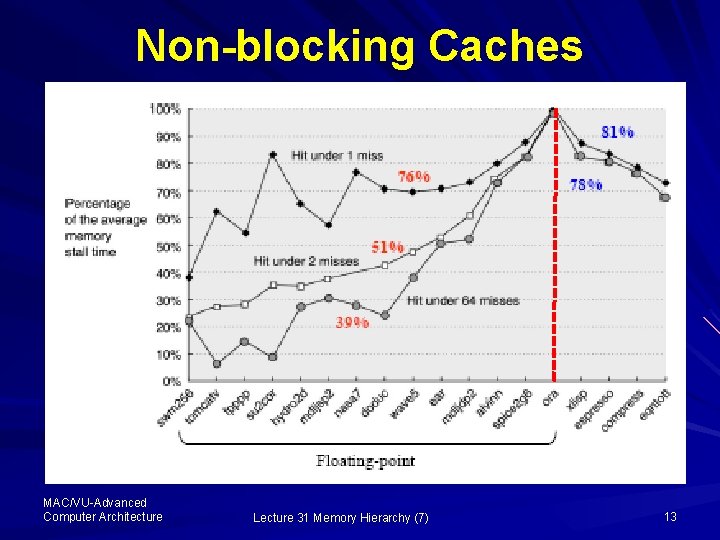

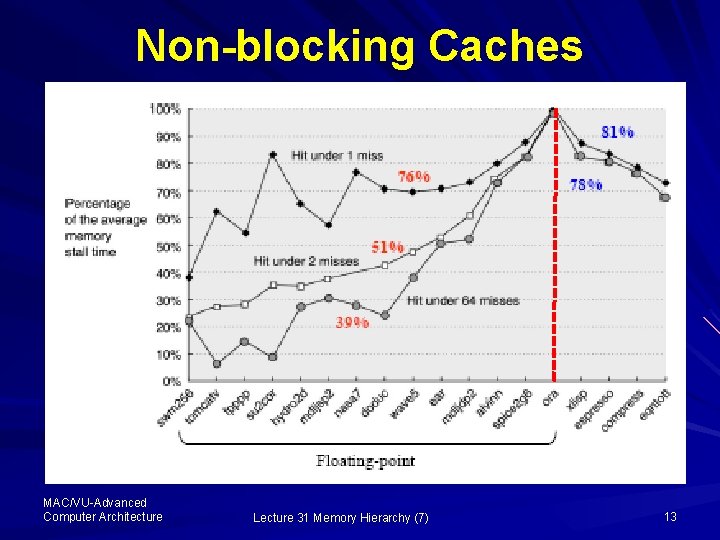

Non-blocking Caches MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 13

Hardware Prefetch: Reduces Misses 2 blocks Requested block “Stream buffer” Available in the stream buffer MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 14

Hardware Prefetch: Reduces Misses – Original cache request is cancelled – Block is read from the stream buffer and – Next pre-fetch request is issued ‘Demand misses’ MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 15

Hardware Prefetch: Reduces Misses Jouppi in 1990 – – – 15% - 25% of misses 4 blocks stream buffer 43% for stream fetching 50% at the same address 16 -block stream buffer – 72% misses MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 16

Hardware Prefetch: Reduces Misses Efficiently for data caches Hardware identifies stream of accesses Palacharla & Kessler in 1994 MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 17

Hardware Prefetch: Reduces Misses 8 stream buffers 50% to 70% 64 KB, 4 -way set associative caches MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 18

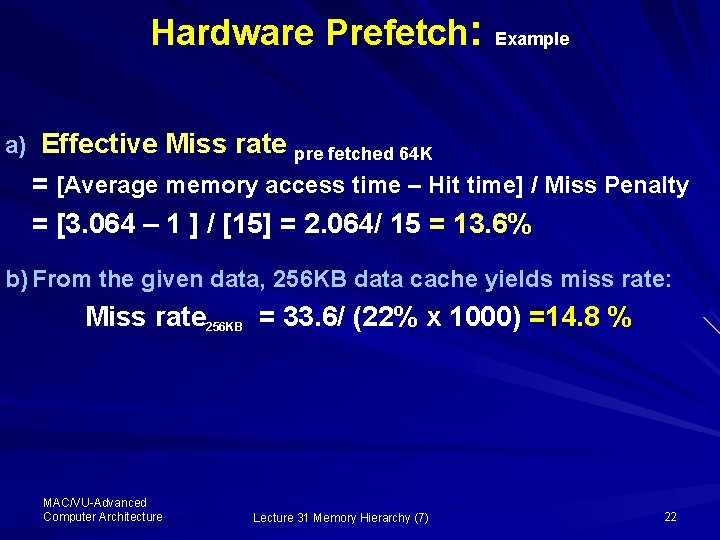

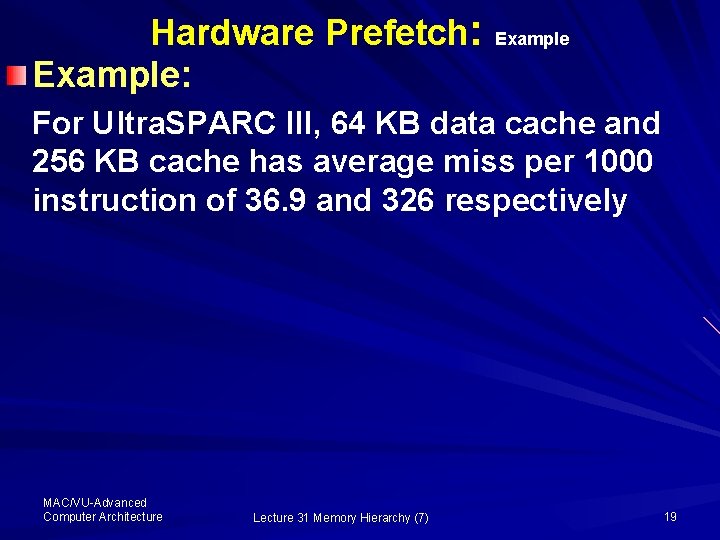

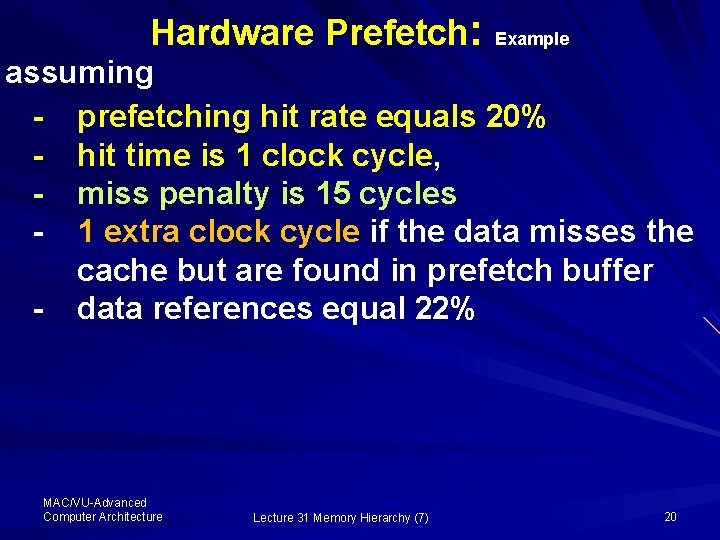

Hardware Prefetch: Example: Example For Ultra. SPARC III, 64 KB data cache and 256 KB cache has average miss per 1000 instruction of 36. 9 and 326 respectively MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 19

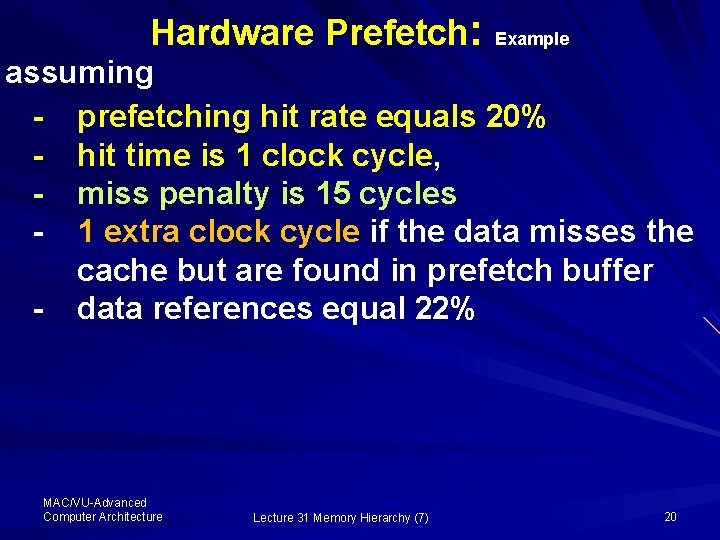

Hardware Prefetch: Example assuming - prefetching hit rate equals 20% - hit time is 1 clock cycle, - miss penalty is 15 cycles - 1 extra clock cycle if the data misses the cache but are found in prefetch buffer - data references equal 22% MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 20

![Hardware Prefetch Example 1 Miss rate prefetch Misses1000 data references 36 Hardware Prefetch: Example 1: Miss rate prefetch = [Misses/1000] / data references = [36.](https://slidetodoc.com/presentation_image/432929bb204bda5bd52ba02432ef765c/image-21.jpg)

Hardware Prefetch: Example 1: Miss rate prefetch = [Misses/1000] / data references = [36. 9 /1000] / [22 /100] = 36. 9/220 = 16. 7% 2: Average memory access time prefetch = Hit Time + Miss rate x Prefetch hit time x 1 + Miss rate x (1 -prefetch hit time) x miss penalty Average memory access time prefetch = 1+ (16. 7% x 20% x 1) + (16. 7% (1 -20%) x 15) = 3. 046 MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 21

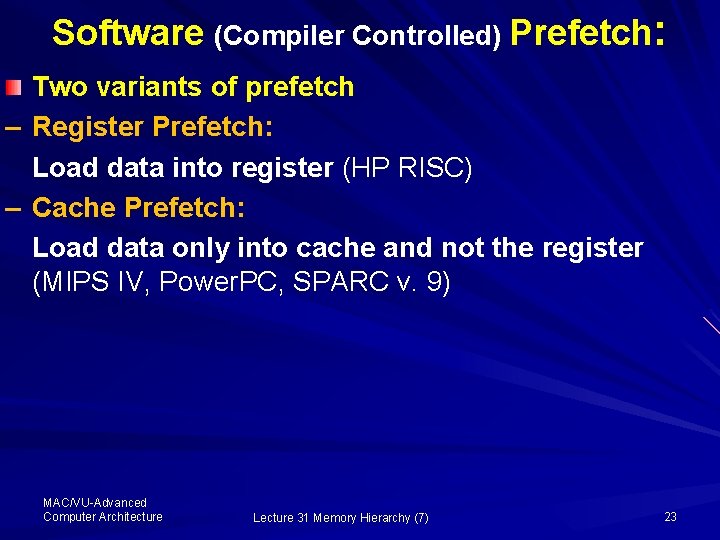

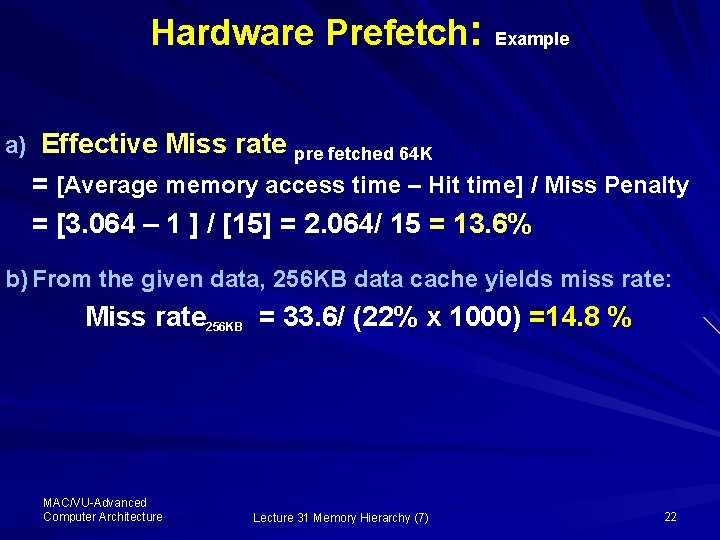

Hardware Prefetch: Example a) Effective Miss rate pre fetched 64 K = [Average memory access time – Hit time] / Miss Penalty = [3. 064 – 1 ] / [15] = 2. 064/ 15 = 13. 6% b) From the given data, 256 KB data cache yields miss rate: Miss rate 256 KB = 33. 6/ (22% x 1000) =14. 8 % MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 22

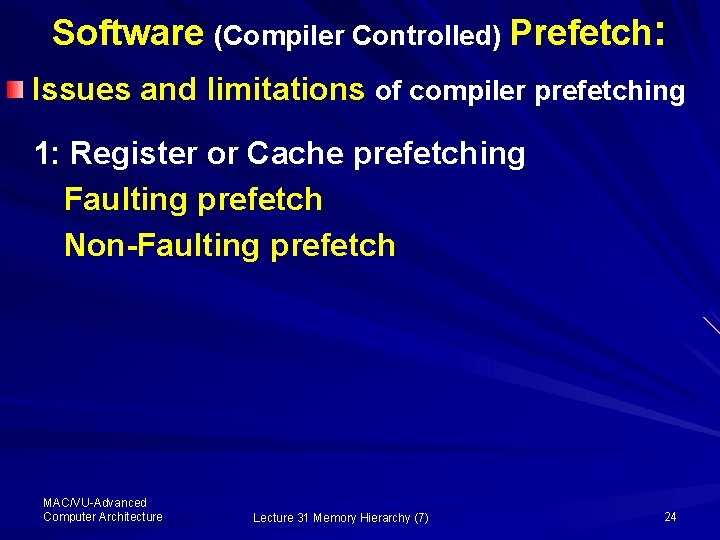

Software (Compiler Controlled) Prefetch: Two variants of prefetch – Register Prefetch: Load data into register (HP RISC) – Cache Prefetch: Load data only into cache and not the register (MIPS IV, Power. PC, SPARC v. 9) MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 23

Software (Compiler Controlled) Prefetch: Issues and limitations of compiler prefetching 1: Register or Cache prefetching Faulting prefetch Non-Faulting prefetch MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 24

Software (Compiler Controlled) Prefetch: 2: Compiler prefetching Semantically Invisible, Cannot cause virtual memory faults 3: Caches do not stall MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 25

Software (Compiler Controlled) Prefetch 4: Issuing the prefetch instructions MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 26

Summary reducing Cache Miss Penalty or miss rate via parallelism The non-blocking caches Bandwidth behind the cache Instruction-level parallelism MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 27

Summary reducing Cache Miss Penalty or miss rate via parallelism Hardware and Software prefetching MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 28

Final Component to reduce Average memory Access Time- Hit Time ─ five techniques to reduce the miss penalty ─ five methods to reduce the miss rate ─ three approaches to reduce the miss penalty ─ miss rate in parallel to reduce the average memory access time Hit time MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 29

Reducing Hit Time clock rate of the processor Cache Access time The four commonly used techniques Small and Simple Caches Avoiding Address translation during Indexing Pipelined Cache Access Trace Caches MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 30

1: Reducing hit time Small and Simple Caches While discussing the cache design, we have observed the most time consuming operation in cache hit is to compare long address tag This operation definitely require bigger circuit which is always slow as compared to smaller circuits Thus an obvious approach to keep the hit time small is to keep the cache Smaller and simpler MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 31

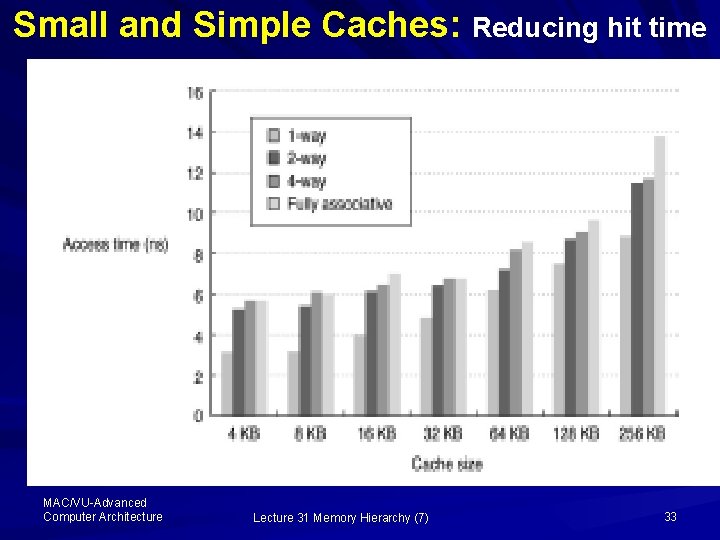

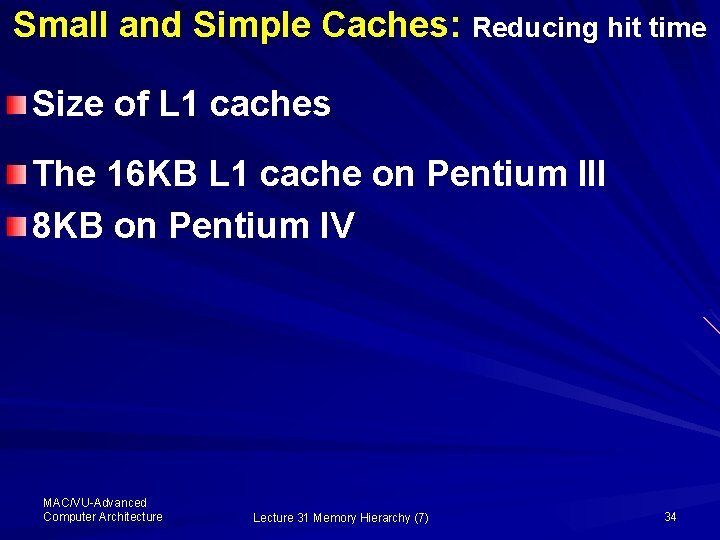

Small and Simple Caches: Reducing hit time The cache be kept small to fit it on the same chip as the processor to avoid the time penalty going off chip; and Simpler, such as the direct-mapped the tag comparison can be overlapped with the data transmission The impact of cache size and complexity, i. e. , associativity and number of read/write ports is shown in this graph MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 32

Small and Simple Caches: Reducing hit time MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 33

Small and Simple Caches: Reducing hit time Size of L 1 caches The 16 KB L 1 cache on Pentium III 8 KB on Pentium IV MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 34

2: Reducing hit time Avoiding Address Translation During Indexing small and simple cache Translation Look-aside Buffer (TLB) Virtual address from CPU MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 35

2: Reducing hit time Avoiding Address Translation During Indexing Virtual memory system Two levels of address mapping Address of the virtual memory to main memory and – Main memory to cache MAC/VU-Advanced Computer Architecture – Lecture 31 Memory Hierarchy (7) 36

2: Reducing hit time : Avoiding Address Translation Virtual address for the cache Directly mapped Virtual caches Physical cache Eliminated from cache hit Amdahl's rule Virtual caches we have to consider two issues MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 37

2: Reducing hit time Avoiding Address Translation Limitation Virtually addressed caches Reasons are ─ Protection ─ Page address MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 38

2: Reducing hit time Avoiding Address Translation ─ Physical Address difference ─ virtual address refers to different physical address MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 39

2: Reducing hit time Avoiding Address Translation ─ Aliasing/synonyms ─ Two different virtual addresses ─ Two processes The hardware solution to synonym 2 -way set associative cache The software solution is to share some address bits MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 40

3: Reducing hit time: Pipelined Cache Access Cache hit time Pipelining the cache access Latency of the first level cache-hit Fast cycle time which increases the bandwidth of instructions MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 41

3: Reducing hit time: Pipelined Cache Access – for Pentium I takes 1 clock-cycle to access the instruction cache; – for Pentium Pro through Pentium II its takes 2 clock cycles, and – for Pentium IV it takes 4 clock cycles MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 42

4: Reducing hit time: Trace Caches Multiple-issue processors Trace caches Dynamic sequence Load into the cache block – MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 43

4: Reducing hit time: Trace Caches Branch prediction Instruction prefetching No wasted words and no conflicts Conflicts are avoided MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 44

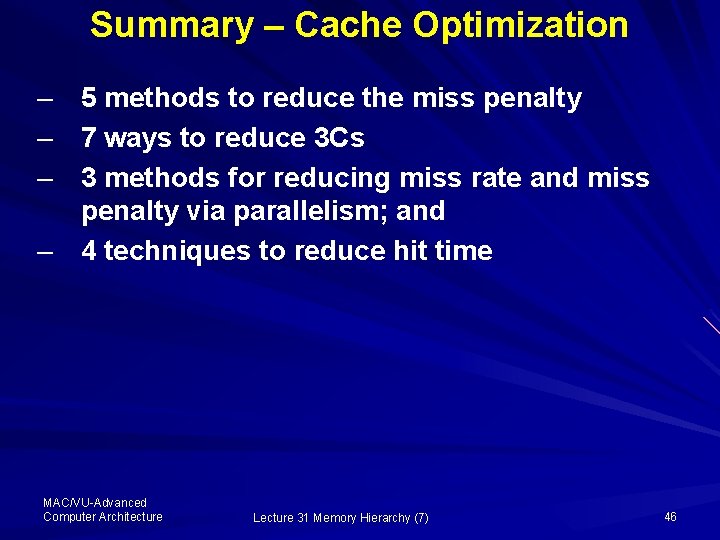

4: Reducing hit time: Trace Caches Complicates the address mapping No longer aligned to power 2 multiples of words Requirement for address mapping Demerit Same instruction may be stored multiple times MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 45

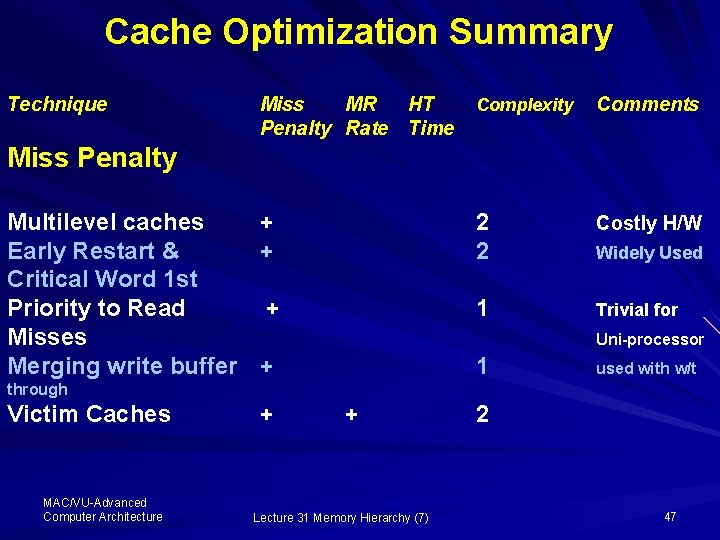

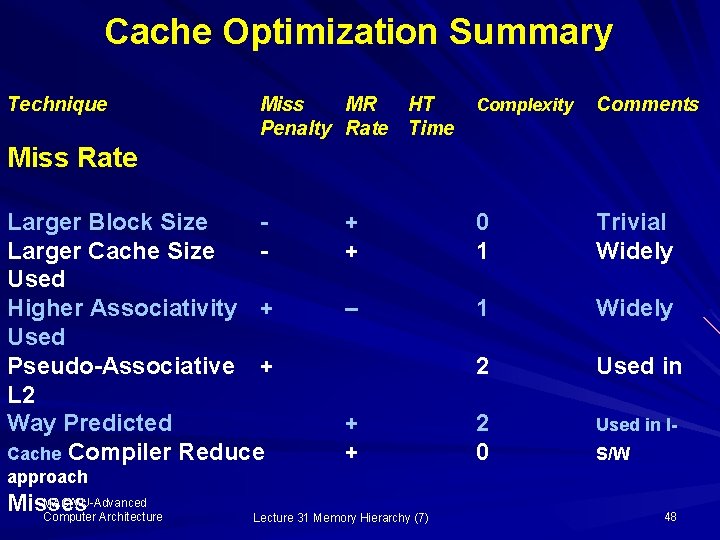

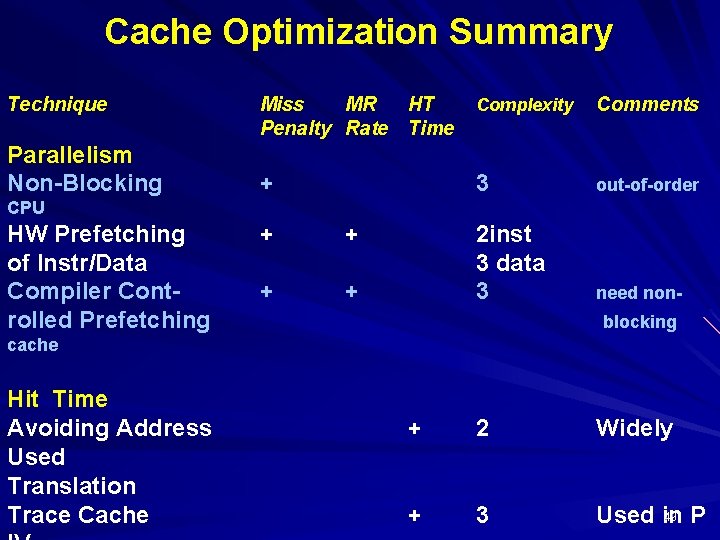

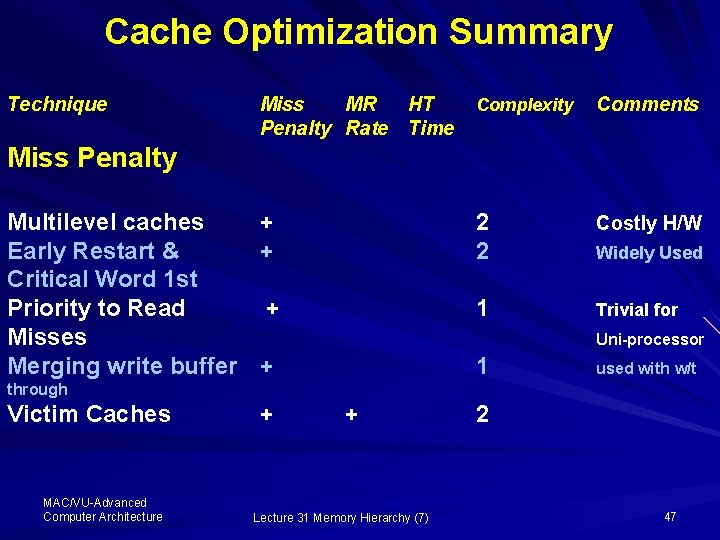

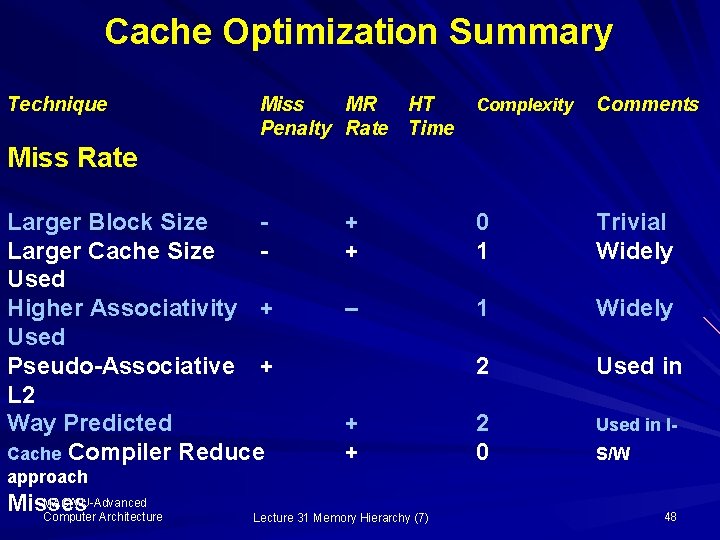

Summary – Cache Optimization – – – 5 methods to reduce the miss penalty 7 ways to reduce 3 Cs 3 methods for reducing miss rate and miss penalty via parallelism; and – 4 techniques to reduce hit time MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 46

Cache Optimization Summary Technique Miss MR HT Penalty Rate Time Complexity Comments 2 2 Costly H/W 1 Trivial for Miss Penalty Multilevel caches + Early Restart & + Critical Word 1 st Priority to Read + Misses Merging write buffer + Widely Used Uni-processor 1 used with w/t through Victim Caches MAC/VU-Advanced Computer Architecture + + Lecture 31 Memory Hierarchy (7) 2 47

Cache Optimization Summary Technique Miss MR HT Penalty Rate Time Complexity Comments + + 0 1 Trivial Widely – 1 Widely 2 Used in 2 0 Used in I- Miss Rate Larger Block Size Larger Cache Size Used Higher Associativity + Used Pseudo-Associative + L 2 Way Predicted Cache Compiler Reduce + + S/W approach MAC/VU-Advanced Misses Computer Architecture Lecture 31 Memory Hierarchy (7) 48

Cache Optimization Summary Technique Parallelism Non-Blocking Miss MR HT Penalty Rate Time Complexity Comments + 3 out-of-order 2 inst 3 data 3 need non- CPU HW Prefetching of Instr/Data Compiler Controlled Prefetching + + blocking cache Hit Time Avoiding Address Used Translation Trace Cache + 2 Widely + 3 49 P Used in

Aslam – o – Alacum And Allah Hafiz MAC/VU-Advanced Computer Architecture Lecture 31 Memory Hierarchy (7) 50