Recap 1 Complex Instruction Set Computing CISC CISC

![Strength reduction Example: for (j = 0; j = n; ++j) A[j] = 2*j; Strength reduction Example: for (j = 0; j = n; ++j) A[j] = 2*j;](https://slidetodoc.com/presentation_image_h/0663bb177565ab80ff6ba8379f61c624/image-30.jpg)

- Slides: 52

Recap 1

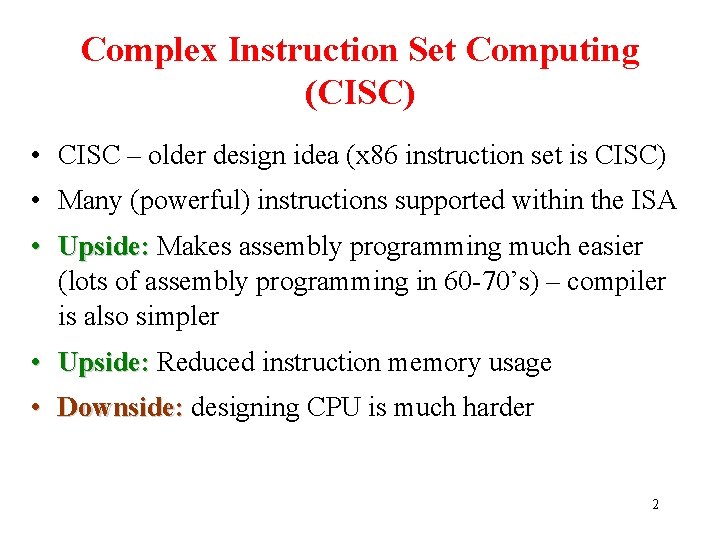

Complex Instruction Set Computing (CISC) • CISC – older design idea (x 86 instruction set is CISC) • Many (powerful) instructions supported within the ISA • Upside: Makes assembly programming much easier (lots of assembly programming in 60 -70’s) – compiler is also simpler • Upside: Reduced instruction memory usage • Downside: designing CPU is much harder 2

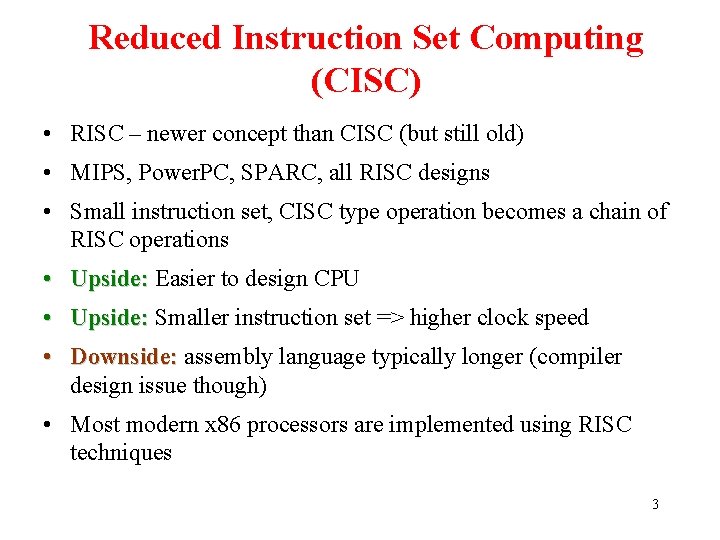

Reduced Instruction Set Computing (CISC) • RISC – newer concept than CISC (but still old) • MIPS, Power. PC, SPARC, all RISC designs • Small instruction set, CISC type operation becomes a chain of RISC operations • Upside: Easier to design CPU • Upside: Smaller instruction set => higher clock speed • Downside: assembly language typically longer (compiler design issue though) • Most modern x 86 processors are implemented using RISC techniques 3

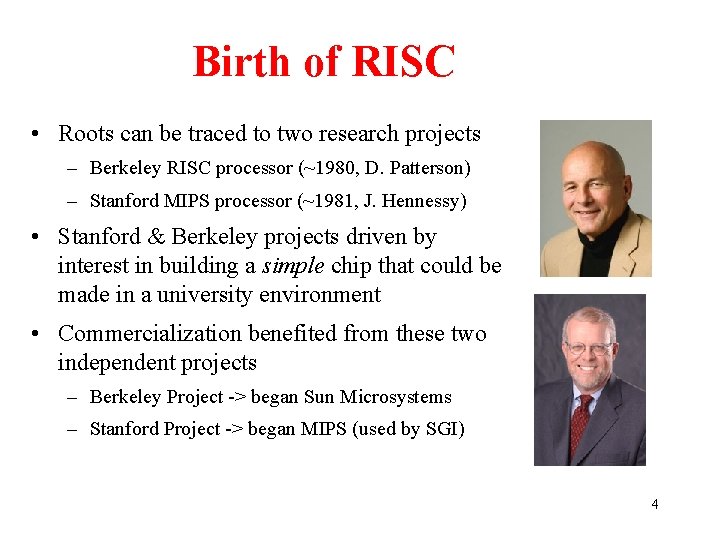

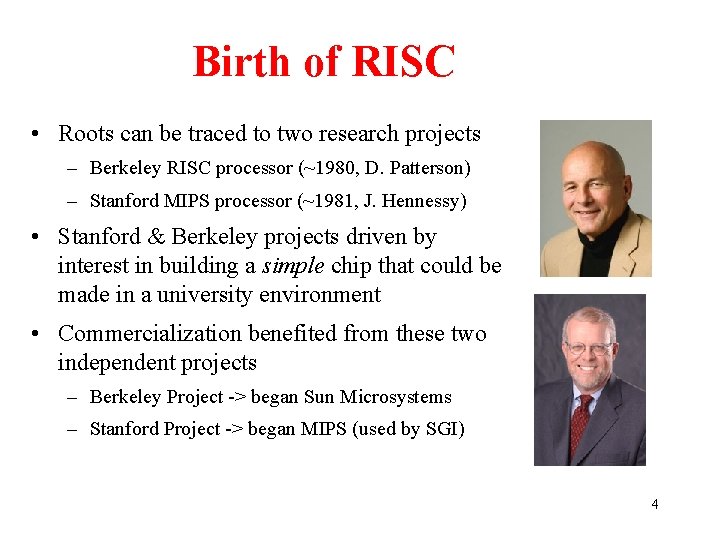

Birth of RISC • Roots can be traced to two research projects – Berkeley RISC processor (~1980, D. Patterson) – Stanford MIPS processor (~1981, J. Hennessy) • Stanford & Berkeley projects driven by interest in building a simple chip that could be made in a university environment • Commercialization benefited from these two independent projects – Berkeley Project -> began Sun Microsystems – Stanford Project -> began MIPS (used by SGI) 4

Who “won”? • Modern x 86 are RISC-CISC hybrids – ISA is translated at hardware level to shorter instructions – Very complicated designs though, lots of scheduling hardware • MIPS, Sun SPARC, DEC Alpha are much truer implementations of the RISC ideal • Modern metric for determining RISCkyness of design: does the ISA have LOAD STORE instructions to memory? 5

Modern RISC processors • Complexity has nonetheless increased significantly • Superscalar execution (where CPU has multiple functional units of the same type e. g. two add units) require complex circuitry to control scheduling of operations • What if we could remove the scheduling complexity by using a smart compiler…? compiler 6

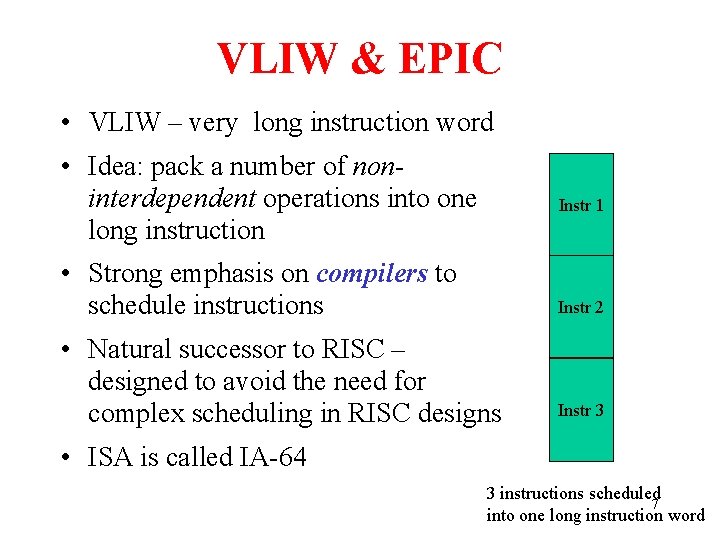

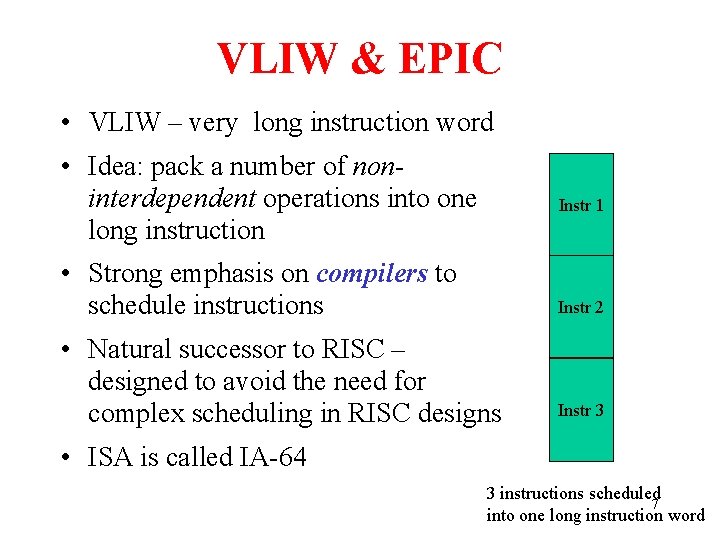

VLIW & EPIC • VLIW – very long instruction word • Idea: pack a number of noninterdependent operations into one long instruction Instr 1 • Strong emphasis on compilers to schedule instructions Instr 2 • Natural successor to RISC – designed to avoid the need for complex scheduling in RISC designs Instr 3 • ISA is called IA-64 3 instructions scheduled 7 into one long instruction word

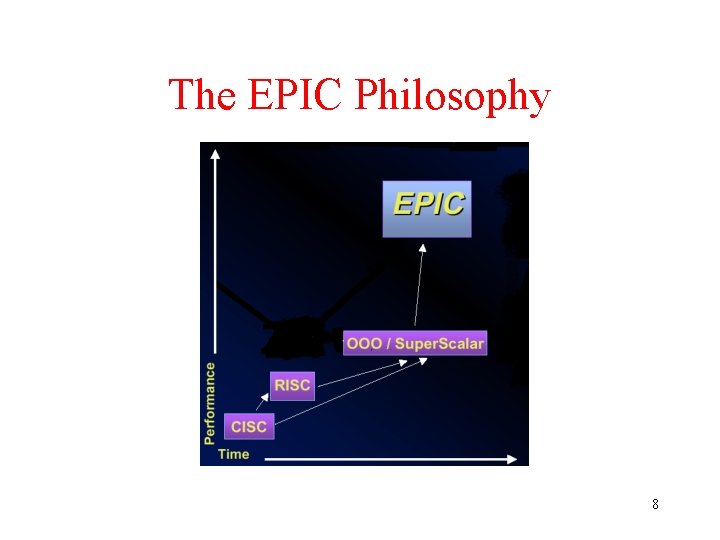

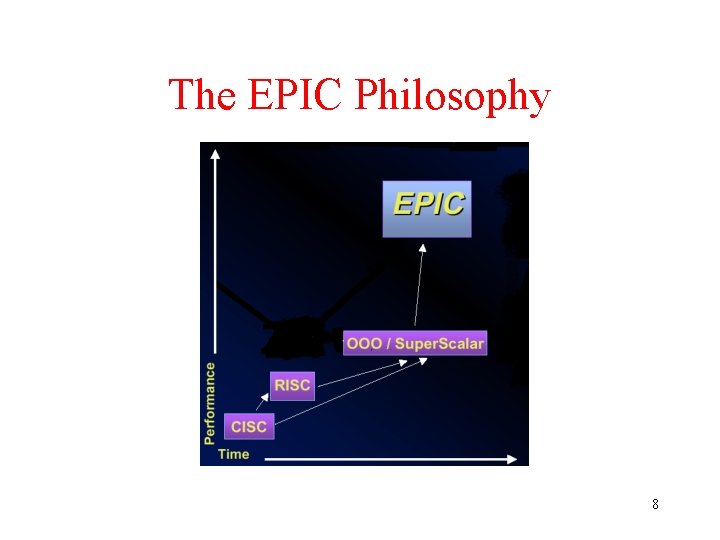

The EPIC Philosophy 8

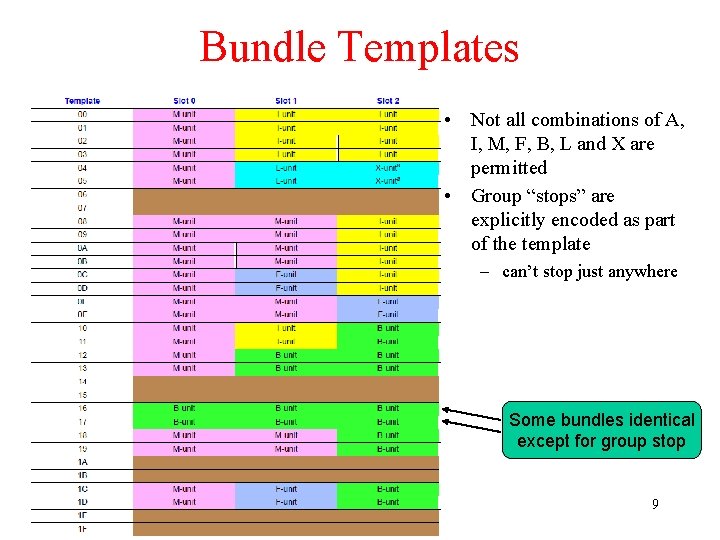

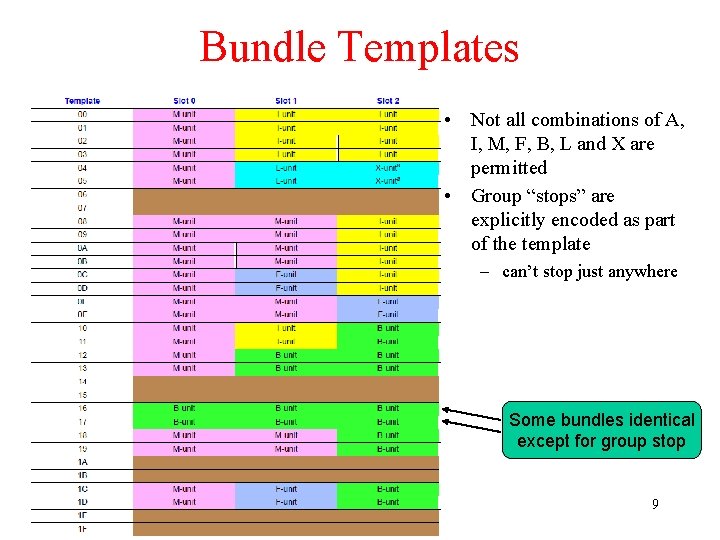

Bundle Templates • Not all combinations of A, I, M, F, B, L and X are permitted • Group “stops” are explicitly encoded as part of the template – can’t stop just anywhere Some bundles identical except for group stop 9

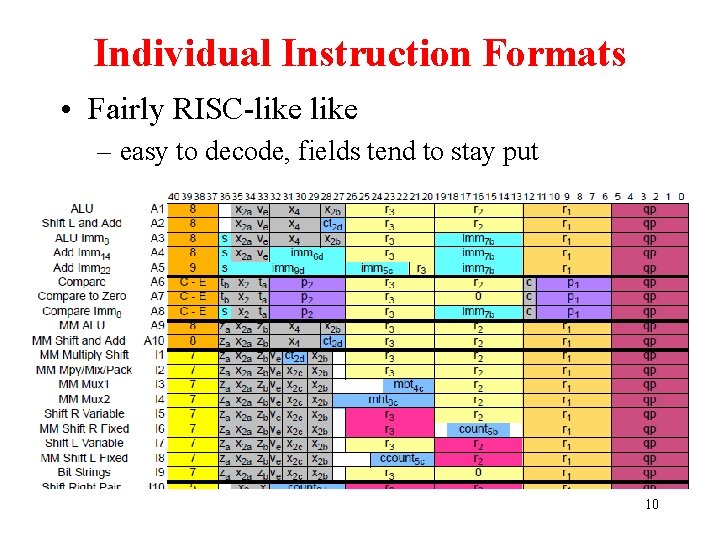

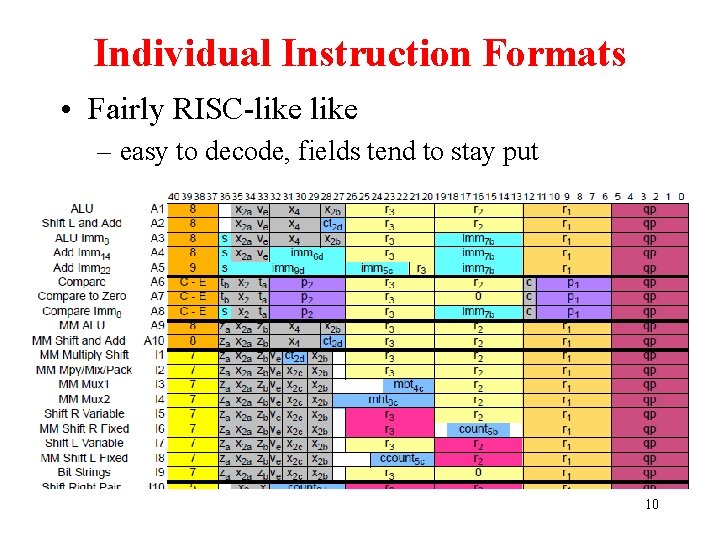

Individual Instruction Formats • Fairly RISC-like – easy to decode, fields tend to stay put 10

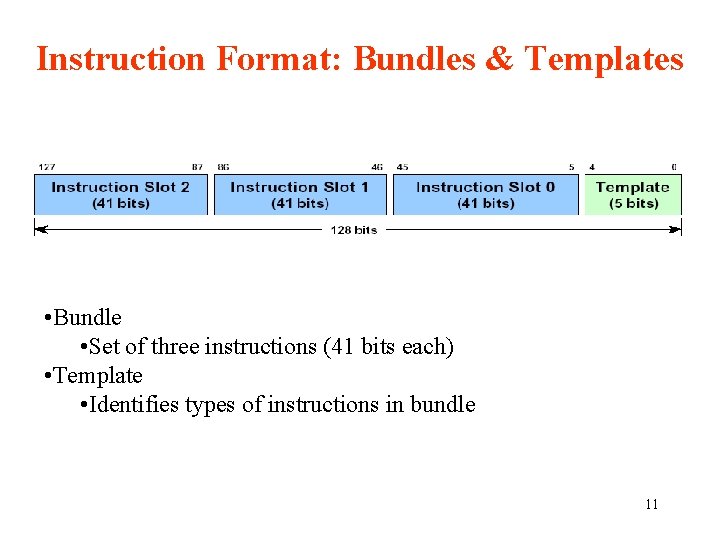

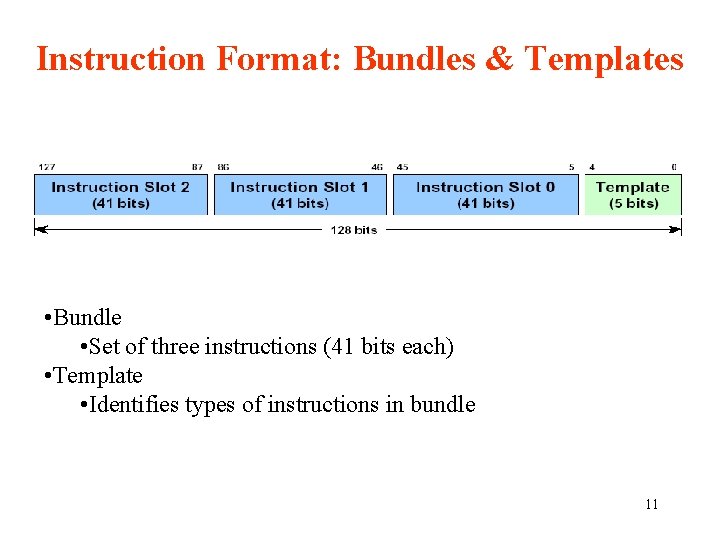

Instruction Format: Bundles & Templates • Bundle • Set of three instructions (41 bits each) • Template • Identifies types of instructions in bundle 11

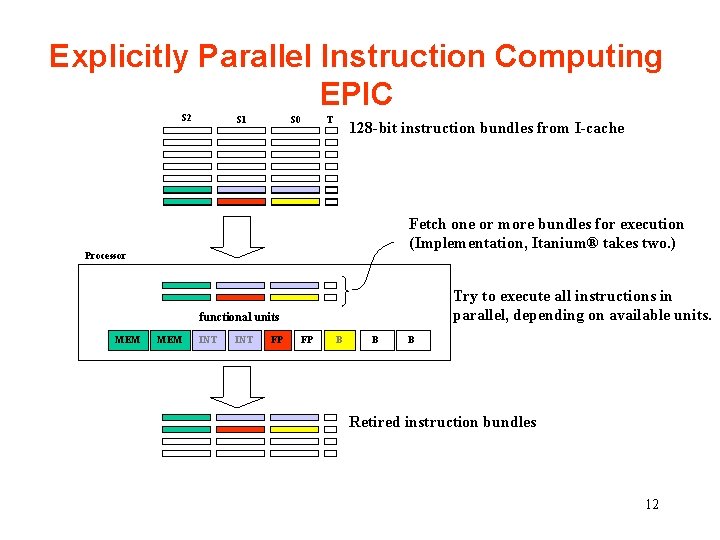

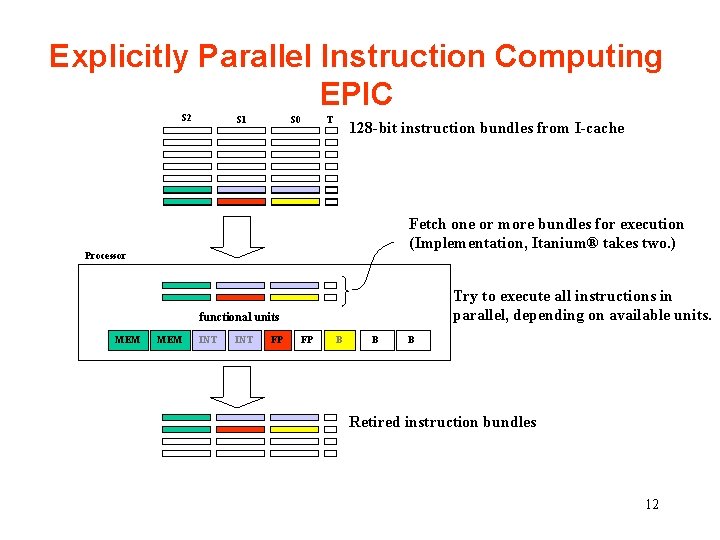

Explicitly Parallel Instruction Computing EPIC S 2 S 1 S 0 T 128 -bit instruction bundles from I-cache Fetch one or more bundles for execution (Implementation, Itanium® takes two. ) Processor Try to execute all instructions in parallel, depending on available units. functional units MEM INT FP FP B B B Retired instruction bundles 12

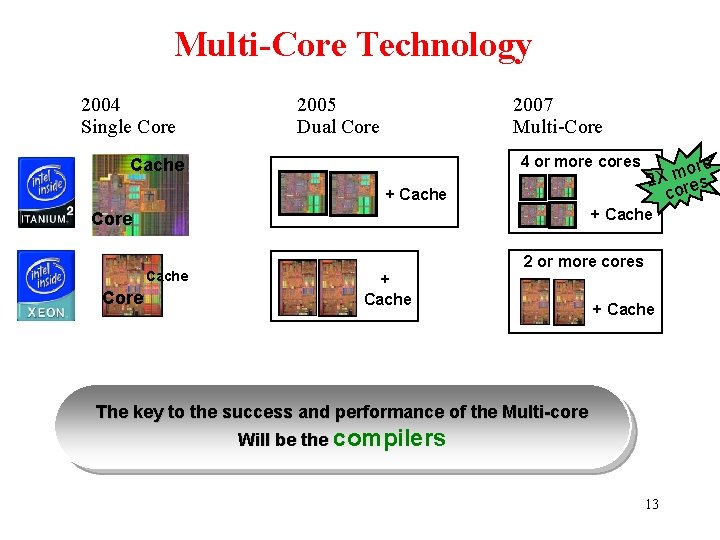

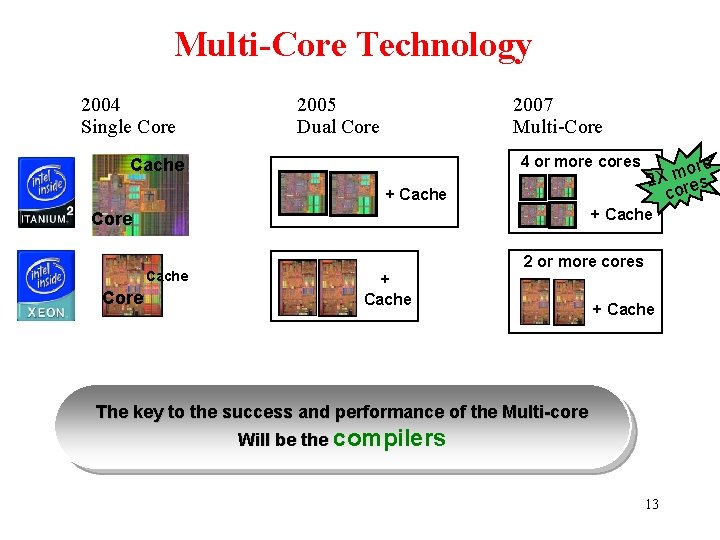

Multi-Core Technology 2004 Single Core 2005 Dual Core 2007 Multi-Core 4 or more cores Cache + Cache Core ore m 2 X res co 2 or more cores + Cache The key to the success and performance of the Multi-core Will be the compilers 13

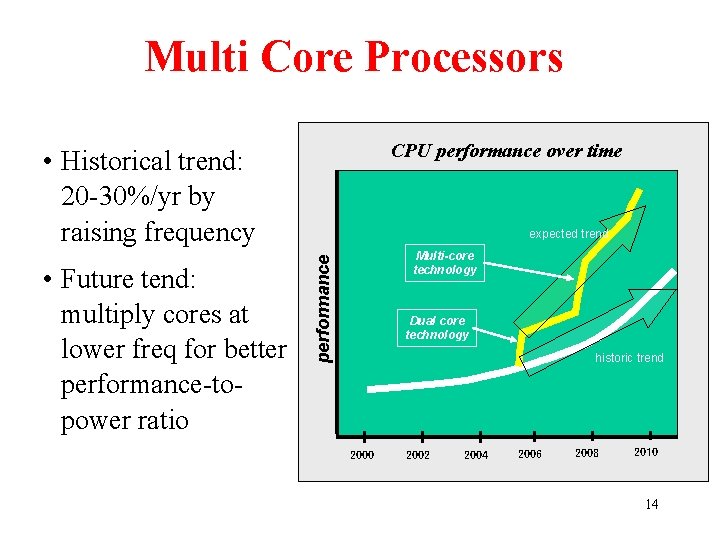

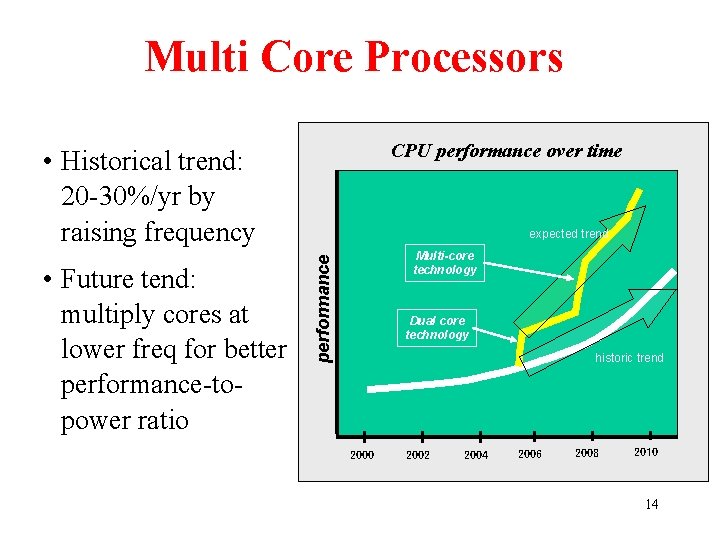

Multi Core Processors CPU performance over time • Historical trend: 20 -30%/yr by raising frequency Multi-core technology performance • Future tend: multiply cores at lower freq for better performance-topower ratio expected trend Dual core technology historic trend 2000 2002 2004 2006 2008 2010 14

64 -Bit Processors 15

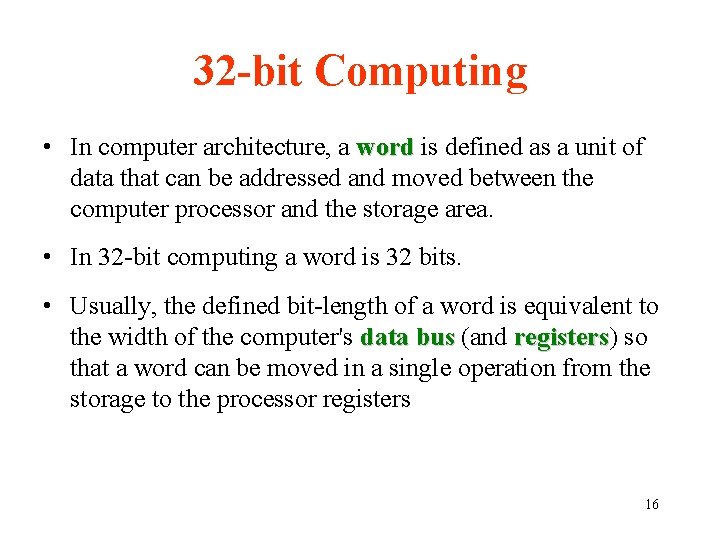

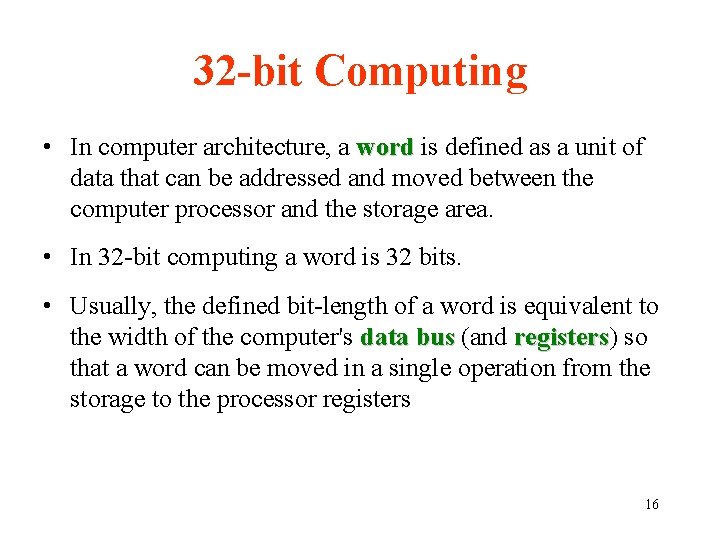

32 -bit Computing • In computer architecture, a word is defined as a unit of data that can be addressed and moved between the computer processor and the storage area. • In 32 -bit computing a word is 32 bits. • Usually, the defined bit-length of a word is equivalent to the width of the computer's data bus (and registers) registers so that a word can be moved in a single operation from the storage to the processor registers 16

32 -bit Computing • In a 32 -bit microprocessor; – There are 32 -bit general purpose registers in the processor. – There are 232 = 4 GB memory to be addressed. 17

64 -bit Computing • The best and simple definition is enhancing the processing word in the architecture to 64 bits. • The addressable memory increases from 4 GB to 264 = 18 billion GB • Size of registers extended to 64 bits • Integer and address data up to 64 bits in length can now be operated on • 264 = 1. 8 x 1019 integers can be represented with 64 bits vs. 4. 3 x 109 with 32 bits • Dynamic range has increased by a factor of 4. 3 billion! 18

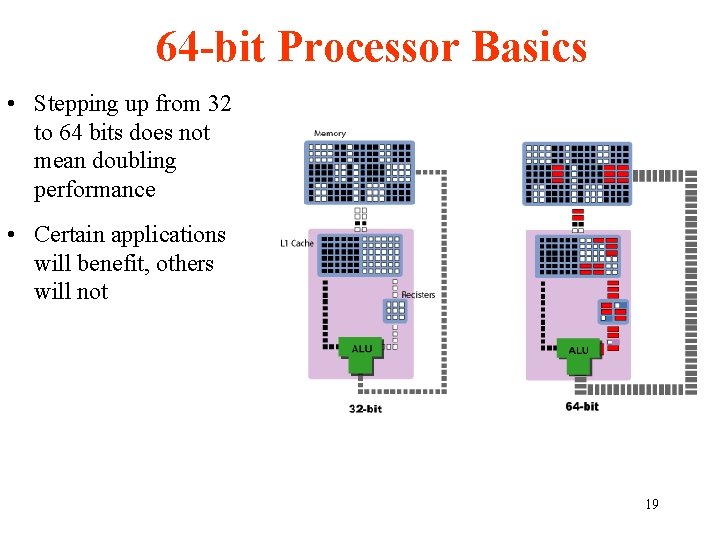

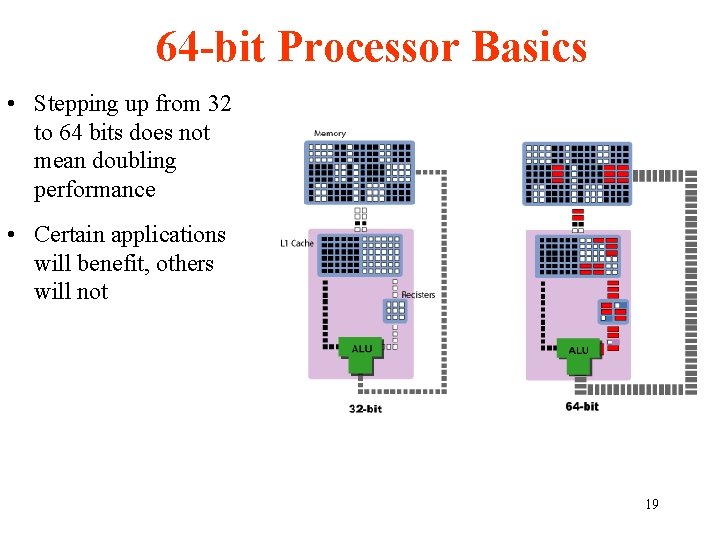

64 -bit Processor Basics • Stepping up from 32 to 64 bits does not mean doubling performance • Certain applications will benefit, others will not 19

What Applications Can Benefit Most From 64 -bit? • Large databases • Business and scientific simulation and modeling programs • Highly graphics-intensive software (CAD, 3 -D games) • Cryptography • Etc. 20

Benefits of 64 -bit Computing • Allowing applications to store vast amounts of data in main memory. • Allowing complex calculations with a highlevel precision. • Manipulating data and executing instructions in chunks that are twice as large as in 32 -bit computing. 21

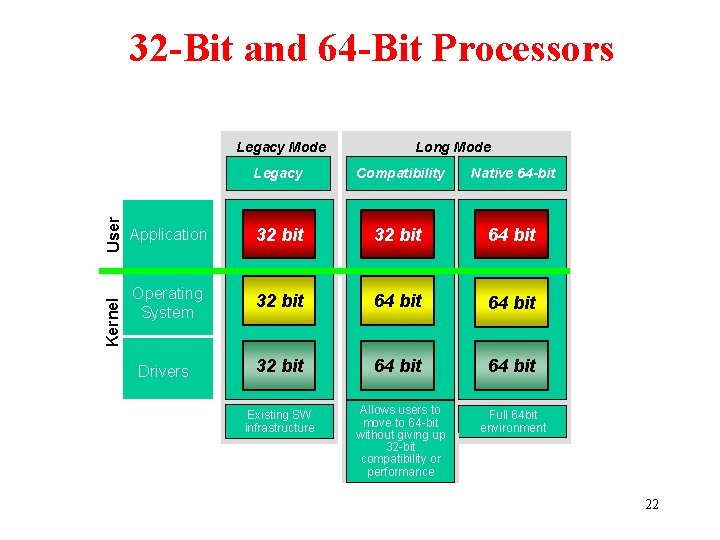

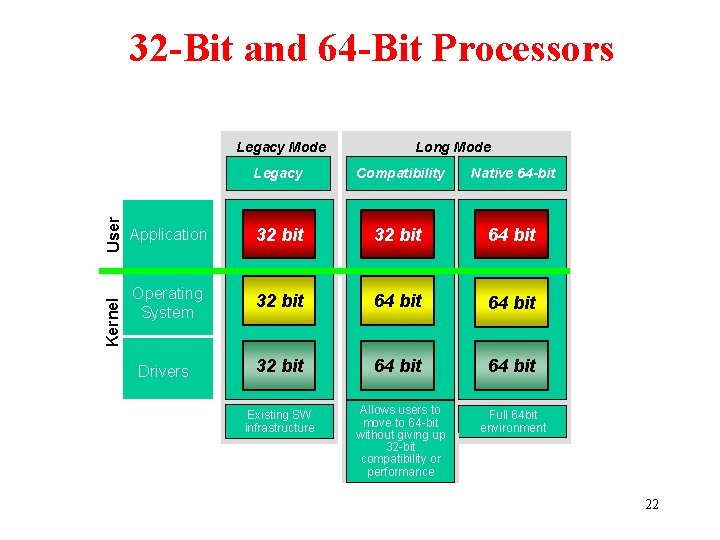

32 -Bit and 64 -Bit Processors Kernel User Legacy Mode Long Mode Legacy Compatibility Native 64 -bit Application 32 bit 64 bit Operating System 32 bit 64 bit Drivers 32 bit 64 bit Existing SW infrastructure Allows users to move to 64 -bit without giving up 32 -bit compatibility or performance Full 64 bit environment 22

The Role of Compilers 23

Compiler and ISA • Almost all programming is done in high-level language (HLL) for desktop and server applications • Most instructions executed are the output of a compiler • So, separation from each other is impractical 24

Goal of the Compiler • Primary goal is correctness • Second goal is speed of the object code • Others: – Speed of the compilation – Ease of providing debug support – Inter-operability among languages 25

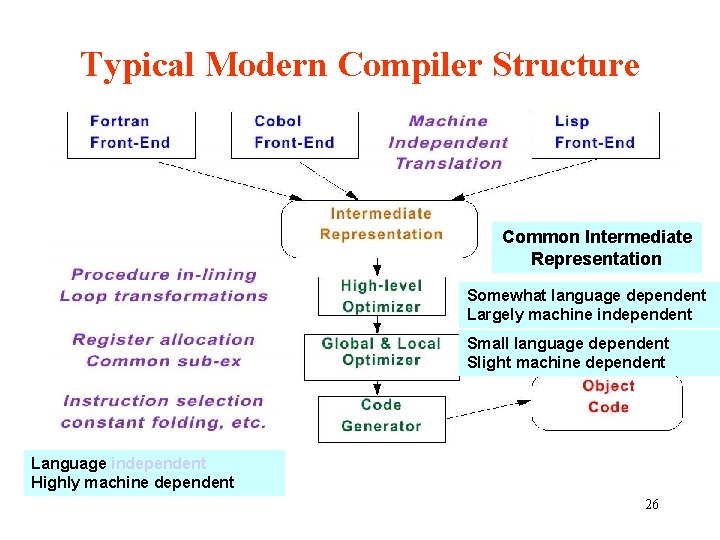

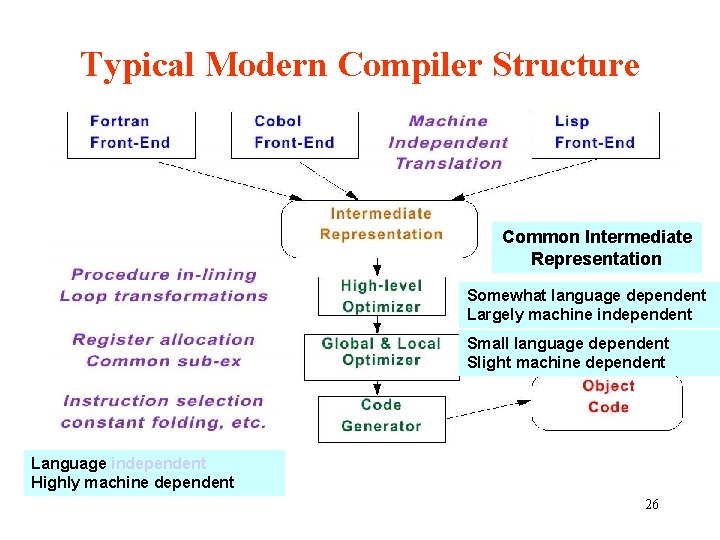

Typical Modern Compiler Structure Common Intermediate Representation Somewhat language dependent Largely machine independent Small language dependent Slight machine dependent Language independent Highly machine dependent 26

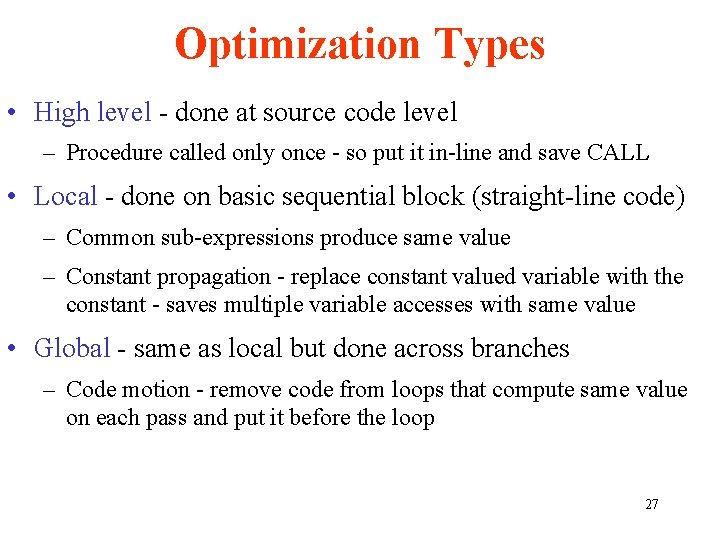

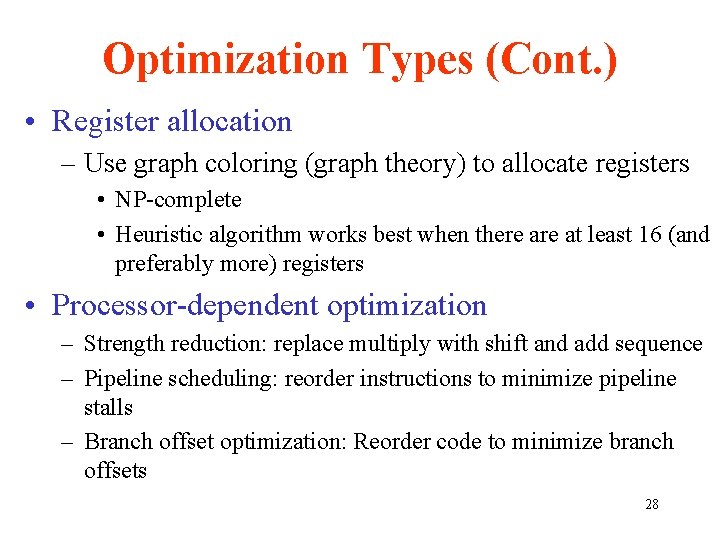

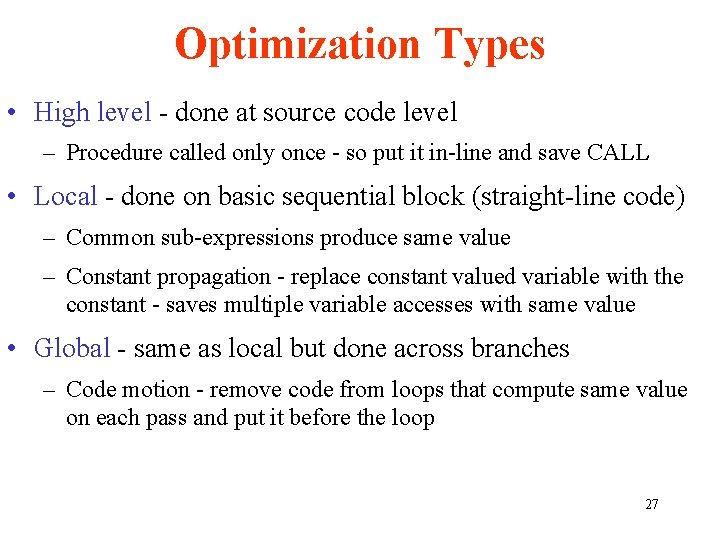

Optimization Types • High level - done at source code level – Procedure called only once - so put it in-line and save CALL • Local - done on basic sequential block (straight-line code) – Common sub-expressions produce same value – Constant propagation - replace constant valued variable with the constant - saves multiple variable accesses with same value • Global - same as local but done across branches – Code motion - remove code from loops that compute same value on each pass and put it before the loop 27

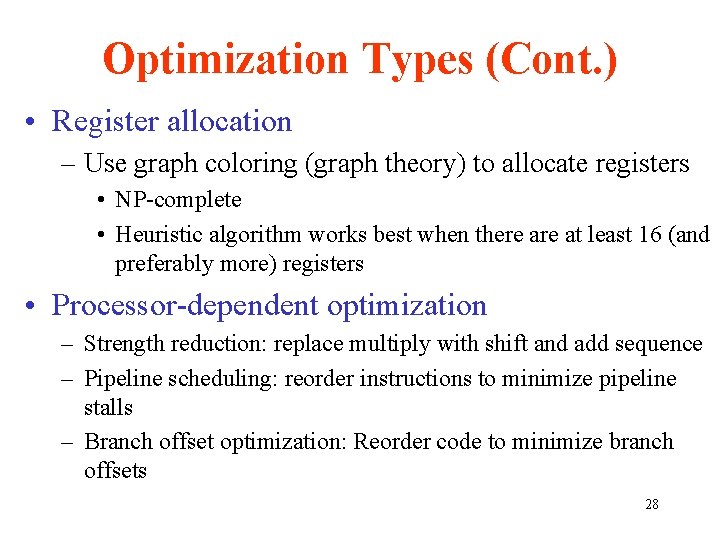

Optimization Types (Cont. ) • Register allocation – Use graph coloring (graph theory) to allocate registers • NP-complete • Heuristic algorithm works best when there at least 16 (and preferably more) registers • Processor-dependent optimization – Strength reduction: replace multiply with shift and add sequence – Pipeline scheduling: reorder instructions to minimize pipeline stalls – Branch offset optimization: Reorder code to minimize branch offsets 28

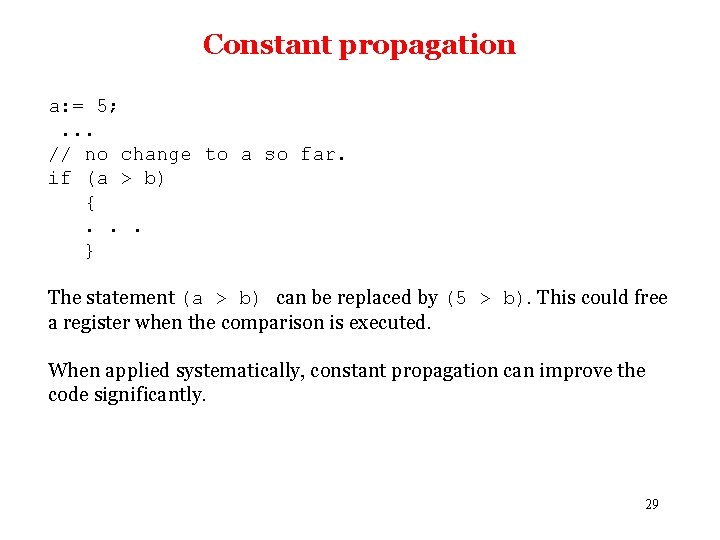

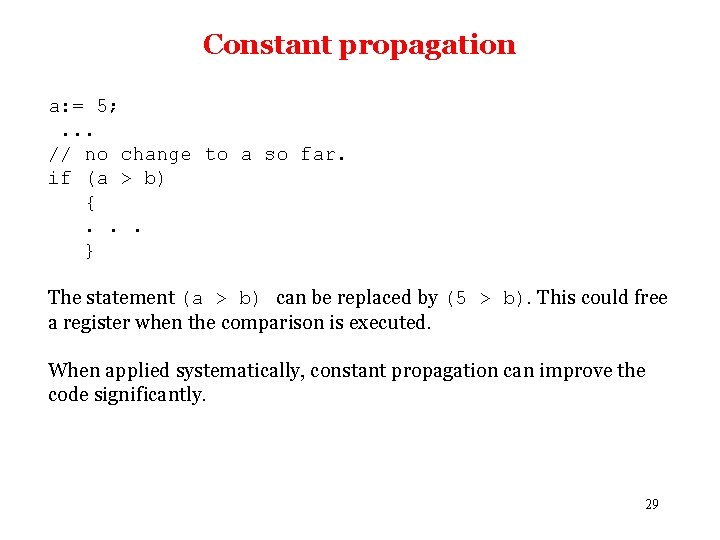

Constant propagation a: = 5; . . . // no change to a so far. if (a > b) {. . . } The statement (a > b) can be replaced by (5 > b). This could free a register when the comparison is executed. When applied systematically, constant propagation can improve the code significantly. 29

![Strength reduction Example for j 0 j n j Aj 2j Strength reduction Example: for (j = 0; j = n; ++j) A[j] = 2*j;](https://slidetodoc.com/presentation_image_h/0663bb177565ab80ff6ba8379f61c624/image-30.jpg)

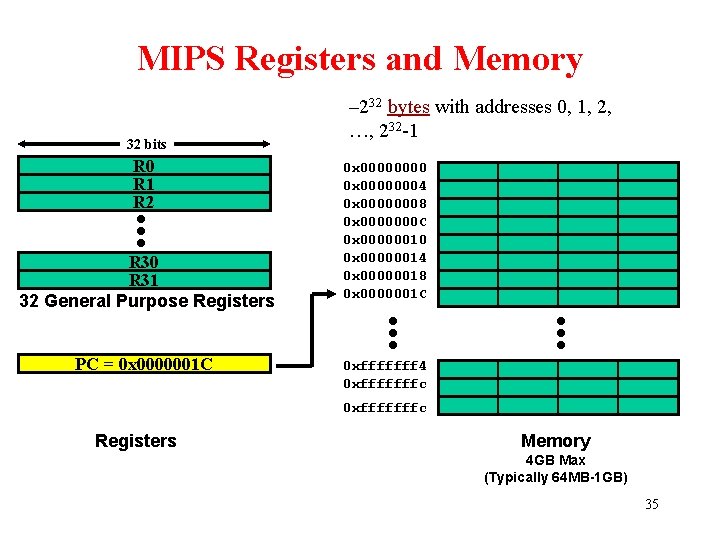

Strength reduction Example: for (j = 0; j = n; ++j) A[j] = 2*j; for (i = 0; 4*i <= n; ++i) A[4*i] = 0; An optimizing compiler can replace multiplication by 4 by addition by 4. This is an example of strength reduction. In general, scalar multiplications can be replaced by additions. 30

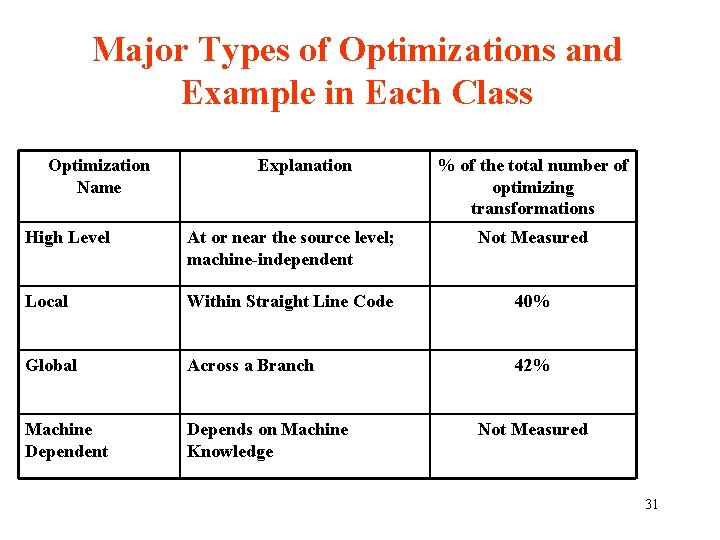

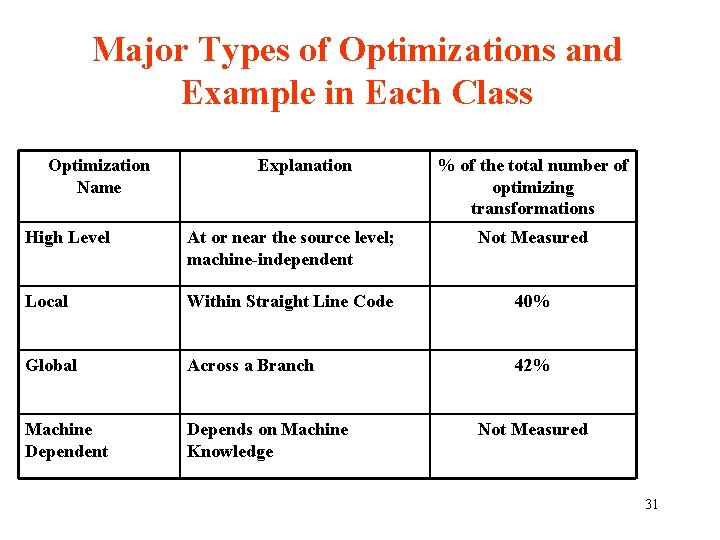

Major Types of Optimizations and Example in Each Class Optimization Name Explanation % of the total number of optimizing transformations High Level At or near the source level; machine-independent Not Measured Local Within Straight Line Code 40% Global Across a Branch 42% Machine Dependent Depends on Machine Knowledge Not Measured 31

MIPS: Case Study of Instruction Set Architecture 32

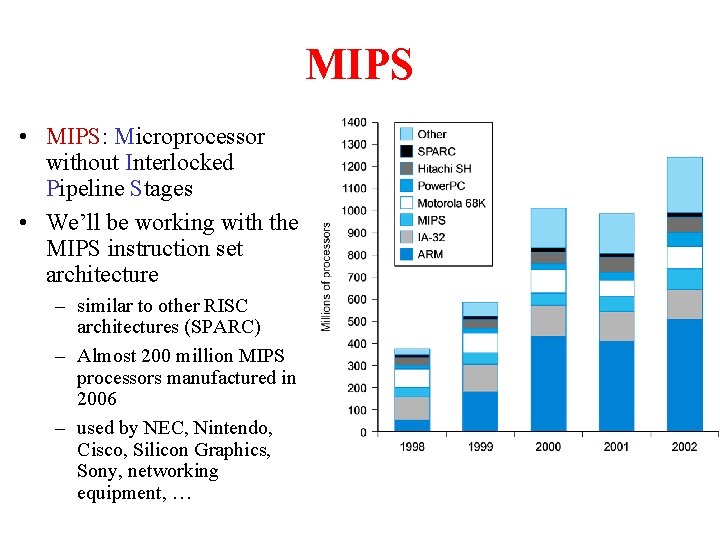

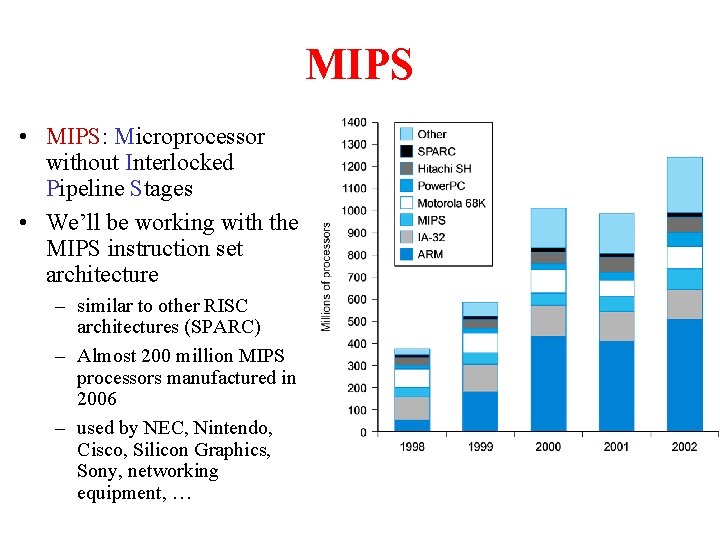

MIPS • MIPS: Microprocessor without Interlocked Pipeline Stages • We’ll be working with the MIPS instruction set architecture – similar to other RISC architectures (SPARC) – Almost 200 million MIPS processors manufactured in 2006 – used by NEC, Nintendo, Cisco, Silicon Graphics, Sony, networking equipment, …

MIPS Design Principles 1. Simplicity Favors Regularity • Keep all instructions a single size • Always require three register operands in arithmetic instructions 2. Smaller is Faster • Has only 32 registers rater than many more 3. Good Design Makes Good Compromises • Comprise between providing larger addresses and constants instruction and keeping instruction the same length 4. Make the Common Case Fast • PC-relative addressing for conditional branches • Immediate addressing for constant operands 34

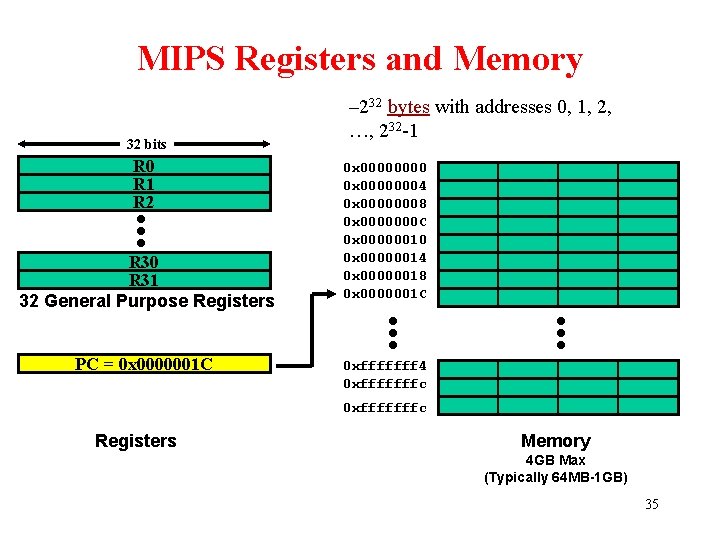

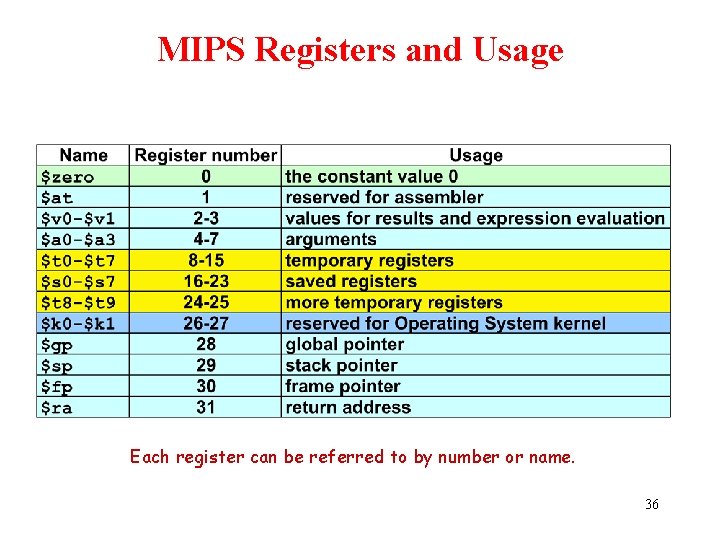

MIPS Registers and Memory 32 bits R 0 R 1 R 2 R 30 R 31 32 General Purpose Registers PC = 0 x 0000001 C – 232 bytes with addresses 0, 1, 2, …, 232 -1 0 x 00000004 0 x 00000008 0 x 0000000 C 0 x 00000010 0 x 00000014 0 x 00000018 0 x 0000001 C 0 xfffffff 4 0 xfffffffc Registers Memory 4 GB Max (Typically 64 MB-1 GB) 35

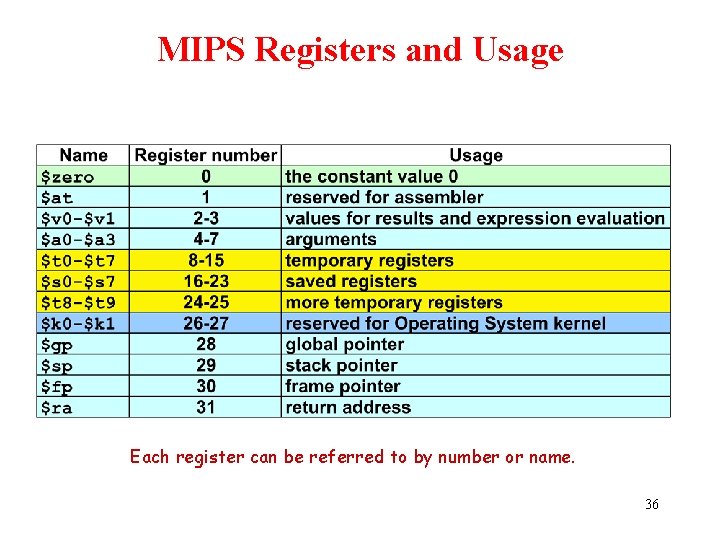

MIPS Registers and Usage Each register can be referred to by number or name. 36

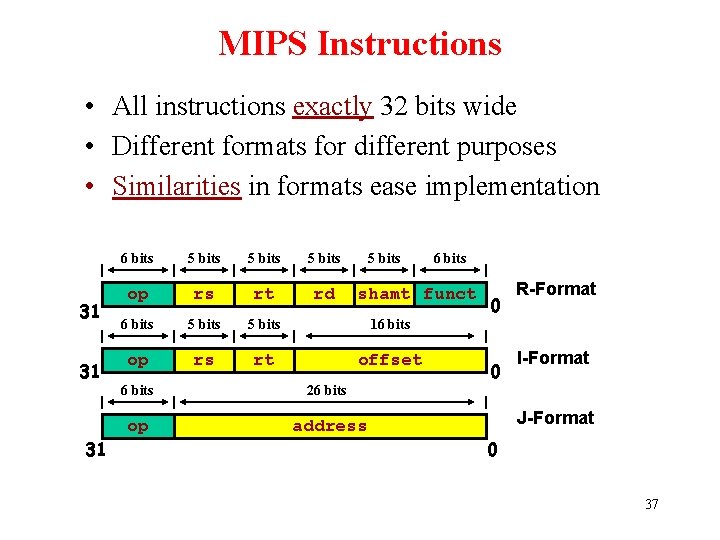

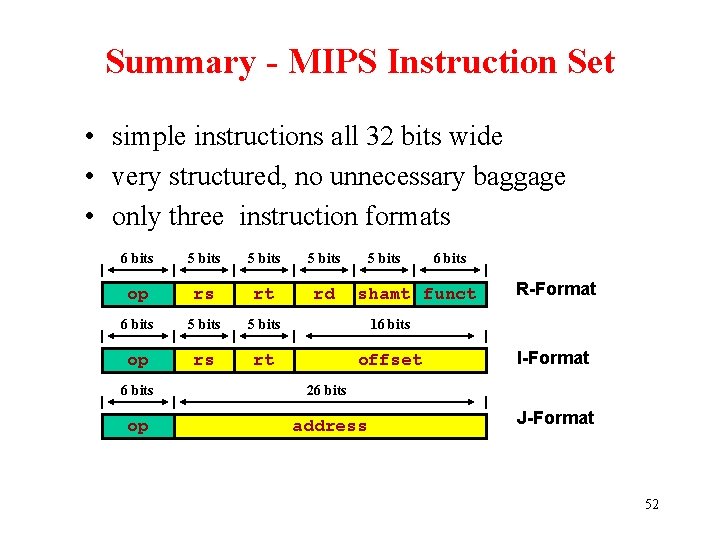

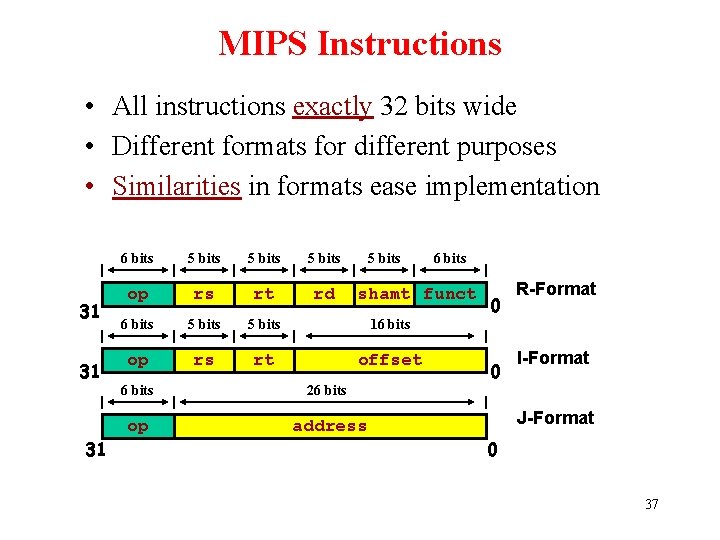

MIPS Instructions • All instructions exactly 32 bits wide • Different formats for different purposes • Similarities in formats ease implementation 31 31 31 6 bits 5 bits op rs rt rd 6 bits 5 bits 16 bits op rs rt offset 6 bits shamt funct 6 bits 26 bits op address 0 0 R-Format I-Format J-Format 0 37

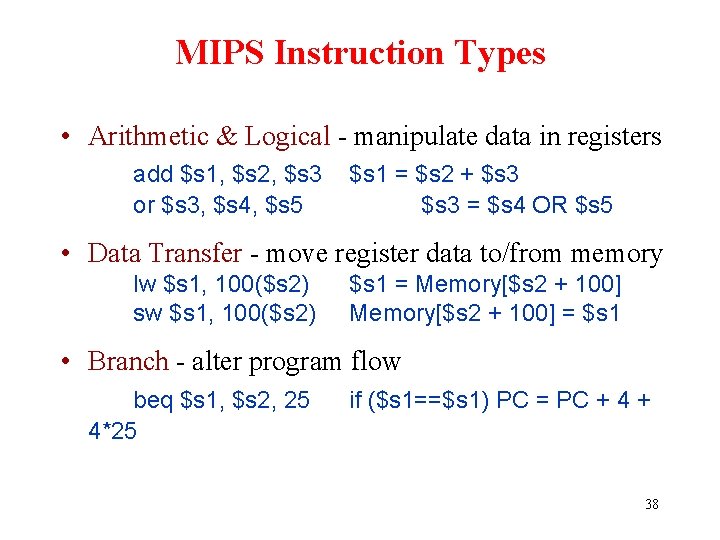

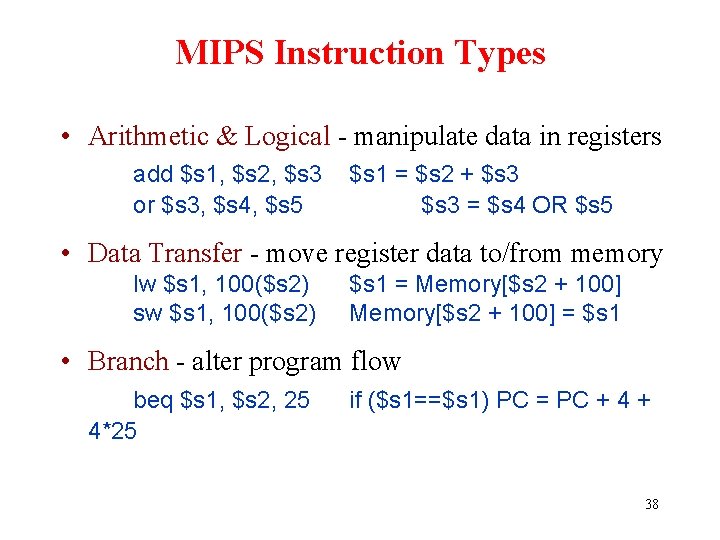

MIPS Instruction Types • Arithmetic & Logical - manipulate data in registers add $s 1, $s 2, $s 3 or $s 3, $s 4, $s 5 $s 1 = $s 2 + $s 3 = $s 4 OR $s 5 • Data Transfer - move register data to/from memory lw $s 1, 100($s 2) sw $s 1, 100($s 2) $s 1 = Memory[$s 2 + 100] = $s 1 • Branch - alter program flow beq $s 1, $s 2, 25 4*25 if ($s 1==$s 1) PC = PC + 4 + 38

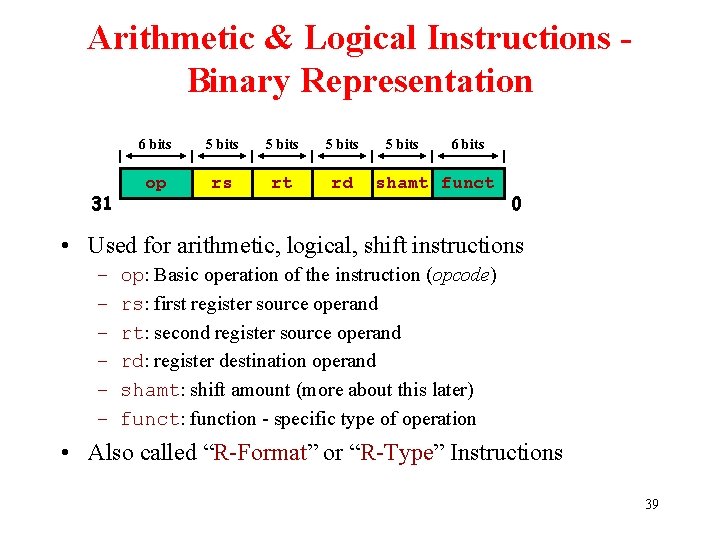

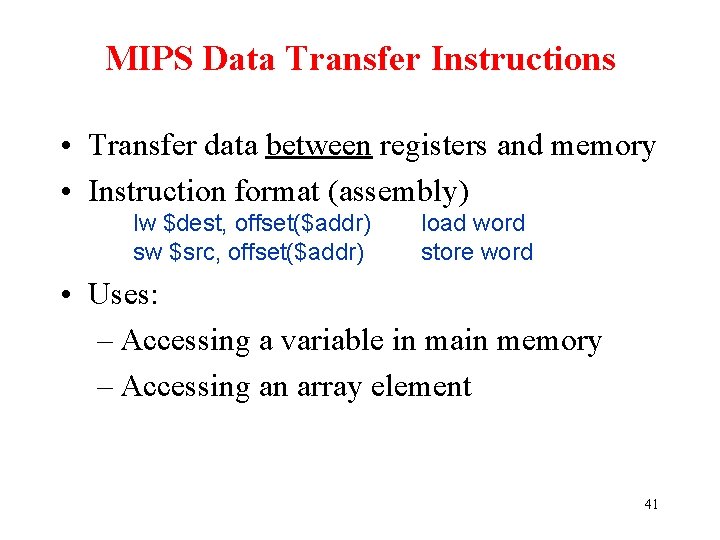

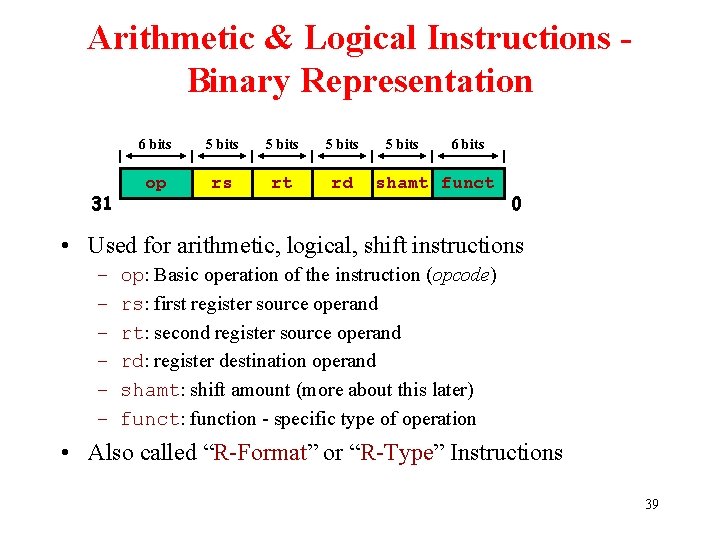

Arithmetic & Logical Instructions Binary Representation 31 6 bits 5 bits op rs rt rd 5 bits 6 bits shamt funct 0 • Used for arithmetic, logical, shift instructions – – – op: Basic operation of the instruction (opcode) rs: first register source operand rt: second register source operand rd: register destination operand shamt: shift amount (more about this later) funct: function - specific type of operation • Also called “R-Format” or “R-Type” Instructions 39

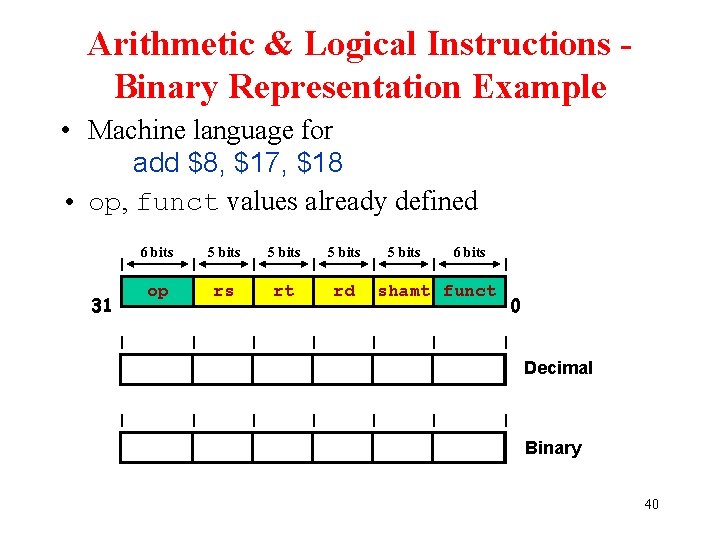

Arithmetic & Logical Instructions Binary Representation Example • Machine language for add $8, $17, $18 • op, funct values already defined 31 6 bits 5 bits op rs rt rd 0 17 18 8 5 bits 6 bits shamt funct 0 32 000000 10001 10010 01000 00000 100000 0 Decimal Binary 40

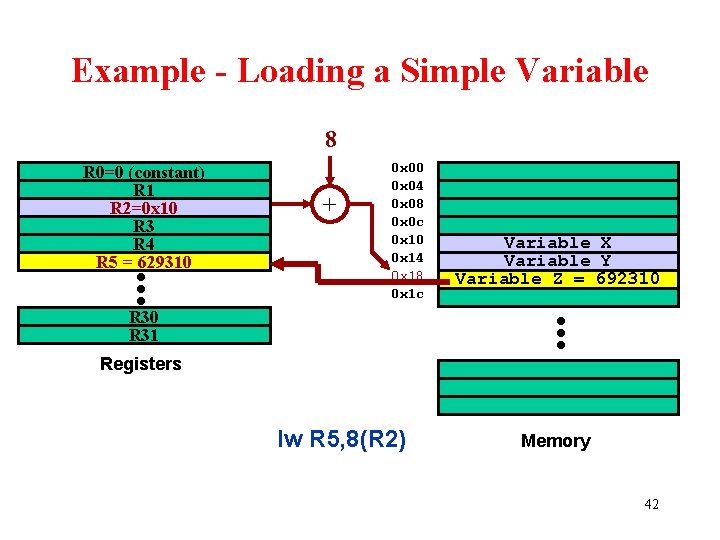

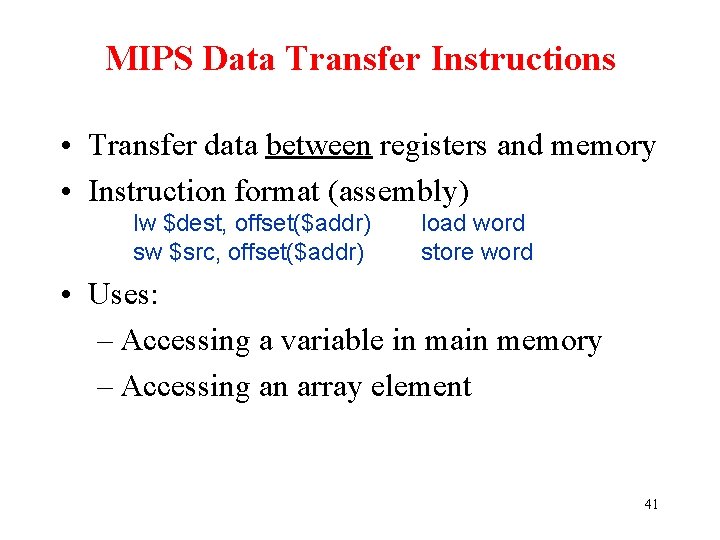

MIPS Data Transfer Instructions • Transfer data between registers and memory • Instruction format (assembly) lw $dest, offset($addr) sw $src, offset($addr) load word store word • Uses: – Accessing a variable in main memory – Accessing an array element 41

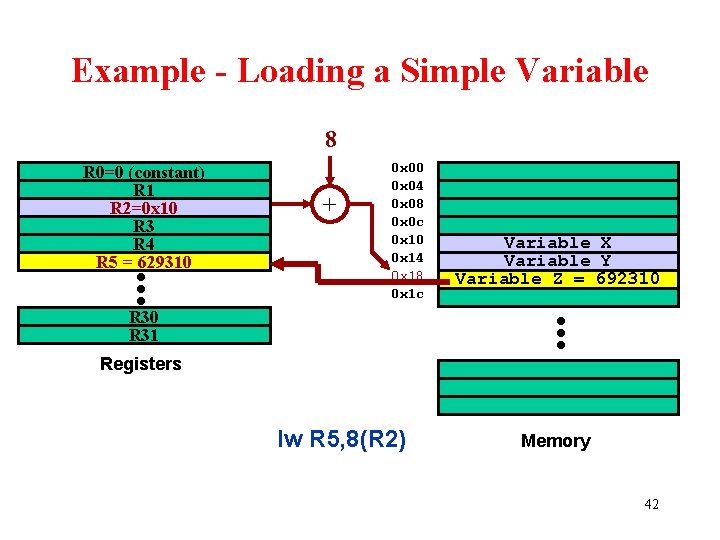

Example - Loading a Simple Variable 8 R 0=0 (constant) R 1 R 2=0 x 10 R 3 R 4 R 5 =R 5 629310 + 0 x 00 0 x 04 0 x 08 0 x 0 c 0 x 10 0 x 14 0 x 18 0 x 1 c Variable X Variable Y Variable Z = 692310 R 31 Registers lw R 5, 8(R 2) Memory 42

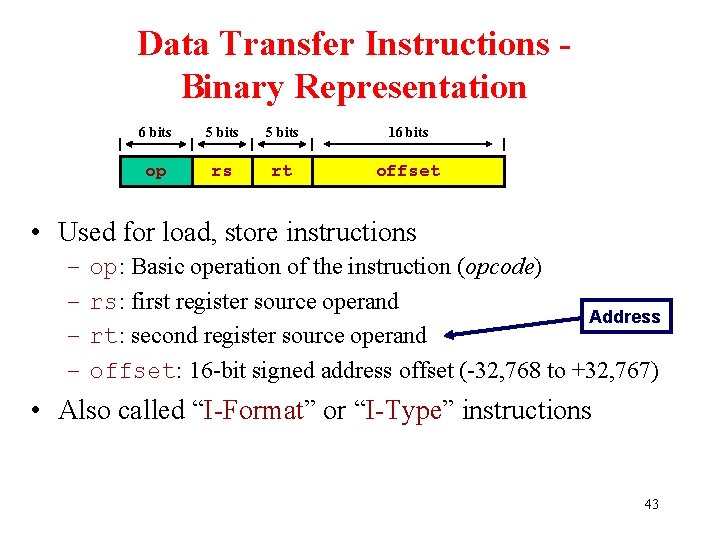

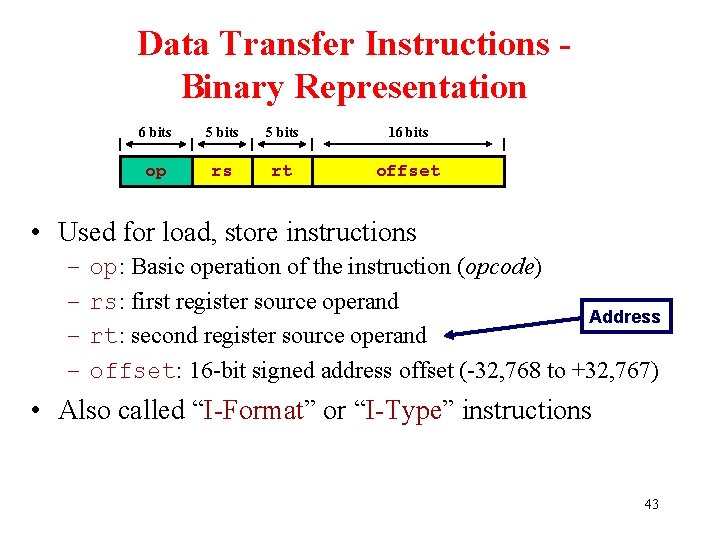

Data Transfer Instructions Binary Representation 6 bits 5 bits 16 bits op rs rt offset • Used for load, store instructions – – op: Basic operation of the instruction (opcode) rs: first register source operand Address rt: second register source operand offset: 16 -bit signed address offset (-32, 768 to +32, 767) • Also called “I-Format” or “I-Type” instructions 43

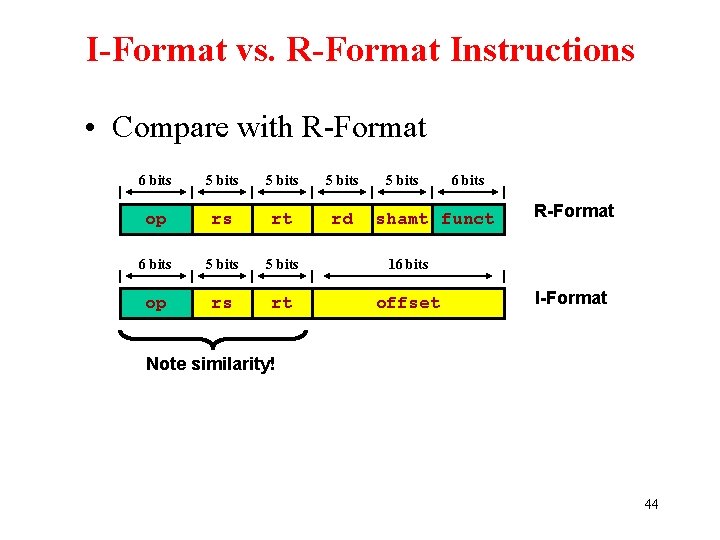

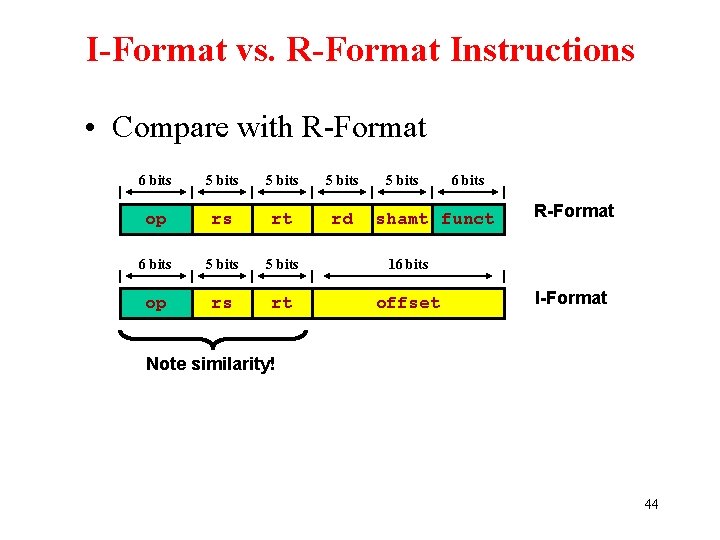

I-Format vs. R-Format Instructions • Compare with R-Format 6 bits 5 bits op rs rt rd 6 bits 5 bits 16 bits op rs rt offset 6 bits shamt funct R-Format I-Format Note similarity! 44

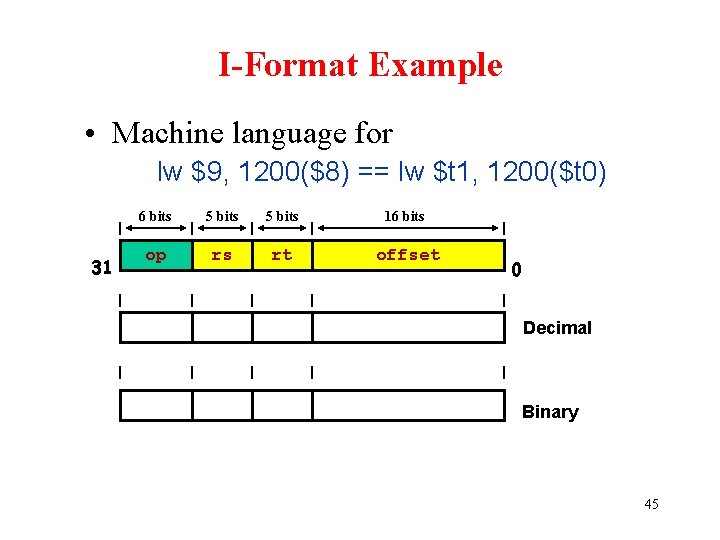

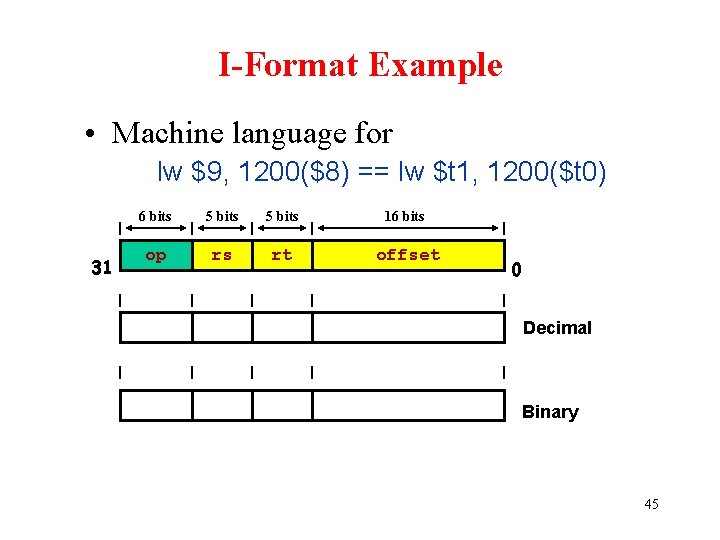

I-Format Example • Machine language for lw $9, 1200($8) == lw $t 1, 1200($t 0) 31 6 bits 5 bits 16 bits op rs rt offset 35 8 9 1200 100011 01000 01001 0000010010110000 0 Decimal Binary 45

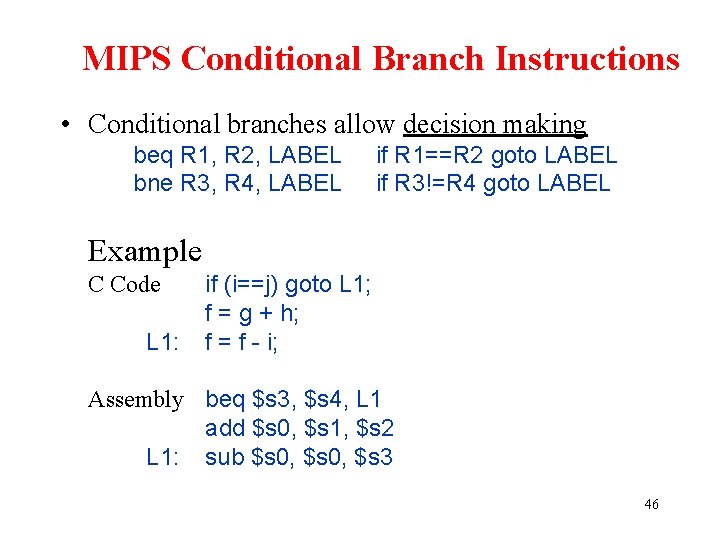

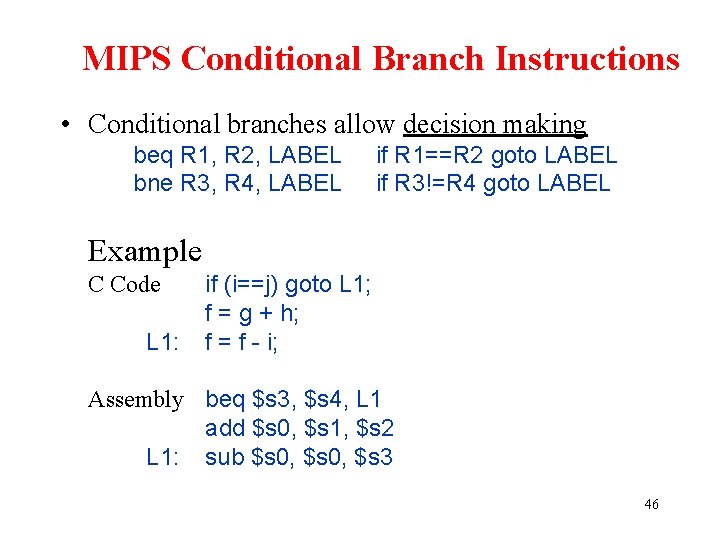

MIPS Conditional Branch Instructions • Conditional branches allow decision making beq R 1, R 2, LABEL bne R 3, R 4, LABEL if R 1==R 2 goto LABEL if R 3!=R 4 goto LABEL Example C Code L 1: if (i==j) goto L 1; f = g + h; f = f - i; Assembly beq $s 3, $s 4, L 1 add $s 0, $s 1, $s 2 L 1: sub $s 0, $s 3 46

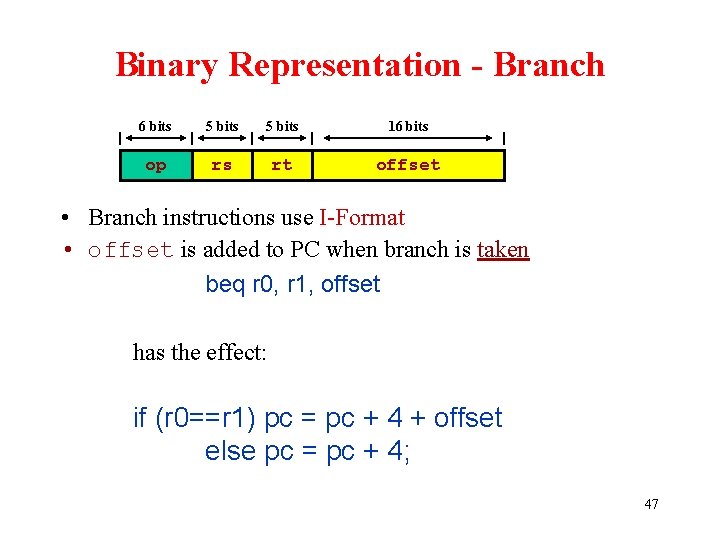

Binary Representation - Branch 6 bits 5 bits 16 bits op rs rt offset • Branch instructions use I-Format • offset is added to PC when branch is taken beq r 0, r 1, offset has the effect: if (r 0==r 1) pc = pc + 4 + offset else pc = pc + 4; 47

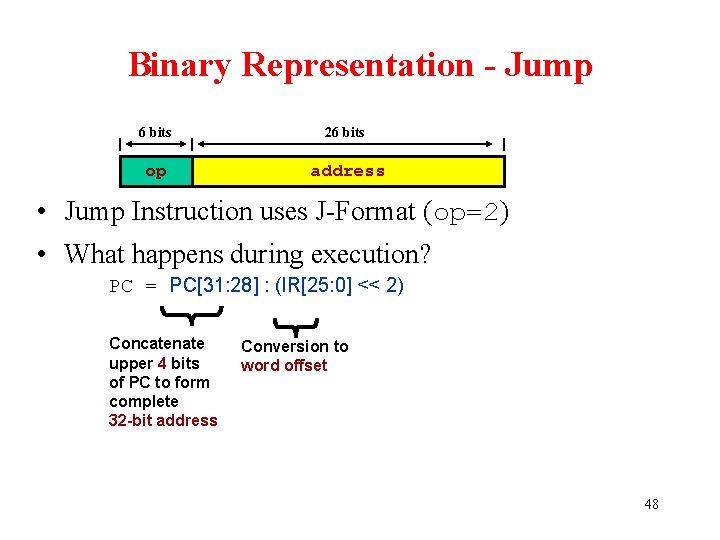

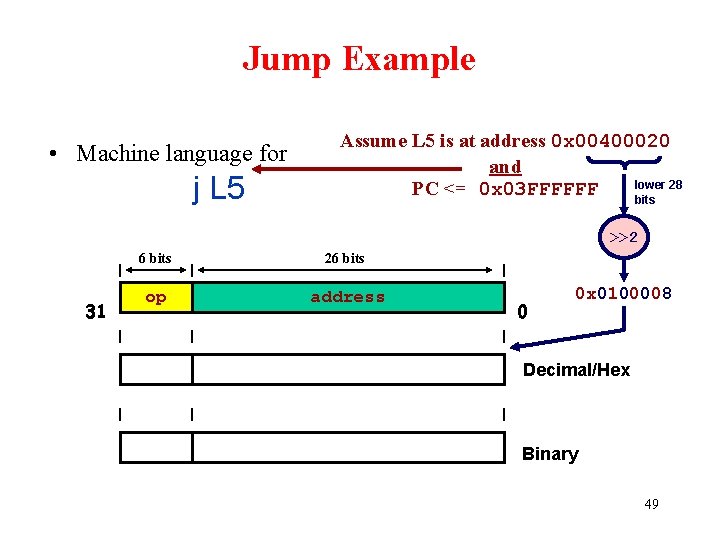

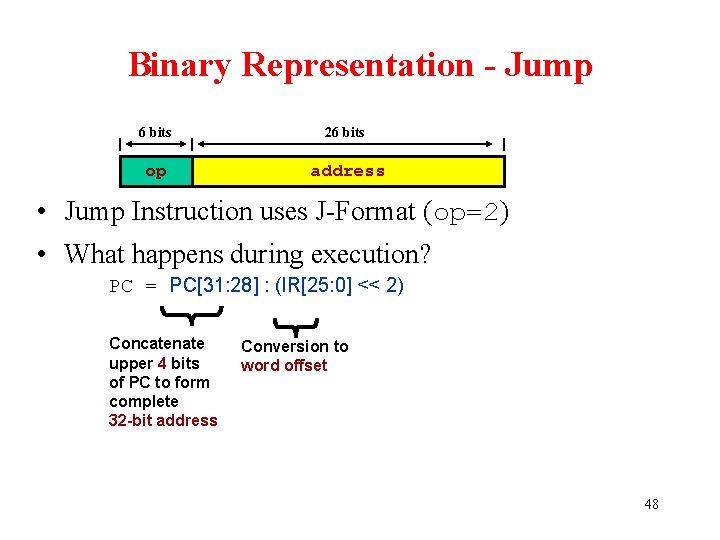

Binary Representation - Jump 6 bits 26 bits op address • Jump Instruction uses J-Format (op=2) • What happens during execution? PC = PC[31: 28] : (IR[25: 0] << 2) Concatenate upper 4 bits of PC to form complete 32 -bit address Conversion to word offset 48

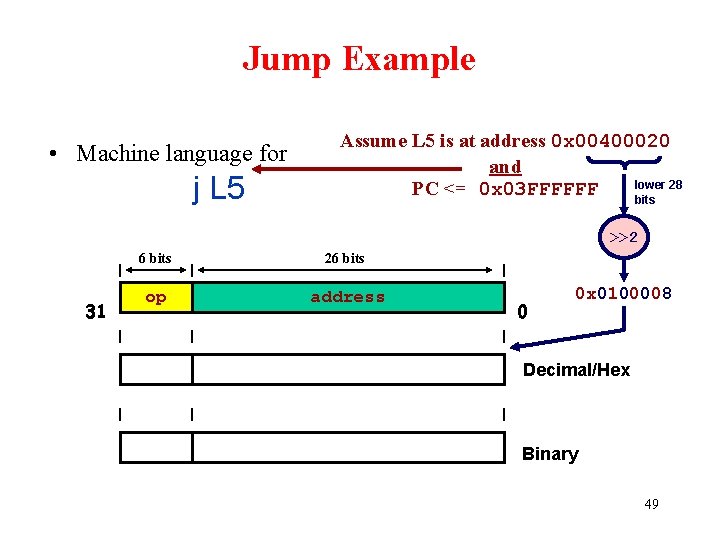

Jump Example • Machine language for j L 5 Assume L 5 is at address 0 x 00400020 and lower 28 PC <= 0 x 03 FFFFFF bits >>2 31 6 bits 26 bits op address 2 0 x 0100008 000010 000001000000001000 0 0 x 0100008 Decimal/Hex Binary 49

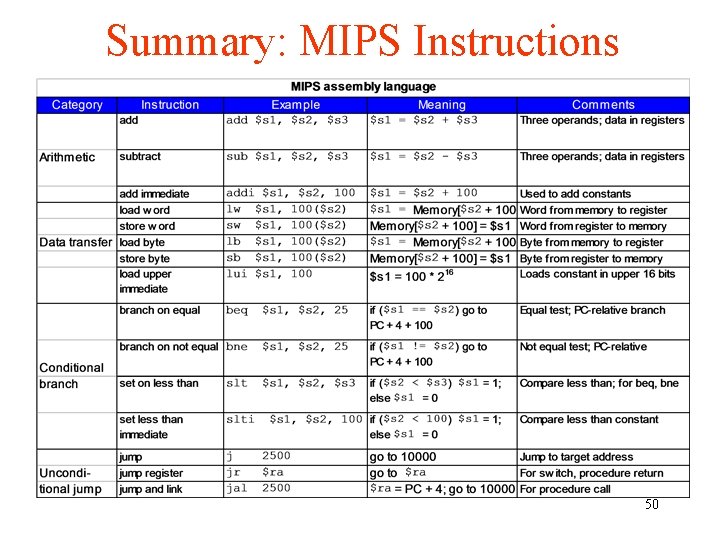

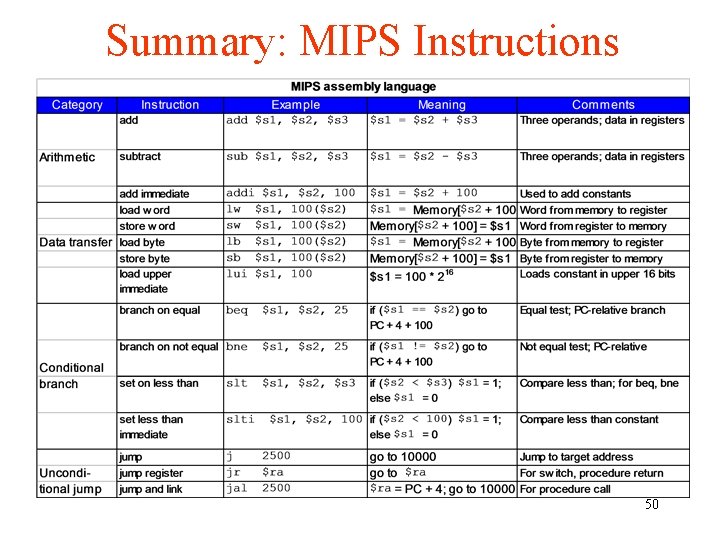

Summary: MIPS Instructions 50

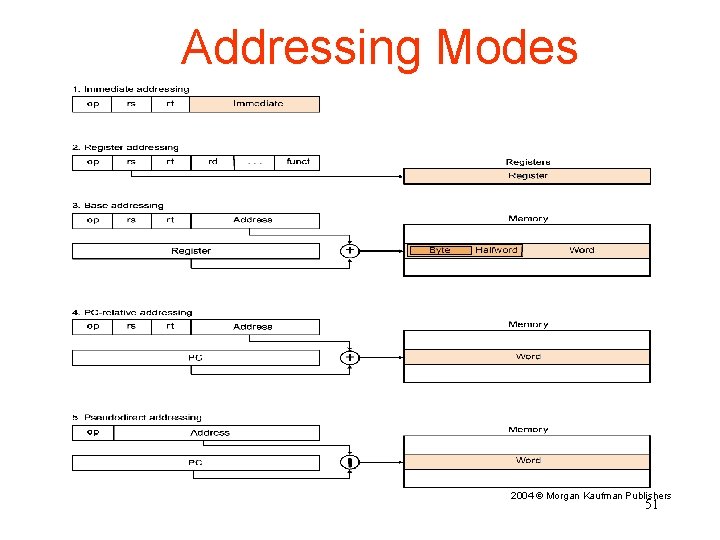

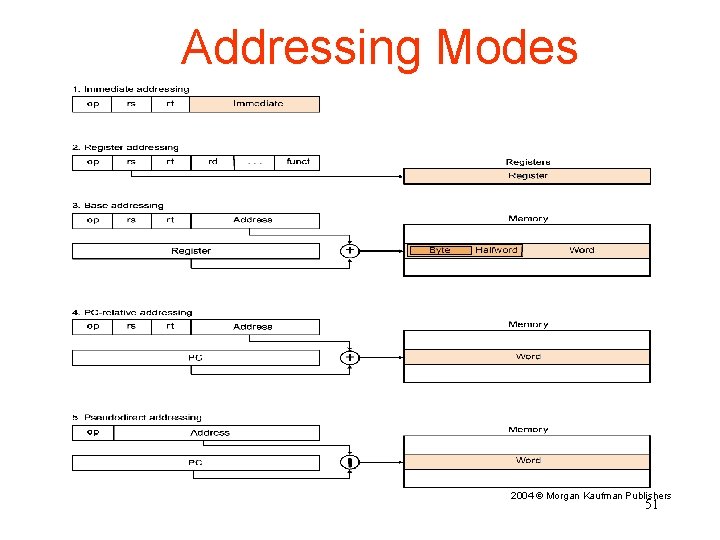

Addressing Modes 2004 © Morgan Kaufman Publishers 51

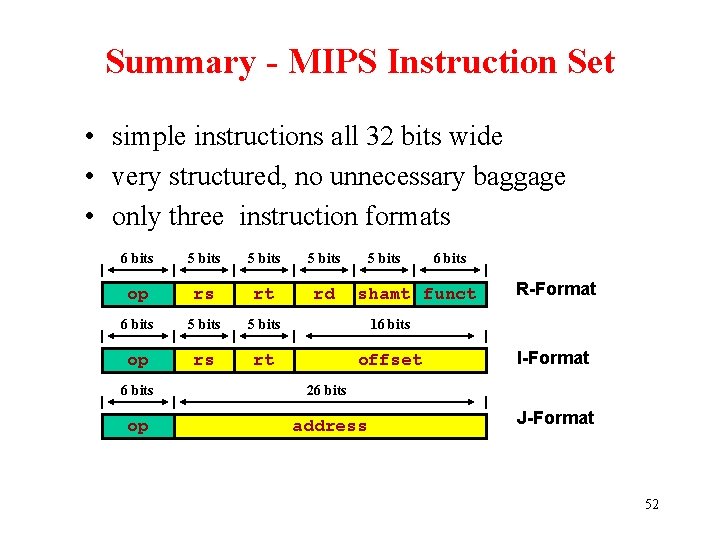

Summary - MIPS Instruction Set • simple instructions all 32 bits wide • very structured, no unnecessary baggage • only three instruction formats 6 bits 5 bits op rs rt rd 6 bits 5 bits 16 bits op rs rt offset 6 bits shamt funct 6 bits 26 bits op address R-Format I-Format J-Format 52