Architecture Overview Introduction to CUDA Programming Andreas Moshovos

![Handling Large Input Data Sets – 1 D Example • Recall grid. Dim. [xy] Handling Large Input Data Sets – 1 D Example • Recall grid. Dim. [xy]](https://slidetodoc.com/presentation_image_h/3659670336575a6af2617c0e3efd0713/image-81.jpg)

- Slides: 81

Architecture Overview Introduction to CUDA Programming Andreas Moshovos Winter 2009 Updated Winter 2002 Most slides/material from: UIUC course by Wen-Mei Hwu and David Kirk Real World Technologies by David Kanter Hot. Chips 22 Presentation by C. M. Wittenbrink, E. Kilgariff and A. Prabhu

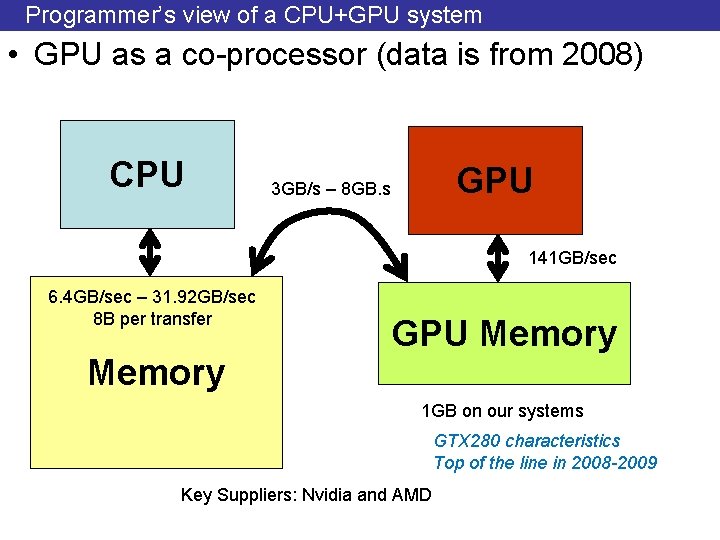

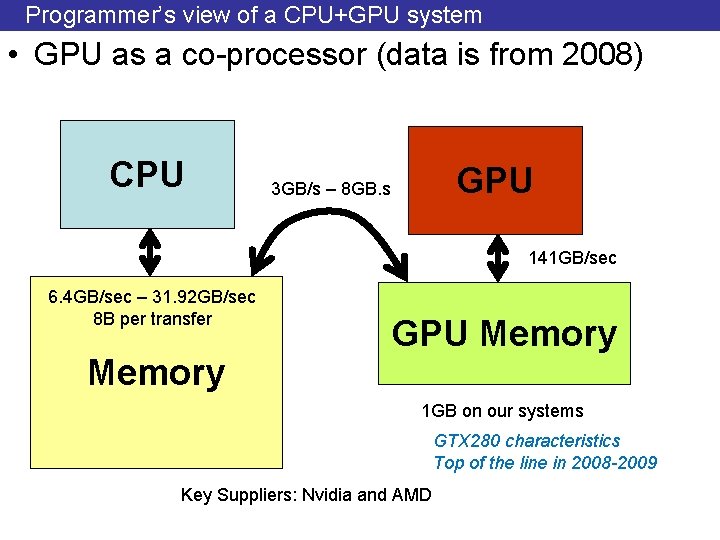

Programmer’s view of a CPU+GPU system • GPU as a co-processor (data is from 2008) CPU GPU 3 GB/s – 8 GB. s 141 GB/sec 6. 4 GB/sec – 31. 92 GB/sec 8 B per transfer Memory GPU Memory 1 GB on our systems GTX 280 characteristics Top of the line in 2008 -2009 Key Suppliers: Nvidia and AMD

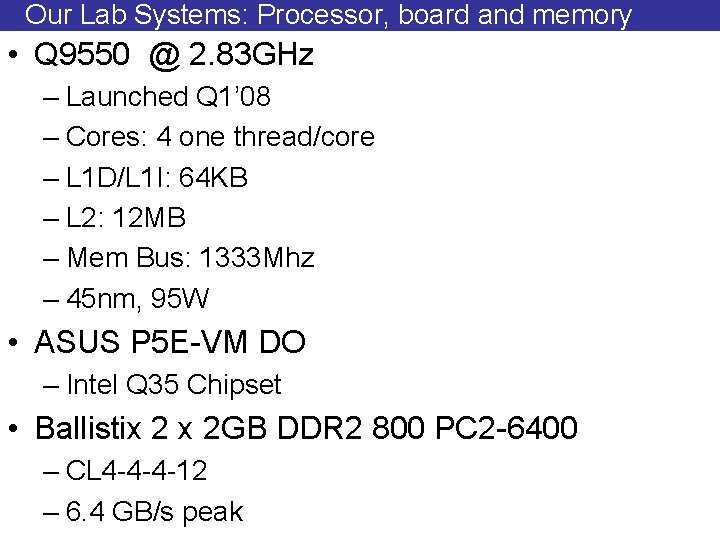

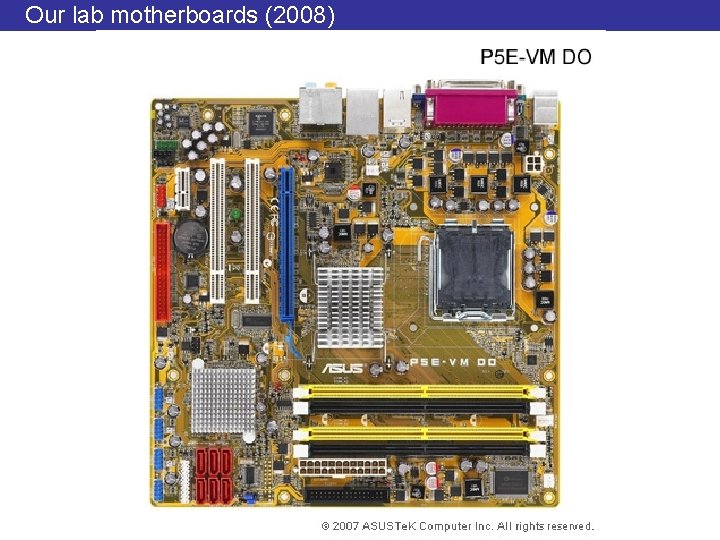

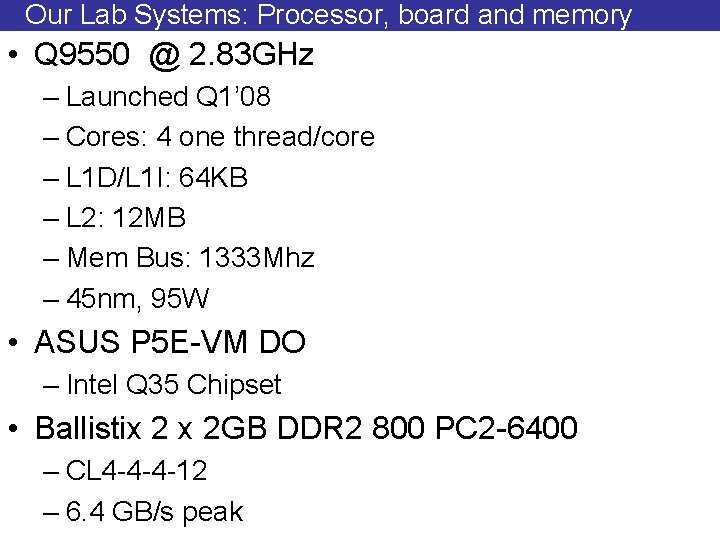

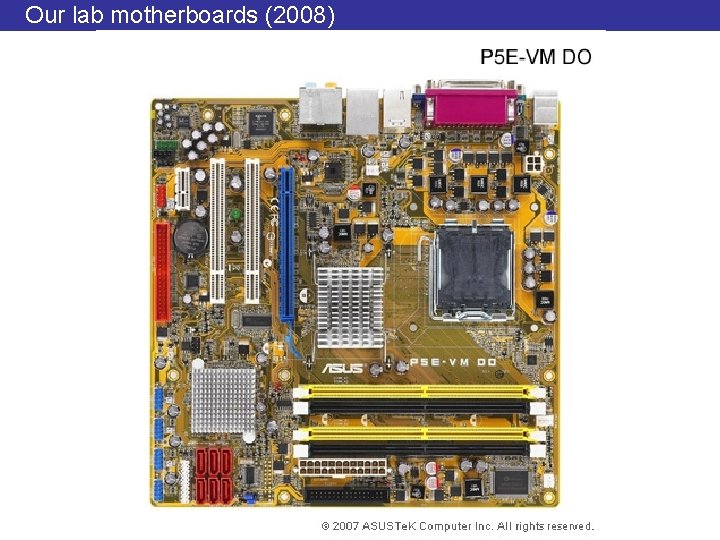

Our Lab Systems: Processor, board and memory • Q 9550 @ 2. 83 GHz – Launched Q 1’ 08 – Cores: 4 one thread/core – L 1 D/L 1 I: 64 KB – L 2: 12 MB – Mem Bus: 1333 Mhz – 45 nm, 95 W • ASUS P 5 E-VM DO – Intel Q 35 Chipset • Ballistix 2 x 2 GB DDR 2 800 PC 2 -6400 – CL 4 -4 -4 -12 – 6. 4 GB/s peak

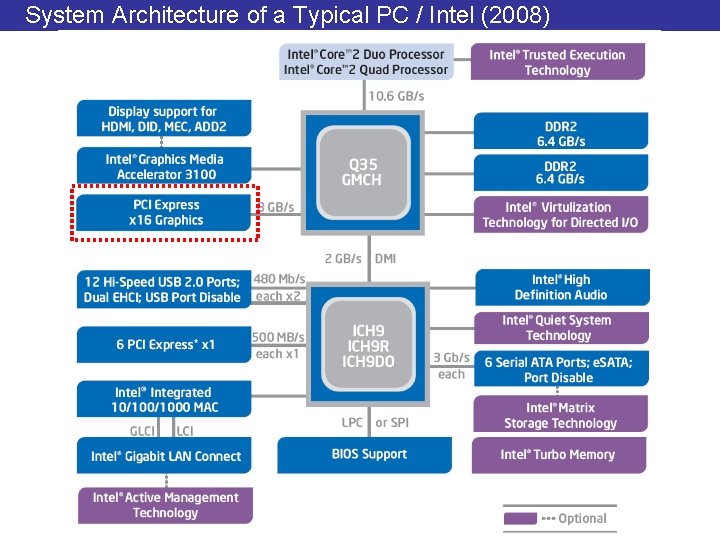

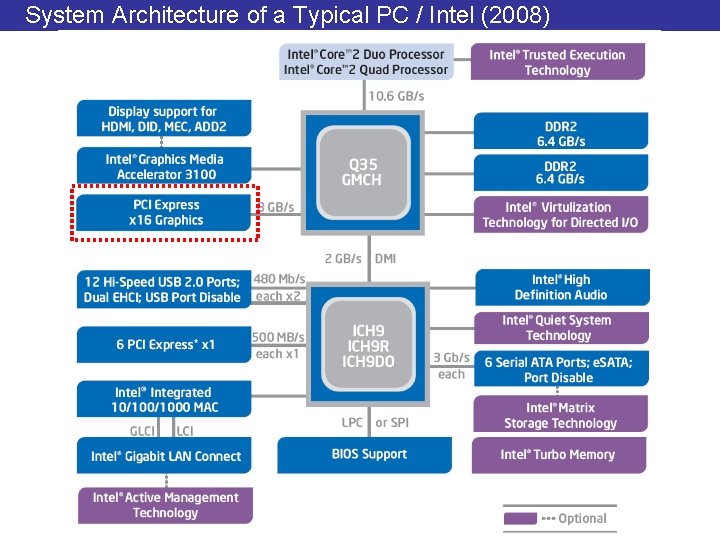

System Architecture of a Typical PC / Intel (2008)

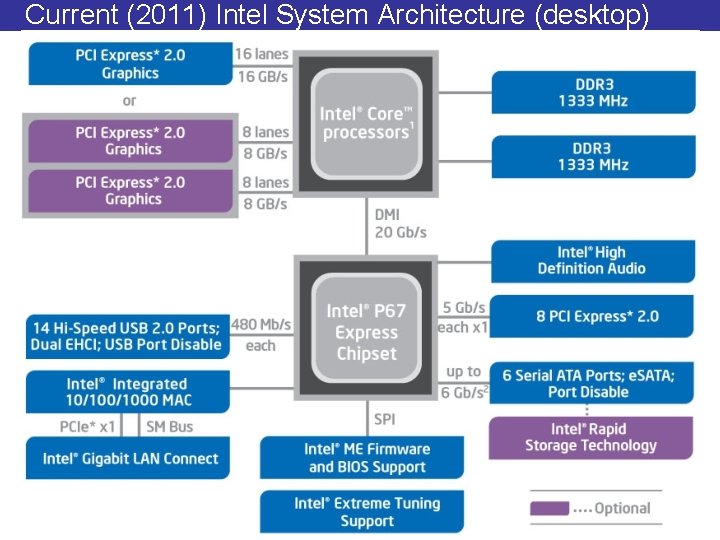

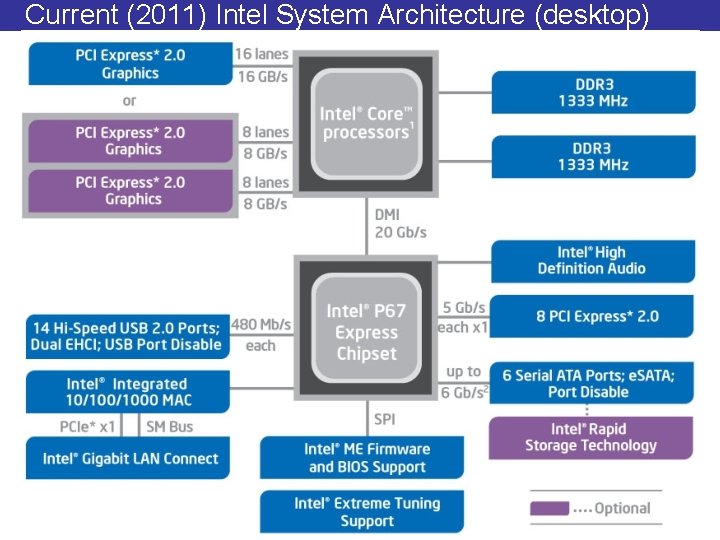

Current (2011) Intel System Architecture (desktop)

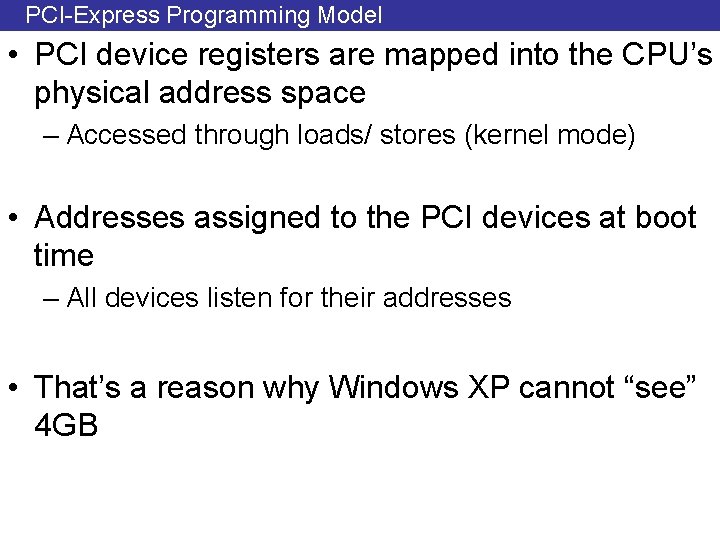

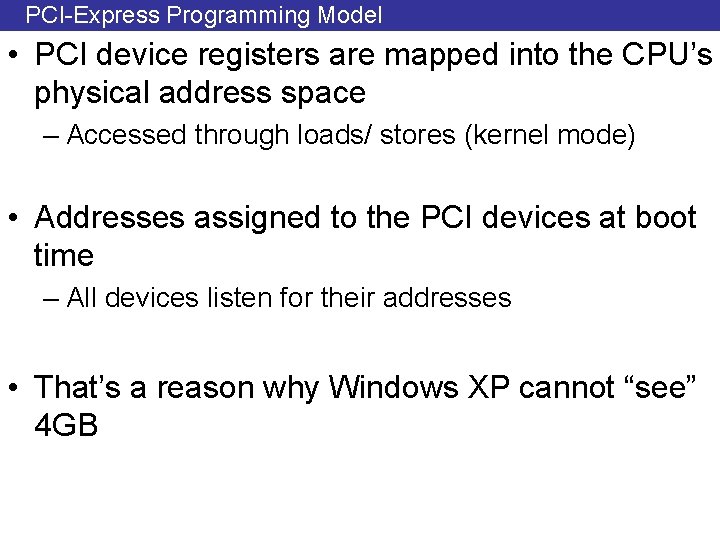

PCI-Express Programming Model • PCI device registers are mapped into the CPU’s physical address space – Accessed through loads/ stores (kernel mode) • Addresses assigned to the PCI devices at boot time – All devices listen for their addresses • That’s a reason why Windows XP cannot “see” 4 GB

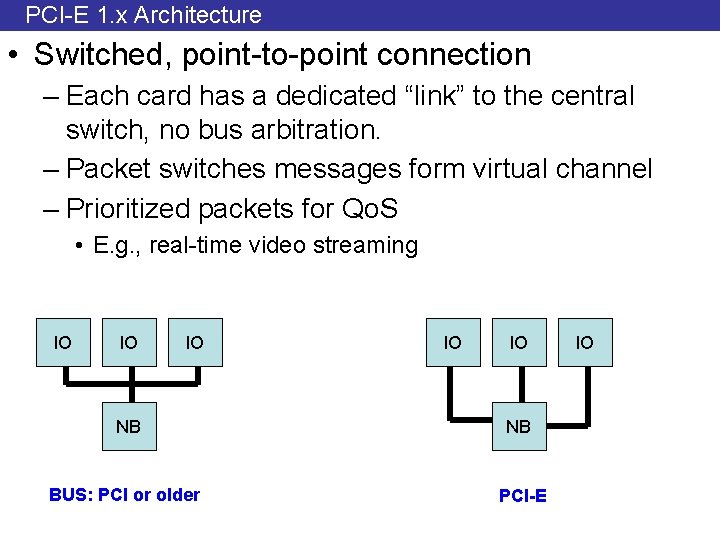

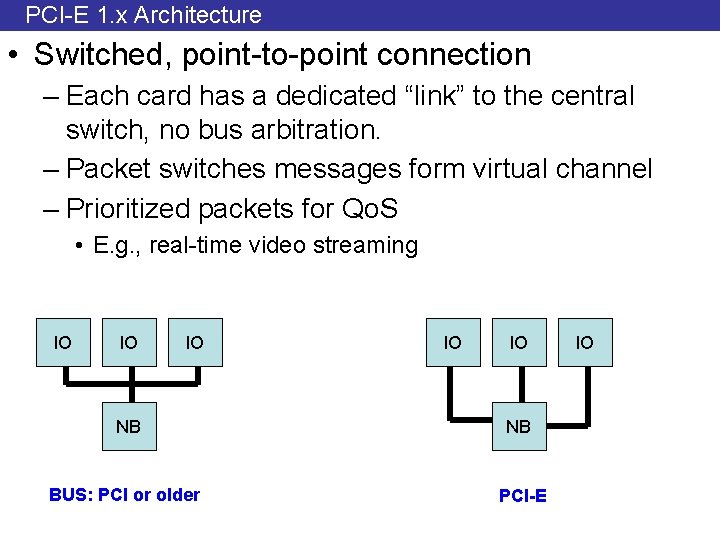

PCI-E 1. x Architecture • Switched, point-to-point connection – Each card has a dedicated “link” to the central switch, no bus arbitration. – Packet switches messages form virtual channel – Prioritized packets for Qo. S • E. g. , real-time video streaming IO IO IO NB NB BUS: PCI or older PCI-E IO

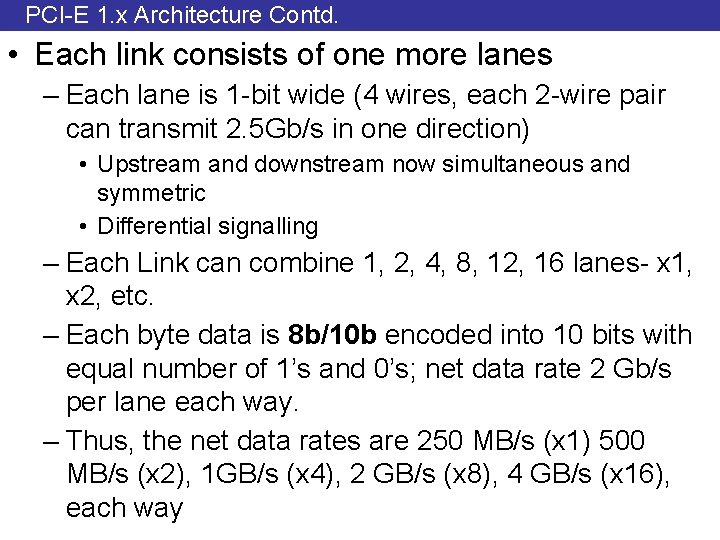

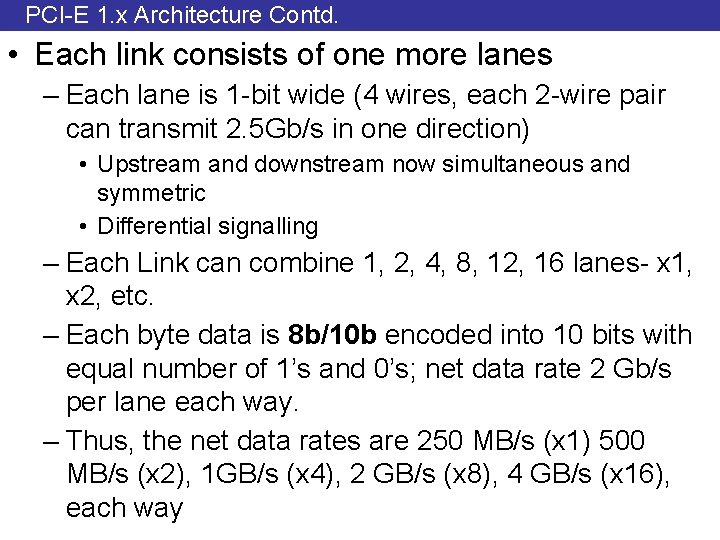

PCI-E 1. x Architecture Contd. • Each link consists of one more lanes – Each lane is 1 -bit wide (4 wires, each 2 -wire pair can transmit 2. 5 Gb/s in one direction) • Upstream and downstream now simultaneous and symmetric • Differential signalling – Each Link can combine 1, 2, 4, 8, 12, 16 lanes- x 1, x 2, etc. – Each byte data is 8 b/10 b encoded into 10 bits with equal number of 1’s and 0’s; net data rate 2 Gb/s per lane each way. – Thus, the net data rates are 250 MB/s (x 1) 500 MB/s (x 2), 1 GB/s (x 4), 2 GB/s (x 8), 4 GB/s (x 16), each way

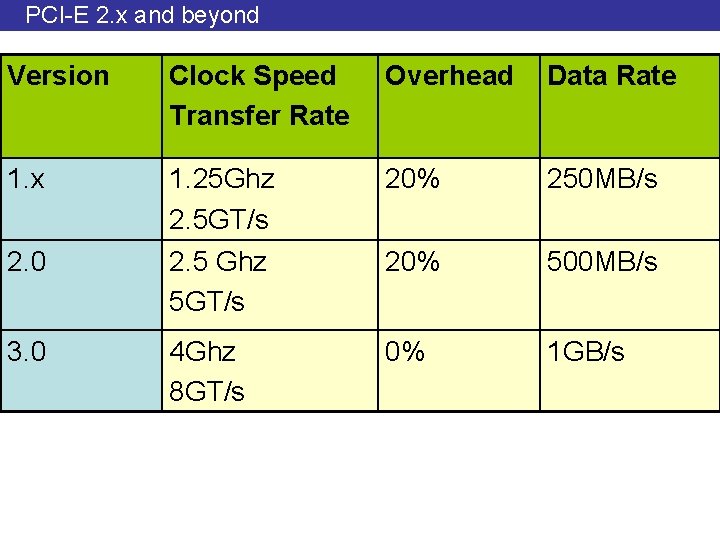

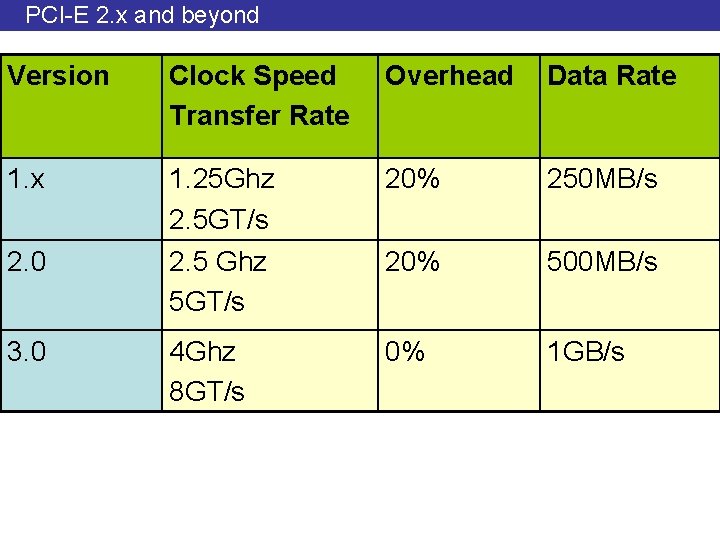

PCI-E 2. x and beyond Version Clock Speed Transfer Rate Overhead Data Rate 1. x 1. 25 Ghz 2. 5 GT/s 20% 250 MB/s 2. 0 2. 5 Ghz 5 GT/s 20% 500 MB/s 3. 0 4 Ghz 8 GT/s 0% 1 GB/s

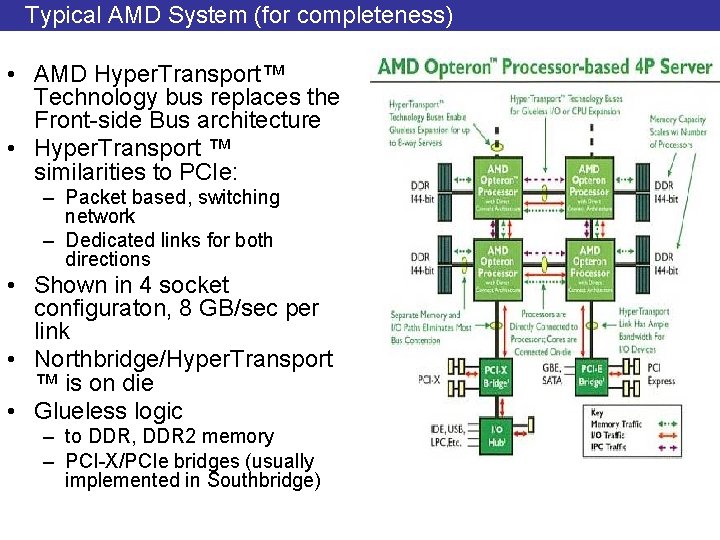

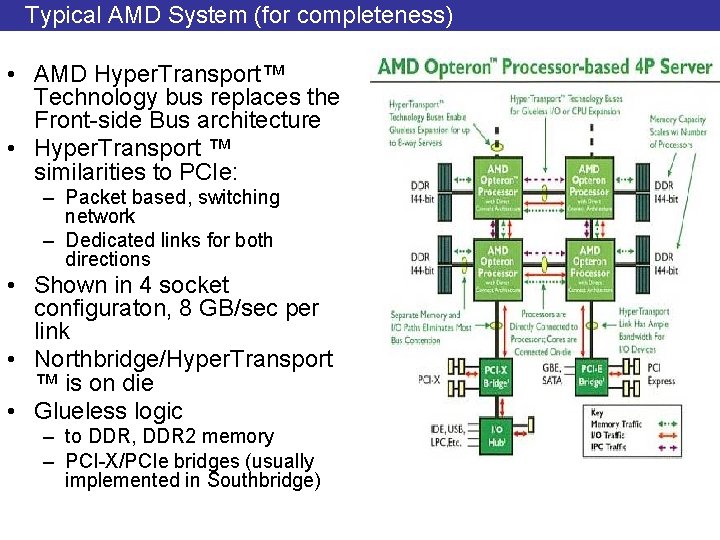

Typical AMD System (for completeness) • AMD Hyper. Transport™ Technology bus replaces the Front-side Bus architecture • Hyper. Transport ™ similarities to PCIe: – Packet based, switching network – Dedicated links for both directions • Shown in 4 socket configuraton, 8 GB/sec per link • Northbridge/Hyper. Transport ™ is on die • Glueless logic – to DDR, DDR 2 memory – PCI-X/PCIe bridges (usually implemented in Southbridge)

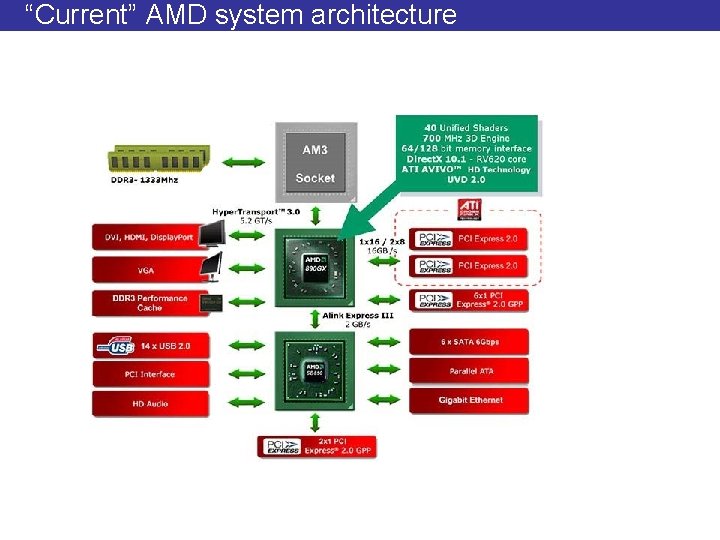

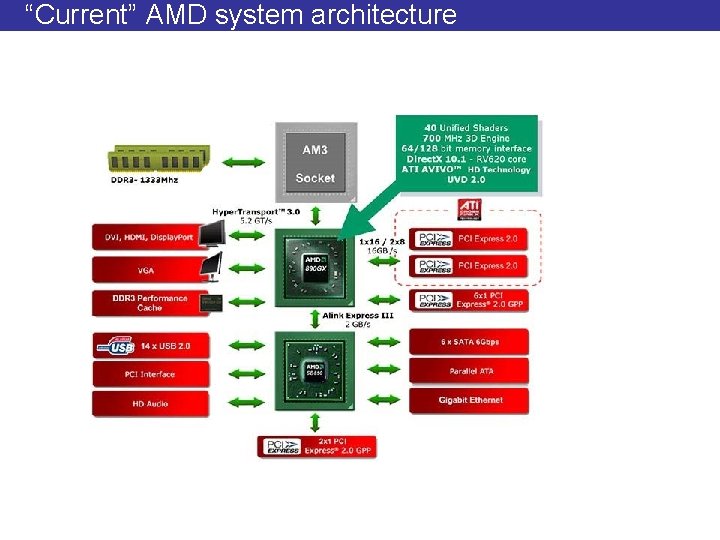

“Current” AMD system architecture

Our lab motherboards (2008)

Typical motherboard today (2012)

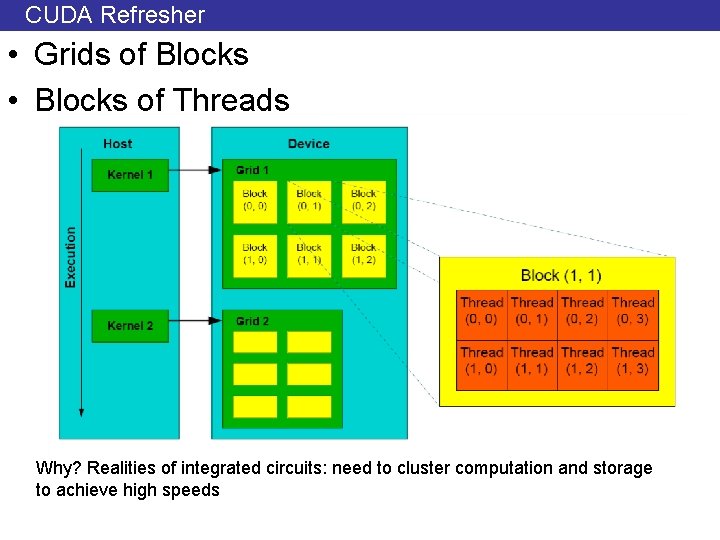

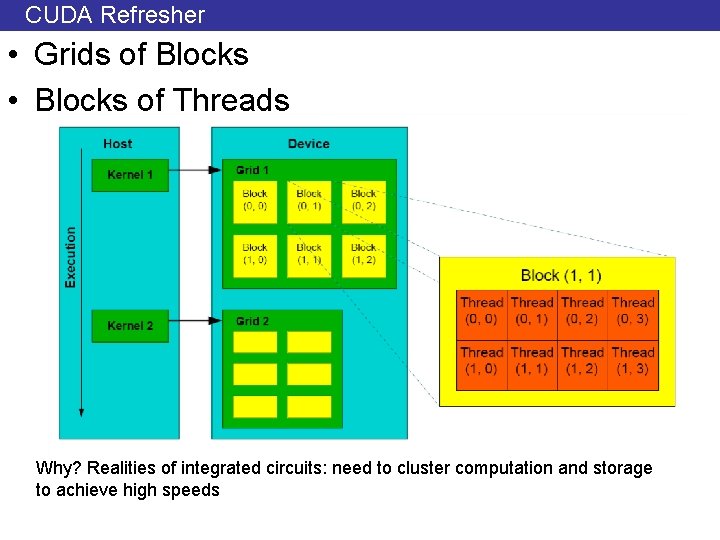

CUDA Refresher • Grids of Blocks • Blocks of Threads Why? Realities of integrated circuits: need to cluster computation and storage to achieve high speeds

Execution model guarantees • Only that threads will execute • Says nothing about the order • Extreme cases: – #1: All threads run in parallel – #2: All threads run sequentially • Interleaving at synchronization points • A CUDA program can run: – On the CPU – On a GPU with 1 and on one with N units • Different models/price points

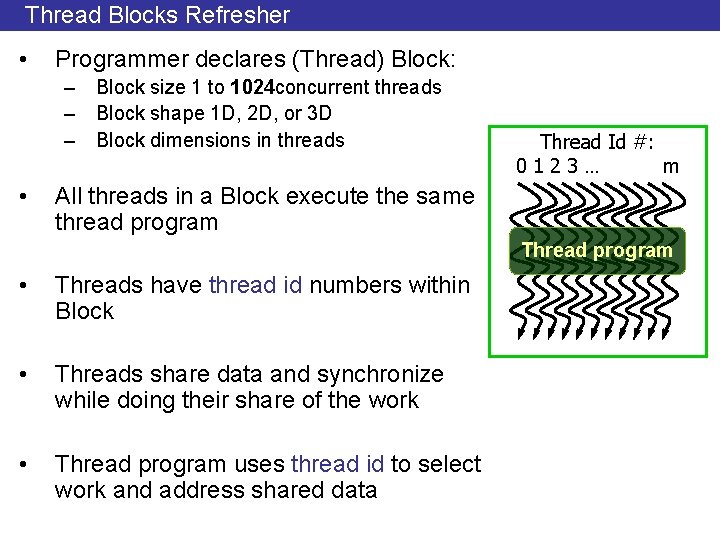

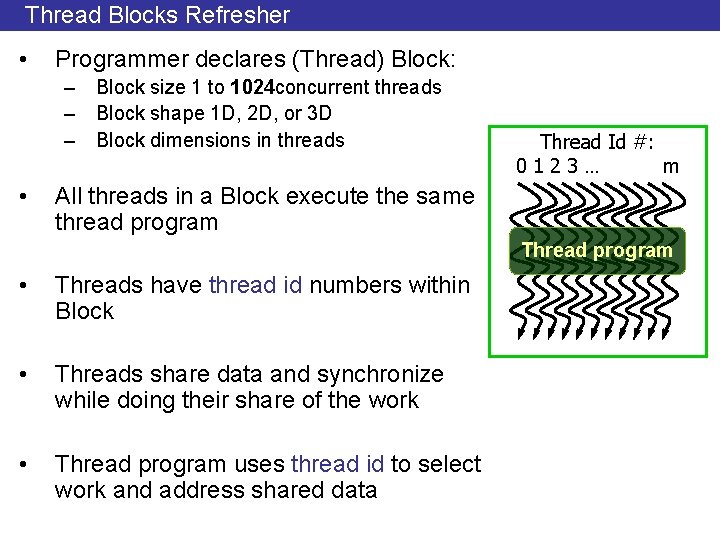

Thread Blocks Refresher • Programmer declares (Thread) Block: – Block size 1 to 1024 concurrent threads – Block shape 1 D, 2 D, or 3 D – Block dimensions in threads • Thread Id #: 0123… m All threads in a Block execute the same thread program Thread program • Threads have thread id numbers within Block • Threads share data and synchronize while doing their share of the work • Thread program uses thread id to select work and address shared data

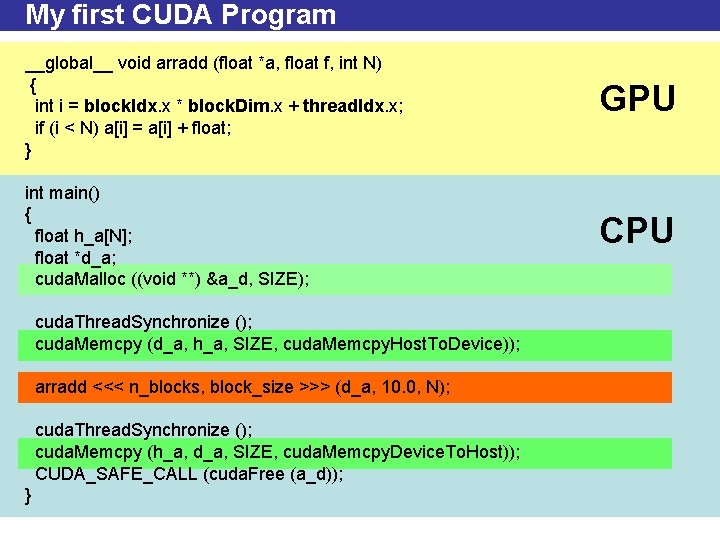

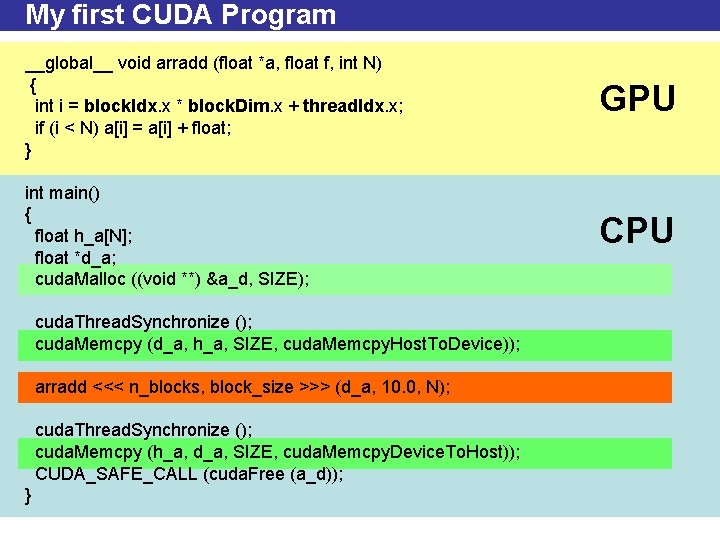

My first CUDA Program __global__ void arradd (float *a, float f, int N) { int i = block. Idx. x * block. Dim. x + thread. Idx. x; if (i < N) a[i] = a[i] + float; } GPU int main() { float h_a[N]; float *d_a; cuda. Malloc ((void **) &a_d, SIZE); CPU cuda. Thread. Synchronize (); cuda. Memcpy (d_a, h_a, SIZE, cuda. Memcpy. Host. To. Device)); arradd <<< n_blocks, block_size >>> (d_a, 10. 0, N); cuda. Thread. Synchronize (); cuda. Memcpy (h_a, d_a, SIZE, cuda. Memcpy. Device. To. Host)); CUDA_SAFE_CALL (cuda. Free (a_d)); }

Architecture Goals • Use multithreading to hide DRAM latency • Support fine-grain parallel processing • Virtualize the processors to achieve scalability – Multiple blocks and threads per processor • Simplify programming. – Develop program for one thread • Conventional Processors – Latency optimized – ILP – Caches 99% hit rate • GPU – Caches 90% or less. Not a good option – Throughput optimized – ILP + TLP

GF 100 Specifications • 3 Billion Transistors in 40 nm process (TSMC) • Up to 512 CUDA / unified shader cores • 384 -bit GDDR 5 memory interface • 6 GB capacity • Ge. Force GTX 480: Graphics Enthusiast

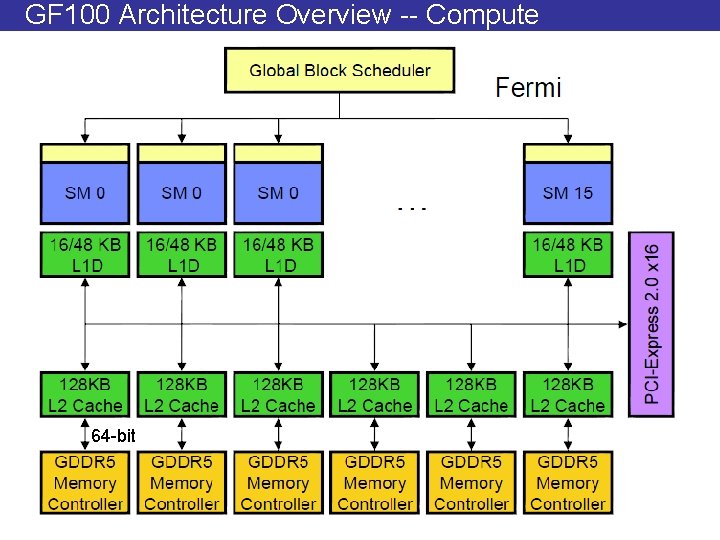

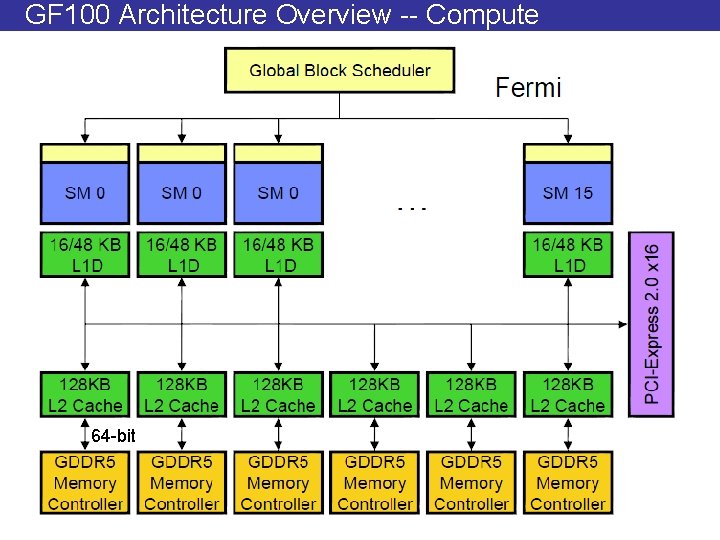

GF 100 Architecture Overview -- Compute 64 -bit

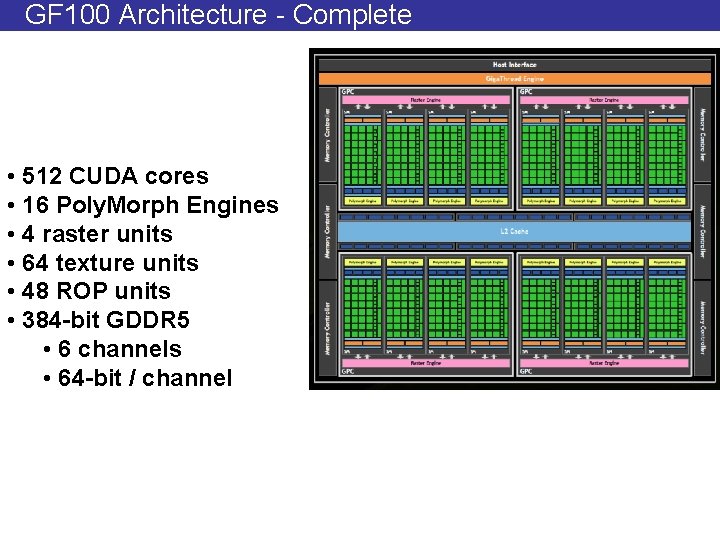

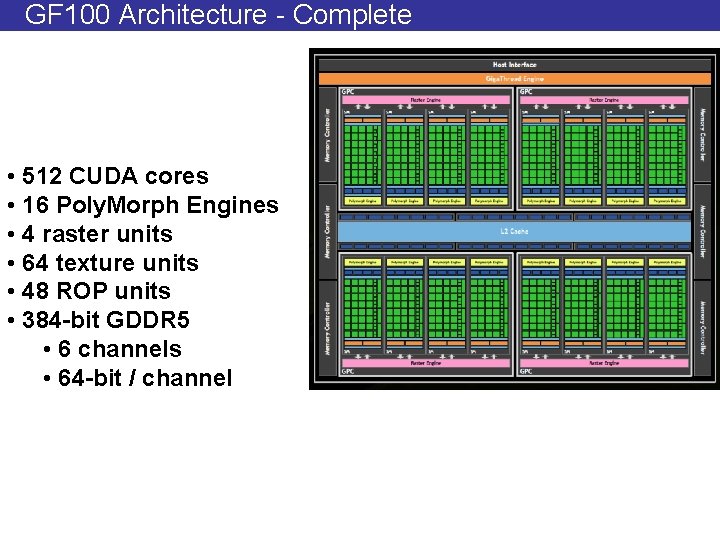

GF 100 Architecture - Complete • 512 CUDA cores • 16 Poly. Morph Engines • 4 raster units • 64 texture units • 48 ROP units • 384 -bit GDDR 5 • 6 channels • 64 -bit / channel

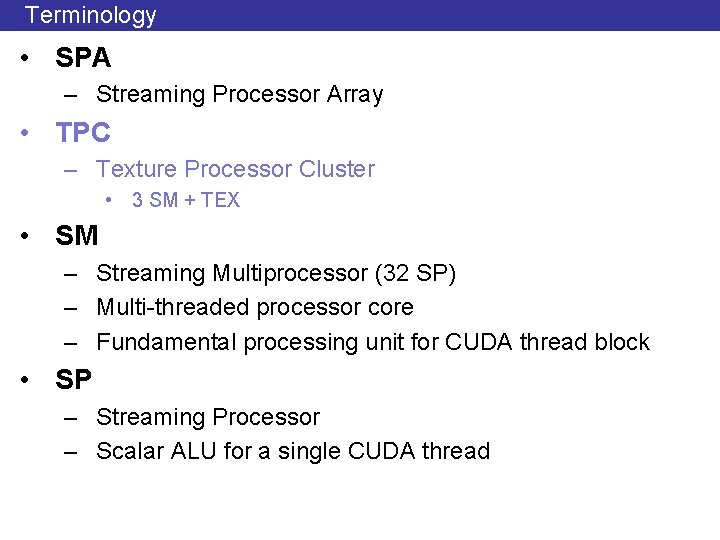

Terminology • SPA – Streaming Processor Array • TPC – Texture Processor Cluster • 3 SM + TEX • SM – Streaming Multiprocessor (32 SP) – Multi-threaded processor core – Fundamental processing unit for CUDA thread block • SP – Streaming Processor – Scalar ALU for a single CUDA thread

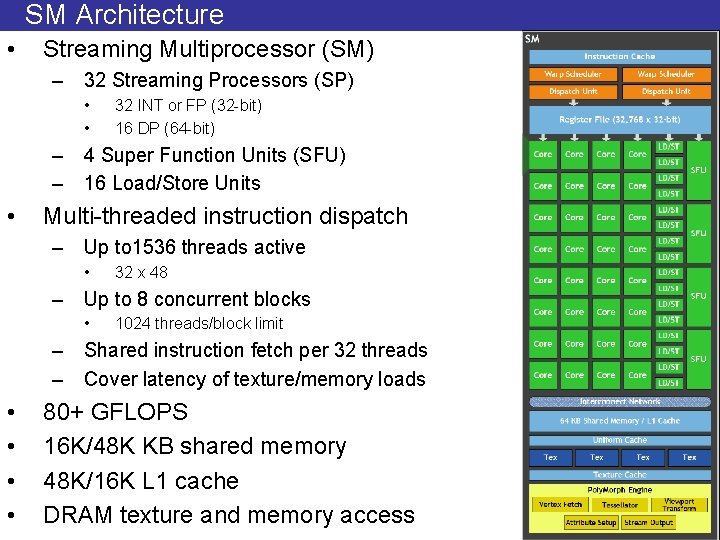

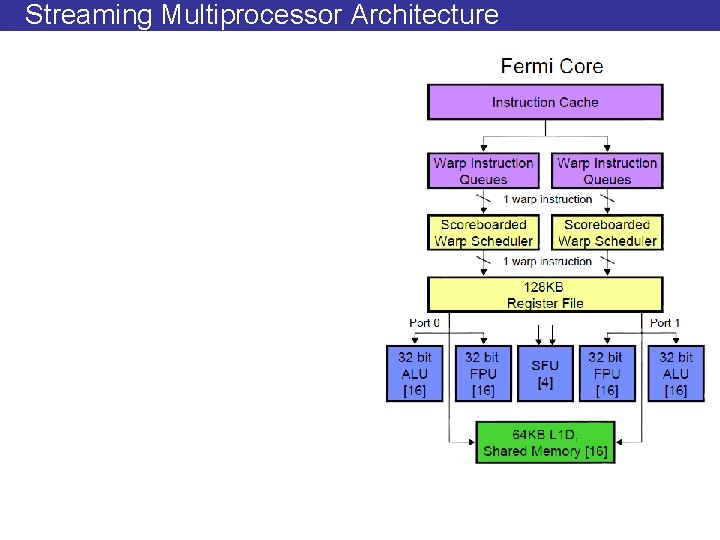

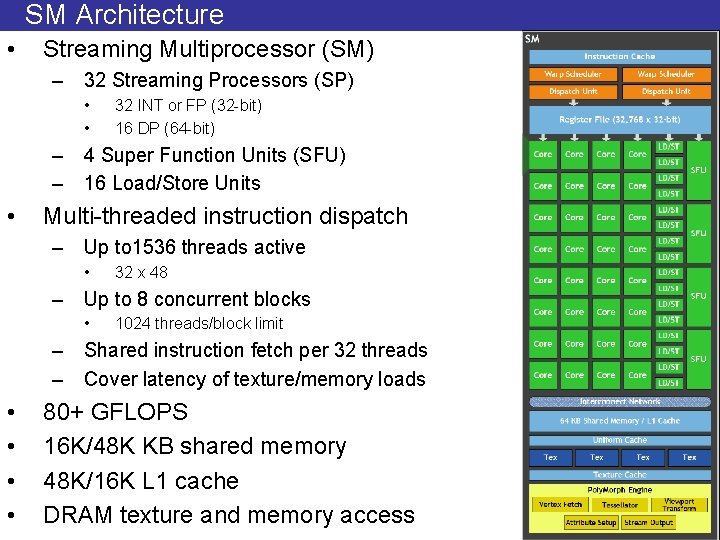

SM Architecture • Streaming Multiprocessor (SM) – 32 Streaming Processors (SP) • • 32 INT or FP (32 -bit) 16 DP (64 -bit) – 4 Super Function Units (SFU) – 16 Load/Store Units • Multi-threaded instruction dispatch – Up to 1536 threads active • 32 x 48 – Up to 8 concurrent blocks • 1024 threads/block limit – Shared instruction fetch per 32 threads – Cover latency of texture/memory loads • • 80+ GFLOPS 16 K/48 K KB shared memory 48 K/16 K L 1 cache DRAM texture and memory access

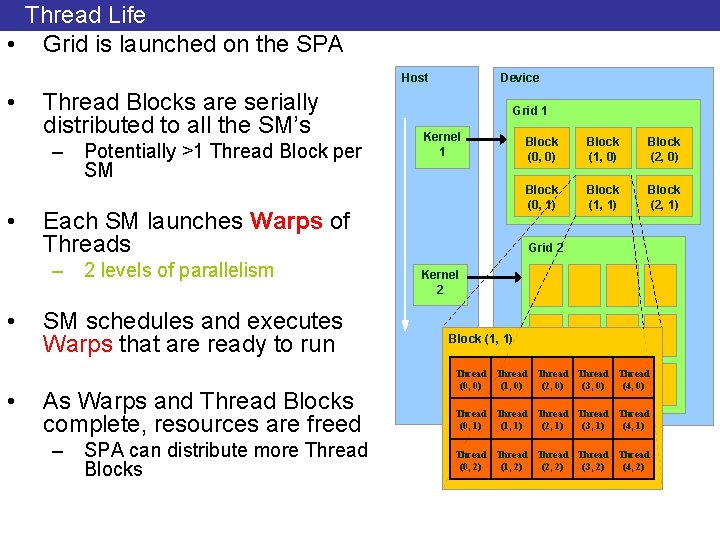

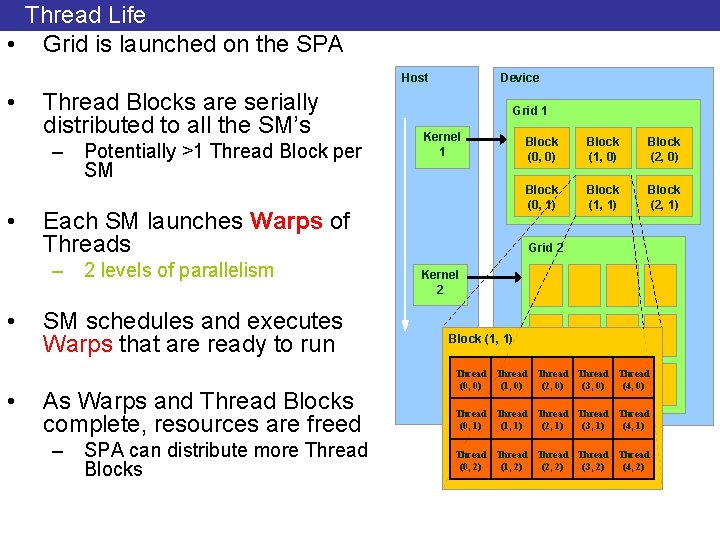

Thread Life • Grid is launched on the SPA Host • Thread Blocks are serially distributed to all the SM’s – Potentially >1 Thread Block per SM • • Grid 1 Kernel 1 Each SM launches Warps of Threads – 2 levels of parallelism • Device SM schedules and executes Warps that are ready to run As Warps and Thread Blocks complete, resources are freed – SPA can distribute more Thread Blocks Block (0, 0) Block (1, 0) Block (2, 0) Block (0, 1) Block (1, 1) Block (2, 1) Grid 2 Kernel 2 Block (1, 1) Thread Thread (0, 0) (1, 0) (2, 0) (3, 0) (4, 0) Thread Thread (0, 1) (1, 1) (2, 1) (3, 1) (4, 1) Thread Thread (0, 2) (1, 2) (2, 2) (3, 2) (4, 2)

Cooperative Thread Array • Break Blocks into warps • Allocate Resources – Registers, Shared Mem, Barriers • Then allocate for execution

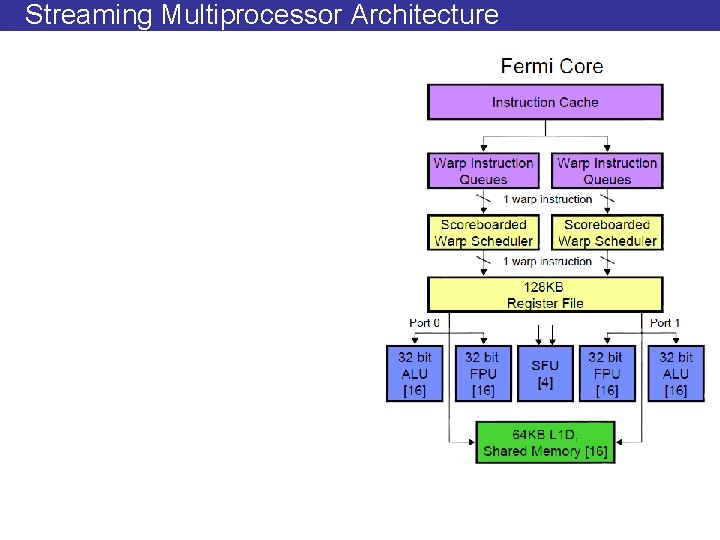

Streaming Multiprocessor Architecture

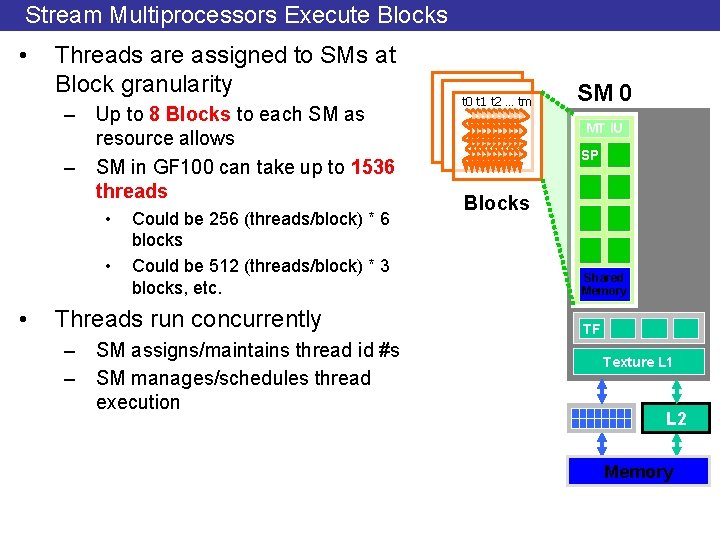

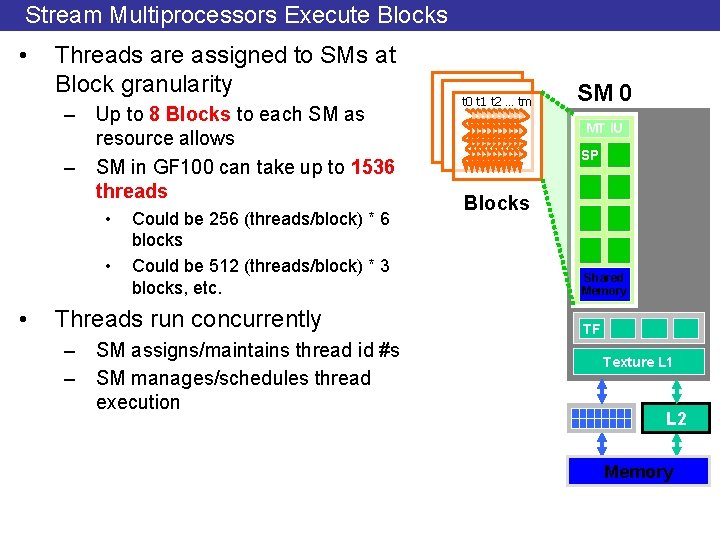

Stream Multiprocessors Execute Blocks • Threads are assigned to SMs at Block granularity – Up to 8 Blocks to each SM as resource allows – SM in GF 100 can take up to 1536 threads • • • Could be 256 (threads/block) * 6 blocks Could be 512 (threads/block) * 3 blocks, etc. Threads run concurrently – SM assigns/maintains thread id #s – SM manages/schedules thread execution t 0 t 1 t 2 … tm SM 0 MT IU SP Blocks Shared Memory TF Texture L 1 L 2 Memory

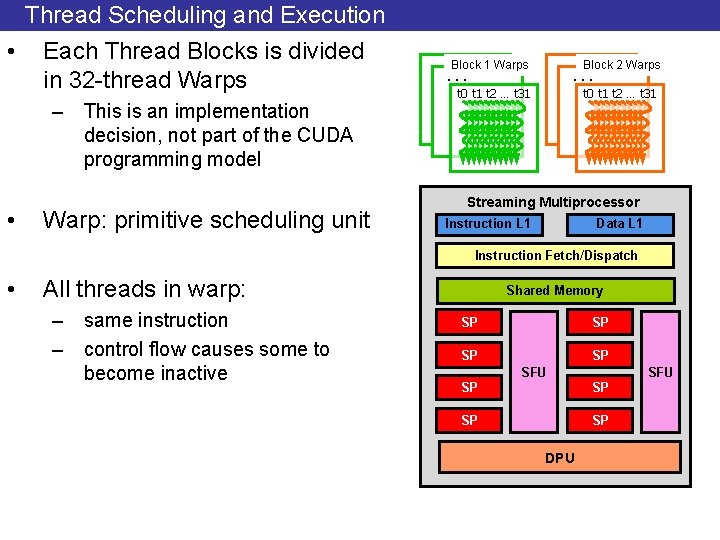

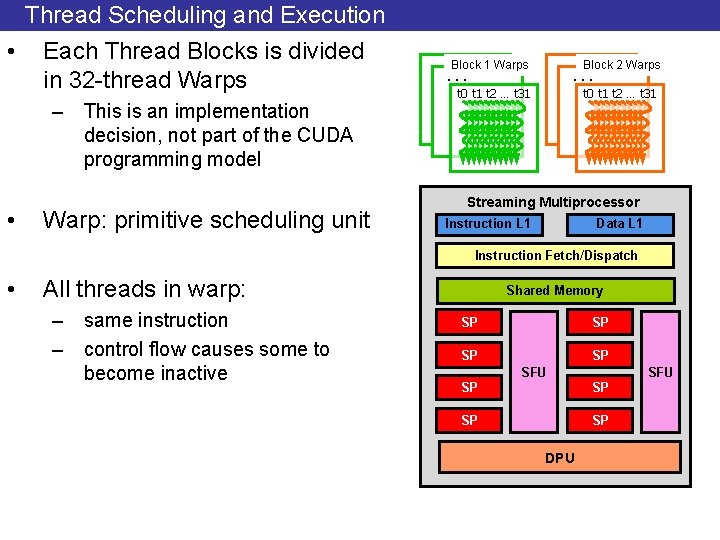

Thread Scheduling and Execution • Each Thread Blocks is divided in 32 -thread Warps – This is an implementation decision, not part of the CUDA programming model • Warp: primitive scheduling unit Block 1 Warps … …Block 2 Warps t 0 t 1 t 2 … t 31 … … Streaming Multiprocessor Instruction L 1 Data L 1 Instruction Fetch/Dispatch • All threads in warp: – same instruction – control flow causes some to become inactive Shared Memory SP SP SFU SP SP DPU

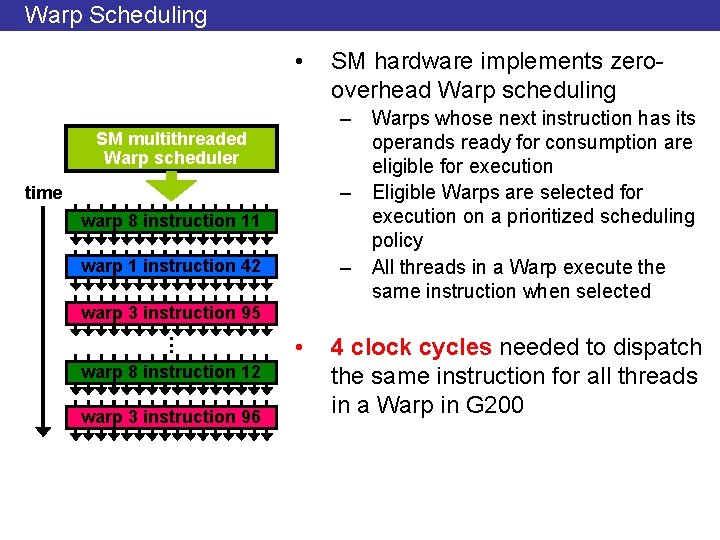

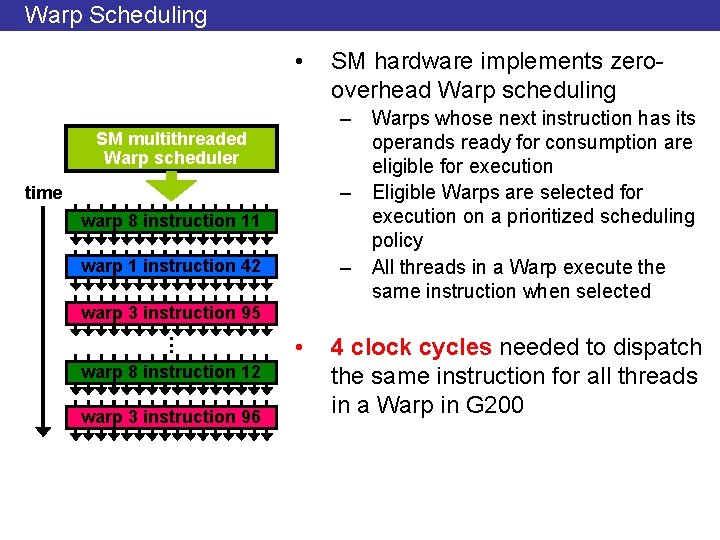

Warp Scheduling • – Warps whose next instruction has its operands ready for consumption are eligible for execution – Eligible Warps are selected for execution on a prioritized scheduling policy – All threads in a Warp execute the same instruction when selected SM multithreaded Warp scheduler time warp 8 instruction 11 warp 1 instruction 42 warp 3 instruction 95. . . warp 8 instruction 12 warp 3 instruction 96 SM hardware implements zerooverhead Warp scheduling • 4 clock cycles needed to dispatch the same instruction for all threads in a Warp in G 200

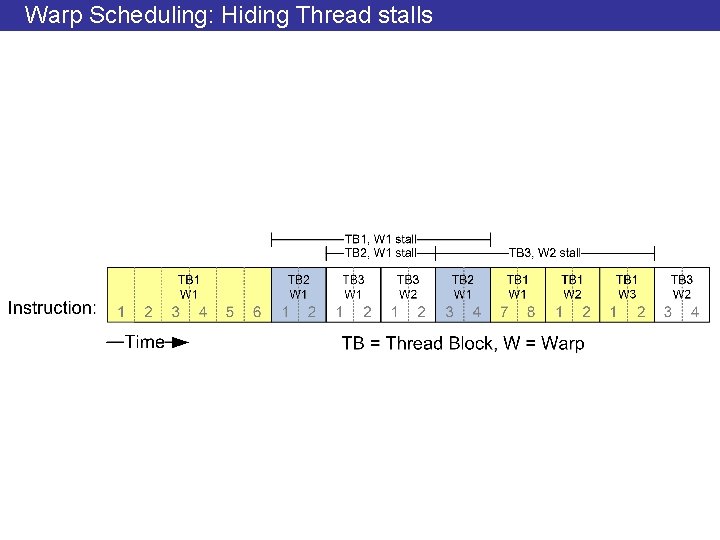

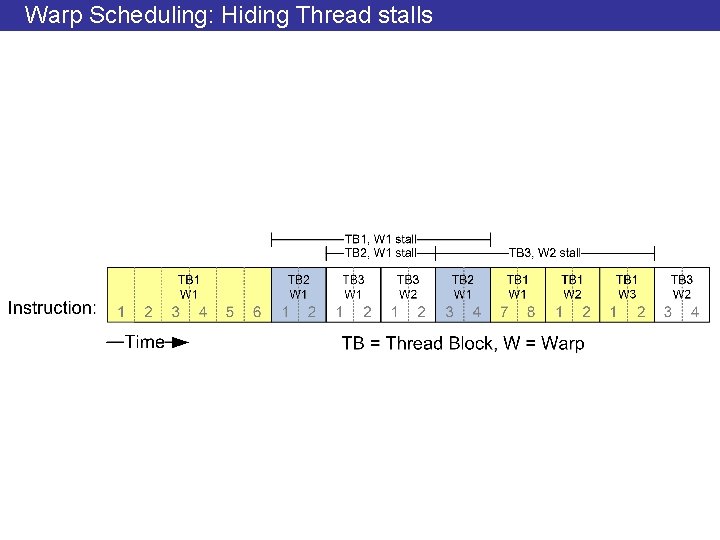

Warp Scheduling: Hiding Thread stalls

How many warps are there? • If 3 blocks are assigned to an SM and each Block has 256 threads, how many Warps are there in an SM? – Each Block is divided into 256/32 = 8 Warps – There are 8 * 3 = 24 Warps – At any point in time, only one of the 24 Warps will be selected for instruction fetch and execution.

Warp Scheduling Ramifications • If one global memory access is needed for every 4 instructions • A minimal of 13 Warps are needed to fully tolerate a 200 -cycle memory latency • Why? – Need to hide 200 cycles every four instructions – Every Warp occupies 4 cycles during which the same instruction executes – Every 4 insts a thread stalls – Every 16 cycles a thread stalls – 200/16 =12. 5 or at least 13 warps

Granularity Considerations: Block & Thread limits per SM • For Matrix Multiplication or any 2 D-type of computation, should I use 8 X 8, 16 X 16 or 32 X 32 tiles? – For 8 X 8, we have 64 threads per Block. • Thread/SM limit = 1024 up to 16 Blocks. • Blocks/SM limit = 8 only 512 threads will go into each SM – For 16 X 16, we have 256 threads per Block. • Thread/SM limit = 1024 up to 4 Blocks. • Blocks/SM limit = 8 full capacity unless other resource considerations overrule. – For 32 X 32, we have 1024 threads per Block. • Thread/block limit = 1024 Not even one can fit into an SM.

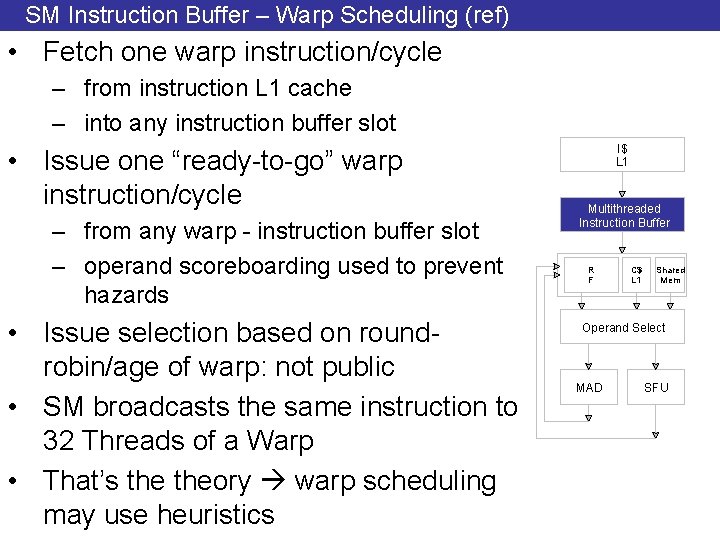

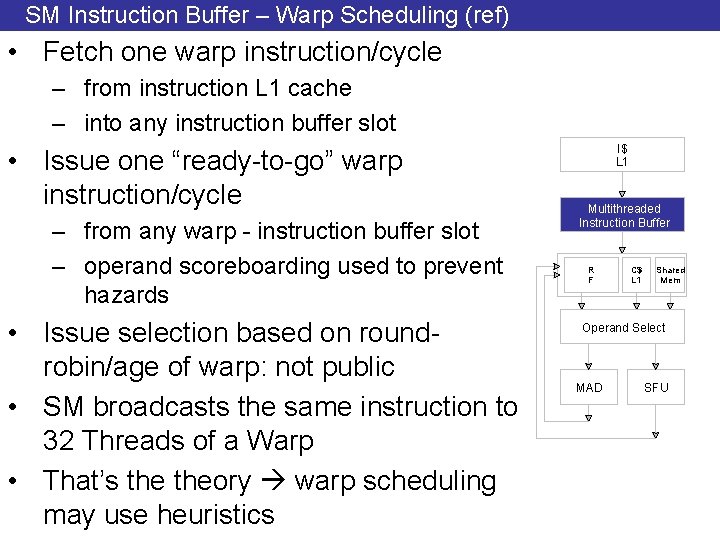

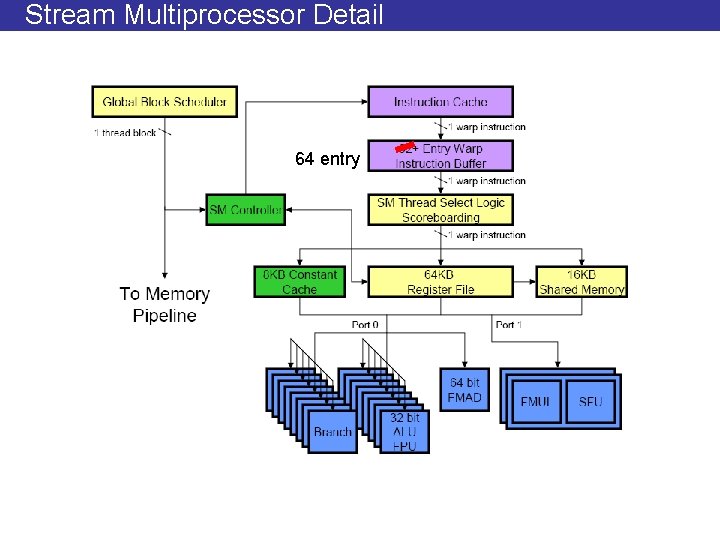

SM Instruction Buffer – Warp Scheduling (ref) • Fetch one warp instruction/cycle – from instruction L 1 cache – into any instruction buffer slot • Issue one “ready-to-go” warp instruction/cycle – from any warp - instruction buffer slot – operand scoreboarding used to prevent hazards • Issue selection based on roundrobin/age of warp: not public • SM broadcasts the same instruction to 32 Threads of a Warp • That’s theory warp scheduling may use heuristics I$ L 1 Multithreaded Instruction Buffer R F C$ L 1 Shared Mem Operand Select MAD SFU

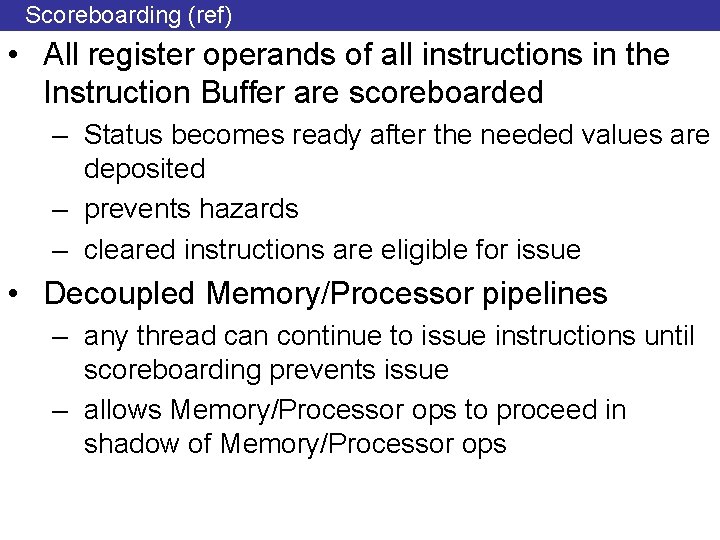

Scoreboarding (ref) • All register operands of all instructions in the Instruction Buffer are scoreboarded – Status becomes ready after the needed values are deposited – prevents hazards – cleared instructions are eligible for issue • Decoupled Memory/Processor pipelines – any thread can continue to issue instructions until scoreboarding prevents issue – allows Memory/Processor ops to proceed in shadow of Memory/Processor ops

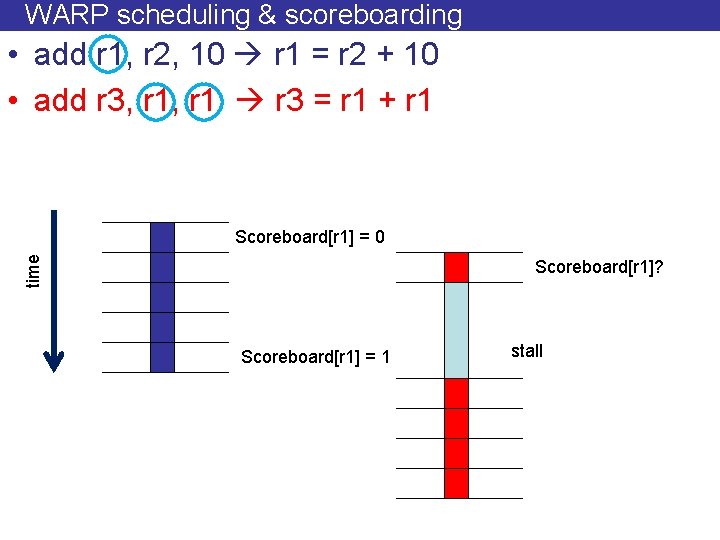

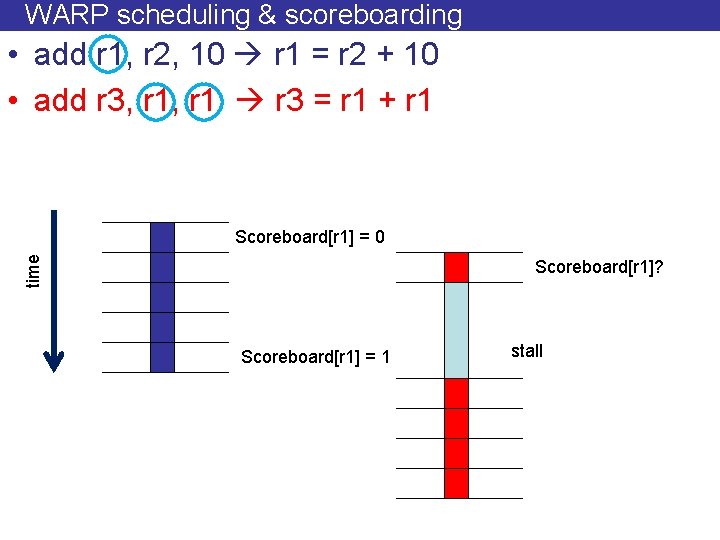

WARP scheduling & scoreboarding • add r 1, r 2, 10 r 1 = r 2 + 10 • add r 3, r 1 r 3 = r 1 + r 1 time Scoreboard[r 1] = 0 Scoreboard[r 1]? Scoreboard[r 1] = 1 stall

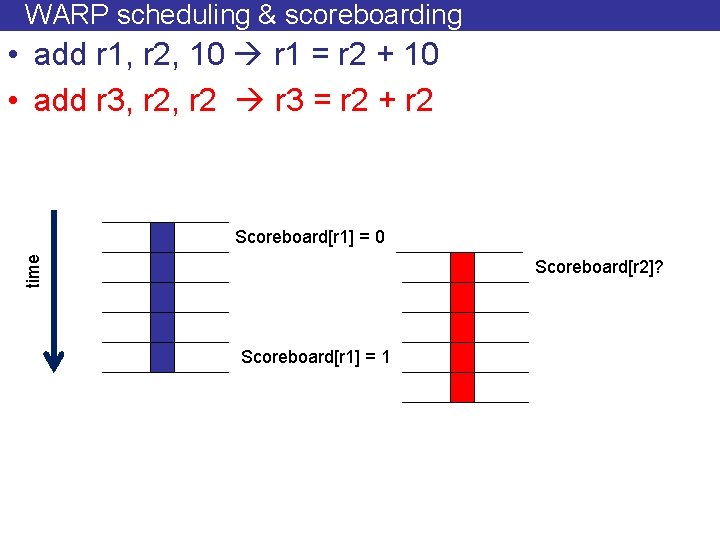

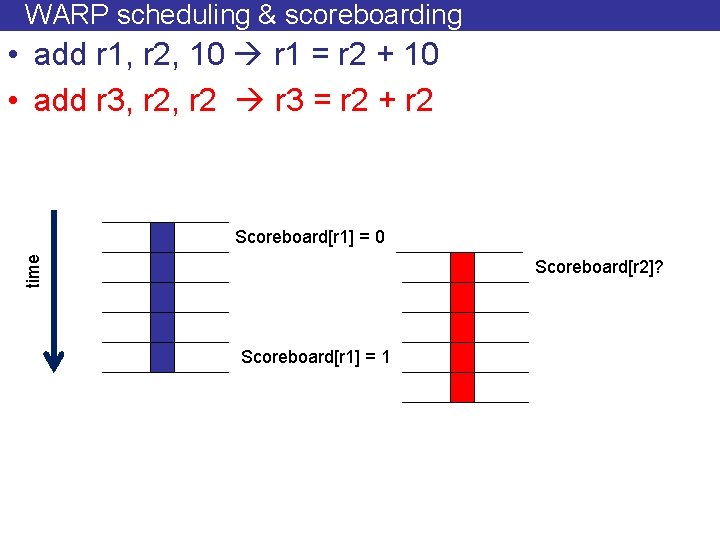

WARP scheduling & scoreboarding • add r 1, r 2, 10 r 1 = r 2 + 10 • add r 3, r 2 r 3 = r 2 + r 2 time Scoreboard[r 1] = 0 Scoreboard[r 2]? Scoreboard[r 1] = 1

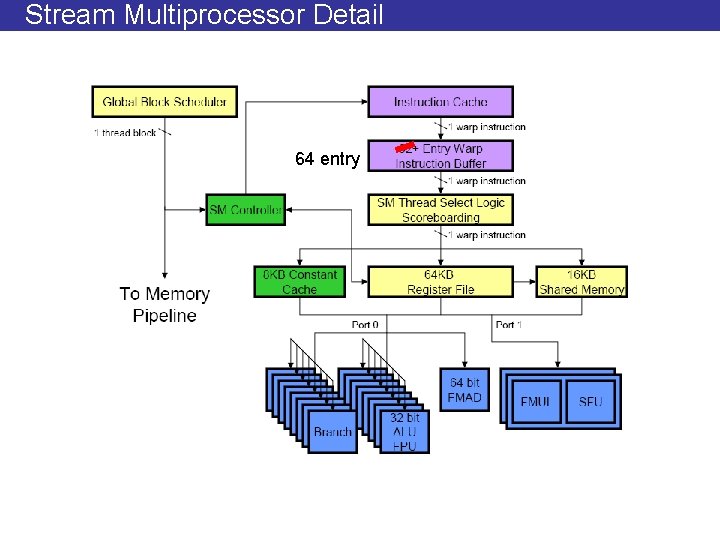

Stream Multiprocessor Detail 64 entry

Scalar Units • • • 32 bit ALU and Multiply-Add IEEE Single-Precision Floating-Point Integer Latency is 4 cycles FP: Na. N, Denormals become signed 0. Round to nearest even

Special Function Units • Transcendental function evaluation and perpixel attribute interpolation • Function evaluator: – rcp, rsqrt, log 2, exp 2, sin, cos approximations – Uses quadratic interpolation based on Enhanced Minimax Approximation – 1 scalar result per cycle • Latency is 16 cycles • Some are synthesized: 32 cycles or so

Memory System Goals • • • High-Bandwidth As much parallelism as possible wide. 512 pins in G 200 / Many DRAM chips fast signalling. max data rate per pin. maximize utilization – Multiple bins of memory requests – Coalesce requests to get as wide as possible – Goal to use every cycle to transfer from/to memory • Compression: lossless and lossy • Caches where it makes sense. Small

DRAM considerations • multiple banks per chip – 4 -8 typical • 2^N rows. – 16 K typical • 2^M cols – 8 K typical • Timing contraints – 10~ cycles for row – 4 cycles within row • DDR – 1 Ghz --> 2 Gbit/pin – 32 -bit --> 8 bytes clock • GPU to memory: many traffic generators – no correlation if greedy scheduling – separate heaps / coalesce accesses • Longer latency

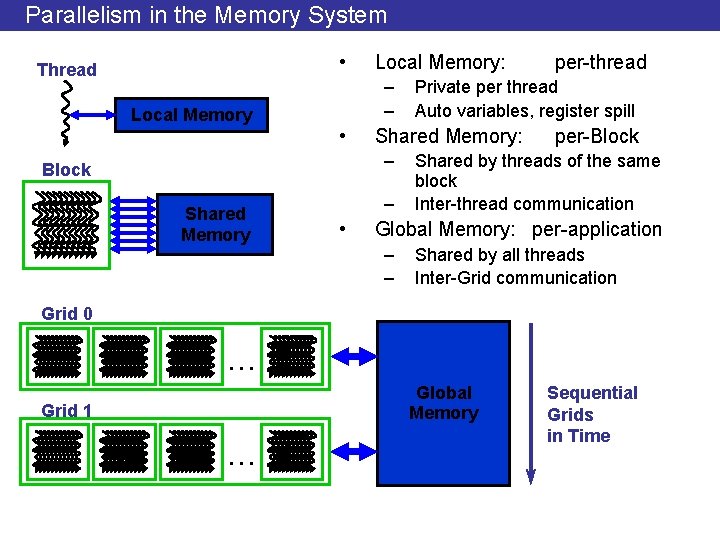

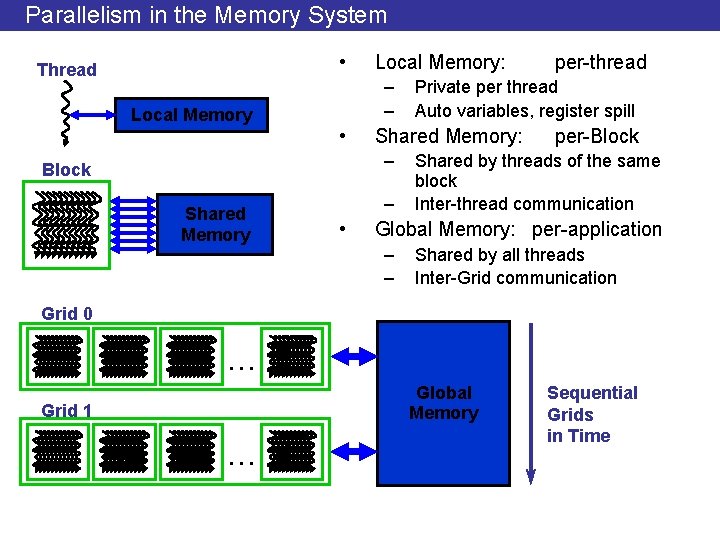

Parallelism in the Memory System • Thread Local Memory: – – • Shared Memory – • Private per thread Auto variables, register spill Shared Memory: – Block per-thread per-Block Shared by threads of the same block Inter-thread communication Global Memory: per-application – – Shared by all threads Inter-Grid communication Grid 0. . . Global Memory Grid 1. . . Sequential Grids in Time

SM Memory Architecture • Threads in a Block share data & results – In Memory and Shared Memory – Synchronize at barrier instruction • Per-Block Shared Memory Allocation – Keeps data close to processor – Minimize trips to global Memory – SM Shared Memory dynamically allocated to Blocks, one of the limiting resources

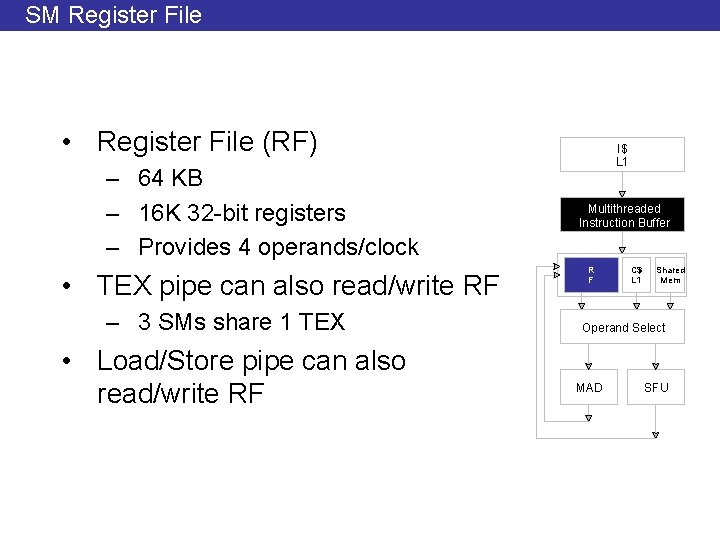

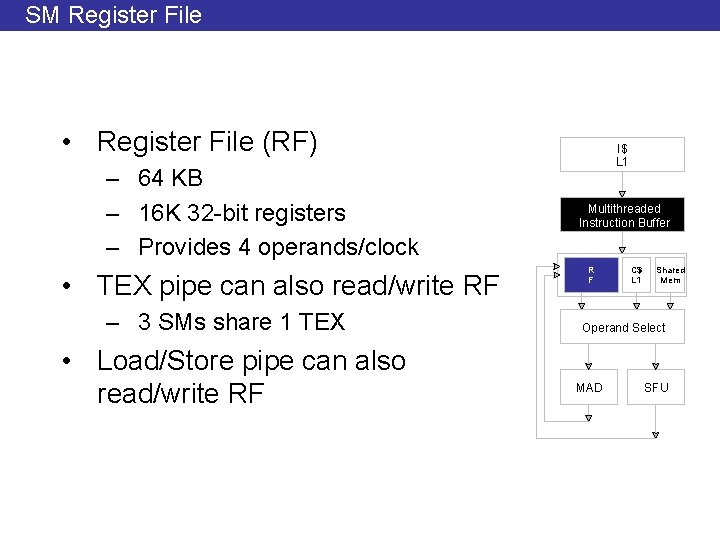

SM Register File • Register File (RF) – 64 KB – 16 K 32 -bit registers – Provides 4 operands/clock • TEX pipe can also read/write RF – 3 SMs share 1 TEX • Load/Store pipe can also read/write RF I$ L 1 Multithreaded Instruction Buffer R F C$ L 1 Shared Mem Operand Select MAD SFU

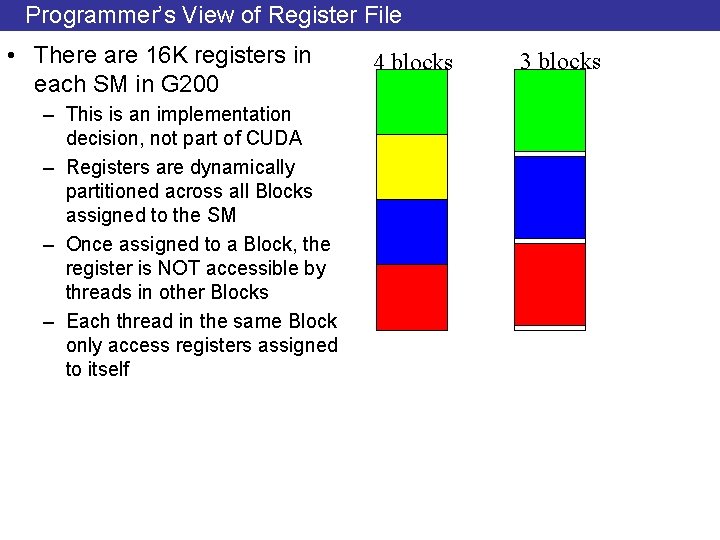

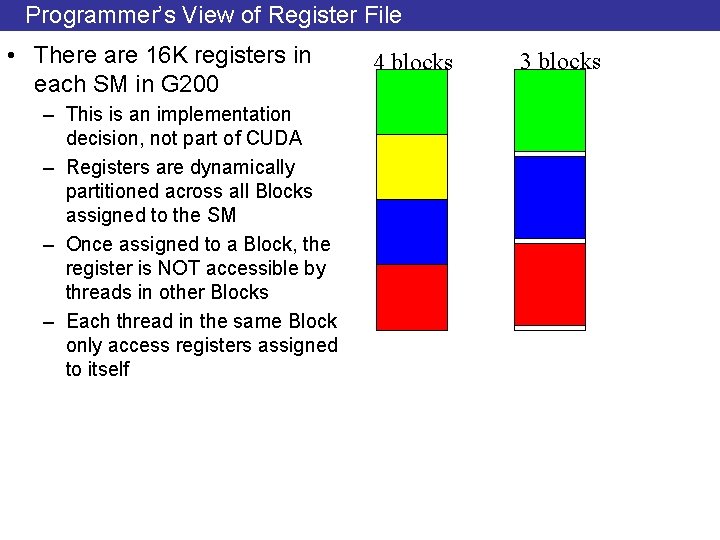

Programmer’s View of Register File • There are 16 K registers in each SM in G 200 – This is an implementation decision, not part of CUDA – Registers are dynamically partitioned across all Blocks assigned to the SM – Once assigned to a Block, the register is NOT accessible by threads in other Blocks – Each thread in the same Block only access registers assigned to itself 4 blocks 3 blocks

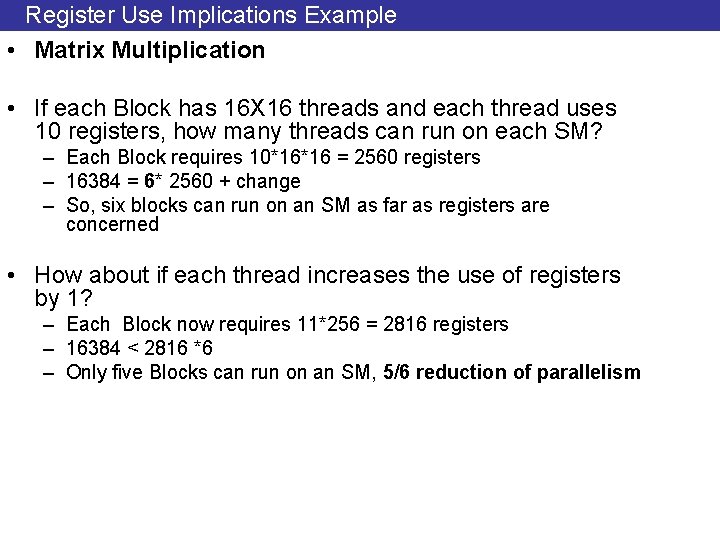

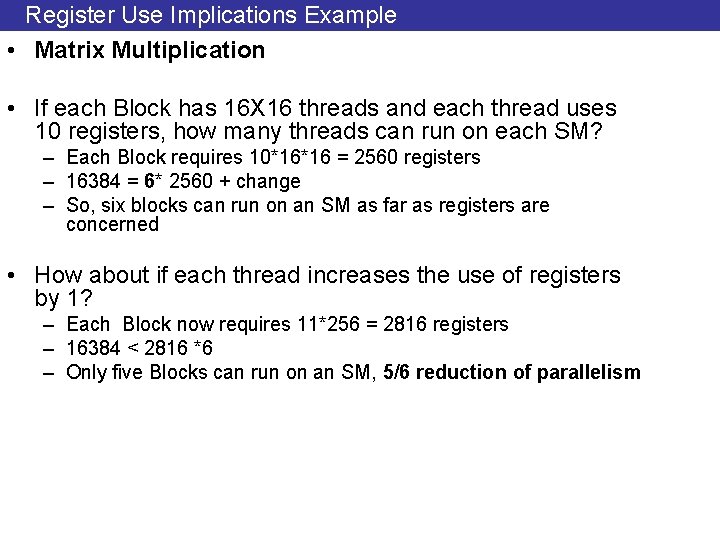

Register Use Implications Example • Matrix Multiplication • If each Block has 16 X 16 threads and each thread uses 10 registers, how many threads can run on each SM? – Each Block requires 10*16*16 = 2560 registers – 16384 = 6* 2560 + change – So, six blocks can run on an SM as far as registers are concerned • How about if each thread increases the use of registers by 1? – Each Block now requires 11*256 = 2816 registers – 16384 < 2816 *6 – Only five Blocks can run on an SM, 5/6 reduction of parallelism

Dynamic Partitioning • Dynamic partitioning gives more flexibility to compilers/programmers – One can run a smaller number of threads that require many registers each or a large number of threads that require few registers each • This allows for finer grain threading than traditional CPU threading models. – The compiler can tradeoff between instruction-level parallelism and thread level parallelism

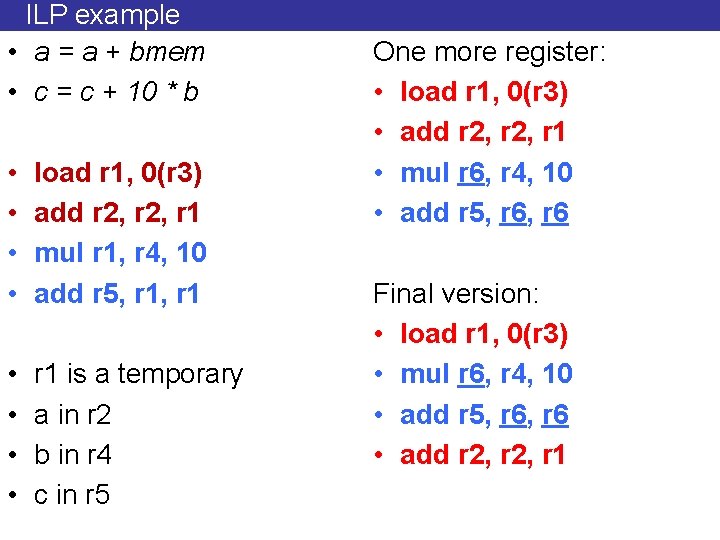

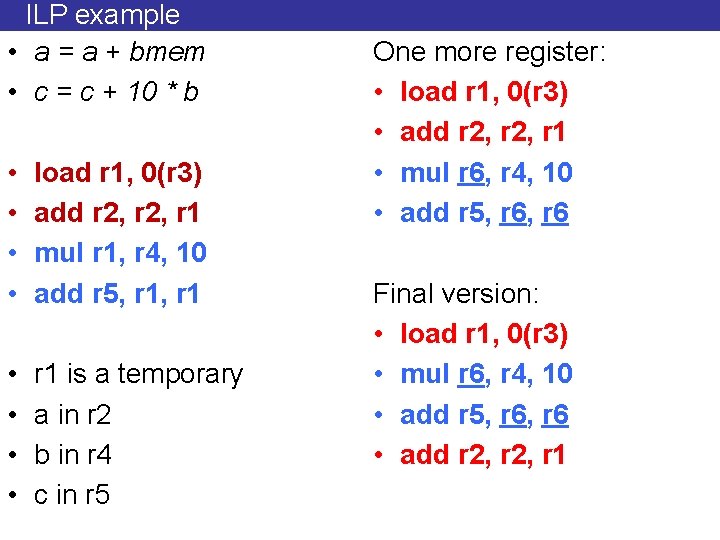

ILP example • a = a + bmem • c = c + 10 * b • • load r 1, 0(r 3) add r 2, r 1 mul r 1, r 4, 10 add r 5, r 1 • • r 1 is a temporary a in r 2 b in r 4 c in r 5 One more register: • load r 1, 0(r 3) • add r 2, r 1 • mul r 6, r 4, 10 • add r 5, r 6 Final version: • load r 1, 0(r 3) • mul r 6, r 4, 10 • add r 5, r 6 • add r 2, r 1

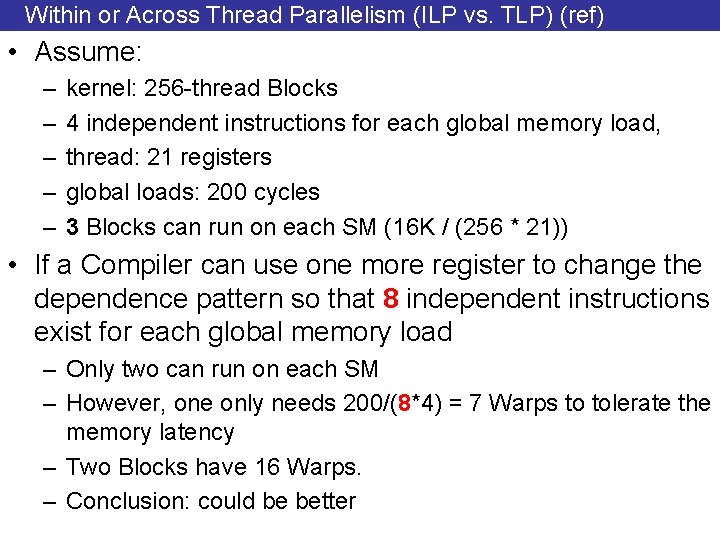

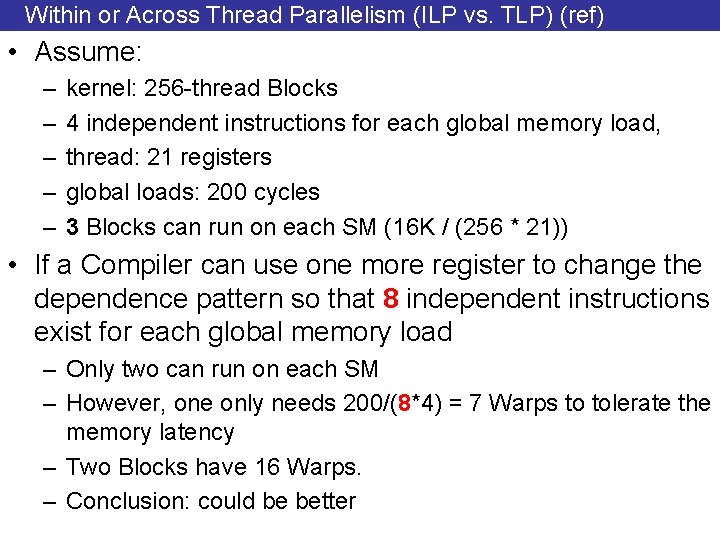

Within or Across Thread Parallelism (ILP vs. TLP) (ref) • Assume: – – – kernel: 256 -thread Blocks 4 independent instructions for each global memory load, thread: 21 registers global loads: 200 cycles 3 Blocks can run on each SM (16 K / (256 * 21)) • If a Compiler can use one more register to change the dependence pattern so that 8 independent instructions exist for each global memory load – Only two can run on each SM – However, one only needs 200/(8*4) = 7 Warps to tolerate the memory latency – Two Blocks have 16 Warps. – Conclusion: could be better

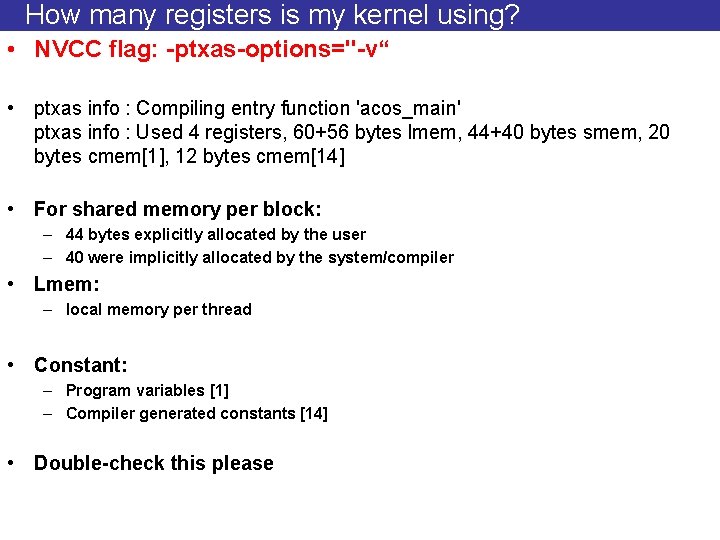

How many registers is my kernel using? • NVCC flag: -ptxas-options="-v“ • ptxas info : Compiling entry function 'acos_main' ptxas info : Used 4 registers, 60+56 bytes lmem, 44+40 bytes smem, 20 bytes cmem[1], 12 bytes cmem[14] • For shared memory per block: – 44 bytes explicitly allocated by the user – 40 were implicitly allocated by the system/compiler • Lmem: – local memory per thread • Constant: – Program variables [1] – Compiler generated constants [14] • Double-check this please

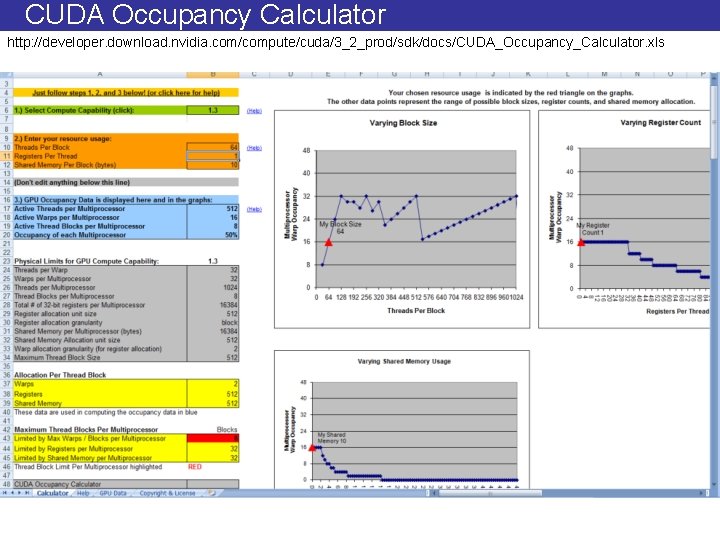

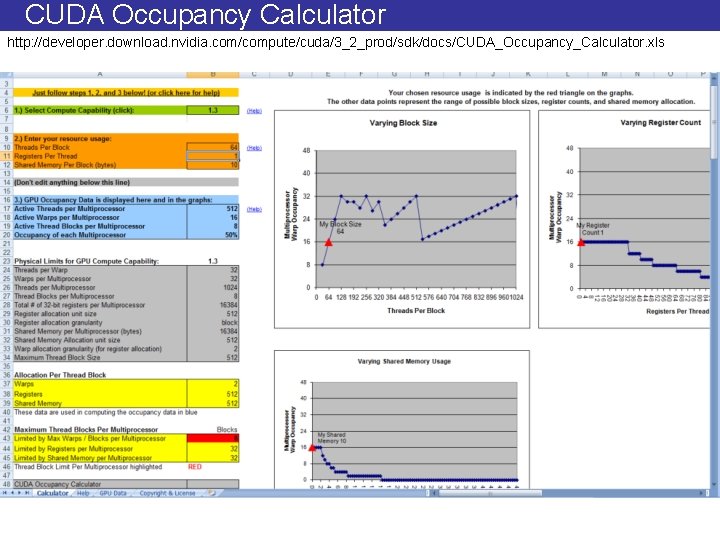

CUDA Occupancy Calculator http: //developer. download. nvidia. com/compute/cuda/3_2_prod/sdk/docs/CUDA_Occupancy_Calculator. xls

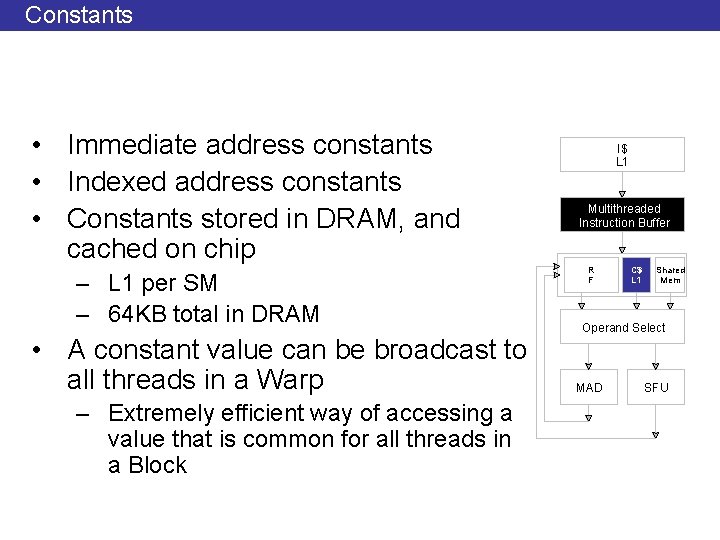

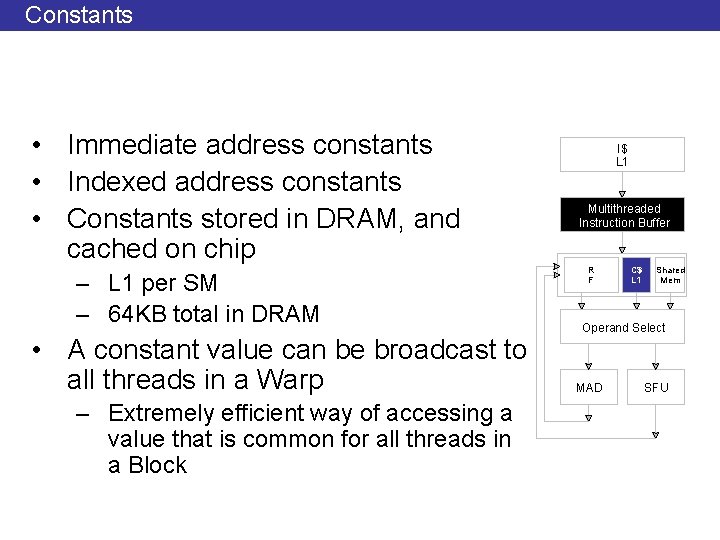

Constants • Immediate address constants • Indexed address constants • Constants stored in DRAM, and cached on chip – L 1 per SM – 64 KB total in DRAM • A constant value can be broadcast to all threads in a Warp – Extremely efficient way of accessing a value that is common for all threads in a Block I$ L 1 Multithreaded Instruction Buffer R F C$ L 1 Shared Mem Operand Select MAD SFU

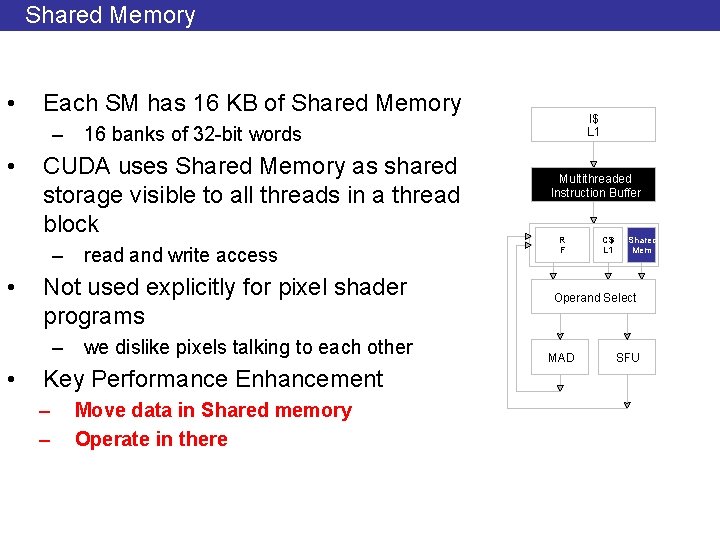

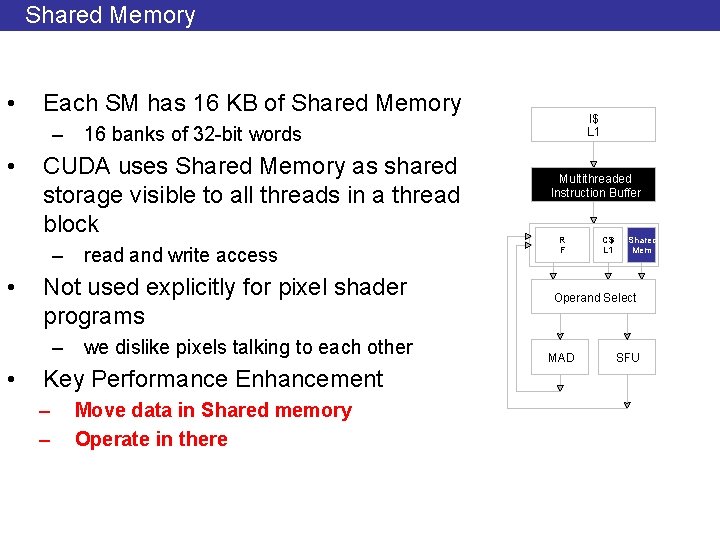

Shared Memory • Each SM has 16 KB of Shared Memory I$ L 1 – 16 banks of 32 -bit words • CUDA uses Shared Memory as shared storage visible to all threads in a thread block – read and write access • Not used explicitly for pixel shader programs – we dislike pixels talking to each other • Key Performance Enhancement – – Move data in Shared memory Operate in there Multithreaded Instruction Buffer R F C$ L 1 Shared Mem Operand Select MAD SFU

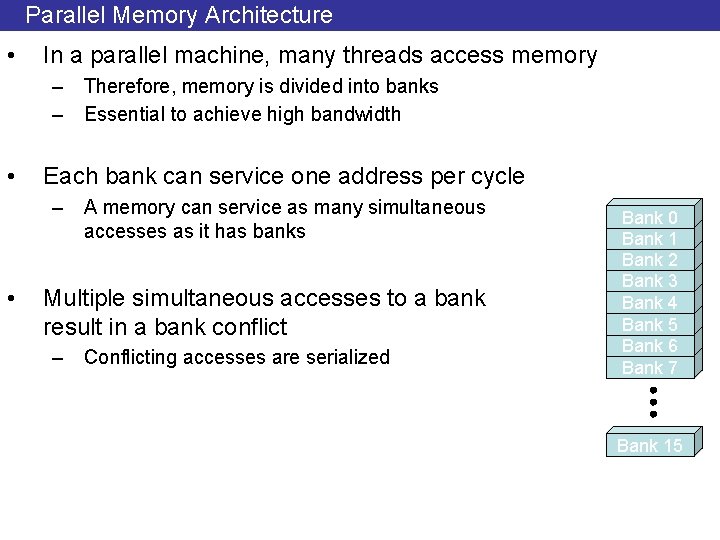

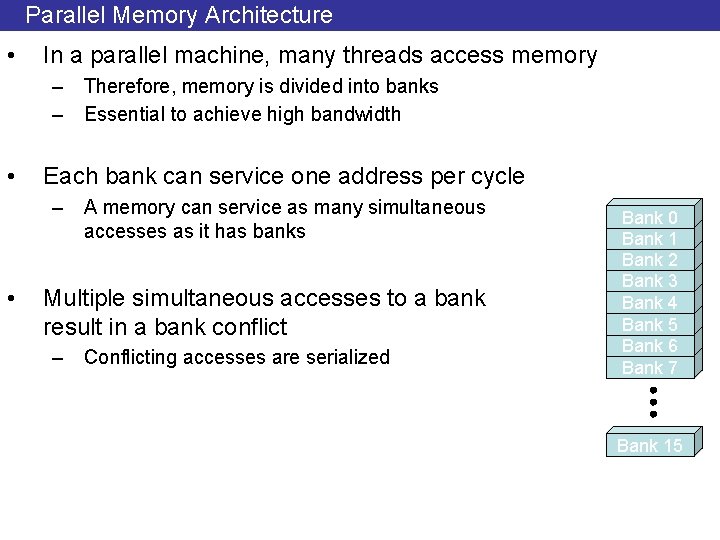

Parallel Memory Architecture • In a parallel machine, many threads access memory – Therefore, memory is divided into banks – Essential to achieve high bandwidth • Each bank can service one address per cycle – A memory can service as many simultaneous accesses as it has banks • Multiple simultaneous accesses to a bank result in a bank conflict – Conflicting accesses are serialized Bank 0 Bank 1 Bank 2 Bank 3 Bank 4 Bank 5 Bank 6 Bank 7 Bank 15

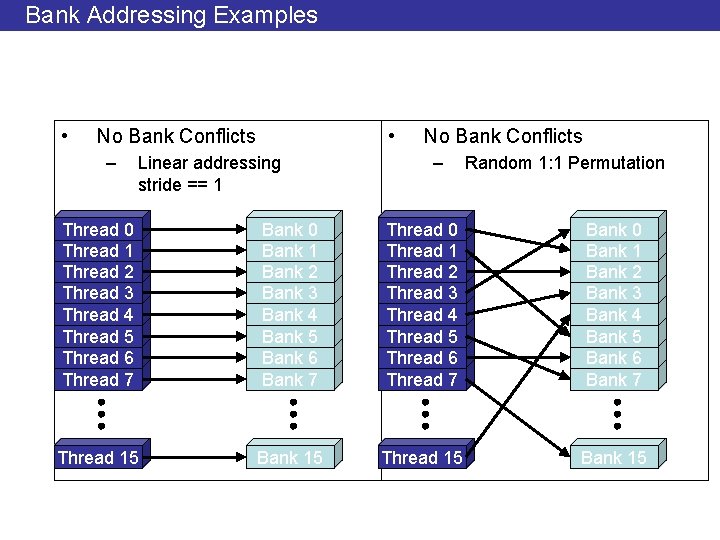

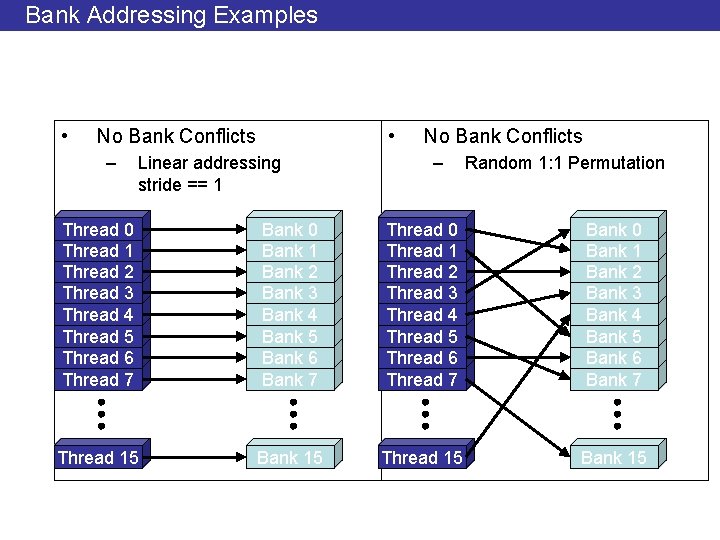

Bank Addressing Examples • • No Bank Conflicts – Linear addressing stride == 1 No Bank Conflicts – Random 1: 1 Permutation Thread 0 Thread 1 Thread 2 Thread 3 Thread 4 Thread 5 Thread 6 Thread 7 Bank 0 Bank 1 Bank 2 Bank 3 Bank 4 Bank 5 Bank 6 Bank 7 Thread 15 Bank 15

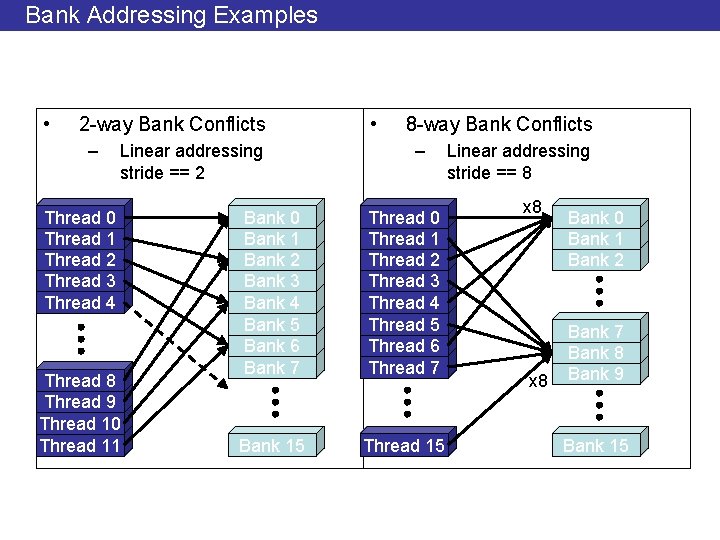

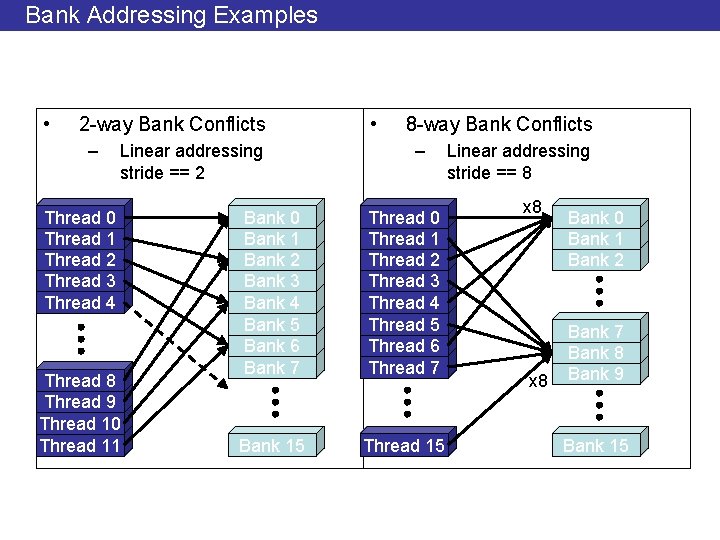

Bank Addressing Examples • 2 -way Bank Conflicts – Linear addressing stride == 2 Thread 0 Thread 1 Thread 2 Thread 3 Thread 4 Thread 8 Thread 9 Thread 10 Thread 11 • 8 -way Bank Conflicts – Bank 0 Bank 1 Bank 2 Bank 3 Bank 4 Bank 5 Bank 6 Bank 7 Thread 0 Thread 1 Thread 2 Thread 3 Thread 4 Thread 5 Thread 6 Thread 7 Bank 15 Thread 15 Linear addressing stride == 8 x 8 Bank 0 Bank 1 Bank 2 Bank 7 Bank 8 Bank 9 Bank 15

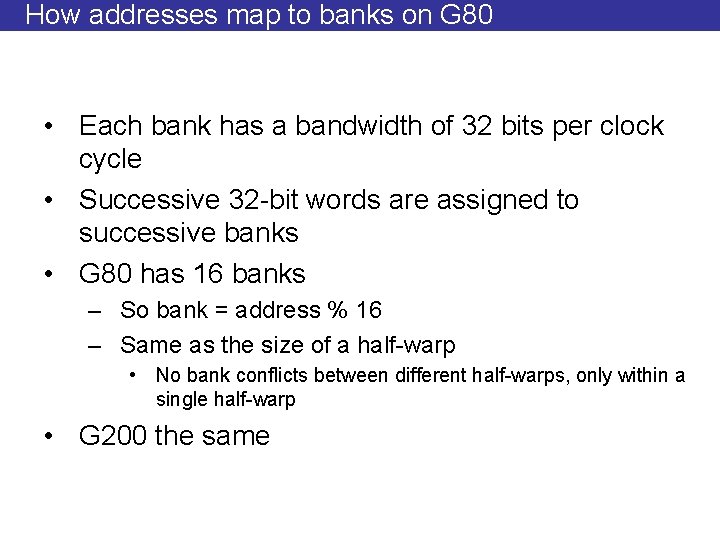

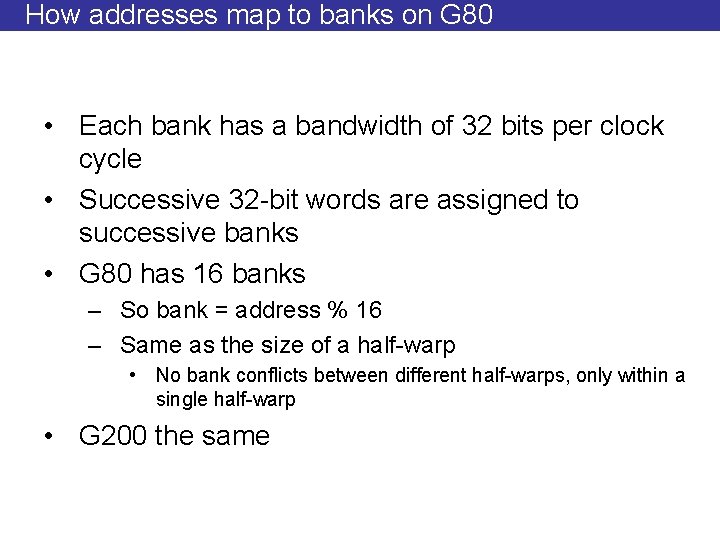

How addresses map to banks on G 80 • Each bank has a bandwidth of 32 bits per clock cycle • Successive 32 -bit words are assigned to successive banks • G 80 has 16 banks – So bank = address % 16 – Same as the size of a half-warp • No bank conflicts between different half-warps, only within a single half-warp • G 200 the same

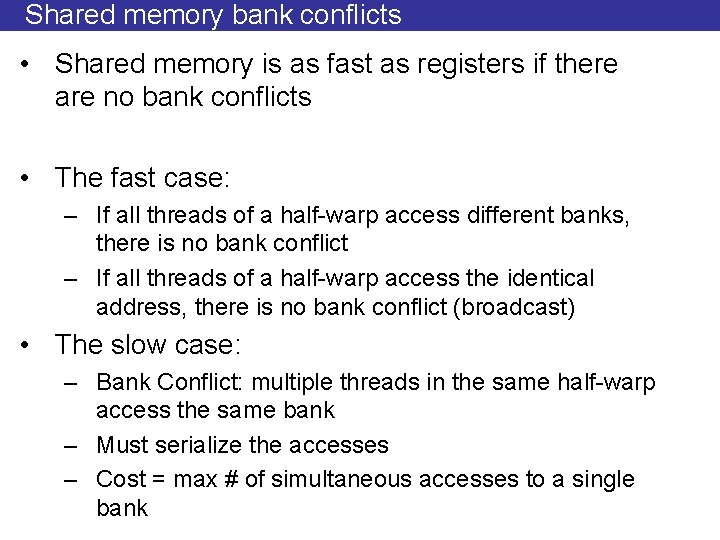

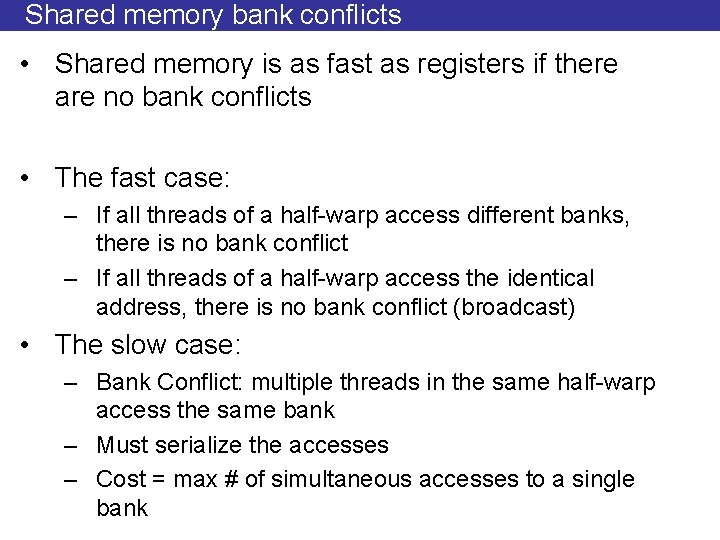

Shared memory bank conflicts • Shared memory is as fast as registers if there are no bank conflicts • The fast case: – If all threads of a half-warp access different banks, there is no bank conflict – If all threads of a half-warp access the identical address, there is no bank conflict (broadcast) • The slow case: – Bank Conflict: multiple threads in the same half-warp access the same bank – Must serialize the accesses – Cost = max # of simultaneous accesses to a single bank

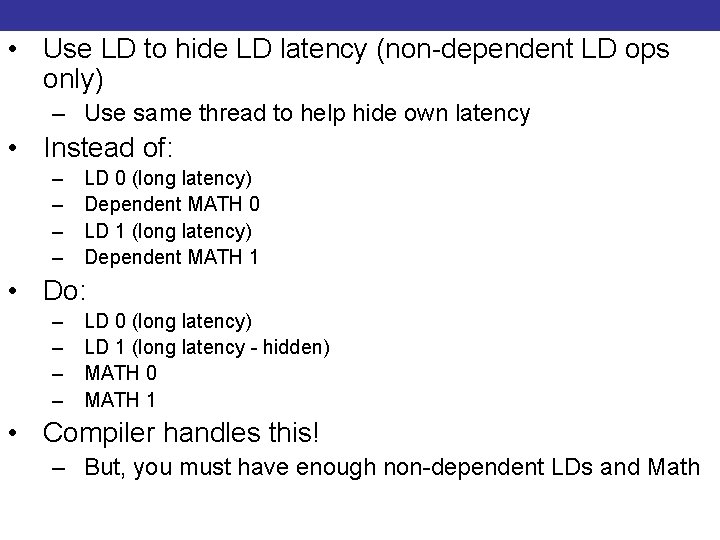

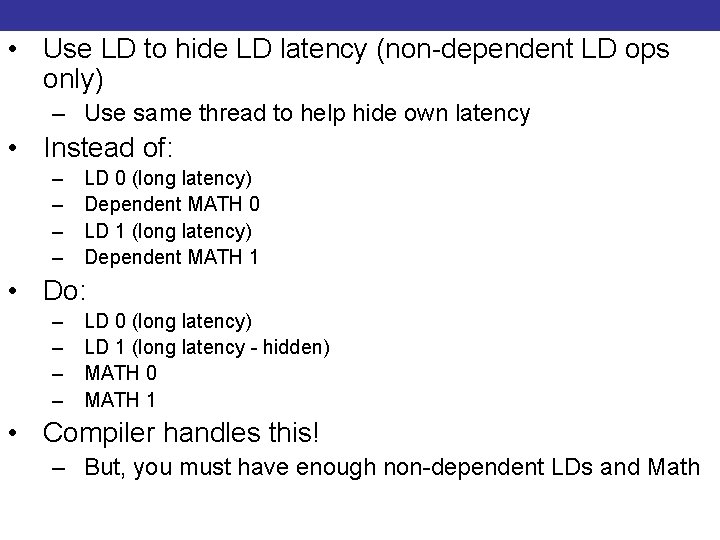

Load/Store (Memory read/write) • Use LD to hide LD latency (non-dependent LD ops only) Clustering/Batching – Use same thread to help hide own latency • Instead of: – – LD 0 (long latency) Dependent MATH 0 LD 1 (long latency) Dependent MATH 1 • Do: – – LD 0 (long latency) LD 1 (long latency - hidden) MATH 0 MATH 1 • Compiler handles this! – But, you must have enough non-dependent LDs and Math

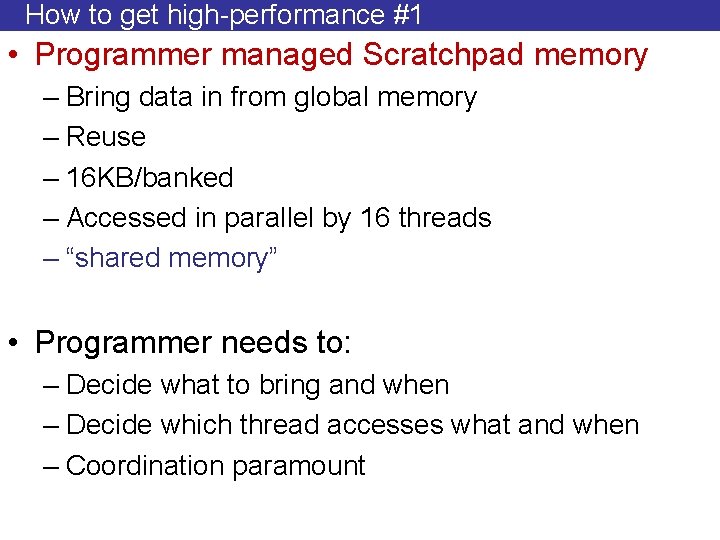

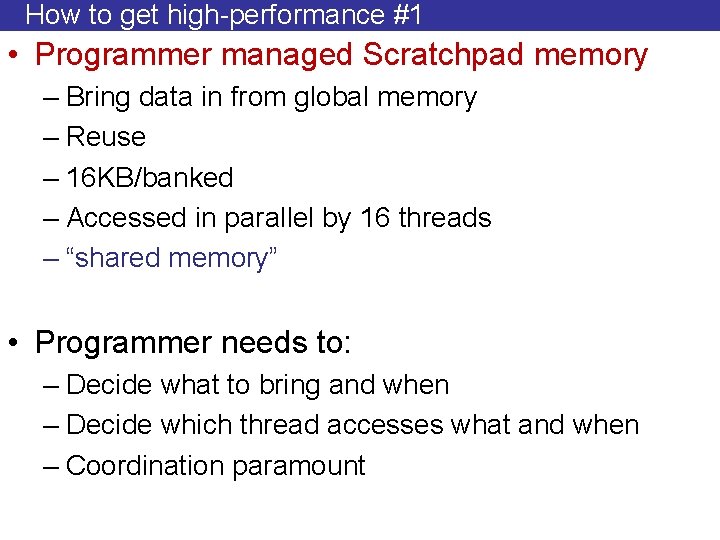

How to get high-performance #1 • Programmer managed Scratchpad memory – Bring data in from global memory – Reuse – 16 KB/banked – Accessed in parallel by 16 threads – “shared memory” • Programmer needs to: – Decide what to bring and when – Decide which thread accesses what and when – Coordination paramount

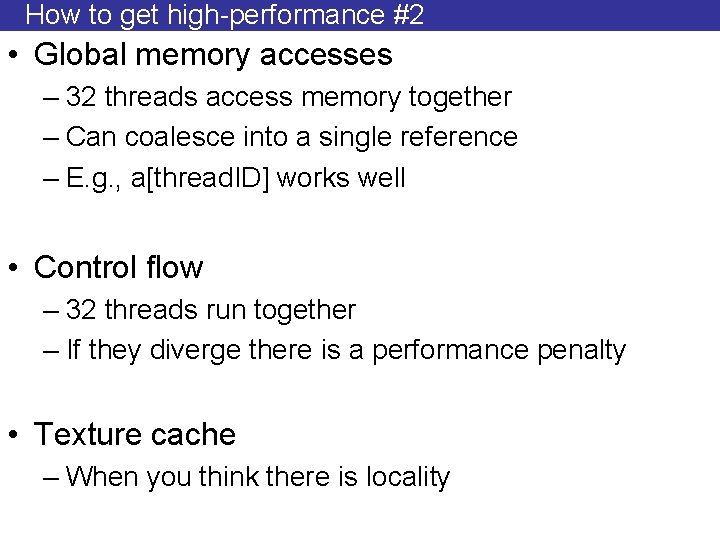

How to get high-performance #2 • Global memory accesses – 32 threads access memory together – Can coalesce into a single reference – E. g. , a[thread. ID] works well • Control flow – 32 threads run together – If they diverge there is a performance penalty • Texture cache – When you think there is locality

Numerical Accuracy • Can do FP – Mostly OK some minor discrepancies • Can do DP – 1/8 the bandwidth – Better on newer hardware • Mixed methods – Break numbers into two single-precision values • Must carefully check for stability/correctness • Will get better w/ next generation hardware

Are GPUs really that much faster than CPUs • 50 x – 200 x speedups typically reported • Recent work found – Not enough effort goes into optimizing code for CPUs – Intel paper (ISCA 2010) • http: //portal. acm. org/ft_gateway. cfm? id=1816021&type=p df • But: – The learning curve and expertise needed for CPUs is much larger – Then, so is the potential and flexibility

Predefined Vector Datatypes • Can be used both in host and in device code. – [u]char[1. . 4], [u]short[1. . 4], [u]int[1. . 4], [u]long[1. . 4], float[1. . 4] • Structures accessed with. x, . y, . z, . w fields • default constructors, “make_TYPE (…)”: – float 4 f 4 = make_float 4 (1 f, 10 f, 1. 2 f, 0. 5 f); • dim 3 – type built on uint 3 – Used to specify dimensions – Default value is (1, 1, 1)

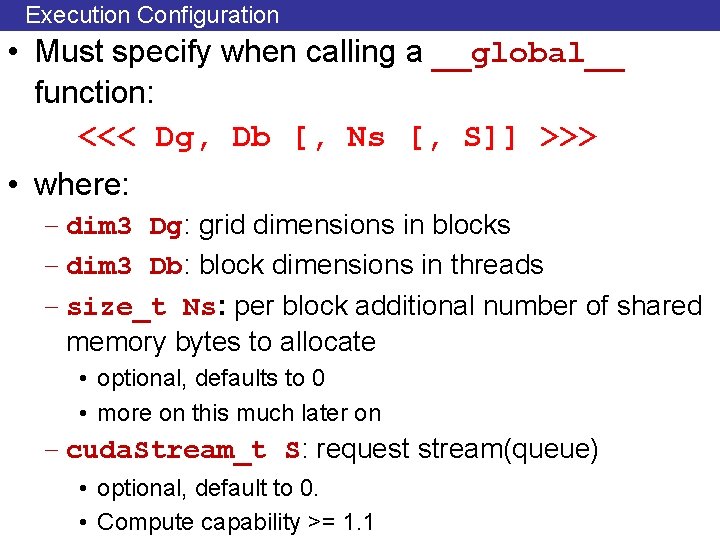

Execution Configuration • Must specify when calling a __global__ function: <<< Dg, Db [, Ns [, S]] >>> • where: – dim 3 Dg: grid dimensions in blocks – dim 3 Db: block dimensions in threads – size_t Ns: per block additional number of shared memory bytes to allocate • optional, defaults to 0 • more on this much later on – cuda. Stream_t S: request stream(queue) • optional, default to 0. • Compute capability >= 1. 1

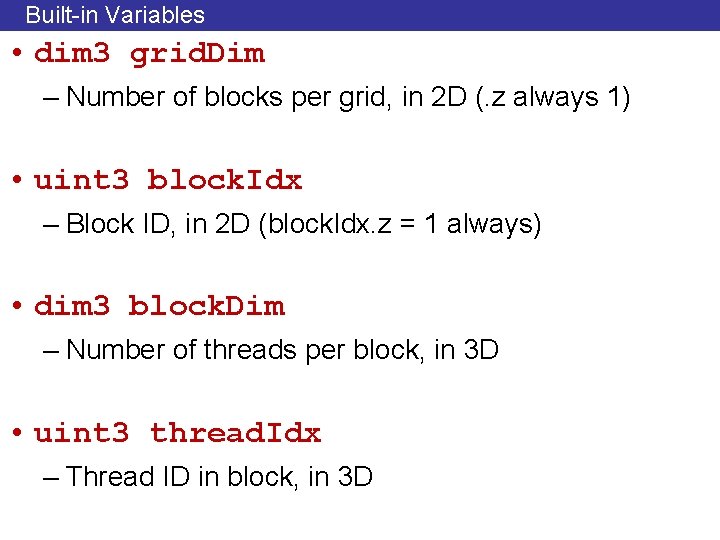

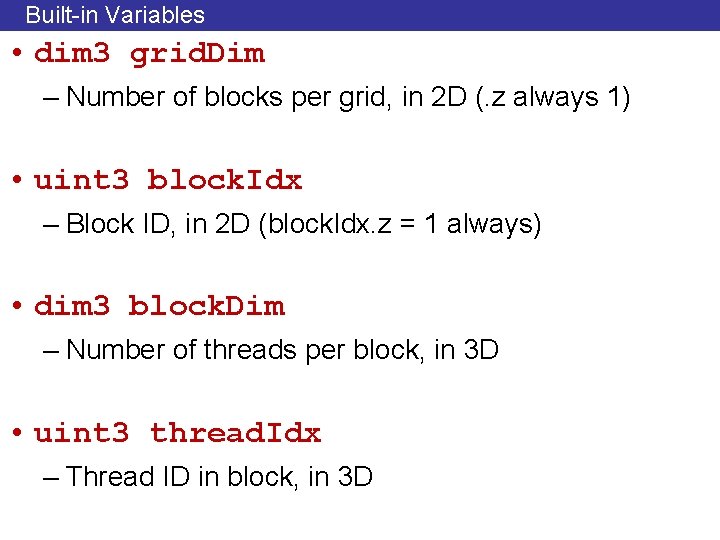

Built-in Variables • dim 3 grid. Dim – Number of blocks per grid, in 2 D (. z always 1) • uint 3 block. Idx – Block ID, in 2 D (block. Idx. z = 1 always) • dim 3 block. Dim – Number of threads per block, in 3 D • uint 3 thread. Idx – Thread ID in block, in 3 D

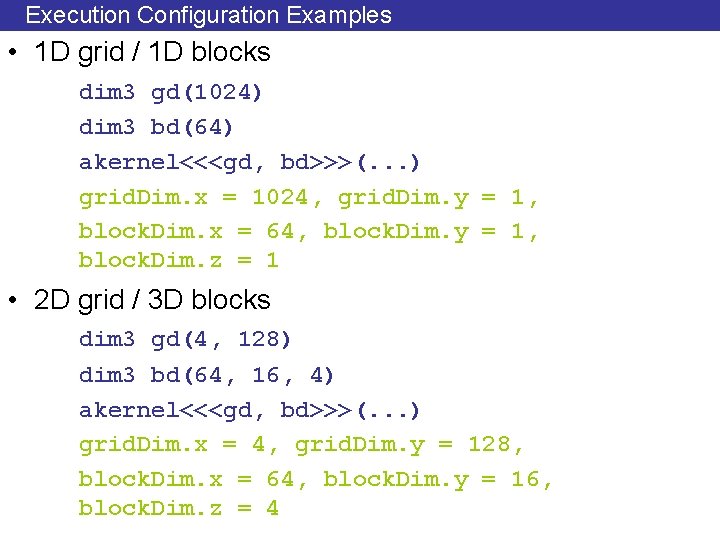

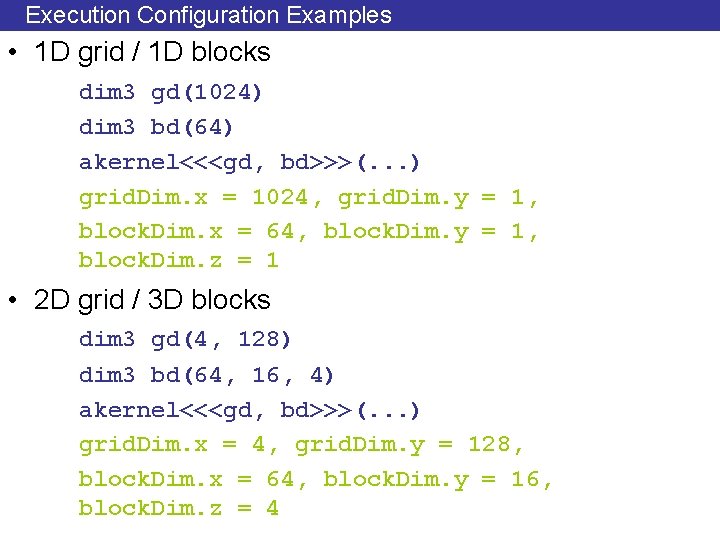

Execution Configuration Examples • 1 D grid / 1 D blocks dim 3 gd(1024) dim 3 bd(64) akernel<<<gd, bd>>>(. . . ) grid. Dim. x = 1024, grid. Dim. y = 1, block. Dim. x = 64, block. Dim. y = 1, block. Dim. z = 1 • 2 D grid / 3 D blocks dim 3 gd(4, 128) dim 3 bd(64, 16, 4) akernel<<<gd, bd>>>(. . . ) grid. Dim. x = 4, grid. Dim. y = 128, block. Dim. x = 64, block. Dim. y = 16, block. Dim. z = 4

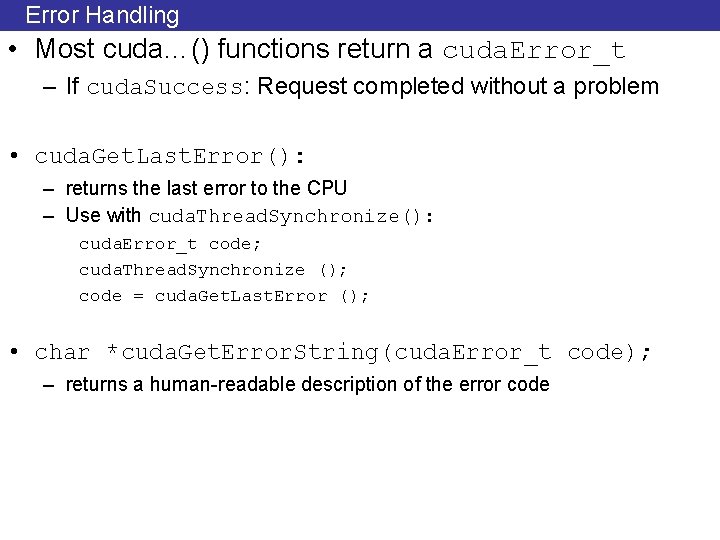

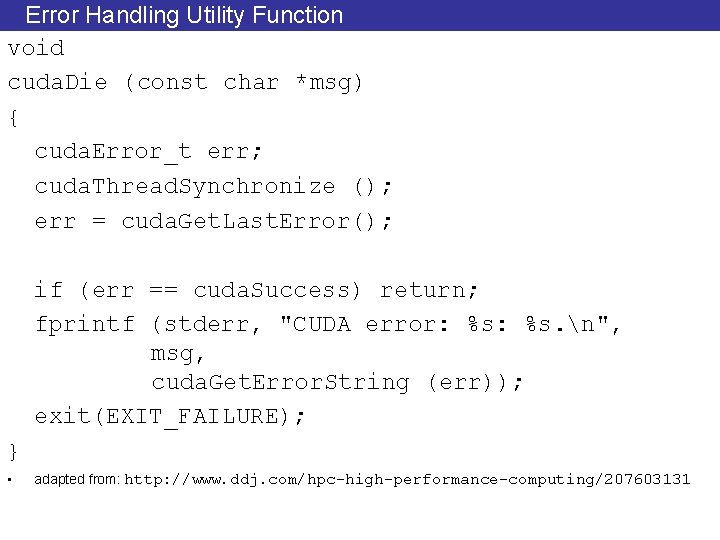

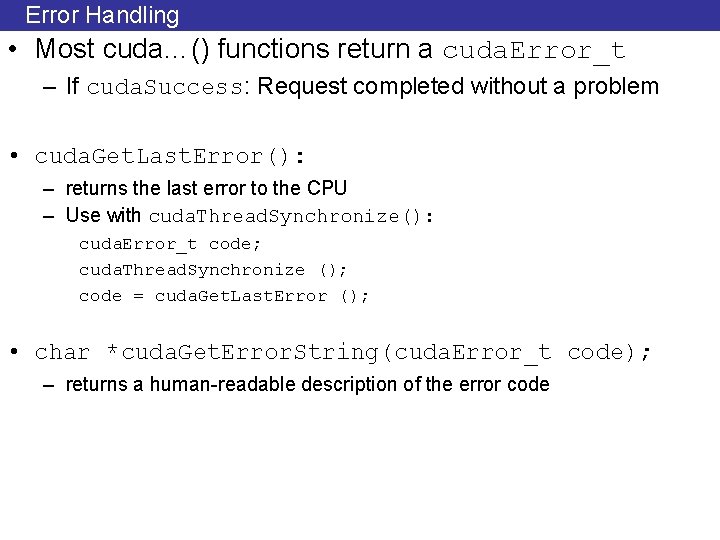

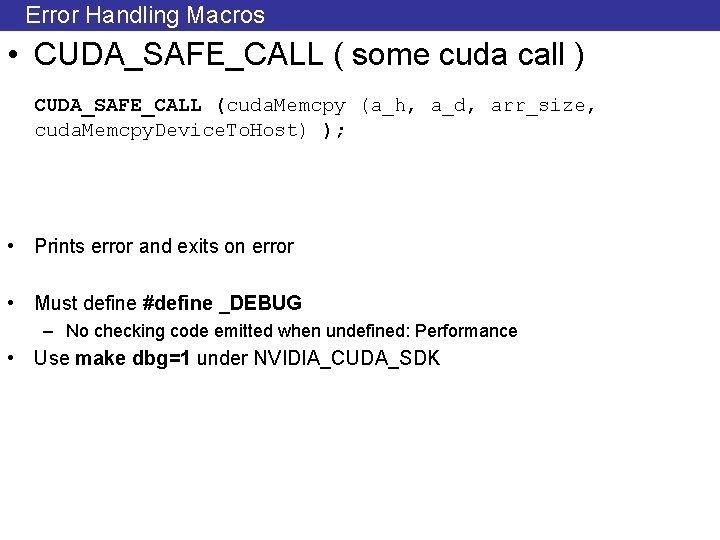

Error Handling • Most cuda…() functions return a cuda. Error_t – If cuda. Success: Request completed without a problem • cuda. Get. Last. Error(): – returns the last error to the CPU – Use with cuda. Thread. Synchronize(): cuda. Error_t code; cuda. Thread. Synchronize (); code = cuda. Get. Last. Error (); • char *cuda. Get. Error. String(cuda. Error_t code); – returns a human-readable description of the error code

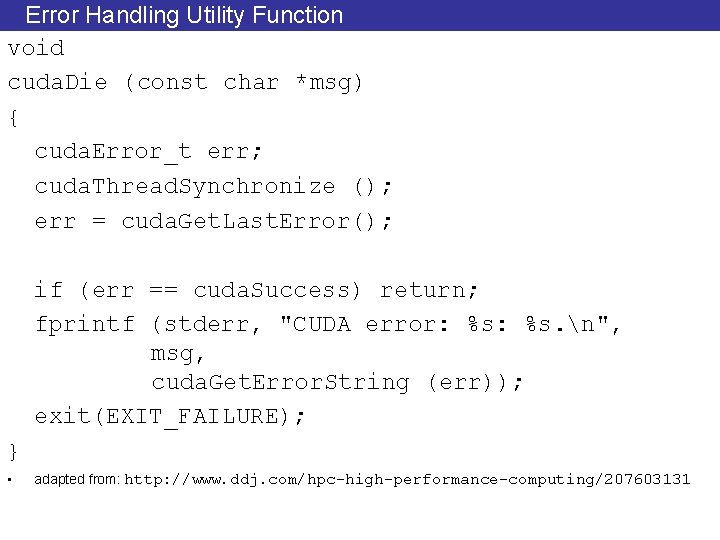

Error Handling Utility Function void cuda. Die (const char *msg) { cuda. Error_t err; cuda. Thread. Synchronize (); err = cuda. Get. Last. Error(); if (err == cuda. Success) return; fprintf (stderr, "CUDA error: %s. n", msg, cuda. Get. Error. String (err)); exit(EXIT_FAILURE); } • adapted from: http: //www. ddj. com/hpc-high-performance-computing/207603131

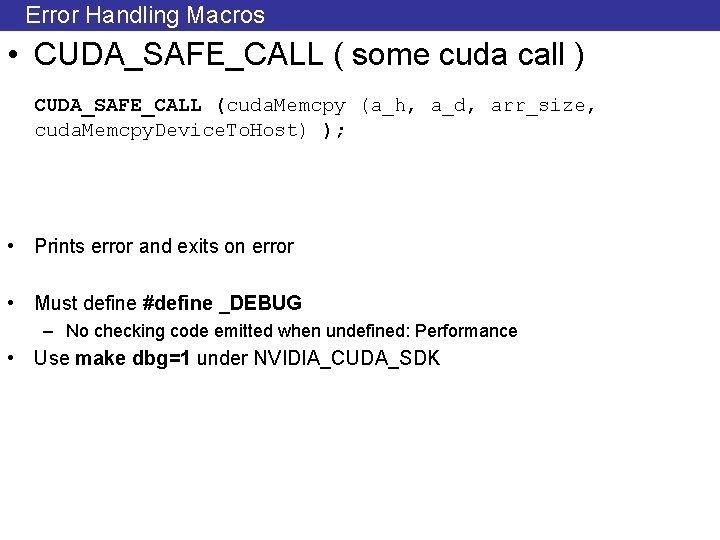

Error Handling Macros • CUDA_SAFE_CALL ( some cuda call ) CUDA_SAFE_CALL (cuda. Memcpy (a_h, a_d, arr_size, cuda. Memcpy. Device. To. Host) ); • Prints error and exits on error • Must define #define _DEBUG – No checking code emitted when undefined: Performance • Use make dbg=1 under NVIDIA_CUDA_SDK

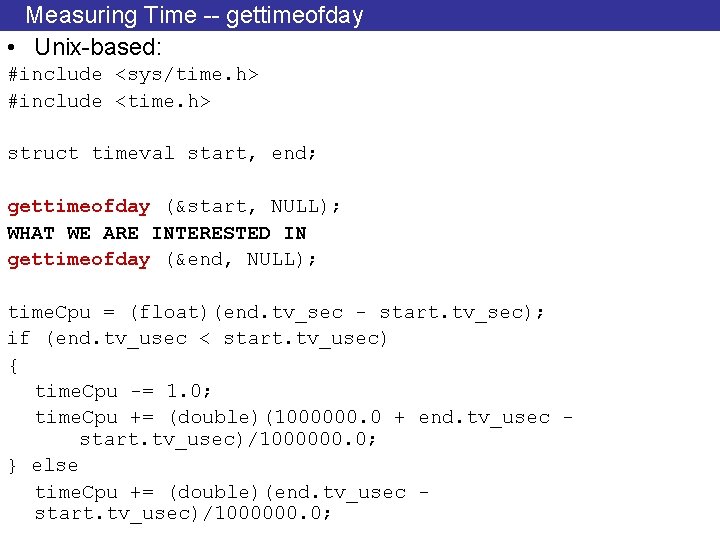

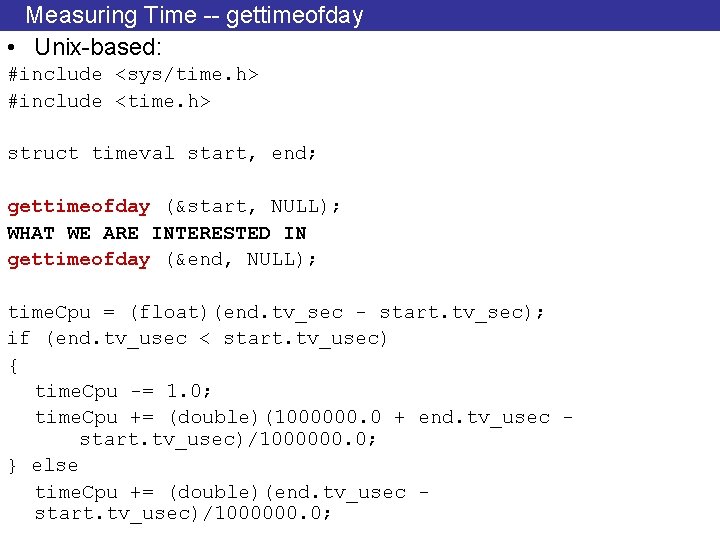

Measuring Time -- gettimeofday • Unix-based: #include <sys/time. h> #include <time. h> struct timeval start, end; gettimeofday (&start, NULL); WHAT WE ARE INTERESTED IN gettimeofday (&end, NULL); time. Cpu = (float)(end. tv_sec - start. tv_sec); if (end. tv_usec < start. tv_usec) { time. Cpu -= 1. 0; time. Cpu += (double)(1000000. 0 + end. tv_usec - start. tv_usec)/1000000. 0; } else time. Cpu += (double)(end. tv_usec - start. tv_usec)/1000000. 0;

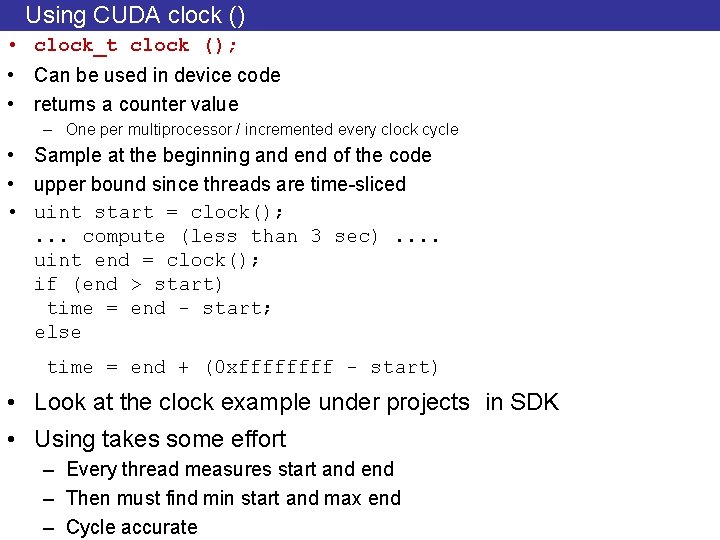

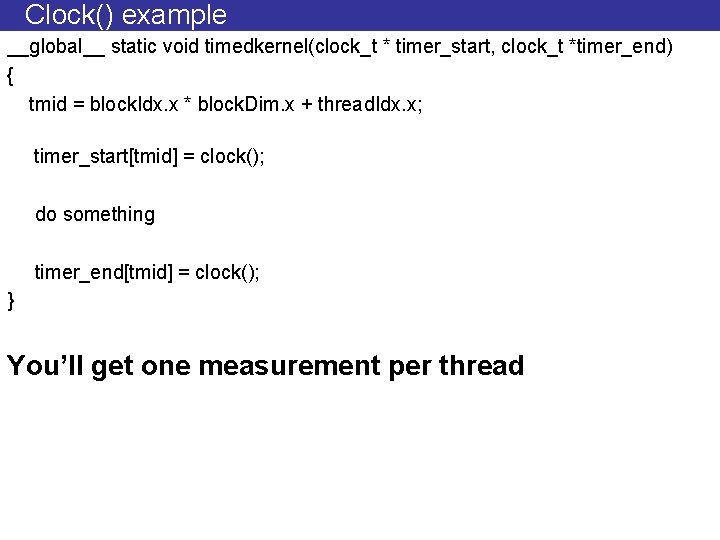

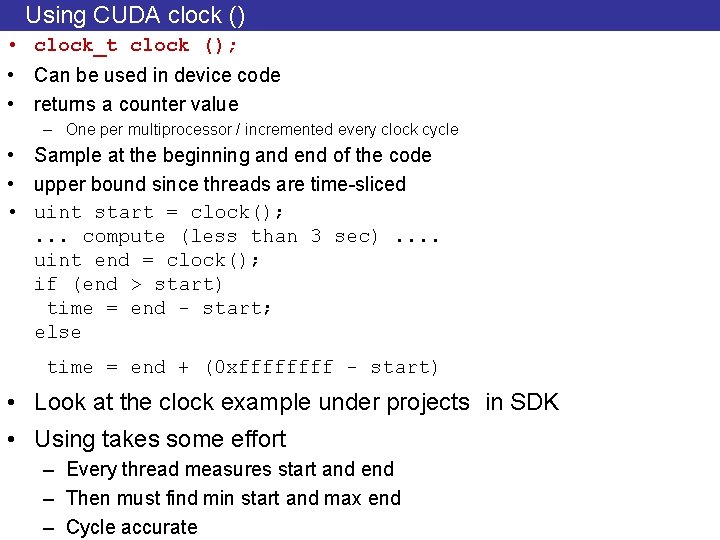

Using CUDA clock () • clock_t clock (); • Can be used in device code • returns a counter value – One per multiprocessor / incremented every clock cycle • Sample at the beginning and end of the code • upper bound since threads are time-sliced • uint start = clock(); . . . compute (less than 3 sec). . uint end = clock(); if (end > start) time = end - start; else time = end + (0 xffff - start) • Look at the clock example under projects in SDK • Using takes some effort – Every thread measures start and end – Then must find min start and max end – Cycle accurate

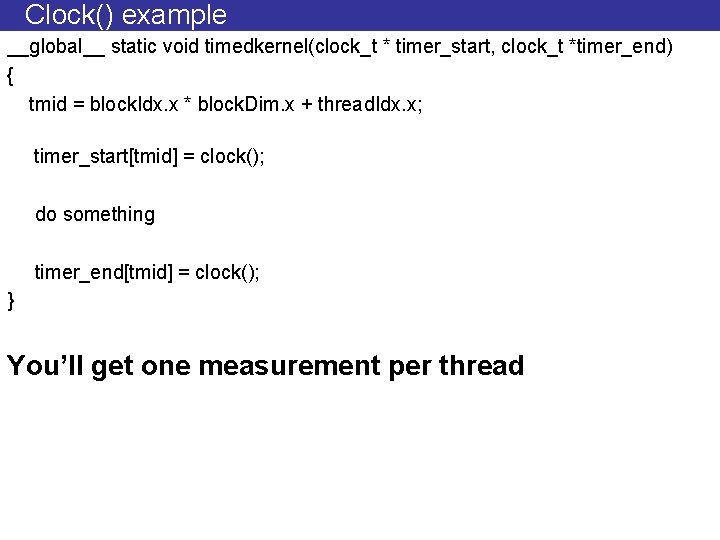

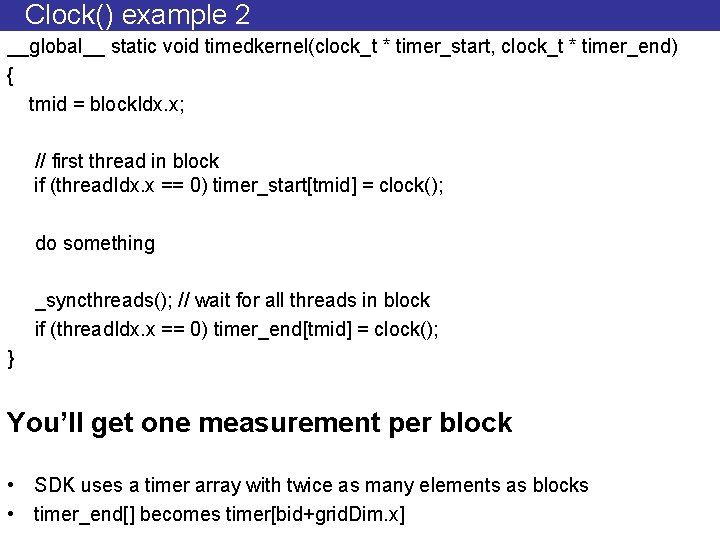

Clock() example __global__ static void timedkernel(clock_t * timer_start, clock_t *timer_end) { tmid = block. Idx. x * block. Dim. x + thread. Idx. x; timer_start[tmid] = clock(); do something timer_end[tmid] = clock(); } You’ll get one measurement per thread

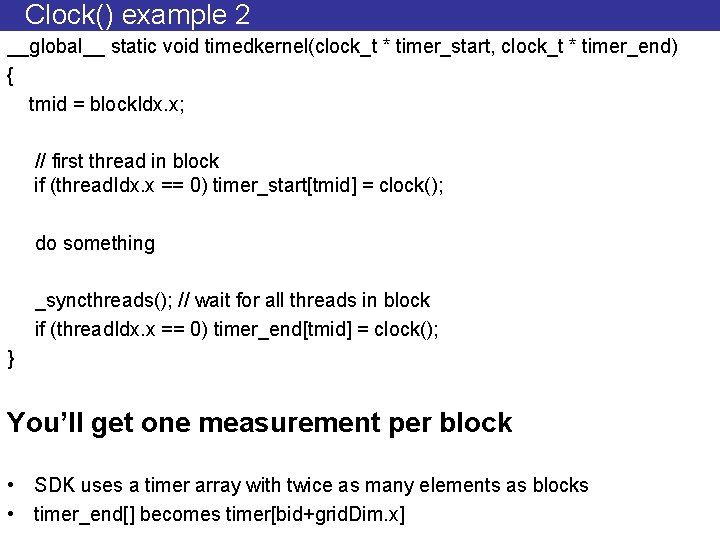

Clock() example 2 __global__ static void timedkernel(clock_t * timer_start, clock_t * timer_end) { tmid = block. Idx. x; // first thread in block if (thread. Idx. x == 0) timer_start[tmid] = clock(); do something _syncthreads(); // wait for all threads in block if (thread. Idx. x == 0) timer_end[tmid] = clock(); } You’ll get one measurement per block • SDK uses a timer array with twice as many elements as blocks • timer_end[] becomes timer[bid+grid. Dim. x]

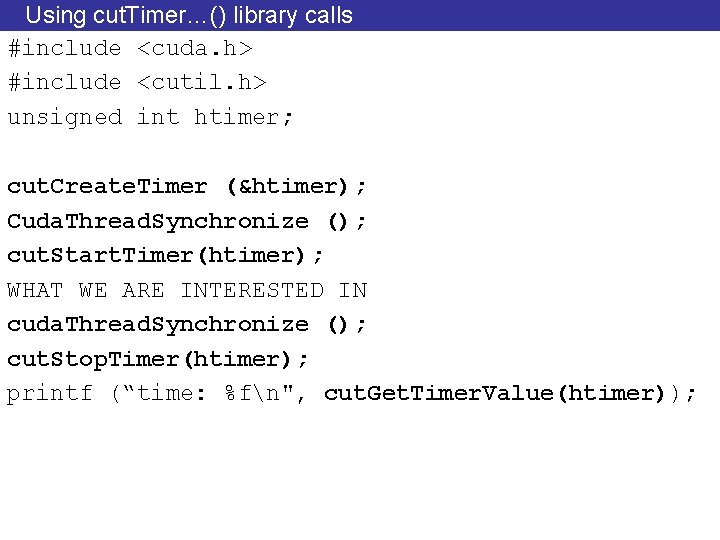

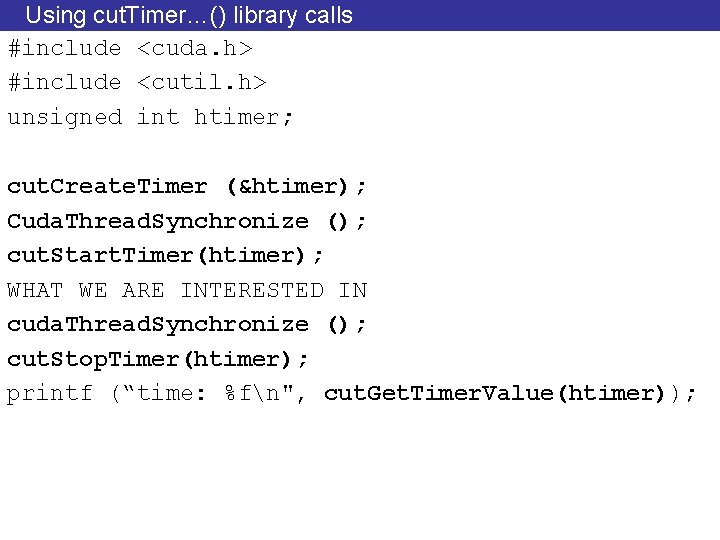

Using cut. Timer…() library calls #include <cuda. h> #include <cutil. h> unsigned int htimer; cut. Create. Timer (&htimer); Cuda. Thread. Synchronize (); cut. Start. Timer(htimer); WHAT WE ARE INTERESTED IN cuda. Thread. Synchronize (); cut. Stop. Timer(htimer); printf (“time: %fn", cut. Get. Timer. Value(htimer));

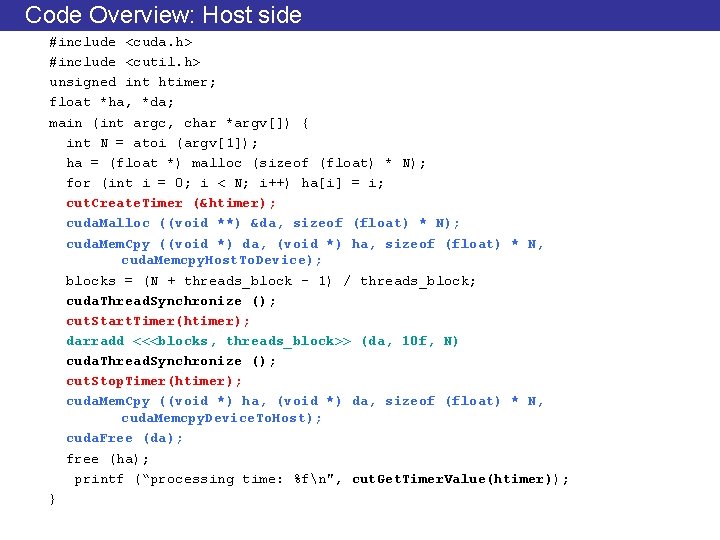

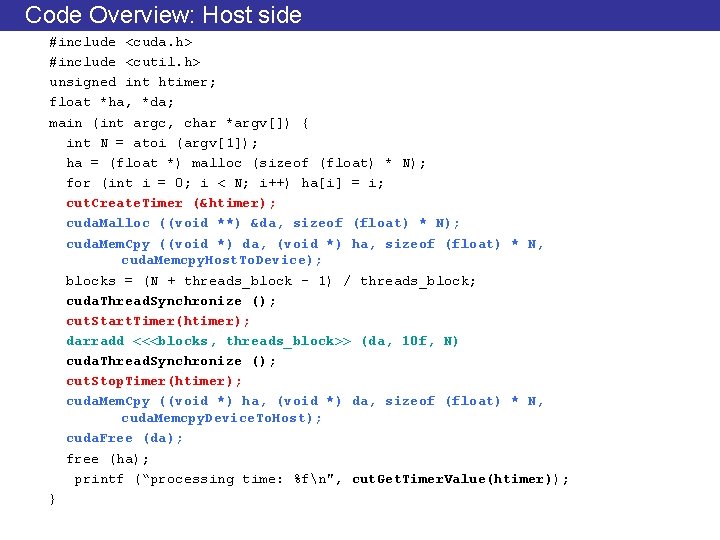

Code Overview: Host side #include <cuda. h> #include <cutil. h> unsigned int htimer; float *ha, *da; main (int argc, char *argv[]) { int N = atoi (argv[1]); ha = (float *) malloc (sizeof (float) * N); for (int i = 0; i < N; i++) ha[i] = i; cut. Create. Timer (&htimer); cuda. Malloc ((void **) &da, sizeof (float) * N); cuda. Mem. Cpy ((void *) da, (void *) ha, sizeof (float) * N, cuda. Memcpy. Host. To. Device); blocks = (N + threads_block – 1) / threads_block; cuda. Thread. Synchronize (); cut. Start. Timer(htimer); darradd <<<blocks, threads_block>> (da, 10 f, N) cuda. Thread. Synchronize (); cut. Stop. Timer(htimer); cuda. Mem. Cpy ((void *) ha, (void *) da, sizeof (float) * N, cuda. Memcpy. Device. To. Host); cuda. Free (da); free (ha); printf (“processing time: %fn", cut. Get. Timer. Value(htimer)); }

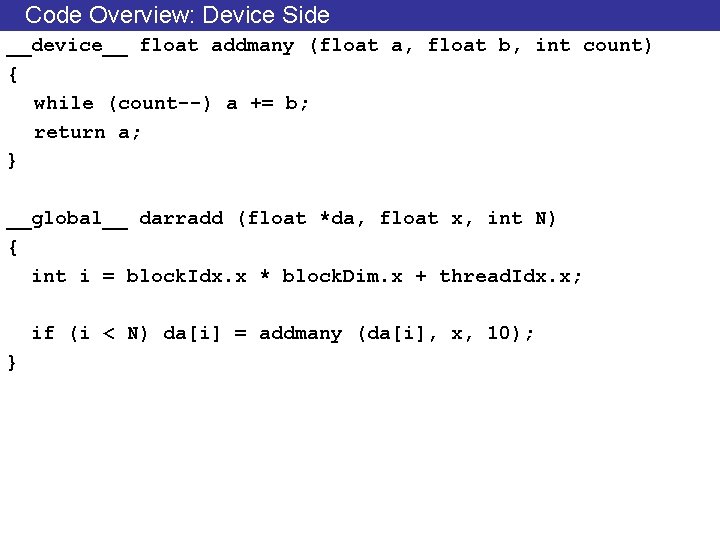

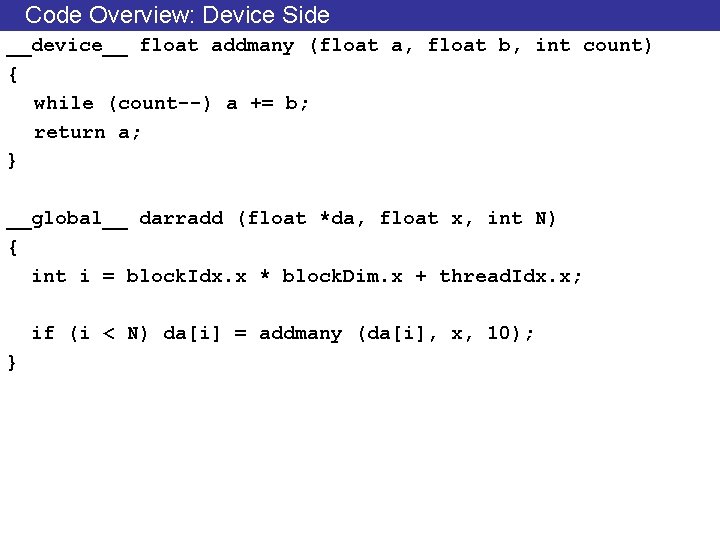

Code Overview: Device Side __device__ float addmany (float a, float b, int count) { while (count--) a += b; return a; } __global__ darradd (float *da, float x, int N) { int i = block. Idx. x * block. Dim. x + thread. Idx. x; if (i < N) da[i] = addmany (da[i], x, 10); }

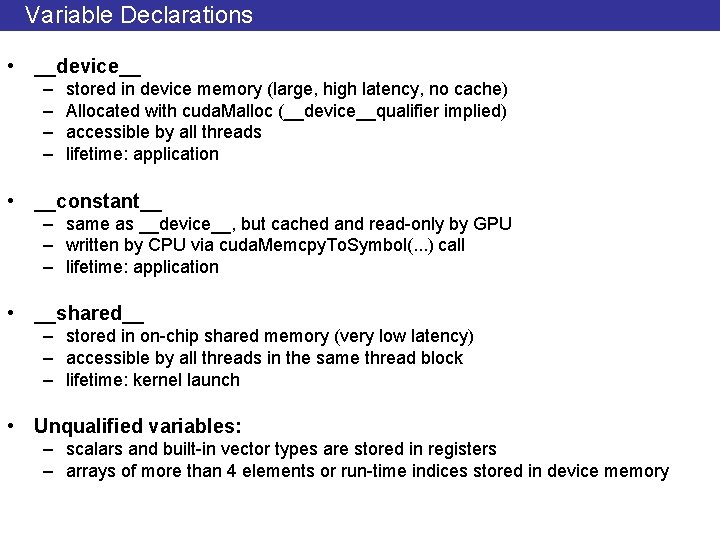

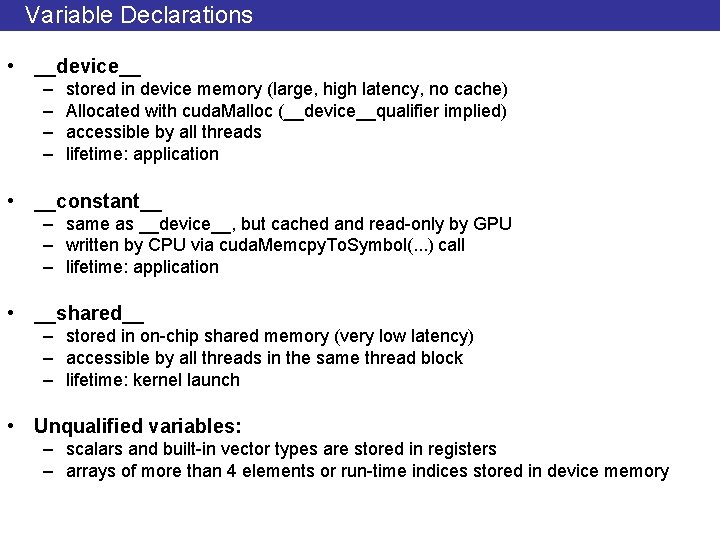

Variable Declarations • __device__ – – stored in device memory (large, high latency, no cache) Allocated with cuda. Malloc (__device__qualifier implied) accessible by all threads lifetime: application • __constant__ – same as __device__, but cached and read-only by GPU – written by CPU via cuda. Memcpy. To. Symbol(. . . ) call – lifetime: application • __shared__ – stored in on-chip shared memory (very low latency) – accessible by all threads in the same thread block – lifetime: kernel launch • Unqualified variables: – scalars and built-in vector types are stored in registers – arrays of more than 4 elements or run-time indices stored in device memory

Measurement Methodology • You will not get exactly the same time measurements every time – Other processes running / external events (e. g. , network activity) – Cannot control – “Non-determinism” • Must take sufficient samples – say 10 or more – There is theory on what the number of samples must be • Measure average • Will discuss this next time or will provide a handout online

![Handling Large Input Data Sets 1 D Example Recall grid Dim xy Handling Large Input Data Sets – 1 D Example • Recall grid. Dim. [xy]](https://slidetodoc.com/presentation_image_h/3659670336575a6af2617c0e3efd0713/image-81.jpg)

Handling Large Input Data Sets – 1 D Example • Recall grid. Dim. [xy] <= 65535 • Host calls kernel multiple times: float *dac = da; // starting offset for current kernel while (n_blocks) { int bn = n_blocks; int elems; // array elements processed in this kernel if (bn > 65535) bn = 65535; elems = bn * block_size; darradd <<<bn, block_size>>> (dac, 10. 0 f, elems); n_blocks -= bn; dac += elems; } • Better alternative: – Each thread processes multiple elements