CS 179 GPU Programming Lecture 8 More CUDA

![CUDA Arrays § Final Notes: § Coordinates can be normalized to [0, 1] if CUDA Arrays § Final Notes: § Coordinates can be normalized to [0, 1] if](https://slidetodoc.com/presentation_image/481b973b2f5f07ade3d43a1b184dddf4/image-15.jpg)

![Device Properties § char name[256]: ASCII identifier of GPU § size_t total. Global. Mem: Device Properties § char name[256]: ASCII identifier of GPU § size_t total. Global. Mem:](https://slidetodoc.com/presentation_image/481b973b2f5f07ade3d43a1b184dddf4/image-21.jpg)

![Device Properties § int max. Grid. Size[3]: maximum grid sizes § size_t total. Constant. Device Properties § int max. Grid. Size[3]: maximum grid sizes § size_t total. Constant.](https://slidetodoc.com/presentation_image/481b973b2f5f07ade3d43a1b184dddf4/image-22.jpg)

![Using Streams § Example: cuda. Stream_t stream[2]; for (int i = 0; i < Using Streams § Example: cuda. Stream_t stream[2]; for (int i = 0; i <](https://slidetodoc.com/presentation_image/481b973b2f5f07ade3d43a1b184dddf4/image-30.jpg)

- Slides: 31

CS 179: GPU Programming Lecture 8: More CUDA Runtime

Today § CUDA arrays for textures § CUDA runtime § Helpful CUDA functions

CUDA Arrays § Recall texture memory § Used to store large data § Stored on GPU § Accessible to all blocks, threads

CUDA Arrays § Used Texture memory for buffers (lab 3) § Allows vertex data to remain on GPU § How else can we access texture memory? § CUDA arrays

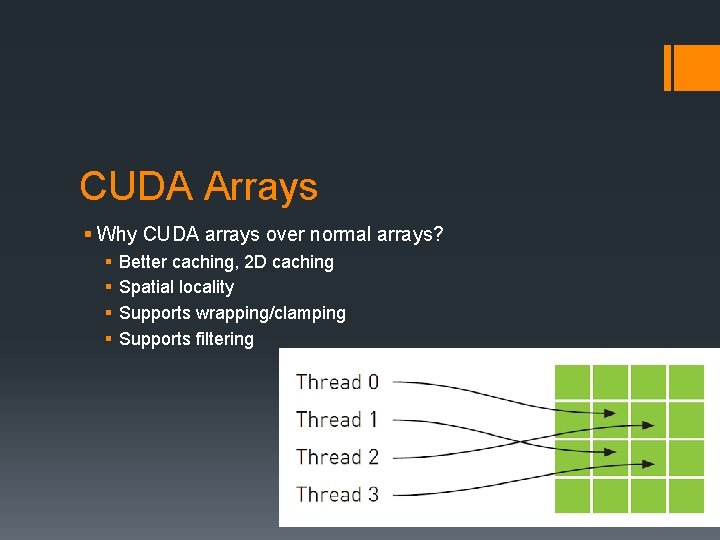

CUDA Arrays § Why CUDA arrays over normal arrays? § § Better caching, 2 D caching Spatial locality Supports wrapping/clamping Supports filtering

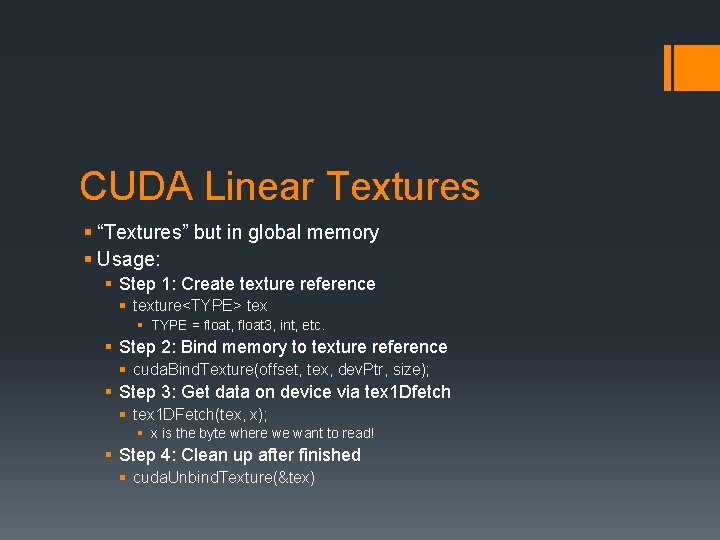

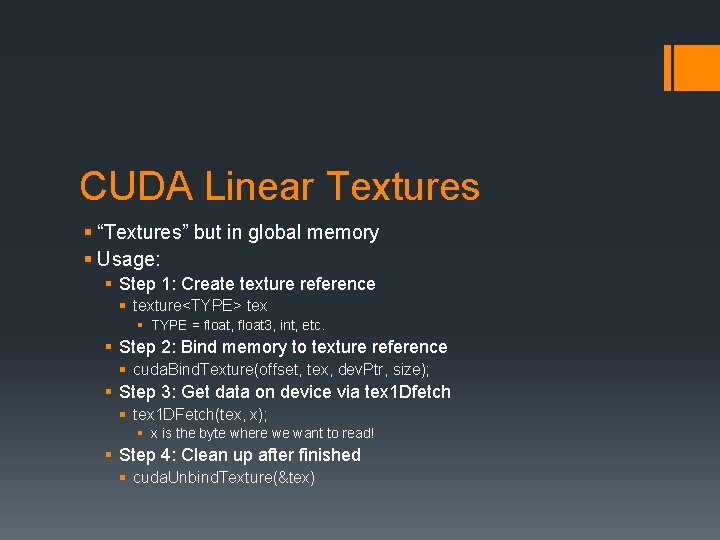

CUDA Linear Textures § “Textures” but in global memory § Usage: § Step 1: Create texture reference § texture<TYPE> tex § TYPE = float, float 3, int, etc. § Step 2: Bind memory to texture reference § cuda. Bind. Texture(offset, tex, dev. Ptr, size); § Step 3: Get data on device via tex 1 Dfetch § tex 1 DFetch(tex, x); § x is the byte where we want to read! § Step 4: Clean up after finished § cuda. Unbind. Texture(&tex)

CUDA Linear Textures § Texture reference properties: § tex. Ref<type, dim, mode> § type = float, int, float 3, etc. § dim = # of dimensions (1, 2, or 3) § mode = § cuda. Read. Mode. Element. Type: standard read § cuda. Read. Mode. Normalized. Float: maps 0 ->0. 0, 255 ->1. 0 for ints->floats

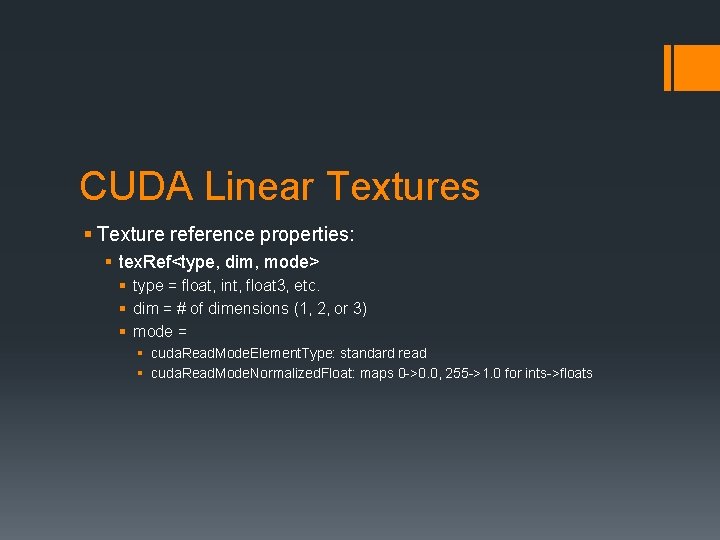

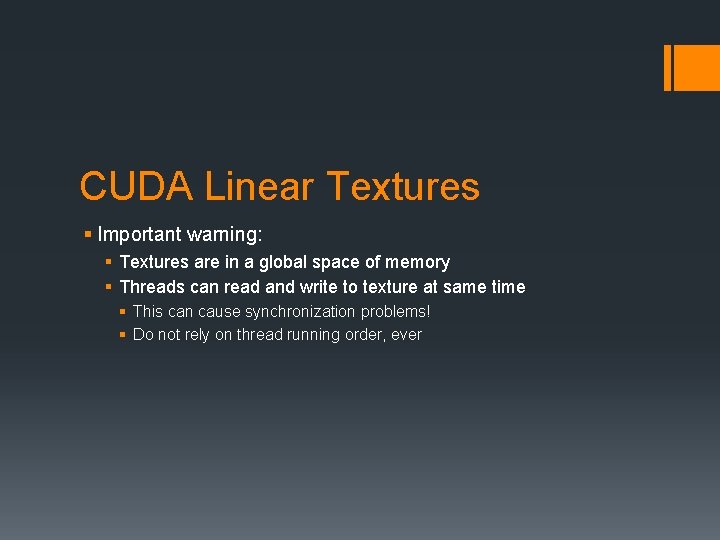

CUDA Linear Textures § Important warning: § Textures are in a global space of memory § Threads can read and write to texture at same time § This can cause synchronization problems! § Do not rely on thread running order, ever

CUDA Linear Textures § Other limitations: § Only 1 D, can make indexing and caching a bit less convenient § Pitch may be not ideal for 2 D array § Not read-write § Solution: CUDA arrays

CUDA Arrays § Live in texture memory space § Access via texture fetches

CUDA Arrays § Step 1: Create channel description § § Tells us texture attributes cuda. Create. Channel. Desc(int x, int y, int z, int w, enum mode) x, y, z, w are number of bytes per component mode is cuda. Channel. Format. Kind. Float, etc.

CUDA Arrays § Step 2: Allocate memory § Must be done dynamically § Use cuda. Malloc. Array(cuda. Array **array, struct desc, int size) § Most global memory functions work with CUDA arrays too § cuda. Memcpy. To. Array, etc.

CUDA Arrays § Step 3: Create texture reference § texture<TYPE, dim, mode> tex. Ref -- just as before § Parameters must match channel description where applicable § Step 4: Edit texture settings § Settings are encoded as tex. Ref struct members

CUDA Arrays § Step 5: Bind the texture reference to array § cuda. Bind. Texture. To. Array(tex. Ref, array) § Step 6: Access texture § Similar to before, now we have more options: § tex 1 DFetch(tex. Ref, x) § tex 2 DFetch(tex. Ref, x, y)

![CUDA Arrays Final Notes Coordinates can be normalized to 0 1 if CUDA Arrays § Final Notes: § Coordinates can be normalized to [0, 1] if](https://slidetodoc.com/presentation_image/481b973b2f5f07ade3d43a1b184dddf4/image-15.jpg)

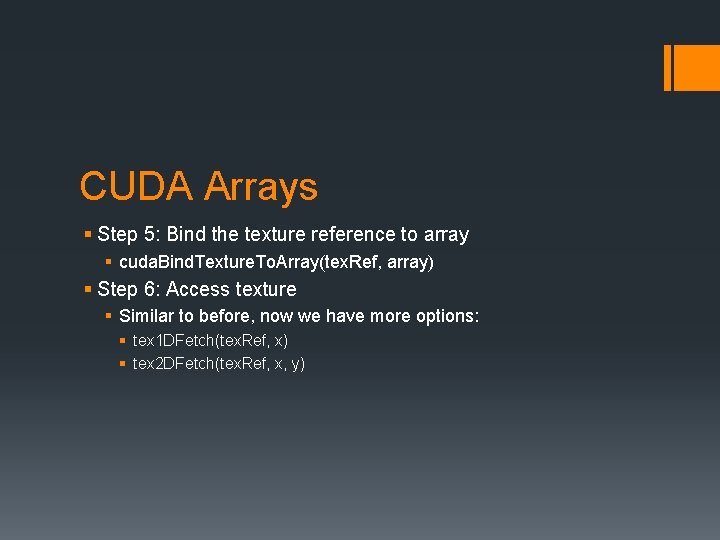

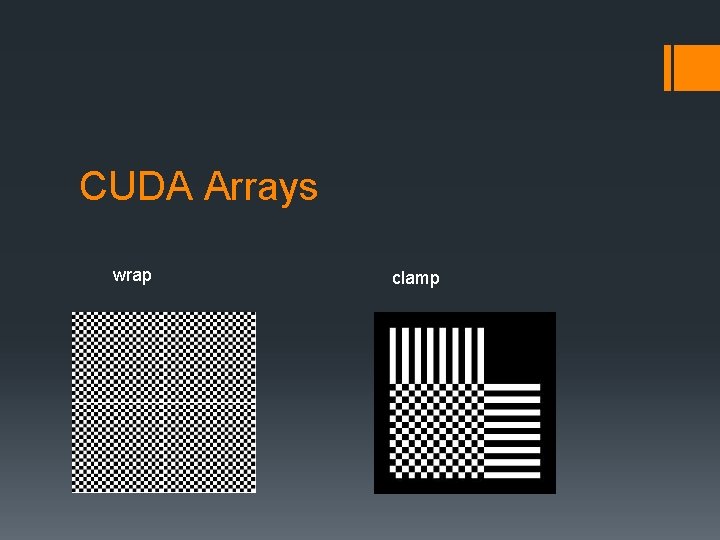

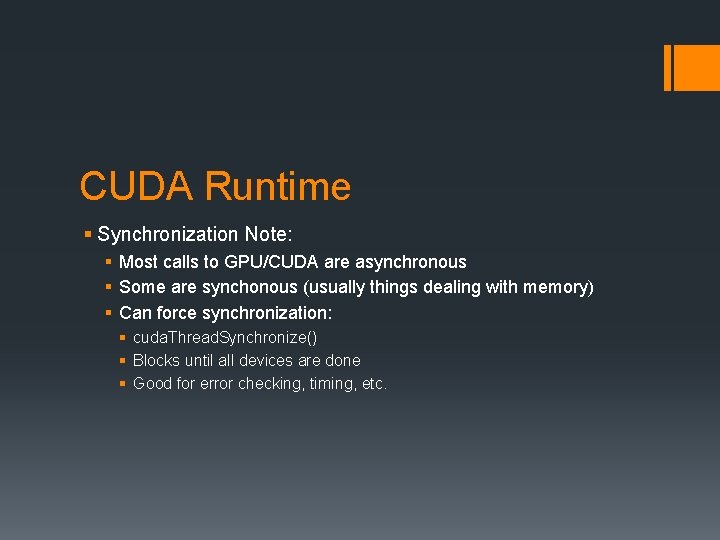

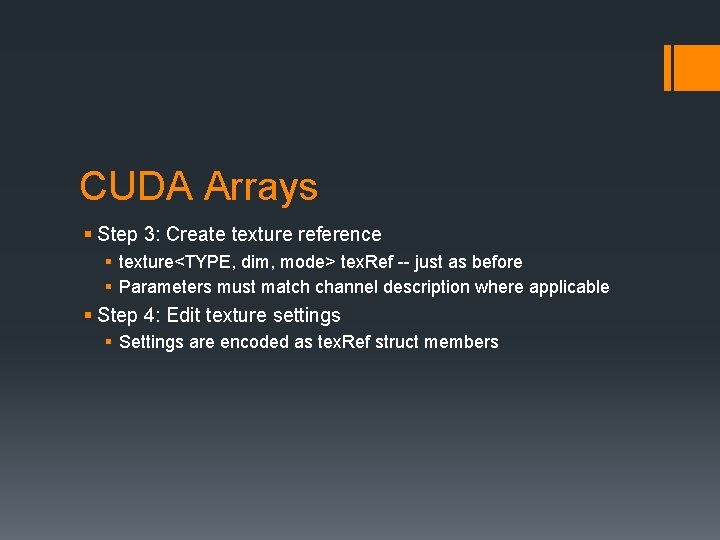

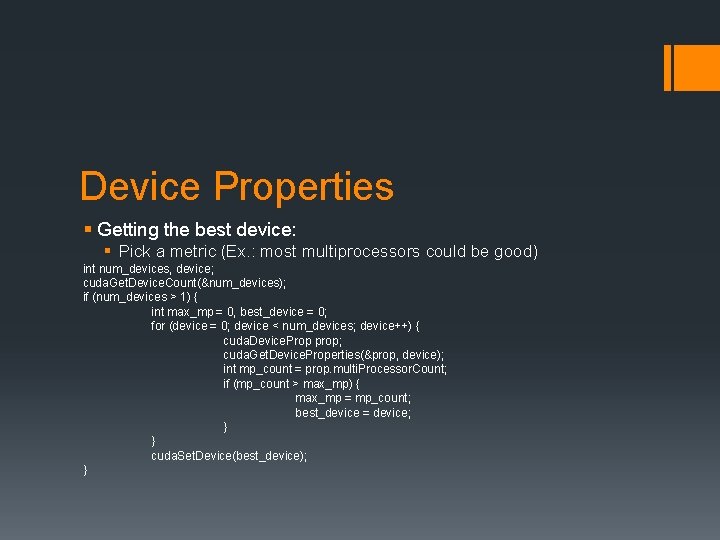

CUDA Arrays § Final Notes: § Coordinates can be normalized to [0, 1] if in float mode § Filter modes: nearest point or linear § Tells CUDA how to blend texture § Wrap vs. clamp: § Wrap: out of bounds accesses wrap around to other side § Ex. : (1. 5, 0. 5) -> (0. 5, 0. 5) § Clamp: out of bounds accesses set to border value § Ex. : (1. 5, 0. 5) -> (1. 0, 0. 5)

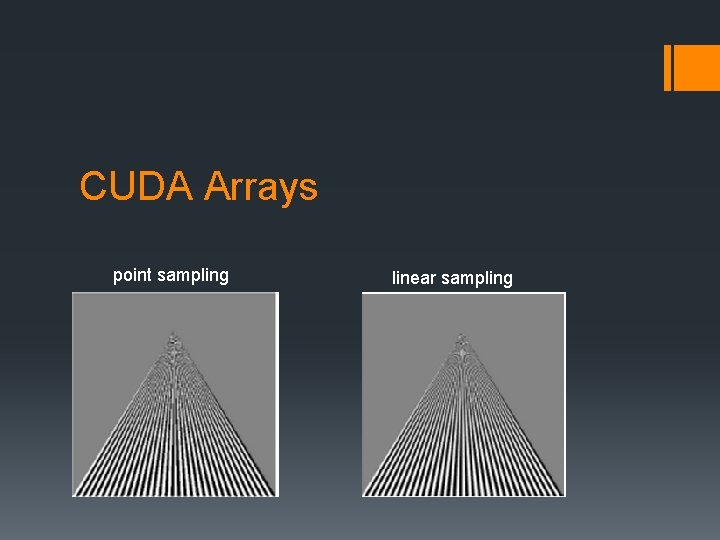

CUDA Arrays point sampling linear sampling

CUDA Arrays wrap clamp

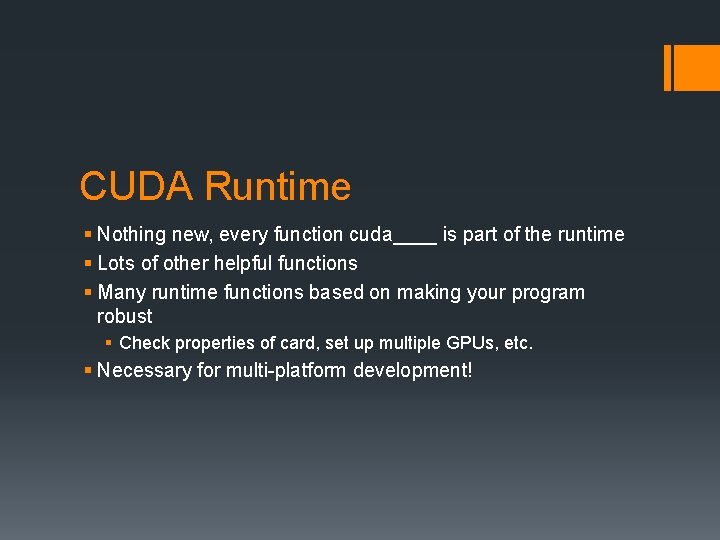

CUDA Runtime § Nothing new, every function cuda____ is part of the runtime § Lots of other helpful functions § Many runtime functions based on making your program robust § Check properties of card, set up multiple GPUs, etc. § Necessary for multi-platform development!

CUDA Runtime § Starting the runtime: § Simply call a cuda_____ function! § CUDA can waste a lot of resources § Stop CUDA with cuda. Thread. Exit() § Called automatically on CPU exit, but you may want to call earlier

CUDA Runtime § Getting devices and properties: § cuda. Get. Device. Count(int * n); § Returns # of CUDA-capable devices § Can use to check if machine is CUDA-capable! § cuda. Set. Device(int n) § Sets device n to the currently used device § cuda. Get. Device. Properties(struct *dev. Prop prop, int n); § Loads data from device n into prop

![Device Properties char name256 ASCII identifier of GPU sizet total Global Mem Device Properties § char name[256]: ASCII identifier of GPU § size_t total. Global. Mem:](https://slidetodoc.com/presentation_image/481b973b2f5f07ade3d43a1b184dddf4/image-21.jpg)

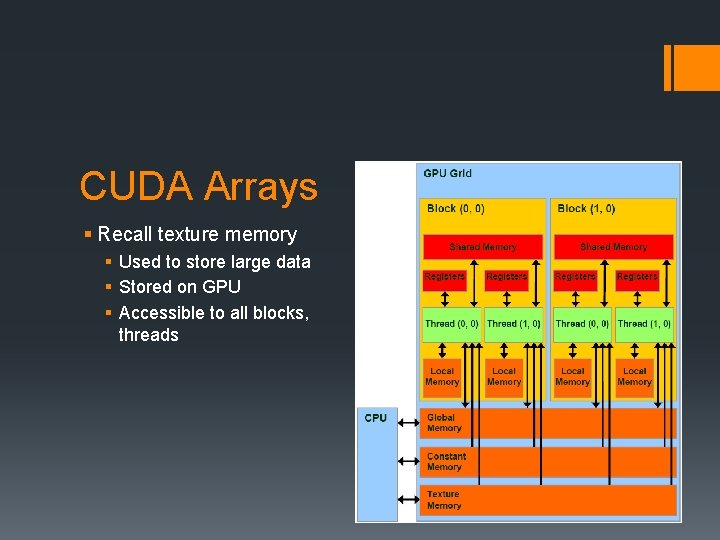

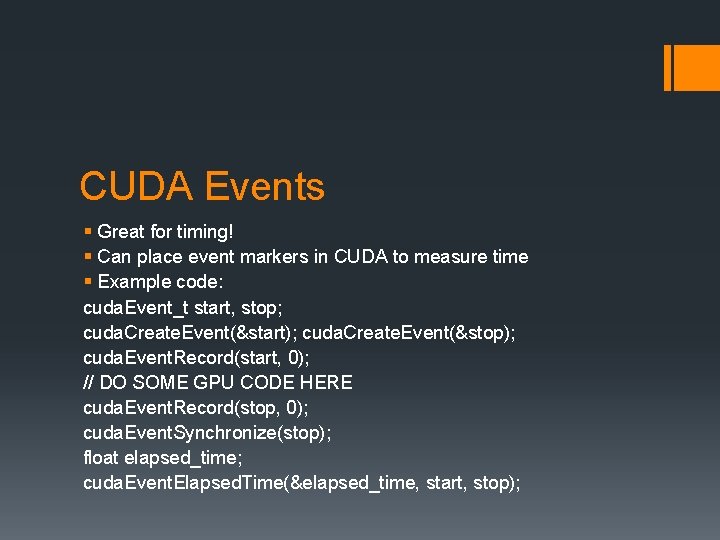

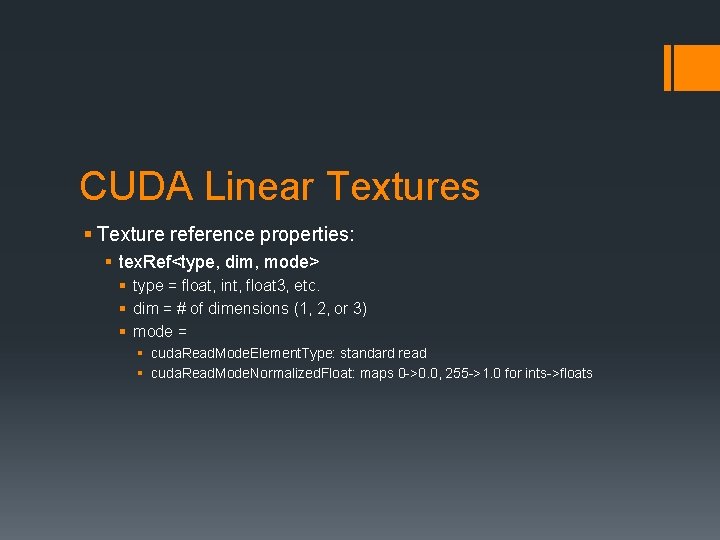

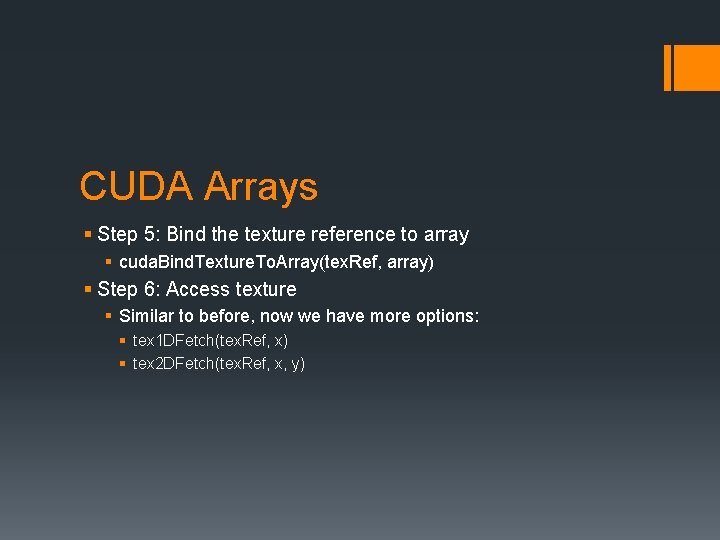

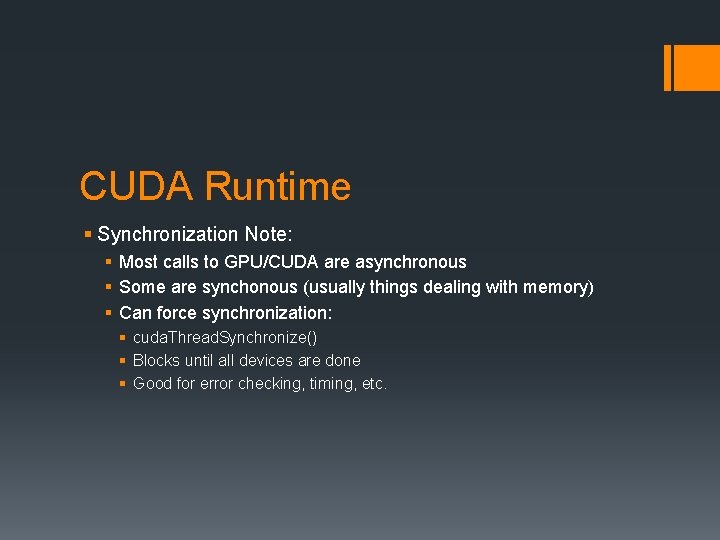

Device Properties § char name[256]: ASCII identifier of GPU § size_t total. Global. Mem: Total global memory available § size_t shared. Mem. Per. Block: Shared memory available per multiprocessor § int regs. Per. Block: How many registers we have per block § int warp. Size: size of our warps § size_t mem. Pitch: maximum pitch allowed for array allocation § int max. Threads. Per. Block: maximum number of threads/block § int max. Threads. Dim[3]: maximum sizes of a block

![Device Properties int max Grid Size3 maximum grid sizes sizet total Constant Device Properties § int max. Grid. Size[3]: maximum grid sizes § size_t total. Constant.](https://slidetodoc.com/presentation_image/481b973b2f5f07ade3d43a1b184dddf4/image-22.jpg)

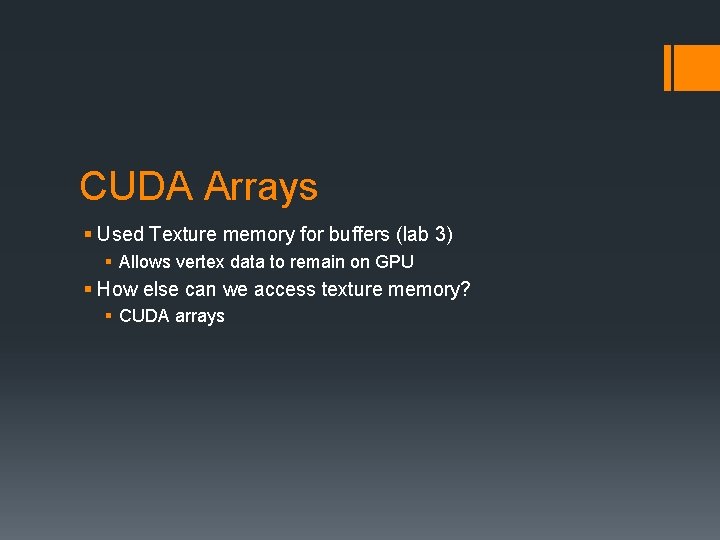

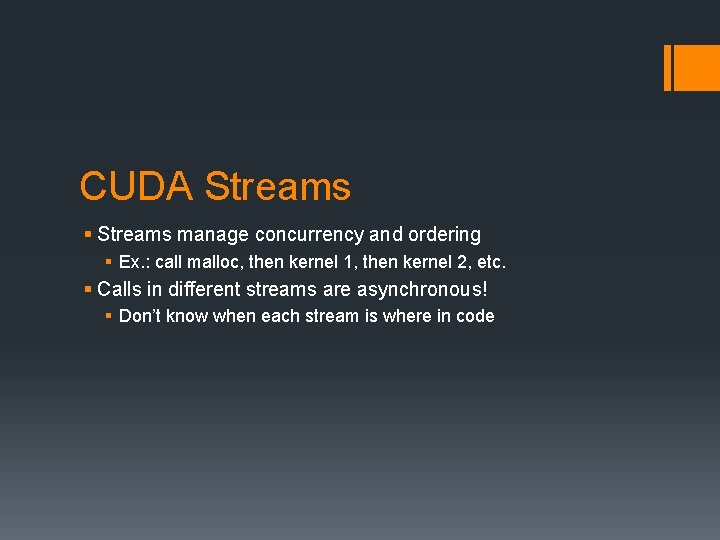

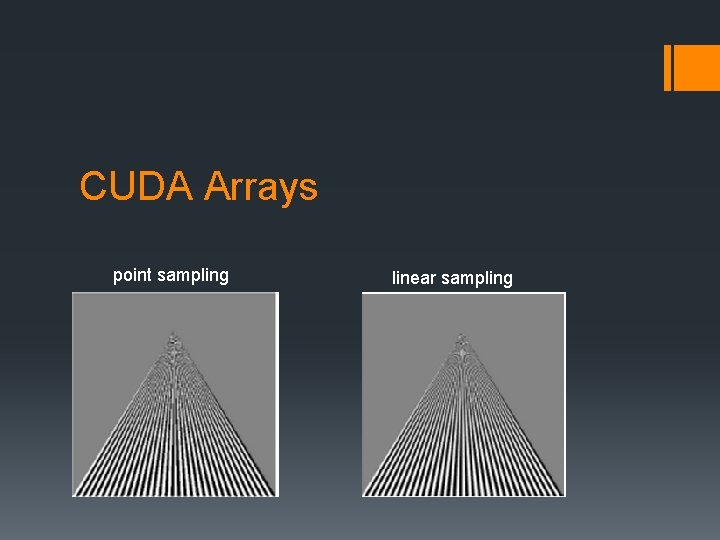

Device Properties § int max. Grid. Size[3]: maximum grid sizes § size_t total. Constant. Memory: maximum available constant memory § int major, int minor: major and minor versions of CUDA support § int clock. Rate: clock rate of device in k. Hz § size_t texture. Alignment: memory alignment required for textures § int device. Overlap: Does this device allow for memory copying while kernel is running? (0 = no, 1 = yes) § int multiprocessor. Count: # of multiprocessors on device

Device Properties § Uses? § Actually get values for memory, instead of guessing § Program to be accessible for multiple systems § Can get the best device

Device Properties § Getting the best device: § Pick a metric (Ex. : most multiprocessors could be good) int num_devices, device; cuda. Get. Device. Count(&num_devices); if (num_devices > 1) { int max_mp = 0, best_device = 0; for (device = 0; device < num_devices; device++) { cuda. Device. Prop prop; cuda. Get. Device. Properties(&prop, device); int mp_count = prop. multi. Processor. Count; if (mp_count > max_mp) { max_mp = mp_count; best_device = device; } } cuda. Set. Device(best_device); }

Device Properties § We can also use this to launch multiple GPUs § Each GPU must have its own host thread § Multithread on CPU, each thread calls different device § Set device on thread using cuda. Set. Device(n);

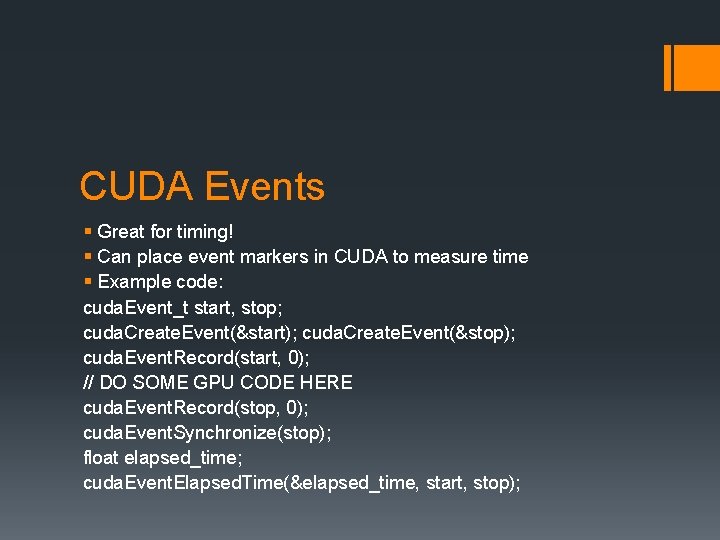

CUDA Runtime § Synchronization Note: § Most calls to GPU/CUDA are asynchronous § Some are synchonous (usually things dealing with memory) § Can force synchronization: § cuda. Thread. Synchronize() § Blocks until all devices are done § Good for error checking, timing, etc.

CUDA Events § Great for timing! § Can place event markers in CUDA to measure time § Example code: cuda. Event_t start, stop; cuda. Create. Event(&start); cuda. Create. Event(&stop); cuda. Event. Record(start, 0); // DO SOME GPU CODE HERE cuda. Event. Record(stop, 0); cuda. Event. Synchronize(stop); float elapsed_time; cuda. Event. Elapsed. Time(&elapsed_time, start, stop);

CUDA Streams § Streams manage concurrency and ordering § Ex. : call malloc, then kernel 1, then kernel 2, etc. § Calls in different streams are asynchronous! § Don’t know when each stream is where in code

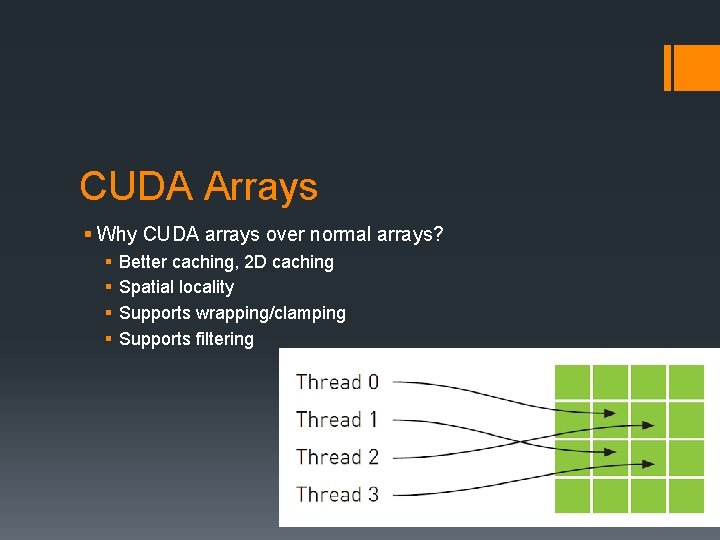

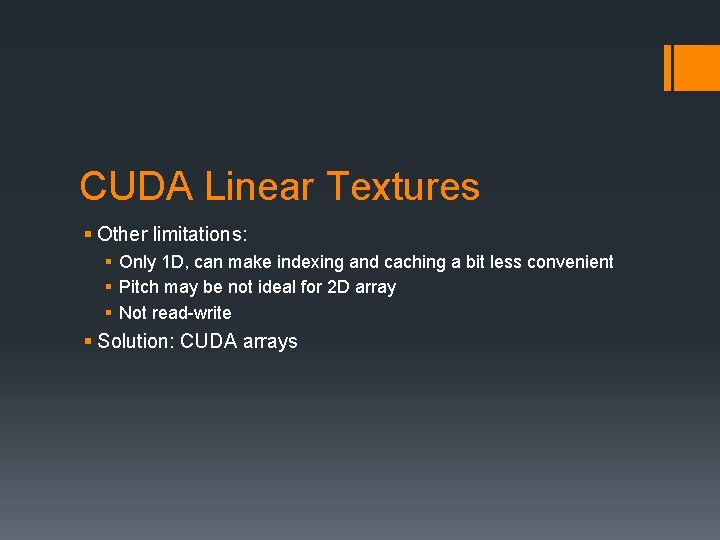

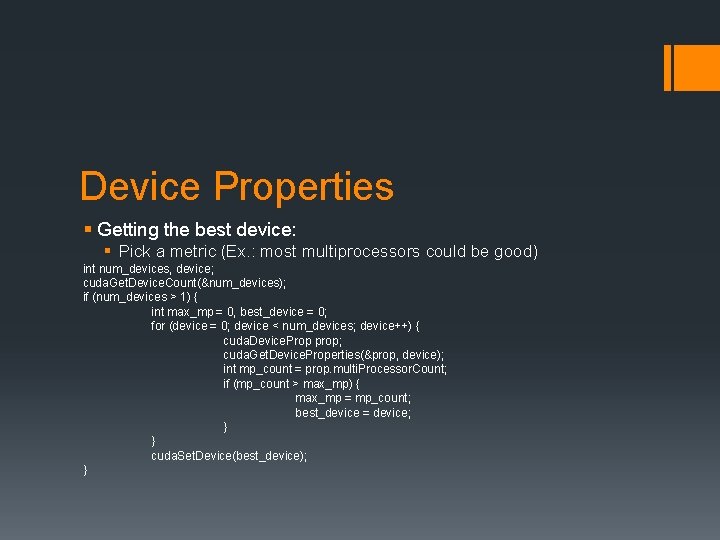

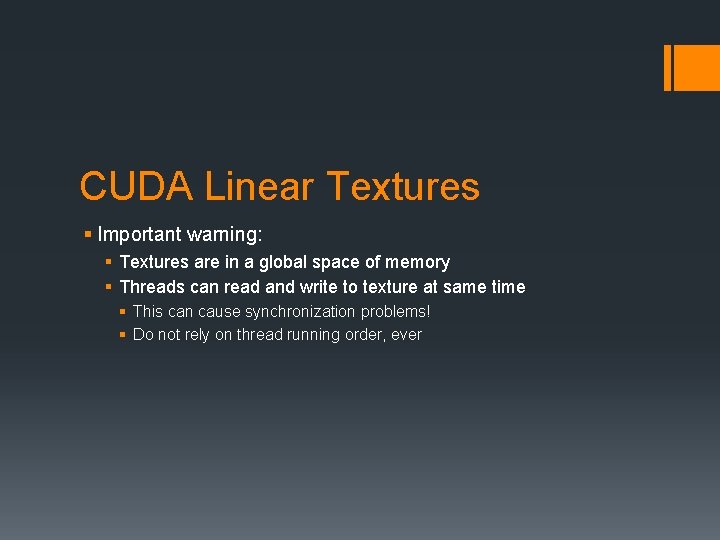

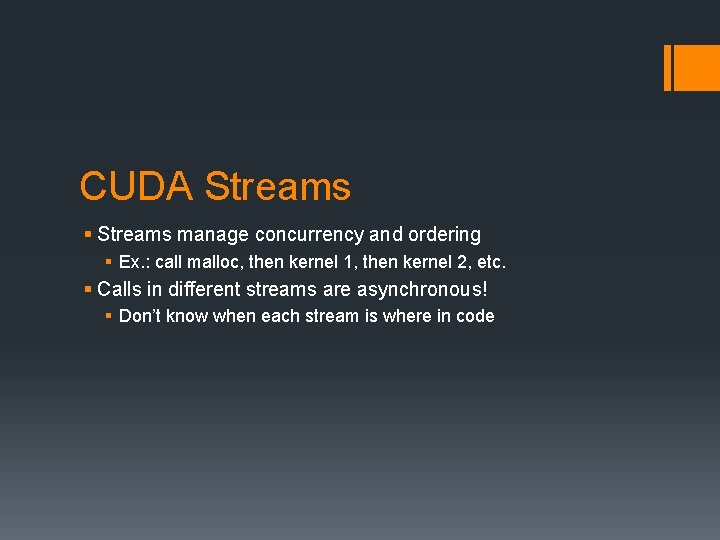

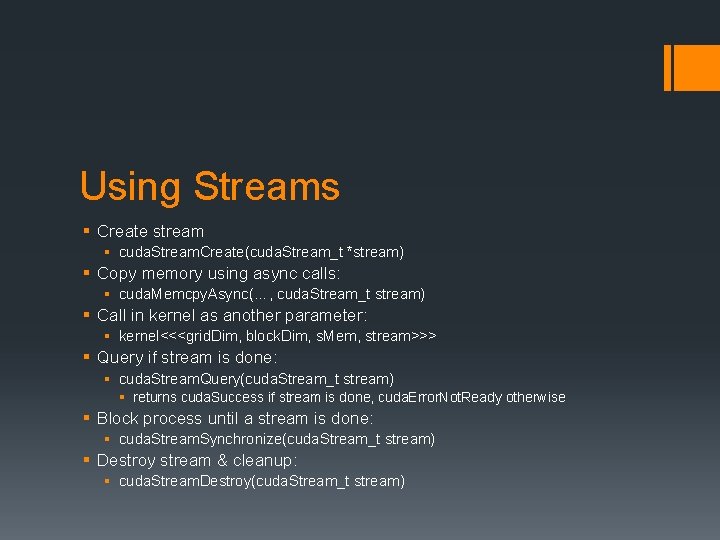

Using Streams § Create stream § cuda. Stream. Create(cuda. Stream_t *stream) § Copy memory using async calls: § cuda. Memcpy. Async(…, cuda. Stream_t stream) § Call in kernel as another parameter: § kernel<<<grid. Dim, block. Dim, s. Mem, stream>>> § Query if stream is done: § cuda. Stream. Query(cuda. Stream_t stream) § returns cuda. Success if stream is done, cuda. Error. Not. Ready otherwise § Block process until a stream is done: § cuda. Stream. Synchronize(cuda. Stream_t stream) § Destroy stream & cleanup: § cuda. Stream. Destroy(cuda. Stream_t stream)

![Using Streams Example cuda Streamt stream2 for int i 0 i Using Streams § Example: cuda. Stream_t stream[2]; for (int i = 0; i <](https://slidetodoc.com/presentation_image/481b973b2f5f07ade3d43a1b184dddf4/image-30.jpg)

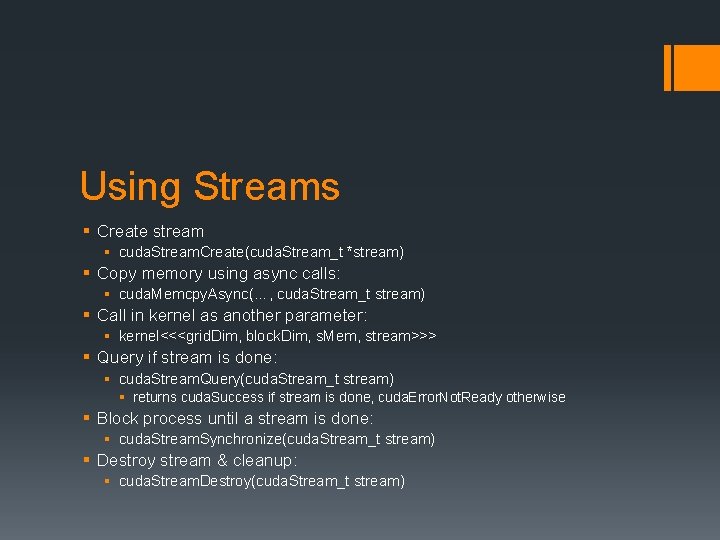

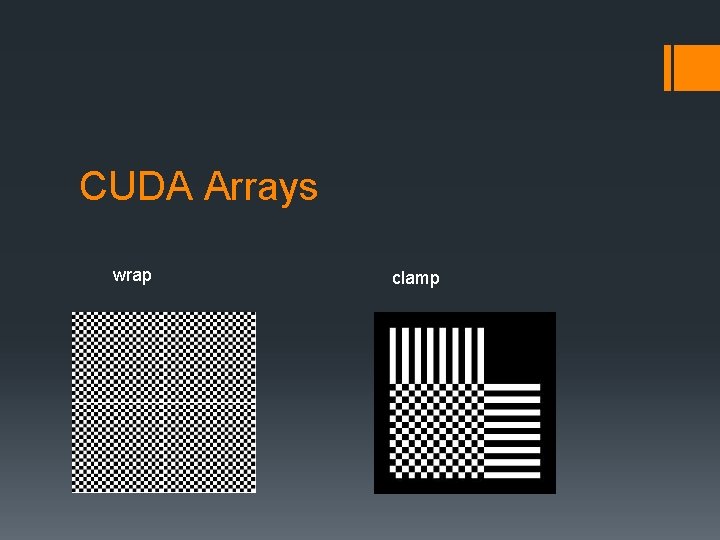

Using Streams § Example: cuda. Stream_t stream[2]; for (int i = 0; i < 2; ++i) cuda. Stream. Create(&stream[i]); for (int i = 0; i < 2; ++i) cuda. Memcpy. Async(input. Dev. Ptr + i * size, host. Ptr + i * size, cuda. Memcpy. Host. To. Device, stream[i]); for (int i = 0; i < 2; ++i) my. Kernel<<<100, 512, 0, stream[i]>>>(output. Dev. Ptr + i * size, input. Dev. Ptr + i * size, size); for (int i = 0; i < 2; ++i) cuda. Memcpy. Async(host. Ptr + i * size, output. Dev. Ptr + i * size, cuda. Memcpy. Device. To. Host, stream[i]); cuda. Thread. Synchronize(); size,

Next Time § Lab 4 Recitation: § 3 D Textures § Pixel Buffer Objects (PBOs) § Fractals!