Network Motif Discovery A GPU Approach Wenqing Lin

- Slides: 25

Network Motif Discovery: A GPU Approach Wenqing Lin†, ‡, Xiaokui Xiao‡, Xing Xie §, Xiao-Li Li† † ‡ § 1

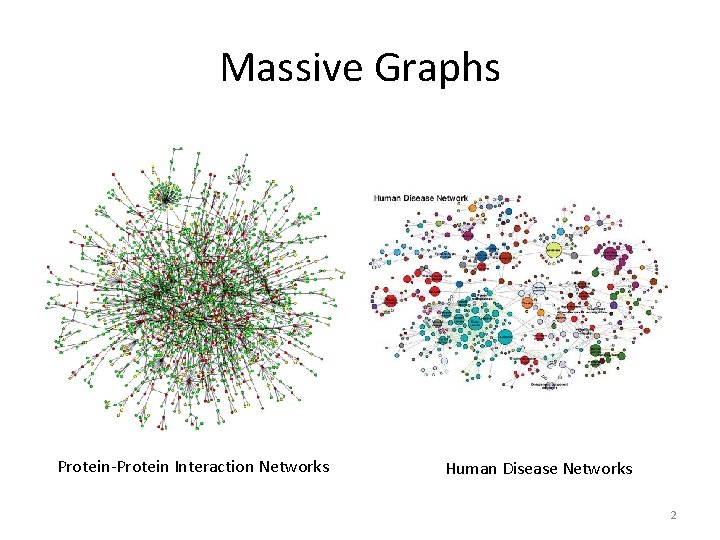

Massive Graphs Protein-Protein Interaction Networks Human Disease Networks 2

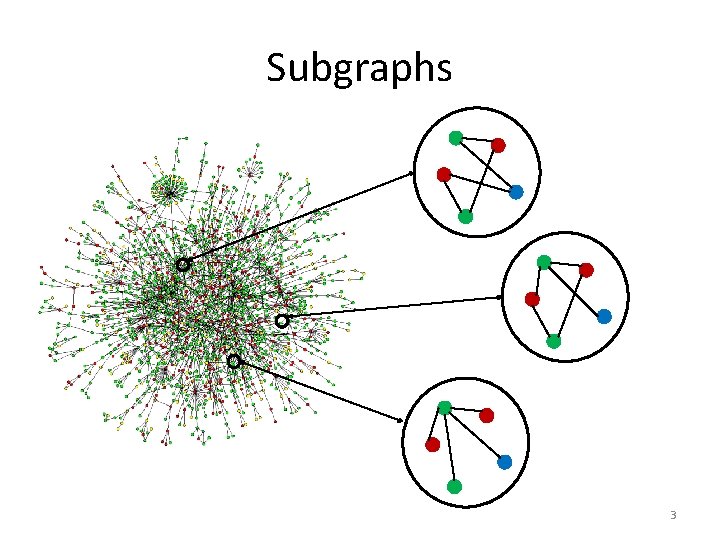

Subgraphs 3

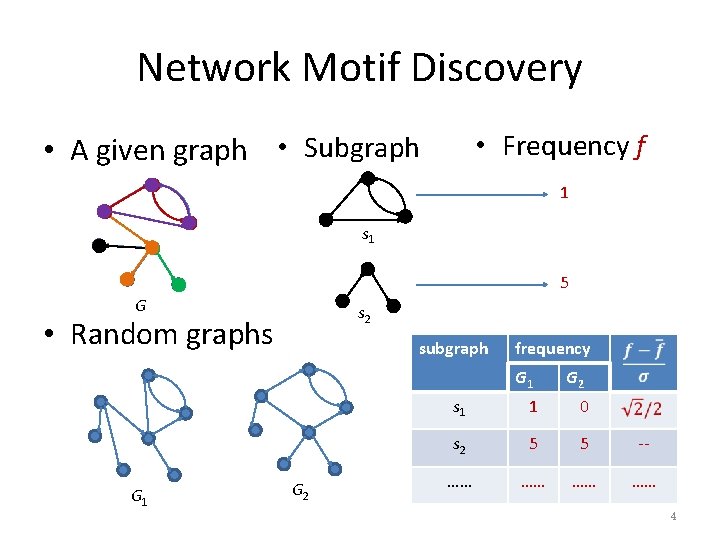

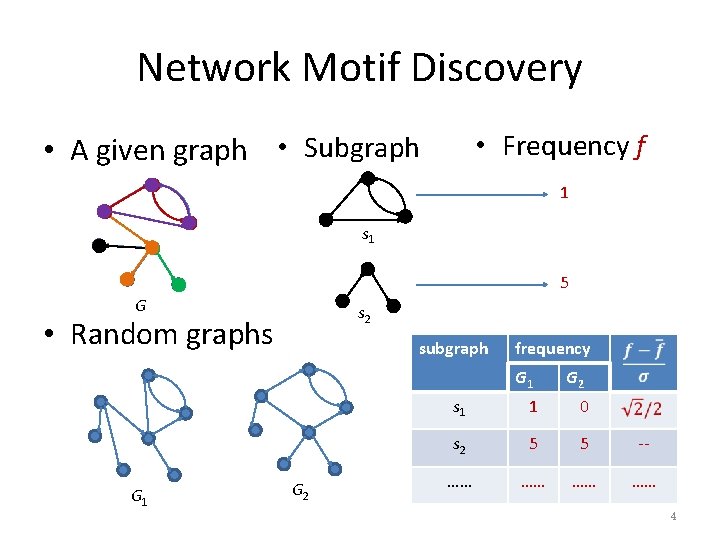

Network Motif Discovery • Frequency f • A given graph • Subgraph 1 s 1 5 G s 2 • Random graphs G 1 subgraph G 2 frequency G 1 G 2 s 1 1 0 s 2 5 5 -- …… …… 4

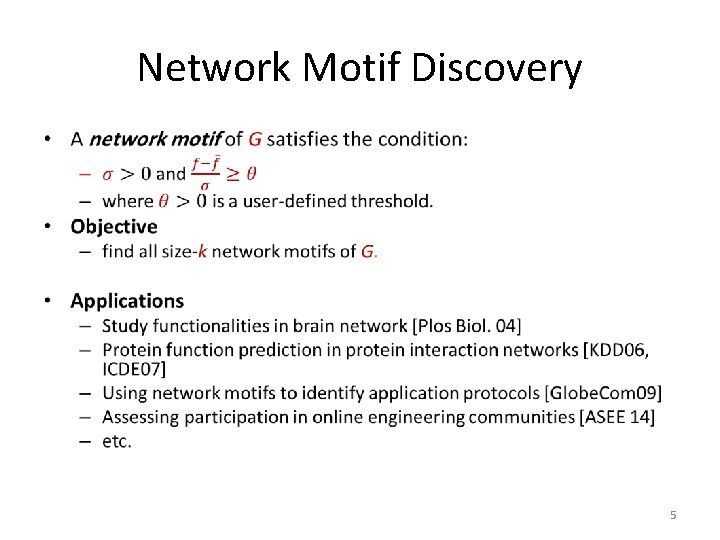

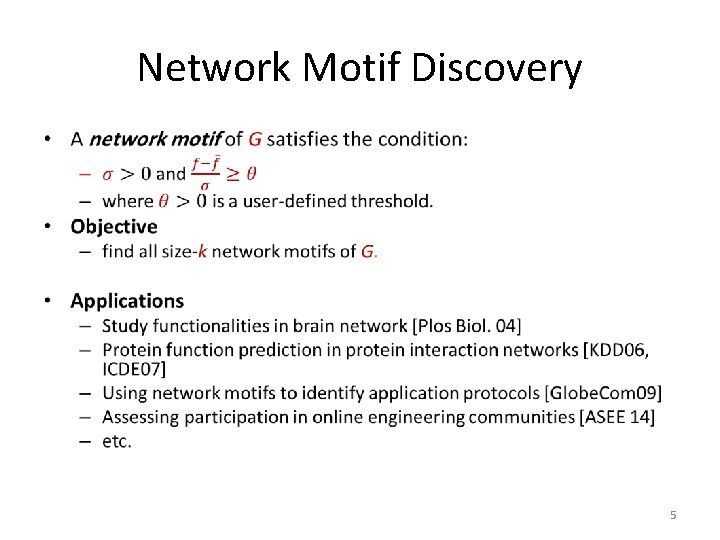

Network Motif Discovery • 5

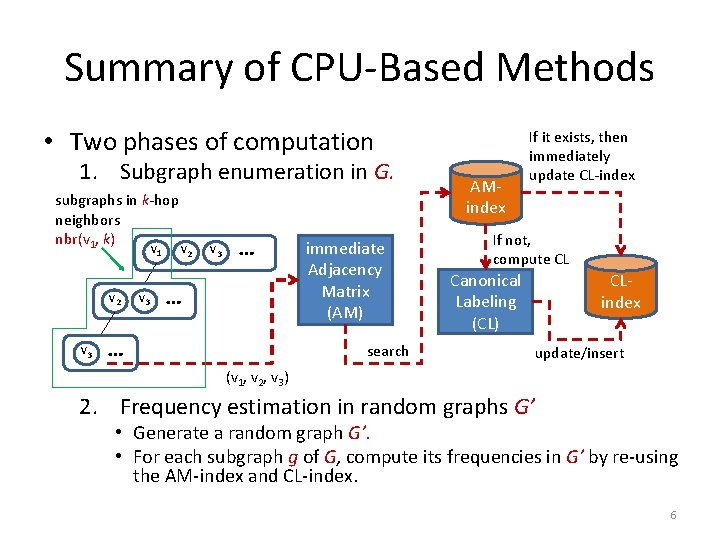

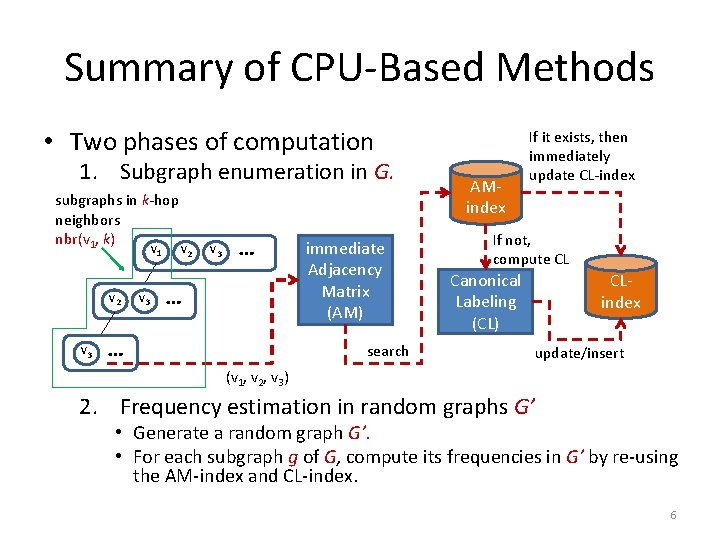

Summary of CPU-Based Methods • Two phases of computation 1. Subgraph enumeration in G. subgraphs in k-hop neighbors nbr(v 1, k) v 1 v 2 v 3 … … … immediate Adjacency Matrix (AM) search AMindex If it exists, then immediately update CL-index If not, compute CL Canonical Labeling (CL) CLindex update/insert (v 1, v 2, v 3) 2. Frequency estimation in random graphs G’ • Generate a random graph G’. • For each subgraph g of G, compute its frequencies in G’ by re-using the AM-index and CL-index. 6

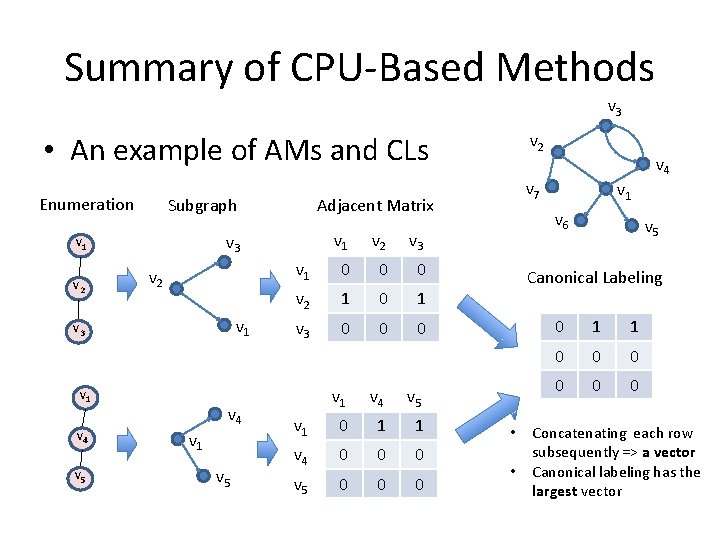

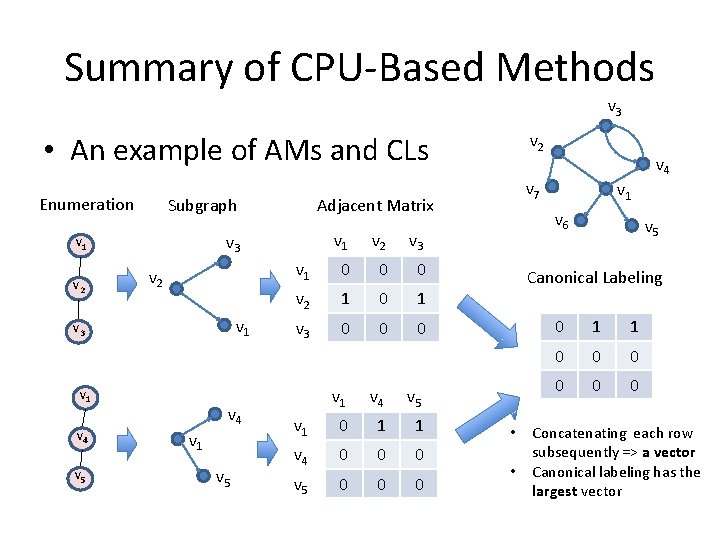

Summary of CPU-Based Methods v 3 • An example of AMs and CLs Enumeration Subgraph v 2 v 1 v 3 v 1 v 4 v 5 v 1 v 2 v 3 v 1 0 0 0 v 2 1 0 1 v 3 0 0 0 v 4 v 1 v 5 v 1 v 4 v 5 v 1 0 1 1 v 4 0 0 0 v 5 0 0 0 v 4 v 7 Adjacent Matrix v 3 v 1 v 2 v 1 v 6 v 5 Canonical Labeling • • 0 1 1 0 0 0 Concatenating each row subsequently => a vector Canonical labeling has the largest vector

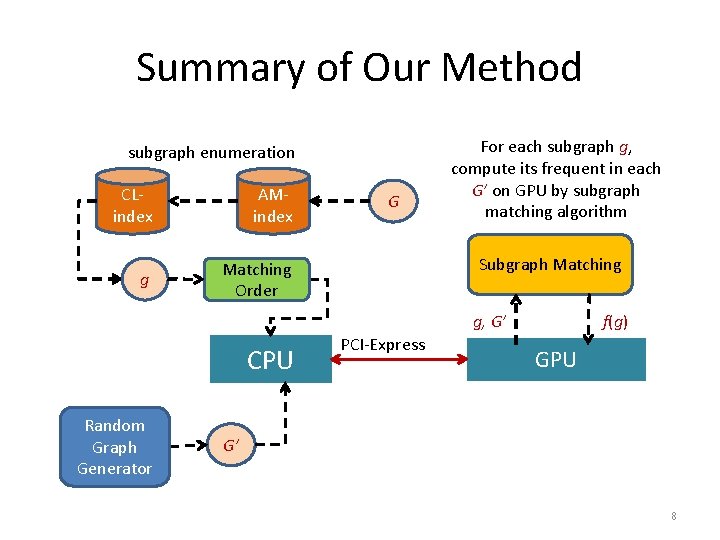

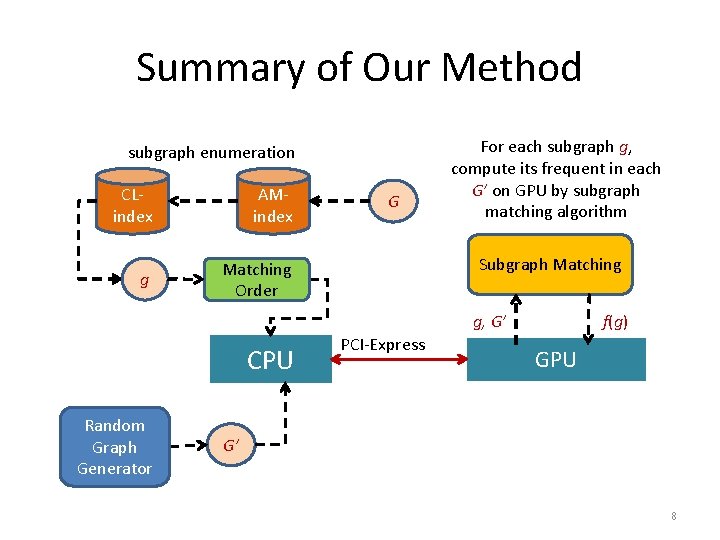

Summary of Our Method subgraph enumeration CLindex AMindex g Matching Order G For each subgraph g, compute its frequent in each G’ on GPU by subgraph matching algorithm Subgraph Matching g, G’ CPU Random Graph Generator PCI-Express f(g) GPU G’ 8

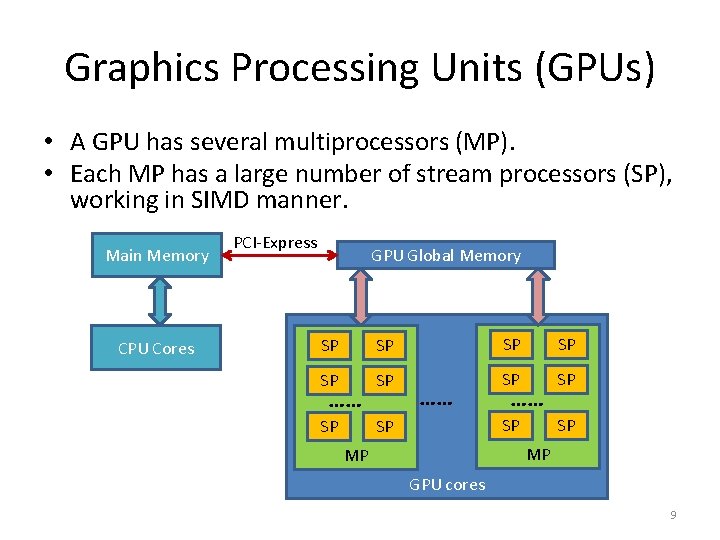

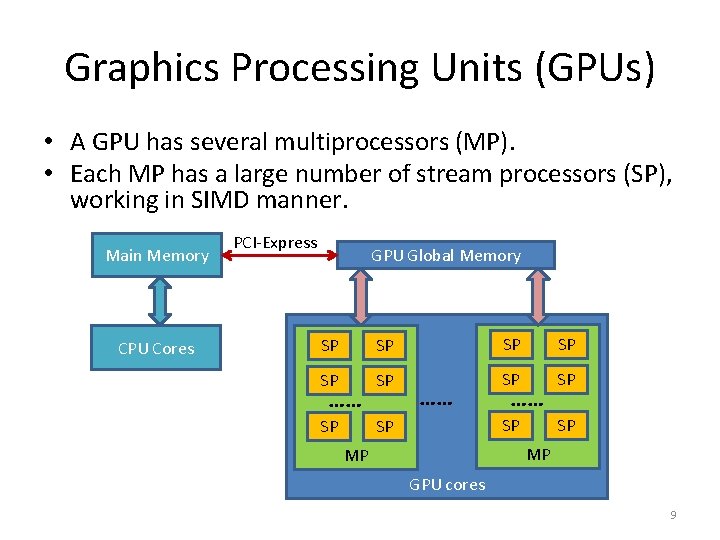

Graphics Processing Units (GPUs) • A GPU has several multiprocessors (MP). • Each MP has a large number of stream processors (SP), working in SIMD manner. Main Memory CPU Cores PCI-Express GPU Global Memory SP SP SP …… …… …… MP MP GPU cores 9

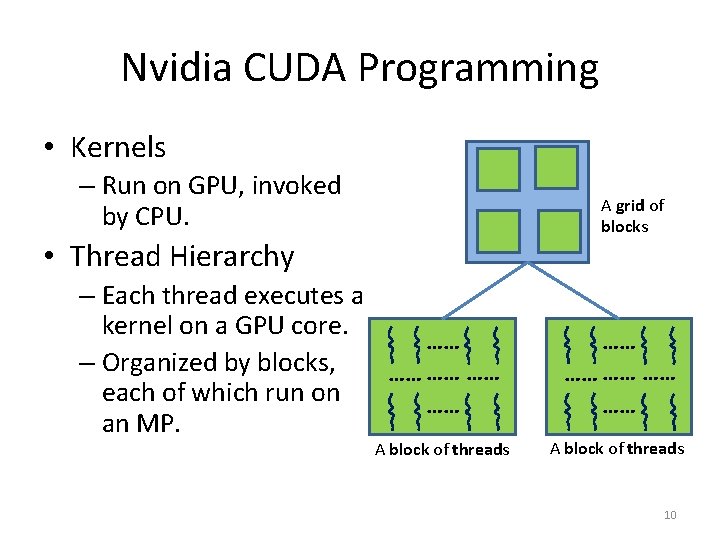

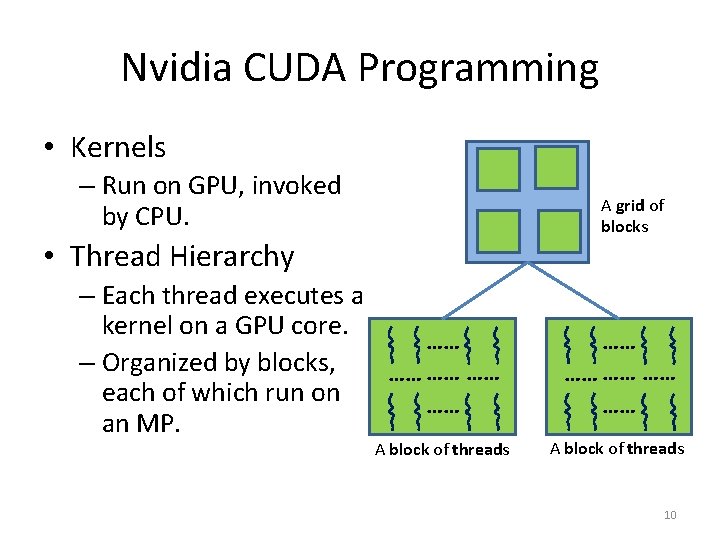

Nvidia CUDA Programming • Kernels – Run on GPU, invoked by CPU. A grid of blocks • Thread Hierarchy – Each thread executes a kernel on a GPU core. …… – Organized by blocks, …… …… …… each of which run on …… an MP. A block of threads …… …… …… A block of threads 10

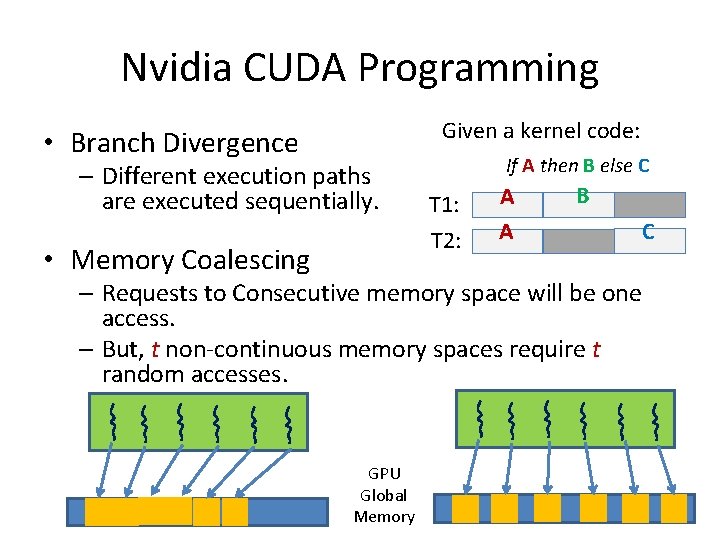

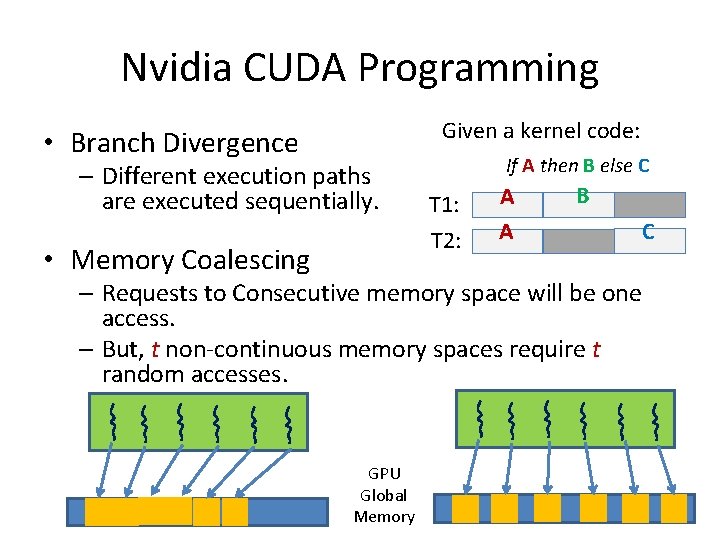

Nvidia CUDA Programming Given a kernel code: • Branch Divergence – Different execution paths are executed sequentially. If A then B else C T 1: T 2: • Memory Coalescing A A B C – Requests to Consecutive memory space will be one access. – But, t non-continuous memory spaces require t random accesses. GPU Global Memory

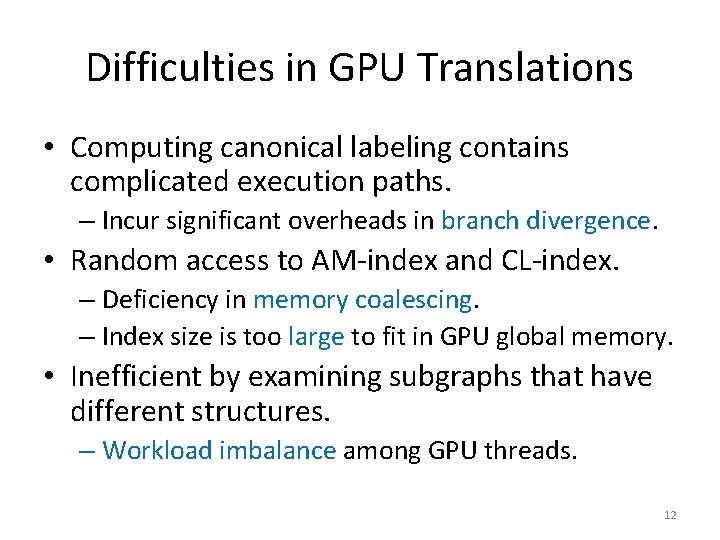

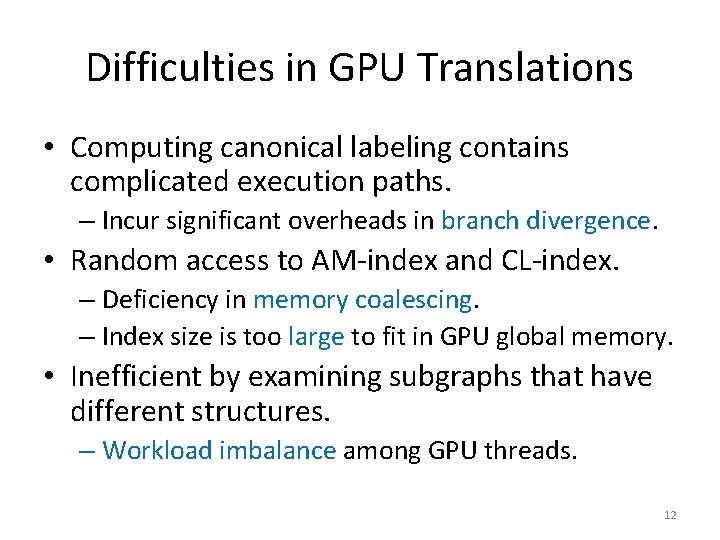

Difficulties in GPU Translations • Computing canonical labeling contains complicated execution paths. – Incur significant overheads in branch divergence. • Random access to AM-index and CL-index. – Deficiency in memory coalescing. – Index size is too large to fit in GPU global memory. • Inefficient by examining subgraphs that have different structures. – Workload imbalance among GPU threads. 12

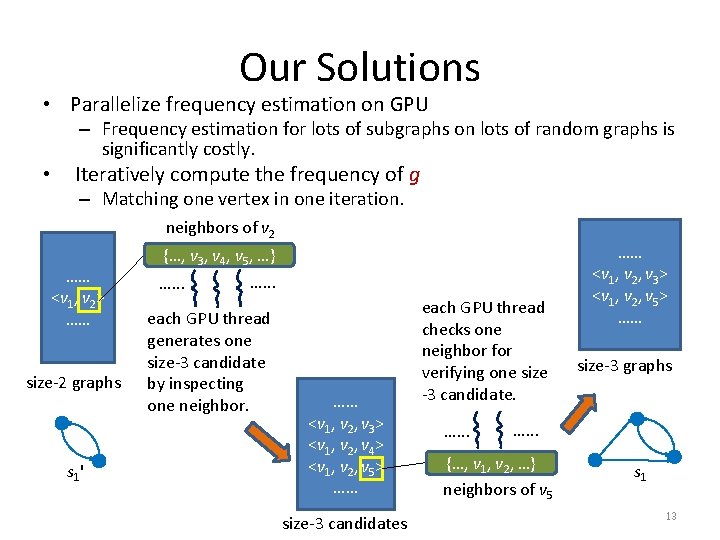

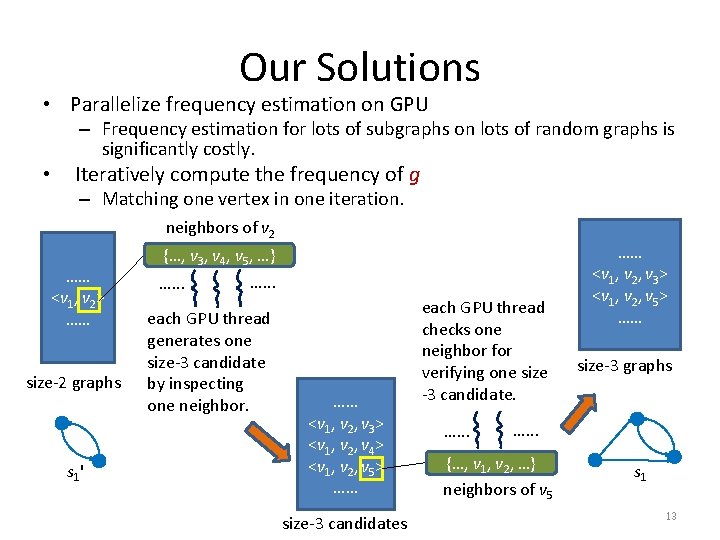

Our Solutions • Parallelize frequency estimation on GPU – Frequency estimation for lots of subgraphs on lots of random graphs is significantly costly. • Iteratively compute the frequency of g – Matching one vertex in one iteration. neighbors of v 2 …… <v 1, v 2> …… size-2 graphs s 1' {…, v 3, v 4, v 5, …} …. . . each GPU thread generates one size-3 candidate by inspecting one neighbor. …… <v 1, v 2, v 3> <v 1, v 2, v 4> <v 1, v 2, v 5> …… size-3 candidates each GPU thread checks one neighbor for verifying one size -3 candidate. …. . . …… <v 1, v 2, v 3> <v 1, v 2, v 5> …… size-3 graphs …. . . {…, v 1, v 2, …} neighbors of v 5 s 1 13

Roadmap • • • Preliminaries Solution Overview Optimizations Experimental Evaluation Conclusion 14

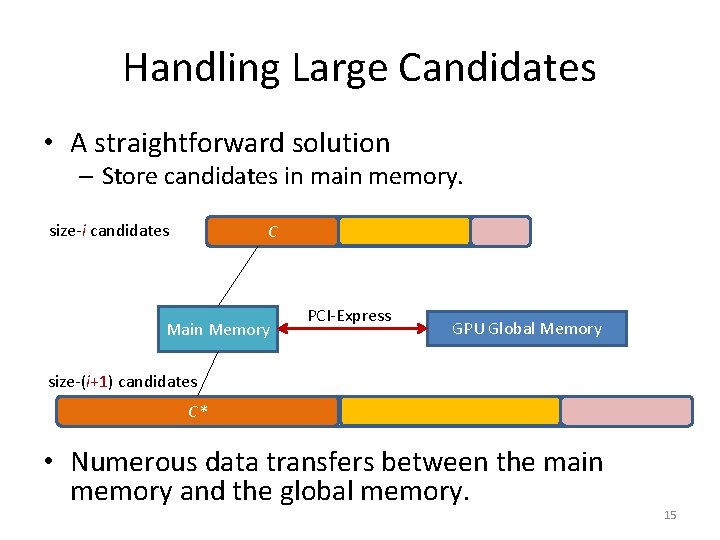

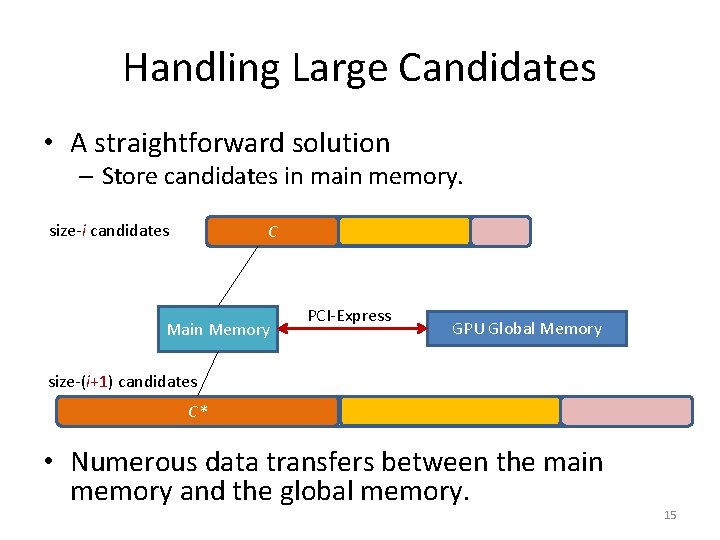

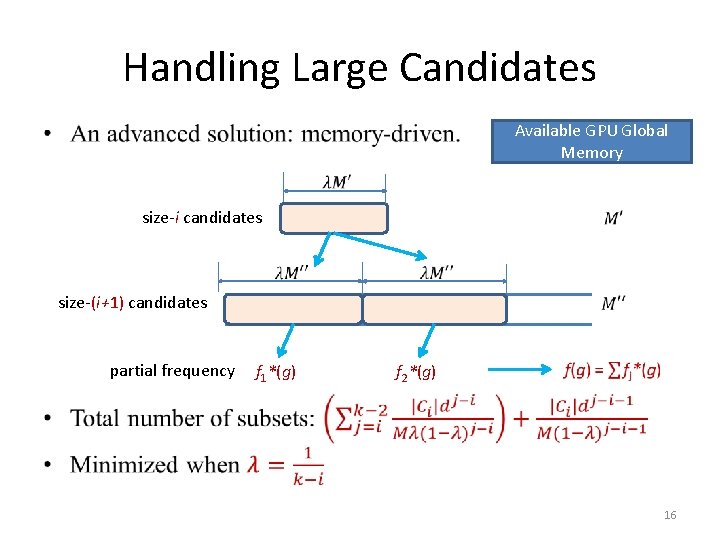

Handling Large Candidates • A straightforward solution – Store candidates in main memory. size-i candidates C Main Memory PCI-Express GPU Global Memory size-(i+1) candidates C* • Numerous data transfers between the main memory and the global memory. 15

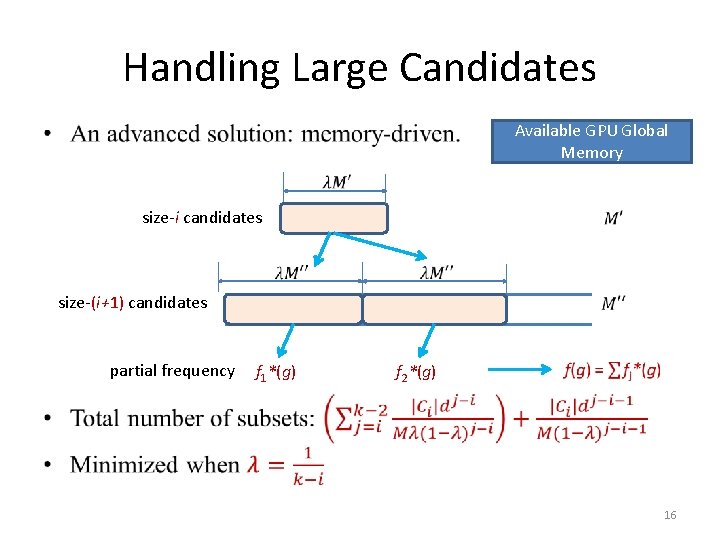

Handling Large Candidates Available GPU Global Memory • size-i candidates size-(i+1) candidates partial frequency f 1*(g) f 2*(g) 16

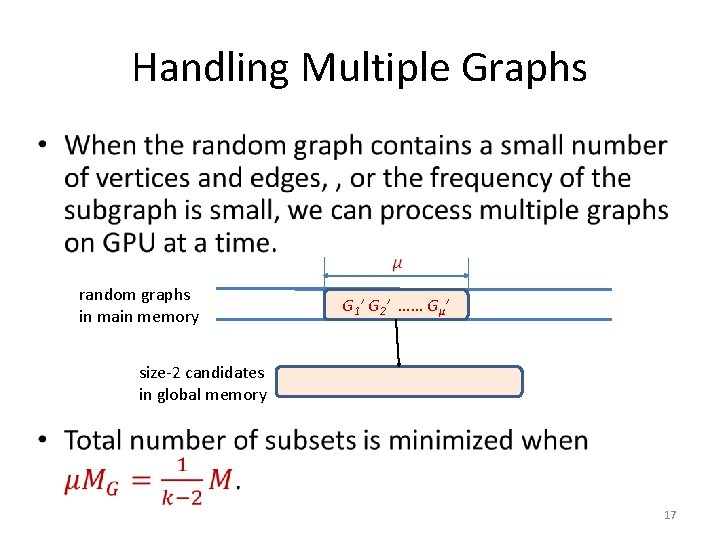

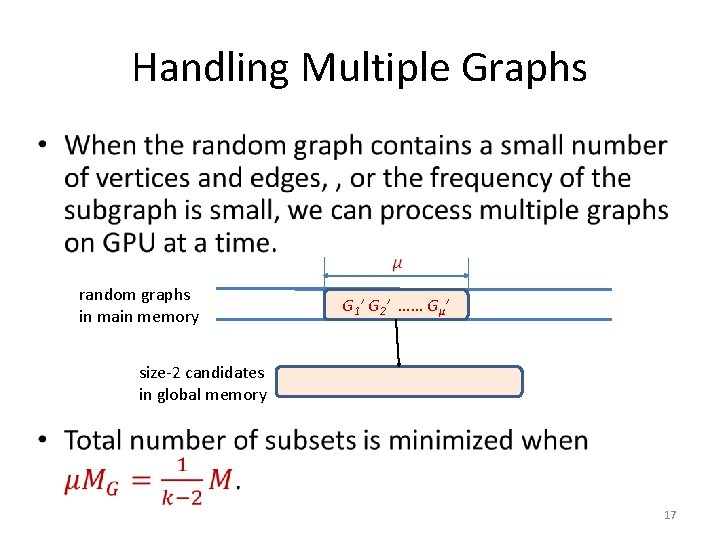

Handling Multiple Graphs • random graphs in main memory G 1’ G 2’ …… Gμ’ size-2 candidates in global memory 17

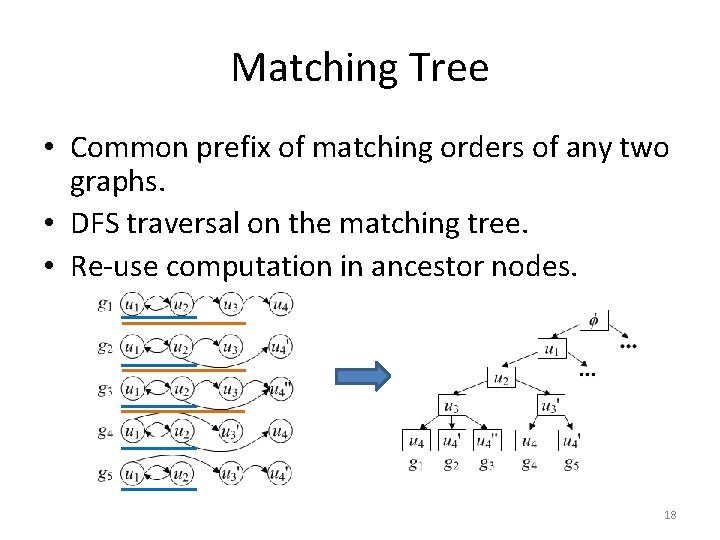

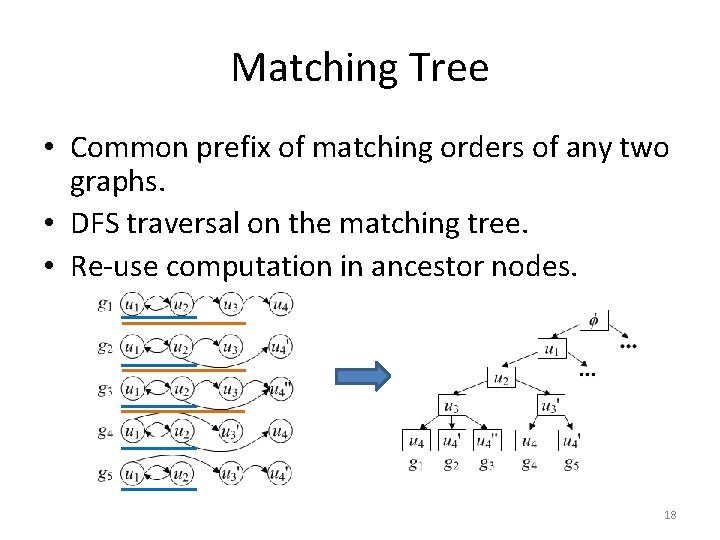

Matching Tree • Common prefix of matching orders of any two graphs. • DFS traversal on the matching tree. • Re-use computation in ancestor nodes. 18

Roadmap • • • Preliminaries Solution Overview Optimizations Experimental Evaluation Conclusion 19

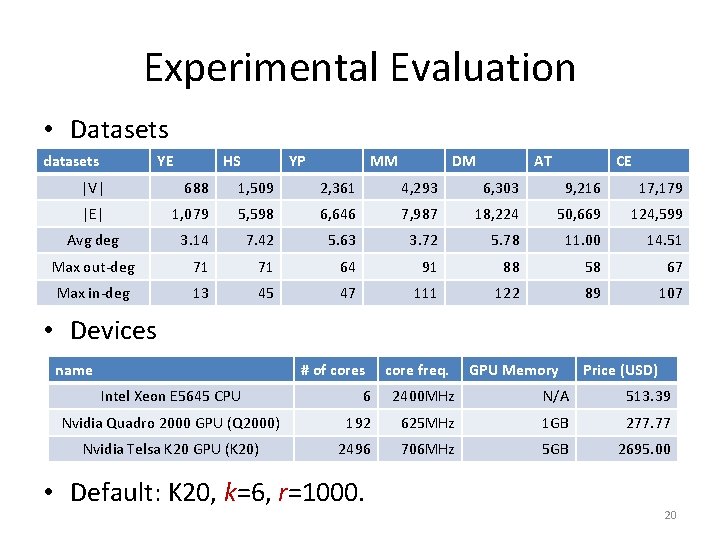

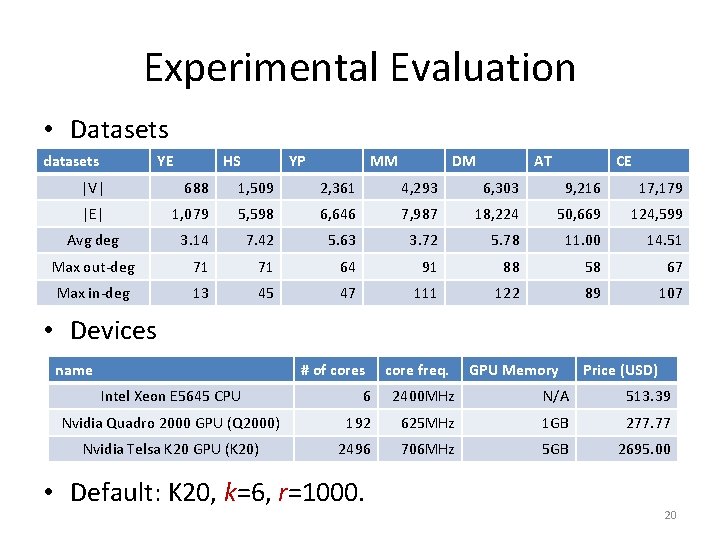

Experimental Evaluation • Datasets datasets YE HS YP MM DM AT CE |V| 688 1, 509 2, 361 4, 293 6, 303 9, 216 17, 179 |E| 1, 079 5, 598 6, 646 7, 987 18, 224 50, 669 124, 599 Avg deg 3. 14 7. 42 5. 63 3. 72 5. 78 11. 00 14. 51 Max out-deg 71 71 64 91 88 58 67 Max in-deg 13 45 47 111 122 89 107 • Devices name # of cores Intel Xeon E 5645 CPU core freq. GPU Memory Price (USD) 6 2400 MHz N/A 513. 39 Nvidia Quadro 2000 GPU (Q 2000) 192 625 MHz 1 GB 277. 77 Nvidia Telsa K 20 GPU (K 20) 2496 706 MHz 5 GB 2695. 00 • Default: K 20, k=6, r=1000. 20

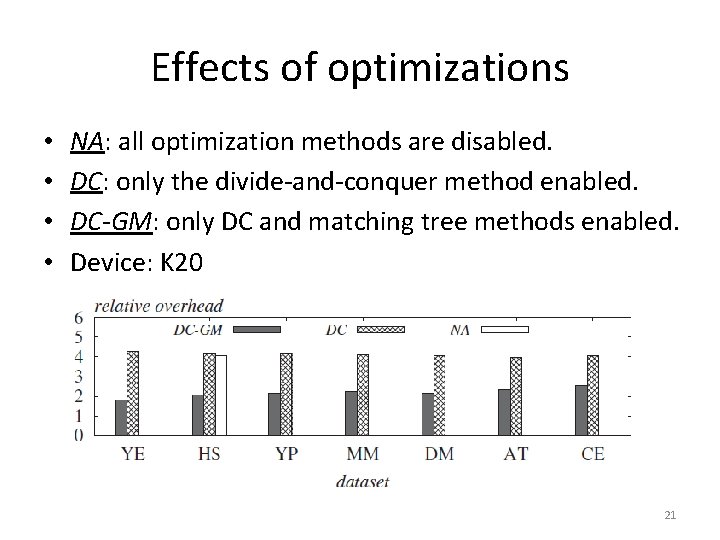

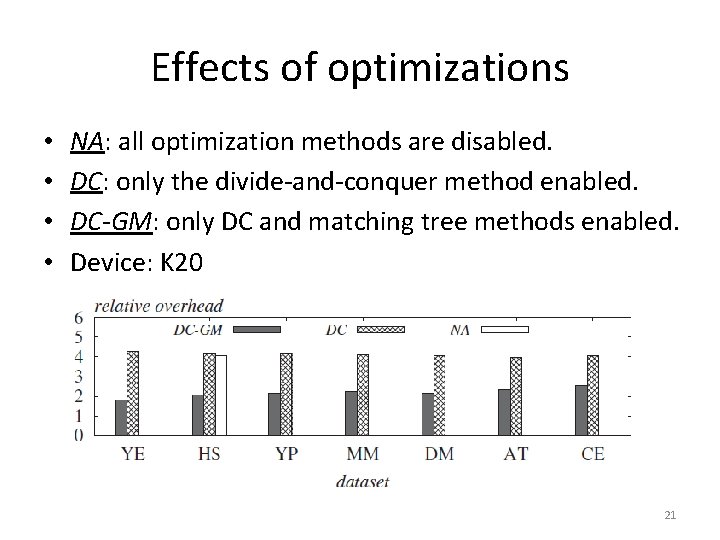

Effects of optimizations • • NA: all optimization methods are disabled. DC: only the divide-and-conquer method enabled. DC-GM: only DC and matching tree methods enabled. Device: K 20 21

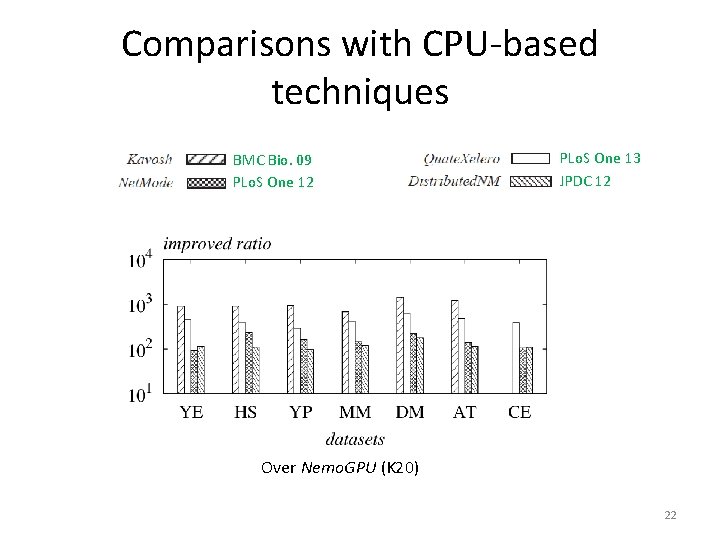

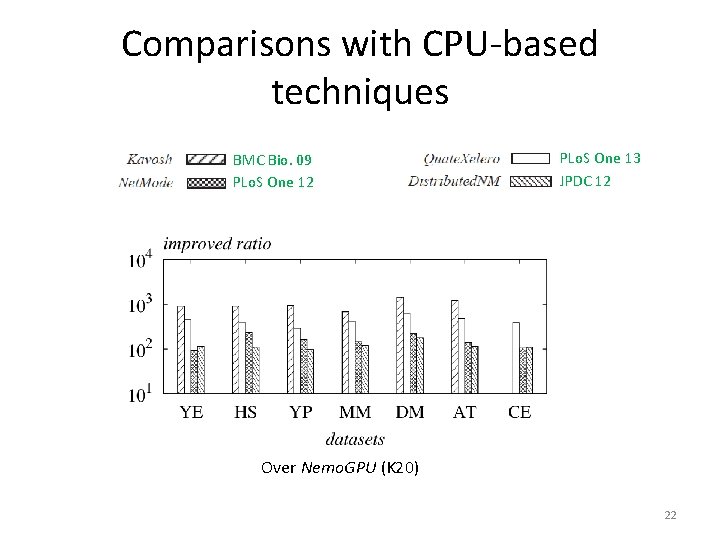

Comparisons with CPU-based techniques BMC Bio. 09 PLo. S One 12 PLo. S One 13 JPDC 12 Over Nemo. GPU (K 20) 22

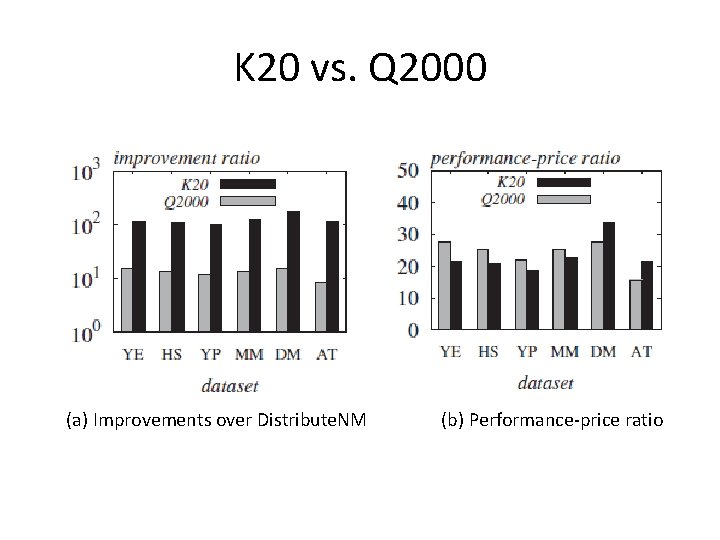

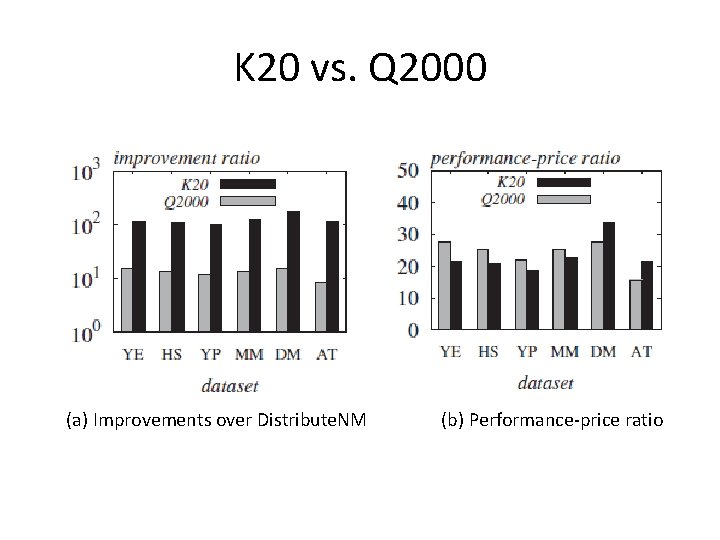

K 20 vs. Q 2000 (a) Improvements over Distribute. NM (b) Performance-price ratio

Conclusion • The first GPU-based solution to the problem of network motif discovery. • Mitigate GPUs’ limitations in terms of the computation power per GPU core. • A number of efficient and effective optimization techniques. • Several orders of magnitude more costeffective than CPU-based approaches. 24

Thank you! 25