cujpeg A Simple JPEG Encoder With CUDA Technology

![Apply FDCT Shift operations From [0, 255] To [-128, 127] DCT Result Meaning of Apply FDCT Shift operations From [0, 255] To [-128, 127] DCT Result Meaning of](https://slidetodoc.com/presentation_image_h/eca8c81c91e8002c785461a44fbb6dac/image-12.jpg)

- Slides: 34

cujpeg A Simple JPEG Encoder With CUDA Technology Dongyue Mou and Zeng Xing

Outline • JPEG Algorithm • Traditional Encoder • What's new in cujpeg • Benchmark • Conclusion

Outline • JPEG Algorithm • Traditional Encoder • What's new in cujpeg • Benchmark • Conclusion

JPEG Algorithm JPEG is a commonly used method for image compression. 1. 2. 3. 4. 5. 6. 7. JPEG Encoding Algorithm is consist of 7 steps: Divide image into 8 x 8 blocks [R, G, B] to [Y, Cb, Cr] conversion Downsampling (optional) FDCT(Forward Discrete Cosine Transform) Quantization Serialization in zig-zag style Entropy encoding (Run Length Coding & Huffman coding)

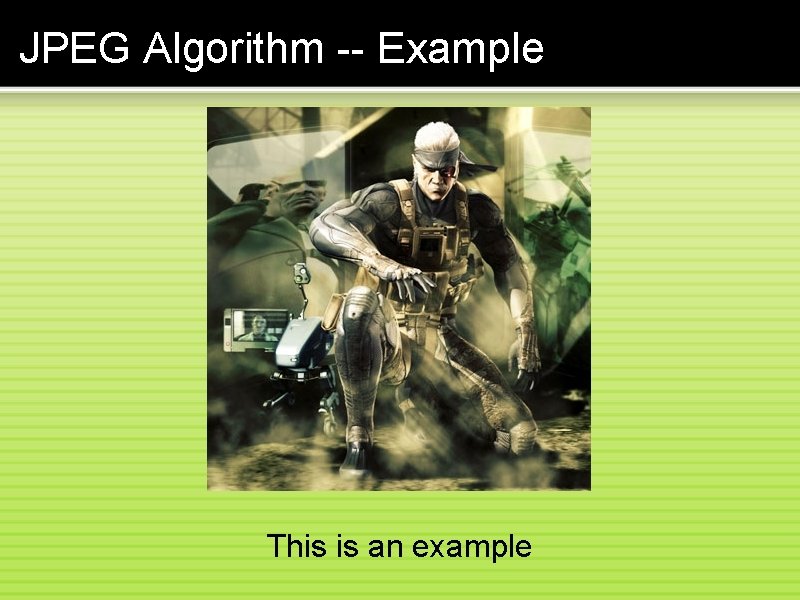

JPEG Algorithm -- Example This is an example

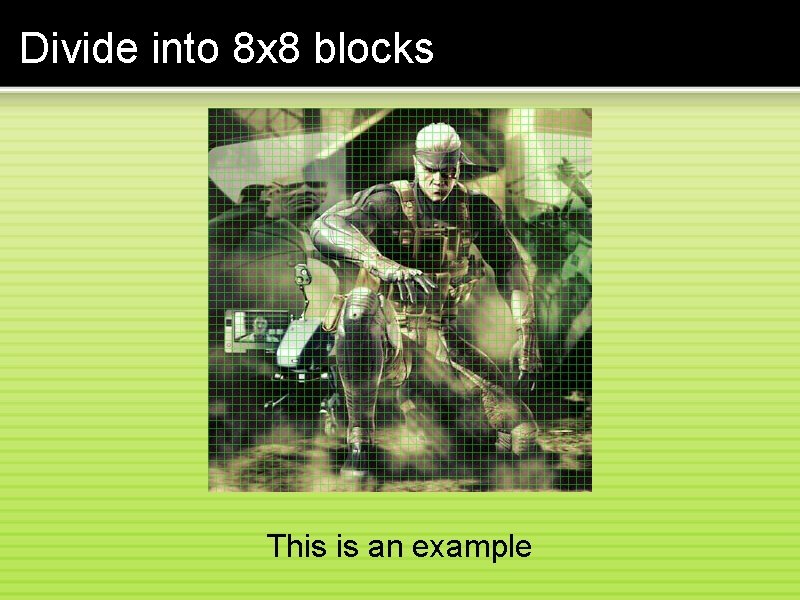

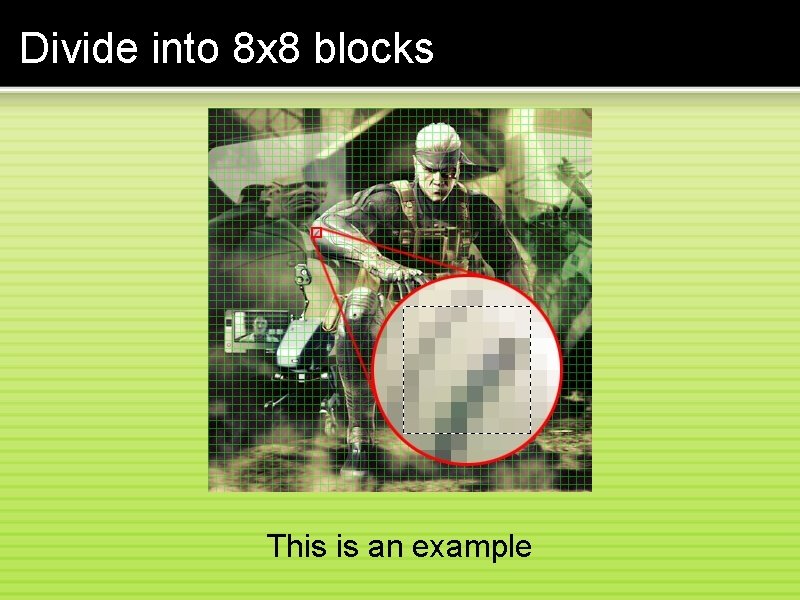

Divide into 8 x 8 blocks This is an example

Divide into 8 x 8 blocks This is an example

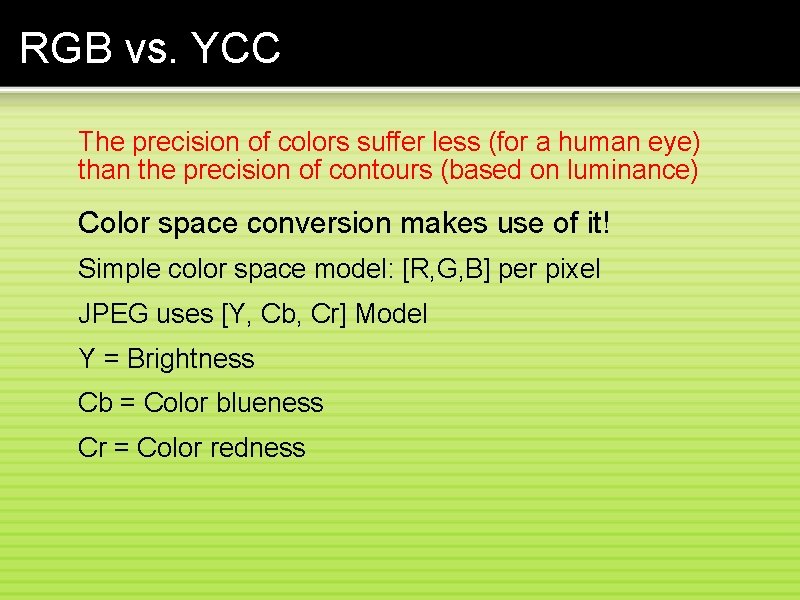

RGB vs. YCC The precision of colors suffer less (for a human eye) than the precision of contours (based on luminance) Color space conversion makes use of it! Simple color space model: [R, G, B] per pixel JPEG uses [Y, Cb, Cr] Model Y = Brightness Cb = Color blueness Cr = Color redness

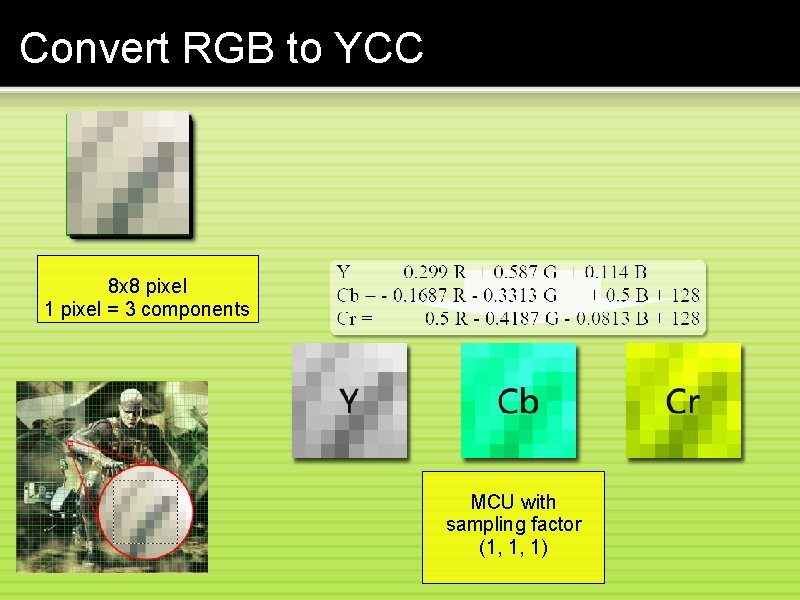

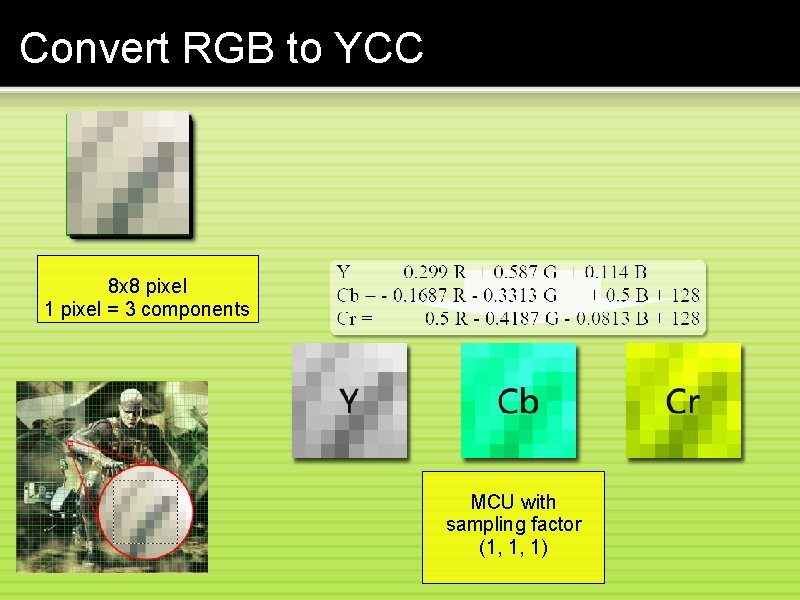

Convert RGB to YCC 8 x 8 pixel 1 pixel = 3 components MCU with sampling factor (1, 1, 1)

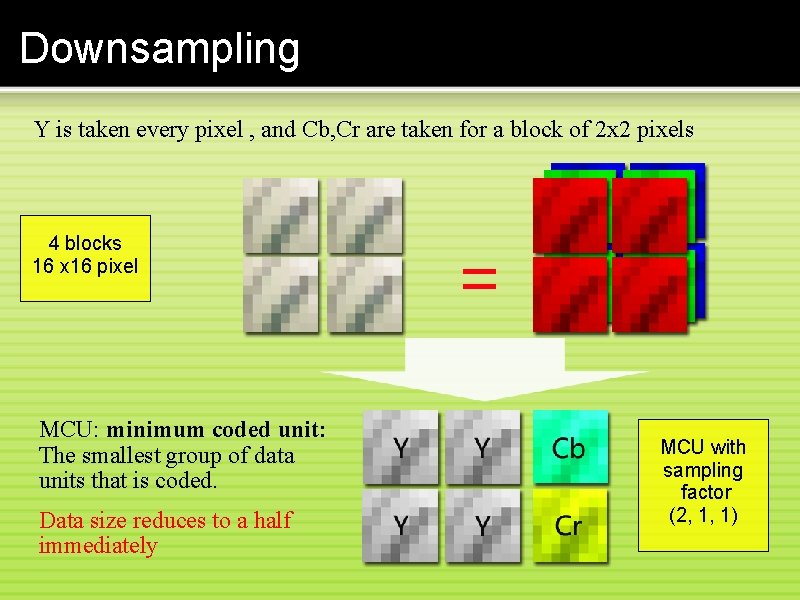

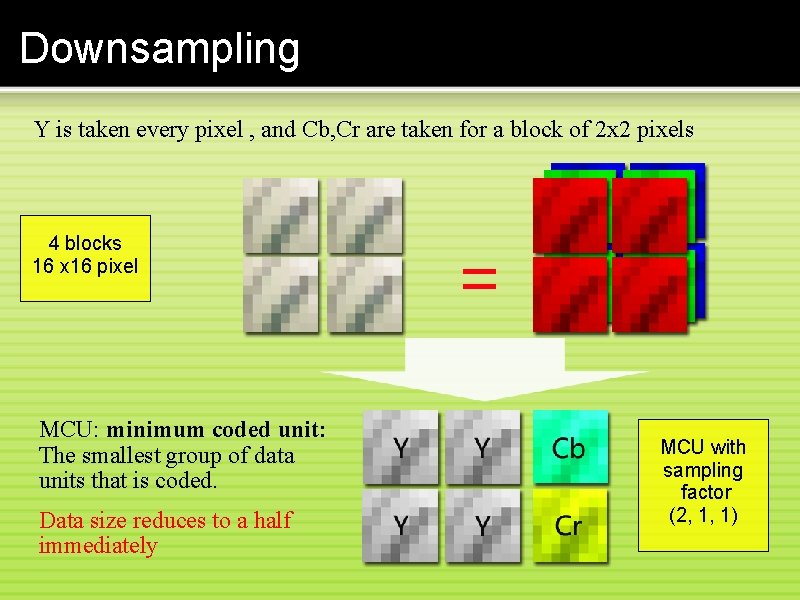

Downsampling Y is taken every pixel , and Cb, Cr are taken for a block of 2 x 2 pixels 4 blocks 16 x 16 pixel MCU: minimum coded unit: The smallest group of data units that is coded. Data size reduces to a half immediately MCU with sampling factor (2, 1, 1)

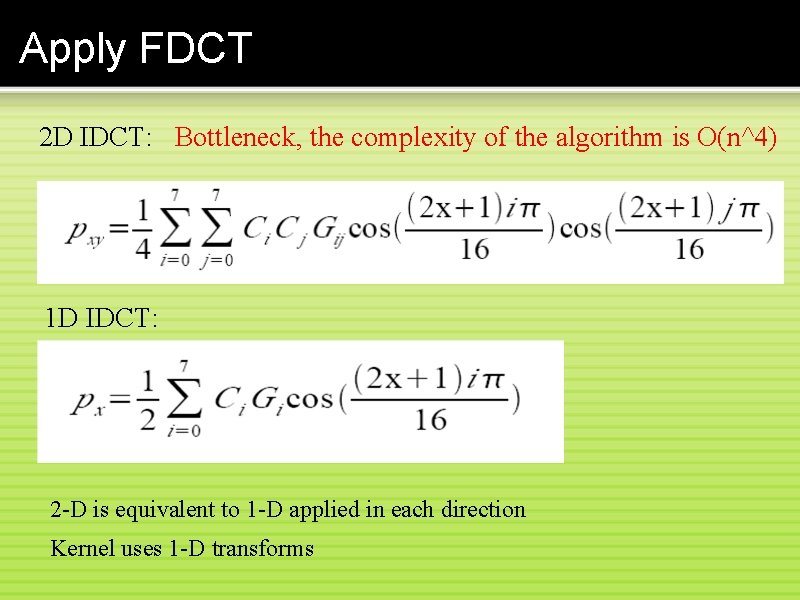

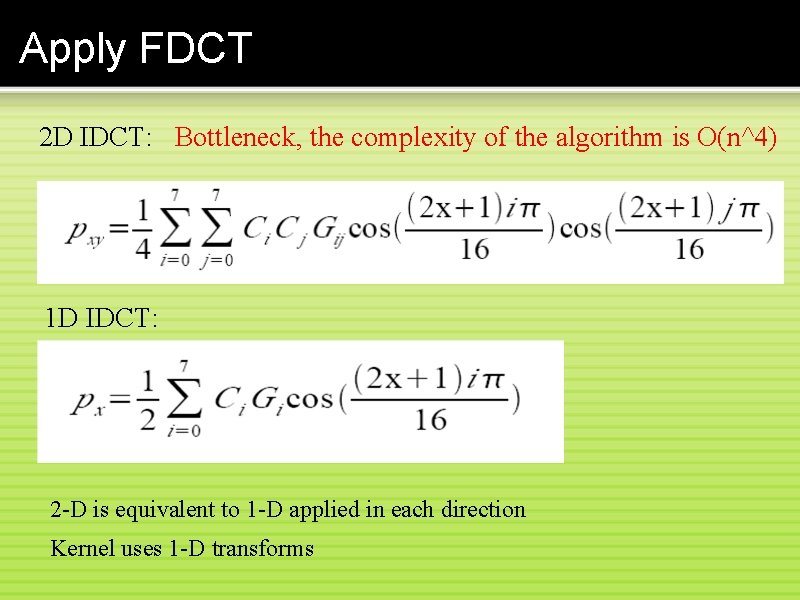

Apply FDCT 2 D IDCT: Bottleneck, the complexity of the algorithm is O(n^4) 1 D IDCT: 2 -D is equivalent to 1 -D applied in each direction Kernel uses 1 -D transforms

![Apply FDCT Shift operations From 0 255 To 128 127 DCT Result Meaning of Apply FDCT Shift operations From [0, 255] To [-128, 127] DCT Result Meaning of](https://slidetodoc.com/presentation_image_h/eca8c81c91e8002c785461a44fbb6dac/image-12.jpg)

Apply FDCT Shift operations From [0, 255] To [-128, 127] DCT Result Meaning of each position in DCT resultmatrix

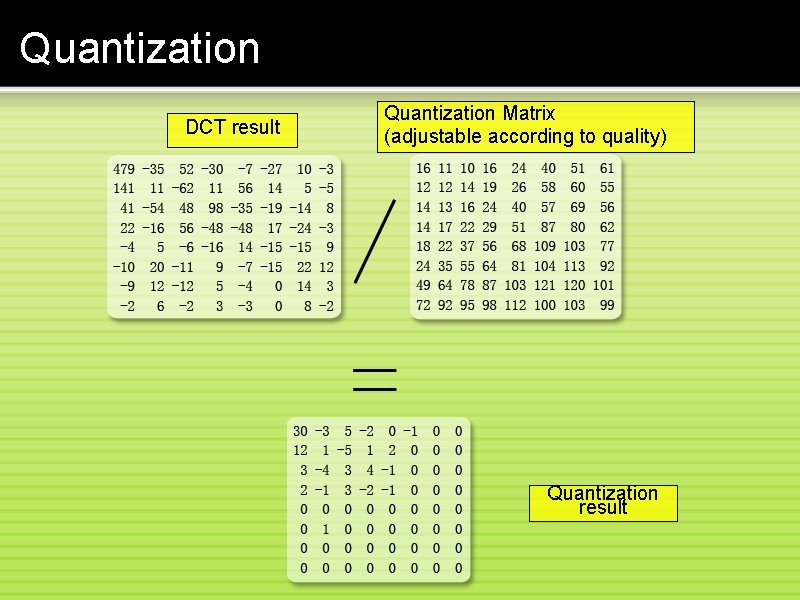

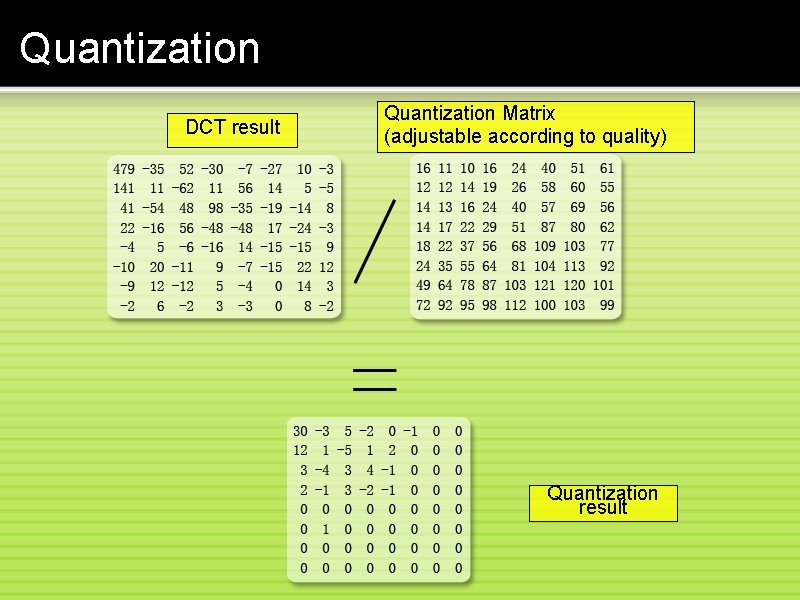

Quantization DCT result Quantization Matrix (adjustable according to quality) Quantization result

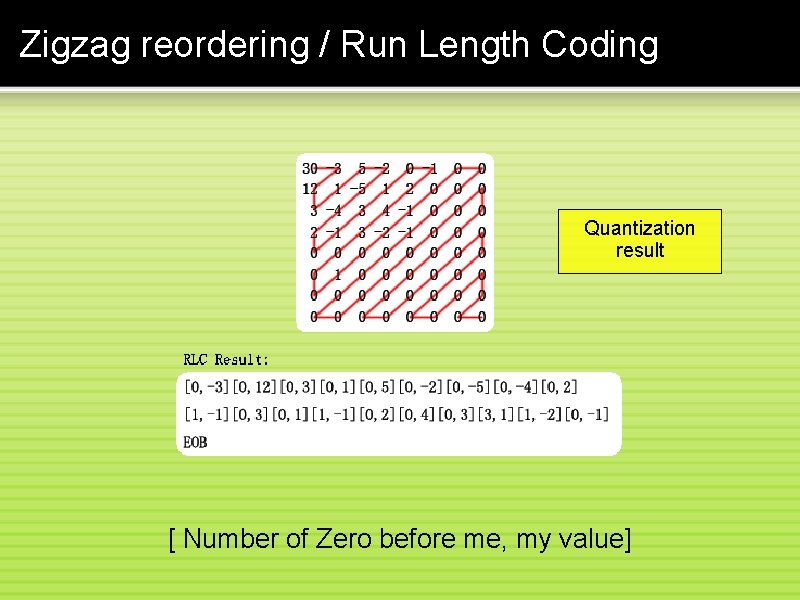

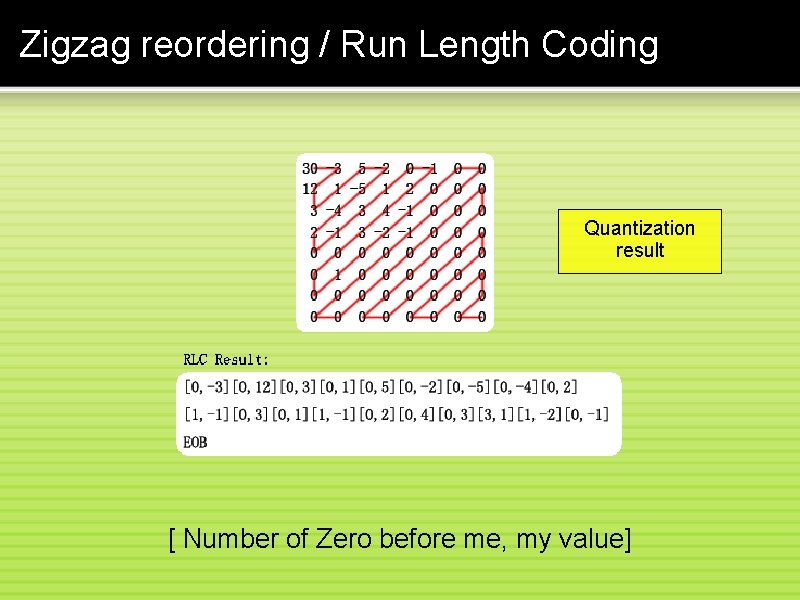

Zigzag reordering / Run Length Coding Quantization result [ Number of Zero before me, my value]

Huffman encoding Values G Real saved values 0 -1, 1 -3, -2, 2, 3 -7, -6, -5, -4, 5, 6, 7. . -32767. . 32767 0 1 2 3 4 5. . . . 15 . 0, 1 00, 01, 10, 11 000, 001, 010, 011, 100, 101, 110, 111 . . RLC result: [0, -3] [0, 12] [0, 3]. . . EOB After group number added: [0, 2, 00 b] [0, 4, 1100 b] [0, 2, 00 b]. . . EOB First Huffman coding (i. e. for [0, 2, 00 b] ): [0, 2, 00 b] => [100 b, 00 b] ( look up e. g. table AC Chron) Total input: 512 bits, Output: 113 bits output

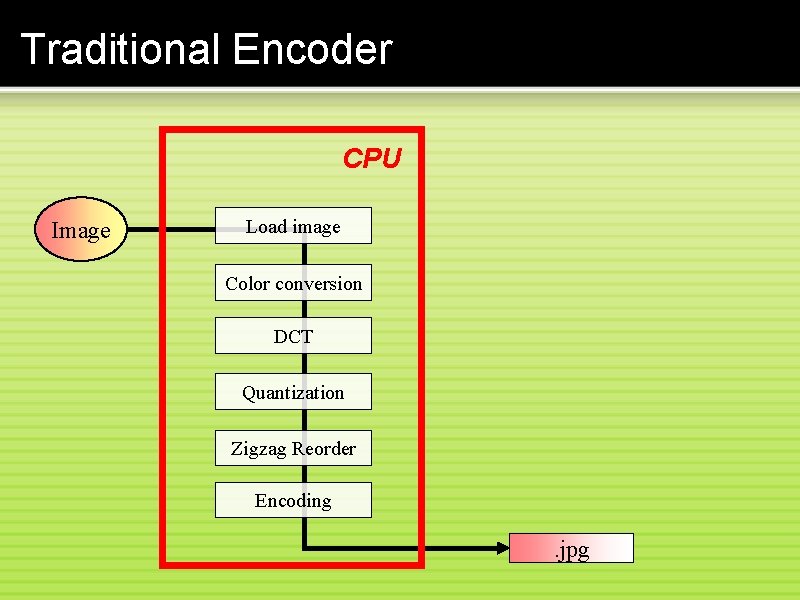

Outline • JPEG Algorithm • Traditional Encoder • What's new in cujpeg • Benchmark • Conclusion

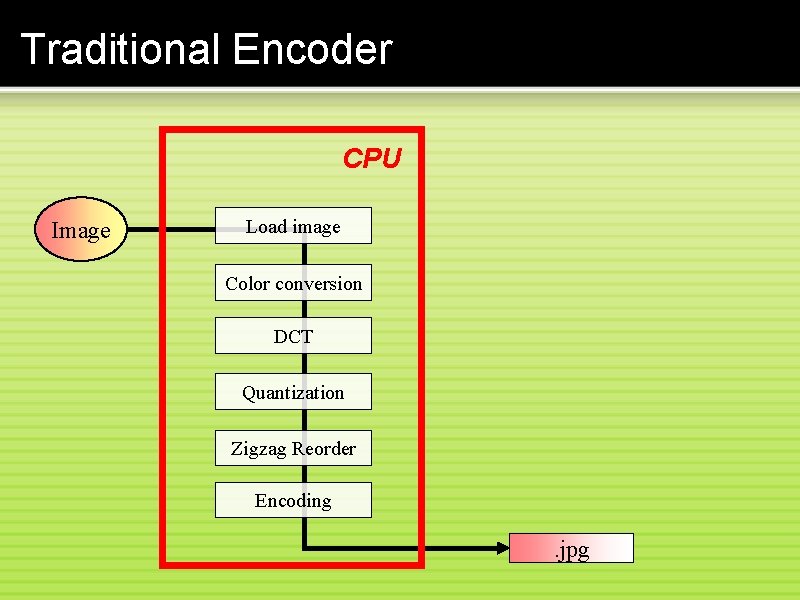

Traditional Encoder CPU Image Load image Color conversion DCT Quantization Zigzag Reorder Encoding . jpg

Outline • JPEG Algorithm • Traditional Encoder • What's new in cujpeg • Benchmark • Conclusion

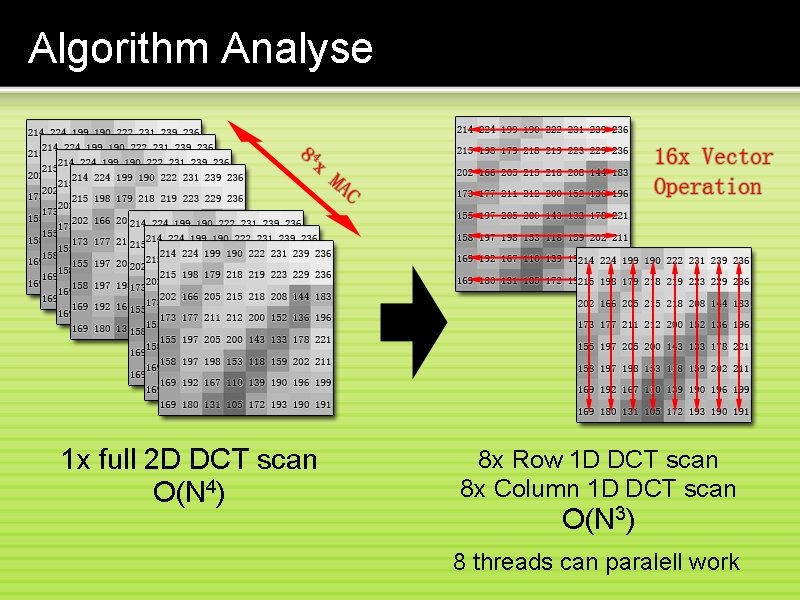

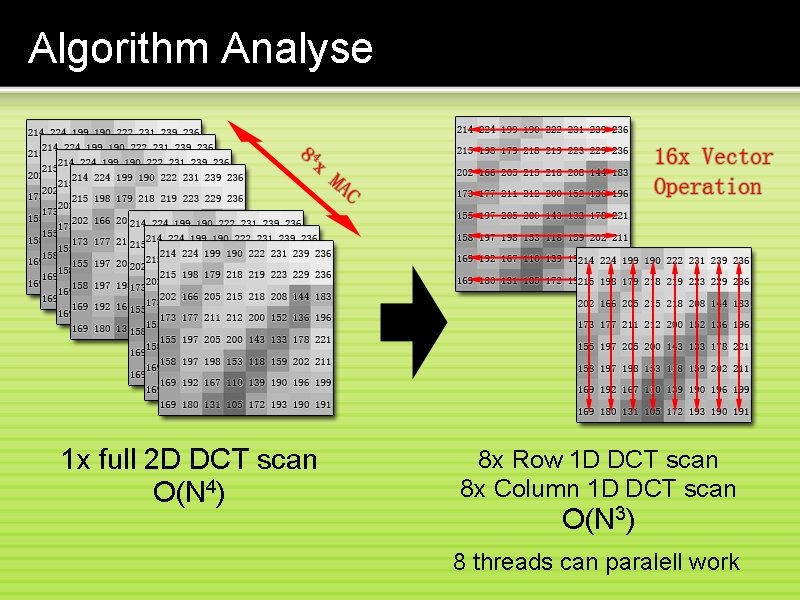

Algorithm Analyse 1 x full 2 D DCT scan O(N 4) 8 x Row 1 D DCT scan 8 x Column 1 D DCT scan O(N 3) 8 threads can paralell work

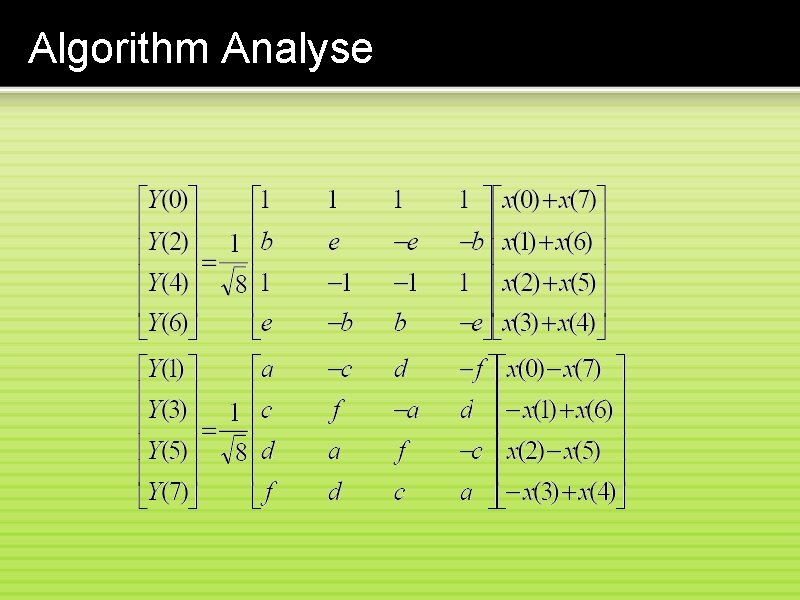

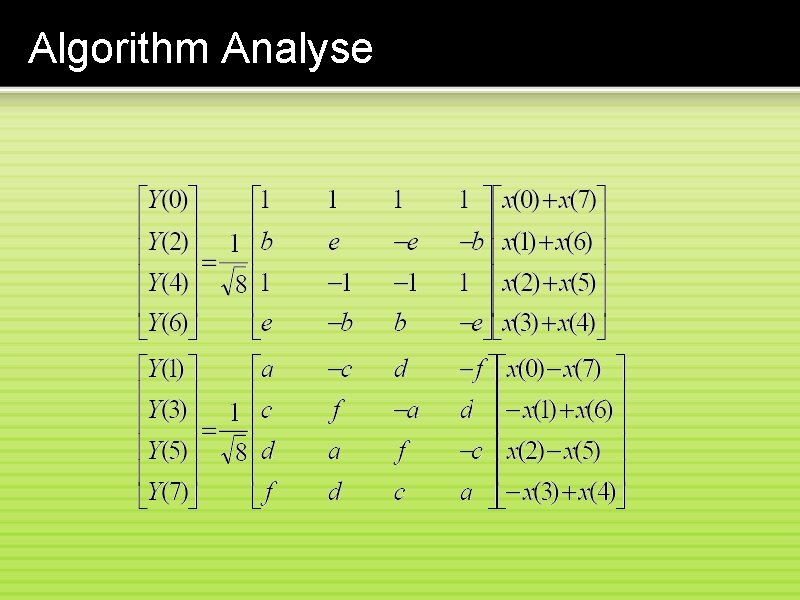

Algorithm Analyse

DCT In Place __device__ void { float } vector. DCTIn. Place(float *Vect 0, int Step) *Vect 1 *Vect 3 *Vect 5 *Vect 7 = = Vect 0 Vect 2 Vect 4 Vect 6 float X 07 P X 16 P X 25 P X 34 P = = (*Vect 0) (*Vect 1) (*Vect 2) (*Vect 3) + + (*Vect 7); (*Vect 6); (*Vect 5); (*Vect 4); float X 07 M X 61 M X 25 M X 43 M = = (*Vect 0) (*Vect 6) (*Vect 2) (*Vect 4) - (*Vect 7); (*Vect 1); (*Vect 5); (*Vect 3); float X 07 P 34 PP X 07 P 34 PM X 16 P 25 PP X 16 P 25 PM + + - X 34 P; X 25 P; = = + + X 07 P X 16 P Step, *Vect 2 = Vect 1 + Step; Step, *Vect 4 = Vect 3 + Step; Step, *Vect 6 = Vect 5 + Step; __device__ void block. DCTIn. Place(float *block) { for(int row = 0; row < 64; row += 8) vector. DCTIn. Place(block + row, 1); for(int col = 0; col < 8; col++) vector. DCTIn. Place(block + col, 1); } __device__ void parallel. DCTIn. Place(float *block) { int col = thread. Idx. x % 8; int row = col * 8; (*Vect 0) (*Vect 2) (*Vect 4) (*Vect 6) = = C_norm * * (X 07 P 34 PP + X 16 P 25 PP); (C_b * X 07 P 34 PM + C_e * X 16 P 25 PM); (X 07 P 34 PP - X 16 P 25 PP); (C_e * X 07 P 34 PM - C_b * X 16 P 25 PM); (*Vect 1) (*Vect 3) (*Vect 5) (*Vect 7) = = C_norm * * (C_a (C_c (C_d (C_f * * X 07 M + + + C_c C_f C_a C_d * * X 61 M + + + C_d C_a C_f C_c * * X 25 M __syncthreads(); vector. DCTIn. Place(block + row, 1); __syncthreads(); vector. DCTIn. Place(block + col, 1); __syncthreads(); } + + C_f C_d C_c C_a * * X 43 M);

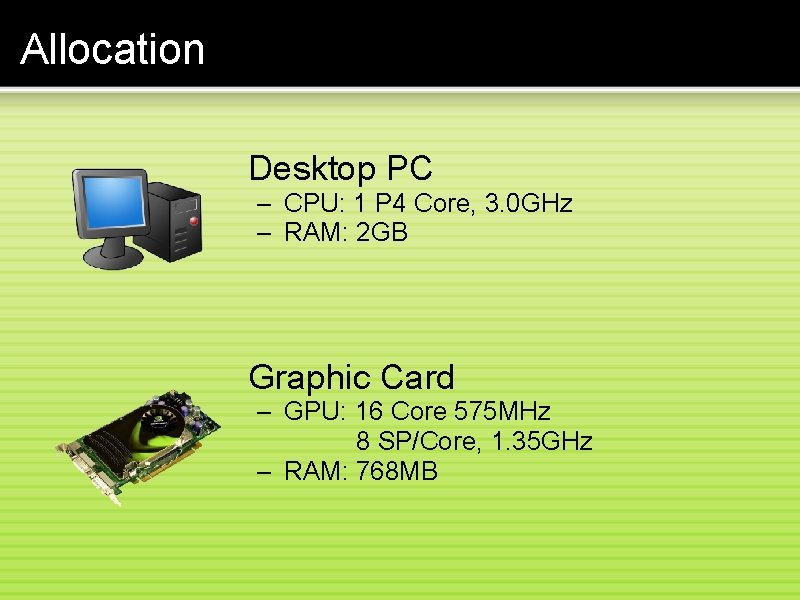

Allocation Desktop PC – CPU: 1 P 4 Core, 3. 0 GHz – RAM: 2 GB Graphic Card – GPU: 16 Core 575 MHz 8 SP/Core, 1. 35 GHz – RAM: 768 MB

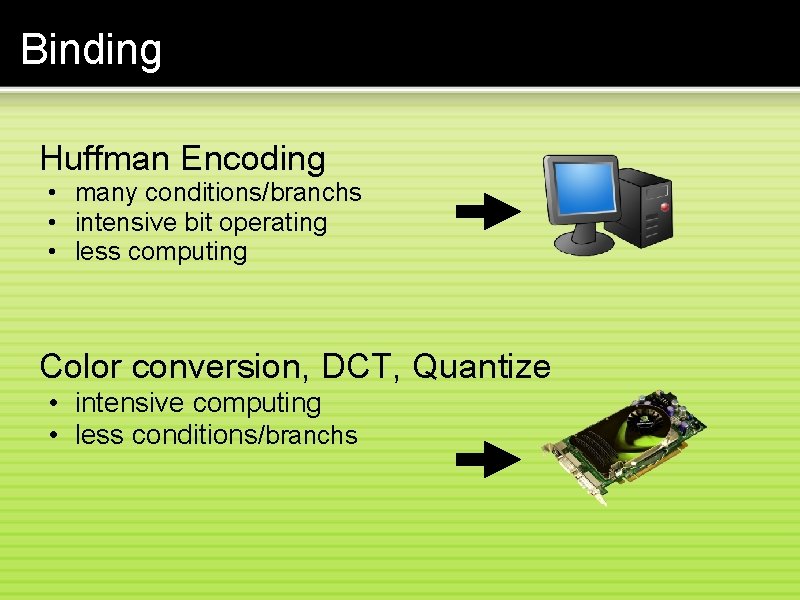

Binding Huffman Encoding • many conditions/branchs • intensive bit operating • less computing Color conversion, DCT, Quantize • intensive computing • less conditions/branchs

Binding Hardware: 16 KB Shared Memory Problem: 1 MCU contains 702 Byte data Result: maximal 21 MCUs/CUDA Block Hardware: 512 threads Problem: 1 MCU contains 3 Blocks, 1 Block needs 8 threads Result: 1 MCU needs 24 threads 1 CUDA Block = 504 Threads

cujpeg Encoder CPU Image GPU Load image Color conversion DCT Quantization Zigzag Reorder Encoding . jpg

cujpeg Encoder CPU GPU cuda. Memcpy( Result. Host, Result. Device, Result. Size, cuda. Memcpy. Device. To. Host); for (int i=0; i<BLOCK_WIDTH; i++) my. Dest. Block[my. ZLine[i]] = (int)(my. DCTLine[i] * my. Div. QLine[i] + 0. 5 f); Image Load image Global Memory Host Memory Color Conversion In Place DCT Quantization Reorder Result Shared Memory Texture Memory Quantize int b = tex 2 D(Tex. Src, Tex. Pos. X++, Tex. Pos. Y); Reorder int g = tex 2 D(Tex. Src, Tex. Pos. X++, Tex. Pos. Y); cuda. Malloc. Array( int&texture. Cache, r = tex 2 D(Tex. Src, &channel, Tex. Pos. X+=6, scanline. Size, Tex. Pos. Y); img. Height )); cuda. Memcpy 2 DTo. Array(texture. Cache, 0, 0, float yimage, = 0. 299*r + 0. 587*g + image. Width, 0. 114*b - 128. 0 image. Height, + 0. 5; image. Stride, float cb = -0. 168763*r - 0. 331264*g + 0. 500*b + 0. 5; cuda. Memcpy. Host. To. Device )); float cr = 0. 500*r - 0. 418688 f*g - 0. 081312*b + 0. 5; cuda. Bind. Texture. To. Array(Tex. Src , texture. Cache, channel)); Encoding my. DCTLine[Offset + i] = y; my. DCTLine[Offset + 64 + i]= Result. Size); cb; cuda. Malloc((void **)(&Result. Device), my. DCTLine[Offset + 128 + i]= cb; . jpg

Scheduling For each MCU: RGB Data • 24 threads x 24 • Convert 2 pixel • 8 threads YCC Block Y Cb Cr • Convert rest 2 pixel • 24 threads x 24 • Do 1 x row vector DCT • Do 1 x column vector DCT • Quantize 8 x scalar value DCT Block x 24 Quantized/Reordered Data

Outline • JPEG Algorithm • Traditional Encoder • What's new in cujpeg • Benchmark • Conclusion

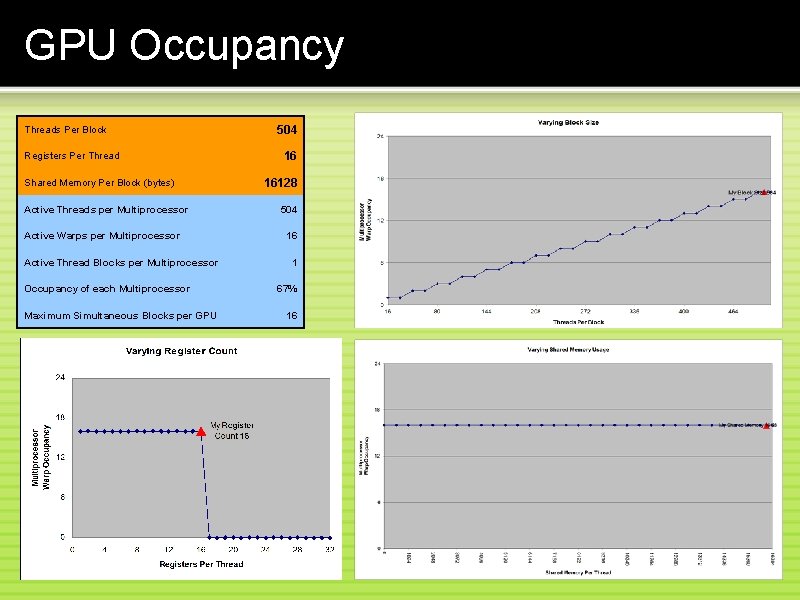

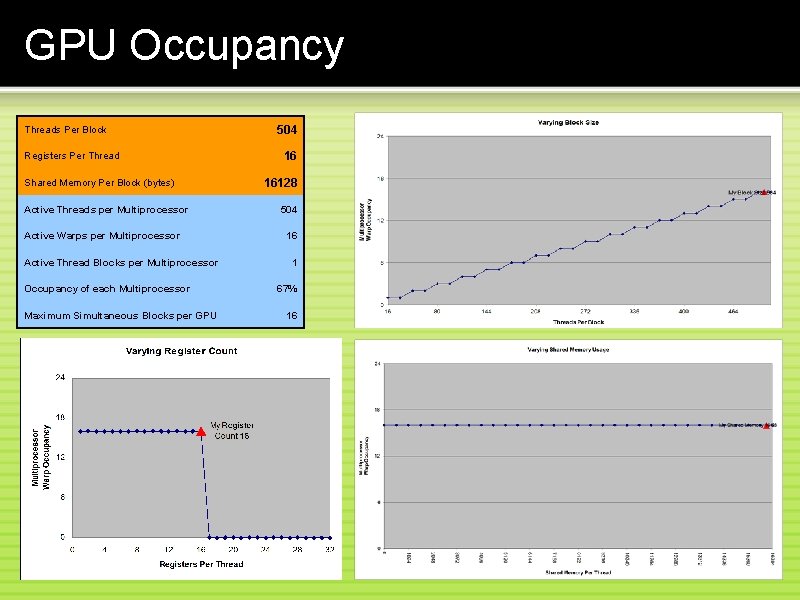

GPU Occupancy Threads Per Block Registers Per Thread Shared Memory Per Block (bytes) Active Threads per Multiprocessor Active Warps per Multiprocessor Active Thread Blocks per Multiprocessor Occupancy of each Multiprocessor Maximum Simultaneous Blocks per GPU 504 16 16128 504 16 1 67% 16

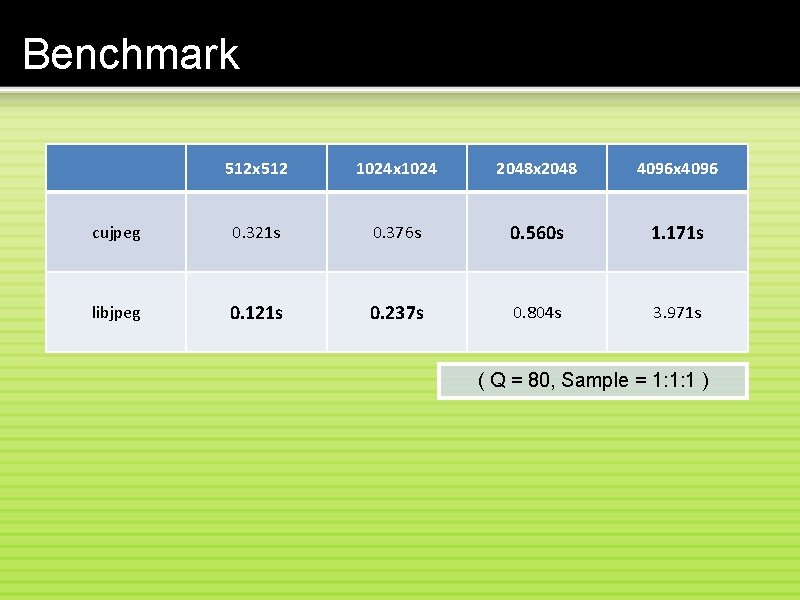

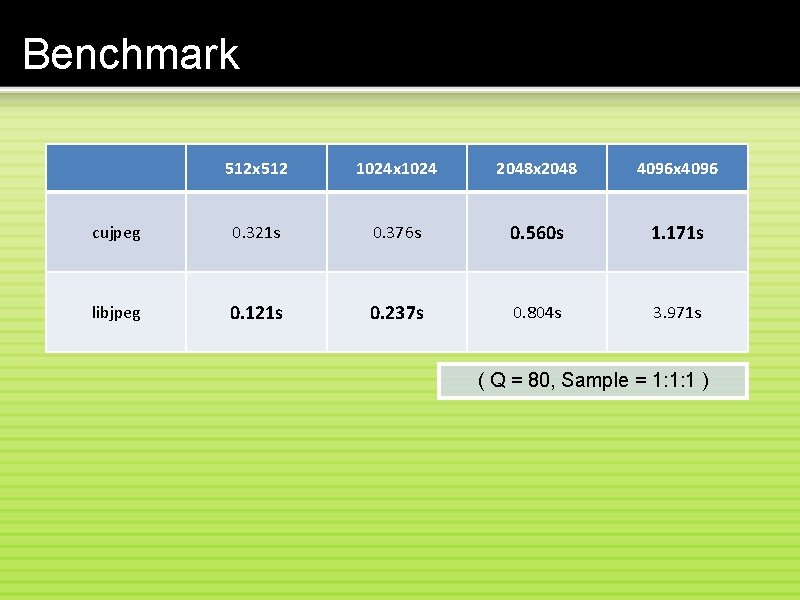

Benchmark 512 x 512 1024 x 1024 2048 x 2048 4096 x 4096 cujpeg 0. 321 s 0. 376 s 0. 560 s 1. 171 s libjpeg 0. 121 s 0. 237 s 0. 804 s 3. 971 s ( Q = 80, Sample = 1: 1: 1 )

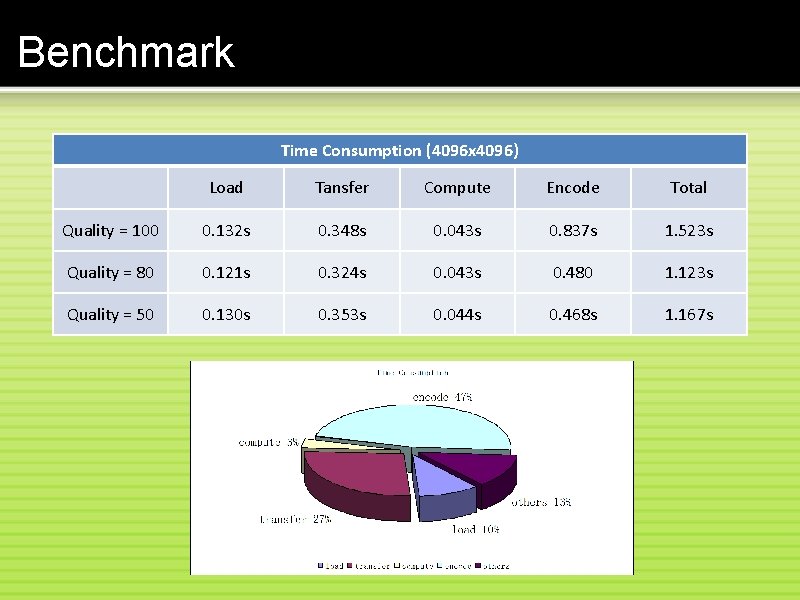

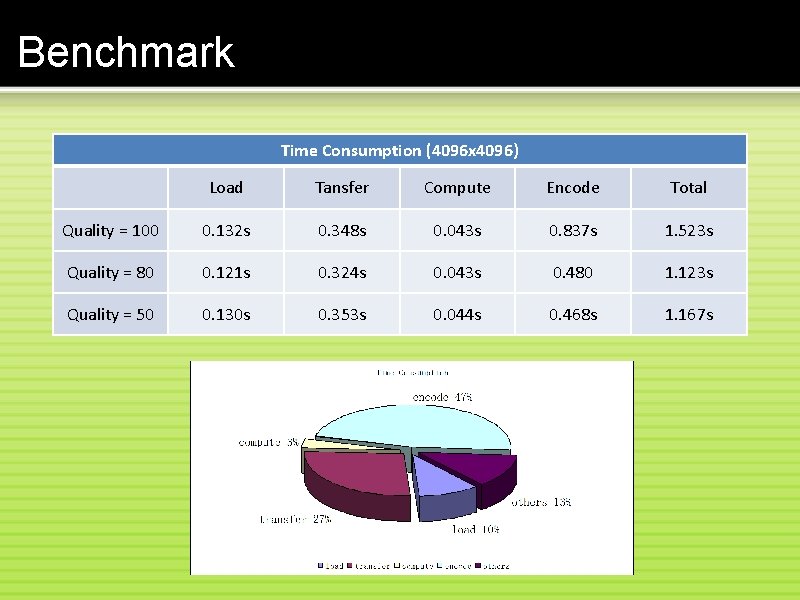

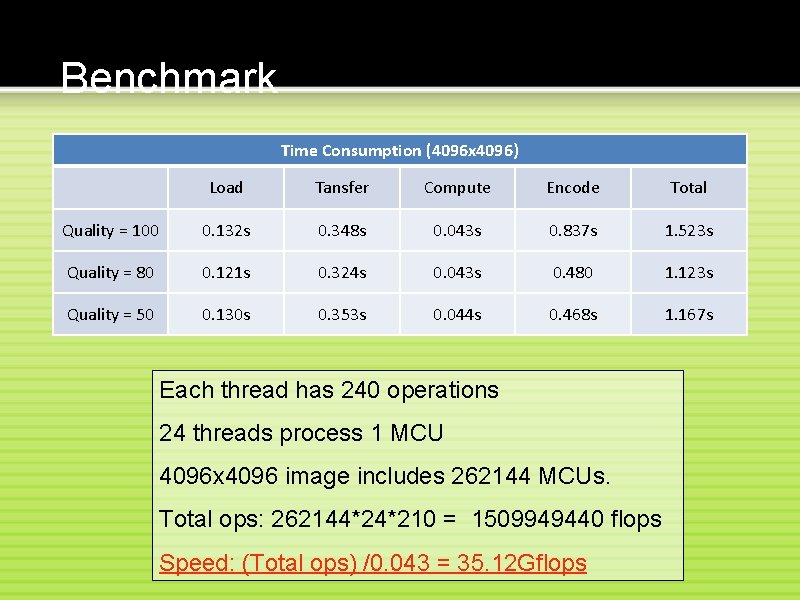

Benchmark Time Consumption (4096 x 4096) Load Tansfer Compute Encode Total Quality = 100 0. 132 s 0. 348 s 0. 043 s 0. 837 s 1. 523 s Quality = 80 0. 121 s 0. 324 s 0. 043 s 0. 480 1. 123 s Quality = 50 0. 130 s 0. 353 s 0. 044 s 0. 468 s 1. 167 s

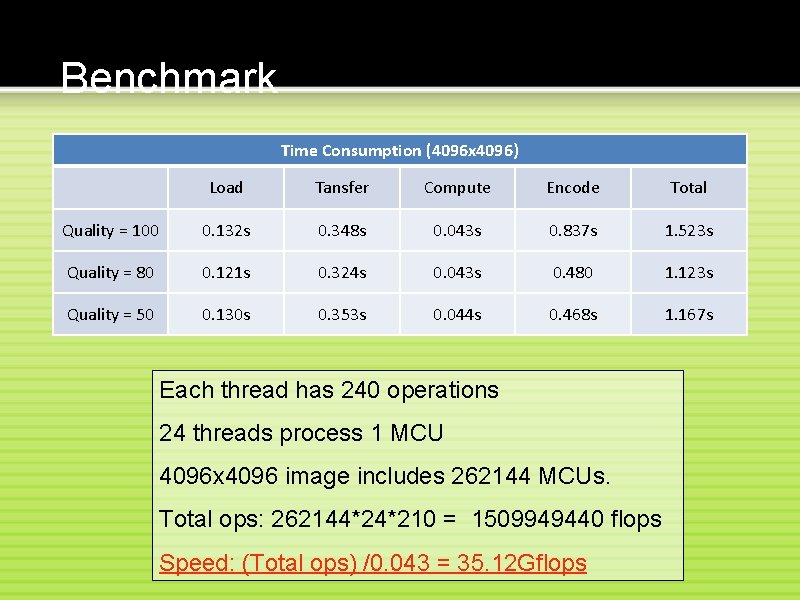

Benchmark Time Consumption (4096 x 4096) Load Tansfer Compute Encode Total Quality = 100 0. 132 s 0. 348 s 0. 043 s 0. 837 s 1. 523 s Quality = 80 0. 121 s 0. 324 s 0. 043 s 0. 480 1. 123 s Quality = 50 0. 130 s 0. 353 s 0. 044 s 0. 468 s 1. 167 s Each thread has 240 operations 24 threads process 1 MCU 4096 x 4096 image includes 262144 MCUs. Total ops: 262144*24*210 = 1509949440 flops Speed: (Total ops) /0. 043 = 35. 12 Gflops

Outline • JPEG Algorithm • Traditional Encoder • What's new in cujpeg • Benchmark • Conclusion

Conclusion CUDA can obviously accelerate the JPEG compression. The over-all performance • • Depends on the system speed More bandwidth Besser encoding routine Support downsample