Programming Massively Parallel Graphics Processors Andreas Moshovos Winter

![• Sequential Execution Model int a[N]; // N is large for (i =0; • Sequential Execution Model int a[N]; // N is large for (i =0;](https://slidetodoc.com/presentation_image_h/d822c9e8a3872084f2a81cbcff41a8aa/image-4.jpg)

![• Data Parallel Execution Model / SIMD int a[N]; // N is large • Data Parallel Execution Model / SIMD int a[N]; // N is large](https://slidetodoc.com/presentation_image_h/d822c9e8a3872084f2a81cbcff41a8aa/image-5.jpg)

![• Single Program Multiple Data / SPMD int a[N]; // N is large • Single Program Multiple Data / SPMD int a[N]; // N is large](https://slidetodoc.com/presentation_image_h/d822c9e8a3872084f2a81cbcff41a8aa/image-6.jpg)

- Slides: 29

Programming Massively Parallel Graphics Processors Andreas Moshovos Winter 2009

• Goals: – Graphics Processors – Learn how program GPUs – Learn how to get performance out of GPUs • Understand GPU architecture and limitations – CUDA: Compute Unified Device Architecture/NVidia • How: – Weekly assignments for the first few weeks – A large team project • Ideal Scenario: – Non-ECE Non-CS people will team up with CS/ECE and attack an interesting problem

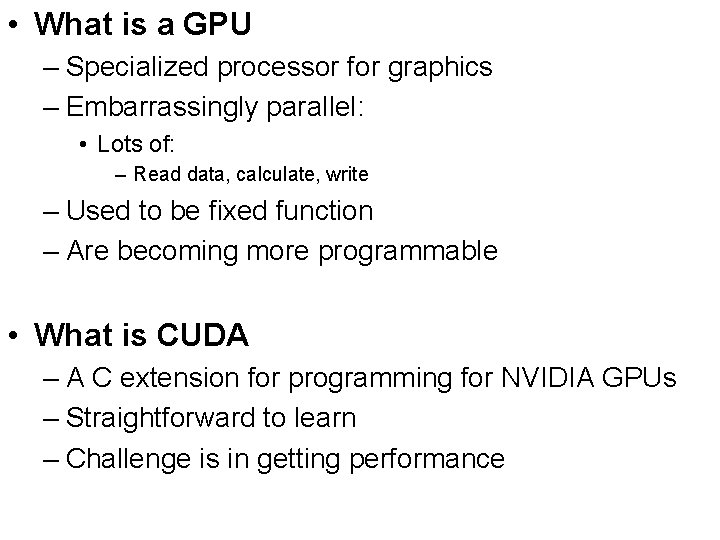

• What is a GPU – Specialized processor for graphics – Embarrassingly parallel: • Lots of: – Read data, calculate, write – Used to be fixed function – Are becoming more programmable • What is CUDA – A C extension for programming for NVIDIA GPUs – Straightforward to learn – Challenge is in getting performance

![Sequential Execution Model int aN N is large for i 0 • Sequential Execution Model int a[N]; // N is large for (i =0;](https://slidetodoc.com/presentation_image_h/d822c9e8a3872084f2a81cbcff41a8aa/image-4.jpg)

• Sequential Execution Model int a[N]; // N is large for (i =0; i < N; i++) time a[i] = a[i] * fade; Flow of control / Thread One instruction at the time Optimizations possible at the machine level

![Data Parallel Execution Model SIMD int aN N is large • Data Parallel Execution Model / SIMD int a[N]; // N is large](https://slidetodoc.com/presentation_image_h/d822c9e8a3872084f2a81cbcff41a8aa/image-5.jpg)

• Data Parallel Execution Model / SIMD int a[N]; // N is large for all elements do in parallel time a[index] = a[index] * fade;

![Single Program Multiple Data SPMD int aN N is large • Single Program Multiple Data / SPMD int a[N]; // N is large](https://slidetodoc.com/presentation_image_h/d822c9e8a3872084f2a81cbcff41a8aa/image-6.jpg)

• Single Program Multiple Data / SPMD int a[N]; // N is large for all elements do in parallel time if (a[i] > threshold) a[i]*= fade;

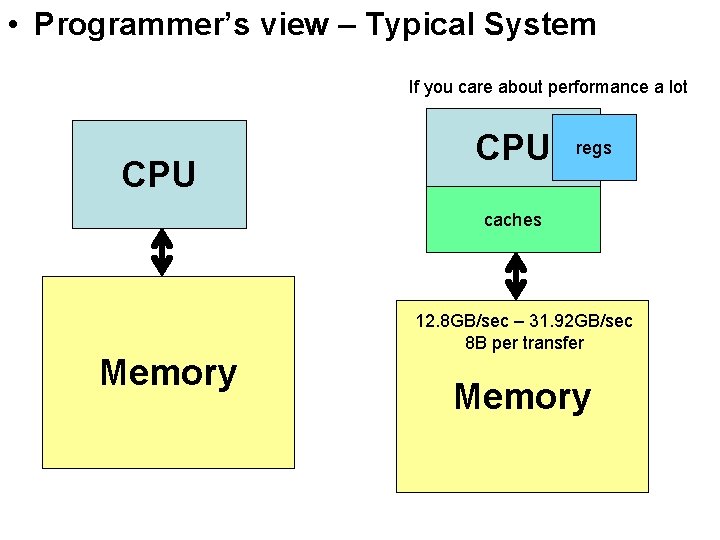

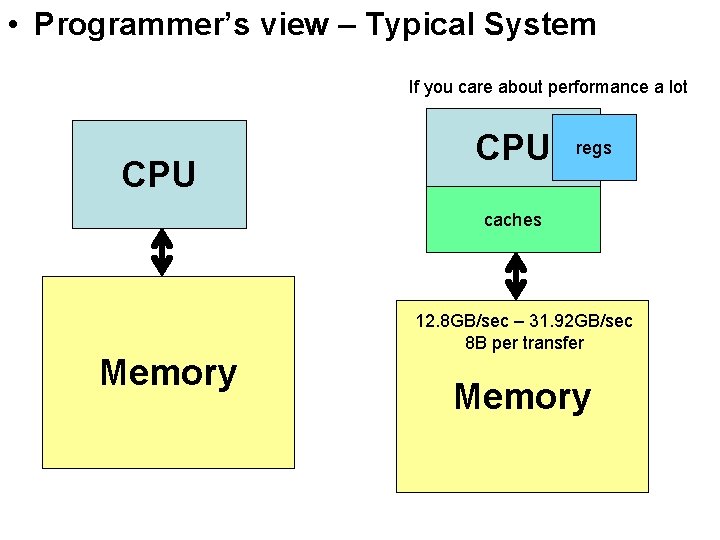

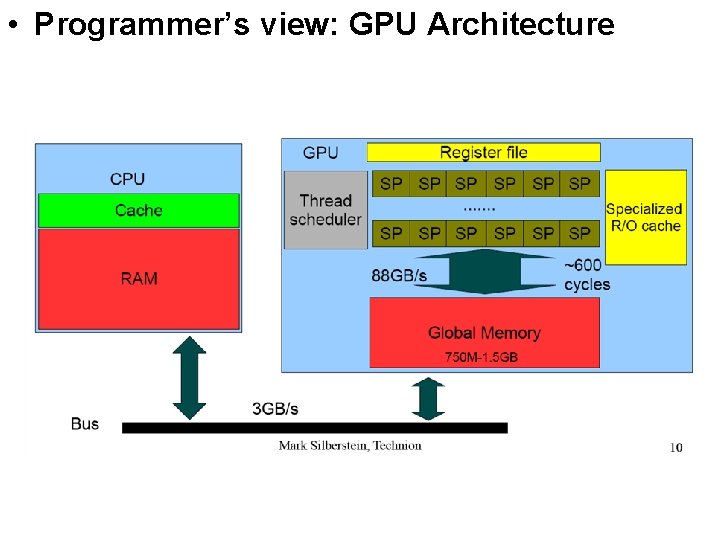

• Programmer’s view – Typical System If you care about performance a lot CPU regs caches 12. 8 GB/sec – 31. 92 GB/sec 8 B per transfer Memory

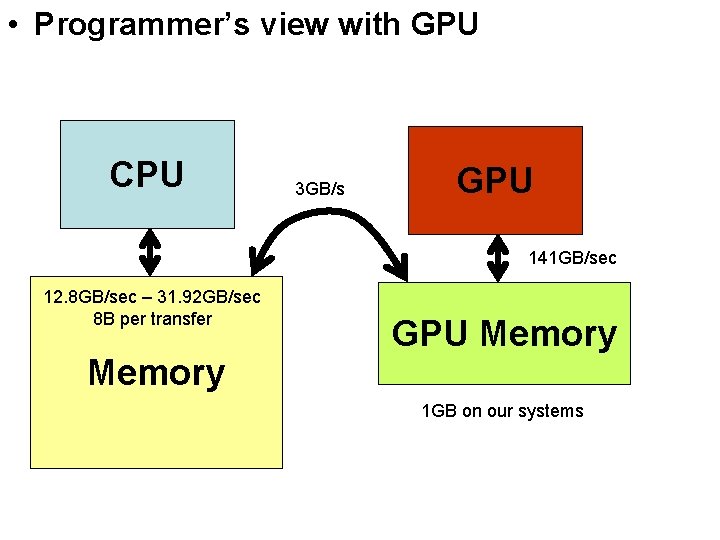

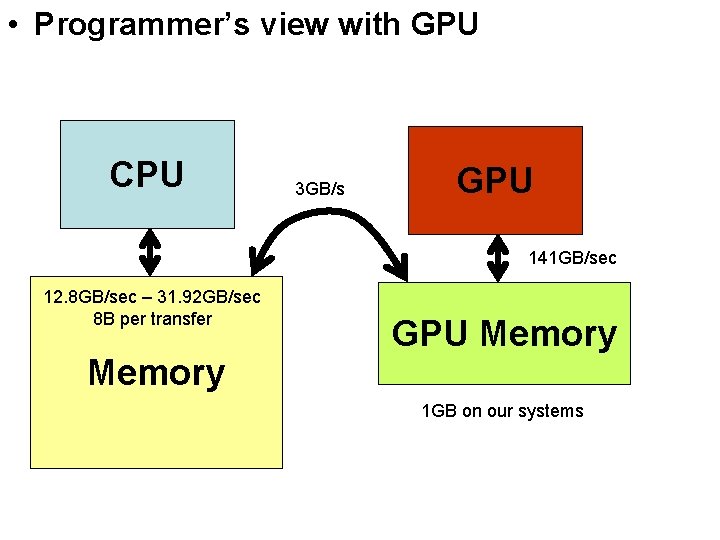

• Programmer’s view with GPU CPU 3 GB/s GPU 141 GB/sec 12. 8 GB/sec – 31. 92 GB/sec 8 B per transfer Memory GPU Memory 1 GB on our systems

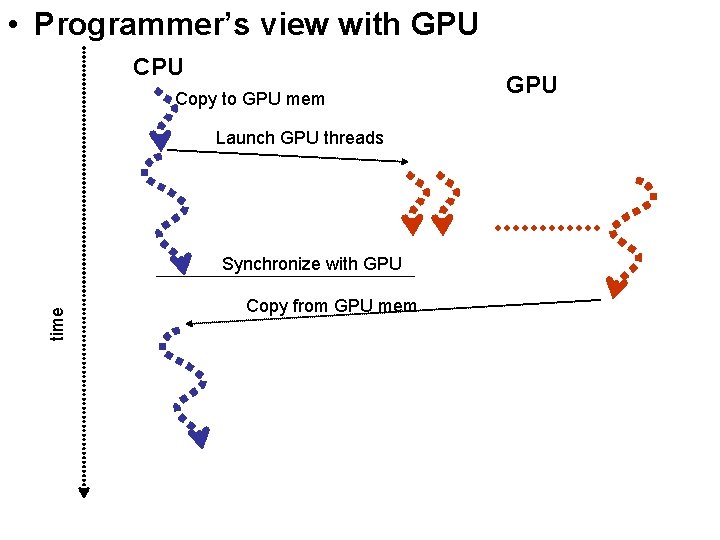

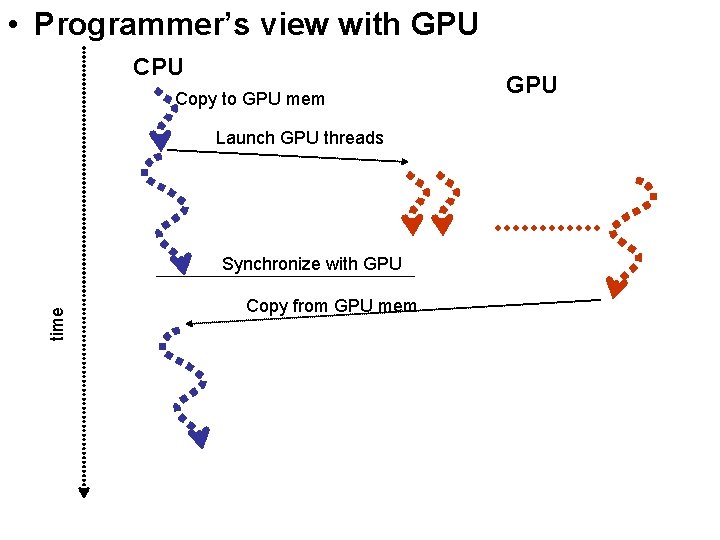

• Programmer’s view with GPU Copy to GPU mem Launch GPU threads time Synchronize with GPU Copy from GPU mem GPU

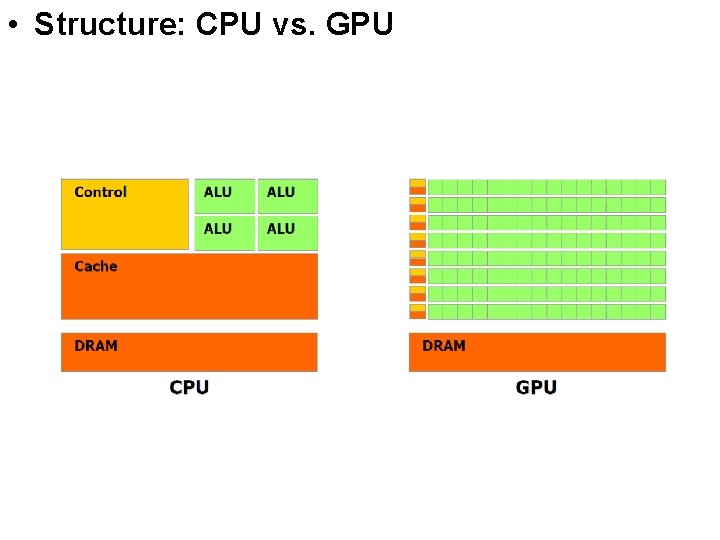

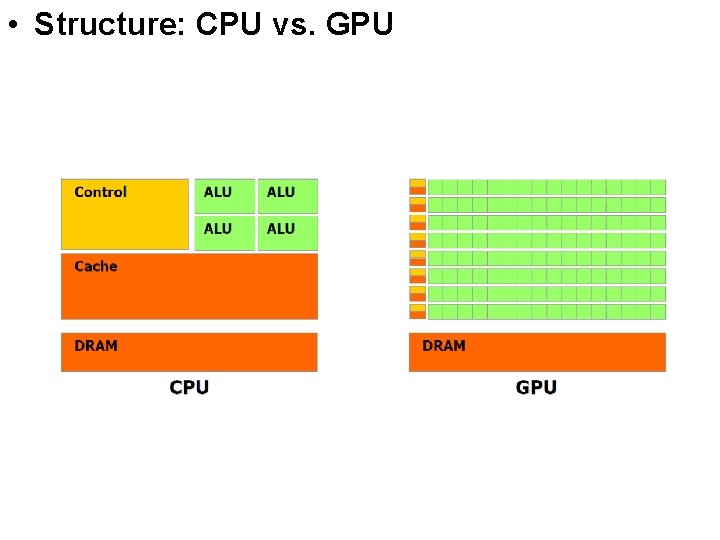

• Structure: CPU vs. GPU

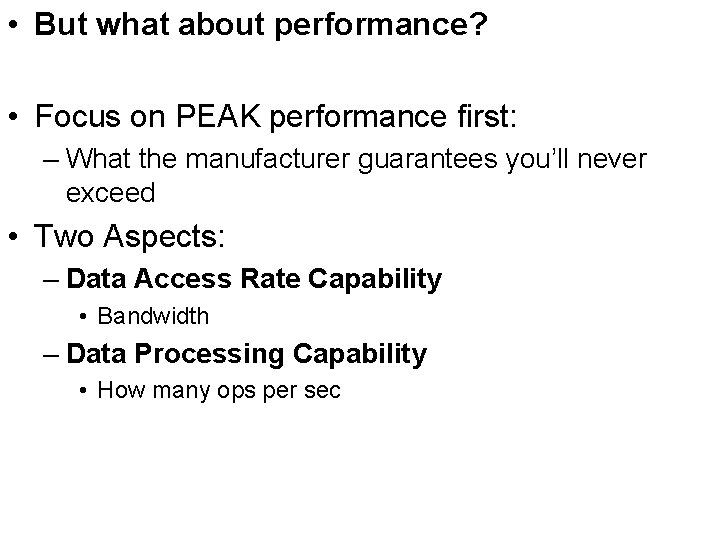

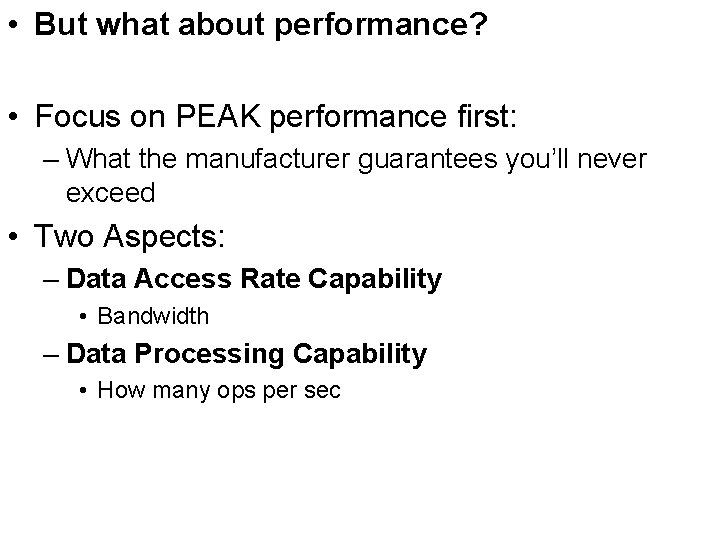

• But what about performance? • Focus on PEAK performance first: – What the manufacturer guarantees you’ll never exceed • Two Aspects: – Data Access Rate Capability • Bandwidth – Data Processing Capability • How many ops per sec

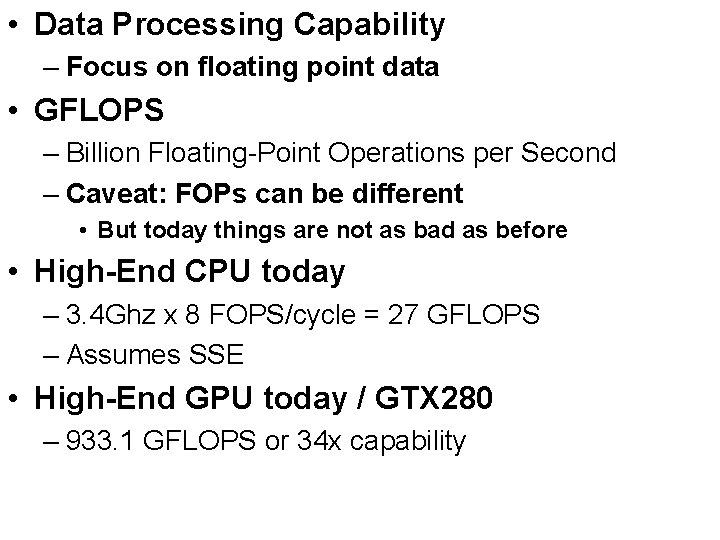

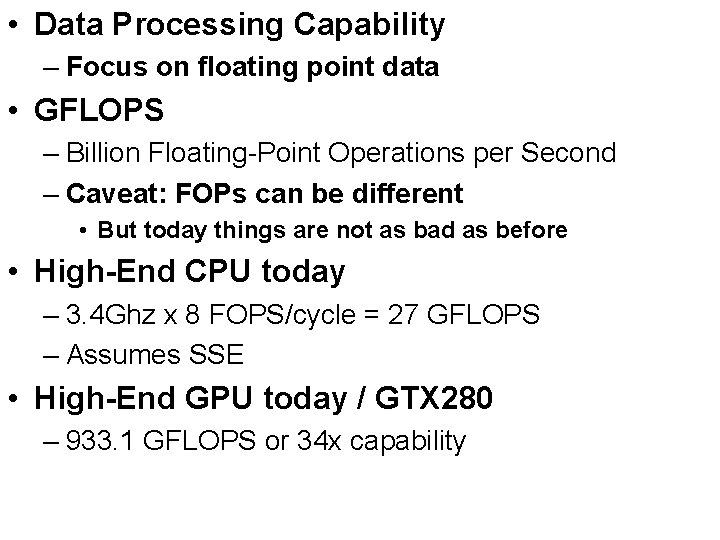

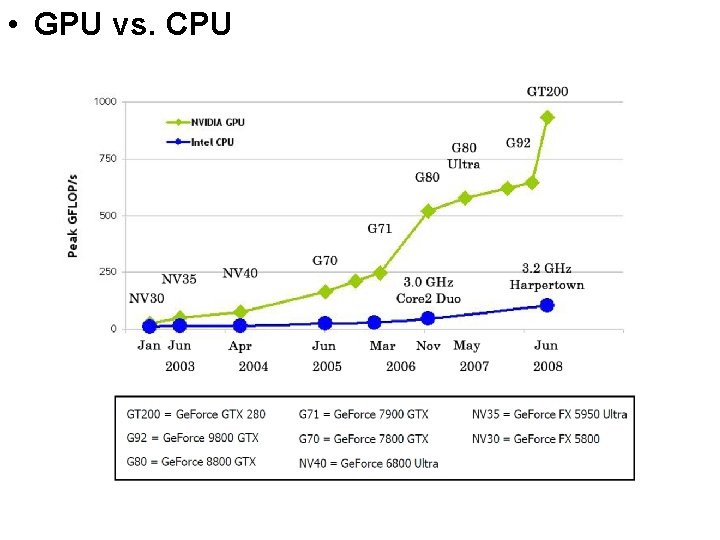

• Data Processing Capability – Focus on floating point data • GFLOPS – Billion Floating-Point Operations per Second – Caveat: FOPs can be different • But today things are not as bad as before • High-End CPU today – 3. 4 Ghz x 8 FOPS/cycle = 27 GFLOPS – Assumes SSE • High-End GPU today / GTX 280 – 933. 1 GFLOPS or 34 x capability

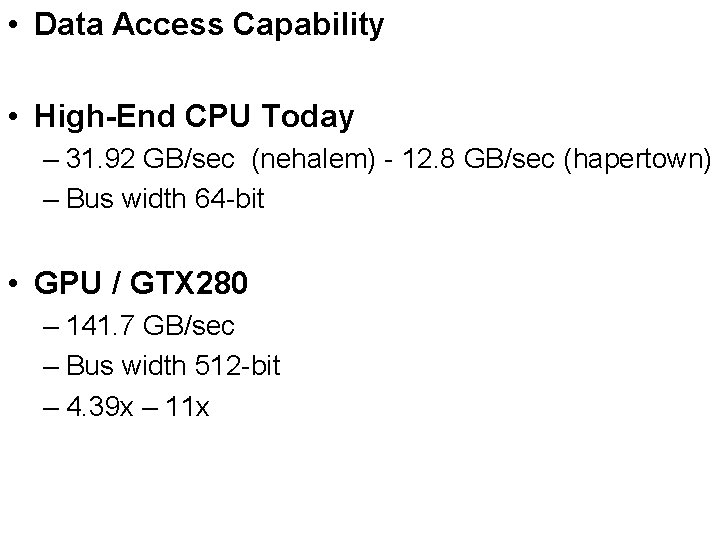

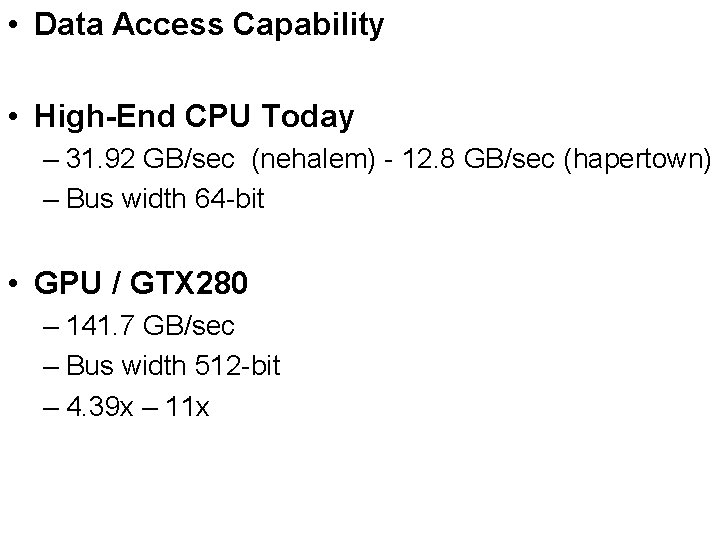

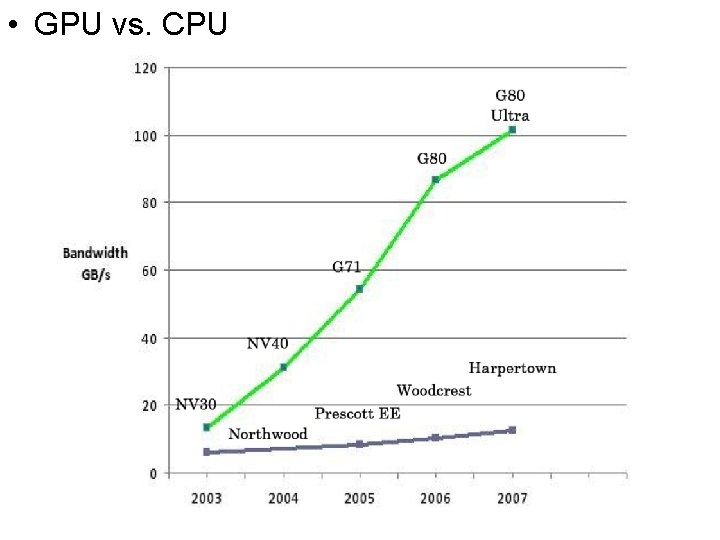

• Data Access Capability • High-End CPU Today – 31. 92 GB/sec (nehalem) - 12. 8 GB/sec (hapertown) – Bus width 64 -bit • GPU / GTX 280 – 141. 7 GB/sec – Bus width 512 -bit – 4. 39 x – 11 x

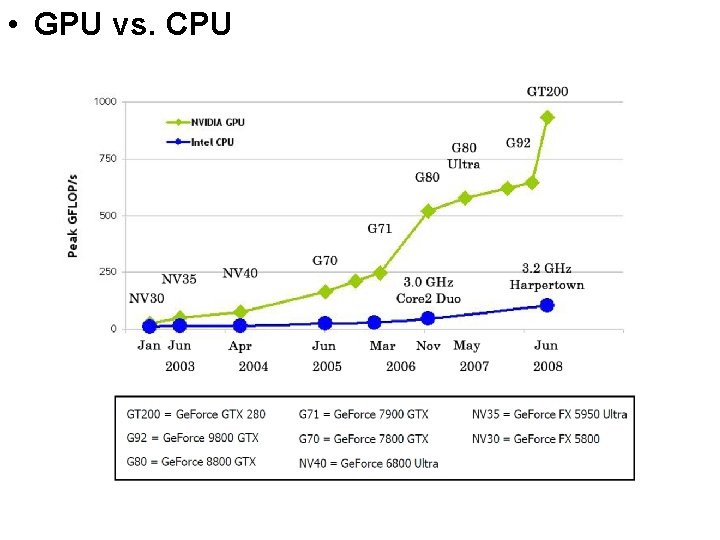

• GPU vs. CPU

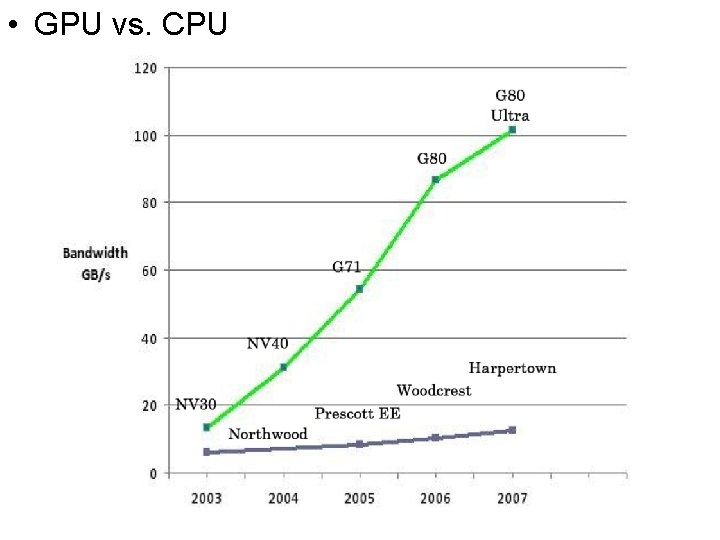

• GPU vs. CPU

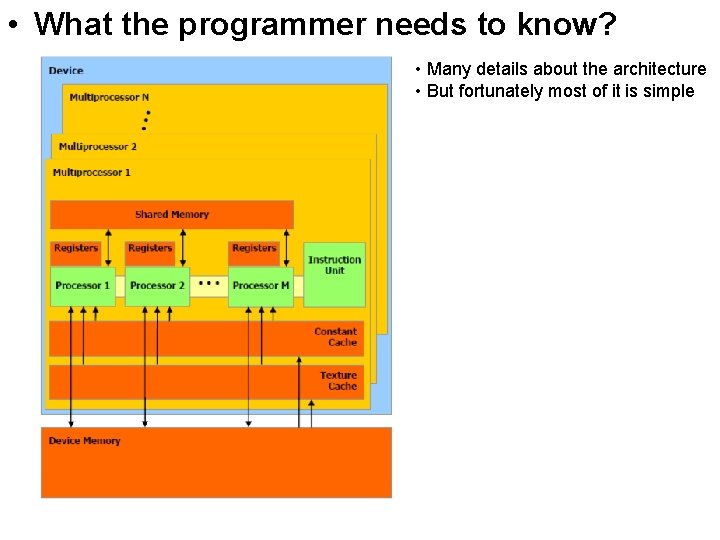

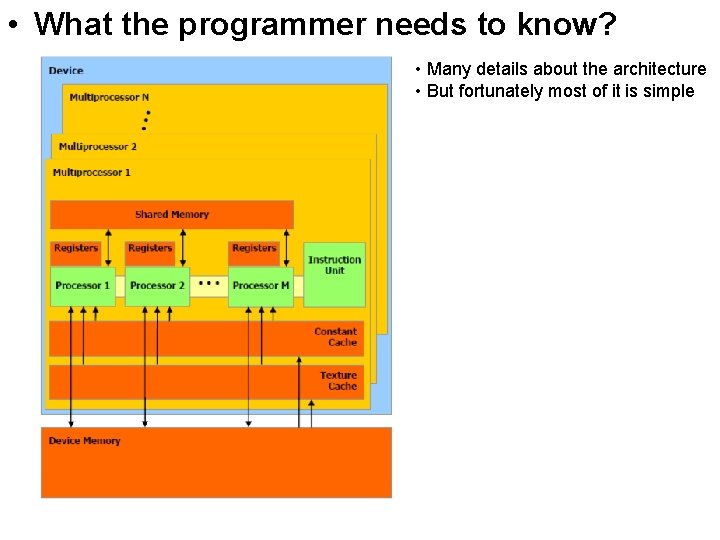

• What the programmer needs to know? • Many details about the architecture • But fortunately most of it is simple

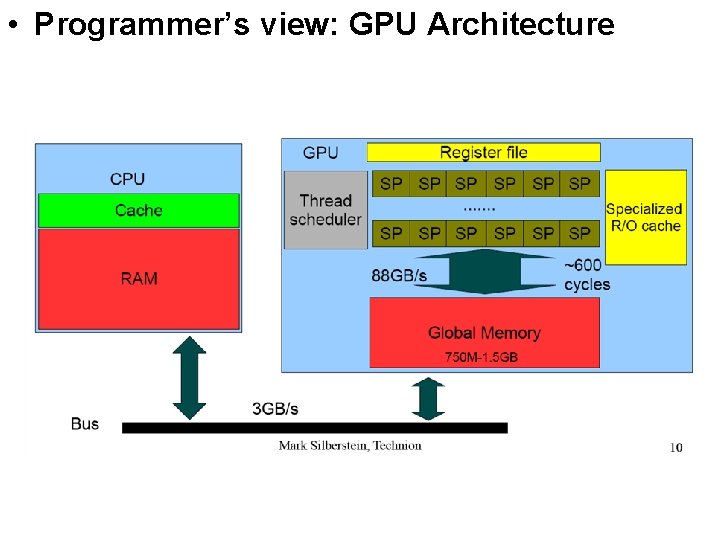

• Programmer’s view: GPU Architecture

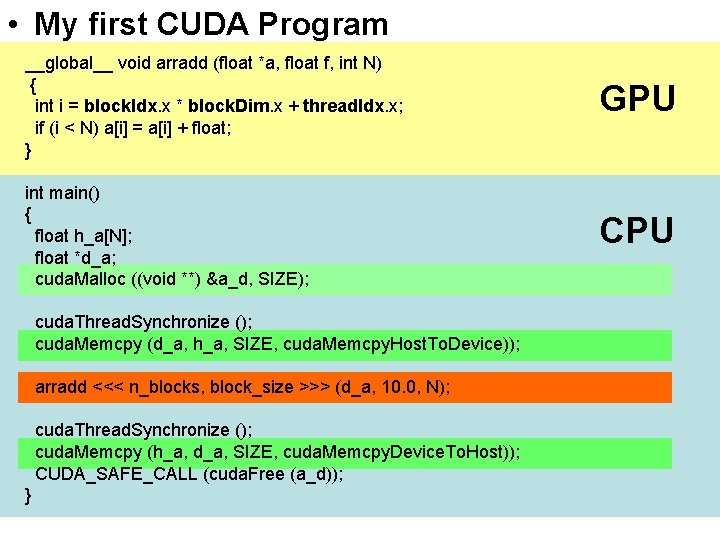

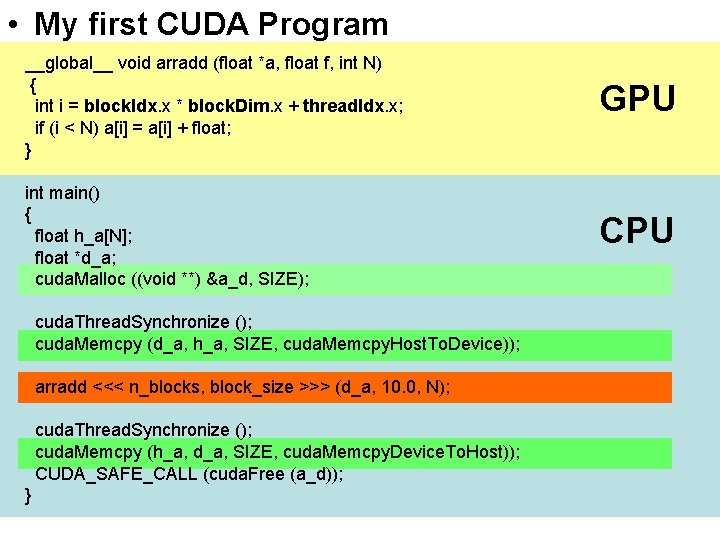

• My first CUDA Program __global__ void arradd (float *a, float f, int N) { int i = block. Idx. x * block. Dim. x + thread. Idx. x; if (i < N) a[i] = a[i] + float; } GPU int main() { float h_a[N]; float *d_a; cuda. Malloc ((void **) &a_d, SIZE); CPU cuda. Thread. Synchronize (); cuda. Memcpy (d_a, h_a, SIZE, cuda. Memcpy. Host. To. Device)); arradd <<< n_blocks, block_size >>> (d_a, 10. 0, N); cuda. Thread. Synchronize (); cuda. Memcpy (h_a, d_a, SIZE, cuda. Memcpy. Device. To. Host)); CUDA_SAFE_CALL (cuda. Free (a_d)); }

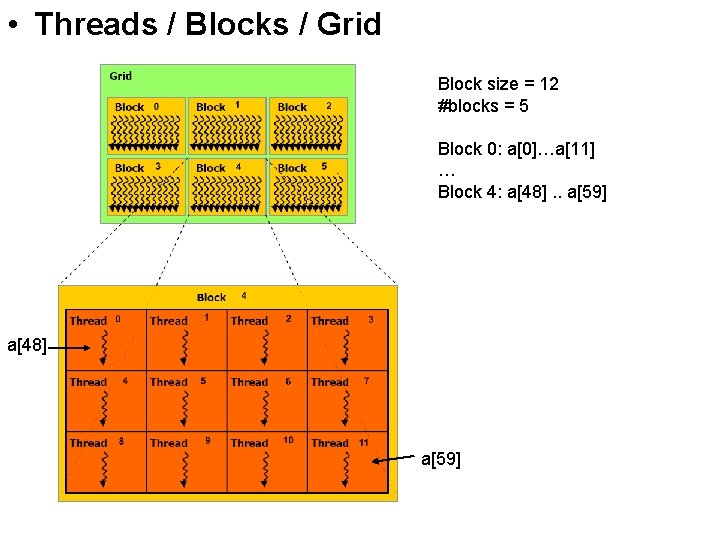

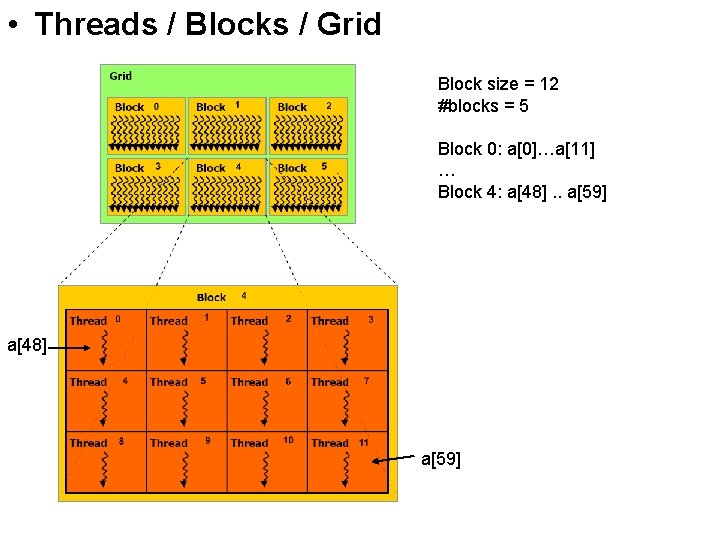

• Threads / Blocks / Grid Block size = 12 #blocks = 5 Block 0: a[0]…a[11] … Block 4: a[48]. . a[59] a[48] a[59]

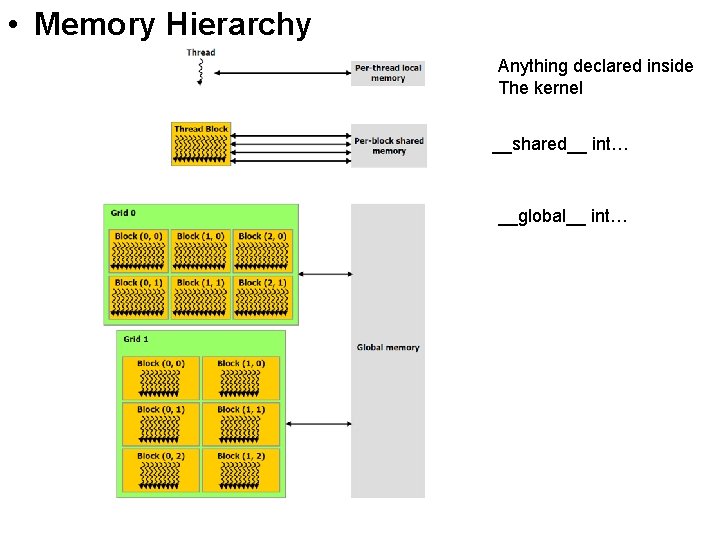

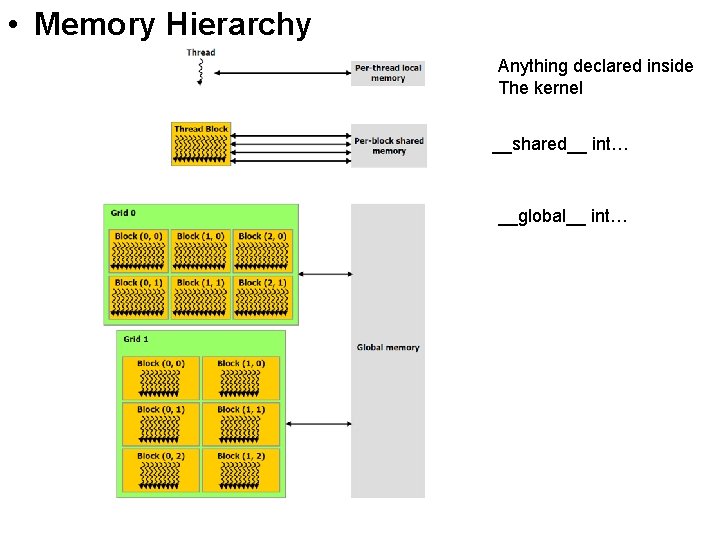

• Memory Hierarchy Anything declared inside The kernel __shared__ int… __global__ int…

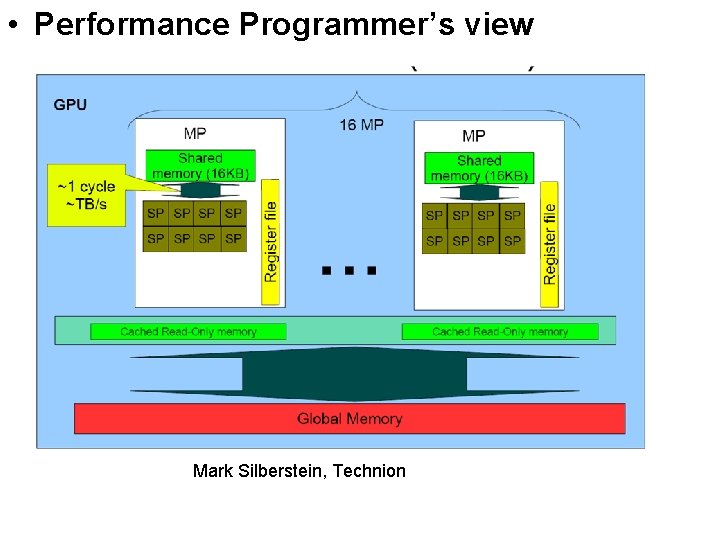

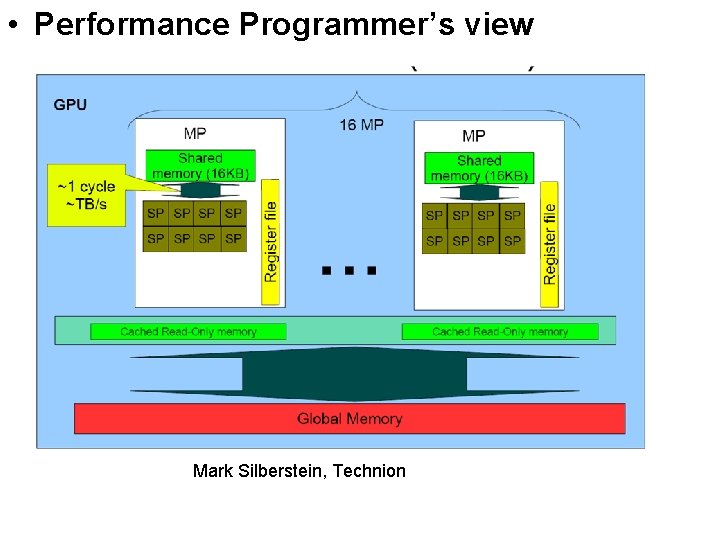

• Performance Programmer’s view Mark Silberstein, Technion

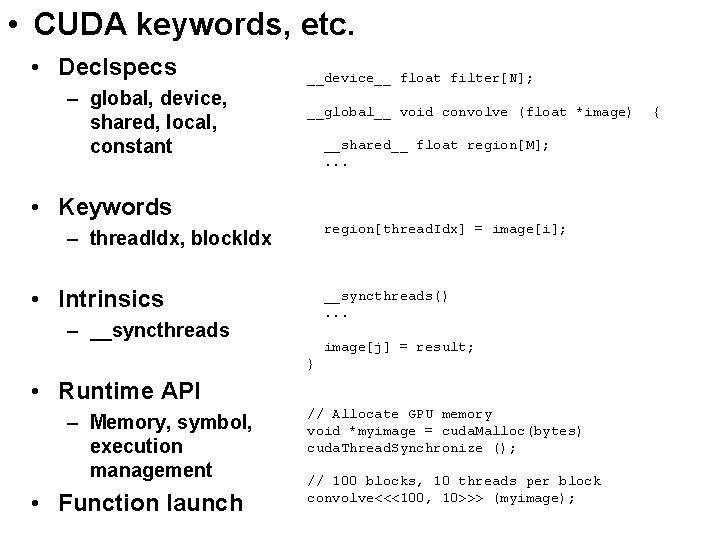

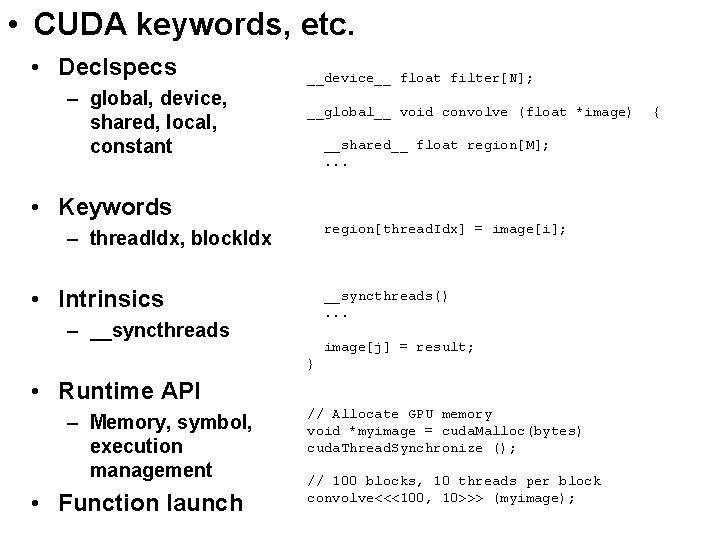

• CUDA keywords, etc. • Declspecs – global, device, shared, local, constant __device__ float filter[N]; __global__ void convolve (float *image) __shared__ float region[M]; . . . • Keywords region[thread. Idx] = image[i]; – thread. Idx, block. Idx • Intrinsics __syncthreads(). . . – __syncthreads image[j] = result; } • Runtime API – Memory, symbol, execution management • Function launch // Allocate GPU memory void *myimage = cuda. Malloc(bytes) cuda. Thread. Synchronize (); // 100 blocks, 10 threads per block convolve<<<100, 10>>> (myimage); {

• Floating-Point Caveats • Single precisions floating point support is not 100% IEEE 754 – No denormals, fixed rounding modes – Must check that SNR remains acceptable – But there are lots of SP FP units • GTX 280 supports double precision – But there are very few of these units

• Development Process • Course Specific • Get an account on the eecg network – Fill in your name/ID/current e-mail on the list • Wait until confirmation is received • Machines – ug 51. eecg through ug 75. eecg. utoronto. ca – SF 2204 – Keycode: _______

• • • Development Process Once you are on ugxx machine source /cad 1/CUDA/cuda. csh That will create a NVIDIA_CUDA_SDK Go in and type “make dbg=1” This builds several examples under bin/linux/debug • The source code is in the projects subdir • We’ll post a handout soon on the course website

• • • Development Process Create a xxxx. cu file Compile it with nvcc Makefile is provided by the SDK Nvcc is a preprocessor

• So, why would Parallel Processing work? • Parallel Processing and Programming has been around for a while • Golden age was the 80 s • Didn’t work – Programming is hard – Hardware was expensive – Single processor performance was doubling every 18 months • Why would it work now? – Cost / Single processor • Not a done deal at all Programming is still hard

• Course Staff • • Andreas Moshovos EA 310, 416 -946 -7373 moshovos@eecg. toronto. edu www. eecg. toronto. edu/~moshovos TA Hassan Shojania hassan@eecg. toronto

• Course Structure • Till the end of February / weekly assignments – CUDA programming – GTX 280 architecture – CUDA performance – Floating Point • March / Project Proposal and work – Case studies – General Parallel Programming guidelines • April – Project Presentations • Make up lectures?