CUDA Programming Introduction to CUDA Programming Andreas Moshovos

![Sequential Execution Model int a[N]; // N is large for (i =0; i < Sequential Execution Model int a[N]; // N is large for (i =0; i <](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-9.jpg)

![Data Parallel Execution Model / SIMD int a[N]; // N is large for all Data Parallel Execution Model / SIMD int a[N]; // N is large for all](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-10.jpg)

![Single Program Multiple Data / SPMD int a[N]; // N is large for all Single Program Multiple Data / SPMD int a[N]; // N is large for all](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-11.jpg)

![Target Applications int a[N]; // N is large for all elements of an array Target Applications int a[N]; // N is large for all elements of an array](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-20.jpg)

![CUDA API: Example int a[N]; for (i =0; i < N; i++) a[i] = CUDA API: Example int a[N]; for (i =0; i < N; i++) a[i] =](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-51.jpg)

![1. Allocate CPU Data container float *ha; main (int argc, char *argv[]) { int 1. Allocate CPU Data container float *ha; main (int argc, char *argv[]) { int](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-53.jpg)

![__device__ Example • Add x to a[i] multiple times __device__ float addmany (float a, __device__ Example • Add x to a[i] multiple times __device__ float addmany (float a,](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-68.jpg)

![Handling Large Input Data Sets – 1 D Example • Recall grid. Dim. [xy] Handling Large Input Data Sets – 1 D Example • Recall grid. Dim. [xy]](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-89.jpg)

- Slides: 93

CUDA Programming Introduction to CUDA Programming Andreas Moshovos Winter 2009 Some slides/material from: UIUC course by Wen-Mei Hwu and David Kirk UCSB course by Andrea Di Blas Universitat Jena by Waqar Saleem NVIDIA by Simon Green

What computation machine do we want? You can have one wish. Wish anything you want Yeah! I want a … Genie cartoon is from www. cane. com http: //www. pdclipart. org

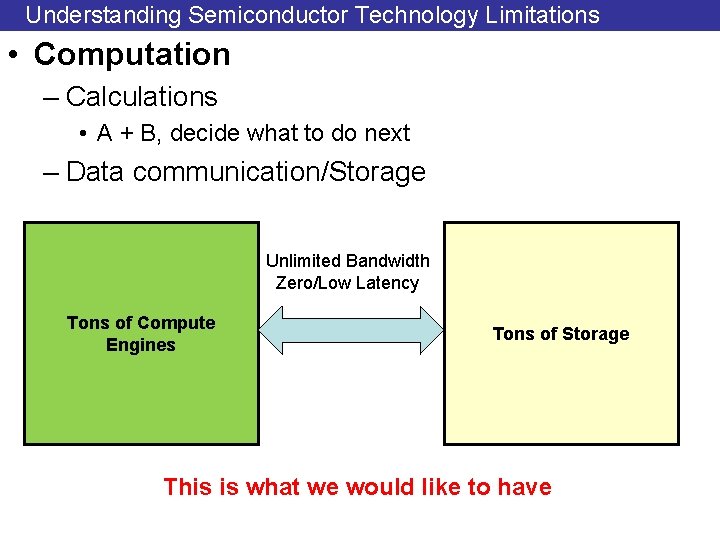

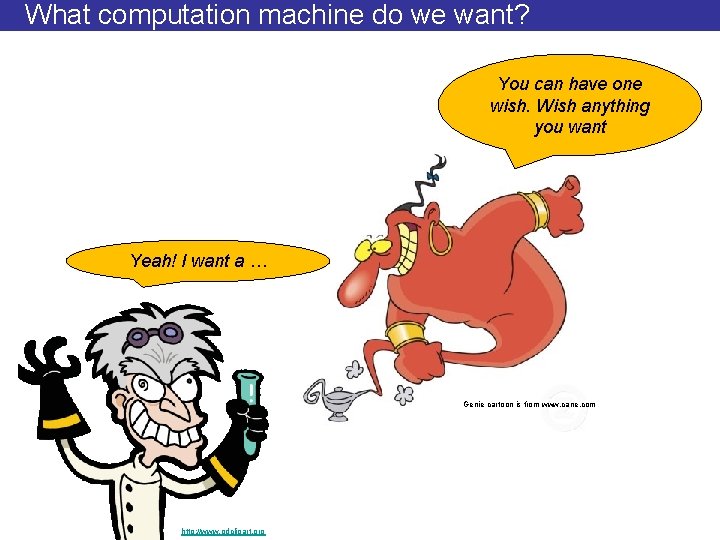

Understanding Semiconductor Technology Limitations • Computation – Calculations • A + B, decide what to do next – Data communication/Storage Unlimited Bandwidth Zero/Low Latency Tons of Compute Engines Tons of Storage This is what we would like to have

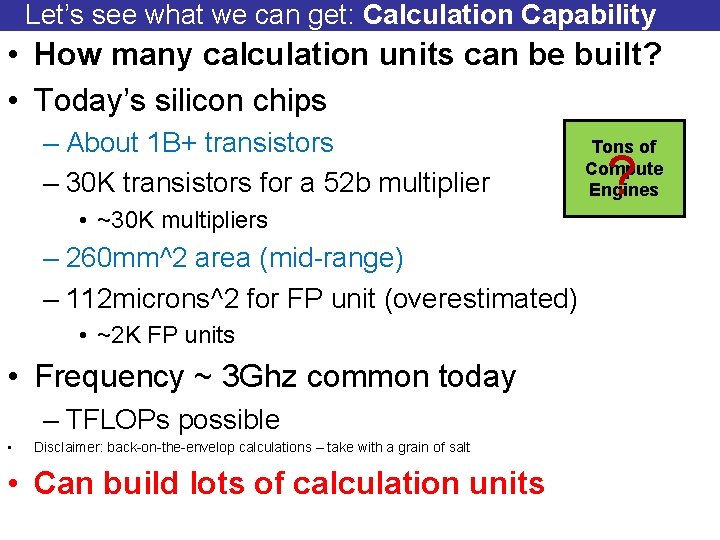

Let’s see what we can get: Calculation Capability • How many calculation units can be built? • Today’s silicon chips – About 1 B+ transistors – 30 K transistors for a 52 b multiplier • ~30 K multipliers – 260 mm^2 area (mid-range) – 112 microns^2 for FP unit (overestimated) • ~2 K FP units • Frequency ~ 3 Ghz common today – TFLOPs possible • Disclaimer: back-on-the-envelop calculations – take with a grain of salt • Can build lots of calculation units Tons of Compute Engines ?

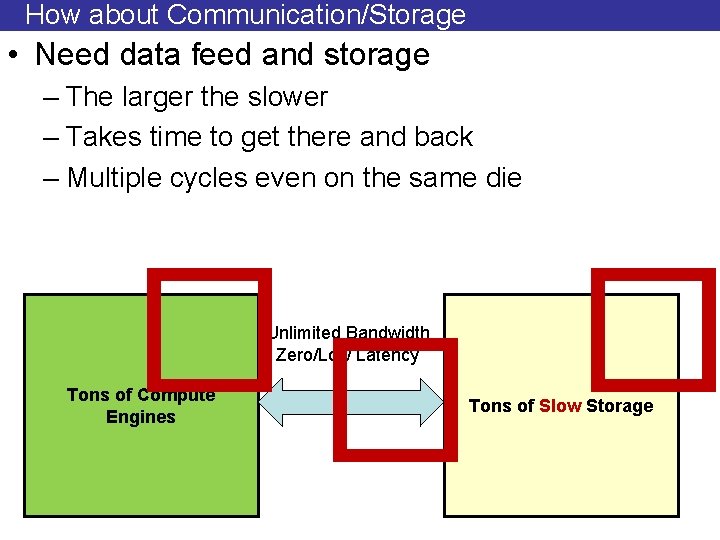

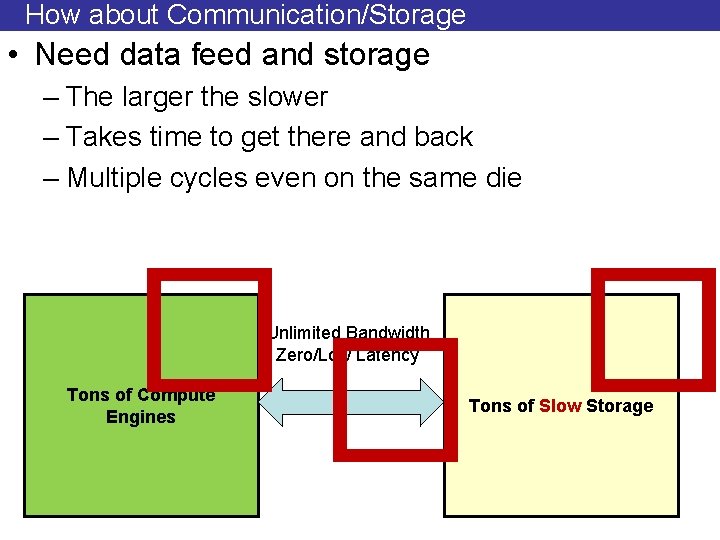

How about Communication/Storage • Need data feed and storage – The larger the slower – Takes time to get there and back – Multiple cycles even on the same die �� � Unlimited Bandwidth Zero/Low Latency Tons of Compute Engines Tons of Slow Storage

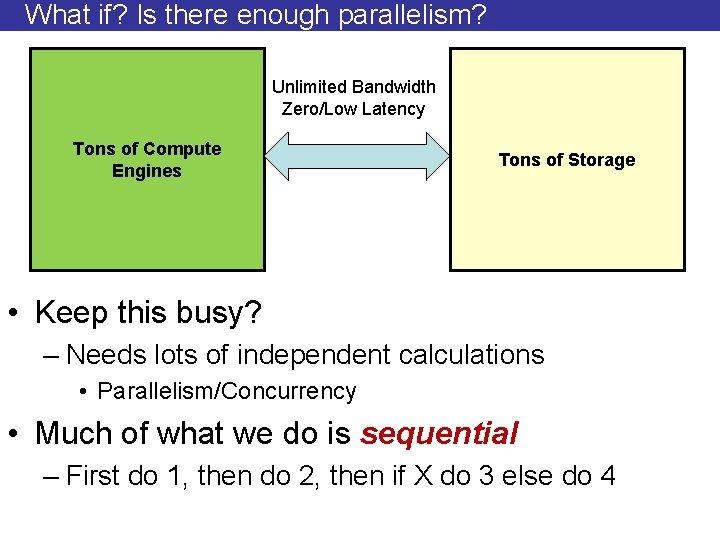

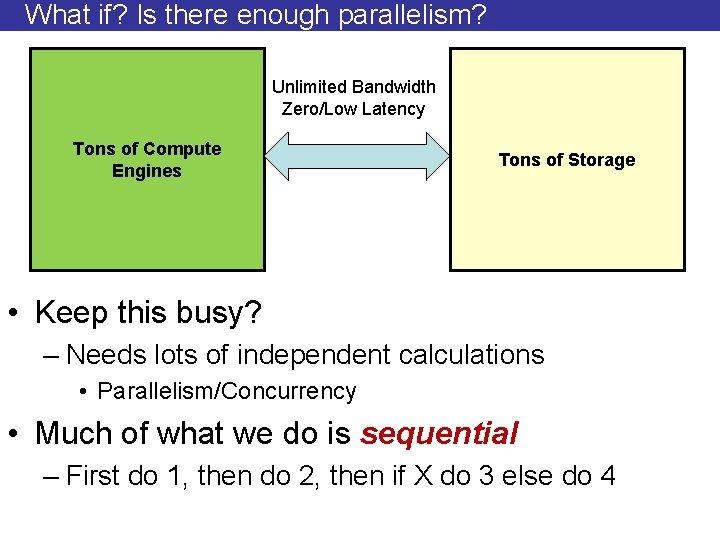

What if? Is there enough parallelism? Unlimited Bandwidth Zero/Low Latency Tons of Compute Engines Tons of Storage • Keep this busy? – Needs lots of independent calculations • Parallelism/Concurrency • Much of what we do is sequential – First do 1, then do 2, then if X do 3 else do 4

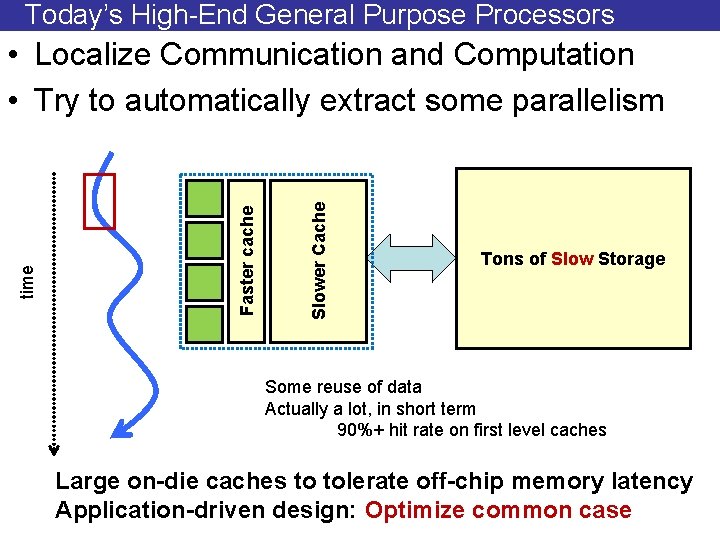

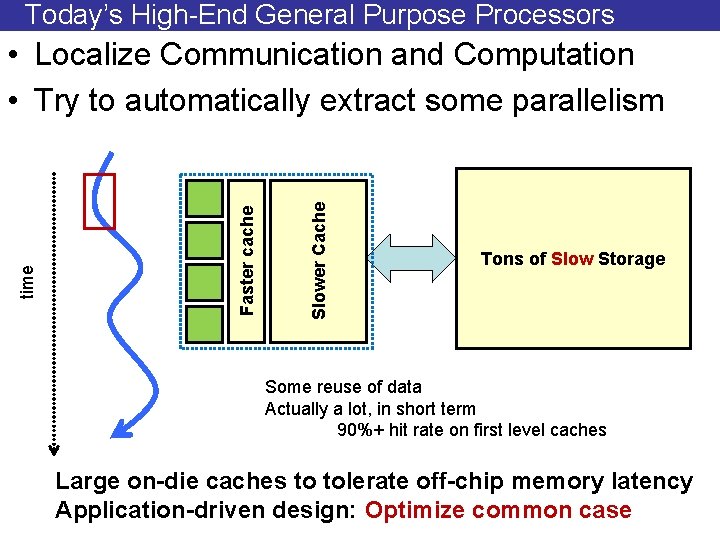

Today’s High-End General Purpose Processors Slower Cache Faster cache time • Localize Communication and Computation • Try to automatically extract some parallelism Tons of Slow Storage Some reuse of data Actually a lot, in short term 90%+ hit rate on first level caches Large on-die caches to tolerate off-chip memory latency Application-driven design: Optimize common case

Some things are naturally parallel

![Sequential Execution Model int aN N is large for i 0 i Sequential Execution Model int a[N]; // N is large for (i =0; i <](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-9.jpg)

Sequential Execution Model int a[N]; // N is large for (i =0; i < N; i++) time a[i] = a[i] * fade; Flow of control / Thread One instruction at the time Optimizations possible at the machine level

![Data Parallel Execution Model SIMD int aN N is large for all Data Parallel Execution Model / SIMD int a[N]; // N is large for all](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-10.jpg)

Data Parallel Execution Model / SIMD int a[N]; // N is large for all elements do in parallel time a[i] = a[i] * fade; This has been tried before: ILLIAC III, UIUC, 1966 http: //ieeexplore. ieee. org/xpls/abs_all. jsp? arnumber=4038028&tag=1 http: //ed-thelen. org/comp-hist/vs-illiac-iv. html

![Single Program Multiple Data SPMD int aN N is large for all Single Program Multiple Data / SPMD int a[N]; // N is large for all](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-11.jpg)

Single Program Multiple Data / SPMD int a[N]; // N is large for all elements do in parallel time if (a[i] > threshold) a[i]*= fade; Code is statically identical across all threads Execution path may differ The model used in today’s Graphics Processors

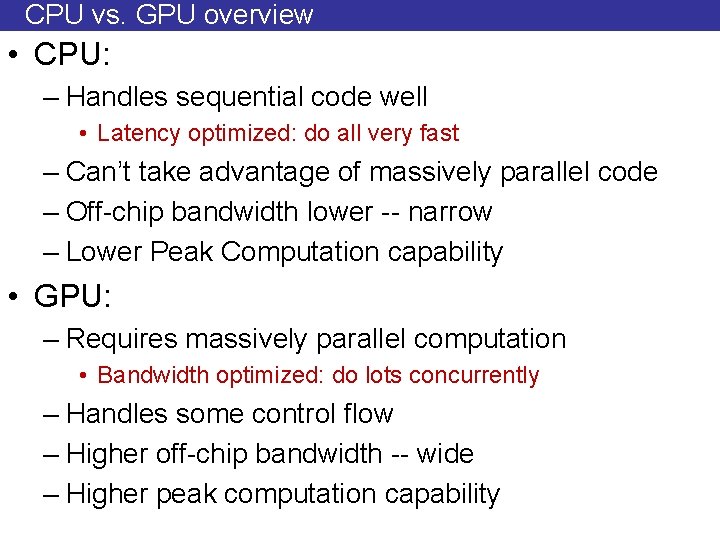

CPU vs. GPU overview • CPU: – Handles sequential code well • Latency optimized: do all very fast – Can’t take advantage of massively parallel code – Off-chip bandwidth lower -- narrow – Lower Peak Computation capability • GPU: – Requires massively parallel computation • Bandwidth optimized: do lots concurrently – Handles some control flow – Higher off-chip bandwidth -- wide – Higher peak computation capability

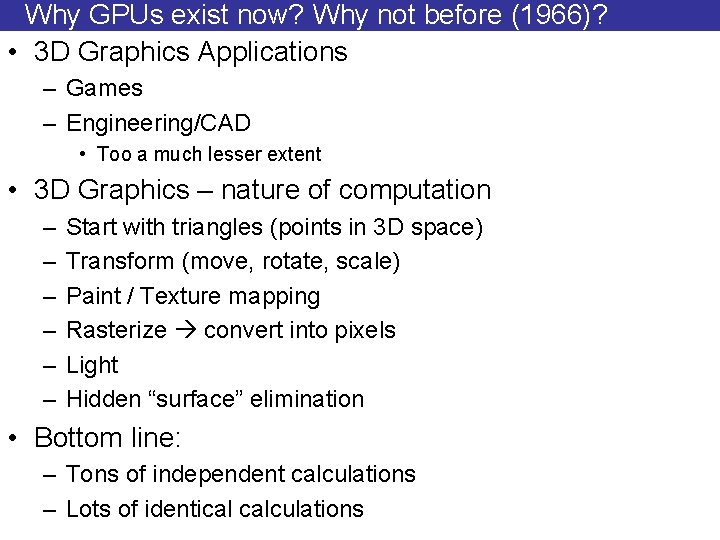

Why GPUs exist now? Why not before (1966)? • 3 D Graphics Applications – Games – Engineering/CAD • Too a much lesser extent • 3 D Graphics – nature of computation – – – Start with triangles (points in 3 D space) Transform (move, rotate, scale) Paint / Texture mapping Rasterize convert into pixels Light Hidden “surface” elimination • Bottom line: – Tons of independent calculations – Lots of identical calculations

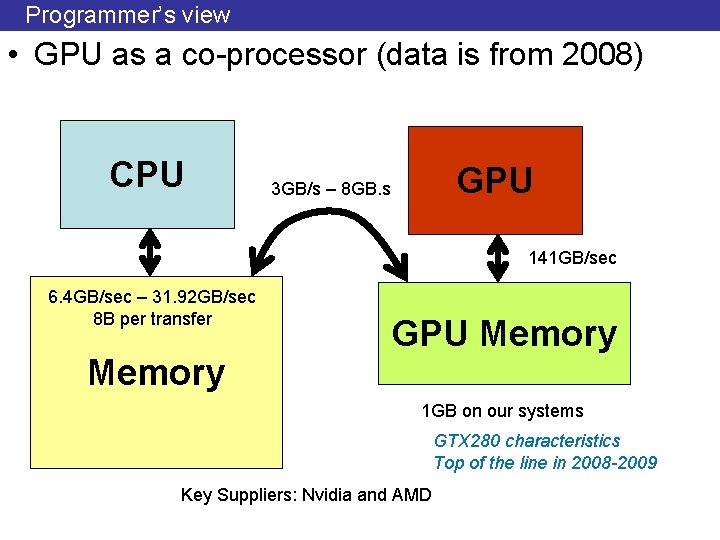

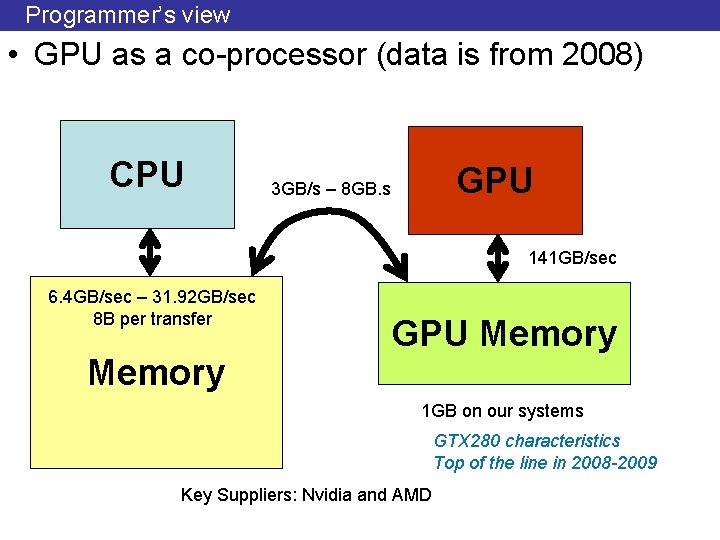

Programmer’s view • GPU as a co-processor (data is from 2008) CPU GPU 3 GB/s – 8 GB. s 141 GB/sec 6. 4 GB/sec – 31. 92 GB/sec 8 B per transfer Memory GPU Memory 1 GB on our systems GTX 280 characteristics Top of the line in 2008 -2009 Key Suppliers: Nvidia and AMD

But what about performance? • Focus on PEAK performance first: – What the manufacturer guarantees you’ll never exceed • Two Aspects: – Data Access Rate Capability • Bandwidth – Data Processing Capability • How many ops per sec

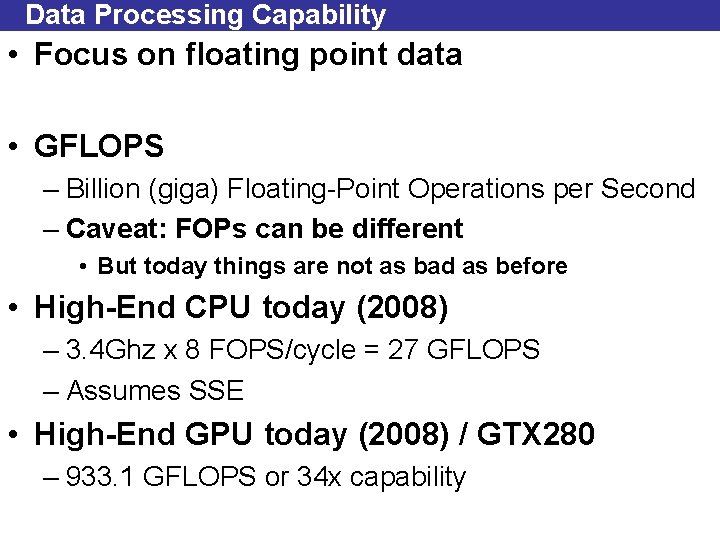

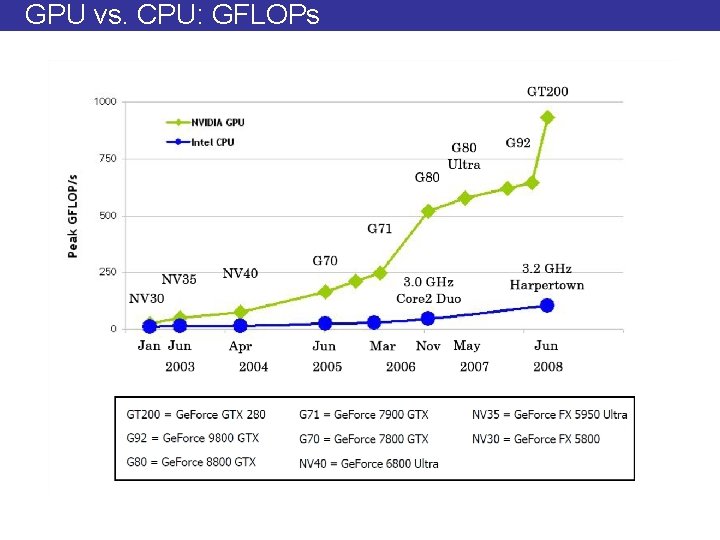

Data Processing Capability • Focus on floating point data • GFLOPS – Billion (giga) Floating-Point Operations per Second – Caveat: FOPs can be different • But today things are not as bad as before • High-End CPU today (2008) – 3. 4 Ghz x 8 FOPS/cycle = 27 GFLOPS – Assumes SSE • High-End GPU today (2008) / GTX 280 – 933. 1 GFLOPS or 34 x capability

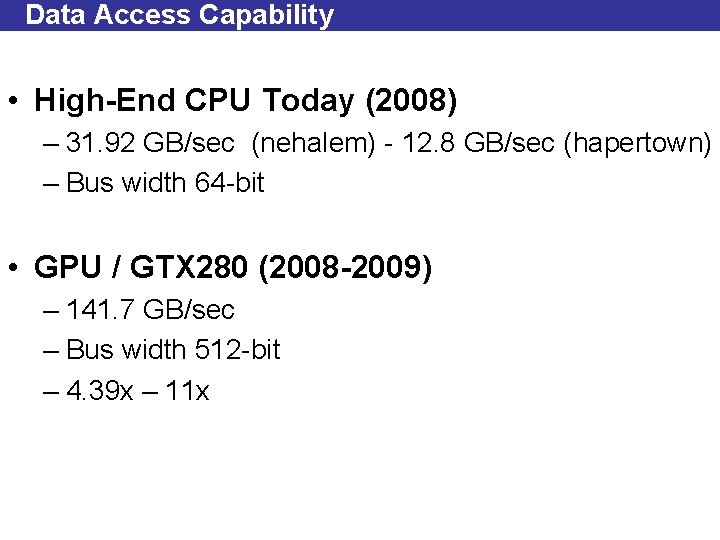

Data Access Capability • High-End CPU Today (2008) – 31. 92 GB/sec (nehalem) - 12. 8 GB/sec (hapertown) – Bus width 64 -bit • GPU / GTX 280 (2008 -2009) – 141. 7 GB/sec – Bus width 512 -bit – 4. 39 x – 11 x

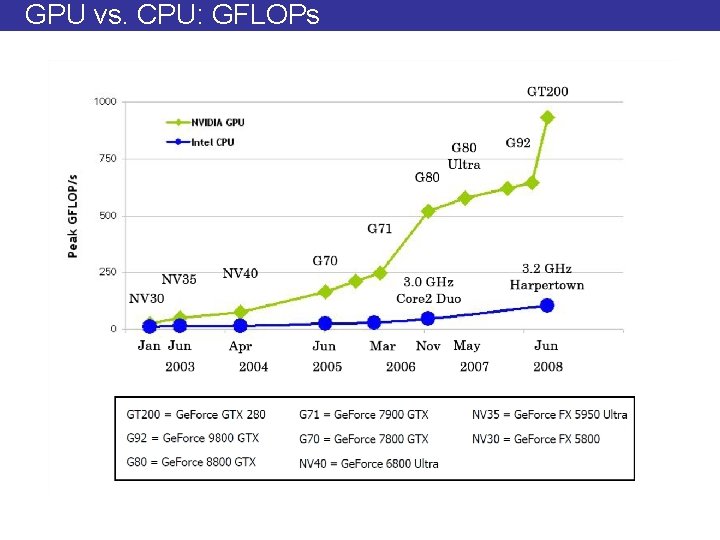

GPU vs. CPU: GFLOPs

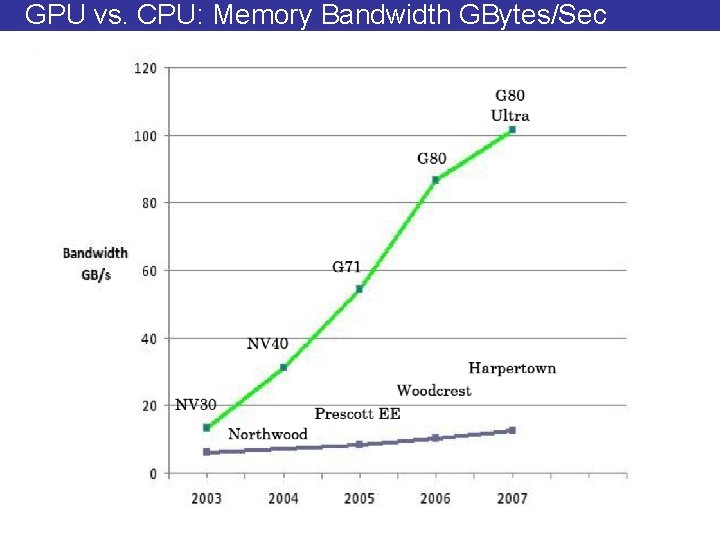

GPU vs. CPU: Memory Bandwidth GBytes/Sec

![Target Applications int aN N is large for all elements of an array Target Applications int a[N]; // N is large for all elements of an array](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-20.jpg)

Target Applications int a[N]; // N is large for all elements of an array a[i] = a[i] * fade Kernel • Lots of independent computations – CUDA threads need not be completely independent THREAD

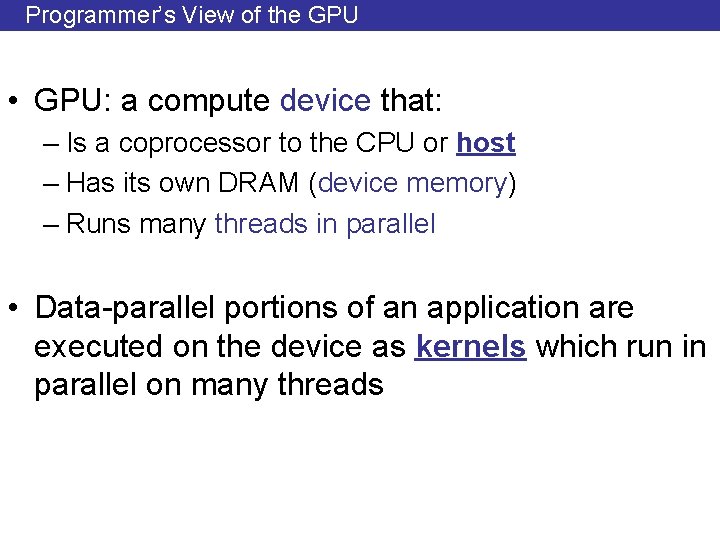

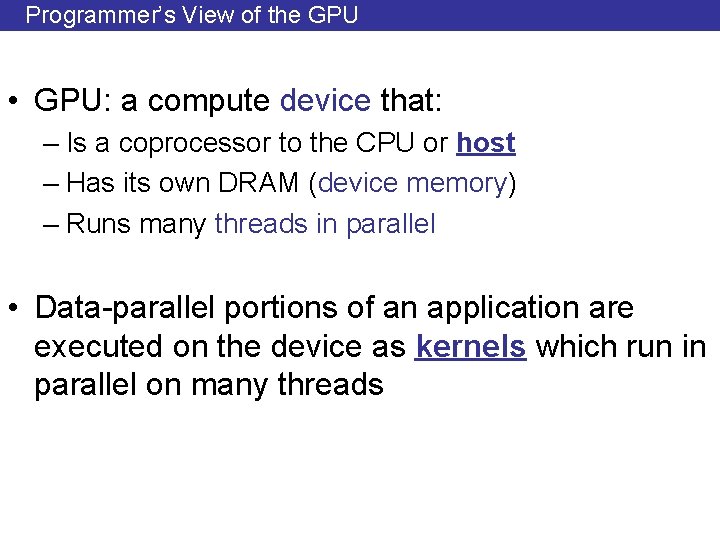

Programmer’s View of the GPU • GPU: a compute device that: – Is a coprocessor to the CPU or host – Has its own DRAM (device memory) – Runs many threads in parallel • Data-parallel portions of an application are executed on the device as kernels which run in parallel on many threads

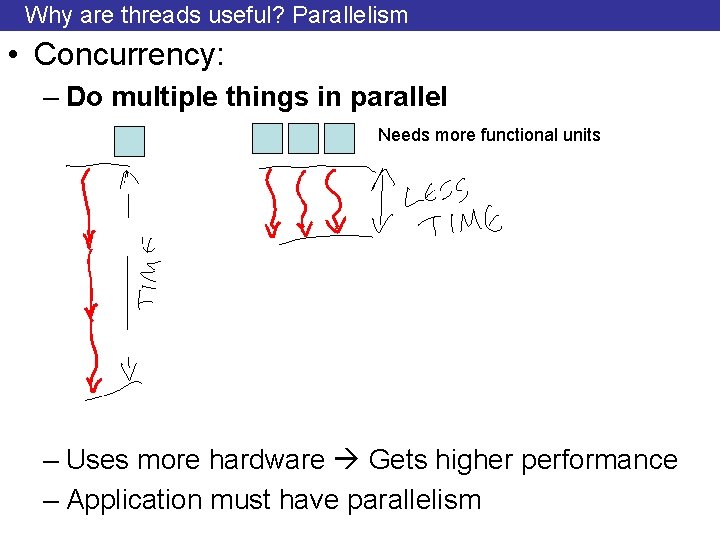

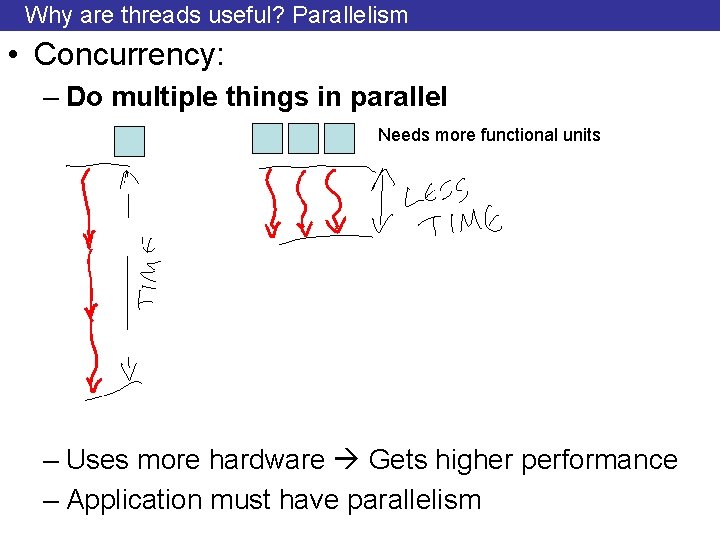

Why are threads useful? Parallelism • Concurrency: – Do multiple things in parallel Needs more functional units – Uses more hardware Gets higher performance – Application must have parallelism

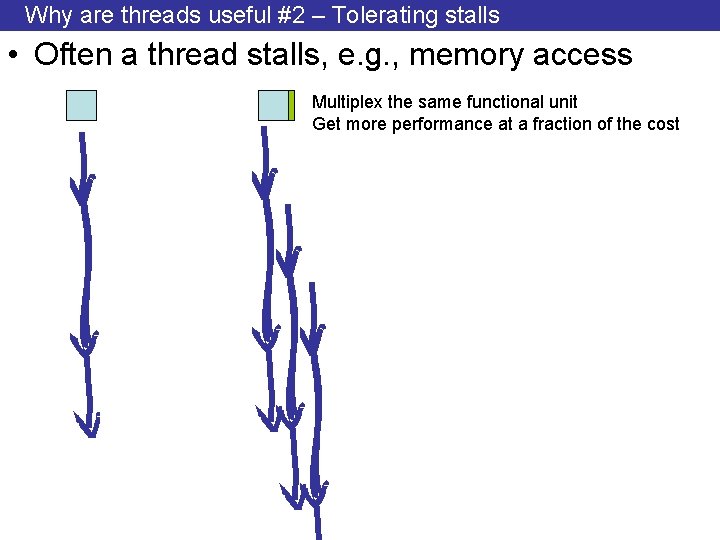

Why are threads useful #2 – Tolerating stalls • Often a thread stalls, e. g. , memory access Multiplex the same functional unit Get more performance at a fraction of the cost

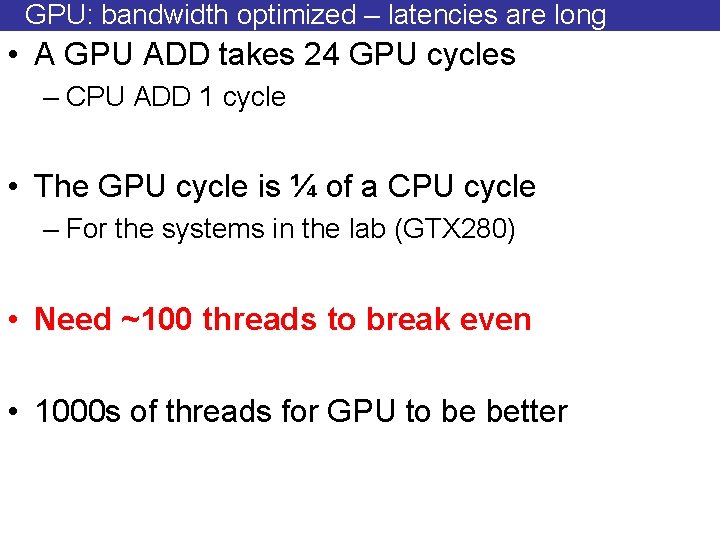

GPU: bandwidth optimized – latencies are long • A GPU ADD takes 24 GPU cycles – CPU ADD 1 cycle • The GPU cycle is ¼ of a CPU cycle – For the systems in the lab (GTX 280) • Need ~100 threads to break even • 1000 s of threads for GPU to be better

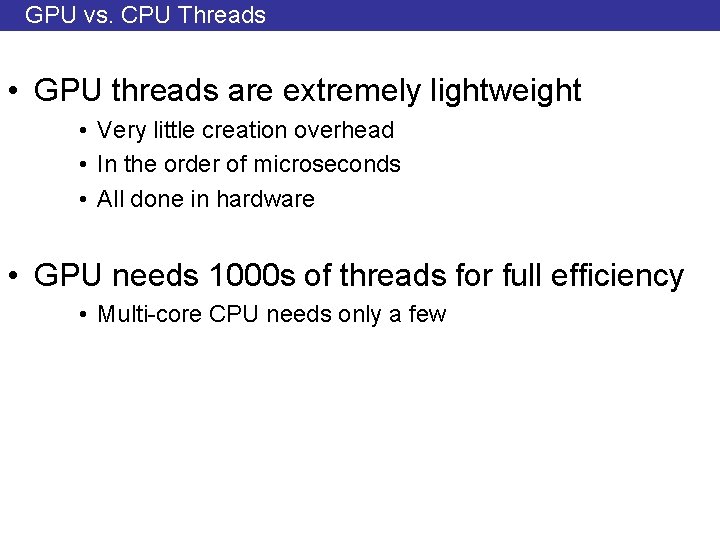

GPU vs. CPU Threads • GPU threads are extremely lightweight • Very little creation overhead • In the order of microseconds • All done in hardware • GPU needs 1000 s of threads for full efficiency • Multi-core CPU needs only a few

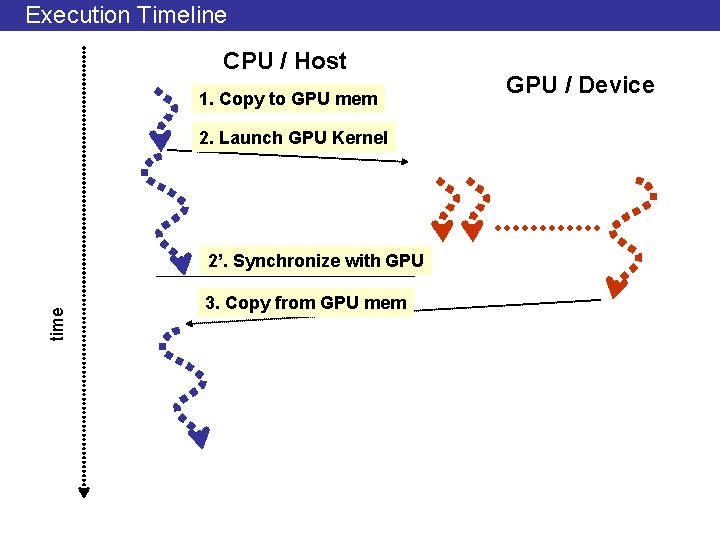

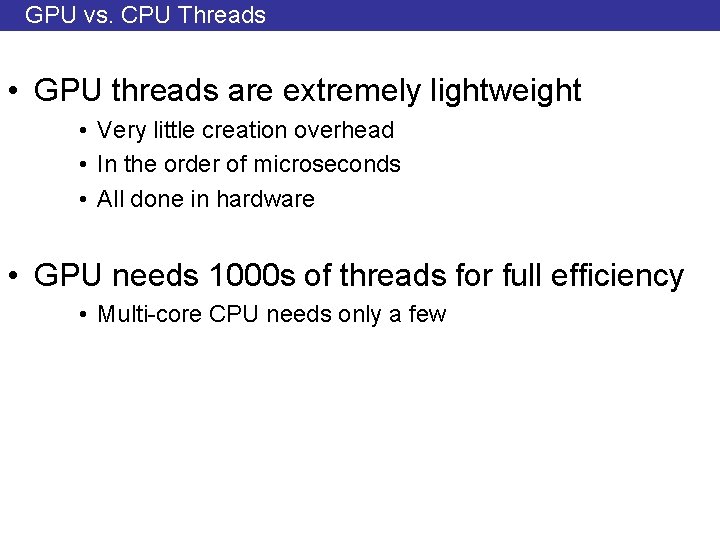

Execution Timeline CPU / Host 1. Copy to GPU mem 2. Launch GPU Kernel time 2’. Synchronize with GPU 3. Copy from GPU mem GPU / Device

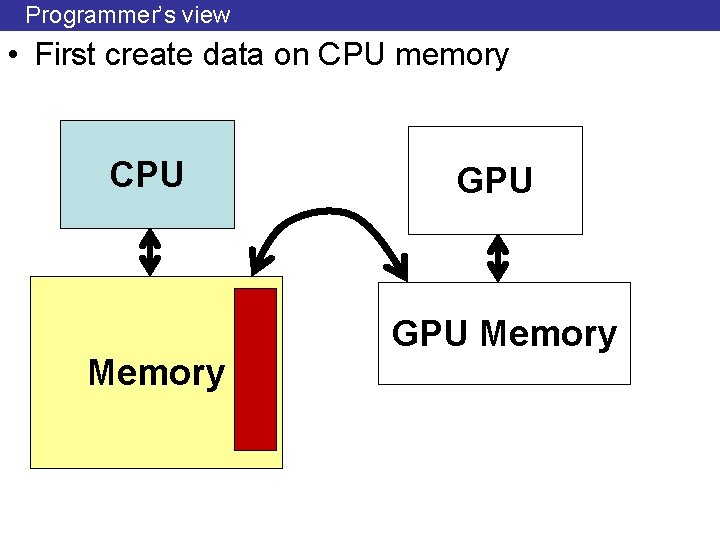

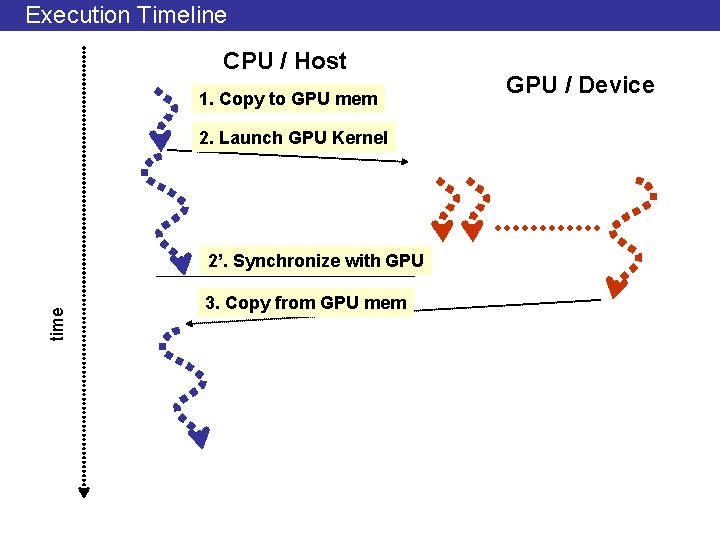

Programmer’s view • First create data on CPU memory CPU Memory GPU Memory

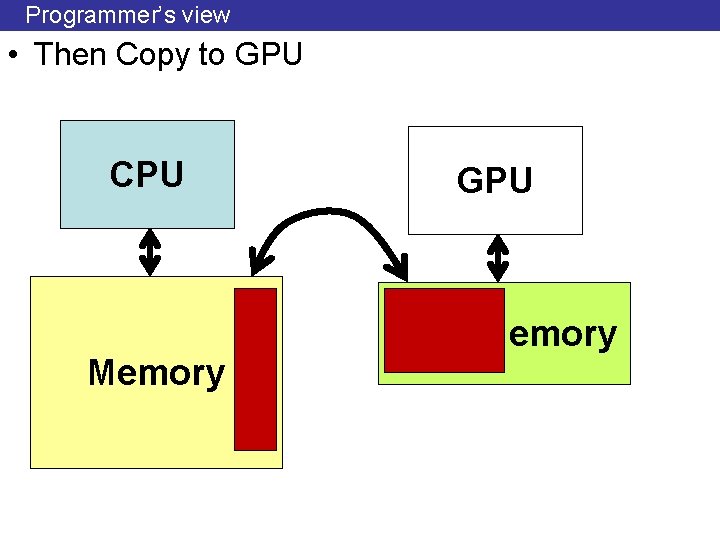

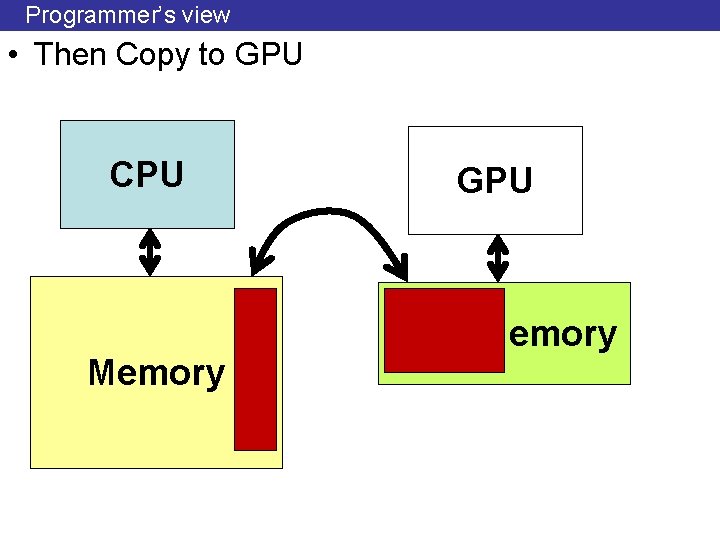

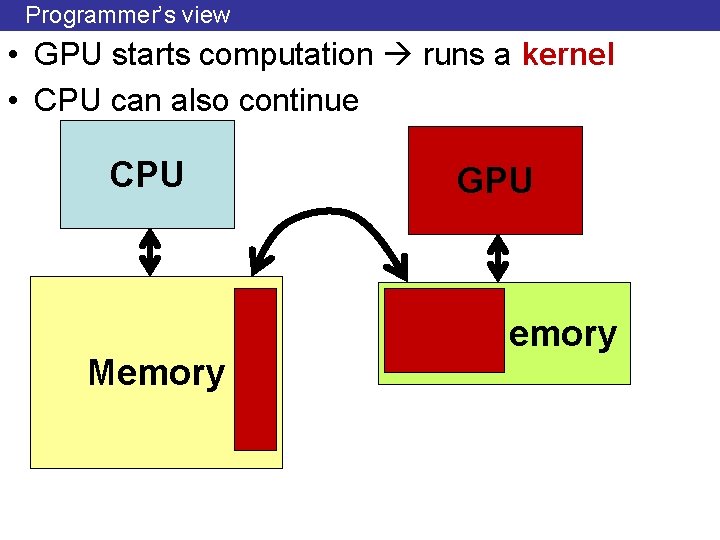

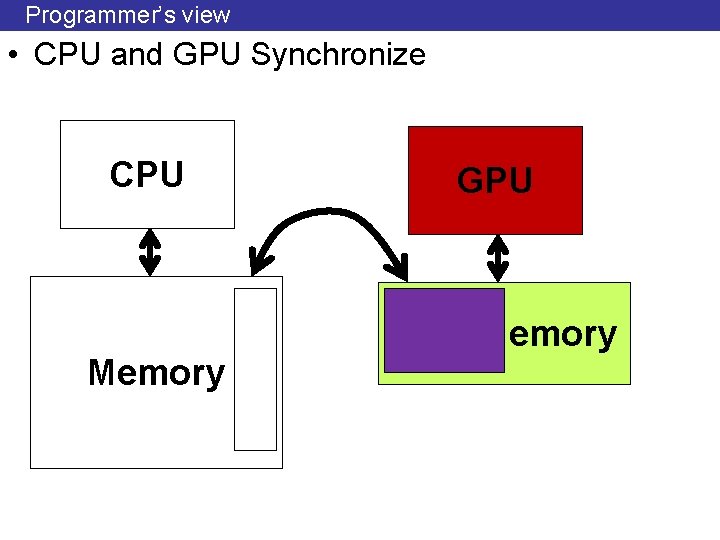

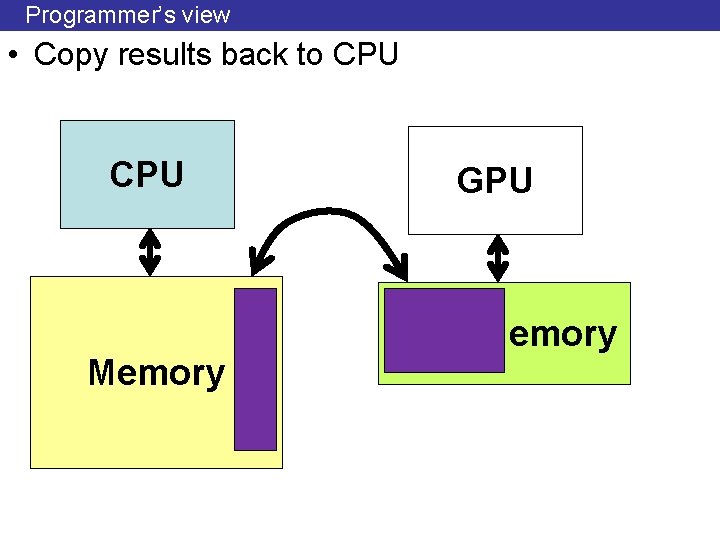

Programmer’s view • Then Copy to GPU CPU Memory GPU Memory

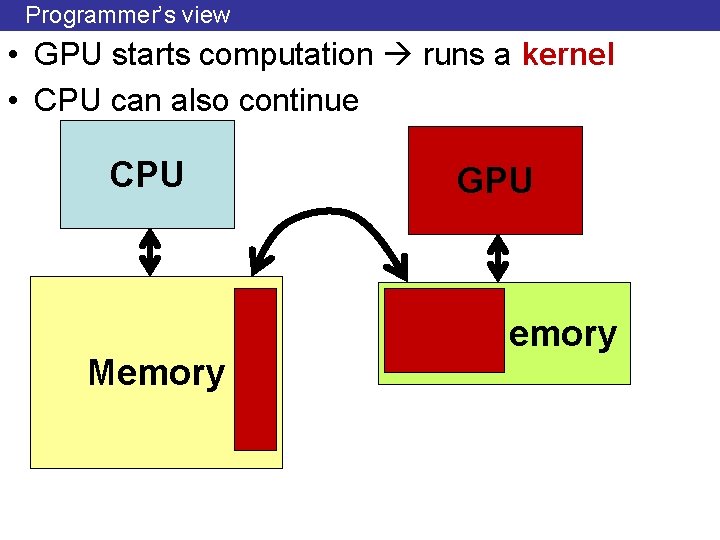

Programmer’s view • GPU starts computation runs a kernel • CPU can also continue CPU Memory GPU Memory

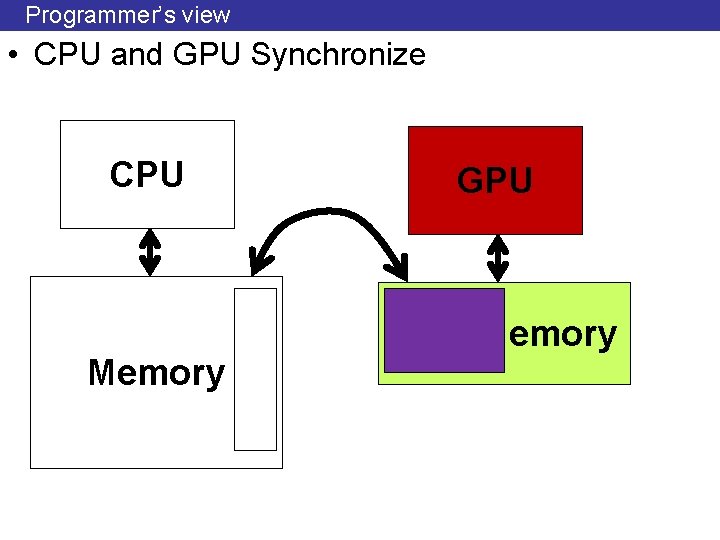

Programmer’s view • CPU and GPU Synchronize CPU Memory GPU Memory

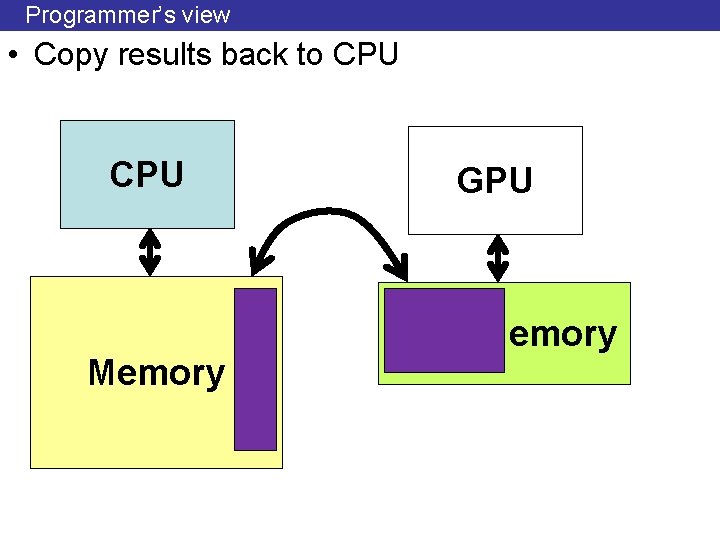

Programmer’s view • Copy results back to CPU Memory GPU Memory

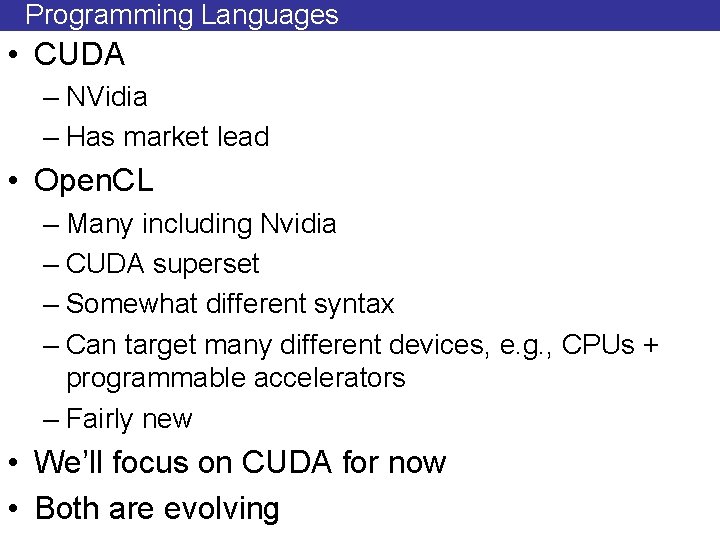

Programming Languages • CUDA – NVidia – Has market lead • Open. CL – Many including Nvidia – CUDA superset – Somewhat different syntax – Can target many different devices, e. g. , CPUs + programmable accelerators – Fairly new • We’ll focus on CUDA for now • Both are evolving

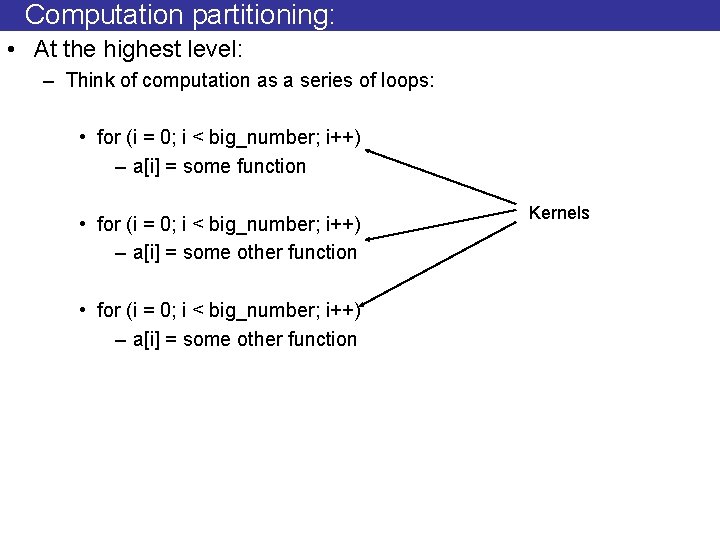

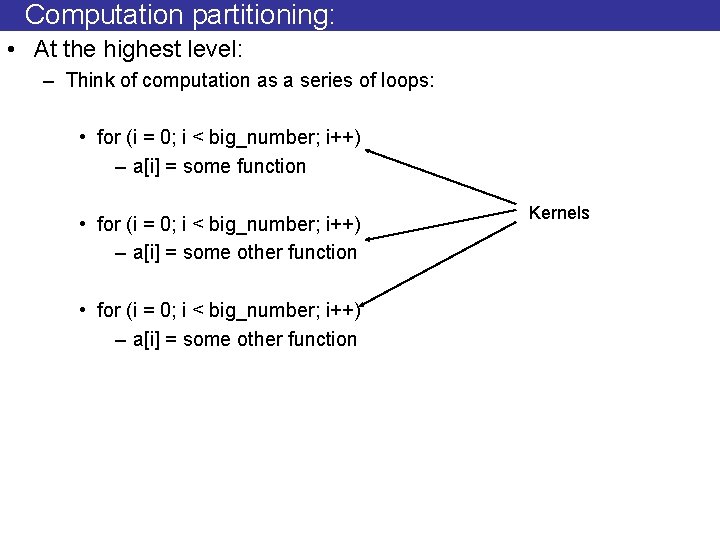

Computation partitioning: • At the highest level: – Think of computation as a series of loops: • for (i = 0; i < big_number; i++) – a[i] = some function • for (i = 0; i < big_number; i++) – a[i] = some other function Kernels

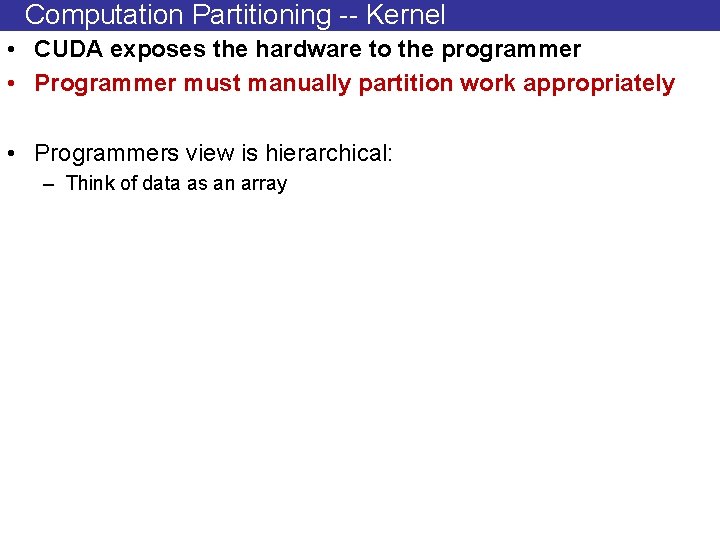

Computation Partitioning -- Kernel • CUDA exposes the hardware to the programmer • Programmer must manually partition work appropriately • Programmers view is hierarchical: – Think of data as an array

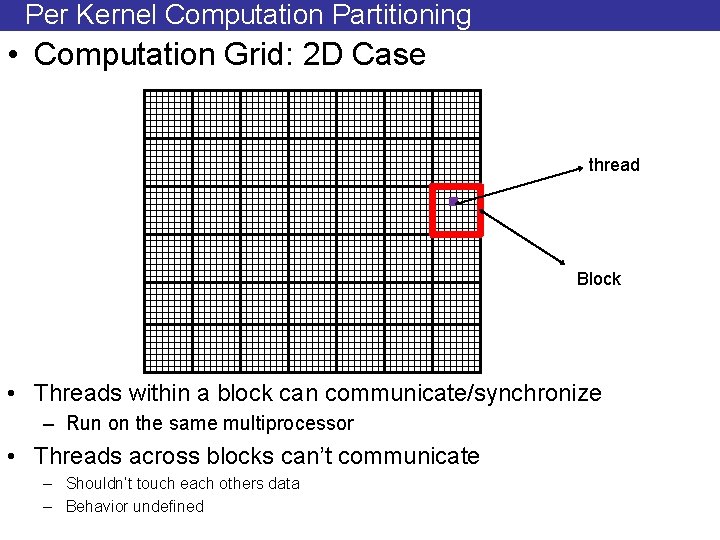

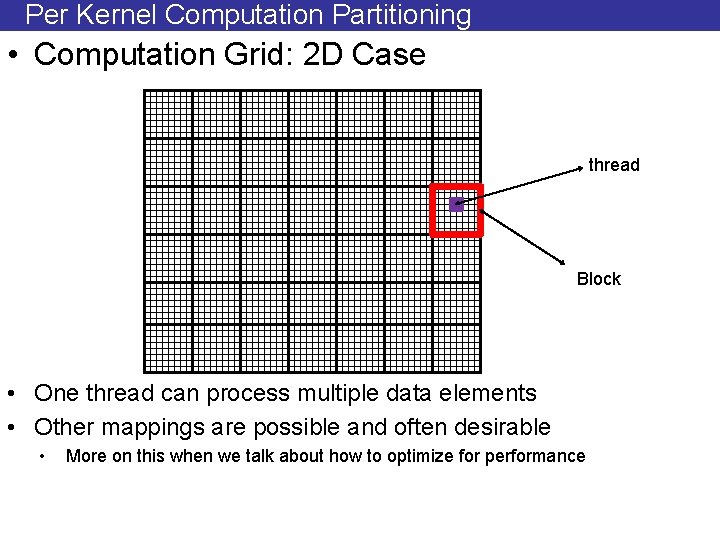

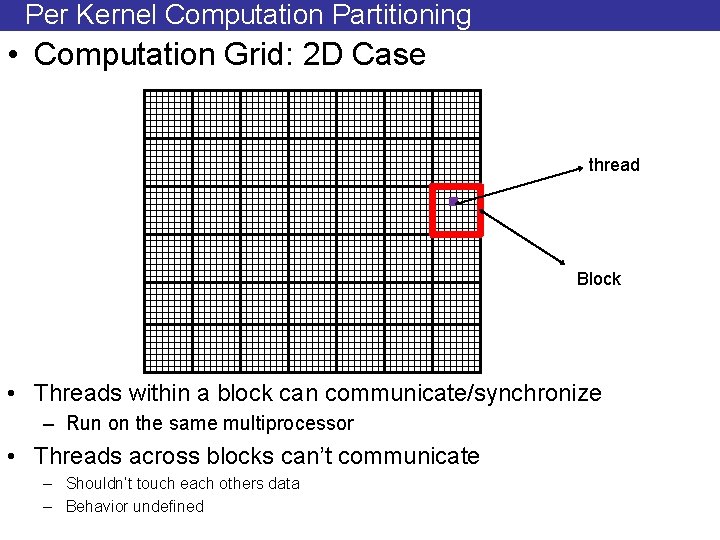

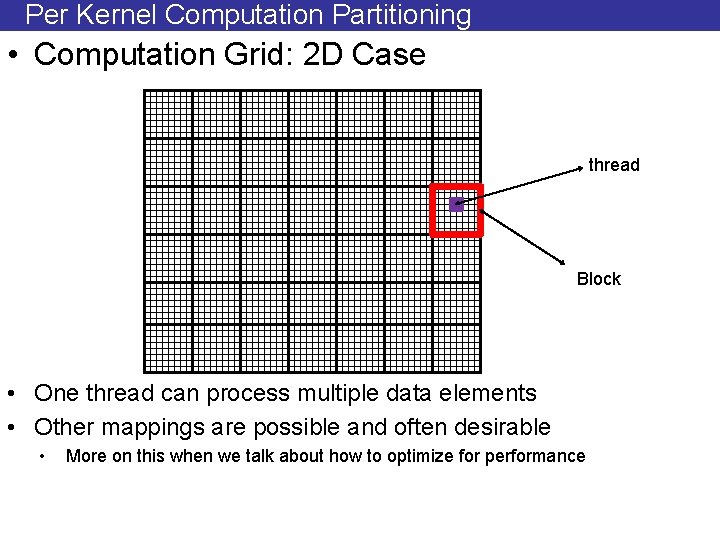

Per Kernel Computation Partitioning • Computation Grid: 2 D Case thread Block • Threads within a block can communicate/synchronize – Run on the same multiprocessor • Threads across blocks can’t communicate – Shouldn’t touch each others data – Behavior undefined

Per Kernel Computation Partitioning • Computation Grid: 2 D Case thread Block • One thread can process multiple data elements • Other mappings are possible and often desirable • More on this when we talk about how to optimize for performance

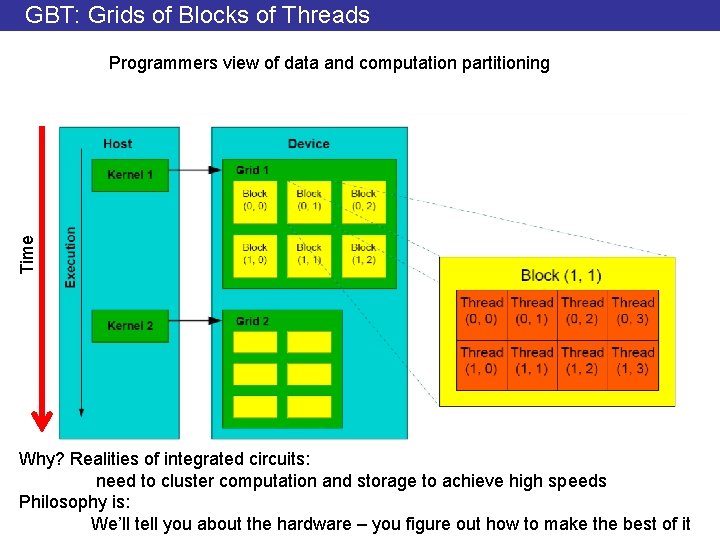

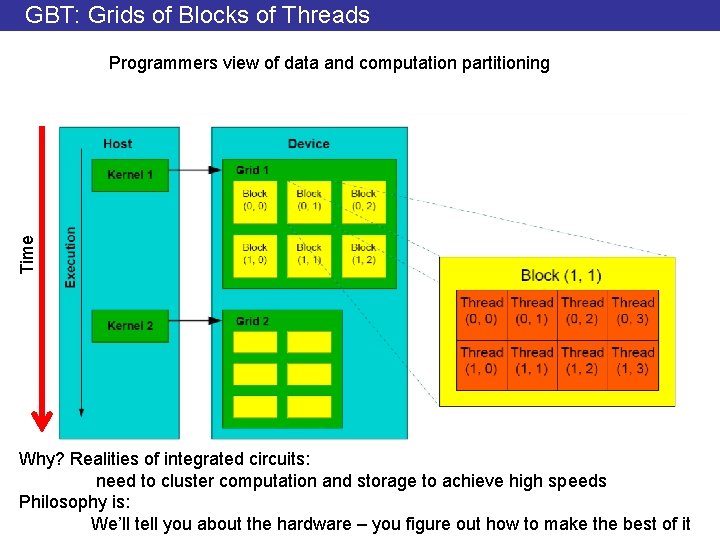

GBT: Grids of Blocks of Threads Time Programmers view of data and computation partitioning Why? Realities of integrated circuits: need to cluster computation and storage to achieve high speeds Philosophy is: We’ll tell you about the hardware – you figure out how to make the best of it

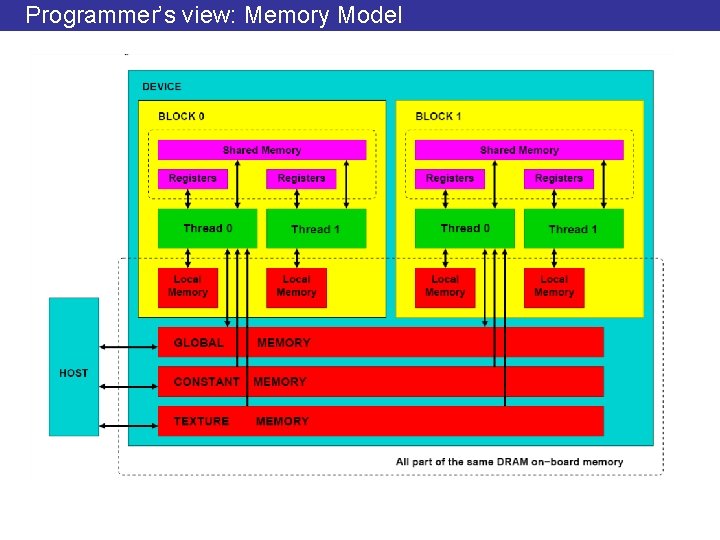

Programmer’s view: Memory Model

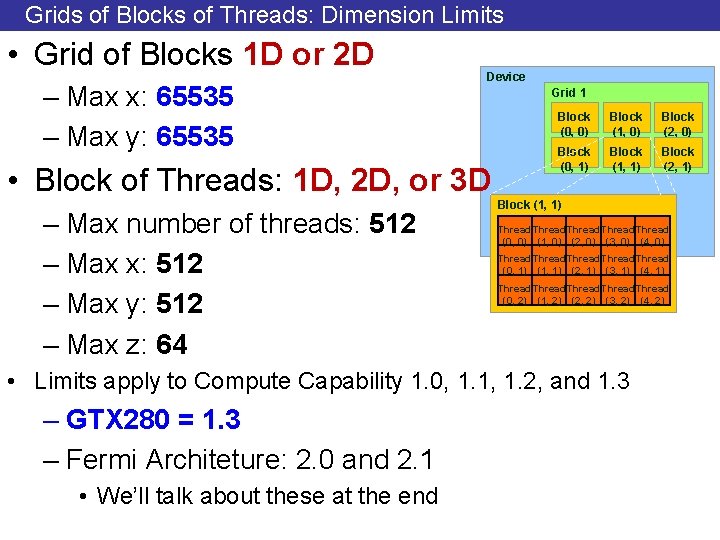

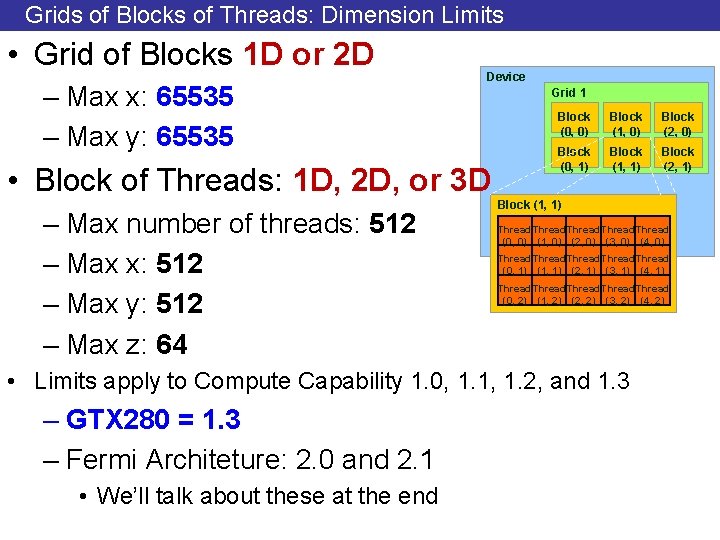

Grids of Blocks of Threads: Dimension Limits • Grid of Blocks 1 D or 2 D – Max x: 65535 – Max y: 65535 Device • Block of Threads: 1 D, 2 D, or 3 D – Max number of threads: 512 – Max x: 512 – Max y: 512 – Max z: 64 Grid 1 Block (0, 0) Block (1, 0) Block (2, 0) Block (0, 1) Block (1, 1) Block (2, 1) Block (1, 1) Thread Thread (0, 0) (1, 0) (2, 0) (3, 0) (4, 0) Thread Thread (0, 1) (1, 1) (2, 1) (3, 1) (4, 1) Thread Thread (0, 2) (1, 2) (2, 2) (3, 2) (4, 2) • Limits apply to Compute Capability 1. 0, 1. 1, 1. 2, and 1. 3 – GTX 280 = 1. 3 – Fermi Architeture: 2. 0 and 2. 1 • We’ll talk about these at the end

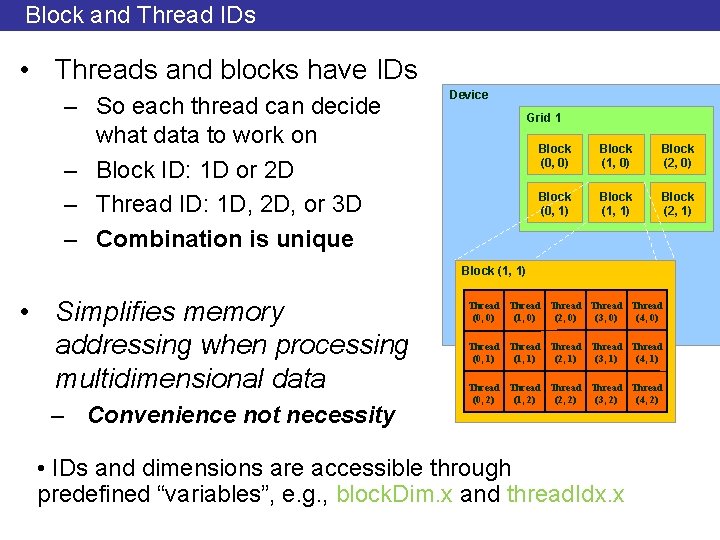

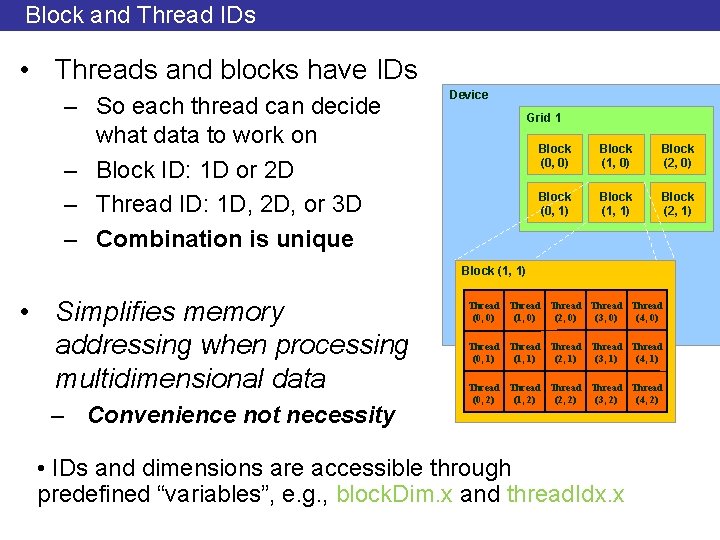

Block and Thread IDs • Threads and blocks have IDs – So each thread can decide what data to work on – Block ID: 1 D or 2 D – Thread ID: 1 D, 2 D, or 3 D – Combination is unique Device Grid 1 Block (0, 0) Block (1, 0) Block (2, 0) Block (0, 1) Block (1, 1) Block (2, 1) Block (1, 1) • Simplifies memory addressing when processing multidimensional data – Convenience not necessity Thread Thread (0, 0) (1, 0) (2, 0) (3, 0) (4, 0) Thread Thread (0, 1) (1, 1) (2, 1) (3, 1) (4, 1) Thread Thread (0, 2) (1, 2) (2, 2) (3, 2) (4, 2) • IDs and dimensions are accessible through predefined “variables”, e. g. , block. Dim. x and thread. Idx. x

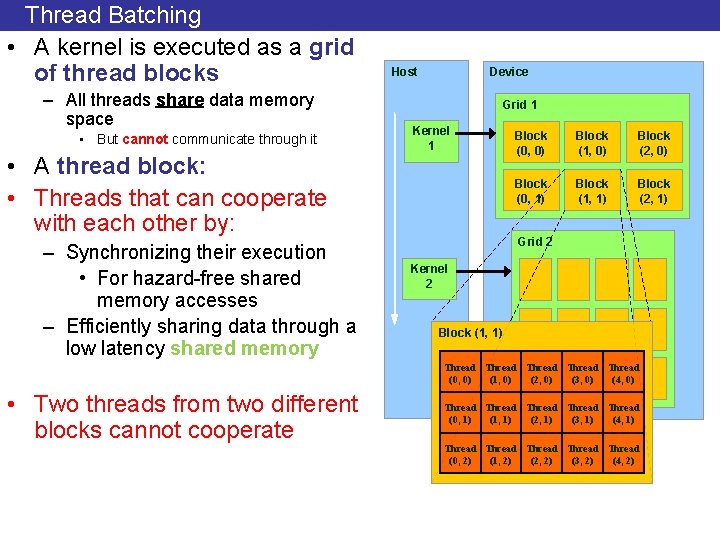

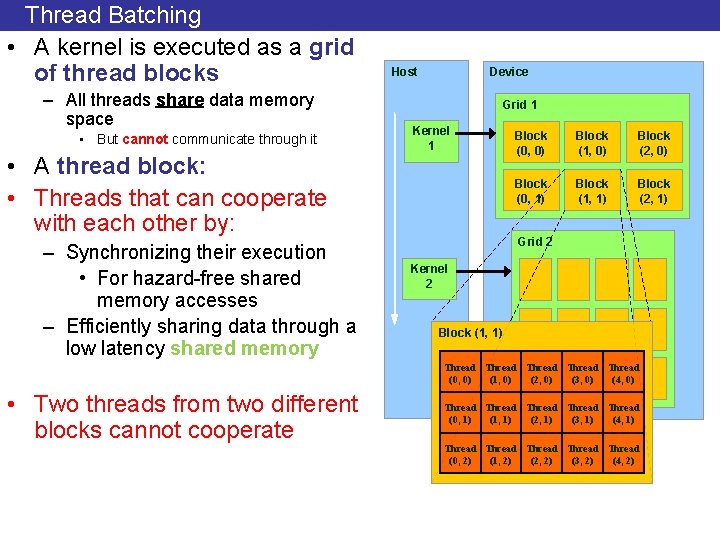

Thread Batching • A kernel is executed as a grid of thread blocks – All threads share data memory space • But cannot communicate through it • A thread block: • Threads that can cooperate with each other by: – Synchronizing their execution • For hazard-free shared memory accesses – Efficiently sharing data through a low latency shared memory Host Device Grid 1 Kernel 1 Block (0, 0) Block (1, 0) Block (2, 0) Block (0, 1) Block (1, 1) Block (2, 1) Grid 2 Kernel 2 Block (1, 1) Thread Thread (0, 0) (1, 0) (2, 0) (3, 0) (4, 0) • Two threads from two different blocks cannot cooperate Thread Thread (0, 1) (1, 1) (2, 1) (3, 1) (4, 1) Thread Thread (0, 2) (1, 2) (2, 2) (3, 2) (4, 2)

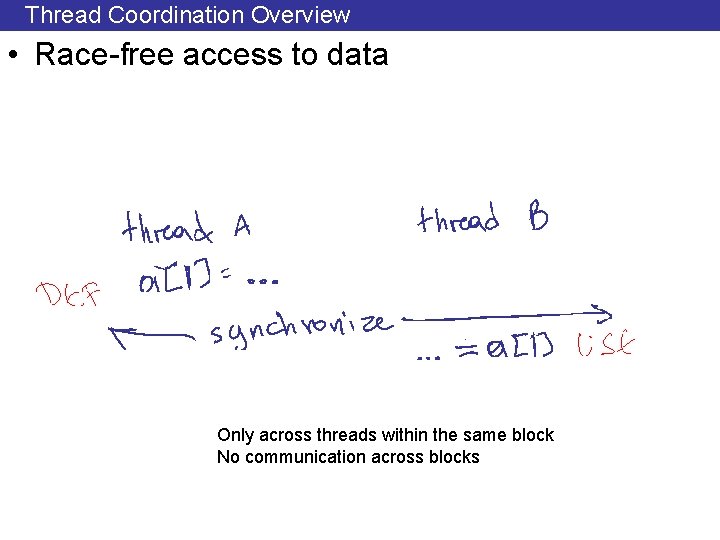

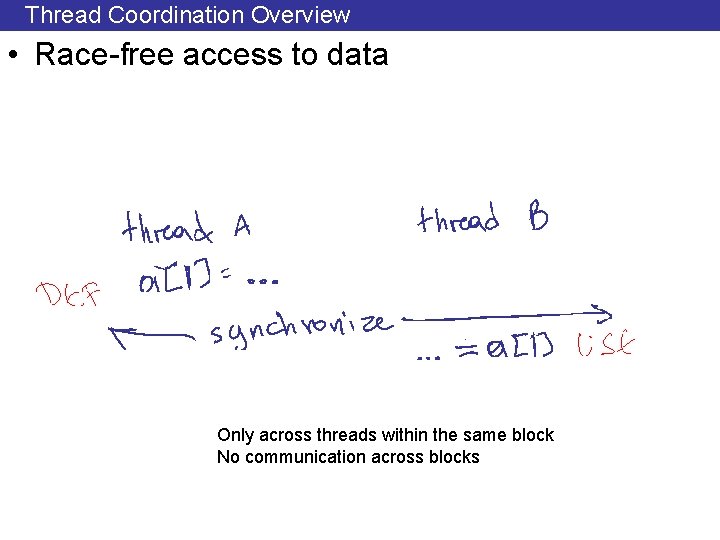

Thread Coordination Overview • Race-free access to data Only across threads within the same block No communication across blocks

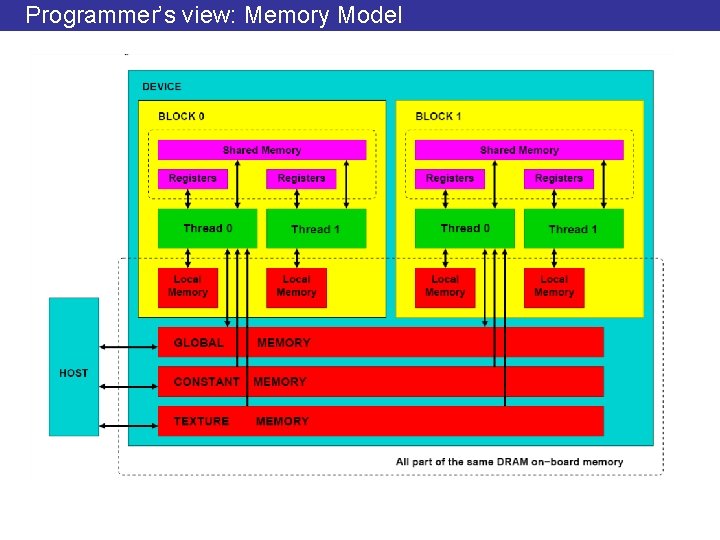

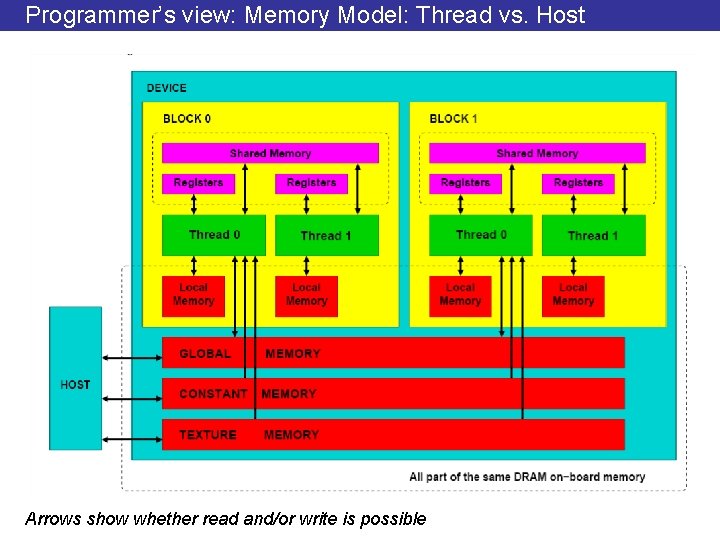

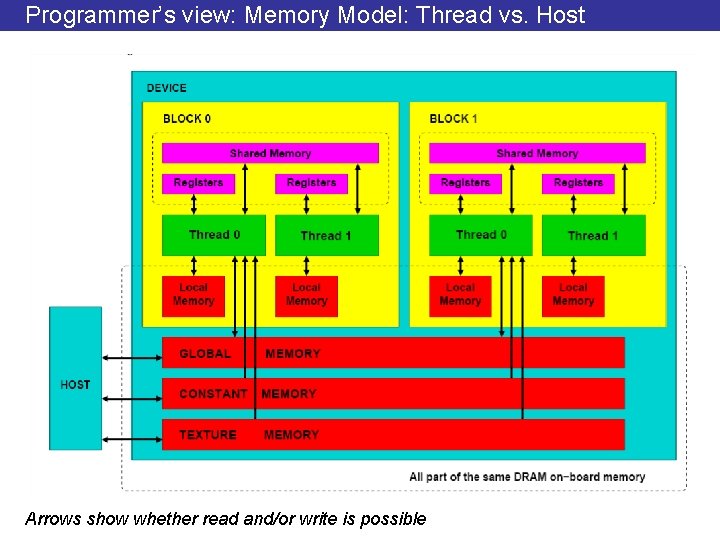

Programmer’s view: Memory Model: Thread vs. Host Arrows show whether read and/or write is possible

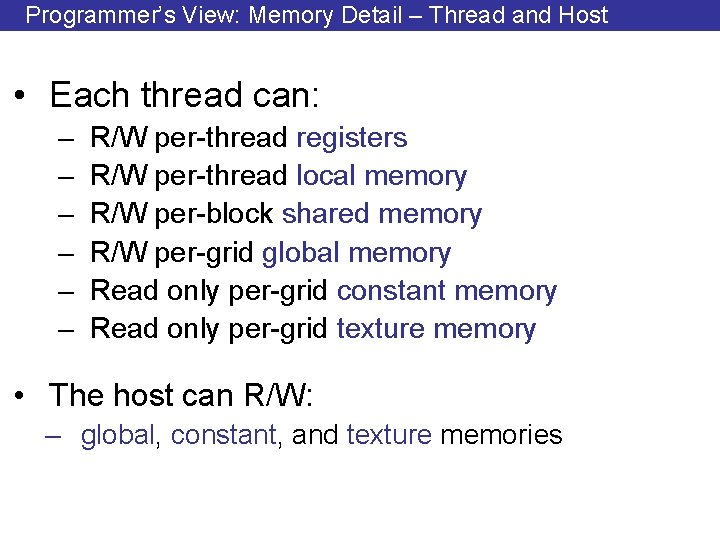

Programmer’s View: Memory Detail – Thread and Host • Each thread can: – – – R/W per-thread registers R/W per-thread local memory R/W per-block shared memory R/W per-grid global memory Read only per-grid constant memory Read only per-grid texture memory • The host can R/W: – global, constant, and texture memories

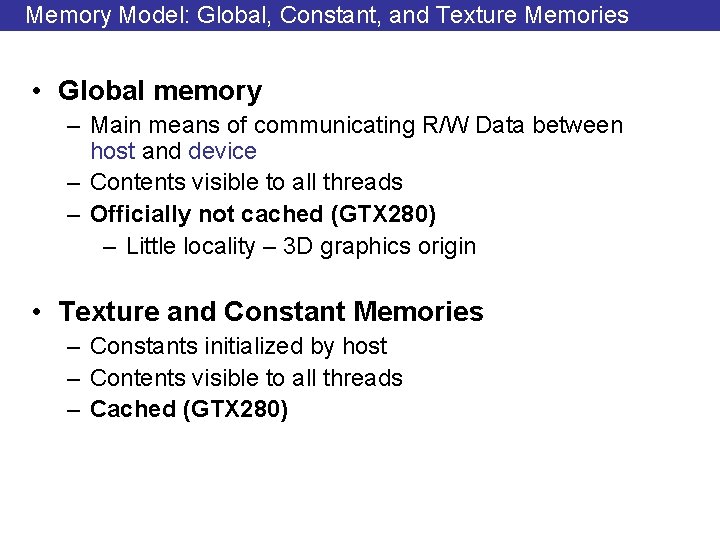

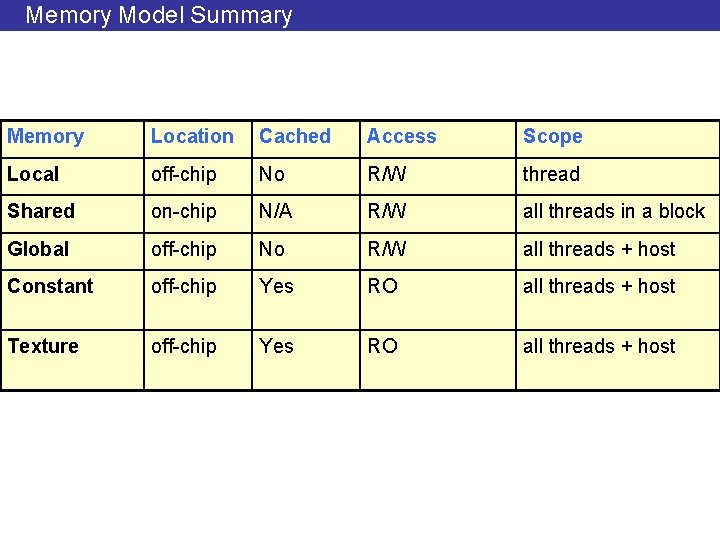

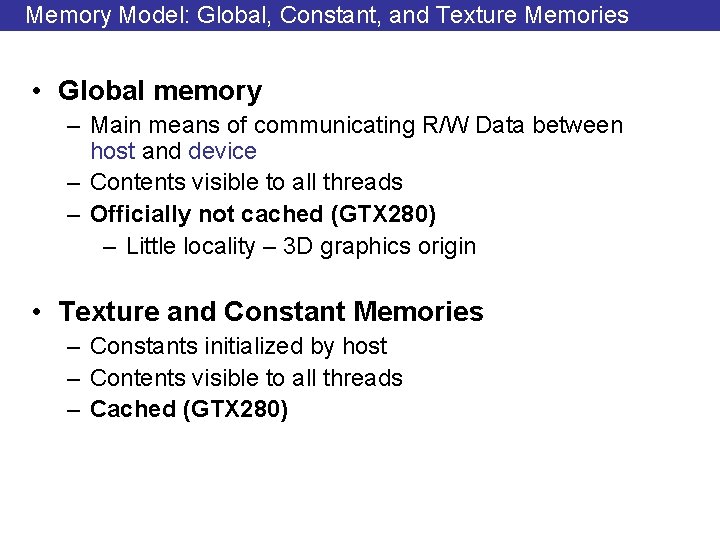

Memory Model: Global, Constant, and Texture Memories • Global memory – Main means of communicating R/W Data between host and device – Contents visible to all threads – Officially not cached (GTX 280) – Little locality – 3 D graphics origin • Texture and Constant Memories – Constants initialized by host – Contents visible to all threads – Cached (GTX 280)

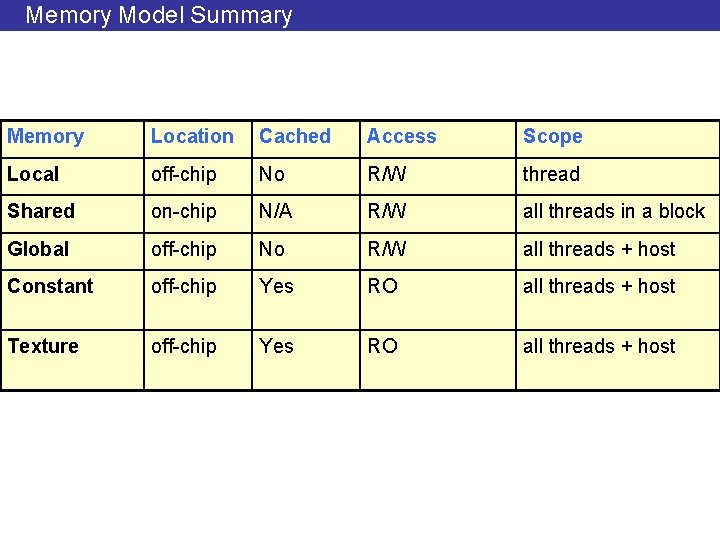

Memory Model Summary Memory Location Cached Access Scope Local off-chip No R/W thread Shared on-chip N/A R/W all threads in a block Global off-chip No R/W all threads + host Constant off-chip Yes RO all threads + host Texture off-chip Yes RO all threads + host

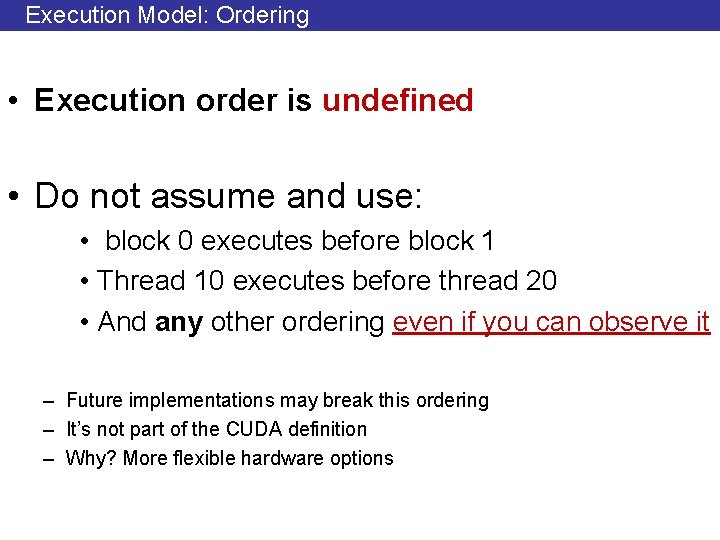

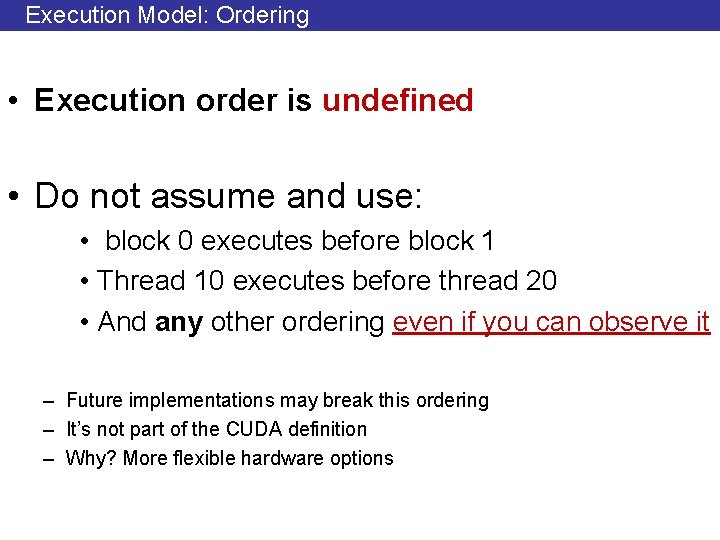

Execution Model: Ordering • Execution order is undefined • Do not assume and use: • block 0 executes before block 1 • Thread 10 executes before thread 20 • And any other ordering even if you can observe it – Future implementations may break this ordering – It’s not part of the CUDA definition – Why? More flexible hardware options

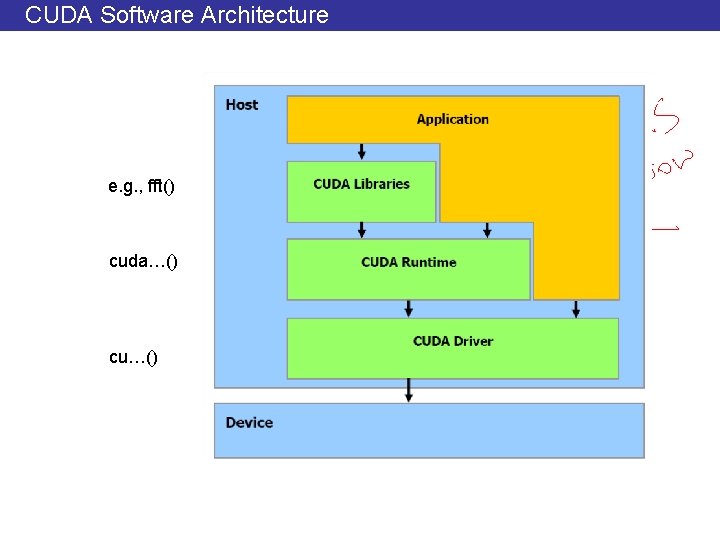

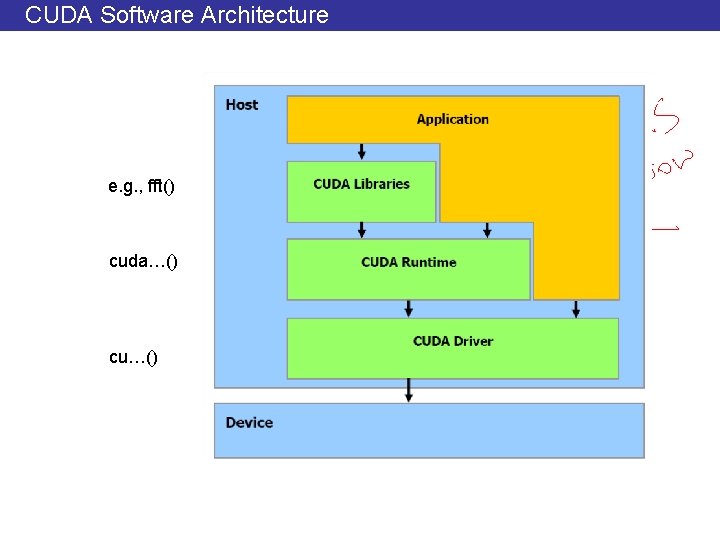

CUDA Software Architecture e. g. , fft() cuda…() cu…()

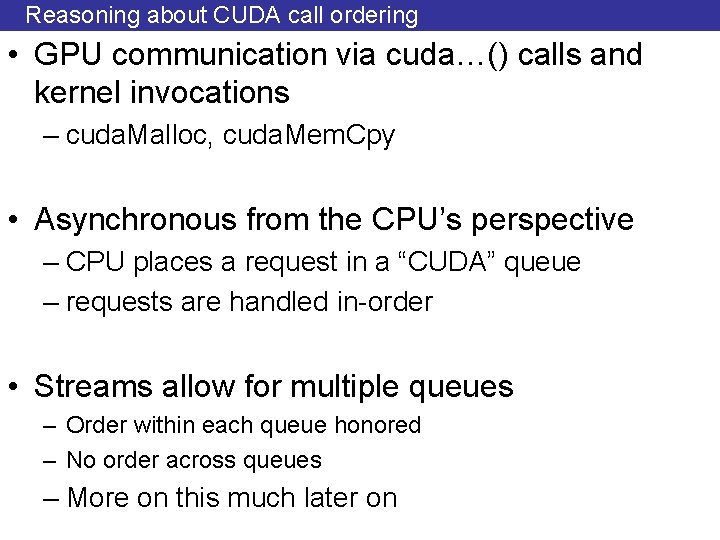

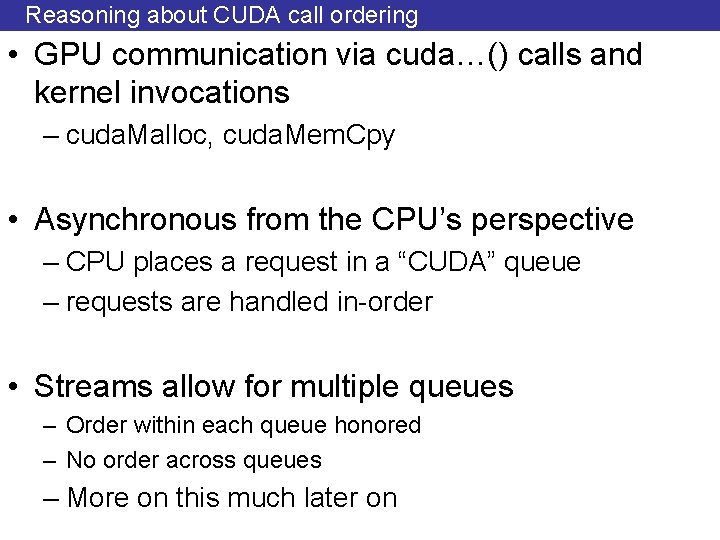

Reasoning about CUDA call ordering • GPU communication via cuda…() calls and kernel invocations – cuda. Malloc, cuda. Mem. Cpy • Asynchronous from the CPU’s perspective – CPU places a request in a “CUDA” queue – requests are handled in-order • Streams allow for multiple queues – Order within each queue honored – No order across queues – More on this much later on

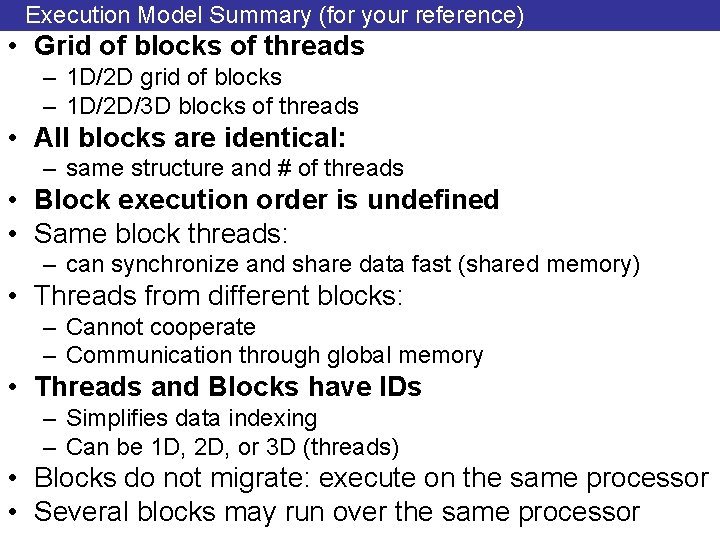

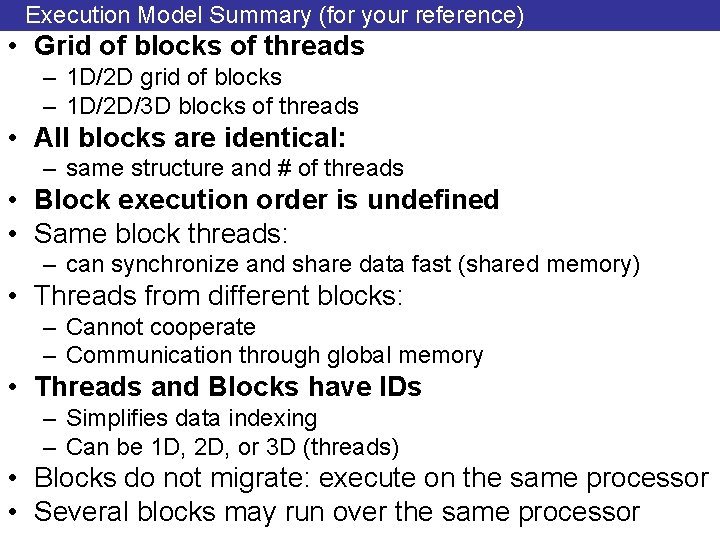

Execution Model Summary (for your reference) • Grid of blocks of threads – 1 D/2 D grid of blocks – 1 D/2 D/3 D blocks of threads • All blocks are identical: – same structure and # of threads • Block execution order is undefined • Same block threads: – can synchronize and share data fast (shared memory) • Threads from different blocks: – Cannot cooperate – Communication through global memory • Threads and Blocks have IDs – Simplifies data indexing – Can be 1 D, 2 D, or 3 D (threads) • Blocks do not migrate: execute on the same processor • Several blocks may run over the same processor

![CUDA API Example int aN for i 0 i N i ai CUDA API: Example int a[N]; for (i =0; i < N; i++) a[i] =](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-51.jpg)

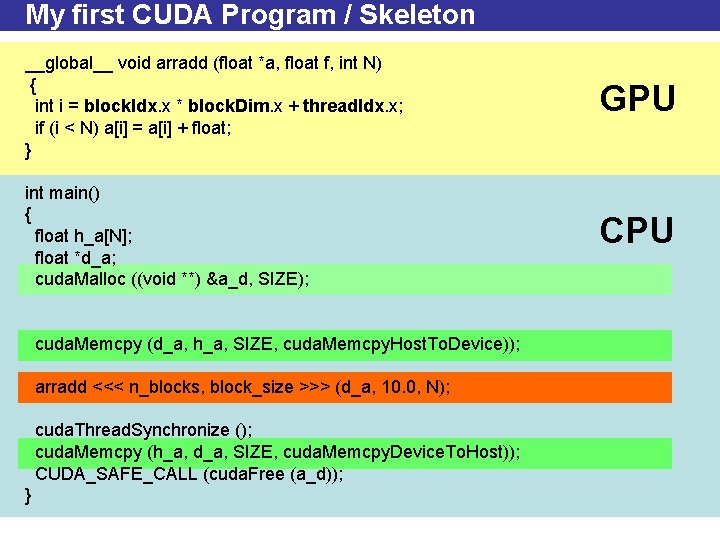

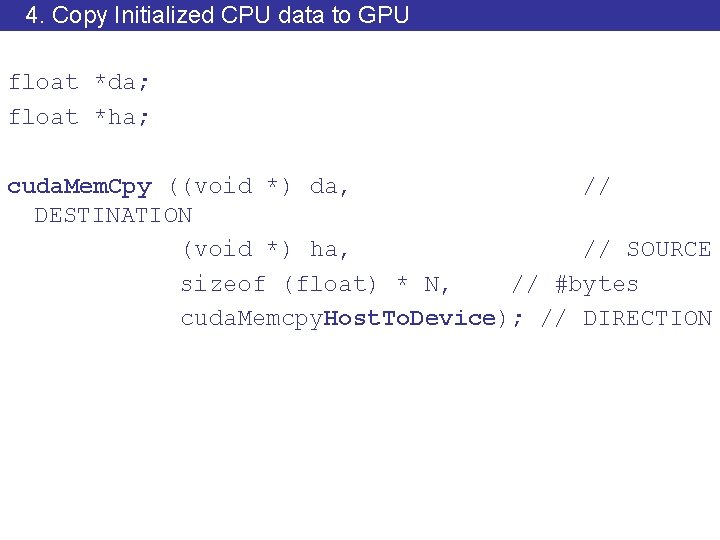

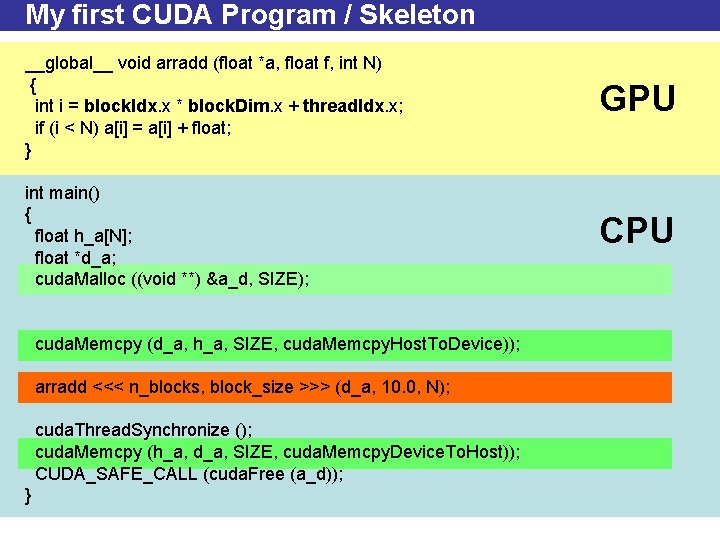

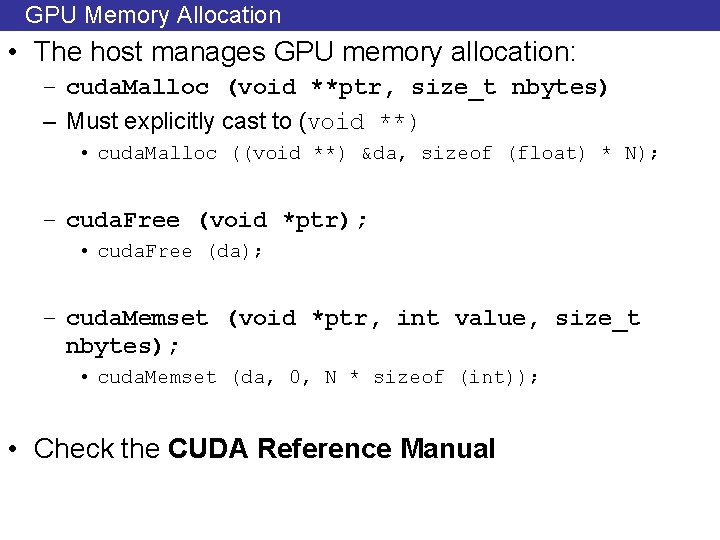

CUDA API: Example int a[N]; for (i =0; i < N; i++) a[i] = a[i] + x; 1. 2. 3. 4. 5. 6. 7. 8. 9. Allocate CPU Data Structure Initialize Data on CPU Allocate GPU Data Structure Copy Data from CPU to GPU Define Execution Configuration Run Kernel CPU synchronizes with GPU Copy Data from GPU to CPU De-allocate GPU and CPU memory

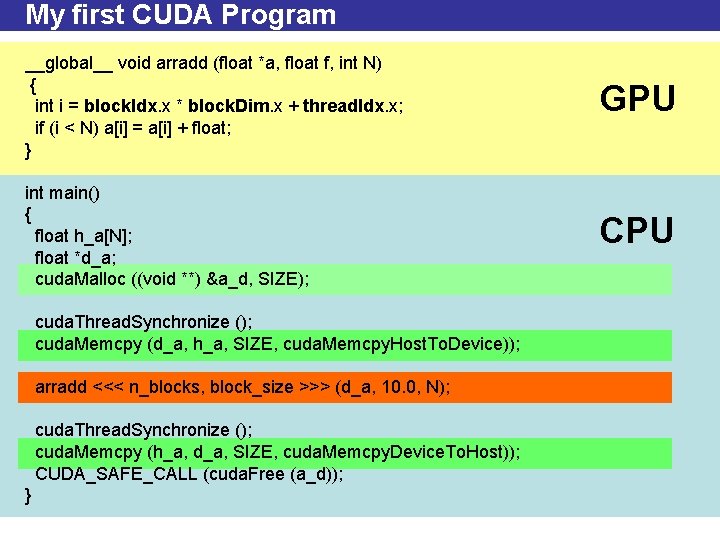

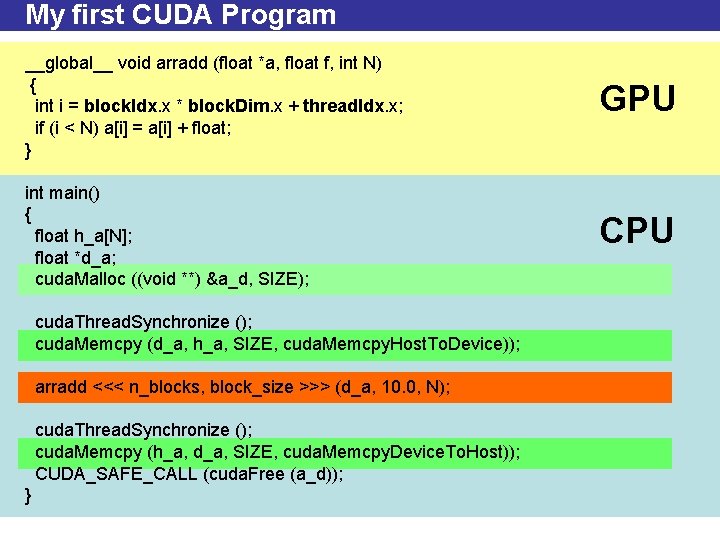

My first CUDA Program / Skeleton __global__ void arradd (float *a, float f, int N) { int i = block. Idx. x * block. Dim. x + thread. Idx. x; if (i < N) a[i] = a[i] + float; } GPU int main() { float h_a[N]; float *d_a; cuda. Malloc ((void **) &a_d, SIZE); CPU cuda. Memcpy (d_a, h_a, SIZE, cuda. Memcpy. Host. To. Device)); arradd <<< n_blocks, block_size >>> (d_a, 10. 0, N); cuda. Thread. Synchronize (); cuda. Memcpy (h_a, d_a, SIZE, cuda. Memcpy. Device. To. Host)); CUDA_SAFE_CALL (cuda. Free (a_d)); }

![1 Allocate CPU Data container float ha main int argc char argv int 1. Allocate CPU Data container float *ha; main (int argc, char *argv[]) { int](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-53.jpg)

1. Allocate CPU Data container float *ha; main (int argc, char *argv[]) { int N = atoi (argv[1]); ha = (float *) malloc (sizeof (float) * N); . . . } No memory allocated on the GPU side • Pinned memory allocation results in faster CPU to/from GPU copies • But pinned memory cannot be paged-out • cuda. Malloc. Host (…)

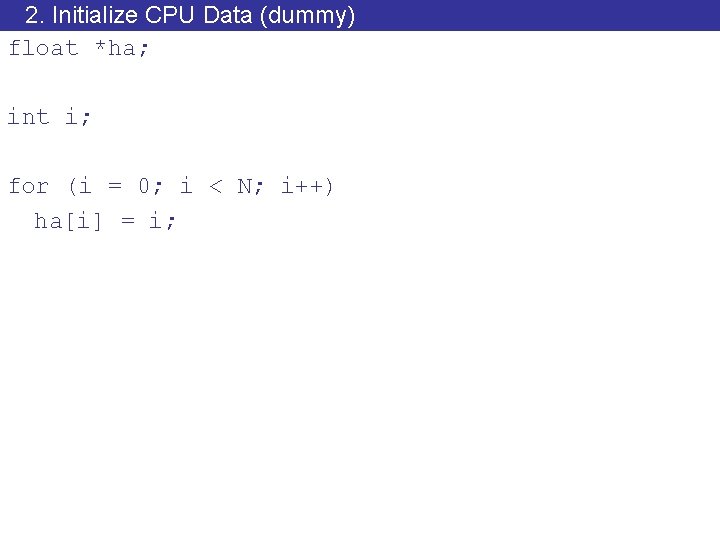

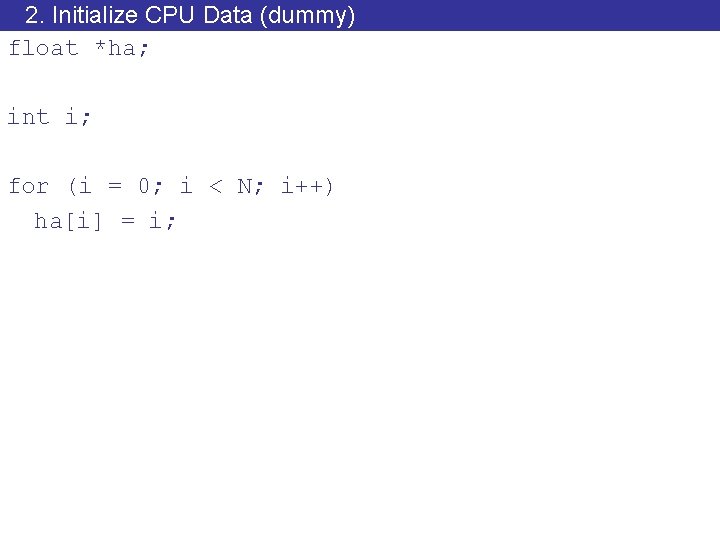

2. Initialize CPU Data (dummy) float *ha; int i; for (i = 0; i < N; i++) ha[i] = i;

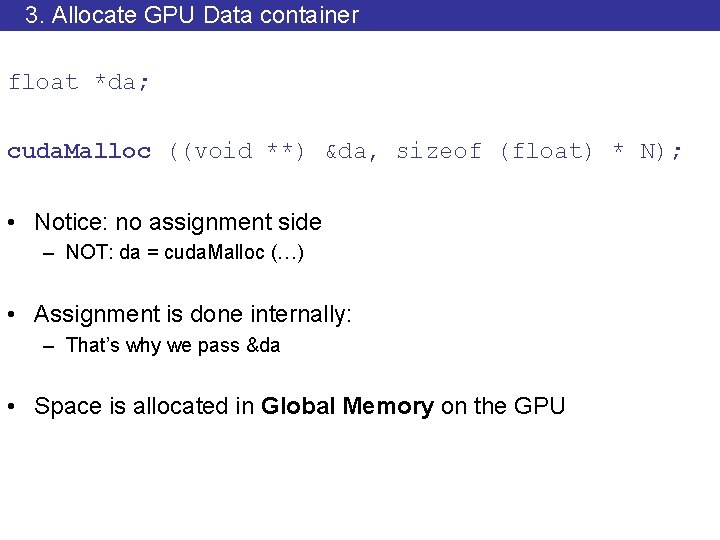

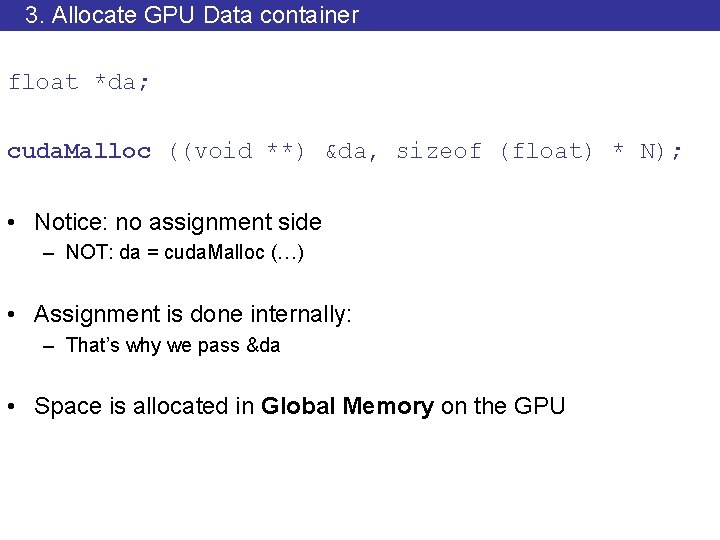

3. Allocate GPU Data container float *da; cuda. Malloc ((void **) &da, sizeof (float) * N); • Notice: no assignment side – NOT: da = cuda. Malloc (…) • Assignment is done internally: – That’s why we pass &da • Space is allocated in Global Memory on the GPU

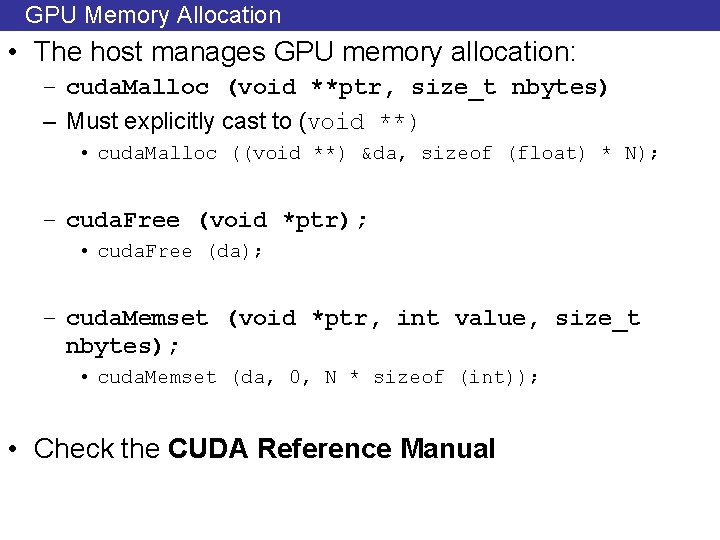

GPU Memory Allocation • The host manages GPU memory allocation: – cuda. Malloc (void **ptr, size_t nbytes) – Must explicitly cast to (void **) • cuda. Malloc ((void **) &da, sizeof (float) * N); – cuda. Free (void *ptr); • cuda. Free (da); – cuda. Memset (void *ptr, int value, size_t nbytes); • cuda. Memset (da, 0, N * sizeof (int)); • Check the CUDA Reference Manual

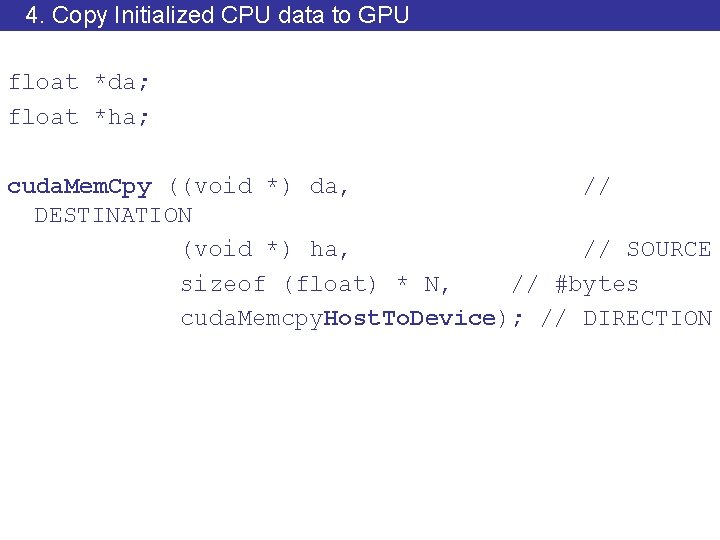

4. Copy Initialized CPU data to GPU float *da; float *ha; cuda. Mem. Cpy ((void *) da, // DESTINATION (void *) ha, // SOURCE sizeof (float) * N, // #bytes cuda. Memcpy. Host. To. Device); // DIRECTION

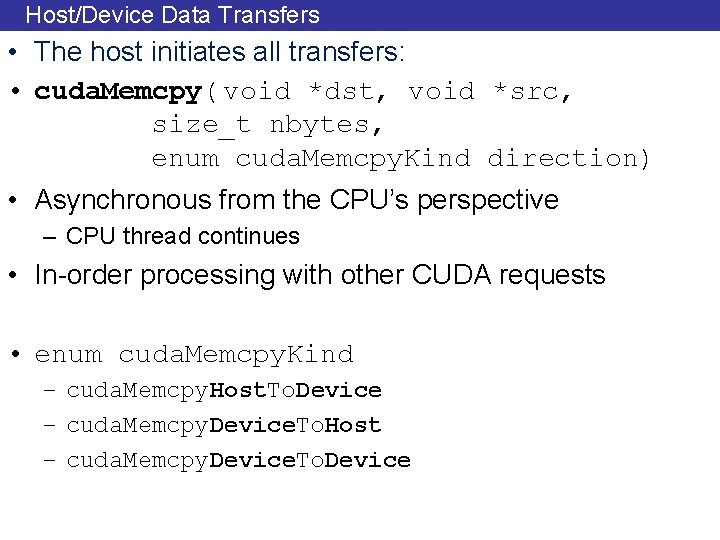

Host/Device Data Transfers • The host initiates all transfers: • cuda. Memcpy( void *dst, void *src, size_t nbytes, enum cuda. Memcpy. Kind direction) • Asynchronous from the CPU’s perspective – CPU thread continues • In-order processing with other CUDA requests • enum cuda. Memcpy. Kind – cuda. Memcpy. Host. To. Device – cuda. Memcpy. Device. To. Host – cuda. Memcpy. Device. To. Device

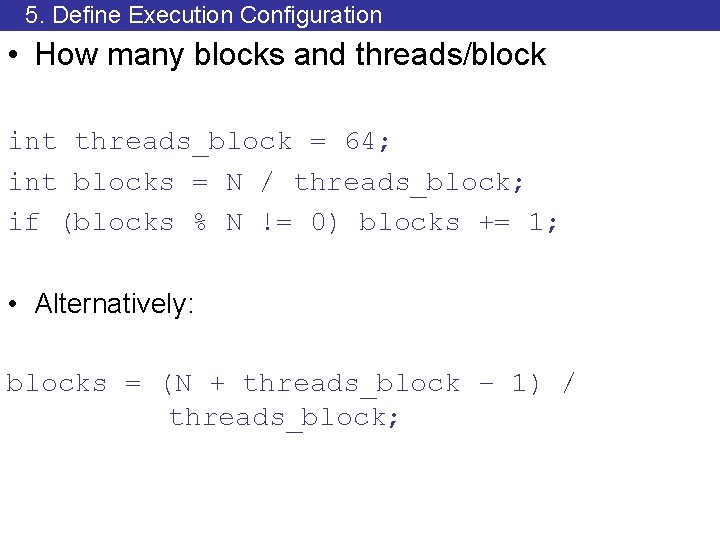

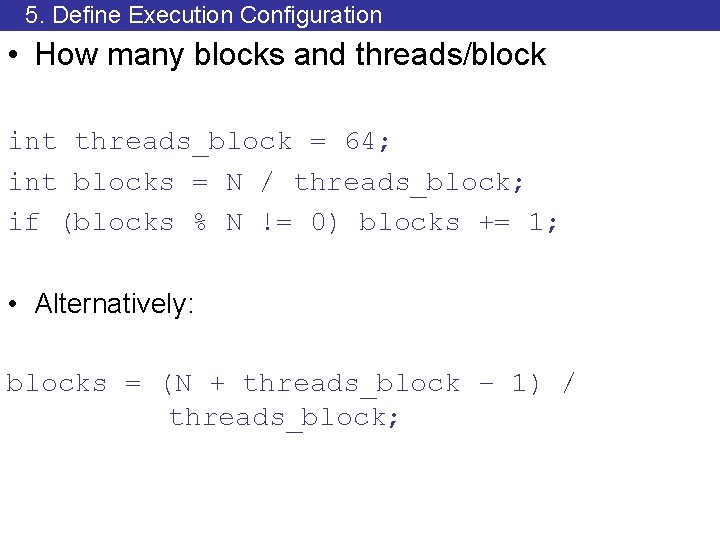

5. Define Execution Configuration • How many blocks and threads/block int threads_block = 64; int blocks = N / threads_block; if (blocks % N != 0) blocks += 1; • Alternatively: blocks = (N + threads_block – 1) / threads_block;

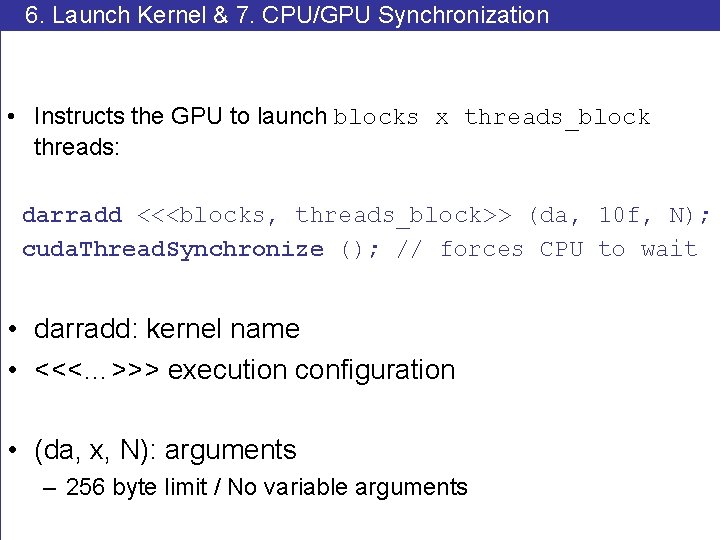

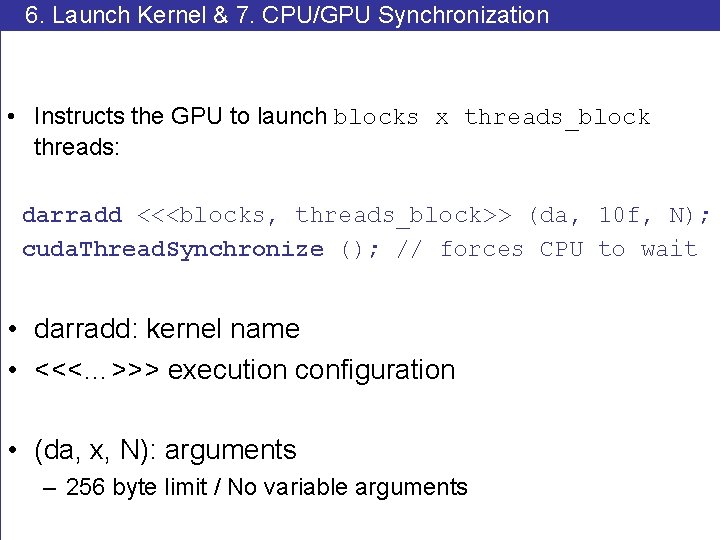

6. Launch Kernel & 7. CPU/GPU Synchronization • Instructs the GPU to launch blocks x threads_block threads: darradd <<<blocks, threads_block>> (da, 10 f, N); cuda. Thread. Synchronize (); // forces CPU to wait • darradd: kernel name • <<<…>>> execution configuration • (da, x, N): arguments – 256 byte limit / No variable arguments

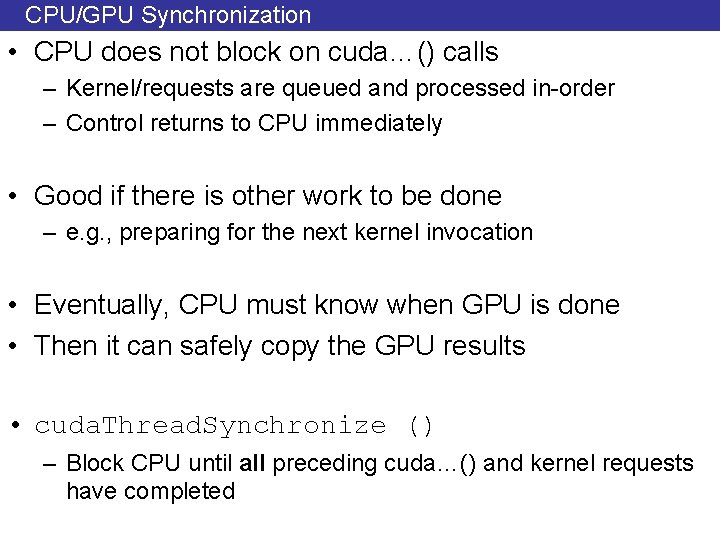

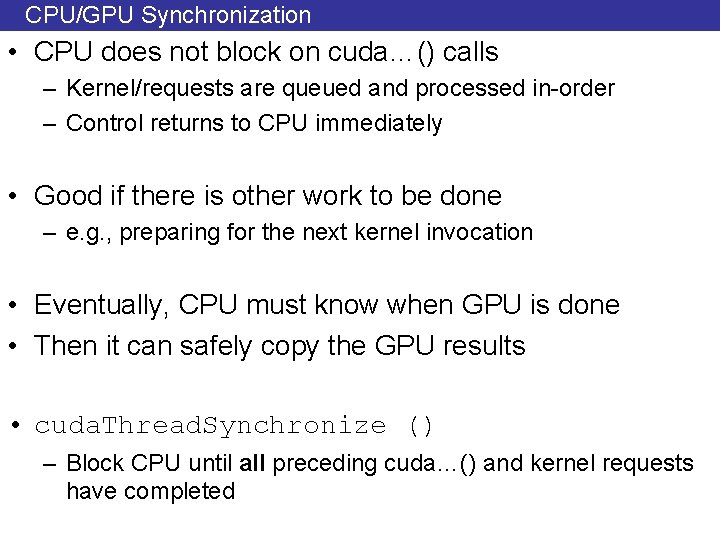

CPU/GPU Synchronization • CPU does not block on cuda…() calls – Kernel/requests are queued and processed in-order – Control returns to CPU immediately • Good if there is other work to be done – e. g. , preparing for the next kernel invocation • Eventually, CPU must know when GPU is done • Then it can safely copy the GPU results • cuda. Thread. Synchronize () – Block CPU until all preceding cuda…() and kernel requests have completed

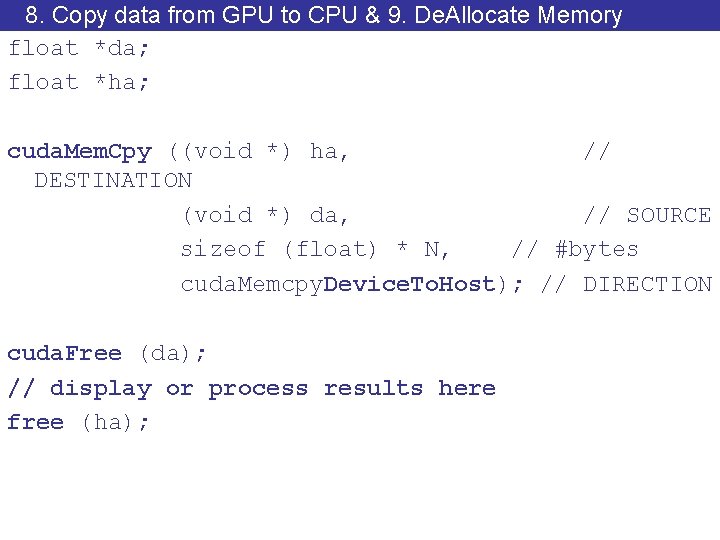

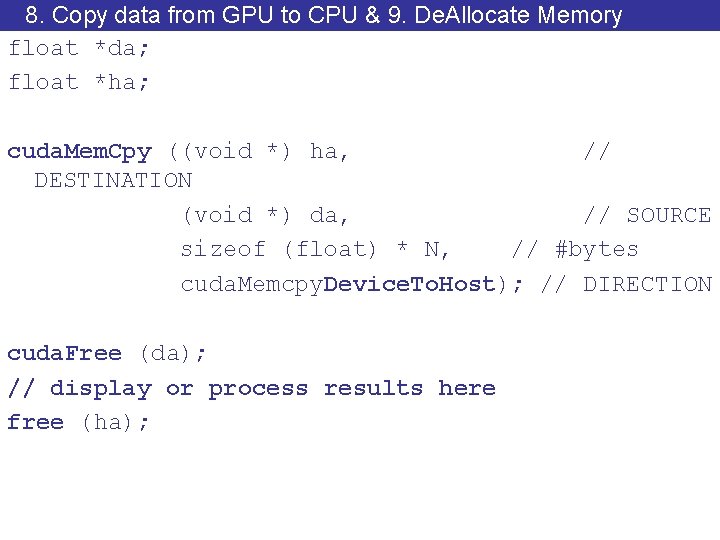

8. Copy data from GPU to CPU & 9. De. Allocate Memory float *da; float *ha; cuda. Mem. Cpy ((void *) ha, // DESTINATION (void *) da, // SOURCE sizeof (float) * N, // #bytes cuda. Memcpy. Device. To. Host); // DIRECTION cuda. Free (da); // display or process results here free (ha);

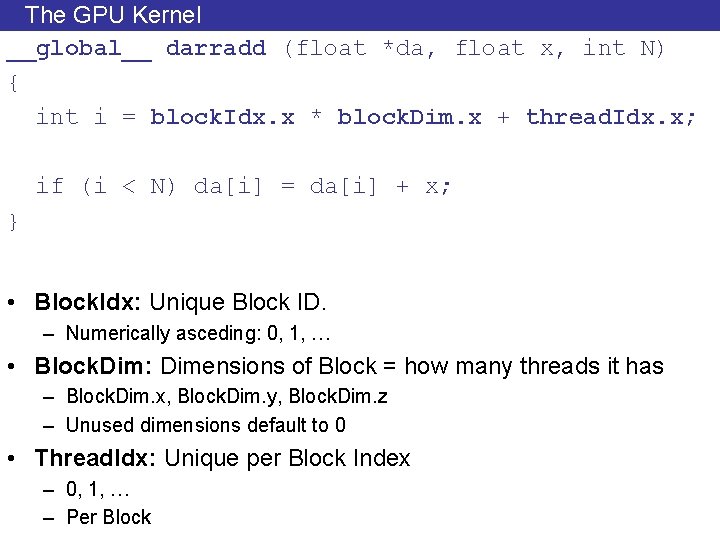

The GPU Kernel __global__ darradd (float *da, float x, int N) { int i = block. Idx. x * block. Dim. x + thread. Idx. x; if (i < N) da[i] = da[i] + x; } • Block. Idx: Unique Block ID. – Numerically asceding: 0, 1, … • Block. Dim: Dimensions of Block = how many threads it has – Block. Dim. x, Block. Dim. y, Block. Dim. z – Unused dimensions default to 0 • Thread. Idx: Unique per Block Index – 0, 1, … – Per Block

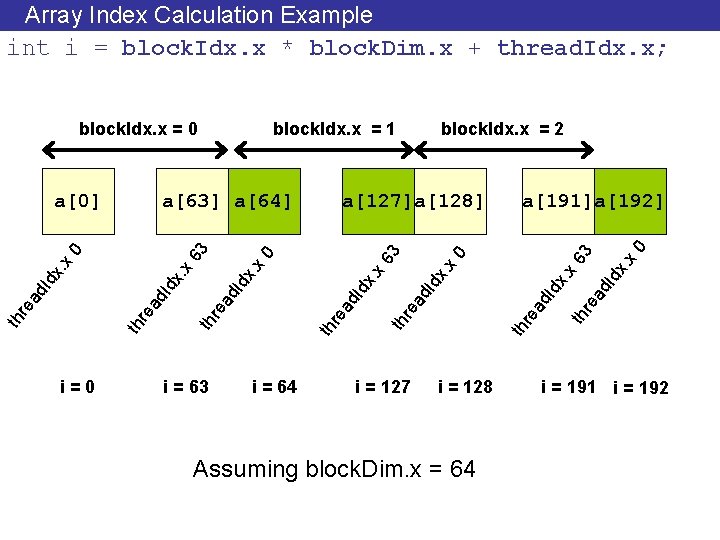

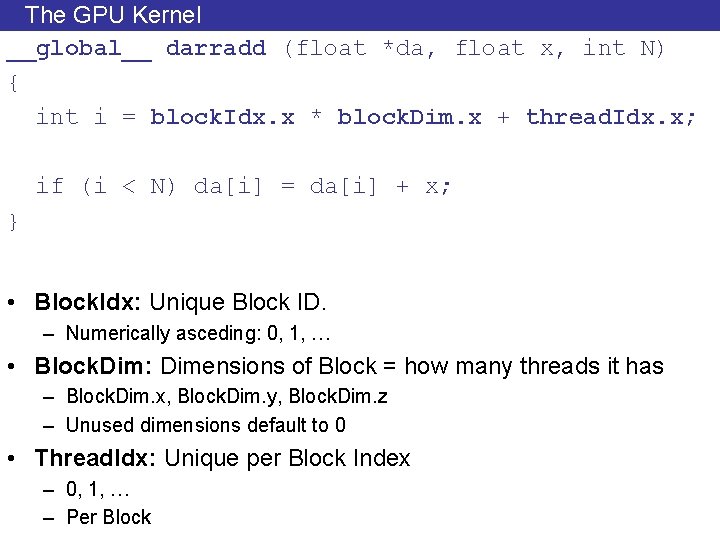

Array Index Calculation Example int i = block. Idx. x * block. Dim. x + thread. Idx. x; i = 127 0 x. th th re ad Id x x. Id ad re x 63 0 Id ad th ad re th i = 64 x. x x. Id Id ad re th i = 63 a[191]a[192] x 0 x. x x. Id ad re th a[127]a[128] x 0 x x. Id ad re th i=0 block. Idx. x = 2 63 a[63] a[64] 63 a[0] block. Idx. x = 1 re block. Idx. x = 0 i = 128 Assuming block. Dim. x = 64 i = 191 i = 192

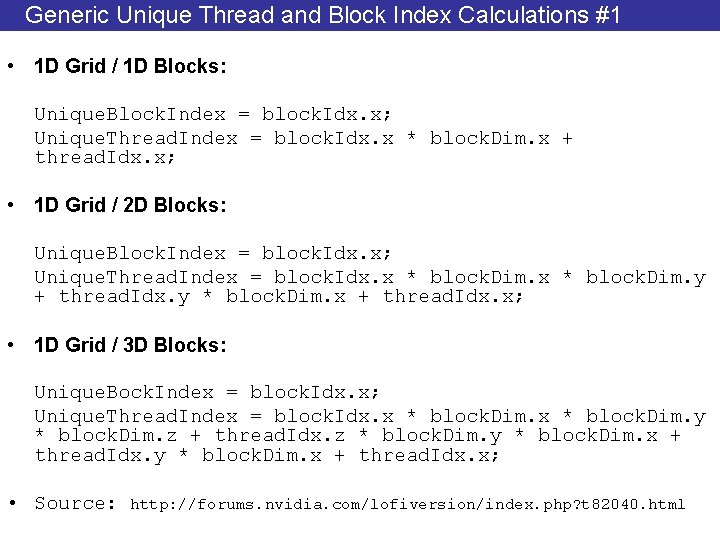

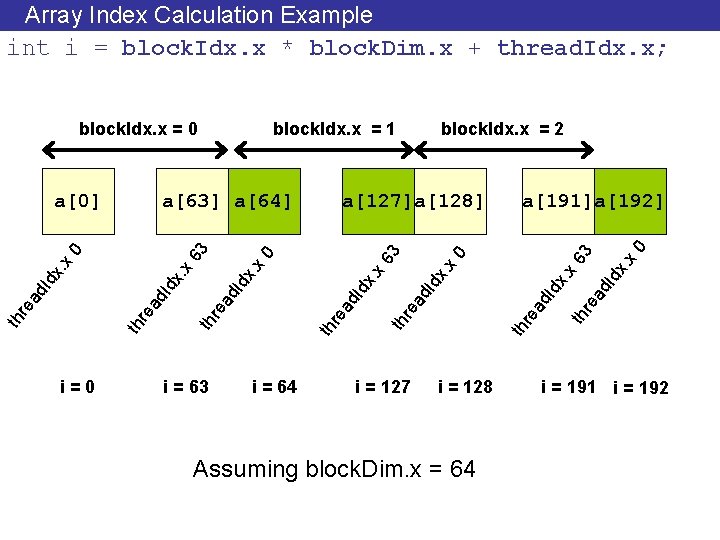

Generic Unique Thread and Block Index Calculations #1 • 1 D Grid / 1 D Blocks: Unique. Block. Index = block. Idx. x; Unique. Thread. Index = block. Idx. x * block. Dim. x + thread. Idx. x; • 1 D Grid / 2 D Blocks: Unique. Block. Index = block. Idx. x; Unique. Thread. Index = block. Idx. x * block. Dim. y + thread. Idx. y * block. Dim. x + thread. Idx. x; • 1 D Grid / 3 D Blocks: Unique. Bock. Index = block. Idx. x; Unique. Thread. Index = block. Idx. x * block. Dim. y * block. Dim. z + thread. Idx. z * block. Dim. y * block. Dim. x + thread. Idx. x; • Source: http: //forums. nvidia. com/lofiversion/index. php? t 82040. html

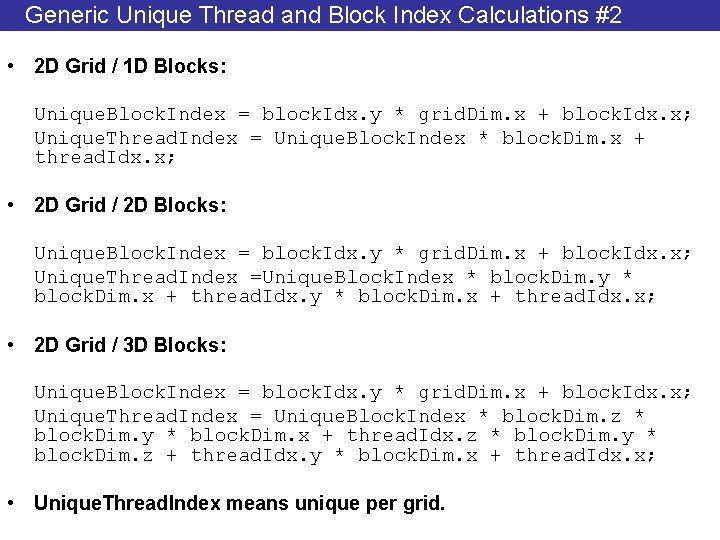

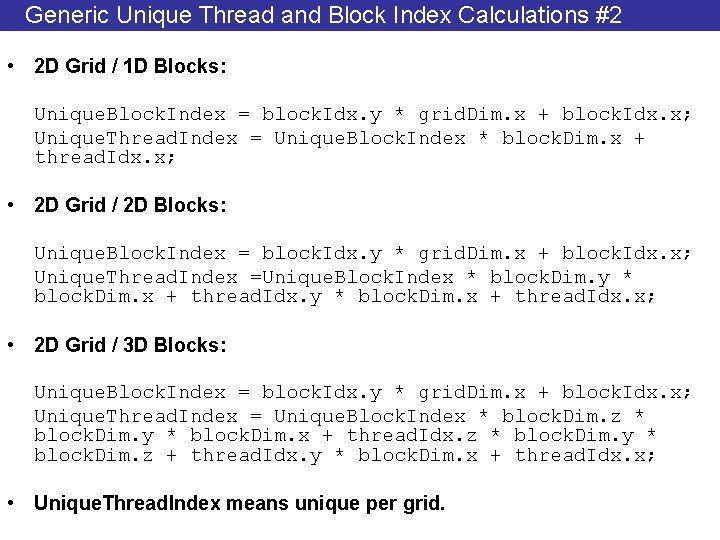

Generic Unique Thread and Block Index Calculations #2 • 2 D Grid / 1 D Blocks: Unique. Block. Index = block. Idx. y * grid. Dim. x + block. Idx. x; Unique. Thread. Index = Unique. Block. Index * block. Dim. x + thread. Idx. x; • 2 D Grid / 2 D Blocks: Unique. Block. Index = block. Idx. y * grid. Dim. x + block. Idx. x; Unique. Thread. Index =Unique. Block. Index * block. Dim. y * block. Dim. x + thread. Idx. x; • 2 D Grid / 3 D Blocks: Unique. Block. Index = block. Idx. y * grid. Dim. x + block. Idx. x; Unique. Thread. Index = Unique. Block. Index * block. Dim. z * block. Dim. y * block. Dim. x + thread. Idx. z * block. Dim. y * block. Dim. z + thread. Idx. y * block. Dim. x + thread. Idx. x; • Unique. Thread. Index means unique per grid.

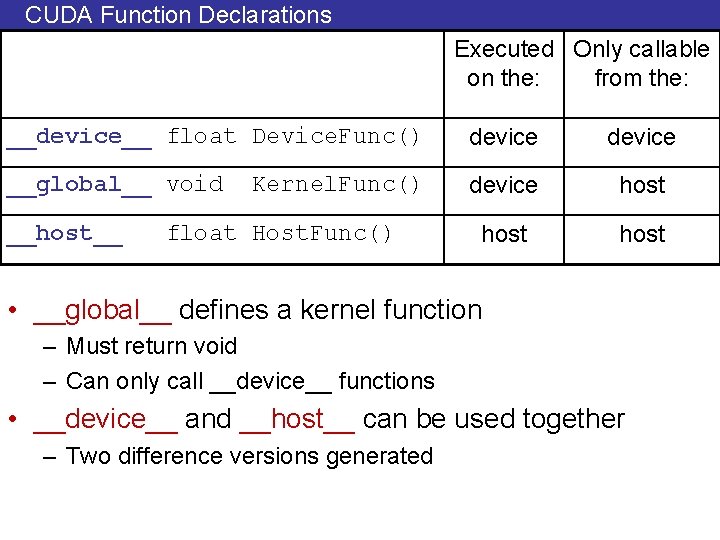

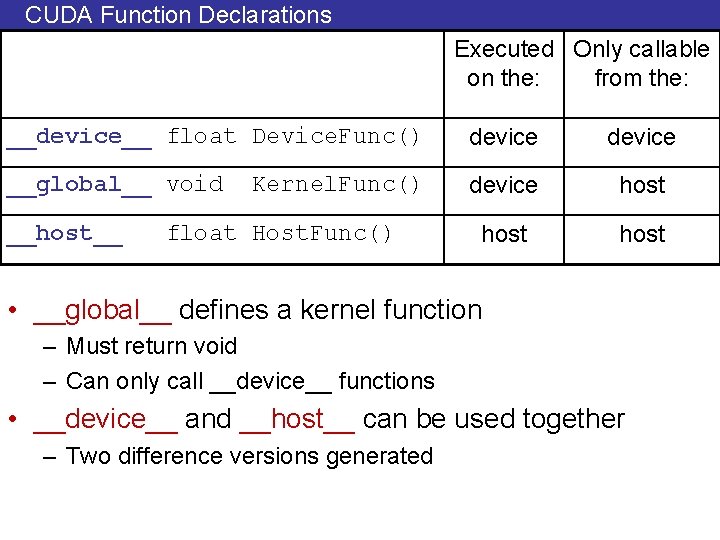

CUDA Function Declarations Executed Only callable on the: from the: __device__ float Device. Func() device __global__ void device host __host__ Kernel. Func() float Host. Func() • __global__ defines a kernel function – Must return void – Can only call __device__ functions • __device__ and __host__ can be used together – Two difference versions generated

![device Example Add x to ai multiple times device float addmany float a __device__ Example • Add x to a[i] multiple times __device__ float addmany (float a,](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-68.jpg)

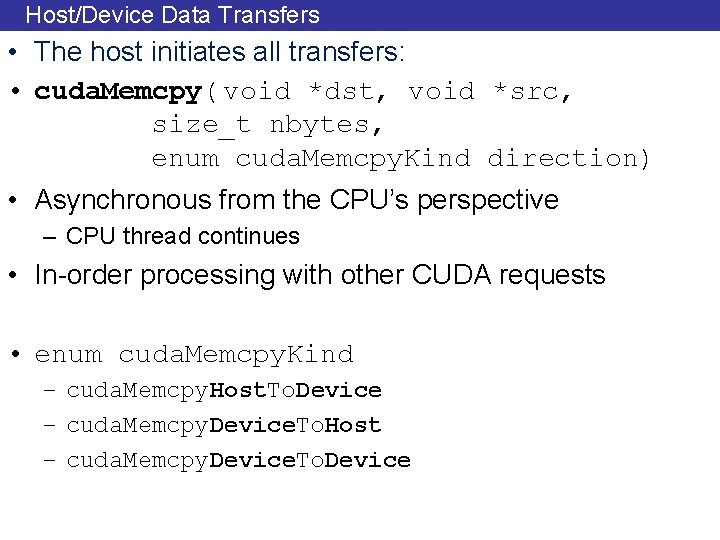

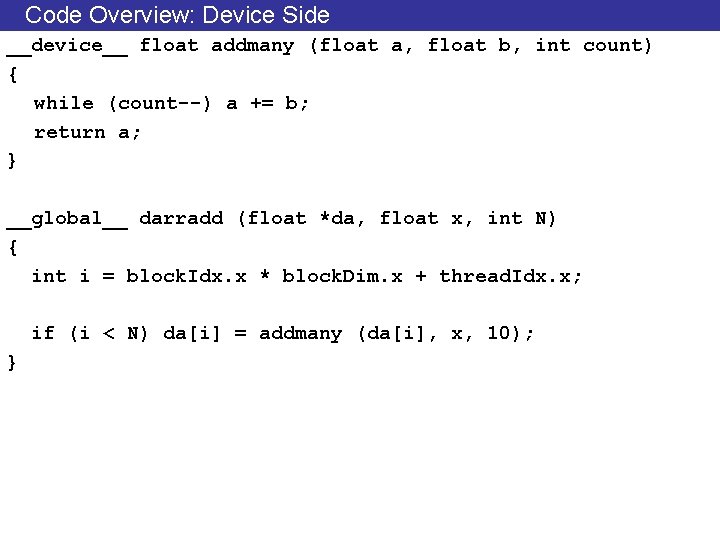

__device__ Example • Add x to a[i] multiple times __device__ float addmany (float a, float b, int count) { while (count--) a += b; return a; } __global__ darradd (float *da, float x, int N) { int i = block. Idx. x * block. Dim. x + thread. Idx. x; if (i < N) da[i] = addmany (da[i], x, 10); }

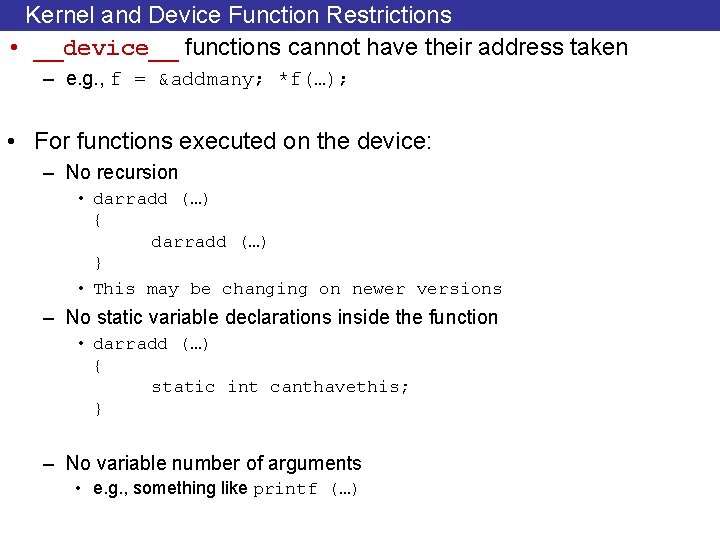

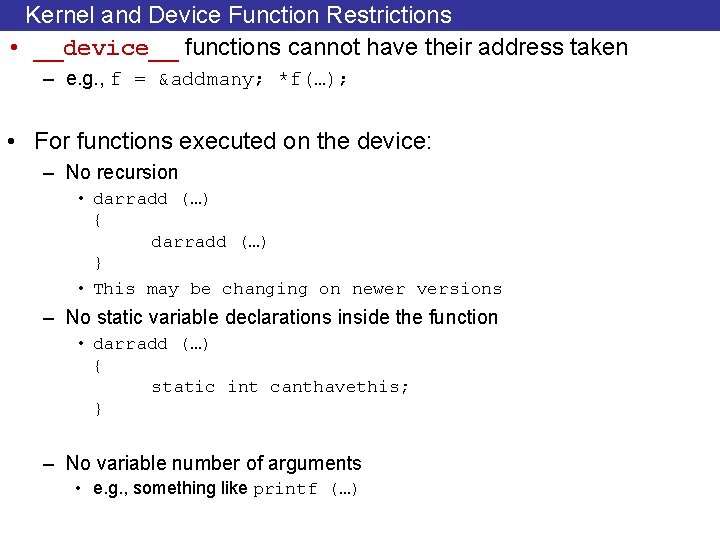

Kernel and Device Function Restrictions • __device__ functions cannot have their address taken – e. g. , f = &addmany; *f(…); • For functions executed on the device: – No recursion • darradd (…) { darradd (…) } • This may be changing on newer versions – No static variable declarations inside the function • darradd (…) { static int canthavethis; } – No variable number of arguments • e. g. , something like printf (…)

My first CUDA Program __global__ void arradd (float *a, float f, int N) { int i = block. Idx. x * block. Dim. x + thread. Idx. x; if (i < N) a[i] = a[i] + float; } GPU int main() { float h_a[N]; float *d_a; cuda. Malloc ((void **) &a_d, SIZE); CPU cuda. Thread. Synchronize (); cuda. Memcpy (d_a, h_a, SIZE, cuda. Memcpy. Host. To. Device)); arradd <<< n_blocks, block_size >>> (d_a, 10. 0, N); cuda. Thread. Synchronize (); cuda. Memcpy (h_a, d_a, SIZE, cuda. Memcpy. Device. To. Host)); CUDA_SAFE_CALL (cuda. Free (a_d)); }

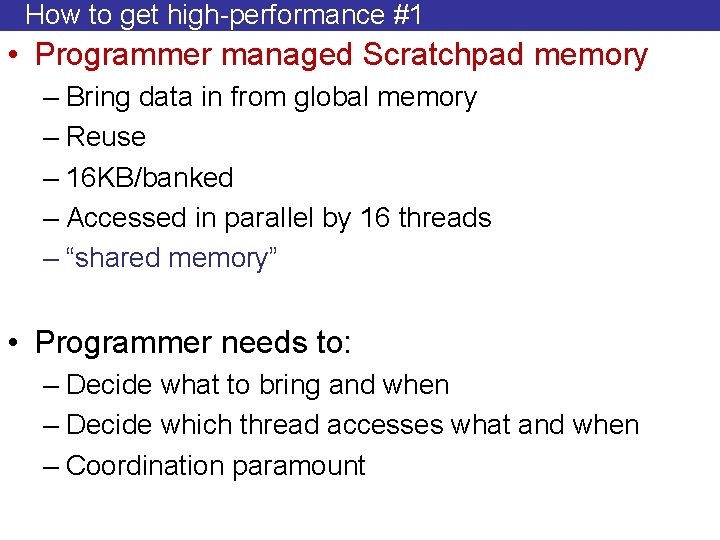

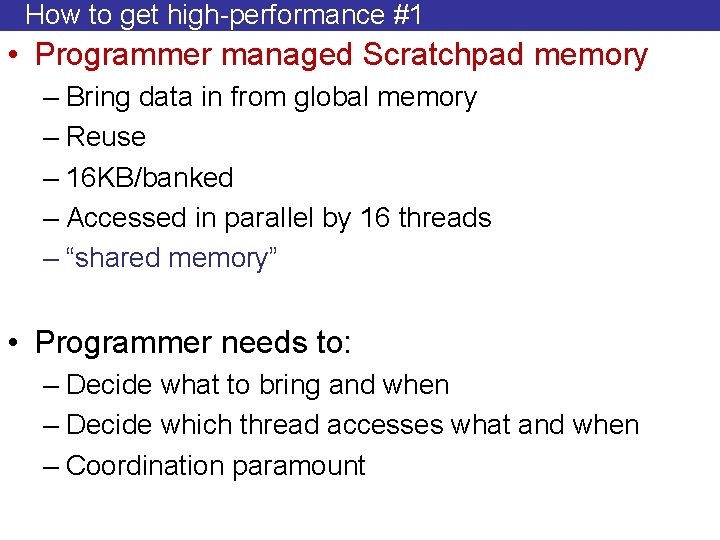

How to get high-performance #1 • Programmer managed Scratchpad memory – Bring data in from global memory – Reuse – 16 KB/banked – Accessed in parallel by 16 threads – “shared memory” • Programmer needs to: – Decide what to bring and when – Decide which thread accesses what and when – Coordination paramount

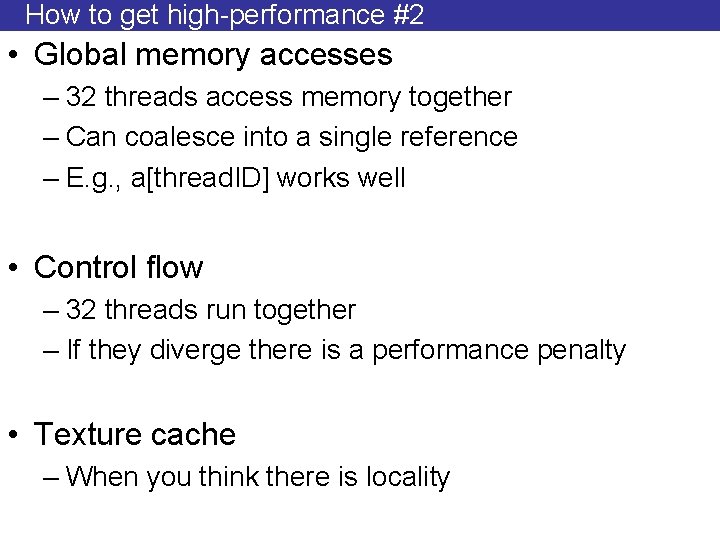

How to get high-performance #2 • Global memory accesses – 32 threads access memory together – Can coalesce into a single reference – E. g. , a[thread. ID] works well • Control flow – 32 threads run together – If they diverge there is a performance penalty • Texture cache – When you think there is locality

Numerical Accuracy • Can do FP – Mostly OK some minor discrepancies • Can do DP – 1/8 the bandwidth – Better on newer hardware • Mixed methods – Break numbers into two single-precision values • Must carefully check for stability/correctness • Will get better w/ next generation hardware

Are GPUs really that much faster than CPUs • 50 x – 200 x speedups typically reported • Recent work found – Not enough effort goes into optimizing code for CPUs – Intel paper (ISCA 2010) • http: //portal. acm. org/ft_gateway. cfm? id=1816021&type=p df • But: – The learning curve and expertise needed for CPUs is much larger – Then, so is the potential and flexibility

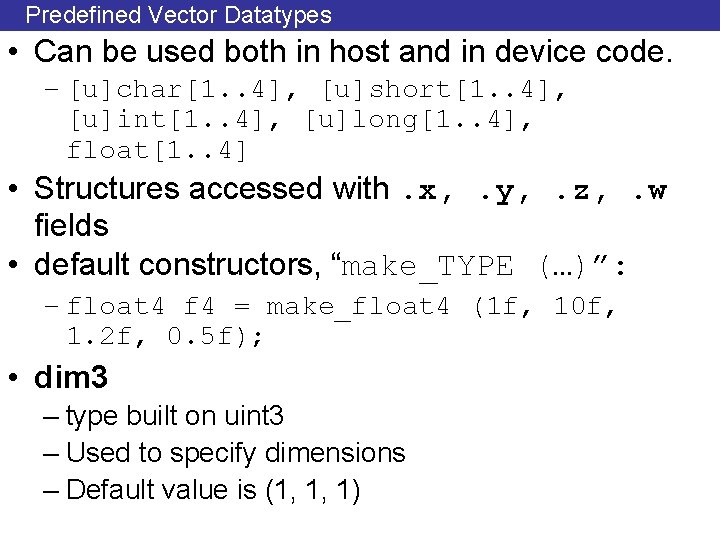

Predefined Vector Datatypes • Can be used both in host and in device code. – [u]char[1. . 4], [u]short[1. . 4], [u]int[1. . 4], [u]long[1. . 4], float[1. . 4] • Structures accessed with. x, . y, . z, . w fields • default constructors, “make_TYPE (…)”: – float 4 f 4 = make_float 4 (1 f, 10 f, 1. 2 f, 0. 5 f); • dim 3 – type built on uint 3 – Used to specify dimensions – Default value is (1, 1, 1)

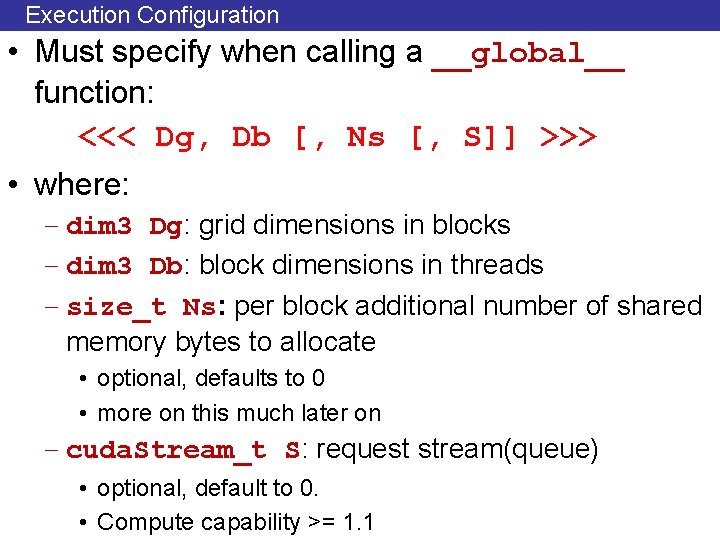

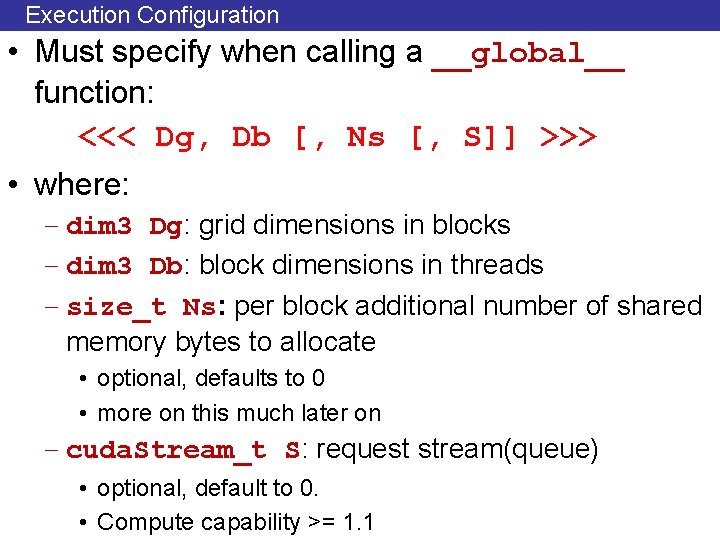

Execution Configuration • Must specify when calling a __global__ function: <<< Dg, Db [, Ns [, S]] >>> • where: – dim 3 Dg: grid dimensions in blocks – dim 3 Db: block dimensions in threads – size_t Ns: per block additional number of shared memory bytes to allocate • optional, defaults to 0 • more on this much later on – cuda. Stream_t S: request stream(queue) • optional, default to 0. • Compute capability >= 1. 1

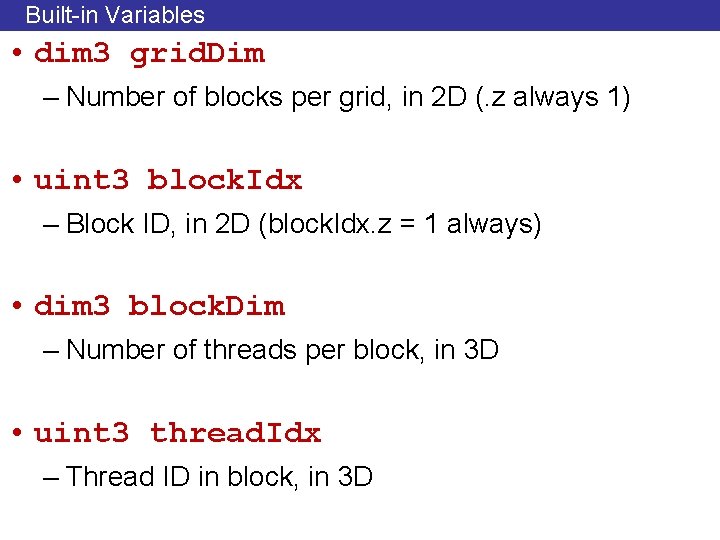

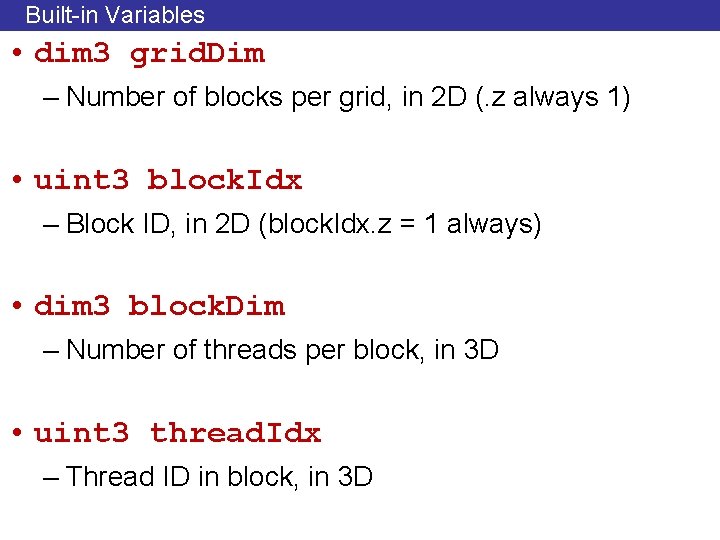

Built-in Variables • dim 3 grid. Dim – Number of blocks per grid, in 2 D (. z always 1) • uint 3 block. Idx – Block ID, in 2 D (block. Idx. z = 1 always) • dim 3 block. Dim – Number of threads per block, in 3 D • uint 3 thread. Idx – Thread ID in block, in 3 D

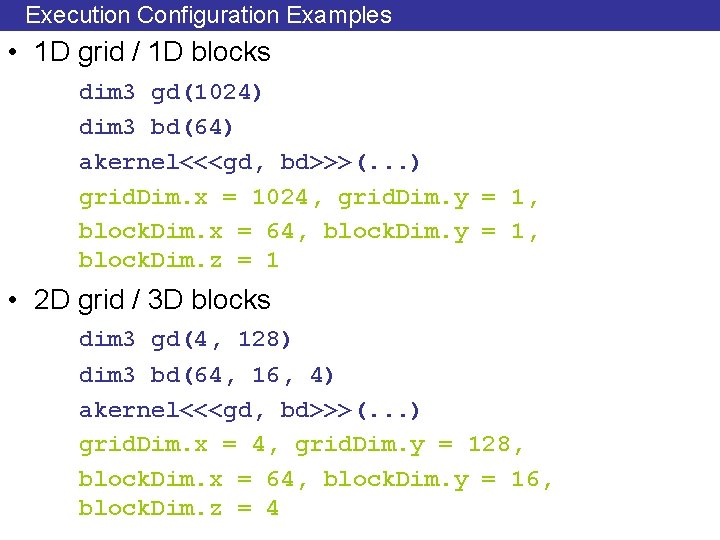

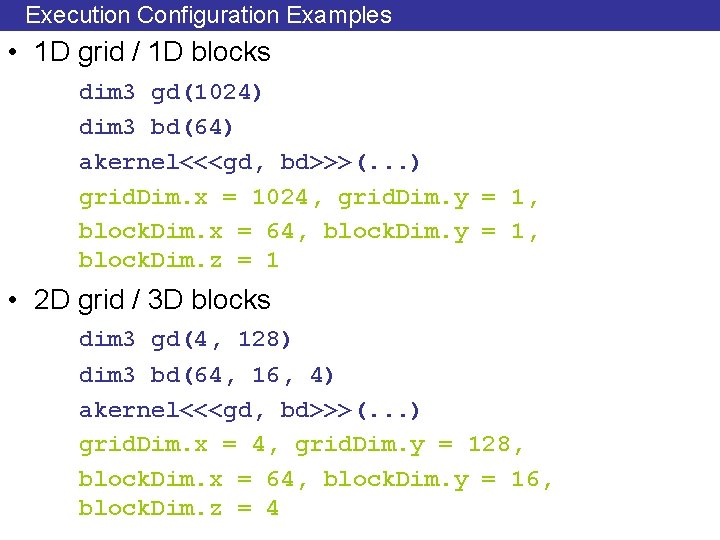

Execution Configuration Examples • 1 D grid / 1 D blocks dim 3 gd(1024) dim 3 bd(64) akernel<<<gd, bd>>>(. . . ) grid. Dim. x = 1024, grid. Dim. y = 1, block. Dim. x = 64, block. Dim. y = 1, block. Dim. z = 1 • 2 D grid / 3 D blocks dim 3 gd(4, 128) dim 3 bd(64, 16, 4) akernel<<<gd, bd>>>(. . . ) grid. Dim. x = 4, grid. Dim. y = 128, block. Dim. x = 64, block. Dim. y = 16, block. Dim. z = 4

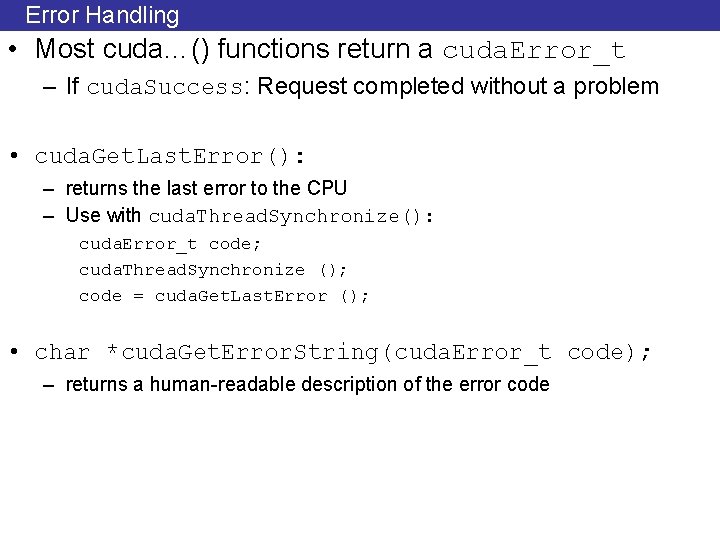

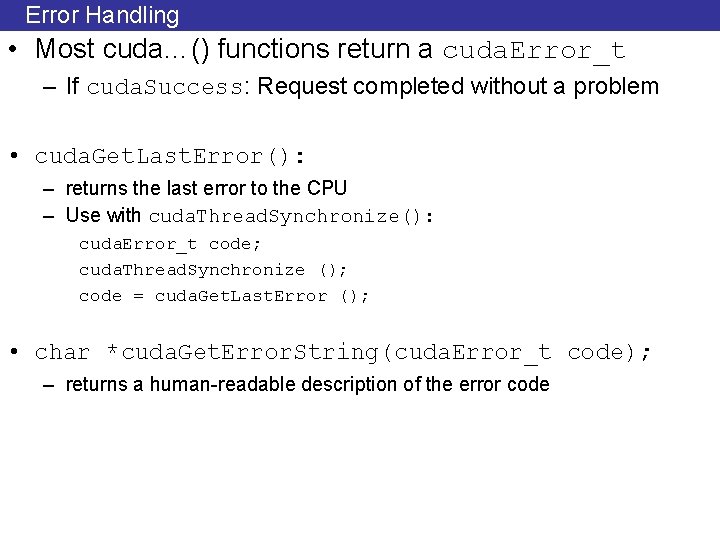

Error Handling • Most cuda…() functions return a cuda. Error_t – If cuda. Success: Request completed without a problem • cuda. Get. Last. Error(): – returns the last error to the CPU – Use with cuda. Thread. Synchronize(): cuda. Error_t code; cuda. Thread. Synchronize (); code = cuda. Get. Last. Error (); • char *cuda. Get. Error. String(cuda. Error_t code); – returns a human-readable description of the error code

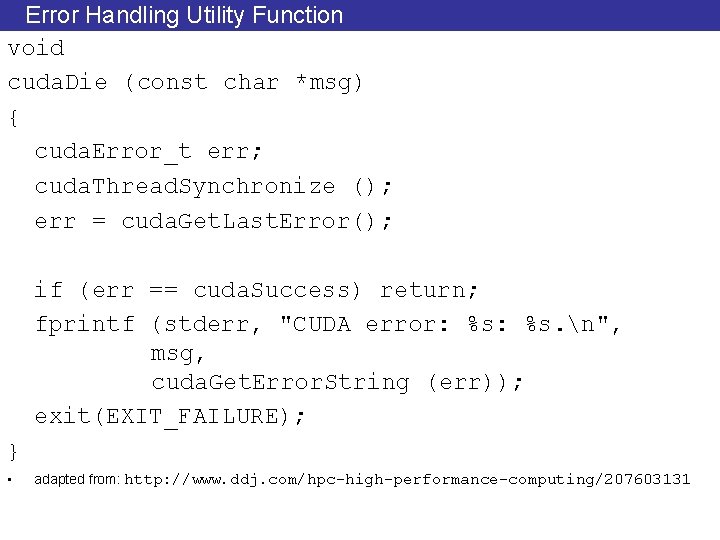

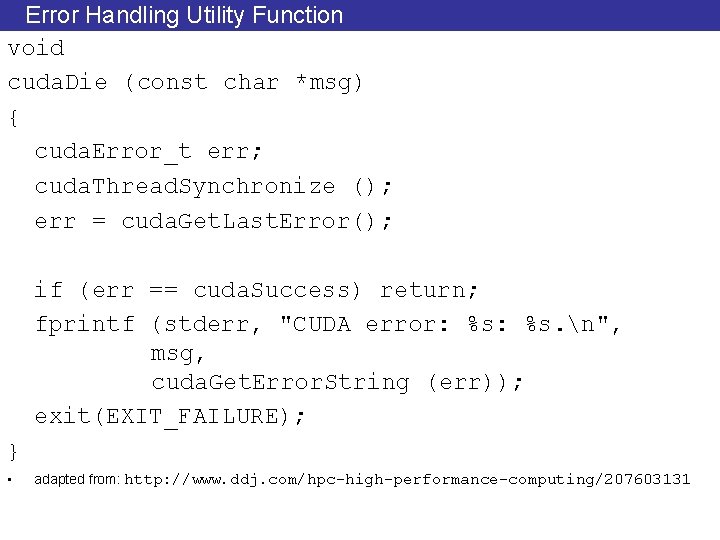

Error Handling Utility Function void cuda. Die (const char *msg) { cuda. Error_t err; cuda. Thread. Synchronize (); err = cuda. Get. Last. Error(); if (err == cuda. Success) return; fprintf (stderr, "CUDA error: %s. n", msg, cuda. Get. Error. String (err)); exit(EXIT_FAILURE); } • adapted from: http: //www. ddj. com/hpc-high-performance-computing/207603131

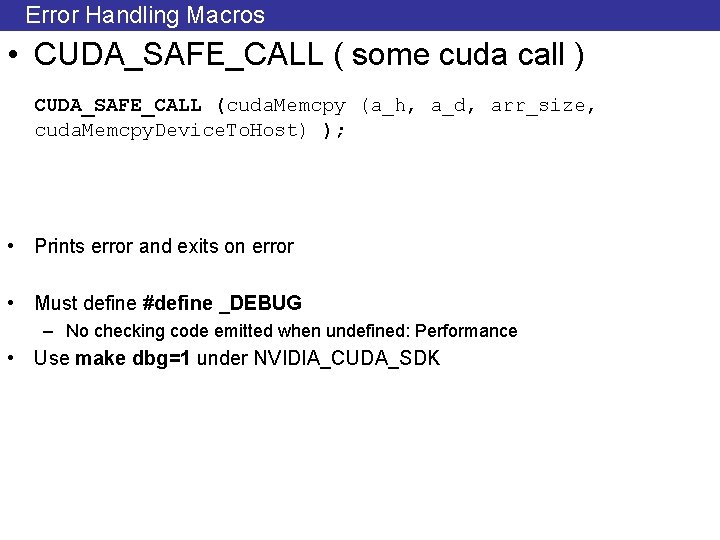

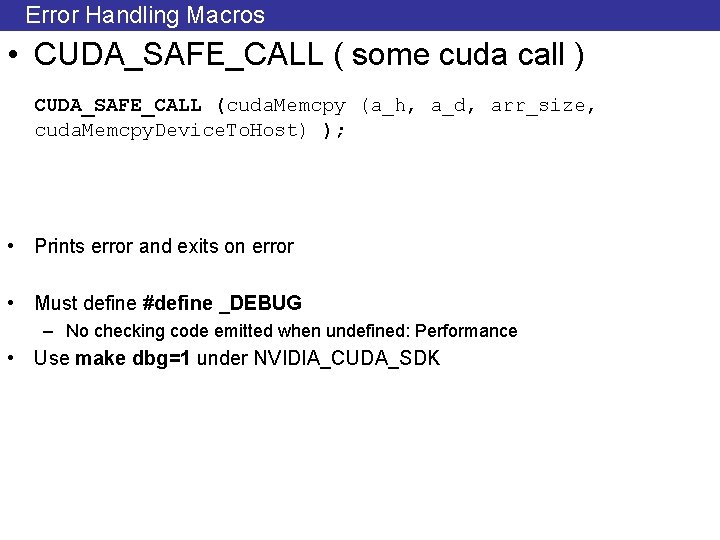

Error Handling Macros • CUDA_SAFE_CALL ( some cuda call ) CUDA_SAFE_CALL (cuda. Memcpy (a_h, a_d, arr_size, cuda. Memcpy. Device. To. Host) ); • Prints error and exits on error • Must define #define _DEBUG – No checking code emitted when undefined: Performance • Use make dbg=1 under NVIDIA_CUDA_SDK

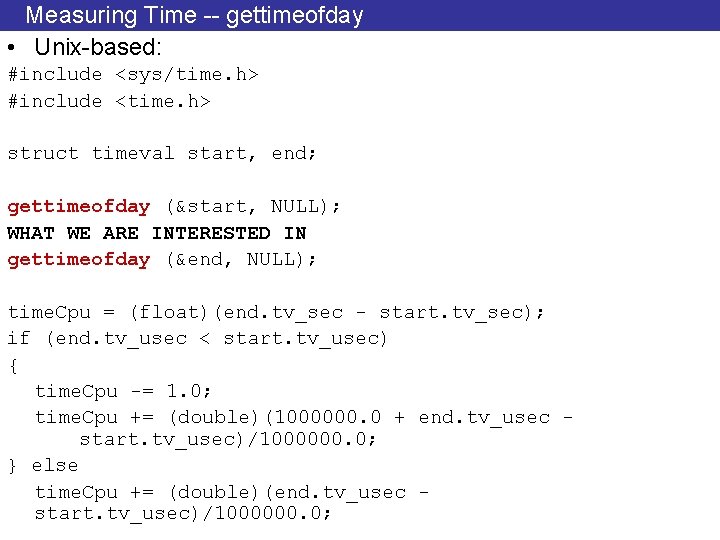

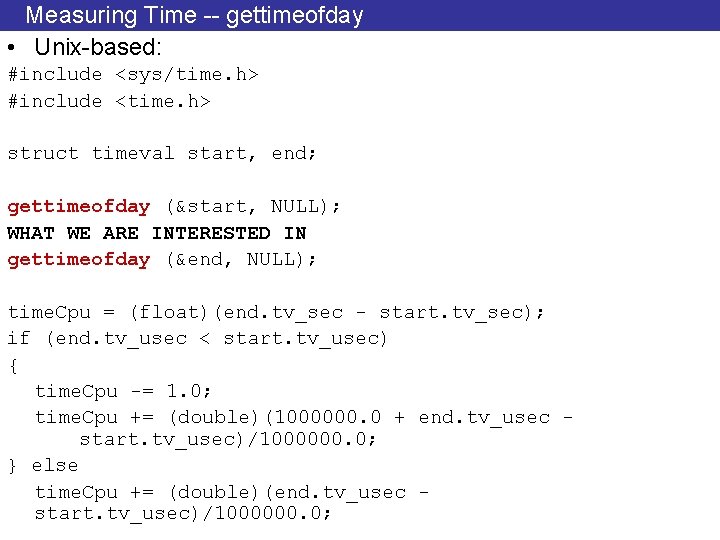

Measuring Time -- gettimeofday • Unix-based: #include <sys/time. h> #include <time. h> struct timeval start, end; gettimeofday (&start, NULL); WHAT WE ARE INTERESTED IN gettimeofday (&end, NULL); time. Cpu = (float)(end. tv_sec - start. tv_sec); if (end. tv_usec < start. tv_usec) { time. Cpu -= 1. 0; time. Cpu += (double)(1000000. 0 + end. tv_usec start. tv_usec)/1000000. 0; } else time. Cpu += (double)(end. tv_usec start. tv_usec)/1000000. 0;

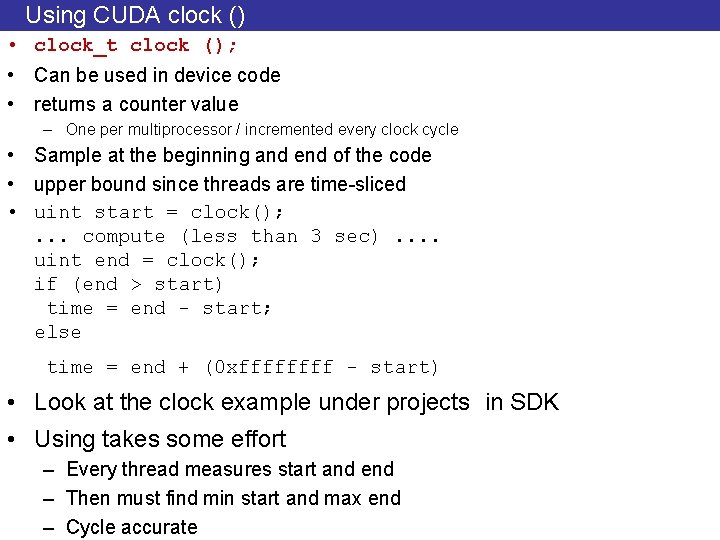

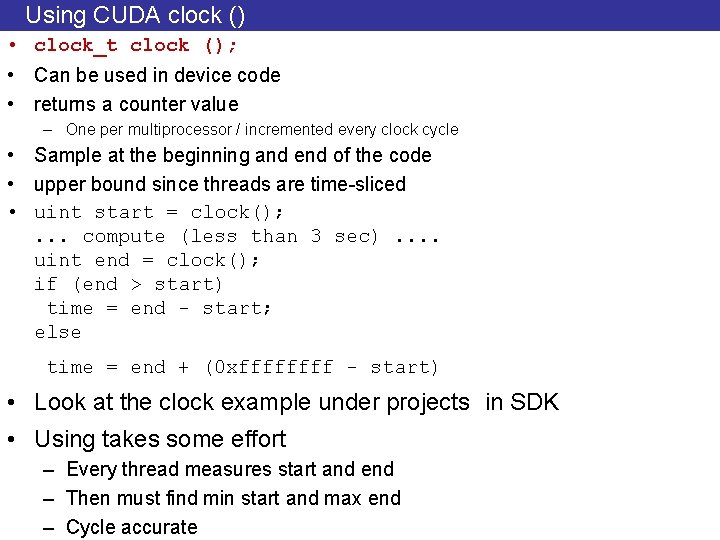

Using CUDA clock () • clock_t clock (); • Can be used in device code • returns a counter value – One per multiprocessor / incremented every clock cycle • Sample at the beginning and end of the code • upper bound since threads are time-sliced • uint start = clock(); . . . compute (less than 3 sec). . uint end = clock(); if (end > start) time = end - start; else time = end + (0 xffff - start) • Look at the clock example under projects in SDK • Using takes some effort – Every thread measures start and end – Then must find min start and max end – Cycle accurate

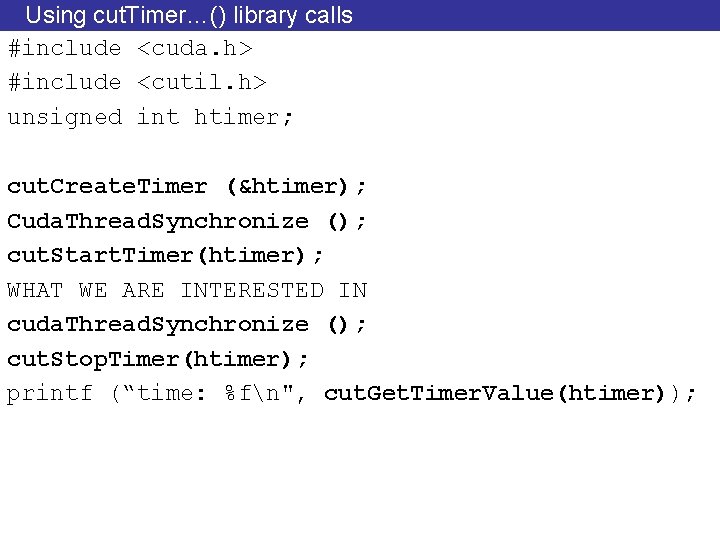

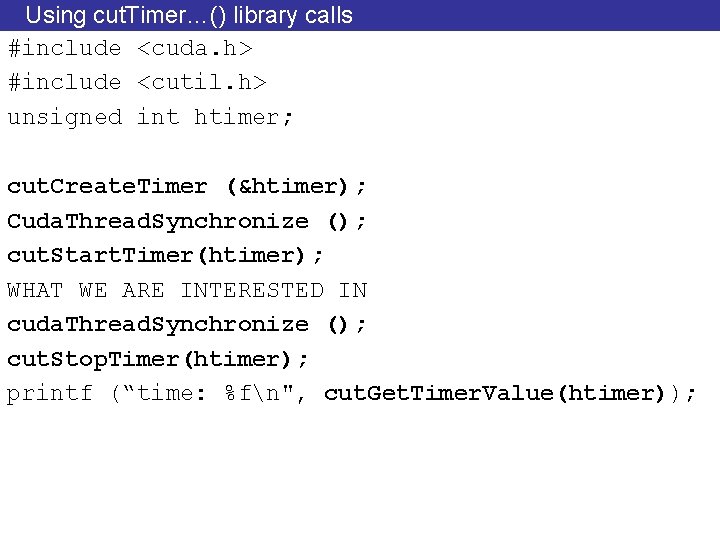

Using cut. Timer…() library calls #include <cuda. h> #include <cutil. h> unsigned int htimer; cut. Create. Timer (&htimer); Cuda. Thread. Synchronize (); cut. Start. Timer(htimer); WHAT WE ARE INTERESTED IN cuda. Thread. Synchronize (); cut. Stop. Timer(htimer); printf (“time: %fn", cut. Get. Timer. Value(htimer));

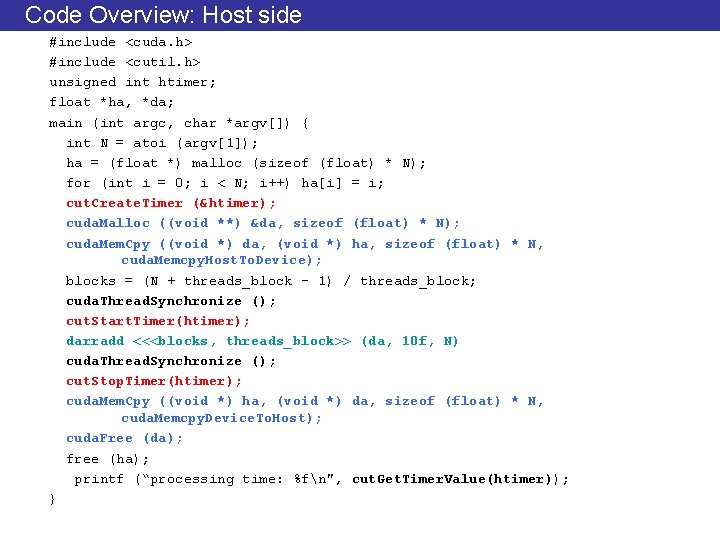

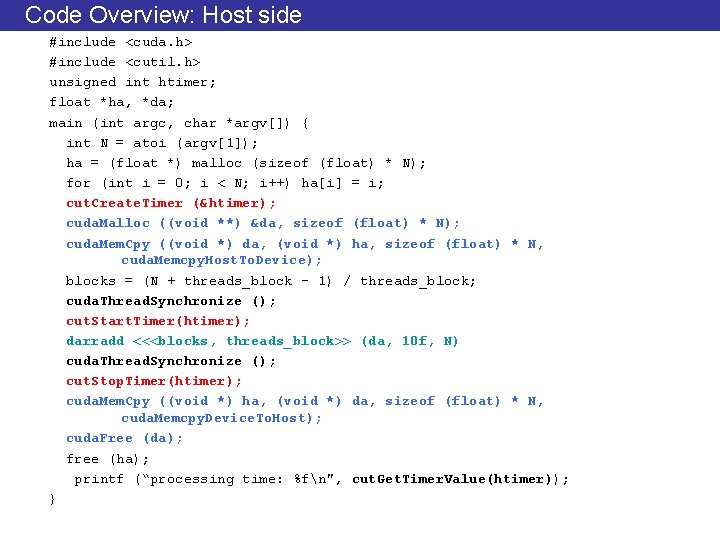

Code Overview: Host side #include <cuda. h> #include <cutil. h> unsigned int htimer; float *ha, *da; main (int argc, char *argv[]) { int N = atoi (argv[1]); ha = (float *) malloc (sizeof (float) * N); for (int i = 0; i < N; i++) ha[i] = i; cut. Create. Timer (&htimer); cuda. Malloc ((void **) &da, sizeof (float) * N); cuda. Mem. Cpy ((void *) da, (void *) ha, sizeof (float) * N, cuda. Memcpy. Host. To. Device); blocks = (N + threads_block – 1) / threads_block; cuda. Thread. Synchronize (); cut. Start. Timer(htimer); darradd <<<blocks, threads_block>> (da, 10 f, N) cuda. Thread. Synchronize (); cut. Stop. Timer(htimer); cuda. Mem. Cpy ((void *) ha, (void *) da, sizeof (float) * N, cuda. Memcpy. Device. To. Host); cuda. Free (da); free (ha); printf (“processing time: %fn", cut. Get. Timer. Value(htimer)); }

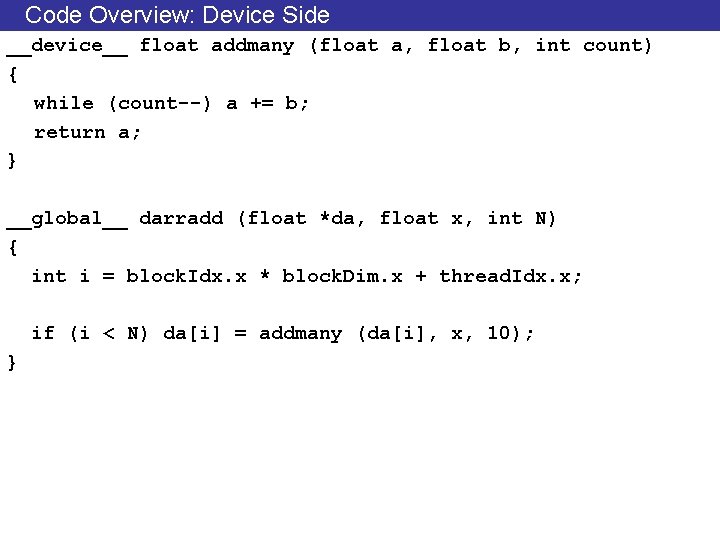

Code Overview: Device Side __device__ float addmany (float a, float b, int count) { while (count--) a += b; return a; } __global__ darradd (float *da, float x, int N) { int i = block. Idx. x * block. Dim. x + thread. Idx. x; if (i < N) da[i] = addmany (da[i], x, 10); }

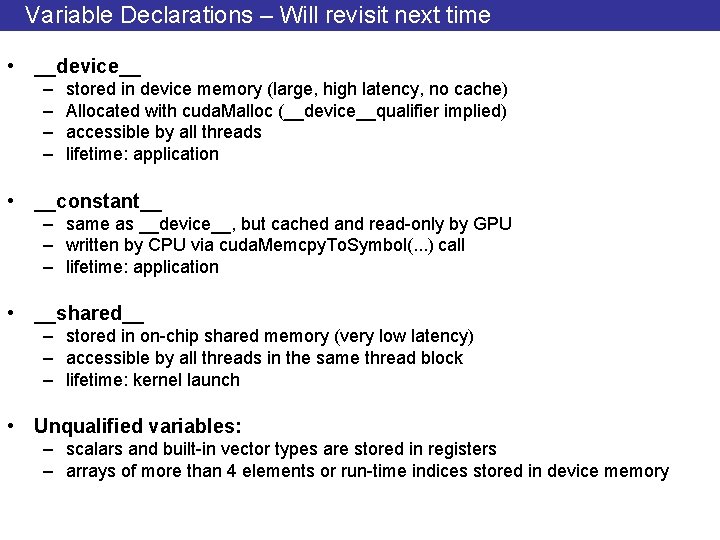

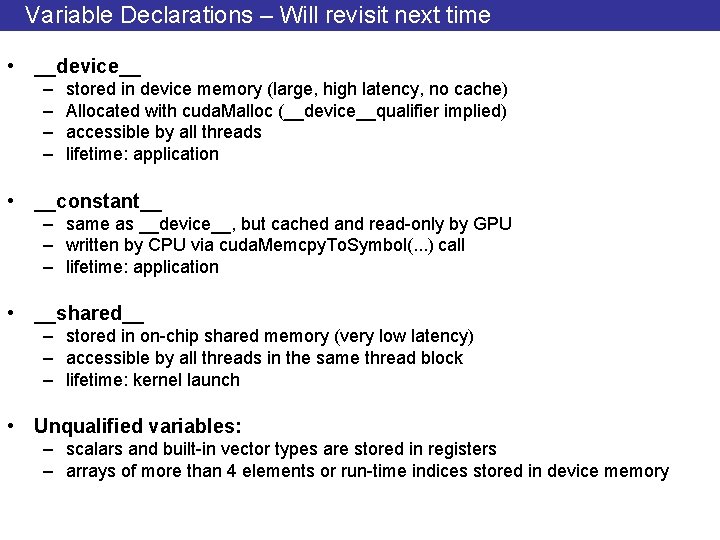

Variable Declarations – Will revisit next time • __device__ – – stored in device memory (large, high latency, no cache) Allocated with cuda. Malloc (__device__qualifier implied) accessible by all threads lifetime: application • __constant__ – same as __device__, but cached and read-only by GPU – written by CPU via cuda. Memcpy. To. Symbol(. . . ) call – lifetime: application • __shared__ – stored in on-chip shared memory (very low latency) – accessible by all threads in the same thread block – lifetime: kernel launch • Unqualified variables: – scalars and built-in vector types are stored in registers – arrays of more than 4 elements or run-time indices stored in device memory

Measurement Methodology • You will not get exactly the same time measurements every time – Other processes running / external events (e. g. , network activity) – Cannot control – “Non-determinism” • Must take sufficient samples – say 10 or more – There is theory on what the number of samples must be • Measure average • Will discuss this next time or will provide a handout online

![Handling Large Input Data Sets 1 D Example Recall grid Dim xy Handling Large Input Data Sets – 1 D Example • Recall grid. Dim. [xy]](https://slidetodoc.com/presentation_image_h2/dbc3d7552ba65bd68a3f73ca073bf0aa/image-89.jpg)

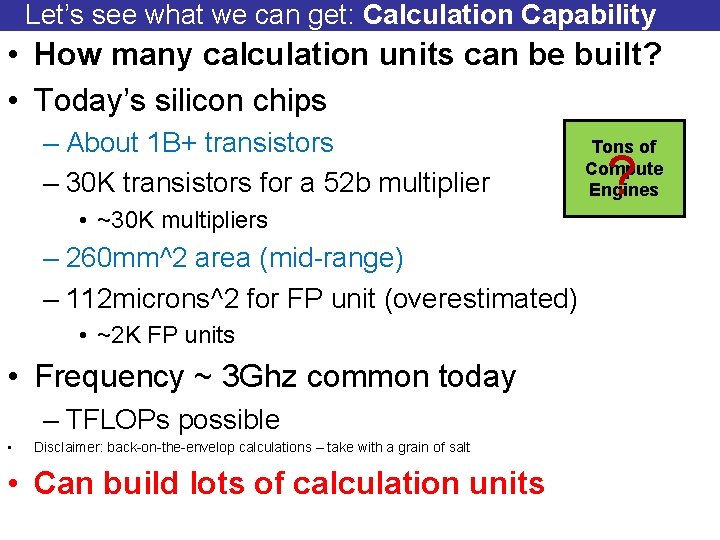

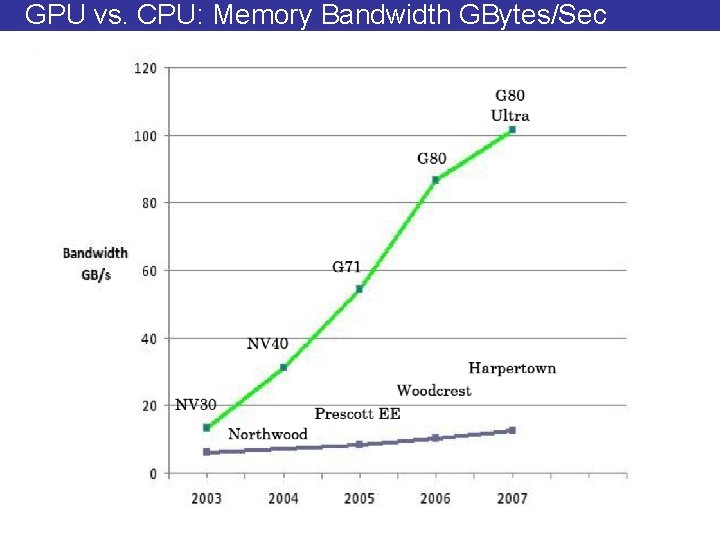

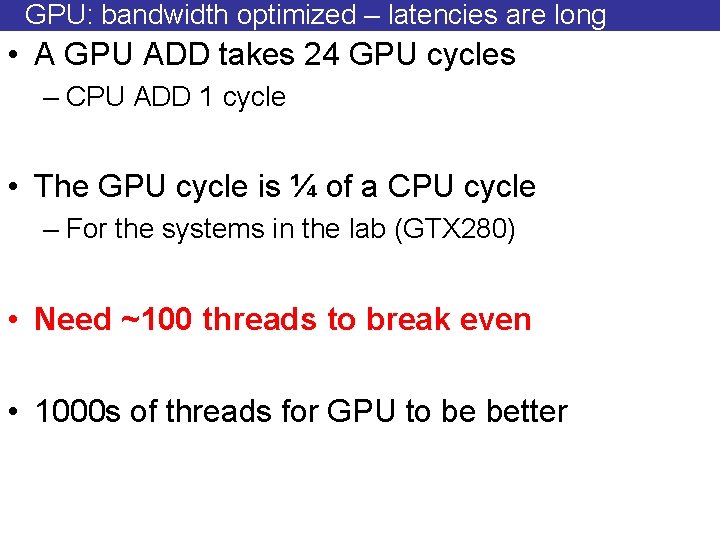

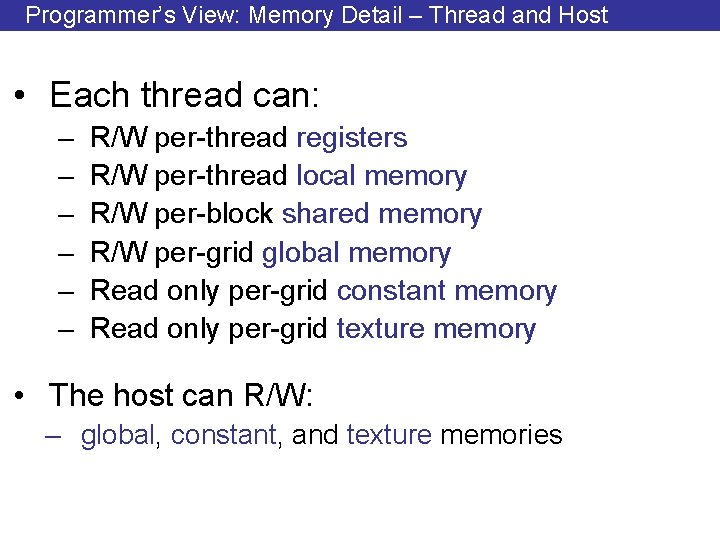

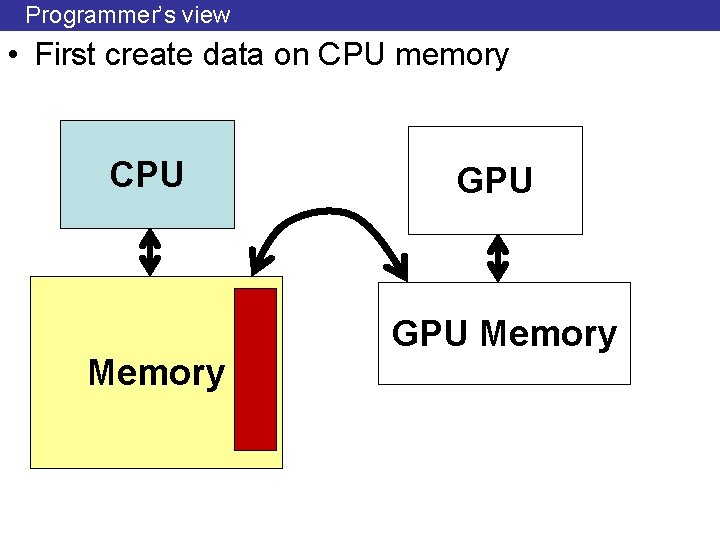

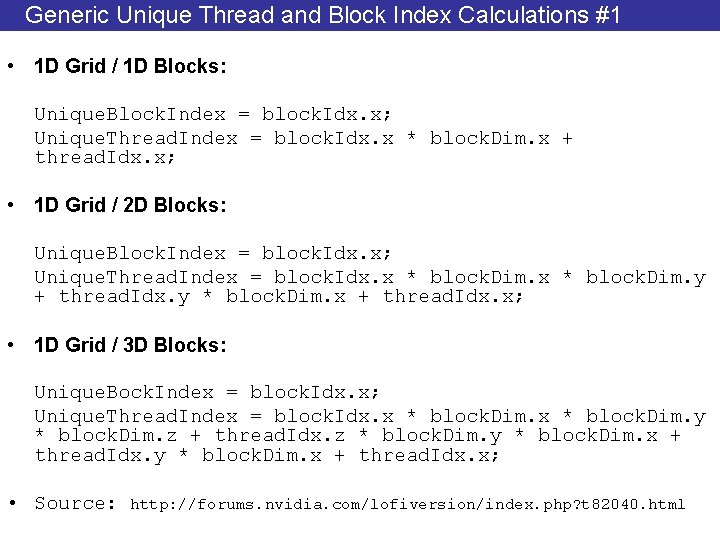

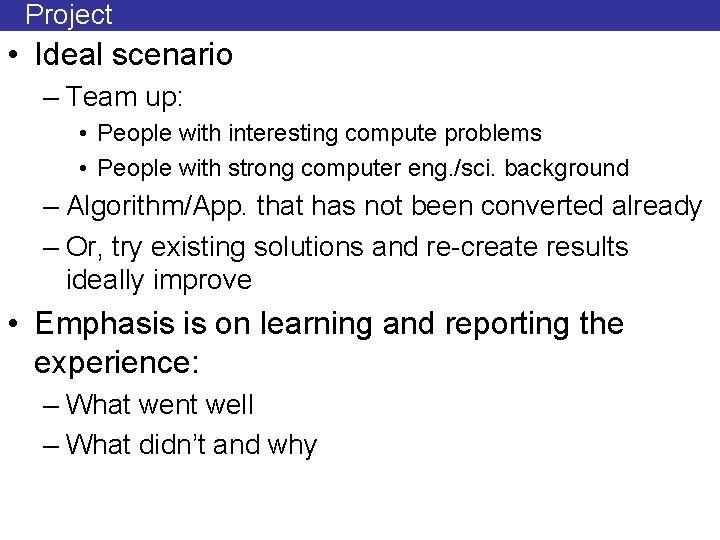

Handling Large Input Data Sets – 1 D Example • Recall grid. Dim. [xy] <= 65535 • Host calls kernel multiple times: float *dac = da; // starting offset for current kernel while (n_blocks) { int bn = n_blocks; int elems; // array elements processed in this kernel if (bn > 65535) bn = 65535; elems = bn * block_size; darradd <<<bn, block_size>>> (dac, 10. 0 f, elems); n_blocks -= bn; dac += elems; } • Better alternative: – Each thread processes multiple elements

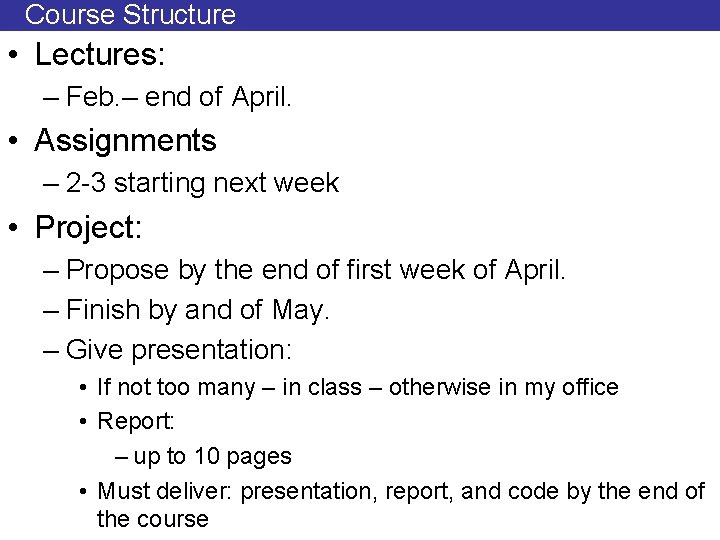

Course Structure • Lectures: – Feb. – end of April. • Assignments – 2 -3 starting next week • Project: – Propose by the end of first week of April. – Finish by and of May. – Give presentation: • If not too many – in class – otherwise in my office • Report: – up to 10 pages • Must deliver: presentation, report, and code by the end of the course

Project • Ideal scenario – Team up: • People with interesting compute problems • People with strong computer eng. /sci. background – Algorithm/App. that has not been converted already – Or, try existing solutions and re-create results ideally improve • Emphasis is on learning and reporting the experience: – What went well – What didn’t and why

Material • Programming Massively Parallel Processors: A Hands-on Approach – D. Kirk and W. -M. Hwu – http: //www. elsevierdirect. com/morgan_kaufmann/kirk/ • The Open. CL Programming Book: Parallel Programming for Multi. Core CPU and GPU, R. Tsuchiyama, T. Nakamura, and T. Lizuka, – http: //www. fixstars. com/en/company/books/opencl/ • We’ll cover CUDA for GTX 280 • At the end we’ll talk about the newest Fermi architecture and AMD’s offerings

TO DO today • www. eecg. toronto. edu/~moshovos/CUDA 11 – not ready yet – Will be posting lecture notes – Try CUDA 10 for recent set of slides • Signup sheet for accounts? – Name, e-mail – E-mail me at moshovos@eecg. toronto. edu – Subject: CUDA 11: • Time? Is this slot OK for everyone? – May be post a doodle to check what other times might work?