Measuring Performance These notes introduce Timing Program Execution

![K 20 bandwidth. Test (cci-grid 08) [abw@cci-grid 08 ~]$ bandwidth. Test [CUDA Bandwidth Test] K 20 bandwidth. Test (cci-grid 08) [abw@cci-grid 08 ~]$ bandwidth. Test [CUDA Bandwidth Test]](https://slidetodoc.com/presentation_image_h/1999d83350c41106eb6f41f9742a8681/image-16.jpg)

![C 2050 bandwidth. Test (cci-grid 07) [abw@cci-grid 07 ~]$ bandwidth. Test [CUDA Bandwidth Test] C 2050 bandwidth. Test (cci-grid 07) [abw@cci-grid 07 ~]$ bandwidth. Test [CUDA Bandwidth Test]](https://slidetodoc.com/presentation_image_h/1999d83350c41106eb6f41f9742a8681/image-17.jpg)

- Slides: 23

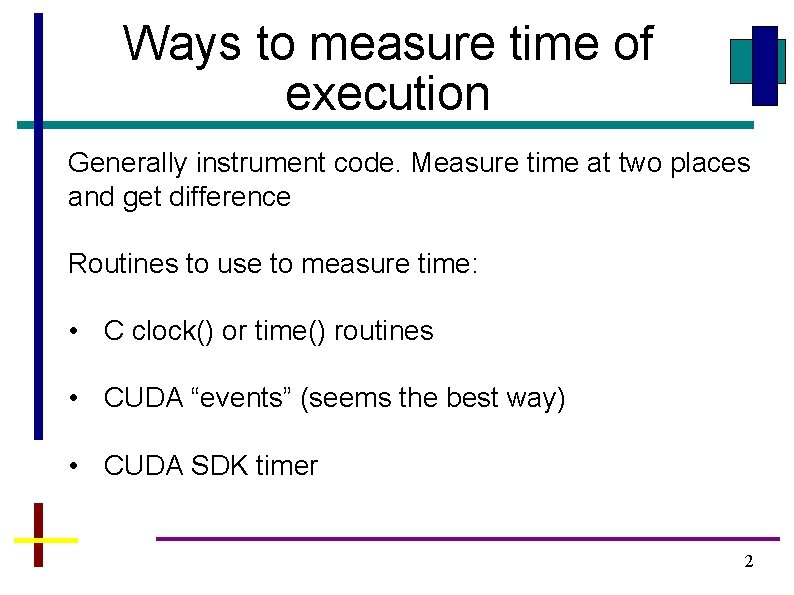

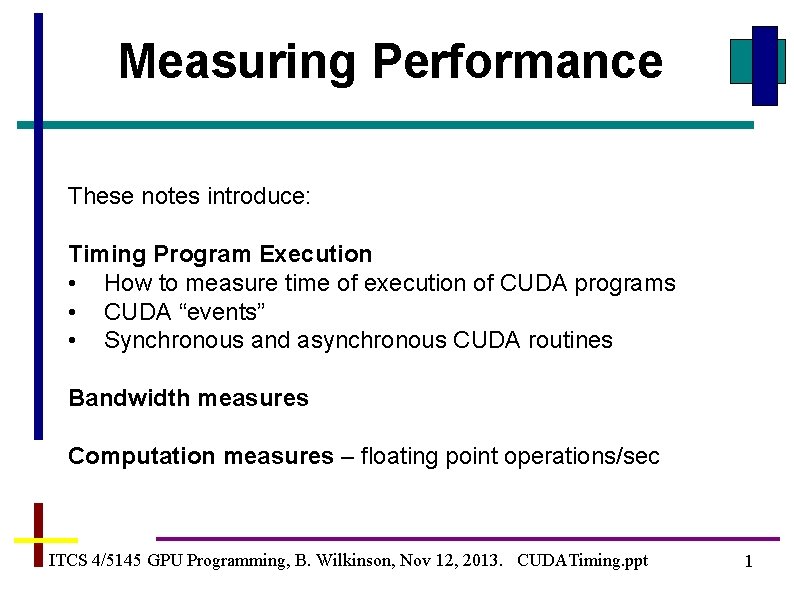

Measuring Performance These notes introduce: Timing Program Execution • How to measure time of execution of CUDA programs • CUDA “events” • Synchronous and asynchronous CUDA routines Bandwidth measures Computation measures – floating point operations/sec ITCS 4/5145 GPU Programming, B. Wilkinson, Nov 12, 2013. CUDATiming. ppt 1

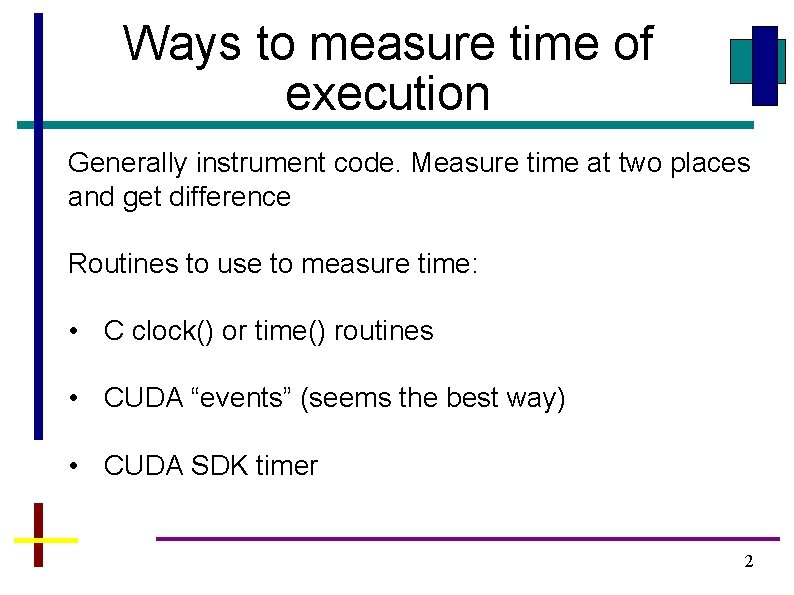

Ways to measure time of execution Generally instrument code. Measure time at two places and get difference Routines to use to measure time: • C clock() or time() routines • CUDA “events” (seems the best way) • CUDA SDK timer 2

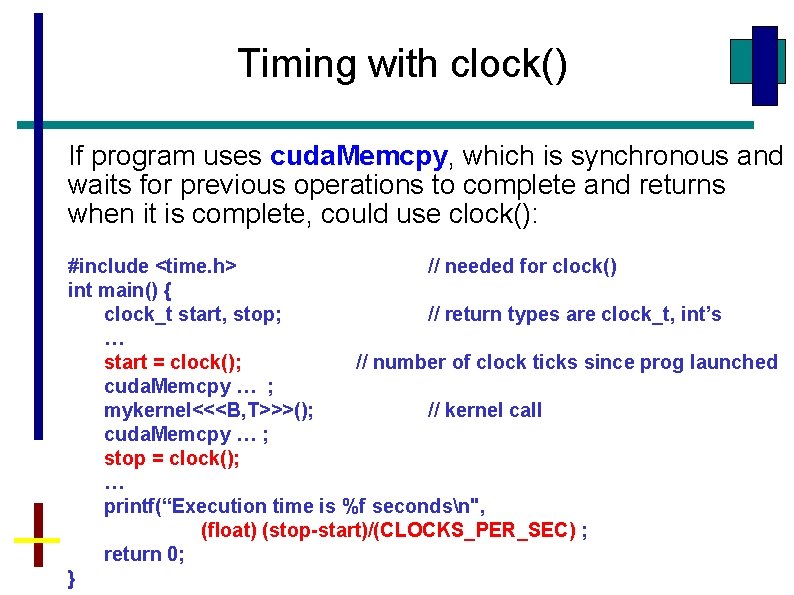

Timing with clock() If program uses cuda. Memcpy, which is synchronous and waits for previous operations to complete and returns when it is complete, could use clock(): #include <time. h> // needed for clock() int main() { clock_t start, stop; // return types are clock_t, int’s … start = clock(); // number of clock ticks since prog launched cuda. Memcpy … ; mykernel<<<B, T>>>(); // kernel call cuda. Memcpy … ; stop = clock(); … printf(“Execution time is %f secondsn", (float) (stop-start)/(CLOCKS_PER_SEC) ; return 0; 3 }

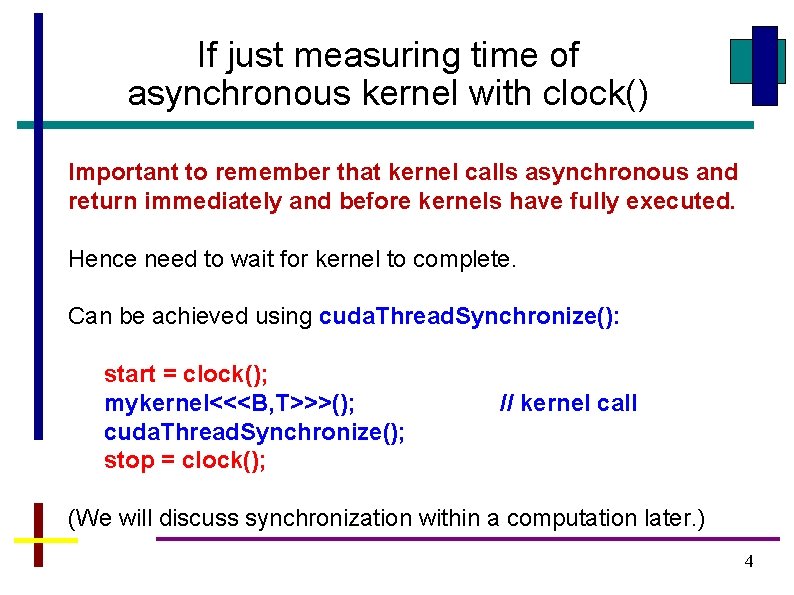

If just measuring time of asynchronous kernel with clock() Important to remember that kernel calls asynchronous and return immediately and before kernels have fully executed. Hence need to wait for kernel to complete. Can be achieved using cuda. Thread. Synchronize(): start = clock(); mykernel<<<B, T>>>(); cuda. Thread. Synchronize(); stop = clock(); // kernel call (We will discuss synchronization within a computation later. ) 4

CUDA event timer In general, better to use CUDA event timer. First need to create event objects. cuda. Event_t event 1; cuda. Event. Create(&event 1); cuda. Event_t event 2; cuda. Event. Create(&event 2); creates two “event” objects, event 1 and event 2. 5

Recording Events cuda. Event. Record(event 1, 0) record an “event” into default “stream” (0). Device will record a timestamp for the event when it reaches that event in the stream, that is, after all preceding operations have completed. (Default stream 0 will mean completed in CUDA context) NOTE: This operation is asynchronous and may return before recording event! 6

Making event actually recorded cuda. Event. Synchronize(event 1) -- waits until named event actually recorded. Event recorded when all work done by threads to complete prior to specified event (Not strictly be necessary if synchronous CUDA call in code. ) 7

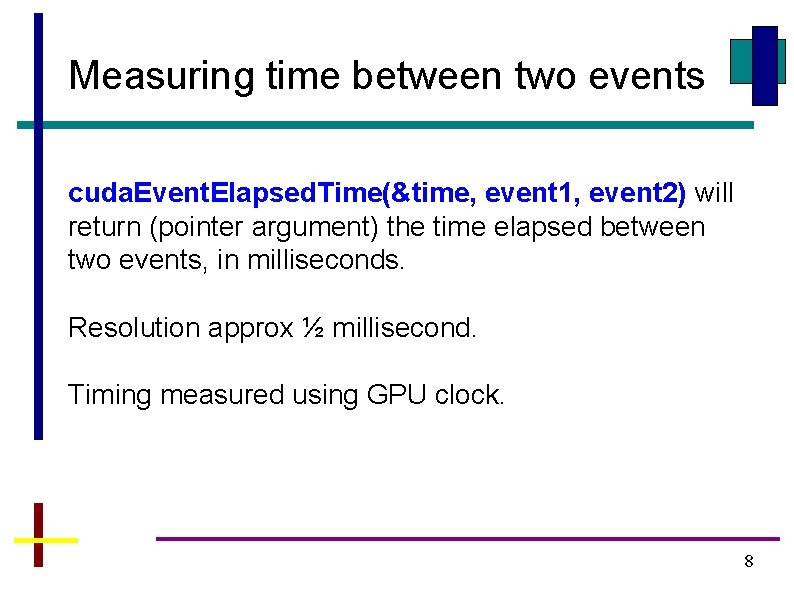

Measuring time between two events cuda. Event. Elapsed. Time(&time, event 1, event 2) will return (pointer argument) the time elapsed between two events, in milliseconds. Resolution approx ½ millisecond. Timing measured using GPU clock. 8

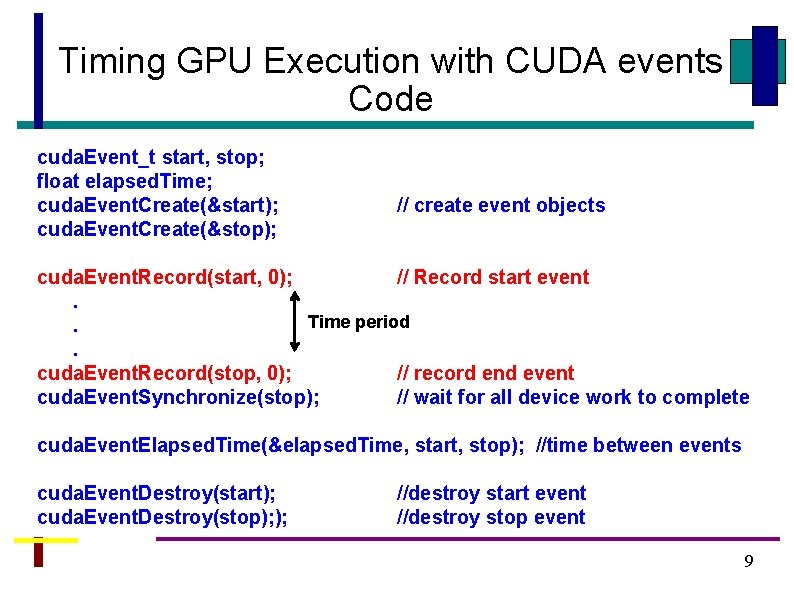

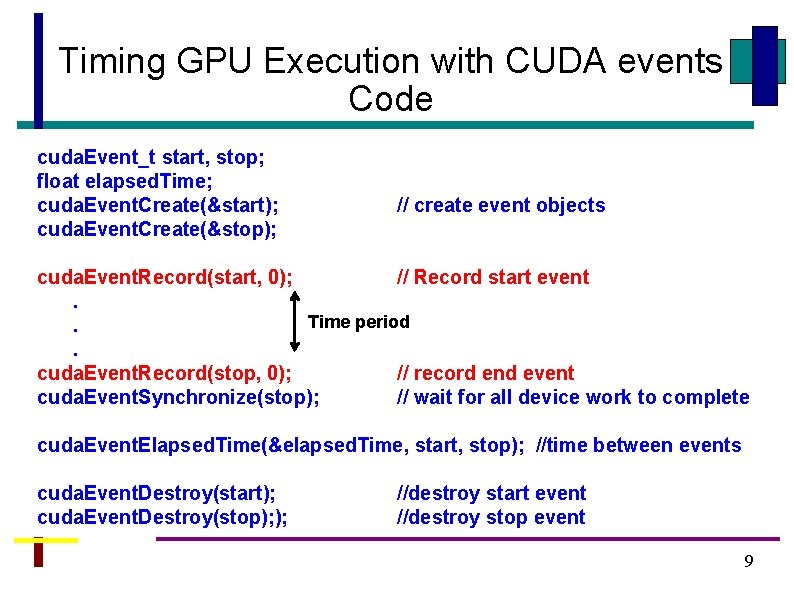

Timing GPU Execution with CUDA events Code cuda. Event_t start, stop; float elapsed. Time; cuda. Event. Create(&start); cuda. Event. Create(&stop); // create event objects cuda. Event. Record(start, 0); // Record start event. Time period. . cuda. Event. Record(stop, 0); // record end event cuda. Event. Synchronize(stop); // wait for all device work to complete cuda. Event. Elapsed. Time(&elapsed. Time, start, stop); //time between events cuda. Event. Destroy(start); cuda. Event. Destroy(stop); ); //destroy start event //destroy stop event 9

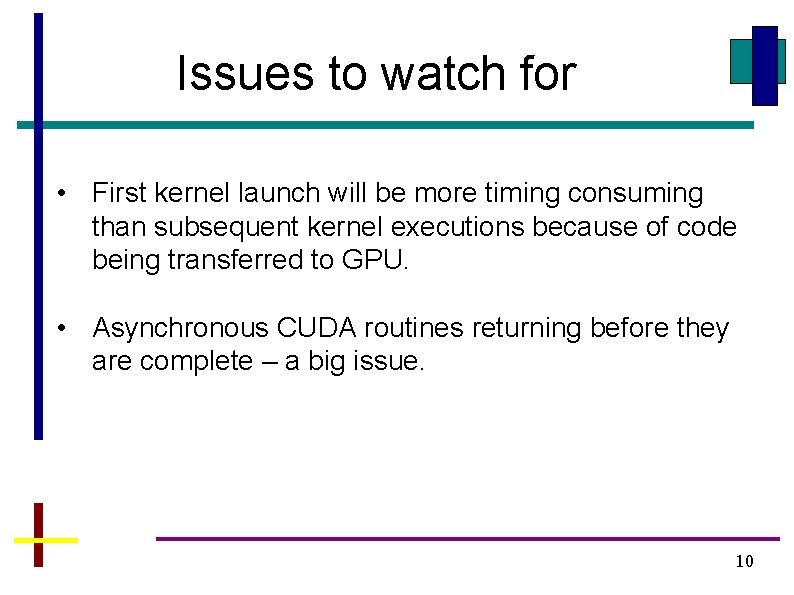

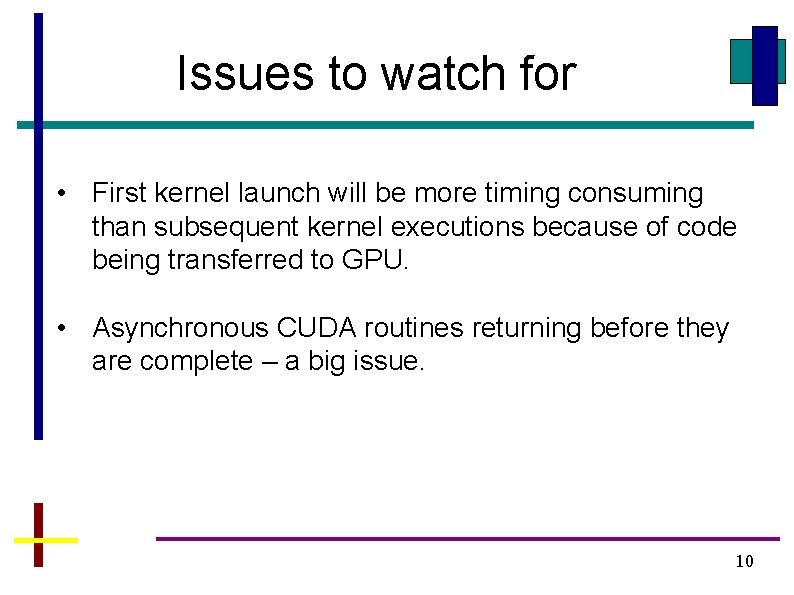

Issues to watch for • First kernel launch will be more timing consuming than subsequent kernel executions because of code being transferred to GPU. • Asynchronous CUDA routines returning before they are complete – a big issue. 10

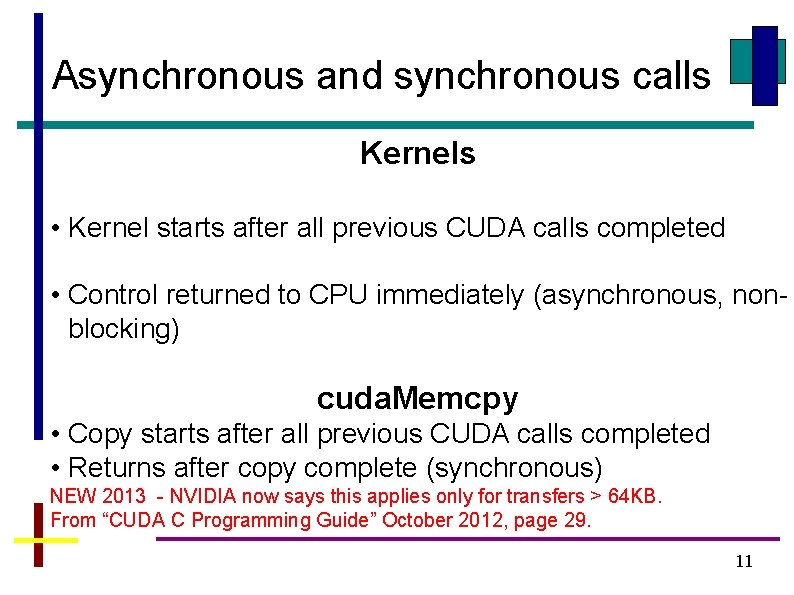

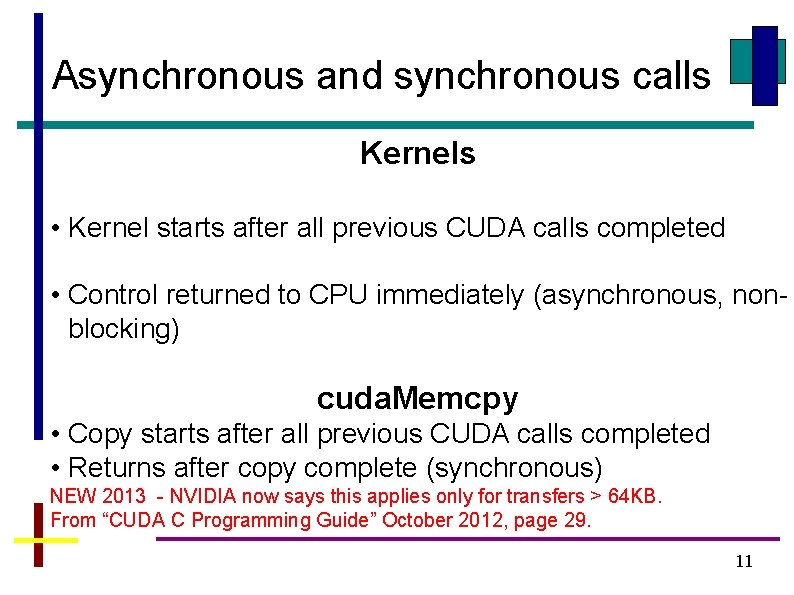

Asynchronous and synchronous calls Kernels • Kernel starts after all previous CUDA calls completed • Control returned to CPU immediately (asynchronous, nonblocking) cuda. Memcpy • Copy starts after all previous CUDA calls completed • Returns after copy complete (synchronous) NEW 2013 - NVIDIA now says this applies only for transfers > 64 KB. From “CUDA C Programming Guide” October 2012, page 29. 11

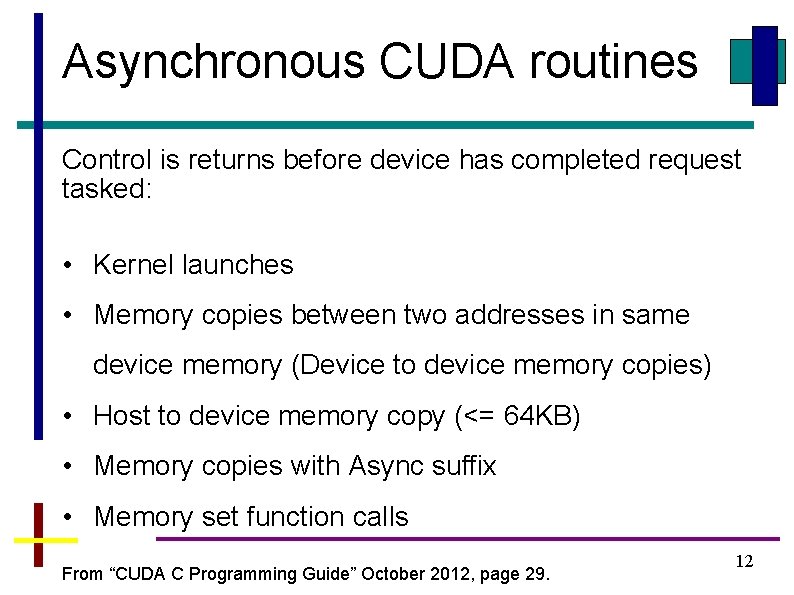

Asynchronous CUDA routines Control is returns before device has completed request tasked: • Kernel launches • Memory copies between two addresses in same device memory (Device to device memory copies) • Host to device memory copy (<= 64 KB) • Memory copies with Async suffix • Memory set function calls From “CUDA C Programming Guide” October 2012, page 29. 12

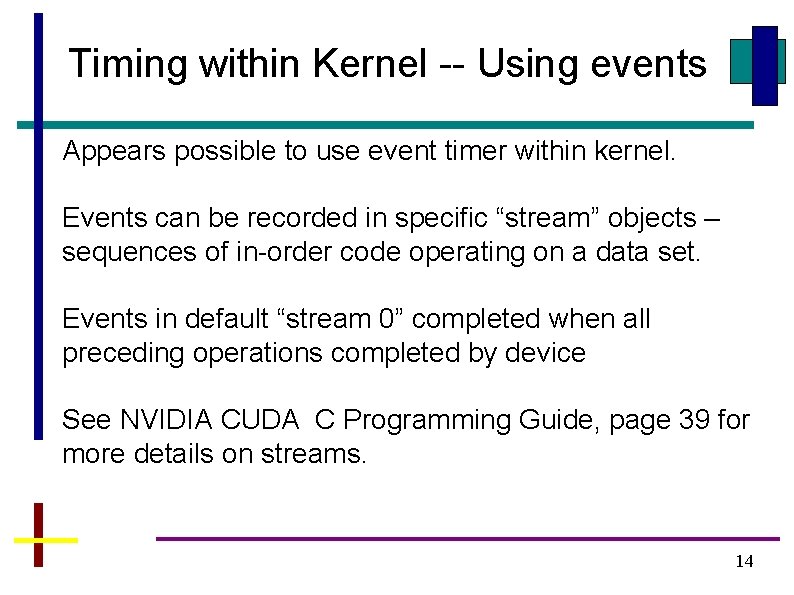

Timing within Kernel -- Using clock() Possible to use clock() within kernel See NVIDIA CUDA C Programming Guide, page 115: “B. 10 Time Function clock_t clock(); when executed in device code, returns the value of a per-multiprocessor counter that is incremented every clock cycle. Sampling this counter at the beginning and at the end of a kernel, taking the difference of the two samples, and recording the result per thread provides a measure for each thread of the number of clock cycles taken by the device to completely execute thread, but not of the number of clock cycles the device actually spent executing thread instructions. The former number is greater that the latter since threads are time sliced. ” 13

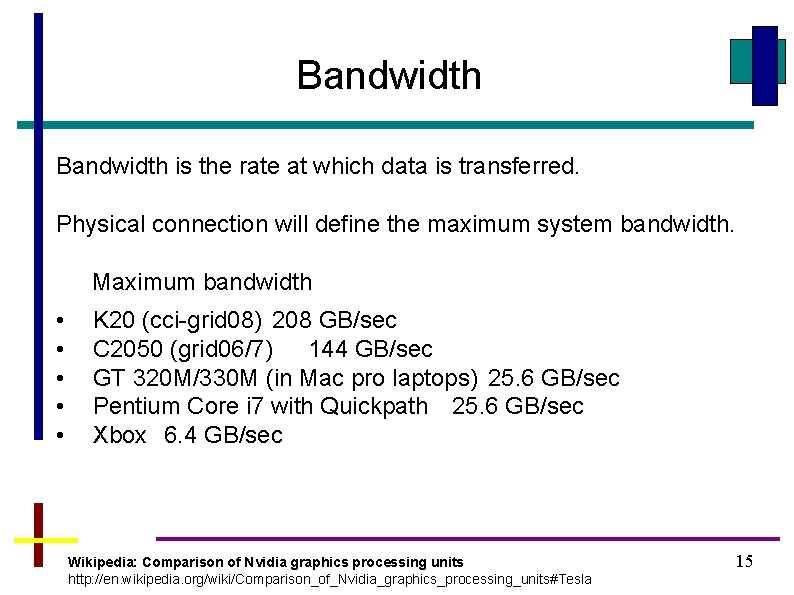

Timing within Kernel -- Using events Appears possible to use event timer within kernel. Events can be recorded in specific “stream” objects – sequences of in-order code operating on a data set. Events in default “stream 0” completed when all preceding operations completed by device See NVIDIA CUDA C Programming Guide, page 39 for more details on streams. 14

Bandwidth is the rate at which data is transferred. Physical connection will define the maximum system bandwidth. Maximum bandwidth • • • K 20 (cci-grid 08) 208 GB/sec C 2050 (grid 06/7) 144 GB/sec GT 320 M/330 M (in Mac pro laptops) 25. 6 GB/sec Pentium Core i 7 with Quickpath 25. 6 GB/sec Xbox 6. 4 GB/sec Wikipedia: Comparison of Nvidia graphics processing units http: //en. wikipedia. org/wiki/Comparison_of_Nvidia_graphics_processing_units#Tesla 15

![K 20 bandwidth Test ccigrid 08 abwccigrid 08 bandwidth Test CUDA Bandwidth Test K 20 bandwidth. Test (cci-grid 08) [abw@cci-grid 08 ~]$ bandwidth. Test [CUDA Bandwidth Test]](https://slidetodoc.com/presentation_image_h/1999d83350c41106eb6f41f9742a8681/image-16.jpg)

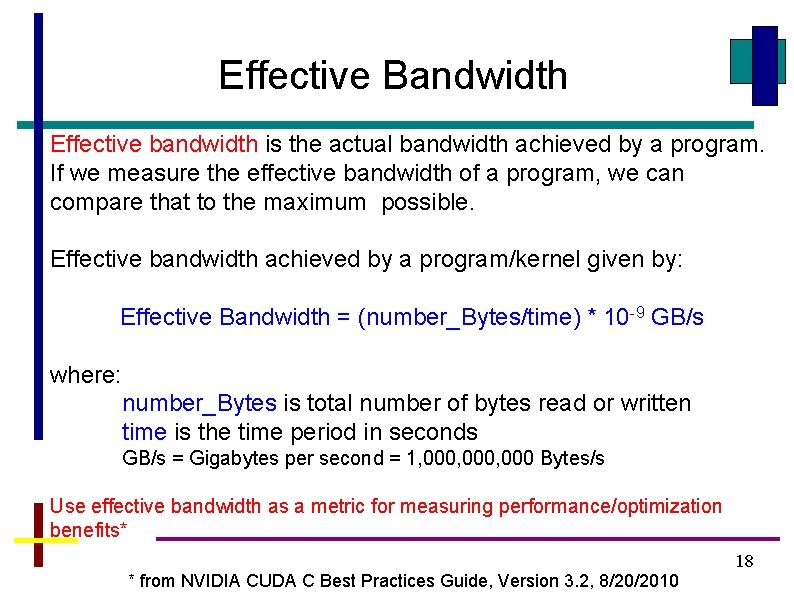

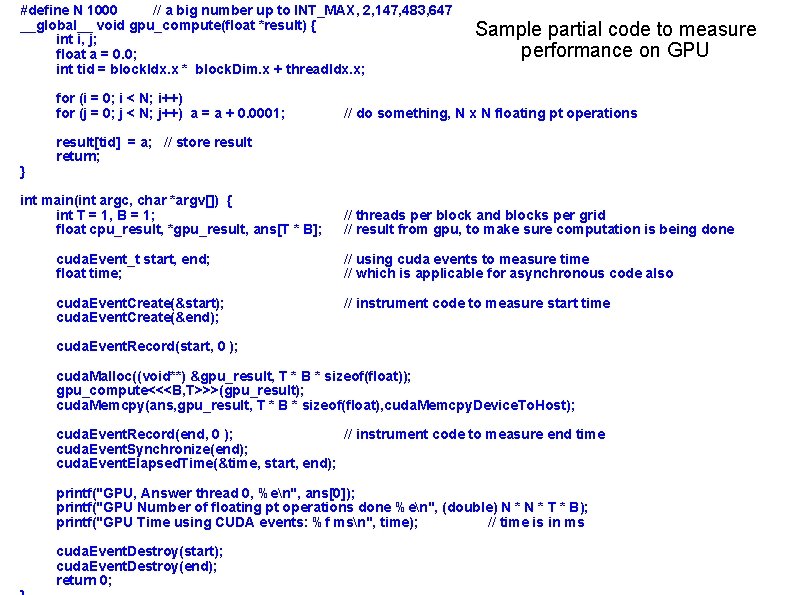

K 20 bandwidth. Test (cci-grid 08) [abw@cci-grid 08 ~]$ bandwidth. Test [CUDA Bandwidth Test] - Starting. . . Running on. . . Device 0: Tesla K 20 c Quick Mode Host to Device Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(MB/s) 33554432 5760. 5 Device to Host Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(MB/s) 33554432 6389. 0 Device to Device Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(MB/s) 33554432 143343. 5 Result = PASS [abw@cci-grid 08 ~]$ 16

![C 2050 bandwidth Test ccigrid 07 abwccigrid 07 bandwidth Test CUDA Bandwidth Test C 2050 bandwidth. Test (cci-grid 07) [abw@cci-grid 07 ~]$ bandwidth. Test [CUDA Bandwidth Test]](https://slidetodoc.com/presentation_image_h/1999d83350c41106eb6f41f9742a8681/image-17.jpg)

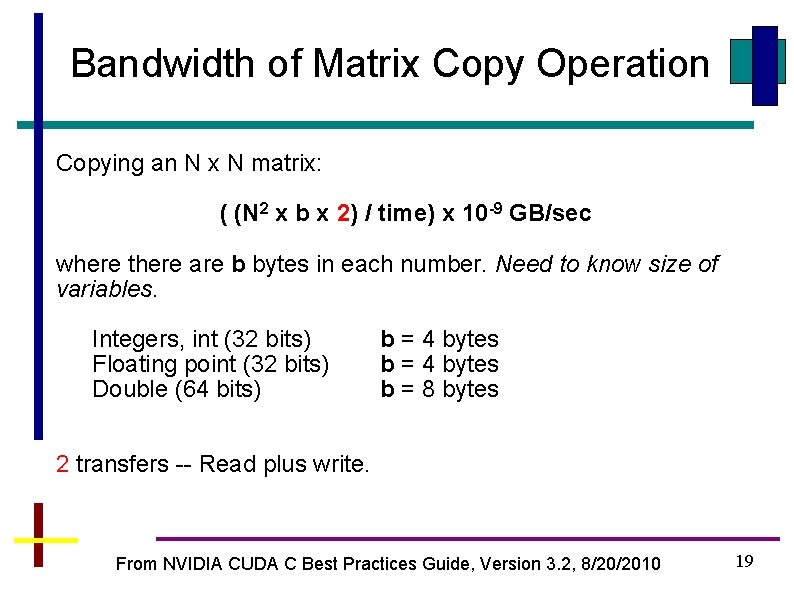

C 2050 bandwidth. Test (cci-grid 07) [abw@cci-grid 07 ~]$ bandwidth. Test [CUDA Bandwidth Test] - Starting. . . Running on. . . Device 0: Tesla C 2050 Quick Mode Host to Device Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(MB/s) 33554432 5697. 6 Device to Host Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(MB/s) 33554432 4934. 0 Device to Device Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(MB/s) 33554432 103795. 3 Result = PASS [abw@cci-grid 07 ~]$ 17

Effective Bandwidth Effective bandwidth is the actual bandwidth achieved by a program. If we measure the effective bandwidth of a program, we can compare that to the maximum possible. Effective bandwidth achieved by a program/kernel given by: Effective Bandwidth = (number_Bytes/time) * 10 -9 GB/s where: number_Bytes is total number of bytes read or written time is the time period in seconds GB/s = Gigabytes per second = 1, 000, 000 Bytes/s Use effective bandwidth as a metric for measuring performance/optimization benefits* 18 * from NVIDIA CUDA C Best Practices Guide, Version 3. 2, 8/20/2010

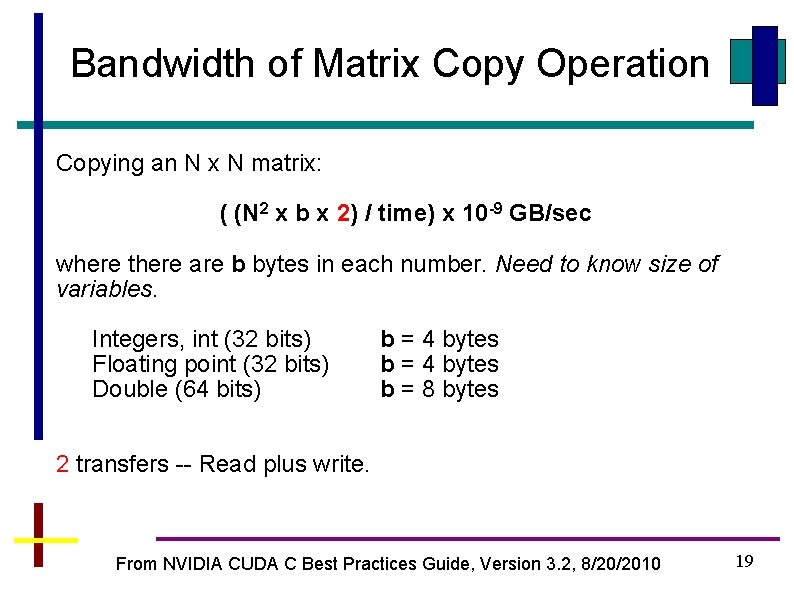

Bandwidth of Matrix Copy Operation Copying an N x N matrix: ( (N 2 x b x 2) / time) x 10 -9 GB/sec where there are b bytes in each number. Need to know size of variables. Integers, int (32 bits) Floating point (32 bits) Double (64 bits) b = 4 bytes b = 8 bytes 2 transfers -- Read plus write. From NVIDIA CUDA C Best Practices Guide, Version 3. 2, 8/20/2010 19

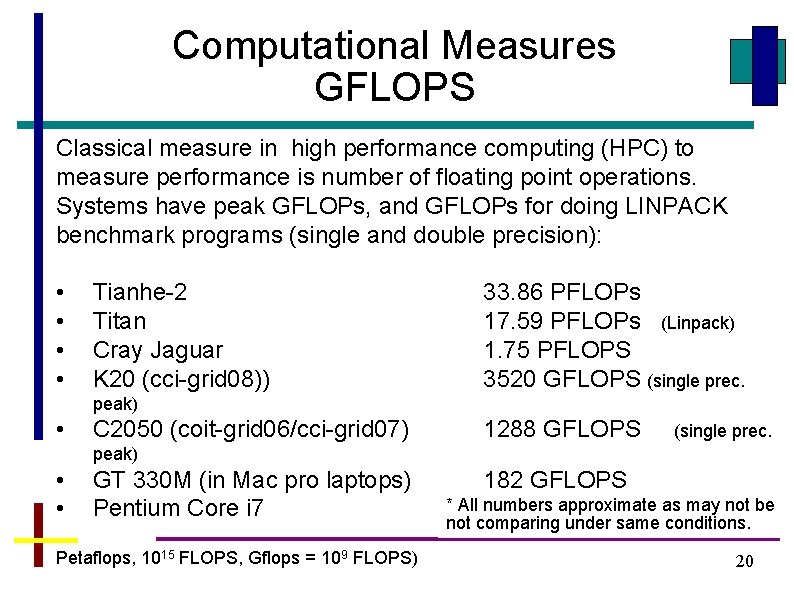

Computational Measures GFLOPS Classical measure in high performance computing (HPC) to measure performance is number of floating point operations. Systems have peak GFLOPs, and GFLOPs for doing LINPACK benchmark programs (single and double precision): • • Tianhe-2 Titan Cray Jaguar K 20 (cci-grid 08)) 33. 86 PFLOPs 17. 59 PFLOPs (Linpack) 1. 75 PFLOPS 3520 GFLOPS (single prec. peak) • C 2050 (coit-grid 06/cci-grid 07) 1288 GFLOPS (single prec. peak) • • GT 330 M (in Mac pro laptops) Pentium Core i 7 Petaflops, 1015 FLOPS, Gflops = 109 FLOPS) 182 GFLOPS * All numbers approximate as may not be 40 -55 GFLOPS not comparing under same conditions. 20

Actual FLOPS Measured using standard benchmark programs such as LINPACK If measure it on your program, can see how close it get to the peak (which presumably is doing only floating point operations). 21

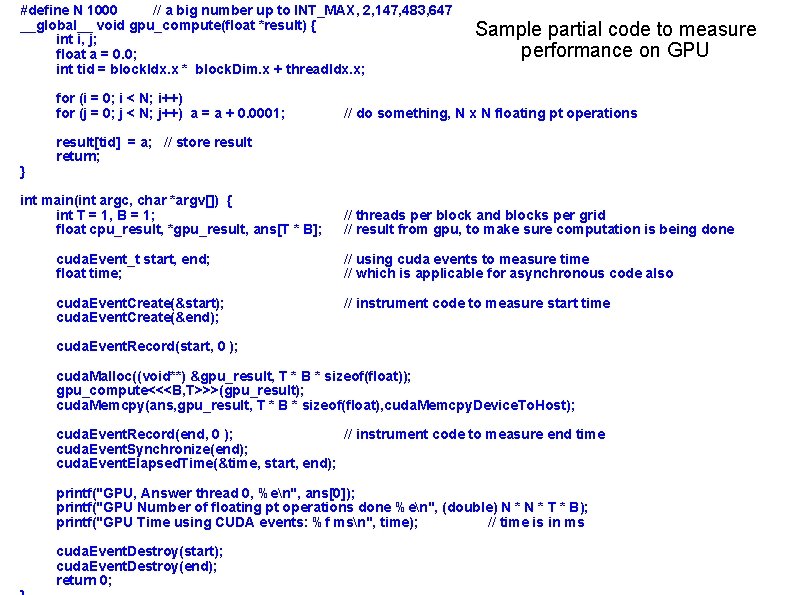

#define N 1000 // a big number up to INT_MAX, 2, 147, 483, 647 __global__ void gpu_compute(float *result) { int i, j; float a = 0. 0; int tid = block. Idx. x * block. Dim. x + thread. Idx. x; for (i = 0; i < N; i++) for (j = 0; j < N; j++) a = a + 0. 0001; } Sample partial code to measure performance on GPU // do something, N x N floating pt operations result[tid] = a; // store result return; int main(int argc, char *argv[]) { int T = 1, B = 1; float cpu_result, *gpu_result, ans[T * B]; // threads per block and blocks per grid // result from gpu, to make sure computation is being done cuda. Event_t start, end; float time; // using cuda events to measure time // which is applicable for asynchronous code also cuda. Event. Create(&start); cuda. Event. Create(&end); // instrument code to measure start time cuda. Event. Record(start, 0 ); cuda. Malloc((void**) &gpu_result, T * B * sizeof(float)); gpu_compute<<<B, T>>>(gpu_result); cuda. Memcpy(ans, gpu_result, T * B * sizeof(float), cuda. Memcpy. Device. To. Host); cuda. Event. Record(end, 0 ); // instrument code to measure end time cuda. Event. Synchronize(end); cuda. Event. Elapsed. Time(&time, start, end); printf("GPU, Answer thread 0, %en", ans[0]); printf("GPU Number of floating pt operations done %en", (double) N * T * B); printf("GPU Time using CUDA events: %f msn", time); // time is in ms cuda. Event. Destroy(start); cuda. Event. Destroy(end); return 0; 22

Questions