Lecture 11 Data Transfer and CUDA Streams David

- Slides: 19

Lecture 11: Data Transfer and CUDA Streams © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

Objective • To learn more advanced features of the CUDA APIs for data transfer and kernel launch – Task parallelism for overlapping data transfer with kernel computation – CUDA streams © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

Serialized Data Transfer and GPU computation • So far, the way we use cuda. Memcpy serializes data transfer and GPU computation Trans. A Trans. B Vector Add Tranfer C time Only use one direction, GPU idle PCIe Idle © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign Only use one direction, GPU idle

Device Overlap • Some CUDA devices support device overlap – Simultaneously execute a kernel while performing a copy between device and host memory int dev_count; cuda. Device. Prop prop; cuda. Get. Device. Count( &dev_count); for (int i = 0; i < dev_count; i++) { cuda. Get. Device. Properties(&prop, i); if (prop. device. Overlap) … © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

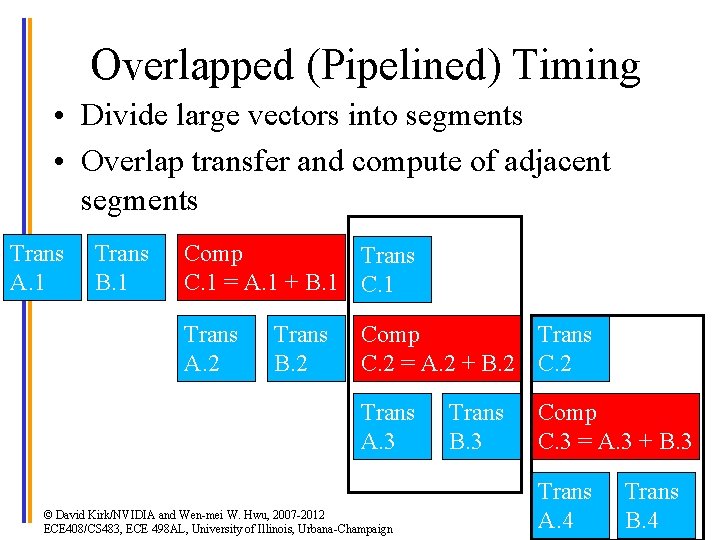

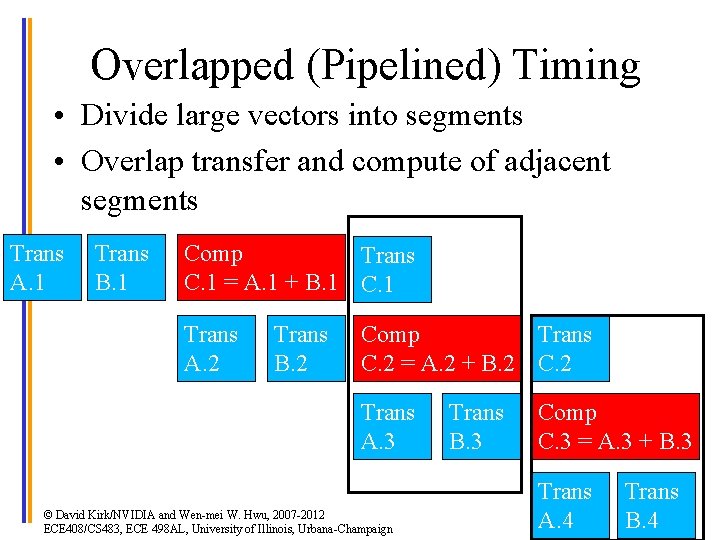

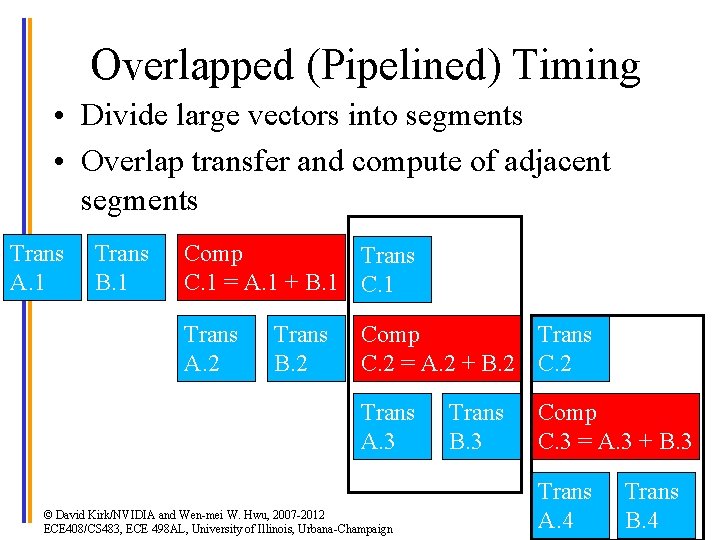

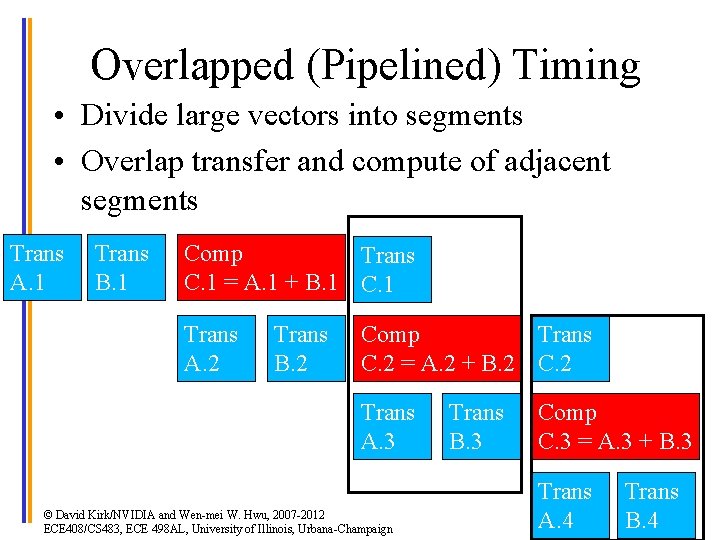

Overlapped (Pipelined) Timing • Divide large vectors into segments • Overlap transfer and compute of adjacent segments Trans A. 1 Trans B. 1 Comp Trans C. 1 = A. 1 + B. 1 C. 1 Trans A. 2 Trans B. 2 Comp Trans C. 2 = A. 2 + B. 2 C. 2 Trans A. 3 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign Trans B. 3 Comp C. 3 = A. 3 + B. 3 Trans A. 4 Trans B. 4

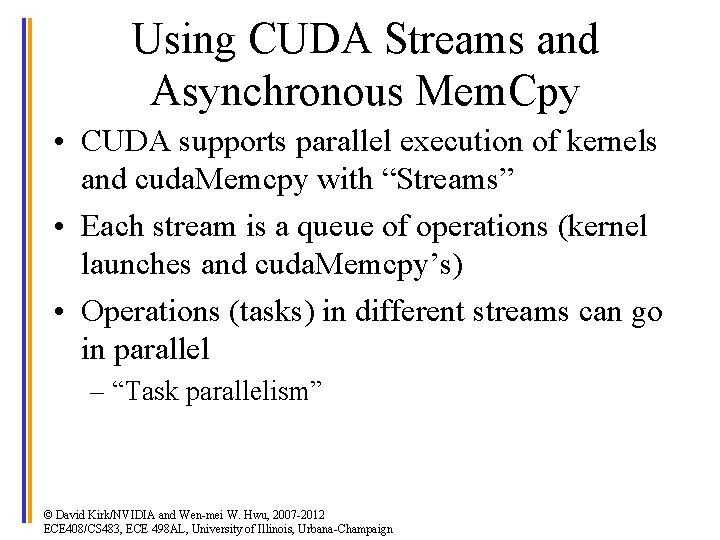

Using CUDA Streams and Asynchronous Mem. Cpy • CUDA supports parallel execution of kernels and cuda. Memcpy with “Streams” • Each stream is a queue of operations (kernel launches and cuda. Memcpy’s) • Operations (tasks) in different streams can go in parallel – “Task parallelism” © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

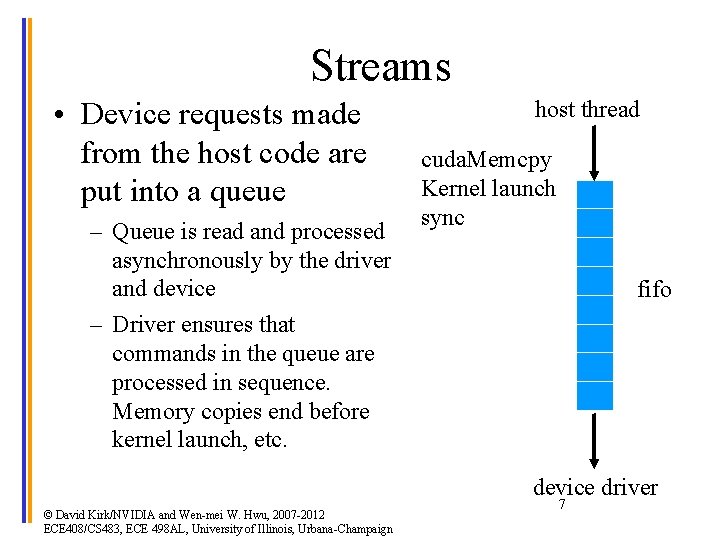

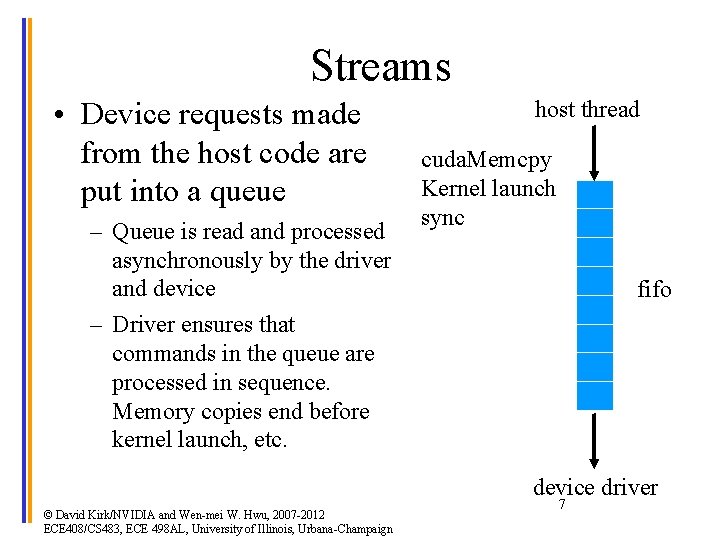

Streams • Device requests made from the host code are put into a queue – Queue is read and processed asynchronously by the driver and device – Driver ensures that commands in the queue are processed in sequence. Memory copies end before kernel launch, etc. host thread cuda. Memcpy Kernel launch sync fifo device driver © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign 7

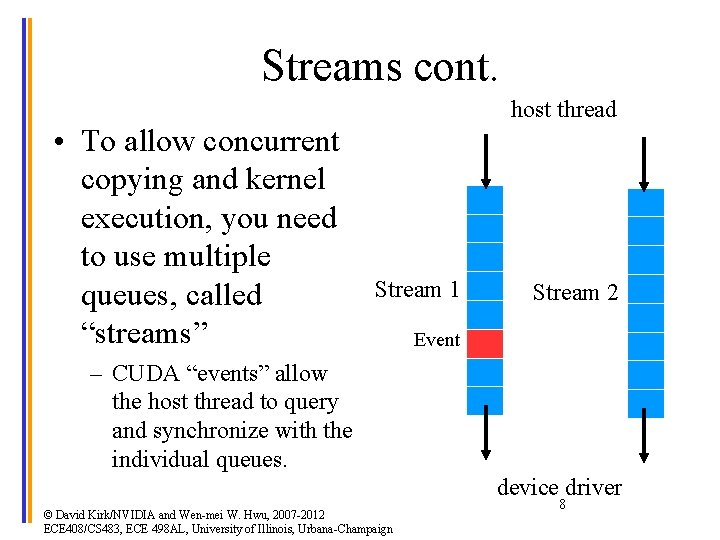

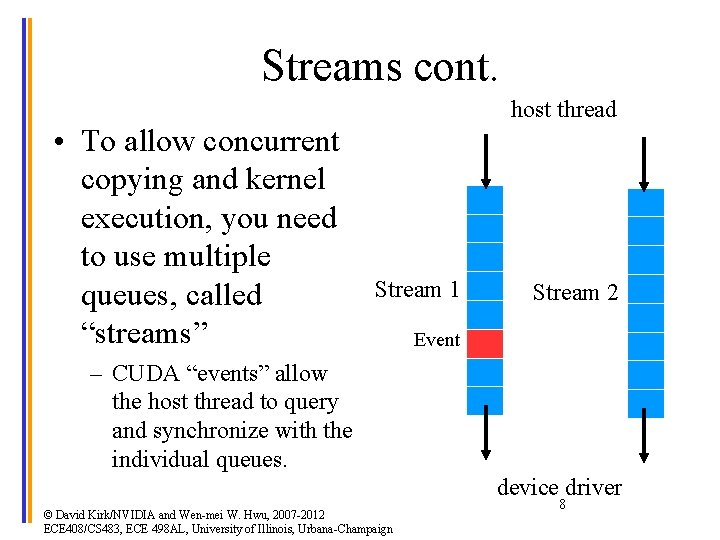

Streams cont. • To allow concurrent copying and kernel execution, you need to use multiple queues, called “streams” host thread Stream 1 Stream 2 Event – CUDA “events” allow the host thread to query and synchronize with the individual queues. device driver © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign 8

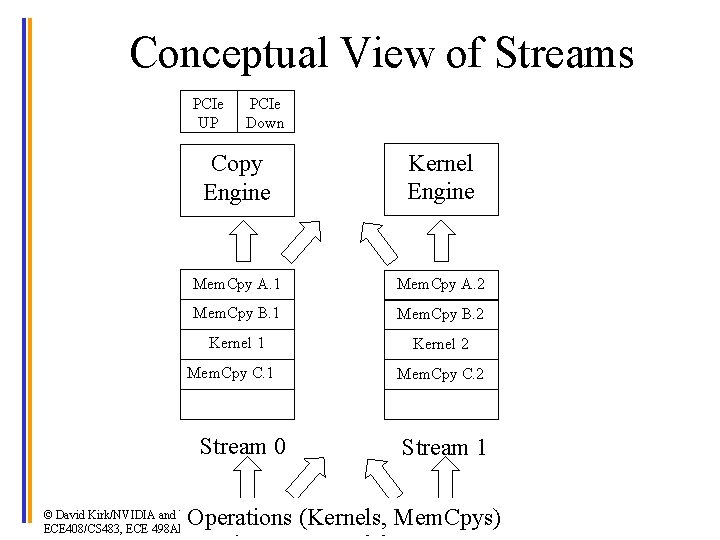

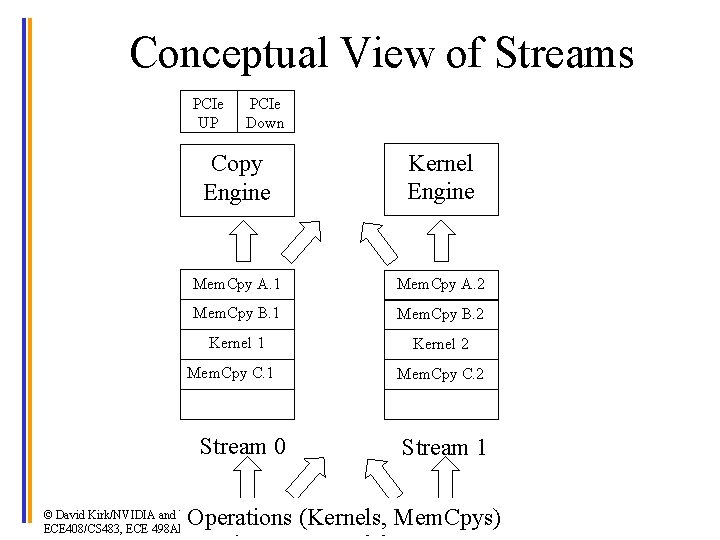

Conceptual View of Streams PCIe UP PCIe Down Copy Engine Kernel Engine Mem. Cpy A. 1 Mem. Cpy A. 2 Mem. Cpy B. 1 Mem. Cpy B. 2 Kernel 1 Kernel 2 Mem. Cpy C. 1 Stream 0 Mem. Cpy C. 2 Stream 1 Operations (Kernels, Mem. Cpys) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

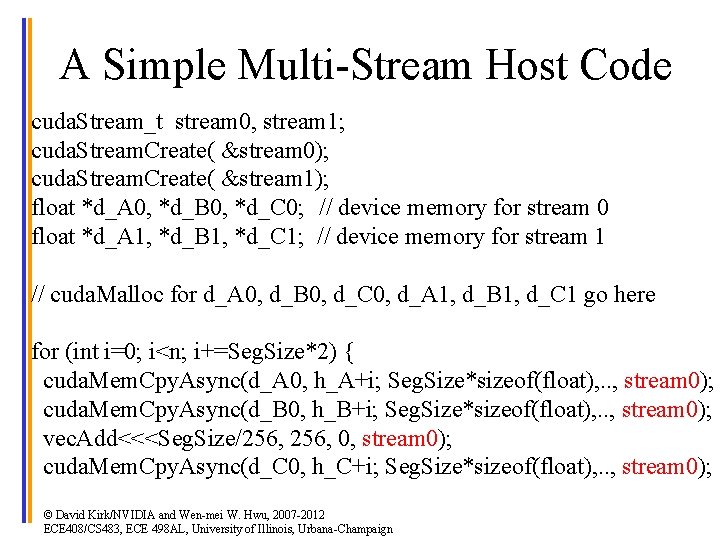

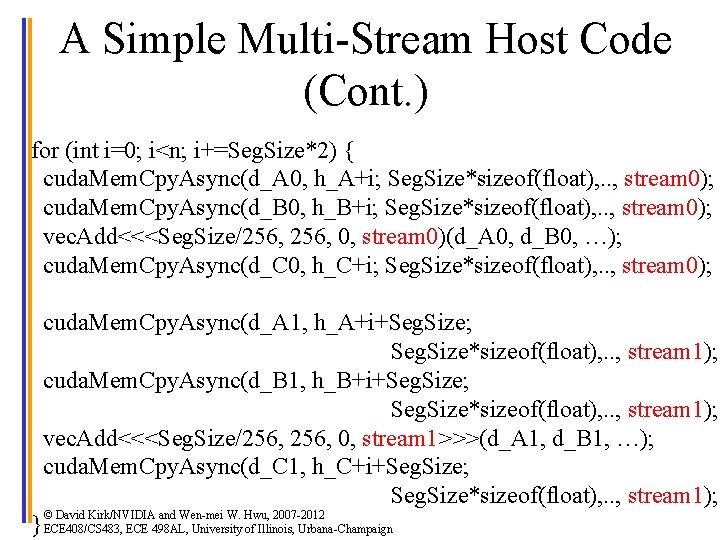

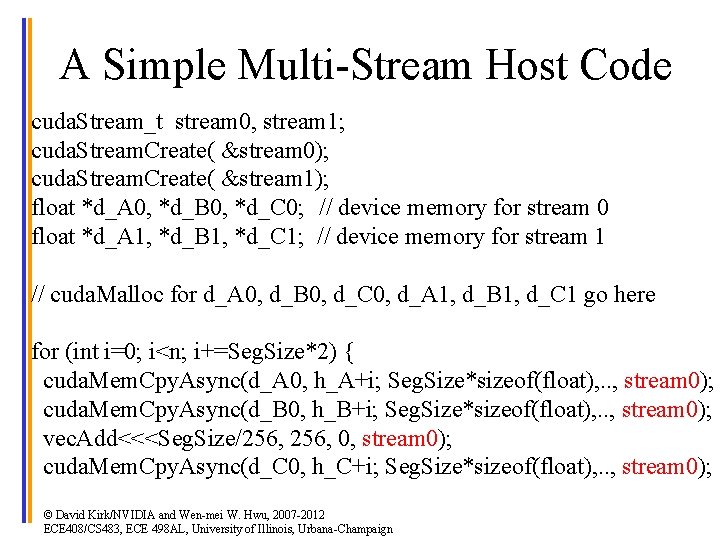

A Simple Multi-Stream Host Code cuda. Stream_t stream 0, stream 1; cuda. Stream. Create( &stream 0); cuda. Stream. Create( &stream 1); float *d_A 0, *d_B 0, *d_C 0; // device memory for stream 0 float *d_A 1, *d_B 1, *d_C 1; // device memory for stream 1 // cuda. Malloc for d_A 0, d_B 0, d_C 0, d_A 1, d_B 1, d_C 1 go here for (int i=0; i<n; i+=Seg. Size*2) { cuda. Mem. Cpy. Async(d_A 0, h_A+i; Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_B 0, h_B+i; Seg. Size*sizeof(float), . . , stream 0); vec. Add<<<Seg. Size/256, 0, stream 0); cuda. Mem. Cpy. Async(d_C 0, h_C+i; Seg. Size*sizeof(float), . . , stream 0); © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

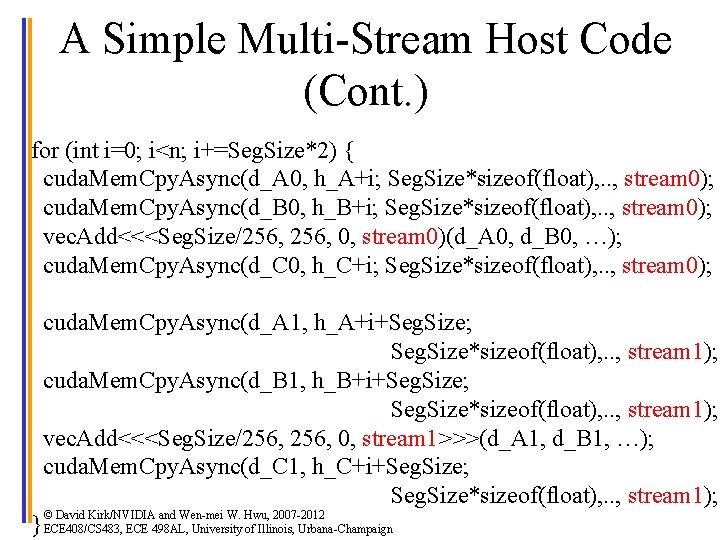

A Simple Multi-Stream Host Code (Cont. ) for (int i=0; i<n; i+=Seg. Size*2) { cuda. Mem. Cpy. Async(d_A 0, h_A+i; Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_B 0, h_B+i; Seg. Size*sizeof(float), . . , stream 0); vec. Add<<<Seg. Size/256, 0, stream 0)(d_A 0, d_B 0, …); cuda. Mem. Cpy. Async(d_C 0, h_C+i; Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_A 1, h_A+i+Seg. Size; Seg. Size*sizeof(float), . . , stream 1); cuda. Mem. Cpy. Async(d_B 1, h_B+i+Seg. Size; Seg. Size*sizeof(float), . . , stream 1); vec. Add<<<Seg. Size/256, 0, stream 1>>>(d_A 1, d_B 1, …); cuda. Mem. Cpy. Async(d_C 1, h_C+i+Seg. Size; Seg. Size*sizeof(float), . . , stream 1); © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 } ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

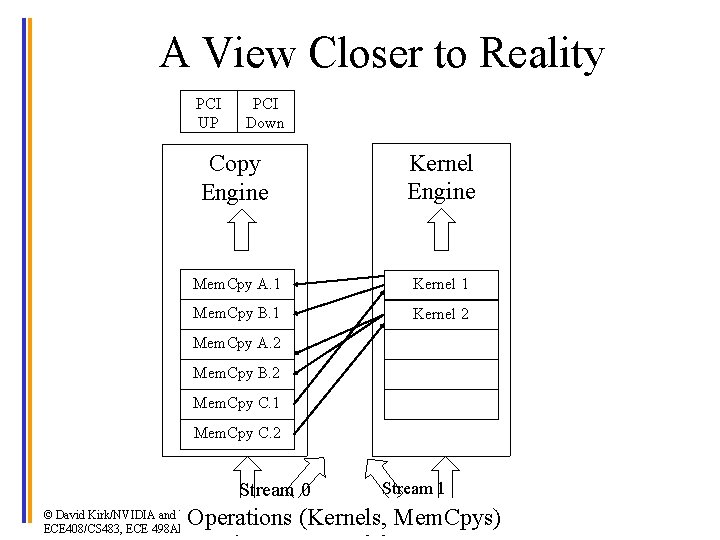

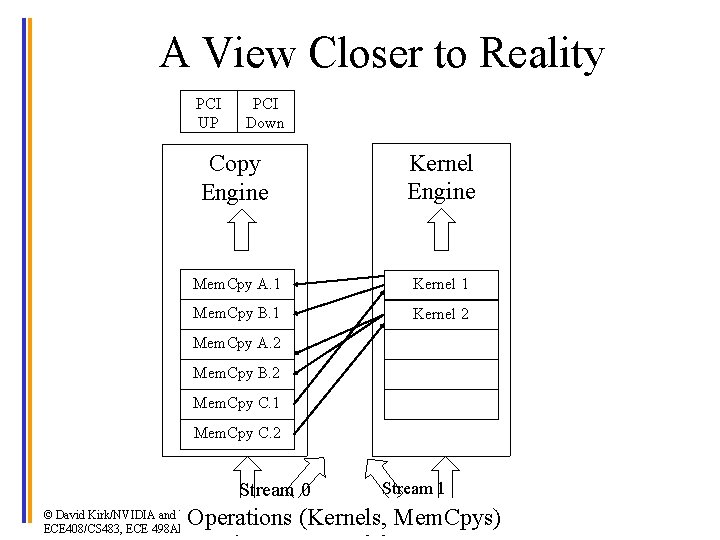

A View Closer to Reality PCI UP PCI Down Copy Engine Kernel Engine Mem. Cpy A. 1 Kernel 1 Mem. Cpy B. 1 Kernel 2 Mem. Cpy C. 1 Mem. Cpy A. 2 Mem. Cpy B. 2 Mem. Cpy C. 2 Stream 0 Stream 1 Operations (Kernels, Mem. Cpys) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

Another Multi-Stream Host Code (Cont. ) for (int i=0; i<n; i+=Seg. Size*2) { cuda. Mem. Cpy. Async(d_A 0, h_A+i; Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_B 0, h_B+i; Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_A 1, h_A+i+Seg. Size; Seg. Size*sizeof(float), . . , stream 1); cuda. Mem. Cpy. Async(d_B 1, h_B+i+Seg. Size; Seg. Size*sizeof(float), . . , stream 1); vec. Add<<<Seg. Size/256, 0, stream 0)(d_A 0, d_B 0, …); vec. Add<<<Seg. Size/256, 0, stream 1>>>(d_A 1, d_B 1, …); cuda. Mem. Cpy. Async(d_C 0, h_C+I; Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_C 1, h_C+i+Seg. Size; Seg. Size*sizeof(float), . . , stream 1); © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 } ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

A View Closer to Reality PCI UP PCI Down Copy Engine Kernel Engine Mem. Cpy A. 1 Kernel 1 Mem. Cpy B. 1 Kernel 2 Mem. Cpy A. 2 Mem. Cpy B. 2 Mem. Cpy C. 1 Mem. Cpy C. 2 Stream 0 Stream 1 Operations (Kernels, Mem. Cpys) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

Overlapped (Pipelined) Timing • Divide large vectors into segments • Overlap transfer and compute of adjacent segments Trans A. 1 Trans B. 1 Comp Trans C. 1 = A. 1 + B. 1 C. 1 Trans A. 2 Trans B. 2 Comp Trans C. 2 = A. 2 + B. 2 C. 2 Trans A. 3 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign Trans B. 3 Comp C. 3 = A. 3 + B. 3 Trans A. 4 Trans B. 4

Hyper Queue • Provide multiple real queues for each engine • Allow much more concurrency by allowing some streams to make progress for an engine while others are blocked © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

Fermi (and older) Concurrency A -- B -- C Stream 1 A--B--C P--Q--R X--Y--Z P -- Q -- R Stream 2 Hardware Work Queue X -- Y -- Z Stream 3 Fermi allows 16 -way concurrency – Up to 16 grids can run at once – But CUDA streams multiplex into a single queue – Overlap only at stream edges © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

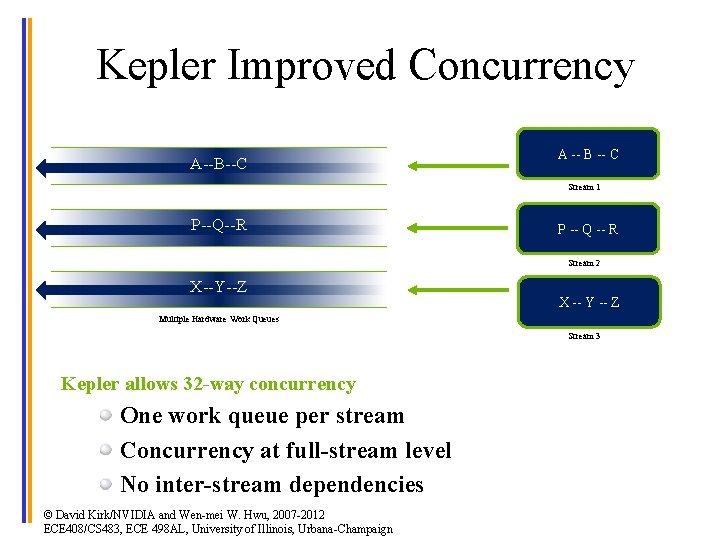

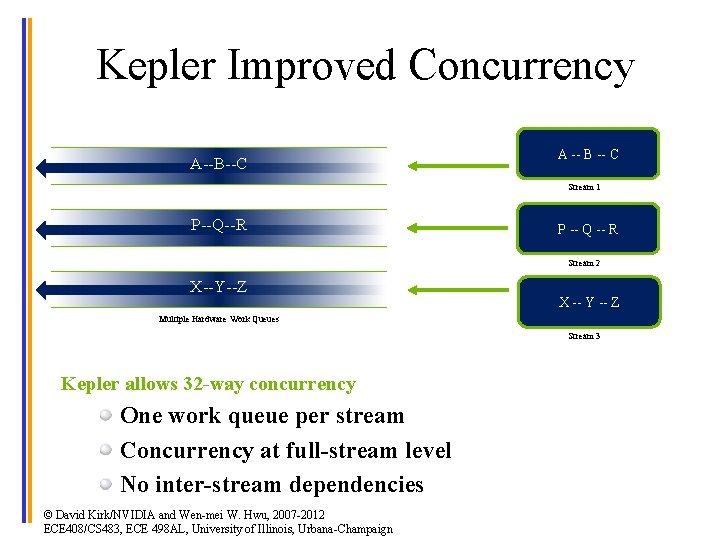

Kepler Improved Concurrency A--B--C A -- B -- C Stream 1 P--Q--R P -- Q -- R Stream 2 X--Y--Z X -- Y -- Z Multiple Hardware Work Queues Stream 3 Kepler allows 32 -way concurrency One work queue per stream Concurrency at full-stream level No inter-stream dependencies © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

ANY QUESTIONS? © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign