Parallel Prefix Sum Scan with CUDA Exclusive Scan

![Exclusive Scan • [a 0, a 1, …, an-1], • [I, a 0, (a Exclusive Scan • [a 0, a 1, …, an-1], • [I, a 0, (a](https://slidetodoc.com/presentation_image_h2/6ed9fb2138cda5993a8ea4e083370fa4/image-2.jpg)

- Slides: 15

Parallel Prefix Sum (Scan) with CUDA

![Exclusive Scan a 0 a 1 an1 I a 0 a Exclusive Scan • [a 0, a 1, …, an-1], • [I, a 0, (a](https://slidetodoc.com/presentation_image_h2/6ed9fb2138cda5993a8ea4e083370fa4/image-2.jpg)

Exclusive Scan • [a 0, a 1, …, an-1], • [I, a 0, (a 0 ⊕ a 1), …, (a 0 ⊕ a 1 ⊕ … ⊕ an-2)].

Sequential Scan • Complexity: O(n)

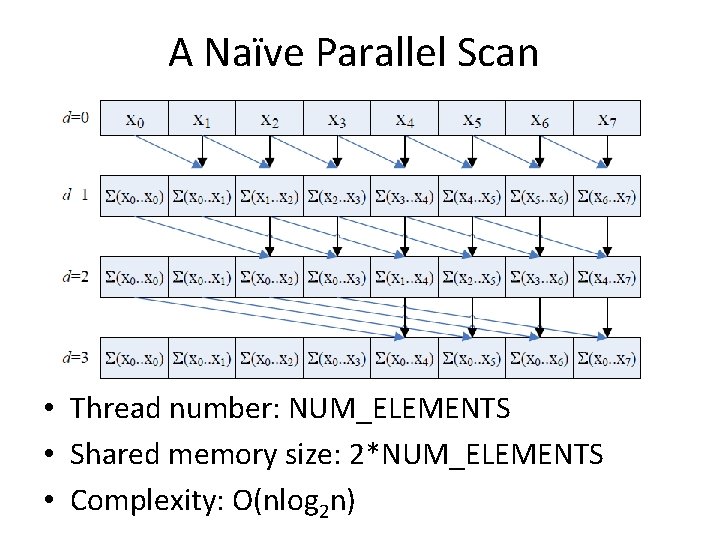

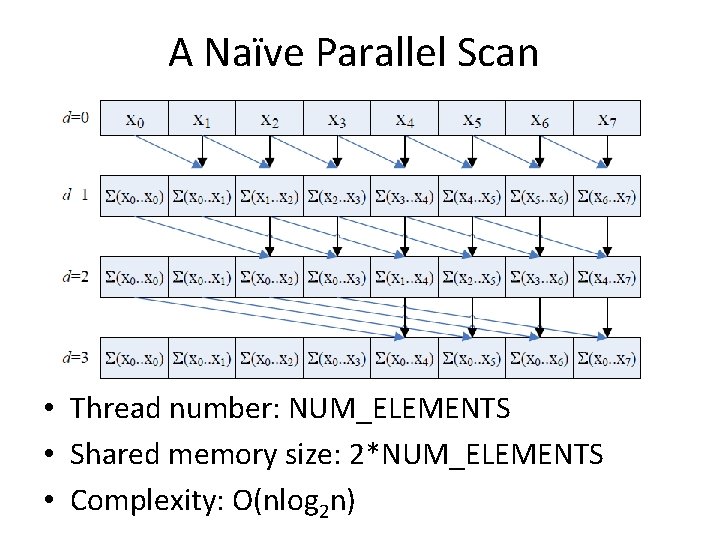

A Naïve Parallel Scan • Thread number: NUM_ELEMENTS • Shared memory size: 2*NUM_ELEMENTS • Complexity: O(nlog 2 n)

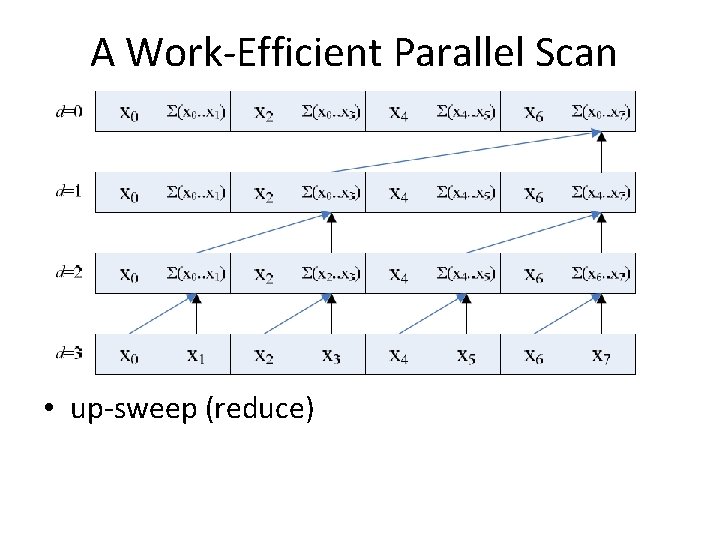

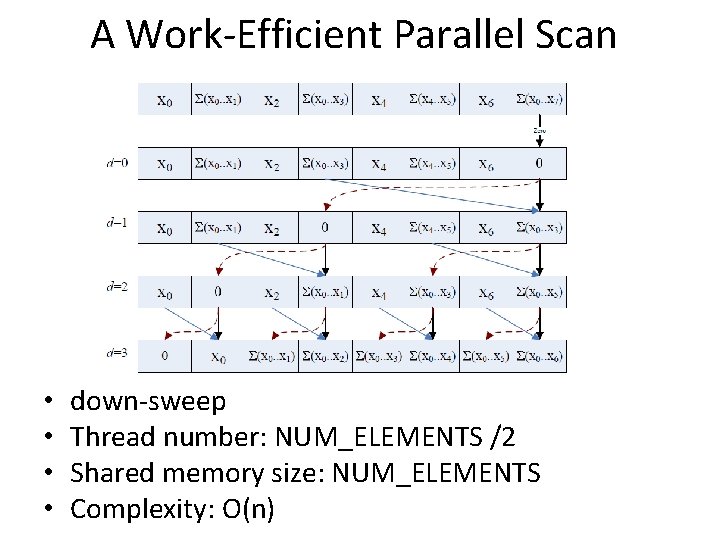

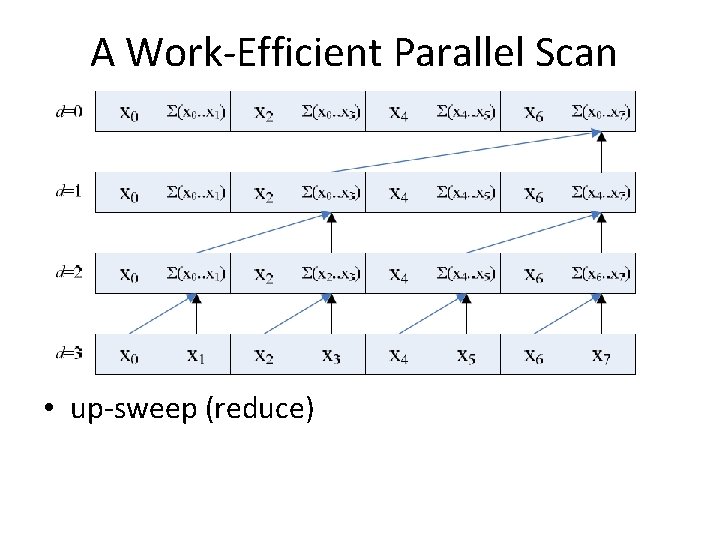

A Work-Efficient Parallel Scan • up-sweep (reduce)

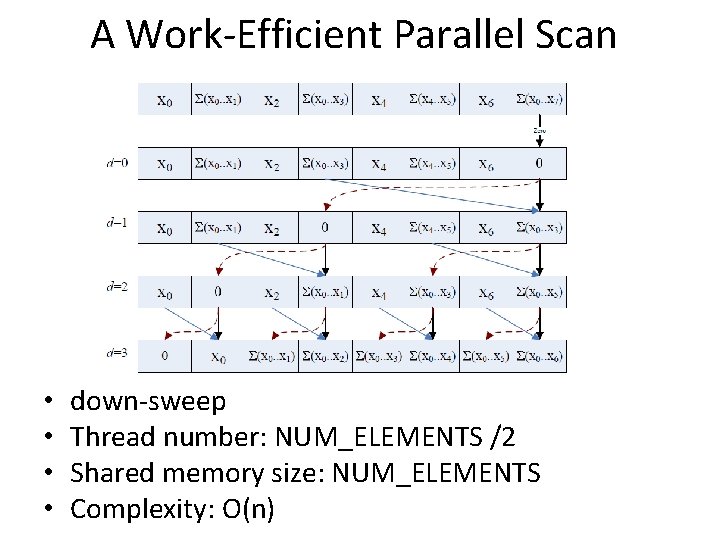

A Work-Efficient Parallel Scan • • down-sweep Thread number: NUM_ELEMENTS /2 Shared memory size: NUM_ELEMENTS Complexity: O(n)

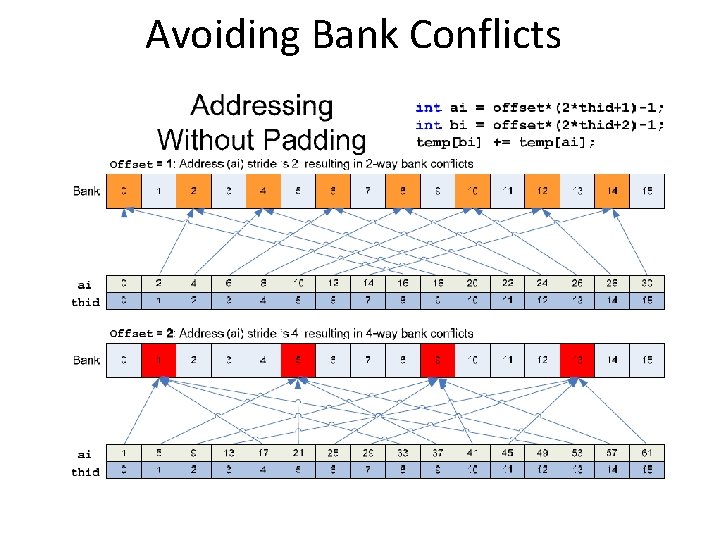

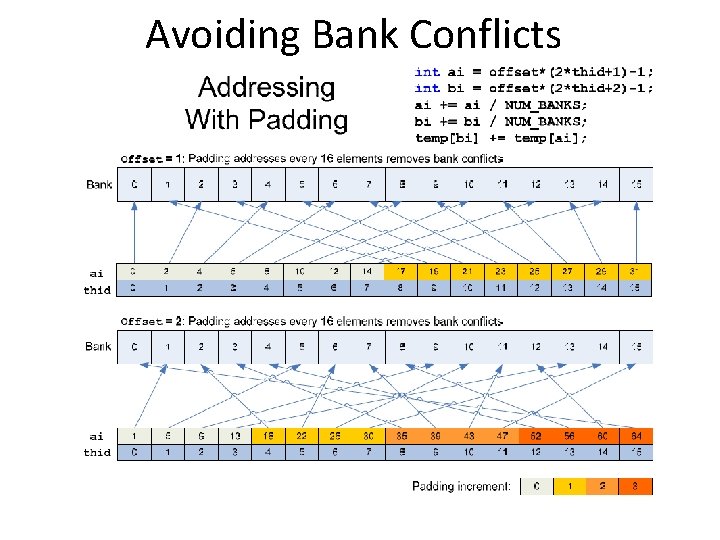

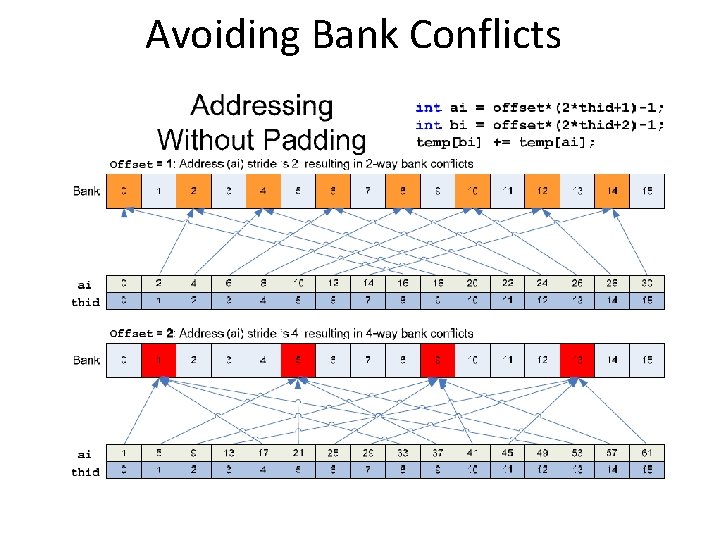

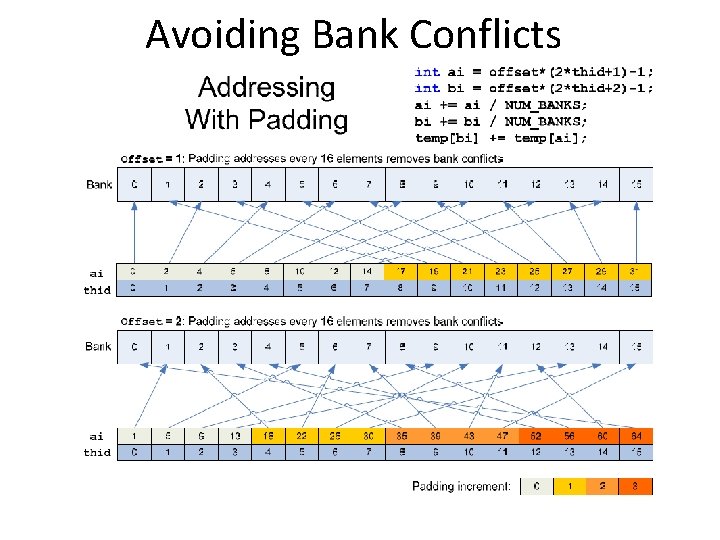

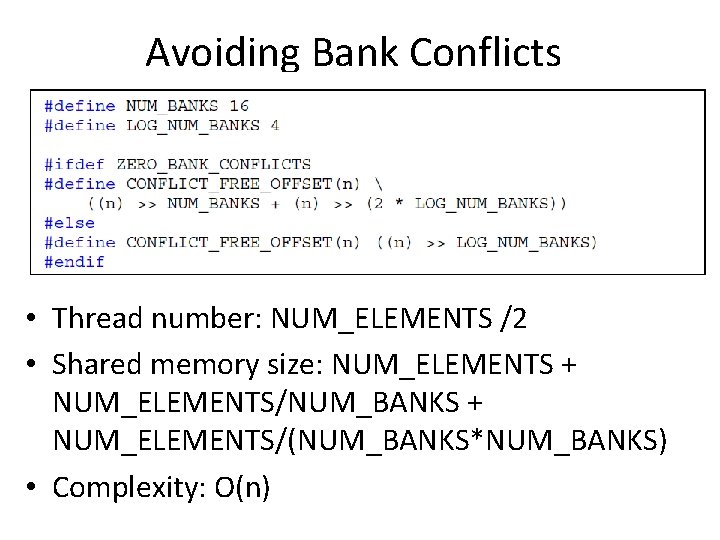

Avoiding Bank Conflicts

Avoiding Bank Conflicts

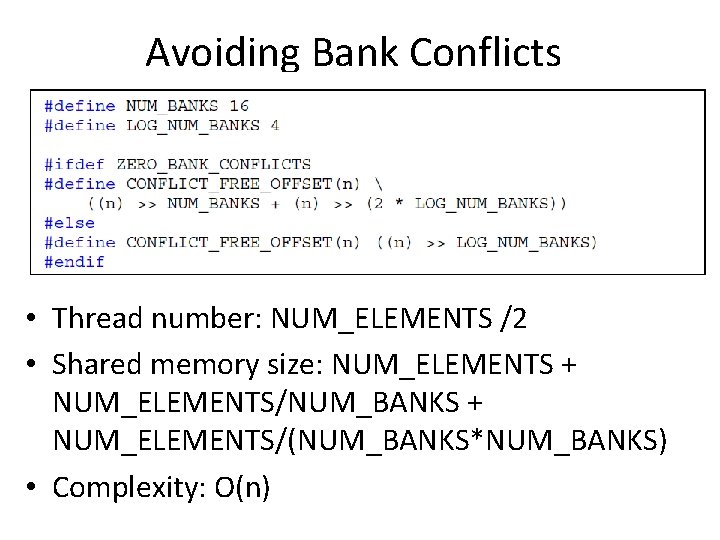

Avoiding Bank Conflicts • Thread number: NUM_ELEMENTS /2 • Shared memory size: NUM_ELEMENTS + NUM_ELEMENTS/NUM_BANKS + NUM_ELEMENTS/(NUM_BANKS*NUM_BANKS) • Complexity: O(n)

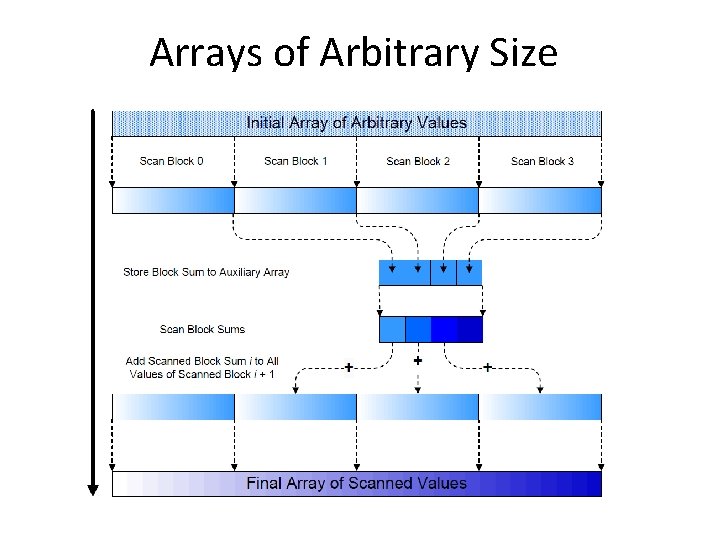

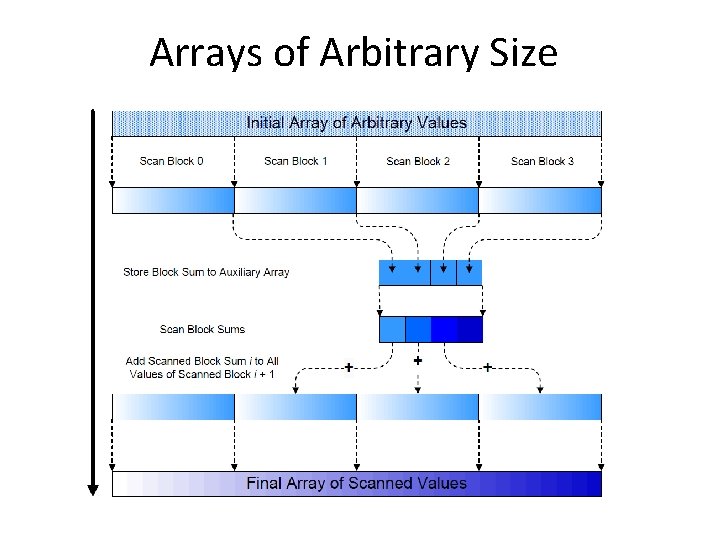

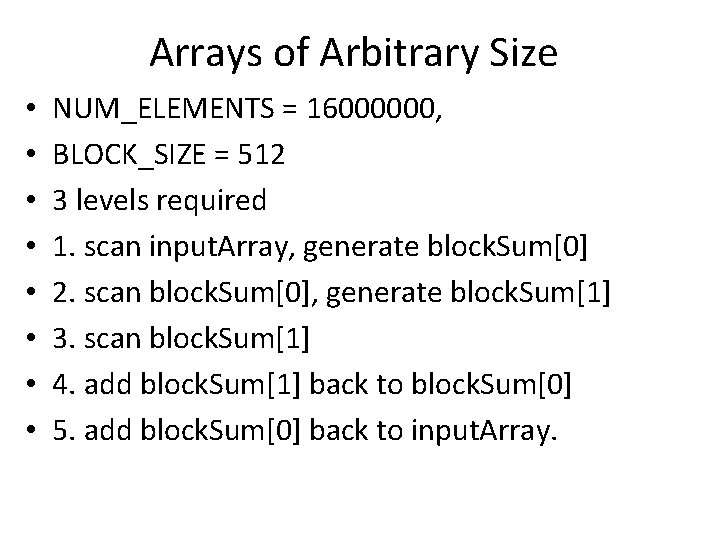

Arrays of Arbitrary Size

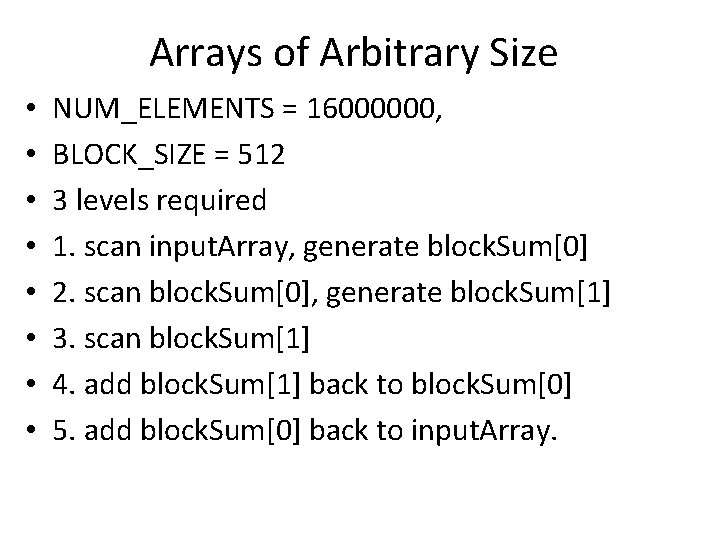

Arrays of Arbitrary Size • • NUM_ELEMENTS = 16000000, BLOCK_SIZE = 512 3 levels required 1. scan input. Array, generate block. Sum[0] 2. scan block. Sum[0], generate block. Sum[1] 3. scan block. Sum[1] 4. add block. Sum[1] back to block. Sum[0] 5. add block. Sum[0] back to input. Array.

Testing and analysis • 2. Copy and paste the performance results when run without arguments, including the host CPU and CUDA GPU processing times and the speedup. • Processing 16000000 elements. . . • Host CPU Processing time: 98. 755997 (ms) • G 80 CUDA Processing time: 13. 595000 (ms) • Speedup: 7. 264141 X

Testing and analysis • Describe how you handled arrays not a power of two in size, and how you minimized shared memory bank conflicts. Also describe any other performance-enhancing optimizations you added. • Extend it to multiples of 2* BLOCK_SIZE • Get rid of out. Array

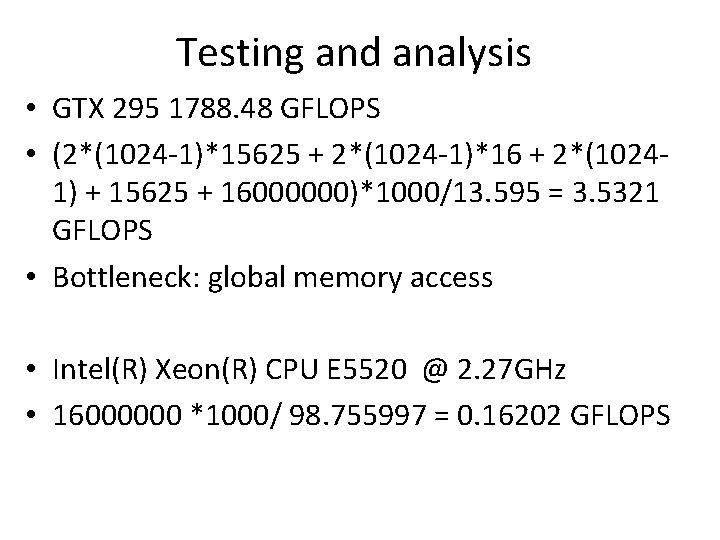

Testing and analysis • How do the measured FLOPS rate for the CPU and GPU kernels compare with each other, and with theoretical performance limits of each architecture? For your GPU implementation, discuss what bottlenecks your code is likely bound by, limiting higher performance.

Testing and analysis • GTX 295 1788. 48 GFLOPS • (2*(1024 -1)*15625 + 2*(1024 -1)*16 + 2*(10241) + 15625 + 16000000)*1000/13. 595 = 3. 5321 GFLOPS • Bottleneck: global memory access • Intel(R) Xeon(R) CPU E 5520 @ 2. 27 GHz • 16000000 *1000/ 98. 755997 = 0. 16202 GFLOPS