WarpLevel Divergence in GPUs Characterization Impact and Mitigation

![Micro-benchmarking • Results > Using CUDA device [0]: Ge. Force GTX 480 > Detected Micro-benchmarking • Results > Using CUDA device [0]: Ge. Force GTX 480 > Detected](https://slidetodoc.com/presentation_image/1aede0f372772ac9bc91dab6c6cd4193/image-14.jpg)

- Slides: 29

Warp-Level Divergence in GPUs: Characterization, Impact, and Mitigation Ping Xiang, Yi Yang, Huiyang Zhou The 20 th IEEE International Symposium On High Performance Computer Architecture, Orlando, Florida, USA 1

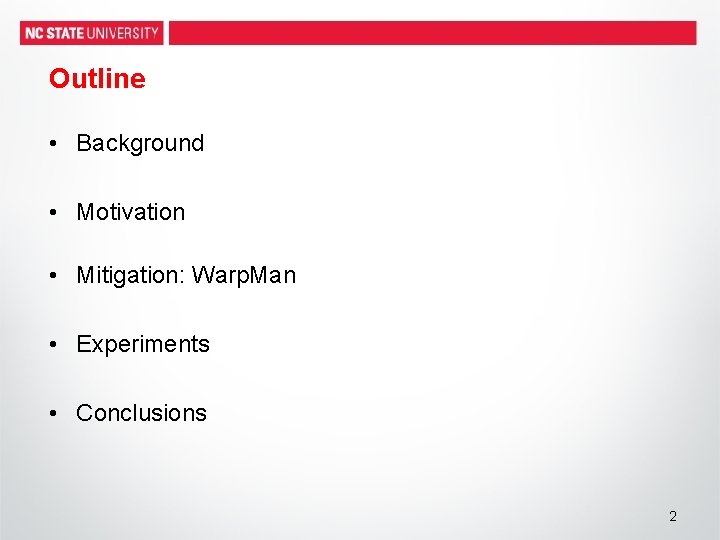

Outline • Background • Motivation • Mitigation: Warp. Man • Experiments • Conclusions 2

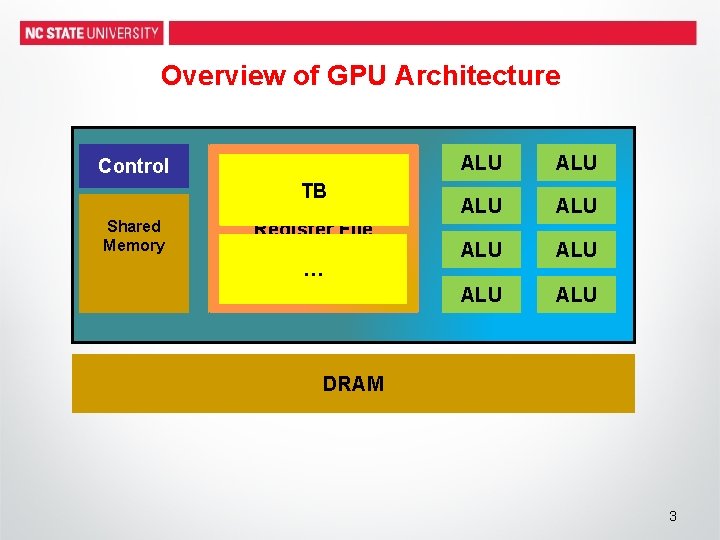

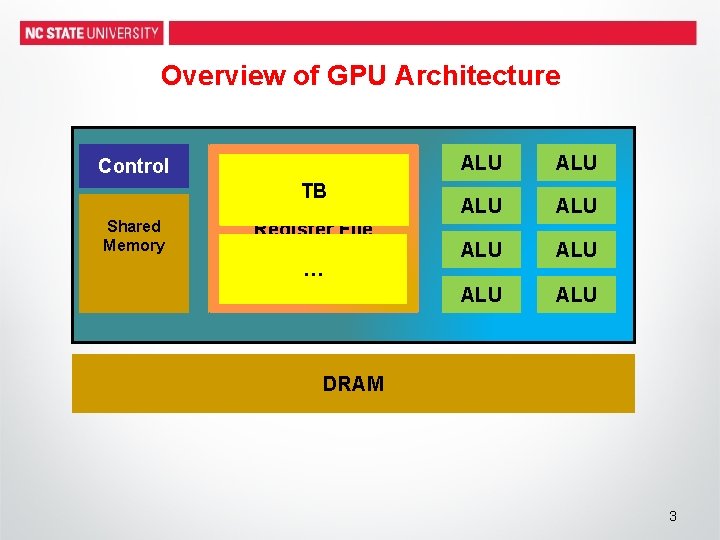

Overview of GPU Architecture Control Shared Memory Cache Warp Threads Warp TB Warp Register File … ALU ALU DRAM 3

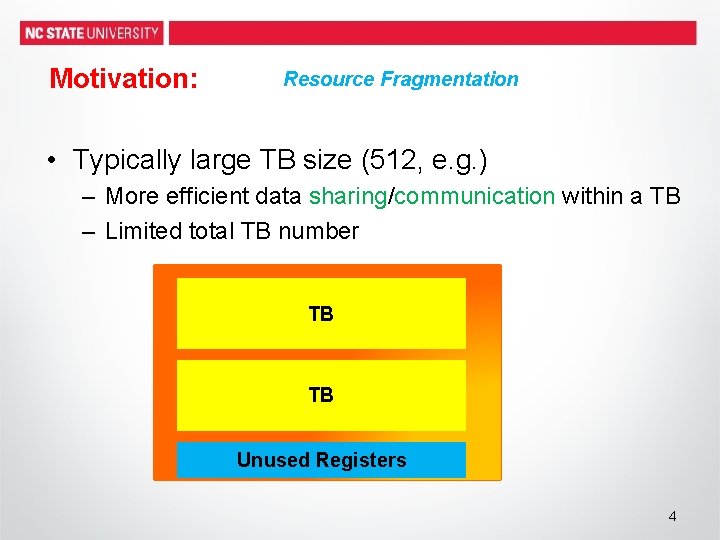

Motivation: Resource Fragmentation • Typically large TB size (512, e. g. ) – More efficient data sharing/communication within a TB – Limited total TB number TB Register File TB Unused Registers 4

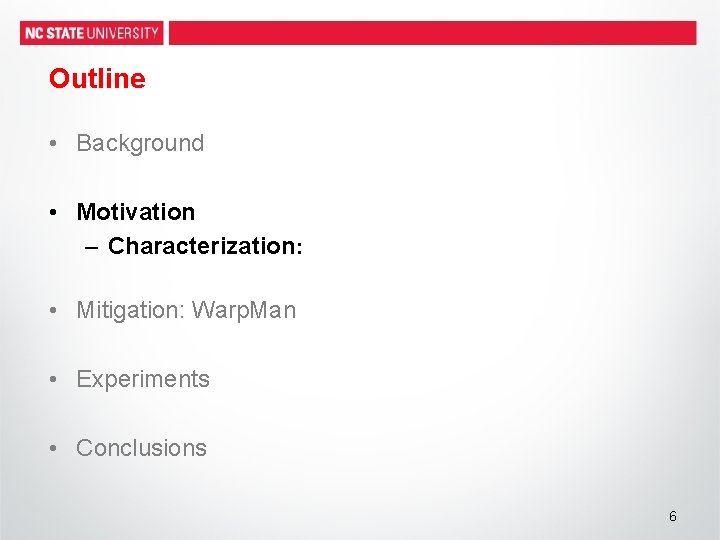

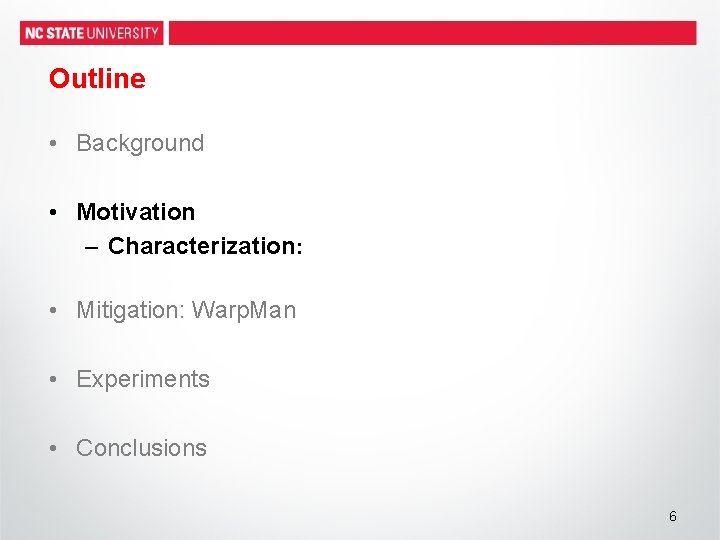

Motivation Warp-Level Divergence: warps within the same TB don’t finish at the same time Warp 1 Warp 2 Finished Warp 3 Finished Warp 4 …. Resources cannot be released promptly TB Unused Resources 5

Outline • Background • Motivation – Characterization: • Mitigation: Warp. Man • Experiments • Conclusions 6

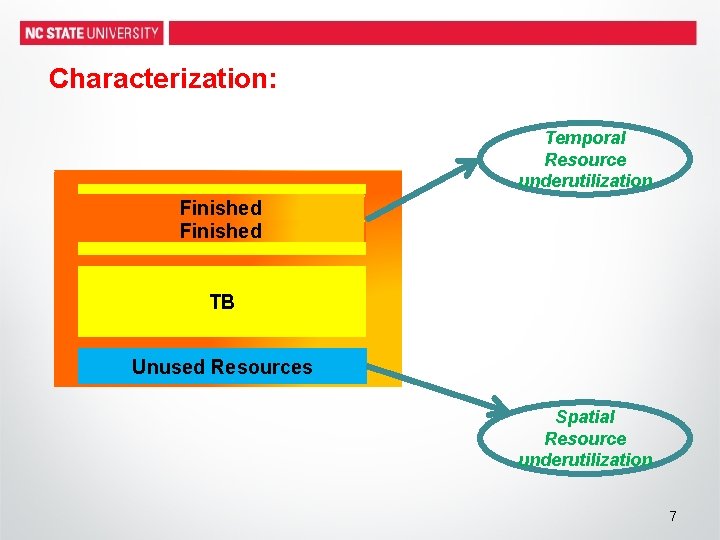

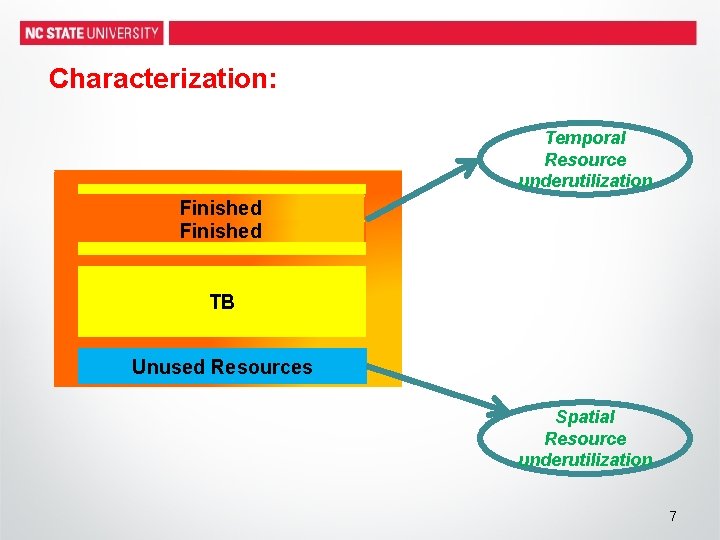

Characterization: Temporal Resource underutilization Finished TB Finished Register File TB Unused Resources Spatial Resource underutilization 7

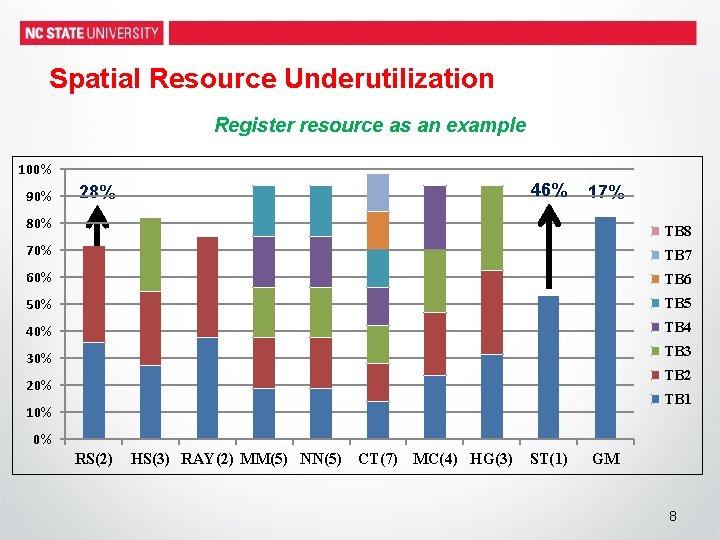

Spatial Resource Underutilization Register resource as an example 100% 90% 46% 28% 17% 80% TB 8 70% TB 7 60% TB 6 50% TB 5 40% TB 4 30% TB 3 TB 2 20% TB 1 10% 0% RS(2) HS(3) RAY(2) MM(5) NN(5) CT(7) MC(4) HG(3) ST(1) GM 8

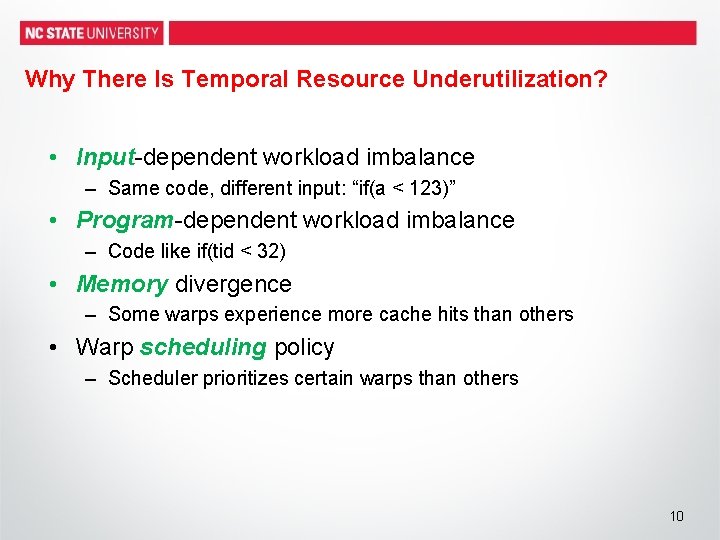

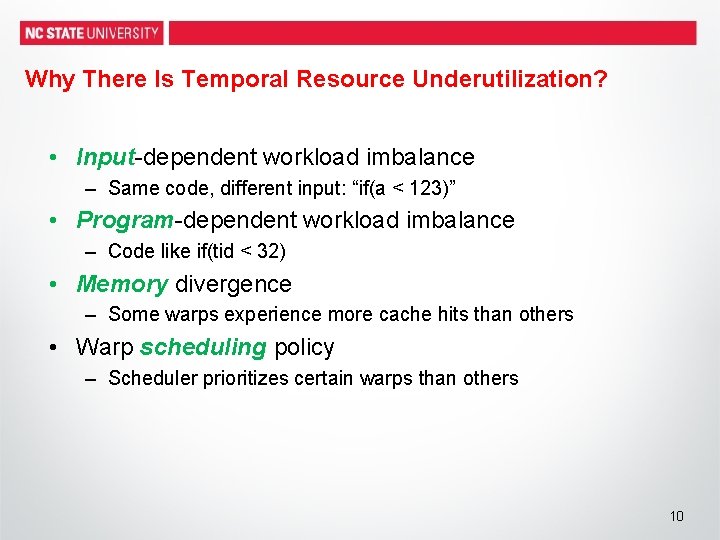

Temporal Resource Underutilization RTRU: ratio of temporal resource underutilization • Case Study: Ray Tracing – 6 warps per TB – Study TB 0 as an example Warp Num. Warp Level Divergence for RAY 5 4 RTRU = 49. 7% 3 2 1 0 0 5000 10000 15000 20000 25000 Cycle 9

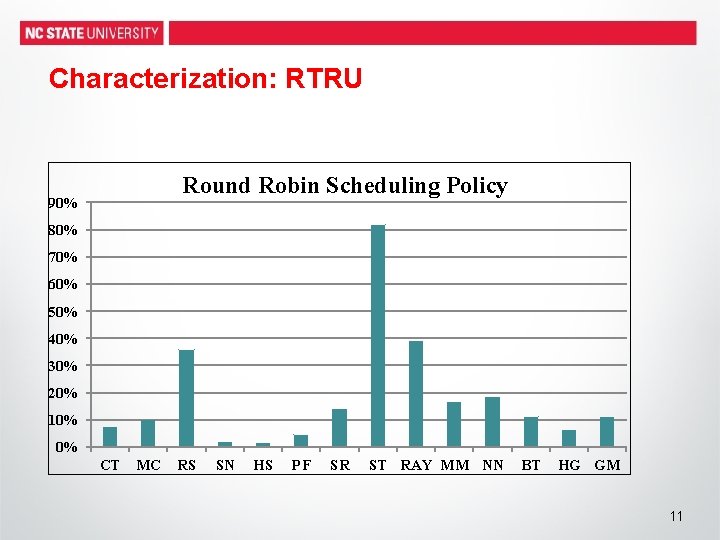

Why There Is Temporal Resource Underutilization? • Input-dependent workload imbalance – Same code, different input: “if(a < 123)” • Program-dependent workload imbalance – Code like if(tid < 32) • Memory divergence – Some warps experience more cache hits than others • Warp scheduling policy – Scheduler prioritizes certain warps than others 10

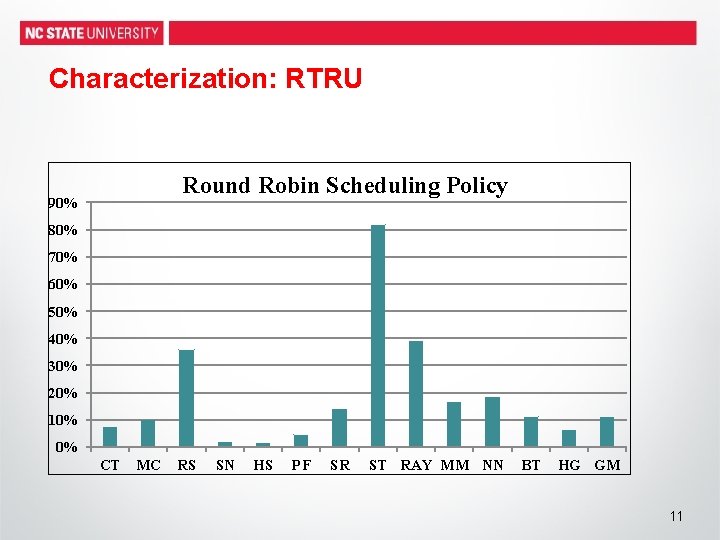

Characterization: RTRU Round Robin Scheduling Policy 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% CT MC RS SN HS PF SR ST RAY MM NN BT HG GM 11

Outline • Background • Motivation – Characterization: – Micro-benchmarking • Mitigation: Warp. Man • Experiments • Conclusions 12

Micro-benchmark • Code • • • • runs on both GTX 480 and GTX 680 1. __global__ void TB_resource_kernel(…, bool call = false){ 2. if(call) bloat. Occupancy(start, size); . . . 3. clock_t start_clock = clock(); 4. if(tid < 32){ //tid is the threadid within a TB 5 clock_offset = 0; 6. while( clock_offset < clock_count ) { 7. clock_offset = clock() - start_clock; 8. } 9. } 10. clock_t end_clock = clock(); 11. d_o[index] = start_clock; //index is the global thread id 12. d_e[index] = end_clock; 13. } 13

![Microbenchmarking Results Using CUDA device 0 Ge Force GTX 480 Detected Micro-benchmarking • Results > Using CUDA device [0]: Ge. Force GTX 480 > Detected](https://slidetodoc.com/presentation_image/1aede0f372772ac9bc91dab6c6cd4193/image-14.jpg)

Micro-benchmarking • Results > Using CUDA device [0]: Ge. Force GTX 480 > Detected Compute SM 3. 0 hardware with 8 multi-processors. … CTA 250 Warp 0: start 80, end 81 CTA 269 Warp 0: start 80, end 81 CTA 272 Warp 0: start 80, end 81 CTA 283 Warp 0: start 80, end 81 CTA 322 Warp 0: start 80, end 81 CTA 329 Warp 0: start 80, end 81 … 14

Outline • Background • Motivation • Mitigation: Warp. Man • Experiments • Conclusions 15

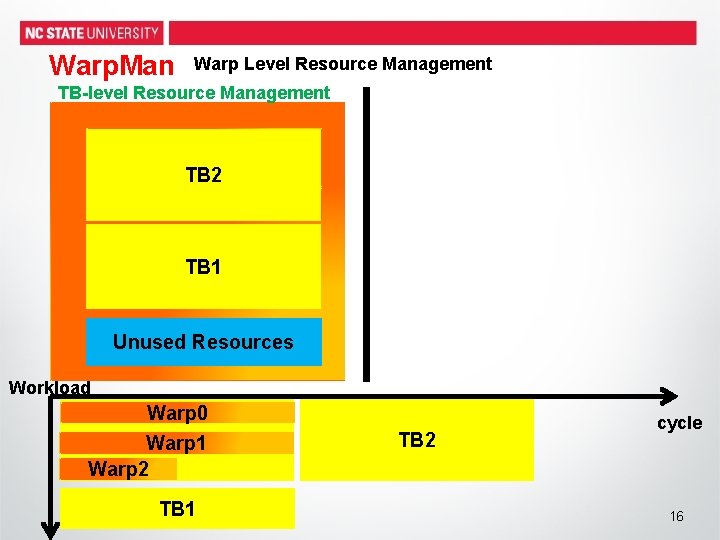

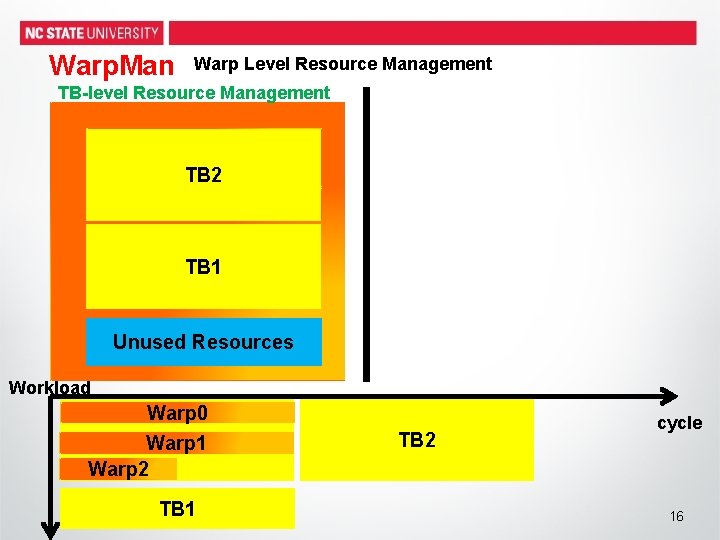

Warp. Man Warp Level Resource Management TB-level Resource Management Finished Warp 0 TB 0 Warp 1 Finished TB 2 Finished Warp 2 SM TB 1 Unused Resources Workload Warp 0 TB 0 Warp 1 Warp 2 TB 1 TB 2 cycle 16

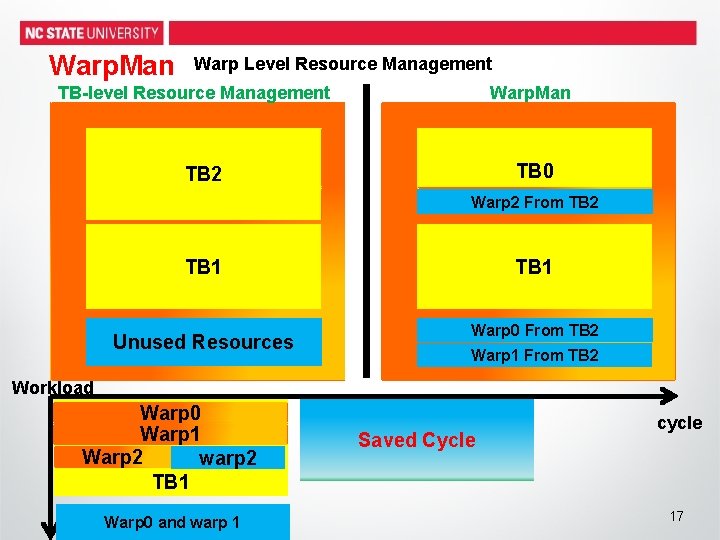

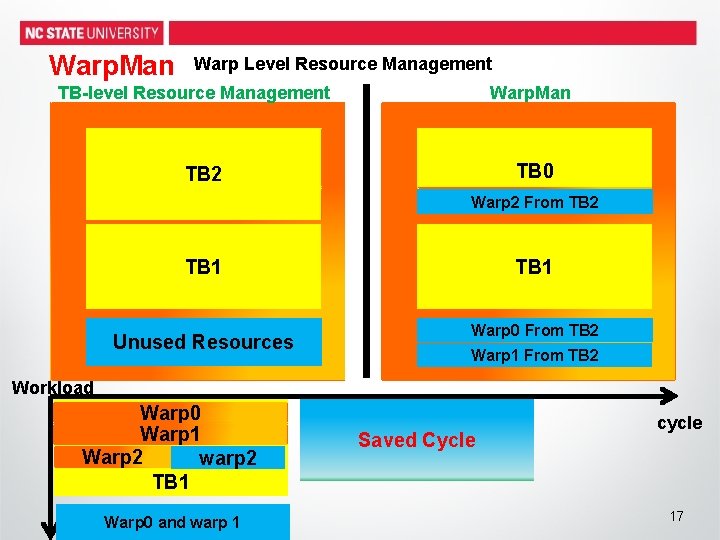

Warp. Man Warp Level Resource Management TB-level Resource Management Warp. Man Finished Warp TB 0 Warp Finished TB 2 TB 0 Finished Warp Finished Released Resource Warp 2 From TB 2 SM TB 1 Unused Resources Warp 0 From TB 2 Warp 1 From TB 2 Workload Warp 0 TB 0 Warp 1 Warp 2 warp 2 TB 1 Warp 0 and warp 1 Saved Cycle cycle 17

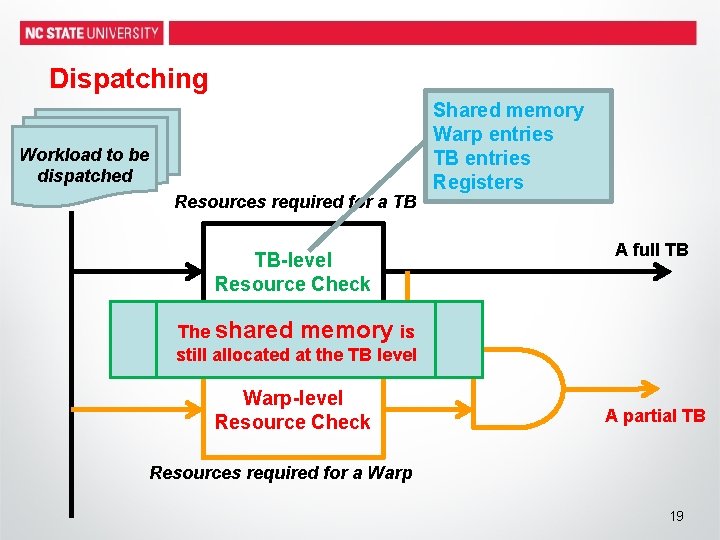

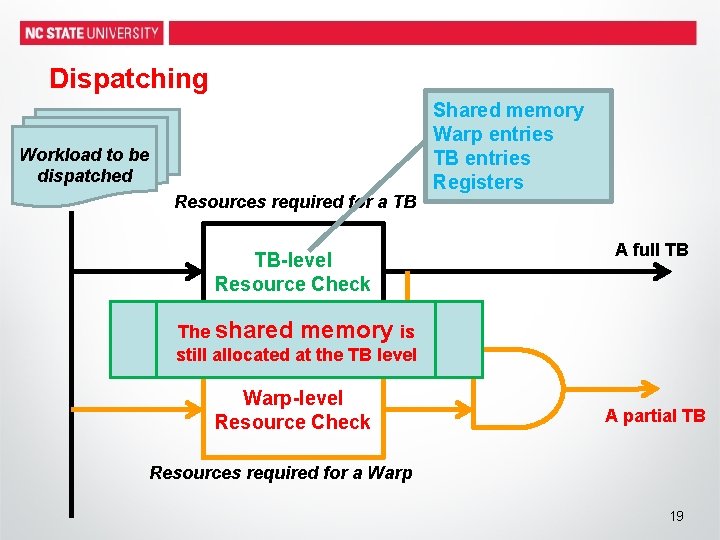

Warp. Man ---- Design • Dispatch logic – Traditional TB-level dispatching logic – Add partial TB dispatch logic • Workload buffer – Store the dispatched but not running partial TBs 18

Dispatching Workload to be dispatched Resources required for a TB TB-level Resource Check Shared memory Warp entries TB entries Registers A full TB The shared memory is still allocated at the TB level Warp-level Resource Check A partial TB Resources required for a Warp 19

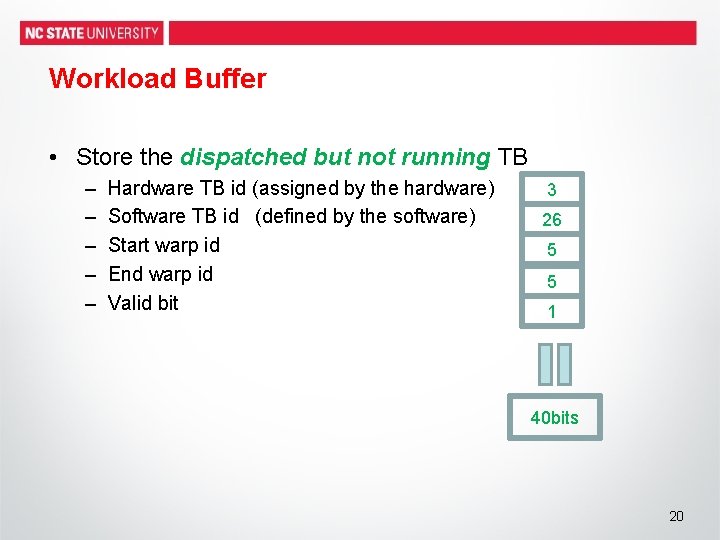

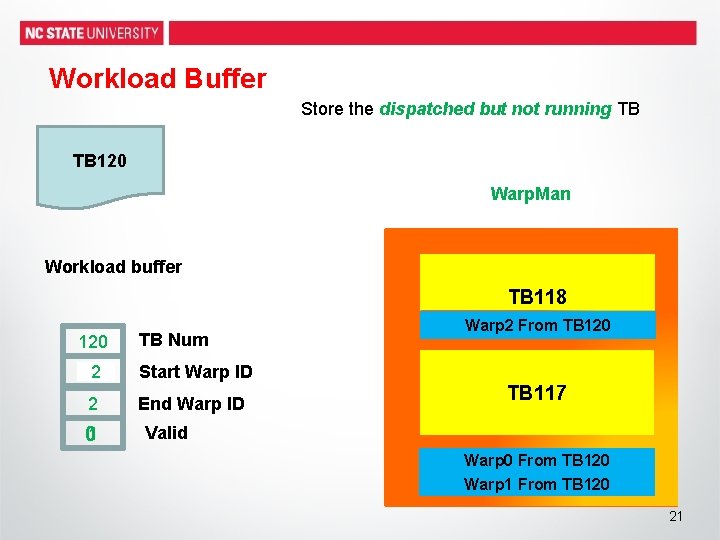

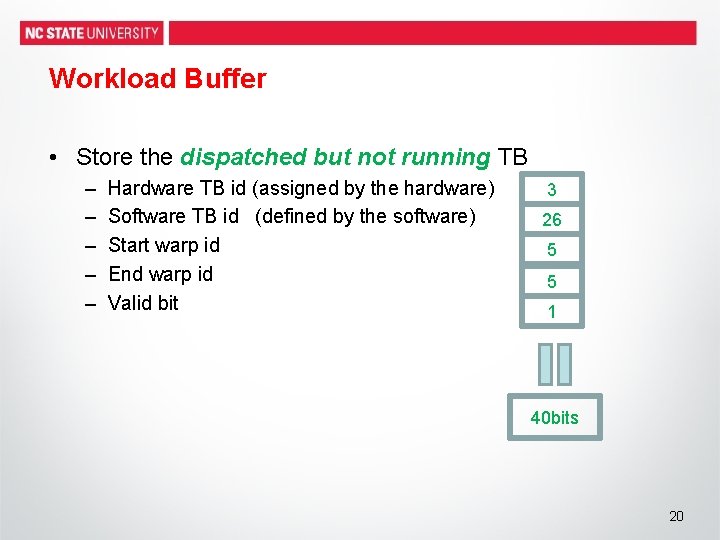

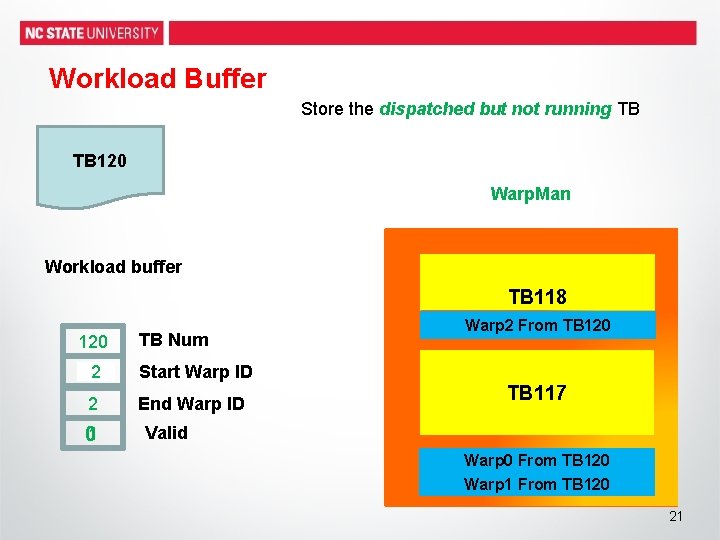

Workload Buffer • Store the dispatched but not running TB – – – Hardware TB id (assigned by the hardware) Software TB id (defined by the software) Start warp id End warp id Valid bit 3 26 5 5 1 40 bits 20

Workload Buffer Store the dispatched but not running TB TB 120 Warp. Man Workload buffer 120 TB Num 01 2 Start Warp ID 2 End Warp ID 01 TB 118 Warp 2 From TB 120 Finished SM TB 117 Valid Warp 0 From TB 120 Warp 1 From TB 120 Unused Resources 21

Outline • Background • Motivation: • Mitigation: Warp. Man • Experiments • Conclusions 22

Methodology • Use GPUWattch for both timing and energy evaluation • Baseline Architecture: (GTX 480) – – 15 SMs, with SIMD size of 32, running at 1. 4 Ghz Max TBs per SM is 8, Max threads per SM is 1536 Scheduling policy: round robin / two level 16 KB L 1 cache, 48 KB shared memory. 128 KB regs • Applications from: • Nvidia CUDA SDK • Rodinia Benchmark Suit • GPGPUsim 23

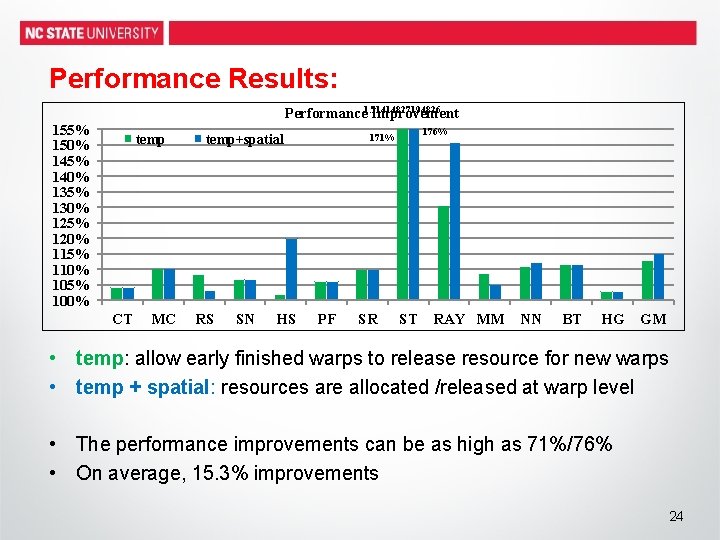

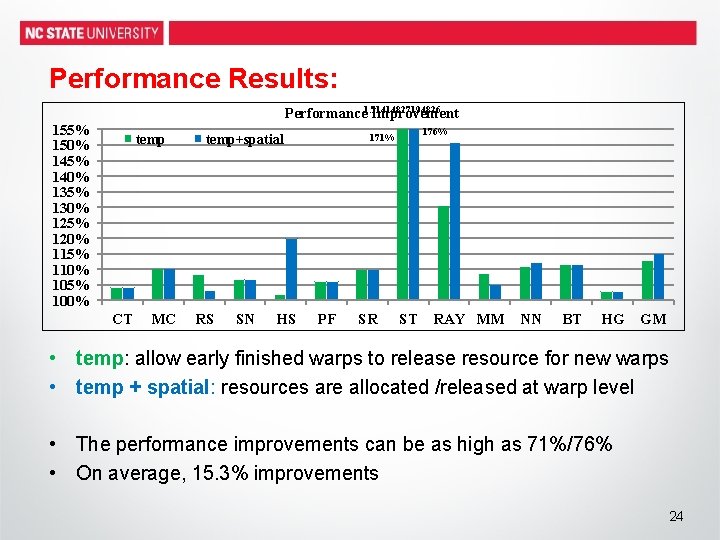

Performance Results: Performance 1. 71414827194826 Improvement 155% 150% 145% 140% 135% 130% 125% 120% 115% 110% 105% 100% temp CT MC temp+spatial RS SN HS 176% 171% PF SR ST RAY MM NN BT HG GM • temp: allow early finished warps to release resource for new warps • temp + spatial: resources are allocated /released at warp level • The performance improvements can be as high as 71%/76% • On average, 15. 3% improvements 24

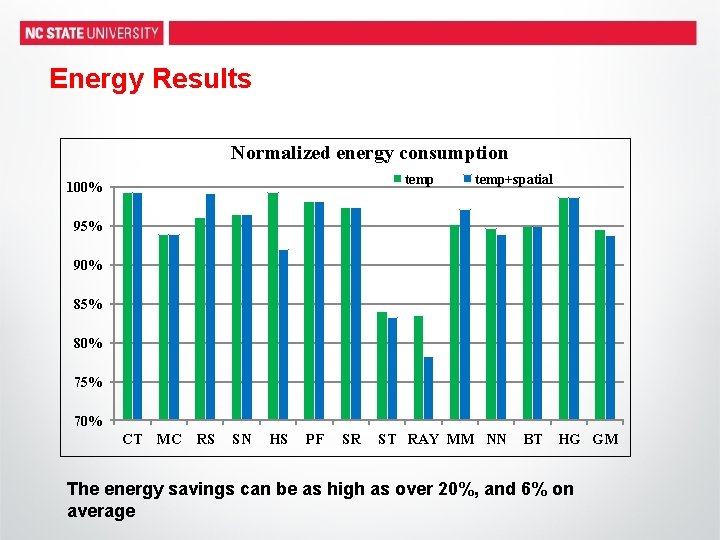

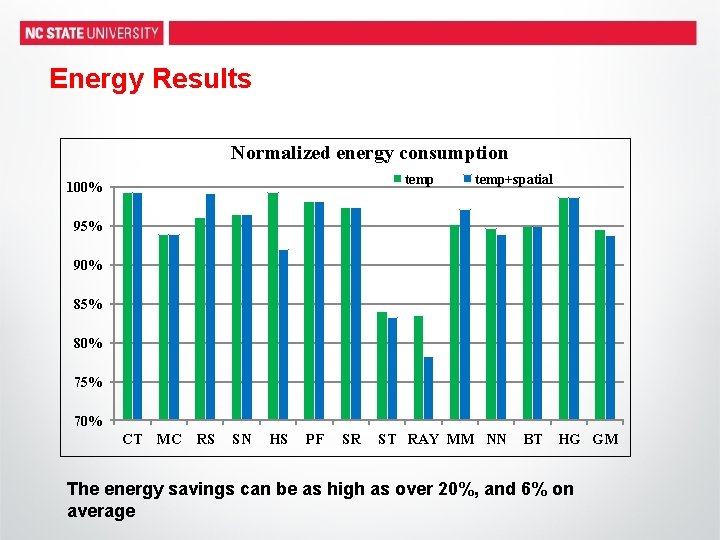

Energy Results Normalized energy consumption temp 100% temp+spatial 95% 90% 85% 80% 75% 70% CT MC RS SN HS PF SR ST RAY MM NN BT HG GM The energy savings can be as high as over 20%, and 6% on average

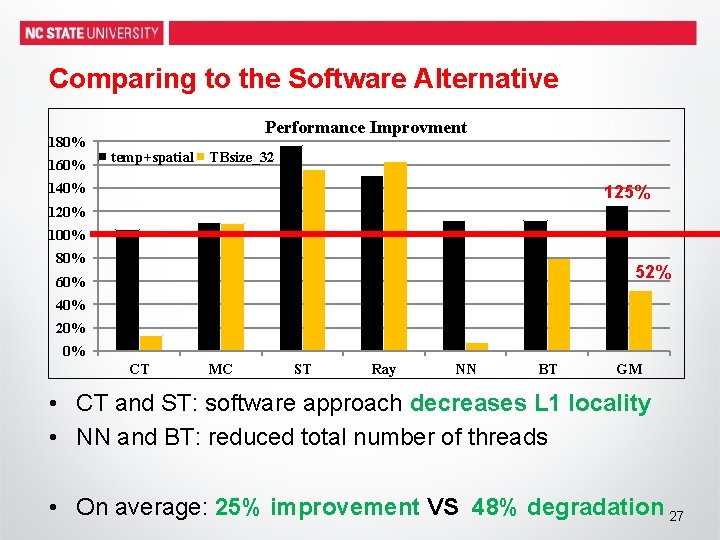

A Software Alternative a smaller TB size • Change the software to have a smaller TB size • Change the hardware to enable more concurrent TBs • Inefficient shared memory usage / synchronization • Decrease the data locality • More as we proceed to the experimental results… 26

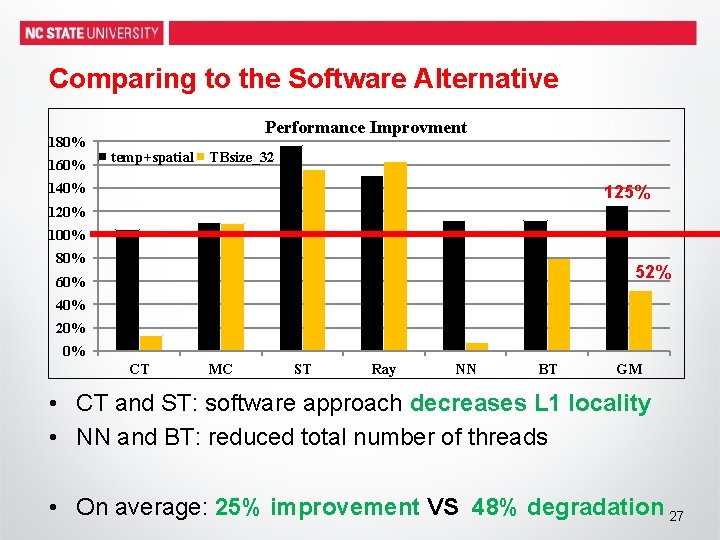

Comparing to the Software Alternative 180% 160% Performance Improvment temp+spatial TBsize_32 140% 125% 120% 100% 80% 52% 60% 40% 20% 0% CT MC ST Ray NN BT GM • CT and ST: software approach decreases L 1 locality • NN and BT: reduced total number of threads • On average: 25% improvement VS 48% degradation 27

Related Work • Resource underutilization due to branch divergence or threadlevel divergence has been well studied. • Yi Yang et al [Pact-21] targets at the shared memory resource management and is complementary to our proposed Warp. Man scheme. • D. Tarjan, et al [US Patent-2009], proposes to use virtual register table to manage physical register file to enable more concurrent TBs 28

Conclusion • We highlight the limitations of TB-level resource management • we characterize warp-level divergence and reveal the fundamental reasons for such divergent behavior; • we propose Warp. Man and show that it can be implemented with minor hardware changes • we show that our proposed solution is highly effective and achieves significant performance improvements and energy savings Questions? 29