Packet Shader A GPUAccelerated Software Router Sangjin Han

- Slides: 95

Packet. Shader: A GPU-Accelerated Software Router Sangjin Han† In collaboration with: Keon Jang †, Kyoung. Soo Park‡, Sue Moon † Advanced Networking Lab, CS, KAIST Networked and Distributed Computing Systems Lab, EE, KAIST † ‡ 2010 Sep.

Packet. Shader: A GPU-Accelerated Software Router High-performance Our prototype: 40 Gbps on a single box 2010 Sep. 2

Software Router § Despite its name, not limited to IP routing § You can implement whatever you want on it. § Driven by software § Flexible § Friendly development environments § Based on commodity hardware § Cheap § Fast evolution 2010 Sep. 3

Now 10 Gigabit NIC is a commodity § From $200 – $300 per port § Great opportunity for software routers 2010 Sep. 4

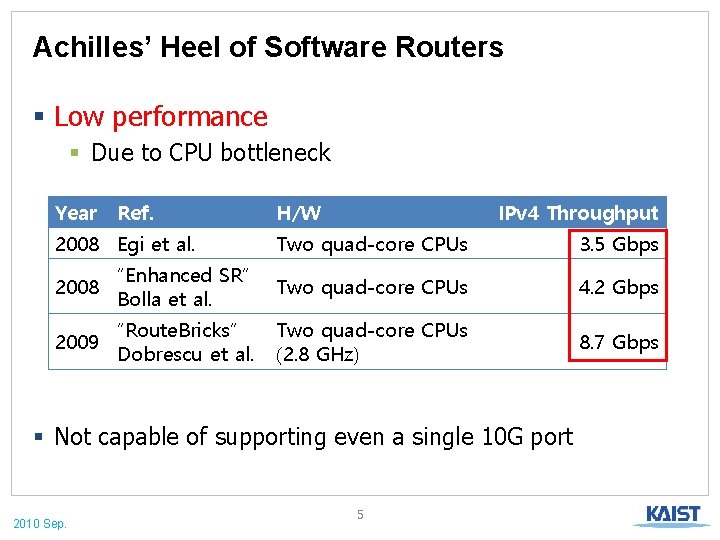

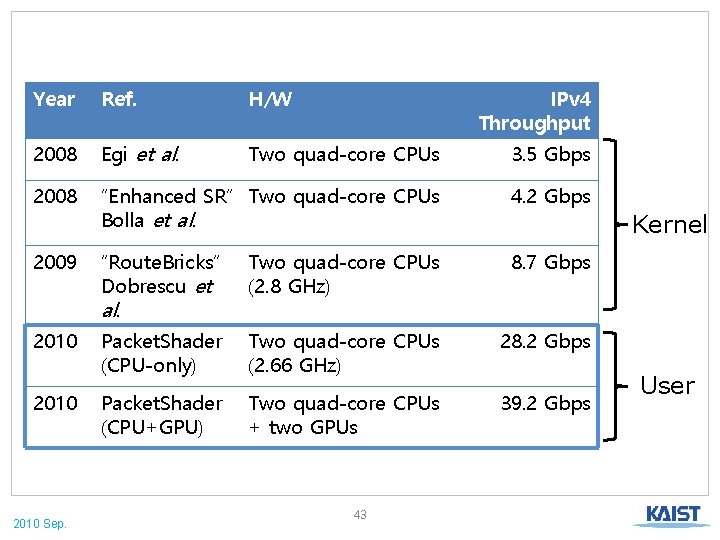

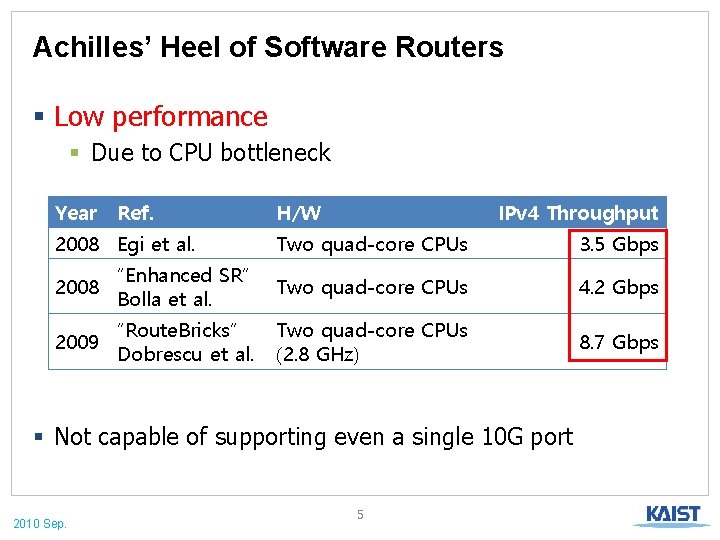

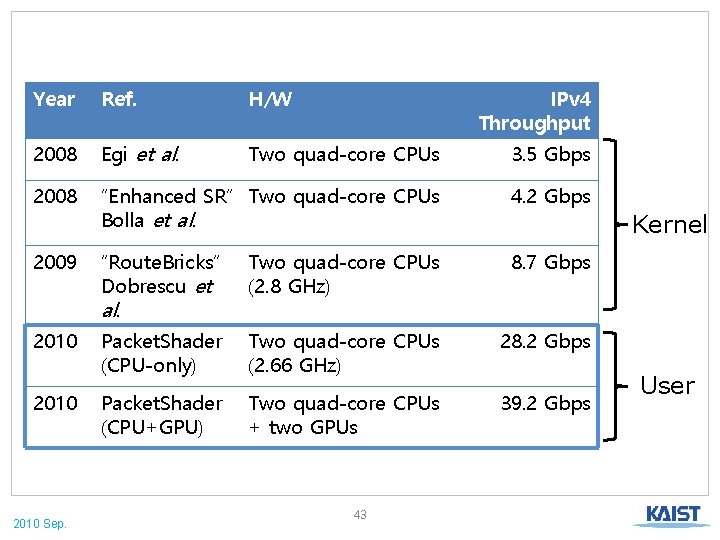

Achilles’ Heel of Software Routers § Low performance § Due to CPU bottleneck Year Ref. 2008 Egi et al. H/W IPv 4 Throughput Two quad-core CPUs 3. 5 Gbps 2008 “Enhanced SR” Bolla et al. Two quad-core CPUs 4. 2 Gbps 2009 “Route. Bricks” Dobrescu et al. Two quad-core CPUs (2. 8 GHz) 8. 7 Gbps § Not capable of supporting even a single 10 G port 2010 Sep. 5

CPU BOTTLENECK 2010 Sep. 6

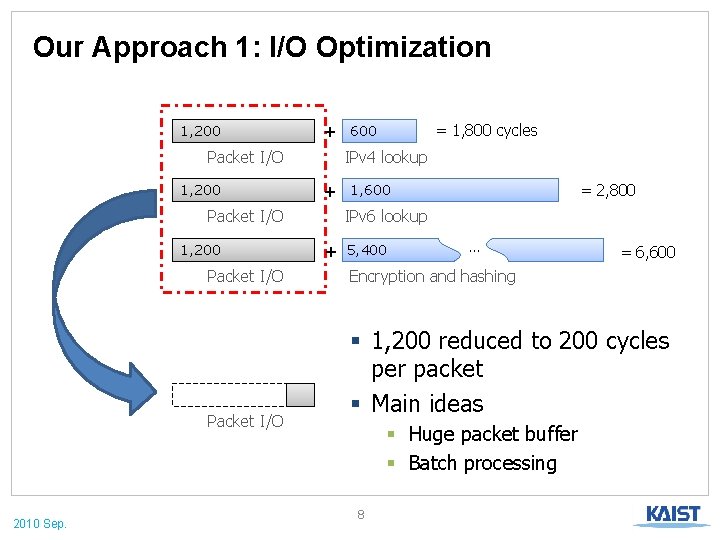

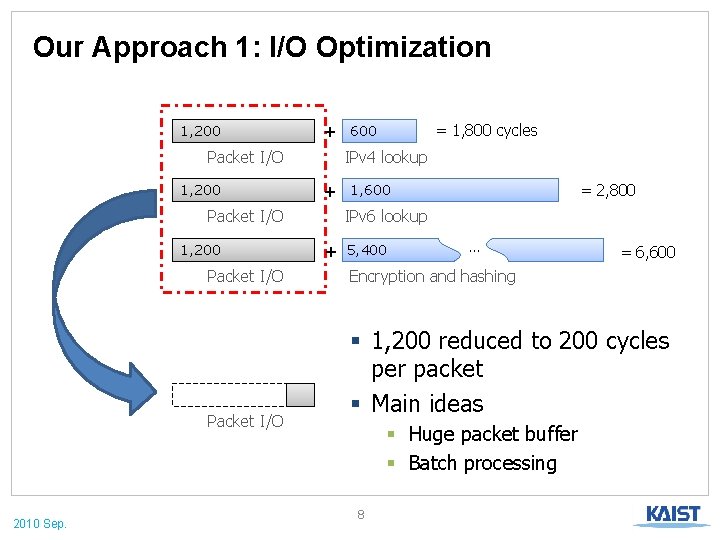

Per-Packet CPU Cycles for 10 G IPv 4 Cycles needed 1, 200 Packet I/O IPv 6 1, 200 Packet I/O 600 = 1, 800 cycles IPv 4 lookup + Packet I/O IPsec Your budget + = 2, 800 1, 600 IPv 6 lookup + 5, 400 … = 6, 600 Encryption and hashing 1, 400 cycles 10 G, min-sized packets, dual quad-core 2. 66 GHz CPUs (in x 86, cycle numbers are from Route. Bricks [Dobrescu 09] and ours) 2010 Sep. 7

Our Approach 1: I/O Optimization 1, 200 + Packet I/O 1, 200 IPv 4 lookup + Packet I/O 1, 200 Packet I/O 2010 Sep. = 1, 800 cycles 600 = 2, 800 1, 600 IPv 6 lookup + 5, 400 … = 6, 600 Encryption and hashing § 1, 200 reduced to 200 cycles per packet § Main ideas § Huge packet buffer § Batch processing 8

Our Approach 2: GPU Offloading + Packet I/O IPv 4 lookup + Packet I/O 1, 600 IPv 6 lookup + Packet I/O 600 5, 400 Encryption and hashing § GPU Offloading for § Memory-intensive or § Compute-intensive operations § Main topic of this talk 2010 Sep. … 9

WHAT IS GPU? 2010 Sep. 10

GPU = Graphics Processing Unit § The heart of graphics cards § Mainly used for real-time 3 D game rendering § Massively-parallel processing capacity (Ubisoft’s AVARTAR, from http: //ubi. com) 2010 Sep. 11

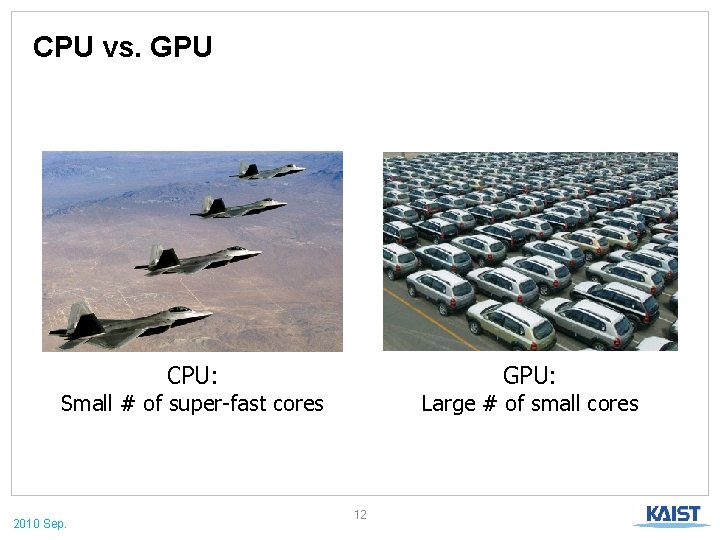

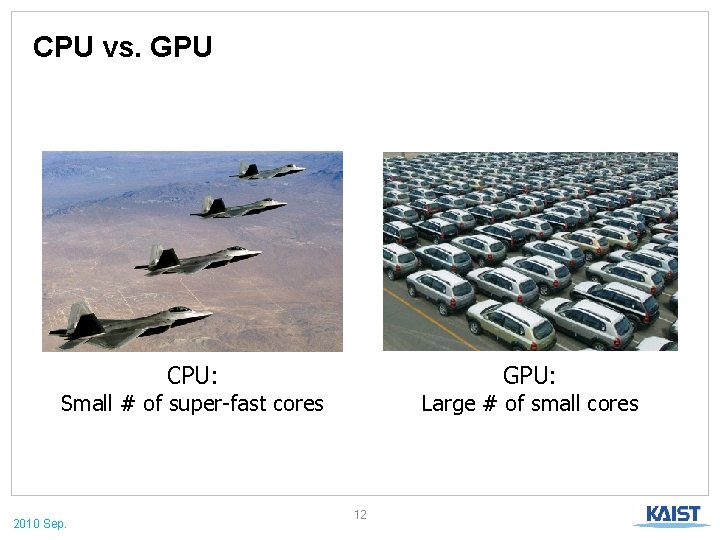

CPU vs. GPU CPU: GPU: Small # of super-fast cores 2010 Sep. Large # of small cores 12

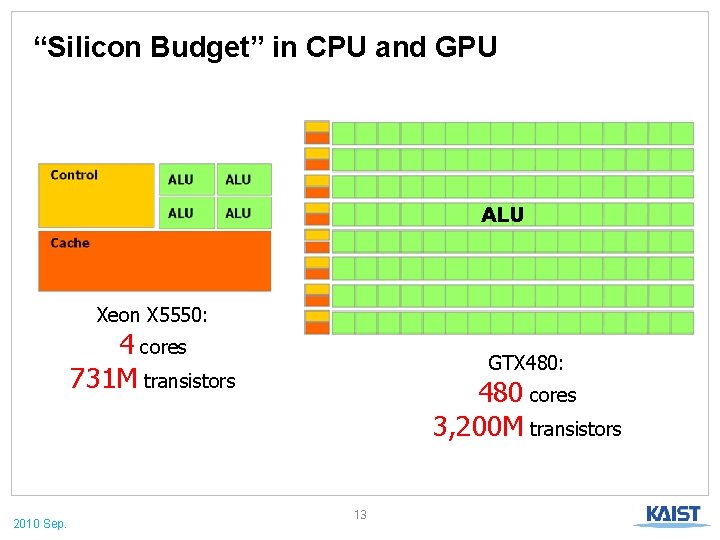

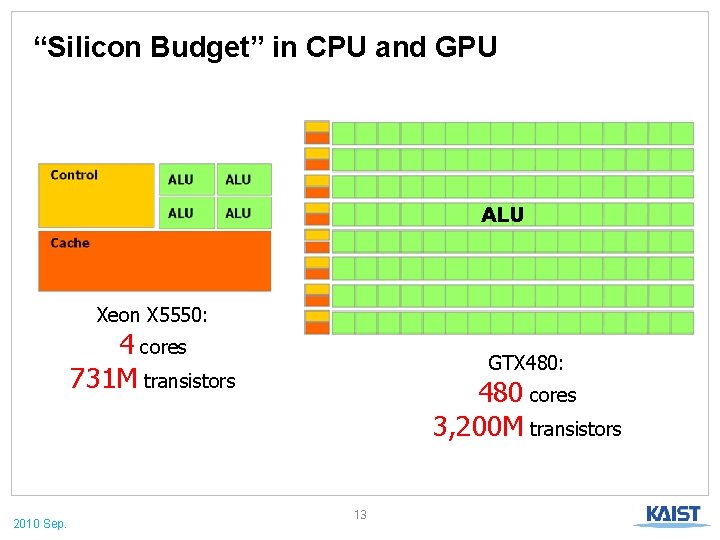

“Silicon Budget” in CPU and GPU ALU Xeon X 5550: 4 cores 731 M transistors 2010 Sep. GTX 480: 480 cores 3, 200 M transistors 13

GPU FOR PACKET PROCESSING 2010 Sep. 14

Advantages of GPU for Packet Processing 1. Raw computation power 2. Memory access latency 3. Memory bandwidth § Comparison between § Intel X 5550 CPU § NVIDIA GTX 480 GPU 2010 Sep. 15

(1/3) Raw Computation Power § Compute-intensive operations in software routers § Hashing, encryption, pattern matching, network coding, compression, etc. § GPU can help! Instructions/sec CPU: 43× 109 = 2. 66 (GHz) × 4 (# of cores) × 4 (4 -way superscalar) 2010 Sep. < 16 GPU: 672× 109 = 1. 4 (GHz) × 480 (# of cores)

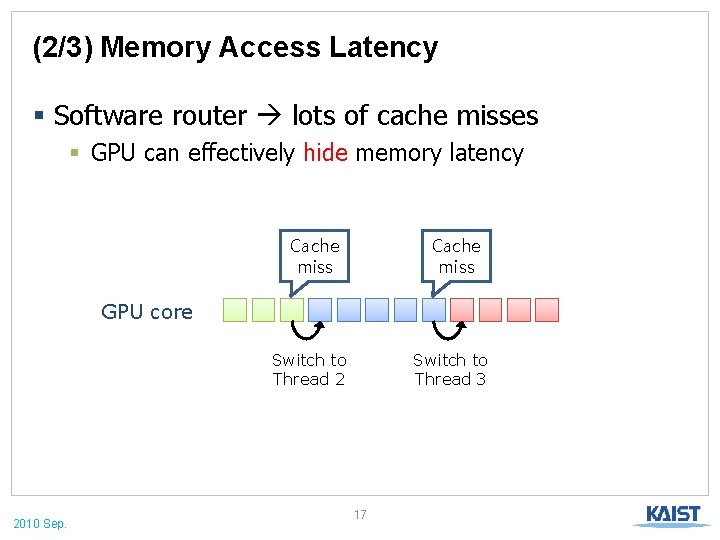

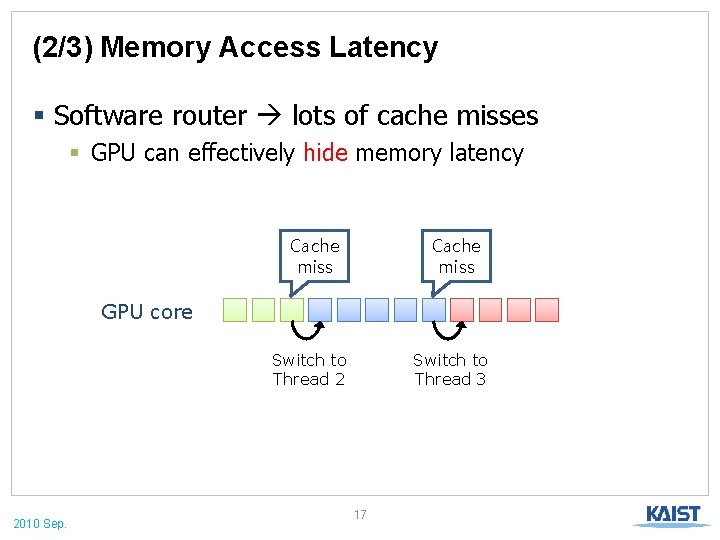

(2/3) Memory Access Latency § Software router lots of cache misses § GPU can effectively hide memory latency Cache miss GPU core Switch to Thread 2 2010 Sep. Switch to Thread 3 17

(3/3) Memory Bandwidth CPU’s memory bandwidth (theoretical): 32 GB/s 2010 Sep. 18

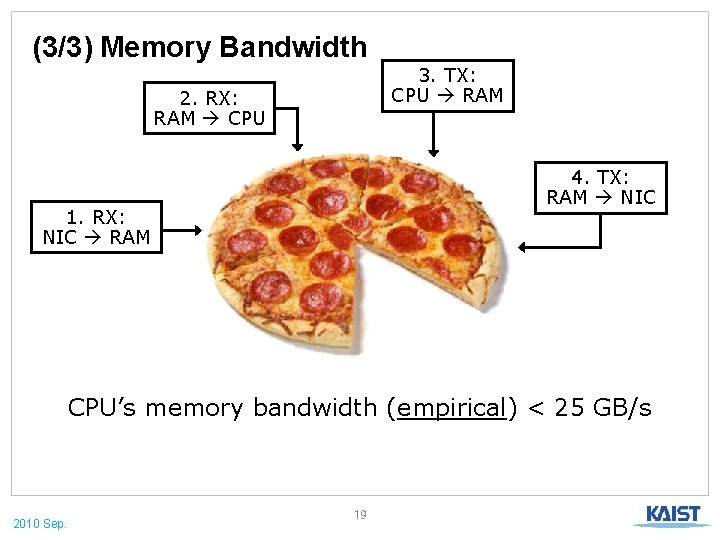

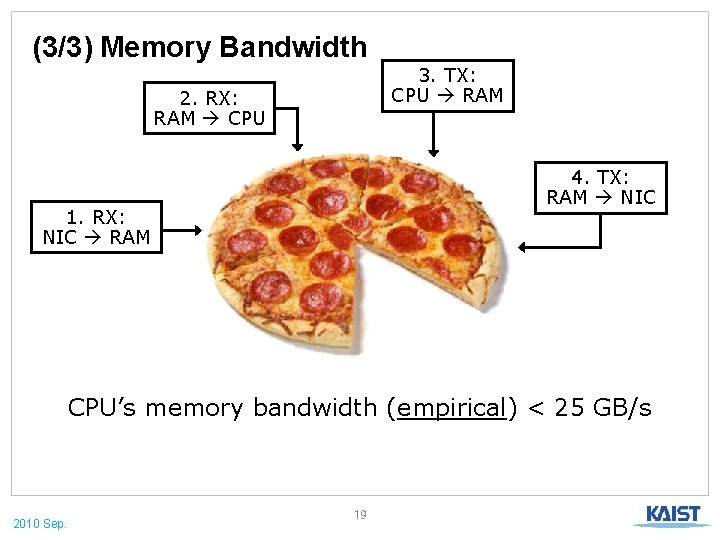

(3/3) Memory Bandwidth 3. TX: CPU RAM 2. RX: RAM CPU 4. TX: RAM NIC 1. RX: NIC RAM CPU’s memory bandwidth (empirical) < 25 GB/s 2010 Sep. 19

(3/3) Memory Bandwidth Your budget for packet processing can be less 10 GB/s 2010 Sep. 20

(3/3) Memory Bandwidth Your budget for packet processing can be less 10 GB/s GPU’s memory bandwidth: 174 GB/s 2010 Sep. 21

HOW TO USE GPU 2010 Sep. 22

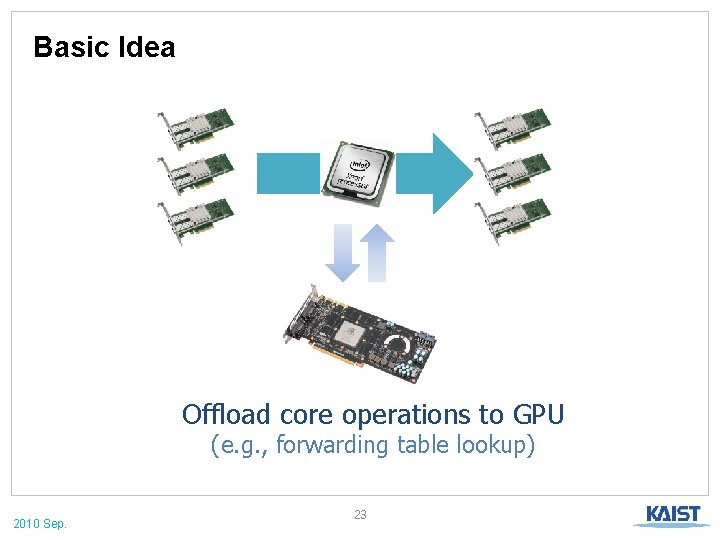

Basic Idea Offload core operations to GPU (e. g. , forwarding table lookup) 2010 Sep. 23

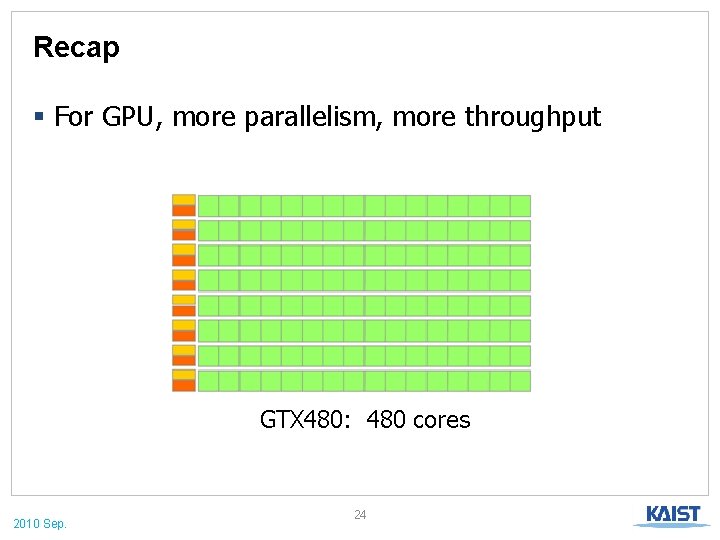

Recap § For GPU, more parallelism, more throughput GTX 480: 480 cores 2010 Sep. 24

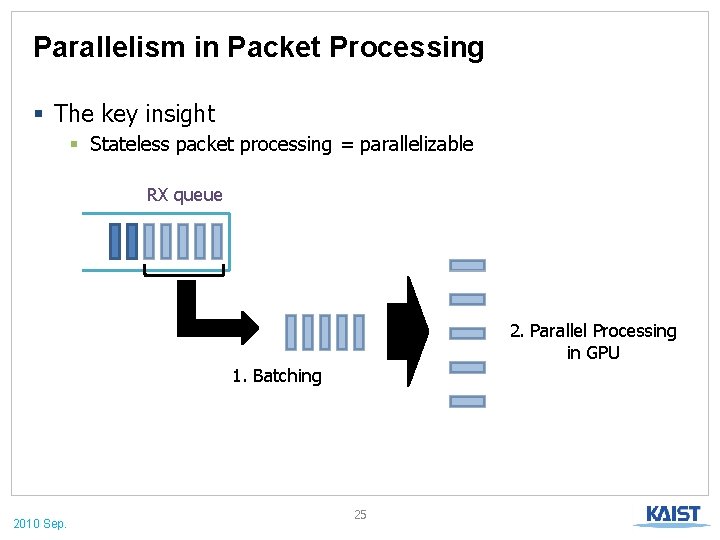

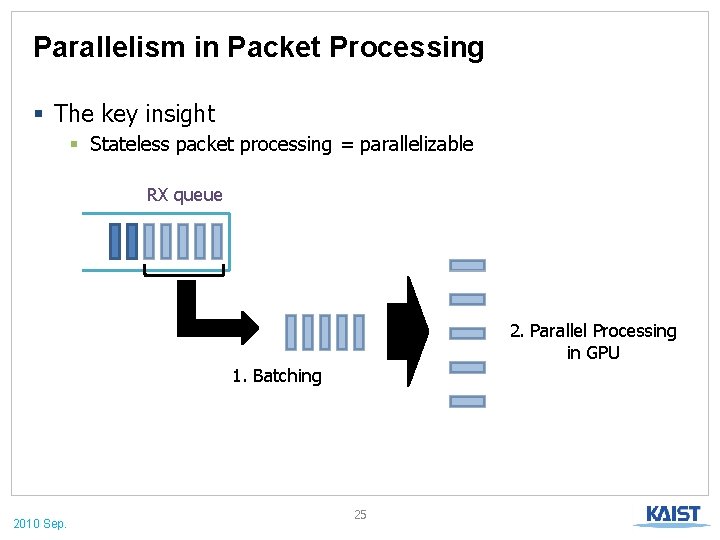

Parallelism in Packet Processing § The key insight § Stateless packet processing = parallelizable RX queue 2. Parallel Processing in GPU 1. Batching 2010 Sep. 25

Batching Long Latency? § Fast link = enough # of packets in a small time window § 10 Gb. E link § up to 1, 000 packets only in 67μs § Much less time with 40 or 100 Gb. E 2010 Sep. 26

PACKETSHADER DESIGN 2010 Sep. 27

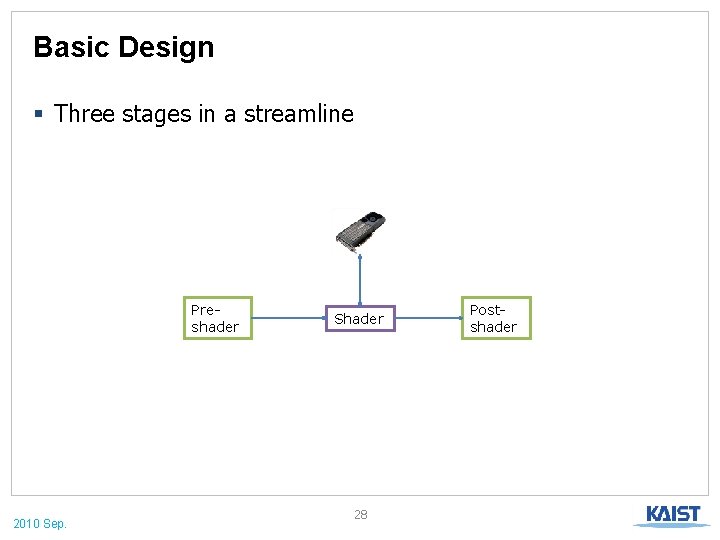

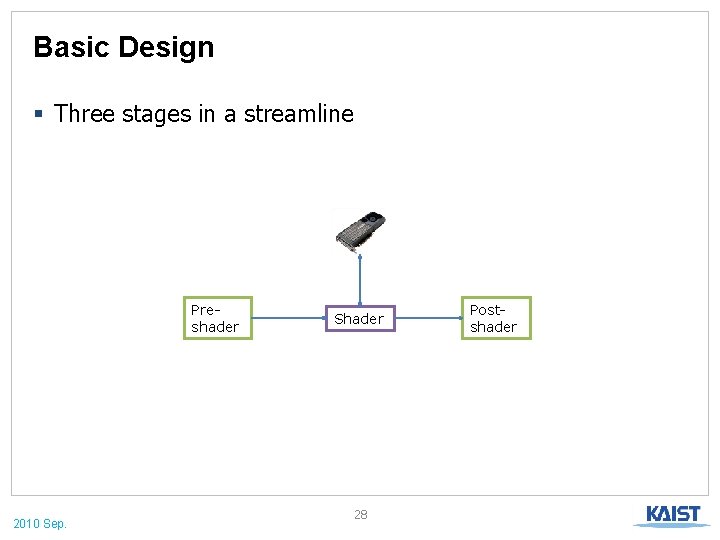

Basic Design § Three stages in a streamline Preshader 2010 Sep. Shader 28 Postshader

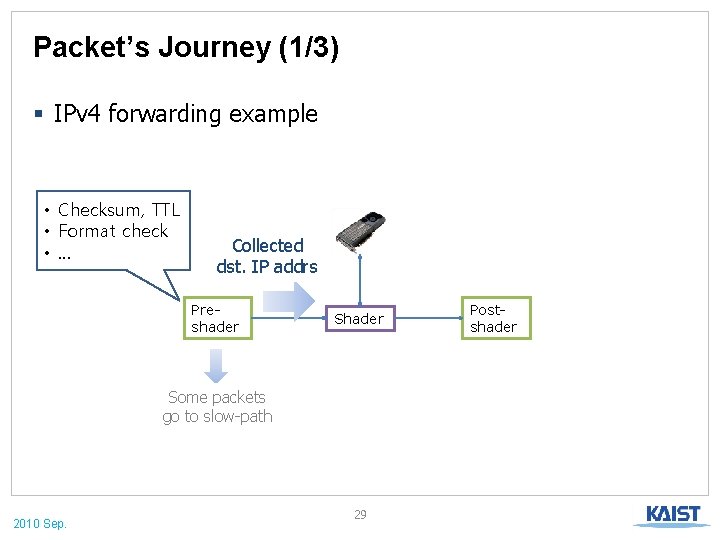

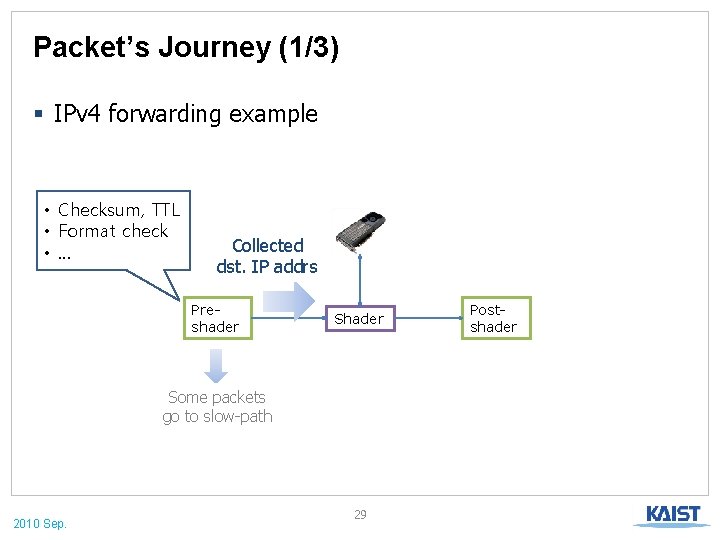

Packet’s Journey (1/3) § IPv 4 forwarding example • Checksum, TTL • Format check • … Collected dst. IP addrs Preshader Some packets go to slow-path 2010 Sep. 29 Postshader

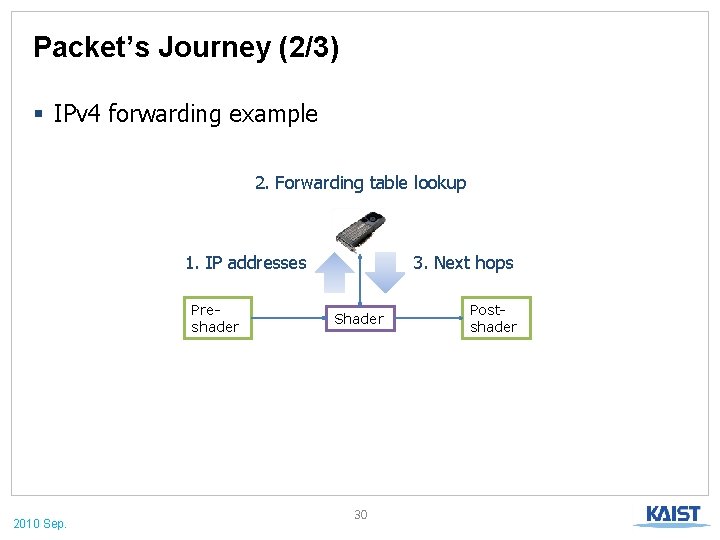

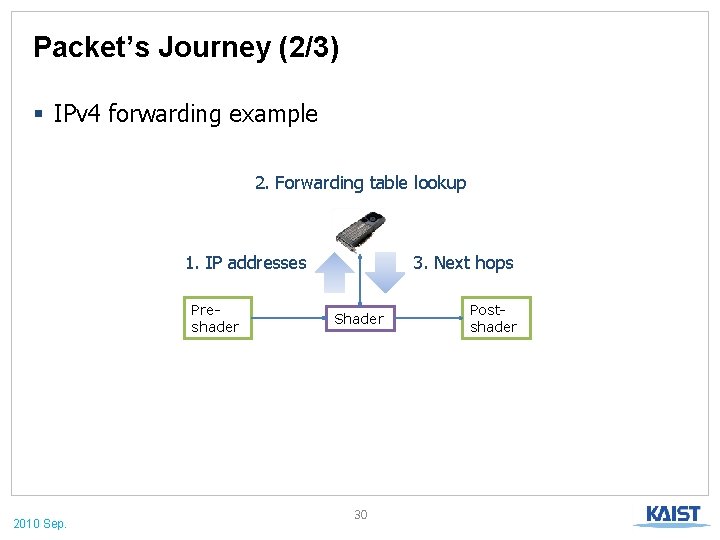

Packet’s Journey (2/3) § IPv 4 forwarding example 2. Forwarding table lookup 1. IP addresses Preshader 2010 Sep. 3. Next hops Shader 30 Postshader

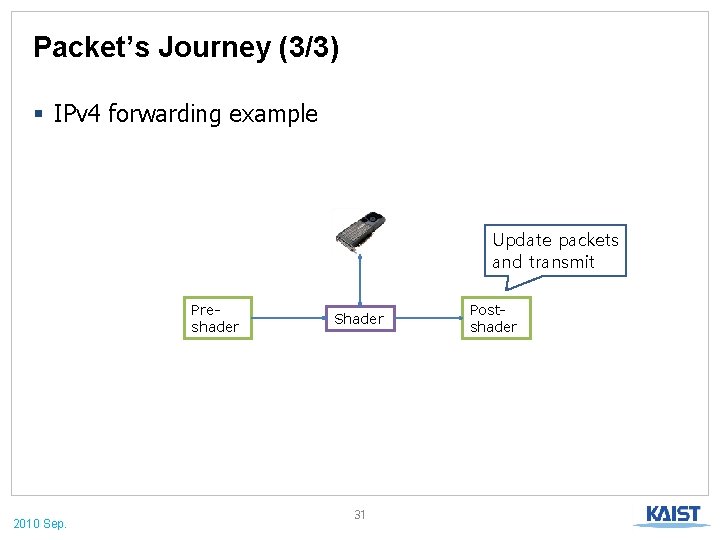

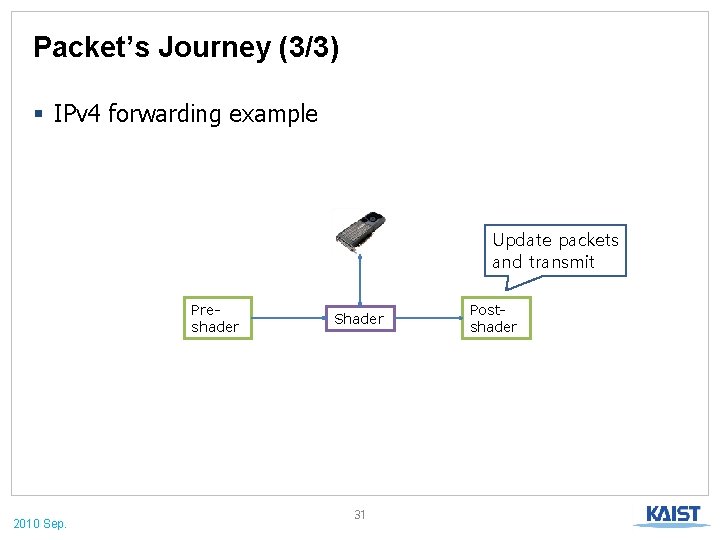

Packet’s Journey (3/3) § IPv 4 forwarding example Update packets and transmit Preshader 2010 Sep. Shader 31 Postshader

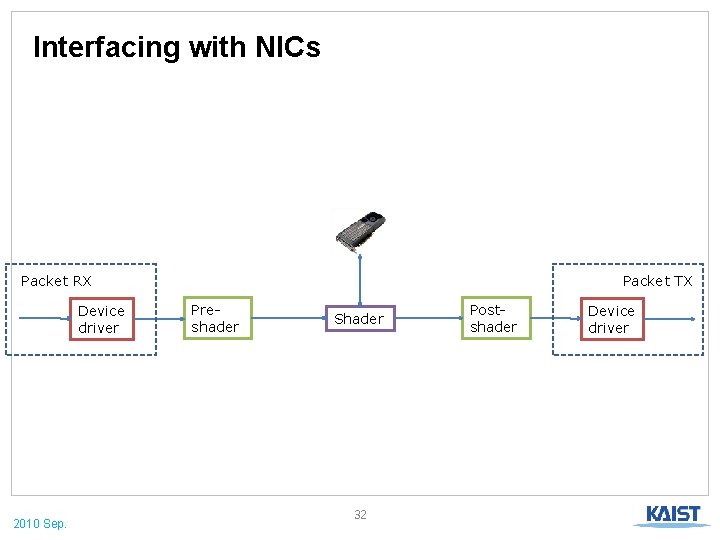

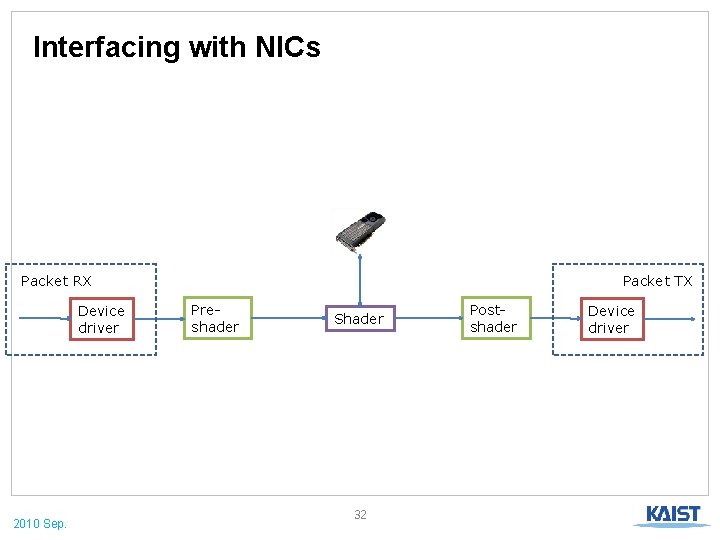

Interfacing with NICs Packet RX Device driver 2010 Sep. Packet TX Preshader Shader 32 Postshader Device driver

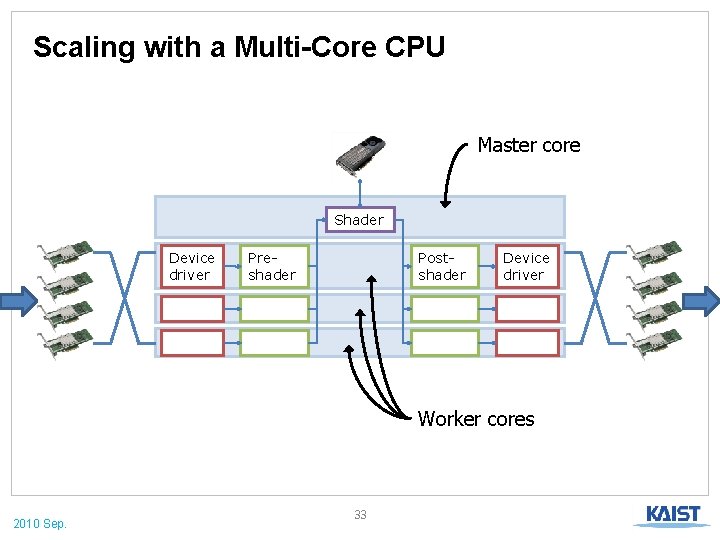

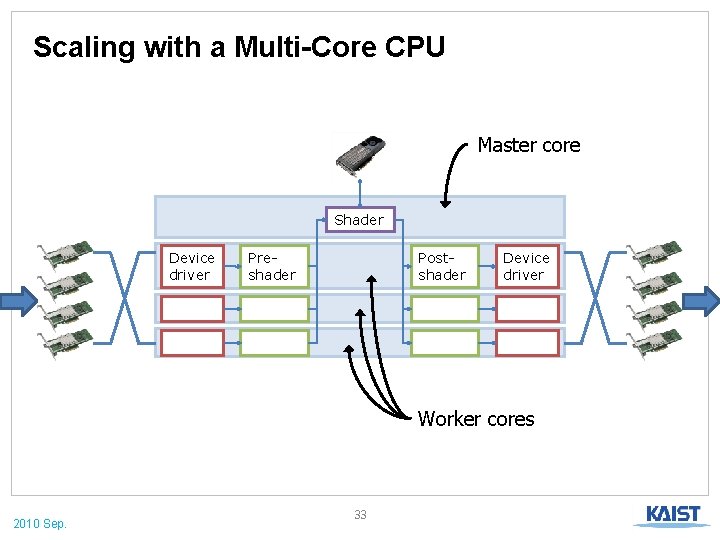

Scaling with a Multi-Core CPU Master core Shader Device driver Preshader Postshader Device driver Worker cores 2010 Sep. 33

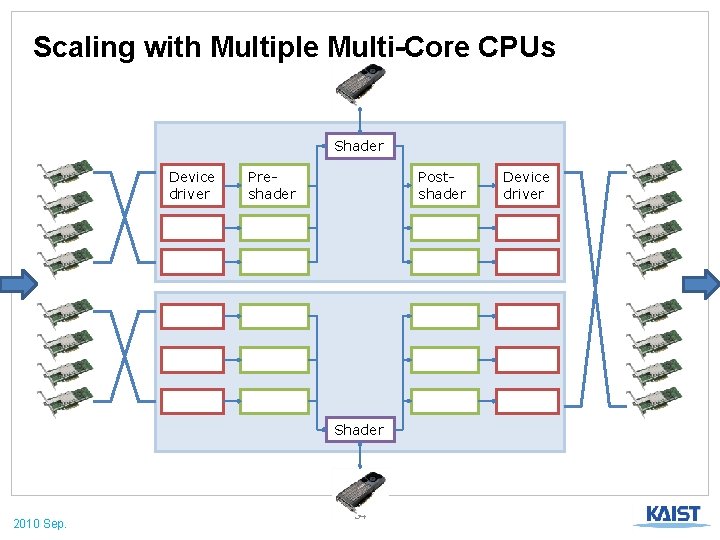

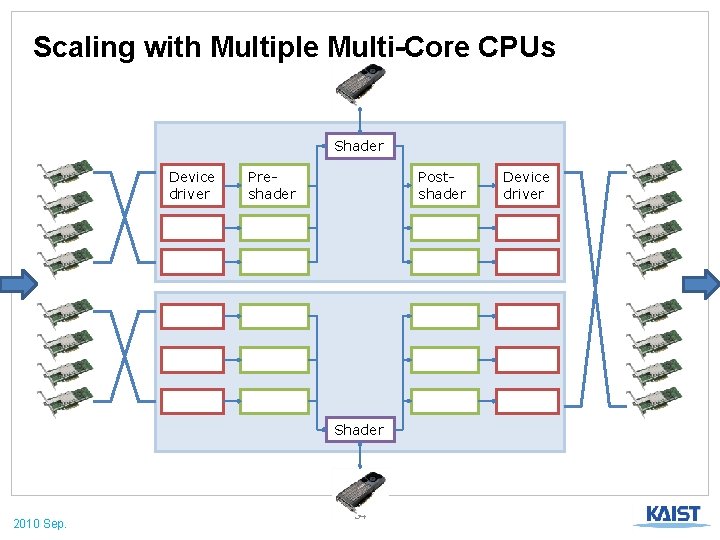

Scaling with Multiple Multi-Core CPUs Shader Device driver Preshader Postshader Shader 2010 Sep. 34 Device driver

EVALUATION 2010 Sep. 35

Hardware Setup CPU: Total 8 CPU cores Quad-core, 2. 66 GHz Total 80 Gbps NIC: Dual-port 10 Gb. E GPU: Total 960 cores 480 cores, 1. 4 GHz 2010 Sep. 36

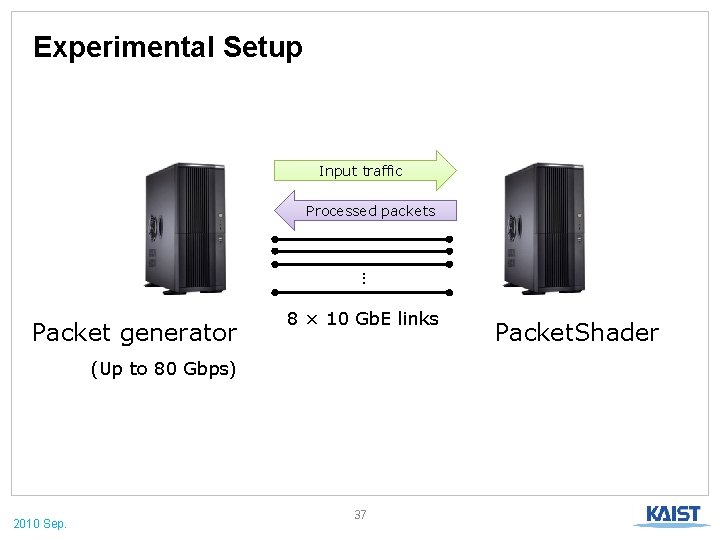

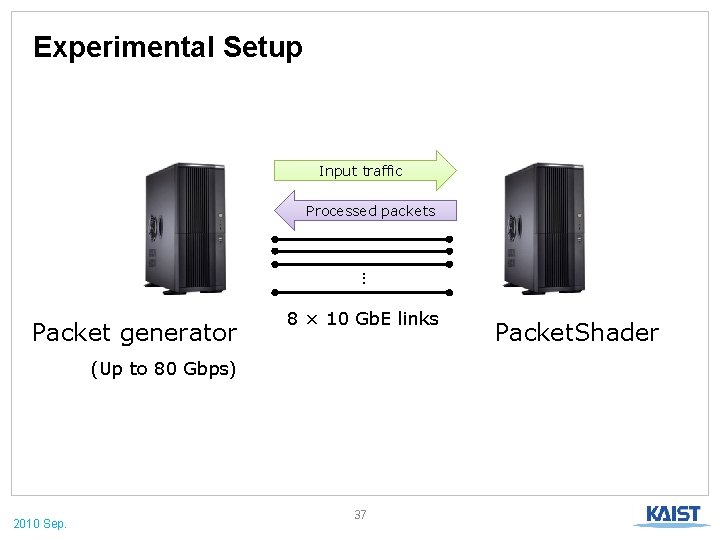

Experimental Setup Input traffic Processed packets … Packet generator 8 × 10 Gb. E links (Up to 80 Gbps) 2010 Sep. 37 Packet. Shader

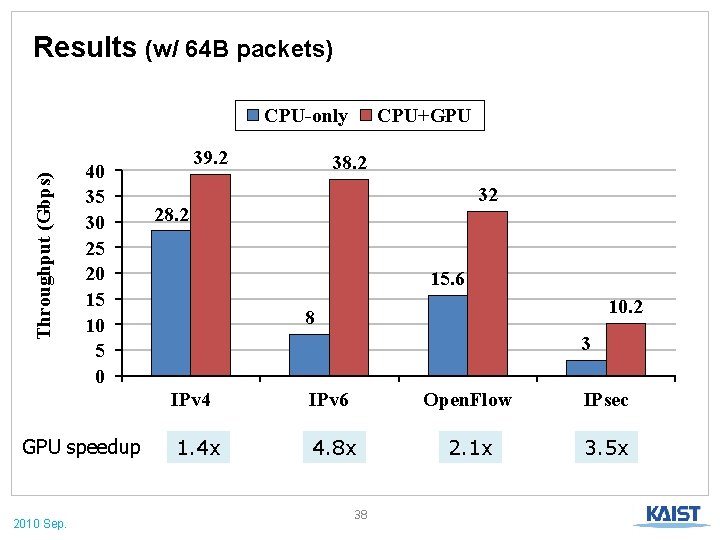

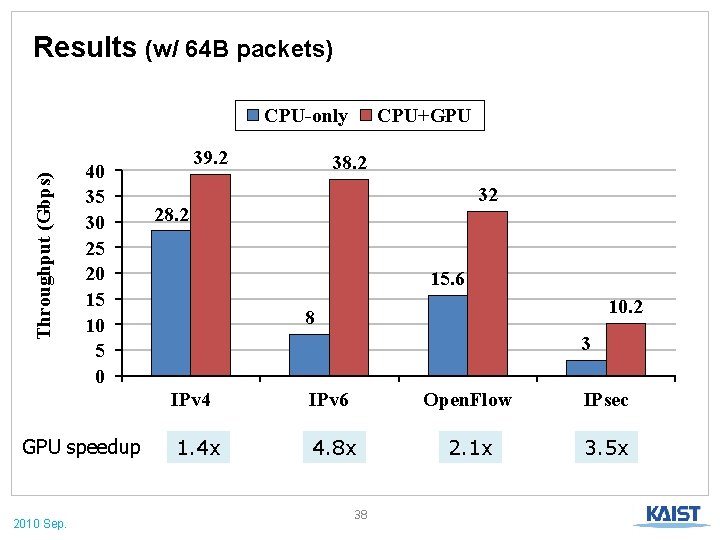

Results (w/ 64 B packets) Throughput (Gbps) CPU-only 40 35 30 25 20 15 10 5 0 GPU speedup 2010 Sep. 39. 2 CPU+GPU 38. 2 32 28. 2 15. 6 10. 2 8 3 IPv 4 IPv 6 Open. Flow IPsec 1. 4 x 4. 8 x 2. 1 x 3. 5 x 38

Example 1: IPv 6 forwarding § Longest prefix matching on 128 -bit IPv 6 addresses § Algorithm: binary search on hash tables [Waldvogel 97] § 7 hashings + 7 memory accesses … Prefix length 2010 Sep. … 1 64 39 … 80 … 96 128

Example 1: IPv 6 forwarding Throughput (Gbps) CPU-only 45 40 35 30 25 20 15 10 5 0 64 128 Bounded by motherboard IO capacity CPU+GPU 256 512 1024 Packet size (bytes) (Routing table was randomly generated with 200 K entries) 2010 Sep. 40 1514

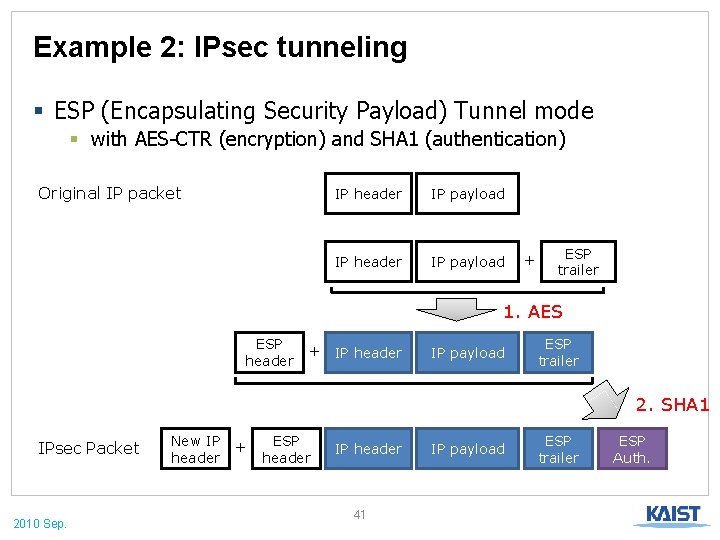

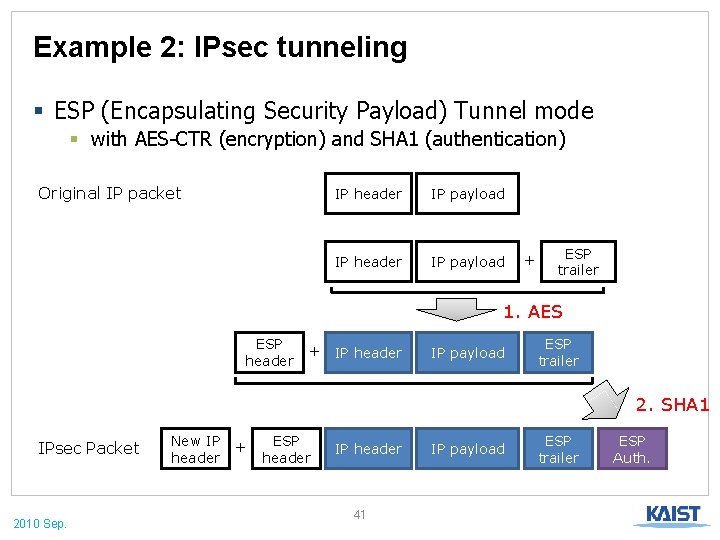

Example 2: IPsec tunneling § ESP (Encapsulating Security Payload) Tunnel mode § with AES-CTR (encryption) and SHA 1 (authentication) Original IP packet IP header IP payload + ESP trailer 1. AES ESP header + IP header IP payload ESP trailer 2. SHA 1 IPsec Packet 2010 Sep. New IP header + ESP header IP header 41 IP payload ESP trailer ESP Auth.

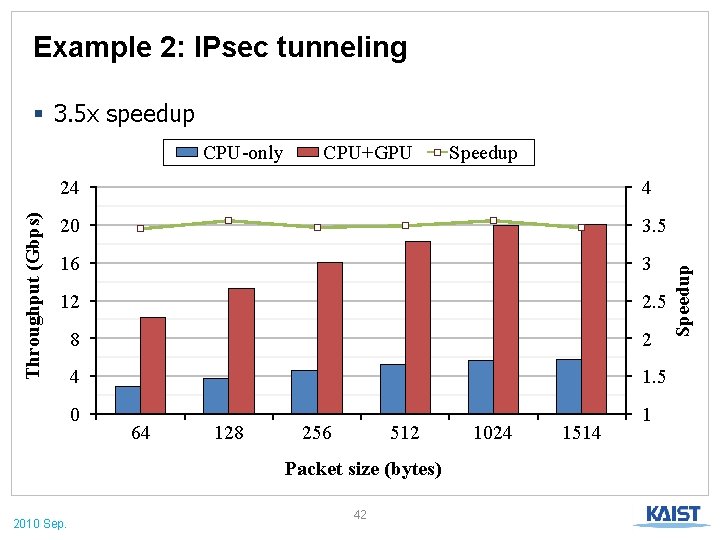

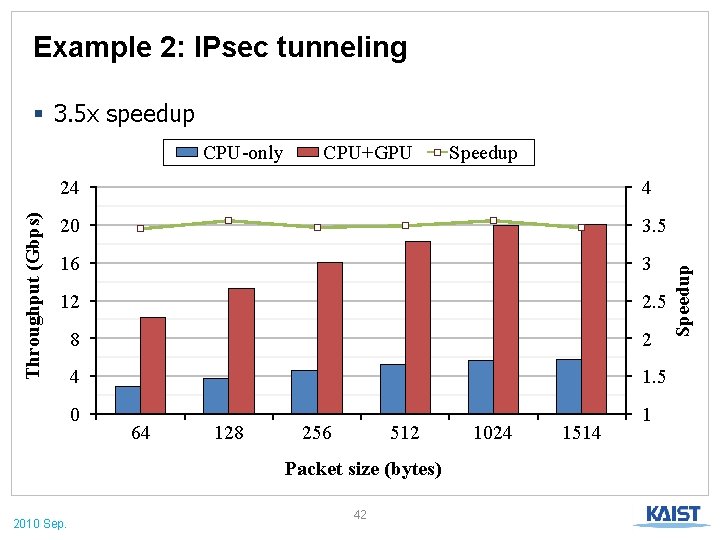

Example 2: IPsec tunneling § 3. 5 x speedup CPU+GPU Speedup 24 4 20 3. 5 16 3 12 2. 5 8 2 4 1. 5 0 1 64 128 256 512 Packet size (bytes) 2010 Sep. 42 1024 1514 Speedup Throughput (Gbps) CPU-only

Year Ref. H/W 2008 Egi et al. Two quad-core CPUs 3. 5 Gbps 2008 “Enhanced SR” Two quad-core CPUs Bolla et al. 4. 2 Gbps 2009 “Route. Bricks” Dobrescu et al. Two quad-core CPUs (2. 8 GHz) 8. 7 Gbps 2010 Packet. Shader (CPU-only) Two quad-core CPUs (2. 66 GHz) 28. 2 Gbps Packet. Shader (CPU+GPU) Two quad-core CPUs + two GPUs 39. 2 Gbps 2010 Sep. IPv 4 Throughput 43 Kernel User

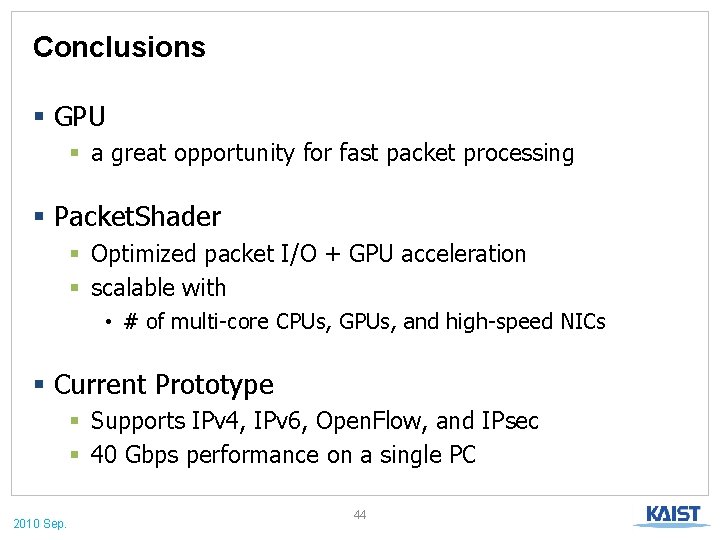

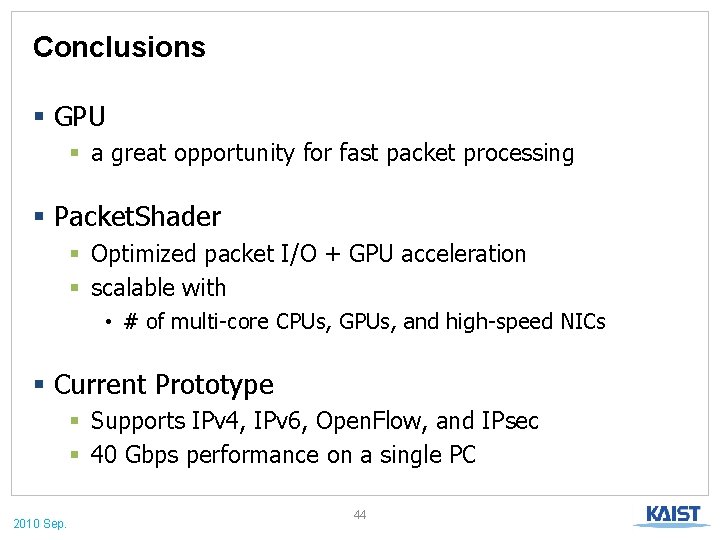

Conclusions § GPU § a great opportunity for fast packet processing § Packet. Shader § Optimized packet I/O + GPU acceleration § scalable with • # of multi-core CPUs, GPUs, and high-speed NICs § Current Prototype § Supports IPv 4, IPv 6, Open. Flow, and IPsec § 40 Gbps performance on a single PC 2010 Sep. 44

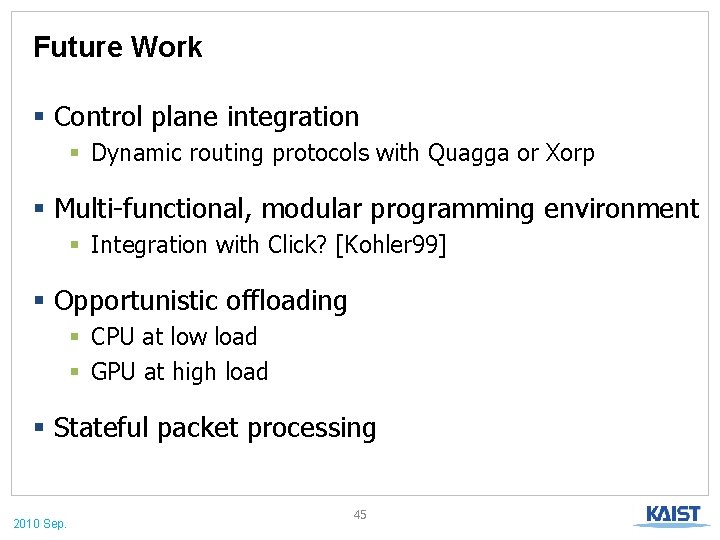

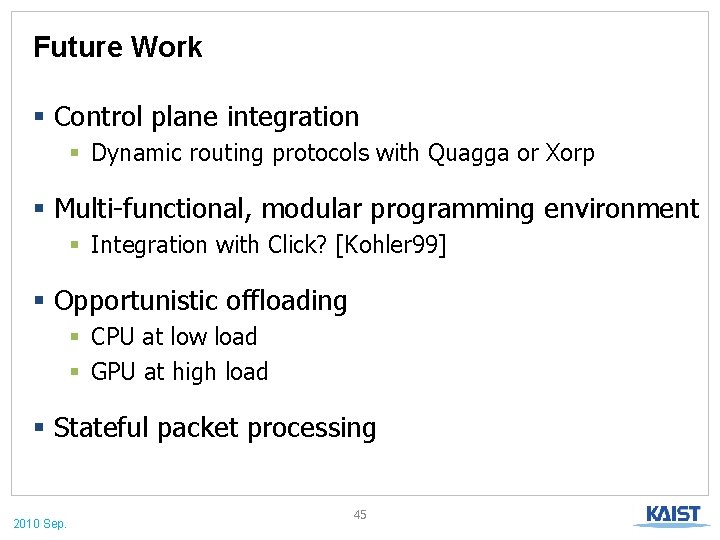

Future Work § Control plane integration § Dynamic routing protocols with Quagga or Xorp § Multi-functional, modular programming environment § Integration with Click? [Kohler 99] § Opportunistic offloading § CPU at low load § GPU at high load § Stateful packet processing 2010 Sep. 45

THANK YOU § Questions? (in CLEAR, SLOW, and EASY ENGLISH, please) § Personal AD (for professors): § I am a prospective grad student. § Going to apply this fall for my Ph. D study. § Less personal AD (for all): § Keon will present SSLShader in the poster session! 2010 Sep. 46

BACKUP SLIDES 2010 Sep. 47

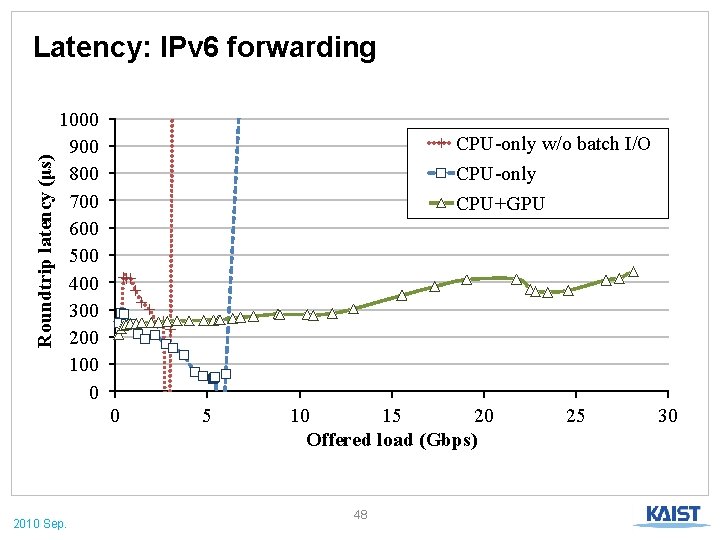

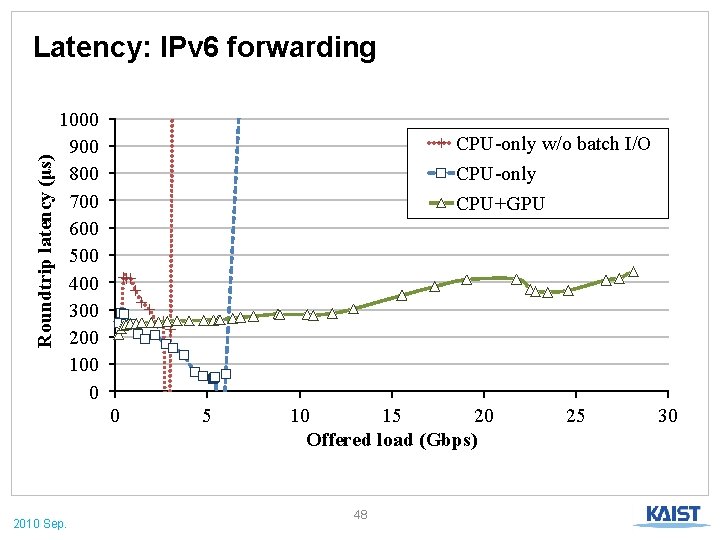

Roundtrip latency (μs) Latency: IPv 6 forwarding 1000 900 800 700 600 500 400 300 200 100 0 CPU-only w/o batch I/O CPU-only CPU+GPU 0 2010 Sep. 5 10 15 20 Offered load (Gbps) 48 25 30

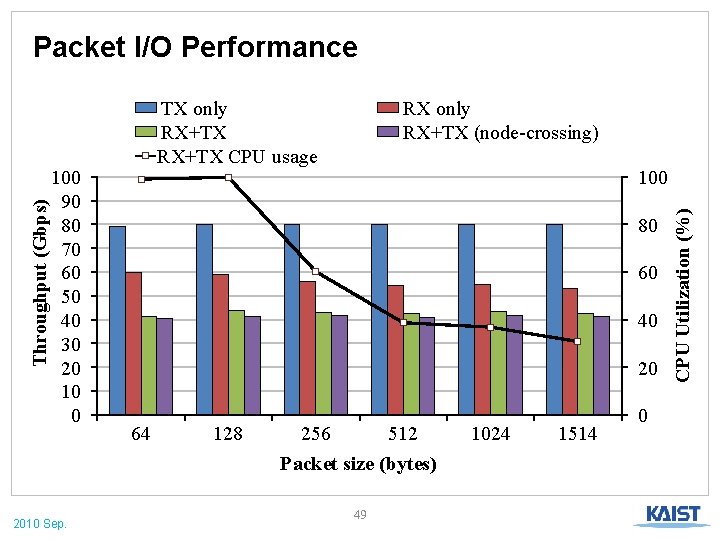

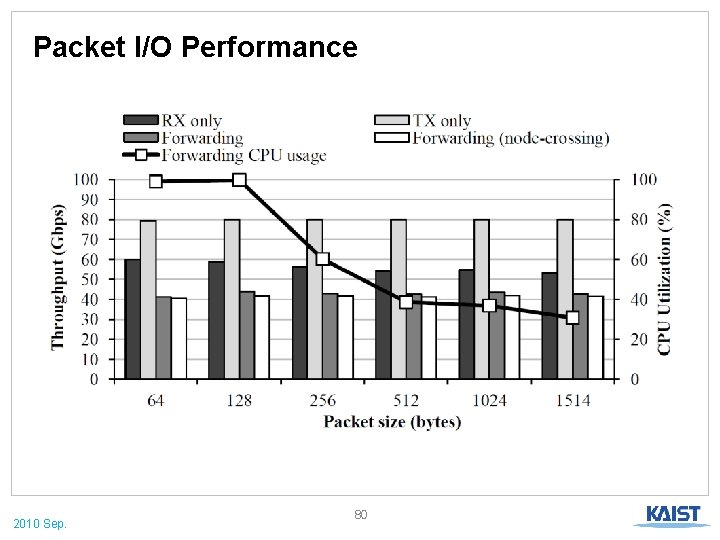

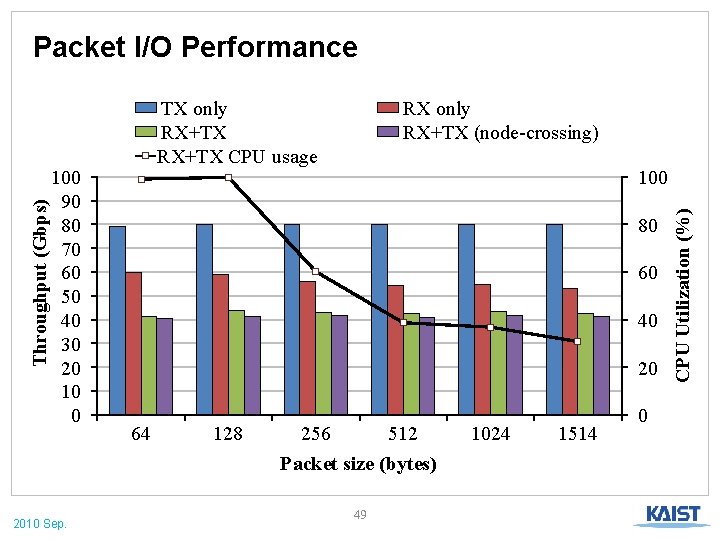

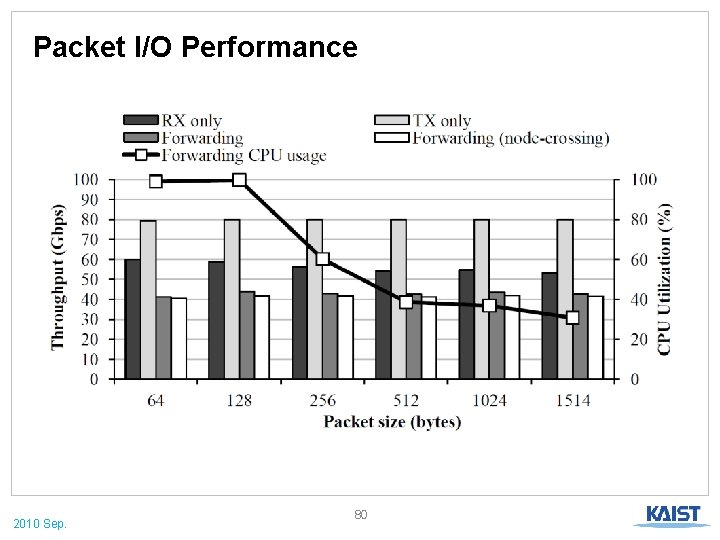

100 90 80 70 60 50 40 30 20 10 0 TX only RX+TX CPU usage RX only RX+TX (node-crossing) 100 80 60 40 20 64 128 256 512 Packet size (bytes) 2010 Sep. 49 1024 1514 0 CPU Utilization (%) Throughput (Gbps) Packet I/O Performance

Throughput (Gbps) IPv 4 Forwarding Performance 45 40 35 30 25 20 15 10 5 0 CPU-only CPU+GPU 64 128 256 512 Packet size (bytes) 2010 Sep. 50 1024 1514

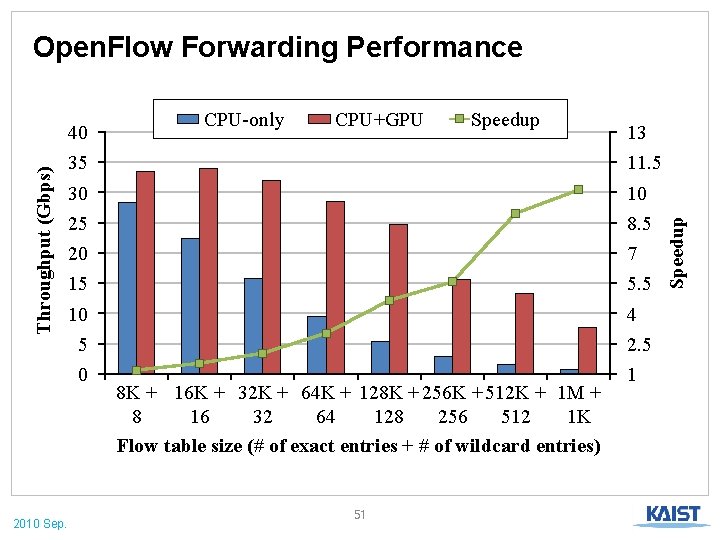

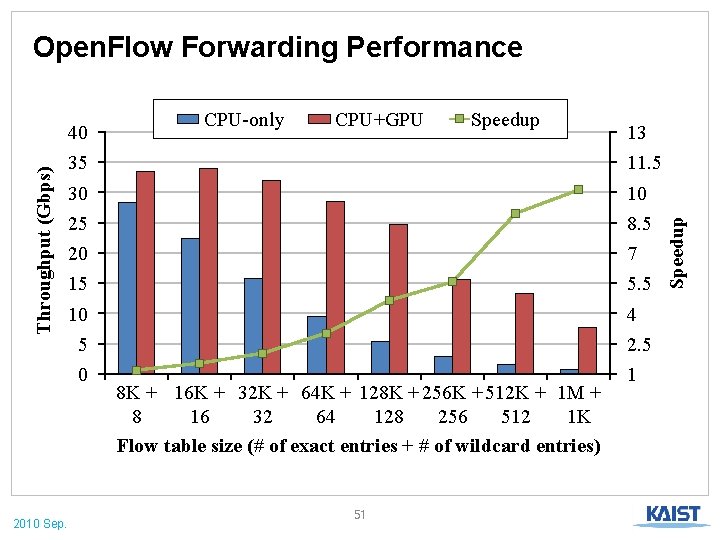

Open. Flow Forwarding Performance 2010 Sep. CPU-only CPU+GPU Speedup 13 35 11. 5 30 10 25 8. 5 20 7 15 5. 5 10 4 5 2. 5 0 1 8 K + 16 K + 32 K + 64 K + 128 K + 256 K + 512 K + 1 M + 8 16 32 64 128 256 512 1 K Flow table size (# of exact entries + # of wildcard entries) 51 Speedup Throughput (Gbps) 40

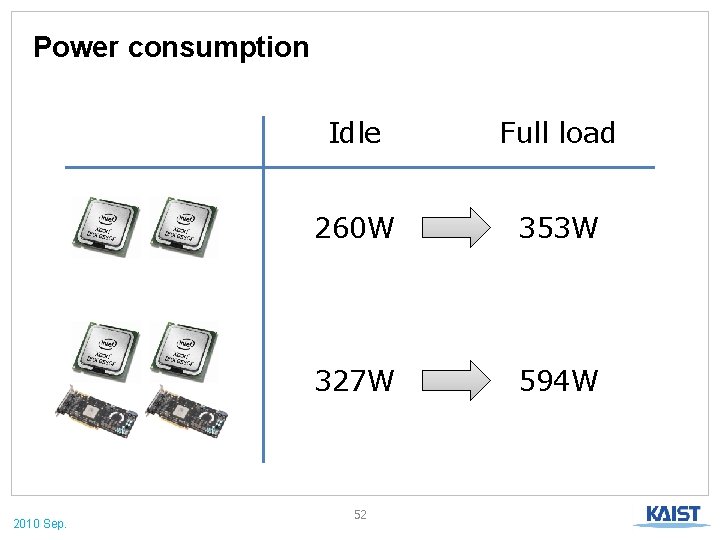

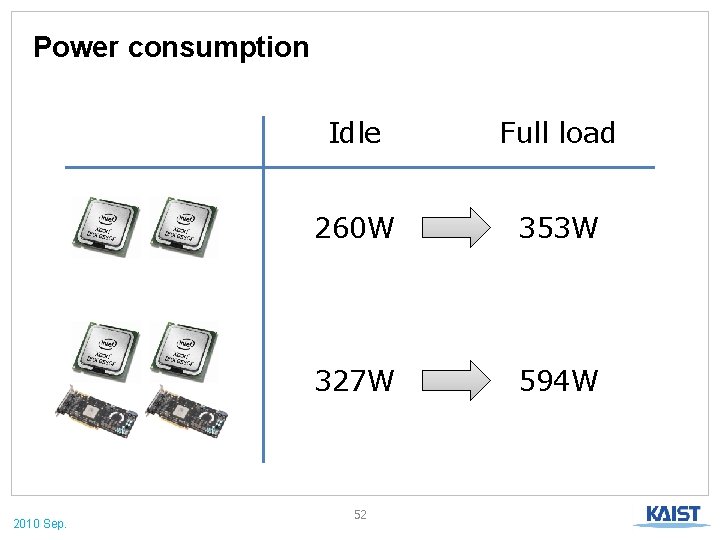

Power consumption 2010 Sep. Idle Full load 260 W 353 W 327 W 594 W 52

Packet Reordering? 3. No reordering in GPU processing § Intra-flow: “No” Shader Device driver 1. Packets from the same flow go to the same worker Preshader 2. FIFO in the worker § Inter-flow: “Possibly yes” § Common in the Internet and not harmful 2010 Sep. Postshader 53 Device driver

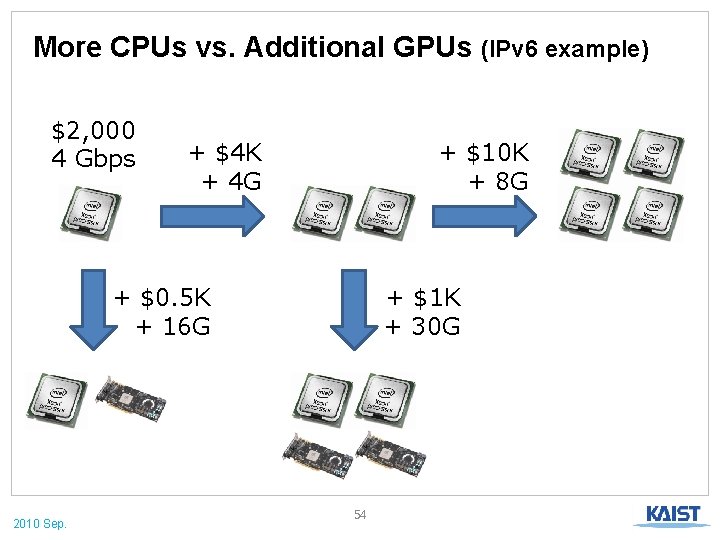

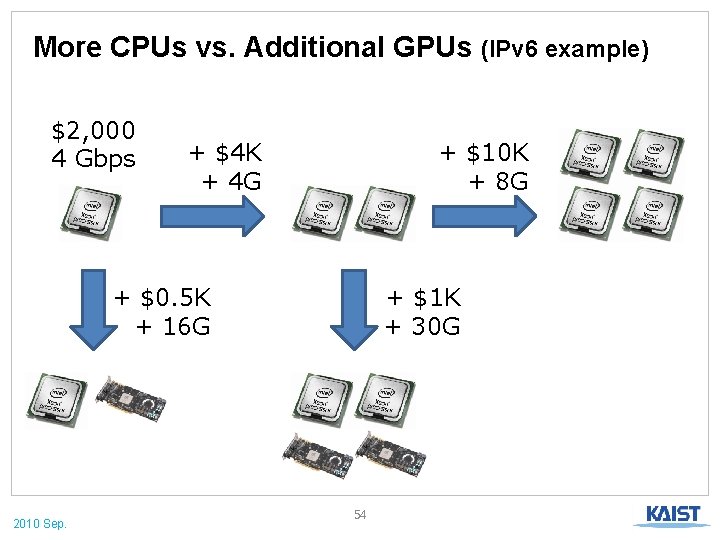

More CPUs vs. Additional GPUs (IPv 6 example) $2, 000 4 Gbps + $4 K + 4 G + $10 K + 8 G + $0. 5 K + 16 G 2010 Sep. + $1 K + 30 G 54

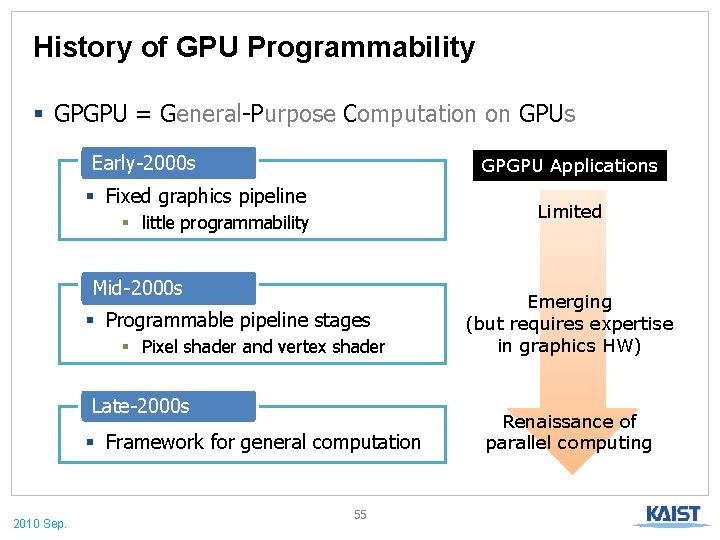

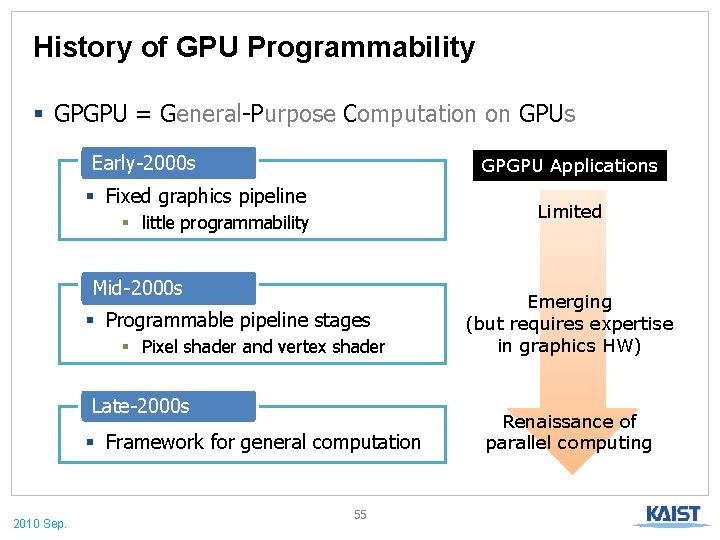

History of GPU Programmability § GPGPU = General-Purpose Computation on GPUs Early-2000 s GPGPU Applications § Fixed graphics pipeline Limited § little programmability Mid-2000 s § Programmable pipeline stages § Pixel shader and vertex shader Late-2000 s § Framework for general computation 2010 Sep. 55 Emerging (but requires expertise in graphics HW) Renaissance of parallel computing

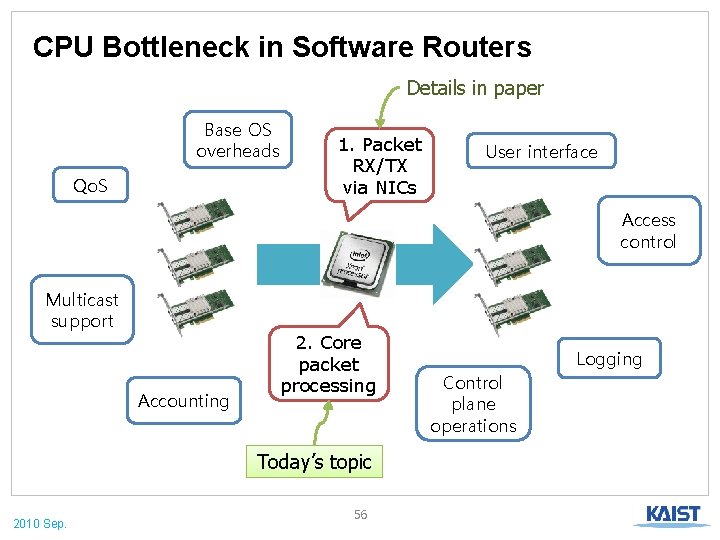

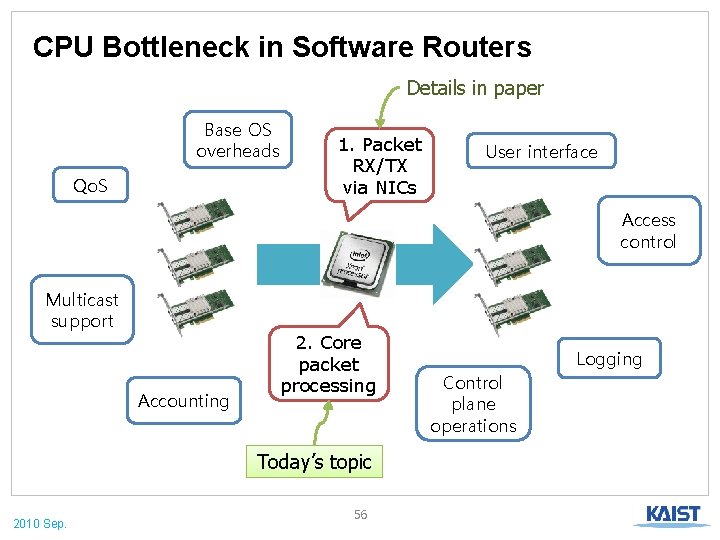

CPU Bottleneck in Software Routers Details in paper Base OS overheads Qo. S 1. Packet RX/TX via NICs User interface Access control Multicast support Accounting 2. Core packet processing Today’s topic 2010 Sep. 56 Control plane operations Logging

Hardware Setup Two CPUs RAM RAM CPU 0 CPU 1 IOH 0 IOH 1 NIC 0, 1 NIC 4, 5 NIC 2, 3 Node 0 10 G port RAM RAM NIC 6, 7 GPU 0 GPU 1 PCIe x 16 PCIe x 8 Node 1 QPI 86% 14% Four dual-port NICs and two graphics cards 2010 Sep. System total cost $7, 000 57 GPU cost $500 × 2 = $1, 000

Applications of (Fast) Software Routers § For well-known protocols (e. g. , IP routing) § Cost-effective replacement of traditional routers § For complex applications (e. g. , intrusion detection) § PC-based network equipments § For Future Internet § Core component for deployment of new protocols § Into the real world beyond labs 2010 Sep. 58

Applications of Software Routers Limitations (due to low performance) § For well-known protocols (e. g. , IP routing) § Cost-effective replacement of traditional routers § Far behind the performance of traditional routers § For complex applications (e. g. , intrusion detection) § PC-based network appliances § Limited deployment only in enterprise networks § For Future Internet § Core component for deployment of new protocols § Hard to get into real world beyond labs 2010 Sep. 59

OLD SLIDES 2010 Sep. 60

Overview Packet. Shader is a … 1. Scalable, • with multiple cores, CPUs, NICs, and GPUs 2. High-Performance • 40+Gbps. The world’s first multi-10 G software router. 3. Framework for • we implemented IPv 4 and IPv 6 routing, Open. Flow, and IPsec on it 4. General Packet Processing • 2010 Sep. provides a viable way towards high-performance software routers 61

In this talk, we do not address the details of History of software routers or GPU & CUDA programming 2010 Sep. 62

INTRODUCTION 2010 Sep. 63

Software Routers § Flexible, but slow § Built on commodity PCs and software § Rather than ASICs, FPGAs, or network processors (NPs). § Good: Full programmability § To meet the ever-increasing demands for flexible traffic handling § Bad: Low performance § Known to be 1~5 Gbps § 8. 3 Gbps by Route. Bricks for min-sized packets (SOSP ‘ 09) § CPU is the bottleneck • Packet I/O, packet processing, control plane, statistics, user-level interface, etc. ) § We claim that GPU can be useful for packet processing. 2010 Sep. 64

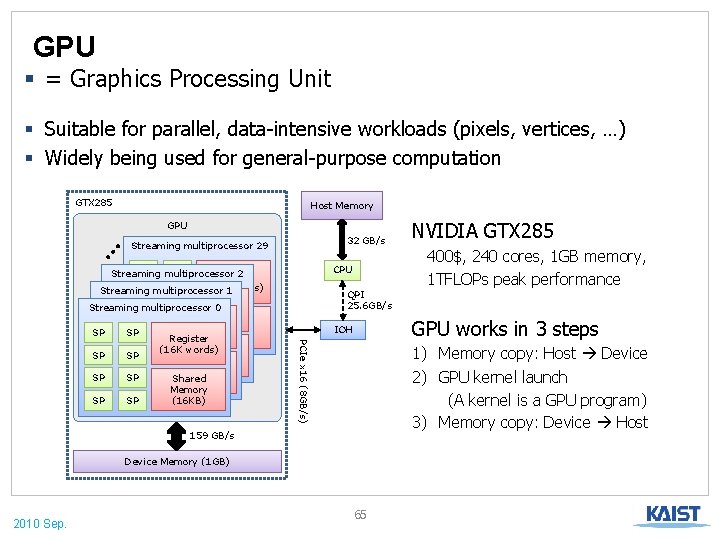

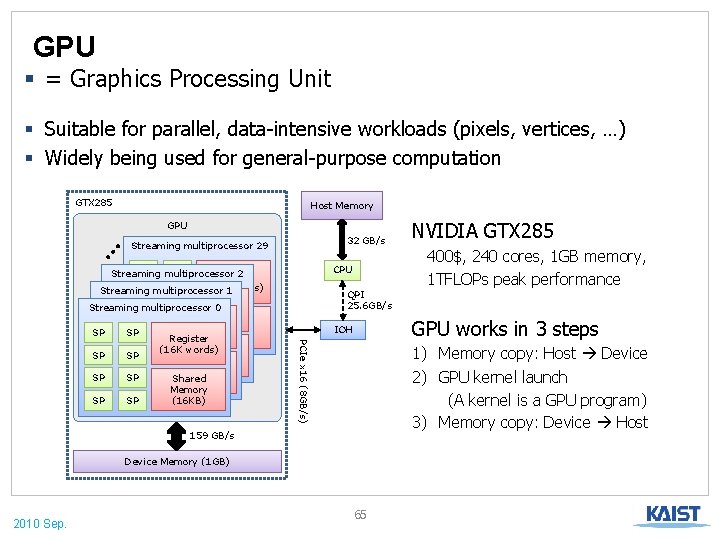

GPU § = Graphics Processing Unit § Suitable for parallel, data-intensive workloads (pixels, vertices, …) § Widely being used for general-purpose computation GTX 285 Host Memory GPU 32 GB/s Streaming multiprocessor 29 CPU QPI 25. 6 GB/s 1) Memory copy: Host Device 2) GPU kernel launch (A kernel is a GPU program) 3) Memory copy: Device Host 159 GB/s Device Memory (1 GB) 2010 Sep. 400$, 240 cores, 1 GB memory, 1 TFLOPs peak performance GPU works in 3 steps IOH PCIe x 16 (8 GB/s) SP multiprocessor SP Streaming 2 Register (16 K words) Streaming 1 SP multiprocessor SP SP SP Register Streaming multiprocessor 0 SP SP SP(16 K words) Shared Register SP SP Memory SP SP (16 K words) Register SP SP SP (16 KB) SP SP Shared (16 K words) SP SP Memory SP SP Shared SP SP (16 KB) Memory SP SP (16 KB) NVIDIA GTX 285 65

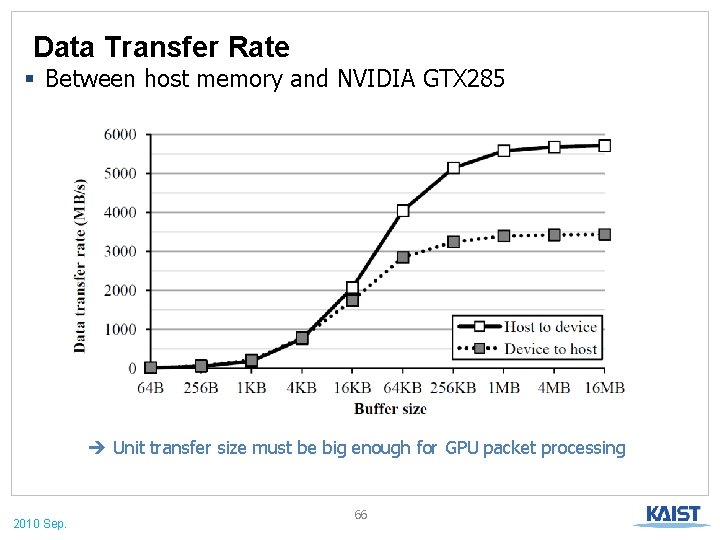

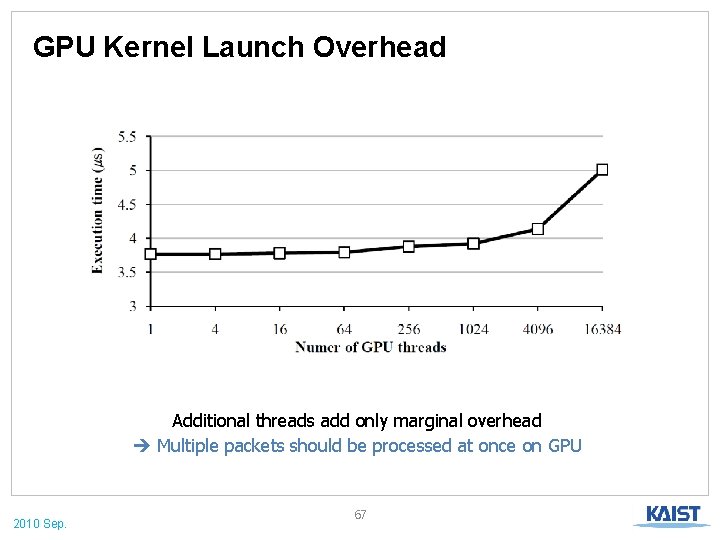

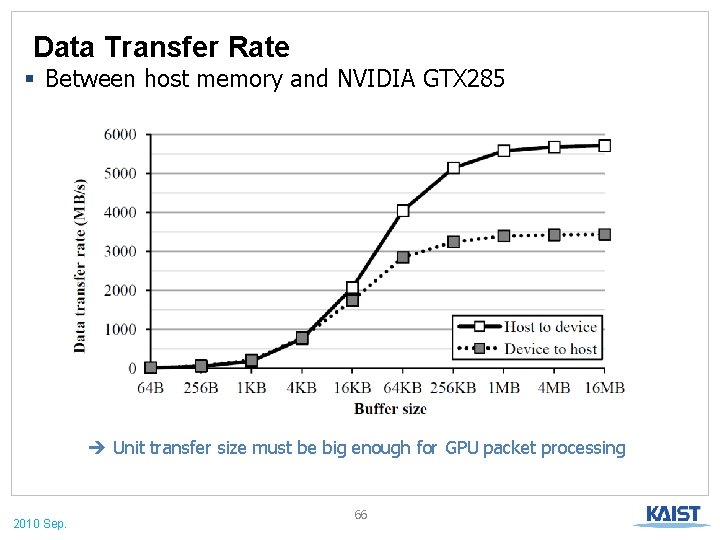

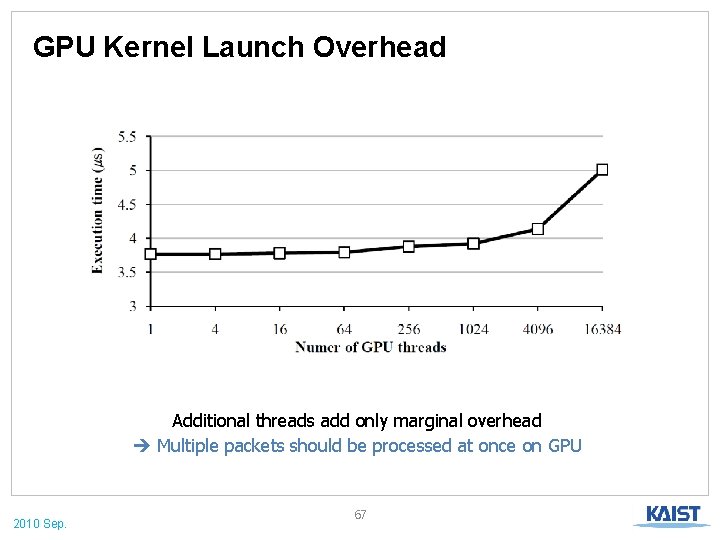

Data Transfer Rate § Between host memory and NVIDIA GTX 285 Unit transfer size must be big enough for GPU packet processing 2010 Sep. 66

GPU Kernel Launch Overhead Additional threads add only marginal overhead Multiple packets should be processed at once on GPU 2010 Sep. 67

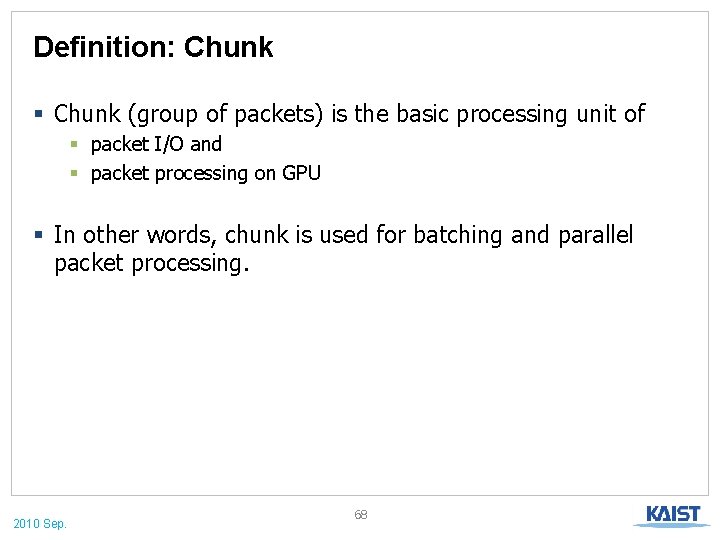

Definition: Chunk § Chunk (group of packets) is the basic processing unit of § packet I/O and § packet processing on GPU § In other words, chunk is used for batching and parallel packet processing. 2010 Sep. 68

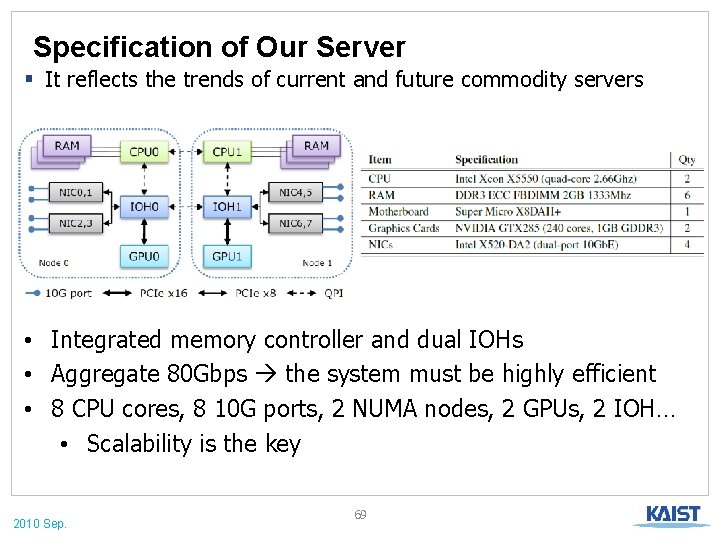

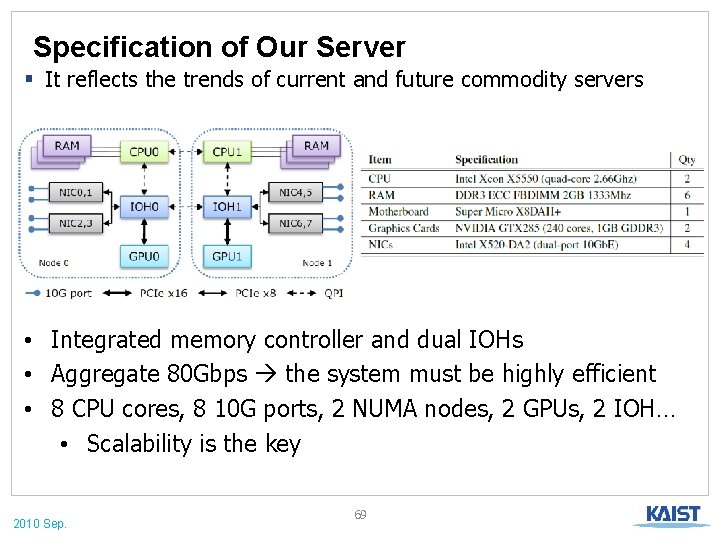

Specification of Our Server § It reflects the trends of current and future commodity servers • Integrated memory controller and dual IOHs • Aggregate 80 Gbps the system must be highly efficient • 8 CPU cores, 8 10 G ports, 2 NUMA nodes, 2 GPUs, 2 IOH… • Scalability is the key 2010 Sep. 69

GPU Acceleration Alone Is Not Enough § Amdahl’s law says… IPsec Packet I/O IPv 4 routing Packet Processing 0 2000 4000 6000 8000 10000 12000 14000 16000 CPU cycles • To accelerate compute-intensive workload such as IPsec, packet processing should be more efficient. • Can be done with GPUs. • To accelerate IO-intensive workload such as IPv 4 routing, packet I/O should be more effective. • So we implement highly-optimized packet I/O engine. 2010 Sep. 70

OPTIMIZING PACKET I/O ENGINE 2010 Sep. 71

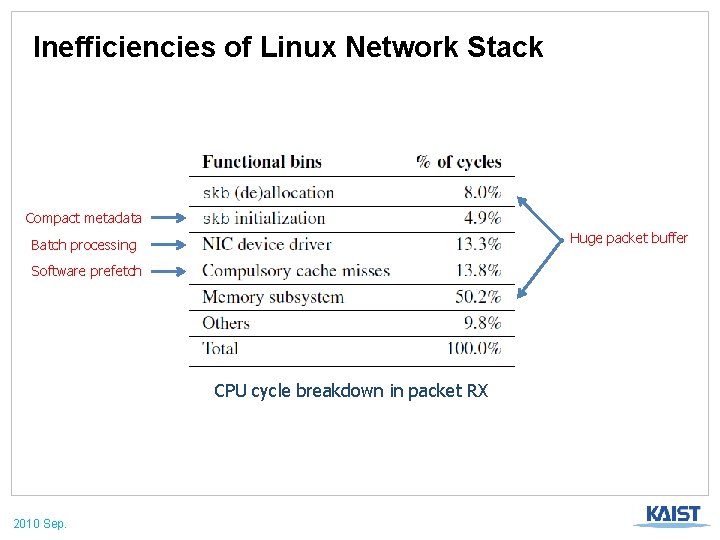

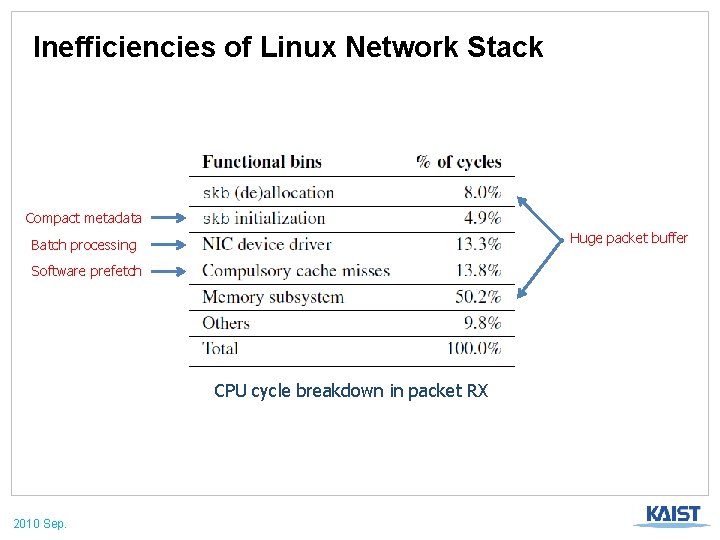

Inefficiencies of Linux Network Stack Compact metadata Huge packet buffer Batch processing Software prefetch CPU cycle breakdown in packet RX 2010 Sep.

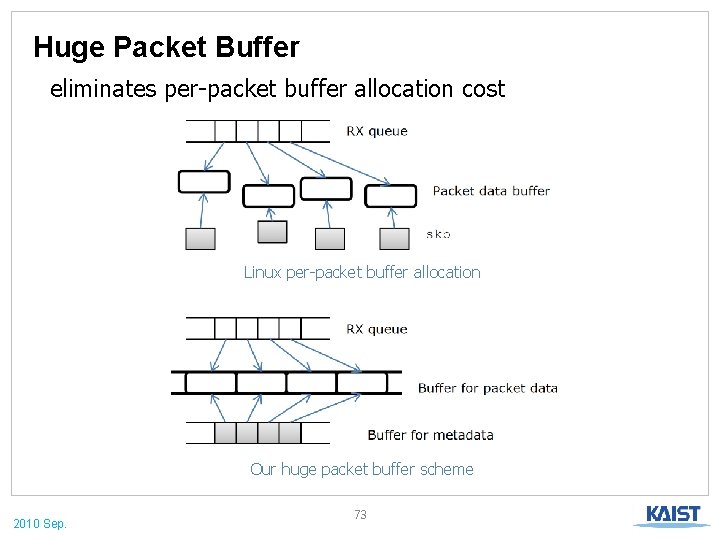

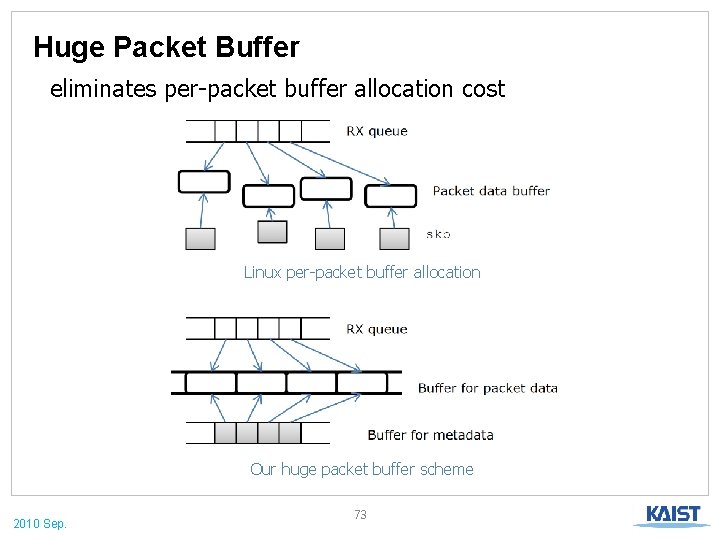

Huge Packet Buffer eliminates per-packet buffer allocation cost Linux per-packet buffer allocation Our huge packet buffer scheme 2010 Sep. 73

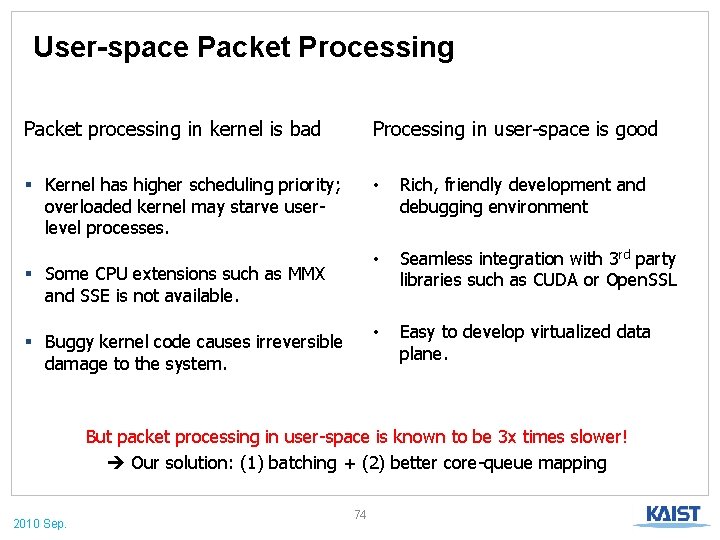

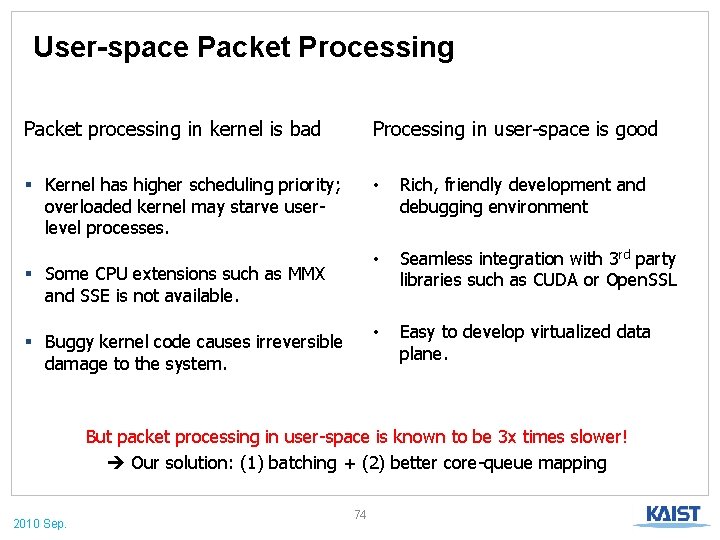

User-space Packet Processing Packet processing in kernel is bad Processing in user-space is good § Kernel has higher scheduling priority; overloaded kernel may starve userlevel processes. • Rich, friendly development and debugging environment • Seamless integration with 3 rd party libraries such as CUDA or Open. SSL • Easy to develop virtualized data plane. § Some CPU extensions such as MMX and SSE is not available. § Buggy kernel code causes irreversible damage to the system. But packet processing in user-space is known to be 3 x times slower! Our solution: (1) batching + (2) better core-queue mapping 2010 Sep. 74

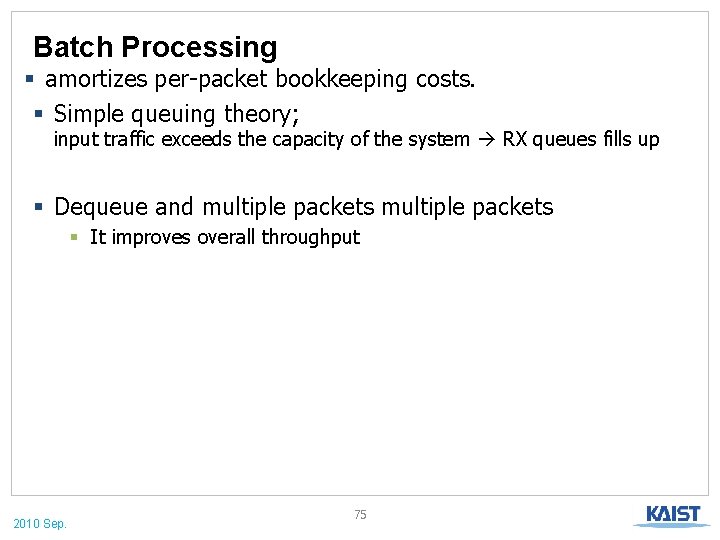

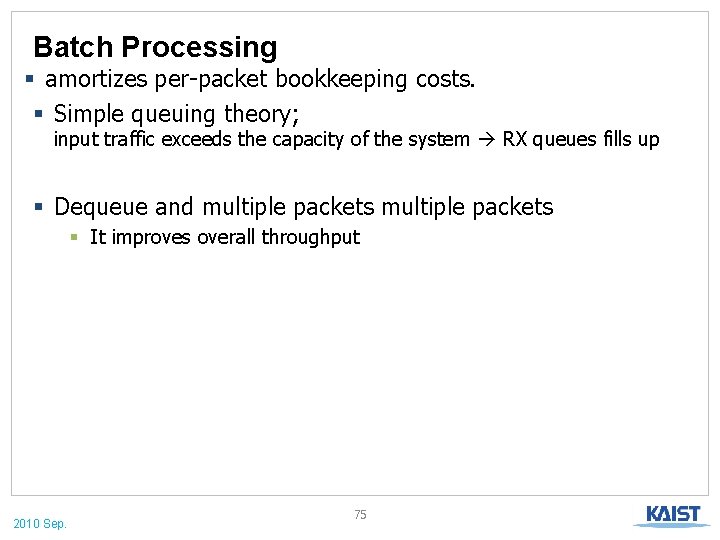

Batch Processing § amortizes per-packet bookkeeping costs. § Simple queuing theory; input traffic exceeds the capacity of the system RX queues fills up § Dequeue and multiple packets § It improves overall throughput 2010 Sep. 75

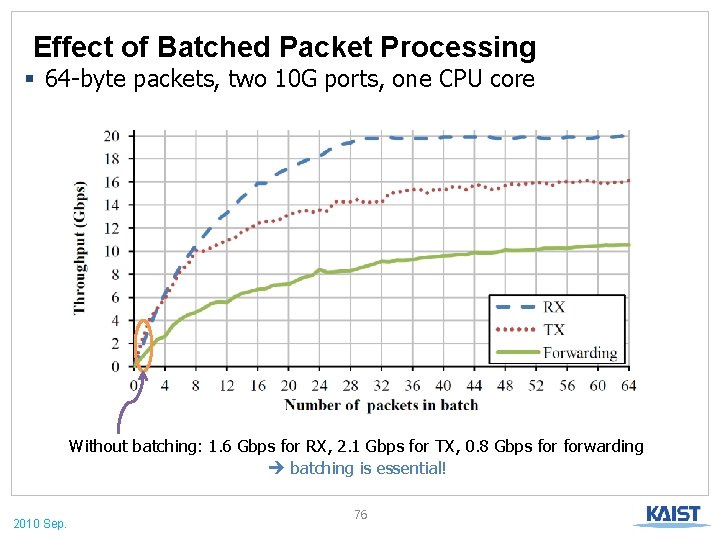

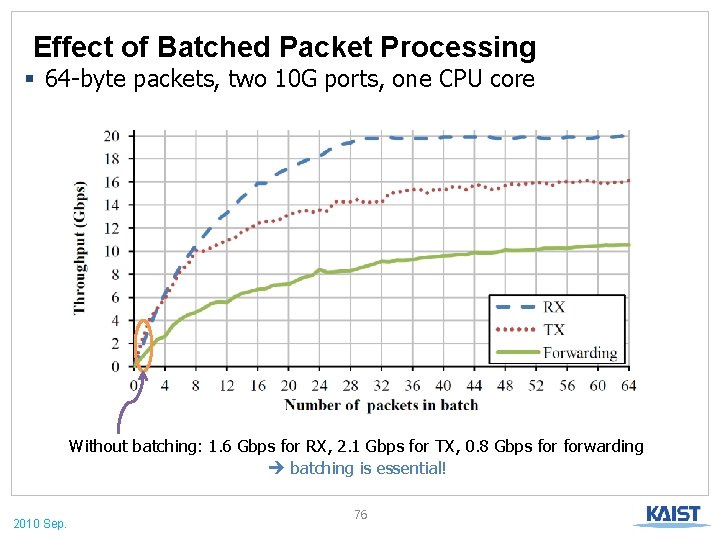

Effect of Batched Packet Processing § 64 -byte packets, two 10 G ports, one CPU core Without batching: 1. 6 Gbps for RX, 2. 1 Gbps for TX, 0. 8 Gbps forwarding batching is essential! 2010 Sep. 76

NUMA –aware RSS § RSS (Receive-Side Scaling) default behavior § RSS-enabled NICs distribute incoming packets into all CPU cores. § To save bandwidth between NUMA nodes, we prevent packets from crossing the NUMA boundary. CPU cores 2010 Sep. CPU cores IOH IOH NIC NIC 77

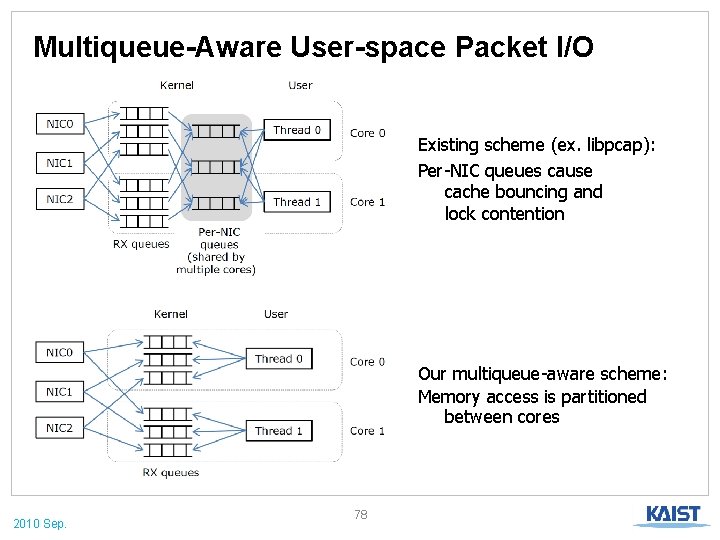

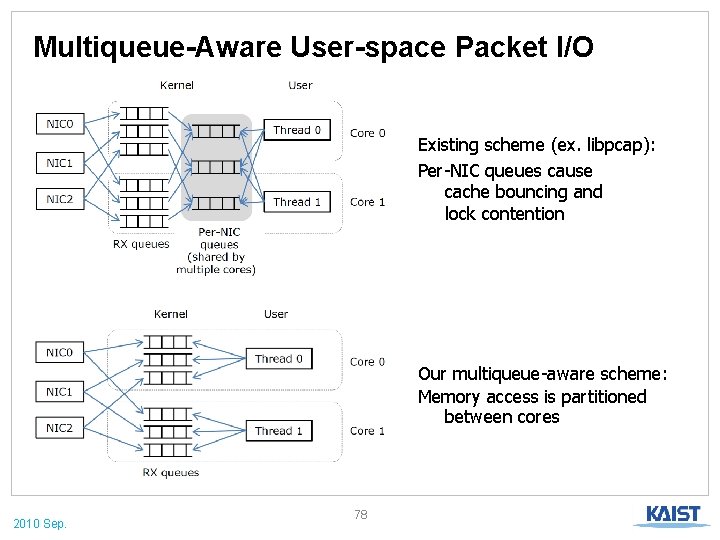

Multiqueue-Aware User-space Packet I/O Existing scheme (ex. libpcap): Per-NIC queues cause cache bouncing and lock contention Our multiqueue-aware scheme: Memory access is partitioned between cores 2010 Sep. 78

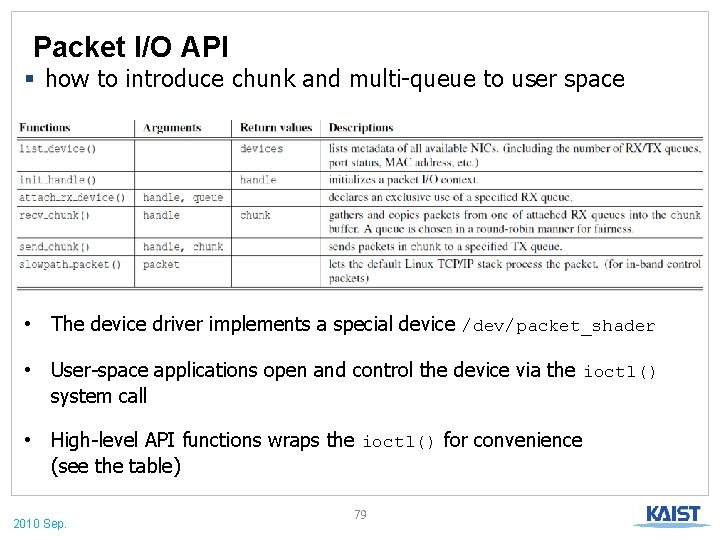

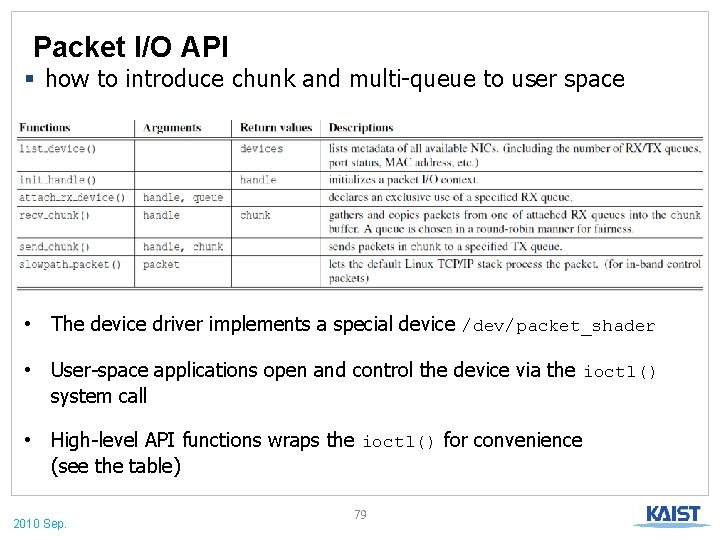

Packet I/O API § how to introduce chunk and multi-queue to user space • The device driver implements a special device /dev/packet_shader • User-space applications open and control the device via the ioctl() system call • High-level API functions wraps the ioctl() for convenience (see the table) 2010 Sep. 79

Packet I/O Performance 2010 Sep. 80

PACKETSHADER 2010 Sep. 81

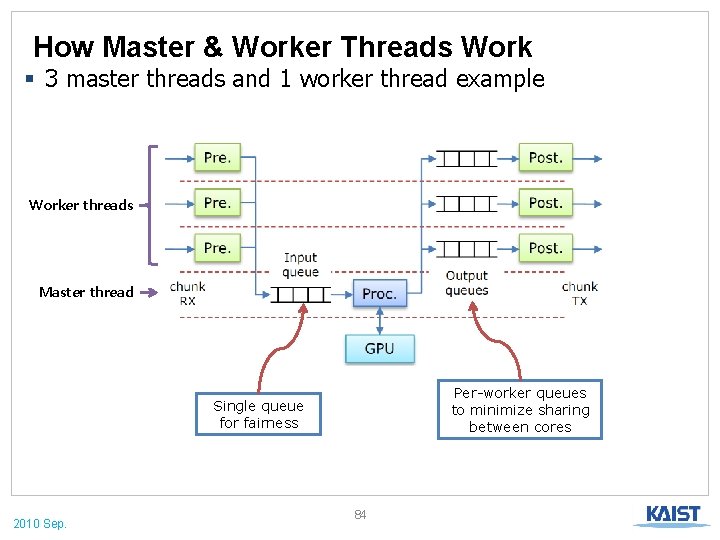

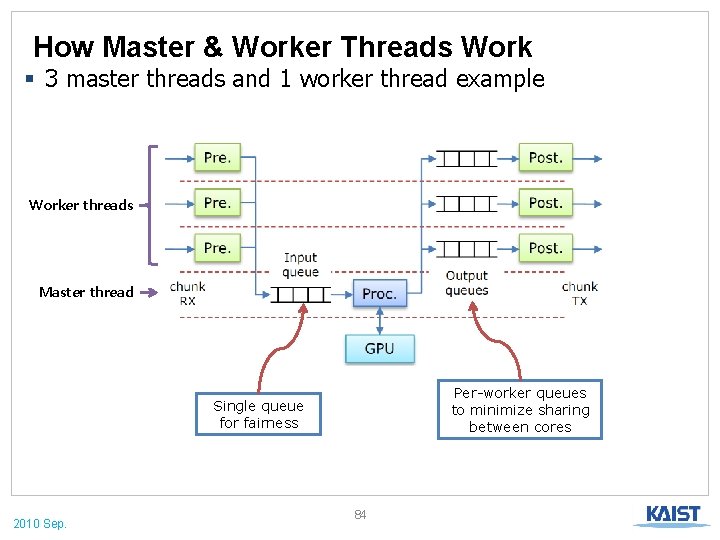

Master-Worker Scheme § In a CPU, we have 4 cores. § Each core has one dedicated thread. § 3 cores run worker threads and 1 core for master thread § Worker thread § performs packet I/O and use the master thread as a proxy § Master thread § accelerates packet processing with GPU. 2010 Sep. 82

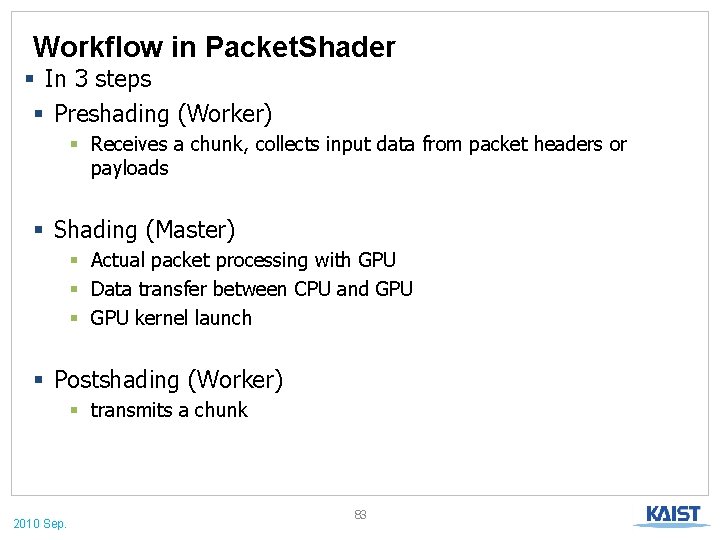

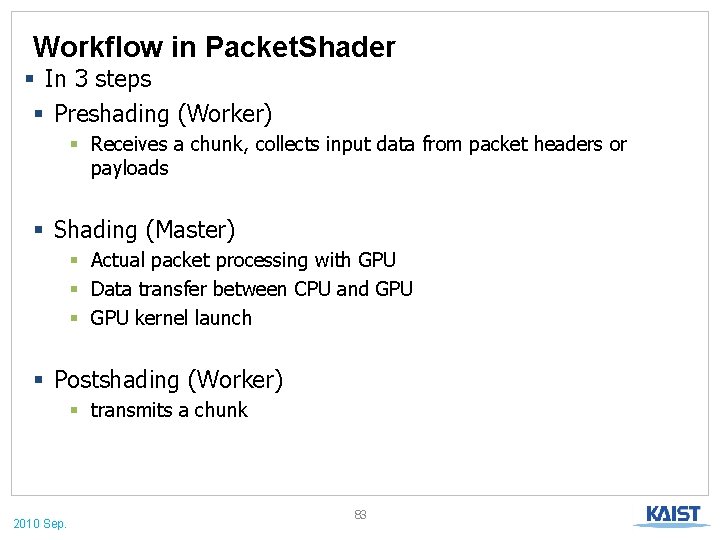

Workflow in Packet. Shader § In 3 steps § Preshading (Worker) § Receives a chunk, collects input data from packet headers or payloads § Shading (Master) § Actual packet processing with GPU § Data transfer between CPU and GPU § GPU kernel launch § Postshading (Worker) § transmits a chunk 2010 Sep. 83

How Master & Worker Threads Work § 3 master threads and 1 worker thread example Worker threads Master thread Per-worker queues to minimize sharing between cores Single queue for fairness 2010 Sep. 84

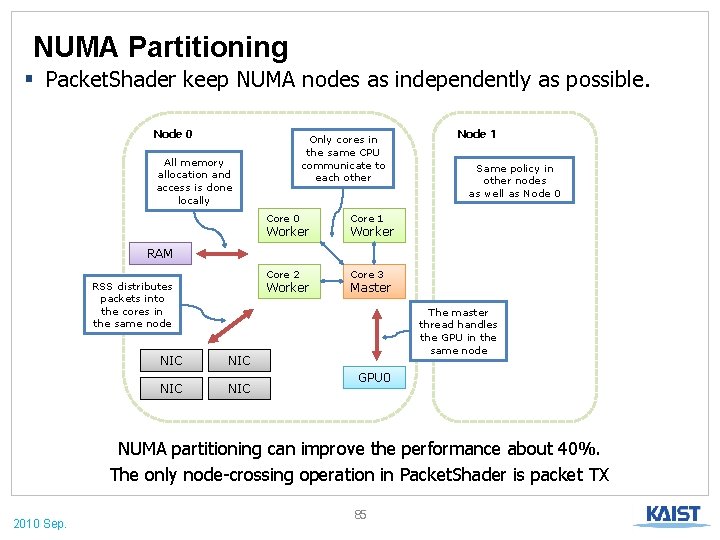

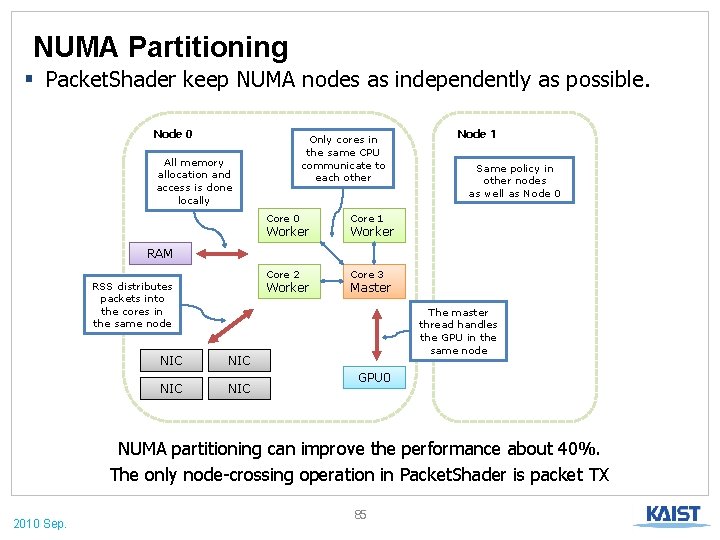

NUMA Partitioning § Packet. Shader keep NUMA nodes as independently as possible. Node 0 Only cores in the same CPU communicate to each other All memory allocation and access is done locally Core 0 Core 1 Core 2 Core 3 Worker Node 1 Same policy in other nodes as well as Node 0 Worker RAM RSS distributes packets into the cores in the same node Worker NIC NIC Master The master thread handles the GPU in the same node GPU 0 NUMA partitioning can improve the performance about 40%. The only node-crossing operation in Packet. Shader is packet TX 2010 Sep. 85

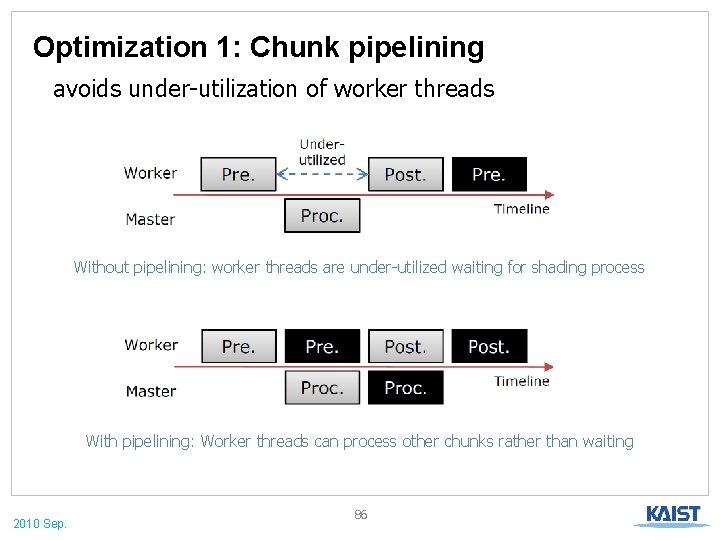

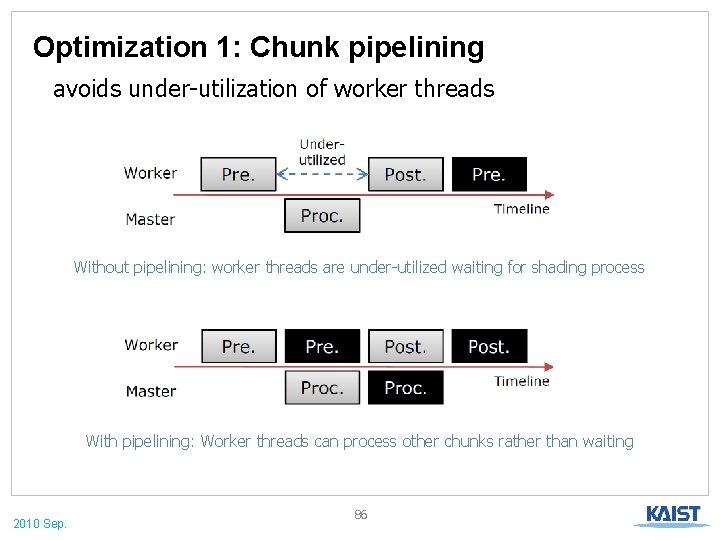

Optimization 1: Chunk pipelining avoids under-utilization of worker threads Without pipelining: worker threads are under-utilized waiting for shading process With pipelining: Worker threads can process other chunks rather than waiting 2010 Sep. 86

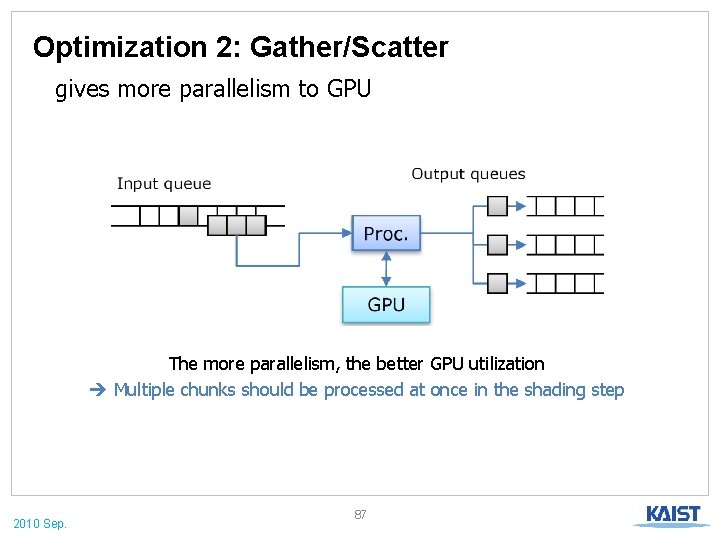

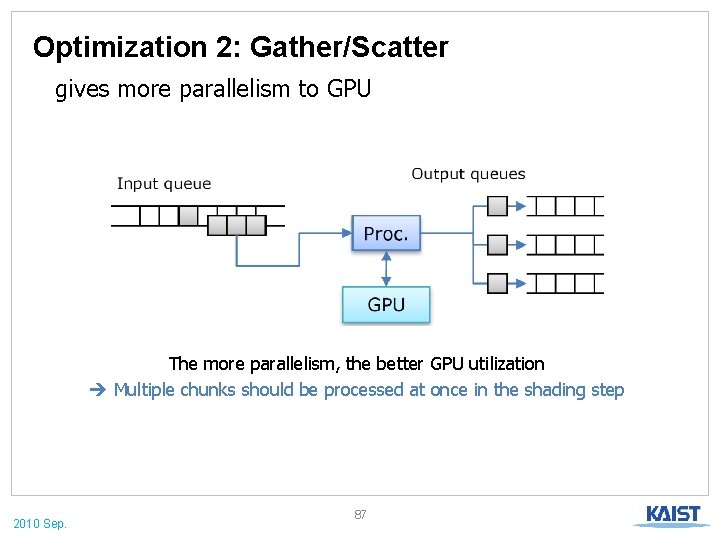

Optimization 2: Gather/Scatter gives more parallelism to GPU The more parallelism, the better GPU utilization Multiple chunks should be processed at once in the shading step 2010 Sep. 87

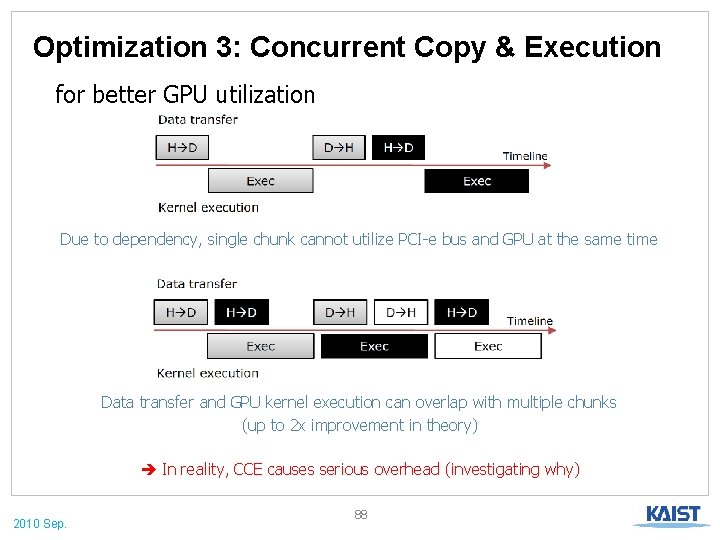

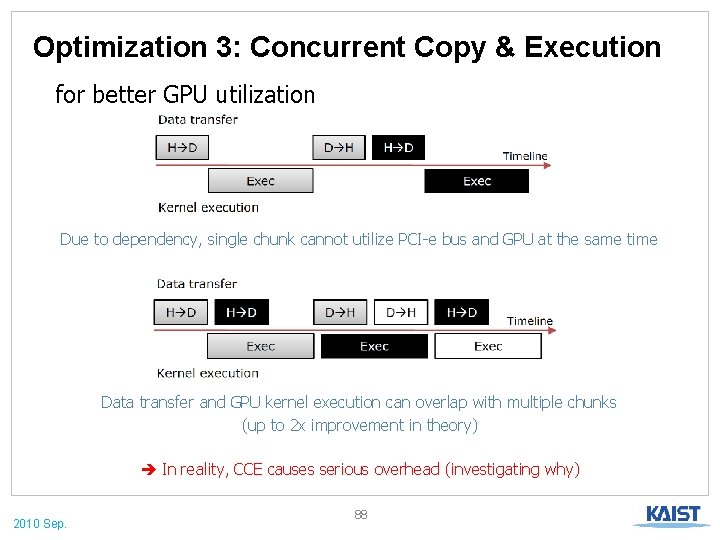

Optimization 3: Concurrent Copy & Execution for better GPU utilization Due to dependency, single chunk cannot utilize PCI-e bus and GPU at the same time Data transfer and GPU kernel execution can overlap with multiple chunks (up to 2 x improvement in theory) In reality, CCE causes serious overhead (investigating why) 2010 Sep. 88

APPLICATIONS 2010 Sep. 89

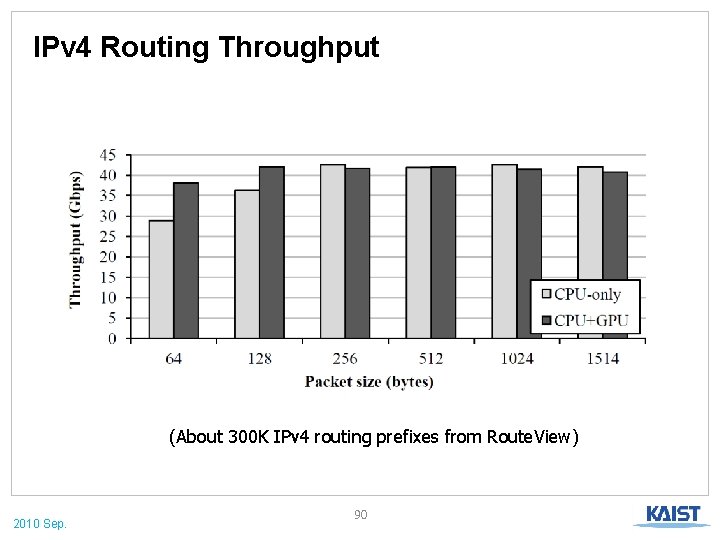

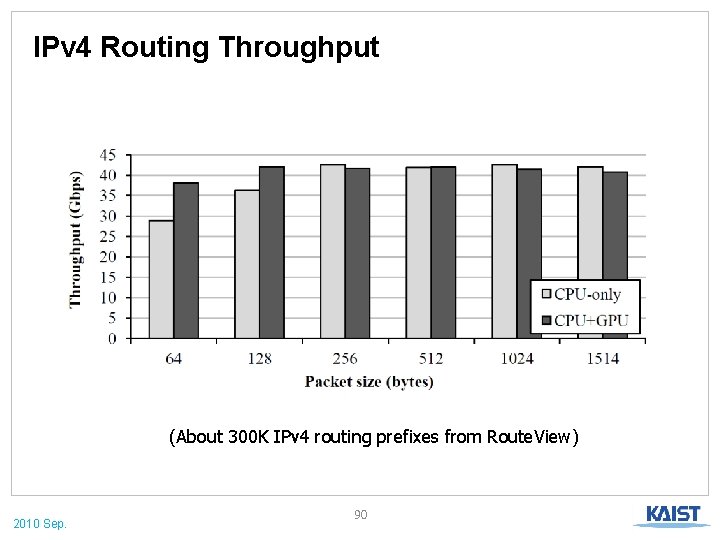

IPv 4 Routing Throughput (About 300 K IPv 4 routing prefixes from Route. View) 2010 Sep. 90

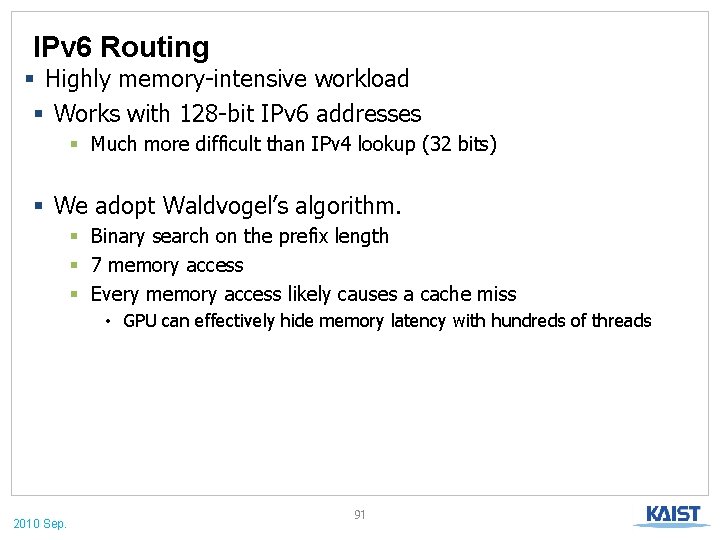

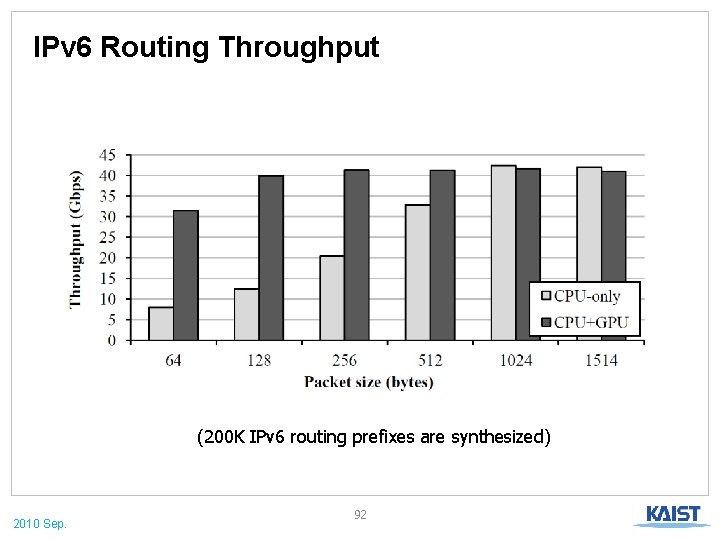

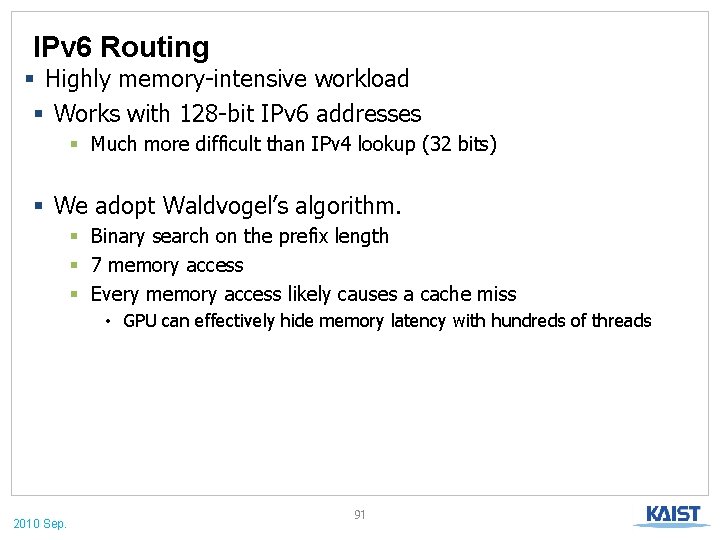

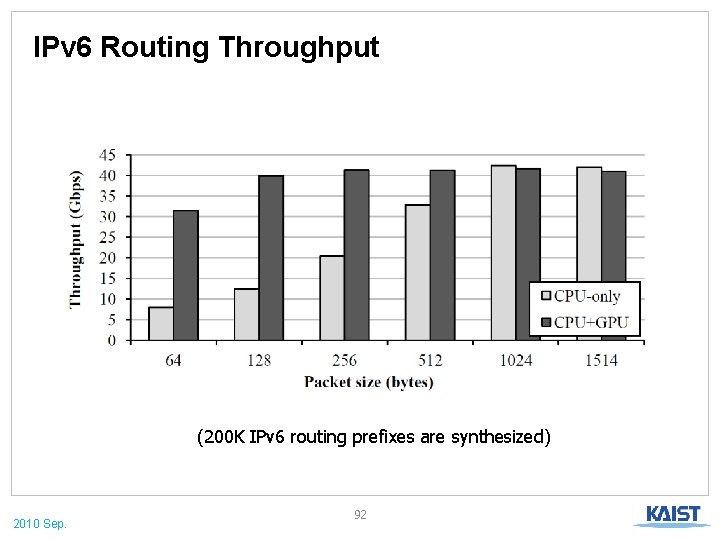

IPv 6 Routing § Highly memory-intensive workload § Works with 128 -bit IPv 6 addresses § Much more difficult than IPv 4 lookup (32 bits) § We adopt Waldvogel’s algorithm. § Binary search on the prefix length § 7 memory access § Every memory access likely causes a cache miss • GPU can effectively hide memory latency with hundreds of threads 2010 Sep. 91

IPv 6 Routing Throughput (200 K IPv 6 routing prefixes are synthesized) 2010 Sep. 92

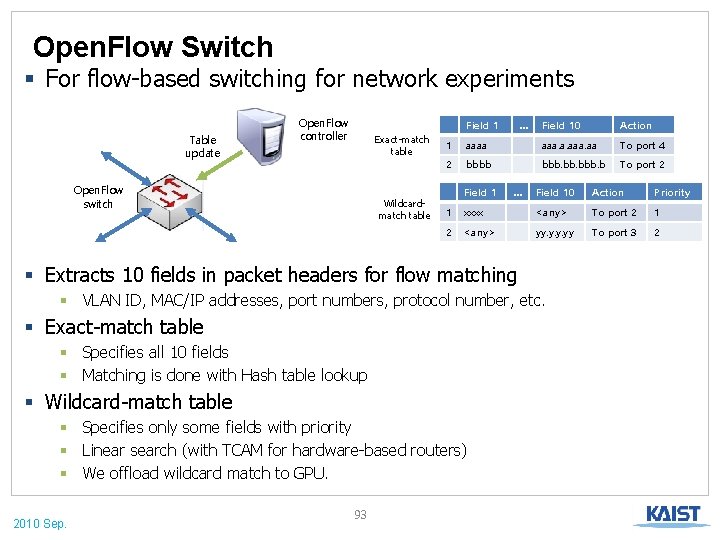

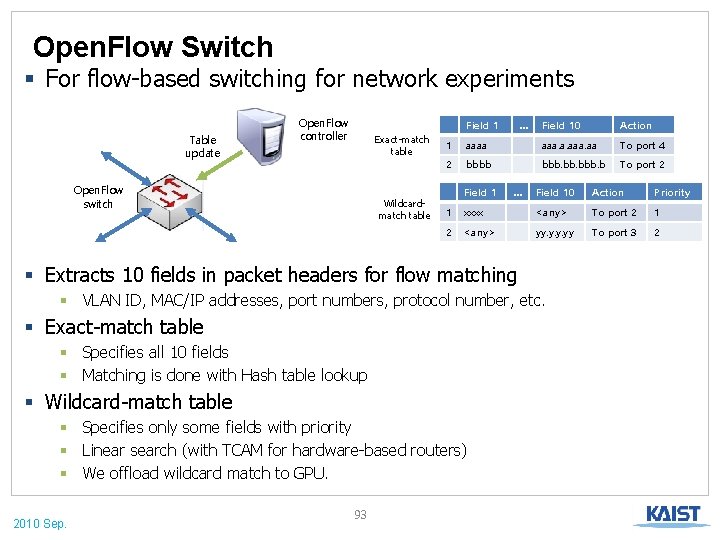

Open. Flow Switch § For flow-based switching for network experiments Table update Open. Flow controller Field 1 Exact-match table Open. Flow switch Wildcardmatch table … Field 10 Action 1 aaaa aaa. a. aa To port 4 2 bbbb bbb. b To port 2 Field 1 … Field 10 Action Priority 1 xxxx <any> To port 2 1 2 <any> yy. yy To port 3 2 § Extracts 10 fields in packet headers for flow matching § VLAN ID, MAC/IP addresses, port numbers, protocol number, etc. § Exact-match table § Specifies all 10 fields § Matching is done with Hash table lookup § Wildcard-match table § Specifies only some fields with priority § Linear search (with TCAM for hardware-based routers) § We offload wildcard match to GPU. 2010 Sep. 93

Open. Flow Throughput § With 64 -byte packets, against various flow table sizes 2010 Sep. 94

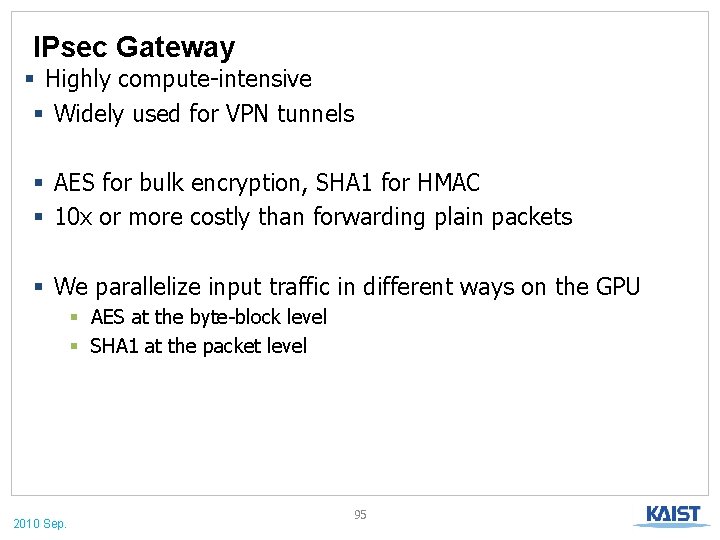

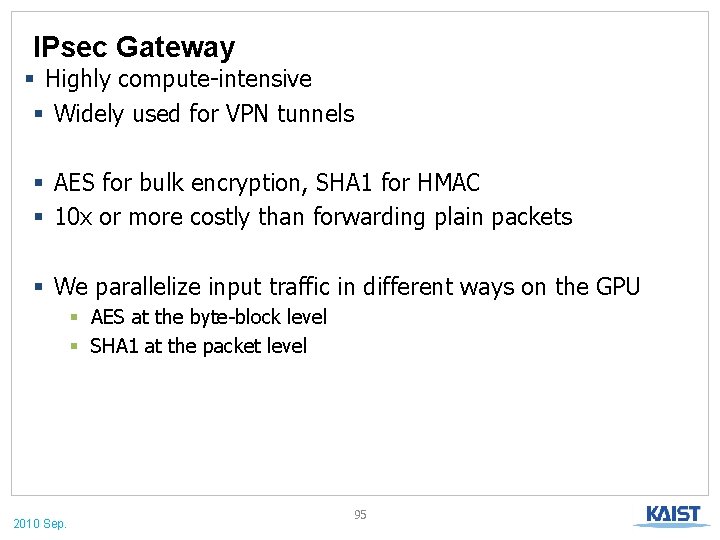

IPsec Gateway § Highly compute-intensive § Widely used for VPN tunnels § AES for bulk encryption, SHA 1 for HMAC § 10 x or more costly than forwarding plain packets § We parallelize input traffic in different ways on the GPU § AES at the byte-block level § SHA 1 at the packet level 2010 Sep. 95