MECEE 4520 Data Science for Mechanical Systems Lecture

- Slides: 54

MECEE 4520: Data Science for Mechanical Systems Lecture #5: Data Pipelines For ML 2 Instructor: Josh Browne, Ph. D Guest speaker: Alejandro Mesa February 20, 2019

Course Logistics ● OH this week: tomorrow (Thursday) 1: 30 PM to 3: 00 PM (Mech E lab) ● Final paper/project abstract assignment - due 11: 59 PM on Sunday, Mar. 3 ○ ○ One page (300 word max) abstract Can work in groups of 1 -3, depending on topic ● Final paper/project due dates: ○ last day of finals (Friday, May 17 th) for non-graduating students ○ first day of finals (Friday, May 10 th) for graduating students ● Next week’s lecture - wildcard w/Dr. Joel Moxley 2

Let’s talk about the homework 3

Goals ● In this section we will cover: ○ ○ ○ Data retention Data exploration Tools for designing and deploying data pipelines in production Methodologies for training Machine Learning models Methodologies for deploying Machine Learning models ● At the end of this section you should: ○ ○ ○ Best practices for data retention How data pipelines are built on production How Machine Learning models are built and deployed on production 4

Any questions from last week? 5

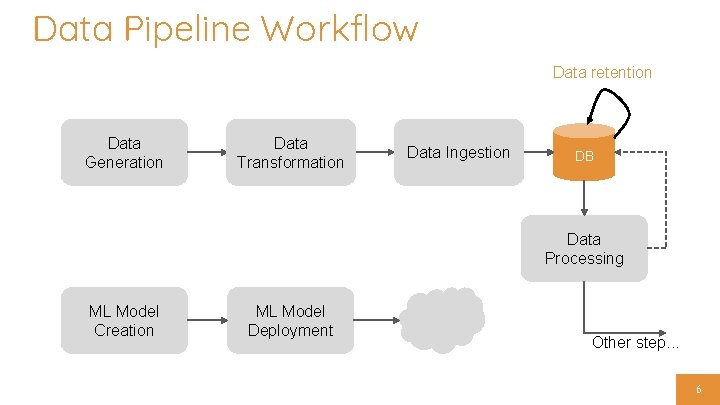

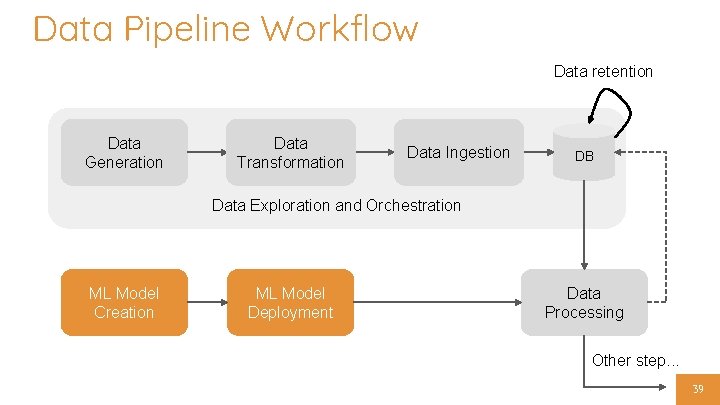

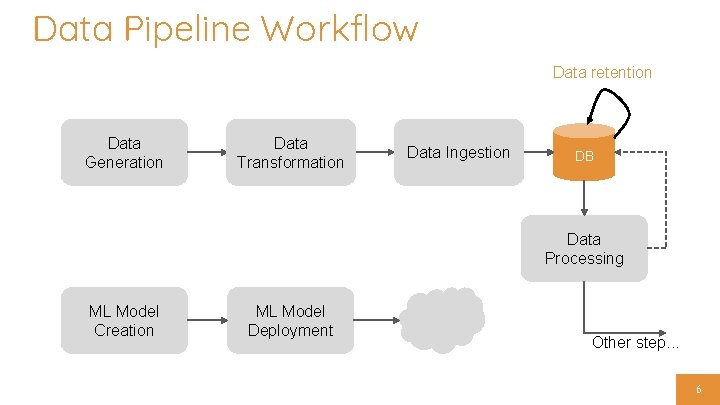

Data Pipeline Workflow Data retention Data Generation Data Transformation Data Ingestion DB Data Processing ML Model Creation ML Model Deployment Other step. . . 6

So, when do we delete old data? 7

Data Retention So, when do I delete old data? ● It depends on the data ○ If it’s training data, almost never. Back it up instead. ○ If it’s operational data, have a roll-over policy 8

Data Retention What’s a roll-over policy? ● Identify how far back do you want to look ○ Days? Months? Years? ● Remove or backup any data older than roll-over window ○ Your mileage may vary 9

Data Retention ● Never truly delete data ○ Back it up first ○ Keep backups for as long as you can ○ Remove data from the pipeline ● Why? ○ You may need it to train other, newer, better models ○ You may be able to sell it as a data set. Let’s get rich! 10

Data Retention Keep data for as long as we can ● Isn’t that expensive? ○ Not really. Storage is very cheap these days ○ Services like AWS S 3 or Azure Storage can help and they are relatively cheap ○ For example: AWS charges $0. 023 per GB per month 11

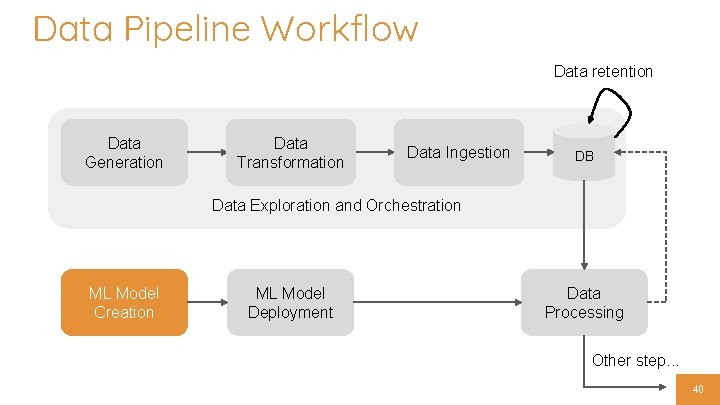

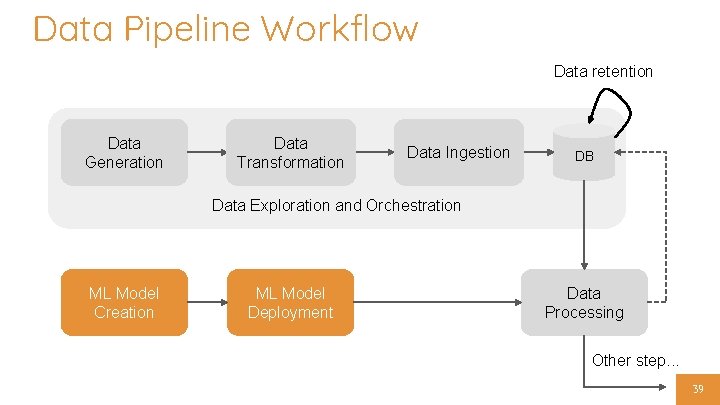

Data Pipeline Workflow Data retention Data Generation Data Transformation Data Ingestion DB Data Exploration and Orchestration ML Model Creation ML Model Deployment Data Processing Other step. . . 12

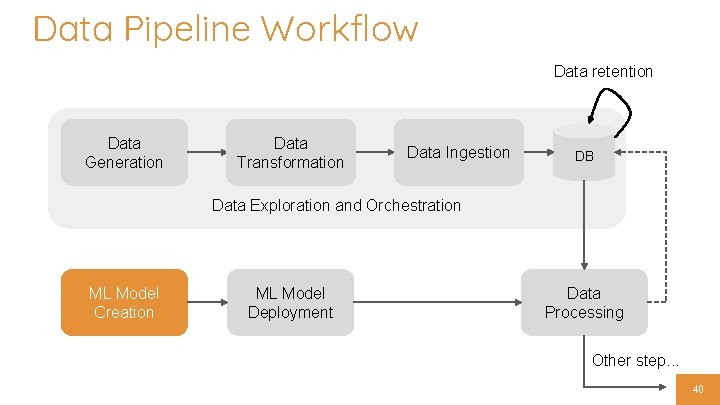

Data Pipeline Workflow Data retention Data Generation Data Transformation Data Ingestion DB Data Exploration and Orchestration ML Model Creation ML Model Deployment Data Processing Other step. . . 13

So, how do we explore the data? 14

Data Exploration ● Is your data set small? ○ Use programming constructs locally ■ Remember Map. Reduce from last week? ■ Remember Jupyter Notebooks? ○ Use Num. Py, Sci. Py and Pandas locally for numerical data ● Not small? ○ Use Hadoop or Spark 15

Data Exploration Num. Py, Sci. Py and Pandas ● Num. Py: Math library that adds support for multidimensional arrays and matrices and brings a large collection of high-level math functions ● Sci. Py: Scientific computational library built on Num. Py, with support for optimization, linear algebra, integration, interpolation, special functions, FFT, signal and image processing. 16

Data Exploration Num. Py, Sci. Py and Pandas ● Pandas: Provides high-performance, easy-to-use data structures for data manipulation and analysis. It offers data structures for manipulating numerical tables and time series. ○ Built on Num. Py 17

Local Exploration Example 18

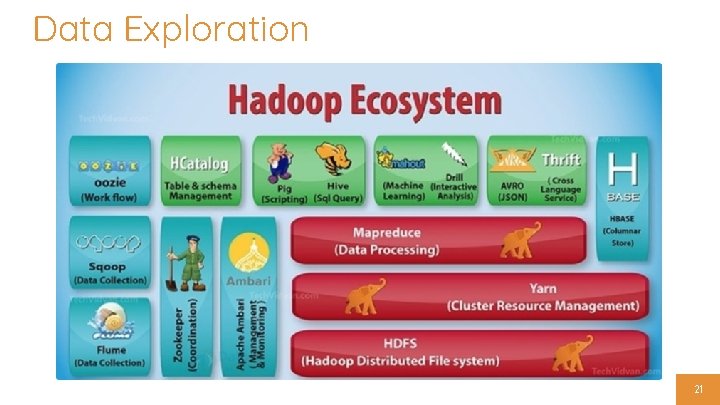

Data Exploration Hadoop High-performance, big-data computational framework Uses the Map. Reduce programming model Uses a cluster of computers for parallel execution Uses the Hadoop Distributed File System (HDFS) for storing and replicating the data across the cluster ● Useful only for batch-processing jobs ● ● 19

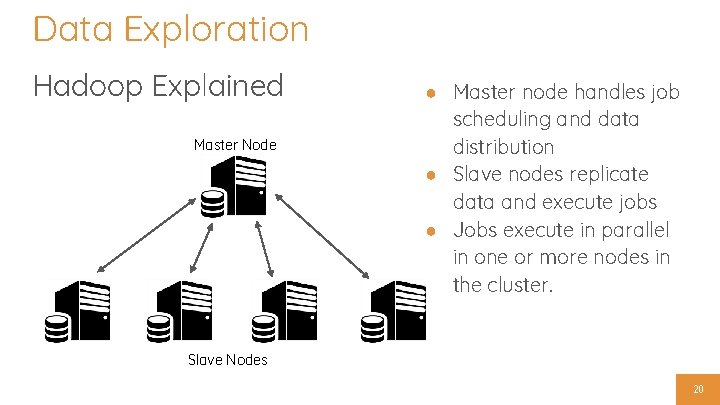

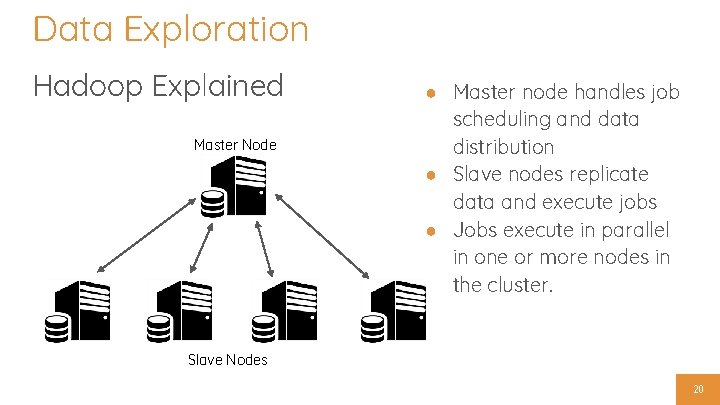

Data Exploration Hadoop Explained Master Node ● Master node handles job scheduling and data distribution ● Slave nodes replicate data and execute jobs ● Jobs execute in parallel in one or more nodes in the cluster. Slave Nodes 20

Data Exploration 21

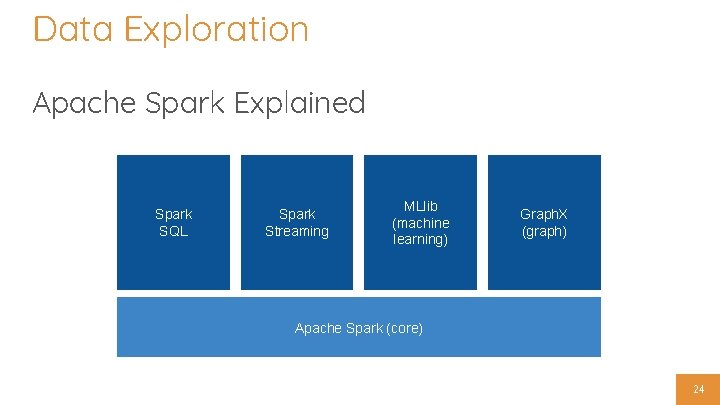

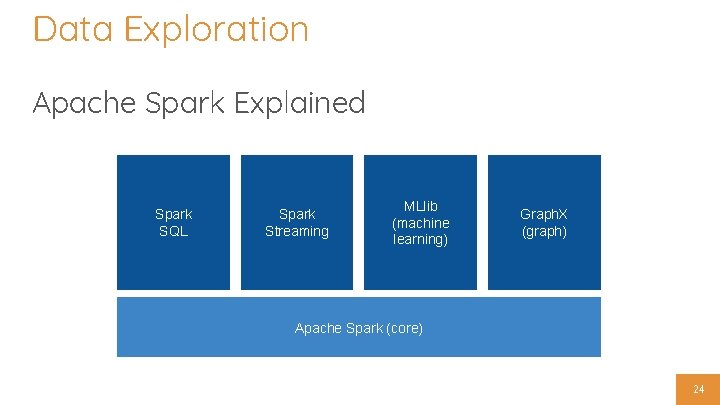

Data Exploration Apache Spark ● General-purpose distributed engine for big data analytics and machine learning ○ 100 x faster than Hadoop Map. Reduce ○ Uses memory for fast data loading and caching ○ Can use Map. Reduce, but has other constructs such as Spark SQL, Spark Streaming, MLlib and Graph. X 22

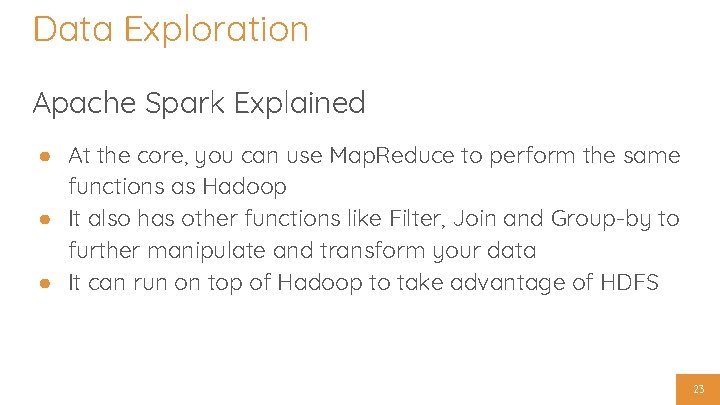

Data Exploration Apache Spark Explained ● At the core, you can use Map. Reduce to perform the same functions as Hadoop ● It also has other functions like Filter, Join and Group-by to further manipulate and transform your data ● It can run on top of Hadoop to take advantage of HDFS 23

Data Exploration Apache Spark Explained Spark SQL Spark Streaming MLlib (machine learning) Graph. X (graph) Apache Spark (core) 24

Spark Examples 25

Break Source : https: //www. andrew. cmu. edu/user/lakoglu/courses/95869/S 17/index. htm 26

Data Exploration Hadoop vs Spark? ● If computer memory is a constraint, use Hadoop ● Otherwise use Spark ○ Easier to set up ○ More performant ○ Has more capabilities out of the box ● There are many other big data frameworks, but these two are the most popular 27

Data Exploration Structured Data ● Use a petabyte-scale data warehouse solution like AWS Redshift ● You can take advantage of SQL to perform queries 28

Data Orchestration Manual Program Execution ● ● Run program 1 Run program 2 … OMG. . . I’m getting carpal tunnel 29

Data Orchestration Use Linux Cron ● Linux Cron is a simple scheduler to run tasks ○ Good enough for small pipelines ○ No restart capabilities for long running jobs ○ Need Linux experience to configure ○ Windows has a similar scheduler 30

Data Orchestration - Cron Example Source: https: //www. howtogeek. com/101288/how-to-schedule-tasks-on-linux-an-introduction-to-crontab-files/ 31

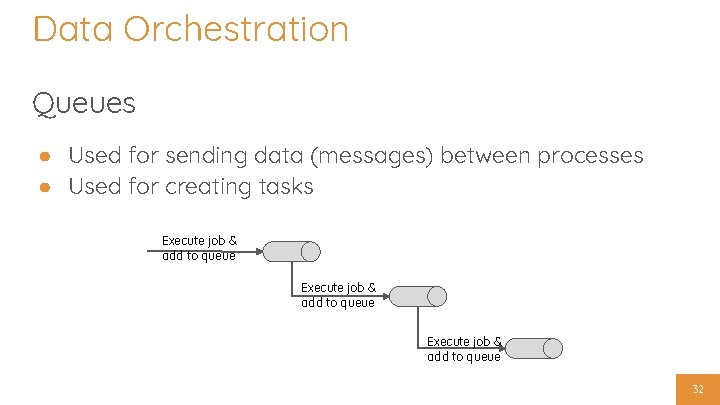

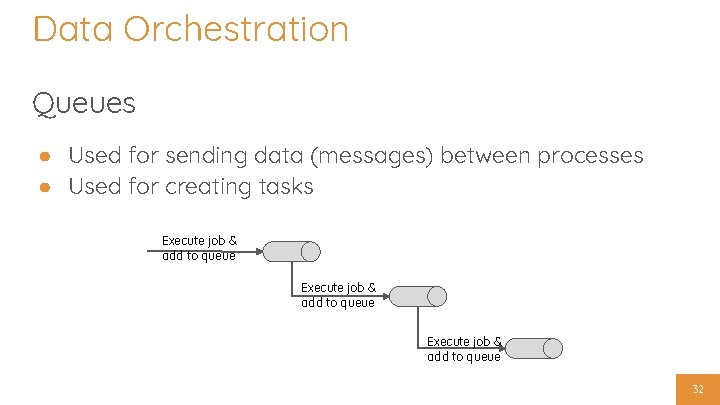

Data Orchestration Queues ● Used for sending data (messages) between processes ● Used for creating tasks Execute job & add to queue 32

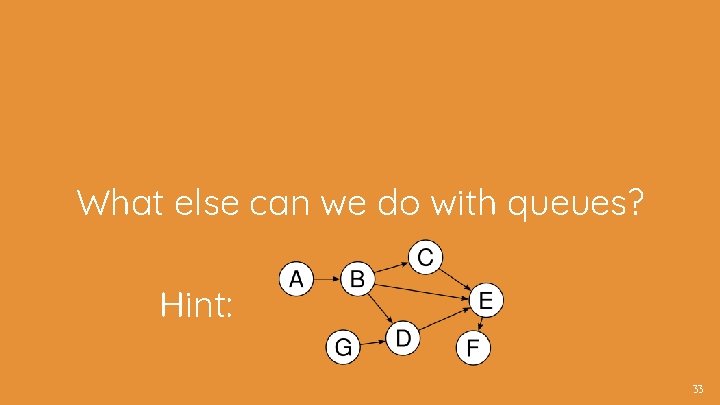

What else can we do with queues? Hint: 33

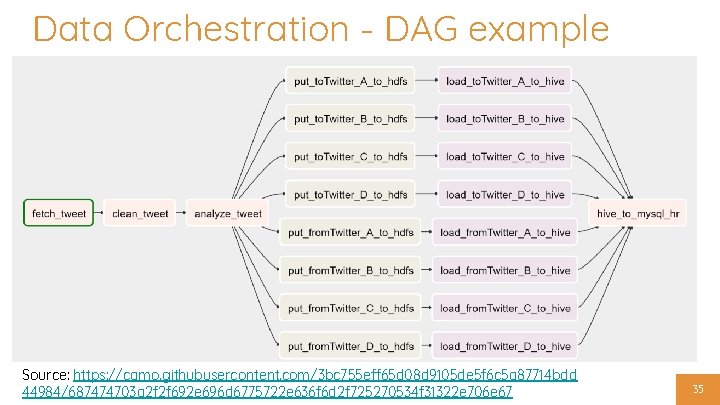

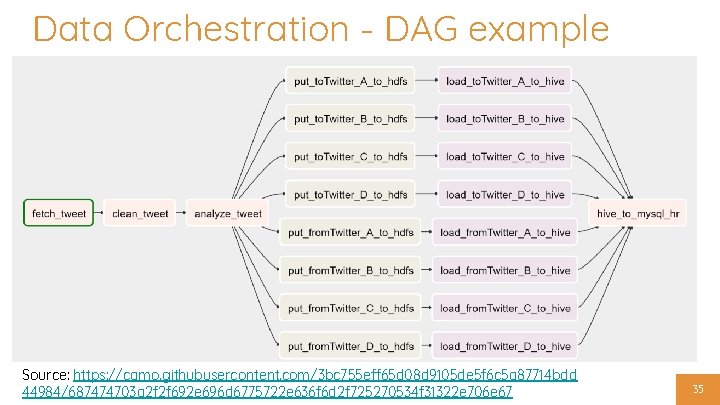

Data Orchestration Directed Acyclic Graph (DAG) ● ● Finite directed graph with no directed cycles Each node in a graph is a task The output of a task can be the input of another task We can orchestrate complex ETL jobs that have dependencies on previous steps Apache Airflow 34

Data Orchestration - DAG example Source: https: //camo. githubusercontent. com/3 bc 755 eff 65 d 08 d 9105 de 5 f 6 c 5 a 87714 bdd 44984/687474703 a 2 f 2 f 692 e 696 d 6775722 e 636 f 6 d 2 f 725270534 f 31322 e 706 e 67 35

Airflow Example 36

Data Orchestration Streams ● Real-time processing of data ● Used to derive insight from our data in a short period of time (usually seconds) ● Example: Send alert if a temperate raises above a certain threshold. 37

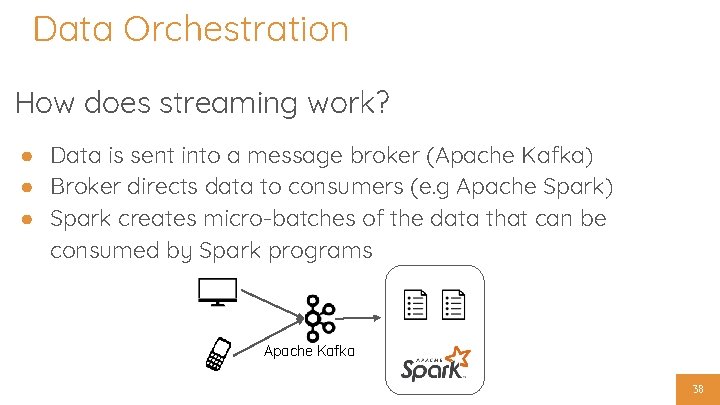

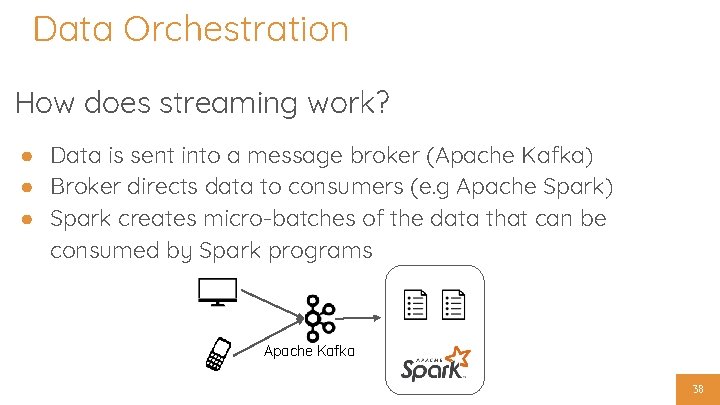

Data Orchestration How does streaming work? ● Data is sent into a message broker (Apache Kafka) ● Broker directs data to consumers (e. g Apache Spark) ● Spark creates micro-batches of the data that can be consumed by Spark programs Apache Kafka 38

Data Pipeline Workflow Data retention Data Generation Data Transformation Data Ingestion DB Data Exploration and Orchestration ML Model Creation ML Model Deployment Data Processing Other step. . . 39

Data Pipeline Workflow Data retention Data Generation Data Transformation Data Ingestion DB Data Exploration and Orchestration ML Model Creation ML Model Deployment Data Processing Other step. . . 40

ML Model Creation Scrap Compile Model Source Create Model Hyper parameters Build Model Run & Test Is it good enough? Deploy Rework 41

Data Pipeline Workflow Data retention Data Generation Data Transformation Data Ingestion DB Data Exploration and Orchestration ML Model Creation ML Model Deployment Data Processing Other step. . . 42

ML Model Deployment Workflow Specify Performance Requirements Create model container Deploy & Monitor 43

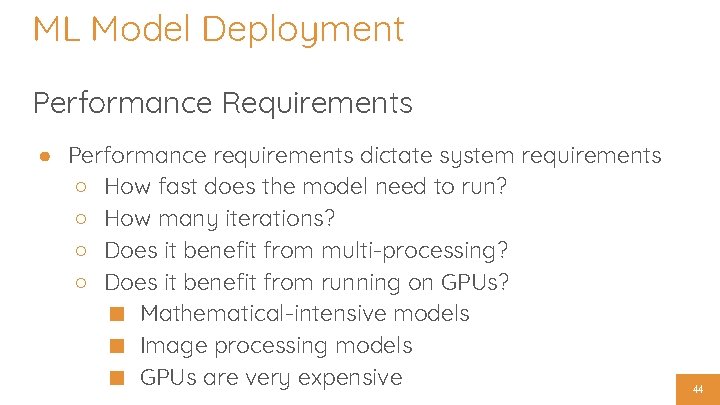

ML Model Deployment Performance Requirements ● Performance requirements dictate system requirements ○ How fast does the model need to run? ○ How many iterations? ○ Does it benefit from multi-processing? ○ Does it benefit from running on GPUs? ■ Mathematical-intensive models ■ Image processing models ■ GPUs are very expensive 44

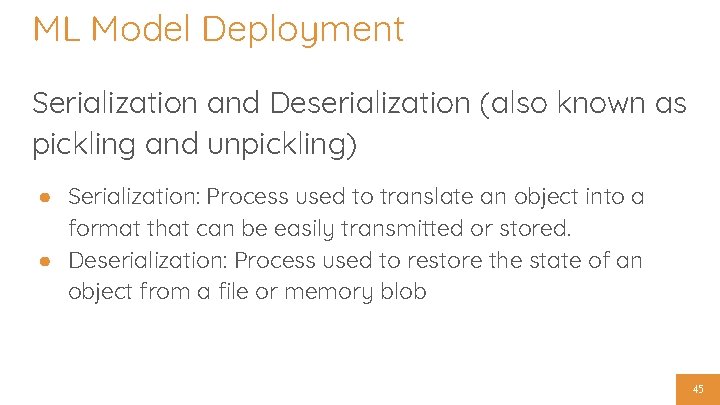

ML Model Deployment Serialization and Deserialization (also known as pickling and unpickling) ● Serialization: Process used to translate an object into a format that can be easily transmitted or stored. ● Deserialization: Process used to restore the state of an object from a file or memory blob 45

ML Model Deployment To pickle or not to pickle? ● Is the model going to be loaded once (at startup) and used many times? Don’t pickle ● Is the model going to be loaded multiple times during the program execution? Pickle! ○ We can store a serialized model in memory ○ Which database can help us with this? 46

ML Model Deployment Creating a model container ● Model container != docker container ● Is the model going to be consumed in-program? ● Is the model going to be consumed externally? 47

ML Model Deployment Running in-program ● The model is part of the program’s source code ● The output of the model is used internally within the program ● Usually loaded once (at startup) and used multiple times 48

ML Model Deployment Exposed externally ● The program does not consume the model, but simply exposes it as a service ● External applications consume this service to make predictions ● Usually done through an API 49

Model Execution Example 50

ML Model Deployment The act of deploying a model ● There are many services that can run your model. ○ AWS Sage. Maker ○ AWS Lambda ○ Spark MLlib ○ Run API service on Heroku ○ Roll your own 51

Monitoring - Closing the Loop ● Once the model is deployed, monitor it for: ○ Systemic failures (e. g exceptions) ○ Incorrect predictions ■ Are you still getting the expected accuracy? ○ Performance ■ Is it running fast enough? 52

Monitoring - Closing the Loop How do we monitor the model? ● Send applications logs to a log aggregator ● Analyze your logs using some the methods learned in the last two lectures ● Potentially create a ML model to detect failures in the logs ○ But don’t we have to now monitor this new model? ■ Yes. It’s a vicious cycle! ■ Luckily there are professional services for that 53

The End Questions? 54