MECEE 4520 Data Science for Mechanical Systems LECTURE

- Slides: 40

MECEE 4520: Data Science for Mechanical Systems LECTURE 9: REINFORCEMENT LEARNING

Lecture outline Introduction to the reinforcement learning setting Markov decision processes (MDPs) Reward, value, and policy Examples of RL in board games and video games Intro to Q-learning

Books: • Sutton and Barto (2018): http: //incompleteideas. net/book/the-book-2 nd. html • Szepesvári (2018): https: //sites. ualberta. ca/~szepesva/RLBook. html Recommended RL references Lectures: • David Silver UCL lectures (2015): http: //www 0. cs. ucl. ac. uk/staff/d. silver/web/Teaching. html Articles: • Arxiv Sanity Preserver (great for deep learning too!): http: //www. arxiv-sanity. com/ • Deepmind Blog: https: //deepmind. com/blog/ • Open. AI Blog: https: //openai. com/blog/

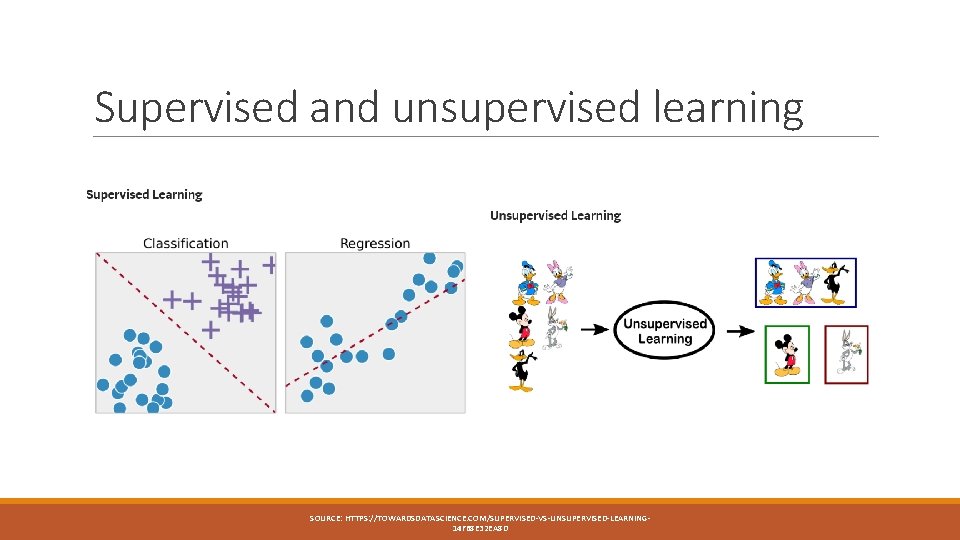

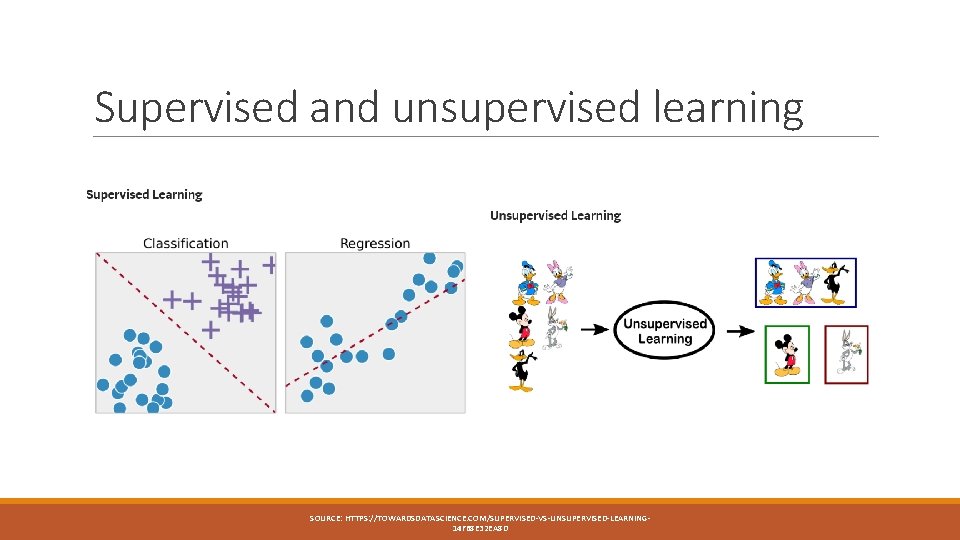

Supervised and unsupervised learning SOURCE: HTTPS: //TOWARDSDATASCIENCE. COM/SUPERVISED-VS-UNSUPERVISED-LEARNING 14 F 68 E 32 EA 8 D

Imagine we design a robotic arm to pick up objects and place in some desired location. ◦ We want an algorithm to learn to perform this task automatically. Sequential decision making Another type of learning? Is this a supervised learning task?

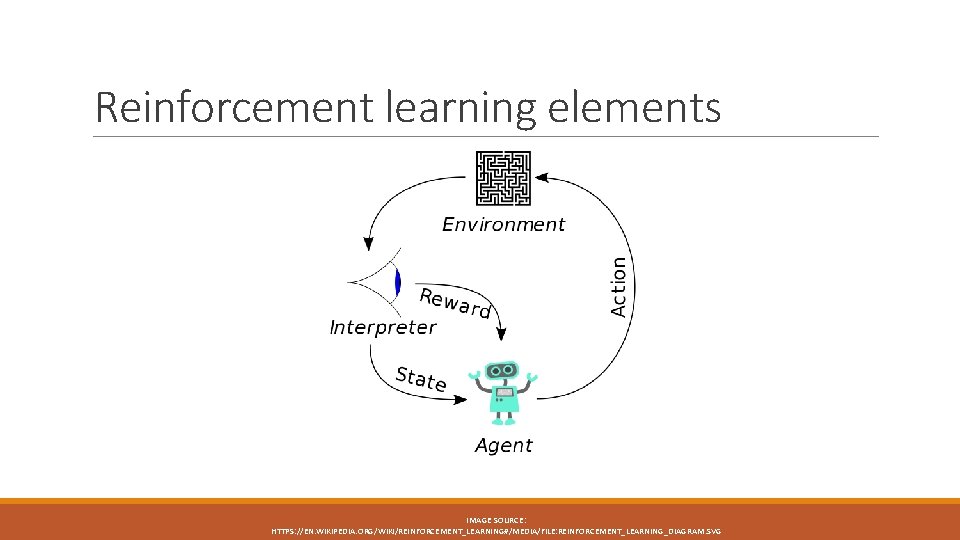

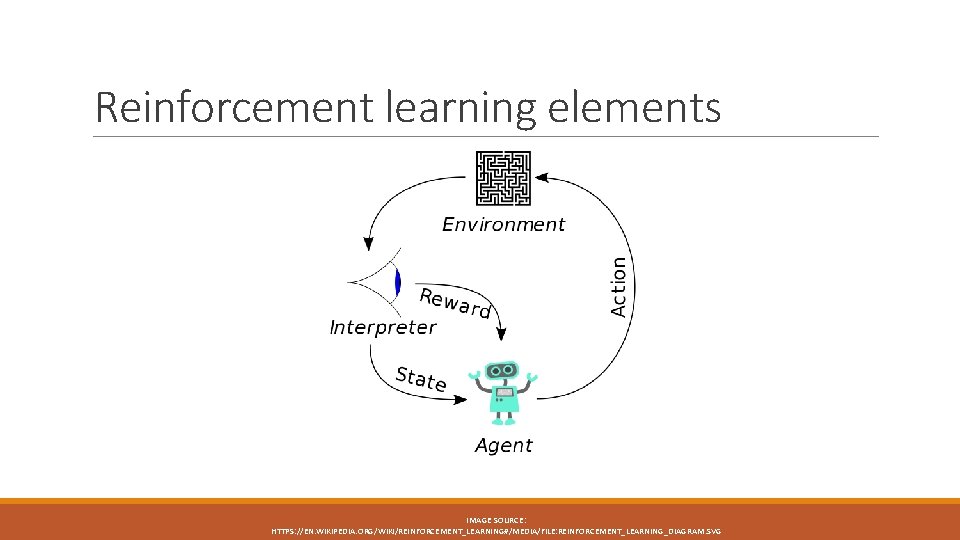

Reinforcement learning elements IMAGE SOURCE: HTTPS: //EN. WIKIPEDIA. ORG/WIKI/REINFORCEMENT_LEARNING#/MEDIA/FILE: REINFORCEMENT_LEARNING_DIAGRAM. SVG

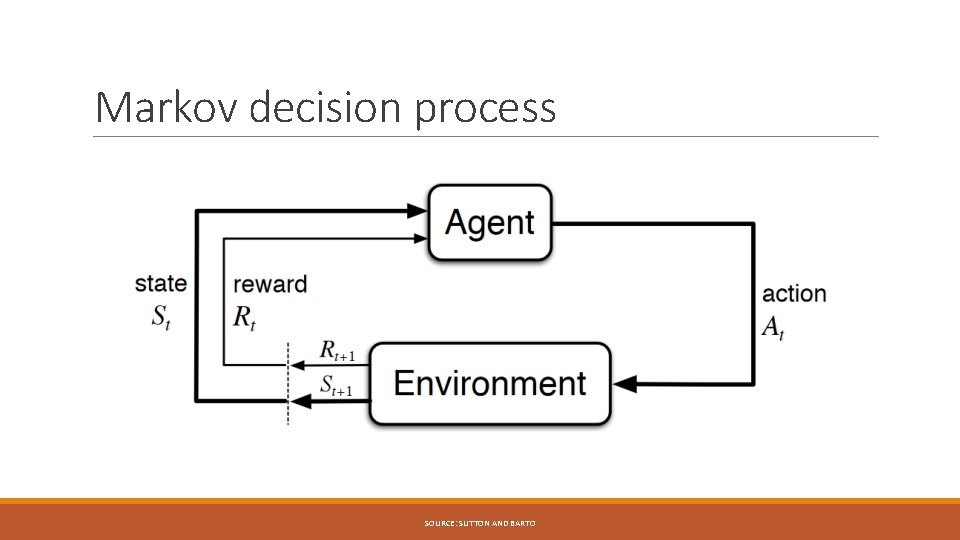

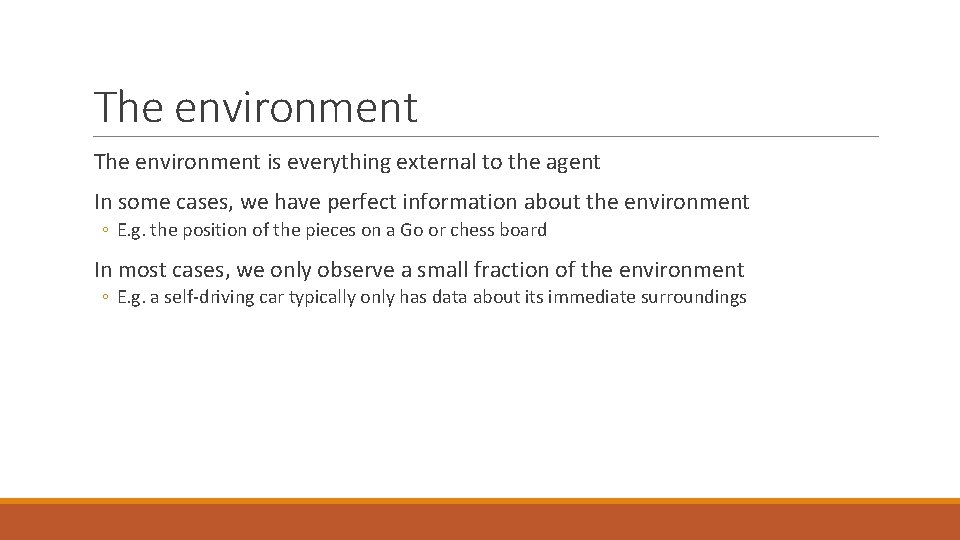

The environment is everything external to the agent In some cases, we have perfect information about the environment ◦ E. g. the position of the pieces on a Go or chess board In most cases, we only observe a small fraction of the environment ◦ E. g. a self-driving car typically only has data about its immediate surroundings

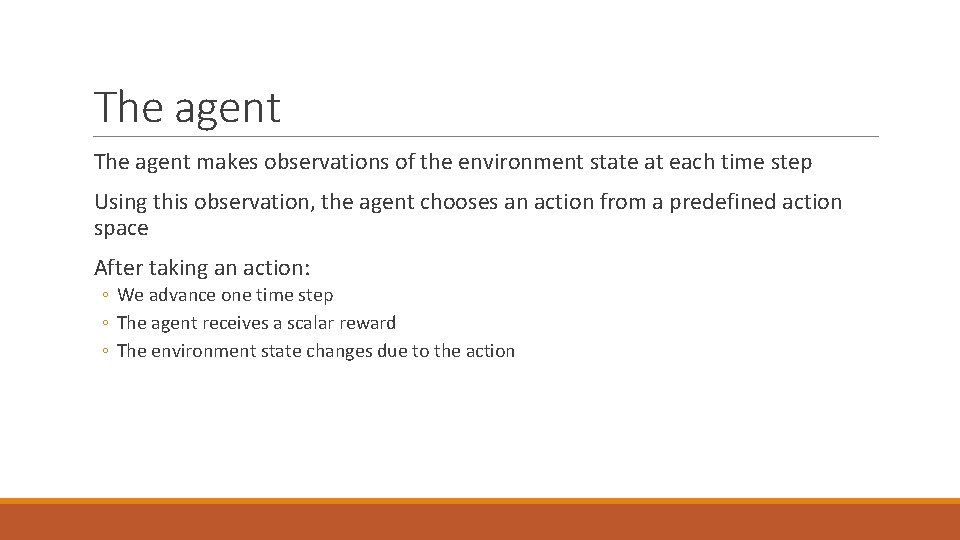

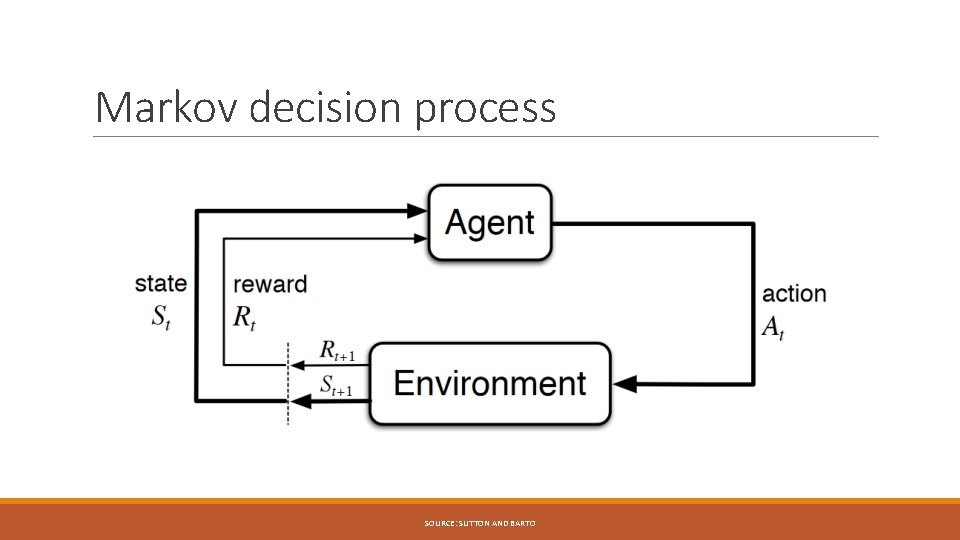

The agent makes observations of the environment state at each time step Using this observation, the agent chooses an action from a predefined action space After taking an action: ◦ We advance one time step ◦ The agent receives a scalar reward ◦ The environment state changes due to the action

The reward After every action taken by the agent there is some scalar reward received ◦ Can be positive or negative The goal of the agent is to maximize the cumulative reward G ◦ Typically, future rewards are discounted ◦ Often the cumulative reward will be dominated by future rewards

Robot arm example What are the agent, environment, and reward? SOURCE: HTTPS: //OPENAI. COM/BLOG/LEARNING-DEXTERITY/

Toy self driving car example What are the agent, environment, and reward? SOURCE: HTTPS: //METACAR-PROJECT. COM/

Markov state A Markov state is a state which contains all of the information available in the full state history Examples ◦ Having the position and momentum of a particle (and the potential it is in) in classical mechanics ◦ The position of all pieces on a chess board ◦ An robot vacuum cleaner with sensor data about its immediate surroundings (and perhaps some representation of where it has recently been).

Markov decision process SOURCE: SUTTON AND BARTO

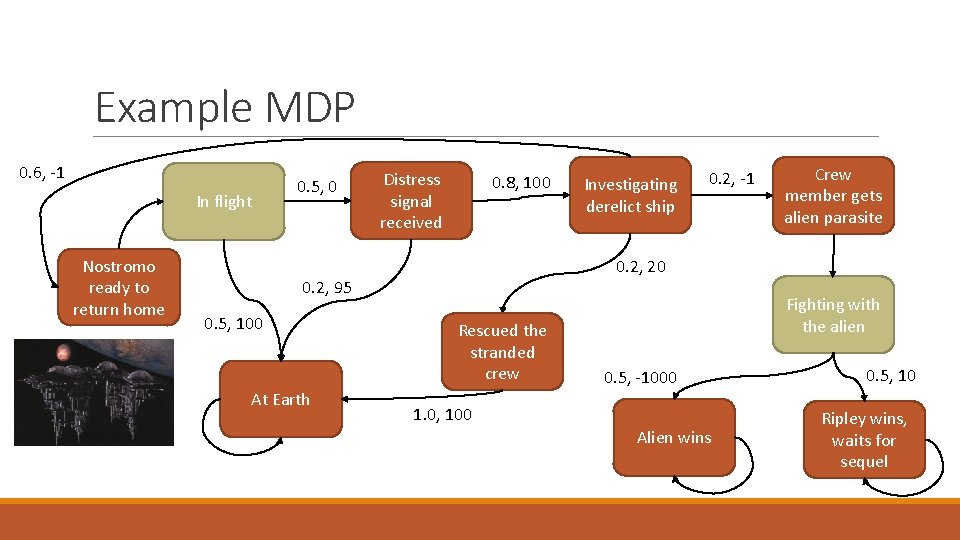

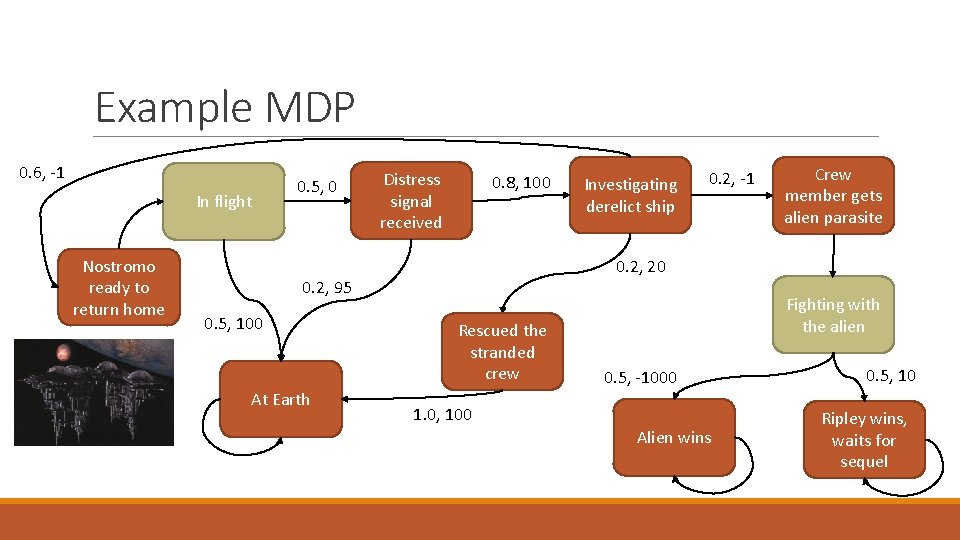

Example MDP 0. 6, -1 In flight Nostromo ready to return home 0. 5, 0 Distress signal received 0. 8, 100 At Earth 0. 2, -1 Crew member gets alien parasite 0. 2, 20 0. 2, 95 0. 5, 100 Investigating derelict ship Rescued the stranded crew Fighting with the alien 0. 5, -1000 1. 0, 100 Alien wins 0. 5, 10 Ripley wins, waits for sequel

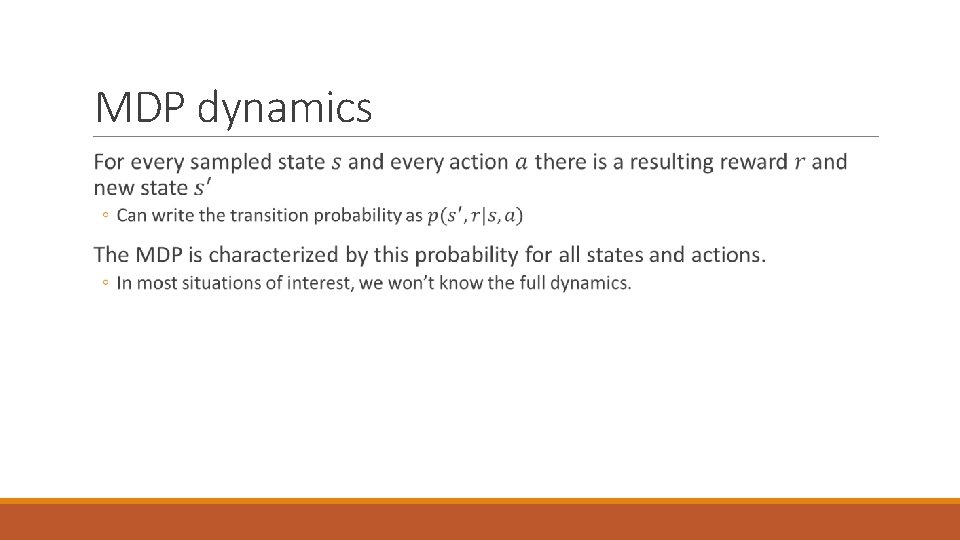

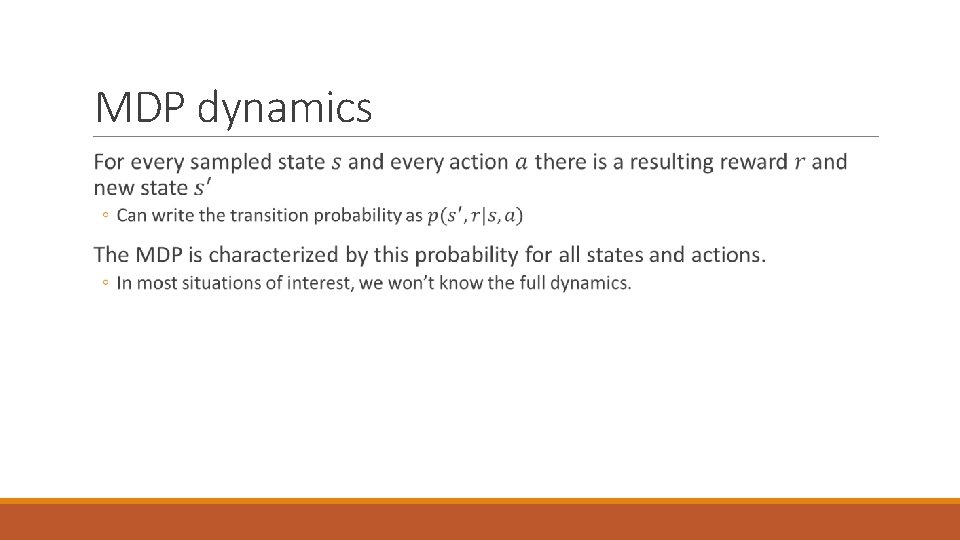

MDP dynamics

Mastering Atari games RL state of the art ~2013 -2015

Agent trajectories

Cumulative rewards

Policy

Exploration vs. explotation

Break SOURCE: TOBOR THE GREAT

Mastering Go Deepmind’s Alpha. Go algorithm used imitation learning and reinforcement learning to beat Go world champion Lee Sedol in 2016.

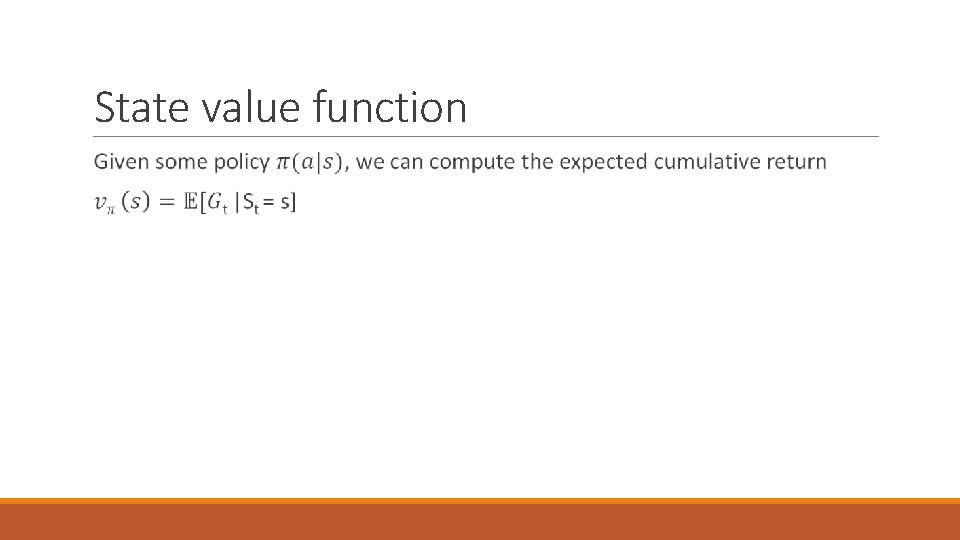

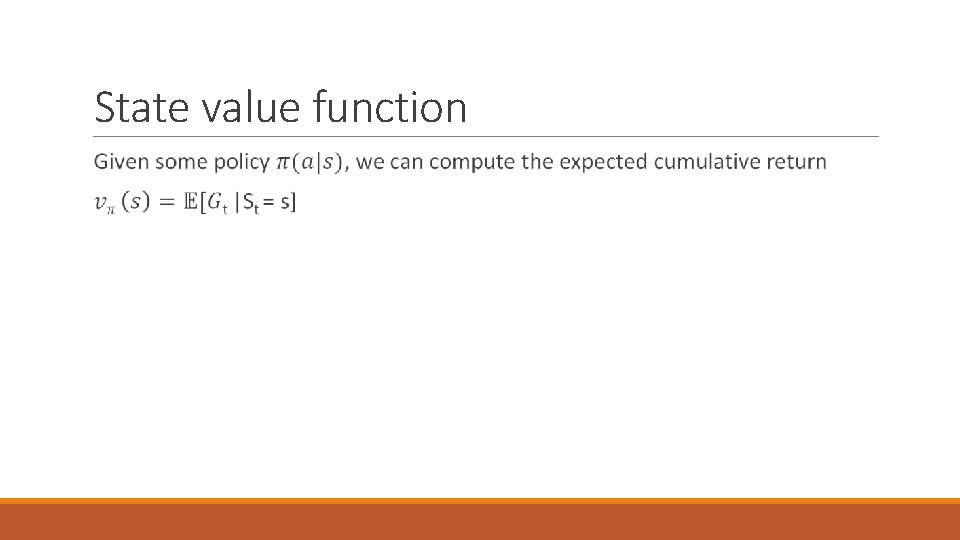

State value function

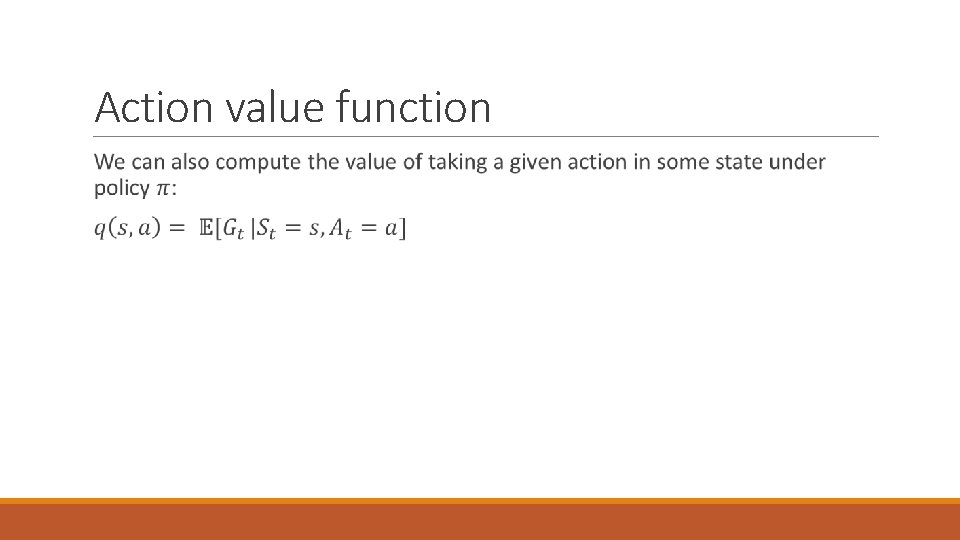

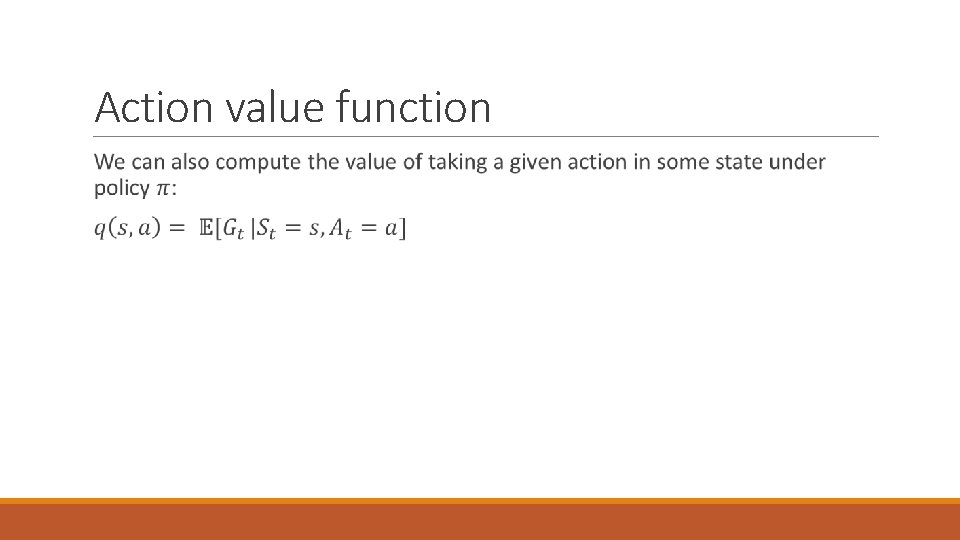

Action value function

Bellman equation

Optimal value functions and policies

Unknown MDP dynamics In everything prior to this, we have some explicit knowledge of transition dynamics. Will that always be true? …No.

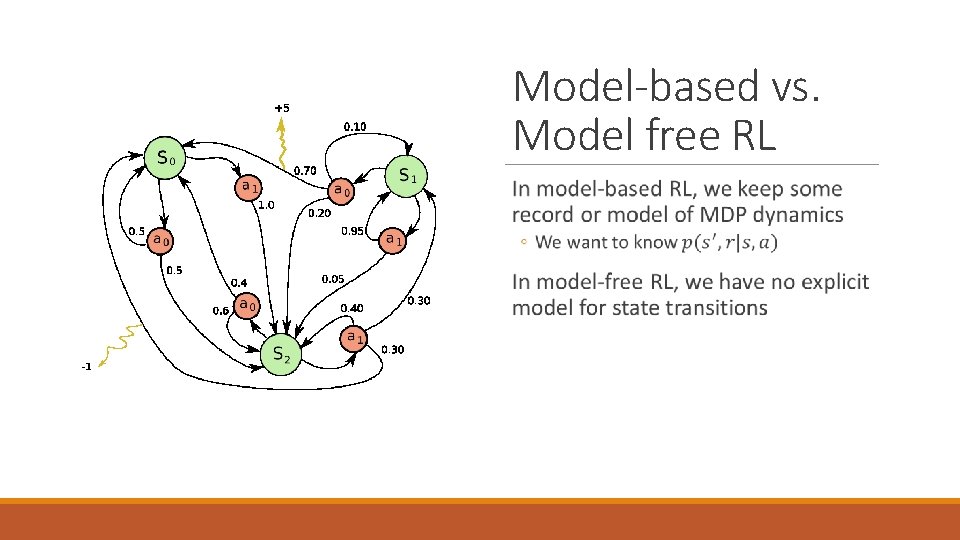

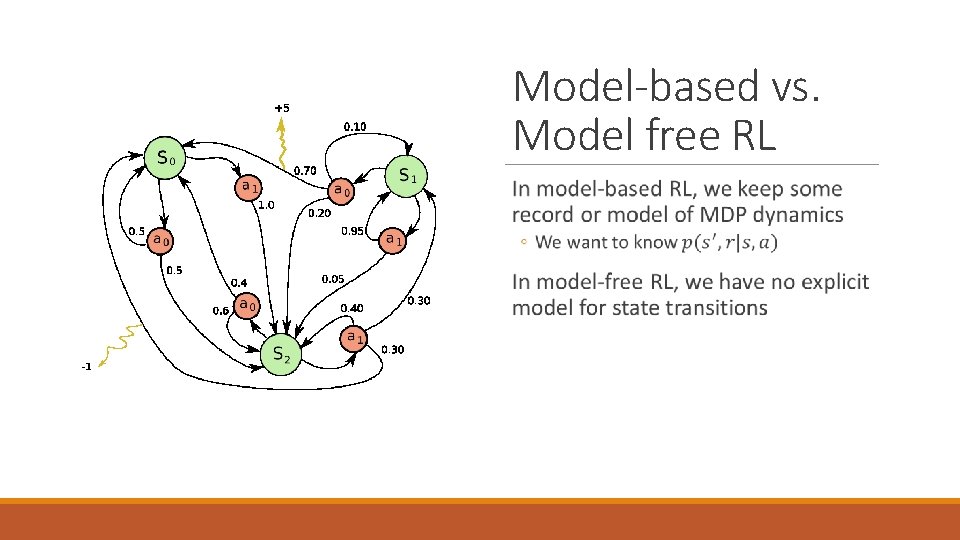

Model-based vs. Model free RL

Mastering board games from scratch In the first victory of an RL agent over a Go world champion, there was an initial imitation learning step using human expert data. Here Alpha. Zero masters Chess, Shogi, and Go from scratch (Late 2017).

Choosing a policy So far we have discussed: ◦ ◦ ◦ Agent Environment / State Reward State transitions Value functions (under some policy) How does the agent choose a policy? Or improve a policy?

One solution: the q function

Determining the q function How could we potentially learn or estimate the q function? If we have complete knowledge of the MDP dynamics, we could learn the qfunction by iterating over all states ◦ This is a nontrivial problem even if you are handed the MDP dynamics for free We could attempt to learn MDP dynamics by taking sample trajectories to estimate values

Or… We can use a statistical estimator to predict action values SOURCE: HTTPS: //WWW. LATIMES. COM/SPORTSNOW/LA-SP-EVEL-KNIEVEL-COLISEUM 20170217 -STORY. HTML

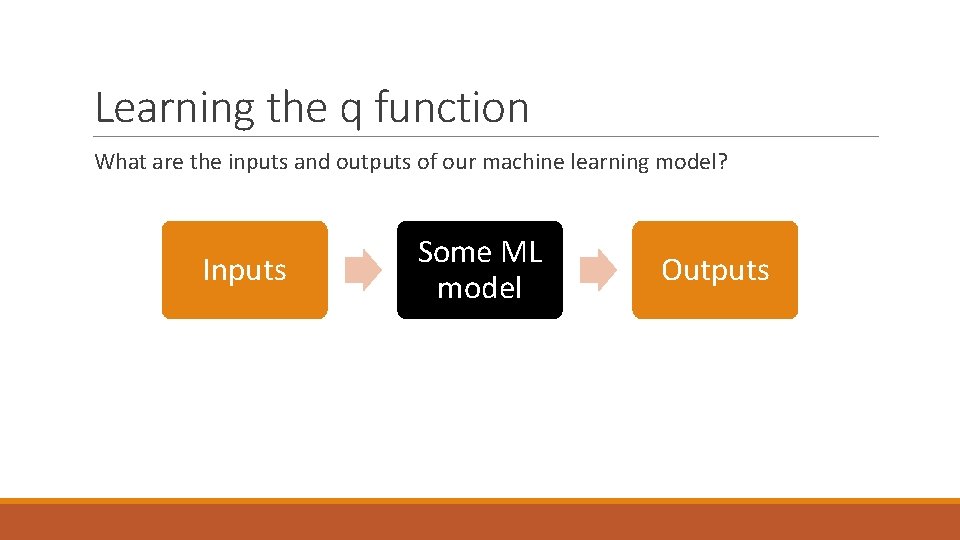

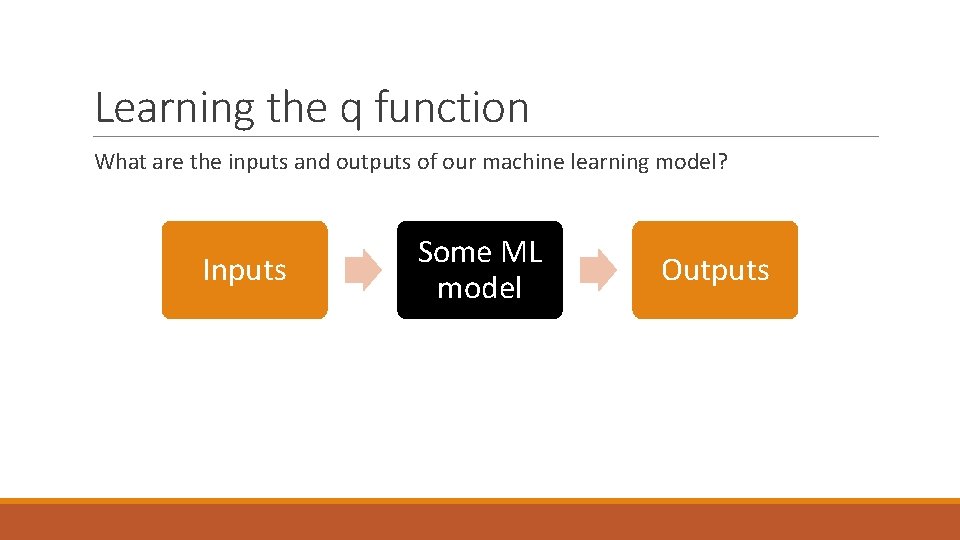

Learning the q function What are the inputs and outputs of our machine learning model? Inputs Some ML model Outputs

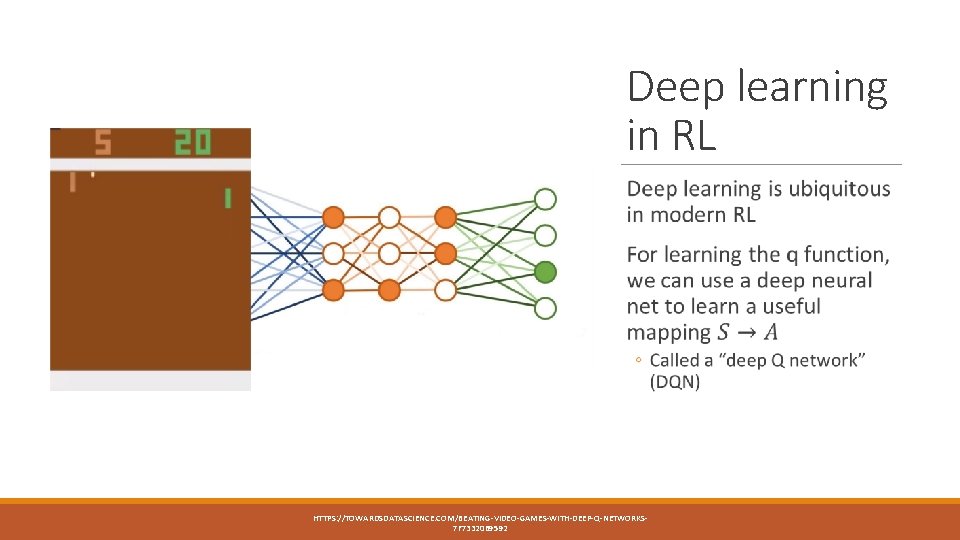

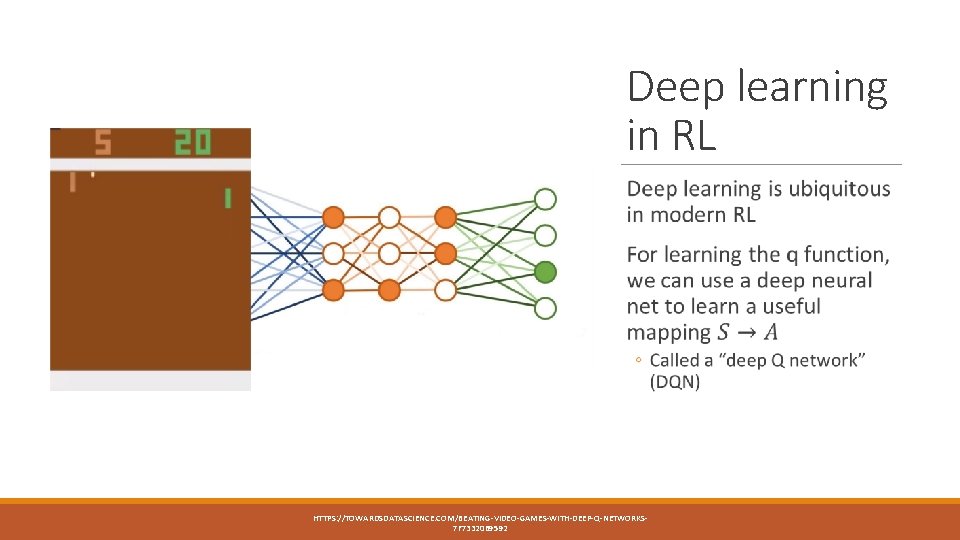

Deep learning in RL HTTPS: //TOWARDSDATASCIENCE. COM/BEATING-VIDEO-GAMES-WITH-DEEP-Q-NETWORKS 7 F 73320 B 9592

Training the DQN What constitutes a training example for our model? How many outputs should our DQN have? At what point do we compute the loss?

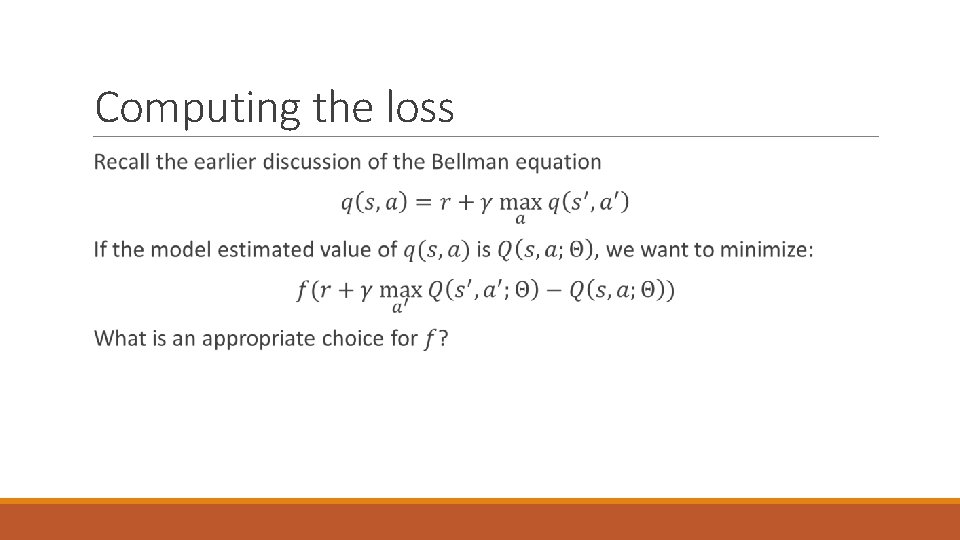

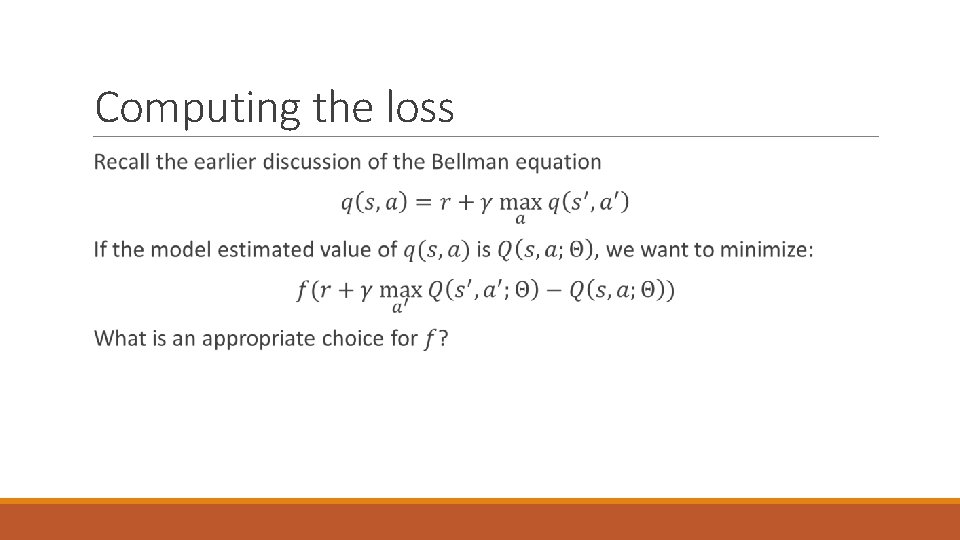

Computing the loss

Mastering Star. Craft 2 The Deepmind Alpha. Star agent defeated world class players at Star. Craft 2 (Dec 2018). Unlike the case of board games, this involves a partially observable environment, continuous control, and complicated game dynamics (e. g. economy, unit and building upgrades)

How does an agent gain experience? How were the agents in the examples throughout the lecture able to gain experience? How would we train our agent for some possible engineering tasks? ◦ Self driving car ◦ Drilling or mining ◦ Chemical process automation

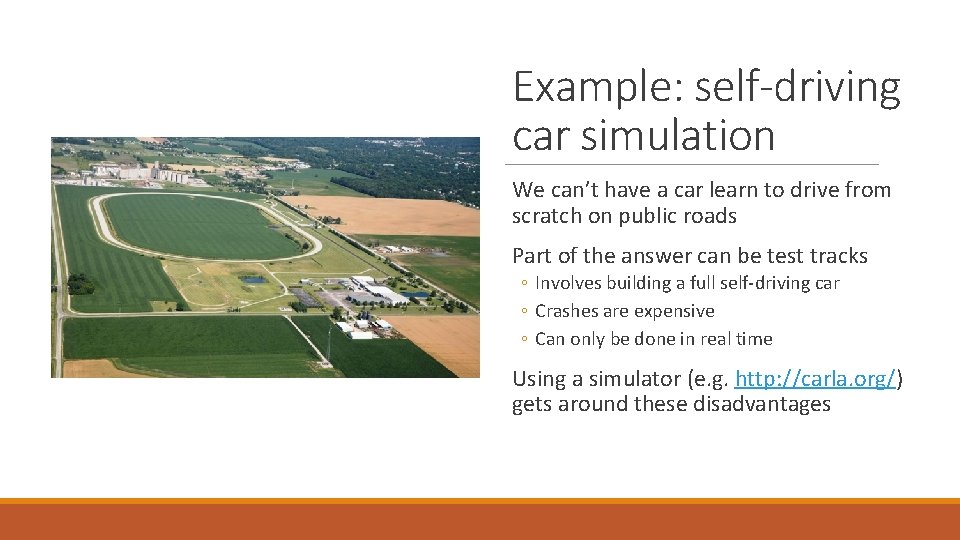

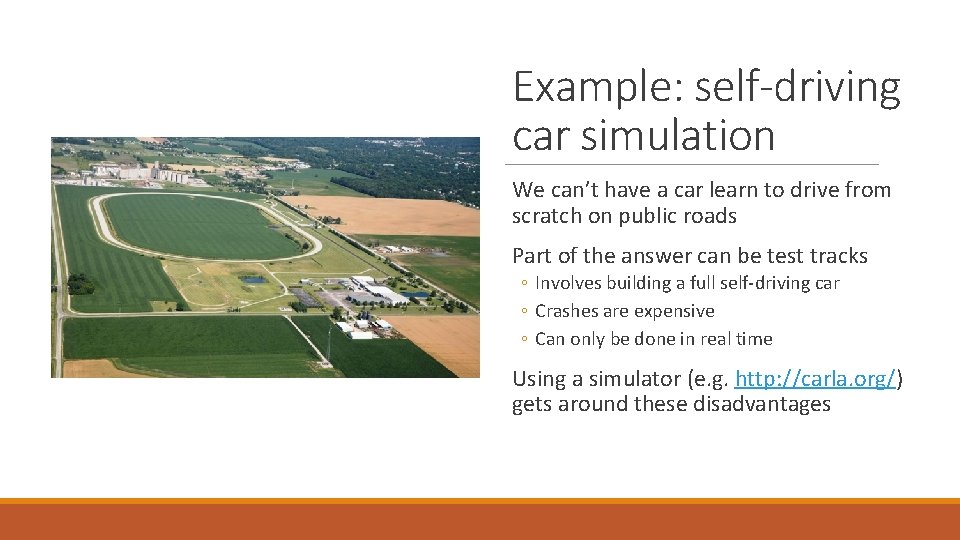

Example: self-driving car simulation We can’t have a car learn to drive from scratch on public roads Part of the answer can be test tracks ◦ Involves building a full self-driving car ◦ Crashes are expensive ◦ Can only be done in real time Using a simulator (e. g. http: //carla. org/) gets around these disadvantages