MECEE 4520 Data Science for Mechanical Systems Lecture

- Slides: 62

MECEE 4520: Data Science for Mechanical Systems Lecture #4: Data Pipelines For ML 1 Instructor: Josh Browne, Ph. D Guest speaker: Alejandro Mesa February 13, 2019

Course Logistics ● HW #1 graded ● HW #2 (I haven’t checked them yet) ● HW #3 – will be assigned this evening, and will be due in two weeks ○ You are encouraged to work in groups of 2 -3 for HW #3 and submit a single assignment ● My OH tomorrow from 4 PM to 5 PM ● Guest lecturer Alejandro Mesa – alejandro. mesa@rho. ai 2

Alejandro Mesa alejandro. mesa@rho. ai ● UCONN - B. S Computer Science - 2005 ● RPI - M. S Management - 2008 ● Columbia - M. S Computer Science - 2012 ● United Technologies Corp. - Sr. Software Engineer 2005 -2011 ● Prometheus Research - Sr. Software Engineer - 2011 ● Rho AI - Lead Software Architect - 2012 3

Any questions about databases? 4

Goals ● In this section we will cover: ○ ○ ● Methodologies for data generation Methodologies for data transformation Methodologies for data ingestion Sources of data At the end of this section you should: ○ ○ Be able to extract, transform and load (ETL) data Understand which tools can be used for ETL 5

So. . . why should we care about data? 6

First some facts. . . According to Domo: "Over 2. 5 quintillion bytes of data are created every single day, and it’s only going to grow from there. By 2020, it’s estimated that 1. 7 MB of data will be created every second for every person on earth. " Source: DOMO 1 quintillion: 1, 000, 000 7

First some facts. . . According to Science Daily: "90% of the world's data has been created in the last two years" Source: Science Daily 8

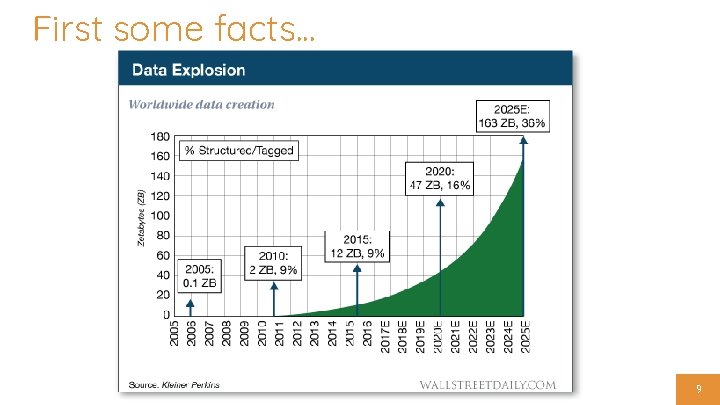

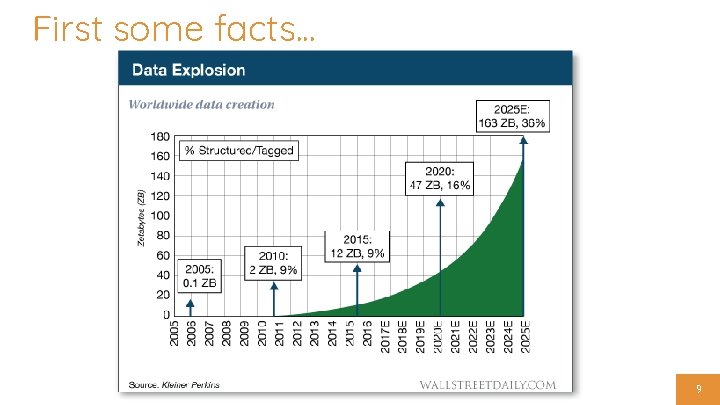

First some facts. . . 9

So. . . why should we care about data? Data is everywhere! It’s being used daily to drive business decisions 10

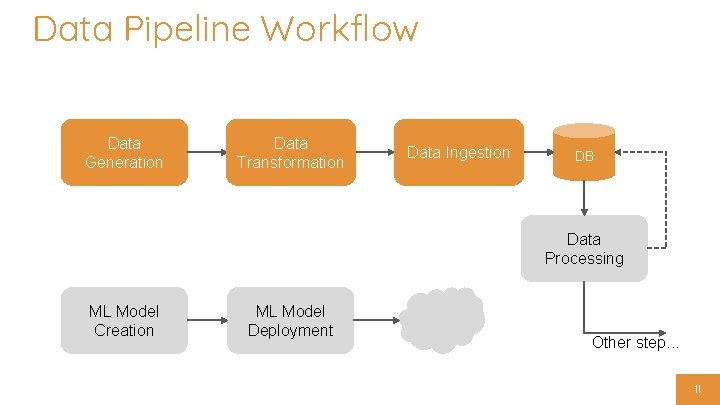

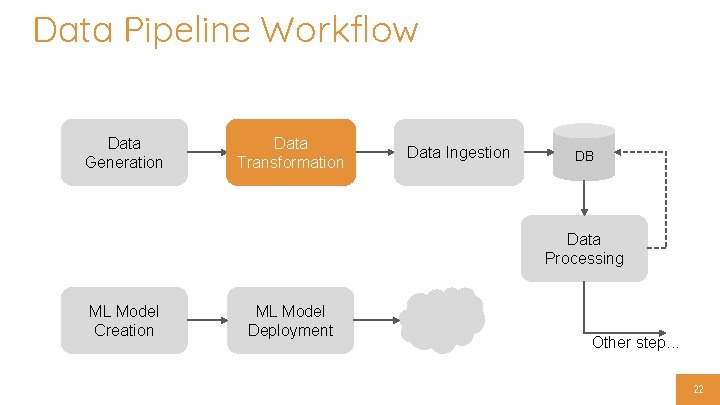

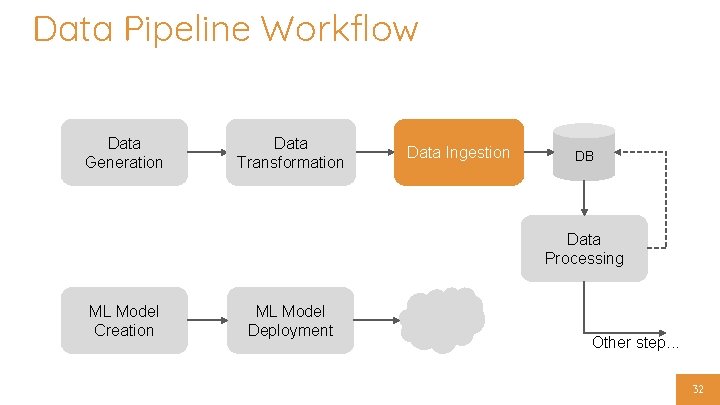

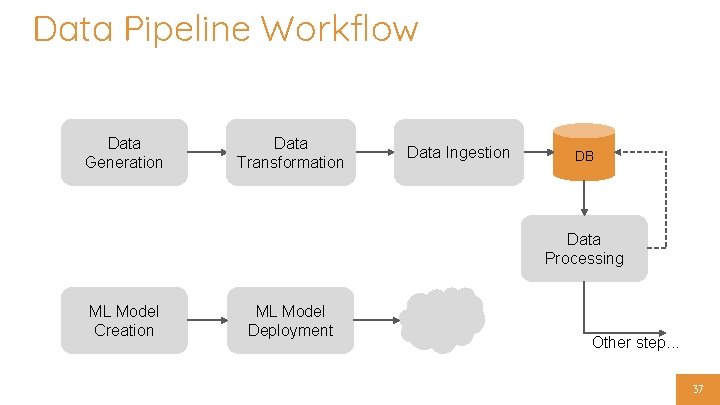

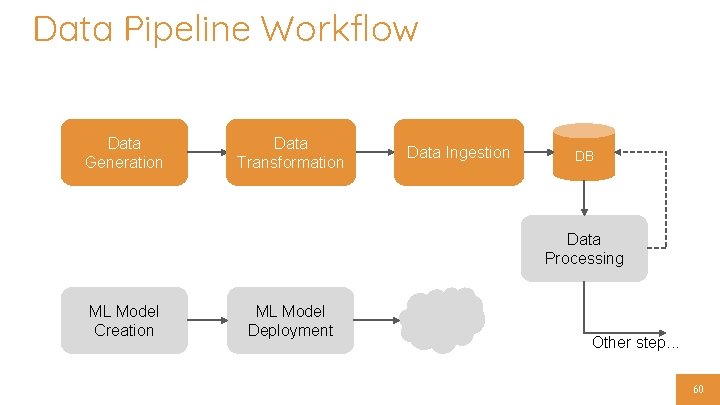

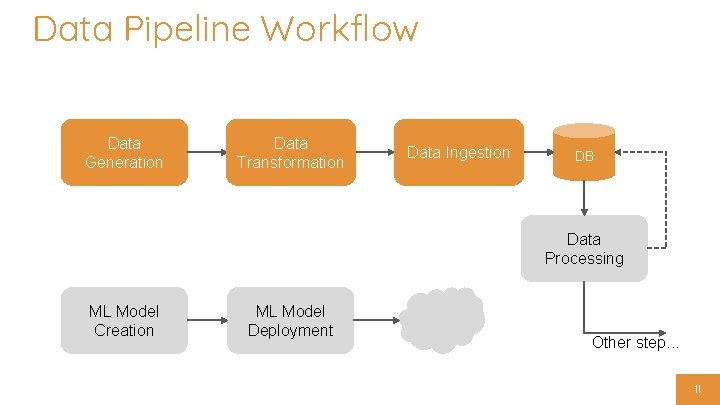

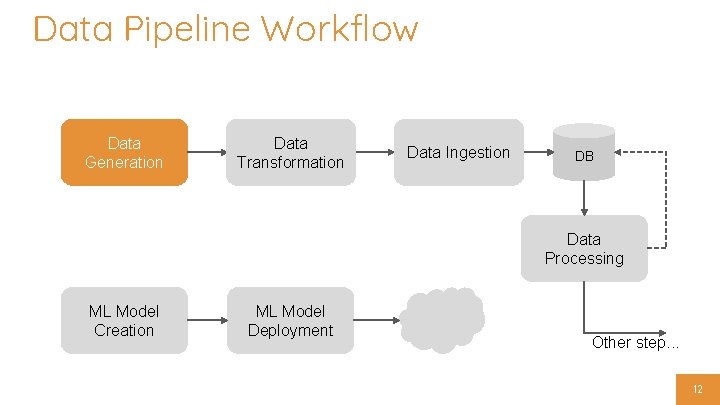

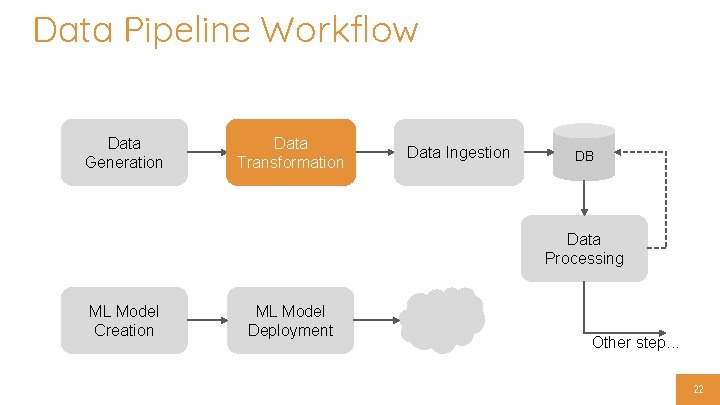

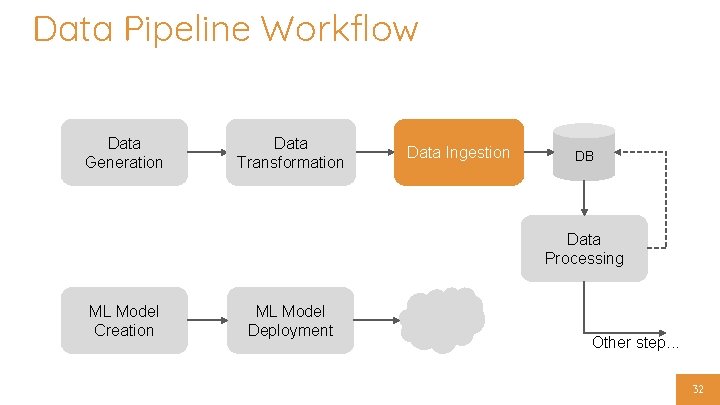

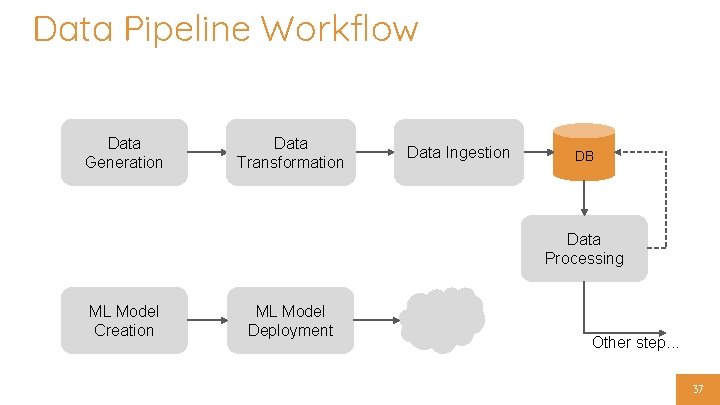

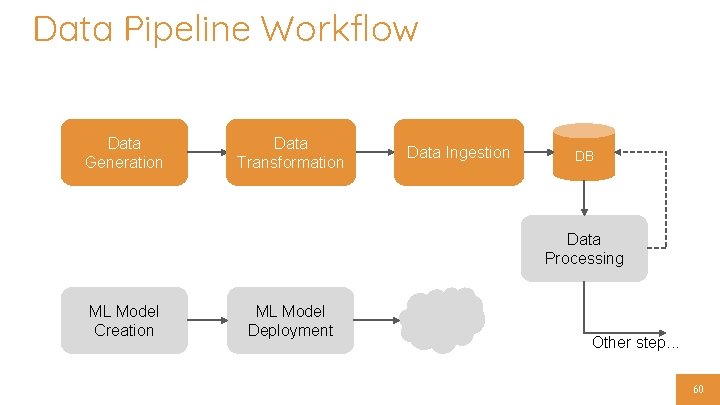

Data Pipeline Workflow Data Generation Data Transformation Data Ingestion DB Data Processing ML Model Creation ML Model Deployment Other step. . . 11

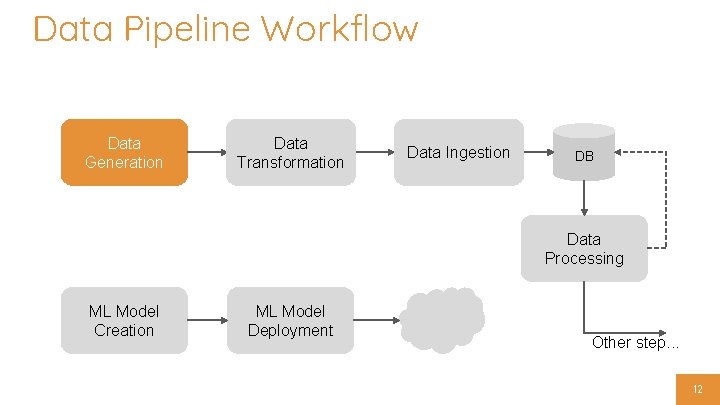

Data Pipeline Workflow Data Generation Data Transformation Data Ingestion DB Data Processing ML Model Creation ML Model Deployment Other step. . . 12

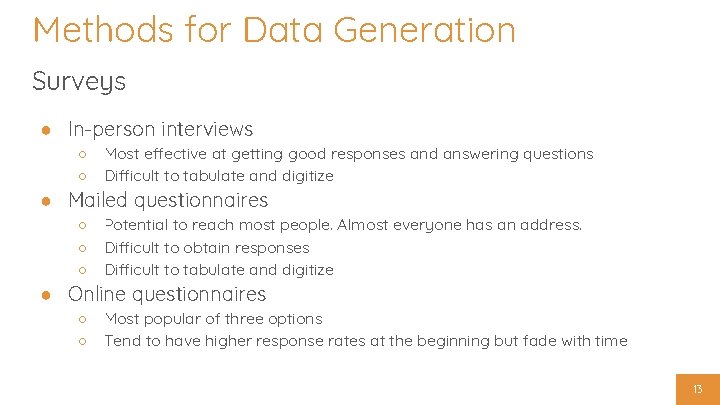

Methods for Data Generation Surveys ● In-person interviews ○ ○ Most effective at getting good responses and answering questions Difficult to tabulate and digitize ○ ○ ○ Potential to reach most people. Almost everyone has an address. Difficult to obtain responses Difficult to tabulate and digitize ○ ○ Most popular of three options Tend to have higher response rates at the beginning but fade with time ● Mailed questionnaires ● Online questionnaires 13

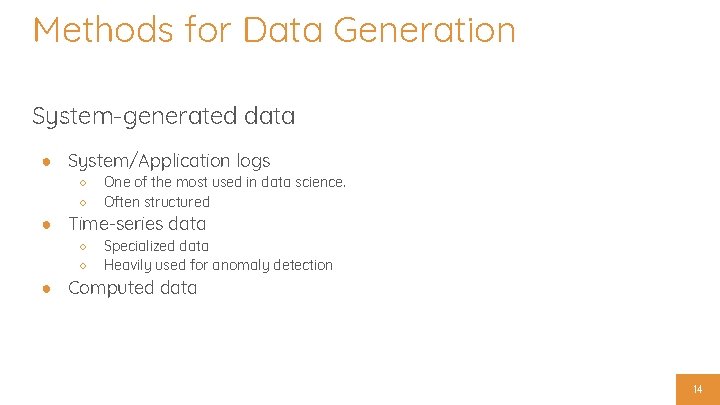

Methods for Data Generation System-generated data ● System/Application logs ○ ○ One of the most used in data science. Often structured ○ ○ Specialized data Heavily used for anomaly detection ● Time-series data ● Computed data 14

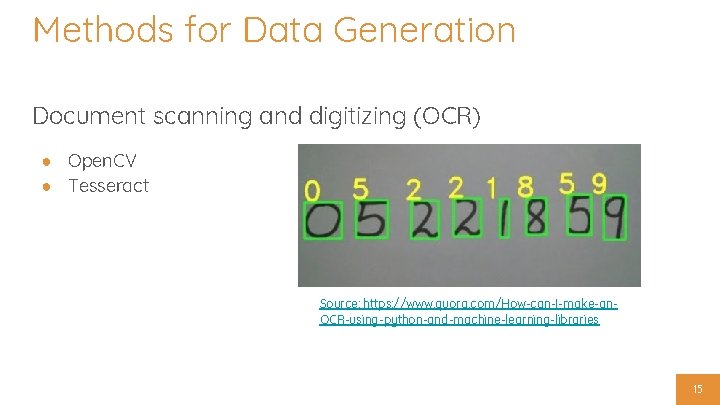

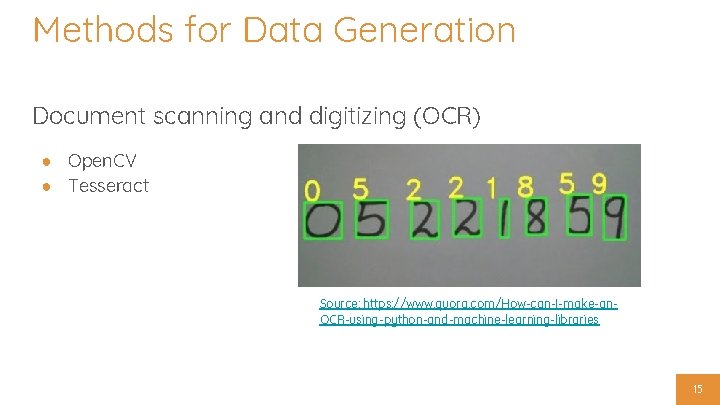

Methods for Data Generation Document scanning and digitizing (OCR) ● Open. CV ● Tesseract Source: https: //www. quora. com/How-can-I-make-an. OCR-using-python-and-machine-learning-libraries 15

Methods for Data Generation Web scraping ● High-activation ● Require domain knowledge Tools and frameworks: ● Octoparse, Parse. Hub ● Scraperapi, Scrapy ● Selenium, Puppeteer 16

Scraper exercise 17

Methods for Data Generation Data labeling ● Annotate text to extract metadata ● Annotate text or images for classification ● Classify text for sentiment analysis Tools and frameworks: ● Prodigy ● Doccano (alternative to Prodigy) ● Brat (alternative to Prodigy) ● Labelbox ● Amazon Mechanical Turk 18

Labeling exercise 19

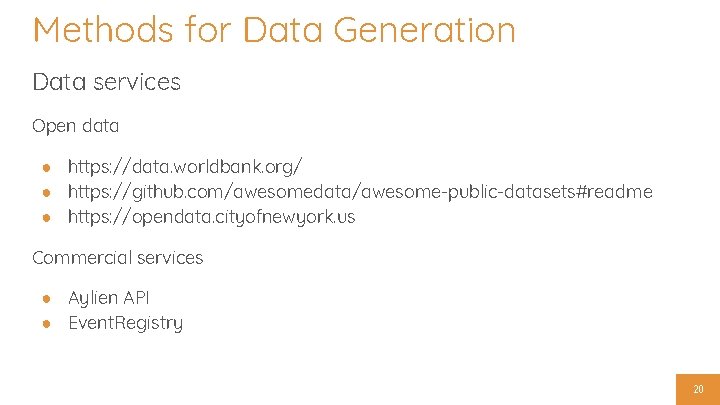

Methods for Data Generation Data services Open data ● https: //data. worldbank. org/ ● https: //github. com/awesomedata/awesome-public-datasets#readme ● https: //opendata. cityofnewyork. us Commercial services ● Aylien API ● Event. Registry 20

Break Source: http: //dilbert. com/strip/2014 -05 -07 21

Data Pipeline Workflow Data Generation Data Transformation Data Ingestion DB Data Processing ML Model Creation ML Model Deployment Other step. . . 22

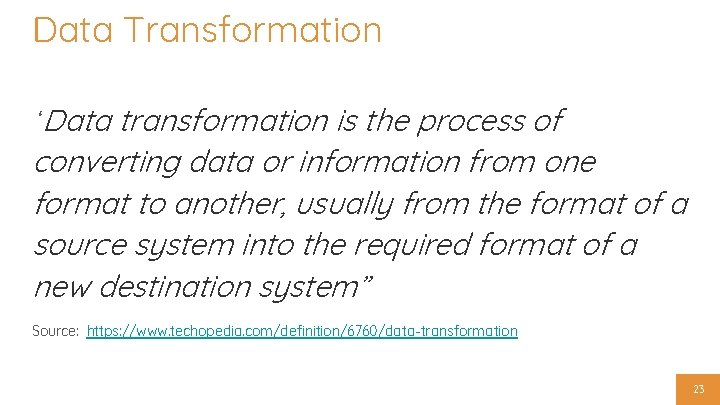

Data Transformation “Data transformation is the process of converting data or information from one format to another, usually from the format of a source system into the required format of a new destination system” Source: https: //www. techopedia. com/definition/6760/data-transformation 23

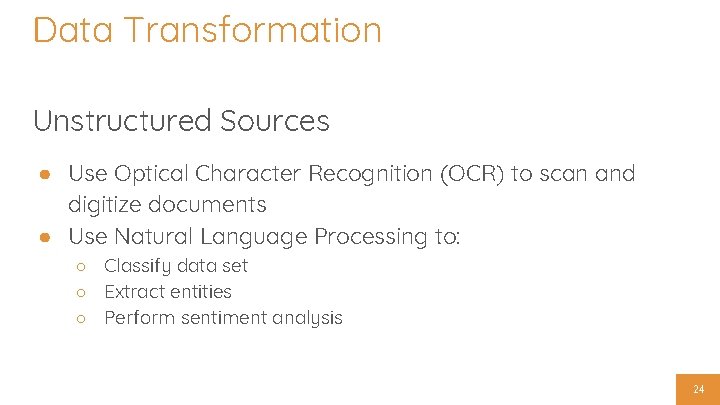

Data Transformation Unstructured Sources ● Use Optical Character Recognition (OCR) to scan and digitize documents ● Use Natural Language Processing to: ○ Classify data set ○ Extract entities ○ Perform sentiment analysis 24

Google NLP example 25

Data Transformation Structured Sources ● You can use Map. Reduce to summarize and reduce your data ● Use Machine Learning models to learn new information about the data ● Compute new data based on existing values ● Feature scaling - Transform data into a new dimension to normalize data set 26

Data Transformation Map. Reduce ● Programming model for processing and generating large data sets. ○ Map: operation to perform filtering or sorting of input data set ○ Reduce: summarizes data set (counting, yielding frequencies, etc) 27

Map. Reduce exercise 28

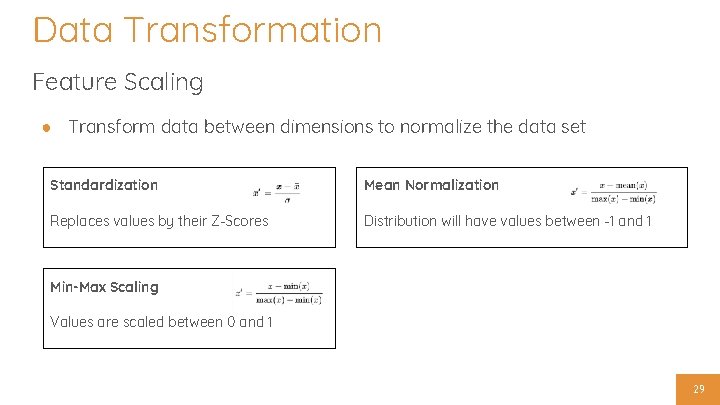

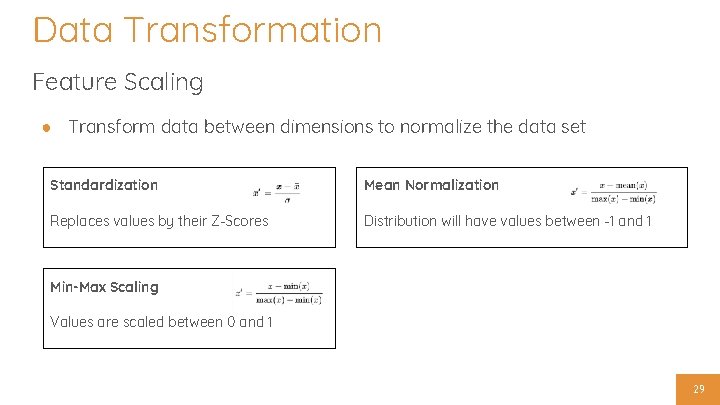

Data Transformation Feature Scaling ● Transform data between dimensions to normalize the data set Standardization Mean Normalization Replaces values by their Z-Scores Distribution will have values between -1 and 1 Min-Max Scaling Values are scaled between 0 and 1 29

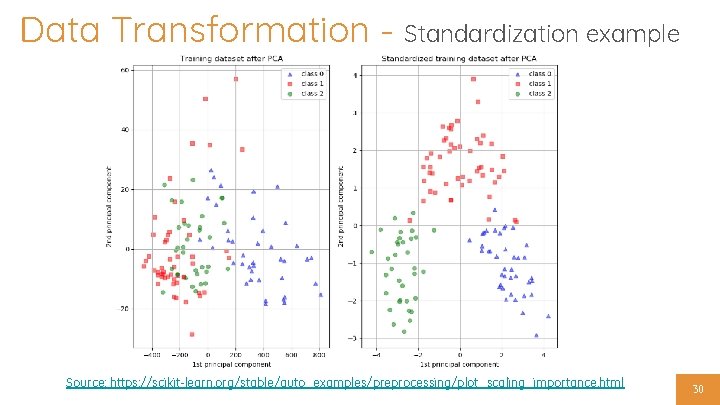

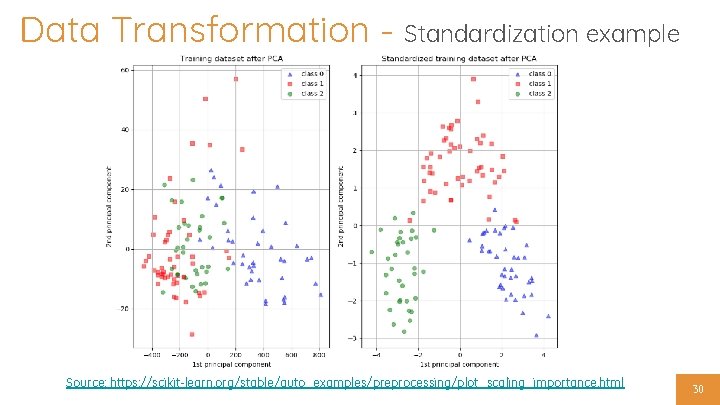

Data Transformation - Standardization example Source: https: //scikit-learn. org/stable/auto_examples/preprocessing/plot_scaling_importance. html 30

Data Transformation More on feature scaling ● https: //scikitlearn. org/stable/auto_examples/preprocessin g/plot_scaling_importance. html ● https: //medium. com/greyatom/why-how-andwhen-to-scale-your-features-4 b 30 ab 09 db 5 e ● http: //ece. eng. umanitoba. ca/undergraduate/ ECE 4850 T 02/Lecture%20 Slides/Feature%2 0 Scaling. pdf 31

Data Pipeline Workflow Data Generation Data Transformation Data Ingestion DB Data Processing ML Model Creation ML Model Deployment Other step. . . 32

Methods for Data Ingestion Batch Processing ● Usually executed manually ● Used for: ○ Initializing new data sets or seeding a database ○ One-off analysis 33

Methods for Data Ingestion Direct Ingestion ● Data generator automatically inserts data into data store ● Synchronous execution ● Commonly used in data scraping 34

Methods for Data Ingestion Asynchronous Ingestion ● ● ● Primarily done through queues Asynchronous job scheduling and ingestion of data Queues have tasks to be executed, but not the data to be ingested Data must reside on a temporary store before being ingested Used for multi-step ingestion (e. g OCR) 35

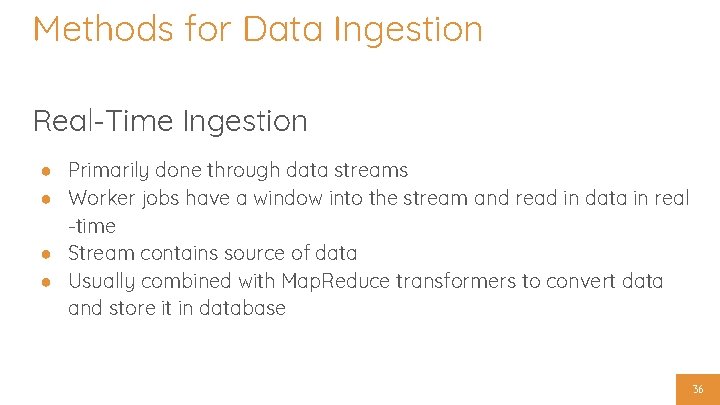

Methods for Data Ingestion Real-Time Ingestion ● Primarily done through data streams ● Worker jobs have a window into the stream and read in data in real -time ● Stream contains source of data ● Usually combined with Map. Reduce transformers to convert data and store it in database 36

Data Pipeline Workflow Data Generation Data Transformation Data Ingestion DB Data Processing ML Model Creation ML Model Deployment Other step. . . 37

Data Types Unstructured Data ● Usually composed of free-form text ● It has minimal metadata associated with it ● Difficult to parse, transform and ingest Examples ● News articles ● Documents ● Tweets 38

Can anyone tell me which database should be used to store this data? 39

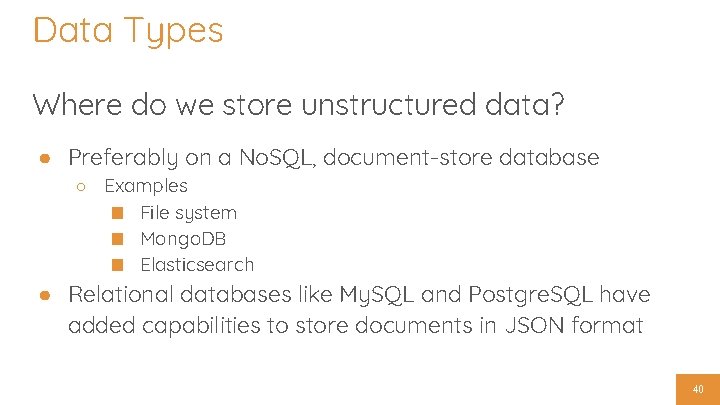

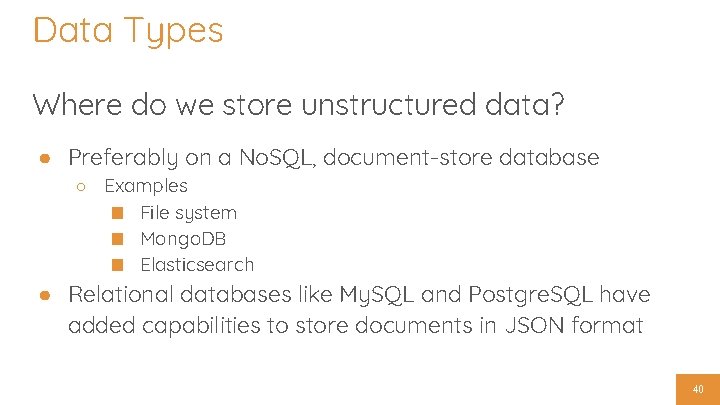

Data Types Where do we store unstructured data? ● Preferably on a No. SQL, document-store database ○ Examples ■ File system ■ Mongo. DB ■ Elasticsearch ● Relational databases like My. SQL and Postgre. SQL have added capabilities to store documents in JSON format 40

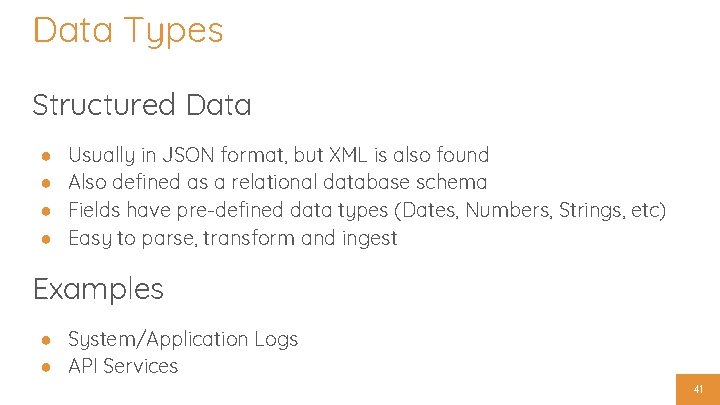

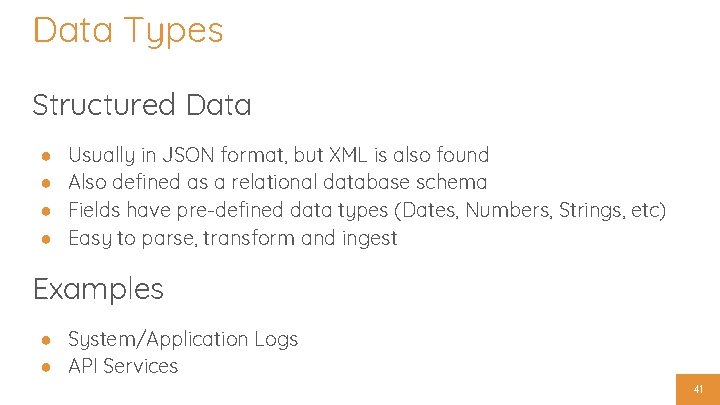

Data Types Structured Data ● ● Usually in JSON format, but XML is also found Also defined as a relational database schema Fields have pre-defined data types (Dates, Numbers, Strings, etc) Easy to parse, transform and ingest Examples ● System/Application Logs ● API Services 41

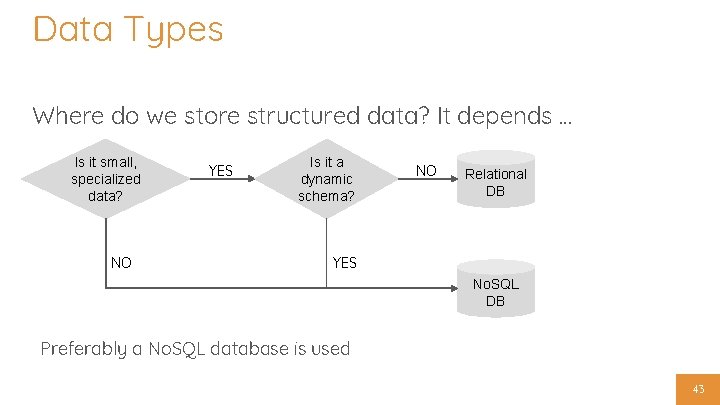

Can anyone tell me which database should be used to store this data? 42

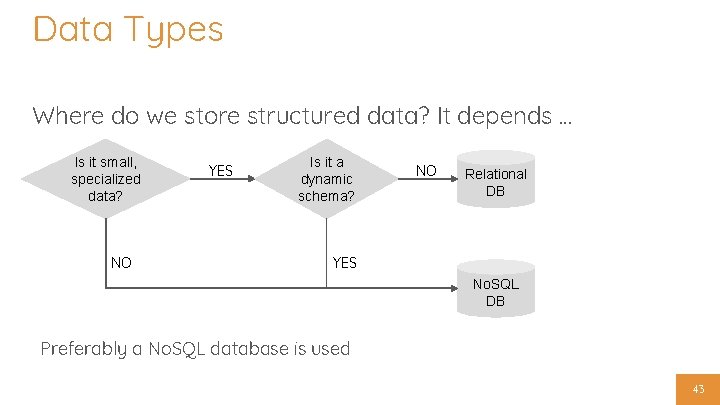

Data Types Where do we store structured data? It depends. . . Is it small, specialized data? YES Is it a dynamic schema? NO Relational DB DB NO YES No. SQL DB Preferably a No. SQL database is used 43

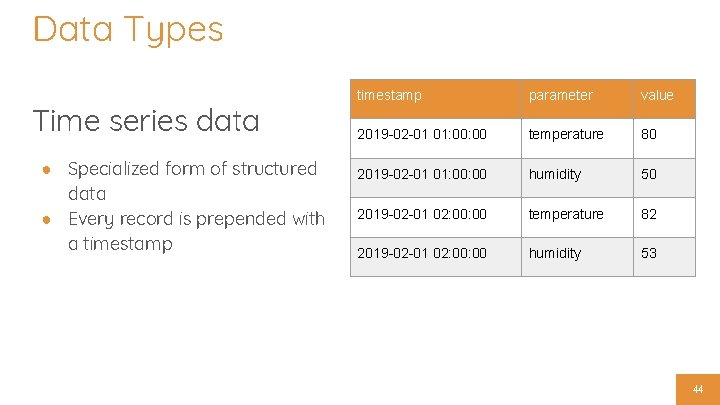

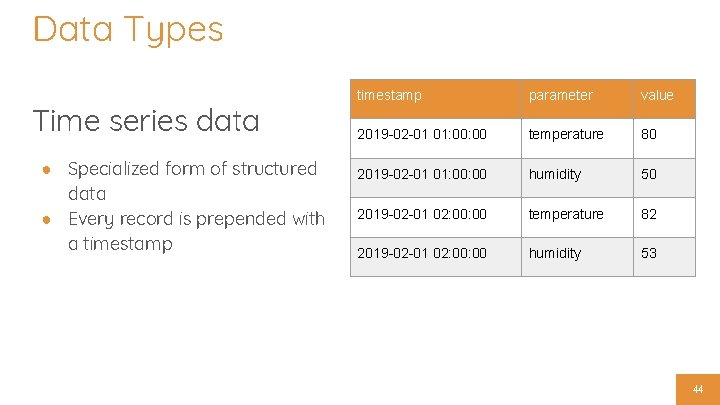

Data Types Time series data ● Specialized form of structured data ● Every record is prepended with a timestamp parameter value 2019 -02 -01 01: 00 temperature 80 2019 -02 -01 01: 00 humidity 50 2019 -02 -01 02: 00 temperature 82 2019 -02 -01 02: 00 humidity 53 44

Can anyone tell me which database should be used to store this data? 45

Can we store time-series data in a relational database? 46

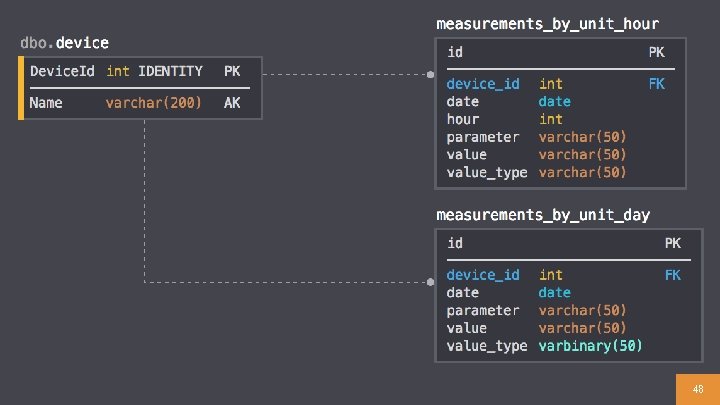

Time Series Data Can we store time-series data in a relational database? ● Yes, we can! ? 47

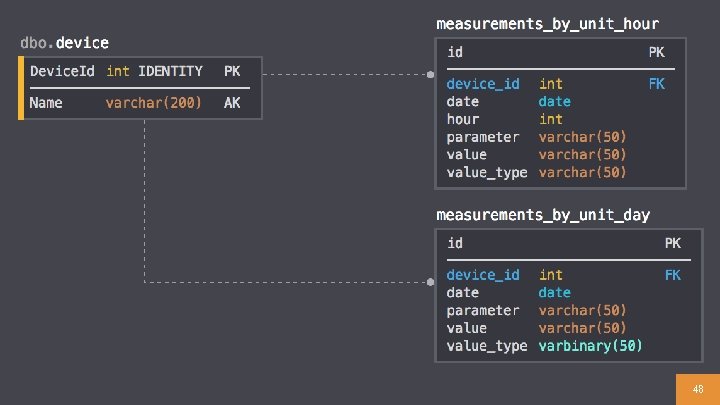

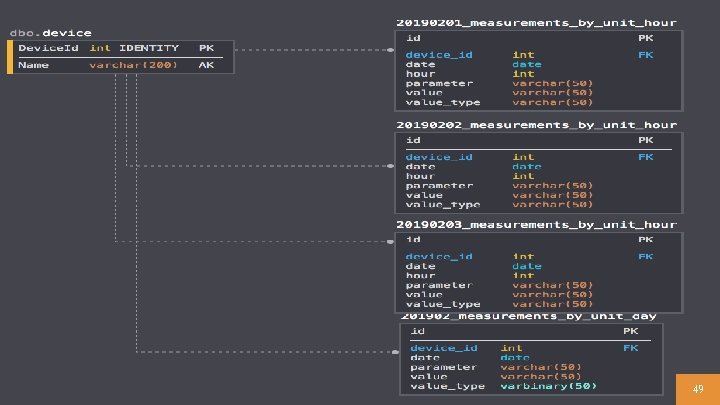

48

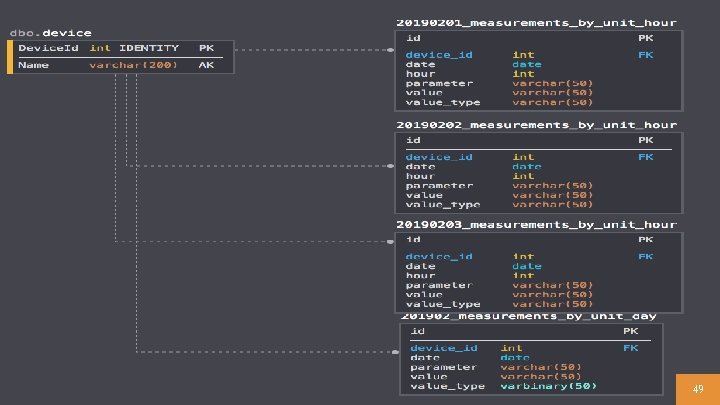

49

Time Series Data Can we store time-series data in a relational database? ● Yes, we can … but we really shouldn’t ○ Data model has to be updated constantly ○ Relational databases don’t scale horizontally ○ Queries will get slow as the data grows 50

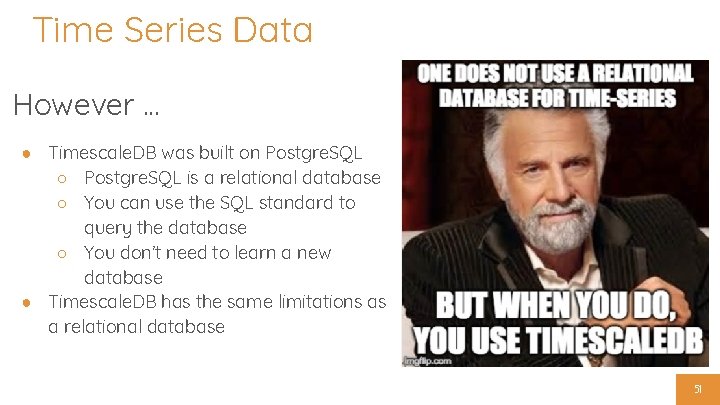

Time Series Data However. . . ● Timescale. DB was built on Postgre. SQL ○ Postgre. SQL is a relational database ○ You can use the SQL standard to query the database ○ You don’t need to learn a new database ● Timescale. DB has the same limitations as a relational database 51

Can we store time-series data in a document store? 52

Time Series Data Can we store time-series data in a document store? ● ● Yes, we can! People have done it in Mongo. DB and Elasticsearch If you can control how data is sharded, then yes! But … there are better databases for it 53

Time Series Databases - Time series data ● Data is stored partitioned by date components (Year, Month, Date, Time or a combination of these) ● Data is aggregated in time slots (min, hours, days, months, years, etc) ● Usually built as No. SQL data stores, but not always ● Data stored so that only small partitions are loaded for a given query ○ Why? ■ Data sets are too big to hold in memory ■ Is more performant to load only the data you need ■ People tend to look at the data in smaller sets (hour, days, weeks, months, etc) ■ CAP theorem (Consistency, Availability and Partition Tolerance) 54

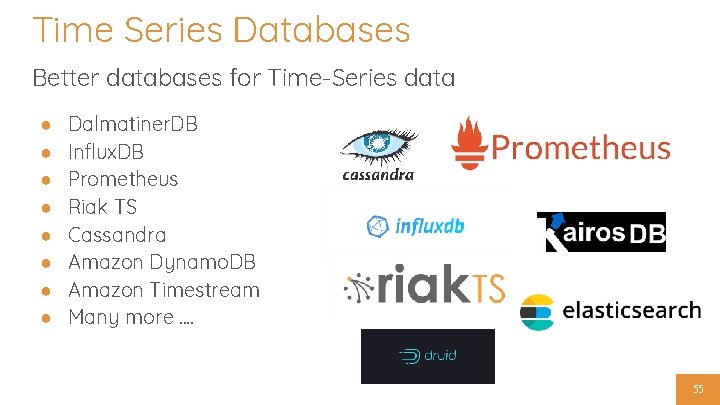

Time Series Databases Better databases for Time-Series data ● ● ● ● Dalmatiner. DB Influx. DB Prometheus Riak TS Cassandra Amazon Dynamo. DB Amazon Timestream Many more. . 55

Applied Example: Io. T 56

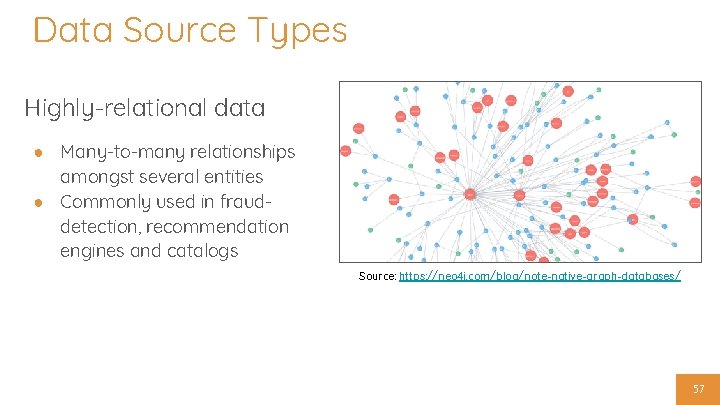

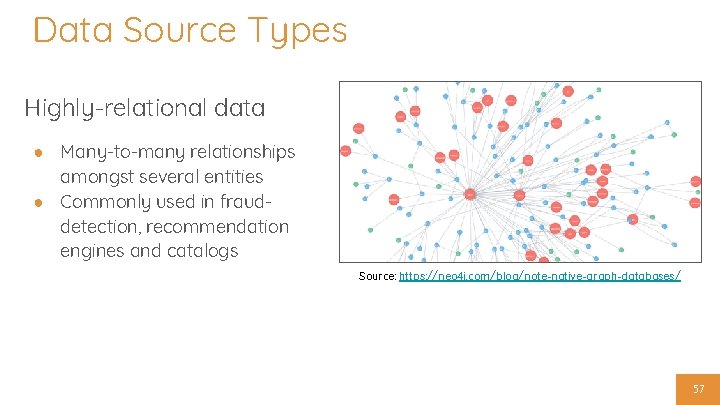

Data Source Types Highly-relational data ● Many-to-many relationships amongst several entities ● Commonly used in frauddetection, recommendation engines and catalogs Source: https: //neo 4 j. com/blog/note-native-graph-databases/ 57

Can we use a relational database to store this data? How about a No. SQL database? 58

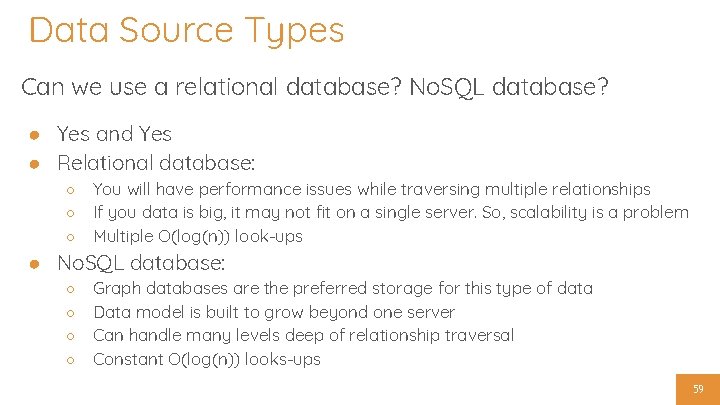

Data Source Types Can we use a relational database? No. SQL database? ● Yes and Yes ● Relational database: ○ You will have performance issues while traversing multiple relationships ○ If you data is big, it may not fit on a single server. So, scalability is a problem ○ Multiple O(log(n)) look-ups ● No. SQL database: ○ ○ Graph databases are the preferred storage for this type of data Data model is built to grow beyond one server Can handle many levels deep of relationship traversal Constant O(log(n)) looks-ups 59

Data Pipeline Workflow Data Generation Data Transformation Data Ingestion DB Data Processing ML Model Creation ML Model Deployment Other step. . . 60

Questions? 61

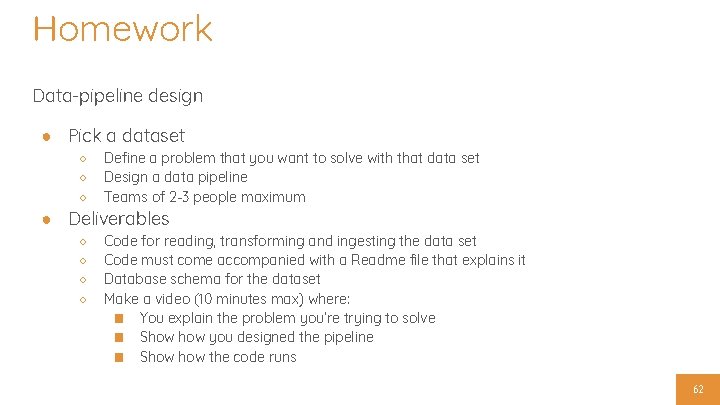

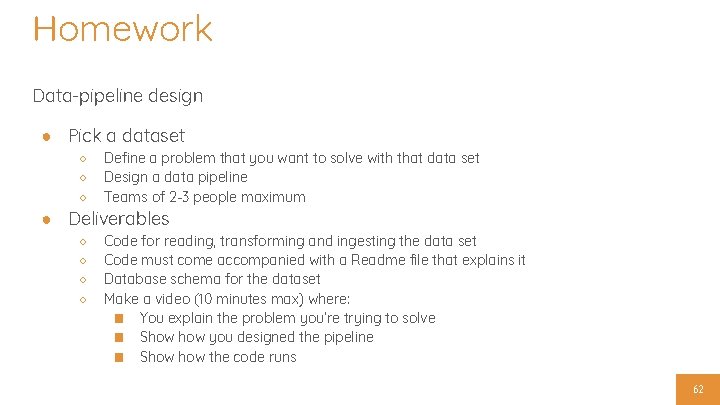

Homework Data-pipeline design ● Pick a dataset ○ ○ ○ Define a problem that you want to solve with that data set Design a data pipeline Teams of 2 -3 people maximum ○ ○ Code for reading, transforming and ingesting the data set Code must come accompanied with a Readme file that explains it Database schema for the dataset Make a video (10 minutes max) where: ■ You explain the problem you’re trying to solve ■ Show you designed the pipeline ■ Show the code runs ● Deliverables 62