Lecture Slides for INTRODUCTION TO Machine Learning ETHEM

- Slides: 63

Lecture Slides for INTRODUCTION TO Machine Learning ETHEM ALPAYDIN © The MIT Press, 2004 alpaydin@boun. edu. tr http: //www. cmpe. boun. edu. tr/~ethem/i 2 ml Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

CHAPTER 1: Introduction Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

What Is Machine Learning? n Machine learning is programming computers to optimize a performance criterion using example data or past experience. 3 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Why do we need Machine Learning? n With advance in computer technology, we currently have the ability to store and process large amounts of data, as well as to access it from physically distinct locations over a computer network. ¨ Google=> Special Mail List 4 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Data Mining n Application of machine learning methods to large databases is called data mining. ¨ Finance banks n n n ¨ Manufacturing n n n ¨ credit applications. Fraud detection. Stock market. Optimization Control Troubleshooting Medicine, telecommunications and World Wide Web. 5 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Artificial Intelligence n n n Machine learning is not just a database problem; it is also a part of artificial intelligence. To be intelligent, a system that is a changing environment should have ability to learn. If the system can learn and adapt to such changes, the system designer need not foresee and provide solutions for all possible situations. 6 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Pattern Recognition n Recognizing faces: This is a task we do effortlessly; every day we recognize family members and friends by looking at their faces or from their photographs, despite difference in pose, lighting, hair style, and so forth. ¨ But we do it unconsciously and are unable to explain how we can do it. ¨ Because we are not able to explain our expertise, we cannot write the computer program. ¨ 8 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Examples of Machine Learning Applications n n n Learning Associations Classification (supervised) Regression (supervised) Unsupervised Learning Reinforcement Learning 9 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Learning Associations n n Association rules P(chips|beer) = 0. 7 ¨ 70 percent of customers who buy beer also buy chips. 10 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Classification n n Classification Discrimination ¨ n n Prediction Pattern Recognition ¨ ¨ n n n Saving vs. Income => Low-risk/high-risk. Optical character recognition. Face recognition Medical diagnosis Speech recognition Knowledge Extraction Compression Outlier detection ¨ Fraud. 11 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Regression n Regression ¨ Supervised Learning. ¨ 12 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Supervised Learning. n n In supervised learning, the aim is to learn a mapping from the input to an output whose correct values are provided by a supervisor. In unsupervised learning, there is no such supervisor and we only have input of data. The aim is to find the regularities in the input. 13 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Unsupervised Learning. n n In unsupervised learning, there is no such supervisor and we only have input of data. The aim is to find the regularities in the input. 14 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Unsupervised Learning n Density Estimation ¨ n In Statistics. Clustering Image compression ¨ Bioinformatics ¨ n n n DNA=>RNA=>Protein DNA in our genome is the “blueprint of life”, and is a sequence of bases, namely, A, G, C, and T. Motif. 15 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Reinforcement Learning n n n In such a case, a single action is not important; What is important is the policy that is the sequence of correct actions to reach the goal. Game playing 16 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Why “Learn” ? n n n Machine learning is programming computers to optimize a performance criterion using example data or past experience. There is no need to “learn” to calculate payroll Learning is used when: Human expertise does not exist (navigating on Mars), ¨ Humans are unable to explain their expertise (speech recognition) ¨ Solution changes in time (routing on a computer network) ¨ Solution needs to be adapted to particular cases (user biometrics) ¨ 17 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

What We Talk About When We Talk About“Learning” n n n Learning general models from a data of particular examples Data is cheap and abundant (data warehouses, data marts); knowledge is expensive and scarce. Example in retail: Customer transactions to consumer behavior: People who bought “Da Vinci Code” also bought “The Five People You Meet in Heaven” (www. amazon. com) n Build a model that is a good and useful approximation to the data. 18 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Data Mining n n n n Retail: Market basket analysis, Customer relationship management (CRM) Finance: Credit scoring, fraud detection Manufacturing: Optimization, troubleshooting Medicine: Medical diagnosis Telecommunications: Quality of service optimization Bioinformatics: Motifs, alignment Web mining: Search engines. . . 19 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

What is Machine Learning? n n n Optimize a performance criterion using example data or past experience. Role of Statistics: Inference from a sample Role of Computer science: Efficient algorithms to ¨ Solve the optimization problem ¨ Representing and evaluating the model for inference 20 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Learning Definition: A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience. 21 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Learning Problem Learning: improving with experience at some task n Improve over task T n With respect to performance measure P n Based on experience E Example: Learn to play checkers: n T: play checkers n P: percentage of games won in a tournament n E: opportunity to play against itself 22 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Learning Problem Learning: improving with experience at some task n Improve over task T n With respect to performance measure P n Based on experience E Example: Learn to play checkers: n T: play checkers n P: percentage of games won in a tournament n E: opportunity to play against itself 23 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Learning to play checkers n n n T: play checkers P: percentage of games won What experience? What exactly should be learned? How shall it be represented? What specific algorithm to learn it? 24 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Type of Training Experience n Direct or indirect? Direct: board state -> correct move ¨ Indirect: outcome of a complete game ¨ Credit assignment problem ¨ n Teacher or not ? Teacher selects board states ¨ Learner can select board states ¨ n Is training experience representative of performance goal? Training playing against itself ¨ Performance evaluated playing against world champion ¨ 25 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Choose Target Function n Choose. Move : B M : board state move ¨ n Maps a legal board state to a legal move Evaluate : B V : board state board value Assigns a numerical score to any given board state, such that better board states obtain a higher score ¨ Select the best move by evaluating all successor states of legal moves and pick the one with the maximal score ¨ 26 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Possible Definition of Target Function n n If b is a final board state that is won then V(b) = 100 If b is a final board state that is lost then V(b) = -100 If b is a final board state that is drawn then V(b)=0 If b is not a final board state, then V(b)=V(b’), where b’ is the best final board state that can be achieved starting from b and playing optimally until the end of the game. Gives correct values but is not operational 27 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

State Space Search V(b)= ? V(b)= maxi V(bi) m 1 : b b 1 m 2 : b b 2 m 3 : b b 3 28 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

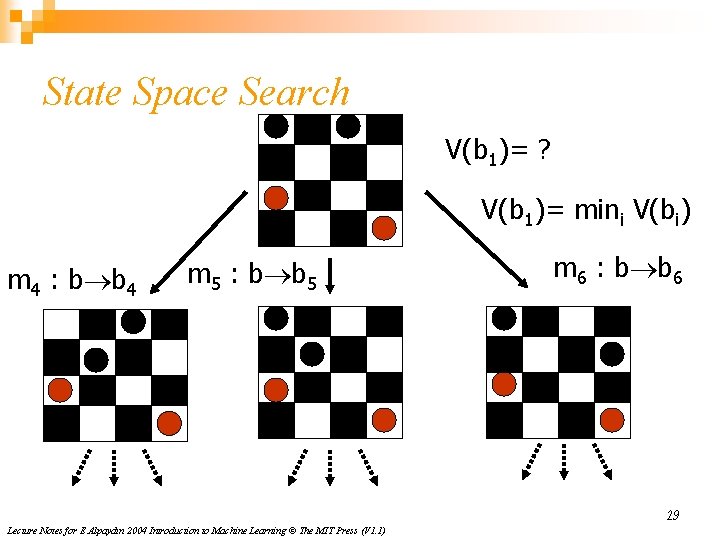

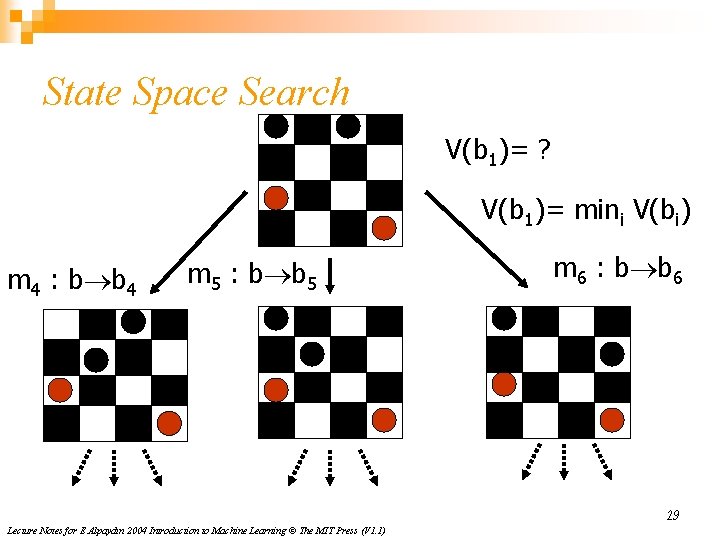

State Space Search V(b 1)= ? V(b 1)= mini V(bi) m 4 : b b 4 m 5 : b b 5 m 6 : b b 6 29 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

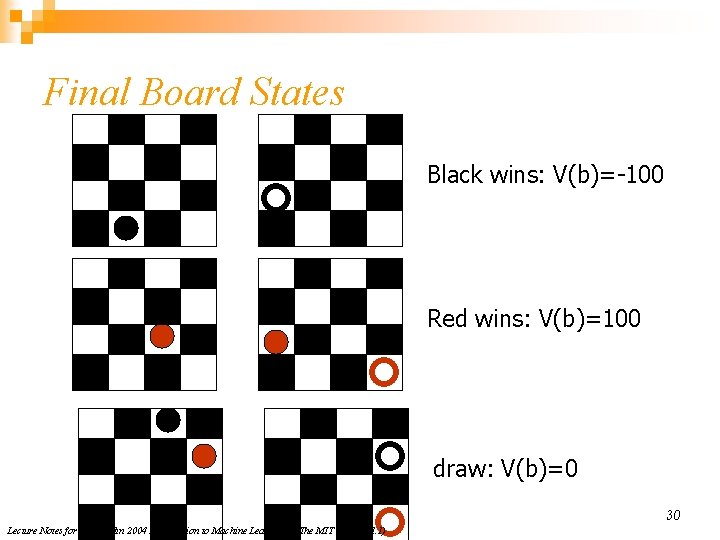

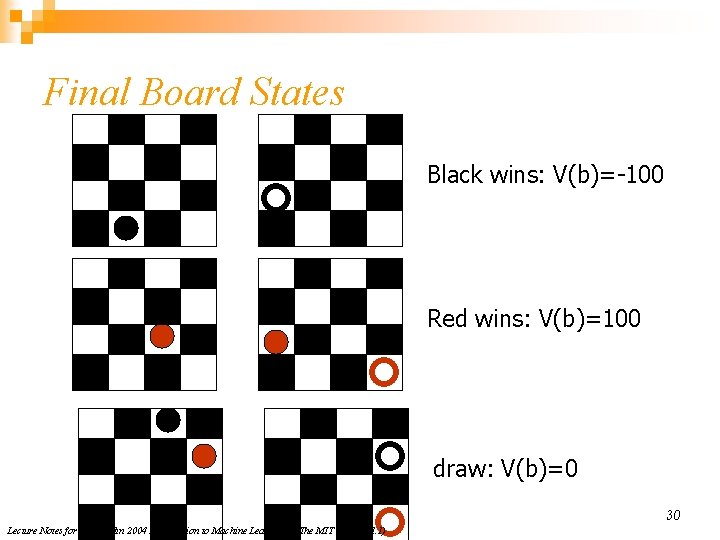

Final Board States Black wins: V(b)=-100 Red wins: V(b)=100 draw: V(b)=0 30 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

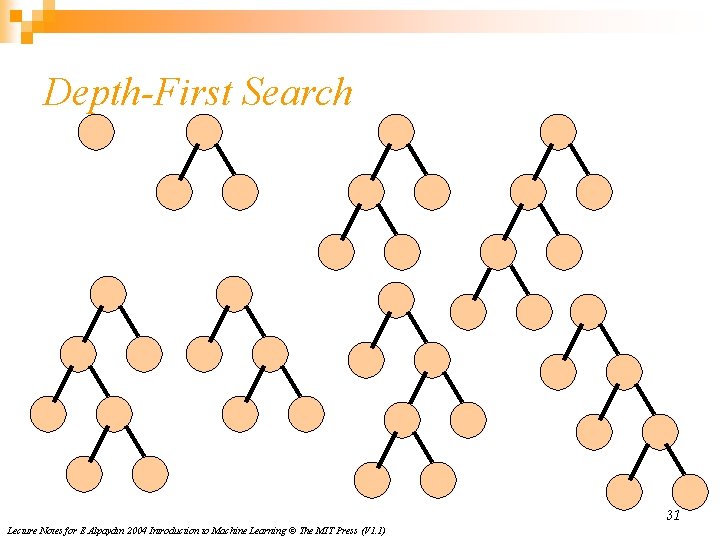

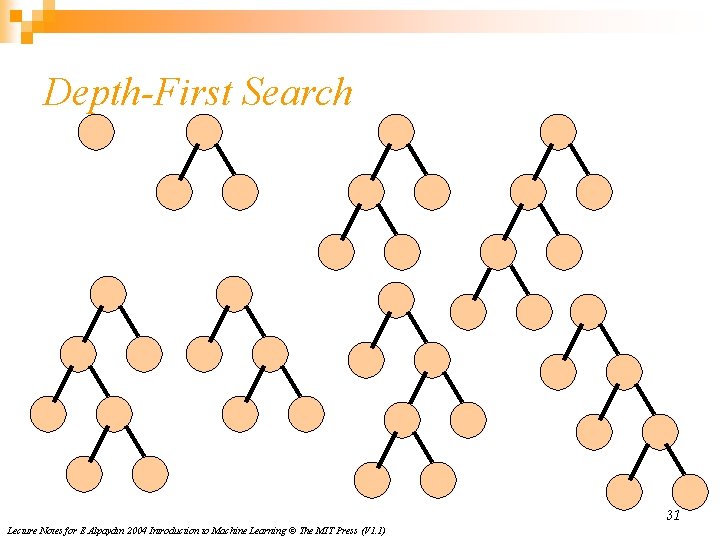

Depth-First Search 31 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Breadth-First Search 32 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Number of Board States Tic-Tac-Toe: #board states < 9!/(5! 4!) + 9!/(1! 4! 4!) + … … + 9!/(2! 4! 3!) + … 9 = 6045 4 x 4 checkers: (no queens) #board states = ? #board states < 8 x 7 x 6 x 5*22/(2!*2!) = 1680 Regular checkers (8 x 8 board, 8 pieces each) #board states < 32!*216/(8! * 16!) = 5. 07*1017 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1) 33

Choose Representation of Target Function n n Table look-up Collection of rules Neural networks Polynomial function of board features Trade-off in choosing an expressive representation: Approximation accuracy ¨ Number of training examples to learn the target function ¨ 34 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

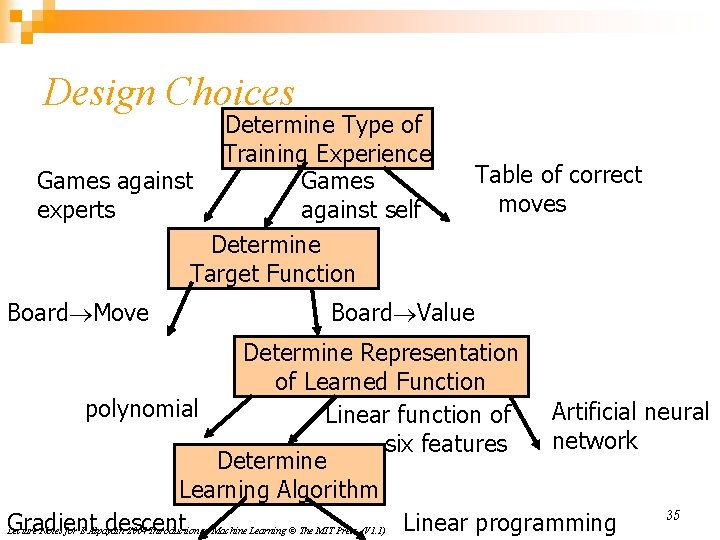

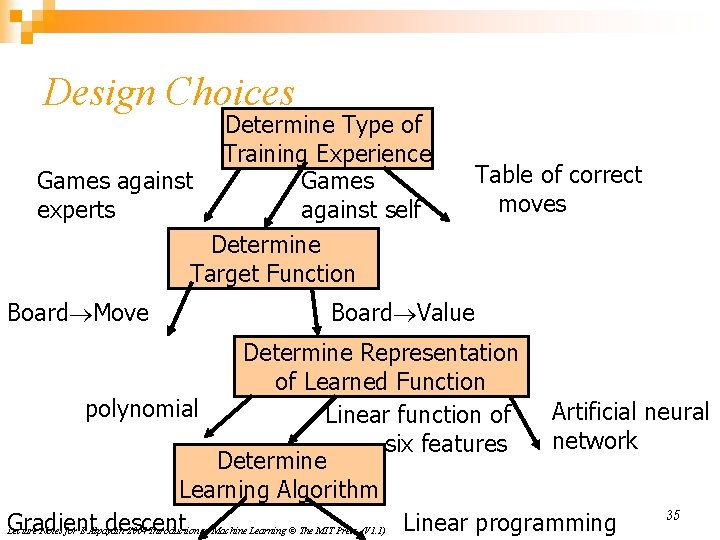

Design Choices Determine Type of Training Experience Games against Games experts against self Determine Target Function Board Move Table of correct moves Board Value Determine Representation of Learned Function polynomial Artificial neural Linear function of network six features Determine Learning Algorithm 35 Gradient descent Linear programming Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

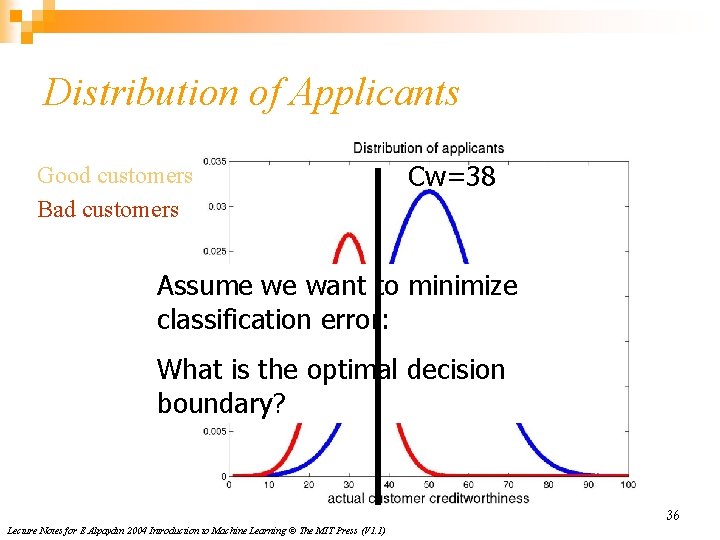

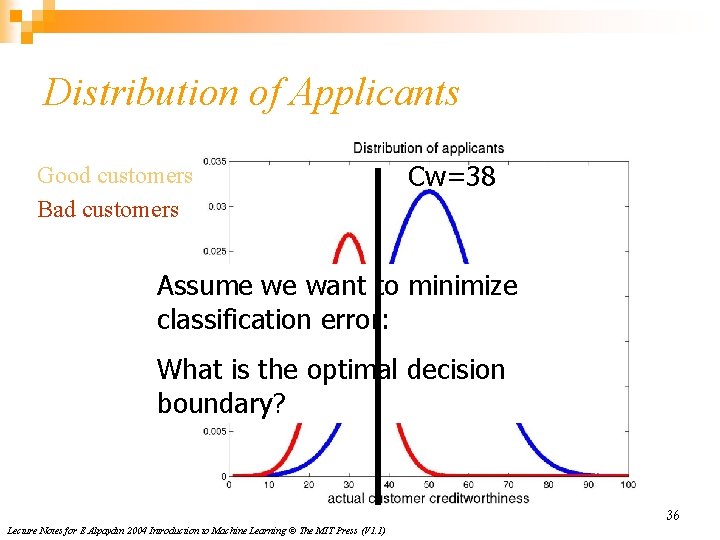

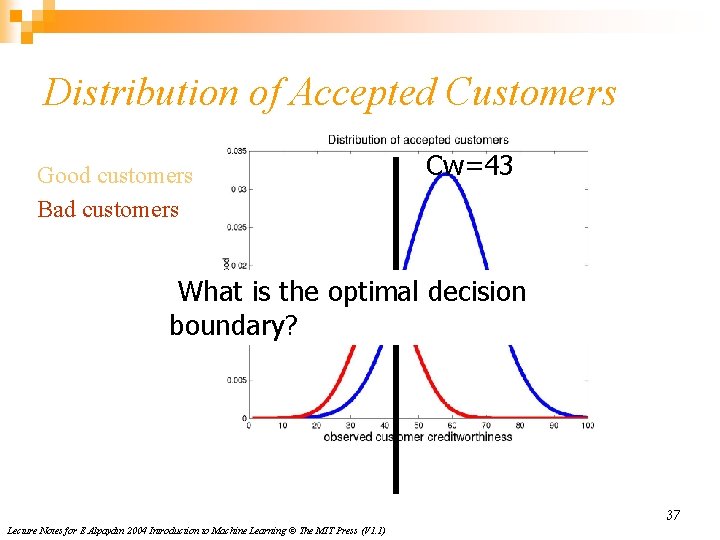

Distribution of Applicants Good customers Bad customers Cw=38 Assume we want to minimize classification error: What is the optimal decision boundary? 36 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

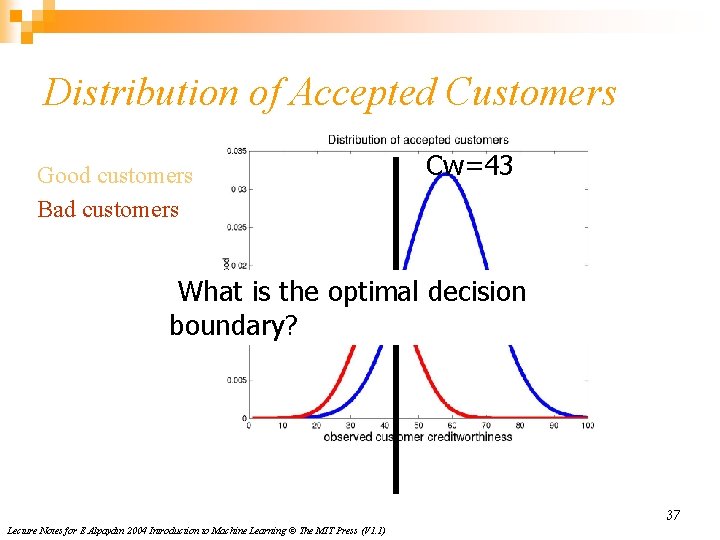

Distribution of Accepted Customers Good customers Bad customers Cw=43 What is the optimal decision boundary? 37 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Target Function Customer record: income, owns house, credit history, age, employed, accept $40000, yes, good, 38, full-time, yes $25000, no, excellent, 25, part-time, no $50000, no, poor, 55, unemployed, no n n n T: Customer data accept/reject T: Customer data probability good customer T: Customer data expected utility/profit 38 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Learning methods n Decision rules: ¨ n Bayesian network: ¨ n n If income < $30. 000 then reject P(good | income, credit history, …. ) Neural Network: Nearest Neighbor: ¨ Take the same decision as for the customer in the data base that is most similar to the applicant 39 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Learning Problem Examples n Obstacle Avoidance Behavior of a Mobile Robot Task T: Navigate robot safely through an environment. ¨ Performance measure P : ? ¨ Experience E : ? ¨ Target function : ? ¨ 40 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Performance Measure P: n n P: Maximize time until collision with obstacle P: Maximize distance travelled until collision with obstacle P: Minimize rotational velocity, maximize translational velocity P: Minimize error between control action of a human operator and robot controller in the same situation 41 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

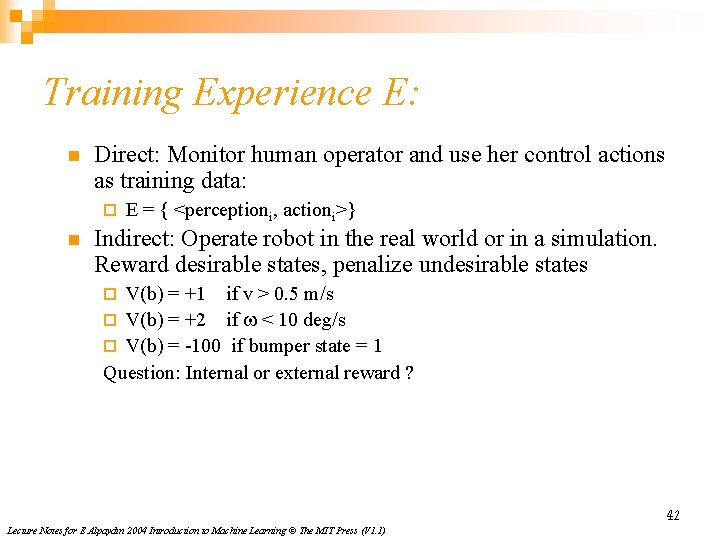

Training Experience E: n Direct: Monitor human operator and use her control actions as training data: ¨ n E = { <perceptioni, actioni>} Indirect: Operate robot in the real world or in a simulation. Reward desirable states, penalize undesirable states V(b) = +1 if v > 0. 5 m/s ¨ V(b) = +2 if < 10 deg/s ¨ V(b) = -100 if bumper state = 1 Question: Internal or external reward ? ¨ 42 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

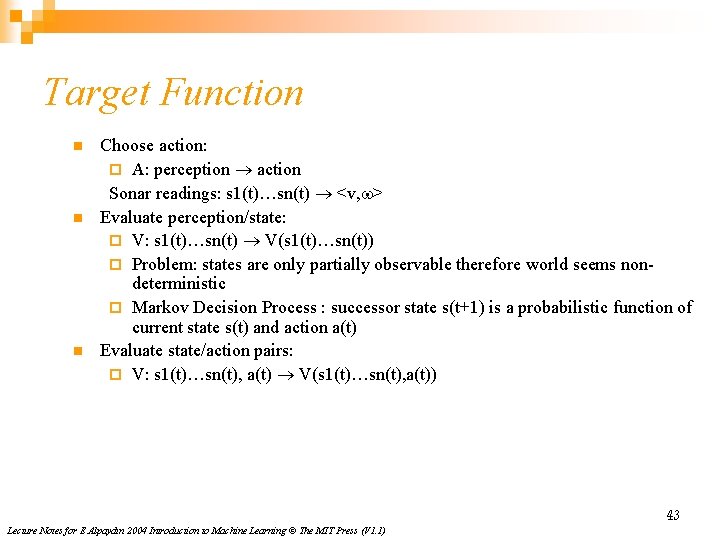

Target Function n Choose action: ¨ A: perception action Sonar readings: s 1(t)…sn(t) <v, > Evaluate perception/state: ¨ V: s 1(t)…sn(t) V(s 1(t)…sn(t)) ¨ Problem: states are only partially observable therefore world seems nondeterministic ¨ Markov Decision Process : successor state s(t+1) is a probabilistic function of current state s(t) and action a(t) Evaluate state/action pairs: ¨ V: s 1(t)…sn(t), a(t) V(s 1(t)…sn(t), a(t)) 43 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

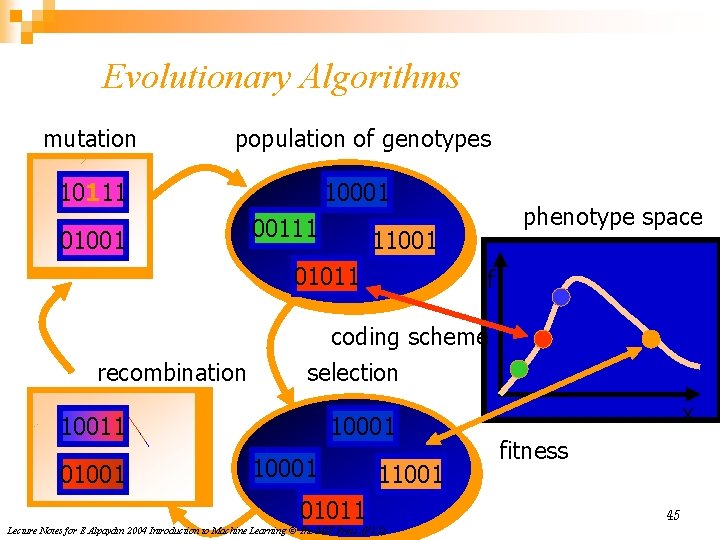

Learning Methods n Neural Networks ¨ n Reinforcement Learning ¨ n Require direct training experience Indirect training experience Evolutionary Algorithms ¨ Indirect training experience 44 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

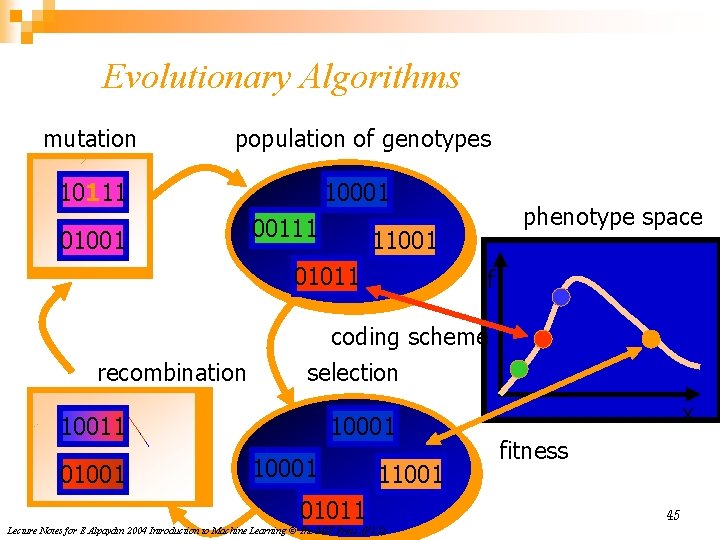

Evolutionary Algorithms mutation population of genotypes 10111 10001 01001 00111 phenotype space 11001 01011 f coding scheme recombination selection 10001 10011 10 011 001 01 01011 001 011 10001 11001 01011 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1) x fitness 45

Applications n n Association Supervised Learning Classification ¨ Regression ¨ n n Unsupervised Learning Reinforcement Learning 46 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Learning Associations n Basket analysis: P (Y | X ) probability that somebody who buys X also buys Y where X and Y are products/services. Example: P ( chips | beer ) = 0. 7 47 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

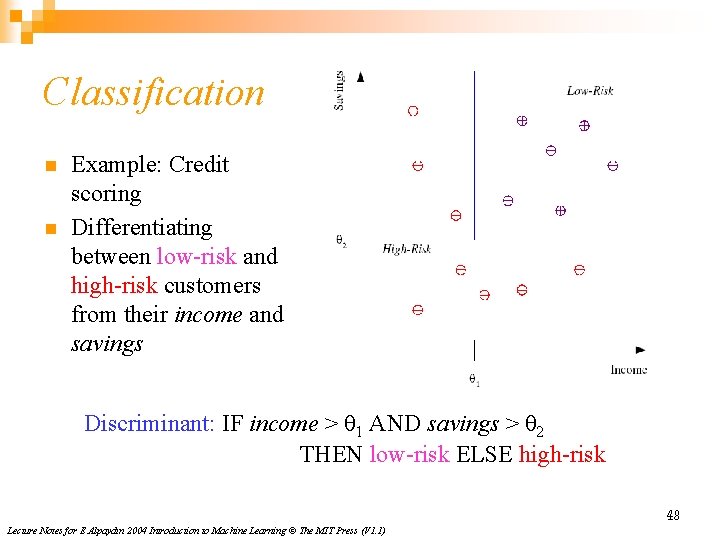

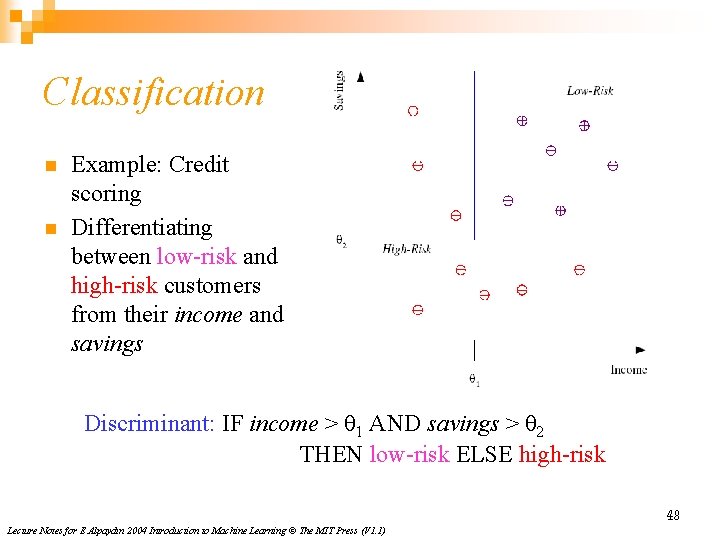

Classification n n Example: Credit scoring Differentiating between low-risk and high-risk customers from their income and savings Discriminant: IF income > θ 1 AND savings > θ 2 THEN low-risk ELSE high-risk 48 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

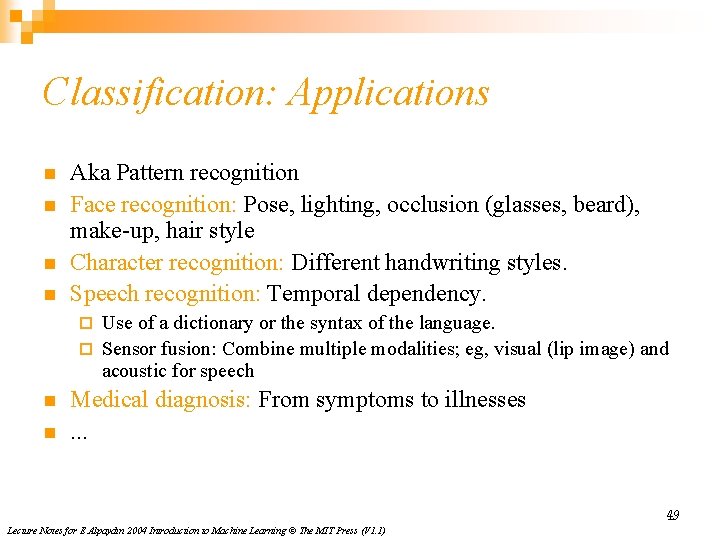

Classification: Applications n n Aka Pattern recognition Face recognition: Pose, lighting, occlusion (glasses, beard), make-up, hair style Character recognition: Different handwriting styles. Speech recognition: Temporal dependency. Use of a dictionary or the syntax of the language. ¨ Sensor fusion: Combine multiple modalities; eg, visual (lip image) and acoustic for speech ¨ n n Medical diagnosis: From symptoms to illnesses. . . 49 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

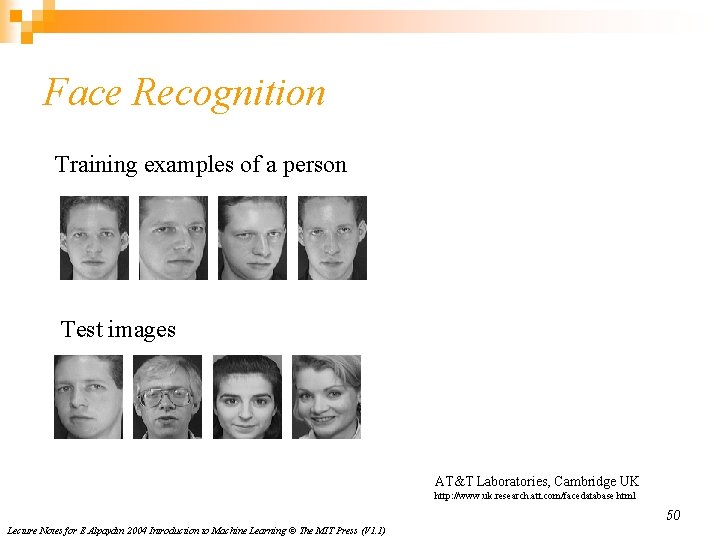

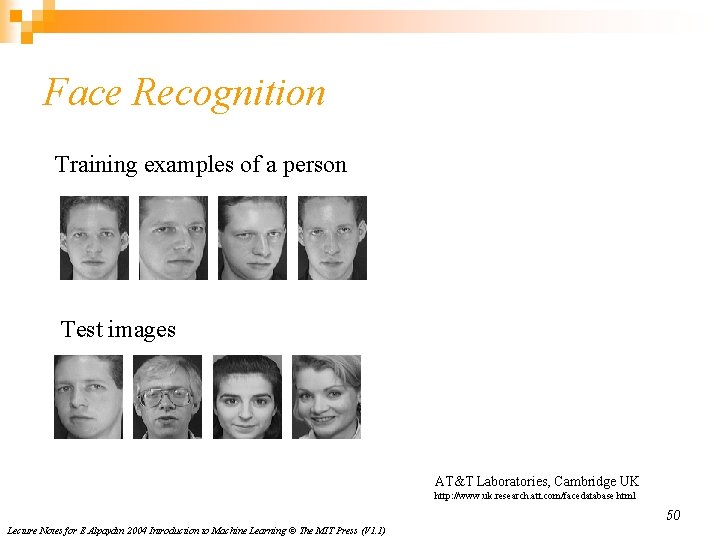

Face Recognition Training examples of a person Test images AT&T Laboratories, Cambridge UK http: //www. uk. research. att. com/facedatabase. html 50 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Regression n n Example: Price of a used car x : car attributes y : price y = g (x | θ ) g ( ) model, θ parameters y = wx+w 0 51 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

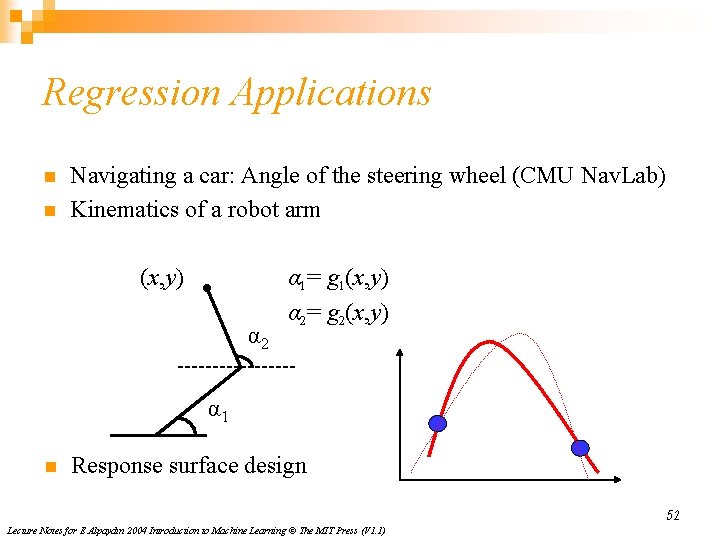

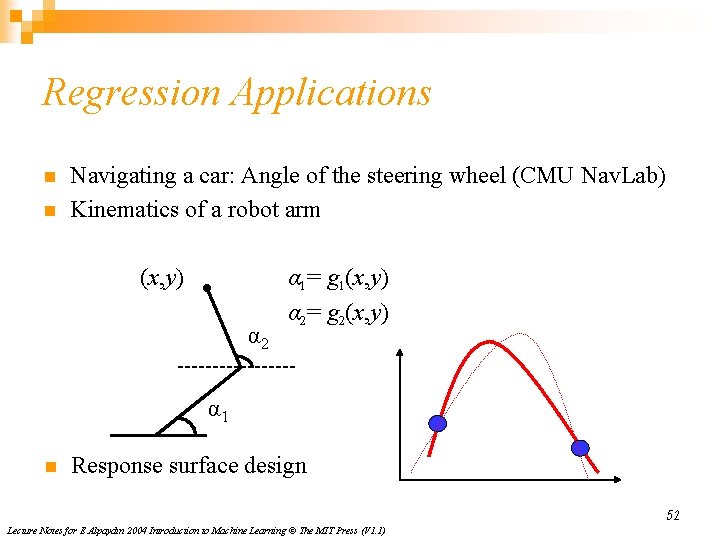

Regression Applications n n Navigating a car: Angle of the steering wheel (CMU Nav. Lab) Kinematics of a robot arm (x, y) α 2 α 1= g 1(x, y) α 2= g 2(x, y) α 1 n Response surface design 52 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Supervised Learning: Uses n n Prediction of future cases: Use the rule to predict the output for future inputs Knowledge extraction: The rule is easy to understand Compression: The rule is simpler than the data it explains Outlier detection: Exceptions that are not covered by the rule, e. g. , fraud 53 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Unsupervised Learning n n Learning “what normally happens” No output Clustering: Grouping similar instances Example applications ¨ Customer segmentation in CRM ¨ Image compression: Color quantization ¨ Bioinformatics: Learning motifs 54 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Reinforcement Learning n n n Learning a policy: A sequence of outputs No supervised output but delayed reward Credit assignment problem Game playing Robot in a maze Multiple agents, partial observability, . . . 55 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

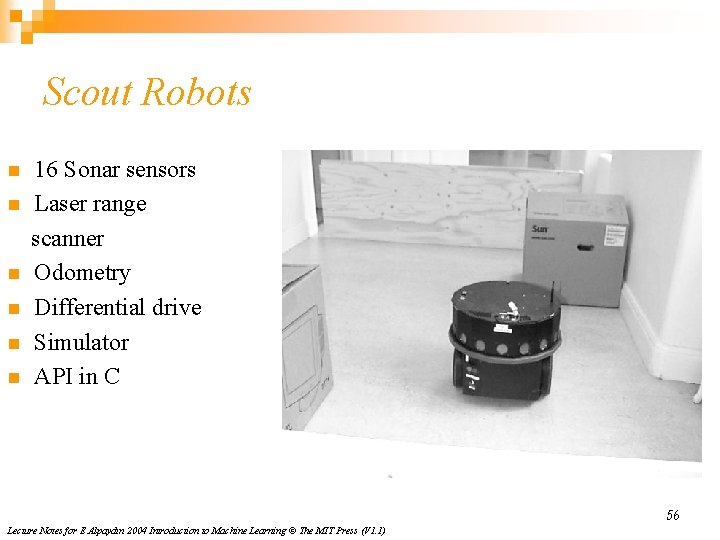

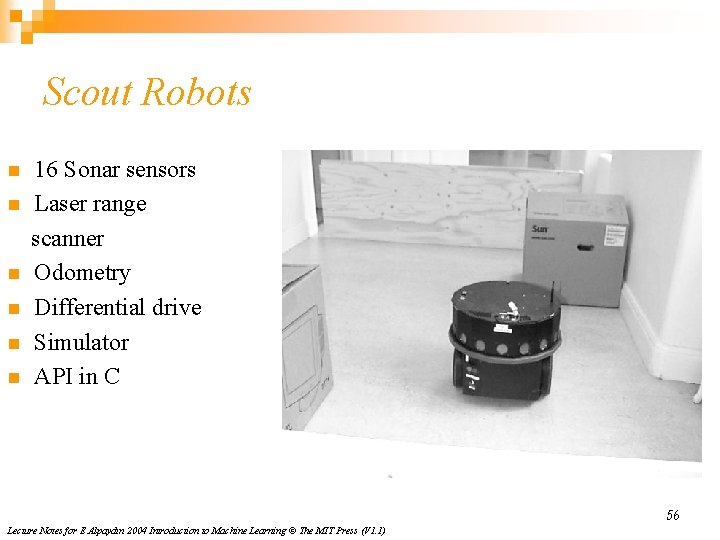

Scout Robots n n n 16 Sonar sensors Laser range scanner Odometry Differential drive Simulator API in C 56 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

LEGO Mindstorms n n n Touch sensor Light sensor Rotation sensor Video cam Motors 57 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Issues in Machine Learning n n n What algorithms can approximate functions well and when? How does the number of training examples influence accuracy? How does the complexity of hypothesis representation impact it? How does noisy data influence accuracy? What are theoretical limits of learnability? 58 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

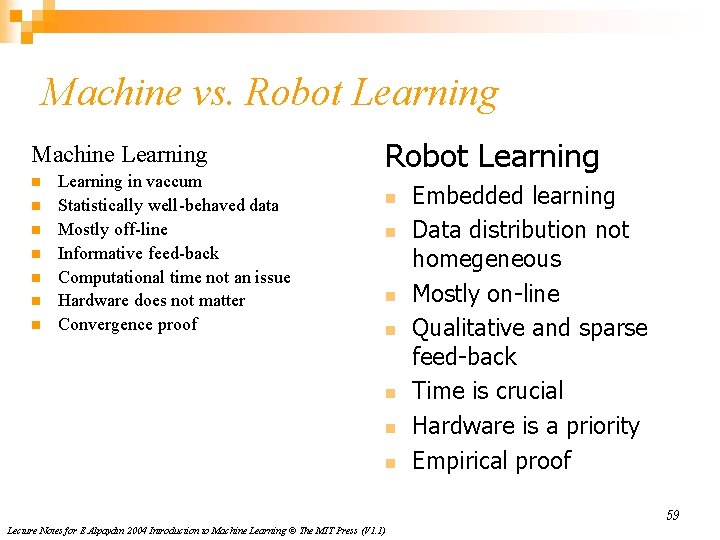

Machine vs. Robot Learning Machine Learning n n n n Learning in vaccum Statistically well-behaved data Mostly off-line Informative feed-back Computational time not an issue Hardware does not matter Convergence proof Robot Learning n n n n Embedded learning Data distribution not homegeneous Mostly on-line Qualitative and sparse feed-back Time is crucial Hardware is a priority Empirical proof 59 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

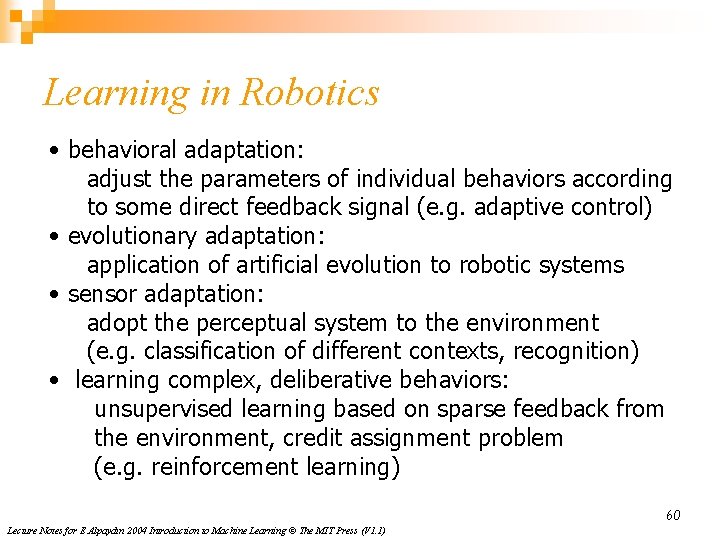

Learning in Robotics • behavioral adaptation: adjust the parameters of individual behaviors according to some direct feedback signal (e. g. adaptive control) • evolutionary adaptation: application of artificial evolution to robotic systems • sensor adaptation: adopt the perceptual system to the environment (e. g. classification of different contexts, recognition) • learning complex, deliberative behaviors: unsupervised learning based on sparse feedback from the environment, credit assignment problem (e. g. reinforcement learning) 60 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

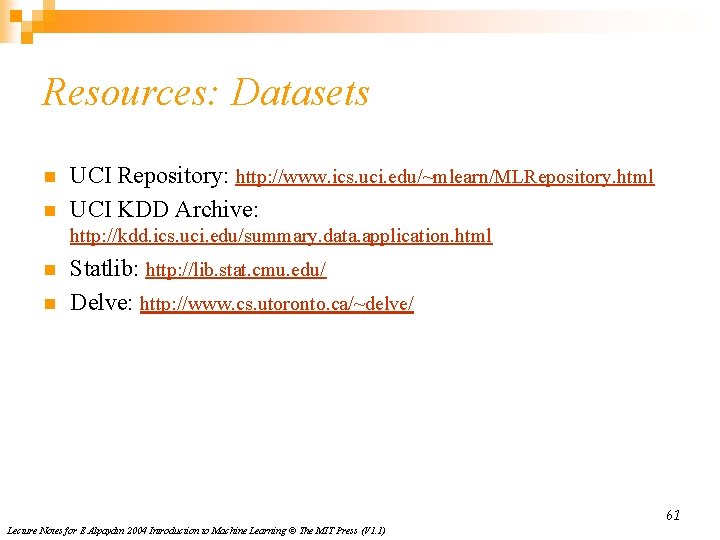

Resources: Datasets n n UCI Repository: http: //www. ics. uci. edu/~mlearn/MLRepository. html UCI KDD Archive: http: //kdd. ics. uci. edu/summary. data. application. html n n Statlib: http: //lib. stat. cmu. edu/ Delve: http: //www. cs. utoronto. ca/~delve/ 61 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

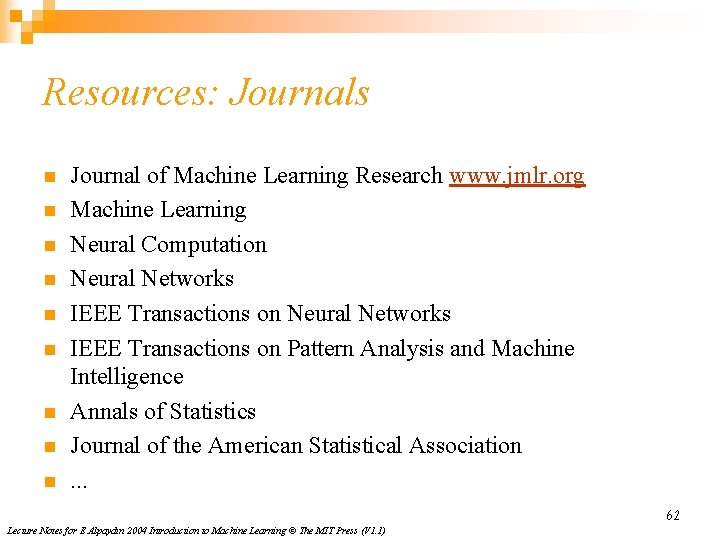

Resources: Journals n n n n n Journal of Machine Learning Research www. jmlr. org Machine Learning Neural Computation Neural Networks IEEE Transactions on Pattern Analysis and Machine Intelligence Annals of Statistics Journal of the American Statistical Association. . . 62 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Resources: Conferences n n n n International Conference on Machine Learning (ICML) ¨ ICML 05: http: //icml. ais. fraunhofer. de/ European Conference on Machine Learning (ECML) ¨ ECML 05: http: //ecmlpkdd 05. liacc. up. pt/ Neural Information Processing Systems (NIPS) ¨ NIPS 05: http: //nips. cc/ Uncertainty in Artificial Intelligence (UAI) ¨ UAI 05: http: //www. cs. toronto. edu/uai 2005/ Computational Learning Theory (COLT) ¨ COLT 05: http: //learningtheory. org/colt 2005/ International Joint Conference on Artificial Intelligence (IJCAI) ¨ IJCAI 05: http: //ijcai 05. csd. abdn. ac. uk/ International Conference on Neural Networks (Europe) ¨ ICANN 05: http: //www. ibspan. waw. pl/ICANN-2005/. . . 63 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)