Lecture Slides for INTRODUCTION TO Machine Learning ETHEM

- Slides: 23

Lecture Slides for INTRODUCTION TO Machine Learning ETHEM ALPAYDIN © The MIT Press, 2004 alpaydin@boun. edu. tr http: //www. cmpe. boun. edu. tr/~ethem/i 2 ml Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

CHAPTER 3: Bayesian Decision Theory Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

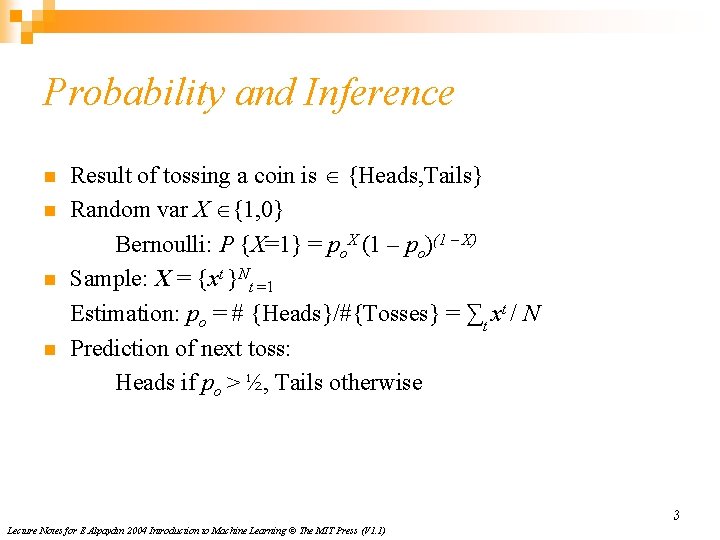

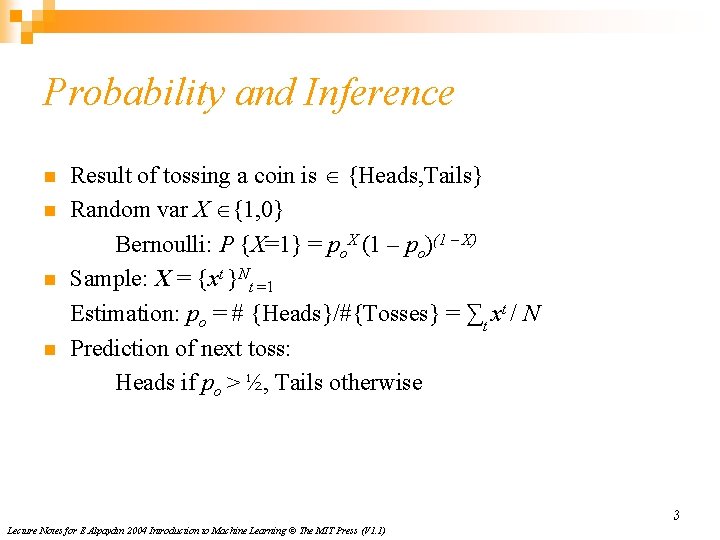

Probability and Inference n n Result of tossing a coin is Î {Heads, Tails} Random var X Î{1, 0} Bernoulli: P {X=1} = po. X (1 ‒ po)(1 ‒ X) Sample: X = {xt }Nt =1 Estimation: po = # {Heads}/#{Tosses} = ∑t xt / N Prediction of next toss: Heads if po > ½, Tails otherwise 3 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

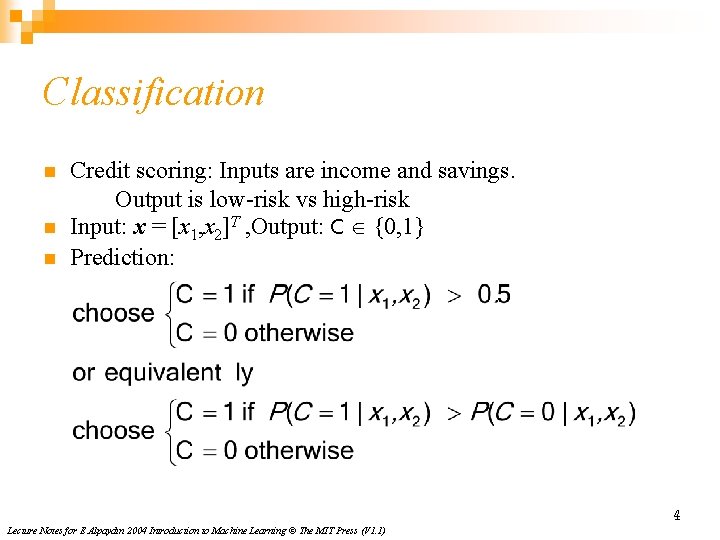

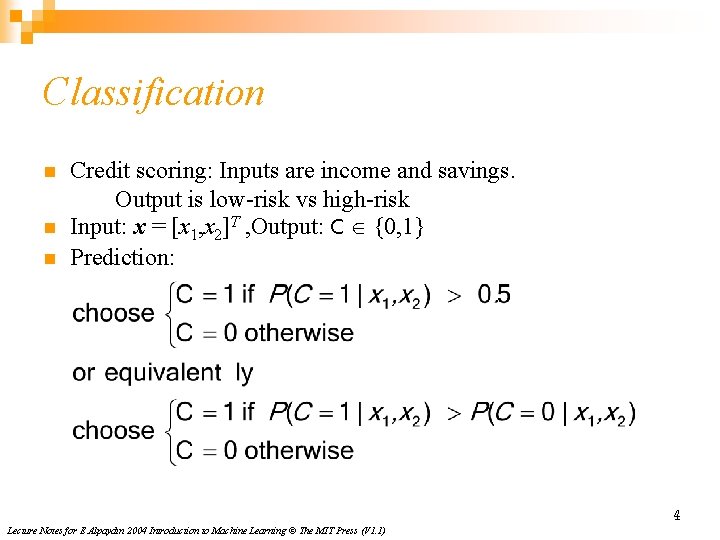

Classification n Credit scoring: Inputs are income and savings. Output is low-risk vs high-risk Input: x = [x 1, x 2]T , Output: C Î {0, 1} Prediction: 4 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

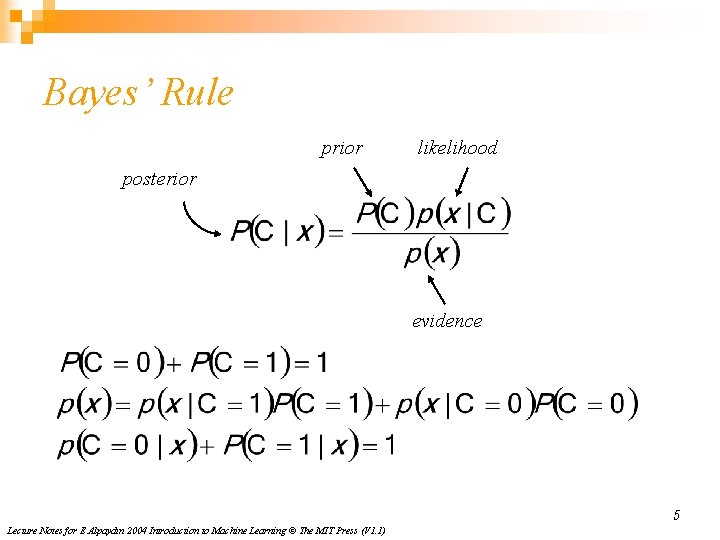

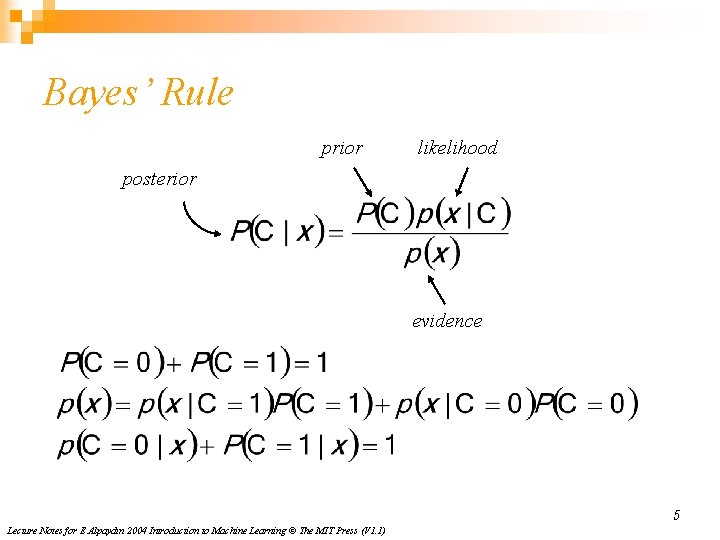

Bayes’ Rule prior likelihood posterior evidence 5 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

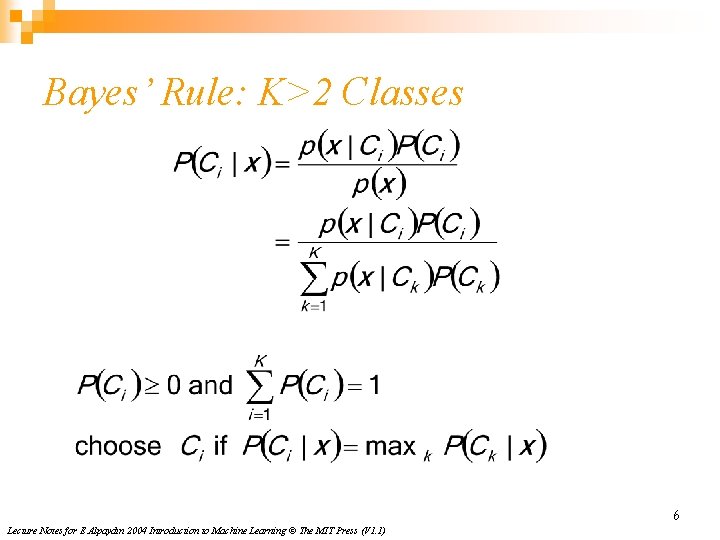

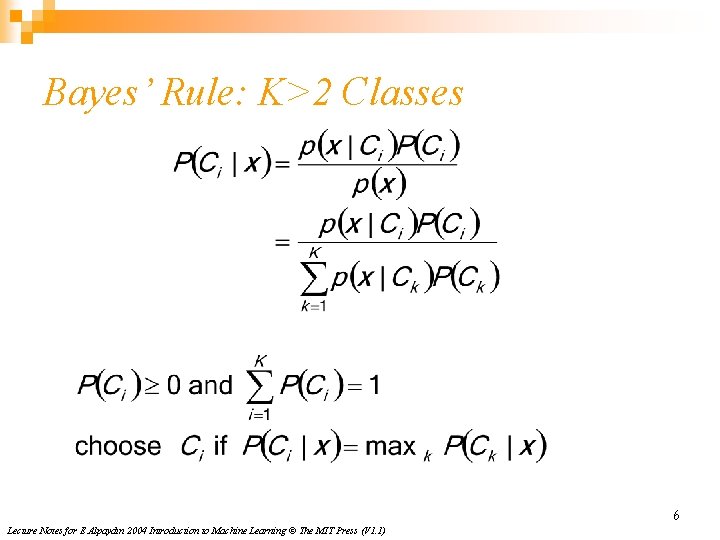

Bayes’ Rule: K>2 Classes 6 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

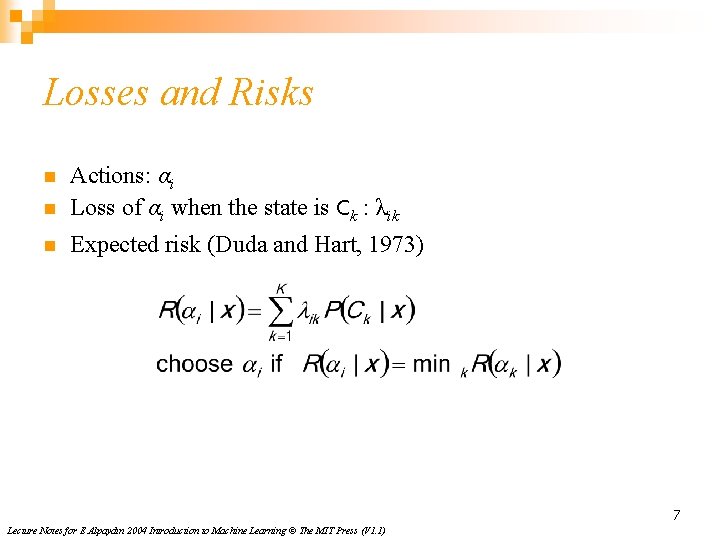

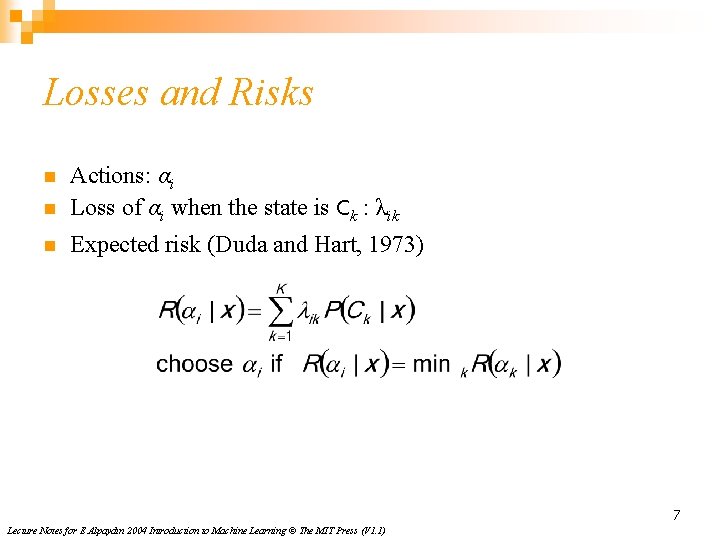

Losses and Risks n Actions: αi Loss of αi when the state is Ck : λik n Expected risk (Duda and Hart, 1973) n 7 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

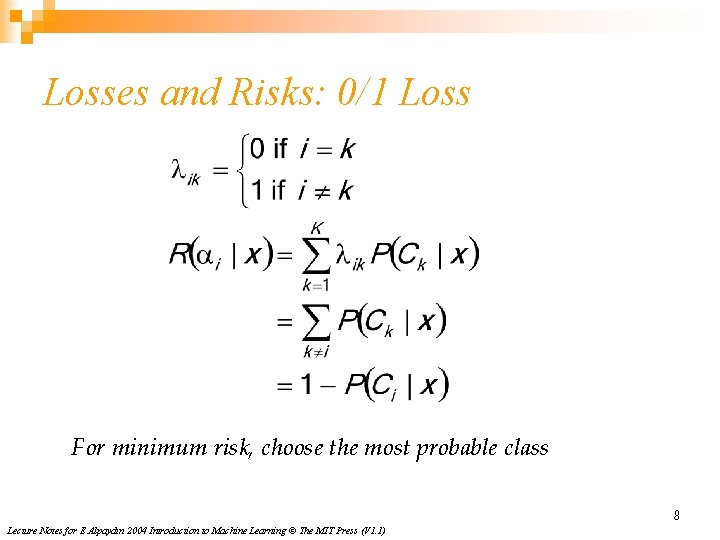

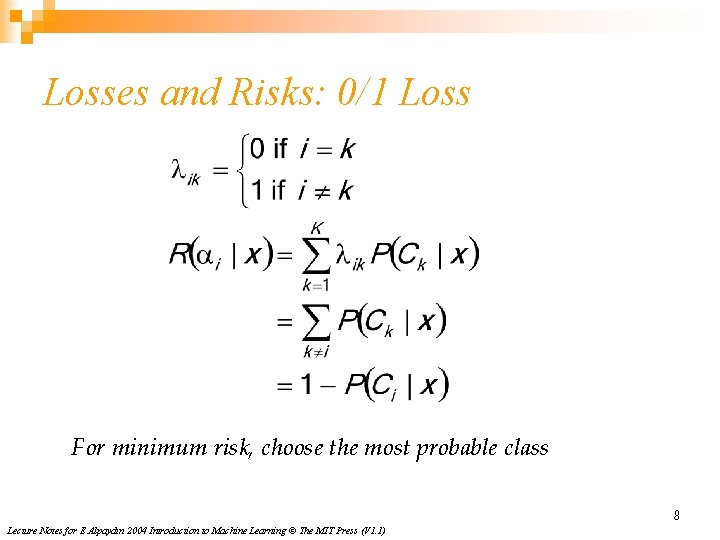

Losses and Risks: 0/1 Loss For minimum risk, choose the most probable class 8 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

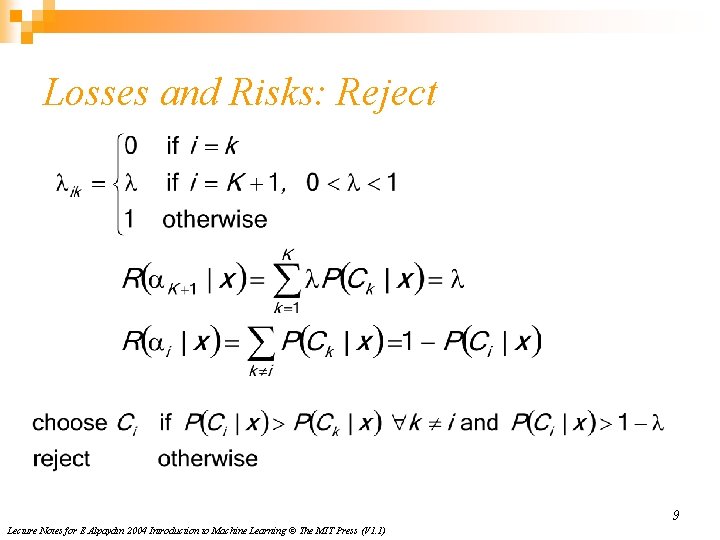

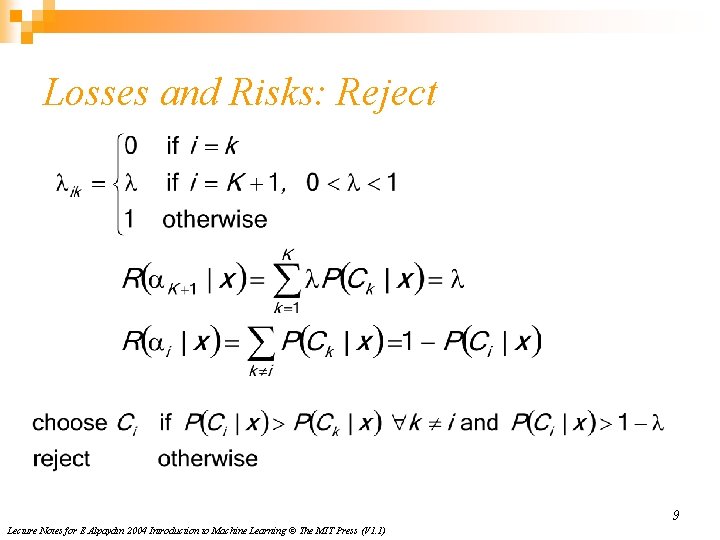

Losses and Risks: Reject 9 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

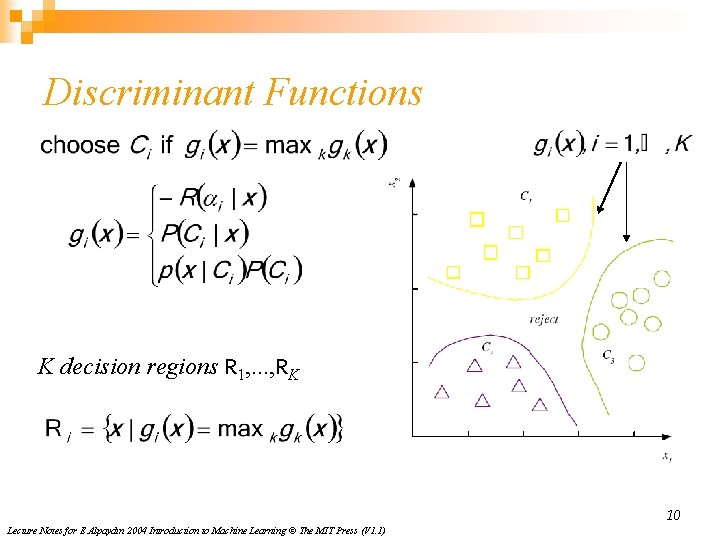

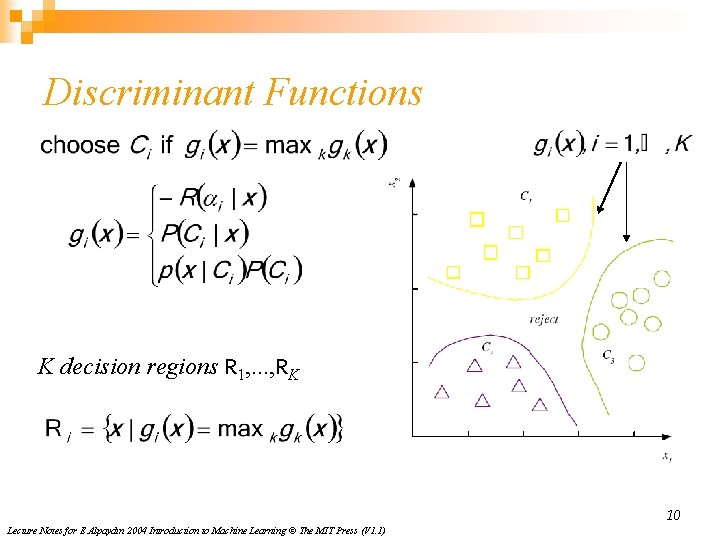

Discriminant Functions K decision regions R 1, . . . , RK 10 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

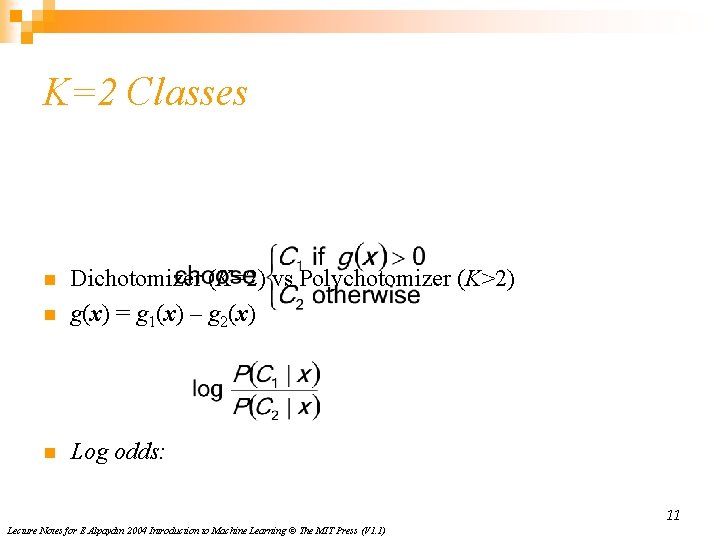

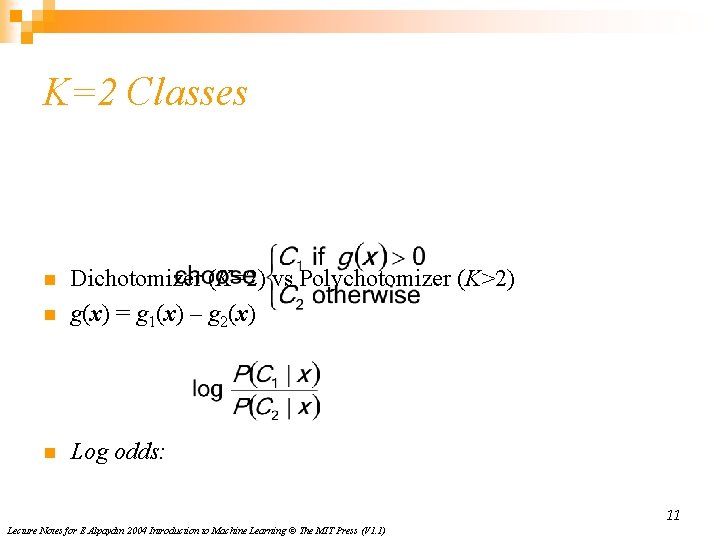

K=2 Classes n Dichotomizer (K=2) vs Polychotomizer (K>2) g(x) = g 1(x) – g 2(x) n Log odds: n 11 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

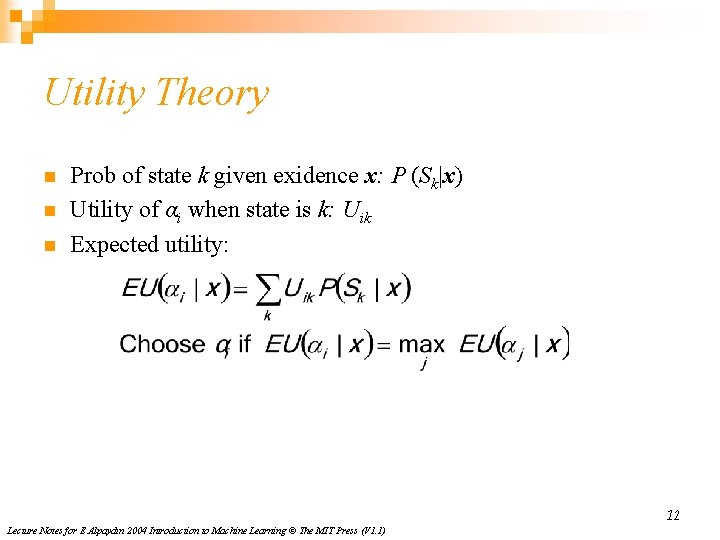

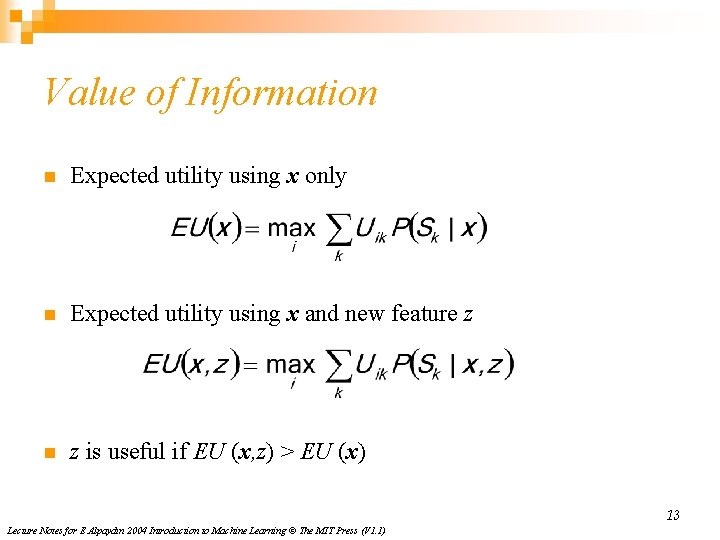

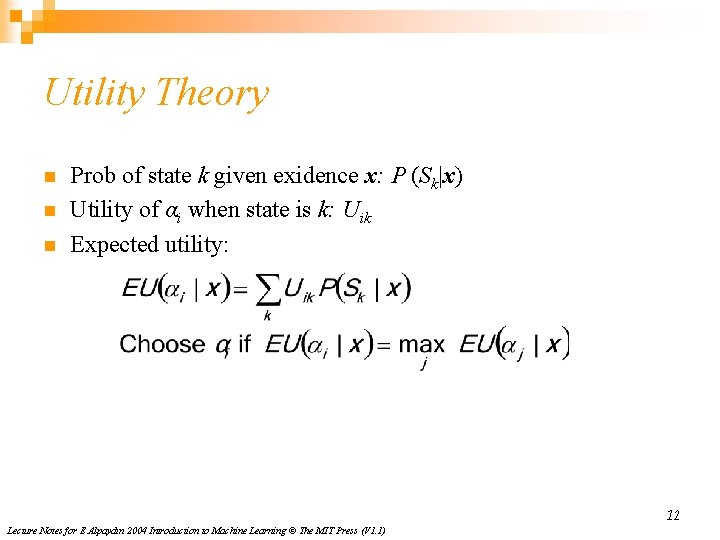

Utility Theory n n n Prob of state k given exidence x: P (Sk|x) Utility of αi when state is k: Uik Expected utility: 12 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

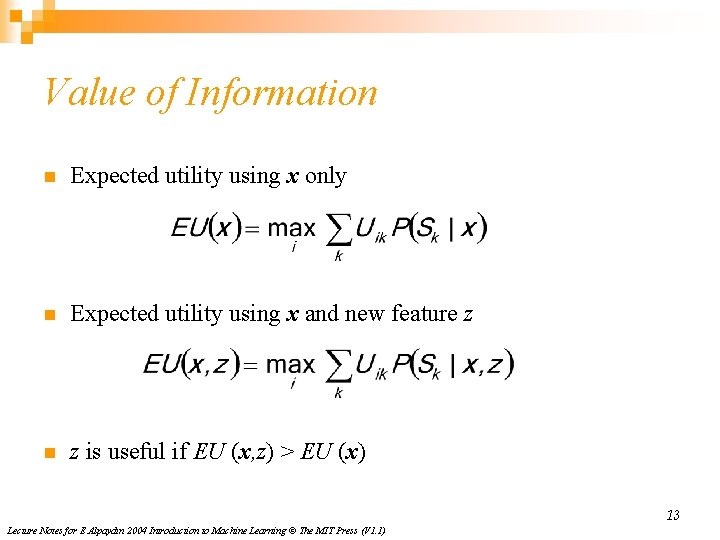

Value of Information n Expected utility using x only n Expected utility using x and new feature z n z is useful if EU (x, z) > EU (x) 13 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

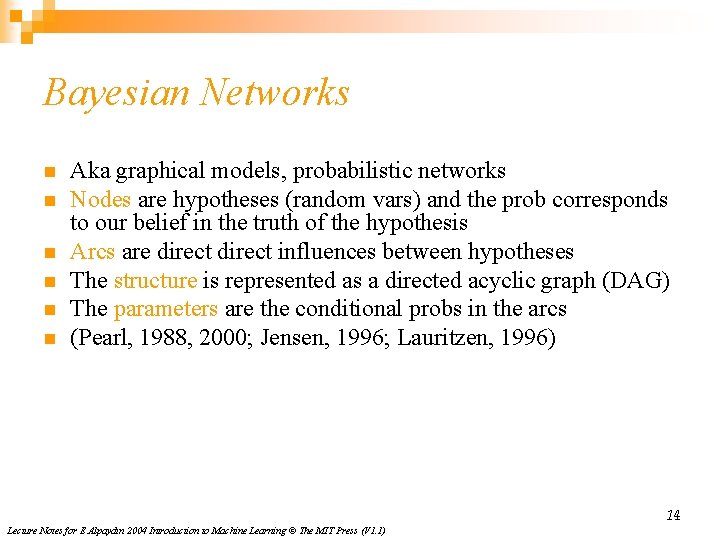

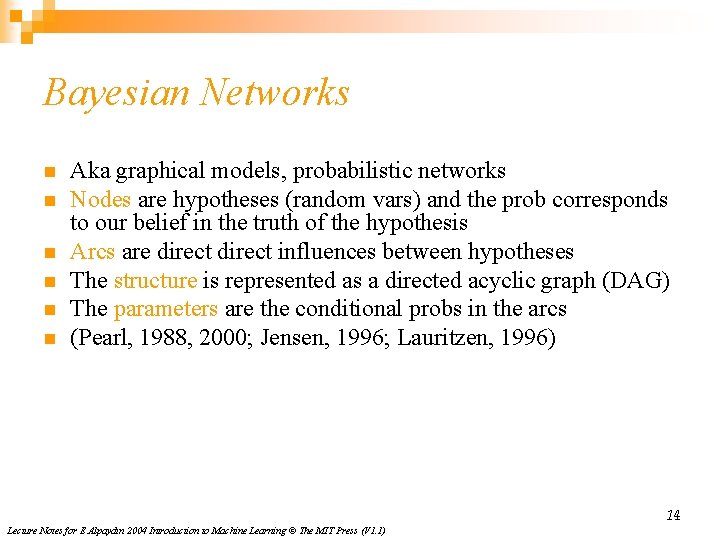

Bayesian Networks n n n Aka graphical models, probabilistic networks Nodes are hypotheses (random vars) and the prob corresponds to our belief in the truth of the hypothesis Arcs are direct influences between hypotheses The structure is represented as a directed acyclic graph (DAG) The parameters are the conditional probs in the arcs (Pearl, 1988, 2000; Jensen, 1996; Lauritzen, 1996) 14 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Causes and Bayes’ Rule diagnostic causal Diagnostic inference: Knowing that the grass is wet, what is the probability that rain is the cause? 15 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

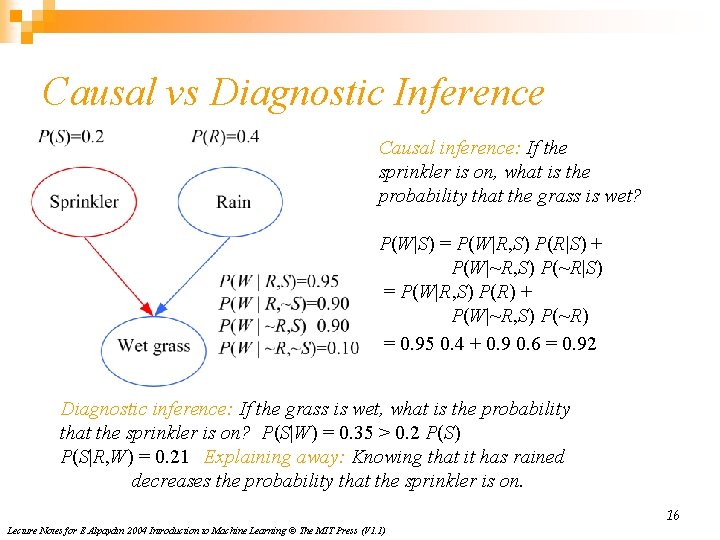

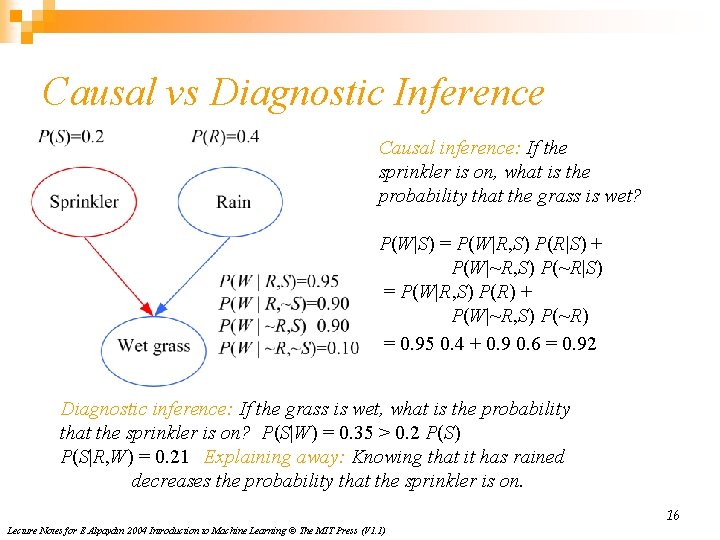

Causal vs Diagnostic Inference Causal inference: If the sprinkler is on, what is the probability that the grass is wet? P(W|S) = P(W|R, S) P(R|S) + P(W|~R, S) P(~R|S) = P(W|R, S) P(R) + P(W|~R, S) P(~R) = 0. 95 0. 4 + 0. 9 0. 6 = 0. 92 Diagnostic inference: If the grass is wet, what is the probability that the sprinkler is on? P(S|W) = 0. 35 > 0. 2 P(S) P(S|R, W) = 0. 21 Explaining away: Knowing that it has rained decreases the probability that the sprinkler is on. 16 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

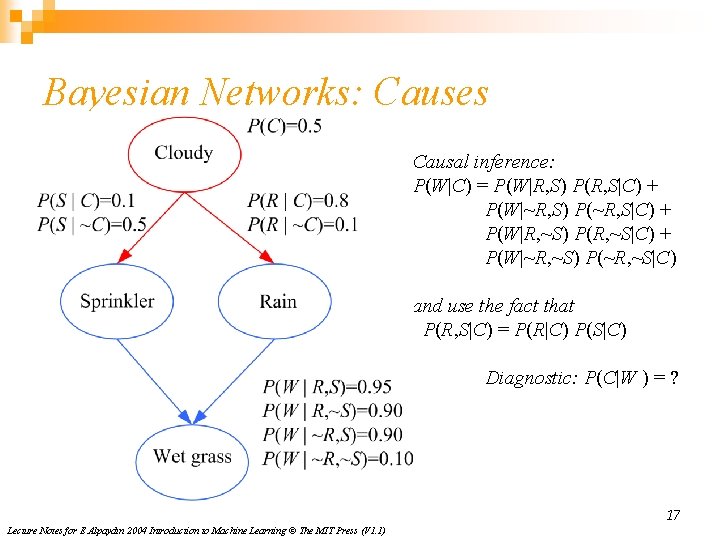

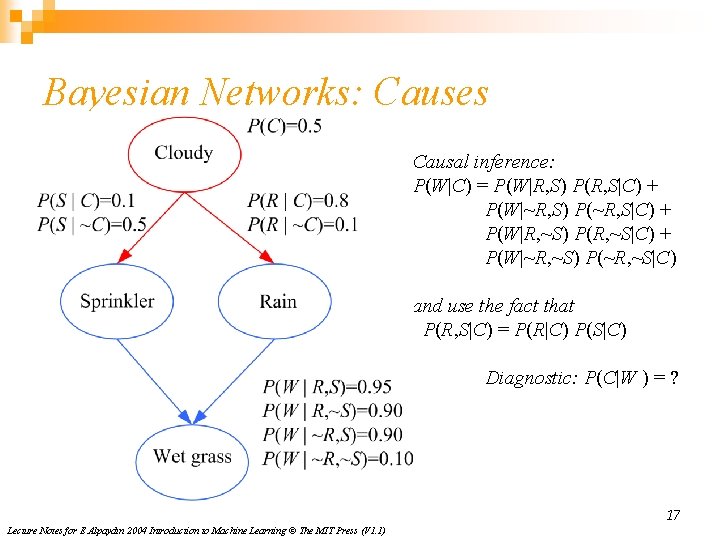

Bayesian Networks: Causes Causal inference: P(W|C) = P(W|R, S) P(R, S|C) + P(W|~R, S) P(~R, S|C) + P(W|R, ~S) P(R, ~S|C) + P(W|~R, ~S) P(~R, ~S|C) and use the fact that P(R, S|C) = P(R|C) P(S|C) Diagnostic: P(C|W ) = ? 17 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

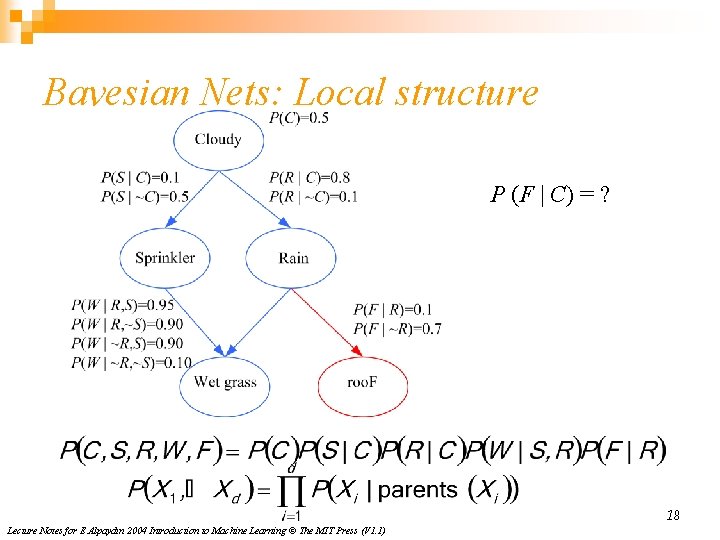

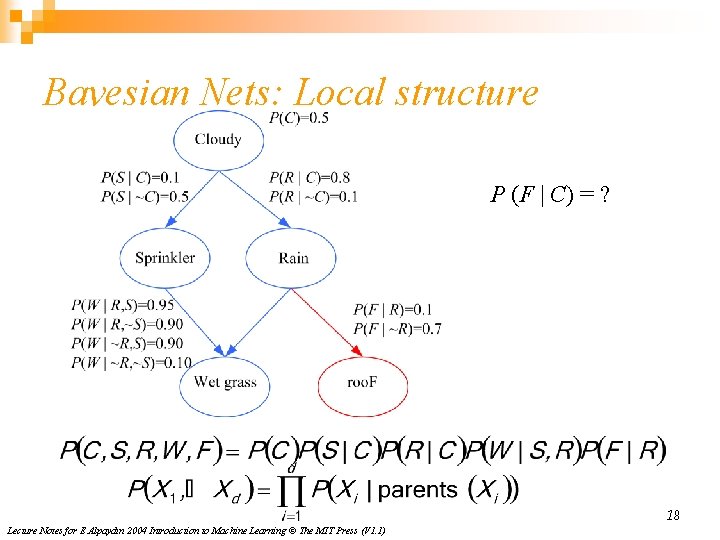

Bayesian Nets: Local structure P (F | C) = ? 18 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

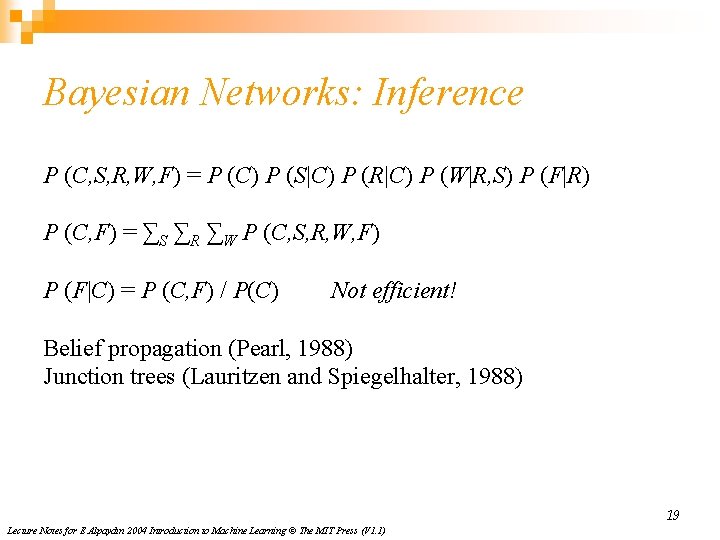

Bayesian Networks: Inference P (C, S, R, W, F) = P (C) P (S|C) P (R|C) P (W|R, S) P (F|R) P (C, F) = ∑S ∑R ∑W P (C, S, R, W, F) P (F|C) = P (C, F) / P(C) Not efficient! Belief propagation (Pearl, 1988) Junction trees (Lauritzen and Spiegelhalter, 1988) 19 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

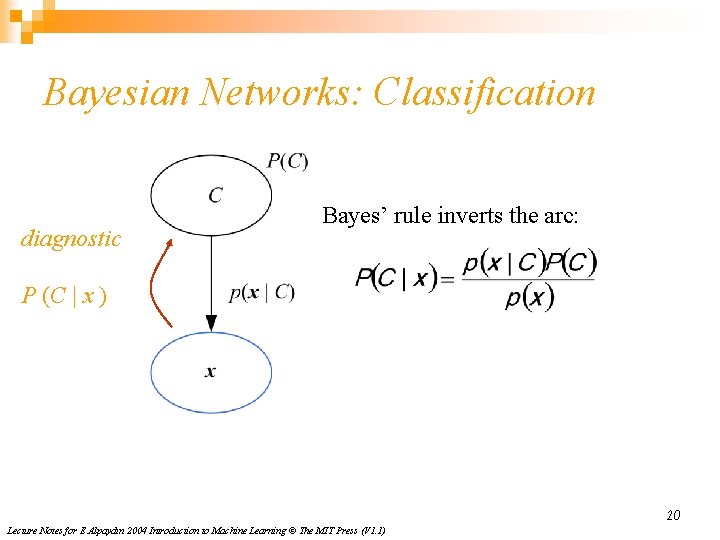

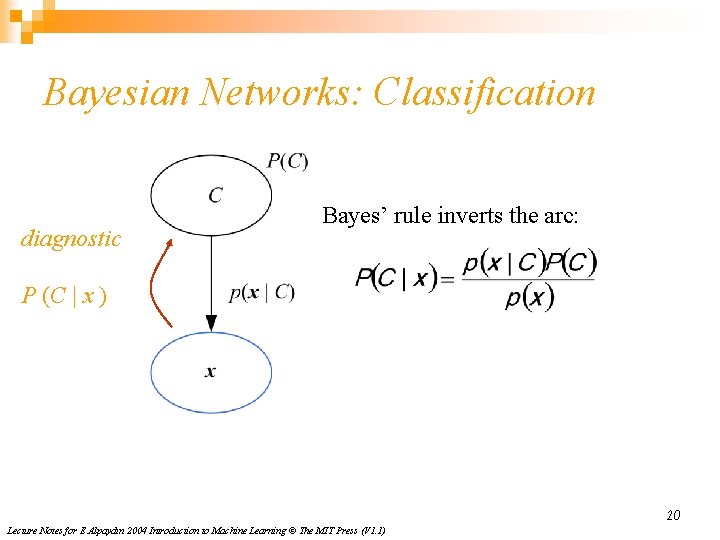

Bayesian Networks: Classification diagnostic Bayes’ rule inverts the arc: P (C | x ) 20 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

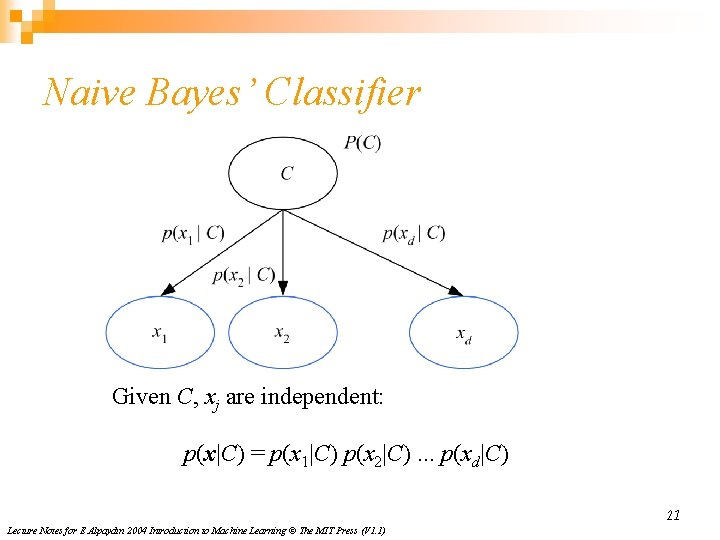

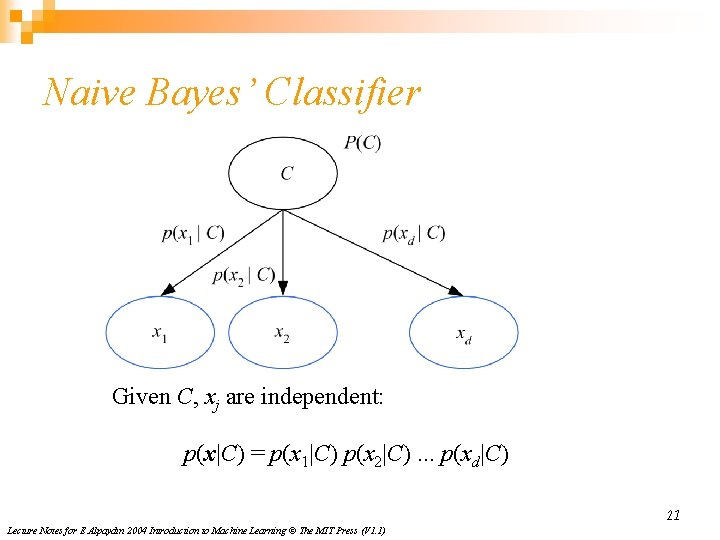

Naive Bayes’ Classifier Given C, xj are independent: p(x|C) = p(x 1|C) p(x 2|C). . . p(xd|C) 21 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

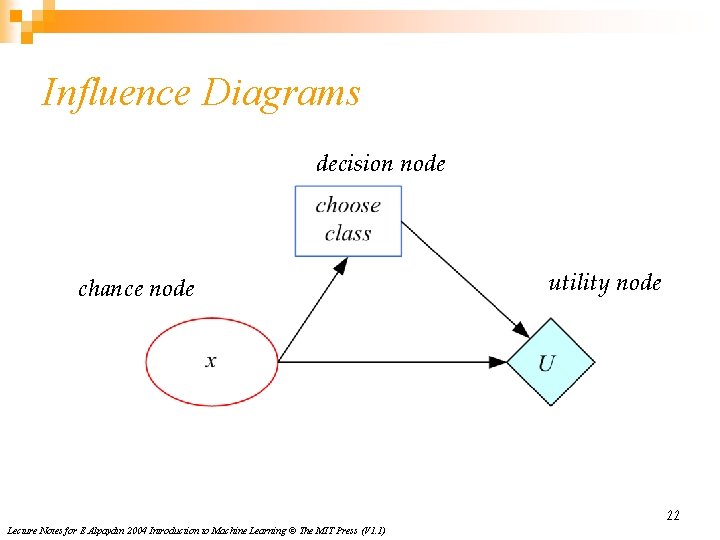

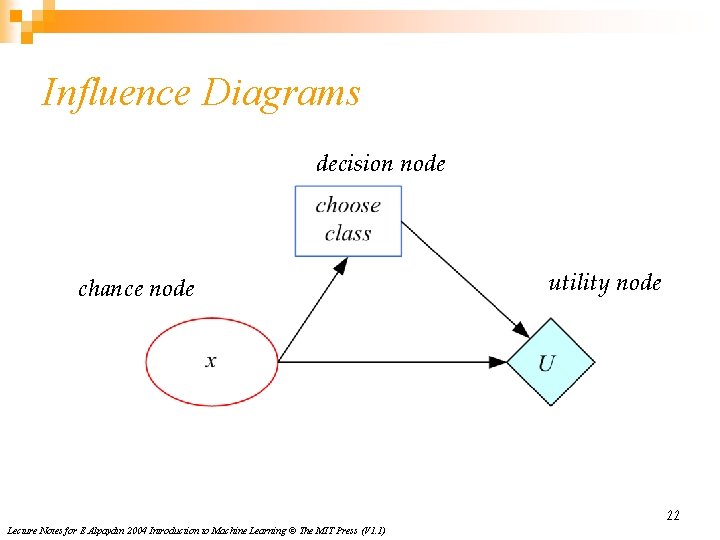

Influence Diagrams decision node chance node utility node 22 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

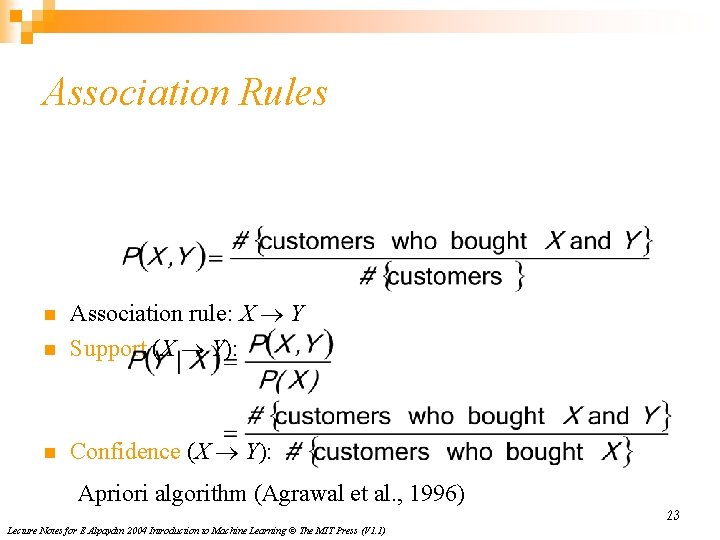

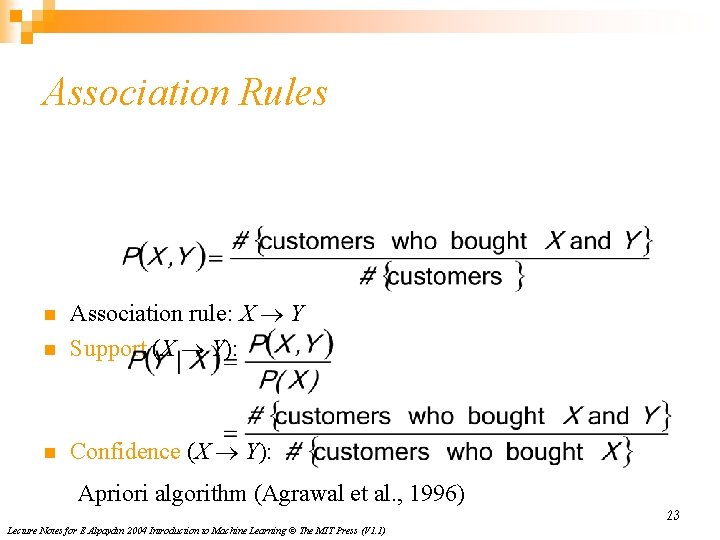

Association Rules n Association rule: X ® Y Support (X ® Y): n Confidence (X ® Y): n Apriori algorithm (Agrawal et al. , 1996) Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1) 23