Lecture Slides for INTRODUCTION TO MACHINE LEARNING 3

- Slides: 15

Lecture Slides for INTRODUCTION TO MACHINE LEARNING 3 RD EDITION ETHEM ALPAYDIN © The MIT Press, 2014 Learning at WPI Modified by Prof. Carolina Ruiz for CS 539 Machine alpaydin@boun. edu. tr http: //www. cmpe. boun. edu. tr/~ethem/i 2 ml 3 e

CHAPTER 3: BAYESIAN DECISION THEORY

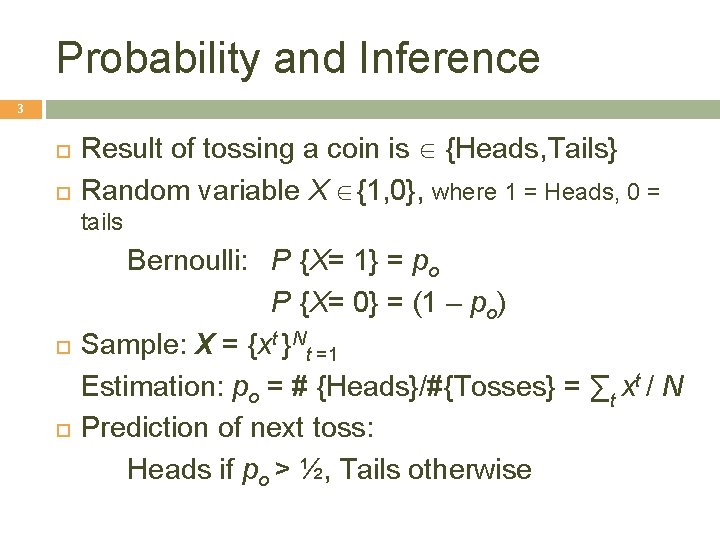

Probability and Inference 3 Result of tossing a coin is Î {Heads, Tails} Random variable X Î{1, 0}, where 1 = Heads, 0 = tails Bernoulli: P {X= 1} = po P {X= 0} = (1 ‒ po) Sample: X = {xt }Nt =1 Estimation: po = # {Heads}/#{Tosses} = ∑t xt / N Prediction of next toss: Heads if po > ½, Tails otherwise

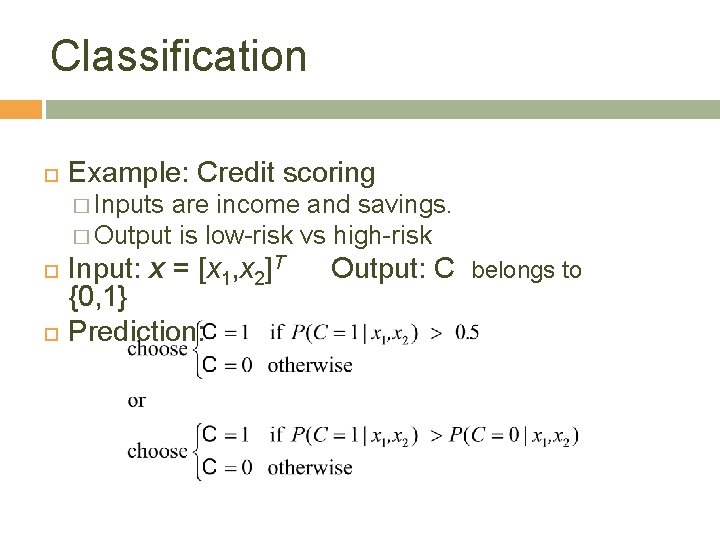

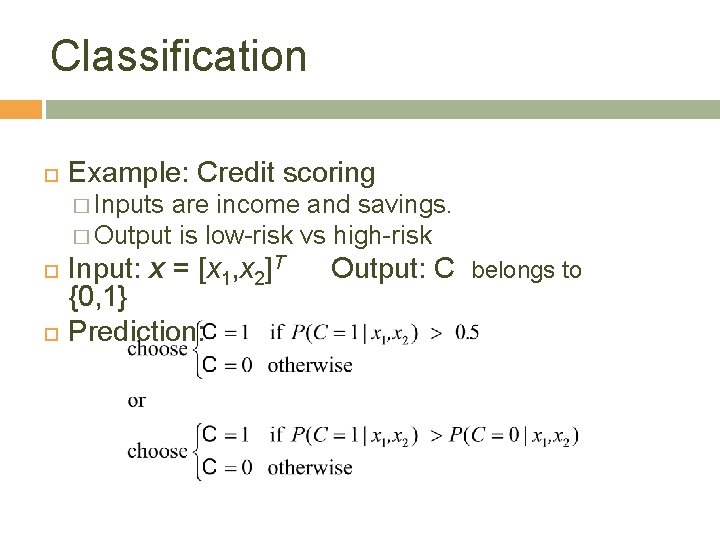

Classification Example: Credit scoring � Inputs are income and savings. � Output is low-risk vs high-risk Input: x = [x 1, x 2]T {0, 1} Prediction: Output: C belongs to 4

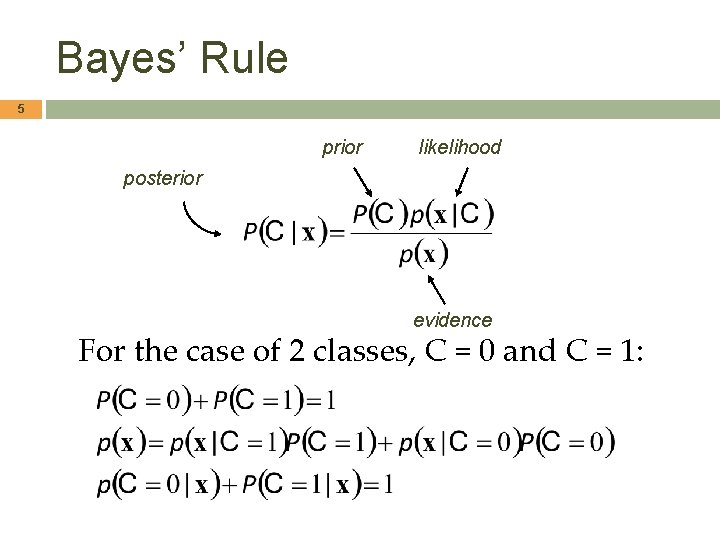

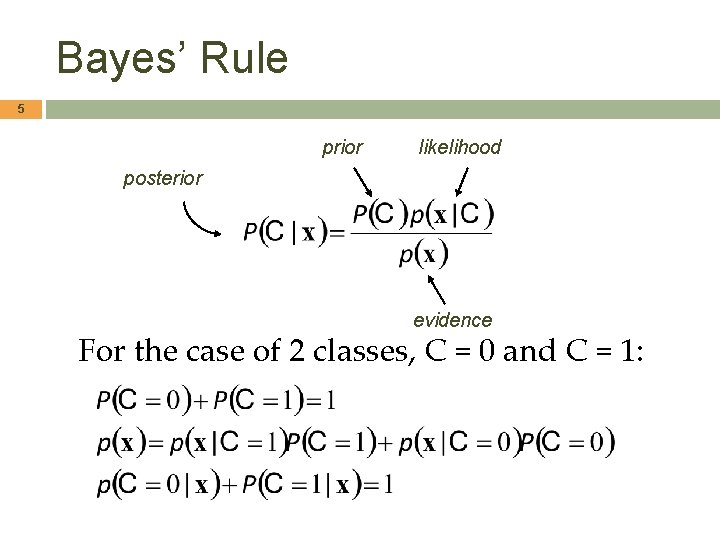

Bayes’ Rule 5 prior likelihood posterior evidence For the case of 2 classes, C = 0 and C = 1:

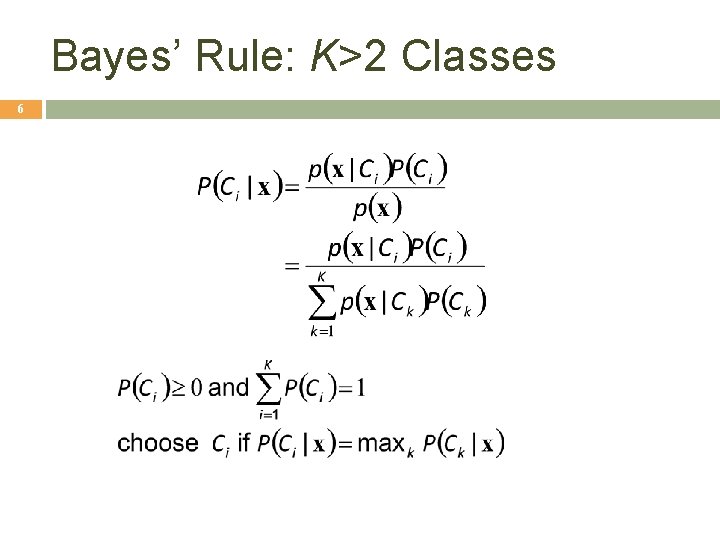

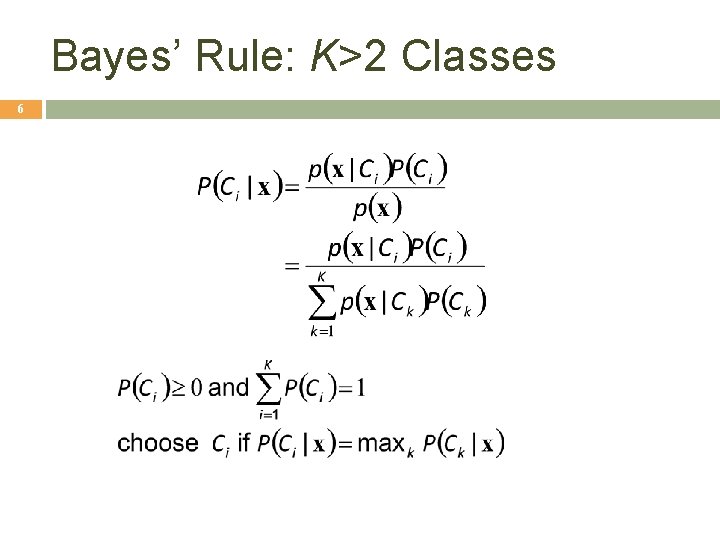

Bayes’ Rule: K>2 Classes 6

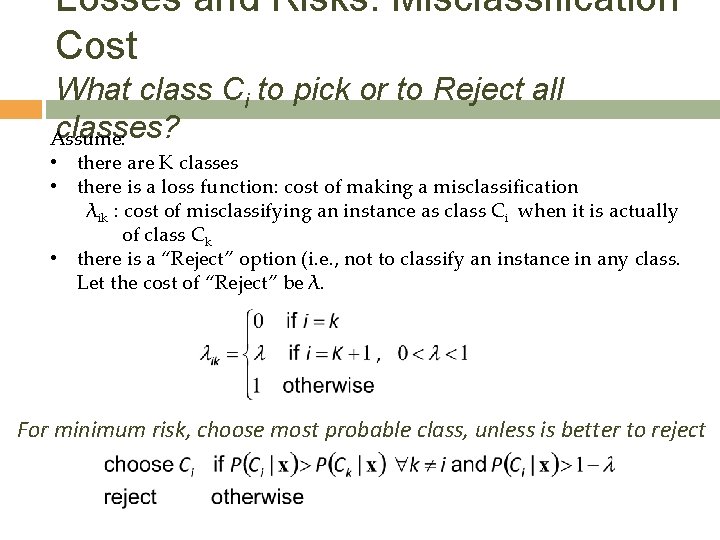

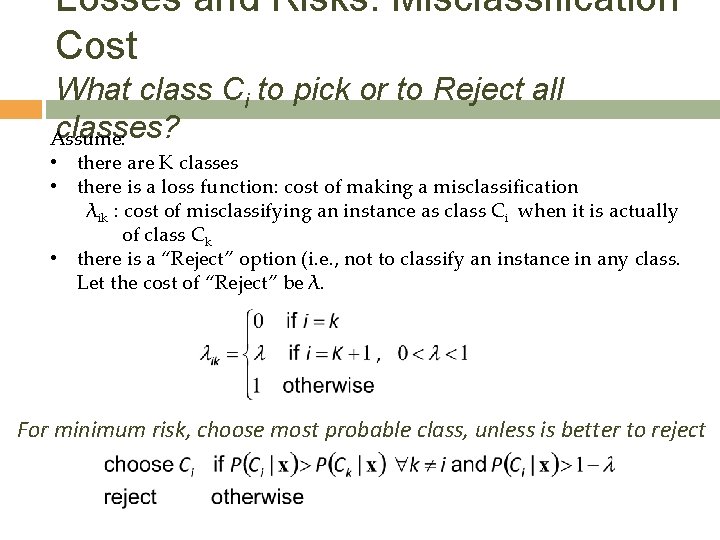

Losses and Risks: Misclassification Cost What class Ci to pick or to Reject all classes? Assume: • there are K classes • there is a loss function: cost of making a misclassification λik : cost of misclassifying an instance as class Ci when it is actually of class Ck • there is a “Reject” option (i. e. , not to classify an instance in any class. Let the cost of “Reject” be λ. For minimum risk, choose most probable class, unless is better to reject 9

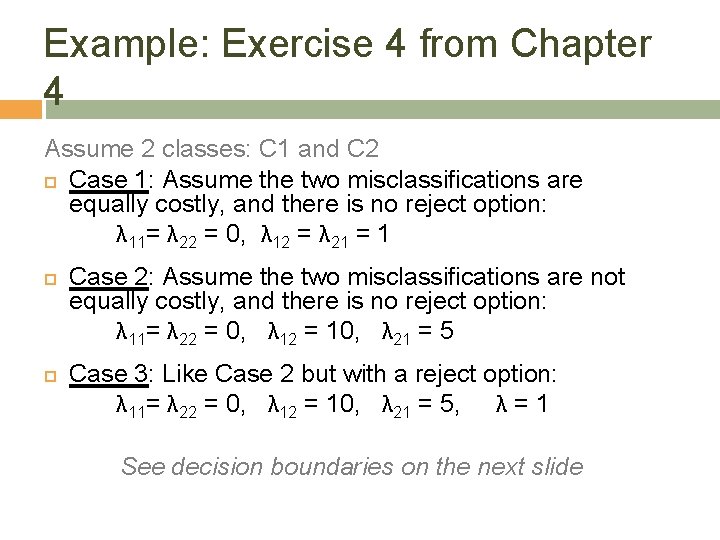

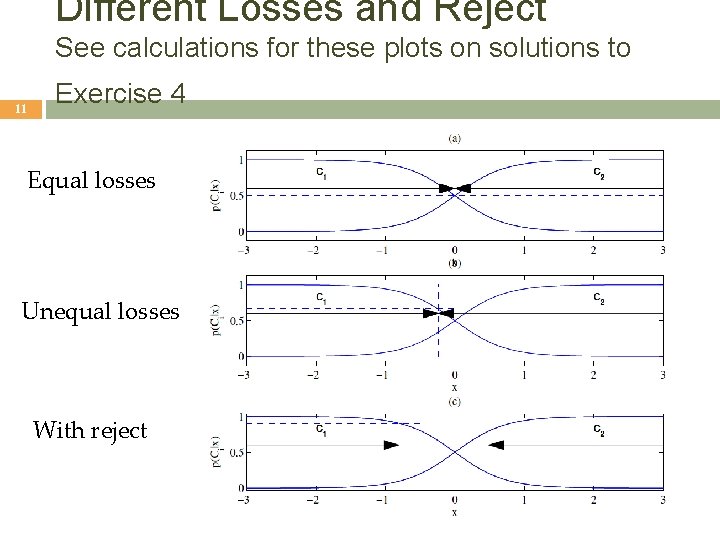

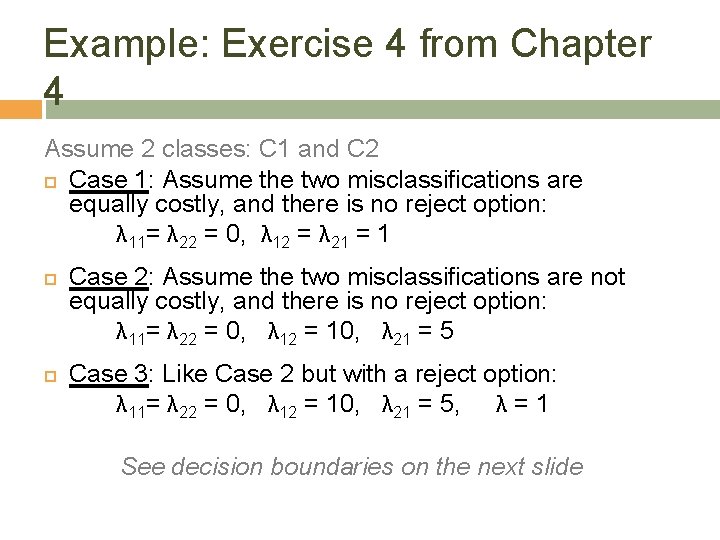

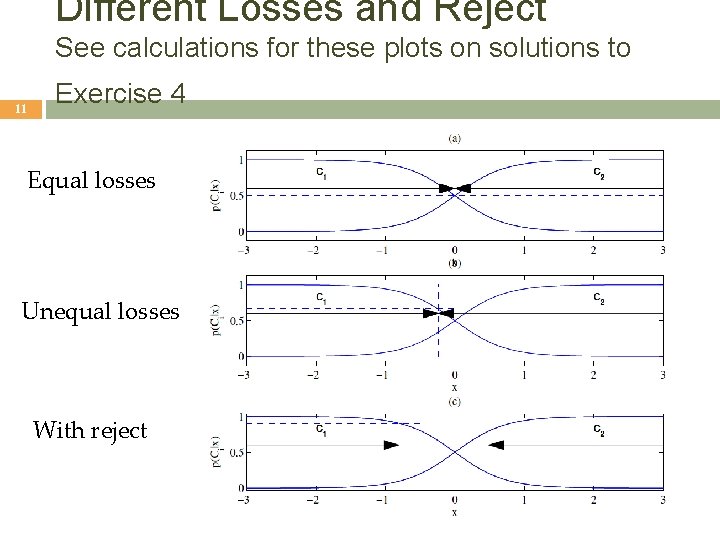

Example: Exercise 4 from Chapter 4 Assume 2 classes: C 1 and C 2 Case 1: Assume the two misclassifications are equally costly, and there is no reject option: λ 11= λ 22 = 0, λ 12 = λ 21 = 1 Case 2: Assume the two misclassifications are not equally costly, and there is no reject option: λ 11= λ 22 = 0, λ 12 = 10, λ 21 = 5 Case 3: Like Case 2 but with a reject option: λ 11= λ 22 = 0, λ 12 = 10, λ 21 = 5, λ = 1 See decision boundaries on the next slide 10

Different Losses and Reject See calculations for these plots on solutions to 11 Exercise 4 Equal losses Unequal losses With reject

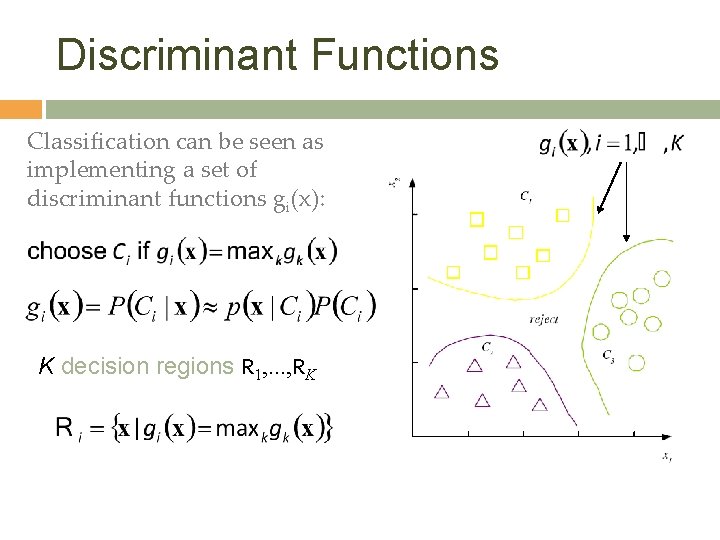

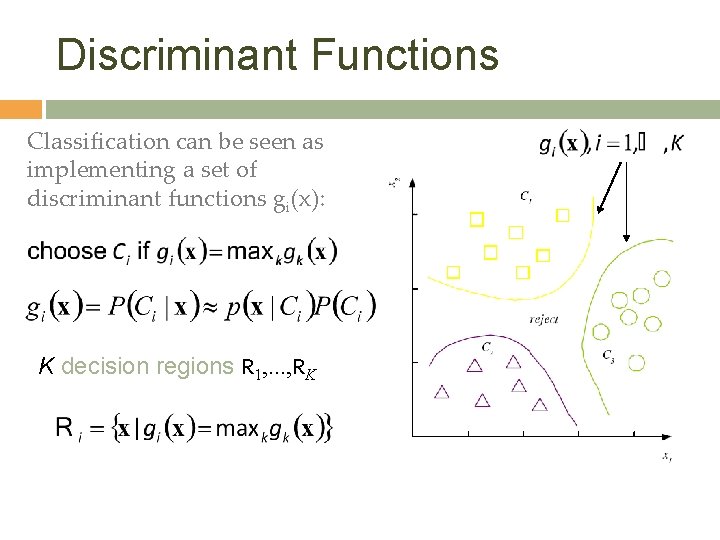

Discriminant Functions Classification can be seen as implementing a set of discriminant functions gi(x): K decision regions R 1, . . . , RK 12

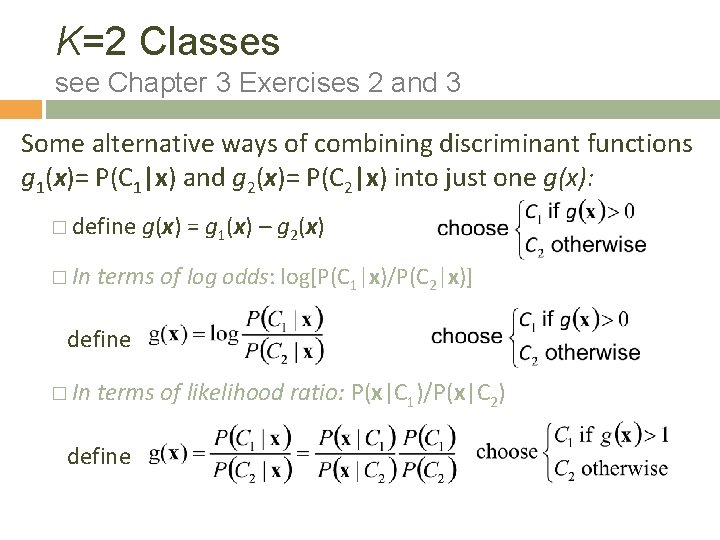

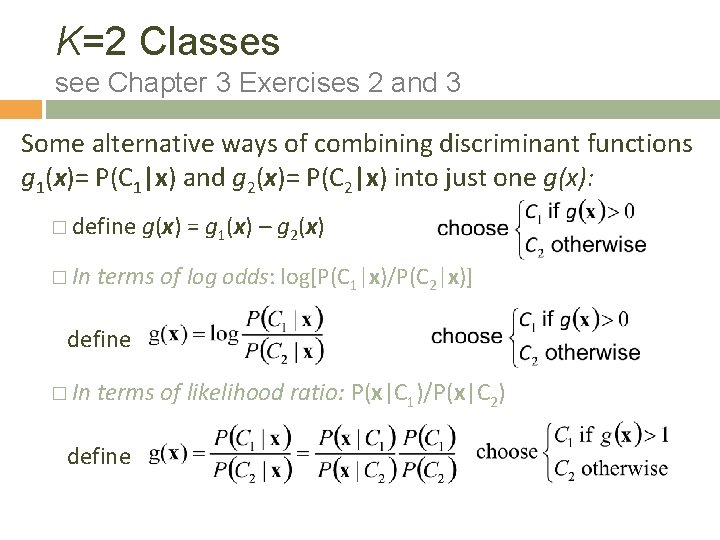

K=2 Classes see Chapter 3 Exercises 2 and 3 Some alternative ways of combining discriminant functions g 1(x)= P(C 1|x) and g 2(x)= P(C 2|x) into just one g(x): � define � In g(x) = g 1(x) – g 2(x) terms of log odds: log[P(C 1|x)/P(C 2|x)] define � In terms of likelihood ratio: P(x|C 1)/P(x|C 2) define 13

Association Rules Association rule: X ® Y People who buy/click/visit/enjoy X are also likely to buy/click/visit/enjoy Y. A rule implies association, not necessarily causation. 15

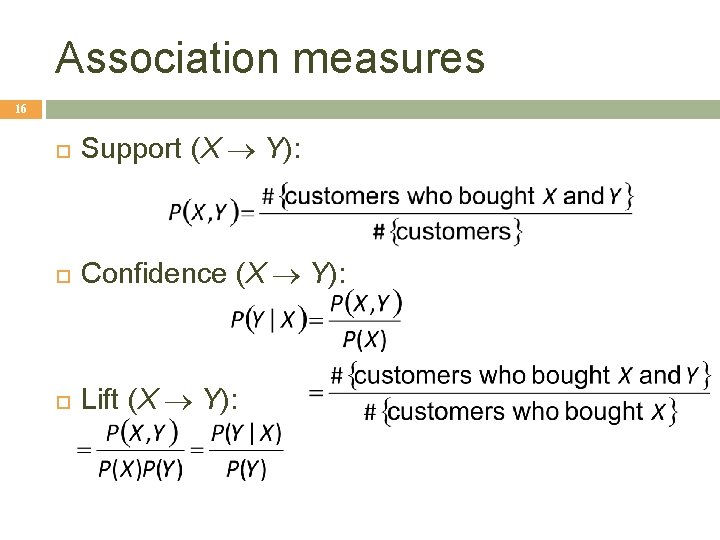

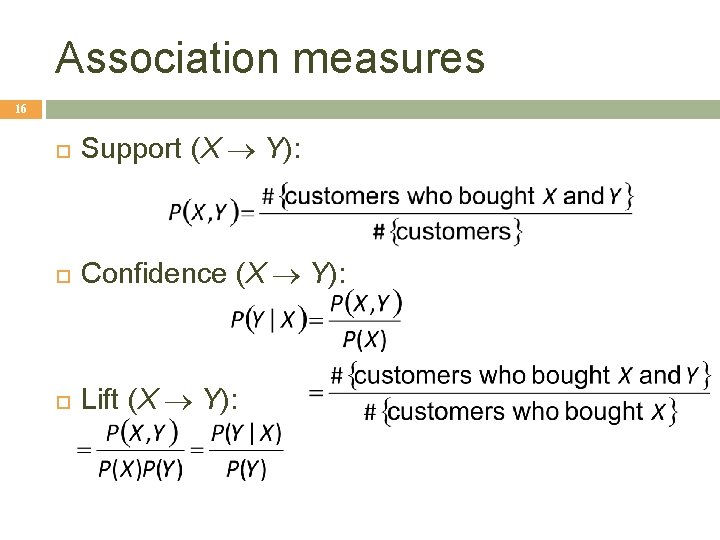

Association measures 16 Support (X ® Y): Confidence (X ® Y): Lift (X ® Y):

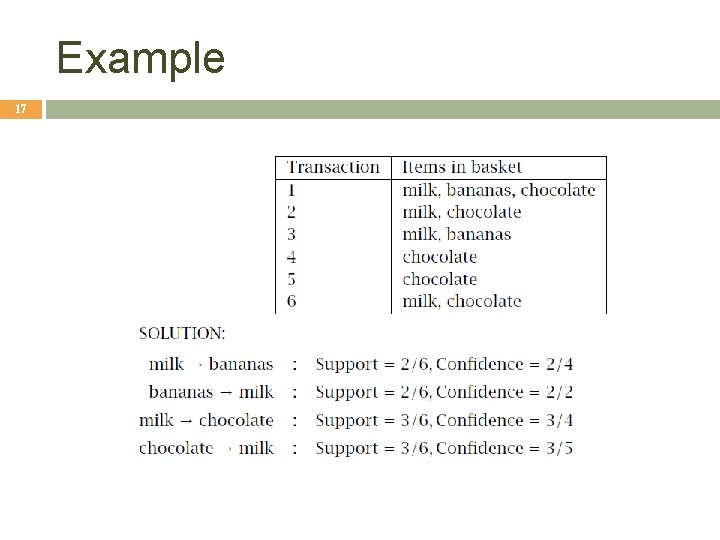

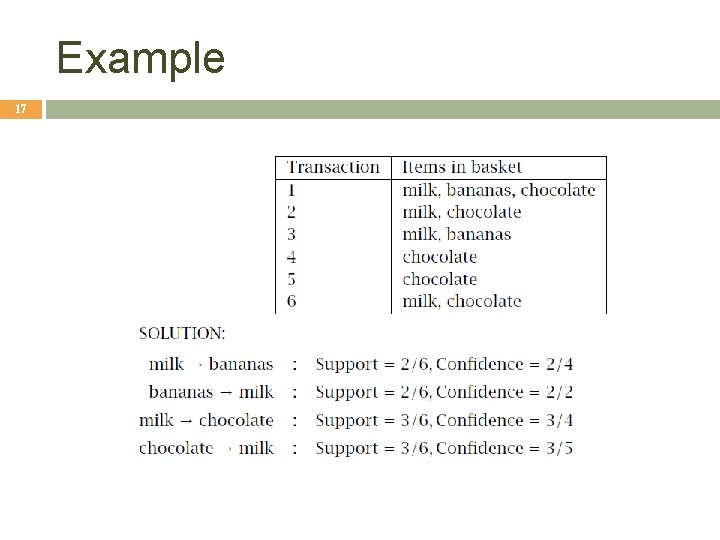

Example 17

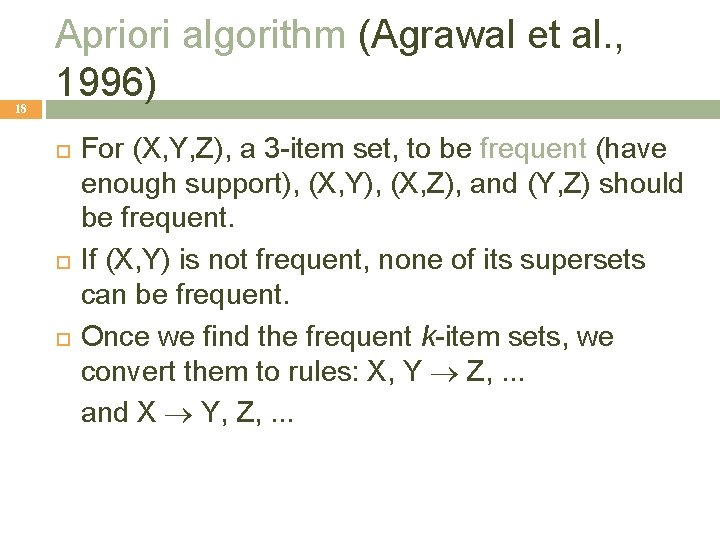

18 Apriori algorithm (Agrawal et al. , 1996) For (X, Y, Z), a 3 -item set, to be frequent (have enough support), (X, Y), (X, Z), and (Y, Z) should be frequent. If (X, Y) is not frequent, none of its supersets can be frequent. Once we find the frequent k-item sets, we convert them to rules: X, Y ® Z, . . . and X ® Y, Z, . . .

Cmu machine learning

Cmu machine learning Introduction to machine learning slides

Introduction to machine learning slides Machine learning lecture notes

Machine learning lecture notes Ethem alpaydin

Ethem alpaydin A small child slides down the four frictionless slides

A small child slides down the four frictionless slides Energy conservation quick check

Energy conservation quick check Principles of economics powerpoint lecture slides

Principles of economics powerpoint lecture slides Business communication lecture slides

Business communication lecture slides 01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad Concept learning task in machine learning

Concept learning task in machine learning Analytical learning in machine learning

Analytical learning in machine learning Pac learning model in machine learning

Pac learning model in machine learning Machine learning t mitchell

Machine learning t mitchell Inductive vs analytical learning

Inductive vs analytical learning Inductive and analytical learning

Inductive and analytical learning Instance based learning in machine learning

Instance based learning in machine learning