Lecture Slides for INTRODUCTION TO Machine Learning ETHEM

- Slides: 22

Lecture Slides for INTRODUCTION TO Machine Learning ETHEM ALPAYDIN © The MIT Press, 2004 alpaydin@boun. edu. tr http: //www. cmpe. boun. edu. tr/~ethem/i 2 ml Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

CHAPTER 8: Nonparametric Methods Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

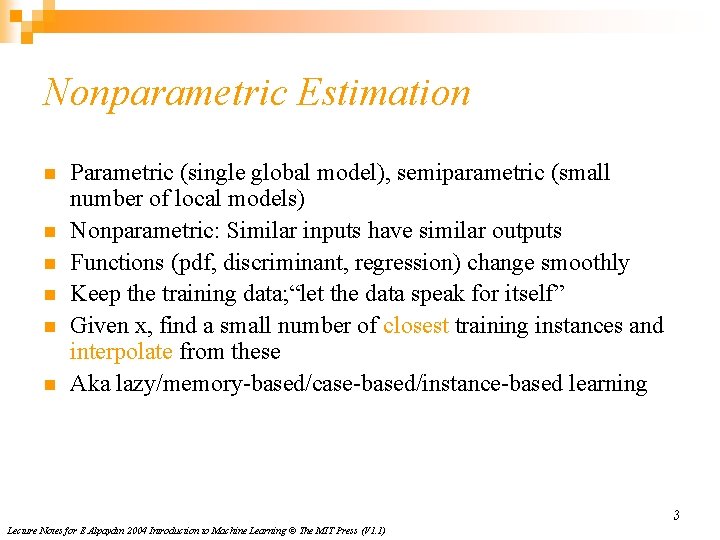

Nonparametric Estimation n n n Parametric (single global model), semiparametric (small number of local models) Nonparametric: Similar inputs have similar outputs Functions (pdf, discriminant, regression) change smoothly Keep the training data; “let the data speak for itself” Given x, find a small number of closest training instances and interpolate from these Aka lazy/memory-based/case-based/instance-based learning 3 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

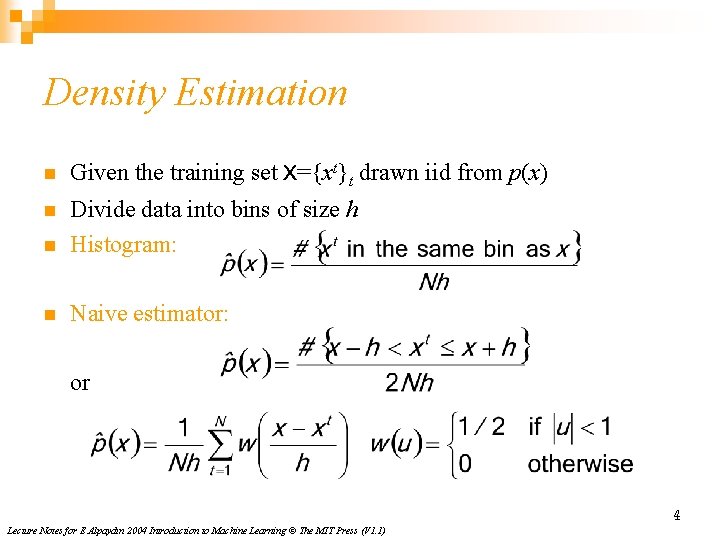

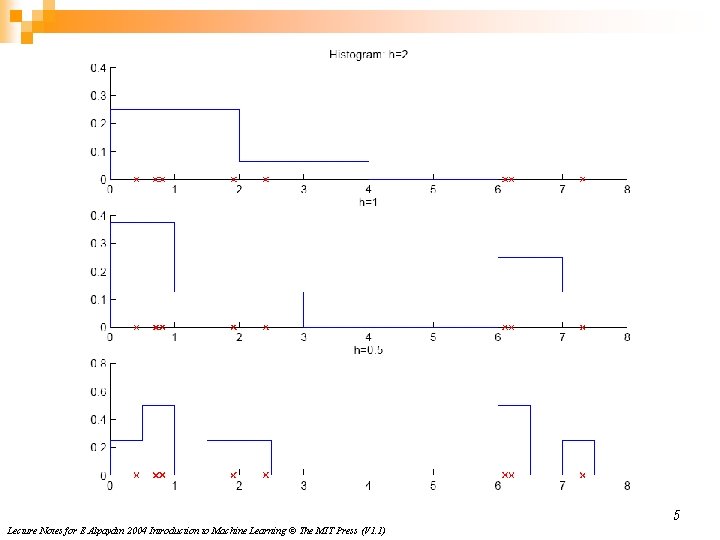

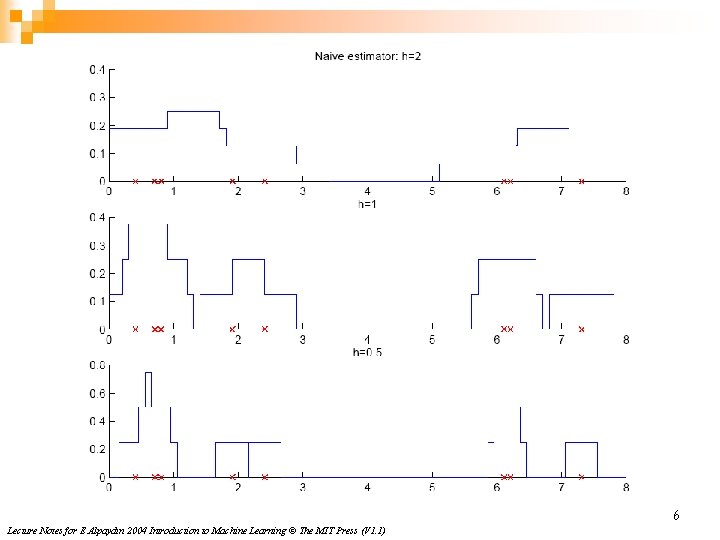

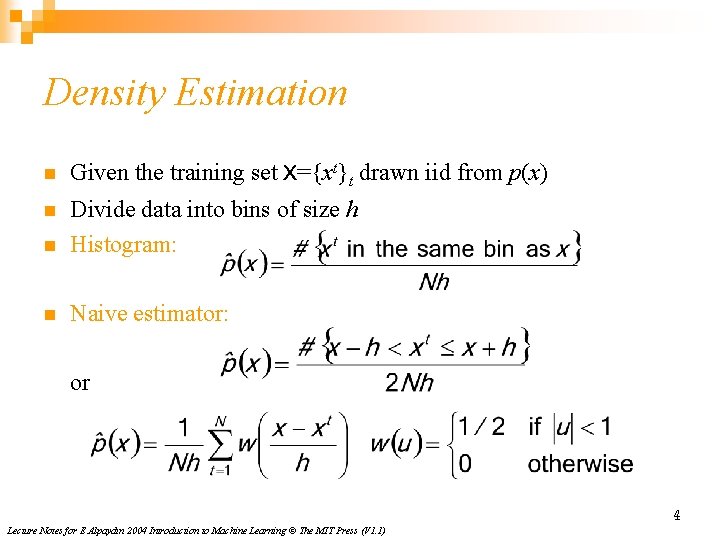

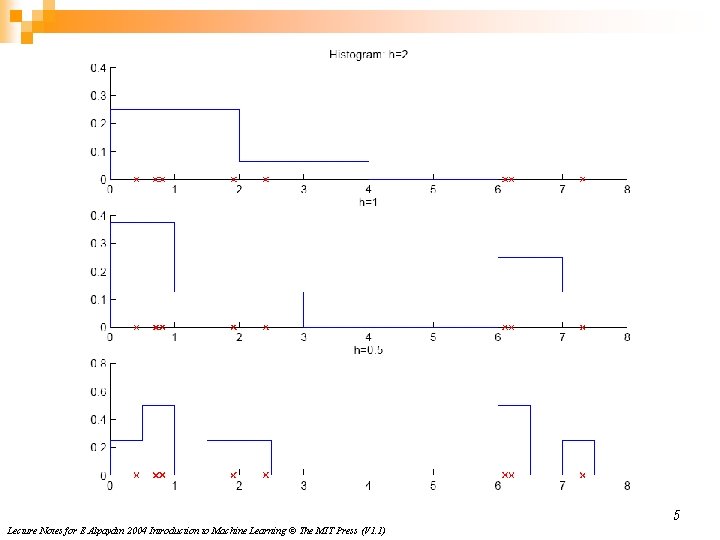

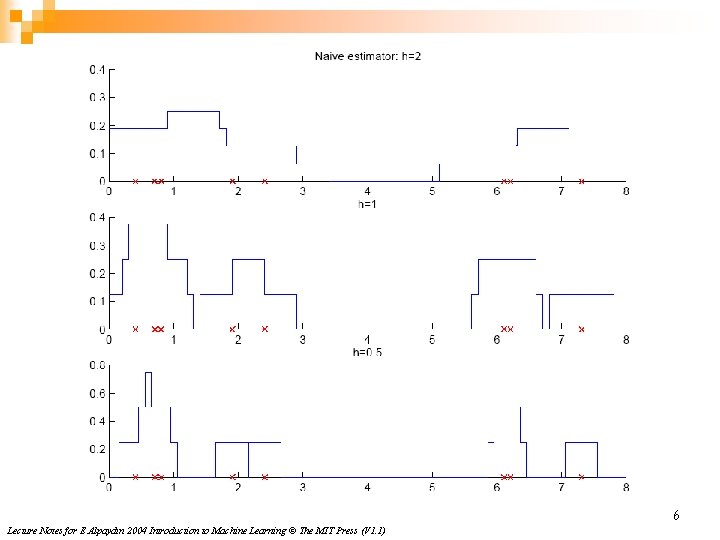

Density Estimation n Given the training set X={xt}t drawn iid from p(x) n n Divide data into bins of size h Histogram: n Naive estimator: or 4 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

5 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

6 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

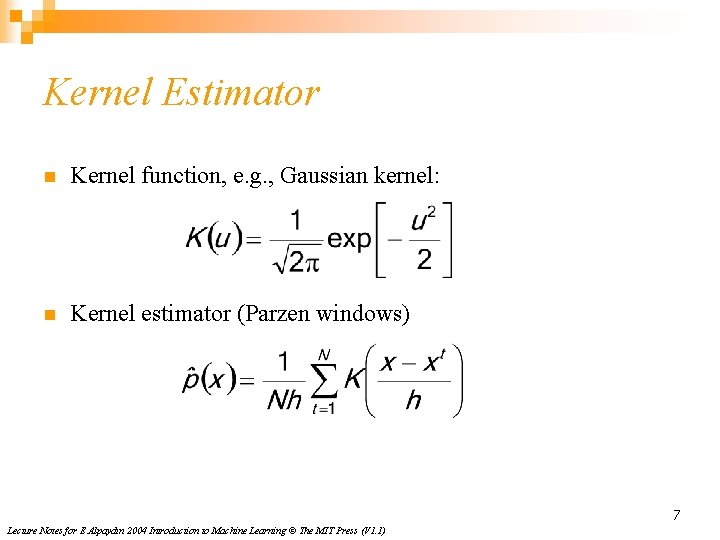

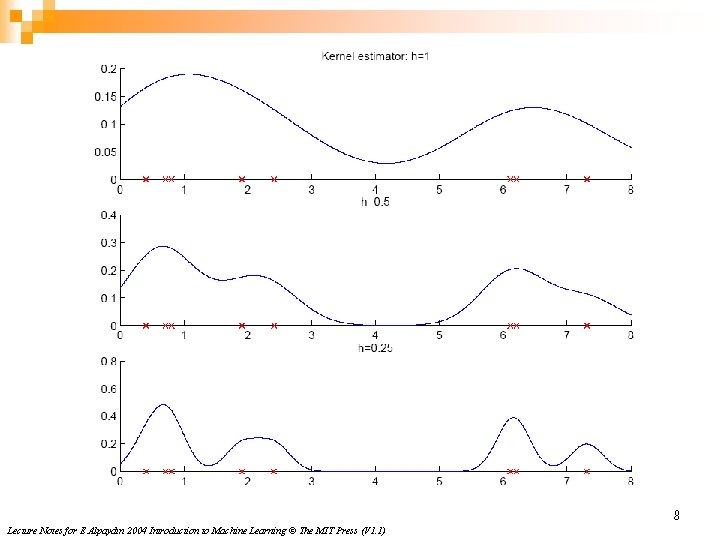

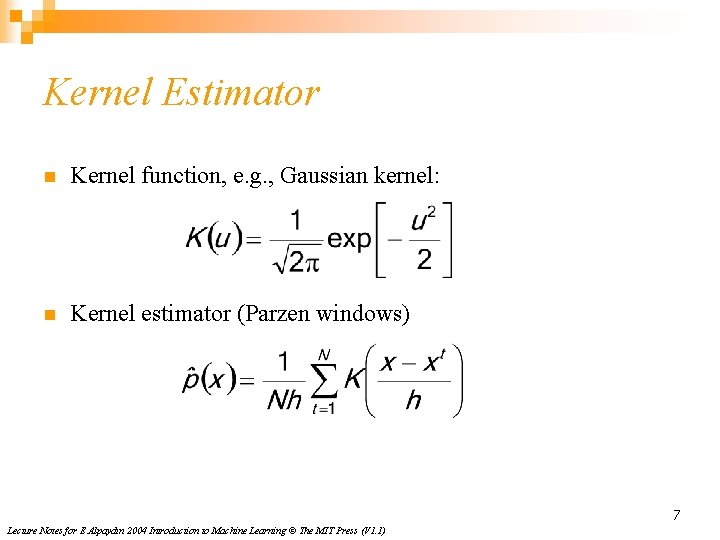

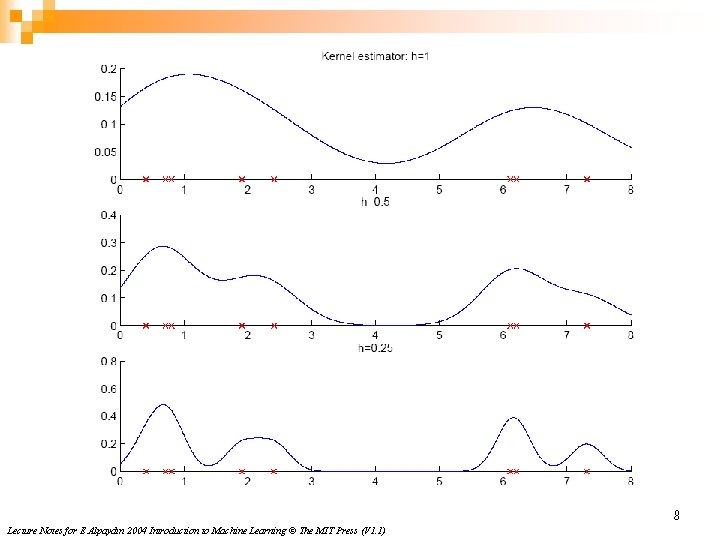

Kernel Estimator n Kernel function, e. g. , Gaussian kernel: n Kernel estimator (Parzen windows) 7 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

8 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

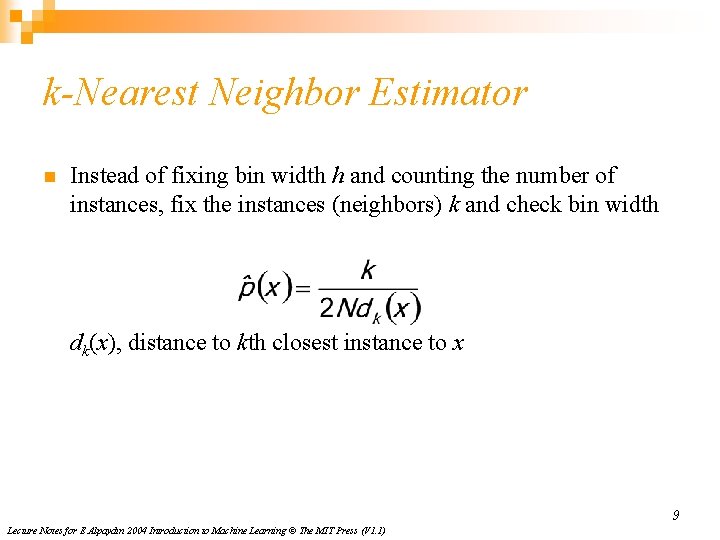

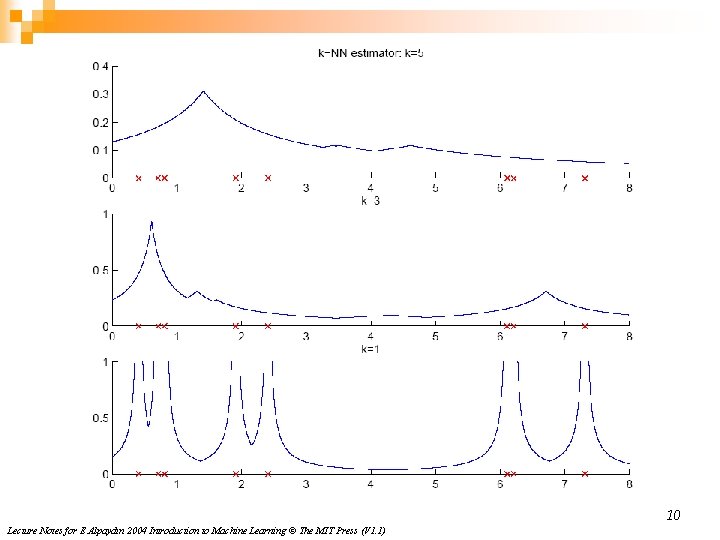

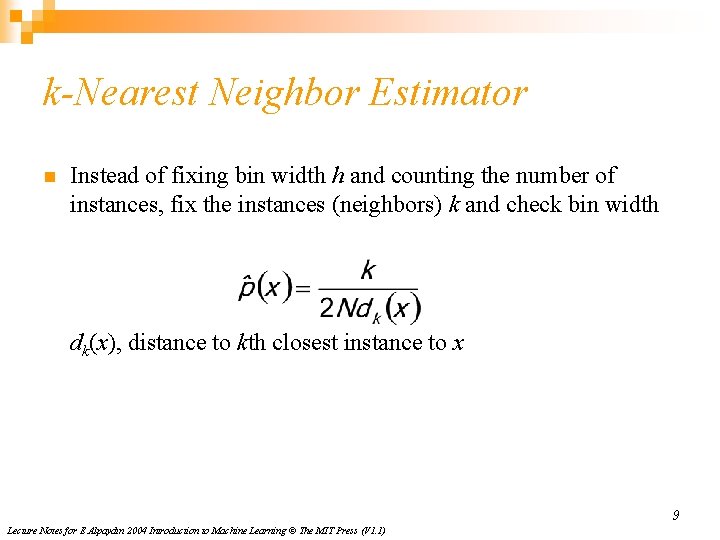

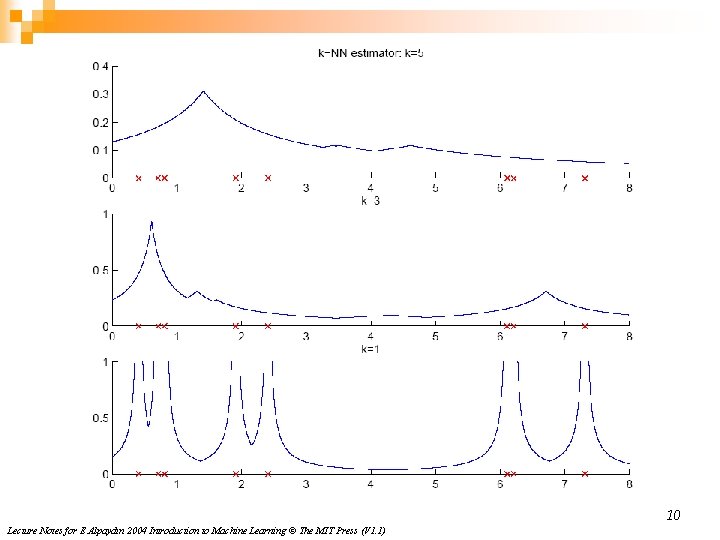

k-Nearest Neighbor Estimator n Instead of fixing bin width h and counting the number of instances, fix the instances (neighbors) k and check bin width dk(x), distance to kth closest instance to x 9 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

10 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Multivariate Data n Kernel density estimator Multivariate Gaussian kernel spheric ellipsoid 11 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

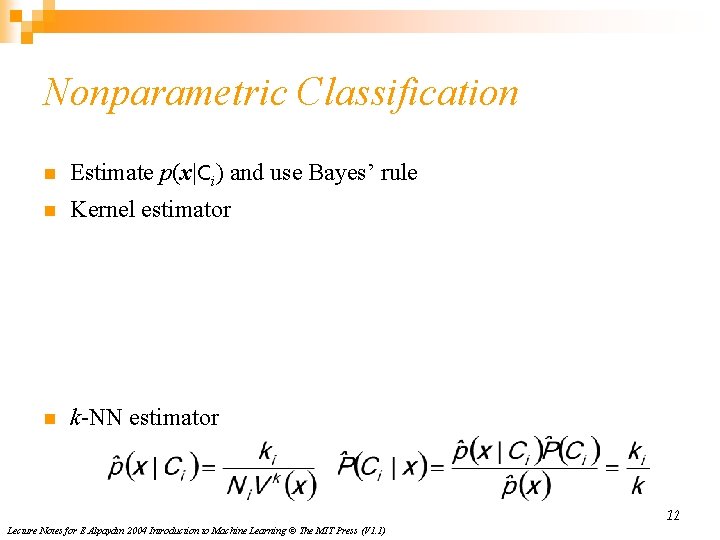

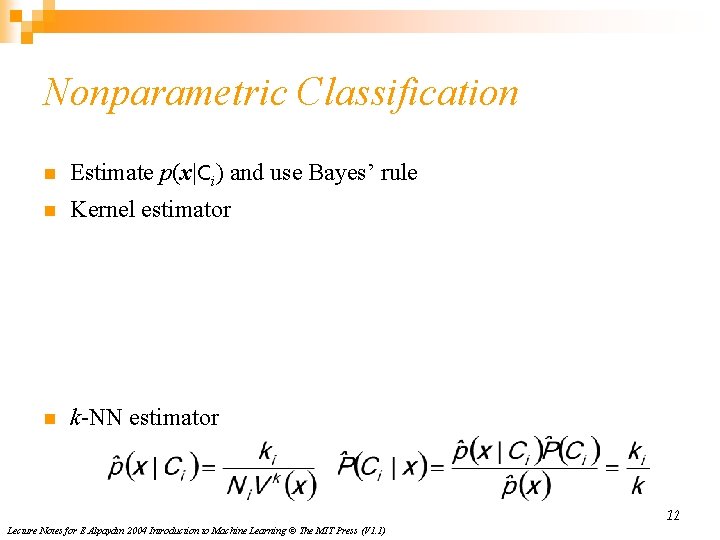

Nonparametric Classification n Estimate p(x|Ci) and use Bayes’ rule n Kernel estimator n k-NN estimator 12 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

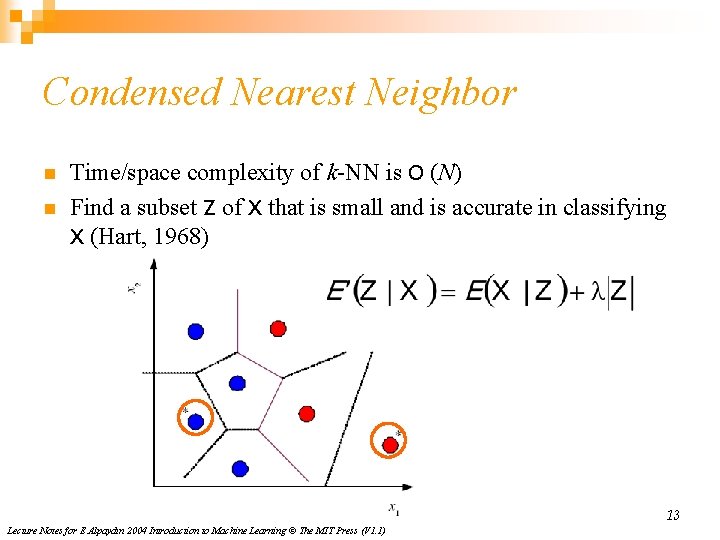

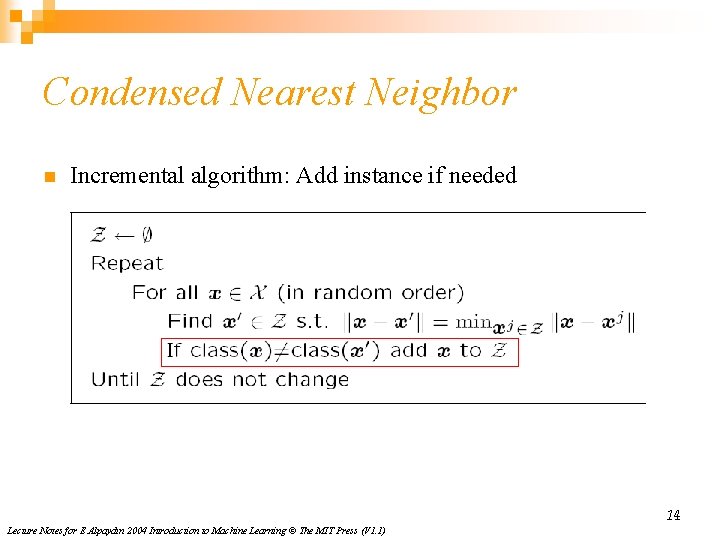

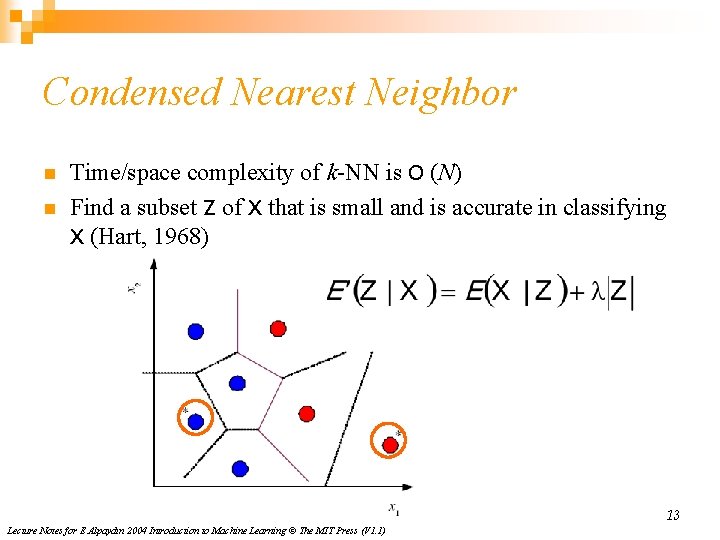

Condensed Nearest Neighbor n n Time/space complexity of k-NN is O (N) Find a subset Z of X that is small and is accurate in classifying X (Hart, 1968) 13 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

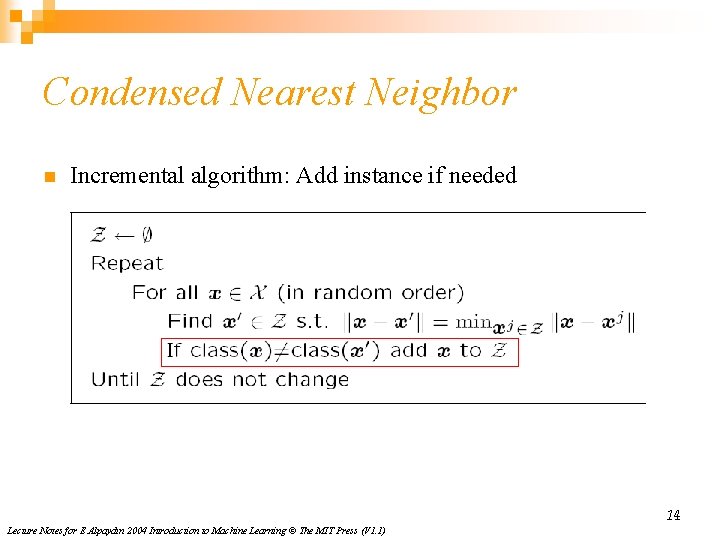

Condensed Nearest Neighbor n Incremental algorithm: Add instance if needed 14 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

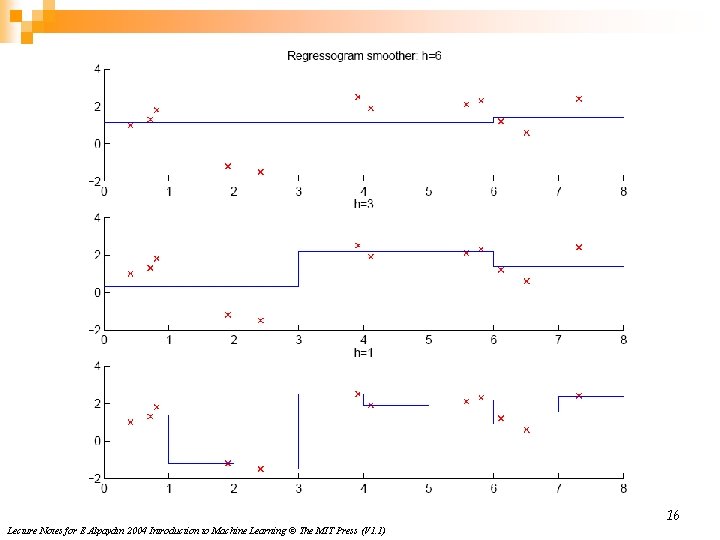

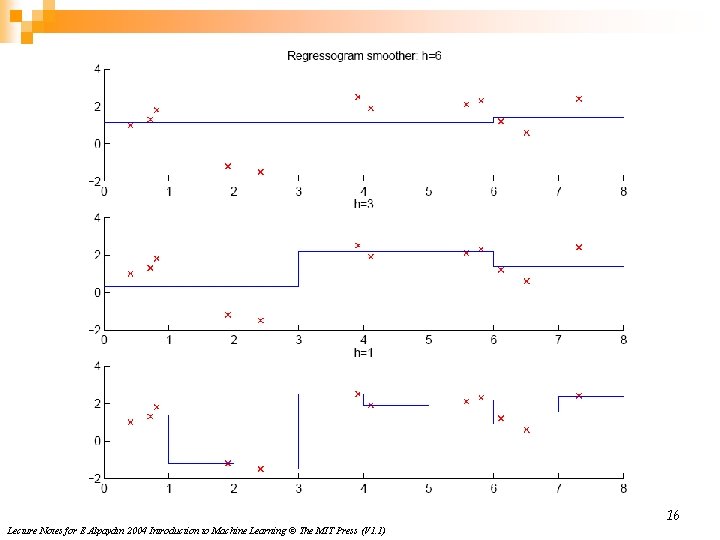

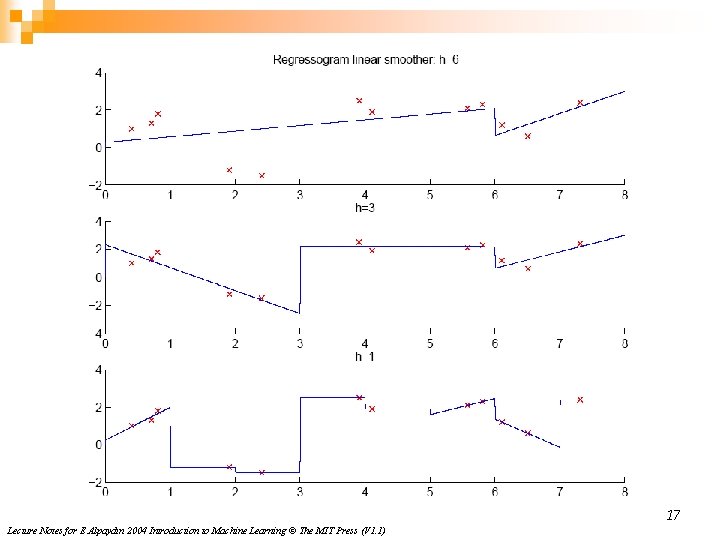

Nonparametric Regression n n Aka smoothing models Regressogram 15 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

16 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

17 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

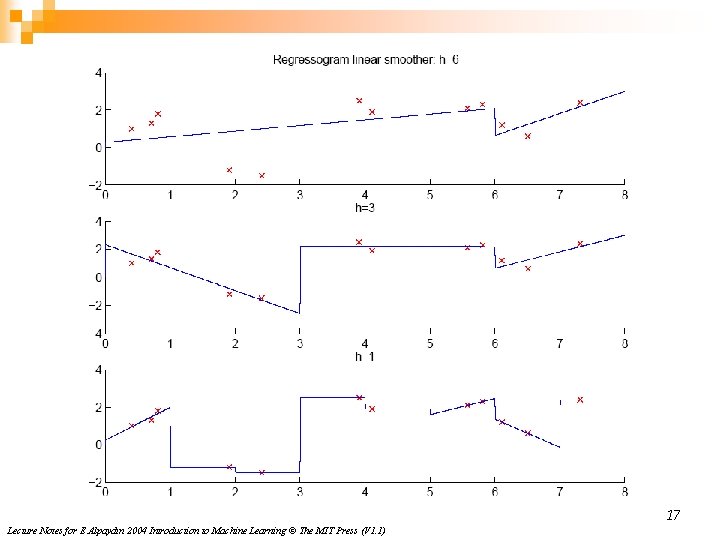

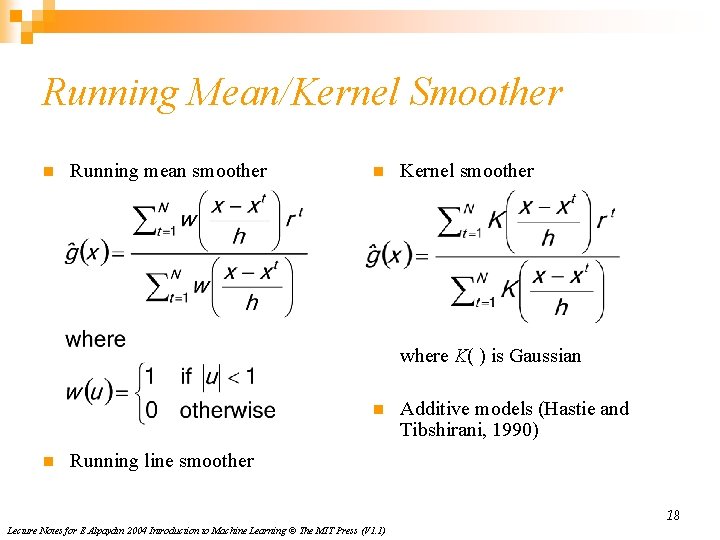

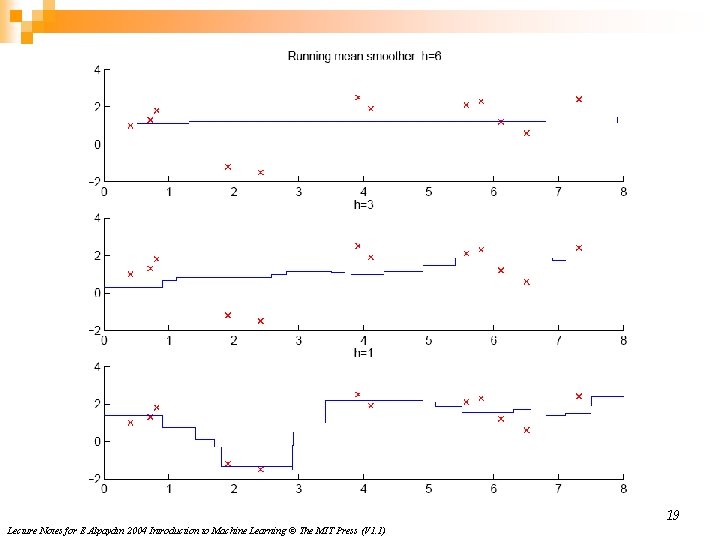

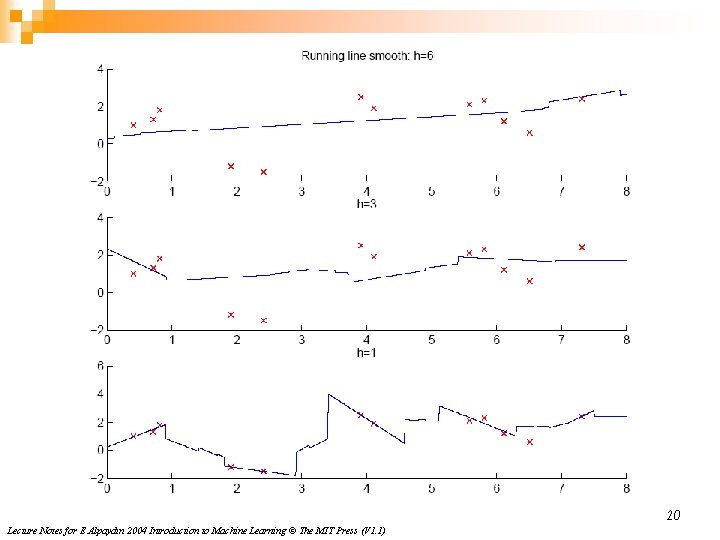

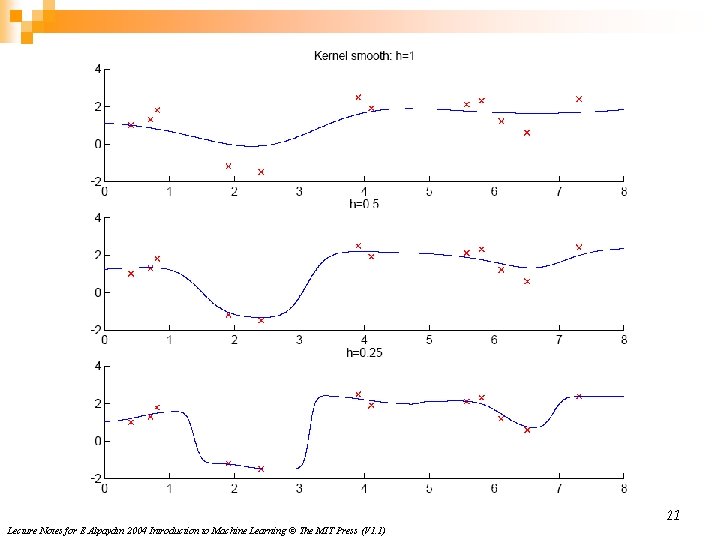

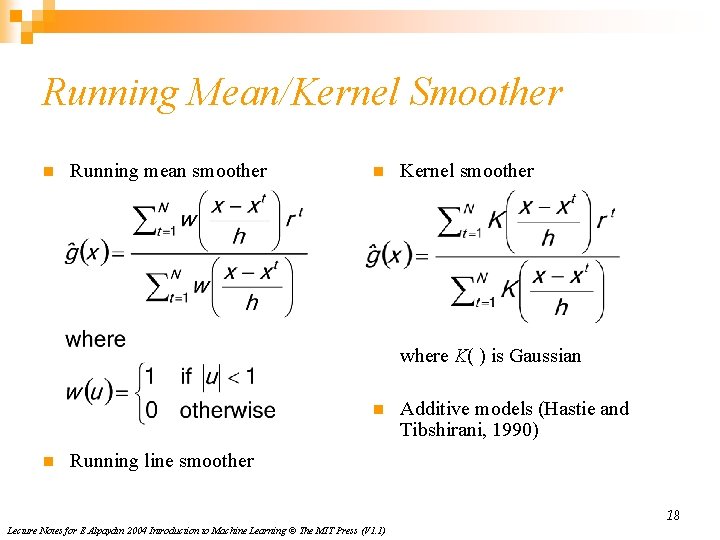

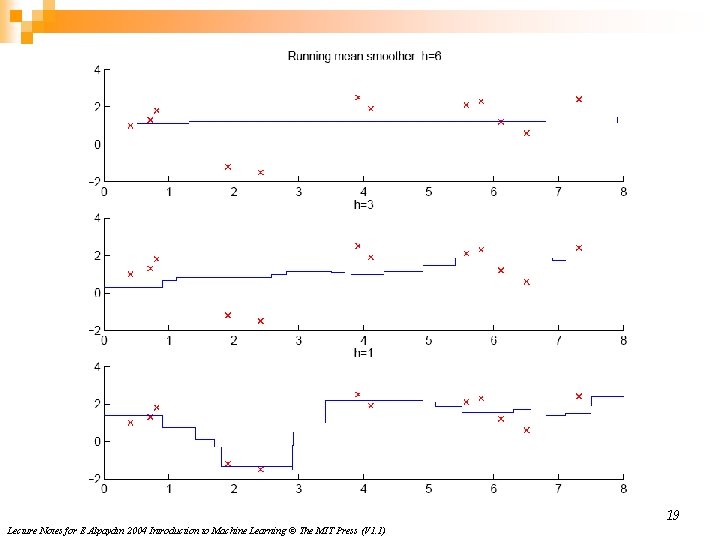

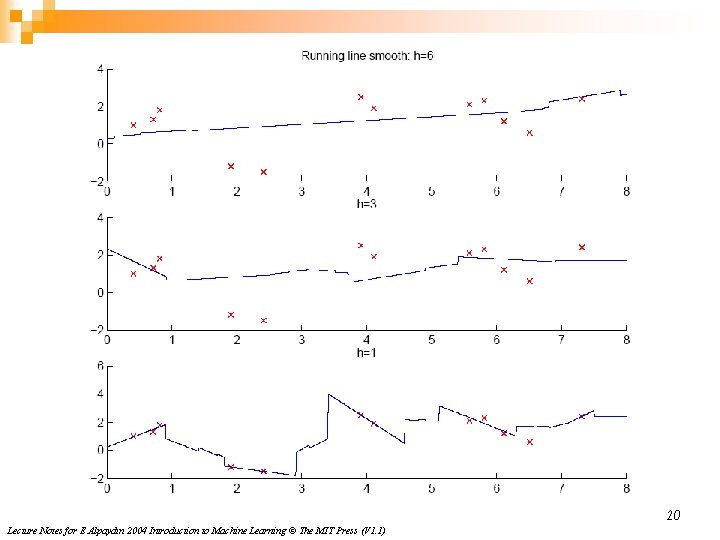

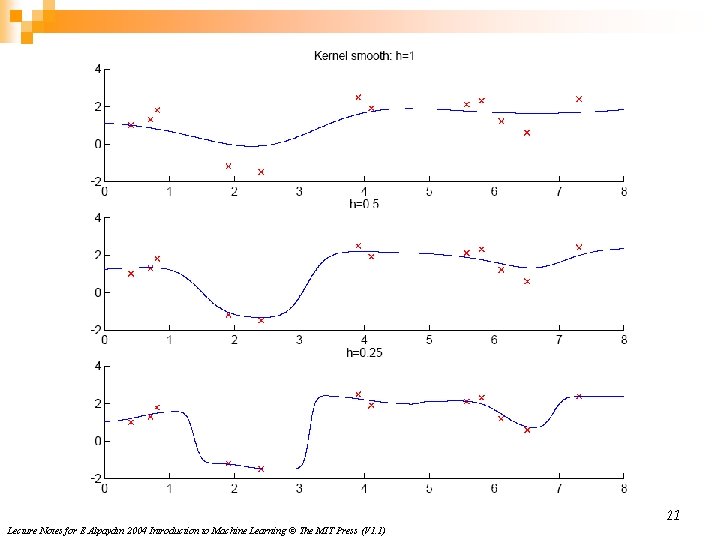

Running Mean/Kernel Smoother n Running mean smoother n Kernel smoother where K( ) is Gaussian n n Additive models (Hastie and Tibshirani, 1990) Running line smoother 18 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

19 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

20 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

21 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

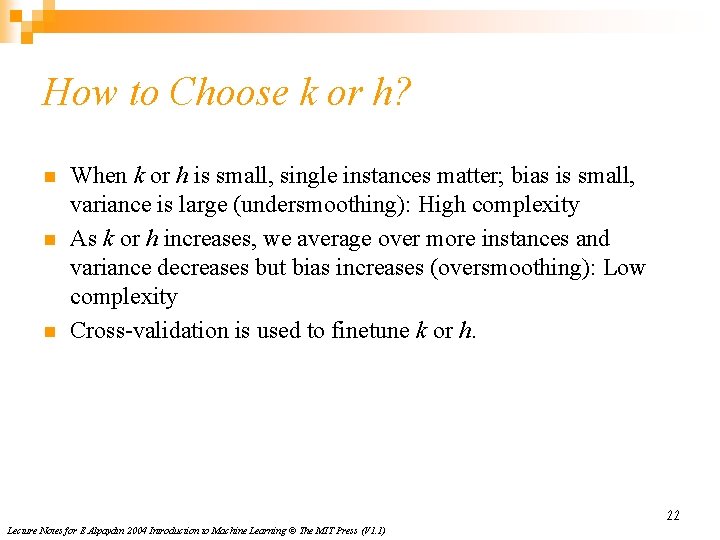

How to Choose k or h? n n n When k or h is small, single instances matter; bias is small, variance is large (undersmoothing): High complexity As k or h increases, we average over more instances and variance decreases but bias increases (oversmoothing): Low complexity Cross-validation is used to finetune k or h. 22 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)