Lecture Slides for INTRODUCTION TO Machine Learning ETHEM

- Slides: 21

Lecture Slides for INTRODUCTION TO Machine Learning ETHEM ALPAYDIN © The MIT Press, 2004 alpaydin@boun. edu. tr http: //www. cmpe. boun. edu. tr/~ethem/i 2 ml Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

CHAPTER 6: Dimensionality Reduction Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Why Reduce Dimensionality? 1. 2. 3. 4. 5. 6. Reduces time complexity: Less computation Reduces space complexity: Less parameters Saves the cost of observing the feature Simpler models are more robust on small datasets More interpretable; simpler explanation Data visualization (structure, groups, outliers, etc) if plotted in 2 or 3 dimensions 3 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

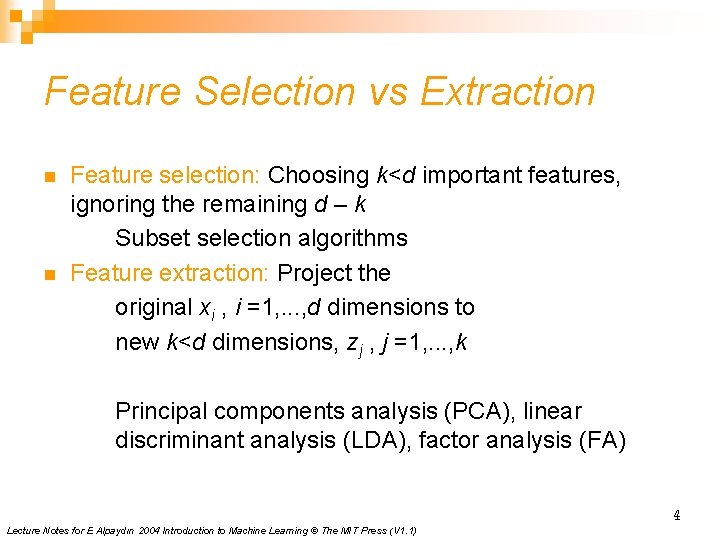

Feature Selection vs Extraction n n Feature selection: Choosing k<d important features, ignoring the remaining d – k Subset selection algorithms Feature extraction: Project the original xi , i =1, . . . , d dimensions to new k<d dimensions, zj , j =1, . . . , k Principal components analysis (PCA), linear discriminant analysis (LDA), factor analysis (FA) 4 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

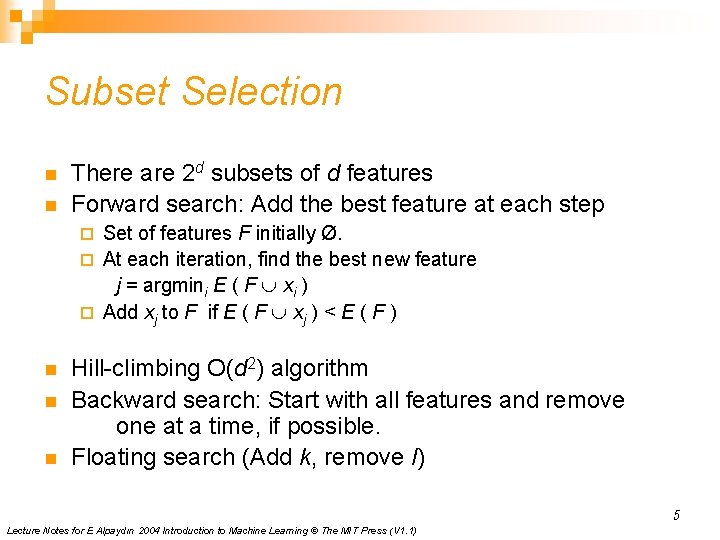

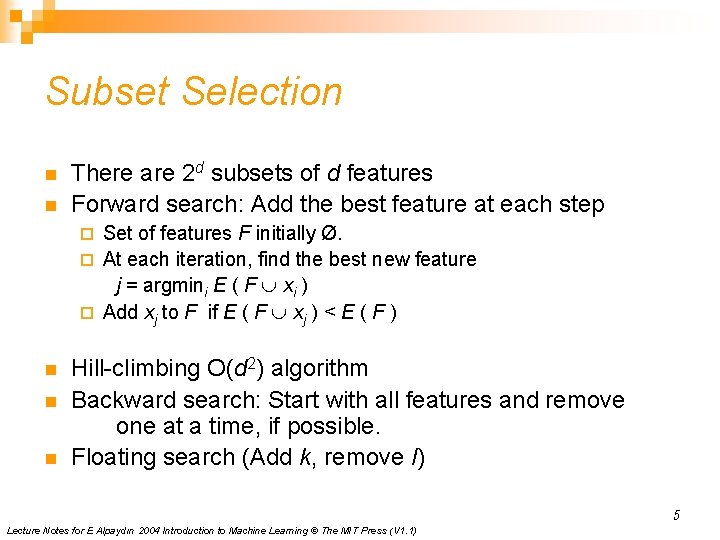

Subset Selection n n There are 2 d subsets of d features Forward search: Add the best feature at each step Set of features F initially Ø. ¨ At each iteration, find the best new feature j = argmini E ( F È xi ) ¨ Add xj to F if E ( F È xj ) < E ( F ) ¨ n n n Hill-climbing O(d 2) algorithm Backward search: Start with all features and remove one at a time, if possible. Floating search (Add k, remove l) 5 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

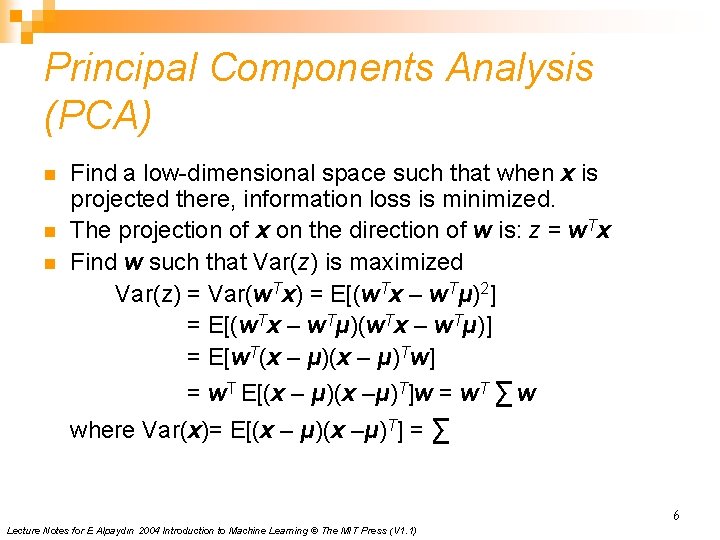

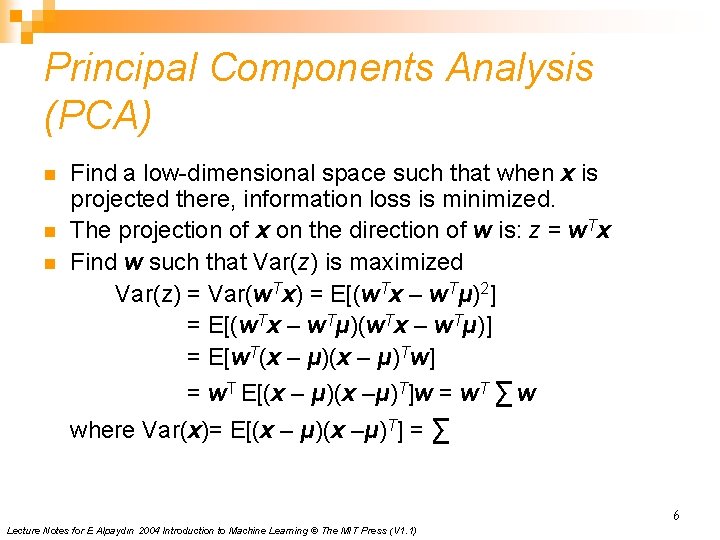

Principal Components Analysis (PCA) n n n Find a low-dimensional space such that when x is projected there, information loss is minimized. The projection of x on the direction of w is: z = w. Tx Find w such that Var(z) is maximized Var(z) = Var(w. Tx) = E[(w. Tx – w. Tμ)2] = E[(w. Tx – w. Tμ)] = E[w. T(x – μ)Tw] = w. T E[(x – μ)(x –μ)T]w = w. T ∑ w where Var(x)= E[(x – μ)(x –μ)T] = ∑ 6 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

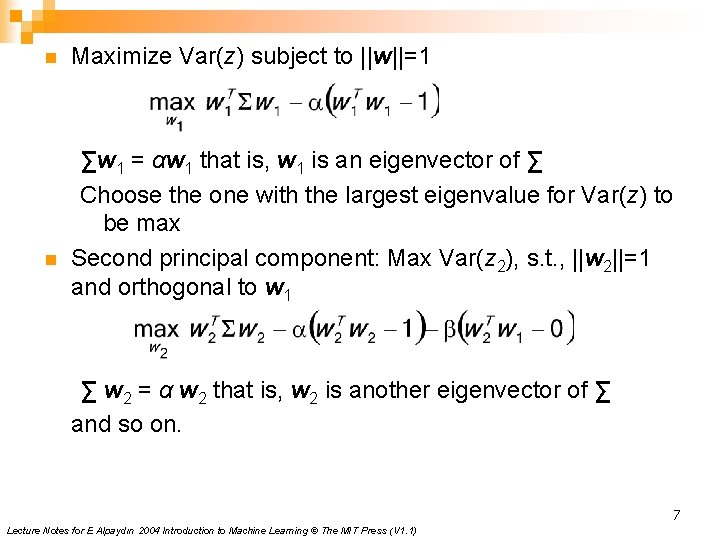

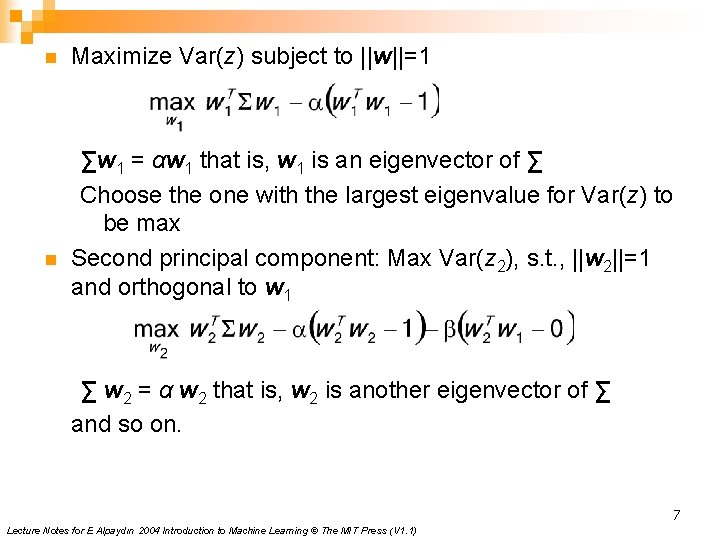

n n Maximize Var(z) subject to ||w||=1 ∑w 1 = αw 1 that is, w 1 is an eigenvector of ∑ Choose the one with the largest eigenvalue for Var(z) to be max Second principal component: Max Var(z 2), s. t. , ||w 2||=1 and orthogonal to w 1 ∑ w 2 = α w 2 that is, w 2 is another eigenvector of ∑ and so on. 7 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

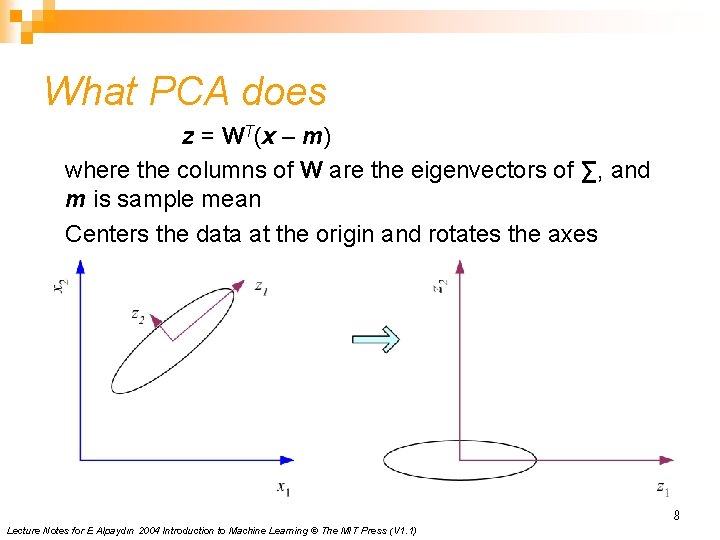

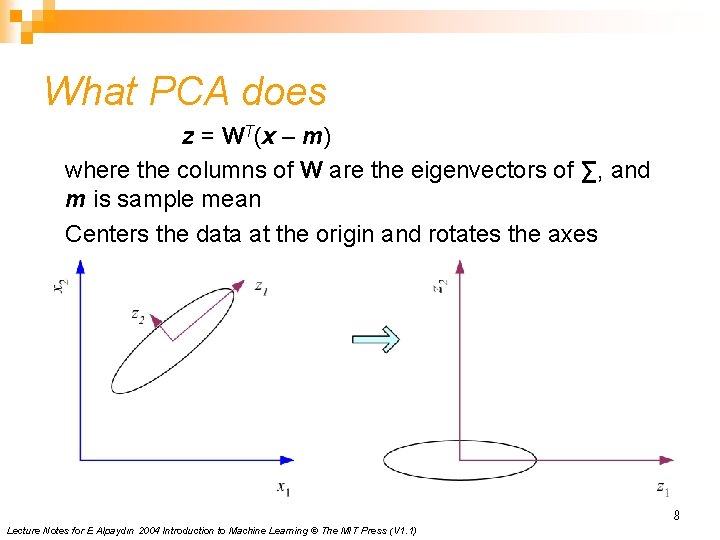

What PCA does z = WT(x – m) where the columns of W are the eigenvectors of ∑, and m is sample mean Centers the data at the origin and rotates the axes 8 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

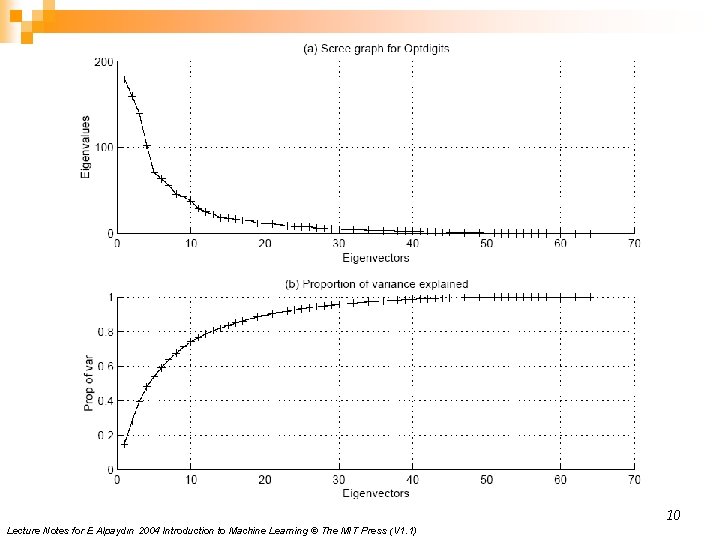

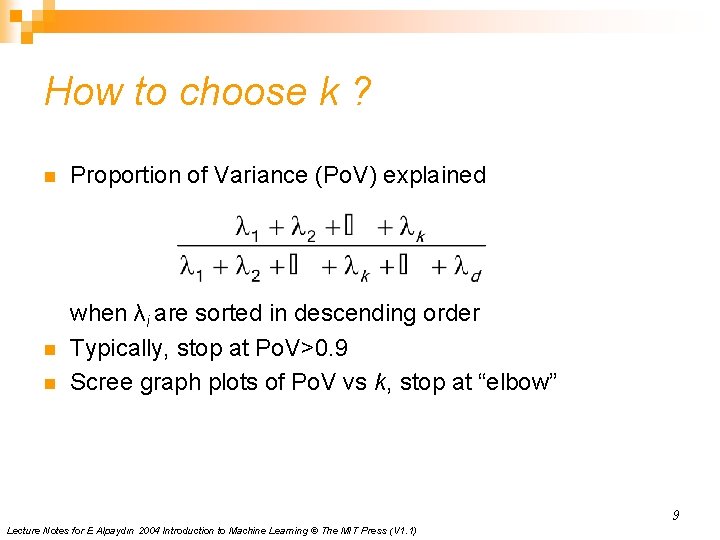

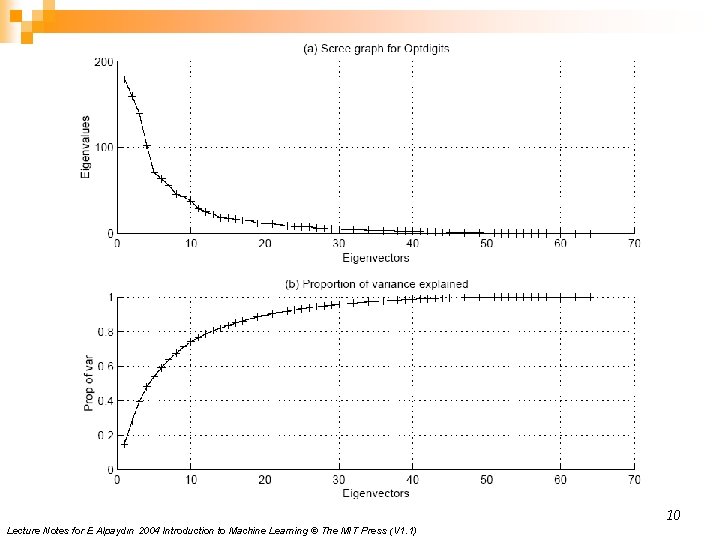

How to choose k ? n Proportion of Variance (Po. V) explained n when λi are sorted in descending order Typically, stop at Po. V>0. 9 Scree graph plots of Po. V vs k, stop at “elbow” n 9 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

10 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

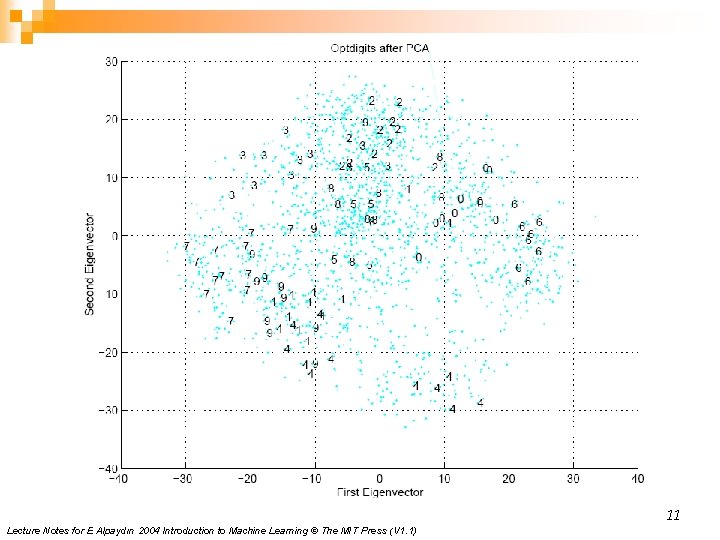

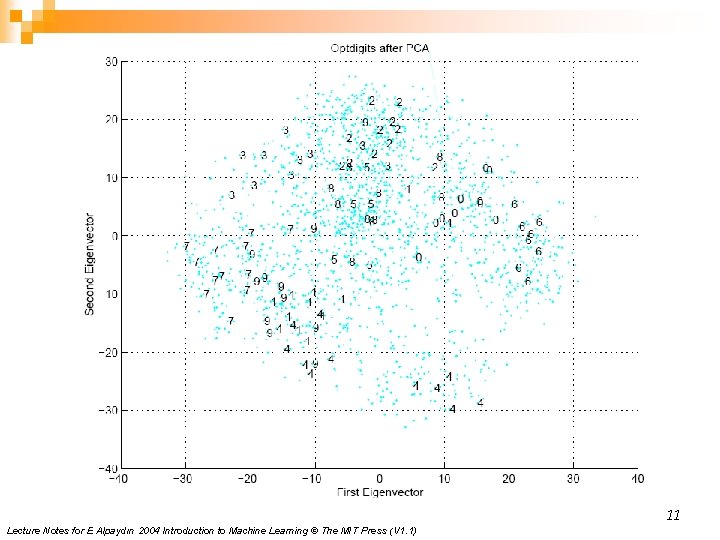

11 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

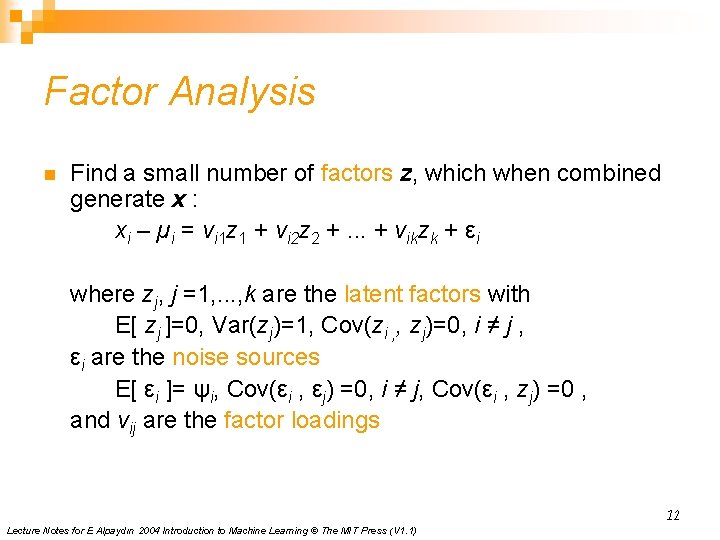

Factor Analysis n Find a small number of factors z, which when combined generate x : xi – µi = vi 1 z 1 + vi 2 z 2 +. . . + vikzk + εi where zj, j =1, . . . , k are the latent factors with E[ zj ]=0, Var(zj)=1, Cov(zi , , zj)=0, i ≠ j , εi are the noise sources E[ εi ]= ψi, Cov(εi , εj) =0, i ≠ j, Cov(εi , zj) =0 , and vij are the factor loadings 12 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

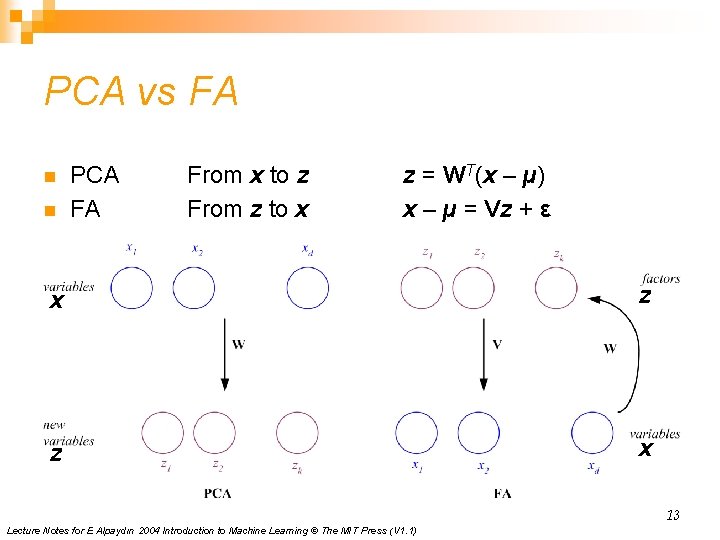

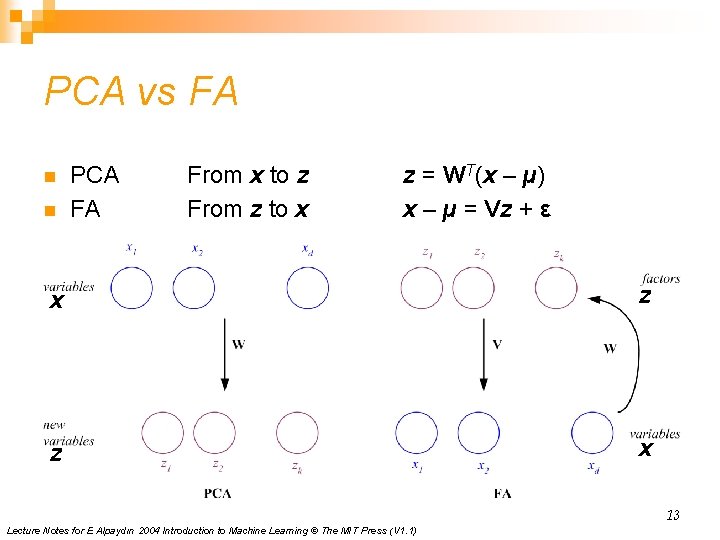

PCA vs FA n n PCA FA From x to z From z to x z = WT(x – µ) x – µ = Vz + ε x z z x 13 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

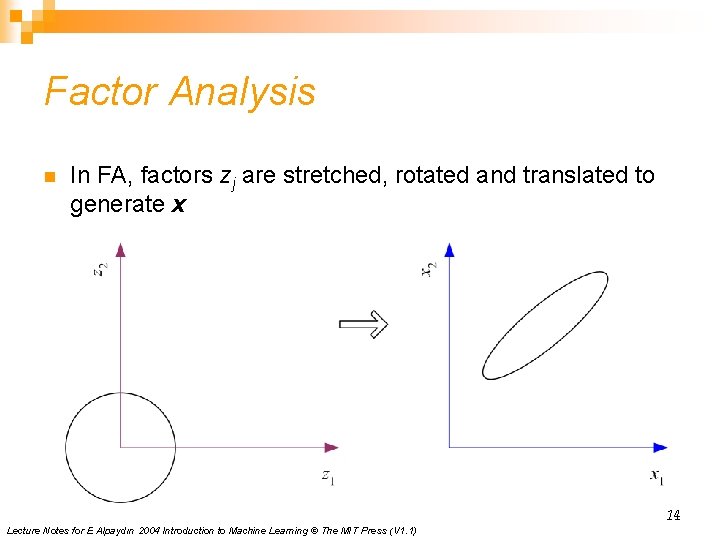

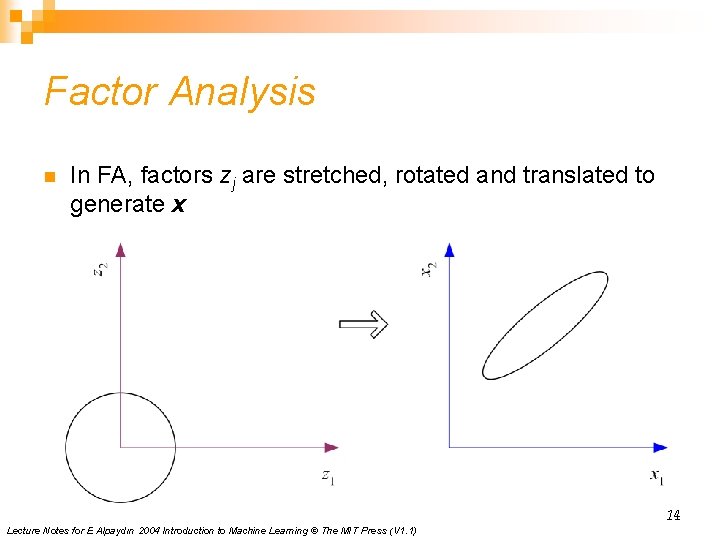

Factor Analysis n In FA, factors zj are stretched, rotated and translated to generate x 14 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

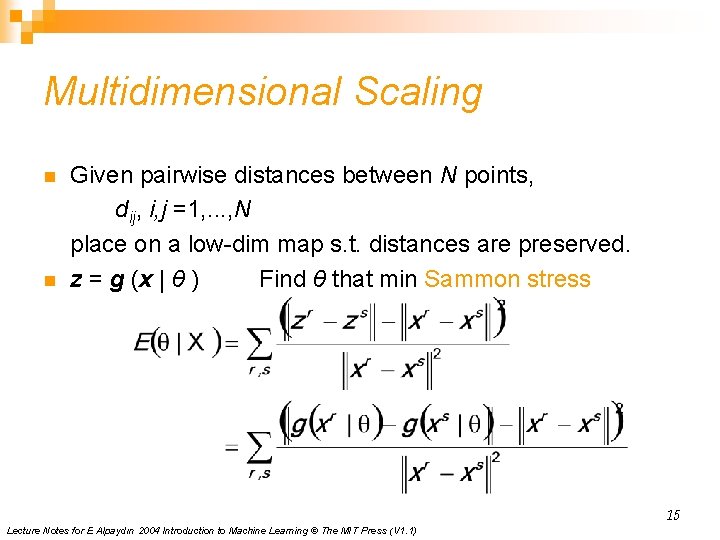

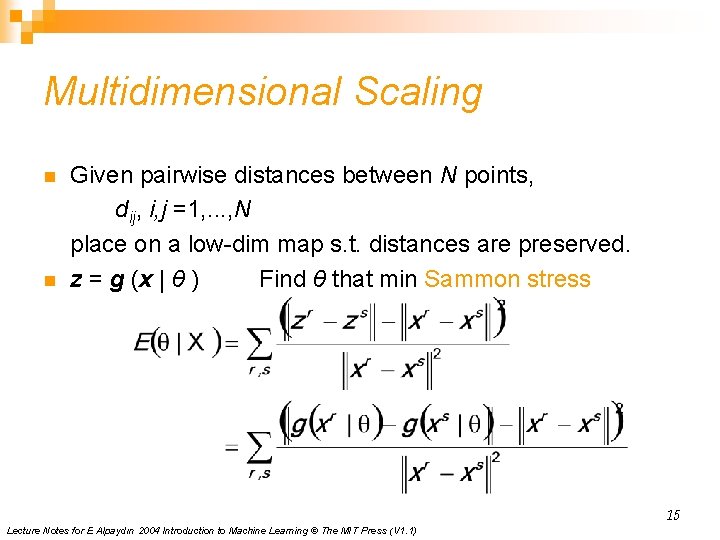

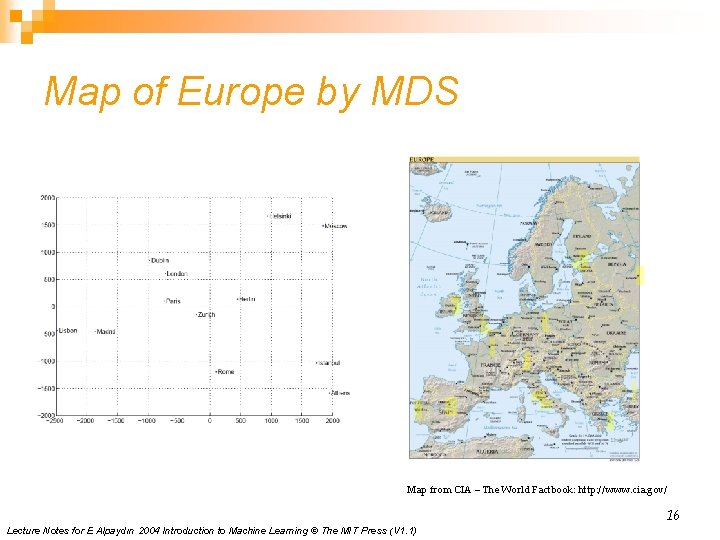

Multidimensional Scaling n n Given pairwise distances between N points, dij, i, j =1, . . . , N place on a low-dim map s. t. distances are preserved. z = g (x | θ ) Find θ that min Sammon stress 15 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

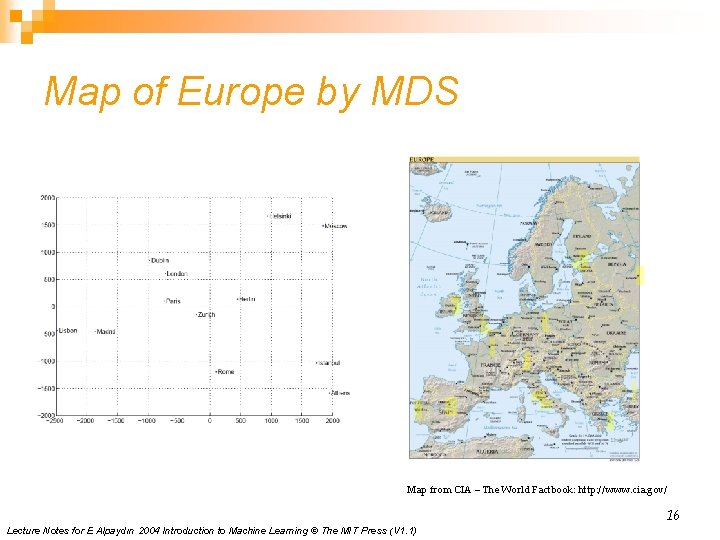

Map of Europe by MDS Map from CIA – The World Factbook: http: //www. cia. gov/ 16 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

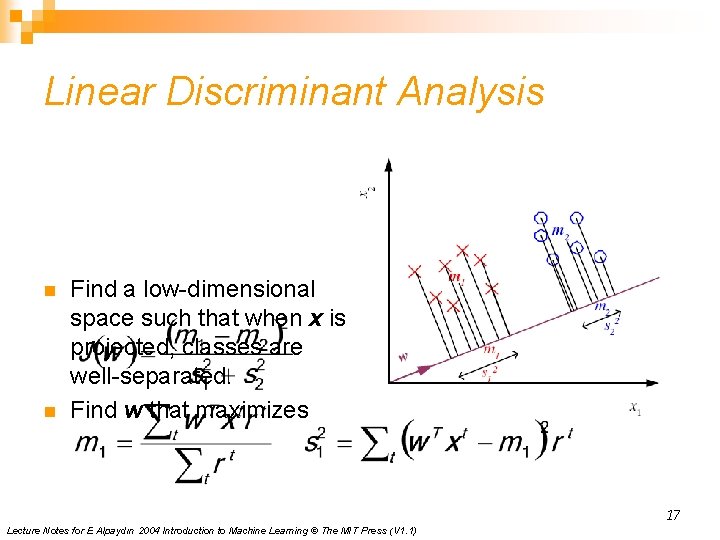

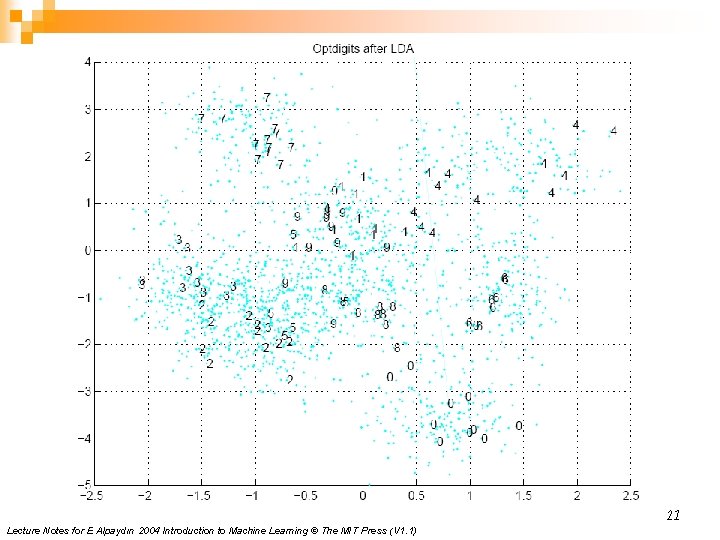

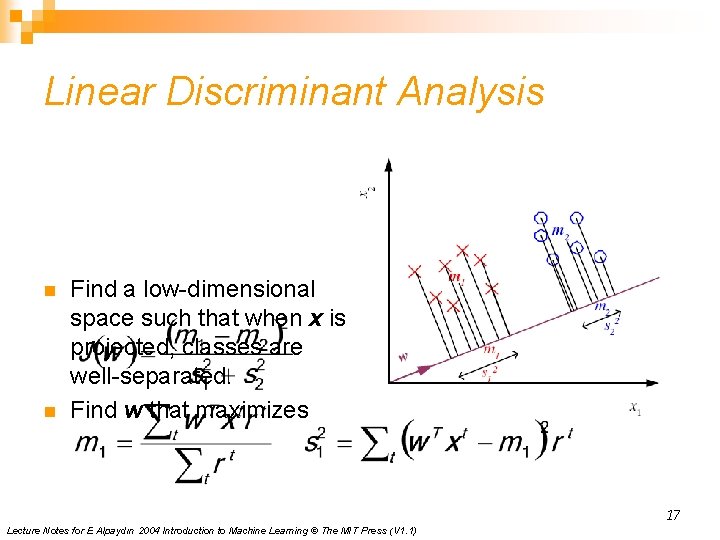

Linear Discriminant Analysis n n Find a low-dimensional space such that when x is projected, classes are well-separated. Find w that maximizes 17 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

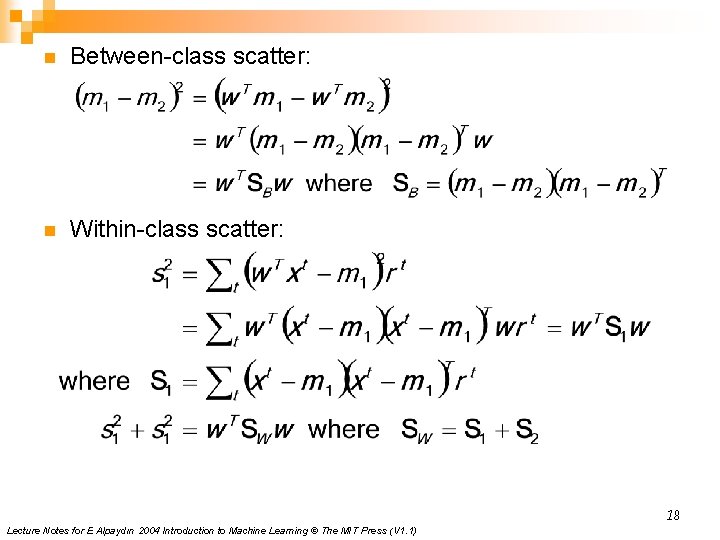

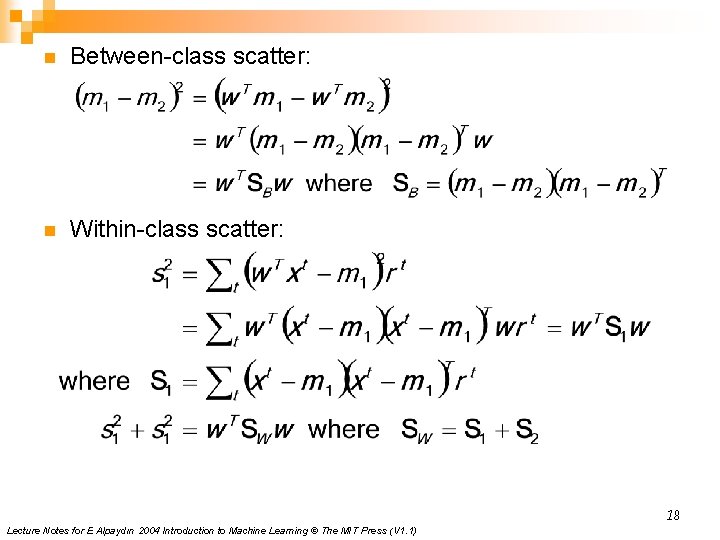

n Between-class scatter: n Within-class scatter: 18 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

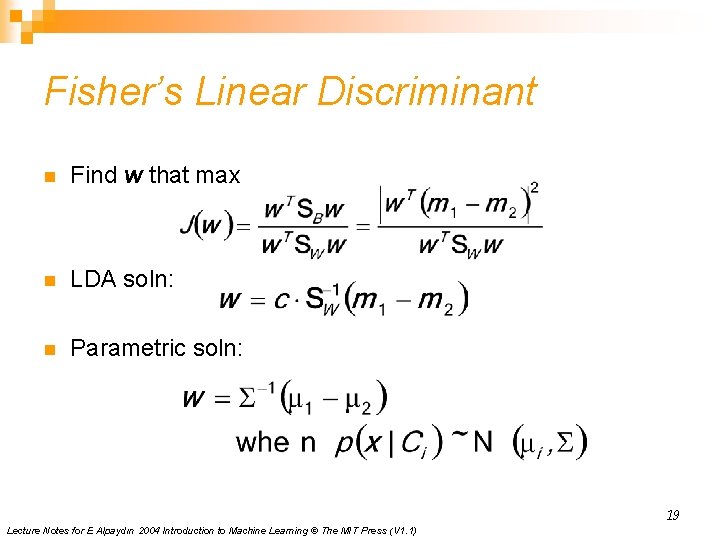

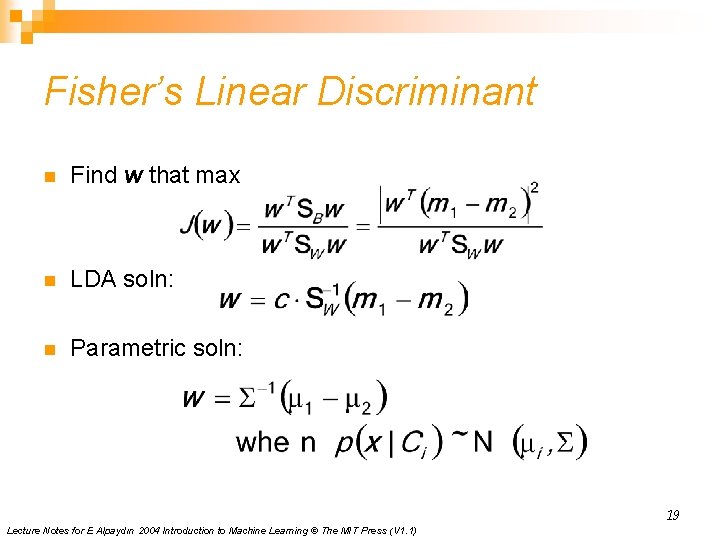

Fisher’s Linear Discriminant n Find w that max n LDA soln: n Parametric soln: 19 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

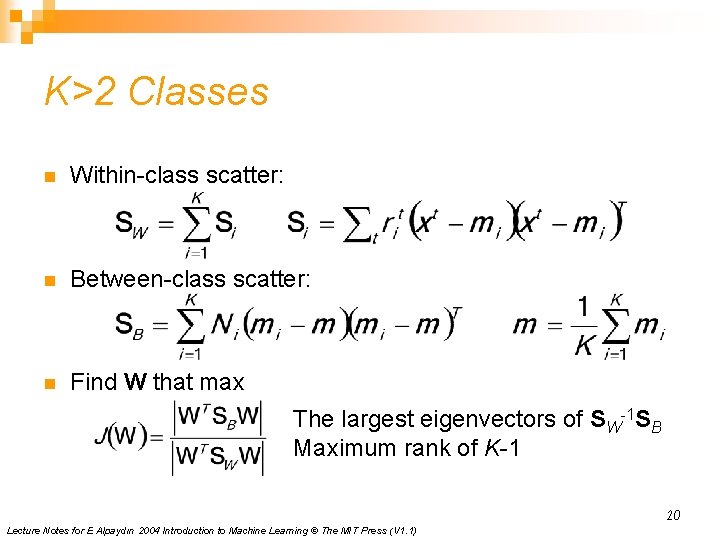

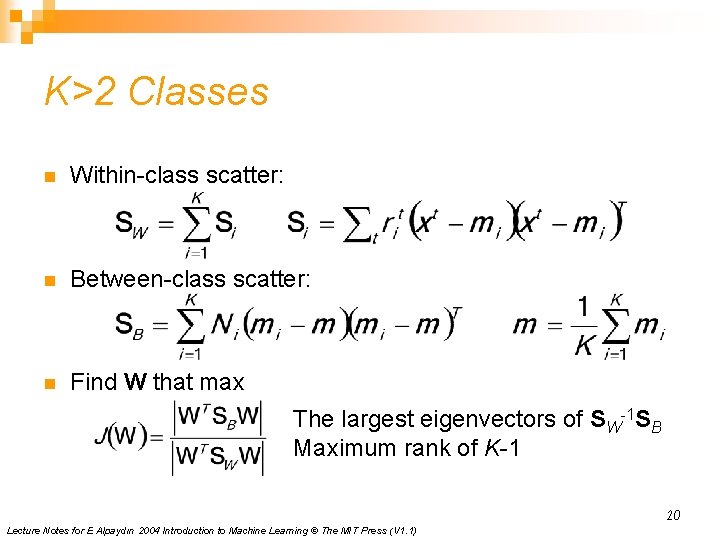

K>2 Classes n Within-class scatter: n Between-class scatter: n Find W that max The largest eigenvectors of SW-1 SB Maximum rank of K-1 20 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

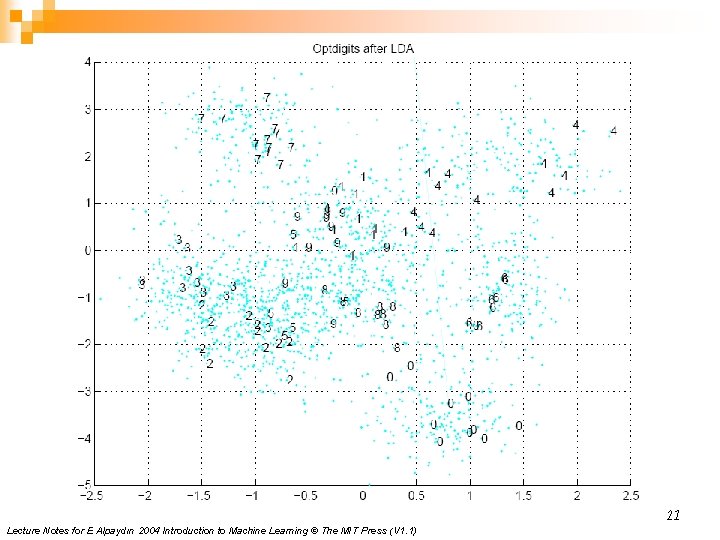

21 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)