Evaluating the Impact of Performancerelated Pay for teachers

- Slides: 40

Evaluating the Impact of Performancerelated Pay for teachers in England Adele Atkinson, Simon Burgess, Bronwyn Croxson, Paul Gregg, Carol Propper, Helen Slater, Deborah Wilson 12 April 2007 www. bris. ac. uk/Depts/CMPO/

Background • Improving education outcomes key priority for governments, but evidence suggests poor returns from simply raising school resources. • One alternative mechanism: incentives for teachers, but rel. little evidence on impact. • 1999: UK government introduced performance related pay scheme for teachers (the “Performance Threshold”). • Performance assessed across five criteria, inc. pupil progress (value-added). 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 2

What we do in this paper • Quantitative evaluation of the impact of this PRP scheme for teachers on pupil test score gains. • Design – Longitudinal teacher-level data and a difference-indifference research design. – Link pupils to their teachers for each subject; collect prior attainment data for each pupil. – So control for teacher and pupil fixed effects. – Also control for differences in teacher experience. • Incentive scheme had significant effects on pupil progress. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 3

Outline of the talk • • Current evidence (in paper, not here) The National Curriculum The PRP scheme Data Evaluation methodology Results Conclusion 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 4

The National Curriculum • Centralised system of control over national exams and teacher pay scales. • All pupils tested at the end of each Key Stage of the National Curriculum. • KS 1 and KS 2 tests taken at ages 7 and 11; KS 3 and KS 4 (GCSE) taken at ages 14 and 16. • KS 1, 2, 3 tests taken in English, maths, science. • These subjects compulsory also at KS 4. • We focus on KS 4 and value added between KS 3 and KS 4. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 5

The PRP scheme • Labour administration 1998 Green Paper: range of reforms to education, inc. performance-related element to teacher pay. • The ‘Performance Threshold’ introduced in 1999/2000; first applications in July 2000. • The Performance Threshold itself was one element of larger pay reform, designed to affect teacher effort, as well as recruitment and retention. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 6

The PRP scheme II • Prior to the PRP scheme, all teachers paid on unified basic salary scale which had 9 full points. • Position on scale depended on qualifications and/or experience; progress through annual increments. Plus additional management points available. • 1999/2000: approx. 75% of teachers at top of scale, at spine point 9. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 7

The PRP scheme III • After the reforms, teachers on spine point 9 could apply to pass the Performance Threshold. 2 effects: – Annual bonus of £ 2, 000. – Move onto the Upper Pay Scale (UPS): additional spine points, each of which related to performance. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 8

The PRP scheme IV • To pass the Threshold, teachers had to demonstrate effectiveness in five areas, including pupil progress (value added). • Forms submitted by July 2000. Assessed by headteacher and external assessor. • Initial Threshold payments funded out of a separate, central budget; no quota or limit. • The vast majority of eligible teachers both applied and were awarded the bonus. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 9

Was it incentive pay? • Wragg et al (2001) survey of 1000 schools – In these schools, 88% of the eligible teachers applied, and of these 97% were awarded the bonus – Unconditional pay increase - little effect on teacher effort. • But – Ex ante (Marsden) survey suggests teachers believed it to be ‘real’ – UPS element clearly performance related 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 10

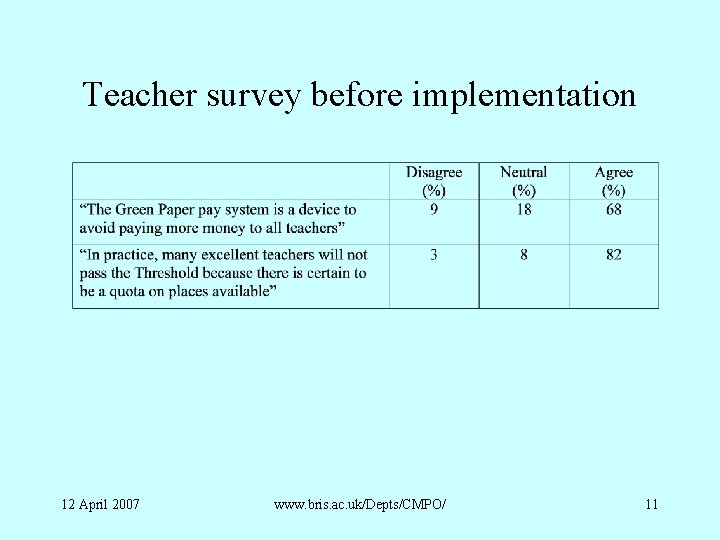

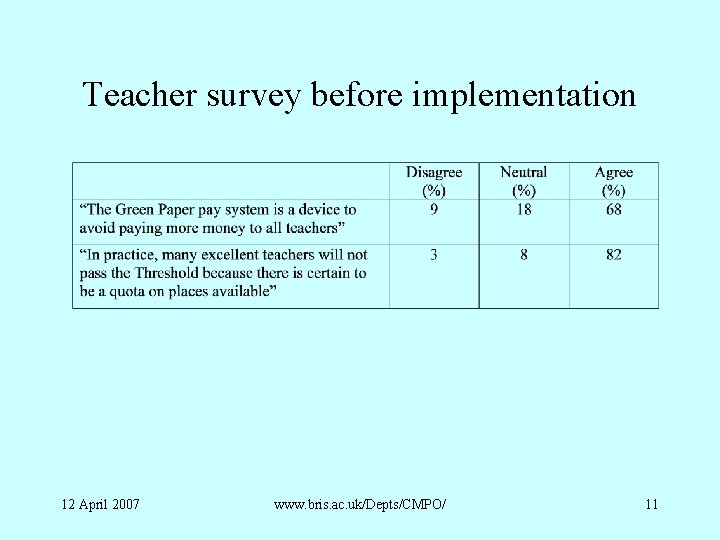

Teacher survey before implementation 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 11

Data requirements • Control for pupil prior attainment to measure progress or value added: – KS 3 -GCSE; English, maths, science. • Longitudinal element: – Follow same teachers through two complete KS 3 GCSE teaching cycles (before and after scheme introduced). • Link pupils to teachers: – Obtain class lists direct from schools. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 12

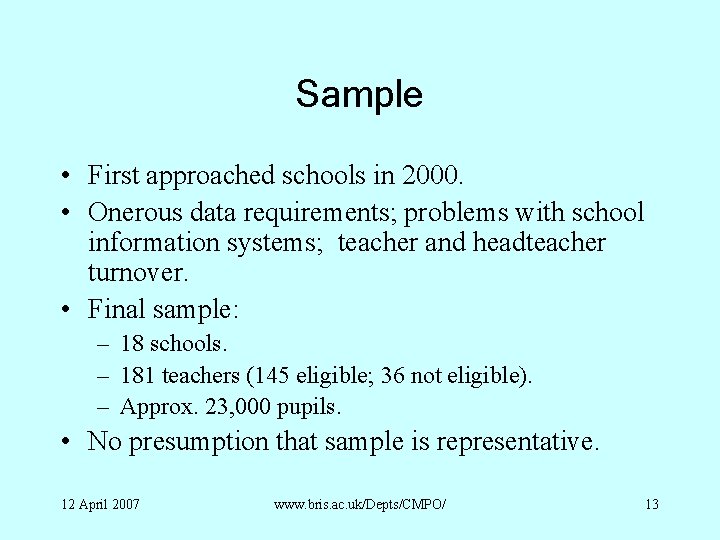

Sample • First approached schools in 2000. • Onerous data requirements; problems with school information systems; teacher and headteacher turnover. • Final sample: – 18 schools. – 181 teachers (145 eligible; 36 not eligible). – Approx. 23, 000 pupils. • No presumption that sample is representative. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 13

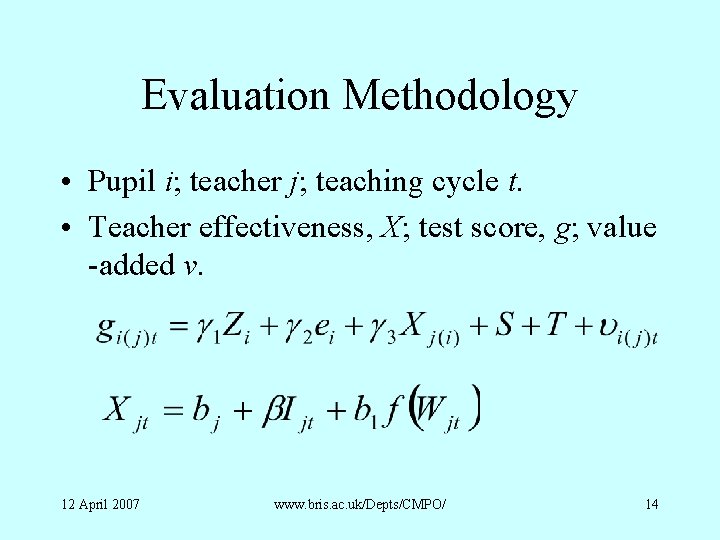

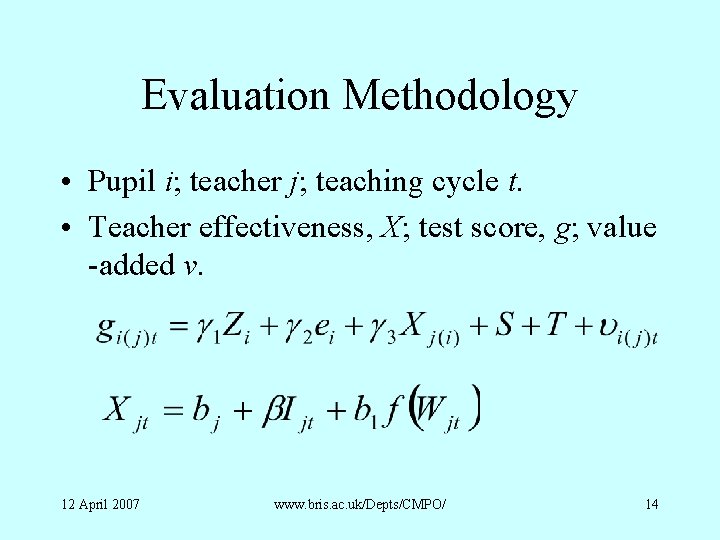

Evaluation Methodology • Pupil i; teacher j; teaching cycle t. • Teacher effectiveness, X; test score, g; value -added v. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 14

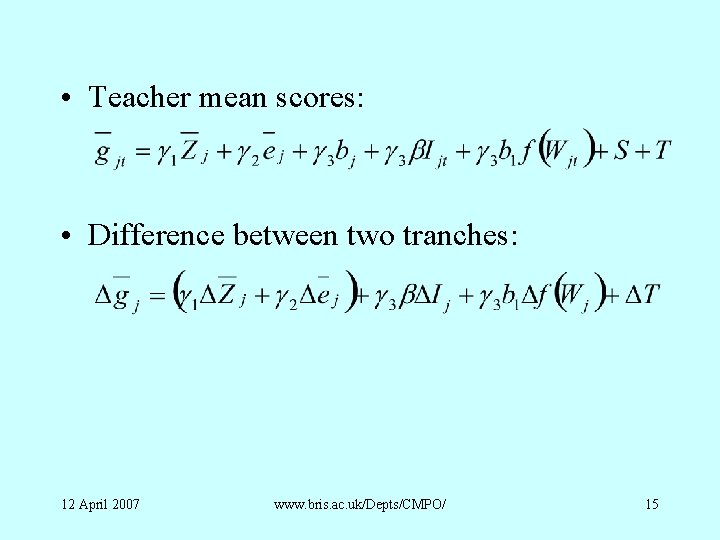

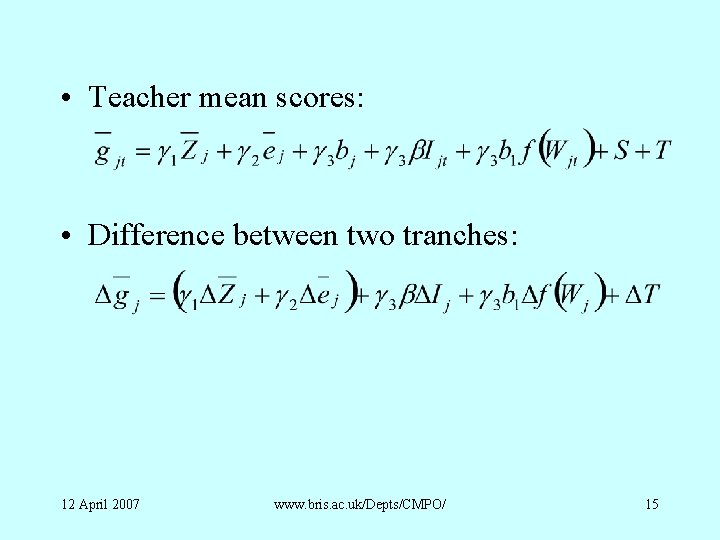

• Teacher mean scores: • Difference between two tranches: 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 15

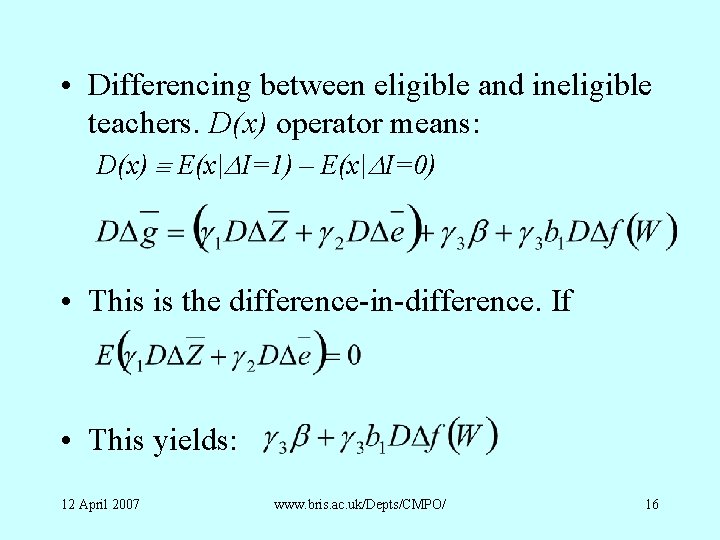

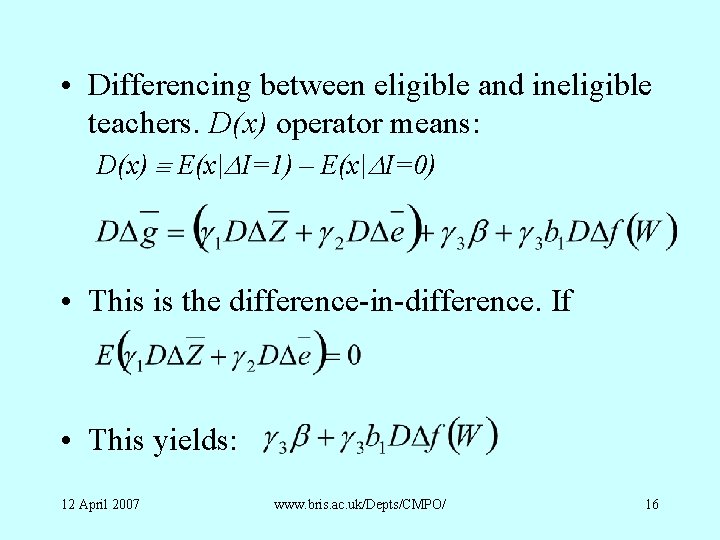

• Differencing between eligible and ineligible teachers. D(x) operator means: D(x) E(x|DI=1) – E(x|DI=0) • This is the difference-in-difference. If • This yields: 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 16

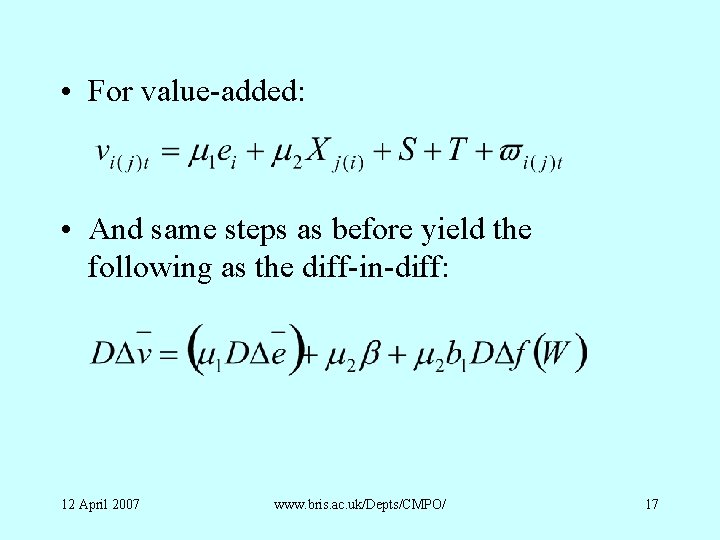

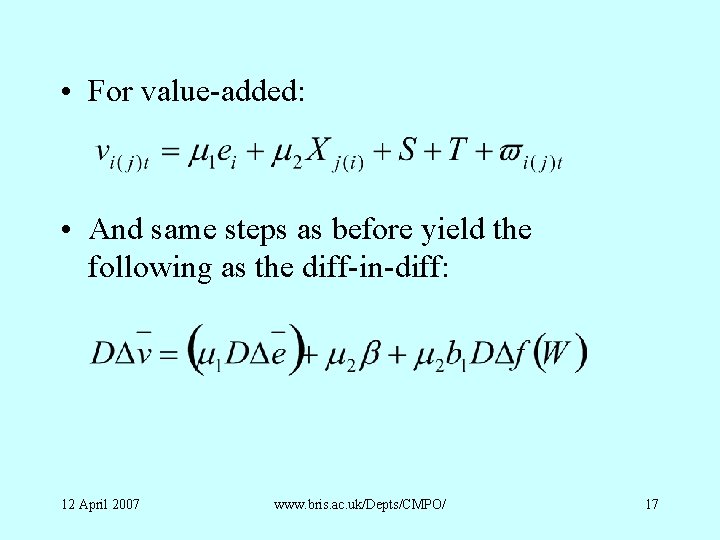

• For value-added: • And same steps as before yield the following as the diff-in-diff: 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 17

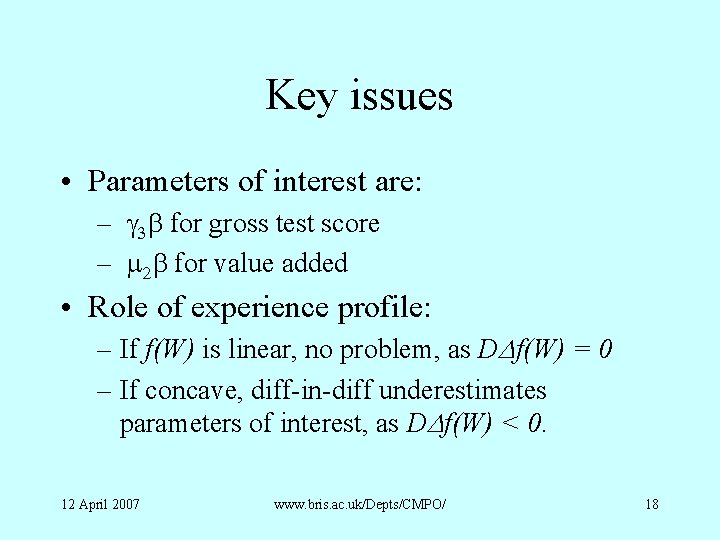

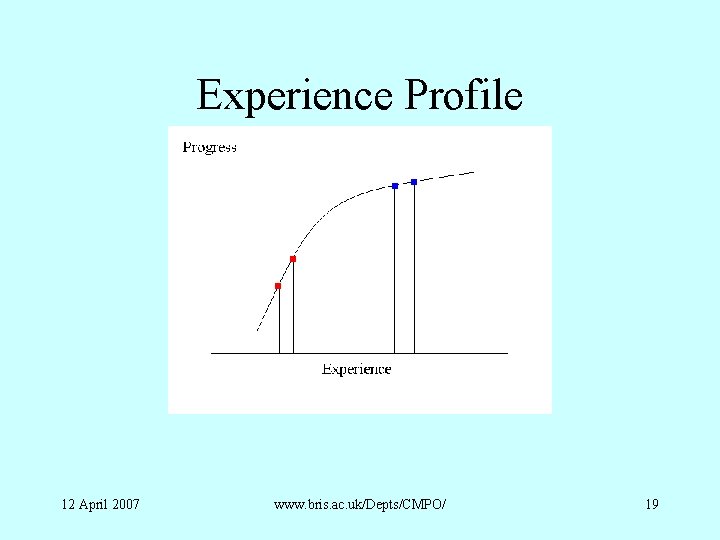

Key issues • Parameters of interest are: – g 3 b for gross test score – m 2 b for value added • Role of experience profile: – If f(W) is linear, no problem, as DDf(W) = 0 – If concave, diff-in-diff underestimates parameters of interest, as DDf(W) < 0. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 18

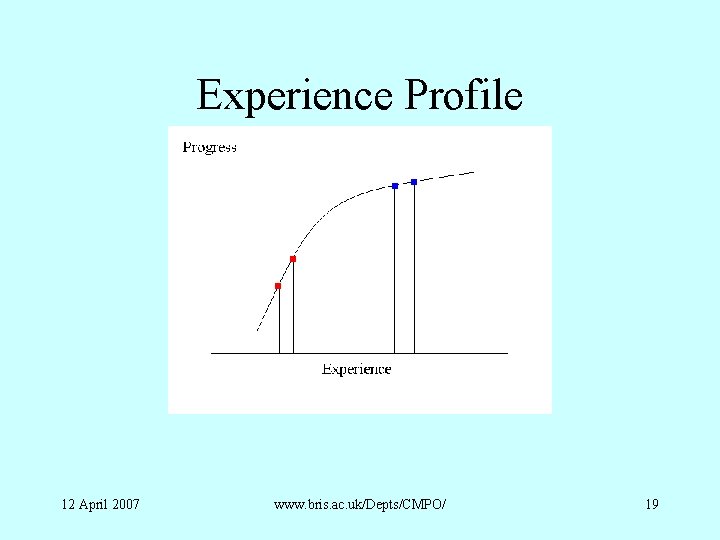

Experience Profile 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 19

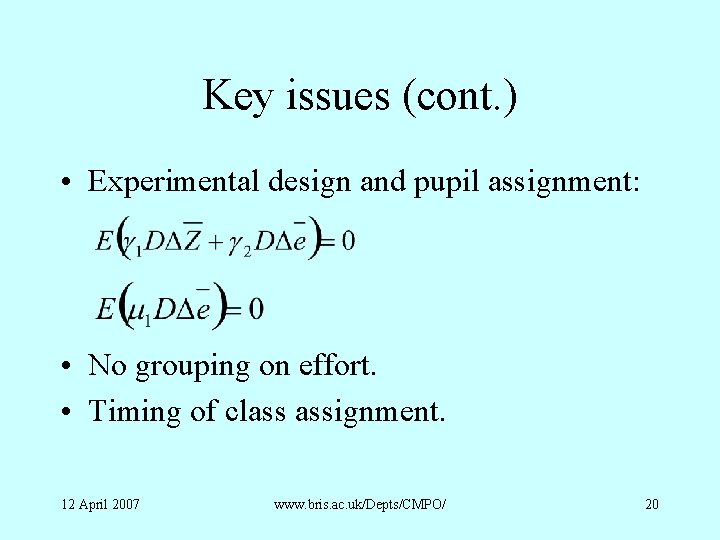

Key issues (cont. ) • Experimental design and pupil assignment: • No grouping on effort. • Timing of class assignment. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 20

Results • • Difference-in-difference results Regressions Robustness checks Interpretation and evaluation 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 21

Table 2: D-in. D analysis: GCSEs 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 22

Table 3: D-in-D VA Means 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 23

Experience Difference • Potentially need to control for systematic differences in experience: ideal: nonparametrically defined experienceeffectiveness profile. • Not enough data to do that, so define a ‘novice’ teacher dummy picking out teachers with least experience. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 24

Table 4: GCSE Analysis 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 25

Table 5: Value Added Analysis 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 26

Table 6: Subject Differences 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 27

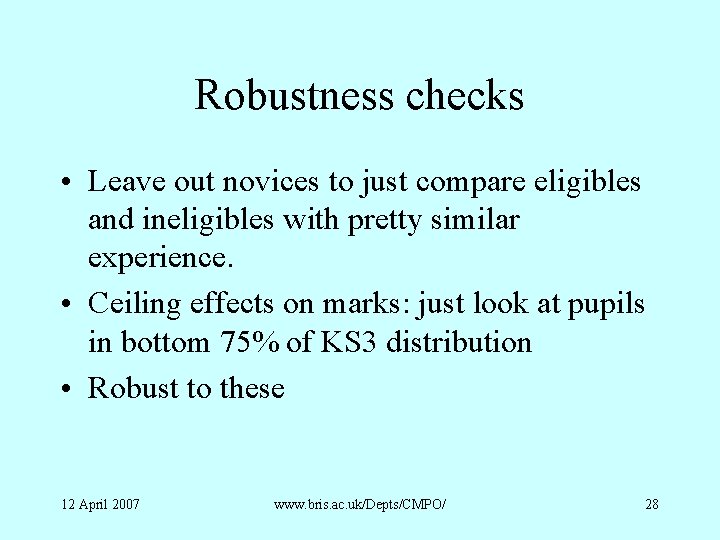

Robustness checks • Leave out novices to just compare eligibles and ineligibles with pretty similar experience. • Ceiling effects on marks: just look at pupils in bottom 75% of KS 3 distribution • Robust to these 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 28

Evaluation • One standard deviation in the teacher-mean change in GCSE is 1. 29, and 0. 58 for VA – Coefficients on eligibility of 0. 890 for GCSE change and 0. 422 for VA change – As percentages of a standard deviation these are 69% and 73% • Alternatively, eligibility dummy is 67% of the novice teacher dummy for GCSE change, and 78% for VA change. 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 29

Conclusions • Rich data, research design which controls for teacher and pupil effects • Results: around 0. 5 GCSE grade per pupil • Caveats – Was it incentive pay and the experienceeffectiveness profile – Extra effort or effort diversion? 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 30

Additional slides 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 31

Table 2: Summary teacher stats 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 32

Table 3: Summary pupil stats 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 33

Table 4: Comparative stats 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 34

Data requested from schools 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 35

12 April 2007 www. bris. ac. uk/Depts/CMPO/ 36

12 April 2007 www. bris. ac. uk/Depts/CMPO/ 37

12 April 2007 www. bris. ac. uk/Depts/CMPO/ 38

Table 12: Distributional Impacts 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 39

Table 13: Robustness checks 12 April 2007 www. bris. ac. uk/Depts/CMPO/ 40

Kim kroll teachers pay teachers

Kim kroll teachers pay teachers Salyersville grade school

Salyersville grade school School teachers pay and conditions document

School teachers pay and conditions document Teachers pay teachersz

Teachers pay teachersz What is the subject of the announcement

What is the subject of the announcement Demotivators and edward deci

Demotivators and edward deci How to calculate gross pay

How to calculate gross pay How much did wanda pay in taxes this pay period

How much did wanda pay in taxes this pay period Designing pay levels mix and pay structures

Designing pay levels mix and pay structures Argument för teckenspråk som minoritetsspråk

Argument för teckenspråk som minoritetsspråk Novell typiska drag

Novell typiska drag Fimbrietratt

Fimbrietratt Trög för kemist

Trög för kemist Magnetsjukhus

Magnetsjukhus Samlade siffror för tryck

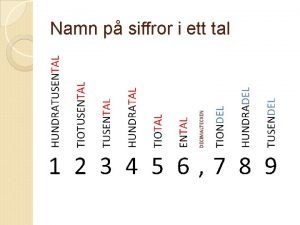

Samlade siffror för tryck Personalliggare bygg undantag

Personalliggare bygg undantag Toppslätskivling effekt

Toppslätskivling effekt Redogör för vad psykologi är

Redogör för vad psykologi är Borra hål för knoppar

Borra hål för knoppar En lathund för arbete med kontinuitetshantering

En lathund för arbete med kontinuitetshantering Mat för unga idrottare

Mat för unga idrottare Gumman cirkel

Gumman cirkel Bris för vuxna

Bris för vuxna Etik och ledarskap etisk kod för chefer

Etik och ledarskap etisk kod för chefer Offentlig förvaltning

Offentlig förvaltning Plagg i rom

Plagg i rom Humanitr

Humanitr Datorkunskap för nybörjare

Datorkunskap för nybörjare Steg för steg rita

Steg för steg rita Ministerstyre för och nackdelar

Ministerstyre för och nackdelar Tillitsbaserad ledning

Tillitsbaserad ledning Tack för att ni lyssnade bild

Tack för att ni lyssnade bild Bästa kameran för astrofoto

Bästa kameran för astrofoto Bunden och fri form

Bunden och fri form Nyckelkompetenser för livslångt lärande

Nyckelkompetenser för livslångt lärande Personlig tidbok fylla i

Personlig tidbok fylla i Modell för handledningsprocess

Modell för handledningsprocess Borstål, egenskaper

Borstål, egenskaper Orubbliga rättigheter

Orubbliga rättigheter Bamse för de yngsta

Bamse för de yngsta Verktyg för automatisering av utbetalningar

Verktyg för automatisering av utbetalningar