SGO 2 0 from Compliance to Quality Increasing

- Slides: 74

SGO 2. 0: from Compliance to Quality Increasing SGO Quality through Better Assessments and Target Setting 1

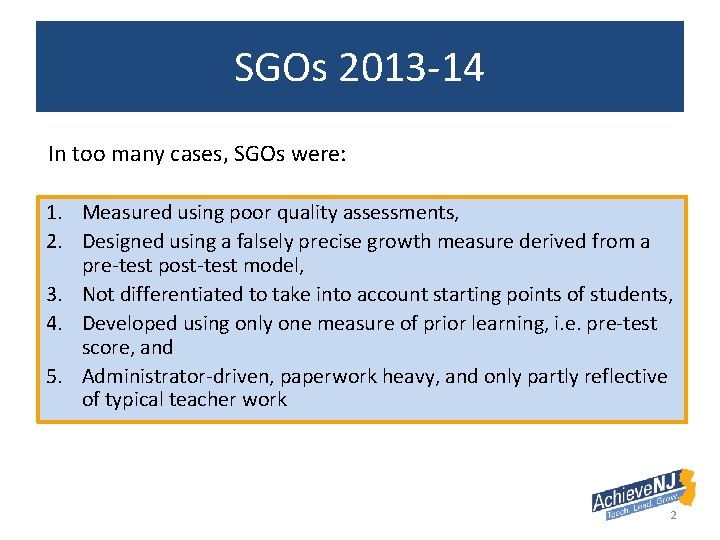

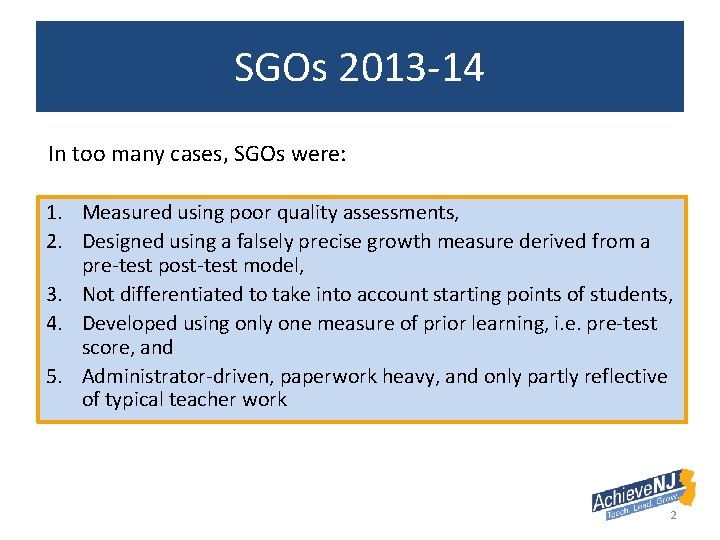

SGOs 2013 -14 In too many cases, SGOs were: 1. Measured using poor quality assessments, 2. Designed using a falsely precise growth measure derived from a pre-test post-test model, 3. Not differentiated to take into account starting points of students, 4. Developed using only one measure of prior learning, i. e. pre-test score, and 5. Administrator-driven, paperwork heavy, and only partly reflective of typical teacher work 2

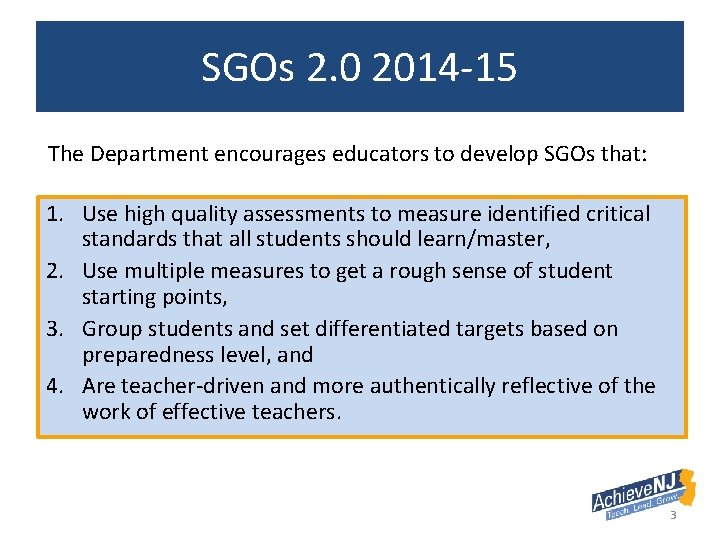

SGOs 2. 0 2014 -15 The Department encourages educators to develop SGOs that: 1. Use high quality assessments to measure identified critical standards that all students should learn/master, 2. Use multiple measures to get a rough sense of student starting points, 3. Group students and set differentiated targets based on preparedness level, and 4. Are teacher-driven and more authentically reflective of the work of effective teachers. 3

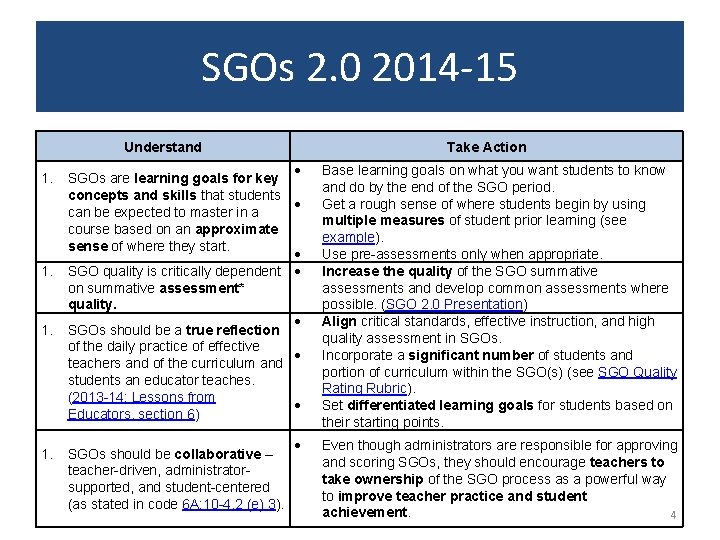

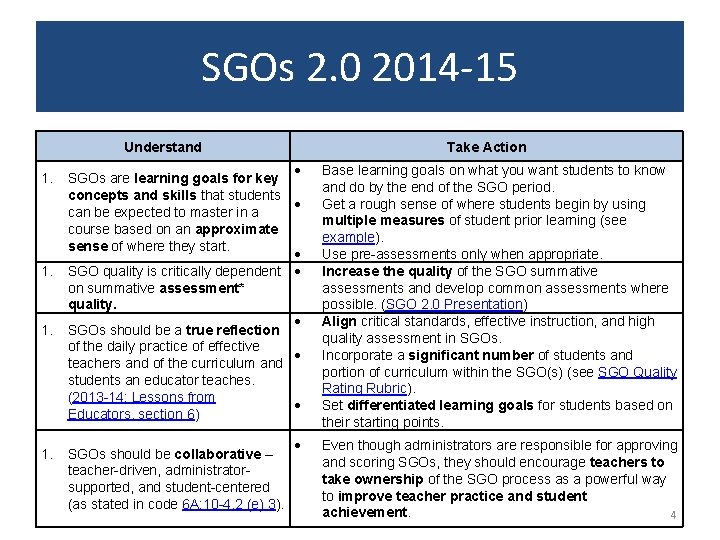

SGOs 2. 0 2014 -15 Understand 1. 1. SGOs are learning goals for key concepts and skills that students can be expected to master in a course based on an approximate sense of where they start. Take Action SGO quality is critically dependent on summative assessment* quality. SGOs should be a true reflection of the daily practice of effective teachers and of the curriculum and students an educator teaches. (2013 -14: Lessons from Educators, section 6) SGOs should be collaborative – teacher-driven, administratorsupported, and student-centered (as stated in code 6 A: 10 -4. 2 (e) 3). Base learning goals on what you want students to know and do by the end of the SGO period. Get a rough sense of where students begin by using multiple measures of student prior learning (see example). Use pre-assessments only when appropriate. Increase the quality of the SGO summative assessments and develop common assessments where possible. (SGO 2. 0 Presentation) Align critical standards, effective instruction, and high quality assessment in SGOs. Incorporate a significant number of students and portion of curriculum within the SGO(s) (see SGO Quality Rating Rubric). Set differentiated learning goals for students based on their starting points. Even though administrators are responsible for approving and scoring SGOs, they should encourage teachers to take ownership of the SGO process as a powerful way to improve teacher practice and student achievement. 4

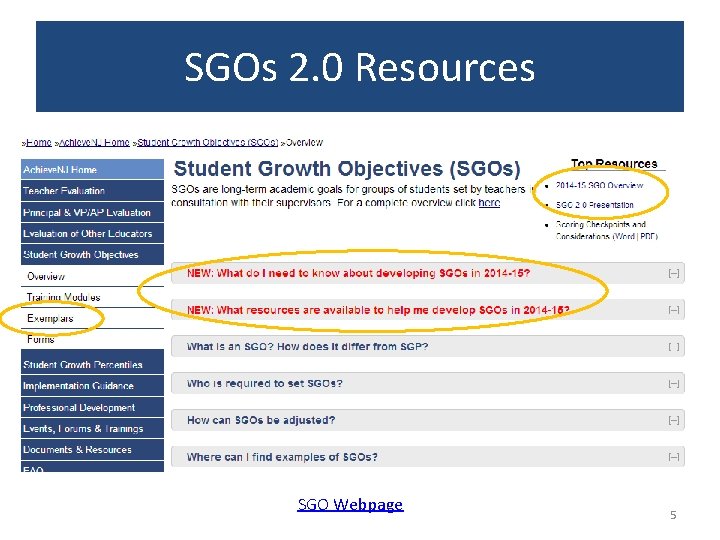

SGOs 2. 0 Resources SGO Webpage 5

Part 1 Clarify what SGOs are and what they are not. 6

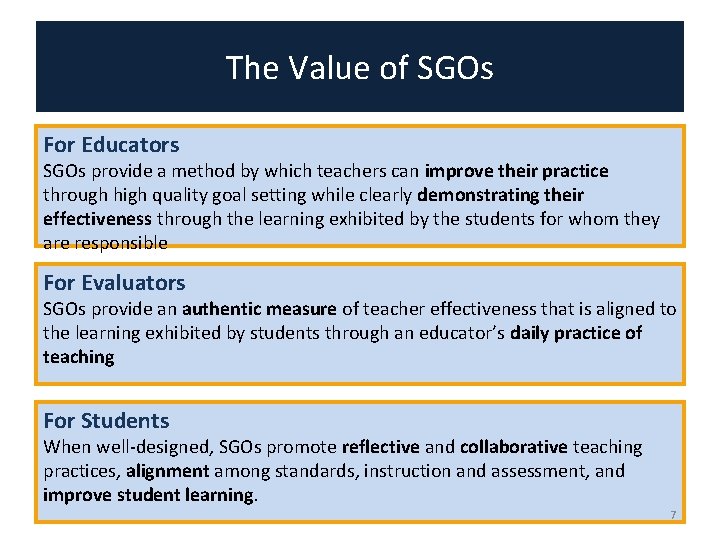

The Value of SGOs For Educators SGOs provide a method by which teachers can improve their practice through high quality goal setting while clearly demonstrating their effectiveness through the learning exhibited by the students for whom they are responsible For Evaluators SGOs provide an authentic measure of teacher effectiveness that is aligned to the learning exhibited by students through an educator’s daily practice of teaching For Students When well-designed, SGOs promote reflective and collaborative teaching practices, alignment among standards, instruction and assessment, and improve student learning. 7

What SGOs Are, and What They Are Not Misconception SGOs need to be a significant addition to the work of a teacher. #1 Reality SGOs should be a reflection of what effective teachers typically do. 8

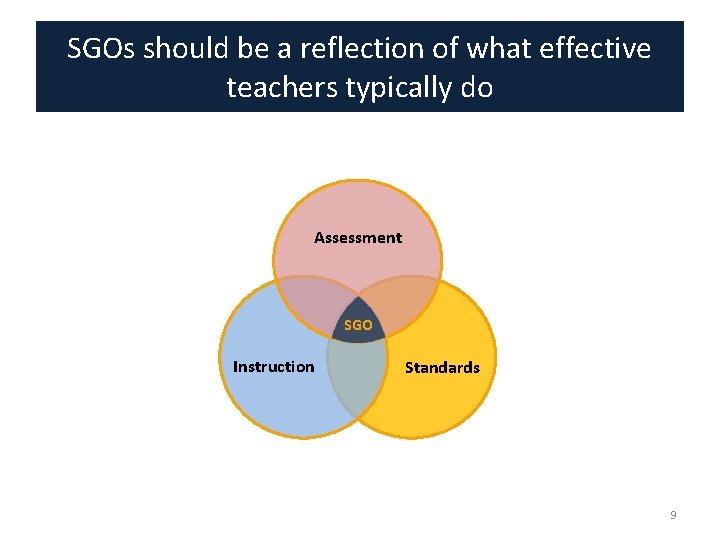

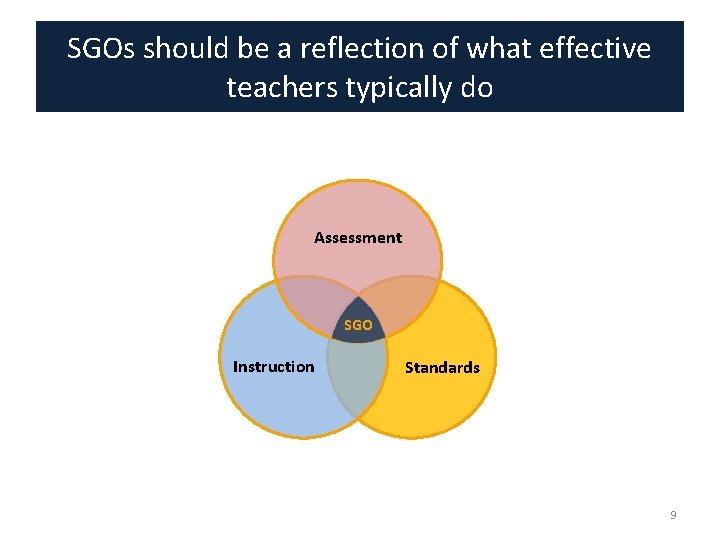

SGOs should be a reflection of what effective teachers typically do Assessment SGO Instruction Standards 9

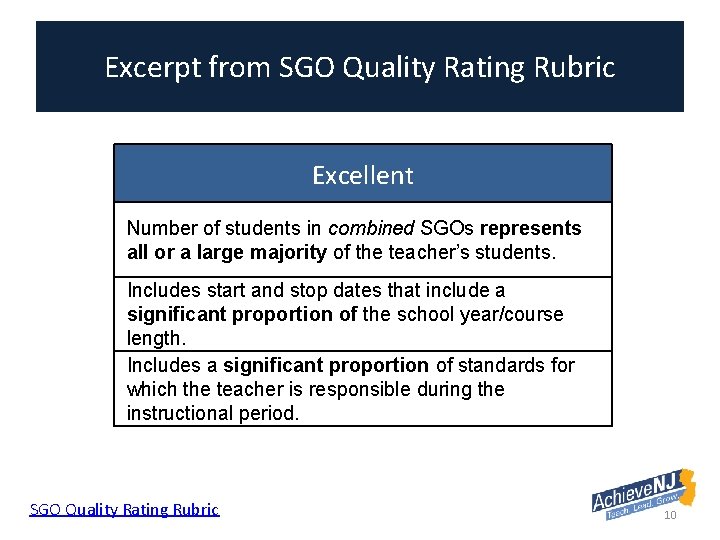

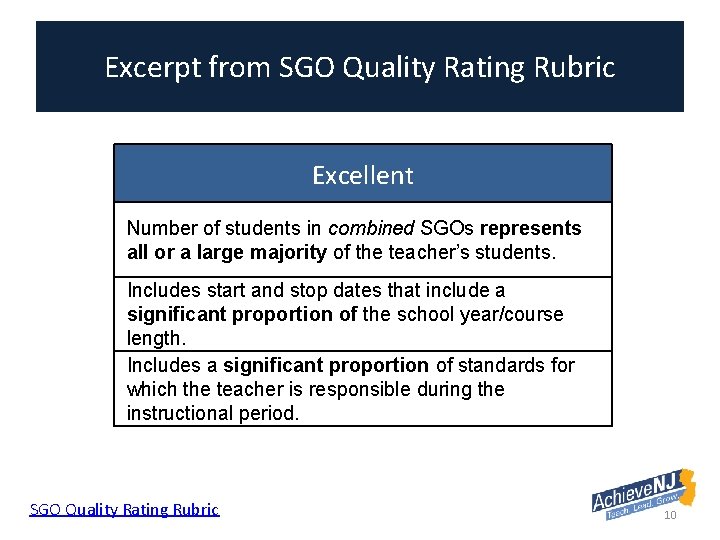

Excerpt from SGO Quality Rating Rubric Excellent Number of students in combined SGOs represents all or a large majority of the teacher’s students. Includes start and stop dates that include a significant proportion of the school year/course length. Includes a significant proportion of standards for which the teacher is responsible during the instructional period. SGO Quality Rating Rubric 10

General and Specific SGOs General • Specific 0. 1 4 O 1 G S 13 0 2 Captures a significant proportion of the students and key standards for a given course or subject area Most teachers will be setting this type of SGO • Focuses on a particular subgroup of students, and/or specific content or skill For teachers whose general SGO already includes all of their students, or those who receive an SGP 11

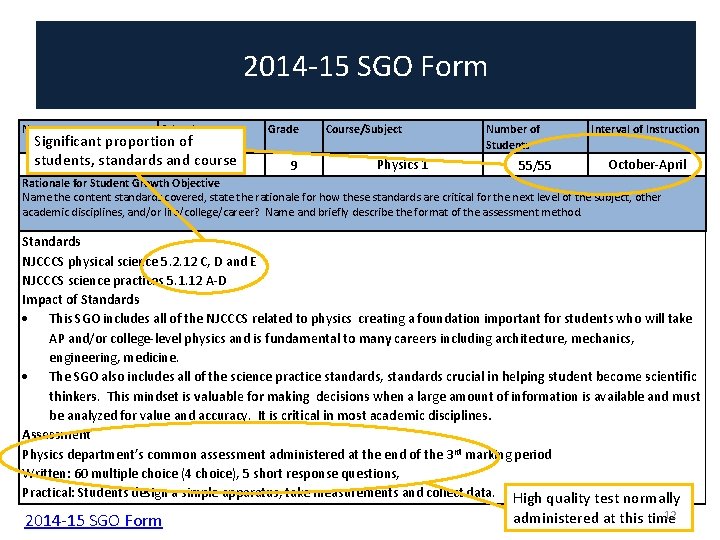

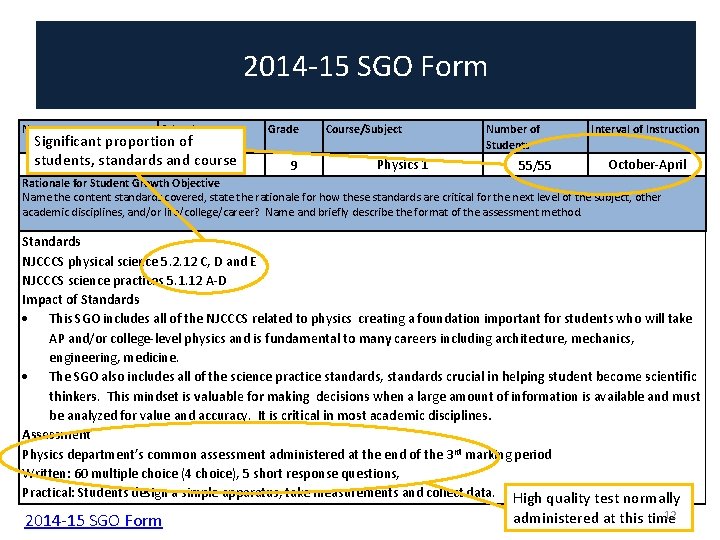

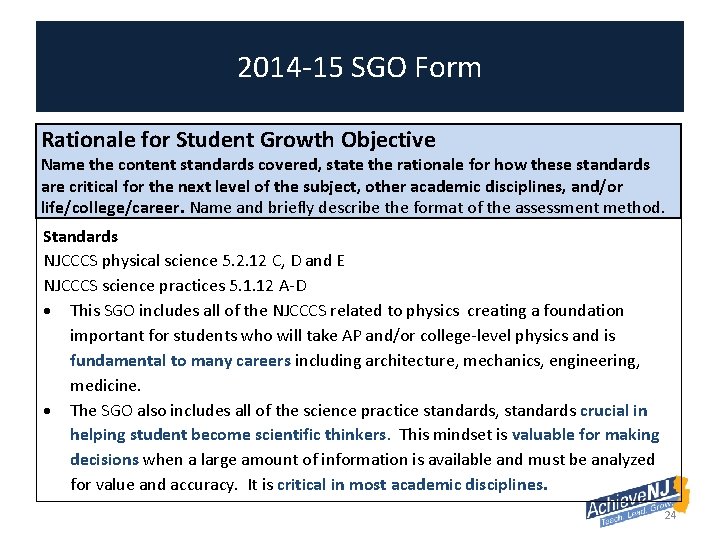

2014 -15 SGO Form Name School Significant proportion of students, standards and course Grade 9 Course/Subject Physics 1 Number of Students 55/55 Interval of Instruction October-April Rationale for Student Growth Objective Name the content standards covered, state the rationale for how these standards are critical for the next level of the subject, other academic disciplines, and/or life/college/career? Name and briefly describe the format of the assessment method. Standards NJCCCS physical science 5. 2. 12 C, D and E NJCCCS science practices 5. 1. 12 A-D Impact of Standards This SGO includes all of the NJCCCS related to physics creating a foundation important for students who will take AP and/or college-level physics and is fundamental to many careers including architecture, mechanics, engineering, medicine. The SGO also includes all of the science practice standards, standards crucial in helping student become scientific thinkers. This mindset is valuable for making decisions when a large amount of information is available and must be analyzed for value and accuracy. It is critical in most academic disciplines. Assessment Physics department’s common assessment administered at the end of the 3 rd marking period Written: 60 multiple choice (4 choice), 5 short response questions, Practical: Students design a simple apparatus, take measurements and collect data. High quality test normally 2014 -15 SGO Form 12 administered at this time

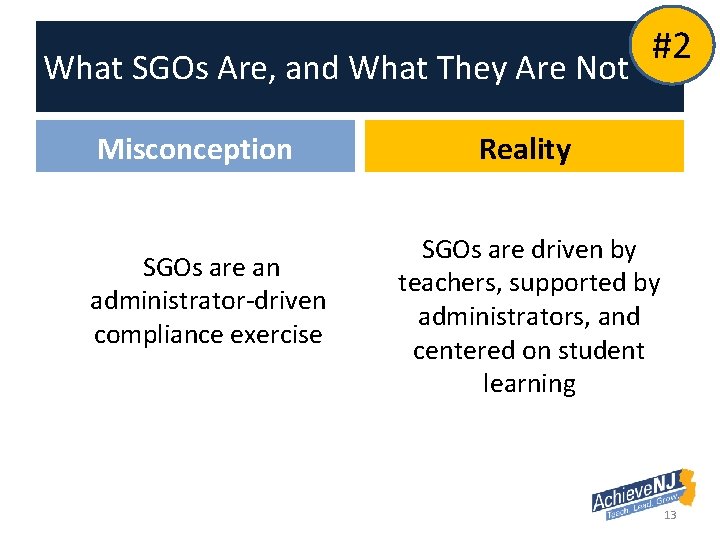

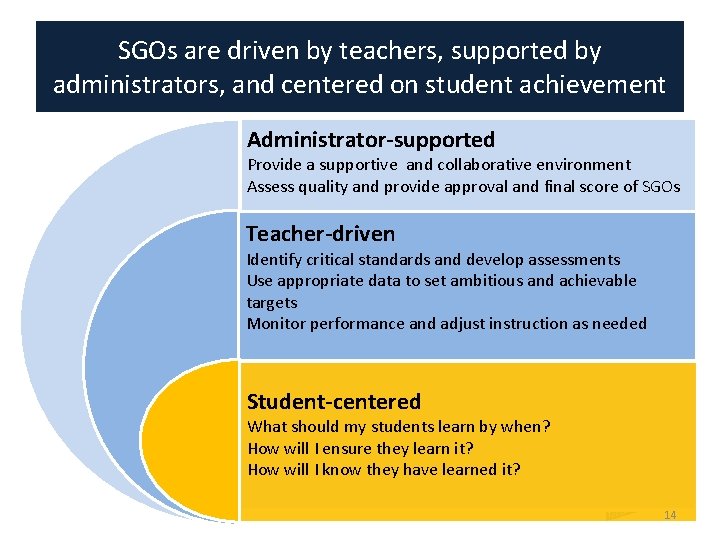

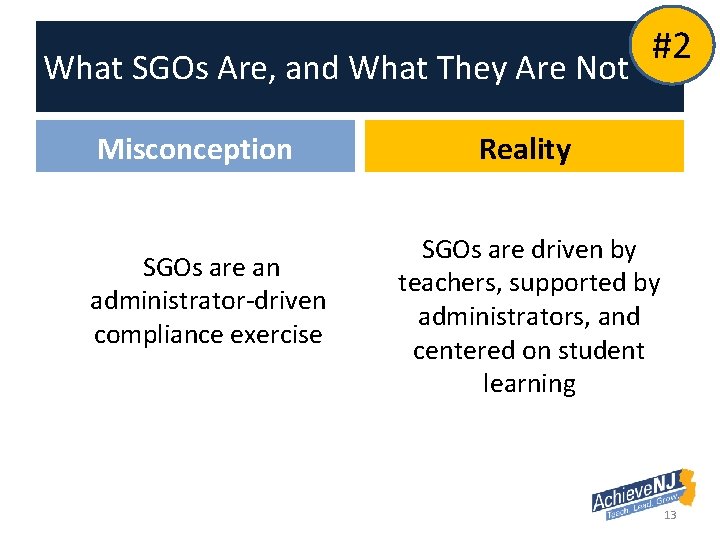

What SGOs Are, and What They Are Not Misconception SGOs are an administrator-driven compliance exercise #2 Reality SGOs are driven by teachers, supported by administrators, and centered on student learning 13

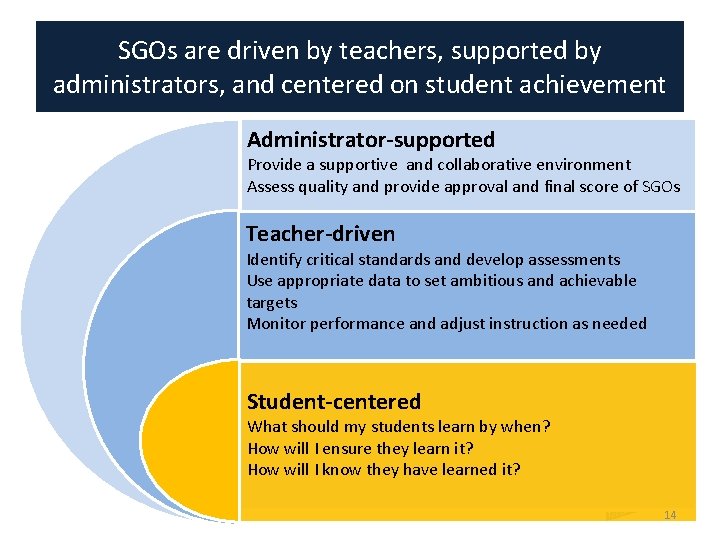

SGOs are driven by teachers, supported by administrators, and centered on student achievement Administrator-supported Provide a supportive and collaborative environment Assess quality and provide approval and final score of SGOs Teacher-driven Identify critical standards and develop assessments Use appropriate data to set ambitious and achievable targets Monitor performance and adjust instruction as needed Student-centered What should my students learn by when? How will I ensure they learn it? How will I know they have learned it? 14

Part 2 Develop a foundational understanding of how to develop and choose high quality assessments. 15

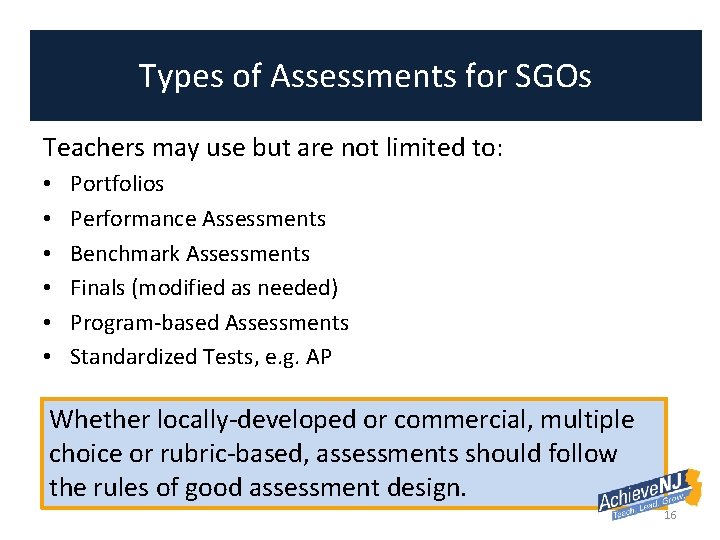

Types of Assessments for SGOs Teachers may use but are not limited to: • • • Portfolios Performance Assessments Benchmark Assessments Finals (modified as needed) Program-based Assessments Standardized Tests, e. g. AP Whether locally-developed or commercial, multiple choice or rubric-based, assessments should follow the rules of good assessment design. 16

What Does Good Assessment Look Like? 17

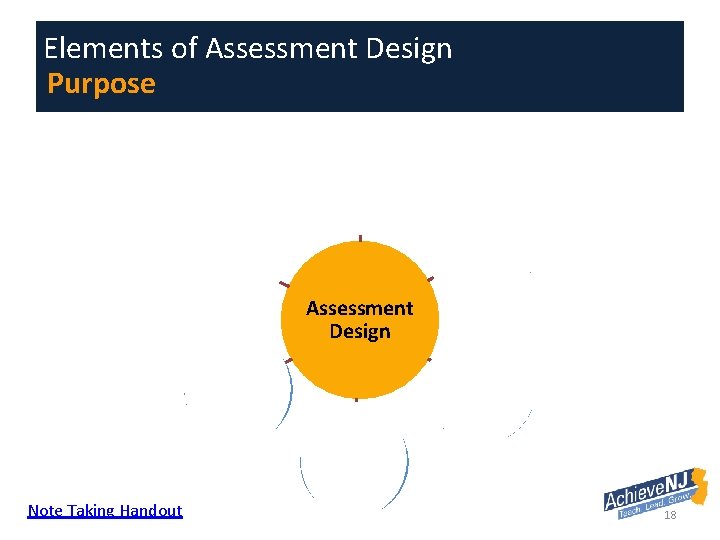

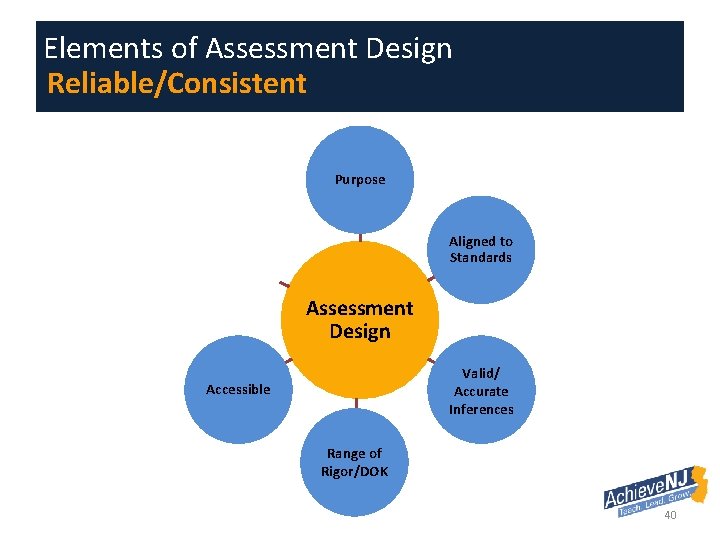

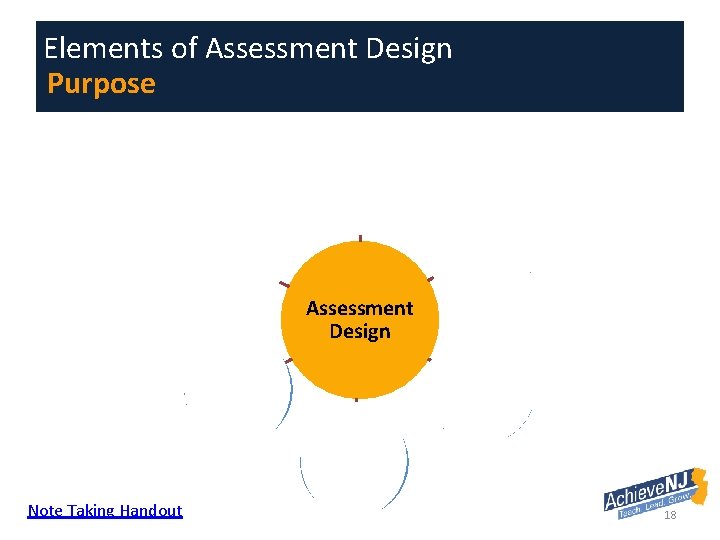

Elements of Assessment Design Purpose Alignment to Standards Rigor/ DOK Assessment Design Reliability/ Consistency Accessibility Validity/ Accuracy Note Taking Handout 18

Elements of Assessment Design Begin with the End in Mind Purpose SGO assessments are measures of how well our students have met the learning goals we have set for them 19

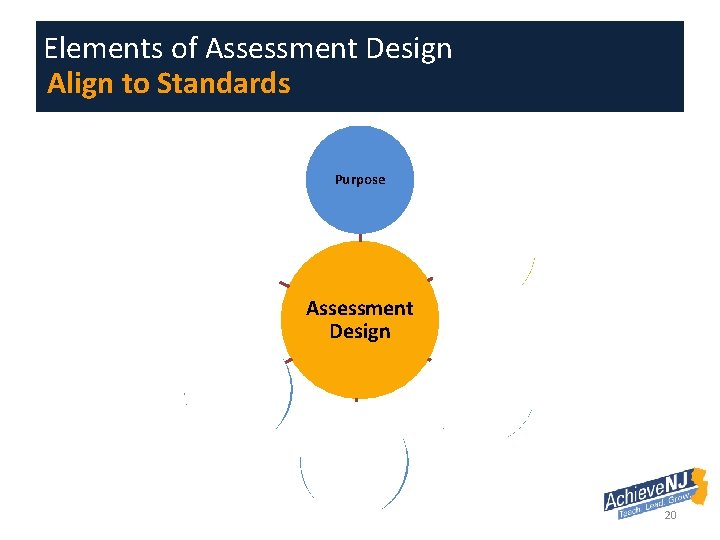

Elements of Assessment Design Align to Standards Purpose Align to Standards Rigor/ DOK Assessment Design Reliable/ Consistent Accessible Valid/ Accurate 20

Elements of Assessment Design Align to Standards 21

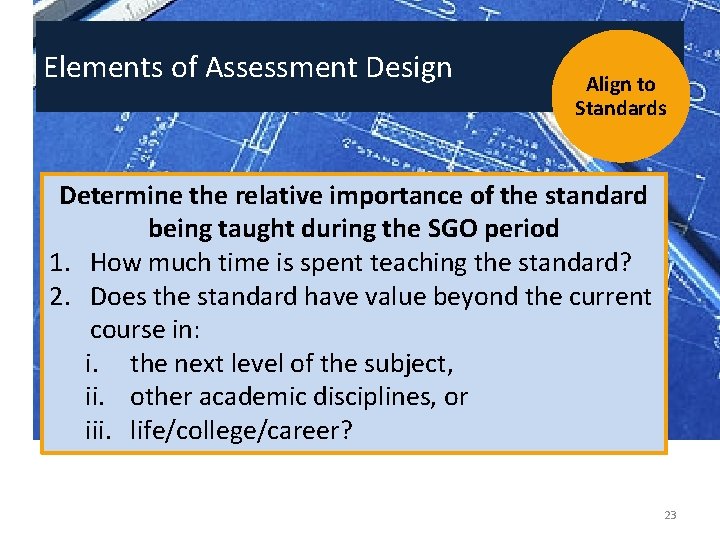

Elements of Assessment Design Align to Standards Given limited resources, especially time, on which standards do we focus our SGOs and assessments? 22

Elements of Assessment Design Align to Standards Determine the relative importance of the standard being taught during the SGO period 1. How much time is spent teaching the standard? 2. Does the standard have value beyond the current course in: i. the next level of the subject, ii. other academic disciplines, or iii. life/college/career? 23

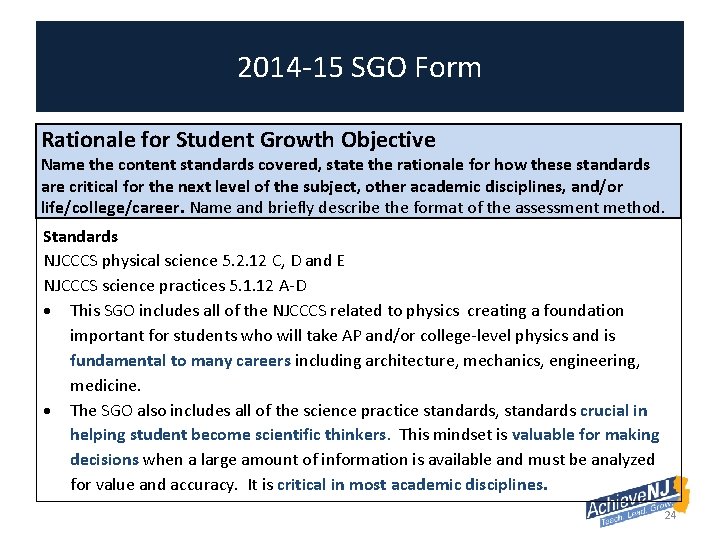

2014 -15 SGO Form Rationale for Student Growth Objective Name the content standards covered, state the rationale for how these standards are critical for the next level of the subject, other academic disciplines, and/or life/college/career. Name and briefly describe the format of the assessment method. Standards NJCCCS physical science 5. 2. 12 C, D and E NJCCCS science practices 5. 1. 12 A-D This SGO includes all of the NJCCCS related to physics creating a foundation important for students who will take AP and/or college-level physics and is fundamental to many careers including architecture, mechanics, engineering, medicine. The SGO also includes all of the science practice standards, standards crucial in helping student become scientific thinkers. This mindset is valuable for making decisions when a large amount of information is available and must be analyzed for value and accuracy. It is critical in most academic disciplines. 24

Using Commercial Products for SGOs 25

Elements of Assessment Design Valid/Accurate Inferences Purpose Align to Standards Rigor/ DOK Assessment Design Valid/ Accurate Inferences Accessible Reliable/ Consistent 26

Elements of Assessment Design Valid/ Accurate Inferences 27

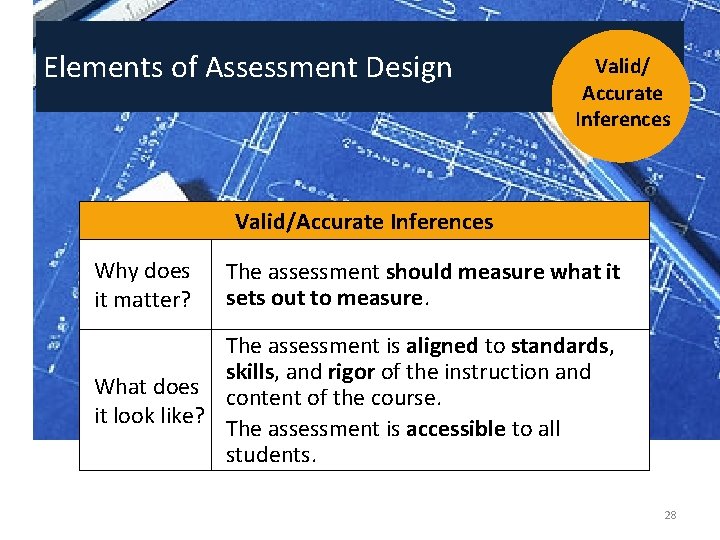

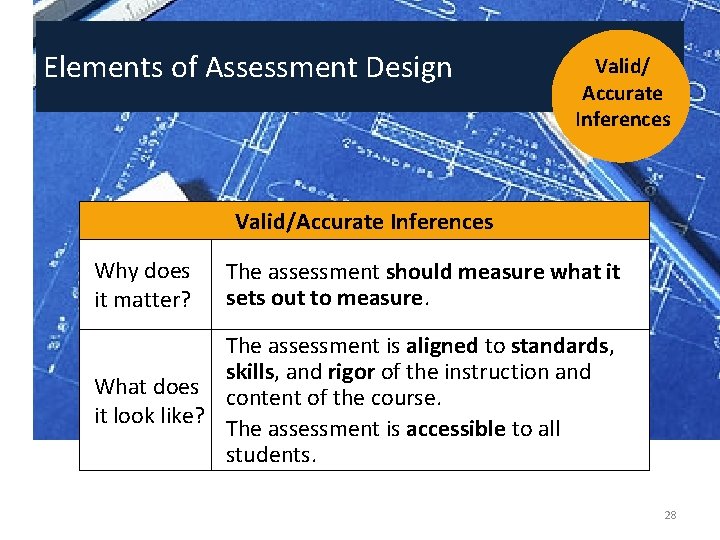

Elements of Assessment Design Valid/ Accurate Inferences Valid/Accurate Inferences Why does it matter? The assessment should measure what it sets out to measure. The assessment is aligned to standards, skills, and rigor of the instruction and What does content of the course. it look like? The assessment is accessible to all students. 28

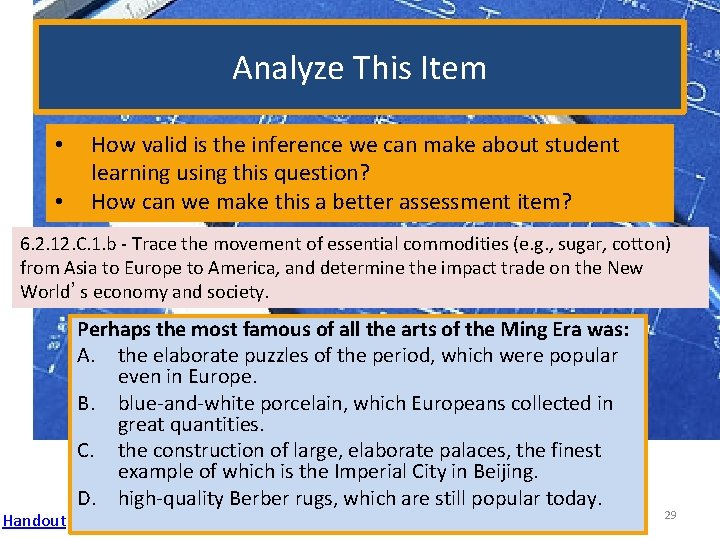

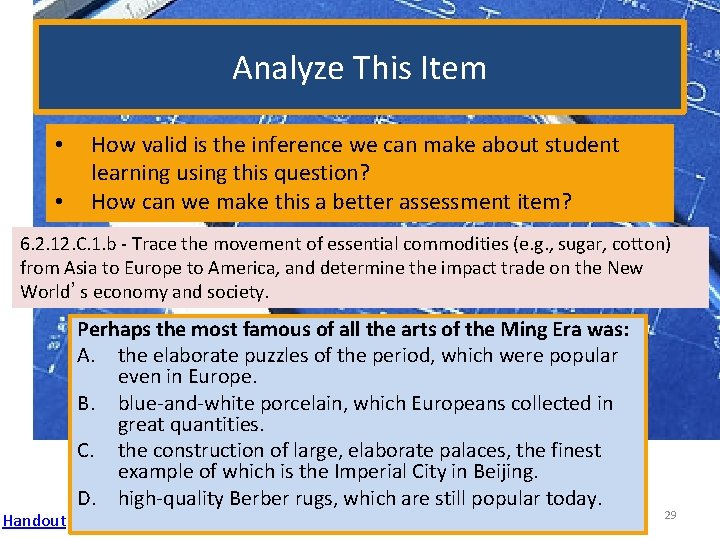

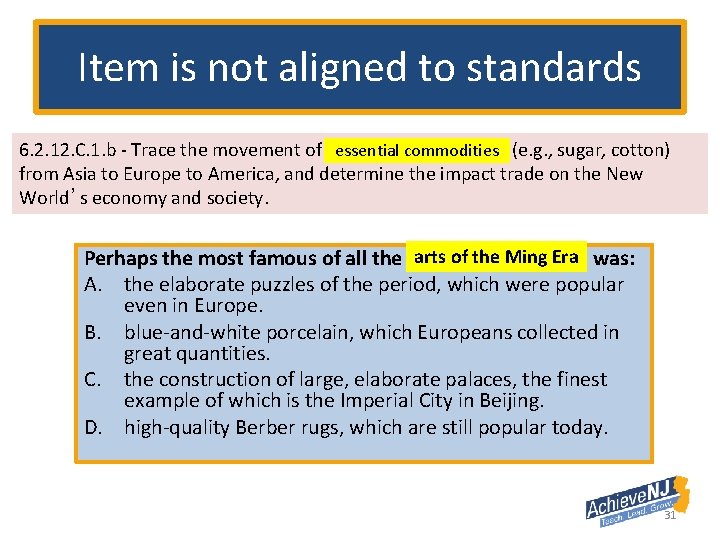

Analyze This Item • • How valid is the inference we can make about student learning using this question? How can we make this a better assessment item? 6. 2. 12. C. 1. b - Trace the movement of essential commodities (e. g. , sugar, cotton) from Asia to Europe to America, and determine the impact trade on the New World’s economy and society. Handout Perhaps the most famous of all the arts of the Ming Era was: A. the elaborate puzzles of the period, which were popular even in Europe. B. blue-and-white porcelain, which Europeans collected in great quantities. C. the construction of large, elaborate palaces, the finest example of which is the Imperial City in Beijing. D. high-quality Berber rugs, which are still popular today. 29

30

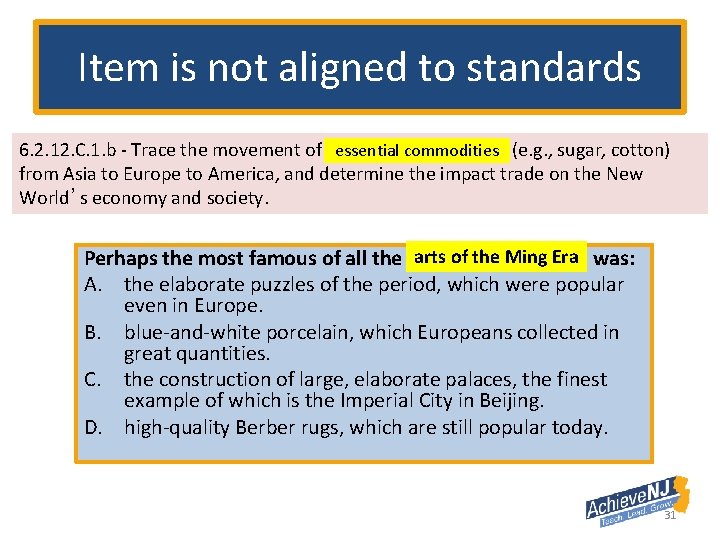

Item is not aligned to standards 6. 2. 12. C. 1. b - Trace the movement of essential commodities (e. g. , sugar, cotton) from Asia to Europe to America, and determine the impact trade on the New World’s economy and society. arts of of the Ming Perhaps the most famous of all the arts Ming. Era was: A. the elaborate puzzles of the period, which were popular even in Europe. B. blue-and-white porcelain, which Europeans collected in great quantities. C. the construction of large, elaborate palaces, the finest example of which is the Imperial City in Beijing. D. high-quality Berber rugs, which are still popular today. 31

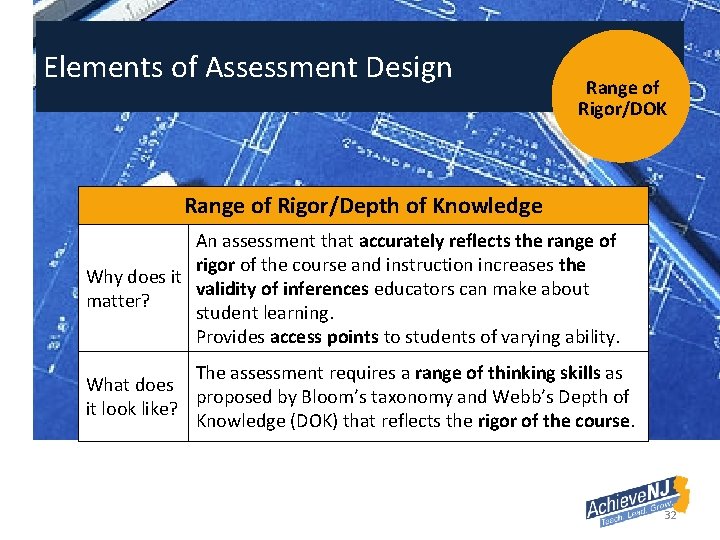

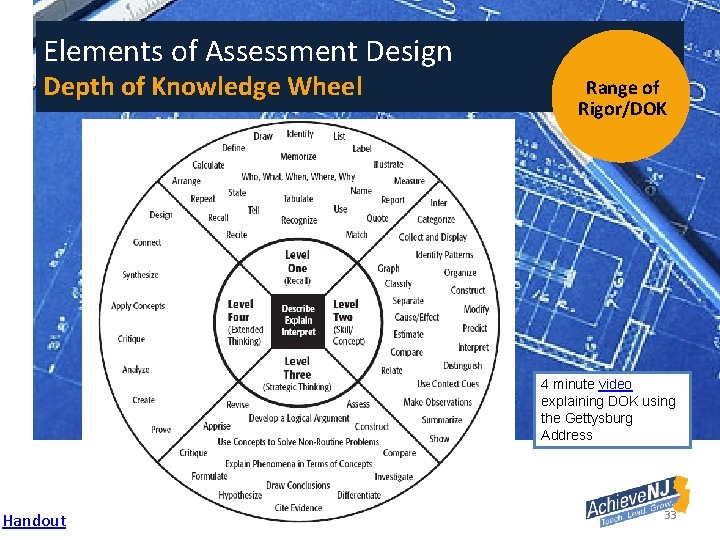

Elements of Assessment Design Range of Rigor/DOK Range of Rigor/Depth of Knowledge An assessment that accurately reflects the range of rigor of the course and instruction increases the Why does it validity of inferences educators can make about matter? student learning. Provides access points to students of varying ability. The assessment requires a range of thinking skills as What does proposed by Bloom’s taxonomy and Webb’s Depth of it look like? Knowledge (DOK) that reflects the rigor of the course. 32

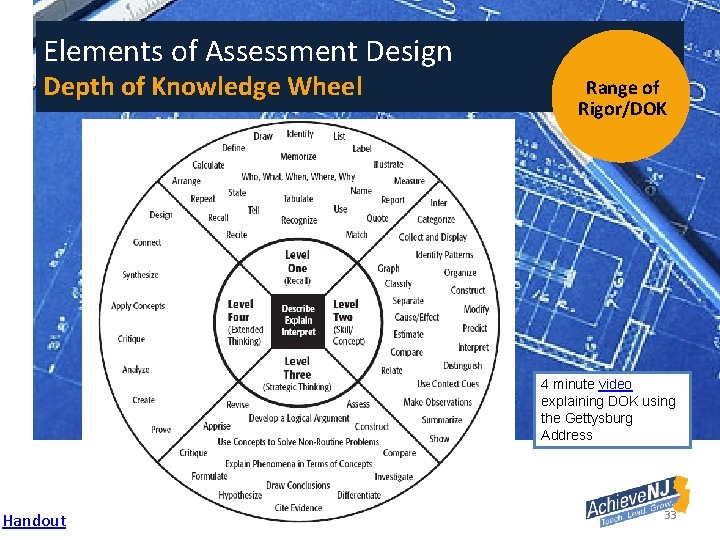

Elements of Assessment Design Depth of Knowledge Wheel Range of Rigor/DOK 4 minute video explaining DOK using the Gettysburg Address Handout 33

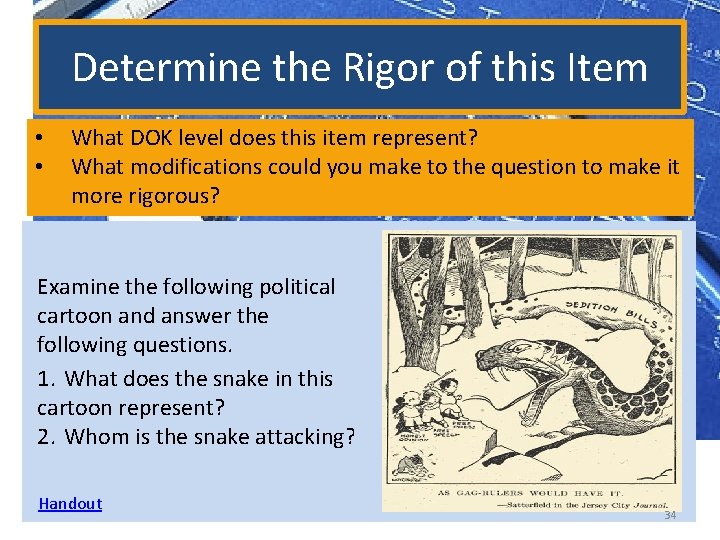

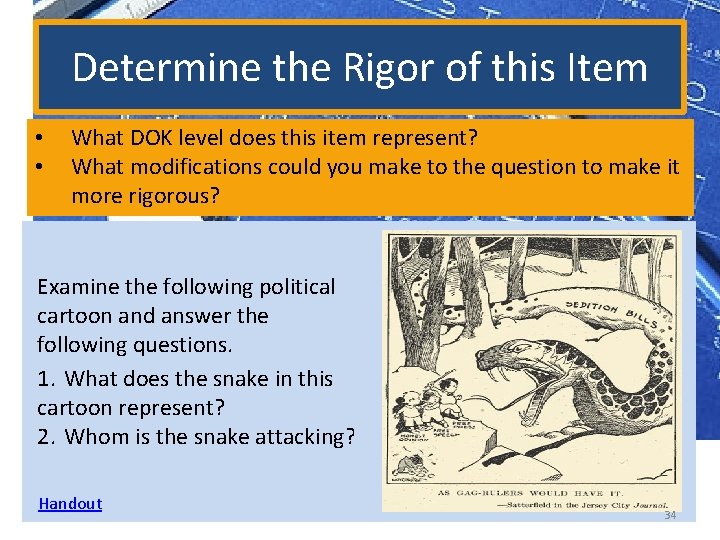

Determine the Rigor of this Item • • What DOK level does this item represent? What modifications could you make to the question to make it more rigorous? Examine the following political cartoon and answer the following questions. 1. What does the snake in this cartoon represent? 2. Whom is the snake attacking? Handout 34

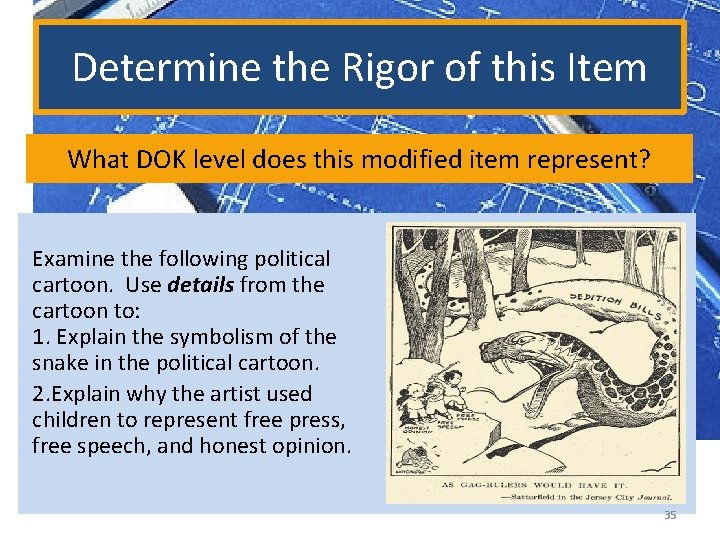

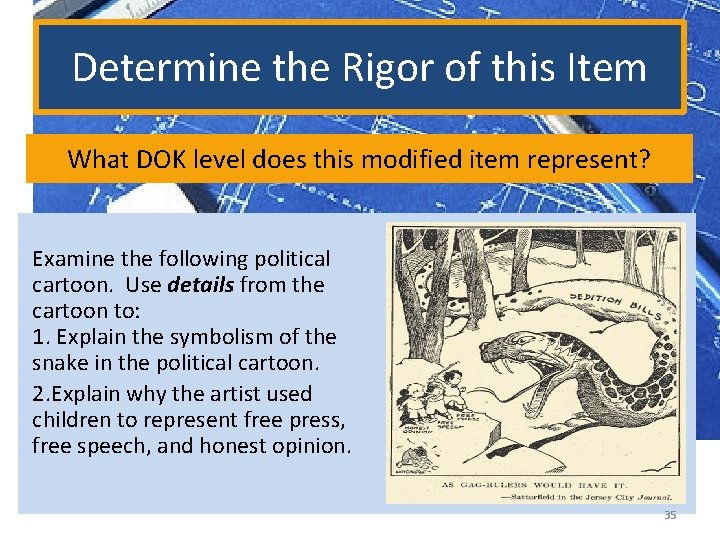

Determine the Rigor of this Item What DOK level does this modified item represent? Examine the following political cartoon. Use details from the cartoon to: 1. Explain the symbolism of the snake in the political cartoon. 2. Explain why the artist used children to represent free press, free speech, and honest opinion. 35

Elements of Assessment Design NOT Rigor for Rigor’s Sake Range of Rigor/DOK A high quality assessment has a range of rigor that: • Is representative of the rigor of instructional level and content delivered in the course, and • Provides stretch at both ends of ability levels 36

Elements of Assessment Design Accessible Assessment Why does it matter? Promotes similar interpretations of the data and informs sound instructional decisions. It’s fair to all students. Provides equal access to all students regardless of personal characteristics/background and pre-existing extra-curricular knowledge. What does it Questions and structure do not disadvantage students from look like? certain groups or those without particular background knowledge. Appropriate modifications for students with learning plans. Format, wording, and instructions are clear. 37

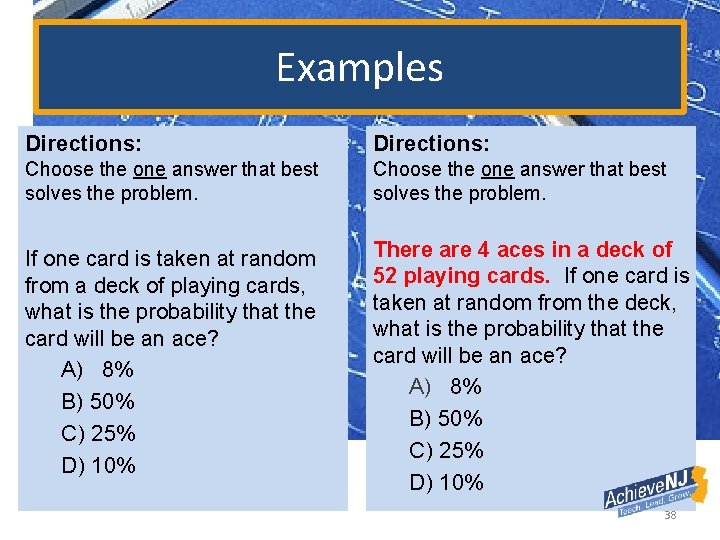

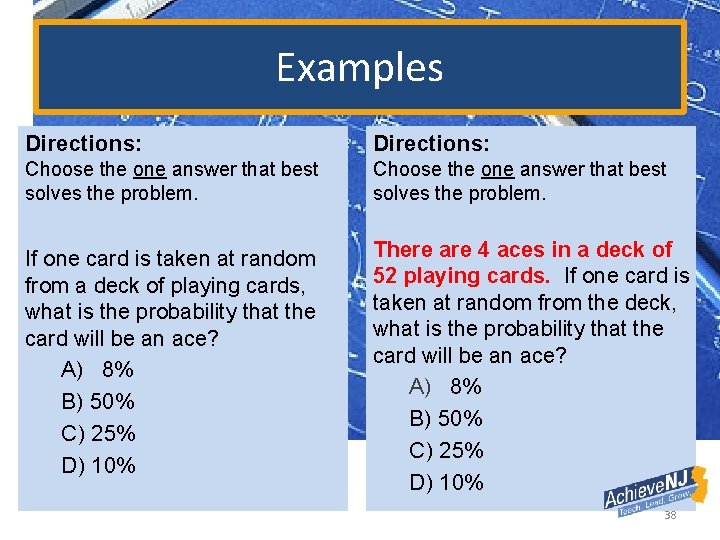

Examples Directions: Choose the one answer that best solves the problem. If one card is taken at random from a deck of playing cards, what is the probability that the card will be an ace? A) 8% B) 50% C) 25% D) 10% There are 4 aces in a deck of 52 playing cards. If one card is taken at random from the deck, what is the probability that the card will be an ace? A) 8% B) 50% C) 25% D) 10% 38

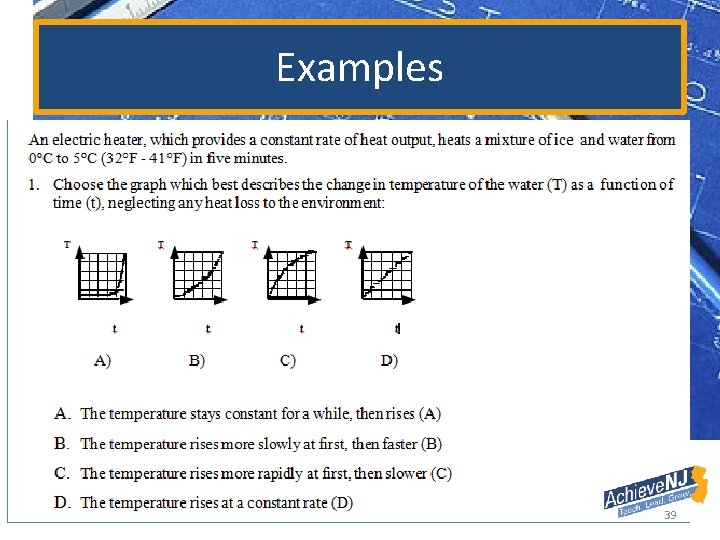

Examples 39

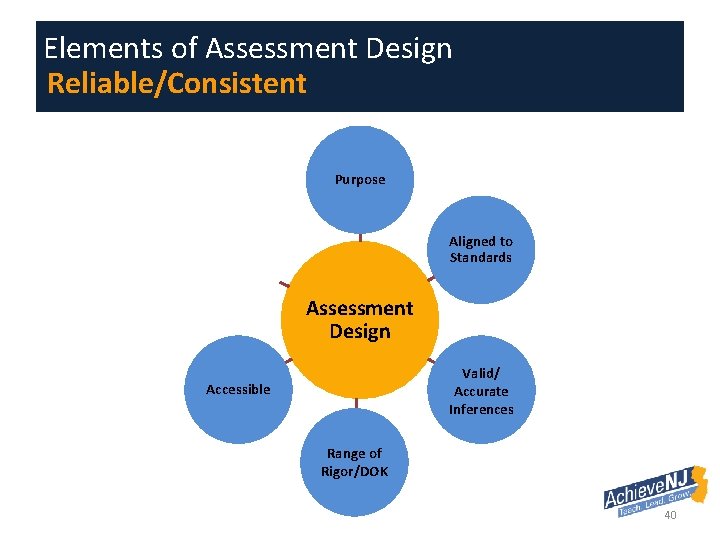

Elements of Assessment Design Reliable/Consistent Purpose Aligned to Standards Reliable/ Consistent Assessment Design Valid/ Accurate Inferences Accessible Range of Rigor/DOK 40

Elements of Assessment Design Reliable/ Consistent Reliable e Unreliabl 41

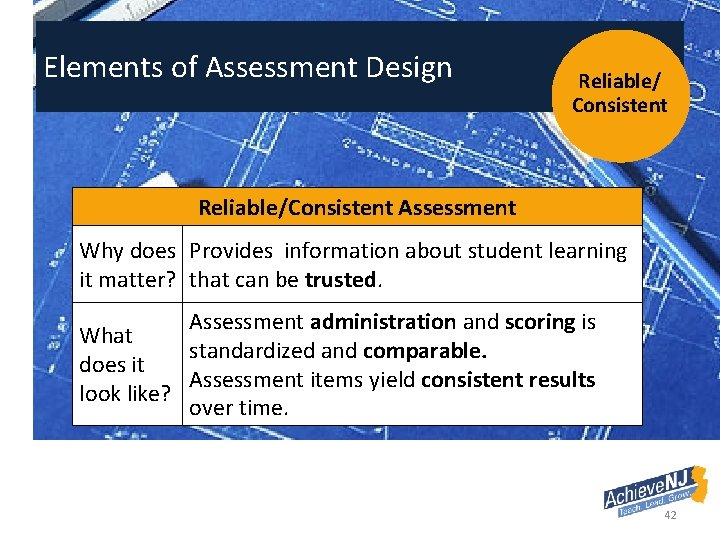

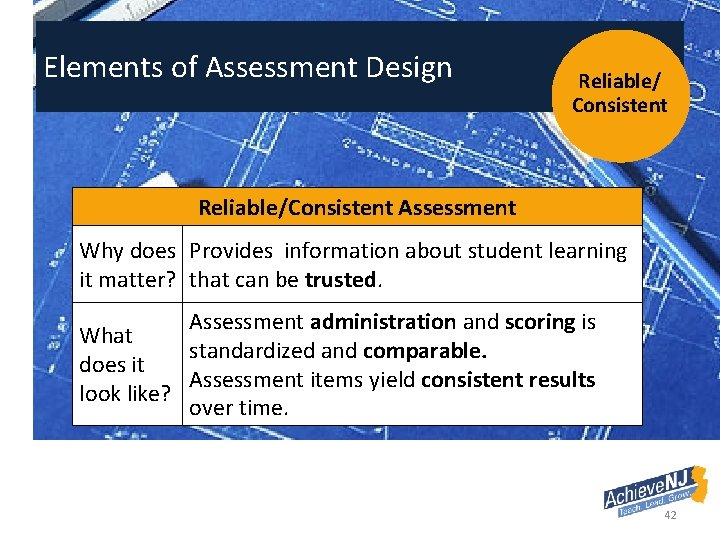

Elements of Assessment Design Reliable/ Consistent Reliable/Consistent Assessment Why does Provides information about student learning it matter? that can be trusted. Assessment administration and scoring is What standardized and comparable. does it Assessment items yield consistent results look like? over time. 42

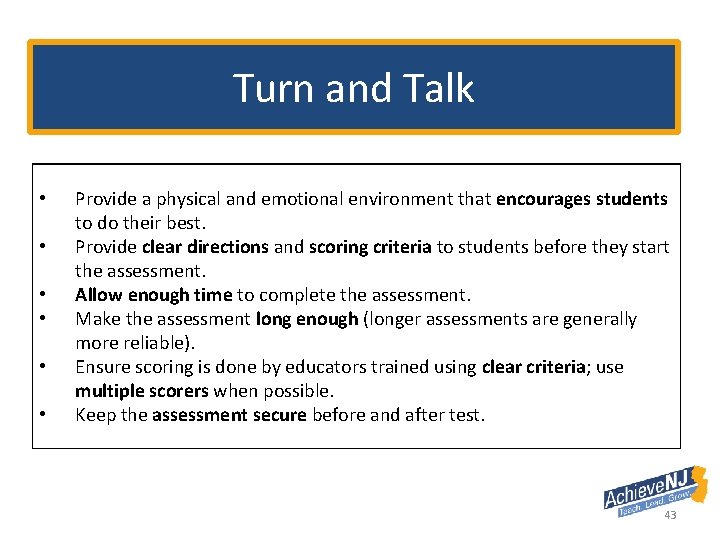

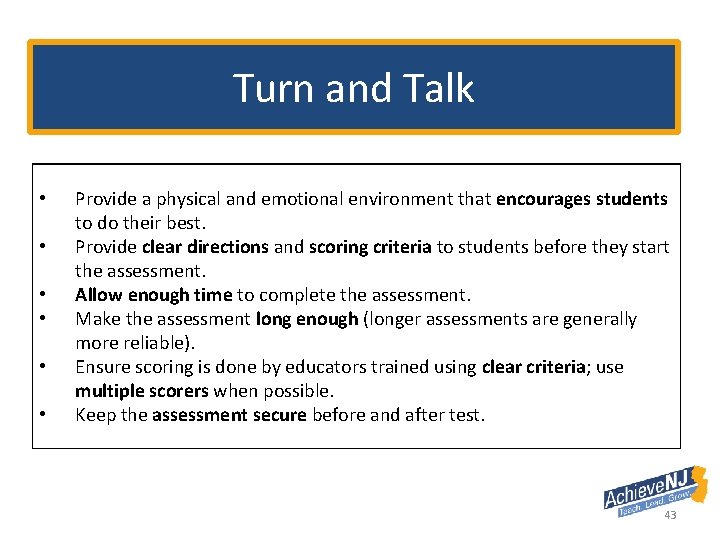

Turn and Talk • • • Provide a physical and emotional environment that encourages students to do their best. Provide clear directions and scoring criteria to students before they start the assessment. Allow enough time to complete the assessment. Make the assessment long enough (longer assessments are generally more reliable). Ensure scoring is done by educators trained using clear criteria; use multiple scorers when possible. Keep the assessment secure before and after test. 43

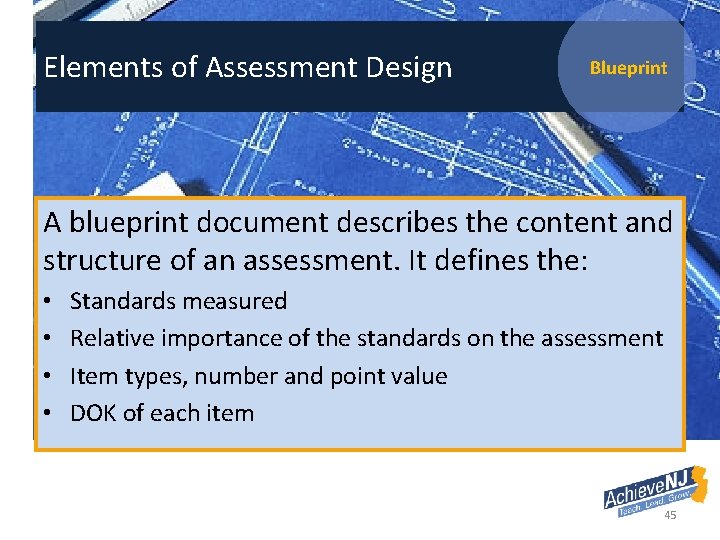

Elements of Assessment Design Bringing the elements together into a coherent whole Purpose Aligned to Standards Reliable/ Consistent Assessment Design Blueprint Valid/ Accurate Inferences Accessible Range of Rigor/DOK 44

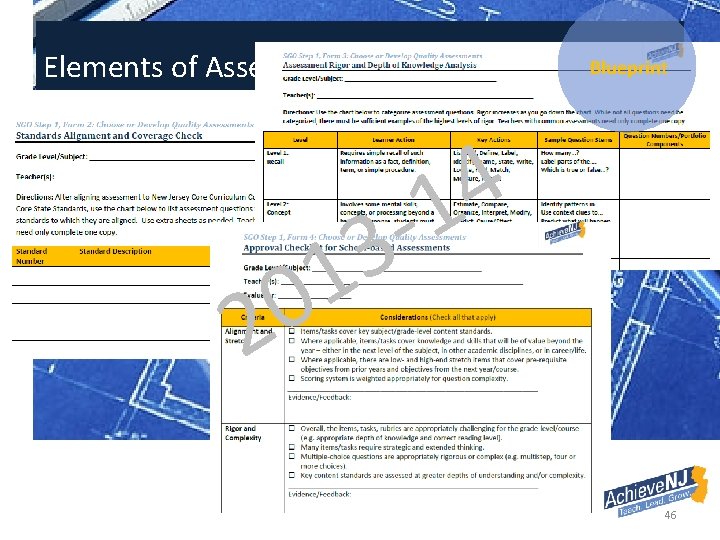

Elements of Assessment Design Blueprint A blueprint document describes the content and structure of an assessment. It defines the: • • Standards measured Relative importance of the standards on the assessment Item types, number and point value DOK of each item 45

Elements of Assessment Design Blueprint 4 1 - 0 2 3 1 46

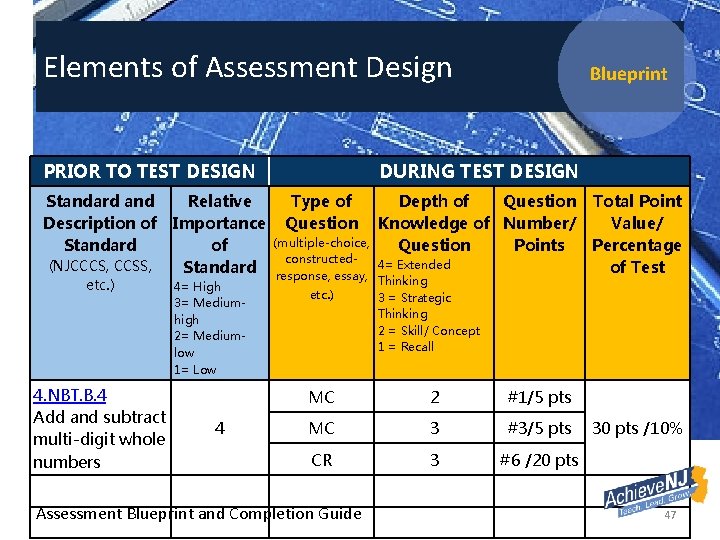

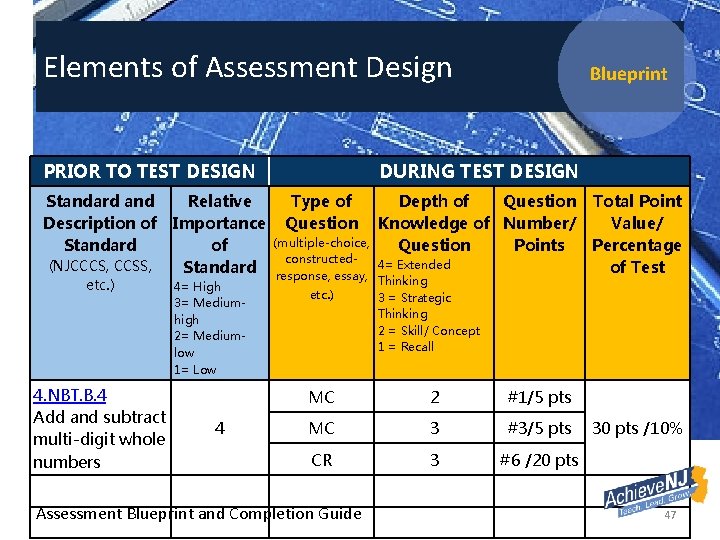

Elements of Assessment Design PRIOR TO TEST DESIGN Standard and Description of Standard (NJCCCS, CCSS, etc. ) 4. NBT. B. 4 Add and subtract multi-digit whole numbers Relative Importance of Standard 4= High 3= Mediumhigh 2= Mediumlow 1= Low 4 Blueprint DURING TEST DESIGN Type of Question Depth of Question Total Point Knowledge of Number/ Value/ Question Points Percentage 4= Extended of Test (multiple-choice, constructedresponse, essay, Thinking etc. ) 3 = Strategic Thinking 2 = Skill/ Concept 1 = Recall MC 2 #1/5 pts MC 3 #3/5 pts CR 3 #6 /20 pts Assessment Blueprint and Completion Guide 30 pts /10% 47

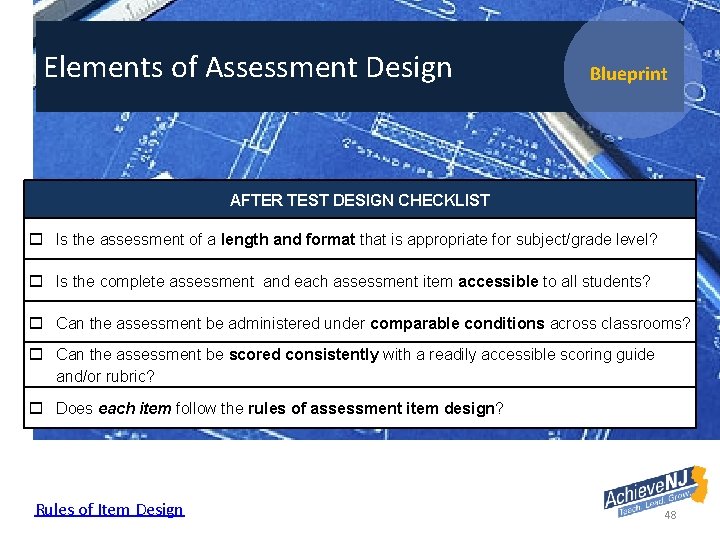

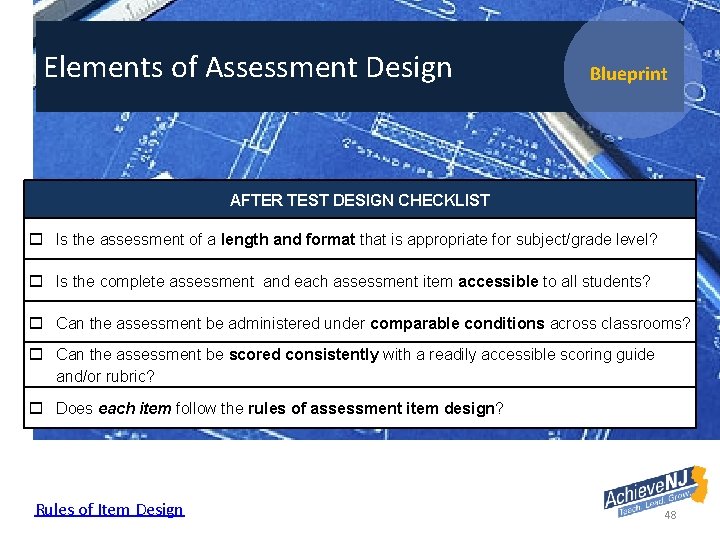

Elements of Assessment Design Blueprint AFTER TEST DESIGN CHECKLIST Is the assessment of a length and format that is appropriate for subject/grade level? Is the complete assessment and each assessment item accessible to all students? Can the assessment be administered under comparable conditions across classrooms? Can the assessment be scored consistently with a readily accessible scoring guide and/or rubric? Does each item follow the rules of assessment item design? Rules of Item Design 48

Part 3 Investigate appropriate ways to set targets using readily available student data. 49

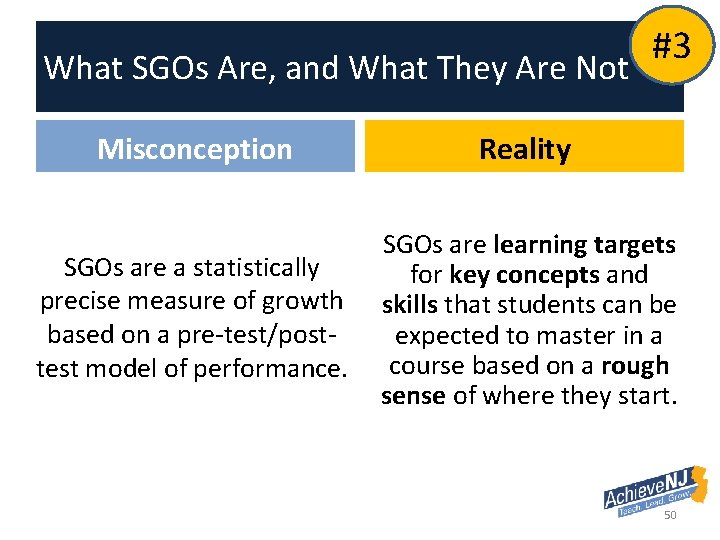

What SGOs Are, and What They Are Not #3 Misconception Reality SGOs are a statistically precise measure of growth based on a pre-test/posttest model of performance. SGOs are learning targets for key concepts and skills that students can be expected to master in a course based on a rough sense of where they start. 50

Pre-tests - The Siren Song of Simplicity 51

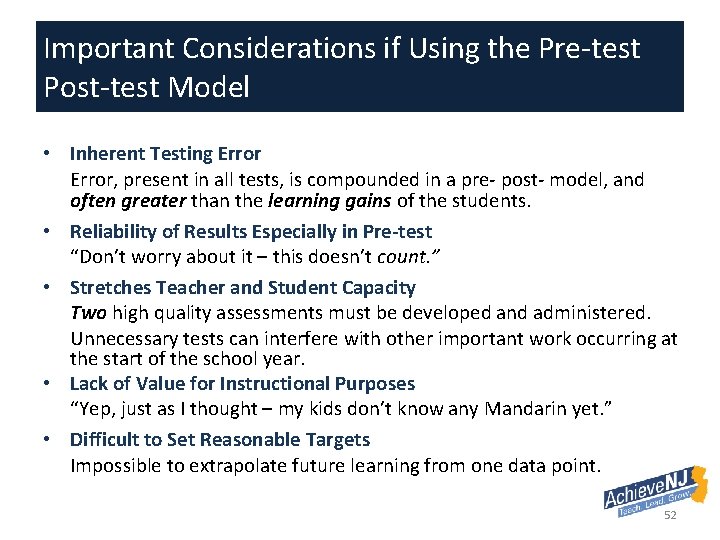

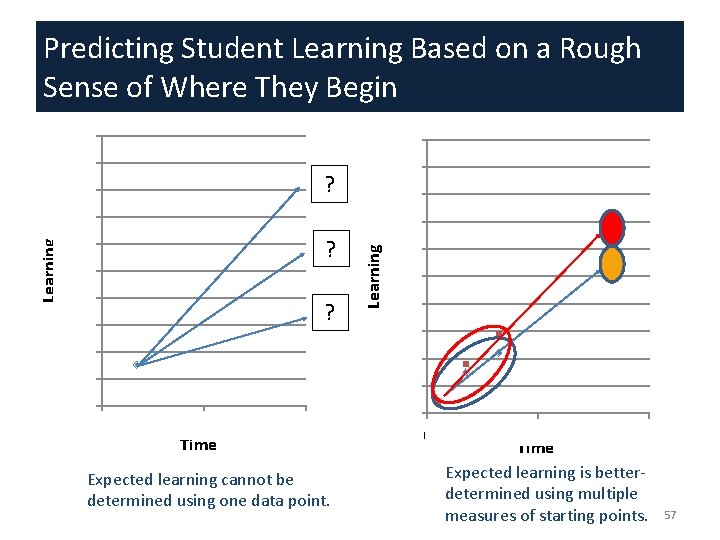

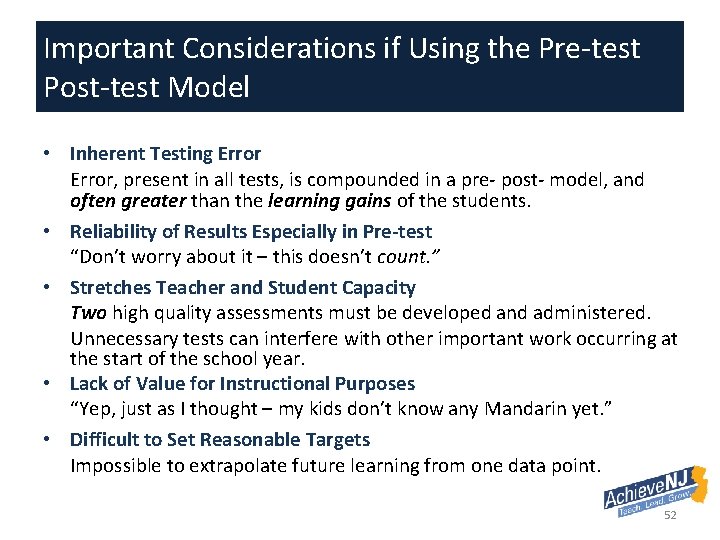

Important Considerations if Using the Pre-test Post-test Model • Inherent Testing Error, present in all tests, is compounded in a pre- post- model, and often greater than the learning gains of the students. • Reliability of Results Especially in Pre-test “Don’t worry about it – this doesn’t count. ” • Stretches Teacher and Student Capacity Two high quality assessments must be developed and administered. Unnecessary tests can interfere with other important work occurring at the start of the school year. • Lack of Value for Instructional Purposes “Yep, just as I thought – my kids don’t know any Mandarin yet. ” • Difficult to Set Reasonable Targets Impossible to extrapolate future learning from one data point. 52

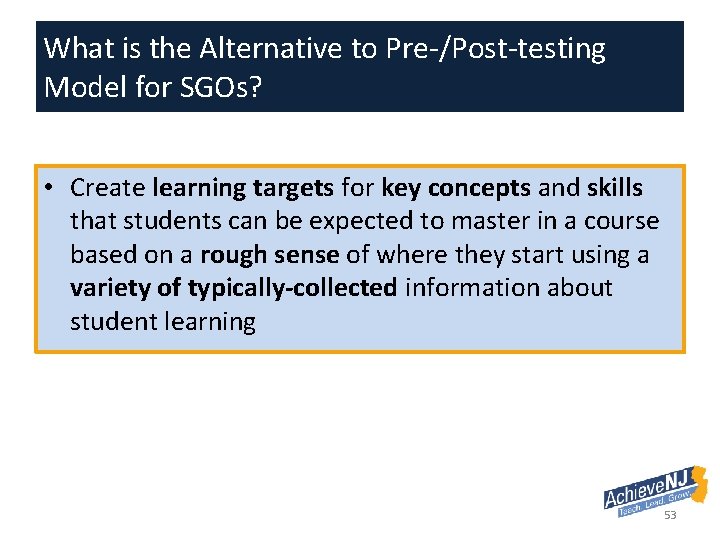

What is the Alternative to Pre-/Post-testing Model for SGOs? • Create learning targets for key concepts and skills that students can be expected to master in a course based on a rough sense of where they start using a variety of typically-collected information about student learning 53

Predict the Final Picture 54

Predict the Final Picture 55

Predict the Final Picture 56

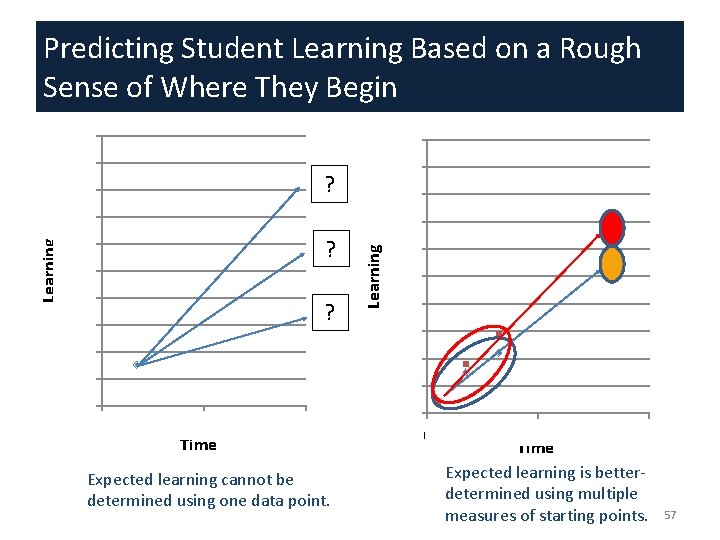

100 90 80 70 60 50 40 30 20 100 90 ? 80 70 ? ? Learning Predicting Student Learning Based on a Rough Sense of Where They Begin 60 50 40 30 20 10 0 2 Time 4 Expected learning cannot be determined using one data point. 0 0 2 4 Time Expected learning is betterdetermined using multiple measures of starting points. 57

List the information you have used or could potentially use to determine students’ starting points. 1. 2. 3. 4. 5. 58

List the information you have used or could potentially use to determine students’ starting points. 1. 2. 3. 4. 5. Current grades Recent test performance Previous year’s scores Important markers of future success Well-constructed and administered, highquality pre-assessments 59

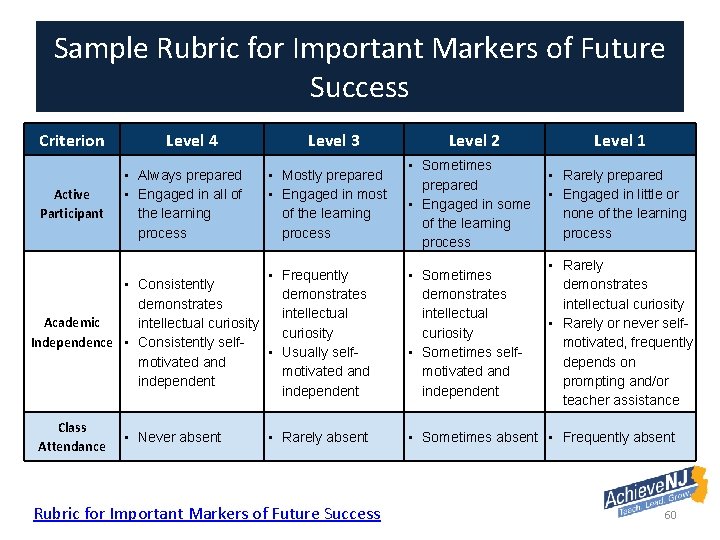

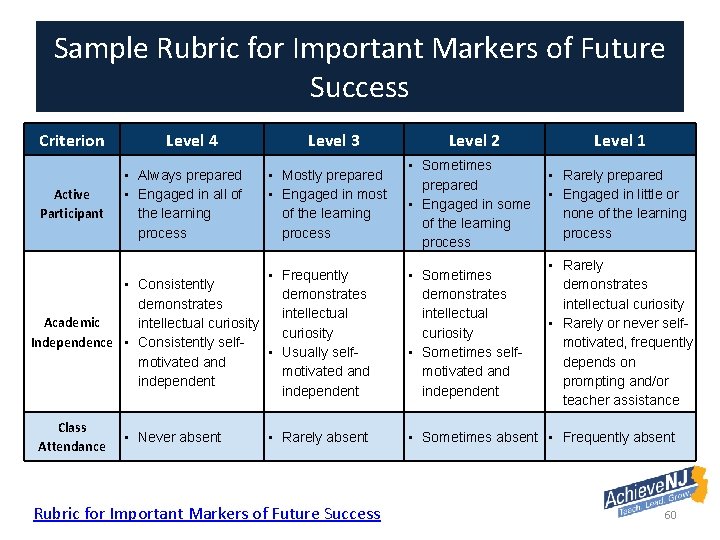

Sample Rubric for Important Markers of Future Success Criterion Active Participant Level 4 • Always prepared • Engaged in all of the learning process Level 3 • Mostly prepared • Engaged in most of the learning process • Frequently • Consistently demonstrates intellectual Academic intellectual curiosity Independence • Consistently self • Usually selfmotivated and independent Class Attendance • Never absent • Rarely absent Rubric for Important Markers of Future Success Level 2 Level 1 • Sometimes prepared • Engaged in some of the learning process • Rarely prepared • Engaged in little or none of the learning process • Sometimes demonstrates intellectual curiosity • Sometimes selfmotivated and independent • Rarely demonstrates intellectual curiosity • Rarely or never selfmotivated, frequently depends on prompting and/or teacher assistance • Sometimes absent • Frequently absent 60

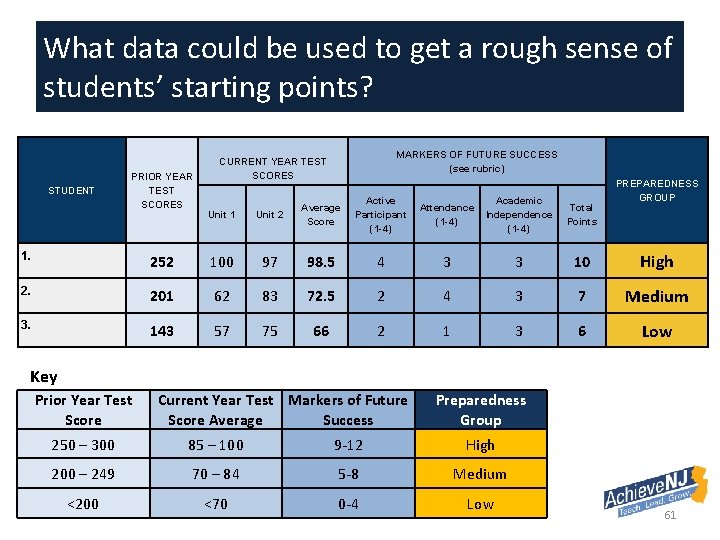

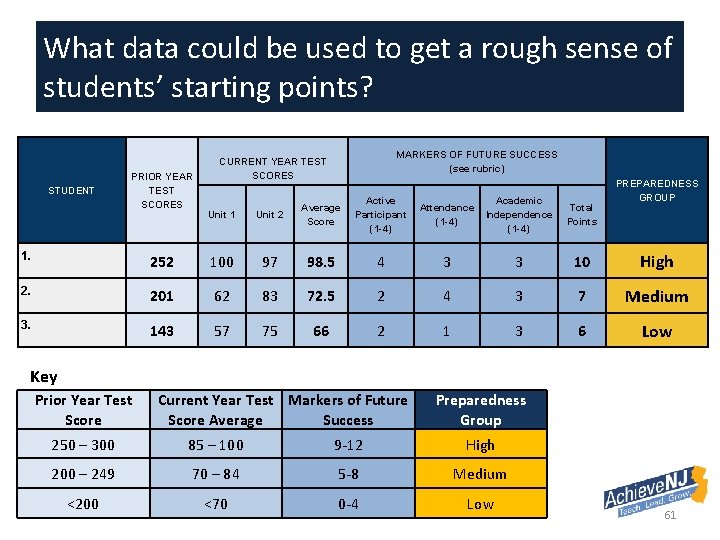

What data could be used to get a rough sense of students’ starting points? STUDENT PRIOR YEAR TEST SCORES MARKERS OF FUTURE SUCCESS (see rubric) CURRENT YEAR TEST SCORES Unit 1 Unit 2 Average Score Active Participant (1 -4) Attendance (1 -4) Academic Independence (1 -4) Total Points PREPAREDNESS GROUP 1. 252 100 97 98. 5 4 3 3 10 High 2. 201 62 83 72. 5 2 4 3 7 Medium 3. 143 57 75 66 2 1 3 6 Low Key Prior Year Test Score Current Year Test Markers of Future Score Average Success Preparedness Group 250 – 300 85 – 100 9 -12 High 200 – 249 70 – 84 5 -8 Medium <200 <70 0 -4 Low 61

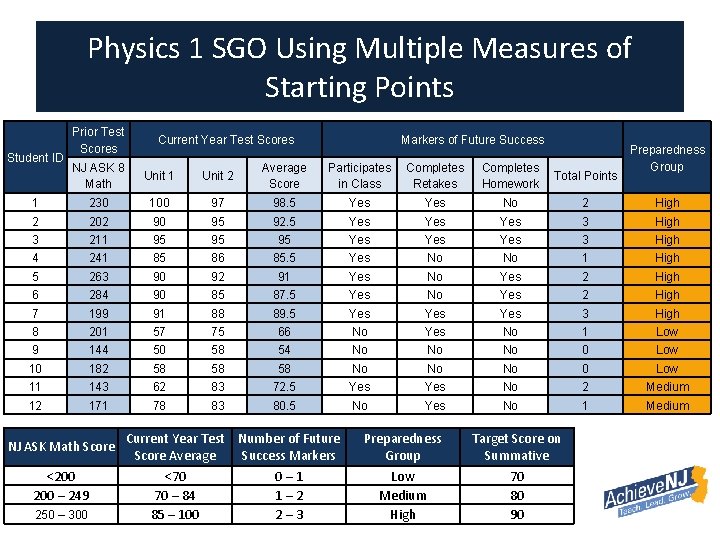

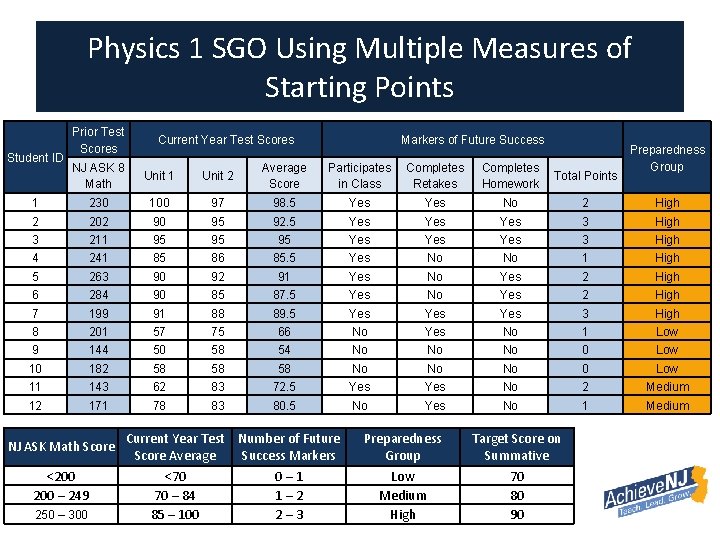

Physics 1 SGO Using Multiple Measures of Starting Points Student ID Prior Test Scores Current Year Test Scores Markers of Future Success Preparedness Group NJ ASK 8 Math Unit 1 Unit 2 Average Score Participates in Class Completes Retakes Completes Homework Total Points 1 230 100 97 98. 5 Yes No 2 High 2 202 90 95 92. 5 Yes Yes 3 High 3 211 95 95 95 Yes Yes 3 High 4 241 85 86 85. 5 Yes No No 1 High 5 263 90 92 91 Yes No Yes 2 High 6 284 90 85 87. 5 Yes No Yes 2 High 7 199 91 88 89. 5 Yes Yes 3 High 8 201 57 75 66 No Yes No 1 Low 9 144 50 58 54 No No No 0 Low 10 182 58 58 58 No No No 0 Low 11 143 62 83 72. 5 Yes No 2 Medium 12 171 78 83 80. 5 No Yes No 1 Medium NJ ASK Math Score <200 – 249 250 – 300 Current Year Test Number of Future Score Average Success Markers <70 0– 1 70 – 84 1– 2 85 – 100 2– 3 Preparedness Group Low Medium High Target Score on Summative 70 80 90

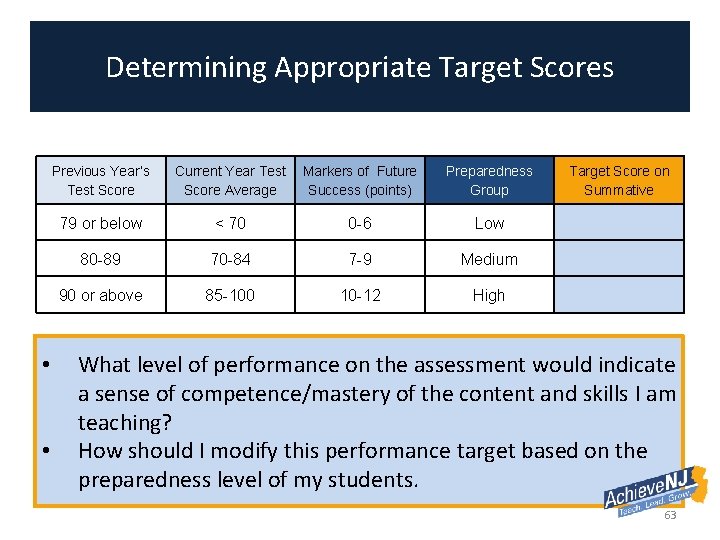

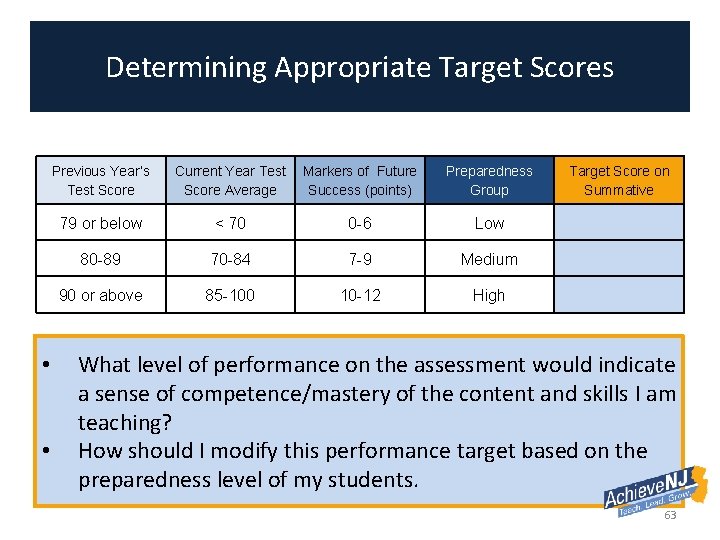

Determining Appropriate Target Scores • • Previous Year’s Test Score Current Year Test Score Average Markers of Future Success (points) Preparedness Group Target Score on Summative 79 or below < 70 0 -6 Low 70 80 -89 70 -84 7 -9 Medium 80 90 or above 85 -100 10 -12 High 90 What level of performance on the assessment would indicate a sense of competence/mastery of the content and skills I am teaching? How should I modify this performance target based on the preparedness level of my students. 63

64

What is the appropriate role of pre-assessments in SGOs? • • Where improvement in a set of skills is being evaluated When assessments are high quality and vertically aligned When normally used for instructional purposes In combination with other measures to help group students according to preparedness level 65

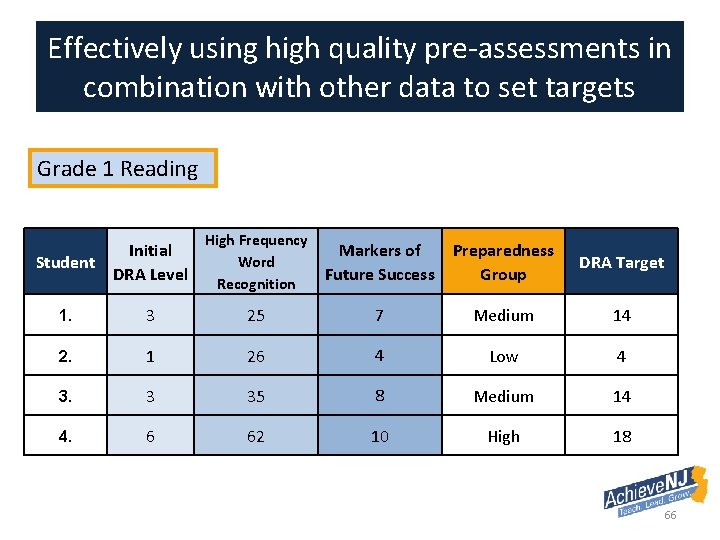

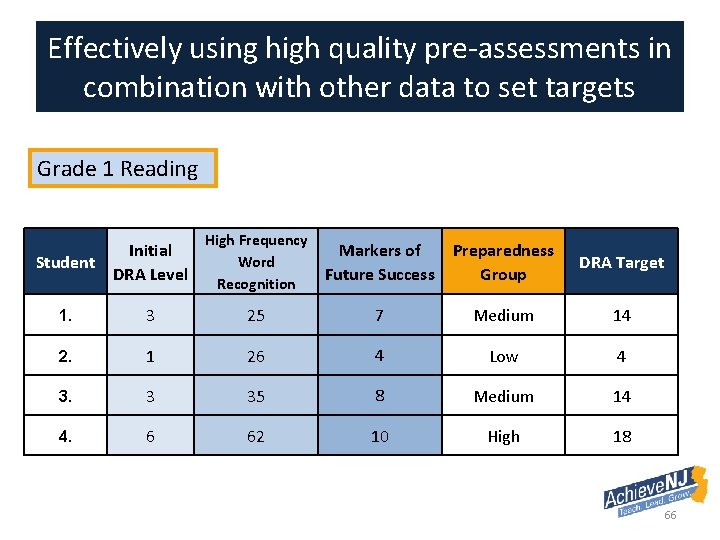

Effectively using high quality pre-assessments in combination with other data to set targets Grade 1 Reading Student Initial DRA Level High Frequency Word Recognition 1. 3 25 7 Medium 14 2. 1 26 4 Low 4 3. 3 35 8 Medium 14 4. 6 62 10 High 18 Markers of Preparedness Future Success Group DRA Target 66

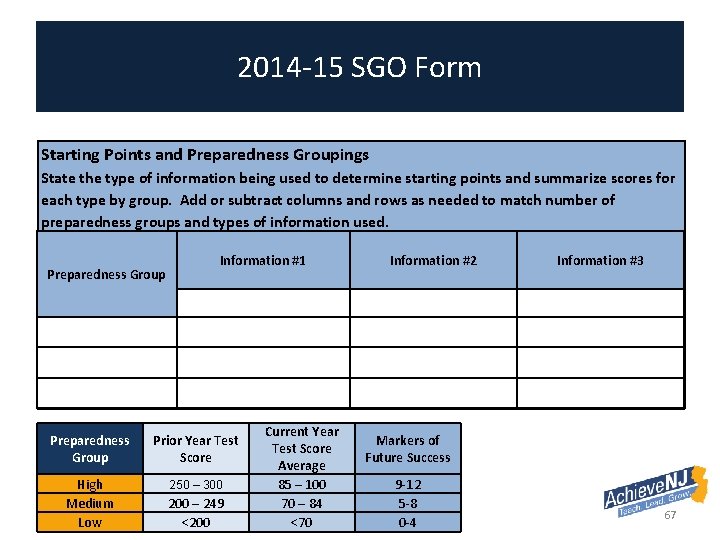

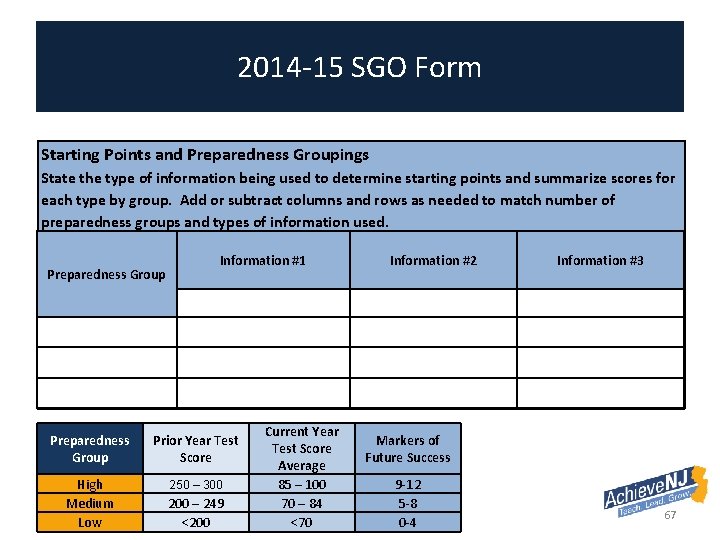

2014 -15 SGO Form Starting Points and Preparedness Groupings State the type of information being used to determine starting points and summarize scores for each type by group. Add or subtract columns and rows as needed to match number of preparedness groups and types of information used. Information #1 Information #2 Information #3 Prior Year Test Score Current Year Test Score Average Markers of Future Success High 250 – 300 85 – 100 9 -12 Medium 200 – 249 70 – 84 5 -8 Low <200 <70 0 -4 Preparedness Group Prior Year Test Score High Medium Low 250 – 300 200 – 249 <200 Current Year Test Score Average 85 – 100 70 – 84 <70 Markers of Future Success 9 -12 5 -8 0 -4 67

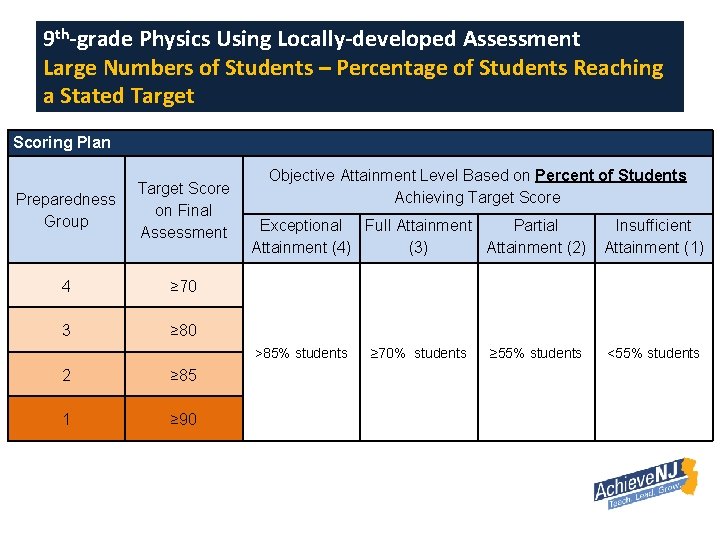

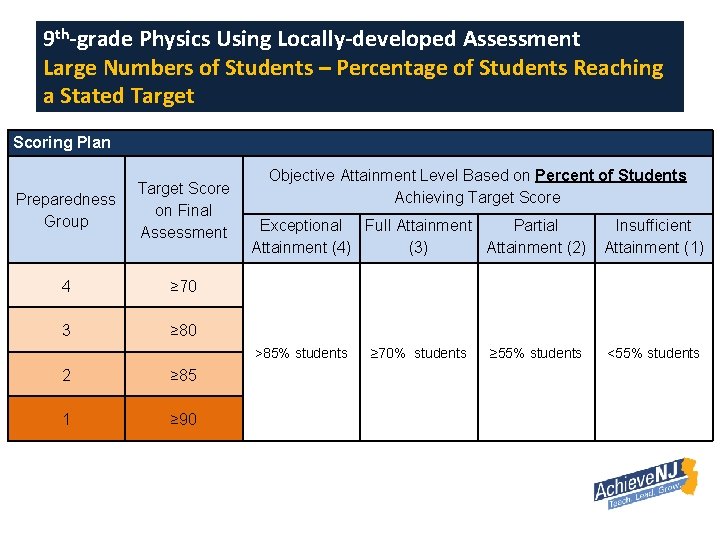

9 th-grade Physics Using Locally-developed Assessment Large Numbers of Students – Percentage of Students Reaching a Stated Target Scoring Plan Preparedness Group Target Score on Final Assessment 4 ≥ 70 3 ≥ 80 2 ≥ 85 1 ≥ 90 Objective Attainment Level Based on Percent of Students Achieving Target Score Exceptional Full Attainment Partial Attainment (4) (3) Attainment (2) Insufficient Attainment (1) >85% students <55% students ≥ 70% students ≥ 55% students

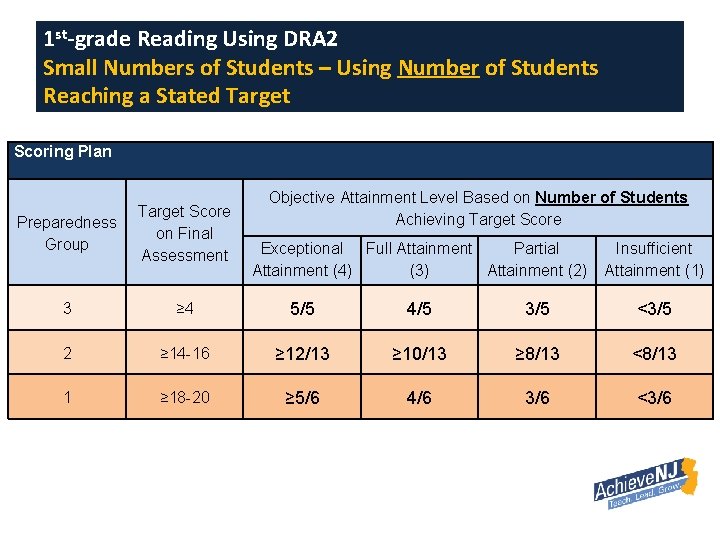

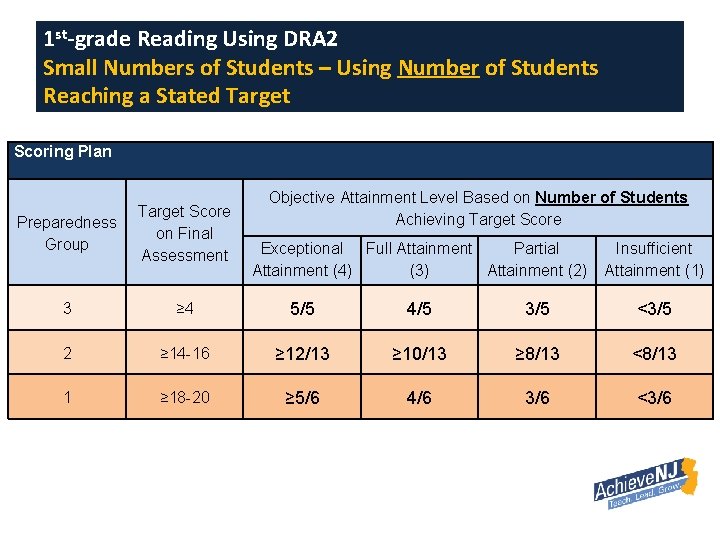

1 st-grade Reading Using DRA 2 Small Numbers of Students – Using Number of Students Reaching a Stated Target Scoring Plan Objective Attainment Level Based on Number of Students Achieving Target Score Preparedness Group Target Score on Final Assessment 3 ≥ 4 5/5 4/5 3/5 <3/5 2 ≥ 14 -16 ≥ 12/13 ≥ 10/13 ≥ 8/13 <8/13 1 ≥ 18 -20 ≥ 5/6 4/6 3/6 <3/6 Exceptional Full Attainment Partial Attainment (4) (3) Attainment (2) Insufficient Attainment (1)

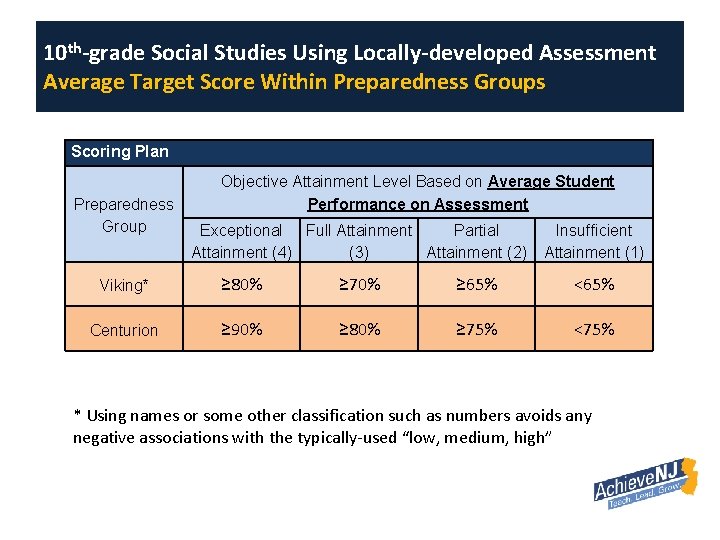

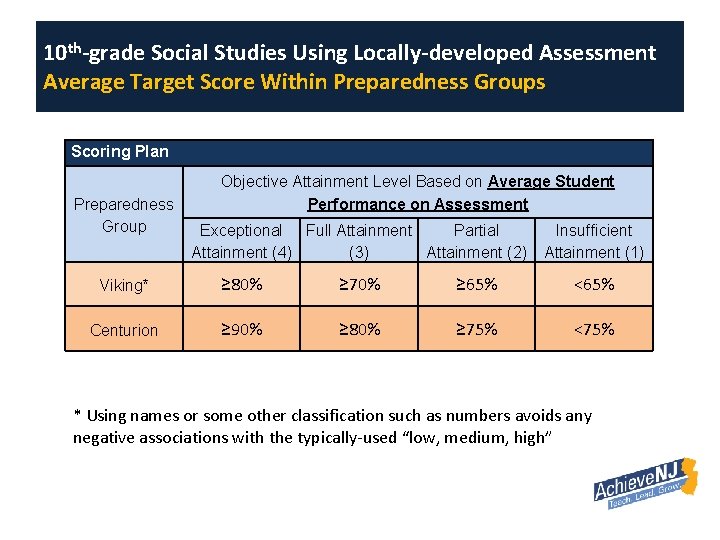

10 th-grade Social Studies Using Locally-developed Assessment Average Target Score Within Preparedness Groups Scoring Plan Preparedness Group Objective Attainment Level Based on Average Student Performance on Assessment Exceptional Full Attainment Partial Attainment (4) (3) Attainment (2) Insufficient Attainment (1) Viking* ≥ 80% ≥ 70% ≥ 65% <65% Centurion ≥ 90% ≥ 80% ≥ 75% <75% * Using names or some other classification such as numbers avoids any negative associations with the typically-used “low, medium, high”

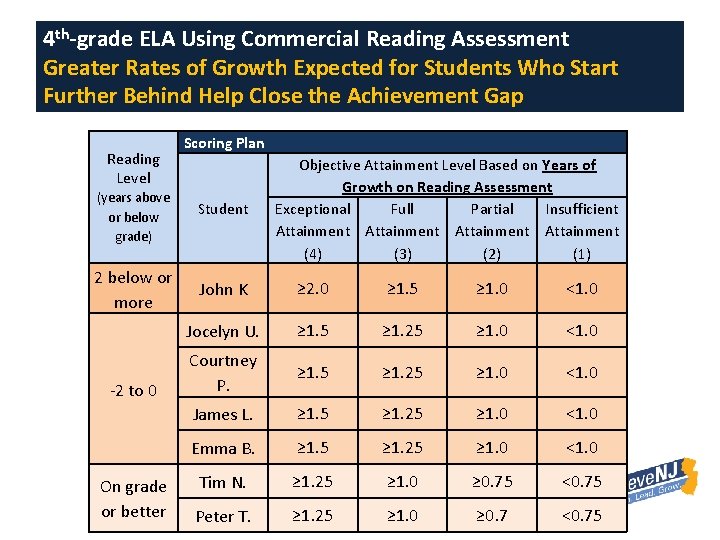

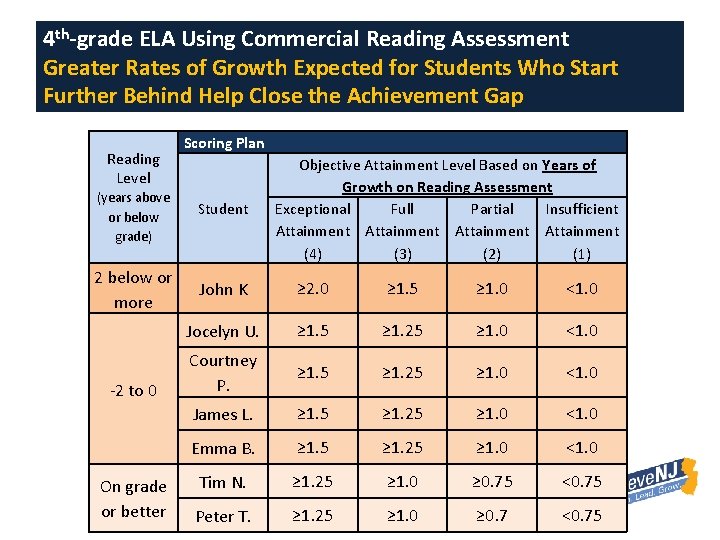

4 th-grade ELA Using Commercial Reading Assessment Greater Rates of Growth Expected for Students Who Start Further Behind Help Close the Achievement Gap Reading Level (years above or below grade) 2 below or more -2 to 0 On grade or better Scoring Plan Student Objective Attainment Level Based on Years of Growth on Reading Assessment Exceptional Full Partial Insufficient Attainment (4) (3) (2) (1) John K ≥ 2. 0 ≥ 1. 5 ≥ 1. 0 <1. 0 Jocelyn U. ≥ 1. 5 ≥ 1. 25 ≥ 1. 0 <1. 0 Courtney P. ≥ 1. 5 ≥ 1. 25 ≥ 1. 0 <1. 0 James L. ≥ 1. 5 ≥ 1. 25 ≥ 1. 0 <1. 0 Emma B. ≥ 1. 5 ≥ 1. 25 ≥ 1. 0 <1. 0 Tim N. ≥ 1. 25 ≥ 1. 0 ≥ 0. 75 <0. 75 Peter T. ≥ 1. 25 ≥ 1. 0 ≥ 0. 7 <0. 75

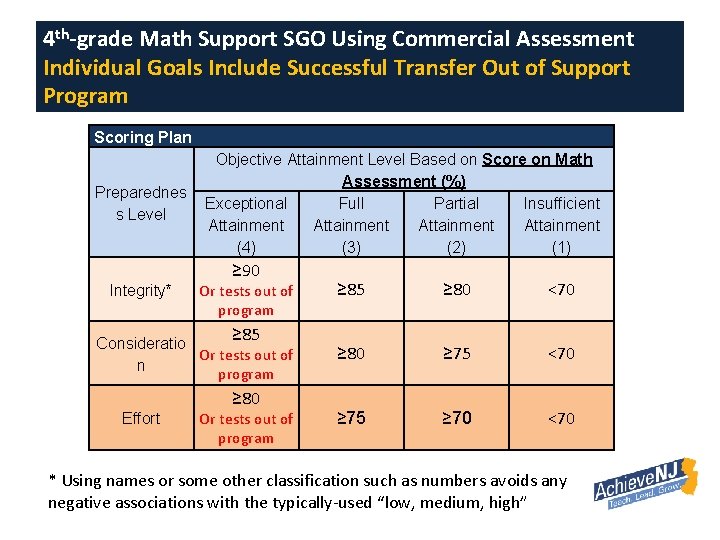

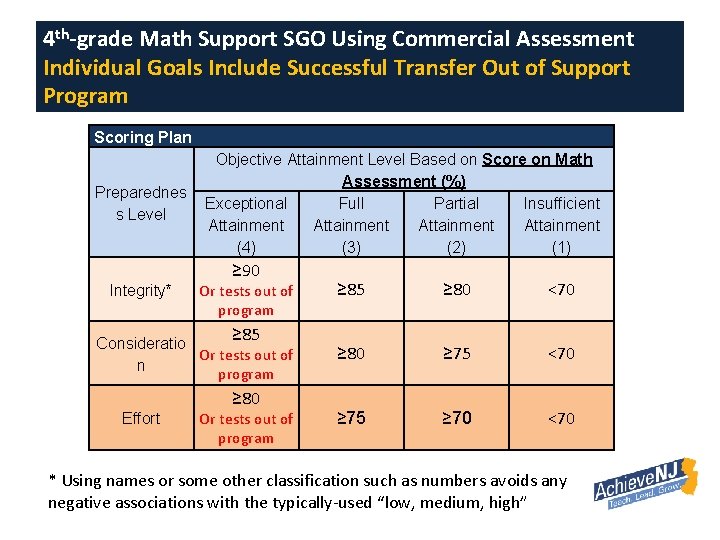

4 th-grade Math Support SGO Using Commercial Assessment Individual Goals Include Successful Transfer Out of Support Program Scoring Plan Preparednes s Level Objective Attainment Level Based on Score on Math Assessment (%) Exceptional Full Partial Insufficient Attainment (4) (3) (2) (1) ≥ 90 Integrity* Or tests out of program ≥ 85 Consideratio Or tests out of n program ≥ 80 Effort Or tests out of program ≥ 85 ≥ 80 <70 ≥ 80 ≥ 75 <70 ≥ 75 ≥ 70 <70 * Using names or some other classification such as numbers avoids any negative associations with the typically-used “low, medium, high”

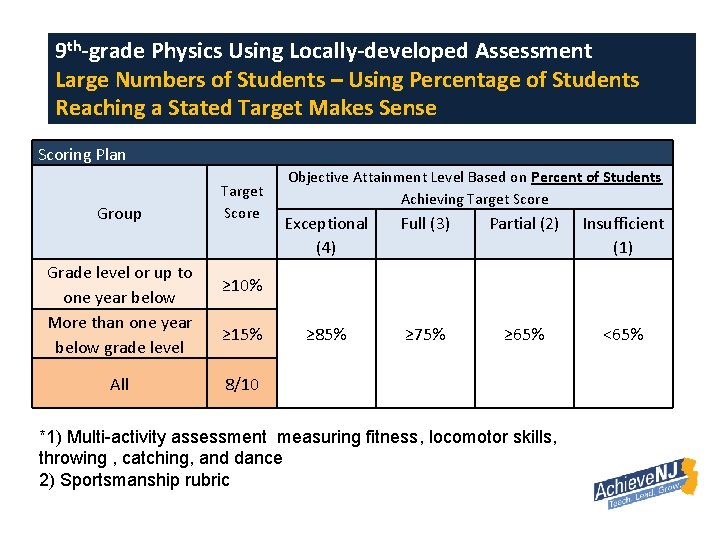

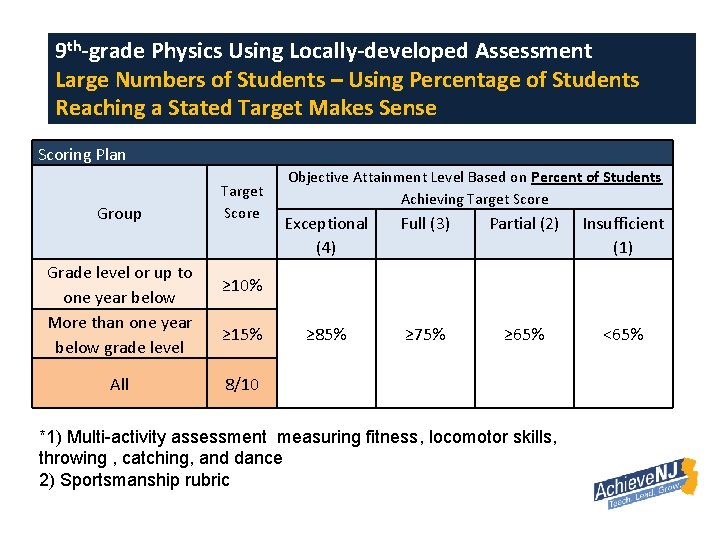

9 th-grade Physics Using Locally-developed Assessment Large Numbers of Students – Using Percentage of Students Reaching a Stated Target Makes Sense Scoring Plan Group Grade level or up to one year below More than one year below grade level All Target Score Objective Attainment Level Based on Percent of Students Achieving Target Score Exceptional (4) Full (3) Partial (2) Insufficient (1) ≥ 85% ≥ 75% ≥ 65% <65% ≥ 10% ≥ 15% 8/10 *1) Multi-activity assessment measuring fitness, locomotor skills, throwing , catching, and dance 2) Sportsmanship rubric

Resources • • Updated SGO guidebook and forms Expanded SGO library Assessment quality webinars Teacher practice workshops Information www. nj. gov/education/Achieve. NJ Questions educatorevaluation@doe. state. nj. us 609 -777 -3788 74