Introduction to Convolution circuits synthesis image processing speech

- Slides: 86

Introduction to Convolution circuits synthesis • • image processing, speech processing, DSP, polynomial multiplication in robot control. convolution

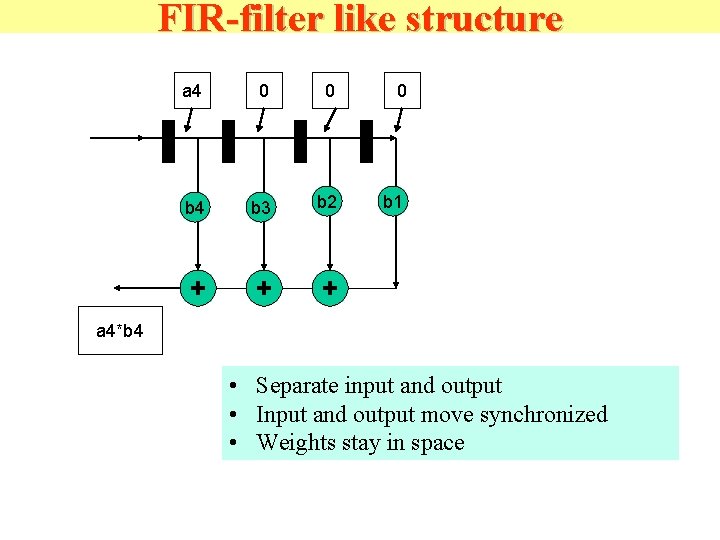

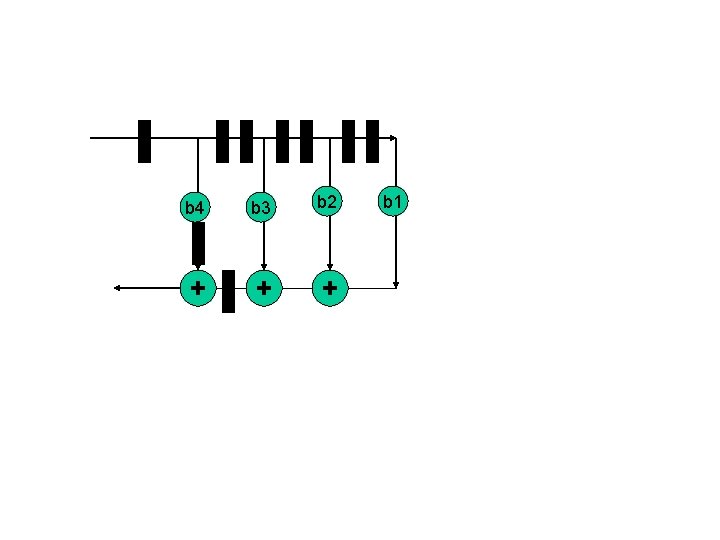

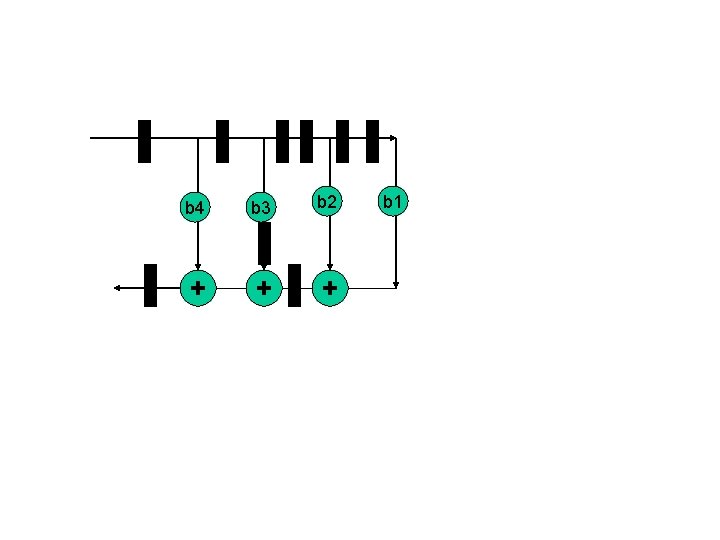

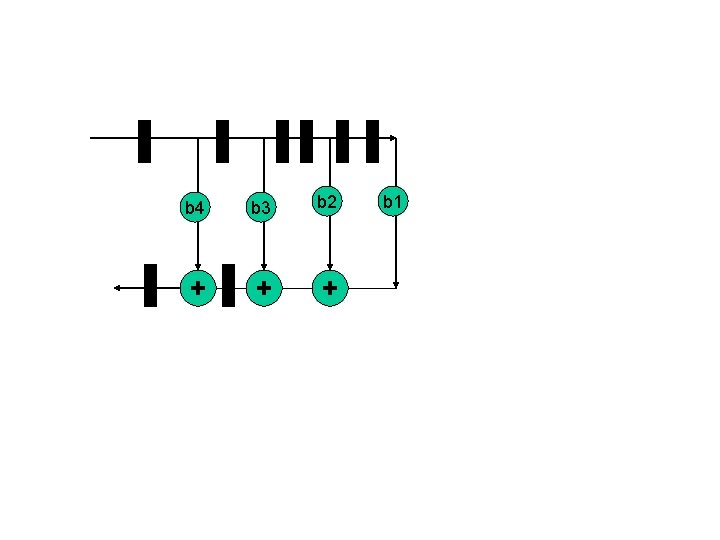

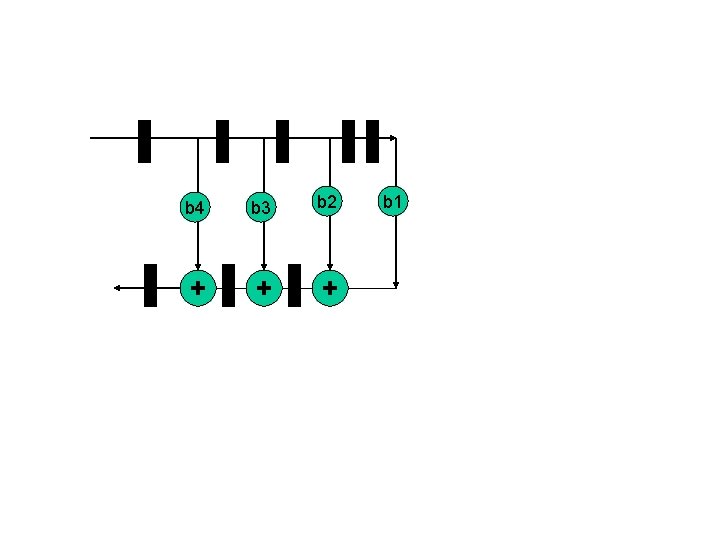

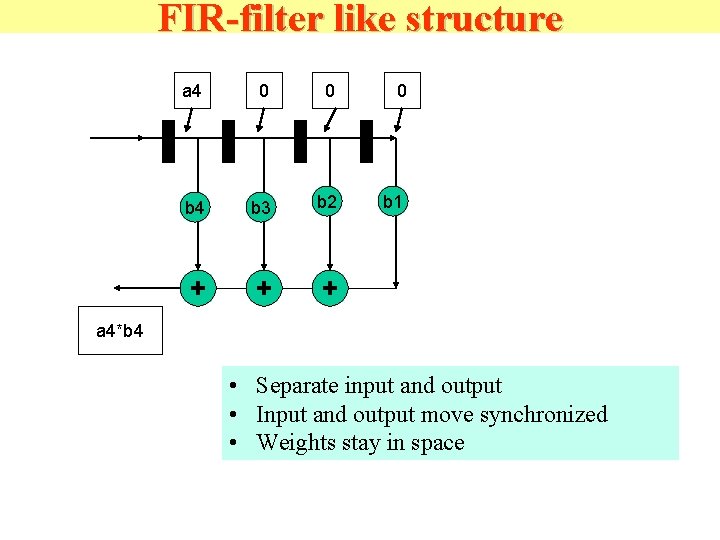

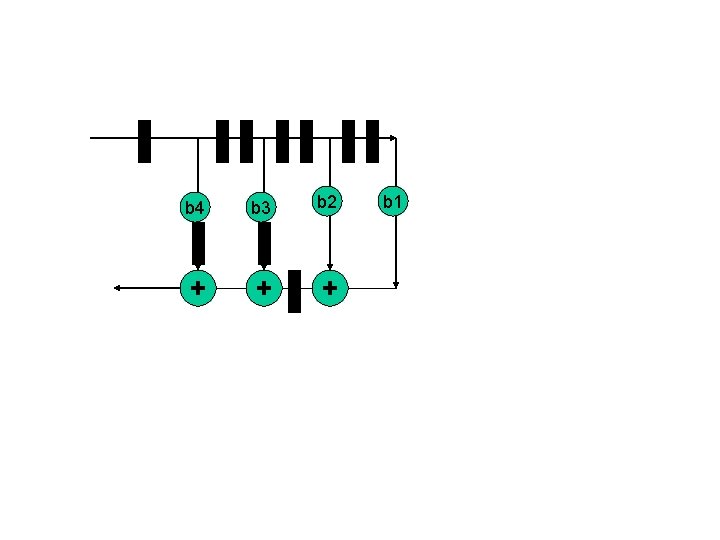

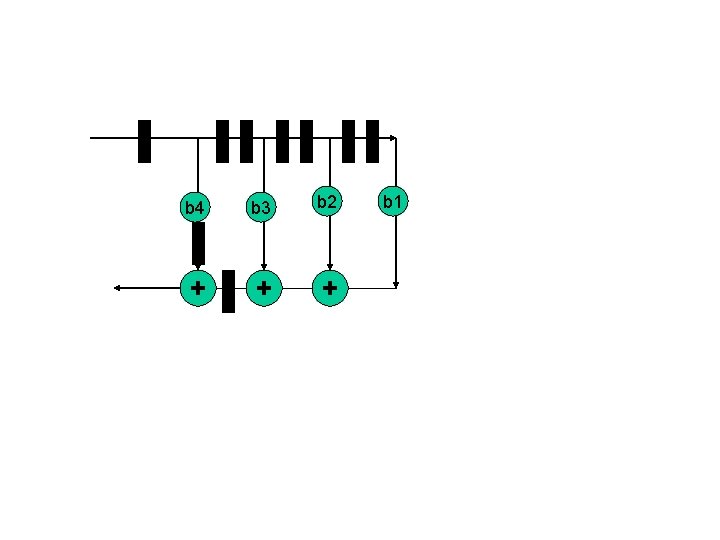

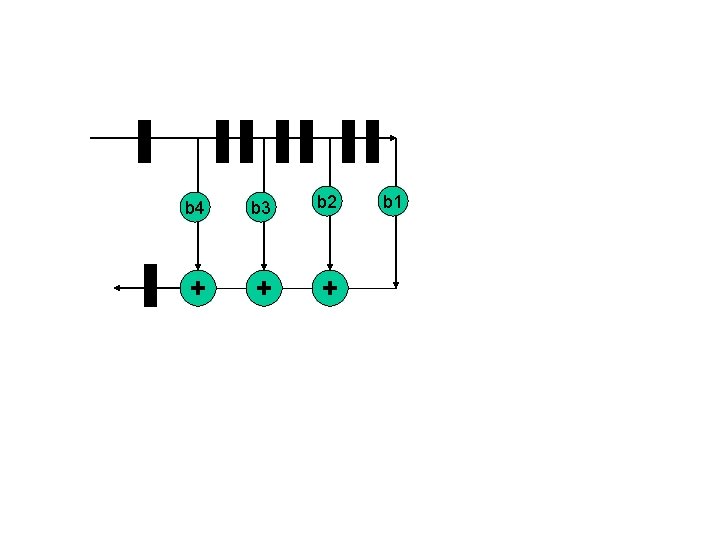

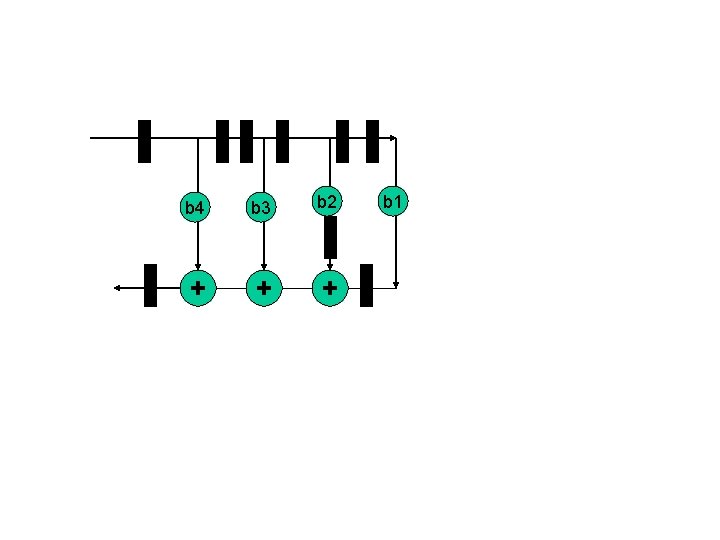

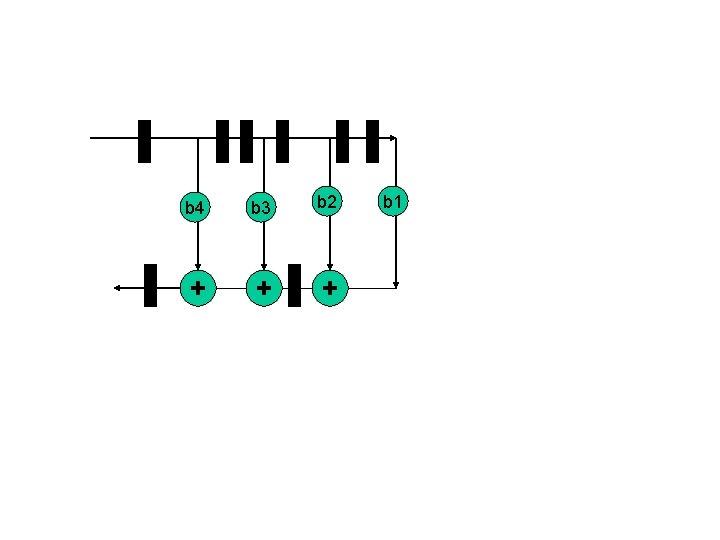

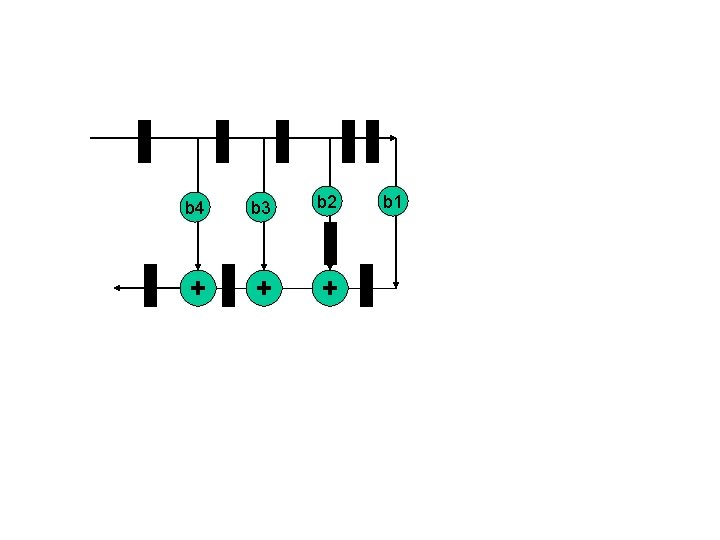

FIR-filter like structure a 4 0 0 0 b 4 b 3 b 2 b 1 + + + a 4*b 4 • Separate input and output • Input and output move synchronized • Weights stay in space

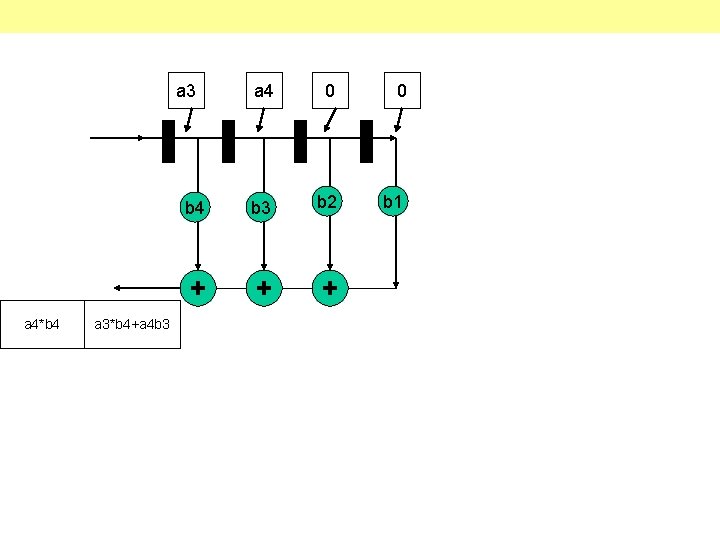

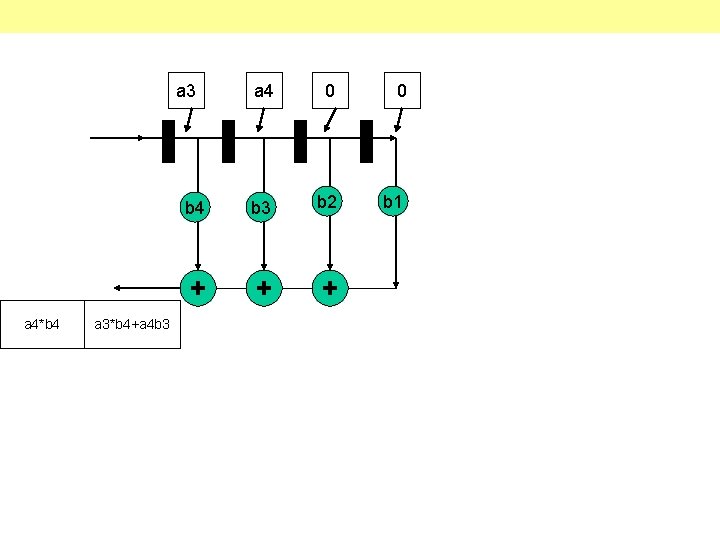

a 3 a 4*b 4 a 3*b 4+a 4 b 3 a 4 0 0 b 4 b 3 b 2 b 1 + + +

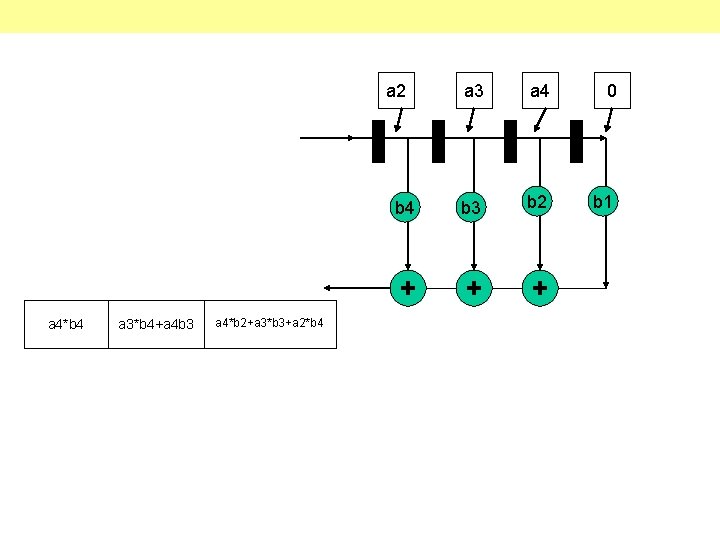

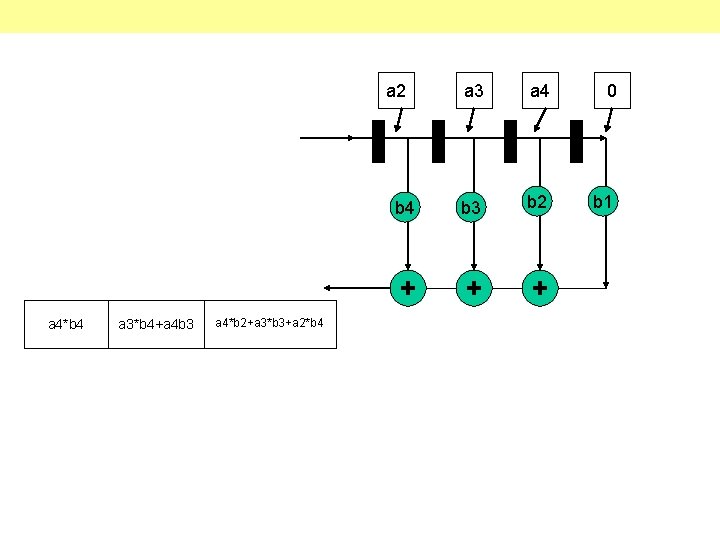

a 2 a 4*b 4 a 3*b 4+a 4 b 3 a 4*b 2+a 3*b 3+a 2*b 4 a 3 a 4 0 b 4 b 3 b 2 b 1 + + +

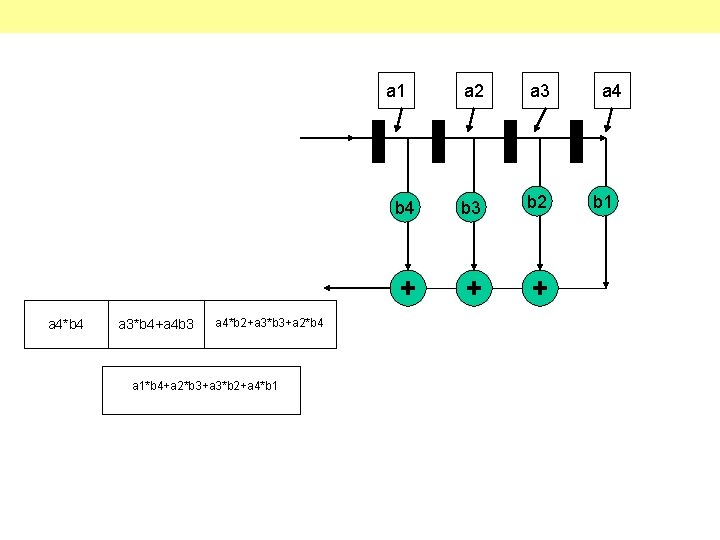

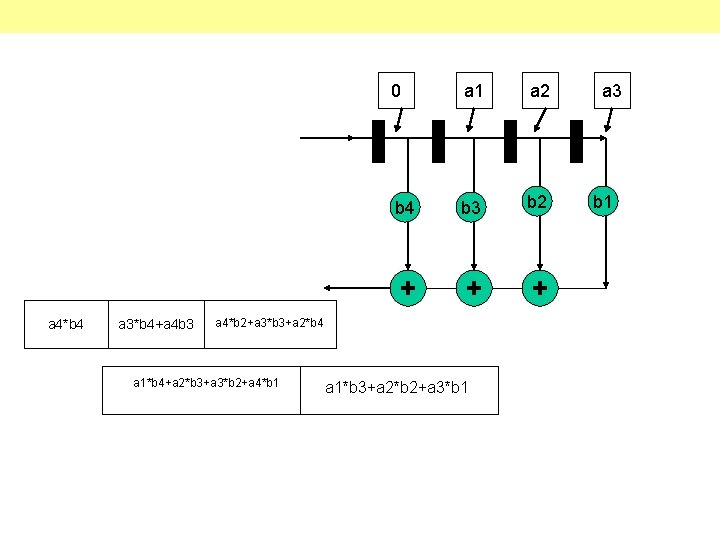

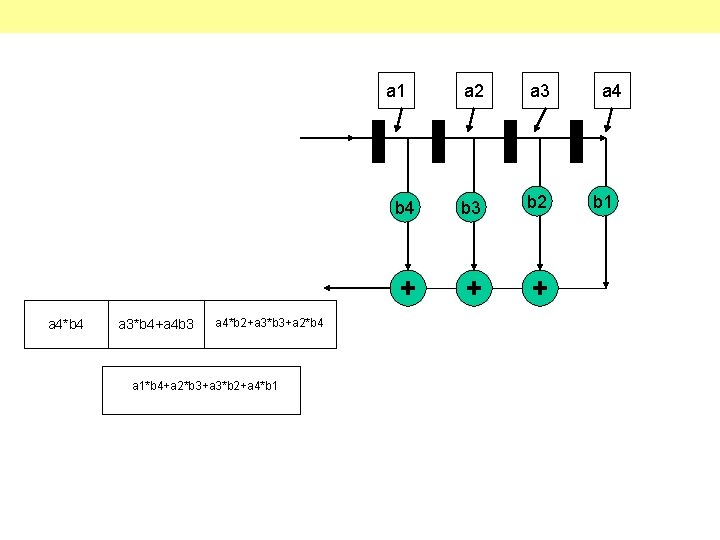

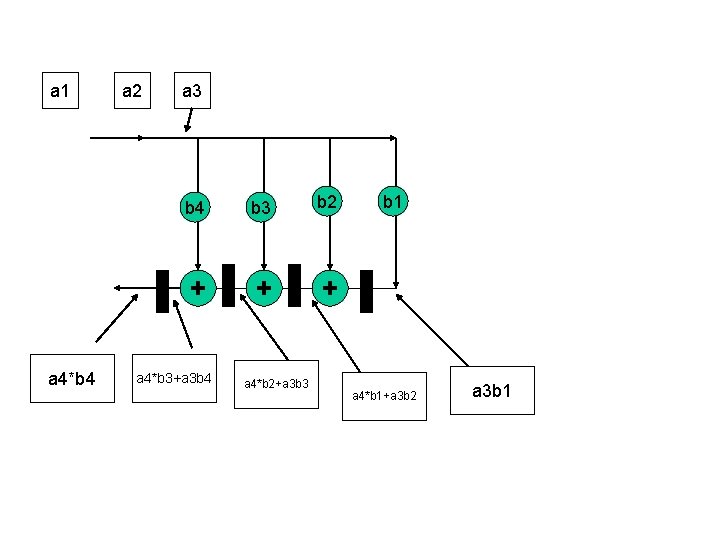

a 1 a 4*b 4 a 3*b 4+a 4 b 3 a 4*b 2+a 3*b 3+a 2*b 4 a 1*b 4+a 2*b 3+a 3*b 2+a 4*b 1 a 2 a 3 b 4 b 3 b 2 + + + a 4 b 1

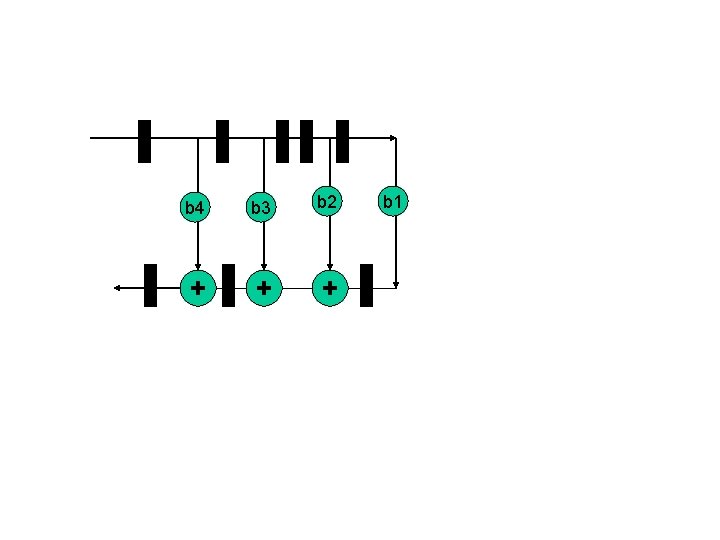

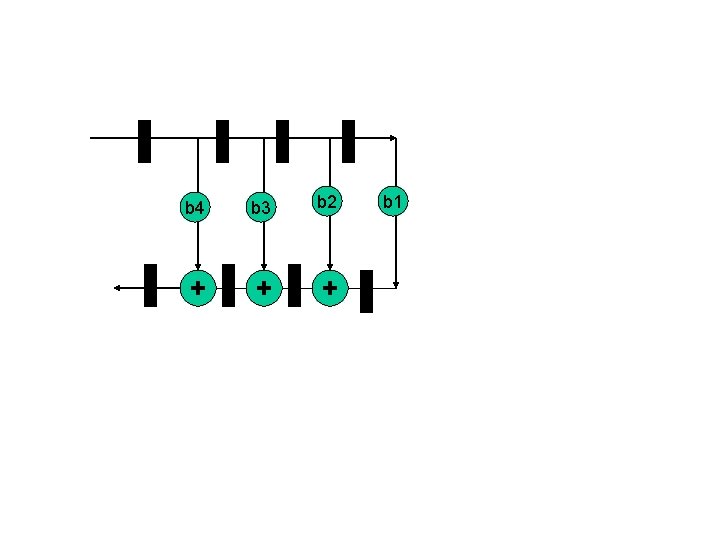

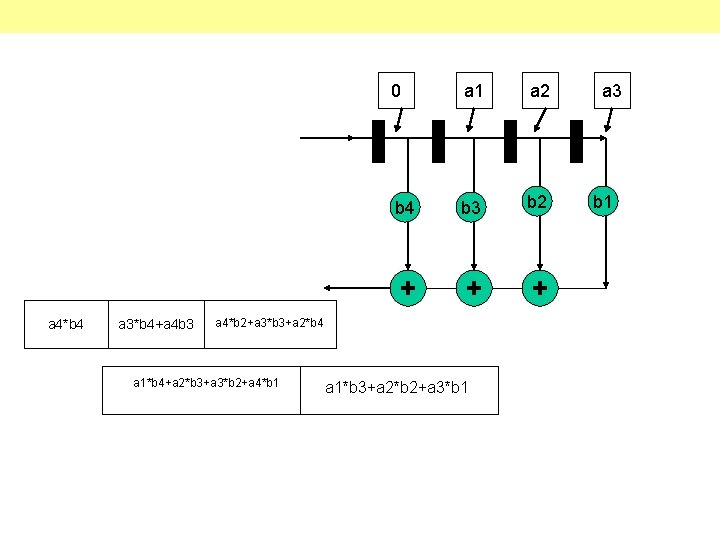

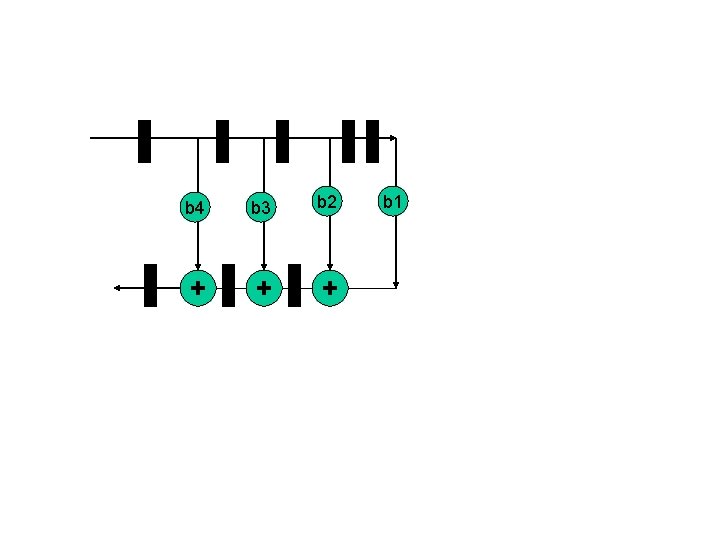

a 4*b 4 a 3*b 4+a 4 b 3 0 a 1 a 2 b 4 b 3 b 2 + + + a 4*b 2+a 3*b 3+a 2*b 4 a 1*b 4+a 2*b 3+a 3*b 2+a 4*b 1 a 1*b 3+a 2*b 2+a 3*b 1 a 3 b 1

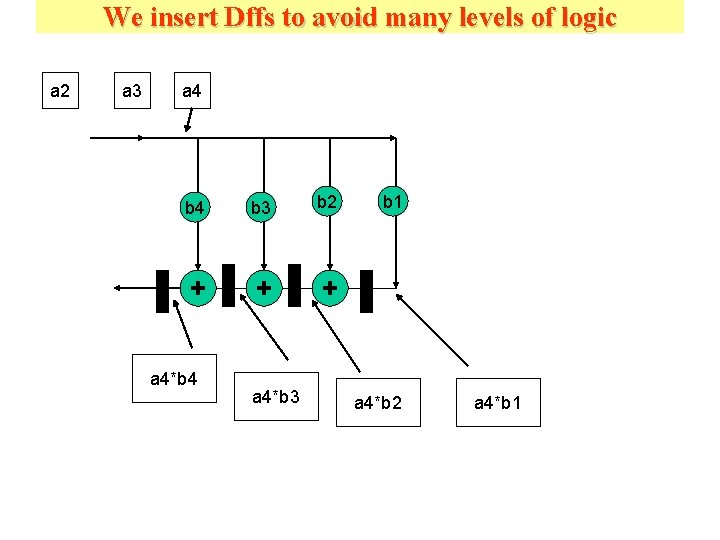

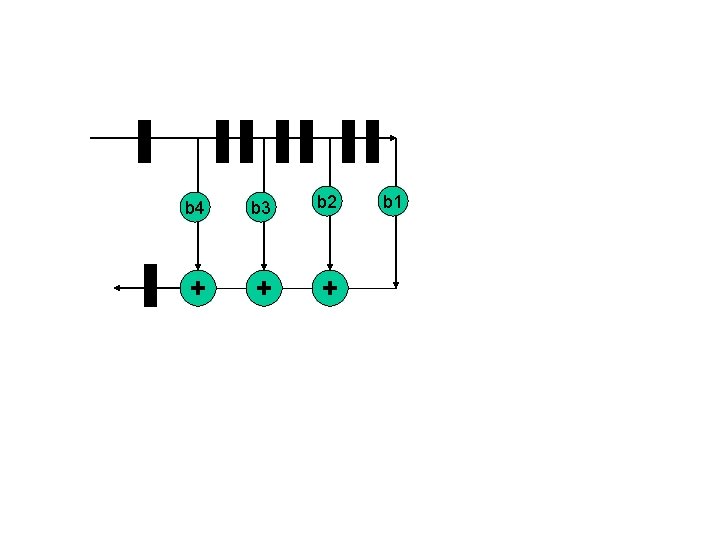

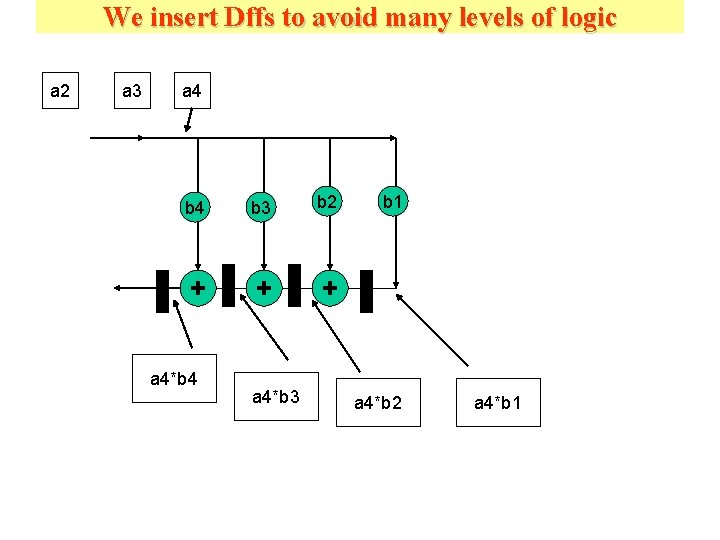

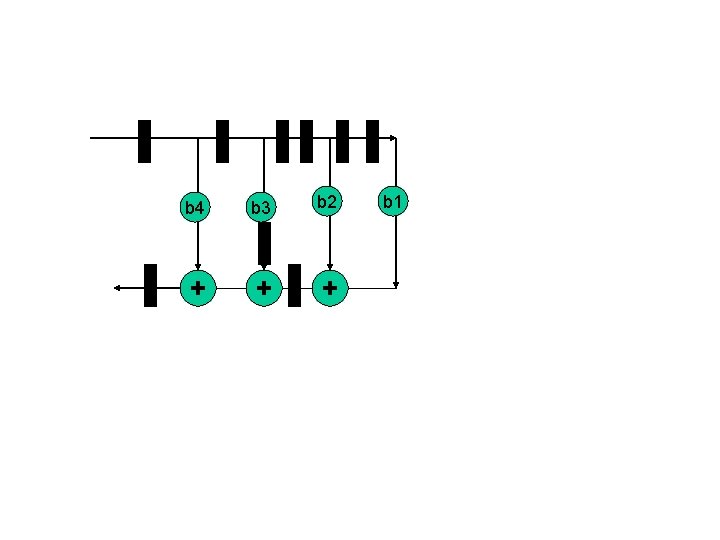

We insert Dffs to avoid many levels of logic a 2 a 3 a 4 b 3 b 2 + + + a 4*b 4 a 4*b 3 b 1 a 4*b 2 a 4*b 1

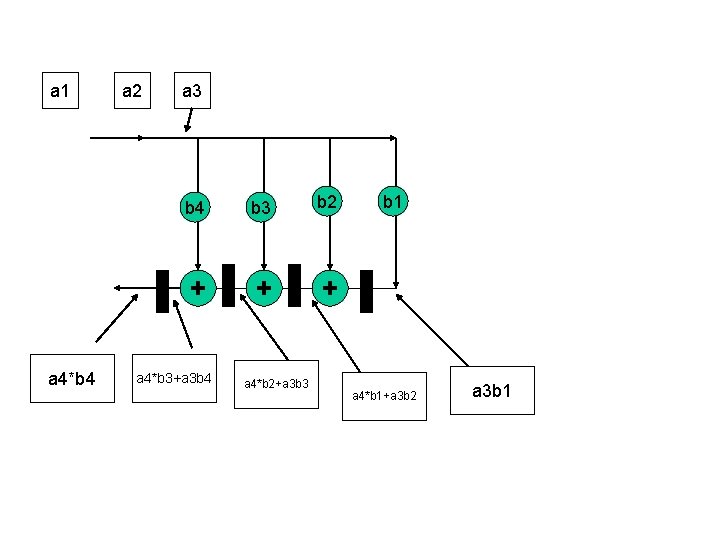

a 1 a 4*b 4 a 2 a 3 b 4 b 3 b 2 + + + a 4*b 3+a 3 b 4 a 4*b 2+a 3 b 3 b 1 a 4*b 1+a 3 b 2 a 3 b 1

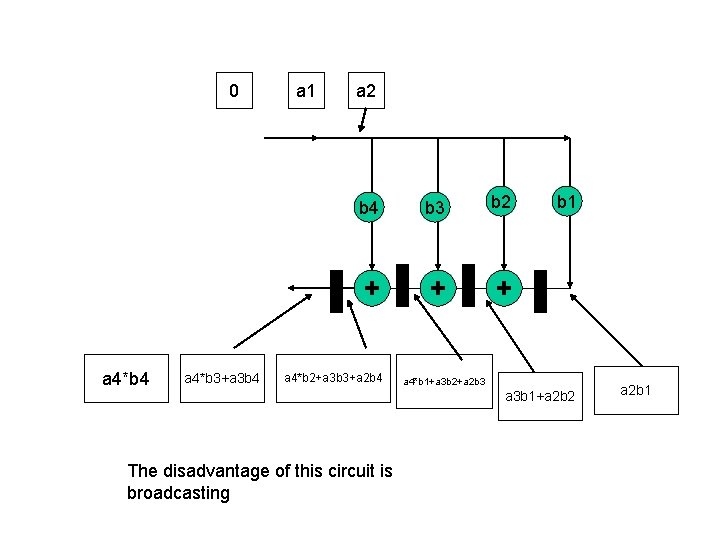

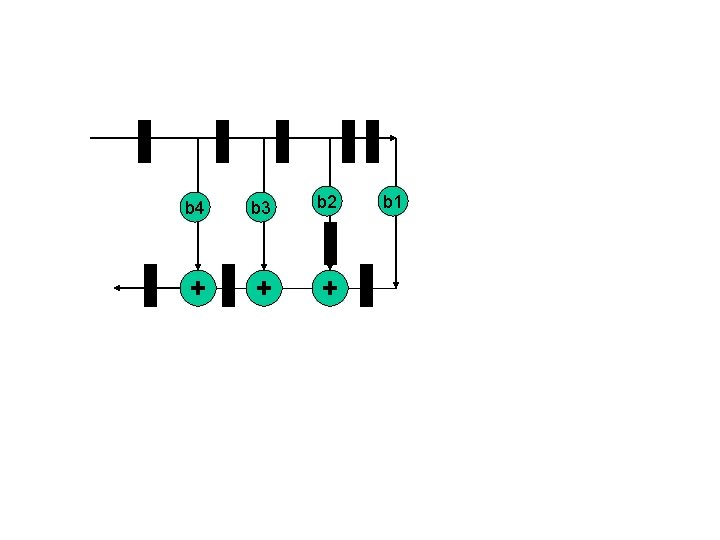

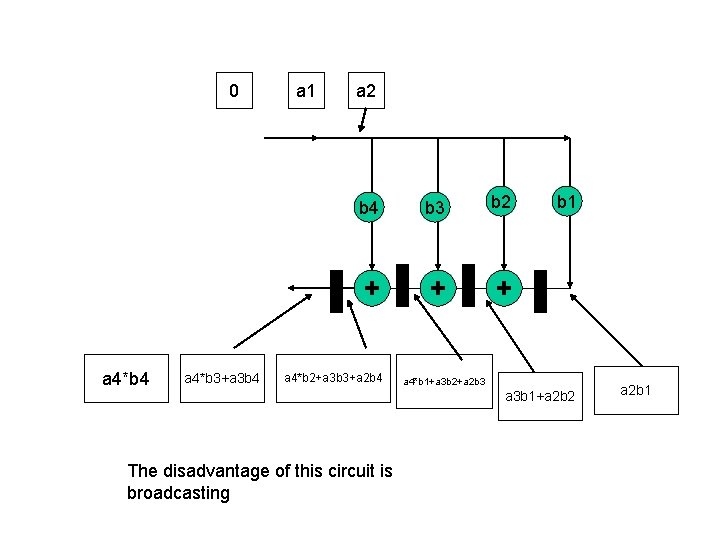

0 a 4*b 4 a 4*b 3+a 3 b 4 a 1 a 2 b 4 b 3 b 2 + + + a 4*b 2+a 3 b 3+a 2 b 4 b 1 a 4*b 1+a 3 b 2+a 2 b 3 a 3 b 1+a 2 b 2 The disadvantage of this circuit is broadcasting a 2 b 1

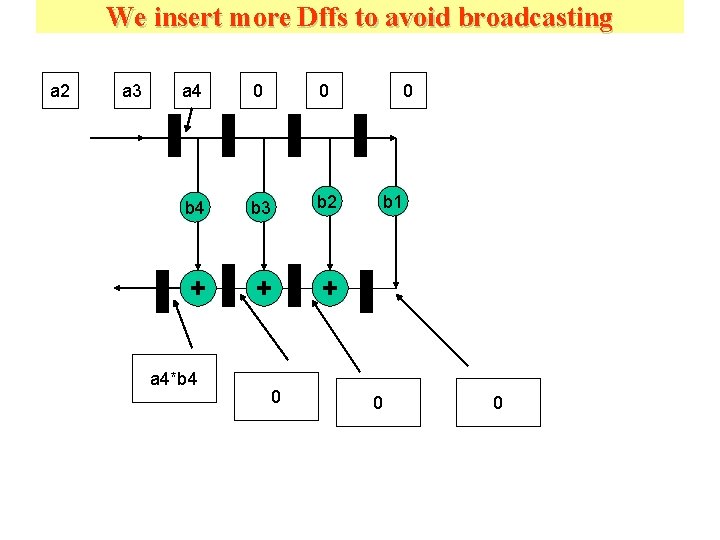

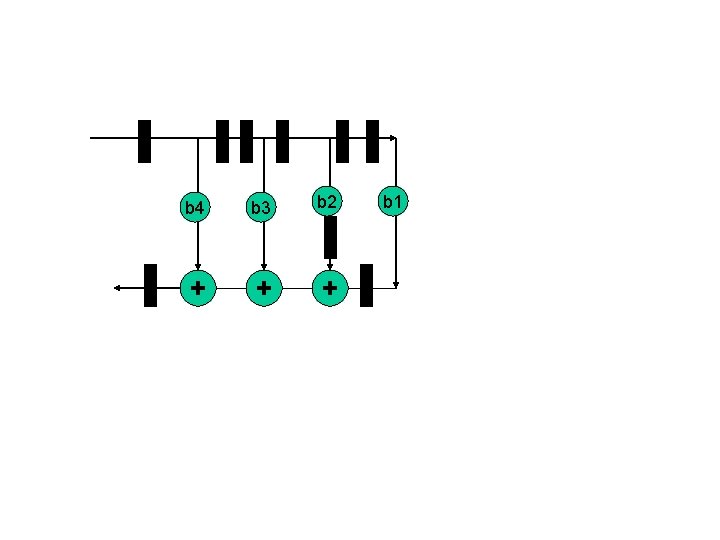

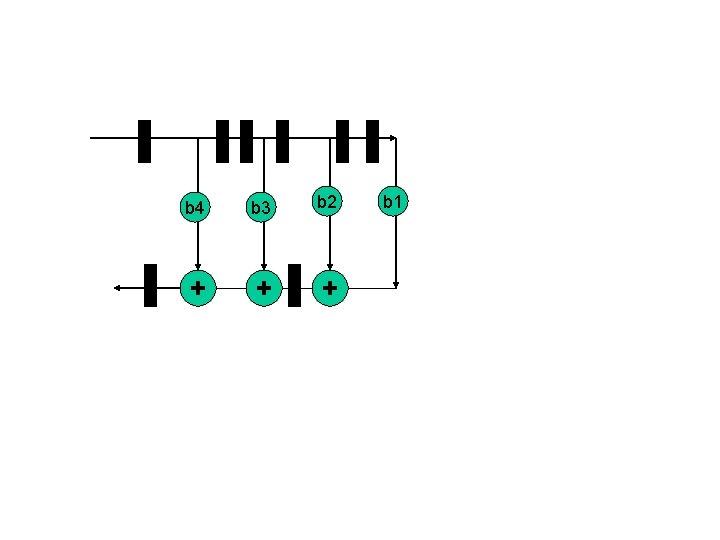

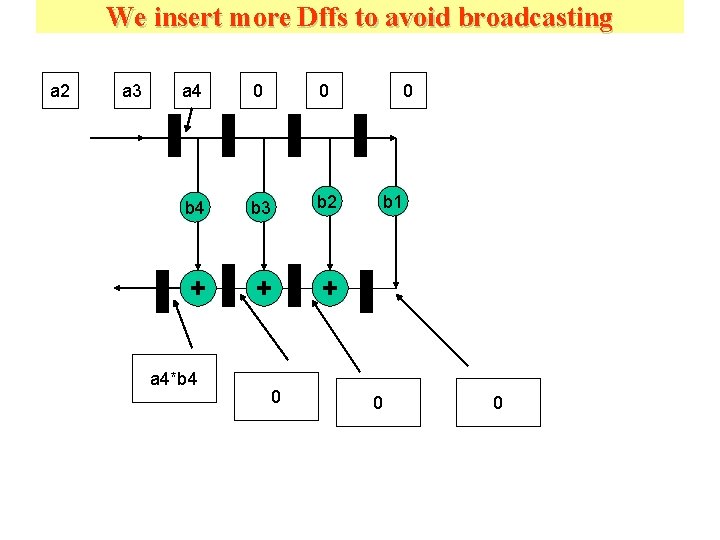

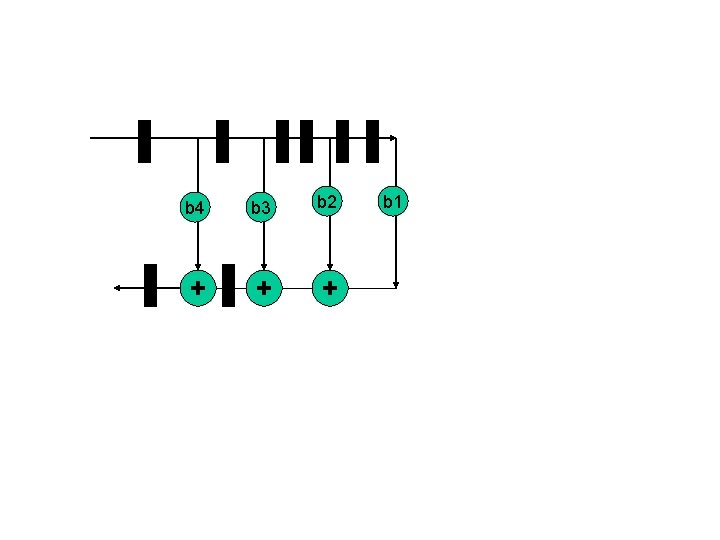

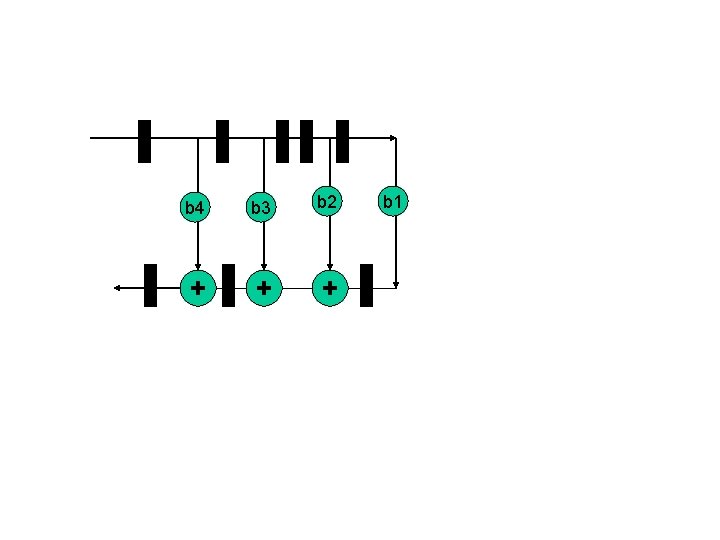

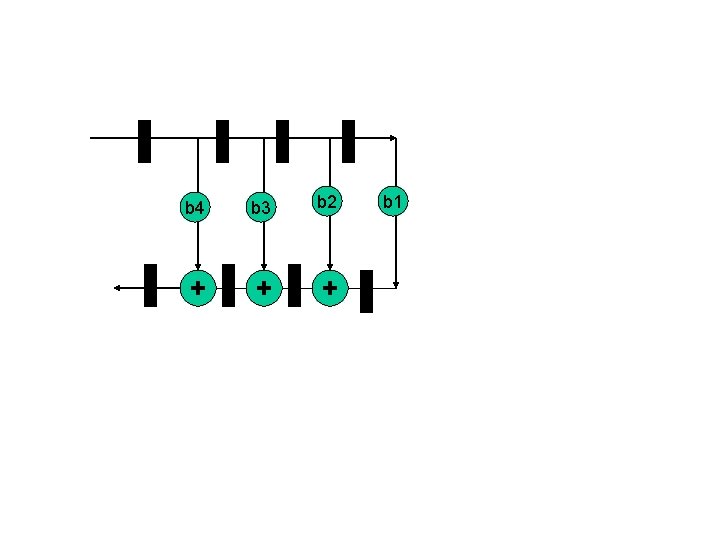

We insert more Dffs to avoid broadcasting a 2 a 3 a 4 0 0 b 4 b 3 b 2 + + + a 4*b 4 0 0 b 1 0 0

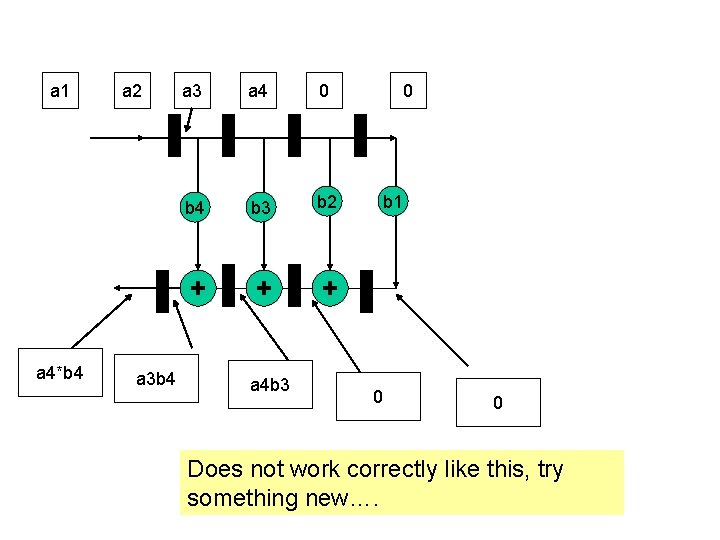

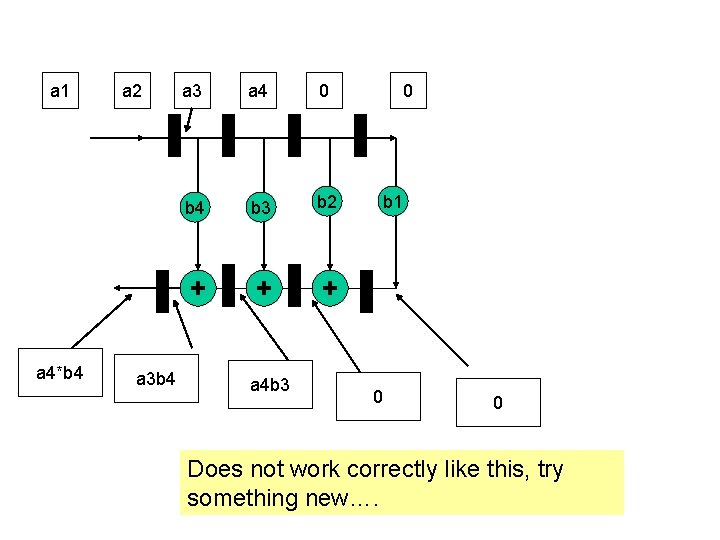

a 1 a 4*b 4 a 2 a 3 b 4 a 3 a 4 0 b 4 b 3 b 2 + + + a 4 b 3 0 b 1 0 0 Does not work correctly like this, try something new….

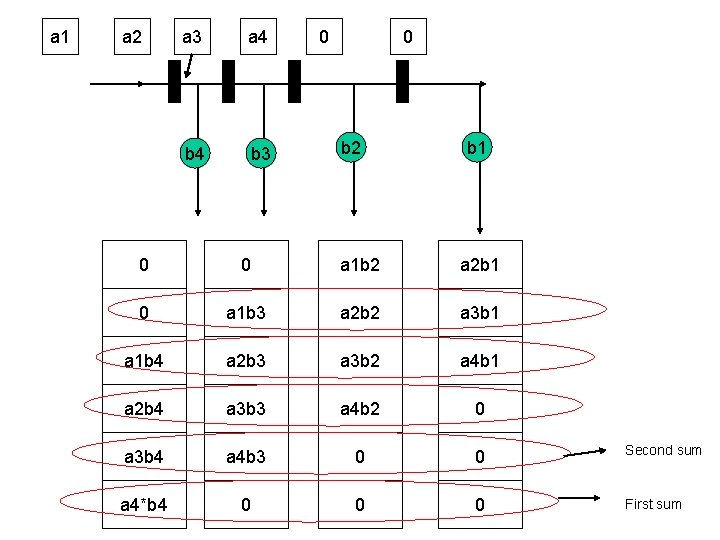

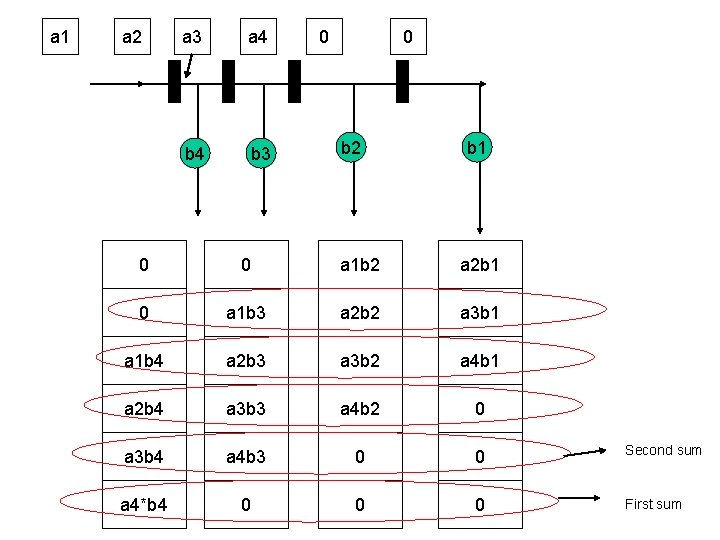

a 1 a 2 a 3 a 4 b 3 0 0 b 2 b 1 0 0 a 1 b 2 a 2 b 1 0 a 1 b 3 a 2 b 2 a 3 b 1 a 1 b 4 a 2 b 3 a 3 b 2 a 4 b 1 a 2 b 4 a 3 b 3 a 4 b 2 0 a 3 b 4 a 4 b 3 0 0 Second sum a 4*b 4 0 0 0 First sum

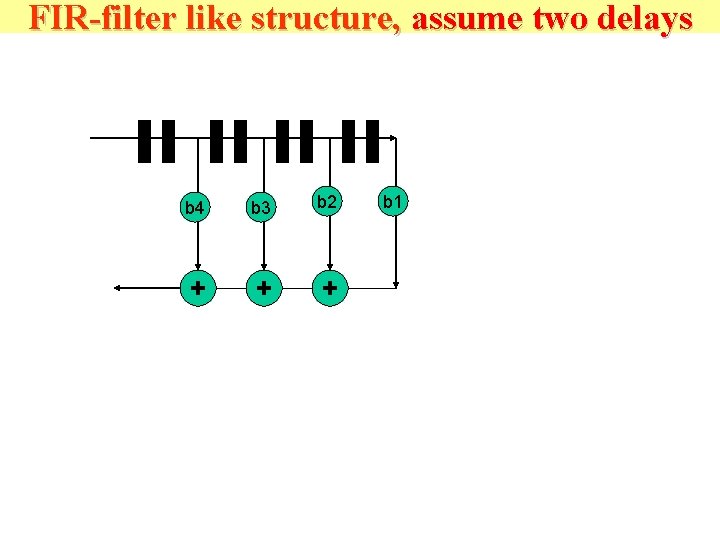

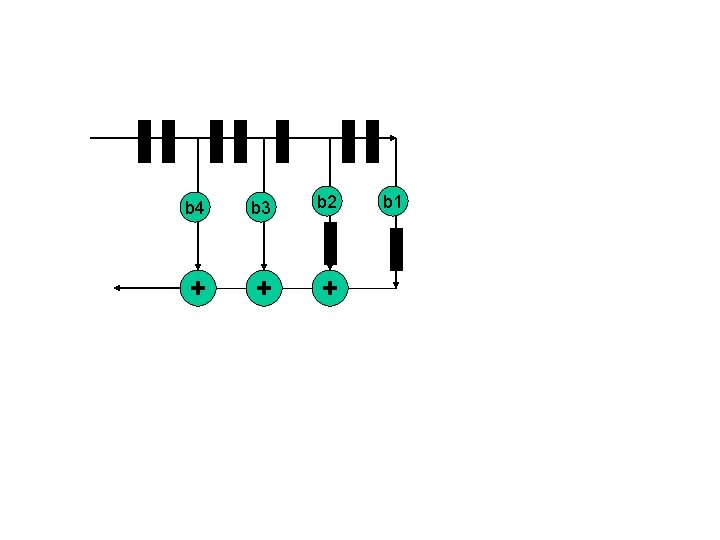

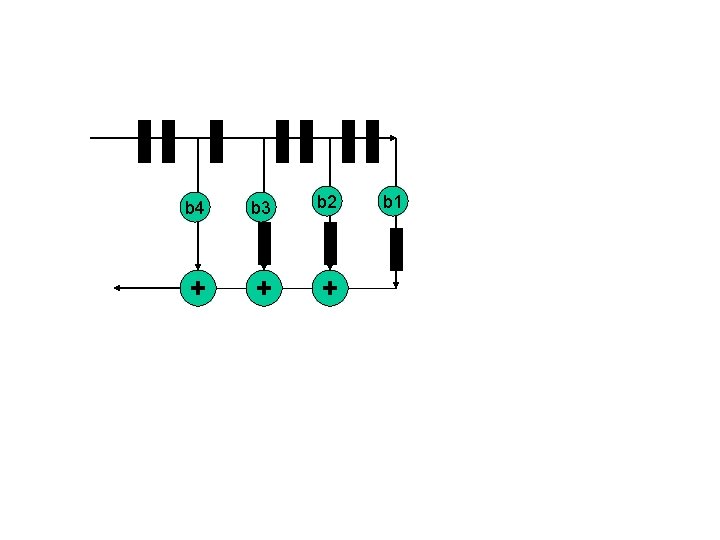

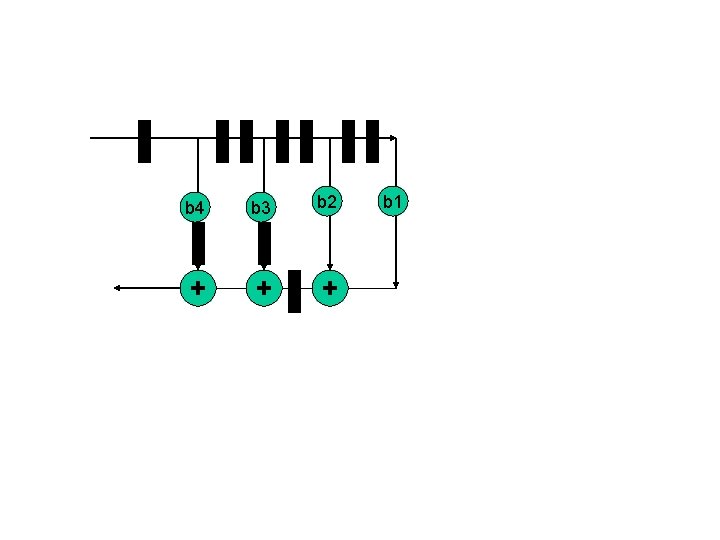

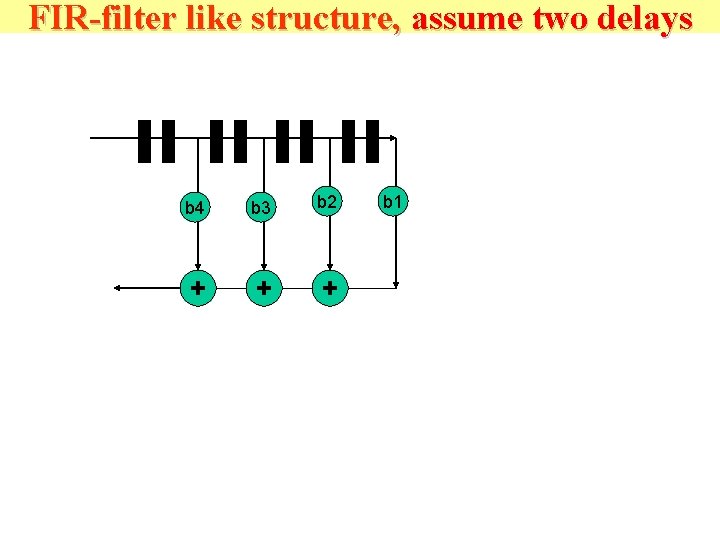

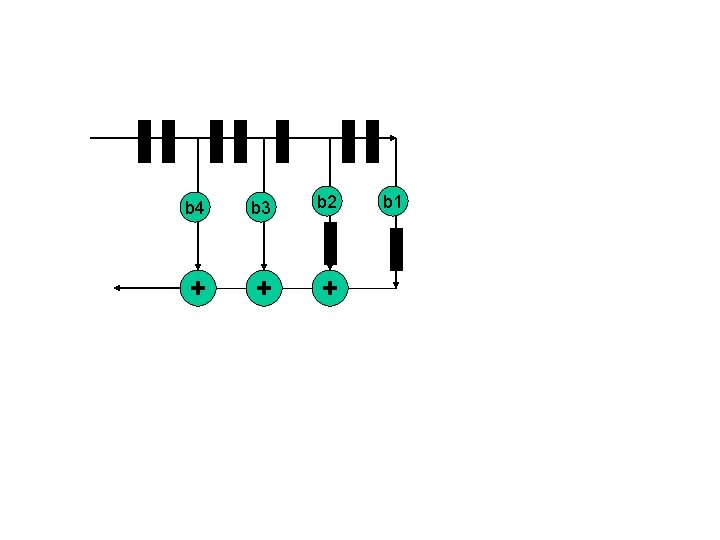

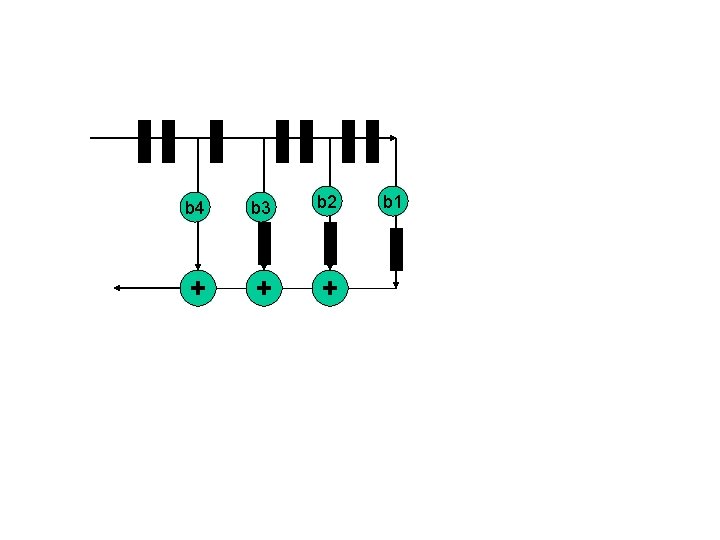

FIR-filter like structure, assume two delays b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

b 4 b 3 b 2 + + + b 1

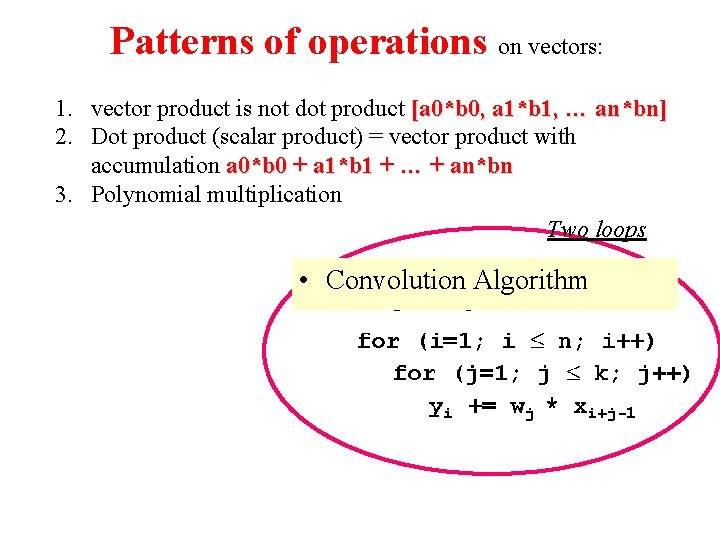

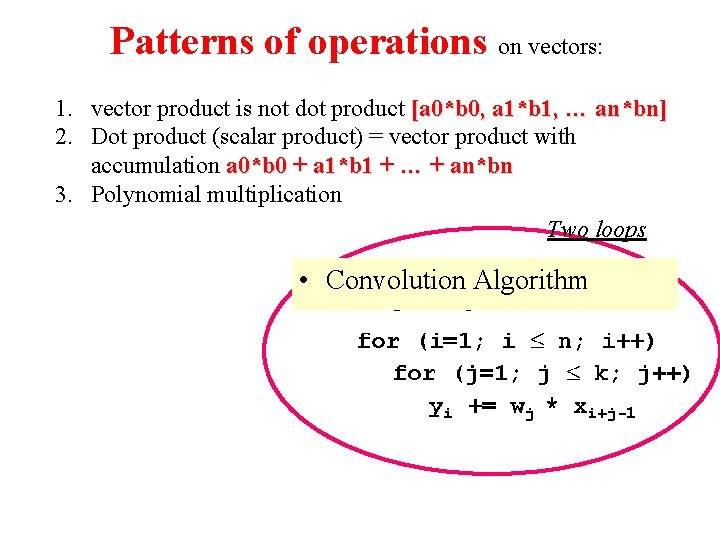

Patterns of operations on vectors: 1. vector product is not dot product [a 0*b 0, a 1*b 1, … an*bn] 2. Dot product (scalar product) = vector product with accumulation a 0*b 0 + a 1*b 1 + … + an*bn 3. Polynomial multiplication Two loops • Convolution Algorithm

Example 3: FIR Filter or Convolution

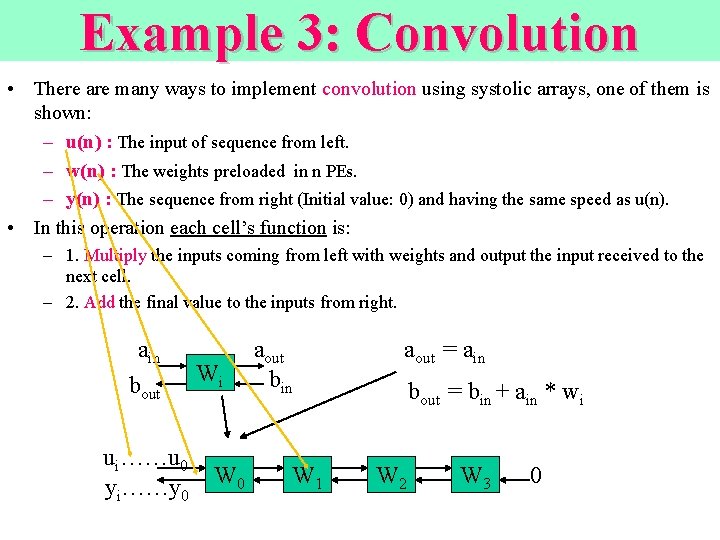

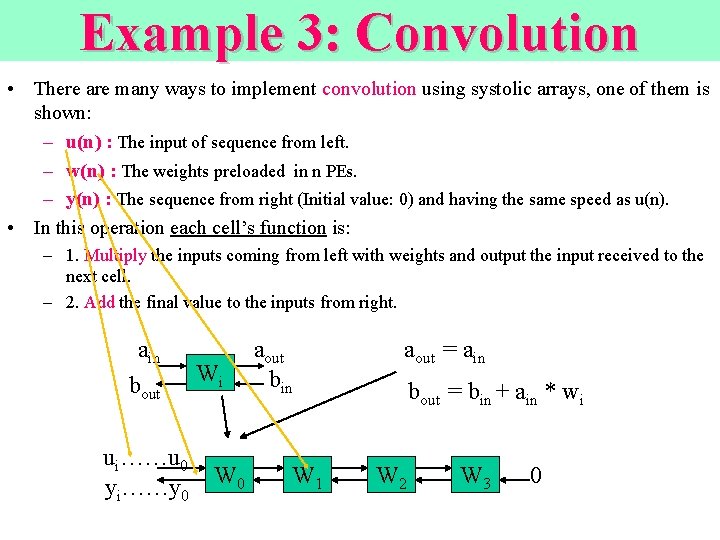

Example 3: Convolution • There are many ways to implement convolution using systolic arrays, one of them is shown: – u(n) : The input of sequence from left. – w(n) : The weights preloaded in n PEs. – y(n) : The sequence from right (Initial value: 0) and having the same speed as u(n). • In this operation each cell’s function is: – 1. Multiply the inputs coming from left with weights and output the input received to the next cell. – 2. Add the final value to the inputs from right. ain bout ui……u 0 yi……y 0 Wi W 0 aout bin W 1 aout = ain bout = bin + ain * wi W 2 W 3 0

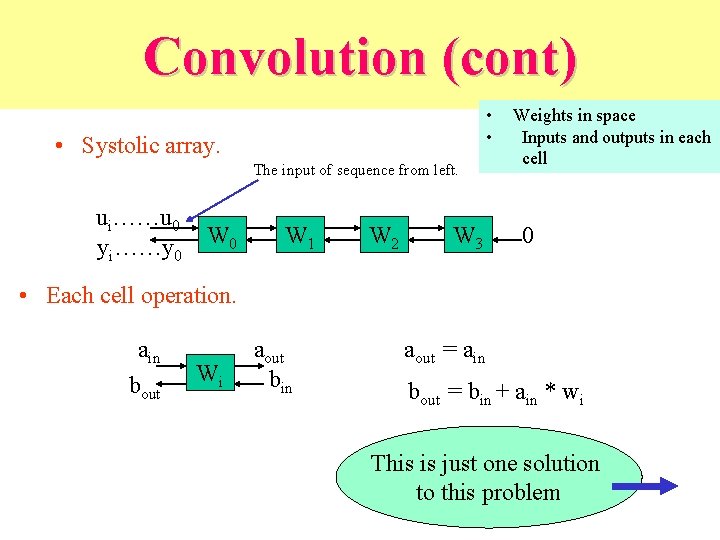

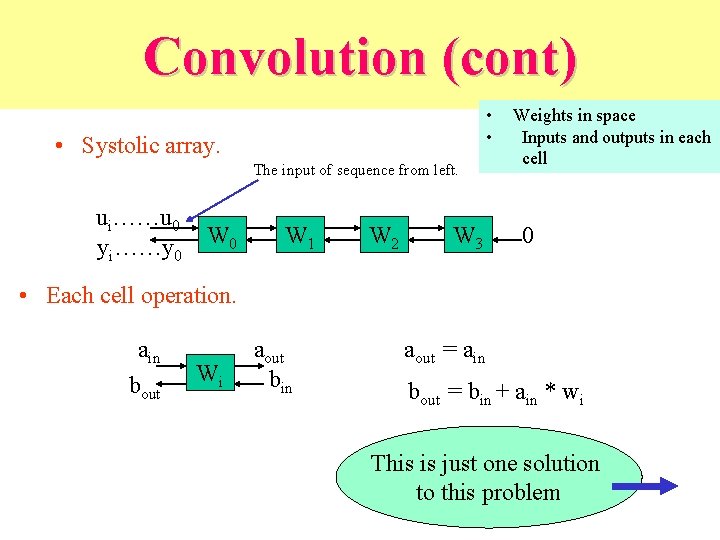

Convolution (cont) • • • Systolic array. The input of sequence from left. ui……u 0 yi……y 0 W 1 W 2 W 3 Weights in space Inputs and outputs in each cell 0 • Each cell operation. ain bout Wi aout bin aout = ain bout = bin + ain * wi This is just one solution to this problem

Convolution • Can be 1 D, 2 D, 3 D, etc. • Is very important in many applications. • Can be implemented efficiently in various architectures. • Is an excellent example to compare various computer architectures: – – – SIMD, MIMD, CA, pipelined, Systolic.

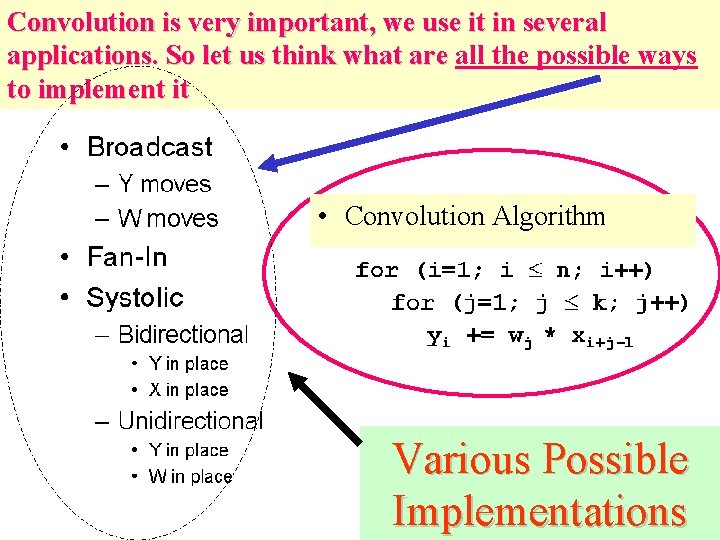

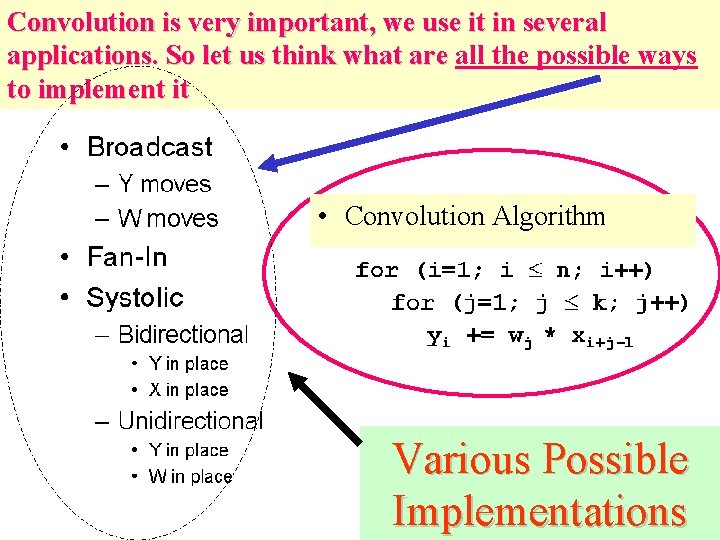

Convolution is very important, we use it in several applications. So let us think what are all the possible ways to implement it • Convolution Algorithm Various Possible Implementations

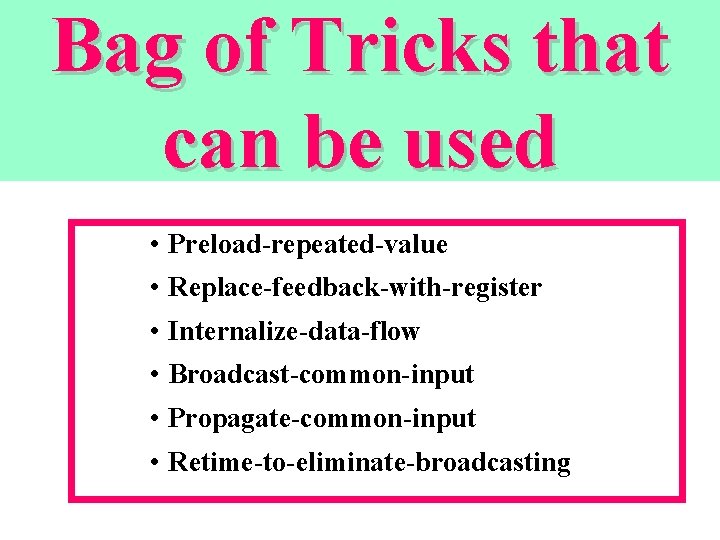

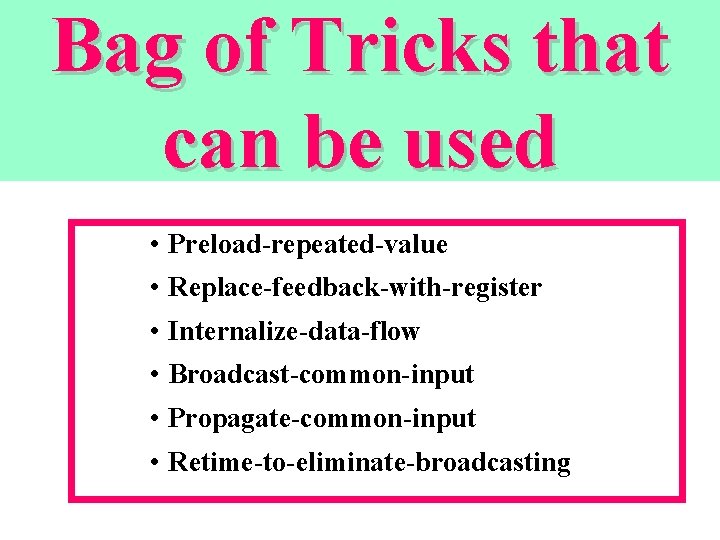

Bag of Tricks that can be used • Preload-repeated-value • Replace-feedback-with-register • Internalize-data-flow • Broadcast-common-input • Propagate-common-input • Retime-to-eliminate-broadcasting

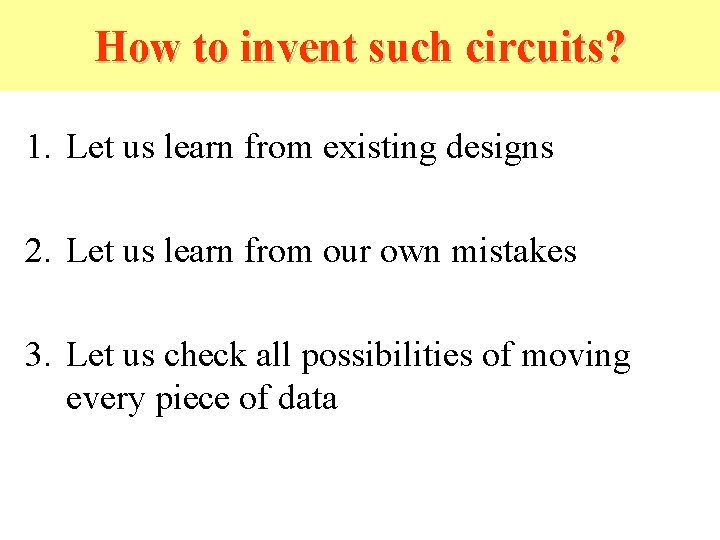

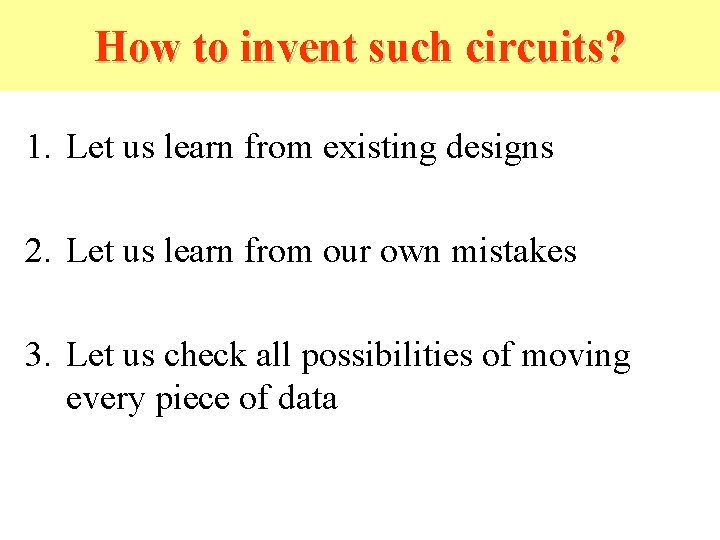

How to invent such circuits? 1. Let us learn from existing designs 2. Let us learn from our own mistakes 3. Let us check all possibilities of moving every piece of data

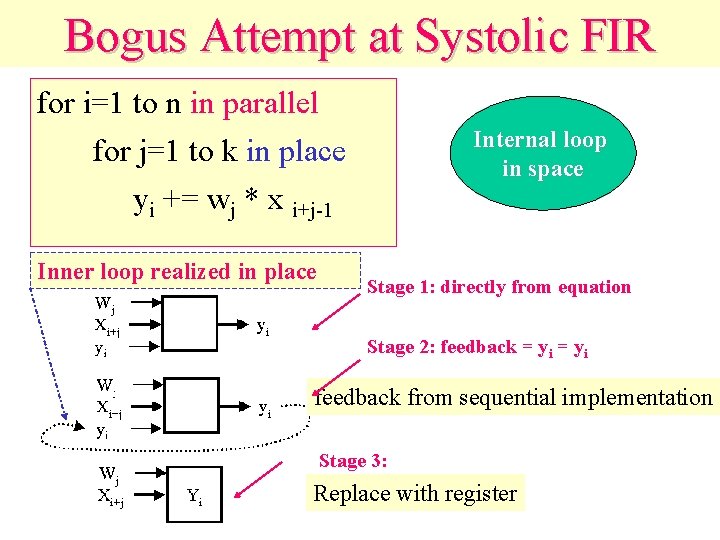

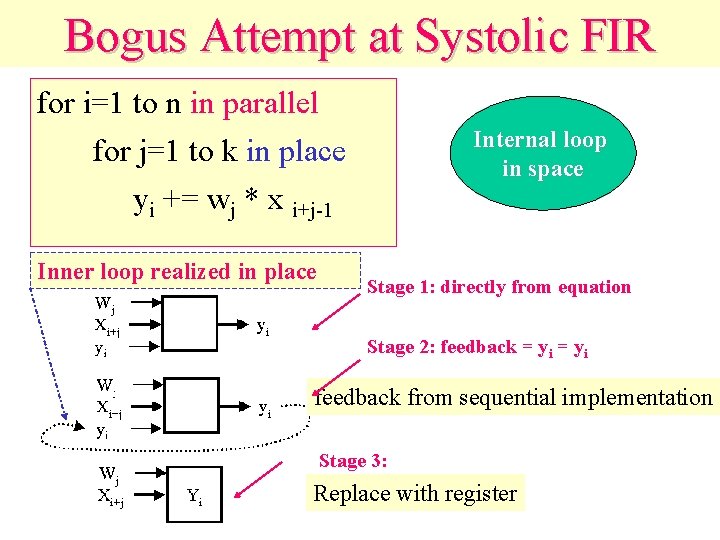

Bogus Attempt at Systolic FIR for i=1 to n in parallel Internal loop in space for j=1 to k in place yi += wj * x i+j-1 Inner loop realized in place Stage 1: directly from equation Stage 2: feedback = yi feedback from sequential implementation Stage 3: Replace with register

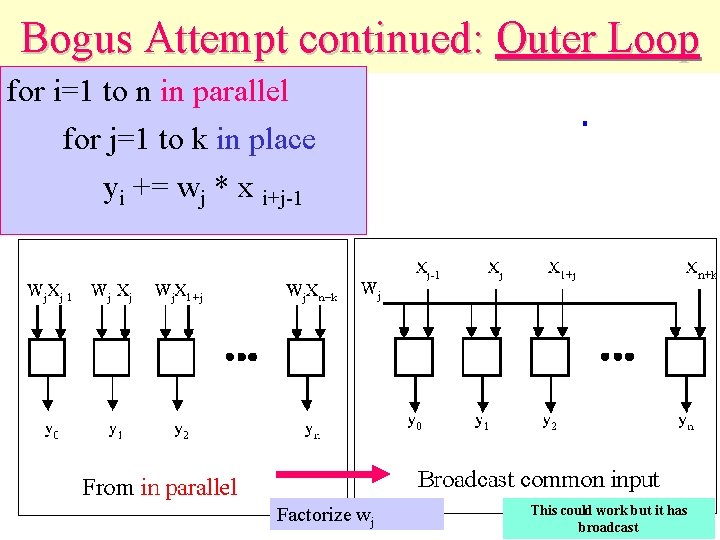

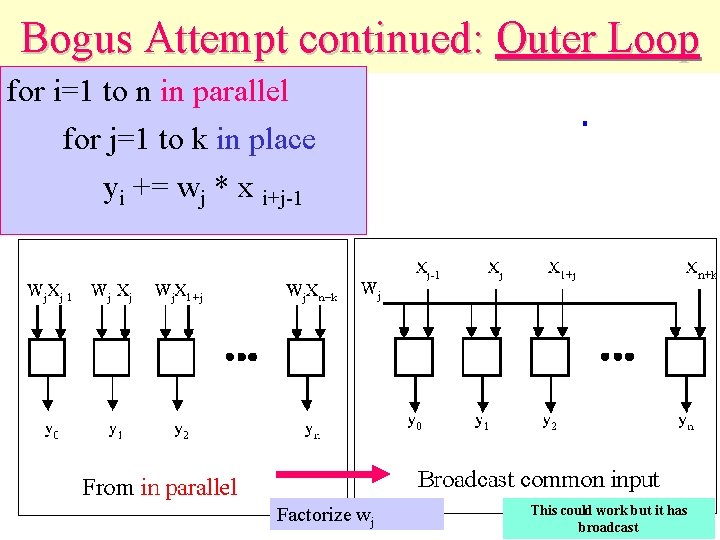

Bogus Attempt continued: Outer Loop for i=1 to n in parallel for j=1 to k in place yi += wj * x i+j-1 Factorize wj This could work but it has broadcast

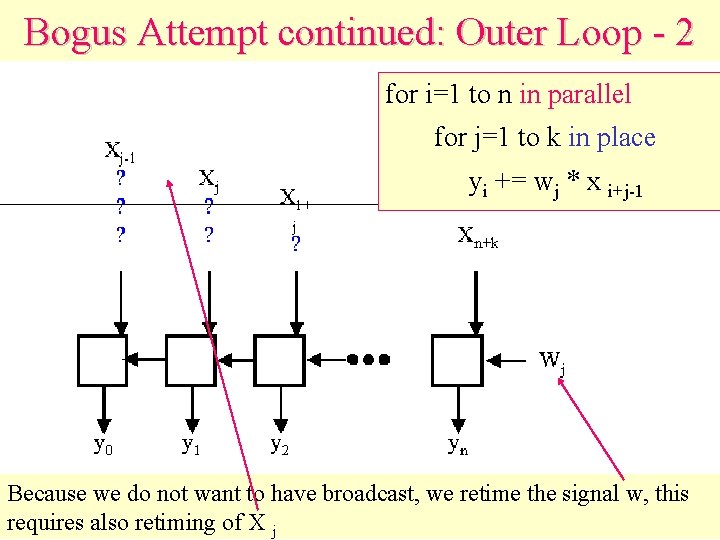

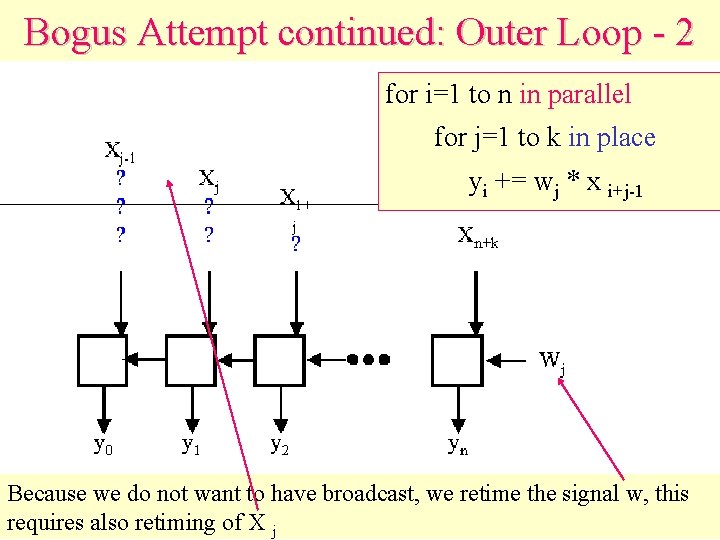

Bogus Attempt continued: Outer Loop - 2 for i=1 to n in parallel for j=1 to k in place yi += wj * x i+j-1 Because we do not want to have broadcast, we retime the signal w, this requires also retiming of X j

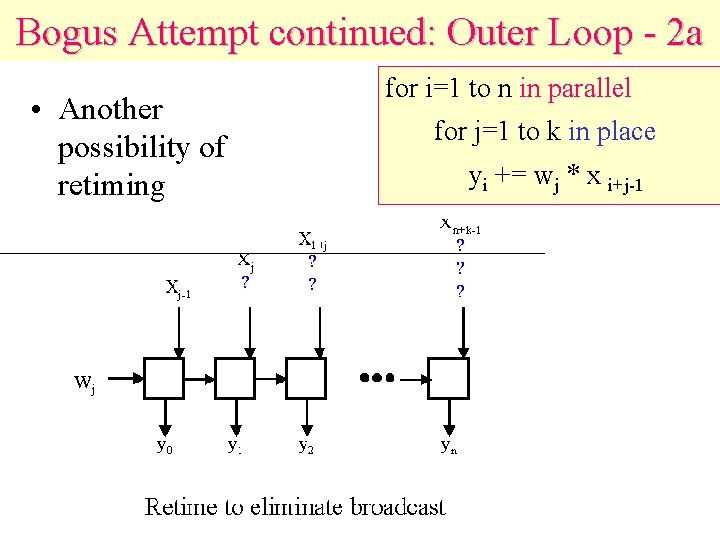

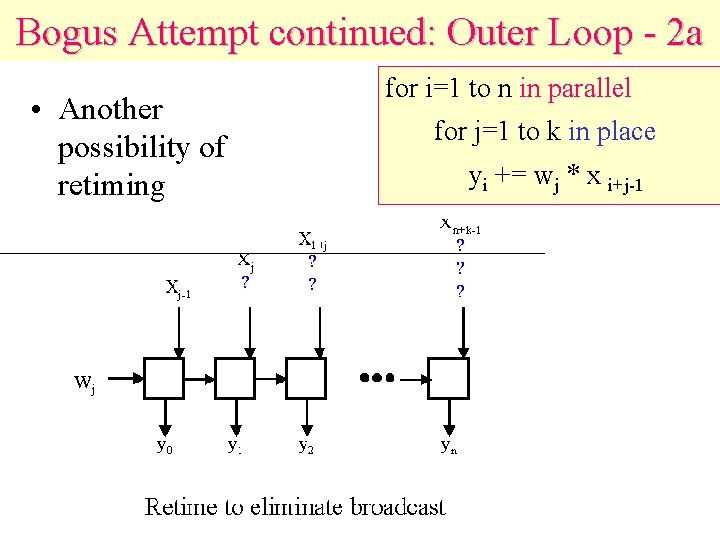

Bogus Attempt continued: Outer Loop - 2 a • Another possibility of retiming for i=1 to n in parallel for j=1 to k in place yi += wj * x i+j-1

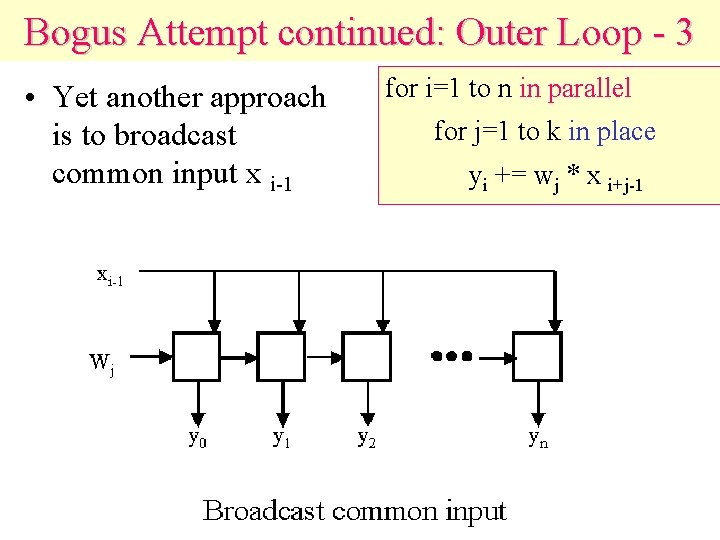

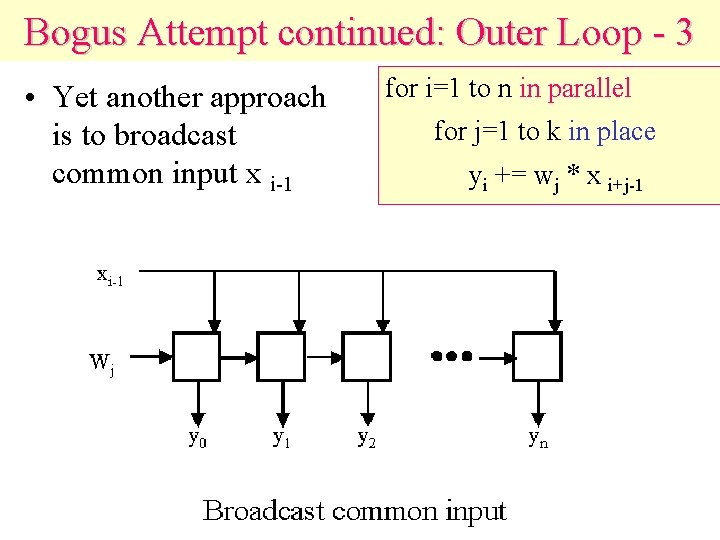

Bogus Attempt continued: Outer Loop - 3 • Yet another approach is to broadcast common input x i-1 for i=1 to n in parallel for j=1 to k in place yi += wj * x i+j-1

What we achieved? • We showed several possible beginnigs of creating architectures. • They were not successful, but show the principles. • We will continue to create architectures, but these attempts were not complete waste of time, they can be used in similar problems successfully. • You have to experiment with ideas!!

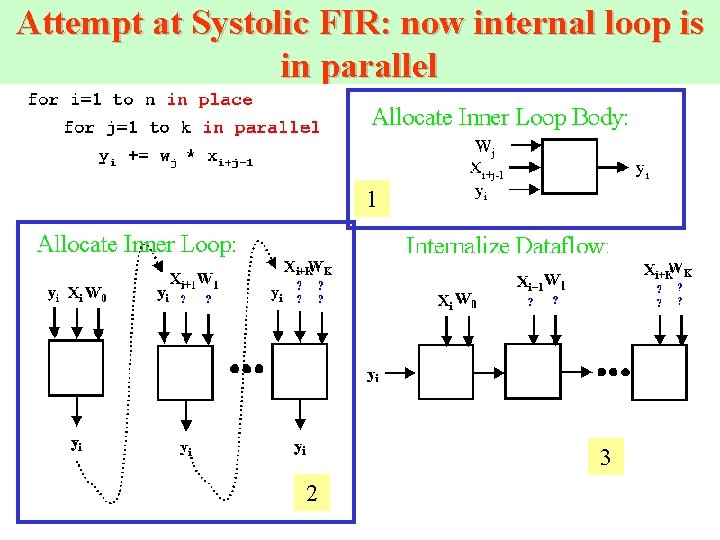

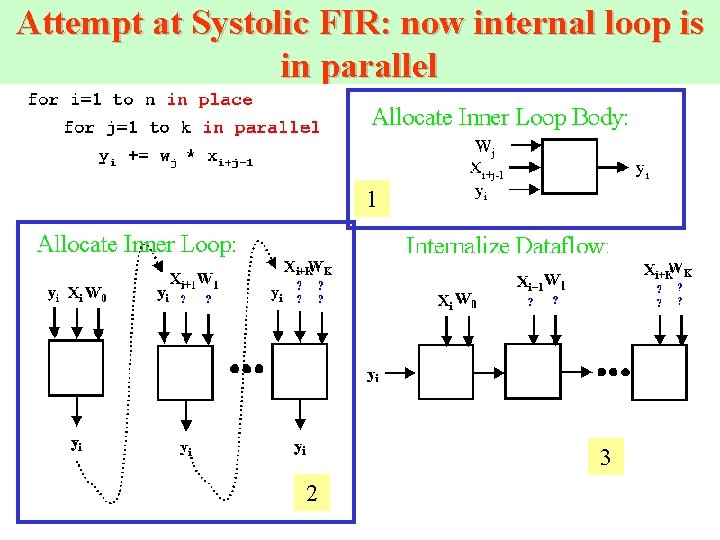

Internal loop in parallel Attempt at Systolic FIR: now internal loop is in parallel

Attempt at Systolic FIR: now internal loop is in parallel 1 3 2

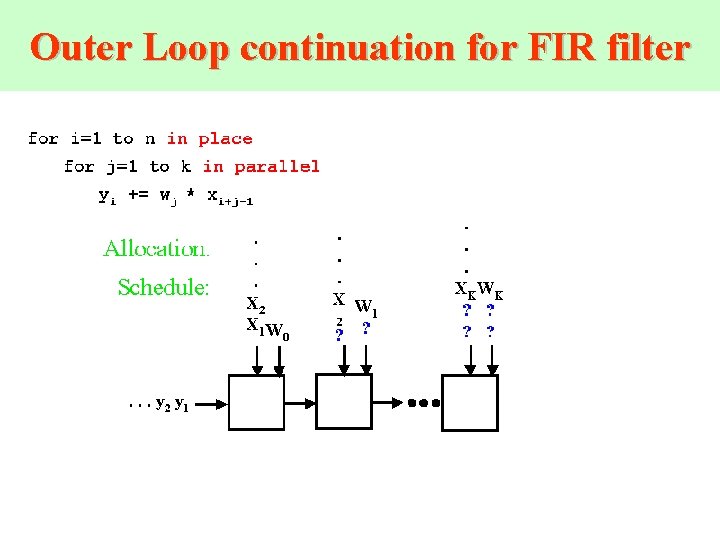

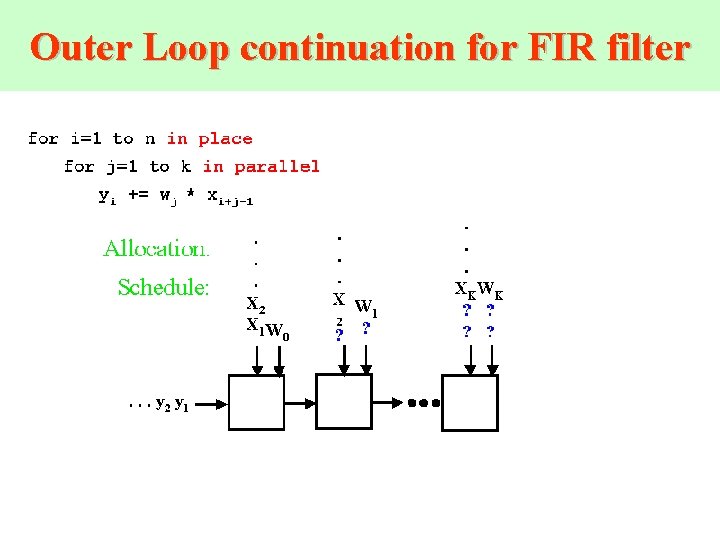

Outer Loop continuation for FIR filter

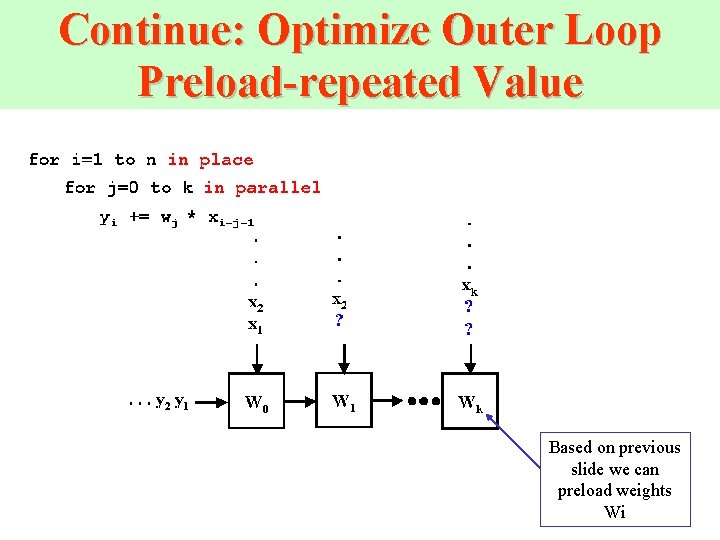

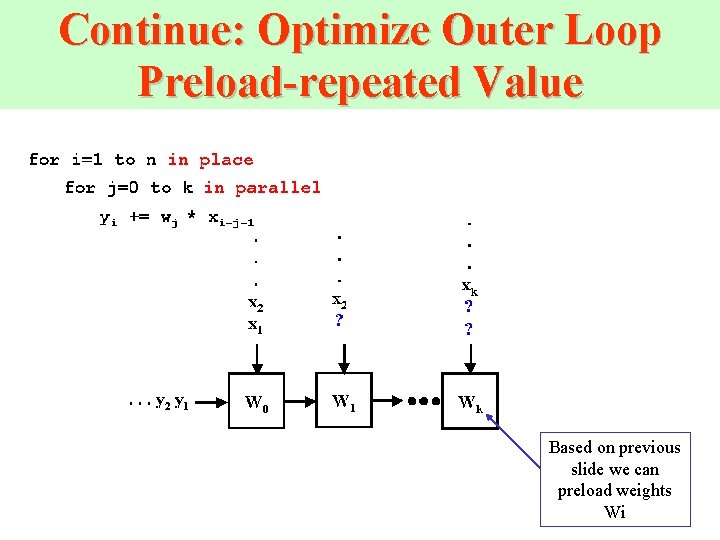

Continue: Optimize Outer Loop Preload-repeated Value Based on previous slide we can preload weights Wi

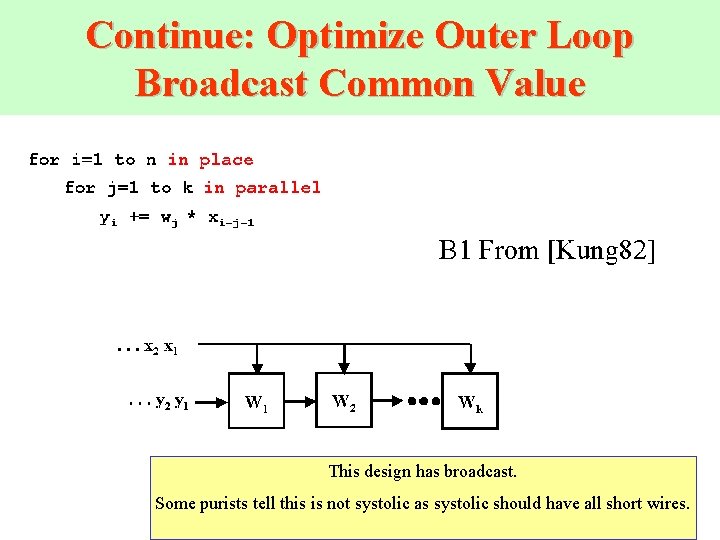

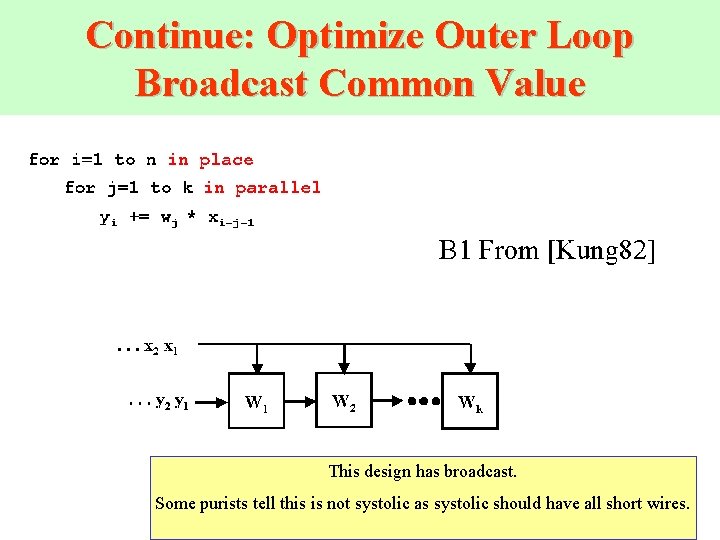

Continue: Optimize Outer Loop Broadcast Common Value This design has broadcast. Some purists tell this is not systolic as systolic should have all short wires.

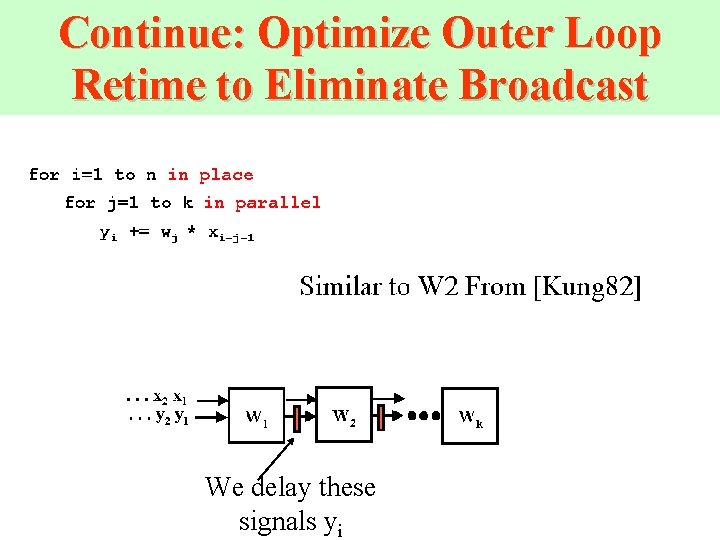

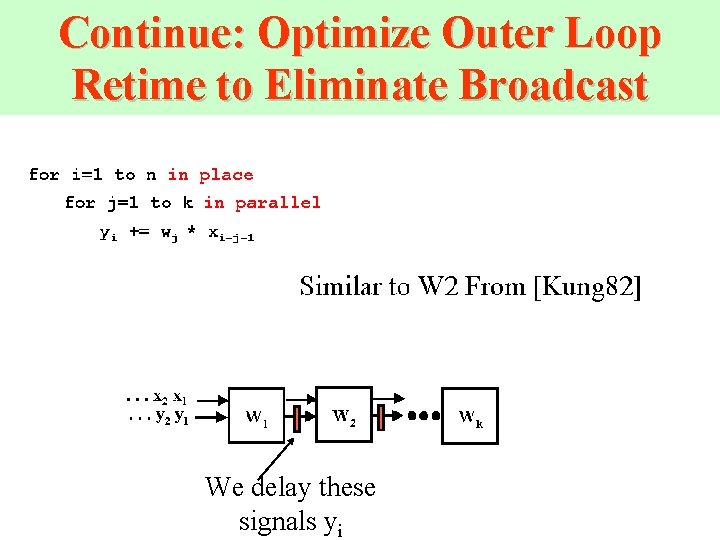

Continue: Optimize Outer Loop Retime to Eliminate Broadcast We delay these signals yi

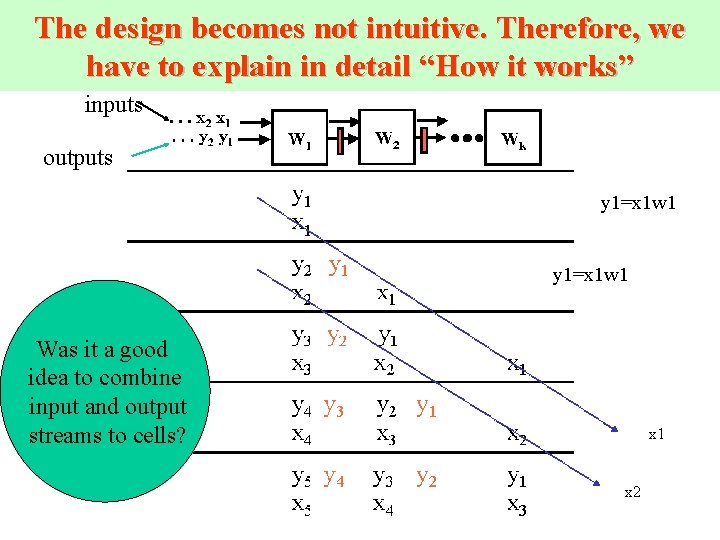

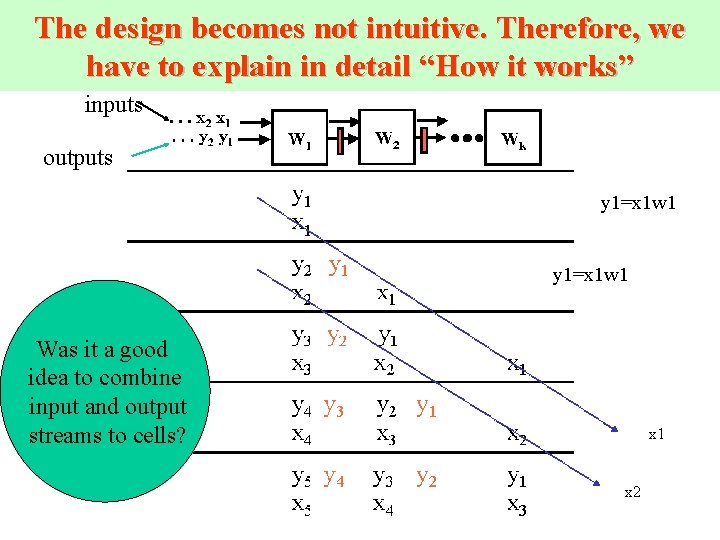

The design becomes not intuitive. Therefore, we have to explain in detail “How it works” inputs outputs y 1=x 1 w 1 Was it a good idea to combine input and output streams to cells? x 1 x 2

Polynomial Multiplication of 1 -D convolution problem

Polynomial Multiplication of 1 -D convolution problem Types of systolic structure • Convolution problem weight : {w 1, w 2, . . . , wk} inputs : {x 1, x 2, . . . , xn} results : {y 1, y 2, . . . , yn+k-1} yi = w 1 xi + w 2 xi+1 +. . . + wkxi+k-1 (combining two data streams) H. T. Kung’s grouping work assume k = 3

A well-known family of systolic designs for convolution computation • Given the sequence of weights {w 1 , w 2 , . . . , wk} • And the input sequence {x 1 , x 2 , . . . , xk} , • Compute the result sequence {y 1 , y 2 , . . . , yn+1 -k} • Defined by yi = w 1 xi + w 2 xi+1 +. . . + wk xi+k-1

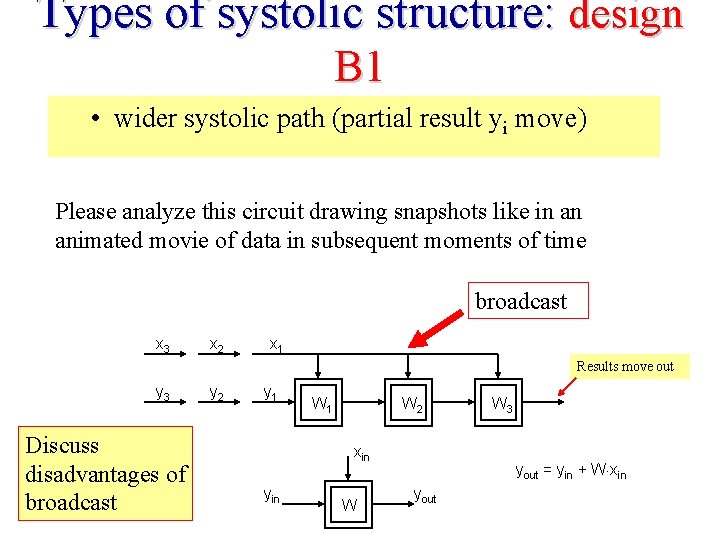

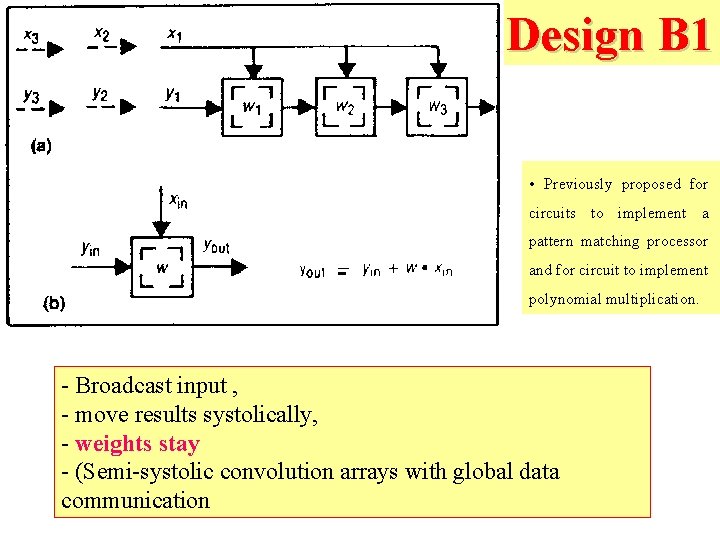

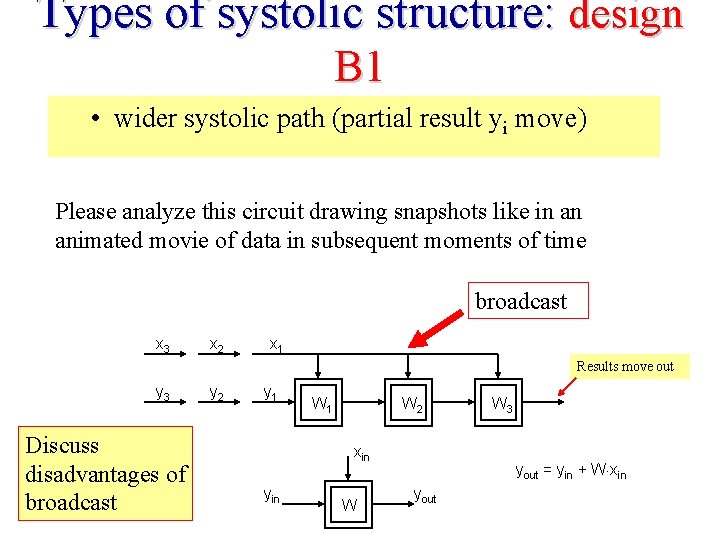

Design B 1 - - Broadcast input , - move results systolically, - weights stay - (Semi-systolic convolution arrays with global data communication

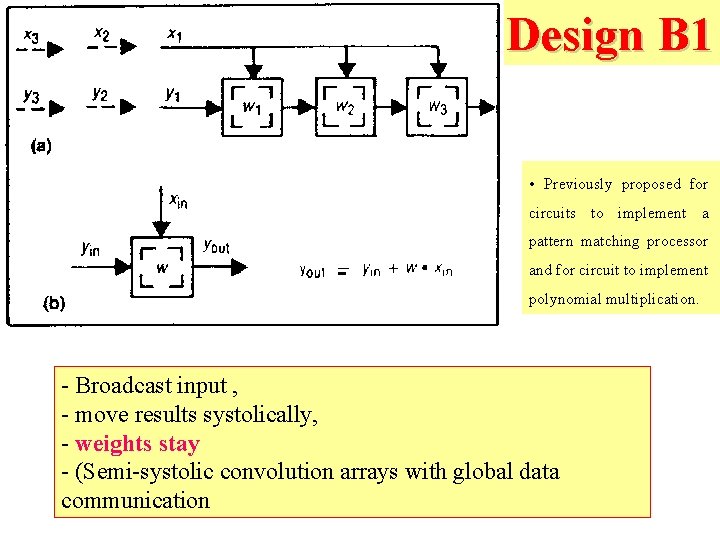

Design B 1 • Previously proposed for circuits to implement a pattern matching processor - and for circuit to implement polynomial multiplication. - Broadcast input , - move results systolically, - weights stay - (Semi-systolic convolution arrays with global data communication

Types of systolic structure: design B 1 • wider systolic path (partial result yi move) Please analyze this circuit drawing snapshots like in an animated movie of data in subsequent moments of time broadcast x 3 x 2 x 1 Results move out y 3 Discuss disadvantages of broadcast y 2 y 1 W 2 xin yin W W 3 yout = yin + W×xin yout

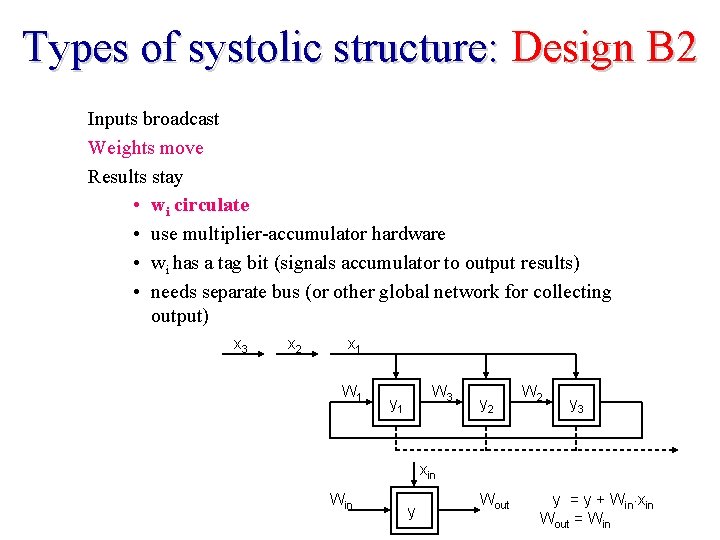

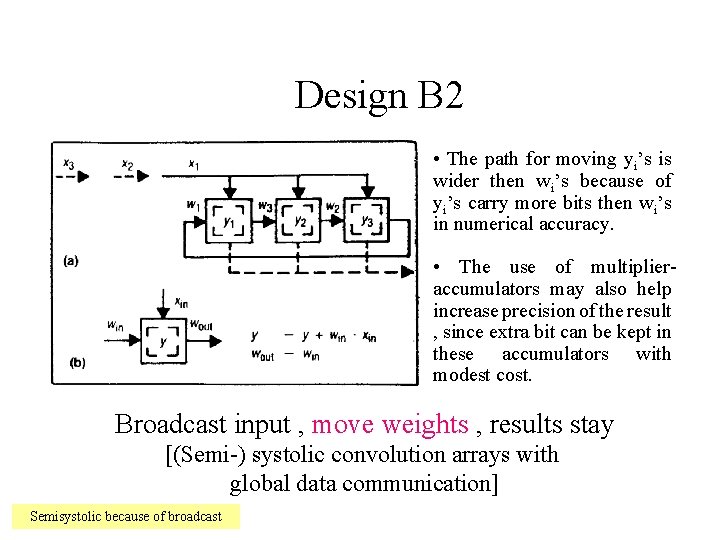

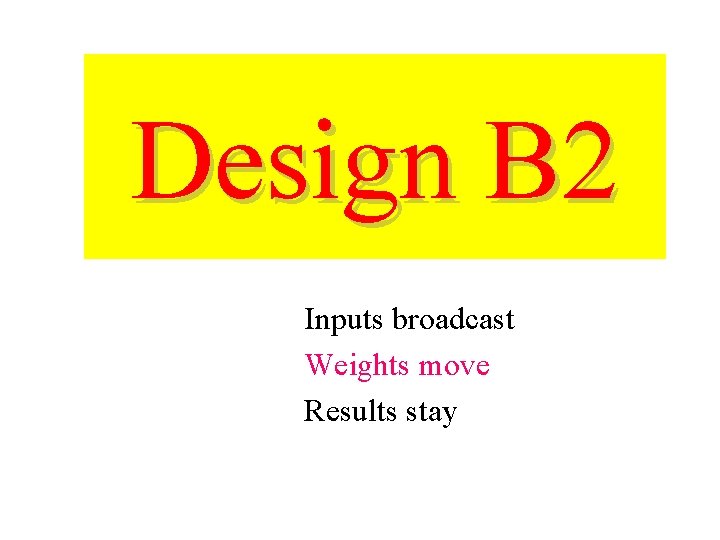

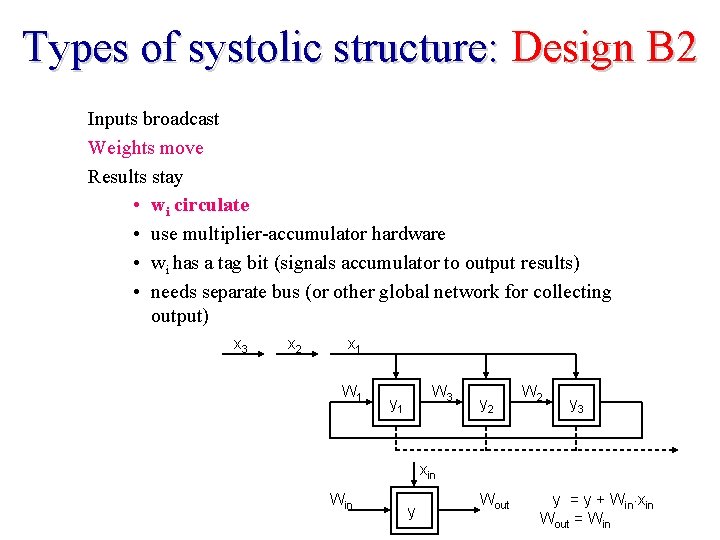

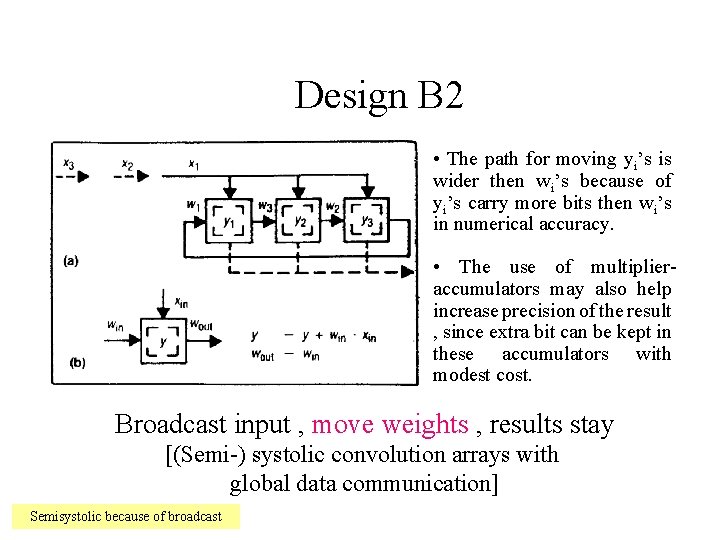

Design B 2 Inputs broadcast Weights move Results stay

Types of systolic structure: Design B 2 Inputs broadcast Weights move Results stay • wi circulate • use multiplier-accumulator hardware • wi has a tag bit (signals accumulator to output results) • needs separate bus (or other global network for collecting output) x 3 x 2 x 1 W 3 y 1 y 2 W 2 y 3 xin Win y Wout y = y + Win×xin Wout = Win

Design B 2 • The path for moving yi’s is wider then wi’s because of yi’s carry more bits then wi’s in numerical accuracy. • The use of multiplieraccumulators may also help increase precision of the result , since extra bit can be kept in these accumulators with modest cost. Broadcast input , move weights , results stay [(Semi-) systolic convolution arrays with global data communication] Semisystolic because of broadcast

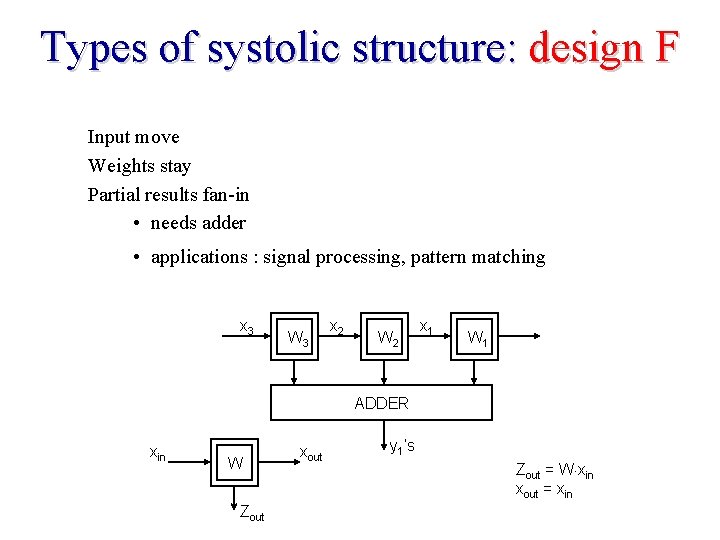

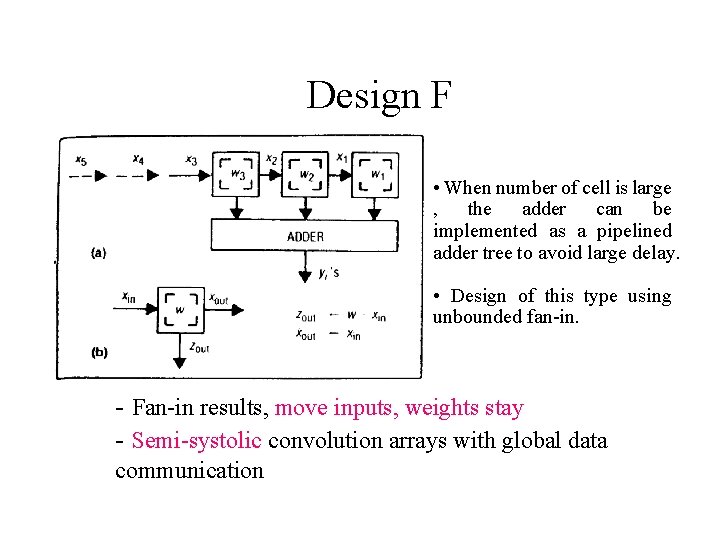

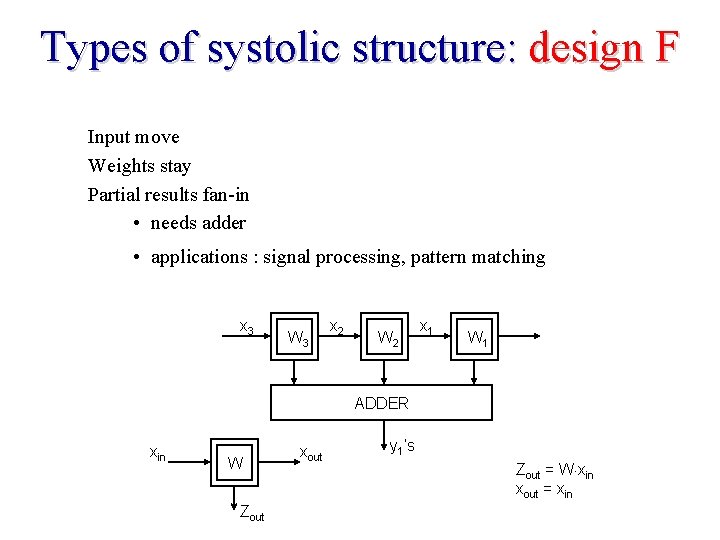

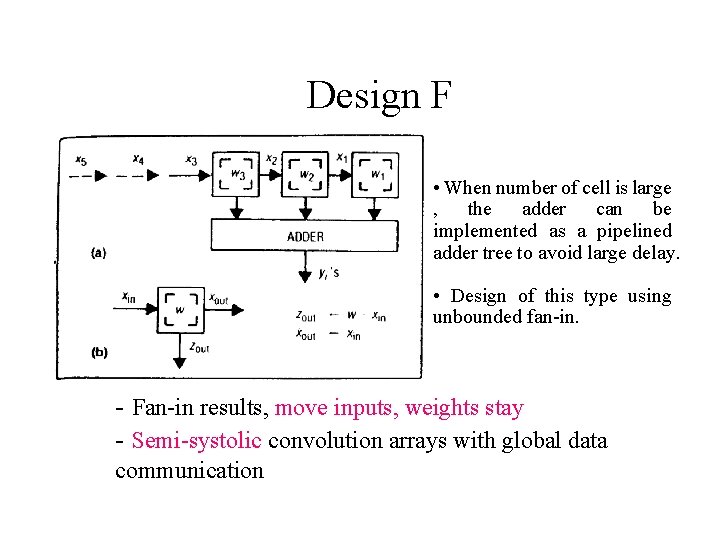

Design F Input move Weights stay Partial results fan-in • needs adder

Types of systolic structure: design F Input move Weights stay Partial results fan-in • needs adder • applications : signal processing, pattern matching x 3 W 3 x 2 W 2 x 1 W 1 ADDER xin W Zout xout y 1’s Zout = W×xin xout = xin

Design F • When number of cell is large , the adder can be implemented as a pipelined adder tree to avoid large delay. • Design of this type using unbounded fan-in. - Fan-in results, move inputs, weights stay - Semi-systolic convolution arrays with global data communication

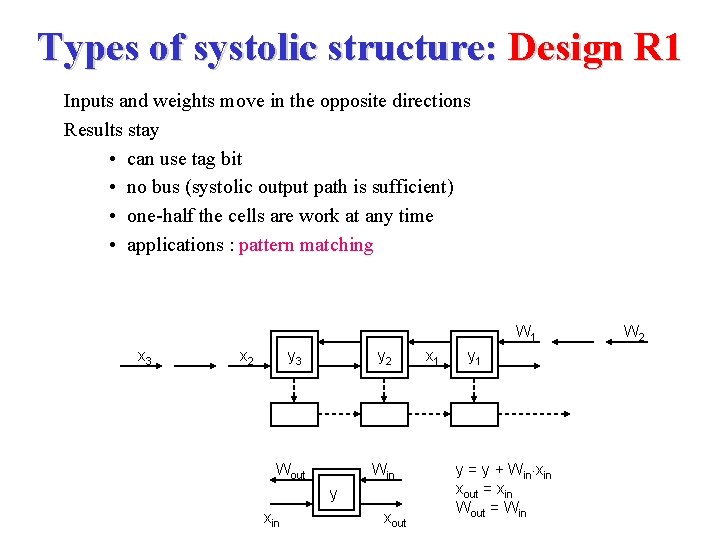

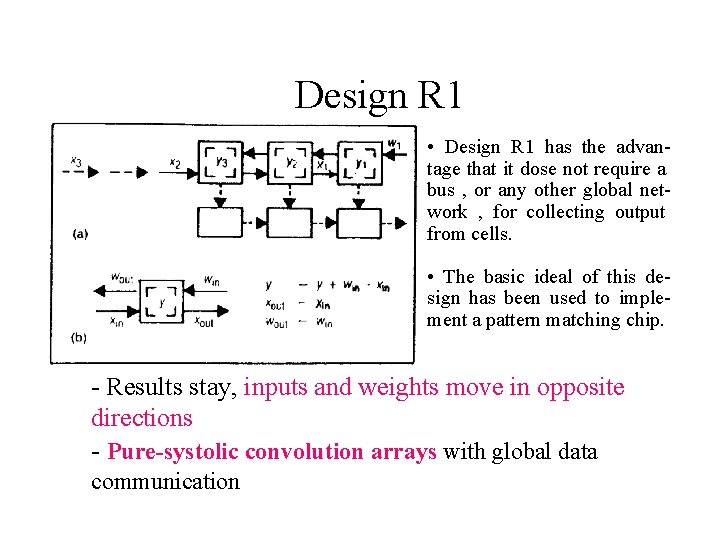

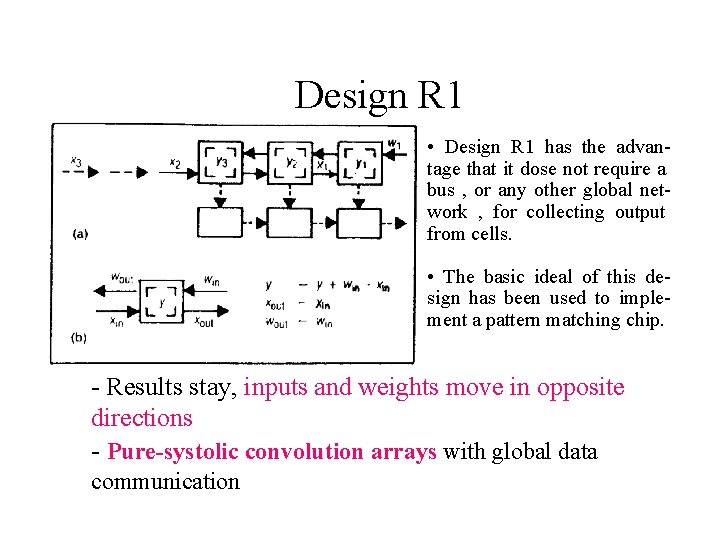

Design R 1 Inputs and weights move in the opposite directions Results stay • can use tag bit • no bus (systolic output path is sufficient) • one-half the cells work at any time

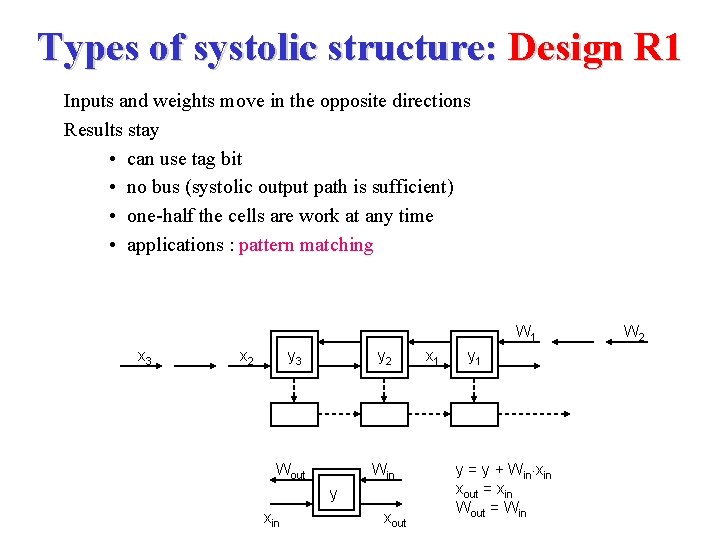

Types of systolic structure: Design R 1 Inputs and weights move in the opposite directions Results stay • can use tag bit • no bus (systolic output path is sufficient) • one-half the cells are work at any time • applications : pattern matching W 1 x 3 x 2 y 3 y 2 Wout Win y xin xout x 1 y = y + Win×xin xout = xin Wout = Win W 2

Design R 1 • Design R 1 has the advantage that it dose not require a bus , or any other global network , for collecting output from cells. • The basic ideal of this design has been used to implement a pattern matching chip. - Results stay, inputs and weights move in opposite directions - Pure-systolic convolution arrays with global data communication

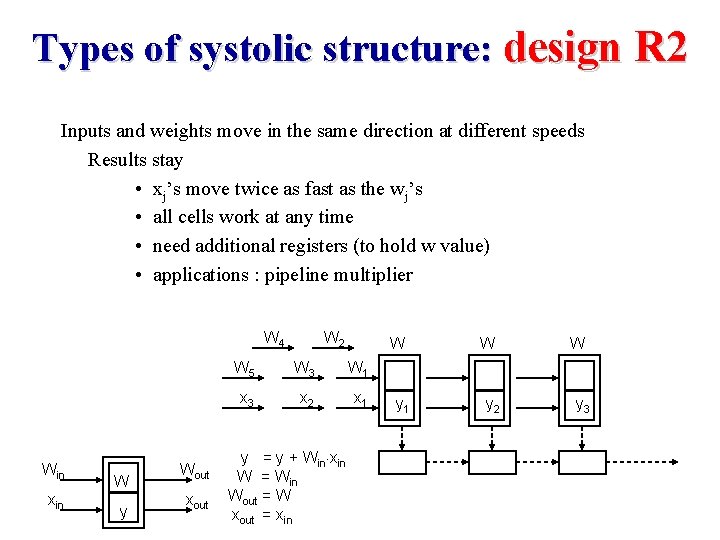

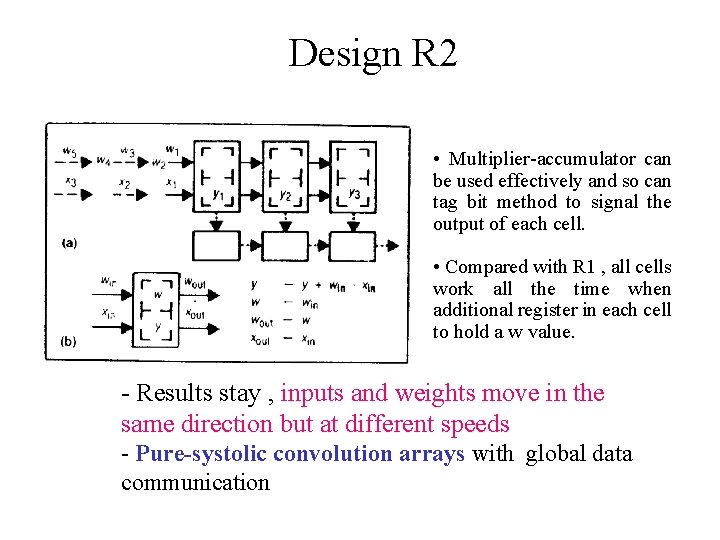

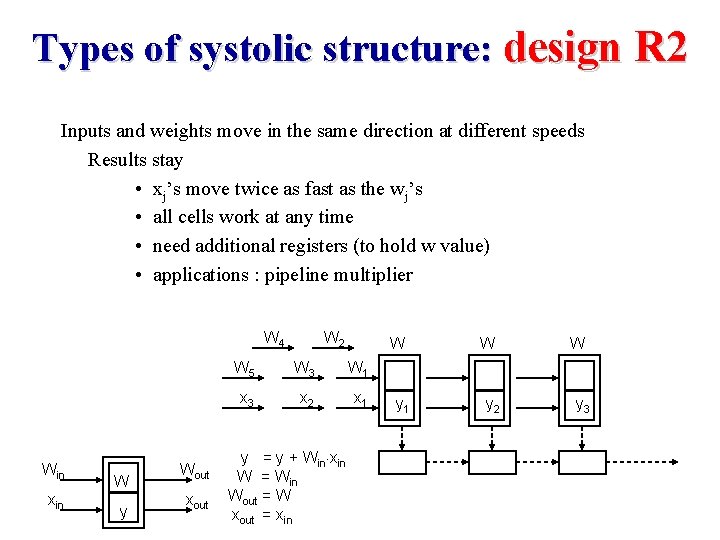

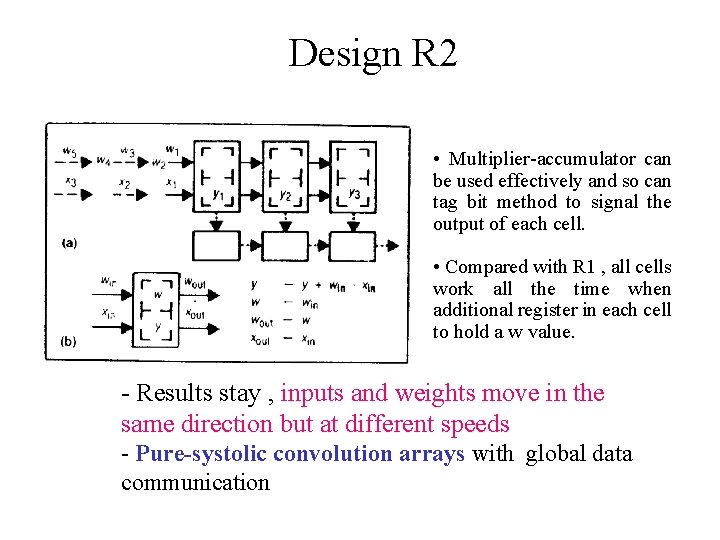

Design R 2 Inputs and weights move in the same direction at different speeds Results stay • xj’s move twice as fast as the wj’s • all cells work at any time • need additional registers (to hold w value)

Types of systolic structure: design R 2 Inputs and weights move in the same direction at different speeds Results stay • xj’s move twice as fast as the wj’s • all cells work at any time • need additional registers (to hold w value) • applications : pipeline multiplier W 4 Win xin W y Wout xout W 2 W 5 W 3 W 1 x 3 x 2 x 1 y = y + Win×xin W = Win Wout = W xout = xin W W W y 1 y 2 y 3

Design R 2 • Multiplier-accumulator can be used effectively and so can tag bit method to signal the output of each cell. • Compared with R 1 , all cells work all the time when additional register in each cell to hold a w value. - Results stay , inputs and weights move in the same direction but at different speeds - Pure-systolic convolution arrays with global data communication

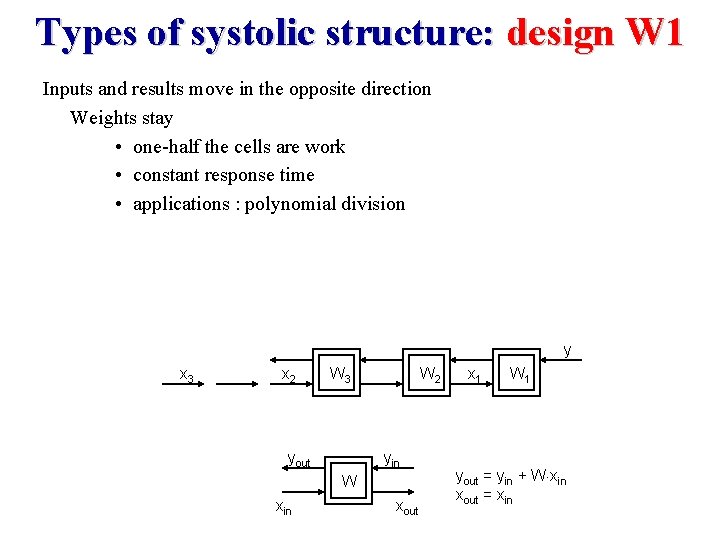

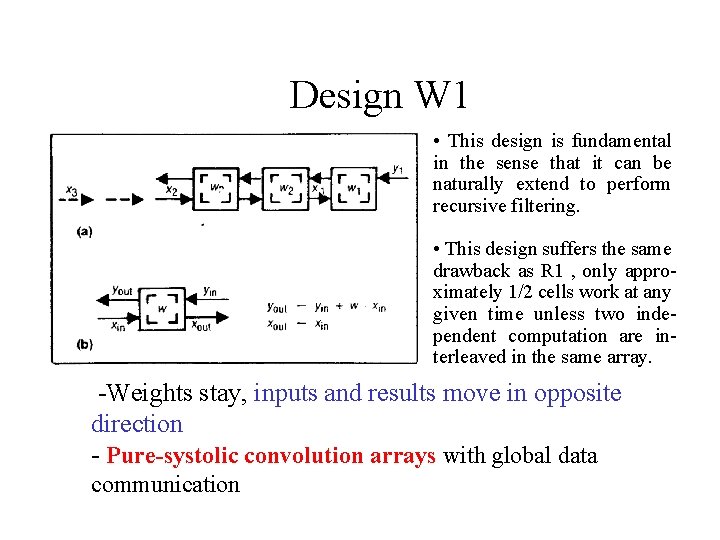

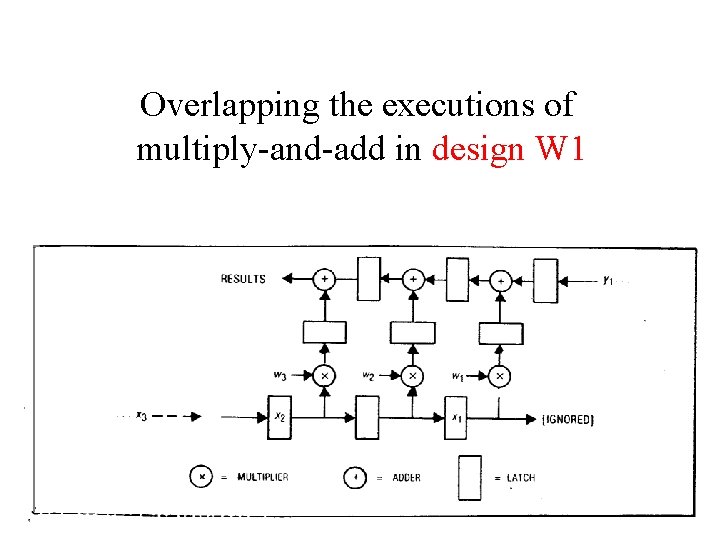

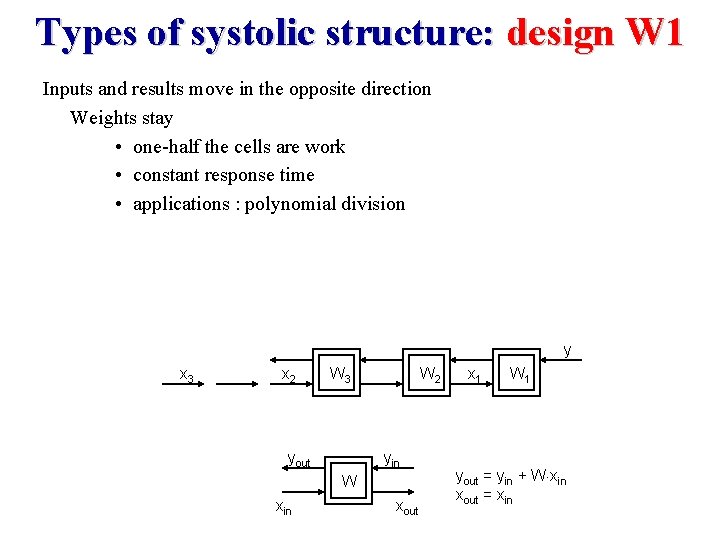

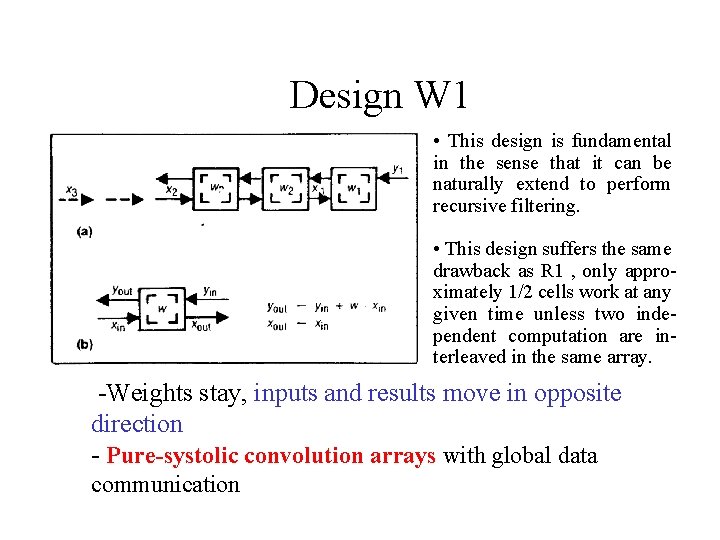

Design W 1 Inputs and results move in the opposite direction Weights stay • one-half the cells are work • constant response time

Types of systolic structure: design W 1 Inputs and results move in the opposite direction Weights stay • one-half the cells are work • constant response time • applications : polynomial division y x 3 x 2 W 3 yout W 2 yin W xin xout x 1 W 1 yout = yin + W×xin xout = xin

Design W 1 • This design is fundamental in the sense that it can be naturally extend to perform recursive filtering. • This design suffers the same drawback as R 1 , only approximately 1/2 cells work at any given time unless two independent computation are interleaved in the same array. -Weights stay, inputs and results move in opposite direction - Pure-systolic convolution arrays with global data communication

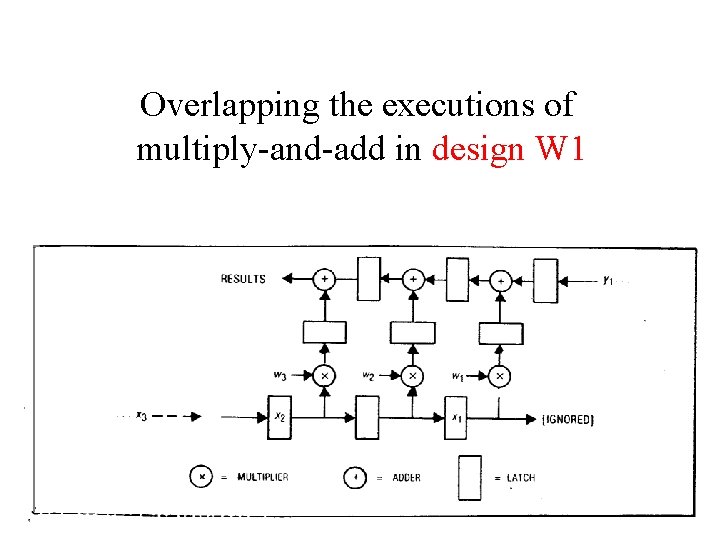

Overlapping the executions of multiply-and-add in design W 1

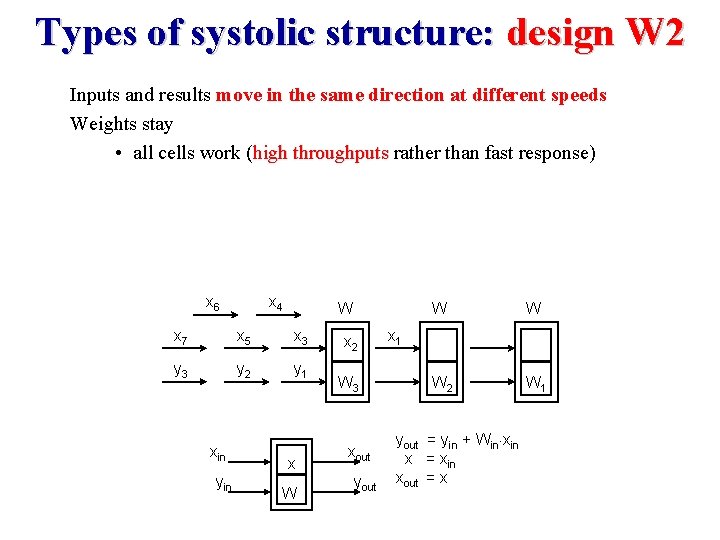

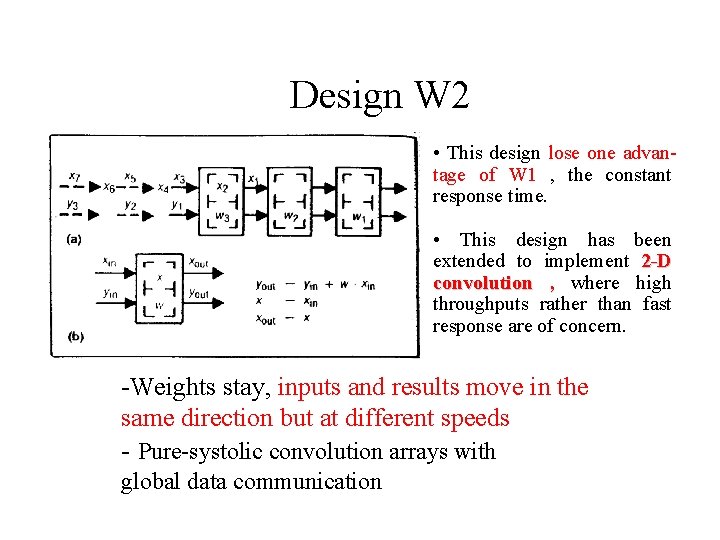

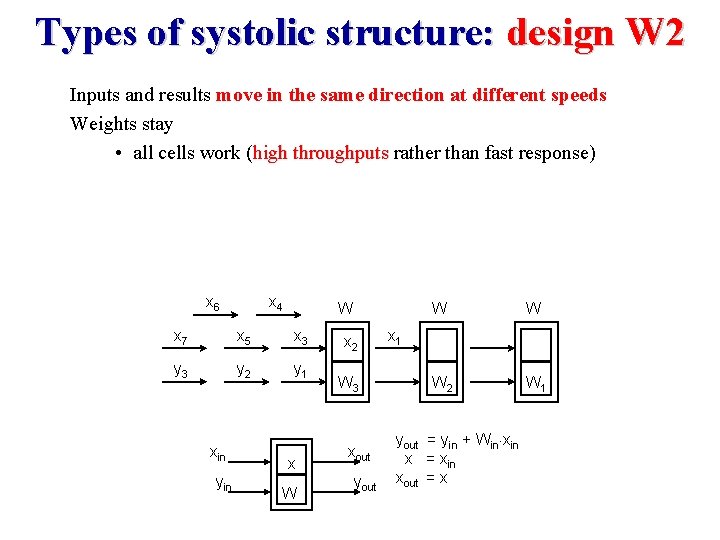

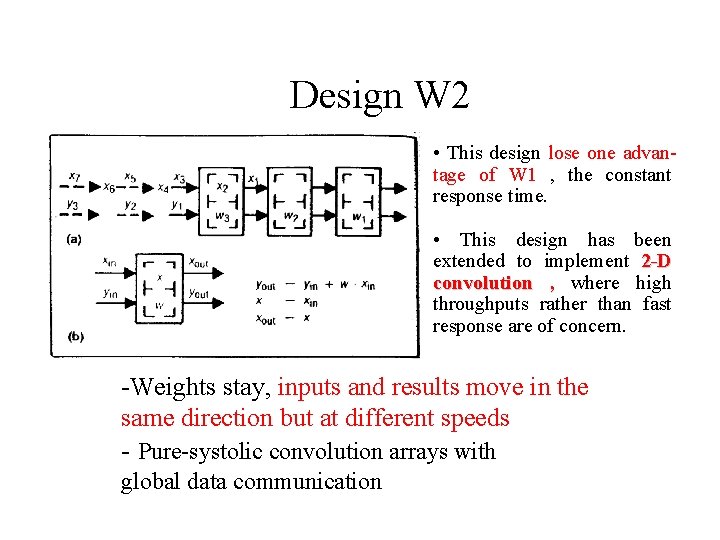

Design W 2 Inputs and results move in the same direction at different speeds Weights stay • all cells work (high throughputs rather than fast response)

Types of systolic structure: design W 2 Inputs and results move in the same direction at different speeds Weights stay • all cells work (high throughputs rather than fast response) x 6 x 4 W x 7 x 5 x 3 y 2 y 1 xin yin x W x 2 W 3 xout yout W W W 2 W 1 x 1 yout = yin + Win×xin x = xin xout = x

Design W 2 • This design lose one advantage of W 1 , the constant response time. • This design has been extended to implement 2 -D convolution , where high throughputs rather than fast response are of concern. -Weights stay, inputs and results move in the same direction but at different speeds - Pure-systolic convolution arrays with global data communication

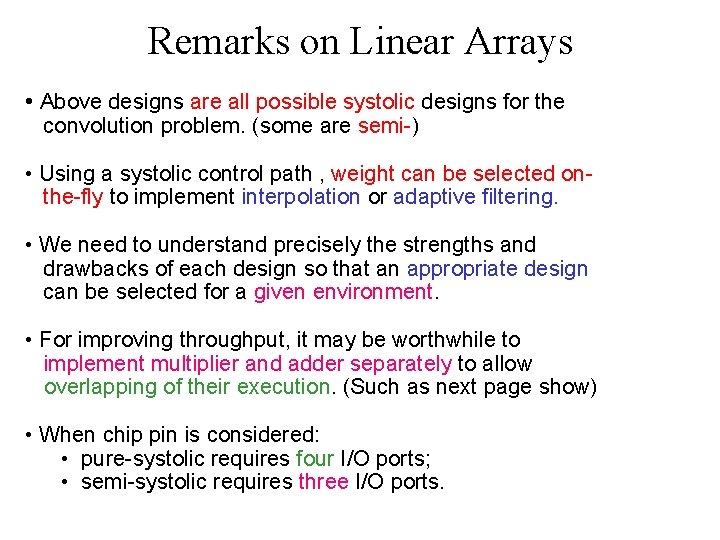

Remarks on Linear Arrays • Above designs are all possible systolic designs for the convolution problem. (some are semi-) • Using a systolic control path , weight can be selected onthe-fly to implement interpolation or adaptive filtering. • We need to understand precisely the strengths and drawbacks of each design so that an appropriate design can be selected for a given environment. • For improving throughput, it may be worthwhile to implement multiplier and adder separately to allow overlapping of their execution. (Such as next page show) • When chip pin is considered: • pure-systolic requires four I/O ports; • semi-systolic requires three I/O ports.

Retiming of filters

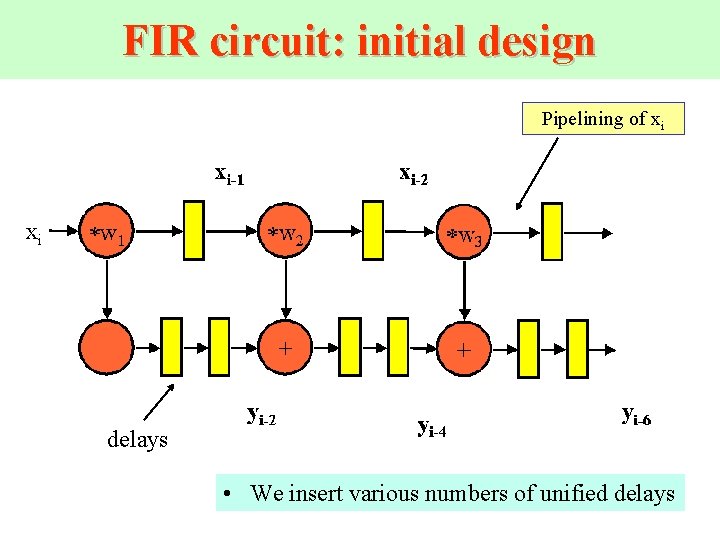

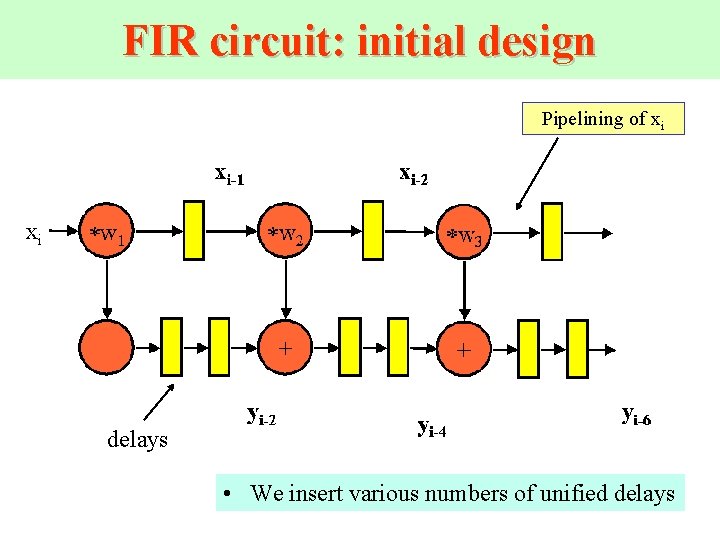

FIR circuit: initial design Pipelining of xi delays • We insert various numbers of unified delays

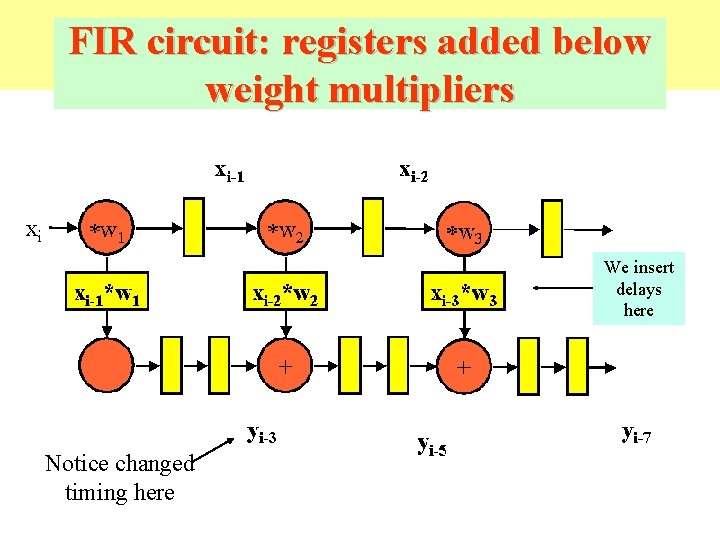

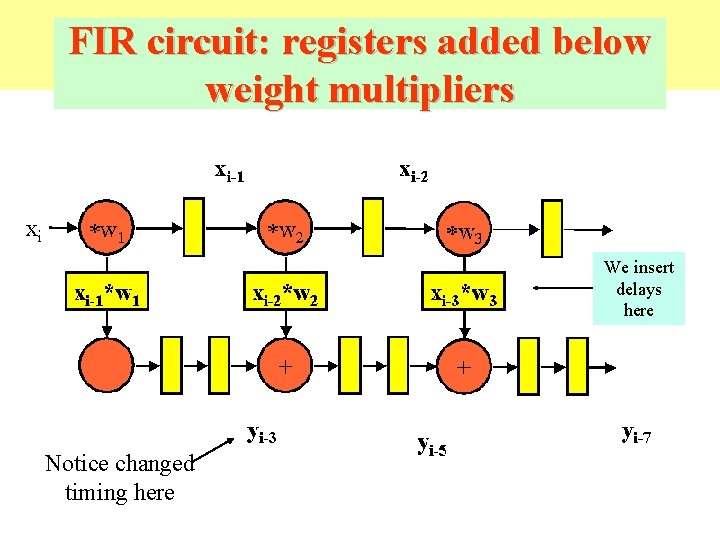

FIR circuit: registers added below weight multipliers We insert delays here Notice changed timing here

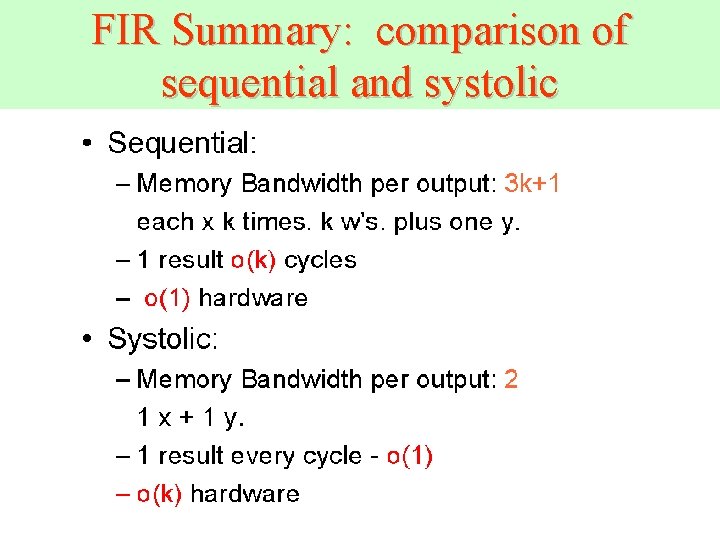

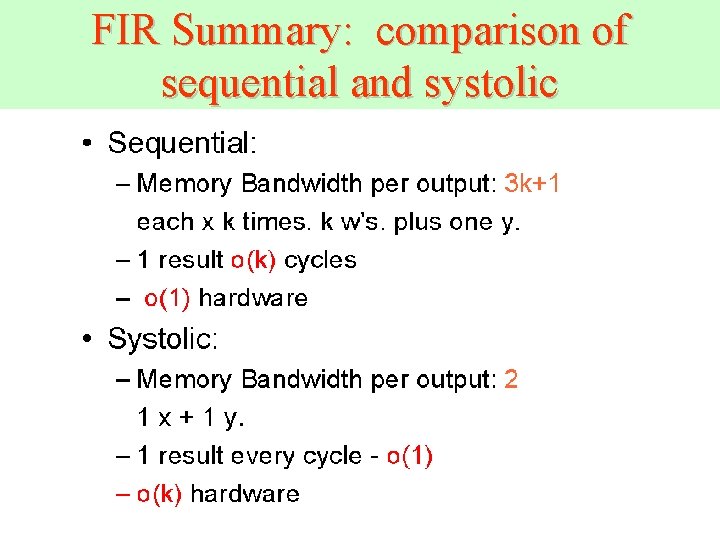

FIR Summary: comparison of sequential and systolic

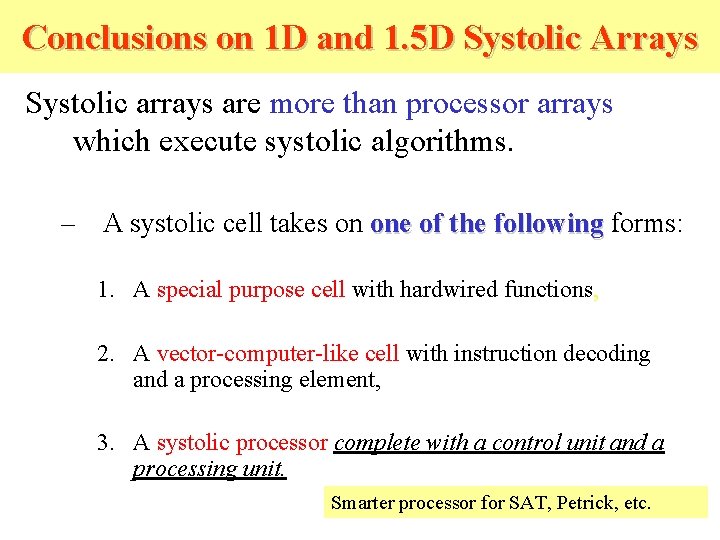

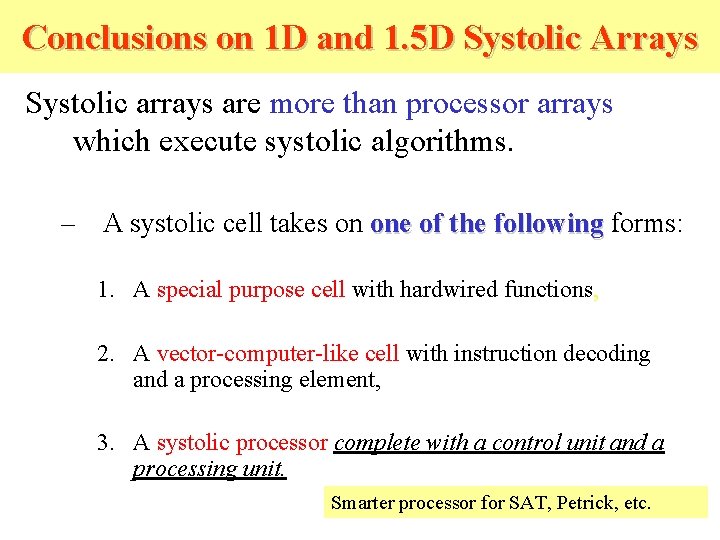

Conclusions on 1 D and 1. 5 D Systolic Arrays Systolic arrays are more than processor arrays which execute systolic algorithms. – A systolic cell takes on one of the following forms: 1. A special purpose cell with hardwired functions, 2. A vector-computer-like cell with instruction decoding and a processing element, 3. A systolic processor complete with a control unit and a processing unit. Smarter processor for SAT, Petrick, etc.

Large Systolic Arrays as general purpose computers

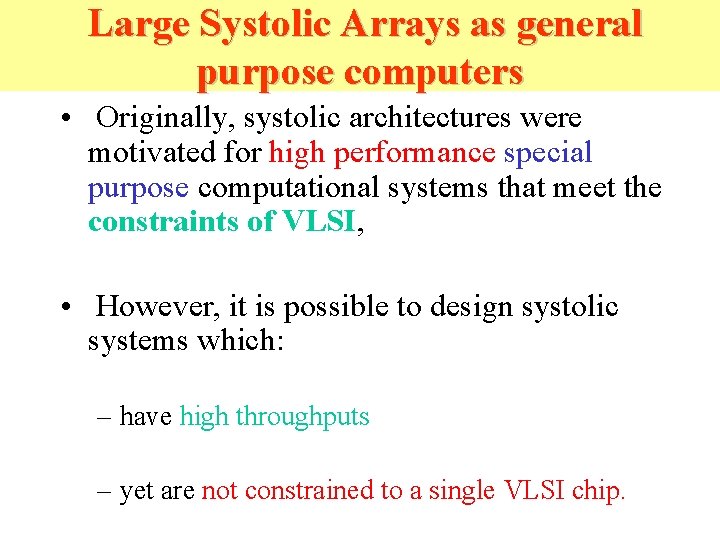

Large Systolic Arrays as general purpose computers • Originally, systolic architectures were motivated for high performance special purpose computational systems that meet the constraints of VLSI, • However, it is possible to design systolic systems which: – have high throughputs – yet are not constrained to a single VLSI chip.

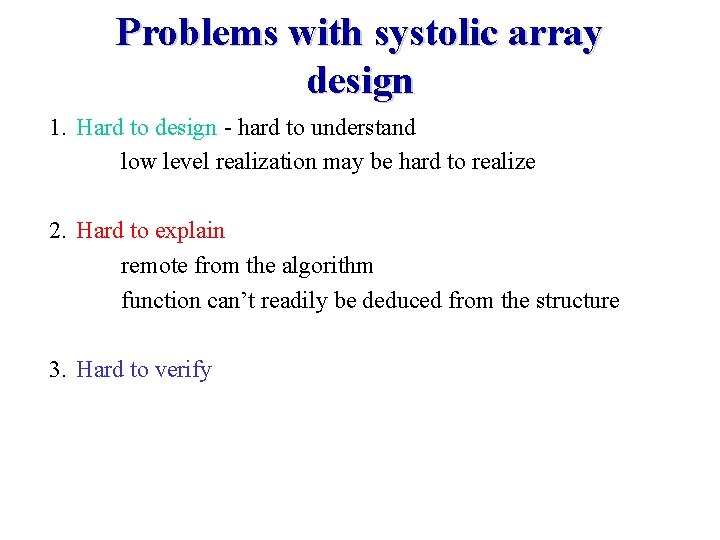

Problems with systolic array design 1. Hard to design - hard to understand low level realization may be hard to realize 2. Hard to explain remote from the algorithm function can’t readily be deduced from the structure 3. Hard to verify

Key architectural issues in designing special-purpose systems • Simple and regular design Simple, regular design yields cost-effective special systems. • Concurrency and communication Design algorithm to support high concurrency and meantime to employ only simple blocks. • Balancing computation with I/O A special-purpose system should be a match to a variety of I/O bandwidths.

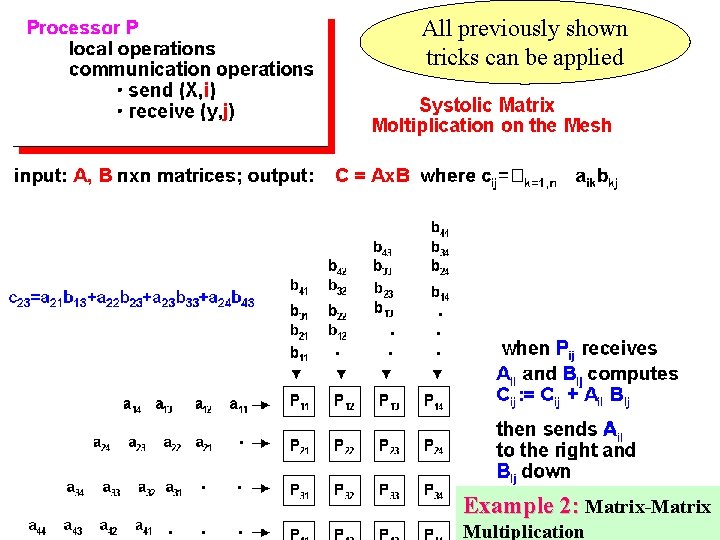

Two Dimensional Systolic Arrays • In 1978, the first systolic arrays were introduced as a feasible design for special purpose devices which meet the VLSI constraints. • These special purpose devices were able to perform four types of matrix operations at high processing speeds: – matrix-vector multiplication, – matrix-matrix multiplication, – LU-decomposition of a matrix, – Solution of triangular linear systems.

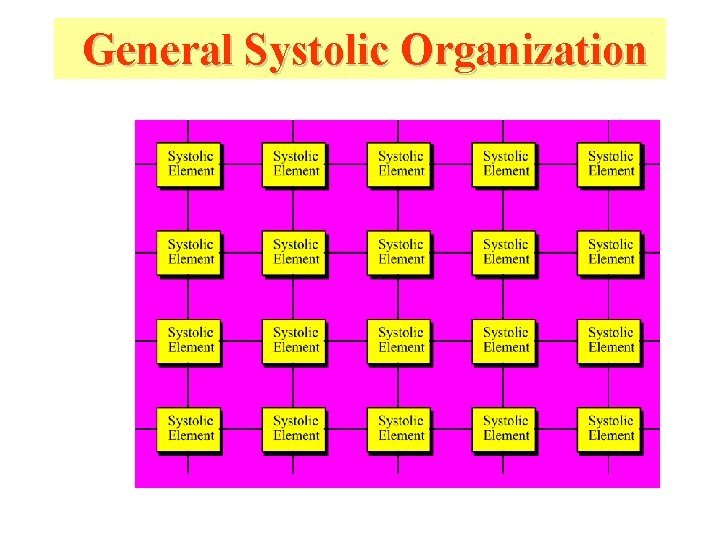

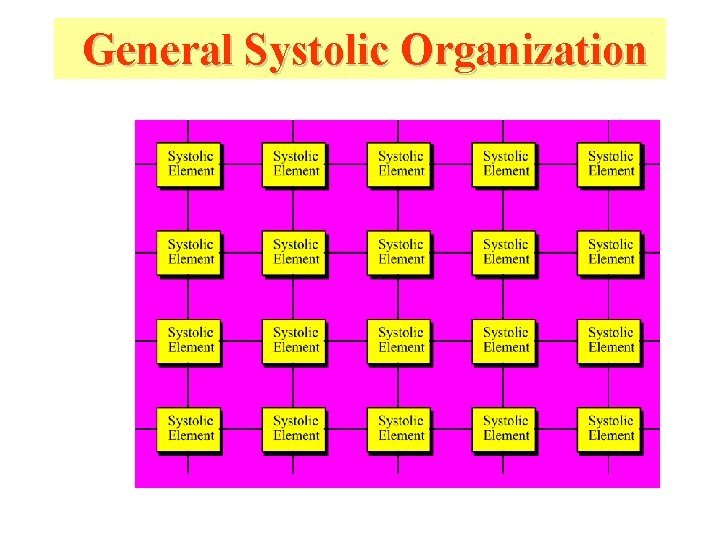

General Systolic Organization

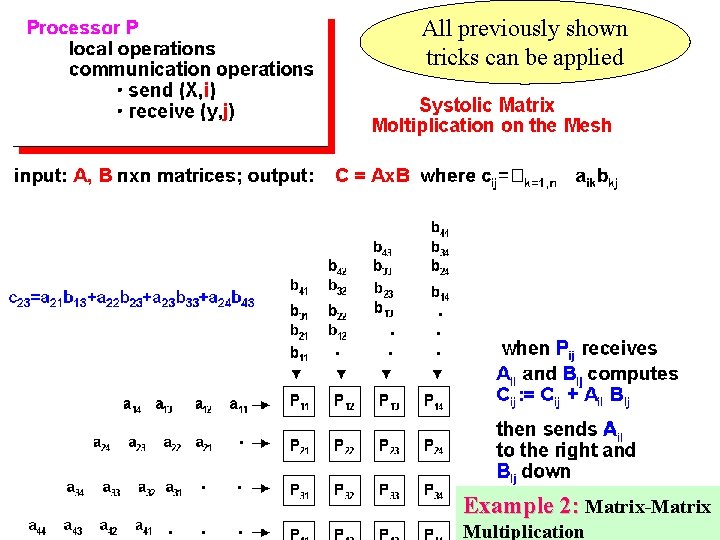

All previously shown tricks can be applied Example 2: Matrix-Matrix Multiplication

Sources • Seth Copen Goldstein, CMU A. R. Hurson 2. David E. Culler, UC. Berkeley, 3. Keller@cs. hmc. edu 4. Syeda Mohsina Afroze and other students of Advanced Logic Synthesis, ECE 572, 1999 and 2000.