Introduction to Convolution Neural Network Zhihao Zhao Convolution

![Downsample Picture from [2] • For a large input image, after the convolution layers, Downsample Picture from [2] • For a large input image, after the convolution layers,](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-7.jpg)

![Classifier/Softmax Picture from [4] Classifier/Softmax Picture from [4]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-9.jpg)

![Object recognition, VGG[6] VGG network Object recognition, VGG[6] VGG network](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-13.jpg)

![Object recognition, resnet[7] • Motivation: Deeper network has better recognition performance. • With a Object recognition, resnet[7] • Motivation: Deeper network has better recognition performance. • With a](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-14.jpg)

![Object detection Picture from [8] Object detection Picture from [8]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-15.jpg)

![Object detection, Faster RCNN[9] • Idea: Using a embedding network generates possible object locations, Object detection, Faster RCNN[9] • Idea: Using a embedding network generates possible object locations,](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-16.jpg)

![Object detection, YOLO[10] • For easy understanding, let’s say, region proposals are implicitly discarded, Object detection, YOLO[10] • For easy understanding, let’s say, region proposals are implicitly discarded,](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-17.jpg)

![Instance segmentation Picture from [11] Run your image Instance segmentation Picture from [11] Run your image](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-18.jpg)

![Object segmentation, FCN[12] • The first convolution network is the same as in object Object segmentation, FCN[12] • The first convolution network is the same as in object](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-19.jpg)

![Transposed convolution[12] • From the figure, transposed convolution has the same operation with convolution. Transposed convolution[12] • From the figure, transposed convolution has the same operation with convolution.](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-20.jpg)

![Image caption Picture from [13] Run your image Image caption Picture from [13] Run your image](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-21.jpg)

![Image caption Picture from [14] Image caption Picture from [14]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-22.jpg)

![Generative adversarial network All generated by neural network! Picture from [15] Generative adversarial network All generated by neural network! Picture from [15]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-23.jpg)

![Image style transfer[16] Image style transfer[16]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-24.jpg)

![Image Matting[17] Image Matting[17]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-26.jpg)

![Recolorization[18] Recolorization[18]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-27.jpg)

![Derain[19] Derain[19]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-28.jpg)

![Deep. Warp[20] Test your own at http: //163. 172. 78. 19/ Deep. Warp[20] Test your own at http: //163. 172. 78. 19/](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-29.jpg)

![Reference [1] http: //deeplearning. stanford. edu/wiki/index. php/Feature_extraction_using_convolution [2] https: //www. quora. com/What-is-max-pooling-in-convolutional-neural-networks [3] http: Reference [1] http: //deeplearning. stanford. edu/wiki/index. php/Feature_extraction_using_convolution [2] https: //www. quora. com/What-is-max-pooling-in-convolutional-neural-networks [3] http:](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-30.jpg)

![Reference [9] Ren, Shaoqing, et al. "Faster r-cnn: Towards real-time object detection with region Reference [9] Ren, Shaoqing, et al. "Faster r-cnn: Towards real-time object detection with region](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-31.jpg)

![Reference [16] Gatys, Leon A. , Alexander S. Ecker, and Matthias Bethge. "Image style Reference [16] Gatys, Leon A. , Alexander S. Ecker, and Matthias Bethge. "Image style](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-32.jpg)

- Slides: 33

Introduction to Convolution Neural Network Zhihao Zhao

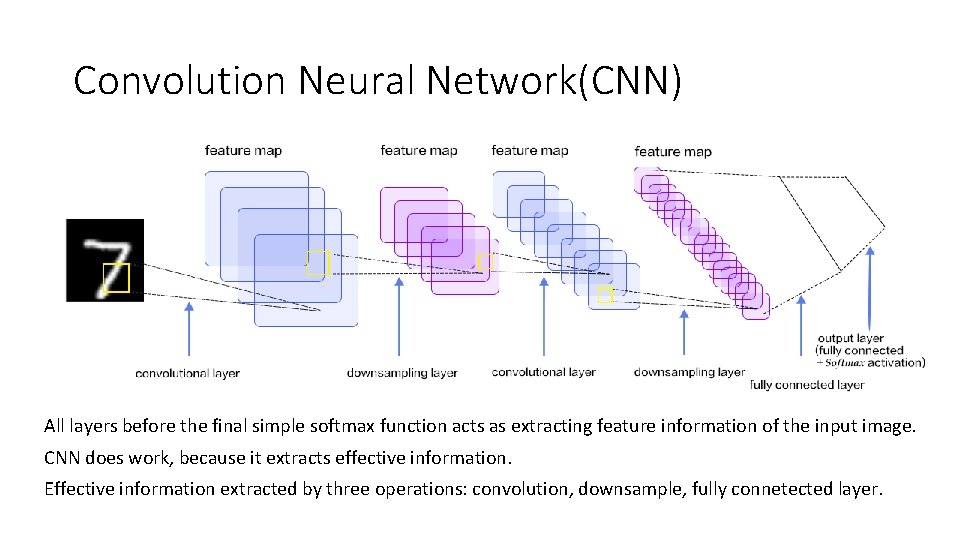

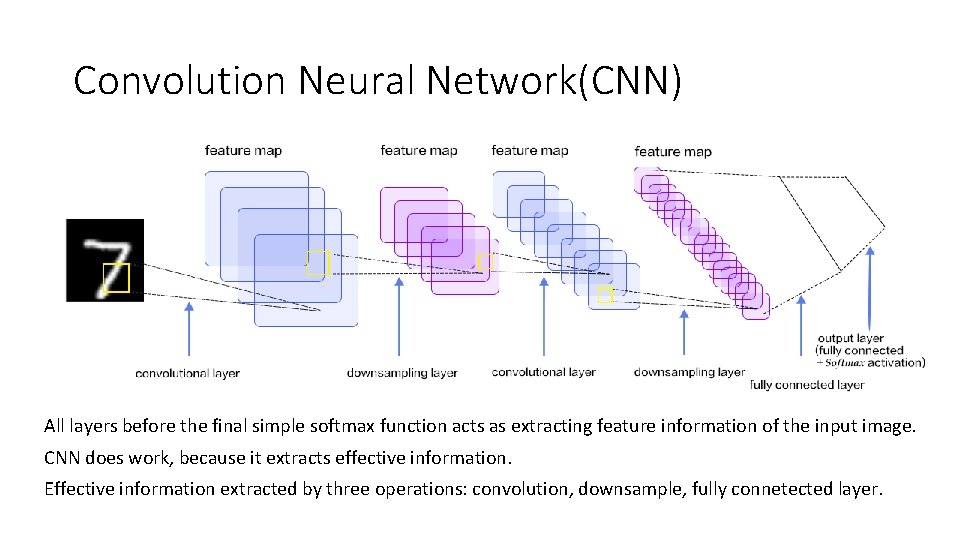

Convolution Neural Network(CNN) All layers before the final simple softmax function acts as extracting feature information of the input image. CNN does work, because it extracts effective information. Effective information extracted by three operations: convolution, downsample, fully connetected layer.

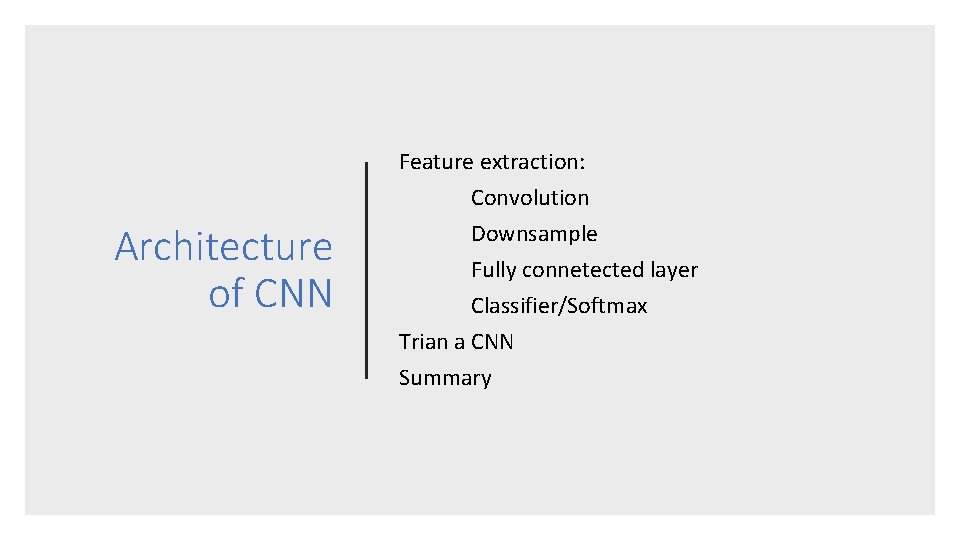

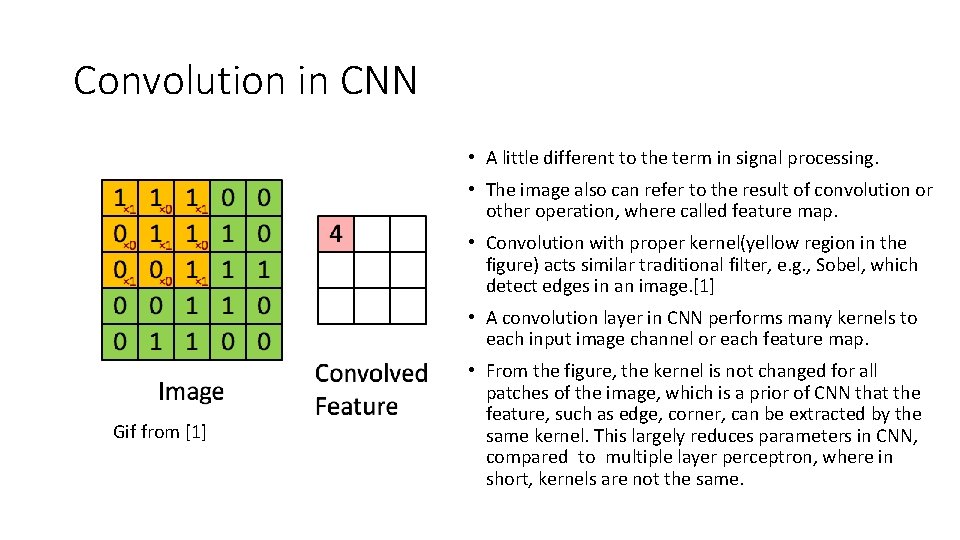

Architecture of CNN Feature extraction: Convolution Downsample Fully connetected layer Classifier/Softmax Trian a CNN Summary

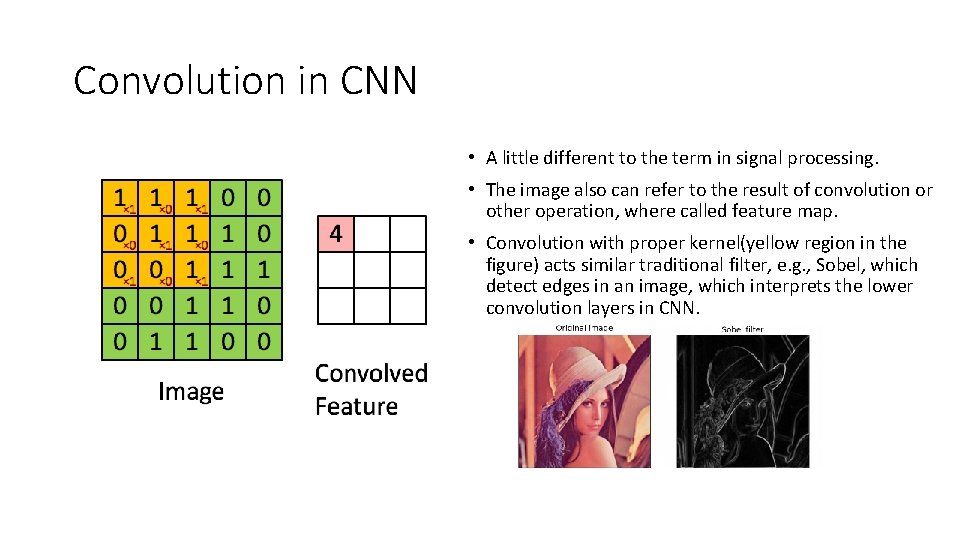

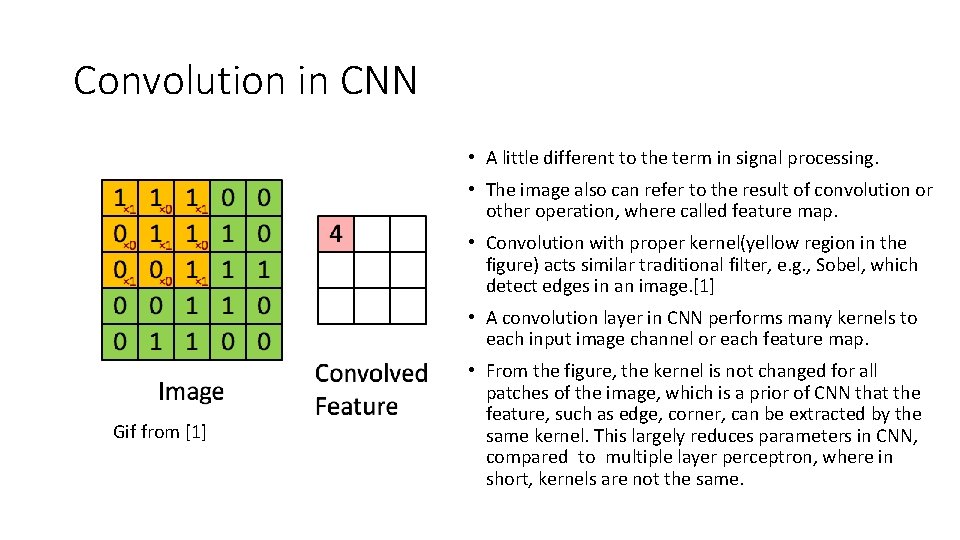

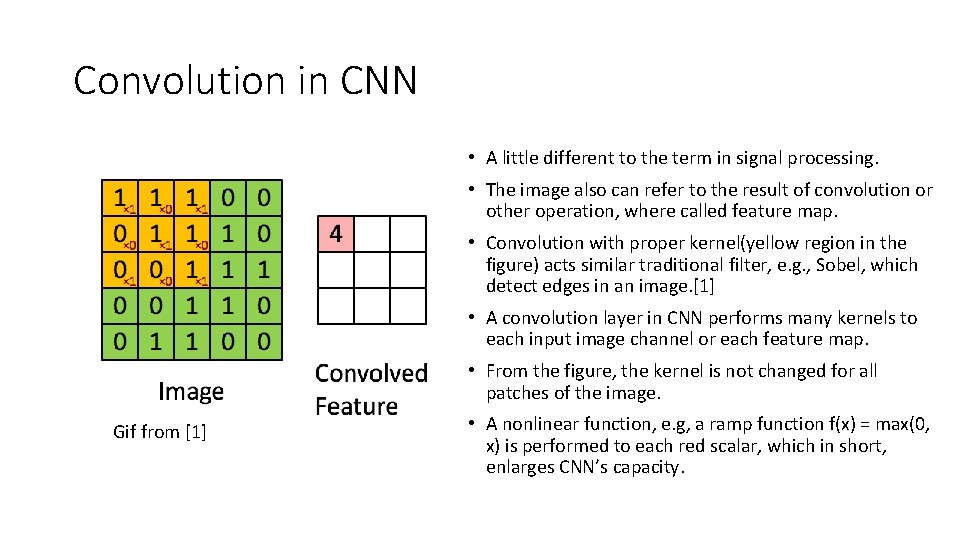

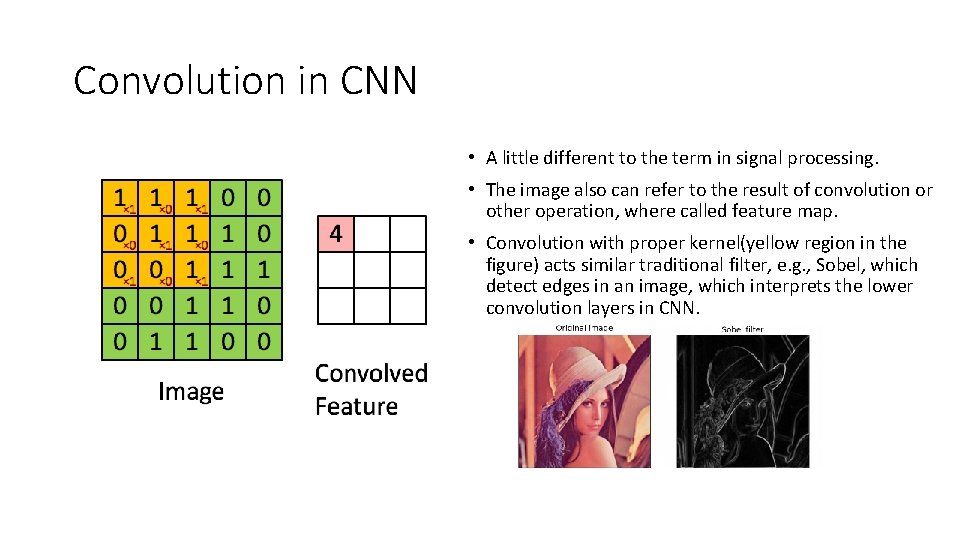

Convolution in CNN • A little different to the term in signal processing. • The image also can refer to the result of convolution or other operation, where called feature map. • Convolution with proper kernel(yellow region in the figure) acts similar traditional filter, e. g. , Sobel, which detect edges in an image, which interprets the lower convolution layers in CNN.

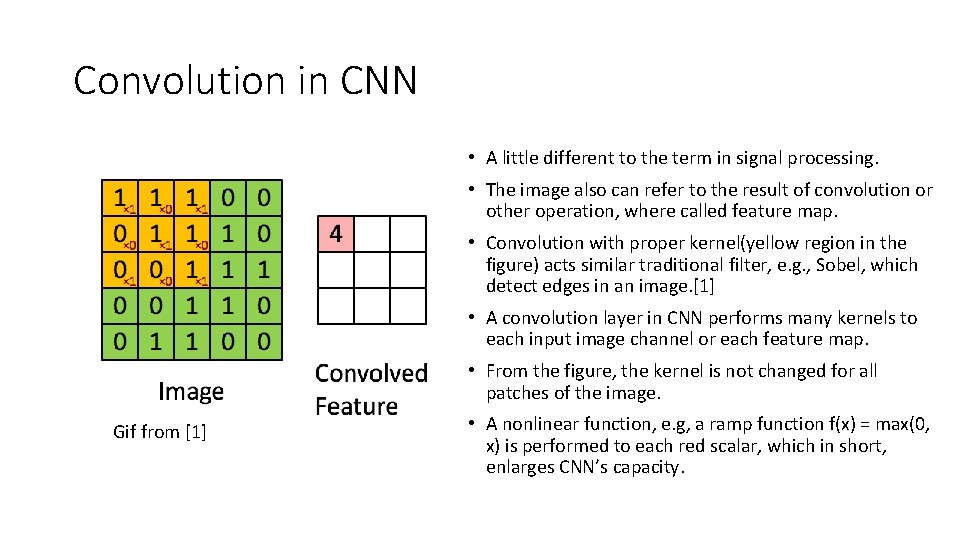

Convolution in CNN • A little different to the term in signal processing. • The image also can refer to the result of convolution or other operation, where called feature map. • Convolution with proper kernel(yellow region in the figure) acts similar traditional filter, e. g. , Sobel, which detect edges in an image. [1] • A convolution layer in CNN performs many kernels to each input image channel or each feature map. Gif from [1] • From the figure, the kernel is not changed for all patches of the image, which is a prior of CNN that the feature, such as edge, corner, can be extracted by the same kernel. This largely reduces parameters in CNN, compared to multiple layer perceptron, where in short, kernels are not the same.

Convolution in CNN • A little different to the term in signal processing. • The image also can refer to the result of convolution or other operation, where called feature map. • Convolution with proper kernel(yellow region in the figure) acts similar traditional filter, e. g. , Sobel, which detect edges in an image. [1] • A convolution layer in CNN performs many kernels to each input image channel or each feature map. • From the figure, the kernel is not changed for all patches of the image. Gif from [1] • A nonlinear function, e. g, a ramp function f(x) = max(0, x) is performed to each red scalar, which in short, enlarges CNN’s capacity.

![Downsample Picture from 2 For a large input image after the convolution layers Downsample Picture from [2] • For a large input image, after the convolution layers,](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-7.jpg)

Downsample Picture from [2] • For a large input image, after the convolution layers, the resulting feature maps has large scale. • Downsample reduces feature maps’ scale, here is an example of max pool downsample.

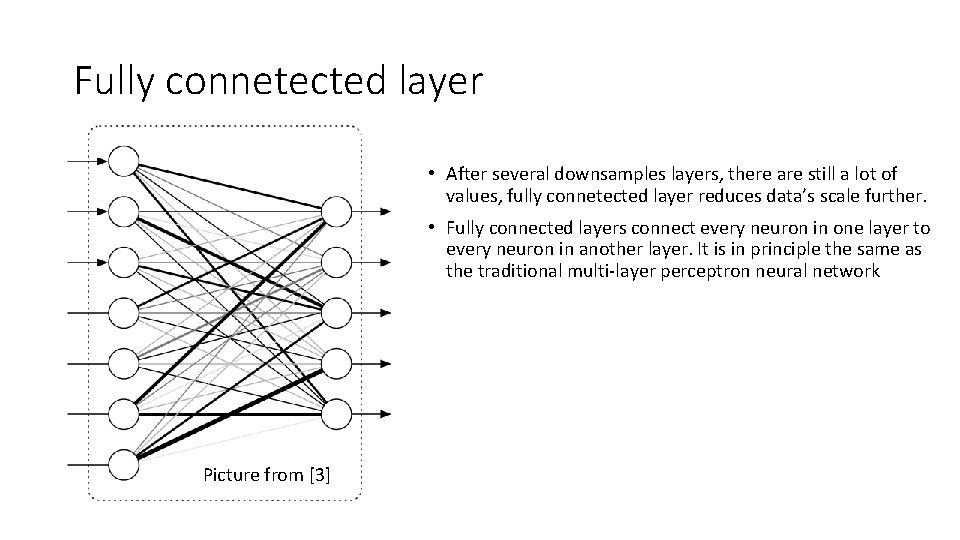

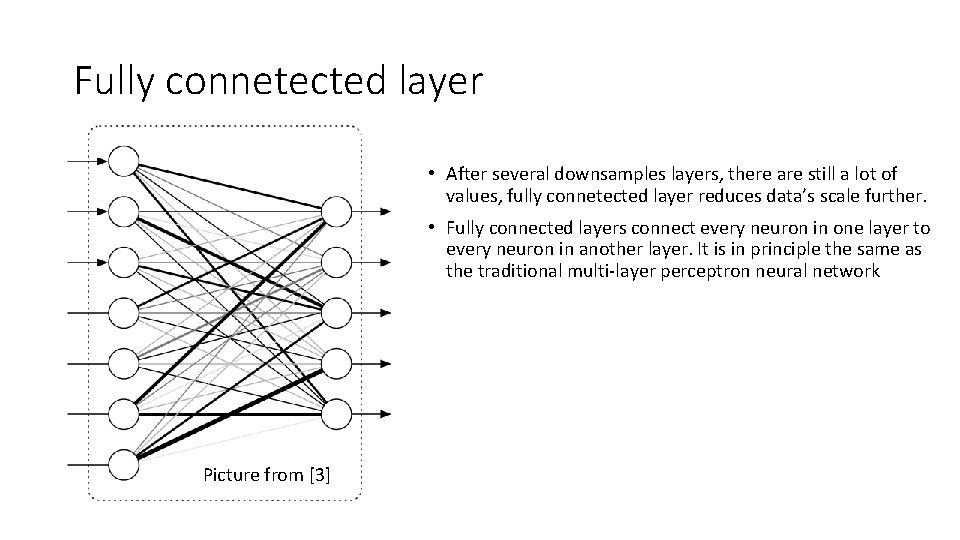

Fully connetected layer • After several downsamples layers, there are still a lot of values, fully connetected layer reduces data’s scale further. • Fully connected layers connect every neuron in one layer to every neuron in another layer. It is in principle the same as the traditional multi-layer perceptron neural network Picture from [3]

![ClassifierSoftmax Picture from 4 Classifier/Softmax Picture from [4]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-9.jpg)

Classifier/Softmax Picture from [4]

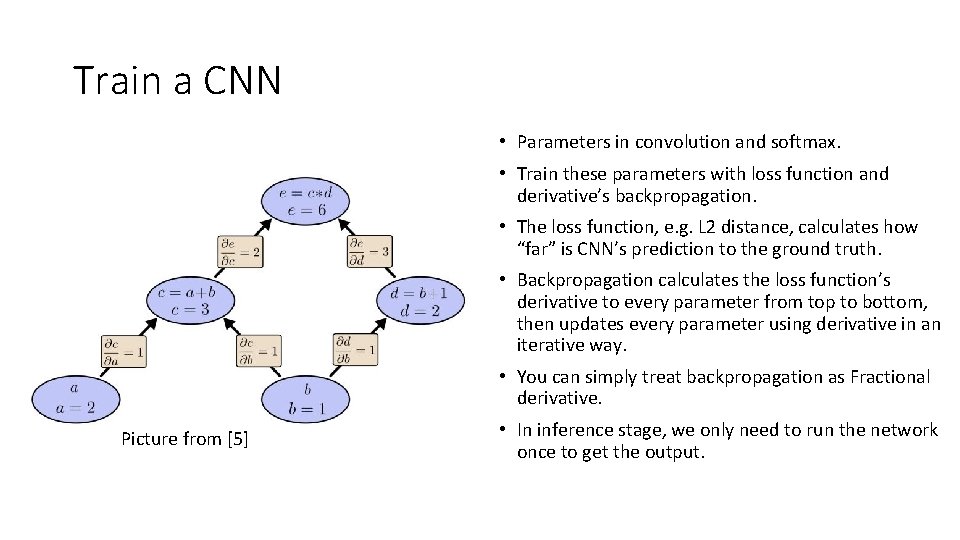

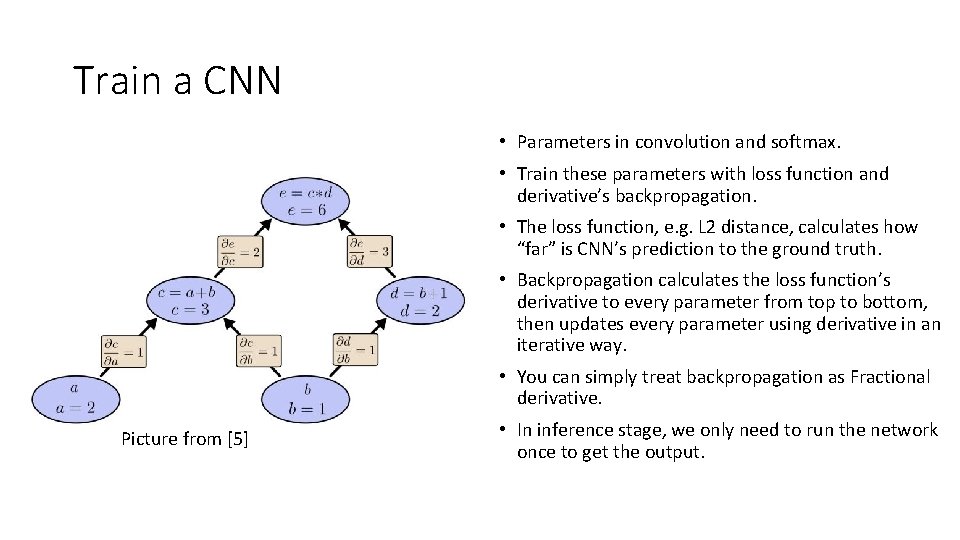

Train a CNN • Parameters in convolution and softmax. • Train these parameters with loss function and derivative’s backpropagation. • The loss function, e. g. L 2 distance, calculates how “far” is CNN’s prediction to the ground truth. • Backpropagation calculates the loss function’s derivative to every parameter from top to bottom, then updates every parameter using derivative in an iterative way. • You can simply treat backpropagation as Fractional derivative. Picture from [5] • In inference stage, we only need to run the network once to get the output.

Summary • Convolution are crucial in feature extraction part, while downsample & fully connected layer are trivial. • Classifier of softmax is very simple, due to powerful features. • Error derivative flows in CNN in training stage.

high-level computer vision • • Object recognition Object detection Instance segmentation Cool applications

![Object recognition VGG6 VGG network Object recognition, VGG[6] VGG network](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-13.jpg)

Object recognition, VGG[6] VGG network

![Object recognition resnet7 Motivation Deeper network has better recognition performance With a Object recognition, resnet[7] • Motivation: Deeper network has better recognition performance. • With a](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-14.jpg)

Object recognition, resnet[7] • Motivation: Deeper network has better recognition performance. • With a simple trick, the network can go much more deeper while maintaining convergence.

![Object detection Picture from 8 Object detection Picture from [8]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-15.jpg)

Object detection Picture from [8]

![Object detection Faster RCNN9 Idea Using a embedding network generates possible object locations Object detection, Faster RCNN[9] • Idea: Using a embedding network generates possible object locations,](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-16.jpg)

Object detection, Faster RCNN[9] • Idea: Using a embedding network generates possible object locations, then classify each image patch at these locations, other parameters for refining(scale, shift) the object’s location are also output by the network. In short, object detection as classification. • Region proposals mean possible object bounding box(locations). • ROI pooling(trivial) is just a trick for engineering that downsample different region proposals to the same size. • Shortcoming: since the region proposal network, Faster RCNN process 1 image at 0. 05~0. 2 s at GPU.

![Object detection YOLO10 For easy understanding lets say region proposals are implicitly discarded Object detection, YOLO[10] • For easy understanding, let’s say, region proposals are implicitly discarded,](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-17.jpg)

Object detection, YOLO[10] • For easy understanding, let’s say, region proposals are implicitly discarded, or say, replaced by grids in the images. • The network outputs classification, bounding box information, and confidence for every grid. • Though a grid may only include part of an object, its prediction bounding box can arbitrarily beyond that grid. From this view, YOLO doesn’t have region proposals. • An object may fall into multiple grids, simply choose the one with largest confidence. The fancy term for this is Non -maximum Suppression.

![Instance segmentation Picture from 11 Run your image Instance segmentation Picture from [11] Run your image](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-18.jpg)

Instance segmentation Picture from [11] Run your image

![Object segmentation FCN12 The first convolution network is the same as in object Object segmentation, FCN[12] • The first convolution network is the same as in object](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-19.jpg)

Object segmentation, FCN[12] • The first convolution network is the same as in object recognition, cropped before fully connect layer. • The deconvolution network just recovers the final feature map of the convolution network, done by interpolation, or transposed convolution(continued at next page). • Sometimes transposed convolution is not better than interpolation though it has parameters. [paper] • Segmentation as classification: Classifiers are applied at every pixel of the final output.

![Transposed convolution12 From the figure transposed convolution has the same operation with convolution Transposed convolution[12] • From the figure, transposed convolution has the same operation with convolution.](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-20.jpg)

Transposed convolution[12] • From the figure, transposed convolution has the same operation with convolution. • Transposed convolution only guarantees that the output will be a 4 x 4 image as well. It will not reverse the corresponding convolution perfectly. At least not concerning the numeric values. Convolution Transposed convolution

![Image caption Picture from 13 Run your image Image caption Picture from [13] Run your image](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-21.jpg)

Image caption Picture from [13] Run your image

![Image caption Picture from 14 Image caption Picture from [14]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-22.jpg)

Image caption Picture from [14]

![Generative adversarial network All generated by neural network Picture from 15 Generative adversarial network All generated by neural network! Picture from [15]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-23.jpg)

Generative adversarial network All generated by neural network! Picture from [15]

![Image style transfer16 Image style transfer[16]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-24.jpg)

Image style transfer[16]

Image style transfer Run you own image at www. deepart. io/hire/

![Image Matting17 Image Matting[17]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-26.jpg)

Image Matting[17]

![Recolorization18 Recolorization[18]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-27.jpg)

Recolorization[18]

![Derain19 Derain[19]](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-28.jpg)

Derain[19]

![Deep Warp20 Test your own at http 163 172 78 19 Deep. Warp[20] Test your own at http: //163. 172. 78. 19/](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-29.jpg)

Deep. Warp[20] Test your own at http: //163. 172. 78. 19/

![Reference 1 http deeplearning stanford eduwikiindex phpFeatureextractionusingconvolution 2 https www quora comWhatismaxpoolinginconvolutionalneuralnetworks 3 http Reference [1] http: //deeplearning. stanford. edu/wiki/index. php/Feature_extraction_using_convolution [2] https: //www. quora. com/What-is-max-pooling-in-convolutional-neural-networks [3] http:](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-30.jpg)

Reference [1] http: //deeplearning. stanford. edu/wiki/index. php/Feature_extraction_using_convolution [2] https: //www. quora. com/What-is-max-pooling-in-convolutional-neural-networks [3] http: //machinethink. net/blog/convolutional-neural-networks-on-the-iphone-with-vggnet/ [4] https: //stats. stackexchange. com/questions/265905/derivative-of-softmax-with-respect-toweights [5] https: //www. zhihu. com/question/27239198 [6] Simonyan, Karen, and Andrew Zisserman. "Very deep convolutional networks for large-scale image recognition. " ar. Xiv preprint ar. Xiv: 1409. 1556 (2014). [7] He, Kaiming, et al. "Deep residual learning for image recognition. " Proceedings of the IEEE conference on computer vision and pattern recognition. 2016. [8] https: //becominghuman. ai/explain-object-detection-to-me-like-im-five-bfb 5 f 312 cba 7

![Reference 9 Ren Shaoqing et al Faster rcnn Towards realtime object detection with region Reference [9] Ren, Shaoqing, et al. "Faster r-cnn: Towards real-time object detection with region](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-31.jpg)

Reference [9] Ren, Shaoqing, et al. "Faster r-cnn: Towards real-time object detection with region proposal networks. " Advances in neural information processing systems. 2015. [10] Redmon, Joseph, et al. "You only look once: Unified, real-time object detection. " Proceedings of the IEEE conference on computer vision and pattern recognition. 2016. [11] http: //www. robots. ox. ac. uk/~aarnab/ [12] Long, Jonathan, Evan Shelhamer, and Trevor Darrell. "Fully convolutional networks for semantic segmentation. " Proceedings of the IEEE conference on computer vision and pattern recognition. 2015. [13] http: //www. cs. nthu. edu. tw/~shwu/courses/ml/competitions/02_Image-Caption/02_Image. Caption. html [14] http: //slides. com/walkingdead 526/deck#/ [15] https: //qiita. com/mattya/items/e 5 bfe 5 e 04 b 9 d 2 f 0 bbd 47

![Reference 16 Gatys Leon A Alexander S Ecker and Matthias Bethge Image style Reference [16] Gatys, Leon A. , Alexander S. Ecker, and Matthias Bethge. "Image style](https://slidetodoc.com/presentation_image/c3c3cbd6d4a200f92086002b7e62d33b/image-32.jpg)

Reference [16] Gatys, Leon A. , Alexander S. Ecker, and Matthias Bethge. "Image style transfer using convolutional neural networks. " Computer Vision and Pattern Recognition (CVPR), 2016 IEEE Conference on. IEEE, 2016. [17] Xu, Ning, et al. "Deep image matting. " Computer Vision and Pattern Recognition (CVPR). 2017. [18] Zhang, Richard, Phillip Isola, and Alexei A. Efros. "Colorful image colorization. " European Conference on Computer Vision. Springer, Cham, 2016. [19] Zhang, He, and Vishal M. Patel. "Density-aware Single Image De-raining using a Multi-stream Dense Network. " ar. Xiv preprint ar. Xiv: 1802. 07412 (2018). (Now CPVR 2018) [20] Ganin, Yaroslav, et al. "Deepwarp: Photorealistic image resynthesis for gaze manipulation. " European Conference on Computer Vision. Springer, Cham, 2016.

Question?