Dr Shankar Sastry Chair Electrical Engineering Computer Sciences

- Slides: 92

Dr. Shankar Sastry, Chair Electrical Engineering& Computer Sciences University of California, Berkeley

Overview • Overview of UAV system • Selection of vehicle platform • Sensor system • Model Identification • Hierarchical Control System Low-level vehicle stabilization and control Way-point navigation • Further applications

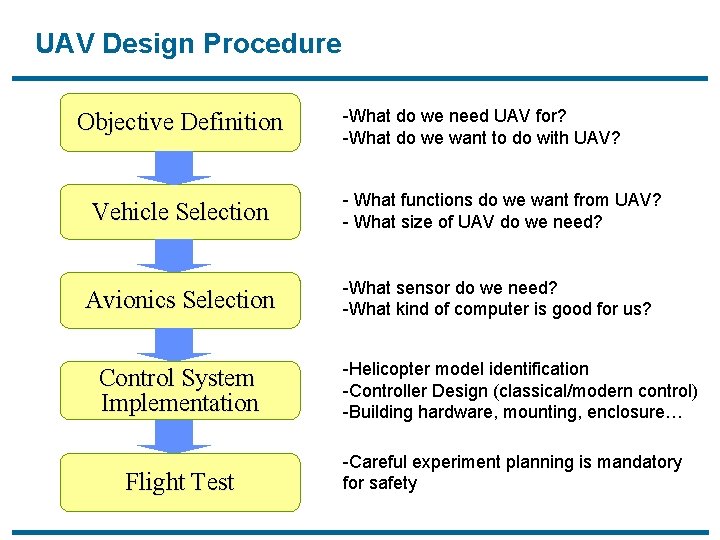

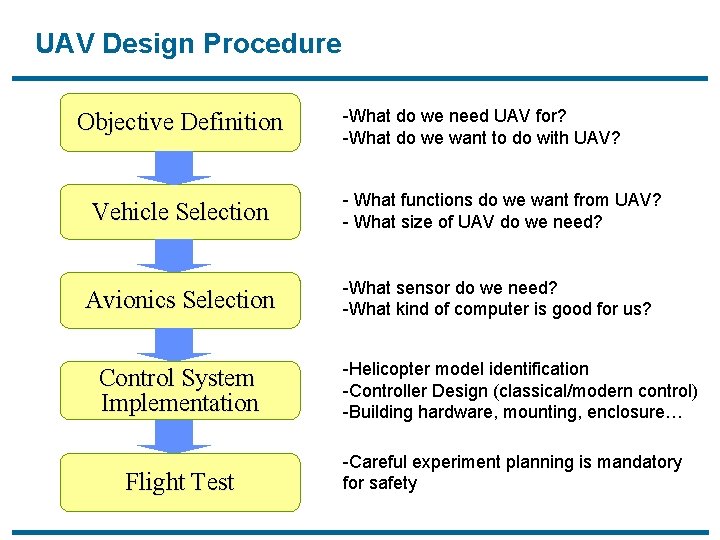

UAV Design Procedure Objective Definition -What do we need UAV for? -What do we want to do with UAV? Vehicle Selection - What functions do we want from UAV? - What size of UAV do we need? Avionics Selection -What sensor do we need? -What kind of computer is good for us? Control System Implementation Flight Test -Helicopter model identification -Controller Design (classical/modern control) -Building hardware, mounting, enclosure… -Careful experiment planning is mandatory for safety

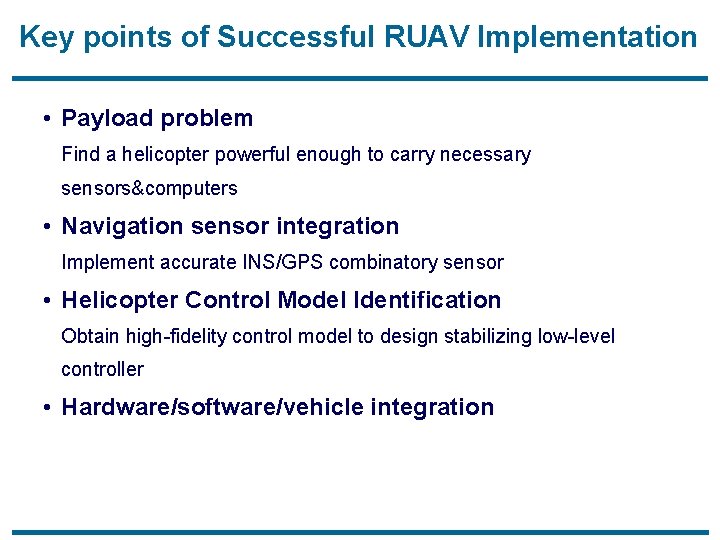

Key points of Successful RUAV Implementation • Payload problem Find a helicopter powerful enough to carry necessary sensors&computers • Navigation sensor integration Implement accurate INS/GPS combinatory sensor • Helicopter Control Model Identification Obtain high-fidelity control model to design stabilizing low-level controller • Hardware/software/vehicle integration

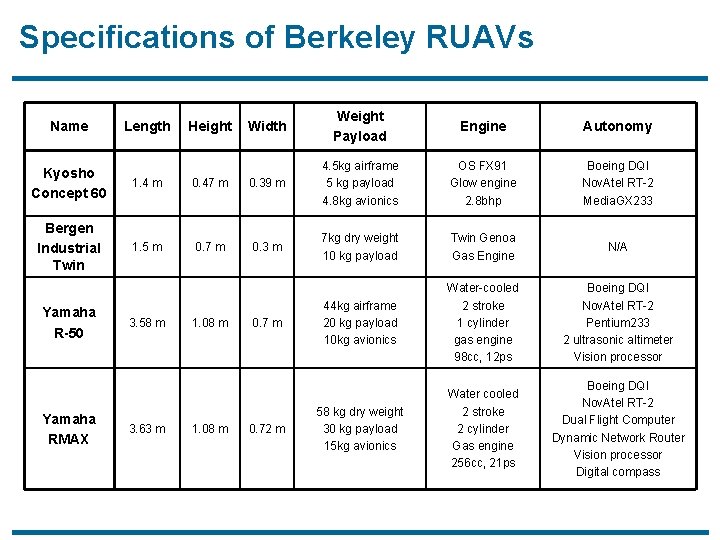

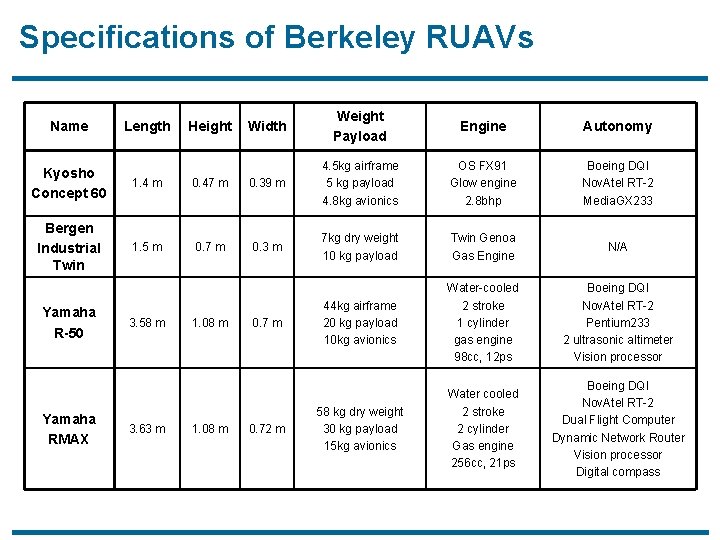

Specifications of Berkeley RUAVs Name Length Height Width Weight Payload Engine Autonomy OS FX 91 Glow engine 2. 8 bhp Boeing DQI Nov. Atel RT-2 Media. GX 233 Kyosho Concept 60 1. 4 m 0. 47 m 0. 39 m 4. 5 kg airframe 5 kg payload 4. 8 kg avionics Bergen Industrial Twin 1. 5 m 0. 7 m 0. 3 m 7 kg dry weight 10 kg payload Twin Genoa Gas Engine N/A 44 kg airframe 20 kg payload 10 kg avionics Water-cooled 2 stroke 1 cylinder gas engine 98 cc, 12 ps Boeing DQI Nov. Atel RT-2 Pentium 233 2 ultrasonic altimeter Vision processor 58 kg dry weight 30 kg payload 15 kg avionics Water cooled 2 stroke 2 cylinder Gas engine 256 cc, 21 ps Boeing DQI Nov. Atel RT-2 Dual Flight Computer Dynamic Network Router Vision processor Digital compass Yamaha R-50 Yamaha RMAX 3. 58 m 3. 63 m 1. 08 m 0. 72 m

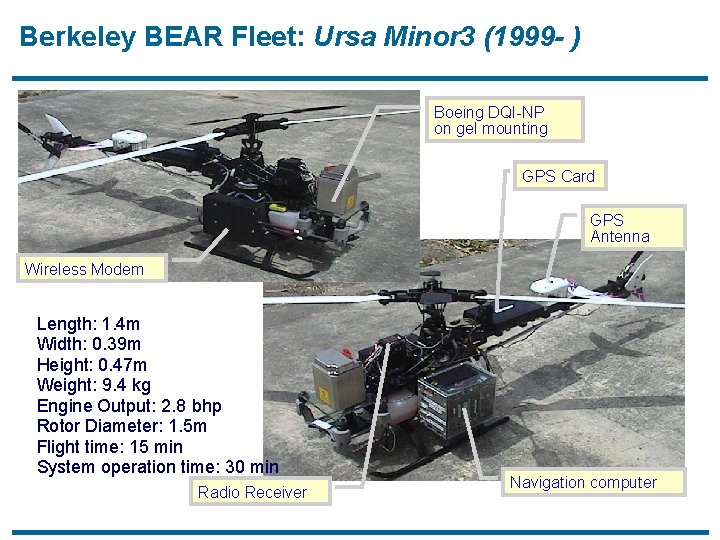

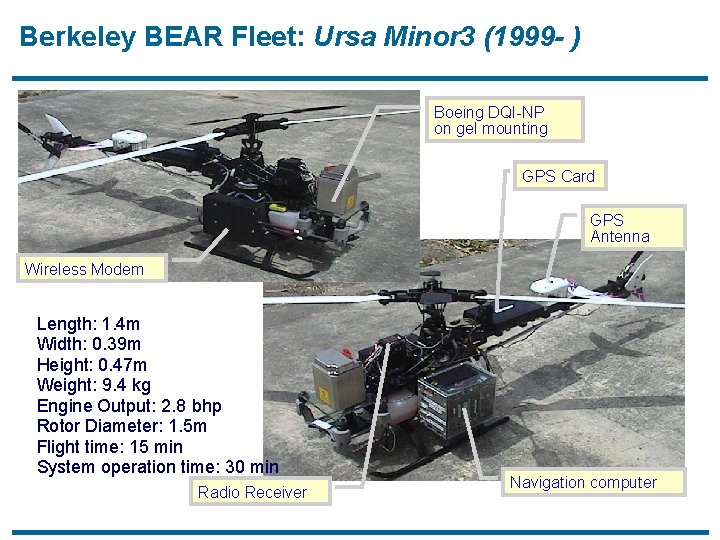

Berkeley BEAR Fleet: Ursa Minor 3 (1999 - ) Boeing DQI-NP on gel mounting GPS Card GPS Antenna Wireless Modem Length: 1. 4 m Width: 0. 39 m Height: 0. 47 m Weight: 9. 4 kg Engine Output: 2. 8 bhp Rotor Diameter: 1. 5 m Flight time: 15 min System operation time: 30 min Radio Receiver Navigation computer

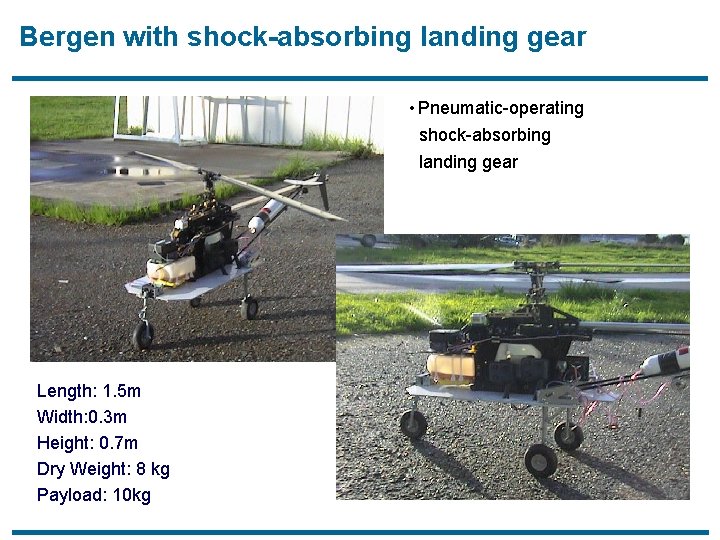

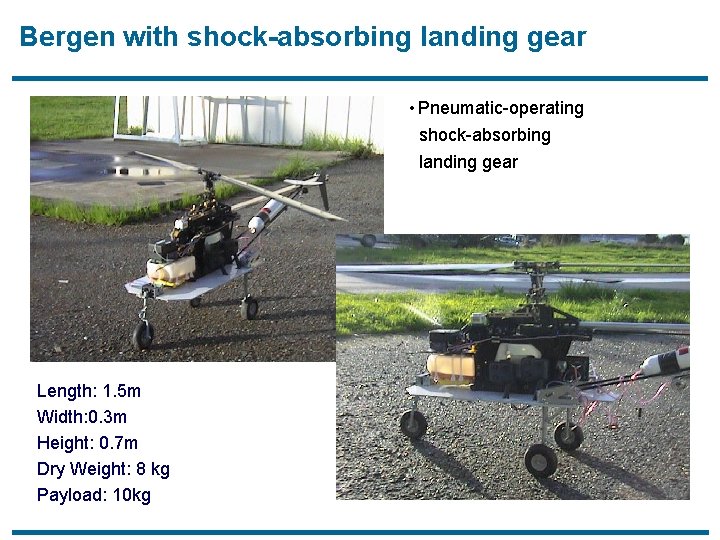

Bergen with shock-absorbing landing gear • Pneumatic-operating shock-absorbing landing gear Length: 1. 5 m Width: 0. 3 m Height: 0. 7 m Dry Weight: 8 kg Payload: 10 kg

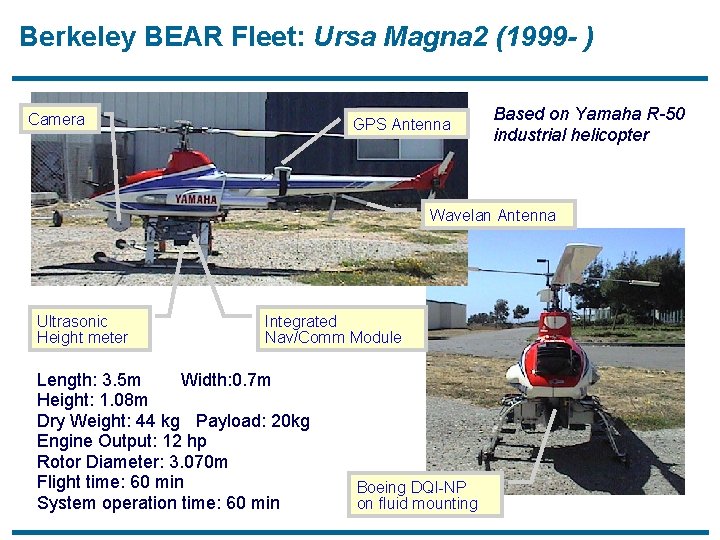

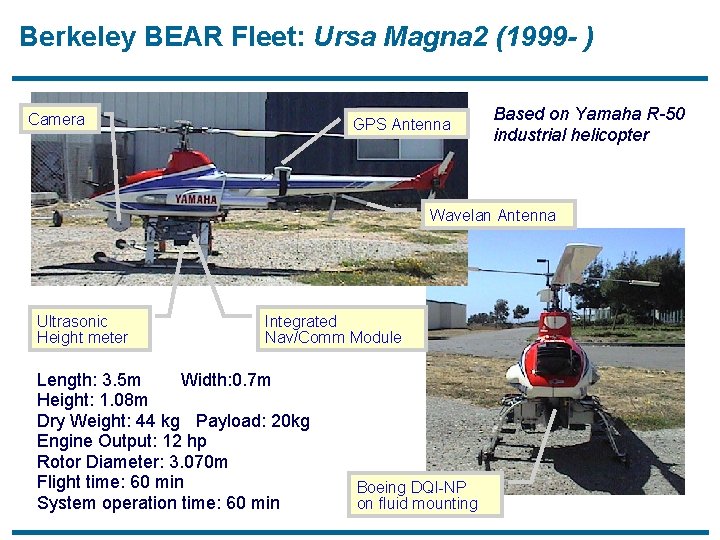

Berkeley BEAR Fleet: Ursa Magna 2 (1999 - ) Camera GPS Antenna Based on Yamaha R-50 industrial helicopter Wavelan Antenna Ultrasonic Height meter Integrated Nav/Comm Module Length: 3. 5 m Width: 0. 7 m Height: 1. 08 m Dry Weight: 44 kg Payload: 20 kg Engine Output: 12 hp Rotor Diameter: 3. 070 m Flight time: 60 min System operation time: 60 min Boeing DQI-NP on fluid mounting

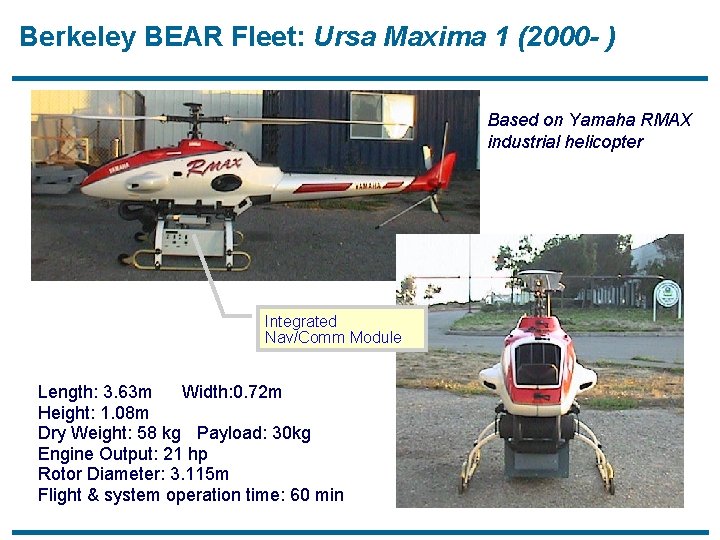

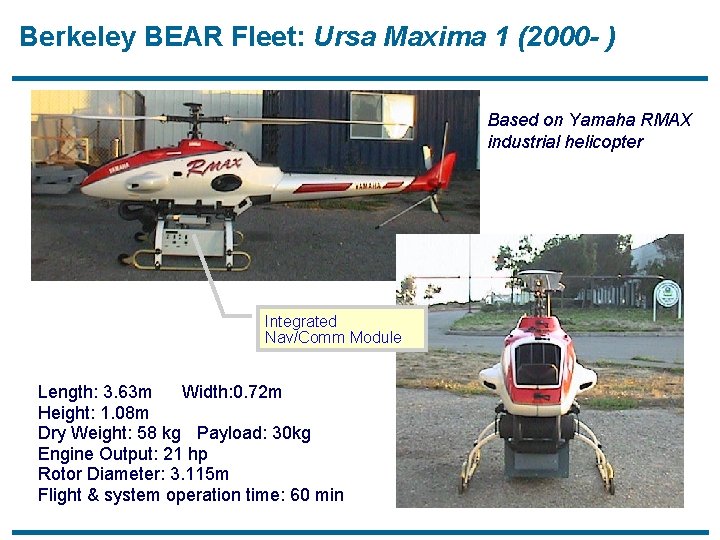

Berkeley BEAR Fleet: Ursa Maxima 1 (2000 - ) Based on Yamaha RMAX industrial helicopter Integrated Nav/Comm Module Length: 3. 63 m Width: 0. 72 m Height: 1. 08 m Dry Weight: 58 kg Payload: 30 kg Engine Output: 21 hp Rotor Diameter: 3. 115 m Flight & system operation time: 60 min

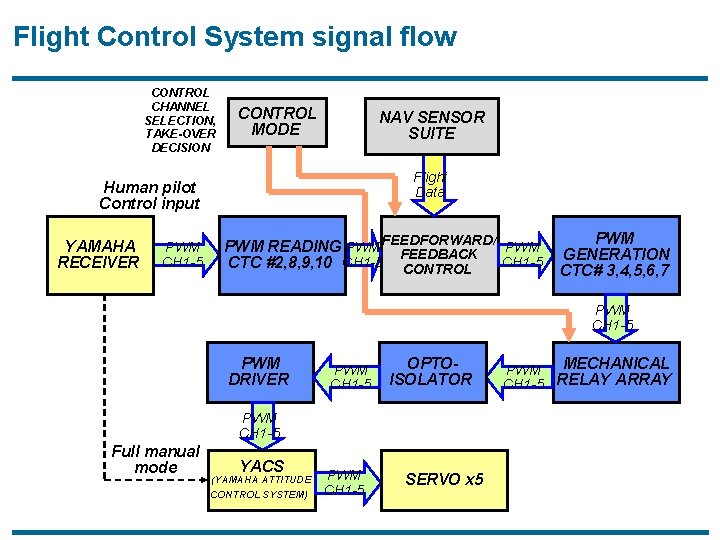

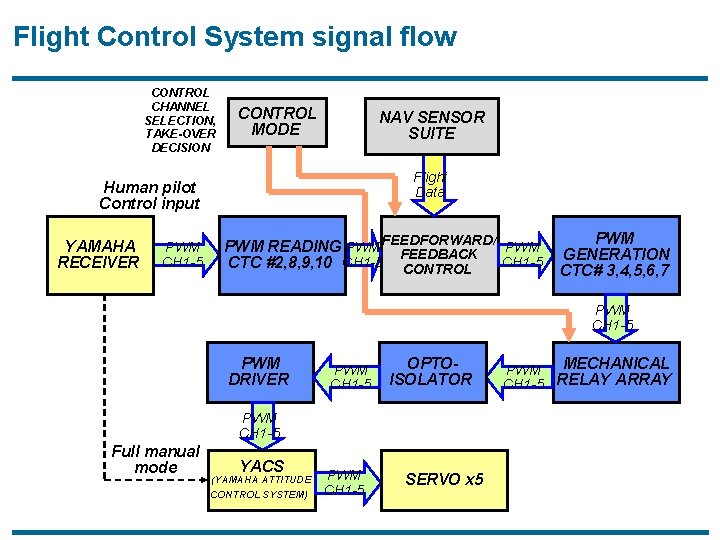

Flight Control System signal flow CONTROL CHANNEL SELECTION, TAKE-OVER DECISION CONTROL MODE NAV SENSOR SUITE Flight Data Human pilot Control input YAMAHA RECEIVER PWM CH 1 -5 PWM READING PWM FEEDFORWARD/ PWM FEEDBACK CH 1 -5 CTC #2, 8, 9, 10 CONTROL PWM GENERATION CTC# 3, 4, 5, 6, 7 PWM CH 1 -5 PWM DRIVER PWM CH 1 -5 OPTOISOLATOR PWM CH 1 -5 Full manual mode YACS (YAMAHA ATTITUDE CONTROL SYSTEM) PWM CH 1 -5 SERVO x 5 MECHANICAL PWM CH 1 -5 RELAY ARRAY

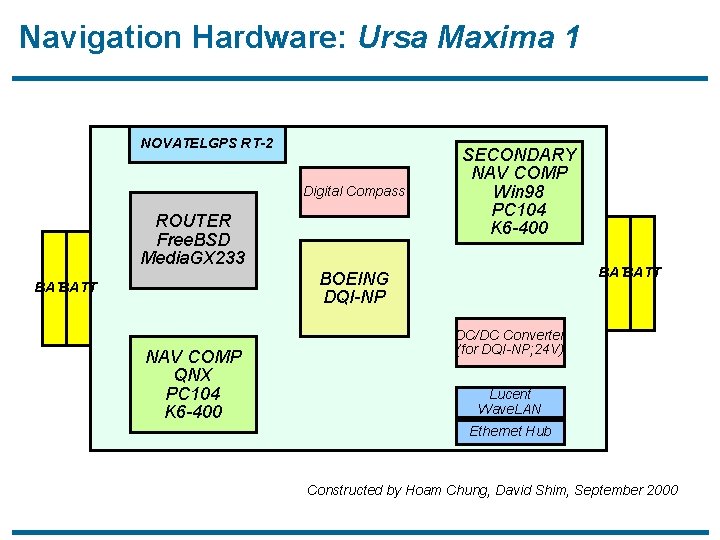

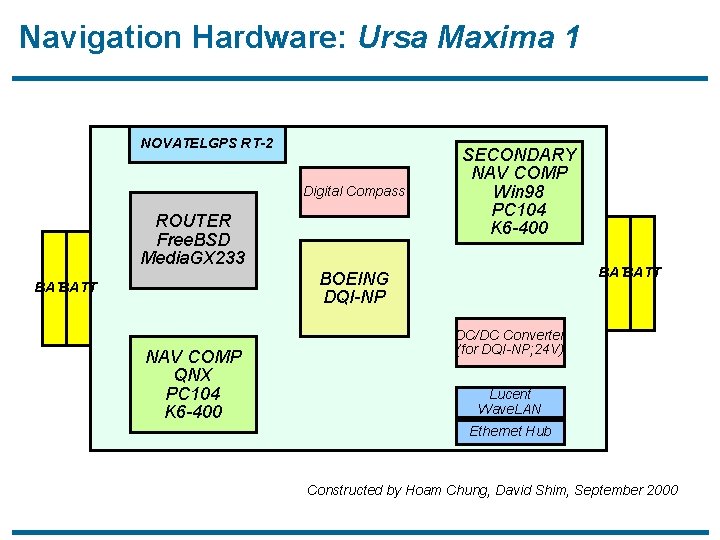

Navigation Hardware: Ursa Maxima 1 NOVATELGPS RT-2 Digital Compass ROUTER Free. BSD Media. GX 233 SECONDARY NAV COMP Win 98 PC 104 K 6 -400 BATT BOEING DQI-NP BATT NAV COMP QNX PC 104 K 6 -400 DC/DC Converter (for DQI-NP; 24 V) Lucent Wave. LAN Ethernet Hub Constructed by Hoam Chung, David Shim, September 2000

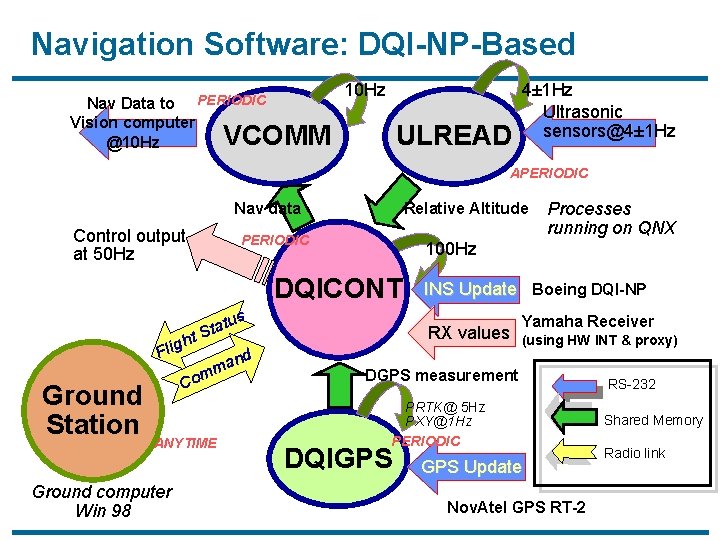

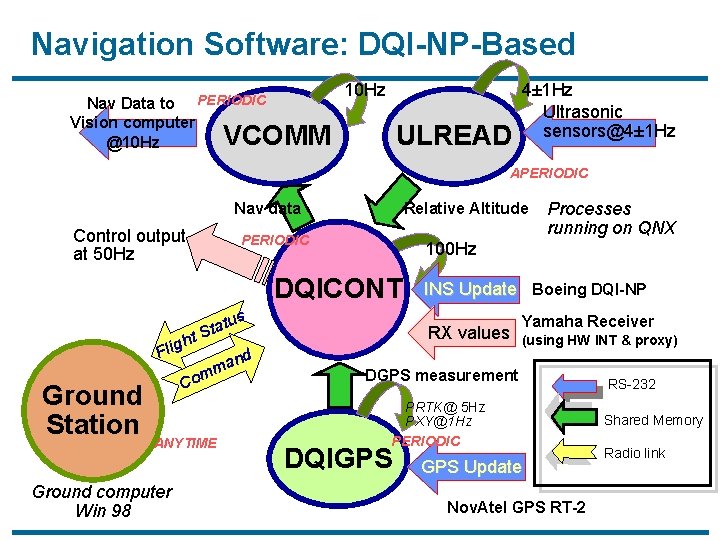

Navigation Software: DQI-NP-Based Nav Data to Vision computer @10 Hz PERIODIC VCOMM ULREAD 4± 1 Hz Ultrasonic sensors@4± sensors@4 1 Hz APERIODIC Nav data Control output at 50 Hz Relative Altitude PERIODIC 100 Hz DQICONT tus a t S ht g i l F Ground Station Co ANYTIME Ground computer Win 98 nd a mm Processes running on QNX INS Update Boeing DQI-NP RX values Yamaha Receiver (using HW INT & proxy) DGPS measurement PRTK@ 5 Hz PXY@1 Hz PERIODIC DQIGPS Update Nov. Atel GPS RT-2 RS-232 Shared Memory Radio link

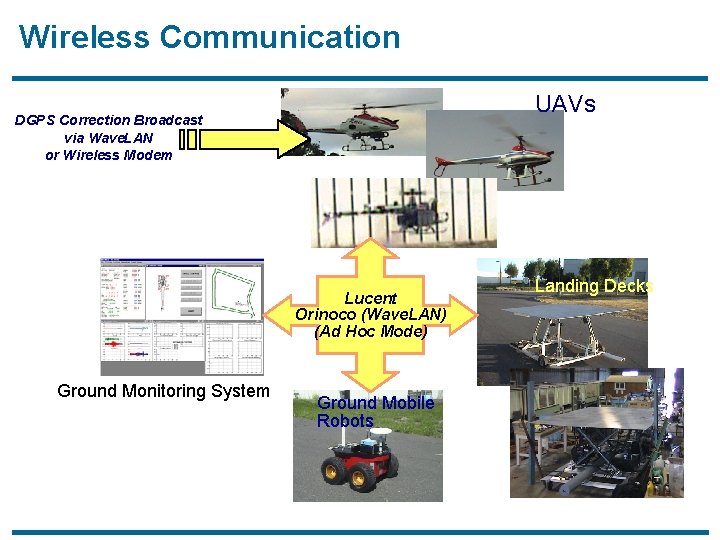

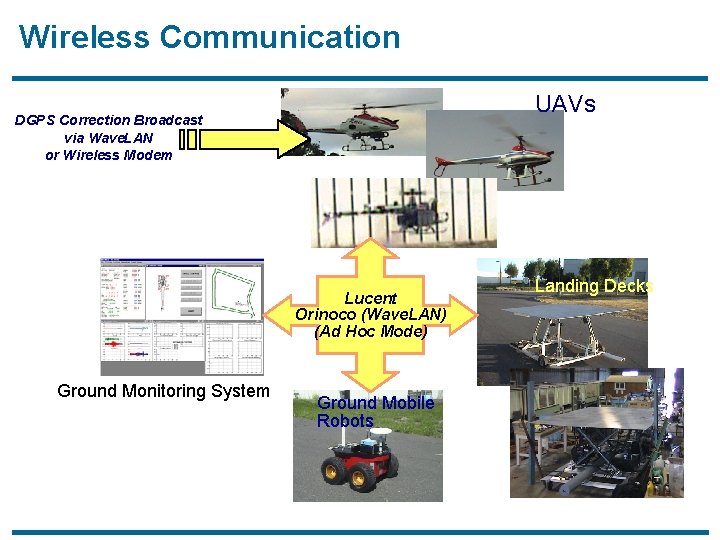

Wireless Communication UAVs DGPS Correction Broadcast via Wave. LAN or Wireless Modem Lucent Orinoco (Wave. LAN) (Ad Hoc Mode) Ground Monitoring System Ground Mobile Robots Landing Decks

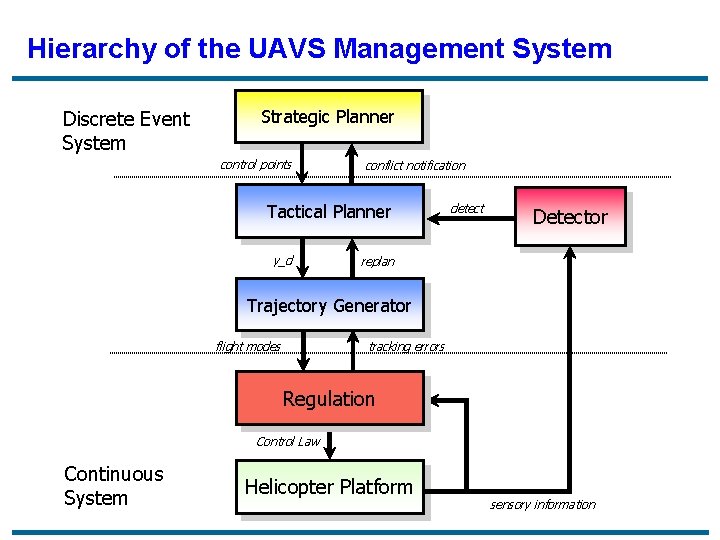

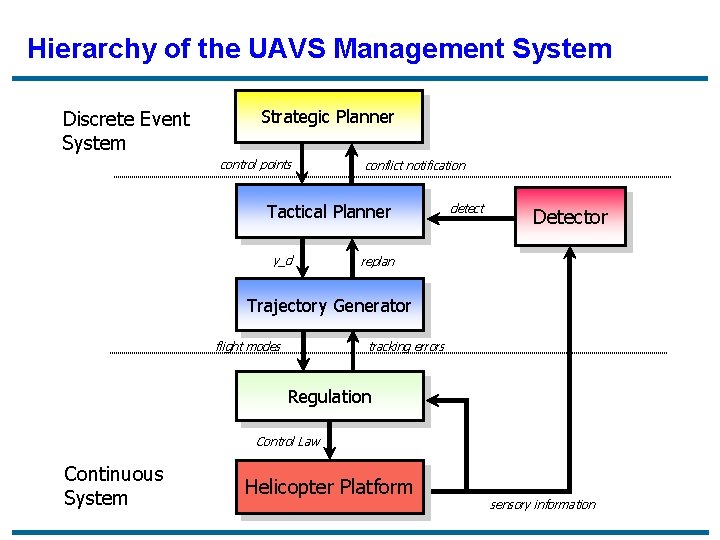

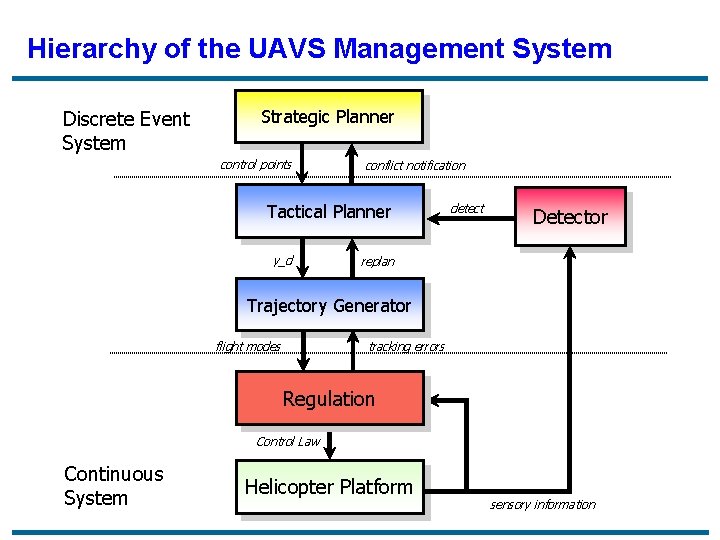

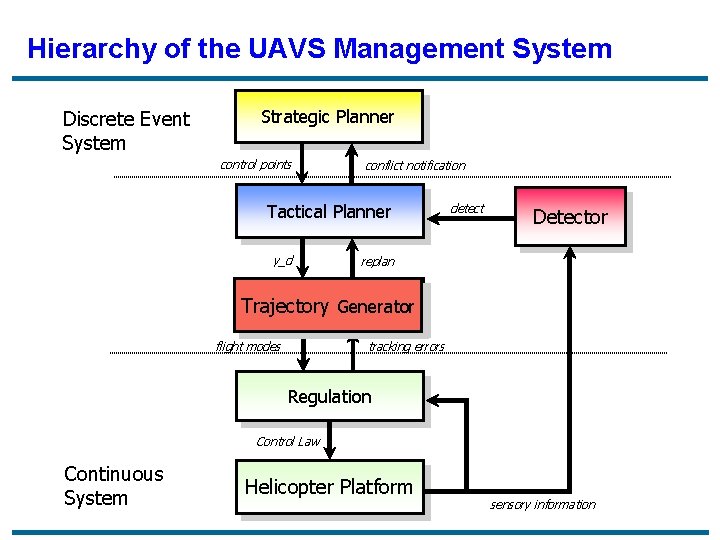

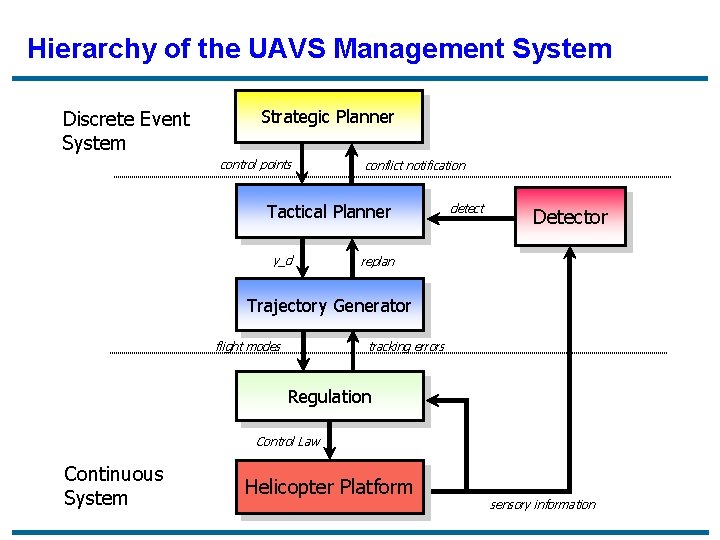

Hierarchy of the UAVS Management System Discrete Event System Strategic Planner control points conflict notification Tactical Planner y_d detect Detector replan Trajectory Generator flight modes tracking errors Regulation Control Law Continuous System Helicopter Platform sensory information

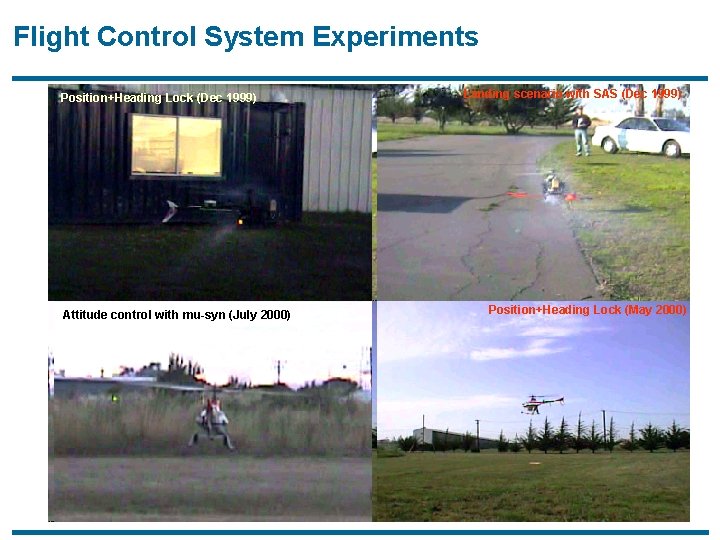

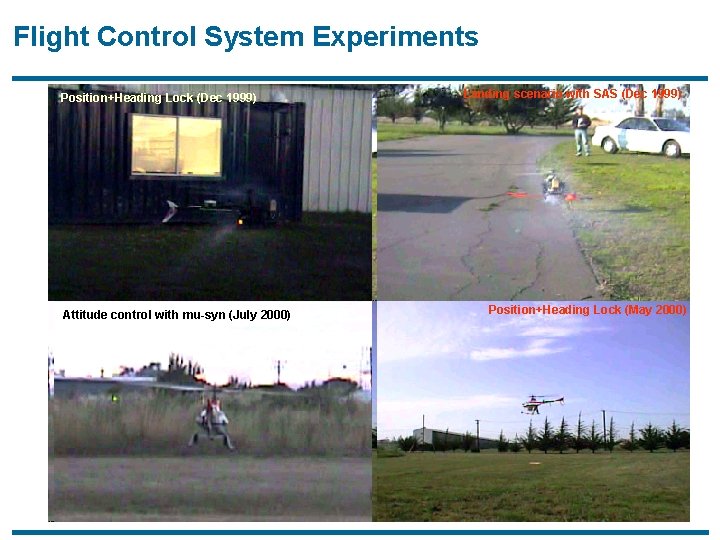

Flight Control System Experiments Position+Heading Lock (Dec 1999) Attitude control with mu-syn (July 2000) Landing scenario with SAS (Dec 1999) Position+Heading Lock (May 2000)

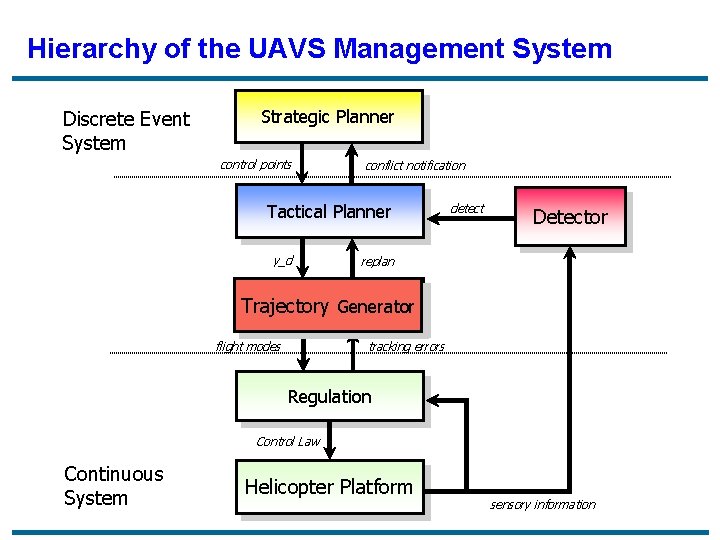

Hierarchy of the UAVS Management System Discrete Event System Strategic Planner control points conflict notification Tactical Planner y_d detect Detector replan Trajectory Generator flight modes tracking errors Regulation Control Law Continuous System Helicopter Platform sensory information

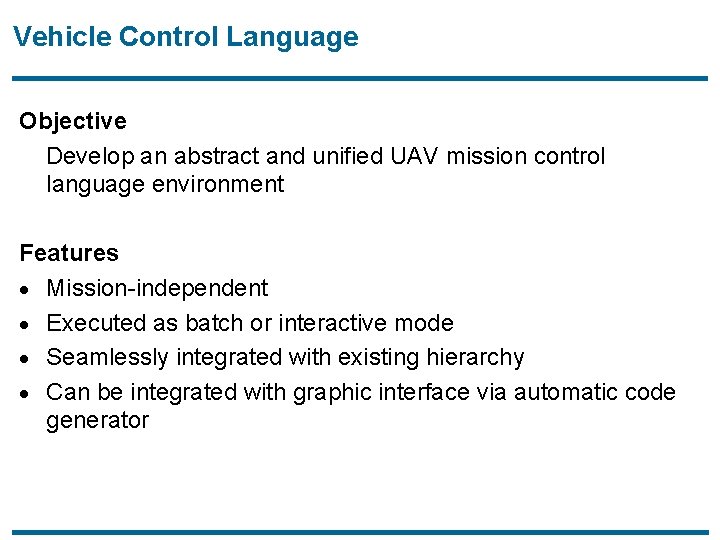

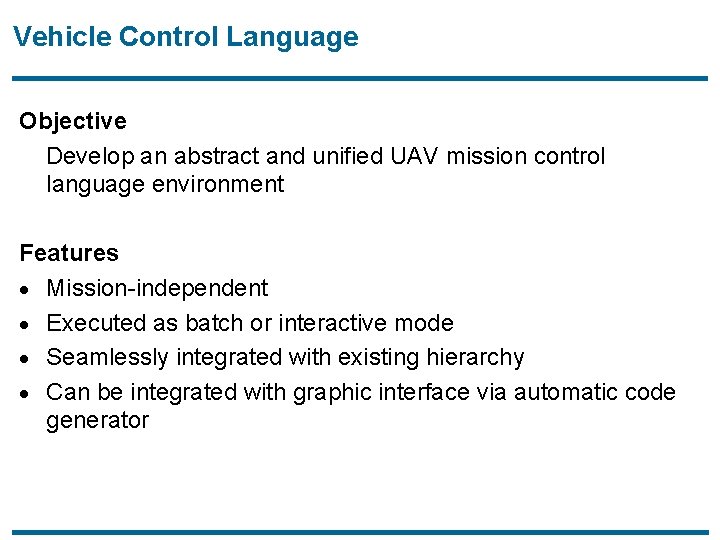

Vehicle Control Language Objective Develop an abstract and unified UAV mission control language environment Features · Mission-independent · Executed as batch or interactive mode · Seamlessly integrated with existing hierarchy · Can be integrated with graphic interface via automatic code generator

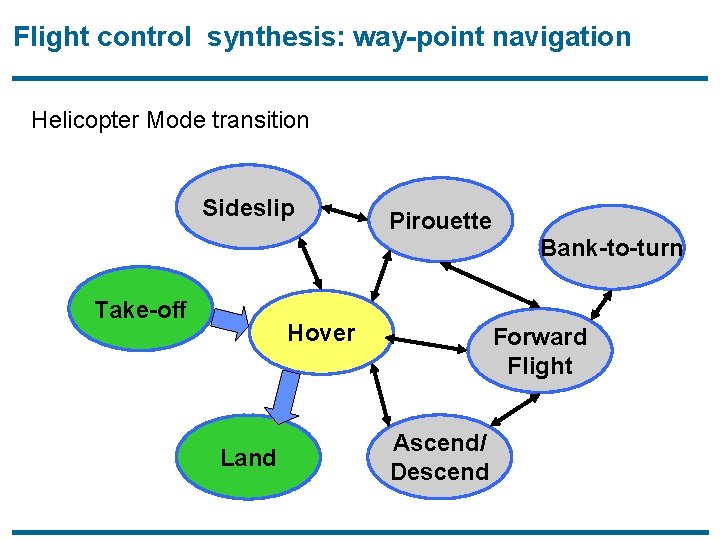

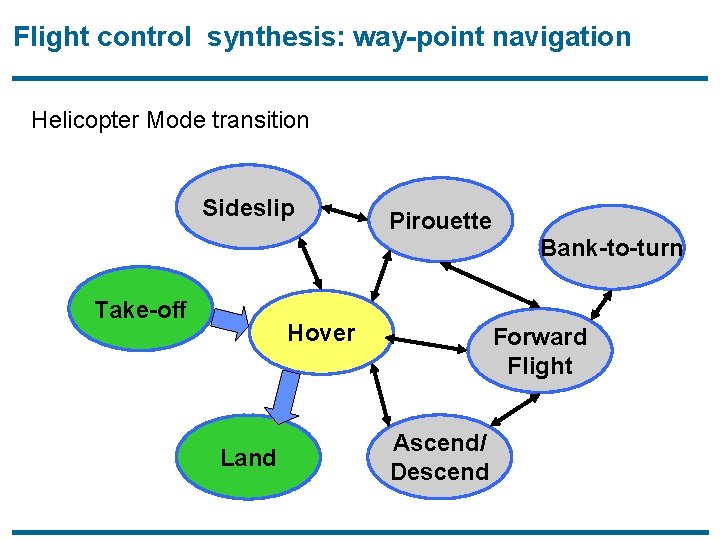

Flight control synthesis: way-point navigation Helicopter Mode transition Sideslip Pirouette Bank-to-turn Take-off Hover Land Forward Flight Ascend/ Descend

Hierarchy of the UAVS Management System Discrete Event System Strategic Planner control points conflict notification Tactical Planner y_d detect Detector replan Trajectory Generator flight modes tracking errors Regulation Control Law Continuous System Helicopter Platform sensory information

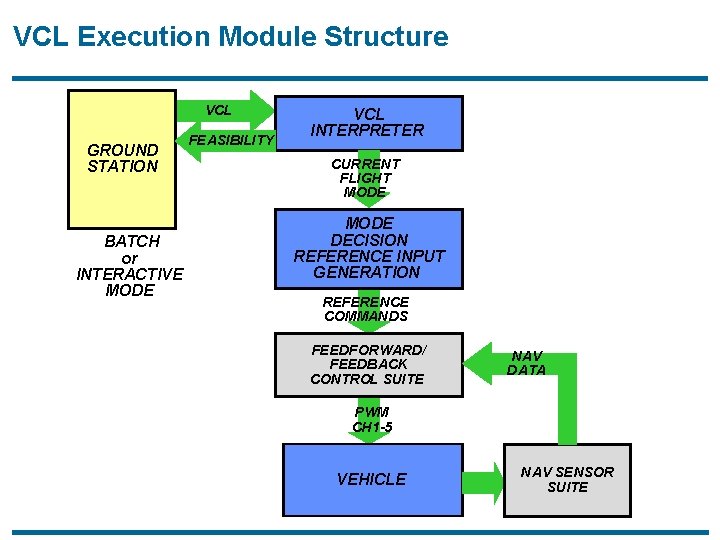

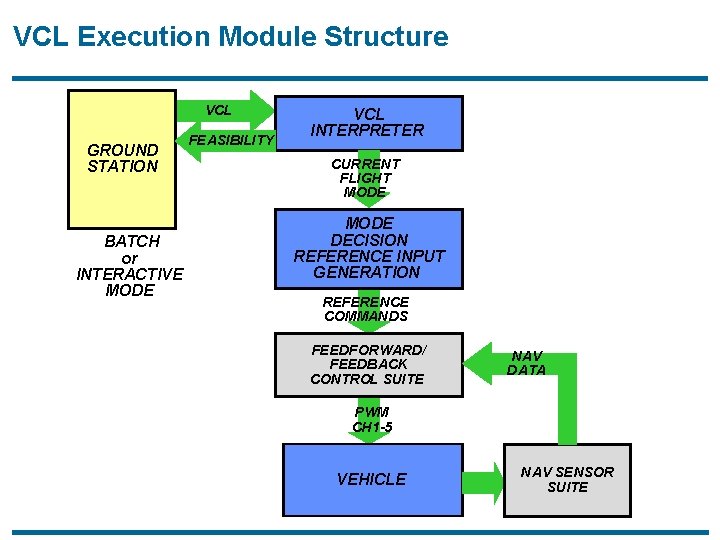

VCL Execution Module Structure VCL GROUND STATION BATCH or INTERACTIVE MODE FEASIBILITY VCL INTERPRETER CURRENT FLIGHT MODE DECISION PWM REFERENCE CH 1 -5 INPUT GENERATION REFERENCE COMMANDS FEEDFORWARD/ FEEDBACK CONTROL SUITE NAV DATA PWM CH 1 -5 VEHICLE NAV SENSOR SUITE

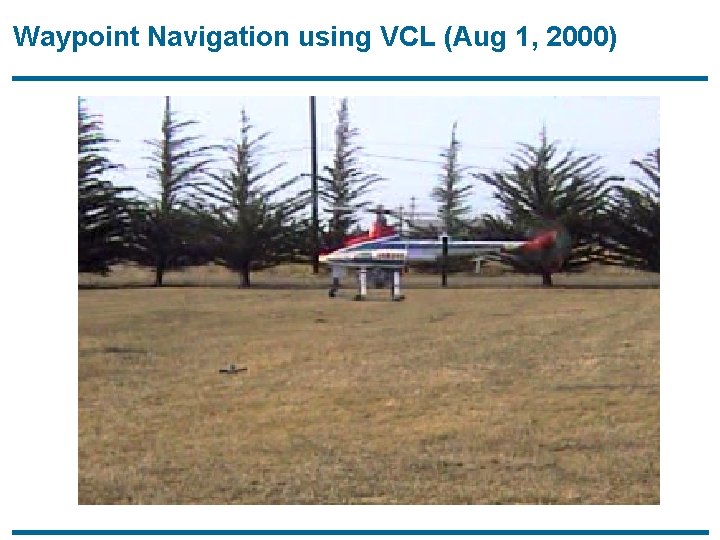

Waypoint Navigation using VCL (Aug 1, 2000)

Vision Based Motion Estimation for UAV Landing Cory Sharp, Omid Shakernia Department of EECS University of California at Berkeley

Outline · Motivation · Vision based ego-motion estimation · Evaluation of motion estimates · Vision system hardware/software · Landing target design/tracking · Active camera control · Flight videos

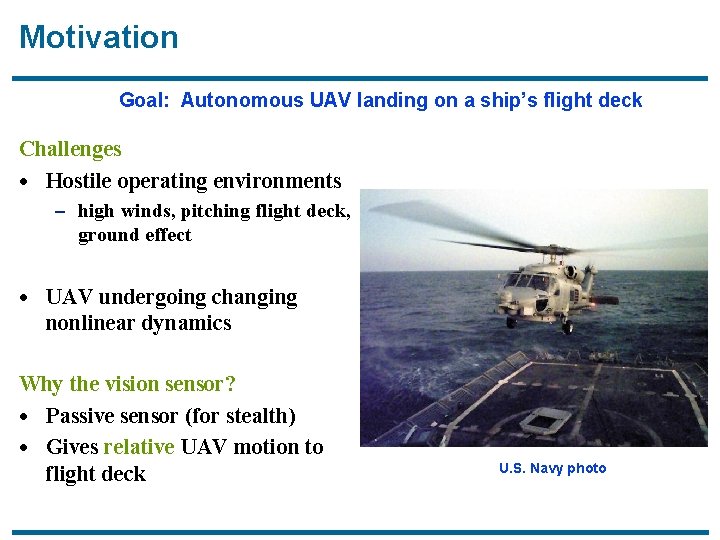

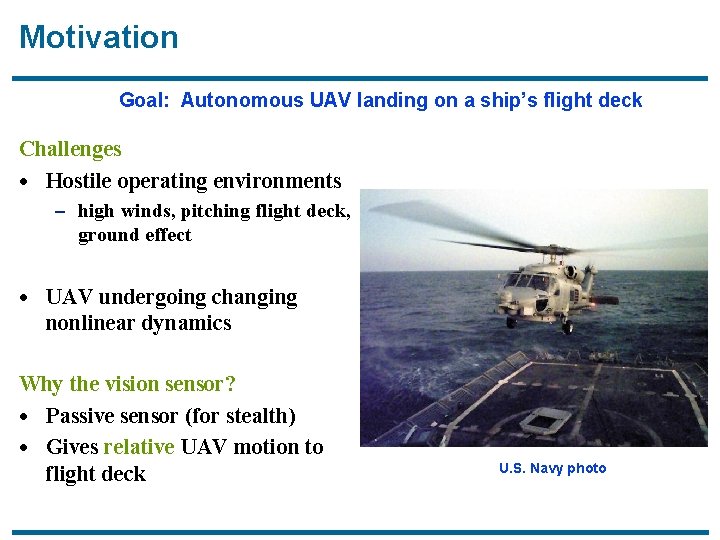

Motivation Goal: Autonomous UAV landing on a ship’s flight deck Challenges · Hostile operating environments – high winds, pitching flight deck, ground effect · UAV undergoing changing nonlinear dynamics Why the vision sensor? · Passive sensor (for stealth) · Gives relative UAV motion to flight deck U. S. Navy photo

Objective for Vision Based Landing

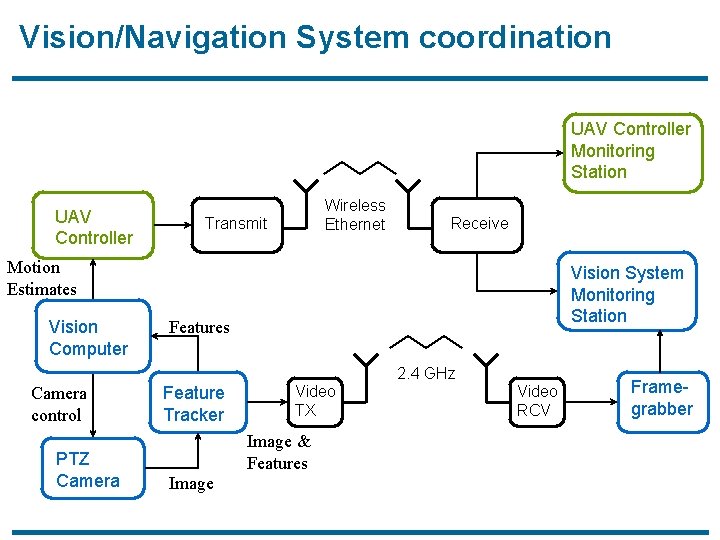

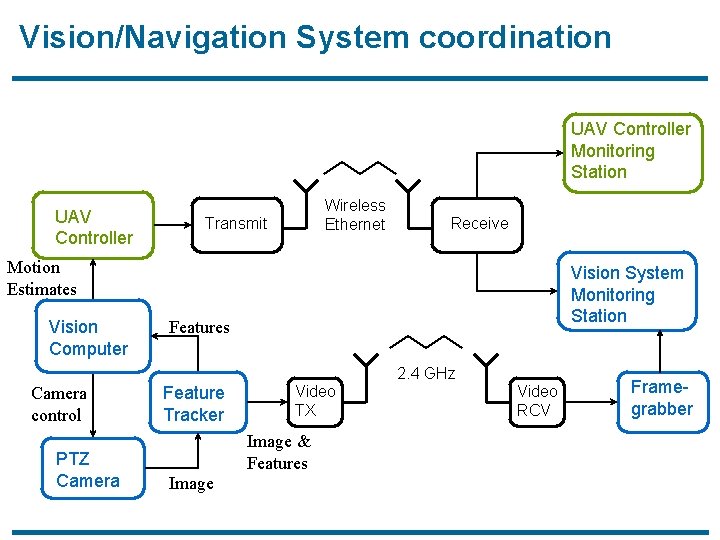

Vision/Navigation System coordination UAV Controller Monitoring Station UAV Controller Wireless Ethernet Transmit Receive Motion Estimates Vision Computer Vision System Monitoring Station Features 2. 4 GHz Camera control PTZ Camera Feature Tracker Video TX Image & Features Image Video RCV Framegrabber

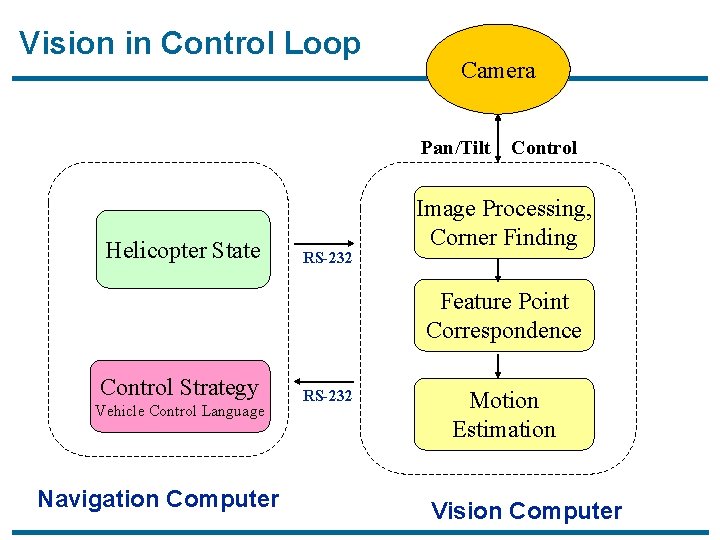

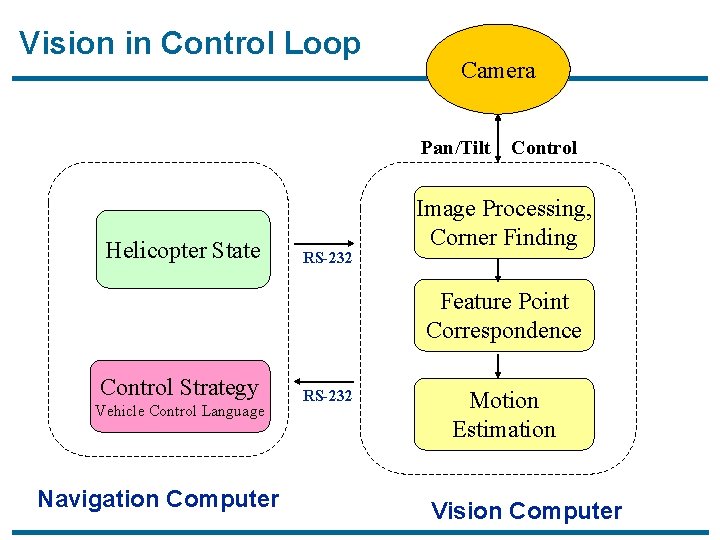

Vision in Control Loop Camera Pan/Tilt Helicopter State RS-232 Control Image Processing, Corner Finding Feature Point Correspondence Control Strategy Vehicle Control Language Navigation Computer RS-232 Motion Estimation Vision Computer

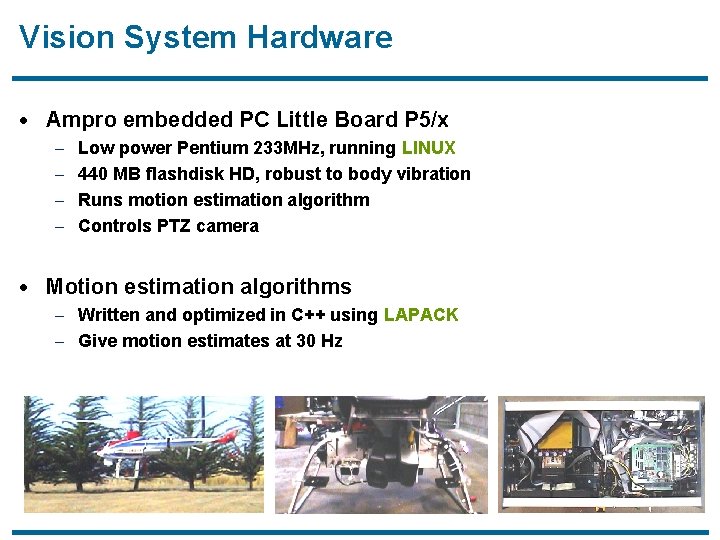

Vision System Hardware · Ampro embedded PC Little Board P 5/x – Low power Pentium 233 MHz, running LINUX – 440 MB flashdisk HD, robust to body vibration – Runs motion estimation algorithm – Controls PTZ camera · Motion estimation algorithms – Written and optimized in C++ using LAPACK – Give motion estimates at 30 Hz

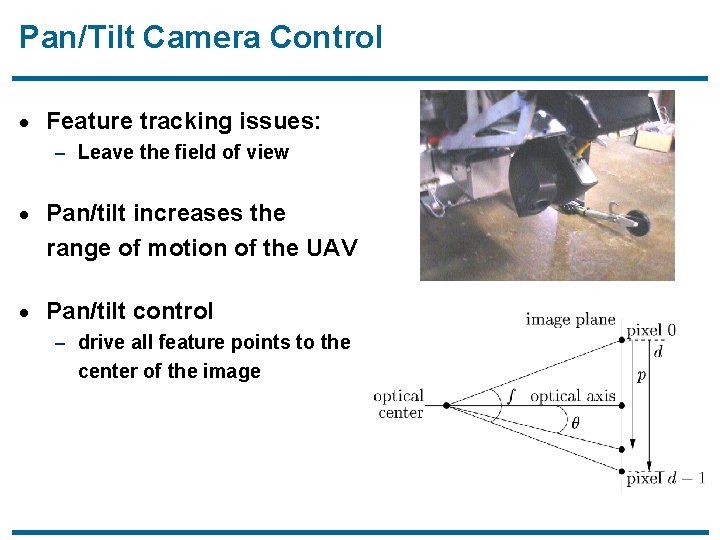

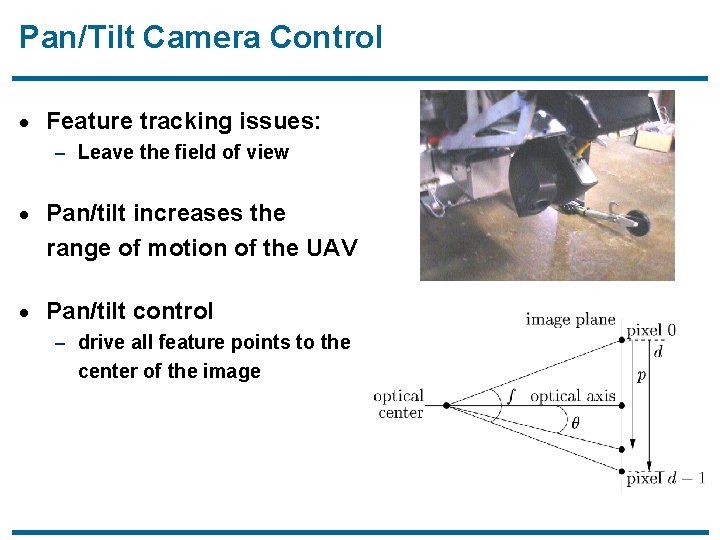

Pan/Tilt Camera Control · Feature tracking issues: – Leave the field of view · Pan/tilt increases the range of motion of the UAV · Pan/tilt control – drive all feature points to the center of the image

Flight Video

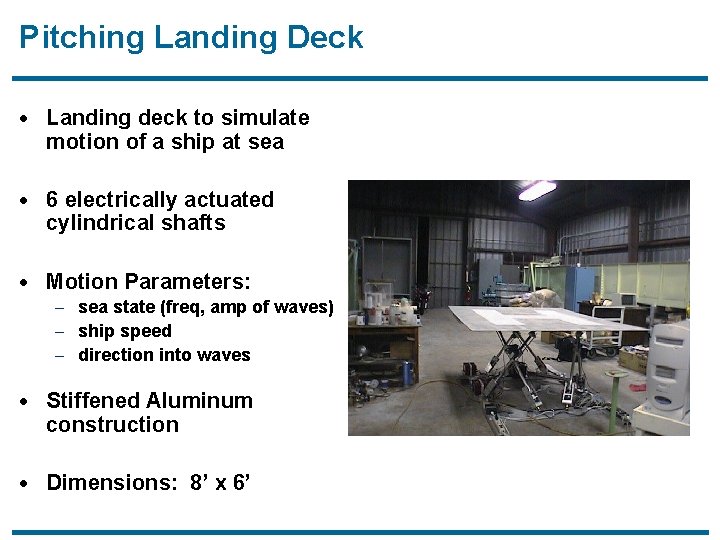

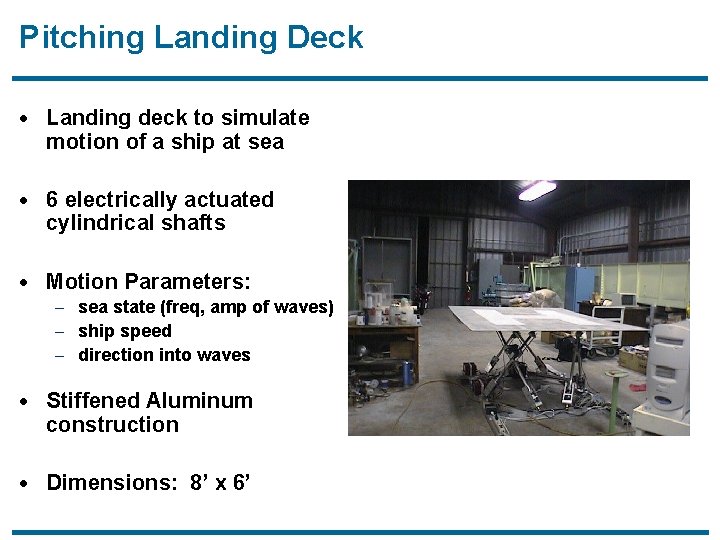

Pitching Landing Deck · Landing deck to simulate motion of a ship at sea · 6 electrically actuated cylindrical shafts · Motion Parameters: – sea state (freq, amp of waves) – ship speed – direction into waves · Stiffened Aluminum construction · Dimensions: 8’ x 6’

Moving Landing Pad

Landing on Deck

Probabilistic Pursuit-Evasion Games with UGVs and UAVs René Vidal C. Sharp, D. Shim, O. Shakernia, J. Hespanha, J. Kim, S. Rashid, S. Sastry University of California at Berkeley 04/05/01

Outline · Introduction · Pursuit Evasion Games – Map Building – Pursuit Policies · Hierarchical Control Architecture – Strategic Planner, Tactical Planner, Regulation, Sensing, Control – System, Agent and Communication Architectures · Architecture Implementation – Tactical Layer: UGVs, UAVs, Hardware, Software, Sensor Fusion – Strategic Layer: Map Building, Pursuit Policies, Visual Interface · Experimental Results – Evaluation of Pursuit Policies – Pursuit Evasion Games with UGV’s and UAV’s · Conclusions and Current Research

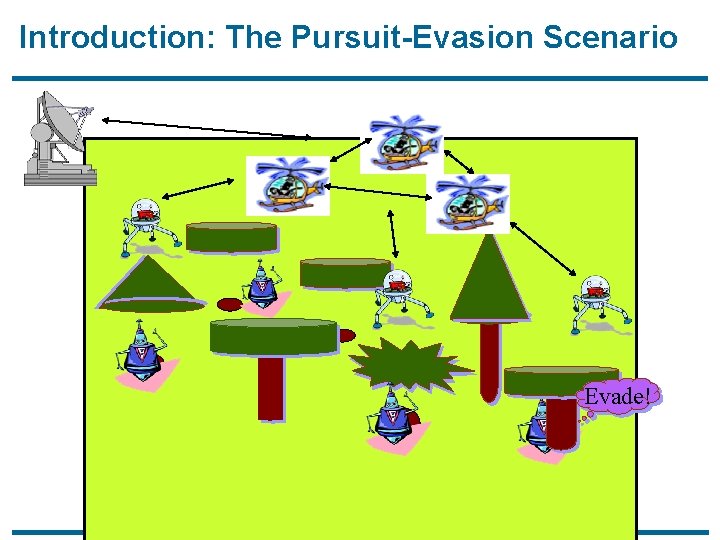

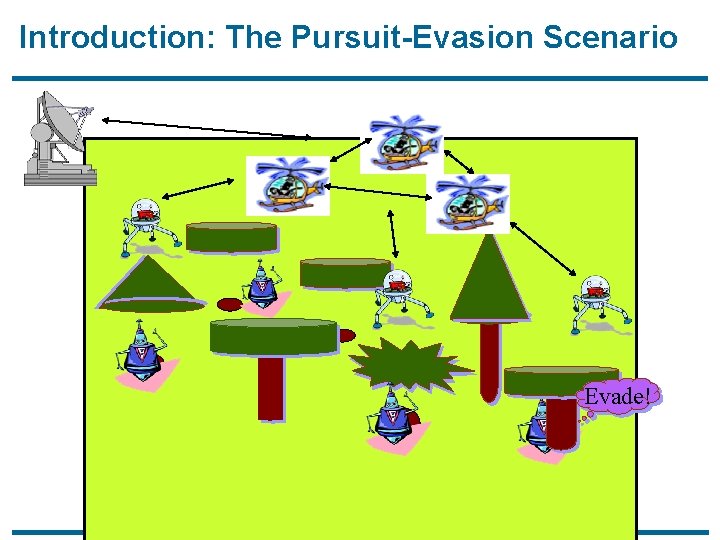

Introduction: The Pursuit-Evasion Scenario Evade!

Introduction: Theoretical Issues · Probabilistic map building · Coordinated multi-agent operation · Networking and intelligent data sharing · Path planning · Identification of vehicle dynamics and control · Sensor integration · Vision system

Pursuit-Evasion Games · Consider approach in Hespanha, Kim and Sastry – Multiple pursuers catching one single evader – Pursuers can only move to adjacent empty cells – Pursuers have perfect knowledge of current location – Sensor model: false positives (p) and negatives (q) for evader detection – Evader moves randomly to adjacent cells · Extensions in Rashid and Kim – Multiple evaders: each one is recognized individually – Supervisory agents: can “fly” over obstacles and evaders, cannot capture – Sensor model for obstacle detection as well

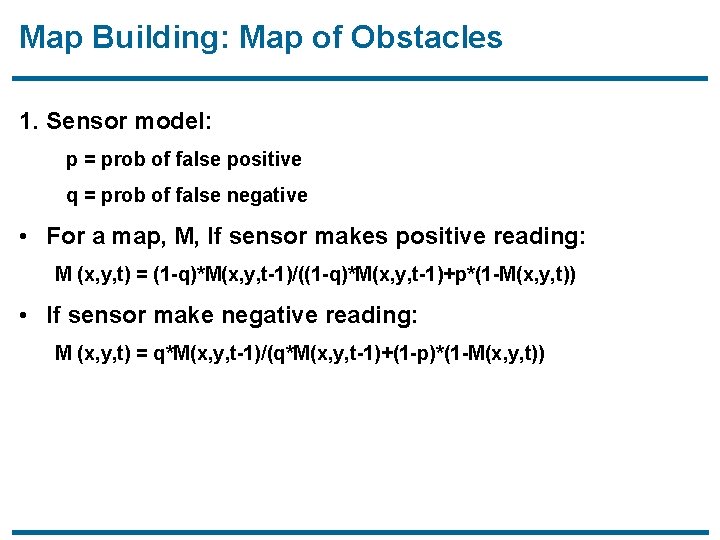

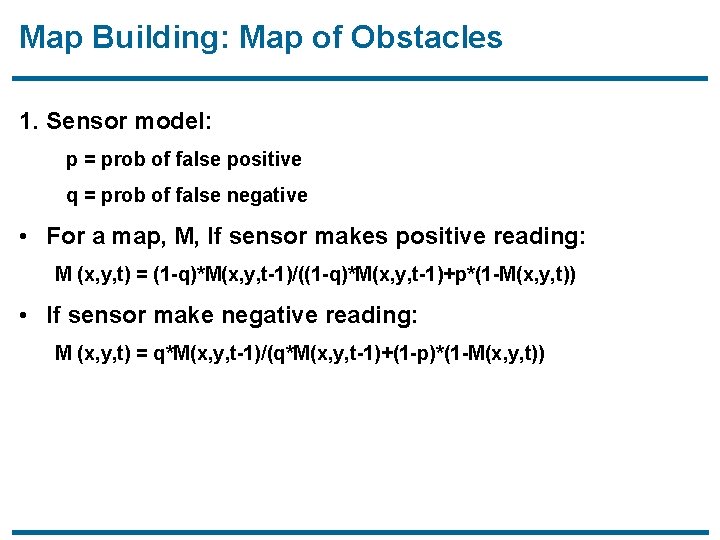

Map Building: Map of Obstacles 1. Sensor model: p = prob of false positive q = prob of false negative • For a map, M, If sensor makes positive reading: M (x, y, t) = (1 -q)*M(x, y, t-1)/((1 -q)*M(x, y, t-1)+p*(1 -M(x, y, t)) • If sensor make negative reading: M (x, y, t) = q*M(x, y, t-1)/(q*M(x, y, t-1)+(1 -p)*(1 -M(x, y, t))

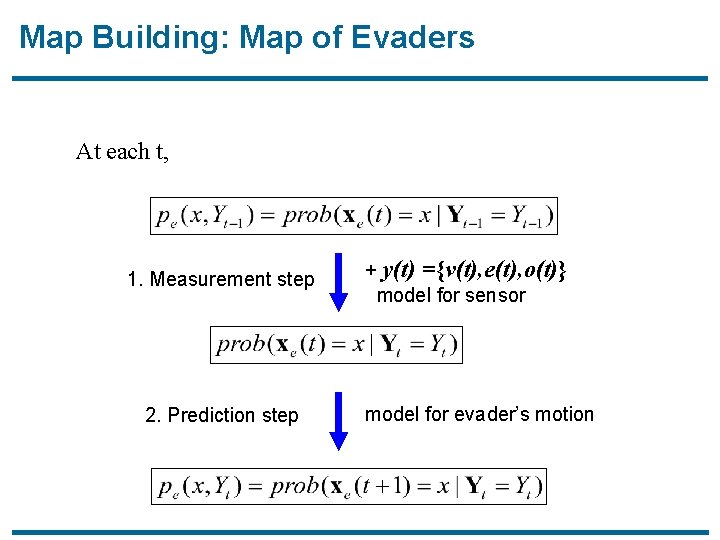

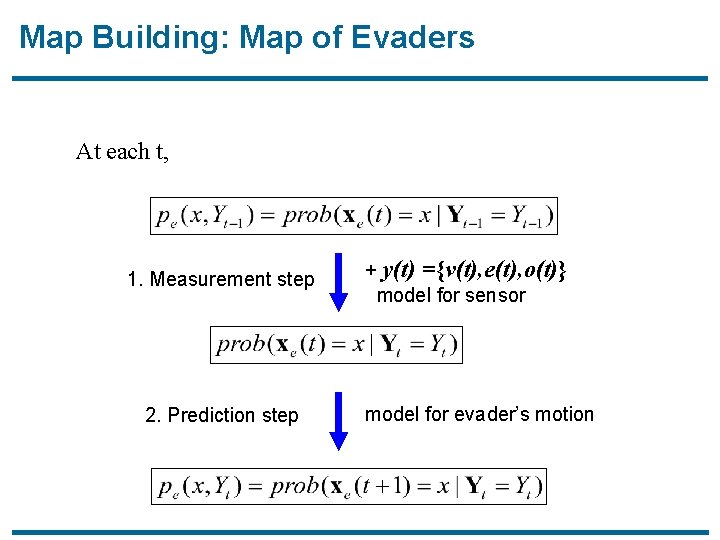

Map Building: Map of Evaders At each t, 1. Measurement step 2. Prediction step + y(t) ={v(t), e(t), o(t)} model for sensor model for evader’s motion

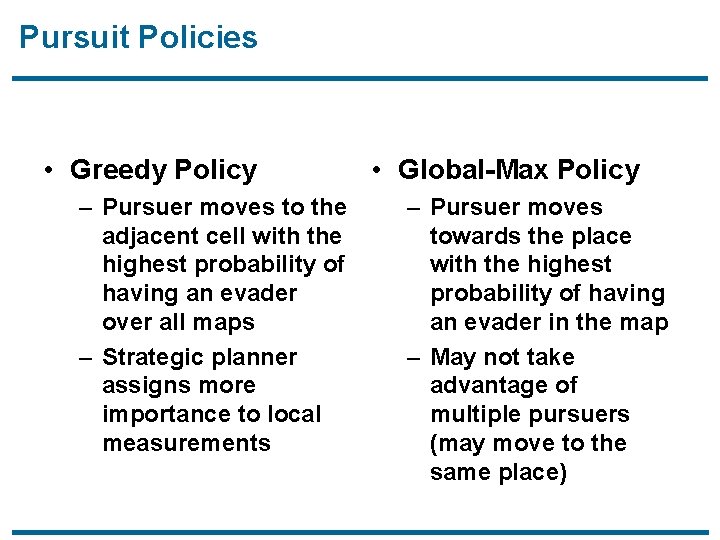

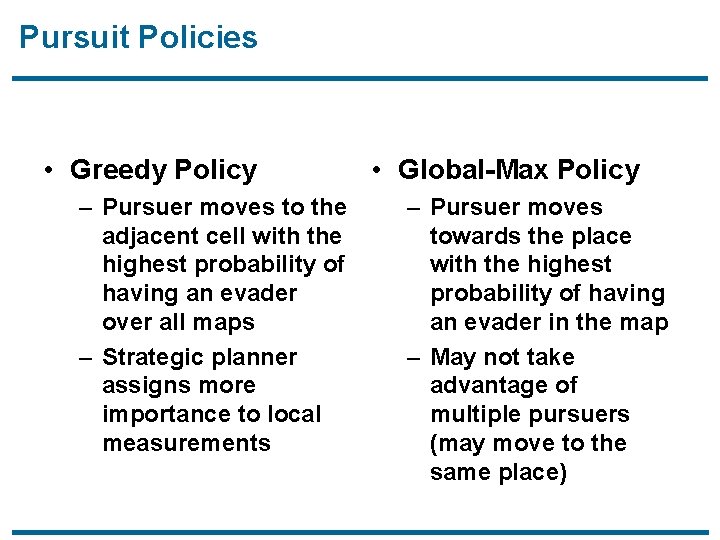

Pursuit Policies • Greedy Policy – Pursuer moves to the adjacent cell with the highest probability of having an evader over all maps – Strategic planner assigns more importance to local measurements • Global-Max Policy – Pursuer moves towards the place with the highest probability of having an evader in the map – May not take advantage of multiple pursuers (may move to the same place)

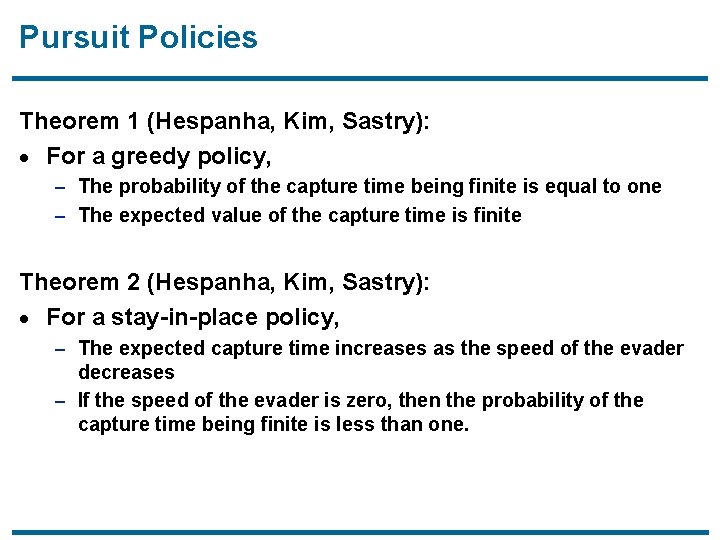

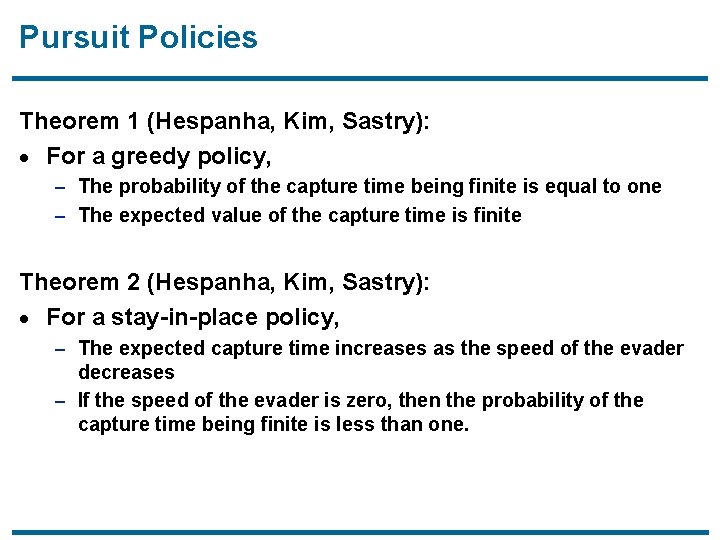

Pursuit Policies Theorem 1 (Hespanha, Kim, Sastry): · For a greedy policy, – The probability of the capture time being finite is equal to one – The expected value of the capture time is finite Theorem 2 (Hespanha, Kim, Sastry): · For a stay-in-place policy, – The expected capture time increases as the speed of the evader decreases – If the speed of the evader is zero, then the probability of the capture time being finite is less than one.

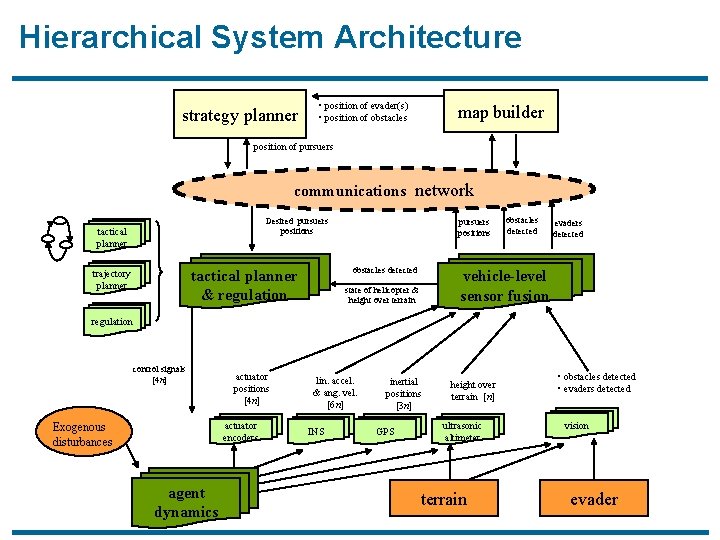

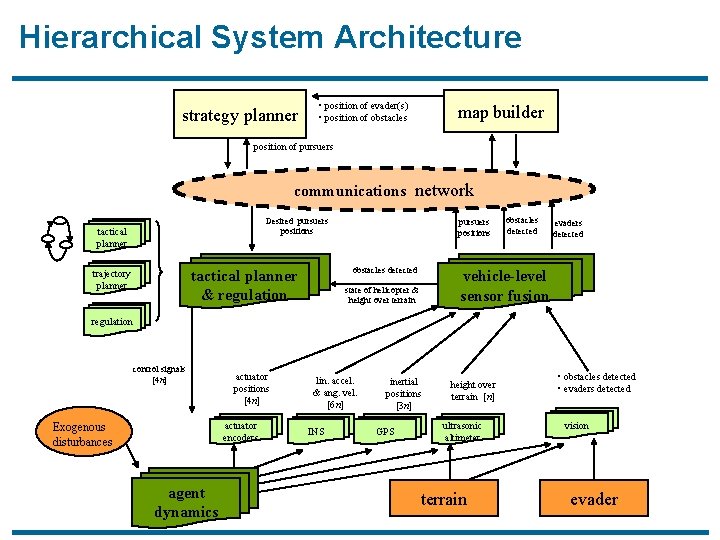

Hierarchical System Architecture strategy planner • position of evader(s) • position of obstacles map builder position of pursuers communications network Desired pursuers positions tactical planner obstacles detected tactical planner & regulation trajectory planner pursuers positions obstacles detected evaders detected vehicle-level sensor fusion state of helicopter & height over terrain regulation control signals [4 n] actuator positions [4 n] actuator encoders Exogenous disturbances agent dynamics lin. accel. & ang. vel. [6 n] INS inertial positions [3 n] GPS height over terrain [n] ultrasonic altimeter terrain • obstacles detected • evaders detected vision evader

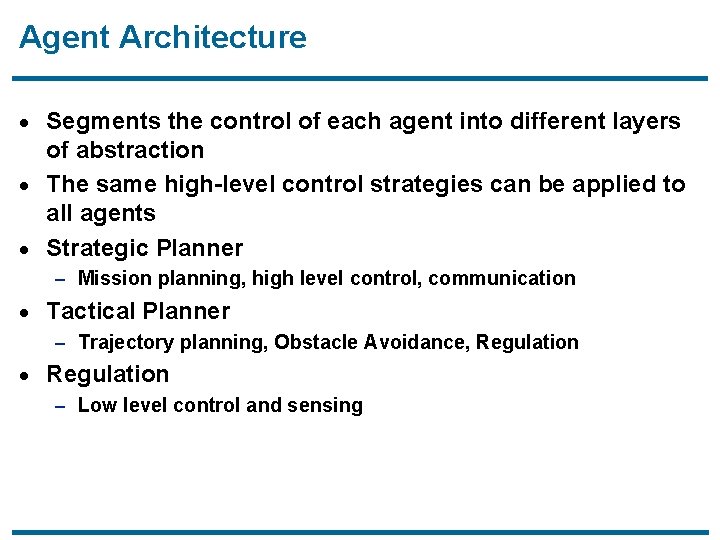

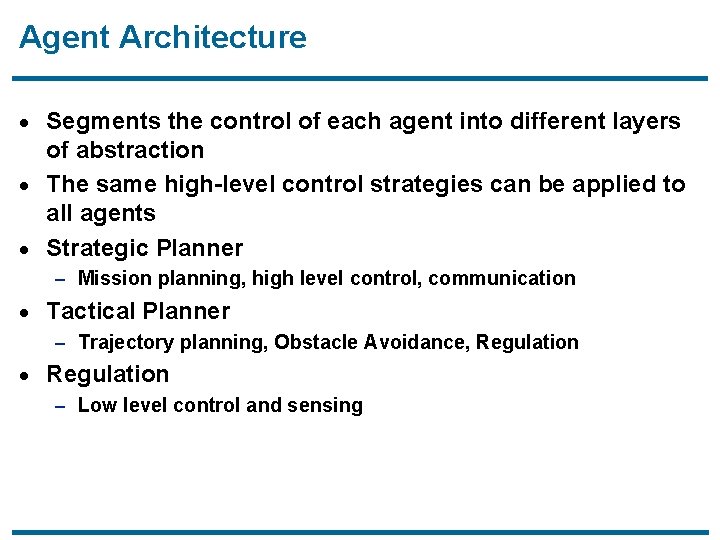

Agent Architecture · Segments the control of each agent into different layers of abstraction · The same high-level control strategies can be applied to all agents · Strategic Planner – Mission planning, high level control, communication · Tactical Planner – Trajectory planning, Obstacle Avoidance, Regulation · Regulation – Low level control and sensing

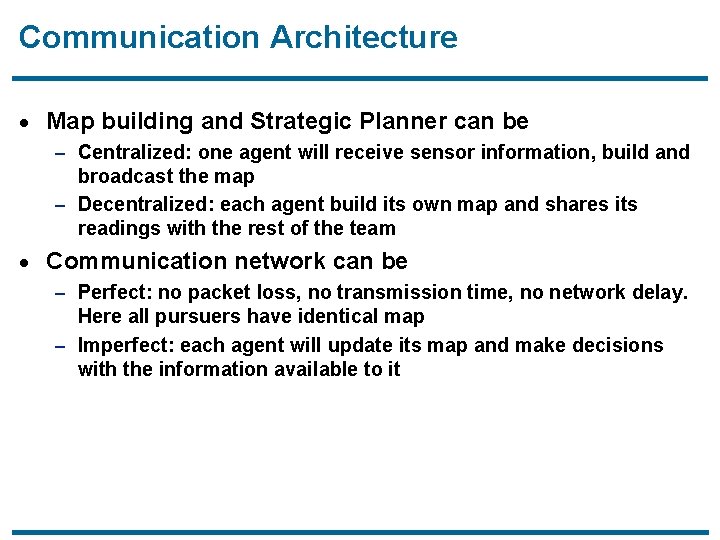

Communication Architecture · Map building and Strategic Planner can be – Centralized: one agent will receive sensor information, build and broadcast the map – Decentralized: each agent build its own map and shares its readings with the rest of the team · Communication network can be – Perfect: no packet loss, no transmission time, no network delay. Here all pursuers have identical map – Imperfect: each agent will update its map and make decisions with the information available to it

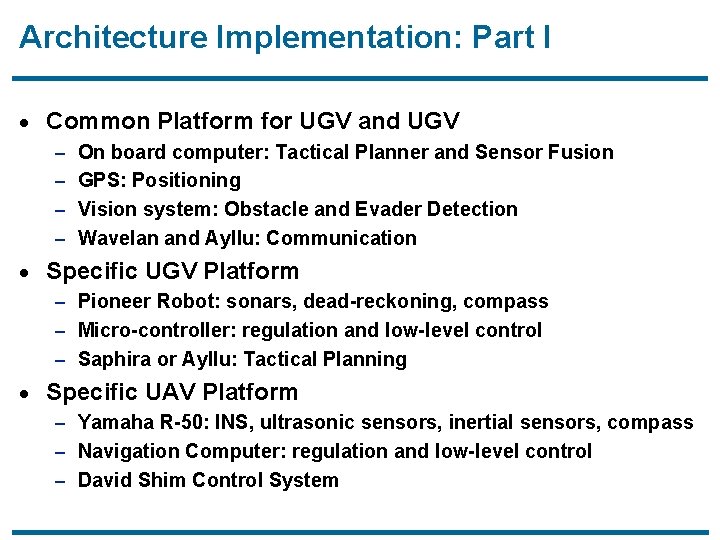

Architecture Implementation: Part I · Common Platform for UGV and UGV – On board computer: Tactical Planner and Sensor Fusion – GPS: Positioning – Vision system: Obstacle and Evader Detection – Wavelan and Ayllu: Communication · Specific UGV Platform – Pioneer Robot: sonars, dead-reckoning, compass – Micro-controller: regulation and low-level control – Saphira or Ayllu: Tactical Planning · Specific UAV Platform – Yamaha R-50: INS, ultrasonic sensors, inertial sensors, compass – Navigation Computer: regulation and low-level control – David Shim Control System

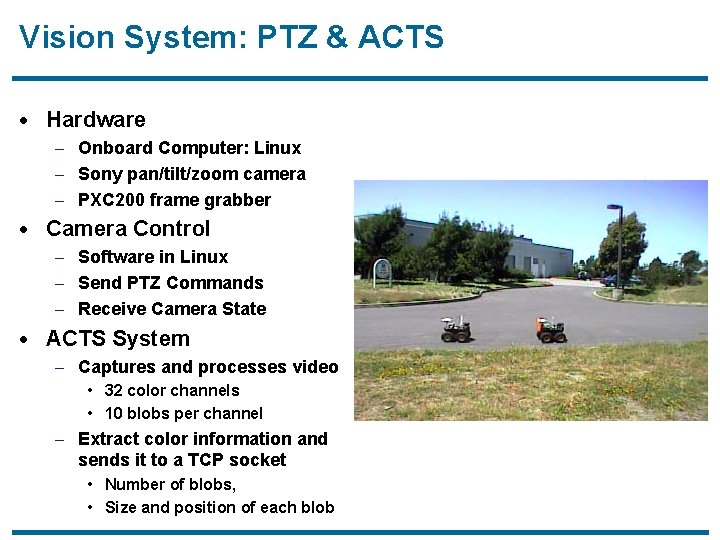

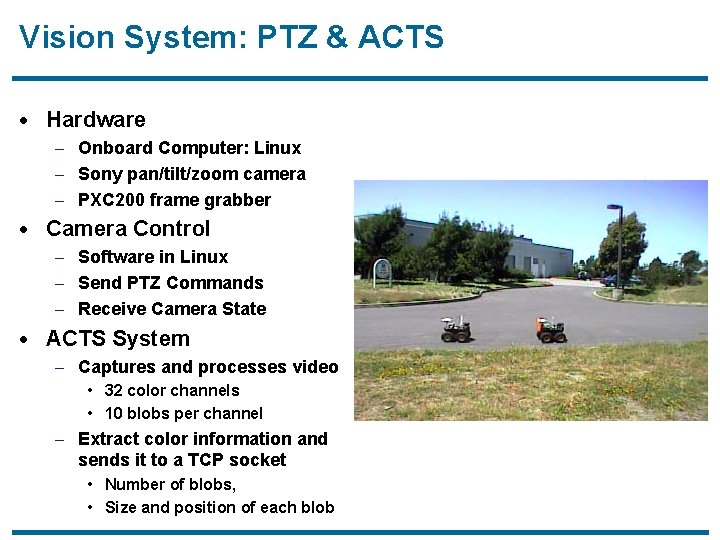

Vision System: PTZ & ACTS · Hardware – Onboard Computer: Linux – Sony pan/tilt/zoom camera – PXC 200 frame grabber · Camera Control – Software in Linux – Send PTZ Commands – Receive Camera State · ACTS System – Captures and processes video • 32 color channels • 10 blobs per channel – Extract color information and sends it to a TCP socket • Number of blobs, • Size and position of each blob

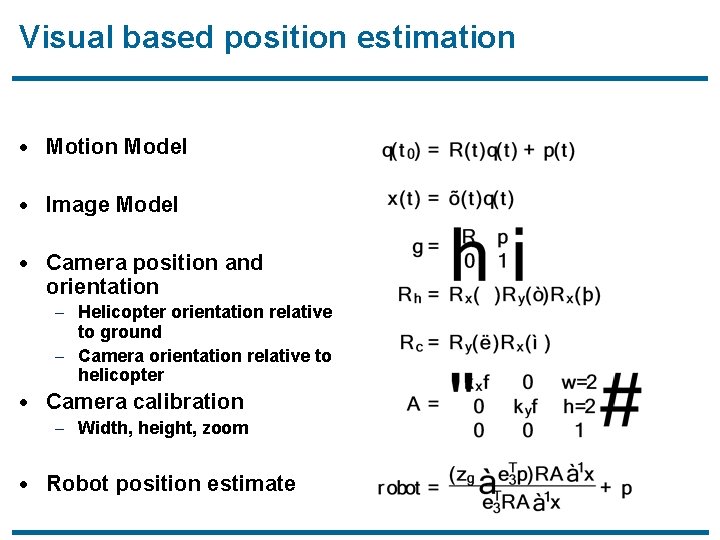

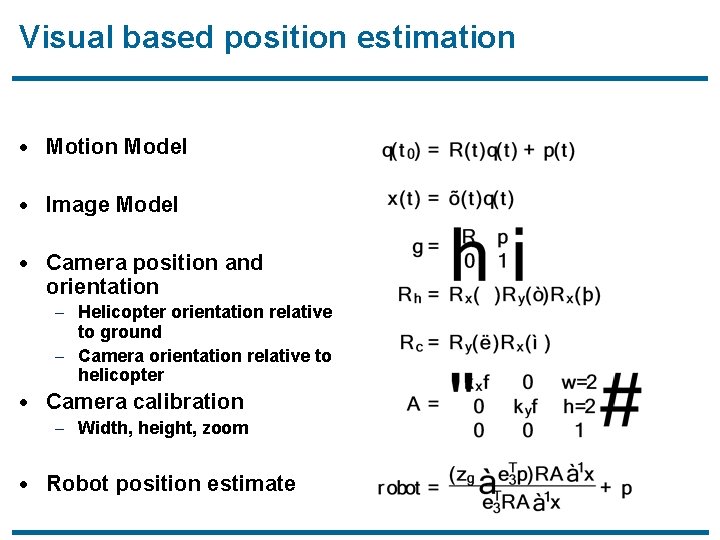

Visual based position estimation · Motion Model · Image Model · Camera position and orientation – Helicopter orientation relative to ground – Camera orientation relative to helicopter · Camera calibration – Width, height, zoom · Robot position estimate

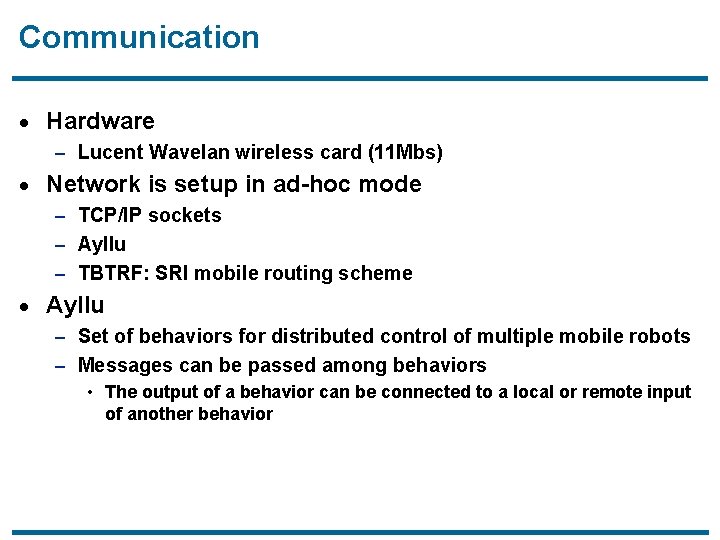

Communication · Hardware – Lucent Wavelan wireless card (11 Mbs) · Network is setup in ad-hoc mode – TCP/IP sockets – Ayllu – TBTRF: SRI mobile routing scheme · Ayllu – Set of behaviors for distributed control of multiple mobile robots – Messages can be passed among behaviors • The output of a behavior can be connected to a local or remote input of another behavior

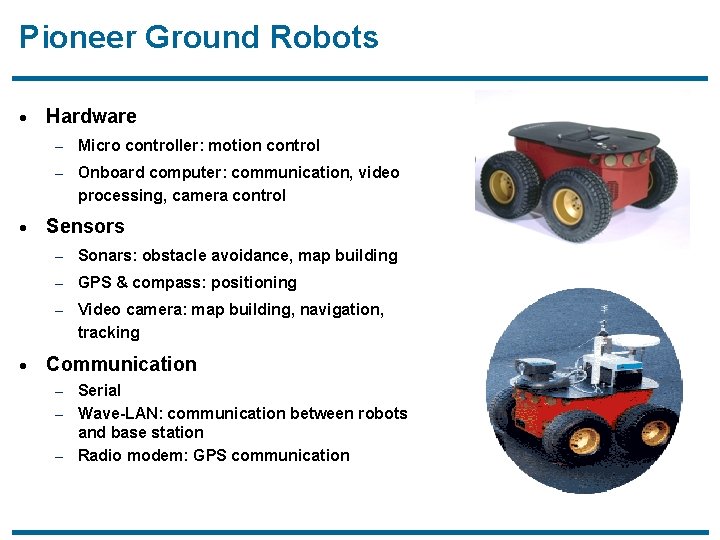

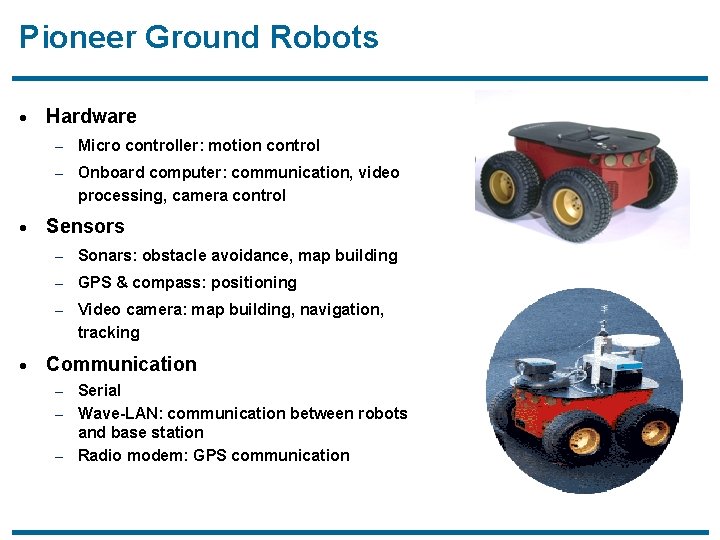

Pioneer Ground Robots · Hardware – Micro controller: motion control – Onboard computer: communication, video processing, camera control · Sensors – Sonars: obstacle avoidance, map building – GPS & compass: positioning – Video camera: map building, navigation, tracking · Communication – Serial – Wave-LAN: communication between robots and base station – Radio modem: GPS communication

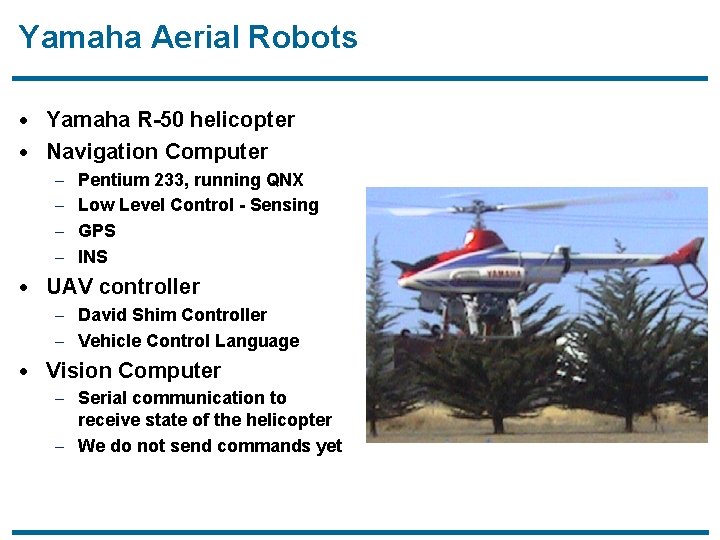

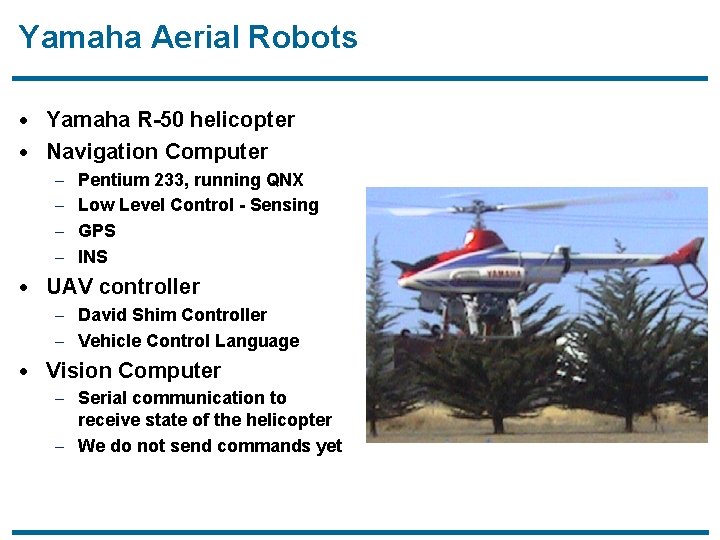

Yamaha Aerial Robots · Yamaha R-50 helicopter · Navigation Computer – Pentium 233, running QNX – Low Level Control - Sensing – GPS – INS · UAV controller – David Shim Controller – Vehicle Control Language · Vision Computer – Serial communication to receive state of the helicopter – We do not send commands yet

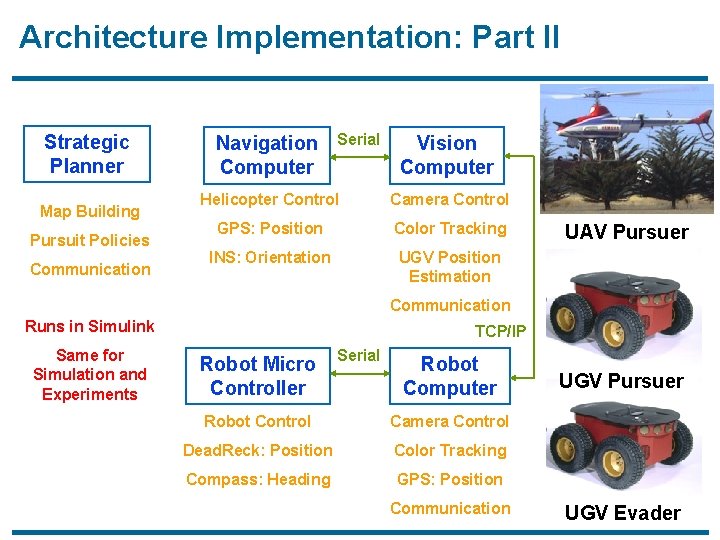

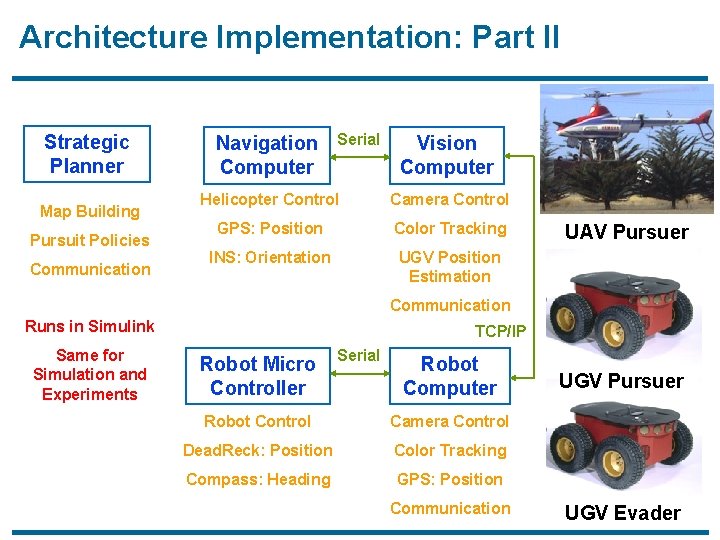

Architecture Implementation: Part II Strategic Planner Map Building Pursuit Policies Communication Navigation Computer Serial Vision Computer Helicopter Control Camera Control GPS: Position Color Tracking INS: Orientation UGV Position Estimation UAV Pursuer Communication Runs in Simulink Same for Simulation and Experiments TCP/IP Robot Micro Controller Serial Robot Computer Robot Control Camera Control Dead. Reck: Position Color Tracking Compass: Heading GPS: Position Communication UGV Pursuer UGV Evader

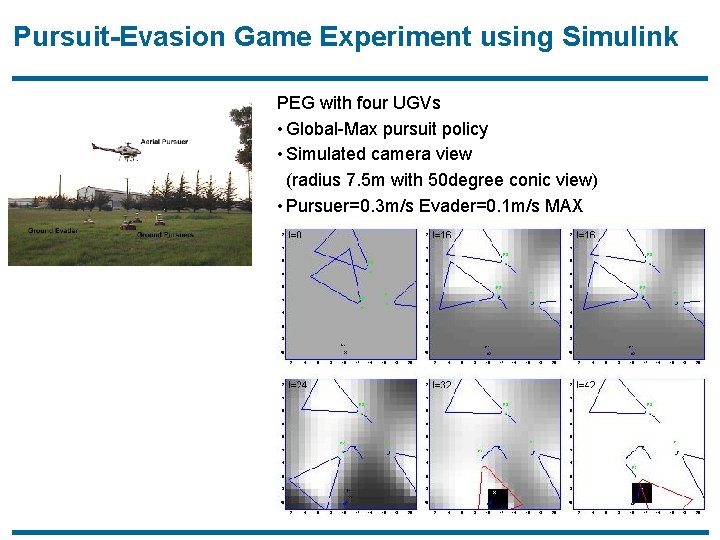

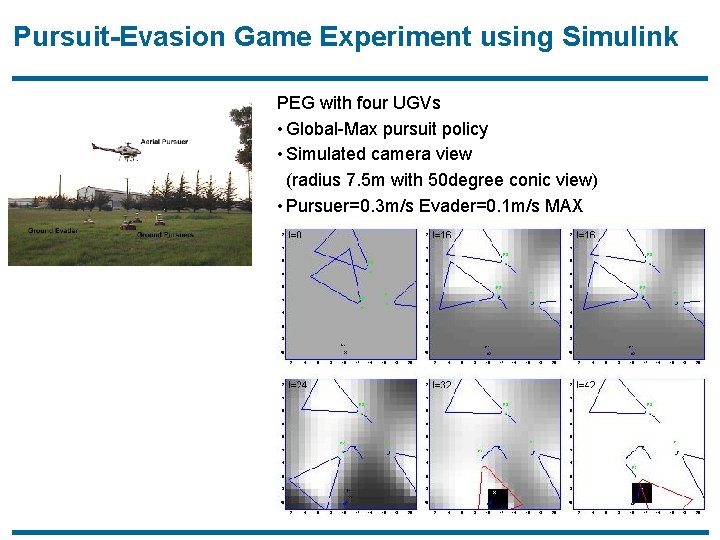

Pursuit-Evasion Game Experiment using Simulink PEG with four UGVs • Global-Max pursuit policy • Simulated camera view (radius 7. 5 m with 50 degree conic view) • Pursuer=0. 3 m/s Evader=0. 1 m/s MAX

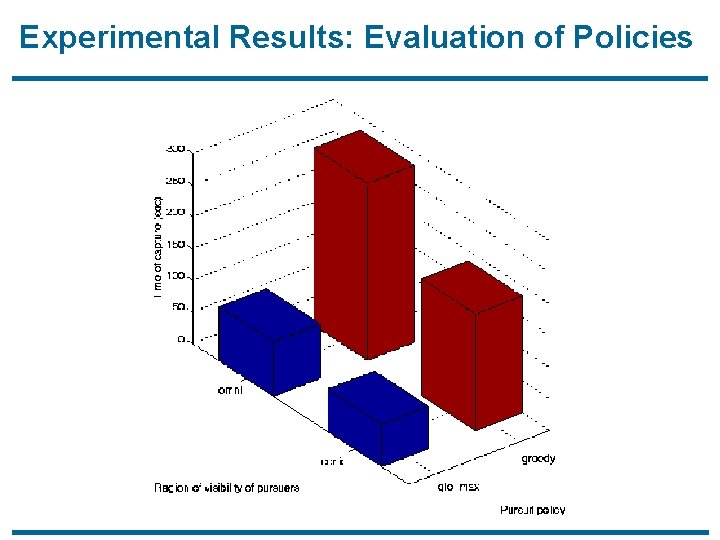

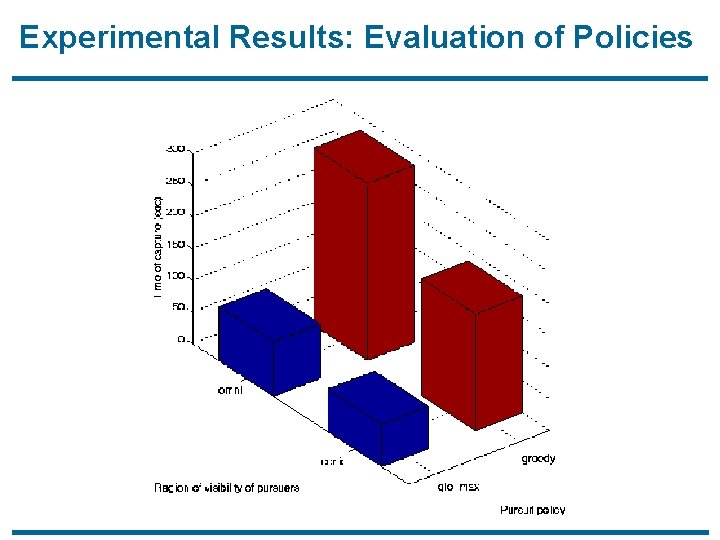

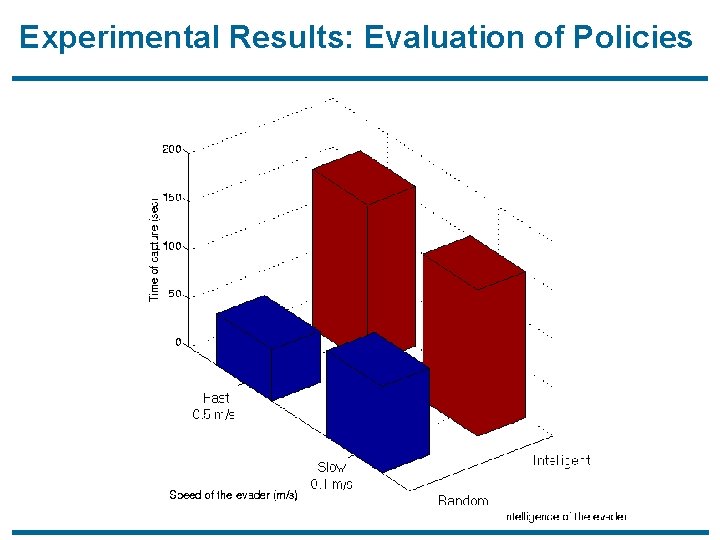

Experimental Results: Evaluation of Policies

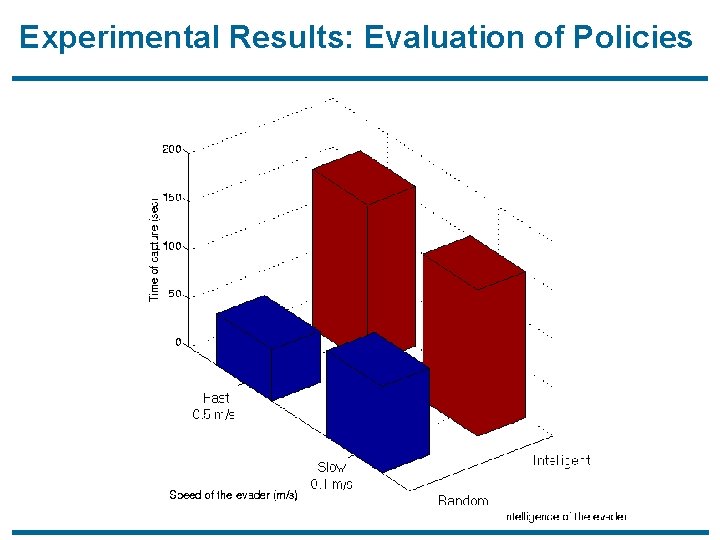

Experimental Results: Evaluation of Policies

Experimental Results: Pursuit Evasion Games with 1 UAV and 2 UGVs (Summer’ 00)

Experimental Results: Pursuit Evasion Games with 4 UGVs and 1 UAV (Spring’ 01)

Experimental Results: Pursuit Evasion Games with 4 UGVs and 1 UAV (Spring’ 01)

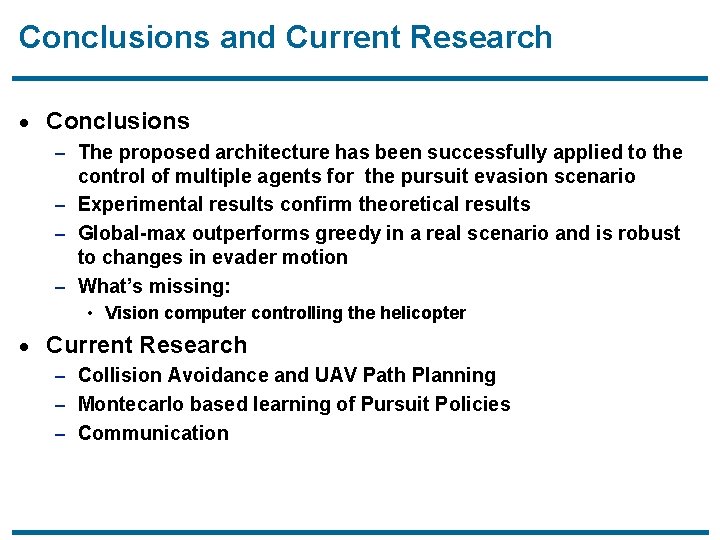

Conclusions and Current Research · Conclusions – The proposed architecture has been successfully applied to the control of multiple agents for the pursuit evasion scenario – Experimental results confirm theoretical results – Global-max outperforms greedy in a real scenario and is robust to changes in evader motion – What’s missing: • Vision computer controlling the helicopter · Current Research – Collision Avoidance and UAV Path Planning – Montecarlo based learning of Pursuit Policies – Communication

A Probabilistic Framework for Pursuit-Evasion Games with Unmanned Air Vehicles Maria Prandini Univ. of Brescia & UC Berkeley In collaboration with J. Hespanha, J. Kim, and S. Sastry

Key Ideas · The “mission-level” control of Unmanned Air Vehicles requires a probabilistic framework · The problem of coordinating teams of autonomous agents is naturally formulated in a game theoretical setting. · Exact solutions for these types of problems are often computationally intractable and, in some cases, open research problems.

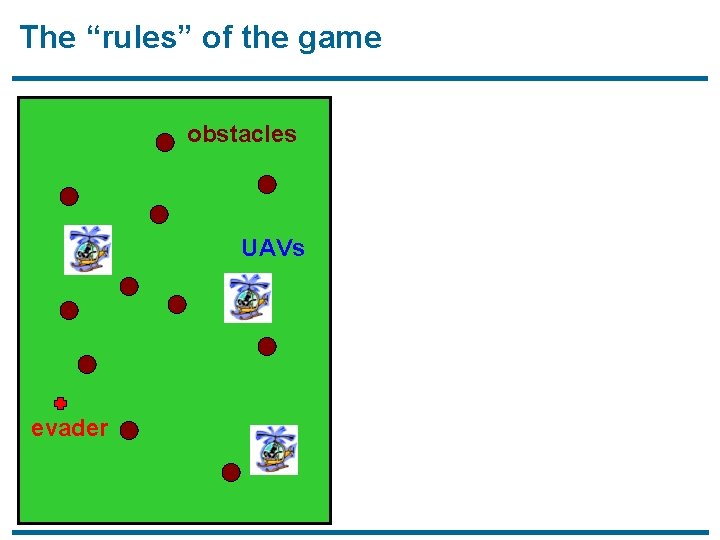

The “rules” of the game obstacles UAVs evader

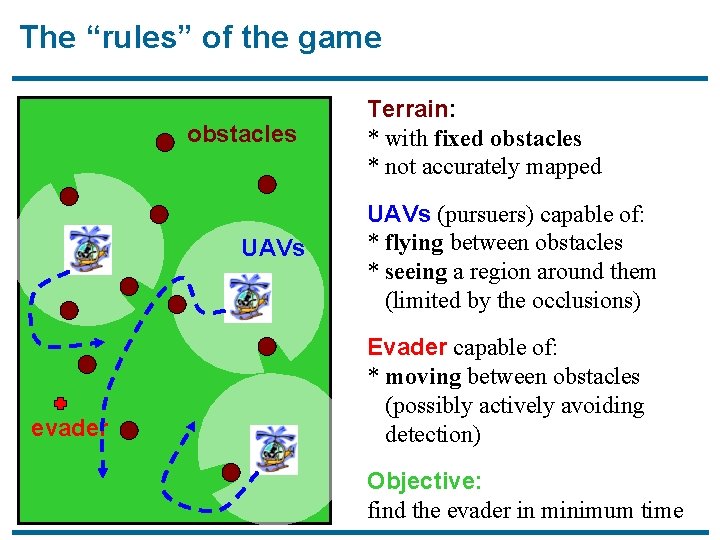

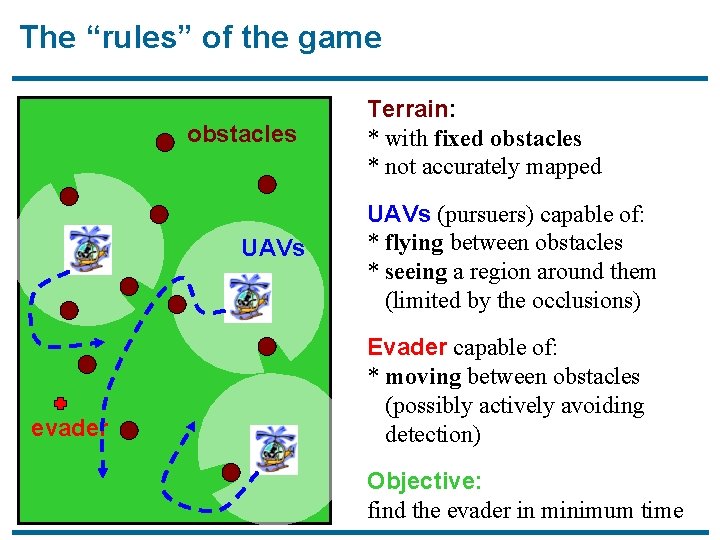

The “rules” of the game obstacles UAVs evader Terrain: * with fixed obstacles * not accurately mapped UAVs (pursuers) capable of: * flying between obstacles * seeing a region around them (limited by the occlusions) Evader capable of: * moving between obstacles (possibly actively avoiding detection) Objective: find the evader in minimum time

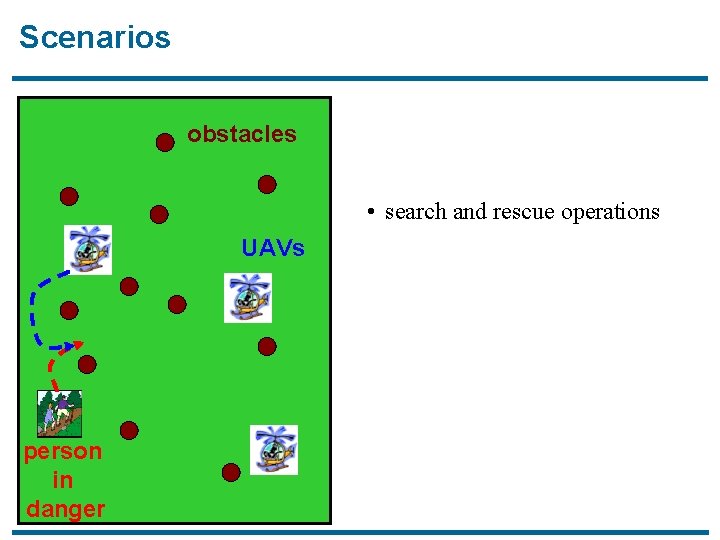

Scenarios obstacles • search and rescue operations UAVs person in danger

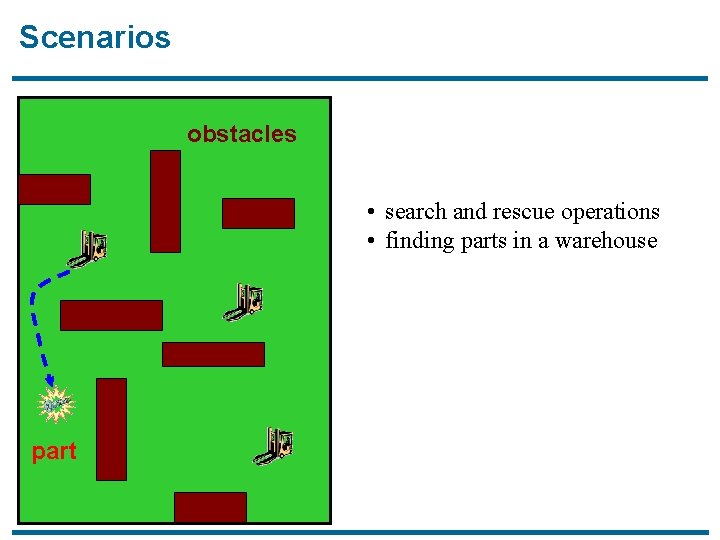

Scenarios obstacles • search and rescue operations • finding parts in a warehouse part

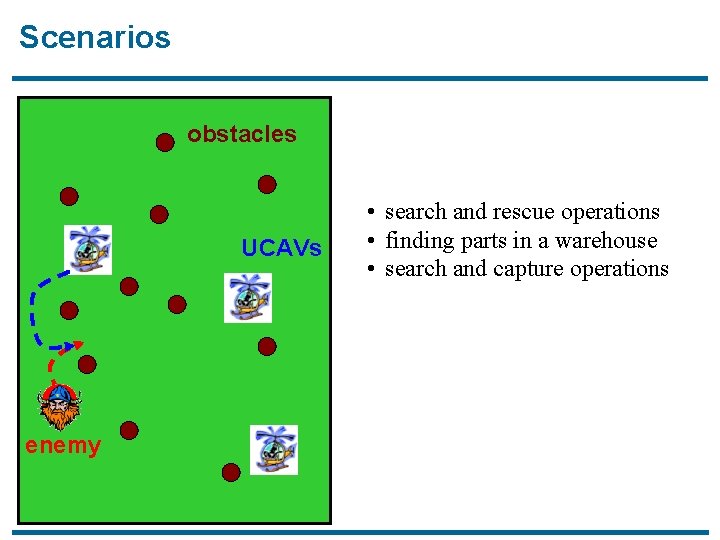

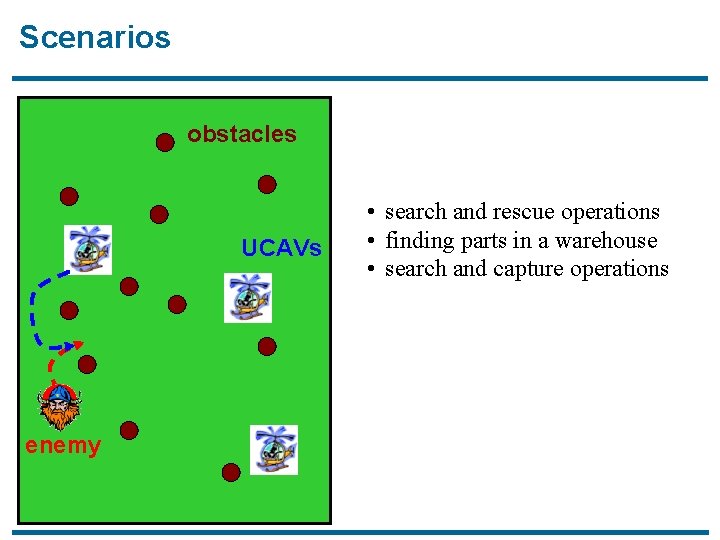

Scenarios obstacles UCAVs enemy • search and rescue operations • finding parts in a warehouse • search and capture operations

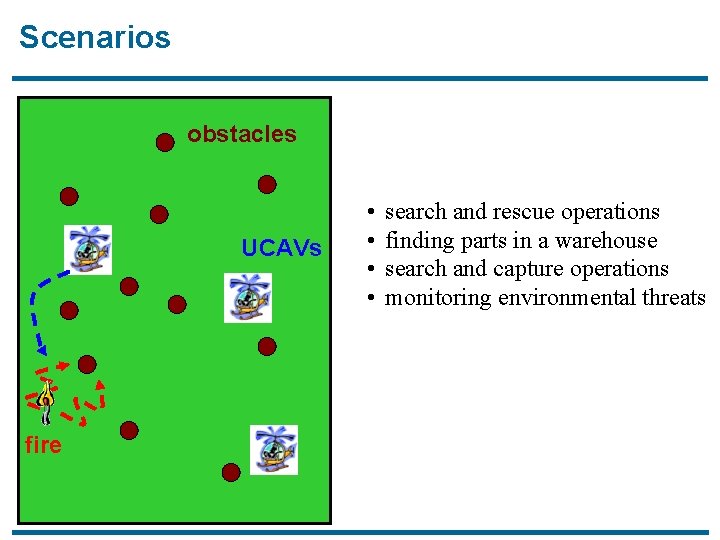

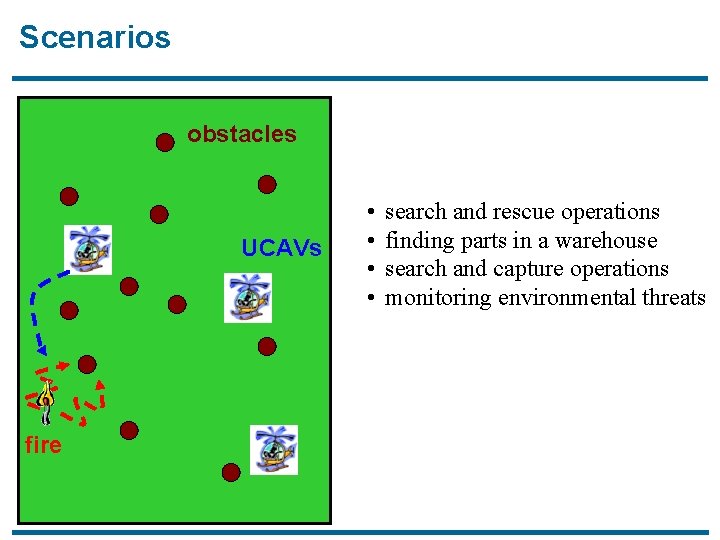

Scenarios obstacles UCAVs fire • • search and rescue operations finding parts in a warehouse search and capture operations monitoring environmental threats

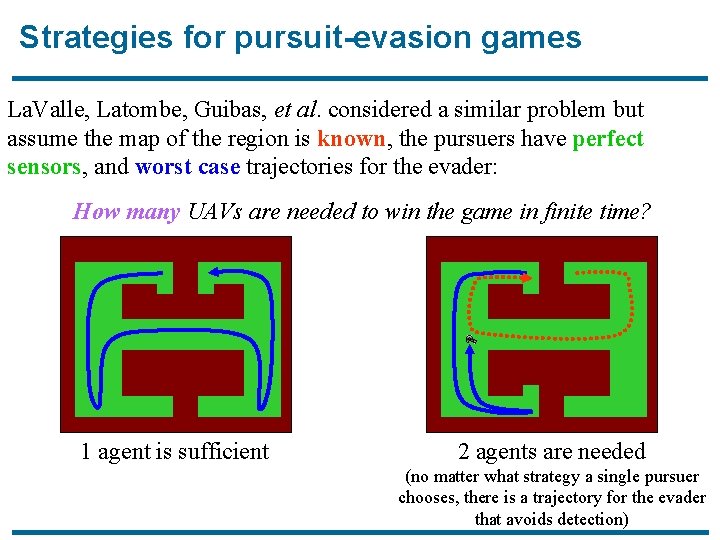

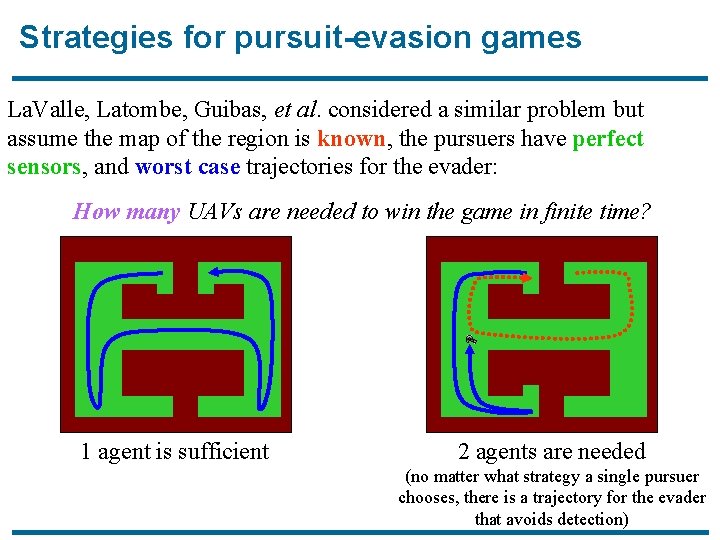

Strategies for pursuit-evasion games La. Valle, Latombe, Guibas, et al. considered a similar problem but assume the map of the region is known, the pursuers have perfect sensors, and worst case trajectories for the evader: How many UAVs are needed to win the game in finite time? 1 agent is sufficient 2 agents are needed (no matter what strategy a single pursuer chooses, there is a trajectory for the evader that avoids detection)

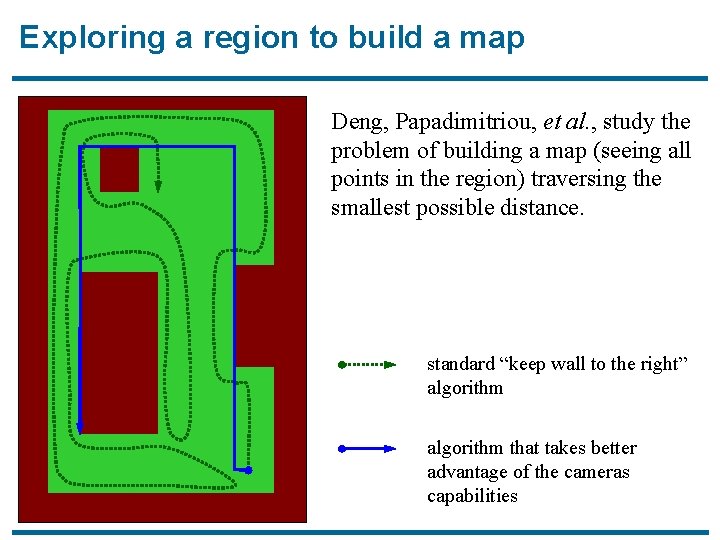

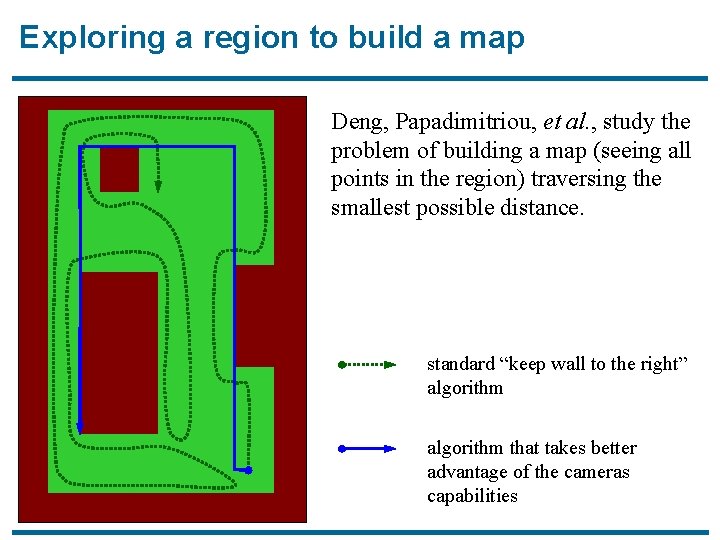

Exploring a region to build a map Deng, Papadimitriou, et al. , study the problem of building a map (seeing all points in the region) traversing the smallest possible distance. standard “keep wall to the right” algorithm that takes better advantage of the cameras capabilities

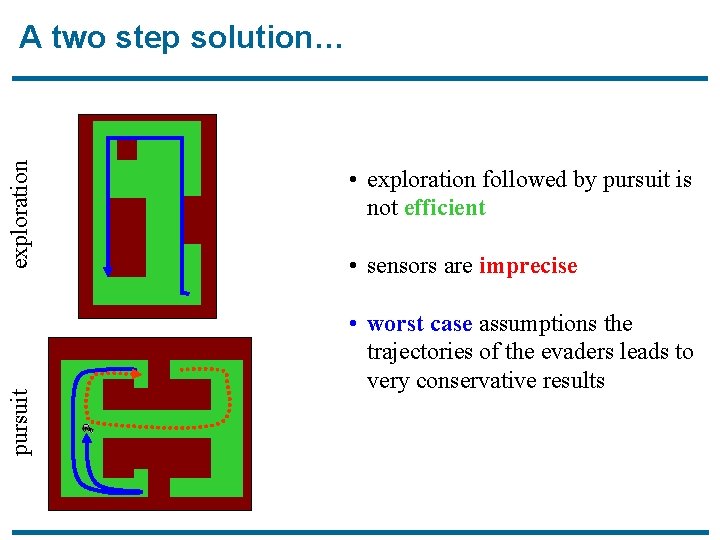

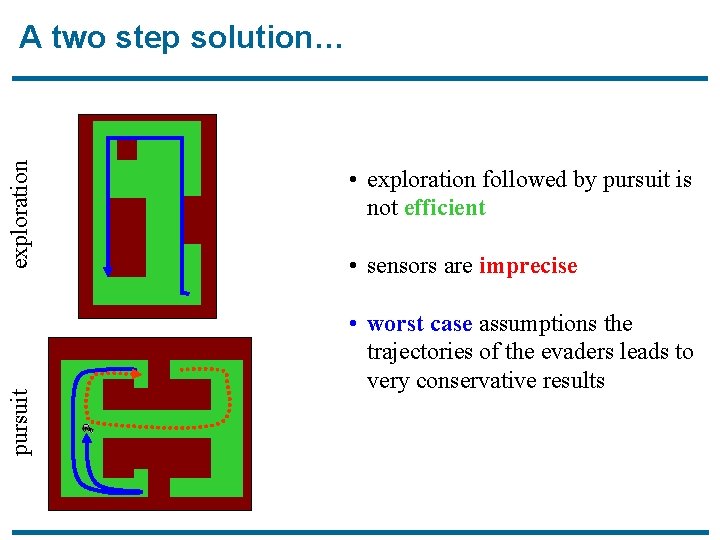

pursuit exploration A two step solution… • exploration followed by pursuit is not efficient • sensors are imprecise • worst case assumptions the trajectories of the evaders leads to very conservative results

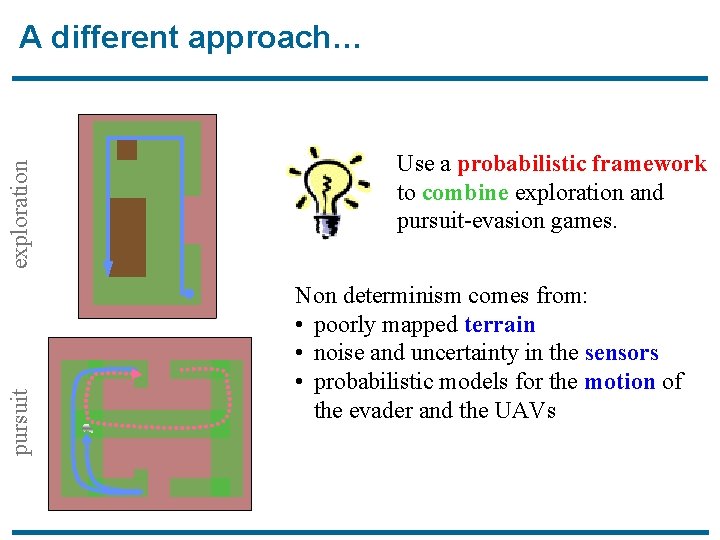

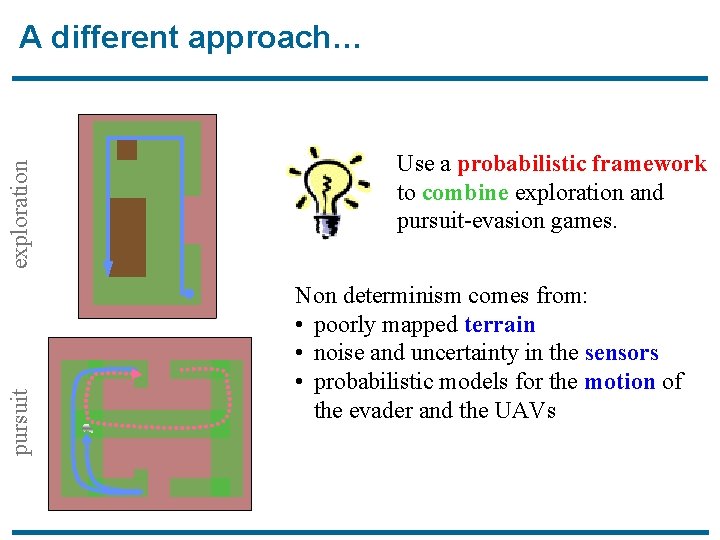

pursuit exploration A different approach… Use a probabilistic framework to combine exploration and pursuit-evasion games. Non determinism comes from: • poorly mapped terrain • noise and uncertainty in the sensors • probabilistic models for the motion of the evader and the UAVs

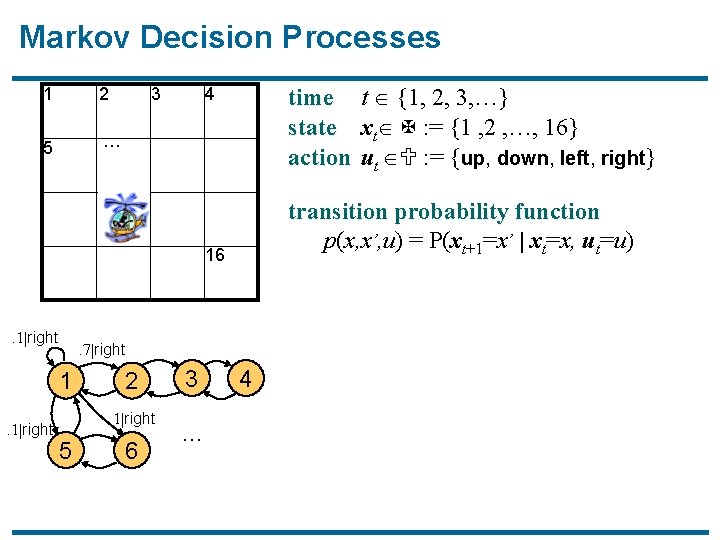

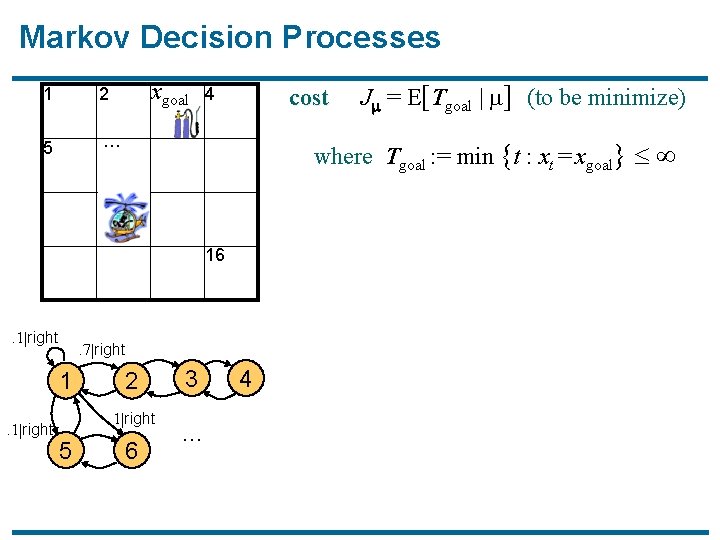

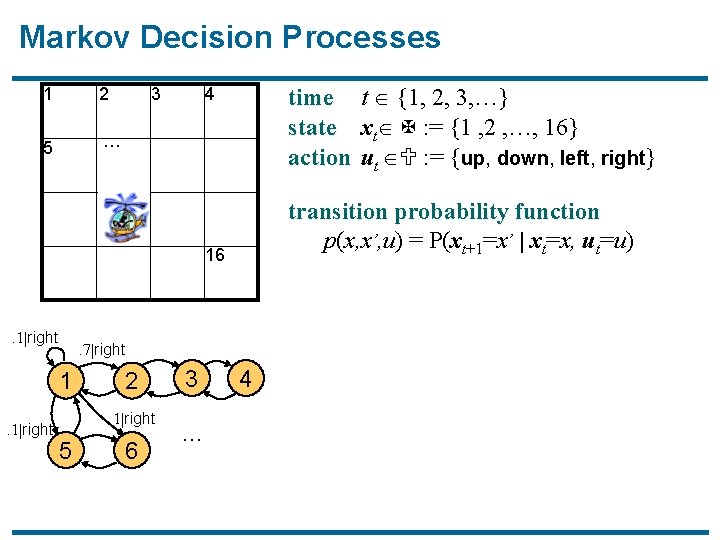

Markov Decision Processes 1 2 5 … 3 time t {1, 2, 3, …} state xt X : = {1 , 2 , …, 16} action ut U : = {up, down, left, right} 4 transition probability function p(x, x’, u) = P(xt+1=x’ | xt=x, ut=u) 16 . 1|right . 7|right 1. 1|right 2. 1|right 5 6 3 … 4

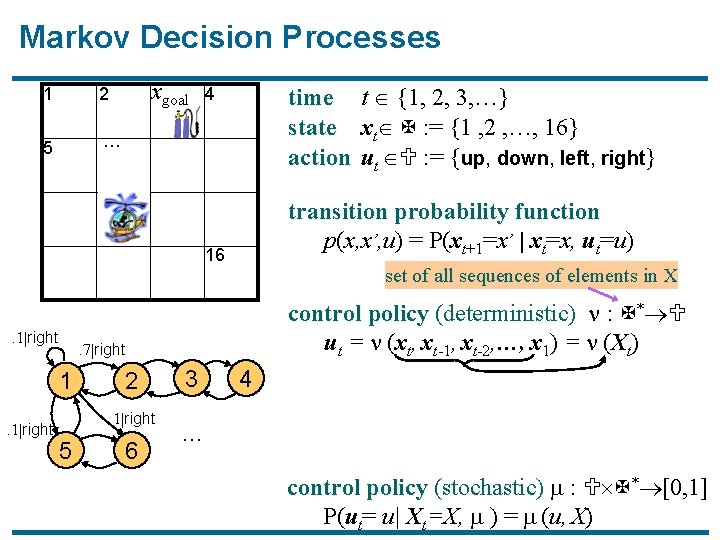

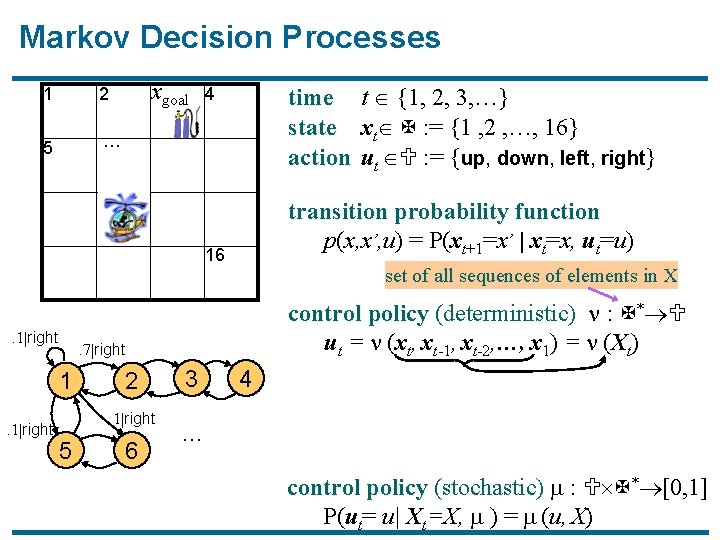

Markov Decision Processes 1 2 5 … xgoal time t {1, 2, 3, …} state xt X : = {1 , 2 , …, 16} action ut U : = {up, down, left, right} 4 transition probability function p(x, x’, u) = P(xt+1=x’ | xt=x, ut=u) 16 . 1|right control policy (deterministic) : X* U ut = (xt, xt-1, xt-2, …, x 1) = (Xt) . 7|right 1. 1|right set of all sequences of elements in X 2. 1|right 5 6 3 4 … control policy (stochastic) : U X* [0, 1] P(ut= u| Xt=X, ) = (u, X)

Markov Decision Processes 1 2 5 … xgoal time t {1, 2, 3, …} state xt X : = {1 , 2 , …, 16} action ut U : = {up, down, left, right} 4 transition probability function p(x, x’, u) = P(xt+1=x’ | xt=x, ut=u) 16 . 1|right control policy (deterministic) : X* U ut = (xt, xt-1, xt-2, …, x 1) = (Xt) . 7|right 1. 1|right set of all sequences of elements in X 2. 1|right 5 6 3 4 (almost surely) … control policy (stochastic) : U X* [0, 1] P(ut= u| Xt=X, ) = (u, X)

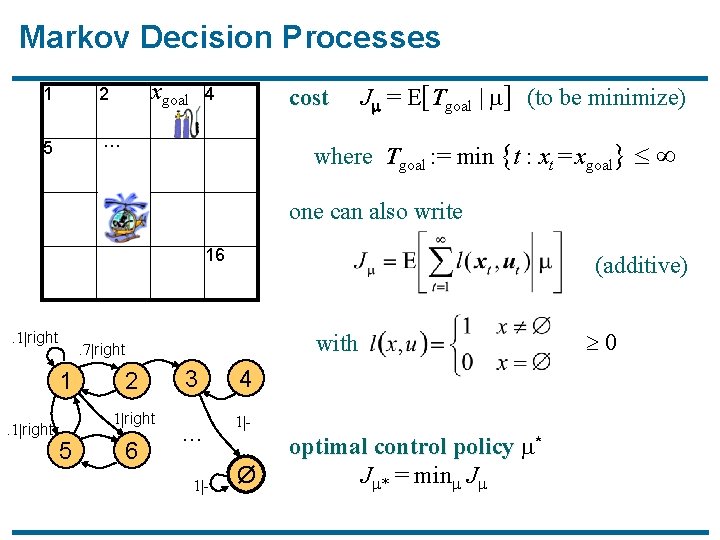

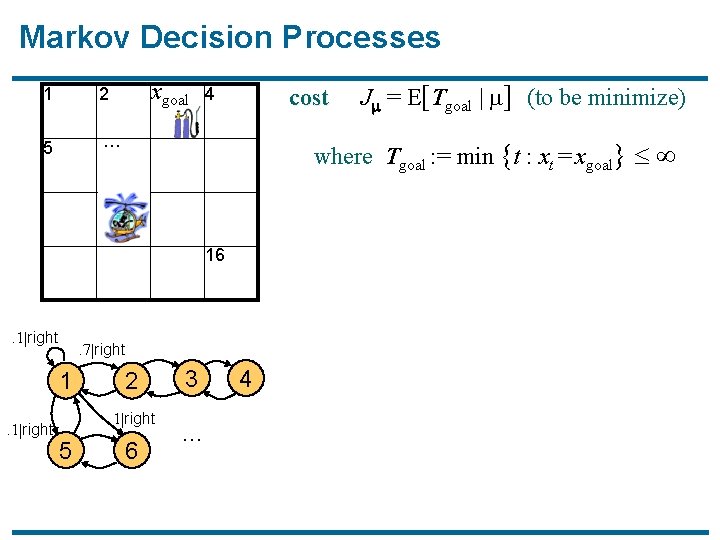

Markov Decision Processes 1 2 5 … xgoal cost 4 where Tgoal : = min {t : xt = xgoal} 16 . 1|right . 7|right 1. 1|right 2. 1|right 5 J = E[Tgoal | ] (to be minimize) 6 3 … 4

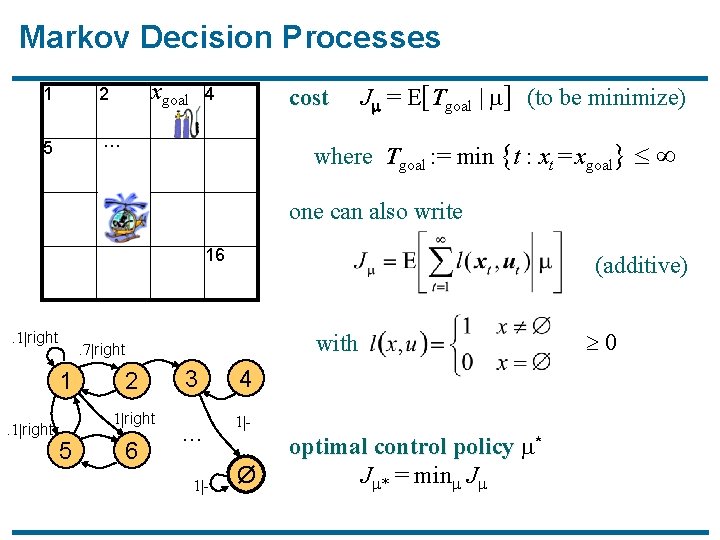

Markov Decision Processes 1 2 5 … xgoal cost 4 J = E[Tgoal | ] (to be minimize) where Tgoal : = min {t : xt = xgoal} one can also write 16 . 1|right with . 7|right 1. 1|right (additive) 2. 1|right 5 6 3 4 … 1|- Ø optimal control policy * J * = min J 0

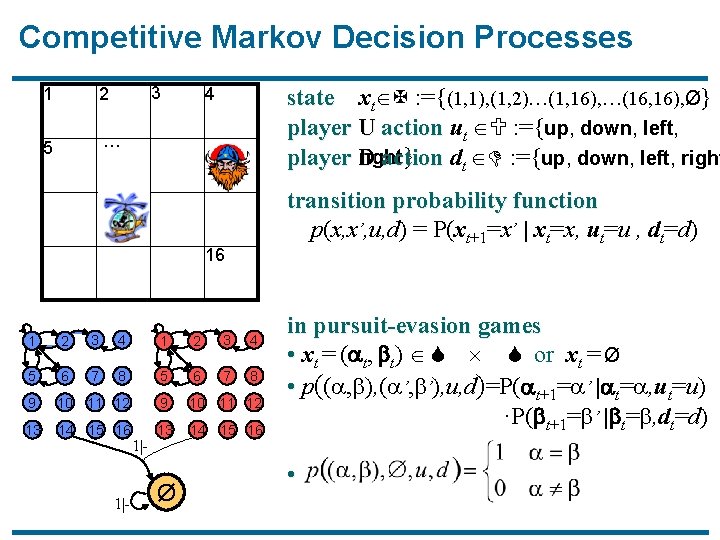

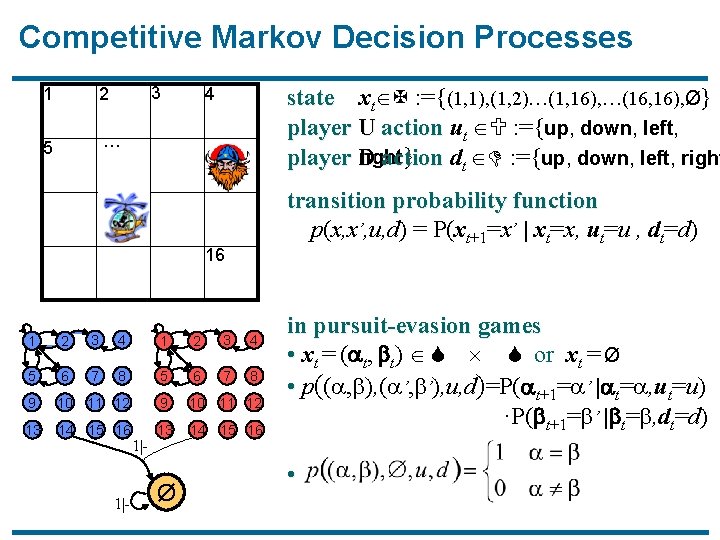

Competitive Markov Decision Processes 3 1 2 5 … state xt X : ={(1, 1), (1, 2)…(1, 16), …(16, 16), Ø} player U action ut U : ={up, down, left, right } dt D : ={up, down, left, right player D action 4 transition probability function p(x, x’, u, d) = P(xt+1=x’ | xt=x, ut=u , dt=d) 16 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1|- Ø in pursuit-evasion games • xt = ( t, t) S S or xt = Ø • p(( , ), ( ’, ’), u, d)=P( t+1= ’ | t= , ut=u) ·P( t+1= ’ | t= , dt=d) •

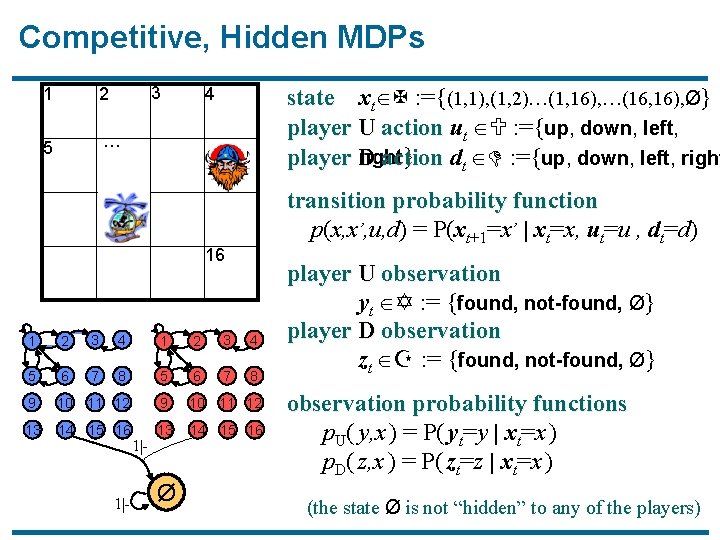

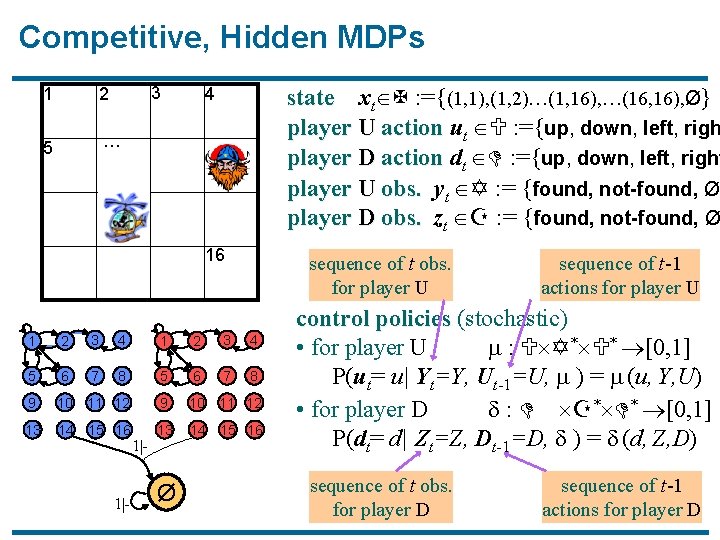

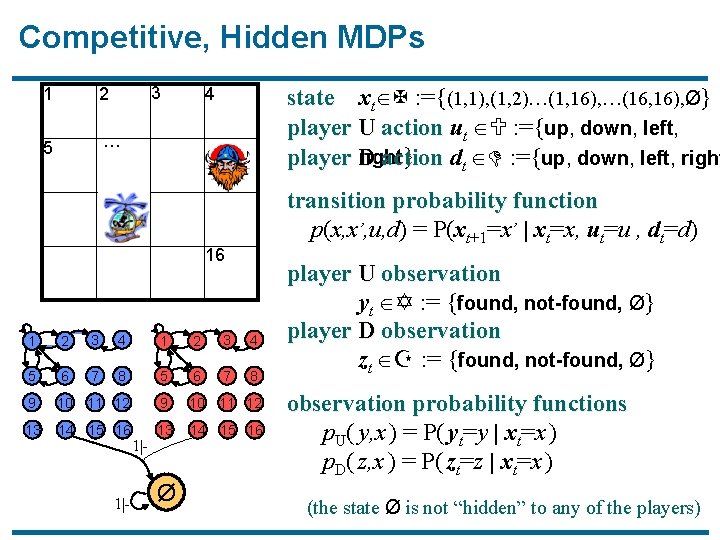

Competitive, Hidden MDPs 3 1 2 5 … state xt X : ={(1, 1), (1, 2)…(1, 16), …(16, 16), Ø} player U action ut U : ={up, down, left, right } dt D : ={up, down, left, right player D action 4 transition probability function p(x, x’, u, d) = P(xt+1=x’ | xt=x, ut=u , dt=d) 16 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1|- Ø player U observation yt Y : = {found, not-found, Ø} player D observation zt Z : = {found, not-found, Ø} observation probability functions p. U( y, x ) = P( yt=y | xt=x ) p. D( z, x ) = P( zt=z | xt=x ) (the state Ø is not “hidden” to any of the players)

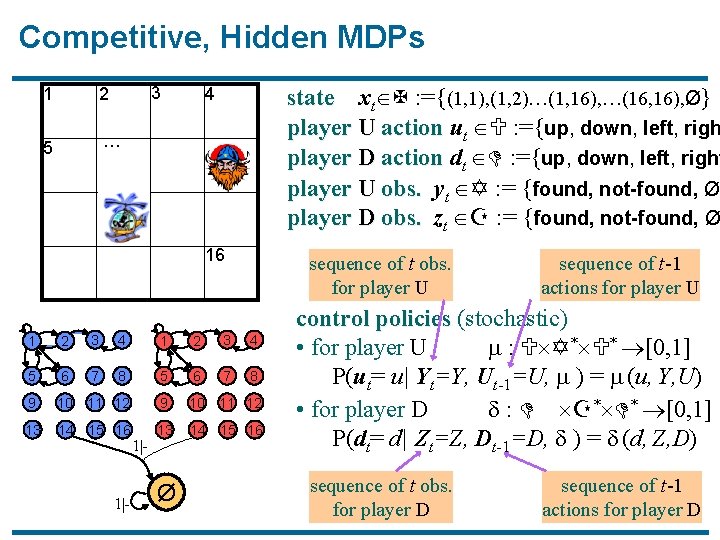

Competitive, Hidden MDPs 3 1 2 5 … state xt X : ={(1, 1), (1, 2)…(1, 16), …(16, 16), Ø} player U action ut U : ={up, down, left, righ player D action dt D : ={up, down, left, right player U obs. yt Y : = {found, not-found, Ø} player D obs. zt Z : = {found, not-found, Ø 4 16 sequence of t obs. for player U 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1|- Ø sequence of t-1 actions for player U control policies (stochastic) • for player U : U Y* U* [0, 1] P(ut= u| Yt=Y, Ut-1=U, ) = (u, Y, U) • for player D : D Z* D* [0, 1] P(dt= d| Zt=Z, Dt-1=D, ) = (d, Z, D) sequence of t obs. for player D sequence of t-1 actions for player D

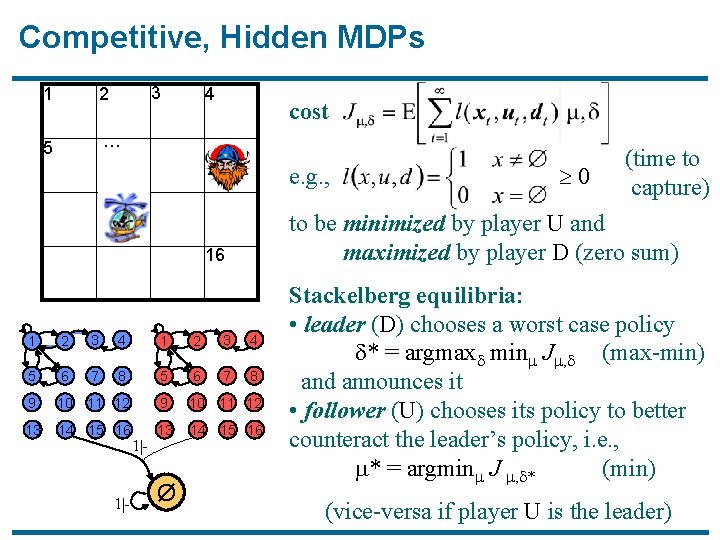

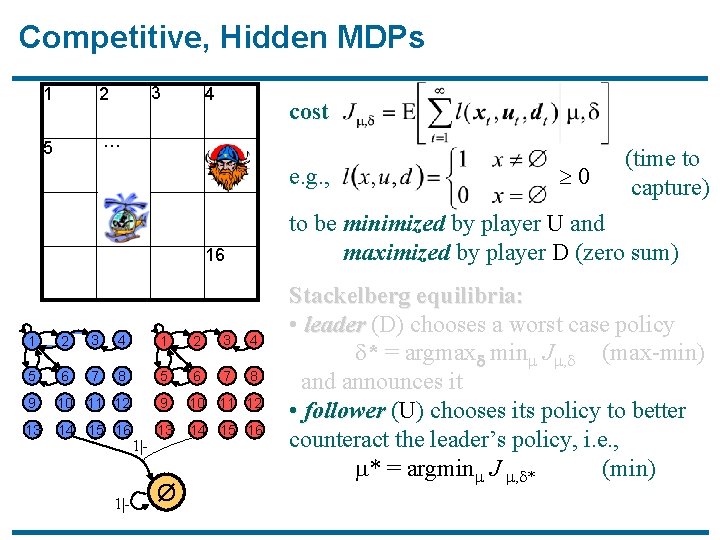

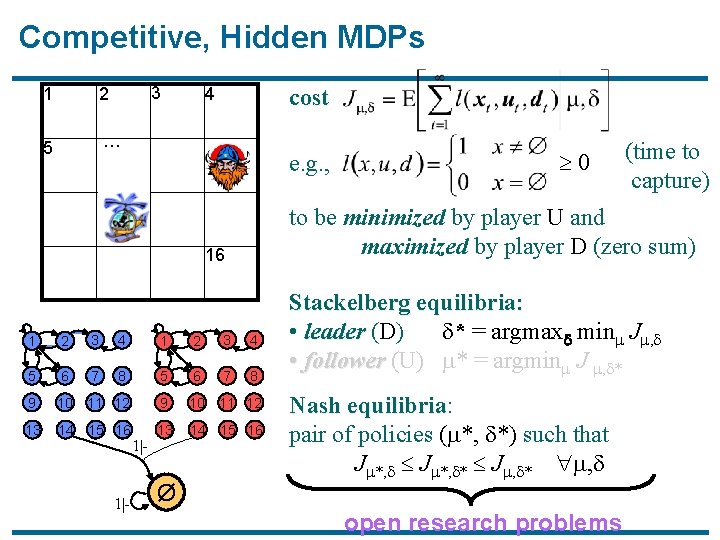

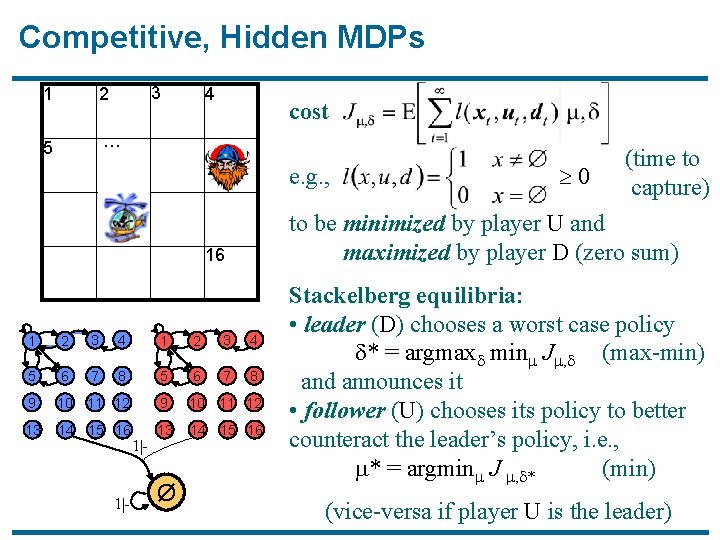

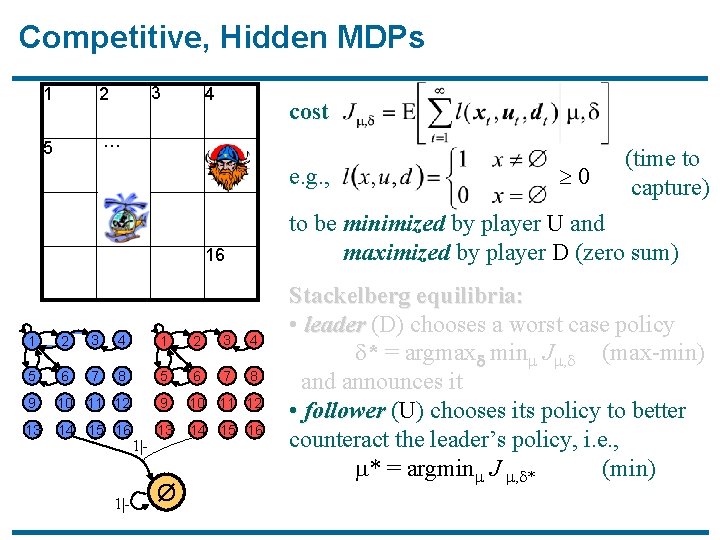

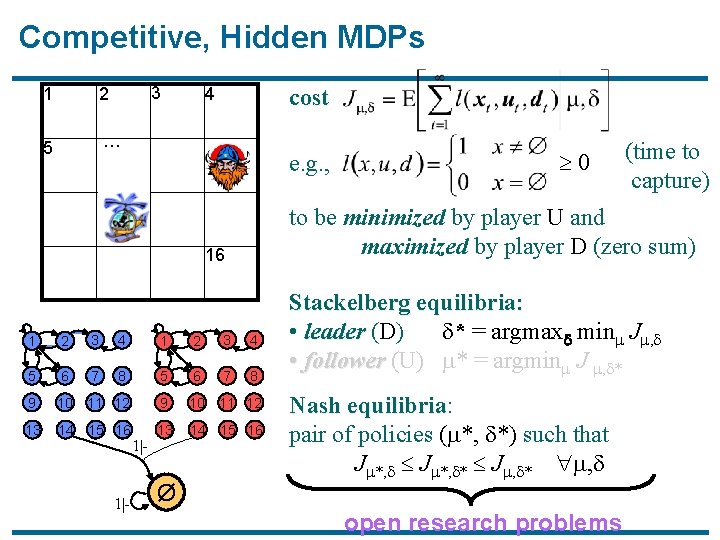

Competitive, Hidden MDPs 3 1 2 5 … 4 cost e. g. , to be minimized by player U and maximized by player D (zero sum) 16 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1|- Ø 0 (time to capture) Stackelberg equilibria: • leader (D) chooses a worst case policy * = argmax min J , (max-min) and announces it • follower (U) chooses its policy to better counteract the leader’s policy, i. e. , * = argmin J , * (min) (vice-versa if player U is the leader)

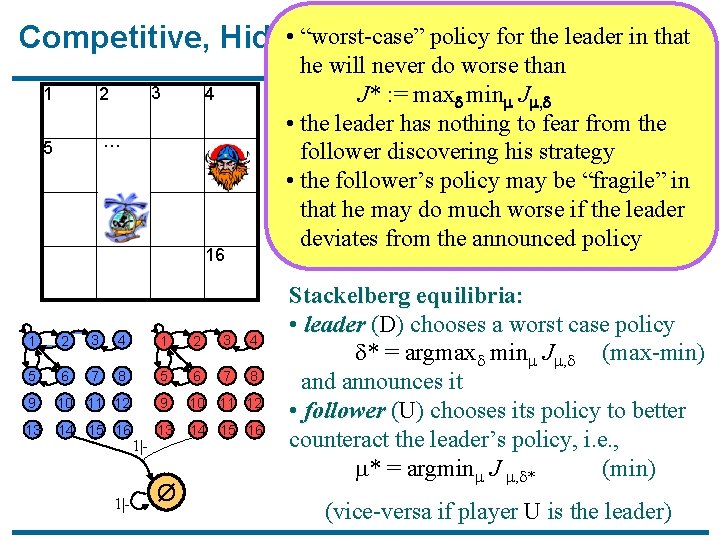

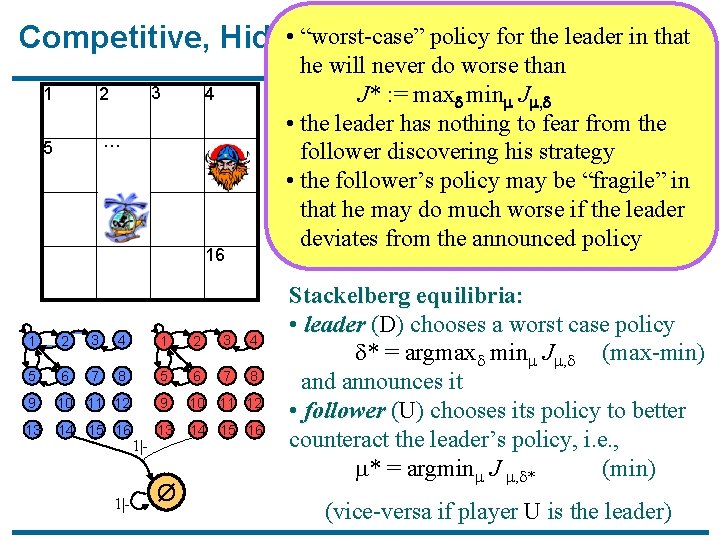

• “worst-case” Competitive, Hidden MDPs policy for the leader in that 3 1 2 5 … he will never do worse than J* : = max min J , • cost the leader has nothing to fear from the follower discovering his strategy (time to 0“fragile” • e. g. , the follower’s policy may be in capture) that he may do much worse if the leader to be minimized by player U and deviates from the announced policy maximized by player D (zero sum) 4 16 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1|- Ø Stackelberg equilibria: • leader (D) chooses a worst case policy * = argmax min J , (max-min) and announces it • follower (U) chooses its policy to better counteract the leader’s policy, i. e. , * = argmin J , * (min) (vice-versa if player U is the leader)

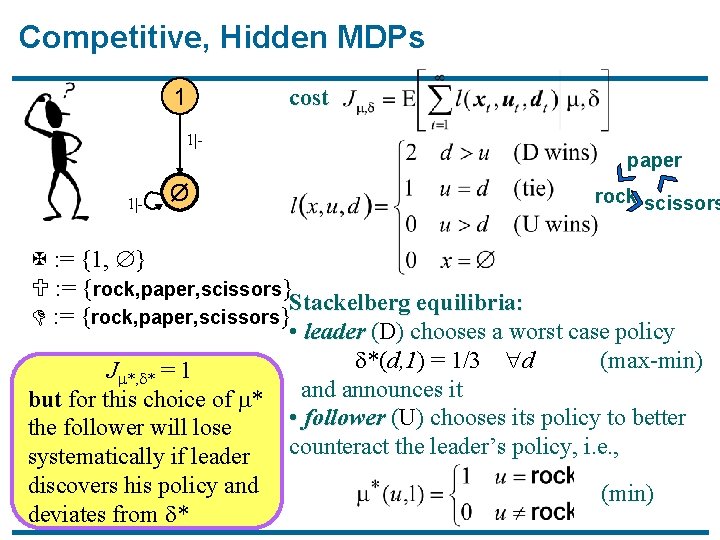

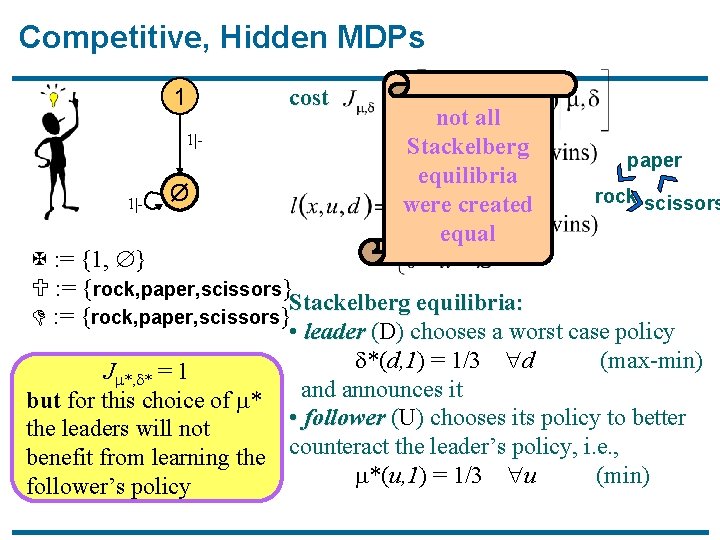

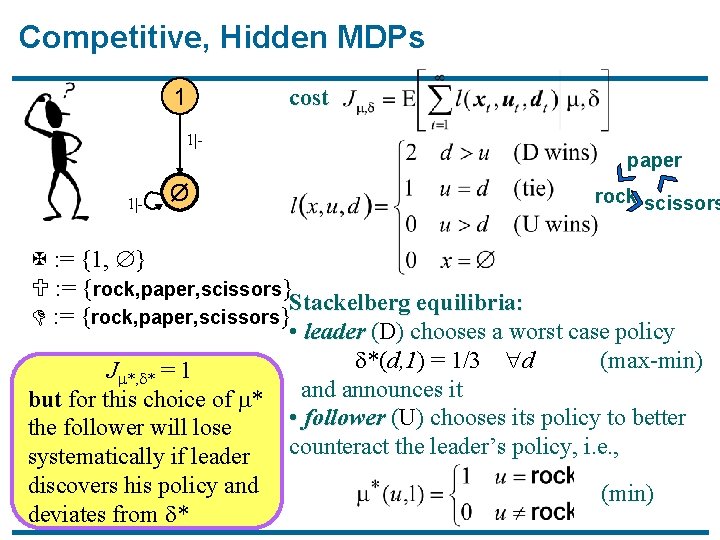

Competitive, Hidden MDPs 1 cost 1|- paper 1|- Ø rock scissors X : = {1, } U : = {rock, paper, scissors} Stackelberg equilibria: D : = {rock, paper, scissors} • leader (D) chooses a worst case policy *(d, 1) = 1/3 d (max-min) J *, * = 1 and announces it but for this choice of * • follower (U) chooses its policy to better the follower will lose counteract the leader’s policy, i. e. , systematically if leader discovers his policy and deviates from * (min)

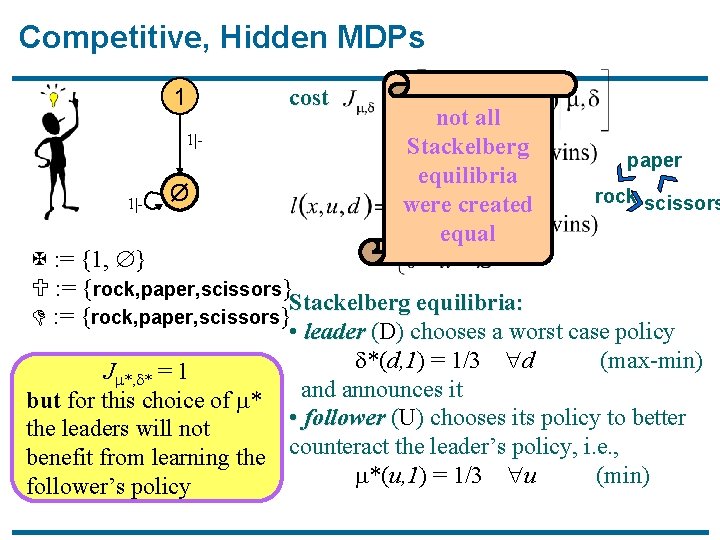

Competitive, Hidden MDPs 1 cost 1|- Ø not all Stackelberg equilibria were created equal paper rock scissors X : = {1, } U : = {rock, paper, scissors} Stackelberg equilibria: D : = {rock, paper, scissors} • leader (D) chooses a worst case policy *(d, 1) = 1/3 d (max-min) J *, * = 1 and announces it but for this choice of * • follower (U) chooses its policy to better the leaders will not benefit from learning the counteract the leader’s policy, i. e. , *(u, 1) = 1/3 u (min) follower’s policy

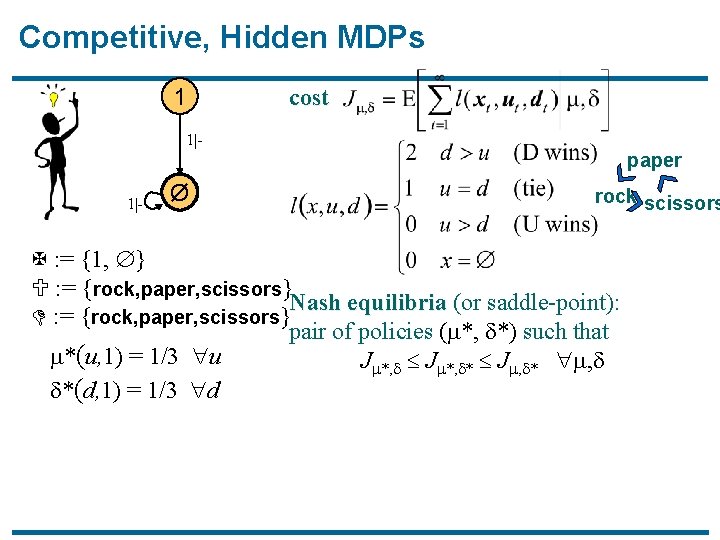

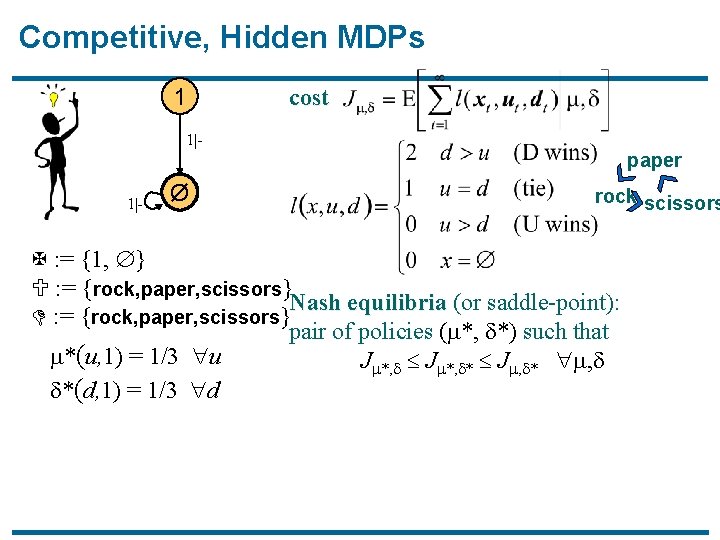

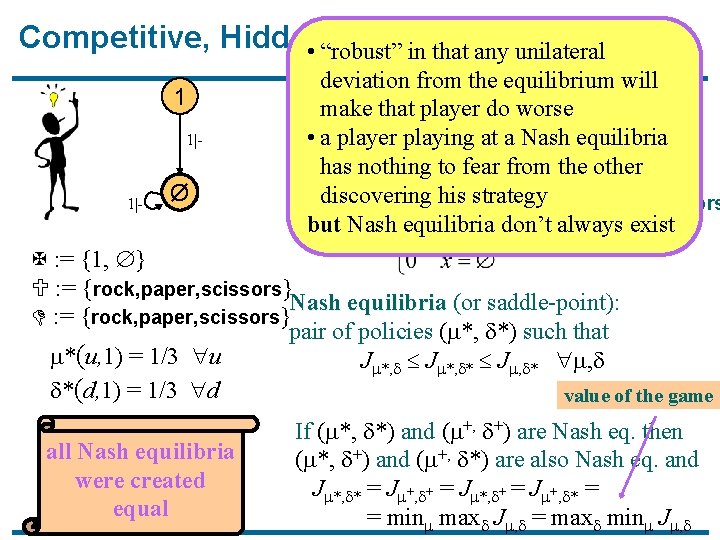

Competitive, Hidden MDPs 1 cost 1|- paper 1|- Ø rock scissors X : = {1, } U : = {rock, paper, scissors} Nash equilibria (or saddle-point): D : = {rock, paper, scissors} pair of policies ( *, *) such that *(u, 1) = 1/3 u J *, * J , * , *(d, 1) = 1/3 d

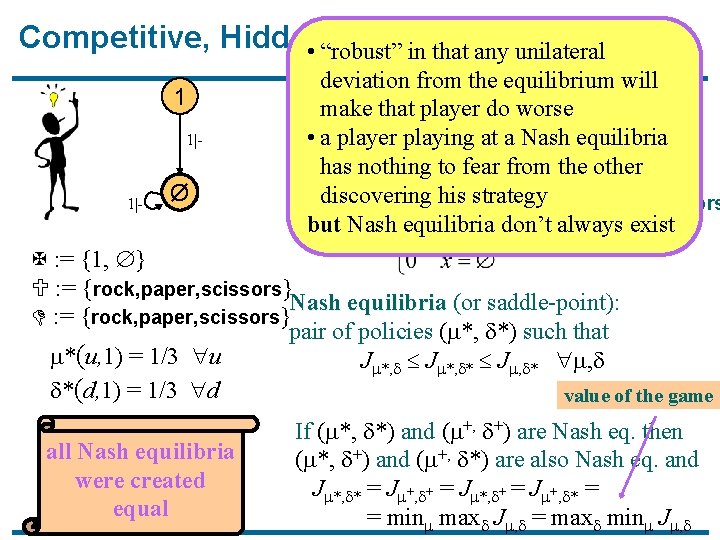

Competitive, Hidden MDPs • “robust” in that any unilateral 1 1|- Ø deviation from the equilibrium will costmake that player do worse • a player playing at a Nash equilibria paper has nothing to fear from the other discovering his strategy rock scissors but Nash equilibria don’t always exist X : = {1, } U : = {rock, paper, scissors} Nash equilibria (or saddle-point): D : = {rock, paper, scissors} pair of policies ( *, *) such that *(u, 1) = 1/3 u J *, * J , * , *(d, 1) = 1/3 d value of the game all Nash equilibria were created equal If ( *, *) and ( +, +) are Nash eq. then ( *, +) and ( +, *) are also Nash eq. and J *, * = J +, + = J *, + = J +, * = = min max J , = max min J ,

Competitive, Hidden MDPs 3 1 2 5 … 4 cost e. g. , to be minimized by player U and maximized by player D (zero sum) 16 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1|- Ø 0 (time to capture) Stackelberg equilibria: • leader (D) chooses a worst case policy * = argmax min J , (max-min) and announces it • follower (U) chooses its policy to better counteract the leader’s policy, i. e. , * = argmin J , * (min)

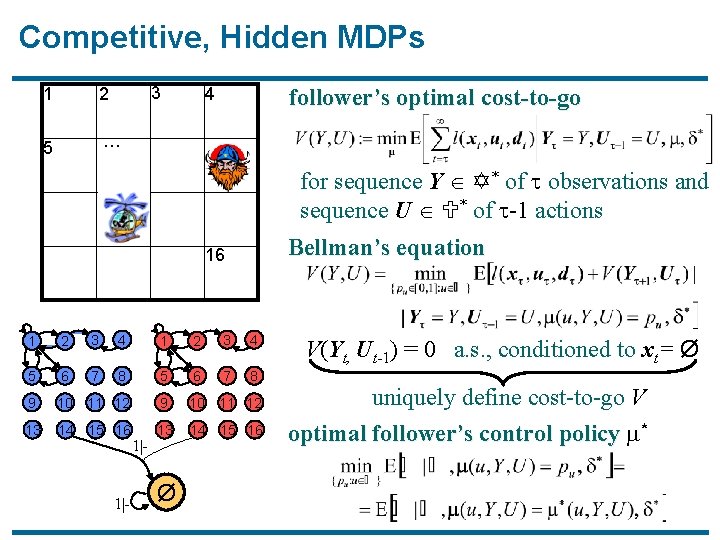

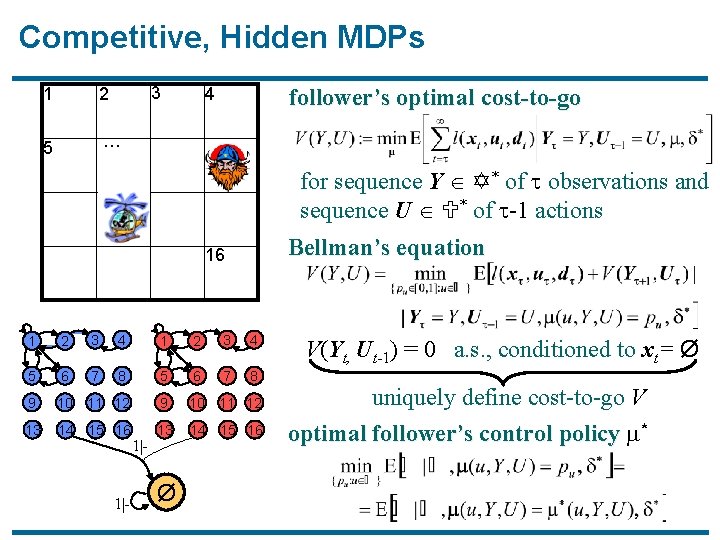

Competitive, Hidden MDPs 3 1 2 5 … follower’s optimal cost-to-go 4 for sequence Y Y* of observations and sequence U U* of -1 actions Bellman’s equation 16 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1|- Ø V(Yt, Ut-1) = 0 a. s. , conditioned to xt= Ø uniquely define cost-to-go V optimal follower’s control policy *

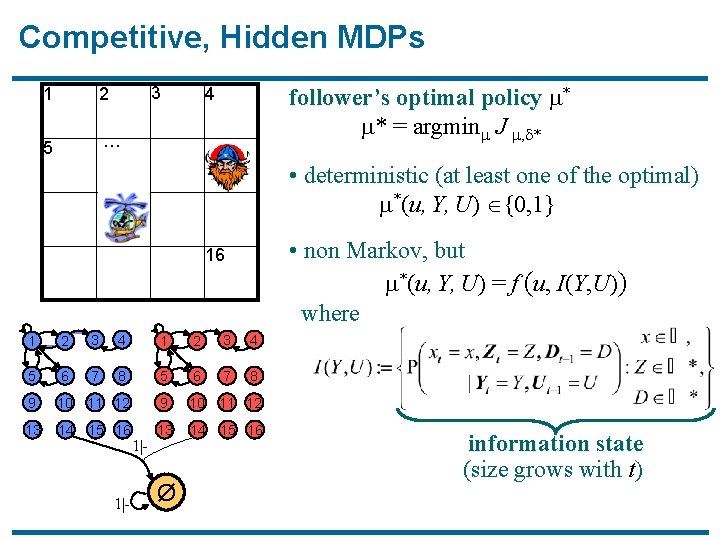

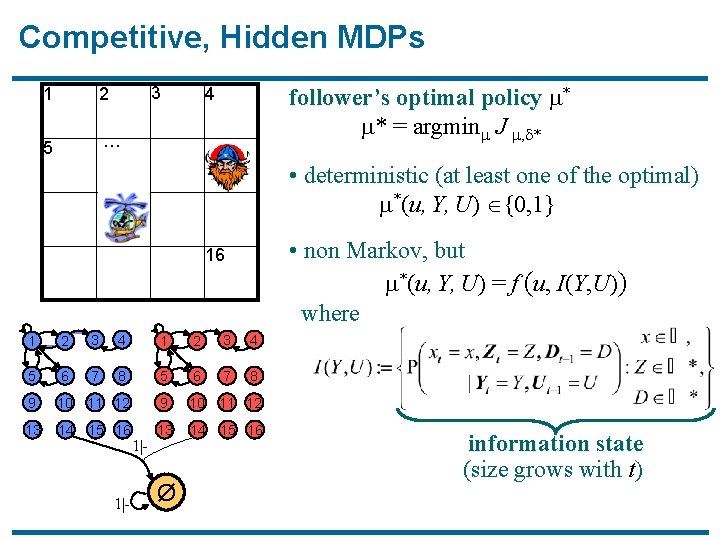

Competitive, Hidden MDPs 3 1 2 5 … follower’s optimal policy * * = argmin J , * 4 • deterministic (at least one of the optimal) *(u, Y, U) {0, 1} • non Markov, but *(u, Y, U) = f (u, I(Y, U)) where 16 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1|- Ø information state (size grows with t)

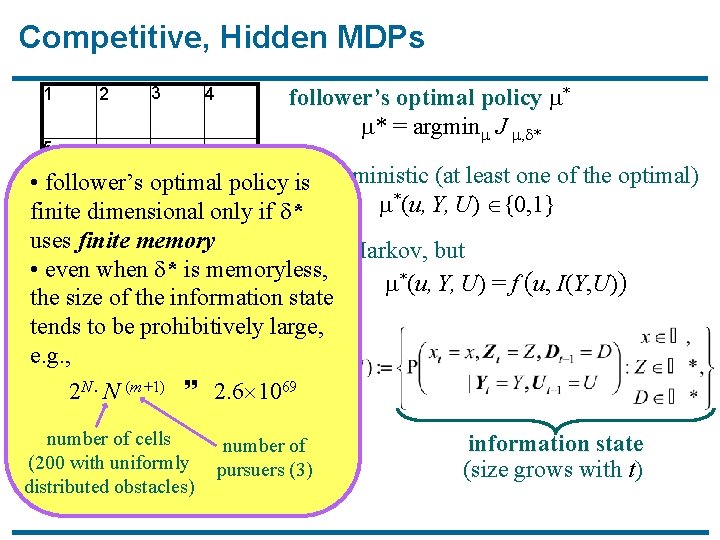

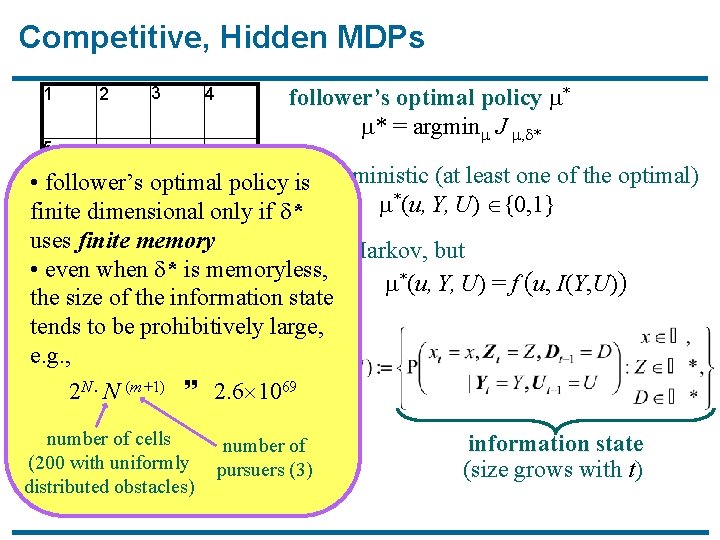

Competitive, Hidden MDPs 3 1 2 5 … follower’s optimal policy * * = argmin J , * 4 • follower’s optimal policy • isdeterministic (at least one of the optimal) *(u, Y, U) {0, 1} finite dimensional only if * uses finite memory • non Markov, but 16 • even when * is memoryless, *(u, Y, U) = f (u, I(Y, U)) the size of the information state where tends to be prohibitively large, 3 4 1 2 e. g. , 5 6 7 8 N. (m+1) 69 2 N 2. 6 10 9 10 11 12 13 14 15 16 13 14 number of 1|cells (200 with uniformly distributed obstacles) Ø 1|- 15 16 number of pursuers (3) information state (size grows with t)

Competitive, Hidden MDPs 3 1 2 5 … cost 4 e. g. , 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1|- Ø (time to capture) to be minimized by player U and maximized by player D (zero sum) 16 1|- 0 Stackelberg equilibria: • leader (D) * = argmax min J , • follower (U) * = argmin J , * Nash equilibria: equilibria pair of policies ( *, *) such that J *, * J , * , open research problems

Conclusions · The “mission-level” control of Unmanned Air Vehicles requires a probabilistic framework · The problem of coordinating teams of autonomous agents is naturally formulated in a game theoretical setting. · Exact solutions for these types of problems are often computationally intractable and, in some cases, open research problems.

Some References · · · Kumar & Varaiya, Stochastic Systems. Prentice Hall, 1986. Fudenberg & Tirole, Game Theory. MIT Press, 1993. Basar & Olsder, Dynamic Noncooperative Game Theory. 2 nd ed. SIAM, 1999. · Bertsekas, Dynamic Programming and Optimal Control. Athena Scientific, vols. 1 & 2, 1995. · Filar & Vrieze, Competitive Markov Decision Processes. Springer. Verlag, 1997. · Patek & Bertsekas, Stochastic Shortest Path Games. SIAM J. Control Optim. , 37(3): 804 -824, 1999. · · see also papers by Michael L. Littman, Junling Hu, Michael P. Wellman on reinforcement learning in Markov games. Intelligent Control Architectures for Unmanned Air Vehicles Home Page: http: //robotics. eecs. berkeley. edu/~sastry/ONRhomepage. html · Software Enabled Control Home Page: