EECS Electrical Engineering and Computer Sciences P A

- Slides: 24

EECS Electrical Engineering and Computer Sciences P A R BERKELEY PAR LAB A L L E L C O M P U T I N G L A B O R A T O R Optimizing Collective Communication for Multicore By Rajesh Nishtala Y

EECS Electrical Engineering and Computer Sciences What Are Collectives BERKELEY PAR LAB v An operation called by all threads together to perform globally coordinated communication § May involve a modest amount of computation, e. g. to combine values as they are communicated § Can be extended to teams (or communicators) in which they operate on a predefined subset of the threads v Focus on collectives in Single Program Multiple Data (SPMD) programming models Multicore Collective Tuning 2

EECS Electrical Engineering and Computer Sciences Some Collectives BERKELEY PAR LAB v Barrier ((MPI_Barrier()) § A thread cannot exit a call to a barrier until all other threads have called the barrier v Broadcast (MPI_Bcast()) § A root thread sends a copy of an array to all the other threads v Reduce-To-All (MPI_Allreduce()) § Each thread contributes an operand to an arithmetic operation across all the threads § The result is then broadcast to all the threads v Exchange (MPI_Alltoall()) § For all i, j < N , thread i copies the jth piece of an input array to the ith slot of an output array located on thread i. Multicore Collective Tuning 3

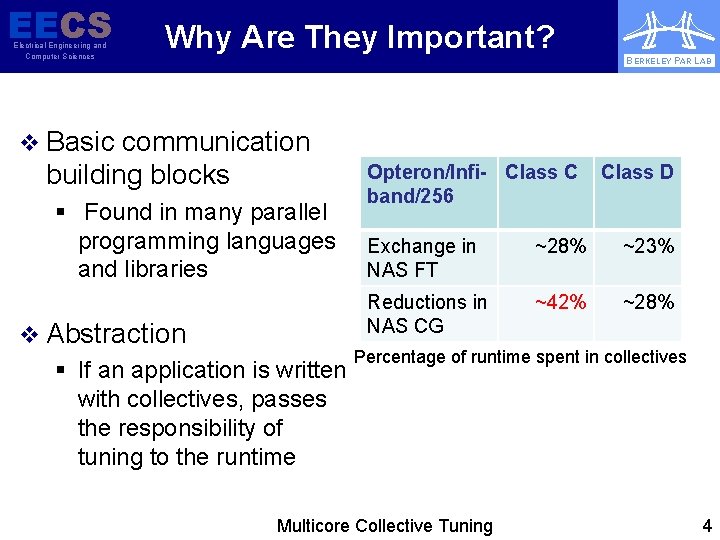

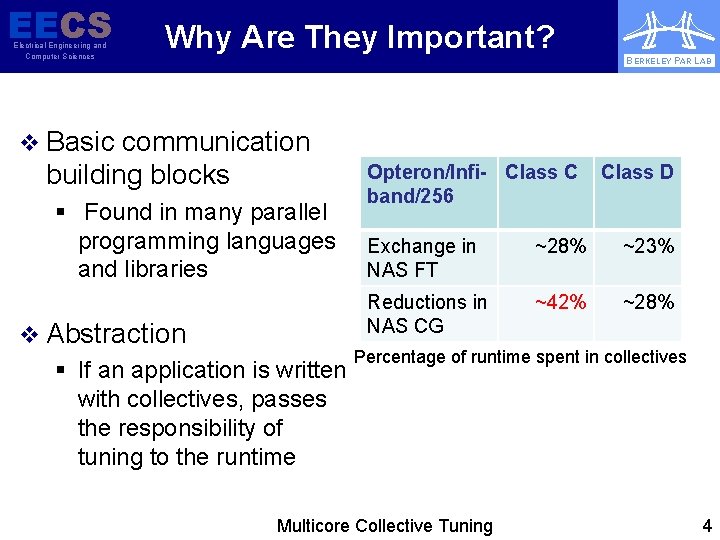

EECS Electrical Engineering and Computer Sciences Why Are They Important? BERKELEY PAR LAB v Basic communication building blocks § Found in many parallel programming languages and libraries v Abstraction § If an application is written with collectives, passes the responsibility of tuning to the runtime Opteron/Infi- Class C band/256 Class D Exchange in NAS FT ~28% ~23% Reductions in NAS CG ~42% ~28% Percentage of runtime spent in collectives Multicore Collective Tuning 4

EECS Electrical Engineering and Computer Sciences Experimental Setup BERKELEY PAR LAB v Platforms § Sun Niagra 2 • 1 socket of 8 multi-threaded cores • Each core supports 8 hardware thread contexts for 64 total threads § Intel Clovertown • 2 “traditional” quad-core sockets § Blue. Gene/P • 1 quad-core socket v MPI for Inter-process communication § shared memory MPICH 2 1. 0. 7 Multicore Collective Tuning 5

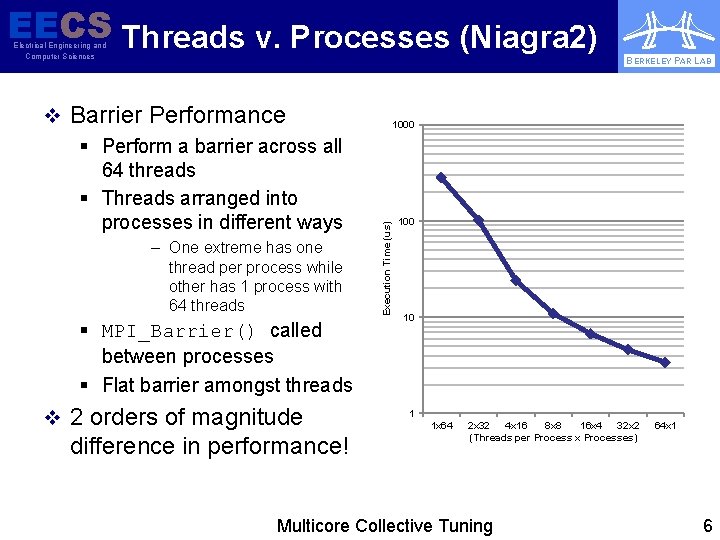

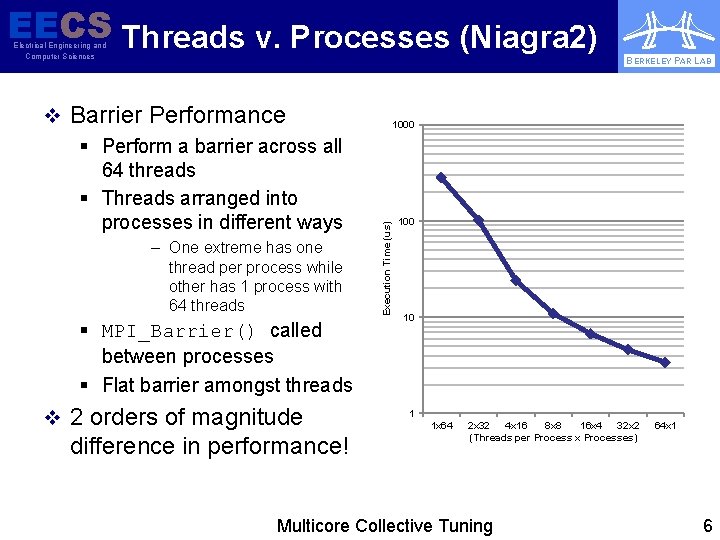

EECS Threads v. Processes (Niagra 2) Electrical Engineering and Computer Sciences BERKELEY PAR LAB – One extreme has one thread per process while other has 1 process with 64 threads § MPI_Barrier() called between processes § Flat barrier amongst threads v 2 orders of magnitude difference in performance! 1000 Execution Time (us) v Barrier Performance § Perform a barrier across all 64 threads § Threads arranged into processes in different ways 100 10 1 1 x 64 2 x 32 4 x 16 8 x 8 16 x 4 32 x 2 (Threads per Process x Processes) Multicore Collective Tuning 64 x 1 6

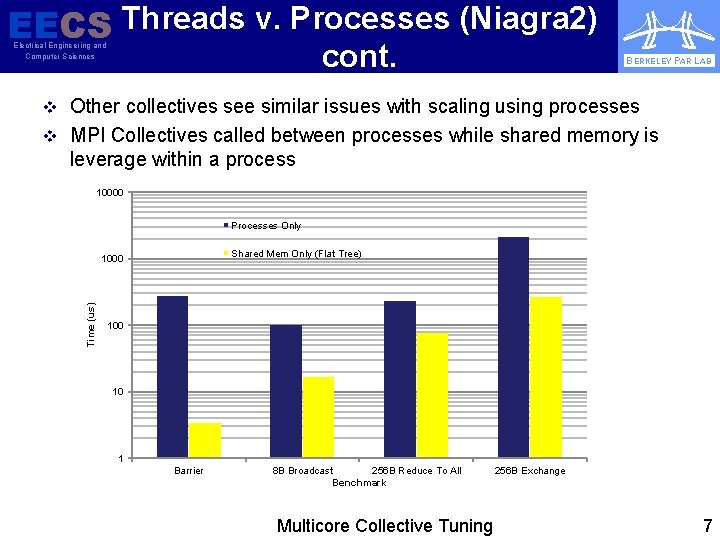

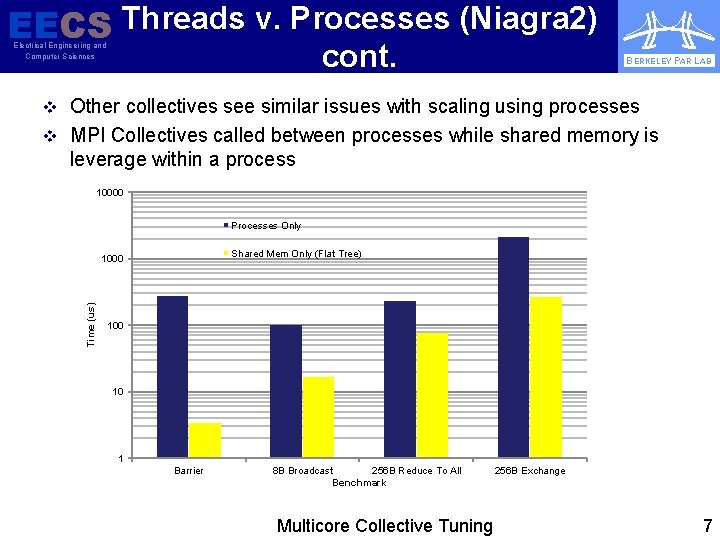

EECS Threads v. Processes (Niagra 2) cont. Electrical Engineering and Computer Sciences BERKELEY PAR LAB v Other collectives see similar issues with scaling using processes v MPI Collectives called between processes while shared memory is leverage within a process 10000 Processes Only Shared Mem Only (Flat Tree) Time (us) 1000 10 1 Barrier 8 B Broadcast 256 B Reduce To All Benchmark Multicore Collective Tuning 256 B Exchange 7

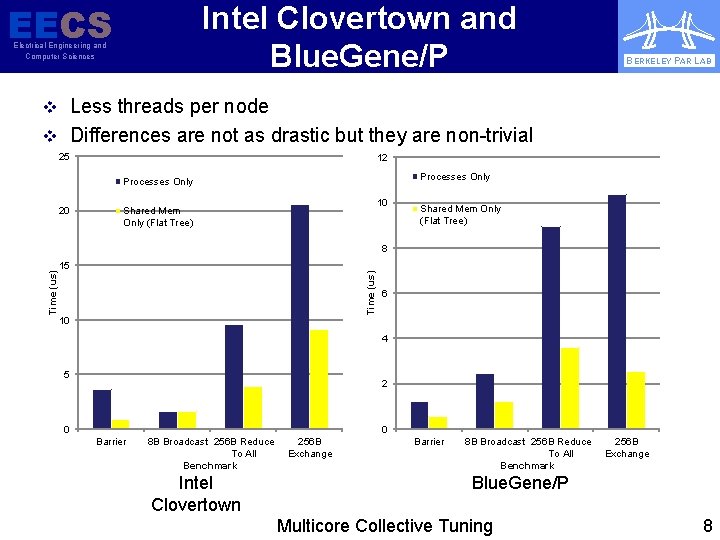

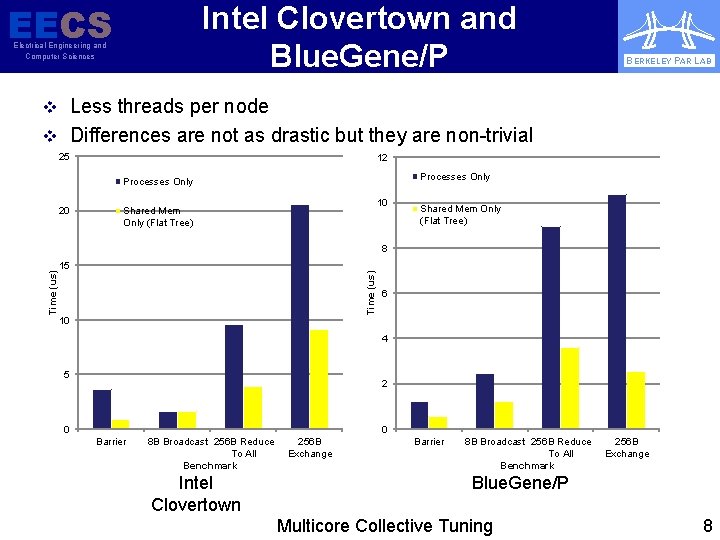

EECS Intel Clovertown and Blue. Gene/P Electrical Engineering and Computer Sciences BERKELEY PAR LAB v Less threads per node v Differences are not as drastic but they are non-trivial 25 12 Processes Only 20 10 Shared Mem Only (Flat Tree) 15 Time (us) 8 10 6 4 5 2 0 0 Barrier 8 B Broadcast 256 B Reduce To All Benchmark Intel Clovertown 256 B Exchange Barrier 8 B Broadcast 256 B Reduce To All Benchmark 256 B Exchange Blue. Gene/P Multicore Collective Tuning 8

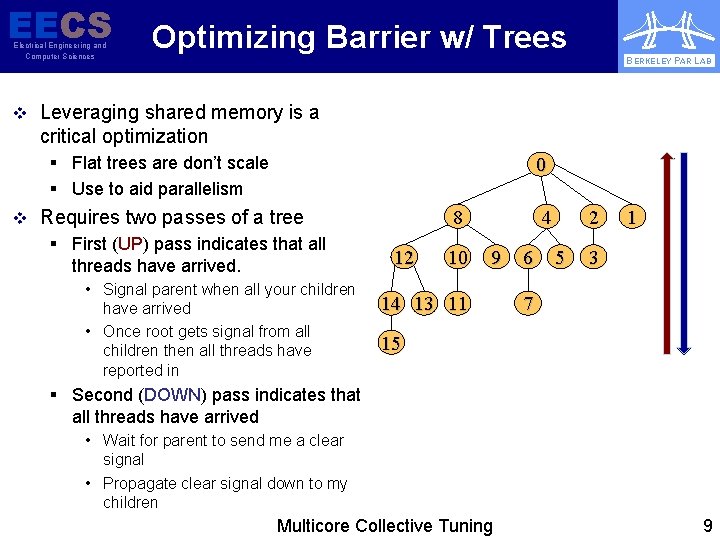

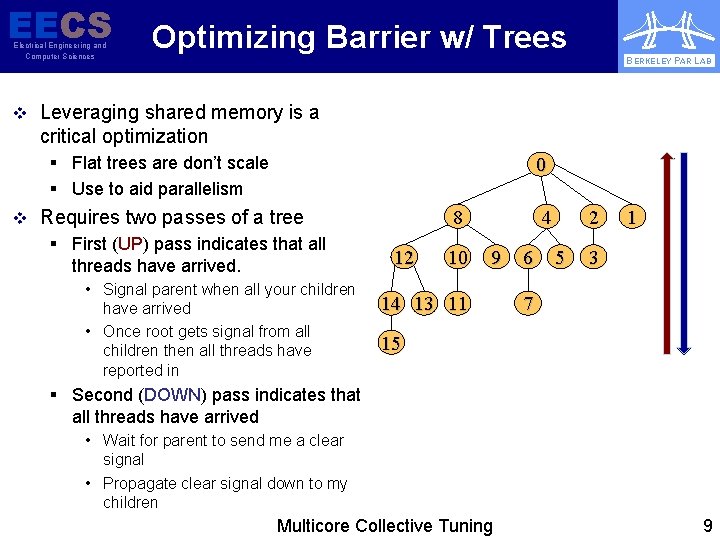

EECS Electrical Engineering and Computer Sciences Optimizing Barrier w/ Trees BERKELEY PAR LAB v Leveraging shared memory is a critical optimization § Flat trees are don’t scale § Use to aid parallelism 0 v Requires two passes of a tree § First (UP) pass indicates that all threads have arrived. • Signal parent when all your children have arrived • Once root gets signal from all children then all threads have reported in 8 12 10 4 9 14 13 11 6 2 5 1 3 7 15 § Second (DOWN) pass indicates that all threads have arrived • Wait for parent to send me a clear signal • Propagate clear signal down to my children Multicore Collective Tuning 9

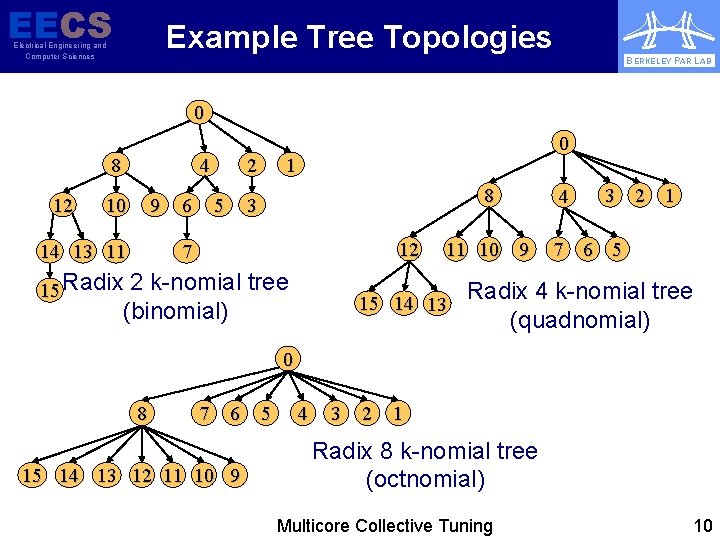

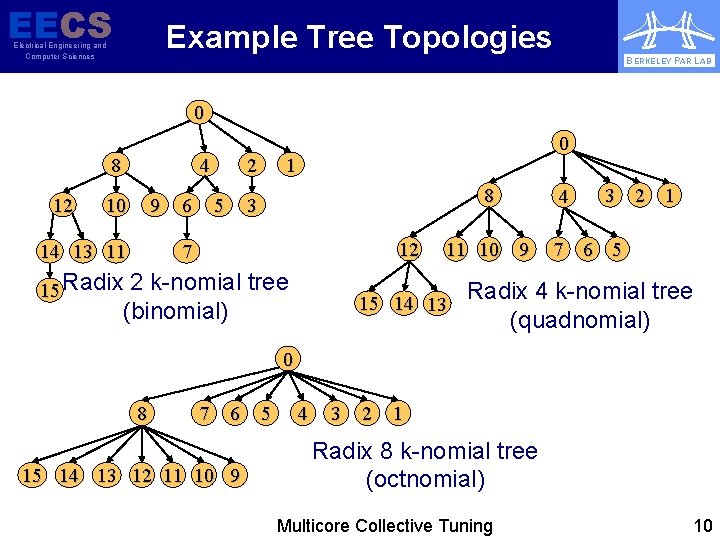

EECS Example Tree Topologies Electrical Engineering and Computer Sciences BERKELEY PAR LAB 0 8 12 4 10 9 14 13 11 6 2 5 0 1 8 3 12 7 15 Radix 2 k-nomial tree (binomial) 11 10 9 15 14 13 4 3 2 1 7 6 5 Radix 4 k-nomial tree (quadnomial) 0 8 7 6 15 14 13 12 11 10 9 5 4 3 2 1 Radix 8 k-nomial tree (octnomial) Multicore Collective Tuning 10

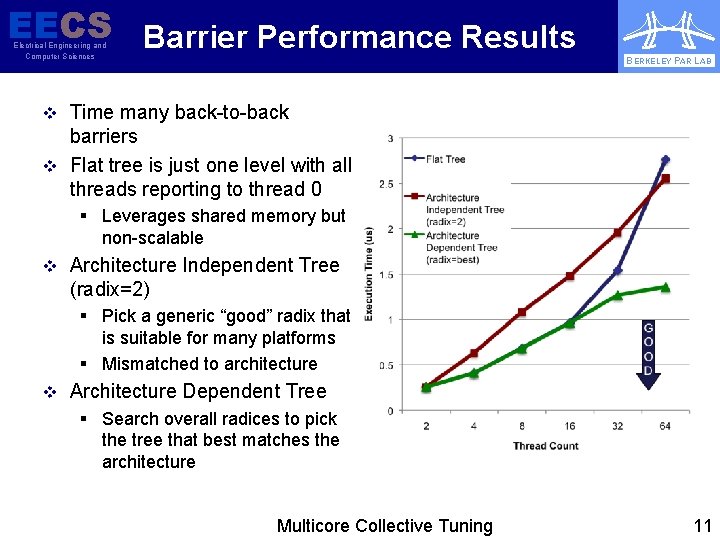

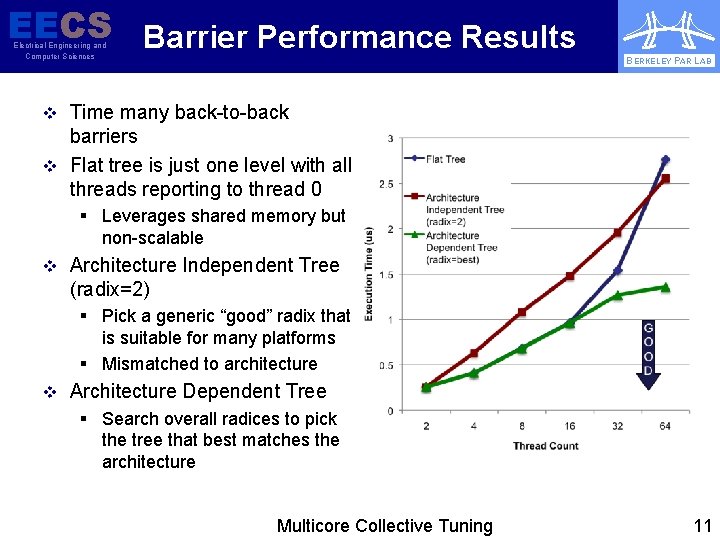

EECS Barrier Performance Results Electrical Engineering and Computer Sciences BERKELEY PAR LAB v Time many back-to-back barriers v Flat tree is just one level with all threads reporting to thread 0 § Leverages shared memory but non-scalable v Architecture Independent Tree (radix=2) G O O D § Pick a generic “good” radix that is suitable for many platforms § Mismatched to architecture v Architecture Dependent Tree § Search overall radices to pick the tree that best matches the architecture Multicore Collective Tuning 11

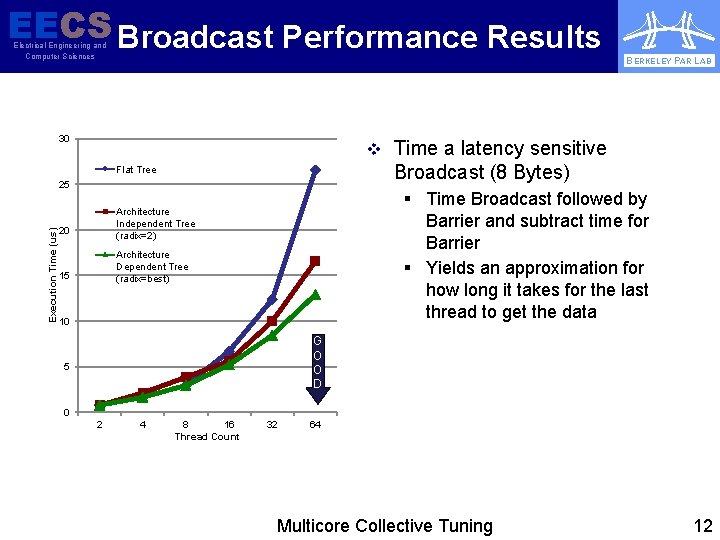

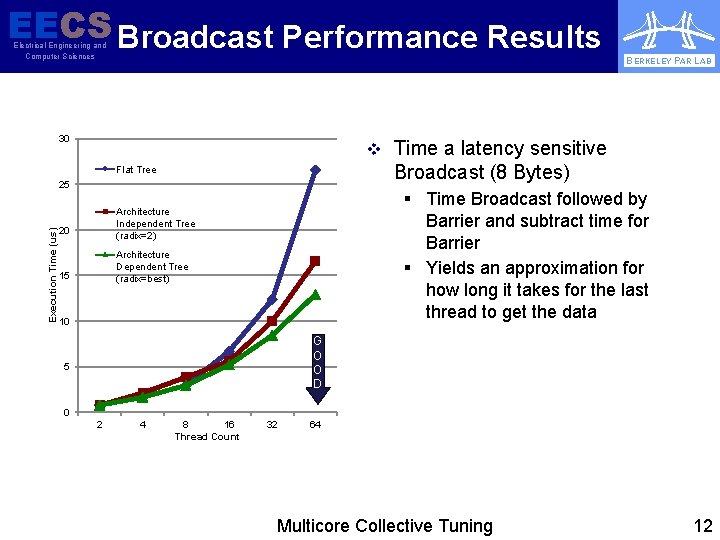

EECS Broadcast Performance Results Electrical Engineering and Computer Sciences BERKELEY PAR LAB 30 v Time a latency sensitive Broadcast (8 Bytes) Flat Tree Execution Time (us) 25 § Time Broadcast followed by Barrier and subtract time for Barrier § Yields an approximation for how long it takes for the last thread to get the data Architecture Independent Tree (radix=2) 20 Architecture Dependent Tree (radix=best) 15 10 G O O D 5 0 2 4 8 16 Thread Count 32 64 Multicore Collective Tuning 12

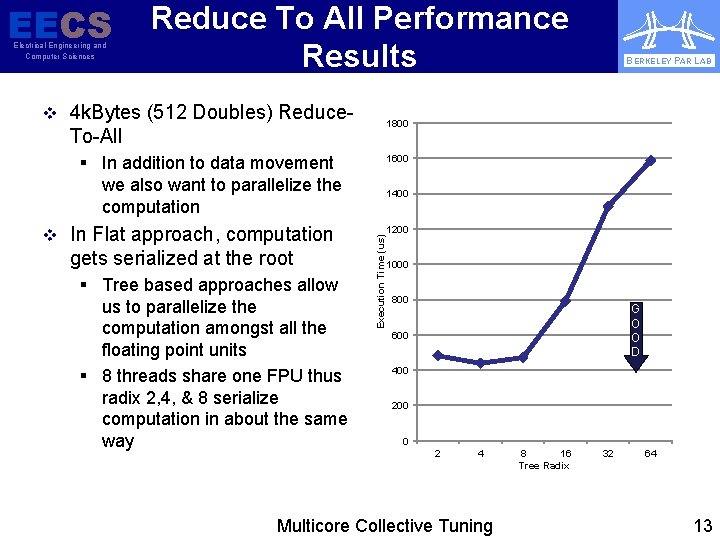

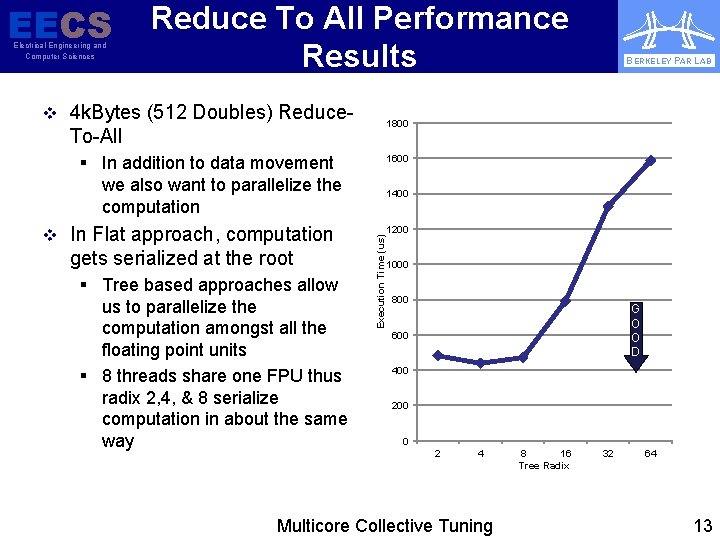

EECS Electrical Engineering and Computer Sciences Reduce To All Performance Results v 4 k. Bytes (512 Doubles) Reduce- 1800 To-All § In addition to data movement we also want to parallelize the computation gets serialized at the root § Tree based approaches allow us to parallelize the computation amongst all the floating point units § 8 threads share one FPU thus radix 2, 4, & 8 serialize computation in about the same way 1600 1400 Execution Time (us) v In Flat approach, computation BERKELEY PAR LAB 1200 1000 800 G O O D 600 400 200 0 2 4 Multicore Collective Tuning 8 16 Tree Radix 32 64 13

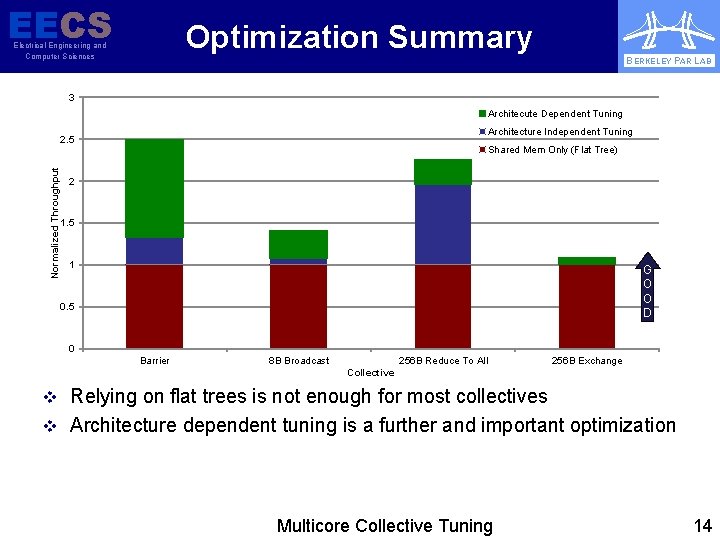

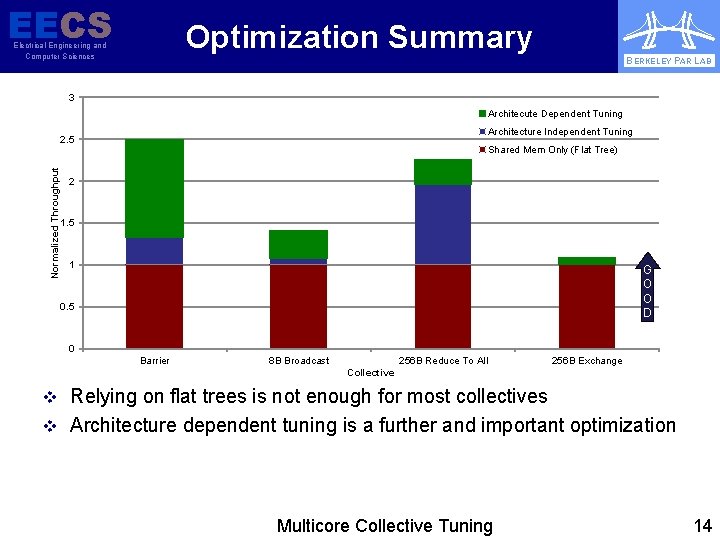

EECS Optimization Summary Electrical Engineering and Computer Sciences BERKELEY PAR LAB 3 Architecute Dependent Tuning Architecture Independent Tuning Normalized Throughput 2. 5 Shared Mem Only (Flat Tree) 2 1. 5 1 G O O D 0. 5 0 Barrier 8 B Broadcast 256 B Reduce To All 256 B Exchange Collective v Relying on flat trees is not enough for most collectives v Architecture dependent tuning is a further and important optimization Multicore Collective Tuning 14

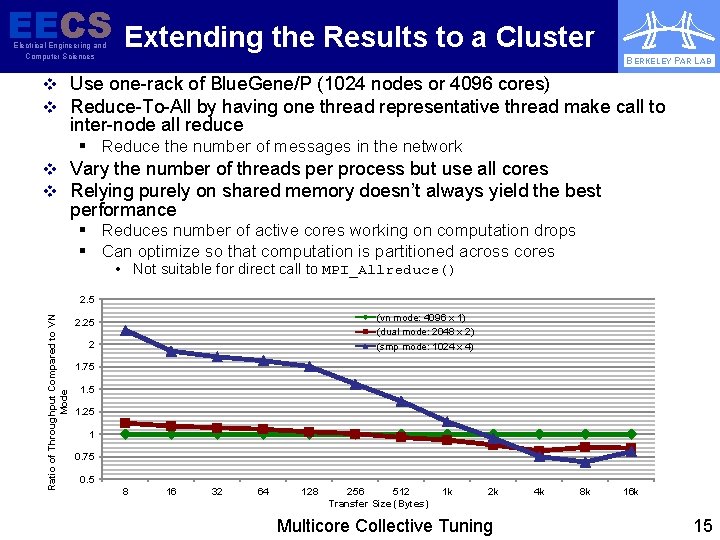

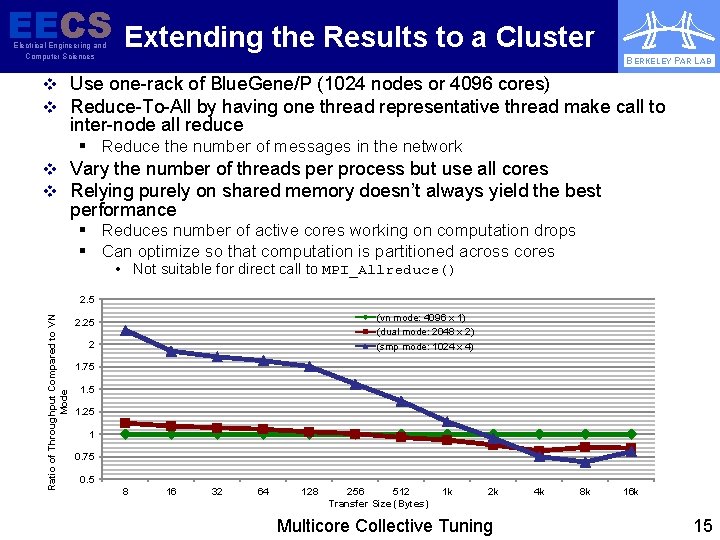

EECS Extending the Results to a Cluster Electrical Engineering and Computer Sciences BERKELEY PAR LAB v Use one-rack of Blue. Gene/P (1024 nodes or 4096 cores) v Reduce-To-All by having one thread representative thread make call to inter-node all reduce § Reduce the number of messages in the network v Vary the number of threads per process but use all cores v Relying purely on shared memory doesn’t always yield the best performance § Reduces number of active cores working on computation drops § Can optimize so that computation is partitioned across cores • Not suitable for direct call to MPI_Allreduce() Ratio of Throughput Compared to VN Mode 2. 5 (vn mode: 4096 x 1) (dual mode: 2048 x 2) (smp mode: 1024 x 4) 2. 25 2 1. 75 1. 25 1 0. 75 0. 5 8 16 32 64 128 256 512 Transfer Size (Bytes) 1 k 2 k Multicore Collective Tuning 4 k 8 k 16 k 15

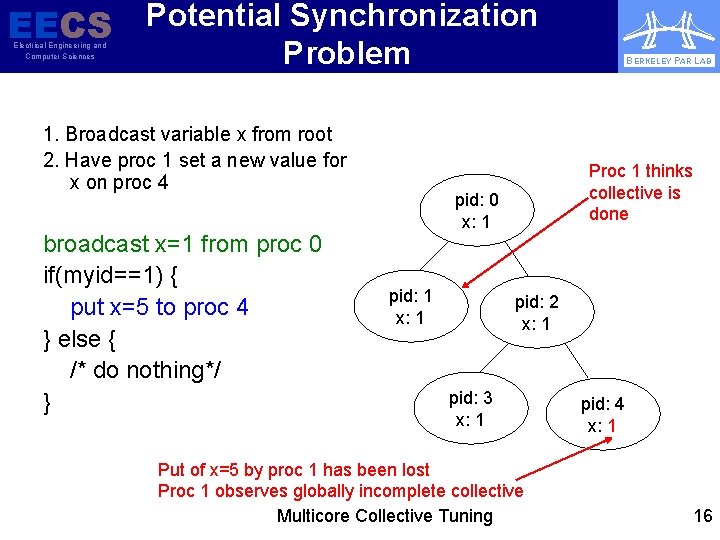

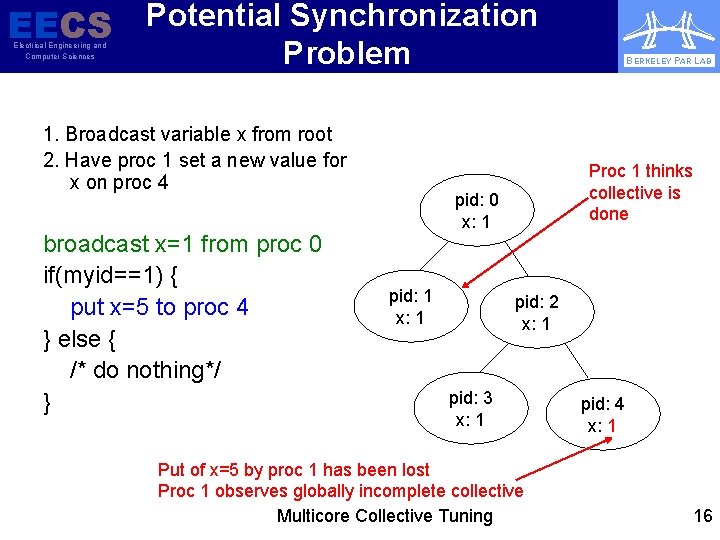

EECS Potential Synchronization Electrical Engineering and Computer Sciences Problem 1. Broadcast variable x from root 2. Have proc 1 set a new value for x on proc 4 broadcast x=1 from proc 0 if(myid==1) { put x=5 to proc 4 } else { /* do nothing*/ } BERKELEY PAR LAB Proc 1 thinks collective is done pid: 0 x: x: Ø 1 pid: 1 x: x: Ø 1 pid: 2 x: x: Ø 1 pid: 3 x: x: Ø 1 Put of x=5 by proc 1 has been lost Proc 1 observes globally incomplete collective Multicore Collective Tuning pid: 4 x: x: Ø 1 5 16

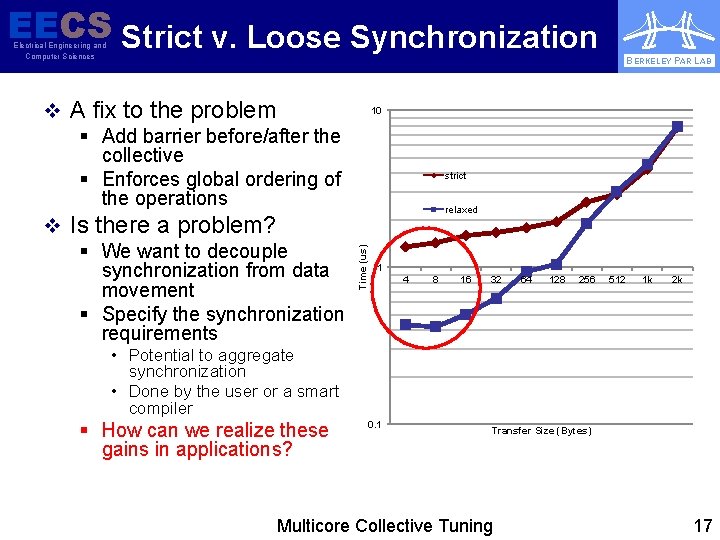

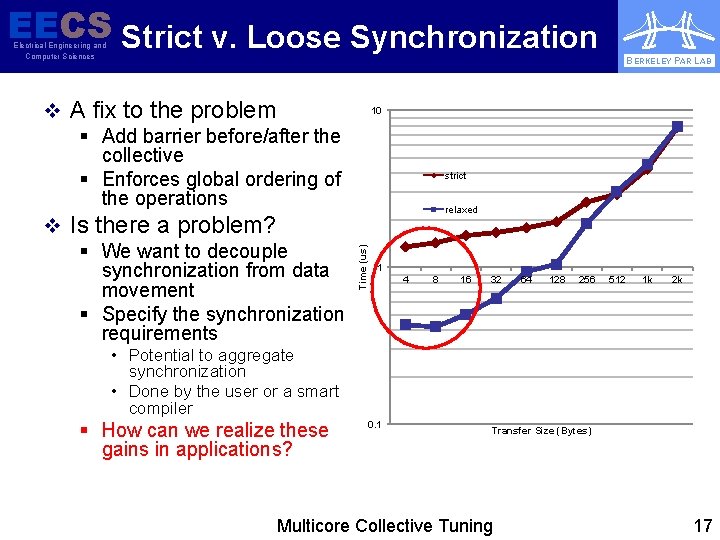

EECS Strict v. Loose Synchronization Electrical Engineering and Computer Sciences BERKELEY PAR LAB 10 strict relaxed Time (us) v A fix to the problem § Add barrier before/after the collective § Enforces global ordering of the operations v Is there a problem? § We want to decouple synchronization from data movement § Specify the synchronization requirements 1 4 8 16 32 64 128 256 512 1 k 2 k • Potential to aggregate synchronization • Done by the user or a smart compiler § How can we realize these gains in applications? 0. 1 Transfer Size (Bytes) Multicore Collective Tuning 17

EECS Electrical Engineering and Computer Sciences Conclusions BERKELEY PAR LAB v Processes Threads is a crucial optimization for single-node collective communication v Can use tree-based collectives to realize better performance, even for collectives on one node v Picking the correct tree that best matches architecture yields the best performance v Multicore adds to the (auto)tuning space for collective communication v Shared memory semantics allow us to create new loosely synchronized collectives Multicore Collective Tuning 18

EECS Electrical Engineering and Computer Sciences BERKELEY PAR LAB Questions? Multicore Collective Tuning 19

EECS Electrical Engineering and Computer Sciences BERKELEY PAR LAB Backup Slides Multicore Collective Tuning 20

EECS Threads and Processes Electrical Engineering and Computer Sciences BERKELEY PAR LAB v Threads § A sequence of instructions and an execution stack § Communication between threads through common and shared address space • No OS/Network involvement needed • Reasoning about inter-thread communication can be tricky v Processes § A set of threads and an associated memory space § All threads within process share address space § Communication between processes must be managed through the OS • Inter-process communication is explicit but may be slow • More expensive to switch between processes Multicore Collective Tuning 21

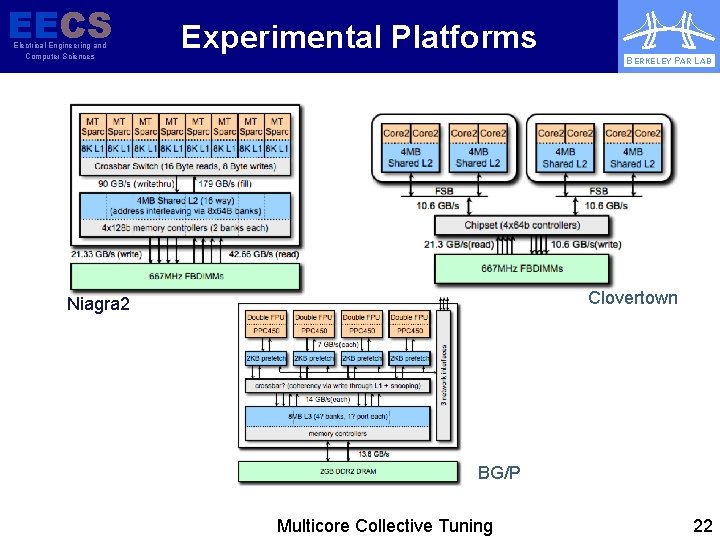

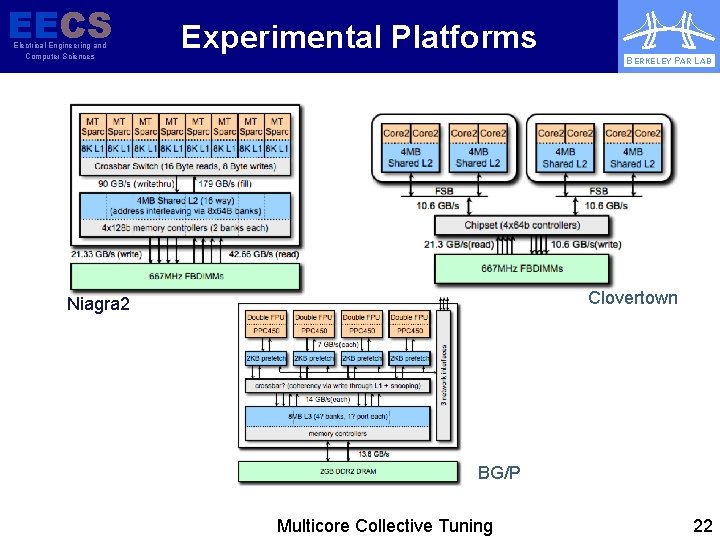

EECS Electrical Engineering and Computer Sciences Experimental Platforms BERKELEY PAR LAB Clovertown Niagra 2 BG/P Multicore Collective Tuning 22

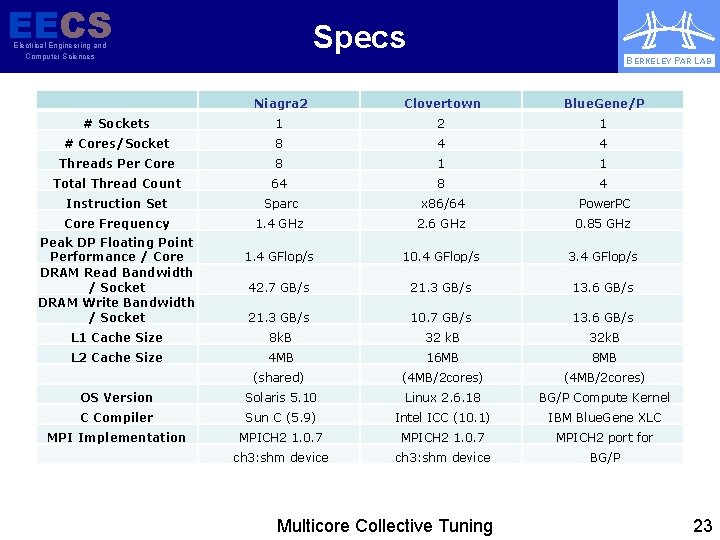

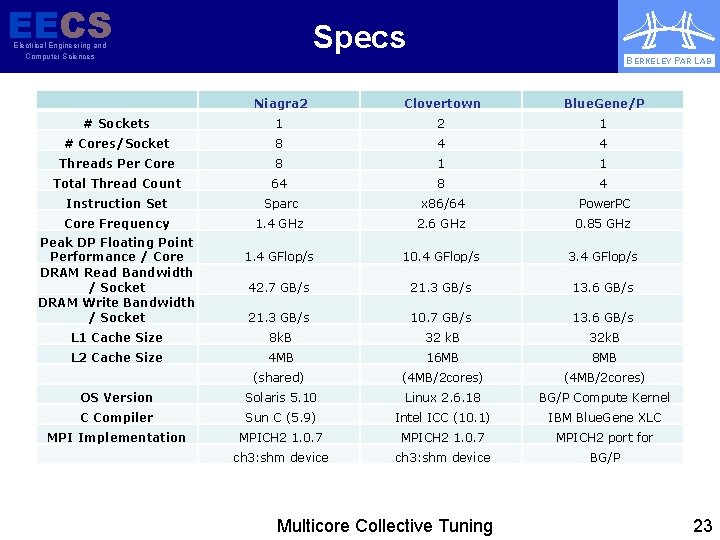

EECS Specs Electrical Engineering and Computer Sciences BERKELEY PAR LAB Niagra 2 Clovertown Blue. Gene/P # Sockets 1 2 1 # Cores/Socket 8 4 4 Threads Per Core 8 1 1 Total Thread Count 64 8 4 Instruction Set Sparc x 86/64 Power. PC Core Frequency 1. 4 GHz 2. 6 GHz 0. 85 GHz 1. 4 GFlop/s 10. 4 GFlop/s 3. 4 GFlop/s 42. 7 GB/s 21. 3 GB/s 13. 6 GB/s 21. 3 GB/s 10. 7 GB/s 13. 6 GB/s L 1 Cache Size 8 k. B 32 k. B L 2 Cache Size 4 MB 16 MB 8 MB (shared) (4 MB/2 cores) OS Version Solaris 5. 10 Linux 2. 6. 18 BG/P Compute Kernel C Compiler Sun C (5. 9) Intel ICC (10. 1) IBM Blue. Gene XLC MPI Implementation MPICH 2 1. 0. 7 MPICH 2 port for ch 3: shm device BG/P Peak DP Floating Point Performance / Core DRAM Read Bandwidth / Socket DRAM Write Bandwidth / Socket Multicore Collective Tuning 23

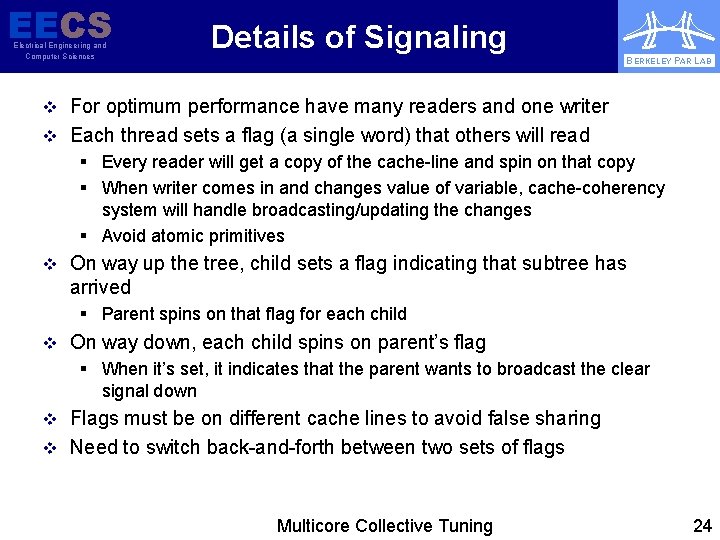

EECS Electrical Engineering and Computer Sciences Details of Signaling BERKELEY PAR LAB v For optimum performance have many readers and one writer v Each thread sets a flag (a single word) that others will read § Every reader will get a copy of the cache-line and spin on that copy § When writer comes in and changes value of variable, cache-coherency system will handle broadcasting/updating the changes § Avoid atomic primitives v On way up the tree, child sets a flag indicating that subtree has arrived § Parent spins on that flag for each child v On way down, each child spins on parent’s flag § When it’s set, it indicates that the parent wants to broadcast the clear signal down v Flags must be on different cache lines to avoid false sharing v Need to switch back-and-forth between two sets of flags Multicore Collective Tuning 24