TopicSensitive Source Rank Extending Source Rank for Performing

- Slides: 50

Topic-Sensitive Source. Rank: Extending Source. Rank for Performing Context-Sensitive Search over Deep-Web MS Thesis Defense Manishkumar Jha Committee Members Dr. Subbarao Kambhampati Dr. Huan Liu Dr. Hasan Davulcu

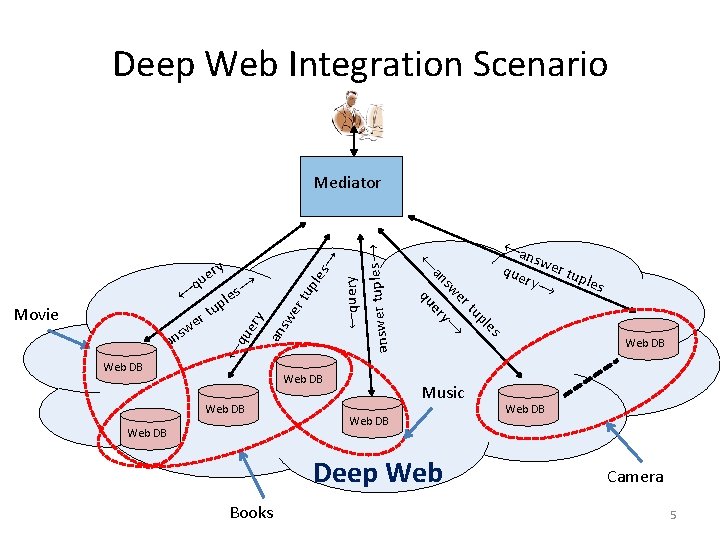

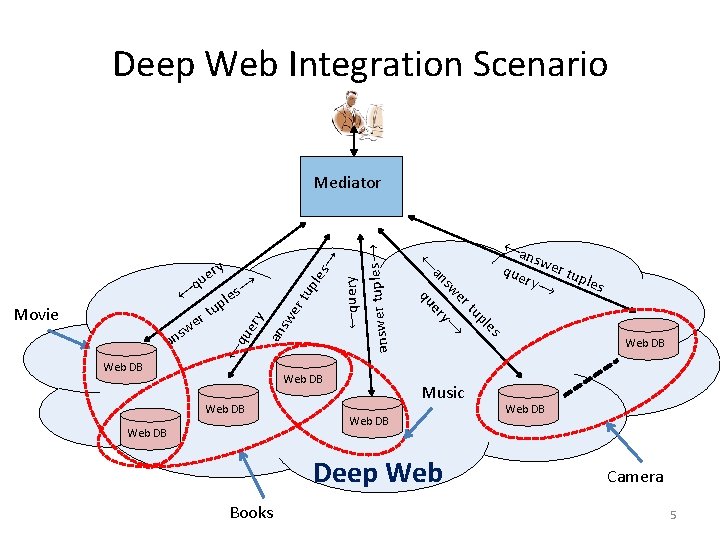

Deep Web Integration Scenario Autonomous Uncontrolled collection → les tup er nsw er t ry → uple s Web DB an sw ery qu ← Web DB que es pl tu er sw ry→ e an t ←a Access is limited to query-forms qu er w s s→ e l up an ← ← y er qu Contains information spanning multiple topics Mediator ←query → answer tuples Millions of sources containing structured tuples Web DB Deep Web 2

Source quality and Source. Rank Source quality Source. Rank • Deep-Web is adversarial • Source. Rank[1] provides a measure for assessing source quality based on source trustworthiness and result importance • Source quality is a major issue over deep-web [1] Source. Rank: Relevance and Trust Assessment for Deep Web Sources Based on Inter-Source Agreement, WWW, 2011 3

… But Source quality is topic-sensitive • Sources might have data corresponding to multiple topics. Importance may vary across topics – Example: Barnes & Noble might be quite good as a book source but not be as good a movie source m o v i e b o o k • Source. Rank will fail to capture this fact • Issues were noted for surface-web. But are much more critical for deep-web as sources are even more likely to cross topics 4

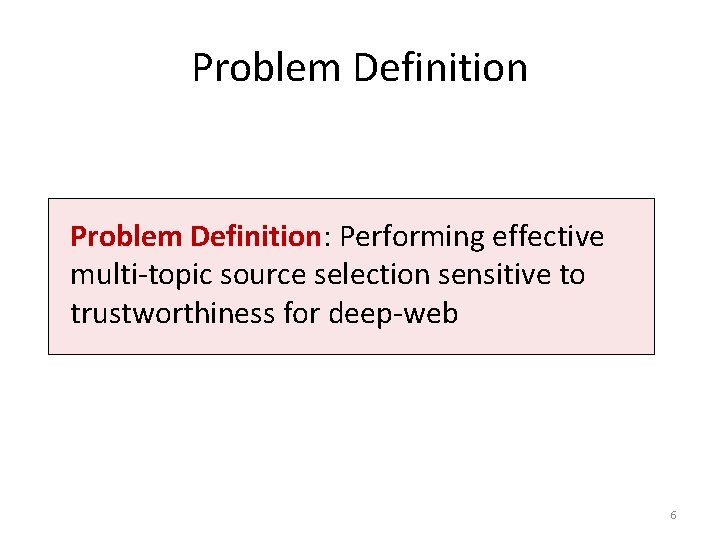

Deep Web Integration Scenario ←query → answer tuples tup er an sw Web DB Music Web DB Deep Web Books nsw er t ry → uple s Web DB ` Web DB ery an t ← er w s qu Movie s→ e l up que qu ← ←a es pl tu er sw ry→ e qu an y er ← → Mediator Web DB Camera 5

Problem Definition: Performing effective multi-topic source selection sensitive to trustworthiness for deep-web 6

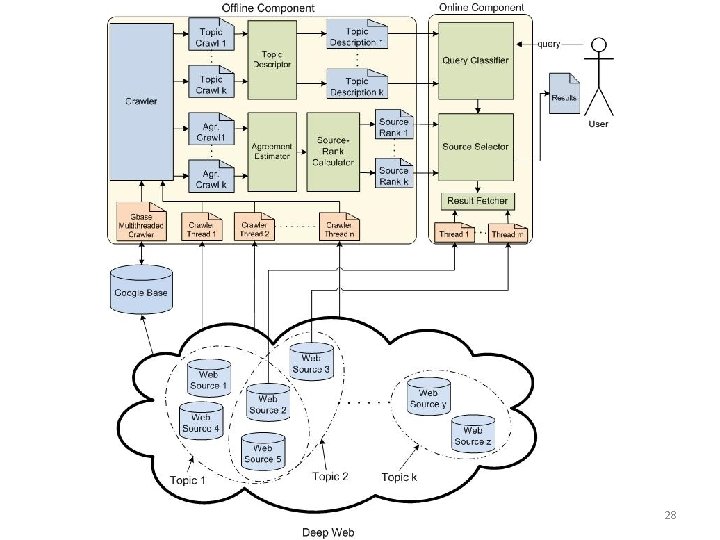

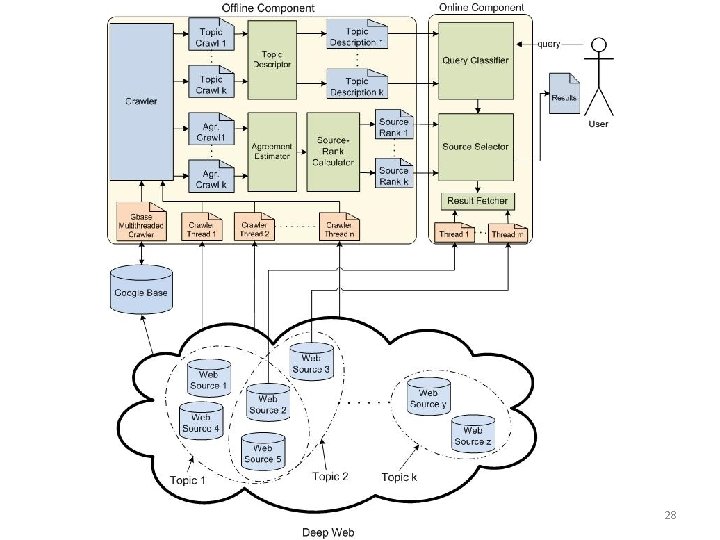

Our solution – Topic sensitive. Source. Rank • Compute multiple topic-sensitive Source. Ranks • At query-time, using query-topic combine these rankings into composite importance ranking • Challenges – Computing topic-sensitive Source. Ranks – Identifying query-topic – Combining topic-sensitive Source. Ranks 7

Agenda • Source. Rank • Topic-sensitive Source. Rank • Experiments and Results • Conclusion 8

Agenda • Source. Rank • Topic-sensitive Source. Rank • Experiments and Results • Conclusion 9

Source. Rank Computation • Assesses source quality based on trustworthiness and result importance • Introduces a domain-agnostic agreement based technique for implicitly creating an endorsement structure between deep-web sources • Agreement of answer sets returned in response to same queries manifests as a form of implicit endorsement 10

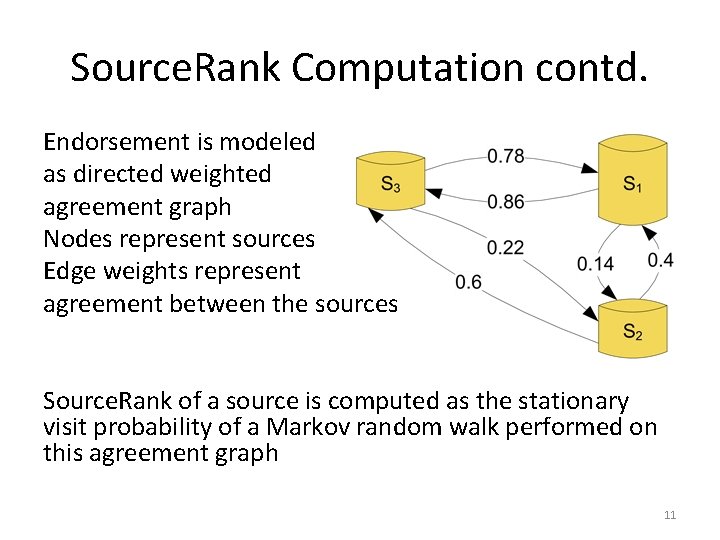

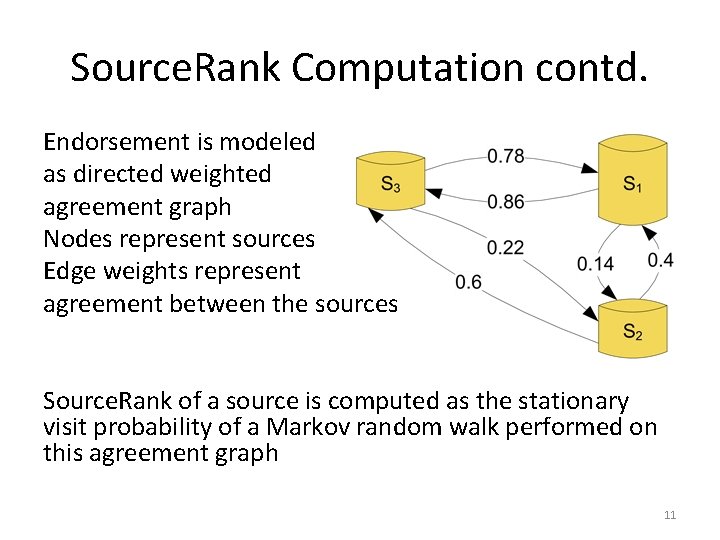

Source. Rank Computation contd. Endorsement is modeled as directed weighted agreement graph Nodes represent sources Edge weights represent agreement between the sources Source. Rank of a source is computed as the stationary visit probability of a Markov random walk performed on this agreement graph 11

Agenda • Source. Rank • Topic-sensitive Source. Rank • Experiments and Results • Conclusion 12

Trust-based measure for multi-topic deep-web • Issues with Source. Rank for multi-topic deep-web – Single importance ranking – Is query-agnostic • We propose Topic-sensitive Source. Rank, TSR for effectively performing multi-topic selection sensitive to trustworthiness • TSR overcomes the drawbacks of Source. Rank 13

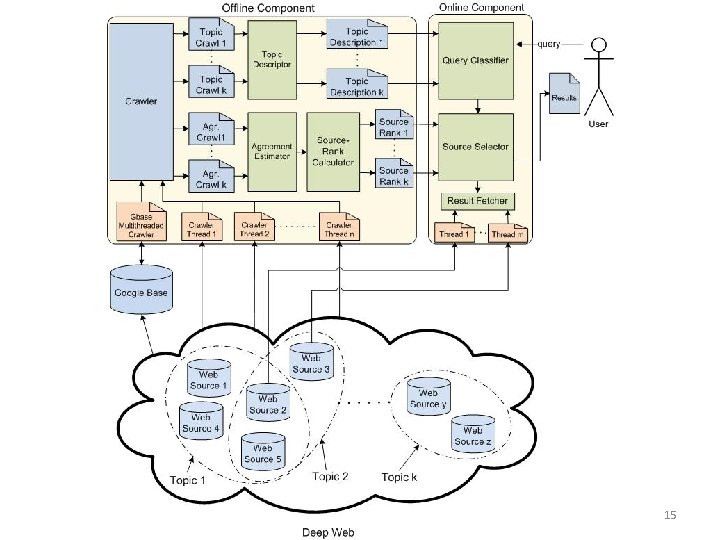

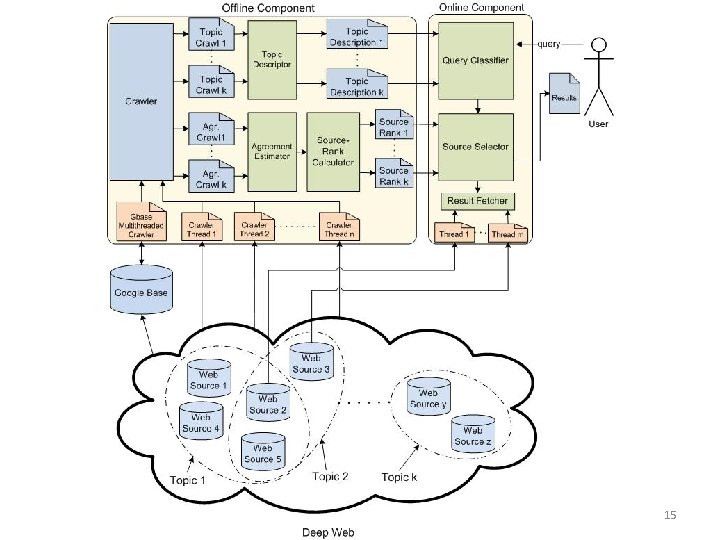

Topic-sensitive Source. Rank Overview • Instead of creating a single importance ranking, multiple importance rankings are created – Each importance ranking is biased towards a particular topic • At query-time, using query information and individual topic-specific importance rankings, compute a composite importance ranking biased towards the query 14

15

Challenges for TSR • Computing topic-specific importance rankings is not trivial • Inferring query information – Identifying query-topic – Computing composite importance ranking 16

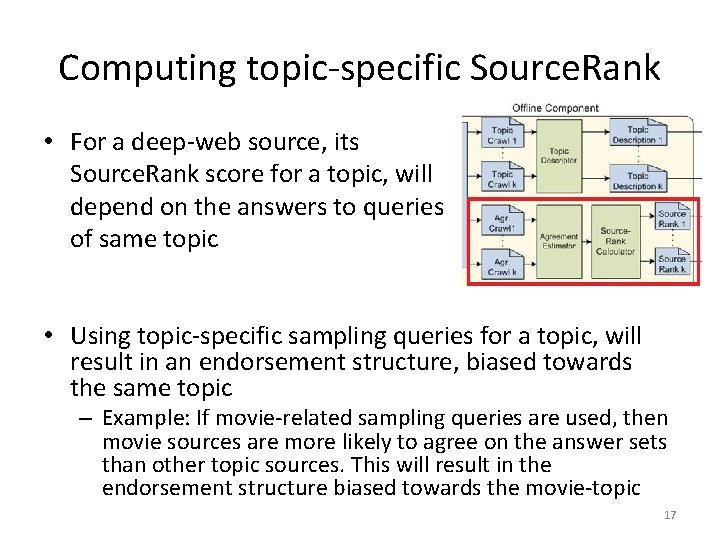

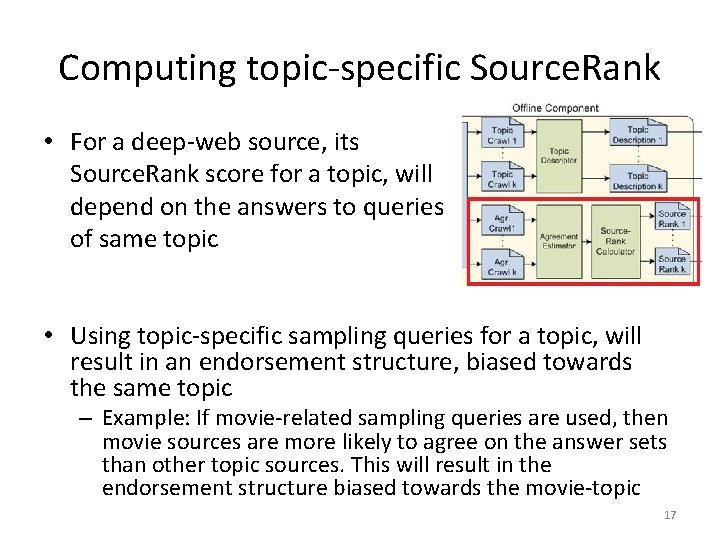

Computing topic-specific Source. Rank • For a deep-web source, its Source. Rank score for a topic, will depend on the answers to queries of same topic • Using topic-specific sampling queries for a topic, will result in an endorsement structure, biased towards the same topic – Example: If movie-related sampling queries are used, then movie sources are more likely to agree on the answer sets than other topic sources. This will result in the endorsement structure biased towards the movie-topic 17

Computing topic-specific Source. Rank contd. • Source. Rank computed on biased agreement graph for a topic will capture topic-specific source importance ranking for the same topic 18

Topic-specific sampling queries • Publicly available online directories such as ODP, Yahoo Directory provide hand-constructed topic hierarchies • These directories along with the links posted under each topic are a good source for obtaining topicspecific sampling queries 19

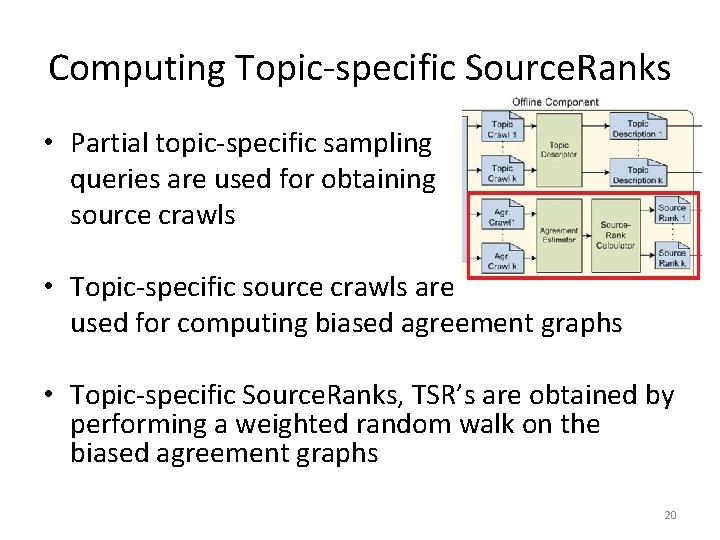

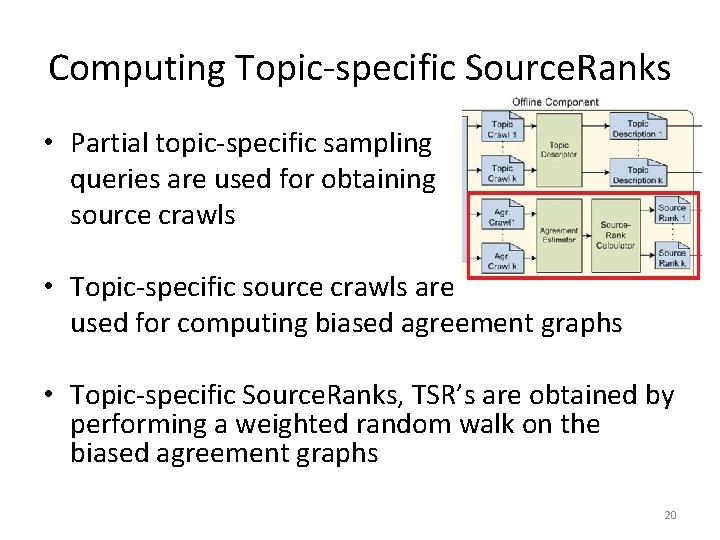

Computing Topic-specific Source. Ranks • Partial topic-specific sampling queries are used for obtaining source crawls • Topic-specific source crawls are used for computing biased agreement graphs • Topic-specific Source. Ranks, TSR’s are obtained by performing a weighted random walk on the biased agreement graphs 20

Query Processing • Query processing involves – Computing query-topic sensitive importance scores – Source selection 21

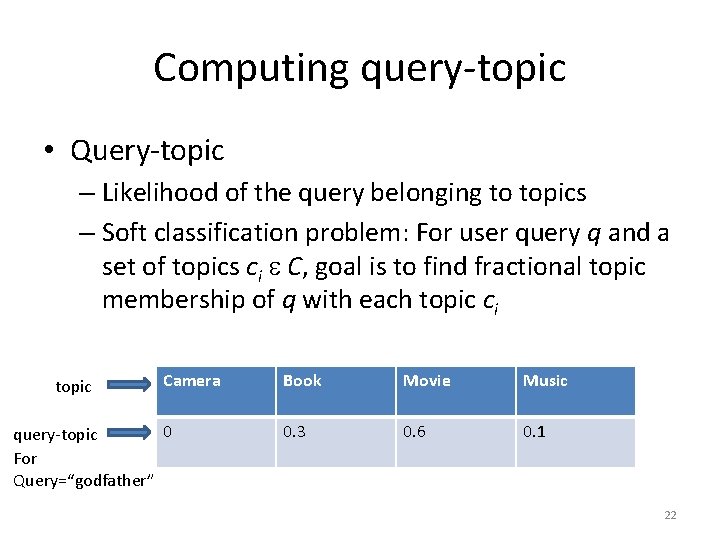

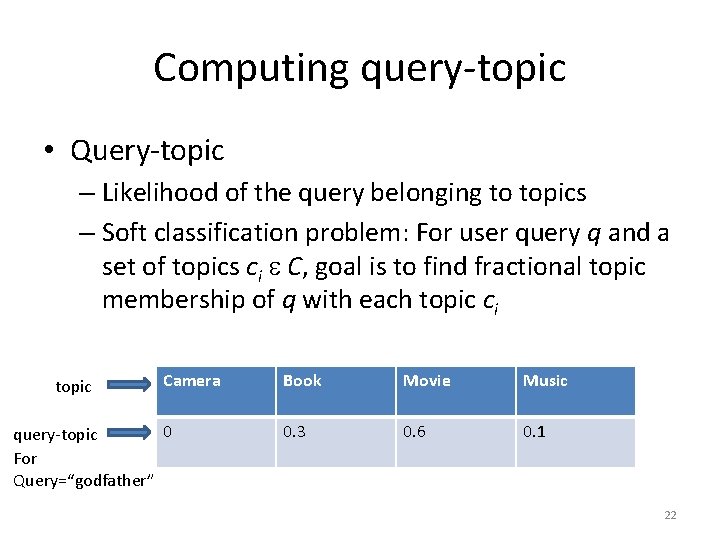

Computing query-topic • Query-topic – Likelihood of the query belonging to topics – Soft classification problem: For user query q and a set of topics ci C, goal is to find fractional topic membership of q with each topic ci topic Camera 0 query-topic For Query=“godfather” Book Movie Music 0. 3 0. 6 0. 1 22

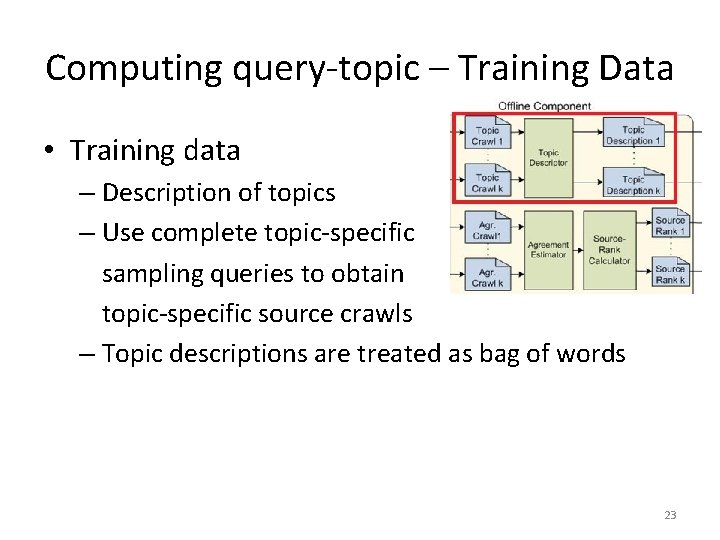

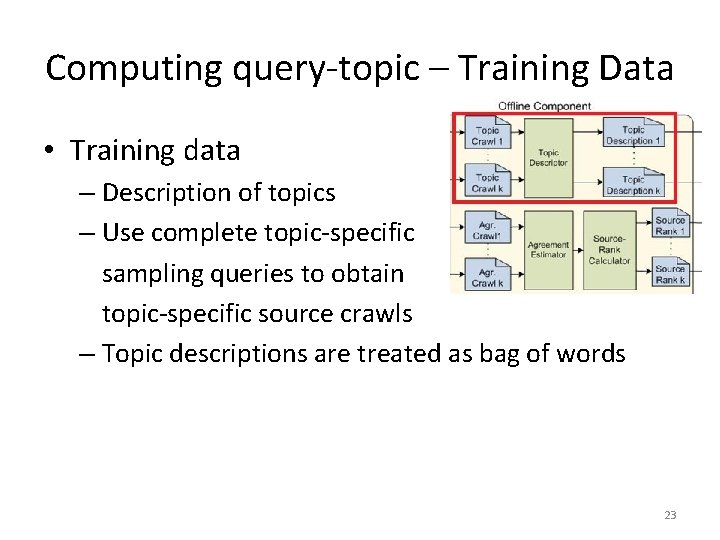

Computing query-topic – Training Data • Training data – Description of topics – Use complete topic-specific sampling queries to obtain topic-specific source crawls – Topic descriptions are treated as bag of words 23

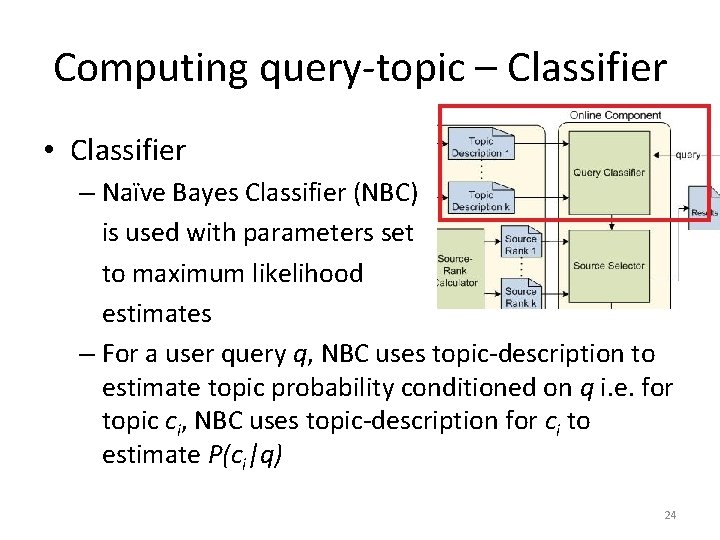

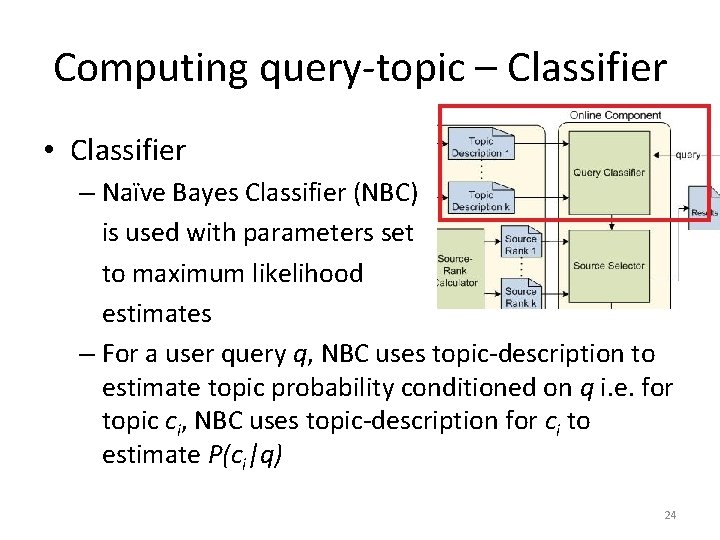

Computing query-topic – Classifier • Classifier – Naïve Bayes Classifier (NBC) is used with parameters set to maximum likelihood estimates – For a user query q, NBC uses topic-description to estimate topic probability conditioned on q i. e. for topic ci, NBC uses topic-description for ci to estimate P(ci|q) 24

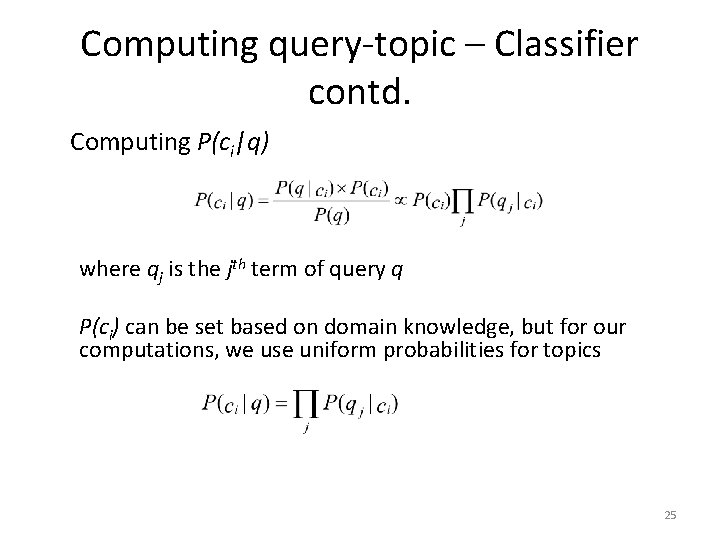

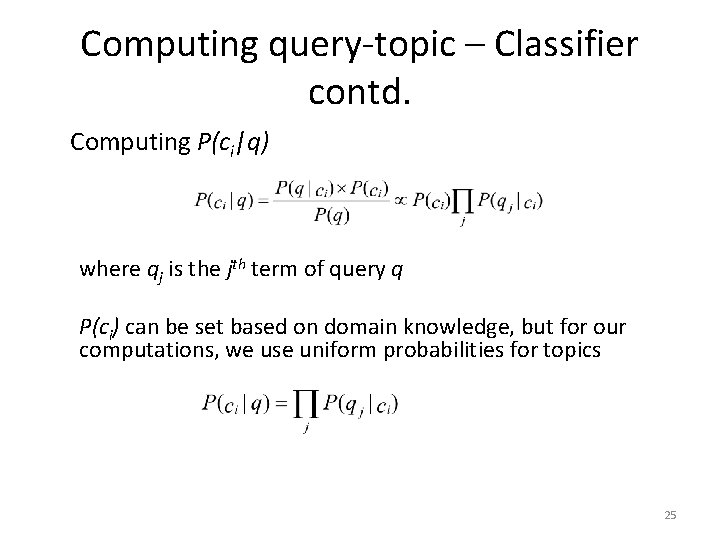

Computing query-topic – Classifier contd. Computing P(ci|q) where qj is the jth term of query q P(ci) can be set based on domain knowledge, but for our computations, we use uniform probabilities for topics 25

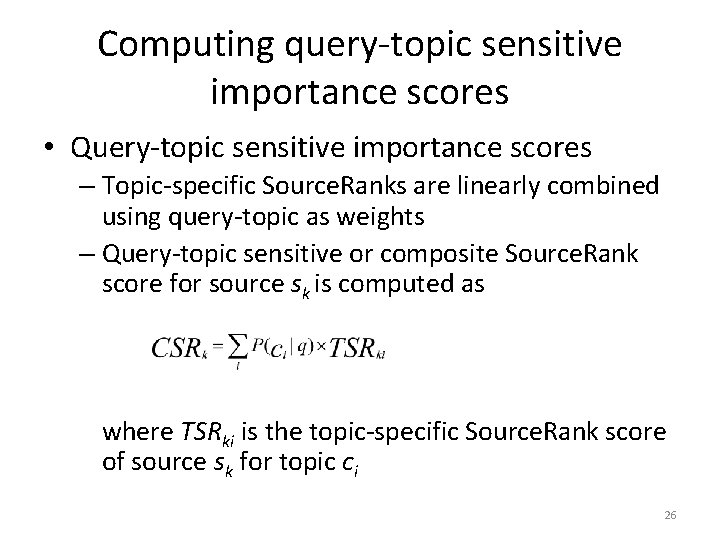

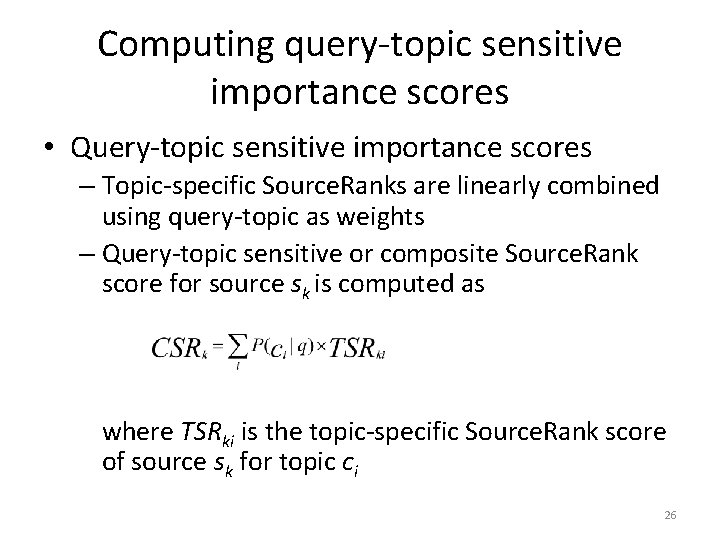

Computing query-topic sensitive importance scores • Query-topic sensitive importance scores – Topic-specific Source. Ranks are linearly combined using query-topic as weights – Query-topic sensitive or composite Source. Rank score for source sk is computed as where TSRki is the topic-specific Source. Rank score of source sk for topic ci 26

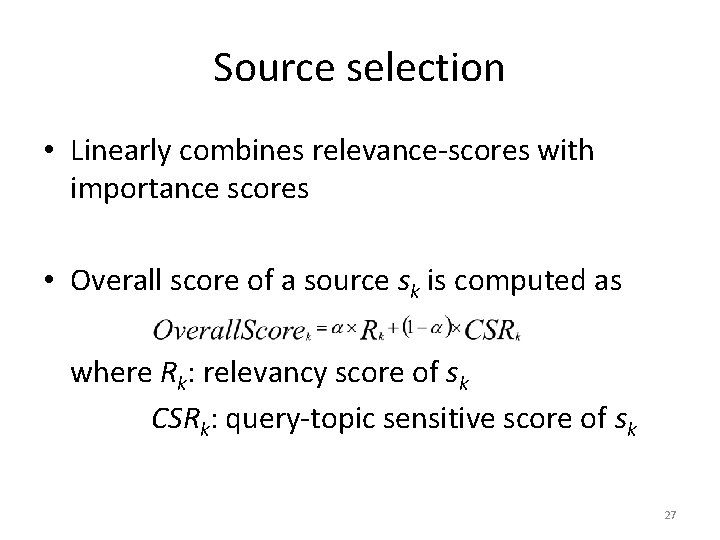

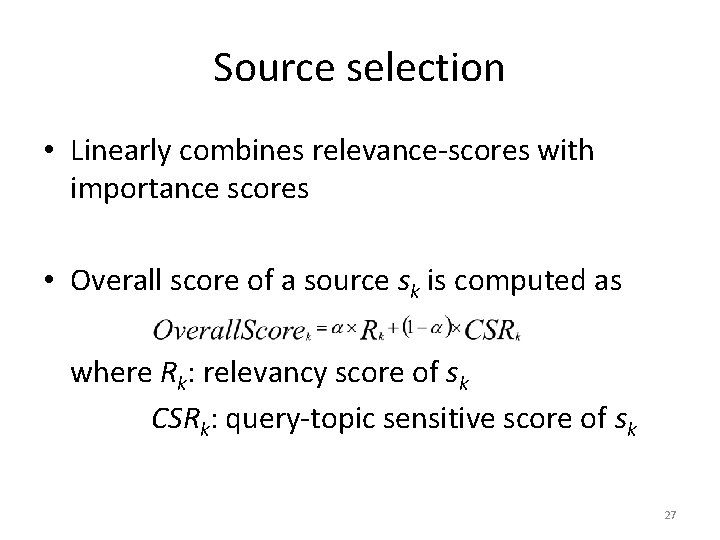

Source selection • Linearly combines relevance-scores with importance scores • Overall score of a source sk is computed as where Rk: relevancy score of sk CSRk: query-topic sensitive score of sk 27

28

Agenda • Source. Rank • Topic-sensitive Source. Rank • Experiments and Results • Conclusion 29

Experimental setup • Experiments were conducted on a multi-topic deepweb environment consisting of four-representative topics – camera, book, movie and music • Source Data. Set – Sources were collected via Google Base – Google Base was probed with 40 queries containing a mix of camera names, book, movie and music album titles – Total of 1440 sources were collected: 276 camera, 556 book, 572 movie and 281 music sources 30

Sampling queries • Generated using publicly available online listings • Used 200 titles or names in each topic • Randomly selected cameras from pbase. com, book from New York Times best sellers, movies from ODP and music albums from Wikipedia’s top-100, 1986 -2010 31

Test queries • Contained a mix of queries from all four topics • Do not overlap with the sampling queries • Generated by randomly removing words from camera names, book, movie and music album titles with 0. 5 probability • Number of test queries varied for different topics to obtain the required (0. 95) statistical significance 32

Query similarity based measure- CORI • CORI – Source statistics were collected using highest document frequency terms – Sources were selected using the same parameters as found optimal in CORI paper 33

Query similarity based measure. Google Base • Google Base – Two-versions of Google Base were used – Gbase on dataset: Google Base search is restricted to our crawled sources – Gbase: Google Base search with no restrictions i. e. considers all sources in Google Base 34

Agreement based measures - USR • Undifferentiated Source. Rank, USR – Source. Rank extended to multi-topic deep-web – Single agreement graph is computed using entire set of sampling queries – USR of sources is computed based on a random walk on this graph 35

Agreement based measures - DSR • Oracular source selection, DSR – Assumes a perfect classification of sources and user queries are available i. e. each source and test query is manually labeled with its domain association – Creates agreement graphs and Source. Ranks for a domain including only sources in that domain – For each test query, sources ranking high in the domain corresponding to the test query are used 36

Result merging, ranking and relevance evaluation • Top-k sources are selected • Google Base is made to query only on these to top-k sources • Experimented with different values of k and found k=10 to be optimal • Google Base’s tuple ranking was used for ranking resulting tuples and return top-5 results in response to test queries 37

Result merging, ranking and relevance evaluation contd. • Top-5 results returned were manually classified as relevant or irrelevant • Result classification was rule based – Example- if the test query is “pirates caribbean chest” and original movie name is “Pirates of Caribbean and Dead Man’s Chest”, then if the result entity refers to the same movie (dvd, blue-ray etc. ) then the result is classified as relevant and otherwise irrelevant • To avoid author bias, results from different source selection methods were merged in a single file so that the evaluator does not know which method each result came from while he does the classification 38

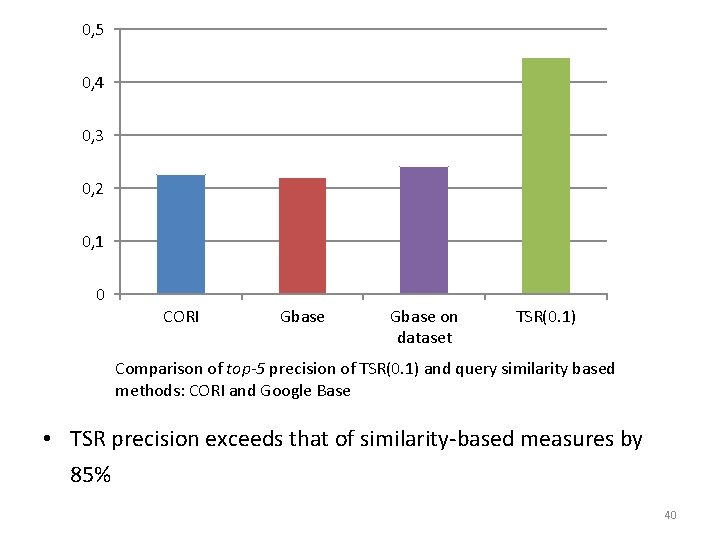

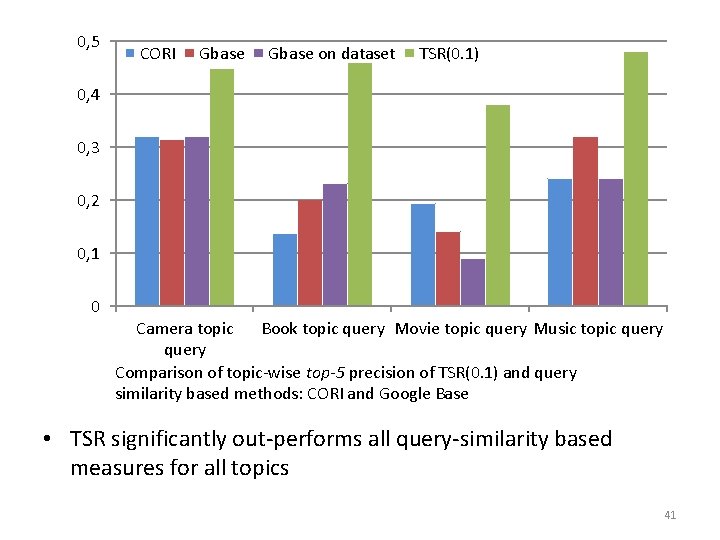

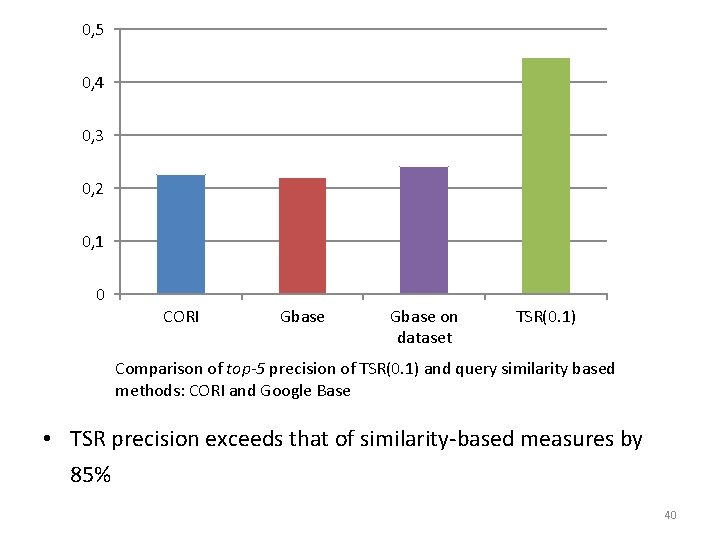

Results • TSR was compared with the baseline source selection methods • Agreement based measures (TSR, USR and DSR) were combined with query-similarity based CORI measure. The combination is represented by agreement based measure name and the weight assigned to agreement based measure, 1 - – Example: TSR(0. 1) represents 0. 9 x. CORI + 0. 1 x. TSR • We experimented with different values of and found that =0. 9 gives best precision for TSR-based source selection i. e. TSR(0. 1) • Higher weightage of CORI compared to TSR is to compensate the fact that TSR scores have higher dispersion compared to CORI scores 39

0, 5 0, 4 0, 3 0, 2 0, 1 0 CORI Gbase on dataset TSR(0. 1) Comparison of top-5 precision of TSR(0. 1) and query similarity based methods: CORI and Google Base • TSR precision exceeds that of similarity-based measures by 85% 40

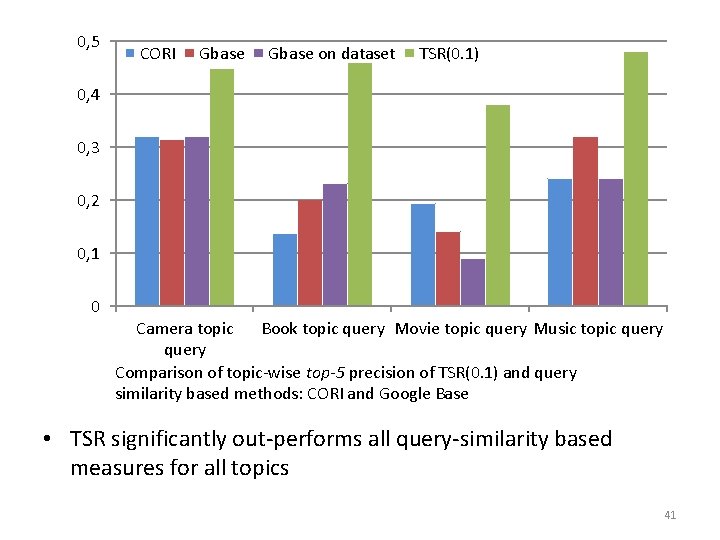

0, 5 CORI Gbase on dataset TSR(0. 1) 0, 4 0, 3 0, 2 0, 1 0 Camera topic Book topic query Movie topic query Music topic query Comparison of topic-wise top-5 precision of TSR(0. 1) and query similarity based methods: CORI and Google Base • TSR significantly out-performs all query-similarity based measures for all topics 41

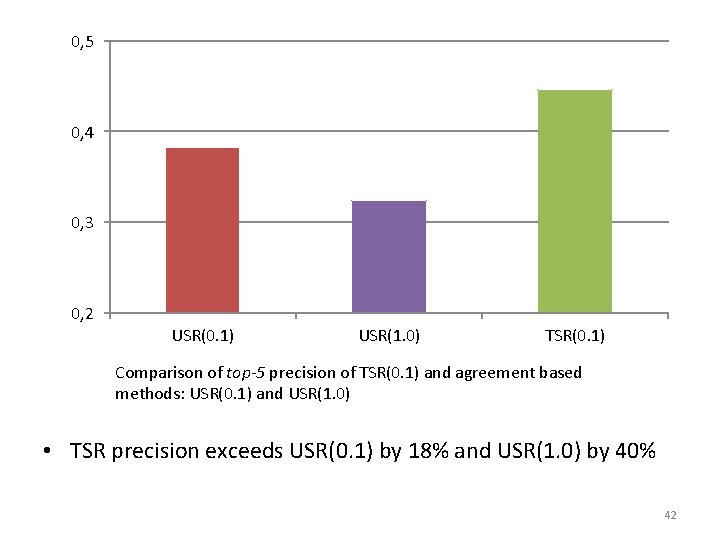

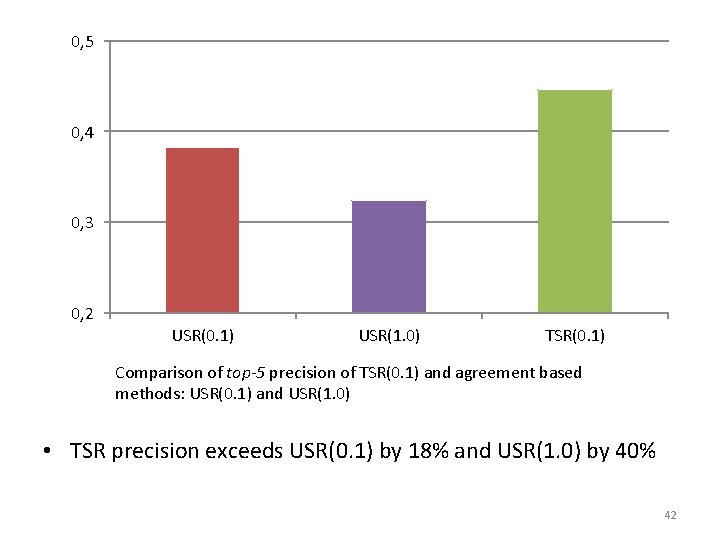

0, 5 0, 4 0, 3 0, 2 USR(0. 1) USR(1. 0) TSR(0. 1) Comparison of top-5 precision of TSR(0. 1) and agreement based methods: USR(0. 1) and USR(1. 0) • TSR precision exceeds USR(0. 1) by 18% and USR(1. 0) by 40% 42

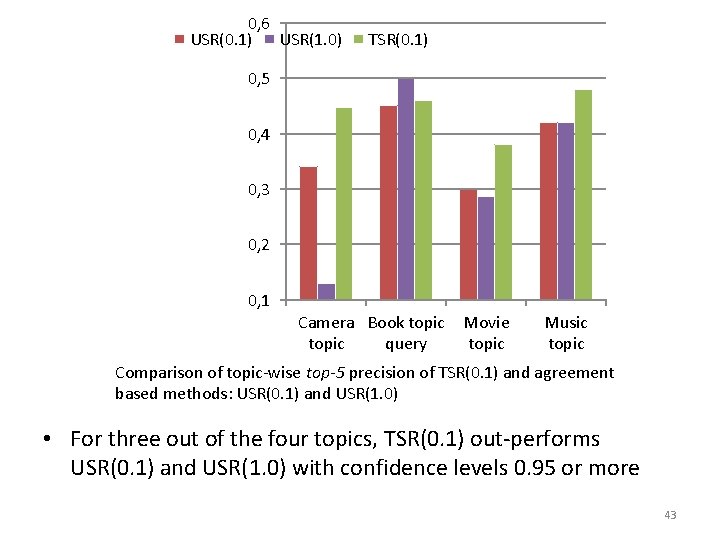

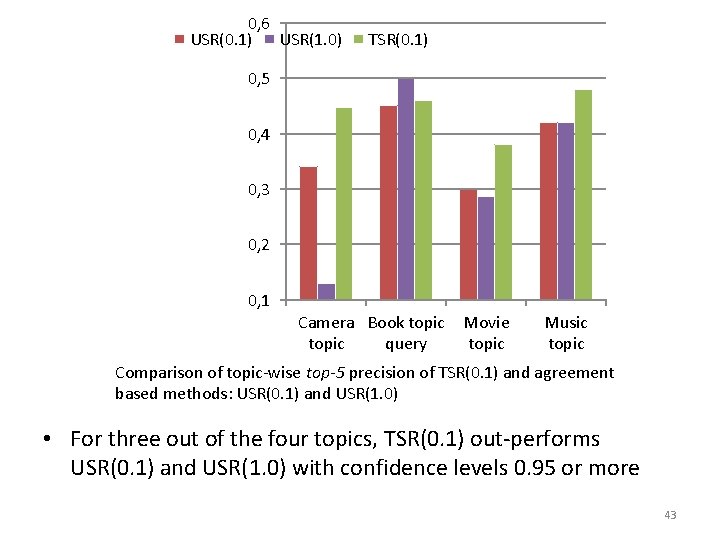

0, 6 USR(0. 1) USR(1. 0) TSR(0. 1) 0, 5 0, 4 0, 3 0, 2 0, 1 Camera Book topic Movie Music topic queryand agreement query Comparison of topic-wise query top-5 precision of TSR(0. 1) based methods: USR(0. 1) and USR(1. 0) • For three out of the four topics, TSR(0. 1) out-performs USR(0. 1) and USR(1. 0) with confidence levels 0. 95 or more 43

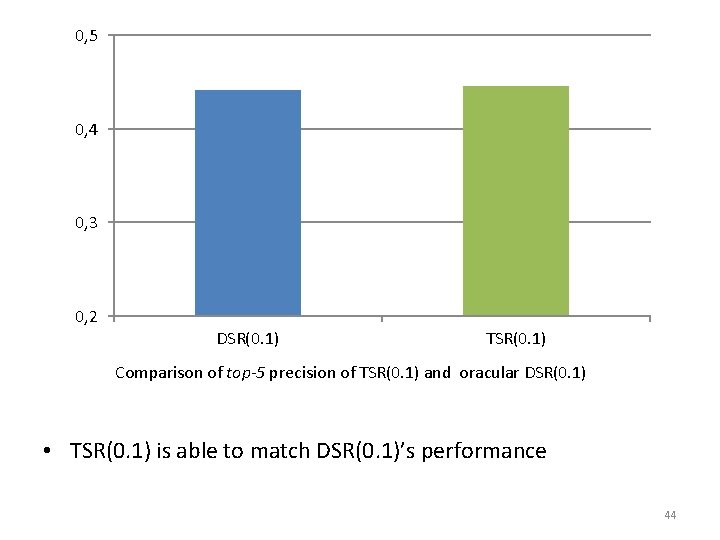

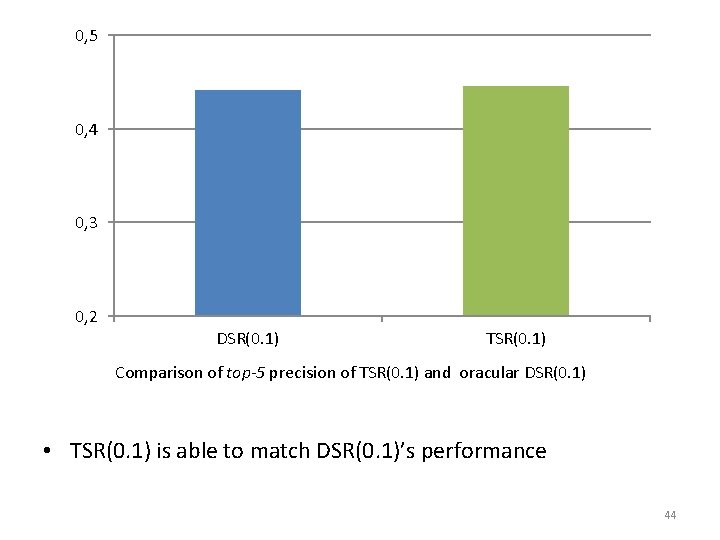

0, 5 0, 4 0, 3 0, 2 DSR(0. 1) TSR(0. 1) Comparison of top-5 precision of TSR(0. 1) and oracular DSR(0. 1) • TSR(0. 1) is able to match DSR(0. 1)’s performance 44

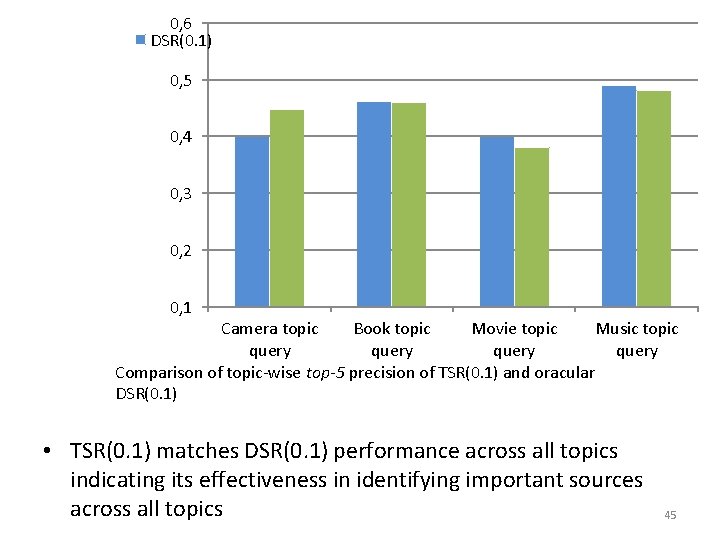

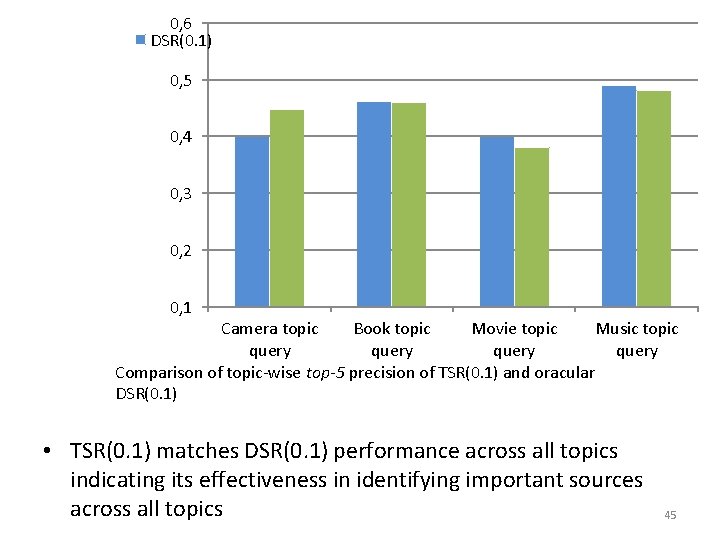

0, 6 DSR(0. 1) 0, 5 0, 4 0, 3 0, 2 0, 1 Camera topic Book topic Movie topic Music topic query Comparison of topic-wise top-5 precision of TSR(0. 1) and oracular DSR(0. 1) • TSR(0. 1) matches DSR(0. 1) performance across all topics indicating its effectiveness in identifying important sources across all topics 45

Agenda • Source. Rank • Topic-sensitive Source. Rank • Experiments and Results • Conclusion 46

Conclusion • We attempted multi-topic source selection sensitive to trustworthiness and importance for the deep-web • We introduced topic-sensitive Source. Rank (TSR) • Our experiments on more than a thousand deepweb sources show that a TSR-based approach is highly effective in extending Source. Rank to multitopic deep-web 47

Conclusion contd. • TSR out-performs query-similarity based measures by around 85% in precision • TSR results in statistically significant precision improvements over other baseline agreementbased methods • Comparison with oracular DSR approach reveals effectiveness of TSR for topic-specific query and source classification and subsequent source selection 48

Paper submitted to Comad’ 11 49

Questions? 50