SUSE Manager as a Platform for KVM Virtualization

%} iscsi. service: kvm_cluster formula (II) sbd. sls {% set sbd = salt['pillar. get']('sbd_storage') %} iscsi. service:](https://slidetodoc.com/presentation_image/dccf9e3dde3ace48b644fedca3b49560/image-22.jpg)

%} ha_group: {% set sbd kvm_cluster formula (III) {% set fencing = salt['pillar. get']('ha_fencing') %} ha_group: {% set sbd](https://slidetodoc.com/presentation_image/dccf9e3dde3ace48b644fedca3b49560/image-23.jpg)

![Tricks /etc/salt/master. d/mine. conf /srv/pillar/mine. sls mine_interval: 5 mine_functions: network. get_hostname: [] network. ip_addrs: Tricks /etc/salt/master. d/mine. conf /srv/pillar/mine. sls mine_interval: 5 mine_functions: network. get_hostname: [] network. ip_addrs:](https://slidetodoc.com/presentation_image/dccf9e3dde3ace48b644fedca3b49560/image-25.jpg)

- Slides: 35

SUSE Manager as a Platform for KVM Virtualization Case Study Cleber Paiva de Souza <cleber@ssys. com. br> Gabriel Dieterich Cavalcante <gabriel@ssys. com. br> S-SYS Sistemas e Soluções Tecnológicas

Who we are 2

• S-SYS was founded in 2014. • SUSE Partner since foundation. • Formed by professionals with experience e certified in all SUSE products (SCA, SCE e SCI) 3

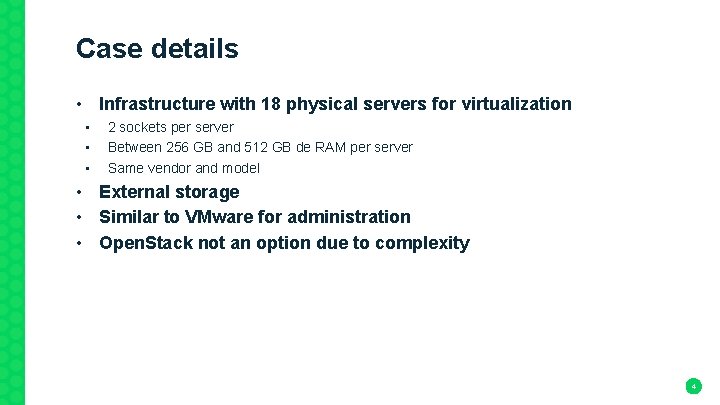

Case details • Infrastructure with 18 physical servers for virtualization • • • 2 sockets per server Between 256 GB and 512 GB de RAM per server Same vendor and model • External storage • Similar to VMware for administration • Open. Stack not an option due to complexity 4

SUSE Manager 5

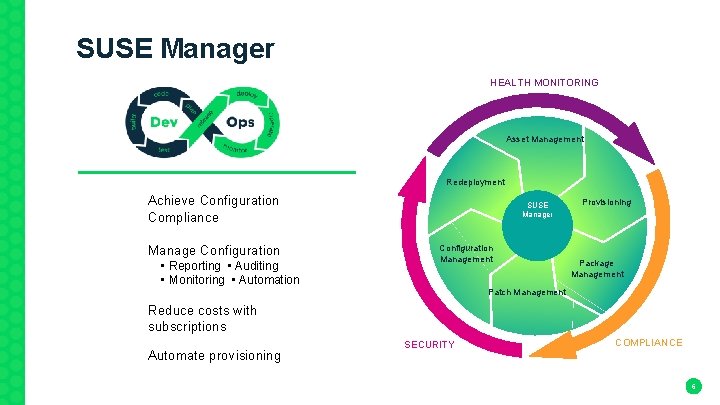

SUSE Manager HEALTH MONITORING Asset Management Redeployment Achieve Configuration Compliance Manage Configuration • Reporting • Auditing • Monitoring • Automation SUSE Manager Configuration Management Provisioning Package Management Patch Management Reduce costs with subscriptions Automate provisioning SECURITY COMPLIANCE 6

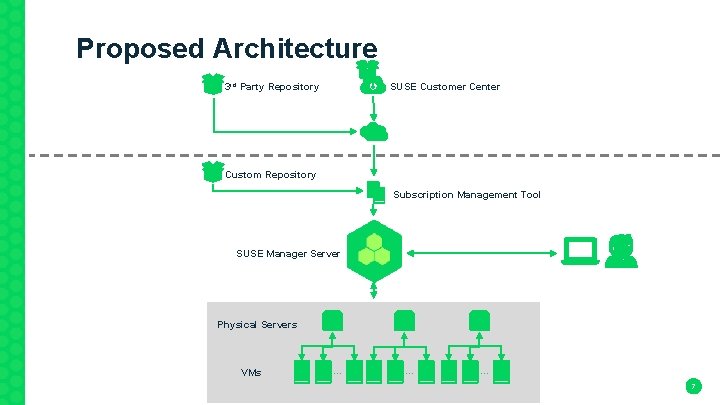

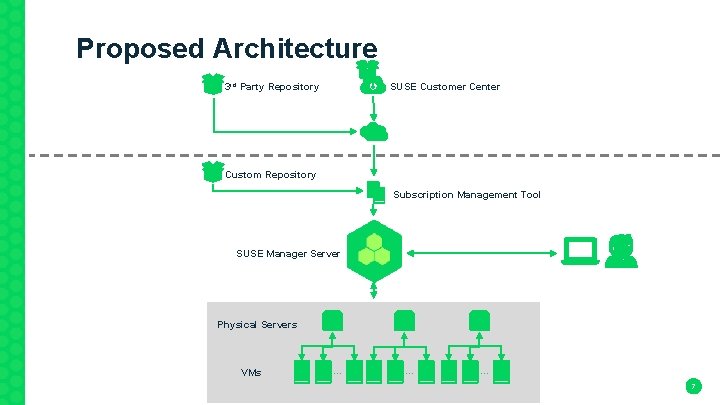

Proposed Architecture SUSE Customer Center 3 rd Party Repository Custom Repository Subscription Management Tool SUSE Manager Server Physical Servers VMs … … … 7

Salt 8

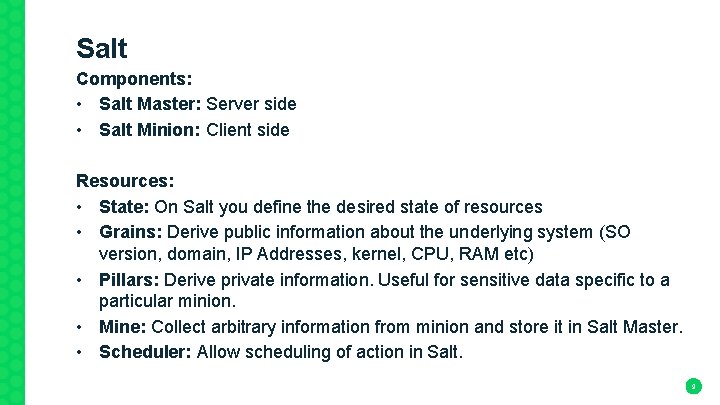

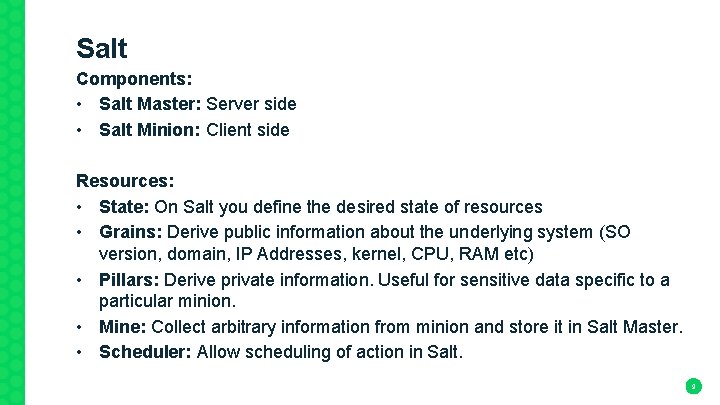

Salt Components: • Salt Master: Server side • Salt Minion: Client side Resources: • State: On Salt you define the desired state of resources • Grains: Derive public information about the underlying system (SO version, domain, IP Addresses, kernel, CPU, RAM etc) • Pillars: Derive private information. Useful for sensitive data specific to a particular minion. • Mine: Collect arbitrary information from minion and store it in Salt Master. • Scheduler: Allow scheduling of action in Salt. 9

SUSE Manager Configuration 10

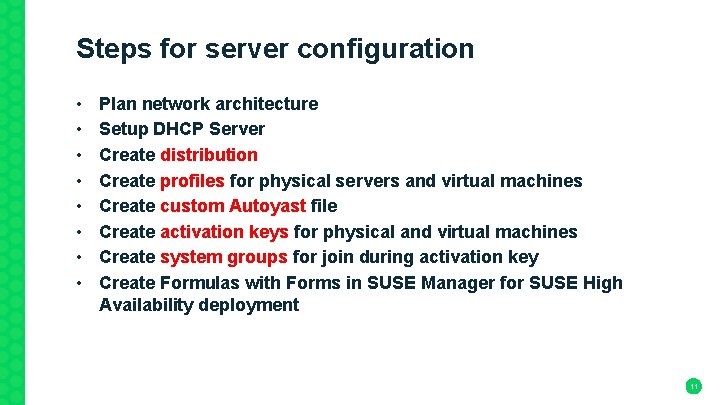

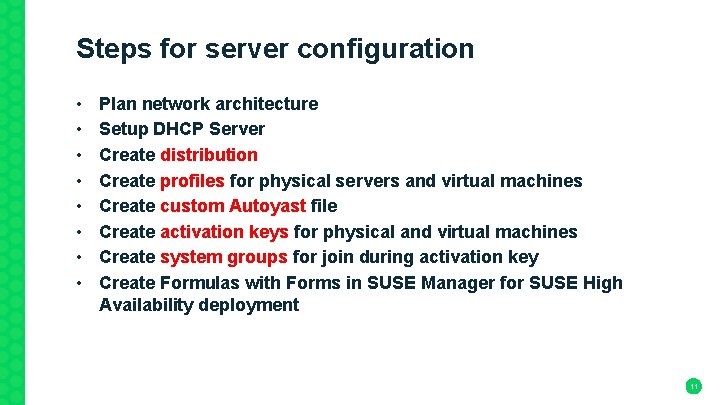

Steps for server configuration • • Plan network architecture Setup DHCP Server Create distribution Create profiles for physical servers and virtual machines Create custom Autoyast file Create activation keys for physical and virtual machines Create system groups for join during activation key Create Formulas with Forms in SUSE Manager for SUSE High Availability deployment 11

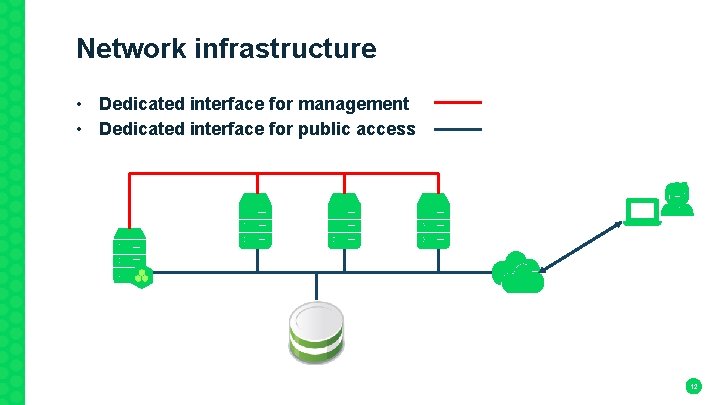

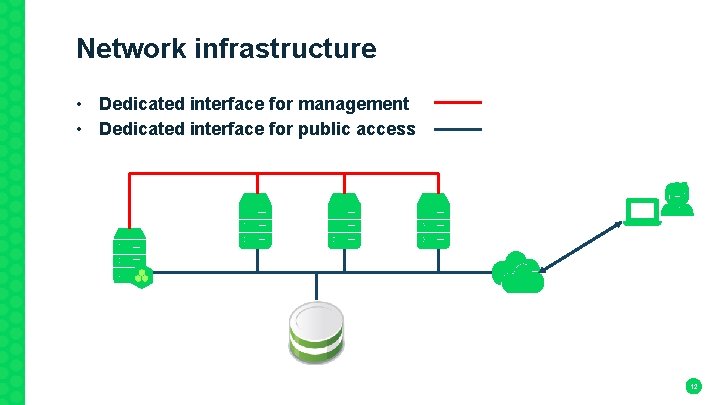

Network infrastructure • Dedicated interface for management • Dedicated interface for public access 12

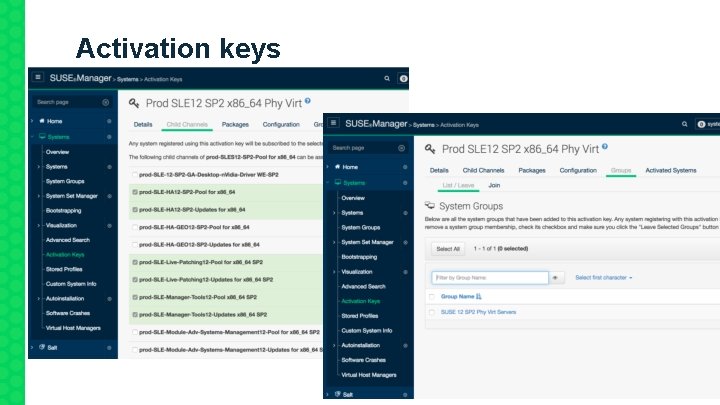

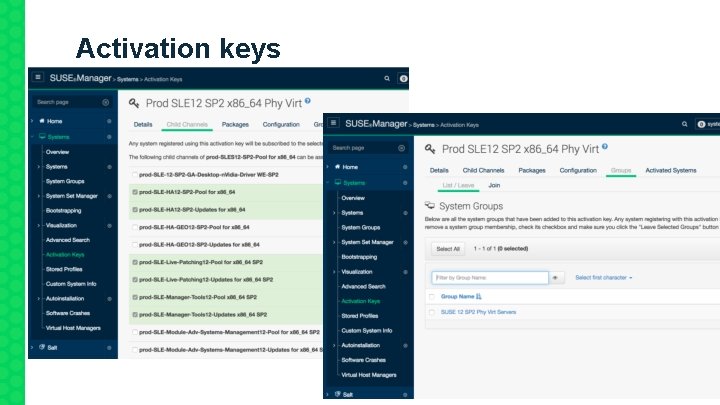

Activation keys 13

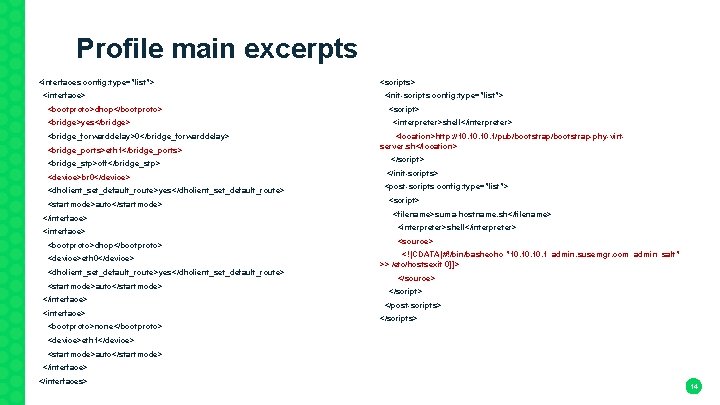

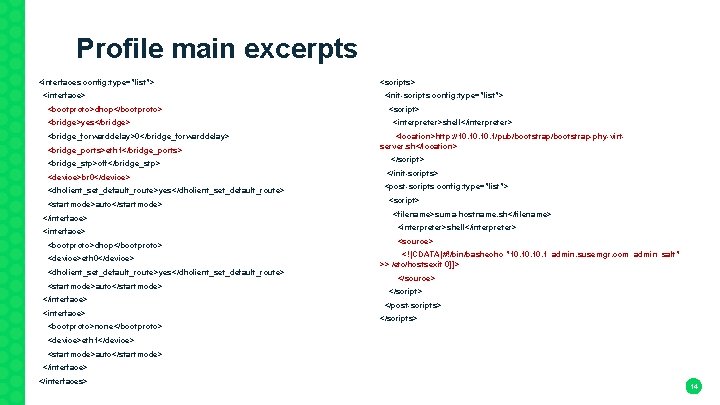

Profile main excerpts <interfaces config: type="list"> <scripts> <interface> <init-scripts config: type="list"> <bootproto>dhcp</bootproto> <script> <bridge>yes</bridge> <interpreter>shell</interpreter> <bridge_forwarddelay>0</bridge_forwarddelay> <location>http: //10. 10. 1/pub/bootstrap-phy-virtserver. sh</location> <bridge_ports>eth 1</bridge_ports> <bridge_stp>off</bridge_stp> <device>br 0</device> <dhclient_set_default_route>yes</dhclient_set_default_route> <startmode>auto</startmode> </interface> <interface> <bootproto>dhcp</bootproto> <device>eth 0</device> <dhclient_set_default_route>yes</dhclient_set_default_route> <startmode>auto</startmode> </interface> <interface> <bootproto>none</bootproto> </script> </init-scripts> <post-scripts config: type="list"> <script> <filename>suma-hostname. sh</filename> <interpreter>shell</interpreter> <source> <![CDATA[#!/bin/bashecho "10. 10. 1 admin. susemgr. com admin salt" >> /etc/hostsexit 0]]> </source> </script> </post-scripts> </scripts> <device>eth 1</device> <startmode>auto</startmode> </interface> </interfaces> 14

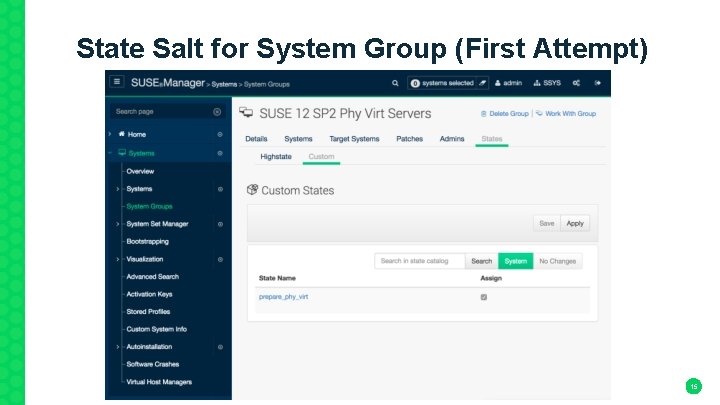

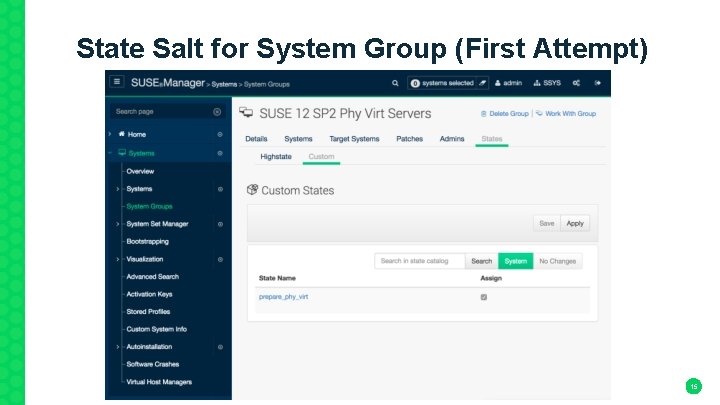

State Salt for System Group (First Attempt) 15

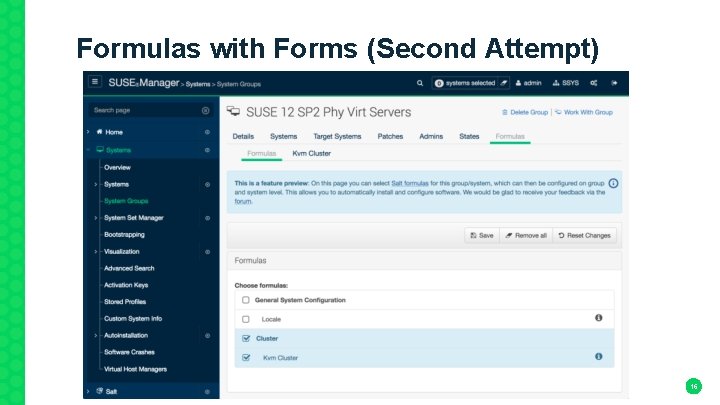

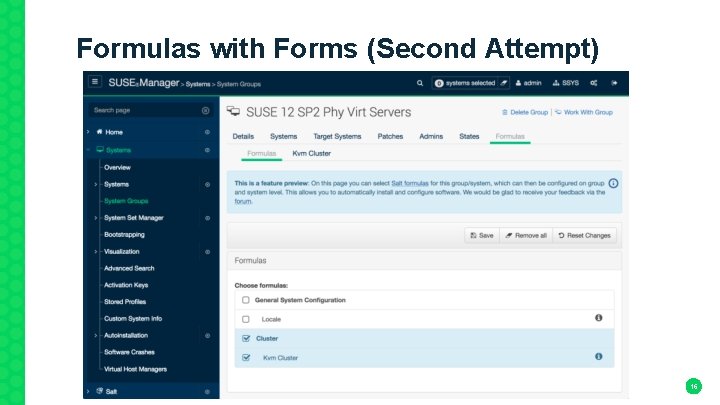

Formulas with Forms (Second Attempt) 16

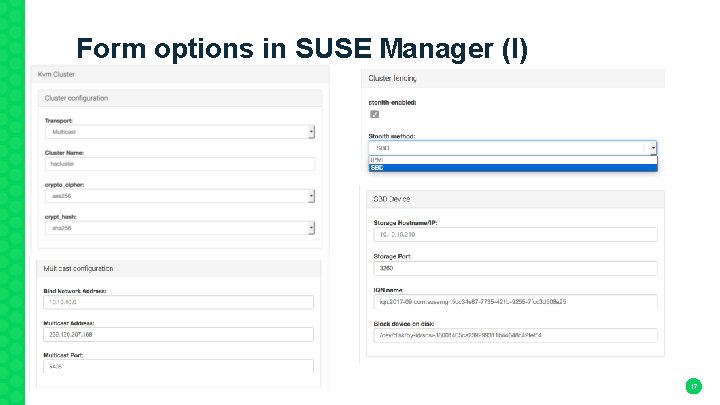

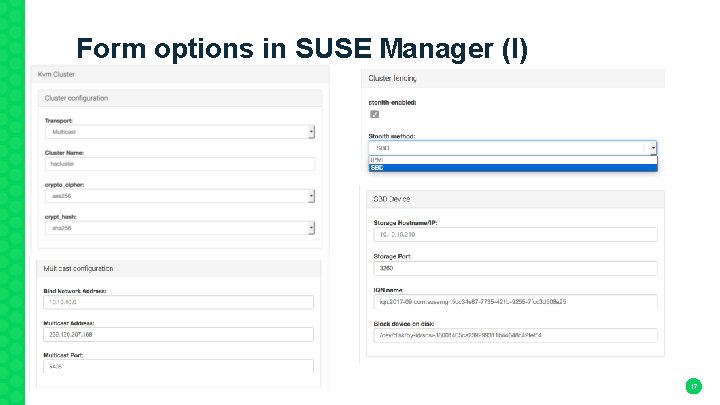

Form options in SUSE Manager (I) 17

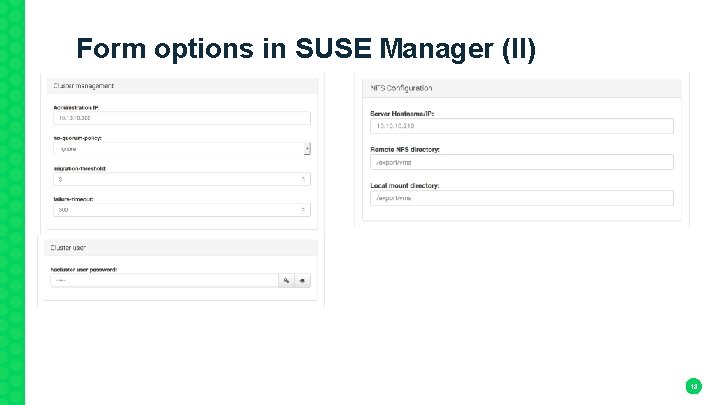

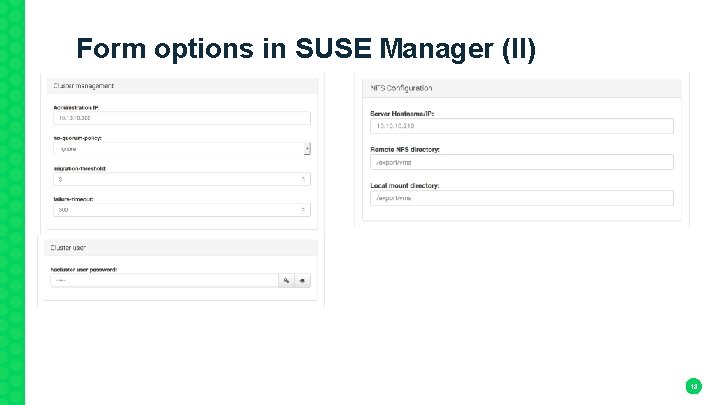

Form options in SUSE Manager (II) 18

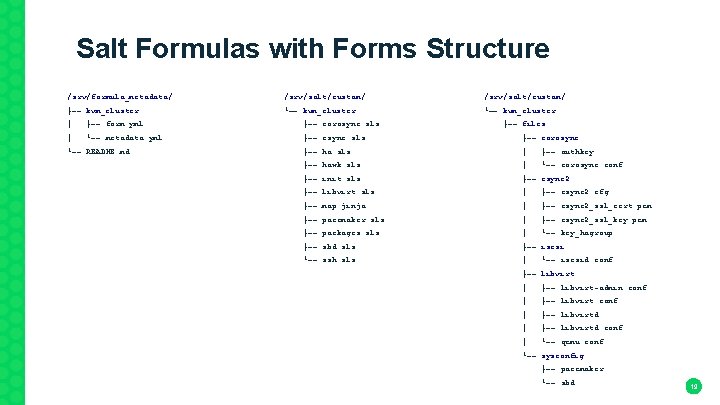

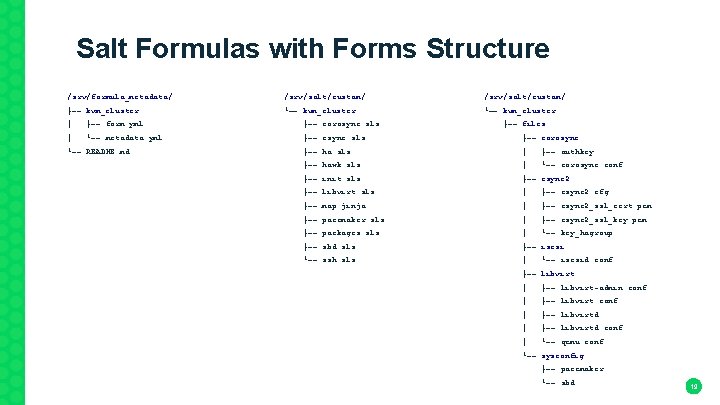

Salt Formulas with Forms Structure /srv/formula_metadata/ /srv/salt/custom/ ├── kvm_cluster └── kvm_cluster │ ├── form. yml ├── corosync. sls ├── files │ └── metadata. yml ├── csync. sls ├── corosync └── README. md ├── ha. sls │ ├── authkey ├── hawk. sls │ └── corosync. conf ├── init. sls ├── csync 2 ├── libvirt. sls │ ├── csync 2. cfg ├── map. jinja │ ├── csync 2_ssl_cert. pem ├── pacemaker. sls │ ├── csync 2_ssl_key. pem ├── packages. sls │ └── key_hagroup ├── sbd. sls ├── iscsi └── ssh. sls │ └── iscsid. conf ├── libvirt │ ├── libvirt-admin. conf │ ├── libvirtd. conf │ └── qemu. conf └── sysconfig ├── pacemaker └── sbd 19

Excerpt from form. yml ha_cluster: crypto_cipher: ha_multicast: $name: "Cluster configuration" $name: "crypto_cipher" $name: "Multicast configuration" $type: group $type: select $type: group $scope: group $help: "Cipher used to secure messages. " $scope: group $values: $visible. If: ha_cluster$transport_method == Multicast transport_method: $name: "Transport" $type: select $values: - Multicast $default: Multicast - none - 3 des bind_interface: - aes 128 $name: "Bind Network Address" - aes 192 $type: text - aes 256 $placeholder: 10. 10. 0 $default: aes 256 name: $name: "Cluster Name" $type: text $help: "Name used by the cluster. " interface: crypto_hash: $name: "crypt_hash" $type: select $default: "hacluster" $help: "Hash algorithm to use to check message integrity. " $visible. If: ha_cluster$crypto_cipher != none $values: - none - md 5 - sha 1 - sha 256 - sha 384 - sha 512 $default: sha 256 $name: "Multicast Address" $type: text $default: 239. 120. 207. 168 port: $name: "Multicast Port" $type: text $default: 5405 . . . Files available for download 20

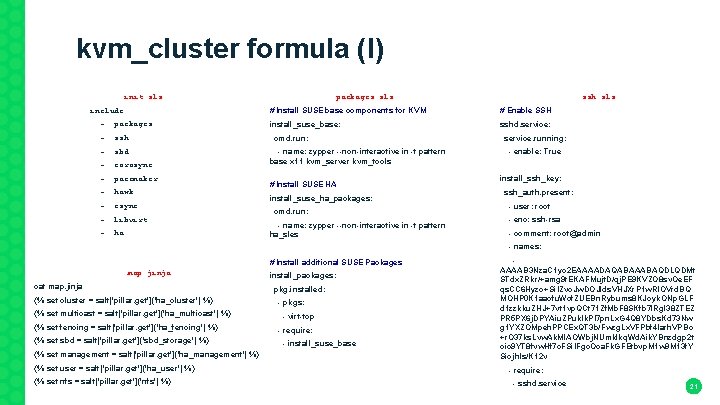

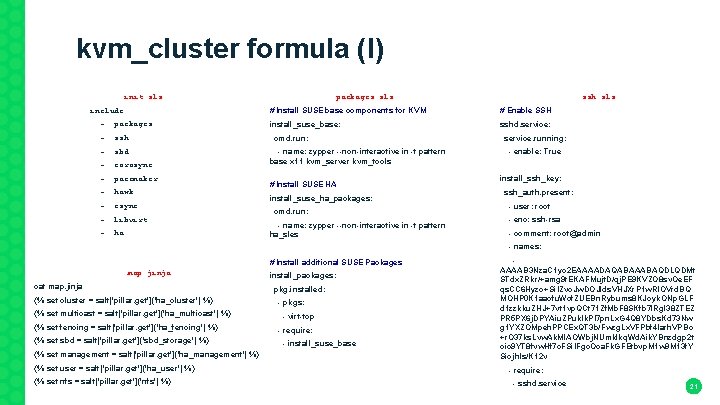

kvm_cluster formula (I) packages. sls init. sls ssh. sls include: # Install SUSE base components for KVM # Enable SSH -. packages install_suse_base: sshd. service: -. ssh cmd. run: service. running: -. sbd - name: zypper --non-interactive in -t pattern base x 11 kvm_server kvm_tools - enable: True -. corosync -. pacemaker -. hawk -. csync -. libvirt -. ha # Install SUSE HA install_suse_ha_packages: cmd. run: - name: zypper --non-interactive in -t pattern ha_sles install_ssh_key: ssh_auth. present: - user: root - enc: ssh-rsa - comment: root@admin - names: {% set management = salt['pillar. get']('ha_management') %} - AAAAB 3 Nza. C 1 yc 2 EAAAADAQABAAABAQDLQDMt STdx. ZRkr/+amg 9 t. EKAFMujt. D/qj. PE 9 KVZO 8 sv. Oe. EF qs. CC 6 Hyzo+Si. IZvo. Jw. DOJlds. VHJXr. P 1 w. RIOVrd. BQ MOHP 0 K 1 aacfu. Wcf. ZUEBn. Rybums 8 KJoyk. ONp. GLF d 1 zzkku. ZHJ+7 vt 1 vp. QCt 71 Zf. Mb. F 8 SKfb 7 l. Rgl 38 ZTEZ PR 5 PX 6 j. DPYAiu. ZPuklk. PI 7 pn. Lx. G 4 Q 8 YDbs. Kd 73 Nw g 1 YXZOMpeh. PPCEx. QT 3 b/Fwzg. Lx. VFPbf 4 larh. VPBc +r. O 37 ks. Lvw. Ak. Ml. AOWbj. NUmklkq. Wd. Aik. YBnzdgp 2 t oic 9 YT 8 fvw. Hf 7 c. FSi. IFgc. Oca. Fk. GFEtbvp. M 1 w 9 M 13 f. Y Siojh. Is/K 12 v {% set user = salt['pillar. get']('ha_user') %} - require: {% set nfs = salt['pillar. get']('nfs') %} - sshd. service # Install additional SUSE Packages map. jinja install_packages: cat map. jinja pkg. installed: {% set cluster = salt['pillar. get']('ha_cluster') %} - pkgs: {% set multicast = salt['pillar. get']('ha_multicast') %} - virt-top {% set fencing = salt['pillar. get']('ha_fencing') %} - require: {% set sbd = salt['pillar. get']('sbd_storage') %} - install_suse_base 21

%} iscsi. service:](https://slidetodoc.com/presentation_image/dccf9e3dde3ace48b644fedca3b49560/image-22.jpg)

kvm_cluster formula (II) sbd. sls {% set sbd = salt['pillar. get']('sbd_storage') %} iscsi. service: # Enable SBD service and check SBD device for setup service. running: sbd_file: - enable: True file. managed: - reload: True - name: /etc/sysconfig/sbd - require: - source: salt: //kvm_cluster/files/sysconfig/sbd - install_suse_base - template: jinja - watch: - user: root - file: /etc/iscsid. conf - group: root file. managed: - mode: 644 - name: /etc/iscsid. conf - source: salt: //kvm_cluster/files/iscsid. conf sbd_block_device: - user: root cmd. run: - group: root - name: iscsiadm -m discovery -t sendtargets -p {{ sbd. ip }} && iscsiadm -m node --targetname {{ sbd. iqn_name }} --portal {{ sbd. ip }}: {{ sbd. port }} --login - mode: 600 - unless: test -b {{ sbd. block_device }} sbd_block_device_format: - require: cmd. run: - iscsi. service - name: sbd -d {{ sbd. block_device }} create - unless: sbd -d {{ sbd. block_device }} list - require: - iscsi. service - sbd_block_device 22

%} ha_group: {% set sbd](https://slidetodoc.com/presentation_image/dccf9e3dde3ace48b644fedca3b49560/image-23.jpg)

kvm_cluster formula (III) {% set fencing = salt['pillar. get']('ha_fencing') %} ha_group: {% set sbd = salt['pillar. get']('sbd_storage') %} group. present: {% set management = salt['pillar. get']('ha_management') %} - name: haclient {% set user = salt['pillar. get']('ha_user') %} - gid: 90 {% set nfs = salt['pillar. get']('nfs') %} # Cluster configuration # Cluster user and group ha_user: user. present: - fullname: heartbeat processes - shell: /bin/bash - name: hacluster - password: {{ user. password }} - hash_password: True # Tuning cluster settings, runs only on DC {% set clusterdc = salt['cmd. run']('crmadmin -q -D 1>/dev/null') %} {% if grains['fqdn'] == salt['cmd. run']('crmadmin -q -D 1>/dev/null') %} ha_default_resource_stickiness: cmd. run: - name: crm configure property default-resource-stickiness=1000 - unless: test $(crm configure get_property default-resource-stickiness) gt 0 - require: - pacemaker. service - uid: 90 - groups: - haclient - require: - install_suse_ha_packages - ha_group 23

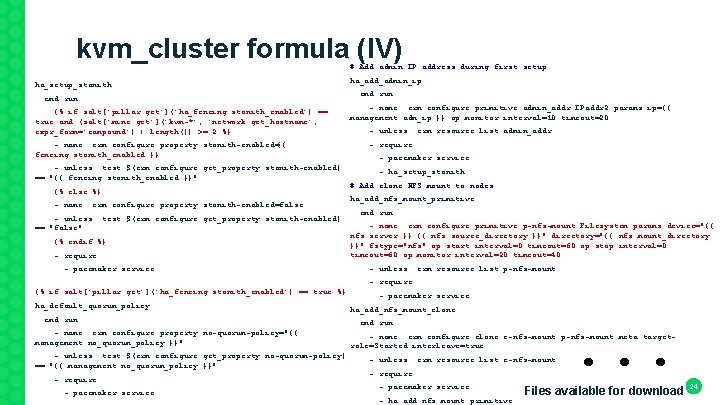

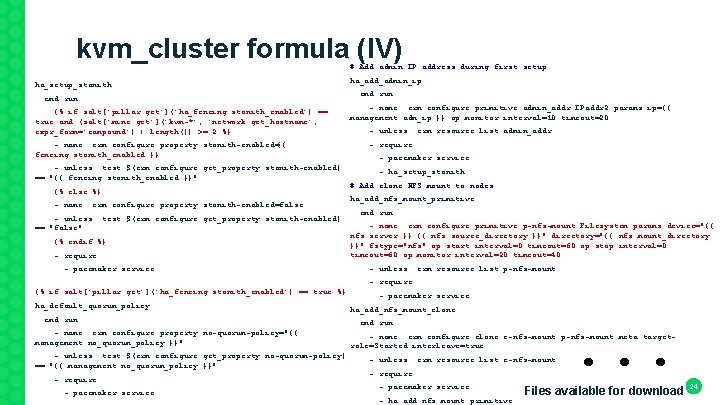

kvm_cluster formula (IV) # Add admin IP address during first setup ha_setup_stonith: cmd. run: {% if salt['pillar. get']('ha_fencing. stonith_enabled') == true and (salt['mine. get']('kvm-*', 'network. get_hostname', expr_form='compound') | length()) >= 2 %} ha_add_admin_ip: cmd. run: - name: crm configure primitive admin_addr IPaddr 2 params ip={{ management. adm_ip }} op monitor interval=10 timeout=20 - unless: crm resource list admin_addr - name: crm configure property stonith-enabled={{ fencing. stonith_enabled }} - require: - pacemaker. service - unless: crm resource list p-nfs-mount - pacemaker. service - unless: test $(crm configure get_property stonith-enabled) - ha_setup_stonith == "{{ fencing. stonith_enabled }}" # Add clone NFS mount to nodes {% else %} ha_add_nfs_mount_primitive: - name: crm configure property stonith-enabled=false cmd. run: - unless: test $(crm configure get_property stonith-enabled) - name: crm configure primitive p-nfs-mount Filesystem params device="{{ == "false" nfs. server }}: {{ nfs. source_directory }}" directory="{{ nfs. mount_directory {% endif %} }}" fstype="nfs" op start interval=0 timeout=60 op stop interval=0 timeout=60 op monitor interval=20 timeout=40 - require: {% if salt['pillar. get']('ha_fencing. stonith_enabled') == true %} - pacemaker. service ha_default_quorum_policy: ha_add_nfs_mount_clone: cmd. run: - name: crm configure property no-quorum-policy="{{ management. no_quorum_policy }}" cmd. run: . . . - name: crm configure clone c-nfs-mount p-nfs-mount meta targetrole=Started interleave=true - unless: test $(crm configure get_property no-quorum-policy) - unless: crm resource list c-nfs-mount == "{{ management. no_quorum_policy }}" - require: 24 - pacemaker. service Files available for download - ha_add_nfs_mount_primitive

![Tricks etcsaltmaster dmine conf srvpillarmine sls mineinterval 5 minefunctions network gethostname network ipaddrs Tricks /etc/salt/master. d/mine. conf /srv/pillar/mine. sls mine_interval: 5 mine_functions: network. get_hostname: [] network. ip_addrs:](https://slidetodoc.com/presentation_image/dccf9e3dde3ace48b644fedca3b49560/image-25.jpg)

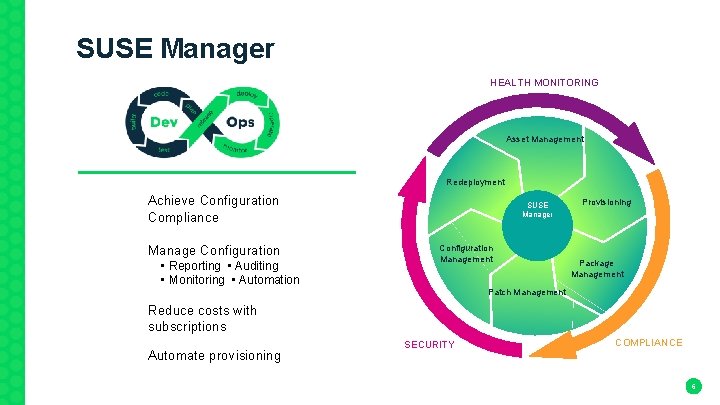

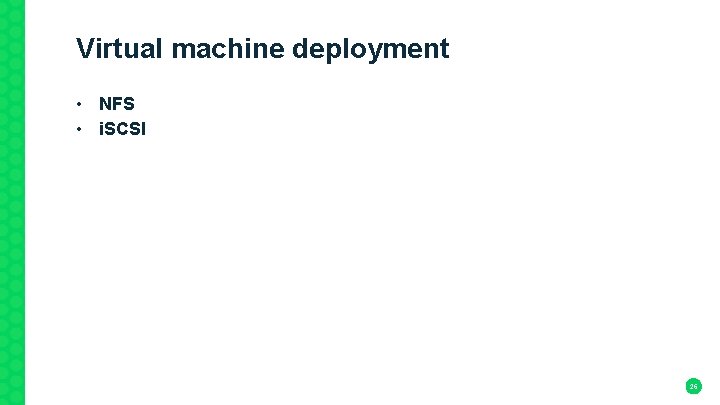

Tricks /etc/salt/master. d/mine. conf /srv/pillar/mine. sls mine_interval: 5 mine_functions: network. get_hostname: [] network. ip_addrs: /srv/pillar/top. sls - br 1 base: 'kvm-*': - mine /srv/pillar/schedule. sls - schedule: highstate: function: state. highstate minutes: 5 25

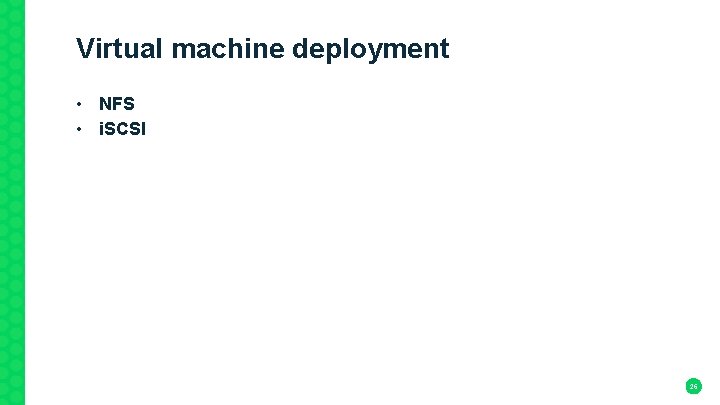

Virtual machine deployment • NFS • i. SCSI 26

Orchestrate virtual machine on SUSE HA • Salt-cloud: • • • Provider for libvirt available since October, 2016 ( https: //github. com/saltstack/salt/pull/36709) Available in Salt Open source in version 2017. 7. 0. Unfortunately not available in SUSE Manager yet (2016. 11. 4). 27

Demo 28

Some errors found • 7018334 - SLES 12 SP 2 Auto. Ya. ST deployment via SUSE Manager fails with language not found error 29

Other SUSECon sessions • CAS 118766 – A Leading US Sporting Goods Retailer Scores with SUSE Manager • CAS 121409 - Using the Power of Salt alongside SUSE Manager 3 • CAS 122700 - SUSE Manager & SUSE Linux Experience • FUT 128677 - SUSE in the Retail Environment • HO 127304 - Get Your Hands on SUSE Manager: Manage Your Containers, VMs and Systems with One Tool • TUT 119026 - One-click Deployment with SUSE Manager • TUT 126043 - SUSE Manager 3. 1 Under the Hood: An Overview from Support, Internal Diagrams, and Salt Integration 30

Files for download All files are available at http: //www. ssys. com. br/susecon/cas 127420/ Formula kvm_cluster available at: https: //github. com/s-sys/kvm_cluster_formula 31

Questions? 32

Thank You 33

Unpublished Work of SUSE LLC. All Rights Reserved. This work is an unpublished work and contains confidential, proprietary and trade secret information of SUSE LLC. Access to this work is restricted to SUSE employees who have a need to know to perform tasks within the scope of their assignments. No part of this work may be practiced, performed, copied, distributed, revised, modified, translated, abridged, condensed, expanded, collected, or adapted without the prior written consent of SUSE. Any use or exploitation of this work without authorization could subject the perpetrator to criminal and civil liability. General Disclaimer This document is not to be construed as a promise by any participating company to develop, deliver, or market a product. It is not a commitment to deliver any material, code, or functionality, and should not be relied upon in making purchasing decisions. SUSE makes no representations or warranties with respect to the contents of this document, and specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose. The development, release, and timing of features or functionality described for SUSE products remains at the sole discretion of SUSE. Further, SUSE reserves the right to revise this document and to make changes to its content, at any time, without obligation to notify any person or entity of such revisions or changes. All SUSE marks referenced in this presentation are trademarks or registered trademarks of Novell, Inc. in the United States and other countries. All thirdparty trademarks are the property of their respective owners. 35