Server Virtualization 1 Virtualization 2 Platform Virtualization 3

- Slides: 12

Server Virtualization 1. Virtualization 2. Platform Virtualization 3. Parallel Processing 4. Vector Processing 5. Symmetric Multiple Processing 6. Massively parallel processors

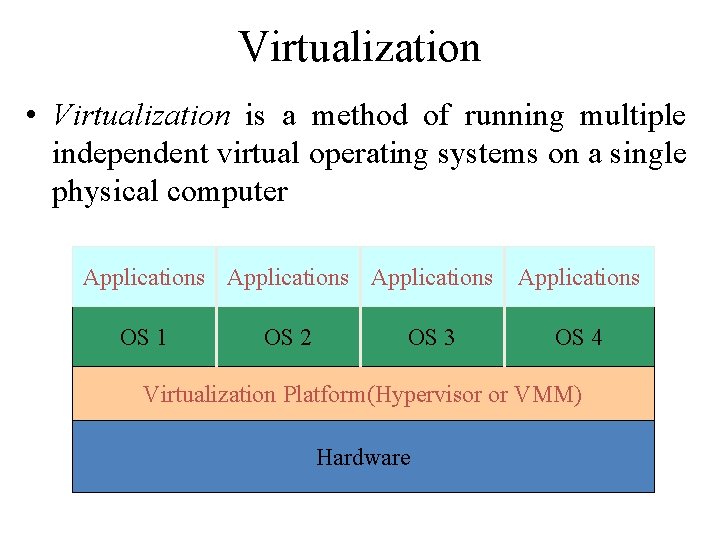

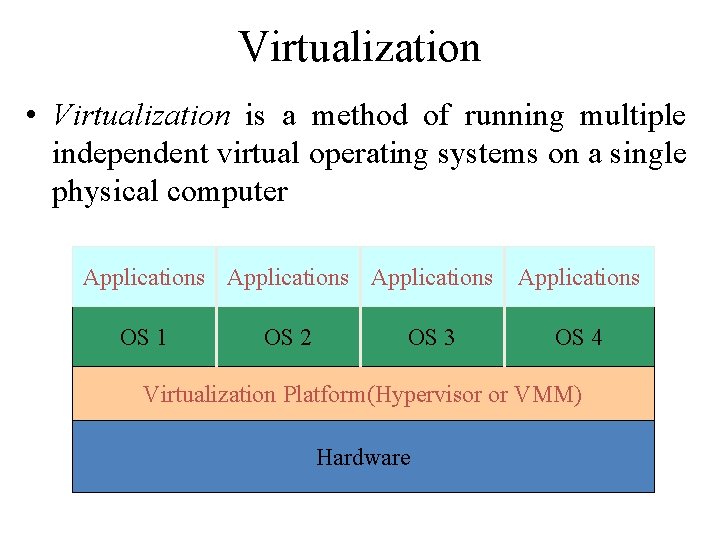

Virtualization • Virtualization is a method of running multiple independent virtual operating systems on a single physical computer Applications OS 1 OS 2 OS 3 Applications OS 4 Virtualization Platform(Hypervisor or VMM) Hardware

Platform Virtualization • The creation and management of virtual machines has often been called platform virtualization. • Platform virtualization is performed on a given computer (hardware platform) by software called a control program. • The control program creates a simulated environment called a virtual computer. • Software that runs on the virtual computer is called guest software. • The guest software often requires access to specific peripheral devices in order to function, the virtualized platform must support guest interfaces to those devices.

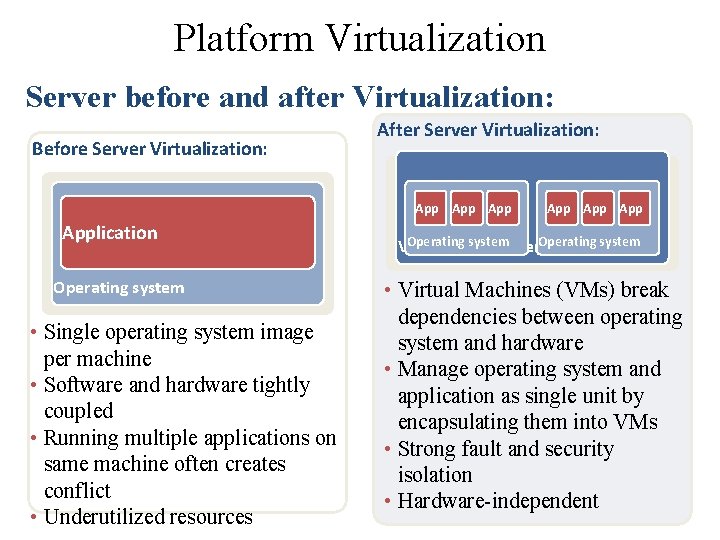

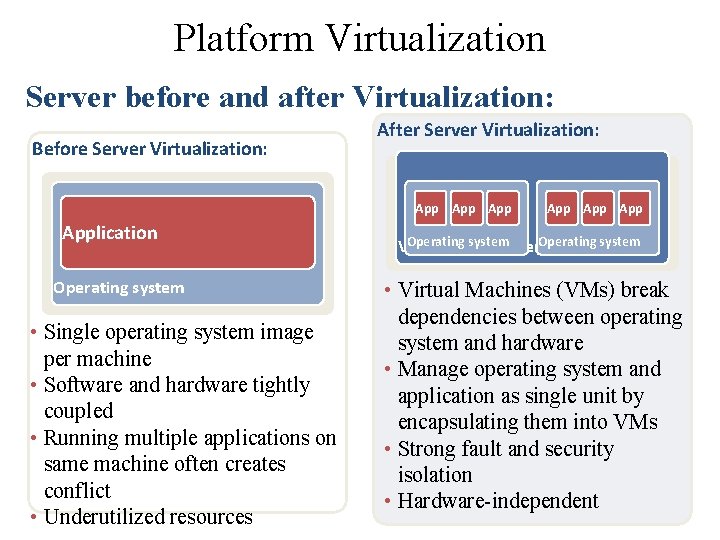

Platform Virtualization Server before and after Virtualization: Before Server Virtualization: After Server Virtualization: App Application Operating system • Single operating system image per machine • Software and hardware tightly coupled • Running multiple applications on same machine often creates conflict • Underutilized resources App App Operating systemlayer. Operating system Virtualization • Virtual Machines (VMs) break dependencies between operating system and hardware • Manage operating system and application as single unit by encapsulating them into VMs • Strong fault and security isolation • Hardware-independent

Parallel processing • Parallel processing is performed by the simultaneous execution of program instructions that have been allocated across multiple processors with the objective of running a program in less time. • Early Forms of Parallel Processing 1. Interleaved execution of programs 2. Multiprogramming – round-robin scheduling 3. Multiprocessing. – two or more processors share a common workload

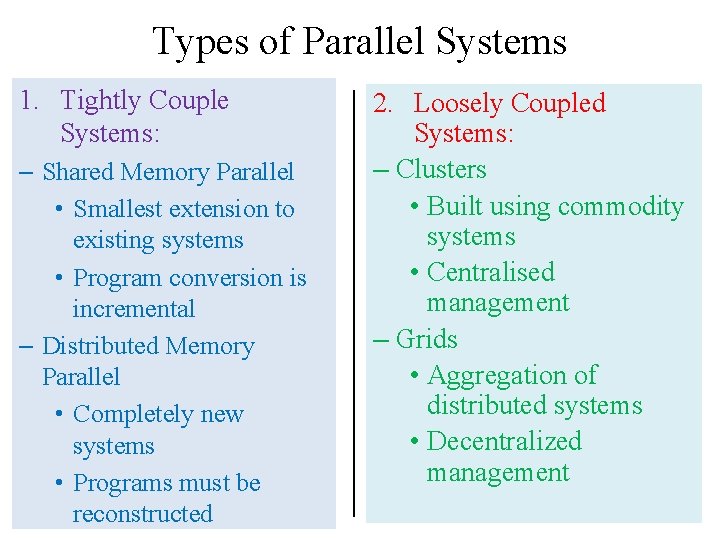

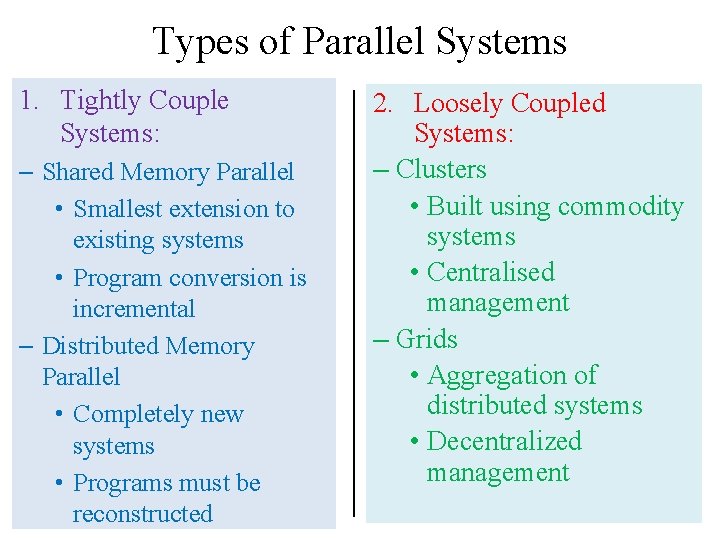

Types of Parallel Systems 1. Tightly Couple Systems: – Shared Memory Parallel • Smallest extension to existing systems • Program conversion is incremental – Distributed Memory Parallel • Completely new systems • Programs must be reconstructed 2. Loosely Coupled Systems: – Clusters • Built using commodity systems • Centralised management – Grids • Aggregation of distributed systems • Decentralized management

Vector Processing • A vector processor is one that can compute operations on entire vectors with one simple instruction. • A vector compiler will attempt to translate loops into single vector instructions. • Example - Suppose we have the following do loop: do 5 i = 1, n X(i) = Y(i) + Z(i) 10 continue • This will be translated into one long vector of length n and a vector add instruction will be executed.

Vector Processing Examples • Vector Processing was used in supercomputers of the 1970's. • First successful implementations of Vector Processing are the CDC (Control Data Corporation) Cyber 100 and the Texas Instruments Advanced Scientific Computer (ASC). • Both of these were imperfect implementations. For example, the CDC Cyber 100 required a considerable amount of time to simply decode the vector instructions before calculation could be accomplished. • This meant that only a very specific set of computations could be "sped up" in this fashion.

Symmetric Multiple Processing • • • Two or more (64 today) processors Each processor is equally capable Shared-everything architecture All processors share all the global resources available Single copy of the OS runs on these systems issues: – Scalability – Data propagation time increases in proportion to the number of processors added to SMP systems. • examples: Ultra. Sparc. II, Alpha ES, Generic Itanium, Opteron, Xeon, …

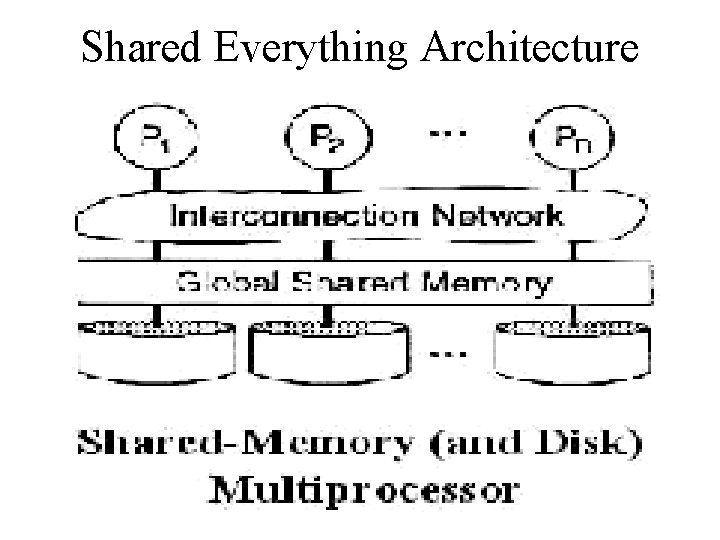

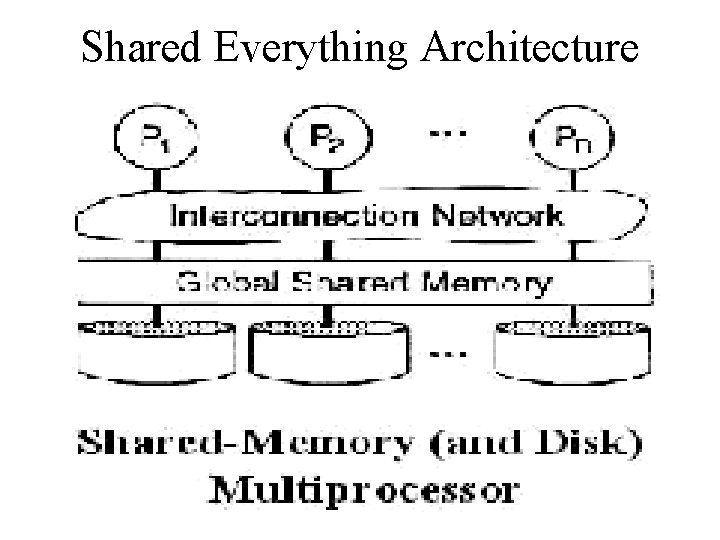

Shared Everything Architecture

Massively parallel processors • A large parallel processing system with a sharednothing architecture • Consist of several hundred nodes with a highspeed interconnection network/switch • Each node consists of a main memory & one or more processors – Runs a separate copy of the OS • advantages: scalability, price • examples: Connection. Systems. CM 1 i CM 2, GAAP (Geometric. Array. Parallel Processor)

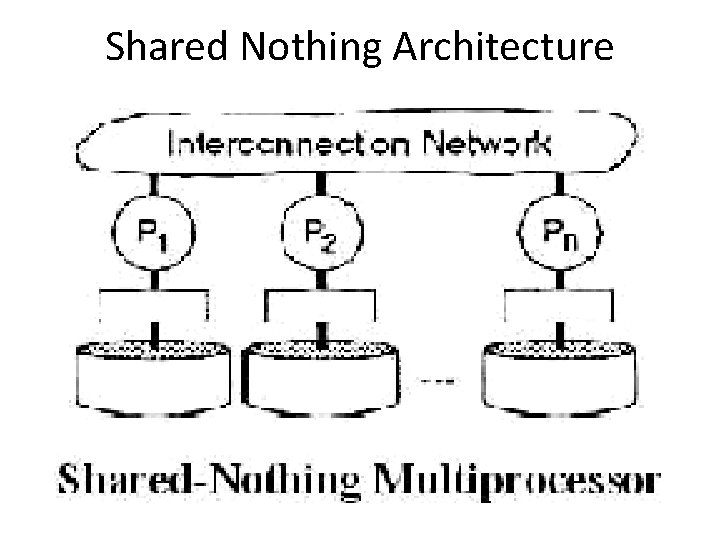

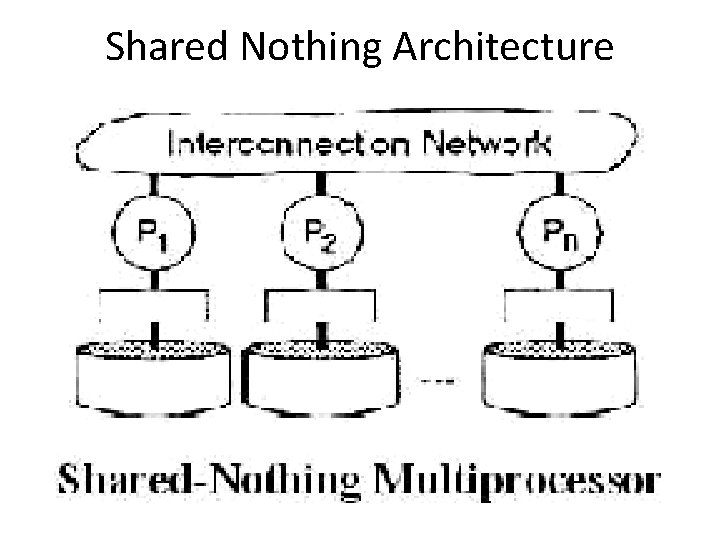

Shared Nothing Architecture