Stan Posey NVIDIA Santa Clara CA USA sposeynvidia

- Slides: 49

Stan Posey NVIDIA, Santa Clara, CA, USA; sposey@nvidia. com

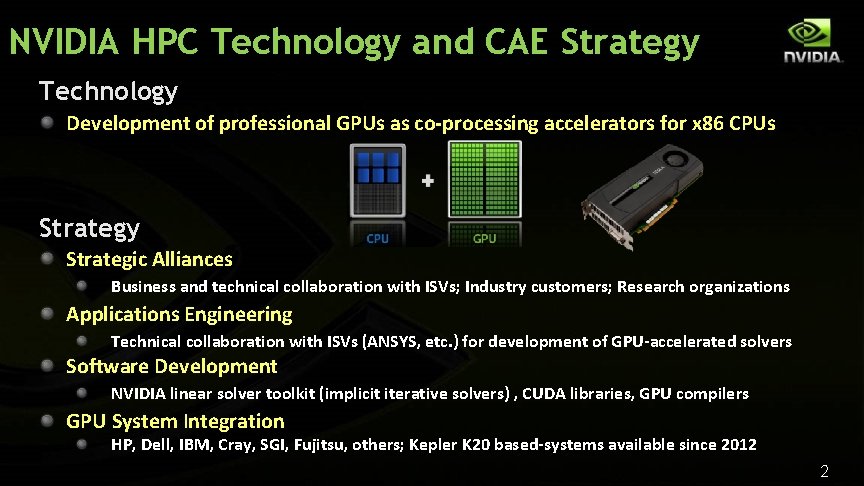

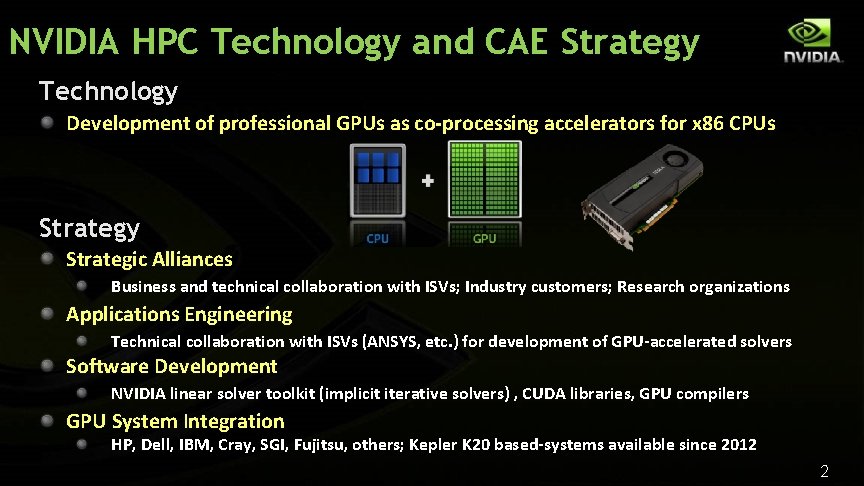

NVIDIA HPC Technology and CAE Strategy Technology Development of professional GPUs as co-processing accelerators for x 86 CPUs Strategy Strategic Alliances Business and technical collaboration with ISVs; Industry customers; Research organizations Applications Engineering Technical collaboration with ISVs (ANSYS, etc. ) for development of GPU-accelerated solvers Software Development NVIDIA linear solver toolkit (implicit iterative solvers) , CUDA libraries, GPU compilers GPU System Integration HP, Dell, IBM, Cray, SGI, Fujitsu, others; Kepler K 20 based-systems available since 2012 2

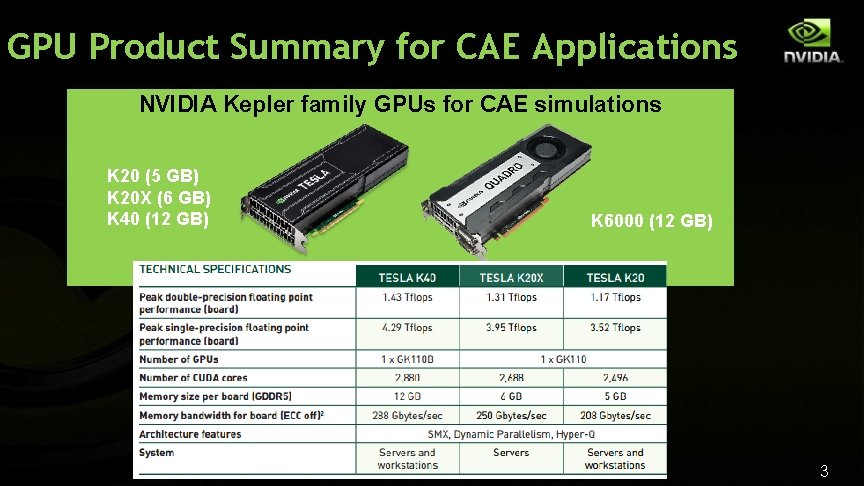

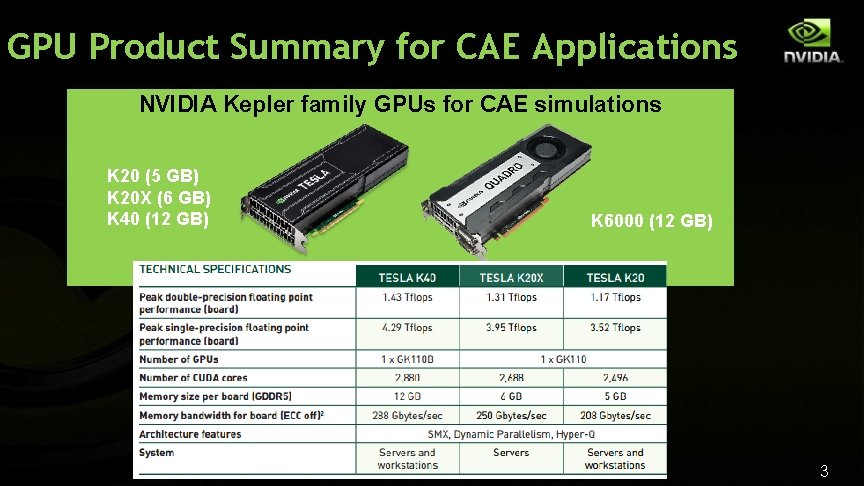

GPU Product Summary for CAE Applications NVIDIA Kepler family GPUs for CAE simulations K 20 (5 GB) K 20 X (6 GB) K 40 (12 GB) K 6000 (12 GB) 3

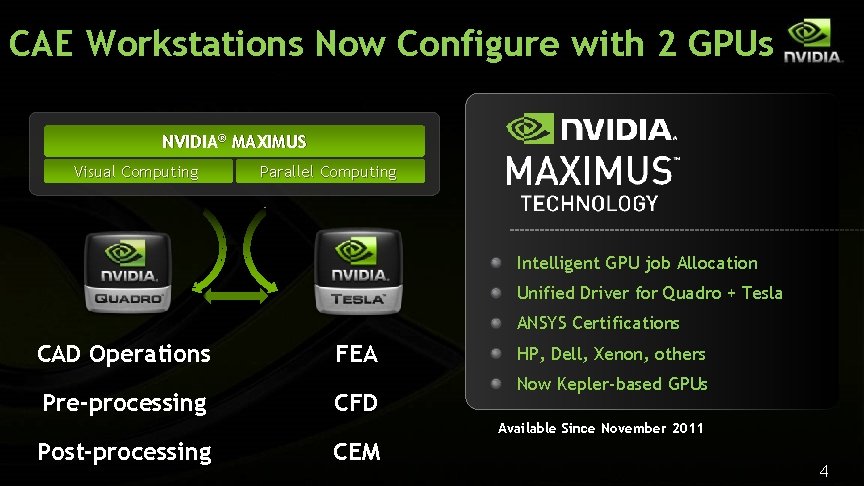

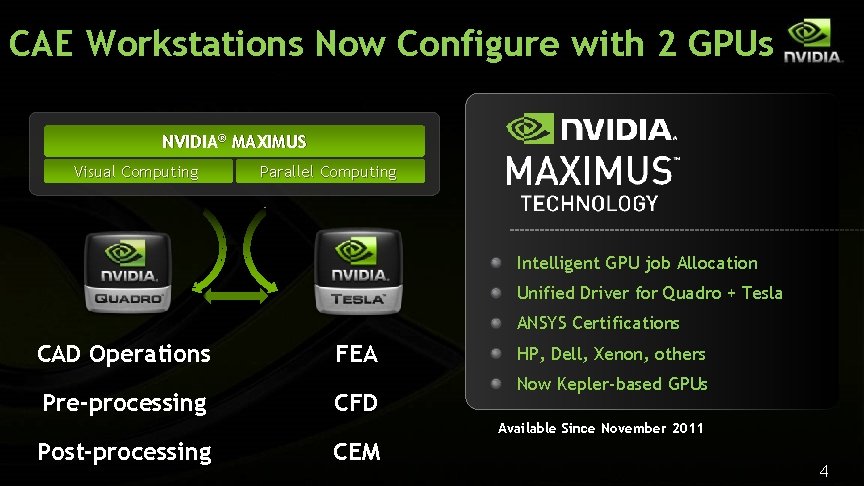

CAE Workstations Now Configure with 2 GPUs NVIDIA® MAXIMUS Visual Computing Parallel Computing Intelligent GPU job Allocation Unified Driver for Quadro + Tesla ANSYS Certifications CAD Operations Pre-processing FEA CFD HP, Dell, Xenon, others Now Kepler-based GPUs Available Since November 2011 Post-processing CEM 4

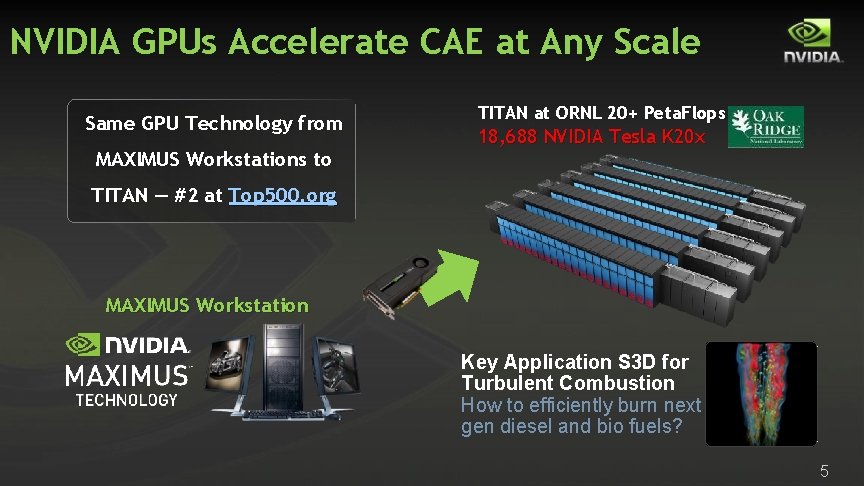

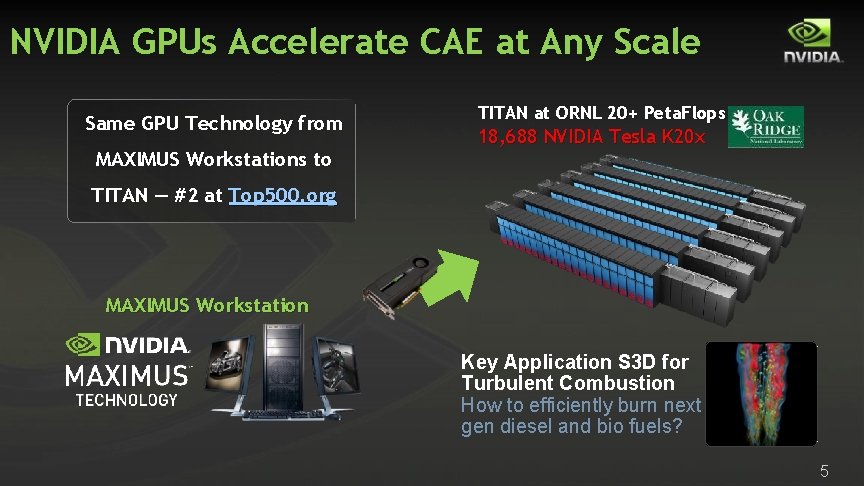

NVIDIA GPUs Accelerate CAE at Any Scale Same GPU Technology from MAXIMUS Workstations to TITAN at ORNL 20+ Peta. Flops 18, 688 NVIDIA Tesla K 20 x TITAN — #2 at Top 500. org MAXIMUS Workstation Key Application S 3 D for Turbulent Combustion How to efficiently burn next gen diesel and bio fuels? 5

NVIDIA Use of CAE in Product Engineering ANSYS Icepak – active and passive cooling of IC packages ANSYS Mechanical – large deflection bending of PCBs ANSYS Mechanical – comfort and fit of 3 D emitter glasses ANSYS Mechanical – shock & vib of solder ball assemblies 6

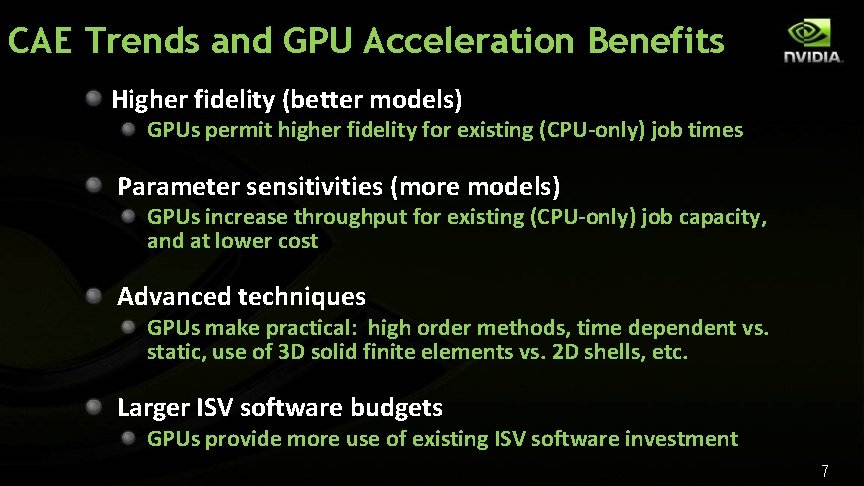

CAE Trends and GPU Acceleration Benefits Higher fidelity (better models) GPUs permit higher fidelity for existing (CPU-only) job times Parameter sensitivities (more models) GPUs increase throughput for existing (CPU-only) job capacity, and at lower cost Advanced techniques GPUs make practical: high order methods, time dependent vs. static, use of 3 D solid finite elements vs. 2 D shells, etc. Larger ISV software budgets GPUs provide more use of existing ISV software investment 7

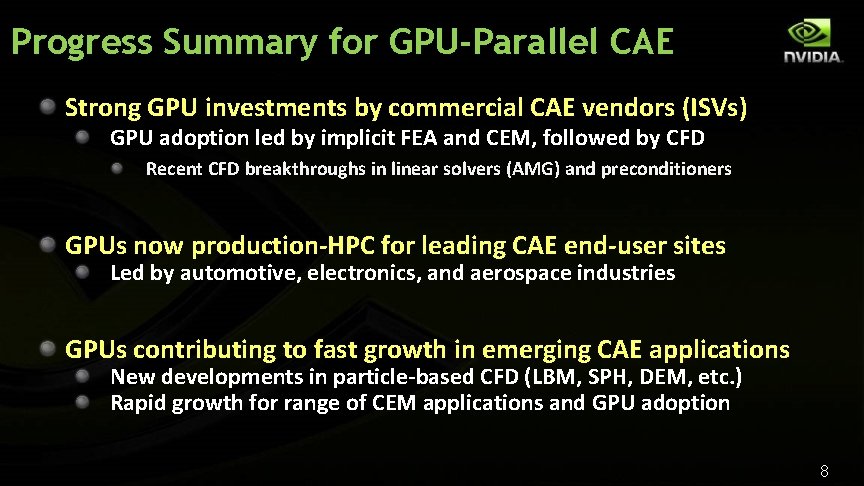

Progress Summary for GPU-Parallel CAE Strong GPU investments by commercial CAE vendors (ISVs) GPU adoption led by implicit FEA and CEM, followed by CFD Recent CFD breakthroughs in linear solvers (AMG) and preconditioners GPUs now production-HPC for leading CAE end-user sites Led by automotive, electronics, and aerospace industries GPUs contributing to fast growth in emerging CAE applications New developments in particle-based CFD (LBM, SPH, DEM, etc. ) Rapid growth for range of CEM applications and GPU adoption 8

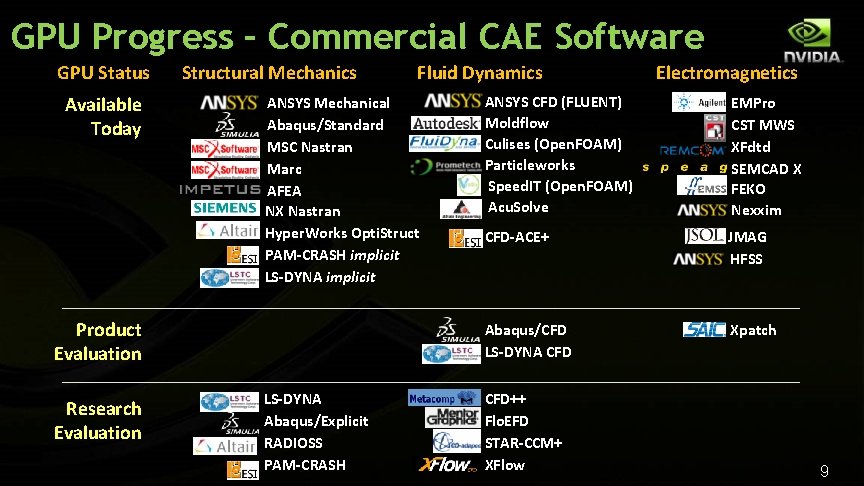

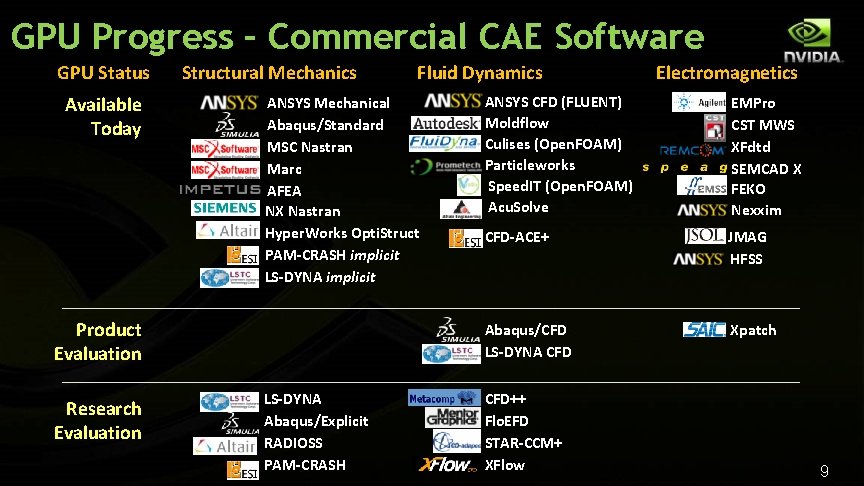

GPU Progress – Commercial CAE Software GPU Status Available Today Structural Mechanics ANSYS Mechanical Abaqus/Standard MSC Nastran Marc AFEA NX Nastran Hyper. Works Opti. Struct PAM-CRASH implicit LS-DYNA implicit Product Evaluation Research Evaluation Fluid Dynamics LS-DYNA Abaqus/Explicit RADIOSS PAM-CRASH Electromagnetics ANSYS CFD (FLUENT) Moldflow Culises (Open. FOAM) Particleworks Speed. IT (Open. FOAM) Acu. Solve EMPro CST MWS XFdtd SEMCAD X FEKO Nexxim CFD-ACE+ JMAG HFSS Abaqus/CFD LS-DYNA CFD Xpatch CFD++ Flo. EFD STAR-CCM+ XFlow 9

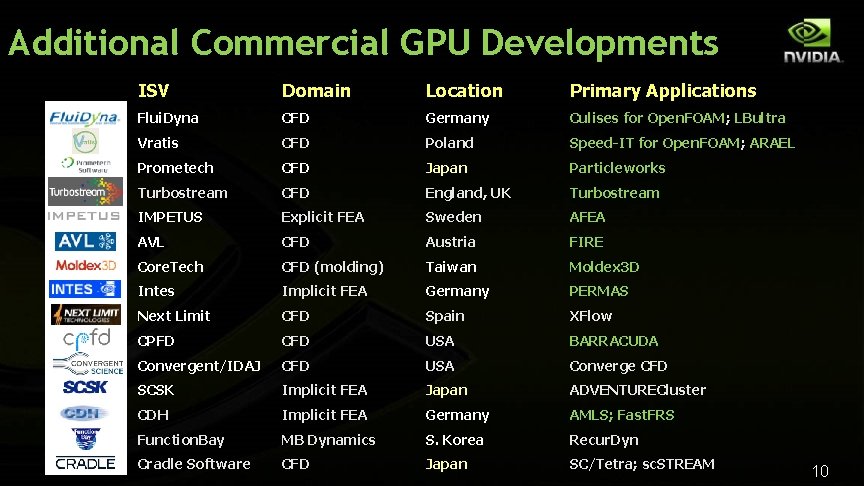

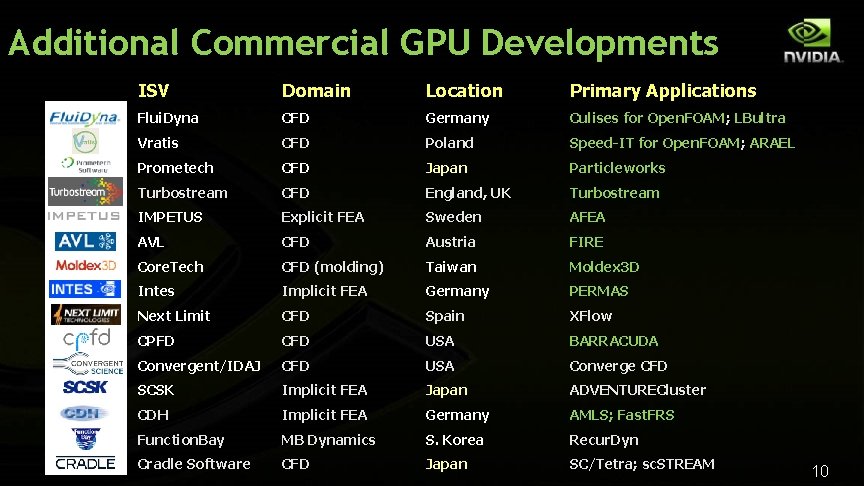

Additional Commercial GPU Developments ISV Domain Location Primary Applications Flui. Dyna CFD Germany Culises for Open. FOAM; LBultra Vratis CFD Poland Speed-IT for Open. FOAM; ARAEL Prometech CFD Japan Particleworks Turbostream CFD England, UK Turbostream IMPETUS Explicit FEA Sweden AFEA AVL CFD Austria FIRE Core. Tech CFD (molding) Taiwan Moldex 3 D Intes Implicit FEA Germany PERMAS Next Limit CFD Spain XFlow CPFD CFD USA BARRACUDA Convergent/IDAJ CFD USA Converge CFD SCSK Implicit FEA Japan ADVENTURECluster CDH Implicit FEA Germany AMLS; Fast. FRS Function. Bay MB Dynamics S. Korea Recur. Dyn Cradle Software CFD Japan SC/Tetra; sc. STREAM 10

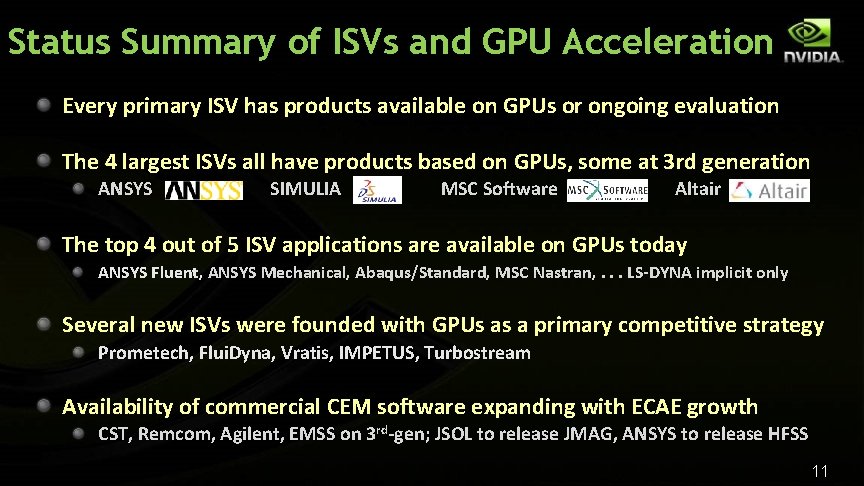

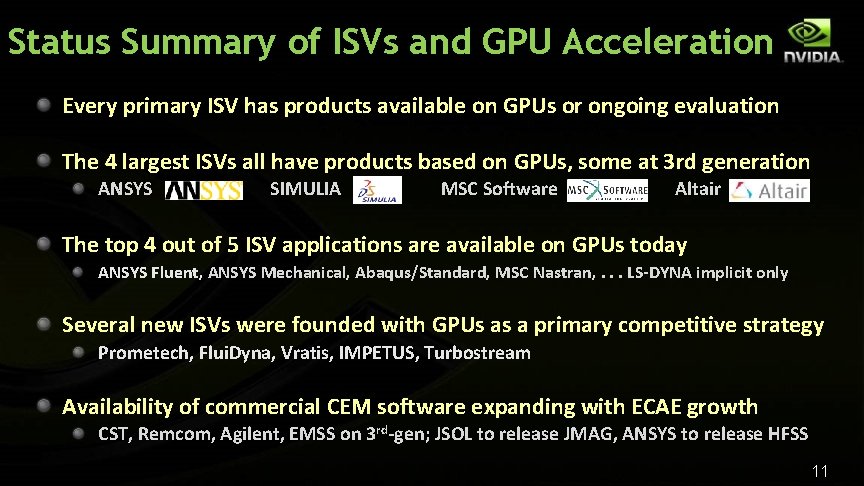

Status Summary of ISVs and GPU Acceleration Every primary ISV has products available on GPUs or ongoing evaluation The 4 largest ISVs all have products based on GPUs, some at 3 rd generation ANSYS SIMULIA MSC Software Altair The top 4 out of 5 ISV applications are available on GPUs today ANSYS Fluent, ANSYS Mechanical, Abaqus/Standard, MSC Nastran, . . . LS-DYNA implicit only Several new ISVs were founded with GPUs as a primary competitive strategy Prometech, Flui. Dyna, Vratis, IMPETUS, Turbostream Availability of commercial CEM software expanding with ECAE growth CST, Remcom, Agilent, EMSS on 3 rd-gen; JSOL to release JMAG, ANSYS to release HFSS 11

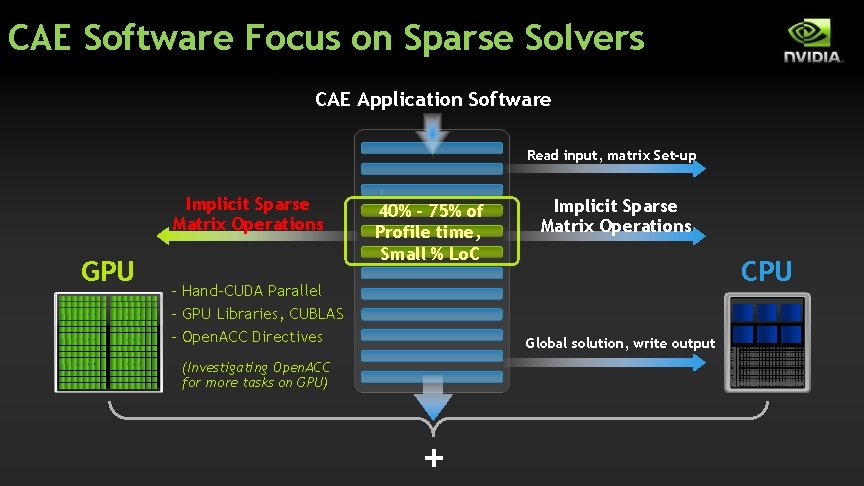

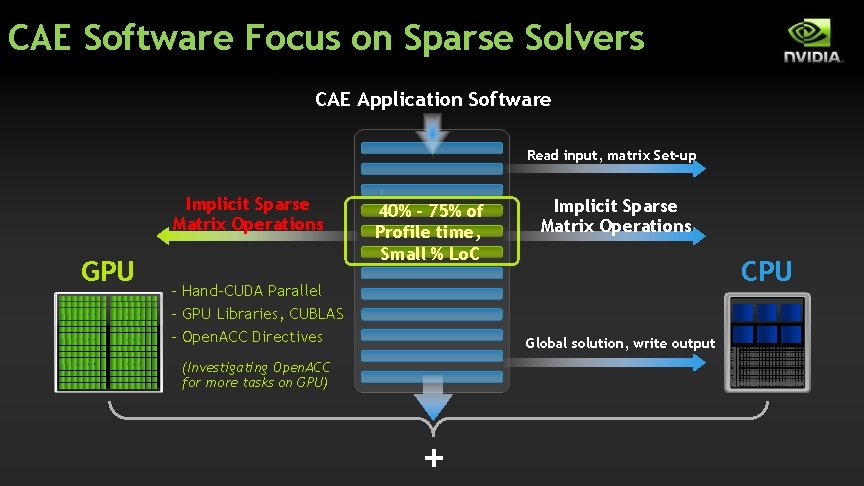

CAE Software Focus on Sparse Solvers CAE Application Software Read input, matrix Set-up Implicit Sparse Matrix Operations GPU 40% - 75% of Profile time, Small % Lo. C - Hand-CUDA Parallel - GPU Libraries, CUBLAS - Open. ACC Directives Implicit Sparse Matrix Operations CPU Global solution, write output (Investigating Open. ACC for more tasks on GPU) + 12

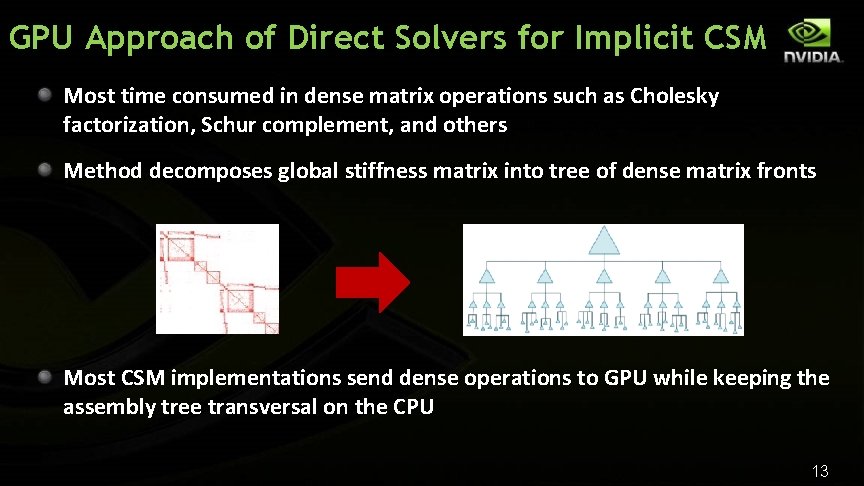

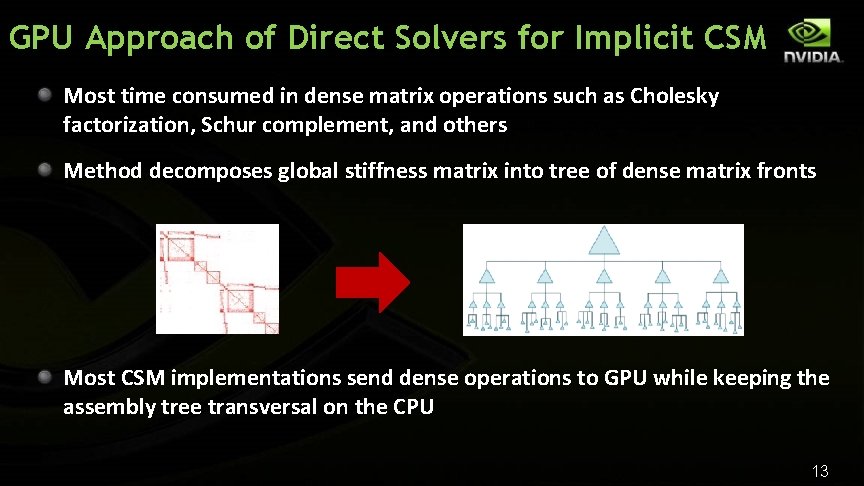

GPU Approach of Direct Solvers for Implicit CSM Most time consumed in dense matrix operations such as Cholesky factorization, Schur complement, and others Method decomposes global stiffness matrix into tree of dense matrix fronts Most CSM implementations send dense operations to GPU while keeping the assembly tree transversal on the CPU 13

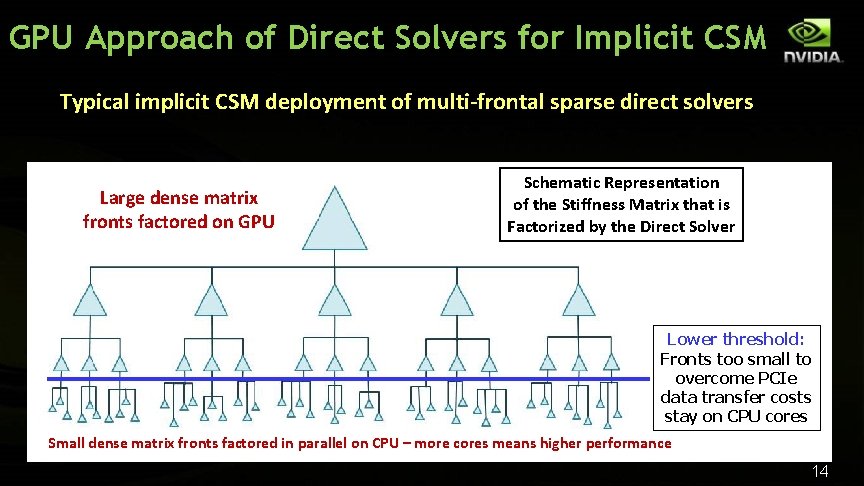

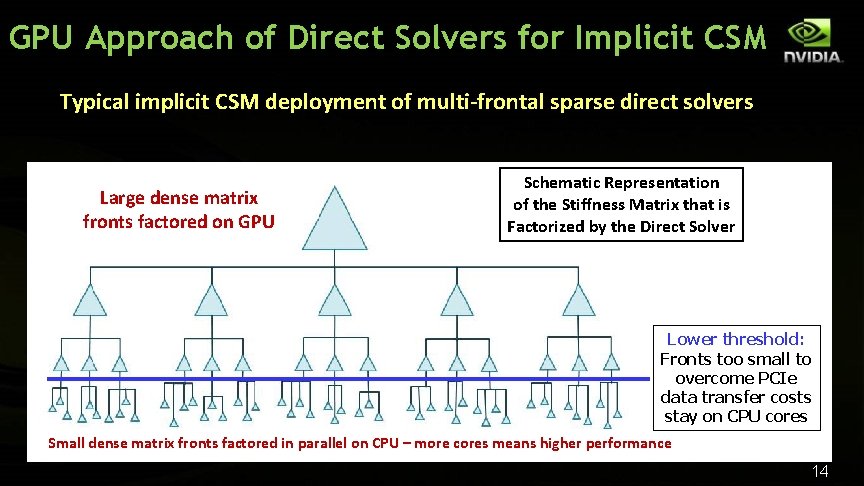

GPU Approach of Direct Solvers for Implicit CSM Typical implicit CSM deployment of multi-frontal sparse direct solvers Large dense matrix fronts factored on GPU Schematic Representation of the Stiffness Matrix that is Factorized by the Direct Solver Lower threshold: Fronts too small to overcome PCIe data transfer costs stay on CPU cores Small dense matrix fronts factored in parallel on CPU – more cores means higher performance 14

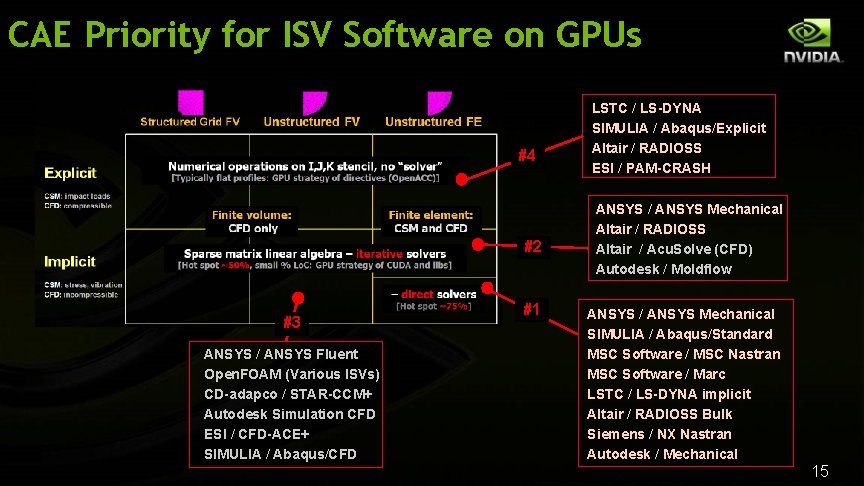

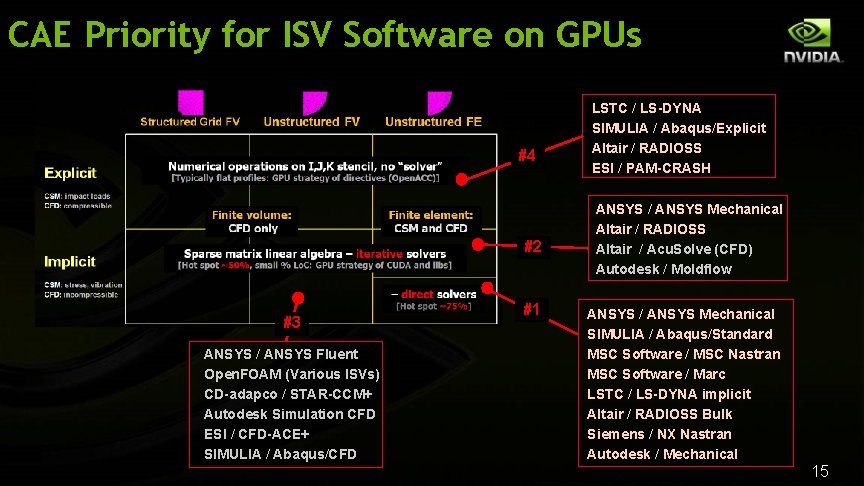

CAE Priority for ISV Software on GPUs #4 #2 #3 ANSYS / ANSYS Fluent Open. FOAM (Various ISVs) CD-adapco / STAR-CCM+ Autodesk Simulation CFD ESI / CFD-ACE+ SIMULIA / Abaqus/CFD #1 LSTC / LS-DYNA SIMULIA / Abaqus/Explicit Altair / RADIOSS ESI / PAM-CRASH ANSYS / ANSYS Mechanical Altair / RADIOSS Altair / Acu. Solve (CFD) Autodesk / Moldflow ANSYS / ANSYS Mechanical SIMULIA / Abaqus/Standard MSC Software / MSC Nastran MSC Software / Marc LSTC / LS-DYNA implicit Altair / RADIOSS Bulk Siemens / NX Nastran Autodesk / Mechanical 15

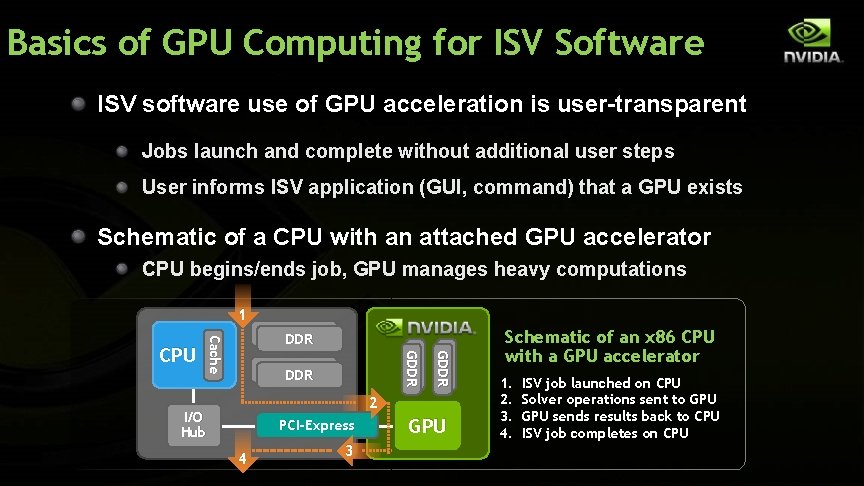

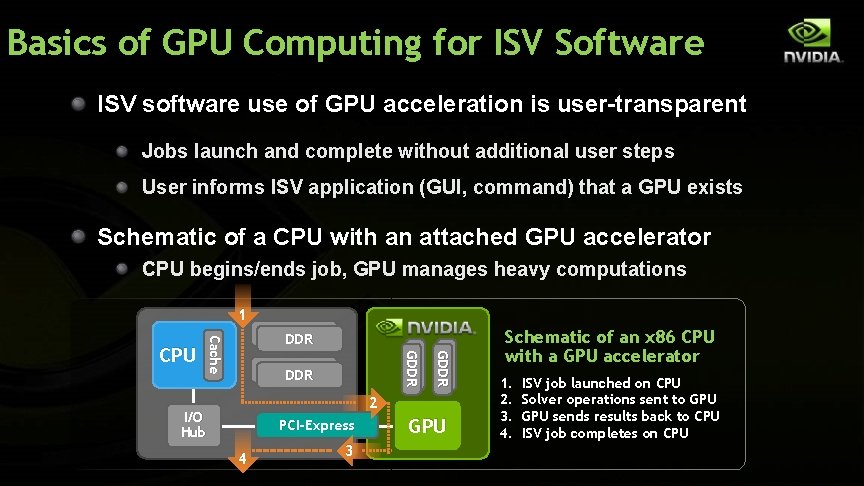

Basics of GPU Computing for ISV Software ISV software use of GPU acceleration is user-transparent Jobs launch and complete without additional user steps User informs ISV application (GUI, command) that a GPU exists Schematic of a CPU with an attached GPU accelerator CPU begins/ends job, GPU manages heavy computations 1 DDR GDDR Cache CPU DDR 2 I/O Hub PCI-Express 4 3 GPU Schematic of an x 86 CPU with a GPU accelerator 1. 2. 3. 4. ISV job launched on CPU Solver operations sent to GPU sends results back to CPU ISV job completes on CPU

Computational Fluid Dynamics ANSYS Fluent 17

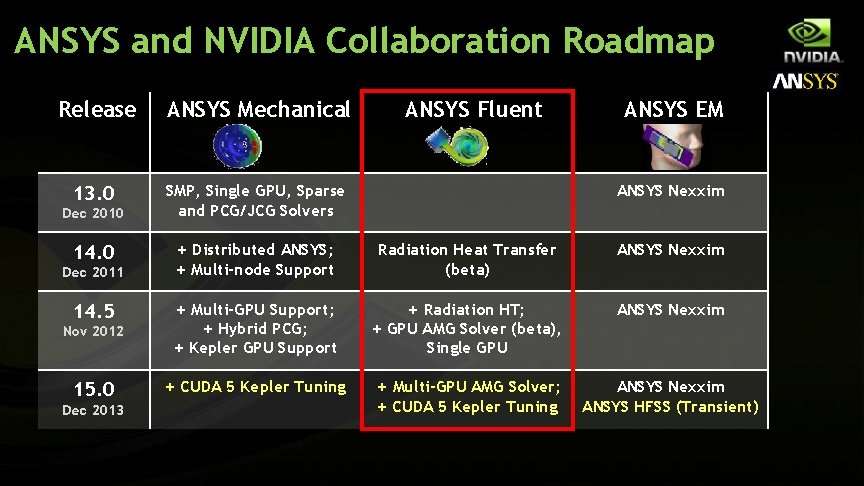

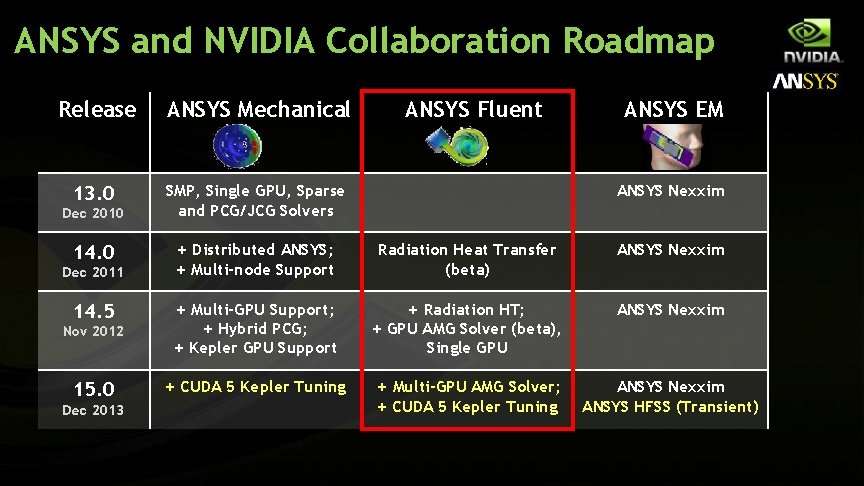

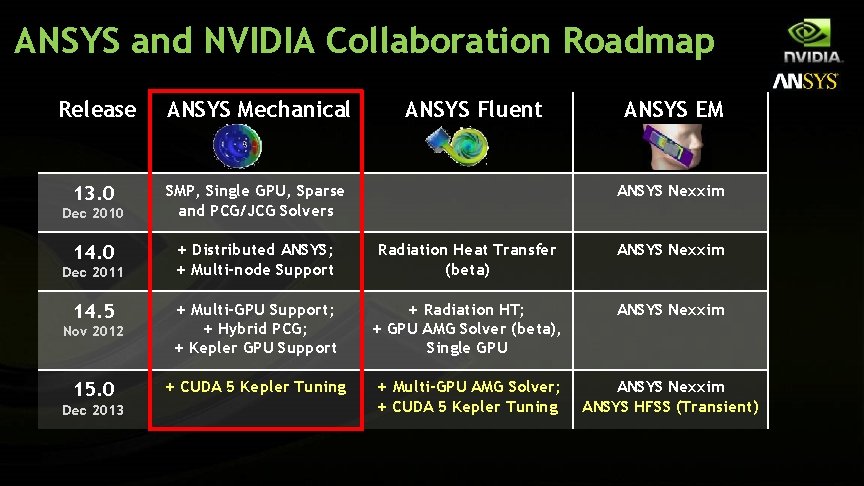

ANSYS and NVIDIA Collaboration Roadmap Release ANSYS Mechanical 13. 0 SMP, Single GPU, Sparse and PCG/JCG Solvers Dec 2010 14. 0 ANSYS Fluent ANSYS EM ANSYS Nexxim + Distributed ANSYS; + Multi-node Support Radiation Heat Transfer (beta) ANSYS Nexxim + Radiation HT; + GPU AMG Solver (beta), Single GPU ANSYS Nexxim Nov 2012 + Multi-GPU Support; + Hybrid PCG; + Kepler GPU Support 15. 0 + CUDA 5 Kepler Tuning + Multi-GPU AMG Solver; + CUDA 5 Kepler Tuning ANSYS Nexxim ANSYS HFSS (Transient) Dec 2011 14. 5 Dec 2013

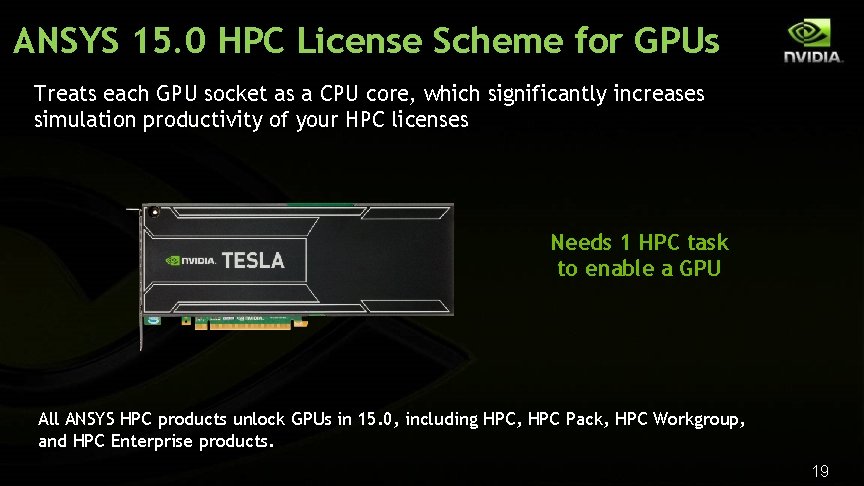

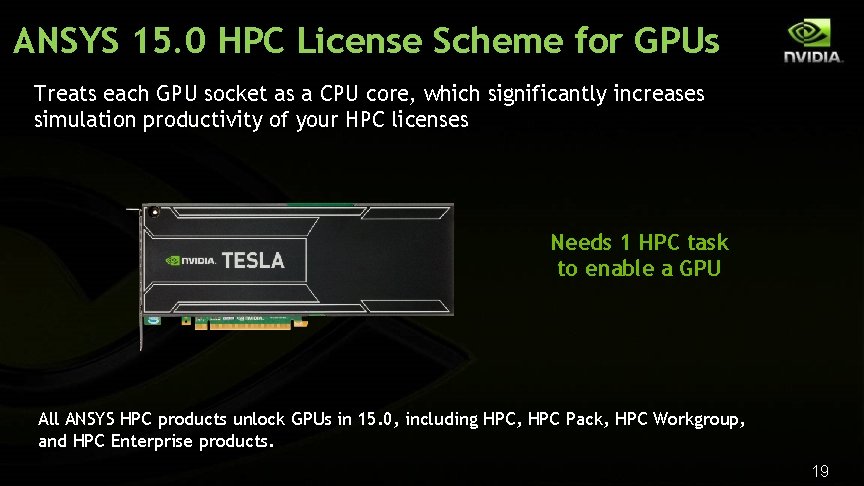

ANSYS 15. 0 HPC License Scheme for GPUs Treats each GPU socket as a CPU core, which significantly increases simulation productivity of your HPC licenses Needs 1 HPC task to enable a GPU All ANSYS HPC products unlock GPUs in 15. 0, including HPC, HPC Pack, HPC Workgroup, and HPC Enterprise products. 19

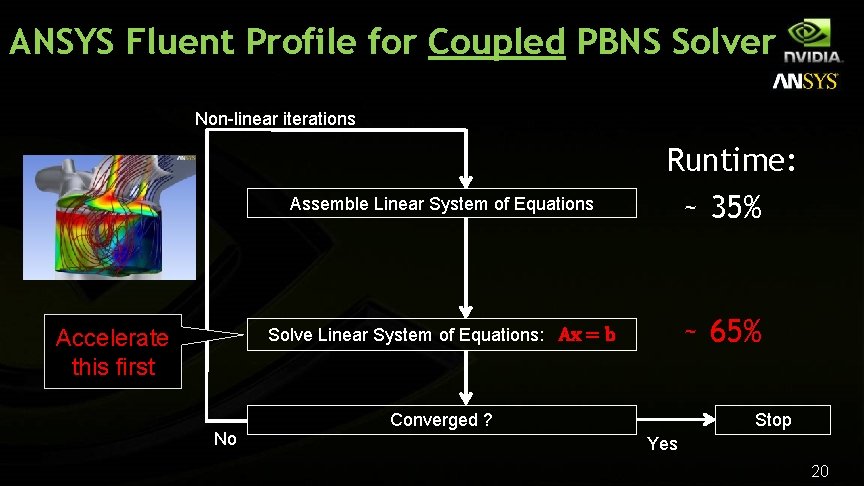

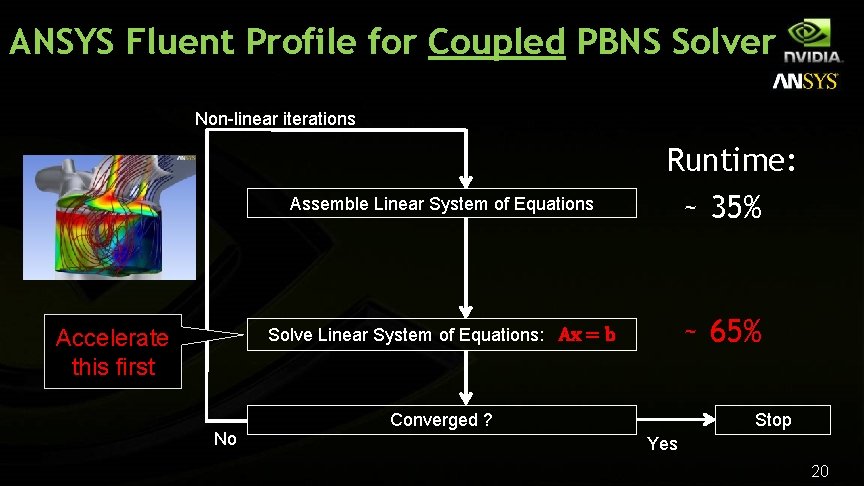

ANSYS Fluent Profile for Coupled PBNS Solver Non-linear iterations Assemble Linear System of Equations Accelerate this first Runtime: ~ 35% ~ 65% Solve Linear System of Equations: Ax = b No Converged ? Stop Yes 20

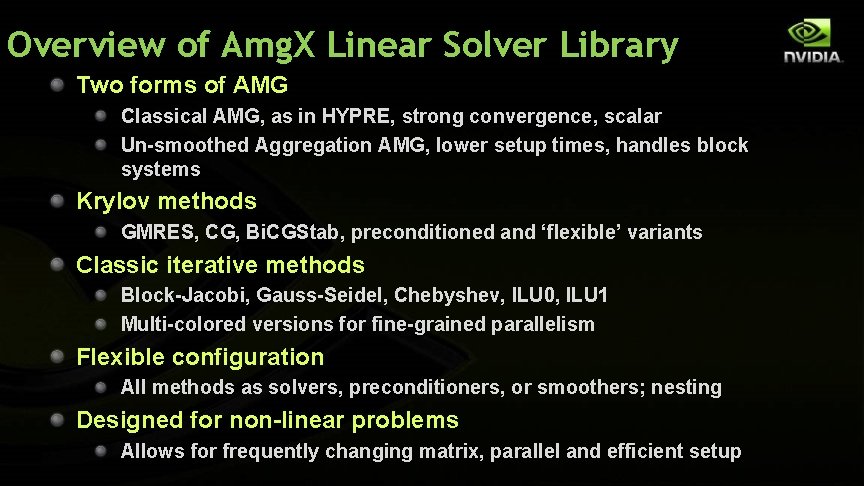

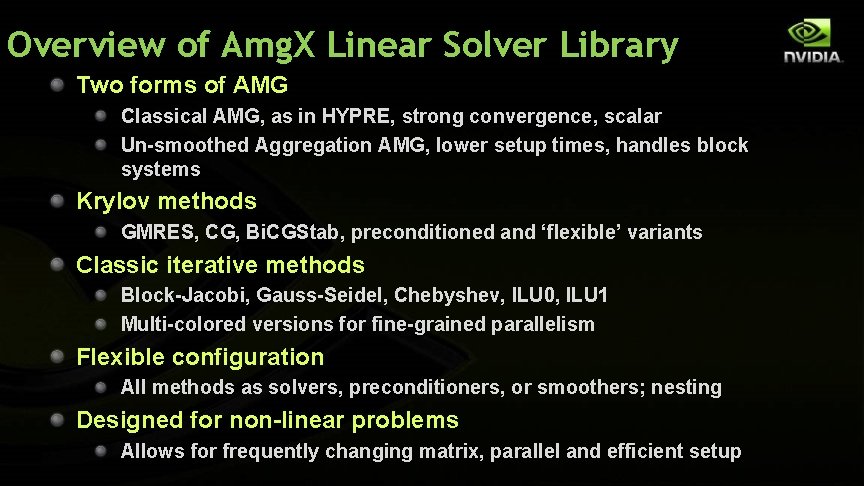

Overview of Amg. X Linear Solver Library Two forms of AMG Classical AMG, as in HYPRE, strong convergence, scalar Un-smoothed Aggregation AMG, lower setup times, handles block systems Krylov methods GMRES, CG, Bi. CGStab, preconditioned and ‘flexible’ variants Classic iterative methods Block-Jacobi, Gauss-Seidel, Chebyshev, ILU 0, ILU 1 Multi-colored versions for fine-grained parallelism Flexible configuration All methods as solvers, preconditioners, or smoothers; nesting Designed for non-linear problems Allows for frequently changing matrix, parallel and efficient setup

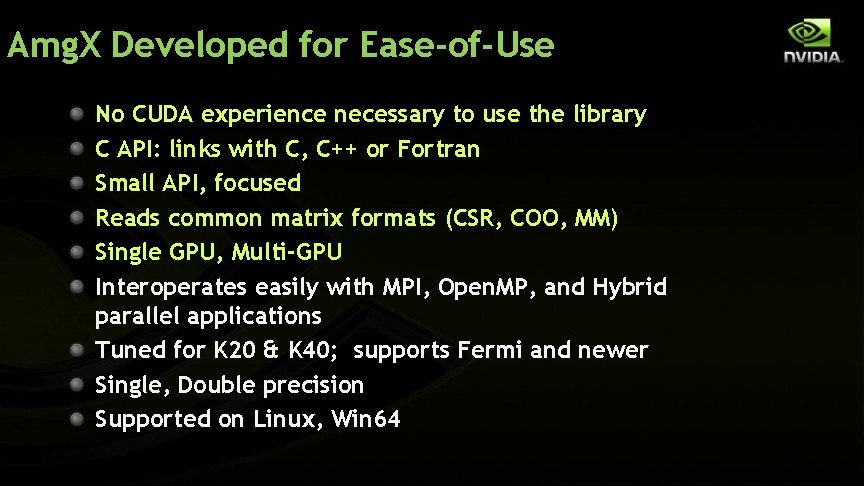

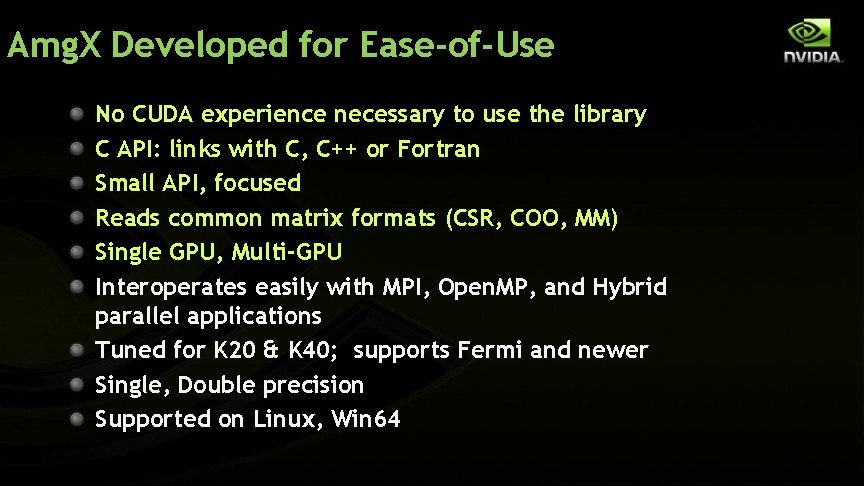

Amg. X Developed for Ease-of-Use No CUDA experience necessary to use the library C API: links with C, C++ or Fortran Small API, focused Reads common matrix formats (CSR, COO, MM) Single GPU, Multi-GPU Interoperates easily with MPI, Open. MP, and Hybrid parallel applications Tuned for K 20 & K 40; supports Fermi and newer Single, Double precision Supported on Linux, Win 64

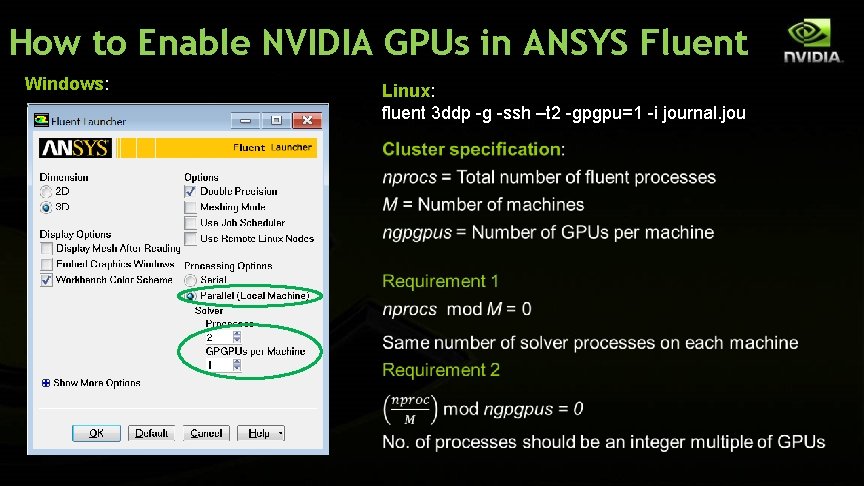

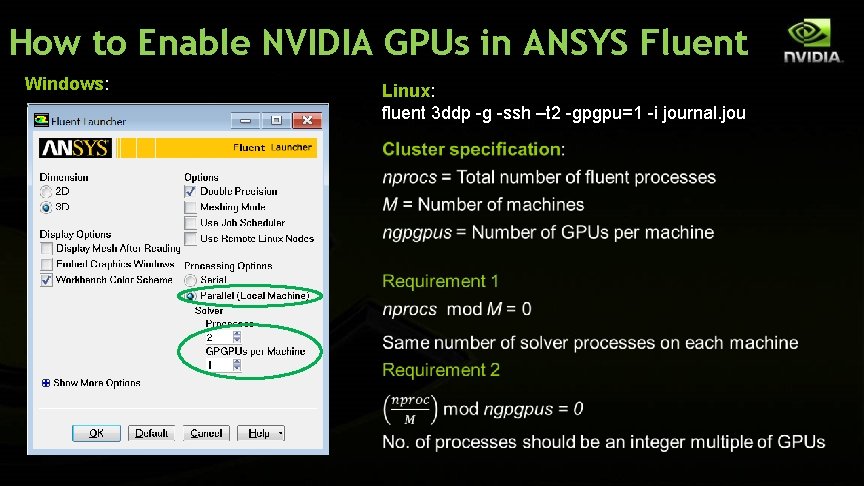

How to Enable NVIDIA GPUs in ANSYS Fluent Windows: Linux: fluent 3 ddp -g -ssh –t 2 -gpgpu=1 -i journal. jou

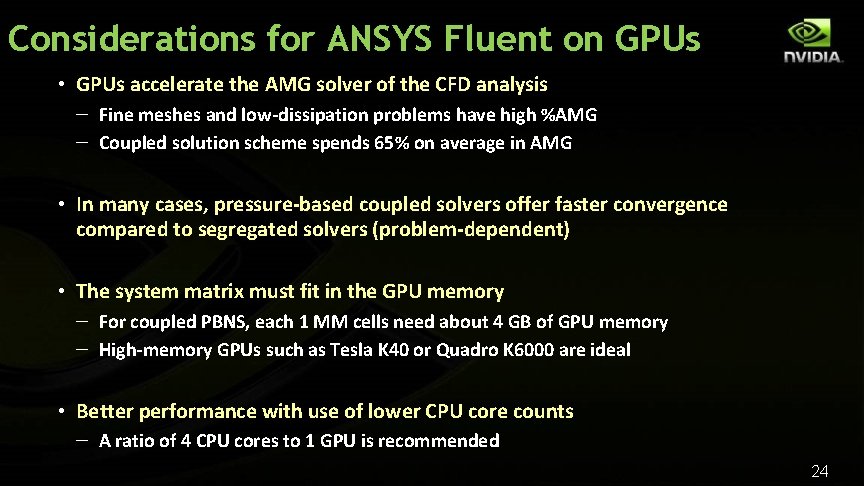

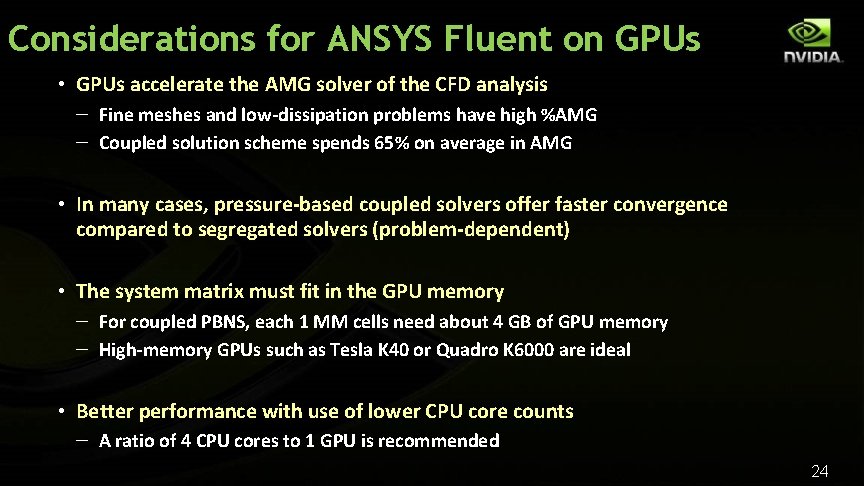

Considerations for ANSYS Fluent on GPUs • GPUs accelerate the AMG solver of the CFD analysis – Fine meshes and low-dissipation problems have high %AMG – Coupled solution scheme spends 65% on average in AMG • In many cases, pressure-based coupled solvers offer faster convergence compared to segregated solvers (problem-dependent) • The system matrix must fit in the GPU memory – For coupled PBNS, each 1 MM cells need about 4 GB of GPU memory – High-memory GPUs such as Tesla K 40 or Quadro K 6000 are ideal • Better performance with use of lower CPU core counts – A ratio of 4 CPU cores to 1 GPU is recommended 24

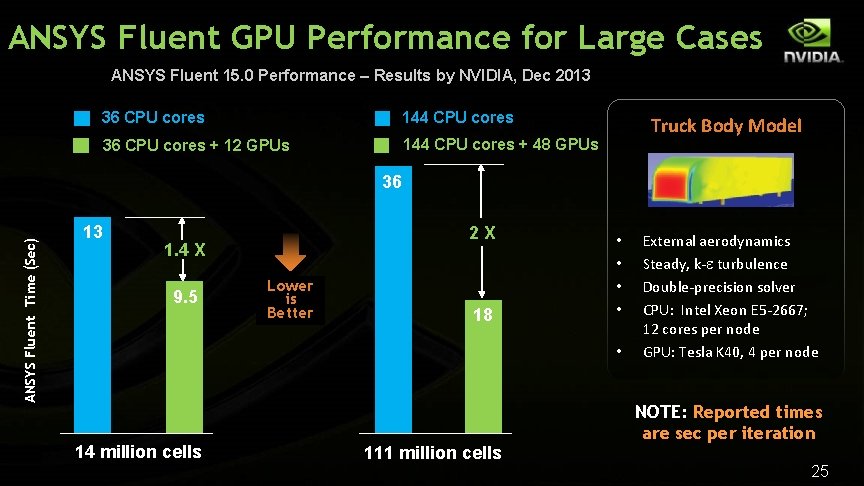

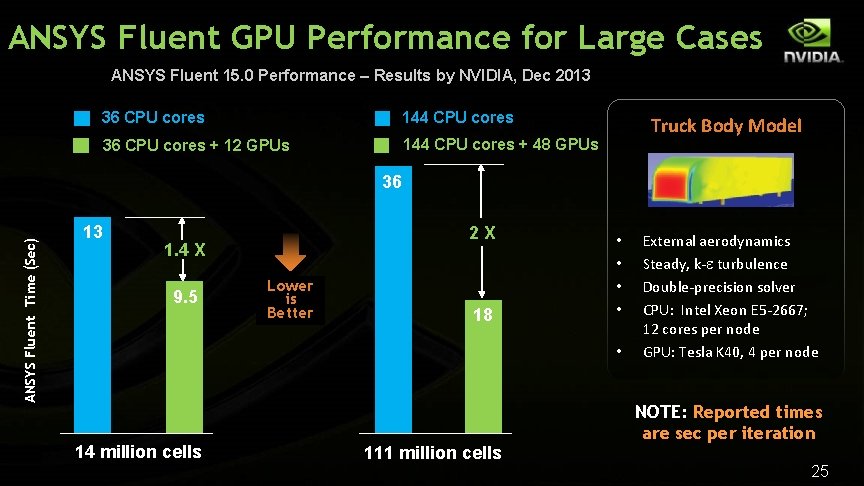

ANSYS Fluent GPU Performance for Large Cases ANSYS Fluent 15. 0 Performance – Results by NVIDIA, Dec 2013 36 CPU cores 144 CPU cores 36 CPU cores + 12 GPUs 144 CPU cores + 48 GPUs Truck Body Model ANSYS Fluent Time (Sec) 36 13 2 X 1. 4 X 9. 5 Lower is Better 18 • • • 14 million cells 111 million cells External aerodynamics Steady, k-e turbulence Double-precision solver CPU: Intel Xeon E 5 -2667; 12 cores per node GPU: Tesla K 40, 4 per node NOTE: Reported times are sec per iteration 25

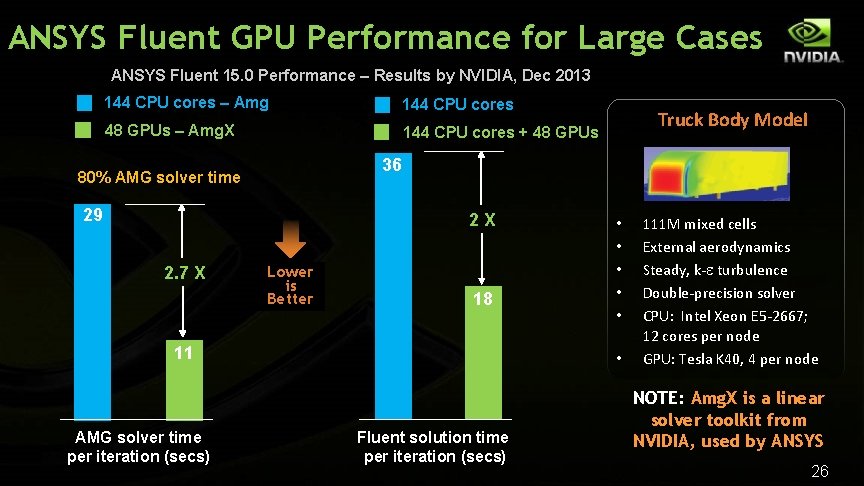

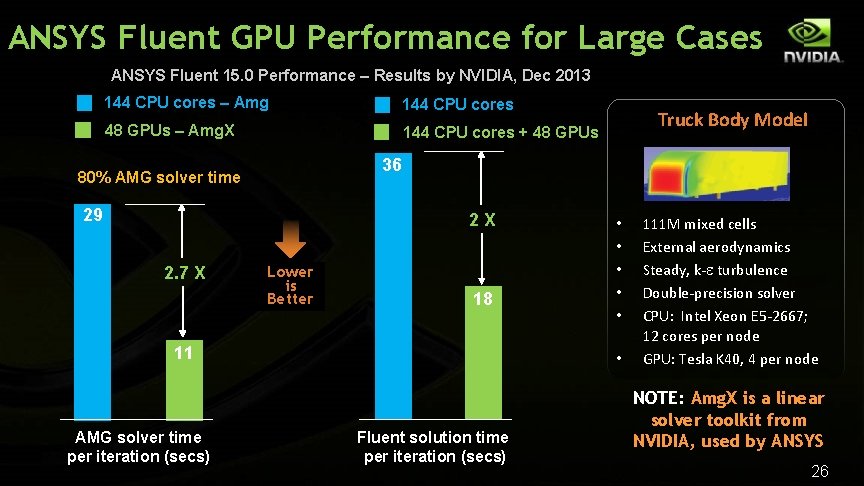

ANSYS Fluent GPU Performance for Large Cases ANSYS Fluent 15. 0 Performance – Results by NVIDIA, Dec 2013 144 CPU cores – Amg 144 CPU cores 48 GPUs – Amg. X 144 CPU cores + 48 GPUs 36 80% AMG solver time 29 2 X 2. 7 X Lower is Better 18 11 AMG solver time per iteration (secs) Truck Body Model • • • Fluent solution time per iteration (secs) 111 M mixed cells External aerodynamics Steady, k-e turbulence Double-precision solver CPU: Intel Xeon E 5 -2667; 12 cores per node GPU: Tesla K 40, 4 per node NOTE: Amg. X is a linear solver toolkit from NVIDIA, used by ANSYS 26

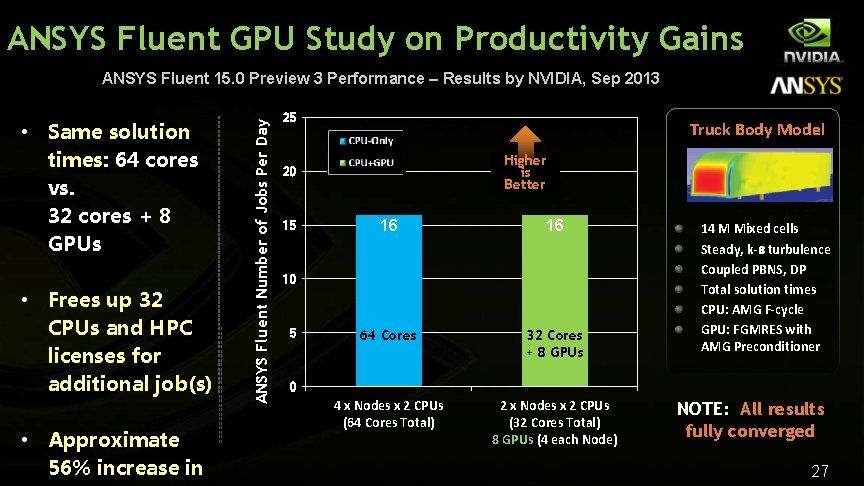

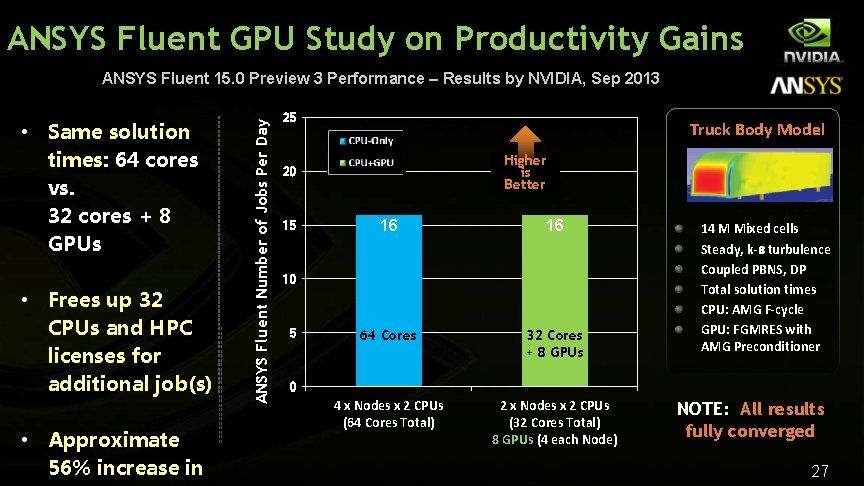

ANSYS Fluent GPU Study on Productivity Gains • Same solution times: 64 cores vs. 32 cores + 8 GPUs • Frees up 32 CPUs and HPC licenses for additional job(s) • Approximate 56% increase in ANSYS Fluent Number of Jobs Per Day ANSYS Fluent 15. 0 Preview 3 Performance – Results by NVIDIA, Sep 2013 25 Truck Body Model Higher is Better 20 15 16 16 64 Cores 32 Cores + 8 GPUs 4 x Nodes x 2 CPUs (64 Cores Total) 2 x Nodes x 2 CPUs (32 Cores Total) 8 GPUs (4 each Node) 10 5 14 M Mixed cells Steady, k-e turbulence Coupled PBNS, DP Total solution times CPU: AMG F-cycle GPU: FGMRES with AMG Preconditioner 0 NOTE: All results fully converged 27

Computational Fluid Dynamics Open. FOAM 28

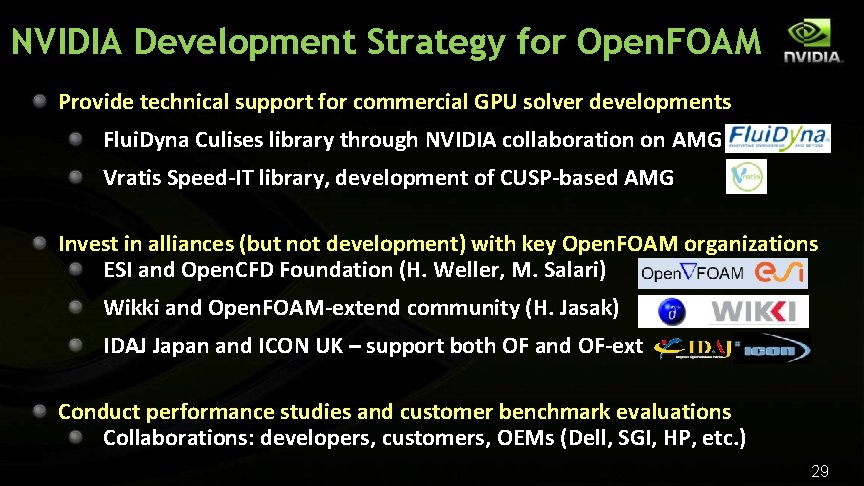

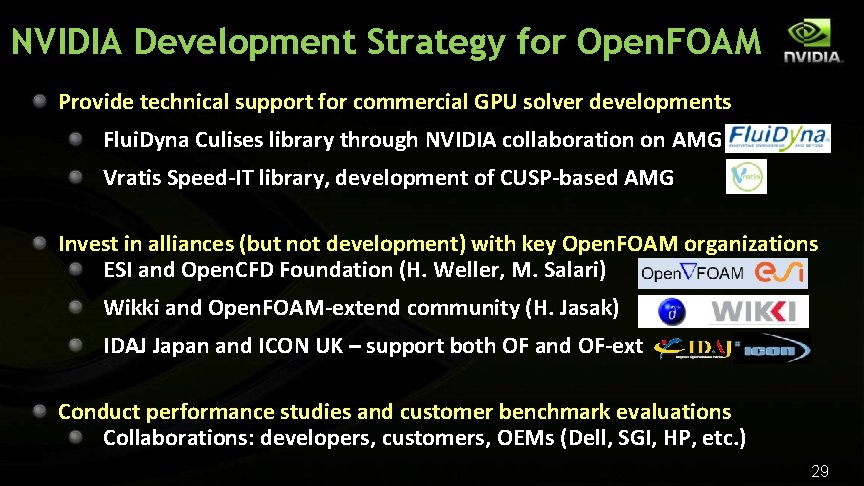

NVIDIA Development Strategy for Open. FOAM Provide technical support for commercial GPU solver developments Flui. Dyna Culises library through NVIDIA collaboration on AMG Vratis Speed-IT library, development of CUSP-based AMG Invest in alliances (but not development) with key Open. FOAM organizations ESI and Open. CFD Foundation (H. Weller, M. Salari) Wikki and Open. FOAM-extend community (H. Jasak) IDAJ Japan and ICON UK – support both OF and OF-ext Conduct performance studies and customer benchmark evaluations Collaborations: developers, customers, OEMs (Dell, SGI, HP, etc. ) 29

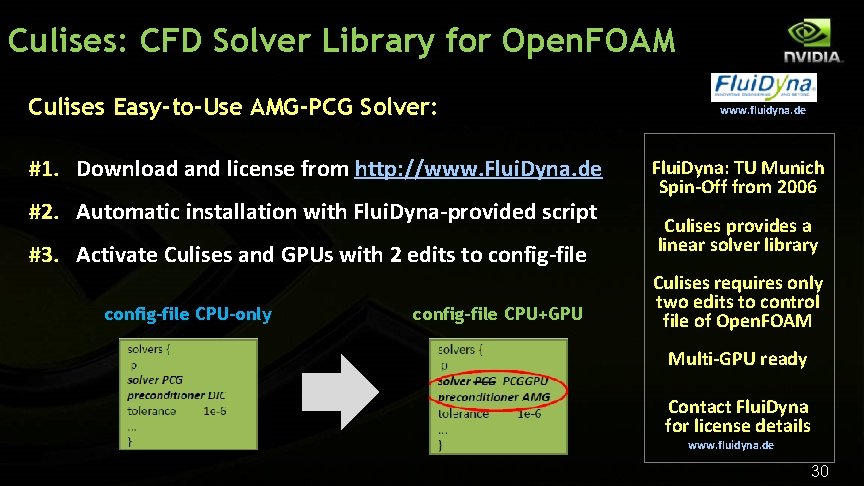

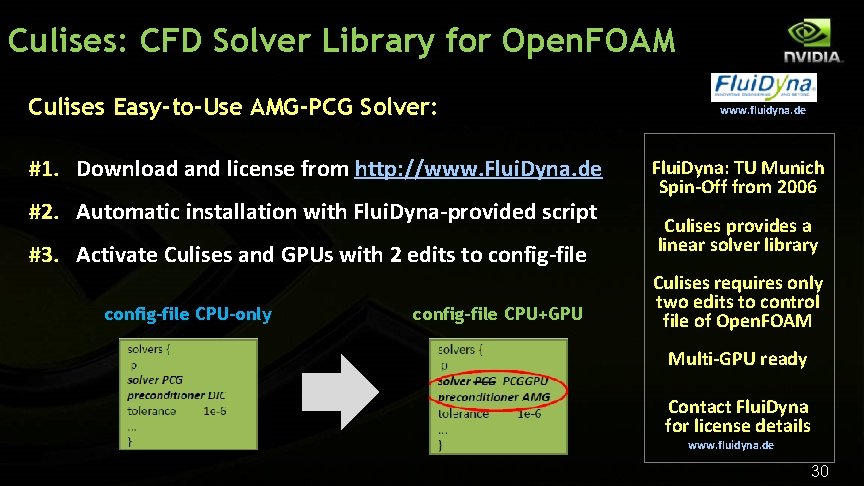

Culises: CFD Solver Library for Open. FOAM Culises Easy-to-Use AMG-PCG Solver: #1. Download and license from http: //www. Flui. Dyna. de #2. Automatic installation with Flui. Dyna-provided script #3. Activate Culises and GPUs with 2 edits to config-file CPU-only config-file CPU+GPU www. fluidyna. de Flui. Dyna: TU Munich Spin-Off from 2006 Culises provides a linear solver library Culises requires only two edits to control file of Open. FOAM Multi-GPU ready Contact Flui. Dyna for license details www. fluidyna. de 30

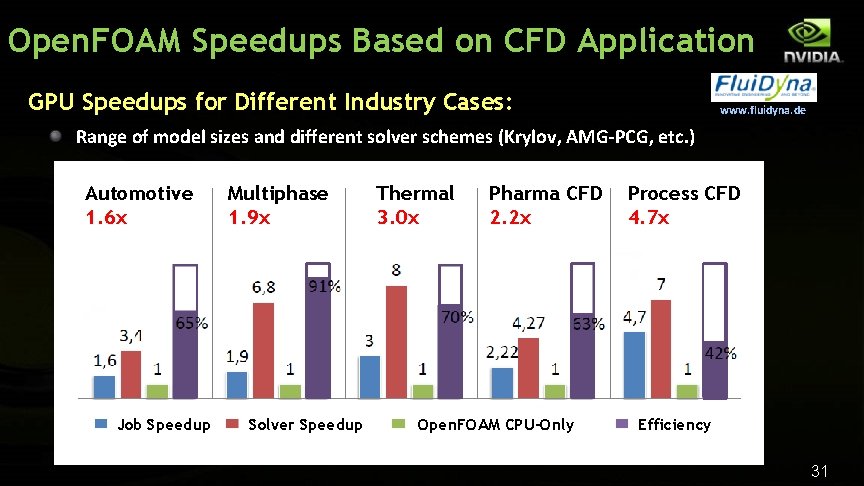

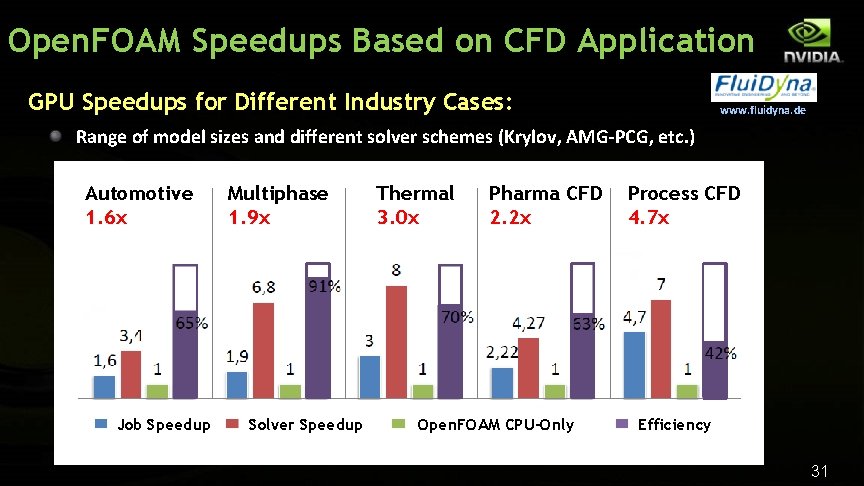

Open. FOAM Speedups Based on CFD Application GPU Speedups for Different Industry Cases: www. fluidyna. de Range of model sizes and different solver schemes (Krylov, AMG-PCG, etc. ) Automotive 1. 6 x Job Speedup Multiphase 1. 9 x Solver Speedup Thermal 3. 0 x Pharma CFD 2. 2 x Open. FOAM CPU-Only Process CFD 4. 7 x Efficiency 31

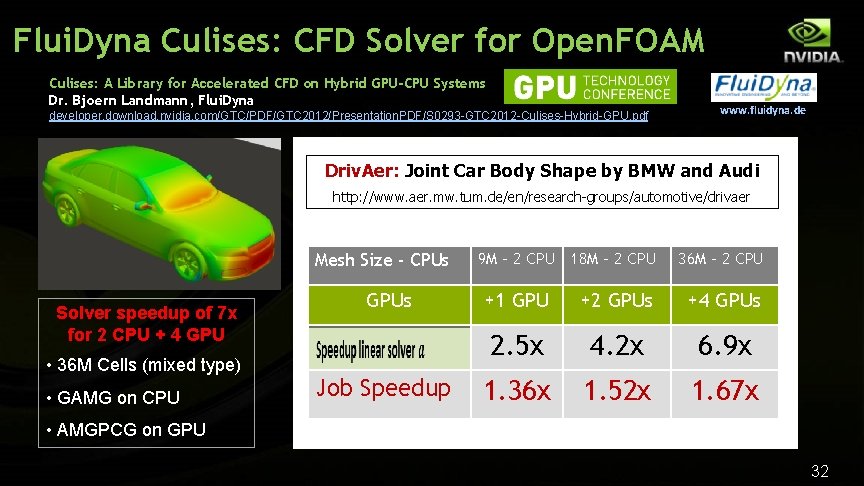

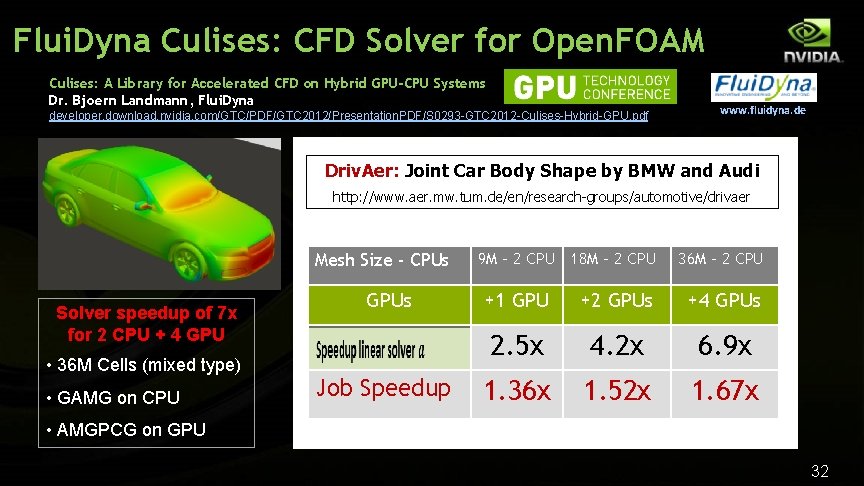

Flui. Dyna Culises: CFD Solver for Open. FOAM Culises: A Library for Accelerated CFD on Hybrid GPU-CPU Systems Dr. Bjoern Landmann, Flui. Dyna developer. download. nvidia. com/GTC/PDF/GTC 2012/Presentation. PDF/S 0293 -GTC 2012 -Culises-Hybrid-GPU. pdf www. fluidyna. de Driv. Aer: Joint Car Body Shape by BMW and Audi http: //www. aer. mw. tum. de/en/research-groups/automotive/drivaer Mesh Size - CPUs Solver speedup of 7 x for 2 CPU + 4 GPU • 36 M Cells (mixed type) • GAMG on CPU GPUs Job Speedup 9 M - 2 CPU 18 M - 2 CPU 36 M - 2 CPU +1 GPU +2 GPUs +4 GPUs 2. 5 x 4. 2 x 6. 9 x 1. 36 x 1. 52 x 1. 67 x • AMGPCG on GPU 32

Computational Structural Mechanics ANSYS Mechanical 33

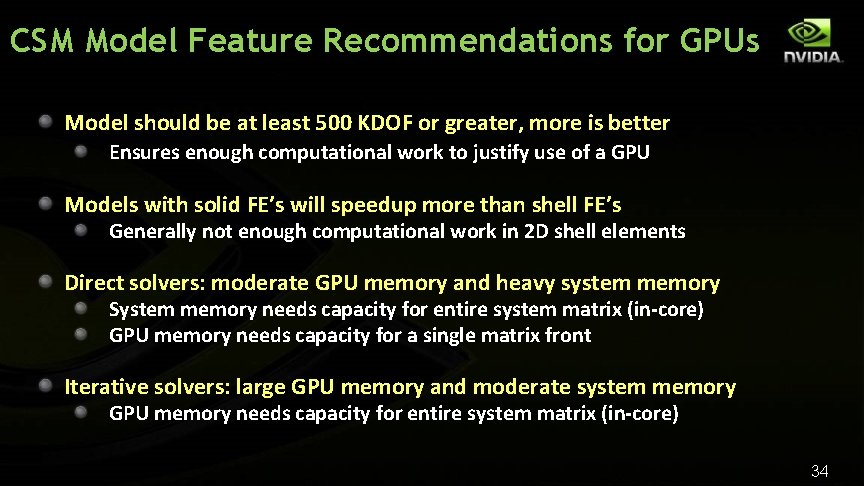

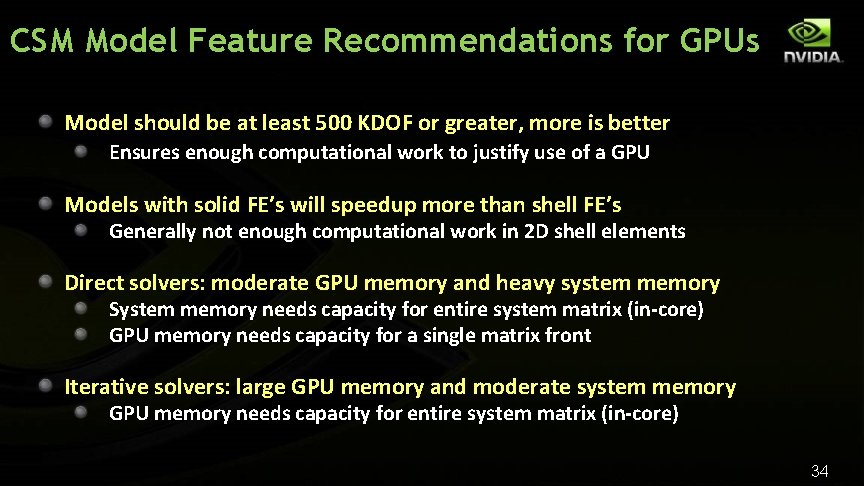

CSM Model Feature Recommendations for GPUs Model should be at least 500 KDOF or greater, more is better Ensures enough computational work to justify use of a GPU Models with solid FE’s will speedup more than shell FE’s Generally not enough computational work in 2 D shell elements Direct solvers: moderate GPU memory and heavy system memory System memory needs capacity for entire system matrix (in-core) GPU memory needs capacity for a single matrix front Iterative solvers: large GPU memory and moderate system memory GPU memory needs capacity for entire system matrix (in-core) 34

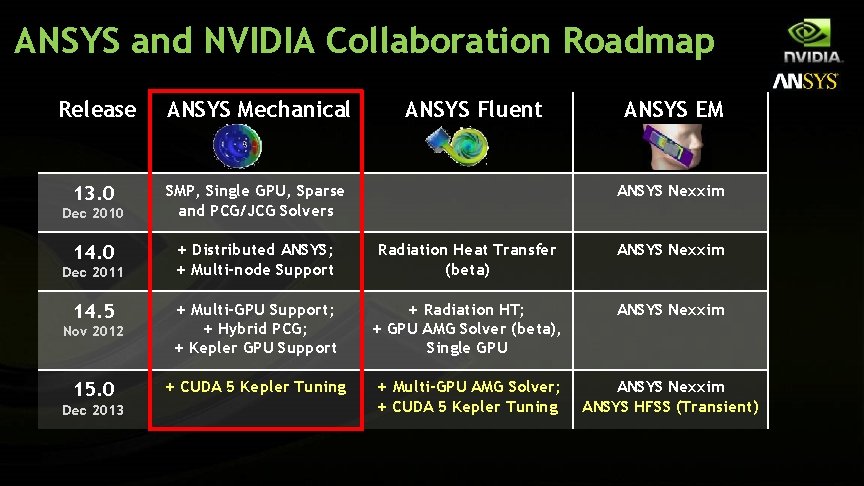

ANSYS and NVIDIA Collaboration Roadmap Release ANSYS Mechanical 13. 0 SMP, Single GPU, Sparse and PCG/JCG Solvers Dec 2010 14. 0 ANSYS Fluent ANSYS EM ANSYS Nexxim + Distributed ANSYS; + Multi-node Support Radiation Heat Transfer (beta) ANSYS Nexxim + Radiation HT; + GPU AMG Solver (beta), Single GPU ANSYS Nexxim Nov 2012 + Multi-GPU Support; + Hybrid PCG; + Kepler GPU Support 15. 0 + CUDA 5 Kepler Tuning + Multi-GPU AMG Solver; + CUDA 5 Kepler Tuning ANSYS Nexxim ANSYS HFSS (Transient) Dec 2011 14. 5 Dec 2013

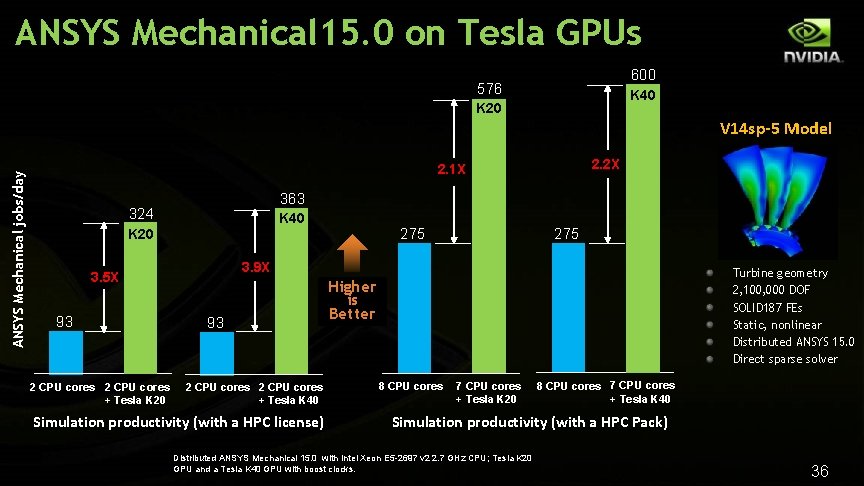

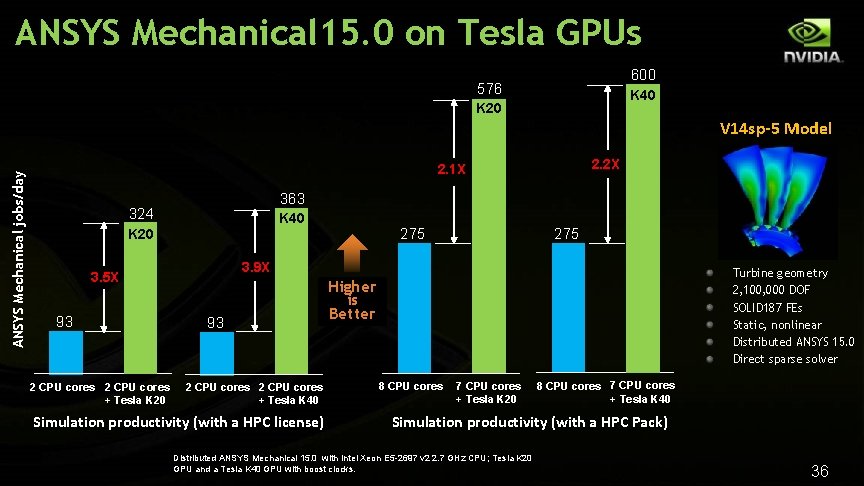

ANSYS Mechanical 15. 0 on Tesla GPUs 600 576 K 40 K 20 ANSYS Mechanical jobs/day V 14 sp-5 Model 2. 2 X 2. 1 X 363 324 K 40 3. 9 X 3. 5 X 93 2 CPU cores + Tesla K 20 275 K 20 93 2 CPU cores + Tesla K 40 Simulation productivity (with a HPC license) Turbine geometry 2, 100, 000 DOF SOLID 187 FEs Static, nonlinear Distributed ANSYS 15. 0 Direct sparse solver Higher is Better 8 CPU cores 7 CPU cores + Tesla K 20 8 CPU cores 7 CPU cores + Tesla K 40 Simulation productivity (with a HPC Pack) Distributed ANSYS Mechanical 15. 0 with Intel Xeon E 5 -2697 v 2 2. 7 GHz CPU; Tesla K 20 GPU and a Tesla K 40 GPU with boost clocks. 36

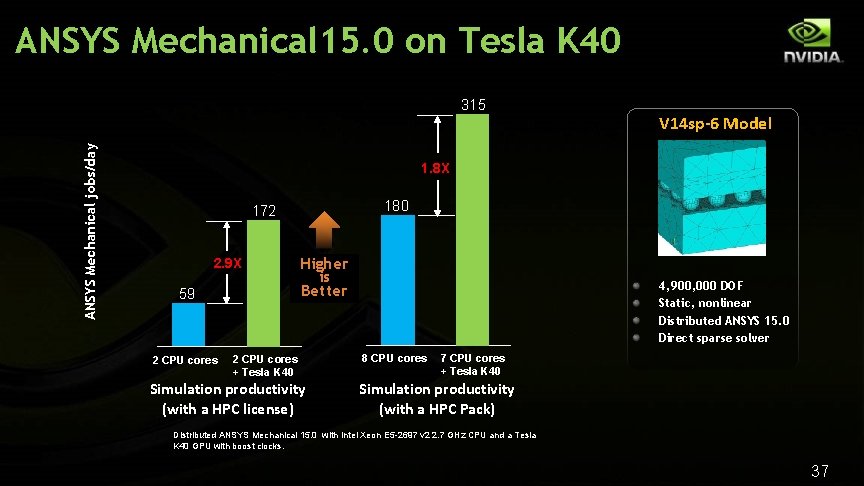

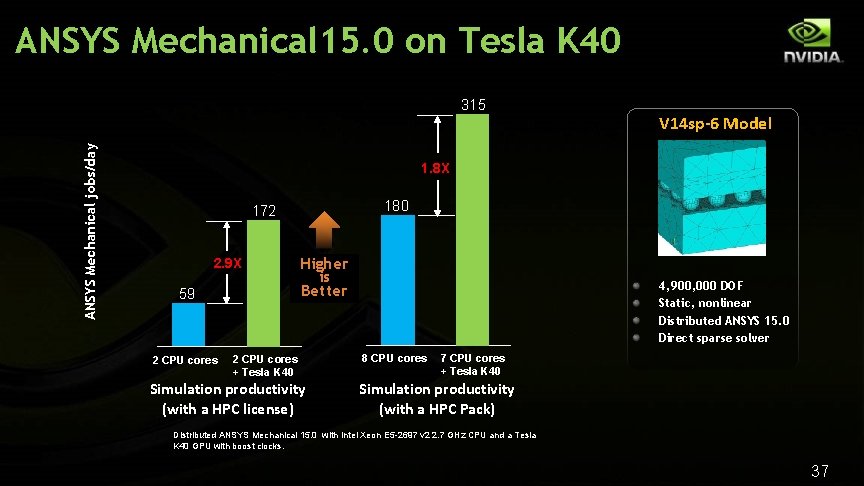

ANSYS Mechanical 15. 0 on Tesla K 40 ANSYS Mechanical jobs/day 315 V 14 sp-6 Model 1. 8 X 180 172 2. 9 X 59 2 CPU cores Higher is Better 2 CPU cores + Tesla K 40 Simulation productivity (with a HPC license) 4, 900, 000 DOF Static, nonlinear Distributed ANSYS 15. 0 Direct sparse solver 8 CPU cores 7 CPU cores + Tesla K 40 Simulation productivity (with a HPC Pack) Distributed ANSYS Mechanical 15. 0 with Intel Xeon E 5 -2697 v 2 2. 7 GHz CPU and a Tesla K 40 GPU with boost clocks. 37

Computational Structural Mechanics Abaqus/Standard 38

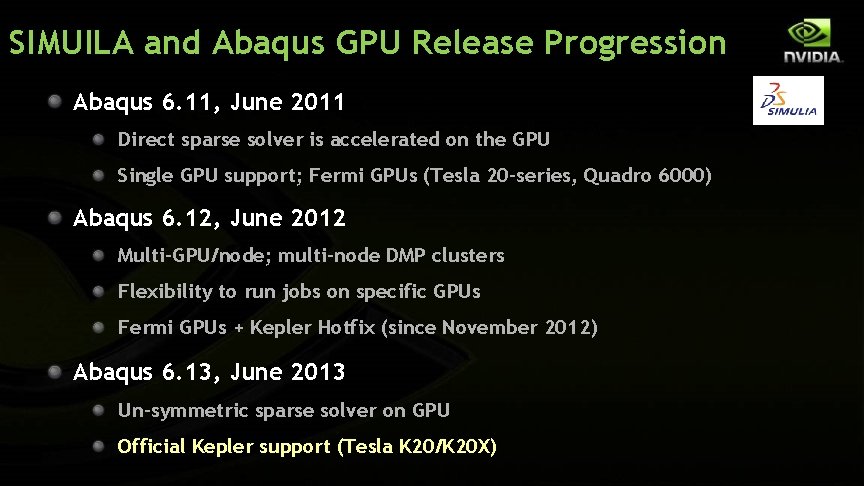

SIMUILA and Abaqus GPU Release Progression Abaqus 6. 11, June 2011 Direct sparse solver is accelerated on the GPU Single GPU support; Fermi GPUs (Tesla 20 -series, Quadro 6000) Abaqus 6. 12, June 2012 Multi-GPU/node; multi-node DMP clusters Flexibility to run jobs on specific GPUs Fermi GPUs + Kepler Hotfix (since November 2012) Abaqus 6. 13, June 2013 Un-symmetric sparse solver on GPU Official Kepler support (Tesla K 20/K 20 X)

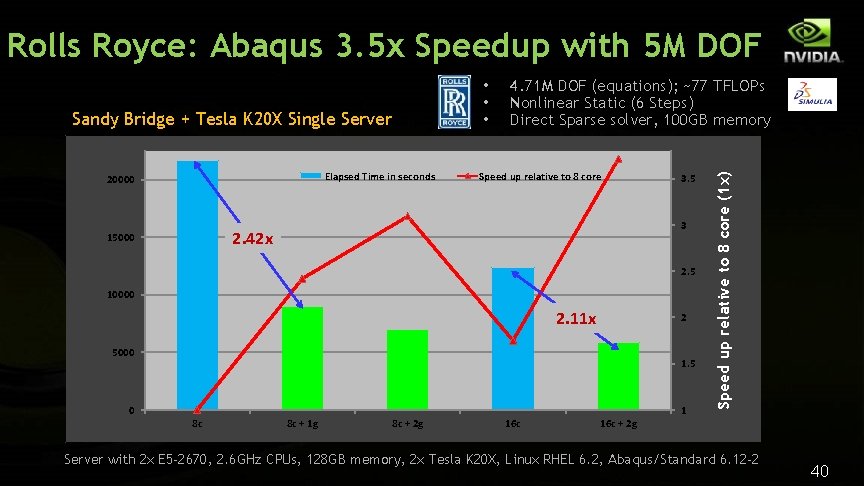

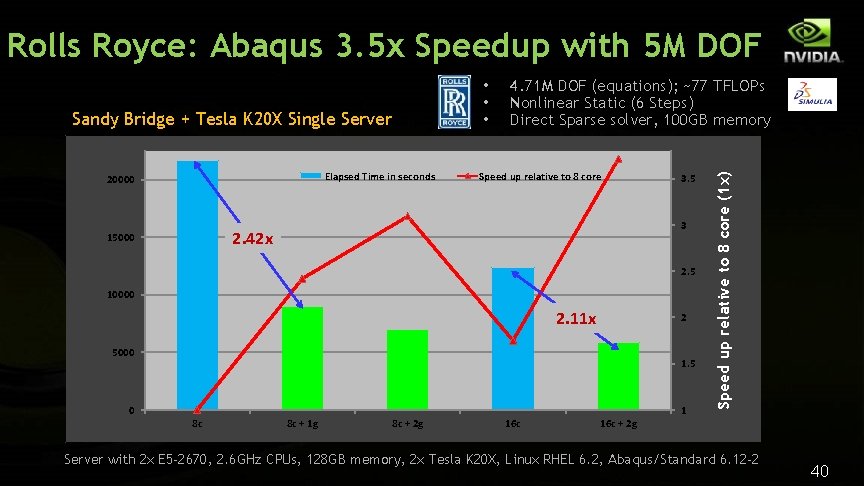

Rolls Royce: Abaqus 3. 5 x Speedup with 5 M DOF Sandy Bridge + Tesla K 20 X Single Server Elapsed Time in seconds 20000 4. 71 M DOF (equations); ~77 TFLOPs Nonlinear Static (6 Steps) Direct Sparse solver, 100 GB memory Speed up relative to 8 core 3 2. 42 x 15000 3. 5 2. 5 10000 2. 11 x 2 5000 1. 5 0 1 8 c 8 c + 1 g 8 c + 2 g 16 c Speed up relative to 8 core (1 x) • • • 16 c + 2 g Server with 2 x E 5 -2670, 2. 6 GHz CPUs, 128 GB memory, 2 x Tesla K 20 X, Linux RHEL 6. 2, Abaqus/Standard 6. 12 -2 40

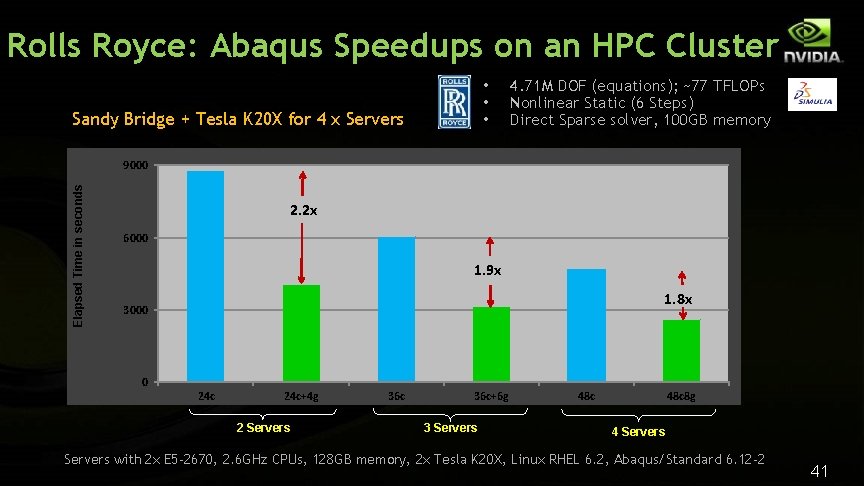

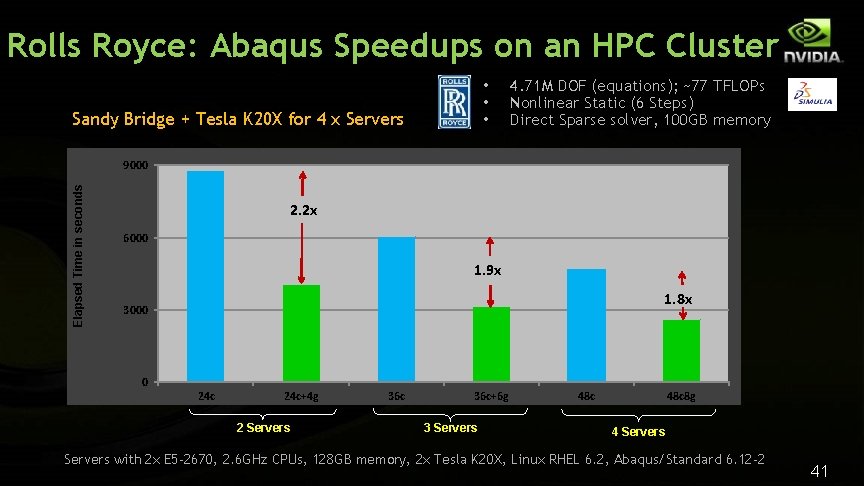

Rolls Royce: Abaqus Speedups on an HPC Cluster • • • Sandy Bridge + Tesla K 20 X for 4 x Servers 4. 71 M DOF (equations); ~77 TFLOPs Nonlinear Static (6 Steps) Direct Sparse solver, 100 GB memory Elapsed Time in seconds 9000 2. 2 x 6000 2. 04 X 1. 9 x 1. 8 X 3000 1. 8 x 0 24 c+4 g 2 Servers 36 c+6 g 3 Servers 48 c 8 g 4 Servers with 2 x E 5 -2670, 2. 6 GHz CPUs, 128 GB memory, 2 x Tesla K 20 X, Linux RHEL 6. 2, Abaqus/Standard 6. 12 -2 41

Computational Structural Mechanics MSC Nastran 42

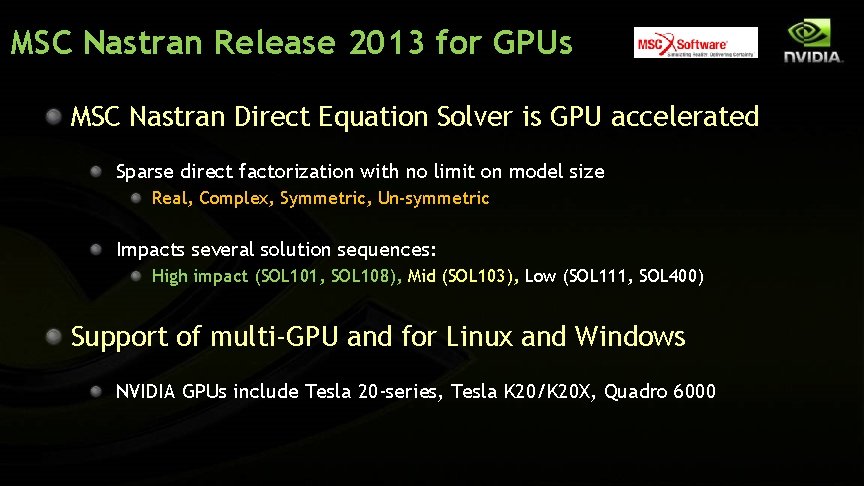

MSC Nastran Release 2013 for GPUs MSC Nastran Direct Equation Solver is GPU accelerated Sparse direct factorization with no limit on model size Real, Complex, Symmetric, Un-symmetric Impacts several solution sequences: High impact (SOL 101, SOL 108), Mid (SOL 103), Low (SOL 111, SOL 400) Support of multi-GPU and for Linux and Windows NVIDIA GPUs include Tesla 20 -series, Tesla K 20/K 20 X, Quadro 6000

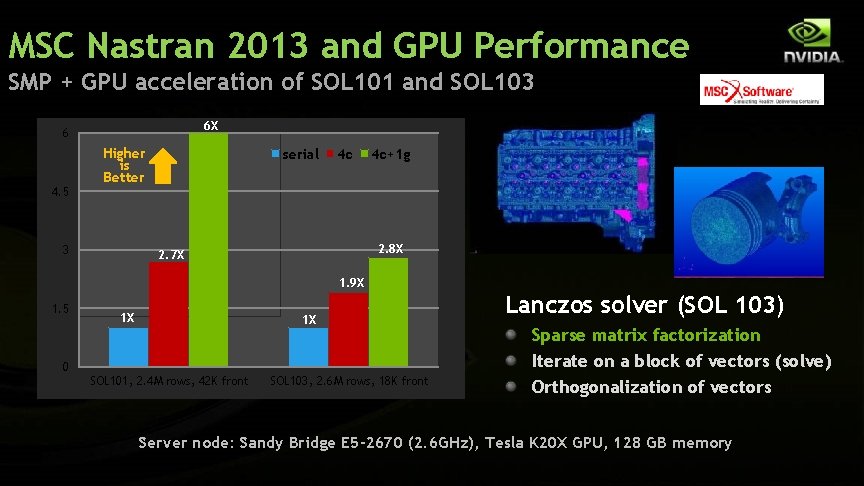

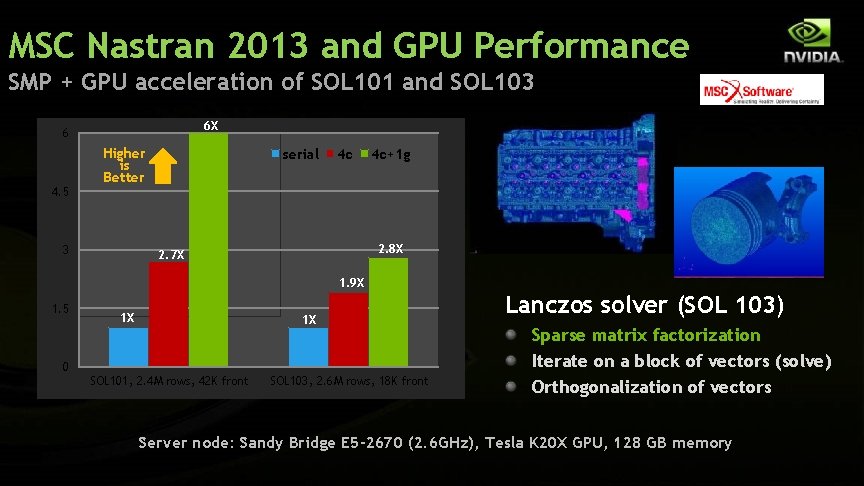

MSC Nastran 2013 and GPU Performance SMP + GPU acceleration of SOL 101 and SOL 103 6 X 6 4. 5 Higher is Better 3 serial 4 c 4 c+1 g 2. 8 X 2. 7 X 1. 9 X 1. 5 1 X 1 X 0 SOL 101, 2. 4 M rows, 42 K front SOL 103, 2. 6 M rows, 18 K front Lanczos solver (SOL 103) Sparse matrix factorization Iterate on a block of vectors (solve) Orthogonalization of vectors Server node: Sandy Bridge E 5 -2670 (2. 6 GHz), Tesla K 20 X GPU, 128 GB memory

MSC Nastran 2013 and NVH Simulation on GPUs Coupled Structural-Acoustics simulation with SOL 108 Europe Auto OEM 1 X Elapsed Time (mins) 1000 Lower is Better 800 710 K nodes, 3. 83 M elements 100 frequency increments (FREQ 1) Direct Sparse solver 600 400 2. 7 X 4. 8 X 200 5. 2 X 5. 5 X 11. 1 X 0 serial 1 c + 1 g 4 c (smp) 4 c + 1 g 8 c (dmp=2) 8 c + 2 g (dmp=2) Server node: Sandy Bridge 2. 6 GHz, 2 x 8 core, Tesla 2 x K 20 X GPU, 128 GB memory 45

Computational Structural Mechanics Altair Opti. Struct 46

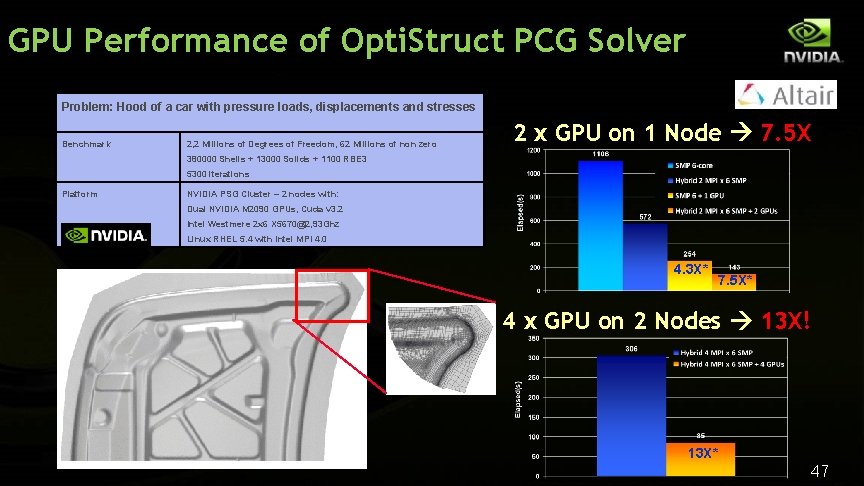

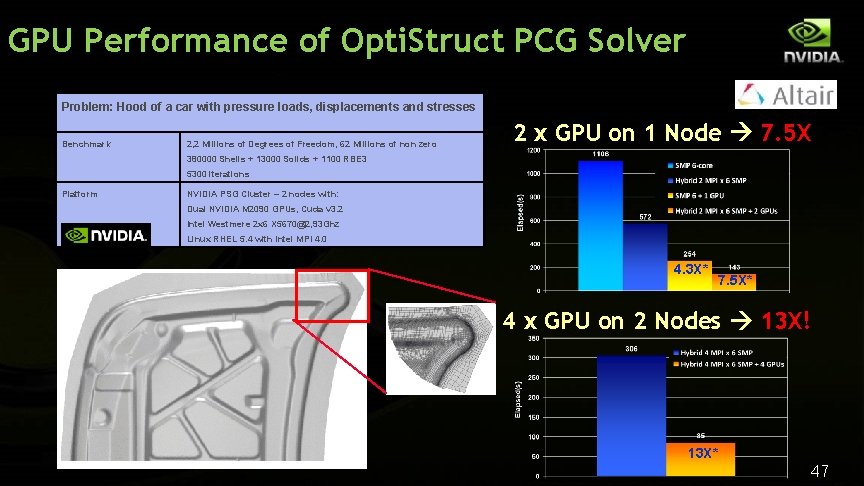

GPU Performance of Opti. Struct PCG Solver Problem: Hood of a car with pressure loads, displacements and stresses Benchmark 2, 2 Millions of Degrees of Freedom, 62 Millions of non zero 2 x GPU on 1 Node 7. 5 X 380000 Shells + 13000 Solids + 1100 RBE 3 5300 iterations Platform NVIDIA PSG Cluster – 2 nodes with: Dual NVIDIA M 2090 GPUs, Cuda v 3. 2 Intel Westmere 2 x 6 X 5670@2, 93 Ghz Linux RHEL 5. 4 with Intel MPI 4. 0 4. 3 X* 7. 5 X* 4 x GPU on 2 Nodes 13 X! 13 X* 47

Summary of GPU Progress for CAE GPUs provide significant speedups for solver intensive simulations Improved product quality with higher fidelity modeling Shorten product engineering cycles with faster simulation turnaround Simulations recently considered impractical now possible FEA: Larger DOFs in model, more complex material behavior, FSI CFD: Unsteady RANS, LES simulations practical in cost and time Effective parameter optimization from large increase in number of jobs 48

Stan Posey NVIDIA, Santa Clara, CA, USA; sposey@nvidia. com