Dynamic GPGPU Power Management Using Adaptive Model Predictive

- Slides: 53

Dynamic GPGPU Power Management Using Adaptive Model Predictive Control Abhinandan Majumdar*, Leonardo Piga†, Indrani Paul†, Joseph L. Greathouse†, Wei Huang†, David H. Albonesi* *Computer Systems Laboratory, Cornell University †Advanced Micro Devices, Inc.

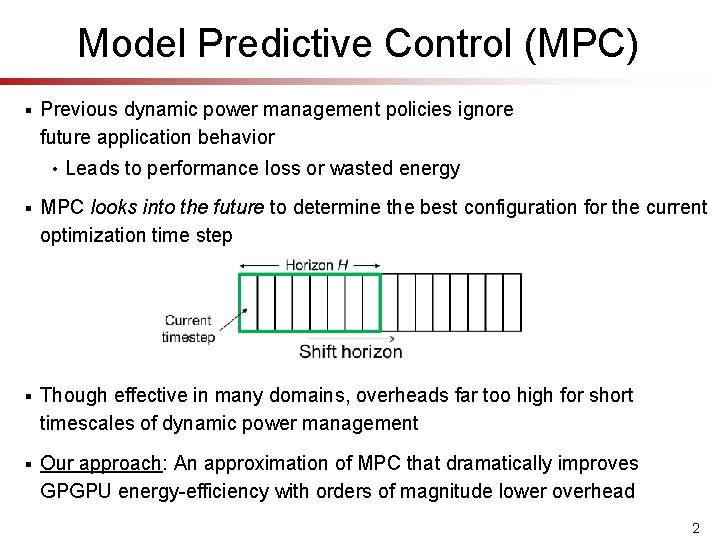

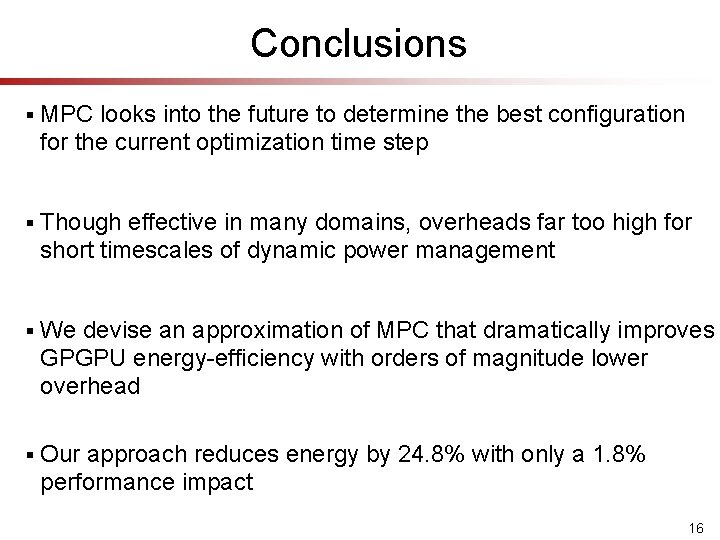

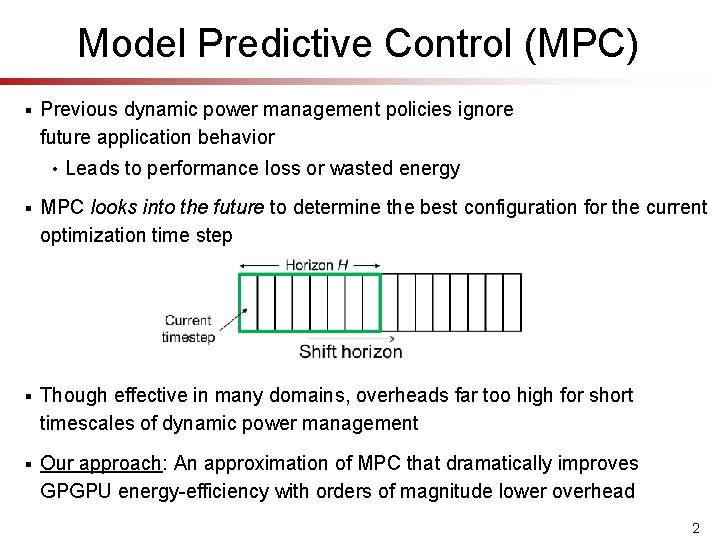

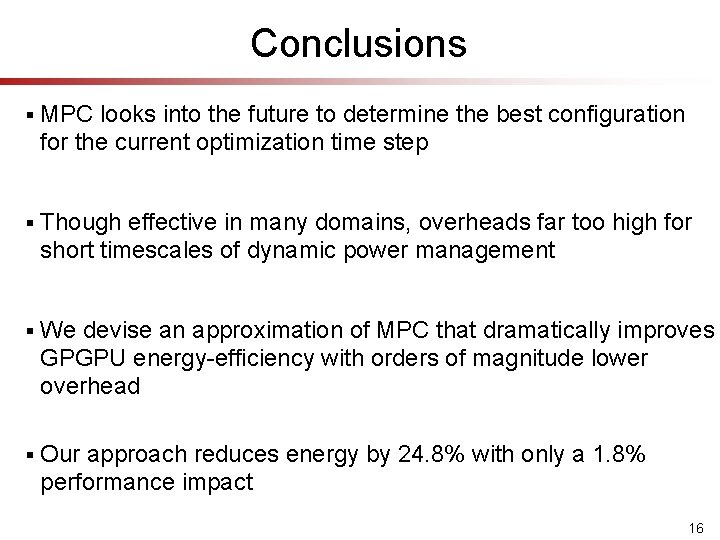

Model Predictive Control (MPC) § Previous dynamic power management policies ignore future application behavior • Leads to performance loss or wasted energy § MPC looks into the future to determine the best configuration for the current optimization time step § Though effective in many domains, overheads far too high for short timescales of dynamic power management § Our approach: An approximation of MPC that dramatically improves GPGPU energy-efficiency with orders of magnitude lower overhead 2

Dynamic GPGPU Power Management § Attempts to maximize performance within power constraints § Hardware knobs § • Number of active GPU Compute Units • DVFS states Goal: Reduce the energy of the GPU application phases compared to the baseline power manager while matching its performance 3

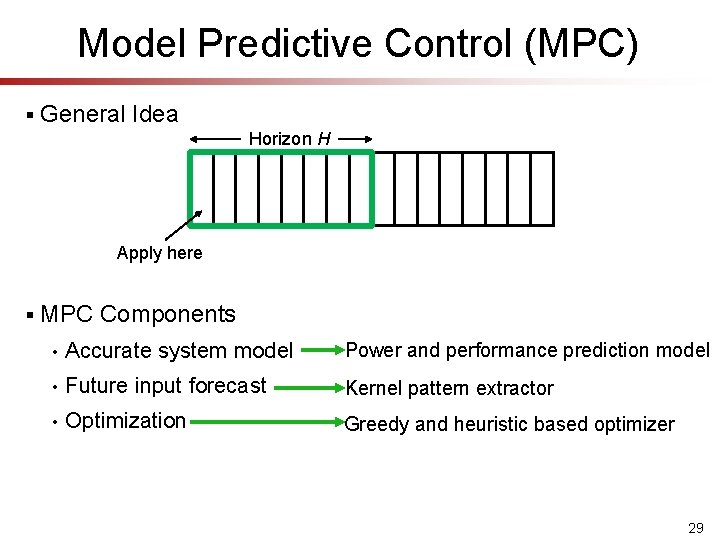

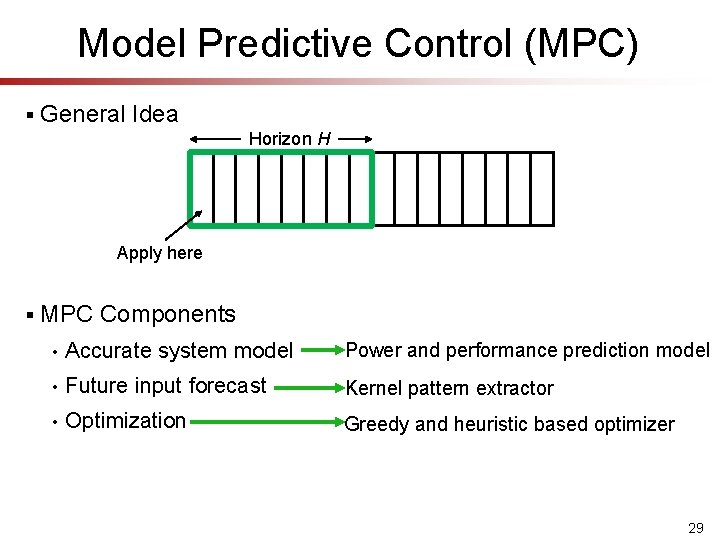

Applying MPC to Dynamic Power Management § General Idea Horizon H Apply here GPU kernels § MPC has high overhead • Complexity scales exponentially with H • Minimizing energy under performance cap with discrete HW settings is fundamentally NP-hard • Usually requires dedicated optimization solvers, such as CVX, lpsolve, etc. 4

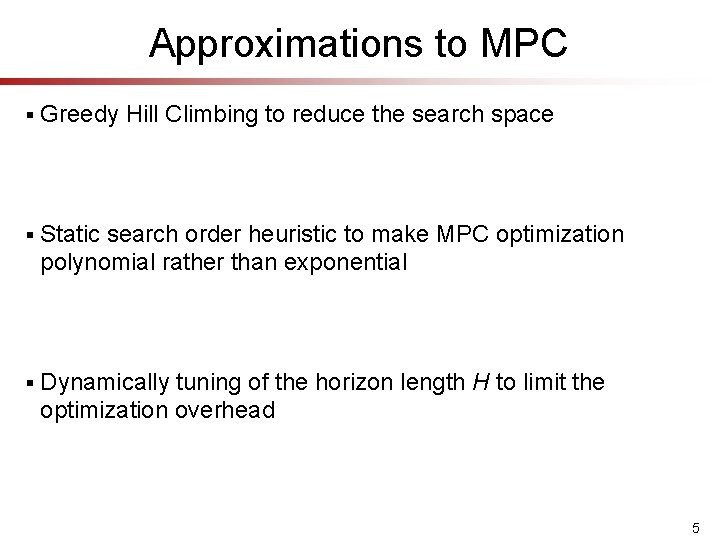

Approximations to MPC § Greedy Hill Climbing to reduce the search space § Static search order heuristic to make MPC optimization polynomial rather than exponential § Dynamically tuning of the horizon length H to limit the optimization overhead 5

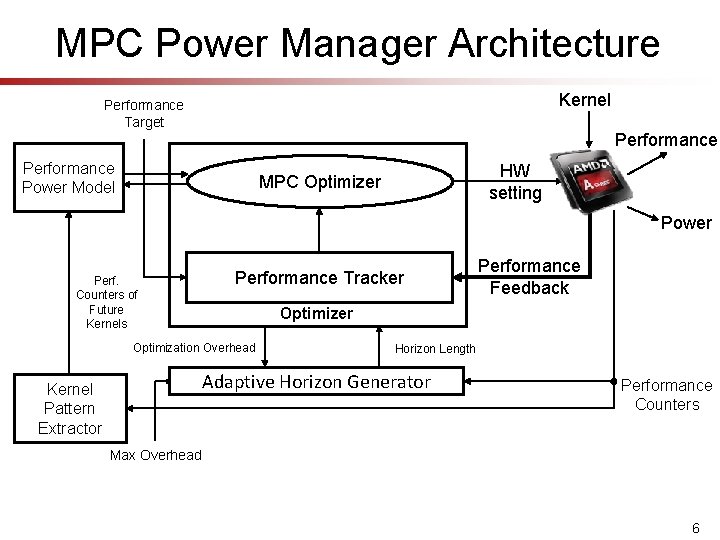

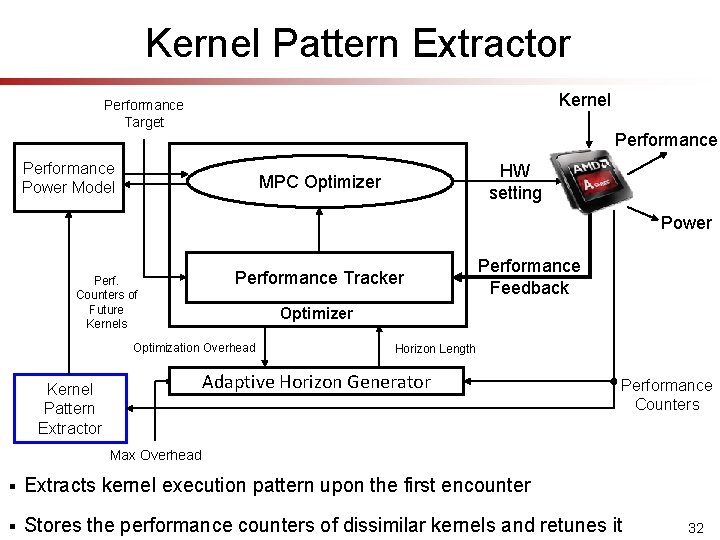

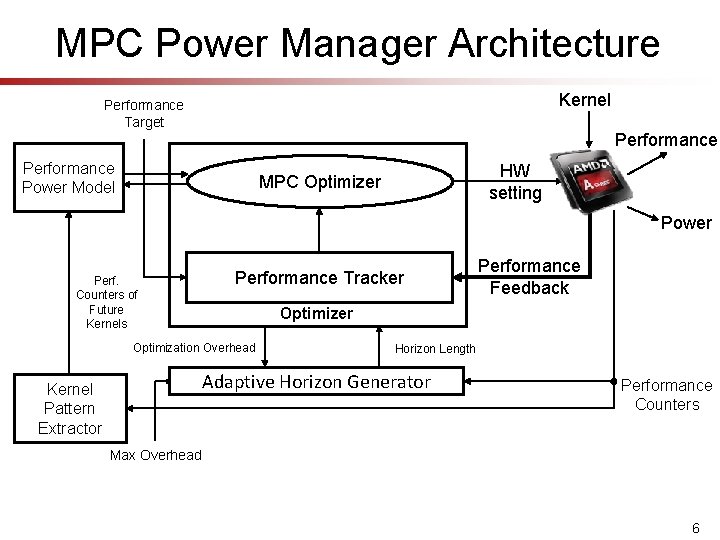

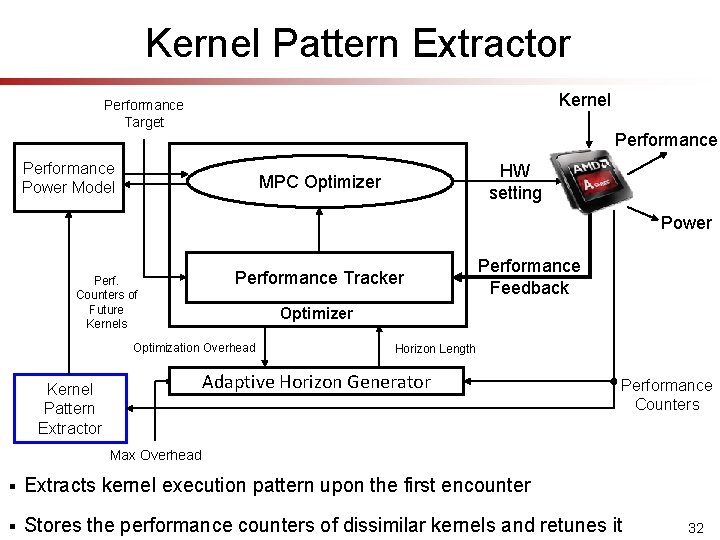

MPC Power Manager Architecture Kernel Performance Target Performance Power Model HW setting MPC Optimizer Power Perf. Counters of Future Kernels Performance Tracker Optimization Overhead Horizon Length Adaptive Horizon Generator Kernel Pattern Extractor Performance Feedback Performance Counters Max Overhead 6

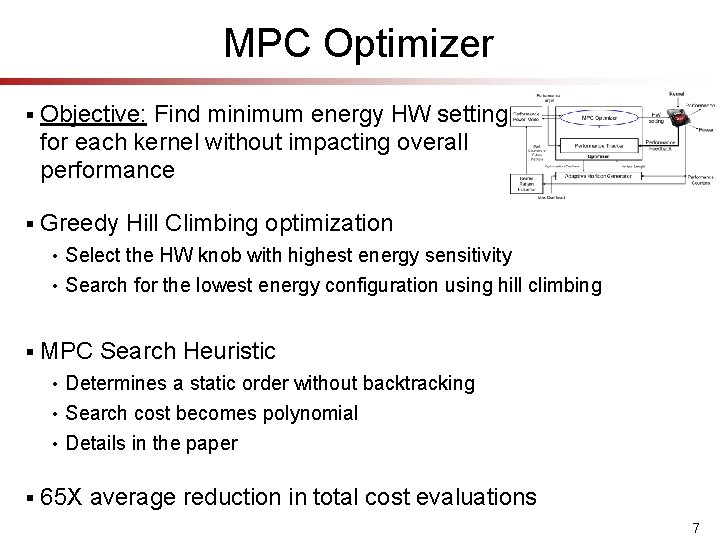

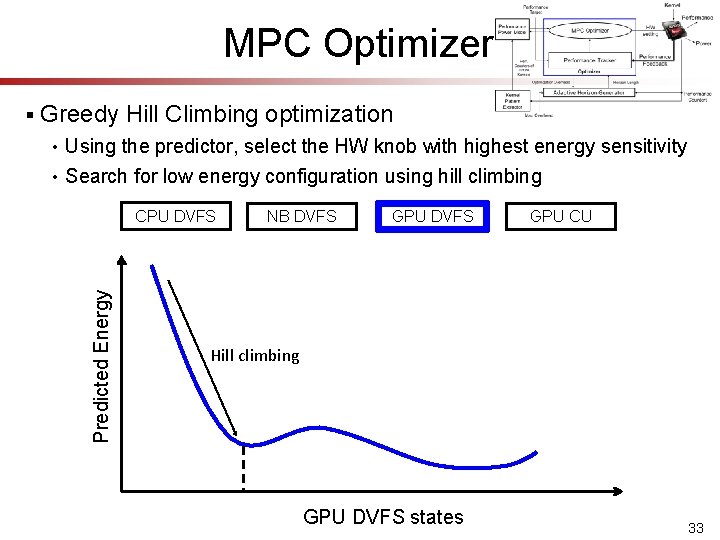

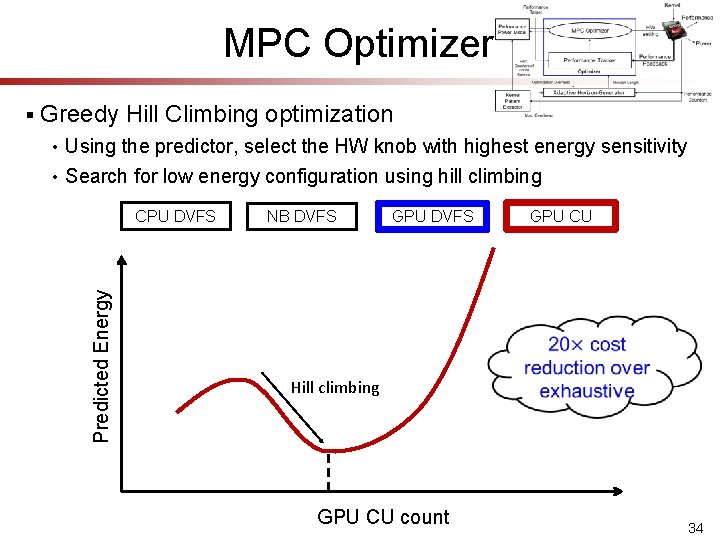

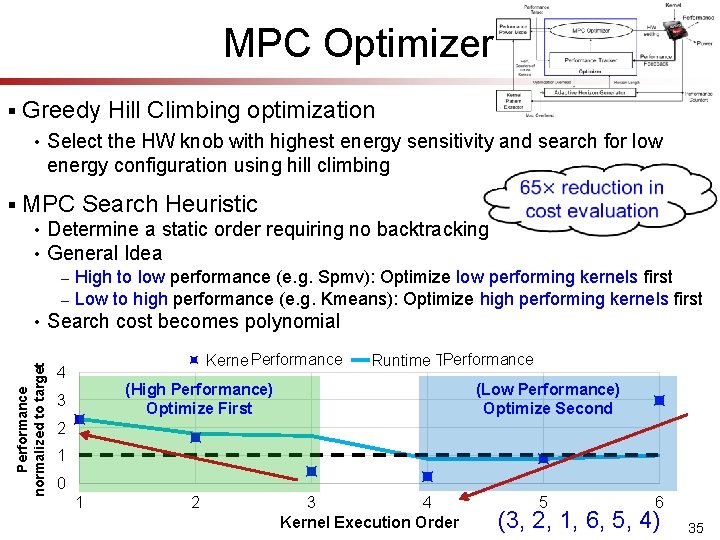

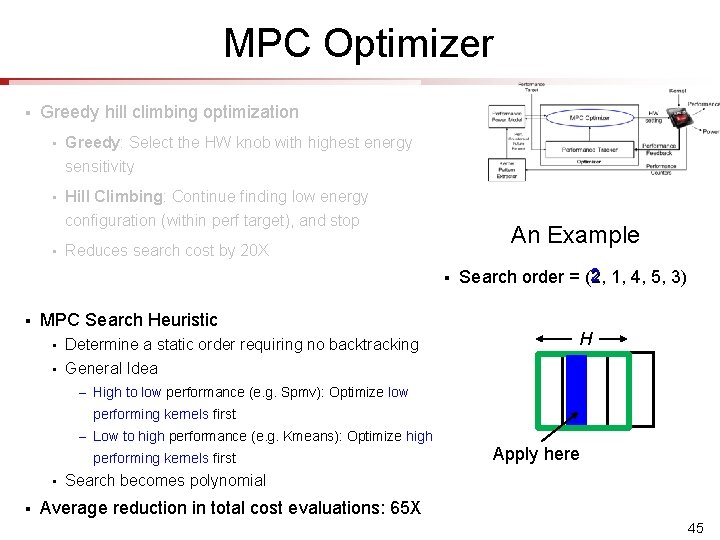

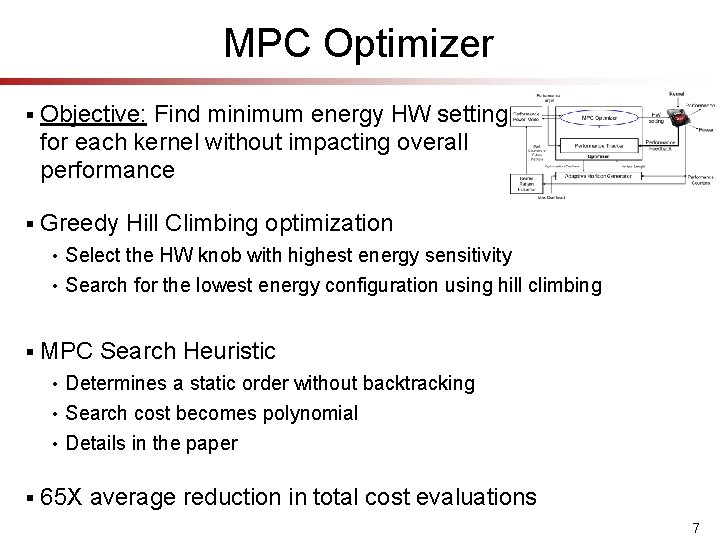

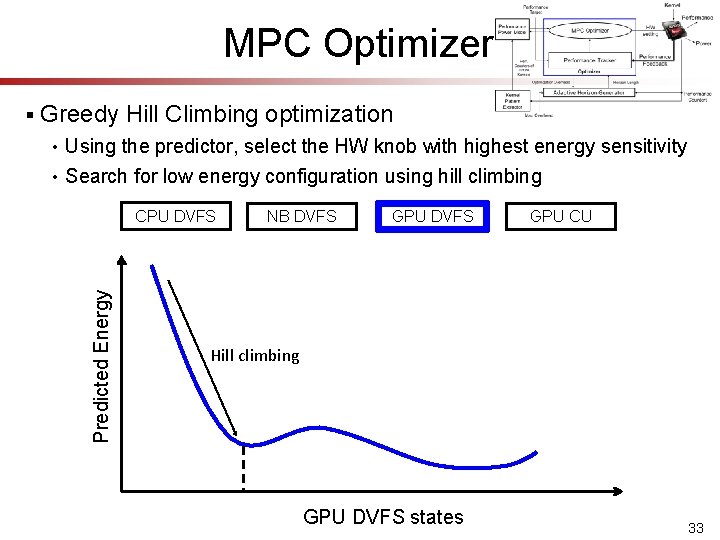

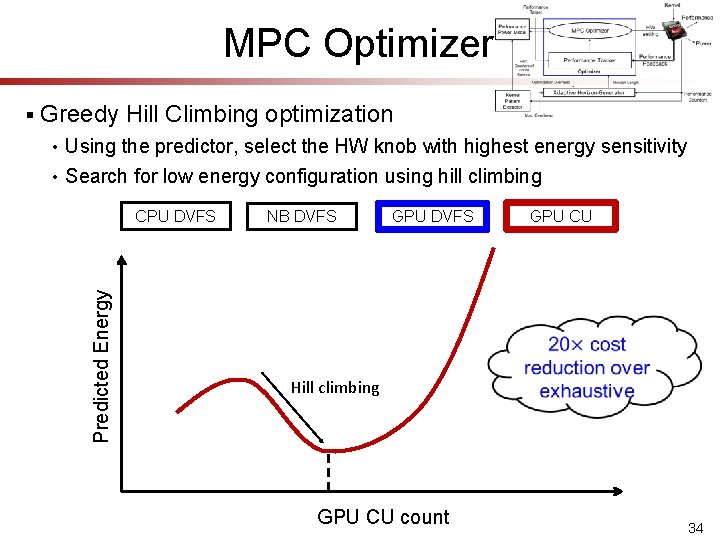

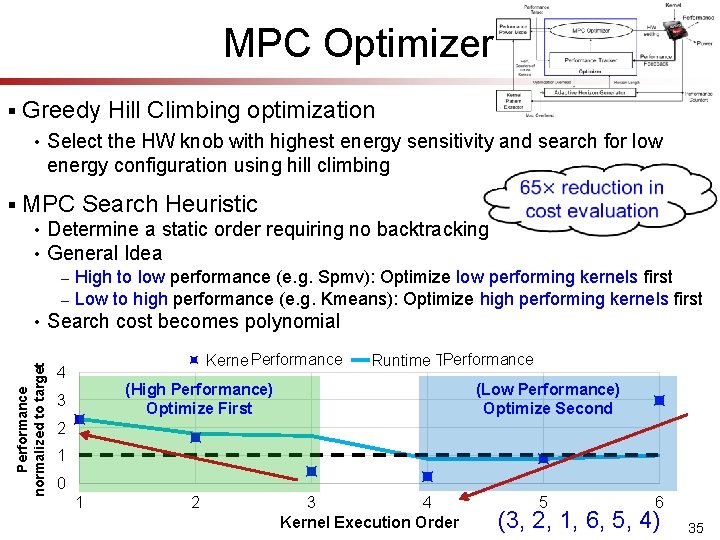

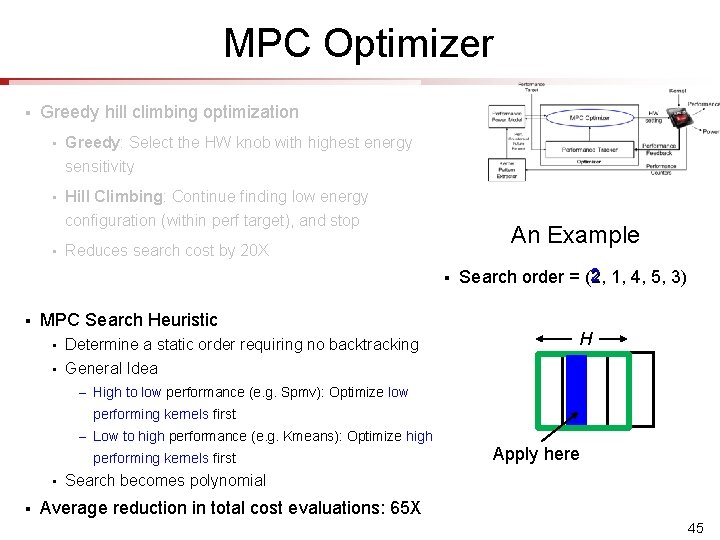

MPC Optimizer § Objective: Find minimum energy HW setting for each kernel without impacting overall performance § Greedy Hill Climbing optimization Select the HW knob with highest energy sensitivity • Search for the lowest energy configuration using hill climbing • § MPC Search Heuristic Determines a static order without backtracking • Search cost becomes polynomial • Details in the paper • § 65 X average reduction in total cost evaluations 7

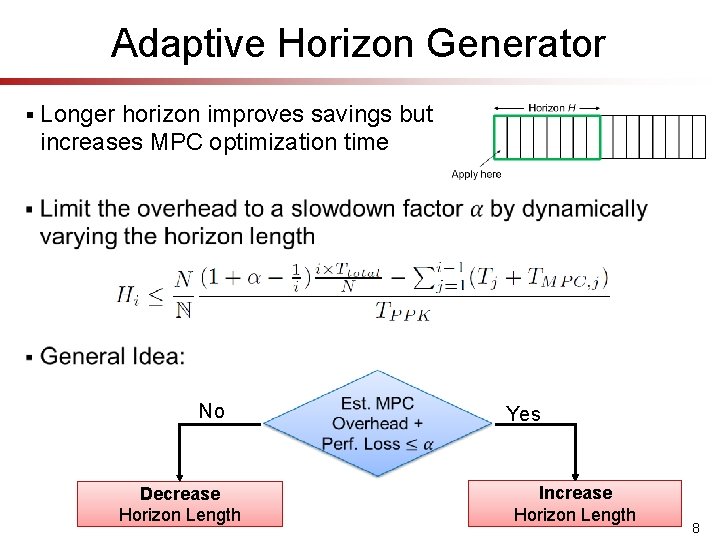

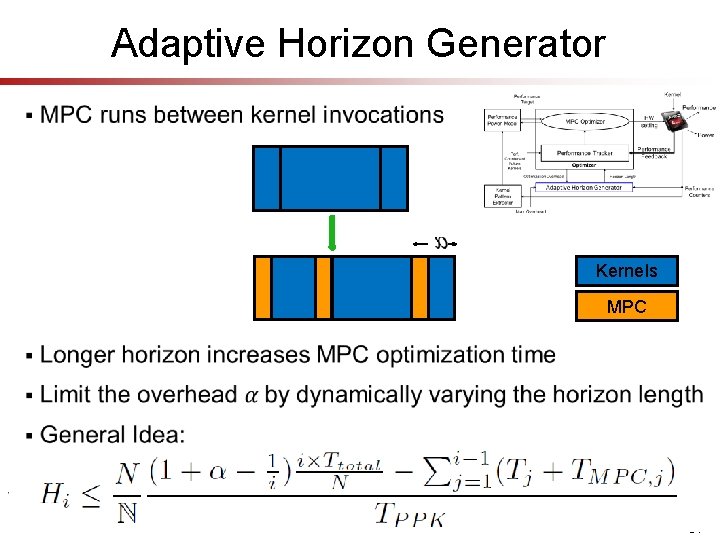

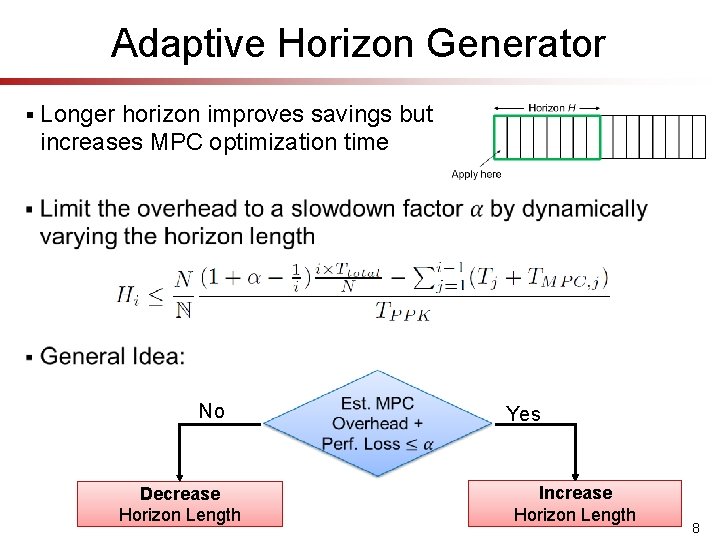

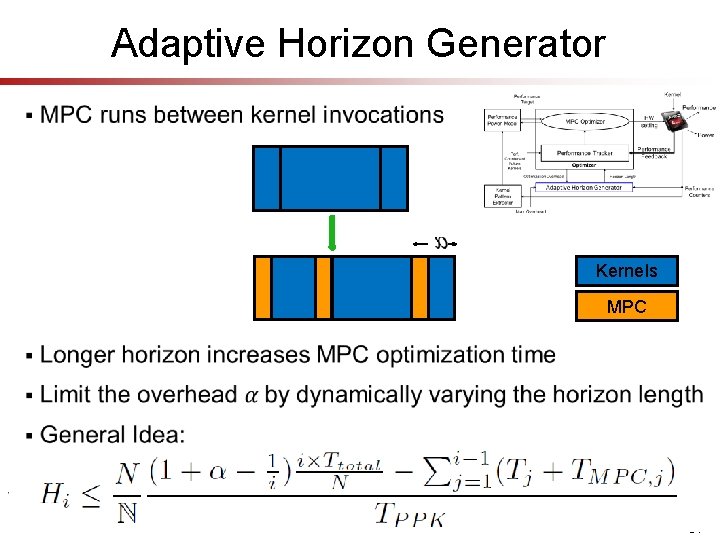

Adaptive Horizon Generator § Longer horizon improves savings but increases MPC optimization time § No Decrease Horizon Length Yes Increase Horizon Length 8

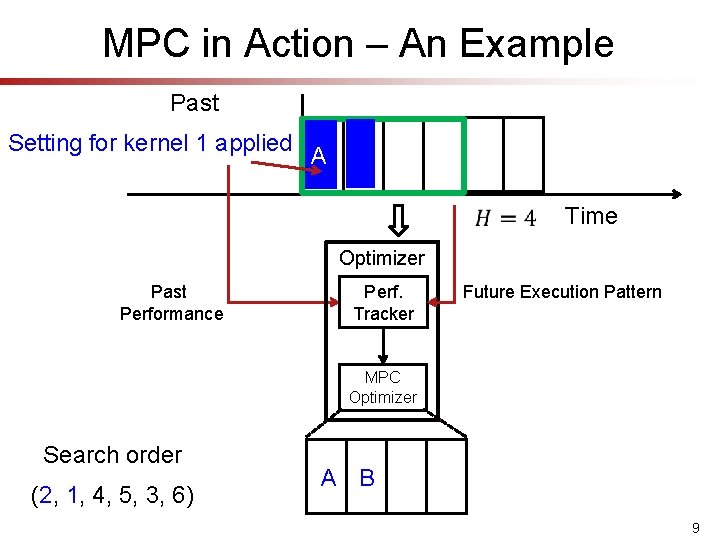

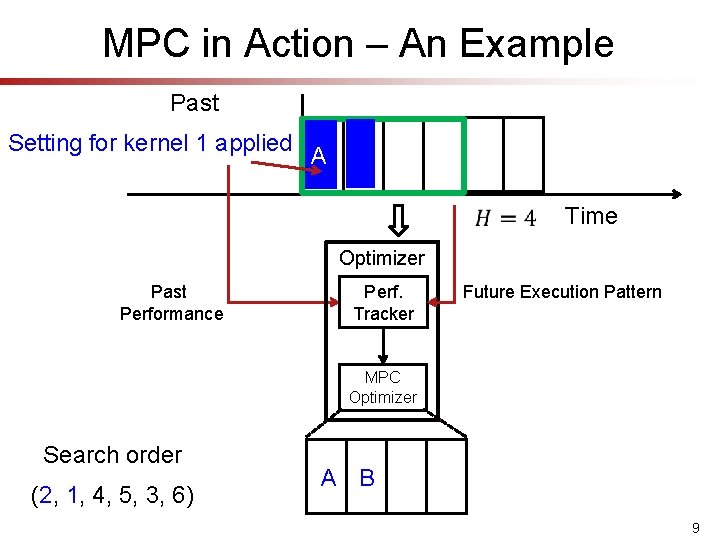

MPC in Action – An Example Past Setting for kernel 1 applied A Time Optimizer Past Performance Perf. Tracker Future Execution Pattern MPC Optimizer Search order 2 1 (2, 1, 4, 5, 3, 6) A B 9

MPC in Action – An Example Past Setting for kernel 2 applied A A C Time Optimizer Updated Past Performance Perf. Tracker Updated Future Execution Pattern MPC Optimizer Search order 2 (2, 1, 4, 5, 3, 6) C 10

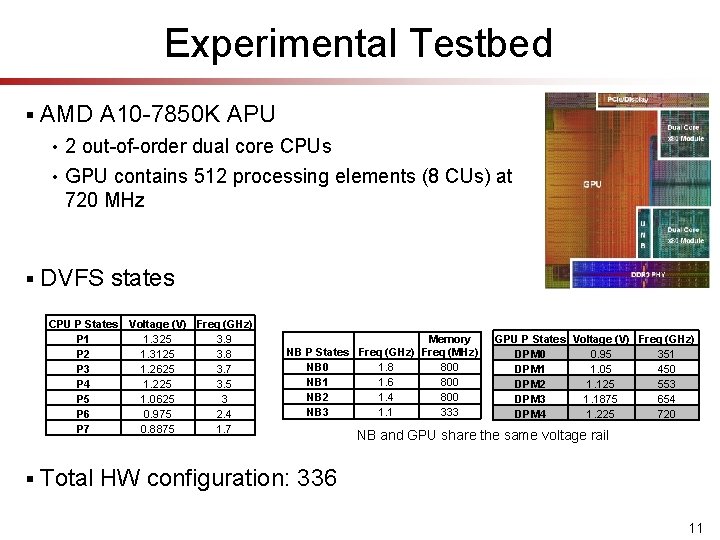

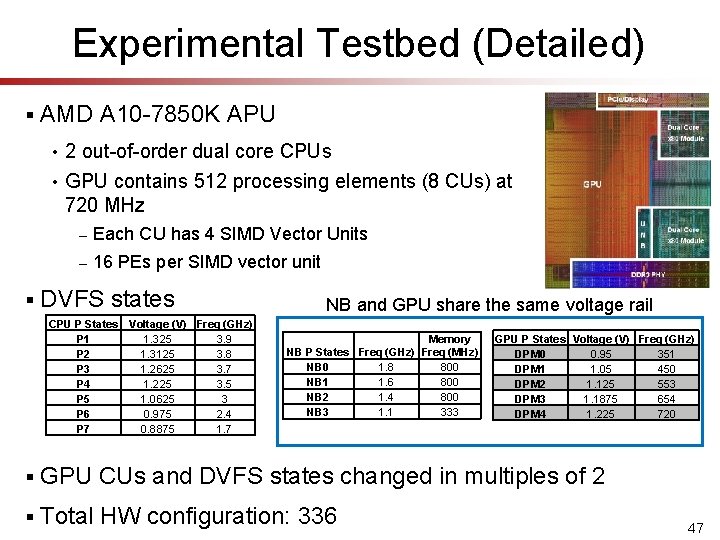

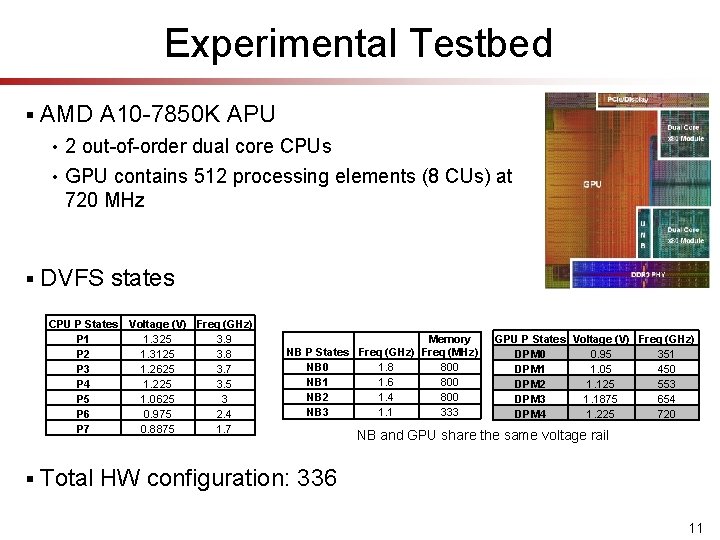

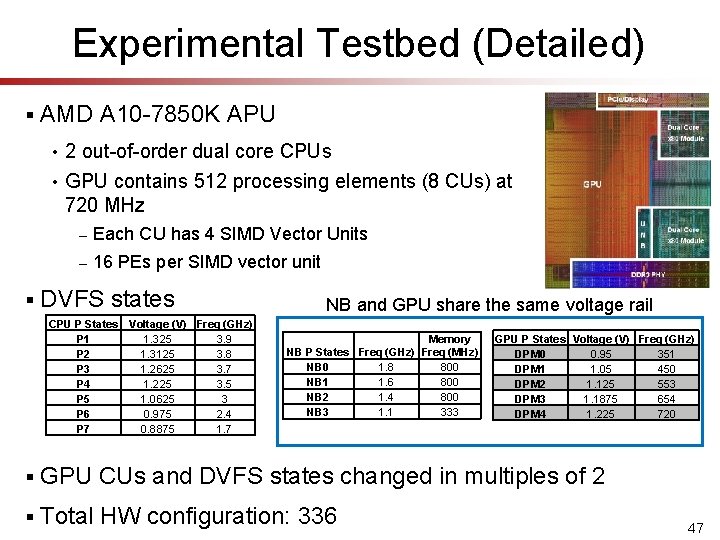

Experimental Testbed § § AMD A 10 -7850 K APU • 2 out-of-order dual core CPUs • GPU contains 512 processing elements (8 CUs) at 720 MHz DVFS states CPU P States Voltage (V) Freq (GHz) P 1 1. 325 3. 9 P 2 1. 3125 3. 8 P 3 1. 2625 3. 7 P 4 1. 225 3. 5 P 5 1. 0625 3 P 6 0. 975 2. 4 P 7 0. 8875 1. 7 § Memory NB P States Freq (GHz) Freq (MHz) NB 0 1. 8 800 NB 1 1. 6 800 NB 2 1. 4 800 NB 3 1. 1 333 GPU P States Voltage (V) Freq (GHz) DPM 0 0. 95 351 DPM 1 1. 05 450 DPM 2 1. 125 553 DPM 3 1. 1875 654 DPM 4 1. 225 720 NB and GPU share the same voltage rail Total HW configuration: 336 11

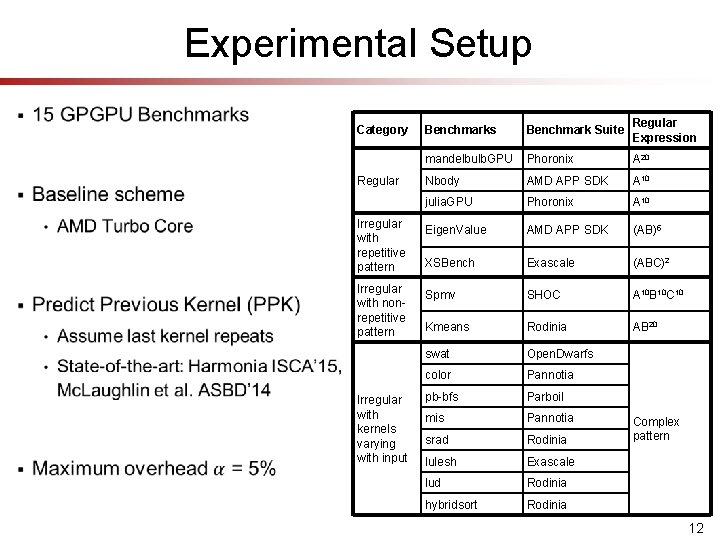

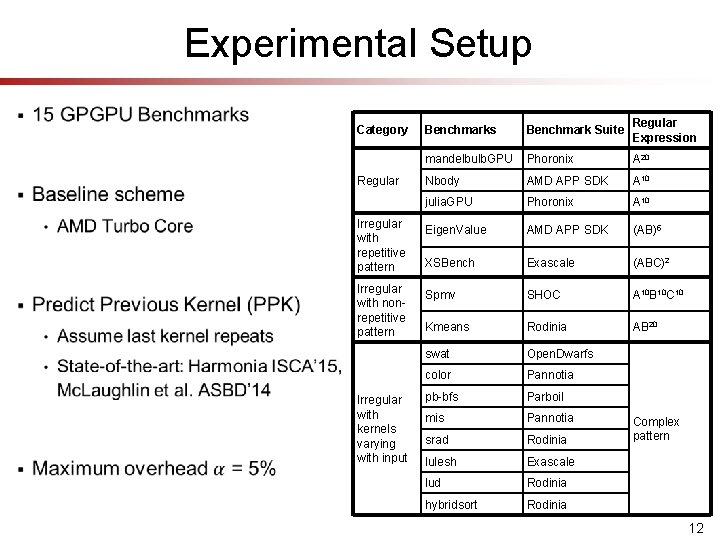

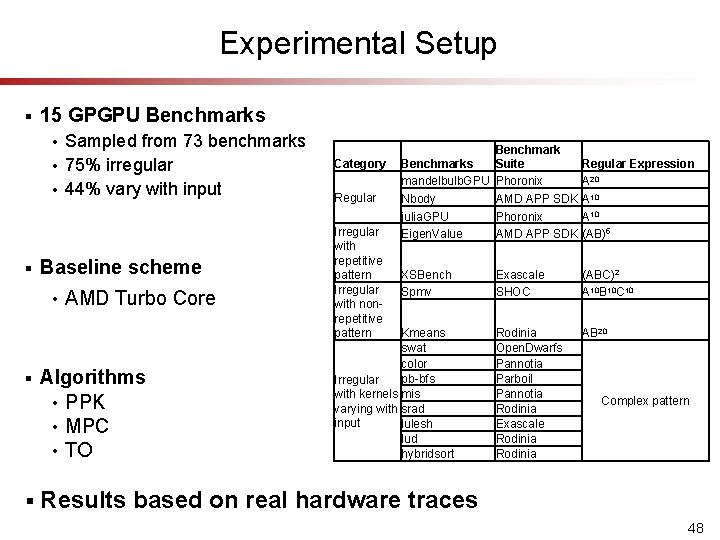

Experimental Setup § Category Regular Irregular with repetitive pattern Irregular with nonrepetitive pattern Irregular with kernels varying with input Benchmarks Benchmark Suite Regular Expression mandelbulb. GPU Phoronix A 20 Nbody AMD APP SDK A 10 julia. GPU Phoronix A 10 Eigen. Value AMD APP SDK (AB)5 XSBench Exascale (ABC)2 Spmv SHOC A 10 B 10 C 10 Kmeans Rodinia AB 20 swat Open. Dwarfs color Pannotia pb-bfs Parboil mis Pannotia srad Rodinia lulesh Exascale lud Rodinia hybridsort Rodinia Complex pattern 12

1. 2 1. 1 1 0. 9 0. 8 0. 7 0. 6 hy lud br id Irre so rt eg g ul ula ar r -v -all ar -in p Av ut er ag e sh le lu ad sr is m ge lbm n. V a XS lue Be nc h Sp m km v ea ns sw at co lo r pb -b fs Ei b. G PU N Bo dy el bu l an d m Energy Savings (%) Predict Previous Kernel hy lud br id Irre so eg gu rt ul ar lara -v ar ll -in p Av ut er ag e sr ad lu le sh is Relative Performance 60 50 40 30 20 10 0 m ge lbm n. V a XS lue Be nc h Sp m km v ea ns sw at co lo r pb -b fs Ei dy Bo N PU b. G el bu l an d m Energy-Performance Gains MPC 24. 8% 19. 5% -1. 8% -10% 13

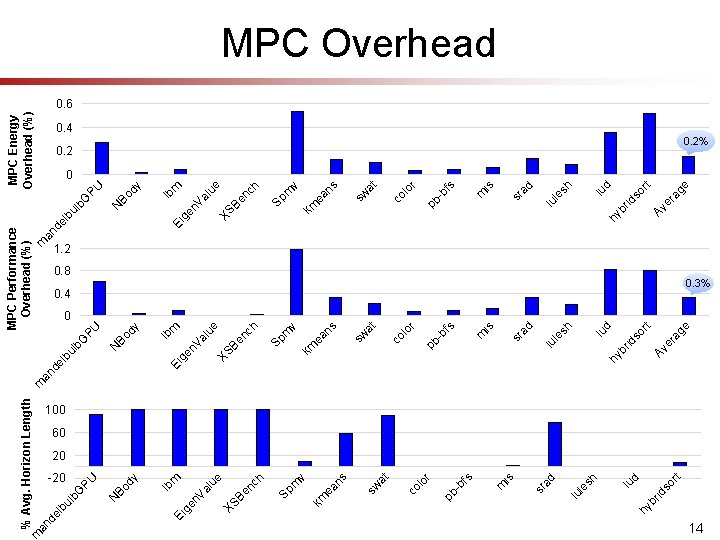

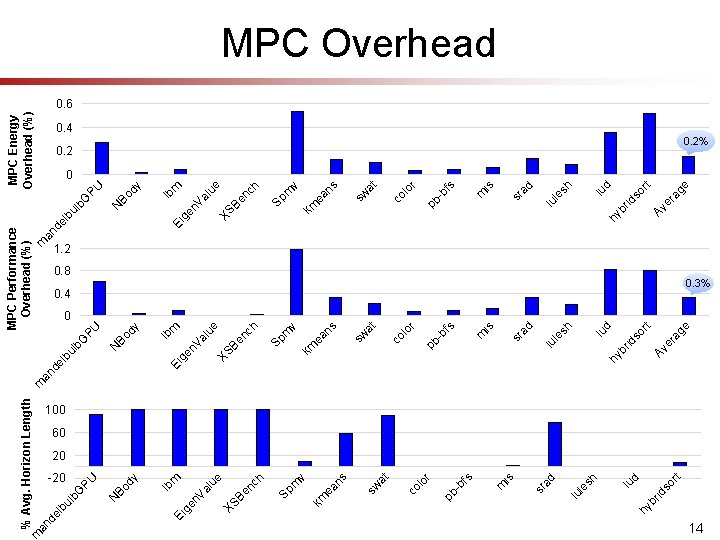

rt rid so lu d es h lu l is ad sr m bf s lo r co at sw s ea n pb - km v m Sp h nc XS Be lu e Av ge er a rt lu d id so br hy es h lu l ad sr is m bf s lo r co at sw s ea n pb - km v m Sp h nc XS Be lu e n. V a lb m Ei ge N lb m ge n. V a hy b Ei 20 dy 60 Bo 100 Bo dy -20 N % Avg. Horizon Length m an an de de lb lb ul ul b. G PU PU Va Av e e rt so d lu sh ra g id br hy le lu ad is m sr r fs -b pb lo co at sw ns km ea v m Sp ch lu e en XS B en m dy lb Bo N MPC Energy Overhead (%) PU b. G ul lb Ei g MPC Performance Overhead (%) m an de MPC Overhead 0. 6 0. 4 0. 2% 0 1. 2 0. 8 0. 4 0. 3% 0 14

e rt Av ge er a rt id so br lu d ag er Av hy lu d 5% Power, 5% Perf id so br hy es h lu l ad sr is m m is bf s pb - lo r co at sw s ea n v bf s lo r co at sw s ea n km m 15% Power, 10% Perf pb - km v Sp Sp RF m lu e XS Be nc h n. V a lb m Ei ge lb m 80 Ei ge Bo dy N Energy Savings (%) PU G ul b de lb an m 1. 4 1. 3 1. 2 1. 1 1 0. 9 0. 8 Bo dy N PU G ul b an de lb m Relative Performance Ramification of Prediction Inaccuracy § RF: 12% Power, 25% Perf § 15% Power, 10% Perf: Wu et al. [HPCA 2015] § 5% Power, 5% Perf: Paul et al. [ISCA 2015] Perfect 60 40 20 0 15

Conclusions § MPC looks into the future to determine the best configuration for the current optimization time step § Though effective in many domains, overheads far too high for short timescales of dynamic power management § We devise an approximation of MPC that dramatically improves GPGPU energy-efficiency with orders of magnitude lower overhead § Our approach reduces energy by 24. 8% with only a 1. 8% performance impact 16

Questions

Backup Slides

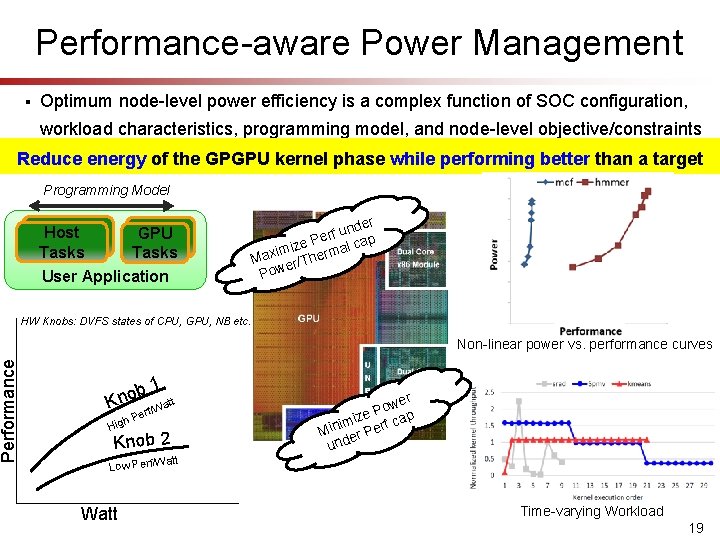

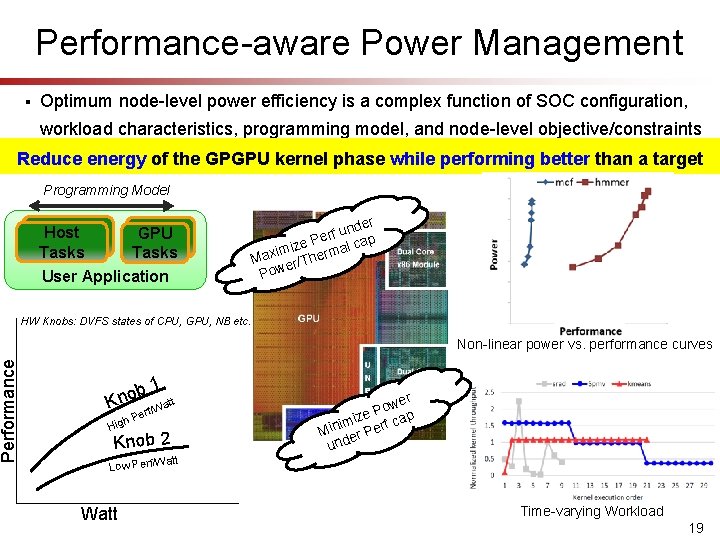

Performance-aware Power Management § Optimum node-level power efficiency is a complex function of SOC configuration, workload characteristics, programming model, and node-level objective/constraints Performance Reduce energy of the GPGPU kernel phase while performing better than a target Programming Model Host Tasks GPU Tasks User Application r nde u f r e Pe al cap z i m i Max er/Therm Pow HW Knobs: DVFS states of CPU, GPU, NB etc. Non-linear power vs. performance curves 1 b o att Kn f/W Per h Hig Knob 2 wer o P ize f cap m i n Mi er Per und att Low Perf/W Watt Time-varying Workload 19

Model Predictive Control (MPC) § MPC looks into the future to determine the best configuration for the current optimization time step Horizon H Current timestep Shift horizon § Though effective in many domains, overheads far too high for short timescales of dynamic power management § Our approach: An approximation of MPC that dramatically improves GPGPU energy-efficiency with orders of magnitude lower overhead 20

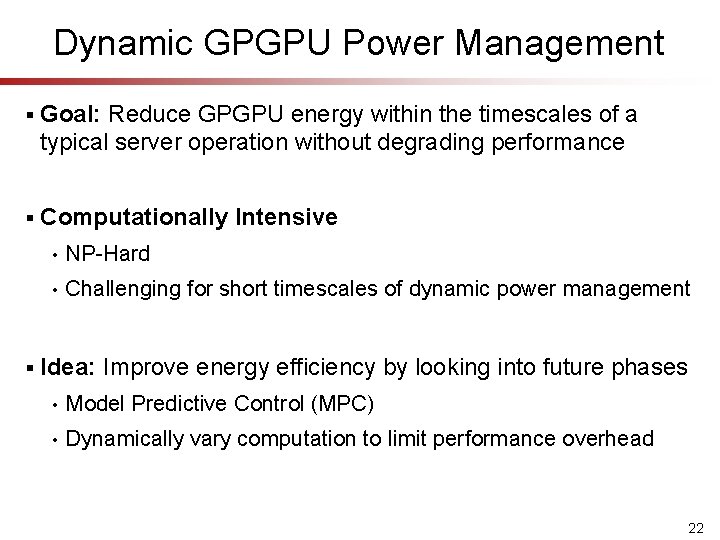

Dynamic GPGPU Power Management § CPU and GPU consume significant power in servers § Previous approaches to dynamic power management are locally predictive and ignore future kernel behavior • Performance loss or wasted energy Model Predictive Control Proactively looks ahead into the future § Applying MPC is challenging for short timescales of dynamic power management § Goal: Approximations to MPC that save GPGPU energy within the timescales of a typical server operation without degrading performance 21

Dynamic GPGPU Power Management § Goal: Reduce GPGPU energy within the timescales of a typical server operation without degrading performance § Computationally Intensive § • NP-Hard • Challenging for short timescales of dynamic power management Idea: Improve energy efficiency by looking into future phases • Model Predictive Control (MPC) • Dynamically vary computation to limit performance overhead 22

Traditional Energy Management § Static • § Reactive • § Predefined set of decisions Act after sensing a change in behavior Performance loss or wasted energy Locally Predictive • Predict the immediate behavior Proactive Energy Management Adapt from past and look-ahead into the future 23

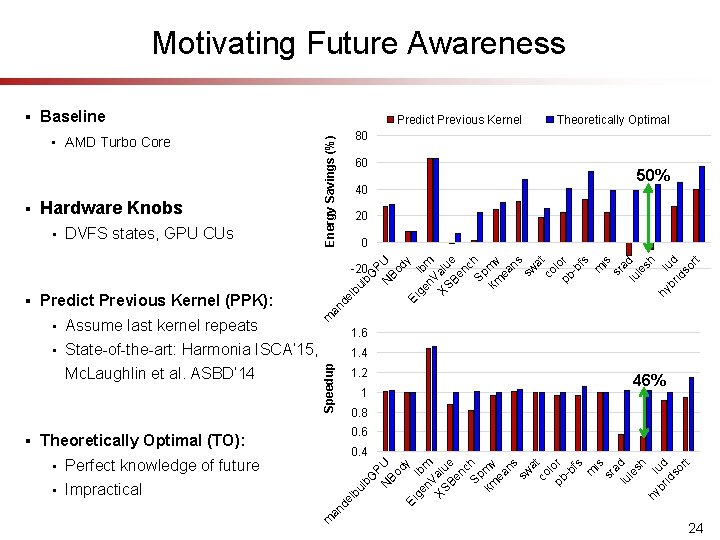

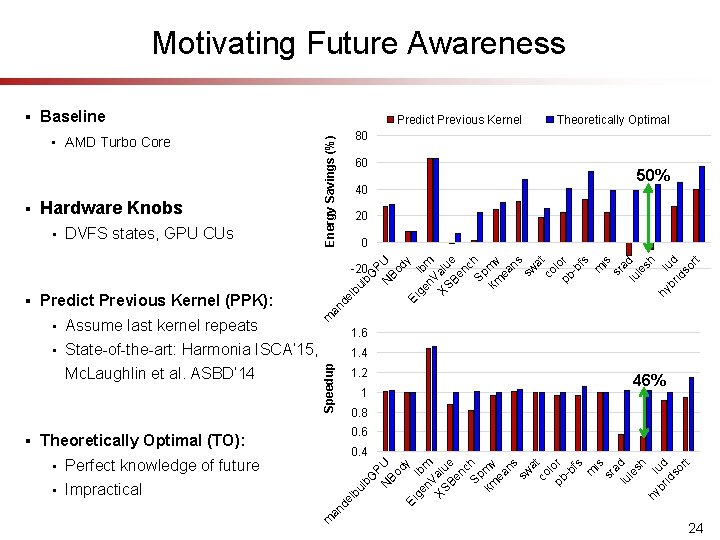

Motivating Future Awareness Baseline Predict Previous Kernel § Energy Savings (%) • AMD Turbo Core Hardware Knobs • DVFS states, GPU CUs Theoretically Optimal 80 60 50% 40 20 0 PU Bo dy Ei ge lb n. V m XS alu Be e nc Sp h km mv ea ns sw at co lo pb r -b fs m is sr a lu d le sh hy lu br d id so rt § Assume last kernel repeats • State-of-the-art: Harmonia ISCA’ 15, Mc. Laughlin et al. ASBD’ 14 1. 6 1. 4 Speedup Perfect knowledge of future • Impractical 1 46% 0. 8 0. 6 Theoretically Optimal (TO): 0. 4 an de lb ul b G • m § 1. 2 P N U Bo dy Ei ge lb n. V m XS alu Be e nc Sp h km mv ea ns sw a co t l pb or -b fs m is sr a lu d le sh hy l br ud id so rt • N an Predict Previous Kernel (PPK): m § de lb ul b G -20 24

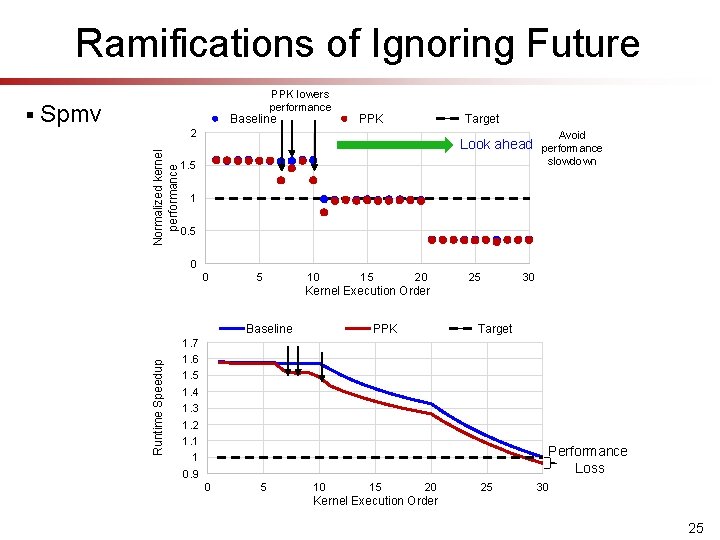

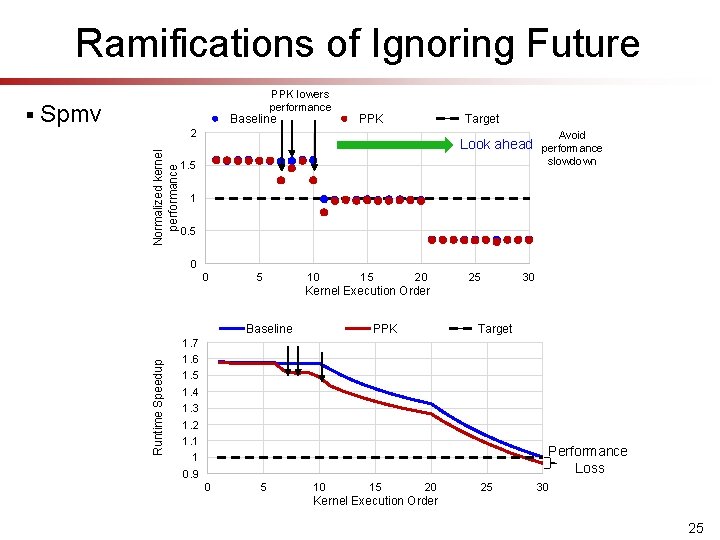

Ramifications of Ignoring Future Spmv Baseline PPK Target Normalized kernel performance 2 Look ahead 1. 5 Avoid performance slowdown 1 0. 5 0 0 5 10 15 20 25 30 Kernel Execution Order Baseline Runtime Speedup § PPK lowers performance PPK Target 1. 7 1. 6 1. 5 1. 4 1. 3 1. 2 1. 1 1 0. 9 Performance Loss 0 5 10 15 20 25 30 Kernel Execution Order 25

Ramifications of Ignoring Future Kmeans Kernel Performance Runtime Speedup Normalized kernel performance 1. 2 Target Look ahead Catch up on performance 1 0. 8 0. 6 PPK spends lot of energy 0. 4 0. 2 0 0 5 10 15 20 Kernel Execution Order Runtime Energy Savings (%) § PPK 70 TO 60 50 40 30 20 10 0 0 5 10 Kernel Execution Order 26

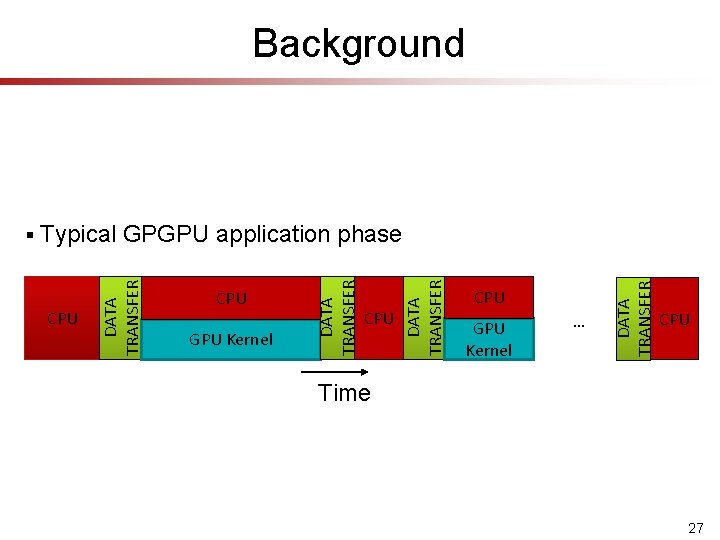

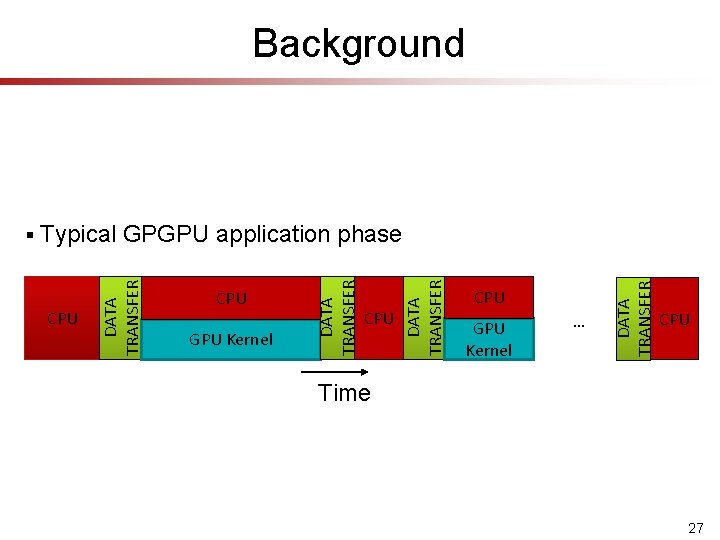

Background CPU GPU Kernel … DATA TRANSFER GPU Kernel CPU DATA TRANSFER Typical GPGPU application phase DATA TRANSFER § CPU Time 27

Kernel Performance Scaling Energy-optimal configuration differ across kernels 28

Model Predictive Control (MPC) § General Idea Horizon H Apply here § MPC Components • Accurate system model Power and performance prediction model • Future input forecast Kernel pattern extractor • Optimization Greedy and heuristic based optimizer 29

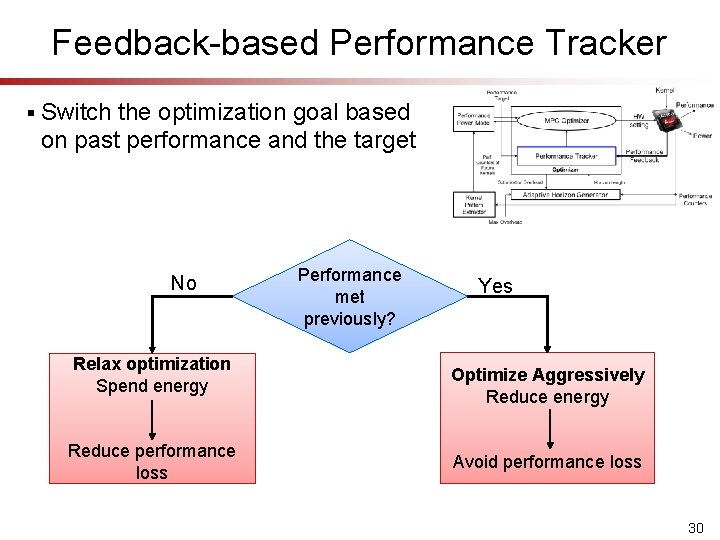

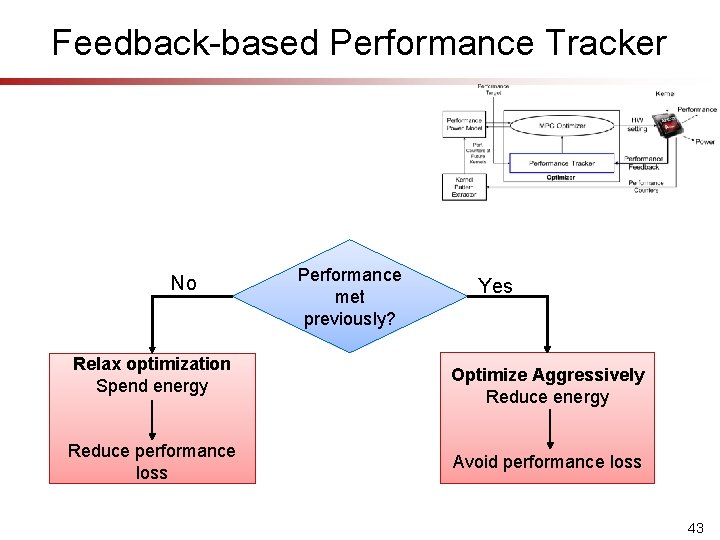

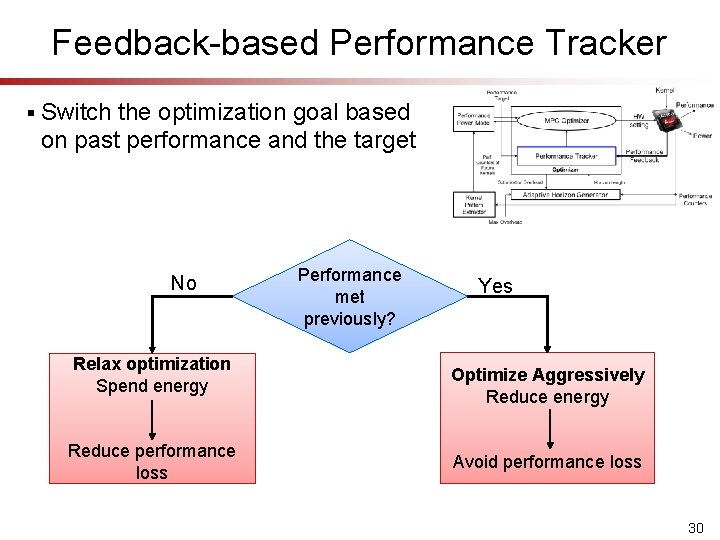

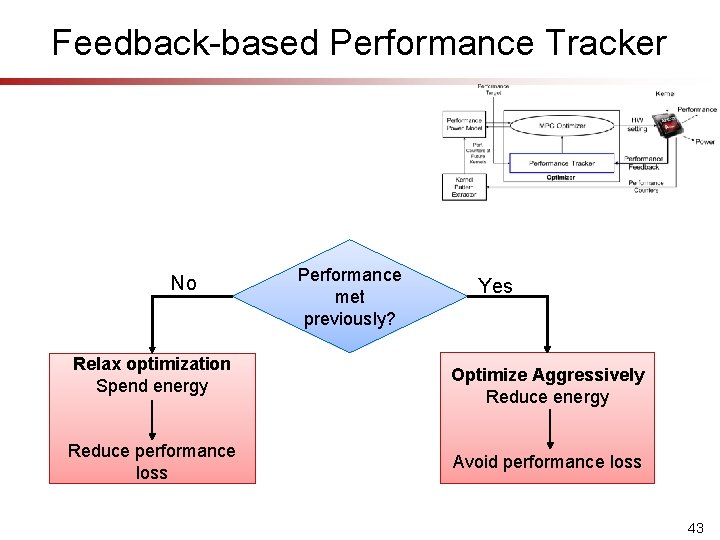

Feedback-based Performance Tracker § Switch the optimization goal based on past performance and the target No Relax optimization Spend energy Reduce performance loss Performance met previously? Yes Optimize Aggressively Reduce energy Avoid performance loss 30

Performance Power Model Kernel Performance Target Performance Power Model HW setting MPC Optimizer Power Perf. Counters of Future Kernels Performance Tracker Performance Feedback Optimizer Optimization Overhead Horizon Length Adaptive Horizon Generator Kernel Pattern Extractor Performance Counters Max Overhead § Trained offline using Random Forest Learning Algorithm § Estimates performance and power for any HW configuration 31

Kernel Pattern Extractor Kernel Performance Target Performance Power Model HW setting MPC Optimizer Power Perf. Counters of Future Kernels Performance Tracker Performance Feedback Optimizer Optimization Overhead Horizon Length Adaptive Horizon Generator Kernel Pattern Extractor Performance Counters Max Overhead § Extracts kernel execution pattern upon the first encounter § Stores the performance counters of dissimilar kernels and retunes it 32

MPC Optimizer Greedy Hill Climbing optimization • Using the predictor, select the HW knob with highest energy sensitivity • Search for low energy configuration using hill climbing CPU DVFS Predicted Energy § NB DVFS GPU CU Hill climbing GPU DVFS states 33

MPC Optimizer Greedy Hill Climbing optimization • Using the predictor, select the HW knob with highest energy sensitivity • Search for low energy configuration using hill climbing CPU DVFS Predicted Energy § NB DVFS GPU CU Hill climbing GPU CU count 34

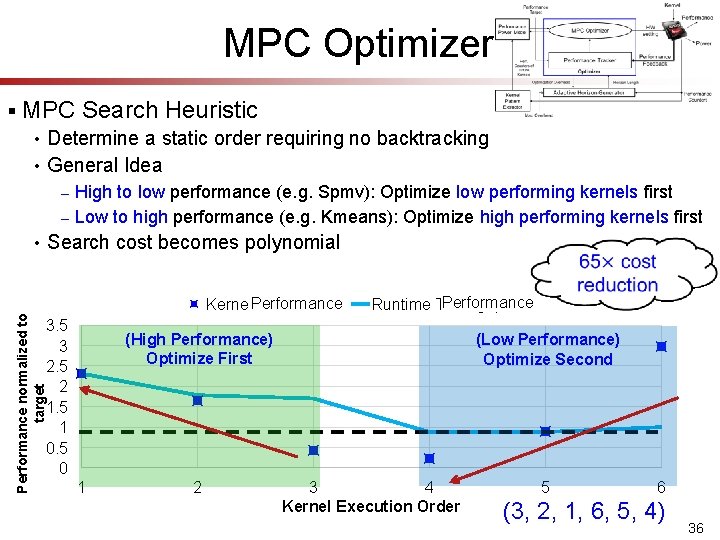

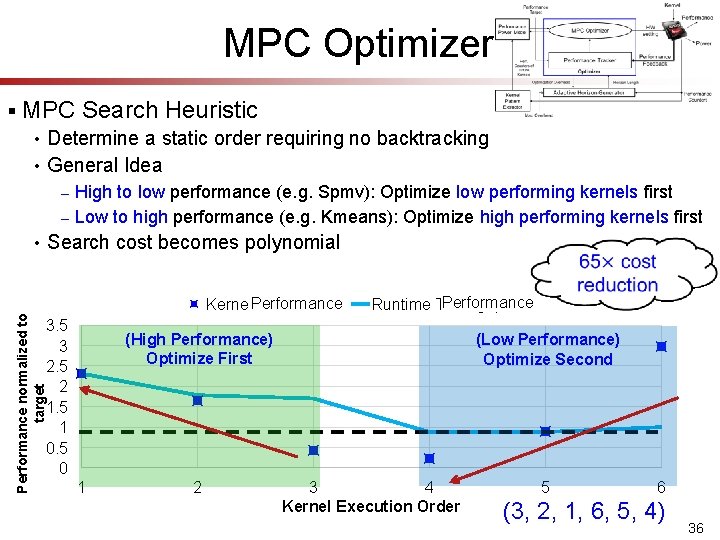

MPC Optimizer § Greedy Hill Climbing optimization • § Select the HW knob with highest energy sensitivity and search for low energy configuration using hill climbing MPC Search Heuristic • • Determine a static order requiring no backtracking General Idea – – Performance normalized to target • High to low performance (e. g. Spmv): Optimize low performing kernels first Low to high performance (e. g. Kmeans): Optimize high performing kernels first Search cost becomes polynomial Performance Kernel Throughput 4 Performance Runtime Throughput (Low Performance) Optimize Second (High Performance) Optimize First 3 2 1 0 1 2 3 4 Kernel Execution Order 5 6 (3, 2, 1, 6, 5, 4) 35

MPC Optimizer § MPC Search Heuristic Determine a static order requiring no backtracking • General Idea • High to low performance (e. g. Spmv): Optimize low performing kernels first – Low to high performance (e. g. Kmeans): Optimize high performing kernels first – Search cost becomes polynomial • Performance normalized to target Performance Kernel Throughput 3. 5 3 2. 5 2 1. 5 1 0. 5 0 Performance Runtime Throughput (High Performance) Optimize First 1 2 (Low Performance) Optimize Second 3 4 Kernel Execution Order 5 6 (3, 2, 1, 6, 5, 4) 36

Adaptive Horizon Generator § Kernels MPC 37

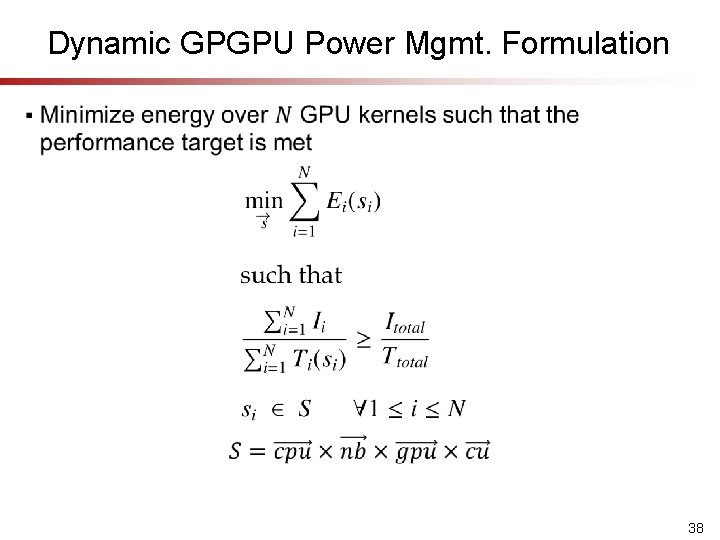

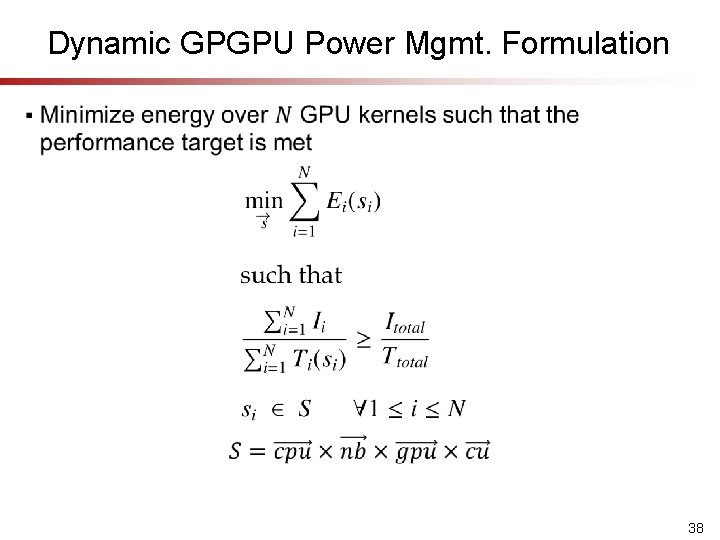

Dynamic GPGPU Power Mgmt. Formulation § 38

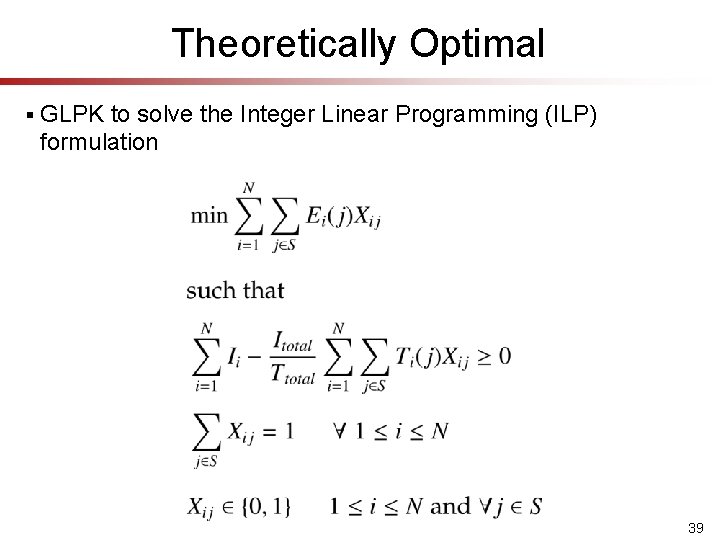

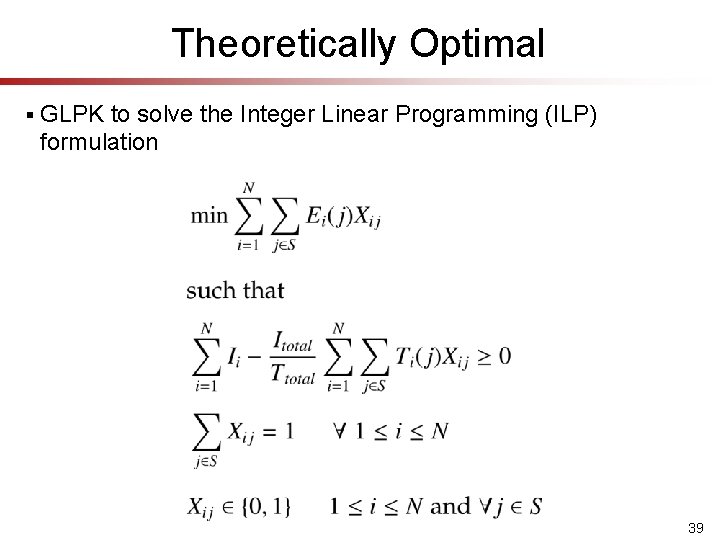

Theoretically Optimal § GLPK to solve the Integer Linear Programming (ILP) formulation 39

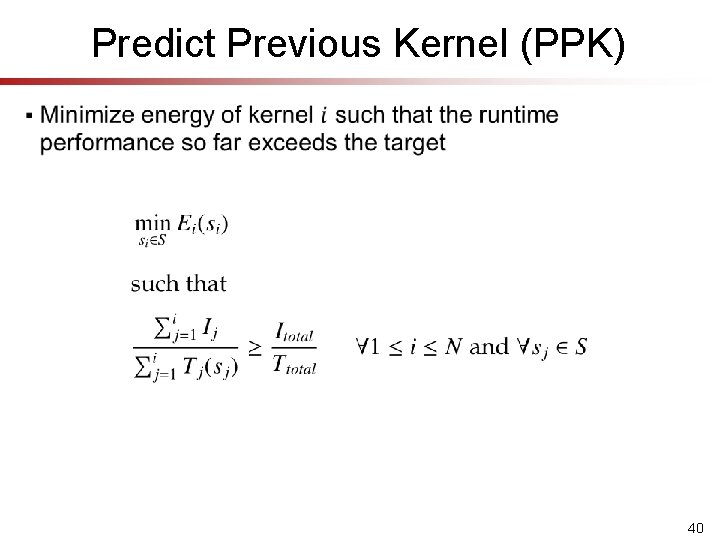

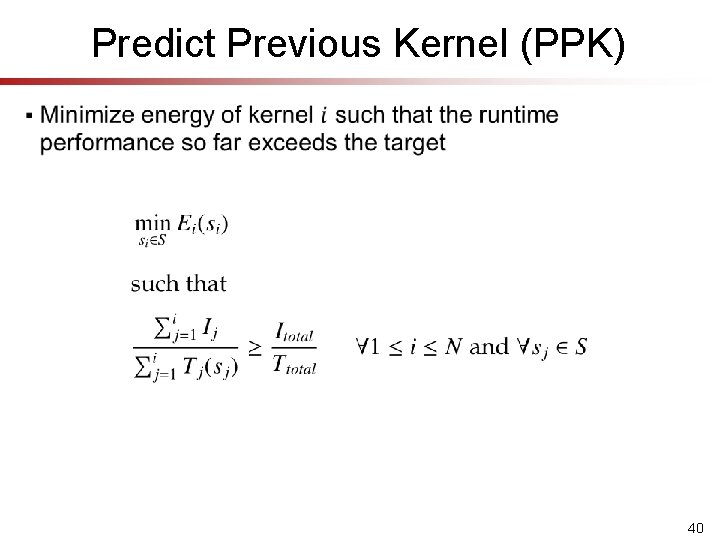

Predict Previous Kernel (PPK) § 40

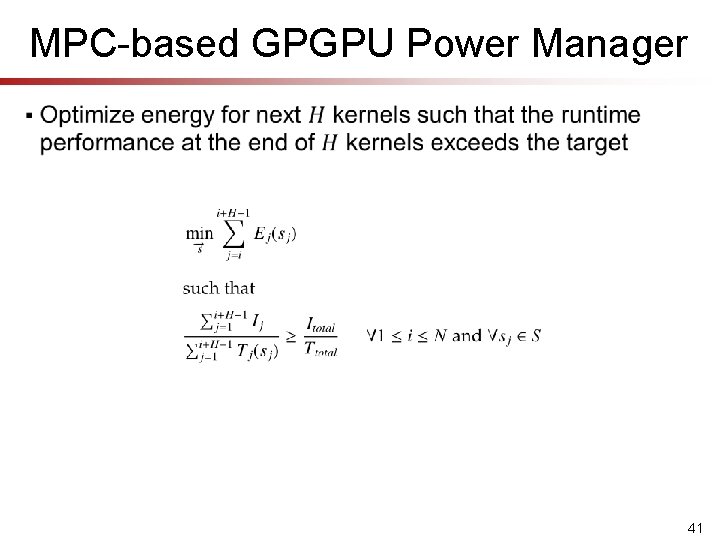

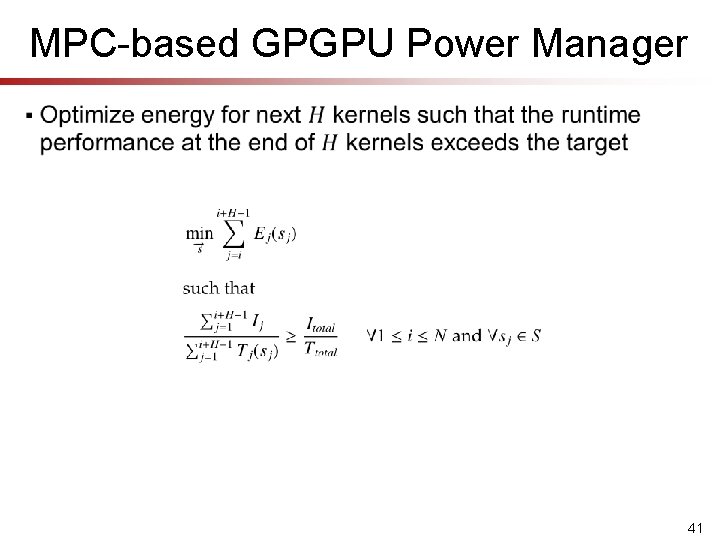

MPC-based GPGPU Power Manager § 41

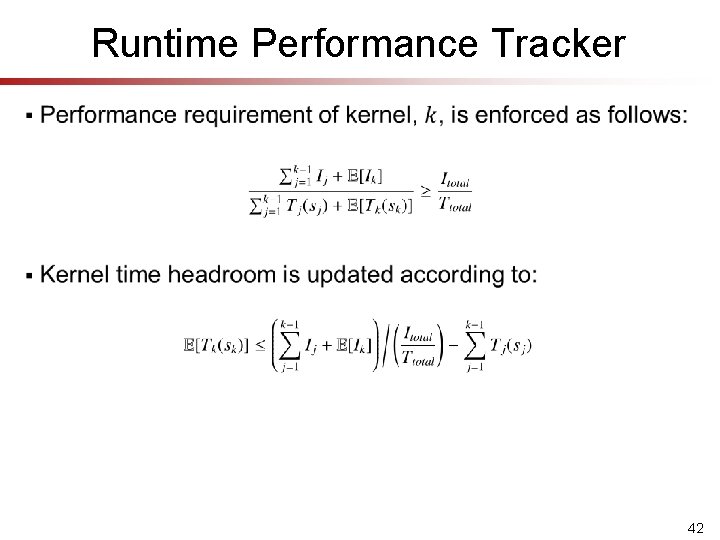

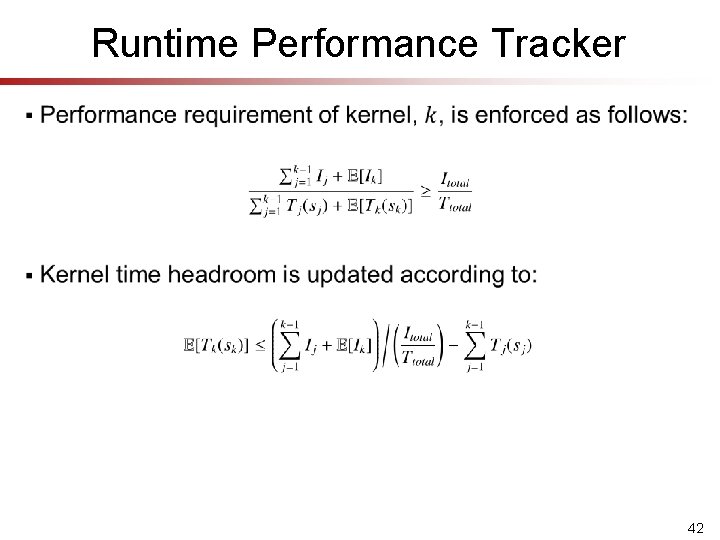

Runtime Performance Tracker § 42

Feedback-based Performance Tracker No Relax optimization Spend energy Reduce performance loss Performance met previously? Yes Optimize Aggressively Reduce energy Avoid performance loss 43

MPC Optimizer § Greedy Hill Climbing optimization • Select the HW knob with highest energy sensitivity and search for low energy configuration using hill climbing § An Example MPC Search Heuristic Determine a static order requiring no backtracking • General Idea • § 2 1 Search order = (2, 1, 4, 5, 3) – High to low performance (e. g. Spmv): Optimize low H performing kernels first – Low to high performance (e. g. Kmeans): Optimize high performing kernels first • § Search cost becomes polynomial 65 X average reduction in total cost evaluations Apply here 44

MPC Optimizer § Greedy hill climbing optimization • Greedy: Select the HW knob with highest energy sensitivity • • Hill Climbing: Continue finding low energy configuration (within perf target), and stop An Example Reduces search cost by 20 X § § MPC Search Heuristic Determine a static order requiring no backtracking • General Idea • 2 Search order = (2, 1, 4, 5, 3) H – High to low performance (e. g. Spmv): Optimize low performing kernels first – Low to high performance (e. g. Kmeans): Optimize high performing kernels first • § Apply here Search becomes polynomial Average reduction in total cost evaluations: 65 X 45

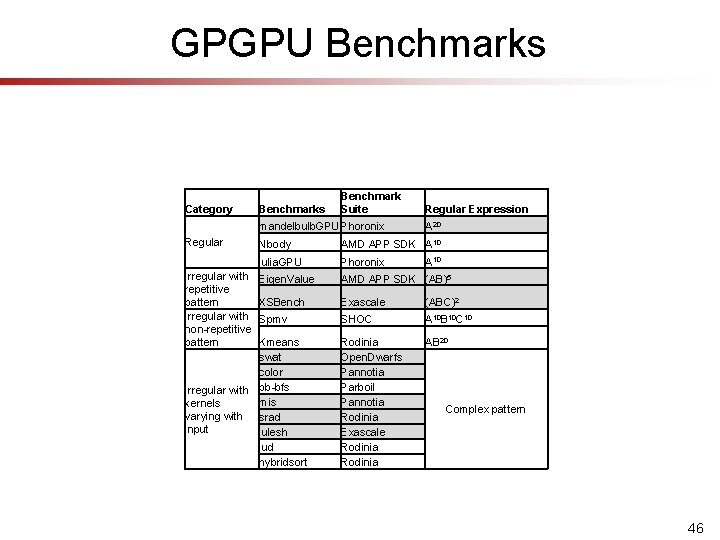

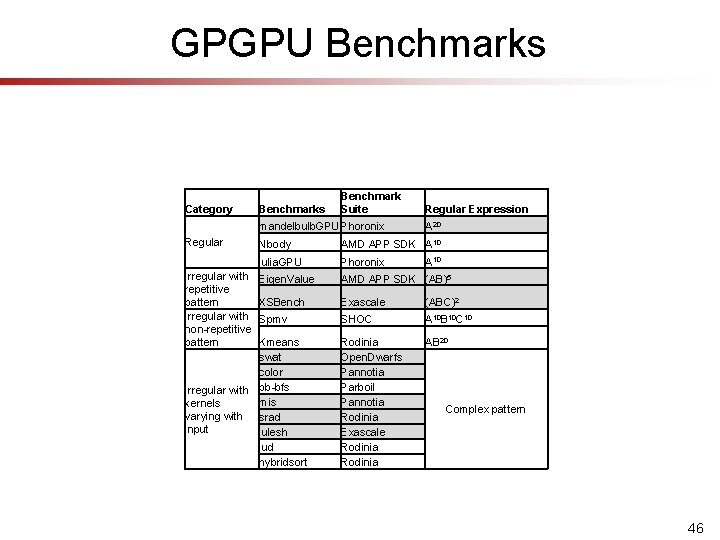

GPGPU Benchmarks Category Benchmarks Benchmark Suite mandelbulb. GPU Phoronix Regular Irregular with repetitive pattern Irregular with non-repetitive pattern Regular Expression A 20 Nbody AMD APP SDK A 10 julia. GPU Phoronix Eigen. Value AMD APP SDK (AB)5 XSBench Exascale (ABC)2 Spmv SHOC A 10 B 10 C 10 Rodinia Open. Dwarfs Pannotia Parboil Pannotia Rodinia Exascale Rodinia AB 20 Kmeans swat color Irregular with pb-bfs mis kernels varying with srad input lulesh lud hybridsort A 10 Complex pattern 46

Experimental Testbed (Detailed) § § AMD A 10 -7850 K APU • 2 out-of-order dual core CPUs • GPU contains 512 processing elements (8 CUs) at 720 MHz – Each CU has 4 SIMD Vector Units – 16 PEs per SIMD vector unit DVFS states CPU P States Voltage (V) Freq (GHz) P 1 1. 325 3. 9 P 2 1. 3125 3. 8 P 3 1. 2625 3. 7 P 4 1. 225 3. 5 P 5 1. 0625 3 P 6 0. 975 2. 4 P 7 0. 8875 1. 7 NB and GPU share the same voltage rail Memory NB P States Freq (GHz) Freq (MHz) NB 0 1. 8 800 NB 1 1. 6 800 NB 2 1. 4 800 NB 3 1. 1 333 GPU P States Voltage (V) Freq (GHz) DPM 0 0. 95 351 DPM 1 1. 05 450 DPM 2 1. 125 553 DPM 3 1. 1875 654 DPM 4 1. 225 720 § GPU CUs and DVFS states changed in multiples of 2 § Total HW configuration: 336 47

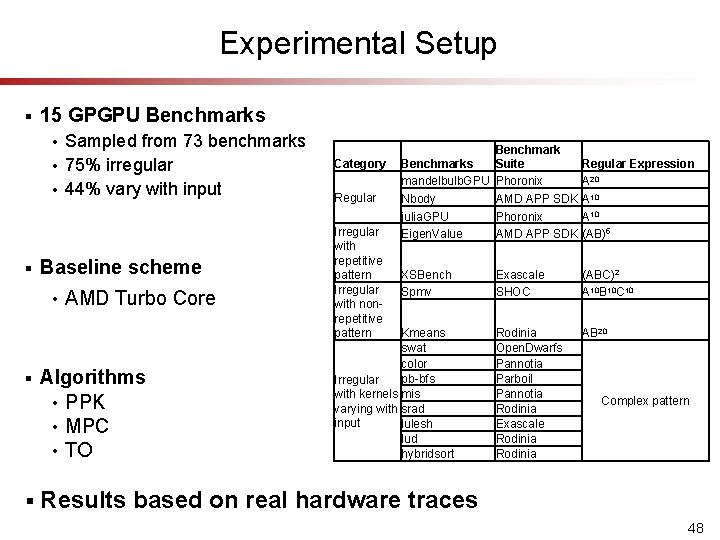

Experimental Setup § 15 GPGPU Benchmarks Sampled from 73 benchmarks • 75% irregular • 44% vary with input • § Baseline scheme • § § AMD Turbo Core Algorithms • PPK • MPC • TO Category Regular Irregular with repetitive pattern Irregular with nonrepetitive pattern Benchmarks Suite mandelbulb. GPU Phoronix Nbody AMD APP SDK julia. GPU Phoronix Eigen. Value AMD APP SDK Regular Expression A 20 A 10 (AB)5 XSBench Spmv Exascale SHOC (ABC)2 A 10 B 10 C 10 Rodinia Open. Dwarfs Pannotia Parboil Pannotia Rodinia Exascale Rodinia AB 20 Kmeans swat color pb-bfs Irregular with kernels mis varying with srad input lulesh lud hybridsort Complex pattern Results based on real hardware traces 48

1. 5 1. 4 1. 3 1. 2 1. 1 1 0. 9 0. 8 hy lud br id Irre sor eg t g ul ula ar r -v -all ar -in p Av ut er ag e sr ad lu le sh 50 hy lud br id Irre sor eg t g ul ula ar r -v -all ar -in p Av ut er ag e sr ad lu le sh is m MPC is dy l ge bm n. V a XS lue Be nc h Sp m km v ea ns sw at co lo r pb -b fs Ei Bo N Energy Savings (%) G PU ul b el b an d m 70 m l ge bm n. V a XS lue Be nc h Sp m km v ea ns sw at co lo r pb -b fs Ei dy Speedup -10 Bo N G PU ul b an de lb m Polynomial MPC w/ Theoretical Optimal Theoretically Optimal 92% 30 10 93% 49

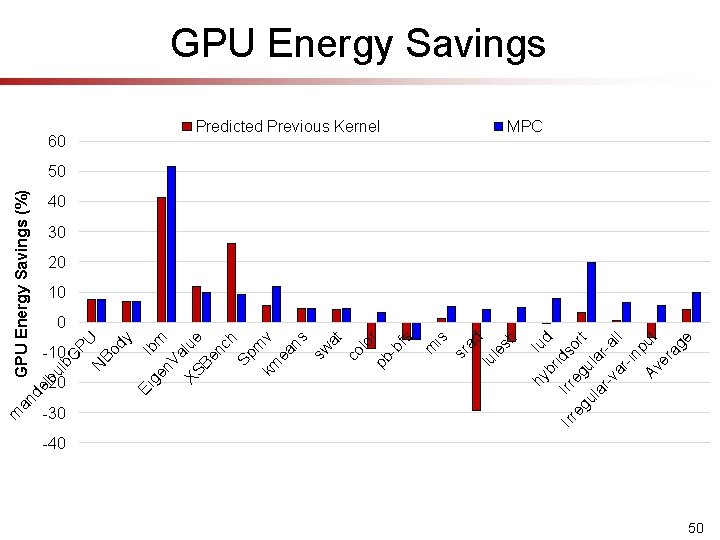

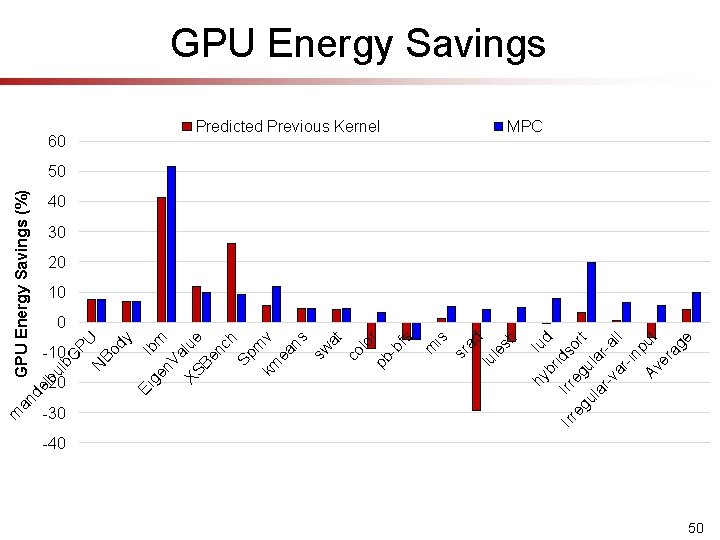

lb -20 le sh lu ad sr is m Predicted Previous Kernel hy 60 lu br d Irr idso eg eg r ul ula t ar -v r-al ar l -in p Av ut er ag e -30 m n. V a XS lue Be nc h Sp m km v ea ns sw at co lo pb r -b fs b. G PU N Bo dy Ei ge GPU Energy Savings (%) -10 bu l de l m an GPU Energy Savings MPC 50 40 30 20 10 0 -40 50

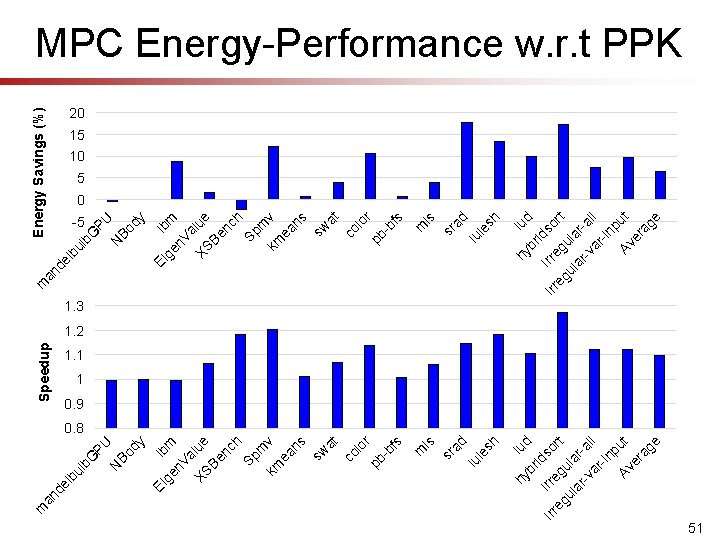

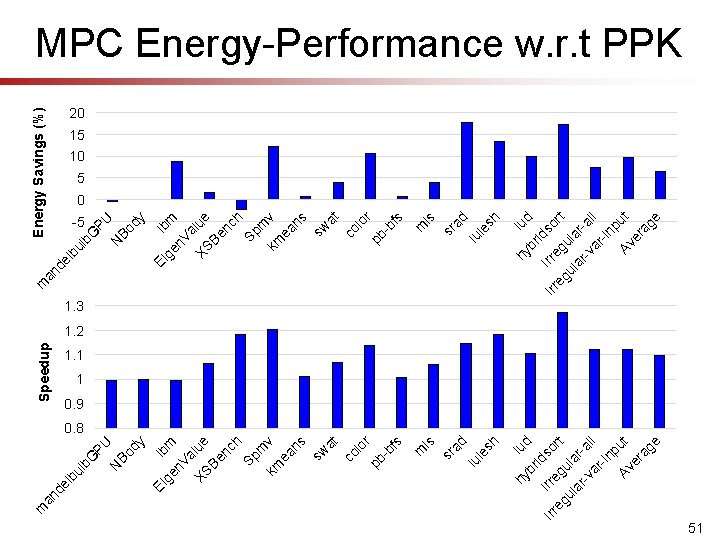

sr ad lu le sh is m ge lbm n. V a XS lue Be nc h Sp m km v ea ns sw at co lo r pb -b fs Ei dy Bo N G PU ul b el b an d Speedup Irr d lu s eg eg ort ul ula ar -v r-al l ar -in p Av ut er ag e Irr rid hy b sh ad is m le lu r fs -b sr pb at lo co sw ns v h m ea km Sp nc Be m ue al lb dy PU Bo n. V XS ge Ei Energy Savings (%) b. G N ul el b an d m -5 hy lud br id Irre so rt eg g ul ula ar -v r-al l ar -in p Av ut er ag e m MPC Energy-Performance w. r. t PPK 20 15 10 5 0 1. 3 1. 2 1. 1 1 0. 9 0. 8 51

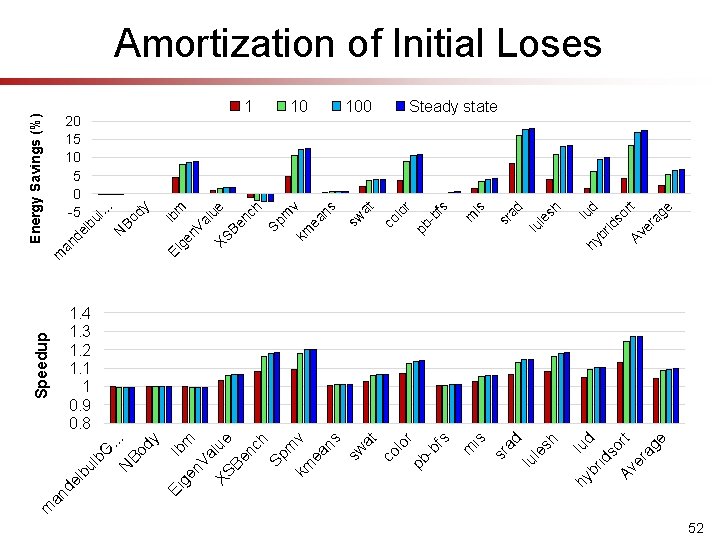

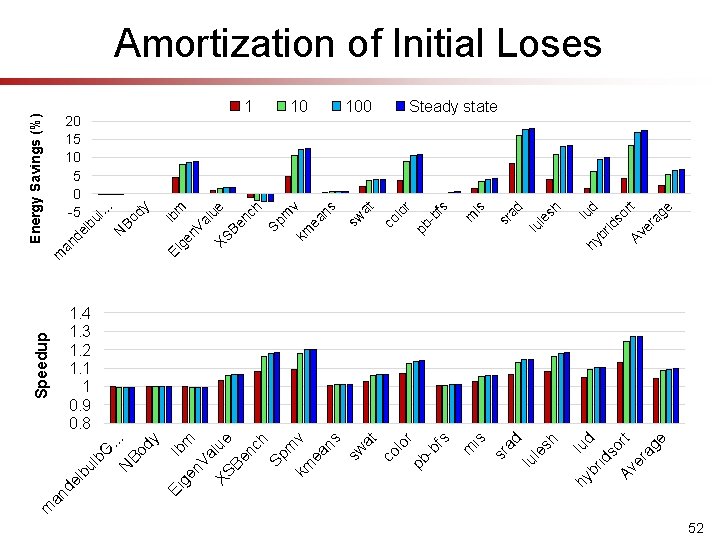

m m lb hy lu br d id s Av ort er ag e sr ad lu le sh is e rt so d lu ag er Av id br hy sh le lu ad sr is m fs -b r 100 pb lo co at 10 sw ns ea v m h nc Sp km m ue lb al Be . . . dy Bo n. V XS ge Ei N ul lb 1 m n. V a XS lue Be nc h Sp m km v ea ns sw at co lo r pb -b fs Ei ge dy Bo de m an Energy Savings (%) 20 15 10 5 0 -5 N G. . . ul b el b an d Speedup Amortization of Initial Loses Steady state 1. 4 1. 3 1. 2 1. 1 1 0. 9 0. 8 52

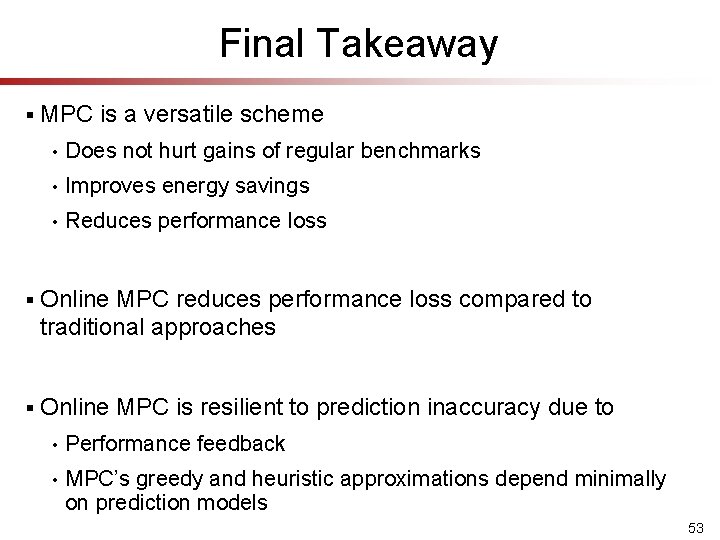

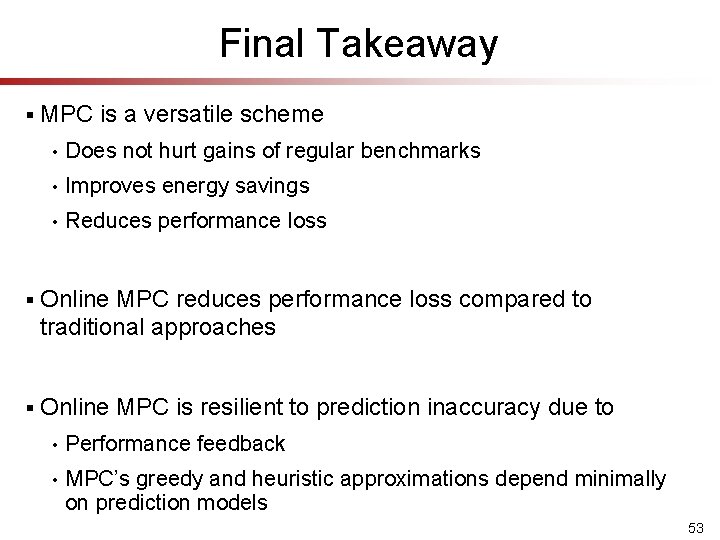

Final Takeaway § MPC is a versatile scheme • Does not hurt gains of regular benchmarks • Improves energy savings • Reduces performance loss § Online MPC reduces performance loss compared to traditional approaches § Online MPC is resilient to prediction inaccuracy due to • Performance feedback • MPC’s greedy and heuristic approximations depend minimally on prediction models 53