Panasas Update on CAE Strategy Stan Posey Director

- Slides: 21

Panasas Update on CAE Strategy Stan Posey Director, Vertical Marketing and Business Development sposey@panasas. com www. panasas. com

Panasas Company Overview Company: Silicon Valley-based; private venture-backed; 150 people WW Technology: Parallel file system and storage appliances for HPC clusters History: Founded 1999 by CMU Prof. Garth Gibson, co-inventor of RAID Alliances: ISVs; Dell and SGI now resell Panasas; Microsoft and WCCS Extensive recognition and awards for HPC breakthroughs: Six Panasas Customers won Awards at SC 07 Conference: Panasas parallel I/O and Storage Enabling Petascale Computing Storage system selected for LANL’s $110 MM hybrid “Roadrunner” - Petaflop IBM system with 16, 000 AMD cpus + 16, 000 IBM cells, 4 x over LLNL BG/L 2 Panasas CTO Gibson leads Sci. DAC’s Petascale Data Storage Institute IDC HPC User Forum, Norfolk, VA 14 Apr 08

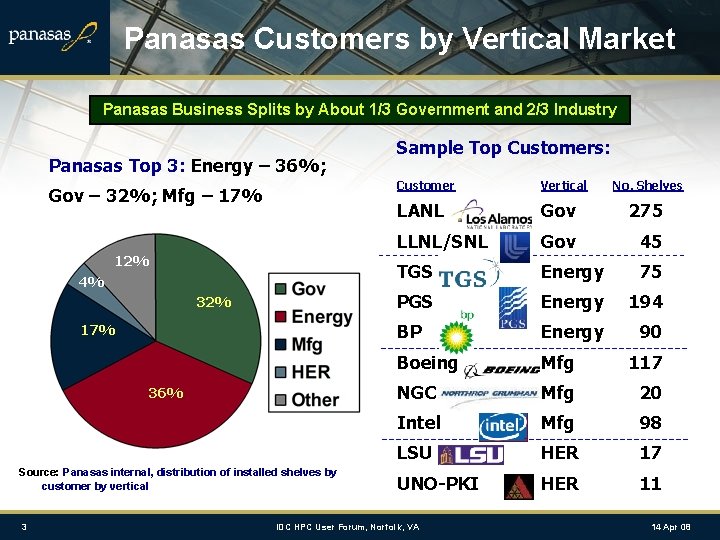

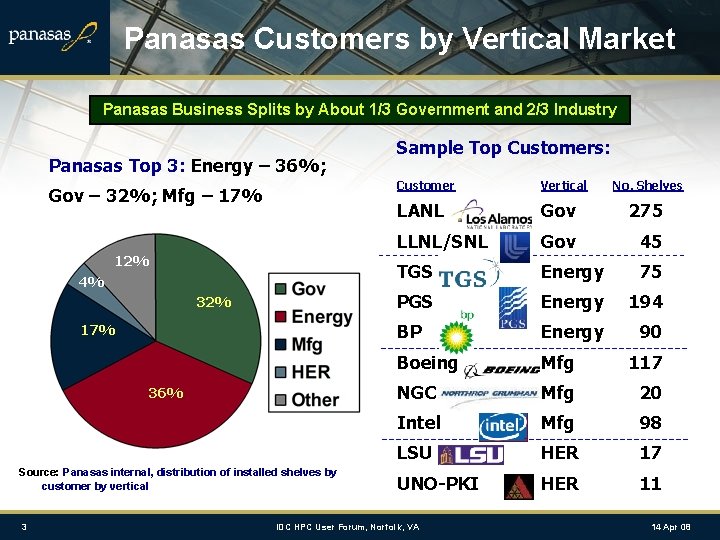

Panasas Customers by Vertical Market Panasas Business Splits by About 1/3 Government and 2/3 Industry Panasas Top 3: Energy – 36%; Gov – 32%; Mfg – 17% 12% 4% 32% 17% 36% Source: Panasas internal, distribution of installed shelves by customer by vertical 3 Sample Top Customers: Customer Vertical LANL Gov 275 LLNL/SNL Gov 45 TGS Energy 75 PGS Energy 194 BP Energy 90 Boeing Mfg 117 NGC Mfg 20 Intel Mfg 98 LSU HER 17 UNO-PKI HER 11 IDC HPC User Forum, Norfolk, VA No. Shelves 14 Apr 08

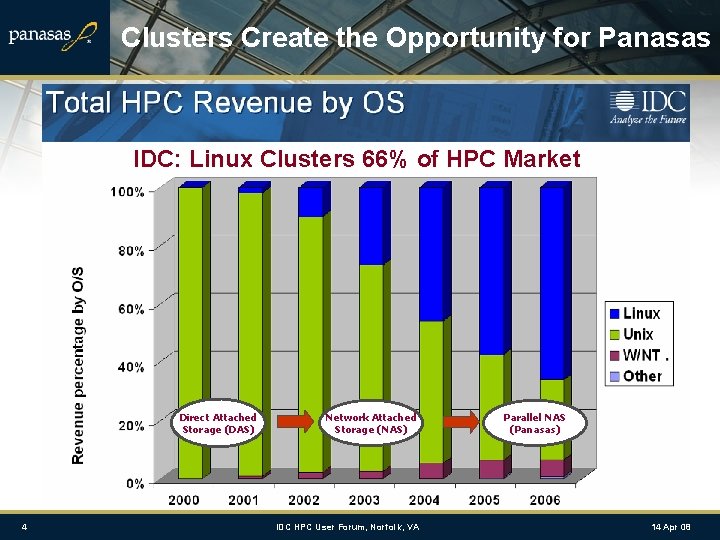

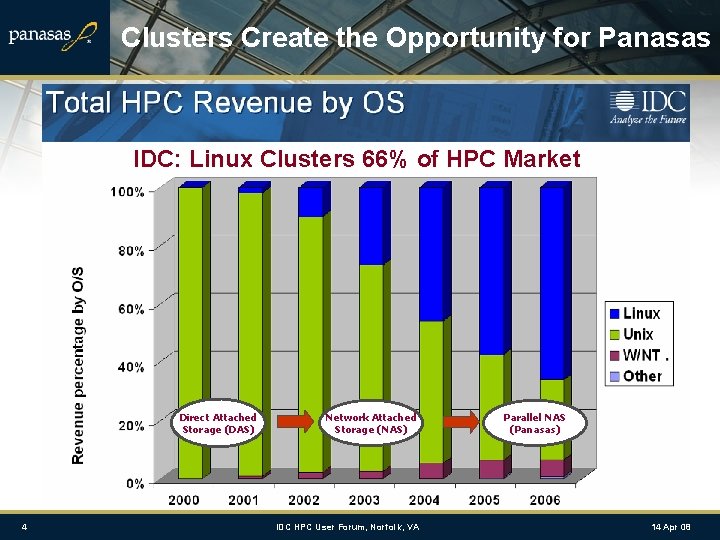

Clusters Create the Opportunity for Panasas IDC: Linux Clusters 66% of HPC Market Direct Attached Storage (DAS) 4 Network Attached Storage (NAS) IDC HPC User Forum, Norfolk, VA Parallel NAS (Panasas) 14 Apr 08

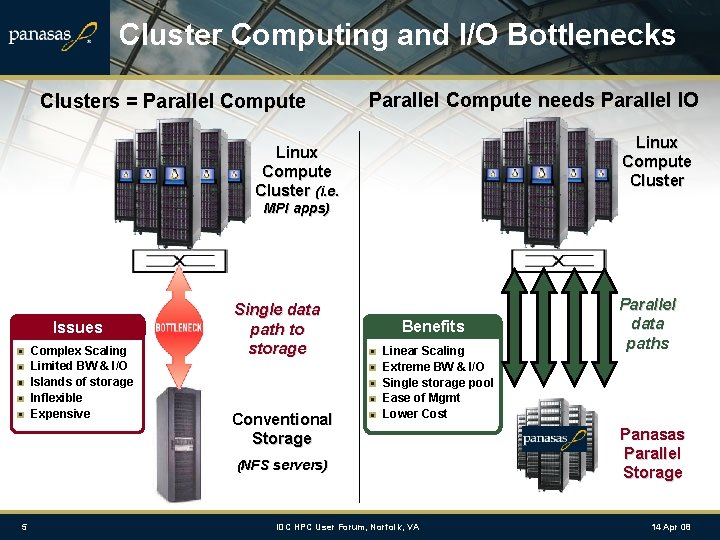

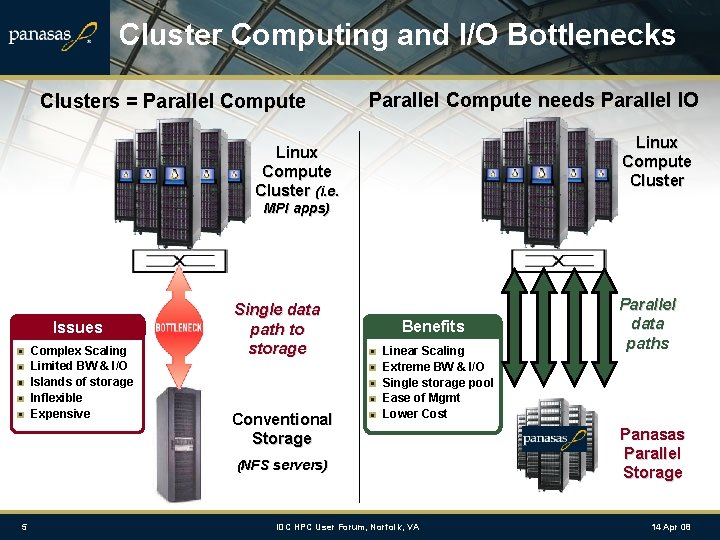

Cluster Computing and I/O Bottlenecks Clusters = Parallel Compute needs Parallel IO Linux Compute Cluster (i. e. MPI apps) Issues Complex Scaling Limited BW & I/O Islands of storage Inflexible Expensive Single data path to storage Conventional Storage Benefits Linear Scaling Extreme BW & I/O Single storage pool Ease of Mgmt Lower Cost (NFS servers) 5 IDC HPC User Forum, Norfolk, VA Parallel data paths Panasas Parallel Storage 14 Apr 08

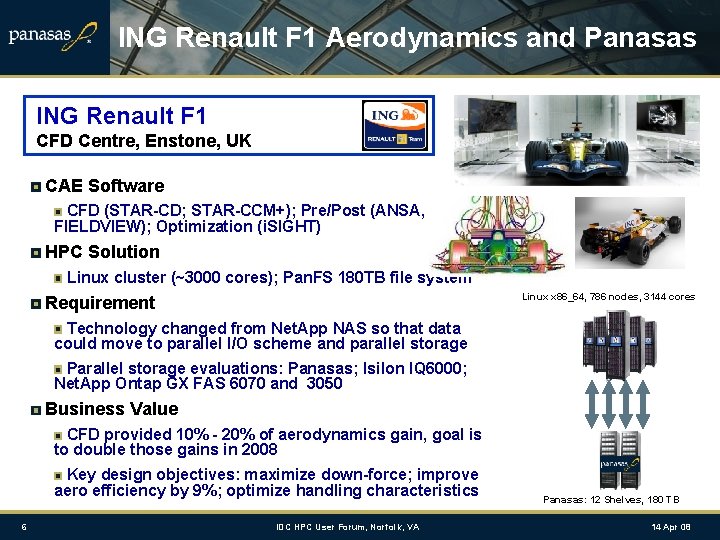

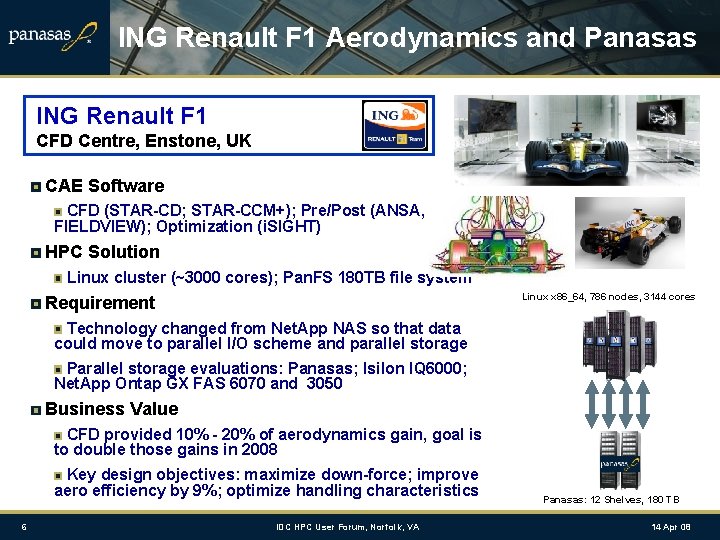

ING Renault F 1 Aerodynamics and Panasas ING Renault F 1 CFD Centre, Enstone, UK CAE Software CFD (STAR-CD; STAR-CCM+); Pre/Post (ANSA, FIELDVIEW); Optimization (i. SIGHT) HPC Solution Linux cluster (~3000 cores); Pan. FS 180 TB file system Linux x 86_64, 786 nodes, 3144 cores Requirement Technology changed from Net. App NAS so that data could move to parallel I/O scheme and parallel storage Parallel storage evaluations: Panasas; Isilon IQ 6000; Net. App Ontap GX FAS 6070 and 3050 Business Value CFD provided 10% - 20% of aerodynamics gain, goal is to double those gains in 2008 Key design objectives: maximize down-force; improve aero efficiency by 9%; optimize handling characteristics 6 IDC HPC User Forum, Norfolk, VA Panasas: 12 Shelves, 180 TB 14 Apr 08

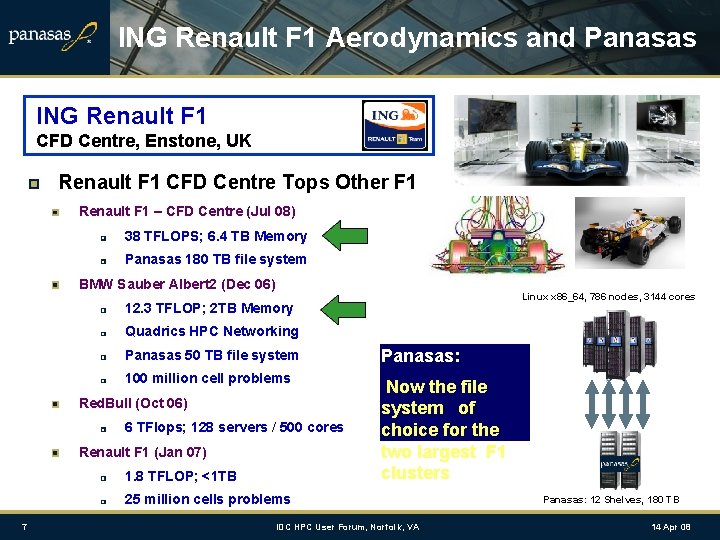

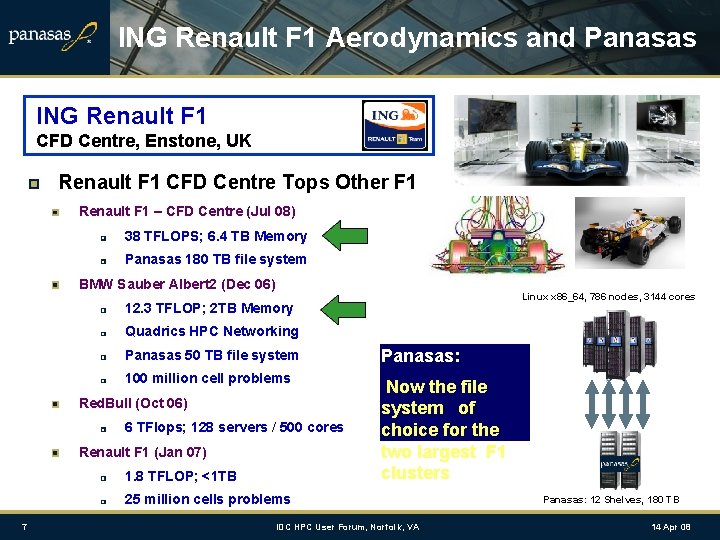

ING Renault F 1 Aerodynamics and Panasas ING Renault F 1 CFD Centre, Enstone, UK Renault F 1 CFD Centre Tops Other F 1 Renault F 1 – CFD Centre (Jul 08) 38 TFLOPS; 6. 4 TB Memory Panasas 180 TB file system BMW Sauber Albert 2 (Dec 06) Linux x 86_64, 786 nodes, 3144 cores 12. 3 TFLOP; 2 TB Memory Quadrics HPC Networking Panasas 50 TB file system Panasas: 100 million cell problems Now the file system of choice for the two largest F 1 clusters Red. Bull (Oct 06) 6 TFlops; 128 servers / 500 cores Renault F 1 (Jan 07) 1. 8 TFLOP; <1 TB 25 million cells problems 7 IDC HPC User Forum, Norfolk, VA Panasas: 12 Shelves, 180 TB 14 Apr 08

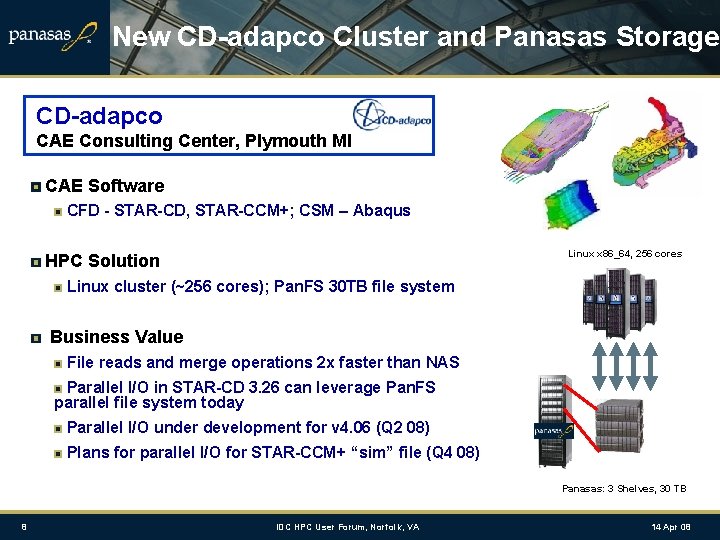

New CD-adapco Cluster and Panasas Storage CD-adapco CAE Consulting Center, Plymouth MI CAE Software CFD - STAR-CD, STAR-CCM+; CSM – Abaqus Linux x 86_64, 256 cores HPC Solution Linux cluster (~256 cores); Pan. FS 30 TB file system Business Value File reads and merge operations 2 x faster than NAS Parallel I/O in STAR-CD 3. 26 can leverage Pan. FS parallel file system today Parallel I/O under development for v 4. 06 (Q 2 08) Plans for parallel I/O for STAR-CCM+ “sim” file (Q 4 08) Panasas: 3 Shelves, 30 TB 8 IDC HPC User Forum, Norfolk, VA 14 Apr 08

Honeywell Aerospace and Panasas Storage Honeywell Aerospace Turbomachinery, Locations in US Profile Use of HPC for design of small gas turbine engines and engine components for GE, Rolls Royce, and others Challenge Deploy CAE simulation software for improvements in aerodynamic efficiency, noise reductions, combustion, etc. Linux x 86_64, 256 cores Provide HPC cluster environment to support distributed users for CFD – FLUENT, CFX; CSM – ANSYS, LS-DYNA HPC Solution Linux clusters (~452 cores) total, Panasas on latest 256 Panasas parallel file system, 5 storage systems, 50 TBs Business Value CAE scalability with Panasas allows improved LES simulation turn-around for combustors Enables efficiency improvements, reduction of tests 9 IDC HPC User Forum, Norfolk, VA Panasas: 5 Shelves, 50 TB 14 Apr 08

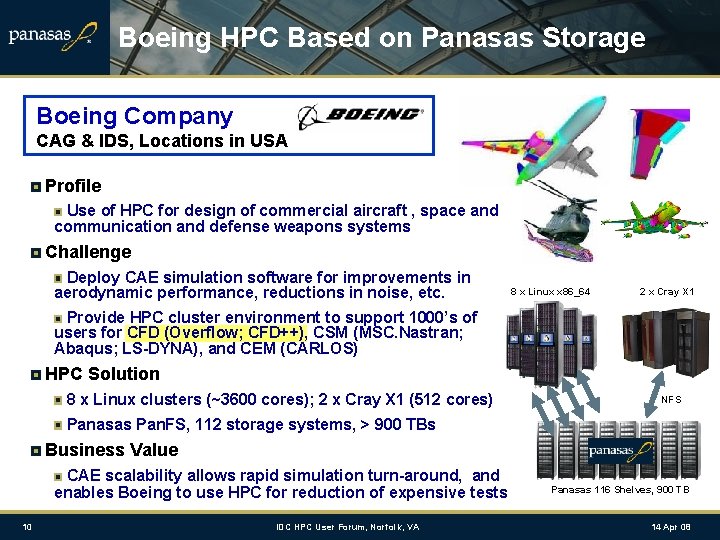

Boeing HPC Based on Panasas Storage Boeing Company CAG & IDS, Locations in USA Profile Use of HPC for design of commercial aircraft , space and communication and defense weapons systems Challenge Deploy CAE simulation software for improvements in aerodynamic performance, reductions in noise, etc. 8 x Linux x 86_64 2 x Cray X 1 Provide HPC cluster environment to support 1000’s of users for CFD (Overflow; CFD++), CSM (MSC. Nastran; Abaqus; LS-DYNA), and CEM (CARLOS) HPC Solution 8 x Linux clusters (~3600 cores); 2 x Cray X 1 (512 cores) NFS Panasas Pan. FS, 112 storage systems, > 900 TBs Business Value CAE scalability allows rapid simulation turn-around, and enables Boeing to use HPC for reduction of expensive tests 10 IDC HPC User Forum, Norfolk, VA Panasas 116 Shelves, 900 TB 14 Apr 08

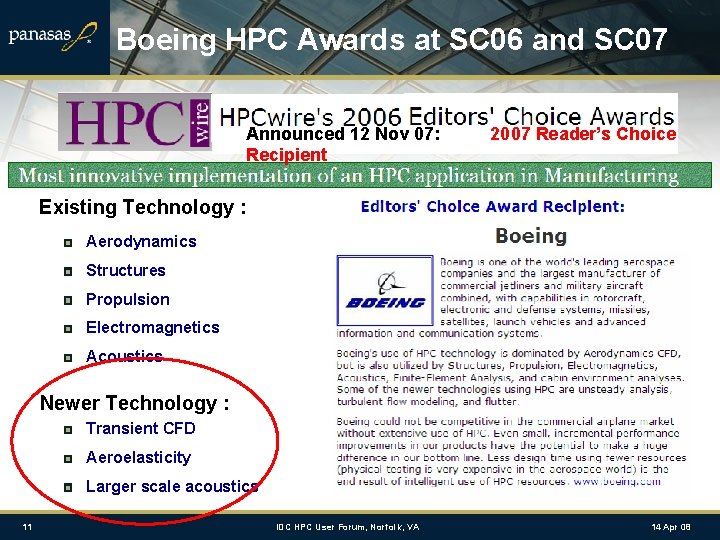

Boeing HPC Awards at SC 06 and SC 07 Announced 12 Nov 07: Recipient 2007 Reader’s Choice Existing Technology : Aerodynamics Structures Propulsion Electromagnetics Acoustics Newer Technology : Transient CFD Aeroelasticity Larger scale acoustics 11 IDC HPC User Forum, Norfolk, VA 14 Apr 08

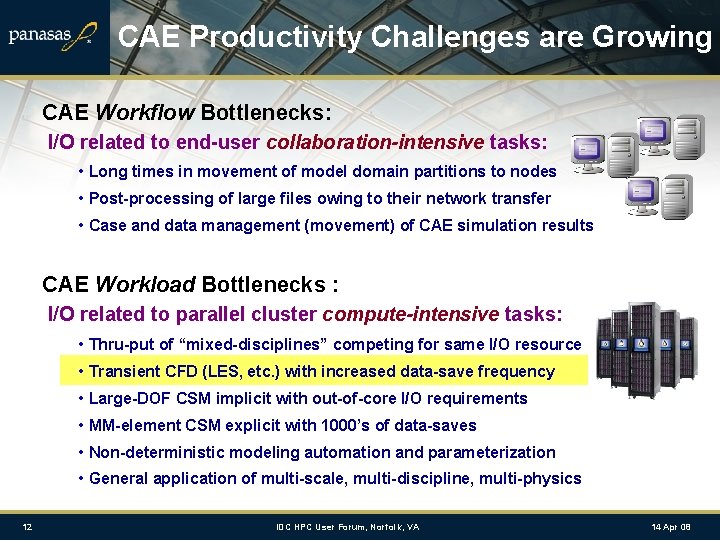

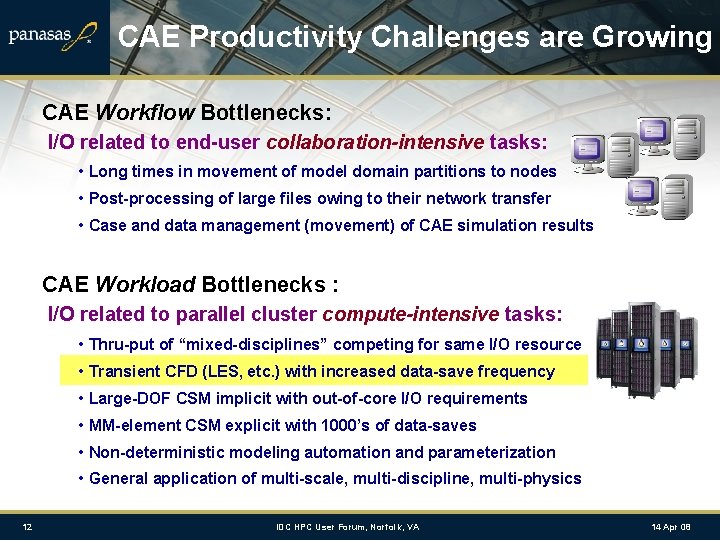

CAE Productivity Challenges are Growing CAE Workflow Bottlenecks: I/O related to end-user collaboration-intensive tasks: • Long times in movement of model domain partitions to nodes • Post-processing of large files owing to their network transfer • Case and data management (movement) of CAE simulation results CAE Workload Bottlenecks : I/O related to parallel cluster compute-intensive tasks: • Thru-put of “mixed-disciplines” competing for same I/O resource • Transient CFD (LES, etc. ) with increased data-save frequency • Large-DOF CSM implicit with out-of-core I/O requirements • MM-element CSM explicit with 1000’s of data-saves • Non-deterministic modeling automation and parameterization • General application of multi-scale, multi-discipline, multi-physics 12 IDC HPC User Forum, Norfolk, VA 14 Apr 08

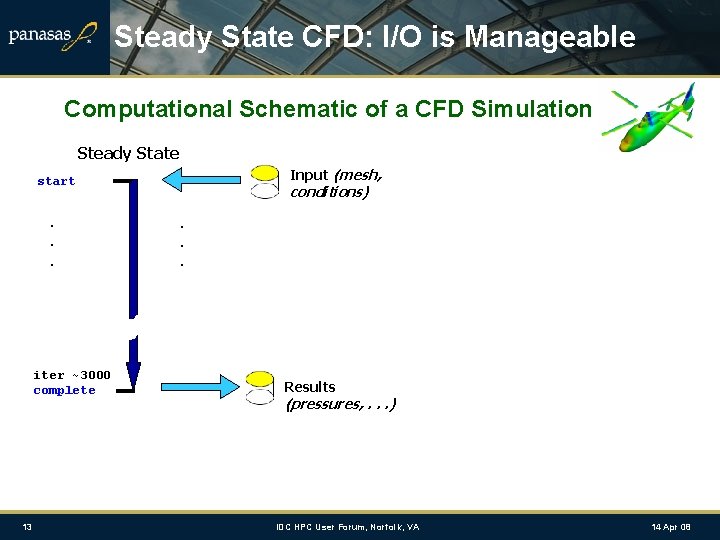

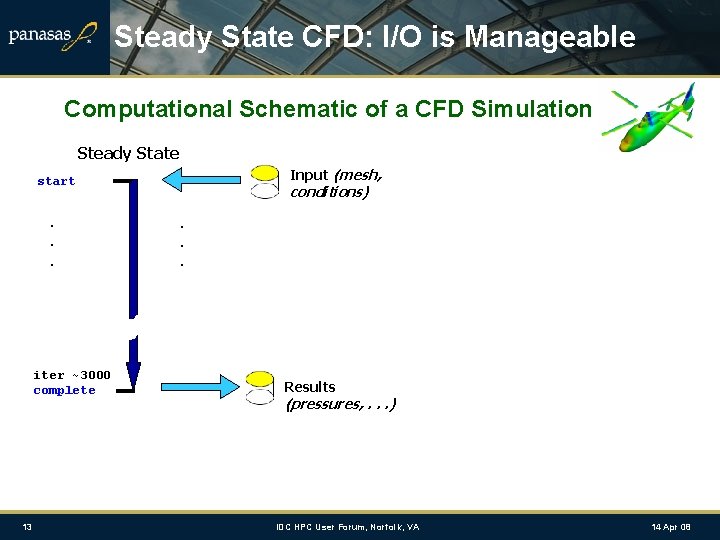

Steady State CFD: I/O is Manageable Computational Schematic of a CFD Simulation Steady State Input (mesh, start . . . iter ~3000 complete 13 conditions) . . . Results (pressures, . . . ) IDC HPC User Forum, Norfolk, VA 14 Apr 08

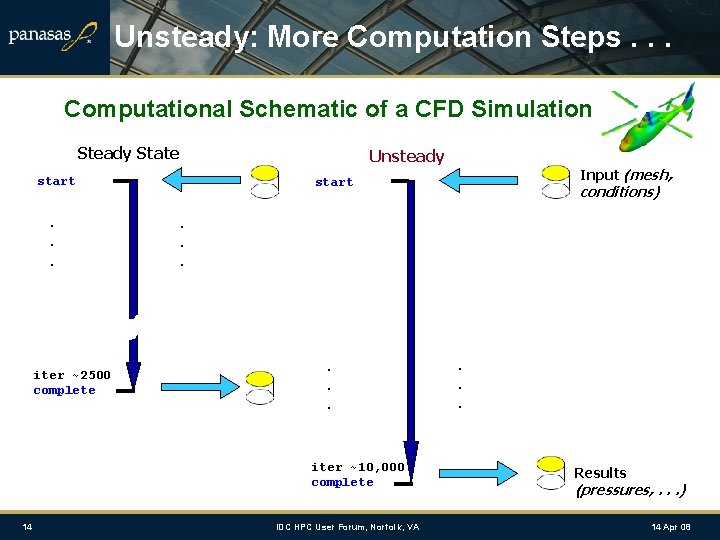

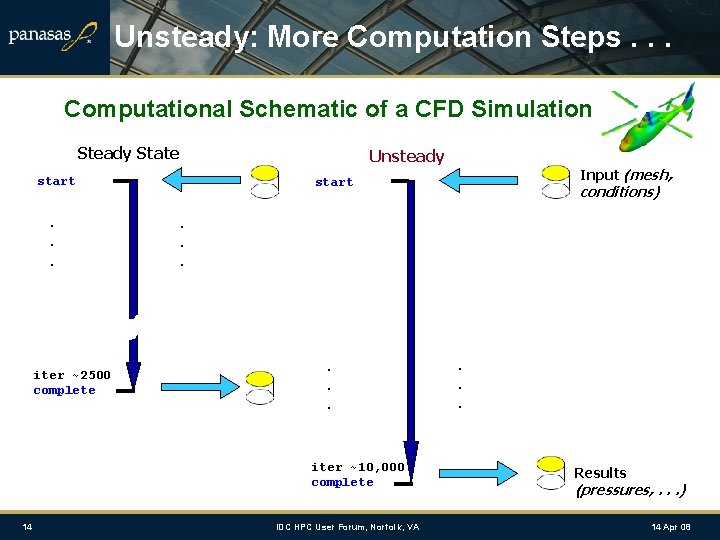

Unsteady: More Computation Steps. . . Computational Schematic of a CFD Simulation Steady State start . . . iter ~2500 complete Unsteady conditions) . . . iter ~10, 000 complete 14 Input (mesh, start IDC HPC User Forum, Norfolk, VA . . . Results (pressures, . . . ) 14 Apr 08

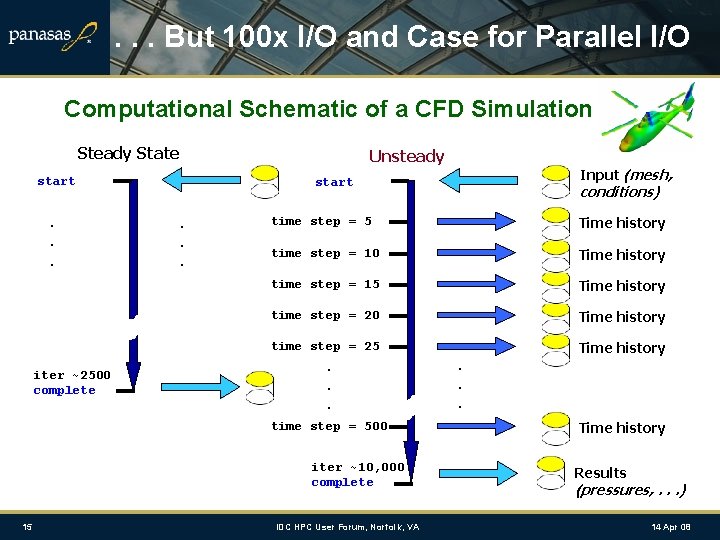

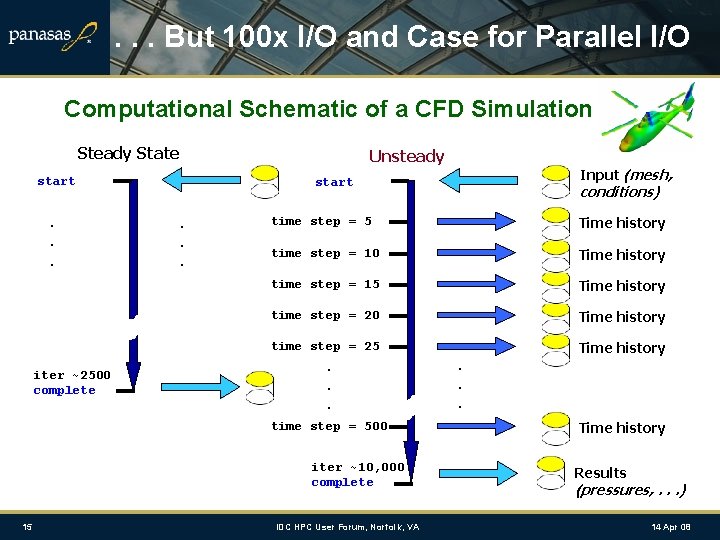

. . . But 100 x I/O and Case for Parallel I/O Computational Schematic of a CFD Simulation Steady State start . . . iter ~2500 complete Unsteady . . . conditions) time step = 5 Time history time step = 10 Time history time step = 15 Time history time step = 20 Time history time step = 25 Time history . . . time step = 500 iter ~10, 000 complete 15 Input (mesh, start IDC HPC User Forum, Norfolk, VA . . . Time history Results (pressures, . . . ) 14 Apr 08

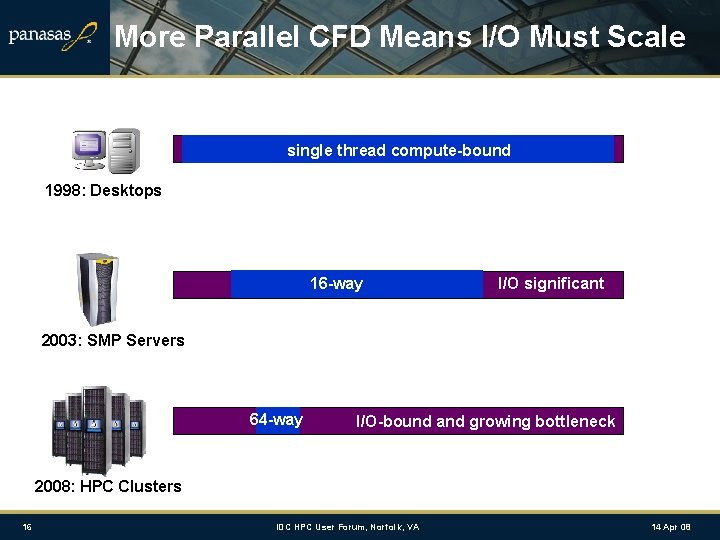

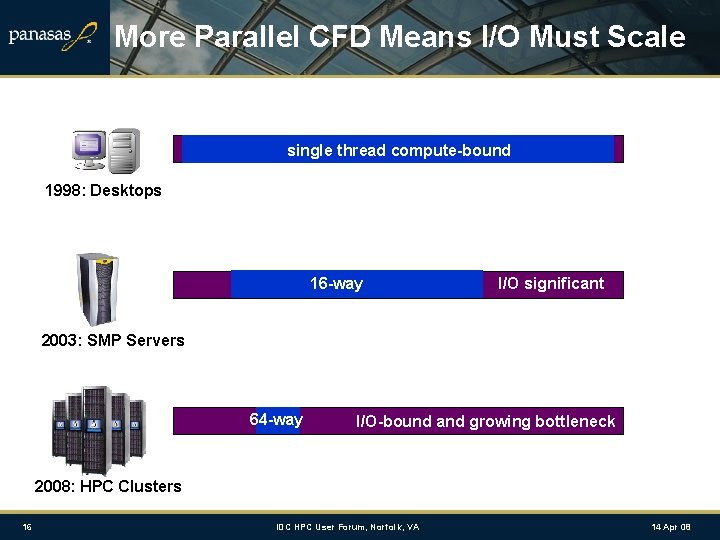

More Parallel CFD Means I/O Must Scale single thread compute-bound 1998: Desktops 16 -way I/O significant 2003: SMP Servers 64 -way I/O-bound and growing bottleneck 2008: HPC Clusters 16 IDC HPC User Forum, Norfolk, VA 14 Apr 08

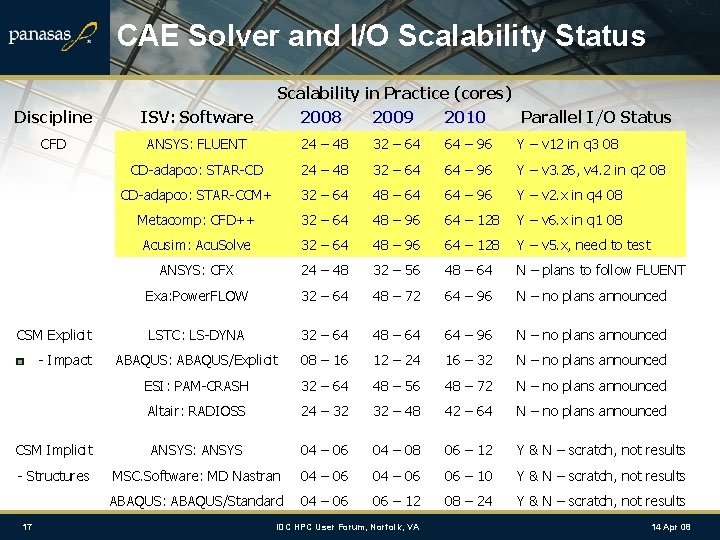

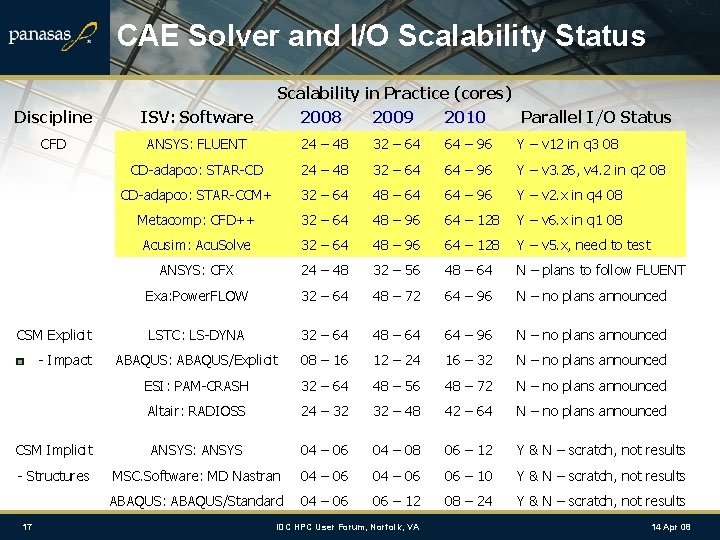

CAE Solver and I/O Scalability Status Scalability in Practice (cores) Discipline ISV: Software 2008 2009 2010 CFD ANSYS: FLUENT 24 – 48 32 – 64 64 – 96 Y – v 12 in q 3 08 CD-adapco: STAR-CD 24 – 48 32 – 64 64 – 96 Y – v 3. 26, v 4. 2 in q 2 08 CD-adapco: STAR-CCM+ 32 – 64 48 – 64 64 – 96 Y – v 2. x in q 4 08 Metacomp: CFD++ 32 – 64 48 – 96 64 – 128 Y – v 6. x in q 1 08 Acusim: Acu. Solve 32 – 64 48 – 96 64 – 128 Y – v 5. x, need to test ANSYS: CFX 24 – 48 32 – 56 48 – 64 N – plans to follow FLUENT Exa: Power. FLOW 32 – 64 48 – 72 64 – 96 N – no plans announced LSTC: LS-DYNA 32 – 64 48 – 64 64 – 96 N – no plans announced ABAQUS: ABAQUS/Explicit 08 – 16 12 – 24 16 – 32 N – no plans announced ESI: PAM-CRASH 32 – 64 48 – 56 48 – 72 N – no plans announced Altair: RADIOSS 24 – 32 32 – 48 42 – 64 N – no plans announced CSM Implicit ANSYS: ANSYS 04 – 06 04 – 08 06 – 12 Y & N – scratch, not results - Structures MSC. Software: MD Nastran 04 – 06 06 – 10 Y & N – scratch, not results ABAQUS: ABAQUS/Standard 04 – 06 06 – 12 08 – 24 Y & N – scratch, not results CSM Explicit - Impact 17 IDC HPC User Forum, Norfolk, VA Parallel I/O Status 14 Apr 08

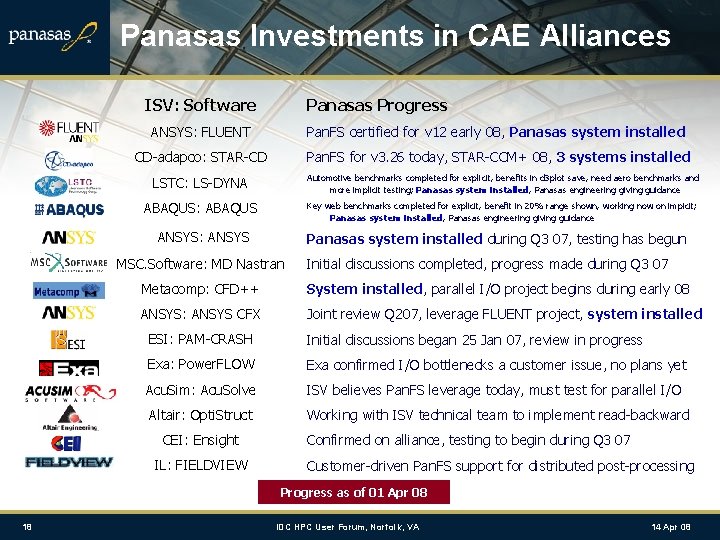

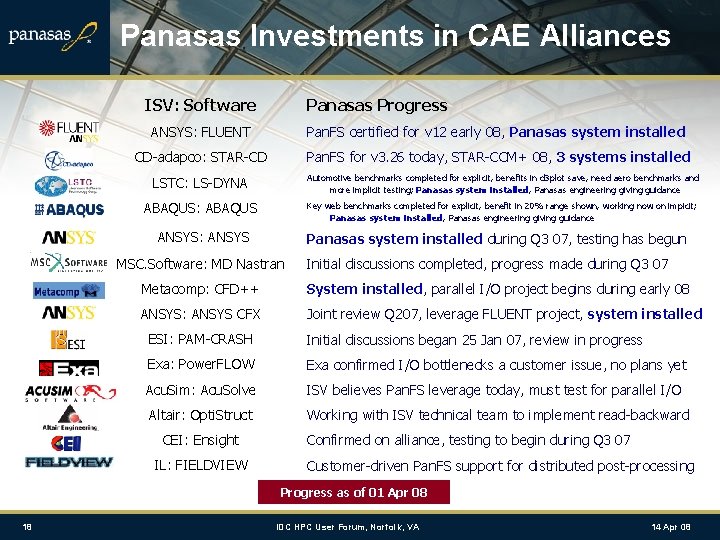

Panasas Investments in CAE Alliances ISV: Software Panasas Progress ANSYS: FLUENT Pan. FS certified for v 12 early 08, Panasas system installed CD-adapco: STAR-CD Pan. FS for v 3. 26 today, STAR-CCM+ 08, 3 systems installed LSTC: LS-DYNA Automotive benchmarks completed for explicit, benefits in d 3 plot save, need aero benchmarks and more implicit testing; Panasas system installed, Panasas engineering giving guidance ABAQUS: ABAQUS Key web benchmarks completed for explicit, benefit in 20% range shown, working now on impicit; Panasas system installed, Panasas engineering giving guidance ANSYS: ANSYS Panasas system installed during Q 3 07, testing has begun MSC. Software: MD Nastran Initial discussions completed, progress made during Q 3 07 Metacomp: CFD++ System installed, parallel I/O project begins during early 08 ANSYS: ANSYS CFX Joint review Q 207, leverage FLUENT project, system installed ESI: PAM-CRASH Initial discussions began 25 Jan 07, review in progress Exa: Power. FLOW Exa confirmed I/O bottlenecks a customer issue, no plans yet Acu. Sim: Acu. Solve ISV believes Pan. FS leverage today, must test for parallel I/O Altair: Opti. Struct Working with ISV technical team to implement read-backward CEI: Ensight IL: FIELDVIEW Confirmed on alliance, testing to begin during Q 3 07 Customer-driven Pan. FS support for distributed post-processing Progress as of 01 Apr 08 18 IDC HPC User Forum, Norfolk, VA 14 Apr 08

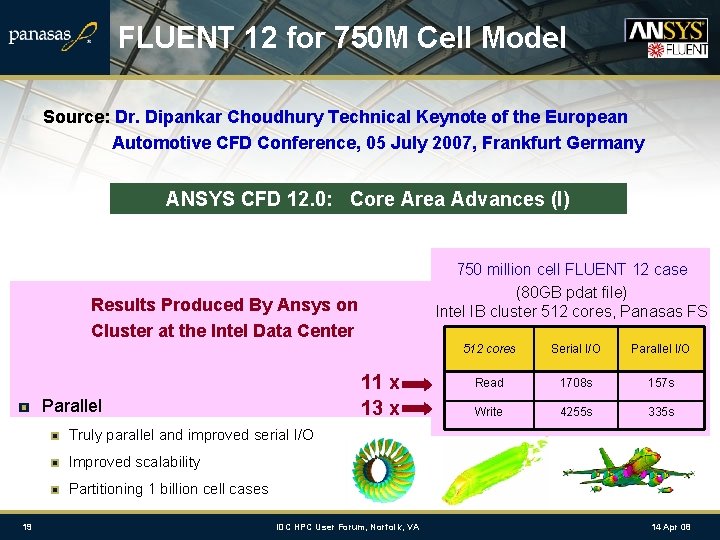

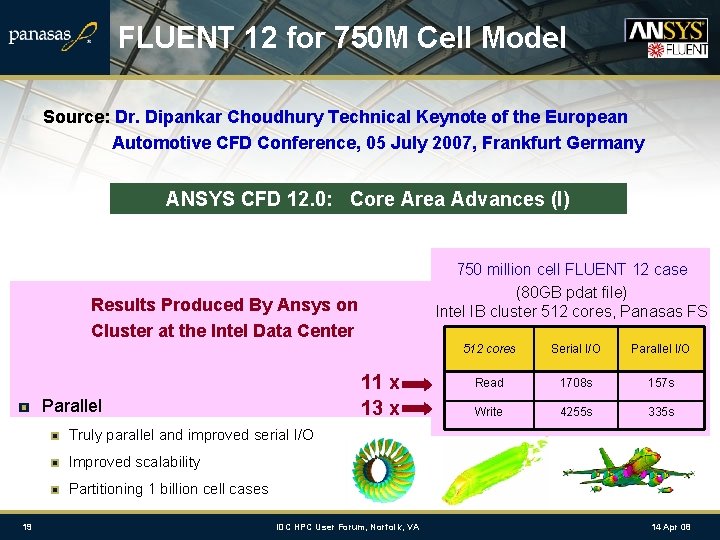

FLUENT 12 for 750 M Cell Model Source: Dr. Dipankar Choudhury Technical Keynote of the European Automotive CFD Conference, 05 July 2007, Frankfurt Germany ANSYS CFD 12. 0: Core Area Advances (I) 750 million cell FLUENT 12 case (80 GB pdat file) Intel IB cluster 512 cores, Panasas FS Results Produced By Ansys on Cluster at the Intel Data Center 11 x 13 x Parallel 512 cores Serial I/O Parallel I/O Read 1708 s 157 s Write 4255 s 335 s Truly parallel and improved serial I/O Improved scalability Partitioning 1 billion cell cases 19 IDC HPC User Forum, Norfolk, VA 14 Apr 08

Panasas HPC Focus and Vision HPC Technology Advancement | ISV Alliances | Industry Standards-based Core Technologies with HPC Productivity Focus Scalable I/O and storage solutions for HPC computation and collaboration Investments in ISV Alliances and HPC Applications Development Joint development on performance and improved application capabilities Established and Growing Industry Influence and Advancement Valued contributions to customers, industry, and research organizations 20 IDC HPC User Forum, Norfolk, VA 14 Apr 08

Thank you for this opportunity For more information, call Panasas at: Q&A 1 -888 -PANASAS (US & Canada) 00 (800) PANASAS 2 (UK & France) 00 (800) 787 -702 (Italy) +001 (510) 608 -7790 (All Other Countries) www. panasas. com Stan Posey Director, Vertical Marketing and Business Development sposey@panasas. com