SemiSupervised Approaches for Learning to Parse Natural Languages

![Co-Training [Blum and Mitchell, 1998] • Assumptions – Have a small treebank – No Co-Training [Blum and Mitchell, 1998] • Assumptions – Have a small treebank – No](https://slidetodoc.com/presentation_image/954813dc6622c14fe7149e2a49219104/image-40.jpg)

![Experimental Setup • Co-training parsers: – Lexicalized Tree Adjoining Grammar parser [Sarkar, 2002] – Experimental Setup • Co-training parsers: – Lexicalized Tree Adjoining Grammar parser [Sarkar, 2002] –](https://slidetodoc.com/presentation_image/954813dc6622c14fe7149e2a49219104/image-44.jpg)

- Slides: 50

Semi-Supervised Approaches for Learning to Parse Natural Languages Slides are from Rebecca Hwa, Ray Mooney 1

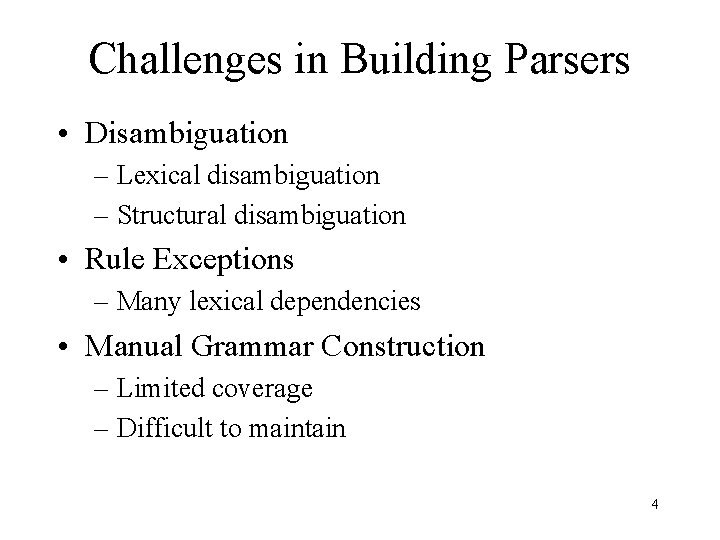

The Role of Parsing in Language Applications… • As a stand-alone application – Grammar checking • As a pre-processing step – Question Answering – Information extraction • As an integral part of a model – Speech Recognition – Machine Translation 2

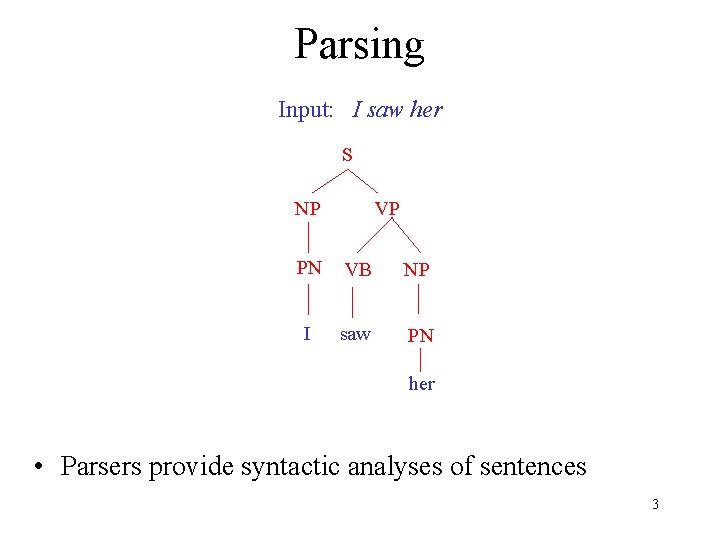

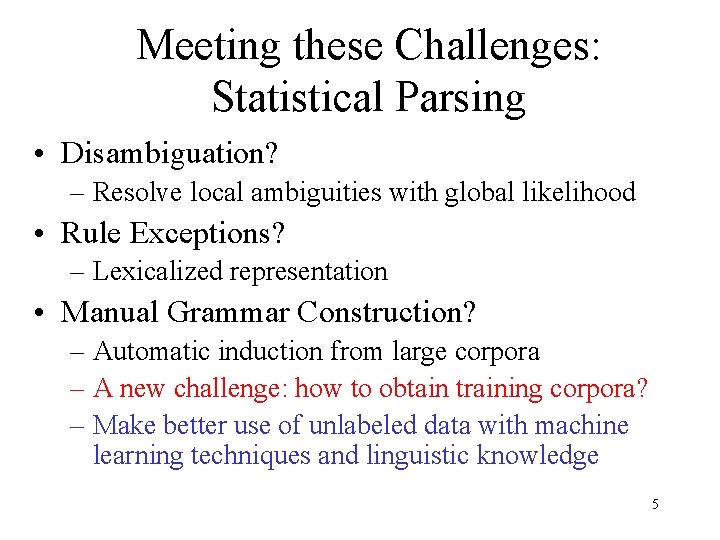

Parsing Input: I saw her S NP VP PN VB NP I saw PN her • Parsers provide syntactic analyses of sentences 3

Challenges in Building Parsers • Disambiguation – Lexical disambiguation – Structural disambiguation • Rule Exceptions – Many lexical dependencies • Manual Grammar Construction – Limited coverage – Difficult to maintain 4

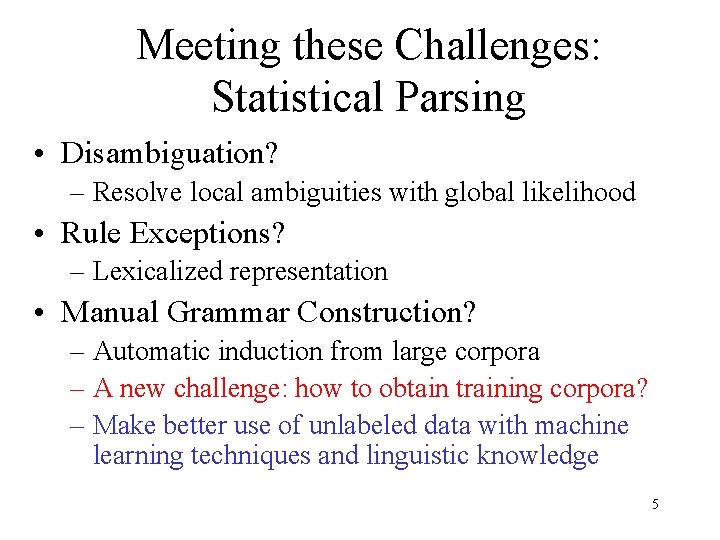

Meeting these Challenges: Statistical Parsing • Disambiguation? – Resolve local ambiguities with global likelihood • Rule Exceptions? – Lexicalized representation • Manual Grammar Construction? – Automatic induction from large corpora – A new challenge: how to obtain training corpora? – Make better use of unlabeled data with machine learning techniques and linguistic knowledge 5

Roadmap • Parsing as a learning problem • Semi-supervised approaches – Sample selection – Co-training – Corrected Co-training • Conclusion and further directions 6

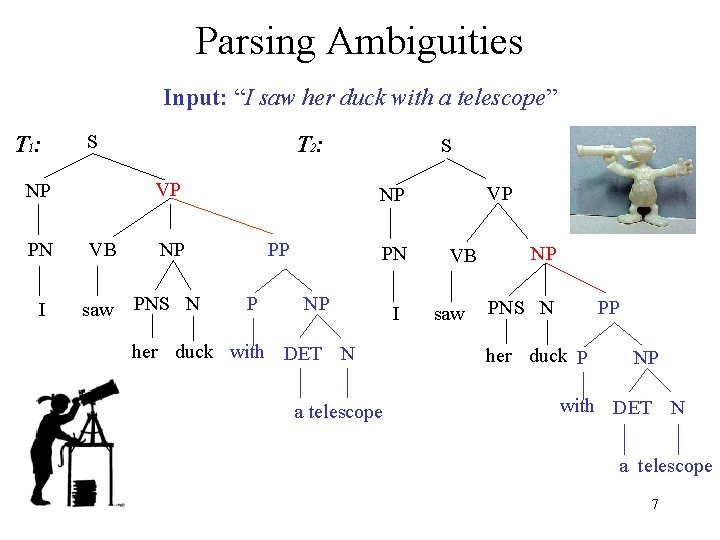

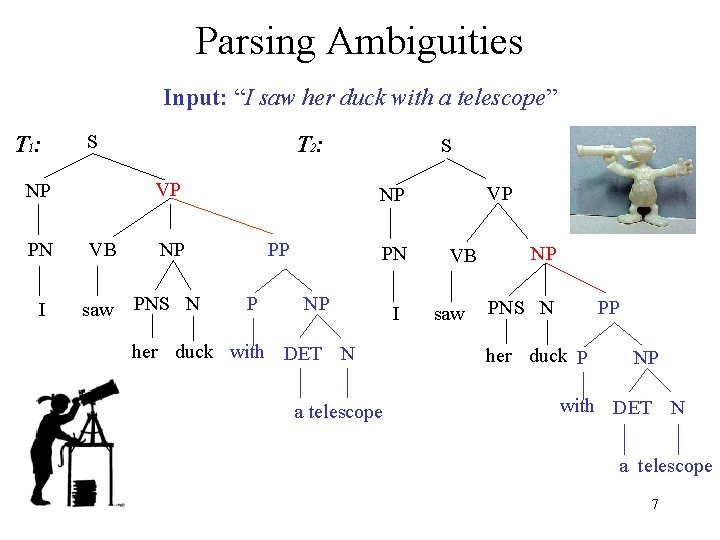

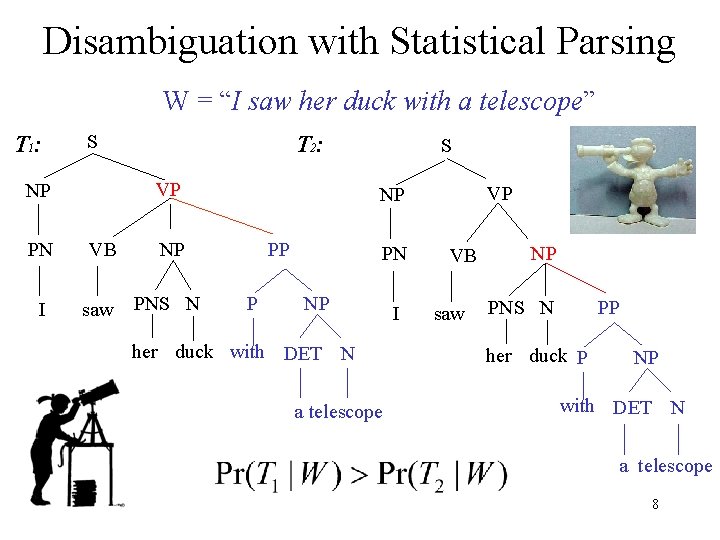

Parsing Ambiguities Input: “I saw her duck with a telescope” T 1: S T 2: VP NP PN VB NP I saw PNS N S VP NP PP P PN NP her duck with DET N a telescope I VB saw NP PNS N PP her duck P NP with DET N a telescope 7

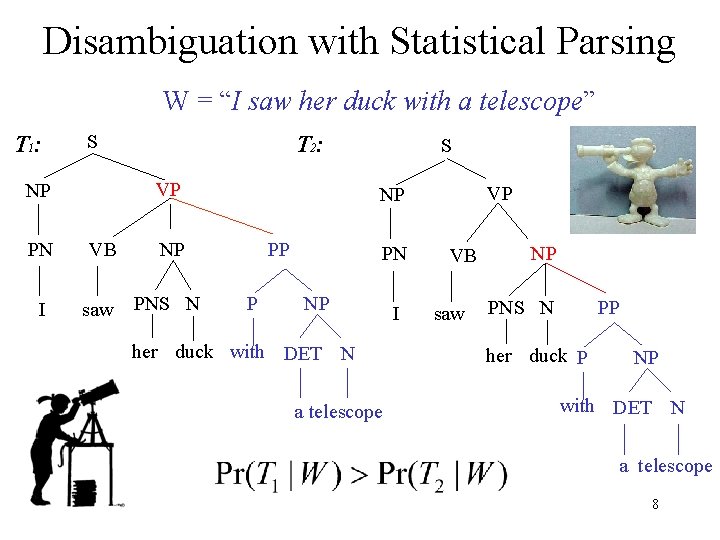

Disambiguation with Statistical Parsing W = “I saw her duck with a telescope” T 1: S T 2: VP NP PN VB NP I saw PNS N S VP NP PP P PN NP her duck with DET N a telescope I VB saw NP PNS N PP her duck P NP with DET N a telescope 8

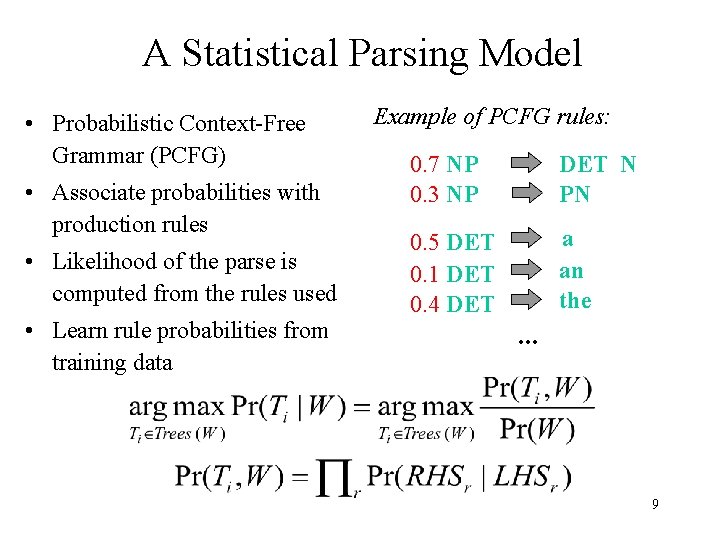

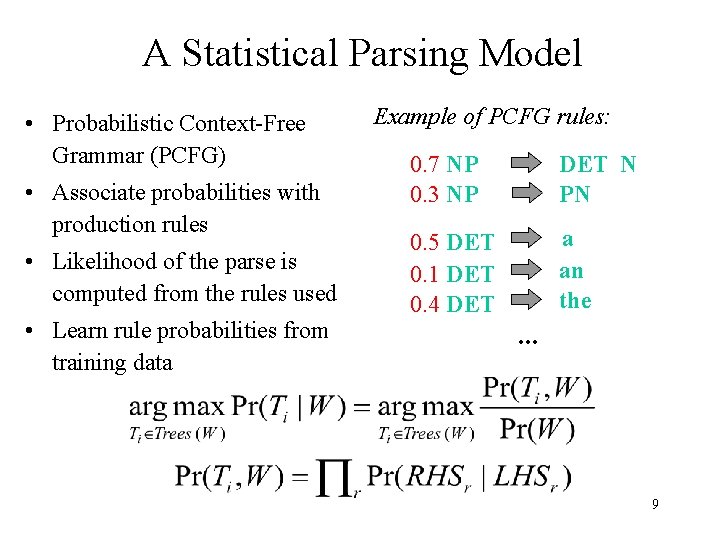

A Statistical Parsing Model • Probabilistic Context-Free Grammar (PCFG) • Associate probabilities with production rules • Likelihood of the parse is computed from the rules used • Learn rule probabilities from training data Example of PCFG rules: 0. 7 NP 0. 3 NP DET N PN 0. 5 DET 0. 1 DET 0. 4 DET a an the . . . 9

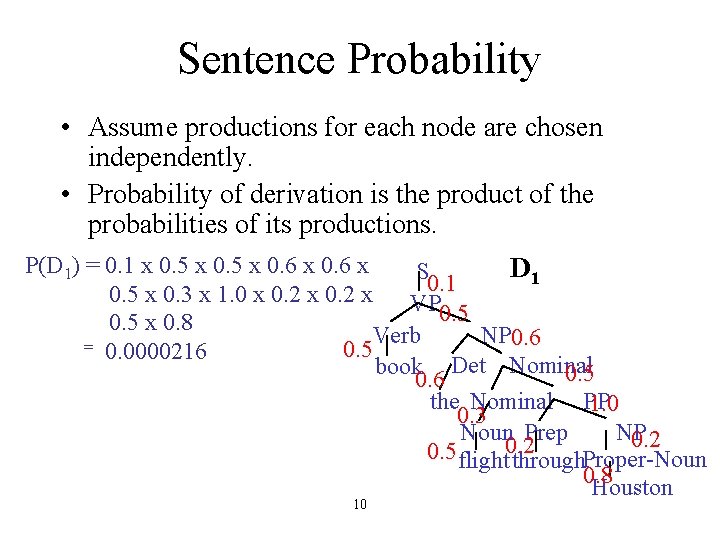

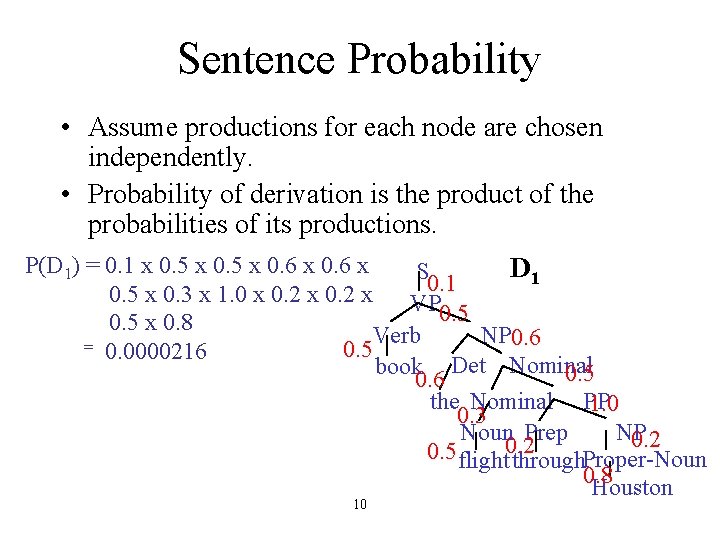

Sentence Probability • Assume productions for each node are chosen independently. • Probability of derivation is the product of the probabilities of its productions. P(D 1) = 0. 1 x 0. 5 x 0. 6 x D 1 S 0. 1 0. 5 x 0. 3 x 1. 0 x 0. 2 x VP 0. 5 x 0. 8 Verb NP 0. 6 = 0. 0000216 0. 5 book Det Nominal 0. 5 0. 6 the Nominal PP 1. 0 0. 3 NP Noun Prep 0. 2 0. 5 flight 0. 2 through. Proper-Noun 0. 8 Houston 10

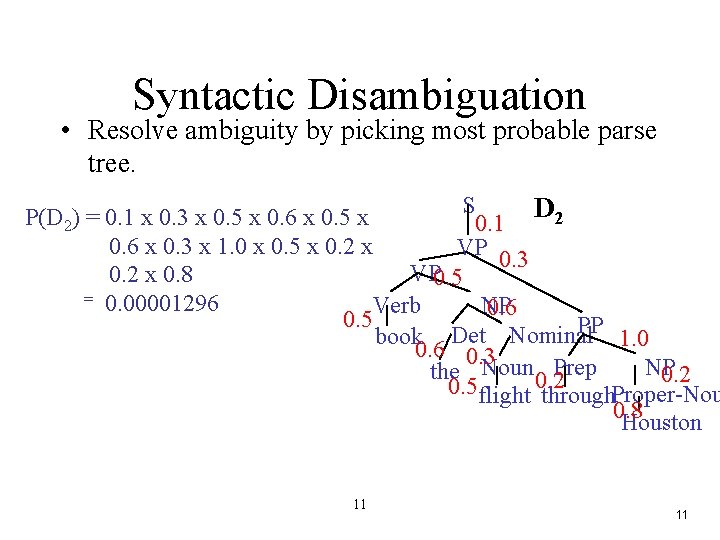

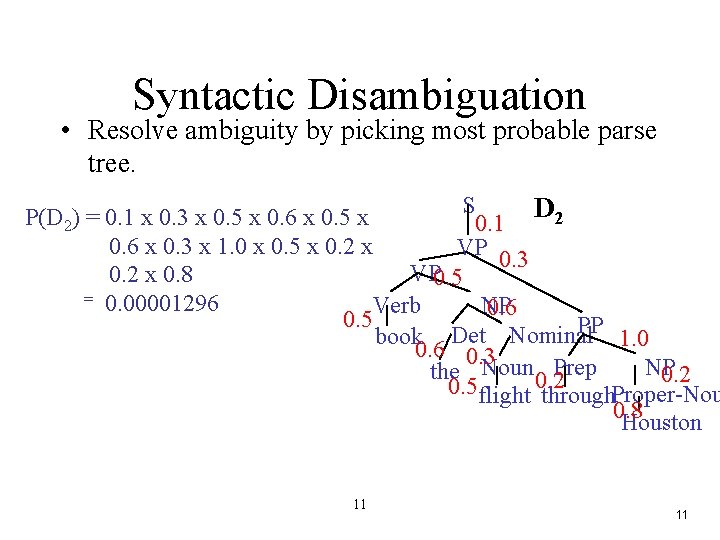

Syntactic Disambiguation • Resolve ambiguity by picking most probable parse tree. S D 2 P(D 2) = 0. 1 x 0. 3 x 0. 5 x 0. 6 x 0. 5 x 0. 1 0. 6 x 0. 3 x 1. 0 x 0. 5 x 0. 2 x VP 0. 3 VP 0. 5 0. 2 x 0. 8 = 0. 00001296 Verb NP 0. 6 0. 5 PP book Det Nominal 1. 0 0. 6 0. 3 NP the Noun 0. 2 Prep 0. 2 0. 5 flight through. Proper-Nou 0. 8 Houston 11 11

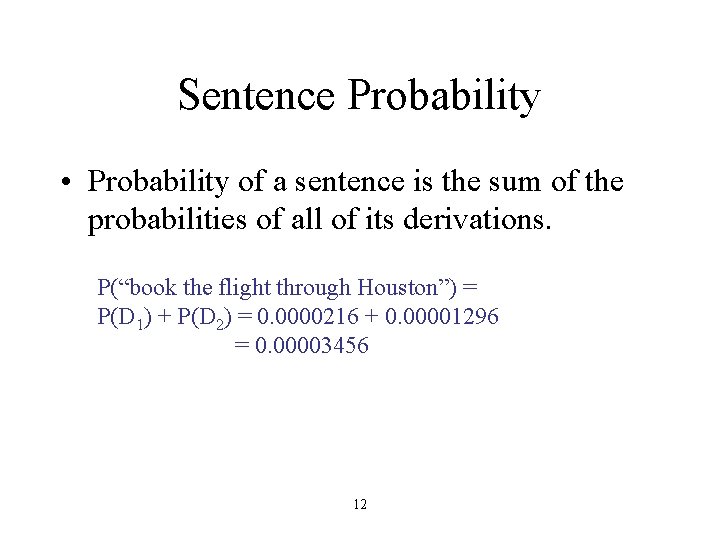

Sentence Probability • Probability of a sentence is the sum of the probabilities of all of its derivations. P(“book the flight through Houston”) = P(D 1) + P(D 2) = 0. 0000216 + 0. 00001296 = 0. 00003456 12

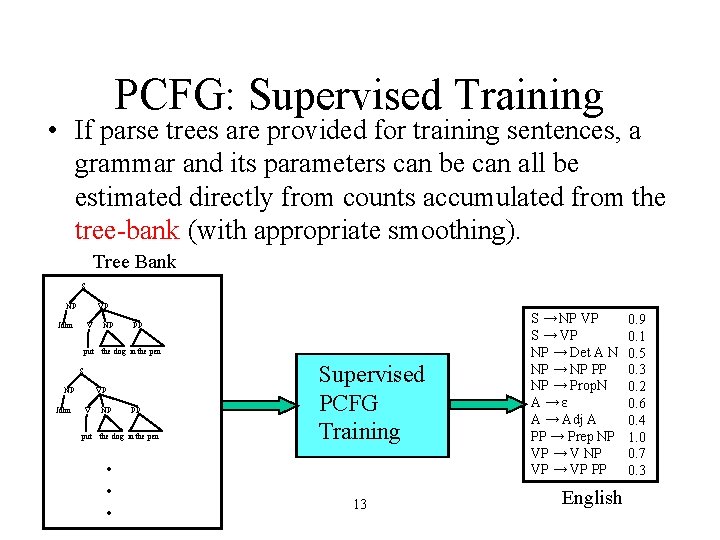

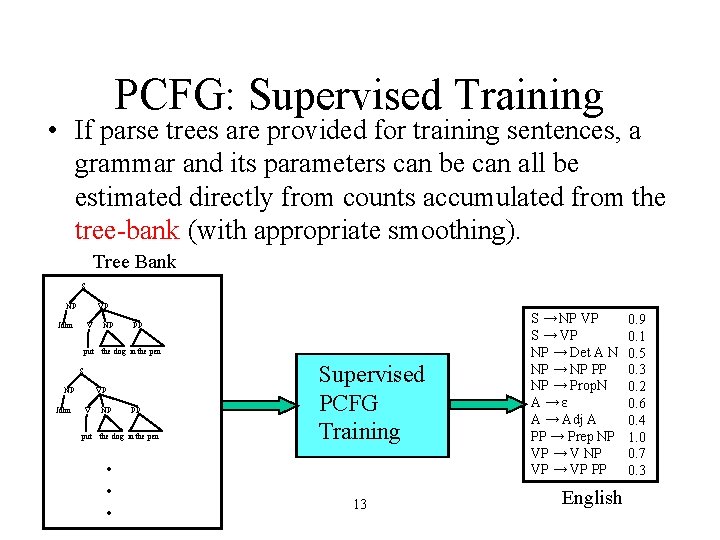

PCFG: Supervised Training • If parse trees are provided for training sentences, a grammar and its parameters can be can all be estimated directly from counts accumulated from the tree-bank (with appropriate smoothing). Tree Bank S NP VP John V put NP PP the dog in the pen S NP John VP V put NP PP the dog in the pen . . . Supervised PCFG Training 13 S → NP VP S → VP NP → Det A N NP → NP PP NP → Prop. N A→ε A → Adj A PP → Prep NP VP → VP PP English 0. 9 0. 1 0. 5 0. 3 0. 2 0. 6 0. 4 1. 0 0. 7 0. 3

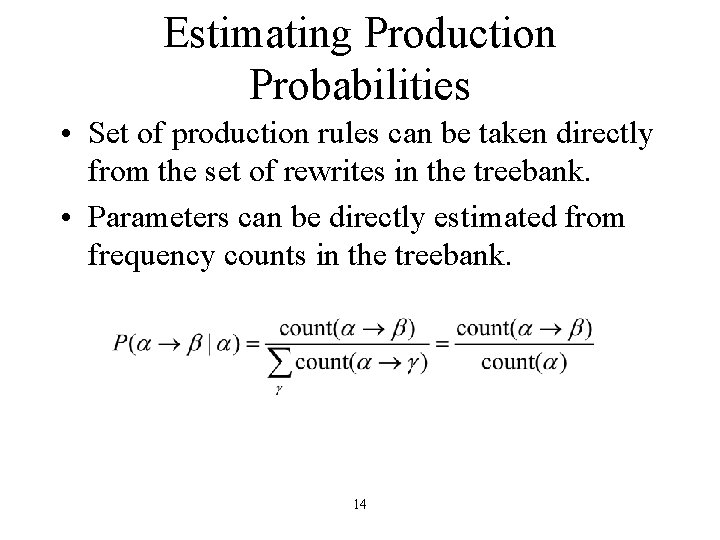

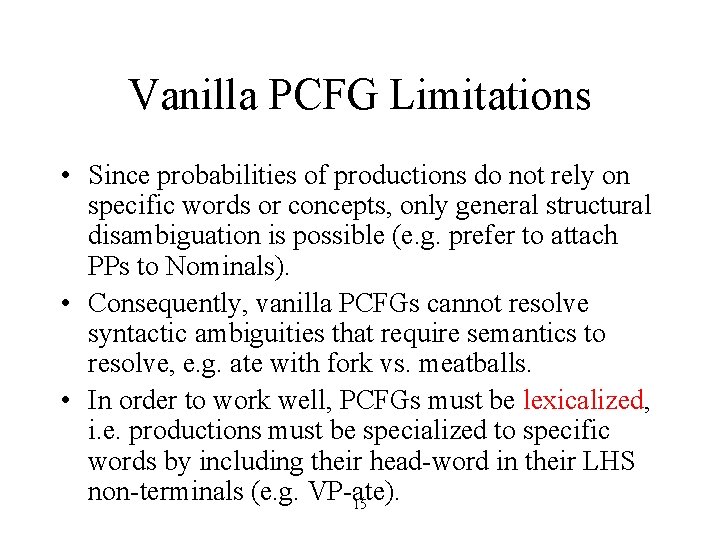

Estimating Production Probabilities • Set of production rules can be taken directly from the set of rewrites in the treebank. • Parameters can be directly estimated from frequency counts in the treebank. 14

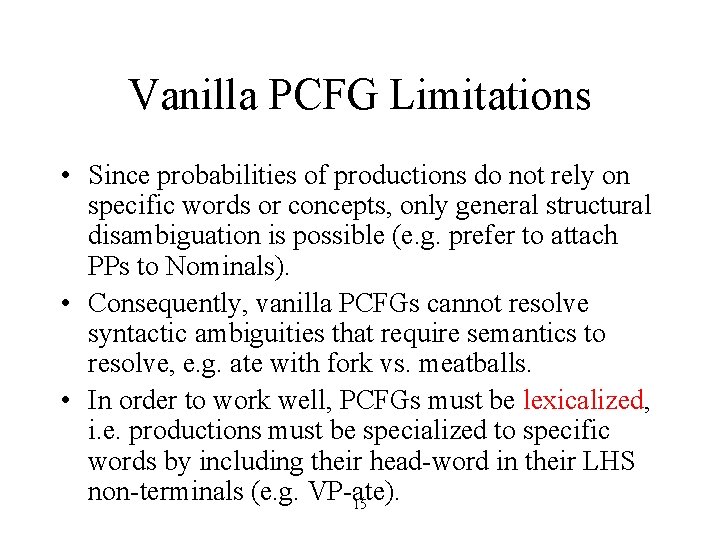

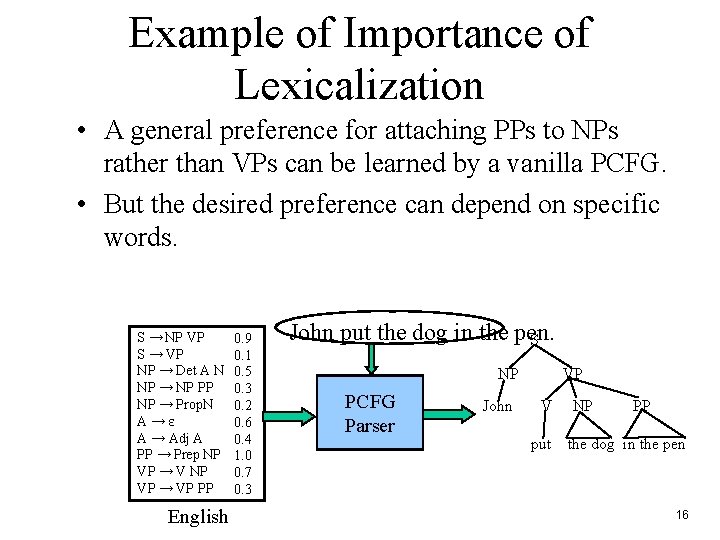

Vanilla PCFG Limitations • Since probabilities of productions do not rely on specific words or concepts, only general structural disambiguation is possible (e. g. prefer to attach PPs to Nominals). • Consequently, vanilla PCFGs cannot resolve syntactic ambiguities that require semantics to resolve, e. g. ate with fork vs. meatballs. • In order to work well, PCFGs must be lexicalized, i. e. productions must be specialized to specific words by including their head-word in their LHS non-terminals (e. g. VP-ate). 15

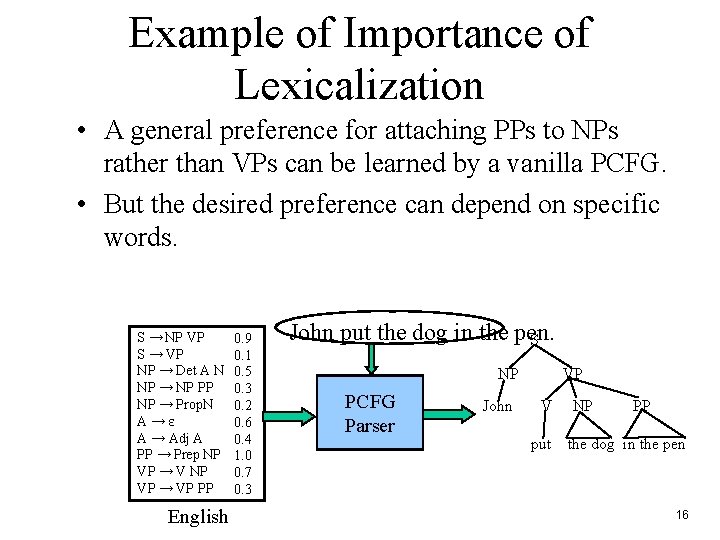

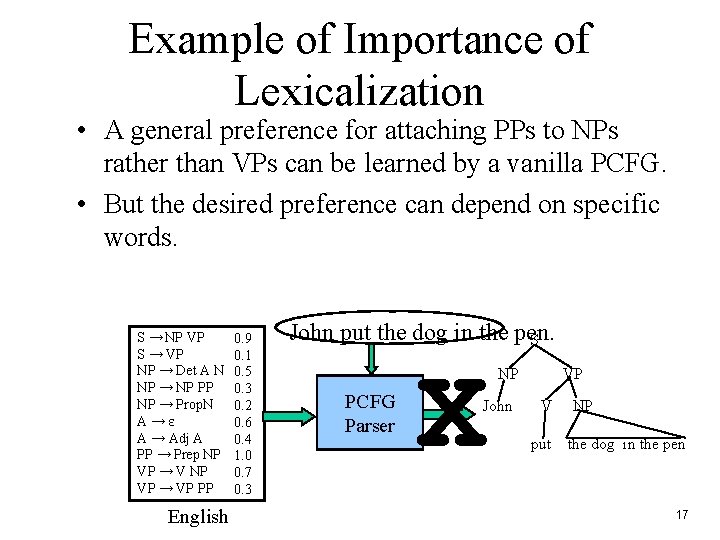

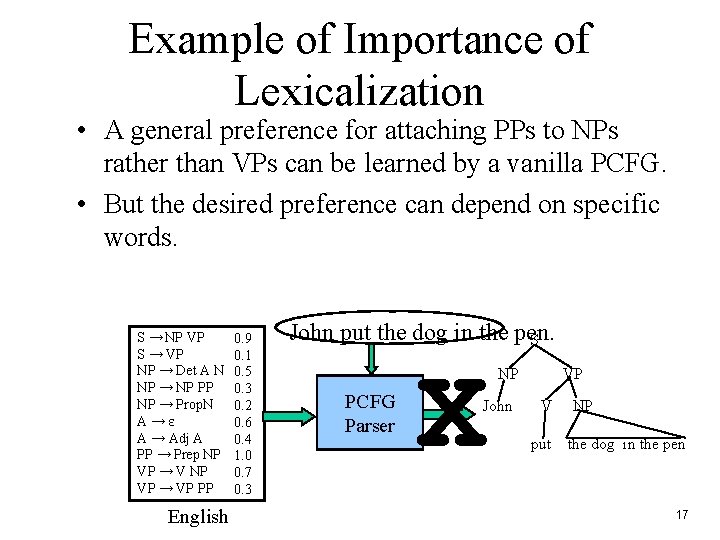

Example of Importance of Lexicalization • A general preference for attaching PPs to NPs rather than VPs can be learned by a vanilla PCFG. • But the desired preference can depend on specific words. S → NP VP S → VP NP → Det A N NP → NP PP NP → Prop. N A→ε A → Adj A PP → Prep NP VP → VP PP English 0. 9 0. 1 0. 5 0. 3 0. 2 0. 6 0. 4 1. 0 0. 7 0. 3 John put the dog in the pen. S NP PCFG Parser John VP V put NP PP the dog in the pen 16

Example of Importance of Lexicalization • A general preference for attaching PPs to NPs rather than VPs can be learned by a vanilla PCFG. • But the desired preference can depend on specific words. S → NP VP S → VP NP → Det A N NP → NP PP NP → Prop. N A→ε A → Adj A PP → Prep NP VP → VP PP English 0. 9 0. 1 0. 5 0. 3 0. 2 0. 6 0. 4 1. 0 0. 7 0. 3 John put the dog in the pen. S PCFG Parser X NP John VP V put NP the dog in the pen 17

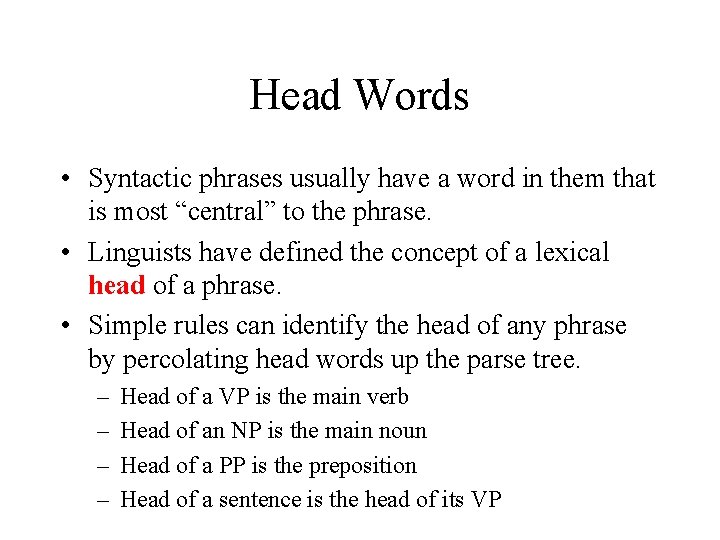

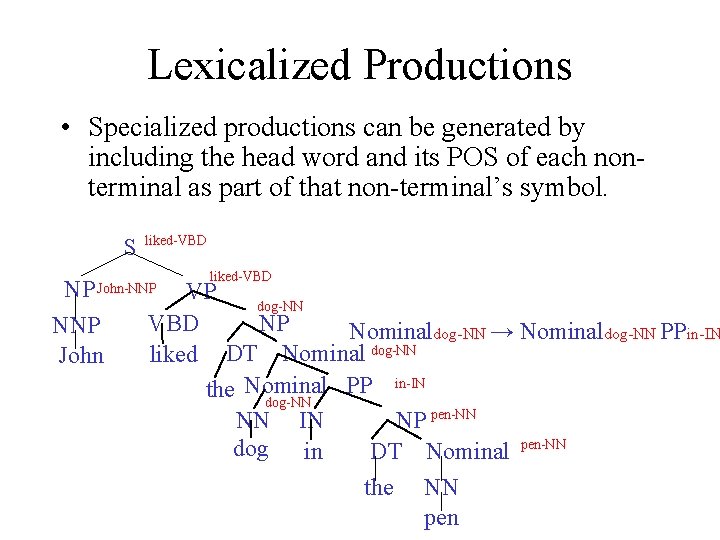

Head Words • Syntactic phrases usually have a word in them that is most “central” to the phrase. • Linguists have defined the concept of a lexical head of a phrase. • Simple rules can identify the head of any phrase by percolating head words up the parse tree. – – Head of a VP is the main verb Head of an NP is the main noun Head of a PP is the preposition Head of a sentence is the head of its VP

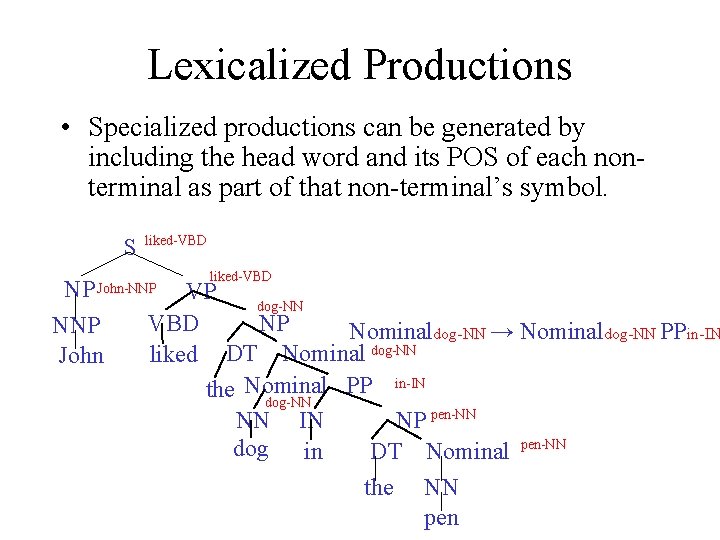

Lexicalized Productions • Specialized productions can be generated by including the head word and its POS of each nonterminal as part of that non-terminal’s symbol. S NP NNP John liked-VBD VP dog-NN VBD NP Nominaldog-NN → Nominaldog-NN PPin-IN liked DT Nominal dog-NN PP in-IN the Nominal dog-NN NP pen-NN NN IN dog in DT Nominal pen-NN the NN pen John-NNP

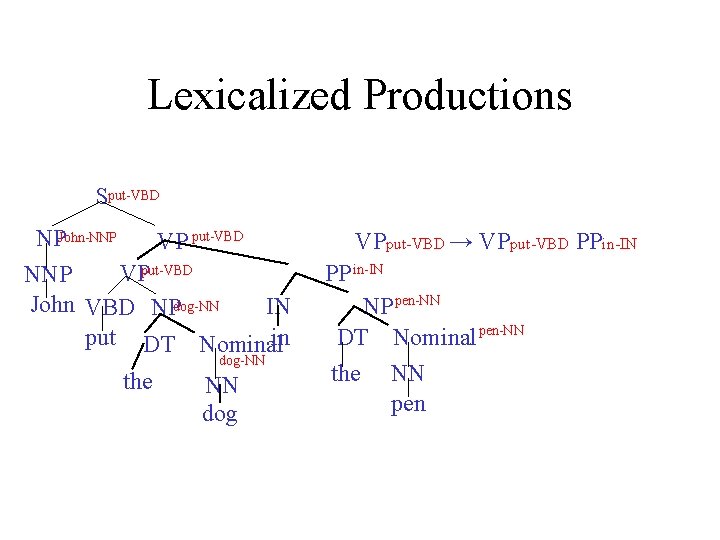

Lexicalized Productions Sput-VBD NPJohn-NNP VP put-VBD VPput-VBD NNP John VBD NPdog-NN IN put DT Nominal in the dog-NN NN dog VPput-VBD → VPput-VBD PPin-IN PP in-IN NP pen-NN DT Nominal pen-NN the NN pen

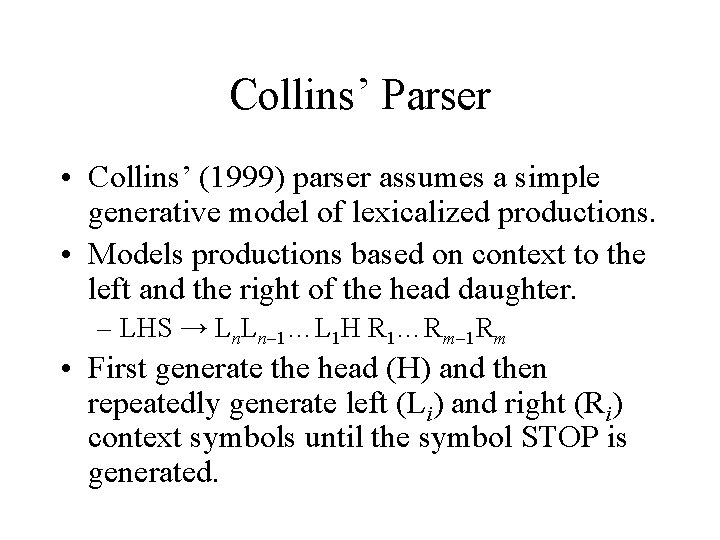

Parameterizing Lexicalized Productions • Accurately estimating parameters on such a large number of very specialized productions could require enormous amounts of treebank data. • Need some way of estimating parameters for lexicalized productions that makes reasonable independence assumptions so that accurate probabilities for very specific rules can be learned.

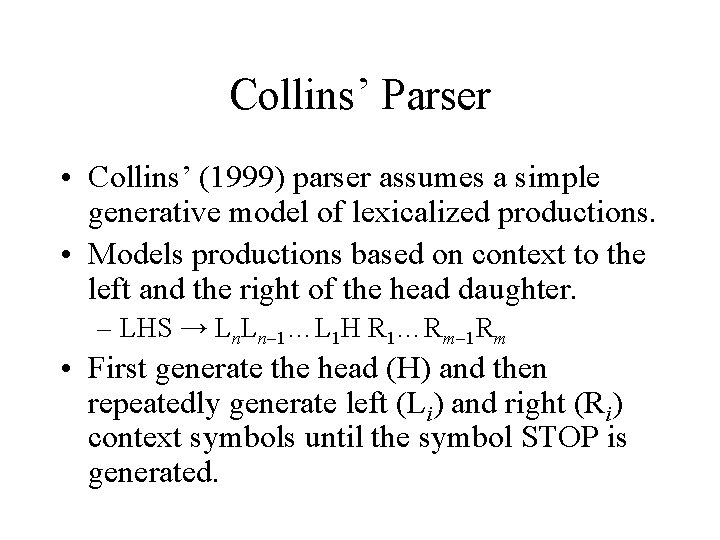

Collins’ Parser • Collins’ (1999) parser assumes a simple generative model of lexicalized productions. • Models productions based on context to the left and the right of the head daughter. – LHS → Ln. Ln 1…L 1 H R 1…Rm 1 Rm • First generate the head (H) and then repeatedly generate left (Li) and right (Ri) context symbols until the symbol STOP is generated.

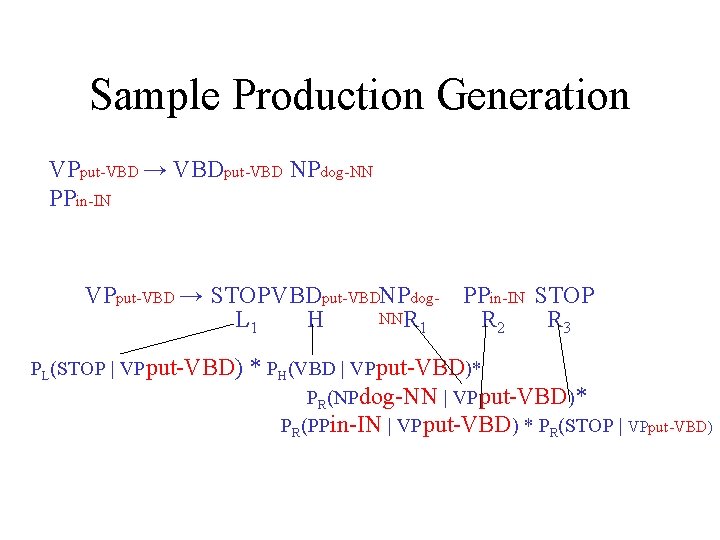

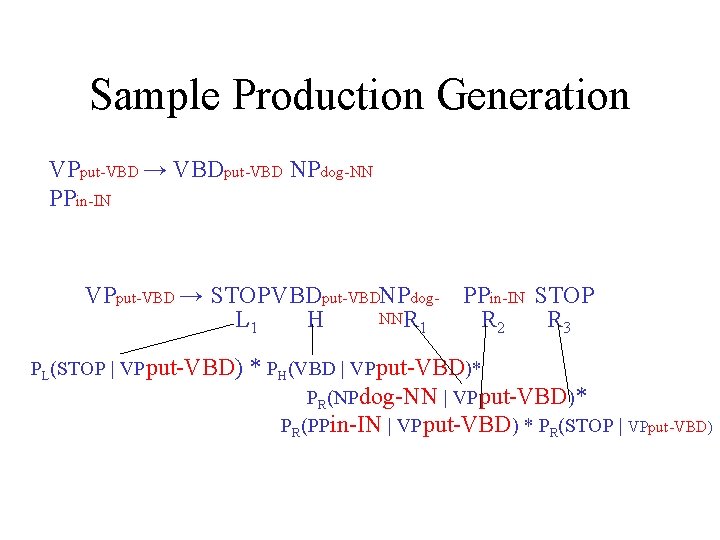

Sample Production Generation VPput-VBD → VBDput-VBD NPdog-NN PPin-IN VPput-VBD → STOP VBDput-VBDNPdog- PPin-IN STOP NN R L 1 H R 2 R 3 1 PL(STOP | VPput-VBD) * PH(VBD | VPput-VBD)* PR(NPdog-NN | VPput-VBD)* PR(PPin-IN | VPput-VBD) * PR(STOP | VPput-VBD)

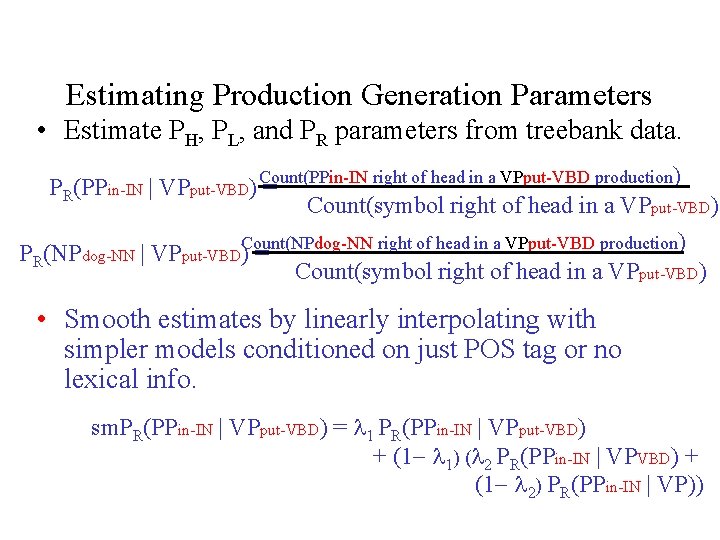

Estimating Production Generation Parameters • Estimate PH, PL, and PR parameters from treebank data. right of head in a VPput-VBD production) PR(PPin-IN | VPput-VBD) Count(PPin-IN = Count(symbol right of head in a VPput-VBD) right of head in a VPput-VBD production) PR(NPdog-NN | VPput-VBDCount(NPdog-NN )= Count(symbol right of head in a VPput-VBD) • Smooth estimates by linearly interpolating with simpler models conditioned on just POS tag or no lexical info. sm. PR(PPin-IN | VPput-VBD) = 1 PR(PPin-IN | VPput-VBD) + (1 1) ( 2 PR(PPin-IN | VPVBD) + (1 2) PR(PPin-IN | VP))

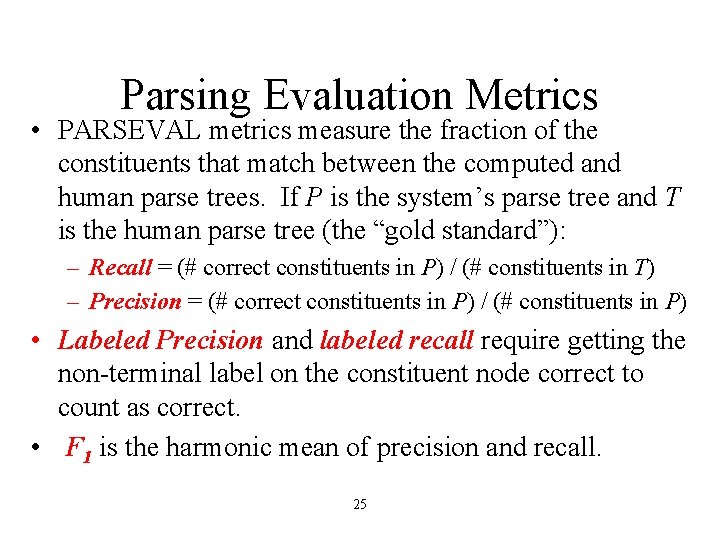

Parsing Evaluation Metrics • PARSEVAL metrics measure the fraction of the constituents that match between the computed and human parse trees. If P is the system’s parse tree and T is the human parse tree (the “gold standard”): – Recall = (# correct constituents in P) / (# constituents in T) – Precision = (# correct constituents in P) / (# constituents in P) • Labeled Precision and labeled recall require getting the non-terminal label on the constituent node correct to count as correct. • F 1 is the harmonic mean of precision and recall. 25

Treebank Results • Results of current state-of-the-art systems on the English Penn WSJ treebank are slightly greater than 90% labeled precision and recall. 26

Supervised Learning Avoids Manual Construction • Training examples are pairs of problems and answers • Training examples for parsing: a collection of sentence, parse tree pairs (Treebank) – From the treebank, get maximum likelihood estimates for the parsing model • New challenge: treebanks are difficult to obtain – Needs human experts – Takes years to complete 27

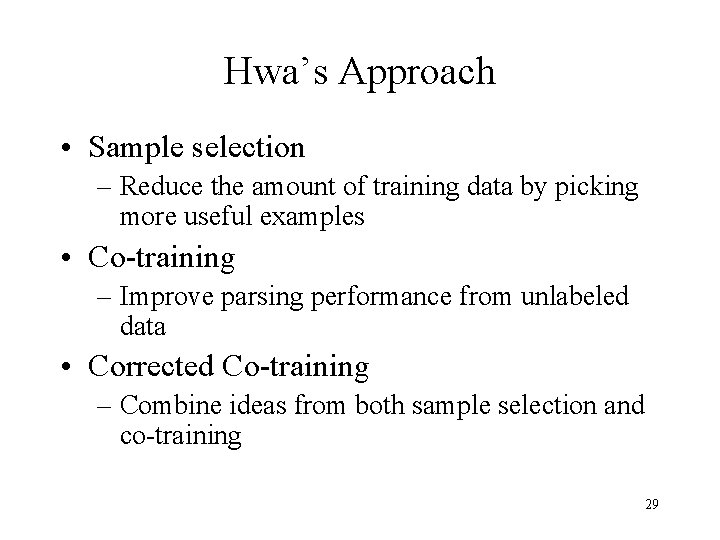

Learning to Classify Learning to Parse Train a model to decide: should a Train a model to decide: what is the prepositional phrase modify the most likely parse for a sentence W? verb before it or the noun? Training examples: (v, saw, duck, with, telescope) (n, saw, duck, with, feathers) (v, saw, stars, with, telescope) (n, saw, stars, with, Oscars) … [S [NP-SBJ [NNP Ford] [NNP Motor] [NNP Co. ]] [VP [VBD acquired] [NP [CD 5] [NN %]] [PP [IN of] [NP [DT the] [NNS shares]] [PP [IN in] [NP [NNP Jaguar] [NNP PLC]]]]]]]. ] [S [NP-SBJ [NNP Pierre] [NNP Vinken]] [VP [MD will] [VP [VB join] [NP [DT the] [NN board]] [PP [IN as] [NP [DT a] [NN director]]]. ] … 28

Hwa’s Approach • Sample selection – Reduce the amount of training data by picking more useful examples • Co-training – Improve parsing performance from unlabeled data • Corrected Co-training – Combine ideas from both sample selection and co-training 29

Roadmap • Parsing as a learning problem • Semi-supervised approaches – Sample selection • Overview • Scoring functions • Evaluation – Co-training – Corrected Co-training • Conclusion and further directions 30

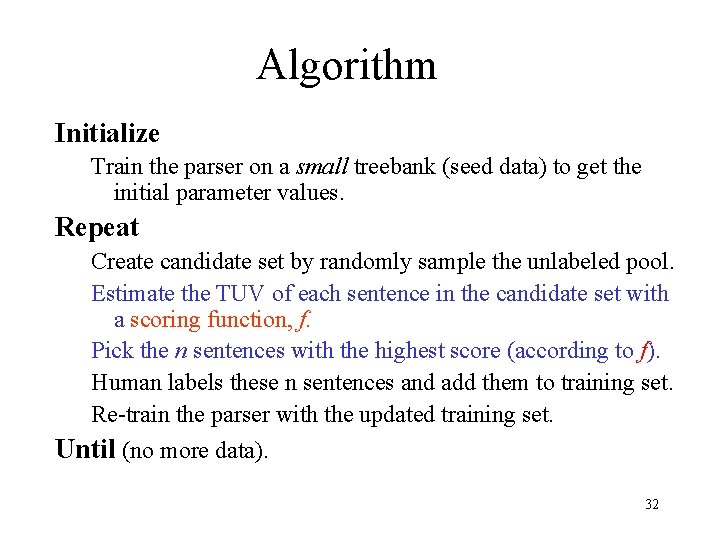

Sample Selection • Assumption – Have lots of unlabeled data (cheap resource) – Have a human annotator (expensive resource) • Iterative training session – Learner selects sentences to learn from – Annotator labels these sentences • Goal: Predict the benefit of annotation – Learner selects sentences with the highest Training Utility Values (TUVs) – Key issue: scoring function to estimate TUV 31

Algorithm Initialize Train the parser on a small treebank (seed data) to get the initial parameter values. Repeat Create candidate set by randomly sample the unlabeled pool. Estimate the TUV of each sentence in the candidate set with a scoring function, f. Pick the n sentences with the highest score (according to f). Human labels these n sentences and add them to training set. Re-train the parser with the updated training set. Until (no more data). 32

Scoring Function • Approximate the TUV of each sentence – True TUVs are not known • Need relative ranking • Ranking criteria – Knowledge about the domain • e. g. , sentence clusters, sentence length, … – Output of the hypothesis • e. g. , error-rate of the parse, uncertainty of the parse, … …. 33

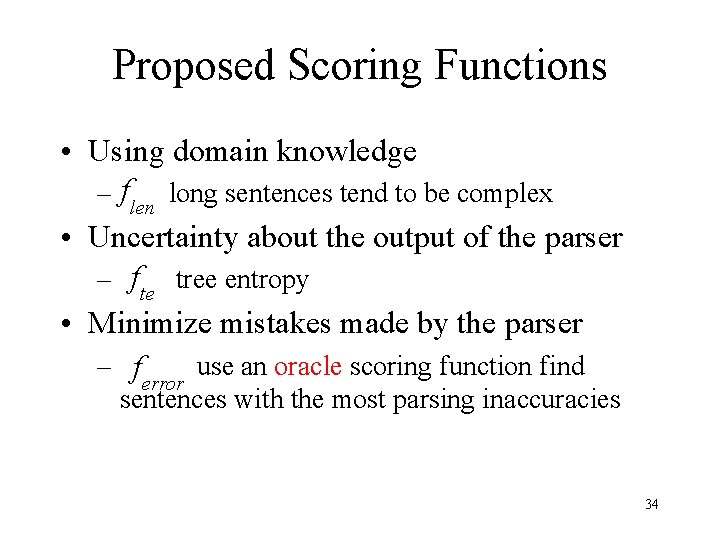

Proposed Scoring Functions • Using domain knowledge – flen long sentences tend to be complex • Uncertainty about the output of the parser – fte tree entropy • Minimize mistakes made by the parser – f use an oracle scoring function find error sentences with the most parsing inaccuracies 34

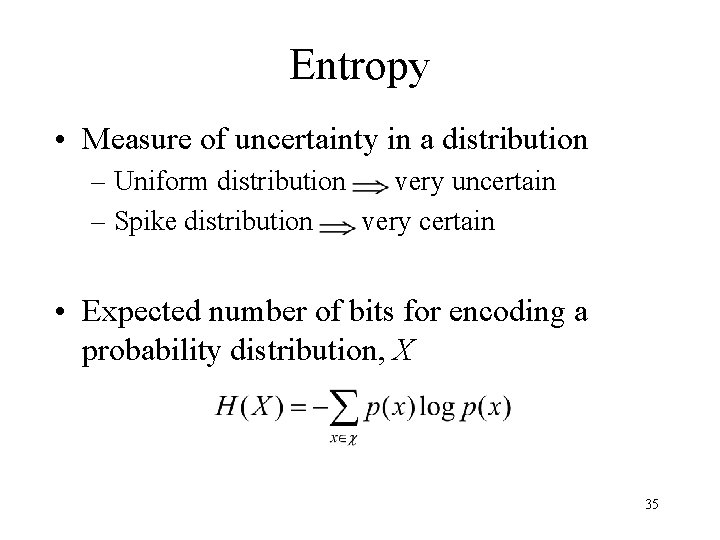

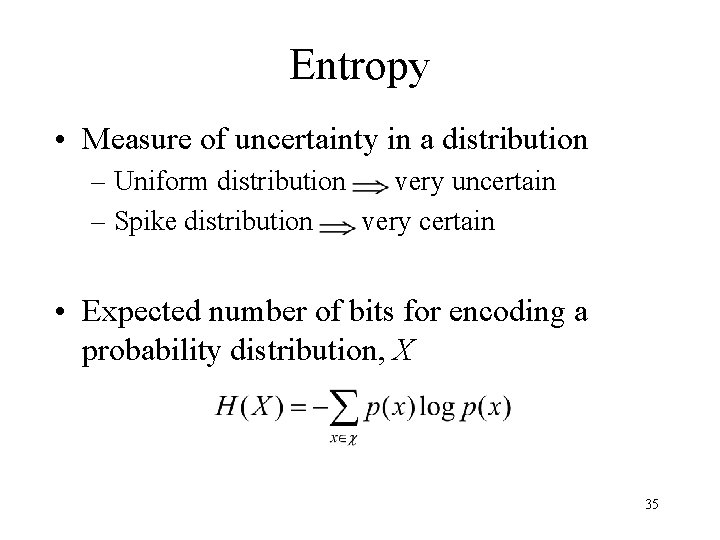

Entropy • Measure of uncertainty in a distribution – Uniform distribution very uncertain – Spike distribution very certain • Expected number of bits for encoding a probability distribution, X 35

Tree Entropy Scoring Function • Distribution over parse trees for sentence W: • Tree entropy: uncertainty of the parse distribution • Scoring function: ratio of actual parse tree entropy to that of a uniform distribution 36

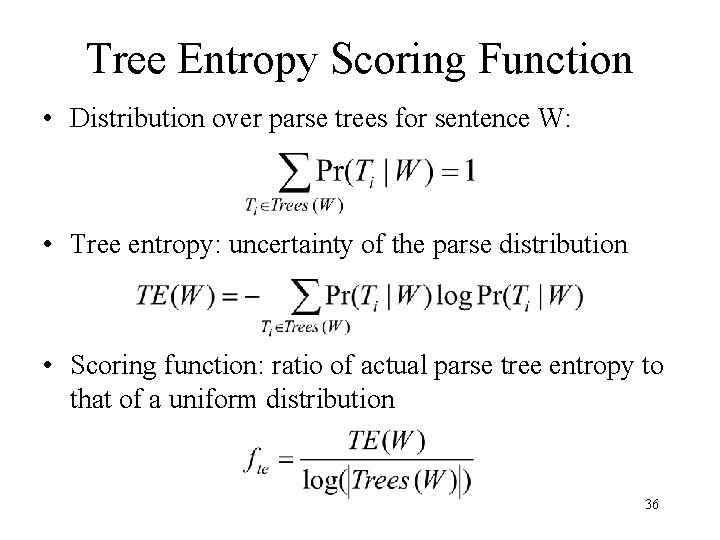

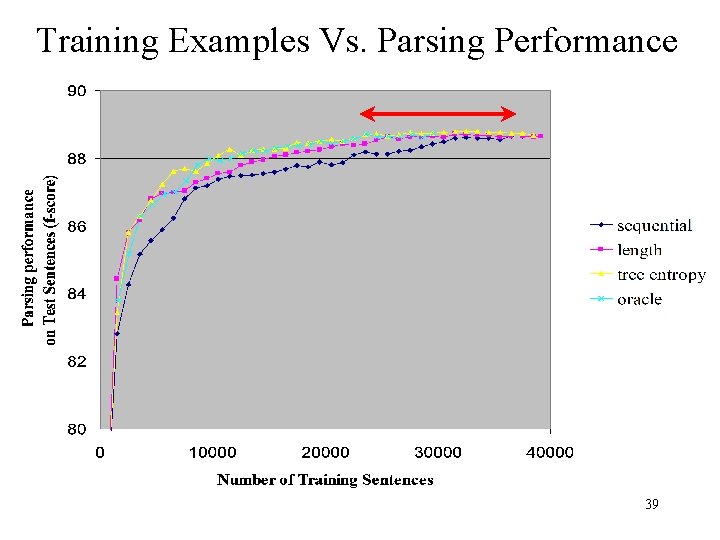

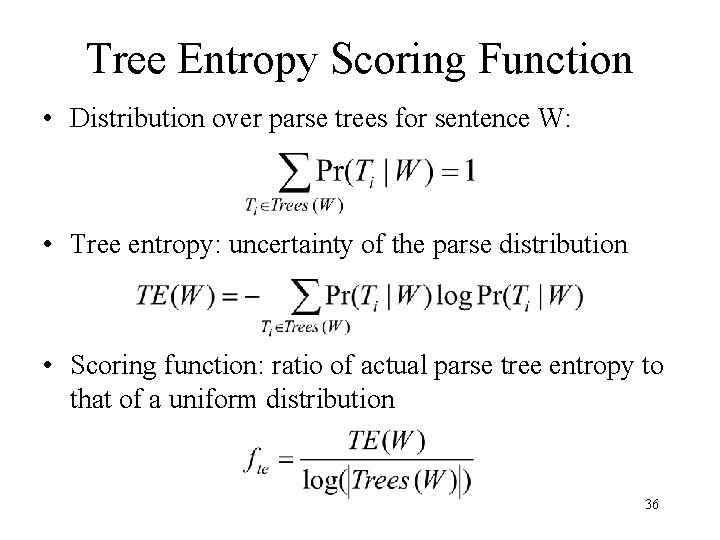

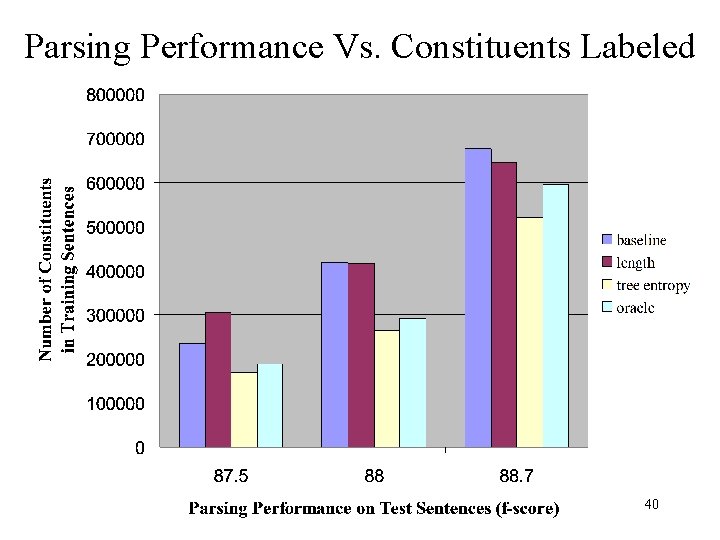

Experimental Setup • Parsing model: – Collins Model 2 • Candidate pool – WSJ sec 02 -21, with the annotation stripped • • Initial labeled examples: 500 sentences Per iteration: add 100 sentences Testing metric: f-score (precision/recall) Test data: – ~2000 unseen sentences (from WSJ sec 00) • Baseline – Annotate data in sequential order 38

Training Examples Vs. Parsing Performance 39

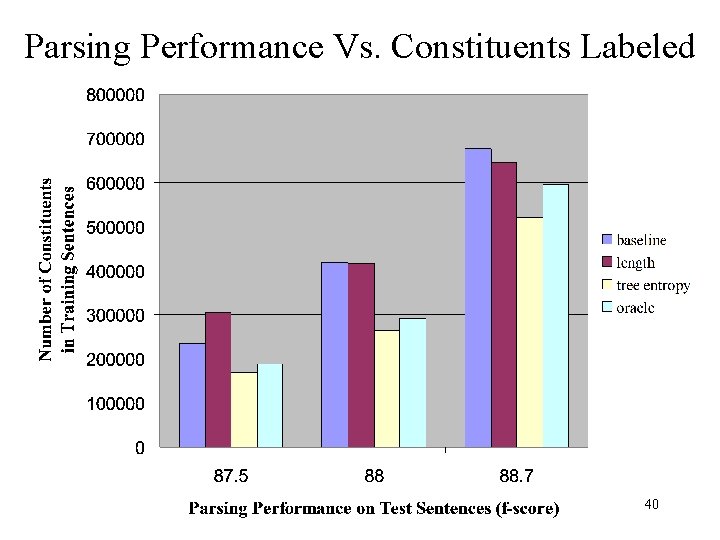

Parsing Performance Vs. Constituents Labeled 40

![CoTraining Blum and Mitchell 1998 Assumptions Have a small treebank No Co-Training [Blum and Mitchell, 1998] • Assumptions – Have a small treebank – No](https://slidetodoc.com/presentation_image/954813dc6622c14fe7149e2a49219104/image-40.jpg)

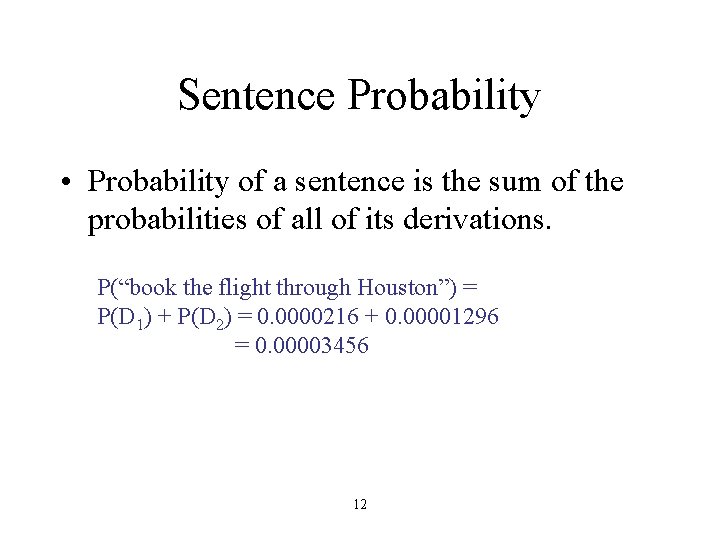

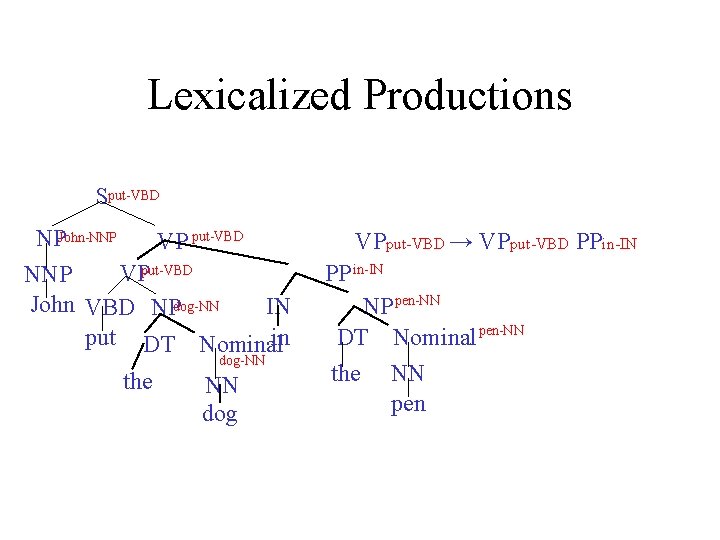

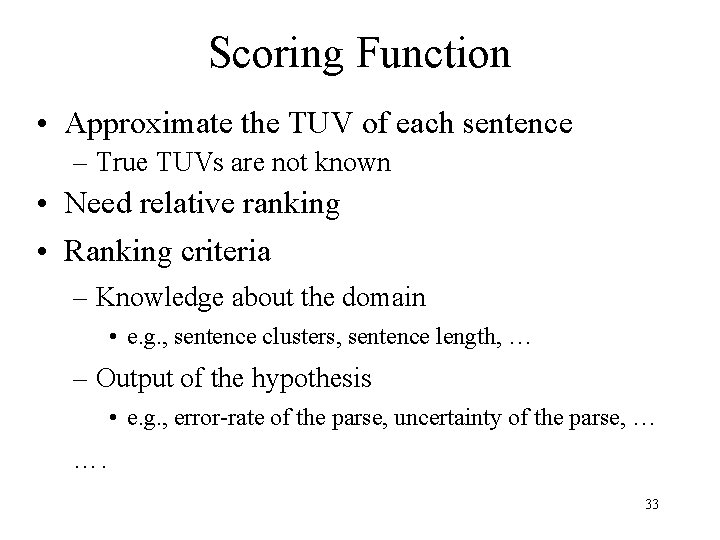

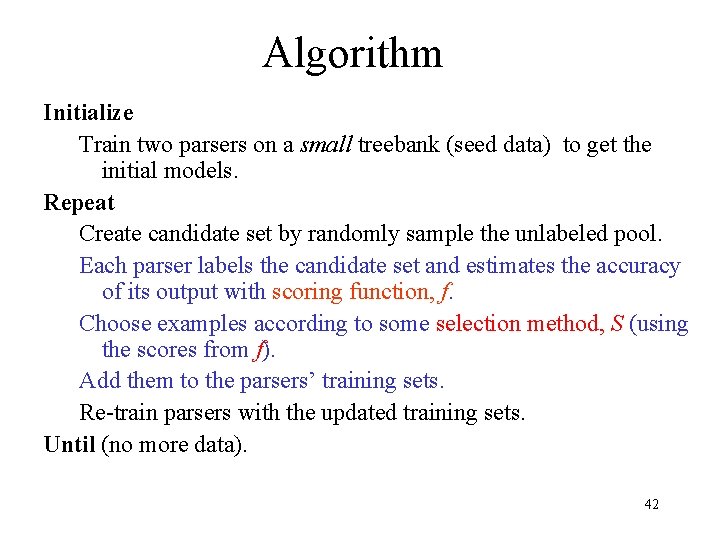

Co-Training [Blum and Mitchell, 1998] • Assumptions – Have a small treebank – No further human assistance – Have two different kinds of parsers • A subset of each parser’s output becomes new training data for the other • Goal: – select sentences that are labeled with confidence by one parser but labeled with uncertainty by the other parser. 41

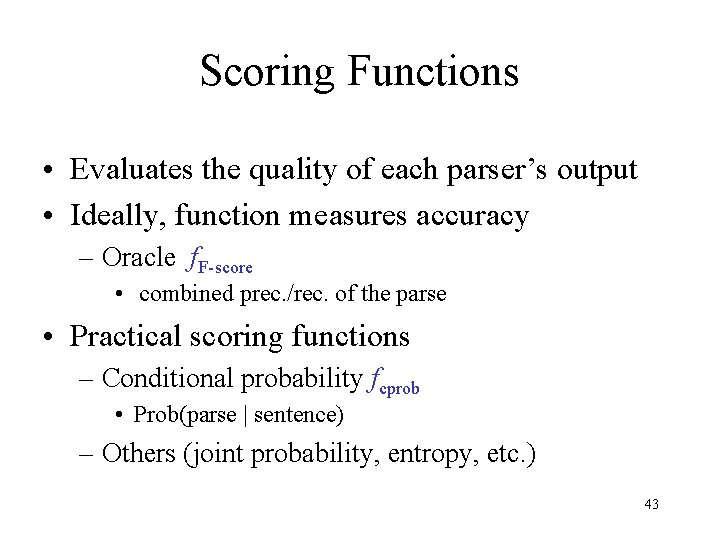

Algorithm Initialize Train two parsers on a small treebank (seed data) to get the initial models. Repeat Create candidate set by randomly sample the unlabeled pool. Each parser labels the candidate set and estimates the accuracy of its output with scoring function, f. Choose examples according to some selection method, S (using the scores from f). Add them to the parsers’ training sets. Re-train parsers with the updated training sets. Until (no more data). 42

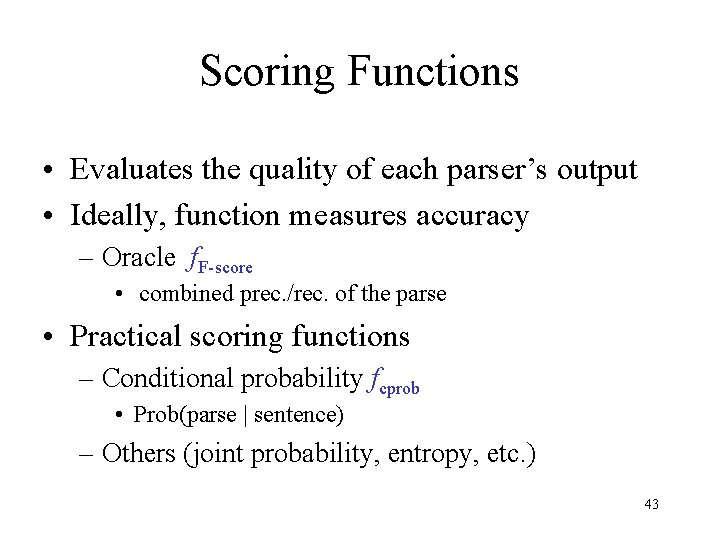

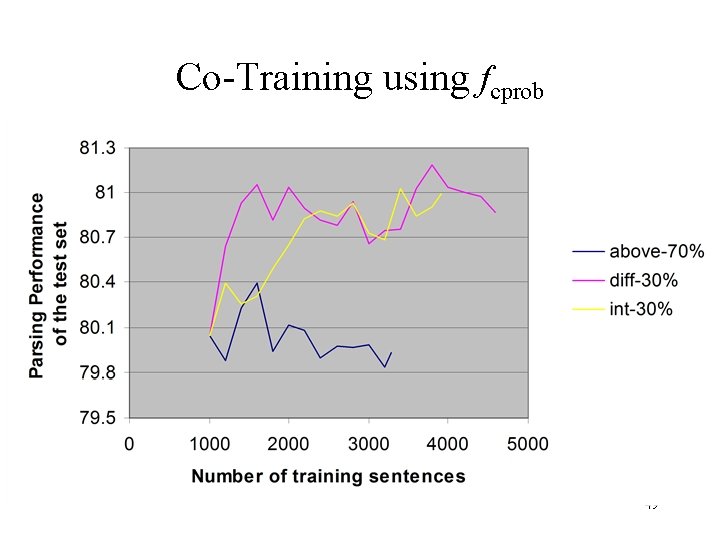

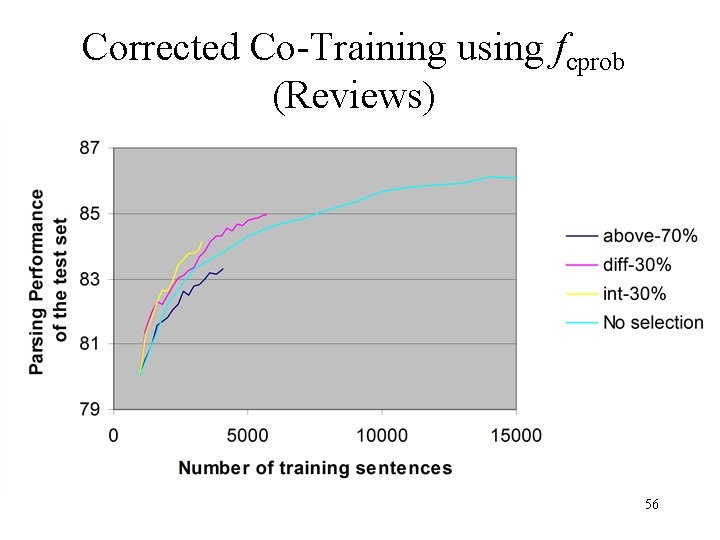

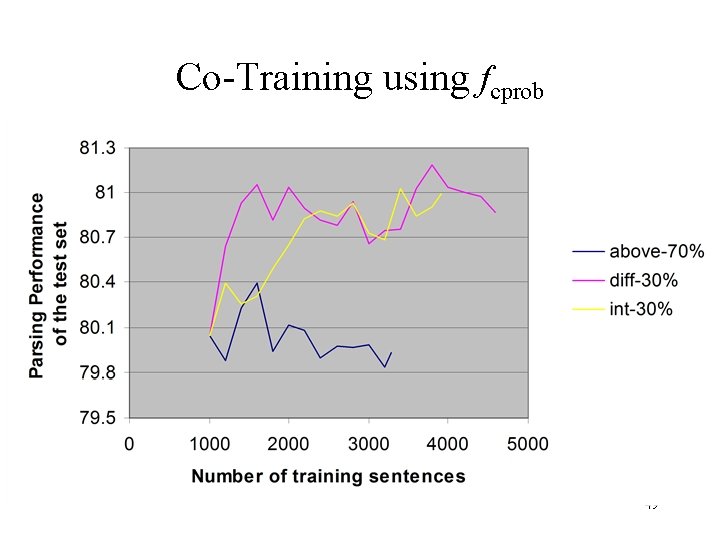

Scoring Functions • Evaluates the quality of each parser’s output • Ideally, function measures accuracy – Oracle f. F-score • combined prec. /rec. of the parse • Practical scoring functions – Conditional probability fcprob • Prob(parse | sentence) – Others (joint probability, entropy, etc. ) 43

Selection Methods • Above-n: Sabove-n – The score of the teacher’s parse is greater than n • Difference: Sdiff-n – The score of the teacher’s parse is greater than that of the student’s parse by n • Intersection: Sint-n – The score of the teacher’s parse is one of its n% highest while the score of the student’s parse for the same sentence is one of the student’s n% lowest 44

![Experimental Setup Cotraining parsers Lexicalized Tree Adjoining Grammar parser Sarkar 2002 Experimental Setup • Co-training parsers: – Lexicalized Tree Adjoining Grammar parser [Sarkar, 2002] –](https://slidetodoc.com/presentation_image/954813dc6622c14fe7149e2a49219104/image-44.jpg)

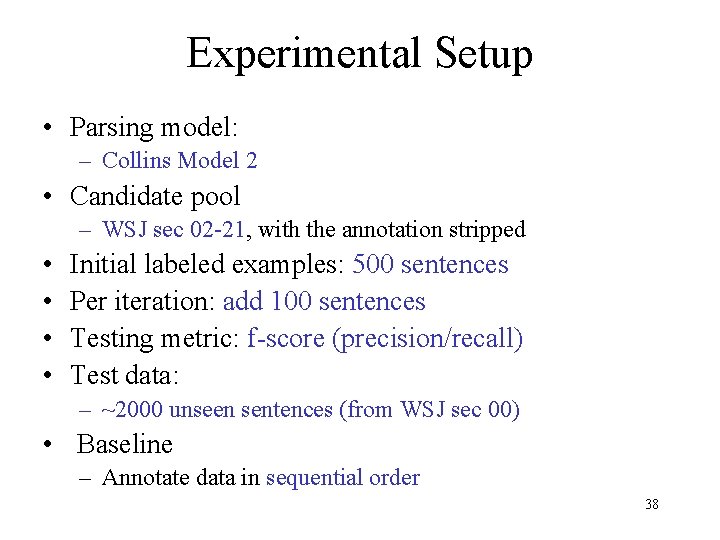

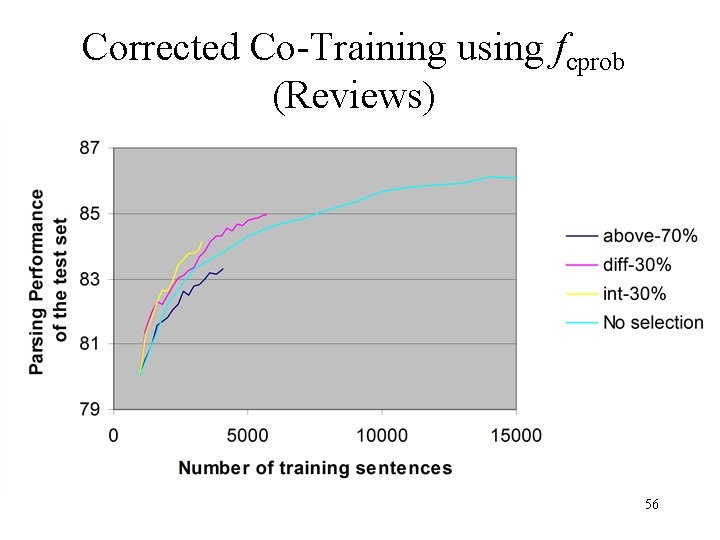

Experimental Setup • Co-training parsers: – Lexicalized Tree Adjoining Grammar parser [Sarkar, 2002] – Lexicalized Context Free Grammar parser [Collins, 1997] • • • Seed data: 1000 parsed sentences from WSJ sec 02 Unlabeled pool: rest of the WSJ sec 02 -21, stripped Consider 500 unlabeled sentences per iteration Development set: WSJ sec 00 Test set: WSJ sec 23 Results: graphs for the Collins parser 45

Co-Training using fcprob 49

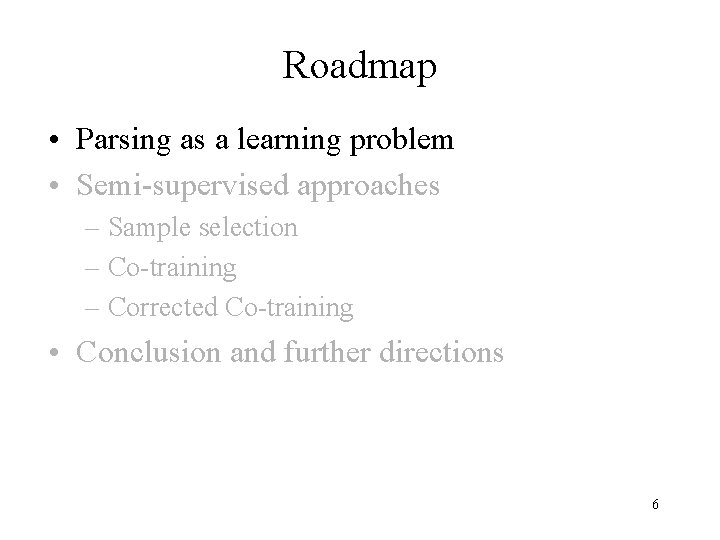

Roadmap • Parsing as a learning problem • Semi-supervised approaches – Sample selection – Co-training – Corrected Co-training • Conclusion and further directions 50

Corrected Co-Training • Human reviews and corrects the machine outputs before they are added to the training set • Can be seen as a variant of sample selection [cf. Muslea et al. , 2000] • Applied to Base NP detection [Pierce & Cardie, 2001] 51

Algorithm Initialize: Train two parsers on a small treebank (seed data) to get the initial models. Repeat Create candidate set by randomly sample the unlabeled pool. Each parser labels the candidate set and estimates the accuracy of its output with scoring function, f. Choose examples according to some selection method, S (using the scores from f). Human reviews and corrects the chosen examples. Add them to the parsers’ training sets. Re-train parsers with the updated training sets. Until (no more data). 52

Selection Methods and Corrected Co-Training • Two scoring functions: f. F-score , fcprob • Three selection methods: Sabove-n , Sdiff-n , Sint-n 53

Corrected Co-Training using fcprob (Reviews) 56