SUPERVISED SEMISUPERVISED AND UNSUPERVISED APPROACHES FOR WORD SENSE

![1. WSD : VARIANTS ü Lexical Sample [Targeted WSD]: § ü All-words WSD: § 1. WSD : VARIANTS ü Lexical Sample [Targeted WSD]: § ü All-words WSD: §](https://slidetodoc.com/presentation_image/44c4331b01608a5066301b571059bc28/image-34.jpg)

- Slides: 56

SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES FOR WORD SENSE DISAMBIGUATION Slides by Arindam Chatterjee & Salil Joshi Under the guidance of Prof. Pushpak Bhattacharyya May 01, 2010

ROADMAP 1. 2. 3. 4. 5. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

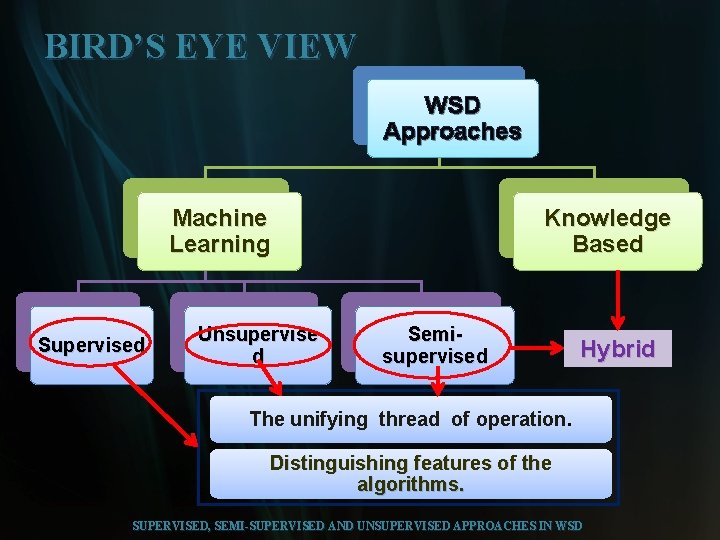

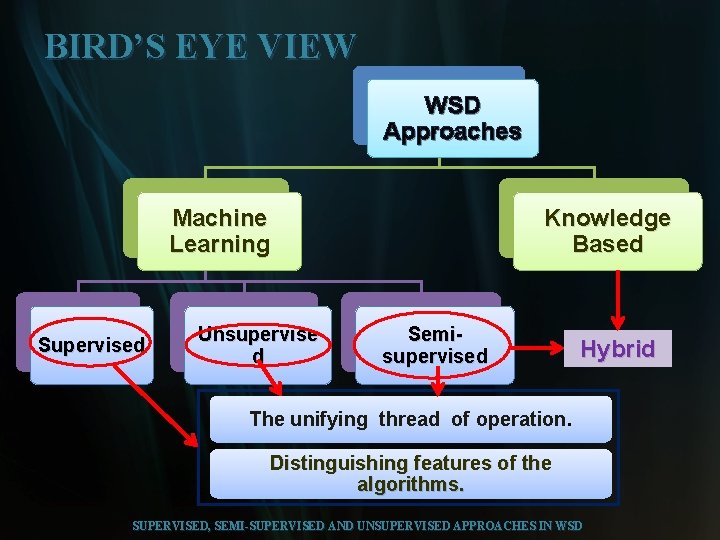

BIRD’S EYE VIEW WSD Approaches Machine Learning Supervised Unsupervise d Knowledge Based Semisupervised Hybrid The unifying thread of operation. Distinguishing features of the algorithms. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

SUPERVISED APPROACHES Supervised, Semi-supervised and Unsupervised Approaches in WSD 4

SUPERVISED APPROACHES WSD CLASSES = SENSES CLASSIFIED BASED ON ITS FEATURE VECTOR Money, finance Water, river Money, finance blood, plasma 5 TRAINING INSTANCES(WORDS) MODEL TRAINED FROM TRAINING DATA CLASS 1 (SENSE 1) CLASS 2 (SENSE 2) CLASS 3 (SENSE 3) TRAININGPHASE TESTING PHASE SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD 5

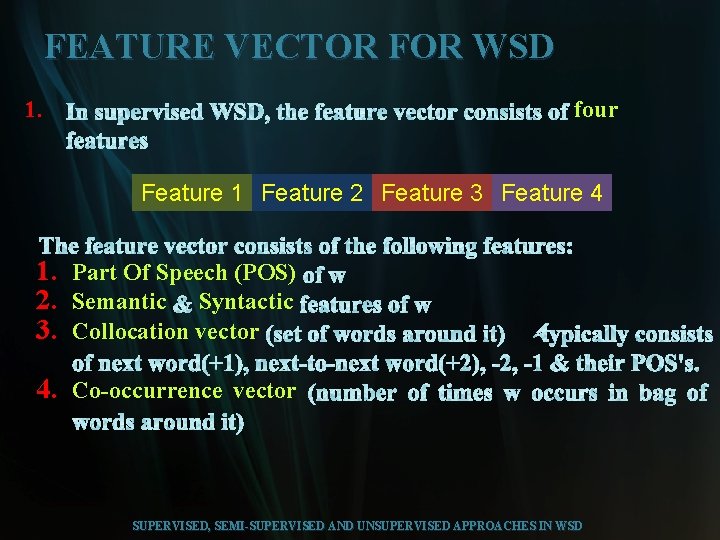

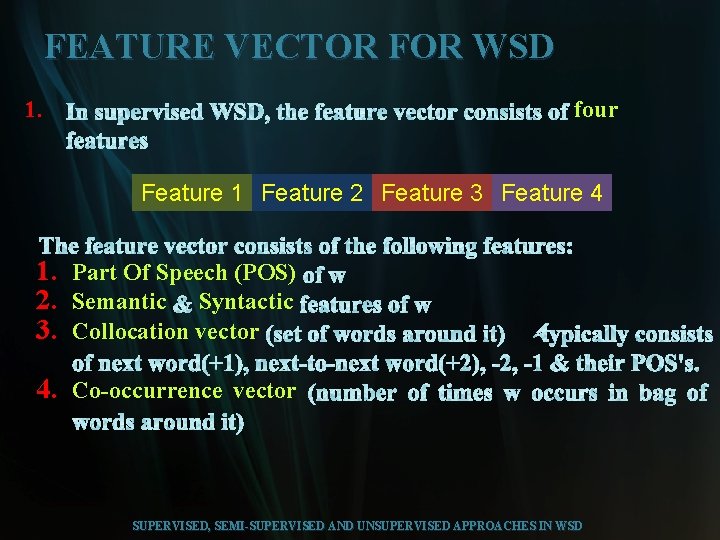

FEATURE VECTOR FOR WSD 1. four Feature 1 Feature 2 Feature 3 Feature 4 1. Part Of Speech (POS) 2. Semantic Syntactic 3. Collocation vector 4. Co-occurrence vector SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

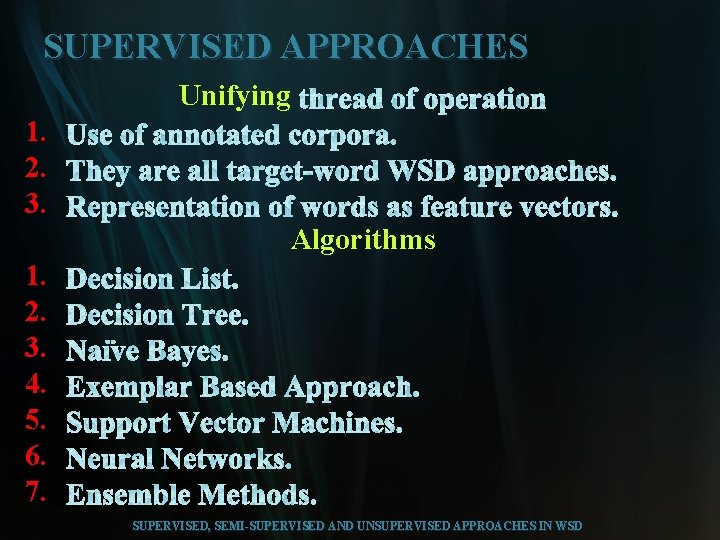

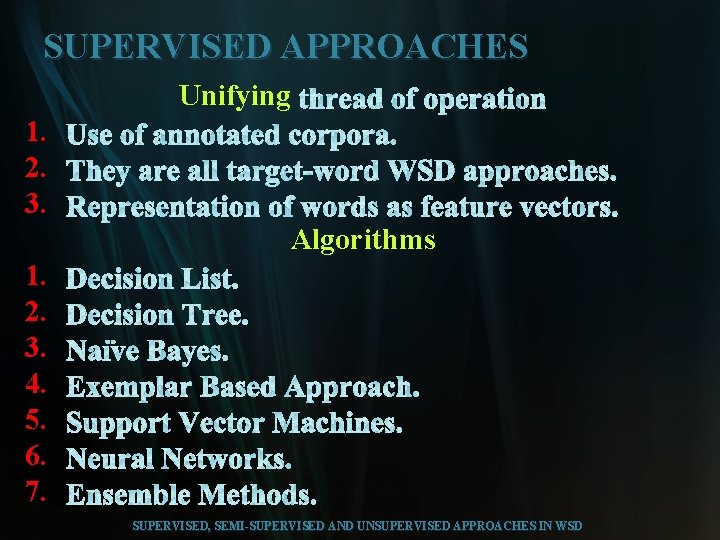

SUPERVISED APPROACHES Unifying 1. 2. 3. Algorithms 1. 2. 3. 4. 5. 6. 7. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

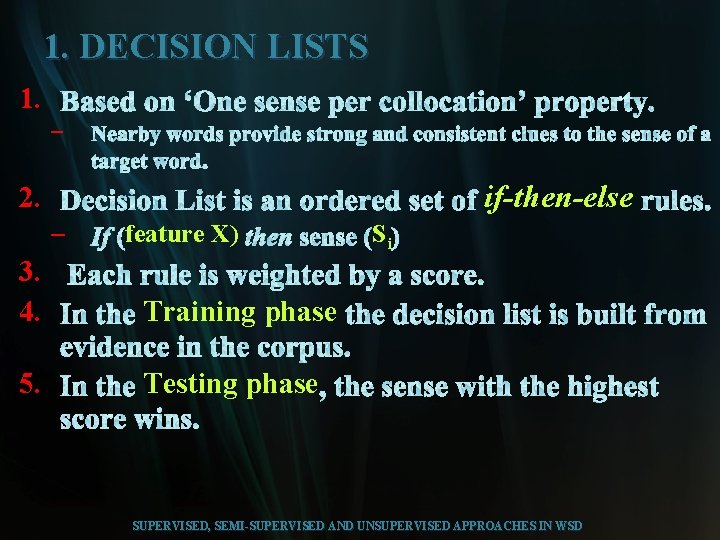

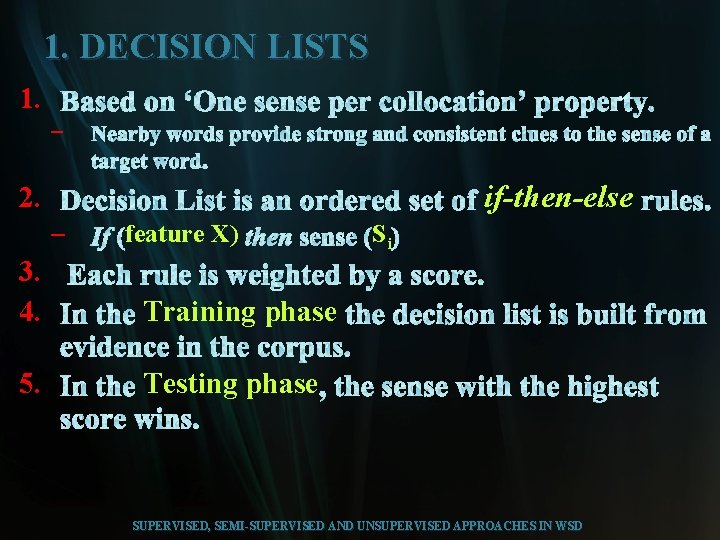

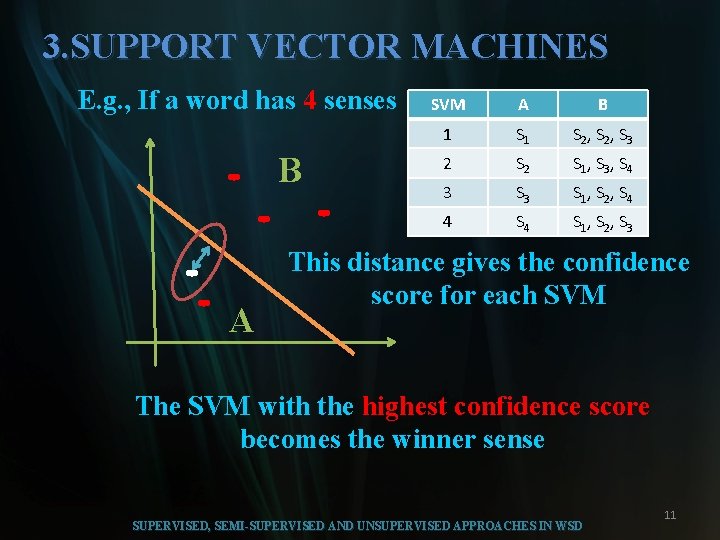

1. DECISION LISTS 1. – if-then-else 2. – feature X) 3. 4. Training phase 5. Testing phase Si SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

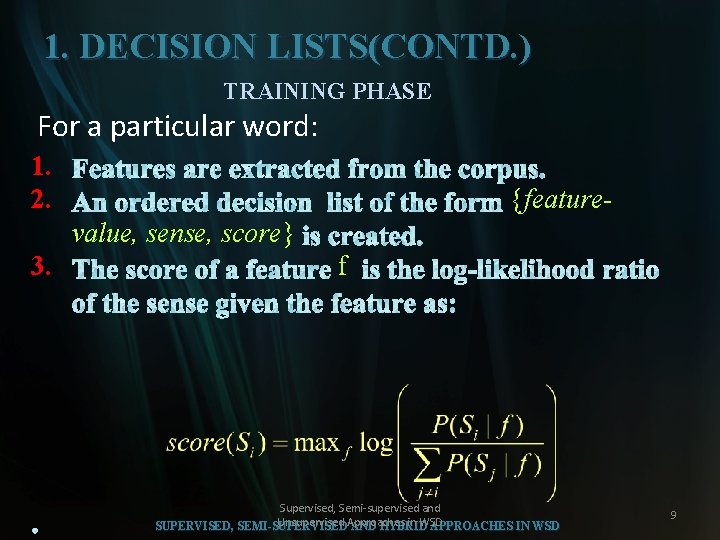

1. DECISION LISTS(CONTD. ) TRAINING PHASE For a particular word: 1. 2. {featurevalue, sense, score} 3. f Supervised, Semi-supervised and Unsupervised Approaches in WSD SUPERVISED, SEMI-SUPERVISED AND HYBRID APPROACHES IN WSD 9

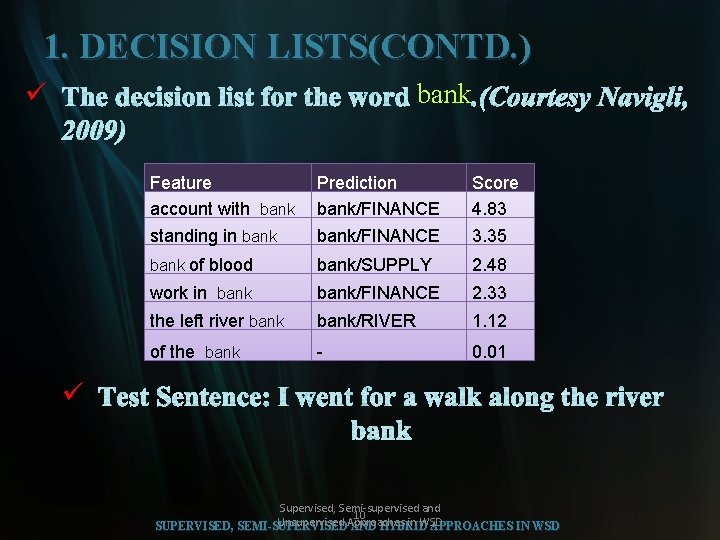

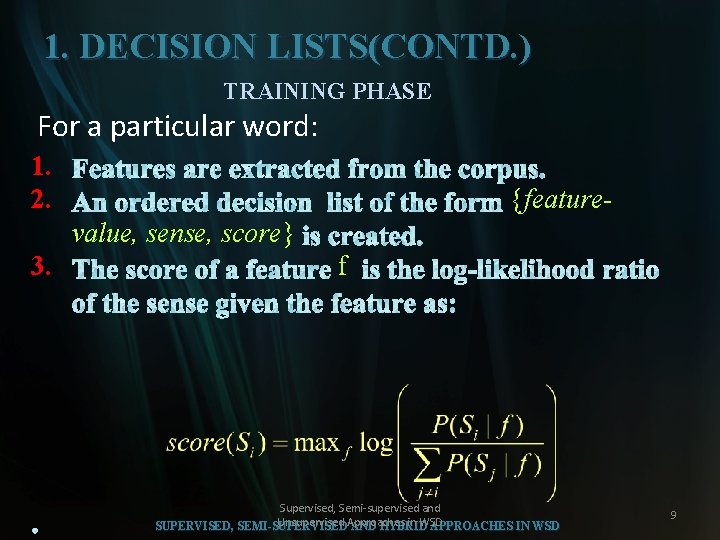

1. DECISION LISTS(CONTD. ) ü bank Feature account with bank Prediction bank/FINANCE Score 4. 83 standing in bank/FINANCE 3. 35 bank of blood bank/SUPPLY 2. 48 work in bank/FINANCE 2. 33 the left river bank/RIVER 1. 12 of the bank - 0. 01 ü Supervised, Semi-supervised and 10 Unsupervised Approaches in WSD SUPERVISED, SEMI-SUPERVISED AND HYBRID APPROACHES IN WSD

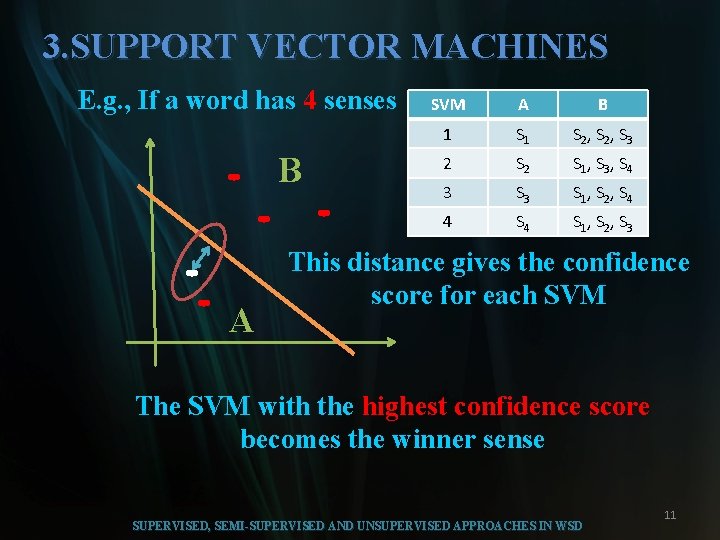

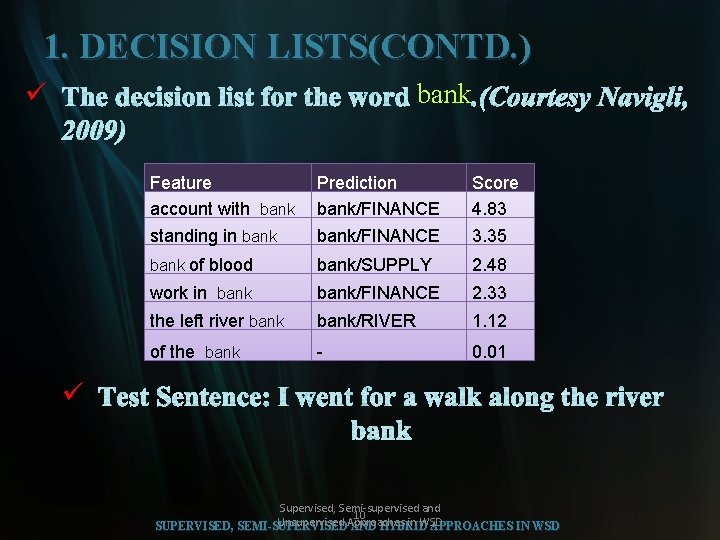

3. SUPPORT VECTOR MACHINES E. g. , If a word has 4 senses B A SVM A B 1 S 2, S 3 2 S 1, S 3, S 4 3 S 1, S 2, S 4 4 S 1, S 2, S 3 This distance gives the confidence score for each SVM The SVM with the highest confidence score becomes the winner sense SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD 11

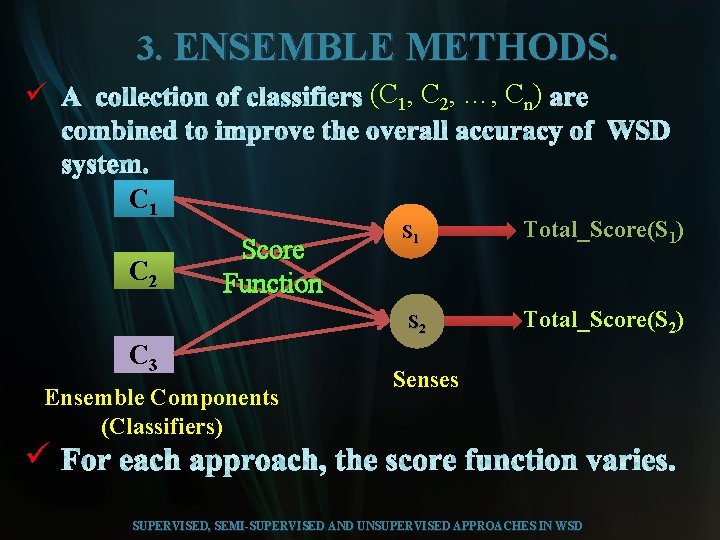

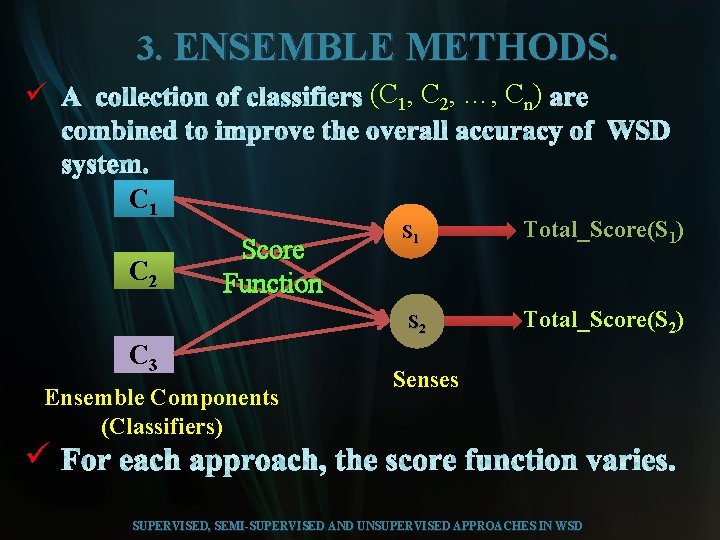

3. ENSEMBLE METHODS. ü (C 1, C 2, …, Cn) C 1 C 2 Score Function C 3 Ensemble Components (Classifiers) S 1 Total_Score(S 1) S 2 Total_Score(S 2) Senses ü SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

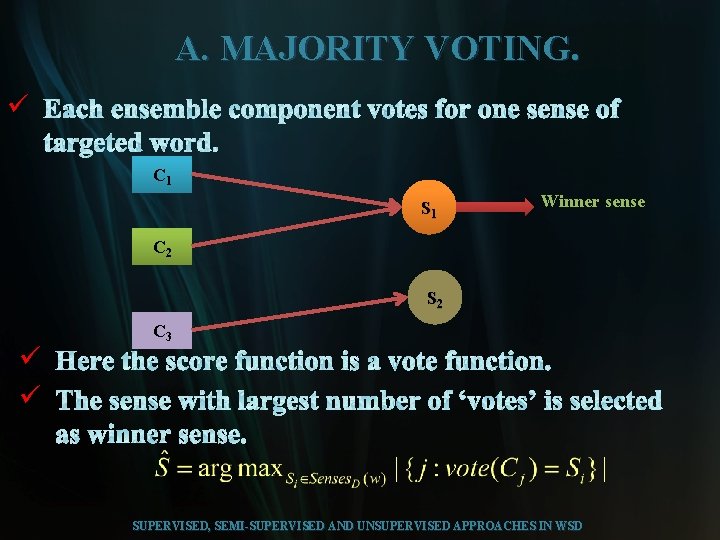

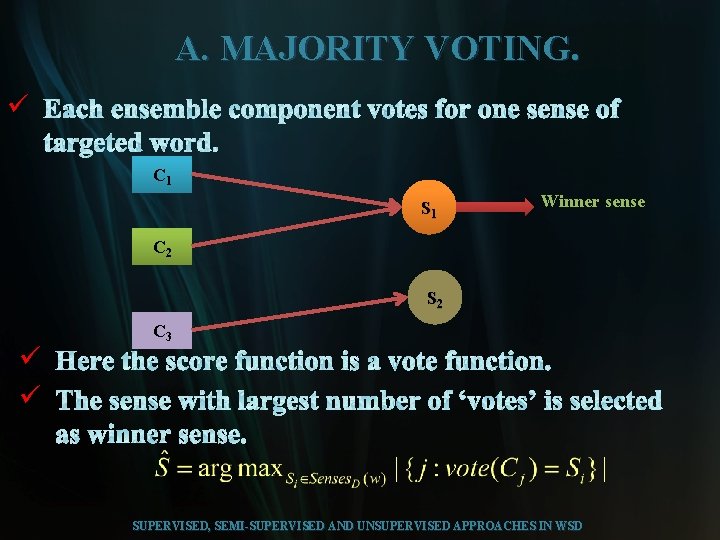

A. MAJORITY VOTING. ü C 1 S 1 Winner sense C 2 S 2 ü ü C 3 SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

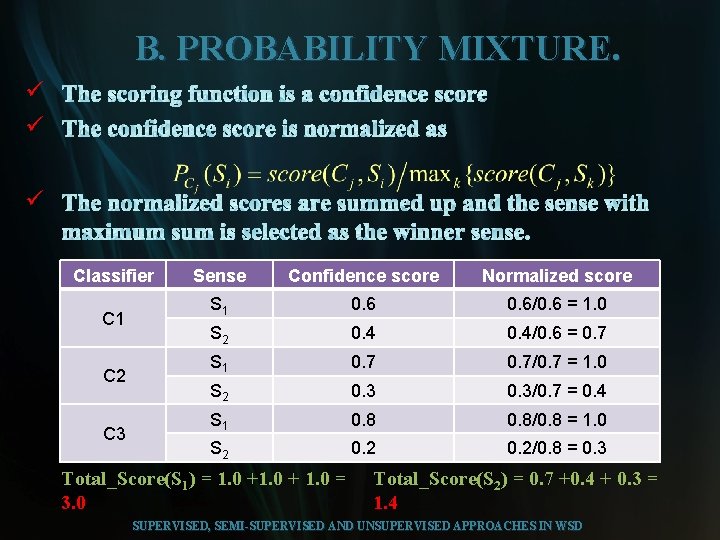

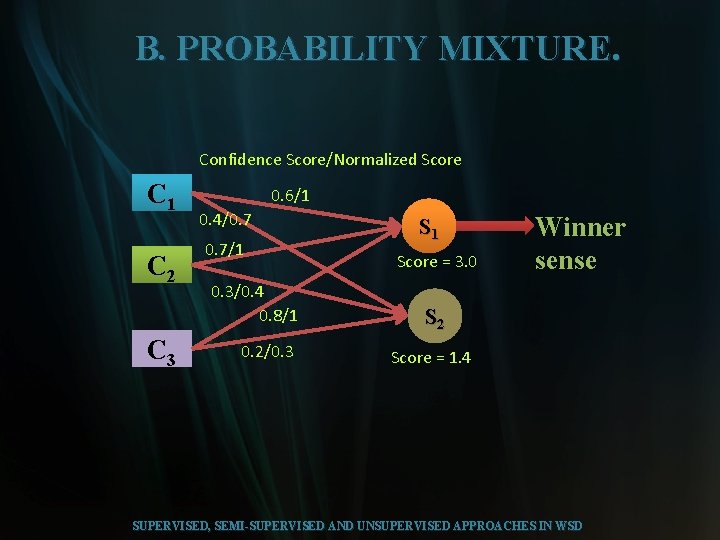

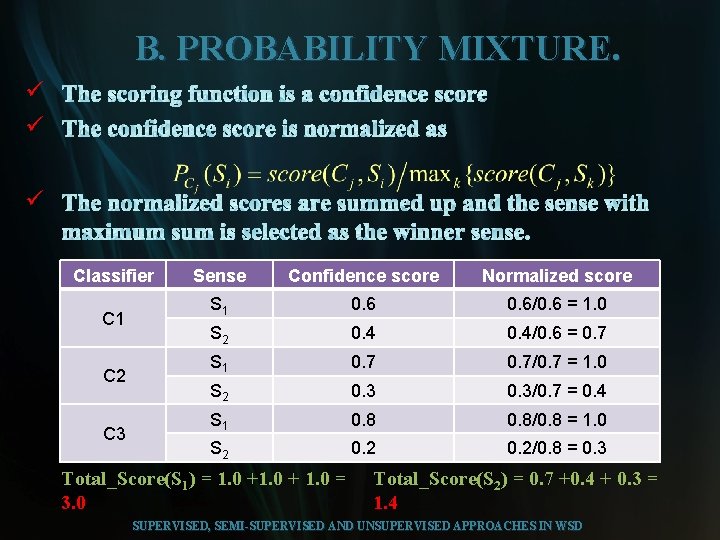

B. PROBABILITY MIXTURE. ü ü ü Classifier C 1 C 2 C 3 Sense Confidence score Normalized score S 1 0. 6/0. 6 = 1. 0 S 2 0. 4/0. 6 = 0. 7 S 1 0. 7/0. 7 = 1. 0 S 2 0. 3/0. 7 = 0. 4 S 1 0. 8/0. 8 = 1. 0 S 2 0. 2/0. 8 = 0. 3 Total_Score(S 1) = 1. 0 + 1. 0 = 3. 0 Total_Score(S 2) = 0. 7 +0. 4 + 0. 3 = 1. 4 SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

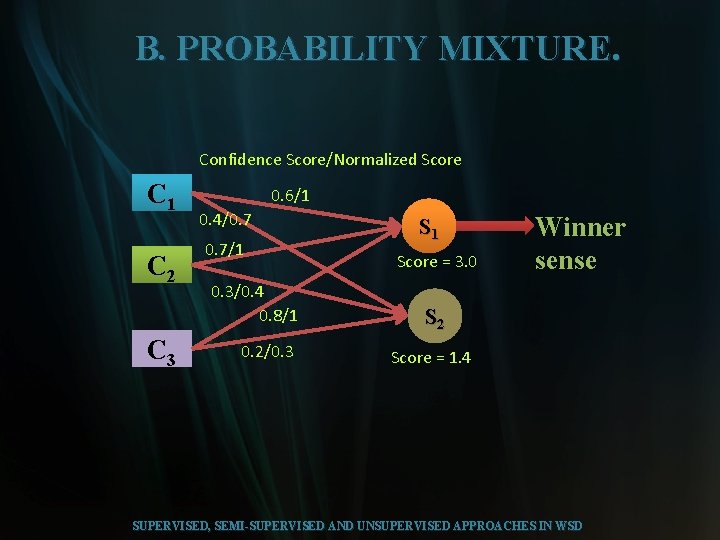

B. PROBABILITY MIXTURE. Confidence Score/Normalized Score C 1 C 2 C 3 0. 6/1 0. 4/0. 7/1 0. 3/0. 4 0. 8/1 0. 2/0. 3 S 1 Score = 3. 0 Winner sense S 2 Score = 1. 4 SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

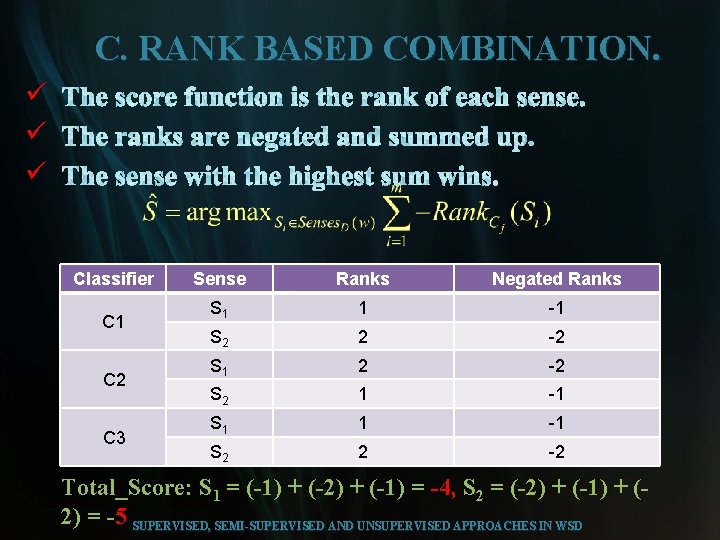

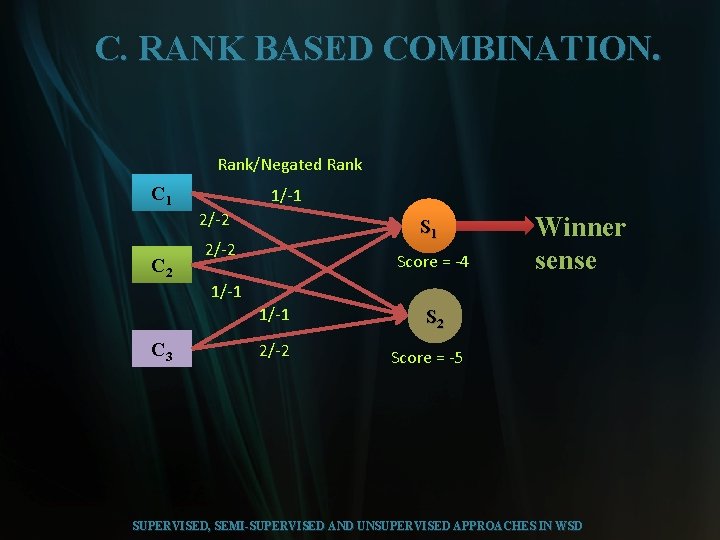

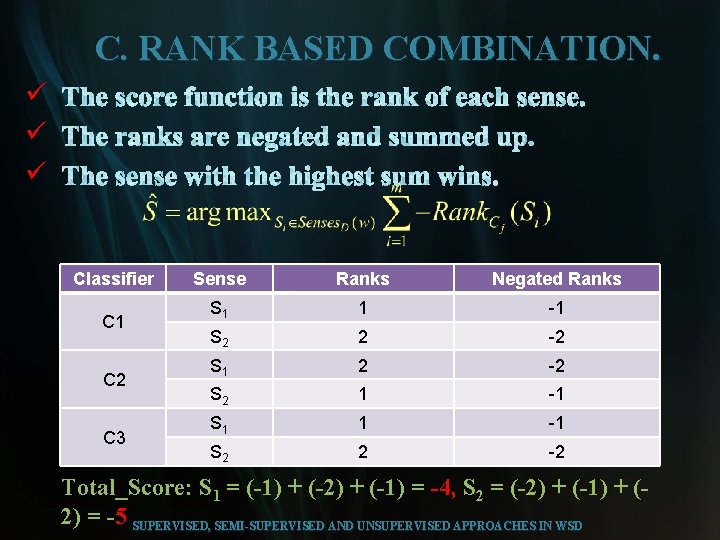

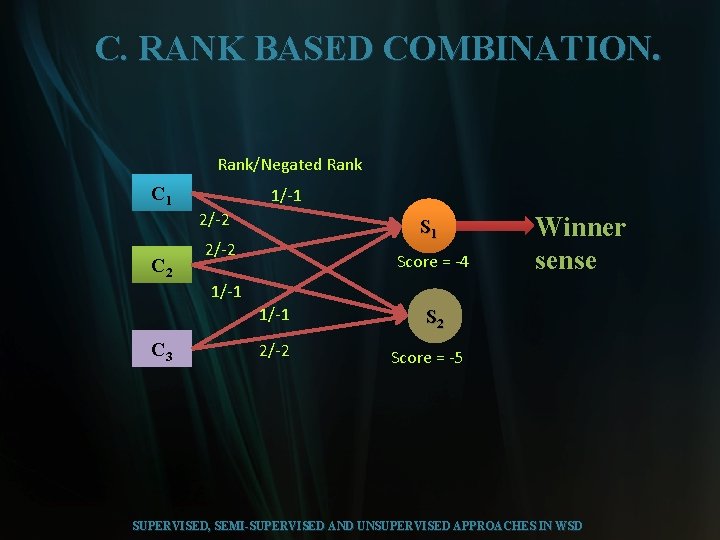

C. RANK BASED COMBINATION. ü ü ü Classifier C 1 C 2 C 3 Sense Ranks Negated Ranks S 1 1 -1 S 2 2 -2 S 1 2 -2 S 2 1 -1 S 1 1 -1 S 2 2 -2 Total_Score: S 1 = (-1) + (-2) + (-1) = -4, S 2 = (-2) + (-1) + (2) = -5 SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

C. RANK BASED COMBINATION. Rank/Negated Rank C 1 1/-1 2/-2 C 2 S 1 2/-2 Score = -4 Winner sense 1/-1 C 3 2/-2 S 2 Score = -5 SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

SEMI-SUPERVISED APPROACHES Supervised, Semi-supervised and Unsupervised Approaches in WSD 18

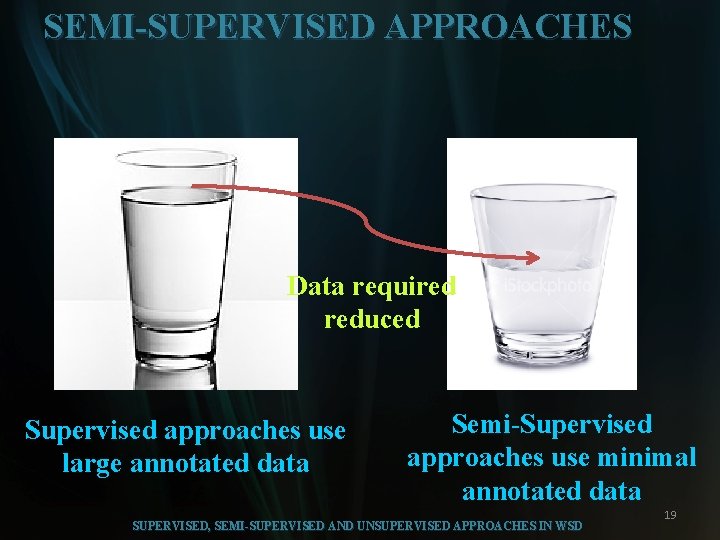

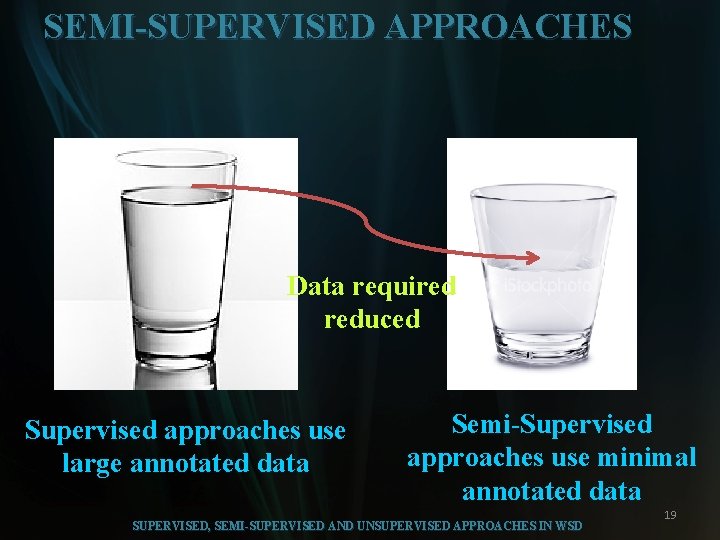

SEMI-SUPERVISED APPROACHES Data required reduced Supervised approaches use large annotated data Semi-Supervised approaches use minimal annotated data SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD 19

SEMI-SUPERVISED APPROACHES Unifying 1. 2. Algorithms 1. 2. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

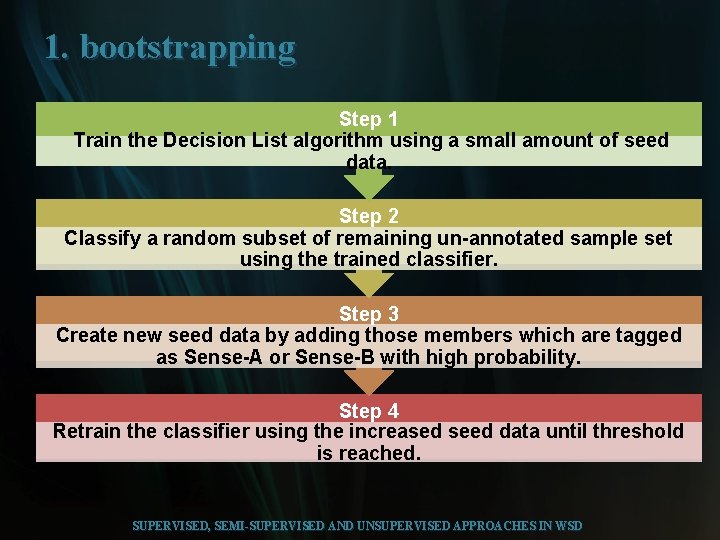

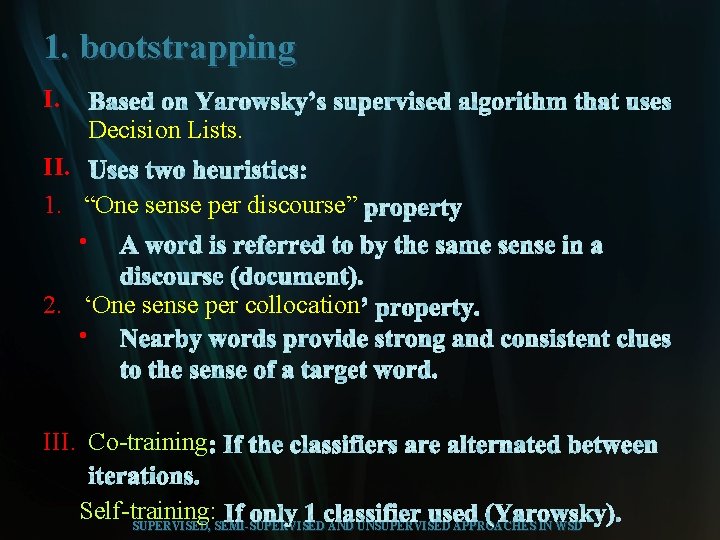

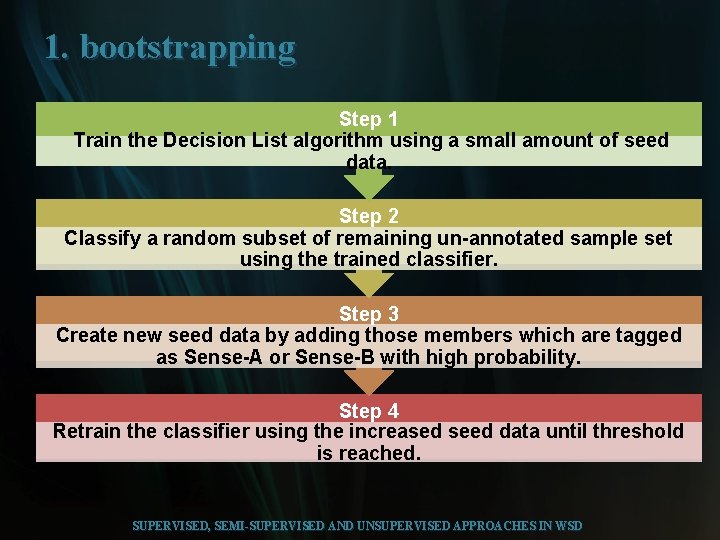

1. bootstrapping Step 1 Train the Decision List algorithm using a small amount of seed data. Step 2 Classify a random subset of remaining un-annotated sample set using the trained classifier. Step 3 Create new seed data by adding those members which are tagged as Sense-A or Sense-B with high probability. Step 4 Retrain the classifier using the increased seed data until threshold is reached. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

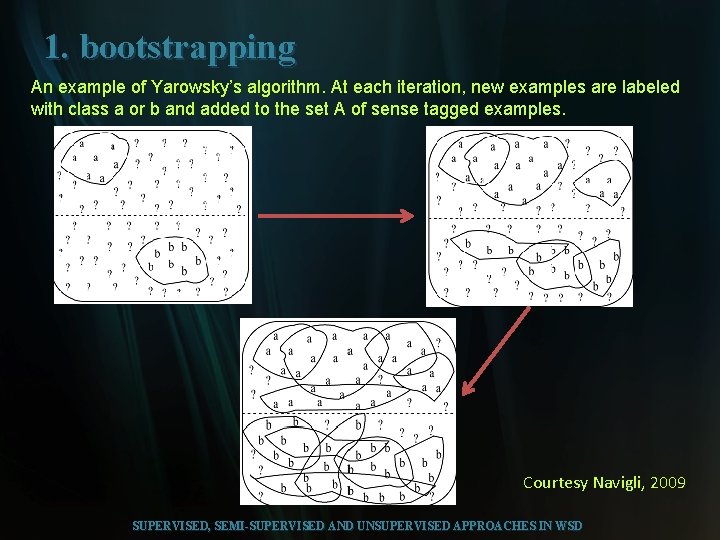

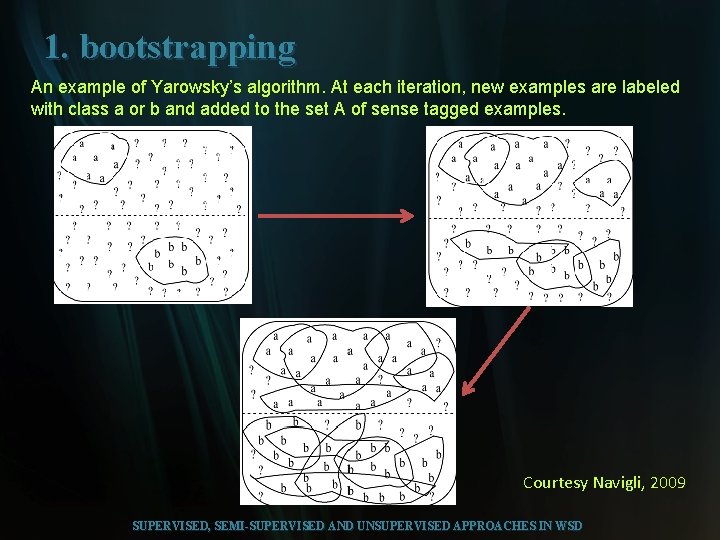

1. bootstrapping An example of Yarowsky’s algorithm. At each iteration, new examples are labeled with class a or b and added to the set A of sense tagged examples. Courtesy Navigli, 2009 SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

UNSUPERVISED APPROACHES Supervised, Semi-supervised and Unsupervised Approaches in WSD 23

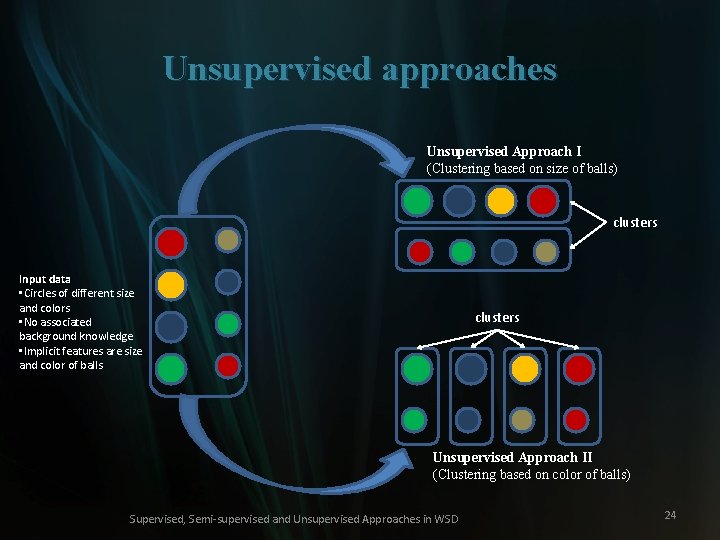

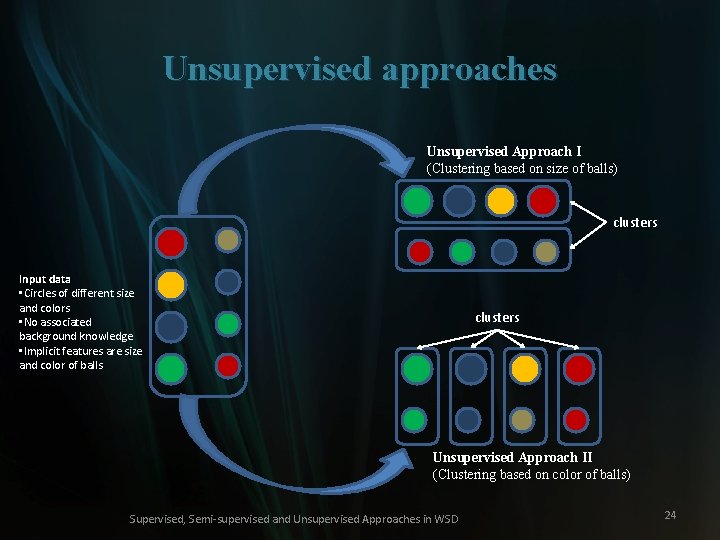

Unsupervised approaches Unsupervised Approach I (Clustering based on size of balls) clusters Input data • Circles of different size and colors • No associated background knowledge • Implicit features are size and color of balls clusters Unsupervised Approach II (Clustering based on color of balls) Supervised, Semi-supervised and Unsupervised Approaches in WSD 24

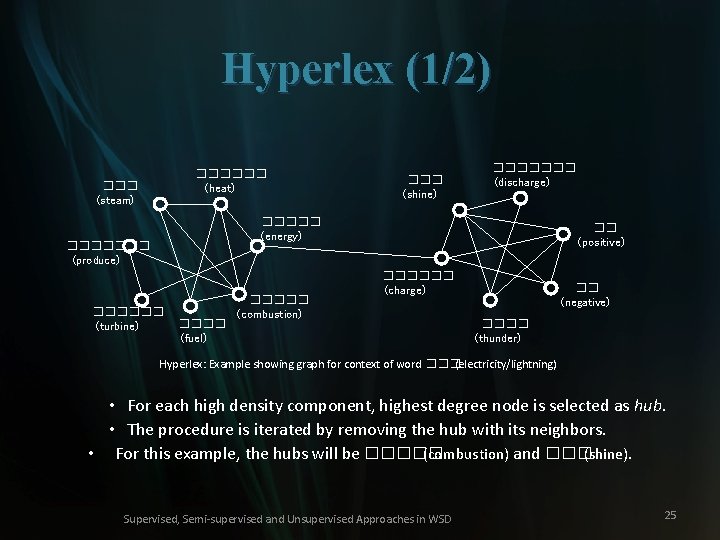

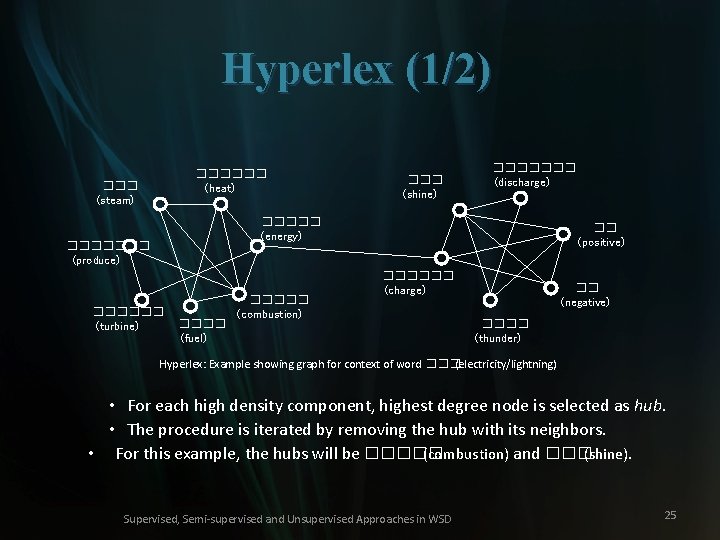

Hyperlex (1/2) ������ (heat) ��� (steam) ��� (shine) ������� (discharge) ����� (energy) ������� (produce) ������ (turbine) ���� (fuel) ����� (combustion) �� (positive) ������ (charge) �� (negative) ���� (thunder) Hyperlex: Example showing graph for context of word ��� (electricity/lightning) • For each high density component, highest degree node is selected as hub. • The procedure is iterated by removing the hub with its neighbors. • For this example, the hubs will be ����� (combustion) and ��� (shine). Supervised, Semi-supervised and Unsupervised Approaches in WSD 25

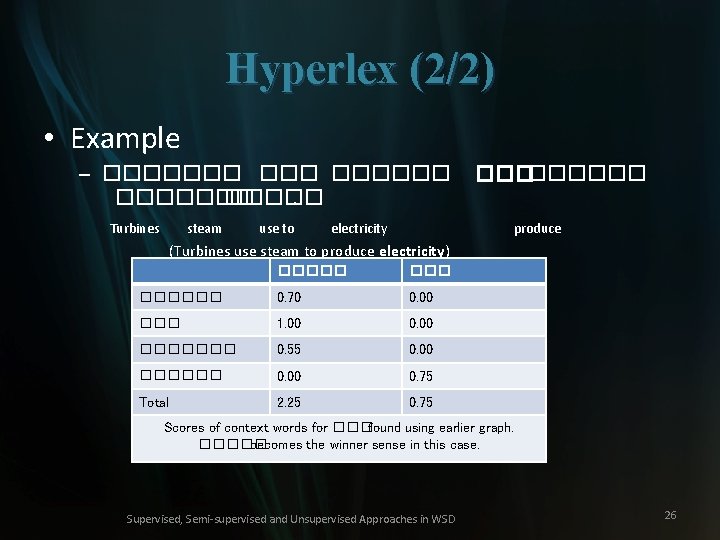

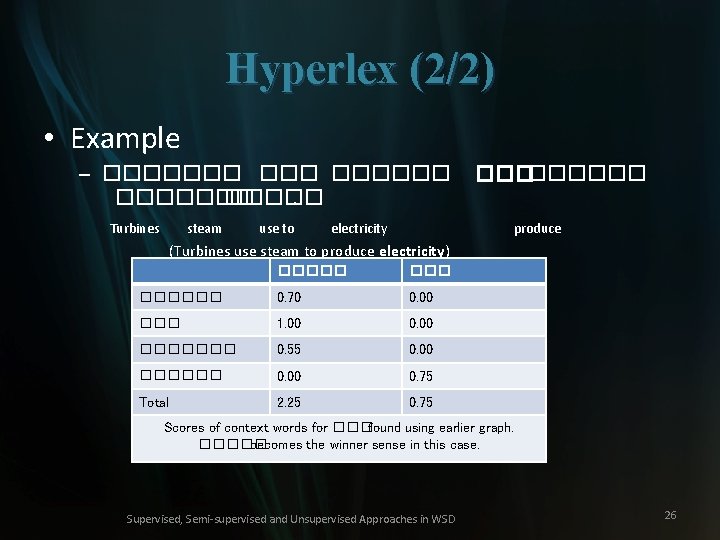

Hyperlex (2/2) • Example – ������� �����. Turbines steam use to electricity ����� produce (Turbines use steam to produce electricity) ������ 0. 70 0. 00 ��� 1. 00 0. 00 ������� 0. 55 0. 00 ������ 0. 00 0. 75 Total 2. 25 0. 75 Scores of context words for ���found using earlier graph. ����� becomes the winner sense in this case. Supervised, Semi-supervised and Unsupervised Approaches in WSD 26

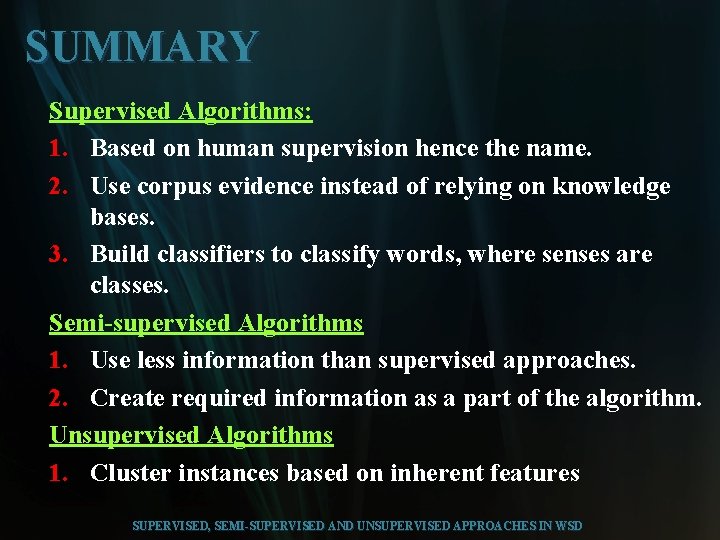

SUMMARY Supervised Algorithms: 1. Based on human supervision hence the name. 2. Use corpus evidence instead of relying on knowledge bases. 3. Build classifiers to classify words, where senses are classes. Semi-supervised Algorithms 1. Use less information than supervised approaches. 2. Create required information as a part of the algorithm. Unsupervised Algorithms 1. Cluster instances based on inherent features SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

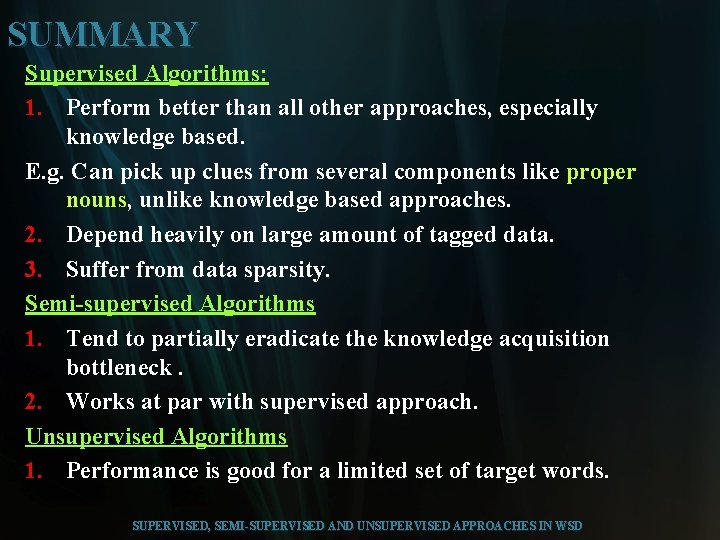

SUMMARY Supervised Algorithms: 1. Perform better than all other approaches, especially knowledge based. E. g. Can pick up clues from several components like proper nouns, unlike knowledge based approaches. 2. Depend heavily on large amount of tagged data. 3. Suffer from data sparsity. Semi-supervised Algorithms 1. Tend to partially eradicate the knowledge acquisition bottleneck. 2. Works at par with supervised approach. Unsupervised Algorithms 1. Performance is good for a limited set of target words. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

REFERENCES 1. AGIRRE, E. , AND MARTINEZ, D. Exploring automatic word sense disambiguation with decision lists and the web. In Proc. of the COLING-2000 (2000). 2. BOSER, B. E. , GUYON, I. M. , AND VAPNIK, V. N. A training algorithm for optimal margin classifiers. In Proceedings of the fifth annual workshop on Computational learning theor y (1992), p. 144152. 3. COST, S. , AND SALZBERG, S. A weighted nearest neighbor algorithm for learning with symbolic features. Machine learning 10, 1 (1993), 5778. 4. ESCUDERO, G. , MARQUEZ, L. , AND RIGAU, G. Naive bayes and exemplar-based approaches to word sense disambiguation revisited. Arxiv preprint cs/0007011 (2000). 5. FELLBAUM, C. , ET AL. Word. Net: An electronic lexical database. MIT press Cambridge, MA, 1998. 6. FREUND, Y. , SCHAPIRE, R. , AND ABE, N. A short introduction to boosting. JOURNAL-JAPANESE SOCIETY FOR ARTIFICIAL INTELLIGENCE 14 (1999), 771780. 7. KHAPRA, M. , BHATTACHARYYA, P. , CHAUHAN, S. , NAIR, S. , AND SHARMA, A. Domain specific iterative word sense disambiguation in a multilingual setting. 8. KILGARRIFF, A. , AND GREFENSTETTE, G. Introduction to the special issue on the web as corpus. Computational linguistics 29, 3 (2003), 333347. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

REFERENCES 9. KILGARRIFF, A. , AND YALLOP, C. Whats in a thesaurus. In Proceedings of the Second Interna-tional Conference on Language Resources and Evaluation (2000), p. 13711379. 10. LITTLESTONE, N. Learning quickly when irrelevant attributes abound: A new linearthreshold algorithm. Machine learning 2, 4 (1988), 285318. 11. MALLERY, J. C. Thinking about foreign policy: Finding an appropriate role for artificially intel-ligent computers. Cambridge: Masters Thesis, MIT Political Science Department (1988). 12. MCCULLOCH, W. S. , AND PITTS, W. A logical calculus of the ideas immanent in nervous activity. Bulletin of Mathematical Biology 5, 4 (1943), 115133. 13. MILLER, G. , BECKWITH, R. , FELLBAUM, C. , GROSS, D. , AND MILLER, K. J. Word. Net: an on -line lexical database. International journal of lexicography 3, 4 (1990), 235312. 14. NAVIGLI, R. Word sense disambiguation: A survey. ACM Comput. Surv. 41, 2 (2009). 15. NAVIGLI, R. , AND VELARDI, P. Learning domain ontologies from document warehouses and dedicated web sites. Computational Linguistics 30, 2 (2004), 151179. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

REFERENCES 16. NG, H. T. , ET AL. Exemplar-based word sense disambiguation: Some recent improvements. In Proceedings of the Second Conference on Empirical methods in natural Language Processing (1997), p. 208213. 17. PEDERSEN, T. A simple approach to building ensembles of naive bayesian classifiers f or word sense disambiguation. In Proceedings of the 1 st North American chapter of the Association for Computational Linguistics conference (2000), p. 6369. 18. QUINLAN, J. R. Induction of decision trees. Machine learning 1, 1 (1986), 81106. 19. QUINLAN, J. R. C 4. 5: programs for machine learning. Morgan Kaufmann, 1993. 20. ROGET, P. M. Roget's International Thesaurus, 1 st ed. Cromwell, New York, 1911. 21. ROTH, D. , YANG, M. , AND AHUJA, N. A snowbased face detector. In Neural Information Processing (2000), vol. 12. 22. SCHAPIRE, R. E. , AND SINGER, Y. Improved boosting algorithms using confidence-rated predic-tions. Machine learning 37, 3 (1999), 297336. 23. YAROWSKY, D. Decision lists for lexical ambiguity resolution: Application to accent restoration in spanish and french. In Proceedings of the 32 nd annual meeting on Association for Computational Linguistics (1994), p. 8895. 24. YAROWSKY, D. Unsupervised word sense disambiguation rivaling supervised methods. In Proceedings of the 33 rd annual meeting on Association for Computational Linguistics (1995), p. 189196. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

THANK YOU ? Supervised, Semi-supervised and Unsupervised Approaches in WSD 32

APPENDIX Supervised, Semi-supervised and Unsupervised Approaches in WSD 33

![1 WSD VARIANTS ü Lexical Sample Targeted WSD ü Allwords WSD 1. WSD : VARIANTS ü Lexical Sample [Targeted WSD]: § ü All-words WSD: §](https://slidetodoc.com/presentation_image/44c4331b01608a5066301b571059bc28/image-34.jpg)

1. WSD : VARIANTS ü Lexical Sample [Targeted WSD]: § ü All-words WSD: § SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

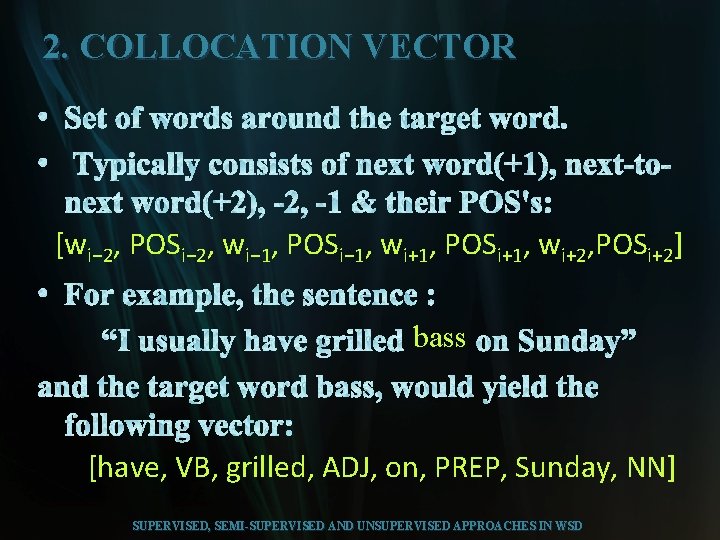

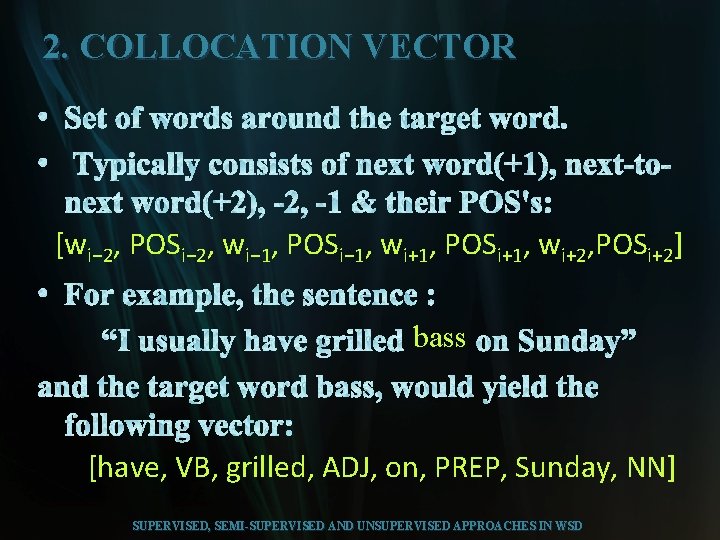

2. COLLOCATION VECTOR [wi− 2, POSi− 2, wi− 1, POSi− 1, wi+1, POSi+1, wi+2, POSi+2] bass [have, VB, grilled, ADJ, on, PREP, Sunday, NN] SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

3. DECISION TREES 1. 2. 3. 4. NAÏVE BAYES 1. sˆ= argmax s ε senses Pr(s). Πi=1 n. Pr(Vwi|s) SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

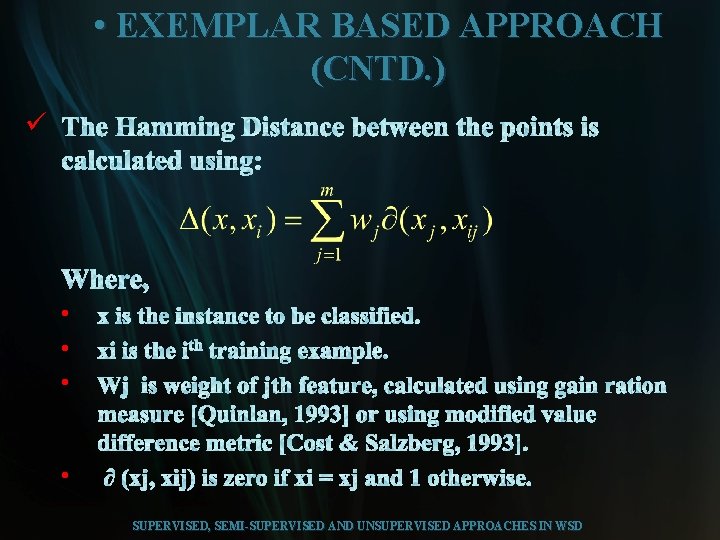

5. EXEMPLAR BASED APPROACH ü ü ü ü SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

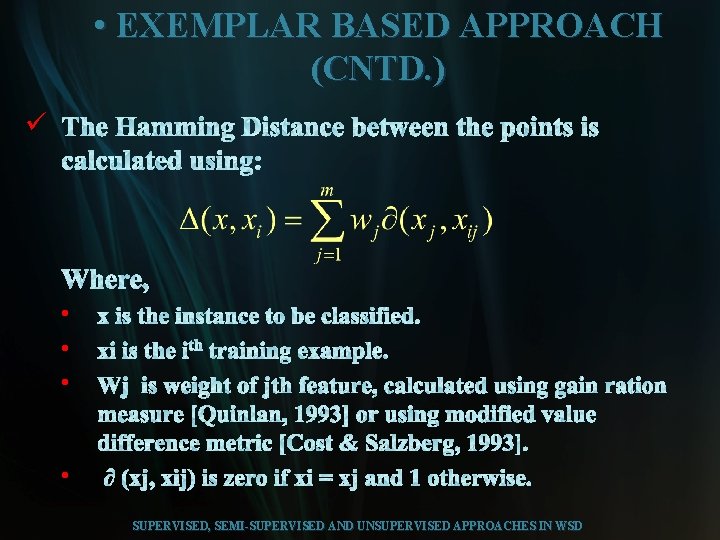

• EXEMPLAR BASED APPROACH (CNTD. ) ü • • SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

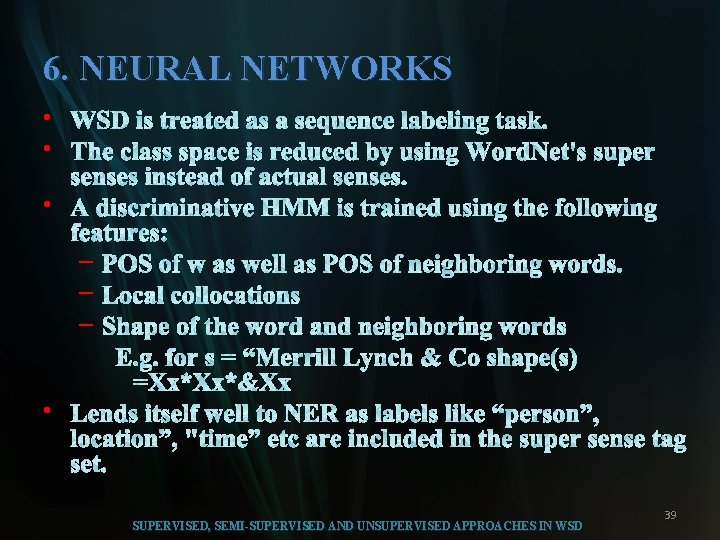

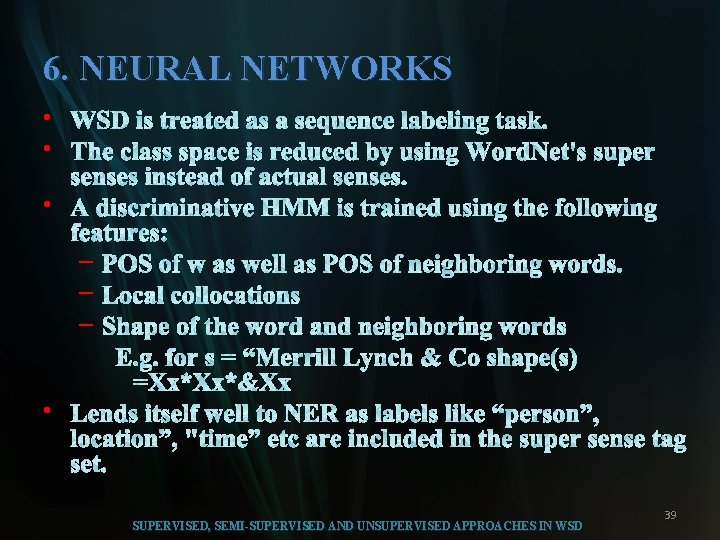

6. NEURAL NETWORKS • • • – – – • SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD 39

7. Monosemous relatives • • SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

8. An iterative approach to wsd • • • SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

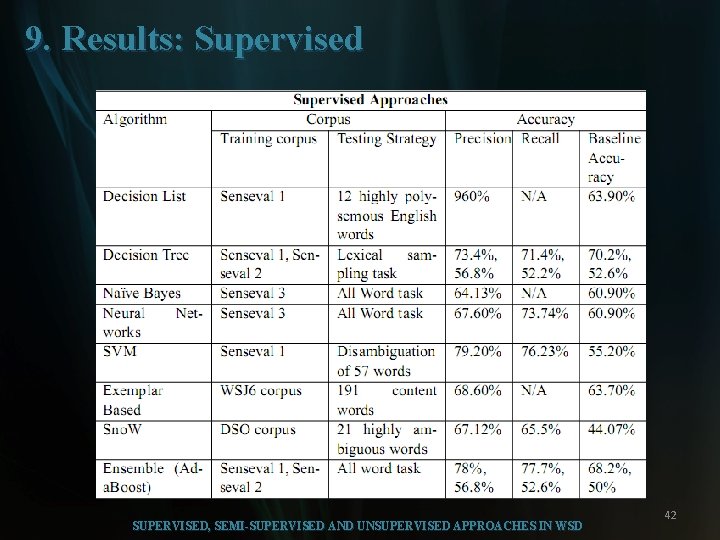

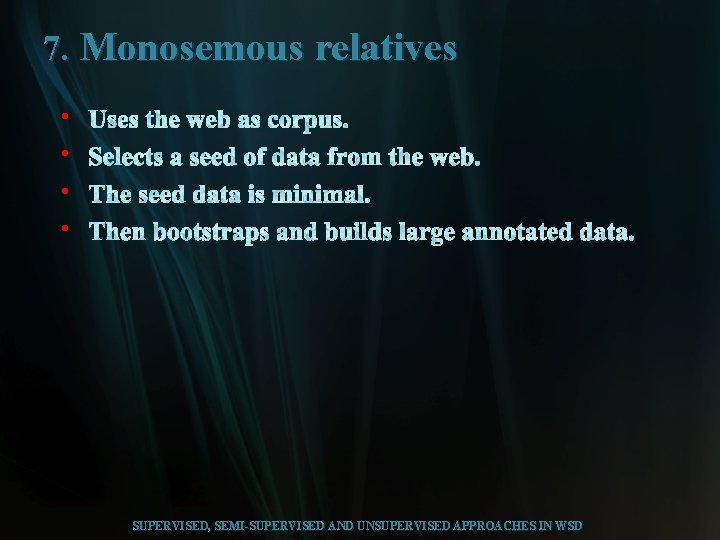

9. Results: Supervised SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD 42

10. Results: Semi-Supervised SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD 43

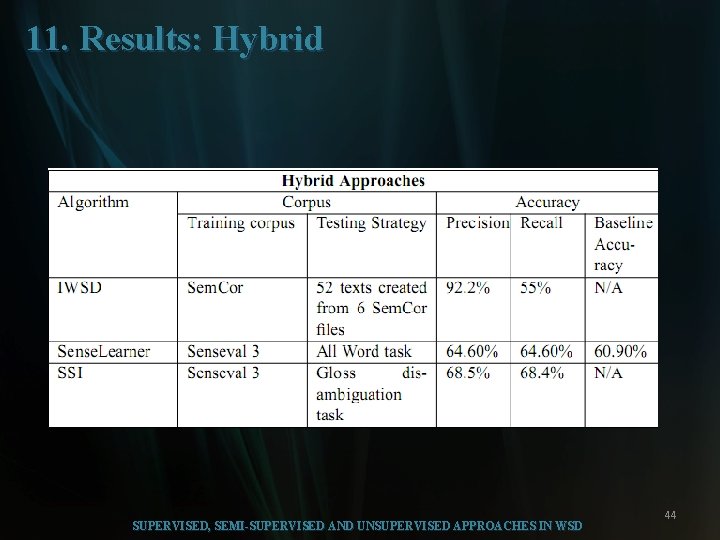

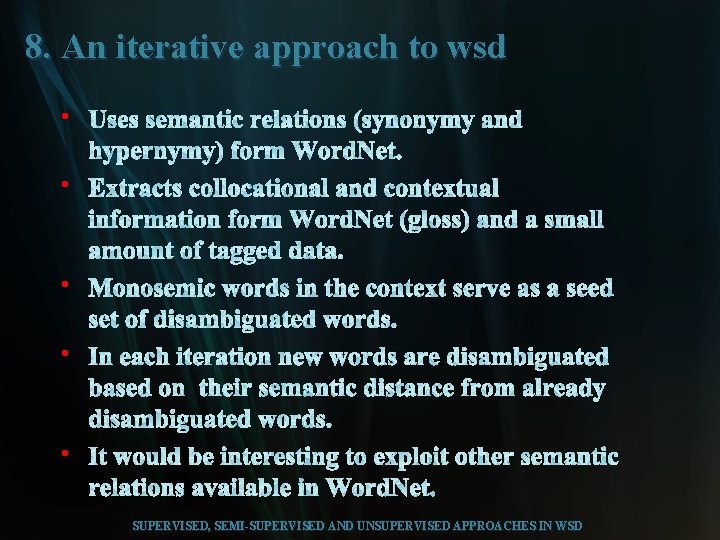

11. Results: Hybrid SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD 44

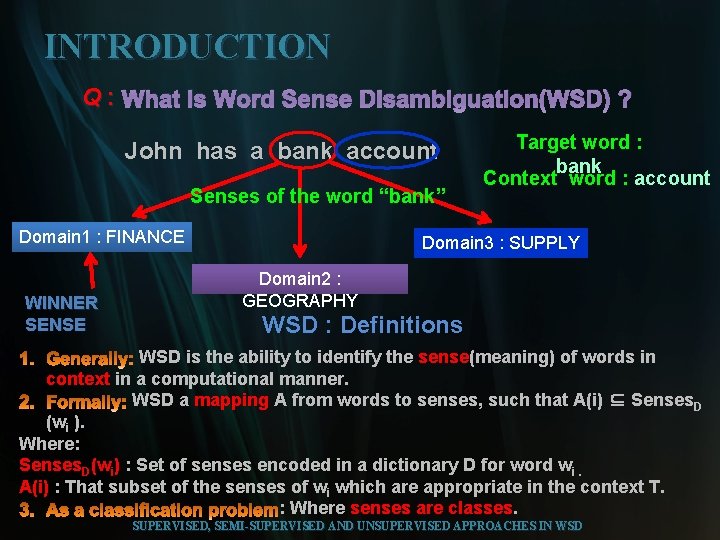

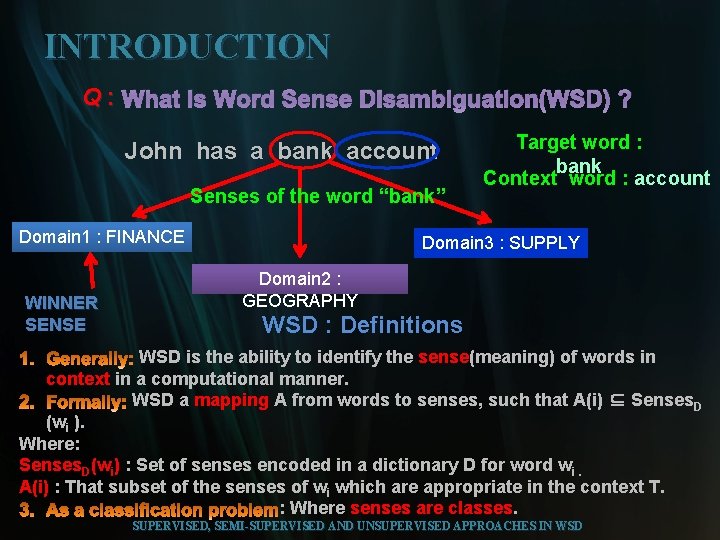

INTRODUCTION Q : What is Word Sense Disambiguation(WSD) ? John has a bank account Senses of the word “bank” Domain 1 : FINANCE WINNER SENSE Target word : bank Context word : account Domain 3 : SUPPLY Domain 2 : GEOGRAPHY WSD : Definitions WSD is the ability to identify the sense(meaning) of words in context in a computational manner. WSD a mapping A from words to senses, such that A(i) ⊆ Senses. D (wi ). Where: Senses. D(wi) : Set of senses encoded in a dictionary D for word wi. A(i) : That subset of the senses of wi which are appropriate in the context T. : Where senses are classes. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

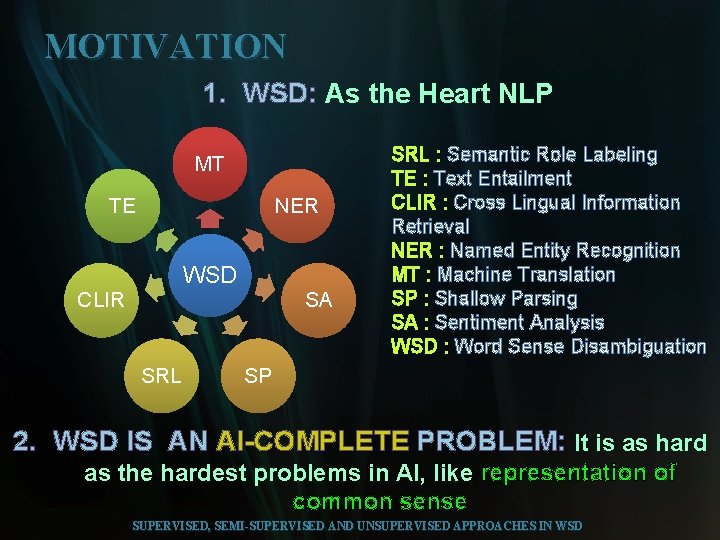

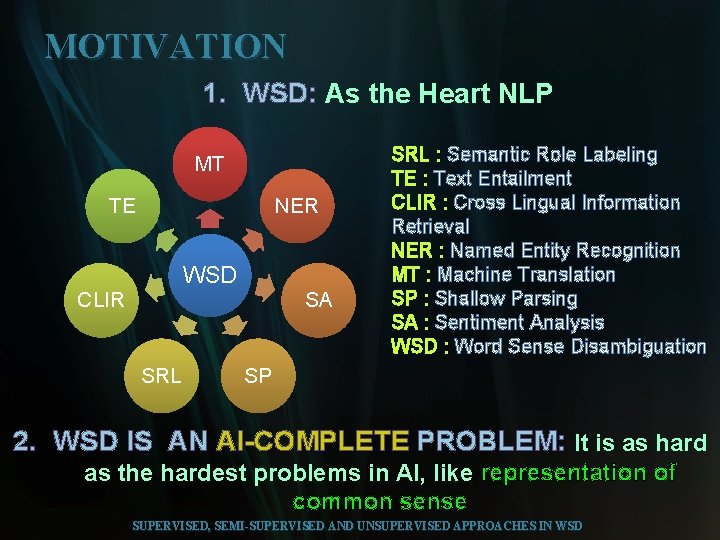

MOTIVATION 1. WSD: As the Heart NLP MT TE NER WSD CLIR SA SRL : Semantic Role Labeling TE : Text Entailment CLIR : Cross Lingual Information Retrieval NER : Named Entity Recognition MT : Machine Translation SP : Shallow Parsing SA : Sentiment Analysis WSD : Word Sense Disambiguation SP 2. WSD IS AN AI-COMPLETE PROBLEM: It is as hard as the hardest problems in AI, like representation of common sense SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

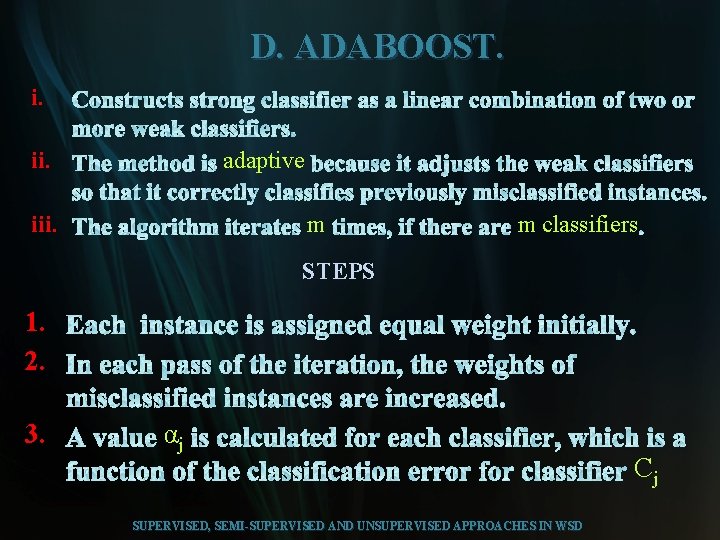

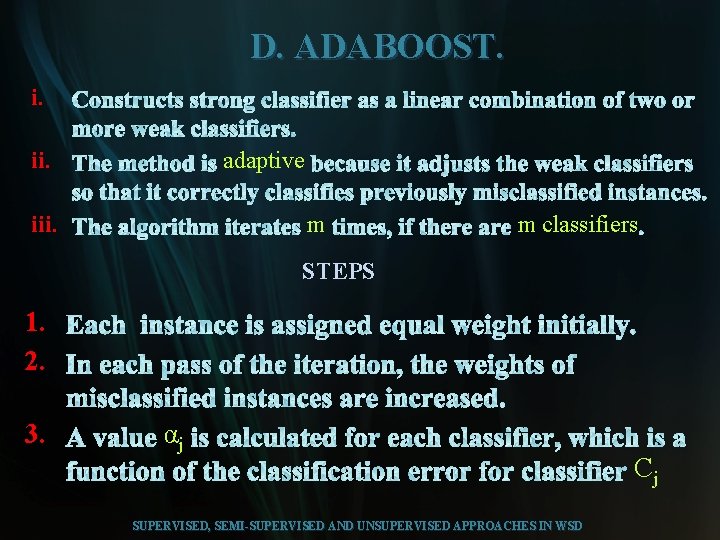

D. ADABOOST. i. ii. adaptive iii. m m classifiers STEPS 1. 2. 3. αj SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD Cj

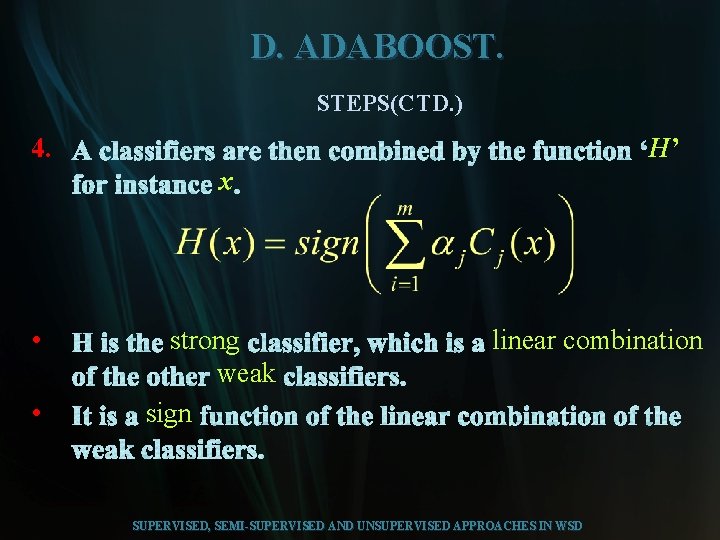

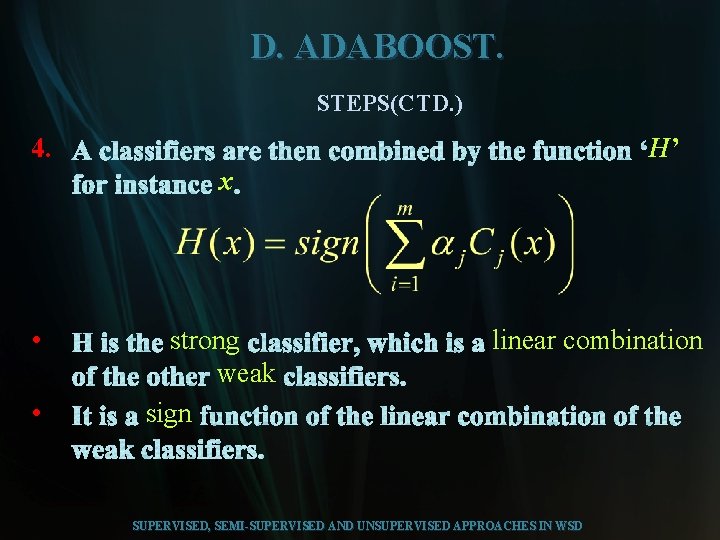

D. ADABOOST. STEPS(CTD. ) 4. H’ x • • strong weak sign linear combination SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

FUTURE DIRECTIONS 1. Development of better sense recognition systems. 2. Eradication of knowledge acquisition bottleneck. 3. More attention needs to be paid towards Domain Specific approach in WSD. 4. If larger annotated corpora can be built then the accuracy of supervised approaches will shoot higher. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

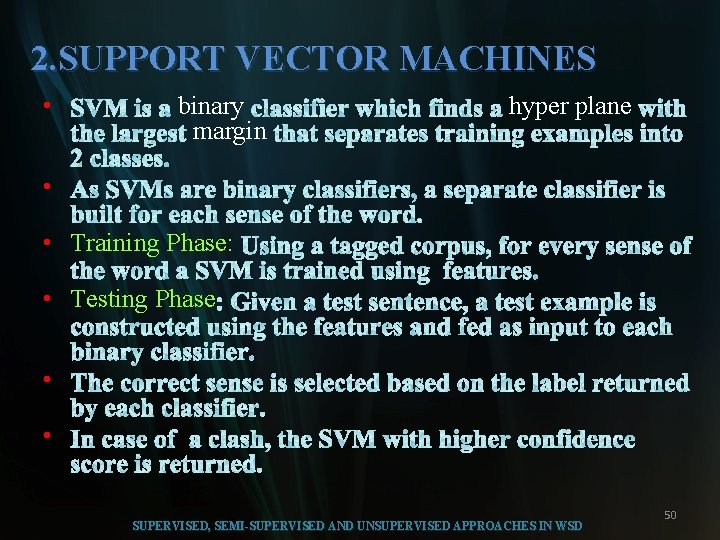

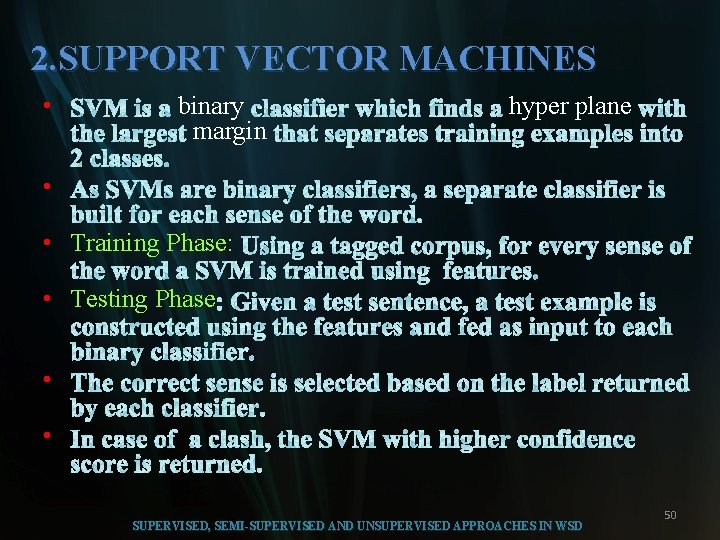

2. SUPPORT VECTOR MACHINES • binary margin hyper plane • • Training Phase: • Testing Phase • • SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD 50

HYBRID APPROACHES Supervised, Semi-supervised and Unsupervised Approaches in WSD 51

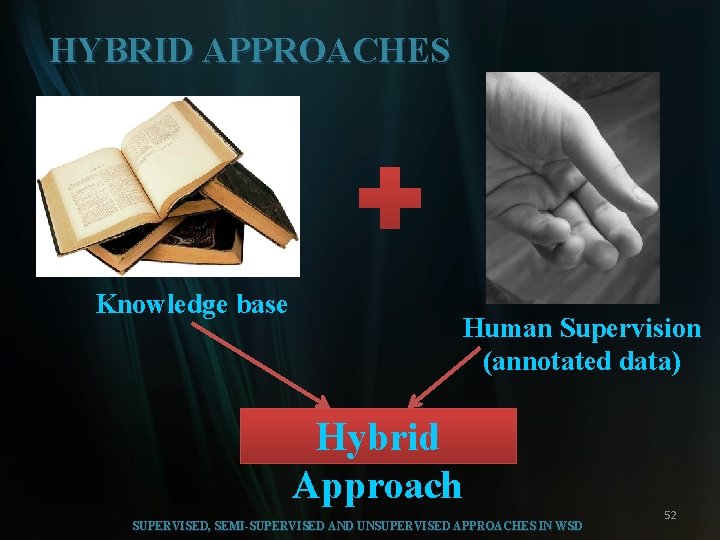

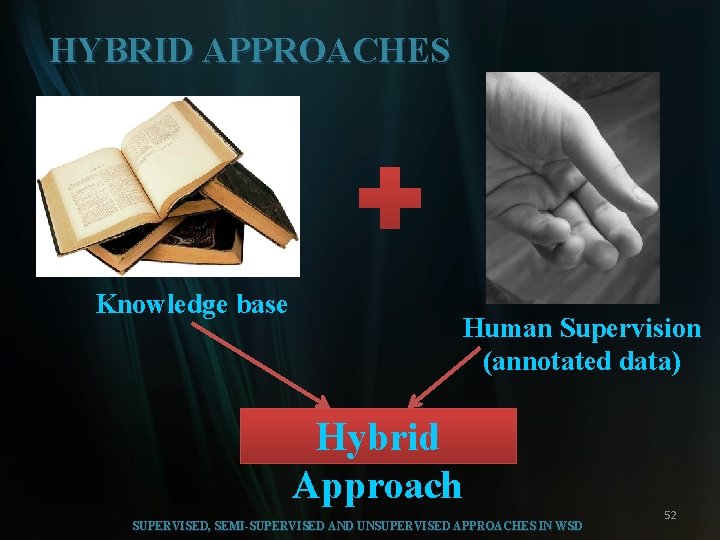

HYBRID APPROACHES Knowledge base Human Supervision (annotated data) Hybrid Approach SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD 52

HYBRID APPROACHES Unifying 1. 2. Algorithms 1. 2. SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

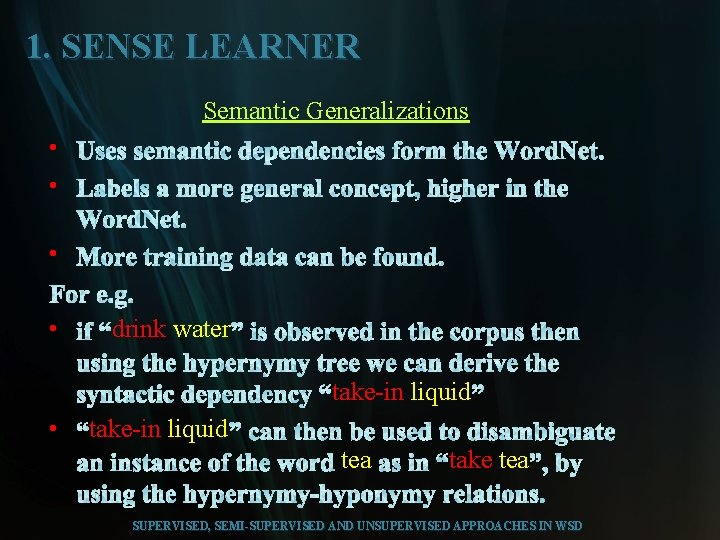

1. SENSE LEARNER • semantic language model • semantic generalizations Semantic Language Model • word & sense • • • SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

1. SENSE LEARNER Semantic Generalizations • • drink water take-in liquid • take-in liquid tea take tea SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD

1. bootstrapping I. Decision Lists. II. 1. “One sense per discourse” • 2. ‘One sense per collocation • III. Co-training Self-training: SUPERVISED, SEMI-SUPERVISED AND UNSUPERVISED APPROACHES IN WSD