Neural Networks and Deep Learning Eli Gutin MIT

- Slides: 88

Neural Networks and Deep Learning Eli Gutin MIT 15. S 60 (adapted from 2016 course by Iain Dunning)

Goals today Go over basics of neural nets n Introduce Tensor. Flow n Introduce Deep Learning n Look at key applications n Practice coding in Python n Become familiar with Deep Reinforcement Learning (if time permits!) n

Plan for today 1. 2. 3. 4. Theory: Supervised learning & NNs Coding: classification problem Theory: Unsupervised learning Coding: auto-encoder Theory: Deep Learning Coding: MNIST with Conv. Nets Theory: Deep Reinforcement Learning

Supervised Learning and Neural Nets

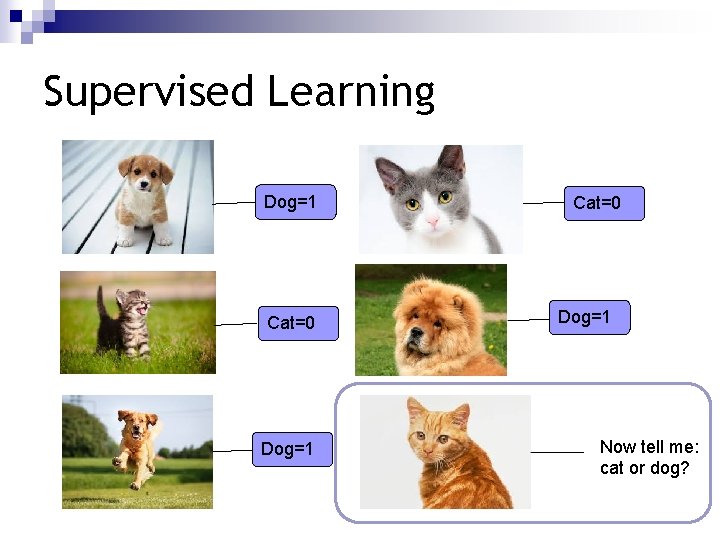

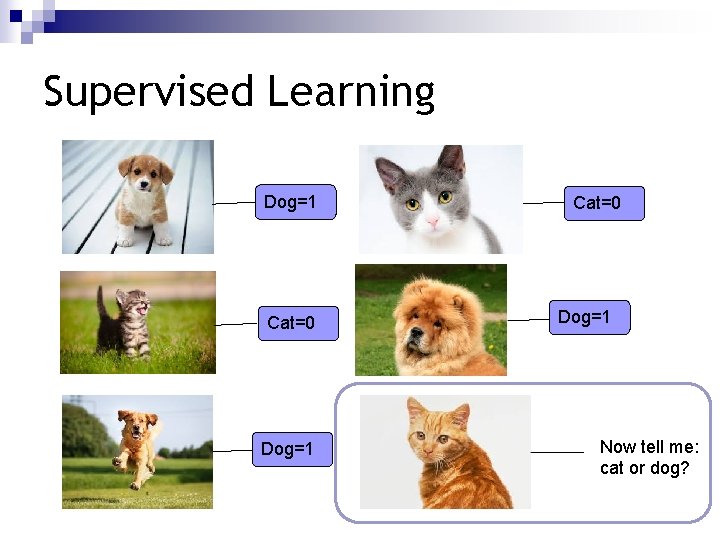

Motivation Artificial intelligence… n Basic example: can the computer distinguish between cats and dogs? n

Supervised Learning Dog=1 Cat=0 Dog=1 Now tell me: cat or dog?

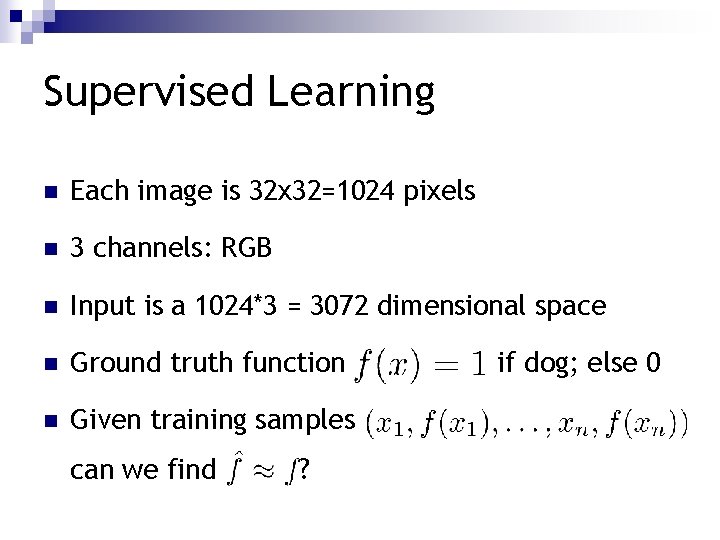

Supervised Learning n Each image is 32 x 32=1024 pixels n 3 channels: RGB n Input is a 1024*3 = 3072 dimensional space

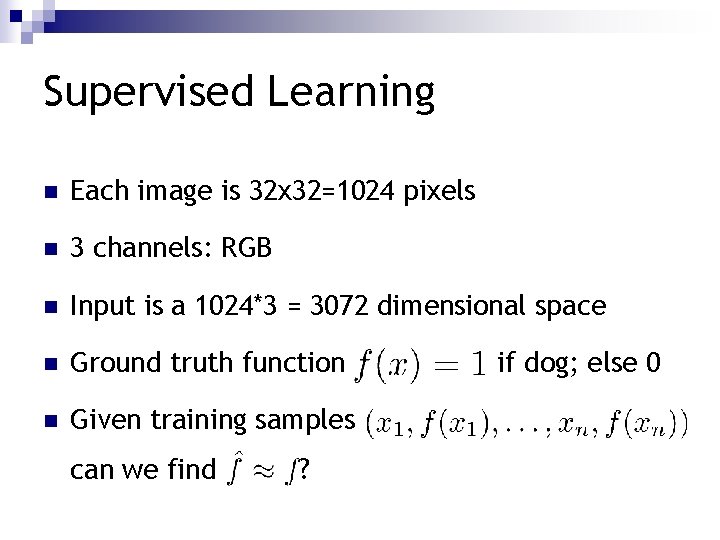

Supervised Learning n Each image is 32 x 32=1024 pixels n 3 channels: RGB n Input is a 1024*3 = 3072 dimensional space n Ground truth function n Given training samples can we find ? if dog; else 0

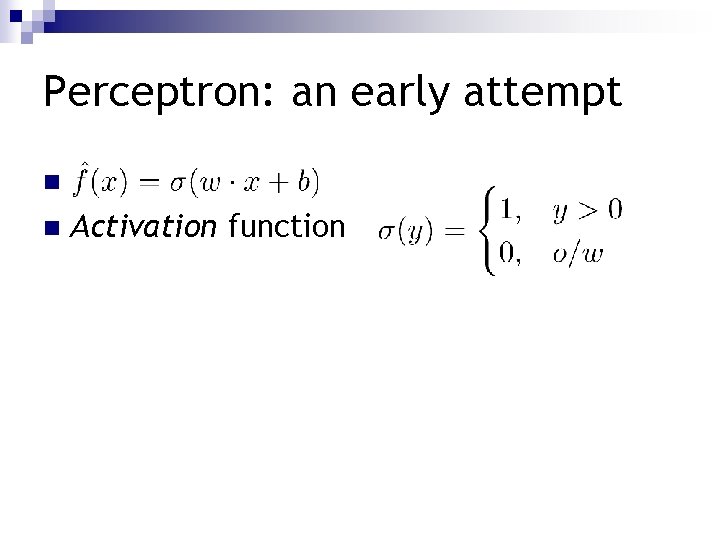

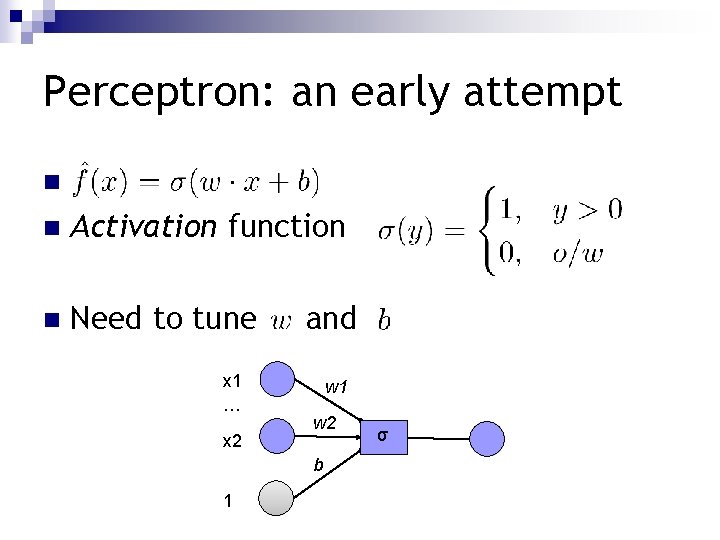

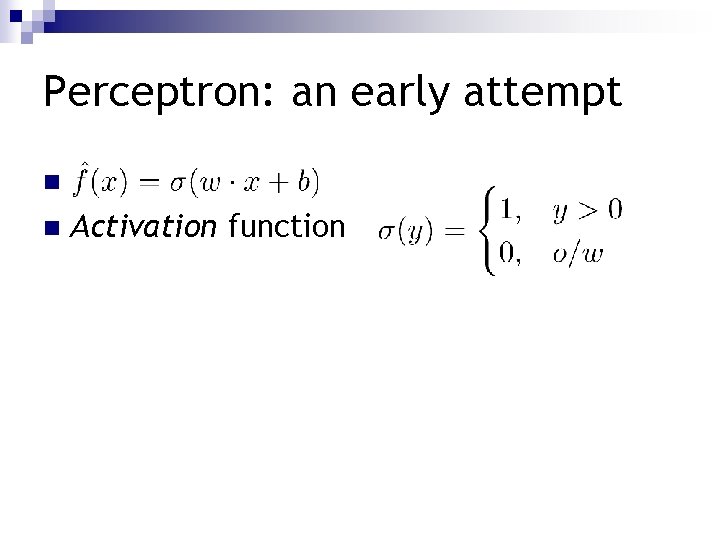

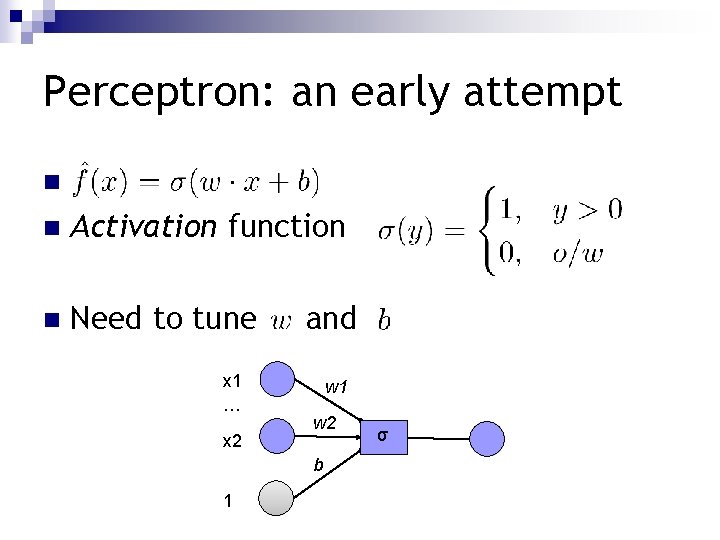

Perceptron: an early attempt n n Activation function

Perceptron: an early attempt n n Activation function n Need to tune x 1 … x 2 and w 1 w 2 b 1 σ

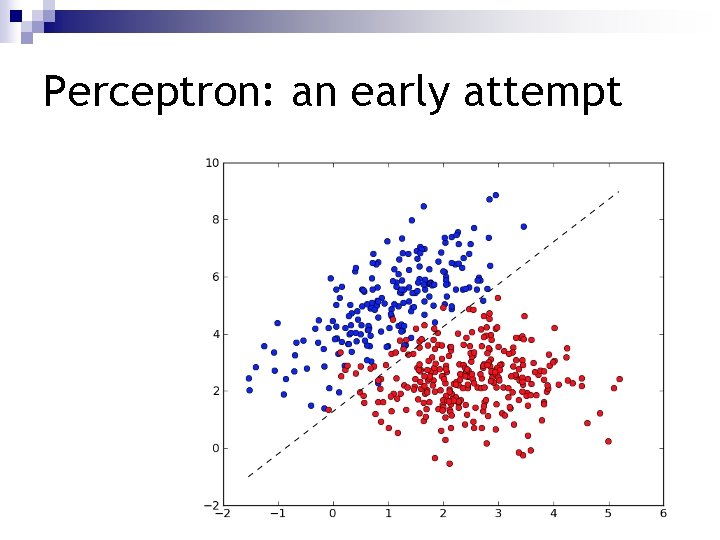

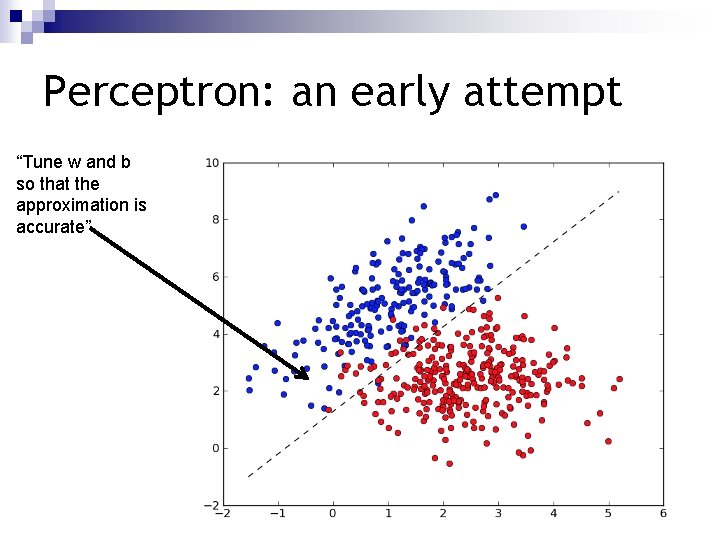

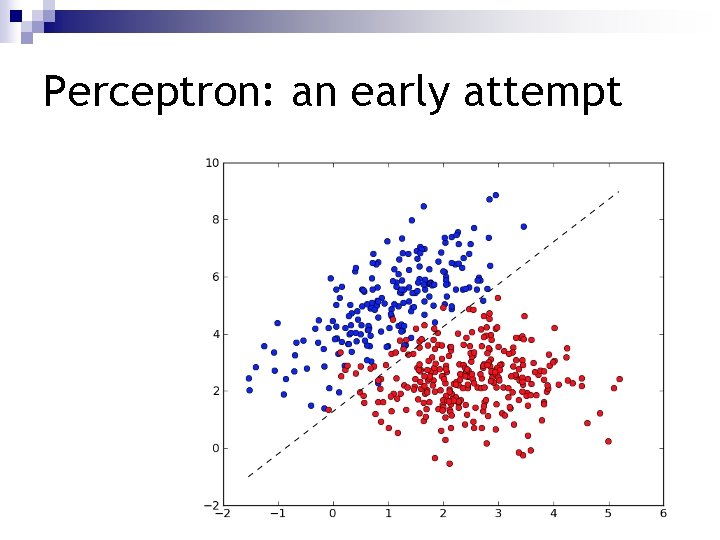

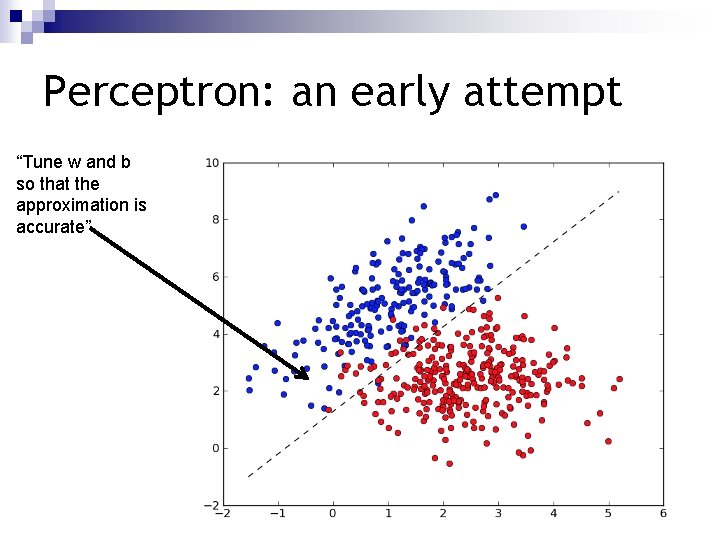

Perceptron: an early attempt

Perceptron: an early attempt “Tune w and b so that the approximation is accurate”

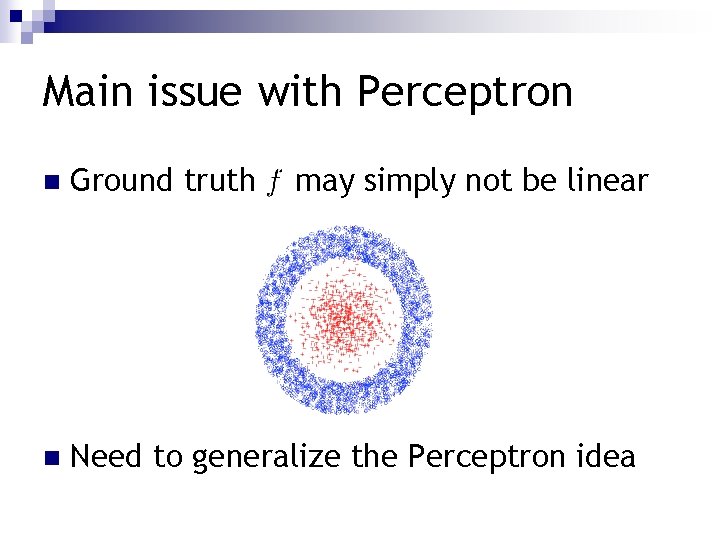

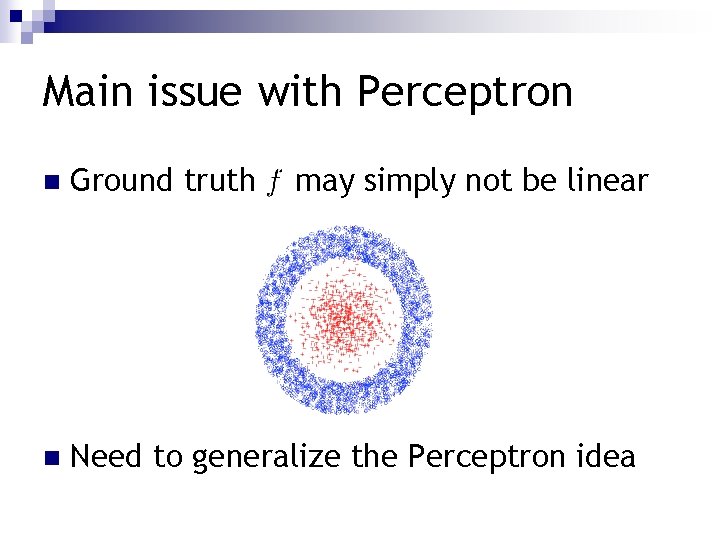

Main issue with Perceptron n Ground truth may simply not be linear n Need to generalize the Perceptron idea

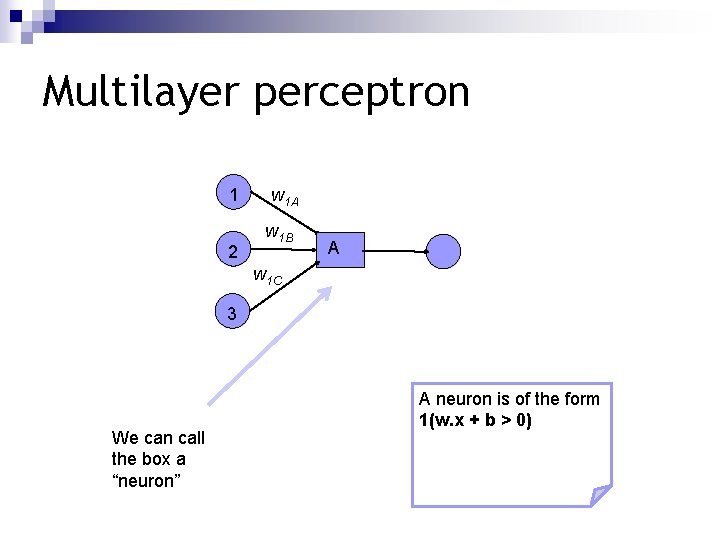

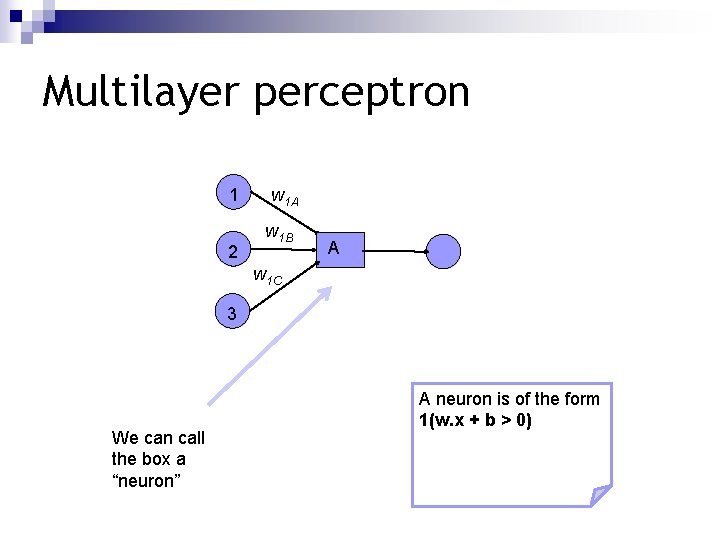

Multilayer perceptron 1 2 w 1 A w 1 B A w 1 C 3 We can call the box a “neuron” A neuron is of the form 1(w. x + b > 0)

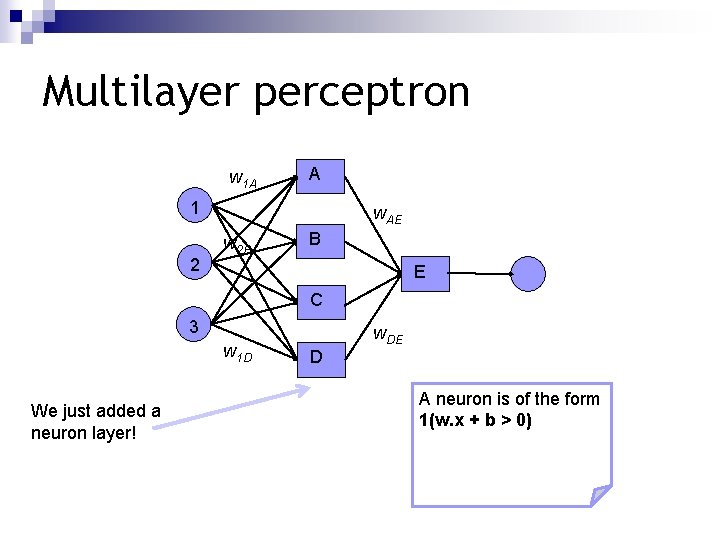

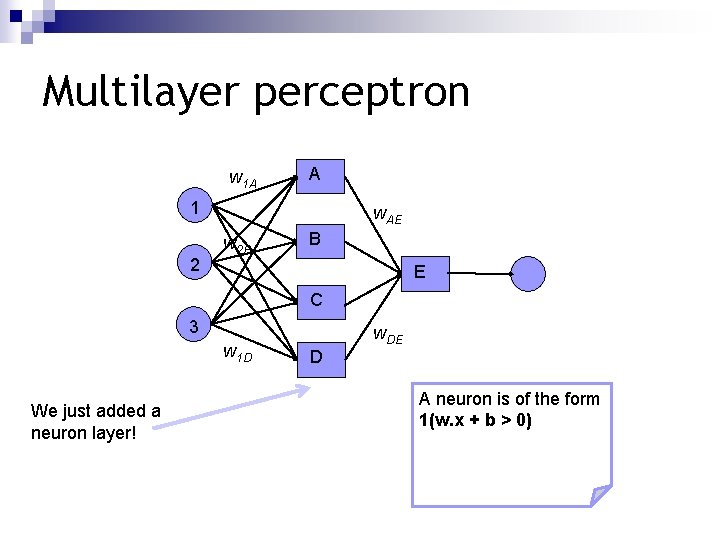

Multilayer perceptron w 1 A A 1 2 w. AE w 2 B B E C 3 w 1 D We just added a neuron layer! w. DE D A neuron is of the form 1(w. x + b > 0)

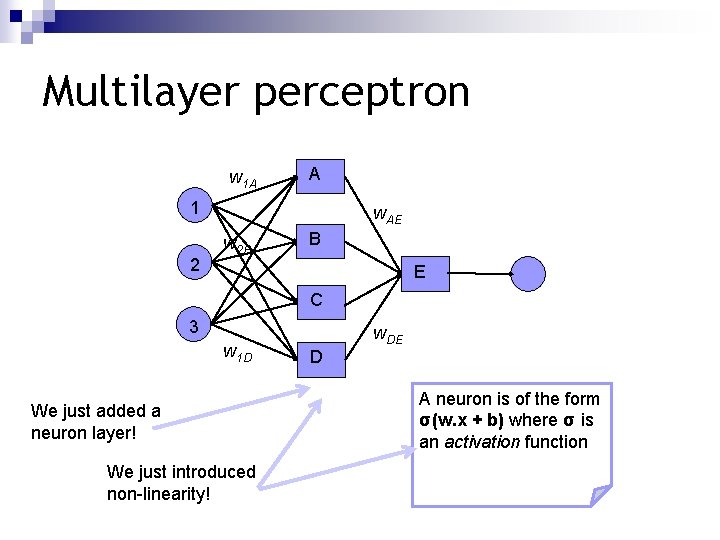

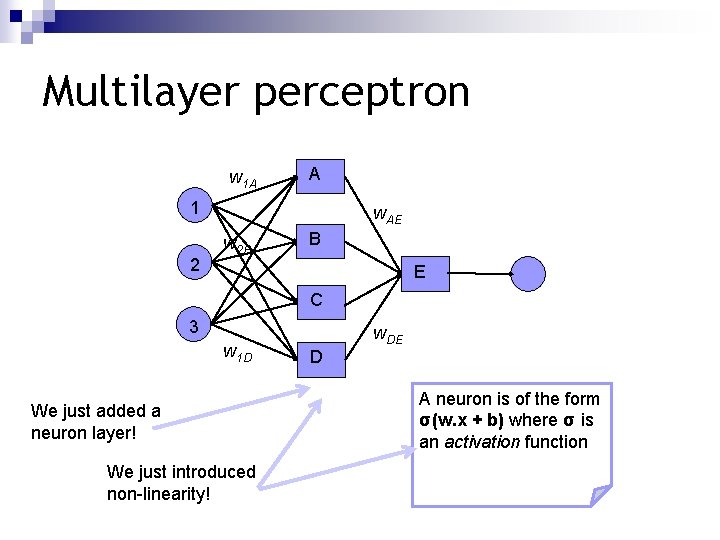

Multilayer perceptron w 1 A A 1 2 w. AE w 2 B B E C 3 w 1 D We just added a neuron layer! We just introduced non-linearity! w. DE D A neuron is of the form σ(w. x + b) where σ is an activation function

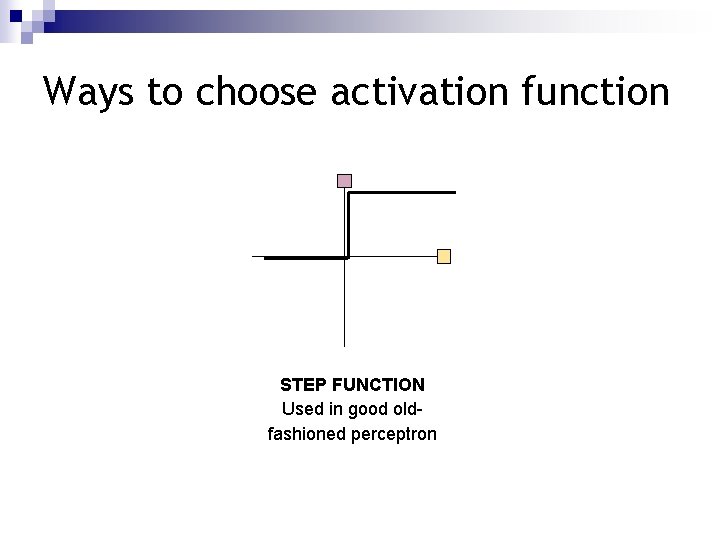

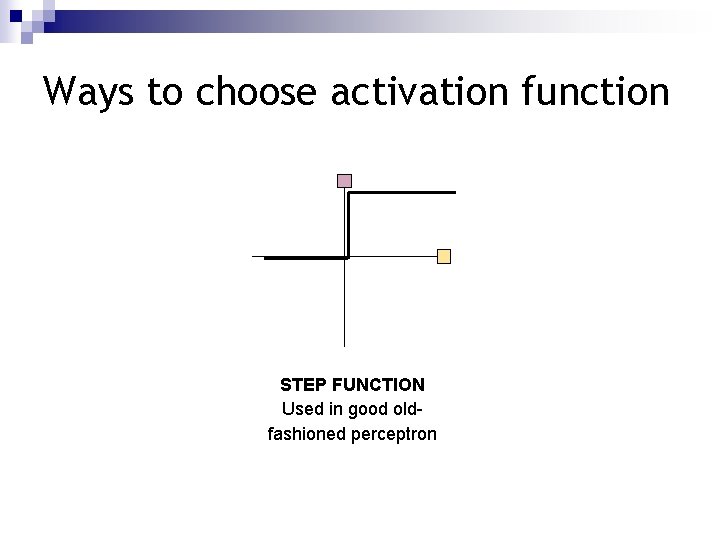

Ways to choose activation function STEP FUNCTION Used in good oldfashioned perceptron

Ways to choose activation function LINEAR like linear regression (only used for final layer) LOGISTIC / SIGMOIDAL / TANH Smooth, differentiable, saturating functions RECTIFIED LINEAR (Re. LU) Cheap to compute, popular lately

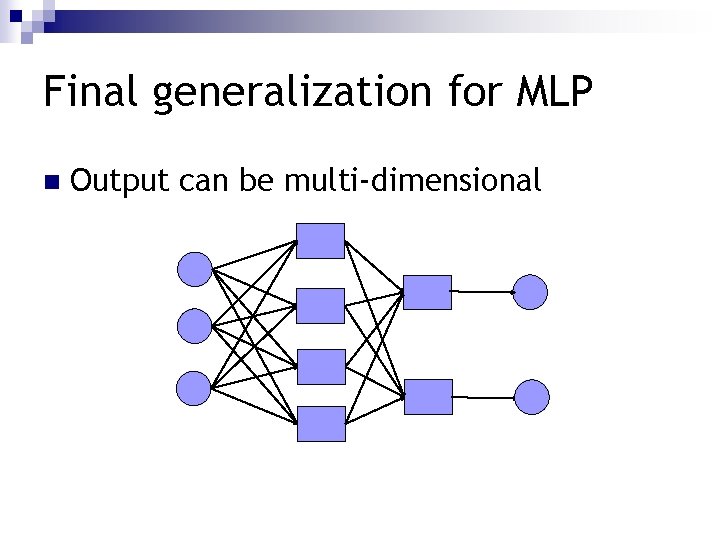

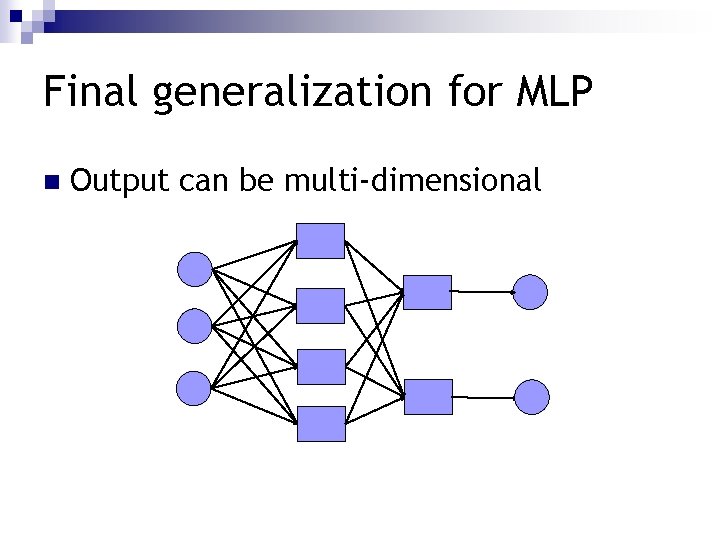

Final generalization for MLP n Output can be multi-dimensional

Universal approximation theorem n n Theorem: 2 -layer NNs with sigmoid activation functions can approximate any other function (Kurt Hornik: Approximation Capabilities of Multi. Layer Feed. Forward Networks, 1991)

How do we optimize the neural net’s parameters? n Quantify how bad the current net is via loss function

How do we optimize the neural net’s parameters? Quantify how bad the current net is via loss function n Training data is. Parameters n Loss function is n ¨ E. g. square loss

How do we optimize the neural net’s parameters? Quantify how bad the current net is via loss function n Training data is. Parameters n Loss function is n ¨ E. g. n square loss Need to minimize the loss function

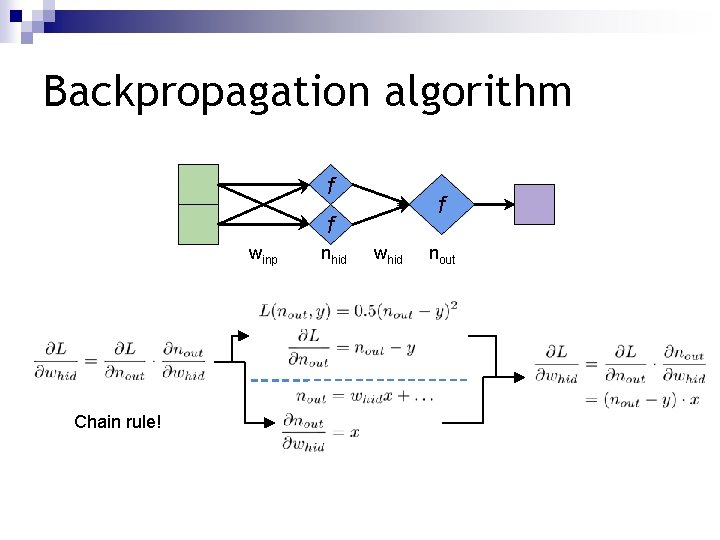

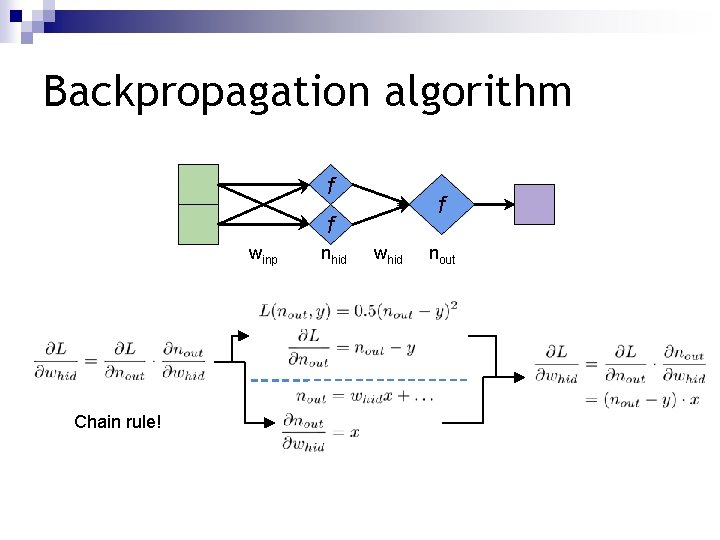

Backpropagation algorithm f f f winp Chain rule! nhid whid nout

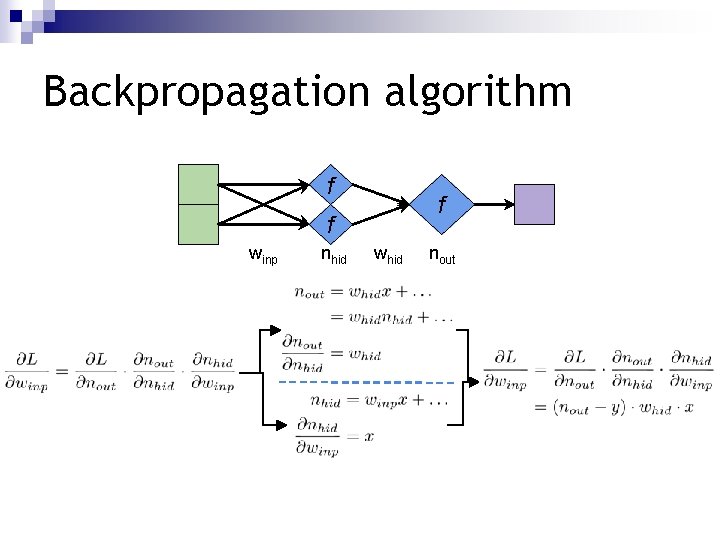

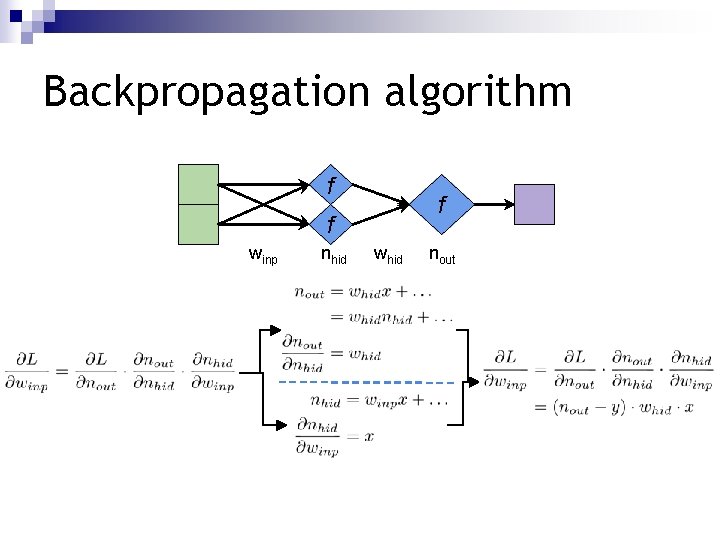

Backpropagation algorithm f f f winp nhid whid nout

Notes on backpropagation n. Can calculate derivatives automatically n. Opportunities n. Re-using for parallelism intermediate values and other efficiencies n. Painful DIY - if only there was a framework. . .

Implementing Neural Nets with Tensor. Flow

Frameworks for Implementing Neural Networks

Today: Google Tensor. Flow n. Use TF to describe computations as a graph n. TF schedules computations on devices CPU, GPU. . . n. Performs automatic differentiation (like Ju. MP!) n. Python is easy to use

Project 1 - Nonlinear classification n. Goal: approximate an unknown non-linear classifier from data n. Plan: generate data, create ANN, fit it n. Live coding in IPython - follow along! n. Play with extending the code

Project 1: Non-linear classification f f Y f N SOFTMAX OUTPUT LAYER OUTPUT f f INPUT HIDDEN LAYER

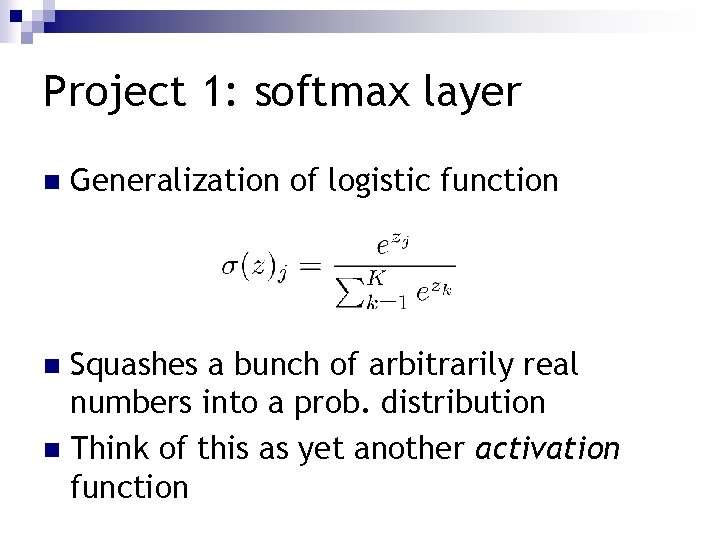

Project 1: softmax layer n Generalization of logistic function Squashes a bunch of arbitrarily real numbers into a prob. distribution n Think of this as yet another activation function n

Project 1: cross-entropy loss n Since we’re comparing a prob. distribution to true 1 -hot encoding, we’ll use the cross-entropy function as the loss:

Project 1: extensions n Try changing… ¨ the number of training points ¨ the number of hidden neurons ¨ the input layer activation function ¨ Gradient Descent’s learning rate ¨ the optimization algorithm ¨ etc.

Unsupervised learning & data representation http: //www. mit. edu/~9. 520/fall 16/Classes/ldr. html

Successful ML ingredients 1. Learning algorithms 2. Lots of labeled data 3. Computational power

Successful ML ingredients 1. Learning algorithms 2. Lots of labeled(!!) data 3. Computational power

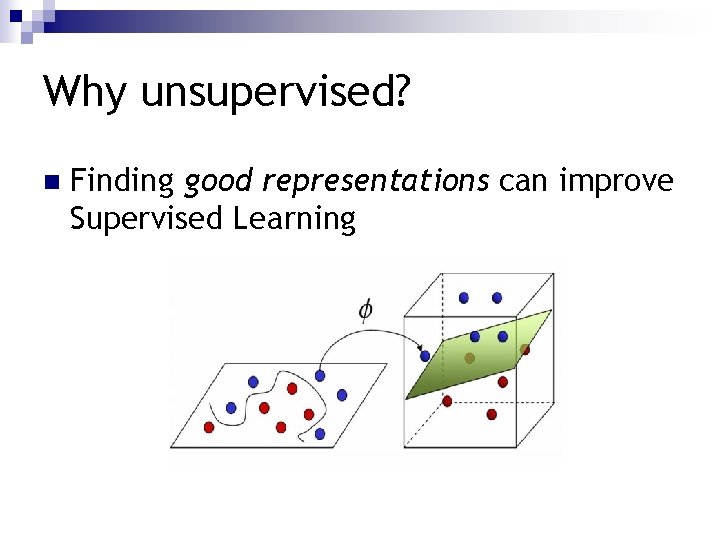

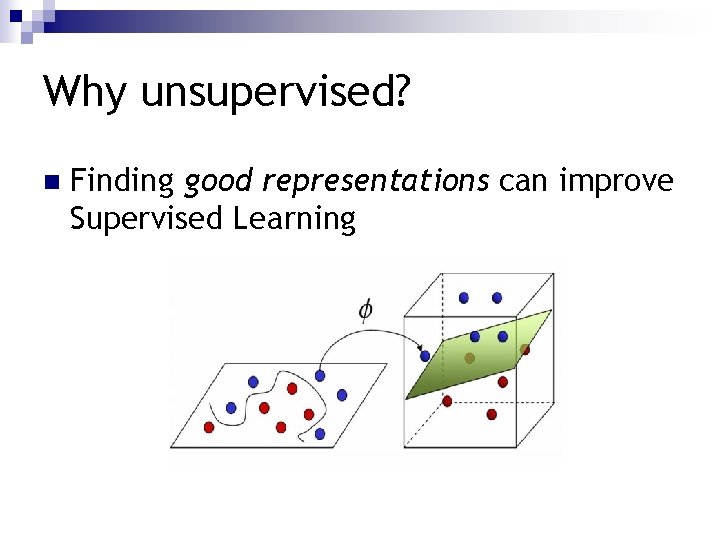

Why unsupervised? n Labeled data is expensive to get ¨ Unlabeled n data cheaper / widely available Different goals: ¨ Cluster analysis ¨ Embedding data in new vector spaces ¨ Compression… n Finding good representations can improve Supervised Learning

Why unsupervised? n Finding good representations can improve Supervised Learning

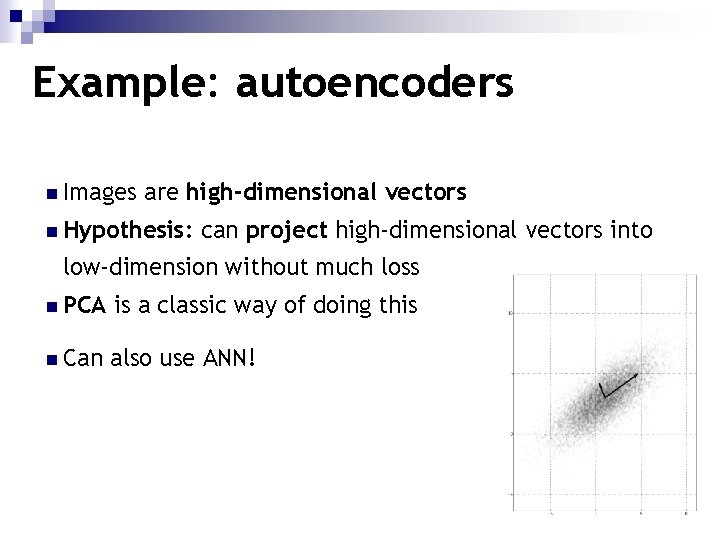

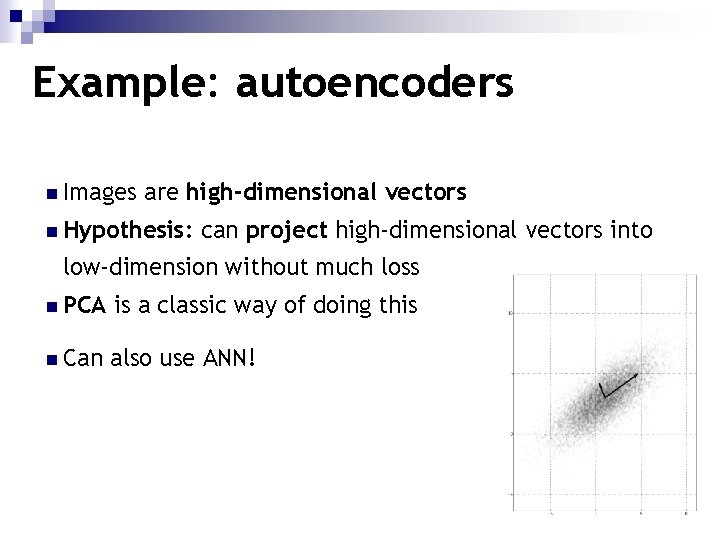

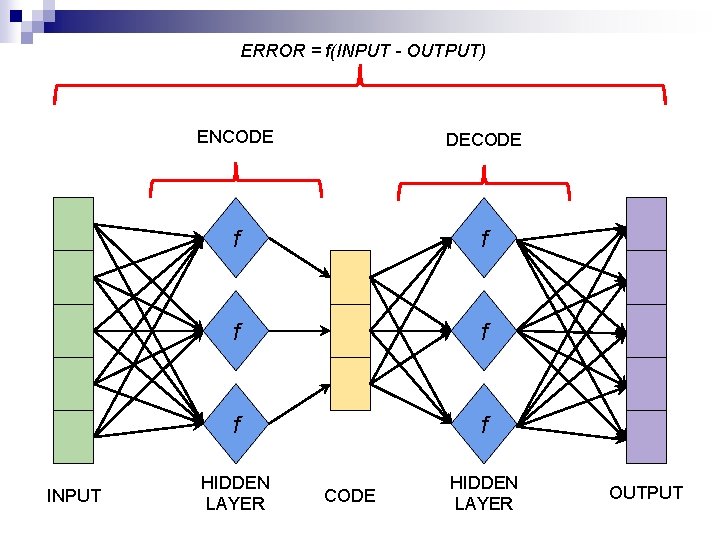

Example: autoencoders n Images are high-dimensional vectors n Hypothesis: can project high-dimensional vectors into low-dimension without much loss n PCA is a classic way of doing this n Can also use ANN!

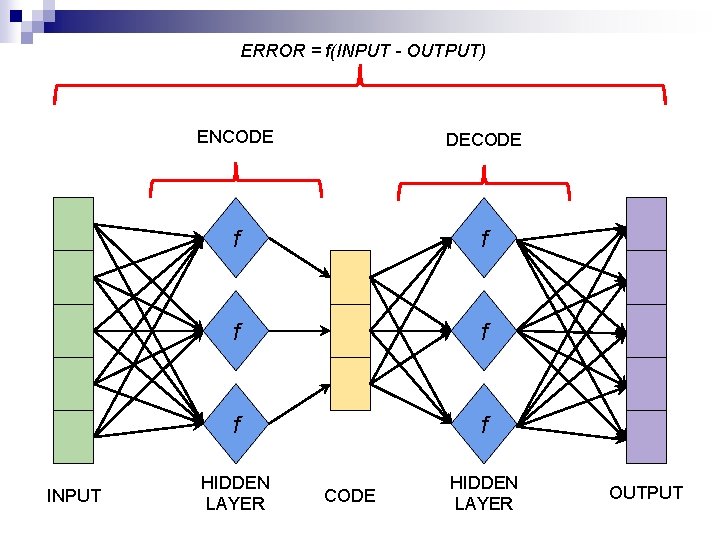

ERROR = f(INPUT - OUTPUT) INPUT ENCODE DECODE f f f HIDDEN LAYER CODE HIDDEN LAYER OUTPUT

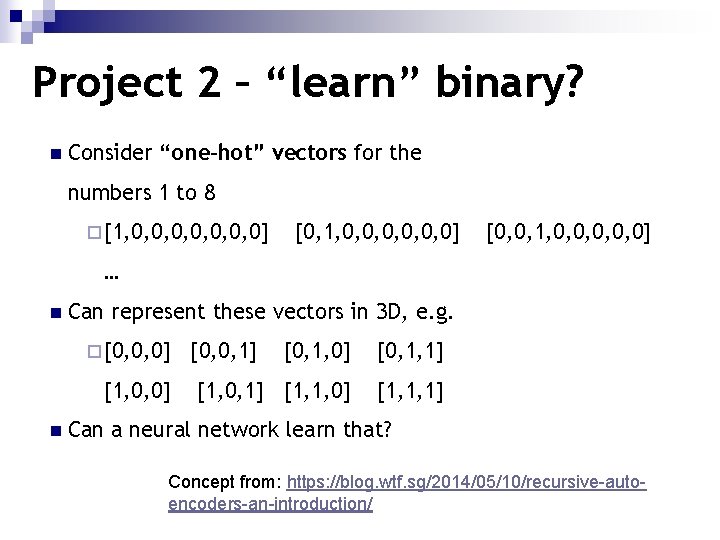

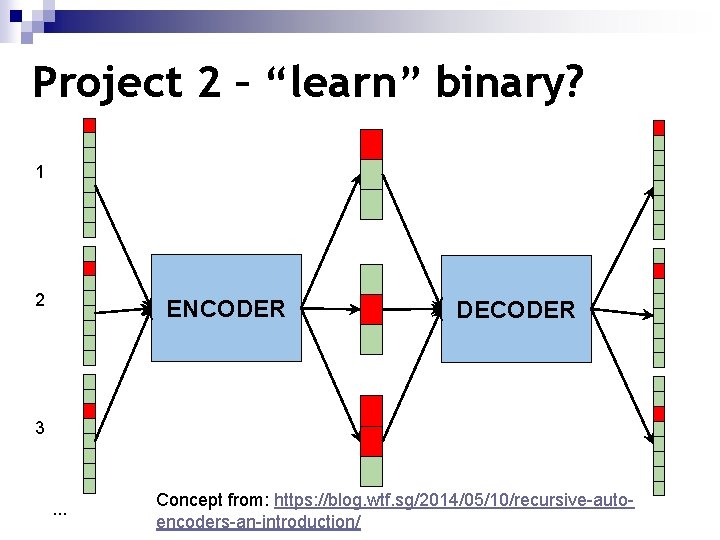

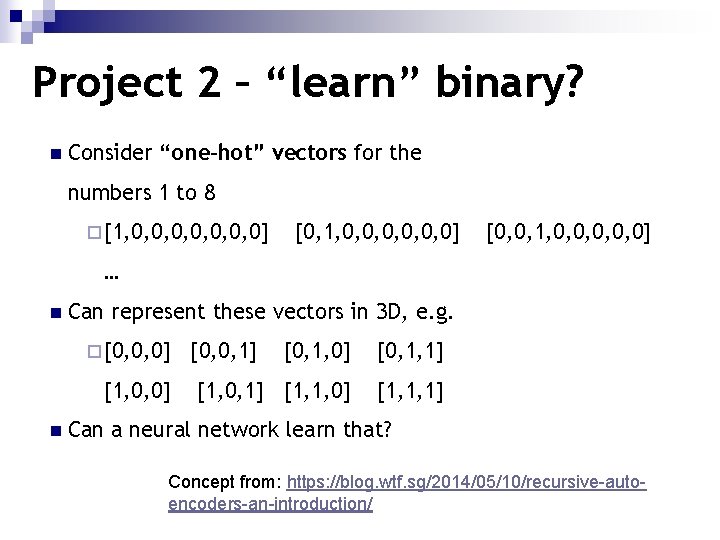

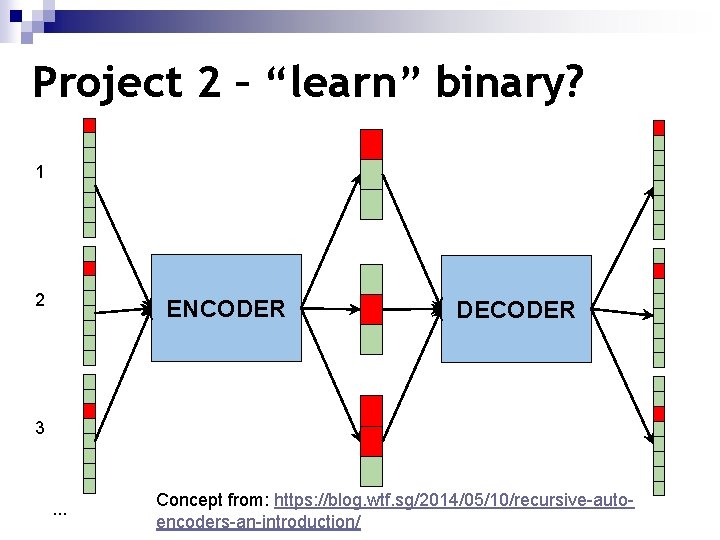

Project 2 – “learn” binary? n Consider “one-hot” vectors for the numbers 1 to 8 ¨ [1, 0, 0, 0, 0] [0, 1, 0, 0, 0] [0, 0, 1, 0, 0, 0] … n Can represent these vectors in 3 D, e. g. ¨ [0, 0, 0] [1, 0, 0] n [0, 0, 1] [0, 1, 0] [0, 1, 1] [1, 0, 1] [1, 1, 0] [1, 1, 1] Can a neural network learn that? Concept from: https: //blog. wtf. sg/2014/05/10/recursive-autoencoders-an-introduction/

Project 2 – “learn” binary? 1 2 ENCODER DECODER 3 . . . Concept from: https: //blog. wtf. sg/2014/05/10/recursive-autoencoders-an-introduction/

Project 2 - Assignments - Try. . . n… changing the activation functions n. . . using fewer hidden units - what can you recover? n. . . n… using multiple layers using different weight for encoding and decoding or adding biases

Recent work on representations: word 2 vec n Given a text corpus, embed words in n-dimensional space n “Similar” n Use words should be “close” in this space a (fairly simple) neural network to encode n Results are… Paper: http: //arxiv. org/pdf/1301. 3781. pdf Tensor. Flow example: https: //www. tensorflow. org/versions/master/tutorials/word 2 vec/index. html

PARIS - FRANCE + ITALY = ROME JAPAN - SUSHI + GERMANY = BRATWURST BIG - BIGGER + COLD = COLDER

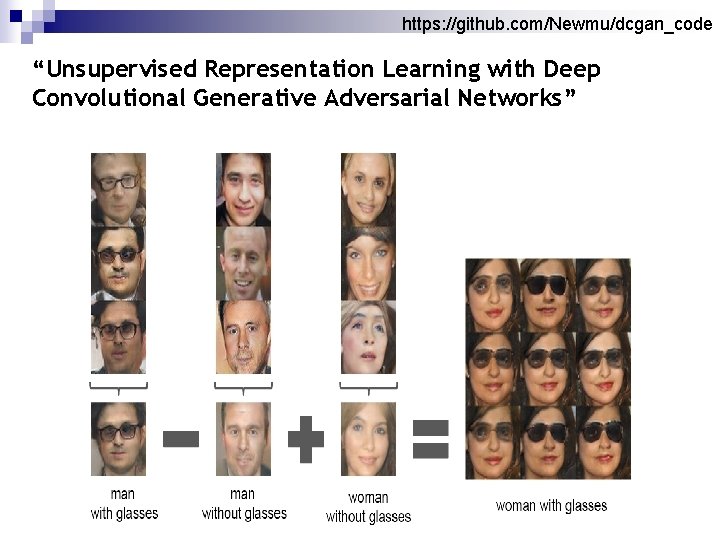

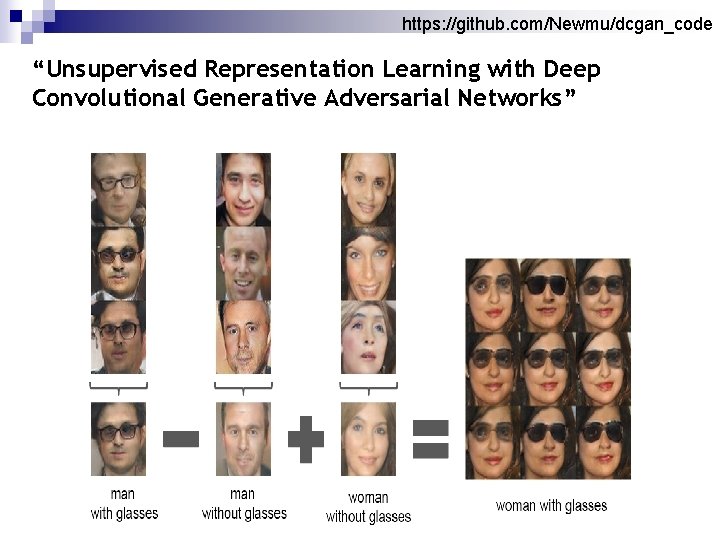

https: //github. com/Newmu/dcgan_code “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks”

Deep Learning with Conv. Nets Based on: https: //adeshpande 3. github. io/ABeginner's-Guide-To-Understanding-Convolutional-Neural. Networks/

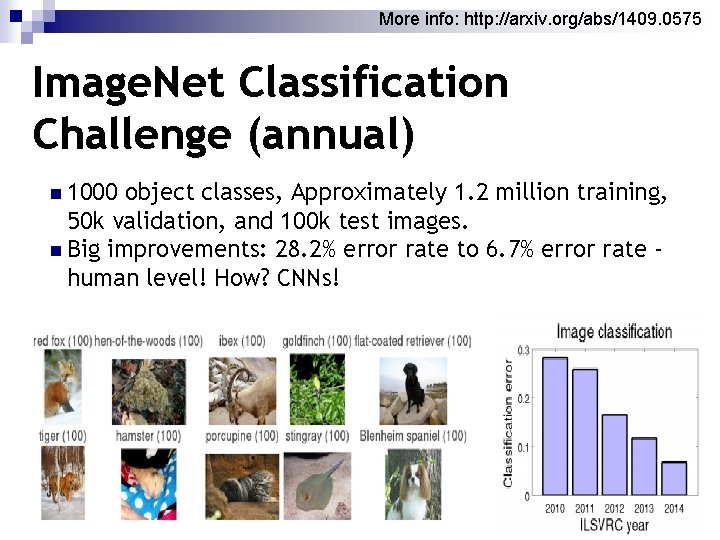

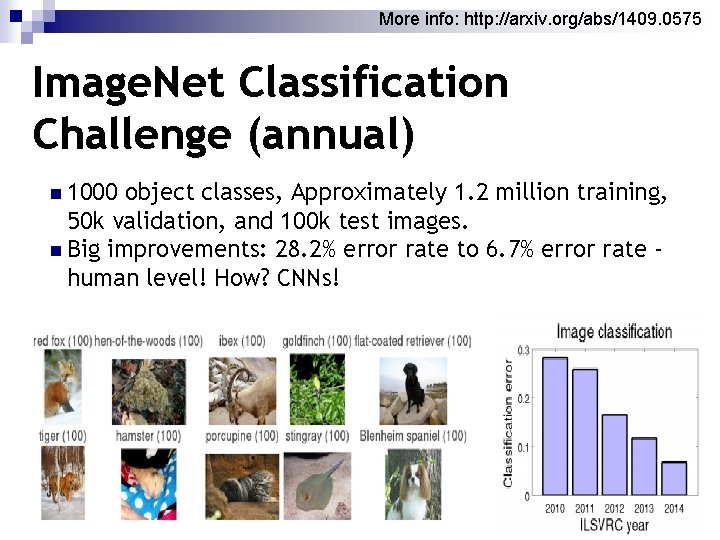

More info: http: //arxiv. org/abs/1409. 0575 Image. Net Classification Challenge (annual) n 1000 object classes, Approximately 1. 2 million training, 50 k validation, and 100 k test images. n Big improvements: 28. 2% error rate to 6. 7% error rate human level! How? CNNs!

Deep Learning for image processing Back to cats and dogs… n Input dimension is ~200 k (256*3)!! n Challenges: n ¨ computationally intensive ¨ questionable performance with shallow architectures… ¨. . would require astronomical amount of data

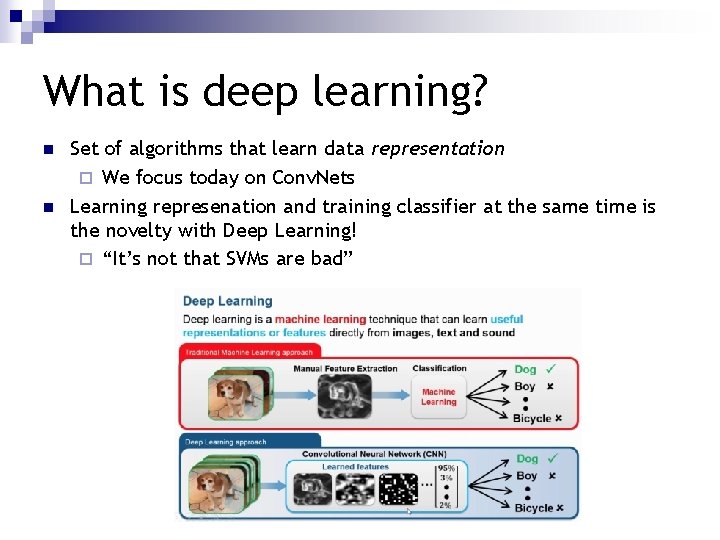

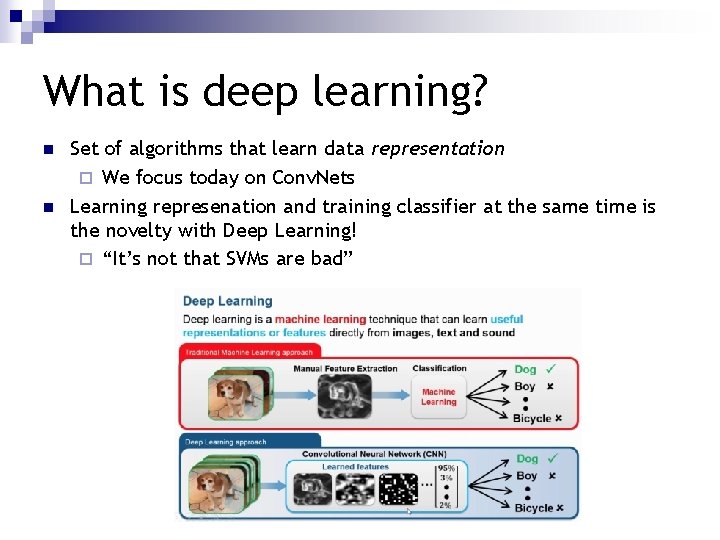

What is deep learning? n n Set of algorithms that learn data representation ¨ We focus today on Conv. Nets Learning represenation and training classifier at the same time is the novelty with Deep Learning! ¨ “It’s not that SVMs are bad”

Basic ingredients of Conv. Nets n Lots of layers. . . more usually perform better! n Most commonly used: ¨ Fully Connected Layers (MLPs - already covered today) ¨ Convolution ¨ Pooling ¨ Dropout ¨ Others: batch norm, Re. LU, etc.

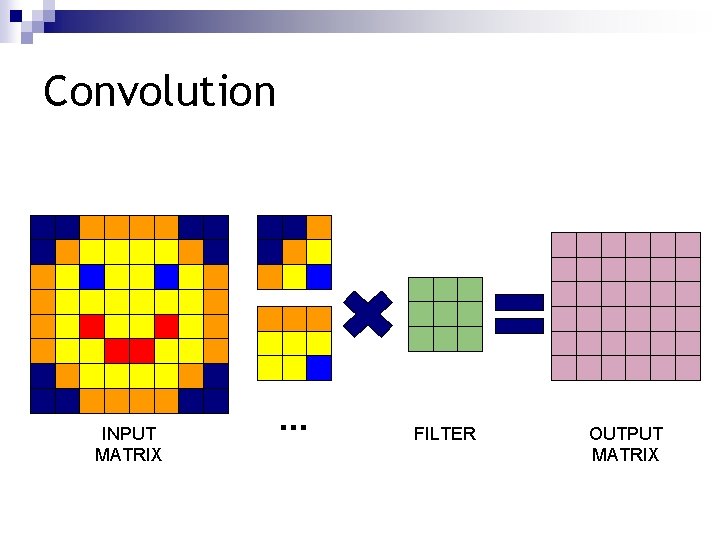

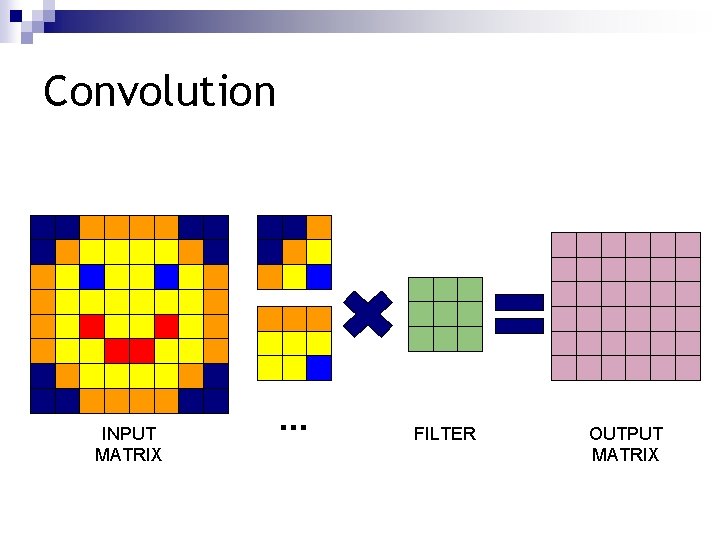

Convolution INPUT MATRIX . . . FILTER OUTPUT MATRIX

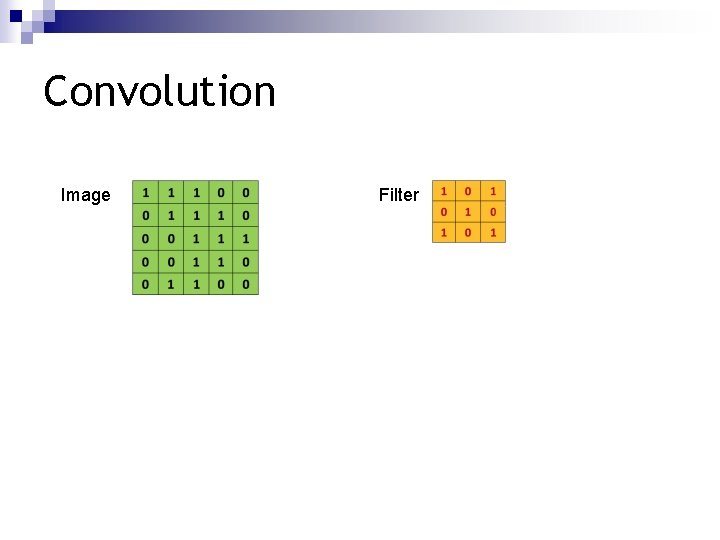

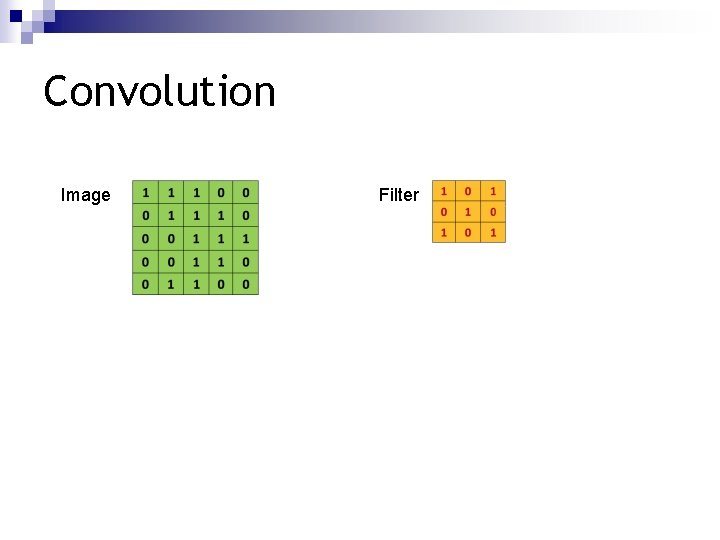

Convolution Image Filter

Convolution Image Filter

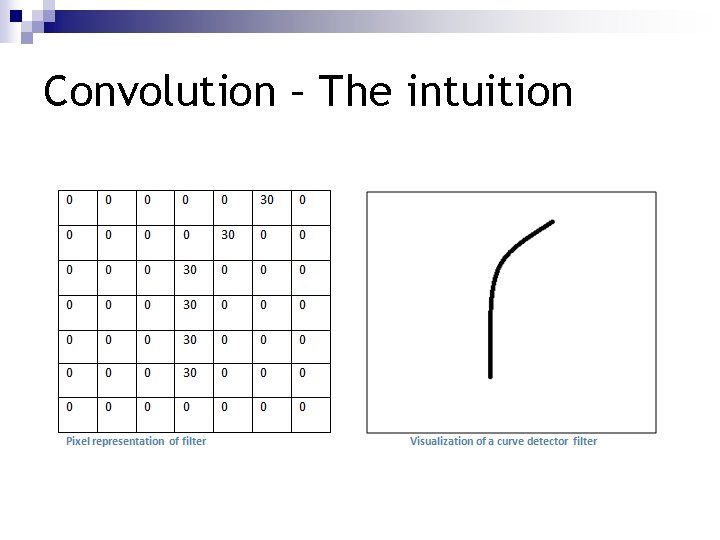

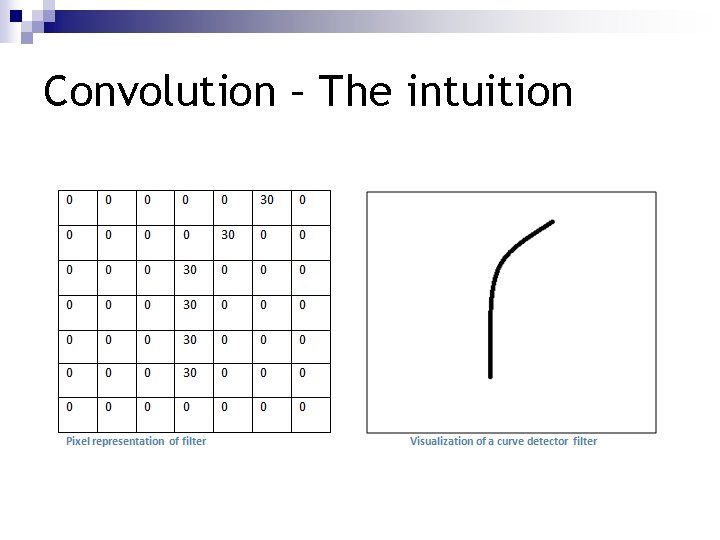

Convolution – The intuition

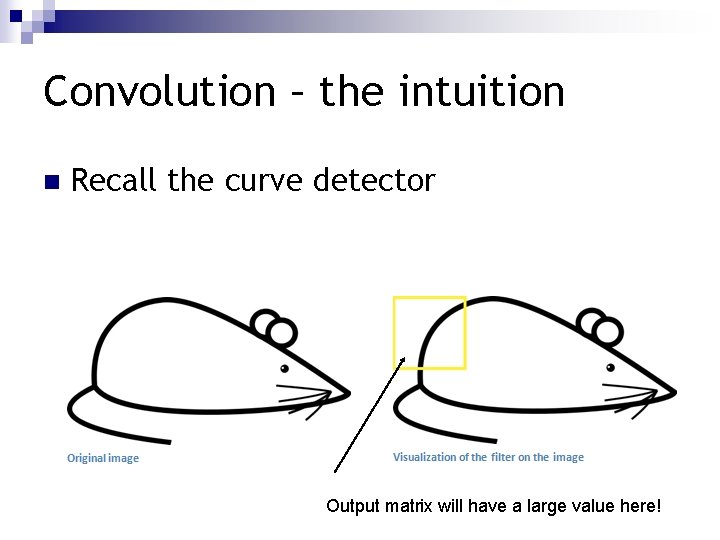

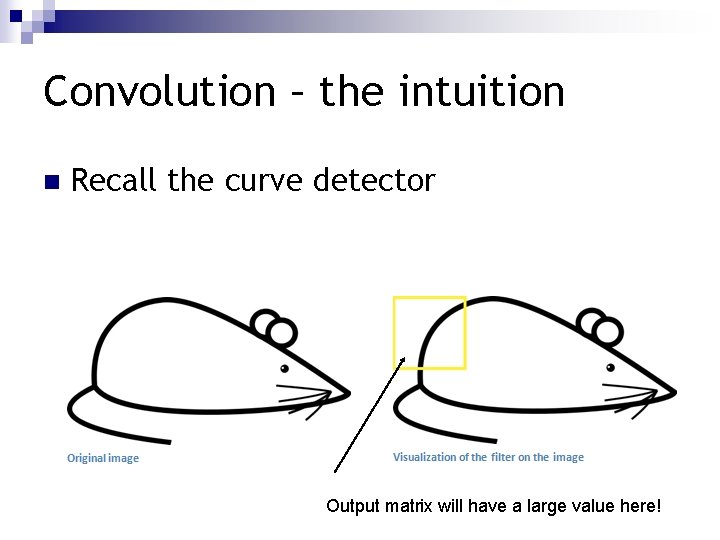

Convolution – the intuition n Recall the curve detector Output matrix will have a large value here!

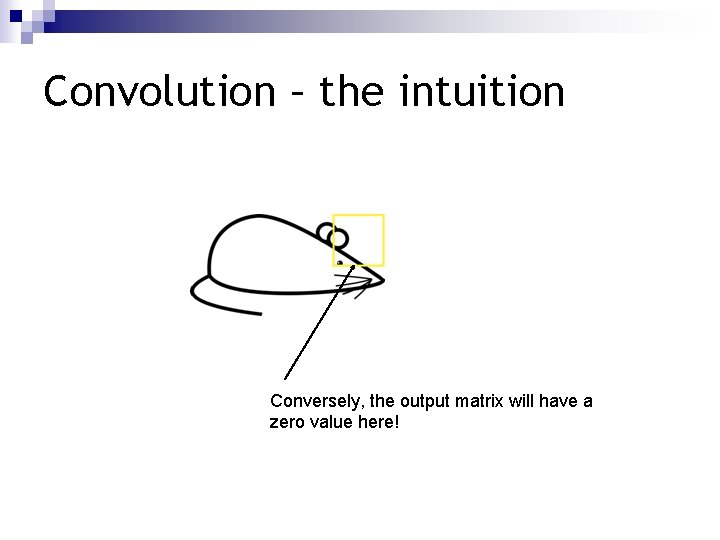

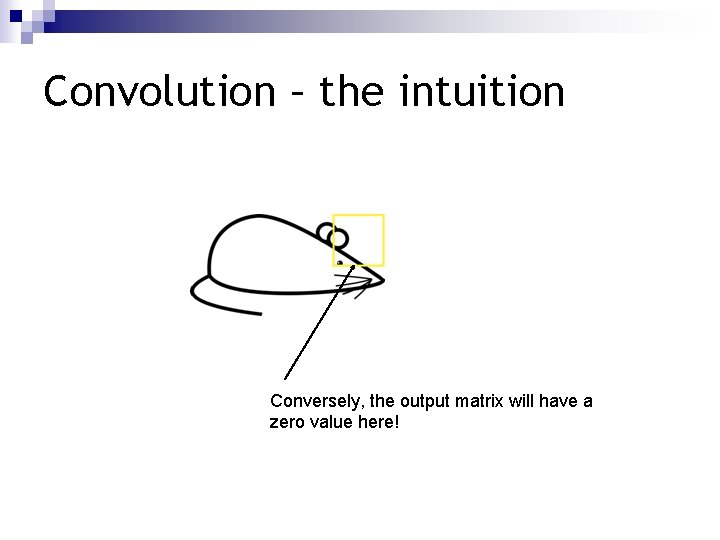

Convolution – the intuition Conversely, the output matrix will have a zero value here!

Convolution - summary n n n Different filters (each acting as a feature detector) produce a feature map Shallow layers detect low-level features Deeper layers learn more complicated features

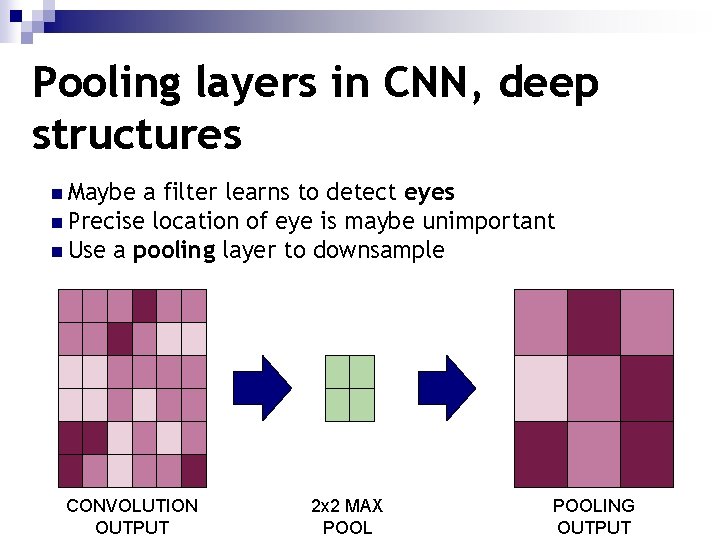

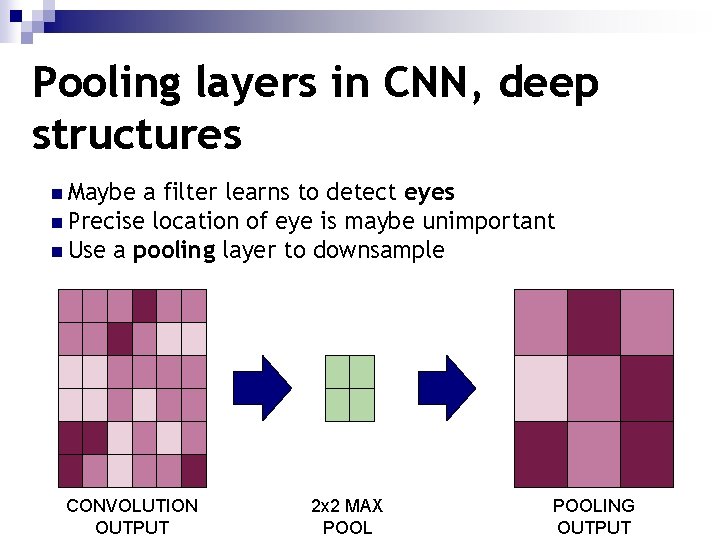

Pooling layers in CNN, deep structures n Maybe a filter learns to detect eyes n Precise location of eye is maybe unimportant n Use a pooling layer to downsample CONVOLUTION OUTPUT 2 x 2 MAX POOLING OUTPUT

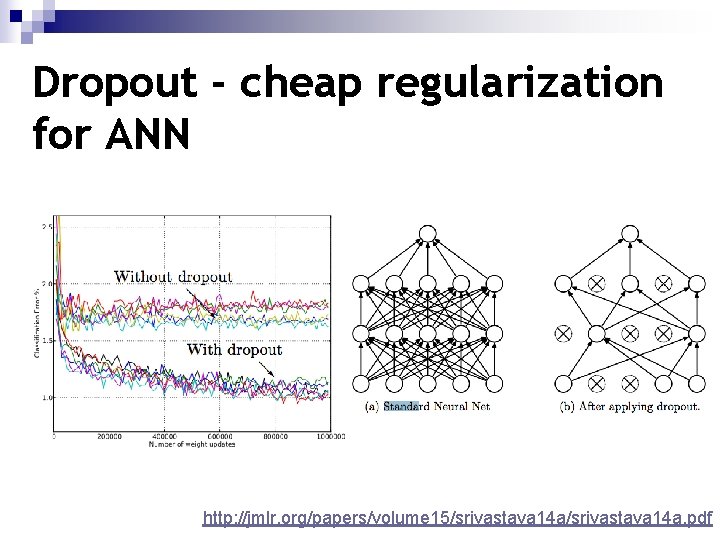

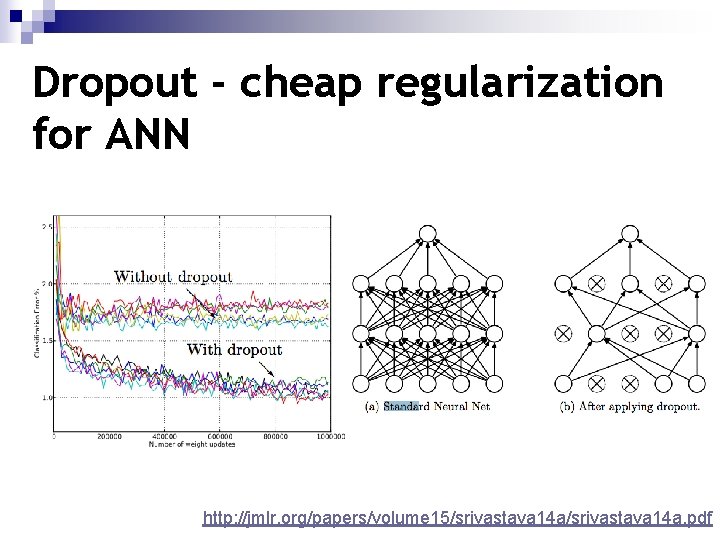

Dropout - cheap regularization for ANN http: //jmlr. org/papers/volume 15/srivastava 14 a. pdf

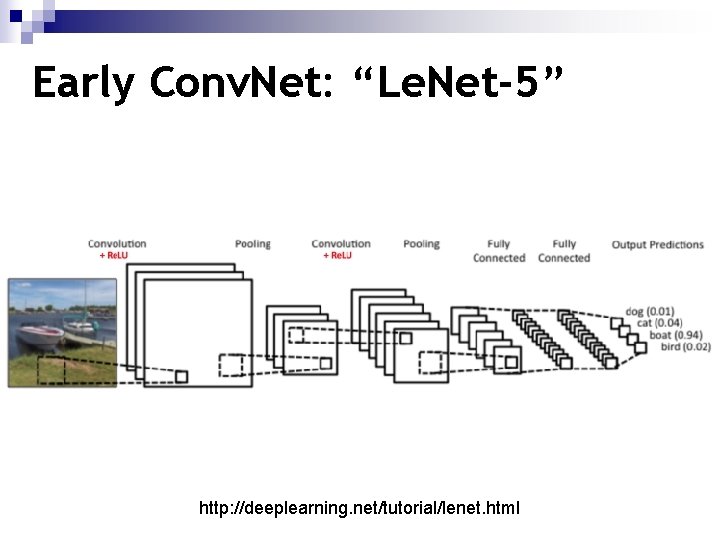

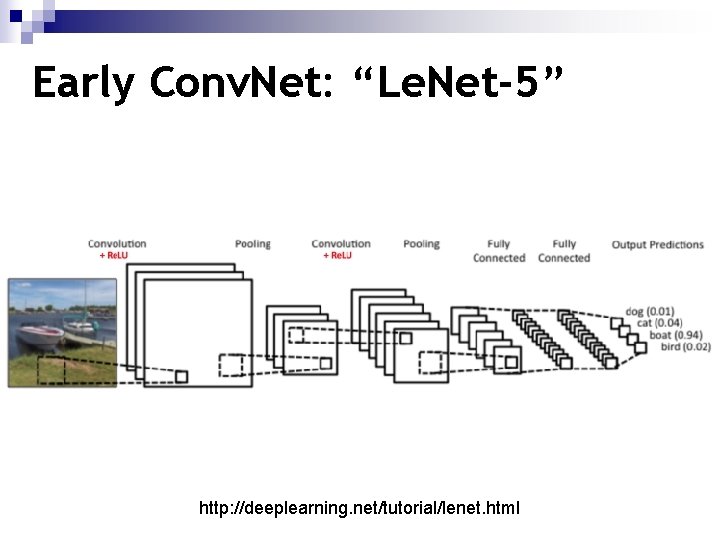

Early Conv. Net: “Le. Net-5” http: //deeplearning. net/tutorial/lenet. html

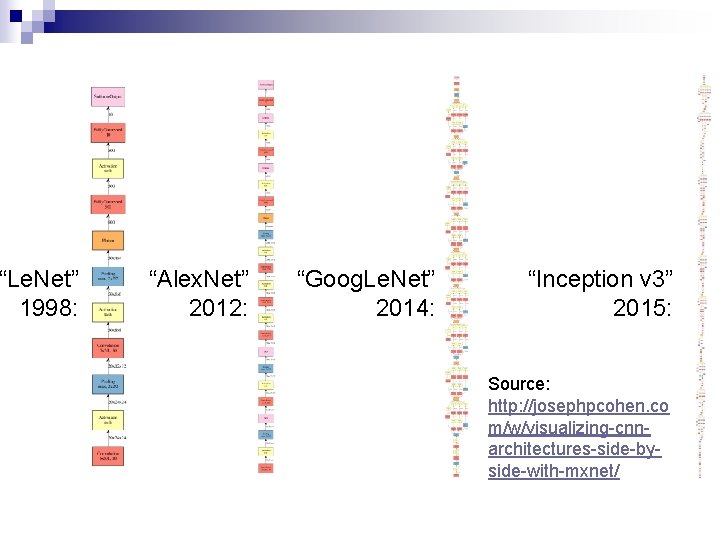

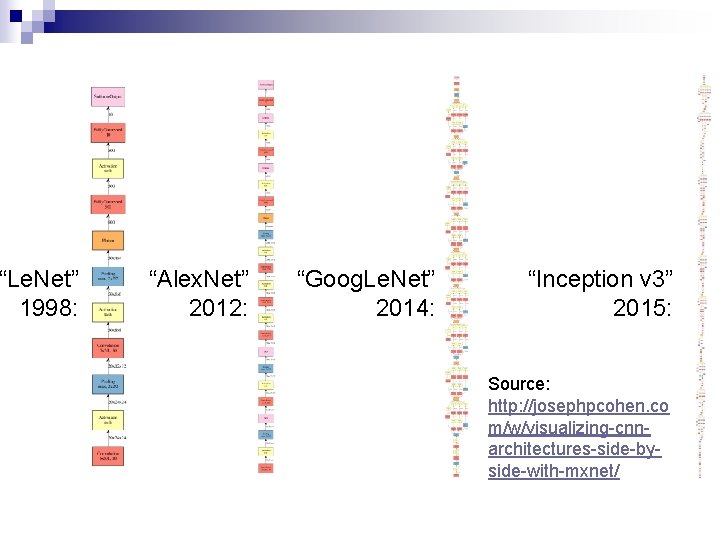

“Le. Net” 1998: “Alex. Net” 2012: “Goog. Le. Net” 2014: “Inception v 3” 2015: Source: http: //josephpcohen. co m/w/visualizing-cnnarchitectures-side-byside-with-mxnet/

Project 3 - MNIST Digit Recognition n. Classify hand-written images of digits 0 to 9 n. Classic “MNIST” data set used widely in ML research n. Will use convolutional neural networks!

Project 3 - Assignments - Try. . . n… changing the filter sizes, max pool size n. . . adding or removing layers n. . . using SGD n… varying the amount of dropout (with iteration? ) n… varying the batch size

Deep Reinforcement Learning

Learning to play from frames? https: //www. youtube. com/watch? v=Q 70 ul. PJW 3 Gk

Challenges of Reinforcement Learning Can we learn the right moves from frames/scores? n Need lots of accurately labeled data for ML n However, in video games we have n ¨ Sparse noisy signal ¨ Delayed observations ¨ Data is not guaranteed to be independent

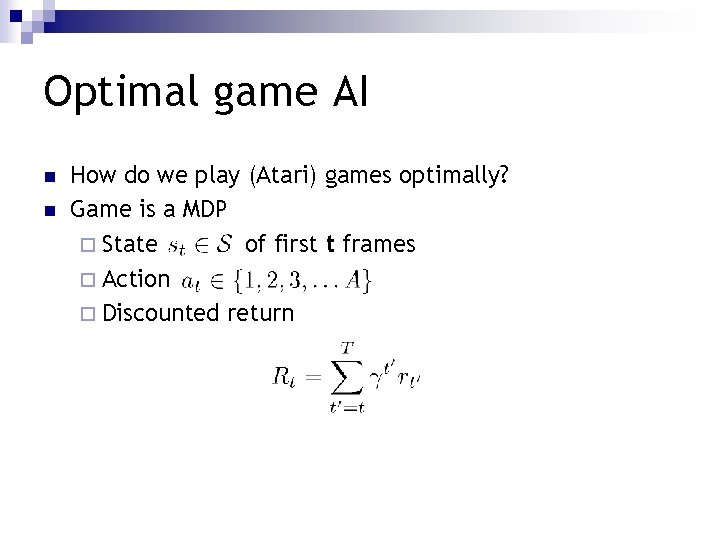

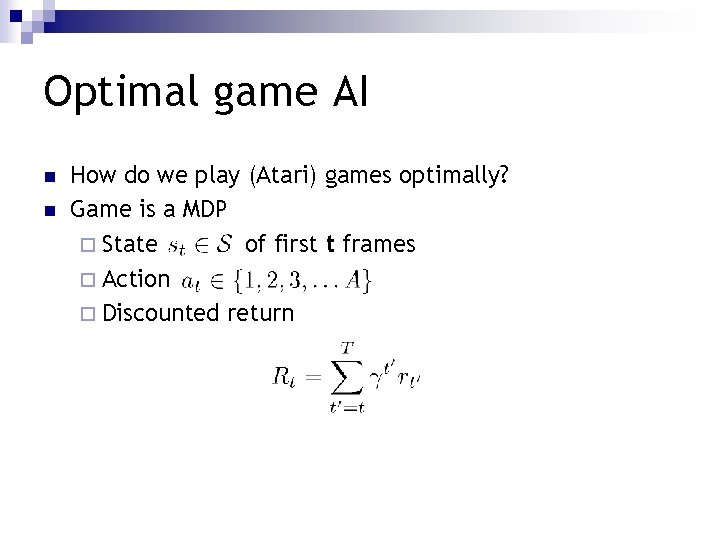

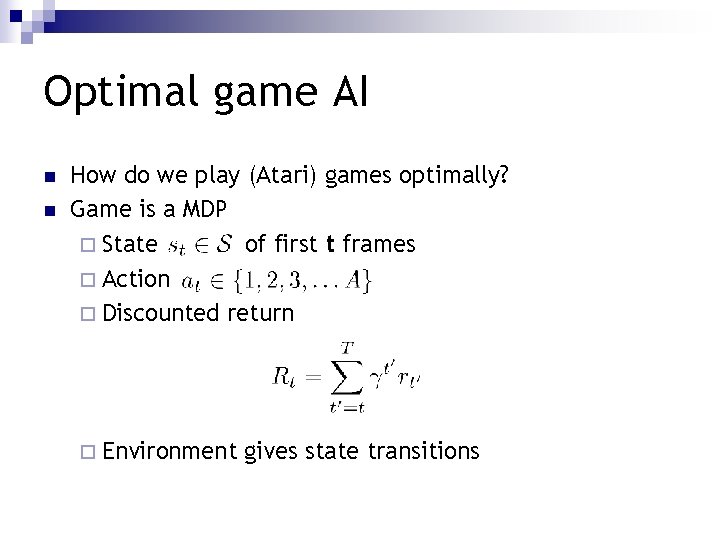

Optimal game AI n How do we play (Atari) games optimally?

Optimal game AI n n How do we play (Atari) games optimally? Game is a MDP ¨ State of first t frames

Optimal game AI n n How do we play (Atari) games optimally? Game is a MDP ¨ State of first t frames ¨ Action

Optimal game AI n n How do we play (Atari) games optimally? Game is a MDP ¨ State of first t frames ¨ Action ¨ Discounted return

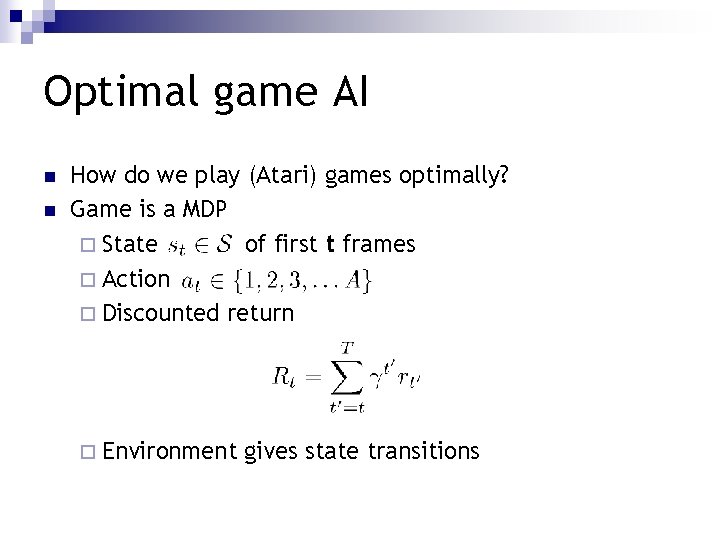

Optimal game AI n n How do we play (Atari) games optimally? Game is a MDP ¨ State of first t frames ¨ Action ¨ Discounted return ¨ Environment gives state transitions

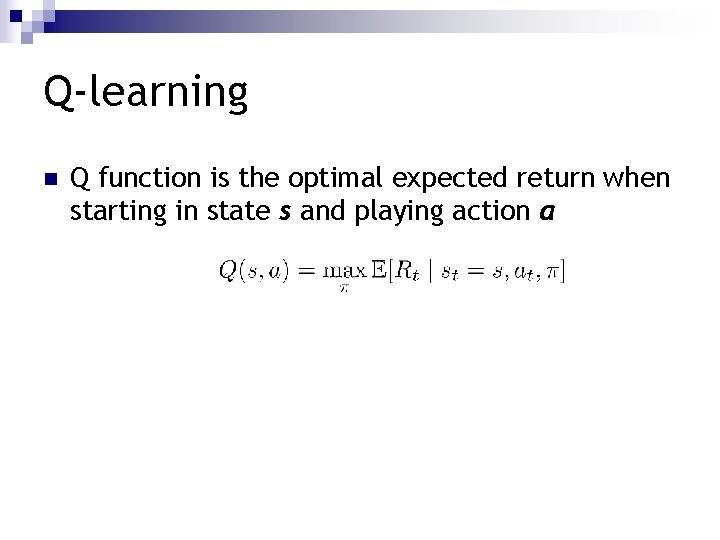

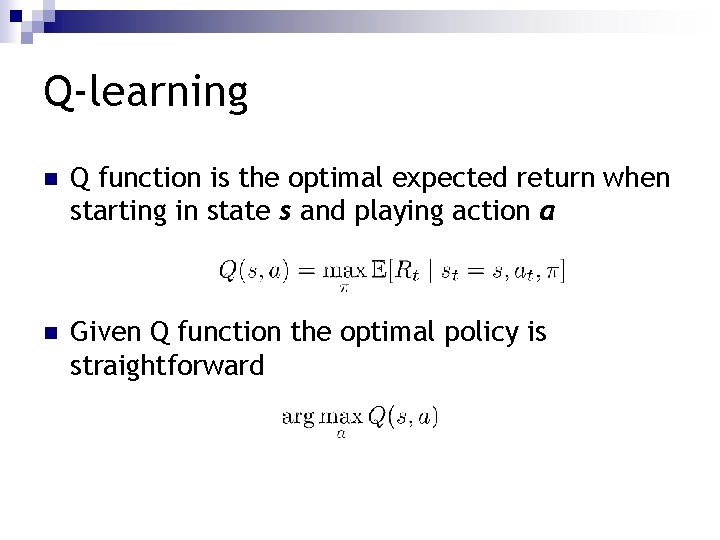

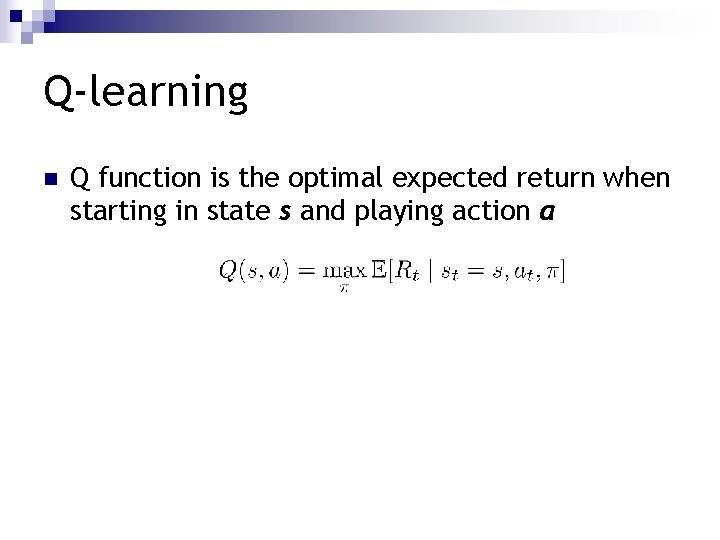

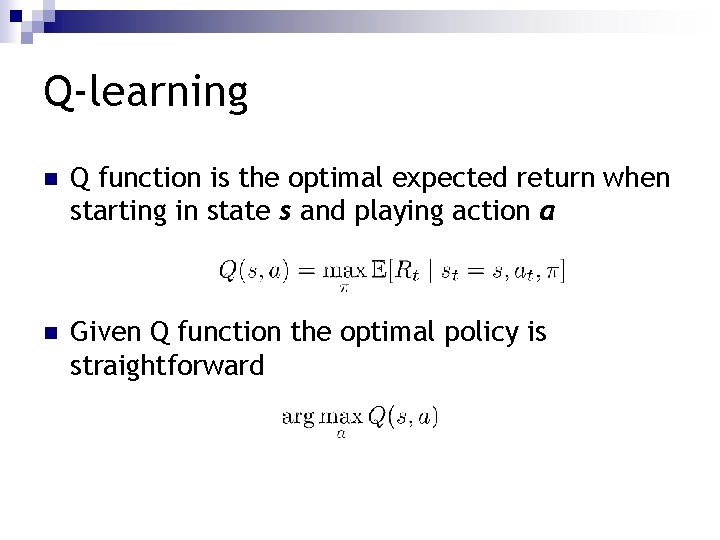

Q-learning n Q function is the optimal expected return when starting in state s and playing action a

Q-learning n Q function is the optimal expected return when starting in state s and playing action a n Given Q function the optimal policy is straightforward

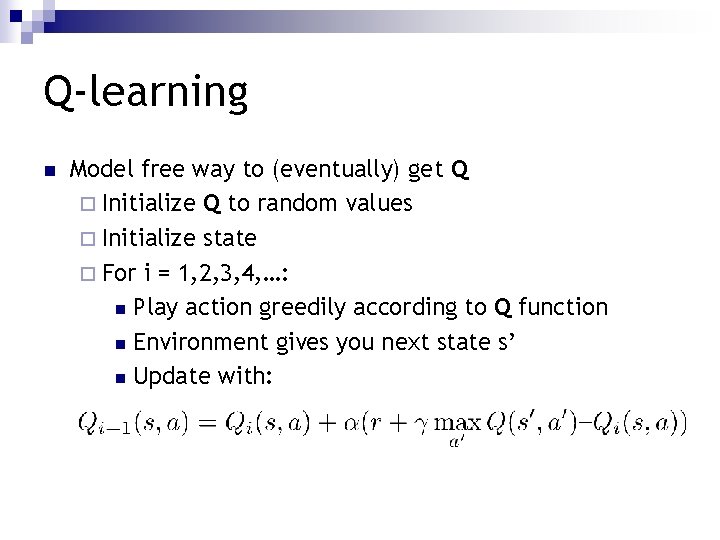

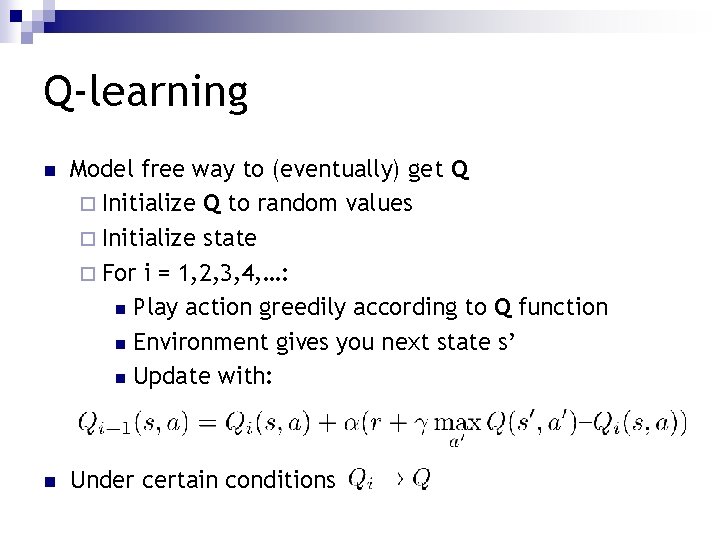

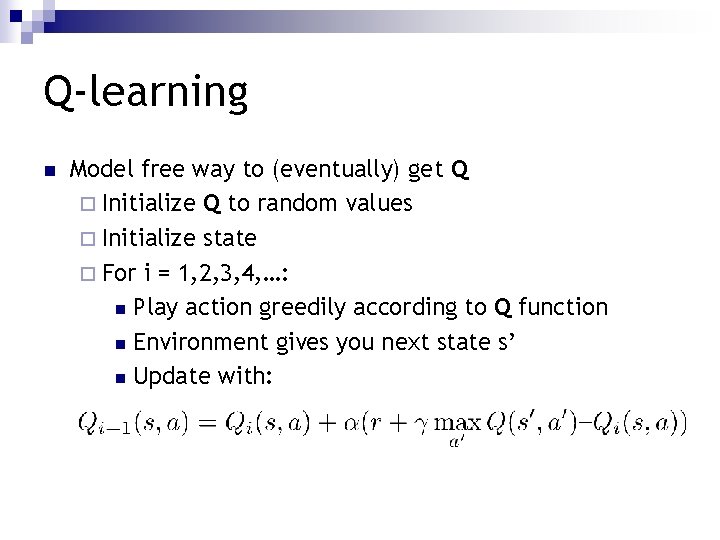

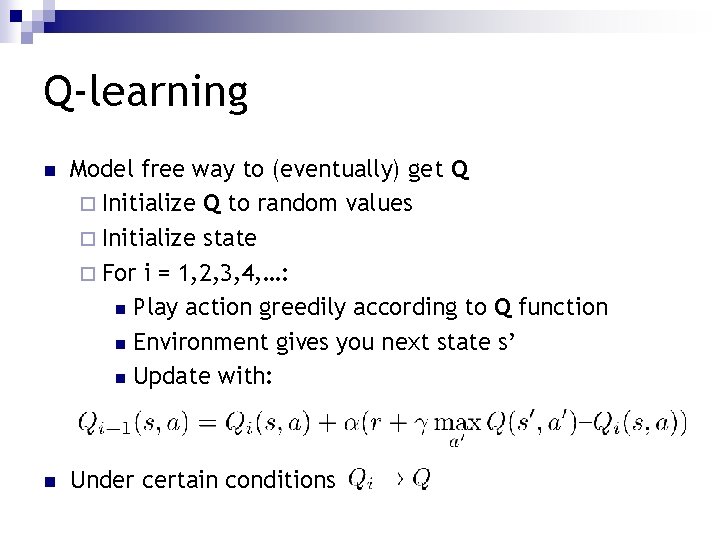

Q-learning n Model free way to (eventually) get Q ¨ Initialize Q to random values ¨ Initialize state

Q-learning n Model free way to (eventually) get Q ¨ Initialize Q to random values ¨ Initialize state ¨ For i = 1, 2, 3, 4, …: n Play action greedily according to Q function n Environment gives you next state s’ n Update with:

Q-learning n Model free way to (eventually) get Q ¨ Initialize Q to random values ¨ Initialize state ¨ For i = 1, 2, 3, 4, …: n Play action greedily according to Q function n Environment gives you next state s’ n Update with: n Under certain conditions

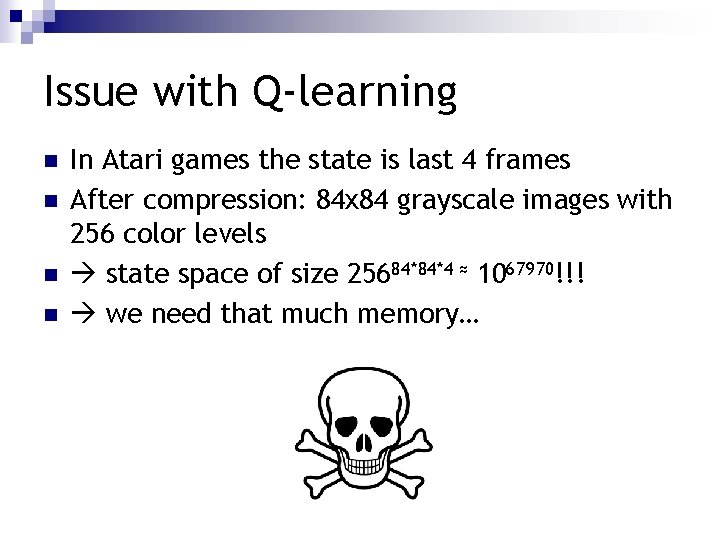

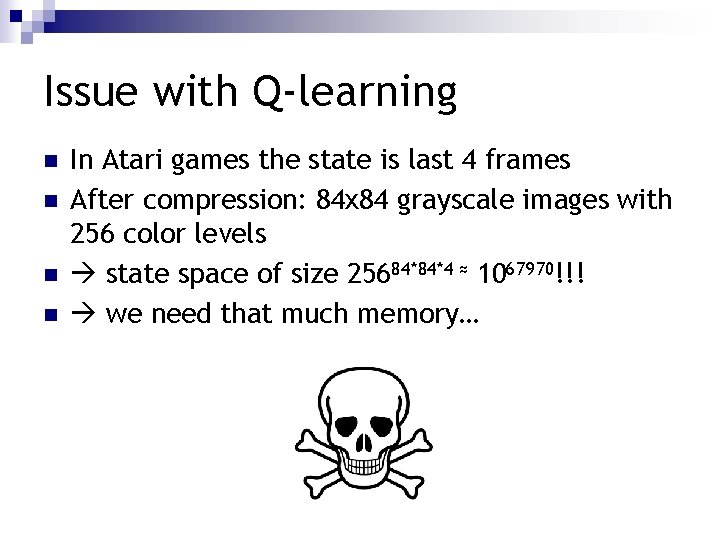

Issue with Q-learning n n In Atari games the state is last 4 frames After compression: 84 x 84 grayscale images with 256 color levels state space of size 25684*84*4 ≈ 1067970!!! we need that much memory…

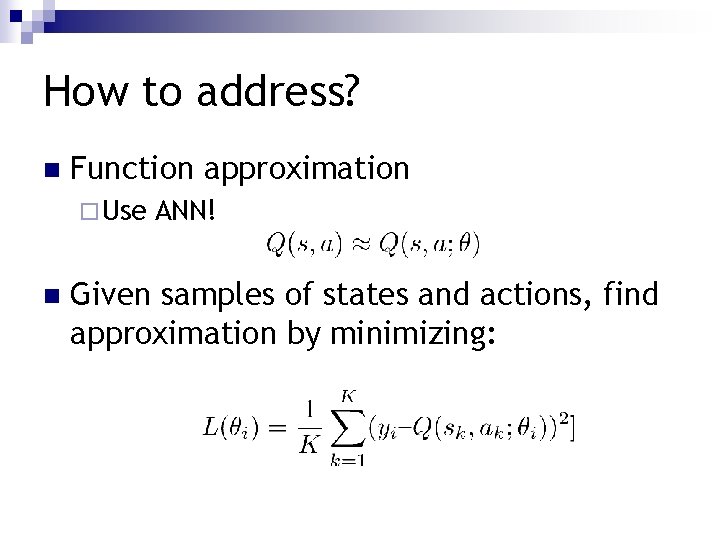

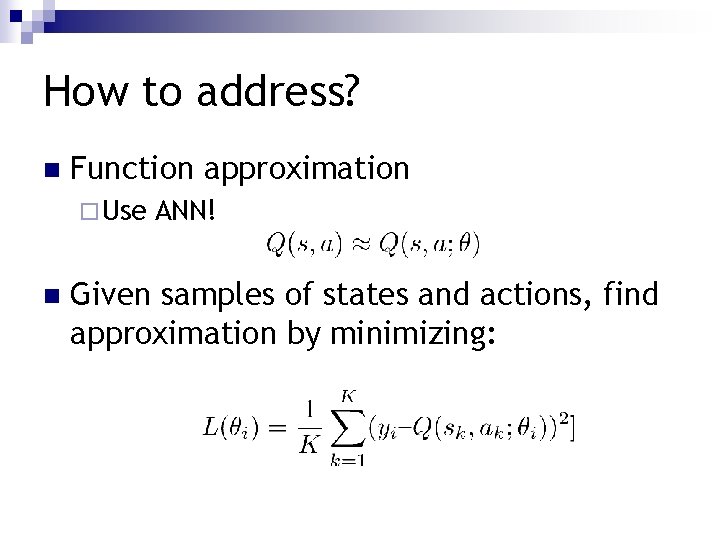

How to address? n Function approximation ¨ Use ANN!

How to address? n Function approximation ¨ Use n ANN! Given samples of states and actions, find approximation by minimizing:

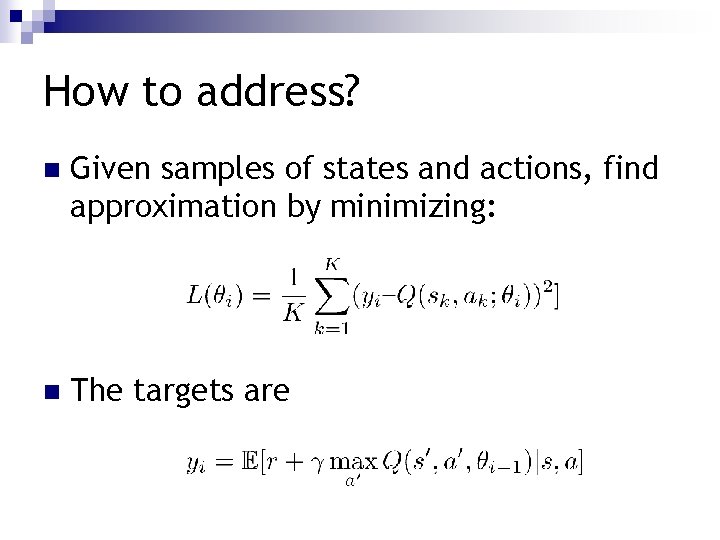

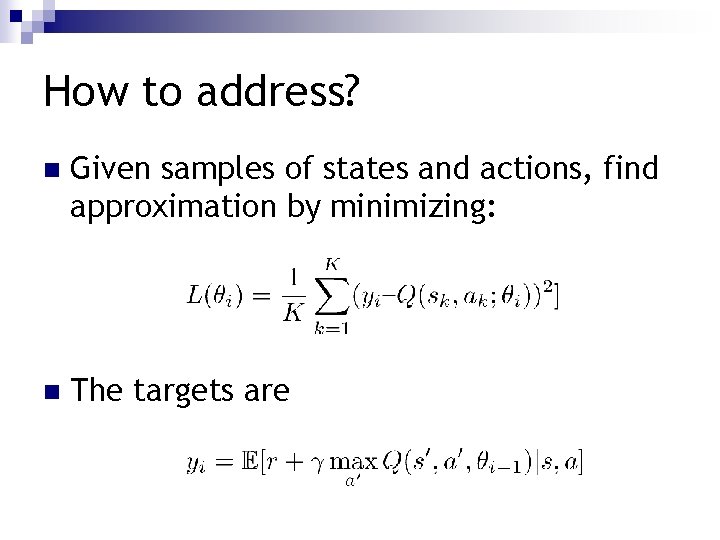

How to address? n Given samples of states and actions, find approximation by minimizing:

How to address? n Given samples of states and actions, find approximation by minimizing: n The targets are

The DQN algorithm n How to get some independence in the samples? n DQN uses experience replay of last 1 M frames n Samples (before, action, reward, after) tuples uniformly from that experience n Then trains the Q-network…

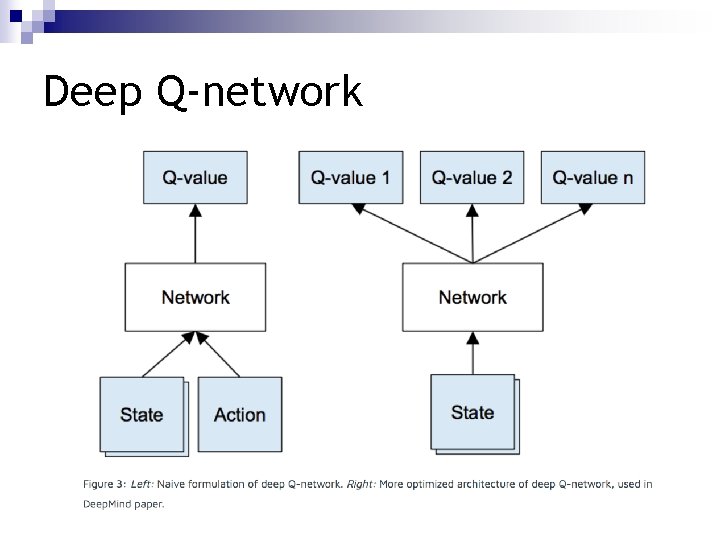

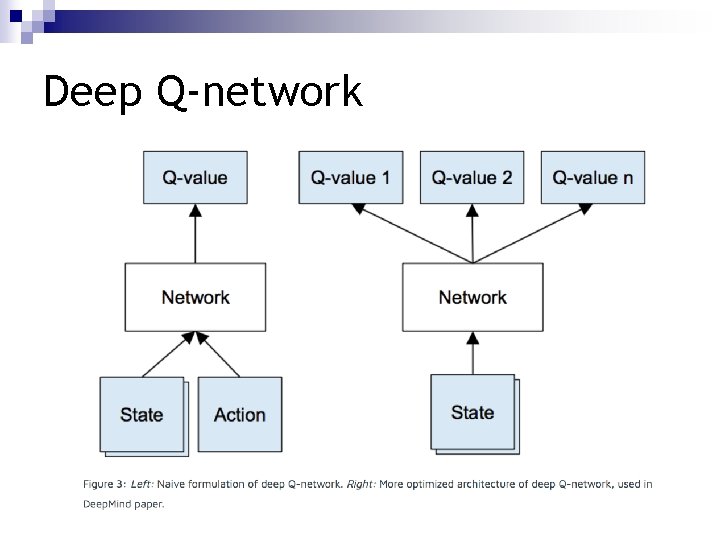

Deep Q-network

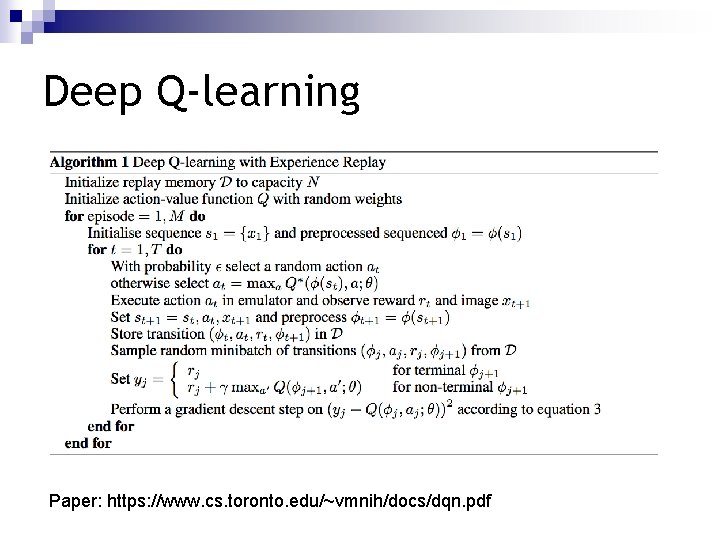

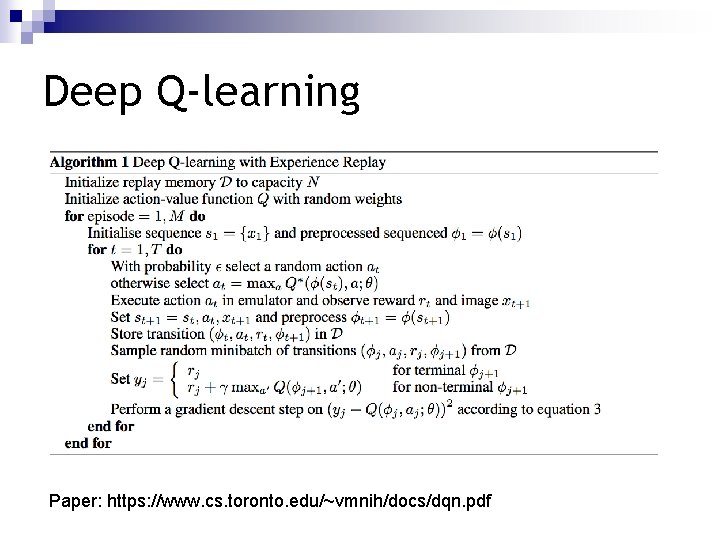

Deep Q-learning Paper: https: //www. cs. toronto. edu/~vmnih/docs/dqn. pdf

Deep convolution Q network n This is the architecture that Deep. Mind had in mind

Thank you!