PrototypeDriven Learning for Sequence Models Aria Haghighi and

- Slides: 35

Prototype-Driven Learning for Sequence Models Aria Haghighi and Dan Klein University of California Berkeley Slides prepared by Andrew Carlson for the Semisupervised NL Learning Reading Group

Motivation: Learn models with least effort • Supervised learning requires many labeled examples • Unsupervised learning requires a carefully designed model – does not necessarily minimize total effort • Prototype-driven learning can require less total effort

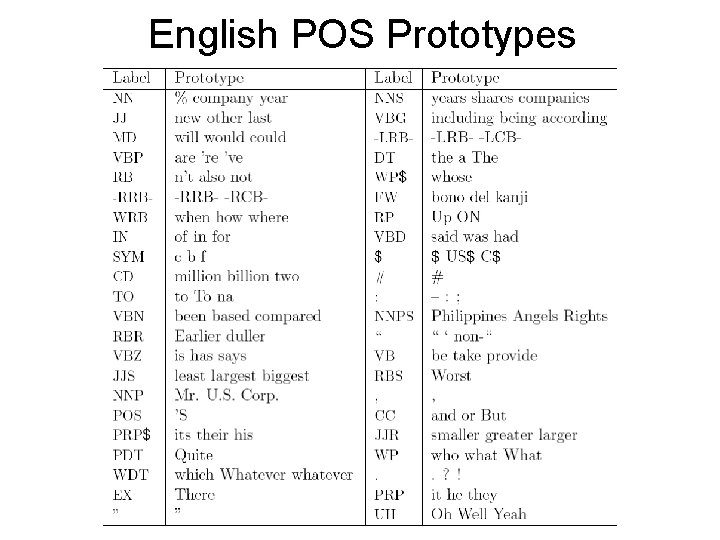

Prototype-driven learning • Specify prototypical examples for each target label • Example: for POS tagging, list the target tags and a few examples of each tag

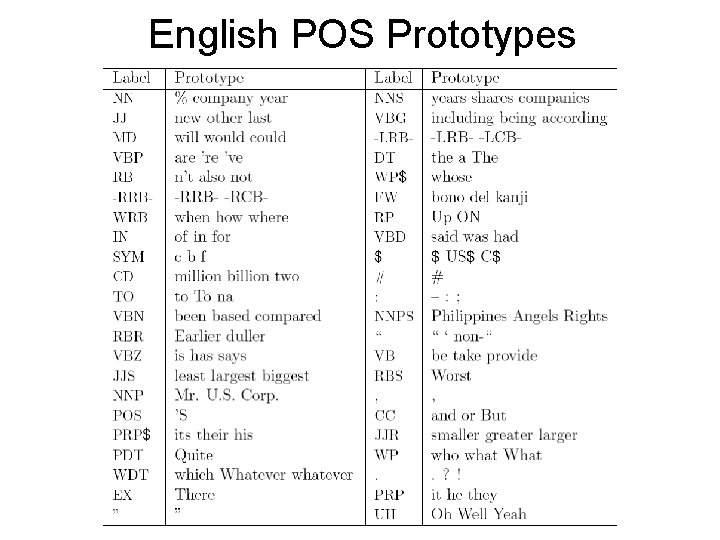

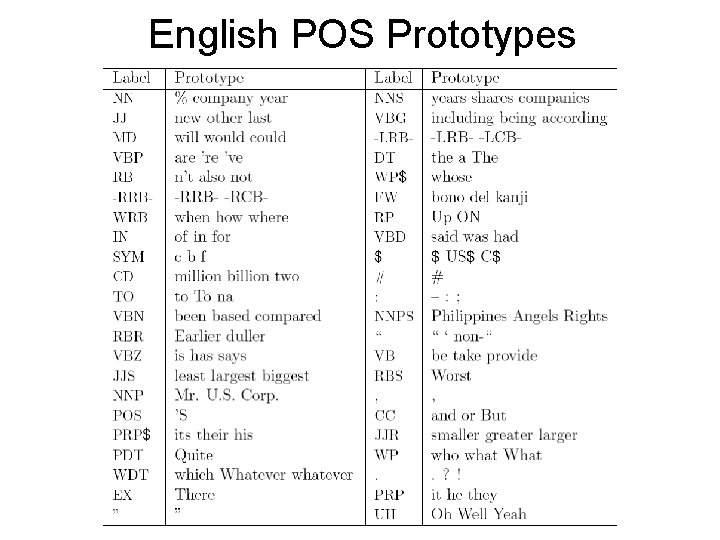

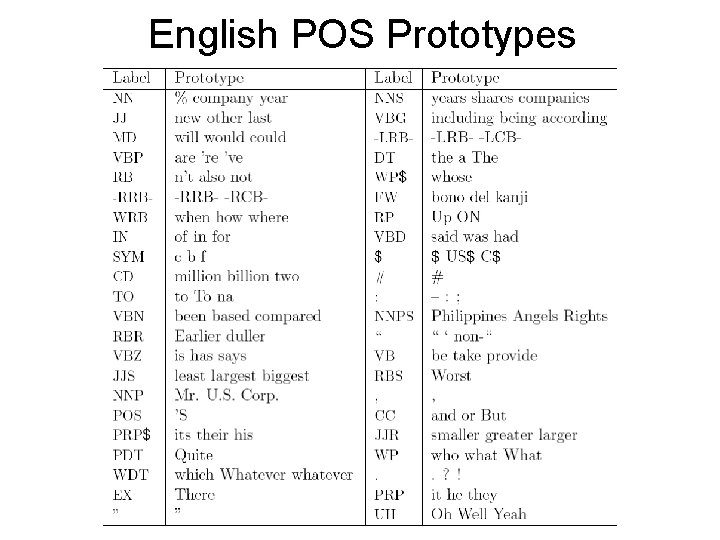

English POS Prototypes

Arguments for prototype-driven learning • Minimum one would have to provide to a human annotator • Pedagogical use • Natural language exhibits proform and prototype effects

General approach • Link any given word to similar prototypes using distributional similarity

General approach • Link any given word to similar prototypes using distributional similarity • Encode these prototype links as features in a log-linear generative model, trained to fit unlabeled data

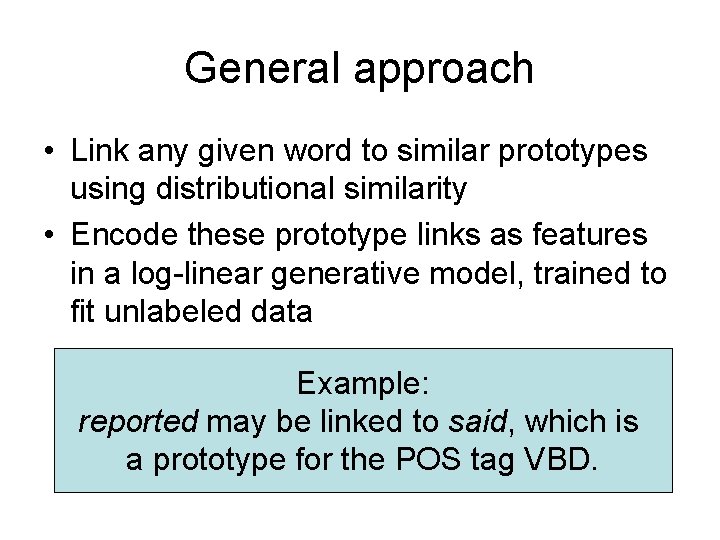

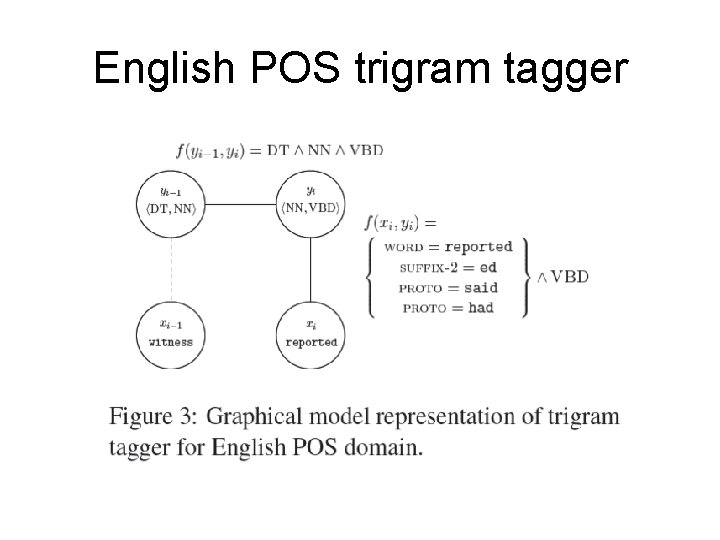

General approach • Link any given word to similar prototypes using distributional similarity • Encode these prototype links as features in a log-linear generative model, trained to fit unlabeled data Example: reported may be linked to said, which is a prototype for the POS tag VBD.

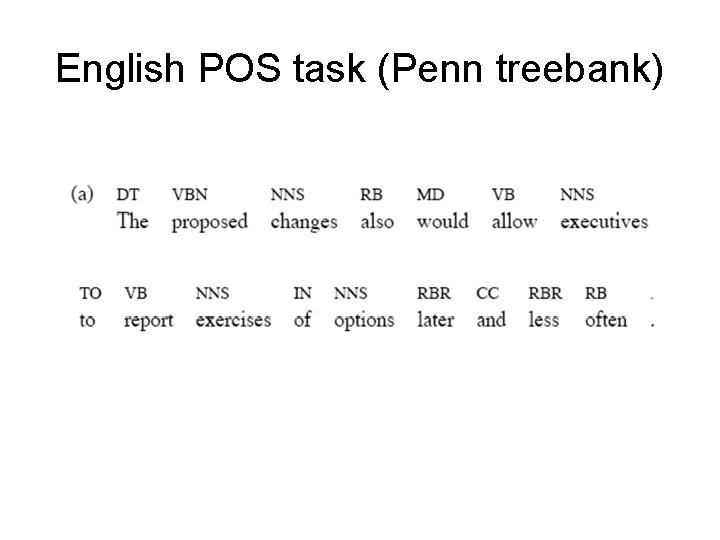

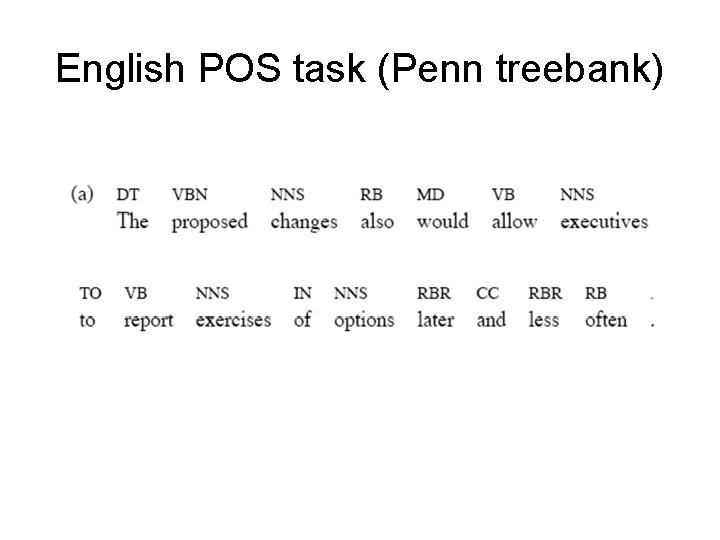

English POS task (Penn treebank)

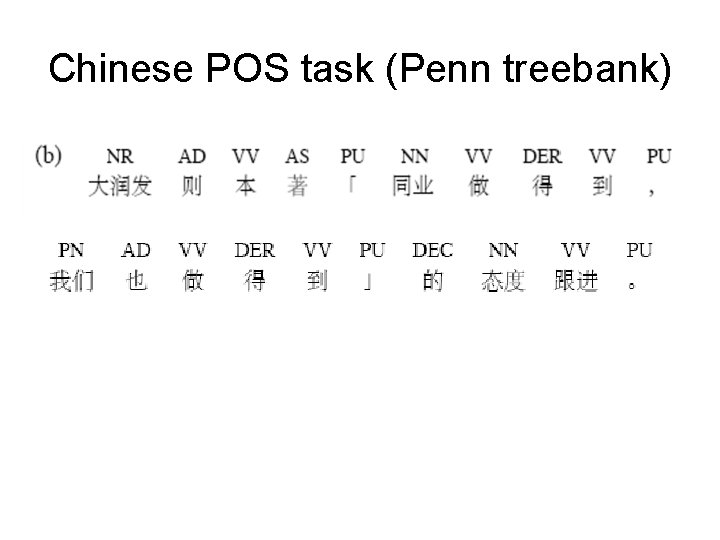

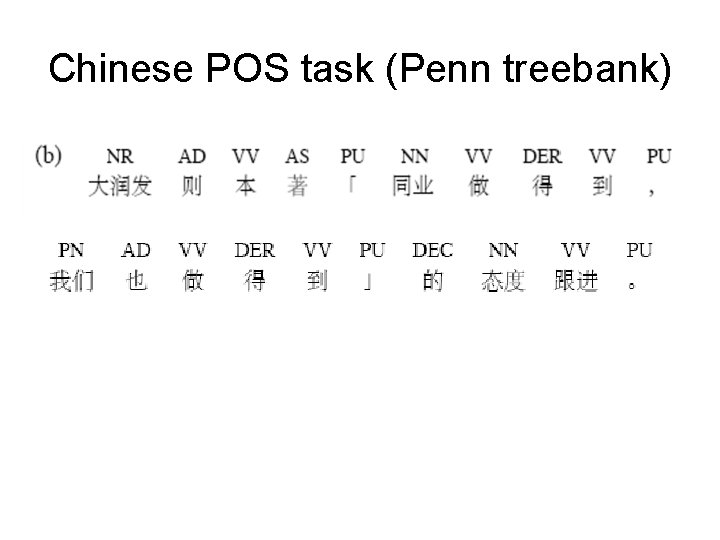

Chinese POS task (Penn treebank)

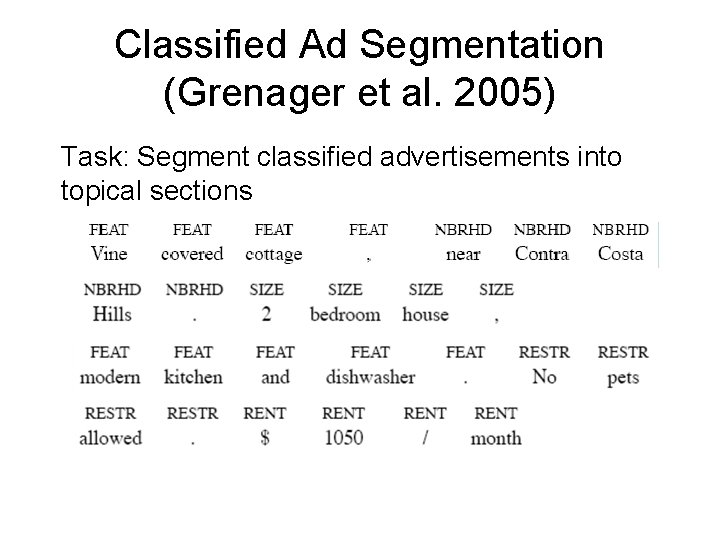

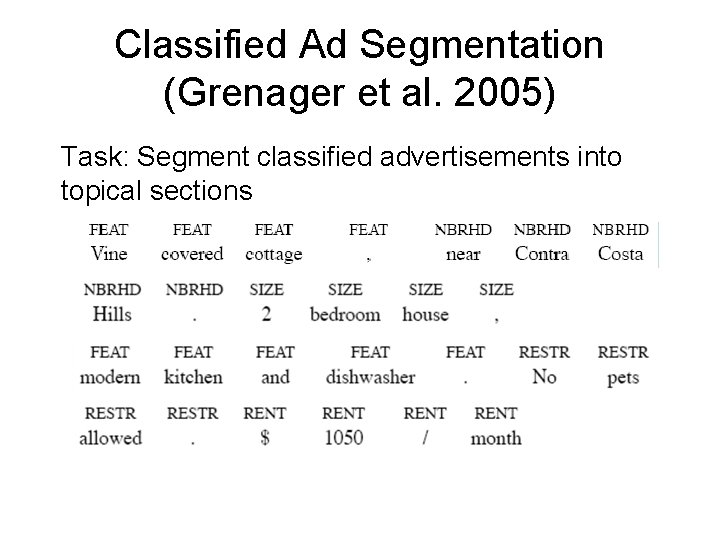

Classified Ad Segmentation (Grenager et al. 2005) Task: Segment classified advertisements into topical sections

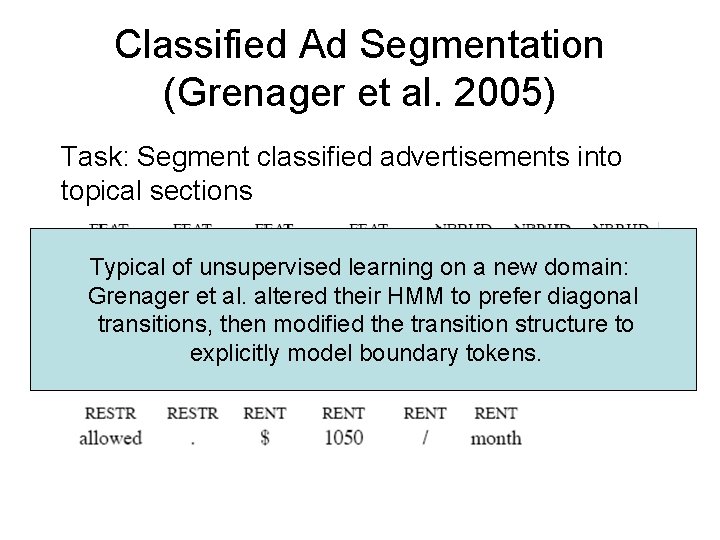

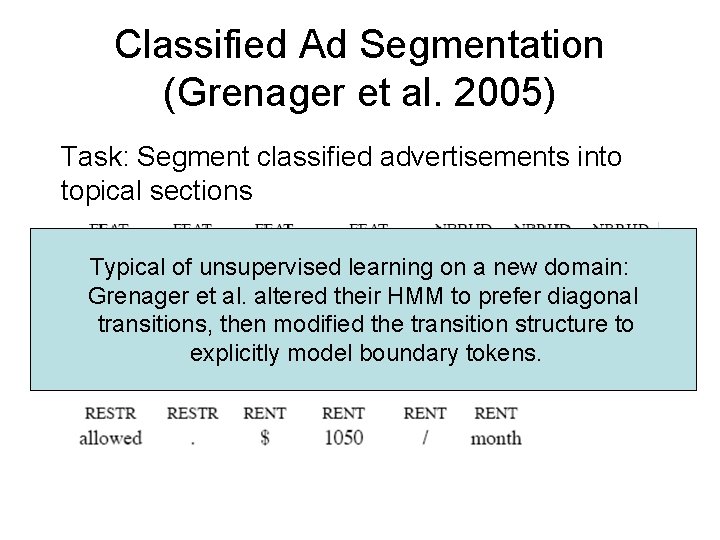

Classified Ad Segmentation (Grenager et al. 2005) Task: Segment classified advertisements into topical sections Typical of unsupervised learning on a new domain: Grenager et al. altered their HMM to prefer diagonal transitions, then modified the transition structure to explicitly model boundary tokens.

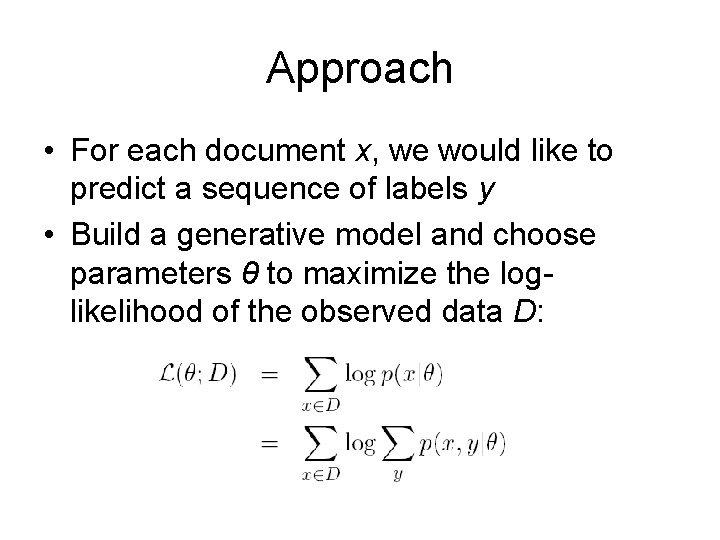

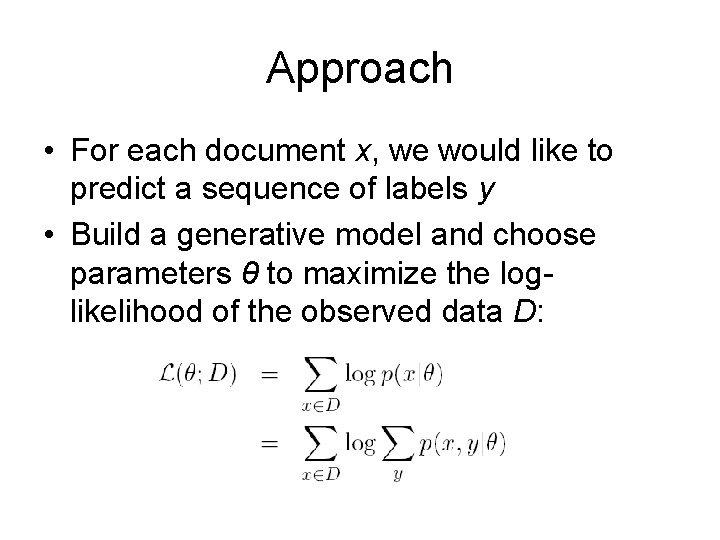

Approach • For each document x, we would like to predict a sequence of labels y • Build a generative model and choose parameters θ to maximize the loglikelihood of the observed data D:

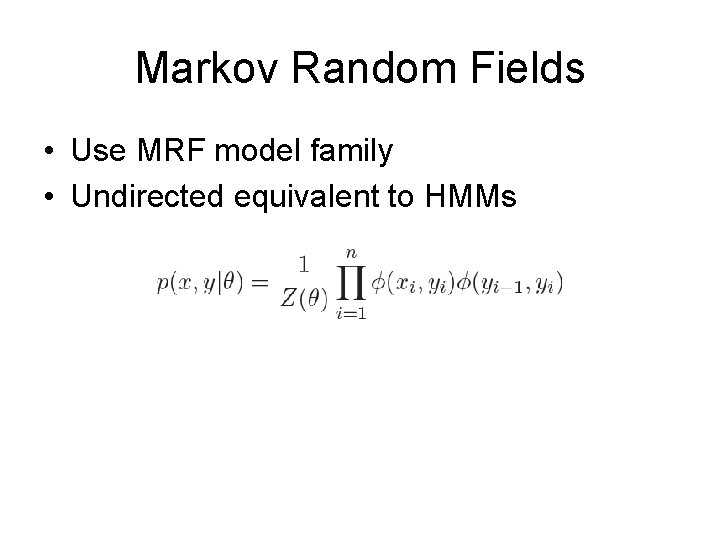

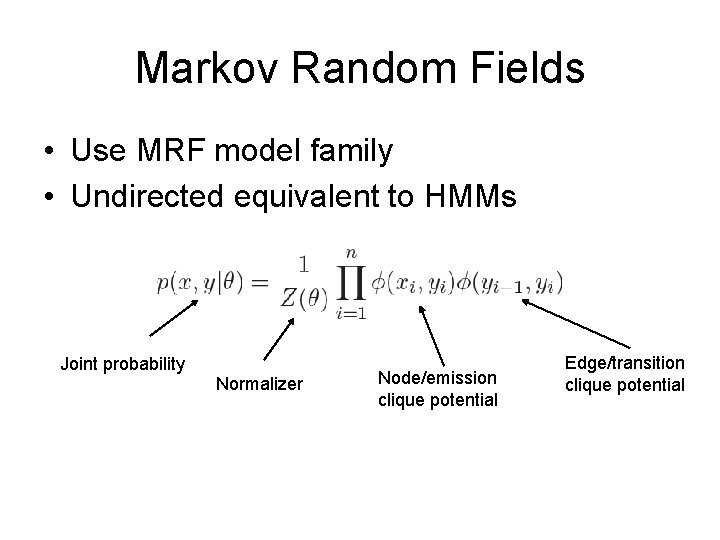

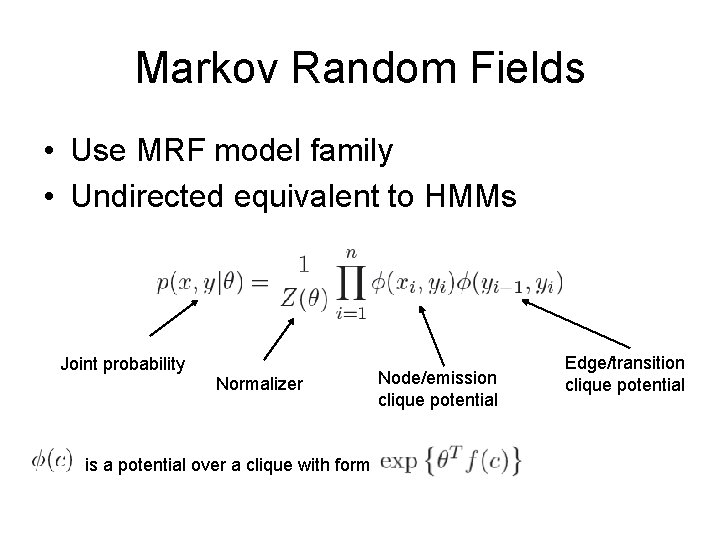

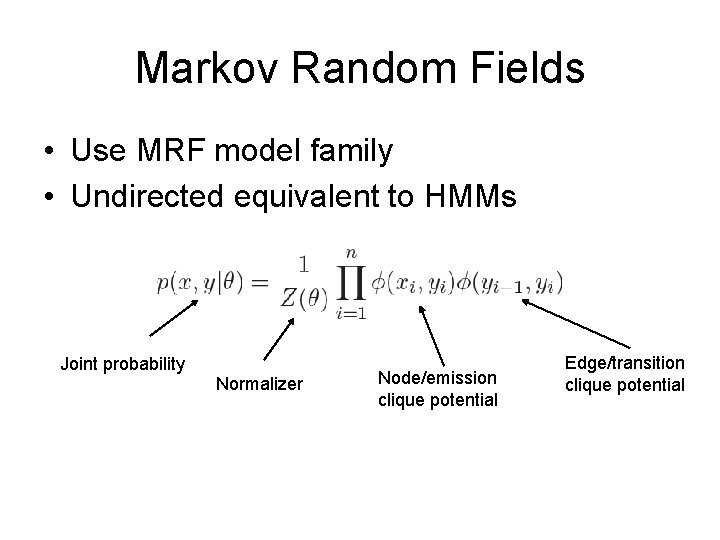

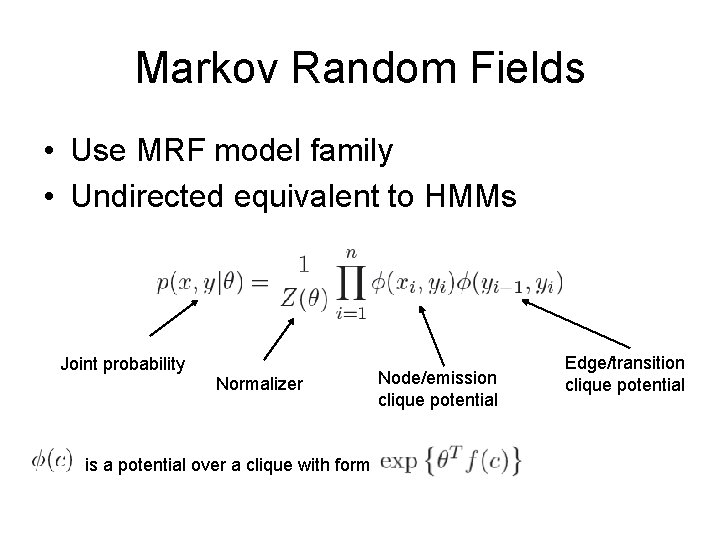

Markov Random Fields • Use MRF model family • Undirected equivalent to HMMs

Markov Random Fields • Use MRF model family • Undirected equivalent to HMMs Joint probability Normalizer Node/emission clique potential Edge/transition clique potential

Markov Random Fields • Use MRF model family • Undirected equivalent to HMMs Joint probability Normalizer is a potential over a clique with form Node/emission clique potential Edge/transition clique potential

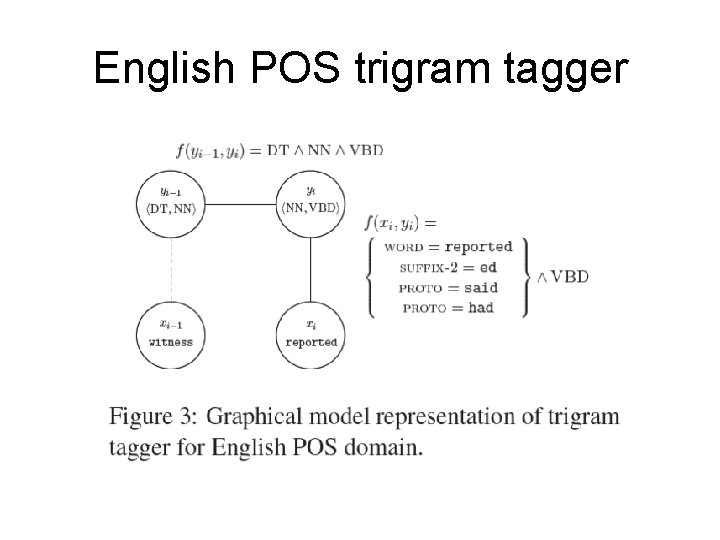

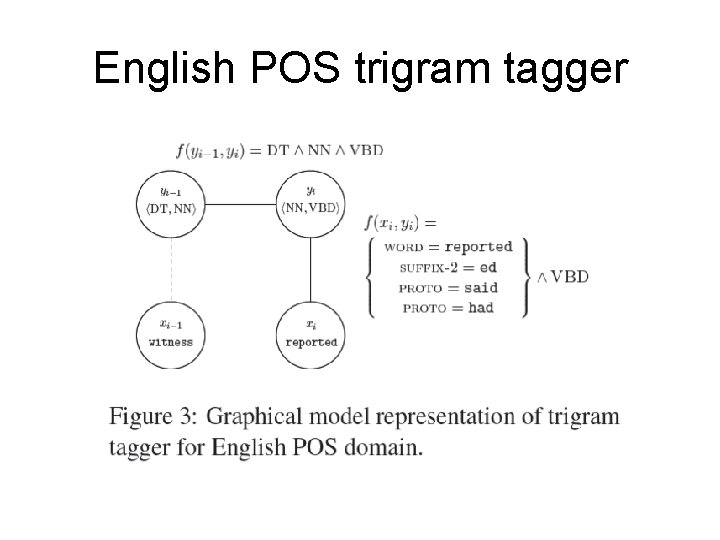

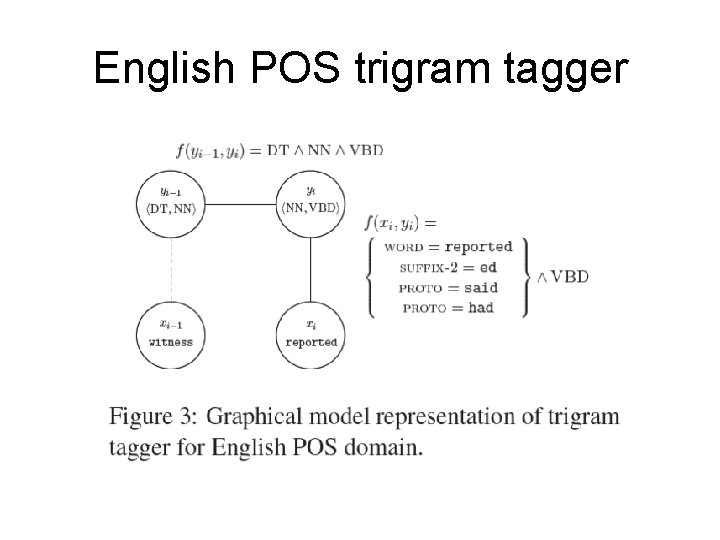

English POS trigram tagger

Using distributional similarity and prototypes • Add a node feature PROTO = z for all prototypes z similar to the word at that node • For POS tagging, similarity is based on positional context vectors • For the classified ad task, position is ignored and a wider window is used

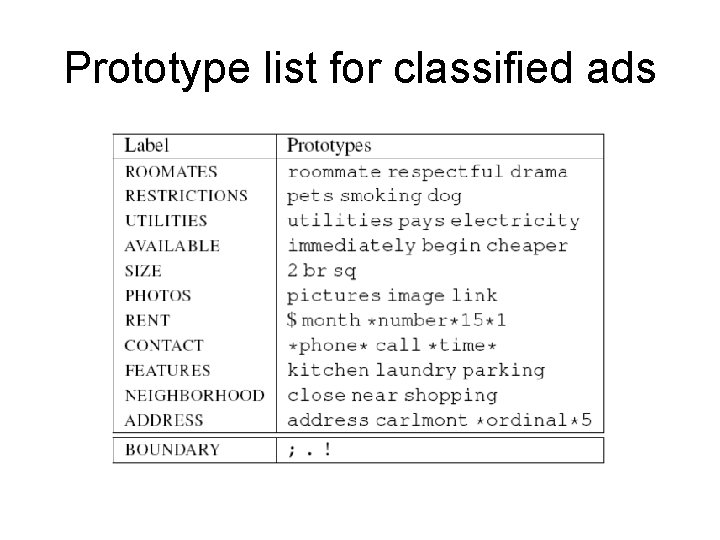

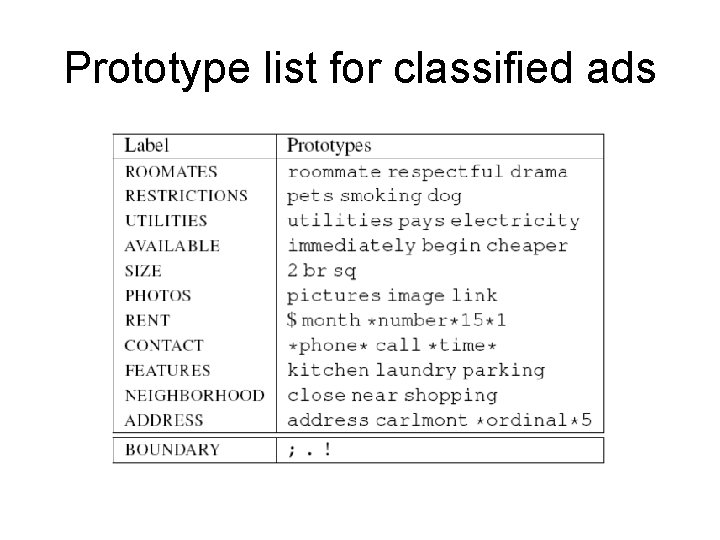

Prototype list for classified ads

Parameter estimation • See the paper • Gradient-based method (L-BFGS), forward -backward, Viterbi

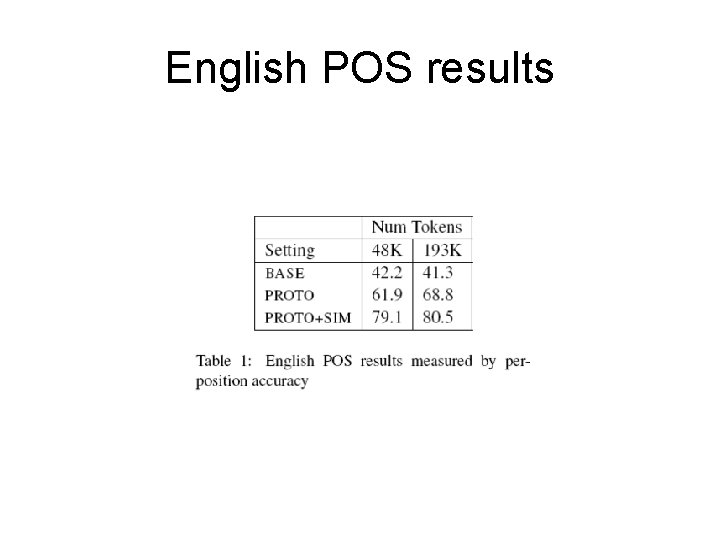

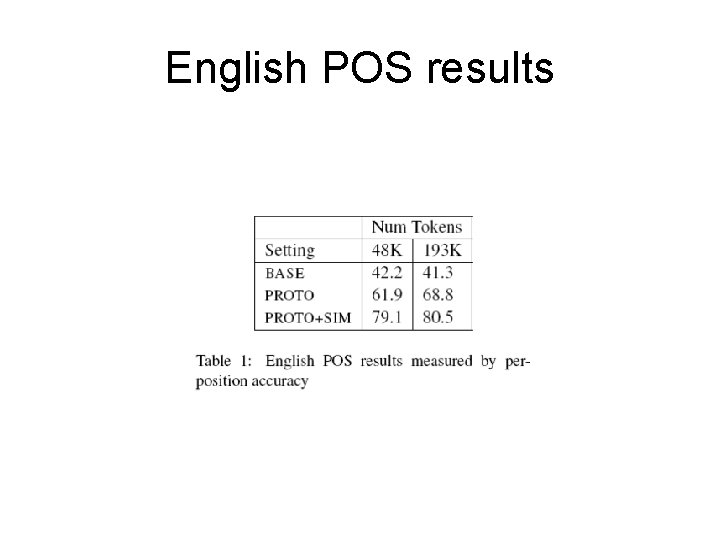

English POS tagging • Used the WSJ portion of the Penn treebank • Used two sizes– 48 K tokens, 193 K tokens

Baseline features • BASE features – Node features: exact word, character suffixes, init-caps, contains-hyphen, contains-digit – Edge features: tag trigrams

Building the prototype list • Automatically extracted the prototype list • For each label, selected the top three occurring words that were not given another label more often

Building the prototype list • Automatically extracted the prototype list • For each label, selected the top three occurring words that were not given another label more often Yes. This does use labeled data! The authors did it this way to give repeatable results, and to avoid excessive tuning.

English POS Prototypes

Use the prototypes • Restricting the prototype words to have their respective labels improved performance, but did not help similar nonprototype words. • Solution: add PROTO features to similar words

English POS trigram tagger

English POS results

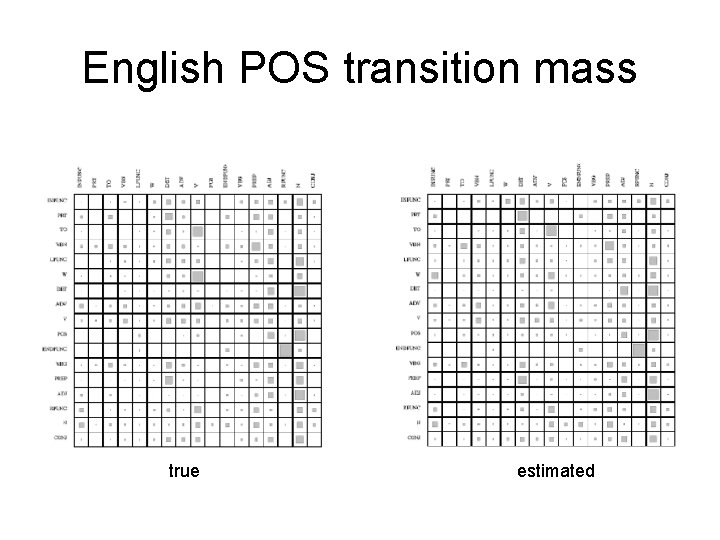

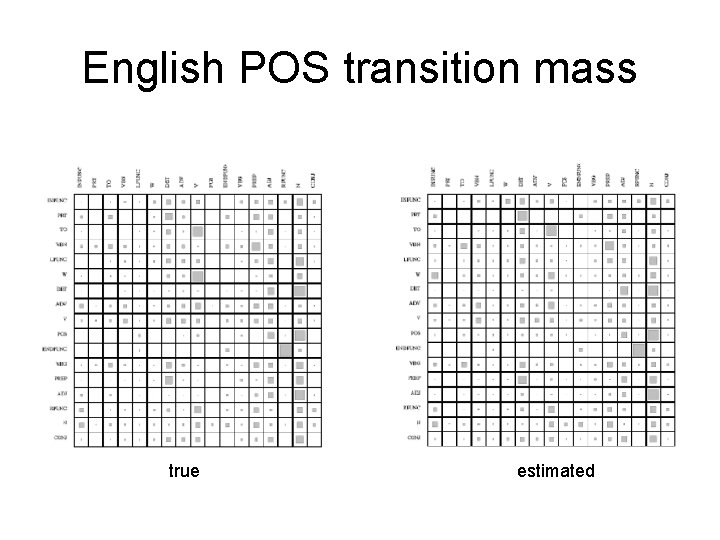

English POS transition mass true estimated

Chinese POS results • Reduces the error rate from BASE by 35%, but not as good as English results • Reasons: task is harder, and had less data for distributional similarity

Classified ad segmentation • For distributional similarity, used context vectors but ignored distance and direction • Added special BOUNDARY state to handle tokens that indicate transitions • Special model tweaking– deviance from “least effort” motivation

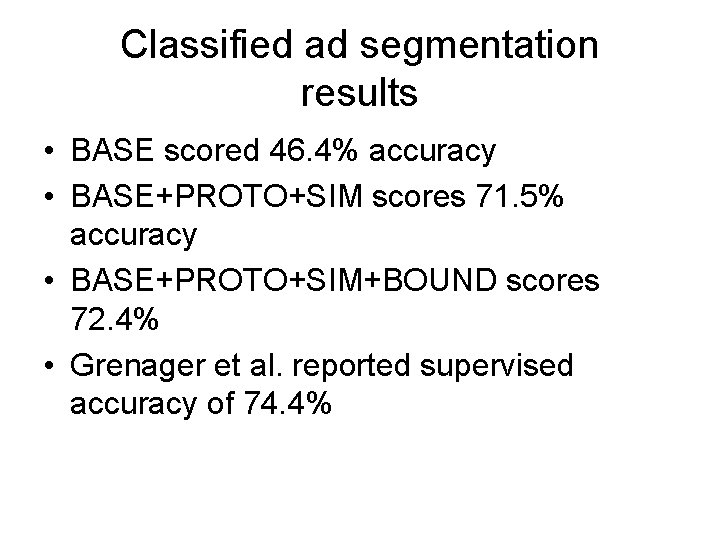

Classified ad segmentation results • BASE scored 46. 4% accuracy • BASE+PROTO+SIM scores 71. 5% accuracy • BASE+PROTO+SIM+BOUND scores 72. 4% • Grenager et al. reported supervised accuracy of 74. 4%

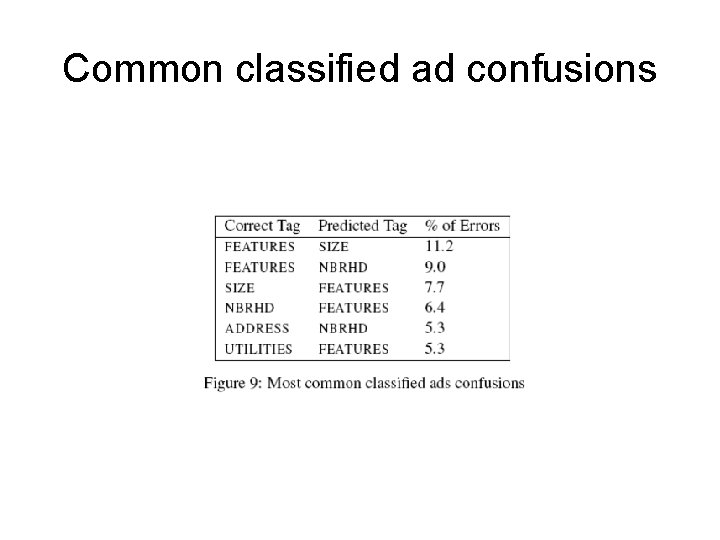

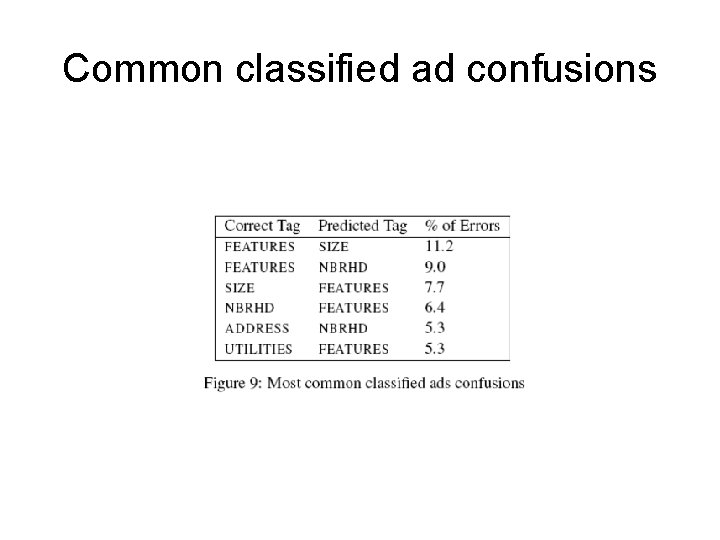

Common classified ad confusions

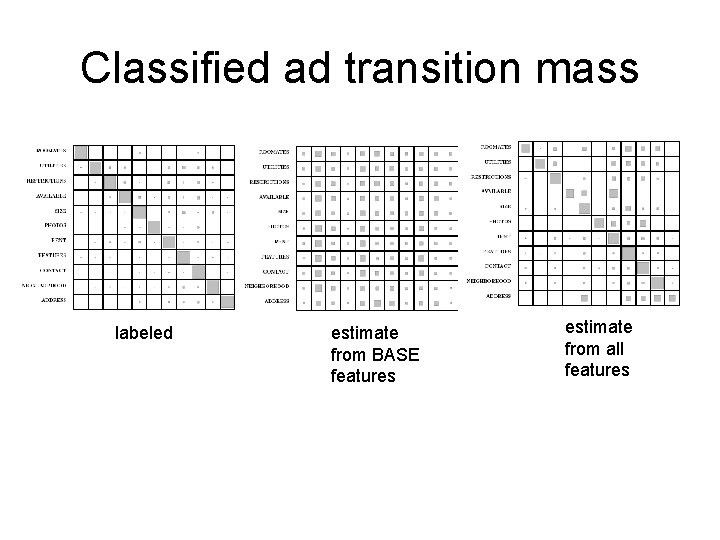

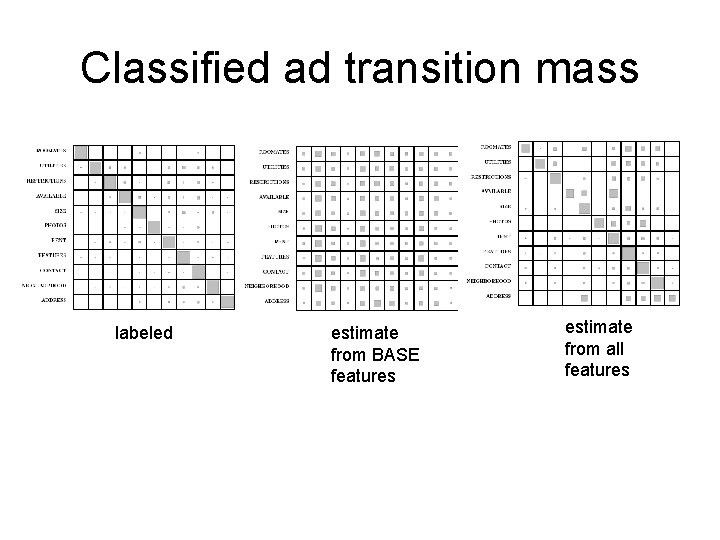

Classified ad transition mass labeled estimate from BASE features estimate from all features

Conclusions • Prototype-driven learning provides a compact and declarative way to specify a target labeling scheme. • Distributional similarity features seem to work well in linking words to prototypes. • Bridges gap between unsupervised sequence-free distributional clustering approaches and supervised sequence model learning.