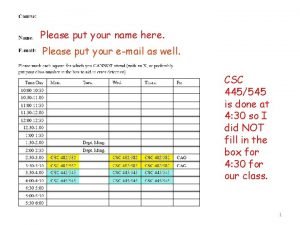

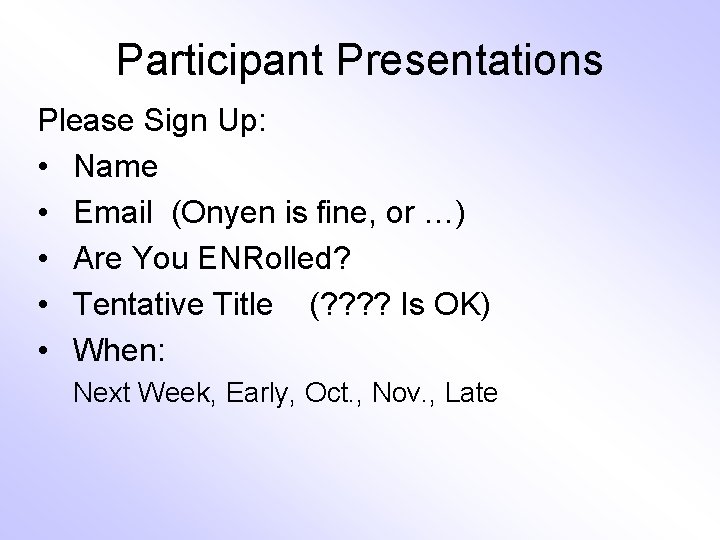

Participant Presentations Please Sign Up Name Email Onyen

- Slides: 157

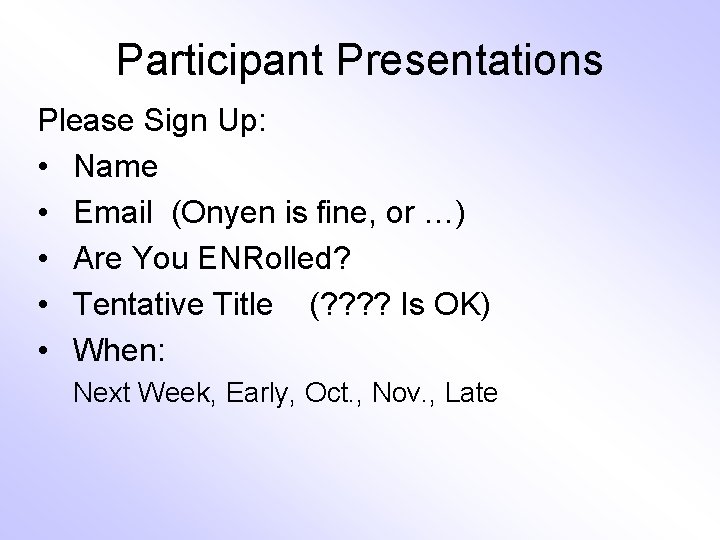

Participant Presentations Please Sign Up: • Name • Email (Onyen is fine, or …) • Are You ENRolled? • Tentative Title (? ? Is OK) • When: Next Week, Early, Oct. , Nov. , Late

Object Oriented Data Analysis Three Major Parts of OODA Applications: I. Object Definition “What are the Data Objects? ” II. Exploratory Analysis “What Is Data Structure / Drivers? ” III. Confirmatory Analysis / Validation Is it Really There (vs. Noise Artifact)?

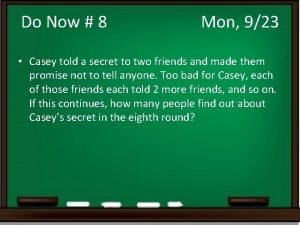

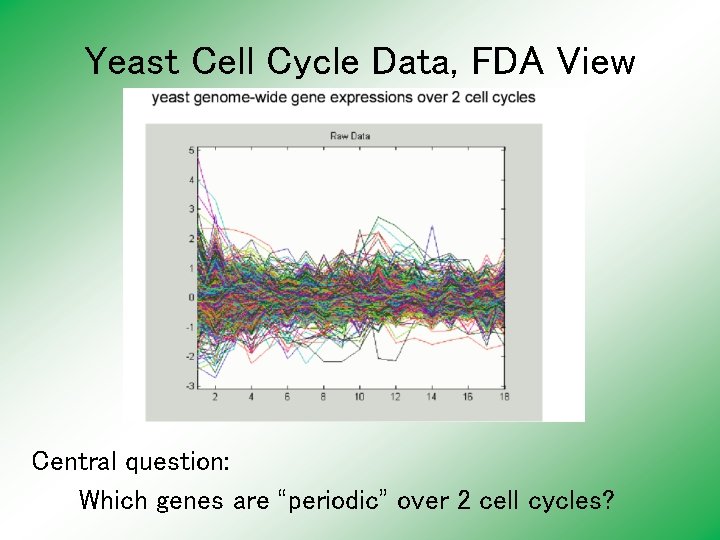

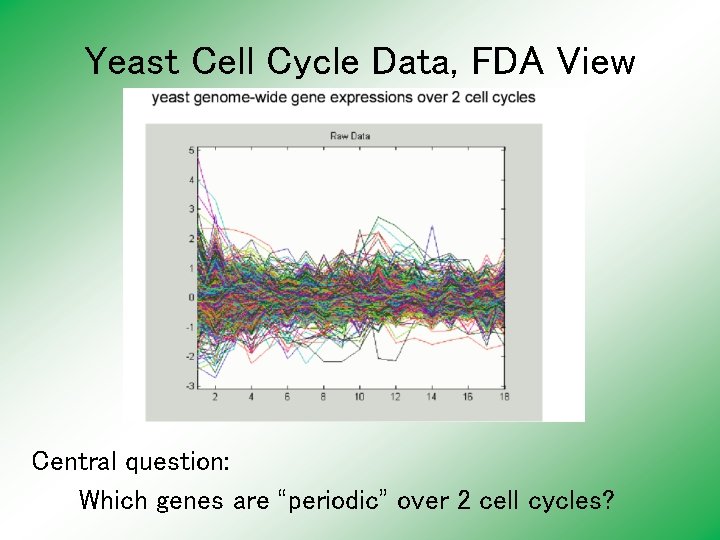

Yeast Cell Cycle Data, FDA View Central question: Which genes are “periodic” over 2 cell cycles?

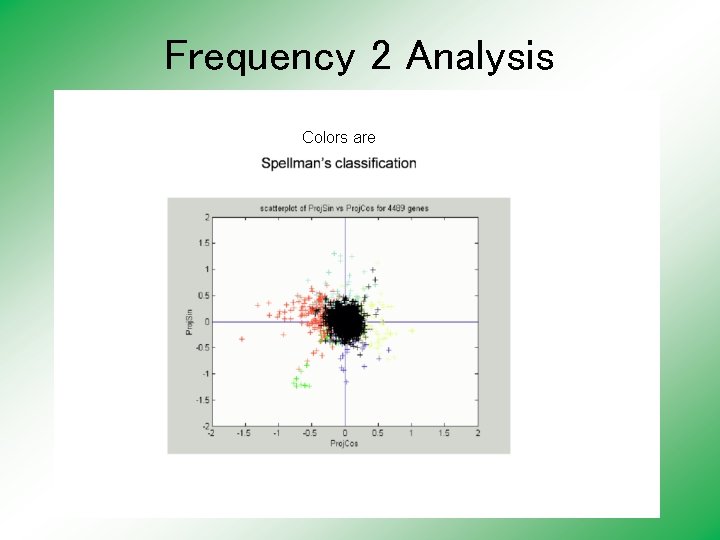

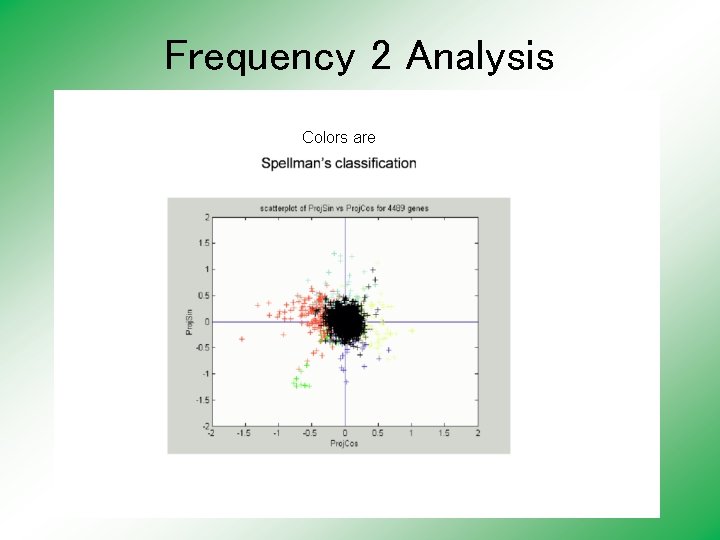

Frequency 2 Analysis Colors are

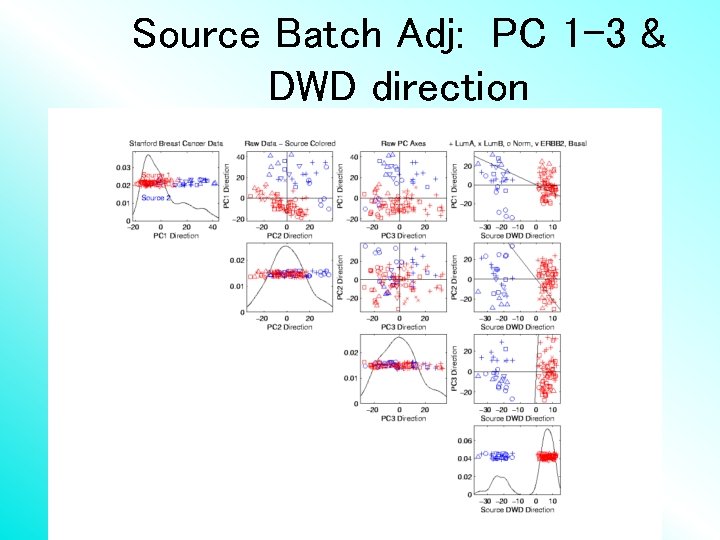

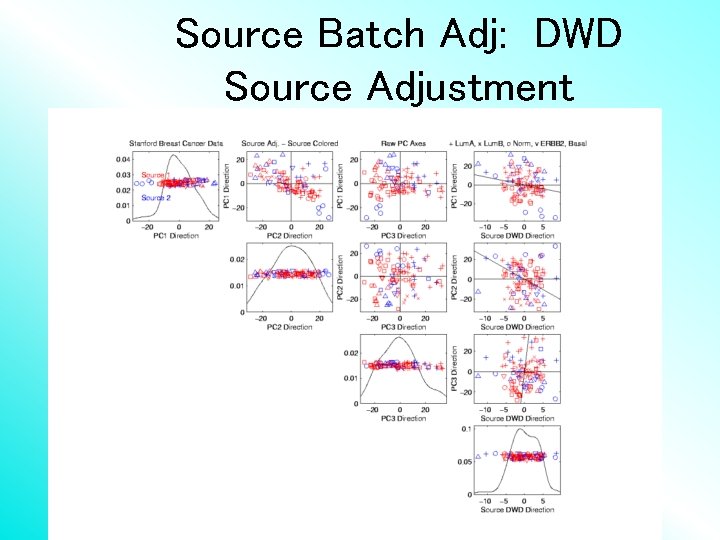

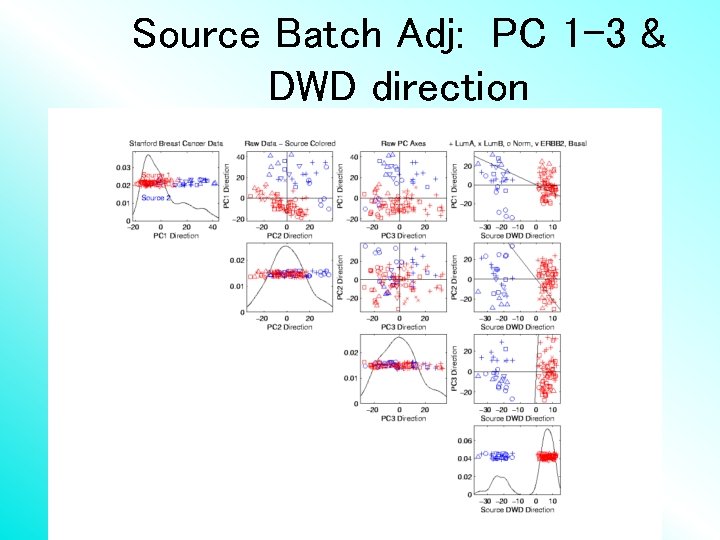

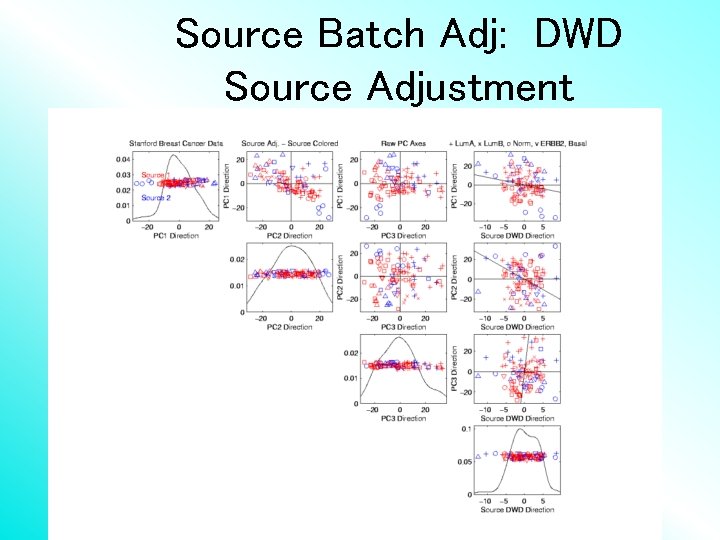

Batch and Source Adjustment • For Stanford Breast Cancer Data (C. Perou) • Analysis in Benito, et al (2004) https: //genome. unc. edu/pubsup/dwd/ • Adjust for Source Effects – Different sources of m. RNA • Adjust for Batch Effects – Arrays fabricated at different times

Source Batch Adj: PC 1 -3 & DWD direction

Source Batch Adj: DWD Source Adjustment

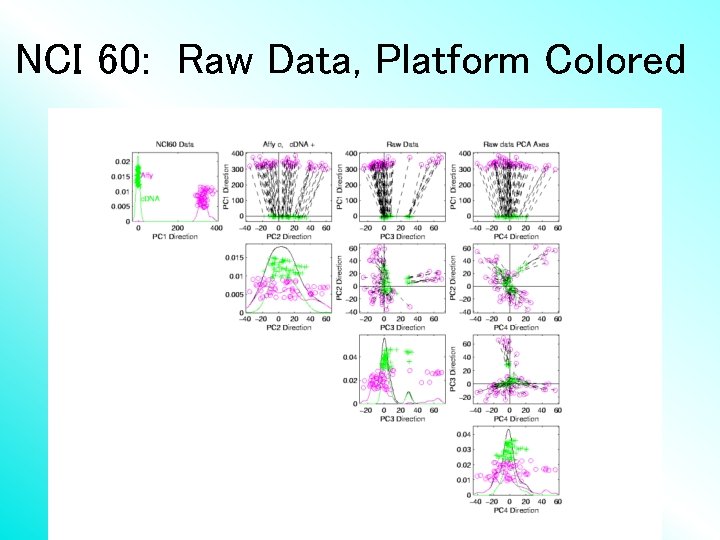

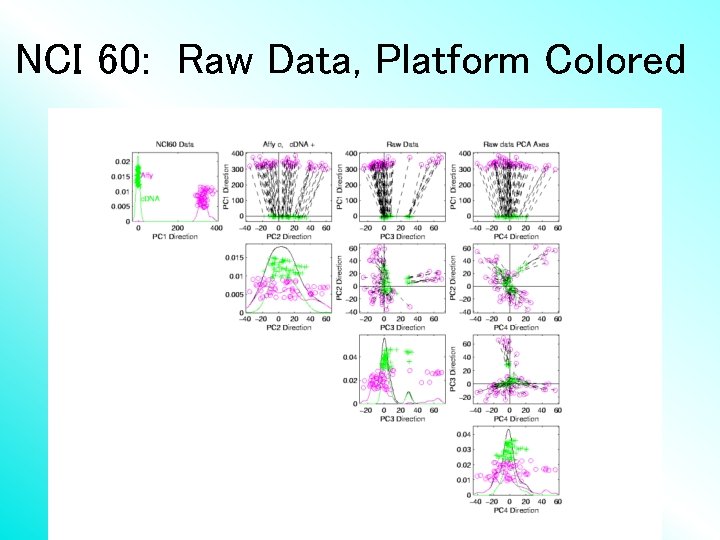

NCI 60: Raw Data, Platform Colored

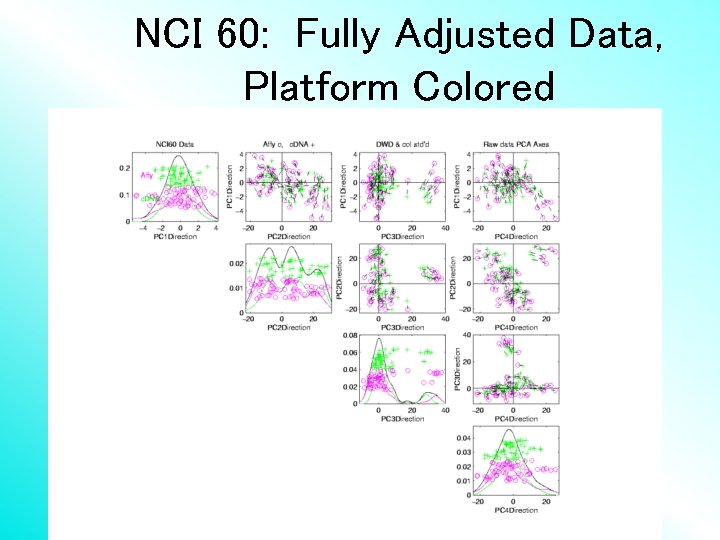

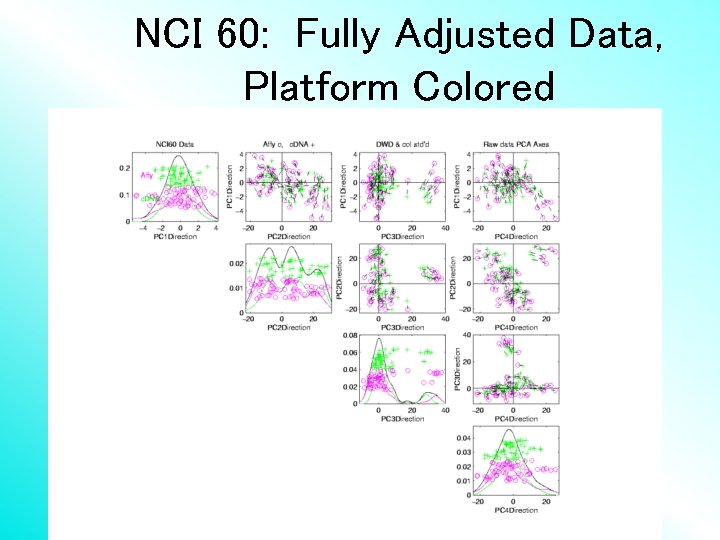

NCI 60: Fully Adjusted Data, Platform Colored

Object Oriented Data Analysis Three Major Parts of OODA Applications: I. Object Definition “What are the Data Objects? ” II. Exploratory Analysis “What Is Data Structure / Drivers? ” III. Confirmatory Analysis / Validation Is it Really There (vs. Noise Artifact)?

Recall Drug Discovery Data •

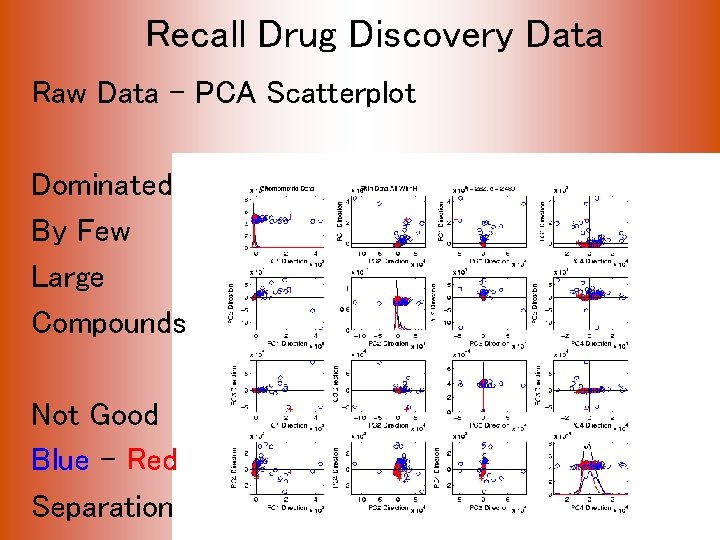

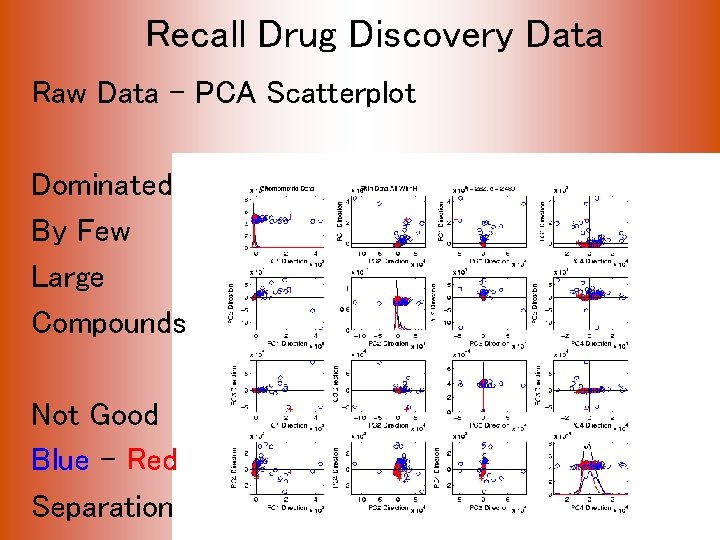

Recall Drug Discovery Data Raw Data – PCA Scatterplot Dominated By Few Large Compounds Not Good Blue - Red Separation

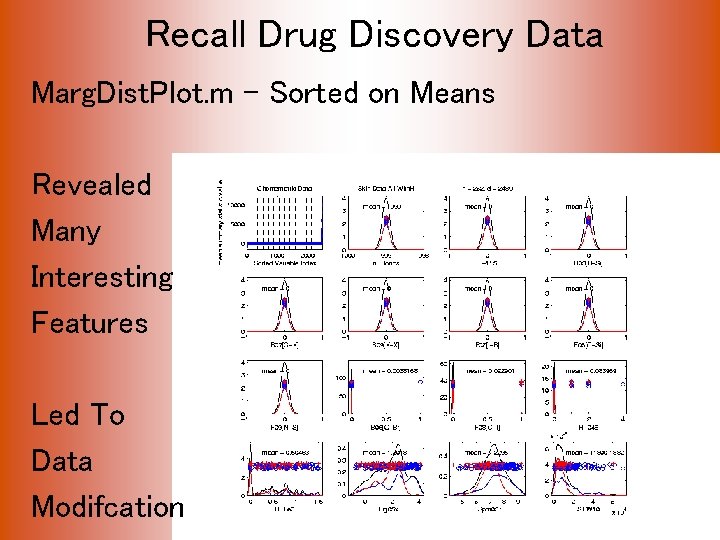

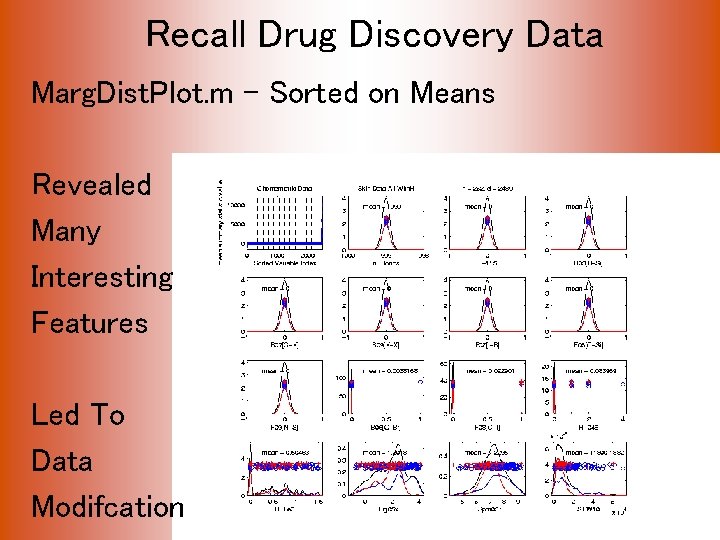

Recall Drug Discovery Data Marg. Dist. Plot. m – Sorted on Means Revealed Many Interesting Features Led To Data Modifcation

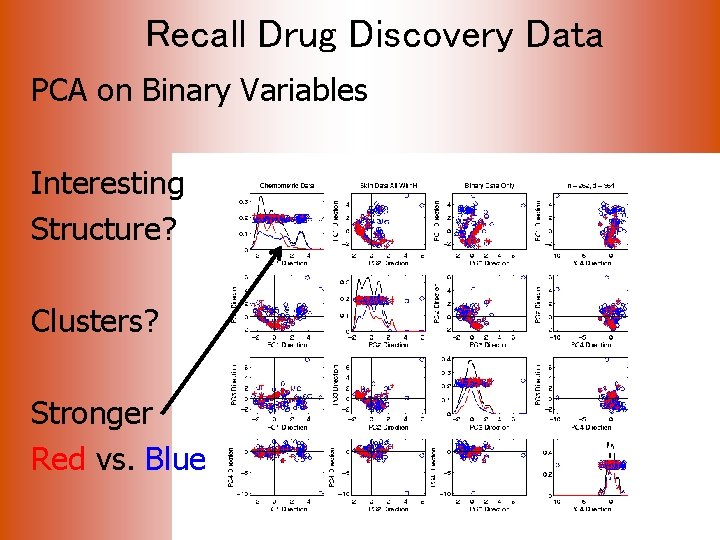

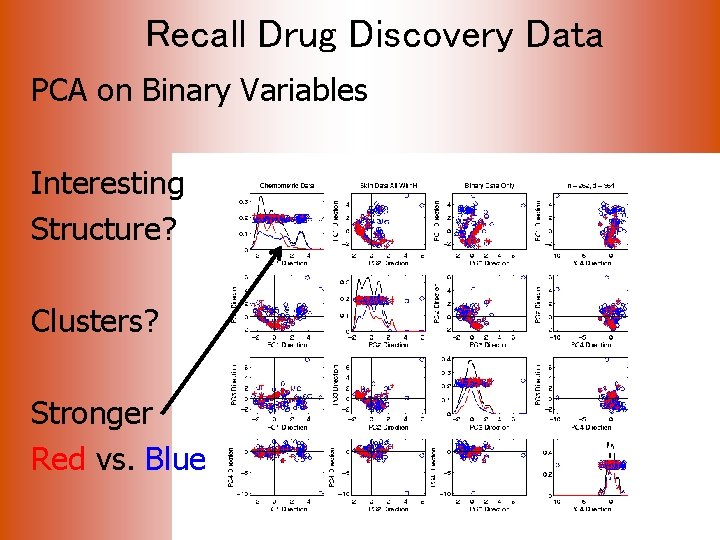

Recall Drug Discovery Data PCA on Binary Variables Interesting Structure? Clusters? Stronger Red vs. Blue

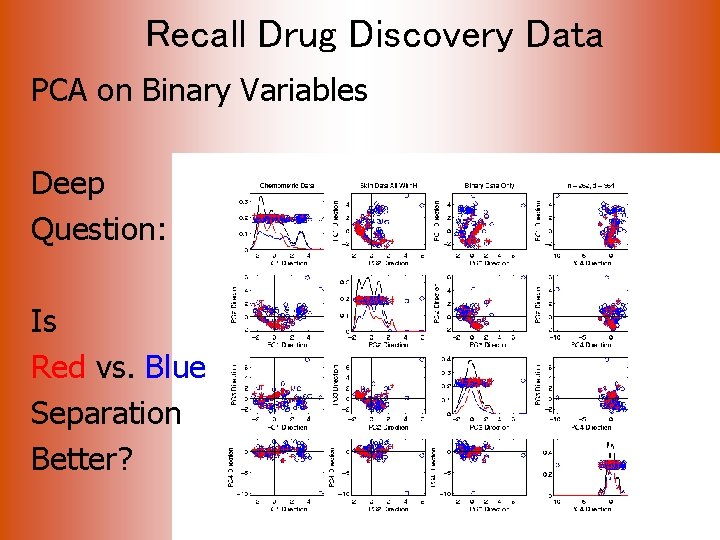

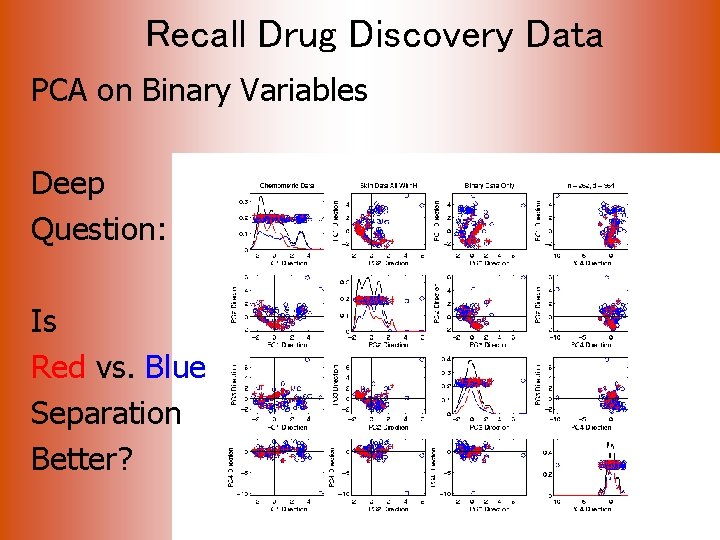

Recall Drug Discovery Data PCA on Binary Variables Deep Question: Is Red vs. Blue Separation Better?

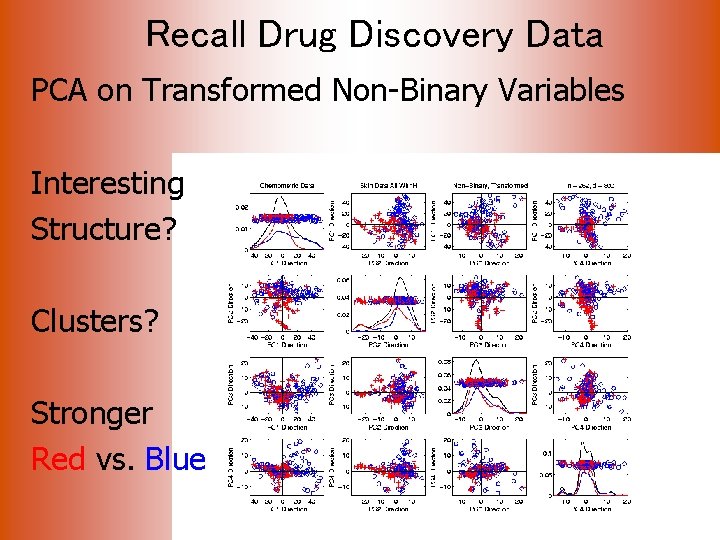

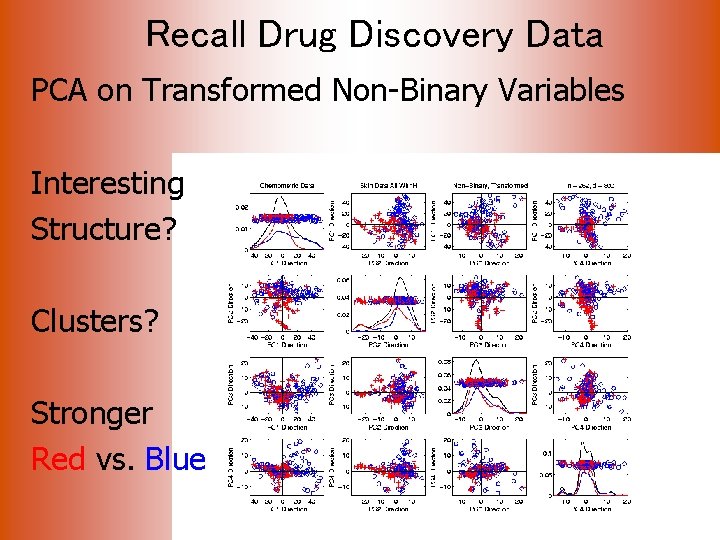

Recall Drug Discovery Data PCA on Transformed Non-Binary Variables Interesting Structure? Clusters? Stronger Red vs. Blue

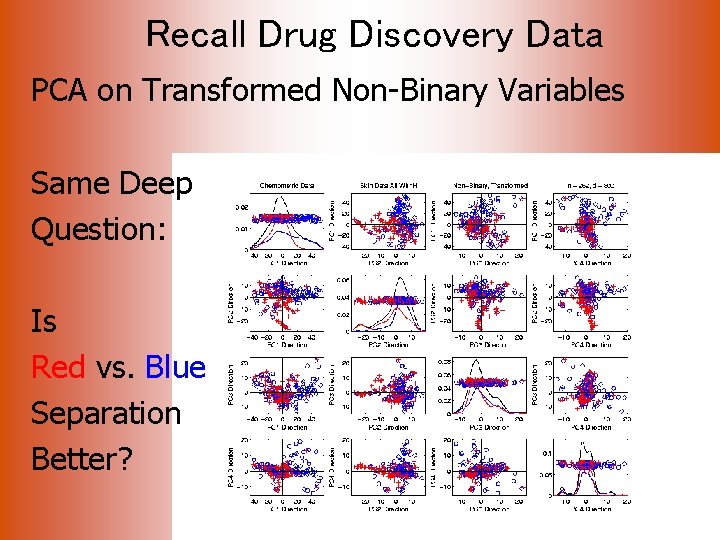

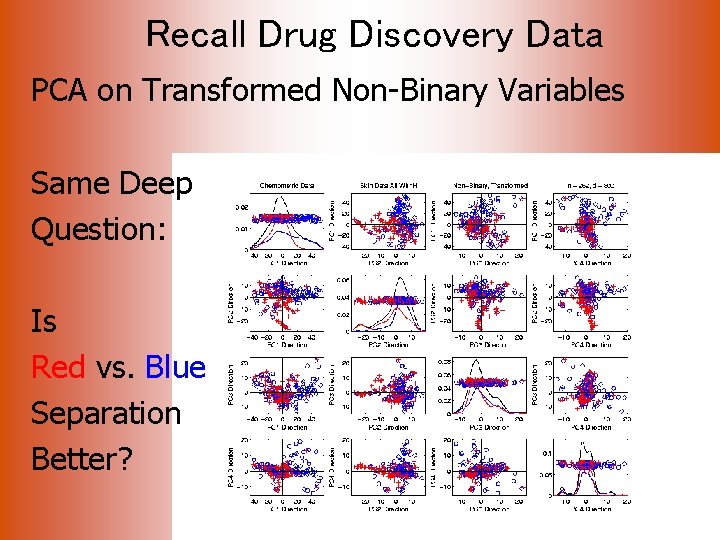

Recall Drug Discovery Data PCA on Transformed Non-Binary Variables Same Deep Question: Is Red vs. Blue Separation Better?

Recall Drug Discovery Data Question: When Is Red vs. Blue Separation Better? Visual Approach: Ø Train DWD to Separate Ø Project, and View How Separated Ø Useful View, Add Orthogonal PC Directions

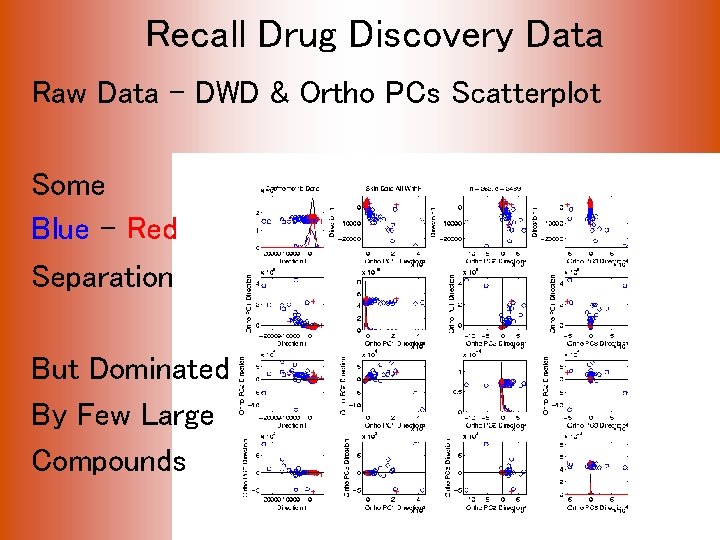

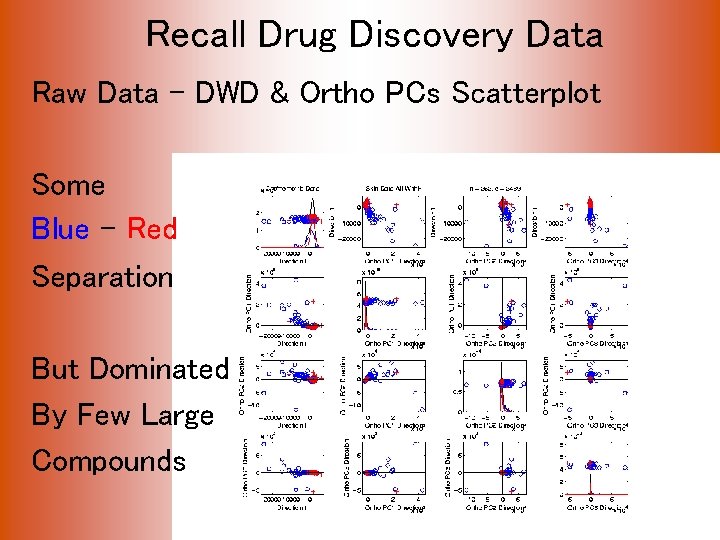

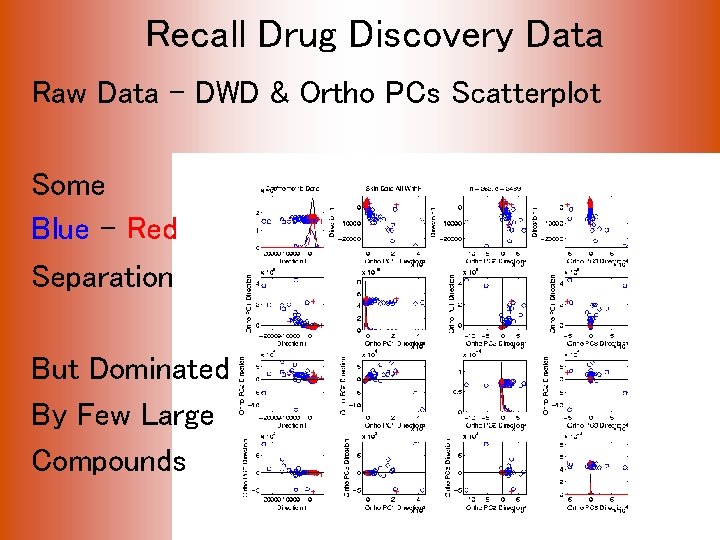

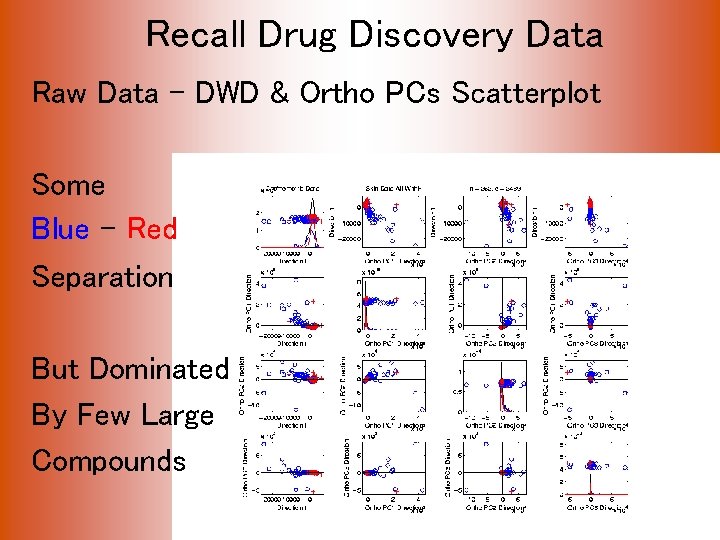

Recall Drug Discovery Data Raw Data – DWD & Ortho PCs Scatterplot Some Blue - Red Separation But Dominated By Few Large Compounds

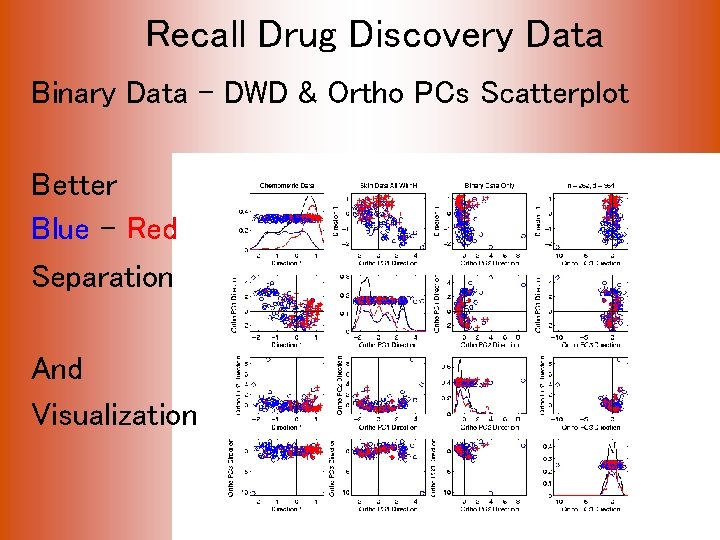

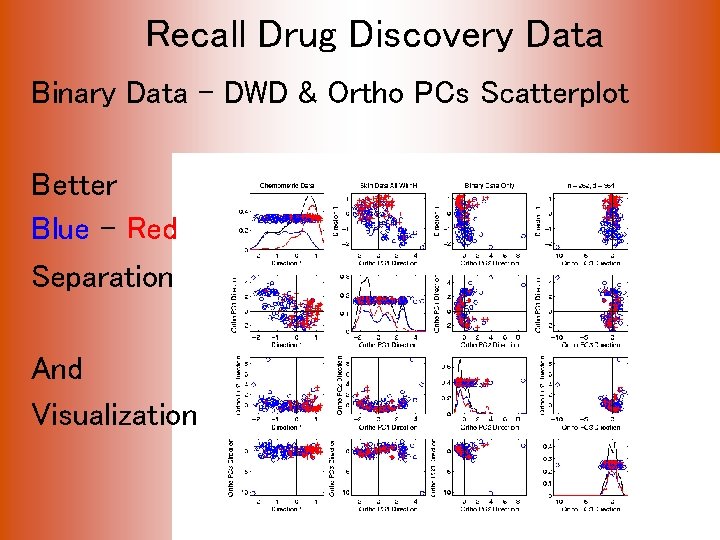

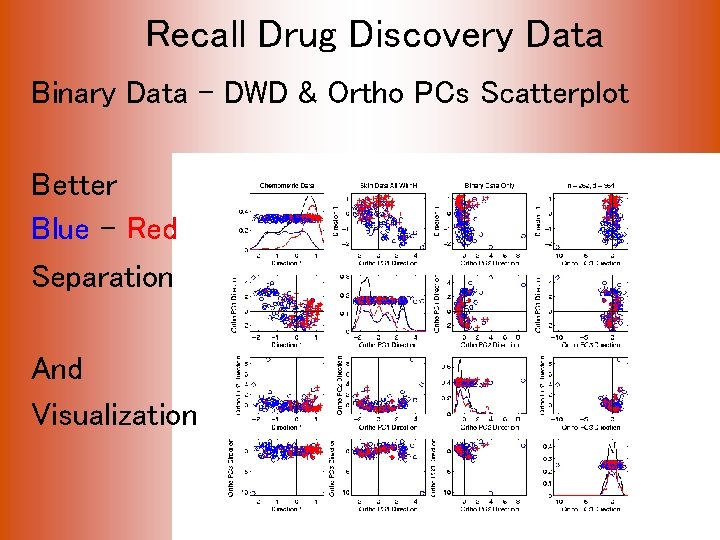

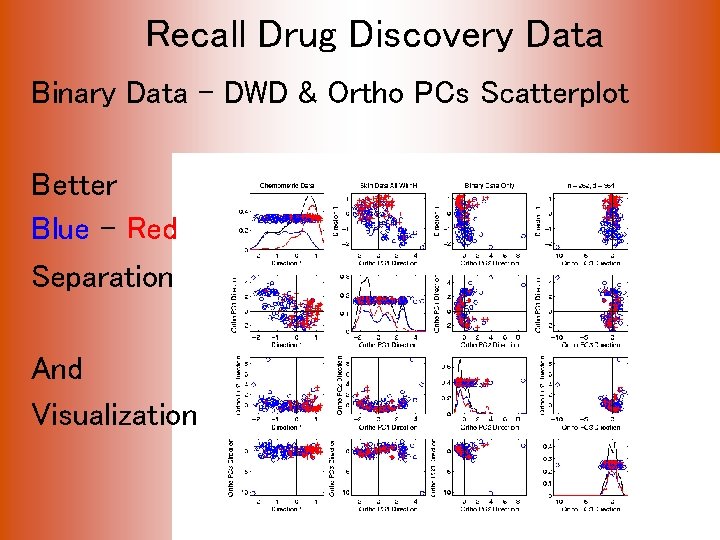

Recall Drug Discovery Data Binary Data – DWD & Ortho PCs Scatterplot Better Blue - Red Separation And Visualization

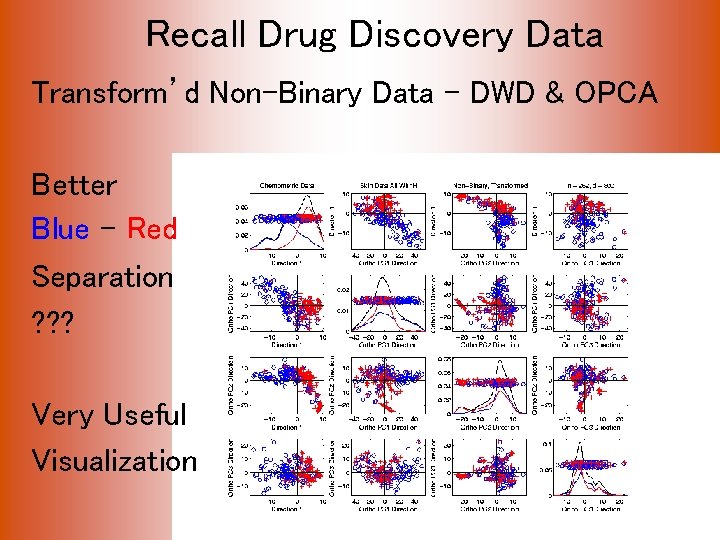

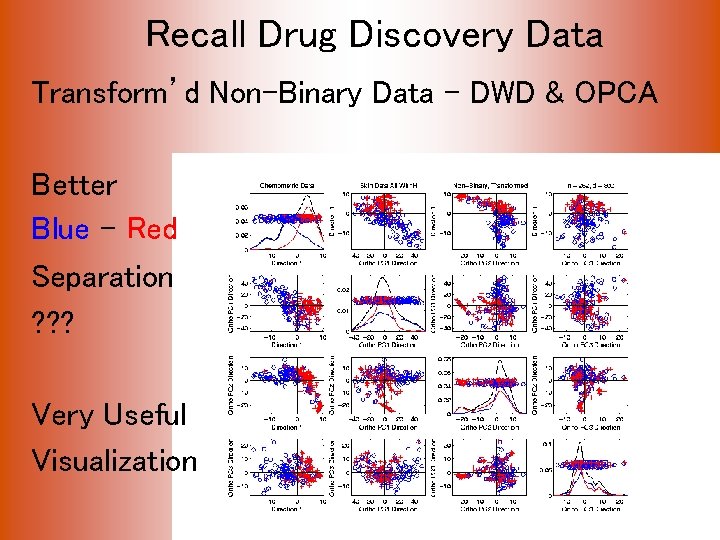

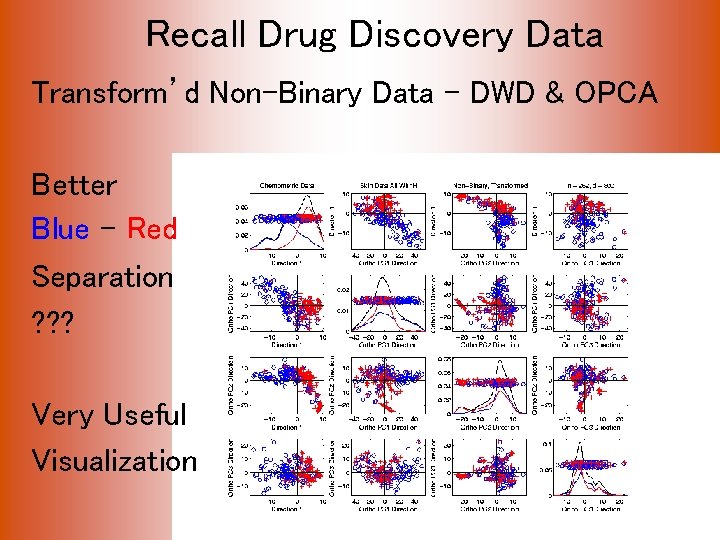

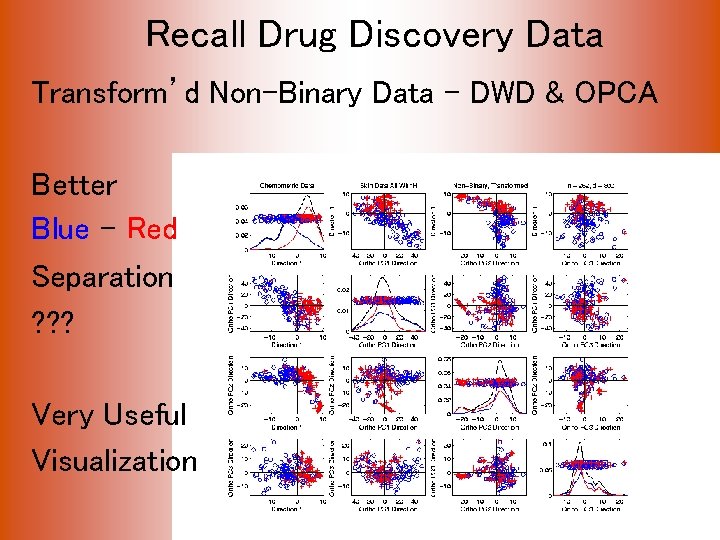

Recall Drug Discovery Data Transform’d Non-Binary Data – DWD & OPCA Better Blue - Red Separation ? ? ? Very Useful Visualization

Caution DWD Separation Can Be Deceptive Since DWD is Really Good at Separation Important Concept: Statistical Inference is Essential

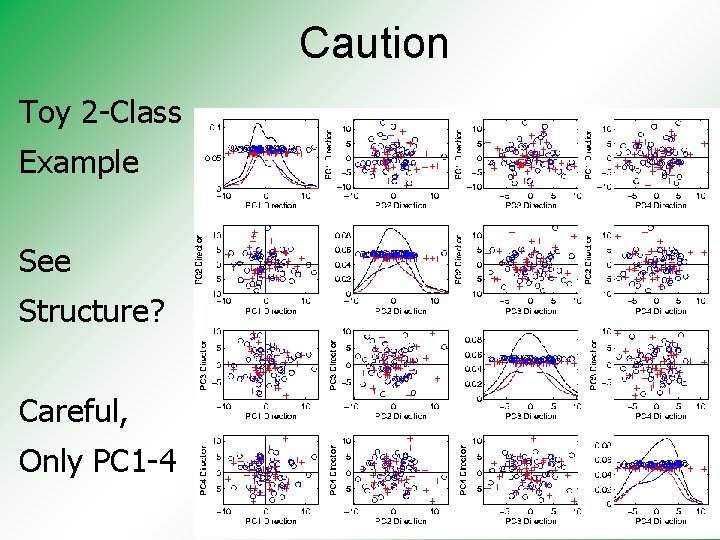

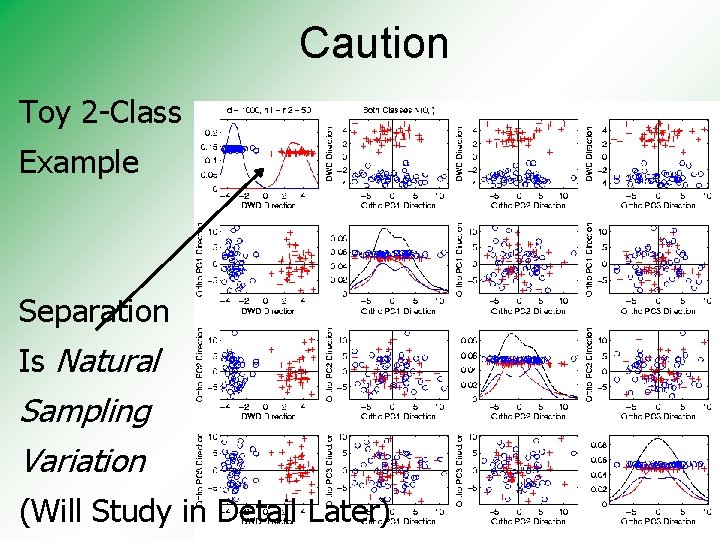

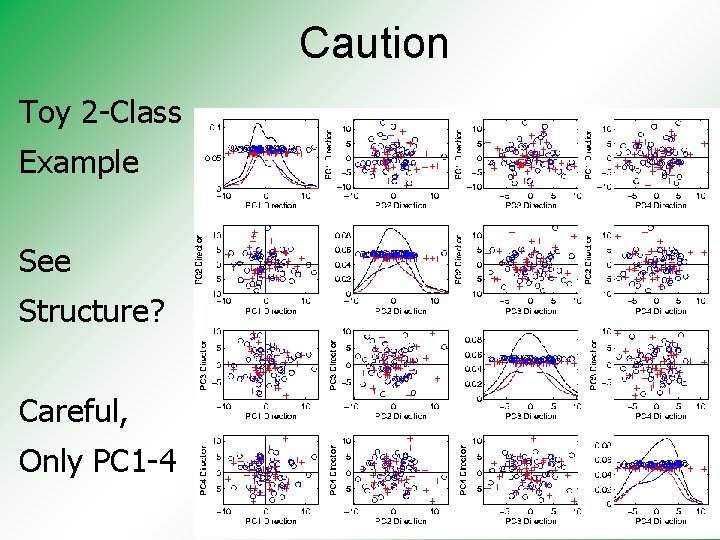

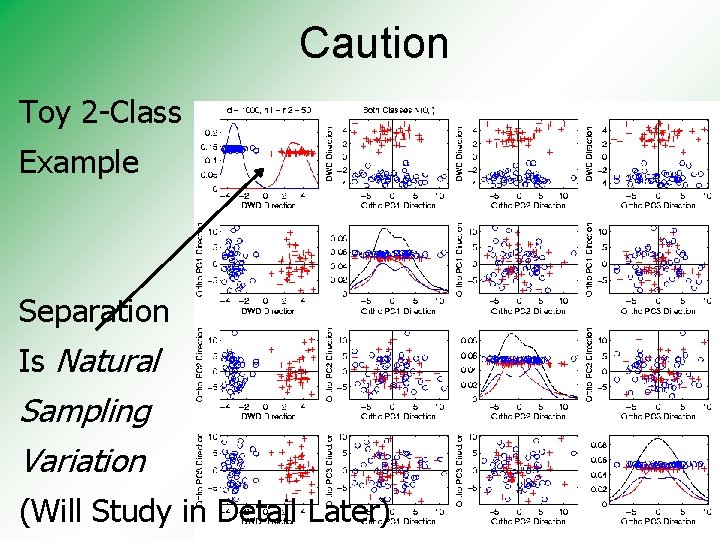

Caution Toy 2 -Class Example See Structure? Careful, Only PC 1 -4

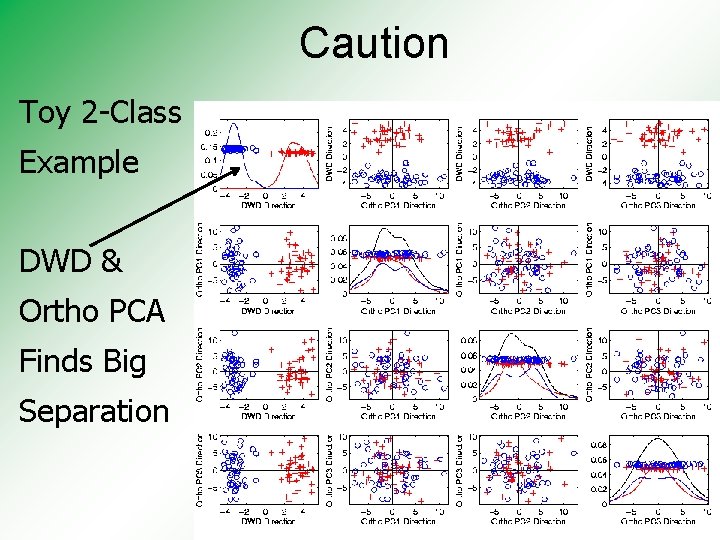

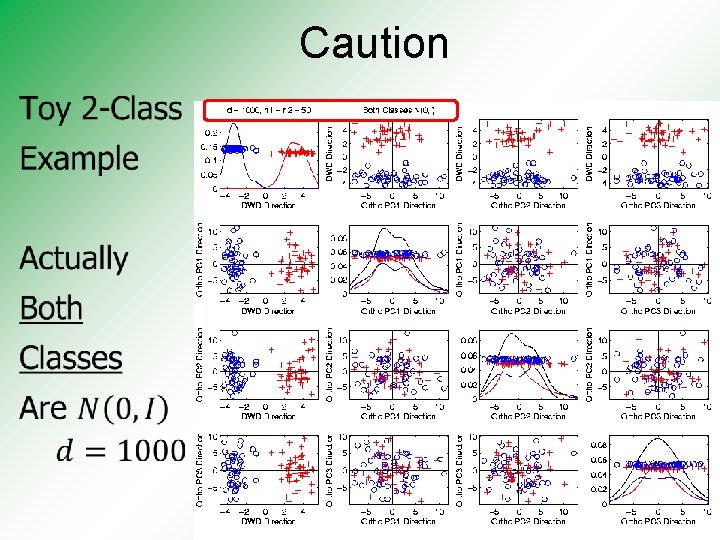

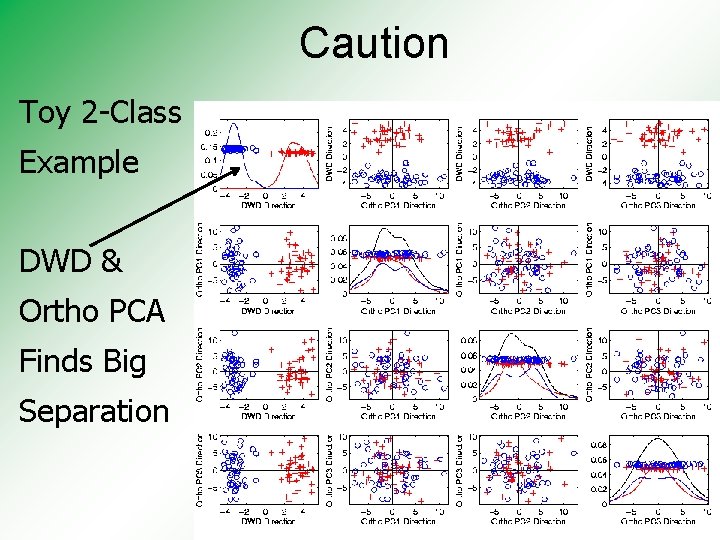

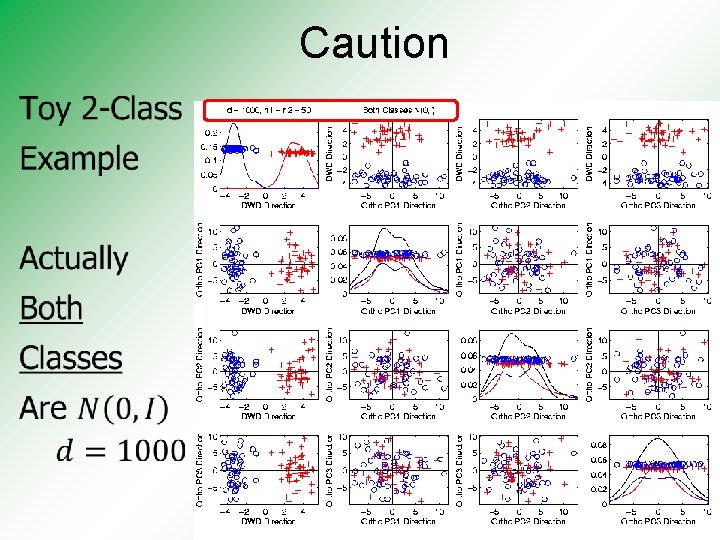

Caution Toy 2 -Class Example DWD & Ortho PCA Finds Big Separation

Caution •

Caution Toy 2 -Class Example Separation Is Natural Sampling Variation (Will Study in Detail Later)

Caution Main Lesson Again: DWD Separation Can Be Deceptive Since DWD is Really Good at Separation Important Concept: Statistical Inference is Essential III. Confirmatory Analysis

Di. Pro. Perm Hypothesis Test •

Di. Pro. Perm Hypothesis Test Context: 2 – sample means H 0: μ+1 = μ-1 vs. H 1: μ+1 ≠ μ-1 (in High Dimensions) Approach taken here: Wei et al (2013) Focus on Visualization via Projection (Thus Test Related to Exploration)

Di. Pro. Perm Hypothesis Test Context: 2 – sample means H 0: μ+1 = μ-1 vs. H 1: μ+1 ≠ μ-1 Challenges: § Distributional Assumptions § Parameter Estimation § HDLSS space is slippery

Di. Pro. Perm Hypothesis Test Context: 2 – sample means H 0: μ+1 = μ-1 vs. H 1: μ+1 ≠ μ-1 Challenges: § Distributional Assumptions § Parameter Estimation Suggested Approach: Permutation test (A flavor of classical “non-parametrics”)

Di. Pro. Perm Hypothesis Test Suggested Approach: ü Find a DIrection (separating classes) ü PROject the data (reduces to 1 dim) ü PERMute (class labels, to assess significance, with recomputed direction)

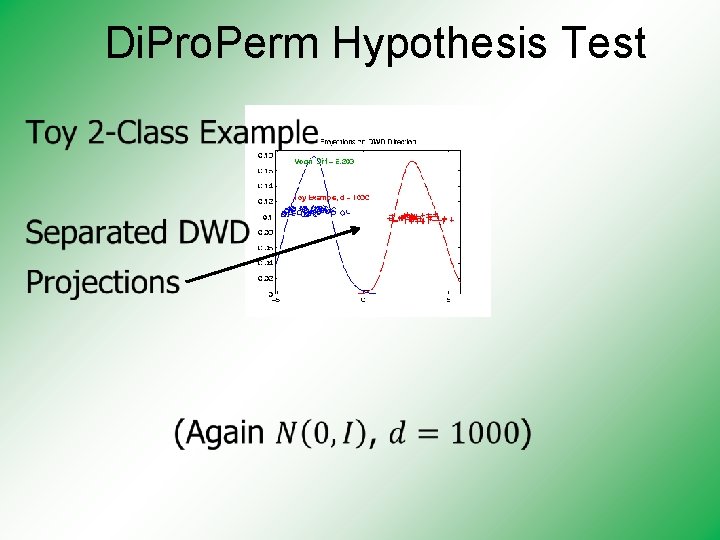

Di. Pro. Perm Hypothesis Test

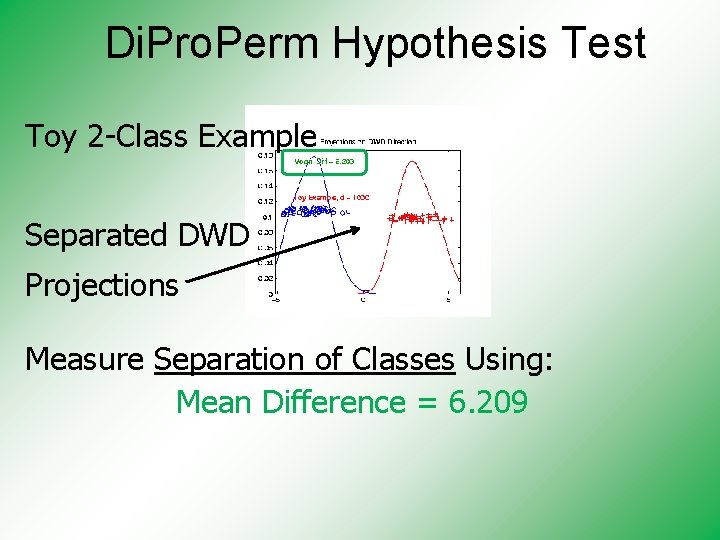

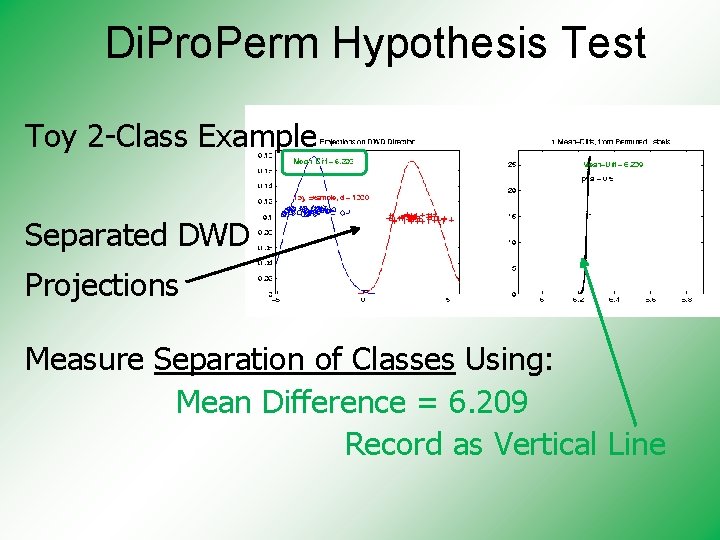

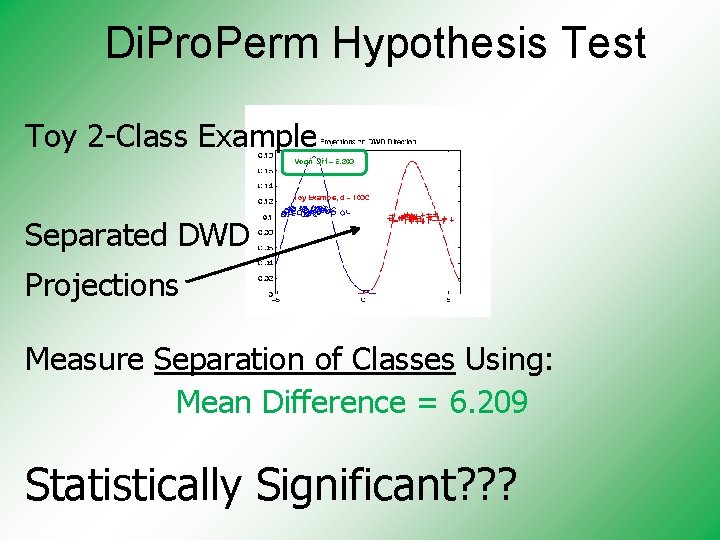

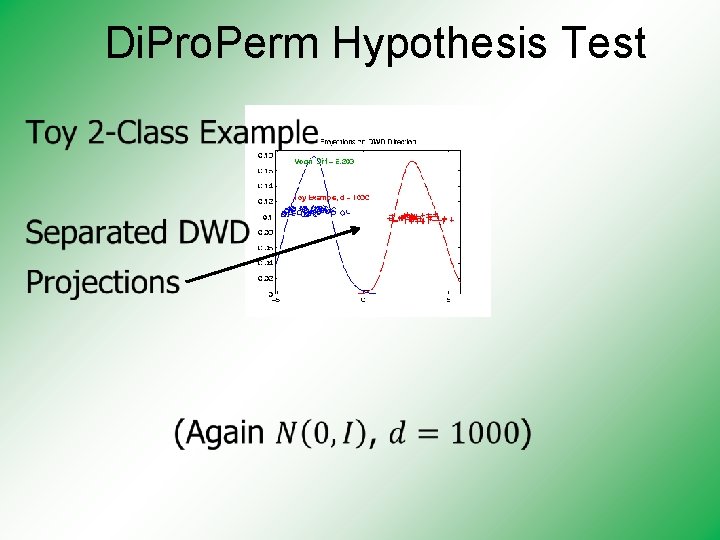

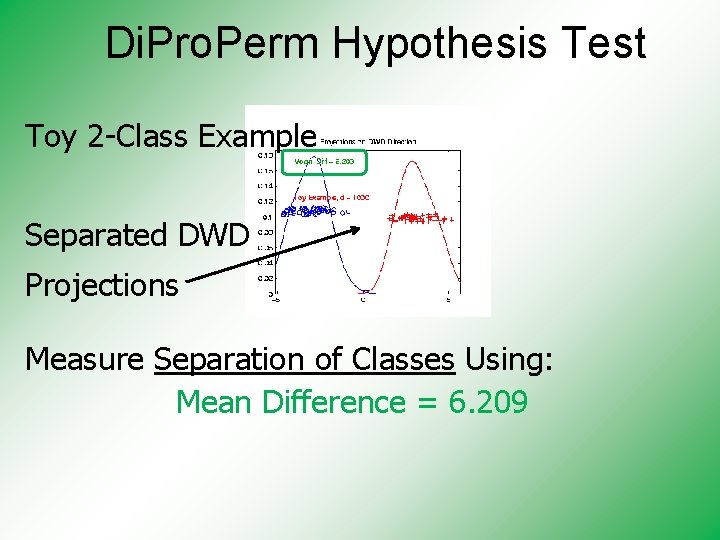

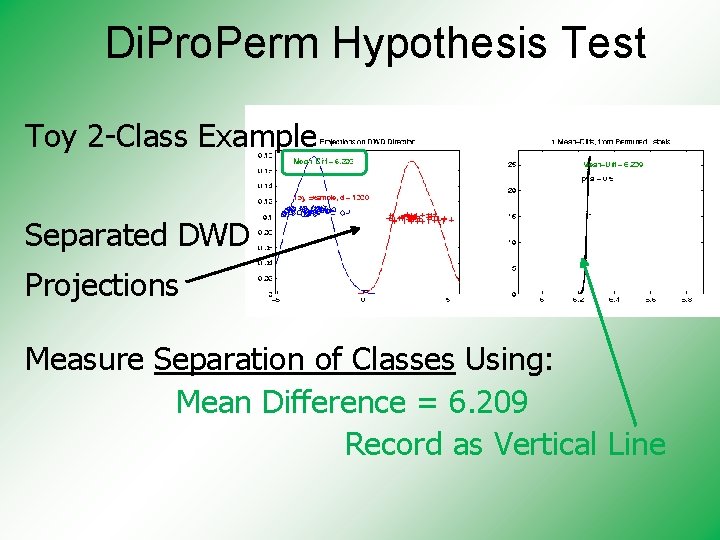

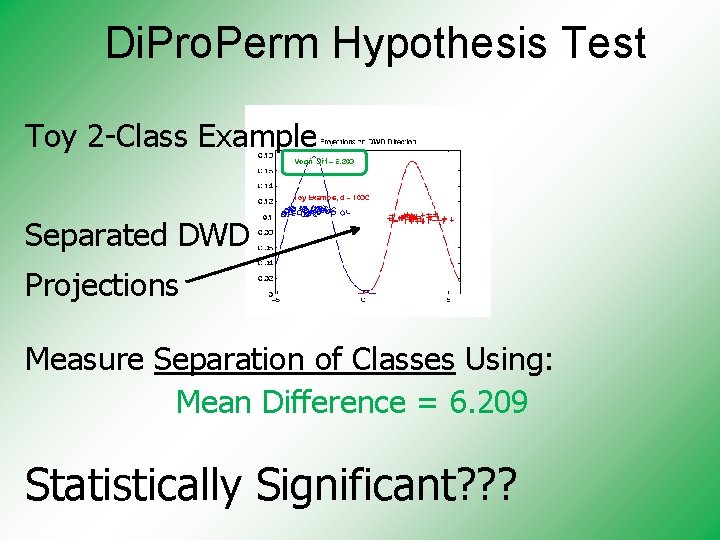

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Separated DWD Projections Measure Separation of Classes Using: Mean Difference = 6. 209

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Separated DWD Projections Measure Separation of Classes Using: Mean Difference = 6. 209 Record as Vertical Line

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Separated DWD Projections Measure Separation of Classes Using: Mean Difference = 6. 209 Statistically Significant? ? ?

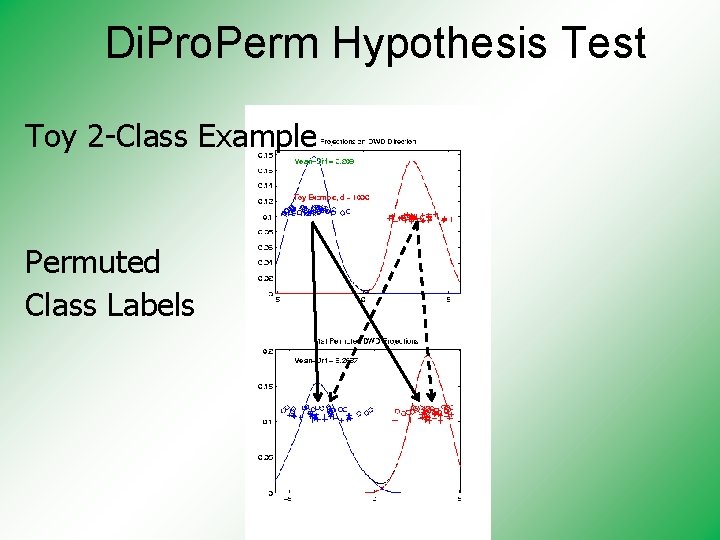

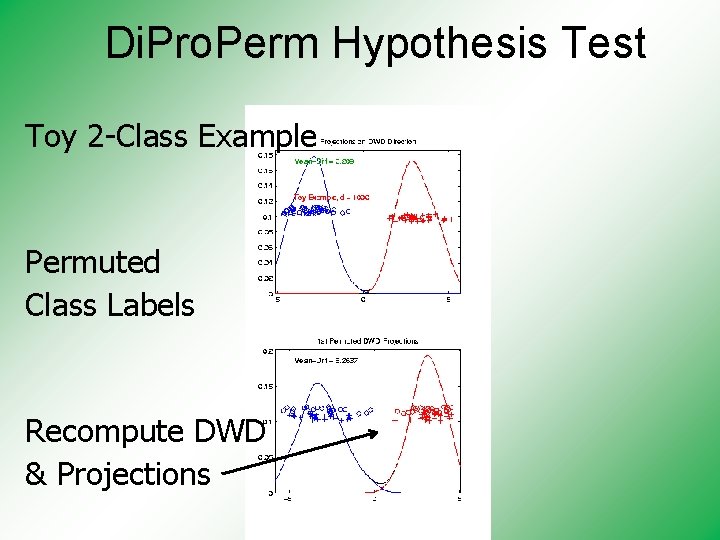

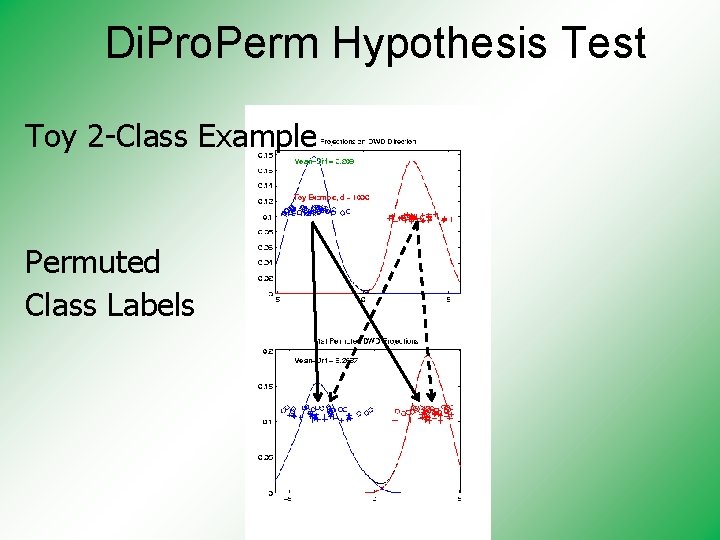

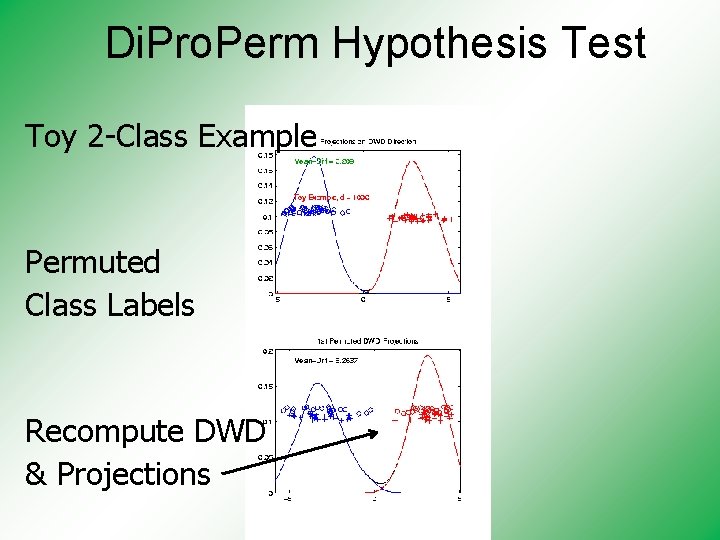

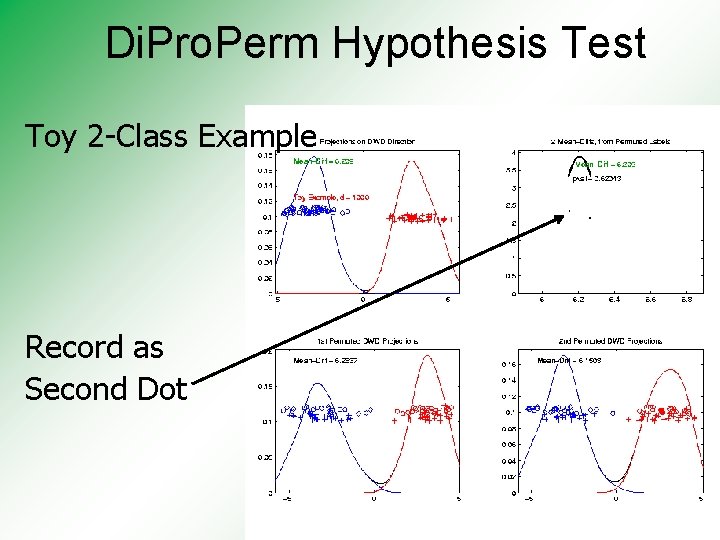

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Permuted Class Labels

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Permuted Class Labels Recompute DWD & Projections

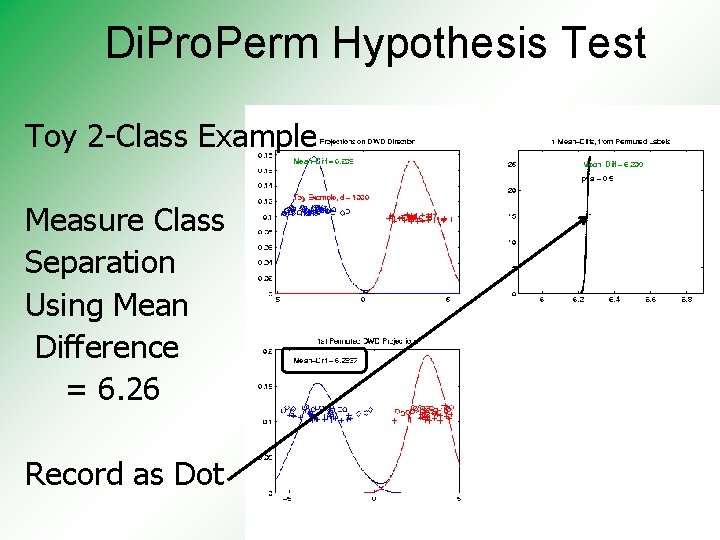

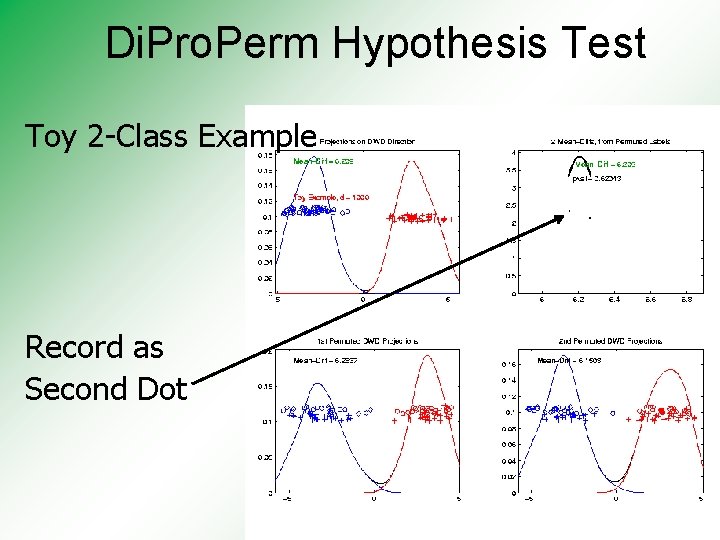

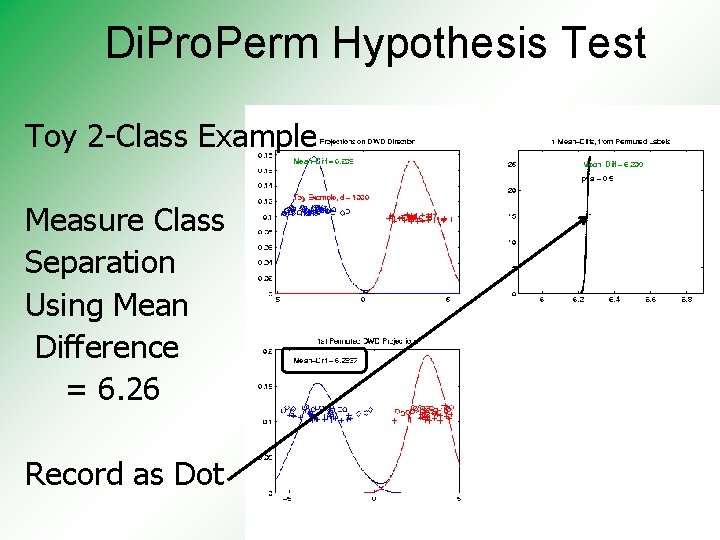

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Measure Class Separation Using Mean Difference = 6. 26

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Measure Class Separation Using Mean Difference = 6. 26 Record as Dot

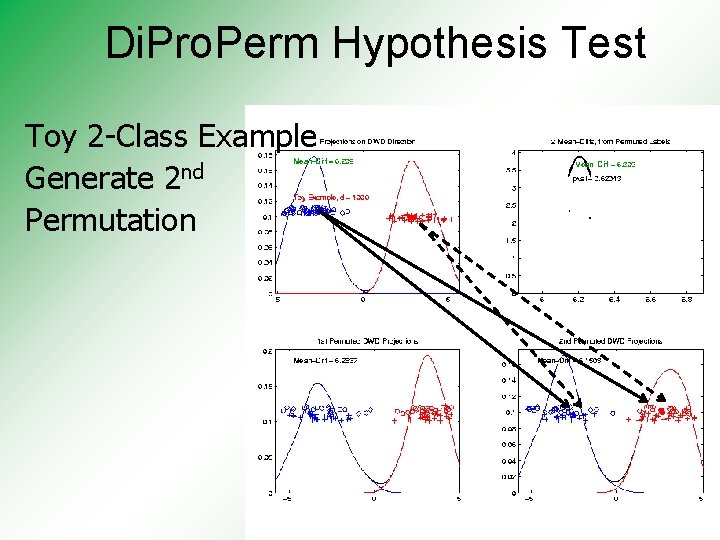

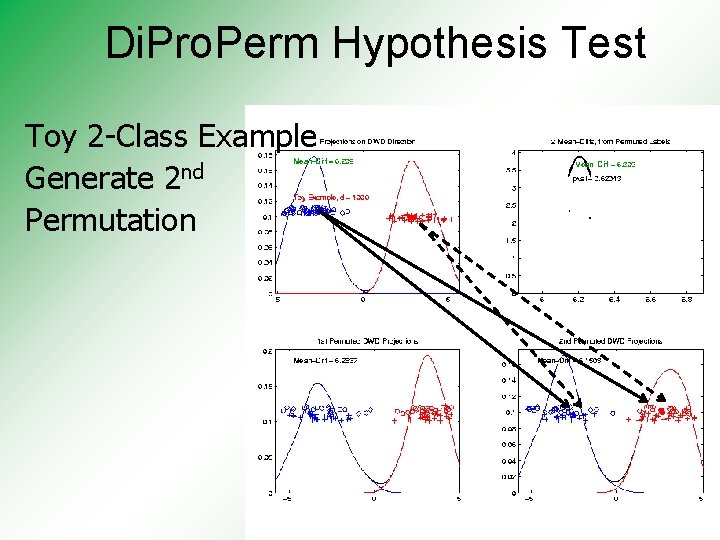

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Generate 2 nd Permutation

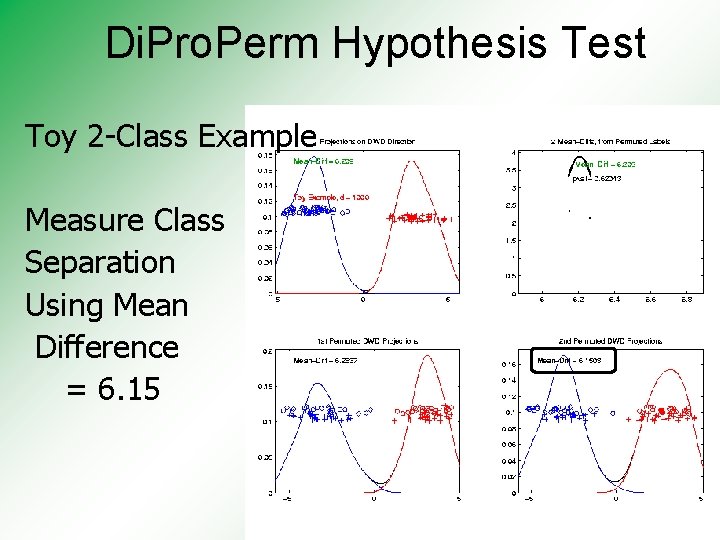

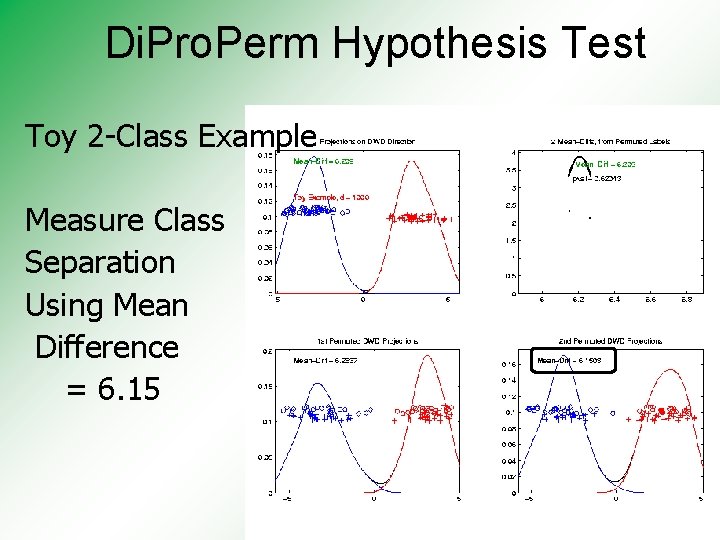

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Measure Class Separation Using Mean Difference = 6. 15

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Record as Second Dot

Di. Pro. Perm Hypothesis Test. . . Repeat This 1, 000 Times To Generate Null Distribution

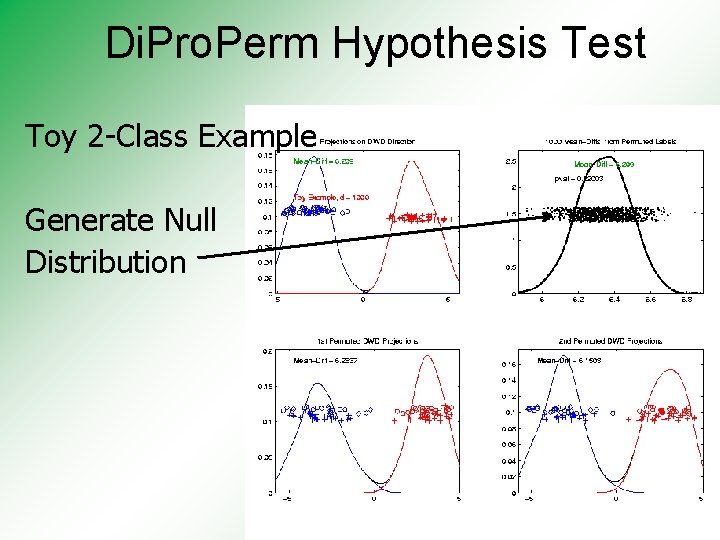

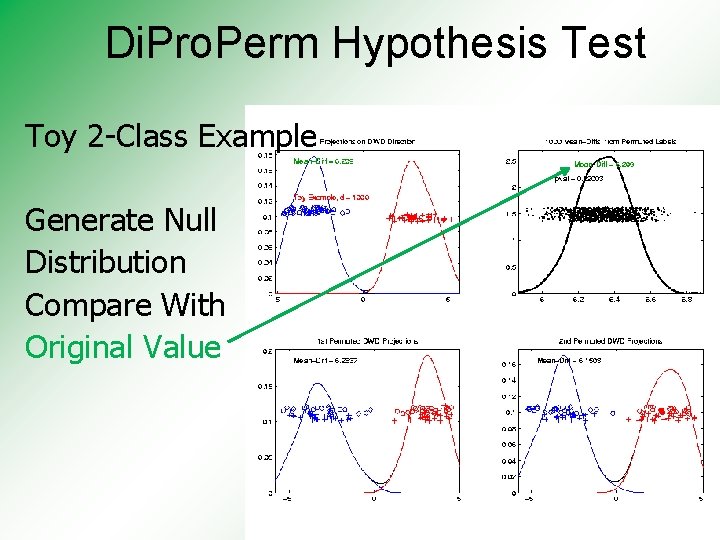

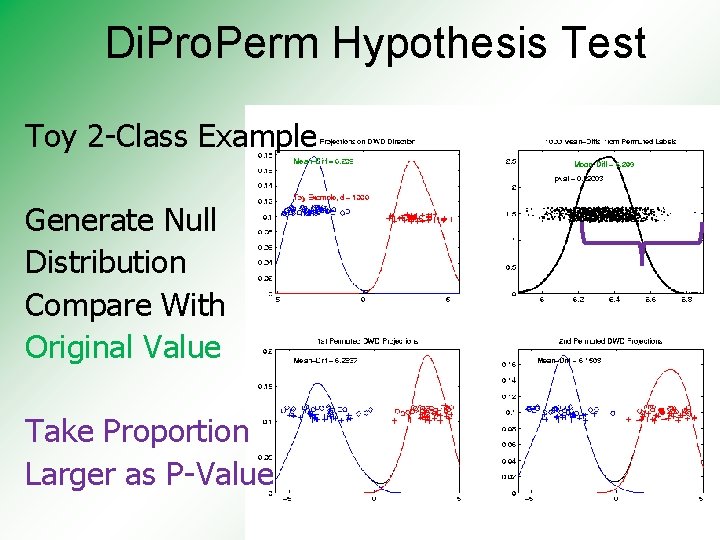

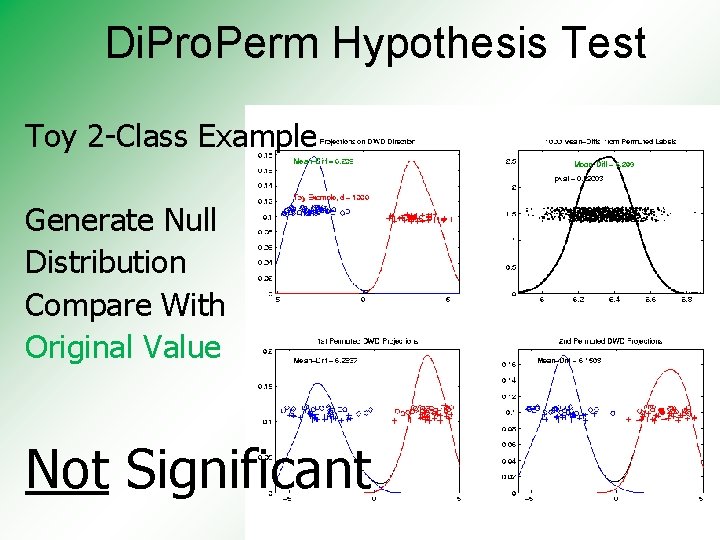

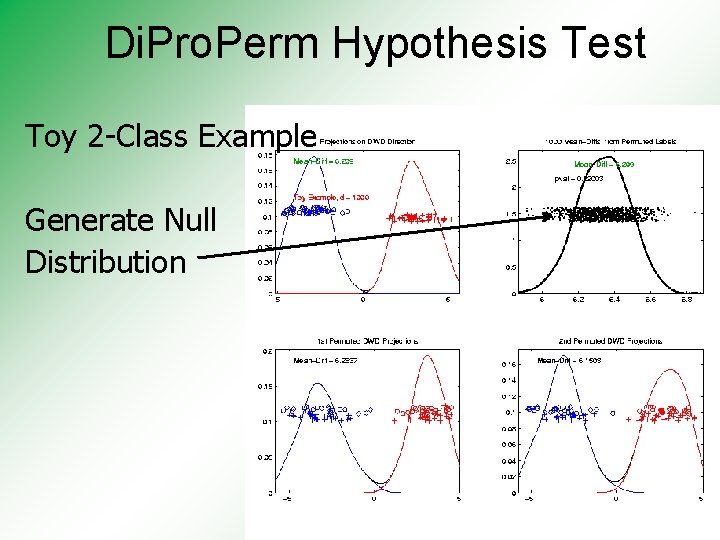

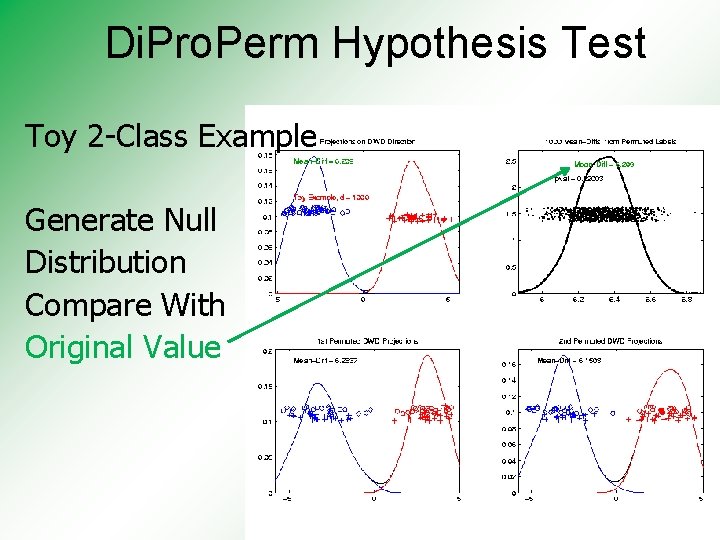

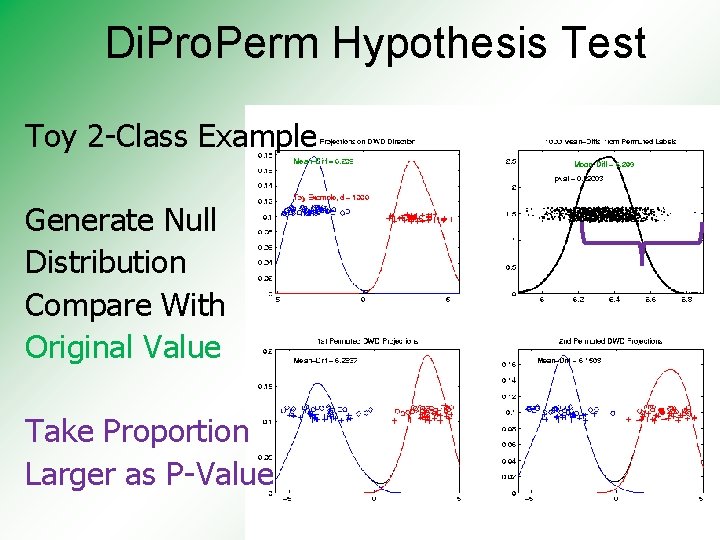

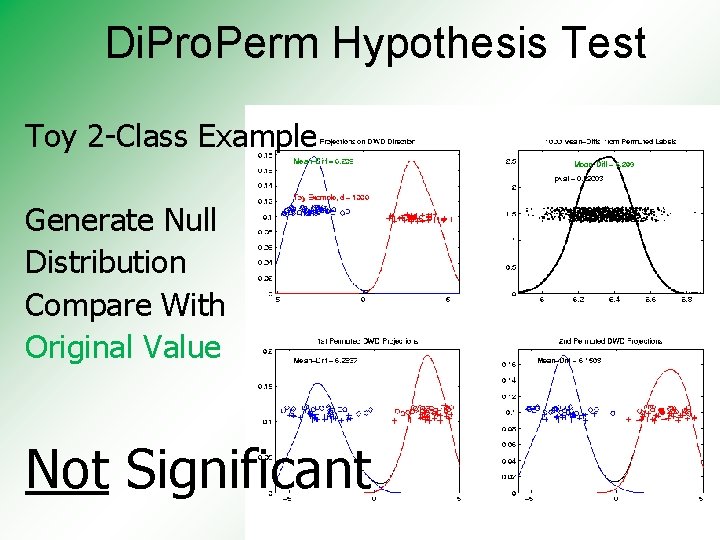

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Generate Null Distribution

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Generate Null Distribution Compare With Original Value

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Generate Null Distribution Compare With Original Value Take Proportion Larger as P-Value

Di. Pro. Perm Hypothesis Test Toy 2 -Class Example Generate Null Distribution Compare With Original Value Not Significant

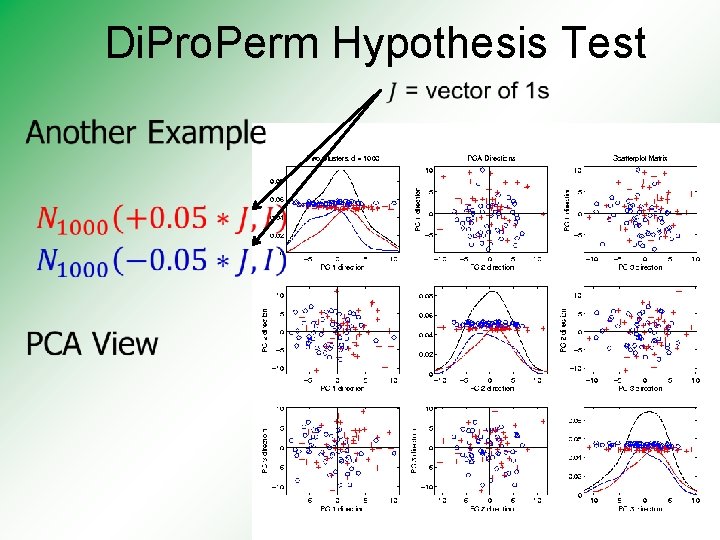

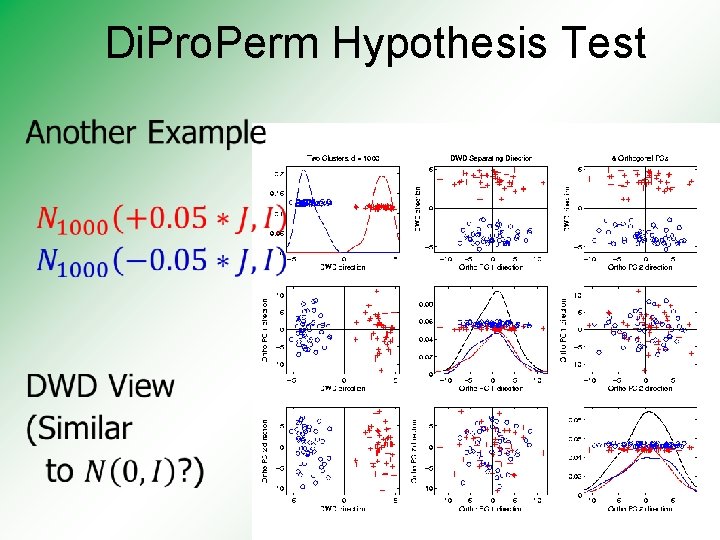

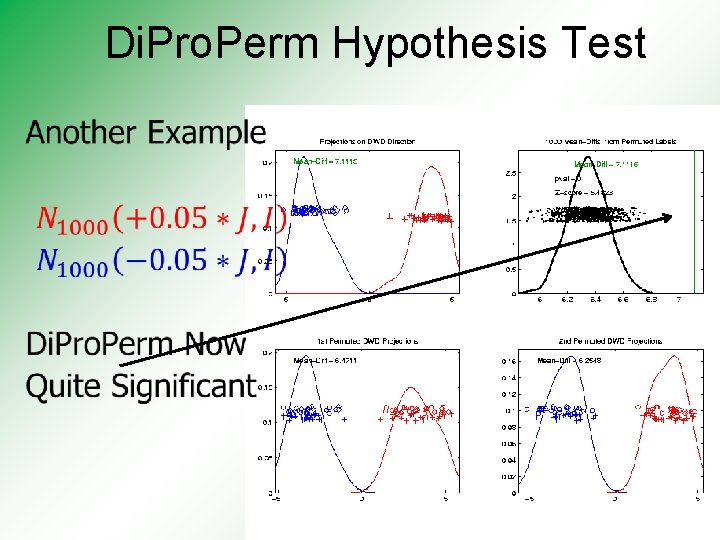

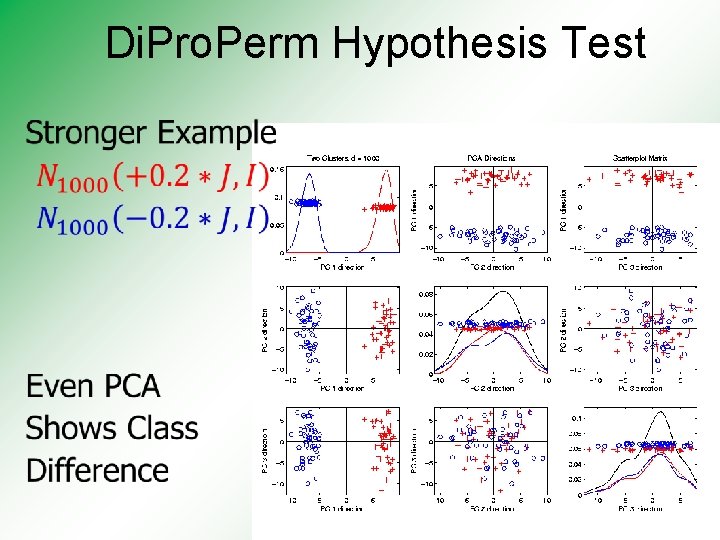

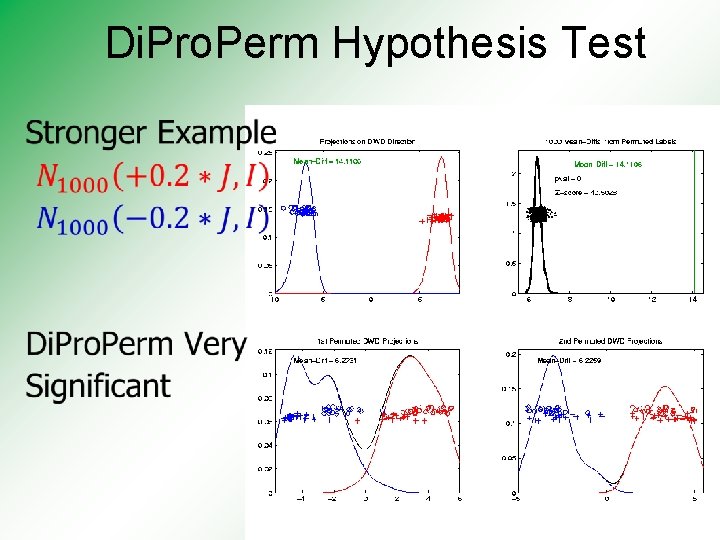

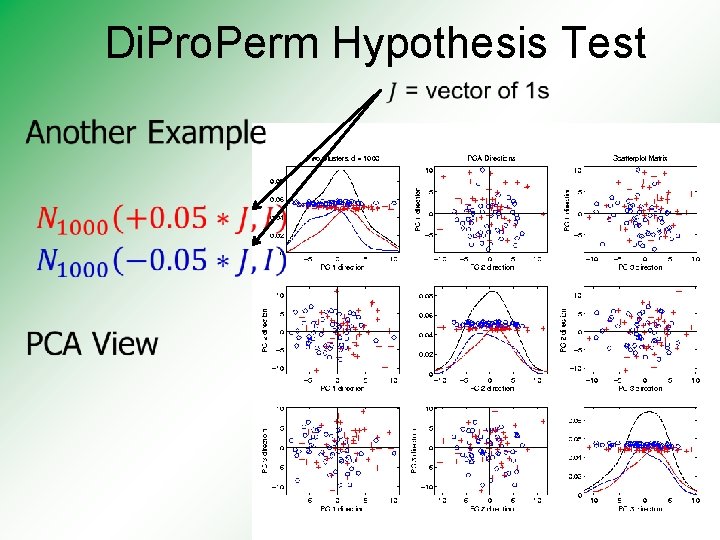

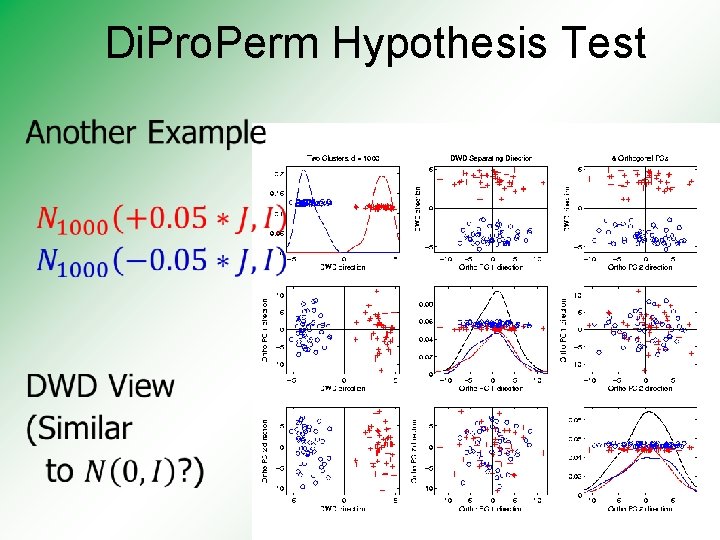

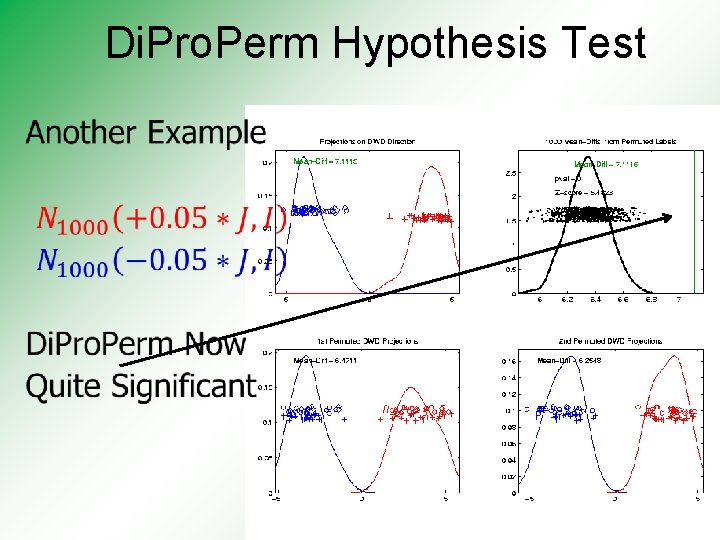

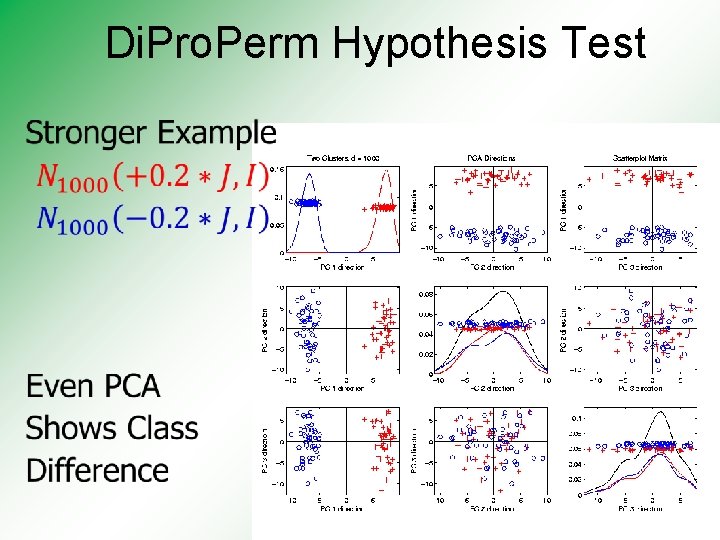

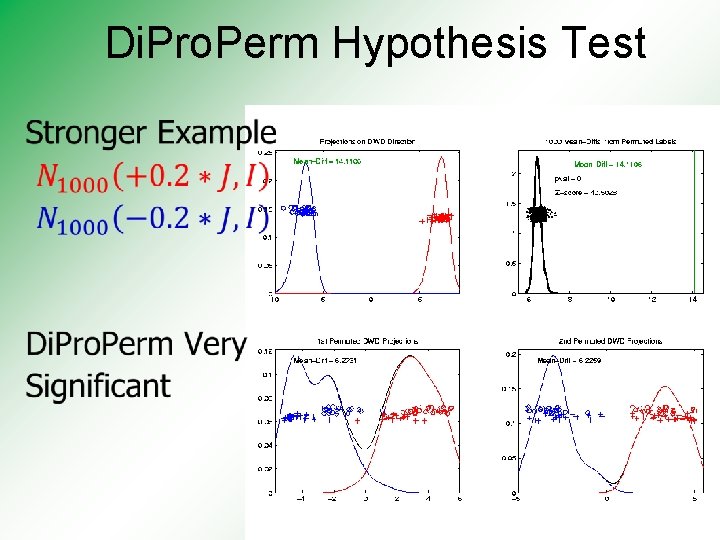

Di. Pro. Perm Hypothesis Test

Di. Pro. Perm Hypothesis Test

Di. Pro. Perm Hypothesis Test

Di. Pro. Perm Hypothesis Test

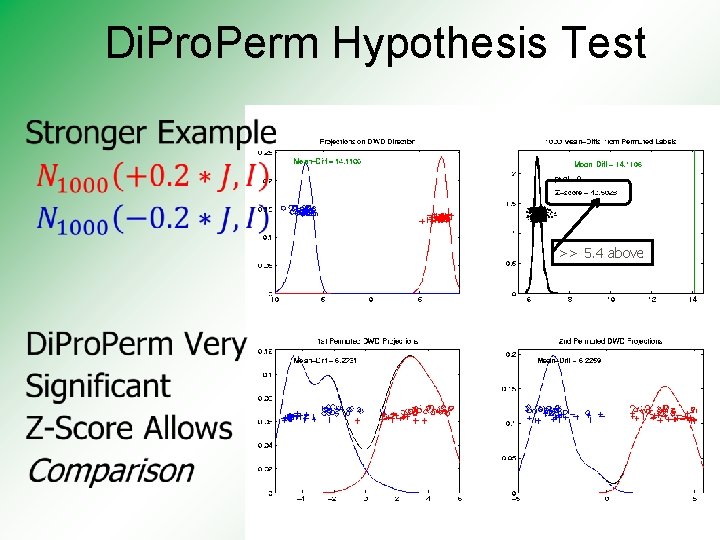

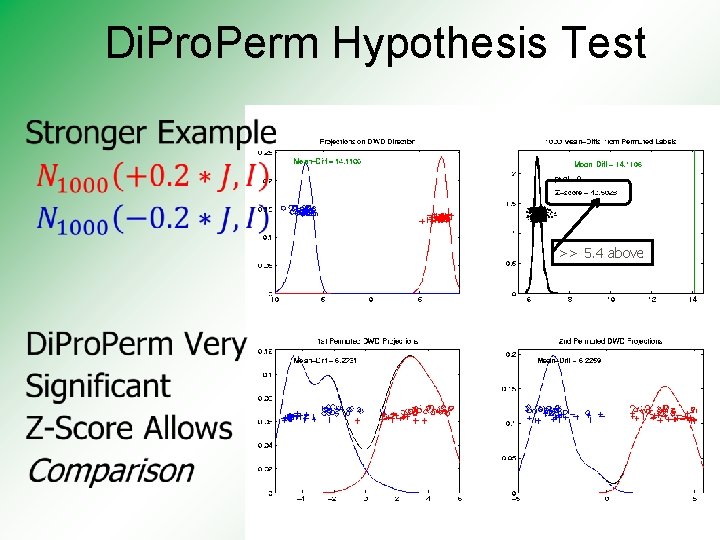

Di. Pro. Perm Hypothesis Test

Di. Pro. Perm Hypothesis Test >> 5. 4 above

Di. Pro. Perm Hypothesis Test Real Data Example: Autism Caudate Shape (sub-cortical brain structure) Shape summarized by 3 -d locations of 1032 corresponding points Autistic vs. Typically Developing (Thanks to Josh Cates)

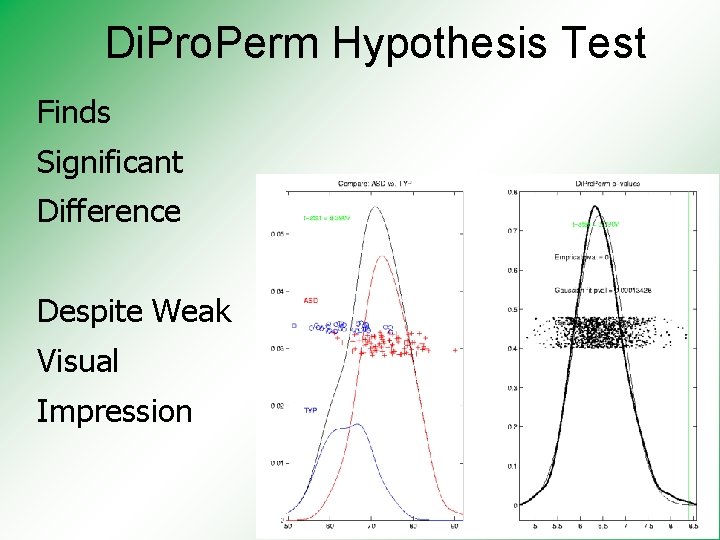

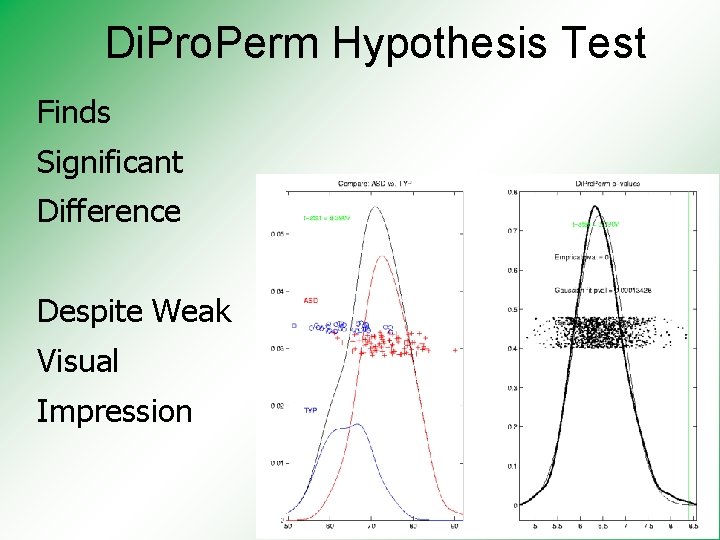

Di. Pro. Perm Hypothesis Test Finds Significant Difference Despite Weak Visual Impression

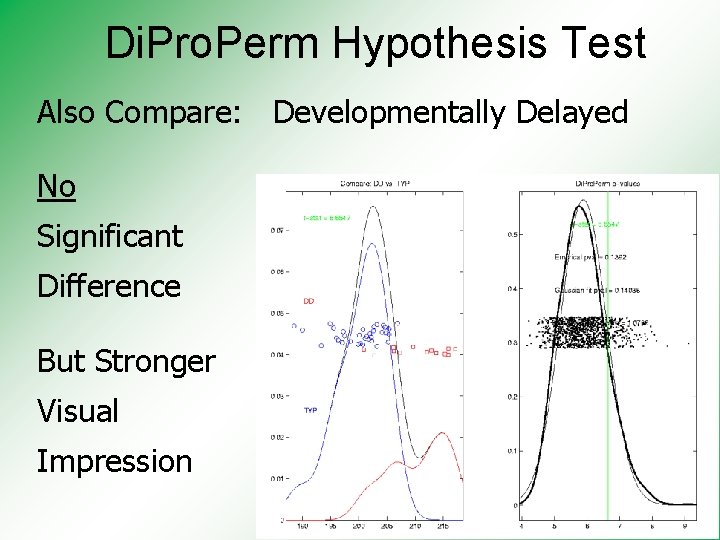

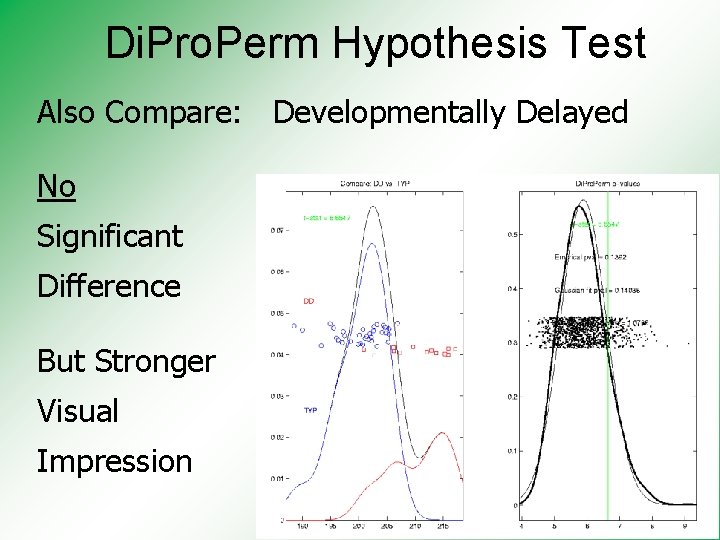

Di. Pro. Perm Hypothesis Test Also Compare: Developmentally Delayed No Significant Difference But Stronger Visual Impression

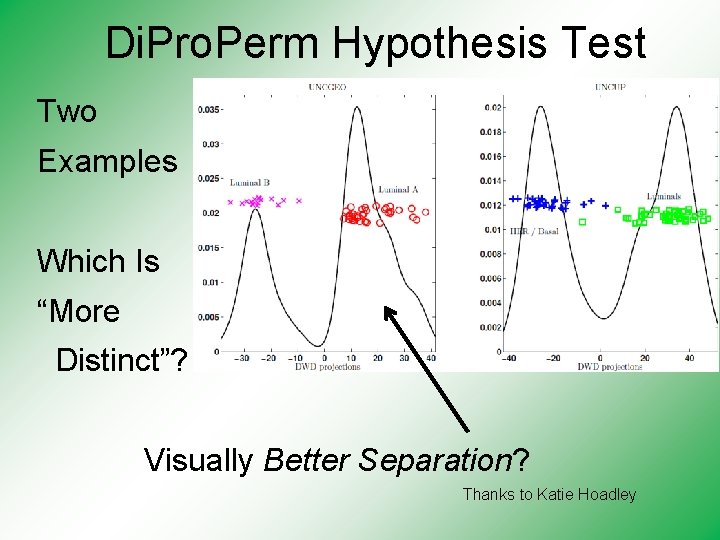

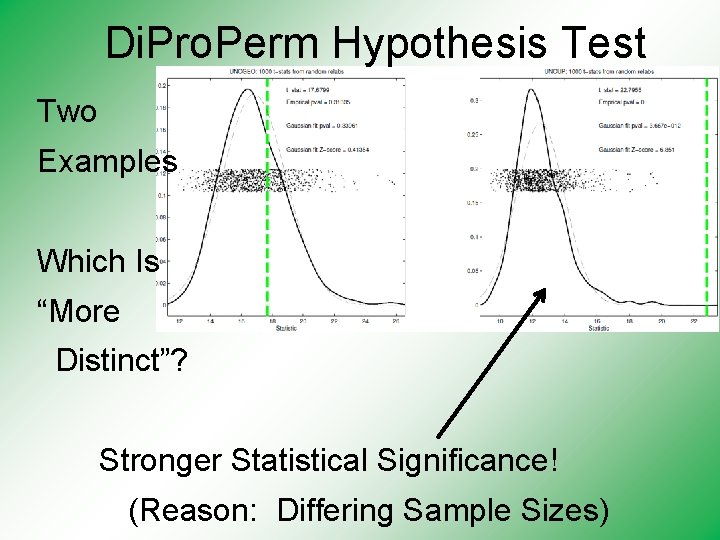

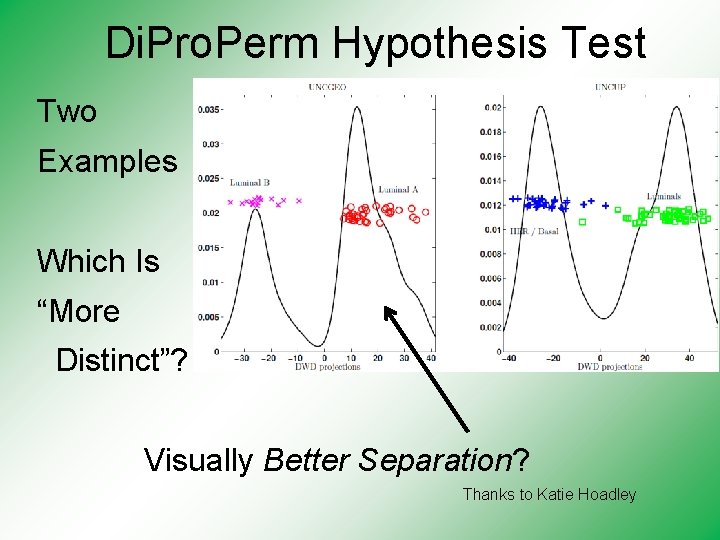

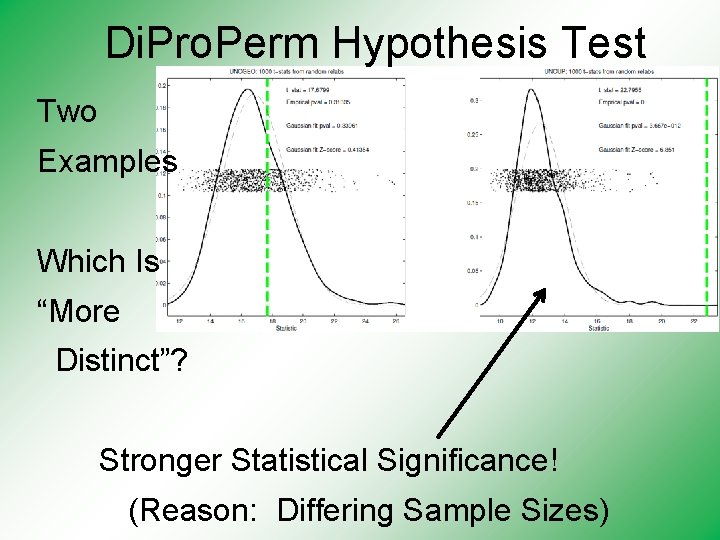

Di. Pro. Perm Hypothesis Test Two Examples Which Is “More Distinct”? Visually Better Separation? Thanks to Katie Hoadley

Di. Pro. Perm Hypothesis Test Two Examples Which Is “More Distinct”? Stronger Statistical Significance! (Reason: Differing Sample Sizes)

Di. Pro. Perm Hypothesis Test •

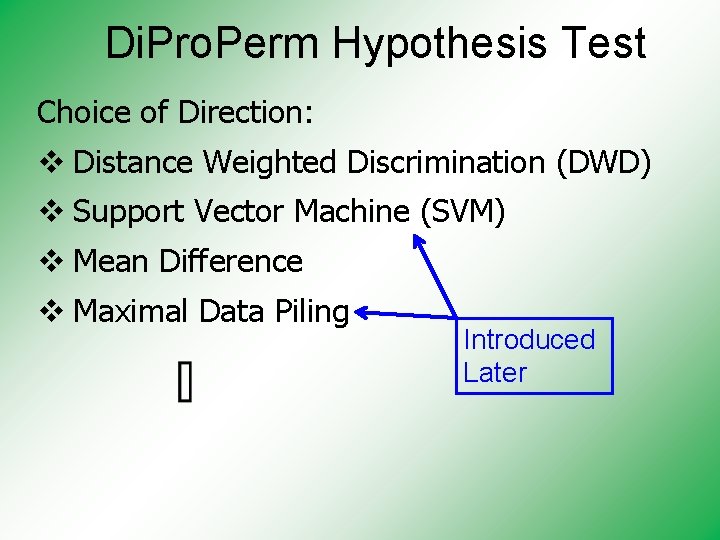

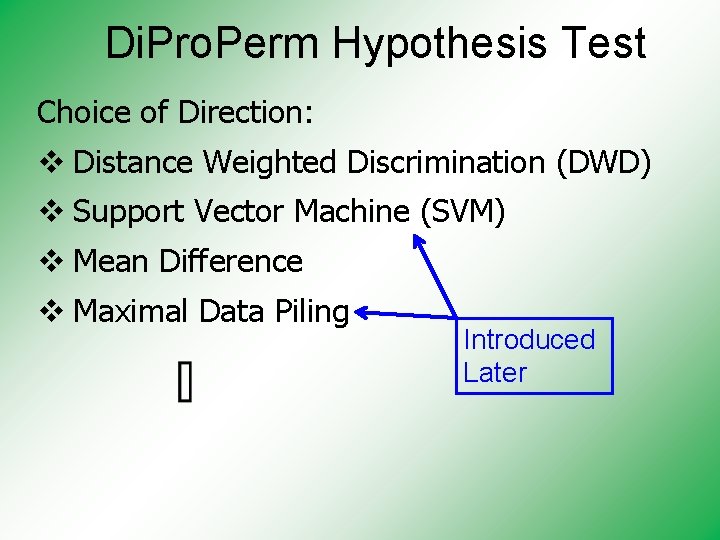

Di. Pro. Perm Hypothesis Test Choice of Direction: v Distance Weighted Discrimination (DWD) v Support Vector Machine (SVM) v Mean Difference v Maximal Data Piling Introduced Later

Di. Pro. Perm Hypothesis Test Choice of 1 -d Summary Statistic: Ø 2 -sample t-stat Ø Mean difference Ø Median difference Ø Area Under ROC Curve Surprising Comparison Coming Later

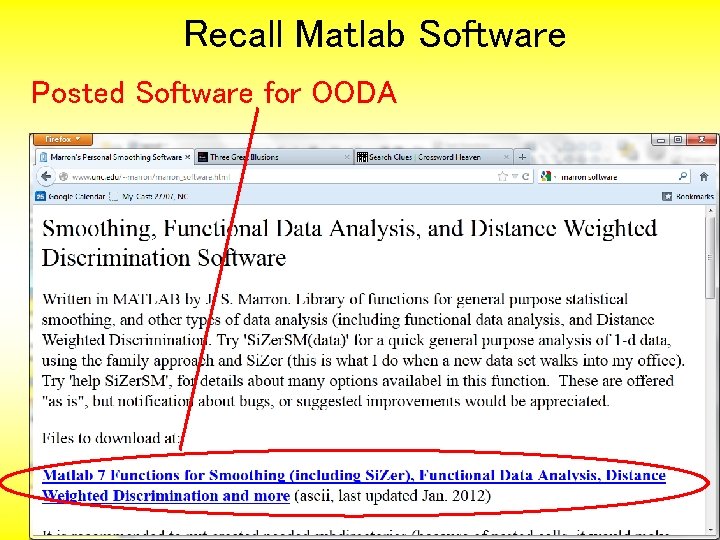

Recall Matlab Software Posted Software for OODA

Di. Pro. Perm Hypothesis Test Matlab Software: Di. Pro. Perm. SM. m In Batch. Adjust Directory

Recall Drug Discovery Data Raw Data – DWD & Ortho PCs Scatterplot Some Blue - Red Separation But Dominated By Few Large Compounds

Recall Drug Discovery Data Binary Data – DWD & Ortho PCs Scatterplot Better Blue - Red Separation And Visualization

Recall Drug Discovery Data Transform’d Non-Binary Data – DWD & OPCA Better Blue - Red Separation ? ? ? Very Useful Visualization

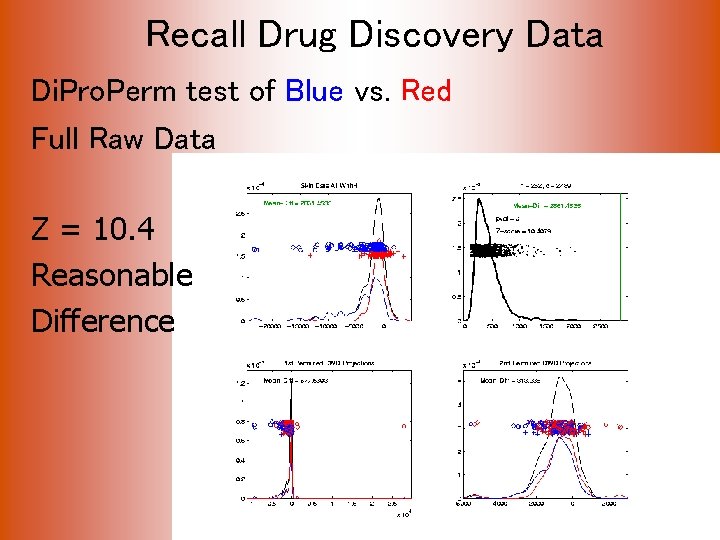

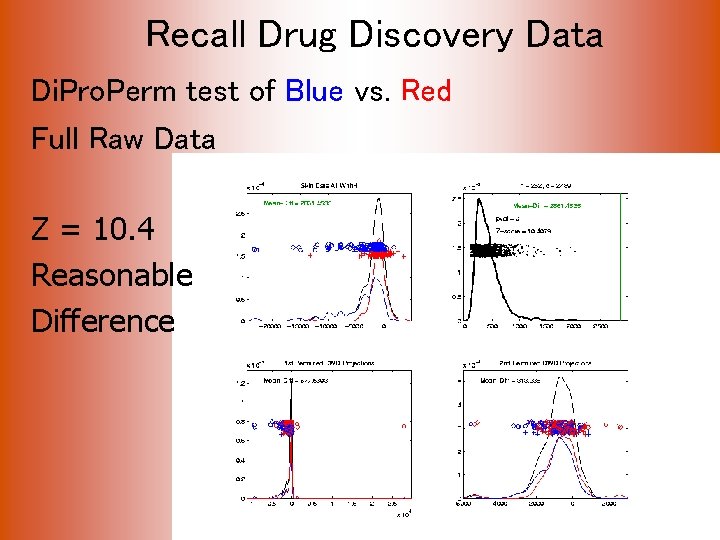

Recall Drug Discovery Data Di. Pro. Perm test of Blue vs. Red Full Raw Data Z = 10. 4 Reasonable Difference

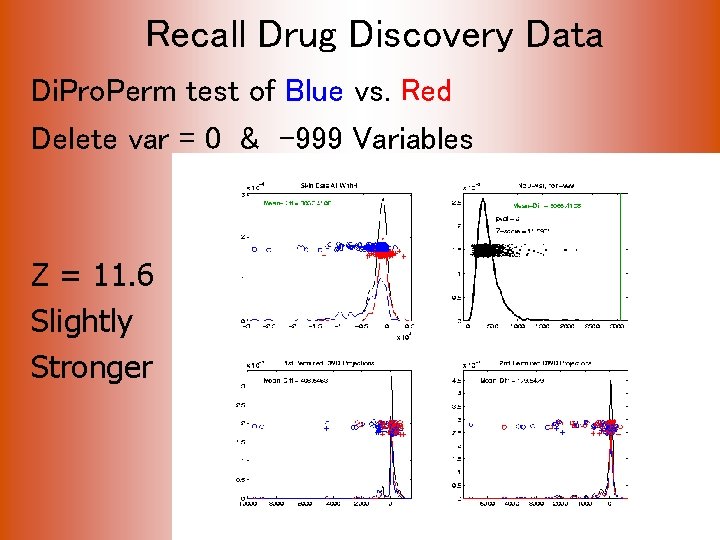

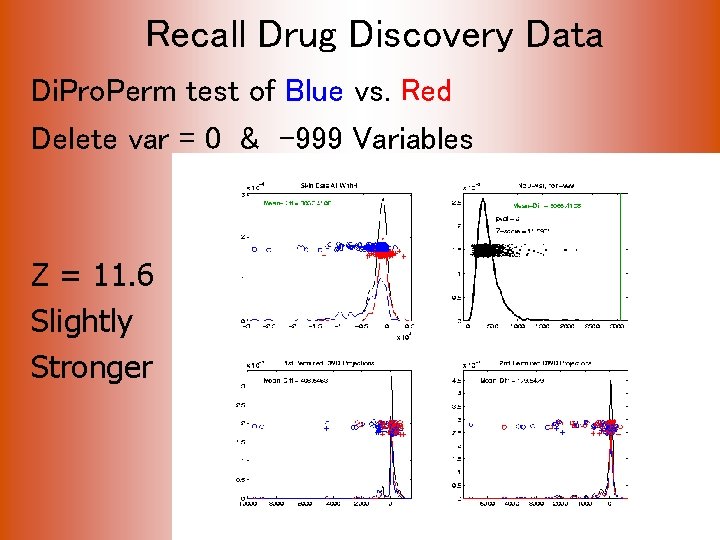

Recall Drug Discovery Data Di. Pro. Perm test of Blue vs. Red Delete var = 0 & -999 Variables Z = 11. 6 Slightly Stronger

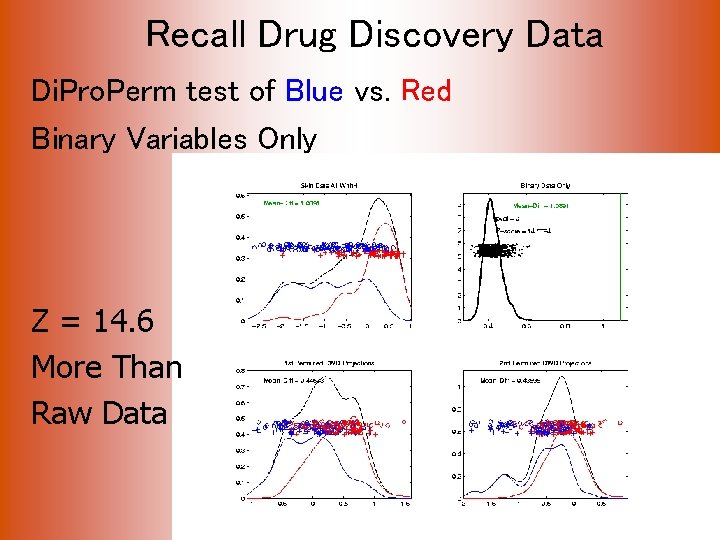

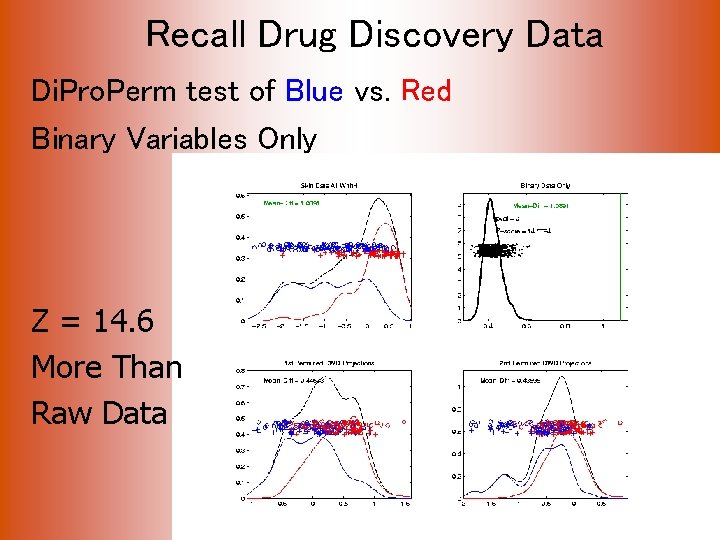

Recall Drug Discovery Data Di. Pro. Perm test of Blue vs. Red Binary Variables Only Z = 14. 6 More Than Raw Data

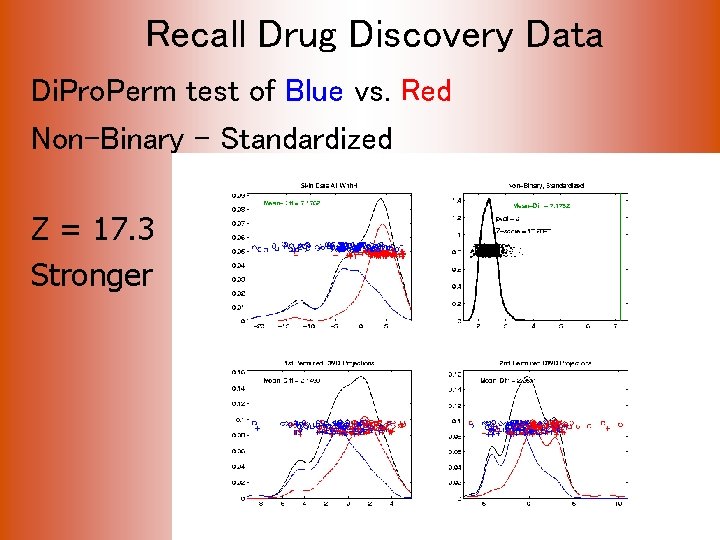

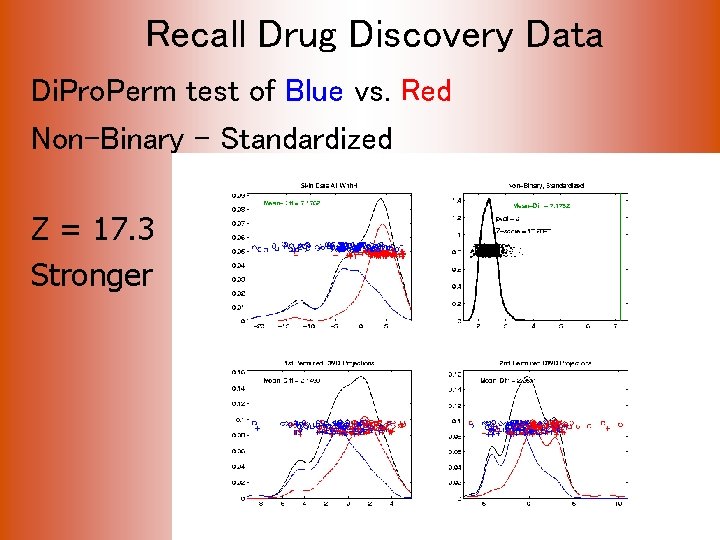

Recall Drug Discovery Data Di. Pro. Perm test of Blue vs. Red Non-Binary – Standardized Z = 17. 3 Stronger

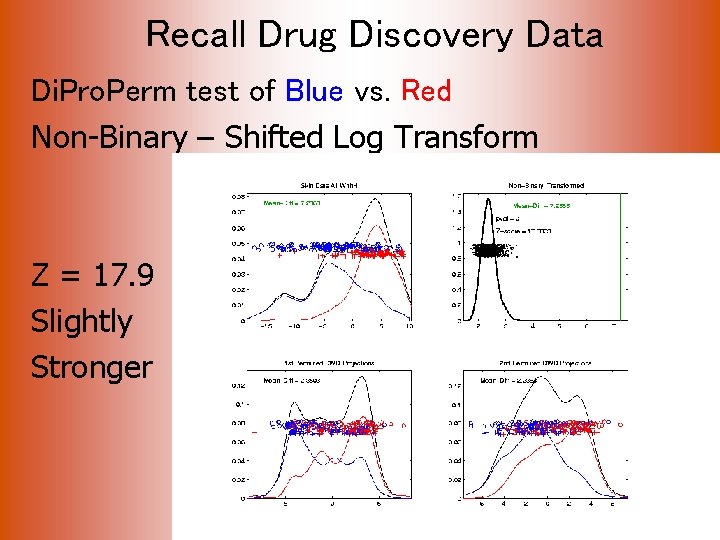

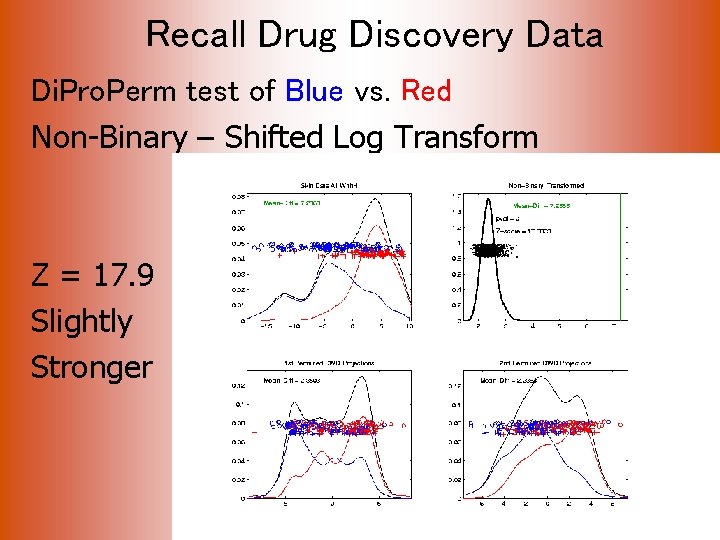

Recall Drug Discovery Data Di. Pro. Perm test of Blue vs. Red Non-Binary – Shifted Log Transform Z = 17. 9 Slightly Stronger

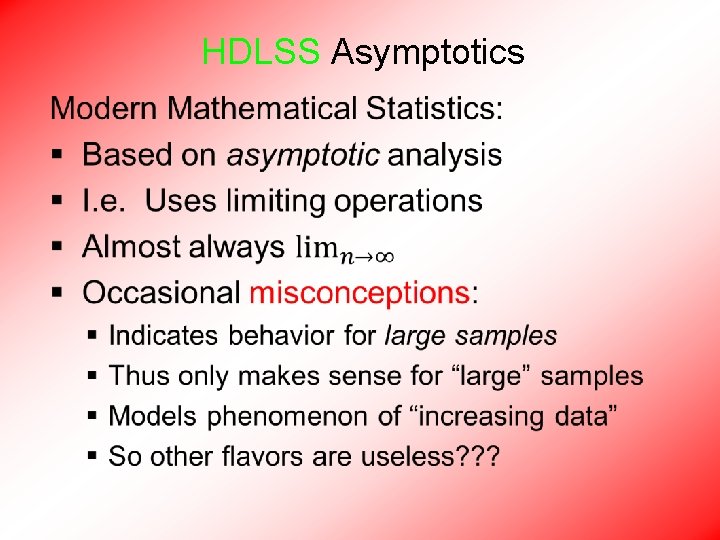

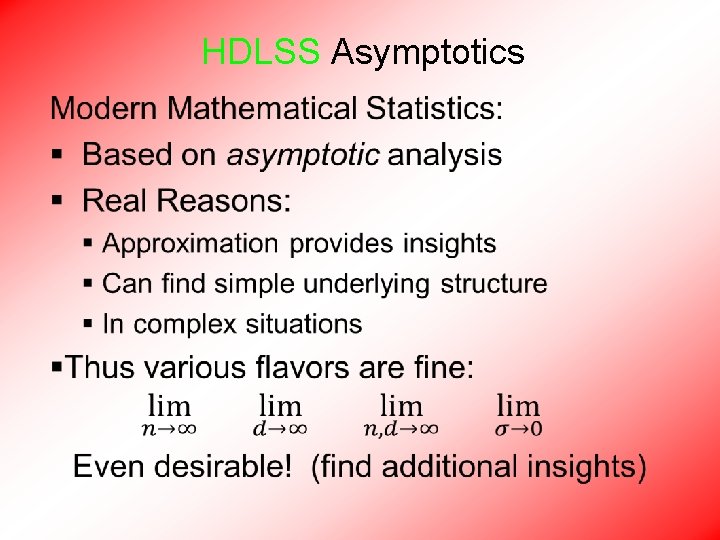

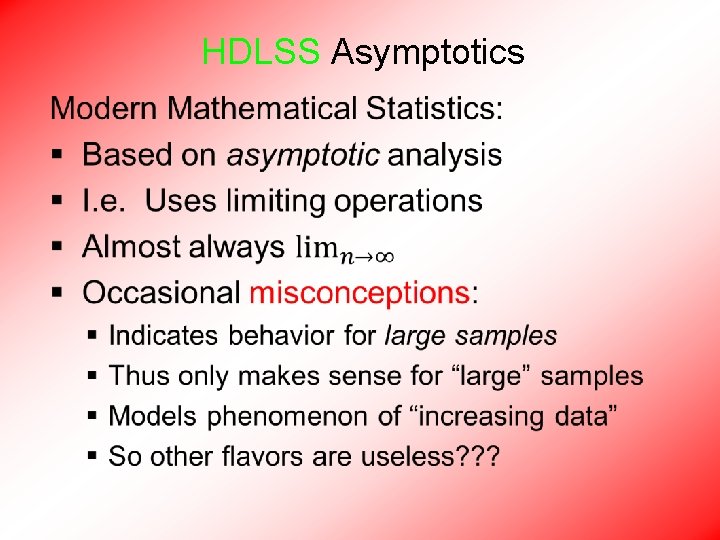

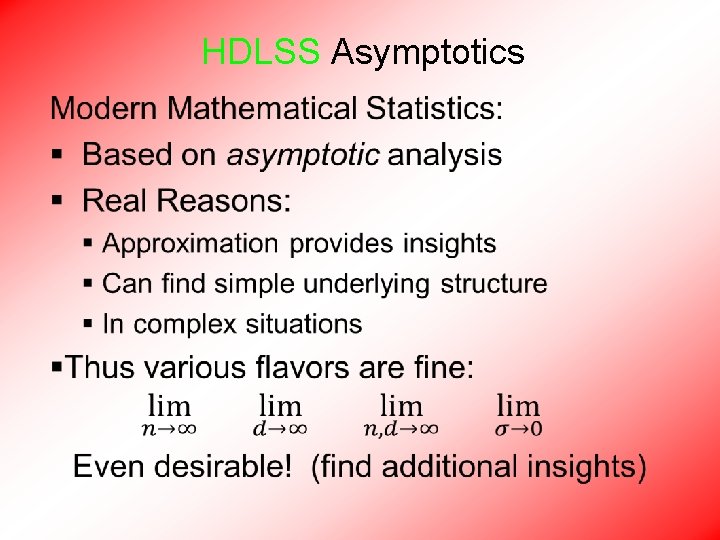

HDLSS Asymptotics •

HDLSS Asymptotics •

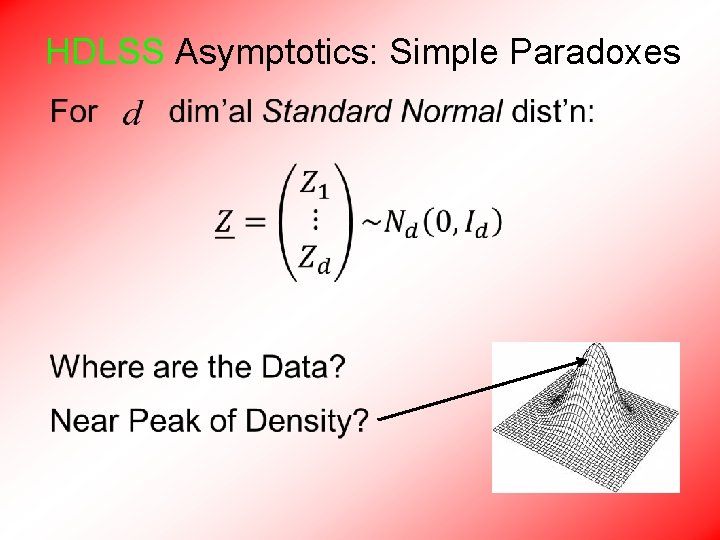

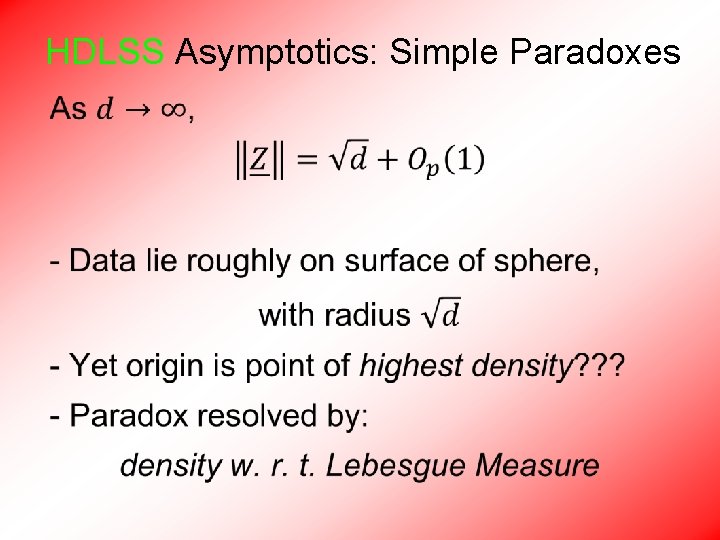

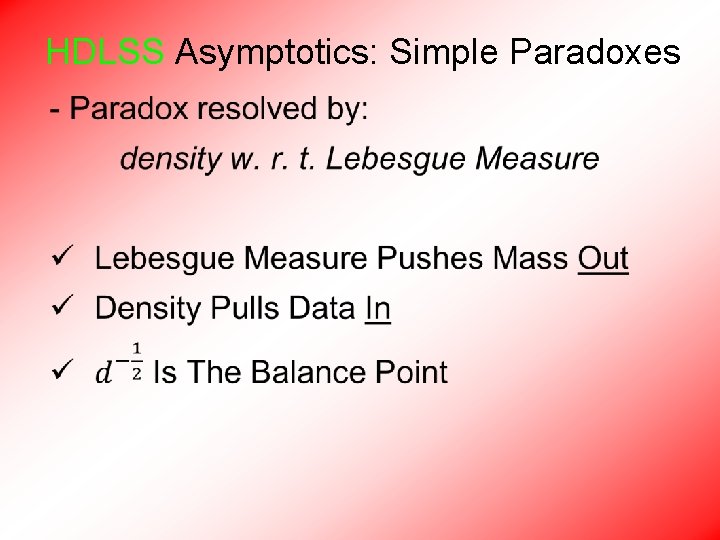

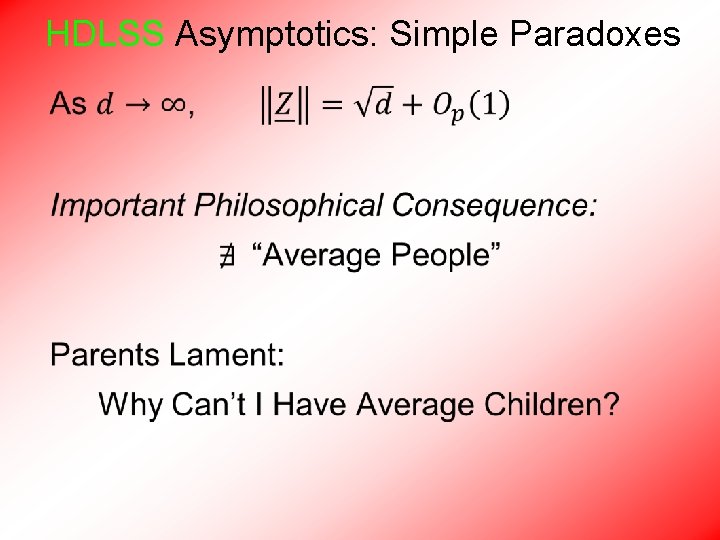

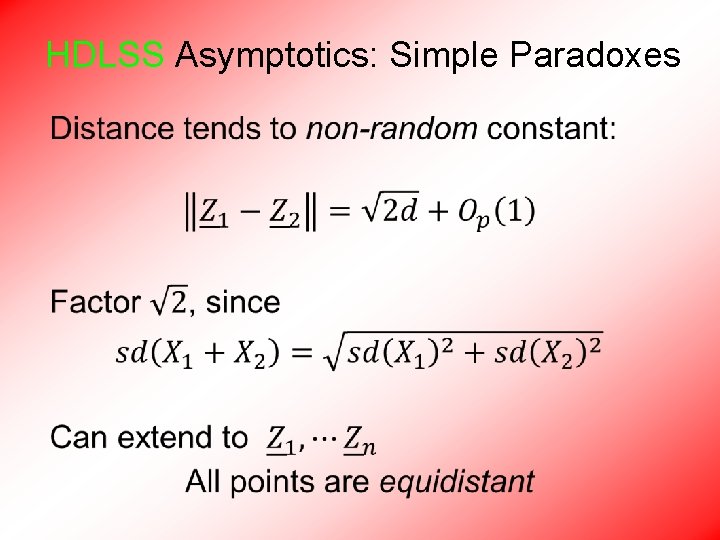

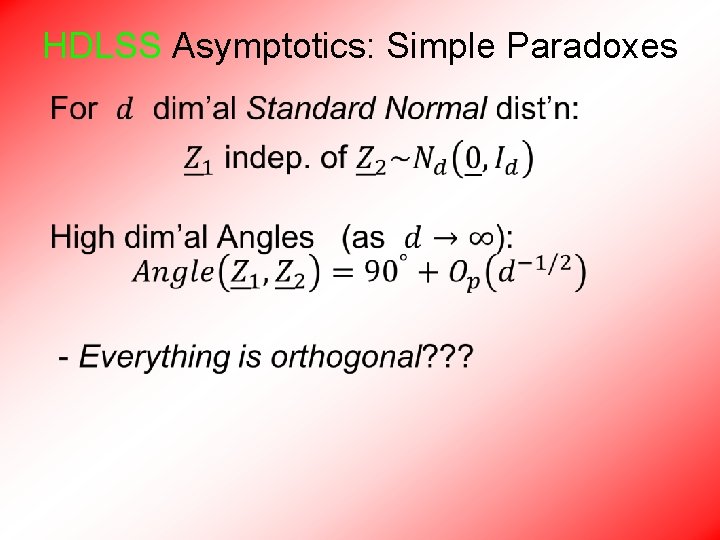

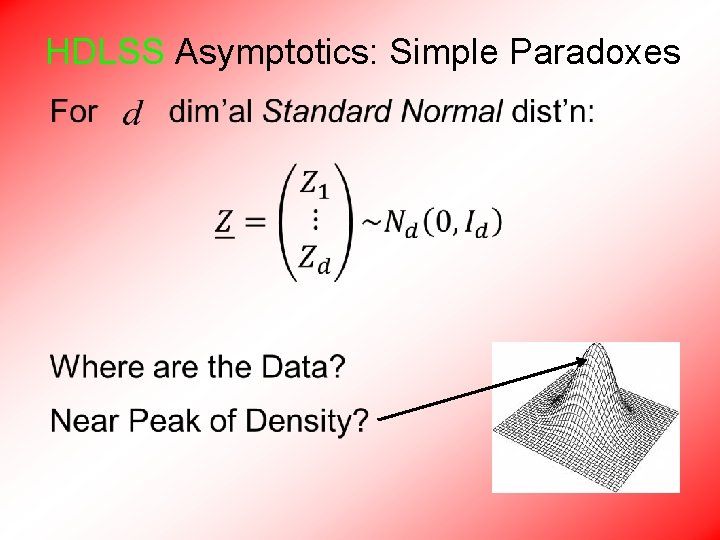

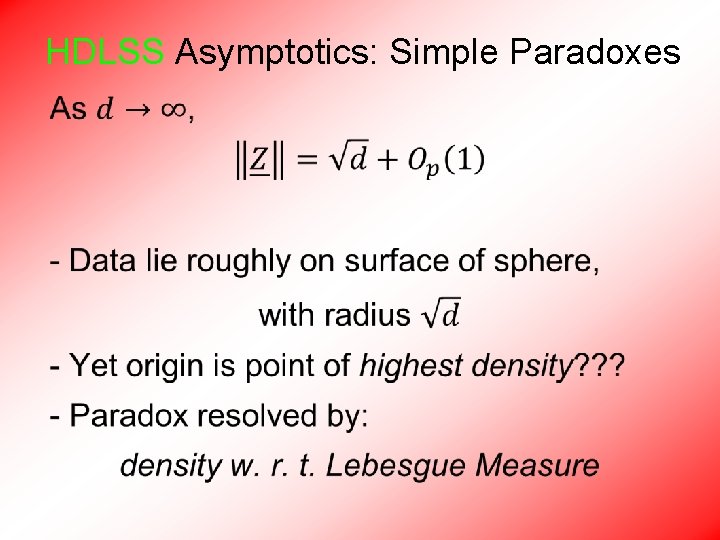

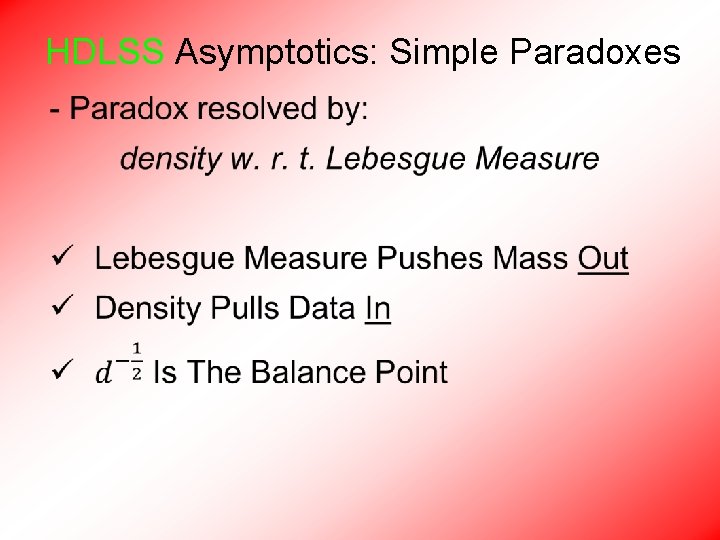

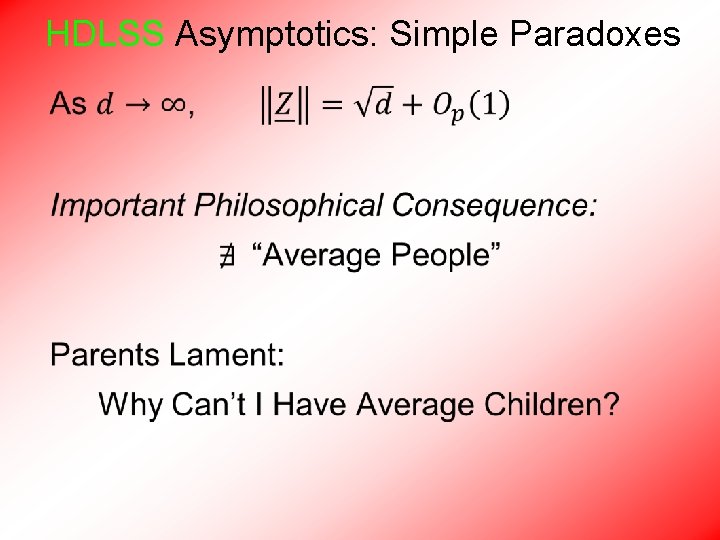

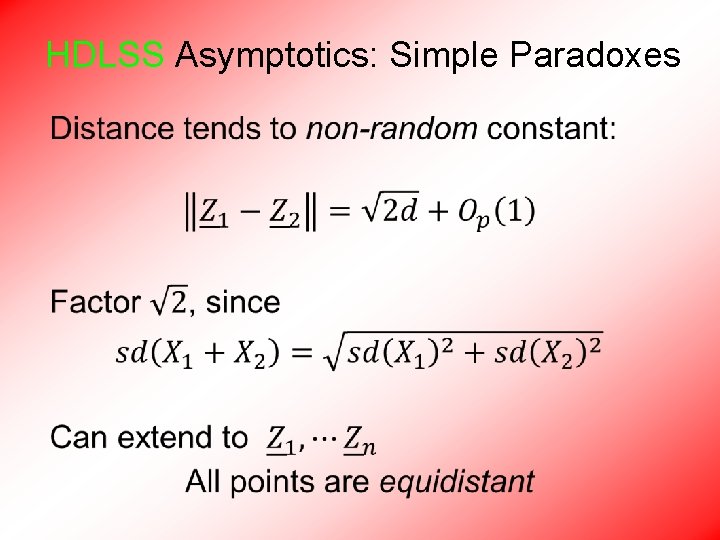

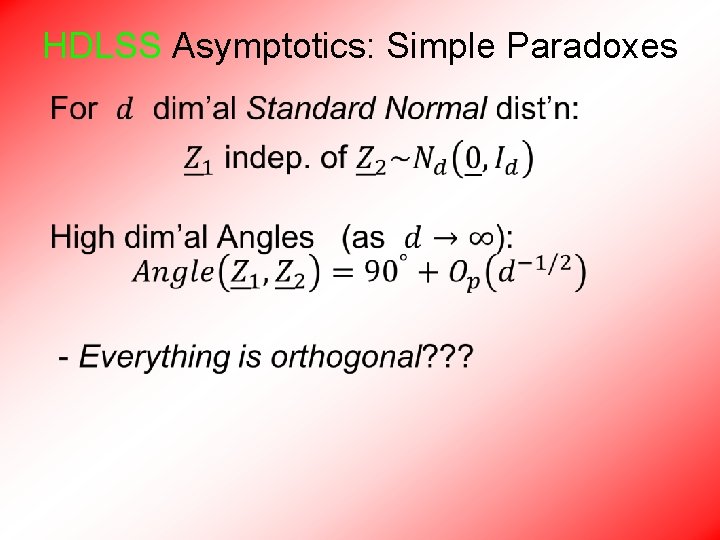

HDLSS Asymptotics: Simple Paradoxes •

HDLSS Asymptotics: Simple Paradoxes •

HDLSS Asymptotics: Simple Paradoxes •

HDLSS Asymptotics: Simple Paradoxes •

HDLSS Asymptotics: Simple Paradoxes •

HDLSS Asymptotics: Simple Paradoxes •

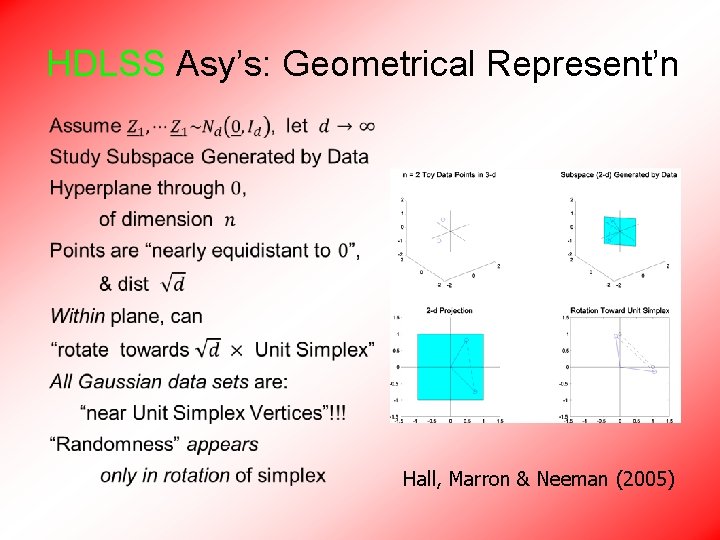

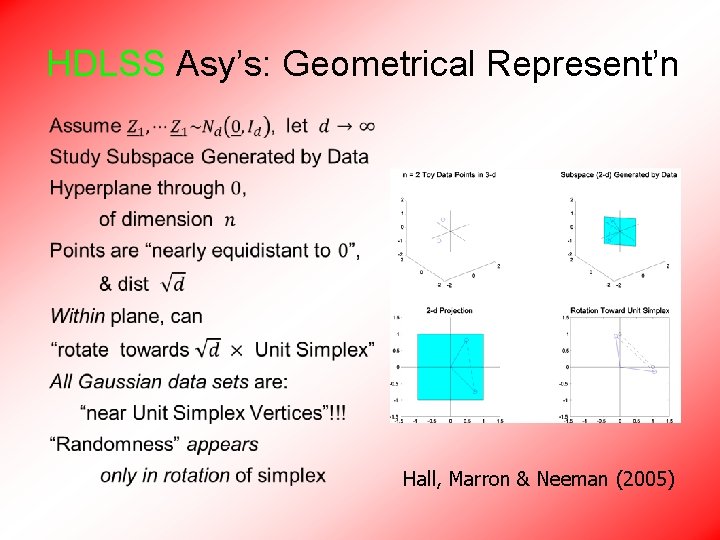

HDLSS Asy’s: Geometrical Represent’n • Hall, Marron & Neeman (2005)

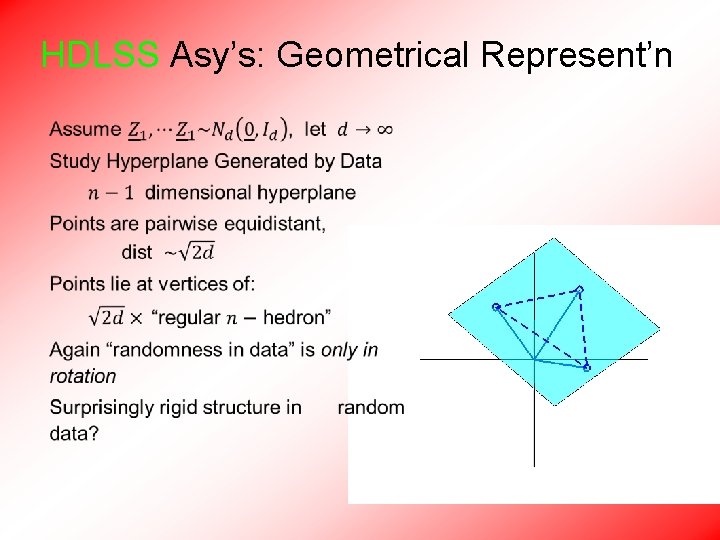

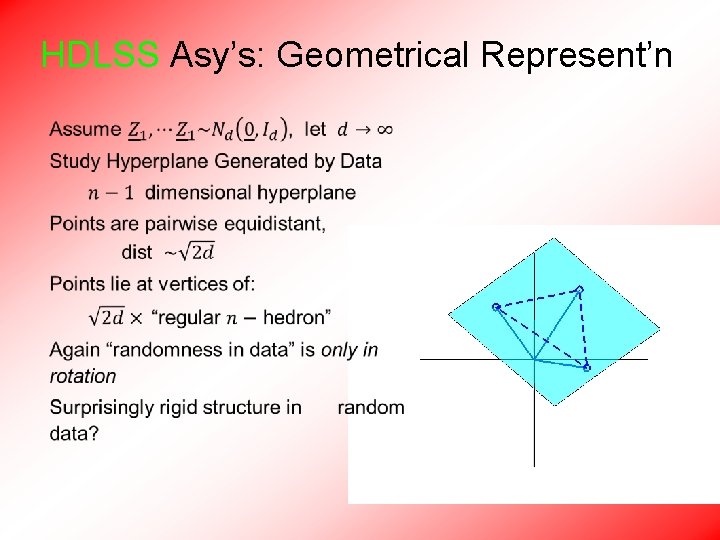

HDLSS Asy’s: Geometrical Represent’n •

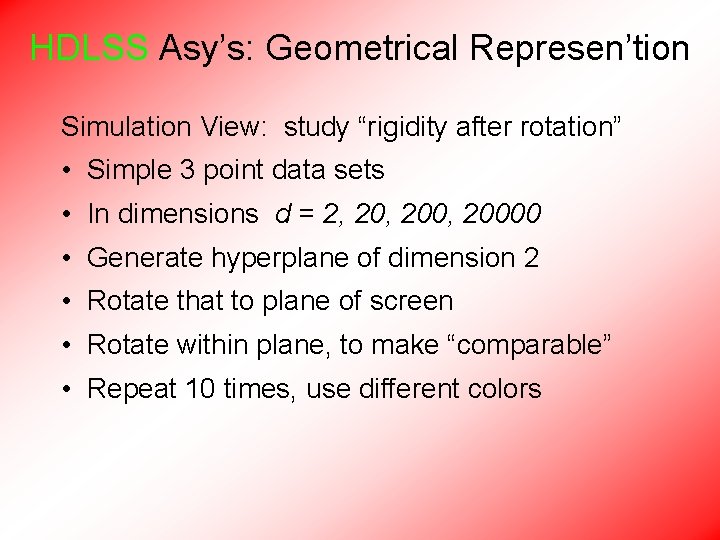

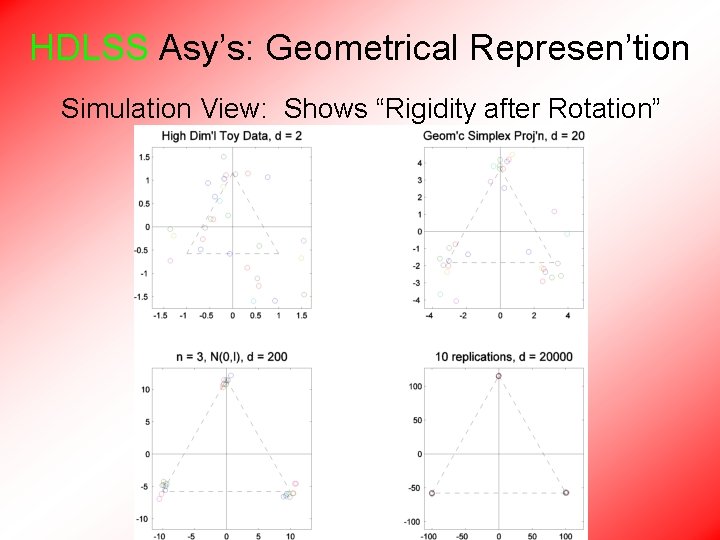

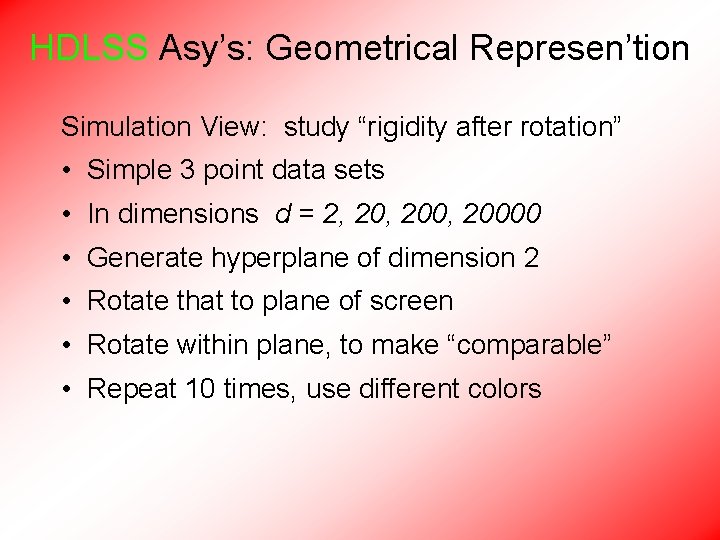

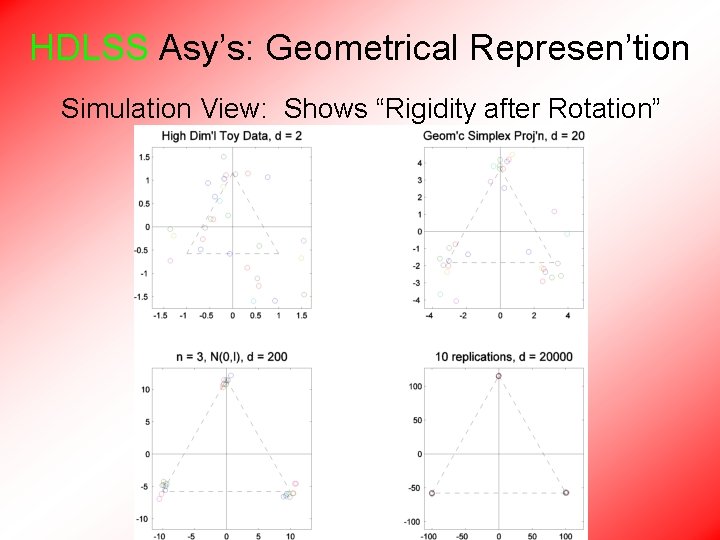

HDLSS Asy’s: Geometrical Represen’tion Simulation View: study “rigidity after rotation” • Simple 3 point data sets • In dimensions d = 2, 200, 20000 • Generate hyperplane of dimension 2 • Rotate that to plane of screen • Rotate within plane, to make “comparable” • Repeat 10 times, use different colors

HDLSS Asy’s: Geometrical Represen’tion Simulation View: Shows “Rigidity after Rotation”

HDLSS Asy’s: Geometrical Represen’tion Straightforward Generalizations: non-Gaussian data: only need moments?

HDLSS Asy’s: Geometrical Represen’tion •

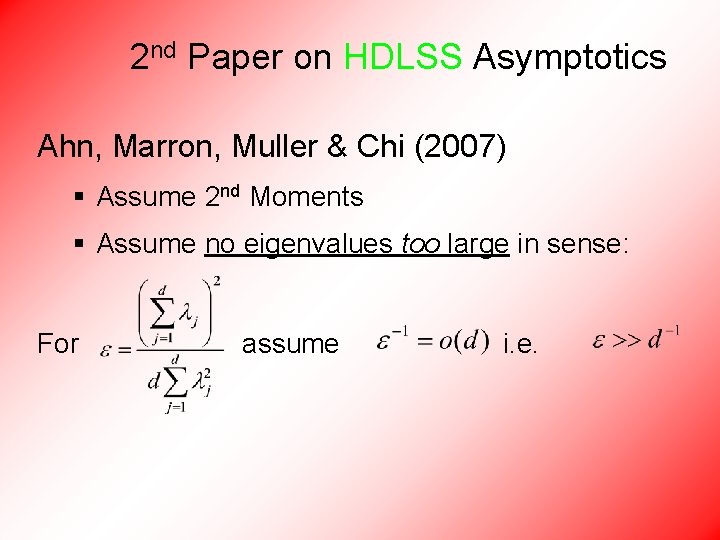

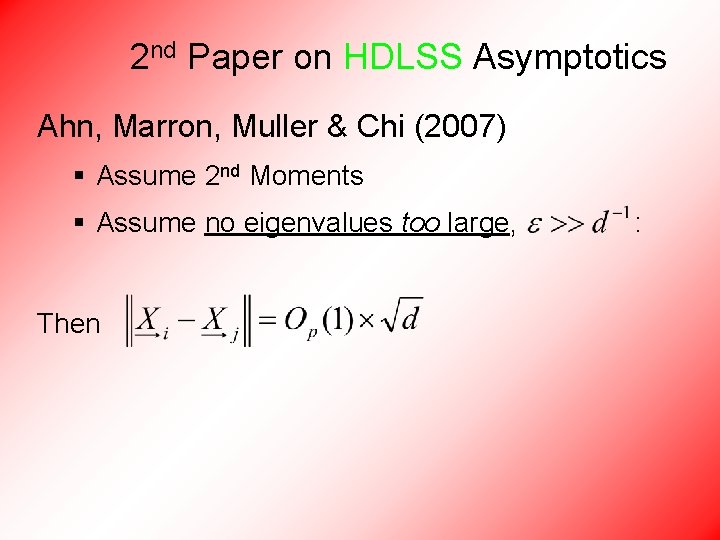

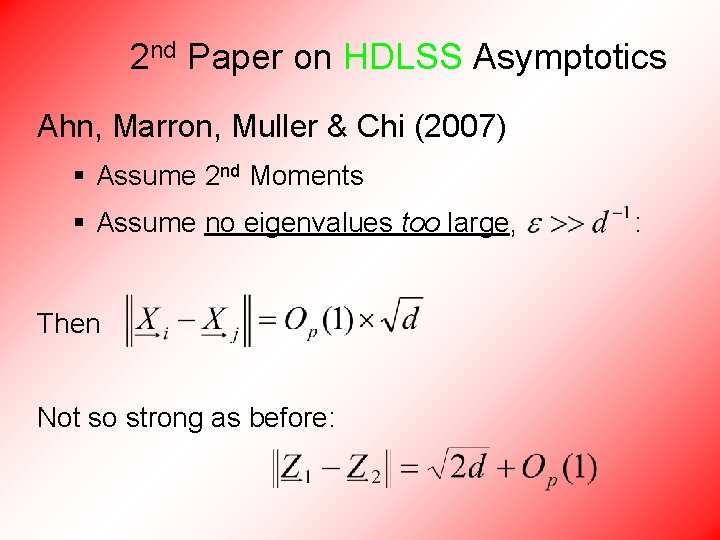

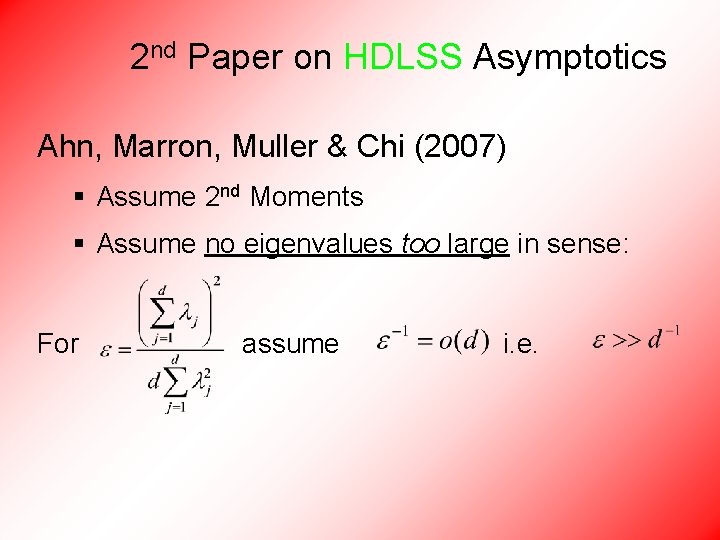

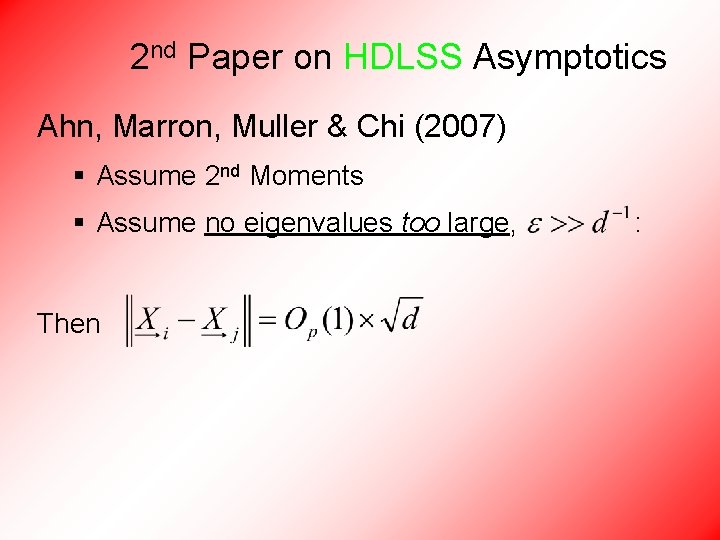

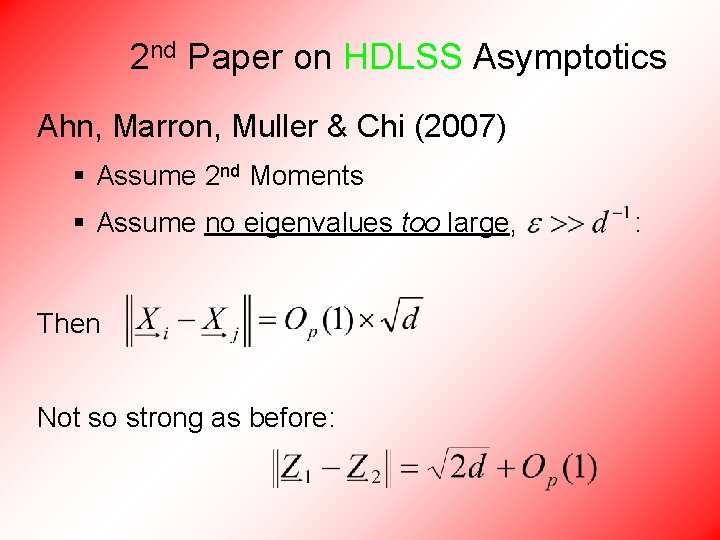

2 nd Paper on HDLSS Asymptotics Ahn, Marron, Muller & Chi (2007) § Assume 2 nd Moments § Assume no eigenvalues too large

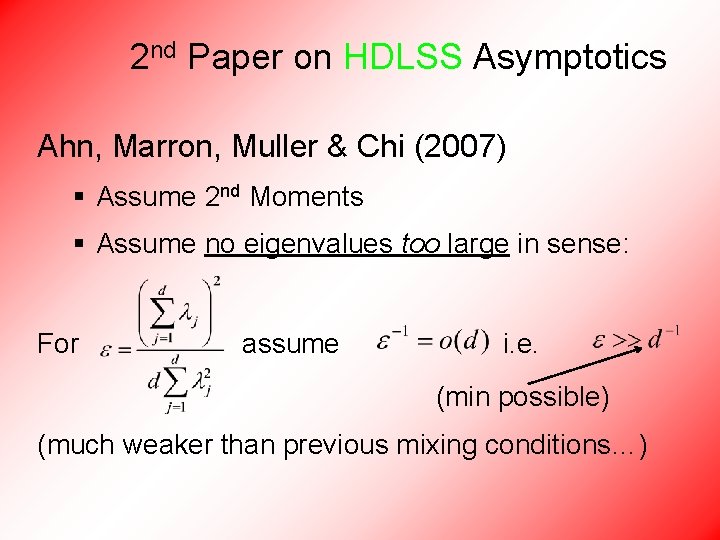

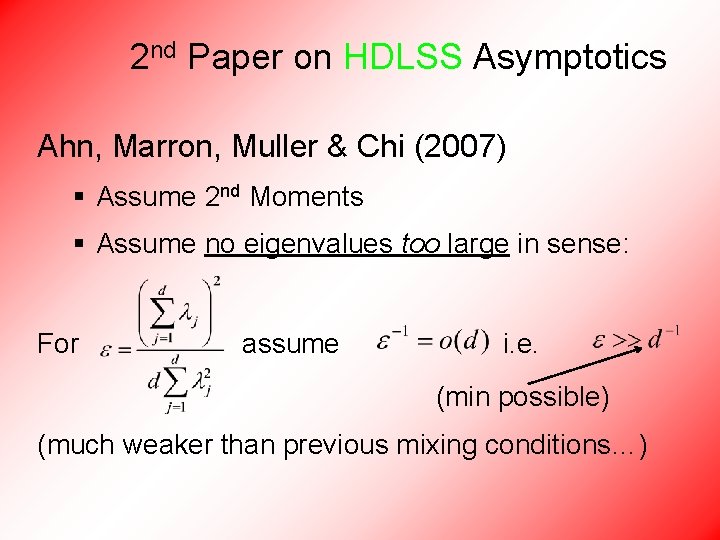

2 nd Paper on HDLSS Asymptotics Ahn, Marron, Muller & Chi (2007) § Assume 2 nd Moments § Assume no eigenvalues too large in sense: For assume i. e.

2 nd Paper on HDLSS Asymptotics Ahn, Marron, Muller & Chi (2007) § Assume 2 nd Moments § Assume no eigenvalues too large in sense: For assume i. e. (min possible) (much weaker than previous mixing conditions…)

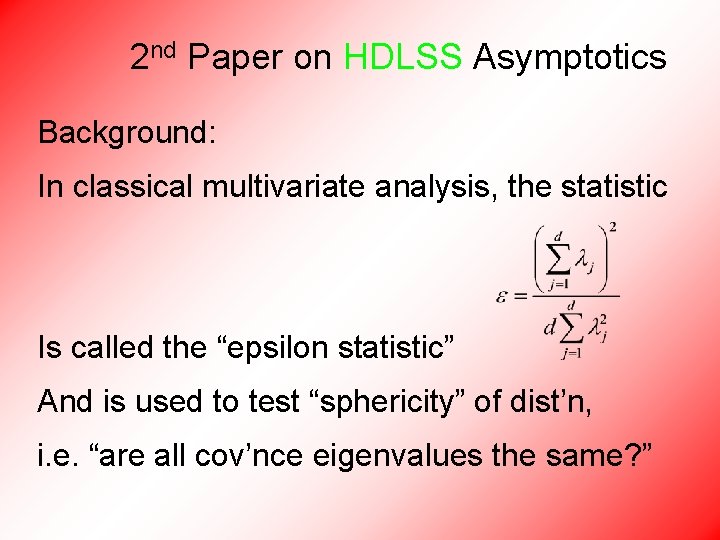

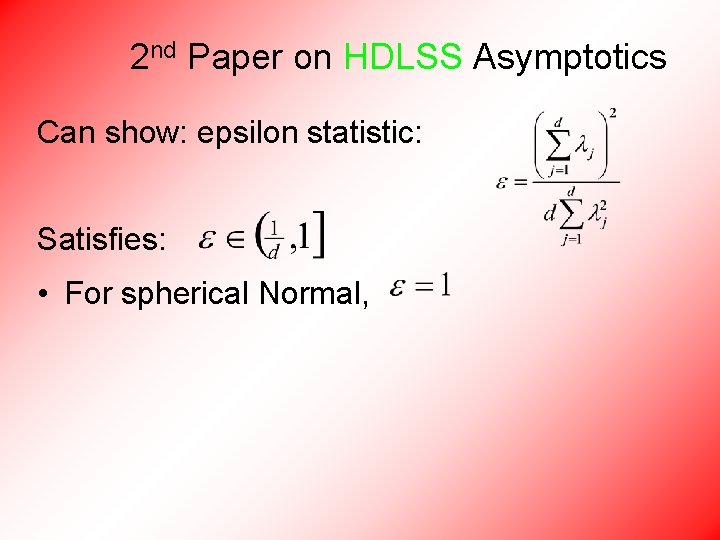

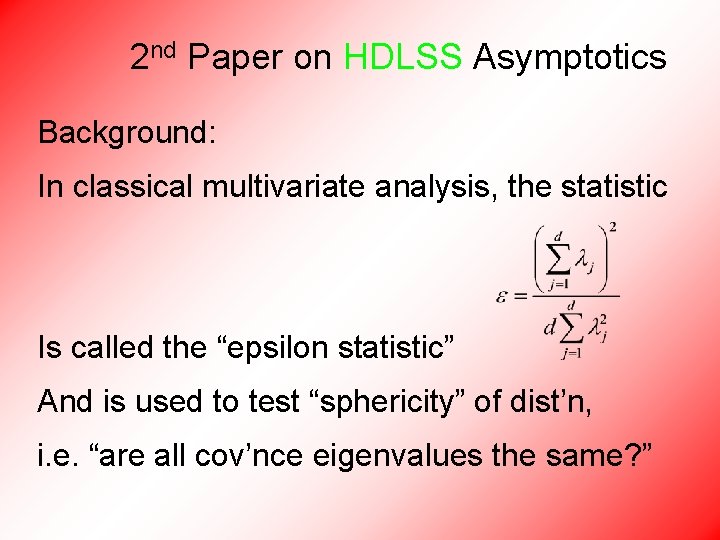

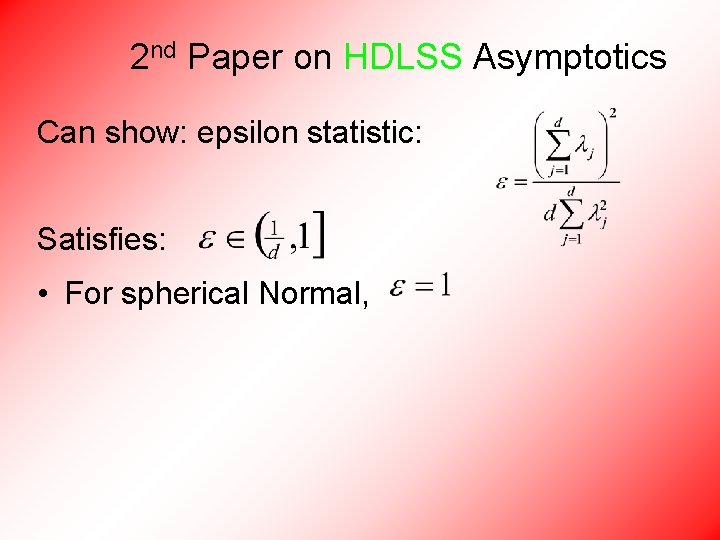

2 nd Paper on HDLSS Asymptotics Background: In classical multivariate analysis, the statistic Is called the “epsilon statistic” And is used to test “sphericity” of dist’n, i. e. “are all cov’nce eigenvalues the same? ”

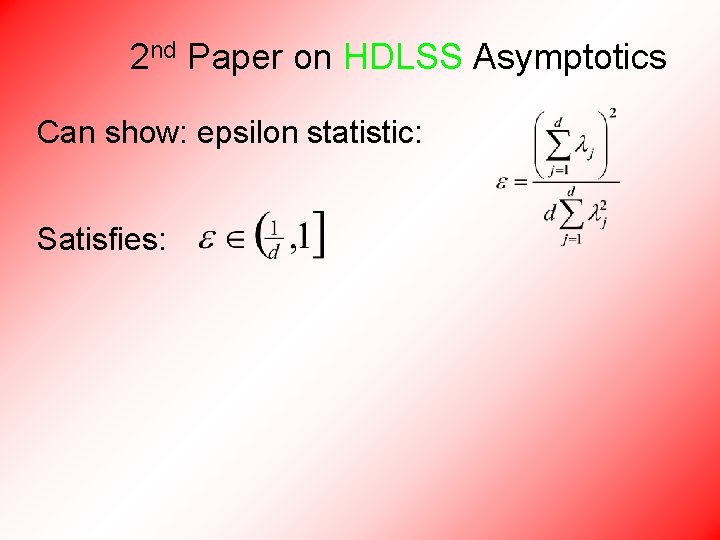

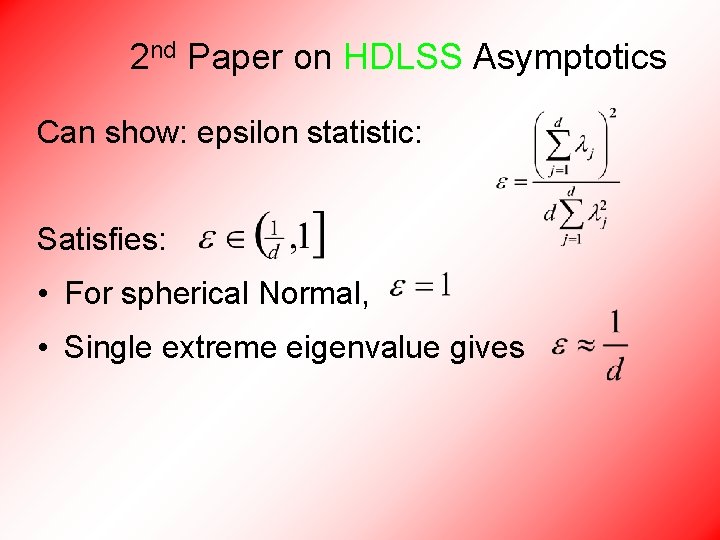

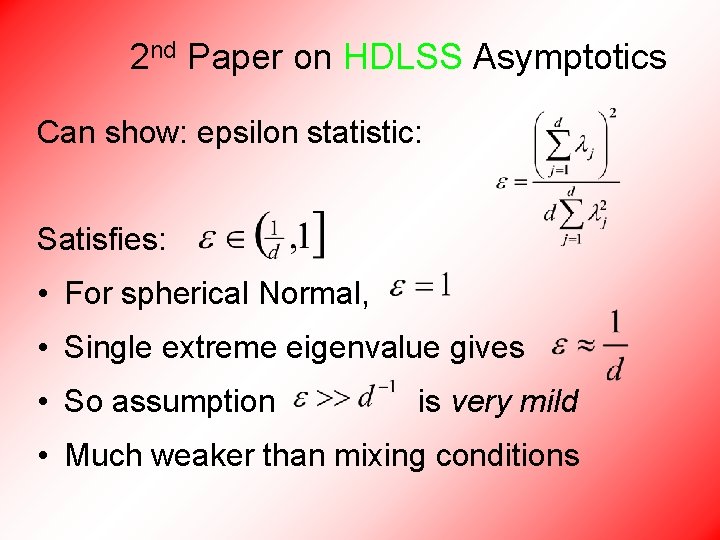

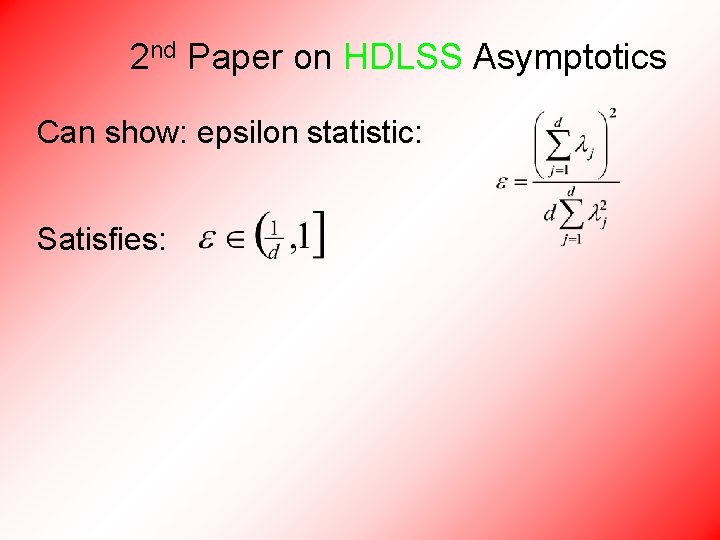

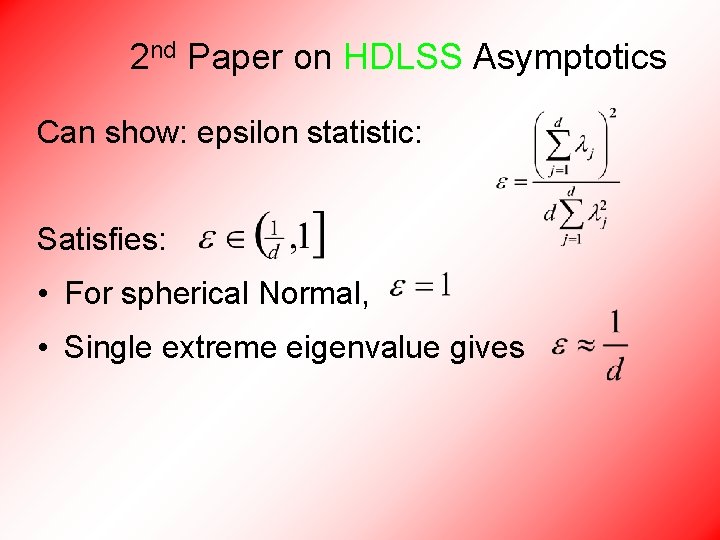

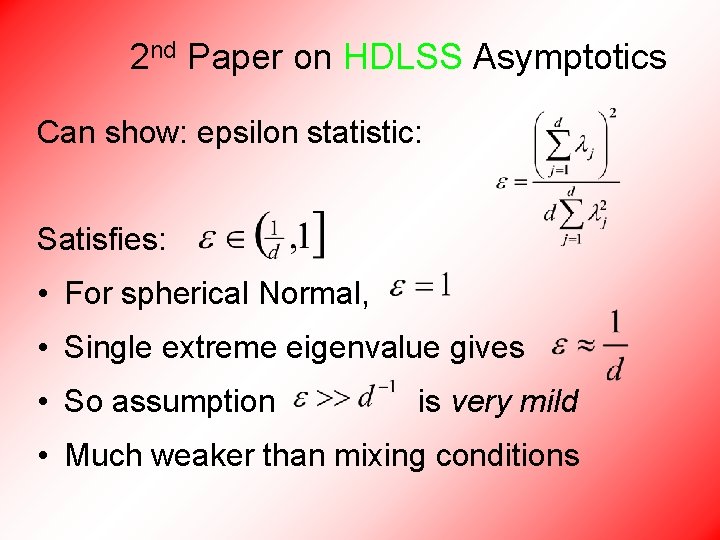

2 nd Paper on HDLSS Asymptotics Can show: epsilon statistic: Satisfies:

2 nd Paper on HDLSS Asymptotics Can show: epsilon statistic: Satisfies: • For spherical Normal,

2 nd Paper on HDLSS Asymptotics Can show: epsilon statistic: Satisfies: • For spherical Normal, • Single extreme eigenvalue gives

2 nd Paper on HDLSS Asymptotics Can show: epsilon statistic: Satisfies: • For spherical Normal, • Single extreme eigenvalue gives • So assumption is very mild • Much weaker than mixing conditions

2 nd Paper on HDLSS Asymptotics Ahn, Marron, Muller & Chi (2007) § Assume 2 nd Moments § Assume no eigenvalues too large, Then :

2 nd Paper on HDLSS Asymptotics Ahn, Marron, Muller & Chi (2007) § Assume 2 nd Moments § Assume no eigenvalues too large, Then Not so strong as before: :

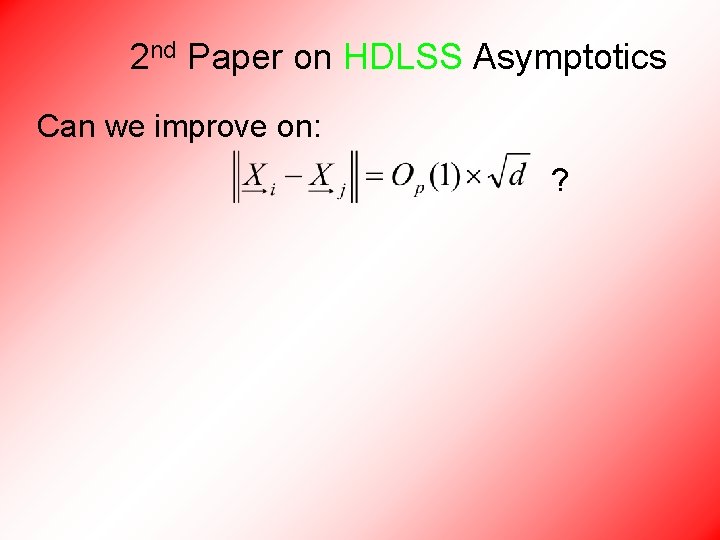

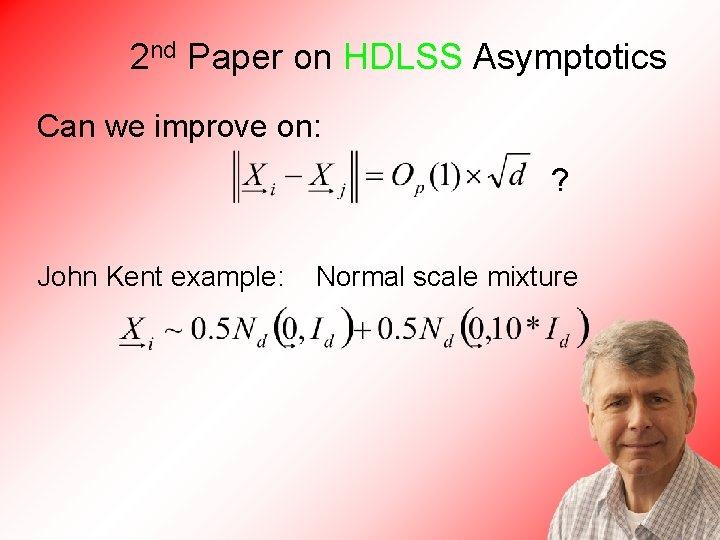

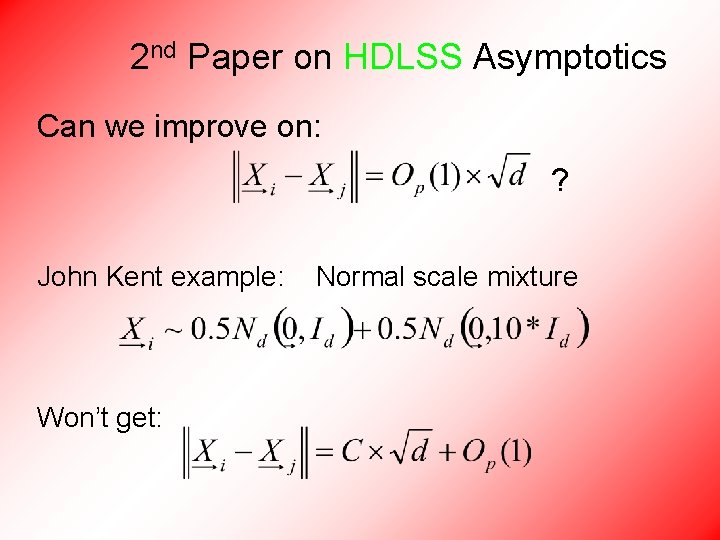

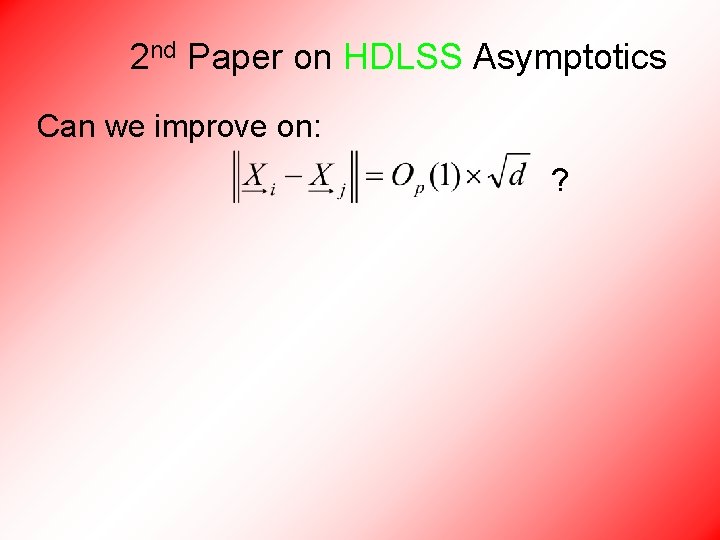

2 nd Paper on HDLSS Asymptotics Can we improve on: ?

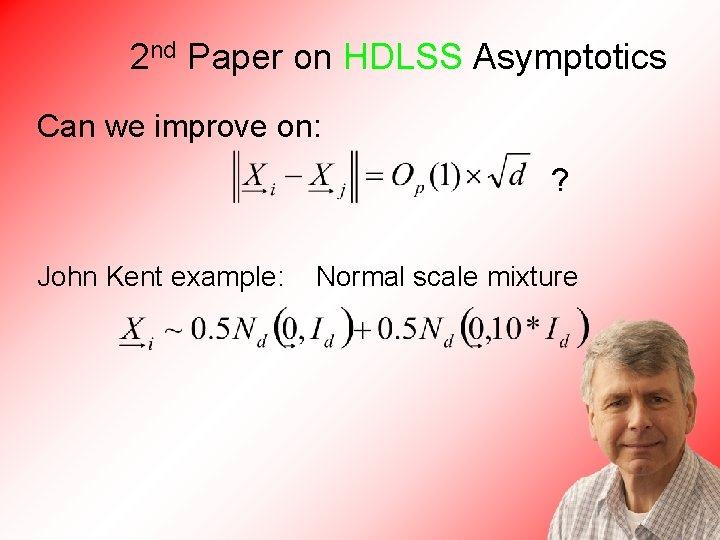

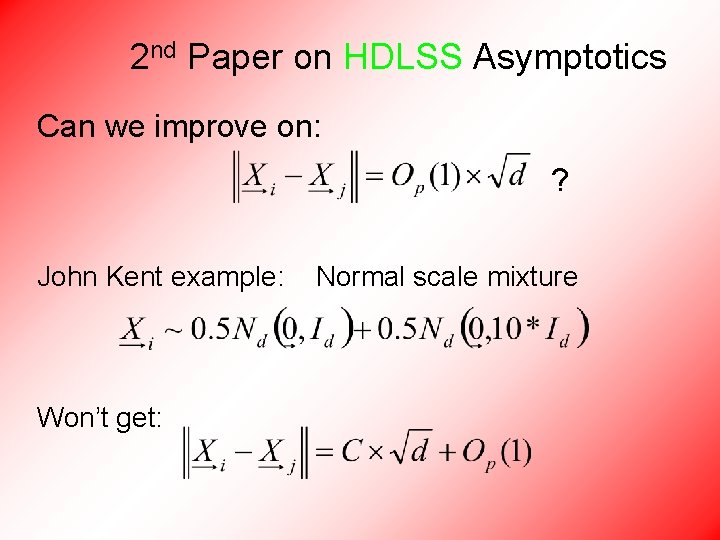

2 nd Paper on HDLSS Asymptotics Can we improve on: ? John Kent example: Normal scale mixture

2 nd Paper on HDLSS Asymptotics Can we improve on: ? John Kent example: Won’t get: Normal scale mixture

3 rd Paper on HDLSS Asymptotics Get Geometrical Representation using • 4 th Moment Assumption • Stronger Covariance Matrix (only) Assum’n Yata & Aoshima (2012)

2 nd Paper on HDLSS Asymptotics Notes on Kent’s Normal Scale Mixture • Data Vectors are indep’dent of each other • But entries of each have strong depend’ce • However, can show entries have cov = 0!

2 nd Paper on HDLSS Asymptotics Notes on Kent’s Normal Scale Mixture • Data Vectors are indep’dent of each other • But entries of each have strong depend’ce • However, can show entries have cov = 0! • Recall statistical folklore: Covariance = 0 Independence

0 Covariance is not independence Simple Example

0 Covariance is not independence Simple Example: • Random Variables and • Make both Gaussian (Note: Not Using Multivariate Gaussian)

0 Covariance is not independence Simple Example: • Random Variables and • Make both Gaussian • With strong dependence • Yet 0 covariance Given , define

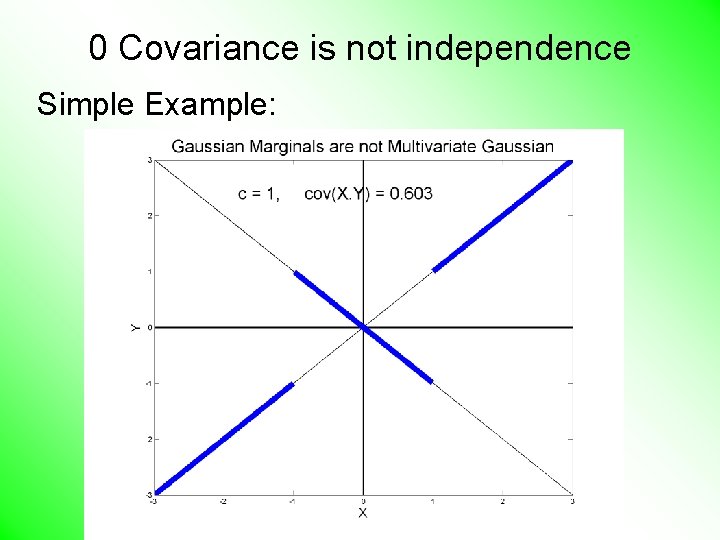

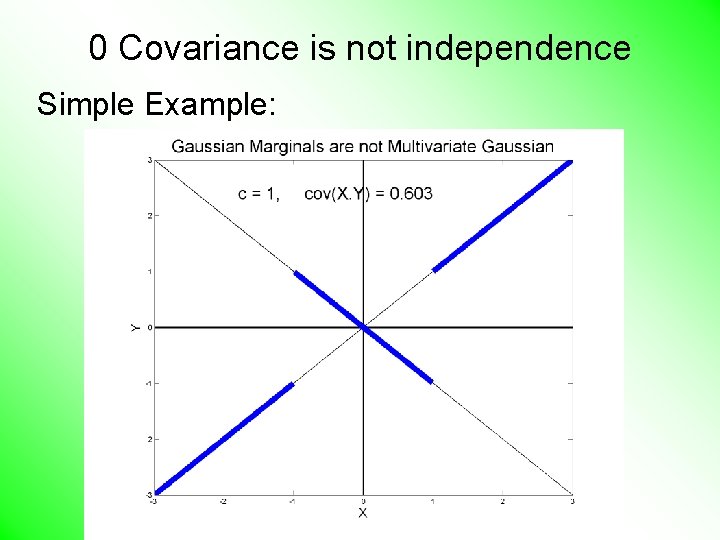

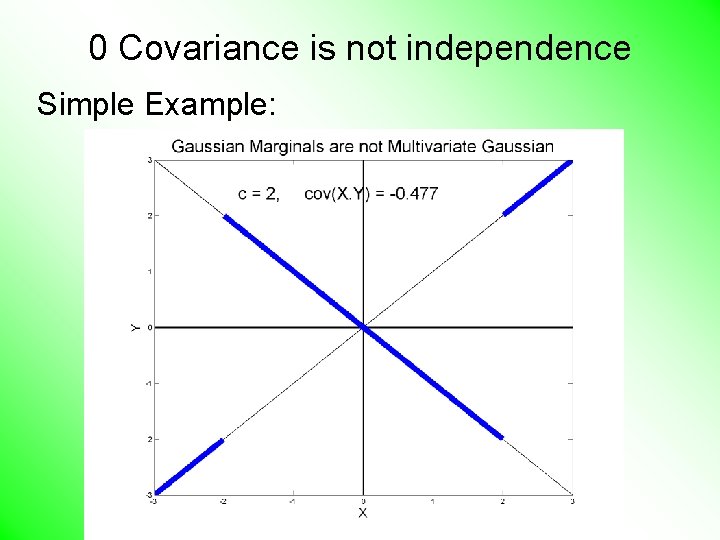

0 Covariance is not independence Simple Example:

0 Covariance is not independence Simple Example:

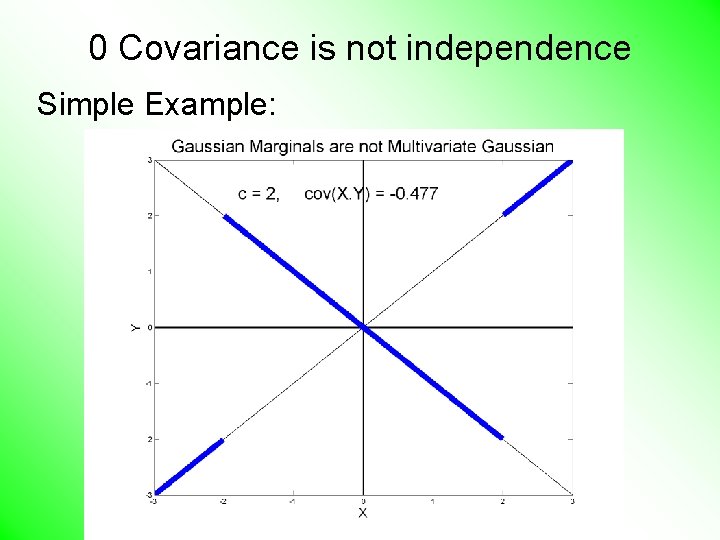

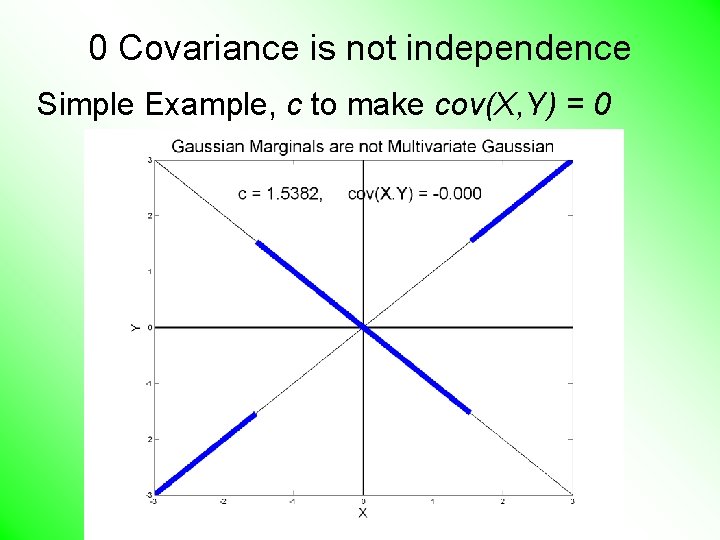

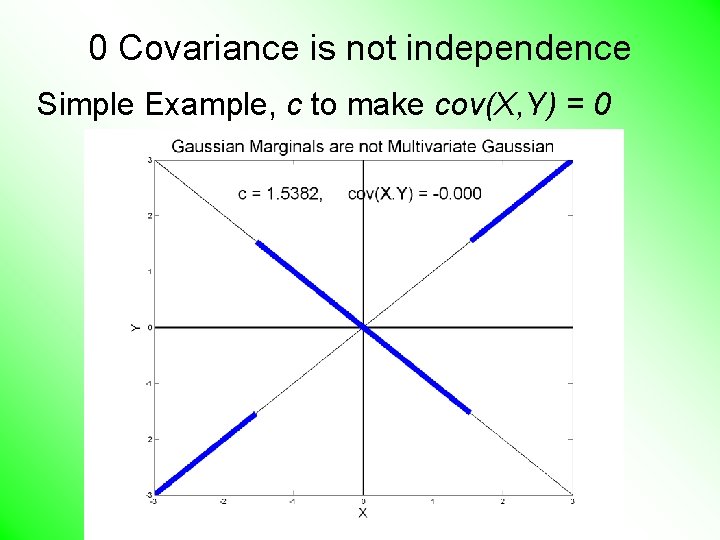

0 Covariance is not independence Simple Example, c to make cov(X, Y) = 0

0 Covariance is not independence Simple Example: • Distribution is degenerate • Supported on diagonal lines

0 Covariance is not independence Simple Example: • Distribution is degenerate • Supported on diagonal lines • Not abs. cont. w. r. t. 2 -d Lebesgue meas.

0 Covariance is not independence Simple Example: • Distribution is degenerate • Supported on diagonal lines • Not abs. cont. w. r. t. 2 -d Lebesgue meas. • For small , have • For large , have

0 Covariance is not independence Simple Example: • Distribution is degenerate • Supported on diagonal lines • Not abs. cont. w. r. t. 2 -d Lebesgue meas. • For small , have • For large , have • By continuity, with

0 Covariance is not independence Result: • Joint distribution of and – Has Gaussian marginals – Has :

0 Covariance is not independence Result: • Joint distribution of and : – Has Gaussian marginals – Has – Yet strong dependence of and – Thus not multivariate Gaussian

0 Covariance is not independence Result: • Joint distribution of and : – Has Gaussian marginals – Has – Yet strong dependence of and – Thus not multivariate Gaussian Shows Multivariate Gaussian means more than Gaussian Marginals

HDLSS Math. Stat. of PCA Consistency & Strong Inconsistency (Study Properties of PCA, In Estimating Eigen-Directions & -Values) [Assume Data are Mean Centered]

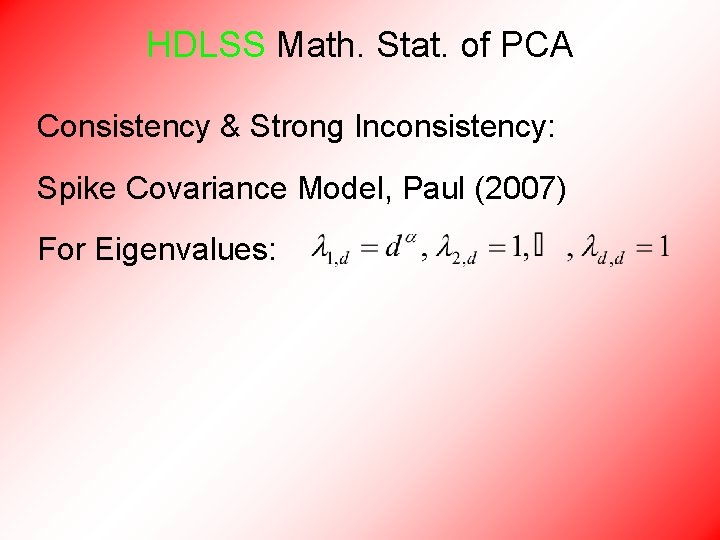

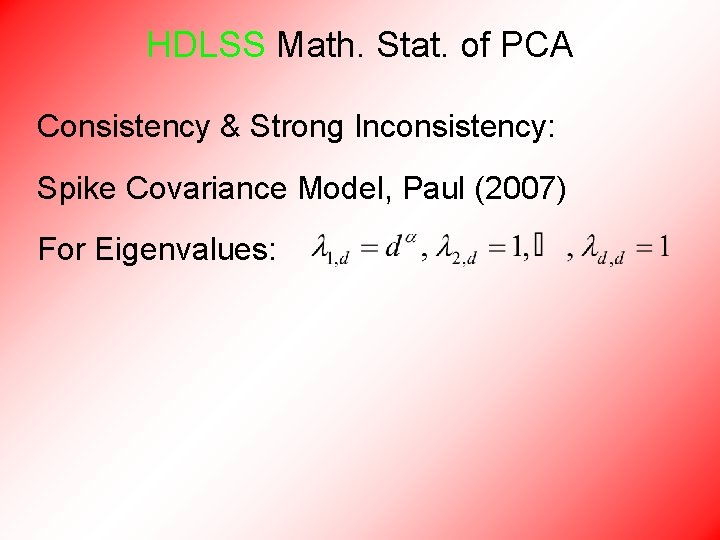

HDLSS Math. Stat. of PCA Consistency & Strong Inconsistency: Spike Covariance Model, Paul (2007) For Eigenvalues:

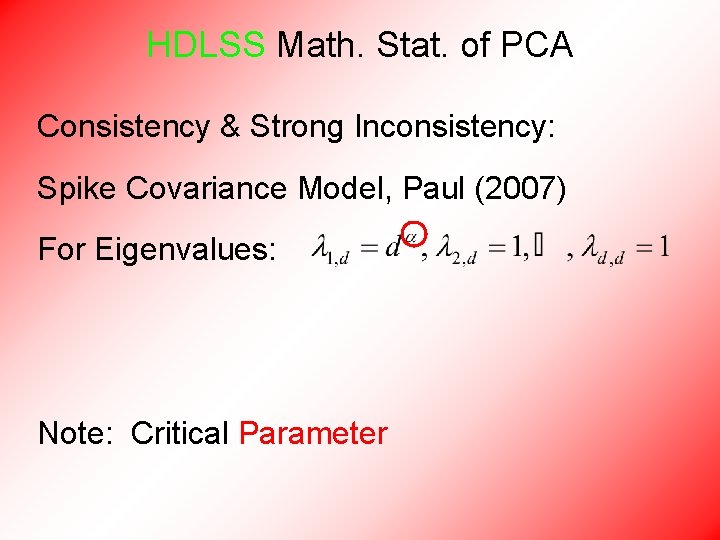

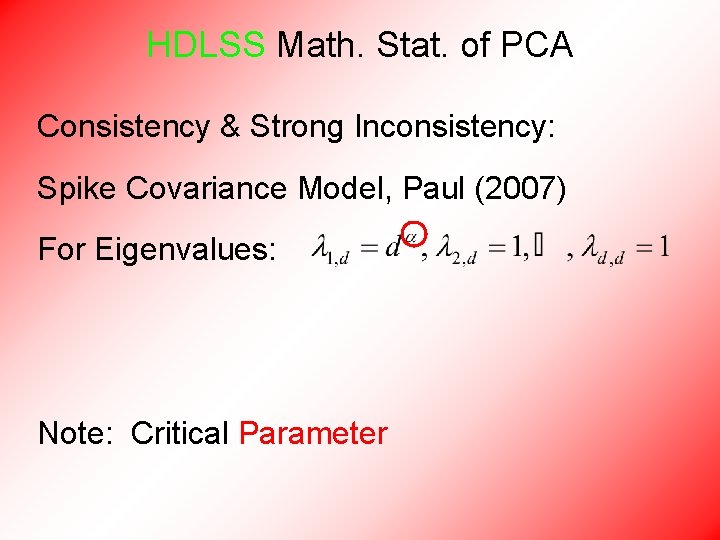

HDLSS Math. Stat. of PCA Consistency & Strong Inconsistency: Spike Covariance Model, Paul (2007) For Eigenvalues: Note: Critical Parameter

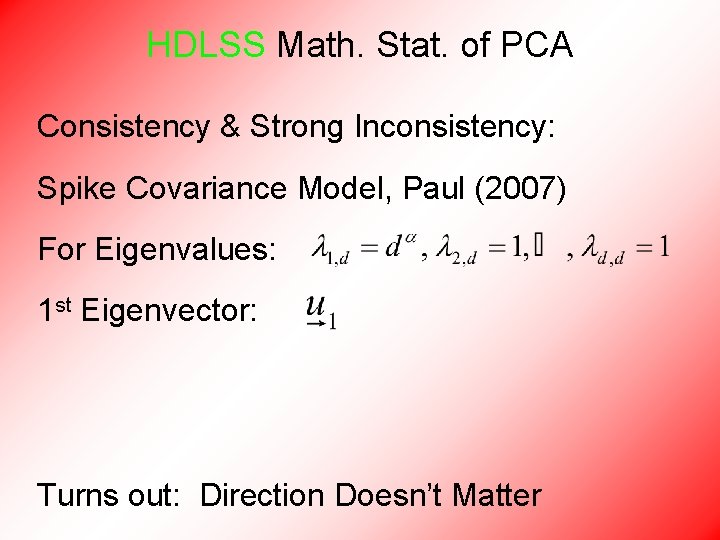

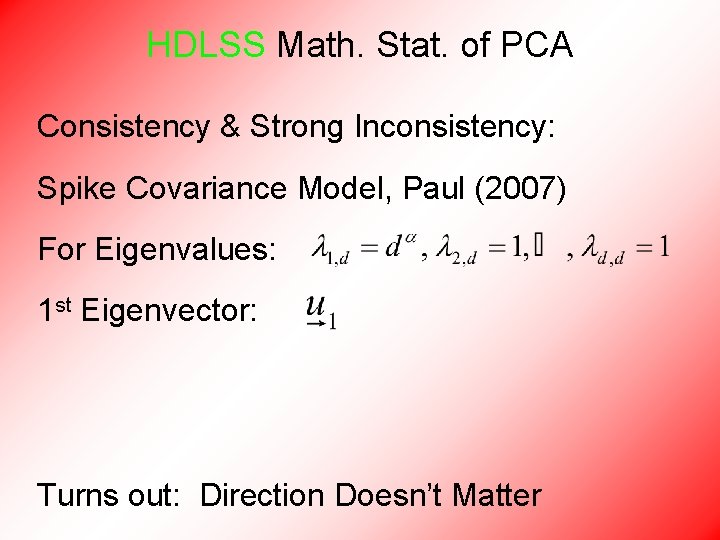

HDLSS Math. Stat. of PCA Consistency & Strong Inconsistency: Spike Covariance Model, Paul (2007) For Eigenvalues: 1 st Eigenvector: Turns out: Direction Doesn’t Matter

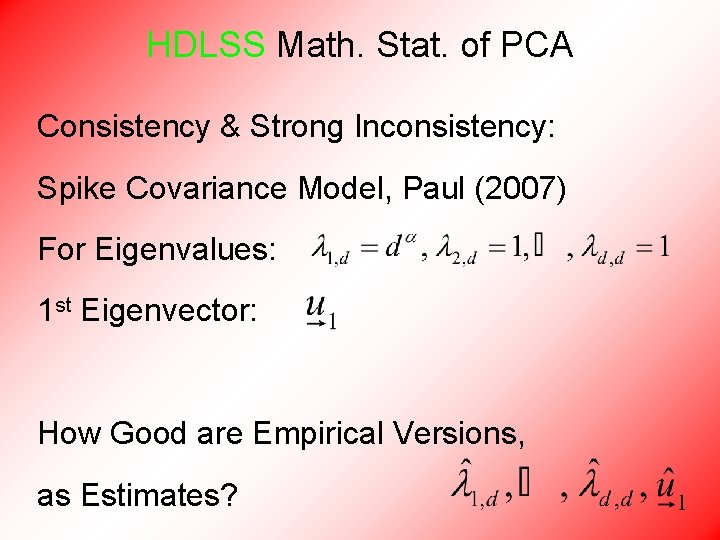

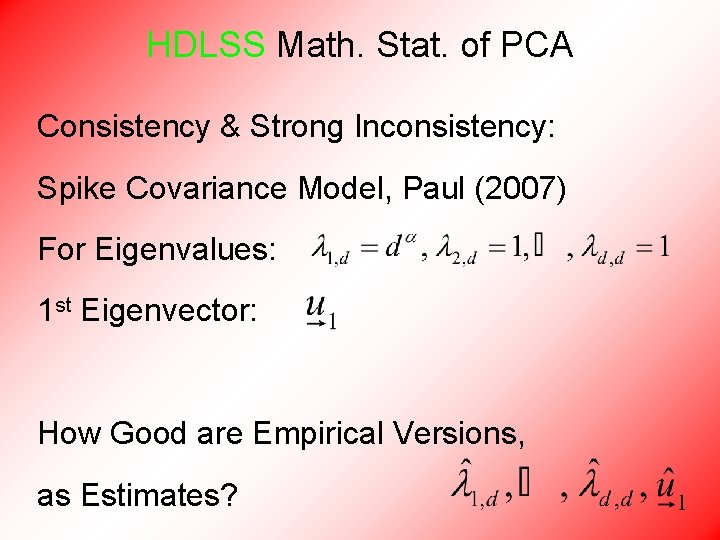

HDLSS Math. Stat. of PCA Consistency & Strong Inconsistency: Spike Covariance Model, Paul (2007) For Eigenvalues: 1 st Eigenvector: How Good are Empirical Versions, as Estimates?

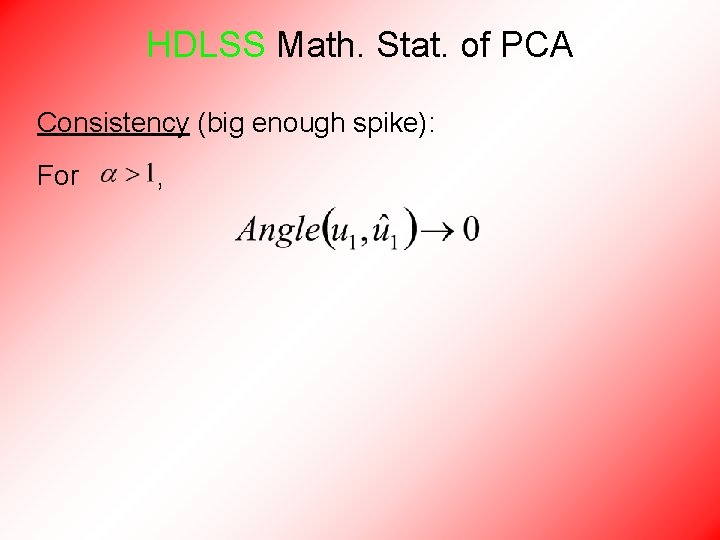

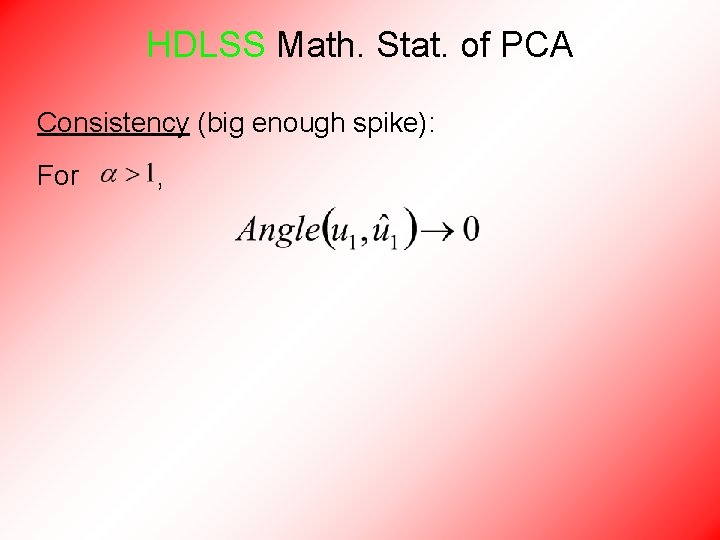

HDLSS Math. Stat. of PCA Consistency (big enough spike): For ,

HDLSS Math. Stat. of PCA Consistency (big enough spike): For , Strong Inconsistency (spike not big enough): For ,

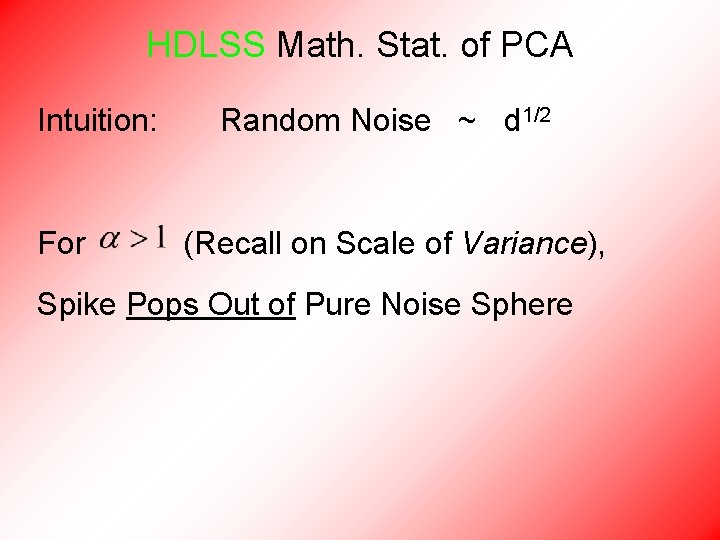

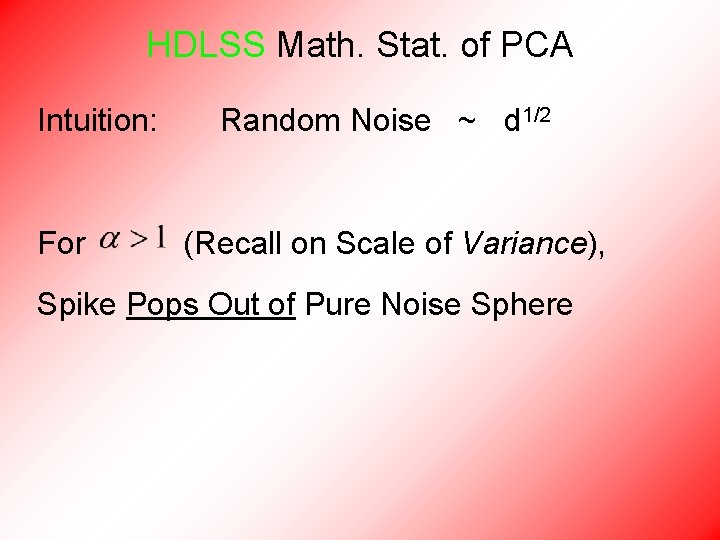

HDLSS Math. Stat. of PCA Intuition: For Random Noise ~ d 1/2 (Recall on Scale of Variance), Spike Pops Out of Pure Noise Sphere

HDLSS Math. Stat. of PCA Intuition: Random Noise ~ d 1/2 For (Recall on Scale of Variance), Spike Pops Out of Pure Noise Sphere For , Spike Contained in Pure Noise Sphere

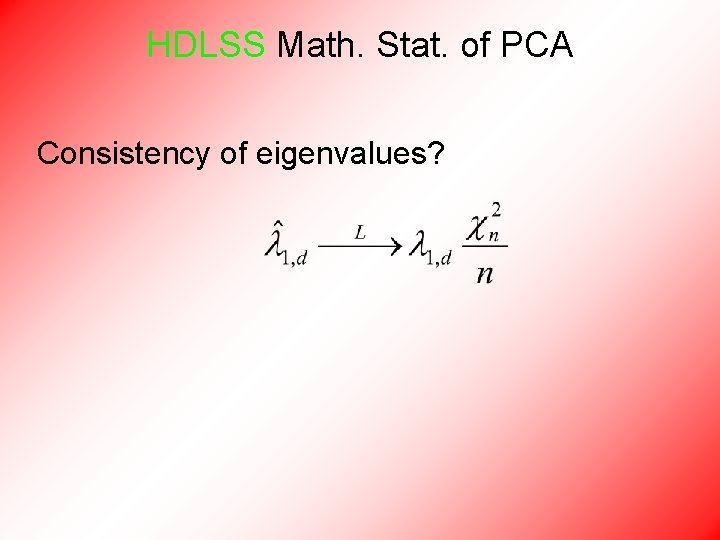

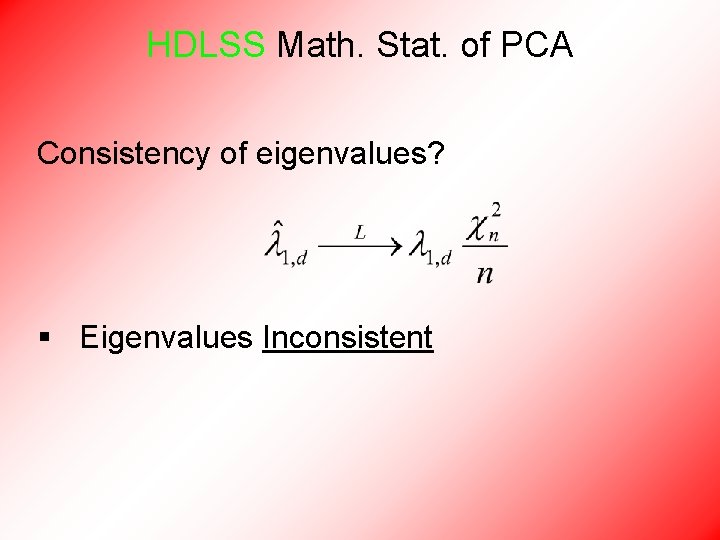

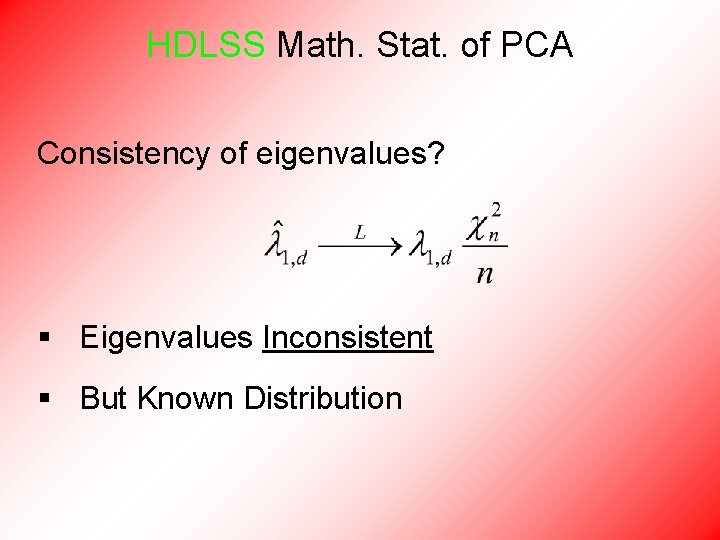

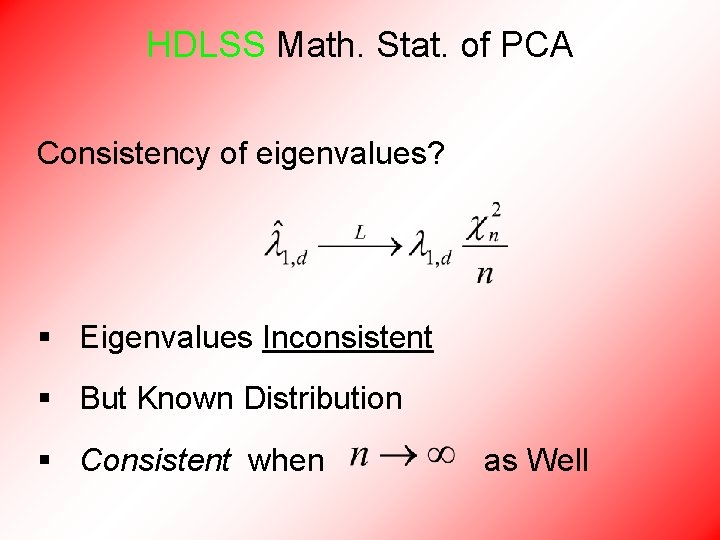

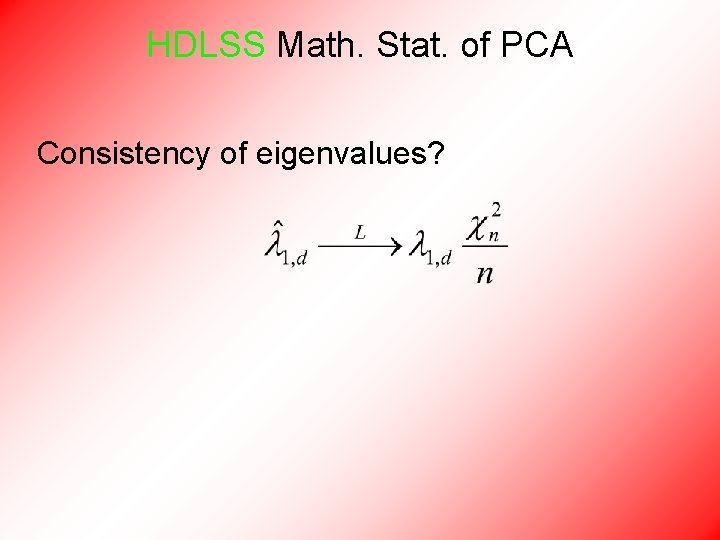

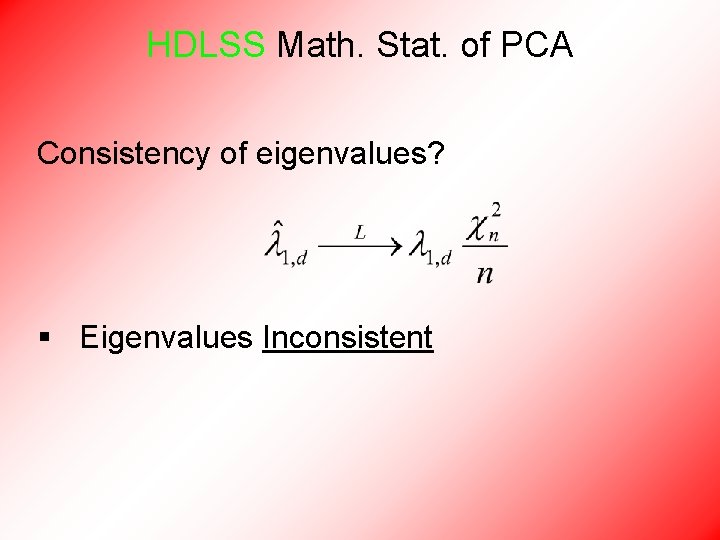

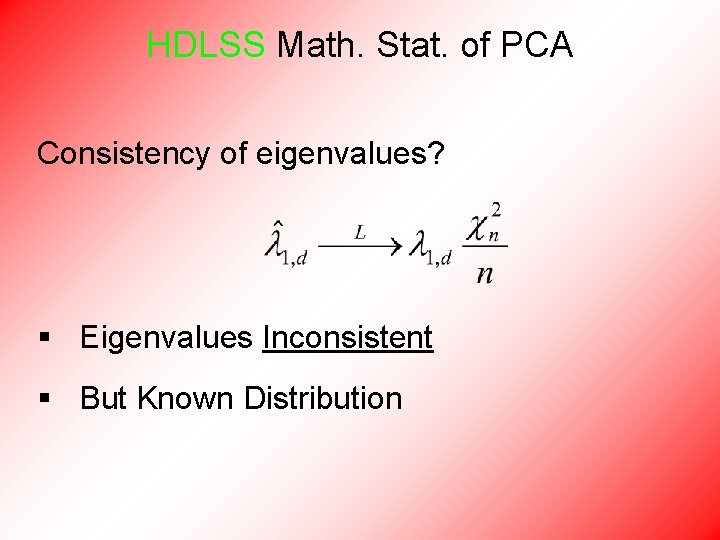

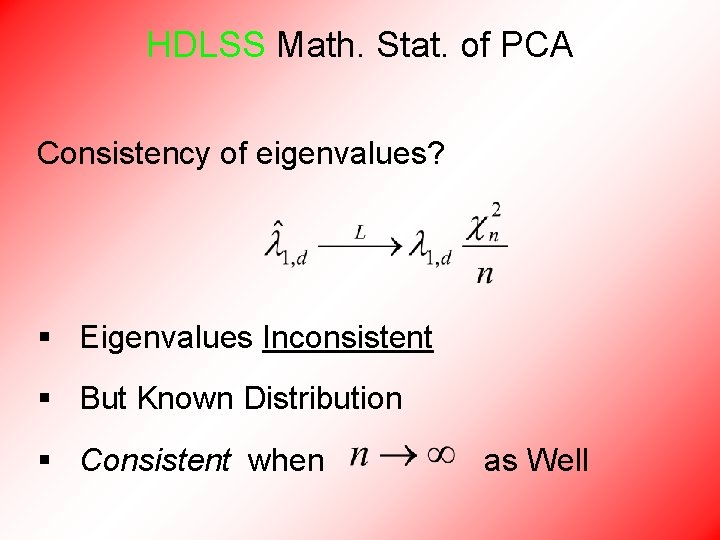

HDLSS Math. Stat. of PCA Consistency of eigenvalues?

HDLSS Math. Stat. of PCA Consistency of eigenvalues? § Eigenvalues Inconsistent

HDLSS Math. Stat. of PCA Consistency of eigenvalues? § Eigenvalues Inconsistent § But Known Distribution

HDLSS Math. Stat. of PCA Consistency of eigenvalues? § Eigenvalues Inconsistent § But Known Distribution § Consistent when as Well

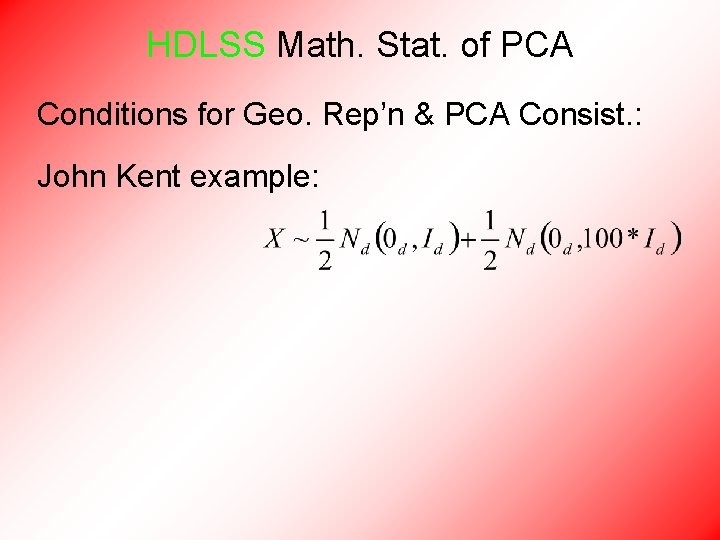

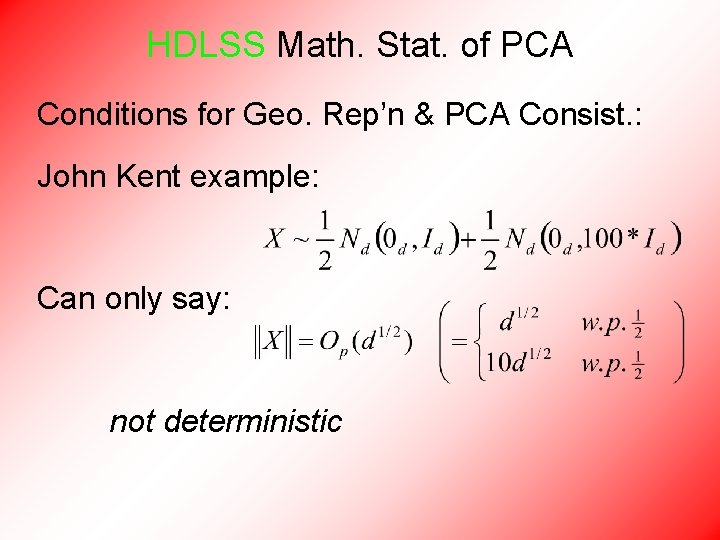

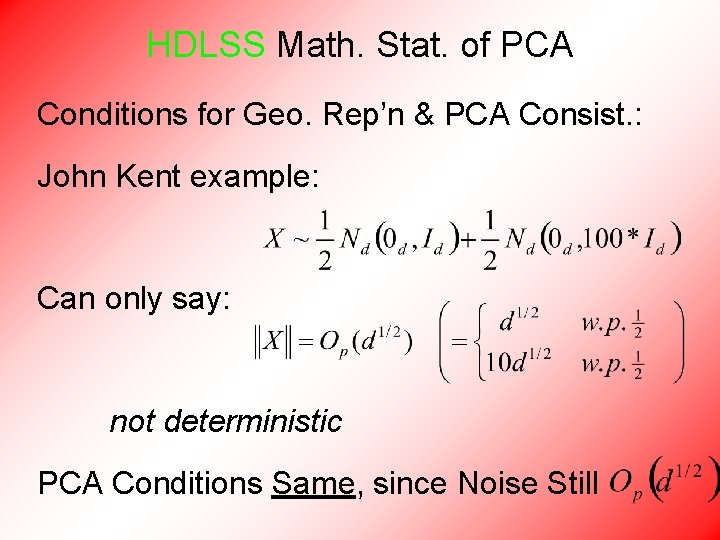

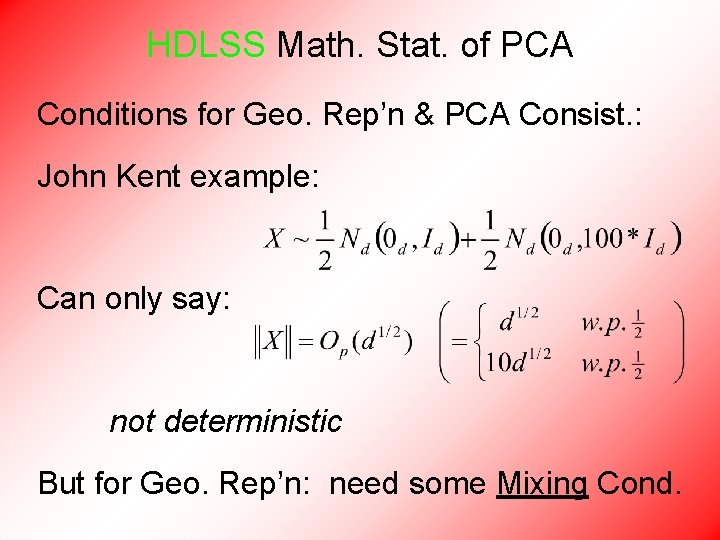

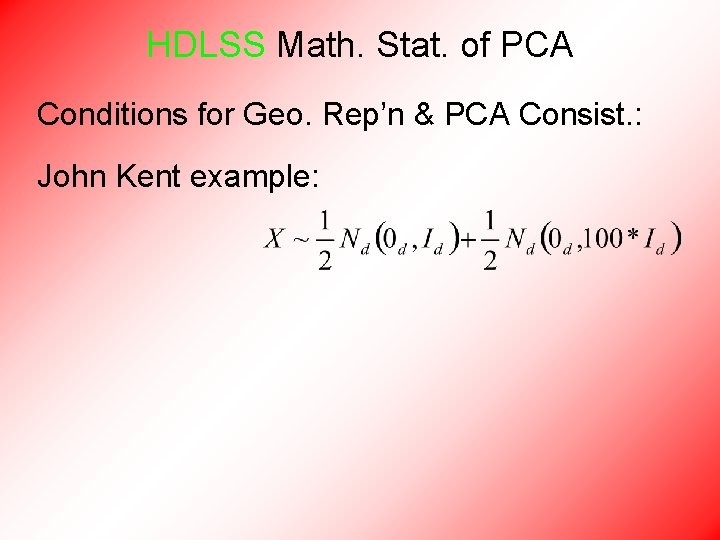

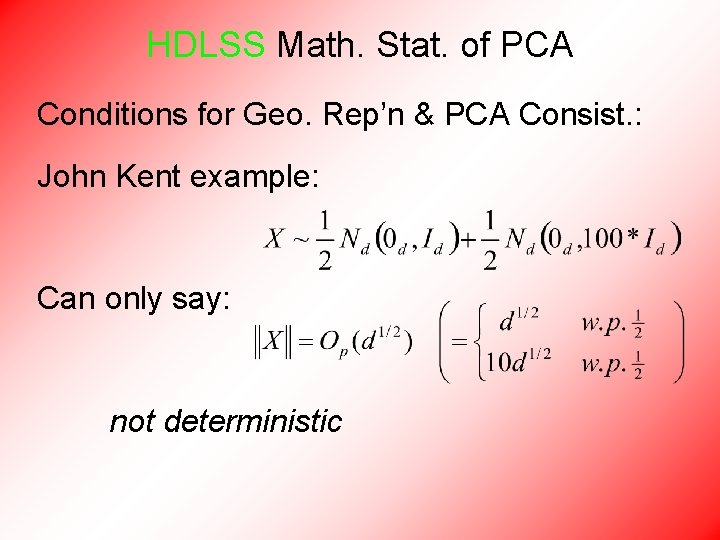

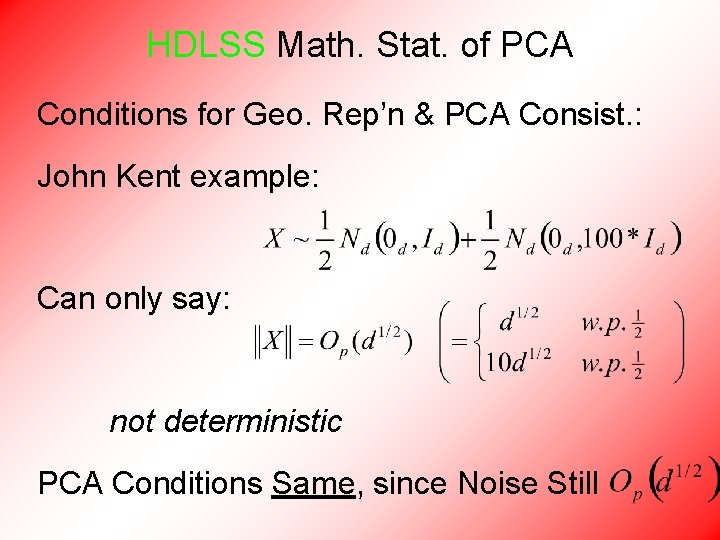

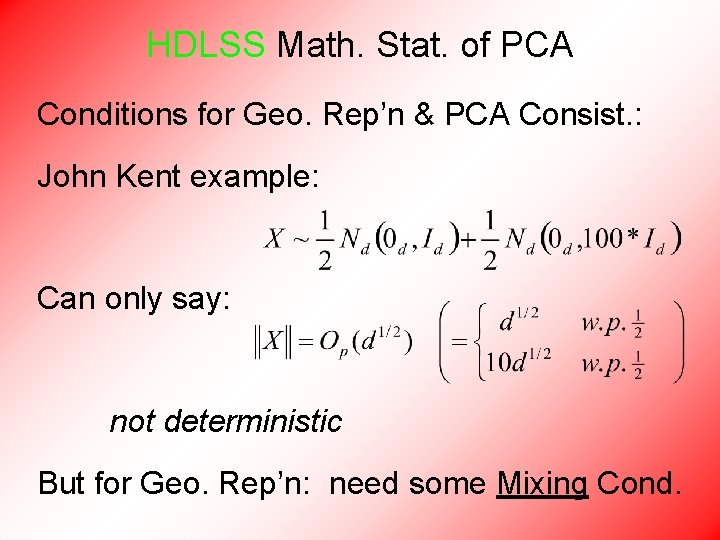

HDLSS Math. Stat. of PCA Conditions for Geo. Rep’n & PCA Consist. : John Kent example:

HDLSS Math. Stat. of PCA Conditions for Geo. Rep’n & PCA Consist. : John Kent example: Can only say: not deterministic

HDLSS Math. Stat. of PCA Conditions for Geo. Rep’n & PCA Consist. : John Kent example: Can only say: not deterministic PCA Conditions Same, since Noise Still

HDLSS Math. Stat. of PCA Conditions for Geo. Rep’n & PCA Consist. : John Kent example: Can only say: not deterministic But for Geo. Rep’n: need some Mixing Cond.

HDLSS Math. Stat. of PCA Conditions for Geo. Rep’n: Conclude: Need some Mixing Condition

Mixing Conditions Idea From Probability Theory:

Mixing Conditions •

Mixing Conditions •

Mixing Conditions •

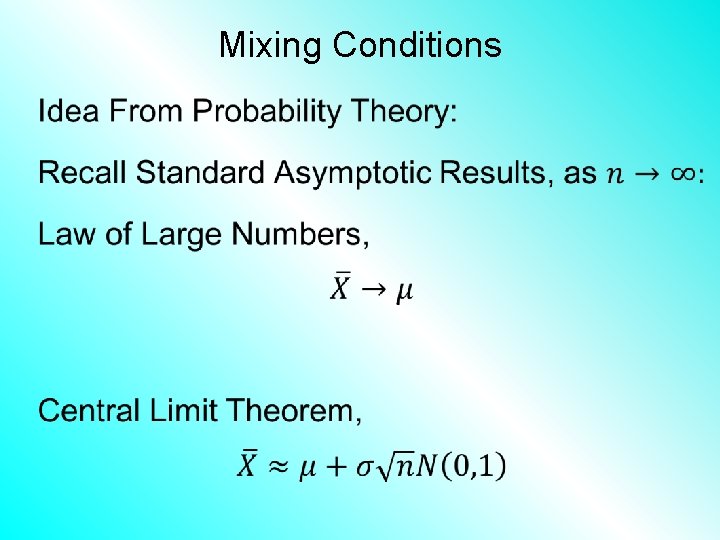

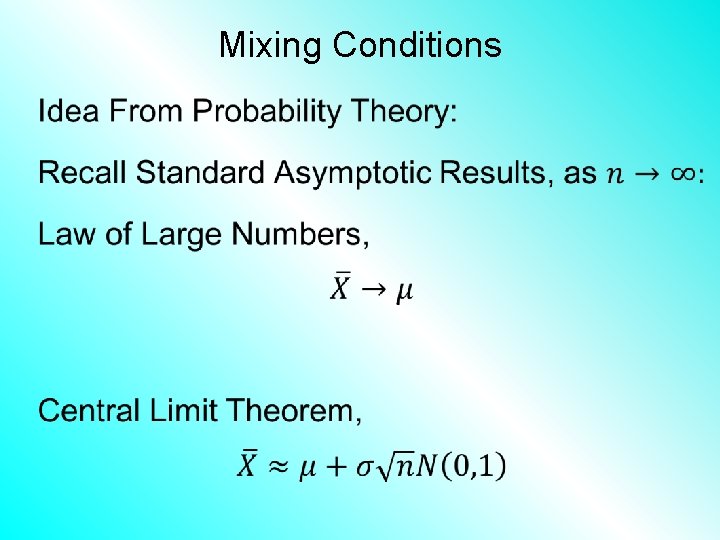

Mixing Conditions Idea From Probability Theory: Law of Large Numbers, Central Limit Theorem, Both have Technical Assumptions (Usually Ignore ? ? ? )

Mixing Conditions •

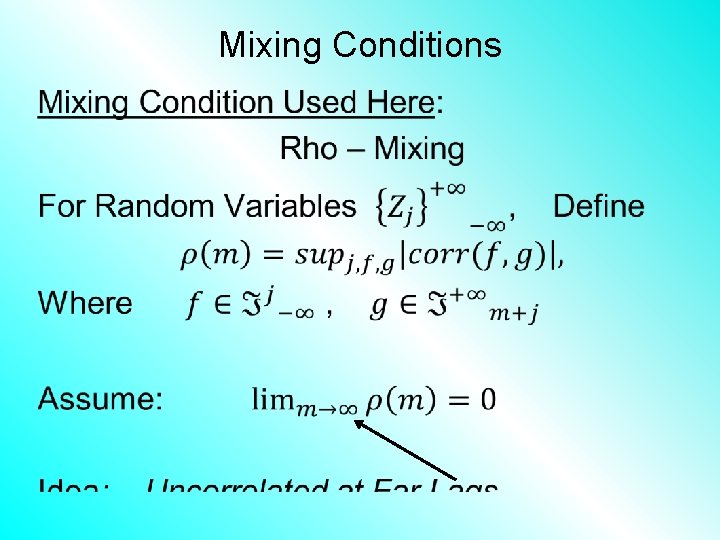

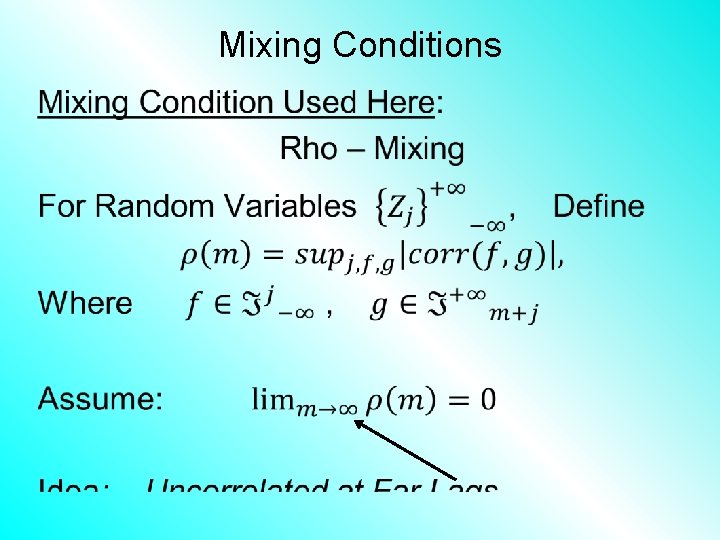

Mixing Conditions Idea From Probability Theory: Mixing Conditions: Explore Weaker Assumptions, to Still Get Law of Large Numbers, Central Limit Theorem

Mixing Conditions •

Mixing Conditions •

Mixing Conditions •

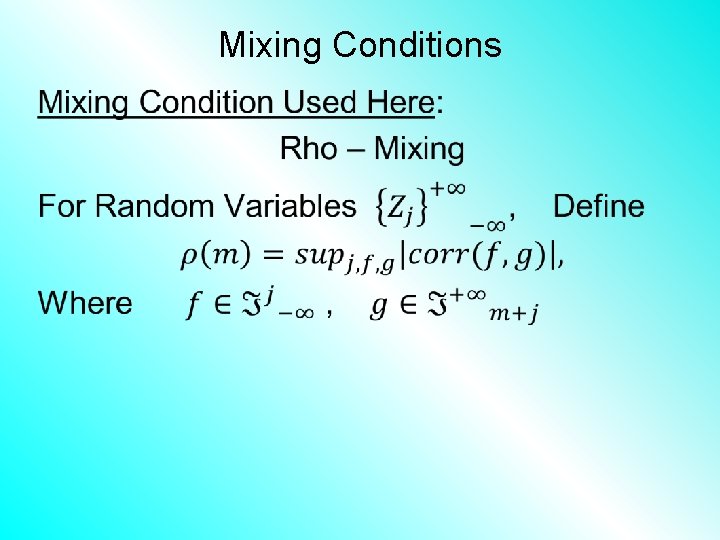

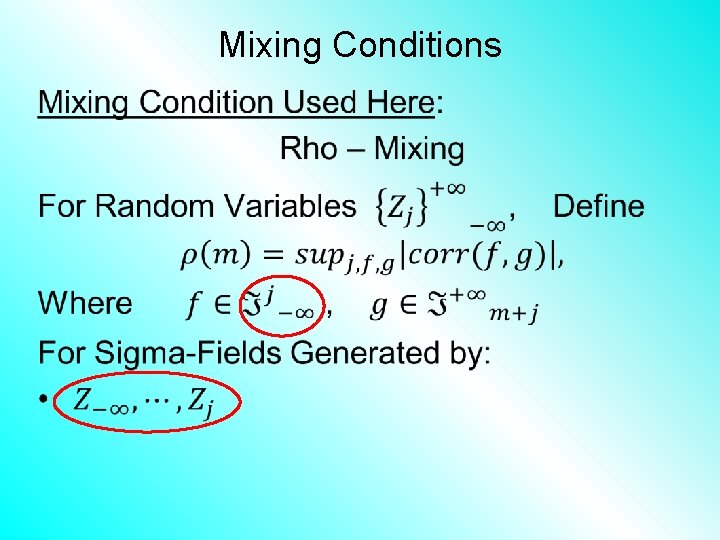

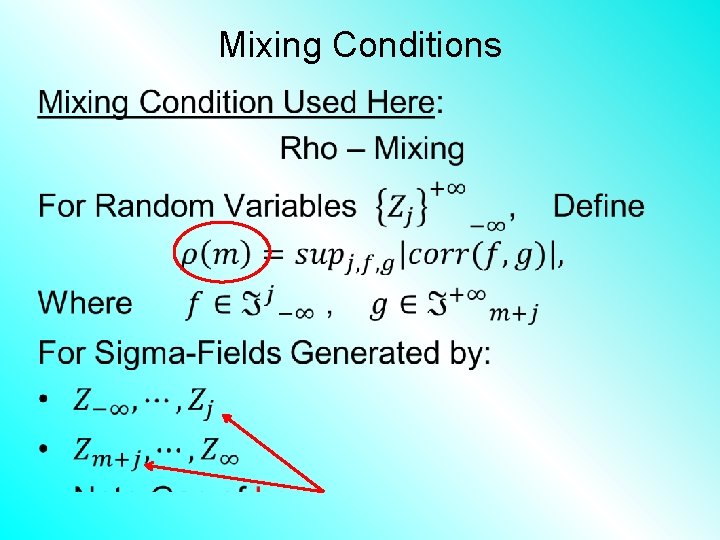

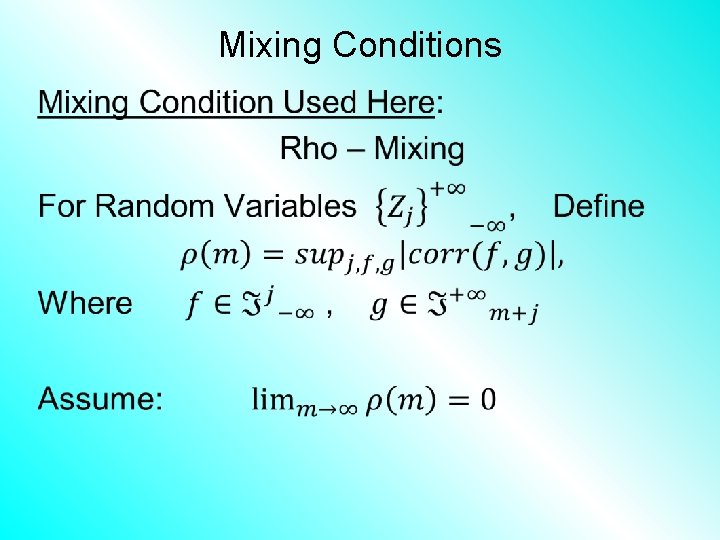

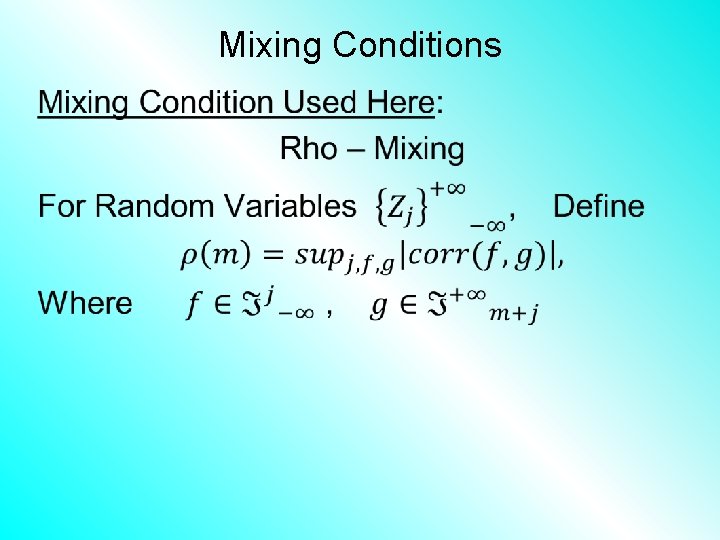

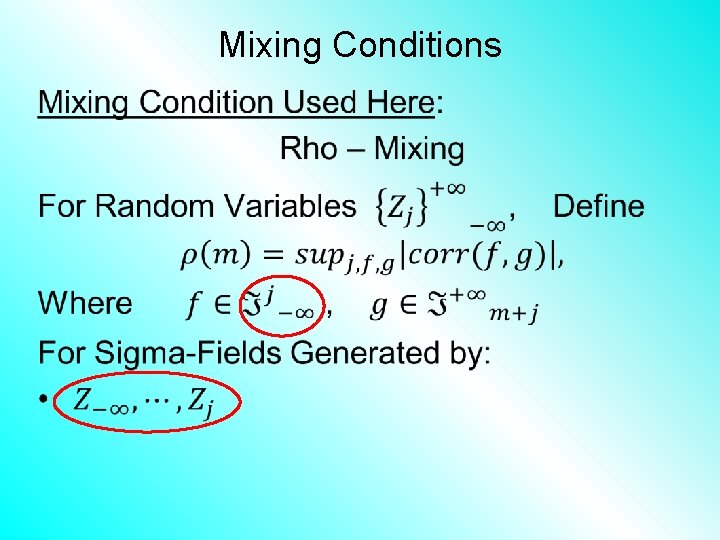

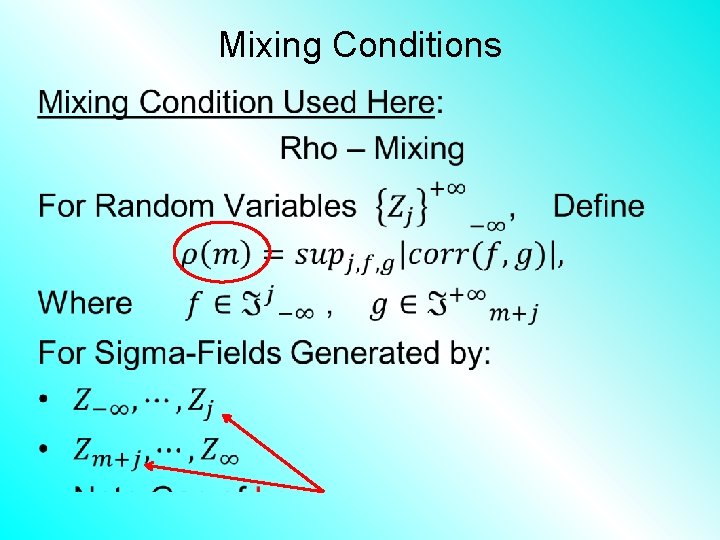

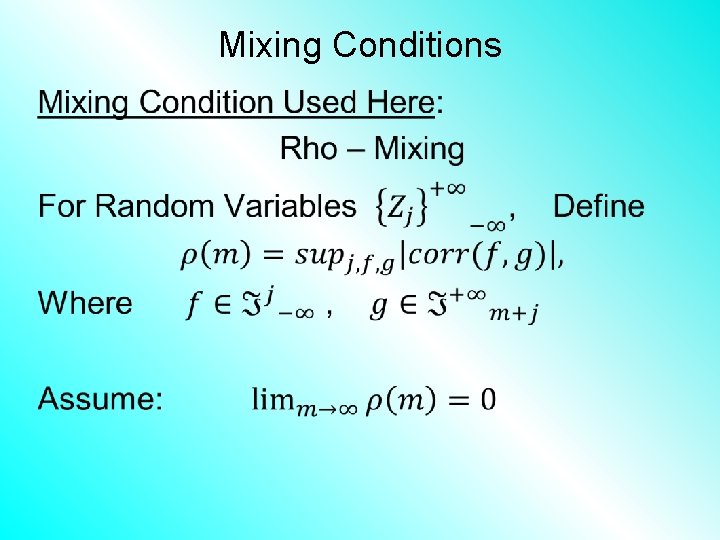

Mixing Conditions Mixing Condition Used Here: Rho – Mixing

Mixing Conditions •

Mixing Conditions •

Mixing Conditions •

Mixing Conditions •

Mixing Conditions •

Mixing Conditions •

HDLSS Math. Stat. of PCA •

HDLSS Math. Stat. of PCA Conditions for Geo. Rep’n: Hall, Marron and Neeman (2005): Drawback: Strong Assumption (In JRSS-B, since Biometrika Refused)

HDLSS Math. Stat. of PCA Conditions for Geo. Rep’n: Series of Technical Improvements: • Ahn, Marron, Muller & Chi (2007) • Aoshima (2010), Yata & Aoshima (2012) (Fully Covariance Based, No Mixing)

HDLSS Math. Stat. of PCA Conditions for Geo. Rep’n: Tricky Point: Classical Mixing Conditions Require Notion of Time Ordering Not Always Clear, e. g. Microarrays

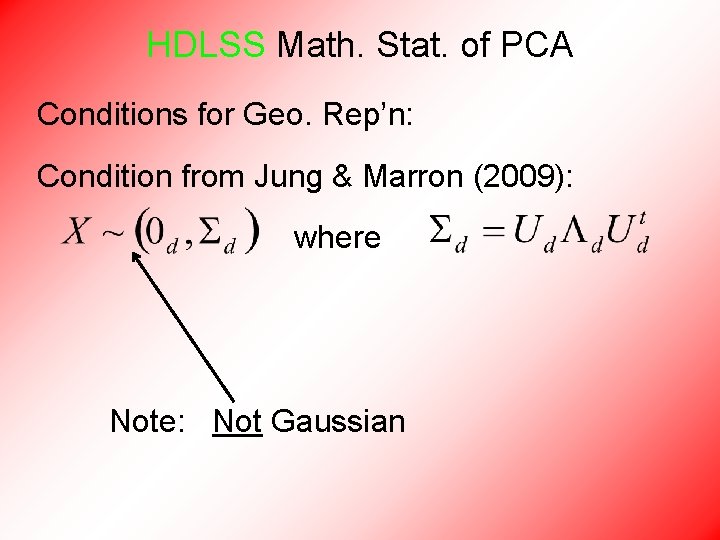

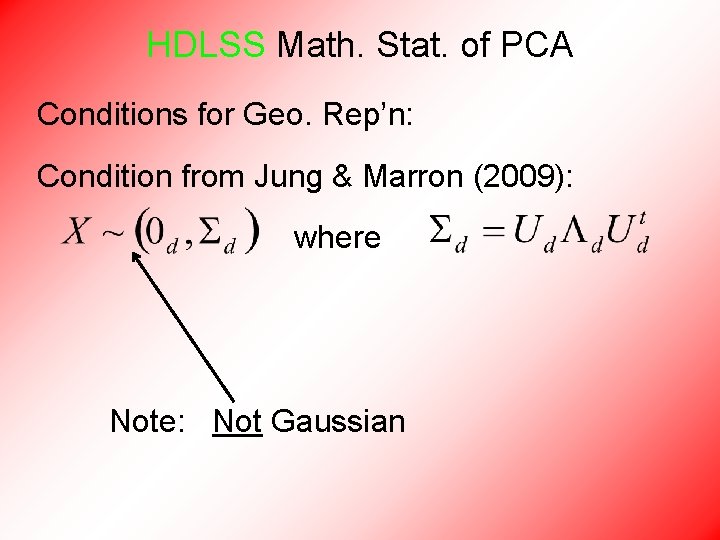

HDLSS Math. Stat. of PCA Conditions for Geo. Rep’n: Condition from Jung & Marron (2009): where Note: Not Gaussian

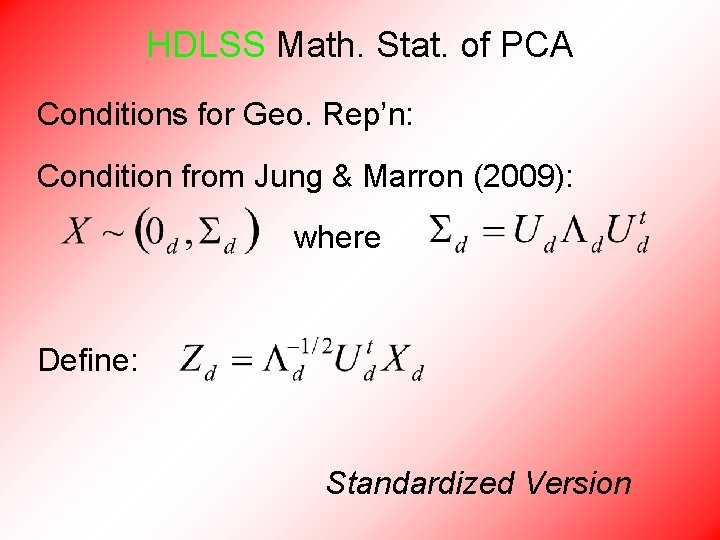

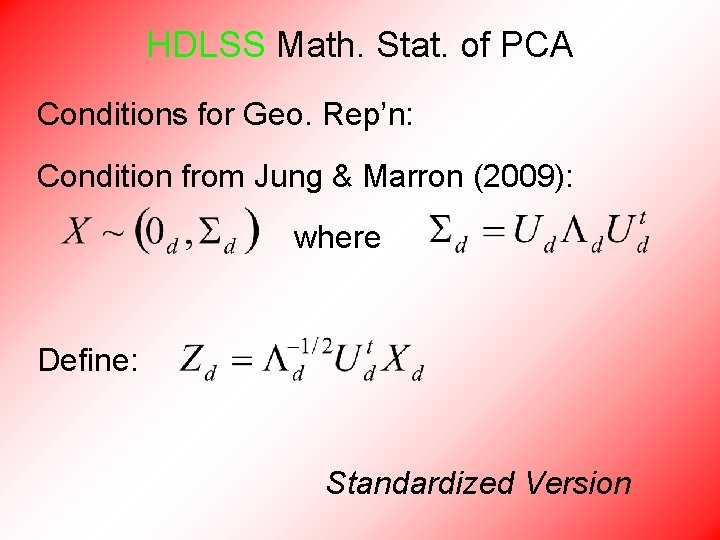

HDLSS Math. Stat. of PCA Conditions for Geo. Rep’n: Condition from Jung & Marron (2009): where Define: Standardized Version

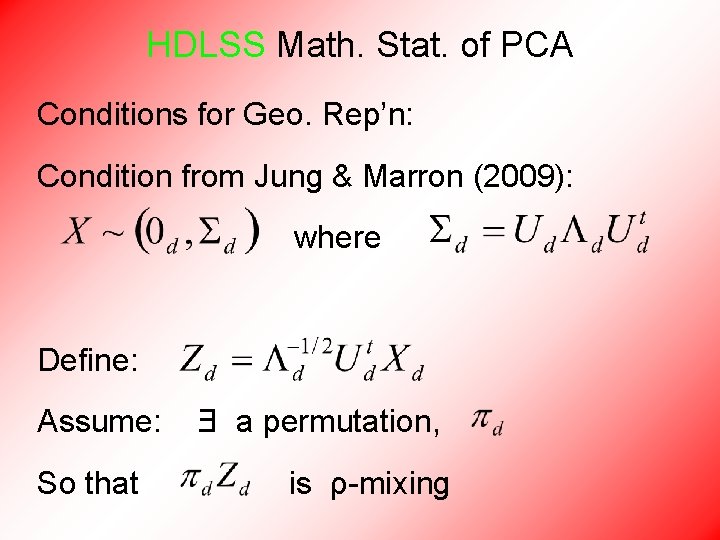

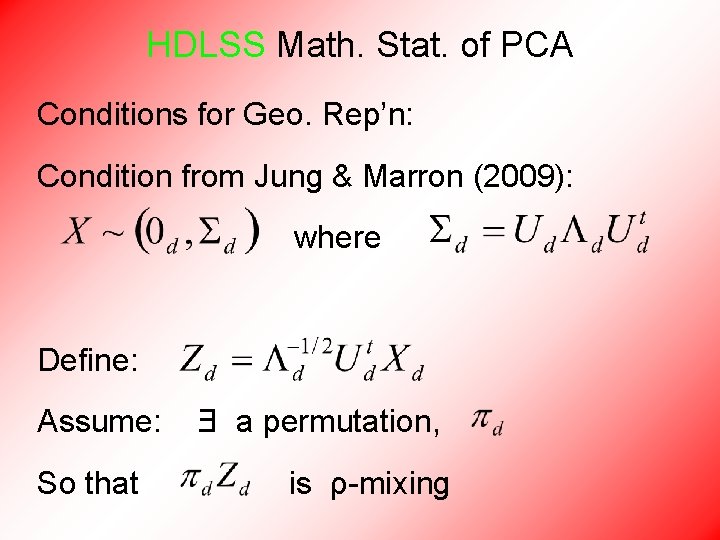

HDLSS Math. Stat. of PCA Conditions for Geo. Rep’n: Condition from Jung & Marron (2009): where Define: Assume: So that Ǝ a permutation, is ρ-mixing

Change onyen password

Change onyen password All the signs for driving

All the signs for driving Will you please be quiet please raymond carver

Will you please be quiet please raymond carver Please help yourself to refreshments

Please help yourself to refreshments Welcome please sign in

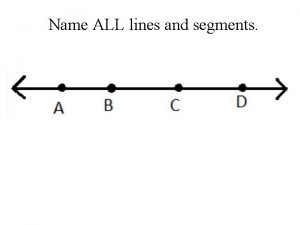

Welcome please sign in Opposite rays

Opposite rays Formal and informal emails

Formal and informal emails Email greetings in french

Email greetings in french Uat kickoff meeting agenda

Uat kickoff meeting agenda Jvp up to mandible คือ

Jvp up to mandible คือ Trousseau’s sign

Trousseau’s sign 2na + glucose/18

2na + glucose/18 Brudzinskis sign

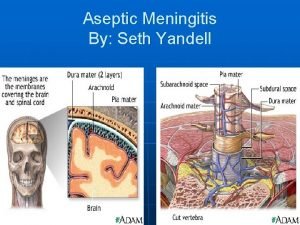

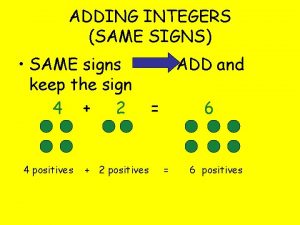

Brudzinskis sign Same sign add different signs subtract

Same sign add different signs subtract Transfer lifting repositioning

Transfer lifting repositioning Tlr participant worksheet answers

Tlr participant worksheet answers Participant sample

Participant sample Contoh lrs

Contoh lrs Participant oriented evaluation approach

Participant oriented evaluation approach Participant tracking system

Participant tracking system Normative theory in communication

Normative theory in communication Dsf.ca/participant

Dsf.ca/participant Participant-driven research

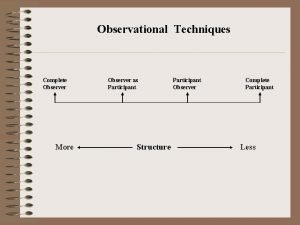

Participant-driven research Covert and overt observation

Covert and overt observation Observation advantages and disadvantages

Observation advantages and disadvantages Participant media

Participant media H2020 participant portal

H2020 participant portal Advantages of overt observation

Advantages of overt observation Democratic participant media theory

Democratic participant media theory Job analysis in hrm

Job analysis in hrm Participant tracking software

Participant tracking software Participant diary/log

Participant diary/log Daina middleton participant

Daina middleton participant Normative theory of mass communication

Normative theory of mass communication Ethnography participant observation

Ethnography participant observation Samhsa anger management

Samhsa anger management Eacea participant portal

Eacea participant portal Safe at home participant

Safe at home participant Complaint against depository participant

Complaint against depository participant Complete participant

Complete participant Non participant observation

Non participant observation What is social function of narrative text

What is social function of narrative text Rhoderick nuncio

Rhoderick nuncio Participant expectations

Participant expectations Eacea participant portal

Eacea participant portal Proximadistal

Proximadistal Please put here

Please put here Please write down your name

Please write down your name Please write your name

Please write your name Craft of scientific presentations

Craft of scientific presentations Introduction to mental health awareness presentation

Introduction to mental health awareness presentation Worst powerpoint

Worst powerpoint Advantages of multimedia presentation

Advantages of multimedia presentation Slidetodoc

Slidetodoc Ventajas y desventajas de corel presentations

Ventajas y desventajas de corel presentations -is not one of the purposes for giving oral presentations.

-is not one of the purposes for giving oral presentations. Verbal support meaning

Verbal support meaning How to make a tok presentation

How to make a tok presentation Best and worst powerpoint presentations

Best and worst powerpoint presentations Anna ritchie allan

Anna ritchie allan Setting up ria

Setting up ria Boardworks ltd

Boardworks ltd Situation complication question answer

Situation complication question answer Internet presentations

Internet presentations The most dangerous game ppt

The most dangerous game ppt Cue cards for speech

Cue cards for speech The end pictures for presentations

The end pictures for presentations Useful phrases presentation

Useful phrases presentation Efficient elements powerpoint

Efficient elements powerpoint You exec presentation

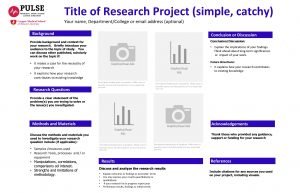

You exec presentation Research project title

Research project title Research project title

Research project title Space exploration merit badge powerpoint

Space exploration merit badge powerpoint Customer service presentations

Customer service presentations Catalyst 37xx stack

Catalyst 37xx stack Bad powerpoint presentations examples

Bad powerpoint presentations examples Really bad powerpoint

Really bad powerpoint Youexec

Youexec Roof ppt presentations

Roof ppt presentations Yoursite.com

Yoursite.com Horse topics for presentations

Horse topics for presentations Types of oral presentations

Types of oral presentations Hello my name is in sign language

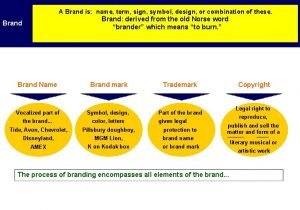

Hello my name is in sign language Is a name term sign symbol

Is a name term sign symbol Brand name selection

Brand name selection First name and last name example

First name and last name example Name above every other name

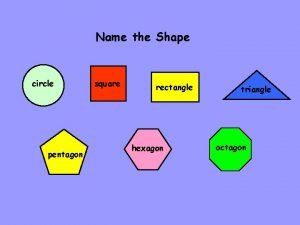

Name above every other name Circle square triangle rectangle hexagon

Circle square triangle rectangle hexagon Class person string name

Class person string name Whats her name

Whats her name Name of presentation company name

Name of presentation company name Name of presentation company name

Name of presentation company name Name teachers name class date

Name teachers name class date Name

Name What's your name is

What's your name is Name class subject school

Name class subject school Student id name department name

Student id name department name Global user

Global user Lecturer's name or lecturer name

Lecturer's name or lecturer name Inception deck template

Inception deck template Name date class teacher

Name date class teacher First name last name tpu

First name last name tpu Jordan stock name

Jordan stock name You ____ pay attention in the class

You ____ pay attention in the class Tuesday please

Tuesday please Could you please tell me where is my uncle's room

Could you please tell me where is my uncle's room Yes clean your room

Yes clean your room Type your answer....

Type your answer.... Turn on microphone

Turn on microphone Please wait. the webinar will begin soon

Please wait. the webinar will begin soon Don't throw sausage pizza away

Don't throw sausage pizza away Thank you julie

Thank you julie Excuse me would you please tell me

Excuse me would you please tell me Lemon andersen please don't take my air jordans lyrics

Lemon andersen please don't take my air jordans lyrics What would juliet rather do than marry paris

What would juliet rather do than marry paris Please reported speech

Please reported speech Please mute your mic

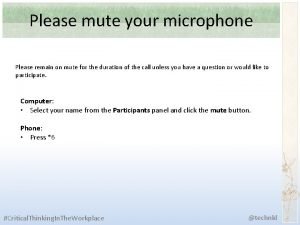

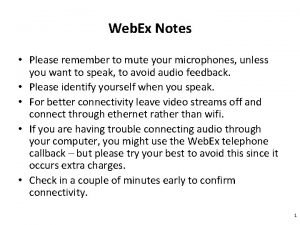

Please mute your mic Please mute your microphone

Please mute your microphone Please miss

Please miss Please dont eat my cookie

Please dont eat my cookie Please sit down and your seat belts

Please sit down and your seat belts Come in and ....................... a seat!

Come in and ....................... a seat! Officious fool

Officious fool Makalah passive voice dalam bahasa inggris

Makalah passive voice dalam bahasa inggris Please sit down and your seat belts

Please sit down and your seat belts Good morning please have a seat

Good morning please have a seat Theobald von bethmann hollweg

Theobald von bethmann hollweg Please silence your phone

Please silence your phone Please feel free to modify

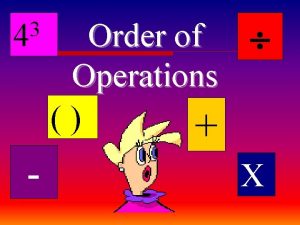

Please feel free to modify Pemdas meaning

Pemdas meaning Please mute your microphone

Please mute your microphone This lesson is important. please pay

This lesson is important. please pay Please put your homework my desk

Please put your homework my desk How to activate set top box

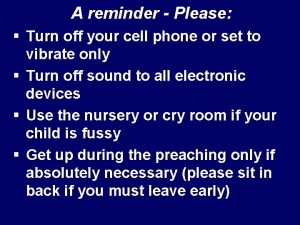

How to activate set top box Please turn off your cell phone in church

Please turn off your cell phone in church Please excuse my dear aunt sally

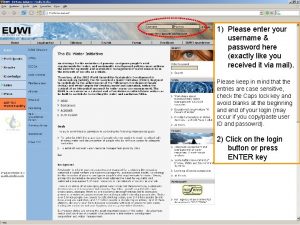

Please excuse my dear aunt sally Please enter username and password

Please enter username and password Please use english only

Please use english only Please help yourself to refreshments

Please help yourself to refreshments Please mute yourself

Please mute yourself Read the sentences carefully

Read the sentences carefully Would you mind ____ the window?

Would you mind ____ the window? Could you please tell me where is my uncle's room

Could you please tell me where is my uncle's room Please clean your room

Please clean your room Please clean your room before we leave for school

Please clean your room before we leave for school No speak english

No speak english Present continuous hold

Present continuous hold Please don't throw sausage pizza away

Please don't throw sausage pizza away Missionary and cannibal game

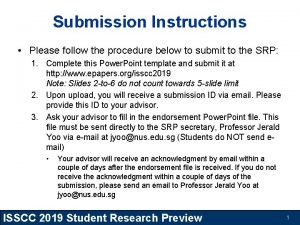

Missionary and cannibal game Follow the procedure below

Follow the procedure below Begin please

Begin please Do not distribute

Do not distribute Please read carefully the instructions

Please read carefully the instructions Please mute

Please mute Please fill all fields

Please fill all fields Whats pemdas

Whats pemdas The next please

The next please Your homework please

Your homework please