Formal Technical Reviews Annette Tetmeyer Fall 2009 1

- Slides: 86

Formal Technical Reviews Annette Tetmeyer Fall 2009 1

Outline • • • Overview of FTR and relationship to software quality improvement History of software quality improvement Impact of quality on software products The FTR process Beyond FTR Discussion and questions 2

Formal Technical Review What is Formal Technical Review (FTR)? Definition (Philip Johnson) A method involving structured encounter quality of theaoriginal work productin which a groupquality of technical personnel analyzes or of the method improves the quality of the original work product as well as the quality of the method. 3

Software Quality Improvement • Improve the quality of the original work Find defects early (less costly) – Reduce defects – • Leads to improved productivity – Benefits by reducing rework build throughout the project requirements design coding testing 4

Software Quality Improvement (2/4) • Survey regarding when reviews are conducted Design or Requirements: 40% – Code review: 30% – • Code reviews pay off even if the code is being tested later (Fagan) 5

Software Quality Improvement (3/4) Improve the quality of the method • Improve team communication • Enhance team learning 6

Software Quality Improvement (4/4) • Which impacts overall quality the most? To raise the quality of the finished product – To improve developer skills – finished product developer skills 7

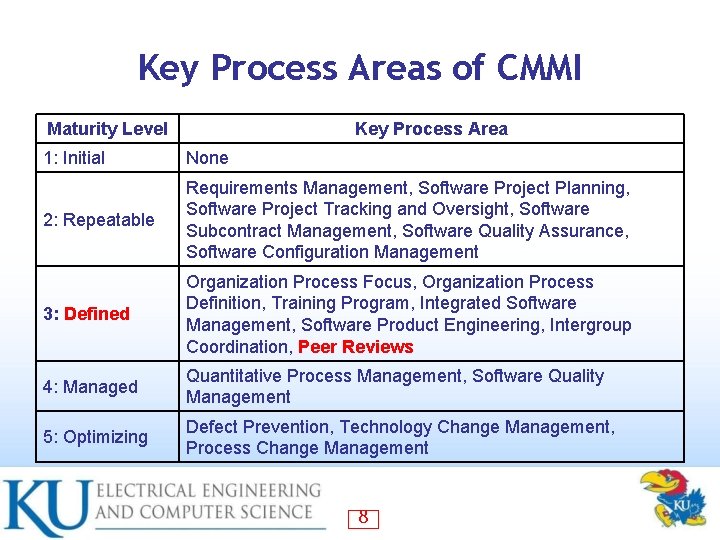

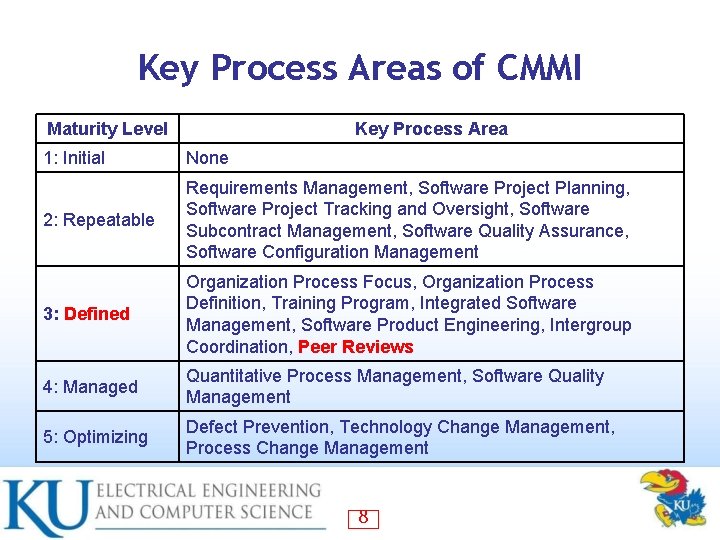

Key Process Areas of CMMI Maturity Level Key Process Area 1: Initial None 2: Repeatable Requirements Management, Software Project Planning, Software Project Tracking and Oversight, Software Subcontract Management, Software Quality Assurance, Software Configuration Management 3: Defined Organization Process Focus, Organization Process Definition, Training Program, Integrated Software Management, Software Product Engineering, Intergroup Coordination, Peer Reviews 4: Managed Quantitative Process Management, Software Quality Management 5: Optimizing Defect Prevention, Technology Change Management, Process Change Management 8

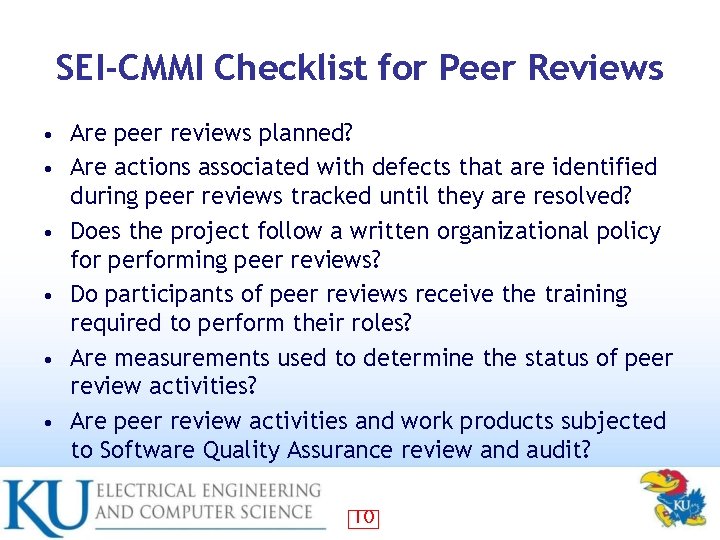

Peer Reviews and CMMI • Does not dictate specific techniques, but instead requires that: A written policy about peer reviews is required – Resources, funding, and training must be provided – Peer reviews must be planned – The peer review procedures to be used must be documented – 9

SEI-CMMI Checklist for Peer Reviews • • • Are peer reviews planned? Are actions associated with defects that are identified during peer reviews tracked until they are resolved? Does the project follow a written organizational policy for performing peer reviews? Do participants of peer reviews receive the training required to perform their roles? Are measurements used to determine the status of peer review activities? Are peer review activities and work products subjected to Software Quality Assurance review and audit? 10

Outline • • • Overview of FTR and relationship to software quality improvement History of software quality improvement Impact of quality on software products The FTR process Beyond FTR Discussion and questions 11

Researchers and Influencers • • • Fagan Johnson Ackermann Gilb and Graham Weinberg Weigers 12

Inspection, Walkthrough or Review? An inspection is ‘a visual examination of a software product to detect and identify software anomalies, including errors and deviations from standards and specifications’ 13

Inspection, Walkthrough or Review? (2/2) A walkthrough is ‘a static analysis technique in which a designer or programmer leads members of the development team and other interested parties through a software product, and the participants ask questions and make comments about possible errors, violation of development standards, and other problems’ A review is ‘a process or meeting during which a software product is presented to project personnel, managers, users, customers, user representatives, or other interested parties for comment or approval’ Source: IEEE Std. 1028 -1997 14

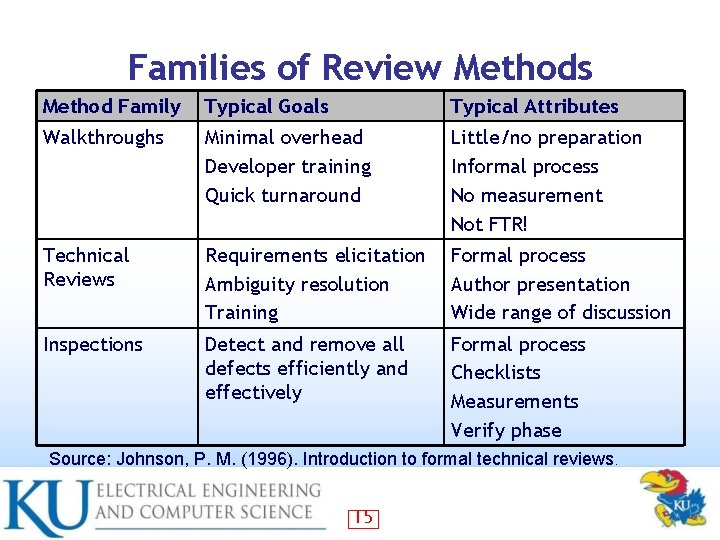

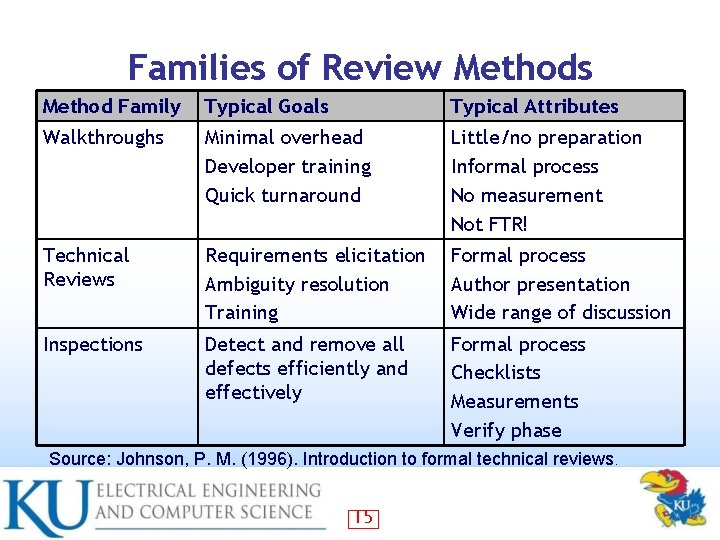

Families of Review Methods Method Family Typical Goals Typical Attributes Walkthroughs Minimal overhead Developer training Quick turnaround Little/no preparation Informal process No measurement Not FTR! Technical Reviews Requirements elicitation Ambiguity resolution Training Formal process Author presentation Wide range of discussion Inspections Detect and remove all defects efficiently and effectively Formal process Checklists Measurements Verify phase Source: Johnson, P. M. (1996). Introduction to formal technical reviews. 15

Informal vs. Formal • Informal Spontaneous – Ad-hoc – No artifacts produced – • Formal Carefully planned and executed – Reports are produced – In reality, there is also a middle ground between informal and formal techniques 16

Outline • • • Overview of FTR and relationship to software quality improvement History of software quality improvement Impact of quality on software products The FTR process Beyond FTR Discussion and questions 17

Cost-Benefit Analysis Fagan reported that IBM inspections found 90% of all defects for a 9% reduction in average project cost • Johnson estimates that rework accounts for 44% of development cost • Finding defects, finding defects early and reducing rework can impact the overall cost of a project • 18

Cost of Defects What is the impact of the annual cost of software defects in the US? $59 billion • Estimated that $22 billion could be avoided by introducing a best-practice defect detection infrastructure Source: NIST, The Economic Impact of Inadequate Infrastructure for Software Testing, May 2002 19

Cost of Defects Gilb project with jet manufacturer • Initial analysis estimated that 41, 000 hours of effort would be lost through faulty requirements • Manufacturer concurred because: • 10 people on the project using 2, 000 hours/year – Project is already one year late (20, 000 hours) – Project is estimated to take one more year (another 20, 000 hours) – 20

Jet Propulsion Laboratory Study Average two hour inspection exposed four major and fourteen minor faults • Savings estimated at $25, 000 per inspection • Additional studies showed the number of faults detected decreases exponentially by phase • – Detecting early saves time and money 21

Software Inspections Why are software inspections not widely used? • Lack of time • Not seen as a priority • Not seen as value added (measured by loc) • Lack of understanding of formalized techniques • Improper tools used to collect data • Lack of training of participants • Pits programmer against reviewers 22

Twelve Reasons Conventional Reviews are Ineffective 1. 2. 3. 4. 5. 6. The reviewers are swamped with information. Most reviewers are not familiar with the product design goals. There are no clear individual responsibilities. Reviewers can avoid potential embarrassment by saying nothing. The review is a large meeting; detailed discussions are difficult. Presence of managers silences criticism. 23

Twelve Reasons Conventional Reviews are Ineffective Presence of uninformed reviewers may turn the review into a tutorial. 8. Specialists are asked general questions. 9. Generalists are expected to know specifics. 10. The review procedure reviews code without respect to structure. 11. Unstated assumptions are not questioned. 12. Inadequate time is allowed. 7. From class website: sw-inspections. pdf (Parnas) 24

Fagan’s Contributions Design and code inspections to reduce errors in program development (1976) • A systematic and efficient approach to improving programming quality • Continuous improvement: reduce initial errors and follow-up with additional improvements • Beginnings of formalized software inspections • 25

Fagan’s Six Major Steps 1. 2. 3. 4. 5. 6. Planning Overview Preparation Examination Rework Follow-up Can steps be skipped or combined? How many people hours are typically involved? 26

Fagan’s Six Major Steps (2/2) 1. 2. 3. 4. 5. 6. Planning: Form team, assign roles Overview: Inform team about product (optional) Preparation: Independent review of materials Examination: Inspection meeting Rework: Author verify defects and correct Follow-up: Moderator checks and verifies corrections 27

Fagan’s Team Roles Fagan recommends that a good size team consists of four people • Moderator: the key person, manages team and offers leadership • Readers, reviewers and authors • Designer: programmer responsible for producing the program design – Coder/ Implementer: translates the design to code – Tester: write, execute test cases – 28

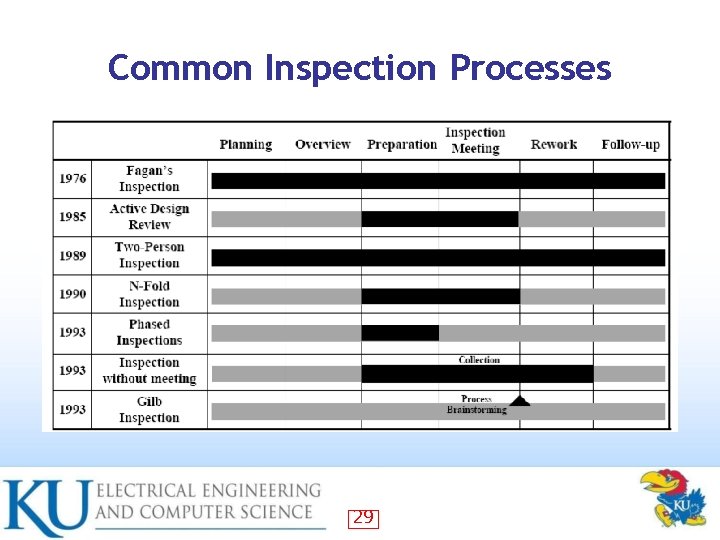

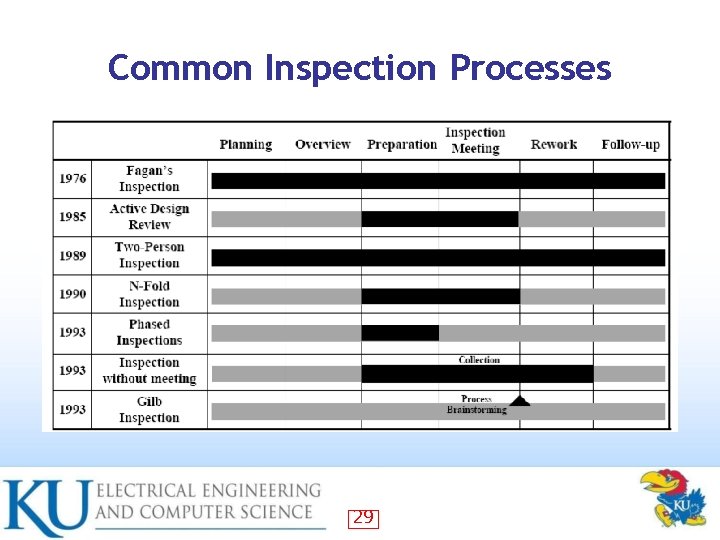

Common Inspection Processes 29

Active Design Parnas and Weiss (1985) • Rationale • Reviewers may be overloaded during preparation phase – Reviewers lack of familiarity with goals – Large team meetings can have drawbacks – Several brief reviews rather than one large review • Focus on a certain part of the project • Used this approach for the design of a military flight navigation system • 30

Two Person Inspection • • • Bisant and Lyle (1989) One author, one reviewer (eliminate moderator) Ad-hoc preparation Noted immediate benefits in program quality and productivity May be more useful in small organizations or small projects 31

N-fold Inspection Martin and Tsai (1990) • Rationale • A single team finds only a fraction of defects – Different teams do not duplicate efforts – • • • Follows Fagan inspection steps N-teams inspect in parallel with results Results from teams are merged After merging results, only one team continues on Team size 3 -4 people (author, moderator, reviewers) 32

Phased Inspection Knight and Myers (1993) • Combines aspects of active design, Fagan, and N-fold • Mini- inspections or “phases” with specific goals • Use checklists for inspection – Can have single-inspector or multiple-inspector phases – • Team size 1 -2 people 33

Inspection without Meeting • Research by Votta (1993) and Johnson (1998) – Does every inspection need a meeting? Builds on the fact that most defects are found in preparation for the meeting (90/10) • Is synergy as important to finding defects as stated by others? • Collection occurs after preparation • Rework follows • 34

Gilb Inspections Gilb and Graham (1993) • Similar to Fagan inspections • Process brainstorming meeting immediately following the inspection meeting • 35

Other Inspections Structured Walkthough (Yourdon, 1989) • Verification-Based Inspection (Dyer, 1992) • 36

Inspection, Walkthrough or Review? Some researchers interpret Fagan’s work as a combination of all three • Does present many of the elements associated with FTR • FTR may be seen as a variant of Fagan inspections (Johnson, Tjahjono 1998) • 37

Outline • • • Overview of FTR and relationship to software quality improvement History of software quality improvement Impact of quality on software products The FTR process Beyond FTR Discussion and questions 38

Formal Technical Review (FTR) • Process – • Roles – • Author, Moderator, Reader, Reviewer, Recorder Objectives – • Phases and procedures Defect removal, requirements elicitation, etc. Measurements – Forms, consistent data collection, etc. 39

FTR Process • • • How much to review Review pacing When to review Pre-meeting preparation Meeting pace 40

How Much to Review? Tied into meeting time (hours) • Should be manageable • Break into chunks if needed • 41

Review Pacing How long should the meeting last? • Based on: • Lines per hour? – Pages? – Specific time frame? – 42

When to Review? How much work should be completed before the review • Set out review schedule with project planning • Again, break into manageable chunks • Prioritize based on impact of code module to overall project • 43

Pre-Meeting Preparation • • • Materials to be given to reviewers Time expectations prior to the meeting Understand the roles of participants Training for team members on their various roles Expected end product 44

Pre-Meeting Preparation (2/2) • How is document examination conducted? Ad-hoc – Checklist – Specific reading techniques (scenarios or perspective -based reading) – Preparation is crucial to effective reviews 45

FTR Team Roles Select the correct participants for each role • Understand team review psychology • Choose the correct team size • 46

FTR Team Roles (2/2) • • • Author Moderator Reader Reviewer Recorder (optional? ) Who should not be involved and why? 47

48

Team Participants Must be actively engaged • Must understand the “bigger picture” • 49

Team Psychology Stress • Conflict resolution • Perceived relationship to performance reviews • 50

Team Size • What is the ideal size for a team? Less than 3? – 3 -6? – Greater than 6? – What is the impact of large, complex projects? • How to work with globally distributed teams? • 51

FTR Objectives Review meetings can take place at various stages of the project lifecycle • Understand the purpose of the review • Requirements elicitation – Defect removal – Other – • Goal of the review is not to provide solutions – Raise issues, don’t resolve them 52

FTR Measurements Documentation and use • Sample forms • Inspection metrics • 53

Documentation Forms used to facilitate the process • Documenting the meeting • Use of standards • How is documentation used by: • Managers – Developers – Team members – 54

Sample Forms NASA Software Formal Inspections Guidebook • Sample checklists • – – – Architecture design Detailed design Code inspection Functional design Software requirements Refer to sample forms distributed in class 55

Inspection Metrics How to gather and classify defects? • How to collect? • What to do with collected metrics? • What metrics are important? • Defects per reviewer? – Inspection rate? – Estimated defects remaining? – Historical data • Future use (or misuse) of data • 56

Inspection Metrics (2/2) Tools for collecting metrics • Move beyond spreadsheets and word processors • Primary barriers to using: • Cost – Quality – Utility – 57

Outline • • • Overview of FTR and relationship to software quality improvement History of software quality improvement Impact of quality on software products The FTR process Beyond FTR Discussion and questions 58

Beyond the FTR Process • • • Impact of reviews on the programmer Post-meeting activities Review challenges Survey of reviews and comparisons Future of FTR 59

Impact on the Programmer Should reviews be used as a measure of performance during appraisal time? • Can it help to improve commitment to their work? • Will it make them a better reviewer when roles are reversed? • Improve teamwork? • 60

Post-Meeting Activities • Defect correction How to ensure that identified defects are corrected? – What metrics or communication tools are needed? – • Follow-up Feedback to team members – Additional phases of reviews – Data collection for historical purposes • Gauging review effectiveness • 61

Review Challenges Distributed, global teams • Large teams • Complex projects • Virtual vs. face-to-face meetings • 62

Survey of Reviews • Reviews were integrated into software development with a range of goals Early defect detection – Better team communication – • Review approaches vary widely – Tending towards nonsystematic methods and techniques Source: Ciolkowski, M. , Laitenberger, O. , & Biffl, S. (2003). Software reviews, the state of the practice. Software, IEEE, 20(6), 46 -51. 63

Survey of Reviews (2/2) • What were common review goals? Quality improvement – Project status evaluation – Means to enforce standards – • Common obstacles Time pressures – Cost – Lack of training (most train by participation) – 64

Do We Really Need a Meeting? • “Phantom Inspector” (Fagan) – The “synergism” among the review team that can lead to the discovery of defects not found by any of the participants working individually Meetings are perceived as higher quality • What about false positives and duplicates? • 65

A Study of Review Meetings The need for face-to-face meetings has never been questioned • Meetings are expensive! • – – – • Simultaneous attendance of all participants Preparation Readiness of work product under review High quality moderation Team personalities Adds to project time and cost (15 -20% overhead) 66

A Study of Review Meetings (2/3) • Studied the impact of: Real (face-to-face) vs. nominal (individual) groups – Detection effectiveness (number of defects detected) – Detection cost – • Significant differences were expected 67

A Study of Review Meetings (3/3) • Results Defect detection effectiveness was not significantly different for either group – Cost was less for nominal than for real groups (average time to find defects was higher) – Nominal groups generated more issues, but had higher false positives and more duplication – 68

Does Openness and Anonymity Impact Meetings? “Working in a group helped me find errors faster and better. ” • “Working in a group helped me understand the code better. ” • “You spent less time arguing the issue validity when working alone. ” • “I could get a lot more done in a given amount of time when working by myself. ” • 69

Study on Successful Industry Uses • Lack of systematic execution during preparation and detection – • 60% don’t prepare at all, only 50% use checklist, less than 10% use advance reading techniques Reviews are not part of an overall improvement program – Only 23% try to optimize the review process 70

Study on Successful Industry Uses (2/3) • Factors for sustained success Top-management support is required – Need evidence (external, internal) to warrant using reviews – Process must be repeatable and measurable for continuous improvement – Techniques need to be easily adaptable to changing needs – 71

Study on Successful Industry Uses (3/3) Repeatable success tends to use well defined techniques • Reported success (NASA, Motorola, IBM) • 95% defect detection rates before testing – 50% overall cost reduction – 50% reduction in delivery time – 72

Future of FTR 1. 2. 3. 4. 5. 6. 7. Provide tighter integration between FTR and the development method Minimize meetings and maximize asynchronicity in FTR Shift the focus from defect removal to improved developer quality Build organizational knowledge bases on review Outsource review and in-source review knowledge Investigate computer-mediated review technology Break the boundaries on review group size 73

Outline • • • Overview of FTR and relationship to software quality improvement History of software quality improvement Impact of quality on software products The FTR process Beyond FTR Discussion and questions 74

Discussion Testing is commonly outsourced, but what about reviews? • What are the implications to outsourcing one over the other? • What if code production is outsourced? What do you review and how? • What is the relationship between reviews and testing? • 75

Discussion • • • Relationship between inspections and testing Do anonymous review tools impact the quality of the review process? How often to review? When to re-review? How to estimate number of defects expected? 76

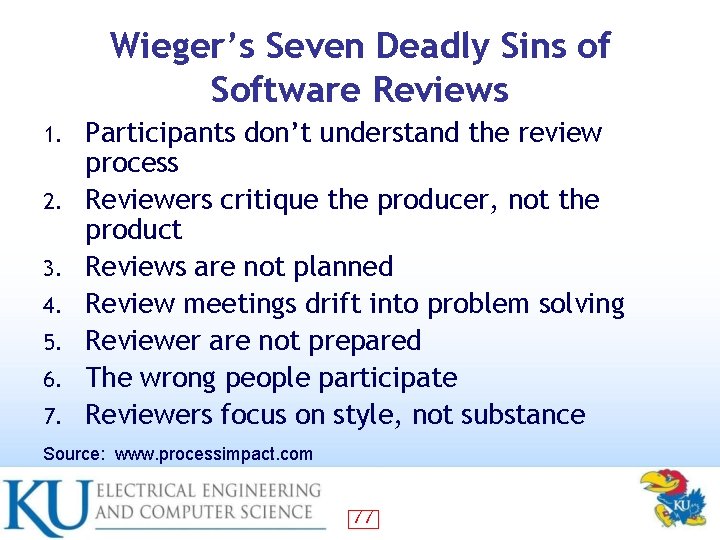

Wieger’s Seven Deadly Sins of Software Reviews 1. 2. 3. 4. 5. 6. 7. Participants don’t understand the review process Reviewers critique the producer, not the product Reviews are not planned Review meetings drift into problem solving Reviewer are not prepared The wrong people participate Reviewers focus on style, not substance Source: www. processimpact. com 77

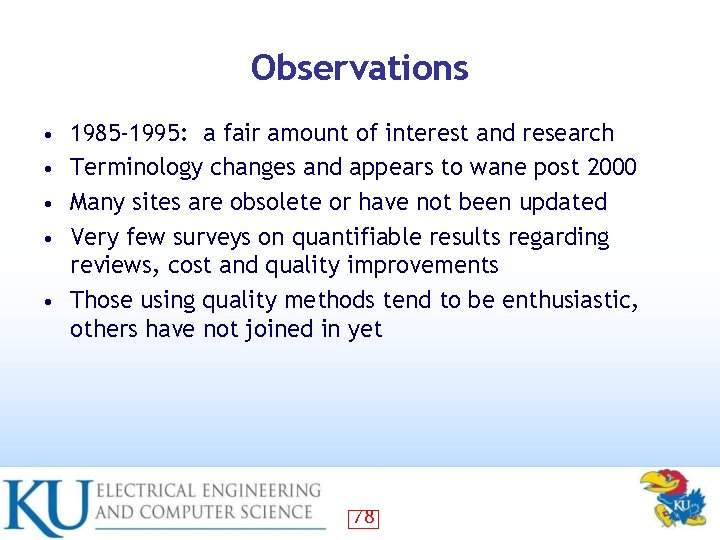

Observations • • • 1985 -1995: a fair amount of interest and research Terminology changes and appears to wane post 2000 Many sites are obsolete or have not been updated Very few surveys on quantifiable results regarding reviews, cost and quality improvements Those using quality methods tend to be enthusiastic, others have not joined in yet 78

Questions 79

References Full references handout provided in class 80

References and Resources Ackerman, A. F. , Buchwald, L. S. , & Lewski, F. H. (1989). Software inspections: an effective verification process. Software, IEEE, 6(3), 31 -36. Aurum, A. , Petersson, H. , & Wohlin, C. (2002). State-of-the-art: software inspections after 25 years. Software Testing, Verification and Reliability, 12(3), 133 -154. Boehm, B. , & Basili, V. R. (2001). Top 10 list [software development]. Computer, 34(1), 135 -137. Ciolkowski, M. , Laitenberger, O. , & Biffl, S. (2003). Software reviews, the state of the practice. Software, IEEE, 20(6), 46 -51. D'Astous, P. , Détienne, F. , Visser, W. , & Robillard, P. N. (2004). Changing our view on design evaluation meetings methodology: a study of software technical review meetings. Design Studies, 25(6), 625 -655. 81

References and Resources Denger, C. , & Shull, F. (2007). A Practical Approach for Quality-Driven Inspections. Software, IEEE, 24(2), 79 -86. Fagan, M. E. (1976). Design and code inspections to reduce errors in program development. IBM Systems Journal, 15(3), 182 -211. Freedman, D. P. , & Weinberg, G. M. (2000). Handbook of Walkthroughs, Inspections, and Technical Reviews: Evaluating Programs, Projects, and Products: Dorset House Publishing Co. , Inc. IEEE Standard for Software Reviews and Audits (2008). IEEE STD 10282008, 1 -52. Johnson, P. M. (1996). Introduction to formal technical reviews, from http: //www. ccs. neu. edu/home/lieber/com 3205/f 02/lectures/Rev iews. ppt 82

References and Resources Johnson, P. M. (1998). Reengineering inspection. Commun. ACM, 41(2), 49 -52. Johnson, P. M. (2001), You can’t even ask them to push a button: Toward ubiquitous, developer-centric, empirical software engineering, Retrieved from http: //www. itrd. gov/subcommittee/sdp/vanderbilt/position_pape rs/philip_johnson_you_cant_even_ask. pdf, Accessed on October 7, 2009. Johnson, P. M. , & Tjahjono, D. (1998). Does every inspection really need a meeting? Empirical Software Engineering, 3(1), 9 -35. Neville-Neil, G. V. (2009). Kode Vicious Kode reviews 101. Commun. ACM, 52(10), 28 -29. 83

References and Resources NIST (May 2002). The economic impact of inadequate infrastructure for software testing. Retrieved October 8, 2009. from http: //www. nist. gov/public_affairs/releases/n 02 -10. htm. Parnas, D. L. , & Weiss, D. M. (1987). Active design reviews: principles and practices. J. Syst. Softw. , 7(4), 259 -265. Porter, A. , Siy, H. , & Votta, L. (1995). A review of software inspections: University of Maryland at College Park. Porter, A. A. , & Johnson, P. M. (1997). Assessing software review meetings: results of a comparative analysis of two experimental studies. Software Engineering, IEEE Transactions on, 23(3), 129145. 84

References and Resources Rombach, D. , Ciolkowski, M. , Jeffery, R. , Laitenberger, O. , Mc. Garry, F. , & Shull, F. (2008). Impact of research on practice in the field of inspections, reviews and walkthroughs: learning from successful industrial uses. SIGSOFT Softw. Eng. Notes, 33(6), 26 -35. Votta, L. G. (1993). Does every inspection need a meeting? SIGSOFT Softw. Eng. Notes, 18(5), 107 -114. 85

Interesting Websites Gilb: Extreme Inspection • http: //www. result-planning. com/Inspection Collaboration Tools • http: //www. resultplanning. com/Site+Content+Overview • http: //www. sdtcorp. com/pdf/Review. Pro. pdf 86