Copyright 2003 Mani Srivastava Highlevel Synthesis Scheduling Allocation

![Copyright 2003 Mani Srivastava 57 Minimization of Register Costs( contd. ) n avg life] Copyright 2003 Mani Srivastava 57 Minimization of Register Costs( contd. ) n avg life]](https://slidetodoc.com/presentation_image_h/a991bca35216f55e0428185f625037dd/image-57.jpg)

- Slides: 68

Copyright 2003 Mani Srivastava High-level Synthesis Scheduling, Allocation, Assignment, Note: Several slides in this Lecture are from Prof. Miodrag Potkonjak, UCLA CS Mani Srivastava UCLA - EE Department Room: 6731 -H Boelter Hall Email: mbs@ee. ucla. edu Tel: 310 -267 -2098 WWW: http: //www. ee. ucla. edu/~mbs

Copyright 2003 Mani Srivastava 2 Overview n High Level Synthesis n Scheduling, Allocation and Assignment n Estimations n Transformations

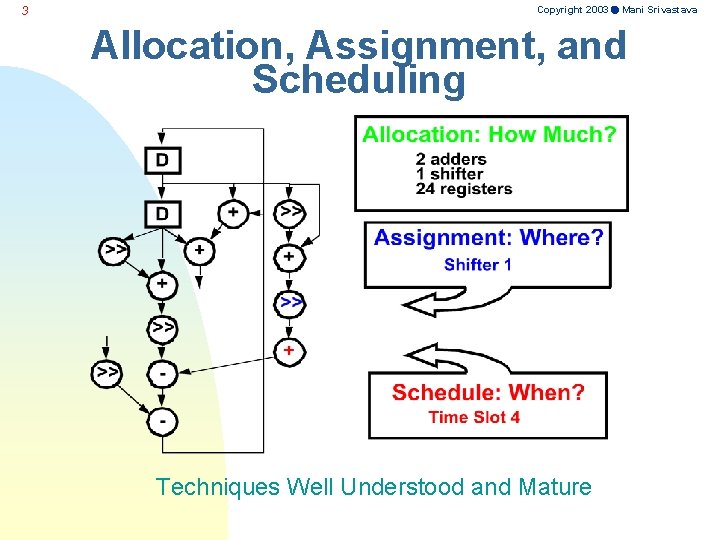

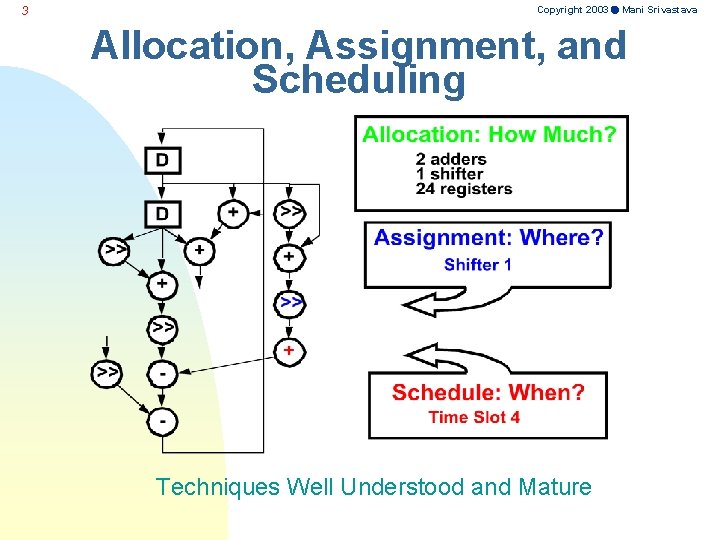

3 Copyright 2003 Mani Srivastava Allocation, Assignment, and Scheduling Techniques Well Understood and Mature

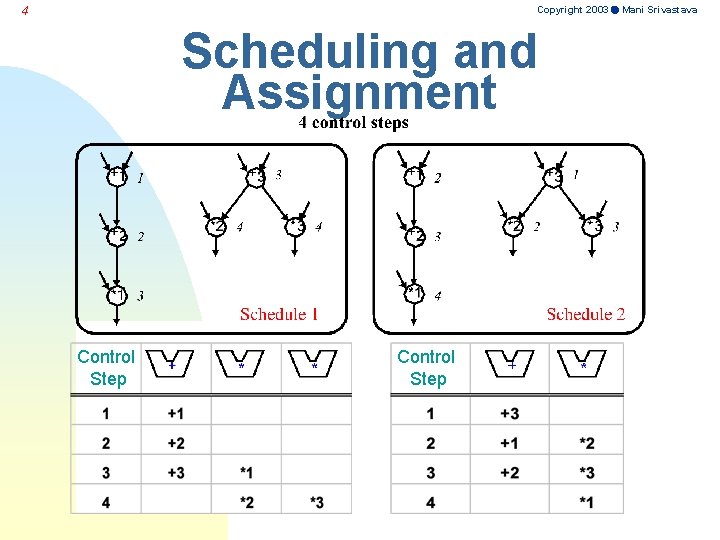

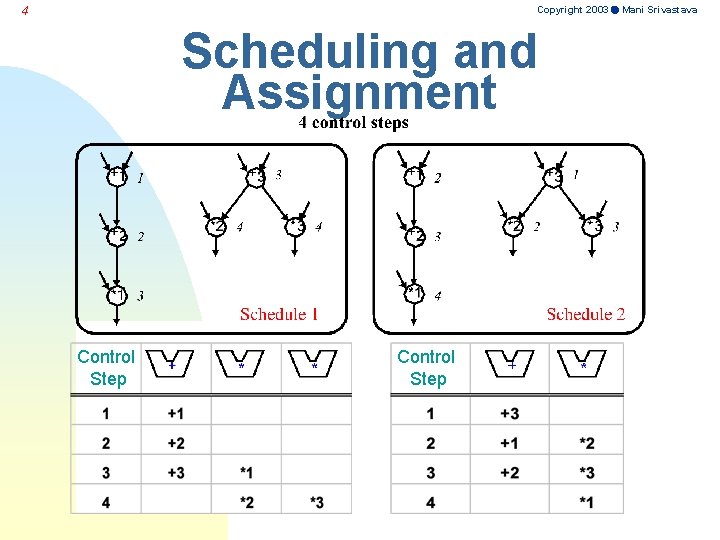

Copyright 2003 Mani Srivastava 4 Scheduling and Assignment Control Step

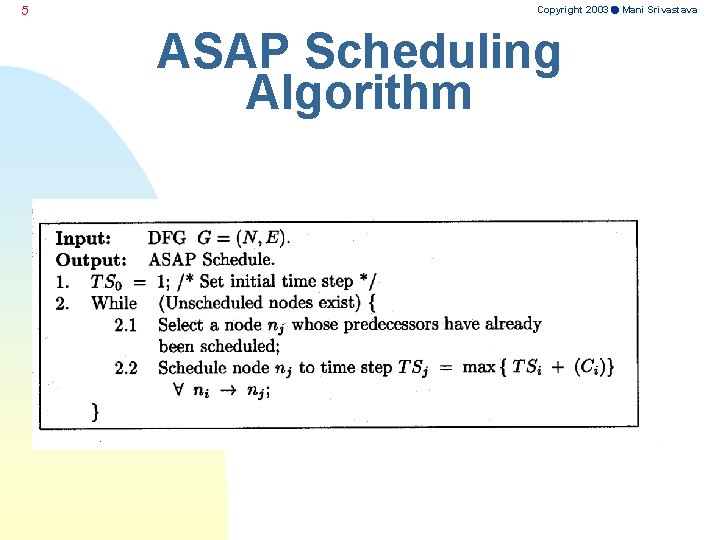

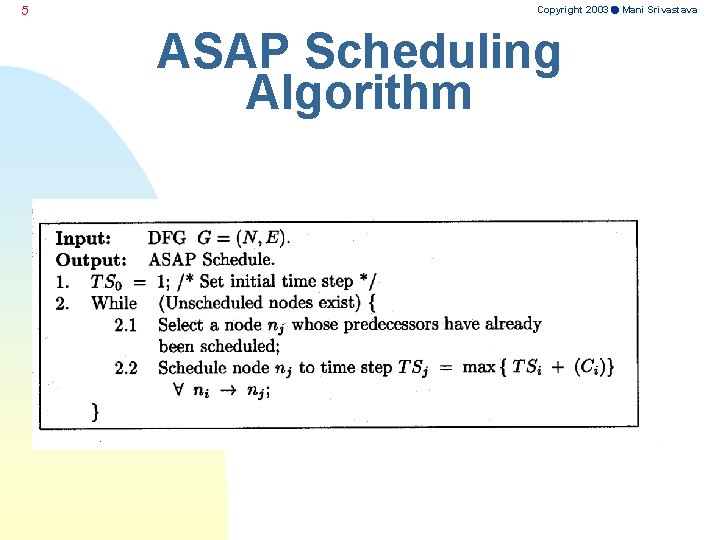

5 Copyright 2003 Mani Srivastava ASAP Scheduling Algorithm

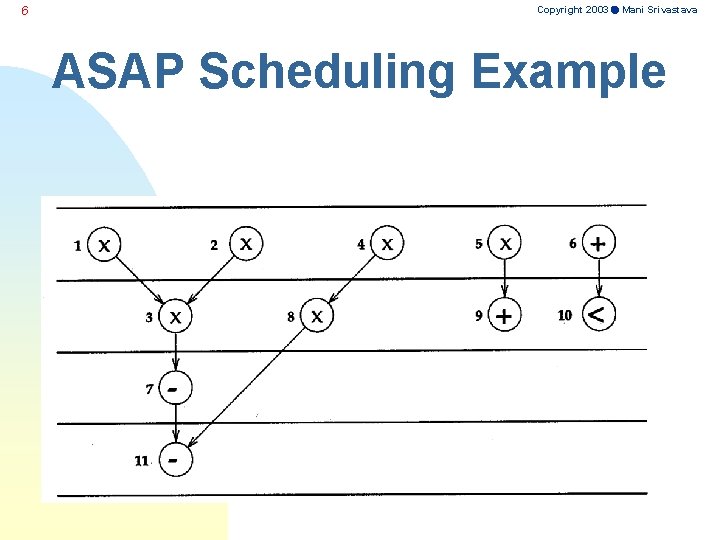

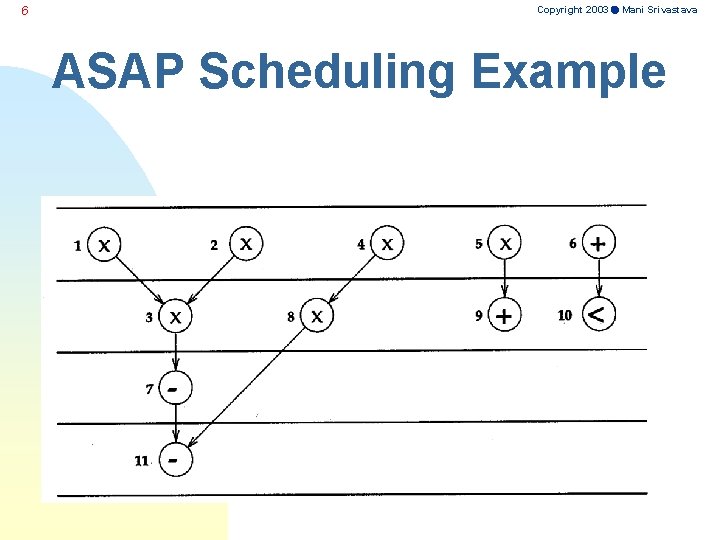

6 Copyright 2003 Mani Srivastava ASAP Scheduling Example

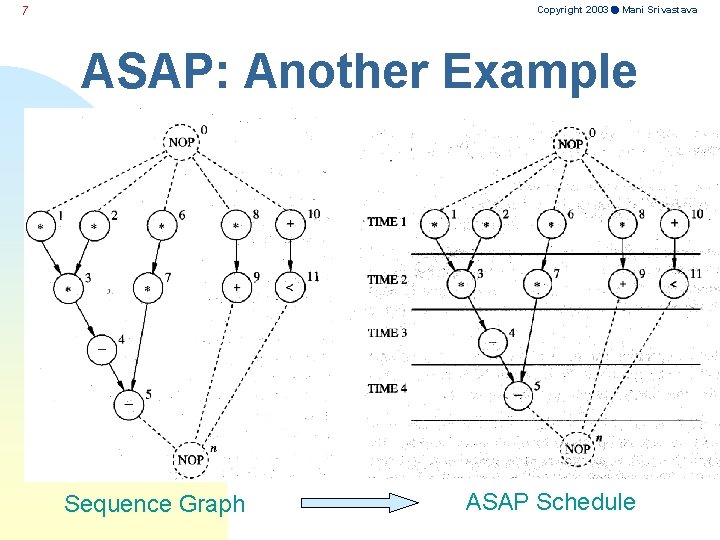

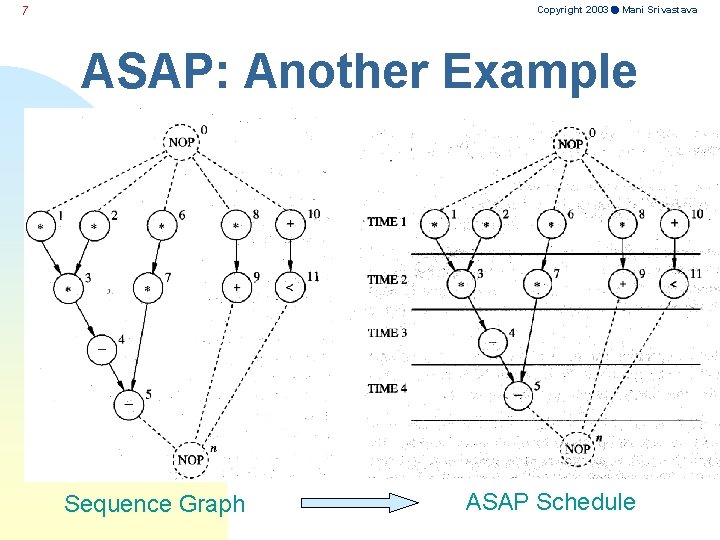

Copyright 2003 Mani Srivastava 7 ASAP: Another Example Sequence Graph ASAP Schedule

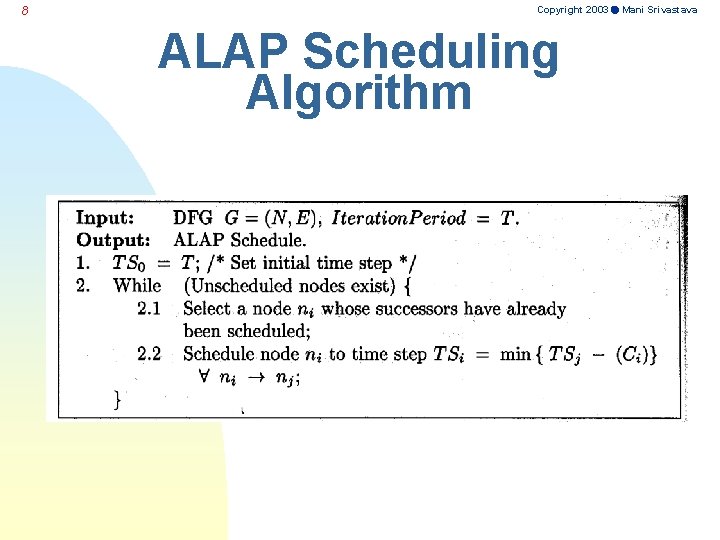

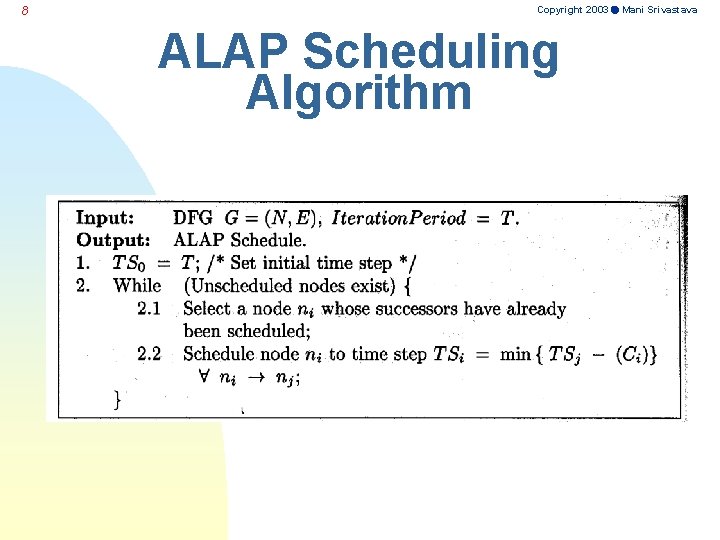

8 Copyright 2003 Mani Srivastava ALAP Scheduling Algorithm

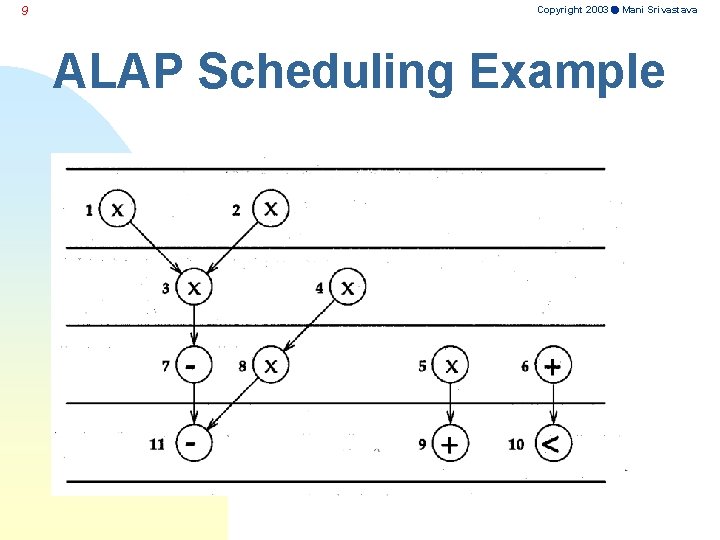

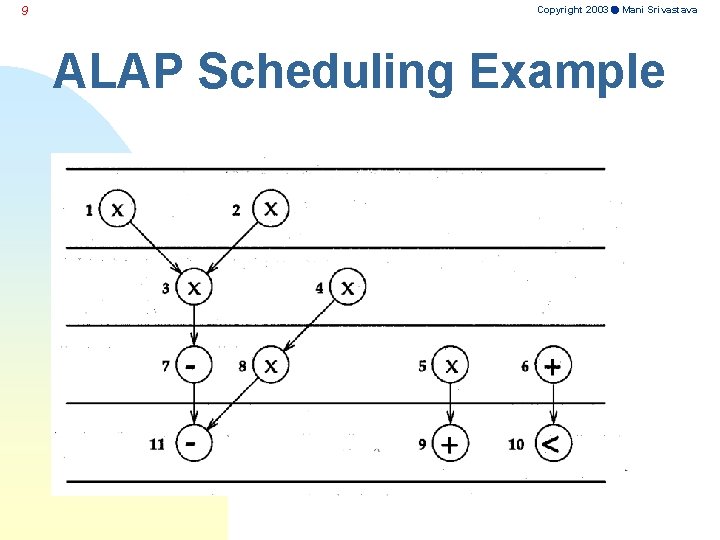

9 Copyright 2003 Mani Srivastava ALAP Scheduling Example

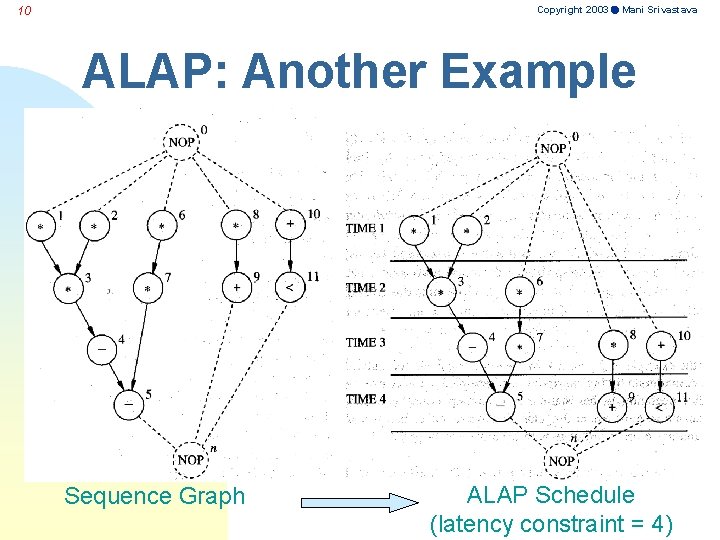

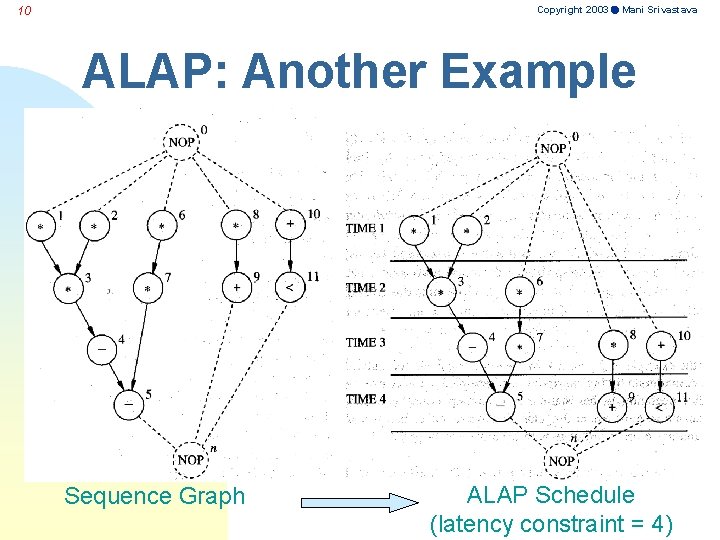

Copyright 2003 Mani Srivastava 10 ALAP: Another Example Sequence Graph ALAP Schedule (latency constraint = 4)

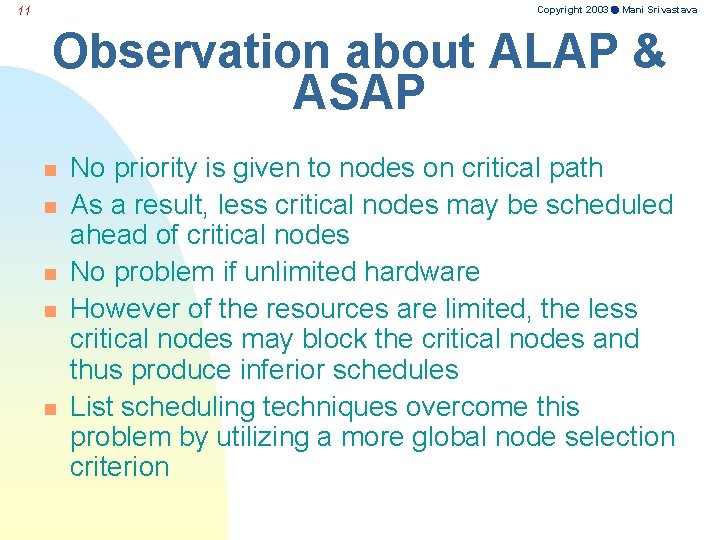

Copyright 2003 Mani Srivastava 11 Observation about ALAP & ASAP n n n No priority is given to nodes on critical path As a result, less critical nodes may be scheduled ahead of critical nodes No problem if unlimited hardware However of the resources are limited, the less critical nodes may block the critical nodes and thus produce inferior schedules List scheduling techniques overcome this problem by utilizing a more global node selection criterion

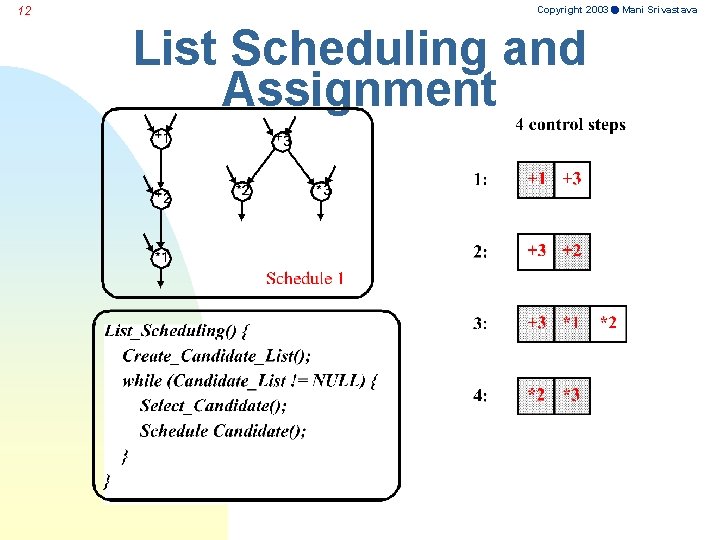

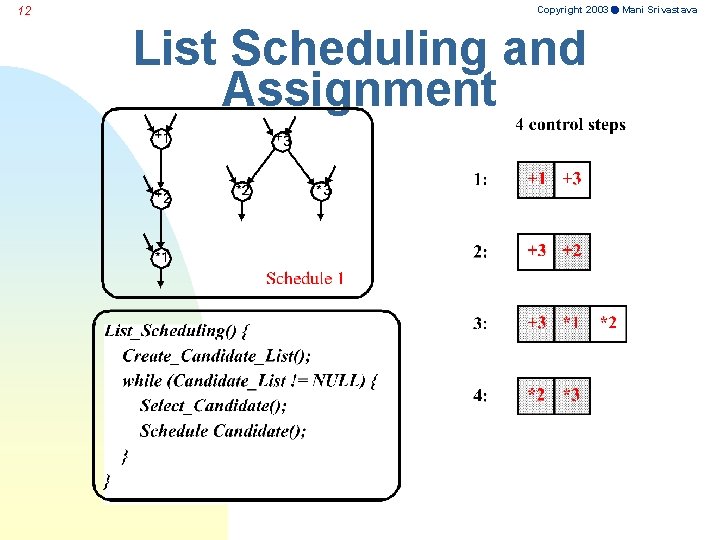

12 Copyright 2003 Mani Srivastava List Scheduling and Assignment

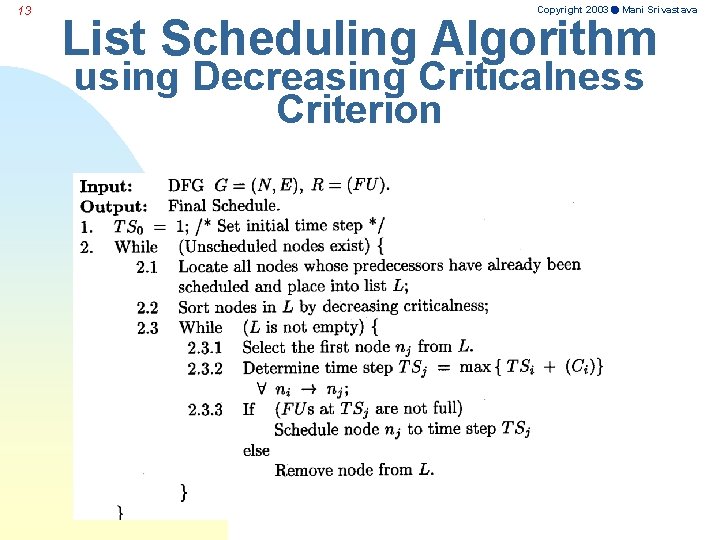

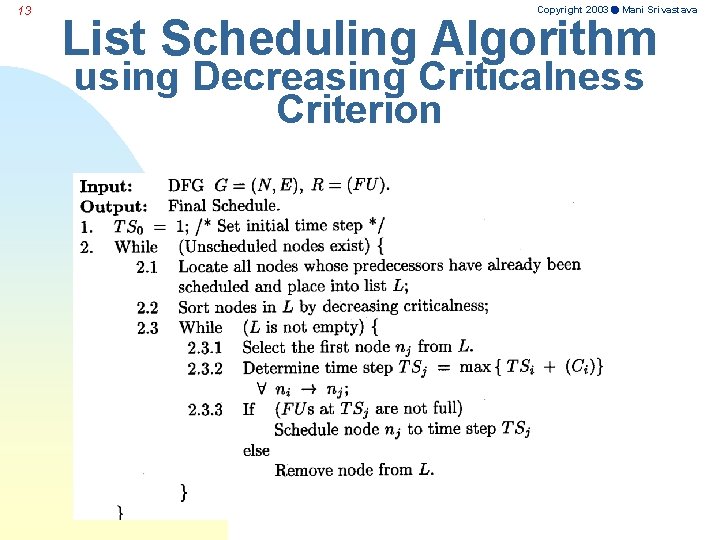

13 Copyright 2003 Mani Srivastava List Scheduling Algorithm using Decreasing Criticalness Criterion

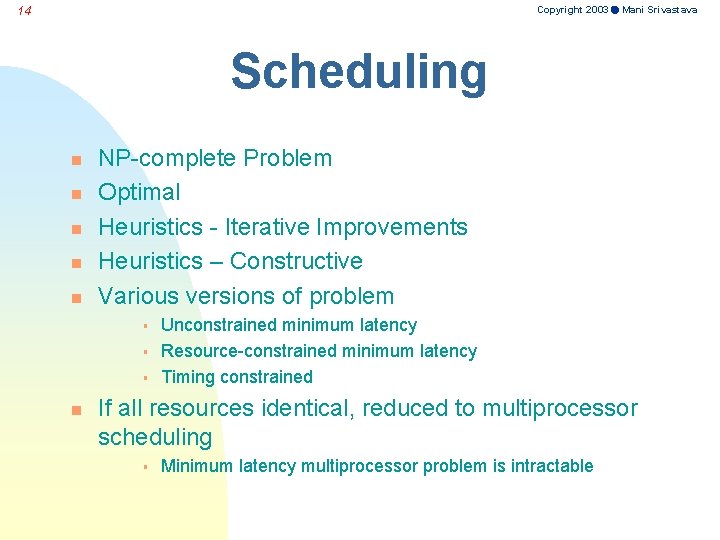

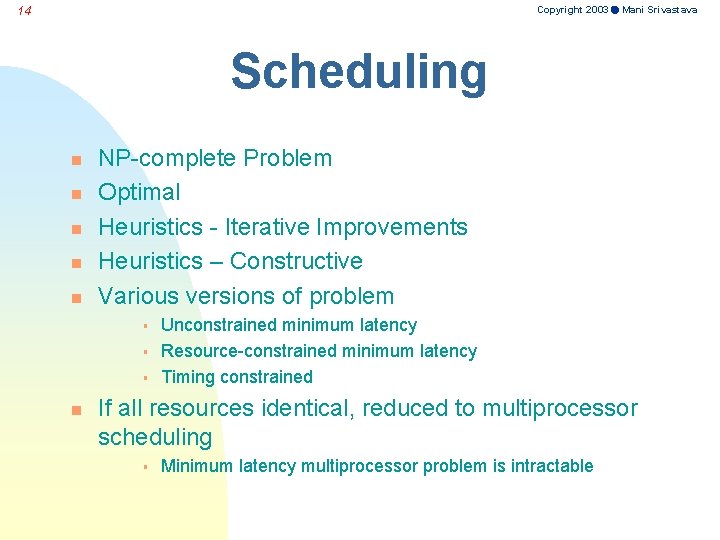

Copyright 2003 Mani Srivastava 14 Scheduling n n n NP-complete Problem Optimal Heuristics - Iterative Improvements Heuristics – Constructive Various versions of problem § § § n Unconstrained minimum latency Resource-constrained minimum latency Timing constrained If all resources identical, reduced to multiprocessor scheduling § Minimum latency multiprocessor problem is intractable

Copyright 2003 Mani Srivastava 15 Scheduling - Optimal Techniques n Integer Linear Programming n Branch and Bound

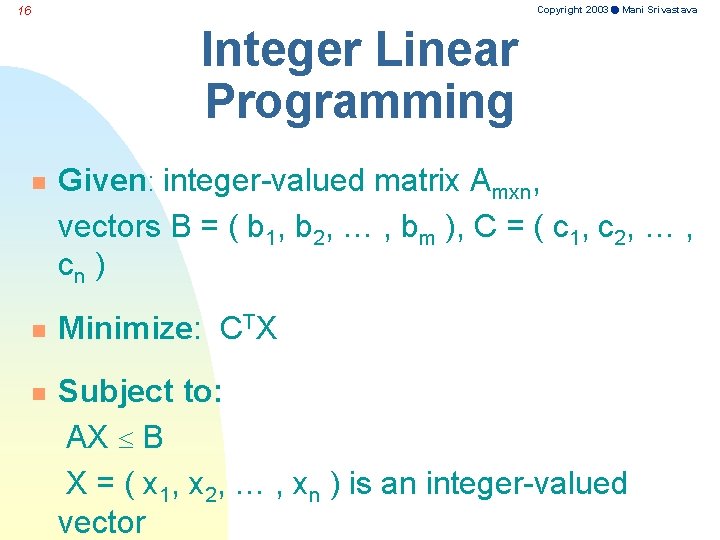

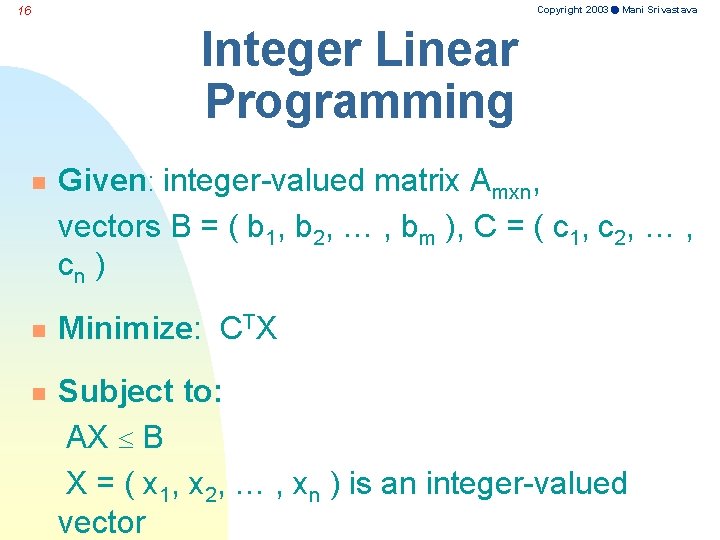

Copyright 2003 Mani Srivastava 16 Integer Linear Programming n Given: integer-valued matrix Amxn, vectors B = ( b 1, b 2, … , bm ), C = ( c 1, c 2, … , cn ) n Minimize: CTX n Subject to: AX B X = ( x 1, x 2, … , xn ) is an integer-valued vector

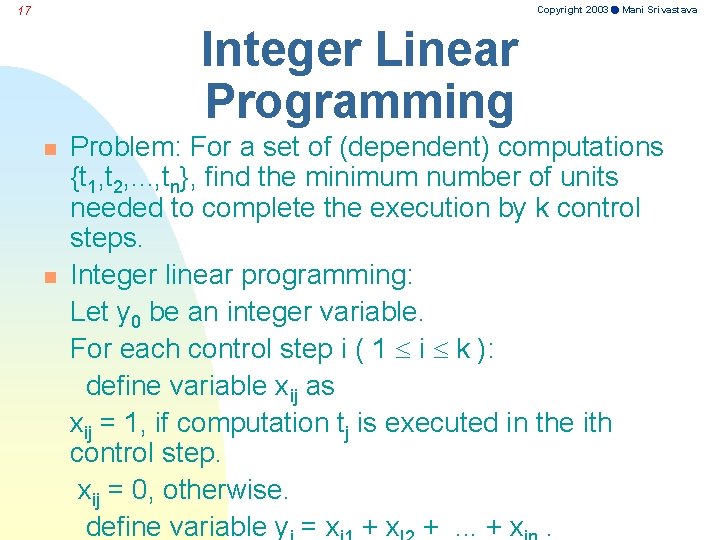

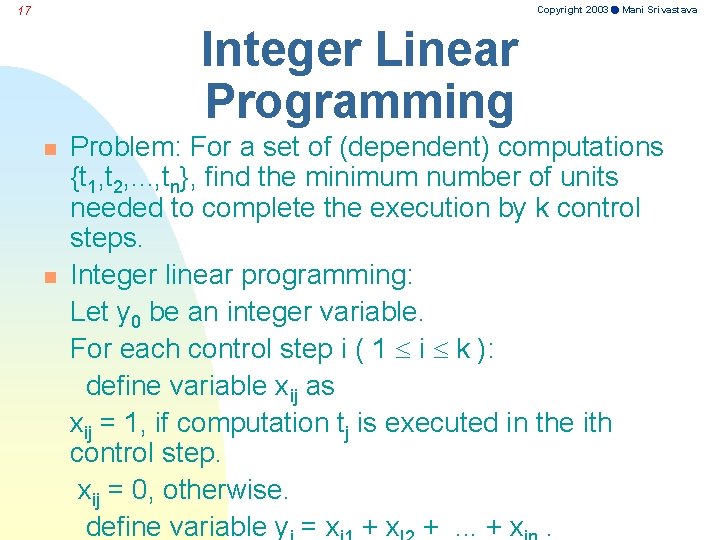

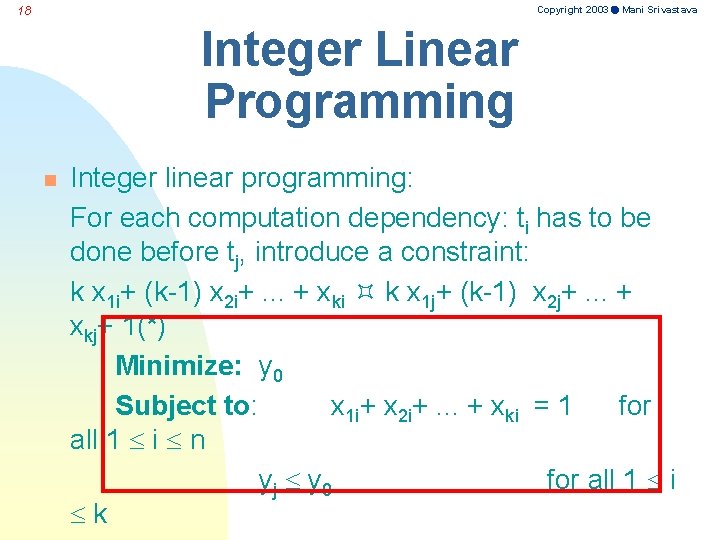

Copyright 2003 Mani Srivastava 17 Integer Linear Programming n n Problem: For a set of (dependent) computations {t 1, t 2, . . . , tn}, find the minimum number of units needed to complete the execution by k control steps. Integer linear programming: Let y 0 be an integer variable. For each control step i ( 1 i k ): define variable xij as xij = 1, if computation tj is executed in the ith control step. xij = 0, otherwise. define variable y = x +. . . + x.

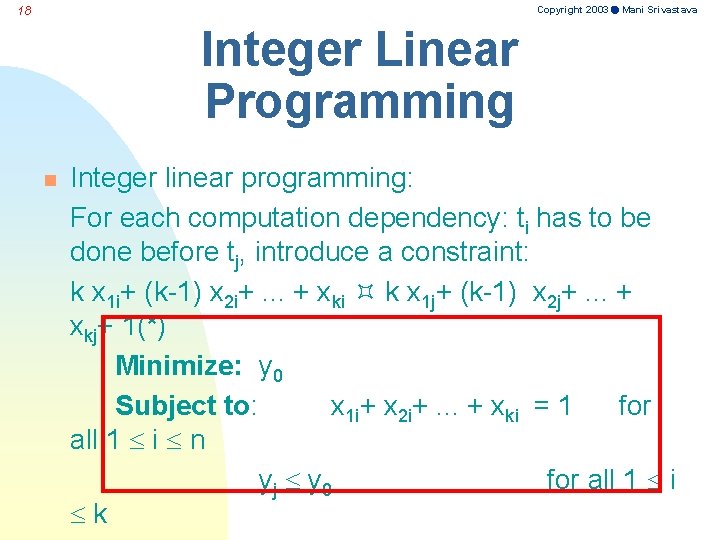

Copyright 2003 Mani Srivastava 18 Integer Linear Programming n Integer linear programming: For each computation dependency: ti has to be done before tj, introduce a constraint: k x 1 i+ (k-1) x 2 i+. . . + xki k x 1 j+ (k-1) x 2 j+. . . + xkj+ 1(*) Minimize: y 0 Subject to: x 1 i+ x 2 i+. . . + xki = 1 for all 1 i n yj y 0 for all 1 i k

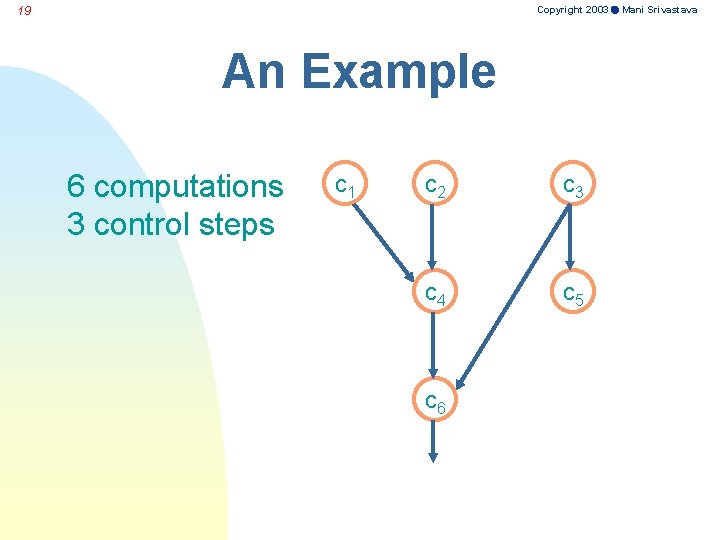

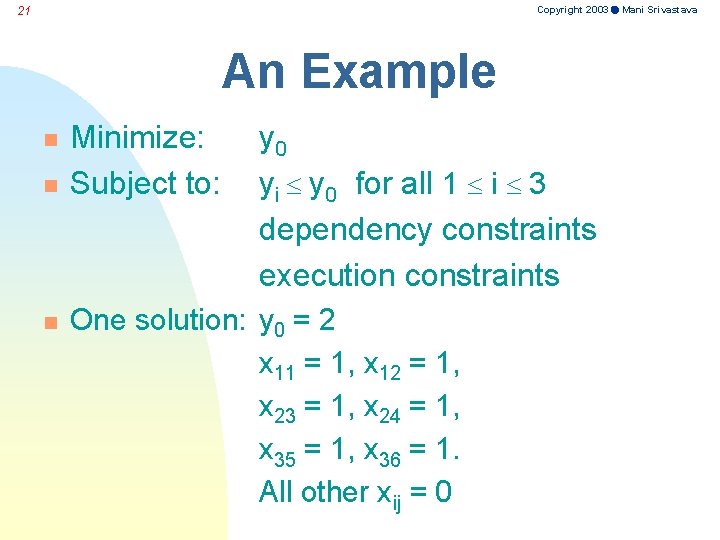

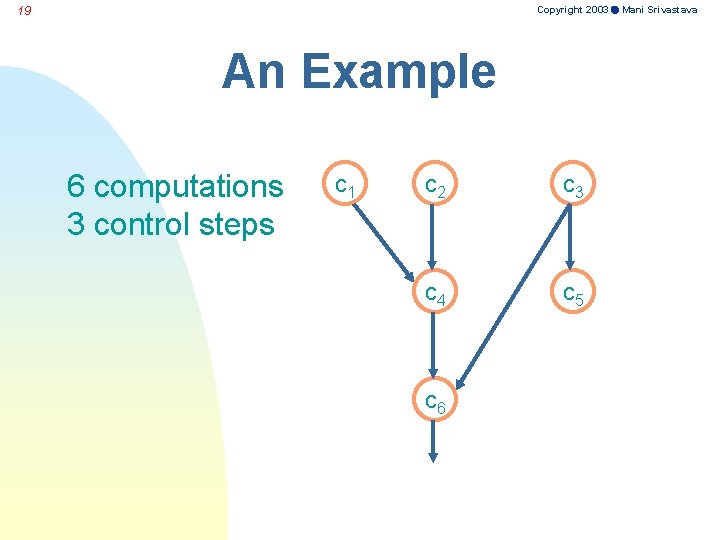

Copyright 2003 Mani Srivastava 19 An Example 6 computations 3 control steps c 1 c 2 c 3 c 4 c 5 c 6

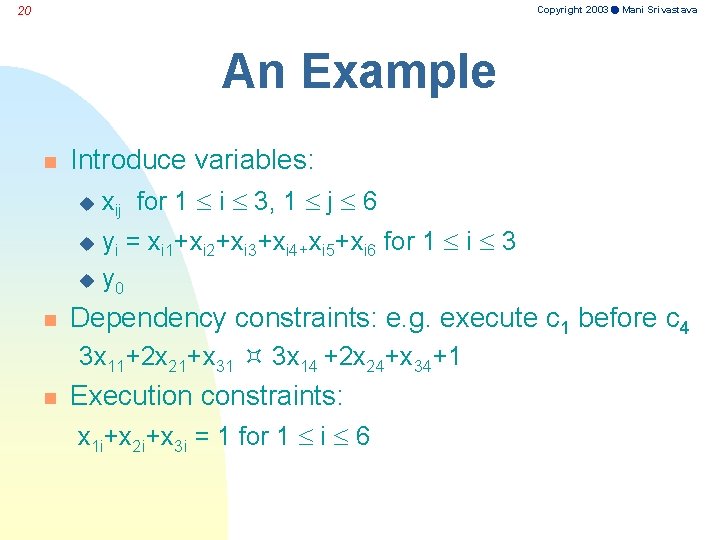

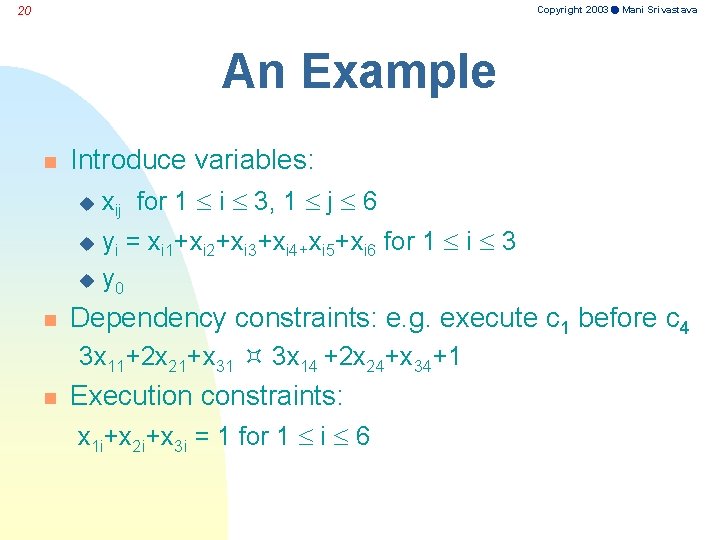

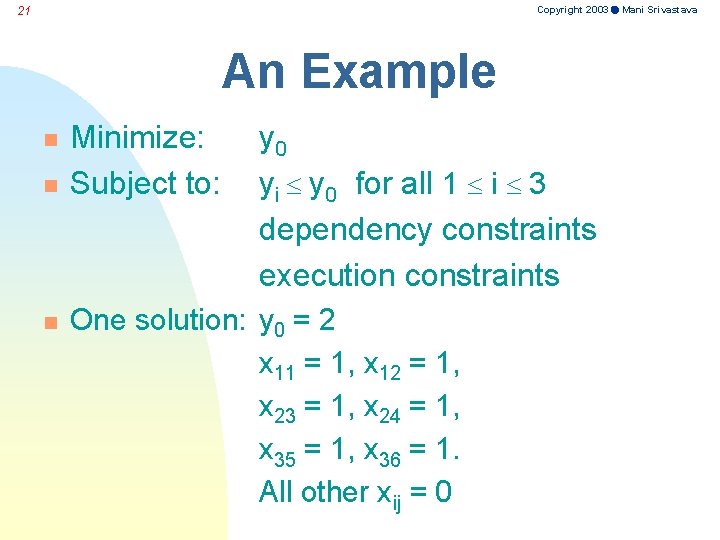

Copyright 2003 Mani Srivastava 20 An Example n Introduce variables: u xij for 1 i 3, 1 j 6 yi = xi 1+xi 2+xi 3+xi 4+xi 5+xi 6 for 1 i 3 u y 0 u n Dependency constraints: e. g. execute c 1 before c 4 3 x 11+2 x 21+x 31 3 x 14 +2 x 24+x 34+1 n Execution constraints: x 1 i+x 2 i+x 3 i = 1 for 1 i 6

Copyright 2003 Mani Srivastava 21 An Example n n n Minimize: Subject to: y 0 yi y 0 for all 1 i 3 dependency constraints execution constraints One solution: y 0 = 2 x 11 = 1, x 12 = 1, x 23 = 1, x 24 = 1, x 35 = 1, x 36 = 1. All other xij = 0

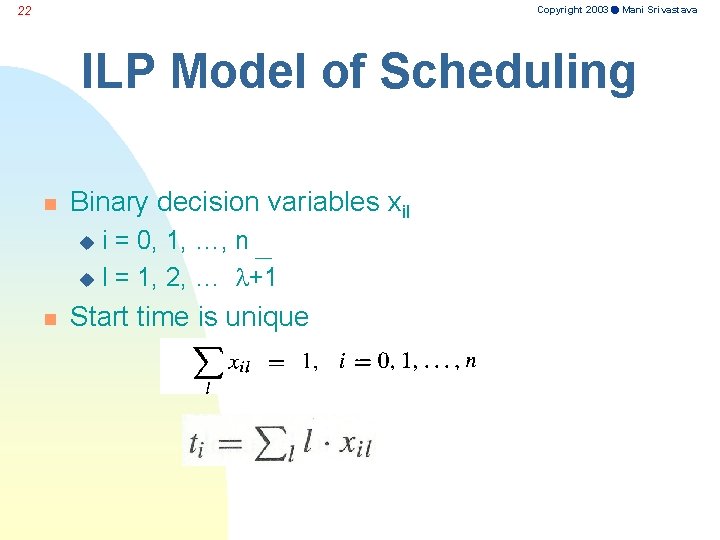

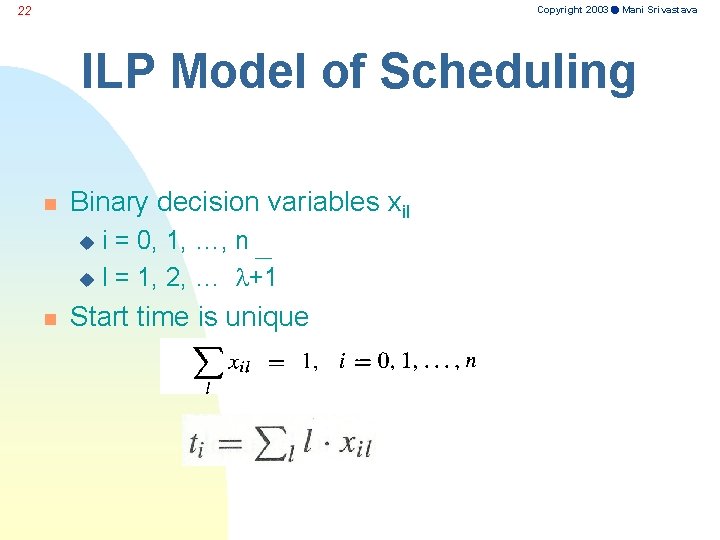

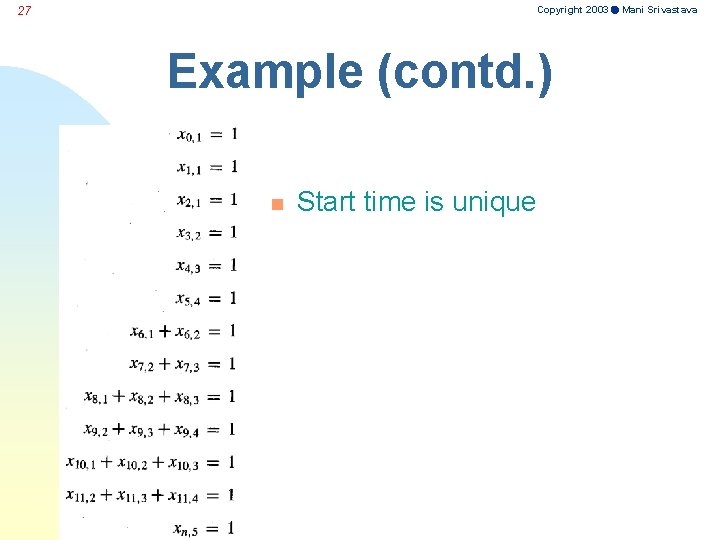

Copyright 2003 Mani Srivastava 22 ILP Model of Scheduling n Binary decision variables xil i = 0, 1, …, n u l = 1, 2, … +1 u n Start time is unique

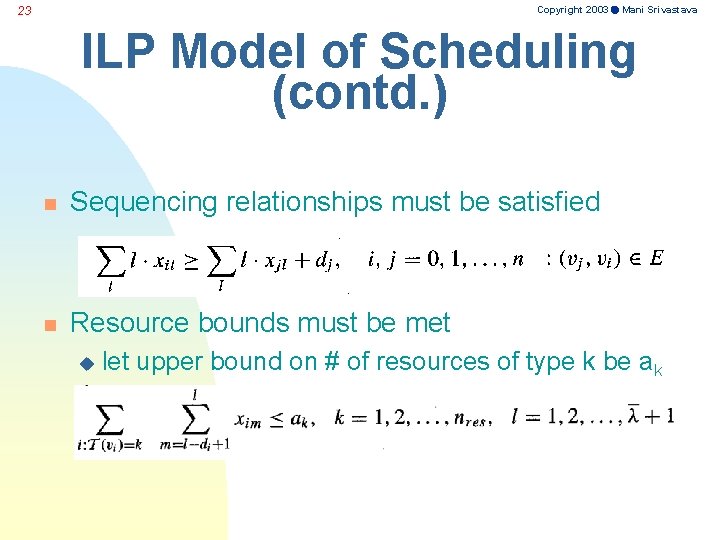

Copyright 2003 Mani Srivastava 23 ILP Model of Scheduling (contd. ) n Sequencing relationships must be satisfied n Resource bounds must be met u let upper bound on # of resources of type k be ak

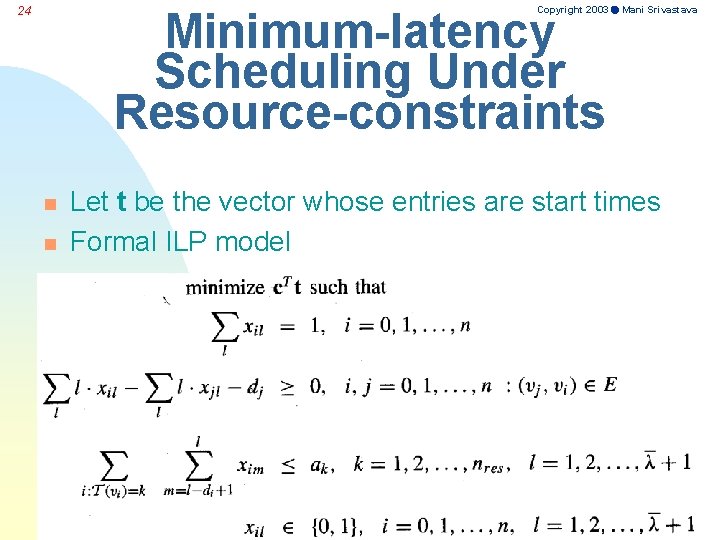

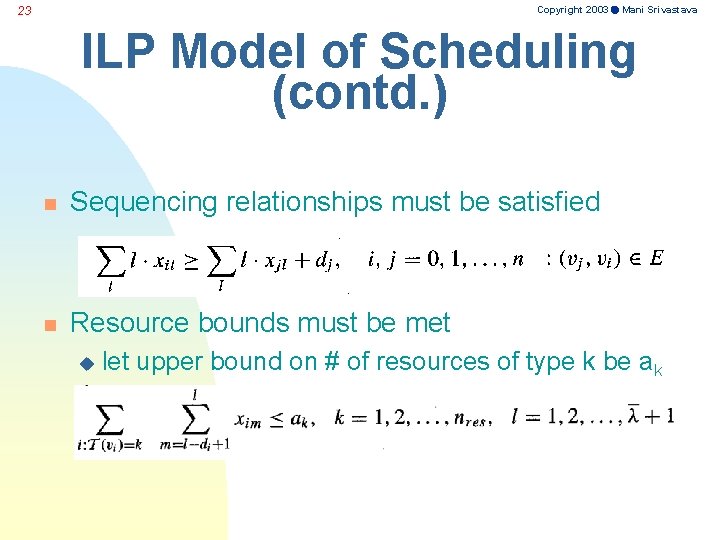

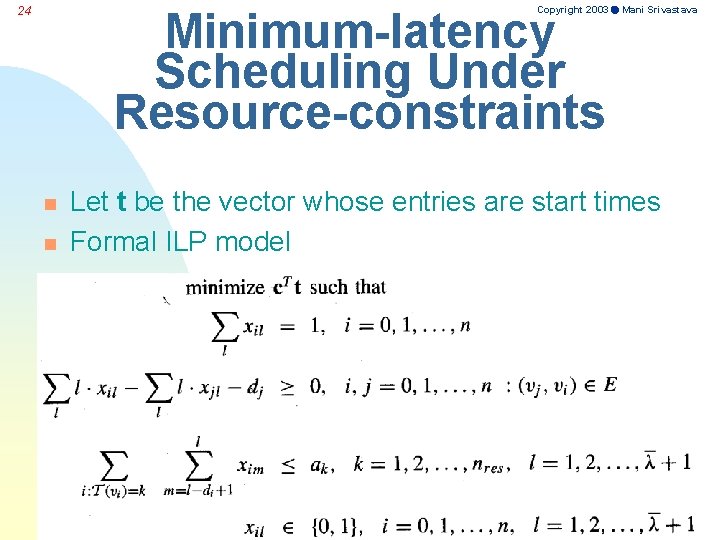

Copyright 2003 Mani Srivastava 24 Minimum-latency Scheduling Under Resource-constraints n n Let t be the vector whose entries are start times Formal ILP model

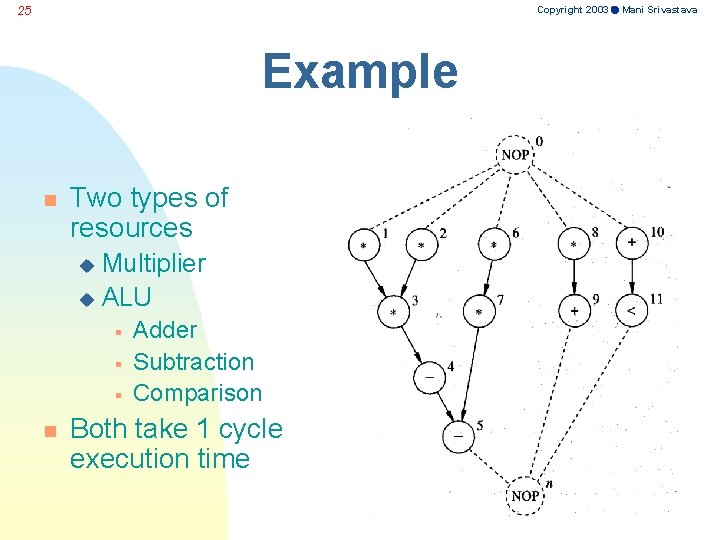

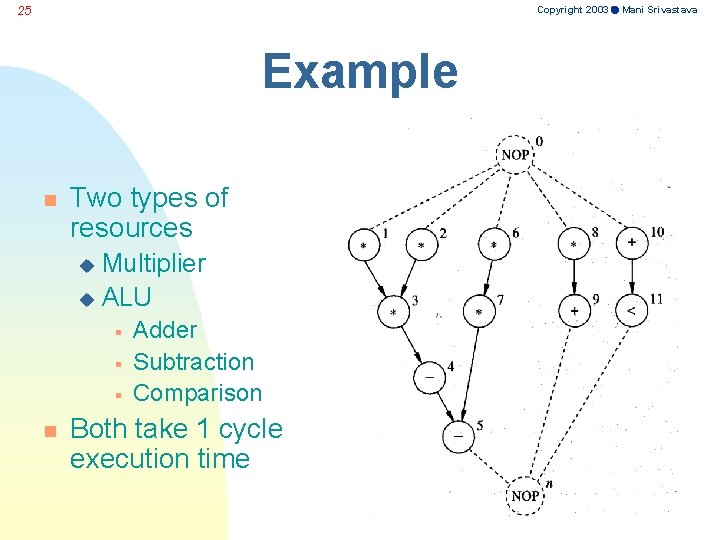

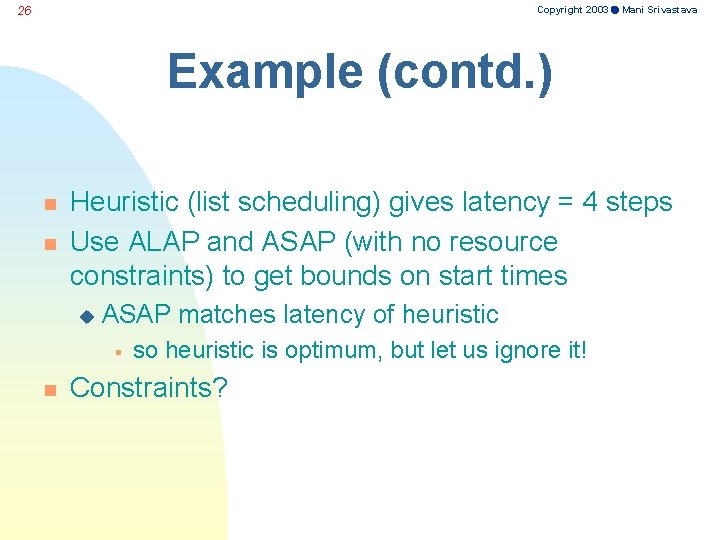

Copyright 2003 Mani Srivastava 25 Example n Two types of resources Multiplier u ALU u § § § n Adder Subtraction Comparison Both take 1 cycle execution time

Copyright 2003 Mani Srivastava 26 Example (contd. ) n n Heuristic (list scheduling) gives latency = 4 steps Use ALAP and ASAP (with no resource constraints) to get bounds on start times u ASAP matches latency of heuristic § n so heuristic is optimum, but let us ignore it! Constraints?

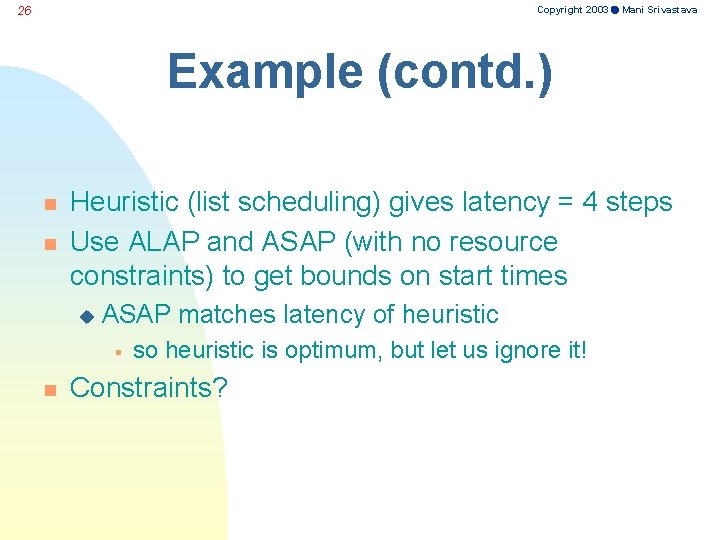

Copyright 2003 Mani Srivastava 27 Example (contd. ) n Start time is unique

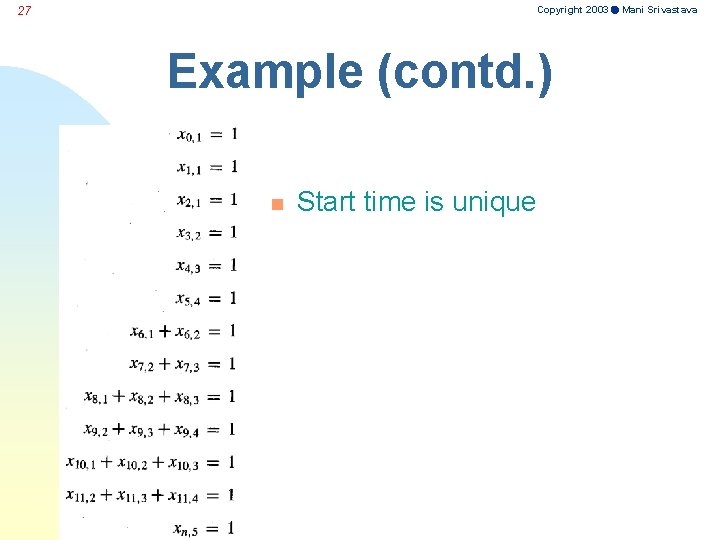

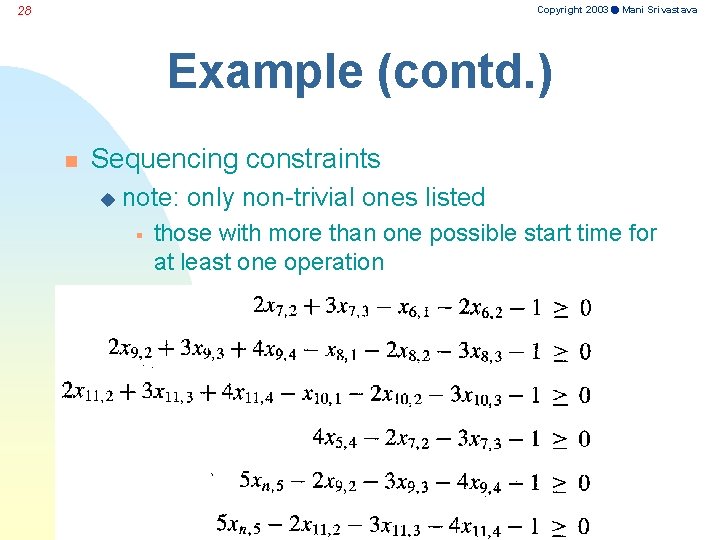

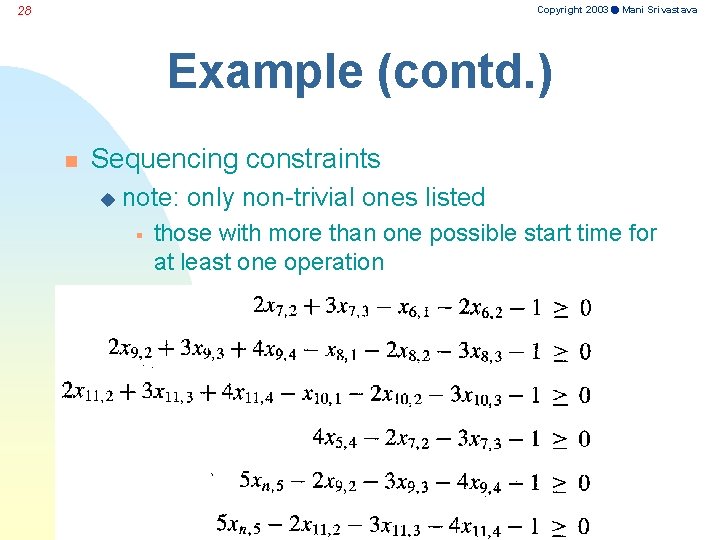

Copyright 2003 Mani Srivastava 28 Example (contd. ) n Sequencing constraints u note: only non-trivial ones listed § those with more than one possible start time for at least one operation

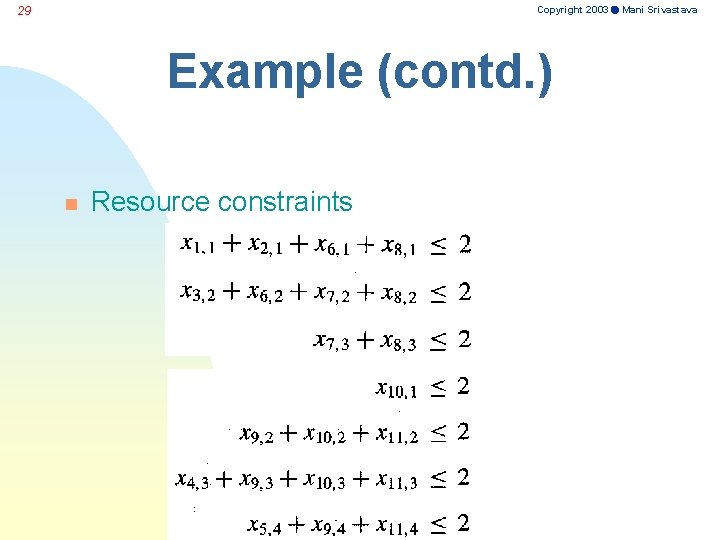

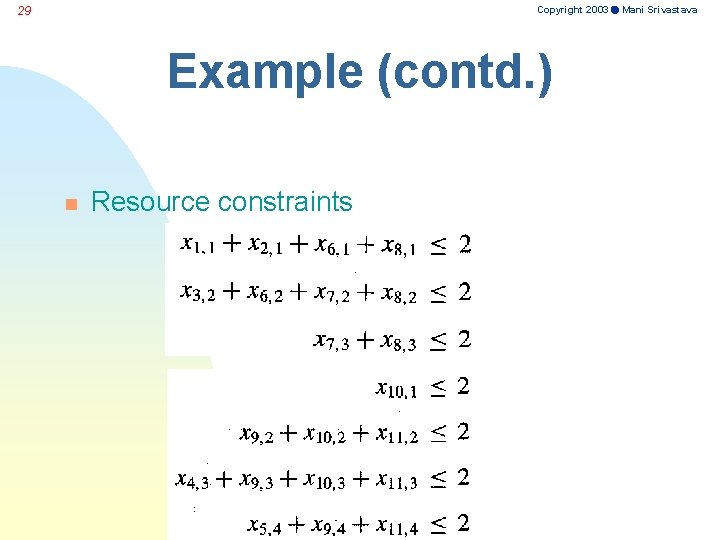

Copyright 2003 Mani Srivastava 29 Example (contd. ) n Resource constraints

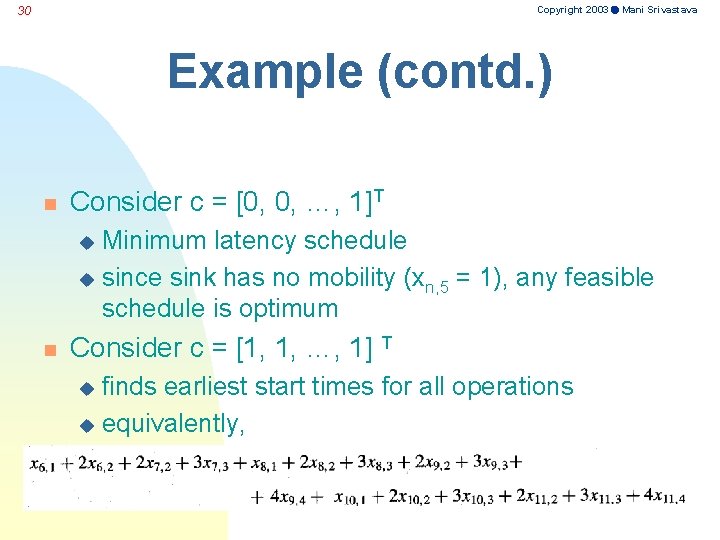

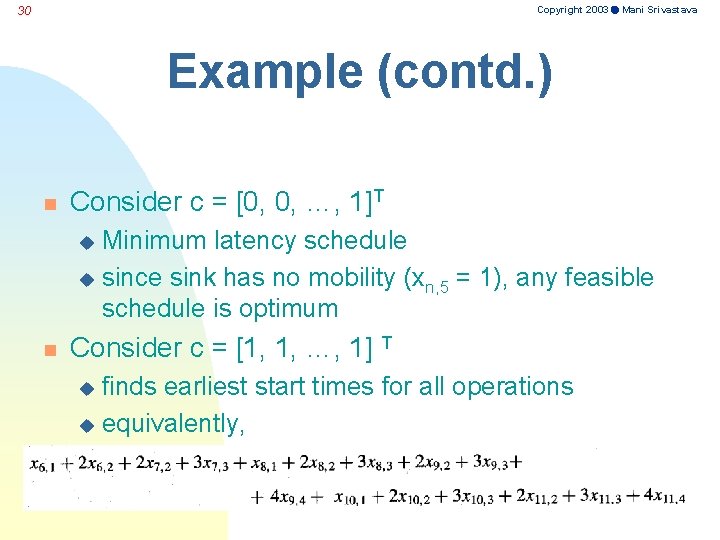

Copyright 2003 Mani Srivastava 30 Example (contd. ) n Consider c = [0, 0, …, 1]T Minimum latency schedule u since sink has no mobility (xn, 5 = 1), any feasible schedule is optimum u n Consider c = [1, 1, …, 1] T finds earliest start times for all operations u equivalently, u

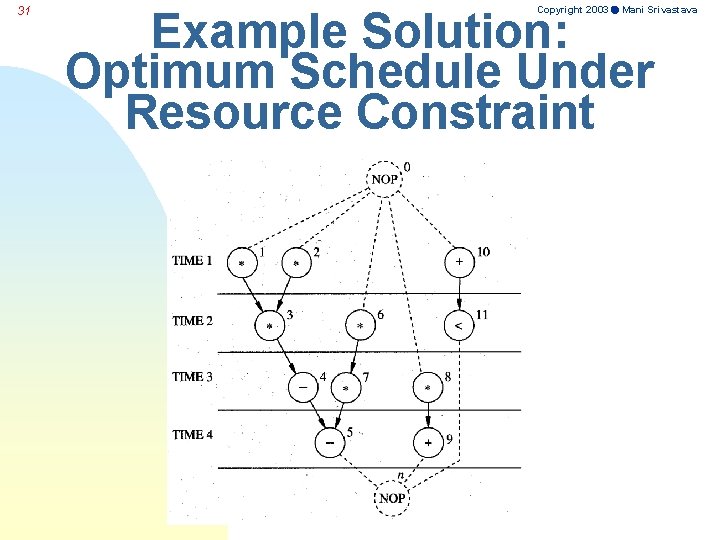

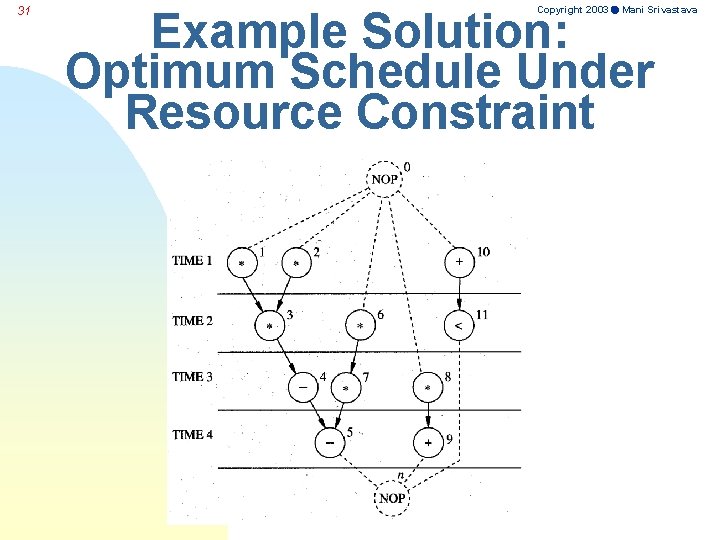

31 Copyright 2003 Mani Srivastava Example Solution: Optimum Schedule Under Resource Constraint

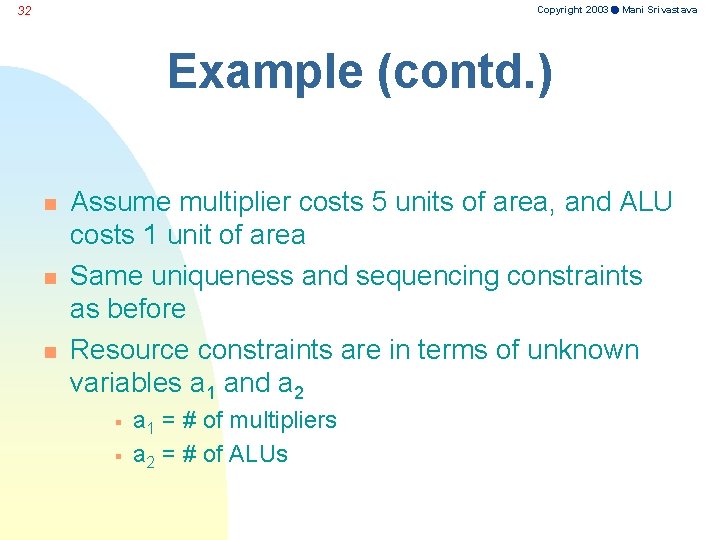

Copyright 2003 Mani Srivastava 32 Example (contd. ) n n n Assume multiplier costs 5 units of area, and ALU costs 1 unit of area Same uniqueness and sequencing constraints as before Resource constraints are in terms of unknown variables a 1 and a 2 § § a 1 = # of multipliers a 2 = # of ALUs

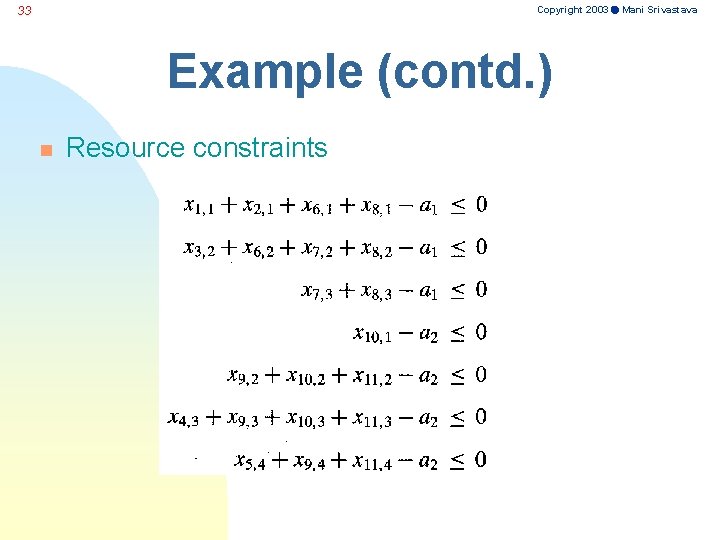

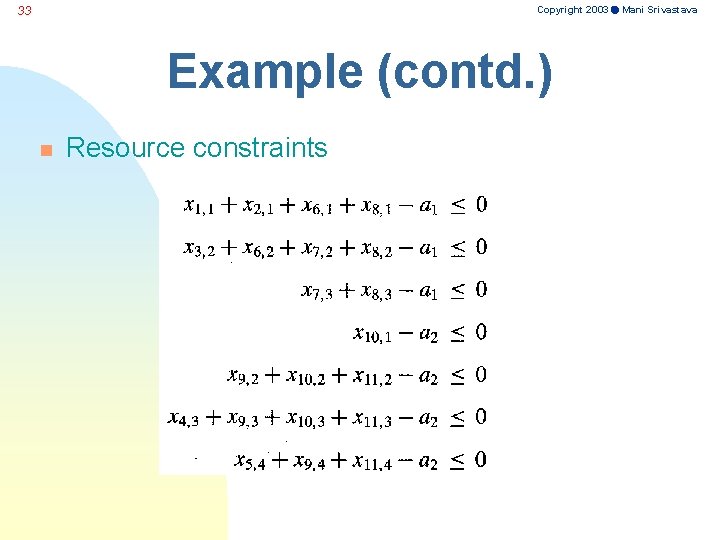

Copyright 2003 Mani Srivastava 33 Example (contd. ) n Resource constraints

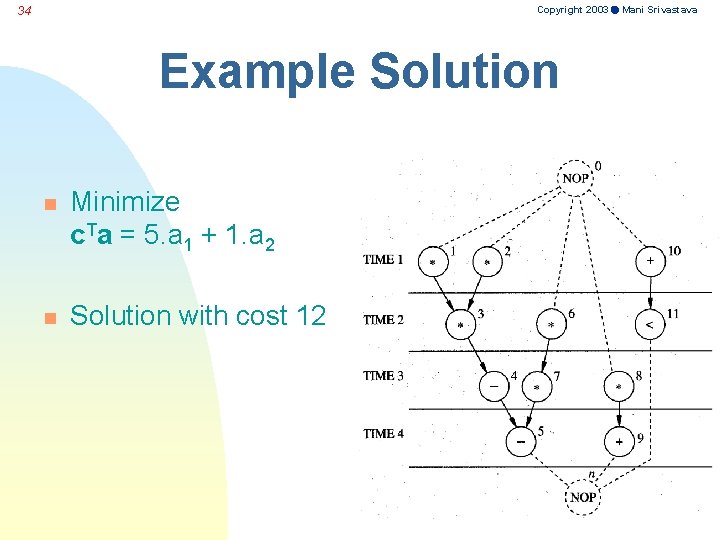

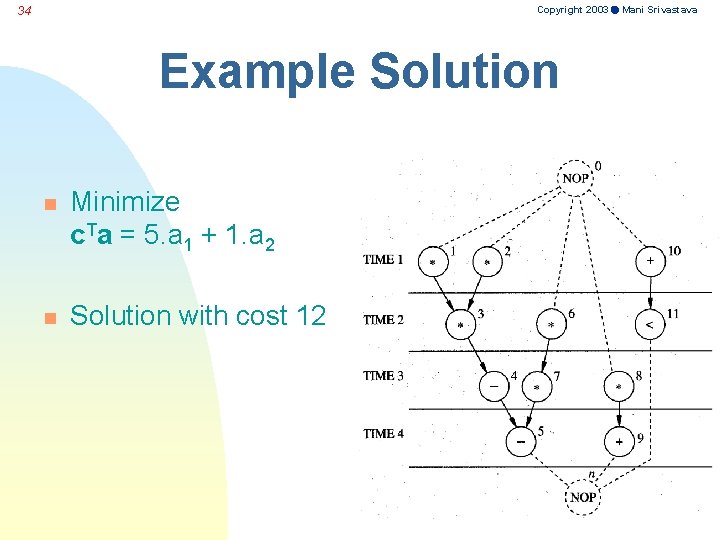

Copyright 2003 Mani Srivastava 34 Example Solution n Minimize c. Ta = 5. a 1 + 1. a 2 n Solution with cost 12

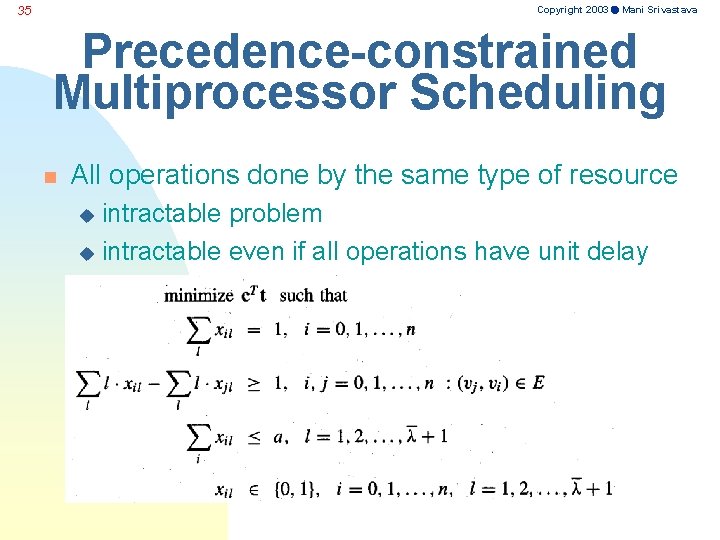

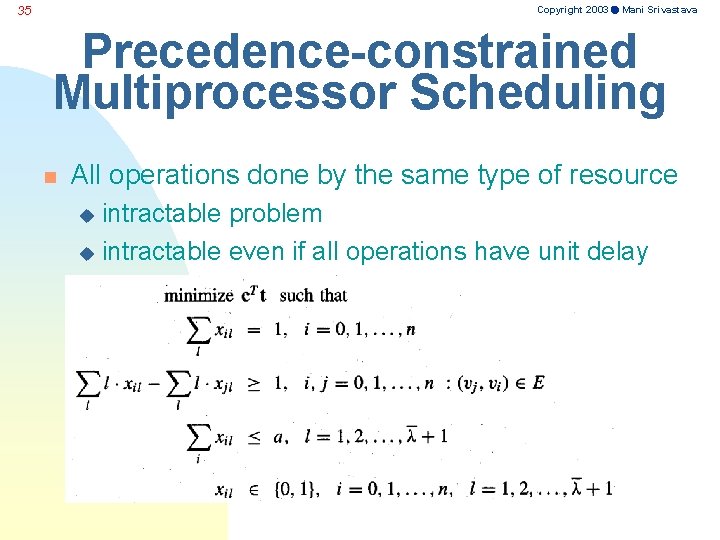

Copyright 2003 Mani Srivastava 35 Precedence-constrained Multiprocessor Scheduling n All operations done by the same type of resource intractable problem u intractable even if all operations have unit delay u

Copyright 2003 Mani Srivastava 36 Scheduling - Iterative Improvement n n n Kernighan - Lin (deterministic) Simulated Annealing Lottery Iterative Improvement Neural Networks Genetic Algorithms Taboo Search

Copyright 2003 Mani Srivastava 37 Scheduling - Constructive Techniques n Most Constrained n Least Constraining

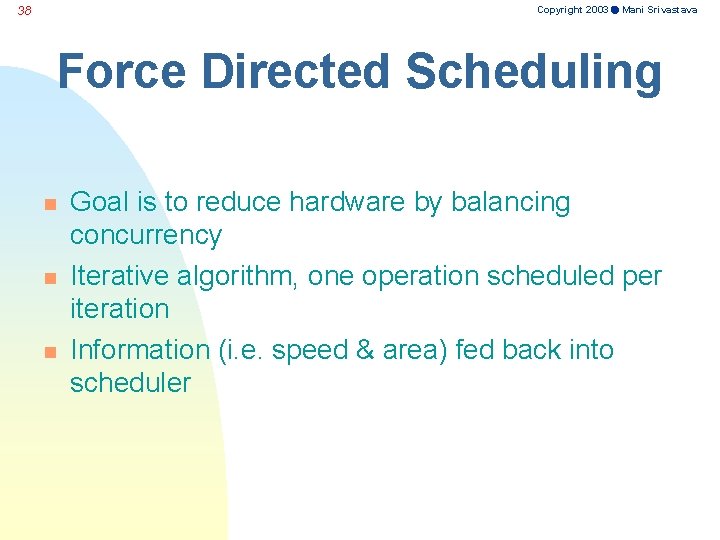

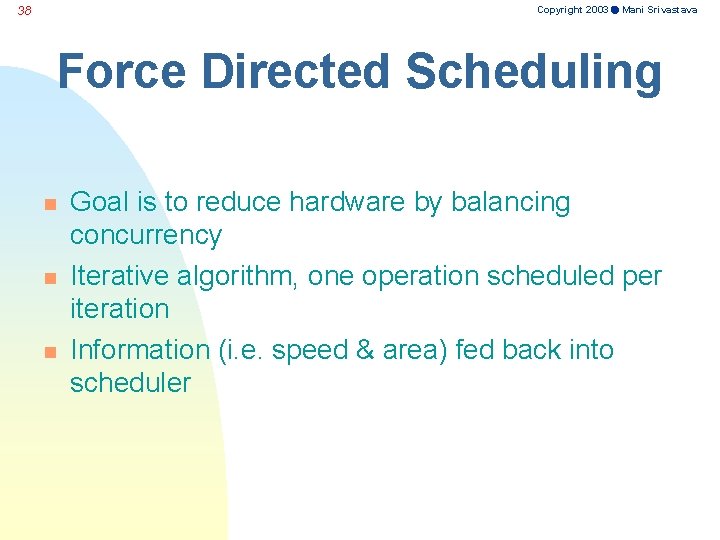

Copyright 2003 Mani Srivastava 38 Force Directed Scheduling n n n Goal is to reduce hardware by balancing concurrency Iterative algorithm, one operation scheduled per iteration Information (i. e. speed & area) fed back into scheduler

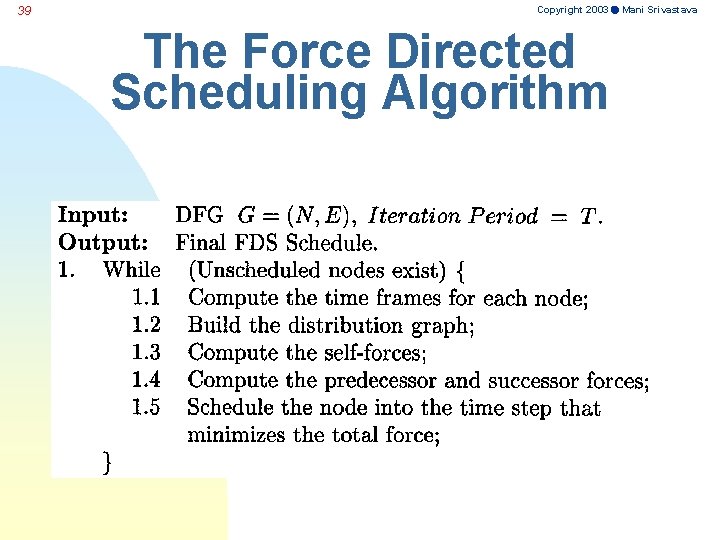

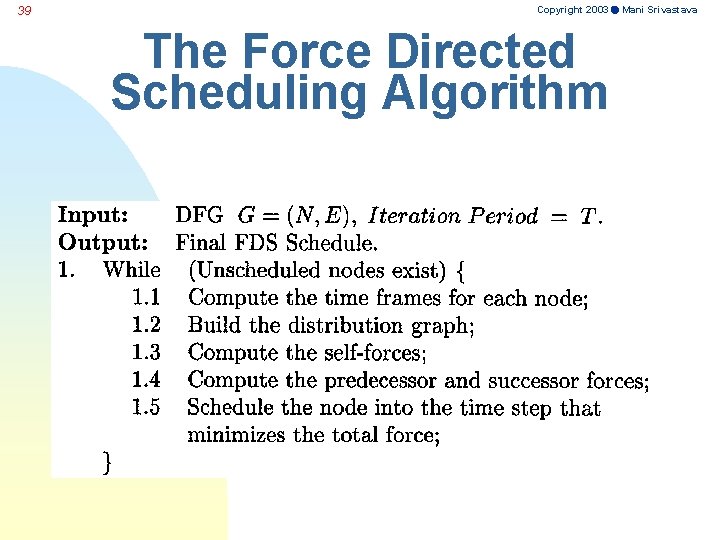

39 Copyright 2003 Mani Srivastava The Force Directed Scheduling Algorithm

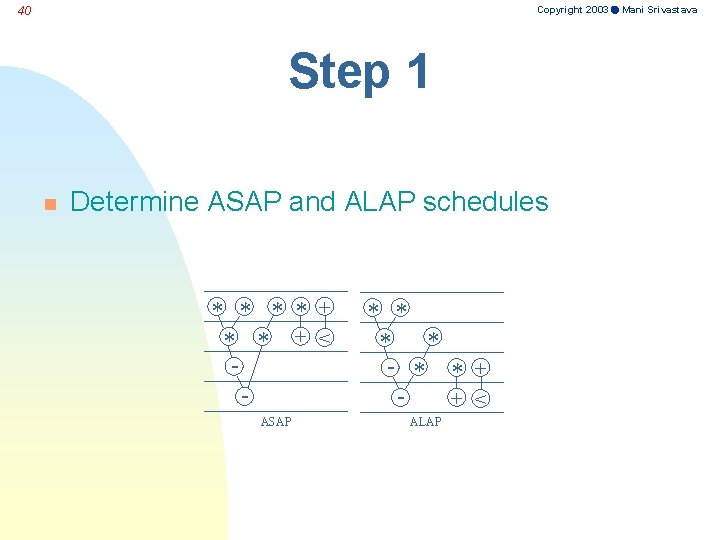

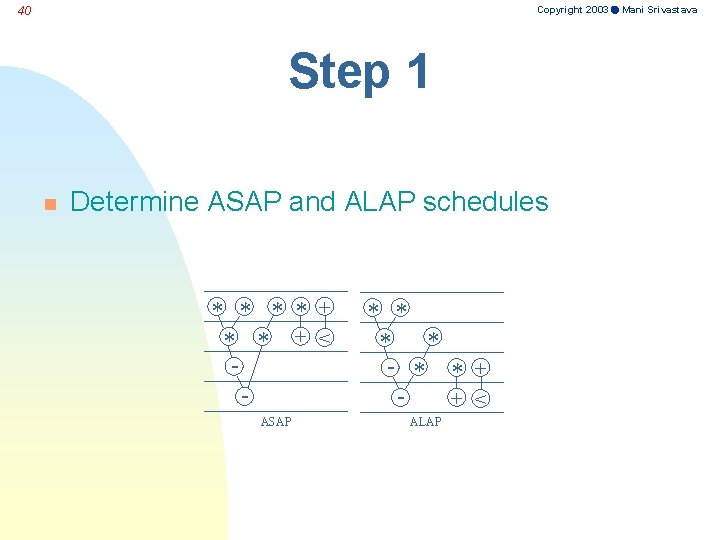

Copyright 2003 Mani Srivastava 40 Step 1 n Determine ASAP and ALAP schedules * * + < ASAP * * - * * + + < ALAP

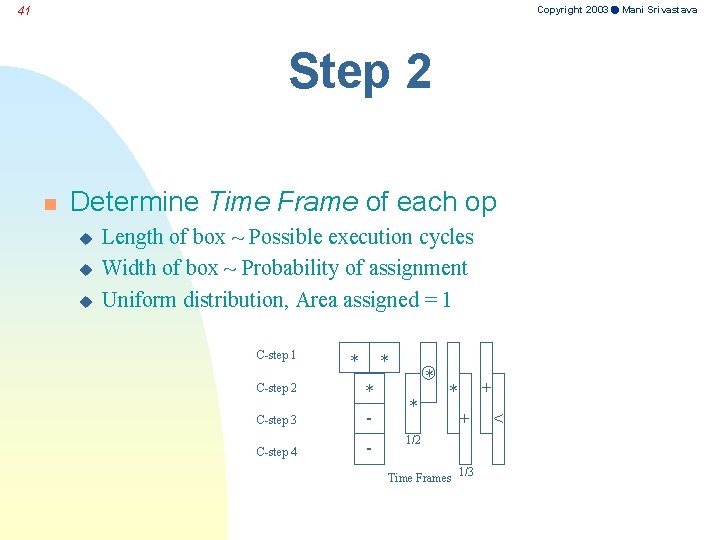

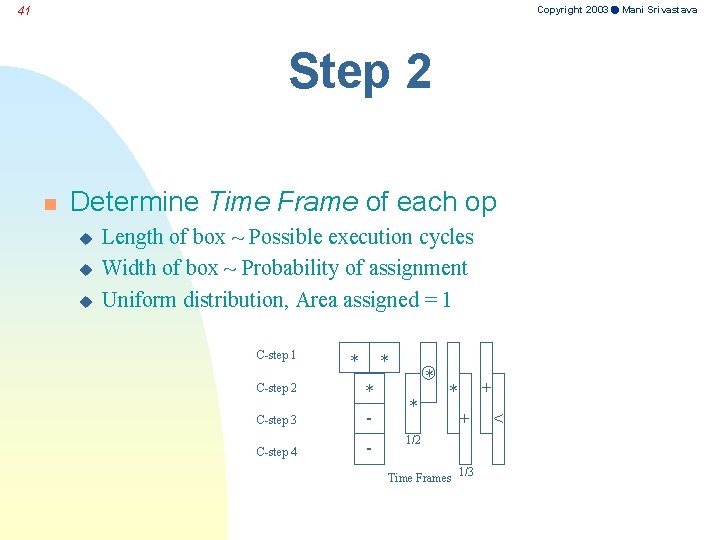

Copyright 2003 Mani Srivastava 41 Step 2 n Determine Time Frame of each op u u u Length of box ~ Possible execution cycles Width of box ~ Probability of assignment Uniform distribution, Area assigned = 1 C-step 1 * * C-step 3 * - C-step 4 - C-step 2 * * + 1/2 Time Frames 1/3 <

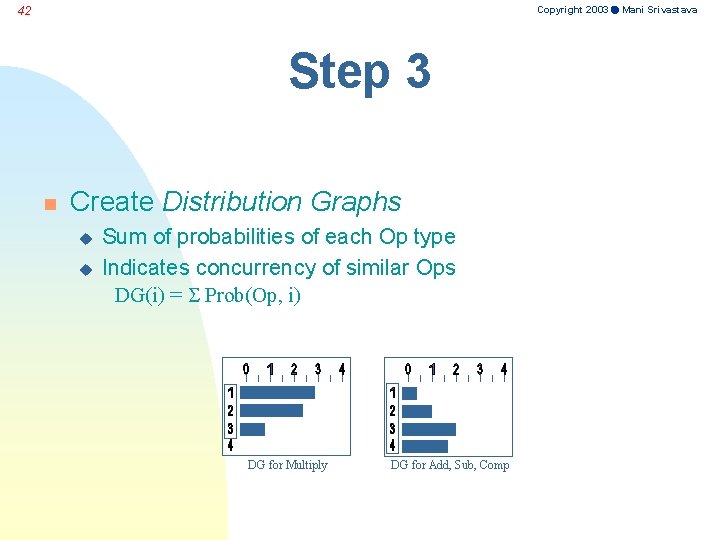

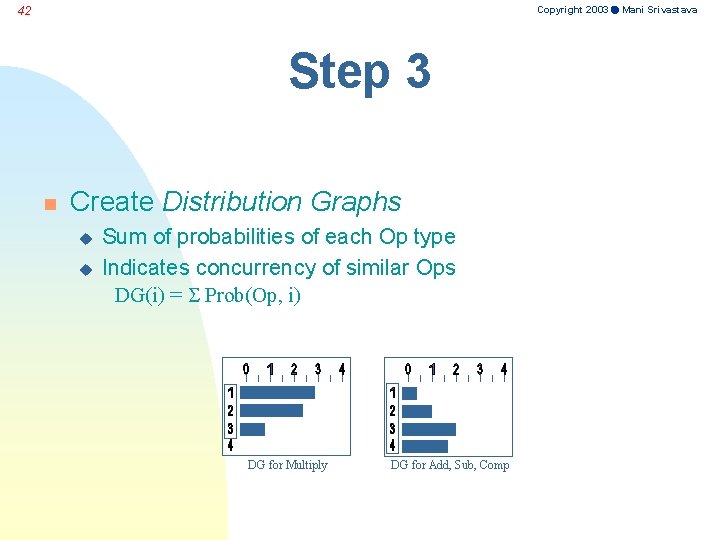

Copyright 2003 Mani Srivastava 42 Step 3 n Create Distribution Graphs u u Sum of probabilities of each Op type Indicates concurrency of similar Ops DG(i) = Prob(Op, i) DG for Multiply DG for Add, Sub, Comp

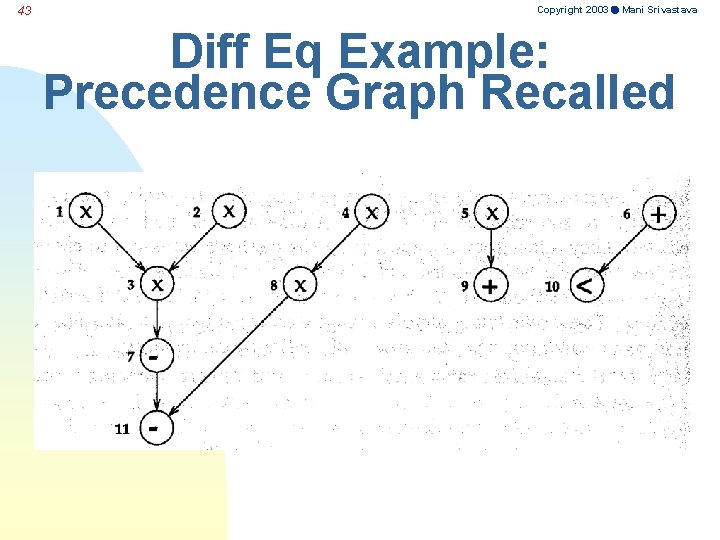

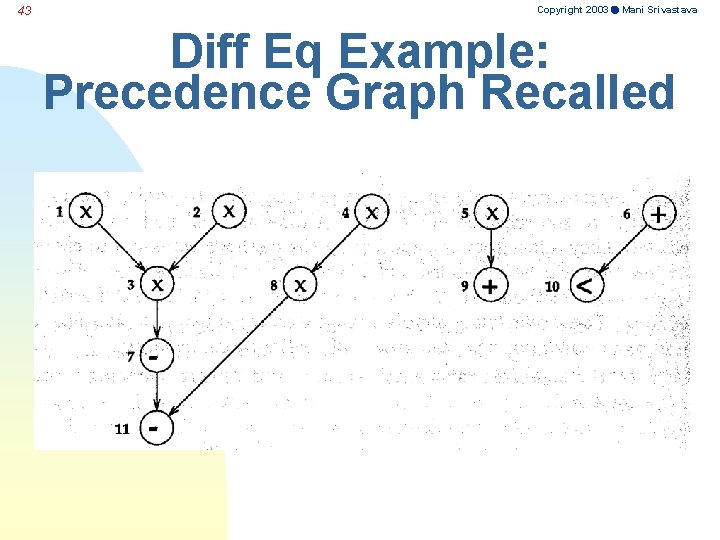

43 Copyright 2003 Mani Srivastava Diff Eq Example: Precedence Graph Recalled

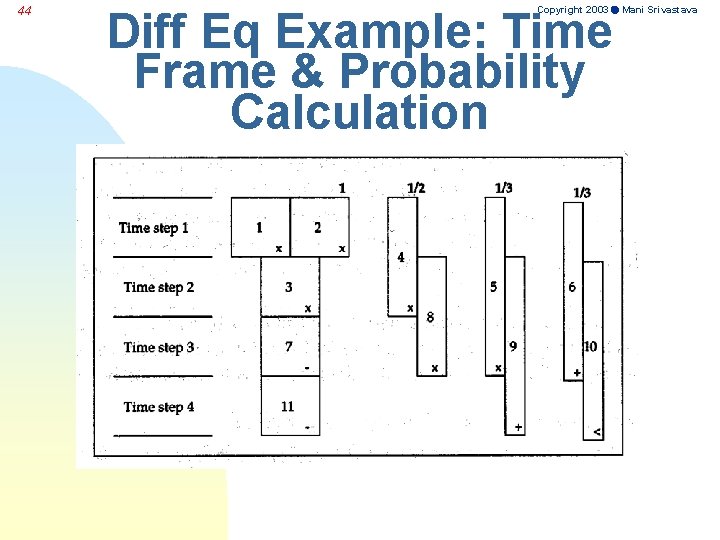

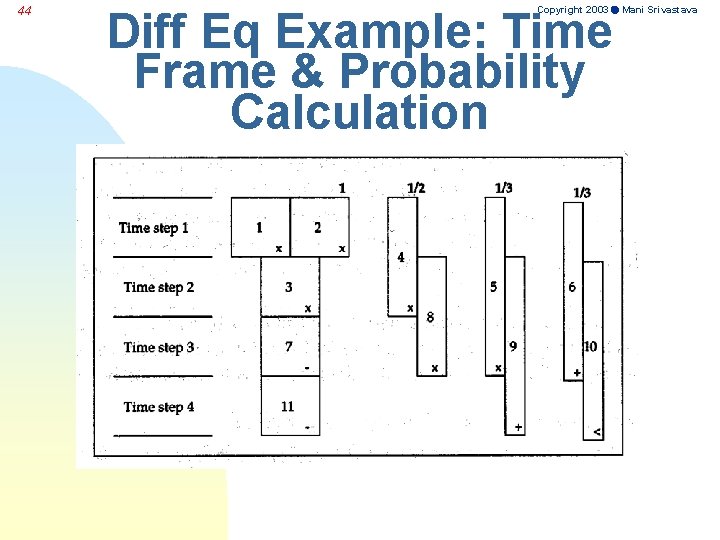

44 Copyright 2003 Mani Srivastava Diff Eq Example: Time Frame & Probability Calculation

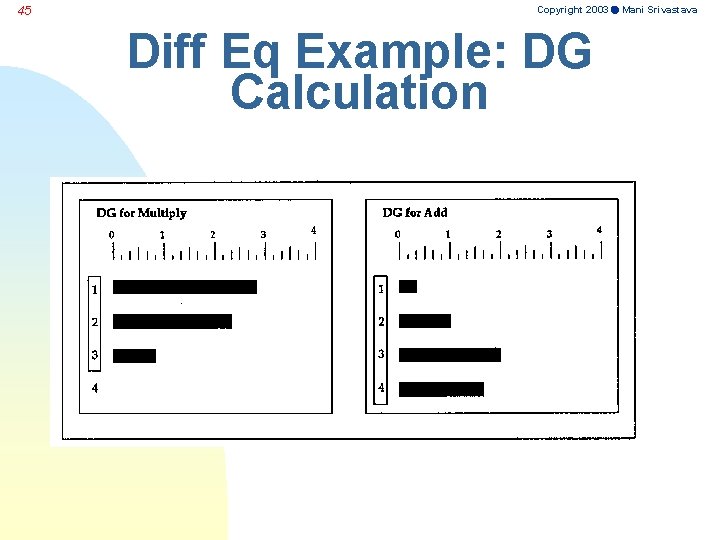

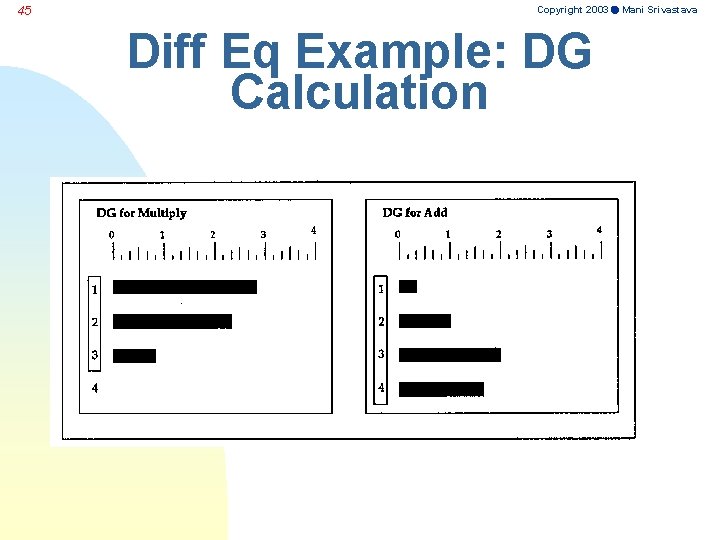

45 Copyright 2003 Mani Srivastava Diff Eq Example: DG Calculation

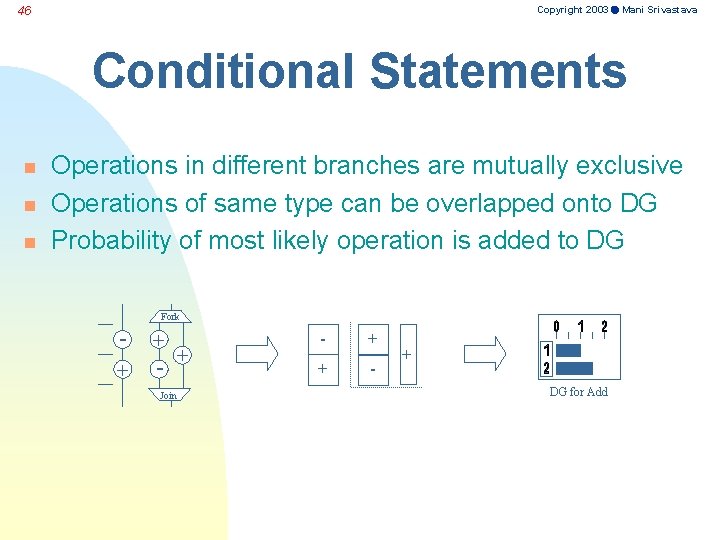

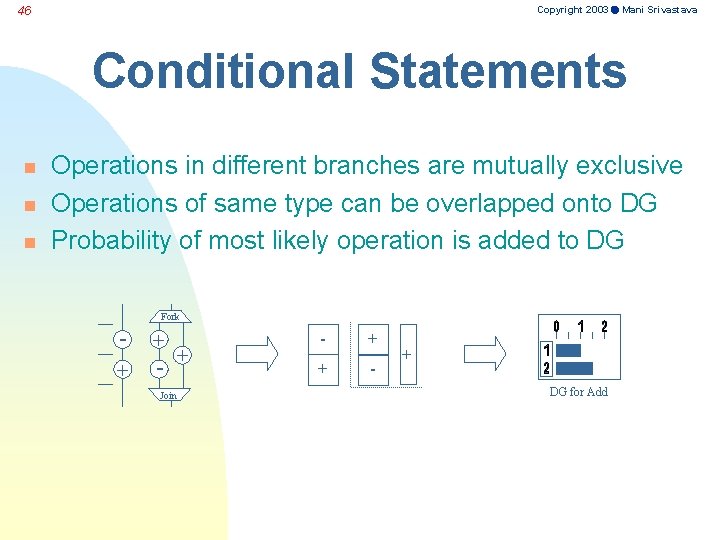

Copyright 2003 Mani Srivastava 46 Conditional Statements n n n Operations in different branches are mutually exclusive Operations of same type can be overlapped onto DG Probability of most likely operation is added to DG Fork - + + - + Join - + + - + DG for Add

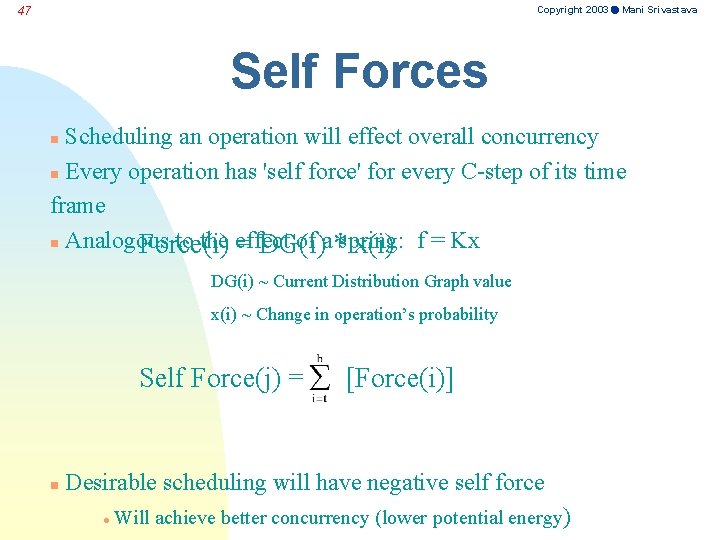

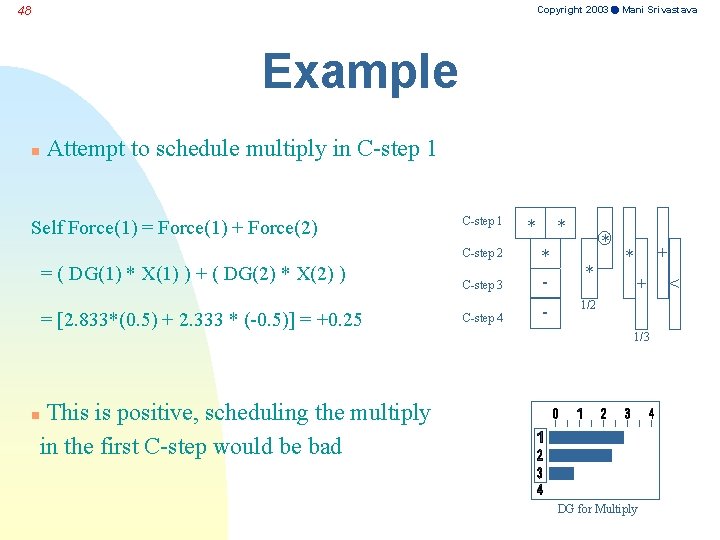

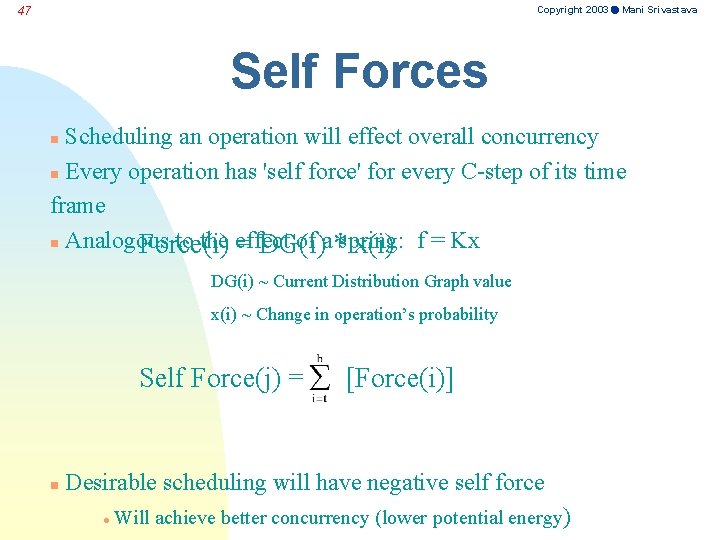

Copyright 2003 Mani Srivastava 47 Self Forces Scheduling an operation will effect overall concurrency n Every operation has 'self force' for every C-step of its time frame n Analogous to the effect of a*spring: Force(i) = DG(i) x(i) f = Kx n DG(i) ~ Current Distribution Graph value x(i) ~ Change in operation’s probability Self Force(j) = n [Force(i)] Desirable scheduling will have negative self force l Will achieve better concurrency (lower potential energy)

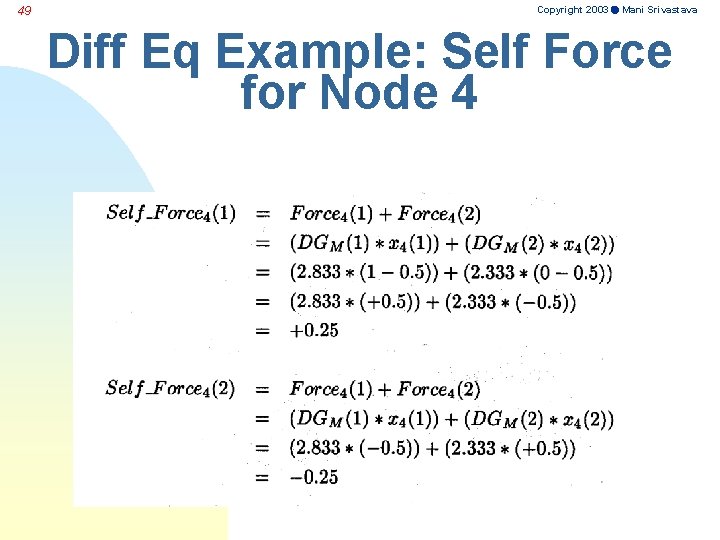

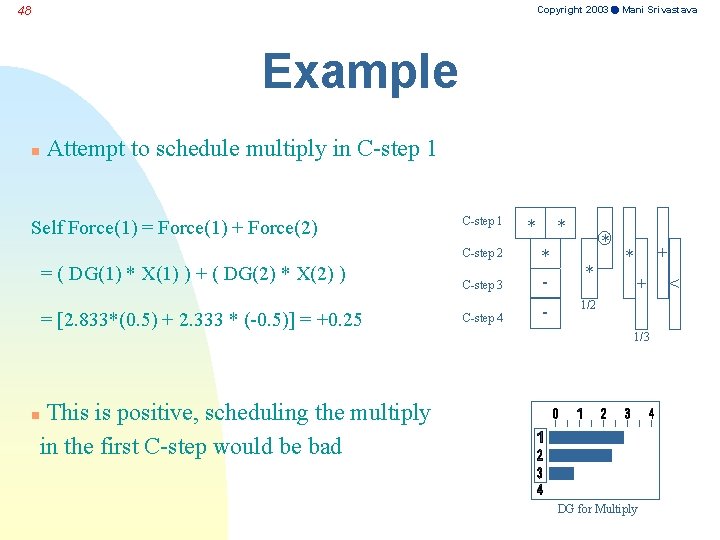

Copyright 2003 Mani Srivastava 48 Example n Attempt to schedule multiply in C-step 1 Self Force(1) = Force(1) + Force(2) C-step 1 = [2. 833*(0. 5) + 2. 333 * (-0. 5)] = +0. 25 * * C-step 3 * - * C-step 4 - 1/2 C-step 2 = ( DG(1) * X(1) ) + ( DG(2) * X(2) ) * + 1/3 This is positive, scheduling the multiply in the first C-step would be bad n DG for Multiply <

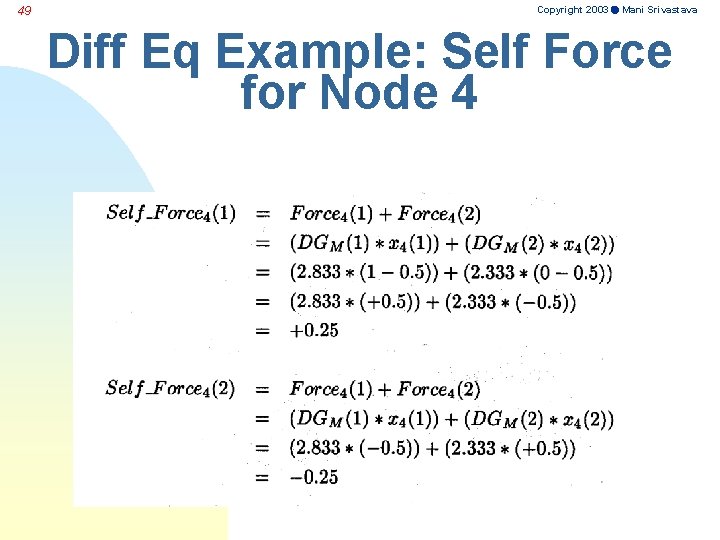

49 Copyright 2003 Mani Srivastava Diff Eq Example: Self Force for Node 4

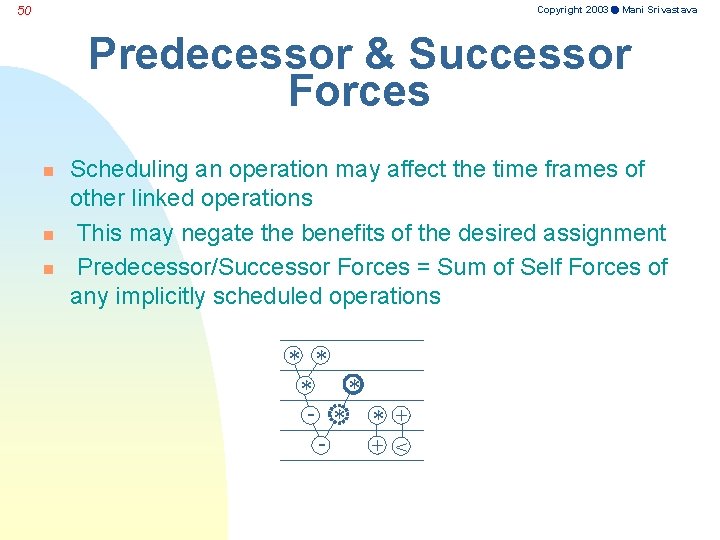

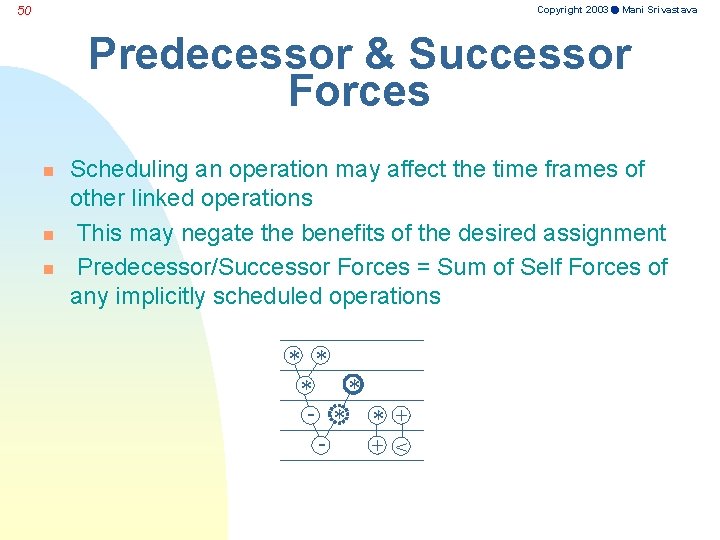

Copyright 2003 Mani Srivastava 50 Predecessor & Successor Forces n n n Scheduling an operation may affect the time frames of other linked operations This may negate the benefits of the desired assignment Predecessor/Successor Forces = Sum of Self Forces of any implicitly scheduled operations * * - * * + + <

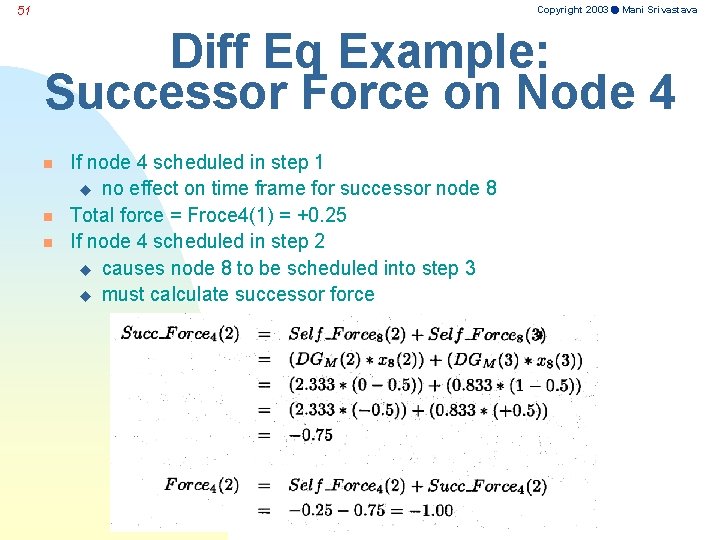

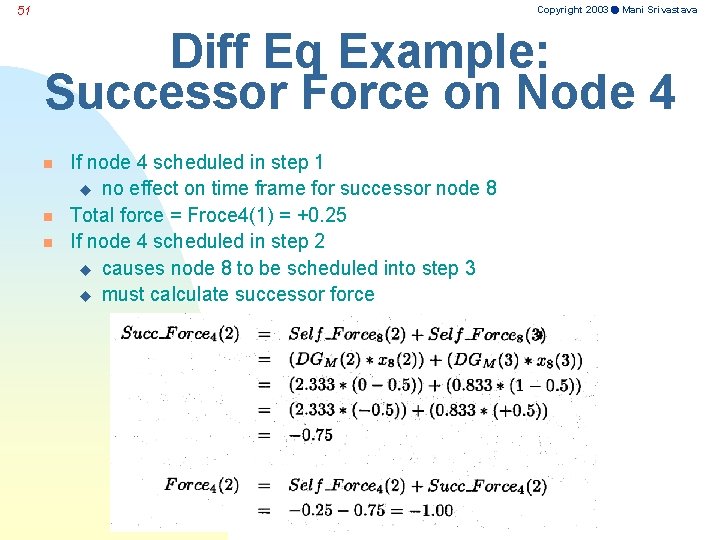

Copyright 2003 Mani Srivastava 51 Diff Eq Example: Successor Force on Node 4 n n n If node 4 scheduled in step 1 u no effect on time frame for successor node 8 Total force = Froce 4(1) = +0. 25 If node 4 scheduled in step 2 u causes node 8 to be scheduled into step 3 u must calculate successor force

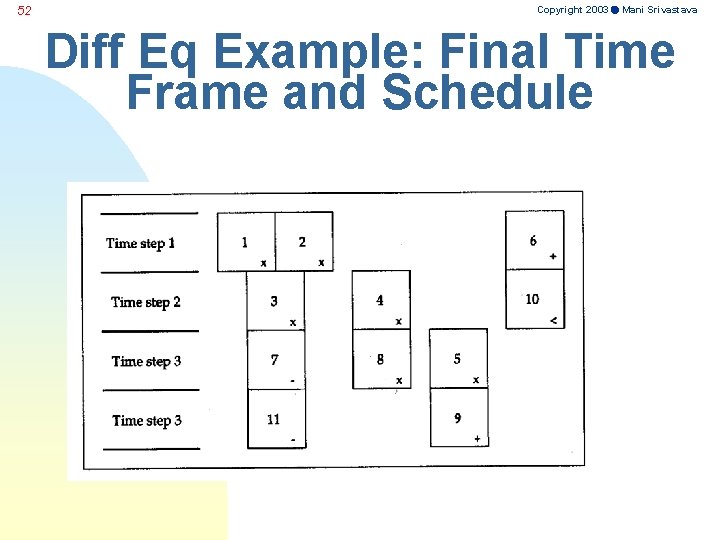

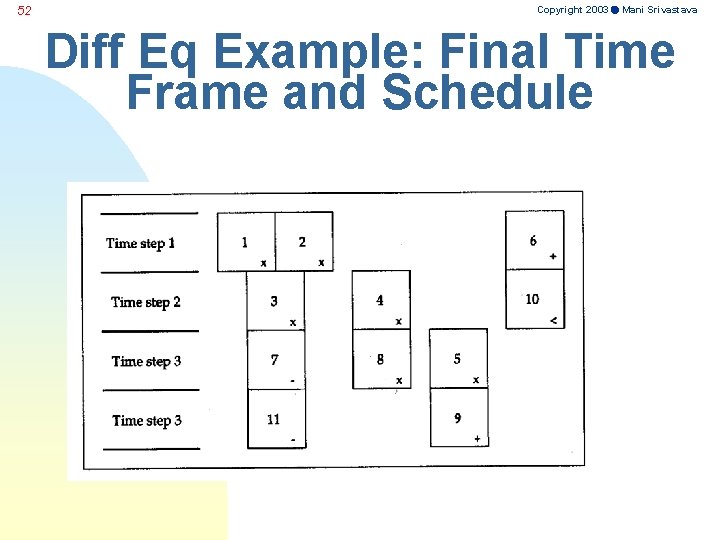

52 Copyright 2003 Mani Srivastava Diff Eq Example: Final Time Frame and Schedule

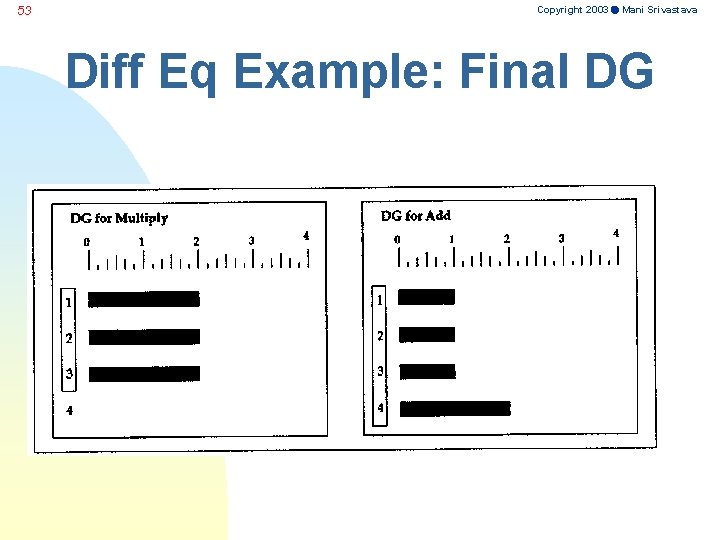

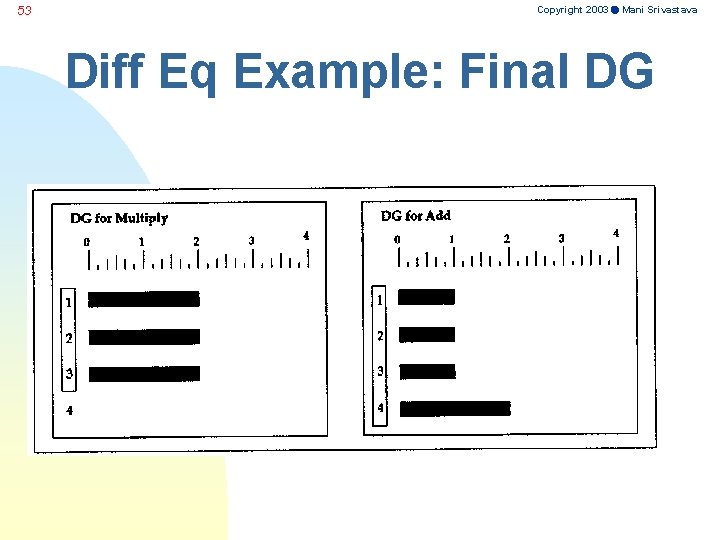

53 Copyright 2003 Mani Srivastava Diff Eq Example: Final DG

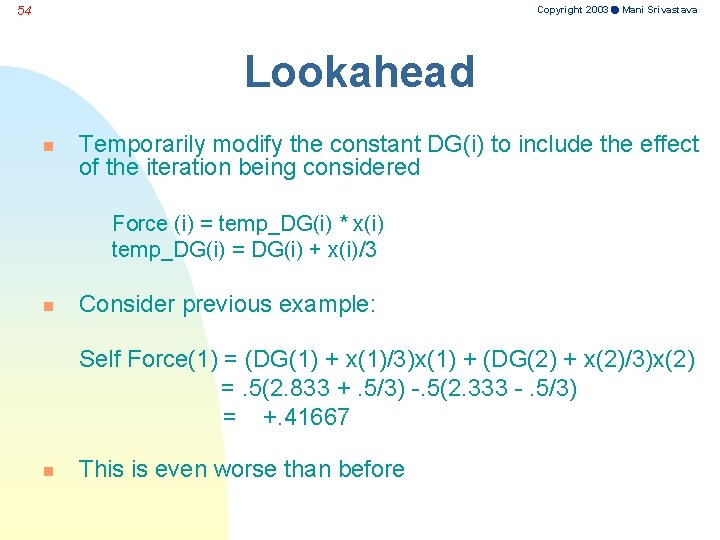

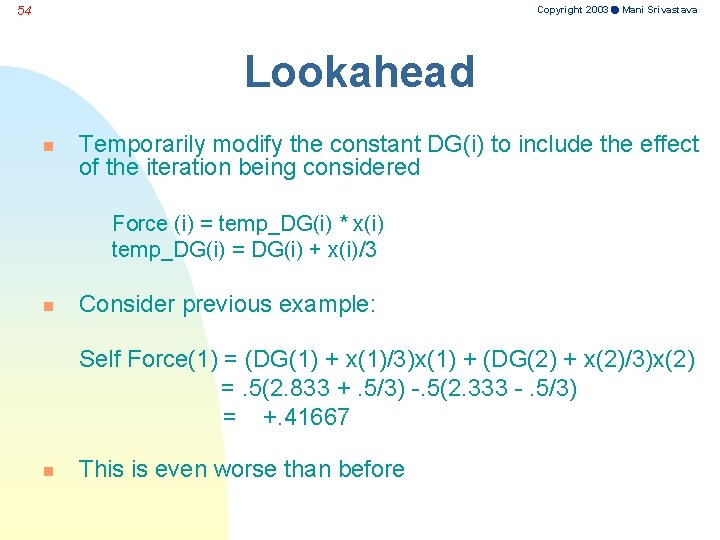

Copyright 2003 Mani Srivastava 54 Lookahead n Temporarily modify the constant DG(i) to include the effect of the iteration being considered Force (i) = temp_DG(i) * x(i) temp_DG(i) = DG(i) + x(i)/3 n Consider previous example: Self Force(1) = (DG(1) + x(1)/3)x(1) + (DG(2) + x(2)/3)x(2) =. 5(2. 833 +. 5/3) -. 5(2. 333 -. 5/3) = +. 41667 n This is even worse than before

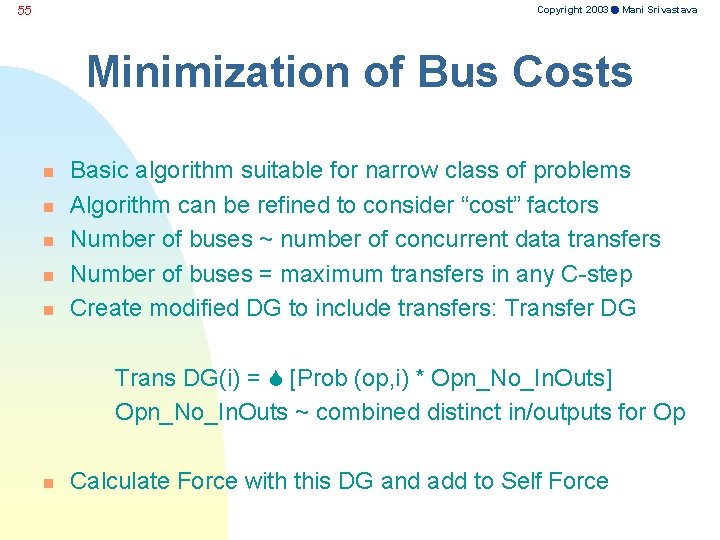

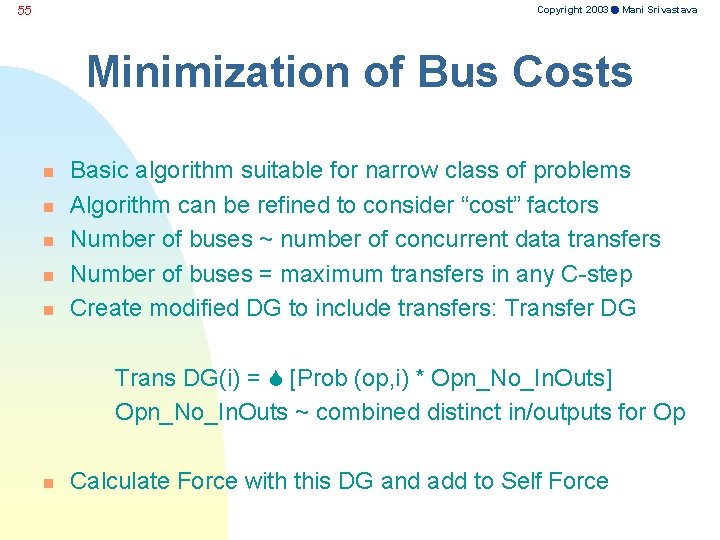

Copyright 2003 Mani Srivastava 55 Minimization of Bus Costs n n n Basic algorithm suitable for narrow class of problems Algorithm can be refined to consider “cost” factors Number of buses ~ number of concurrent data transfers Number of buses = maximum transfers in any C-step Create modified DG to include transfers: Transfer DG Trans DG(i) = [Prob (op, i) * Opn_No_In. Outs] Opn_No_In. Outs ~ combined distinct in/outputs for Op n Calculate Force with this DG and add to Self Force

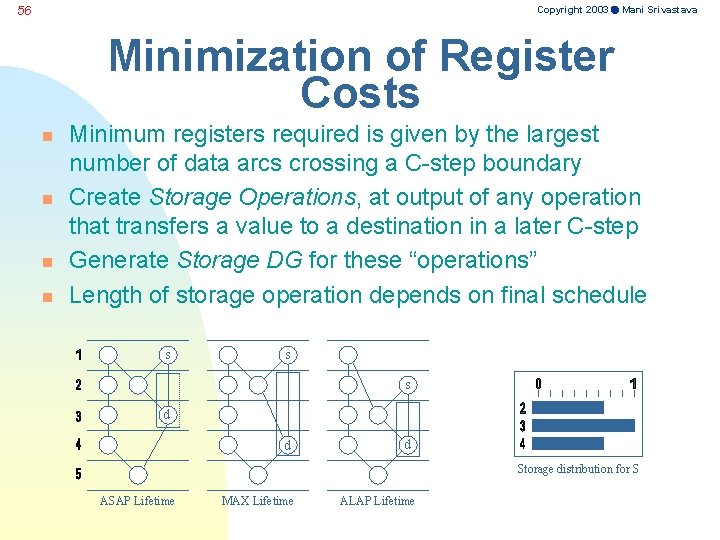

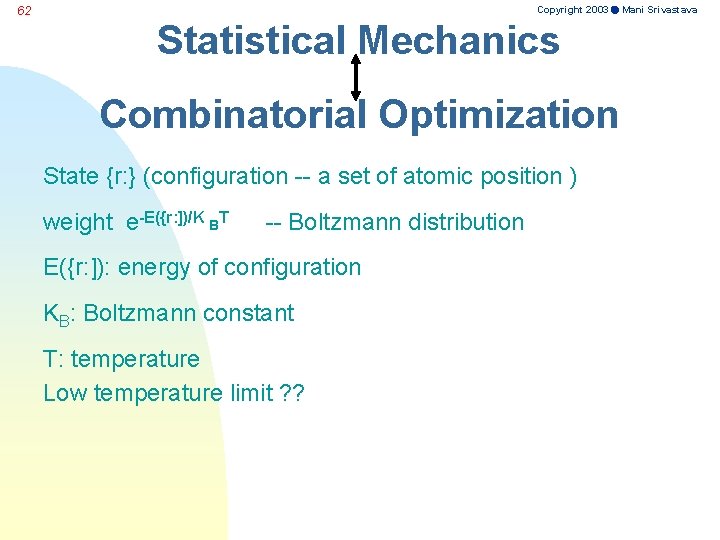

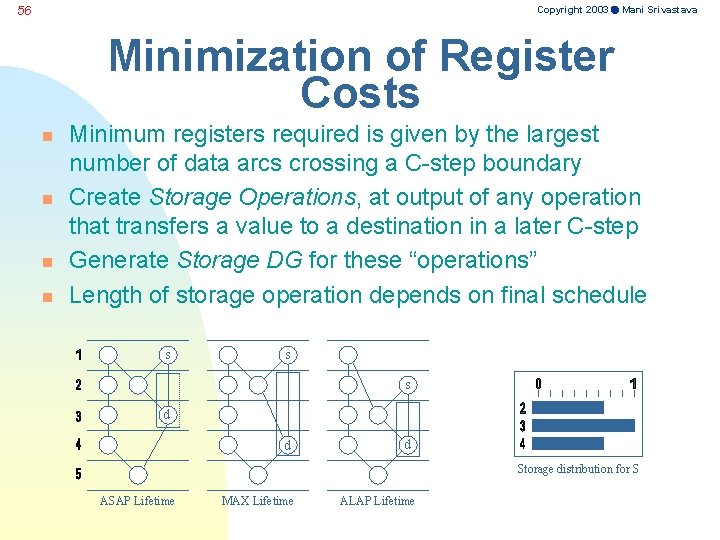

Copyright 2003 Mani Srivastava 56 Minimization of Register Costs n n Minimum registers required is given by the largest number of data arcs crossing a C-step boundary Create Storage Operations, at output of any operation that transfers a value to a destination in a later C-step Generate Storage DG for these “operations” Length of storage operation depends on final schedule s s s d d d Storage distribution for S ASAP Lifetime MAX Lifetime ALAP Lifetime

![Copyright 2003 Mani Srivastava 57 Minimization of Register Costs contd n avg life Copyright 2003 Mani Srivastava 57 Minimization of Register Costs( contd. ) n avg life]](https://slidetodoc.com/presentation_image_h/a991bca35216f55e0428185f625037dd/image-57.jpg)

Copyright 2003 Mani Srivastava 57 Minimization of Register Costs( contd. ) n avg life] = n storage DG(i) = (no overlap between ASAP & ALAP) n storage DG(i) = (if overlap) n Calculate and add “Storage” Force to Self Force ASAP 7 registers minimum Force Directed 5 registers minimum

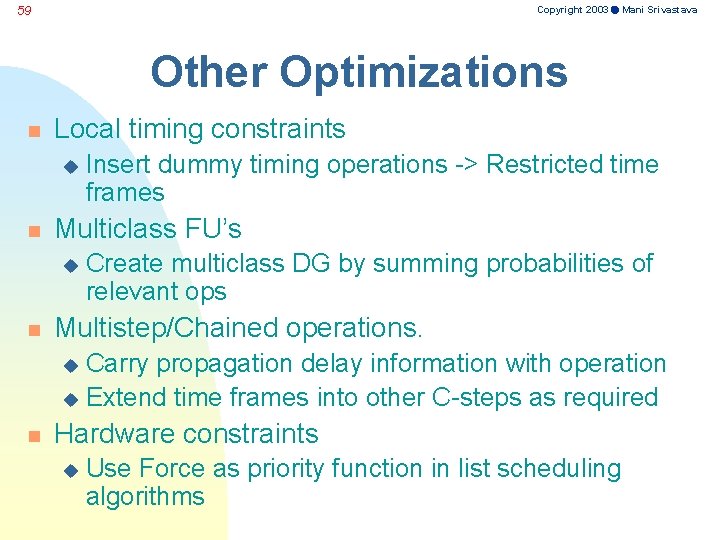

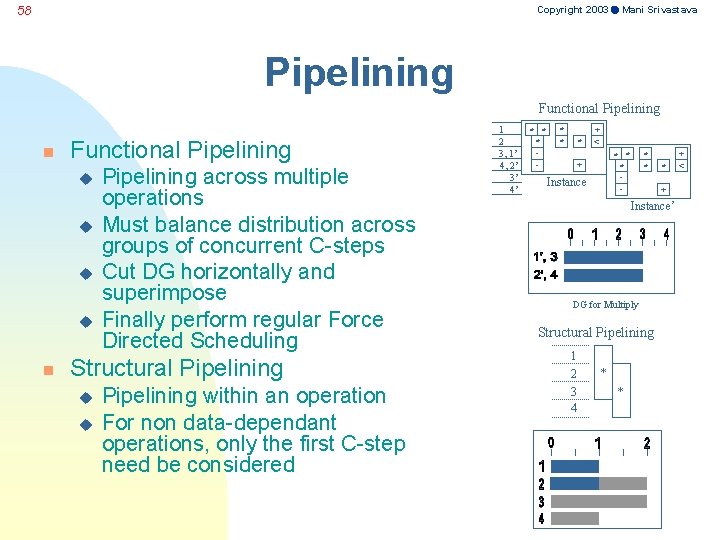

Copyright 2003 Mani Srivastava 58 Pipelining Functional Pipelining n Functional Pipelining u u n Pipelining across multiple operations Must balance distribution across groups of concurrent C-steps Cut DG horizontally and superimpose Finally perform regular Force Directed Scheduling Structural Pipelining u u Pipelining within an operation For non data-dependant operations, only the first C-step need be considered 1 2 3, 1’ 4, 2’ 3’ 4’ * * * - * * * + < * * * - + Instance * + Instance’ DG for Multiply Structural Pipelining 1 2 3 4 * * + <

Copyright 2003 Mani Srivastava 59 Other Optimizations n Local timing constraints u n Multiclass FU’s u n Insert dummy timing operations -> Restricted time frames Create multiclass DG by summing probabilities of relevant ops Multistep/Chained operations. Carry propagation delay information with operation u Extend time frames into other C-steps as required u n Hardware constraints u Use Force as priority function in list scheduling algorithms

60 Copyright 2003 Mani Srivastava Scheduling using Simulated Annealing Reference: Devadas, S. ; Newton, A. R. Algorithms for hardware allocation in data path synthesis. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, July 1989, Vol. 8, (no. 7): 768 -81.

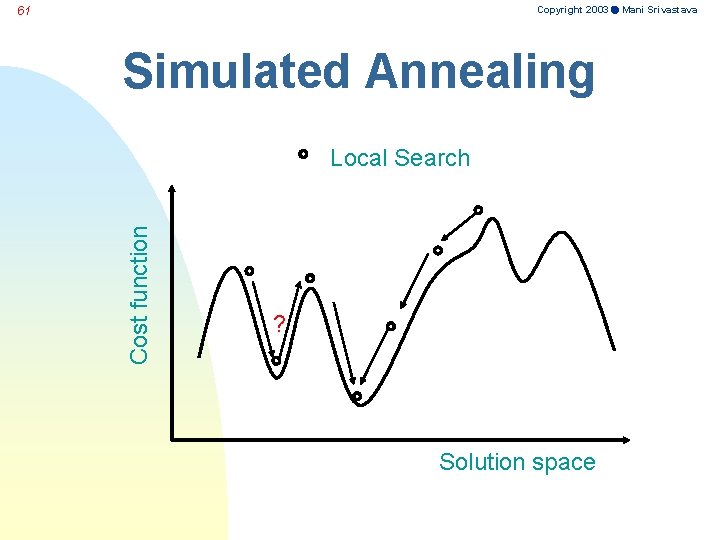

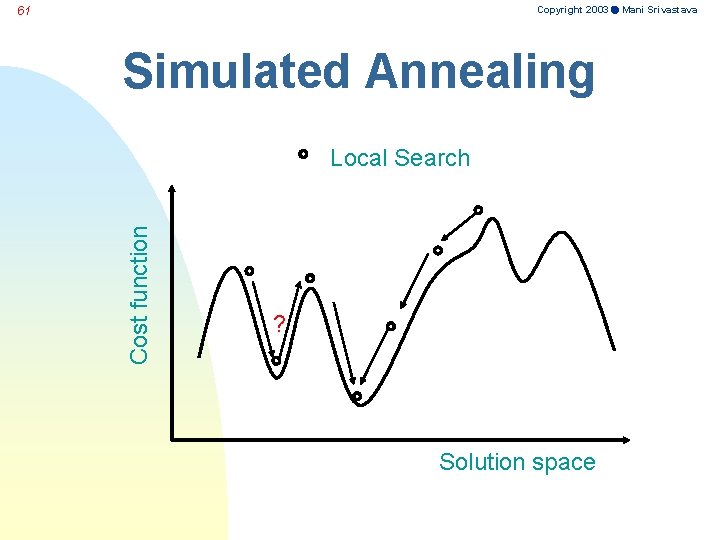

Copyright 2003 Mani Srivastava 61 Simulated Annealing Cost function Local Search ? Solution space

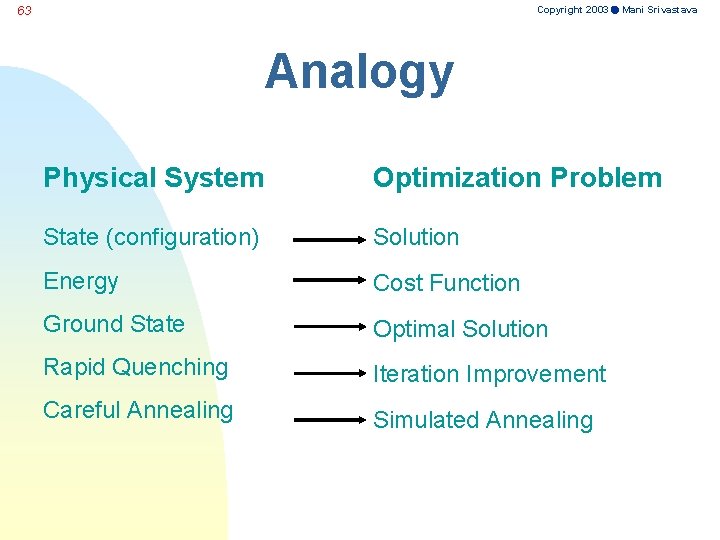

Copyright 2003 Mani Srivastava 62 Statistical Mechanics Combinatorial Optimization State {r: } (configuration -- a set of atomic position ) weight e-E({r: ])/K BT -- Boltzmann distribution E({r: ]): energy of configuration KB: Boltzmann constant T: temperature Low temperature limit ? ?

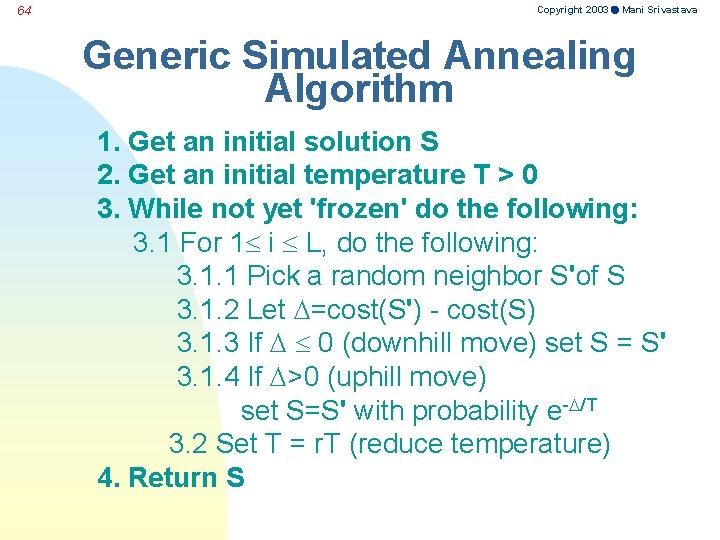

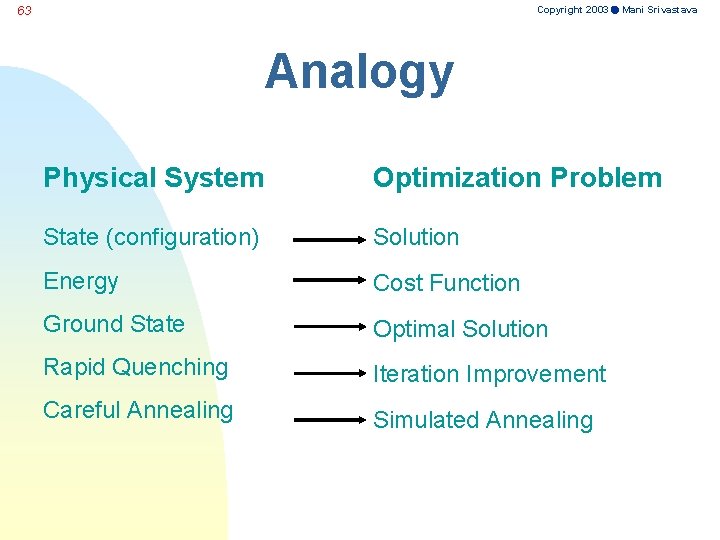

Copyright 2003 Mani Srivastava 63 Analogy Physical System Optimization Problem State (configuration) Solution Energy Cost Function Ground State Optimal Solution Rapid Quenching Iteration Improvement Careful Annealing Simulated Annealing

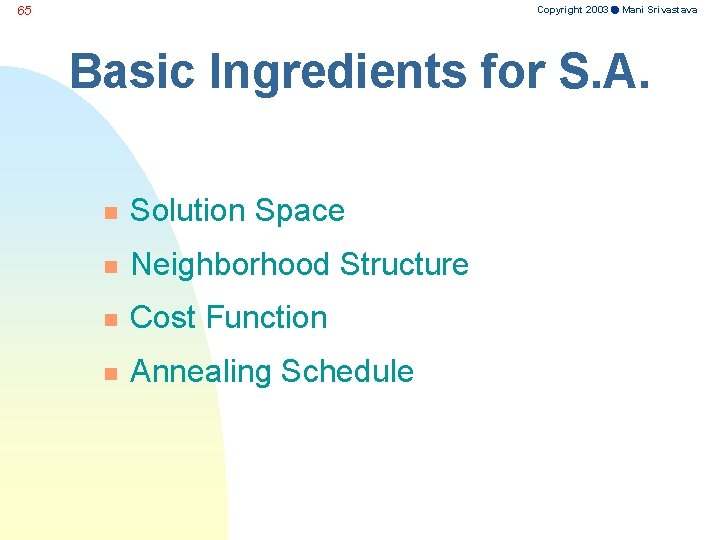

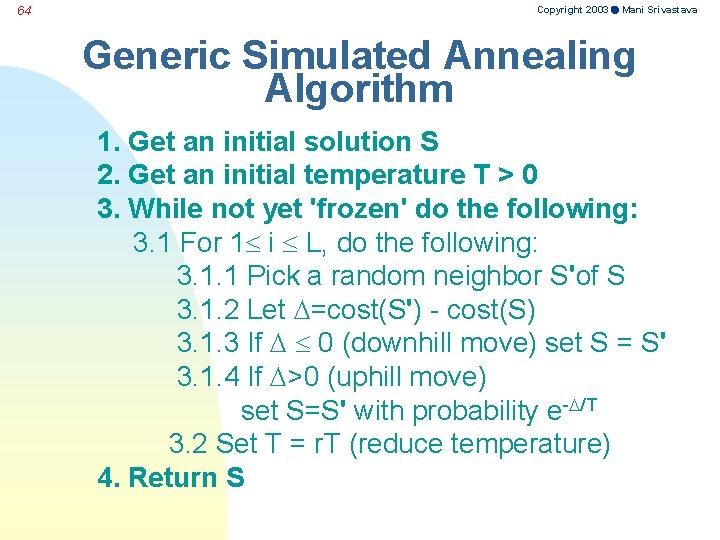

64 Copyright 2003 Mani Srivastava Generic Simulated Annealing Algorithm 1. Get an initial solution S 2. Get an initial temperature T > 0 3. While not yet 'frozen' do the following: 3. 1 For 1 i L, do the following: 3. 1. 1 Pick a random neighbor S'of S 3. 1. 2 Let =cost(S') - cost(S) 3. 1. 3 If 0 (downhill move) set S = S' 3. 1. 4 If >0 (uphill move) set S=S' with probability e- /T 3. 2 Set T = r. T (reduce temperature) 4. Return S

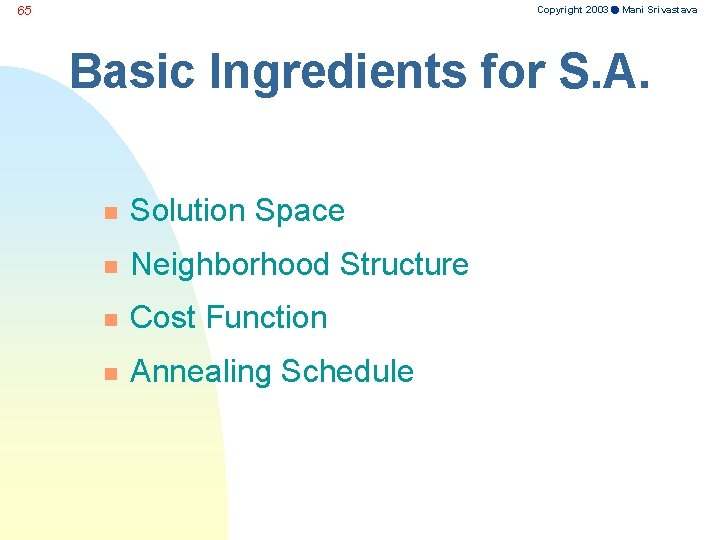

Copyright 2003 Mani Srivastava 65 Basic Ingredients for S. A. n Solution Space n Neighborhood Structure n Cost Function n Annealing Schedule

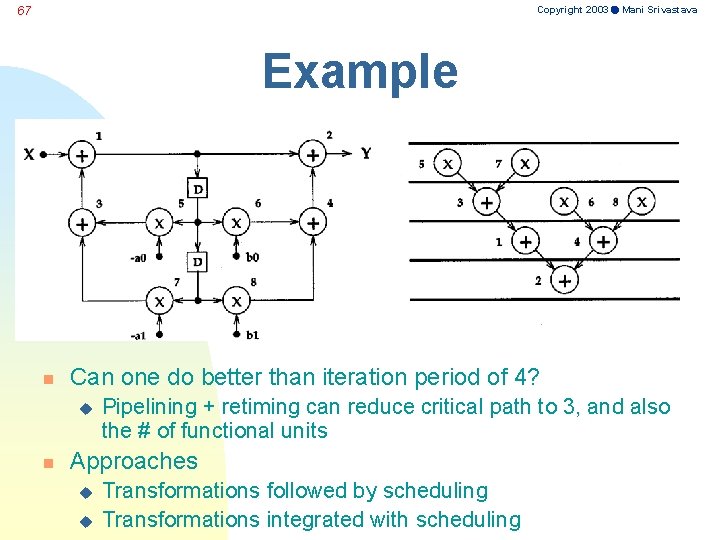

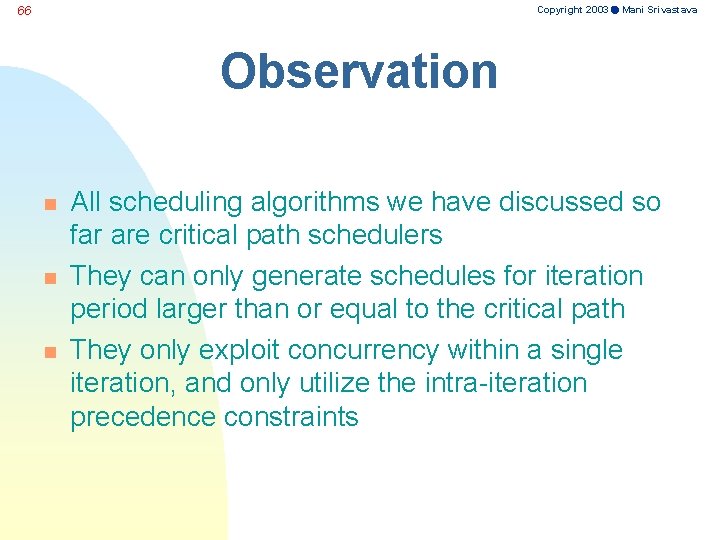

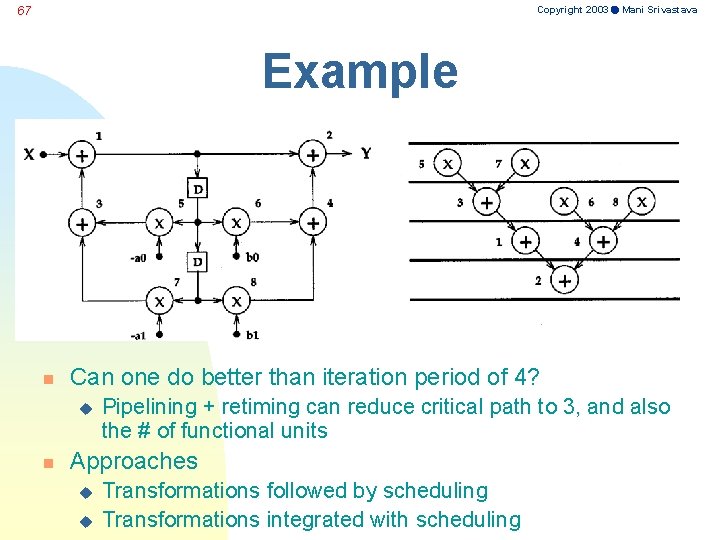

Copyright 2003 Mani Srivastava 66 Observation n All scheduling algorithms we have discussed so far are critical path schedulers They can only generate schedules for iteration period larger than or equal to the critical path They only exploit concurrency within a single iteration, and only utilize the intra-iteration precedence constraints

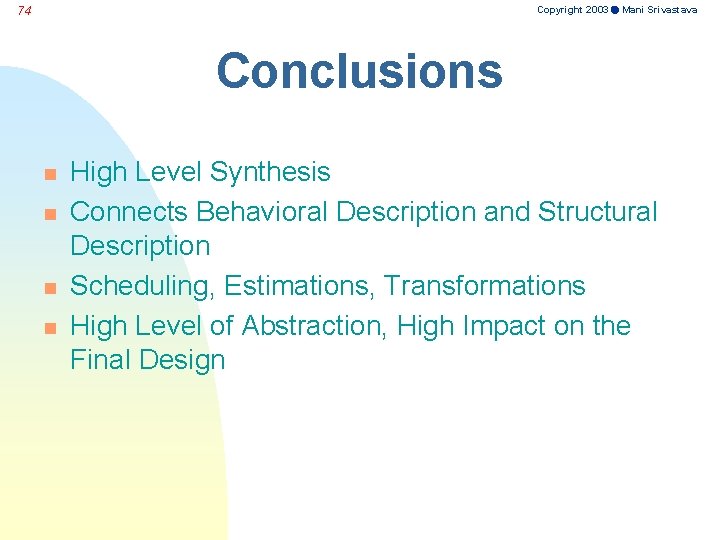

Copyright 2003 Mani Srivastava 67 Example n Can one do better than iteration period of 4? u n Pipelining + retiming can reduce critical path to 3, and also the # of functional units Approaches u u Transformations followed by scheduling Transformations integrated with scheduling

Copyright 2003 Mani Srivastava 74 Conclusions n n High Level Synthesis Connects Behavioral Description and Structural Description Scheduling, Estimations, Transformations High Level of Abstraction, High Impact on the Final Design