T 3 Memory Index n Memory management concepts

![Address Spaces n Address space: Range of addresses [@first_adress…@last_address] n That concept is applied Address Spaces n Address space: Range of addresses [@first_adress…@last_address] n That concept is applied](https://slidetodoc.com/presentation_image_h/14874195ee55d3aa839985de2b28f4d7/image-7.jpg)

![Sbrk: example max int main(int argc, char *argv[]) { int procs_nb=atoi(argv[1]); int *pids; pids=sbrk(procs_nb*sizeof(int)); Sbrk: example max int main(int argc, char *argv[]) { int procs_nb=atoi(argv[1]); int *pids; pids=sbrk(procs_nb*sizeof(int));](https://slidetodoc.com/presentation_image_h/14874195ee55d3aa839985de2b28f4d7/image-24.jpg)

![malloc/free: example int main(int argc, char *argv[]) { int procs_nb=atoi(argv[1]); int *pids; pids=malloc(procs_nb*sizeof(int)); for(i=0; malloc/free: example int main(int argc, char *argv[]) { int procs_nb=atoi(argv[1]); int *pids; pids=malloc(procs_nb*sizeof(int)); for(i=0;](https://slidetodoc.com/presentation_image_h/14874195ee55d3aa839985de2b28f4d7/image-26.jpg)

- Slides: 58

T 3 -Memory

Index n Memory management concepts n Basic Services l Program loading in memory l Dynamic memory l HW support 4 To memory assignment 4 To address translation n Services to optimize physical memory usage l COW l Virtual memory l Prefetch n Linux on Pentium 3. 2

Physical memory vs. Logical memory Process address space Addresses assignment to processes Operating system tasks Hardware support CONCEPTS 3. 3

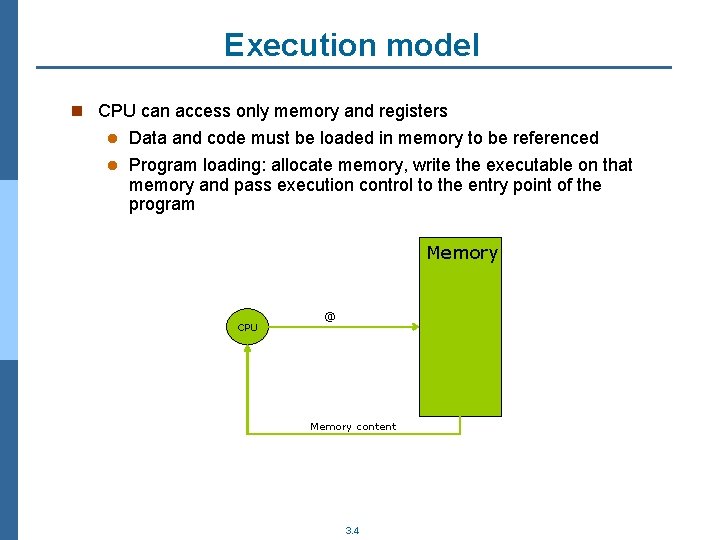

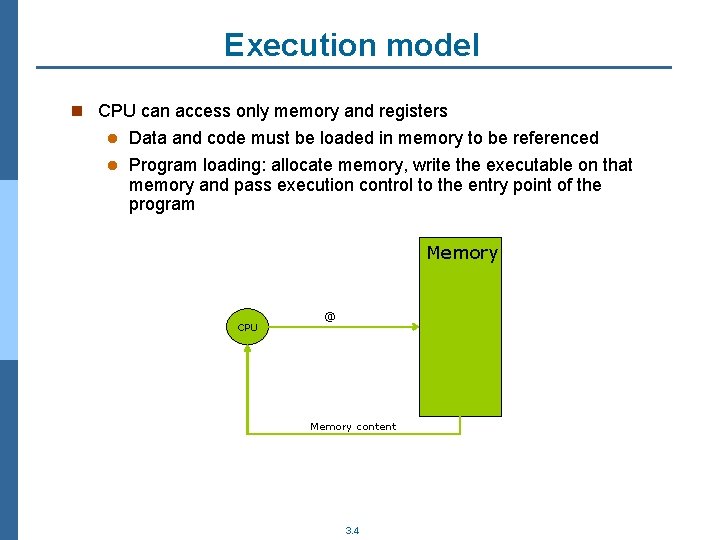

Execution model n CPU can access only memory and registers Data and code must be loaded in memory to be referenced l Program loading: allocate memory, write the executable on that memory and pass execution control to the entry point of the program l Memory CPU @ Memory content 3. 4

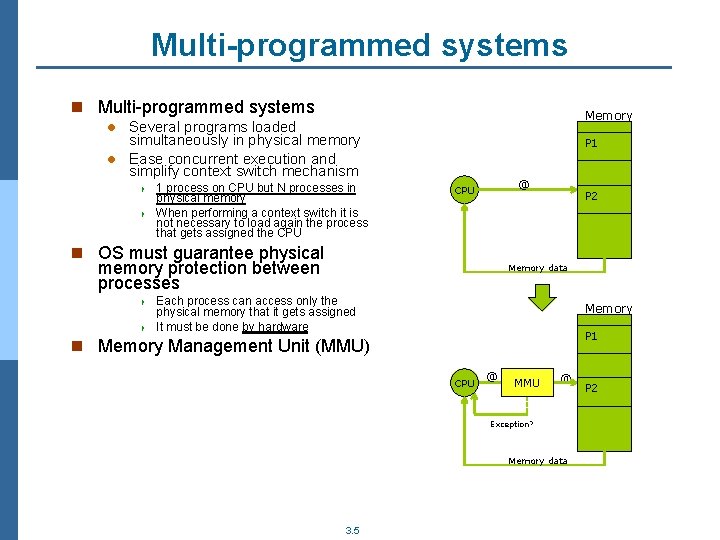

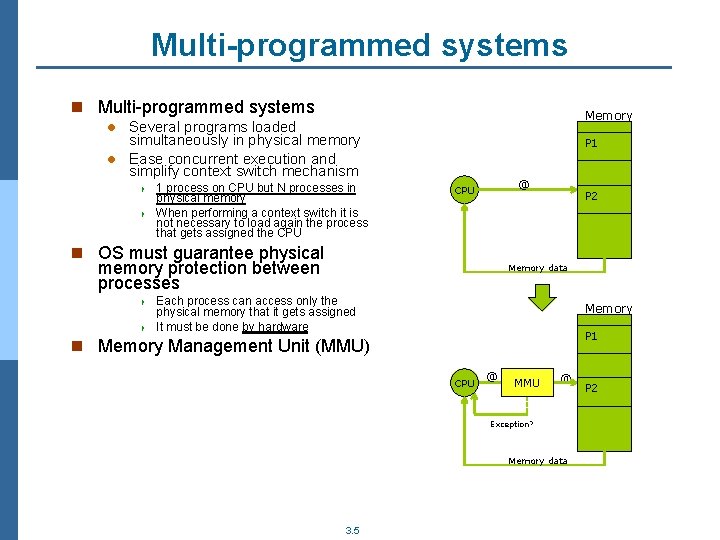

Multi-programmed systems n Multi-programmed systems Memory Several programs loaded simultaneously in physical memory l Ease concurrent execution and simplify context switch mechanism l 4 4 1 process on CPU but N processes in physical memory When performing a context switch it is not necessary to load again the process that gets assigned the CPU P 1 @ CPU n OS must guarantee physical memory protection between processes 4 4 P 2 Memory data Each process can access only the physical memory that it gets assigned It must be done by hardware Memory P 1 n Memory Management Unit (MMU) CPU @ MMU @ Exception? Memory data 3. 5 P 2

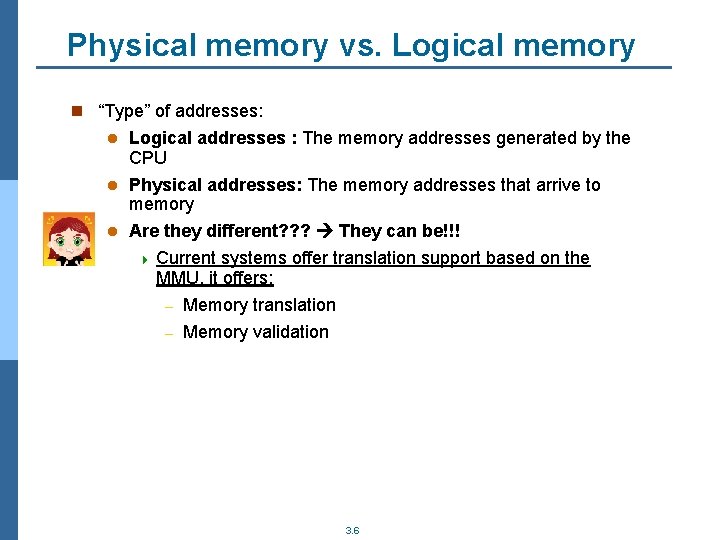

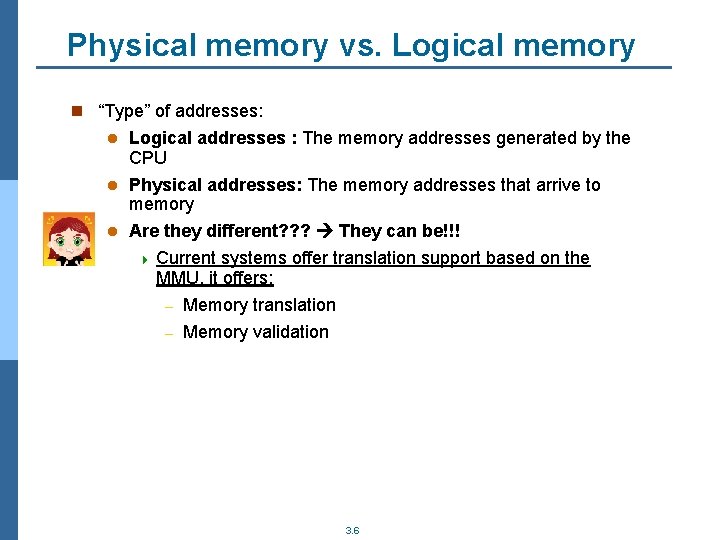

Physical memory vs. Logical memory n “Type” of addresses: Logical addresses : The memory addresses generated by the CPU l Physical addresses: The memory addresses that arrive to memory l Are they different? ? ? They can be!!! l 4 Current systems offer translation support based on the MMU, it offers: – Memory translation – Memory validation 3. 6

![Address Spaces n Address space Range of addresses firstadresslastaddress n That concept is applied Address Spaces n Address space: Range of addresses [@first_adress…@last_address] n That concept is applied](https://slidetodoc.com/presentation_image_h/14874195ee55d3aa839985de2b28f4d7/image-7.jpg)

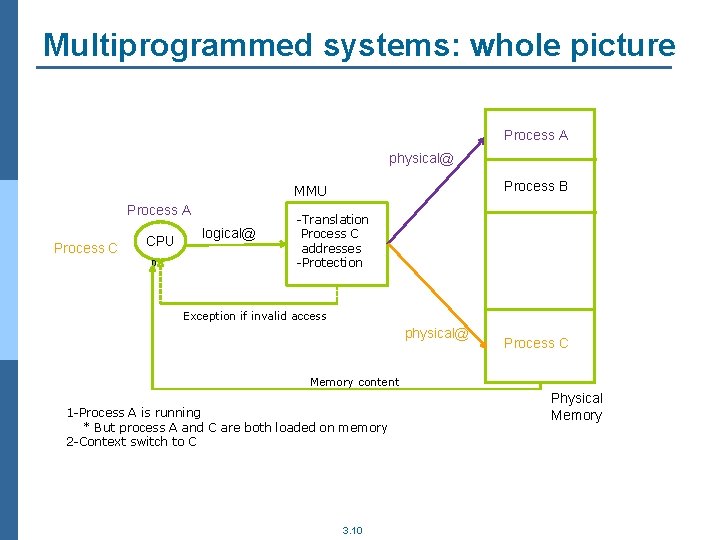

Address Spaces n Address space: Range of addresses [@first_adress…@last_address] n That concept is applied to different contexts: Processor address space l Process logical address space 4 Subset of logical addresses that a process can reference (OS kernel decides which are those valid addresses for each process) l Process physical address space n Relationship between logical addresses and physical addresses l Without translation: logical address space == physical address space l With translation: It can be done at different moments l 4 Option 1, During program loading: kernel decides where to place the process in memory and translate references at program loading 4 Option 2, During program execution: each issued reference is translated at runtime (this is the normal behavior in current systems) 3. 7

Assignment of addresses to processes n There exists other choices but… current general purpose systems translate @ to instructions and data at runtime n Since logical addresses are decoupled from physical addresses l We can have many processes with the same logical addresses without problem – FORK!!!!! Parent and child have the same logical address space without conflict – Compiler can translate program references to memory without concerning about other programs references and about which physical addresses will be available when the process starts the execution l Processes are enabled to change their position in memory without changing their logical address space. 4 Example: Paging (explained in EC course) 3. 8

Multi-programmed systems with MMU support n Collaboration between MMU (HW) and Kernel (SW) n MMU It implements the mechanism to detect illegal accesses – Out of process logical memory address space – Valid address but invalid access l It throws an exception to the OS if some problem is detected during memory address translation n kernel l It configures MMU l It manages the exception according to the situation l 4 For example, if the logical address is not valid it can kill the process (SISEGV signal) 3. 9

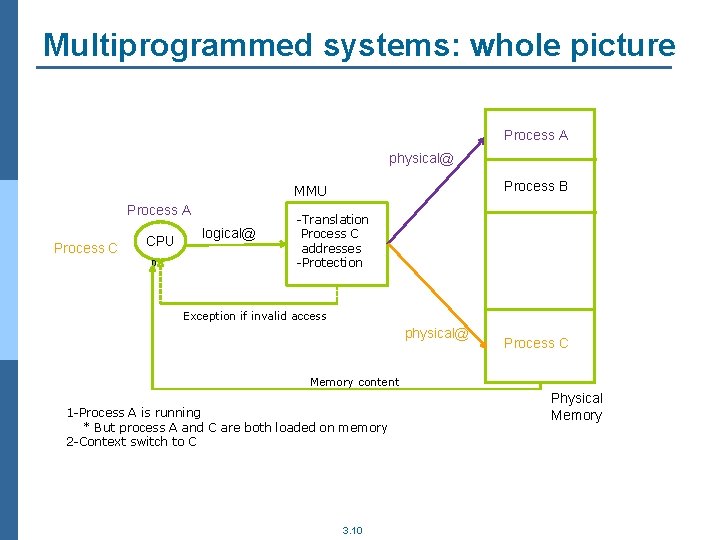

Multiprogrammed systems: whole picture Process A physical@ Process B MMU Process A Process C CPU logical@ -Translation Process AC addresses -Protection Exception if invalid access physical@ Process C Memory content 1 -Process A is running * But process A and C are both loaded on memory 2 -Context switch to C 3. 10 Physical Memory

When does the OS need to update the MMU? ? ? n Case 1: When assigning memory l Initialization when assigning new memory (mutation, execlp) l Changes in the address space: grows/diminishes. n Case 2: When switching contexts l For the process that leaves the CPU: if it is not finished, then keep in its data structures (PCB) the information to configure the MMU when it resumes the execution l For the process that resumes the execution: configure the MMU 3. 13

OS tasks in memory management n Program loading in memory 4 Once loaded we have already seen how it works!! n Allocate/Deallocate dynamic memory (requested through system calls) n Shared memory between processes l COW: transparent sharing of read-only regions between processes l Shared memory explicitly requested through system calls (out of the scope of this course) n Optimization services l COW l Virtual memory l Prefetch 3. 15

Program loading Dynamic memory Memory assignment Explicit shared memory between processes OS BASIC SERVICES 3. 16

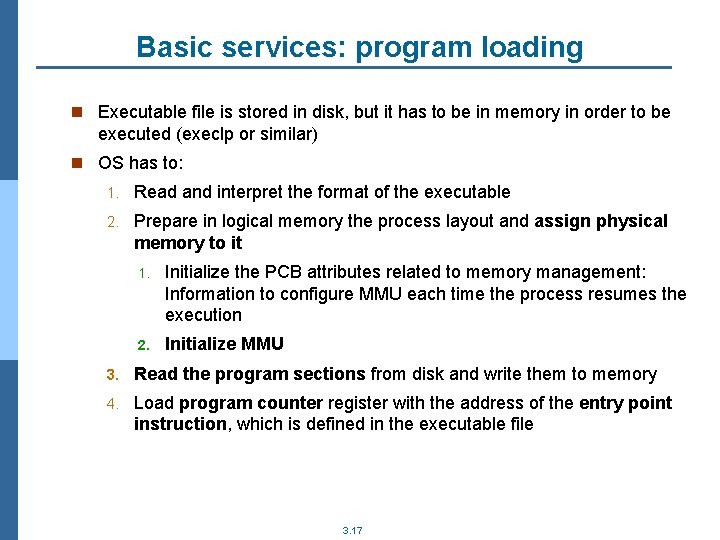

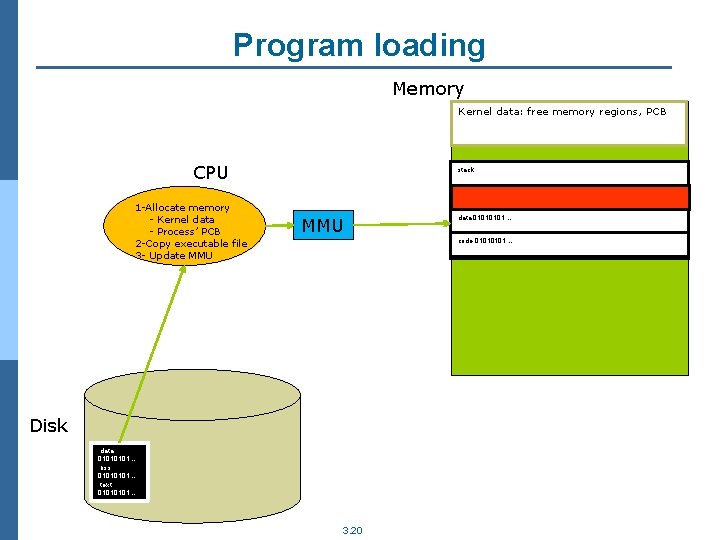

Basic services: program loading n Executable file is stored in disk, but it has to be in memory in order to be executed (execlp or similar) n OS has to: 1. Read and interpret the format of the executable 2. Prepare in logical memory the process layout and assign physical memory to it 1. Initialize the PCB attributes related to memory management: Information to configure MMU each time the process resumes the execution 2. Initialize MMU 3. Read the program sections from disk and write them to memory 4. Load program counter register with the address of the entry point instruction, which is defined in the executable file 3. 17

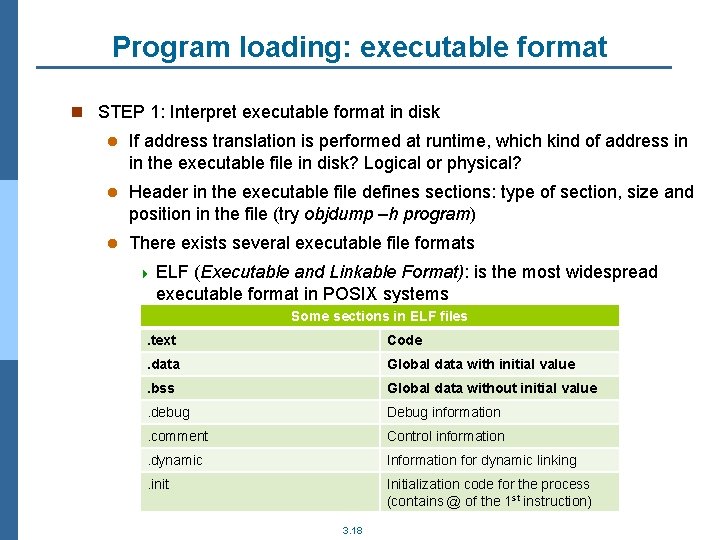

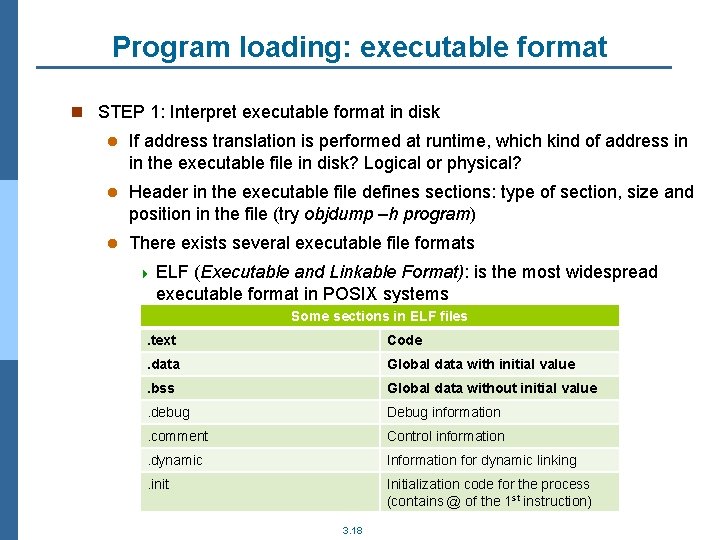

Program loading: executable format n STEP 1: Interpret executable format in disk l If address translation is performed at runtime, which kind of address in in the executable file in disk? Logical or physical? l Header in the executable file defines sections: type of section, size and position in the file (try objdump –h program) l There exists several executable file formats 4 ELF (Executable and Linkable Format): is the most widespread executable format in POSIX systems Some sections in ELF files . text Code . data Global data with initial value . bss Global data without initial value . debug Debug information . comment Control information . dynamic Information for dynamic linking . init Initialization code for the process (contains @ of the 1 st instruction) 3. 18

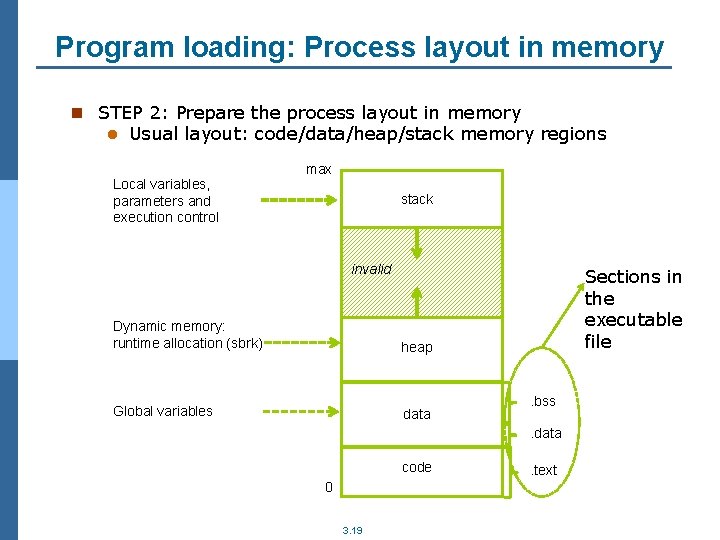

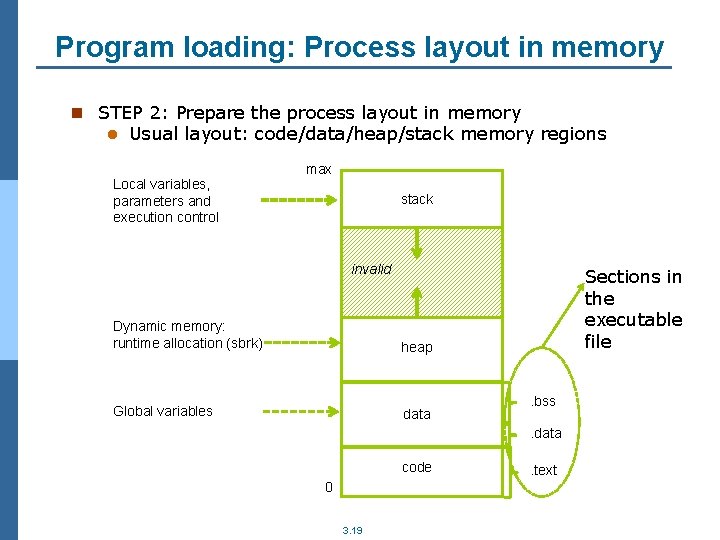

Program loading: Process layout in memory n STEP 2: Prepare the process layout in memory l Usual layout: code/data/heap/stack memory regions Local variables, parameters and execution control max stack invalid Dynamic memory: runtime allocation (sbrk) heap Global variables data Sections in the executable file. bss. data code 0 3. 19 . text

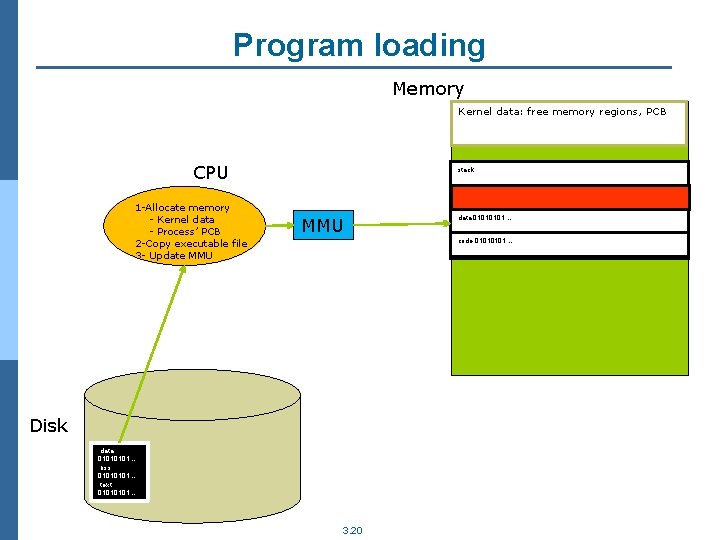

Program loading Memory Kernel data: free memory regions, PCB CPU 1 -Allocate memory - Kernel data - Process’ PCB 2 -Copy executable file 3 - Update MMU stack MMU data 0101… datos code 0101… código Disk. data 0101…. bss 0101…. text 0101… 3. 20

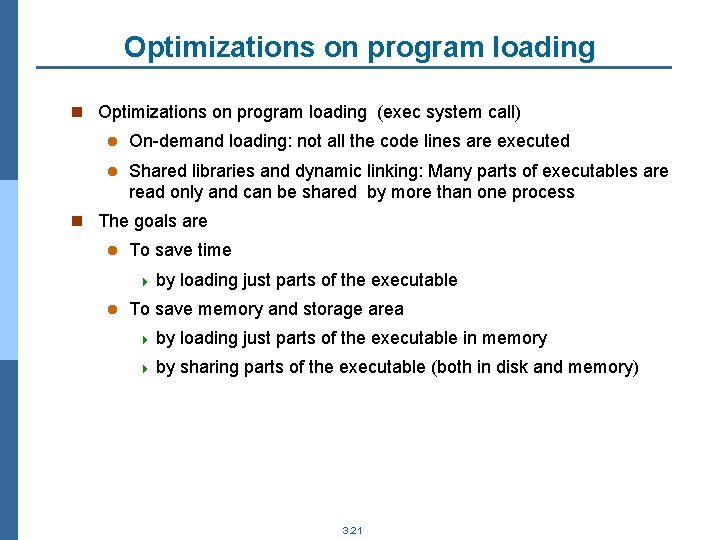

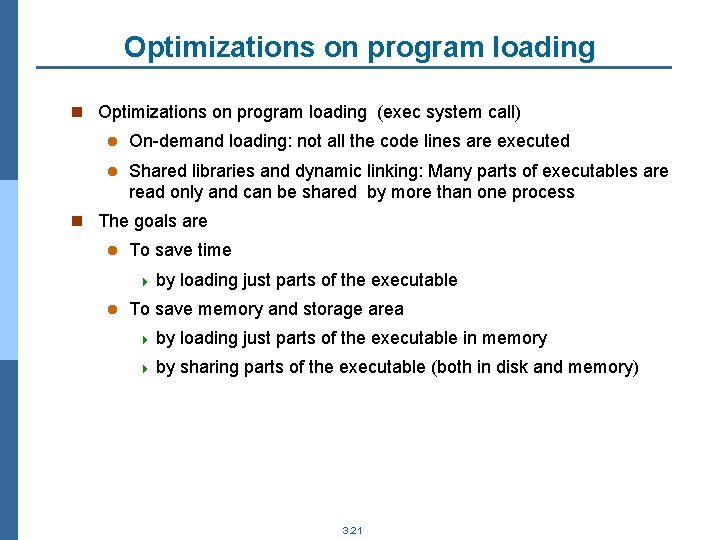

Optimizations on program loading n Optimizations on program loading (exec system call) l On-demand loading: not all the code lines are executed l Shared libraries and dynamic linking: Many parts of executables are read only and can be shared by more than one process n The goals are l To save time 4 by l loading just parts of the executable To save memory and storage area 4 by loading just parts of the executable in memory 4 by sharing parts of the executable (both in disk and memory) 3. 21

Optimizations on program loading n On-demand loading l Loading of routines is delayed until they are called l It requires a mechanism to detect if an address is already in memory or not. – Real MMU information is stored at PCB – MMU exception code validates the memory address, if correct » updates memory content » updates MMU and PCB attributes » Restart instruction 3. 22

Optimizations on program loading n Shared libraries and dynamic linking Libraries can be generated in two different ways: static and dynamic version l Executables can use static or dynamic version of libraries (default is dynamic) 4 Static: Library code is included in the executable file 4 Dynamic: Executable files (in disk) do not contain the dynamic library code but just a reference to it » That saves a lot of disk space! (Link phase is delayed until runtime) » When executed, that code loads the library if it is not already loaded in memory and updates the process code to substitute the call to the stub by the call to the routine in the shared library l l Processes can share those memory areas holding the same code (it is read only) and the code of libraries It saves a lot of memory space 3. 23

BASIC SERVICES: DYNAMIC MEMORY 3. 24

Dynamic memory allocation/deallocation n System call to ask for an extra memory space or to reduce a previously reserved memory area 4 Heap area: region in the process address space that holds dynamic memory allocations n Required when the size of a variable depends on runtime parameters l In this situation, it is not desirable to fix sizes at compiling time, causes over allocation (memory wasting) or under allocation (runtime error) n Optimization l Physical memory assignment can be delayed until the first write access to the region 4 Temporal assignment of a 0 filled region to manage read accesses (it depends on the interface). 3. 25

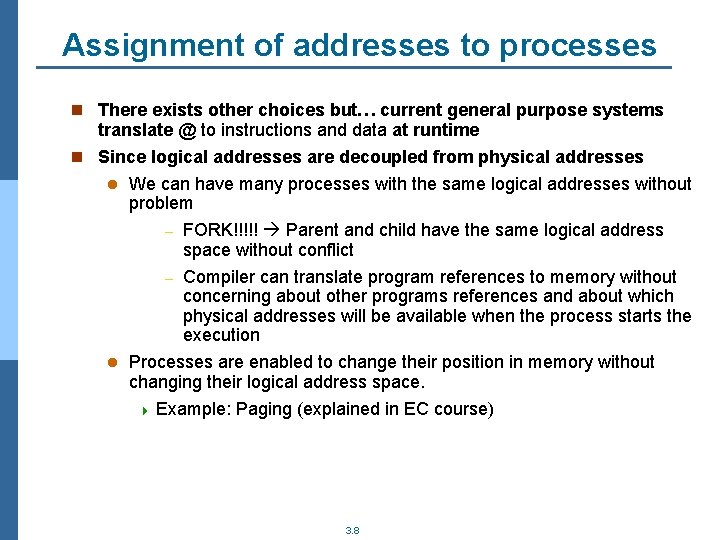

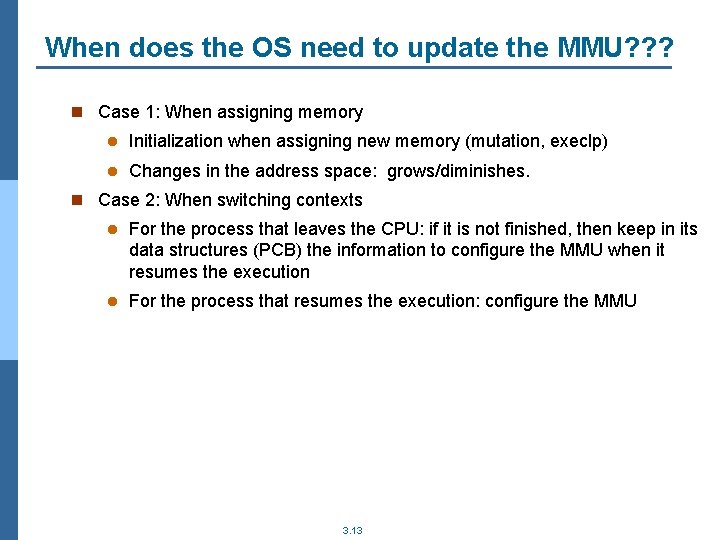

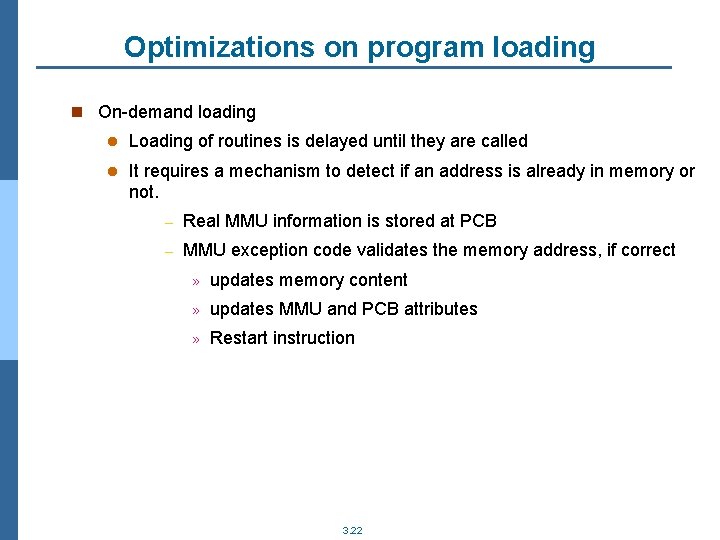

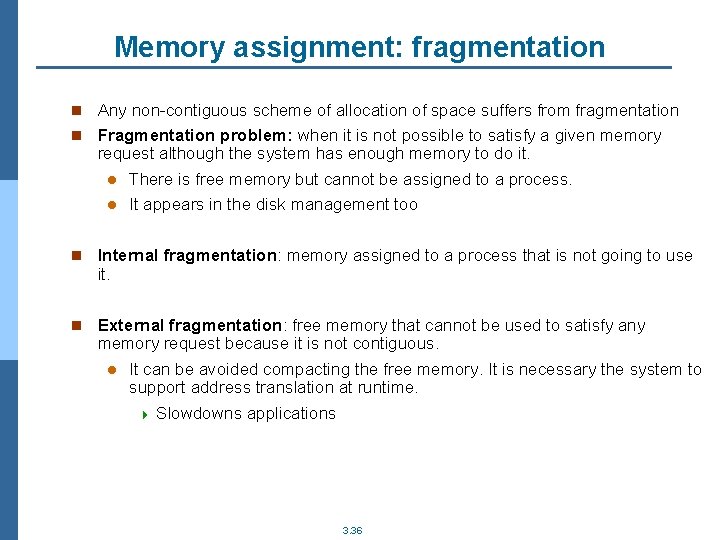

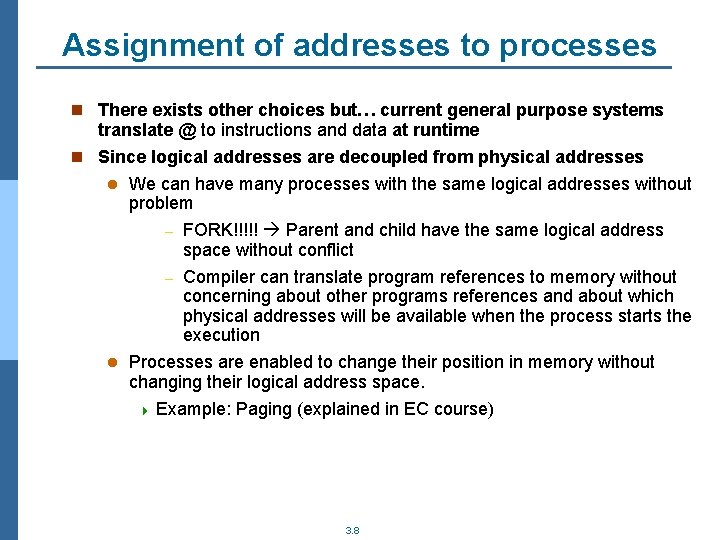

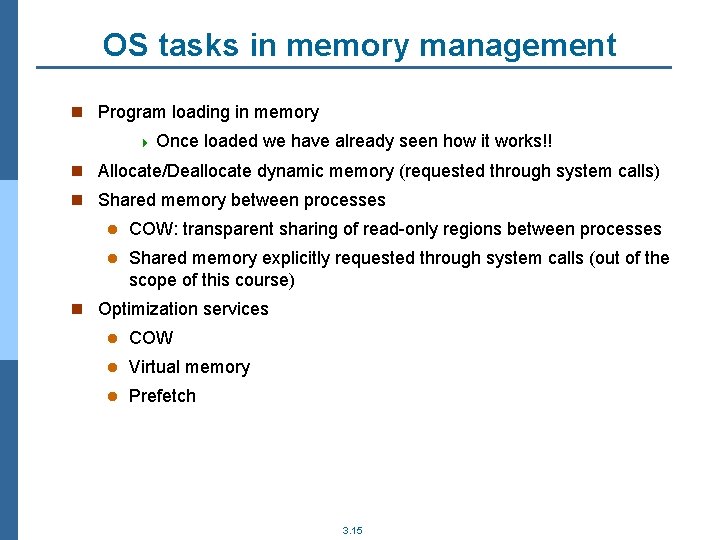

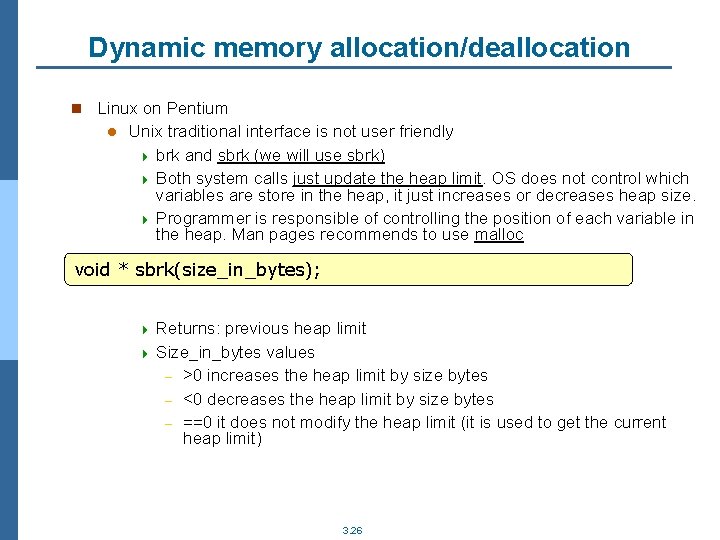

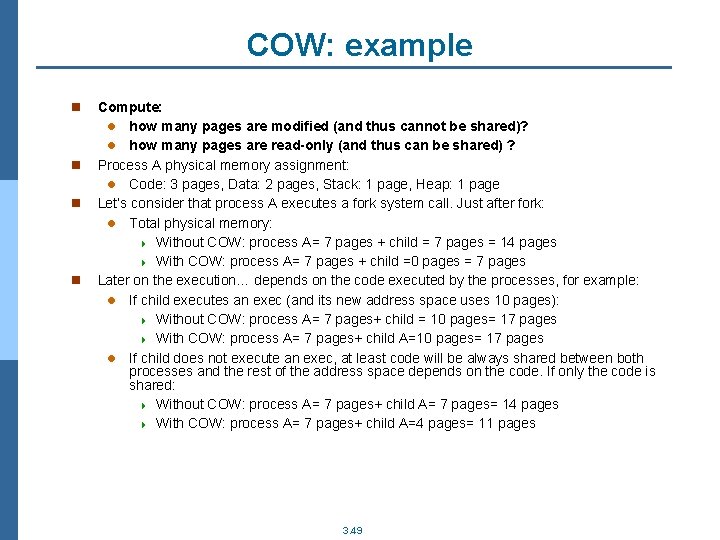

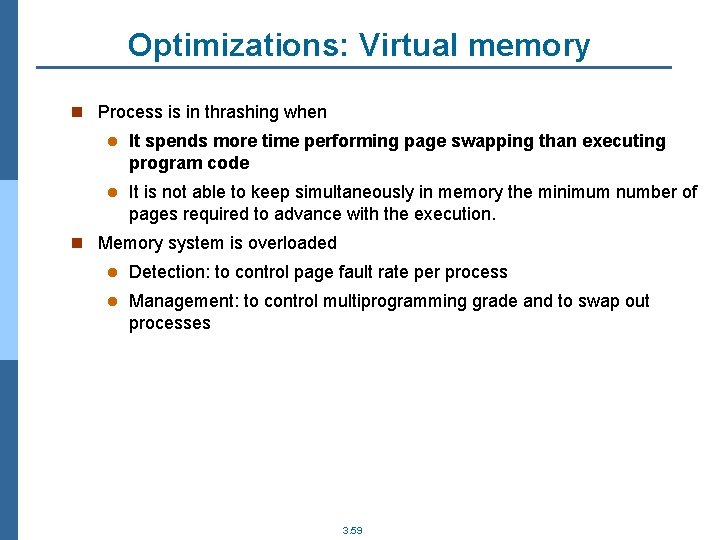

Dynamic memory allocation/deallocation n Linux on Pentium l Unix traditional interface is not user friendly 4 brk and sbrk (we will use sbrk) 4 Both system calls just update the heap limit. OS does not control which variables are store in the heap, it just increases or decreases heap size. 4 Programmer is responsible of controlling the position of each variable in the heap. Man pages recommends to use malloc void * sbrk(size_in_bytes); Returns: previous heap limit 4 Size_in_bytes values – >0 increases the heap limit by size bytes – <0 decreases the heap limit by size bytes – ==0 it does not modify the heap limit (it is used to get the current heap limit) 4 3. 26

![Sbrk example max int mainint argc char argv int procsnbatoiargv1 int pids pidssbrkprocsnbsizeofint Sbrk: example max int main(int argc, char *argv[]) { int procs_nb=atoi(argv[1]); int *pids; pids=sbrk(procs_nb*sizeof(int));](https://slidetodoc.com/presentation_image_h/14874195ee55d3aa839985de2b28f4d7/image-24.jpg)

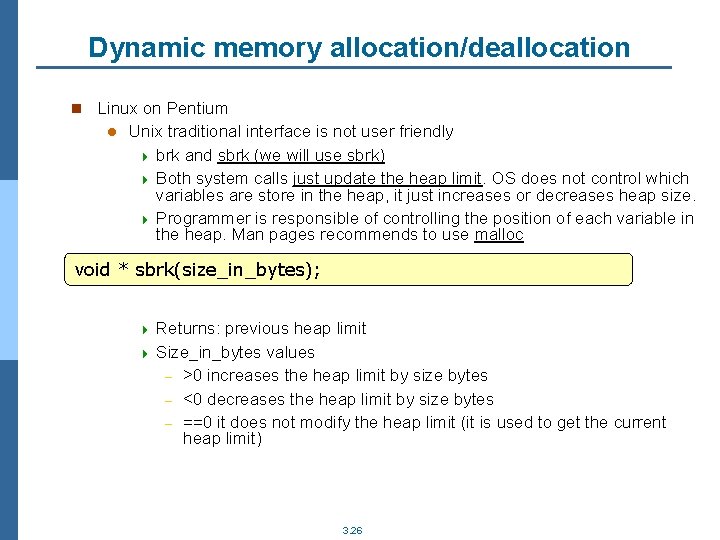

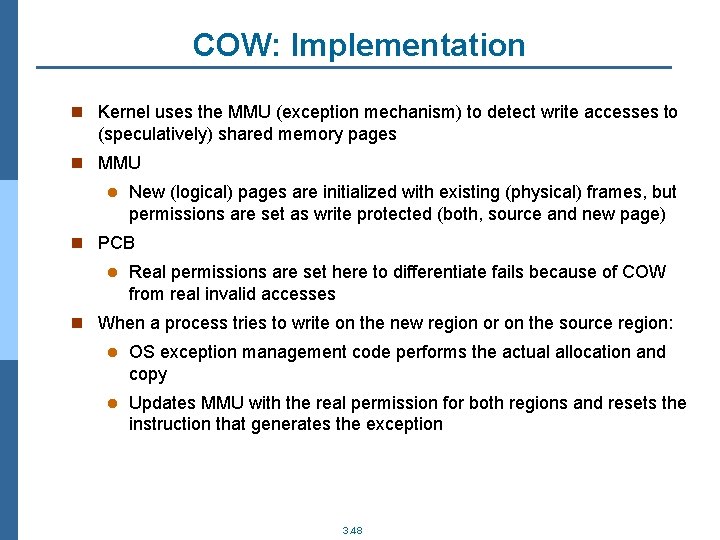

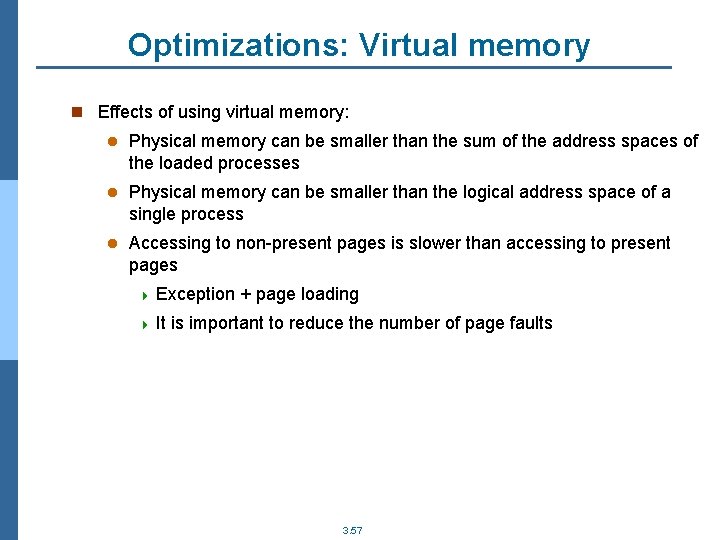

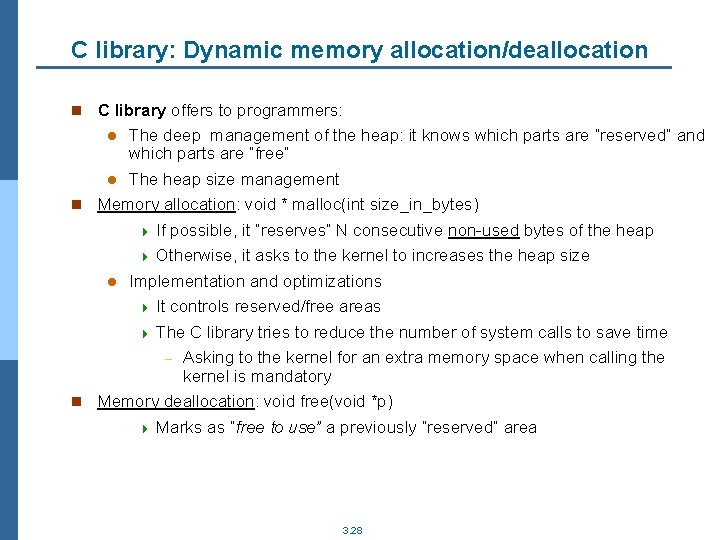

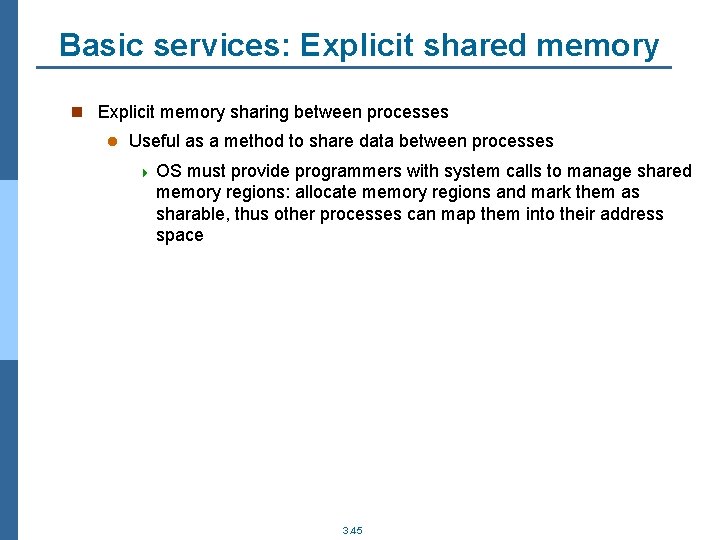

Sbrk: example max int main(int argc, char *argv[]) { int procs_nb=atoi(argv[1]); int *pids; pids=sbrk(procs_nb*sizeof(int)); for(i=0; i<10; i++){ pids[i]=fork(); if (pids[i]==0){ …. } } sbrk(-1*procs_nb*sizeof(int)); 0 3. 27 STACK HEAP DATA CODE

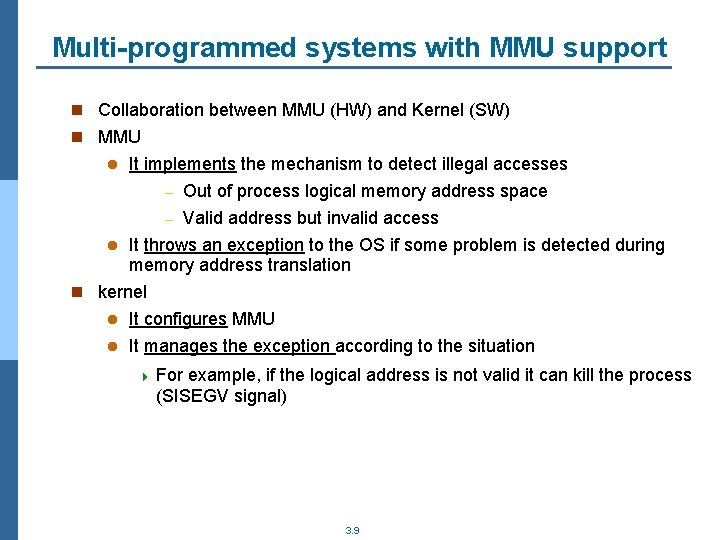

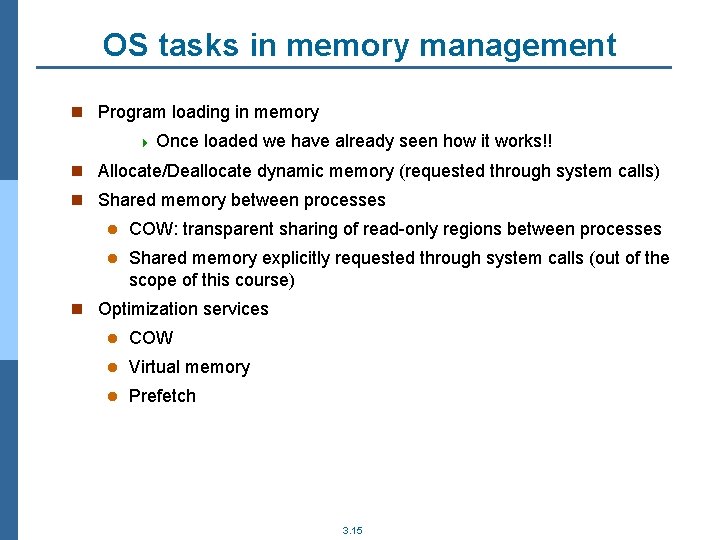

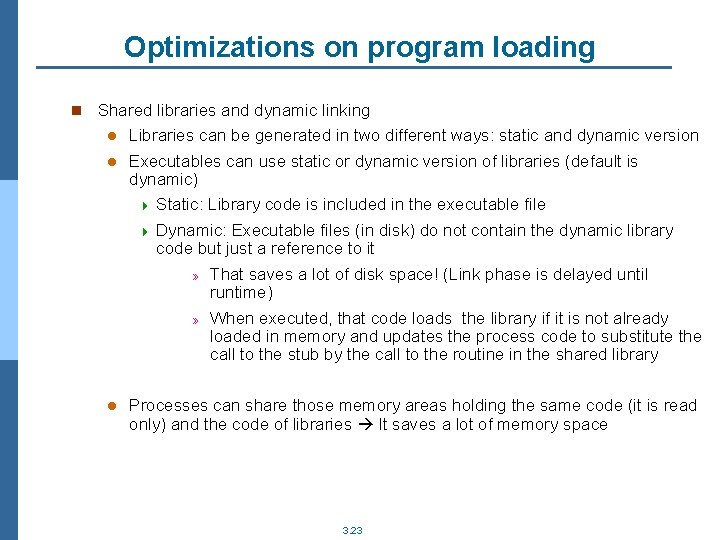

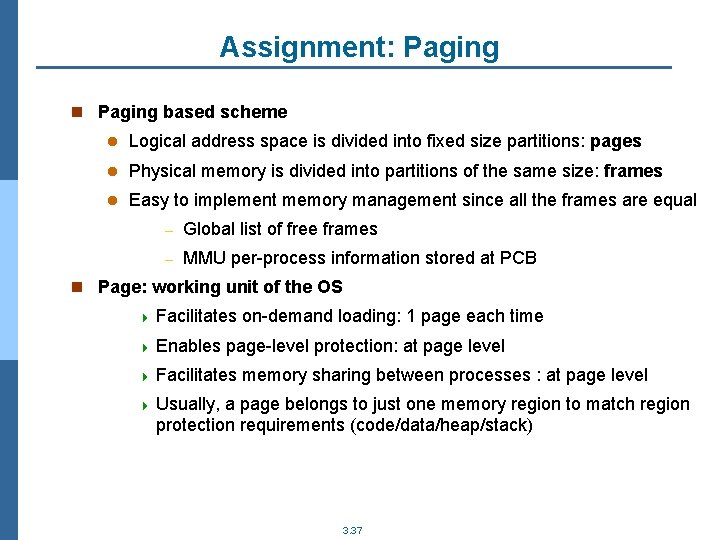

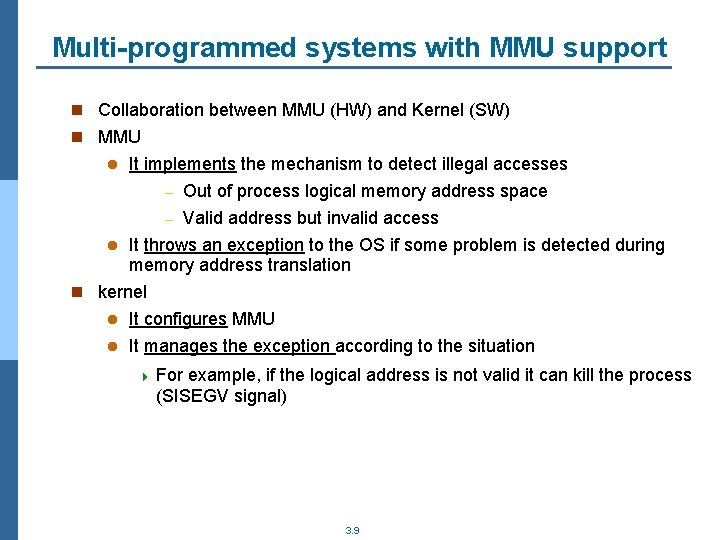

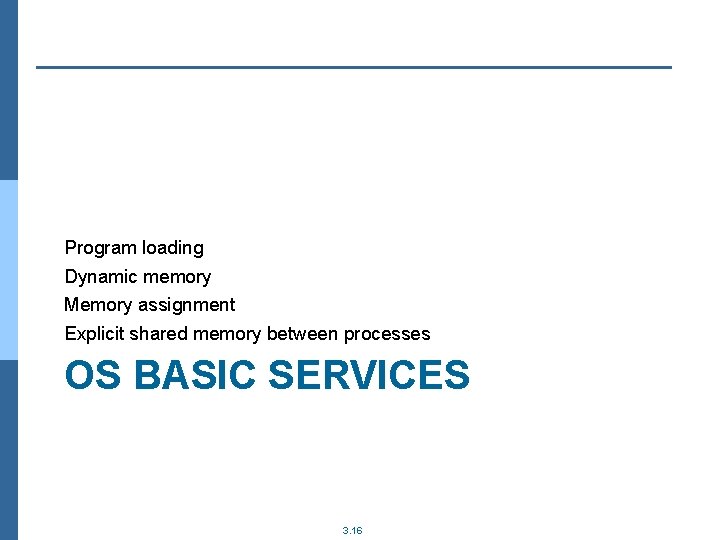

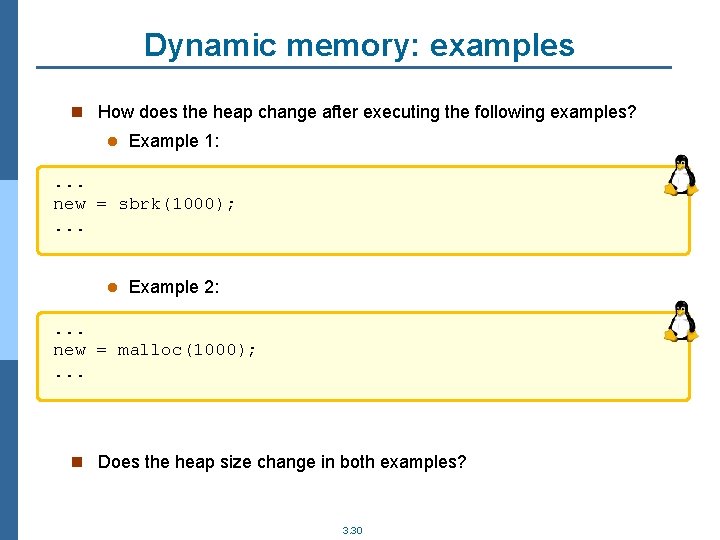

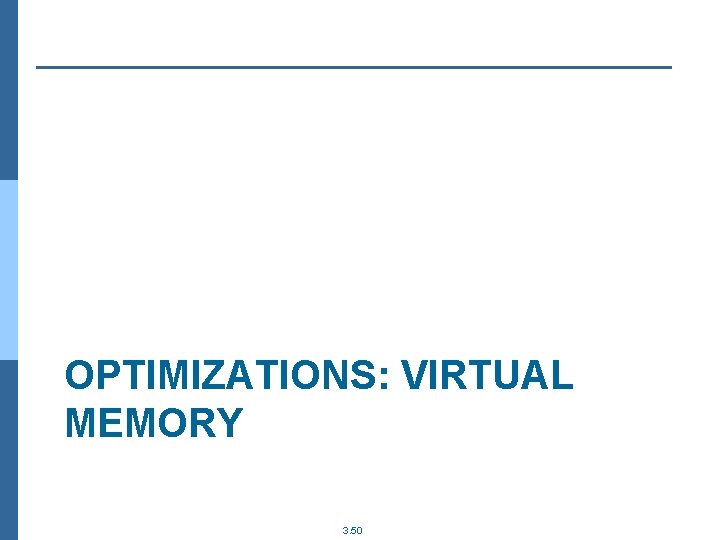

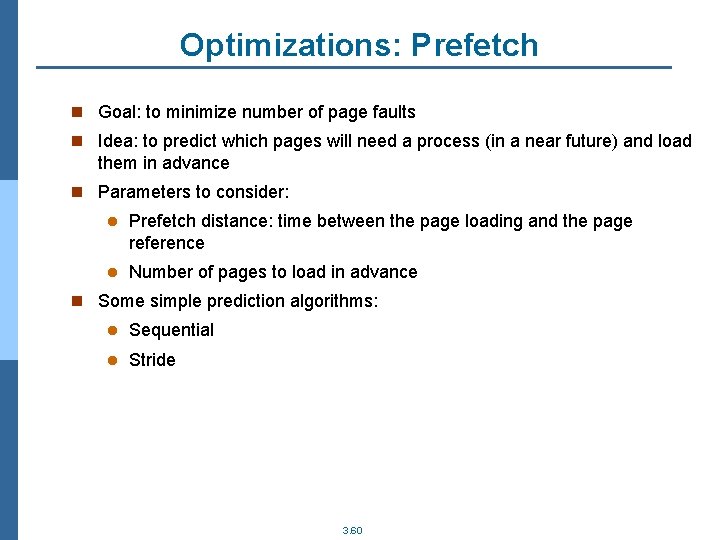

C library: Dynamic memory allocation/deallocation n C library offers to programmers: The deep management of the heap: it knows which parts are “reserved” and which parts are “free” l The heap size management n Memory allocation: void * malloc(int size_in_bytes) 4 If possible, it “reserves” N consecutive non-used bytes of the heap 4 Otherwise, it asks to the kernel to increases the heap size l Implementation and optimizations 4 It controls reserved/free areas 4 The C library tries to reduce the number of system calls to save time l – Asking to the kernel for an extra memory space when calling the kernel is mandatory n Memory deallocation: void free(void *p) 4 Marks as “free to use” a previously “reserved” area 3. 28

![mallocfree example int mainint argc char argv int procsnbatoiargv1 int pids pidsmallocprocsnbsizeofint fori0 malloc/free: example int main(int argc, char *argv[]) { int procs_nb=atoi(argv[1]); int *pids; pids=malloc(procs_nb*sizeof(int)); for(i=0;](https://slidetodoc.com/presentation_image_h/14874195ee55d3aa839985de2b28f4d7/image-26.jpg)

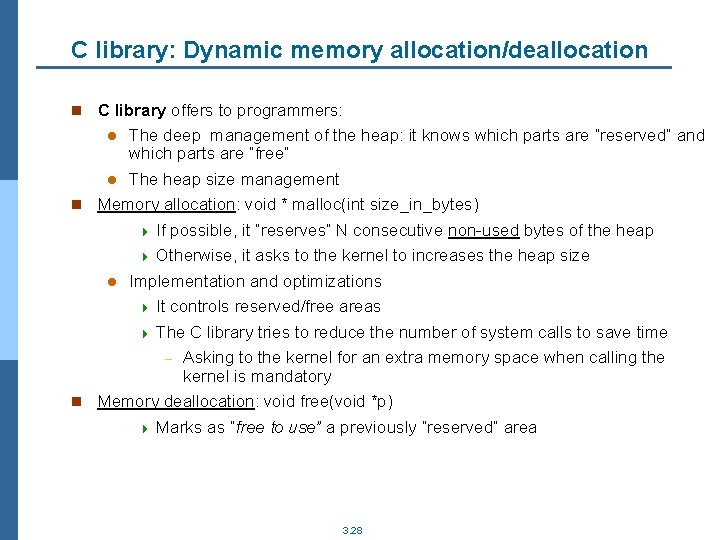

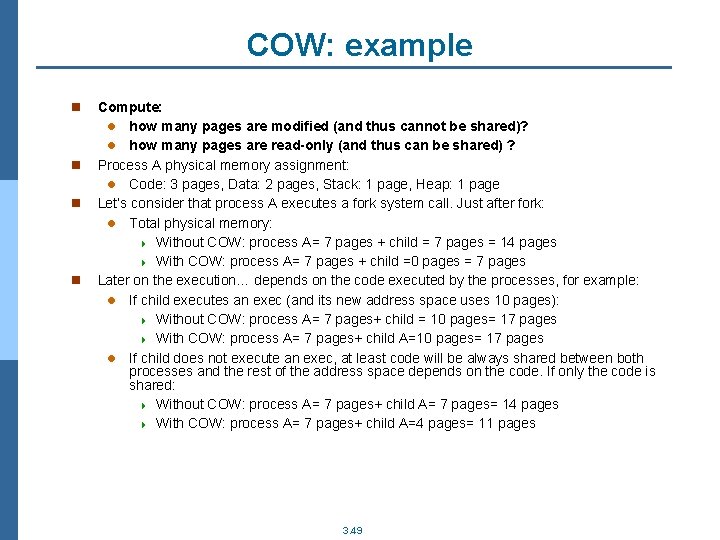

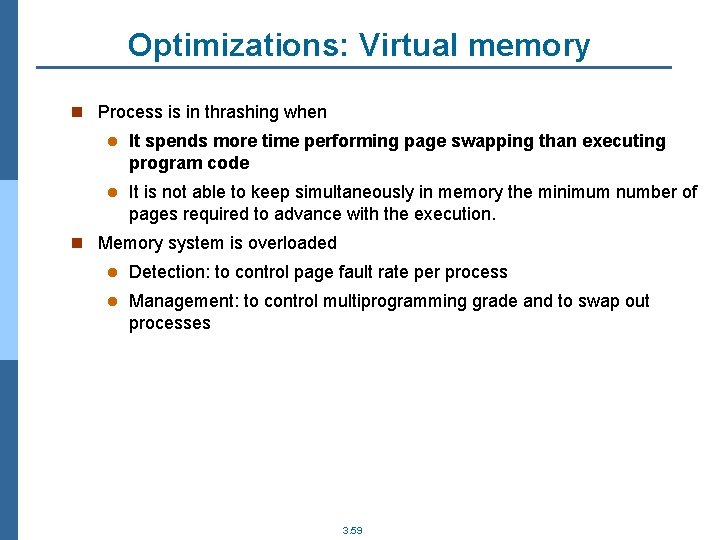

malloc/free: example int main(int argc, char *argv[]) { int procs_nb=atoi(argv[1]); int *pids; pids=malloc(procs_nb*sizeof(int)); for(i=0; i<10; i++){ pids[i]=fork(); if (pids[i]==0){ …. } } free(pids); malloc interface like sbrk interface. free interface needs as input parameter a pointer to the base address of the region 3. 29

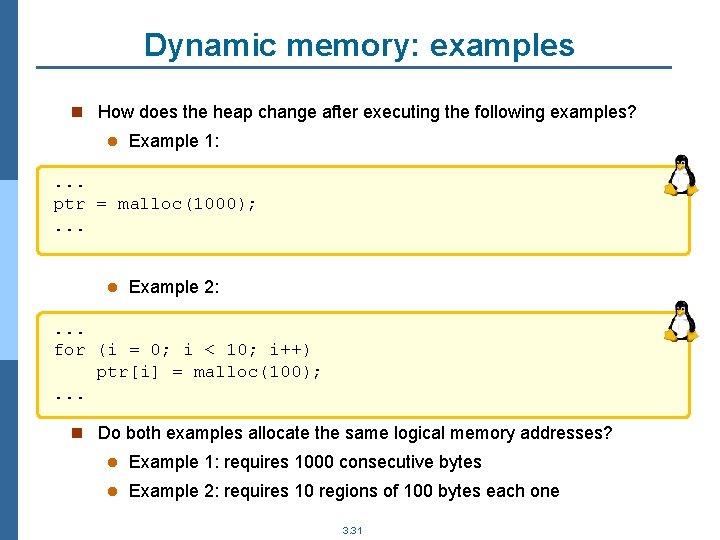

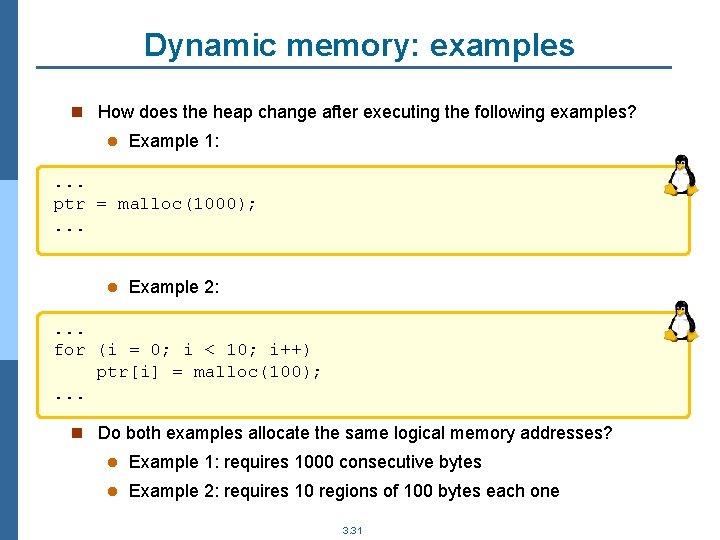

Dynamic memory: examples n How does the heap change after executing the following examples? l Example 1: . . . new = sbrk(1000); . . . l Example 2: . . . new = malloc(1000); . . . n Does the heap size change in both examples? 3. 30

Dynamic memory: examples n How does the heap change after executing the following examples? l Example 1: . . . ptr = malloc(1000); . . . l Example 2: . . . for (i = 0; i < 10; i++) ptr[i] = malloc(100); . . . n Do both examples allocate the same logical memory addresses? l Example 1: requires 1000 consecutive bytes l Example 2: requires 10 regions of 100 bytes each one 3. 31

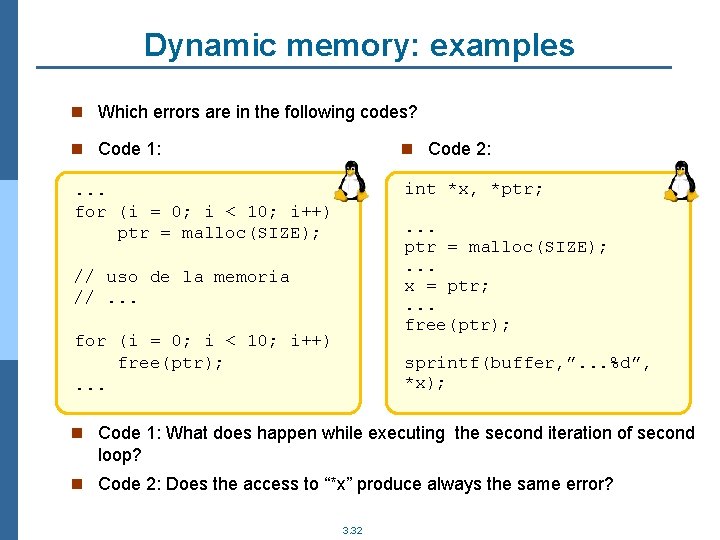

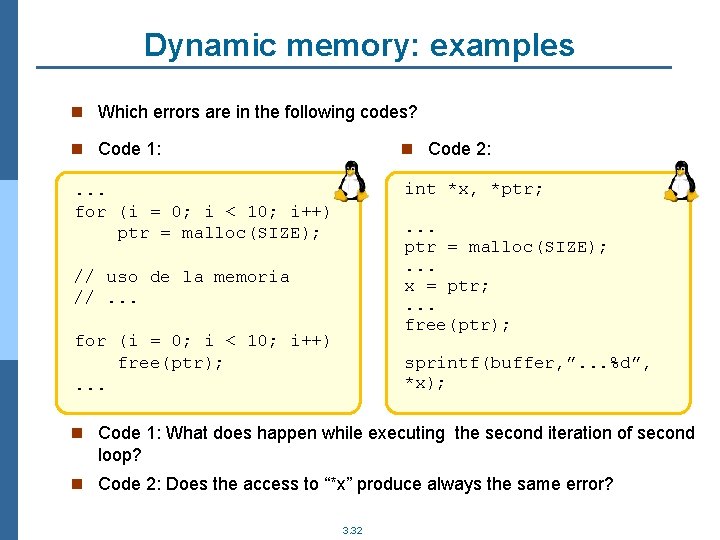

Dynamic memory: examples n Which errors are in the following codes? n Code 1: n Code 2: . . . for (i = 0; i < 10; i++) ptr = malloc(SIZE); int *x, *ptr; . . . ptr = malloc(SIZE); . . . x = ptr; . . . free(ptr); // uso de la memoria //. . . for (i = 0; i < 10; i++) free(ptr); . . . sprintf(buffer, ”. . . %d”, *x); n Code 1: What does happen while executing the second iteration of second loop? n Code 2: Does the access to “*x” produce always the same error? 3. 32

Fixed partitions: Paging Variable partitions: Segmentation BASIC SERVICES: MEMORY ASSIGNMENT 3. 33

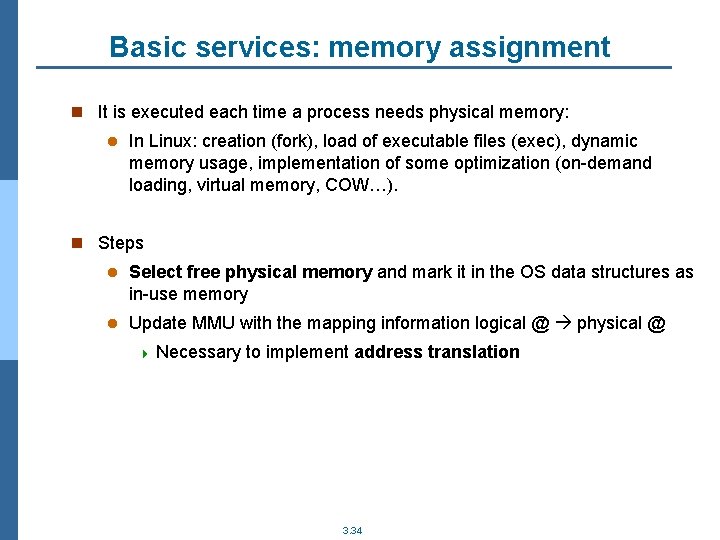

Basic services: memory assignment n It is executed each time a process needs physical memory: l In Linux: creation (fork), load of executable files (exec), dynamic memory usage, implementation of some optimization (on-demand loading, virtual memory, COW…). n Steps l Select free physical memory and mark it in the OS data structures as in-use memory l Update MMU with the mapping information logical @ physical @ 4 Necessary to implement address translation 3. 34

Basic services: memory assignment n First approach: contiguous assignment Process physical address space is contiguous 4 The whole process is loaded on a partition which is selected at loading time l It is not flexible and complicates to apply optimizations (as, for example, ondemand loading) and services such as dynamic memory n Non-contiguous assignment l Process physical address space is not contiguous l Flexible l Increases complexity of OS and MMU n Based on l Fixed partitions: Paging l Variable partitions: Segmentation l Combined schemes 4 For example, segmentation at a first level and paging in a second level l explained in EC course 3. 35

Memory assignment: fragmentation n Any non-contiguous scheme of allocation of space suffers from fragmentation n Fragmentation problem: when it is not possible to satisfy a given memory request although the system has enough memory to do it. l There is free memory but cannot be assigned to a process. l It appears in the disk management too n Internal fragmentation: memory assigned to a process that is not going to use it. n External fragmentation: free memory that cannot be used to satisfy any memory request because it is not contiguous. l It can be avoided compacting the free memory. It is necessary the system to support address translation at runtime. 4 Slowdowns applications 3. 36

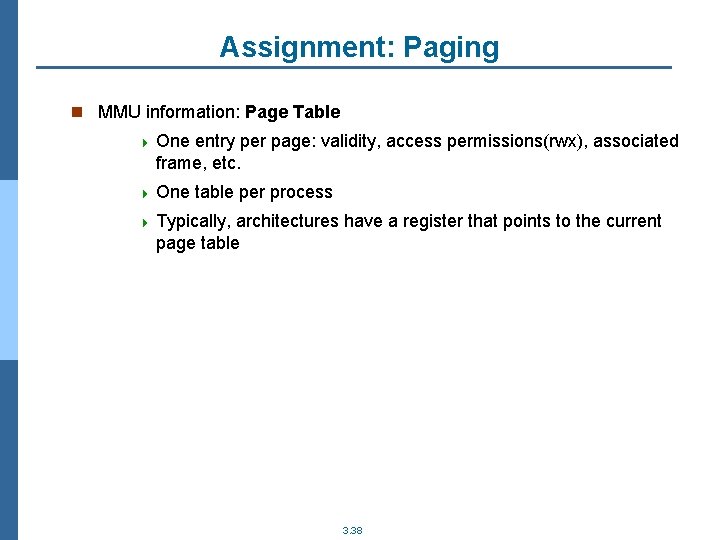

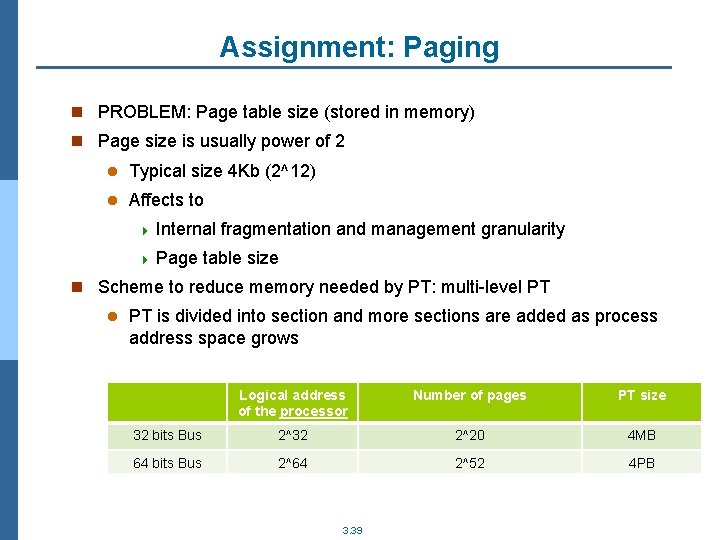

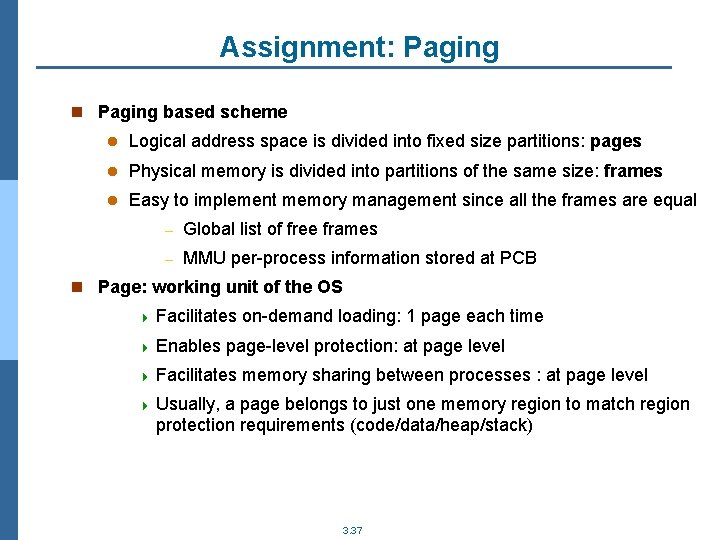

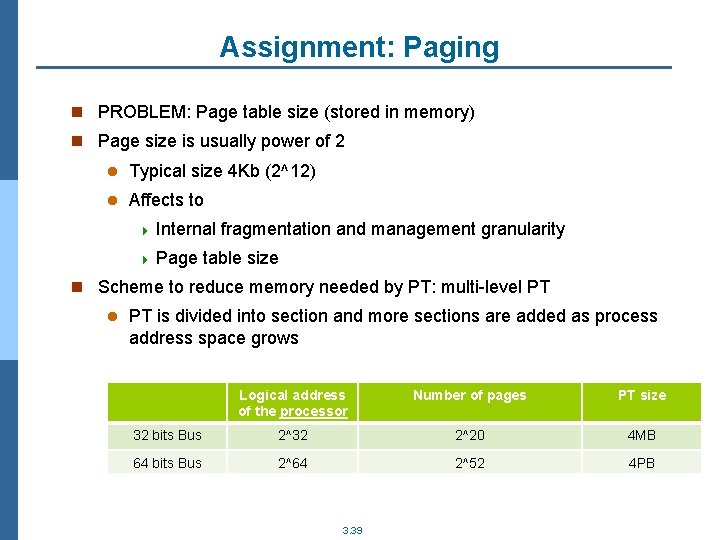

Assignment: Paging n Paging based scheme l Logical address space is divided into fixed size partitions: pages l Physical memory is divided into partitions of the same size: frames l Easy to implement memory management since all the frames are equal – Global list of free frames – MMU per-process information stored at PCB n Page: working unit of the OS 4 Facilitates 4 Enables on-demand loading: 1 page each time page-level protection: at page level 4 Facilitates memory sharing between processes : at page level 4 Usually, a page belongs to just one memory region to match region protection requirements (code/data/heap/stack) 3. 37

Assignment: Paging n MMU information: Page Table 4 One entry per page: validity, access permissions(rwx), associated frame, etc. 4 One table per process 4 Typically, architectures have a register that points to the current page table 3. 38

Assignment: Paging n PROBLEM: Page table size (stored in memory) n Page size is usually power of 2 l Typical size 4 Kb (2^12) l Affects to 4 Internal 4 Page fragmentation and management granularity table size n Scheme to reduce memory needed by PT: multi-level PT is divided into section and more sections are added as process address space grows Logical address of the processor Number of pages PT size 32 bits Bus 2^32 2^20 4 MB 64 bits Bus 2^64 2^52 4 PB 3. 39

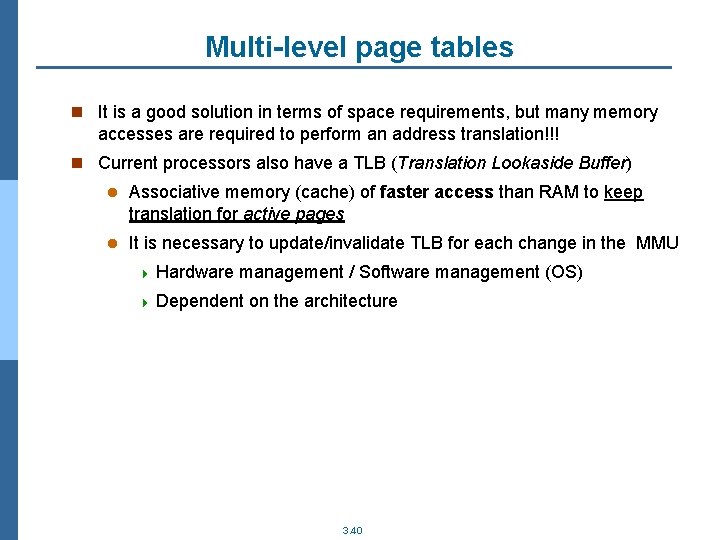

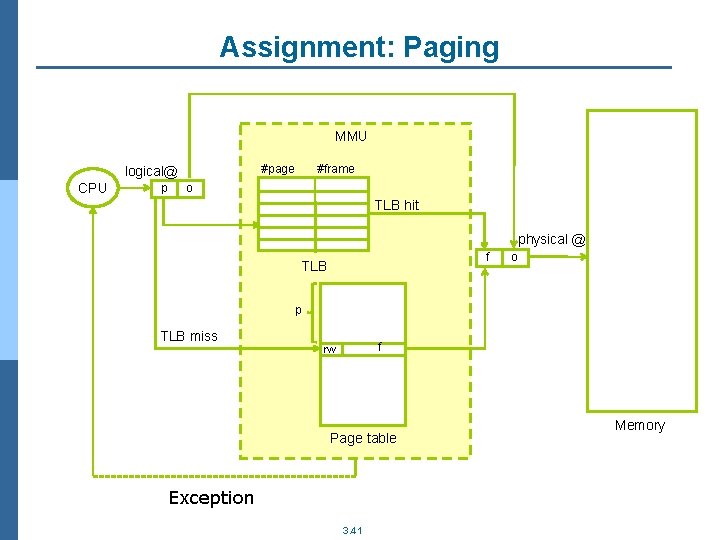

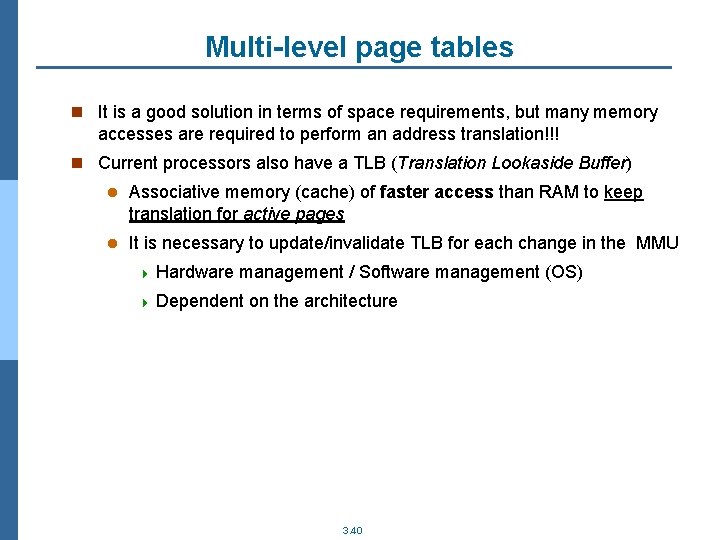

Multi-level page tables n It is a good solution in terms of space requirements, but many memory accesses are required to perform an address translation!!! n Current processors also have a TLB (Translation Lookaside Buffer) l Associative memory (cache) of faster access than RAM to keep translation for active pages l It is necessary to update/invalidate TLB for each change in the MMU 4 Hardware management / Software management (OS) 4 Dependent on the architecture 3. 40

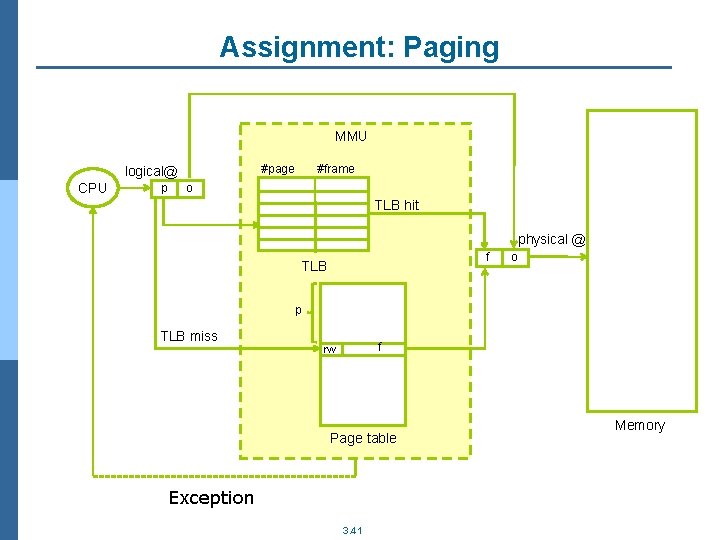

Assignment: Paging MMU #page logical@ CPU p #frame o TLB hit physical @ f TLB o p TLB miss f rw Page table Exception 3. 41 Memory

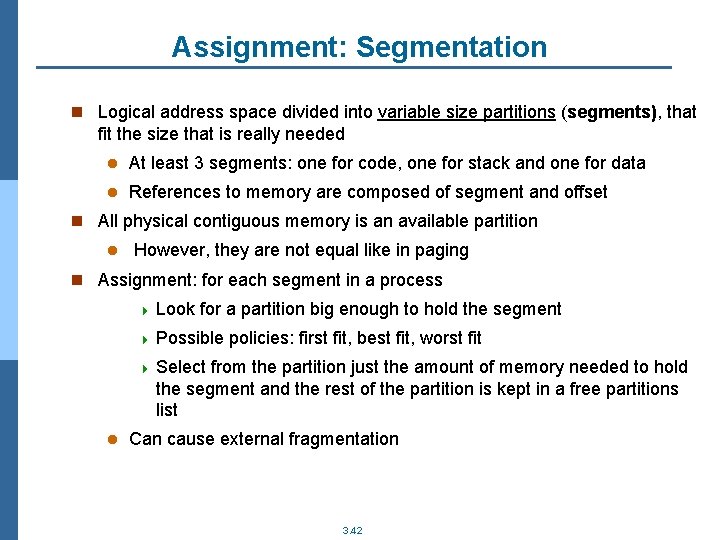

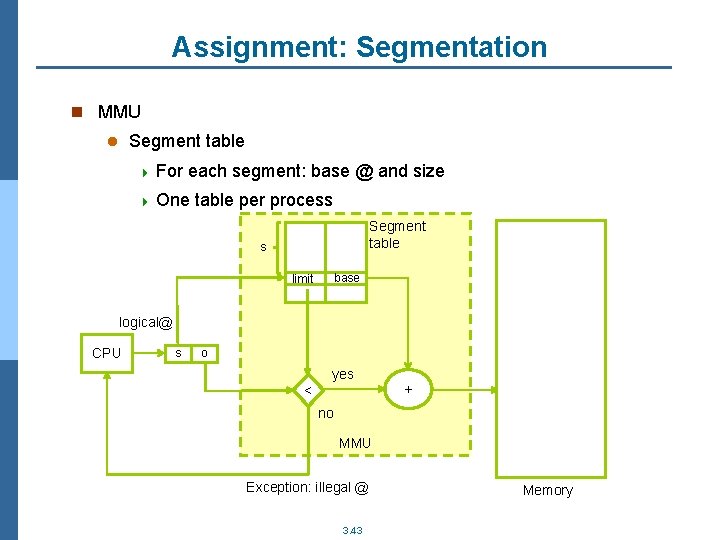

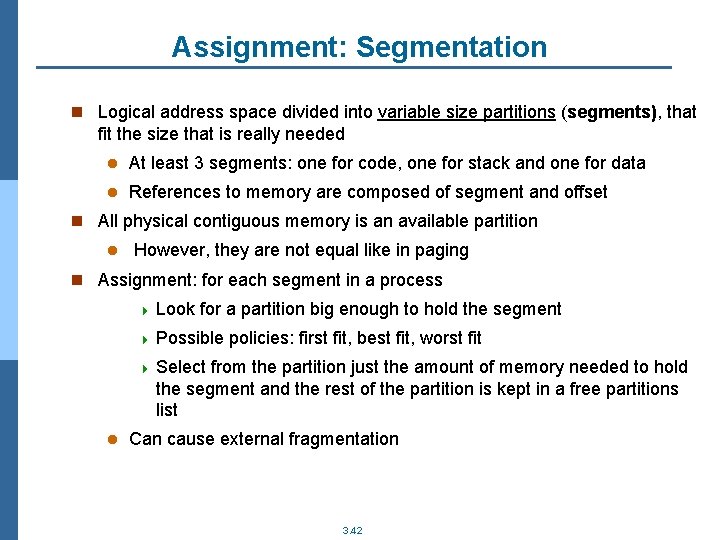

Assignment: Segmentation n Logical address space divided into variable size partitions (segments), that fit the size that is really needed l At least 3 segments: one for code, one for stack and one for data l References to memory are composed of segment and offset n All physical contiguous memory is an available partition l However, they are not equal like in paging n Assignment: for each segment in a process 4 Look for a partition big enough to hold the segment 4 Possible policies: first fit, best fit, worst fit 4 Select from the partition just the amount of memory needed to hold the segment and the rest of the partition is kept in a free partitions list l Can cause external fragmentation 3. 42

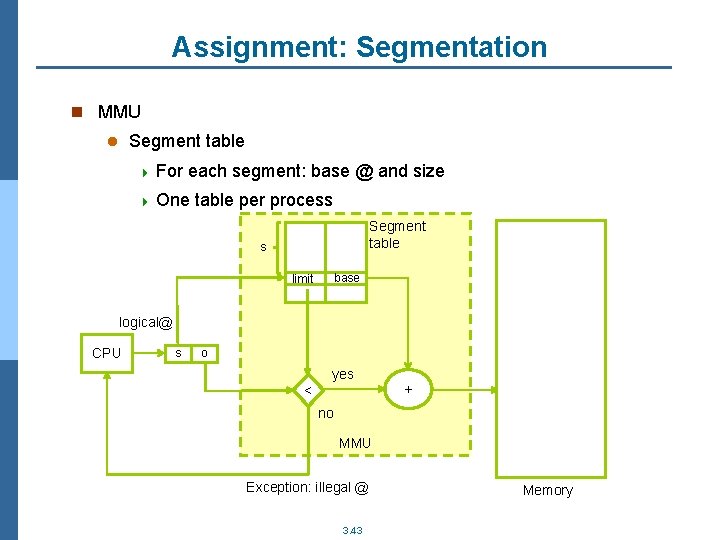

Assignment: Segmentation n MMU Segment table l 4 For each segment: base @ and size 4 One table per process Segment table s limit base logical@ CPU s o yes < + no MMU Exception: illegal @ 3. 43 Memory

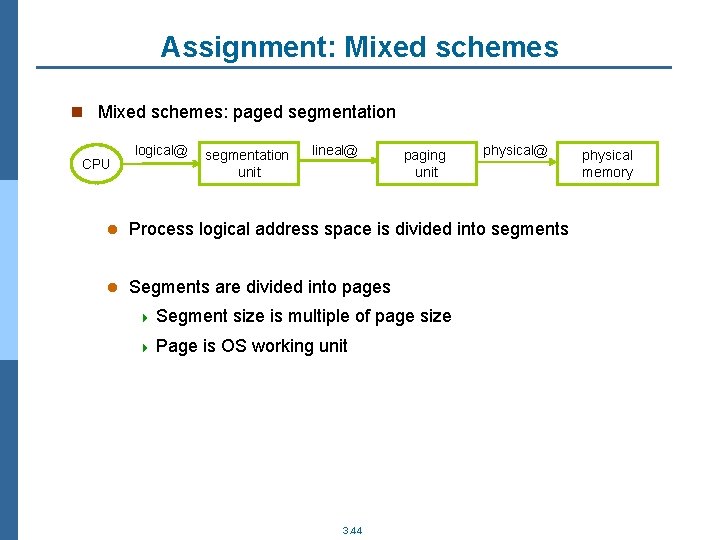

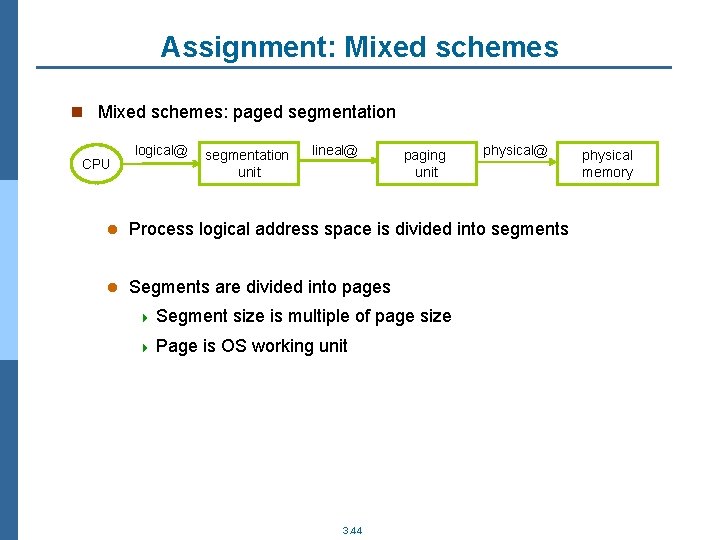

Assignment: Mixed schemes n Mixed schemes: paged segmentation CPU logical@ segmentation unit lineal@ paging unit physical@ l Process logical address space is divided into segments l Segments are divided into pages 4 Segment 4 Page size is multiple of page size is OS working unit 3. 44 physical memory

Basic services: Explicit shared memory n Explicit memory sharing between processes l Useful as a method to share data between processes 4 OS must provide programmers with system calls to manage shared memory regions: allocate memory regions and mark them as sharable, thus other processes can map them into their address space 3. 45

COW Virtual Memory Prefetch SERVICES TO OPTIMIZE PHYSICAL MEMORY USAGE 3. 46

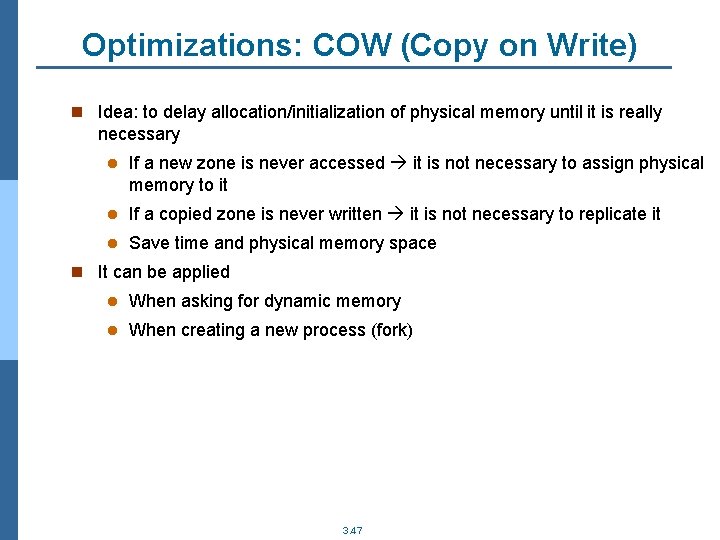

Optimizations: COW (Copy on Write) n Idea: to delay allocation/initialization of physical memory until it is really necessary l If a new zone is never accessed it is not necessary to assign physical memory to it l If a copied zone is never written it is not necessary to replicate it l Save time and physical memory space n It can be applied l When asking for dynamic memory l When creating a new process (fork) 3. 47

COW: Implementation n Kernel uses the MMU (exception mechanism) to detect write accesses to (speculatively) shared memory pages n MMU l New (logical) pages are initialized with existing (physical) frames, but permissions are set as write protected (both, source and new page) n PCB l Real permissions are set here to differentiate fails because of COW from real invalid accesses n When a process tries to write on the new region or on the source region: l OS exception management code performs the actual allocation and copy l Updates MMU with the real permission for both regions and resets the instruction that generates the exception 3. 48

COW: example n n Compute: l how many pages are modified (and thus cannot be shared)? l how many pages are read-only (and thus can be shared) ? Process A physical memory assignment: l Code: 3 pages, Data: 2 pages, Stack: 1 page, Heap: 1 page Let’s consider that process A executes a fork system call. Just after fork: l Total physical memory: 4 Without COW: process A= 7 pages + child = 7 pages = 14 pages 4 With COW: process A= 7 pages + child =0 pages = 7 pages Later on the execution… depends on the code executed by the processes, for example: l If child executes an exec (and its new address space uses 10 pages): 4 Without COW: process A= 7 pages+ child = 10 pages= 17 pages 4 With COW: process A= 7 pages+ child A=10 pages= 17 pages l If child does not execute an exec, at least code will be always shared between both processes and the rest of the address space depends on the code. If only the code is shared: 4 Without COW: process A= 7 pages+ child A= 7 pages= 14 pages 4 With COW: process A= 7 pages+ child A=4 pages= 11 pages 3. 49

OPTIMIZATIONS: VIRTUAL MEMORY 3. 50

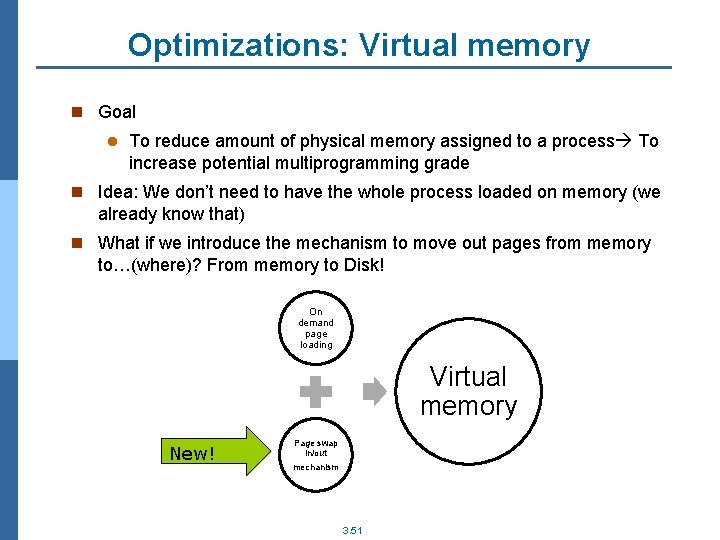

Optimizations: Virtual memory n Goal l To reduce amount of physical memory assigned to a process To increase potential multiprogramming grade n Idea: We don’t need to have the whole process loaded on memory (we already know that) n What if we introduce the mechanism to move out pages from memory to…(where)? From memory to Disk! On demand page loading Virtual memory New! Page swap in/out mechanism 3. 51

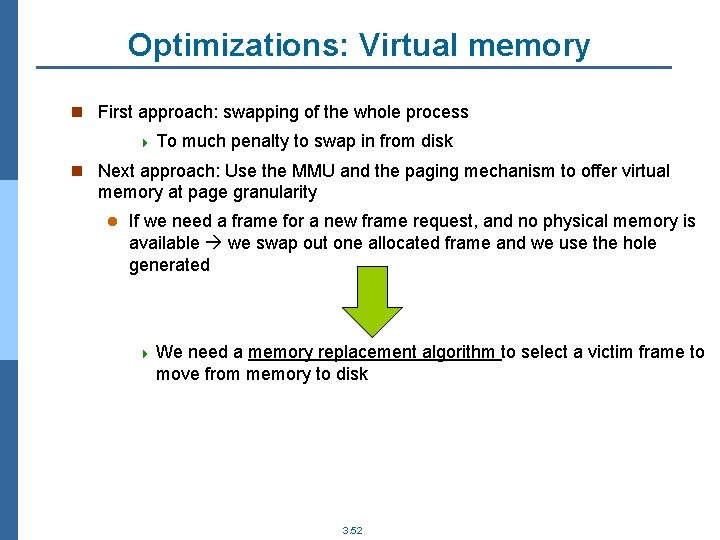

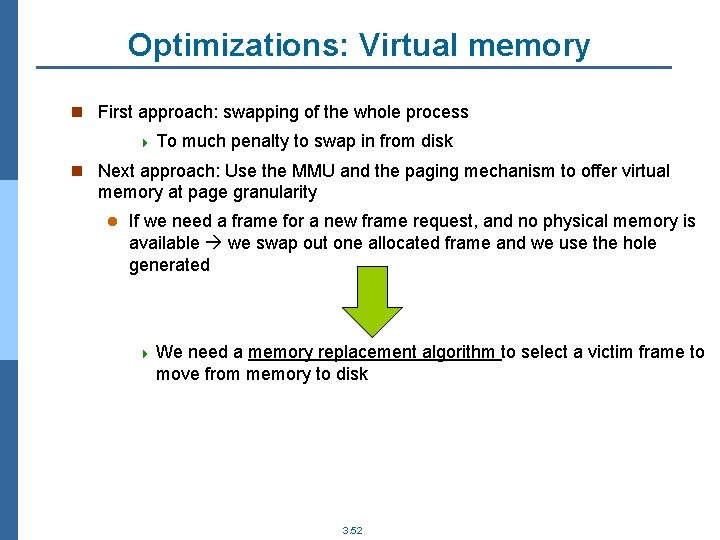

Optimizations: Virtual memory n First approach: swapping of the whole process 4 To much penalty to swap in from disk n Next approach: Use the MMU and the paging mechanism to offer virtual memory at page granularity l If we need a frame for a new frame request, and no physical memory is available we swap out one allocated frame and we use the hole generated 4 We need a memory replacement algorithm to select a victim frame to move from memory to disk 3. 52

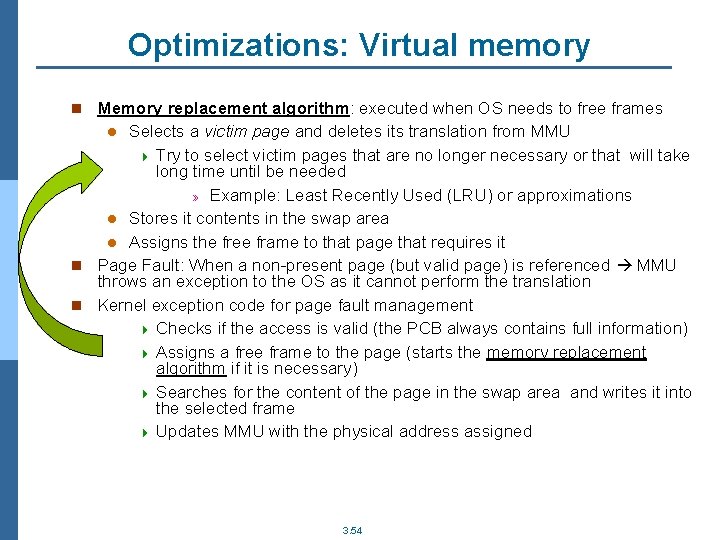

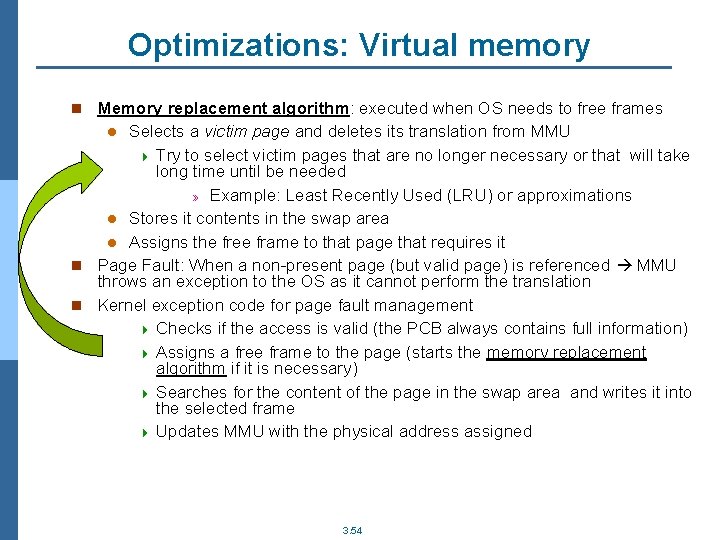

Optimizations: Virtual memory n Memory replacement algorithm: executed when OS needs to free frames Selects a victim page and deletes its translation from MMU 4 Try to select victim pages that are no longer necessary or that will take long time until be needed » Example: Least Recently Used (LRU) or approximations l Stores it contents in the swap area l Assigns the free frame to that page that requires it n Page Fault: When a non-present page (but valid page) is referenced MMU throws an exception to the OS as it cannot perform the translation n Kernel exception code for page fault management 4 Checks if the access is valid (the PCB always contains full information) 4 Assigns a free frame to the page (starts the memory replacement algorithm if it is necessary) 4 Searches for the content of the page in the swap area and writes it into the selected frame 4 Updates MMU with the physical address assigned l 3. 54

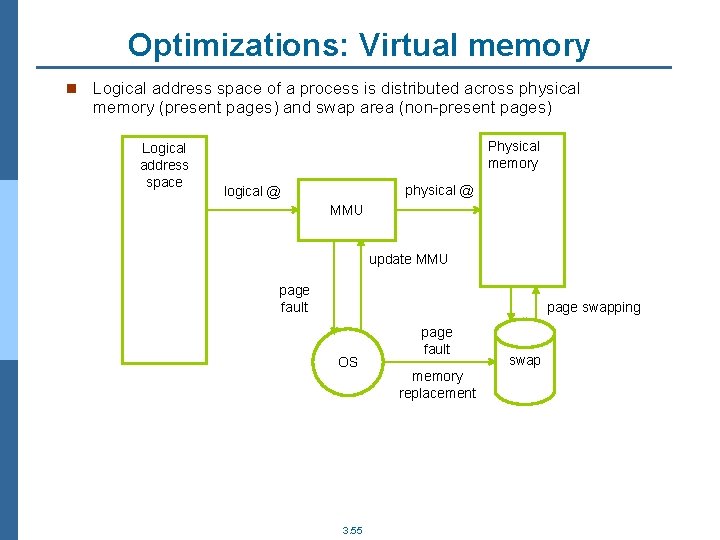

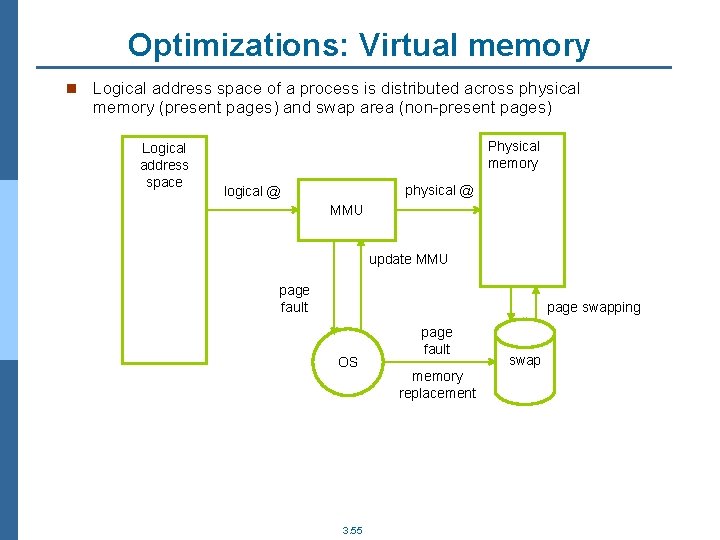

Optimizations: Virtual memory n Logical address space of a process is distributed across physical memory (present pages) and swap area (non-present pages) Logical address space Physical memory physical @ logical @ MMU update MMU page fault page swapping OS 3. 55 page fault memory replacement swap

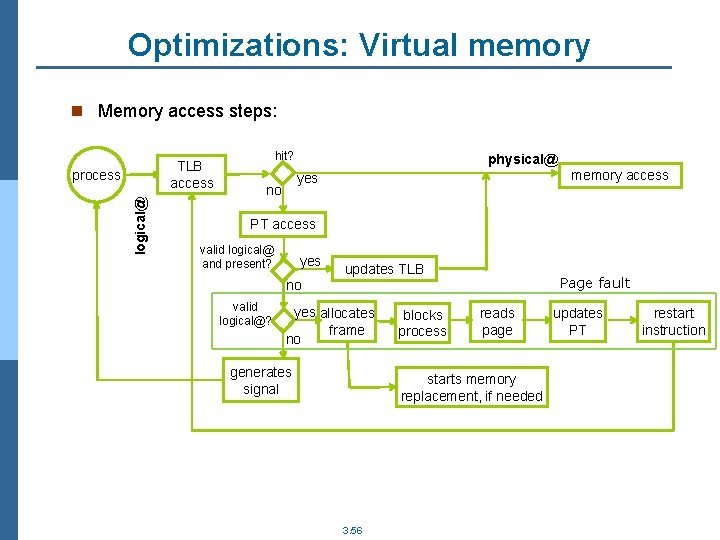

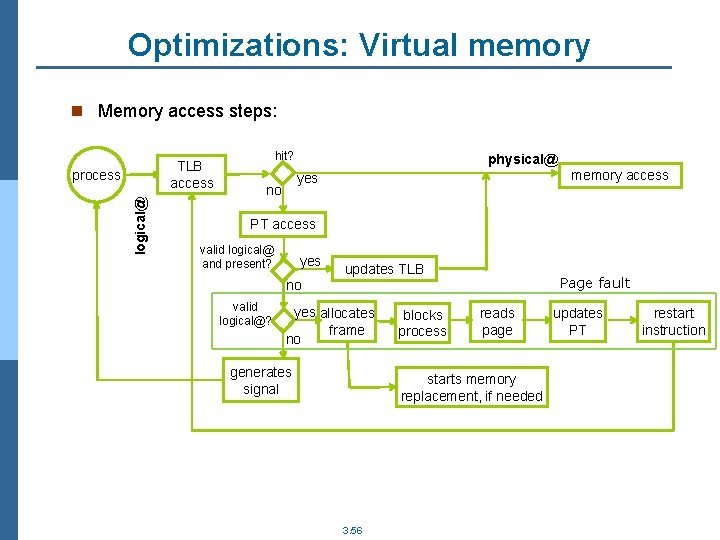

Optimizations: Virtual memory n Memory access steps: TLB access logical@ process hit? physical@ memory access yes no PT access valid logical@ and present? yes updates TLB Page fault no valid logical@? yes allocates frame no generates signal blocks process reads page starts memory replacement, if needed 3. 56 updates PT restart instruction

Optimizations: Virtual memory n Effects of using virtual memory: l Physical memory can be smaller than the sum of the address spaces of the loaded processes l Physical memory can be smaller than the logical address space of a single process l Accessing to non-present pages is slower than accessing to present pages 4 Exception 4 It + page loading is important to reduce the number of page faults 3. 57

Optimizations: Virtual memory n Process is in thrashing when l It spends more time performing page swapping than executing program code l It is not able to keep simultaneously in memory the minimum number of pages required to advance with the execution. n Memory system is overloaded l Detection: to control page fault rate per process l Management: to control multiprogramming grade and to swap out processes 3. 59

Optimizations: Prefetch n Goal: to minimize number of page faults n Idea: to predict which pages will need a process (in a near future) and load them in advance n Parameters to consider: l Prefetch distance: time between the page loading and the page reference l Number of pages to load in advance n Some simple prediction algorithms: l Sequential l Stride 3. 60

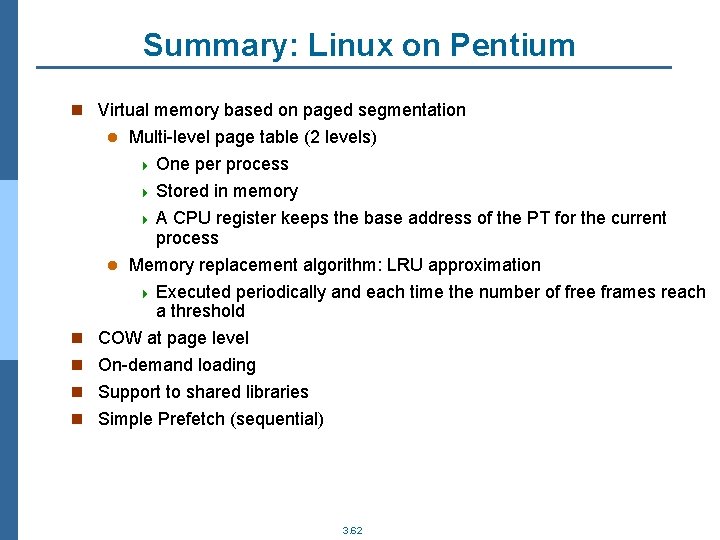

Summary: Linux on Pentium n exec system call: loads a new program PCB initialization with the description of the new address space, memory assignment, … n Process creation (fork): l PCB initialization with the description of its address space (which is a parent copy) l Uses COW l Creation and initialization of the new process PT 4 Base address of the PT is kept in the PCB of the process n Process scheduling l Context switch: updates MMU with the base address of the current PT and invalidates TLB l n exit: l Deletes process PT and deallocates process frames (if those frames are not in use by other process) 3. 61

Summary: Linux on Pentium n Virtual memory based on paged segmentation Multi-level page table (2 levels) 4 One per process 4 Stored in memory 4 A CPU register keeps the base address of the PT for the current process l Memory replacement algorithm: LRU approximation 4 Executed periodically and each time the number of free frames reach a threshold n COW at page level l n On-demand loading n Support to shared libraries n Simple Prefetch (sequential) 3. 62

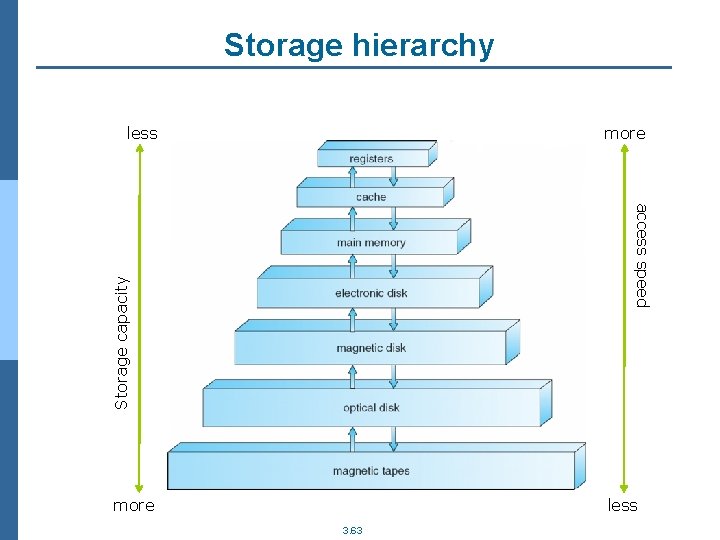

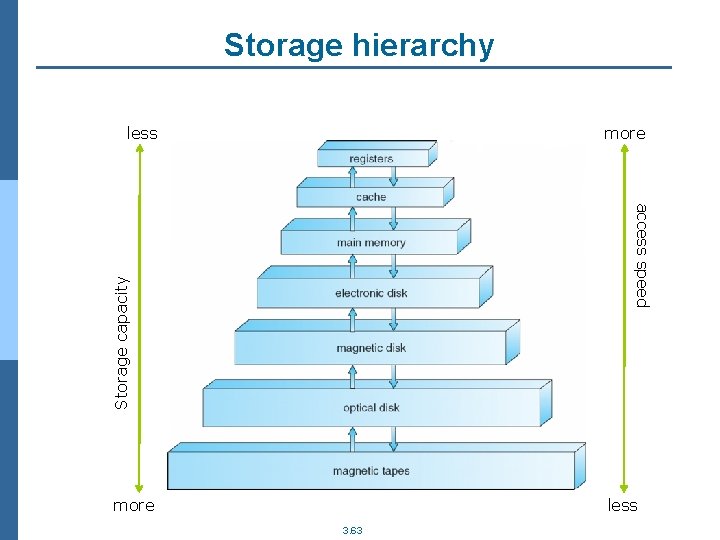

Storage hierarchy less more Storage capacity access speed more less 3. 63