Virtual Memory CENG 331 Introduction to Computer Systems

- Slides: 80

Virtual Memory CENG 331: Introduction to Computer Systems 12 th Lecture Instructor: Erol Sahin Acknowledgement: Most of the slides are adapted from the ones prepared by R. E. Bryant, D. R. O’Hallaron of Carnegie-Mellon Univ.

Today Virtual memory (VM) n n n Overview and motivation VM as tool for caching VM as tool for memory management VM as tool for memory protection Address translation – 2–

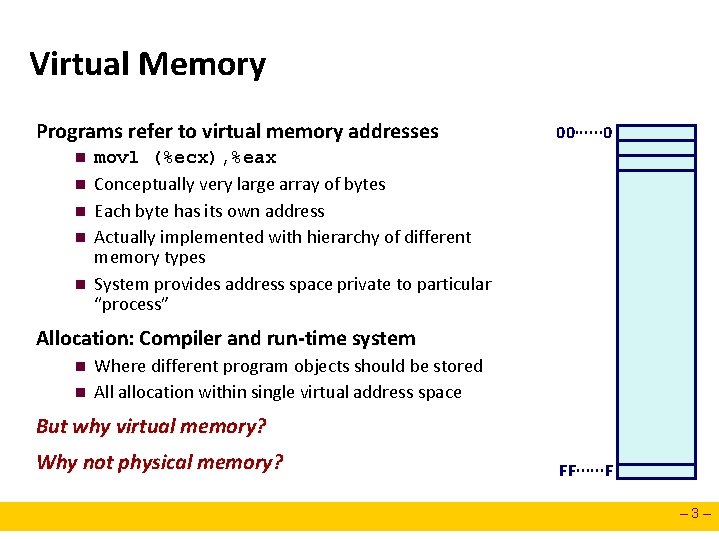

Virtual Memory Programs refer to virtual memory addresses n n n 00∙∙∙∙∙∙ 0 movl (%ecx), %eax Conceptually very large array of bytes Each byte has its own address Actually implemented with hierarchy of different memory types System provides address space private to particular “process” Allocation: Compiler and run-time system n n Where different program objects should be stored All allocation within single virtual address space But why virtual memory? Why not physical memory? FF∙∙∙∙∙∙F – 3–

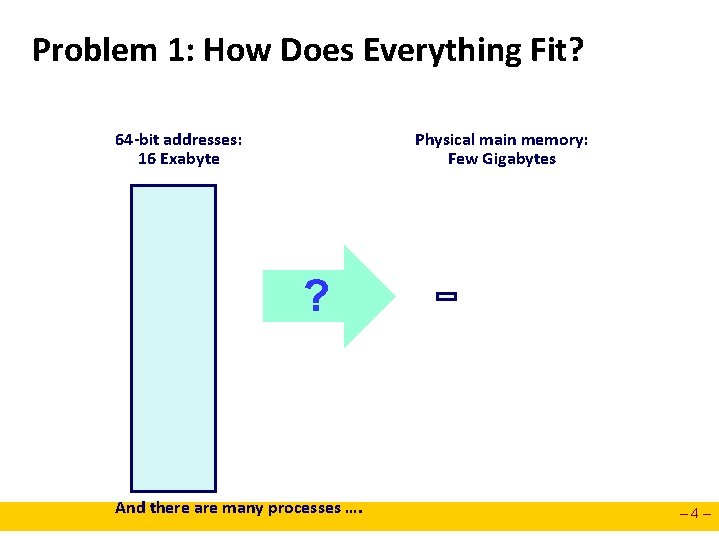

Problem 1: How Does Everything Fit? 64 -bit addresses: 16 Exabyte Physical main memory: Few Gigabytes ? And there are many processes …. – 4–

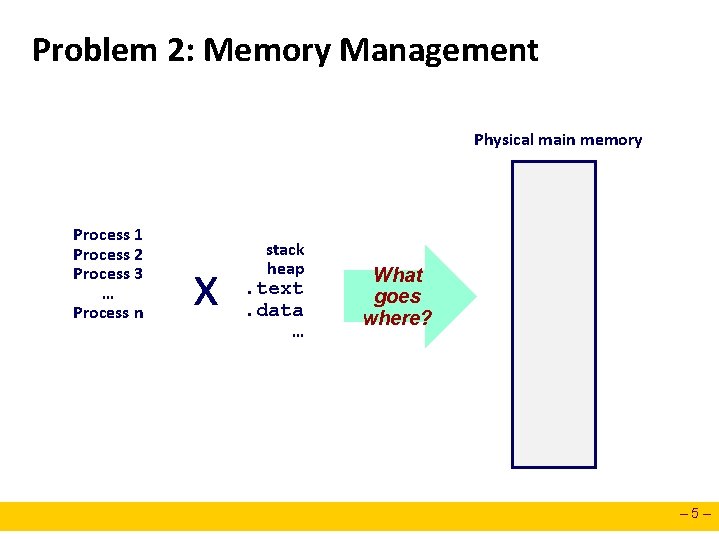

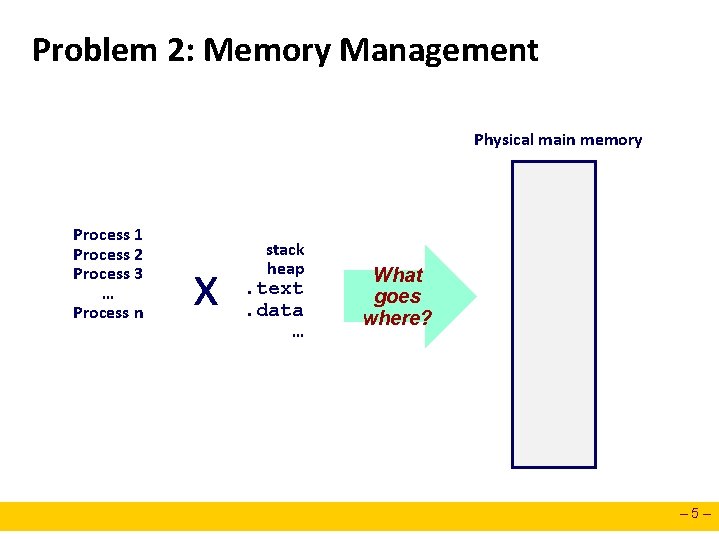

Problem 2: Memory Management Physical main memory Process 1 Process 2 Process 3 … Process n x stack heap . text. data … What goes where? – 5–

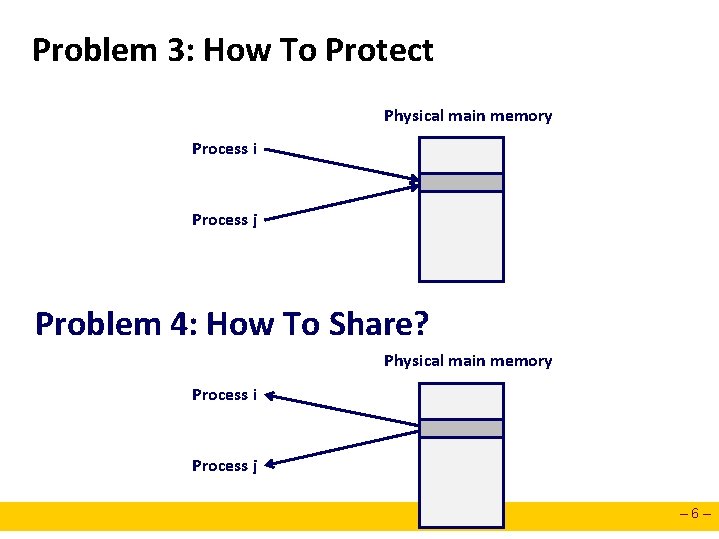

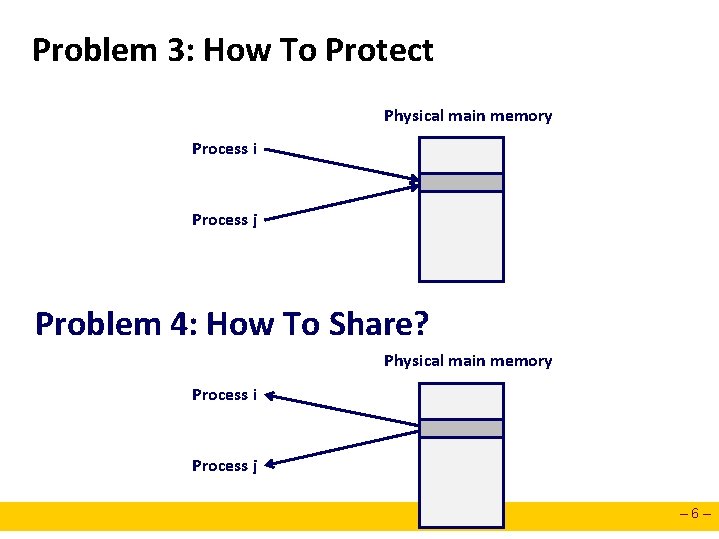

Problem 3: How To Protect Physical main memory Process i Process j Problem 4: How To Share? Physical main memory Process i Process j – 6–

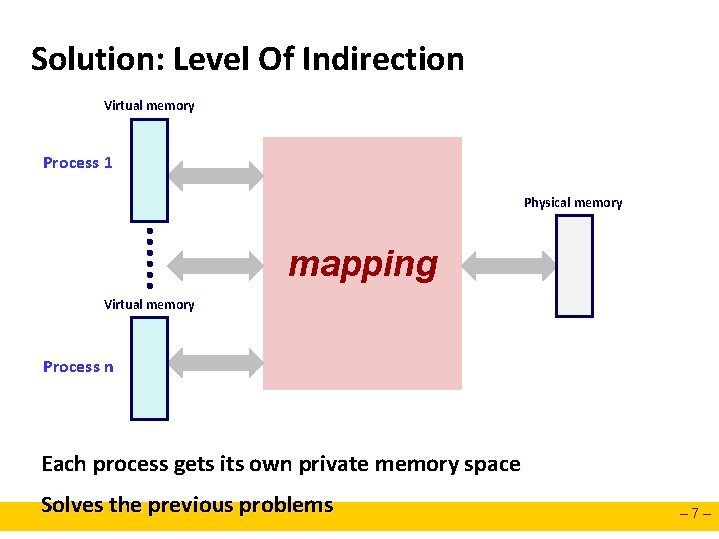

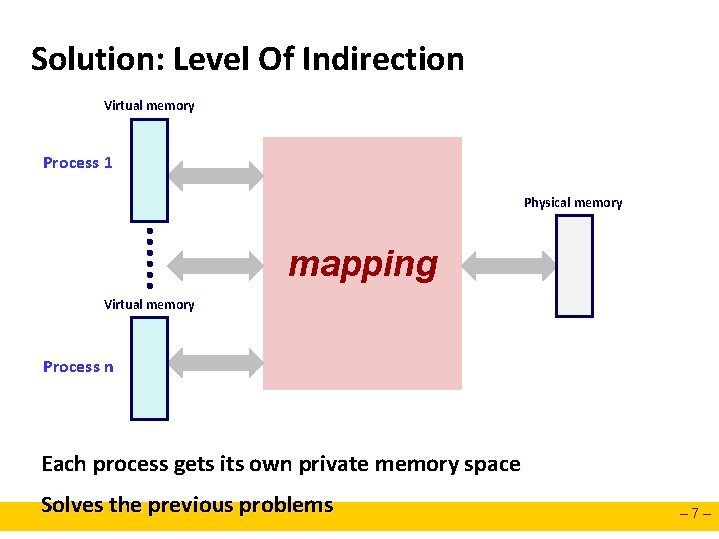

Solution: Level Of Indirection Virtual memory Process 1 Physical memory mapping Virtual memory Process n Each process gets its own private memory space Solves the previous problems – 7–

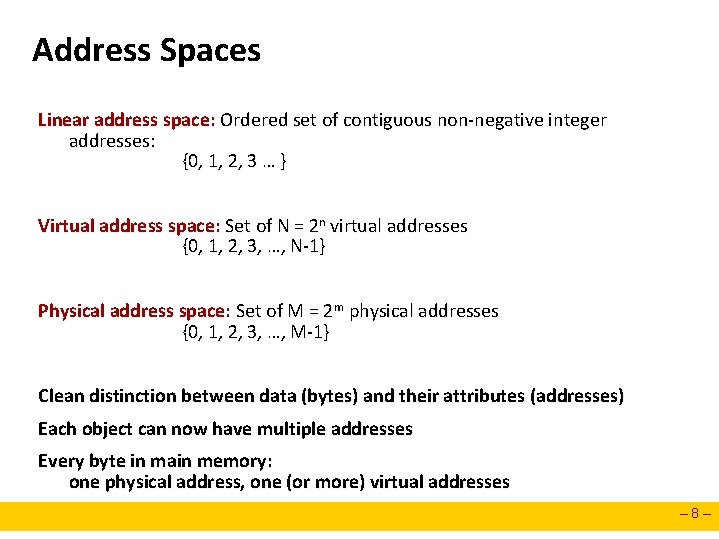

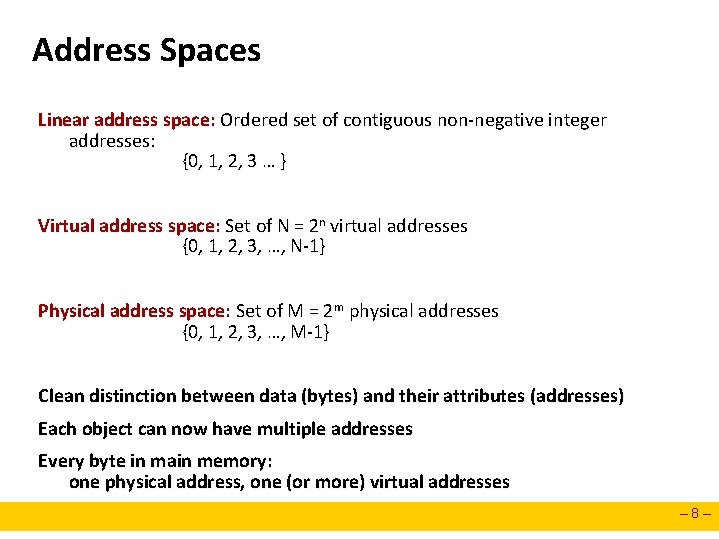

Address Spaces Linear address space: Ordered set of contiguous non-negative integer addresses: {0, 1, 2, 3 … } Virtual address space: Set of N = 2 n virtual addresses {0, 1, 2, 3, …, N-1} Physical address space: Set of M = 2 m physical addresses {0, 1, 2, 3, …, M-1} Clean distinction between data (bytes) and their attributes (addresses) Each object can now have multiple addresses Every byte in main memory: one physical address, one (or more) virtual addresses – 8–

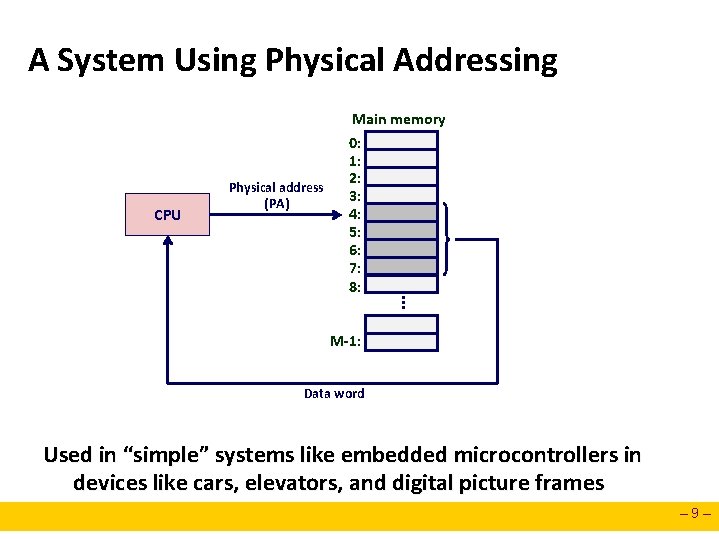

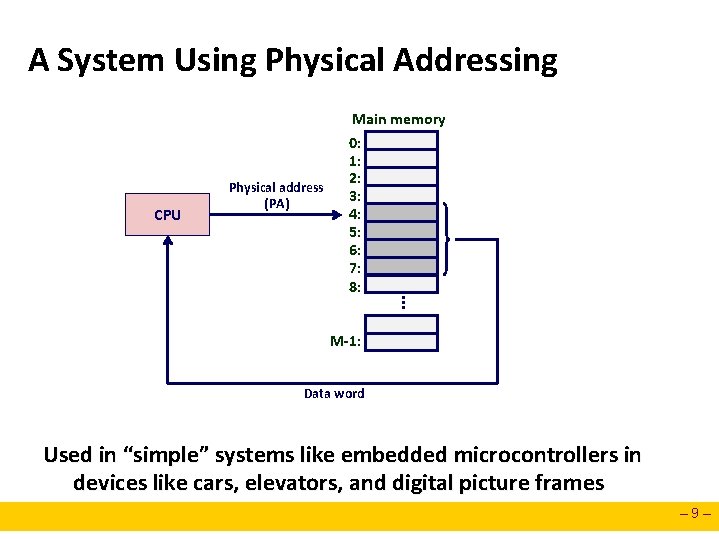

A System Using Physical Addressing CPU Physical address (PA) . . . Main memory 0: 1: 2: 3: 4: 5: 6: 7: 8: M-1: Data word Used in “simple” systems like embedded microcontrollers in devices like cars, elevators, and digital picture frames – 9–

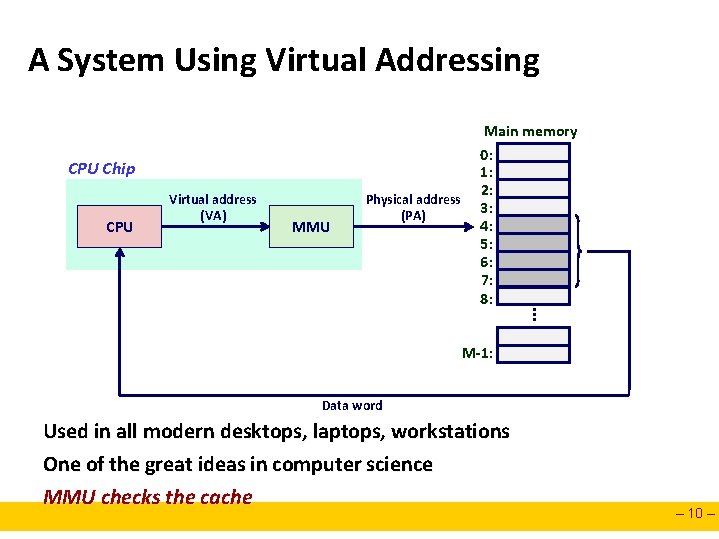

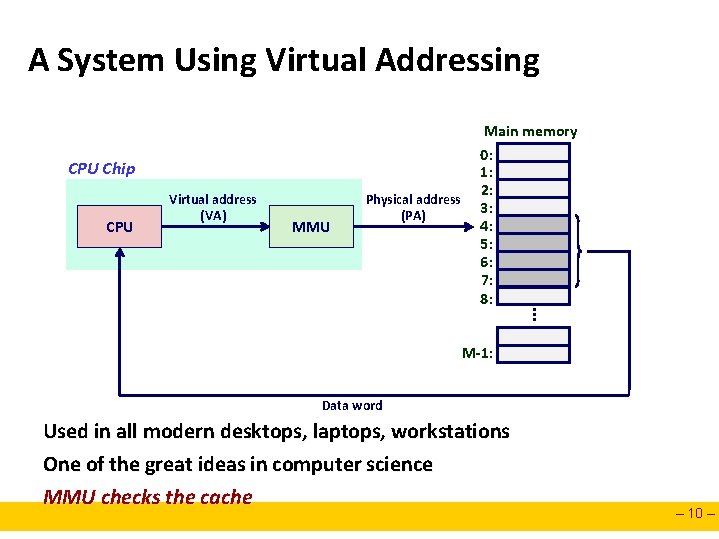

A System Using Virtual Addressing CPU Chip CPU Virtual address (VA) MMU Physical address (PA) . . . Main memory 0: 1: 2: 3: 4: 5: 6: 7: 8: M-1: Data word Used in all modern desktops, laptops, workstations One of the great ideas in computer science MMU checks the cache – 10 –

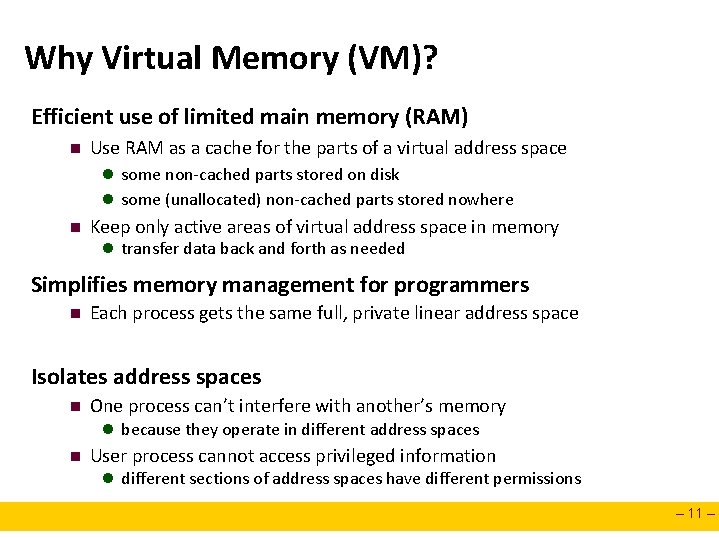

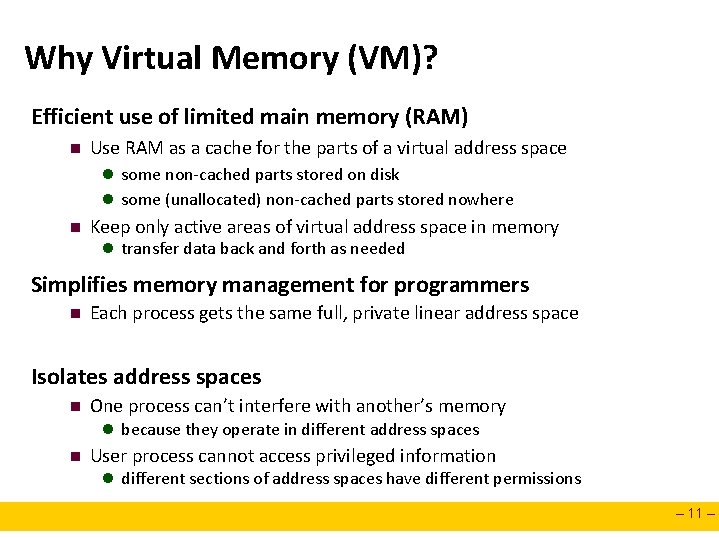

Why Virtual Memory (VM)? Efficient use of limited main memory (RAM) n Use RAM as a cache for the parts of a virtual address space l some non-cached parts stored on disk l some (unallocated) non-cached parts stored nowhere n Keep only active areas of virtual address space in memory l transfer data back and forth as needed Simplifies memory management for programmers n Each process gets the same full, private linear address space Isolates address spaces n One process can’t interfere with another’s memory l because they operate in different address spaces n User process cannot access privileged information l different sections of address spaces have different permissions – 11 –

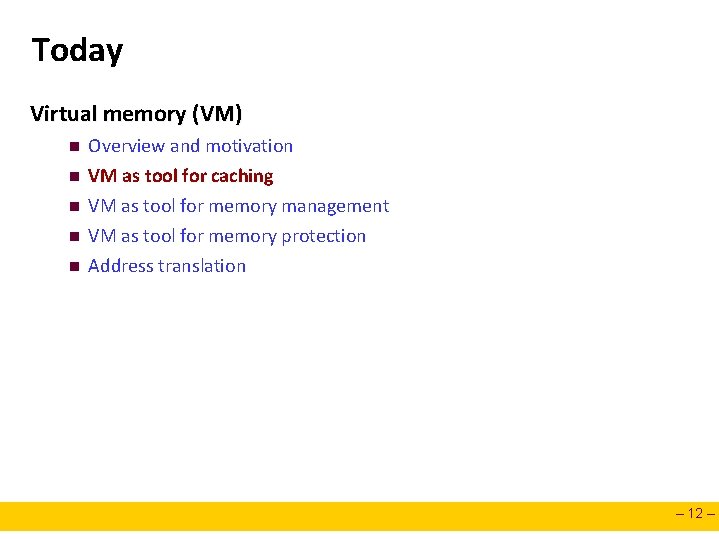

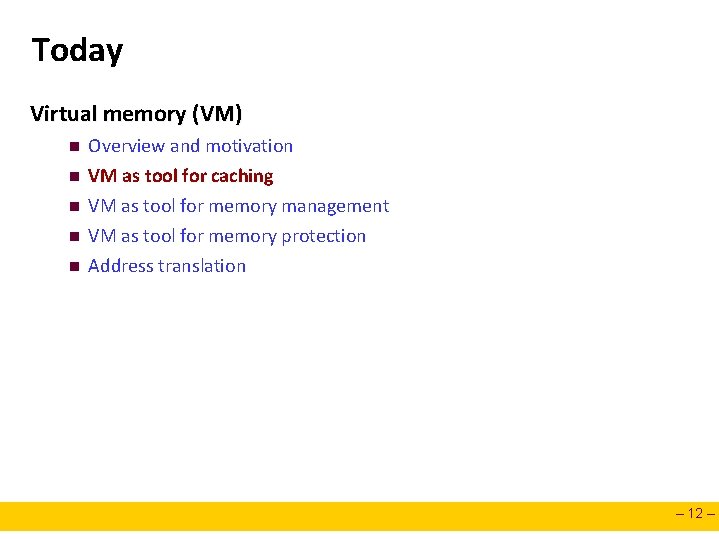

Today Virtual memory (VM) n n n Overview and motivation VM as tool for caching VM as tool for memory management VM as tool for memory protection Address translation – 12 –

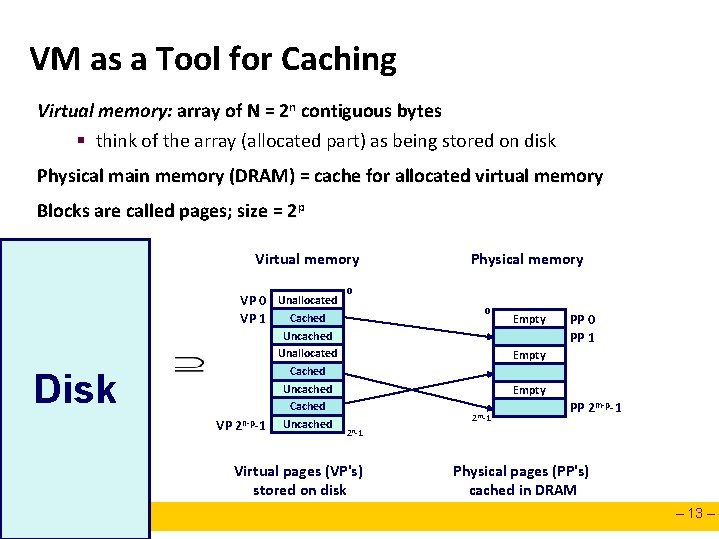

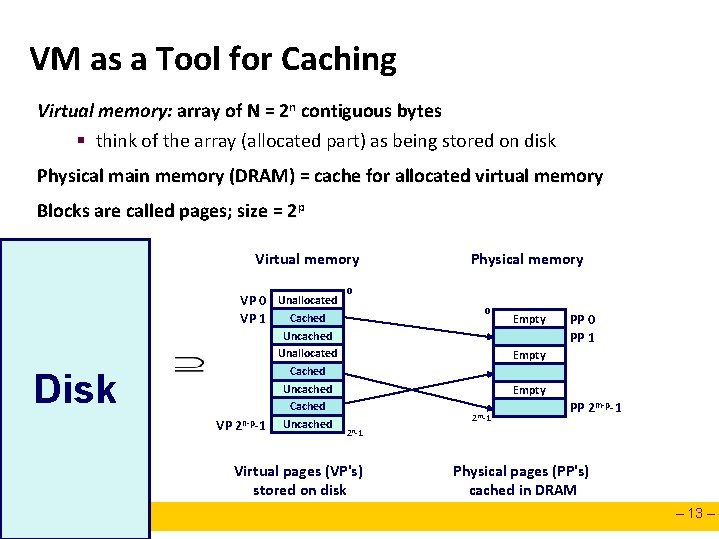

VM as a Tool for Caching Virtual memory: array of N = 2 n contiguous bytes § think of the array (allocated part) as being stored on disk Physical main memory (DRAM) = cache for allocated virtual memory Blocks are called pages; size = 2 p Virtual memory VP 0 Unallocated Cached VP 1 Disk Physical memory 0 Uncached Unallocated Cached Uncached Cached VP 2 n-p-1 Uncached 2 n-1 Virtual pages (VP's) stored on disk 0 Empty PP 0 PP 1 Empty 2 m-1 PP 2 m-p-1 Physical pages (PP's) cached in DRAM – 13 –

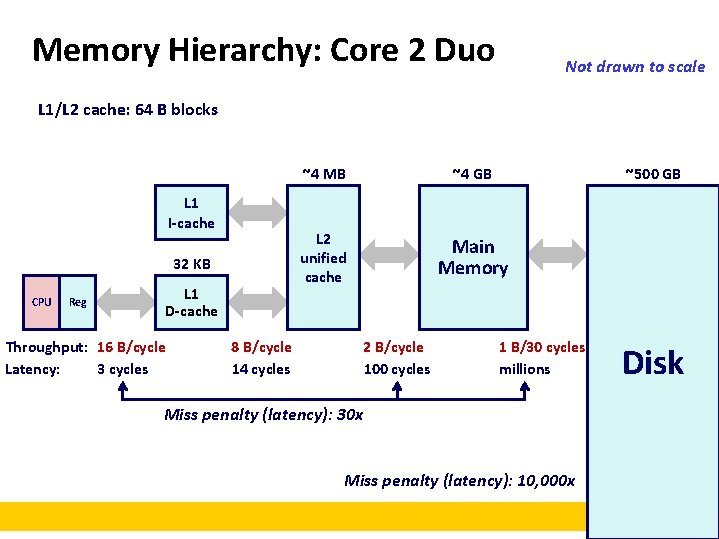

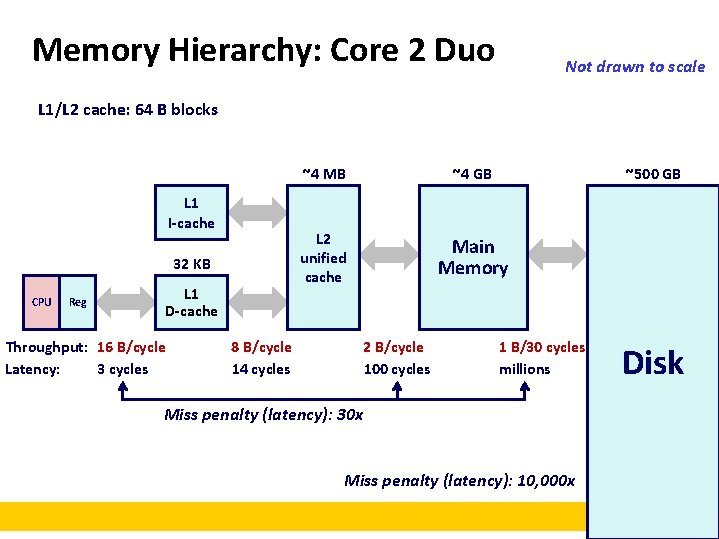

Memory Hierarchy: Core 2 Duo Not drawn to scale L 1/L 2 cache: 64 B blocks L 1 I-cache 32 KB CPU Reg L 1 D-cache Throughput: 16 B/cycle Latency: 3 cycles 8 B/cycle 14 cycles ~4 MB ~4 GB L 2 unified cache Main Memory 2 B/cycle 100 cycles ~500 GB 1 B/30 cycles millions Disk Miss penalty (latency): 30 x Miss penalty (latency): 10, 000 x – 14 –

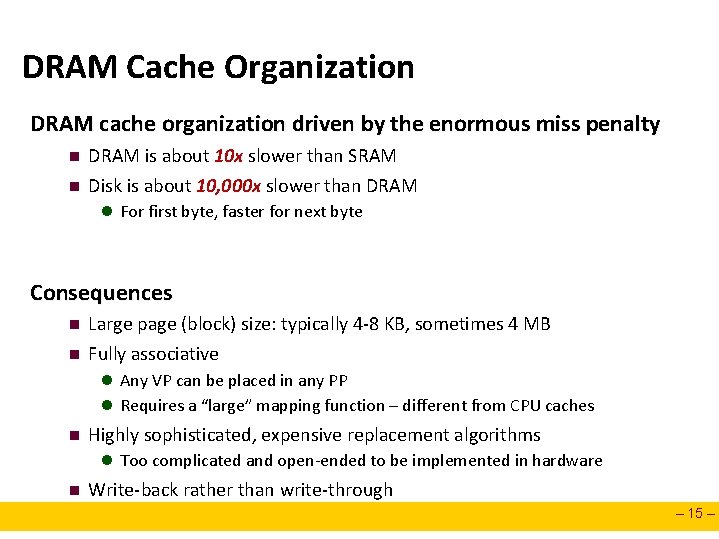

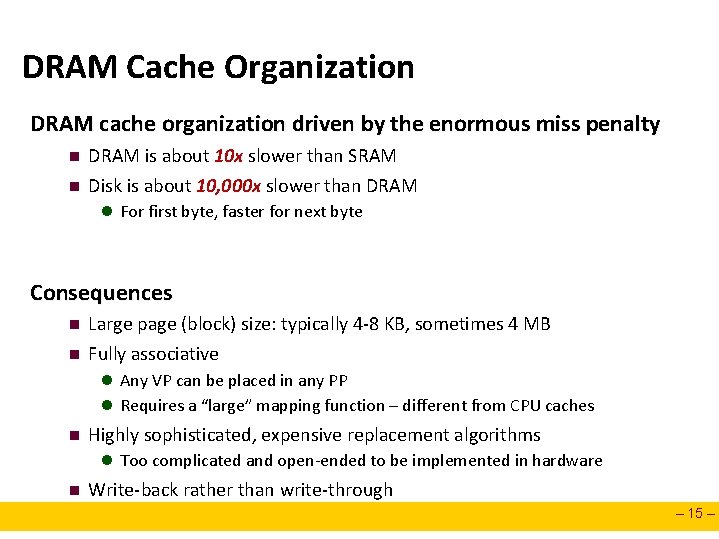

DRAM Cache Organization DRAM cache organization driven by the enormous miss penalty n n DRAM is about 10 x slower than SRAM Disk is about 10, 000 x slower than DRAM l For first byte, faster for next byte Consequences n n Large page (block) size: typically 4 -8 KB, sometimes 4 MB Fully associative l Any VP can be placed in any PP l Requires a “large” mapping function – different from CPU caches n Highly sophisticated, expensive replacement algorithms l Too complicated and open-ended to be implemented in hardware n Write-back rather than write-through – 15 –

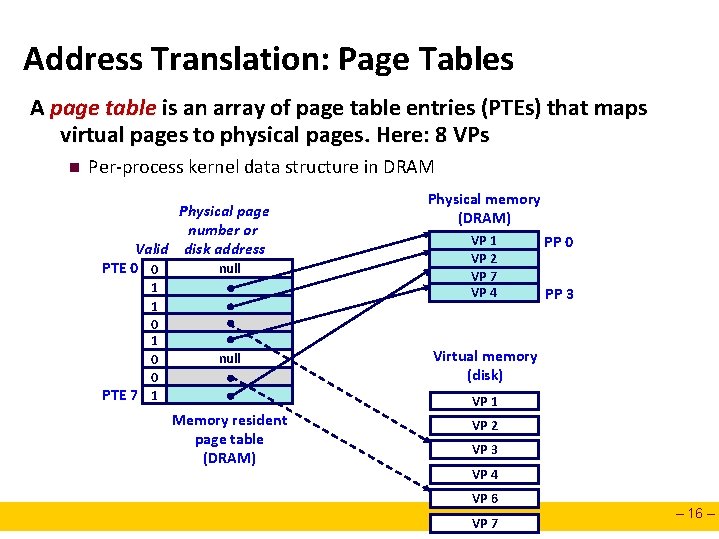

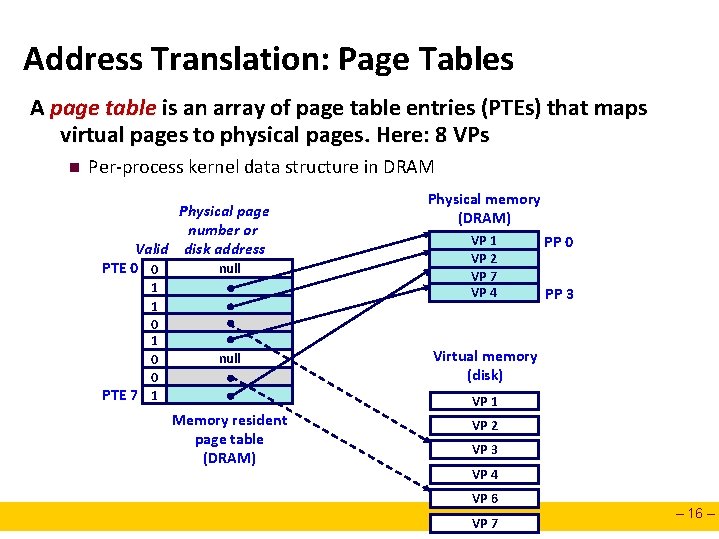

Address Translation: Page Tables A page table is an array of page table entries (PTEs) that maps virtual pages to physical pages. Here: 8 VPs n Per-process kernel data structure in DRAM Physical page number or Valid disk address PTE 0 0 null 1 1 0 0 PTE 7 1 null Physical memory (DRAM) VP 1 VP 2 VP 7 VP 4 PP 0 PP 3 Virtual memory (disk) VP 1 Memory resident page table (DRAM) VP 2 VP 3 VP 4 VP 6 VP 7 – 16 –

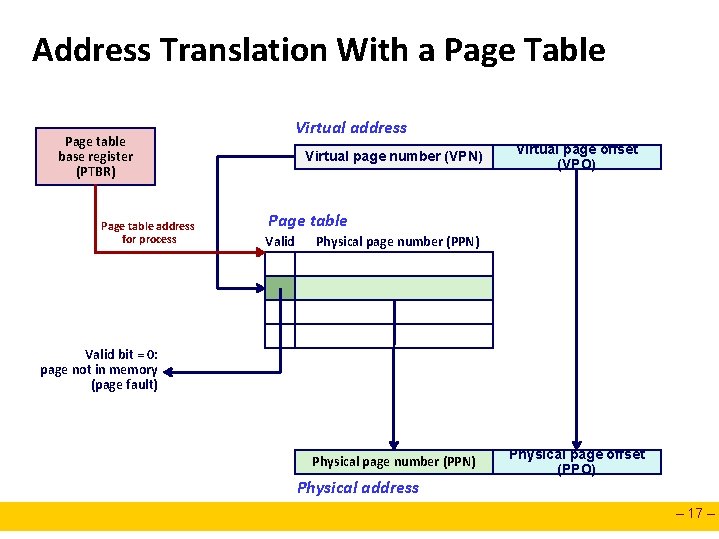

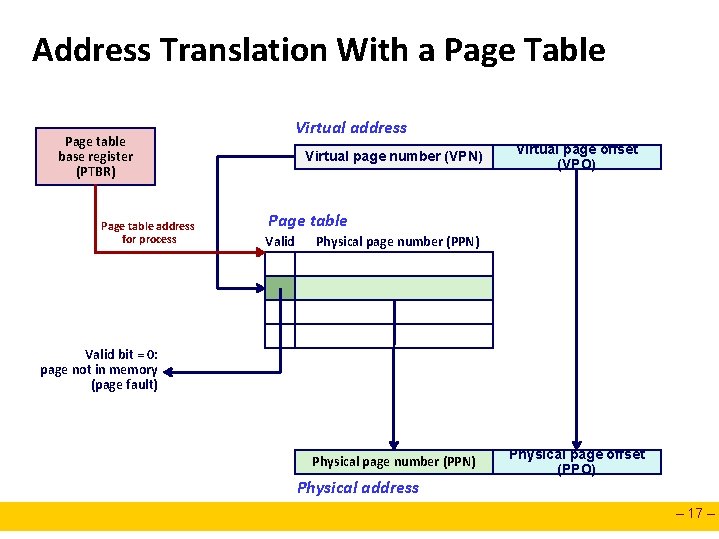

Address Translation With a Page Table Page table base register (PTBR) Page table address for process Virtual address Virtual page number (VPN) Virtual page offset (VPO) Page table Valid Physical page number (PPN) Valid bit = 0: page not in memory (page fault) Physical page number (PPN) Physical address Physical page offset (PPO) – 17 –

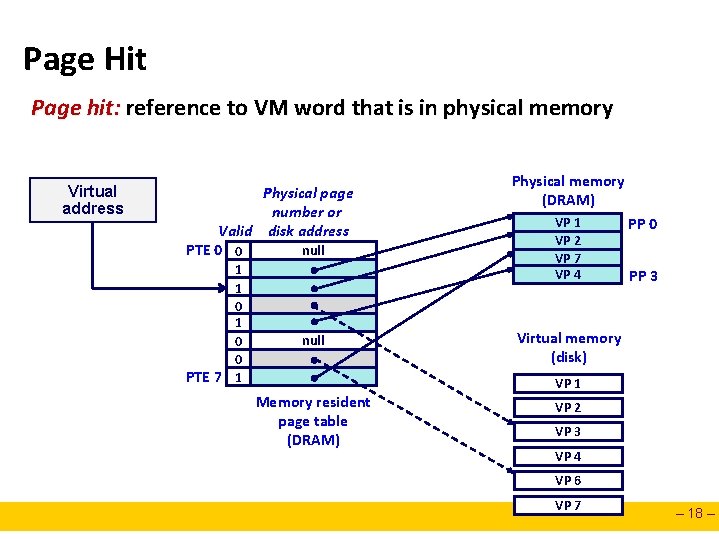

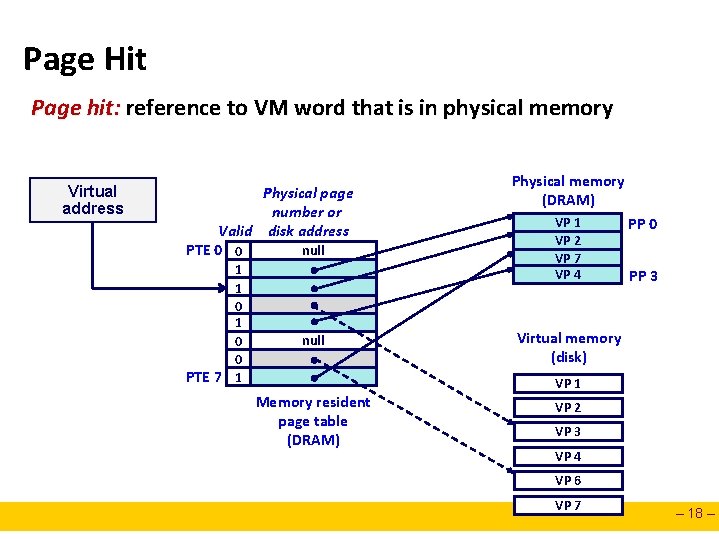

Page Hit Page hit: reference to VM word that is in physical memory Virtual address Physical page number or Valid disk address PTE 0 0 null 1 1 0 0 PTE 7 1 null Physical memory (DRAM) VP 1 VP 2 VP 7 VP 4 PP 0 PP 3 Virtual memory (disk) VP 1 Memory resident page table (DRAM) VP 2 VP 3 VP 4 VP 6 VP 7 – 18 –

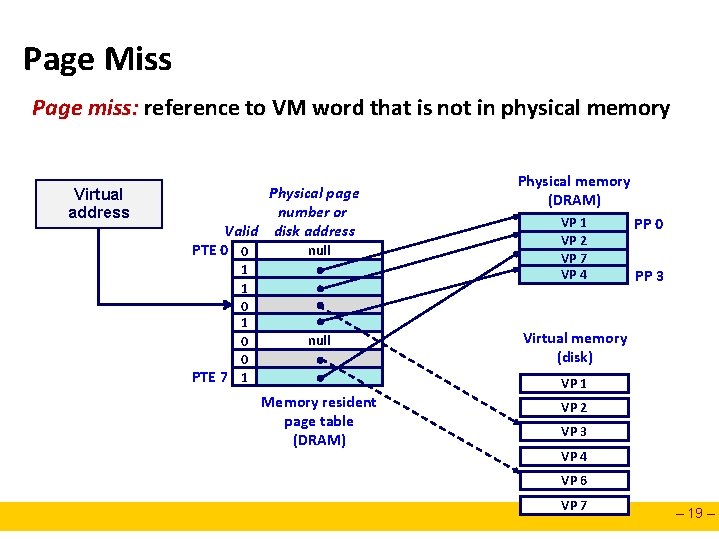

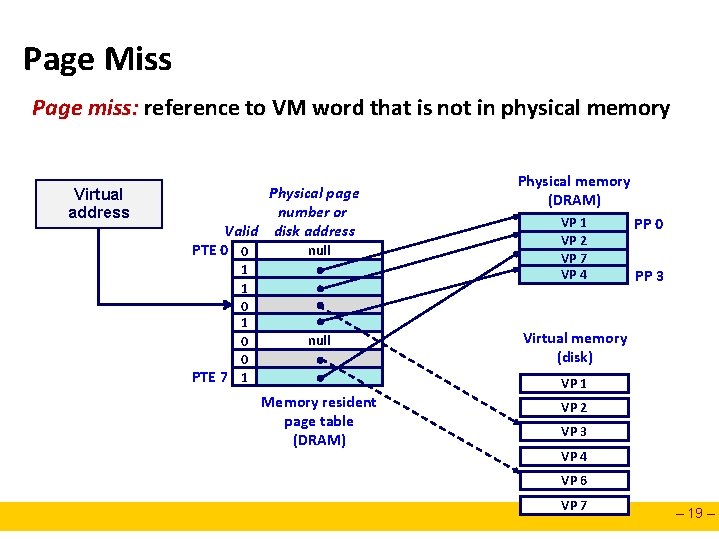

Page Miss Page miss: reference to VM word that is not in physical memory Virtual address Physical page number or Valid disk address PTE 0 0 null 1 1 0 0 PTE 7 1 null Physical memory (DRAM) VP 1 VP 2 VP 7 VP 4 PP 0 PP 3 Virtual memory (disk) VP 1 Memory resident page table (DRAM) VP 2 VP 3 VP 4 VP 6 VP 7 – 19 –

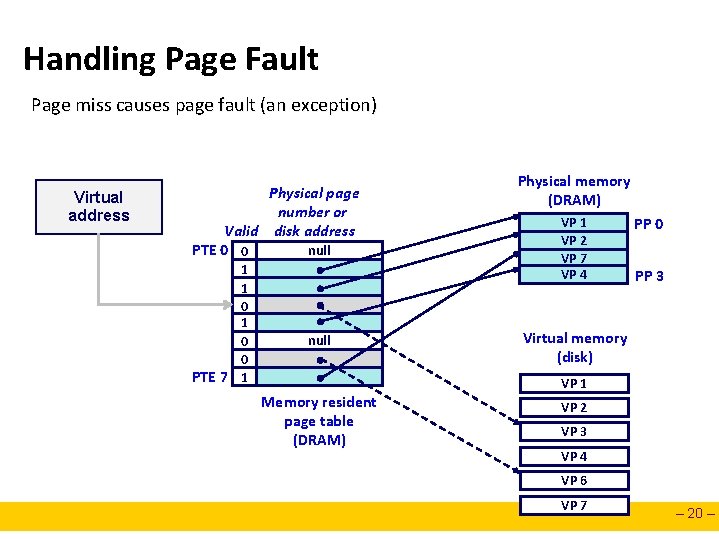

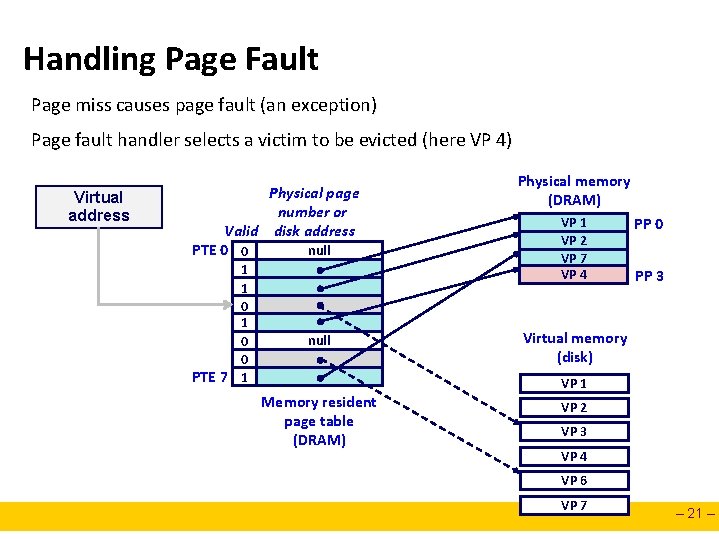

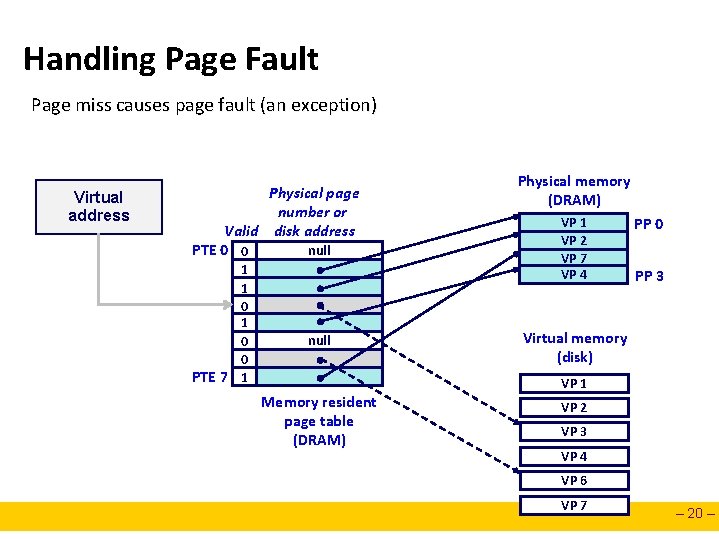

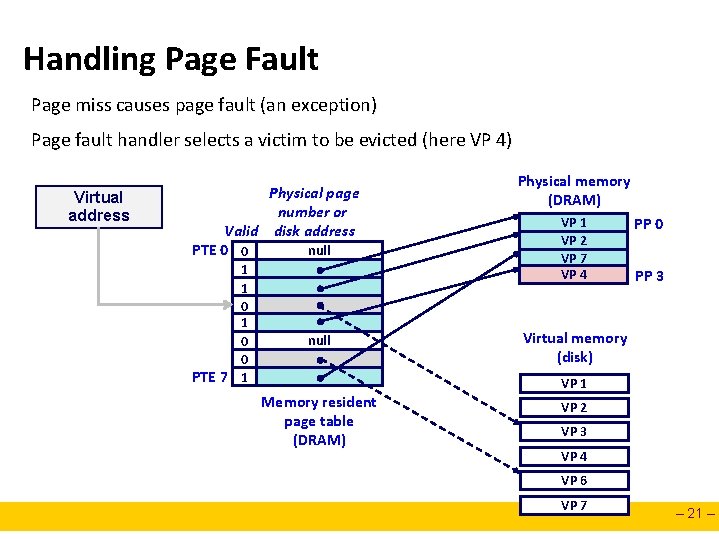

Handling Page Fault Page miss causes page fault (an exception) Virtual address Physical page number or Valid disk address PTE 0 0 null 1 1 0 0 PTE 7 1 null Physical memory (DRAM) VP 1 VP 2 VP 7 VP 4 PP 0 PP 3 Virtual memory (disk) VP 1 Memory resident page table (DRAM) VP 2 VP 3 VP 4 VP 6 VP 7 – 20 –

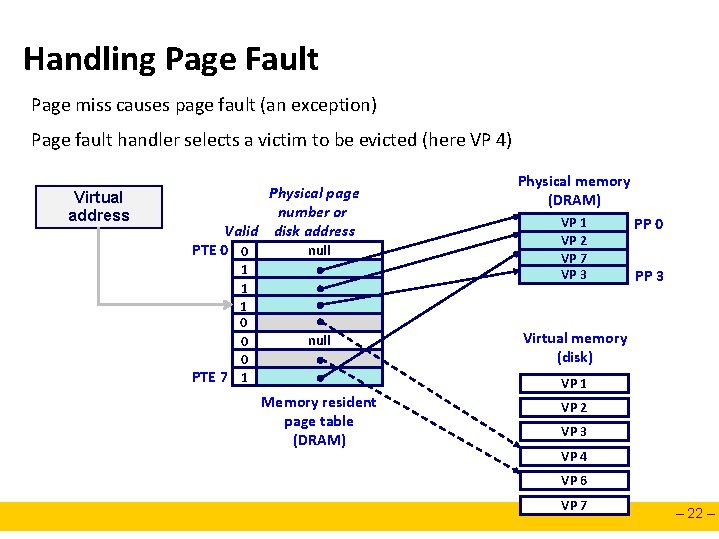

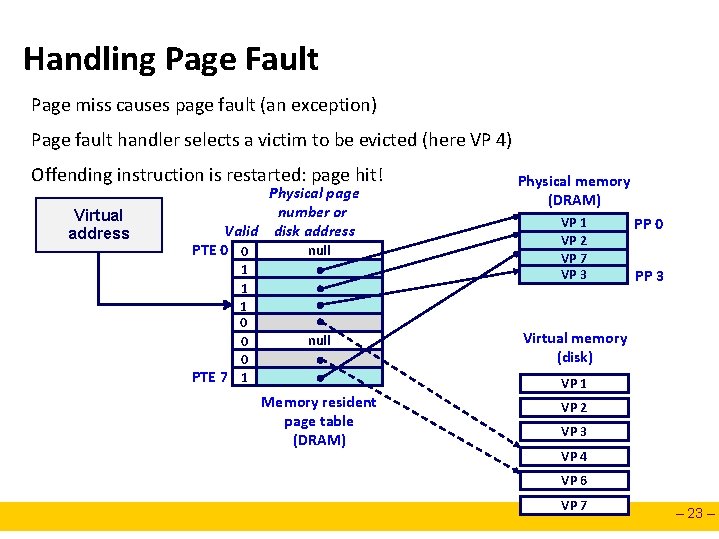

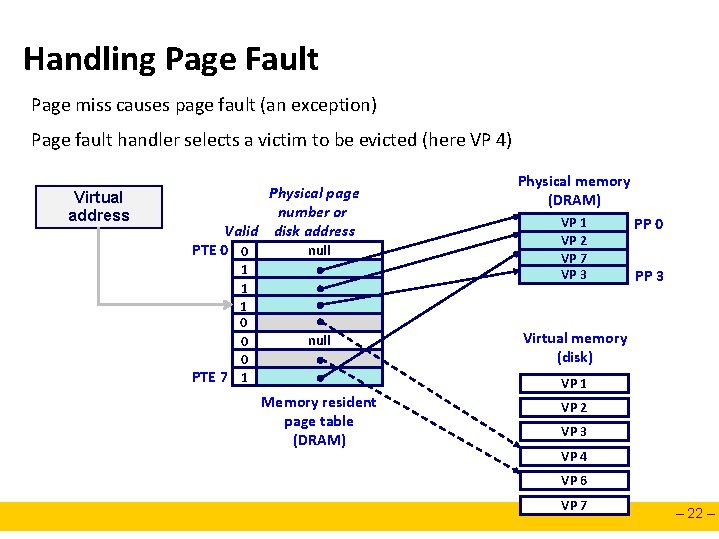

Handling Page Fault Page miss causes page fault (an exception) Page fault handler selects a victim to be evicted (here VP 4) Virtual address Physical page number or Valid disk address PTE 0 0 null 1 1 0 0 PTE 7 1 null Physical memory (DRAM) VP 1 VP 2 VP 7 VP 4 PP 0 PP 3 Virtual memory (disk) VP 1 Memory resident page table (DRAM) VP 2 VP 3 VP 4 VP 6 VP 7 – 21 –

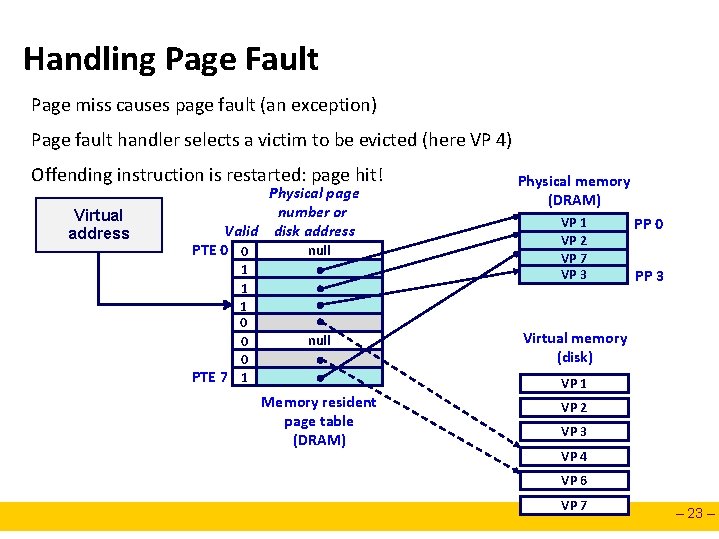

Handling Page Fault Page miss causes page fault (an exception) Page fault handler selects a victim to be evicted (here VP 4) Virtual address Physical page number or Valid disk address PTE 0 0 null 1 1 1 0 0 0 PTE 7 1 null Physical memory (DRAM) VP 1 VP 2 VP 7 VP 3 PP 0 PP 3 Virtual memory (disk) VP 1 Memory resident page table (DRAM) VP 2 VP 3 VP 4 VP 6 VP 7 – 22 –

Handling Page Fault Page miss causes page fault (an exception) Page fault handler selects a victim to be evicted (here VP 4) Offending instruction is restarted: page hit! Virtual address Physical page number or Valid disk address PTE 0 0 null 1 1 1 0 0 0 PTE 7 1 null Physical memory (DRAM) VP 1 VP 2 VP 7 VP 3 PP 0 PP 3 Virtual memory (disk) VP 1 Memory resident page table (DRAM) VP 2 VP 3 VP 4 VP 6 VP 7 – 23 –

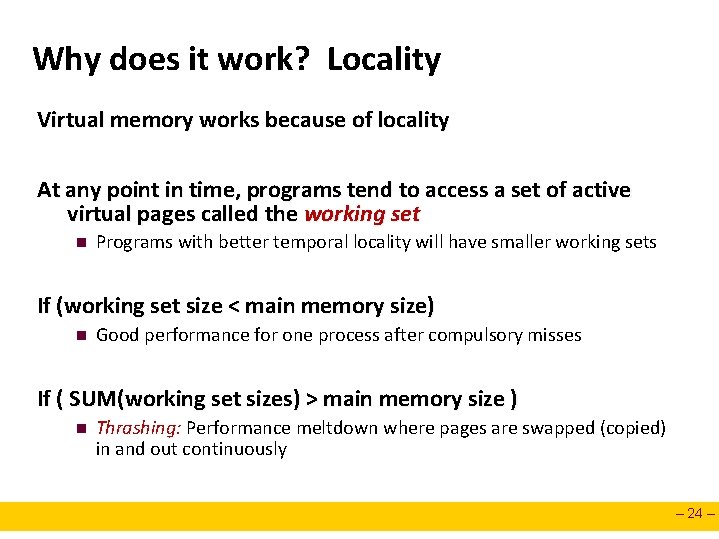

Why does it work? Locality Virtual memory works because of locality At any point in time, programs tend to access a set of active virtual pages called the working set n Programs with better temporal locality will have smaller working sets If (working set size < main memory size) n Good performance for one process after compulsory misses If ( SUM(working set sizes) > main memory size ) n Thrashing: Performance meltdown where pages are swapped (copied) in and out continuously – 24 –

Today Virtual memory (VM) n n n Overview and motivation VM as tool for caching VM as tool for memory management VM as tool for memory protection Address translation – 25 –

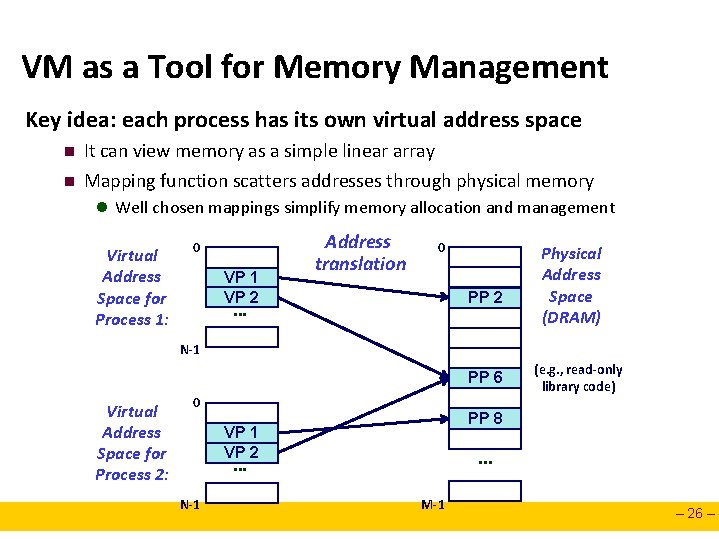

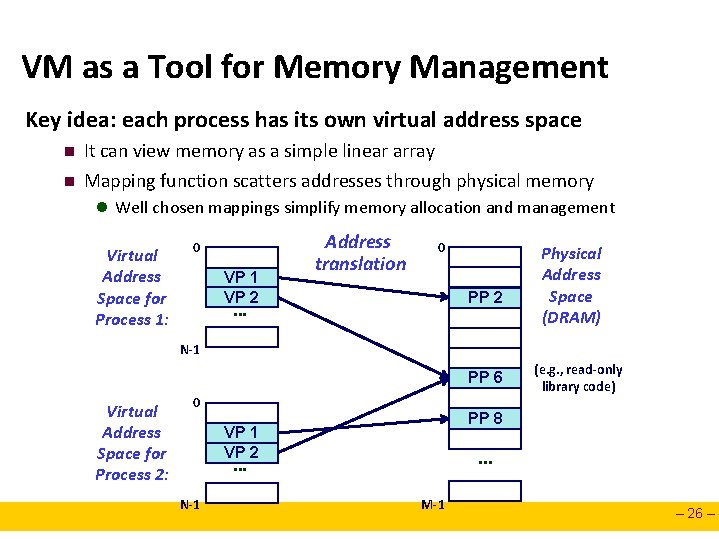

VM as a Tool for Memory Management Key idea: each process has its own virtual address space n n It can view memory as a simple linear array Mapping function scatters addresses through physical memory l Well chosen mappings simplify memory allocation and management Virtual Address Space for Process 1: 0 VP 1 VP 2 Address translation 0 PP 2 . . . Physical Address Space (DRAM) N-1 PP 6 Virtual Address Space for Process 2: 0 PP 8 VP 1 VP 2 . . . N-1 (e. g. , read-only library code) M-1 – 26 –

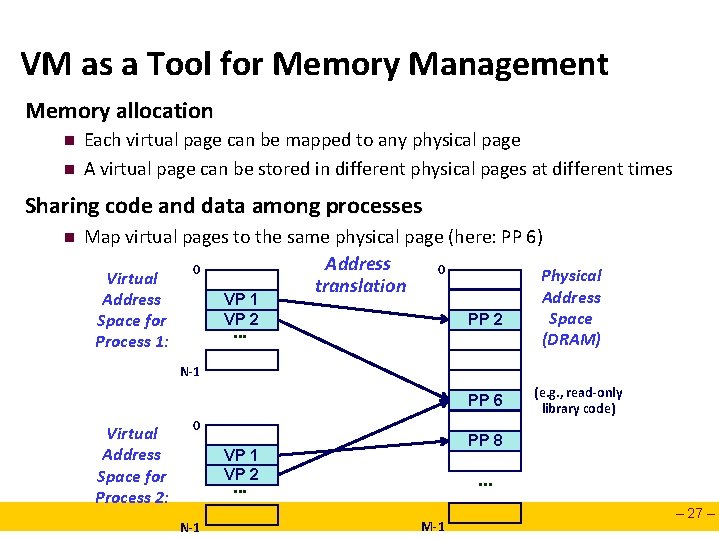

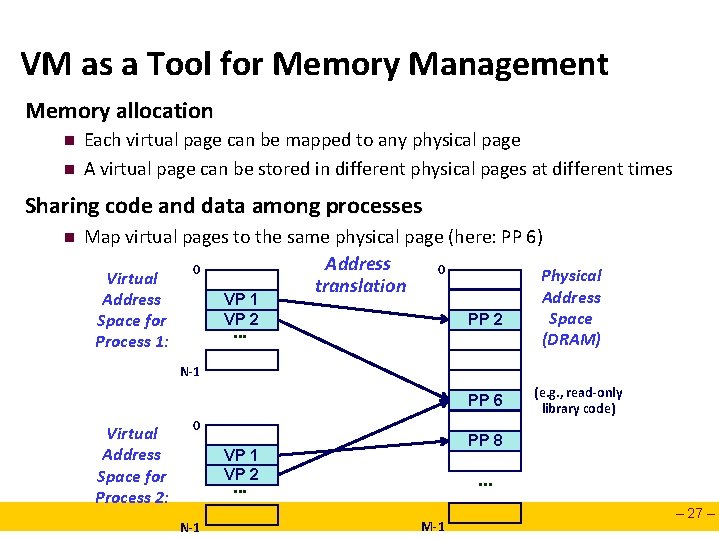

VM as a Tool for Memory Management Memory allocation n n Each virtual page can be mapped to any physical page A virtual page can be stored in different physical pages at different times Sharing code and data among processes n Map virtual pages to the same physical page (here: PP 6) Address 0 0 Physical Virtual translation Address Space for Process 1: VP 1 VP 2 PP 2 . . . Address Space (DRAM) N-1 PP 6 Virtual Address Space for Process 2: 0 PP 8 VP 1 VP 2 . . . N-1 (e. g. , read-only library code) M-1 – 27 –

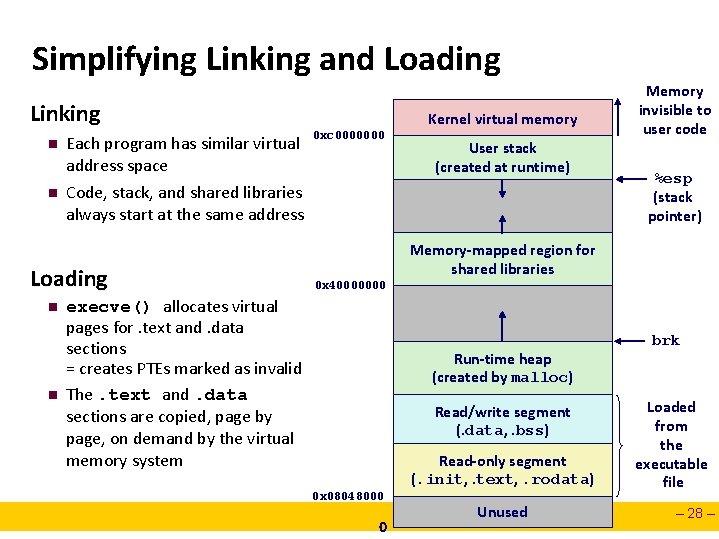

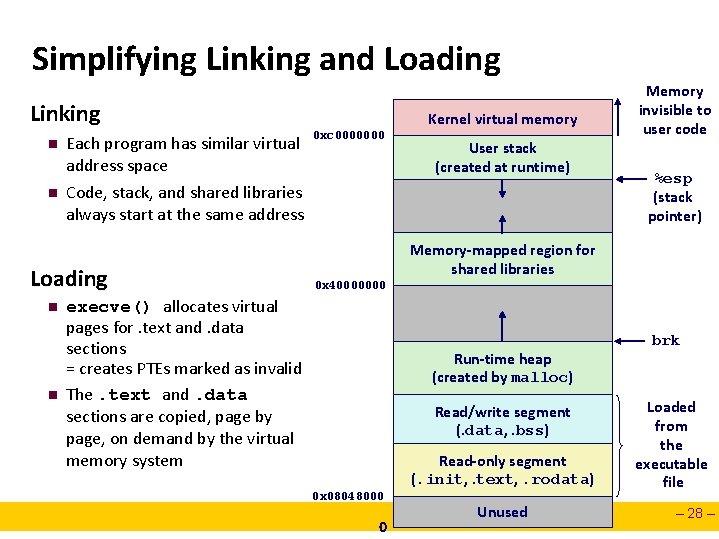

Simplifying Linking and Loading Linking n n Each program has similar virtual address space n 0 xc 0000000 User stack (created at runtime) Code, stack, and shared libraries always start at the same address Loading n Kernel virtual memory Memory invisible to user code %esp (stack pointer) Memory-mapped region for shared libraries 0 x 40000000 execve() allocates virtual pages for. text and. data sections = creates PTEs marked as invalid The. text and. data sections are copied, page by page, on demand by the virtual memory system Run-time heap (created by malloc) Read/write segment (. data, . bss) Read-only segment (. init, . text, . rodata) 0 x 08048000 0 Unused brk Loaded from the executable file – 28 –

Today Virtual memory (VM) n n n Overview and motivation VM as tool for caching VM as tool for memory management VM as tool for memory protection Address translation – 29 –

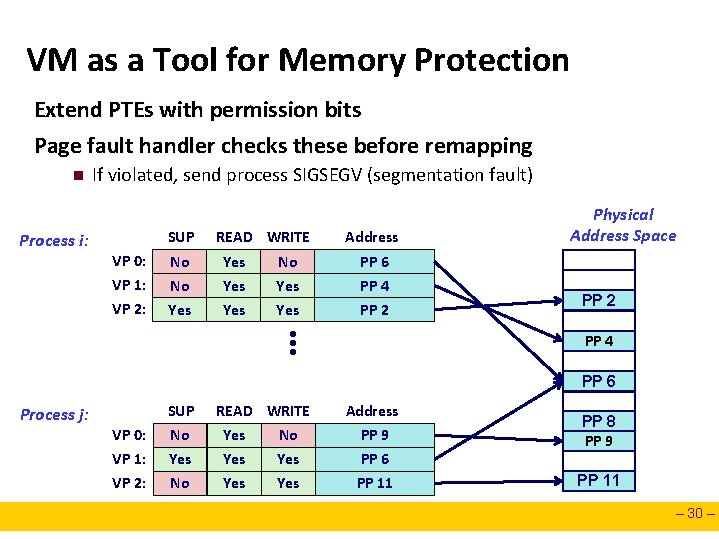

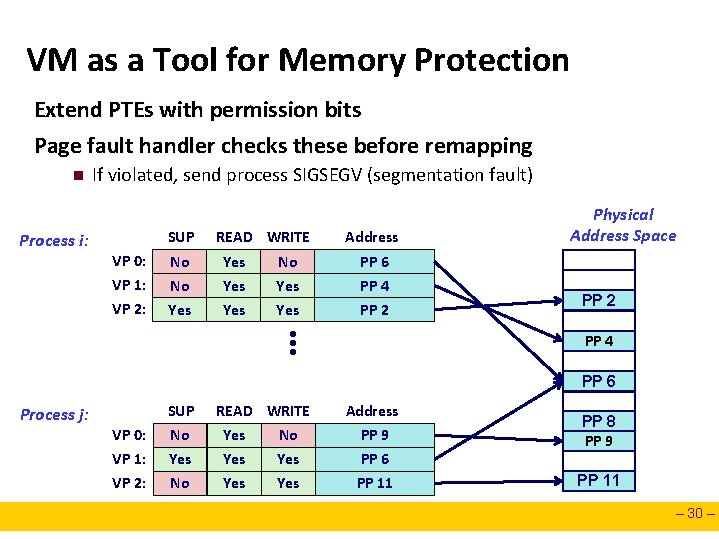

VM as a Tool for Memory Protection Extend PTEs with permission bits Page fault handler checks these before remapping n Process i: If violated, send process SIGSEGV (segmentation fault) SUP VP 0: VP 1: VP 2: No No Yes READ WRITE Yes Yes No Yes • • • Address PP 6 PP 4 PP 2 Physical Address Space PP 2 PP 4 PP 6 Process j: SUP VP 0: VP 1: VP 2: No Yes No READ WRITE Yes Yes No Yes Address PP 9 PP 6 PP 11 PP 8 PP 9 PP 11 – 30 –

Today Virtual memory (VM) n n n Overview and motivation VM as tool for caching VM as tool for memory management VM as tool for memory protection Address translation – 31 –

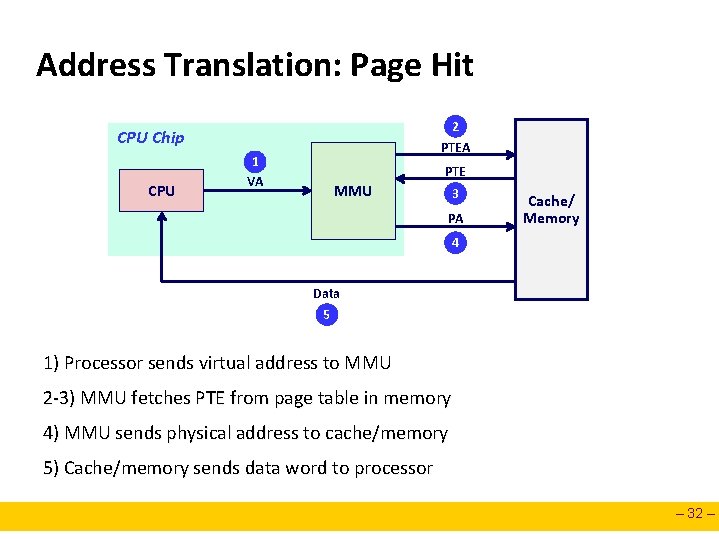

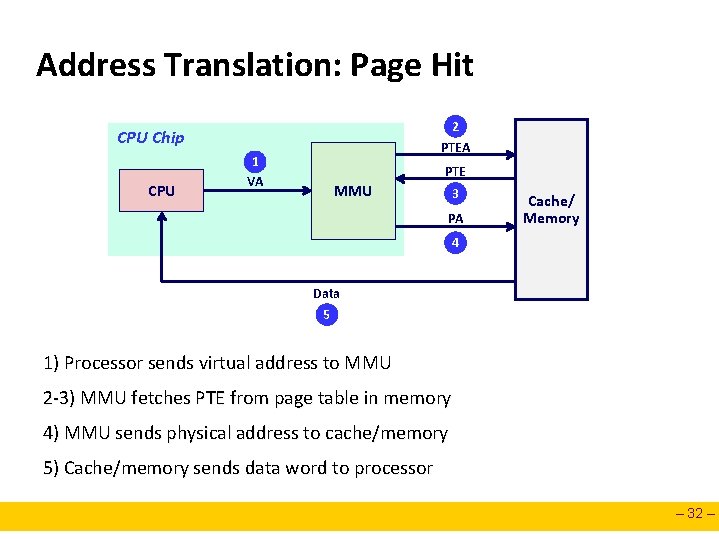

Address Translation: Page Hit 2 PTEA CPU Chip CPU 1 VA PTE MMU 3 PA Cache/ Memory 4 Data 5 1) Processor sends virtual address to MMU 2 -3) MMU fetches PTE from page table in memory 4) MMU sends physical address to cache/memory 5) Cache/memory sends data word to processor – 32 –

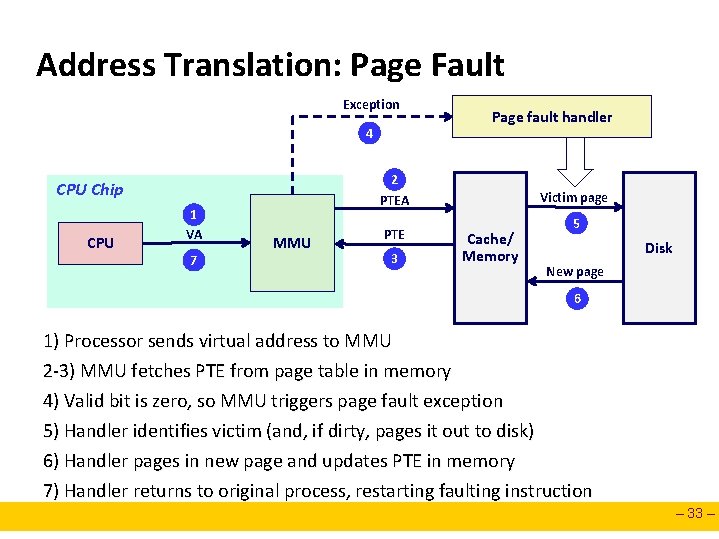

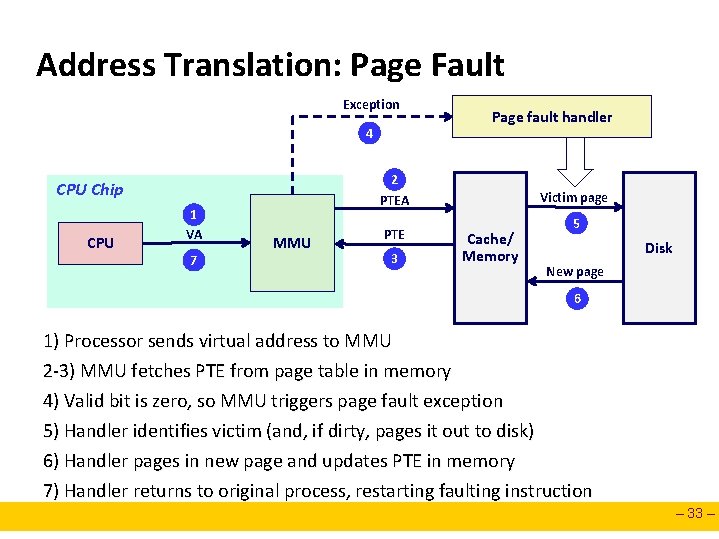

Address Translation: Page Fault Exception 4 2 PTEA CPU Chip CPU 1 VA 7 Page fault handler MMU PTE 3 Victim page Cache/ Memory 5 Disk New page 6 1) Processor sends virtual address to MMU 2 -3) MMU fetches PTE from page table in memory 4) Valid bit is zero, so MMU triggers page fault exception 5) Handler identifies victim (and, if dirty, pages it out to disk) 6) Handler pages in new page and updates PTE in memory 7) Handler returns to original process, restarting faulting instruction – 33 –

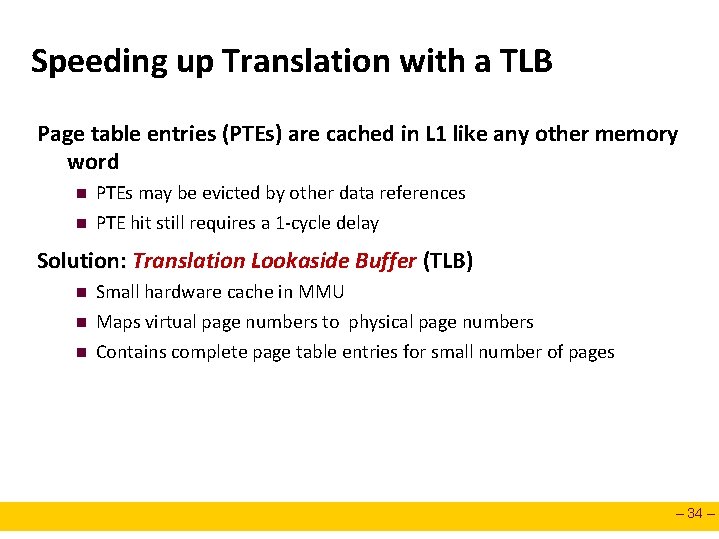

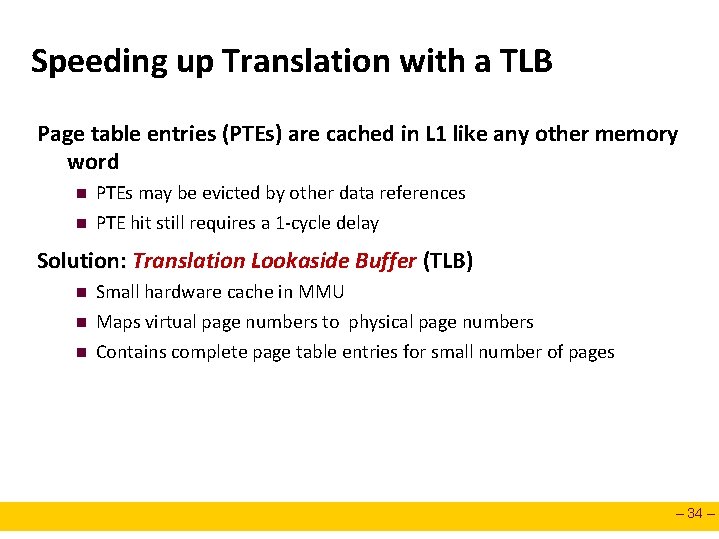

Speeding up Translation with a TLB Page table entries (PTEs) are cached in L 1 like any other memory word n n PTEs may be evicted by other data references PTE hit still requires a 1 -cycle delay Solution: Translation Lookaside Buffer (TLB) n n n Small hardware cache in MMU Maps virtual page numbers to physical page numbers Contains complete page table entries for small number of pages – 34 –

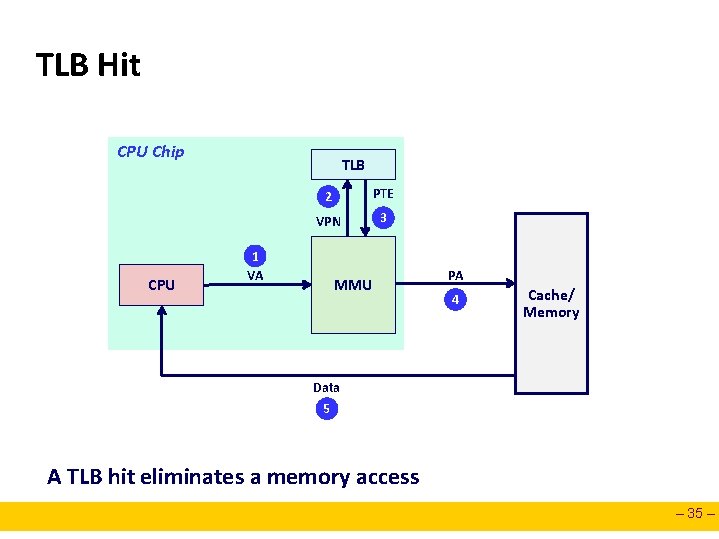

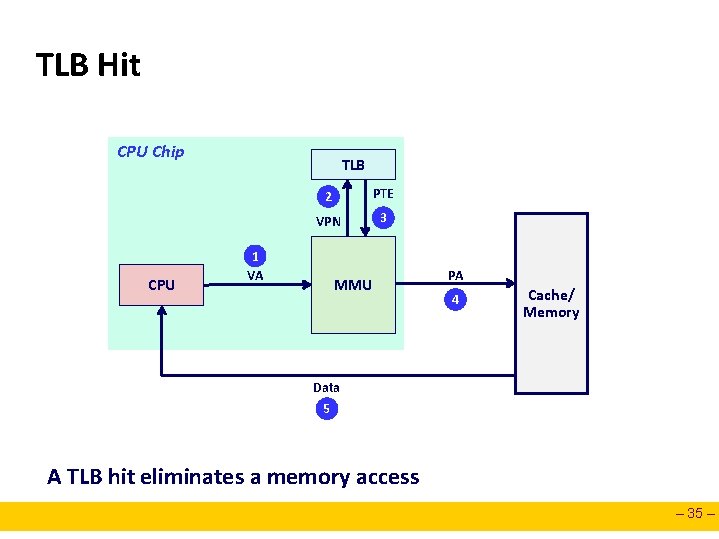

TLB Hit CPU Chip CPU TLB 2 PTE VPN 3 1 VA MMU PA 4 Cache/ Memory Data 5 A TLB hit eliminates a memory access – 35 –

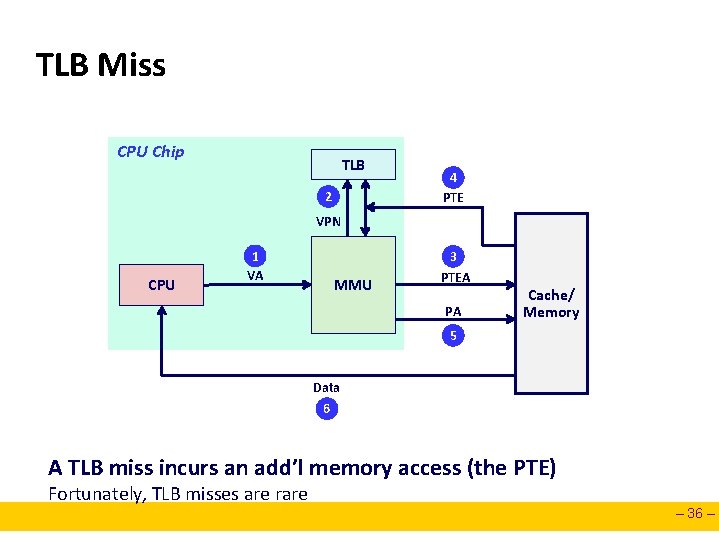

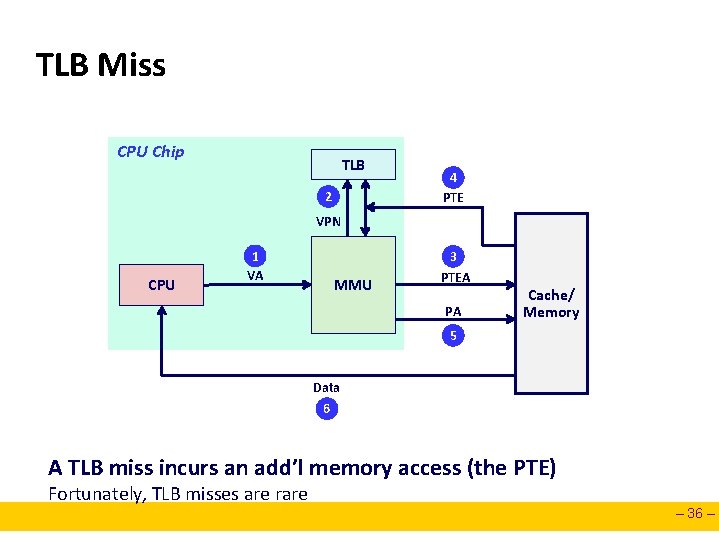

TLB Miss CPU Chip TLB 2 4 PTE VPN CPU 1 VA MMU 3 PTEA PA Cache/ Memory 5 Data 6 A TLB miss incurs an add’l memory access (the PTE) Fortunately, TLB misses are rare – 36 –

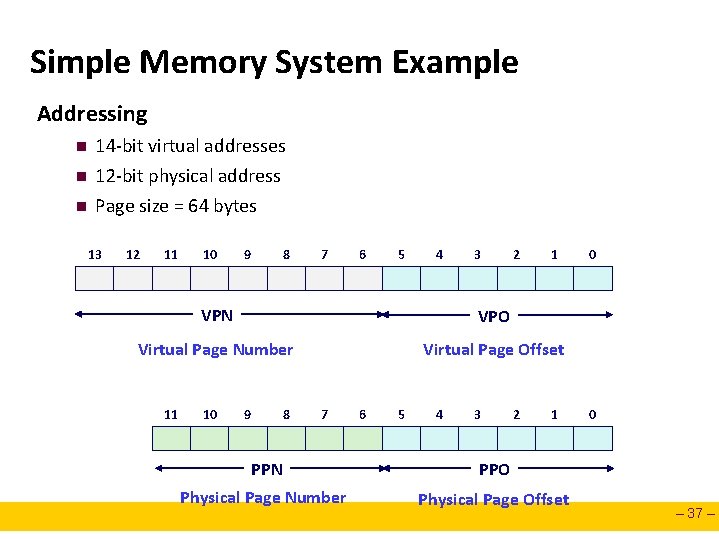

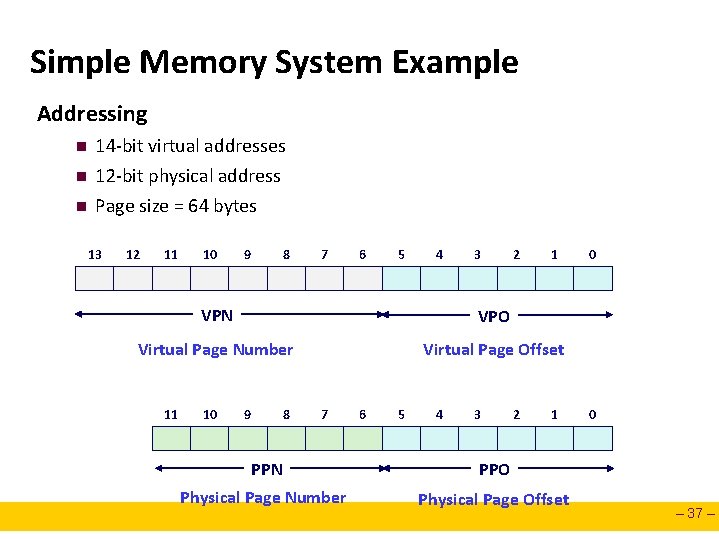

Simple Memory System Example Addressing n n n 14 -bit virtual addresses 12 -bit physical address Page size = 64 bytes 13 12 11 10 9 8 7 6 5 4 3 2 1 VPN VPO Virtual Page Number Virtual Page Offset 11 10 9 8 7 6 5 4 3 2 1 PPN PPO Physical Page Number Physical Page Offset 0 0 – 37 –

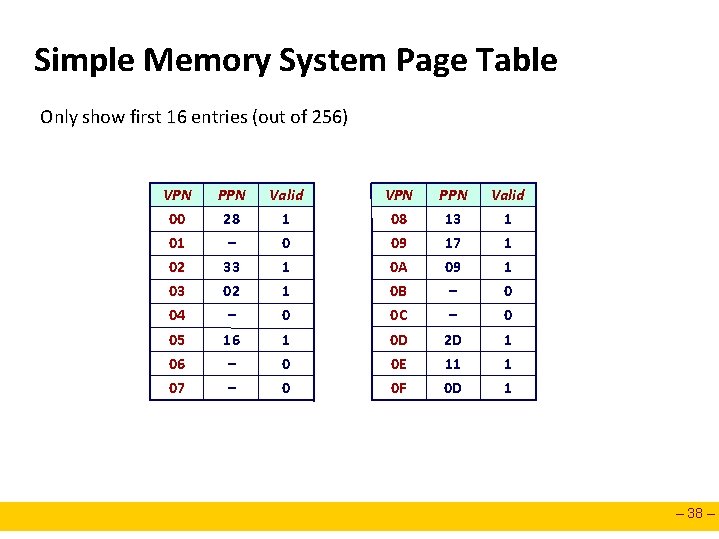

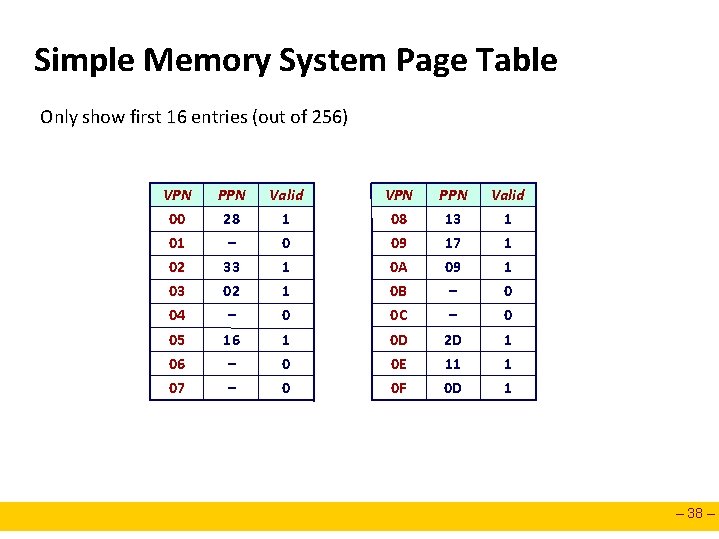

Simple Memory System Page Table Only show first 16 entries (out of 256) VPN PPN Valid 00 28 1 08 13 1 01 – 0 09 17 1 02 33 1 0 A 09 1 03 02 1 0 B – 0 04 – 0 0 C – 0 05 16 1 0 D 2 D 1 06 – 0 0 E 11 1 07 – 0 0 F 0 D 1 – 38 –

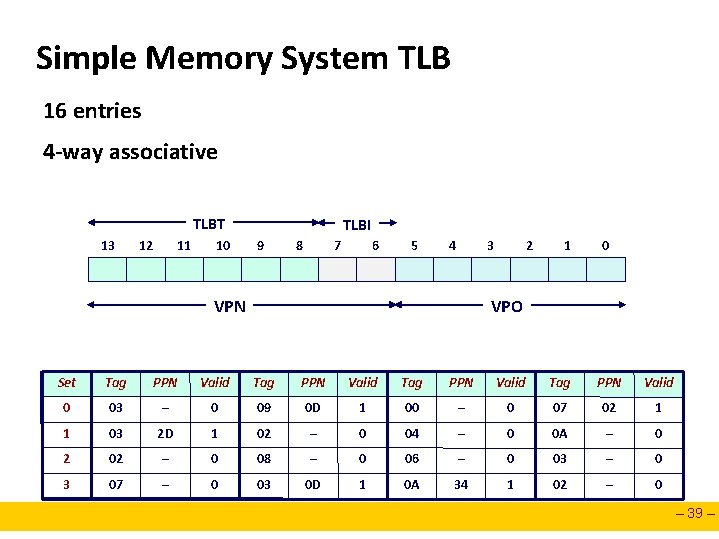

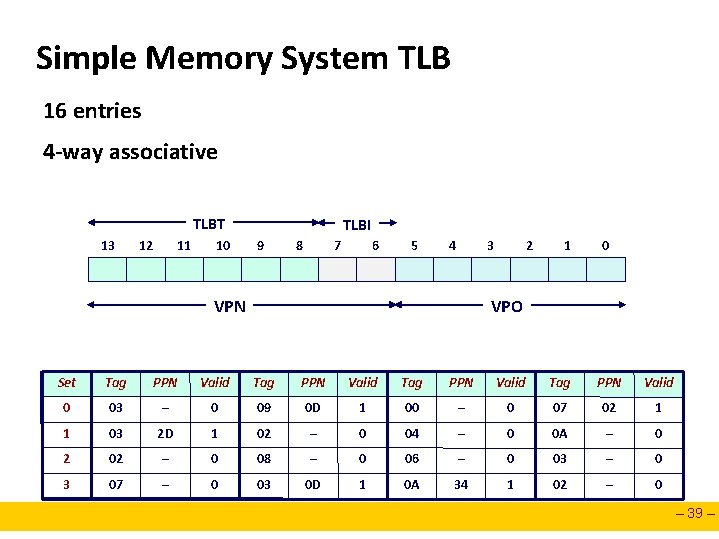

Simple Memory System TLB 16 entries 4 -way associative TLBT 13 12 11 10 TLBI 9 8 7 6 5 4 3 2 1 0 VPO VPN Set Tag PPN Valid 0 03 – 0 09 0 D 1 00 – 0 07 02 1 1 03 2 D 1 02 – 0 04 – 0 0 A – 0 2 02 – 0 08 – 0 06 – 0 03 – 0 3 07 – 0 03 0 D 1 0 A 34 1 02 – 0 – 39 –

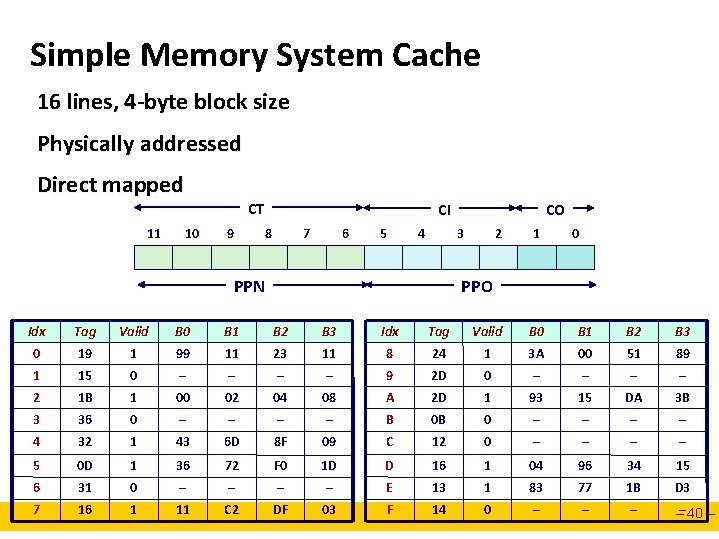

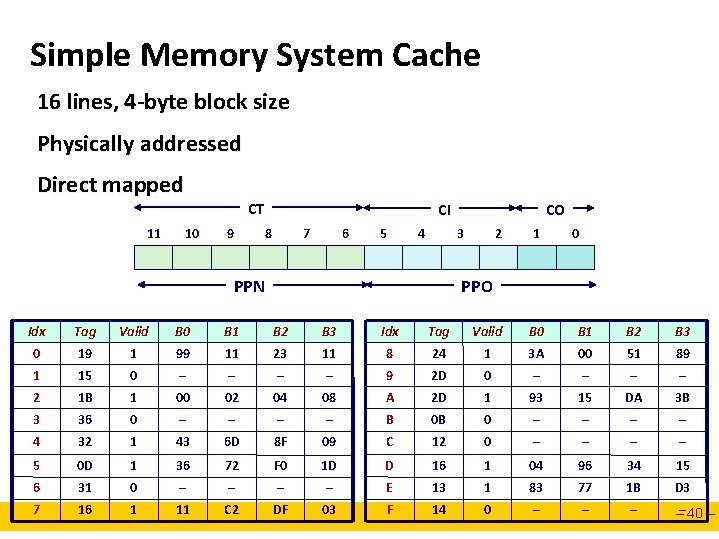

Simple Memory System Cache 16 lines, 4 -byte block size Physically addressed Direct mapped 11 10 CT 9 CI 8 7 6 5 4 CO 3 PPN 2 1 0 PPO Idx Tag Valid B 0 B 1 B 2 B 3 0 19 1 99 11 23 11 8 24 1 3 A 00 51 89 1 15 0 – – 9 2 D 0 – – 2 1 B 1 00 02 04 08 A 2 D 1 93 15 DA 3 B 3 36 0 – – B 0 B 0 – – 4 32 1 43 6 D 8 F 09 C 12 0 – – 5 0 D 1 36 72 F 0 1 D D 16 1 04 96 34 15 6 31 0 – – E 13 1 83 77 1 B D 3 7 16 1 11 C 2 DF 03 F 14 0 – –– 40 –

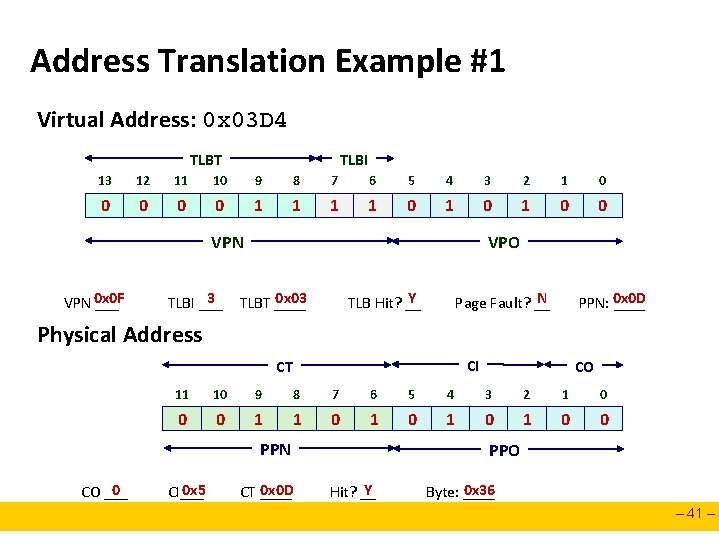

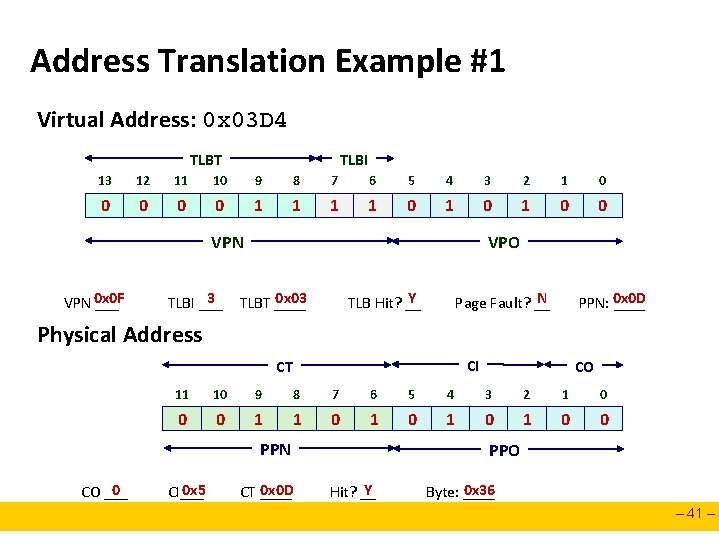

Address Translation Example #1 Virtual Address: 0 x 03 D 4 TLBT TLBI 13 12 11 10 9 8 7 6 5 4 3 2 1 0 0 0 1 1 0 1 0 0 VPN 0 x 0 F ___ 3 TLBI ___ VPO Y TLB Hit? __ 0 x 03 TLBT ____ N Page Fault? __ PPN: 0 x 0 D ____ Physical Address CI CT 11 10 9 8 7 6 5 4 3 2 1 0 0 0 1 1 0 1 0 0 PPN 0 CO ___ CO 0 x 5 CI___ 0 x 0 D CT ____ PPO Y Hit? __ 0 x 36 Byte: ____ – 41 –

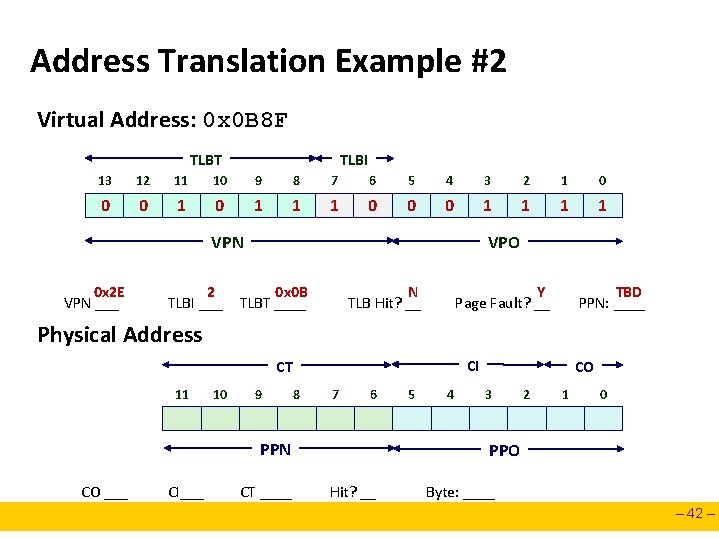

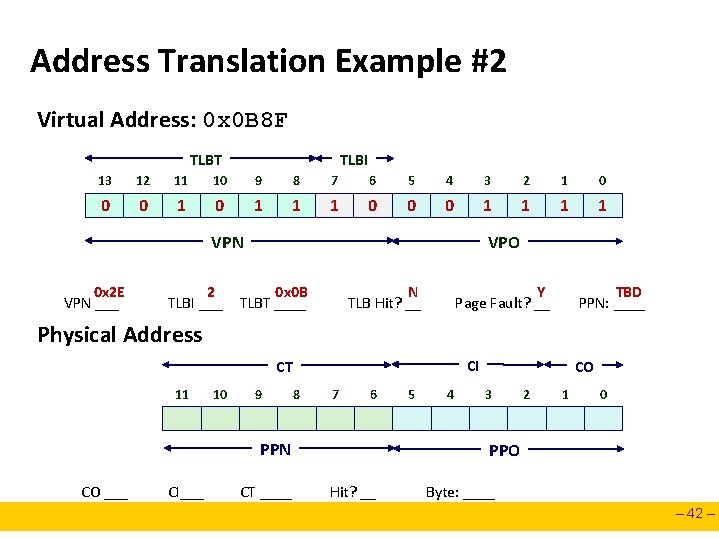

Address Translation Example #2 Virtual Address: 0 x 0 B 8 F TLBT TLBI 13 12 11 10 9 8 7 6 5 4 3 2 1 0 0 0 1 1 VPN 0 x 2 E VPN ___ 2 TLBI ___ VPO N TLB Hit? __ 0 x 0 B TLBT ____ Y Page Fault? __ TBD PPN: ____ Physical Address CI CT 11 10 9 8 7 6 PPN CO ___ CI___ CT ____ 5 4 CO 3 2 1 0 PPO Hit? __ Byte: ____ – 42 –

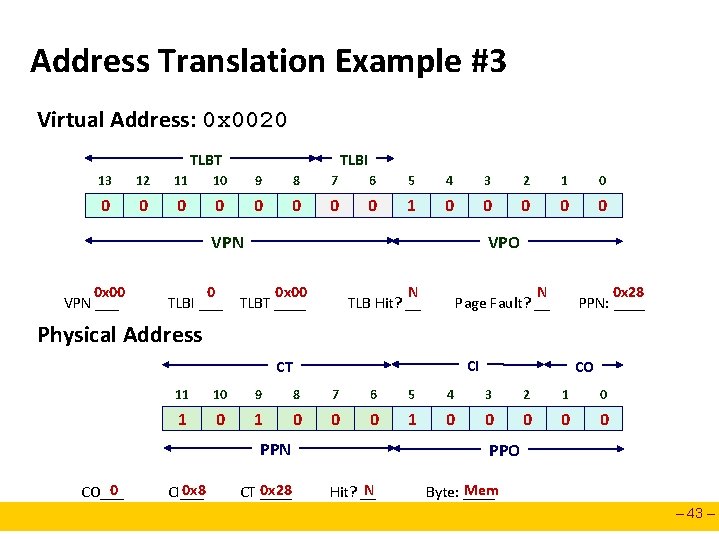

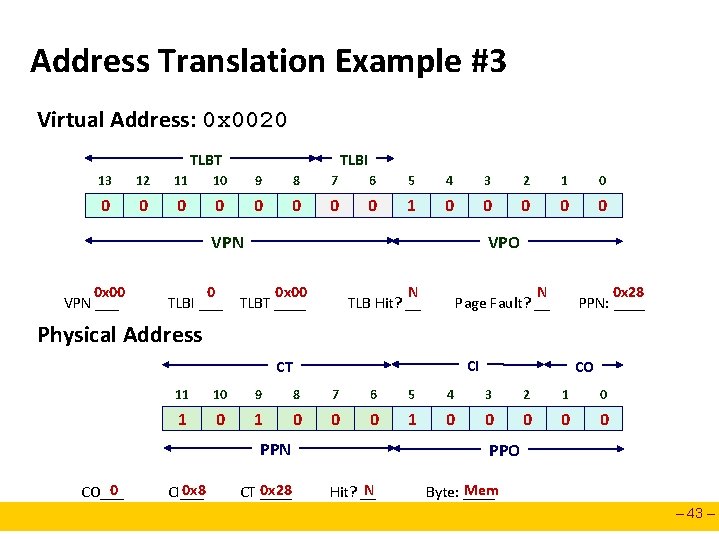

Address Translation Example #3 Virtual Address: 0 x 0020 TLBT TLBI 13 12 11 10 9 8 7 6 5 4 3 2 1 0 0 0 0 0 1 0 0 0 VPN 0 x 00 VPN ___ 0 TLBI ___ VPO N TLB Hit? __ 0 x 00 TLBT ____ N Page Fault? __ 0 x 28 PPN: ____ Physical Address CI CT 11 10 9 8 7 6 5 4 3 2 1 0 1 0 0 0 0 0 PPN 0 CO___ CO 0 x 8 CI___ 0 x 28 CT ____ PPO N Hit? __ Mem Byte: ____ – 43 –

Summary Programmer’s view of virtual memory n n Each process has its own private linear address space Cannot be corrupted by other processes System view of virtual memory n Uses memory efficiently by caching virtual memory pages l Efficient only because of locality n n Simplifies memory management and programming Simplifies protection by providing a convenient interpositioning point to check permissions – 44 –

Today Virtual memory (VM) n n n Overview and motivation VM as tool for caching VM as tool for memory management VM as tool for memory protection Address translation Allocation, multi-level page tables Linux VM system – 45 –

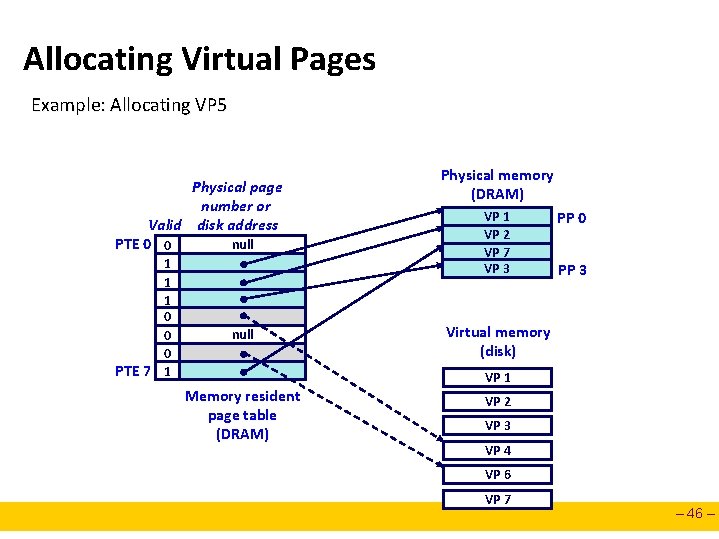

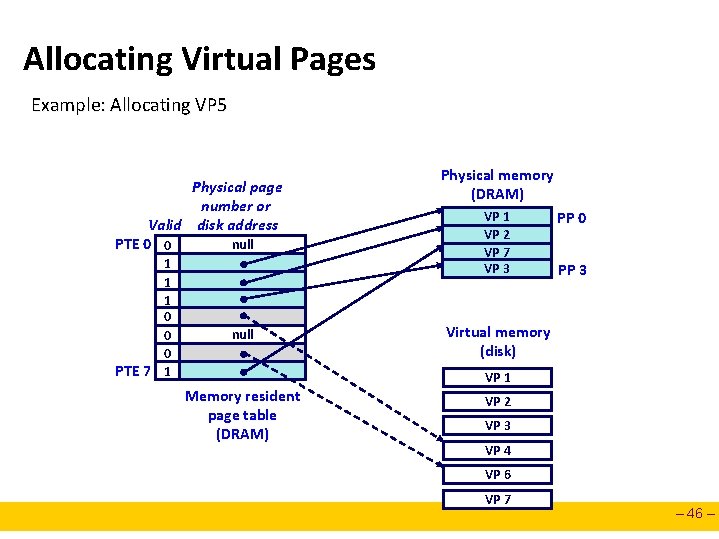

Allocating Virtual Pages Example: Allocating VP 5 Physical page number or Valid disk address PTE 0 0 null 1 1 1 0 0 0 PTE 7 1 null Physical memory (DRAM) VP 1 VP 2 VP 7 VP 3 PP 0 PP 3 Virtual memory (disk) VP 1 Memory resident page table (DRAM) VP 2 VP 3 VP 4 VP 6 VP 7 – 46 –

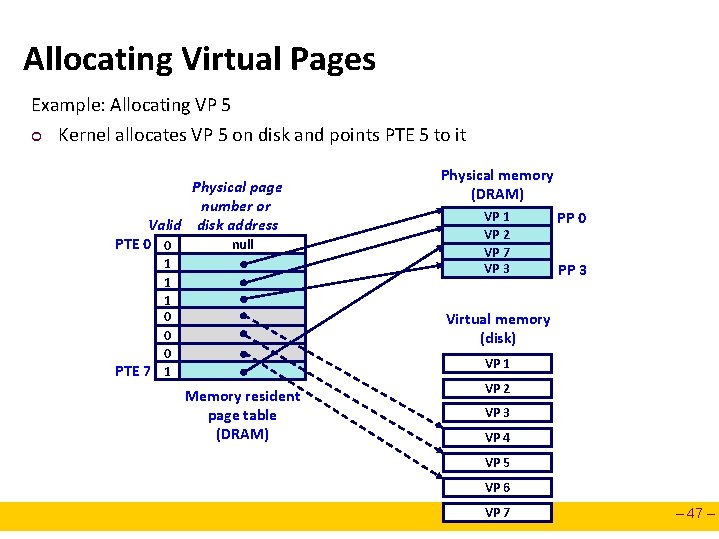

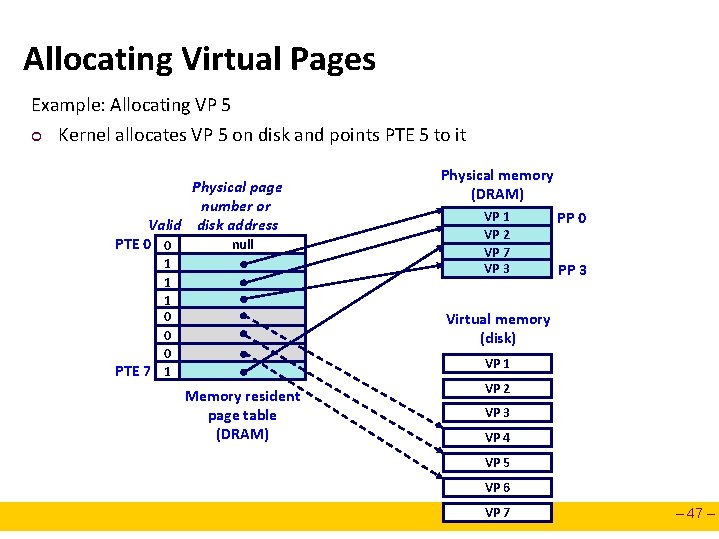

Allocating Virtual Pages Example: Allocating VP 5 ¢ Kernel allocates VP 5 on disk and points PTE 5 to it Physical page number or Valid disk address PTE 0 0 null 1 1 1 0 0 0 PTE 7 1 Physical memory (DRAM) VP 1 VP 2 VP 7 VP 3 PP 0 PP 3 Virtual memory (disk) VP 1 Memory resident page table (DRAM) VP 2 VP 3 VP 4 VP 5 VP 6 VP 7 – 47 –

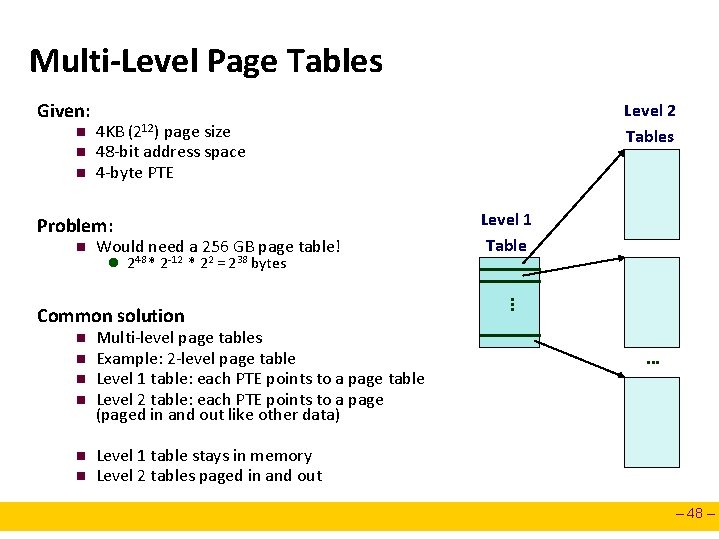

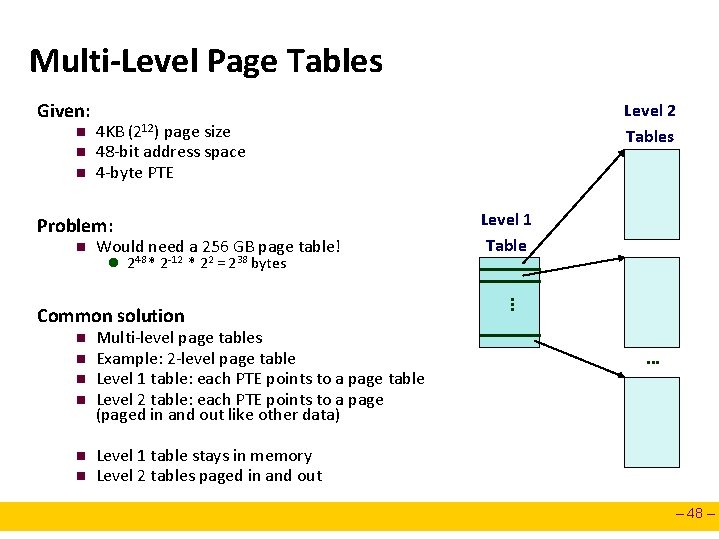

Multi-Level Page Tables Given: n n n 4 KB (212) page size 48 -bit address space 4 -byte PTE Problem: n Level 2 Tables Would need a 256 GB page table! l 248 * 2 -12 * 22 = 238 bytes n n Multi-level page tables Example: 2 -level page table Level 1 table: each PTE points to a page table Level 2 table: each PTE points to a page (paged in and out like other data) n n Level 1 table stays in memory Level 2 tables paged in and out . . . Common solution Level 1 Table . . . – 48 –

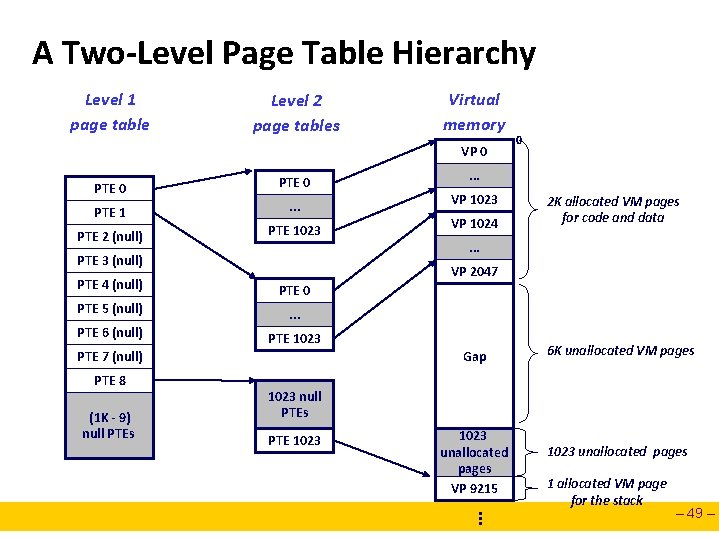

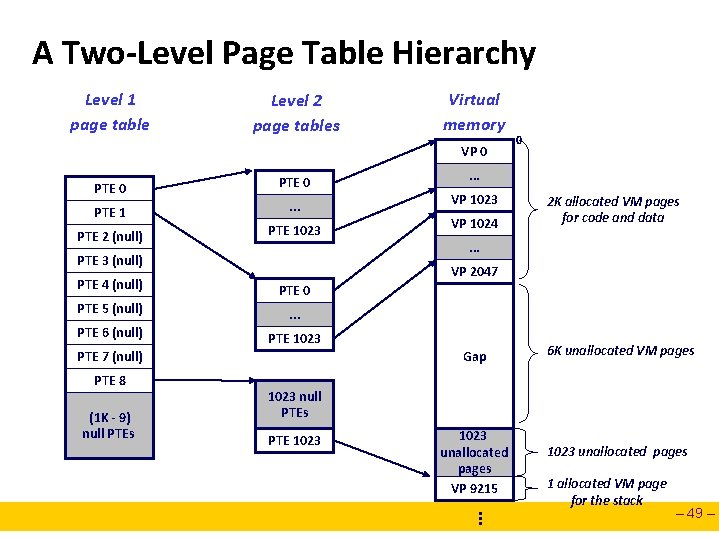

A Two-Level Page Table Hierarchy Level 1 page table Level 2 page tables Virtual memory VP 0 PTE 1 . . . PTE 2 (null) PTE 1023 PTE 3 (null) PTE 0 PTE 5 (null) . . . PTE 6 (null) PTE 1023 VP 1024 2 K allocated VM pages for code and data . . . Gap PTE 7 (null) (1 K - 9) null PTEs . . . VP 2047 PTE 4 (null) PTE 8 0 6 K unallocated VM pages 1023 null PTEs PTE 1023 unallocated pages VP 9215 1023 unallocated pages 1 allocated VM page for the stack . . . – 49 –

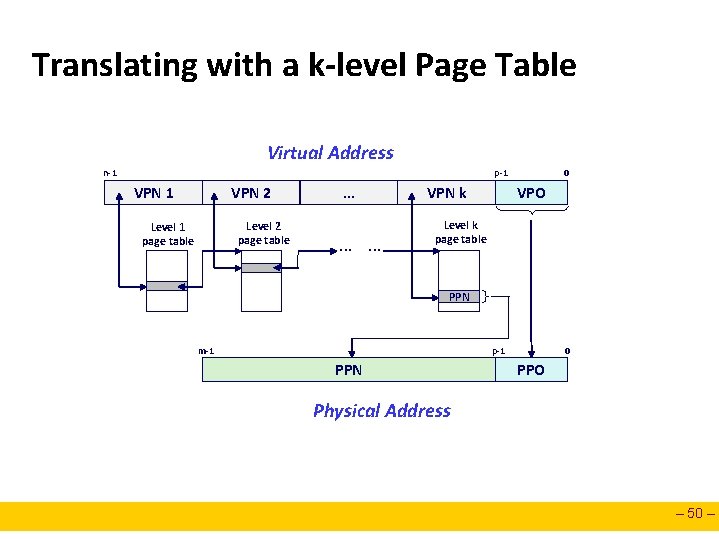

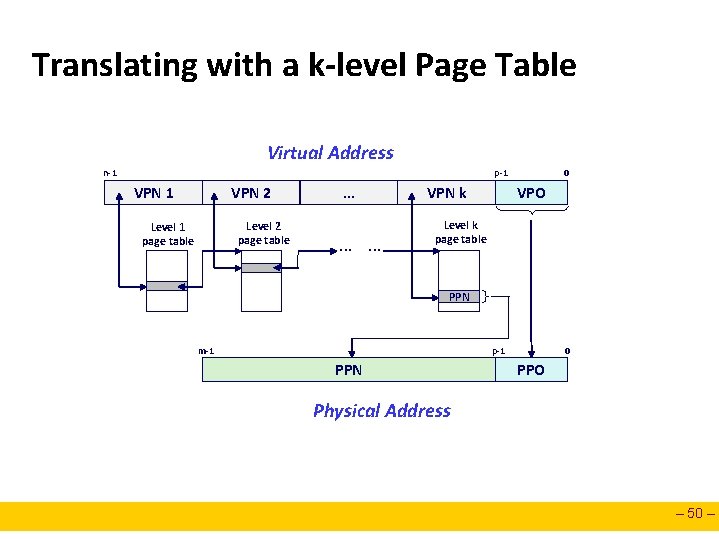

Translating with a k-level Page Table Virtual Address n-1 p-1 VPN 2 Level 2 page table Level 1 page table . . . VPN k. . . 0 VPO Level k page table PPN m-1 p-1 PPN 0 PPO Physical Address – 50 –

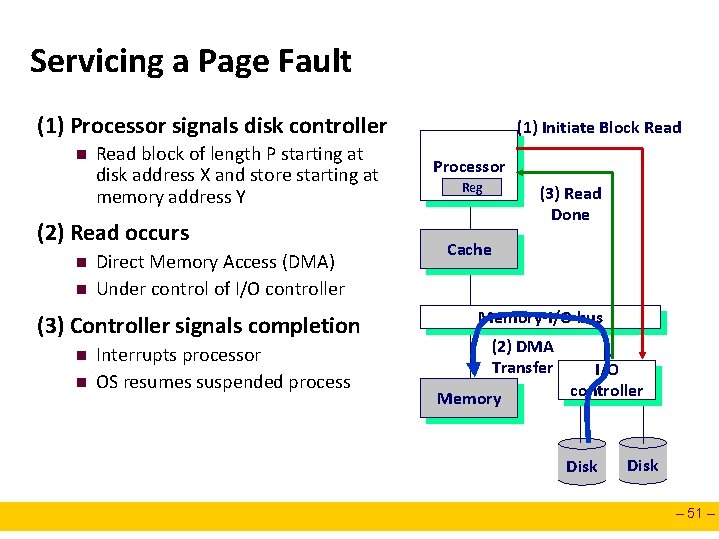

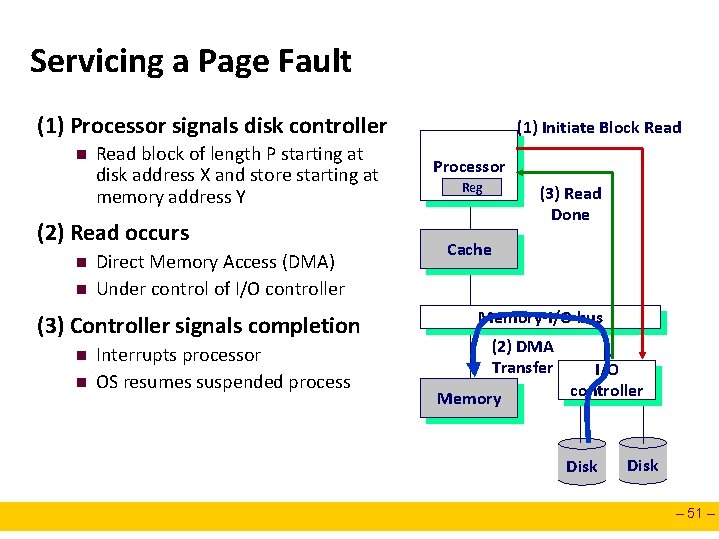

Servicing a Page Fault (1) Processor signals disk controller n Read block of length P starting at disk address X and store starting at memory address Y (2) Read occurs n n Direct Memory Access (DMA) Under control of I/O controller (3) Controller signals completion n n Interrupts processor OS resumes suspended process (1) Initiate Block Read Processor Reg (3) Read Done Cache Memory-I/O bus (2) DMA Transfer Memory I/O controller Disk – 51 –

Today Virtual memory (VM) n Multi-level page tables Linux VM system Case study: VM system on P 6 Performance optimization for VM system – 52 –

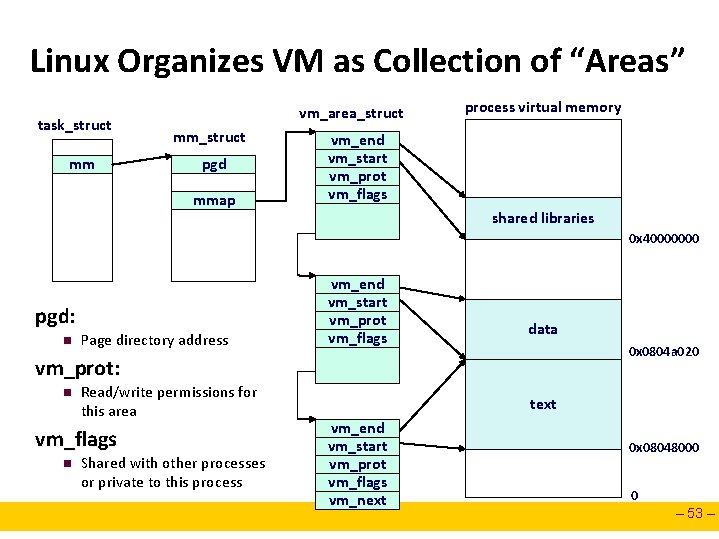

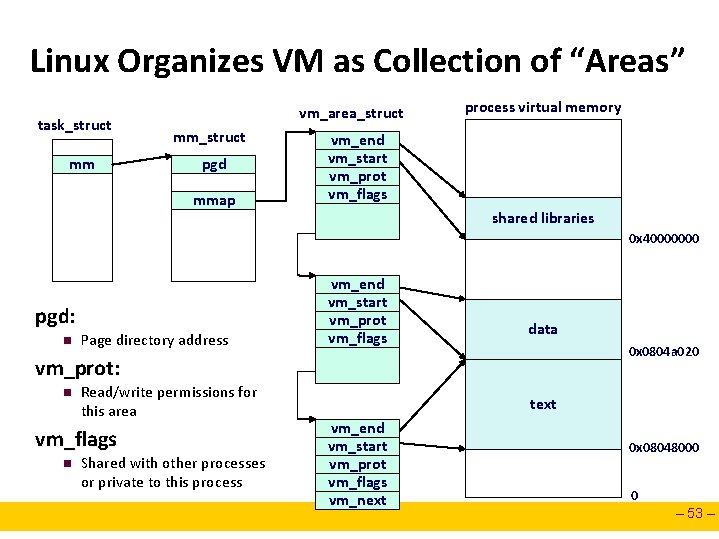

Linux Organizes VM as Collection of “Areas” task_struct mm vm_area_struct mm_struct pgd mmap vm_end vm_start vm_prot vm_flags vm_next pgd: n Page directory address vm_prot: n Read/write permissions for this area vm_flags n Shared with other processes or private to this process virtual memory vm_end vm_start vm_prot vm_flags shared libraries 0 x 40000000 data 0 x 0804 a 020 vm_next text vm_end vm_start vm_prot vm_flags vm_next 0 x 08048000 0 – 53 –

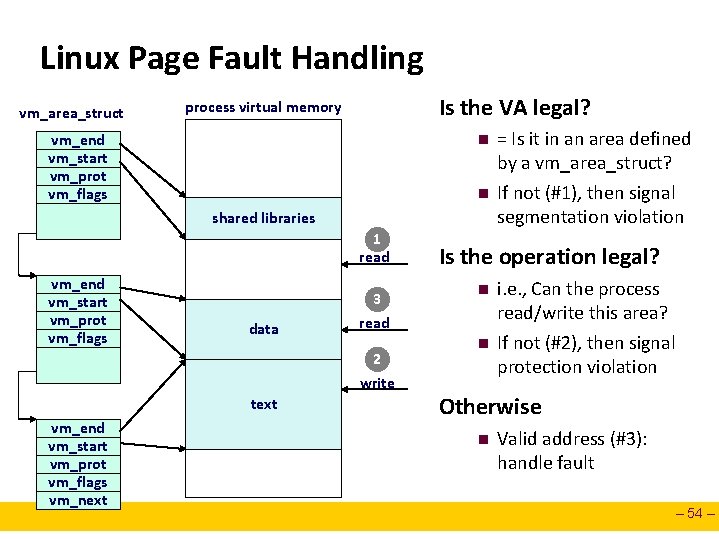

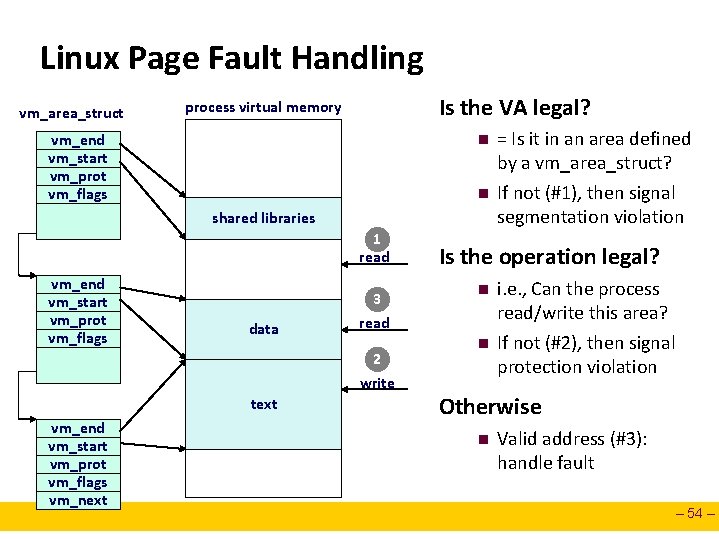

Linux Page Fault Handling vm_area_struct Is the VA legal? process virtual memory vm_end vm_start vm_prot vm_flags vm_next vm_end vm_start vm_prot vm_flags n n shared libraries 1 read data 2 write vm_next text vm_end vm_start vm_prot vm_flags vm_next 3 read = Is it in an area defined by a vm_area_struct? If not (#1), then signal segmentation violation Is the operation legal? n n i. e. , Can the process read/write this area? If not (#2), then signal protection violation Otherwise n Valid address (#3): handle fault – 54 –

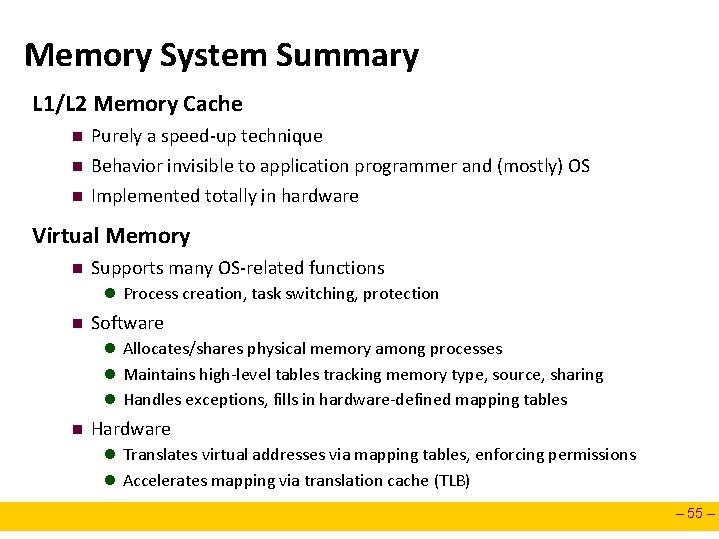

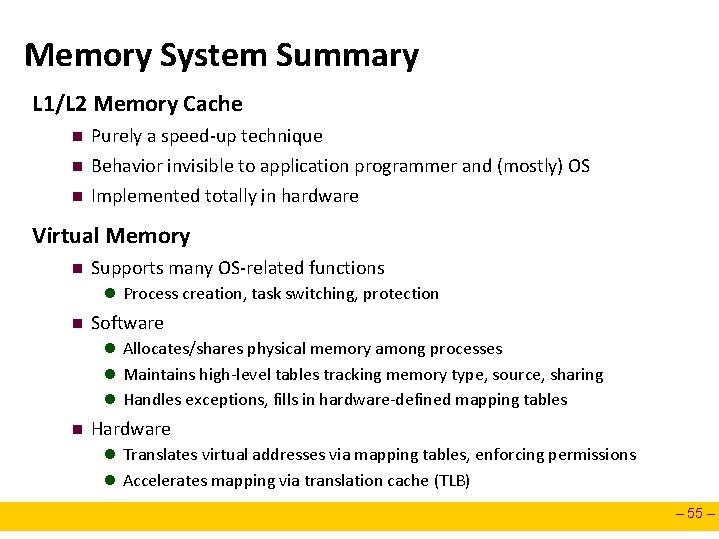

Memory System Summary L 1/L 2 Memory Cache n n n Purely a speed-up technique Behavior invisible to application programmer and (mostly) OS Implemented totally in hardware Virtual Memory n Supports many OS-related functions l Process creation, task switching, protection n Software l Allocates/shares physical memory among processes l Maintains high-level tables tracking memory type, source, sharing l Handles exceptions, fills in hardware-defined mapping tables n Hardware l Translates virtual addresses via mapping tables, enforcing permissions l Accelerates mapping via translation cache (TLB) – 55 –

Further Reading Intel TLBs: n Application Note: “TLBs, Paging-Structure Caches, and Their Invalidation”, April 2007 – 56 –

Today Virtual memory (VM) n Multi-level page tables Linux VM system Case study: VM system on P 6 Performance optimization for VM system – 57 –

Intel P 6 Internal designation for successor to Pentium n Which had internal designation P 5 Fundamentally different from Pentium n Out-of-order, superscalar operation Resulting processors n n Pentium Pro (1996) Pentium II (1997) l L 2 cache on same chip n Pentium III (1999) – 58 –

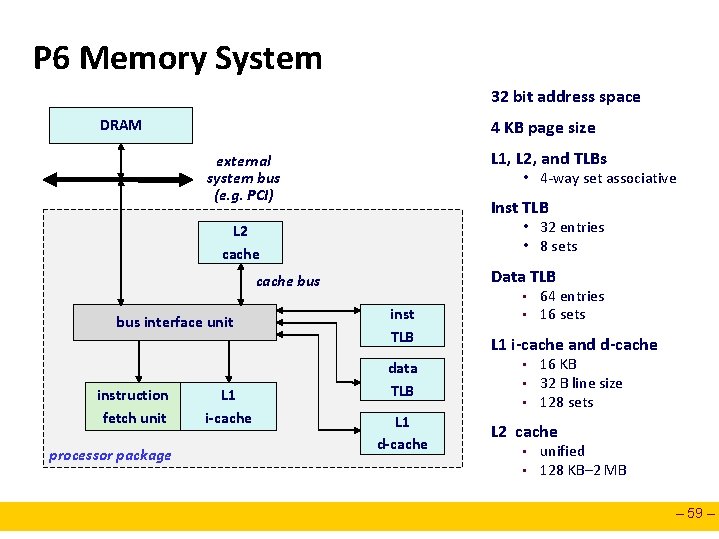

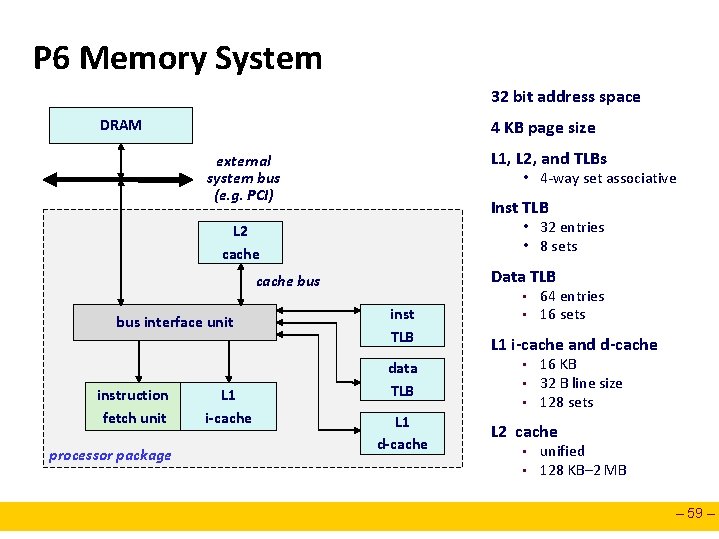

P 6 Memory System 32 bit address space DRAM 4 KB page size L 1, L 2, and TLBs external system bus (e. g. PCI) • 4 -way set associative Inst TLB • 32 entries • 8 sets L 2 cache Data TLB cache bus interface unit instruction fetch unit processor package L 1 i-cache • inst TLB data TLB L 1 d-cache • 64 entries 16 sets L 1 i-cache and d-cache • • • 16 KB 32 B line size 128 sets L 2 cache • • unified 128 KB– 2 MB – 59 –

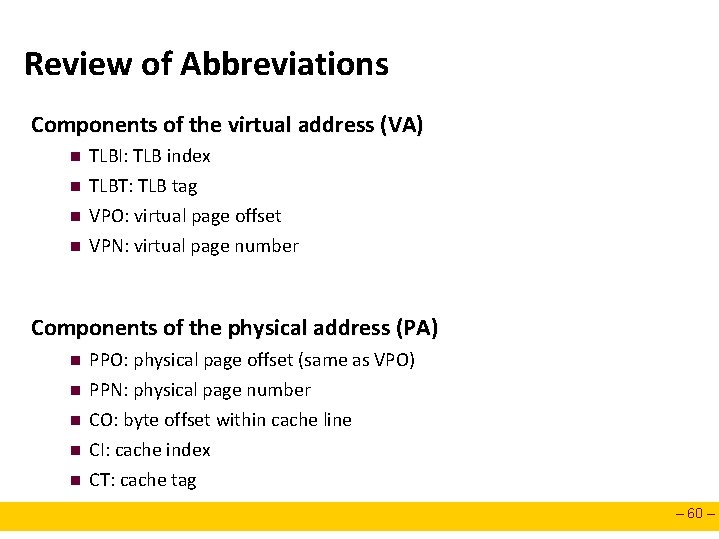

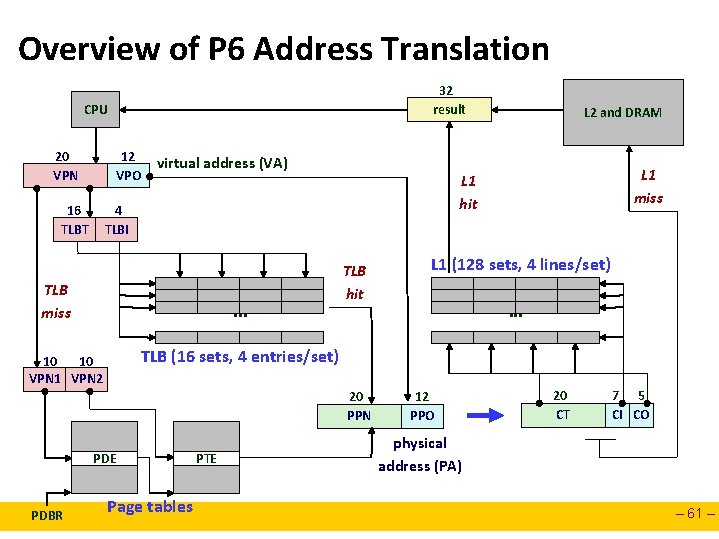

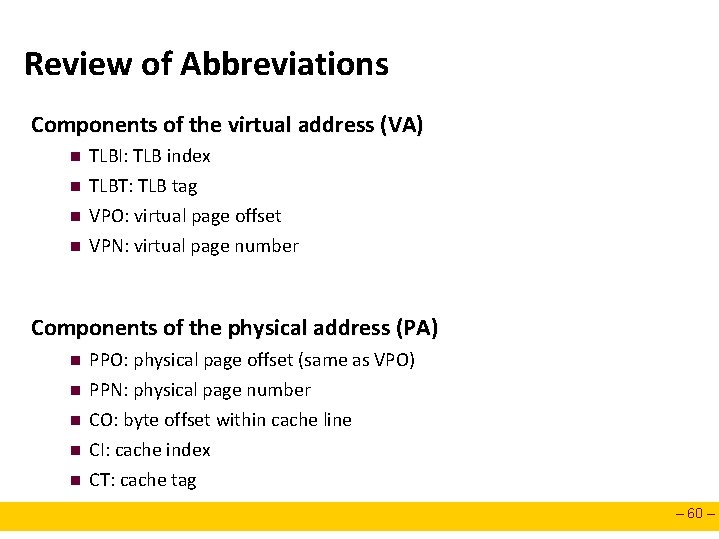

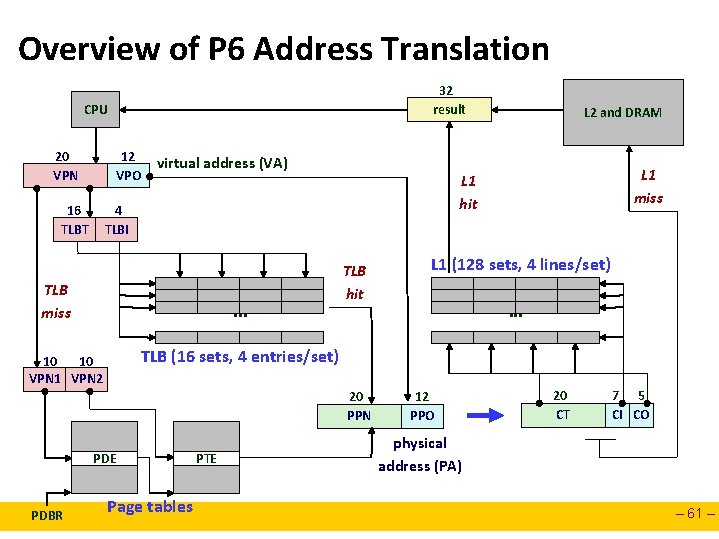

Review of Abbreviations Components of the virtual address (VA) n n TLBI: TLB index TLBT: TLB tag VPO: virtual page offset VPN: virtual page number Components of the physical address (PA) n PPO: physical page offset (same as VPO) n PPN: physical page number CO: byte offset within cache line CI: cache index CT: cache tag n n n – 60 –

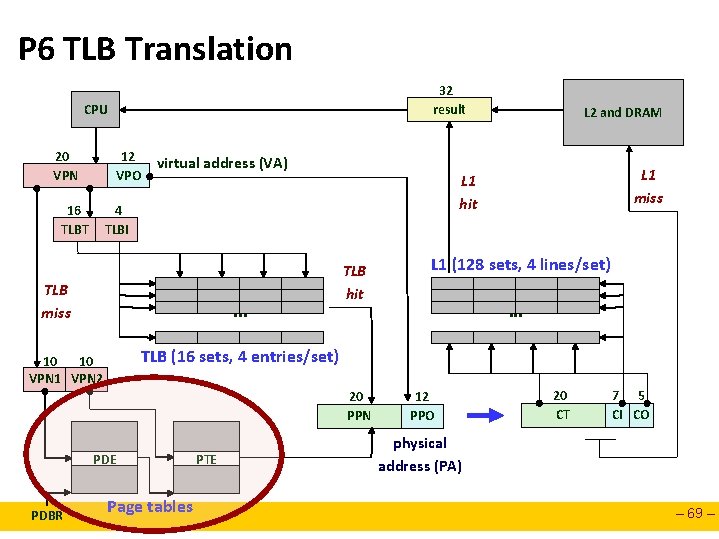

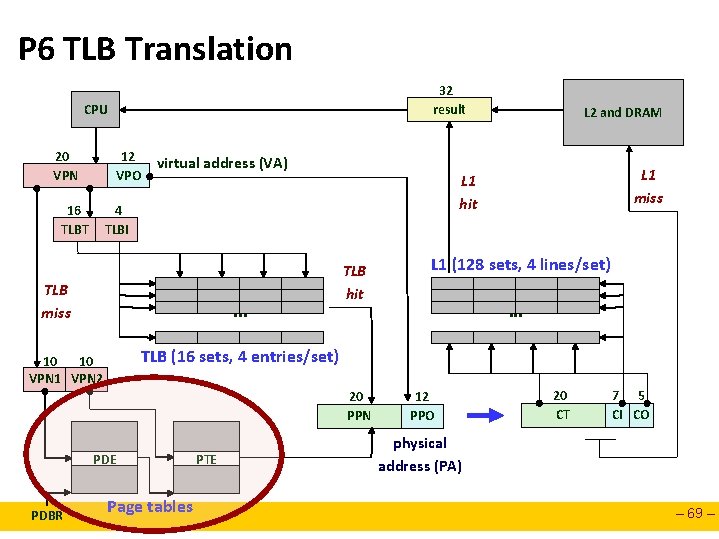

Overview of P 6 Address Translation 32 result CPU 20 VPN 12 VPO 16 TLBT virtual address (VA) . . . TLB hit L 1 (128 sets, 4 lines/set). . . TLB (16 sets, 4 entries/set) 10 10 VPN 1 VPN 2 20 PPN PDE PDBR L 1 miss L 1 hit 4 TLBI TLB miss L 2 and DRAM Page tables PTE 12 PPO 20 CT 7 5 CI CO physical address (PA) – 61 –

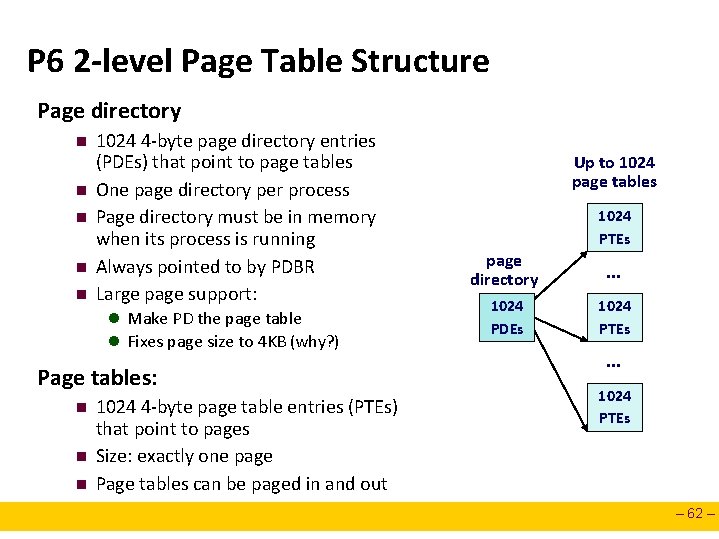

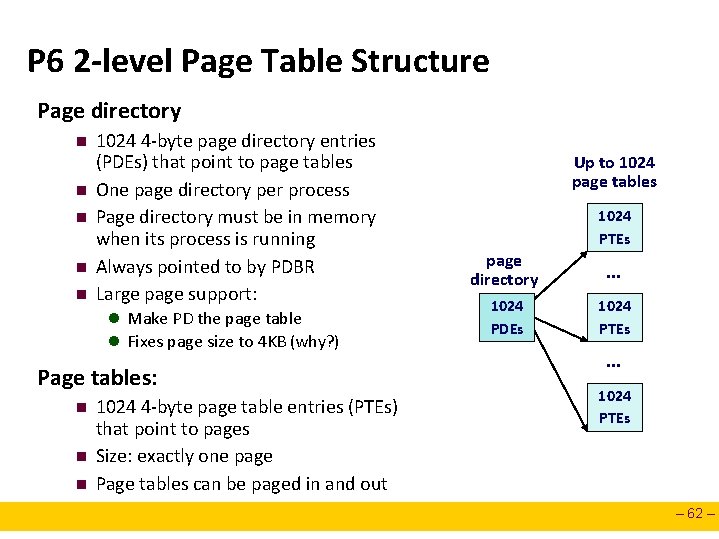

P 6 2 -level Page Table Structure Page directory n n n 1024 4 -byte page directory entries (PDEs) that point to page tables One page directory per process Page directory must be in memory when its process is running Always pointed to by PDBR Large page support: l Make PD the page table l Fixes page size to 4 KB (why? ) Page tables: n n n 1024 4 -byte page table entries (PTEs) that point to pages Size: exactly one page Page tables can be paged in and out Up to 1024 page tables 1024 PTEs page directory . . . 1024 PDEs 1024 PTEs . . . 1024 PTEs – 62 –

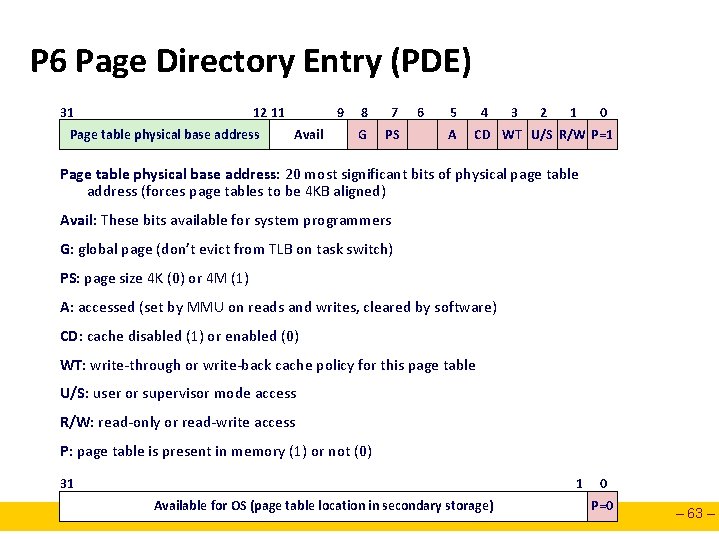

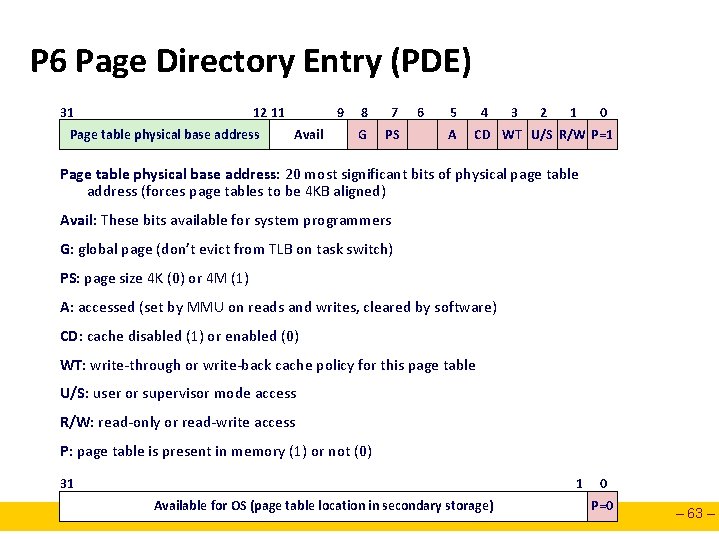

P 6 Page Directory Entry (PDE) 31 12 11 Page table physical base address 9 Avail 8 7 G PS 6 5 A 4 3 2 1 0 CD WT U/S R/W P=1 Page table physical base address: 20 most significant bits of physical page table address (forces page tables to be 4 KB aligned) Avail: These bits available for system programmers G: global page (don’t evict from TLB on task switch) PS: page size 4 K (0) or 4 M (1) A: accessed (set by MMU on reads and writes, cleared by software) CD: cache disabled (1) or enabled (0) WT: write-through or write-back cache policy for this page table U/S: user or supervisor mode access R/W: read-only or read-write access P: page table is present in memory (1) or not (0) 31 1 Available for OS (page table location in secondary storage) 0 P=0 – 63 –

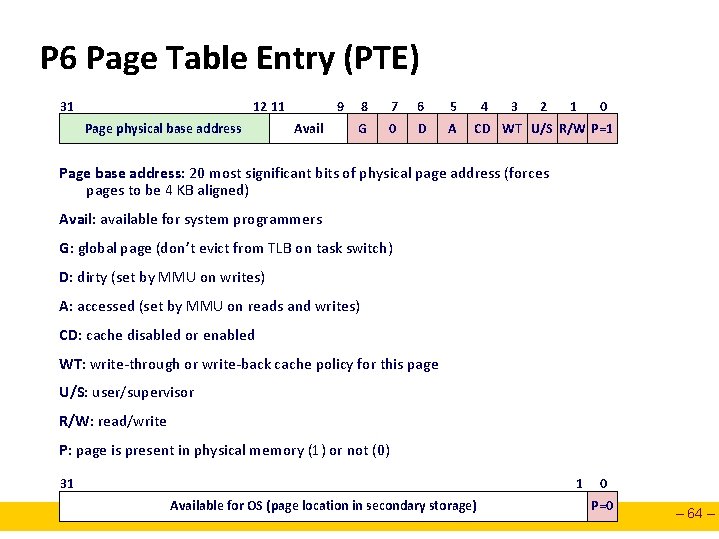

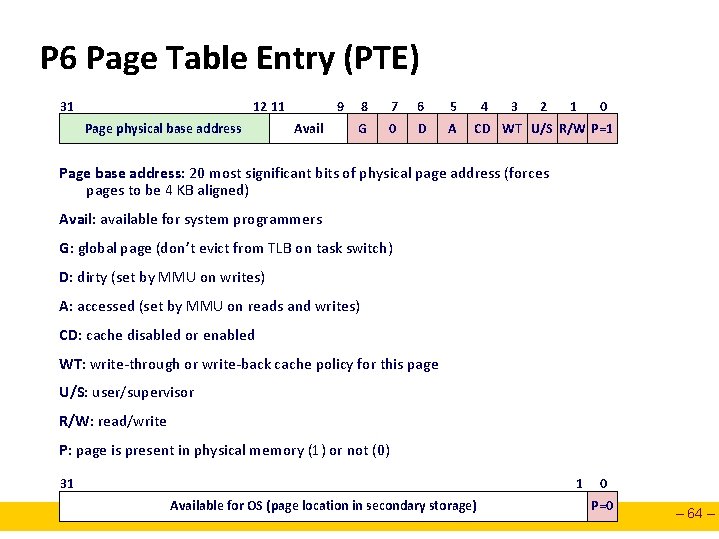

P 6 Page Table Entry (PTE) 31 12 11 Page physical base address 9 Avail 8 7 6 5 G 0 D A 4 3 2 1 0 CD WT U/S R/W P=1 Page base address: 20 most significant bits of physical page address (forces pages to be 4 KB aligned) Avail: available for system programmers G: global page (don’t evict from TLB on task switch) D: dirty (set by MMU on writes) A: accessed (set by MMU on reads and writes) CD: cache disabled or enabled WT: write-through or write-back cache policy for this page U/S: user/supervisor R/W: read/write P: page is present in physical memory (1) or not (0) 31 1 Available for OS (page location in secondary storage) 0 P=0 – 64 –

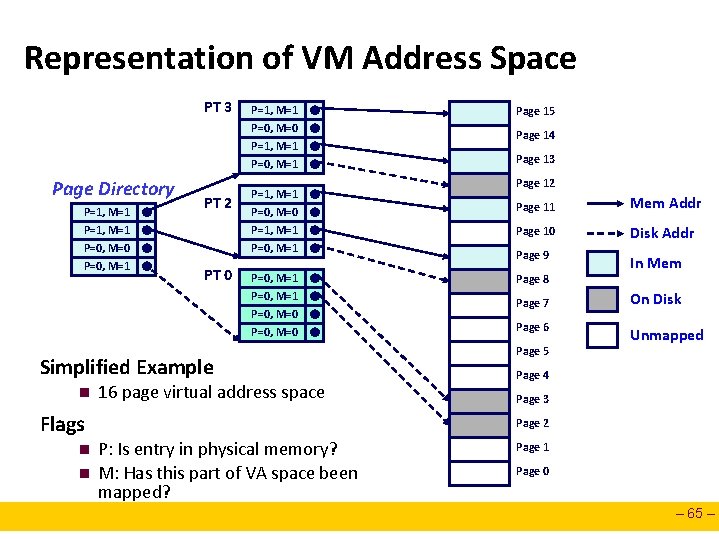

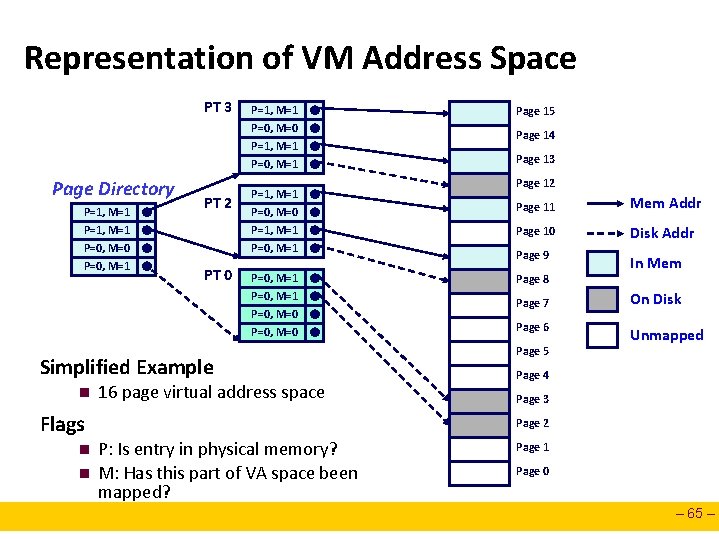

Representation of VM Address Space PT 3 Page Directory P=1, M=1 P=0, M=0 P=0, M=1 • • PT 2 PT 0 P=1, M=1 P=0, M=1 P=1, M=1 P=0, M=0 P=1, M=1 P=0, M=0 P=0, M=0 • • • Simplified Example n 16 page virtual address space Flags n n Page 15 Page 14 Page 13 Page 12 Page 11 Mem Addr Page 10 Disk Addr Page 9 In Mem Page 8 Page 7 Page 6 Page 5 On Disk Unmapped Page 4 Page 3 Page 2 P: Is entry in physical memory? M: Has this part of VA space been mapped? Page 1 Page 0 – 65 –

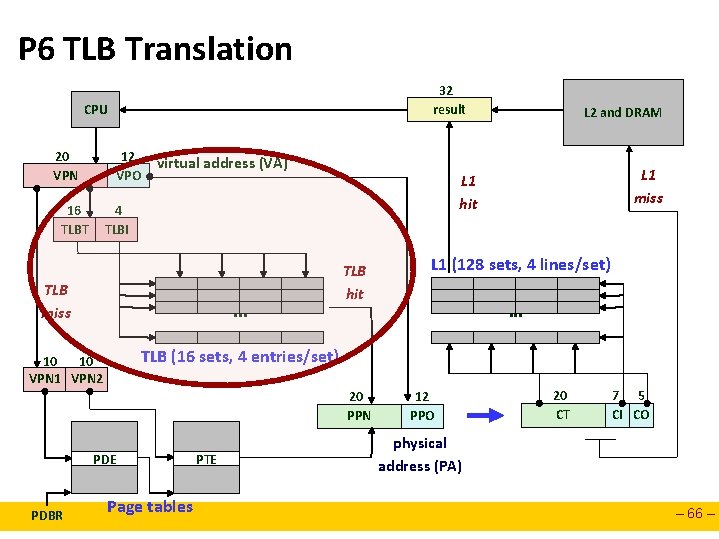

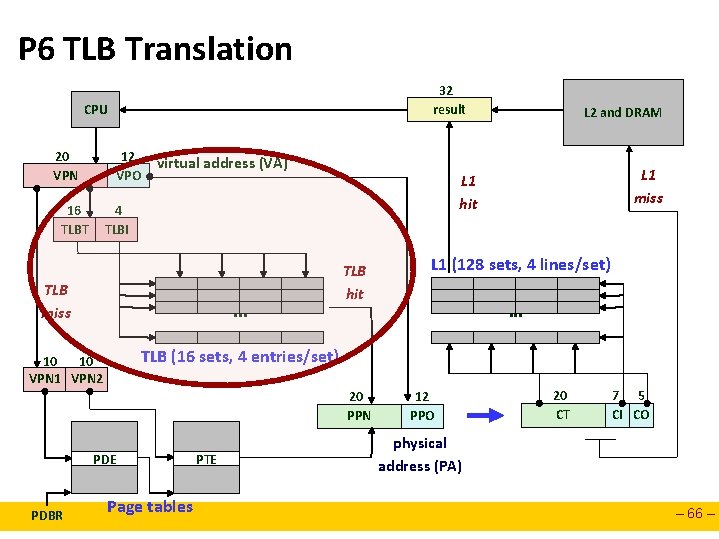

P 6 TLB Translation 32 result CPU 20 VPN 12 VPO 16 TLBT virtual address (VA) . . . TLB hit L 1 (128 sets, 4 lines/set). . . TLB (16 sets, 4 entries/set) 10 10 VPN 1 VPN 2 20 PPN PDE PDBR L 1 miss L 1 hit 4 TLBI TLB miss L 2 and DRAM Page tables PTE 12 PPO 20 CT 7 5 CI CO physical address (PA) – 66 –

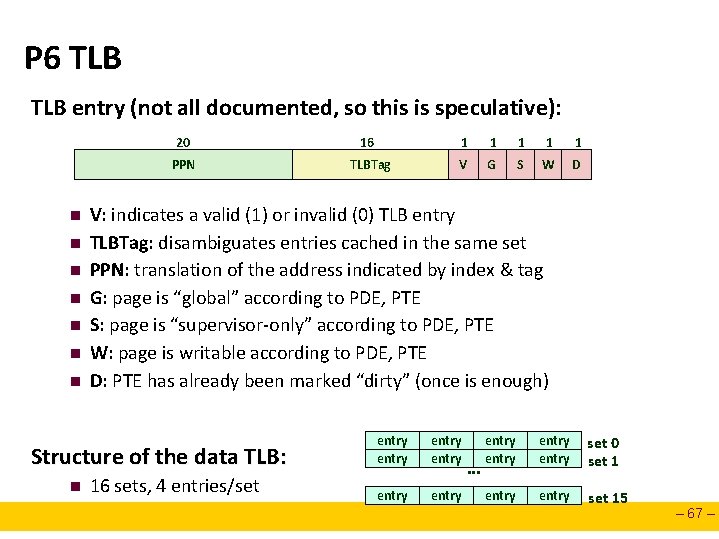

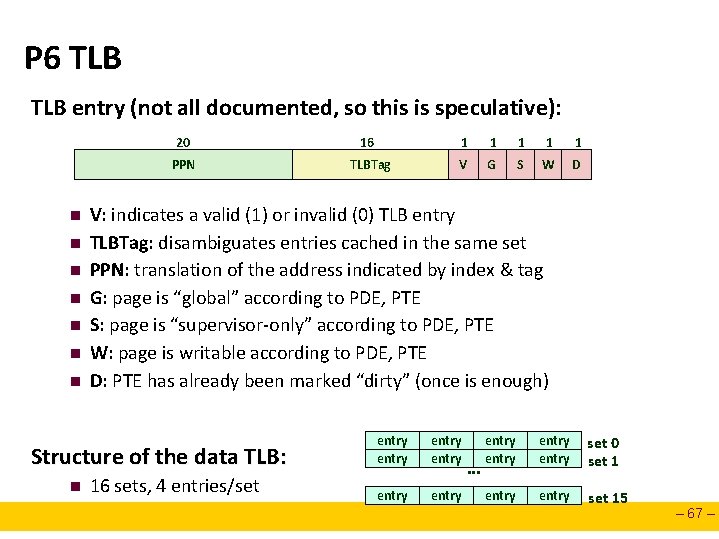

P 6 TLB entry (not all documented, so this is speculative): n n n n 20 16 1 1 1 PPN TLBTag V G S W D V: indicates a valid (1) or invalid (0) TLB entry TLBTag: disambiguates entries cached in the same set PPN: translation of the address indicated by index & tag G: page is “global” according to PDE, PTE S: page is “supervisor-only” according to PDE, PTE W: page is writable according to PDE, PTE D: PTE has already been marked “dirty” (once is enough) Structure of the data TLB: n 16 sets, 4 entries/set entry entry . . . entry set 0 set 1 entry set 15 – 67 –

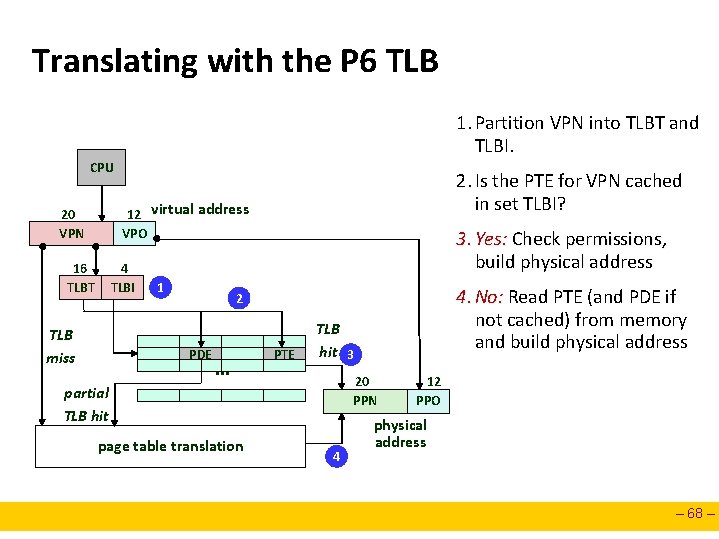

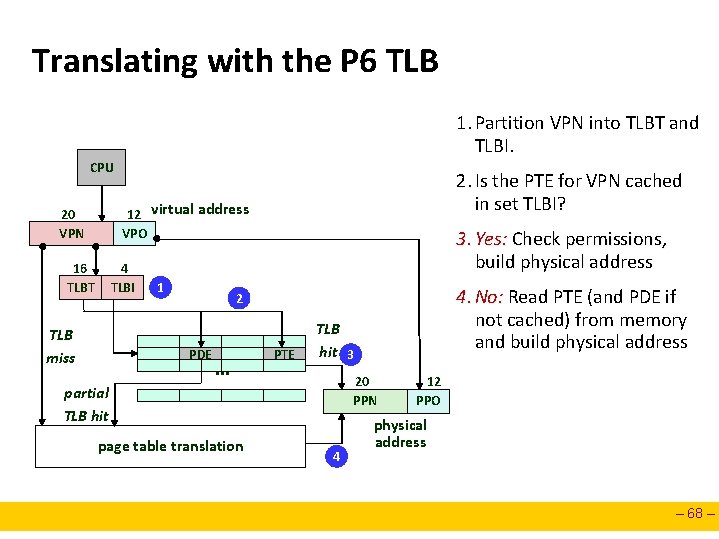

Translating with the P 6 TLB 1. Partition VPN into TLBT and TLBI. CPU 2. Is the PTE for VPN cached in set TLBI? 12 virtual address VPO 20 VPN 16 TLBT 4 TLBI TLB miss 1 3. Yes: Check permissions, build physical address 4. No: Read PTE (and PDE if not cached) from memory and build physical address 2 PDE . . . PTE TLB hit 3 20 PPN partial TLB hit page table translation 4 12 PPO physical address – 68 –

P 6 TLB Translation 32 result CPU 20 VPN 12 VPO 16 TLBT virtual address (VA) . . . TLB hit L 1 (128 sets, 4 lines/set). . . TLB (16 sets, 4 entries/set) 10 10 VPN 1 VPN 2 20 PPN PDE PDBR L 1 miss L 1 hit 4 TLBI TLB miss L 2 and DRAM Page tables PTE 12 PPO 20 CT 7 5 CI CO physical address (PA) – 69 –

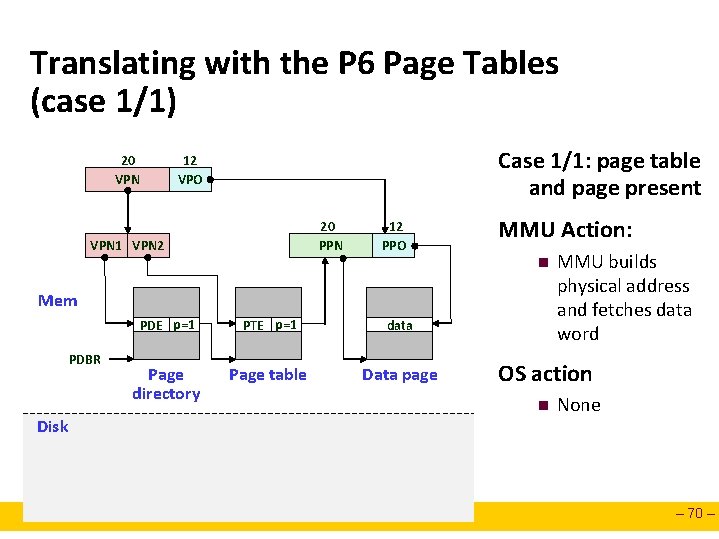

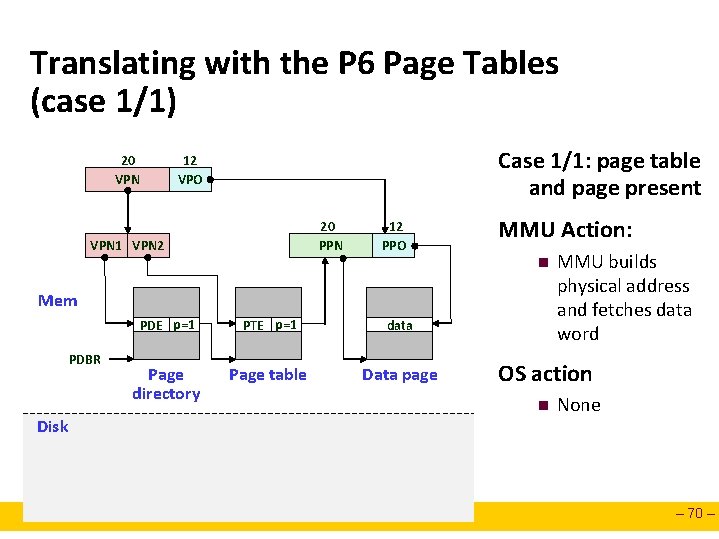

Translating with the P 6 Page Tables (case 1/1) 20 VPN Case 1/1: page table and page present 12 VPO 20 PPN VPN 1 VPN 2 12 PPO MMU Action: n Mem PDBR Disk PDE p=1 PTE p=1 data Page directory Page table Data page MMU builds physical address and fetches data word OS action n None – 70 –

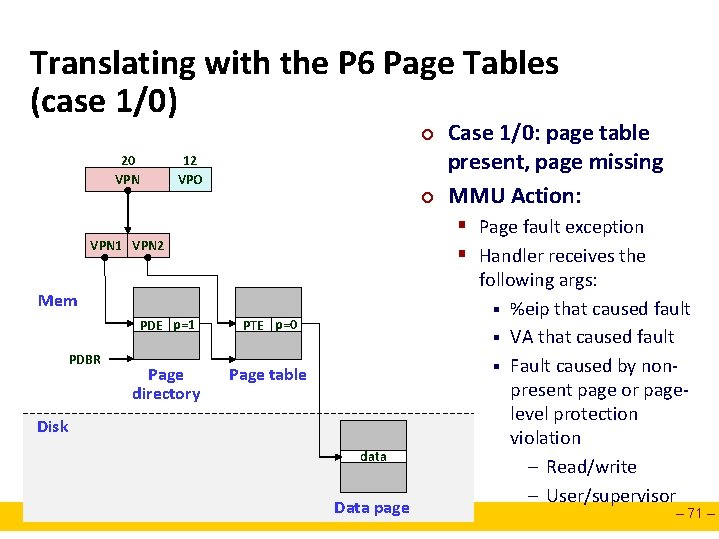

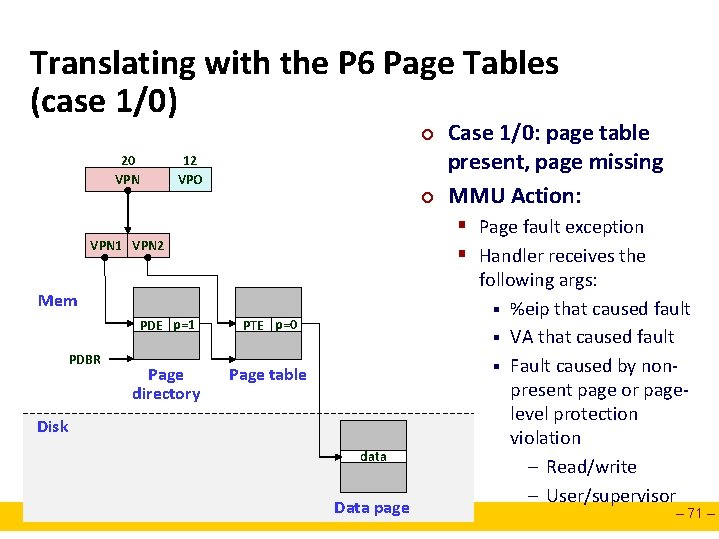

Translating with the P 6 Page Tables (case 1/0) ¢ 20 VPN 12 VPO ¢ § Page fault exception § Handler receives the VPN 1 VPN 2 Mem PDBR Case 1/0: page table present, page missing MMU Action: PDE p=1 PTE p=0 Page directory Page table Disk data Data page following args: § %eip that caused fault § VA that caused fault § Fault caused by nonpresent page or pagelevel protection violation – Read/write – User/supervisor – 71 –

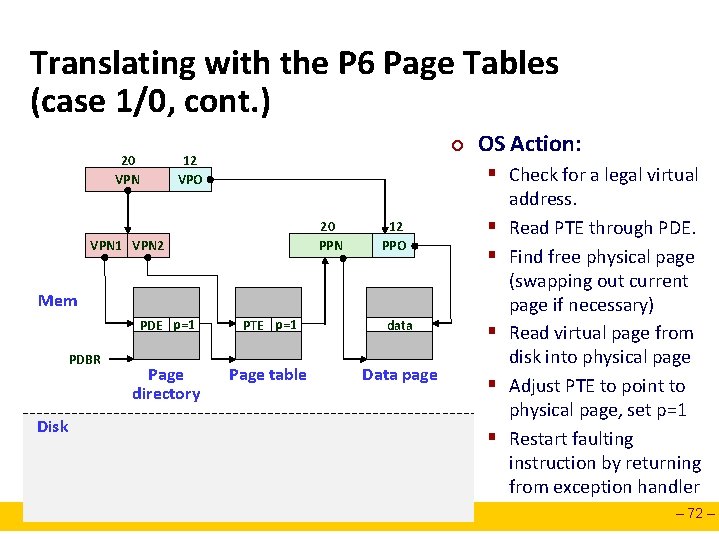

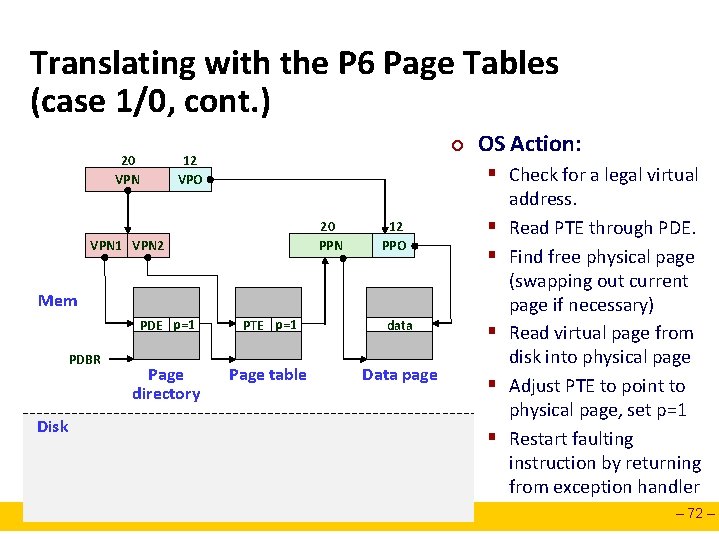

Translating with the P 6 Page Tables (case 1/0, cont. ) 20 VPN ¢ 12 VPO OS Action: § Check for a legal virtual 20 PPN VPN 1 VPN 2 12 PPO § § Mem PDBR Disk PDE p=1 PTE p=1 data Page directory Page table Data page § § § address. Read PTE through PDE. Find free physical page (swapping out current page if necessary) Read virtual page from disk into physical page Adjust PTE to point to physical page, set p=1 Restart faulting instruction by returning from exception handler – 72 –

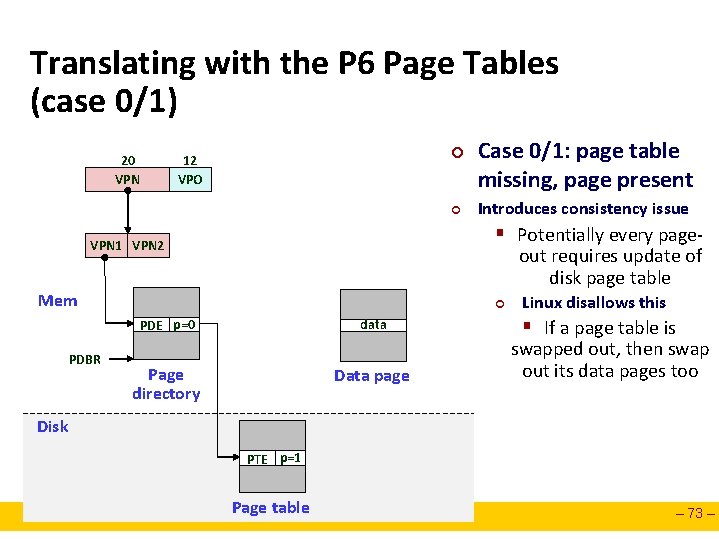

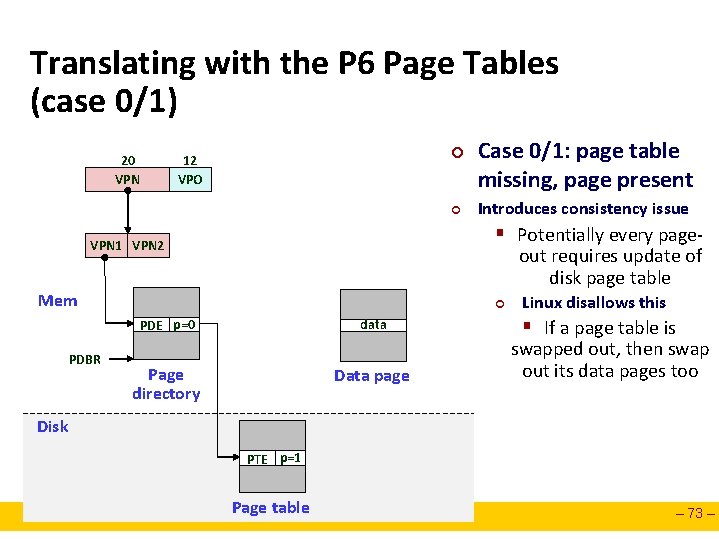

Translating with the P 6 Page Tables (case 0/1) 20 VPN ¢ 12 VPO ¢ Introduces consistency issue § Potentially every page- VPN 1 VPN 2 out requires update of disk page table Mem ¢ PDE p=0 PDBR Case 0/1: page table missing, page present data Page directory Data page Linux disallows this § If a page table is swapped out, then swap out its data pages too Disk PTE p=1 Page table – 73 –

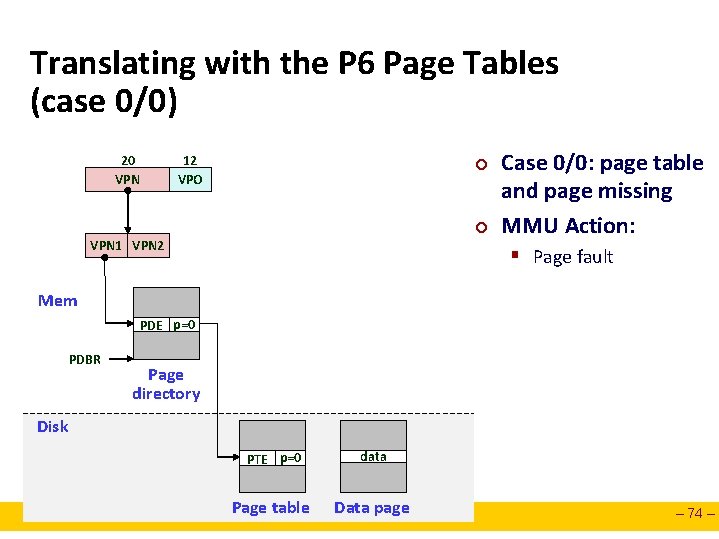

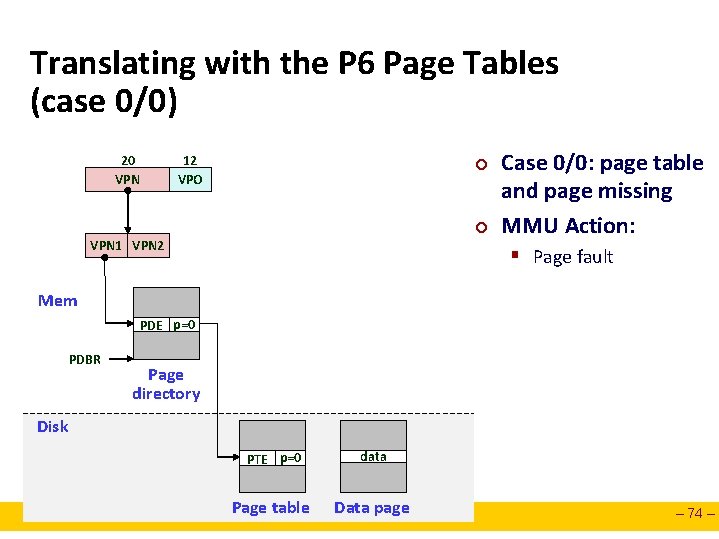

Translating with the P 6 Page Tables (case 0/0) 20 VPN 12 VPO ¢ ¢ VPN 1 VPN 2 Case 0/0: page table and page missing MMU Action: § Page fault Mem PDE p=0 PDBR Page directory Disk PTE p=0 data Page table Data page – 74 –

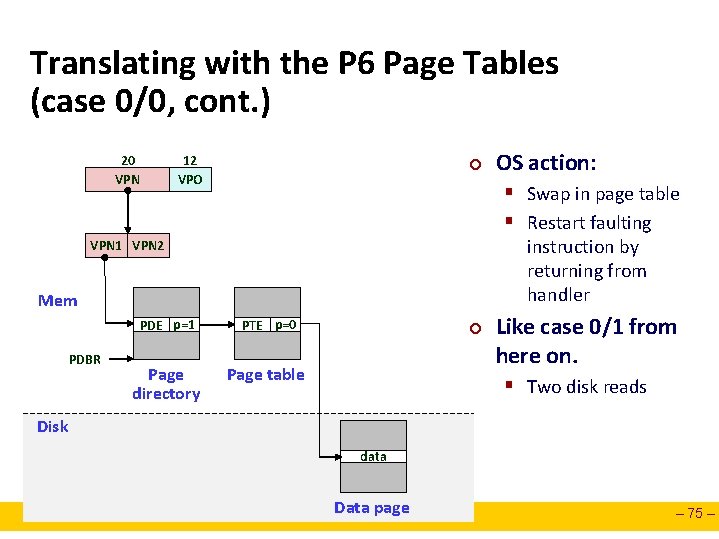

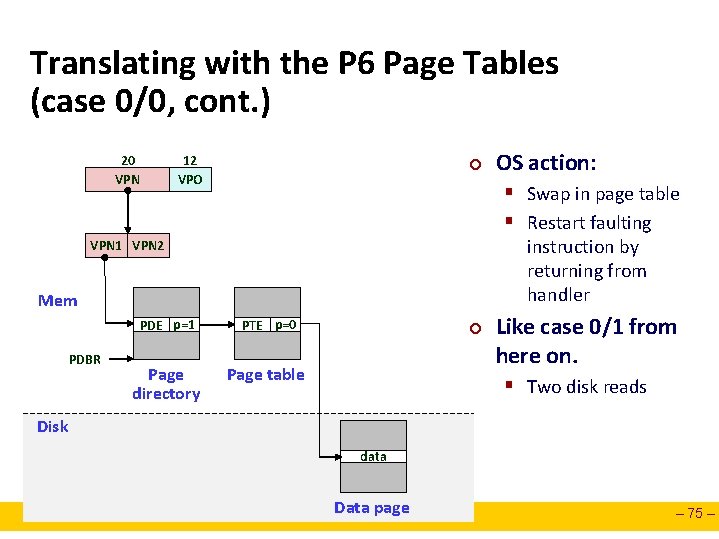

Translating with the P 6 Page Tables (case 0/0, cont. ) 20 VPN 12 VPO ¢ § Swap in page table § Restart faulting instruction by returning from handler VPN 1 VPN 2 Mem PDBR OS action: PDE p=1 PTE p=0 Page directory Page table ¢ Like case 0/1 from here on. § Two disk reads Disk data Data page – 75 –

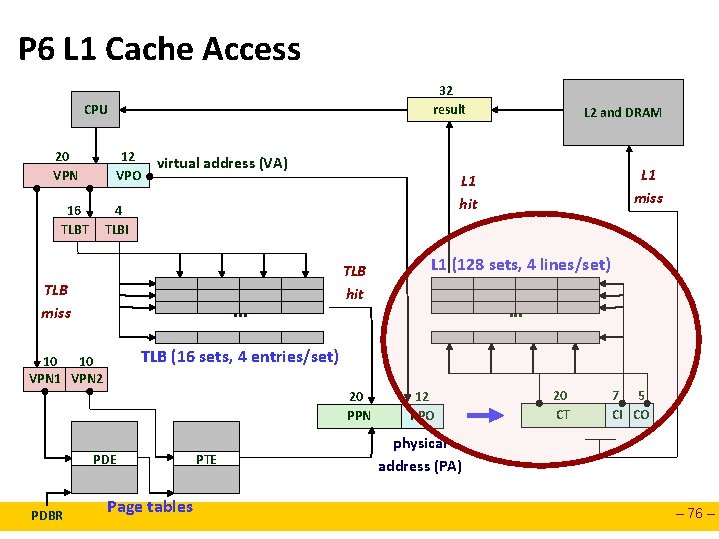

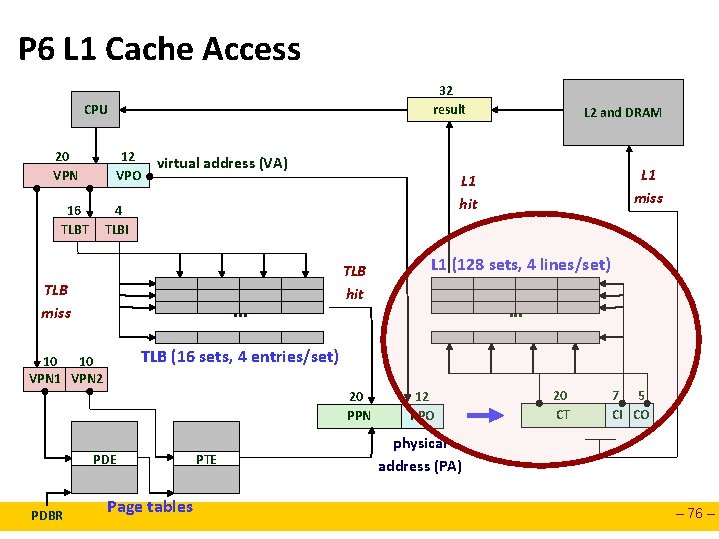

P 6 L 1 Cache Access 32 result CPU 20 VPN 12 VPO 16 TLBT virtual address (VA) . . . TLB hit L 1 (128 sets, 4 lines/set). . . TLB (16 sets, 4 entries/set) 10 10 VPN 1 VPN 2 20 PPN PDE PDBR L 1 miss L 1 hit 4 TLBI TLB miss L 2 and DRAM Page tables PTE 12 PPO 20 CT 7 5 CI CO physical address (PA) – 76 –

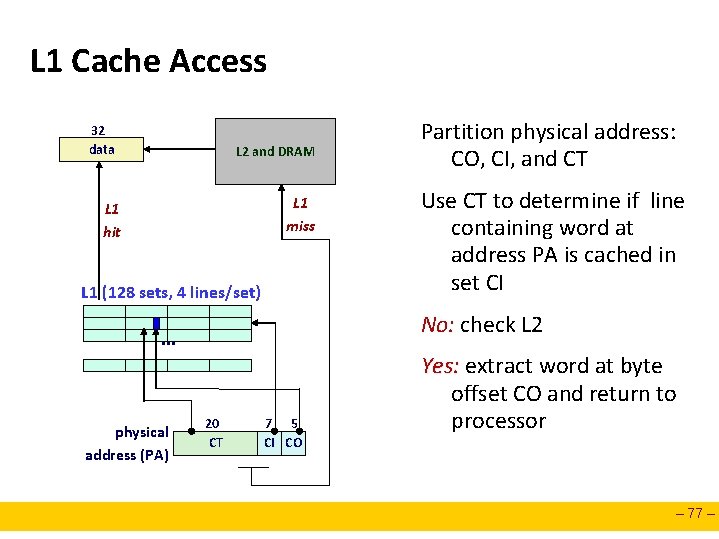

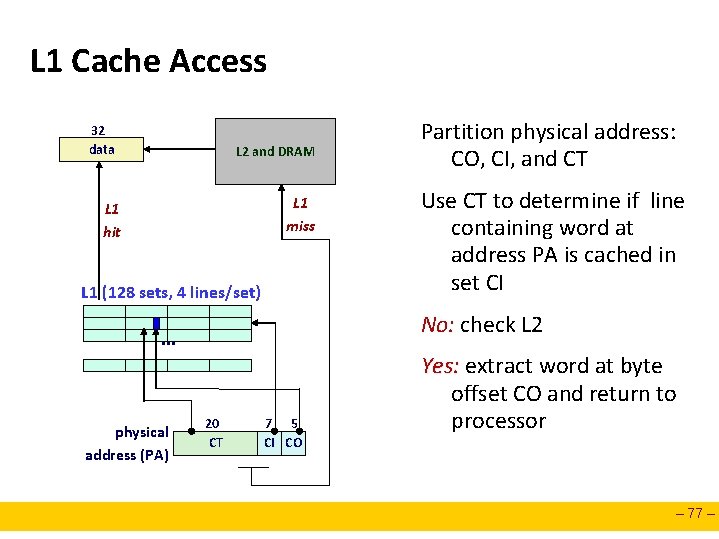

L 1 Cache Access 32 data L 2 and DRAM L 1 miss L 1 hit L 1 (128 sets, 4 lines/set) Use CT to determine if line containing word at address PA is cached in set CI No: check L 2 . . . physical address (PA) Partition physical address: CO, CI, and CT 20 CT 7 5 CI CO Yes: extract word at byte offset CO and return to processor – 77 –

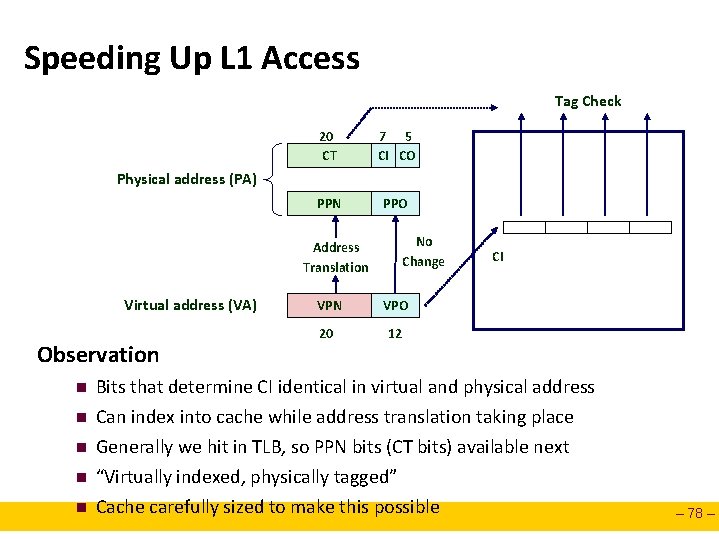

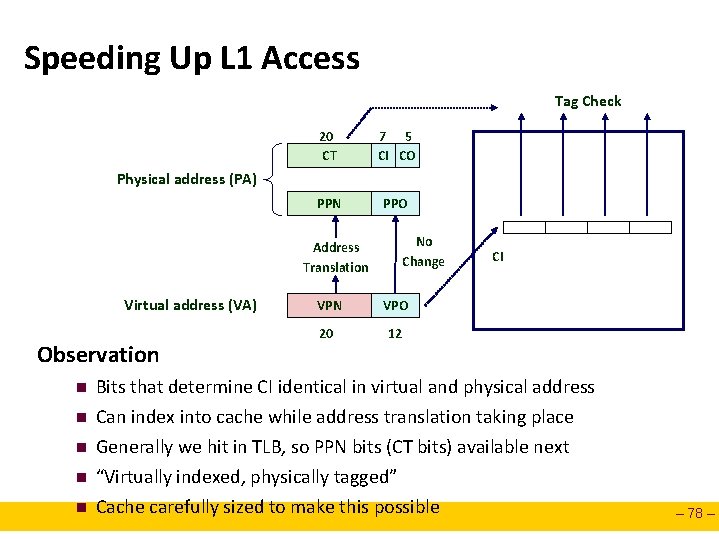

Speeding Up L 1 Access Tag Check 20 CT 7 5 CI CO PPN PPO Physical address (PA) No Change Address Translation Virtual address (VA) Observation n n VPN VPO 20 12 CI Bits that determine CI identical in virtual and physical address Can index into cache while address translation taking place Generally we hit in TLB, so PPN bits (CT bits) available next “Virtually indexed, physically tagged” Cache carefully sized to make this possible – 78 –

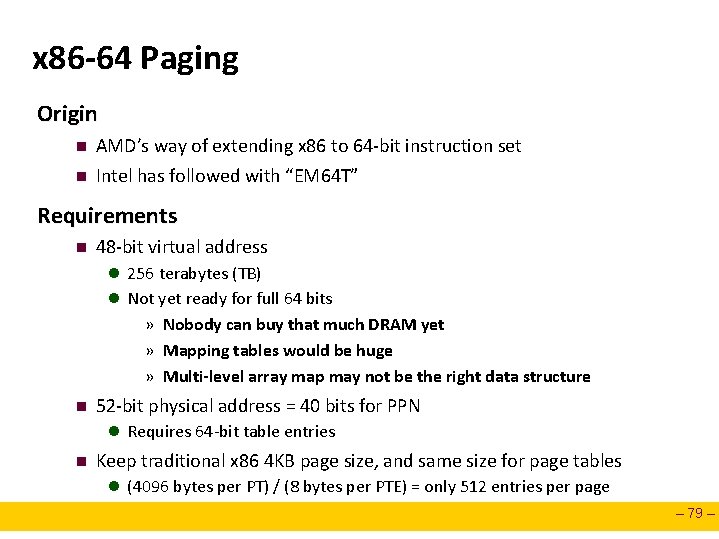

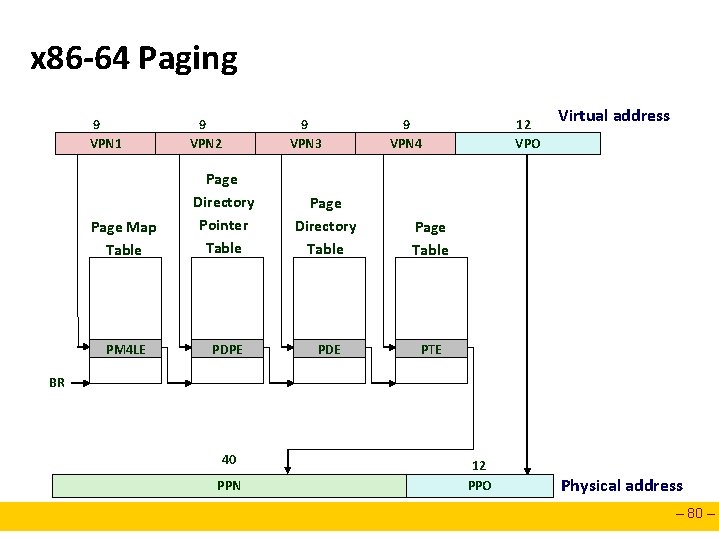

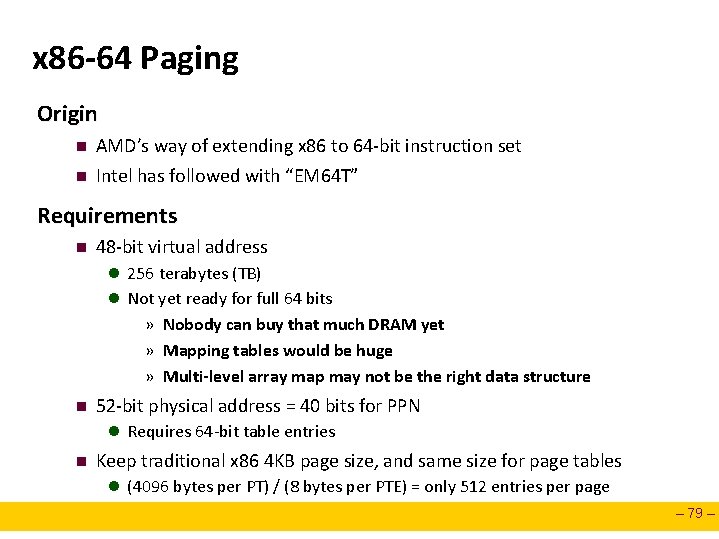

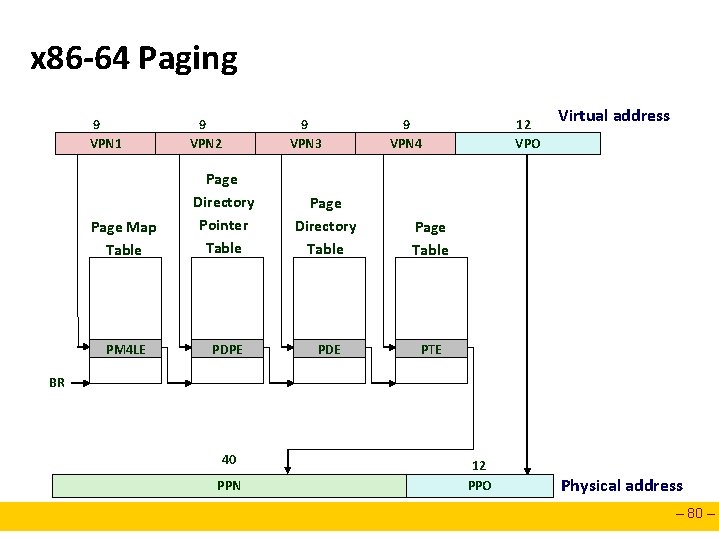

x 86 -64 Paging Origin n n AMD’s way of extending x 86 to 64 -bit instruction set Intel has followed with “EM 64 T” Requirements n 48 -bit virtual address l 256 terabytes (TB) l Not yet ready for full 64 bits » Nobody can buy that much DRAM yet » Mapping tables would be huge » Multi-level array map may not be the right data structure n 52 -bit physical address = 40 bits for PPN l Requires 64 -bit table entries n Keep traditional x 86 4 KB page size, and same size for page tables l (4096 bytes per PT) / (8 bytes per PTE) = only 512 entries per page – 79 –

x 86 -64 Paging 9 VPN 1 9 VPN 2 9 VPN 3 9 VPN 4 Page Map Table Page Directory Pointer Table Page Directory Table Page Table PM 4 LE PDPE PDE PTE 12 VPO Virtual address BR 40 PPN 12 PPO Physical address – 80 –