CSCE 430830 Computer Architecture Memory Hierarchy Virtual Memory

- Slides: 17

CSCE 430/830 Computer Architecture Memory Hierarchy: Virtual Memory Lecturer: Prof. Hong Jiang Courtesy of Yifeng Zhu (U. Maine) Fall, 2006 CSCE 430/830 Portions of these slides are derived from: Dave Patterson © UCB Memory: Virtual Memory

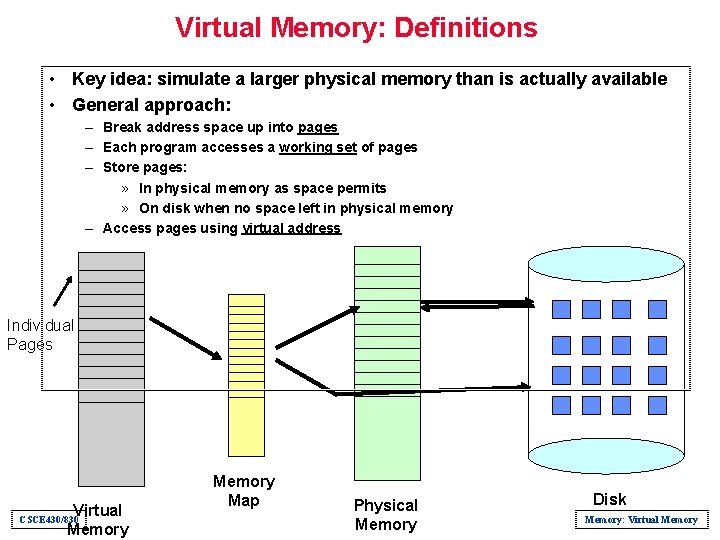

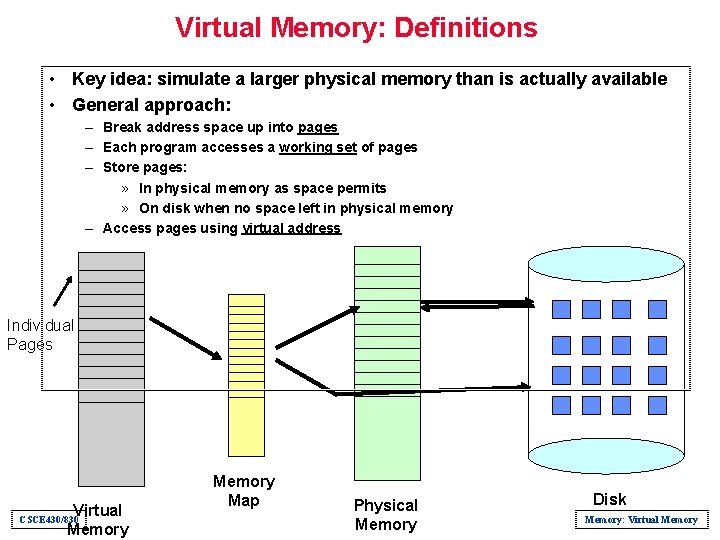

Virtual Memory: Definitions • Key idea: simulate a larger physical memory than is actually available • General approach: – Break address space up into pages – Each program accesses a working set of pages – Store pages: » In physical memory as space permits » On disk when no space left in physical memory – Access pages using virtual address Individual Pages Virtual CSCE 430/830 Memory Map Physical Memory Disk Memory: Virtual Memory

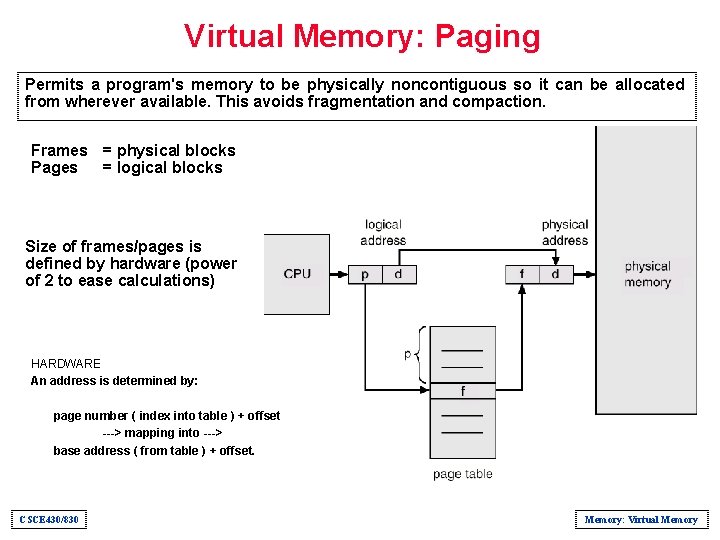

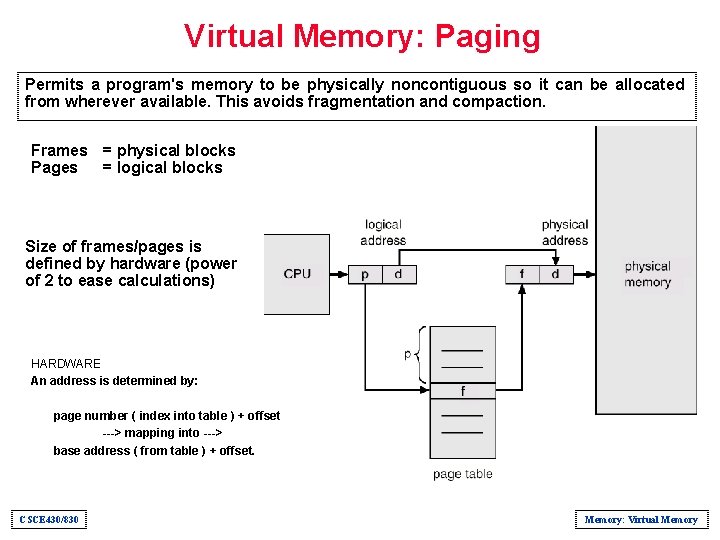

Virtual Memory: Paging Permits a program's memory to be physically noncontiguous so it can be allocated from wherever available. This avoids fragmentation and compaction. Frames = physical blocks Pages = logical blocks Size of frames/pages is defined by hardware (power of 2 to ease calculations) HARDWARE An address is determined by: page number ( index into table ) + offset ---> mapping into ---> base address ( from table ) + offset. CSCE 430/830 Memory: Virtual Memory

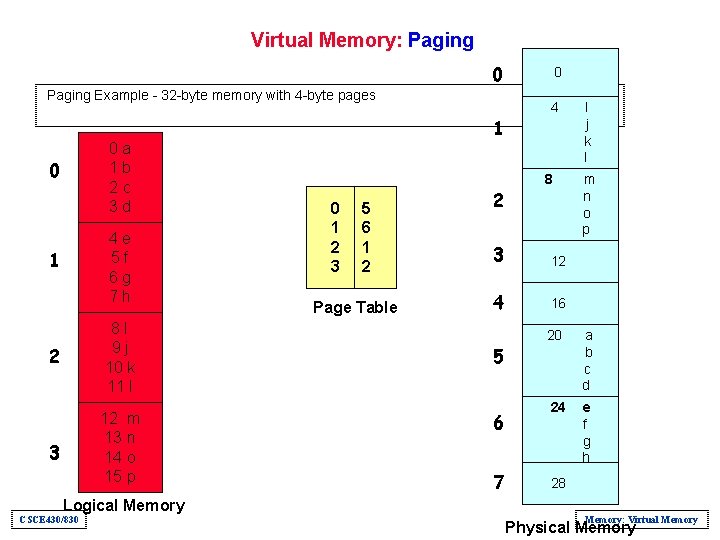

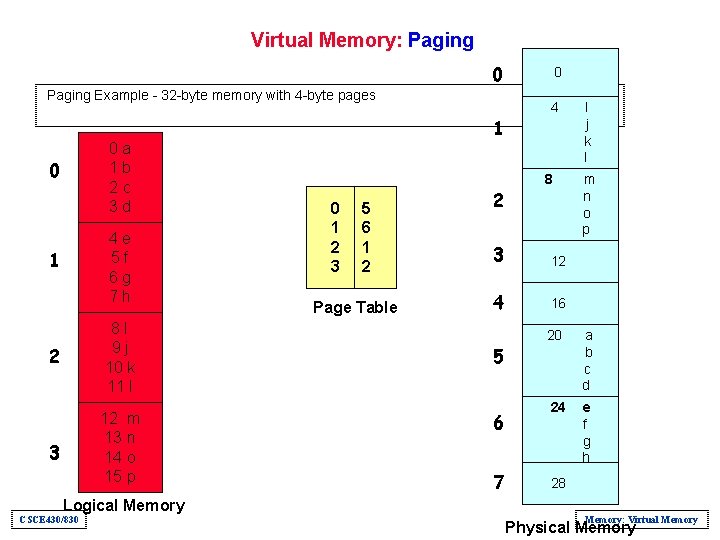

Virtual Memory: Paging 0 0 1 4 I j k l 2 8 m n o p Paging Example - 32 -byte memory with 4 -byte pages 0 a 1 b 2 c 3 d 0 4 e 5 f 6 g 7 h 1 2 8 I 9 j 10 k 11 l 3 12 m 13 n 14 o 15 p Logical Memory CSCE 430/830 0 5 1 6 2 1 3 2 Page Table 3 12 4 16 5 20 a b c d 6 7 24 e f g h 28 Memory: Virtual Memory Physical Memory

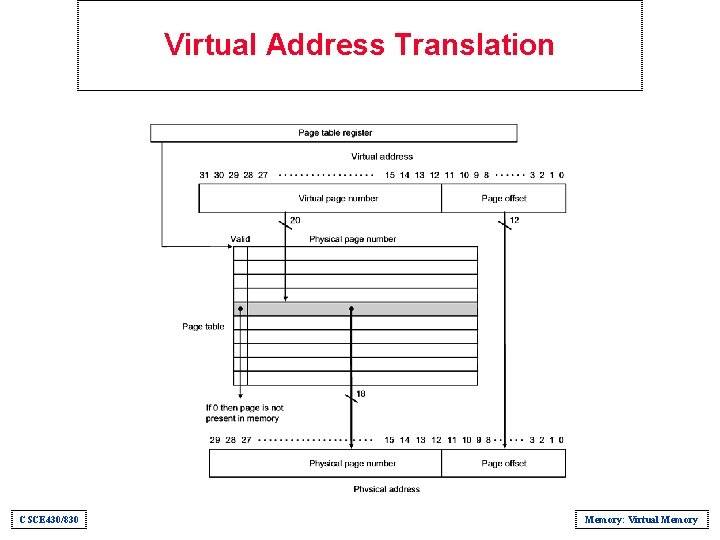

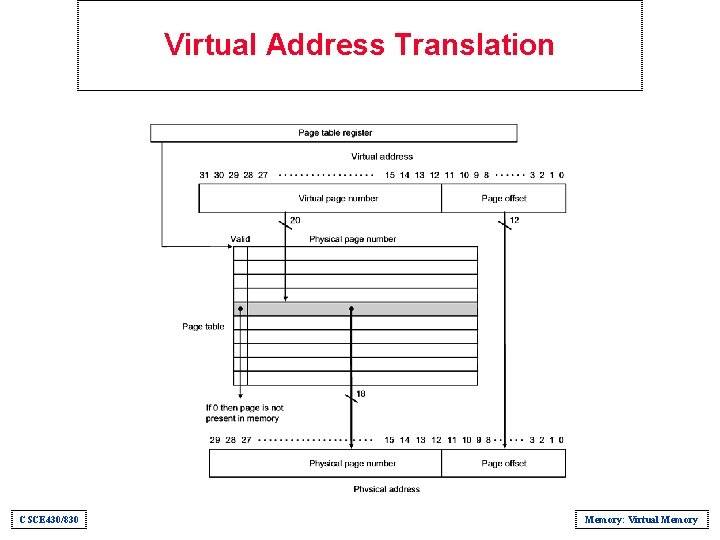

Virtual Address Translation CSCE 430/830 Memory: Virtual Memory

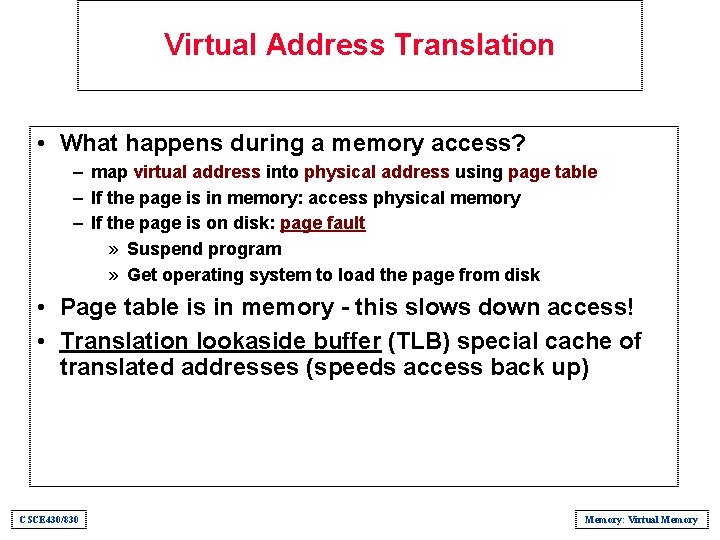

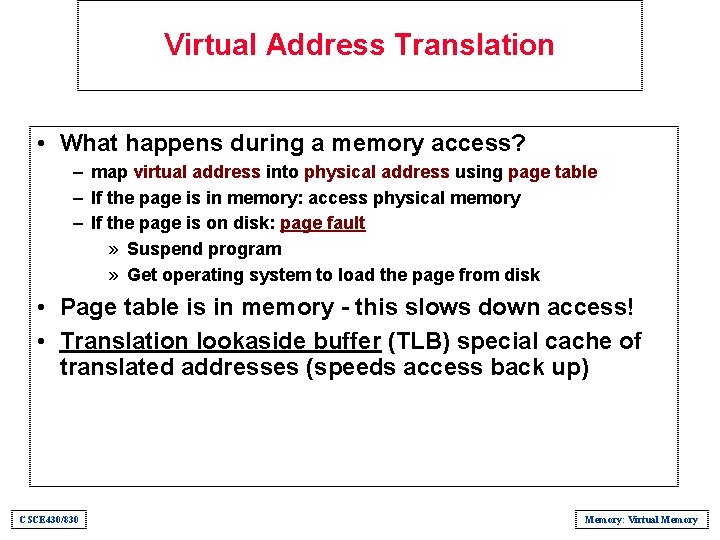

Virtual Address Translation • What happens during a memory access? – map virtual address into physical address using page table – If the page is in memory: access physical memory – If the page is on disk: page fault » Suspend program » Get operating system to load the page from disk • Page table is in memory - this slows down access! • Translation lookaside buffer (TLB) special cache of translated addresses (speeds access back up) CSCE 430/830 Memory: Virtual Memory

TLB Structure CSCE 430/830 Memory: Virtual Memory

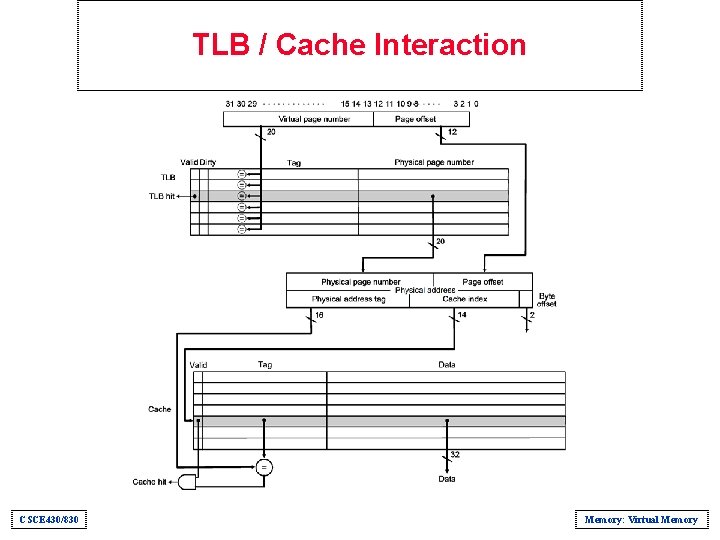

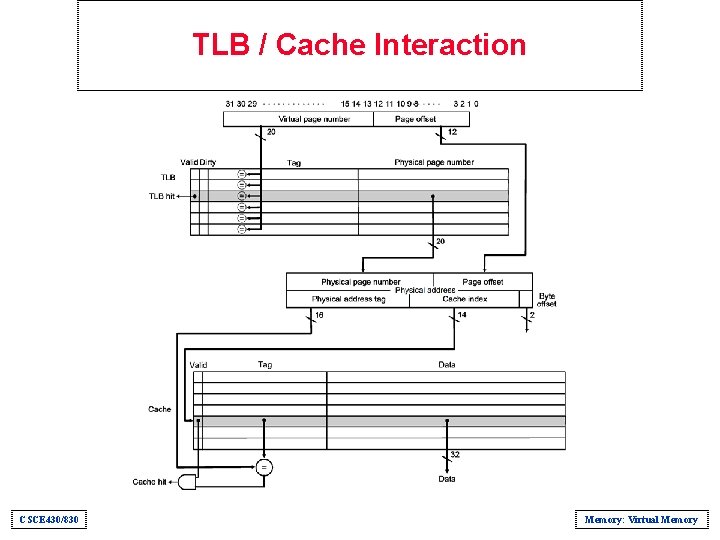

TLB / Cache Interaction CSCE 430/830 Memory: Virtual Memory

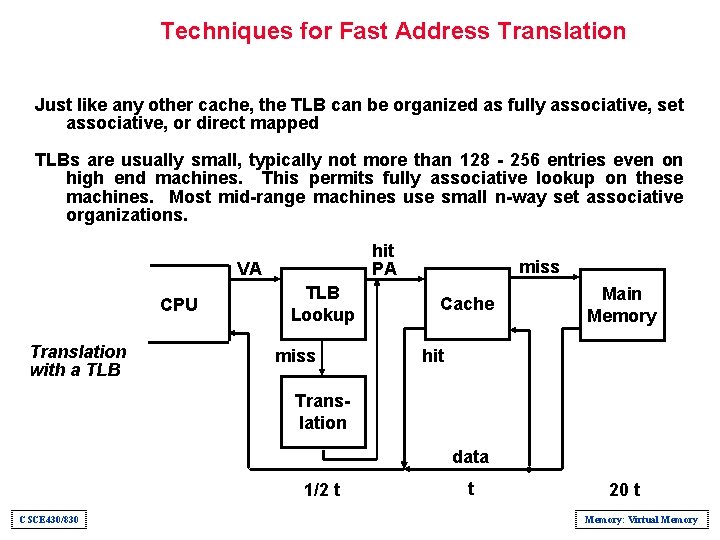

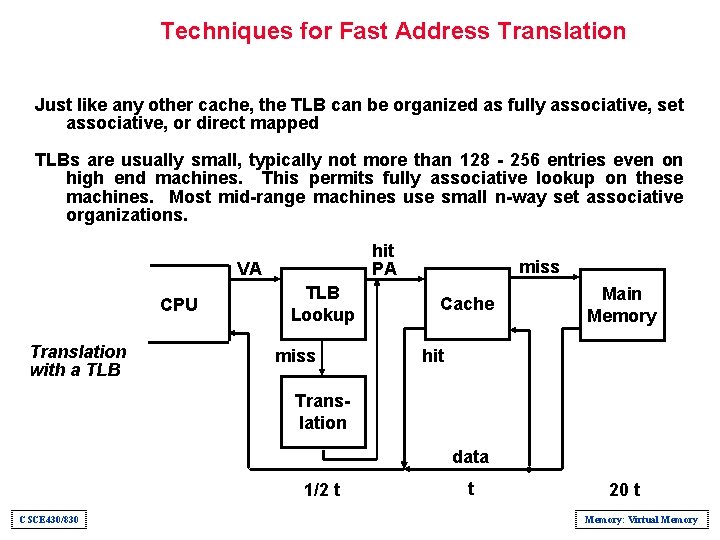

Techniques for Fast Address Translation Just like any other cache, the TLB can be organized as fully associative, set associative, or direct mapped TLBs are usually small, typically not more than 128 - 256 entries even on high end machines. This permits fully associative lookup on these machines. Most mid-range machines use small n-way set associative organizations. hit PA VA CPU Translation with a TLB Lookup miss Cache Main Memory hit Translation data 1/2 t CSCE 430/830 t 20 t Memory: Virtual Memory

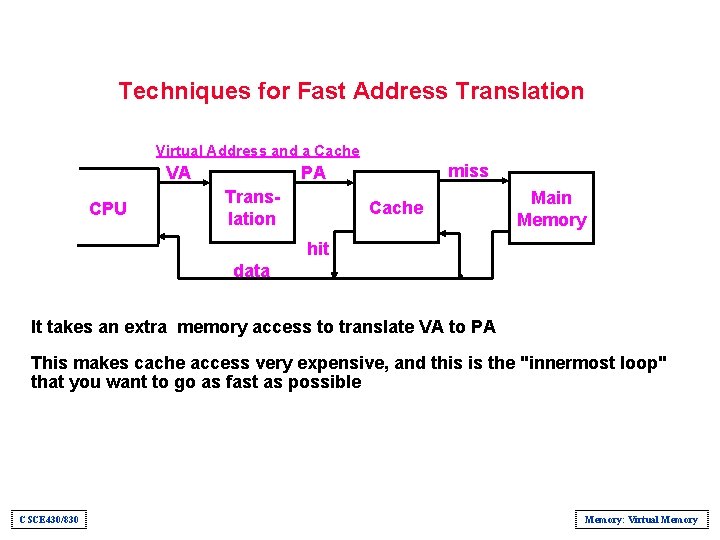

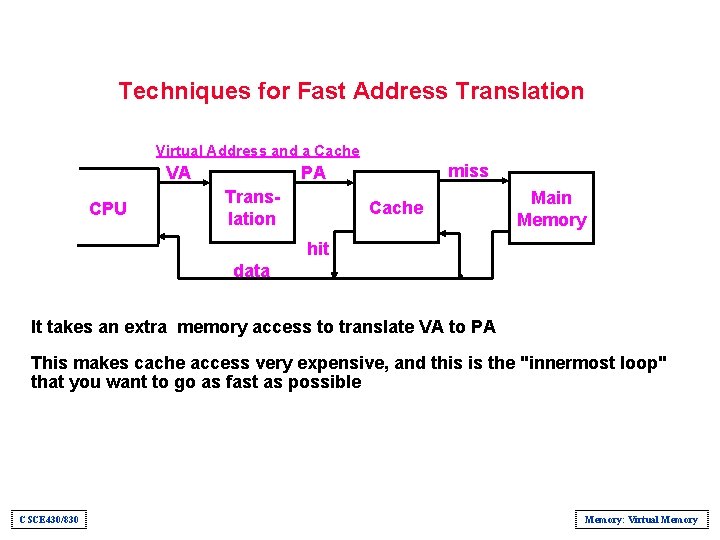

Techniques for Fast Address Translation Virtual Address and a Cache VA CPU miss PA Translation Cache Main Memory hit data It takes an extra memory access to translate VA to PA This makes cache access very expensive, and this is the "innermost loop" that you want to go as fast as possible CSCE 430/830 Memory: Virtual Memory

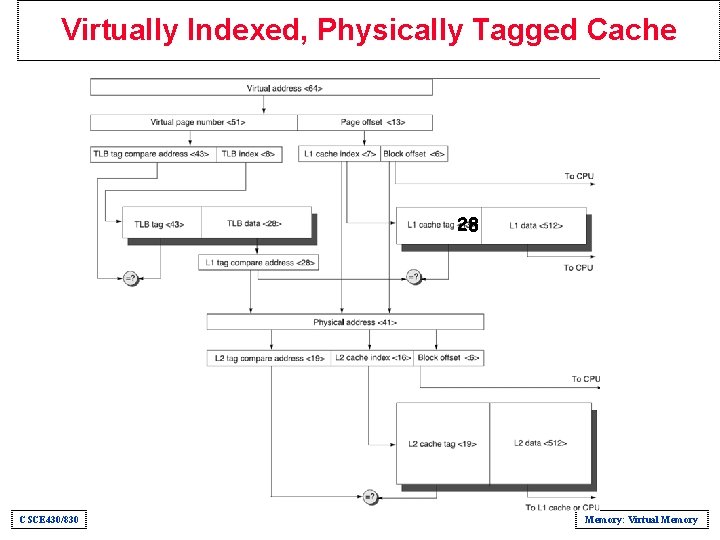

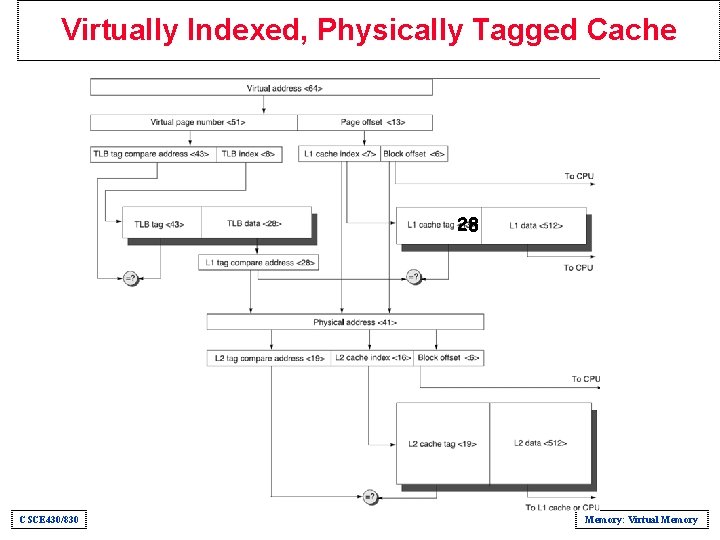

Virtual Memory Design • • Page size: 8 KB TLB is direct mapped with 256 entries L 1 cache is direct-mapped 8 KB. L 2 cache is direct mapped 4 MB. L 1 and L 2 use 64 -byte blocks The virtual address is 64 bits The physical address is 41 bits • Please show the overall picture of memory hierarchy. CSCE 430/830 Memory: Virtual Memory

Virtually Indexed, Physically Tagged Cache What motivation? • Fast cache hit by parallel TLB access • No virtual cache shortcomings How could it be correct? • Require cache way size <= page size; now physical index is from page offset • Then virtual and physical indices are identical ⇒ works like a physically indexed cache! CSCE 430/830 Memory: Virtual Memory

Virtually Indexed, Physically Tagged Cache 28 CSCE 430/830 Memory: Virtual Memory

Virtual Memory and Protection • Important function of virtual memory: Protection – Allow sharing of single main memory by multiple processes – Provide each process with its own address space – Protect each process from memory accesses by other processes • Basic mechanism: two modes of operation – User mode - allows access only to user address space – Supervisor (kernel) mode - allows access to OS address space • System call - allows processor to change mode CSCE 430/830 Memory: Virtual Memory

Virtual Memory • Crosscutting Issues: The Design of Memory Hierarchies Ø Superscalar CPU and Number of Ports to the Cache must provide sufficient peak bandwidth to benefit from multiple issues. Some processors increase complexity of instruction fetch by allowing instructions to be issued to be found on any boundary instead of, say, multiples of 4 words. Ø Speculative Execution and the Memory System Speculative and conditional instructions generate exceptions (by generating invalid addresses) that would otherwise not occur, which in turn can overwhelm the benefits of speculation with the exception handling overhead. Such CPUs must be matched with non-blocking caches and only speculate on L 1 misses (due to the unbearable penalty of L 2). Ø Combining Instruction Cache with Instruction Fetch and Decode Mechanisms Increasing demand for ILP and clock rate has led to the merging of the first part of instruction execution with instruction cache, by incorporating trace cache (which combines branch prediction with instruction fetch) and storing the internal RISC operations in the trace cache (e. g. , Pentium 4’s Net. Burst microarchitecture). A cache hit in the merged cache saves portion of the instruction execution cycles. CSCE 430/830 Memory: Virtual Memory

Virtual Memory • Crosscutting Issues: The Design of Memory Hierarchies Ø Embedded Computer Caches and Real-Time Performance In real-time applications, variation of performance matters much more than average performance. Thus, caches that offer average performance enhancement have to be used carefully. Instruction caches are often used due to the highly predictability of instructions; whereas data caches are “locked down”, forcing them to act as small scratchpad memory under program control. Ø Embedded Computer Caches and Power It is much more power efficient to access on-chip memory than to access off-chip one (which needs to drive the pins, buses and activate external memory chips, etc). Other techniques, such as way prediction, can be used to save power (by only powering half of the two-way set-associative cache). Ø I/O and Consistency of Cached Data Cache coherence problem must be addressed when I/O devices also share the same cached data. CSCE 430/830 Memory: Virtual Memory

Summary - Virtual Memory • Bottom level of memory hierarchy for programs • Used in all general-purpose architectures • Relies heavily on OS for support CSCE 430/830 Memory: Virtual Memory