CS 3214 Computer Systems Virtual Memory Virtual Memory

![int main(int ac, char *av[]) { int fd = open(av[1], O_RDONLY); assert (fd != int main(int ac, char *av[]) { int fd = open(av[1], O_RDONLY); assert (fd !=](https://slidetodoc.com/presentation_image_h/35afa15ec14eb33836cbc1bf376fb6f5/image-30.jpg)

![int main(int ac, char *av[]) { size_t sz = getpagesize(); int sharedflag = ac int main(int ac, char *av[]) { size_t sz = getpagesize(); int sharedflag = ac](https://slidetodoc.com/presentation_image_h/35afa15ec14eb33836cbc1bf376fb6f5/image-31.jpg)

![int main(int ac, char *av[]) { size_t sz = getpagesize(); int sharedflag = ac int main(int ac, char *av[]) { size_t sz = getpagesize(); int sharedflag = ac](https://slidetodoc.com/presentation_image_h/35afa15ec14eb33836cbc1bf376fb6f5/image-32.jpg)

![#define PGSIZE 4096 char persistent_data[PGSIZE] __attribute__((aligned(PGSIZE))); int main(int ac, char *av[]) { int i, #define PGSIZE 4096 char persistent_data[PGSIZE] __attribute__((aligned(PGSIZE))); int main(int ac, char *av[]) { int i,](https://slidetodoc.com/presentation_image_h/35afa15ec14eb33836cbc1bf376fb6f5/image-33.jpg)

- Slides: 34

CS 3214 Computer Systems Virtual Memory

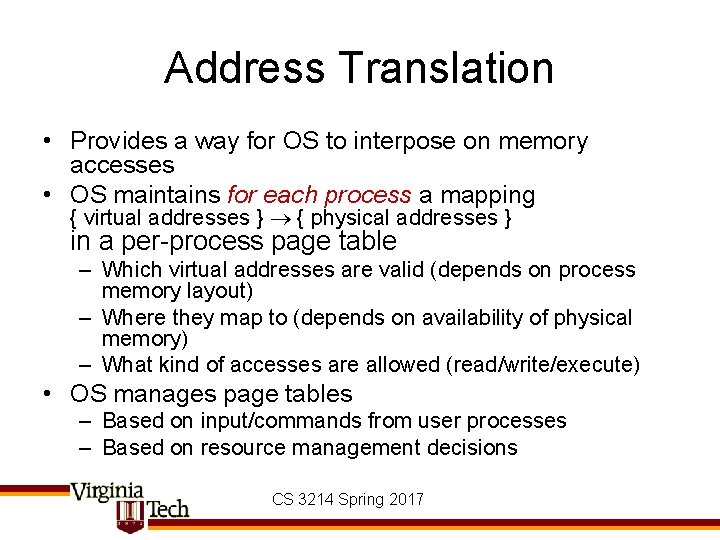

Virtual Memory • Is not a “kind” of memory • Is a technique that combines one or more of the following concepts: – Address translation (always) – Paging from/to disk (usually) – Protection (usually) • Can make storage that isn’t physical DRAM appear as though it were CS 3214 Spring 2017

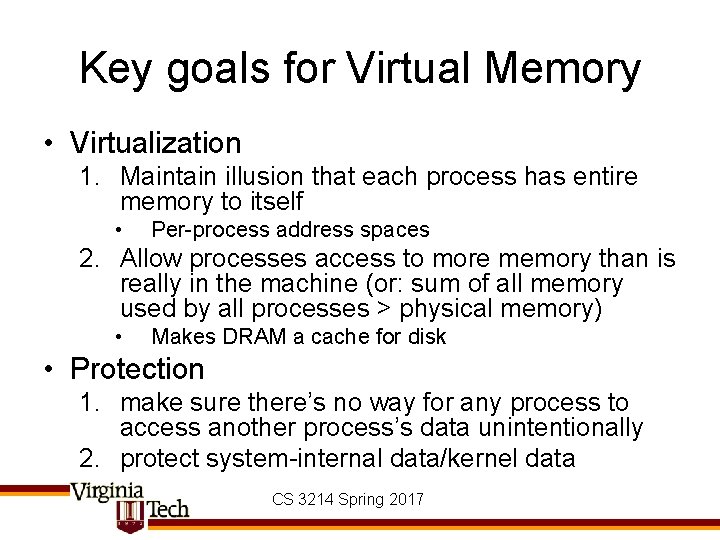

Key goals for Virtual Memory • Virtualization 1. Maintain illusion that each process has entire memory to itself • Per-process address spaces 2. Allow processes access to more memory than is really in the machine (or: sum of all memory used by all processes > physical memory) • Makes DRAM a cache for disk • Protection 1. make sure there’s no way for any process to access another process’s data unintentionally 2. protect system-internal data/kernel data CS 3214 Spring 2017

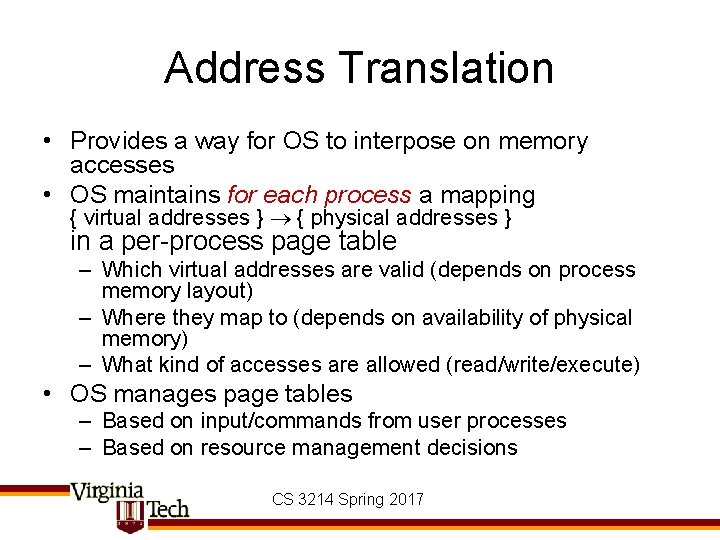

Address Translation • Provides a way for OS to interpose on memory accesses • OS maintains for each process a mapping { virtual addresses } { physical addresses } in a per-process page table – Which virtual addresses are valid (depends on process memory layout) – Where they map to (depends on availability of physical memory) – What kind of accesses are allowed (read/write/execute) • OS manages page tables – Based on input/commands from user processes – Based on resource management decisions CS 3214 Spring 2017

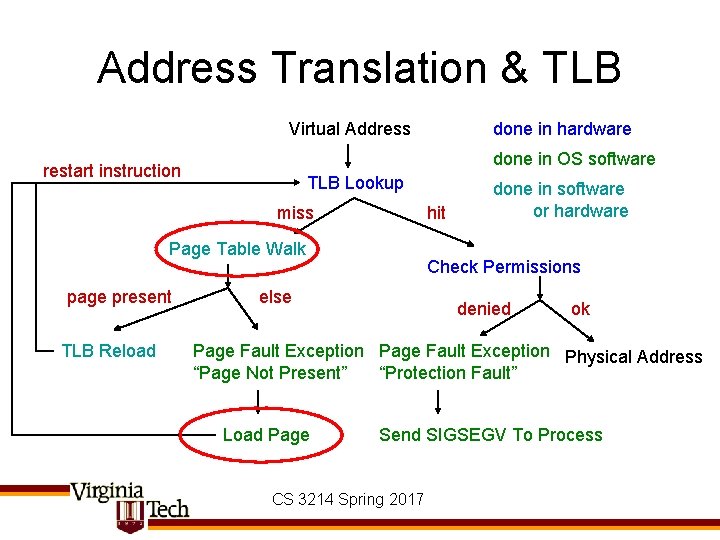

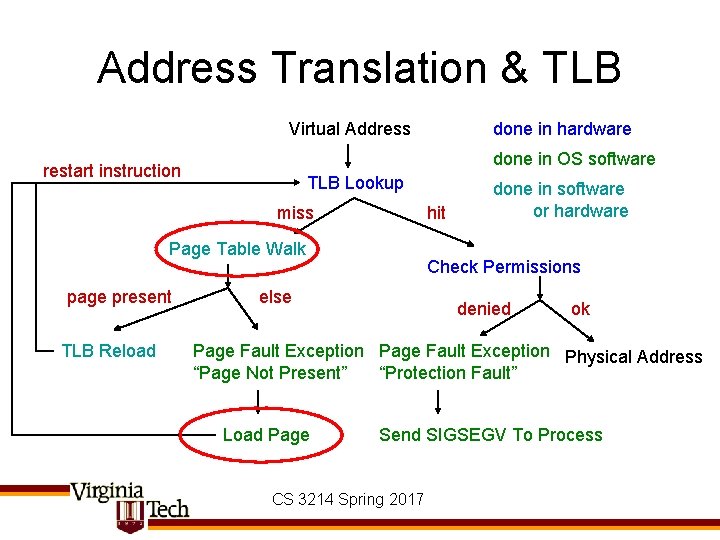

Address Translation & TLB Virtual Address done in OS software restart instruction TLB Lookup miss hit Page Table Walk page present TLB Reload done in hardware done in software or hardware Check Permissions else denied ok Page Fault Exception Physical Address “Page Not Present” “Protection Fault” Load Page Send SIGSEGV To Process CS 3214 Spring 2017

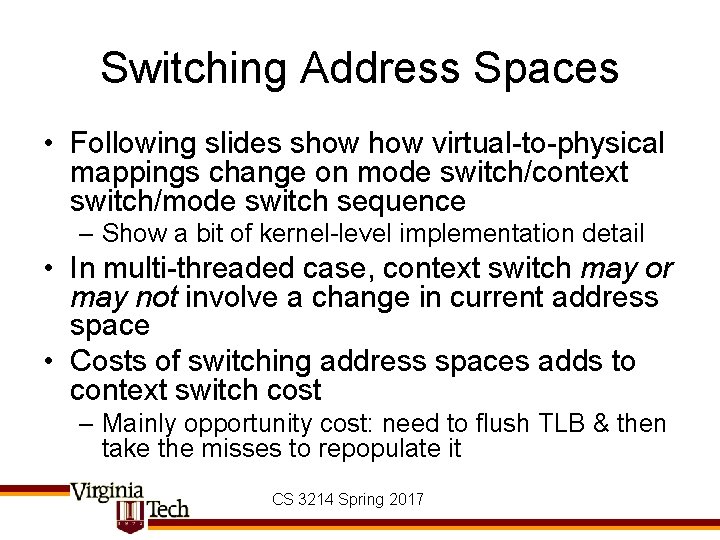

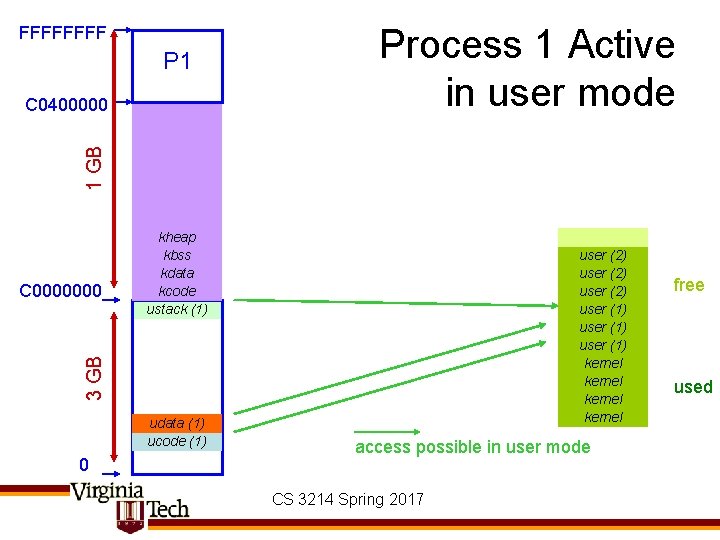

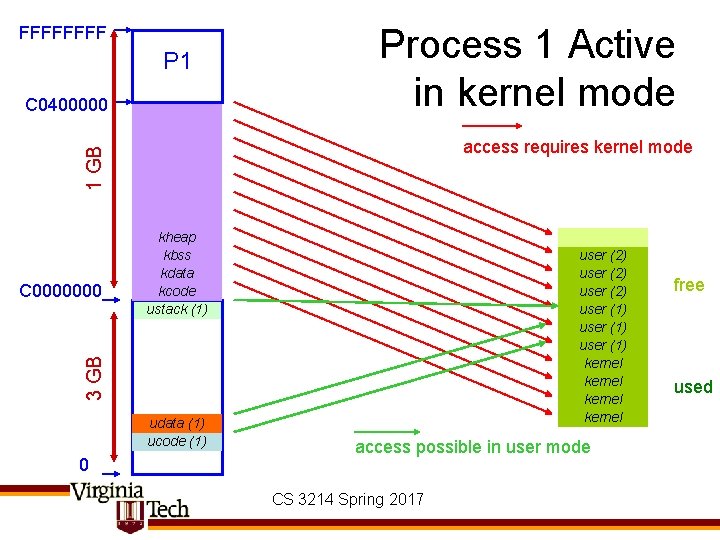

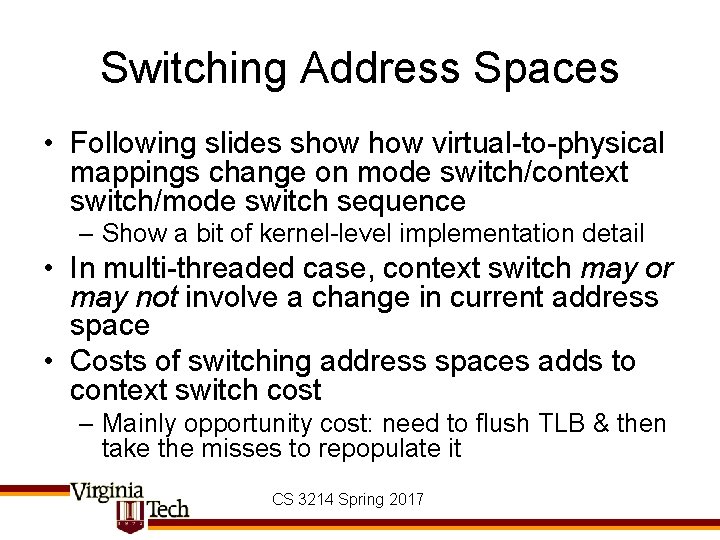

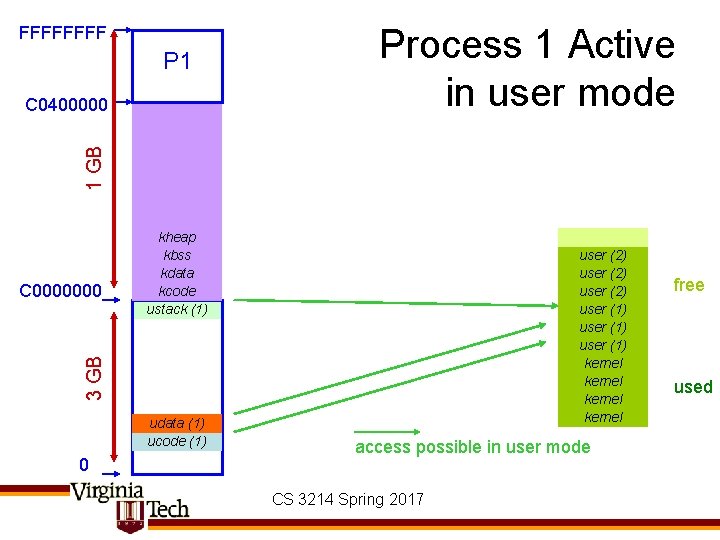

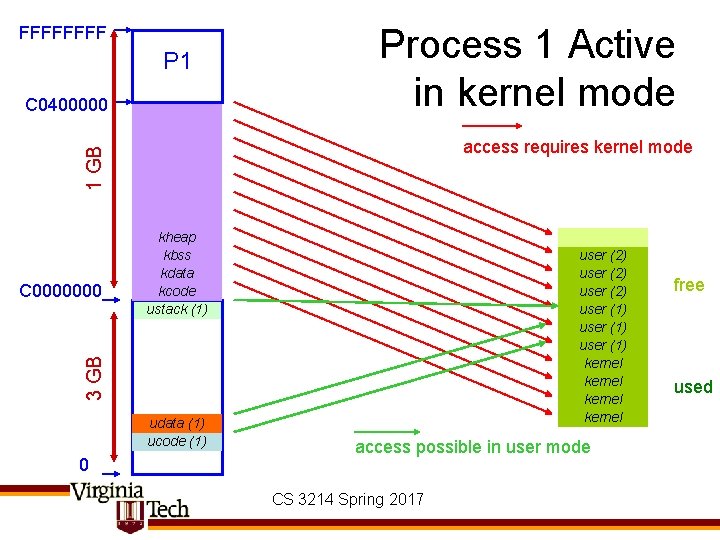

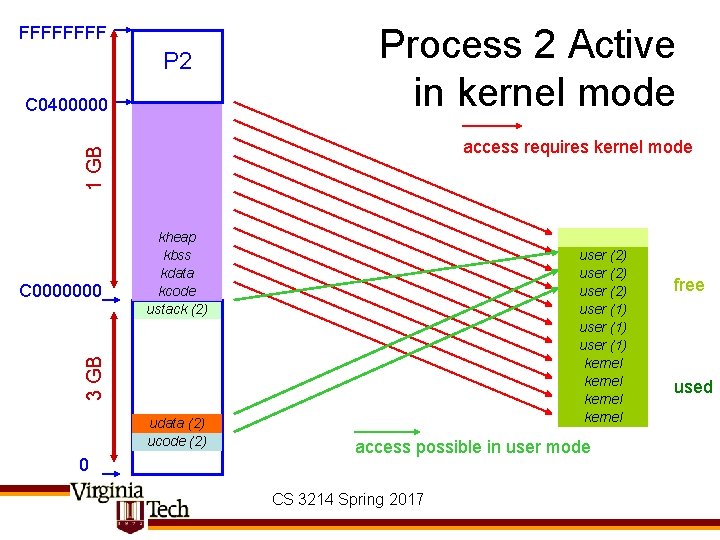

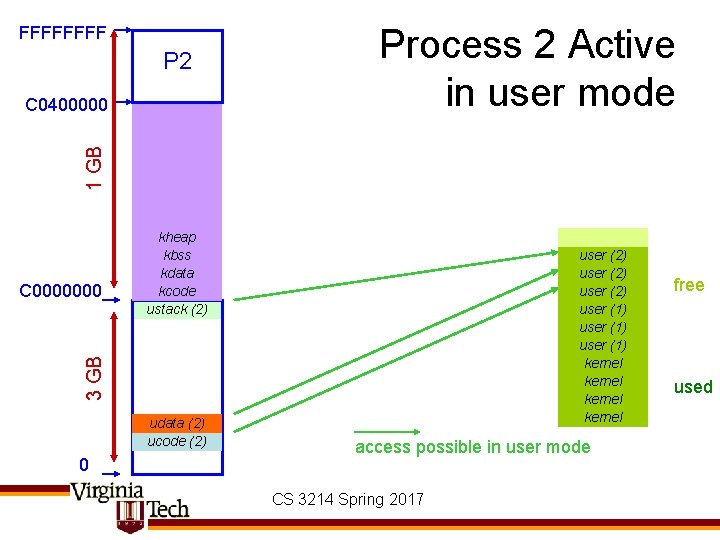

Switching Address Spaces • Following slides show virtual-to-physical mappings change on mode switch/context switch/mode switch sequence – Show a bit of kernel-level implementation detail • In multi-threaded case, context switch may or may not involve a change in current address space • Costs of switching address spaces adds to context switch cost – Mainly opportunity cost: need to flush TLB & then take the misses to repopulate it CS 3214 Spring 2017

FFFF P 1 1 GB C 0400000 Process 1 Active in user mode user (2) user (1) kernel 3 GB C 0000000 kheap kbss kdata kcode ustack (1) udata (1) ucode (1) 0 access possible in user mode CS 3214 Spring 2017 free used

FFFF P 1 C 0400000 Process 1 Active in kernel mode 1 GB access requires kernel mode user (2) user (1) kernel 3 GB C 0000000 kheap kbss kdata kcode ustack (1) udata (1) ucode (1) 0 access possible in user mode CS 3214 Spring 2017 free used

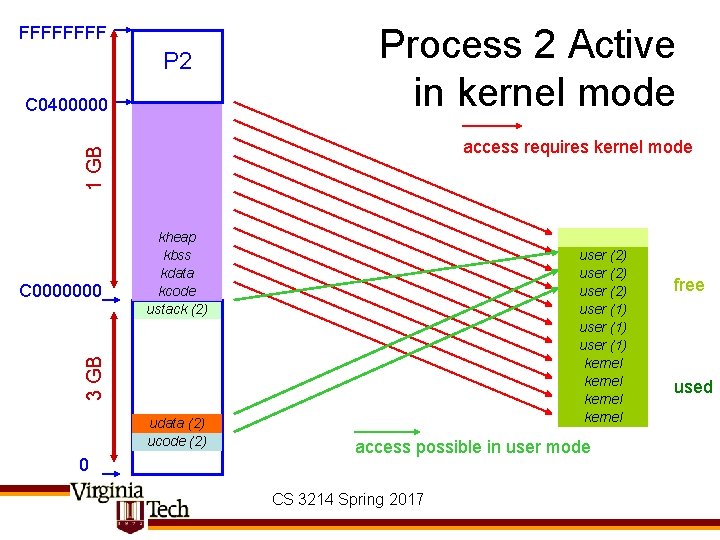

FFFF P 2 C 0400000 Process 2 Active in kernel mode 1 GB access requires kernel mode user (2) user (1) kernel 3 GB C 0000000 kheap kbss kdata kcode ustack (2) udata (2) ucode (2) 0 access possible in user mode CS 3214 Spring 2017 free used

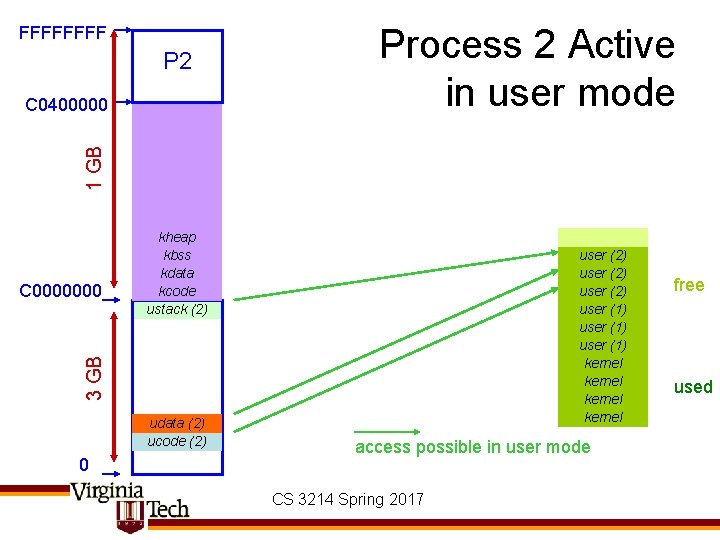

FFFF P 2 1 GB C 0400000 Process 2 Active in user mode user (2) user (1) kernel 3 GB C 0000000 kheap kbss kdata kcode ustack (2) udata (2) ucode (2) 0 access possible in user mode CS 3214 Spring 2017 free used

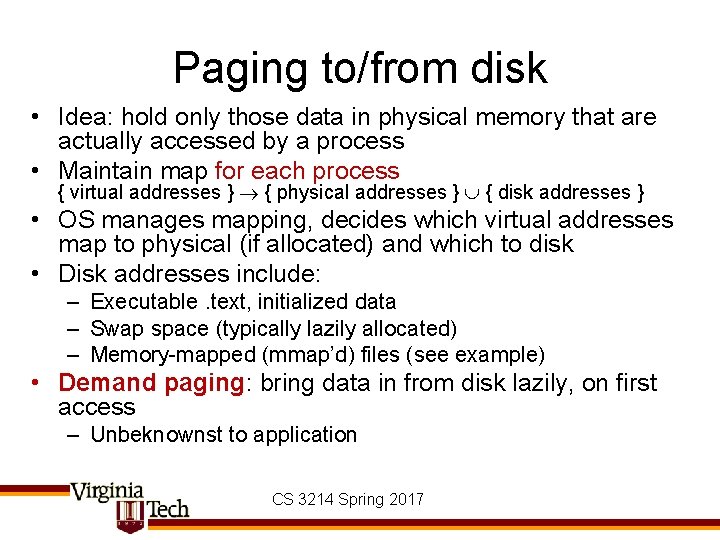

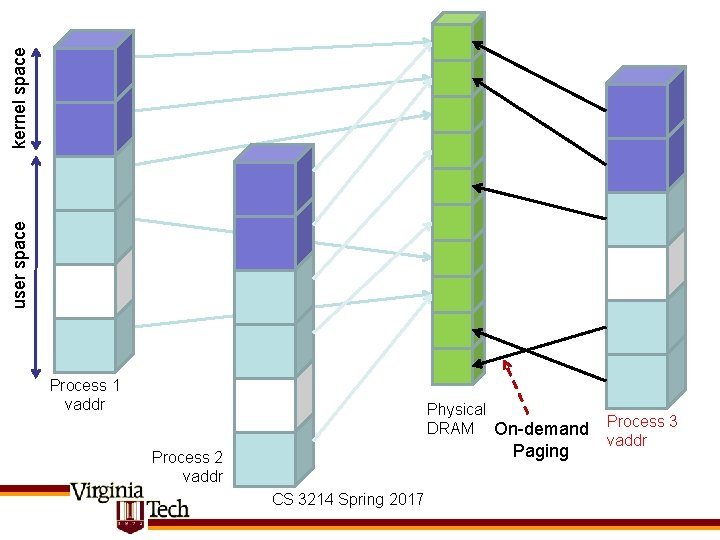

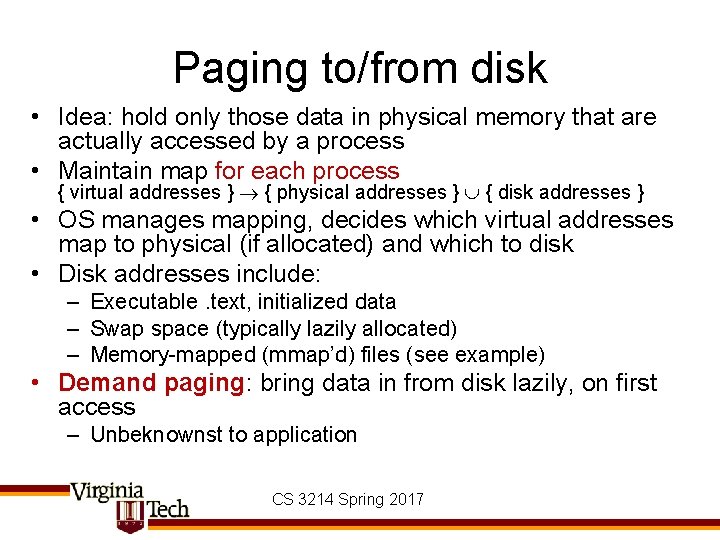

Paging to/from disk • Idea: hold only those data in physical memory that are actually accessed by a process • Maintain map for each process { virtual addresses } { physical addresses } { disk addresses } • OS manages mapping, decides which virtual addresses map to physical (if allocated) and which to disk • Disk addresses include: – Executable. text, initialized data – Swap space (typically lazily allocated) – Memory-mapped (mmap’d) files (see example) • Demand paging: bring data in from disk lazily, on first access – Unbeknownst to application CS 3214 Spring 2017

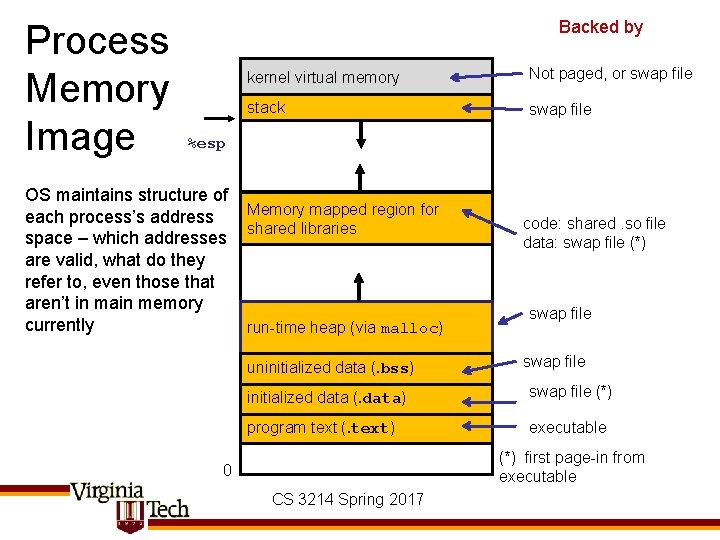

Process Memory Image Backed by kernel virtual memory Not paged, or swap file stack swap file %esp OS maintains structure of each process’s address space – which addresses are valid, what do they refer to, even those that aren’t in main memory currently Memory mapped region for shared libraries run-time heap (via malloc) uninitialized data (. bss) code: shared. so file data: swap file (*) swap file initialized data (. data) swap file (*) program text (. text) executable (*) first page-in from executable 0 CS 3214 Spring 2017

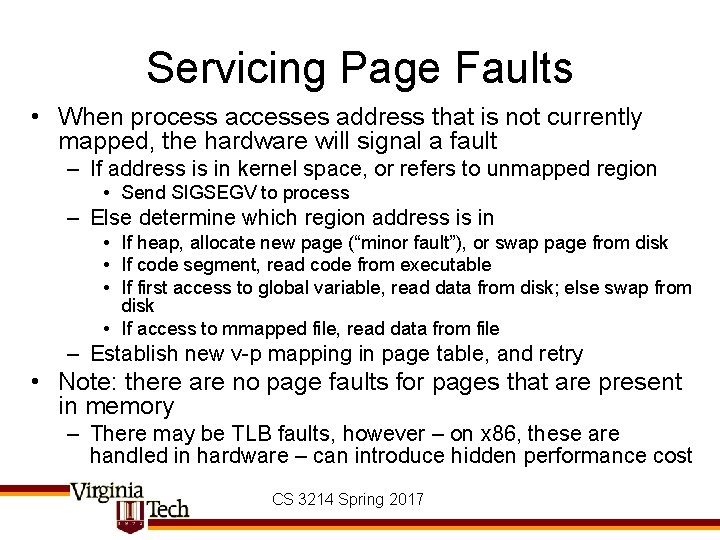

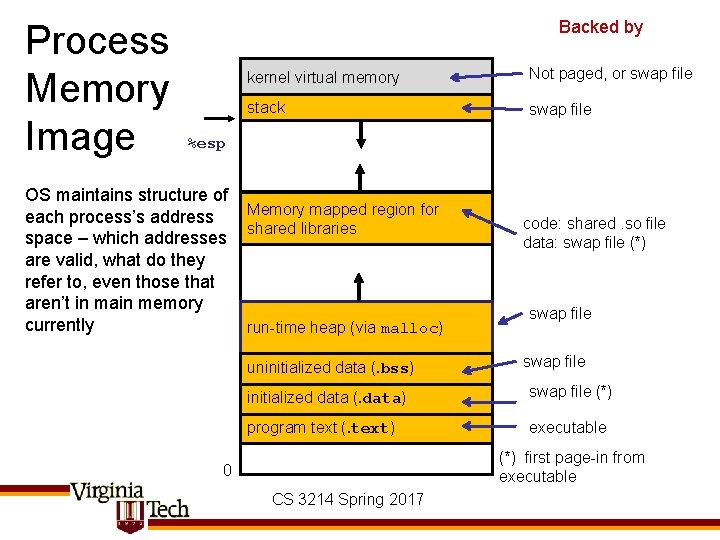

Servicing Page Faults • When process accesses address that is not currently mapped, the hardware will signal a fault – If address is in kernel space, or refers to unmapped region • Send SIGSEGV to process – Else determine which region address is in • If heap, allocate new page (“minor fault”), or swap page from disk • If code segment, read code from executable • If first access to global variable, read data from disk; else swap from disk • If access to mmapped file, read data from file – Establish new v-p mapping in page table, and retry • Note: there are no page faults for pages that are present in memory – There may be TLB faults, however – on x 86, these are handled in hardware – can introduce hidden performance cost CS 3214 Spring 2017

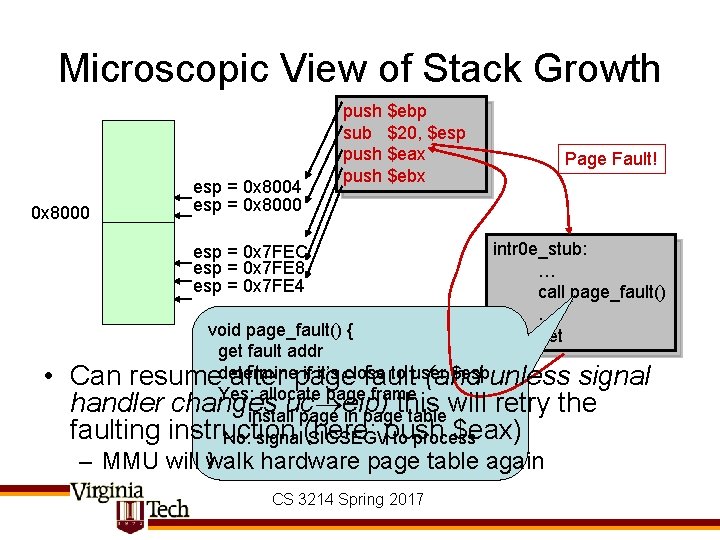

Microscopic View of Stack Growth 0 x 8000 esp = 0 x 8004 esp = 0 x 8000 push $ebp sub $20, $esp push $eax push $ebx esp = 0 x 7 FEC esp = 0 x 7 FE 8 esp = 0 x 7 FE 4 • Page Fault! intr 0 e_stub: … call page_fault() … iret void page_fault() { get fault addr if it’s close to user $espunless signal Can resumedetermine after page fault (and Yes: allocate page frame handler changes uc eip) this will retry the install page in page table faulting instruction $eax) No: signal (here: SIGSEGVpush to process } – MMU will walk hardware page table again CS 3214 Spring 2017

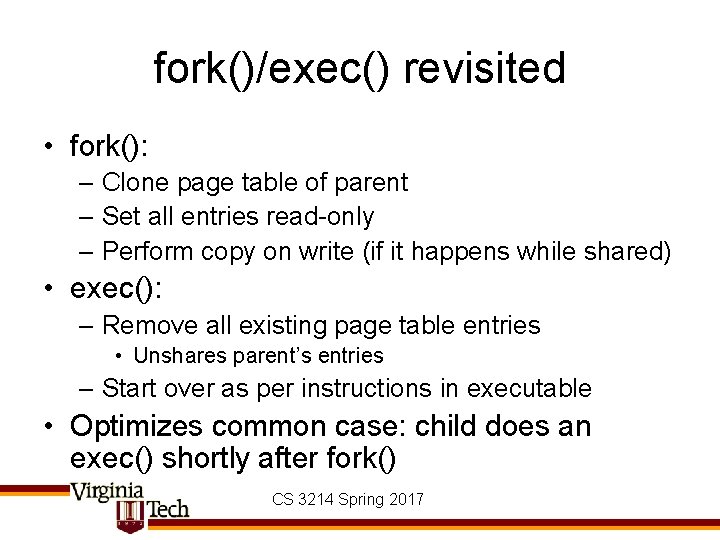

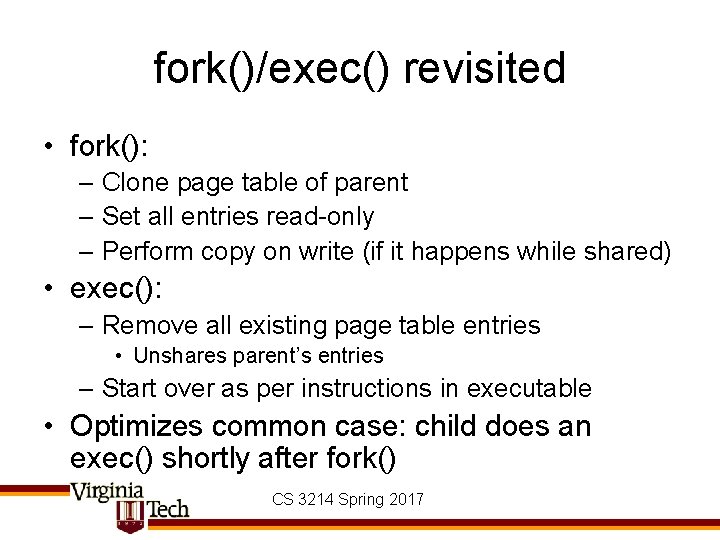

fork()/exec() revisited • fork(): – Clone page table of parent – Set all entries read-only – Perform copy on write (if it happens while shared) • exec(): – Remove all existing page table entries • Unshares parent’s entries – Start over as per instructions in executable • Optimizes common case: child does an exec() shortly after fork() CS 3214 Spring 2017

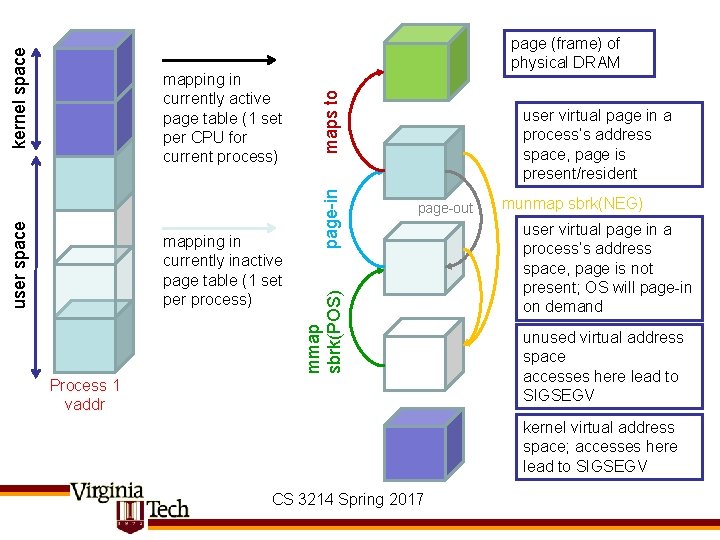

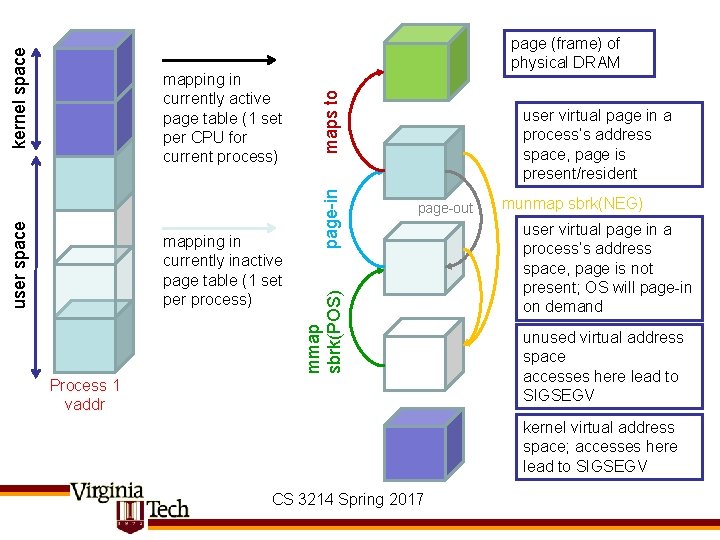

maps to page-in mapping in currently inactive page table (1 set per process) user virtual page in a process‘s address space, page is present/resident page-out mmap sbrk(POS) kernel space user space mapping in currently active page table (1 set per CPU for current process) page (frame) of physical DRAM Process 1 vaddr munmap sbrk(NEG) user virtual page in a process‘s address space, page is not present; OS will page-in on demand unused virtual address space accesses here lead to SIGSEGV kernel virtual address space; accesses here lead to SIGSEGV CS 3214 Spring 2017

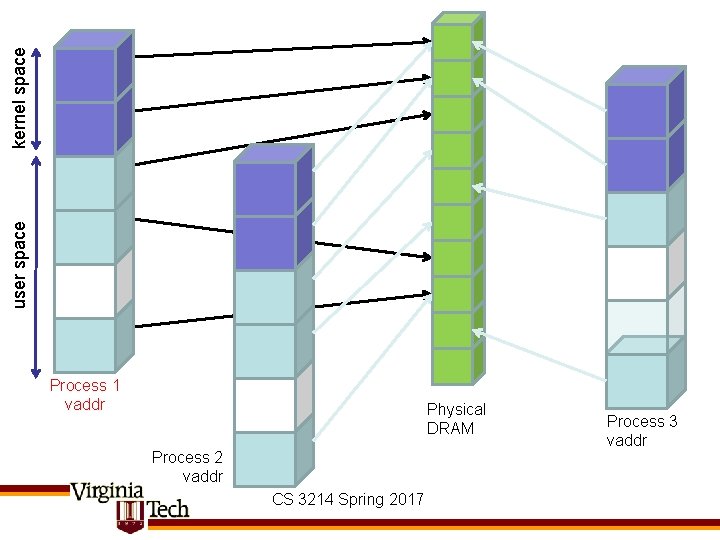

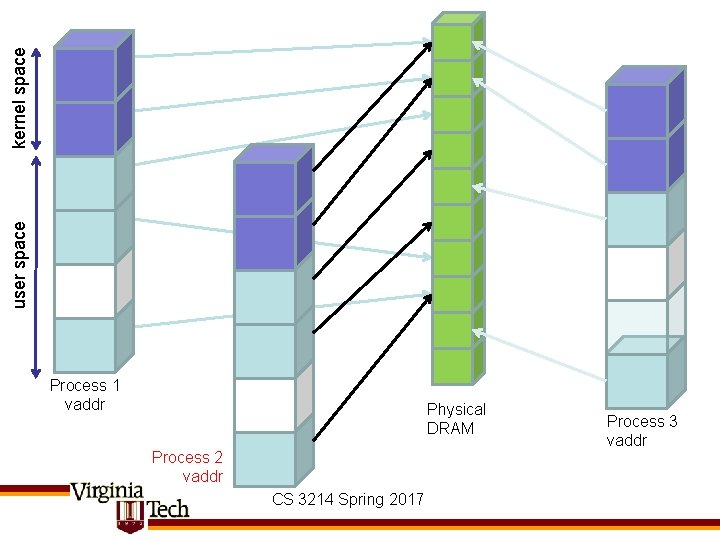

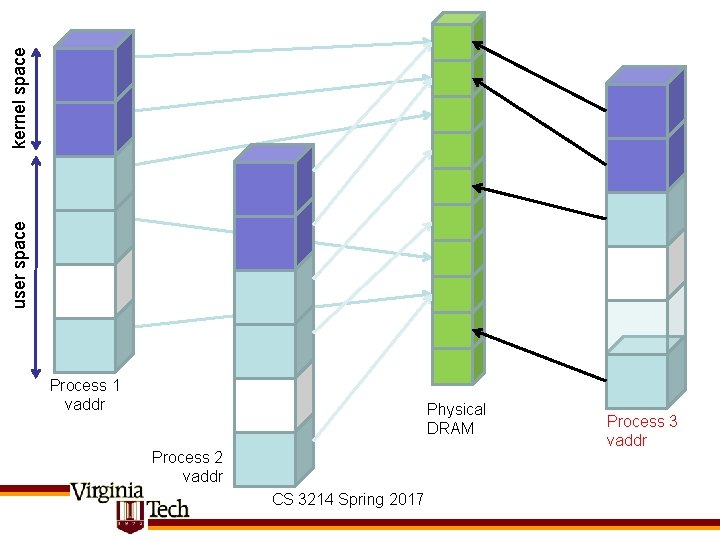

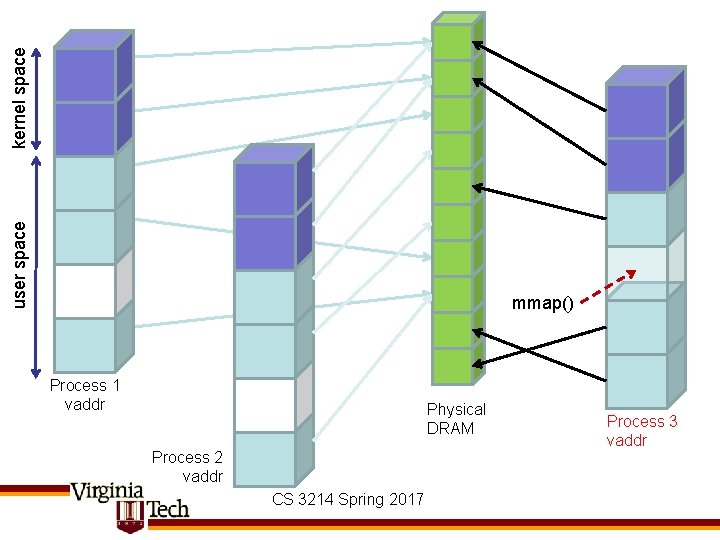

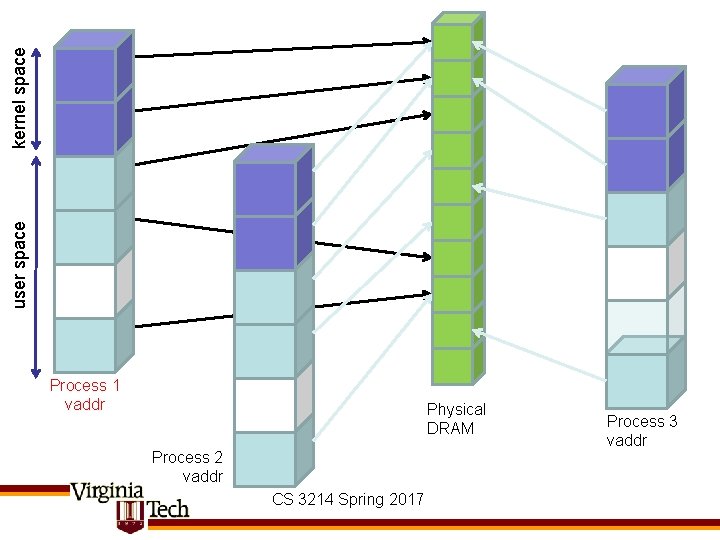

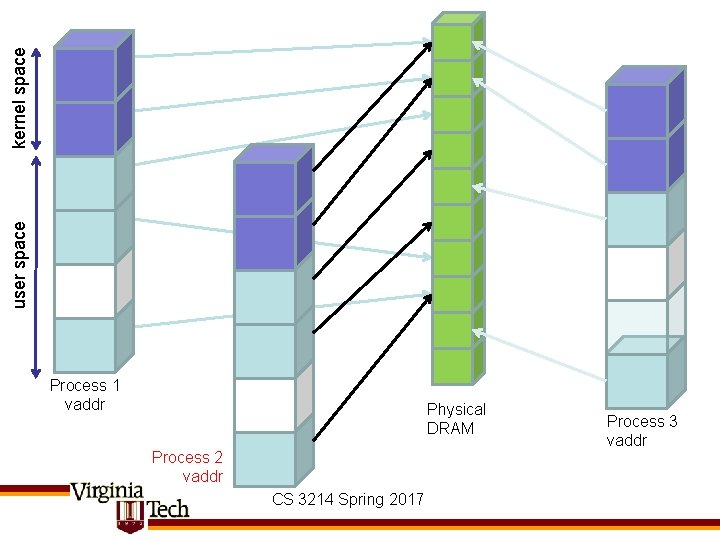

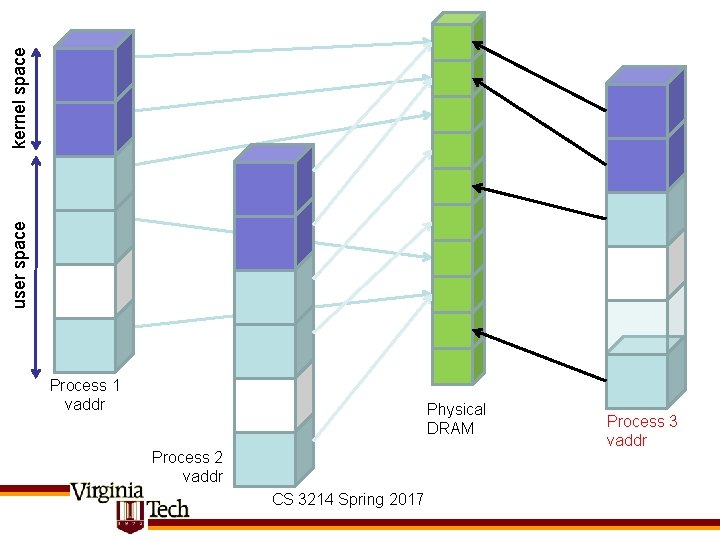

kernel space user space Process 1 vaddr Physical DRAM Process 2 vaddr CS 3214 Spring 2017 Process 3 vaddr

kernel space user space Process 1 vaddr Physical DRAM Process 2 vaddr CS 3214 Spring 2017 Process 3 vaddr

kernel space user space Process 1 vaddr Physical DRAM Process 2 vaddr CS 3214 Spring 2017 Process 3 vaddr

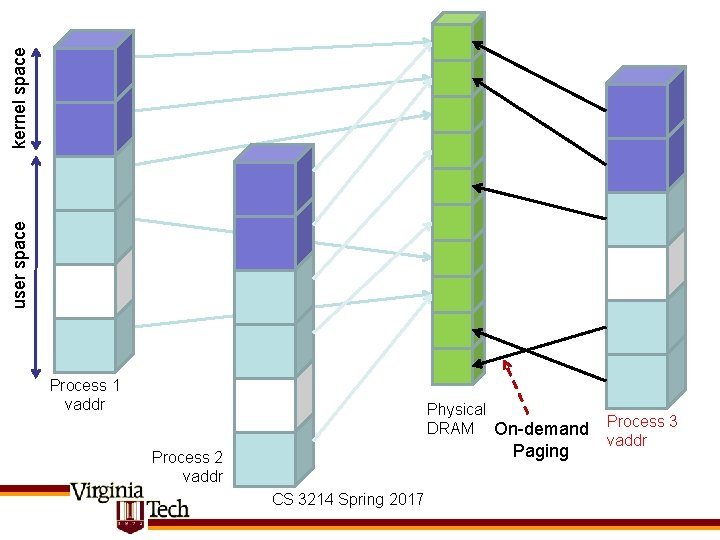

kernel space user space Process 1 vaddr Physical DRAM On-demand Paging Process 2 vaddr CS 3214 Spring 2017 Process 3 vaddr

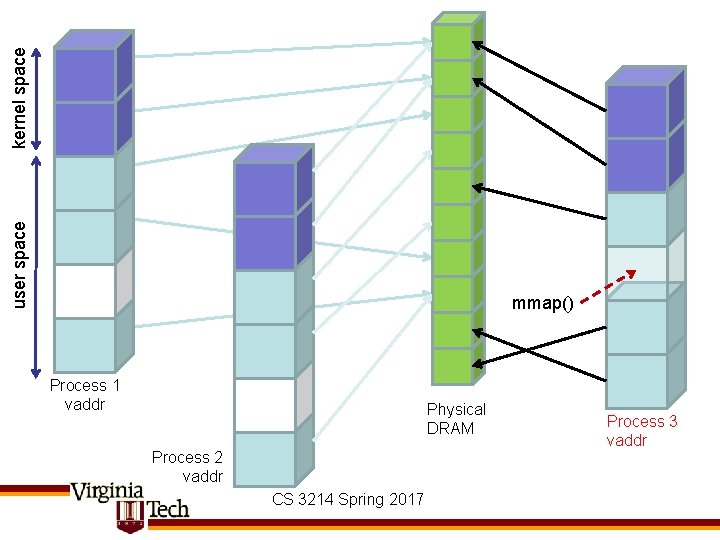

kernel space user space mmap() Process 1 vaddr Physical DRAM Process 2 vaddr CS 3214 Spring 2017 Process 3 vaddr

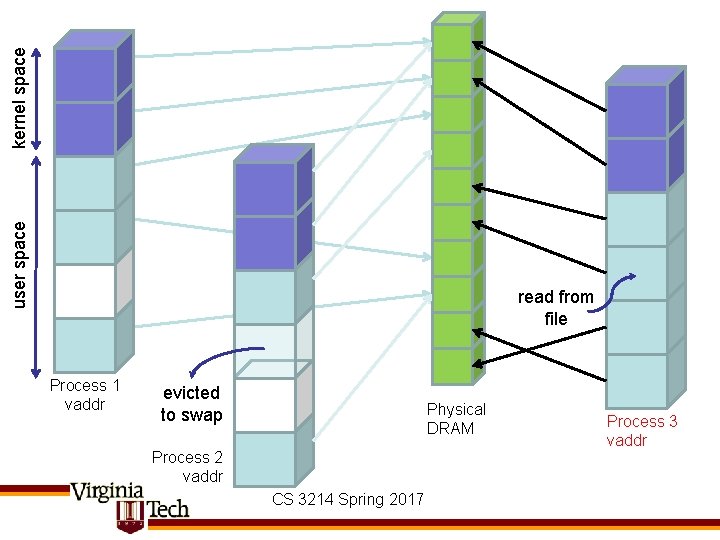

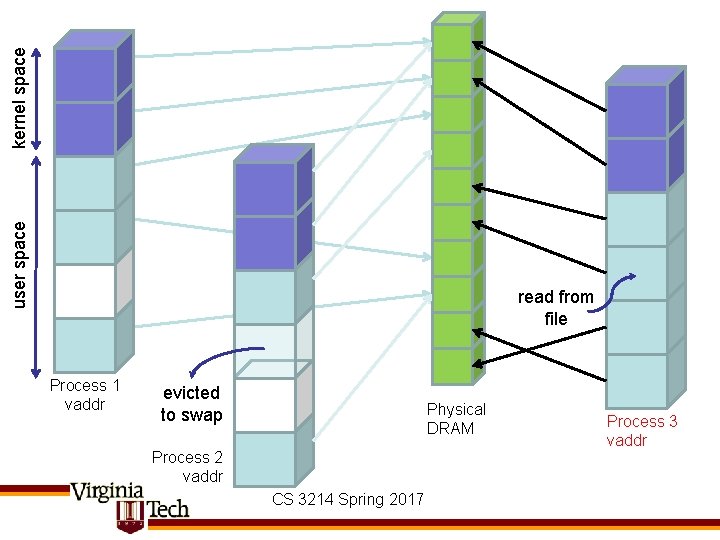

kernel space user space read from file Process 1 vaddr evicted to swap Physical DRAM Process 2 vaddr CS 3214 Spring 2017 Process 3 vaddr

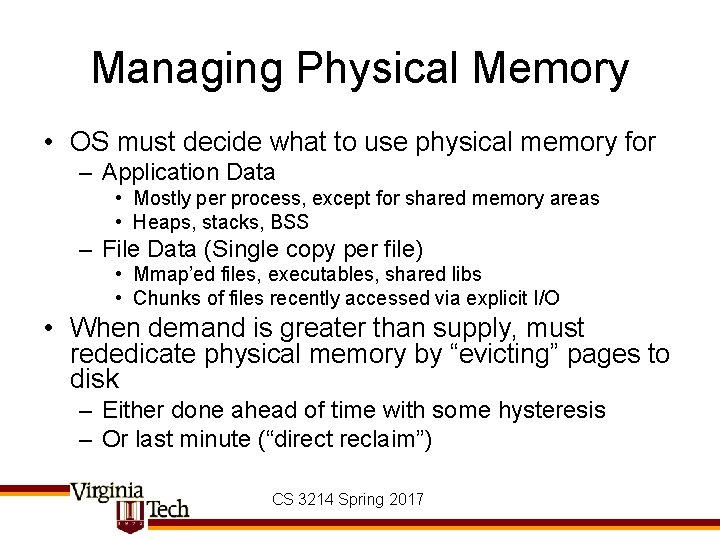

Managing Physical Memory • OS must decide what to use physical memory for – Application Data • Mostly per process, except for shared memory areas • Heaps, stacks, BSS – File Data (Single copy per file) • Mmap’ed files, executables, shared libs • Chunks of files recently accessed via explicit I/O • When demand is greater than supply, must rededicate physical memory by “evicting” pages to disk – Either done ahead of time with some hysteresis – Or last minute (“direct reclaim”) CS 3214 Spring 2017

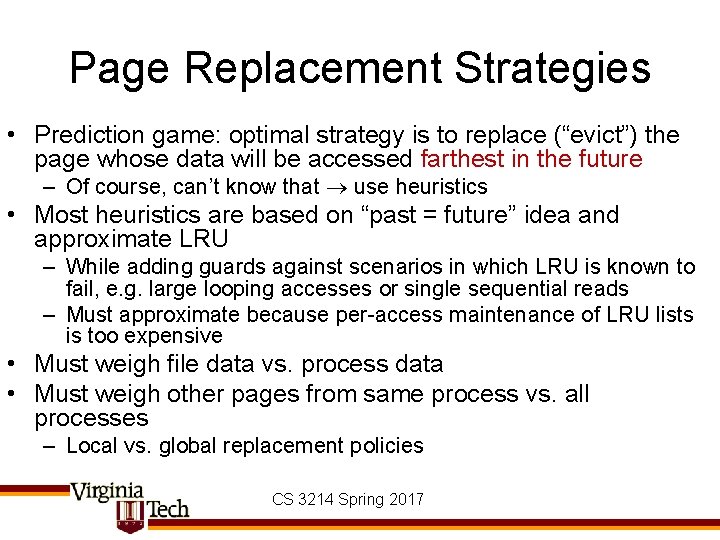

Page Replacement Strategies • Prediction game: optimal strategy is to replace (“evict”) the page whose data will be accessed farthest in the future – Of course, can’t know that use heuristics • Most heuristics are based on “past = future” idea and approximate LRU – While adding guards against scenarios in which LRU is known to fail, e. g. large looping accesses or single sequential reads – Must approximate because per-access maintenance of LRU lists is too expensive • Must weigh file data vs. process data • Must weigh other pages from same process vs. all processes – Local vs. global replacement policies CS 3214 Spring 2017

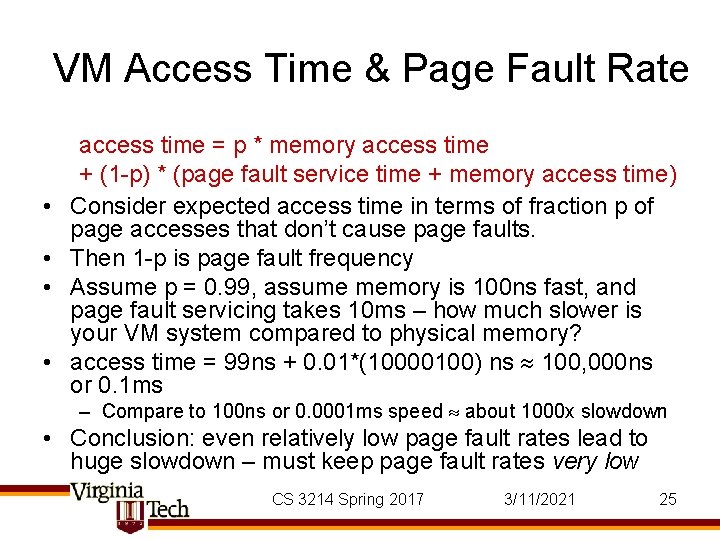

VM Access Time & Page Fault Rate • • access time = p * memory access time + (1 -p) * (page fault service time + memory access time) Consider expected access time in terms of fraction p of page accesses that don’t cause page faults. Then 1 -p is page fault frequency Assume p = 0. 99, assume memory is 100 ns fast, and page fault servicing takes 10 ms – how much slower is your VM system compared to physical memory? access time = 99 ns + 0. 01*(10000100) ns 100, 000 ns or 0. 1 ms – Compare to 100 ns or 0. 0001 ms speed about 1000 x slowdown • Conclusion: even relatively low page fault rates lead to huge slowdown – must keep page fault rates very low CS 3214 Spring 2017 3/11/2021 25

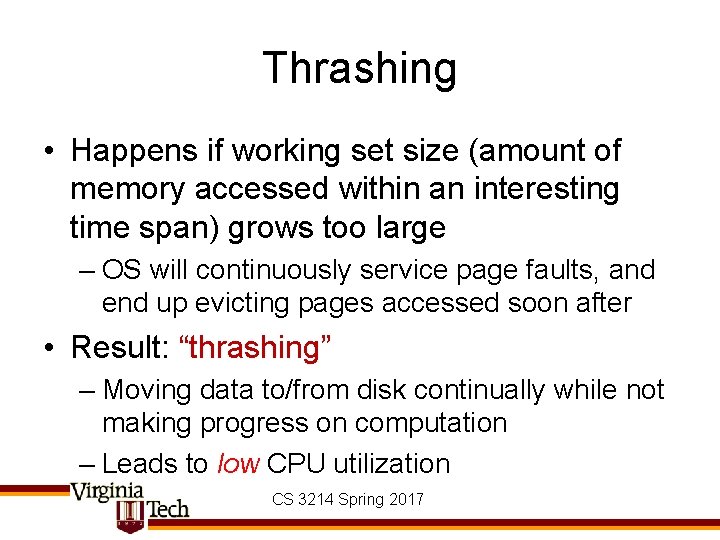

Thrashing • Happens if working set size (amount of memory accessed within an interesting time span) grows too large – OS will continuously service page faults, and end up evicting pages accessed soon after • Result: “thrashing” – Moving data to/from disk continually while not making progress on computation – Leads to low CPU utilization CS 3214 Spring 2017

Prefetching • All modern VM systems use prefetching – Usual strategy: detect sequential accesses to file • even if done via virtual memory system & mmaped files – Sometimes application-guided • Linux readahead(2) system call • E. g. Windows Vista remembers which data an application touched (speeds up startup time) CS 3214 Spring 2017

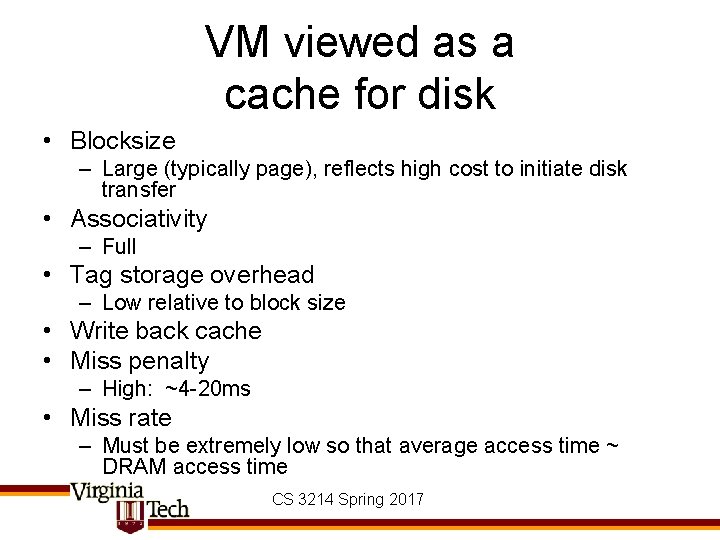

VM viewed as a cache for disk • Blocksize – Large (typically page), reflects high cost to initiate disk transfer • Associativity – Full • Tag storage overhead – Low relative to block size • Write back cache • Miss penalty – High: ~4 -20 ms • Miss rate – Must be extremely low so that average access time ~ DRAM access time CS 3214 Spring 2017

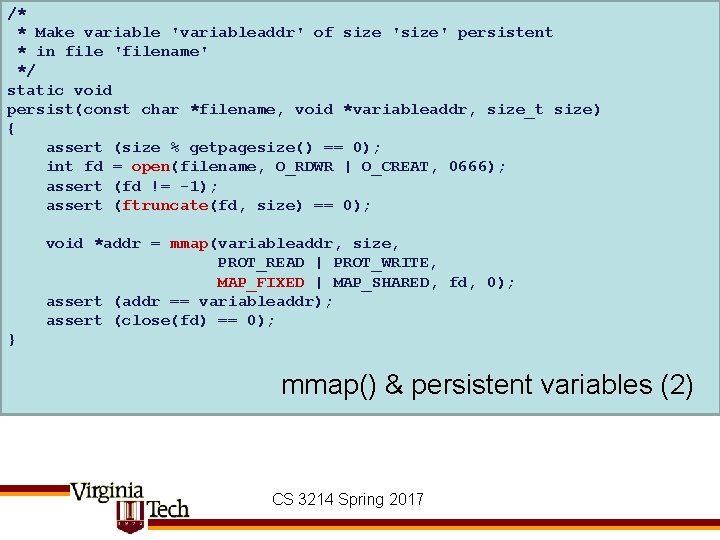

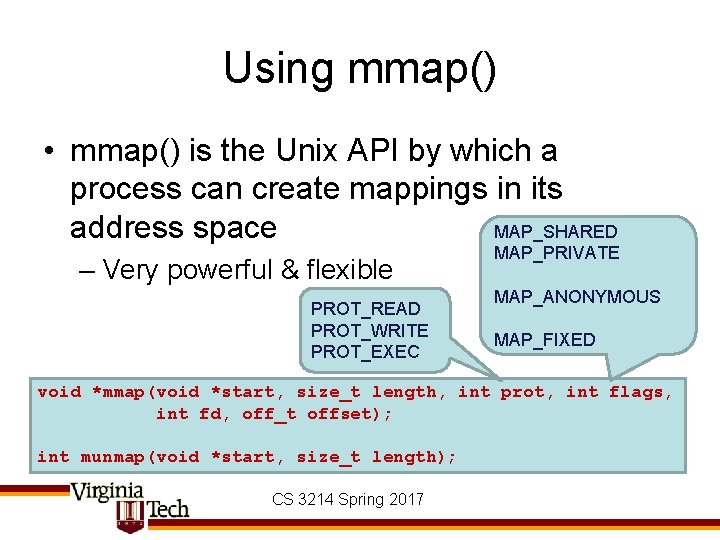

Using mmap() • mmap() is the Unix API by which a process can create mappings in its address space MAP_SHARED – Very powerful & flexible PROT_READ PROT_WRITE PROT_EXEC MAP_PRIVATE MAP_ANONYMOUS MAP_FIXED void *mmap(void *start, size_t length, int prot, int flags, int fd, off_t offset); int munmap(void *start, size_t length); CS 3214 Spring 2017

![int mainint ac char av int fd openav1 ORDONLY assert fd int main(int ac, char *av[]) { int fd = open(av[1], O_RDONLY); assert (fd !=](https://slidetodoc.com/presentation_image_h/35afa15ec14eb33836cbc1bf376fb6f5/image-30.jpg)

int main(int ac, char *av[]) { int fd = open(av[1], O_RDONLY); assert (fd != -1); off_t filesize = lseek(fd, 0, SEEK_END); assert (filesize != (off_t) -1); mmap() for file I/O size_t pgsize = getpagesize(); size_t mapsize = (filesize + pgsize - 1) & ~(pgsize-1); void *addr = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, fd, 0); if (addr == MAP_FAILED) { perror("mmap"); exit(-1); } assert (close(fd) == 0); // access file data like memory char *start = addr, *end = addr + filesize; while (start < end) fputc(*start++, stdout); return 0; } CS 3214 Spring 2017

![int mainint ac char av sizet sz getpagesize int sharedflag ac int main(int ac, char *av[]) { size_t sz = getpagesize(); int sharedflag = ac](https://slidetodoc.com/presentation_image_h/35afa15ec14eb33836cbc1bf376fb6f5/image-31.jpg)

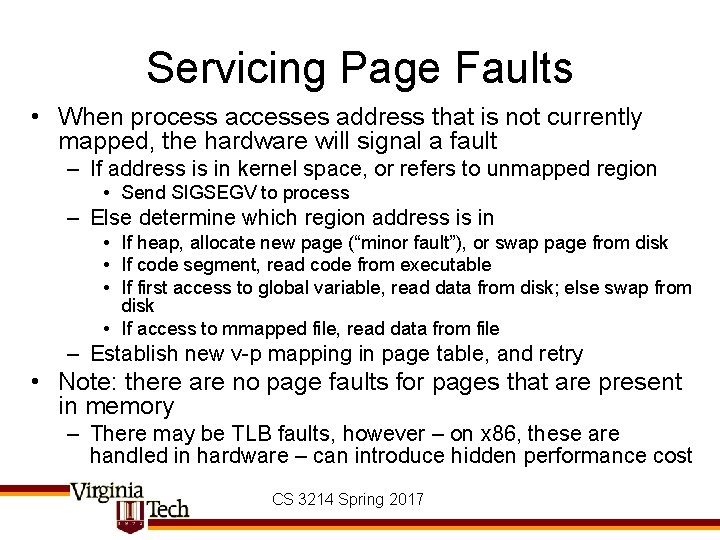

int main(int ac, char *av[]) { size_t sz = getpagesize(); int sharedflag = ac < 2 || strcmp(av[1], "-private") ? MAP_SHARED : MAP_PRIVATE; void *addr = mmap(NULL, sz, PROT_READ|PROT_WRITE, MAP_ANONYMOUS | sharedflag, -1, 0); assert (addr != MAP_FAILED); printf("Memory mapped at %pn", addr); int i, *ia = addr; if (fork() == 0) { for (i = 0; i < 10; i++) ia[i] = i; } else { assert (wait(NULL) > 0); for (i = 0; i < 10; i++) printf("%d ", ia[i]); printf("n"); } return 0; mmap() for parent/child communication } CS 3214 Spring 2017

![int mainint ac char av sizet sz getpagesize int sharedflag ac int main(int ac, char *av[]) { size_t sz = getpagesize(); int sharedflag = ac](https://slidetodoc.com/presentation_image_h/35afa15ec14eb33836cbc1bf376fb6f5/image-32.jpg)

int main(int ac, char *av[]) { size_t sz = getpagesize(); int sharedflag = ac < 2 || strcmp(av[1], "-private") ? MAP_SHARED : MAP_PRIVATE; void *addr = mmap(NULL, sz, PROT_READ|PROT_WRITE, MAP_ANONYMOUS | sharedflag, -1, 0); assert (addr != MAP_FAILED); sem_t *semp = (sem_t*) addr; assert(sem_init(semp, /* shared */1, /* initial value */ 0) == 0); int i, *ia = addr + sizeof(*semp); if (fork() == 0) { for (i = 0; i < 10; i++) ia[i] = i; sem_post(semp); } else { sem_wait(semp); for (i = 0; i < 10; i++) printf("%d ", ia[i]); printf("n"); } return 0; } CS 3214 Spring 2017 mmap() & shared semaphores

![define PGSIZE 4096 char persistentdataPGSIZE attributealignedPGSIZE int mainint ac char av int i #define PGSIZE 4096 char persistent_data[PGSIZE] __attribute__((aligned(PGSIZE))); int main(int ac, char *av[]) { int i,](https://slidetodoc.com/presentation_image_h/35afa15ec14eb33836cbc1bf376fb6f5/image-33.jpg)

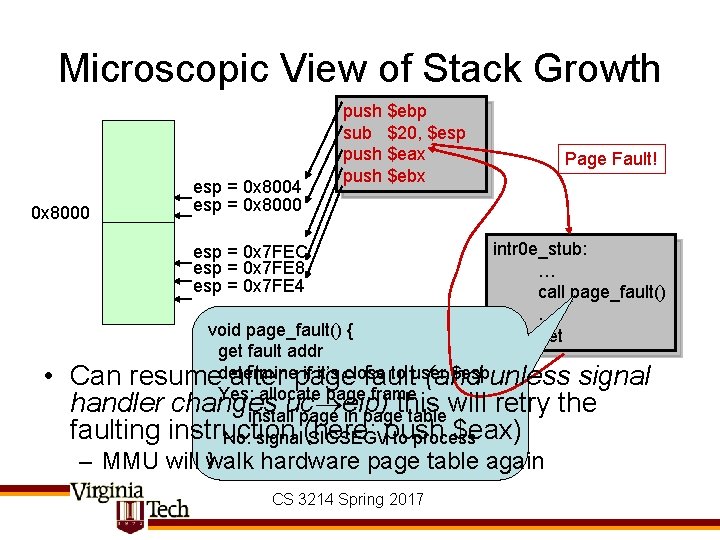

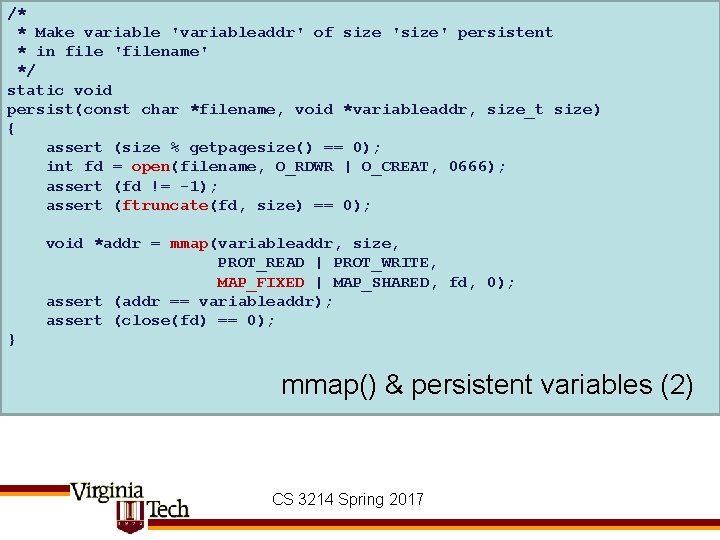

#define PGSIZE 4096 char persistent_data[PGSIZE] __attribute__((aligned(PGSIZE))); int main(int ac, char *av[]) { int i, do_read = ac < 2 || strcmp(av[1], "-read") == 0; persist(". persistent_data", persistent_data, sizeof (persistent_data)); if (do_read) { for (i = 0; persistent_data[i] != 0 && i < PGSIZE; i++) fputc(persistent_data[i], stdout); } else { int c; for (i = 0; i < PGSIZE && (c = fgetc(stdin)) != -1; i++) persistent_data[i] = c; memset(persistent_data + i, 0, PGSIZE - i); // zero rest } return 0; } mmap() & persistent variables CS 3214 Spring 2017

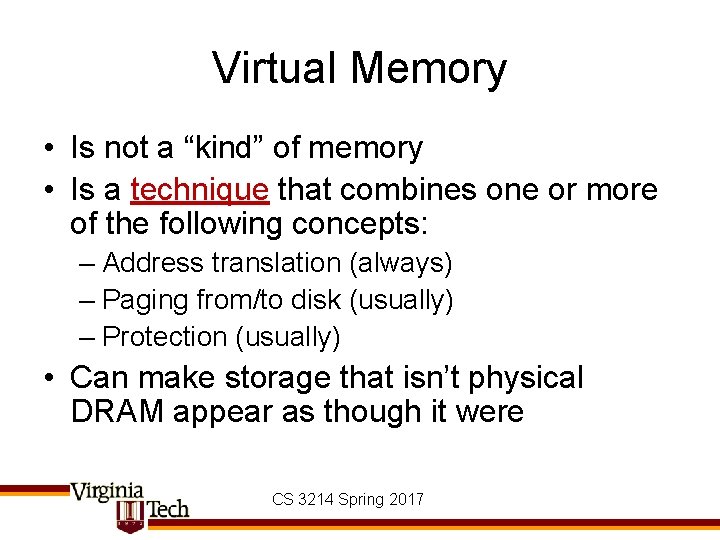

/* * Make variable 'variableaddr' of size 'size' persistent * in file 'filename' */ static void persist(const char *filename, void *variableaddr, size_t size) { assert (size % getpagesize() == 0); int fd = open(filename, O_RDWR | O_CREAT, 0666); assert (fd != -1); assert (ftruncate(fd, size) == 0); void *addr = mmap(variableaddr, size, PROT_READ | PROT_WRITE, MAP_FIXED | MAP_SHARED, fd, 0); assert (addr == variableaddr); assert (close(fd) == 0); } mmap() & persistent variables (2) CS 3214 Spring 2017