Understanding the soundscape concept the role of sound

- Slides: 36

Understanding the soundscape concept: the role of sound recognition and source identification David Chesmore Audio Systems Laboratory Department of Electronics University of York Audio lab

Overview of Presentation • Role of soundscape analysis • Instrument for Soundscape Recognition, Identification and Evaluation (ISRIE) • Soundscape description language • Applications • Conclusions Audio lab

Role of Soundscape Analysis • Potential applications: • identifying relevant sound elements in a • • • soundscape (e. g. high intensity sounds) determining positive and negative sounds biodiversity studies tranquil areas preserving important soundscapes planning and noise abatement studies Audio lab

Soundscape Analysis Options • Manual • Advantage: subjective • Disadvantages: time consuming, limited resources, subjective, very large storage requirements • Automatic • Advantages: objective (once trained), continuous analysis possible, much reduced data storage requirements • Disadvantage: reliability of sound element classification Audio lab

How to Automatically Classify Sounds? • Major issues to address: • separation and localisation of sounds in the soundscape (especially with multiple simultaneous sounds) • classification of sounds depends on feature overlap, number of elements • Number of elements, localisation, etc depends on application Audio lab

Instrument for Soundscape Recognition, Identification and Evaluation (ISRIE) • ISRIE is a collaborative project between York, • • Southampton and Newcastle Universities 1 of 3 projects arising from EPSRC Noisy Futures Sandpit York - sound separation + sound classification Southampton - applications + interface with users Newcastle - sound localisation + arrays Audio lab

Aim of ISRIE • Aim is to produce an instrument capable of automatically identifying sounds in a soundscape by: • separating sounds in 3 -d • localising sounds from the 3 -d field • classification of sound in a restricted range of categories Audio lab

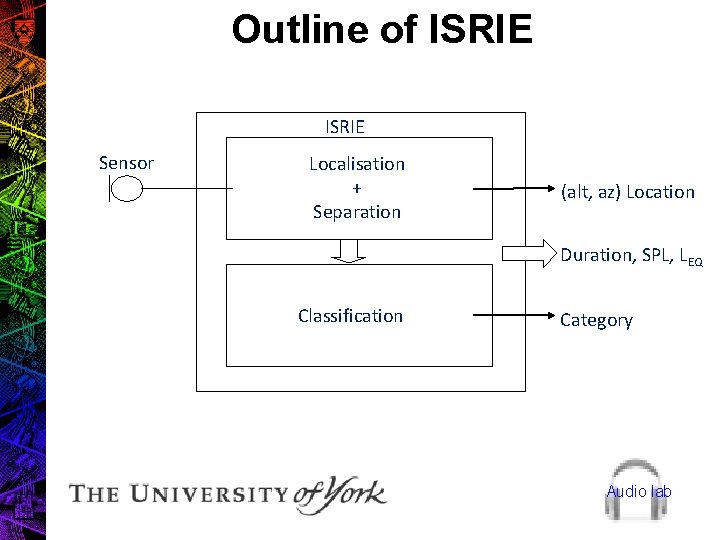

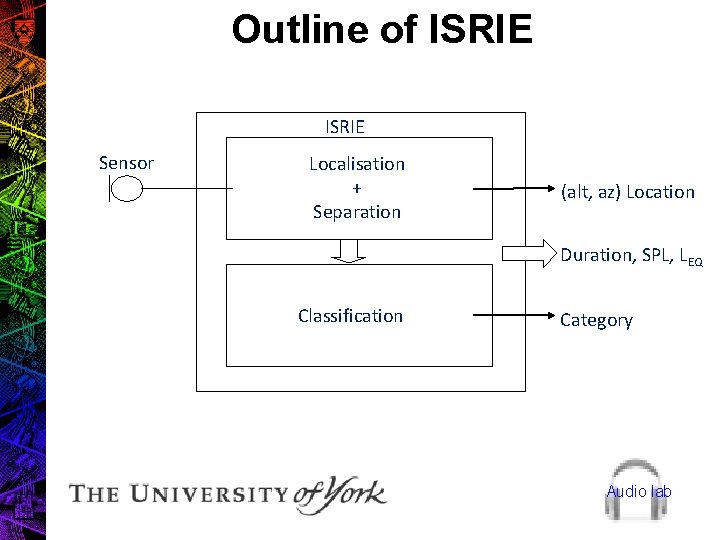

Outline of ISRIE Sensor Localisation + Separation (alt, az) Location Duration, SPL, LEQ Classification Category Audio lab

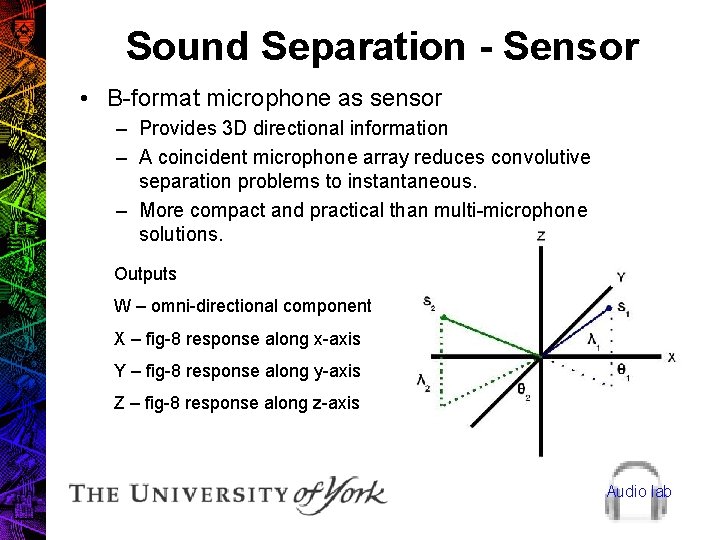

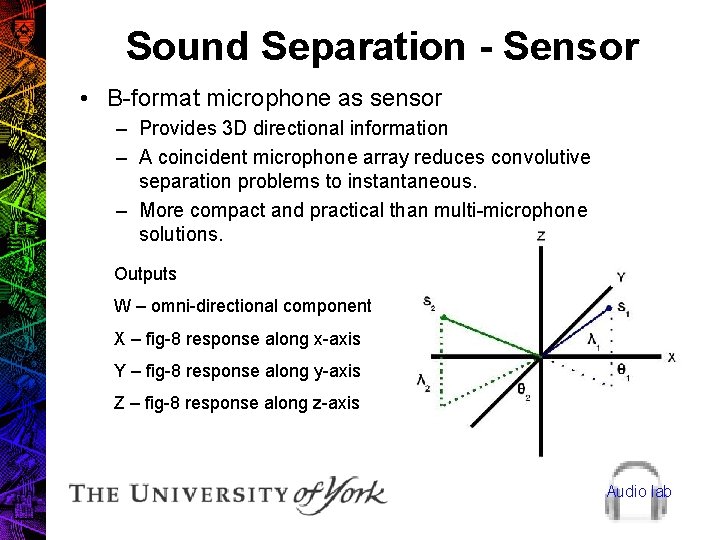

Sound Separation - Sensor • B-format microphone as sensor – Provides 3 D directional information – A coincident microphone array reduces convolutive separation problems to instantaneous. – More compact and practical than multi-microphone solutions. Outputs W – omni-directional component X – fig-8 response along x-axis Y – fig-8 response along y-axis Z – fig-8 response along z-axis Audio lab

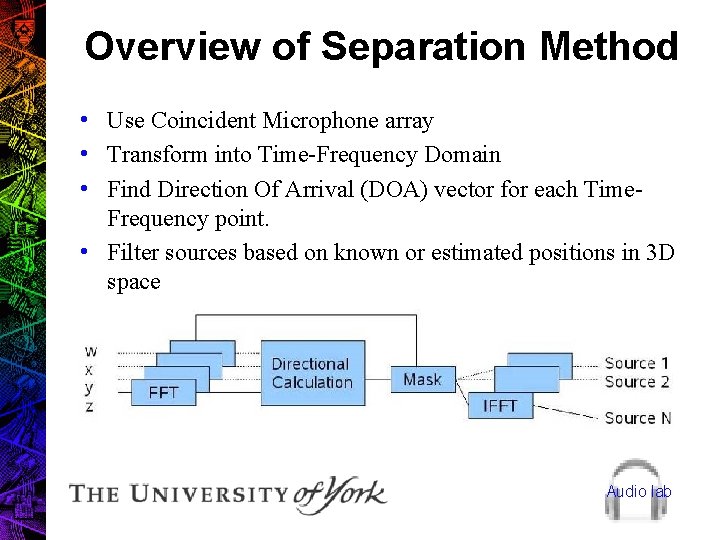

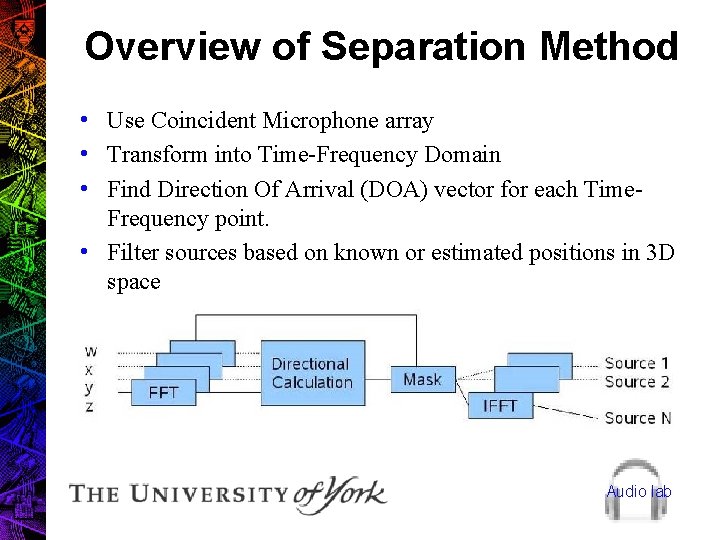

Overview of Separation Method • Use Coincident Microphone array • Transform into Time-Frequency Domain • Find Direction Of Arrival (DOA) vector for each Time. Frequency point. • Filter sources based on known or estimated positions in 3 D space Audio lab

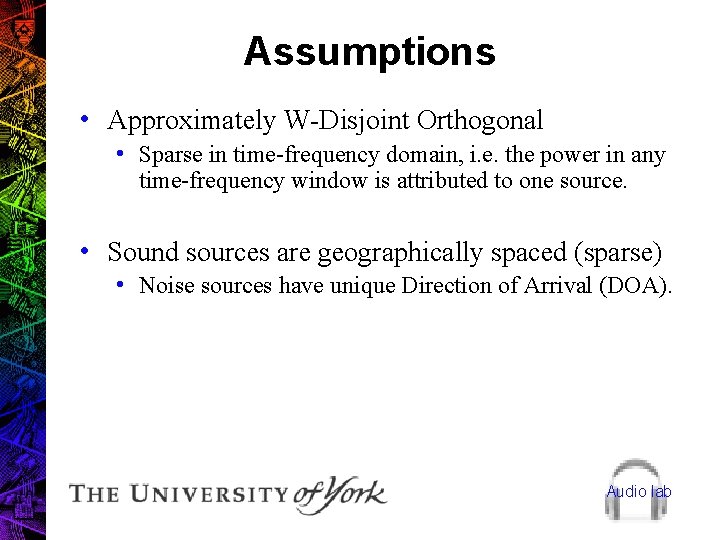

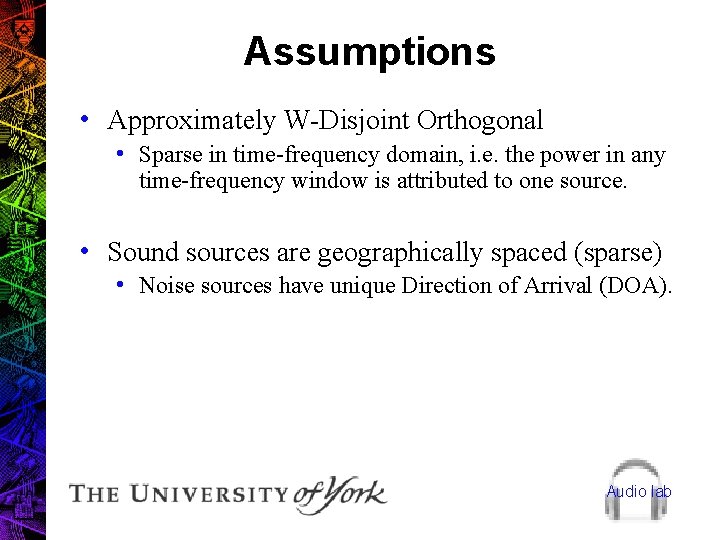

Assumptions • Approximately W-Disjoint Orthogonal • Sparse in time-frequency domain, i. e. the power in any time-frequency window is attributed to one source. • Sound sources are geographically spaced (sparse) • Noise sources have unique Direction of Arrival (DOA). Audio lab

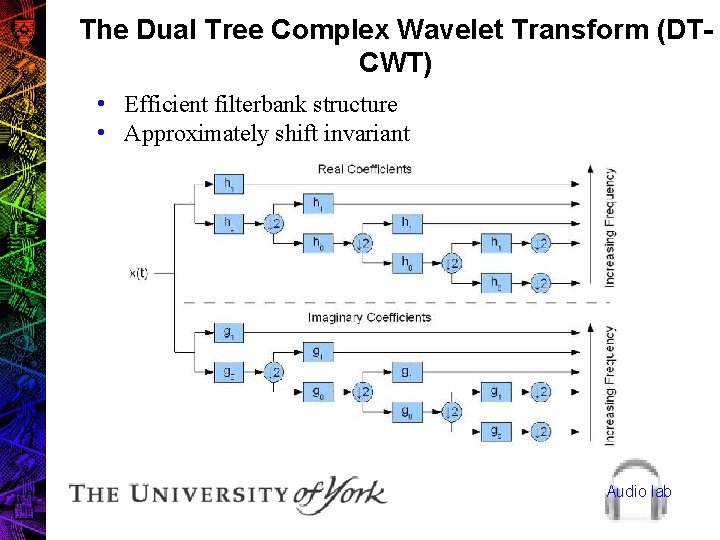

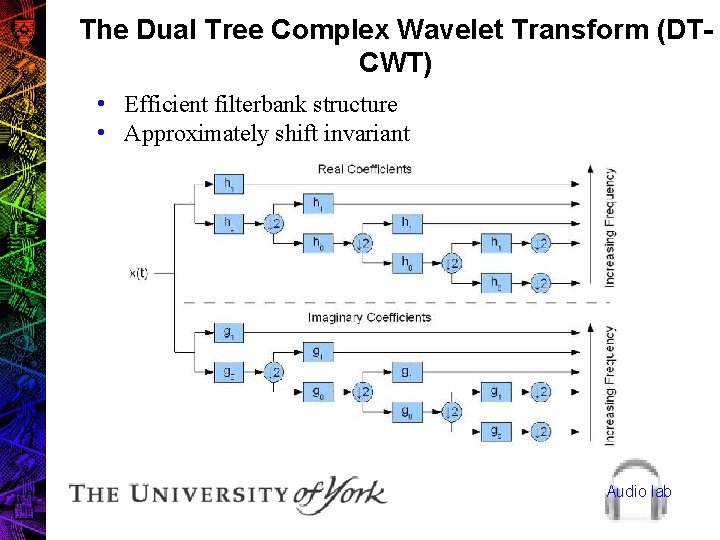

The Dual Tree Complex Wavelet Transform (DTCWT) • Efficient filterbank structure • Approximately shift invariant Audio lab

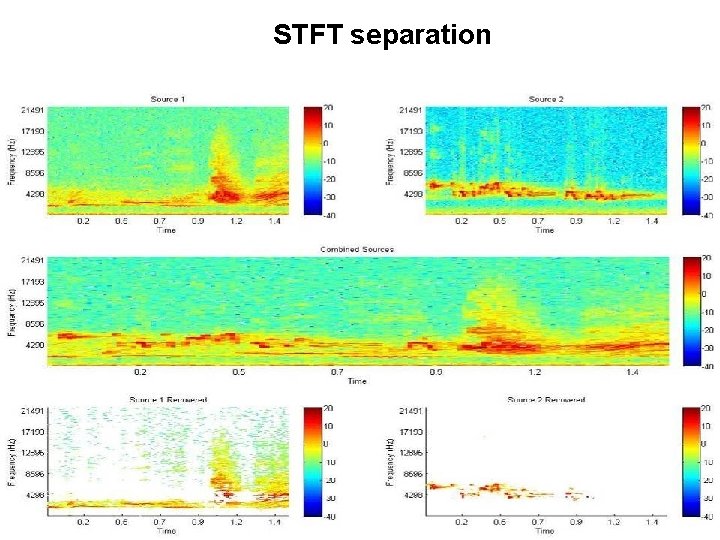

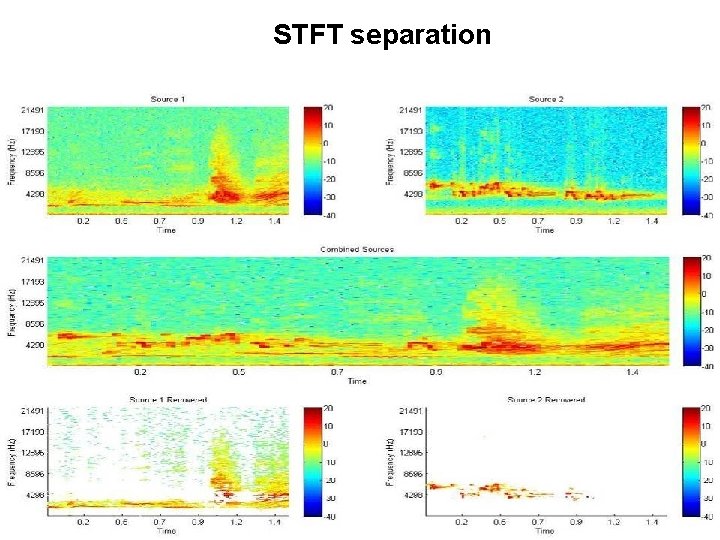

STFT separation

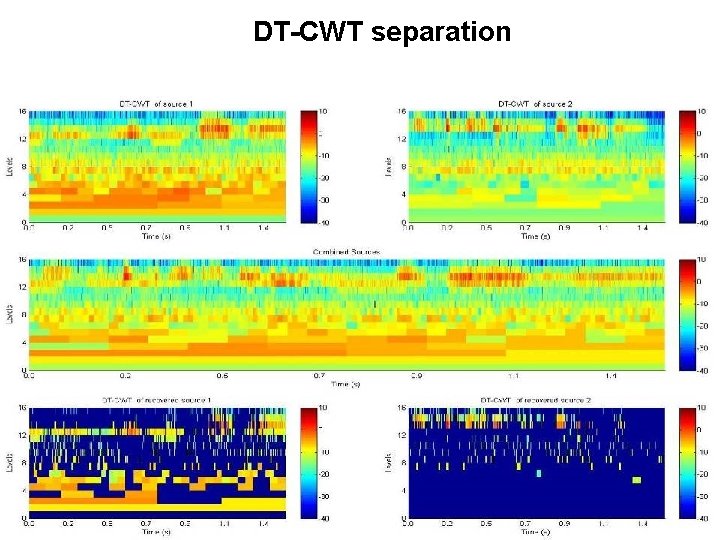

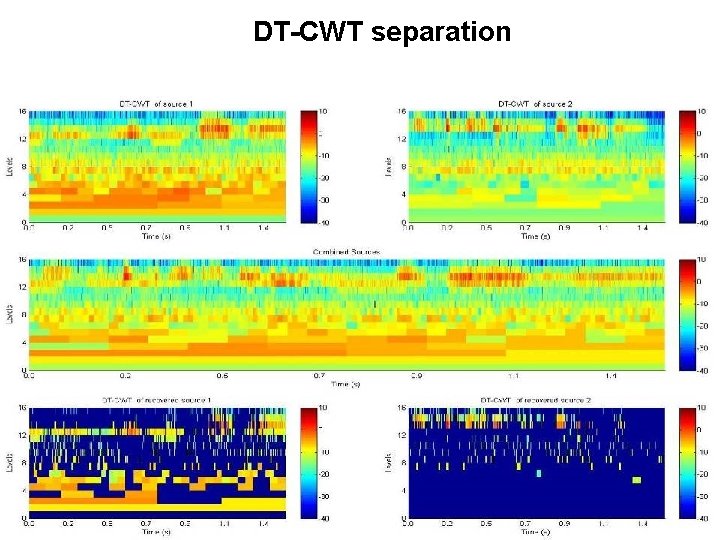

DT-CWT separation

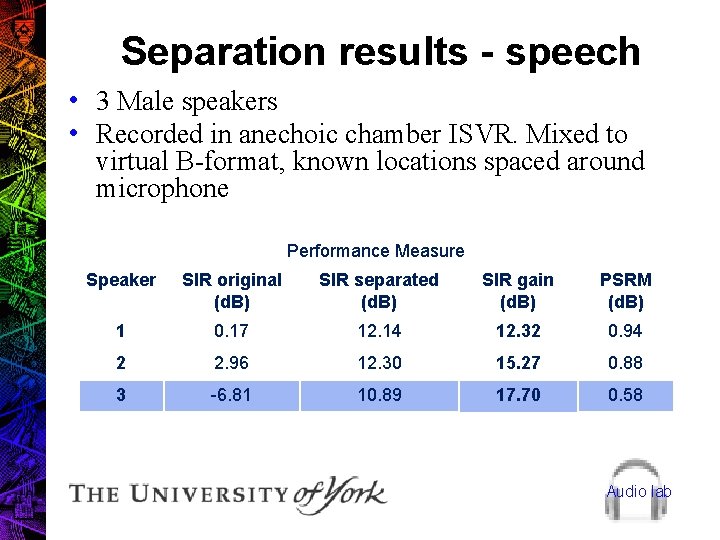

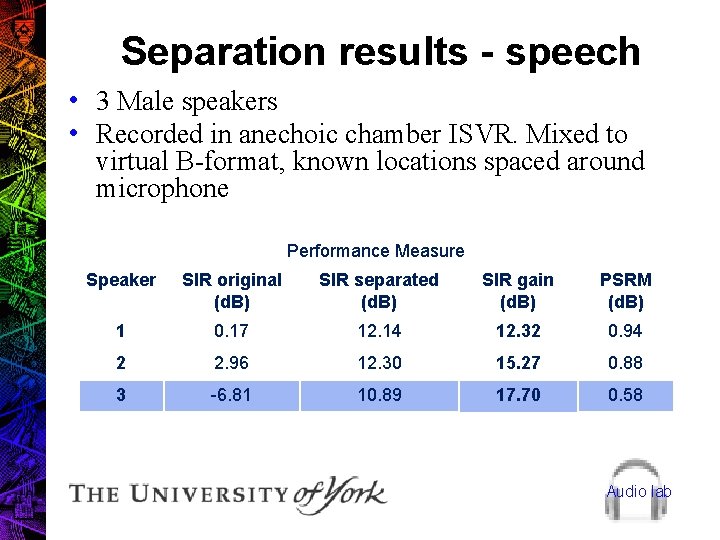

Separation results - speech • 3 Male speakers • Recorded in anechoic chamber ISVR. Mixed to virtual B-format, known locations spaced around microphone Performance Measure Speaker SIR original (d. B) SIR separated (d. B) SIR gain (d. B) PSRM (d. B) 1 0. 17 12. 14 12. 32 0. 94 2 2. 96 12. 30 15. 27 0. 88 3 -6. 81 10. 89 17. 70 0. 58 Audio lab

Source Estimation and Tracking • Examples used known source locations. In many deployment scenarios, this is acceptable. • More versatility could be provided by finding source locations and tracking • Two approaches considered • 3 D histogram approach • Clustering using plastic self organising map Audio lab

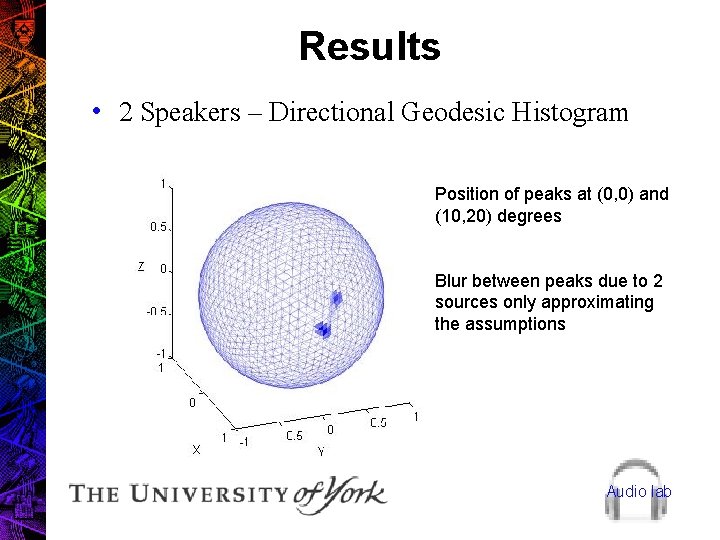

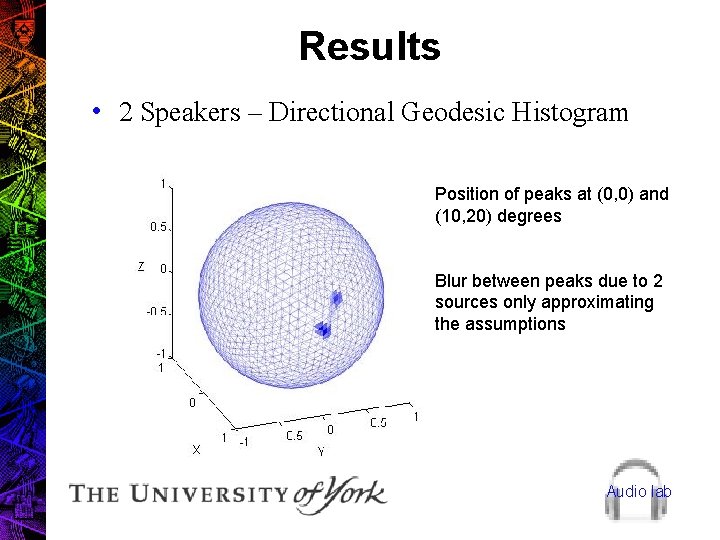

Results • 2 Speakers – Directional Geodesic Histogram Position of peaks at (0, 0) and (10, 20) degrees Blur between peaks due to 2 sources only approximating the assumptions Audio lab

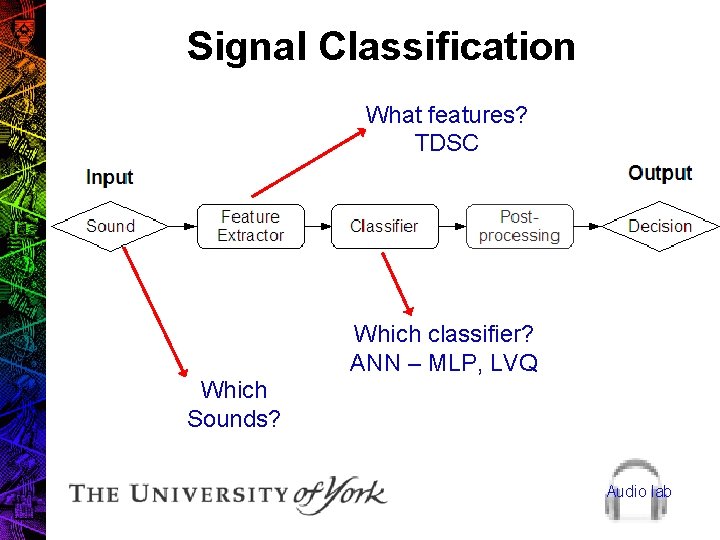

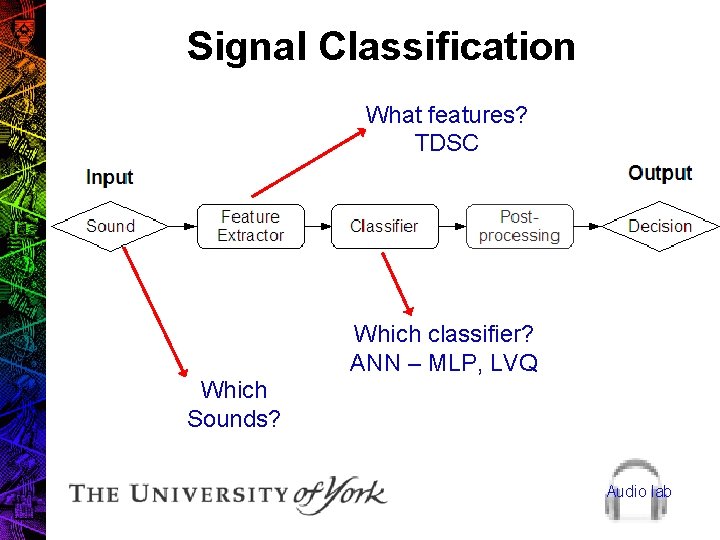

Signal Classification What features? TDSC Which classifier? ANN – MLP, LVQ Which Sounds? Audio lab

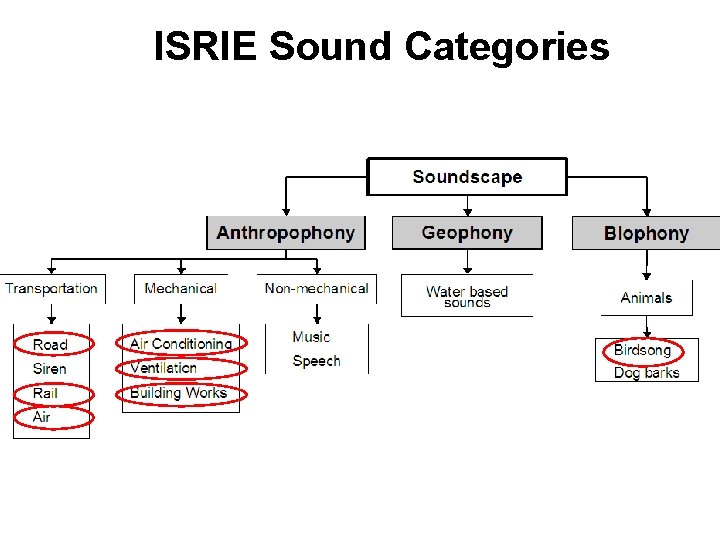

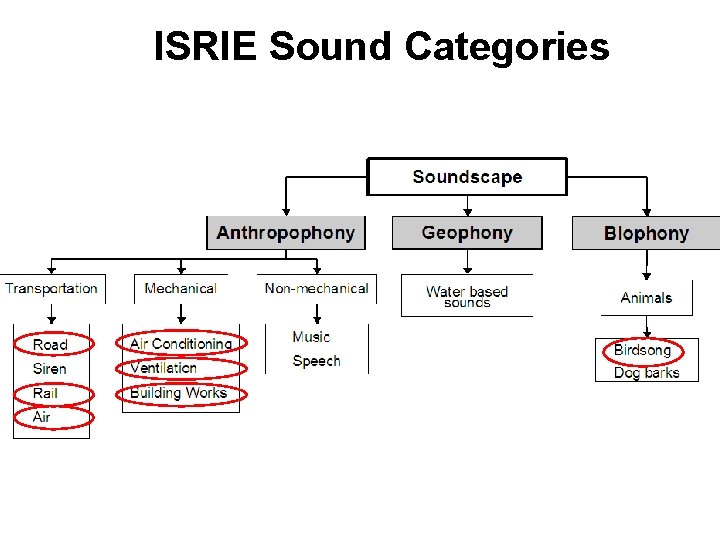

ISRIE Sound Categories

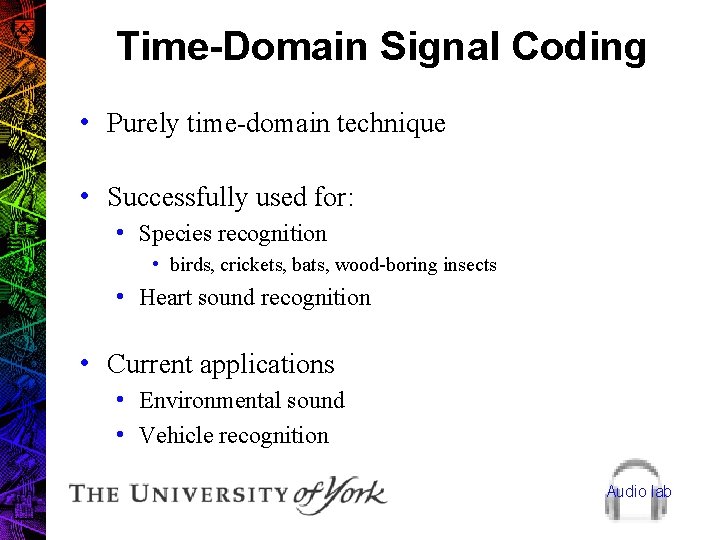

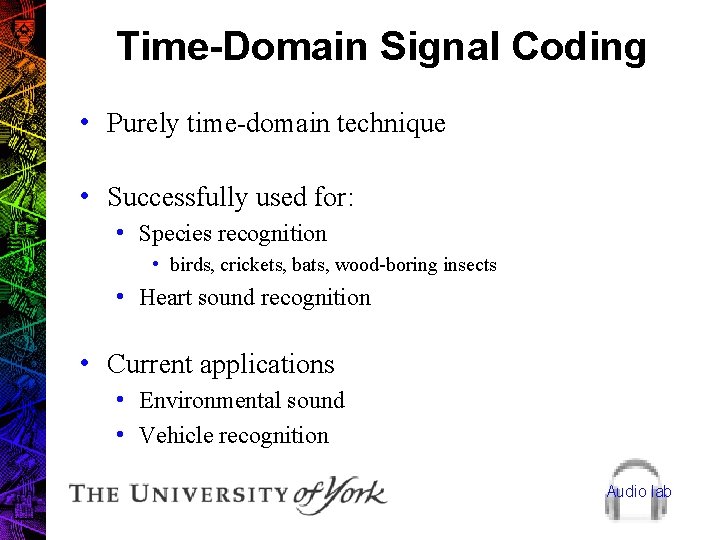

Time-Domain Signal Coding • Purely time-domain technique • Successfully used for: • Species recognition • birds, crickets, bats, wood-boring insects • Heart sound recognition • Current applications • Environmental sound • Vehicle recognition Audio lab

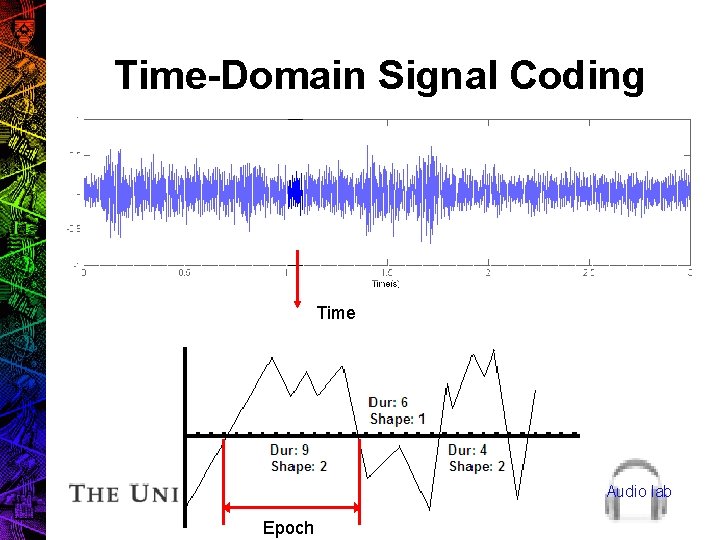

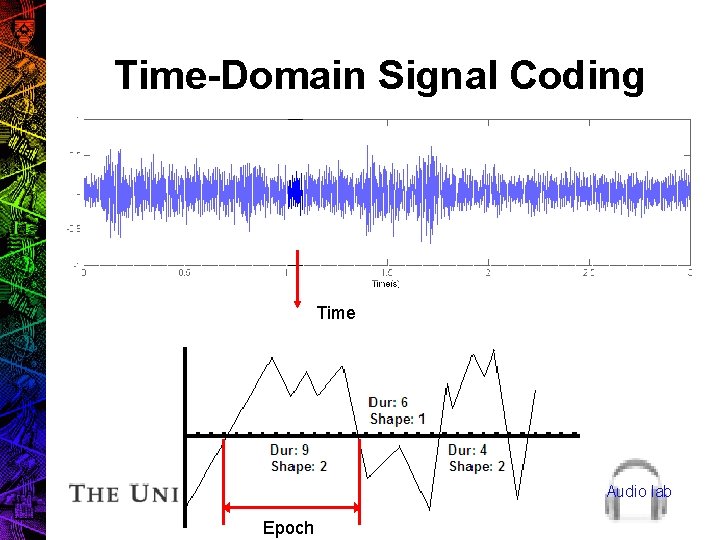

Time-Domain Signal Coding Time Audio lab Epoch

Multiscale. TDSC (MTDSC) • New method of D-S data presentation • Replaces S-matrix, A-matrix or D-matrix • Multiscale • Made from groups of epochs in powers of 2 (512, 256, etc) • Inspired by Wavelets Audio lab

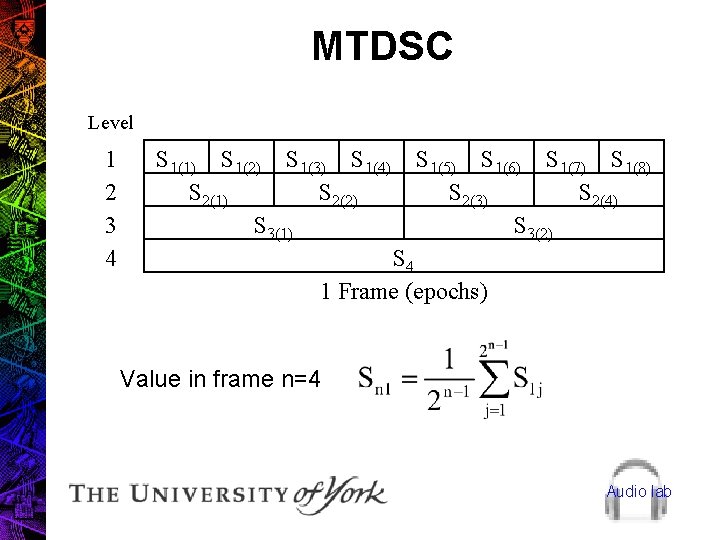

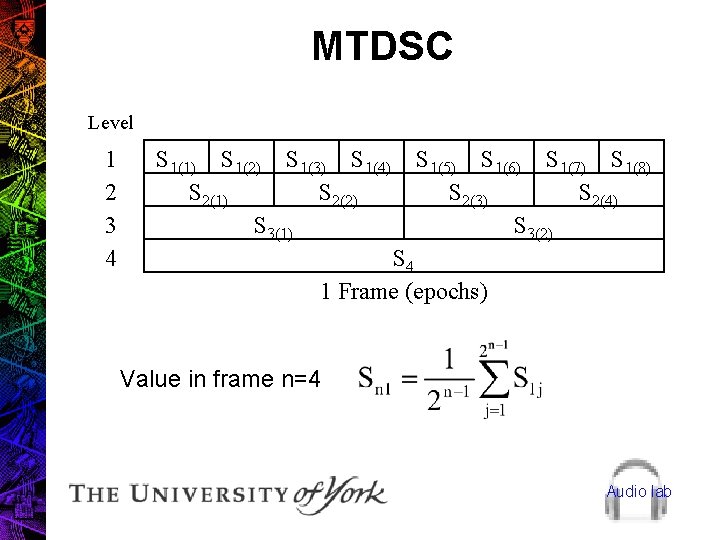

MTDSC Level 1 2 3 4 S 1(1) S 1(2) S 1(3) S 1(4) S 2(1) S 2(2) S 3(1) S 1(5) S 1(6) S 1(7) S 1(8) S 2(3) S 2(4) S 3(2) S 4 1 Frame (epochs) Value in frame n=4 Audio lab

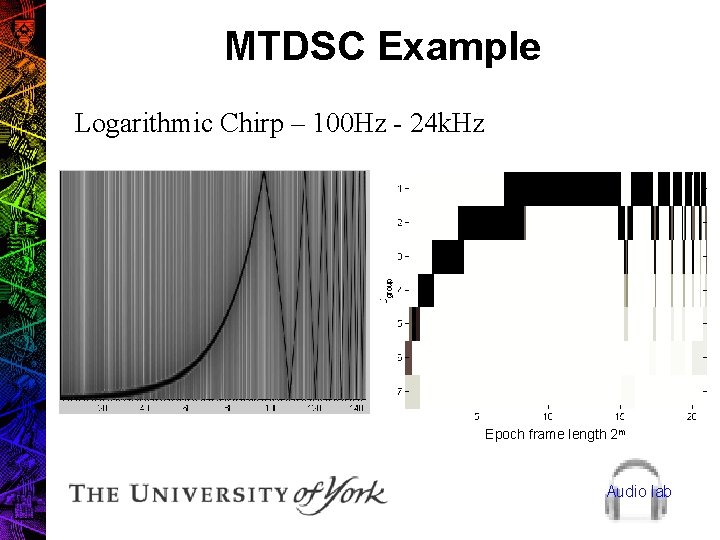

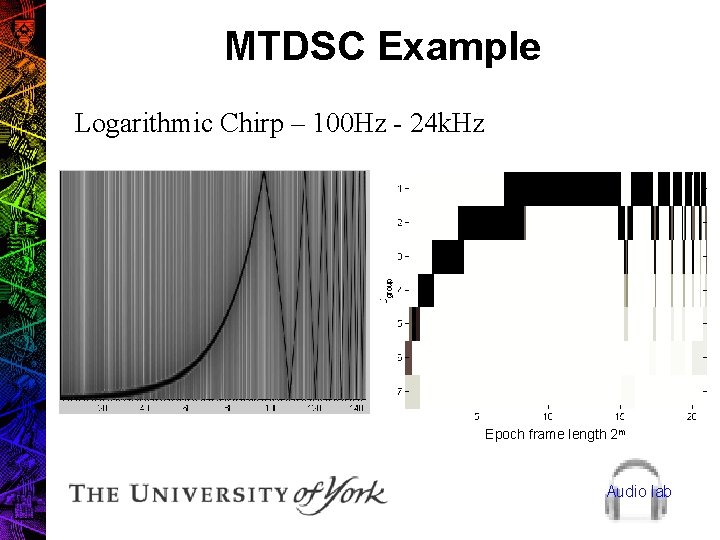

MTDSC Example Logarithmic Chirp – 100 Hz - 24 k. Hz Epoch frame length 2 m Audio lab

MTDSC (cont) • Currently use shape but will investigate: • epoch duration (zero-crossings interval) only • epoch duration and shape • epoch duration, shape and energy • Also use mean, can also use varience, higher order statistics for larger values of m (e. g. 9) Audio lab

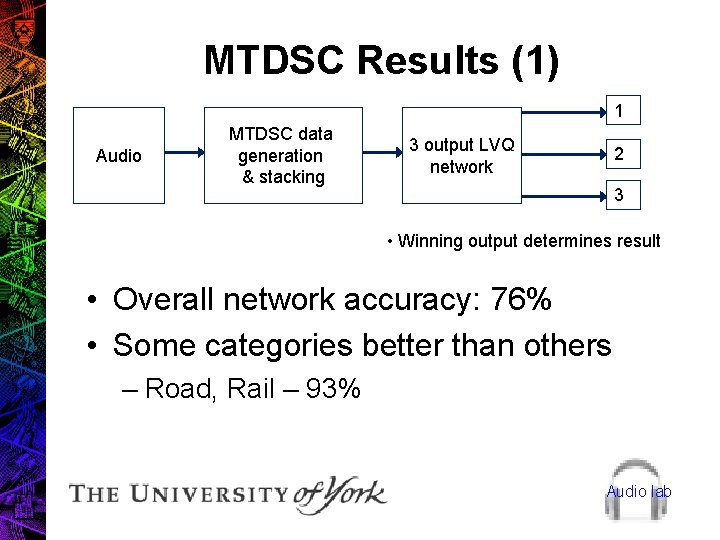

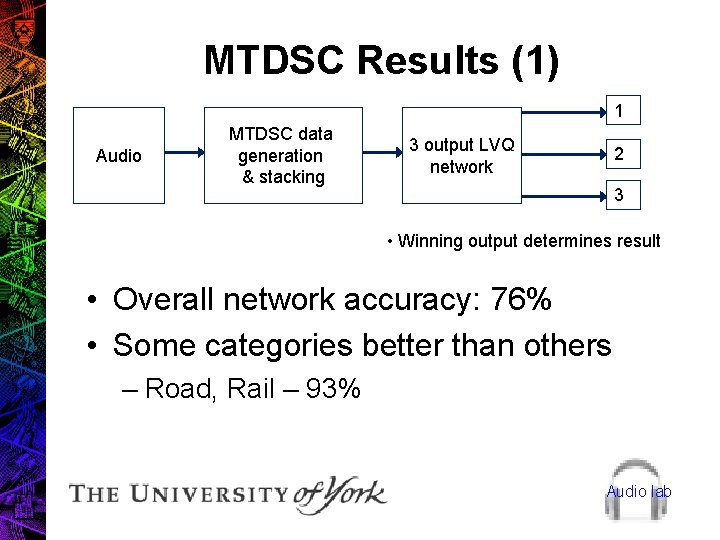

MTDSC Results (1) 1 Audio MTDSC data generation & stacking 3 output LVQ network 2 3 • Winning output determines result • Overall network accuracy: 76% • Some categories better than others – Road, Rail – 93% Audio lab

MTDSC Results (2) • 3 different Japanese cicada species used for biodiversity studies (2 common, 1 rare) in northern Japan • 21 test files from field recordings including 1 with -6 d. B SNR • Backpropagation MLP classifier • 20 out of 21 test files correctly classified • ~ 95% accuracy Audio lab

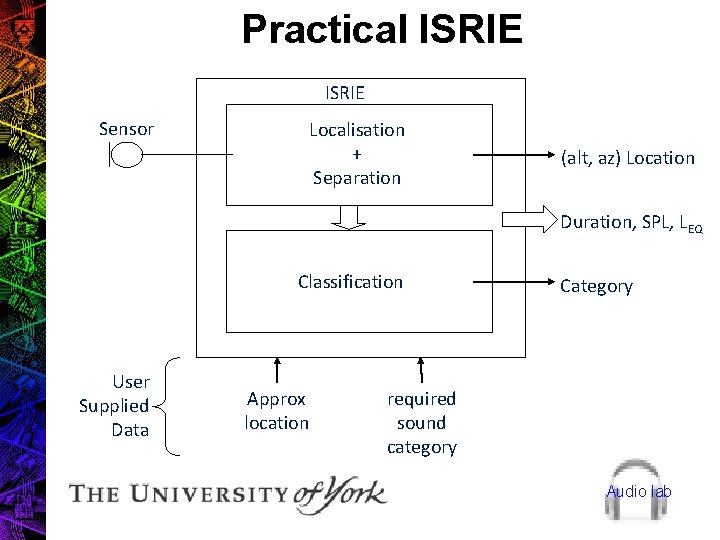

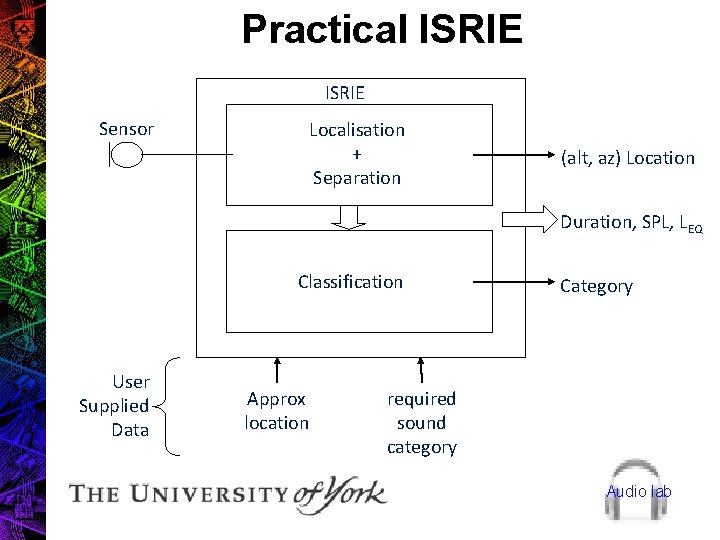

Practical ISRIE Sensor Localisation + Separation (alt, az) Location Duration, SPL, LEQ Classification User Supplied Data Approx location Category required sound category Audio lab

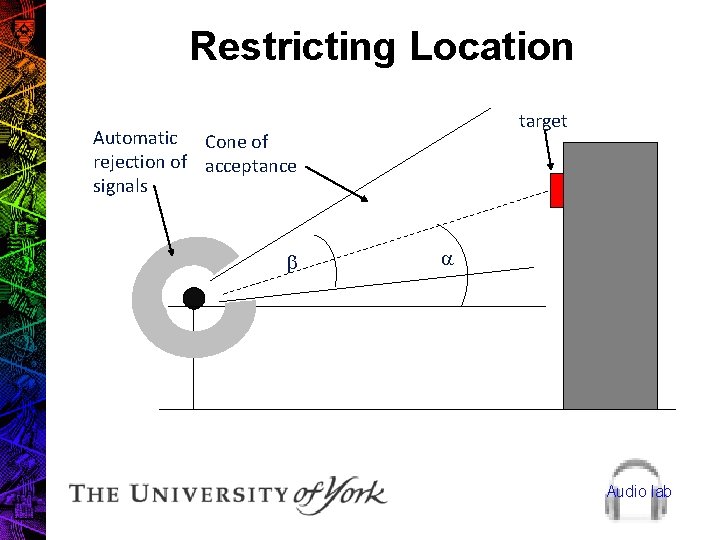

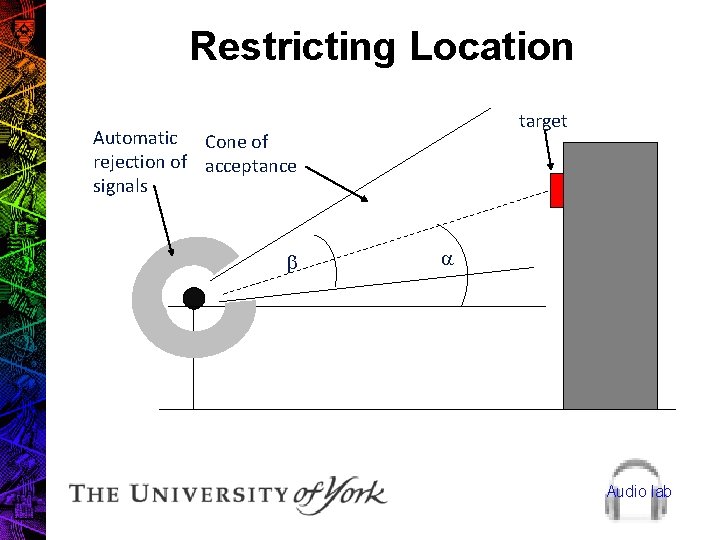

Restricting Location target Automatic Cone of rejection of acceptance signals b a Audio lab

Further Automated Analysis • At present, ISRIE only provides a classified sound element in a small range of categories • Can we create a soundscape description language (SDL)? • Needs to be flexible enough to accomodate manually and automatically generated soundscapes • Take inspiration from speech recognition, natural language, bioacoustics (e. g. automated ID of insects, birds, bats, cetaceans) Audio lab

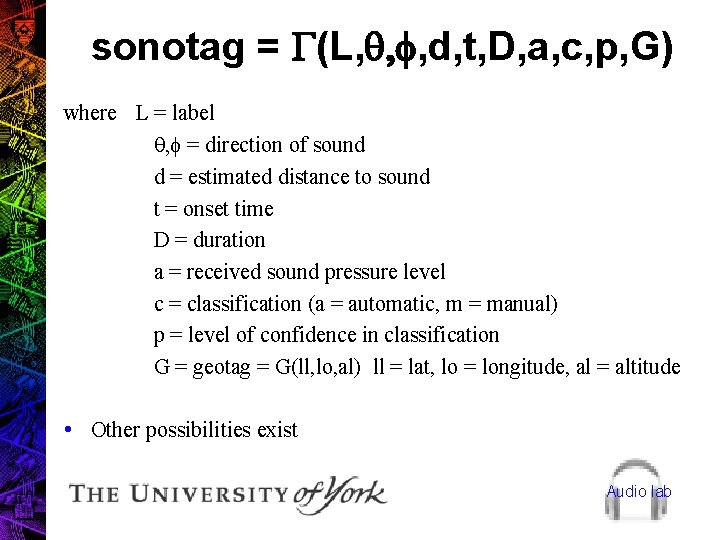

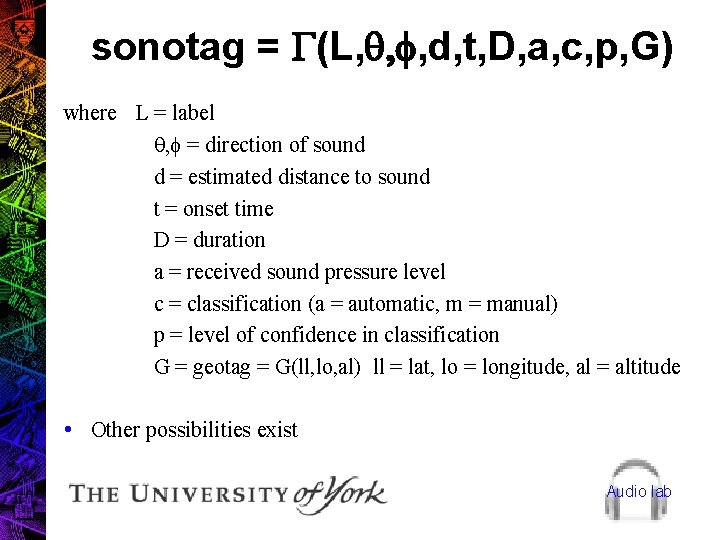

sonotag = G(L, q, f, d, t, D, a, c, p, G) where L = label q, f = direction of sound d = estimated distance to sound t = onset time D = duration a = received sound pressure level c = classification (a = automatic, m = manual) p = level of confidence in classification G = geotag = G(ll, lo, al) ll = lat, lo = longitude, al = altitude • Other possibilities exist Audio lab

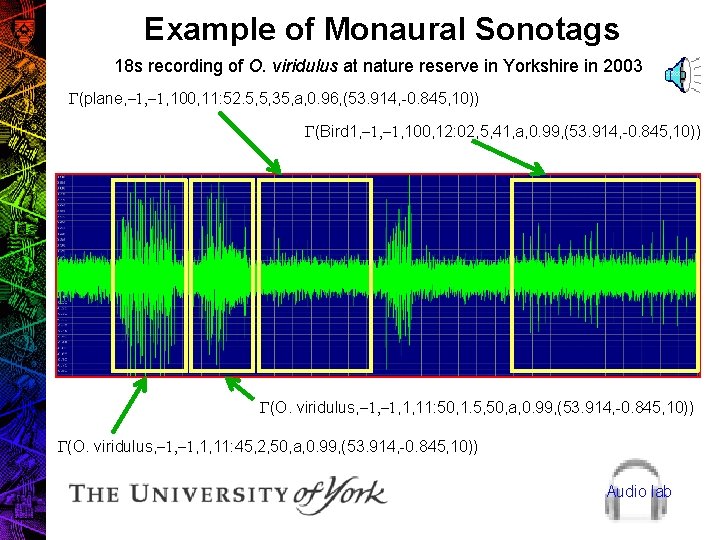

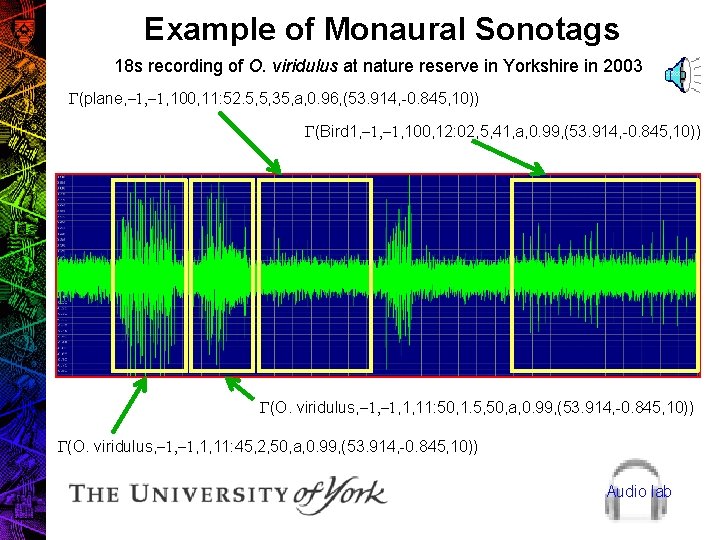

Example of Monaural Sonotags 18 s recording of O. viridulus at nature reserve in Yorkshire in 2003 G(plane, -1, 100, 11: 52. 5, 5, 35, a, 0. 96, (53. 914, -0. 845, 10)) G(Bird 1, -1, 100, 12: 02, 5, 41, a, 0. 99, (53. 914, -0. 845, 10)) G(O. viridulus, -1, 1, 11: 50, 1. 5, 50, a, 0. 99, (53. 914, -0. 845, 10)) G(O. viridulus, -1, 1, 11: 45, 2, 50, a, 0. 99, (53. 914, -0. 845, 10)) Audio lab

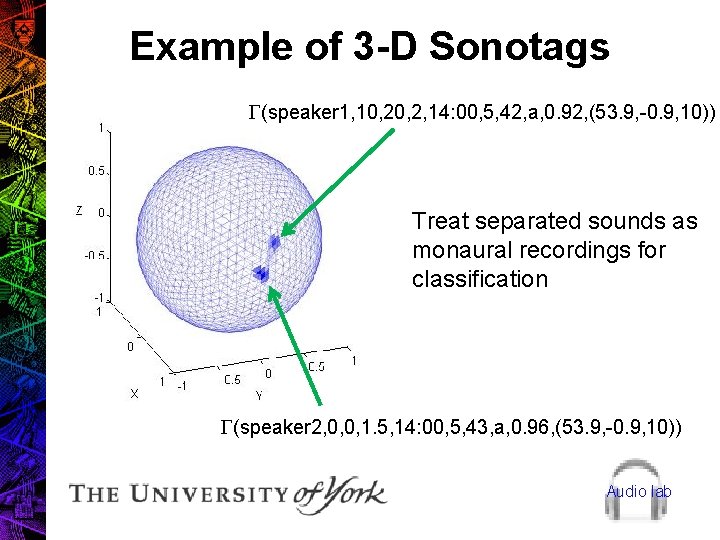

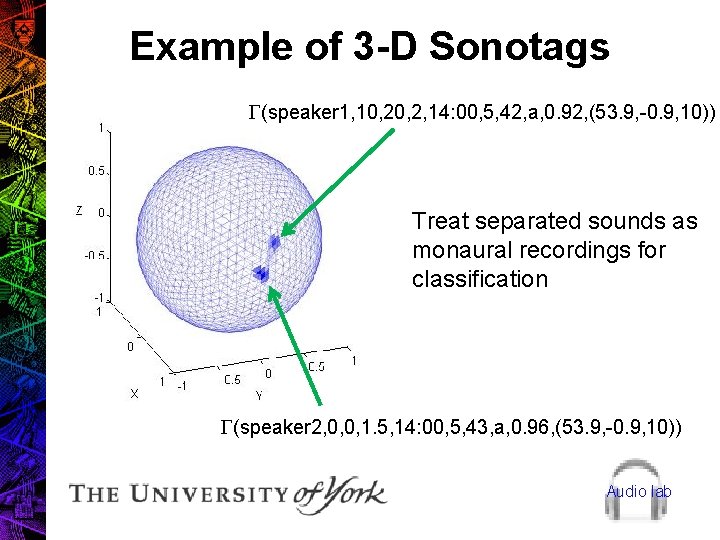

Example of 3 -D Sonotags G(speaker 1, 10, 2, 14: 00, 5, 42, a, 0. 92, (53. 9, -0. 9, 10)) Treat separated sounds as monaural recordings for classification G(speaker 2, 0, 0, 1. 5, 14: 00, 5, 43, a, 0. 96, (53. 9, -0. 9, 10)) Audio lab

Applications (1) • • • BS 4142 assessments PPG 24 assessments Noise nuisance applications Other acoustic consultancy problems Soundscape recordings Future noise policy Audio lab

Applications (2) • Biodiversity assessment, endangered species • • monitoring Alien invasive species (e. g. Cane Toad in Australia) Anthropomorphic noise effects on animals Habitat fragmentation Tranquility studies Audio lab

Conclusions • ISRIE has been shown to be successful in separating and classifying urban sounds • much work still to be done, especially in classification • Automated soundscape description is possible but a flexible and formal framework is needed Audio lab

Understanding the role of culture

Understanding the role of culture “a sound mind is in a sound body”

“a sound mind is in a sound body” Loud sound and soft sound

Loud sound and soft sound Worker role azure

Worker role azure Symbolischer interaktionismus krappmann

Symbolischer interaktionismus krappmann Statuses and their related roles determine

Statuses and their related roles determine Logical view of data in relational database model

Logical view of data in relational database model Similarities of ideal self and actual self

Similarities of ideal self and actual self Contoh selling concept

Contoh selling concept Hát kết hợp bộ gõ cơ thể

Hát kết hợp bộ gõ cơ thể Frameset trong html5

Frameset trong html5 Bổ thể

Bổ thể Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Voi kéo gỗ như thế nào

Voi kéo gỗ như thế nào Chụp phim tư thế worms-breton

Chụp phim tư thế worms-breton Chúa sống lại

Chúa sống lại Các môn thể thao bắt đầu bằng tiếng đua

Các môn thể thao bắt đầu bằng tiếng đua Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Công thức tính độ biến thiên đông lượng

Công thức tính độ biến thiên đông lượng Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ Mật thư tọa độ 5x5

Mật thư tọa độ 5x5 Làm thế nào để 102-1=99

Làm thế nào để 102-1=99 độ dài liên kết

độ dài liên kết Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Thơ thất ngôn tứ tuyệt đường luật

Thơ thất ngôn tứ tuyệt đường luật Quá trình desamine hóa có thể tạo ra

Quá trình desamine hóa có thể tạo ra Một số thể thơ truyền thống

Một số thể thơ truyền thống Cái miệng nó xinh thế chỉ nói điều hay thôi

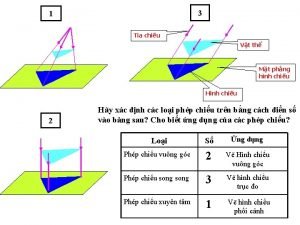

Cái miệng nó xinh thế chỉ nói điều hay thôi Vẽ hình chiếu vuông góc của vật thể sau

Vẽ hình chiếu vuông góc của vật thể sau Biện pháp chống mỏi cơ

Biện pháp chống mỏi cơ đặc điểm cơ thể của người tối cổ

đặc điểm cơ thể của người tối cổ V cc

V cc Vẽ hình chiếu đứng bằng cạnh của vật thể

Vẽ hình chiếu đứng bằng cạnh của vật thể Vẽ hình chiếu vuông góc của vật thể sau

Vẽ hình chiếu vuông góc của vật thể sau Thẻ vin

Thẻ vin đại từ thay thế

đại từ thay thế